- 1School of Business, Xuchang University, Xuchang, China

- 2School of Education, Xuchang University, Xuchang, China

- 3School of Civil Engineering, Xuchang University, Xuchang, China

Introduction: The implementation of blended learning helps overcome the limitations of traditional “chalk-and-talk” didactic teaching and unidirectional passive learning, demonstrating significant value in cultivating students’ problem-solving skills, critical thinking, and enhancing instructional quality. However, there remains a lack of systematic evaluation frameworks for assessing blended learning quality in discipline-specific courses (e.g., Organizational Behavior).

Methods: This study developed a blended teaching quality evaluation system based on the CIPP (Context, Input, Process, Product) model, comprising four first-level indicators (context evaluation, input evaluation, process evaluation, and product evaluation) and sixteen second-level indicators, along with defining a five-level course performance rating set S (very low, low, medium, high, very high). Using the organizational behavior course repository from a public university in Henan Province as the sample, empirical analysis was conducted employing the Analytic Hierarchy Process (AHP).

Results: Empirical results indicate the course’s blended learning quality achieved an overall “High” rating. The AHP method not only effectively evaluates teaching quality but also identifies specific issues in teacher-student interactions through indicator analysis.

Discussion: The proposed CIPP-AHP integrated framework provides a practical diagnostic solution for blended learning quality (supported by 2023 data). Its indicator system and grading criteria are generalizable to similar courses, offering referential value for optimizing instructional design and teacher professional development.

1 Introduction

With the rapid development of society, countries around the world have developed diverse innovative educational concepts and practices to promote global high-quality education development. However, establishing a scientific and systematic evaluation system to promote the sustainable and high-quality educational development has become an important task for universities. The key to high-quality development in higher education lies in transforming traditional one-way knowledge delivery classrooms into interactive spaces that foster wisdom. Therefore, the teaching quality evaluation system, student learning quality evaluation, and teacher classroom effectiveness have garnered widespread attention (Xu et al., 2022; Yang et al., 2022; Zhang and Gao, 2022). Traditional evaluation systems focus solely on the teaching process, neglecting talent development goals, which makes it difficult to guide the achievement of teaching objectives. Developing teaching evaluation models using big data and related analytical methods, studying the relationships between teaching variables in the new era, and effectively assessing teaching quality are crucial for advancing the high-quality development of higher education (Wang et al., 2022).

The evaluation of teaching effectiveness of teaching quality in universities is key to improving the quality of teaching activities and is widely applied globally (Hu and Chen, 2024). Student evaluations of teaching are an important indicator of teaching effectiveness (Li and Yang, 2024). Effectiveness evaluation helps promote teaching outcomes, value judgments, and problem diagnosis, thereby enhancing teaching quality and efficiency (Jinxue and Li, 2023; Wang and Fu, 2022). Many studies have focused on evaluating university teaching. Liu and Wang (2022) combined static and dynamic evaluations to identify teaching issues and clarify directions for improvement. Xia and Lv (2022) used machine learning techniques including decision trees, support vector machines, Bayesian theory, and random forests to evaluate student course data, demonstrating their effectiveness and importance in higher education data mining. Chen and Lu (2022) developed an intelligent teaching evaluation system that incorporates basic qualities, teaching attitudes, teaching methods, teaching abilities, and teaching effectiveness, thereby improving evaluation accuracy. Li (2022) conducted a scientific evaluation of English teaching through the Analytic Hierarchy Process (AHP) and K-means clustering. For evaluating the quality of university physical education teaching, Wang et al. (2021) proposed a model based on grey relational analysis and multi-attribute fuzzy evaluation, addressing the complexity of evaluation factors. Wei (2022) employed a grey relational model and a variable-weight comprehensive evaluation method to enhance the reliability of English teaching effectiveness evaluation. Xia and Xu (2022) developed a machine learning-based teaching quality evaluation system, which quantifies evaluation indicators and overcoming subjective factors to achieve satisfactory results. An (2022) proposed a deep learning-based evaluation model, that integrates teaching data through neural networks to achieve precise assessment. Li (2022) developed a university physical education teaching quality evaluation system based on decision tree algorithms and AHP, validating its correctness and applicability through experiments.

The rapid development of computer technology has driven the transformation of teaching models, making online and offline blended teaching the mainstream approach in universities. However, their teaching effectiveness has not yet met expectations. China places great importance on research into teaching quality evaluation. Blended teaching models restructure teaching processes through information technology, combining traditional teaching with new technologies and gradually gaining attention from teachers and students. Compared to traditional methods, blended teaching enhances self-directed learning abilities and promotes a shift from passive to personalized learning (Meng et al., 2019; Wu et al., 2021; Gu, 2022). Hu and Wang (2022) proposed a blended teaching effectiveness evaluation method based on big data analysis, constructing an evaluation system from three dimensions and achieving ideal results through constrained parameter analysis. Yuan (2021) improved the Markov chain evaluation model and validated its effectiveness experimentally. Guo and Niu (2021) developed an interactive teaching system for university basketball training, combining online and offline models to improve students’ basketball skills and academic performance. Hui (2021) found that the SPOC and deep learning blended model stimulates students’ interest in English speaking and promote self-directed learning and reflection. Cao (2022) developed a teacher-student interaction adaptability calculation model, validating the effectiveness of collaborative evaluation in blended teaching. Miao (2021) proposed an intelligent English blended teaching assistance model based on mobile information systems, integrating resources to achieve personalized teaching. Li et al. (2022) developed a blended teaching quality evaluation method using Bayesian theory, with experiments demonstrating its accuracy and discriminative power. Shao (2021) provided a feasible method for blended teaching evaluation by converting quantitative data into qualitative concepts through data mining algorithms.

Blended teaching methods are widely used in higher education, however there remains a significant research gap in the systematic and comprehensive evaluation of their effectiveness, particularly in the context of organizational behavior courses. Existing research primarily focuses on traditional teaching models, with limited attention given to blended teaching models. Blended teaching combines the advantages of online and offline methods, necessitating the development of more appropriate evaluation systems. Although the CIPP model (Context, Input, Process, Product) is widely used in educational evaluation, its systematic application to blended teaching research is insufficient. Existing studies often focus on single dimensions (e.g., student engagement or course design), lack comprehensive consideration of the teaching process, and rarely integrate models such as CIPP for structured evaluation. Additionally, the lack of empirical research combining qualitative and quantitative indicators hinders the deeper understanding of blended teaching effectiveness.

2 Methodology

2.1 CIPP (context, input, process, product) evaluation model

The CIPP model is an educational evaluation model proposed by the renowned modern American education evaluation expert, Stufflebeam. This model consists of four evaluation stages: Context evaluation, Input evaluation, Process evaluation, and Product evaluation. It is characterized by pluralism, scientific rigor, process orientation, diagnosis, and termination.

The CIPP evaluation model is a typical educational evaluation model, primarily applicable to school and educational program evaluations. Based on the current blended teaching evaluation models and the fundamental aspects of the CIPP evaluation model, this study conducted questionnaire and interview surveys to assess the demand for using the CIPP model in blended teaching evaluation in higher education institutions. The data were analyzed using the Questionnaire Star statistical software, and recommendations were proposed for effectively applying the CIPP model in blended teaching evaluation.

To improve the quality of teaching, it is recommended that decision-makers use CIPP evaluation model as a systematic method to evaluate all stages of an education plan, from formulation to implementation (Lei, 2024). Based on the CIPP model, effective teaching theory, and formative evaluation theory, Li and Hu (2022) developed a college teaching quality assurance indicator system and conducted an empirical study using colleges and universities as examples. The investigation and experimental results on the current state of quality assurance show that the CIPP-based evaluation index system of college teaching quality has good applicability. Tuna evaluated the effectiveness of tourism education undergraduate courses using the CIPP model. Quantitative research methods were used, and students from four universities in Türkiye were surveyed. The results indicate that, according to students’ opinions, tourism undergraduate courses have some strengths and weaknesses in the basic components of the CIPP model (Tuna and Basdal, 2021). The CIPP model is illustrated in Figure 1.

2.2 Construction of mixed teaching quality evaluation index system based on CIPP

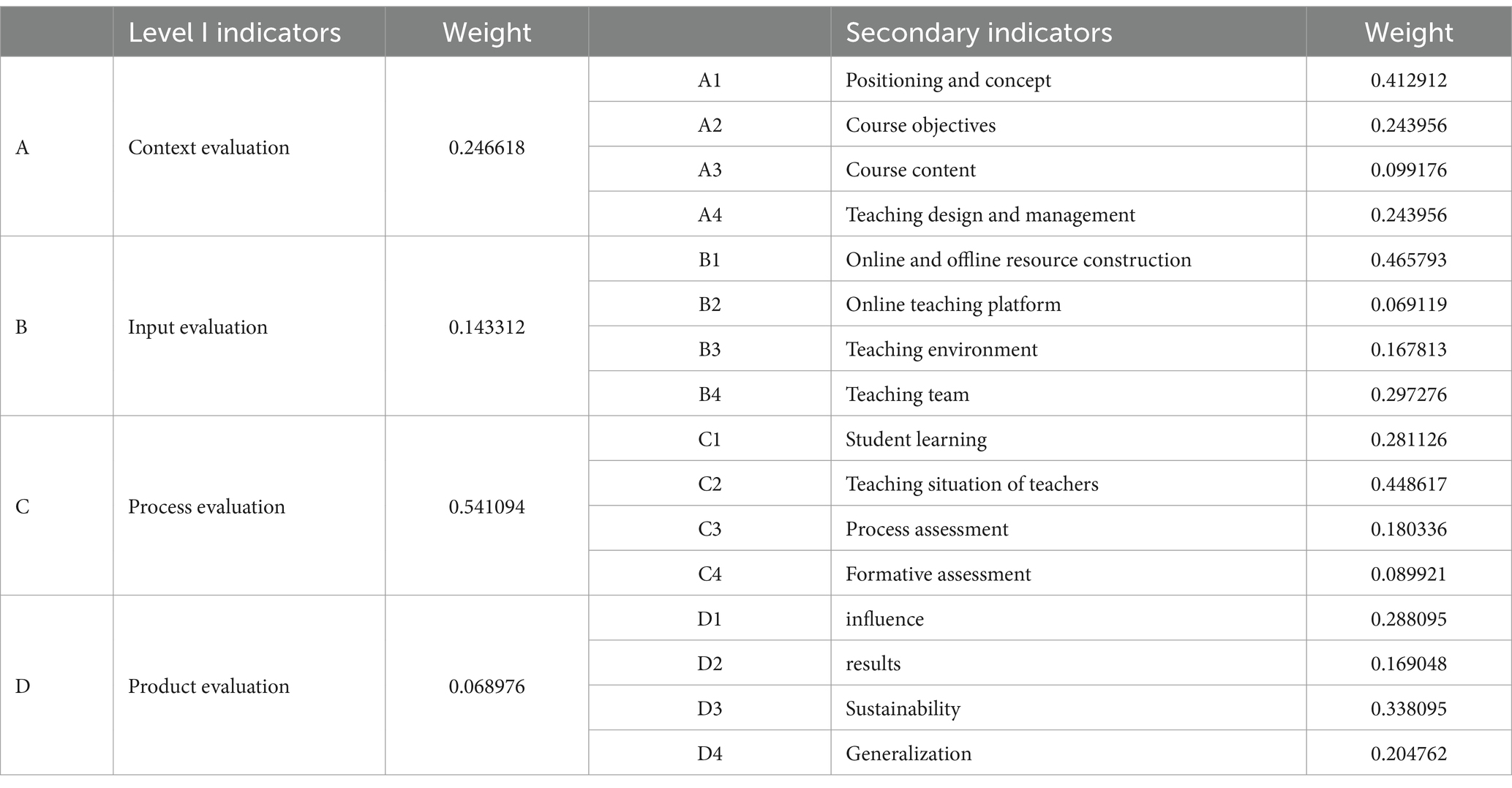

In the age of big data, blended teaching has emerged as a modern pedagogical approach, making the development of its teaching quality evaluation indicators an urgent research priority. Based on the CIPP evaluation model and the characteristics of blended teaching, the primary indicators of the teaching quality evaluation system include context evaluation, input evaluation, process evaluation, and product evaluation (see Table 1).

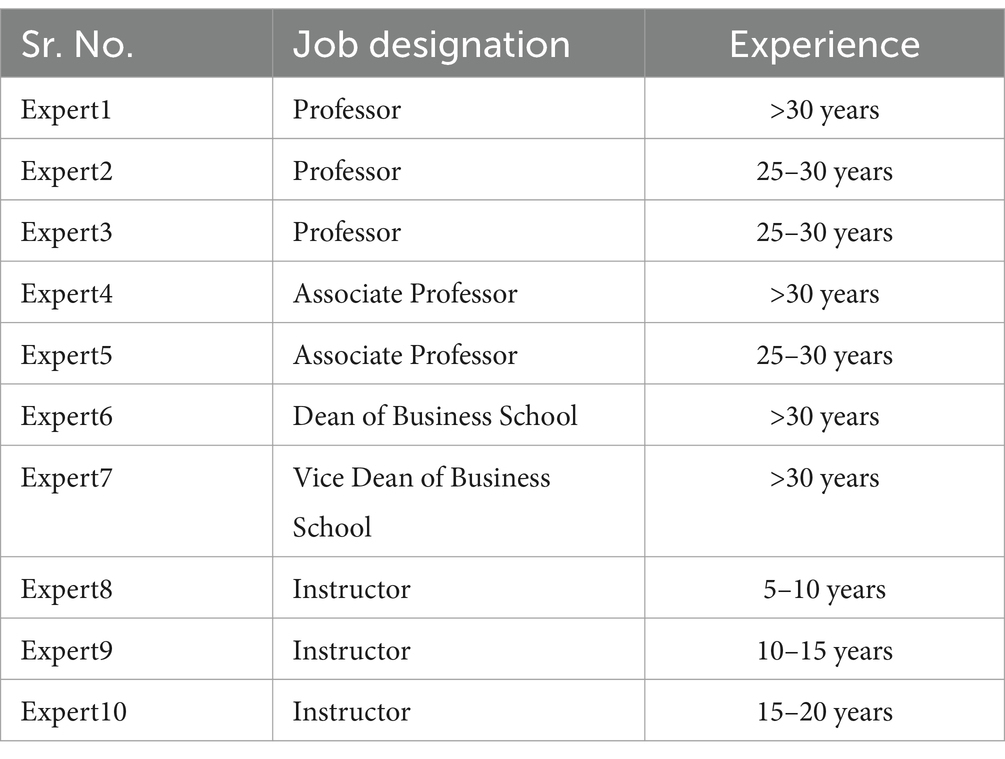

Based on the first level indicators of CIPP evaluation model, we determine the secondary indicators through expert opinion survey. We selected 10 experts from the Business School of a public university in Henan Province to conduct an opinion survey, as shown in Table 2.

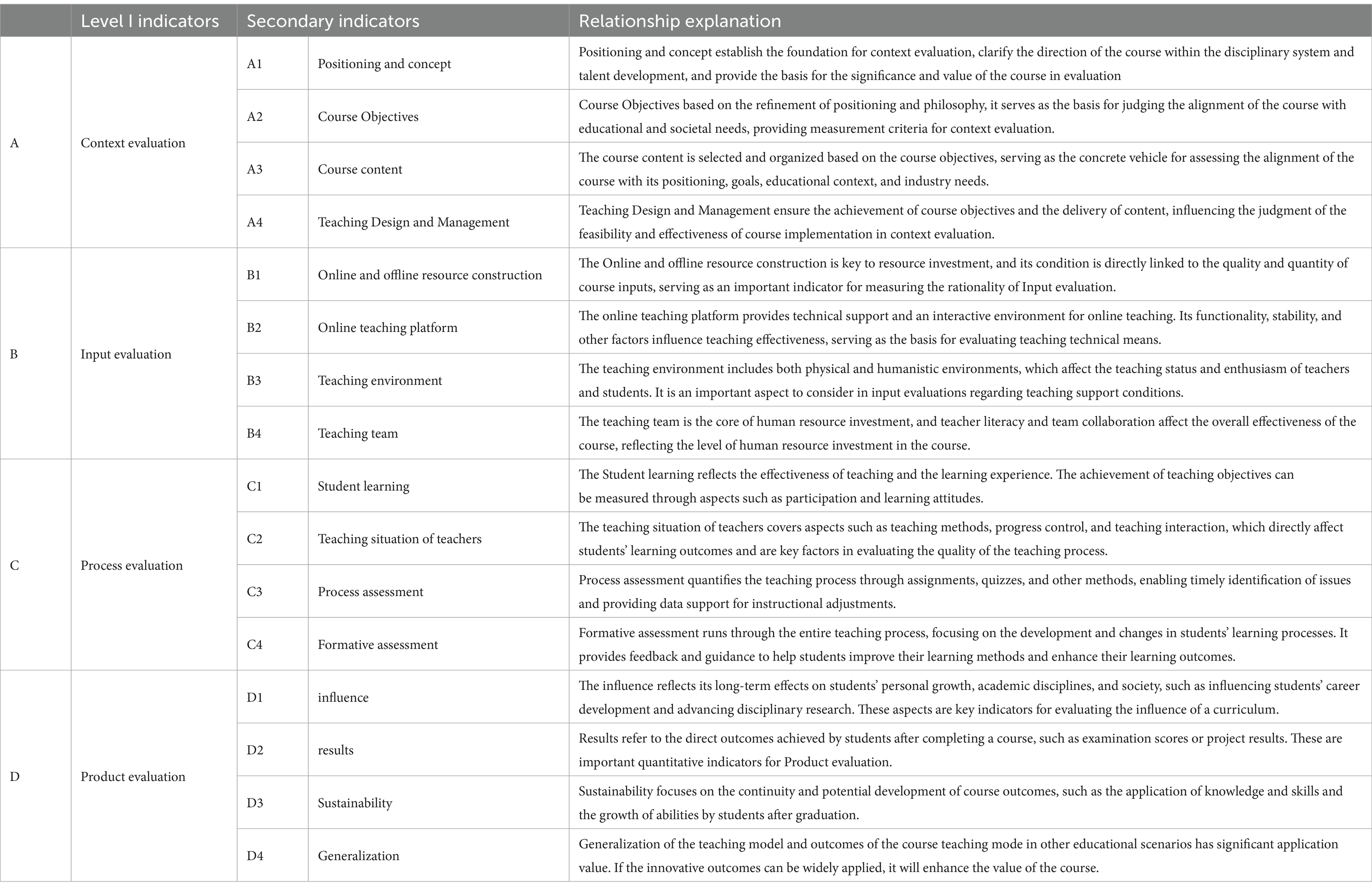

All 10 experts provided valid responses, including 1 Dean of Business, 1 Vice Dean of Business, 3 professors, 2 associate professors and 3 instructors, most of whom have 10 years or more of relevant work experience. The final secondary indicators were determined through expert consensus. Their relationships with primary indicators are demonstrated in Table 3 and Figure 2.

A Context Evaluation.

Context evaluation is the foundation of the entire teaching evaluation, used to clarify the course’s positioning, objectives, content, and design direction, providing a basis for subsequent instructional design, implementation, and evaluation. Its secondary indicators include:

A1 Positioning and Concept: This indicator clarifies the course’s direction within the disciplinary system within the disciplinary system and talent development, providing the basis for the significance and value of the course in evaluation.

A2 Course Objectives: Based on the refinement of positioning and concept, it serves as the basis for judging whether the course aligns with educational and societal needs, providing measurement criteria for context evaluation.

A3 Course Content: Selected and organized based on course objectives, serving as the concrete vehicle for assessing the alignment of the course with its positioning, goals, educational context, and industry needs.

A4 Teaching Design and Management: Ensures the achievement of course objectives and the delivery of content, influencing the judgment of the feasibility and effectiveness of course implementation in context evaluation.

B Input Evaluation.

Input evaluation provides the resources and conditions necessary for teaching implementation, serving as a critical link to ensure the smooth conduct of teaching. Its secondary indicators include:

B1 Online and Offline Resource Construction: Key to resource investment, directly linked to the quality and quantity of course inputs, and an important indicator for measuring the rationality of inputs.

B2 Online Teaching Platform: Provides technical support and an interactive environment for online teaching. Its functionality, stability, and other factors influence teaching effectiveness, serving as the basis for evaluating teaching technical means.

B3 Teaching Environment: Includes physical and virtual environments, affecting the smoothness of teaching implementation and students’ learning experiences.

B4 Teaching Team: The core of human resource investment, where the professional competence and teaching level of instructors directly impact the effectiveness of course implementation.

C Process Evaluation.

Process evaluation focuses on the dynamic performance during teaching implementation, used to assess the effectiveness of teaching activities and students’ learning outcomes. Its secondary indicators include:

C1 Student Learning: Reflects students’ learning engagement, outcomes, and experiences.

C2 Teaching Situation of Teachers: Evaluates teachers’ teaching methods, attitudes, and effectiveness.

C3 Process Assessment: Quantifies each aspect of the teaching process to ensure the achievement of teaching objectives.

C4 Formative Assessment: Adjusts teaching strategies through dynamic feedback to optimize the teaching process.

D Product Evaluation.

Product evaluation focuses on the final outcomes of teaching activities, used to measure the achievement of course objectives and the long-term impact of the course. Its secondary indicators include:

D1 Influence: Reflects the long-term impact of the course on students’ knowledge, abilities, and qualities.

D2 Results: Directly reflect students’ learning outcomes and the achievement of course objectives.

D3 Sustainability: Focuses on the future continuity of course effects, assessing the course’s support for students’ long-term development.

D4 Generalization: Considers the application value and promotion potential of course outcomes, evaluating whether the course has broad applicability.

2.2.1 Summary of the relationship between primary and secondary indicators

Primary indicators are the macro dimensions of evaluation, providing the overall framework for teaching evaluation.

Secondary indicators are the specification of primary indicators, used to refine evaluation content and provide operable assessment criteria.

Together, primary and secondary indicators form a complete evaluation system, ensuring the comprehensiveness, scientific nature and operability of teaching evaluation.

2.3 AHP

2.3.1 Origin and introduction

AHP was first proposed by Saaty in the 1970s for system decision support. It is a systematic analysis tool that calculated via eigenvector methods (Saaty, 1980).

The Analytic Hierarchy Process (AHP) is highly practical and convenient for decision-making and problem-solving.

1. Addressing Complex Multi-Objective Decision Problems.

The evaluation of teaching quality in higher education involves multiple dimensions and indicators (e.g., teaching attitude, teaching methods, student engagement, etc.). The hierarchical structure typically includes a goal, criteria, and alternatives, as described in evidence 4 and 7. By constructing a hierarchical model, AHP can quantify qualitative issues, helping decision-makers better understand the relationships among various indicators.

2. Supports the Integration of Subjective and Objective Evaluations.

Teaching quality evaluation includes both quantitative data (e.g., student grades, attendance rates) and qualitative indicators (e.g., teaching attitude, classroom interaction). AHP translates subjective judgments into quantifiable weights via pairwise comparisons using a 1–9 scale, as outlined in evidence 2 and 4, thereby integrating qualitative and quantitative indicators.

3. Scientific and Transparent Weight Calculation.

AHP calculates the weights of each indicator by constructing a judgment matrix, ensuring the scientific and logical nature of the evaluation process. The weight calculation process is transparent, making it easy to verify and adjust, thereby enhancing the credibility of the evaluation results.

4. Consistency Check Ensures Evaluation Reliability.

AHP provides a consistency check mechanism to detect logical inconsistencies in expert scoring or judgments, ensuring the rationality and reliability of the evaluation results. The consistency ratio (CR) must be less than 0.1 to ensure reliability, as defined in evidence 12 and 19.

5. Flexibility and Adaptability.

AHP can adjust the hierarchical structure and indicators according to specific needs, making it suitable for evaluating different disciplines and teaching models. For example, in blended teaching models, AHP can flexibly integrate indicators for both online and offline teaching to construct an appropriate evaluation system. For instance, in blended teaching models, AHP can adaptively combine online interaction rates (quantitative) and offline teaching effectiveness surveys (qualitative), aligning with evidence 5’s application in multi-criteria scenarios.

6. Wide Application and Maturity.

AHP has been widely applied in fields such as educational evaluation and management decision-making, and its maturity and effectiveness have been validated by extensive research and practice. In the evaluation of teaching quality in higher education, AHP has proven to be effective in handling complex, multi-dimensional, and multi-level problems.

7. Supports Decision Optimization.

AHP not only evaluates current teaching quality but also identifies weaknesses in teaching through weight analysis, providing a scientific basis for teaching improvement.

In summary, the AHP method, with its systematic, scientific, flexible, and widely applicable characteristics, is an ideal tool for evaluating teaching quality in higher education. It is particularly suitable for addressing multi-dimensional evaluation challenges in blended teaching models.

Using AHP is helpful to simplify complex problems in a structured form, and to process and analyze data in pairs. It is a useful tool to solve the ambiguity and complexity of decision-making problems (Xing et al., 2024). Combined with literature research, AHP has several advantages. For example, AHP can help to deal with compound, unstructured and multi criteria based decision-making problems. Many researchers apply AHP to various decision-making fields (Ahmed et al., 2019; Naveed et al., 2017; Naveed et al., 2017). AHP allows decision making issues to be decomposed into components that allow the development of a hierarchy of dimensions and standards, thereby clarifying the level of importance associated with each dimension and standard (Ramaditya et al., 2023). AHP realizes group decision-making through group consensus (Zahir, 1999). AHP is useful in situations of uncertainty or risk, because it allows researchers or practitioners to develop scales when ordinary assessments cannot be applied (Millet and Wedley, 2002). AHP is based on the judgment of experts in professional fields and the field. When evaluating the model, it does not need a large number of samples to obtain stable results. Sometimes, data can be evaluated based on the opinions of an expert, and the results are still representative (Darko et al., 2019).

This also shows that in AHP research, sample selection is more important than sample size. This practice also proves the appropriateness of the sample data size used in this study. In this study, we use AHP to establish an evaluation model to determine the order of importance of the key factors that may affect the results of the student questionnaire.

Consistency analysis is the main method to test the reliability of expert judgment in AHP research (Saaty, 1980). Although the data we collected is based on professional knowledge, AHP can reduce the deviation through consistency analysis and ensure the reliability of the judgment through adjustment of results (Rui et al., 2023). In this study, based on the research data, our judgment matrix of indicators at all levels has been checked for consistency to check its reliability and ensure that the consistency threshold is less than 0.1 (value<0.1). The judgment matrix is constructed according to the survey and expert opinions. The judgment matrix is the basis for determining the weight order, so the judgment matrix should be constructed first when using the analytic hierarchy process. In order to accurately construct the judgment matrix, we should conduct accurate and detailed analysis and research on each constituent element.

2.3.2 Research stages and methods

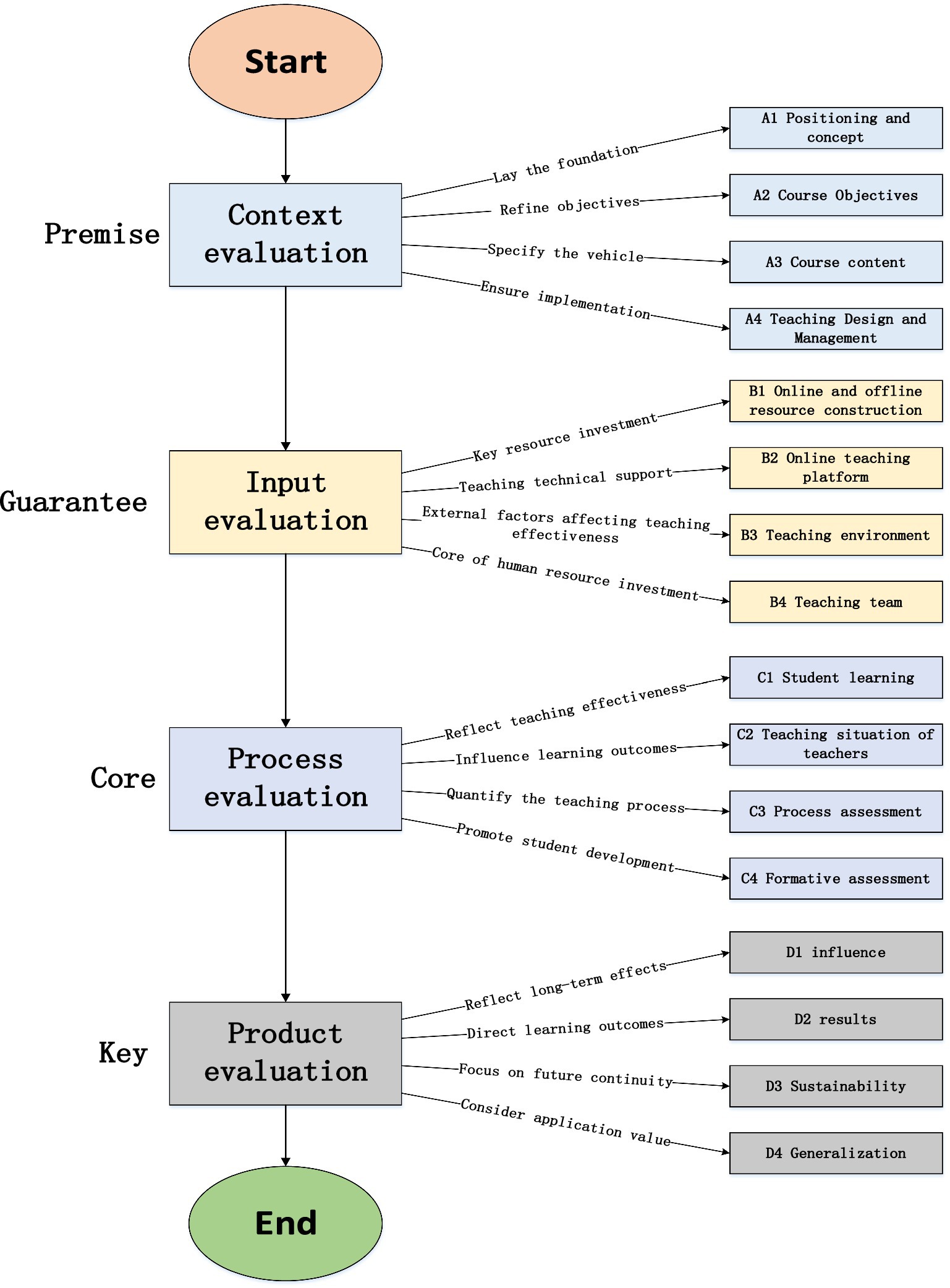

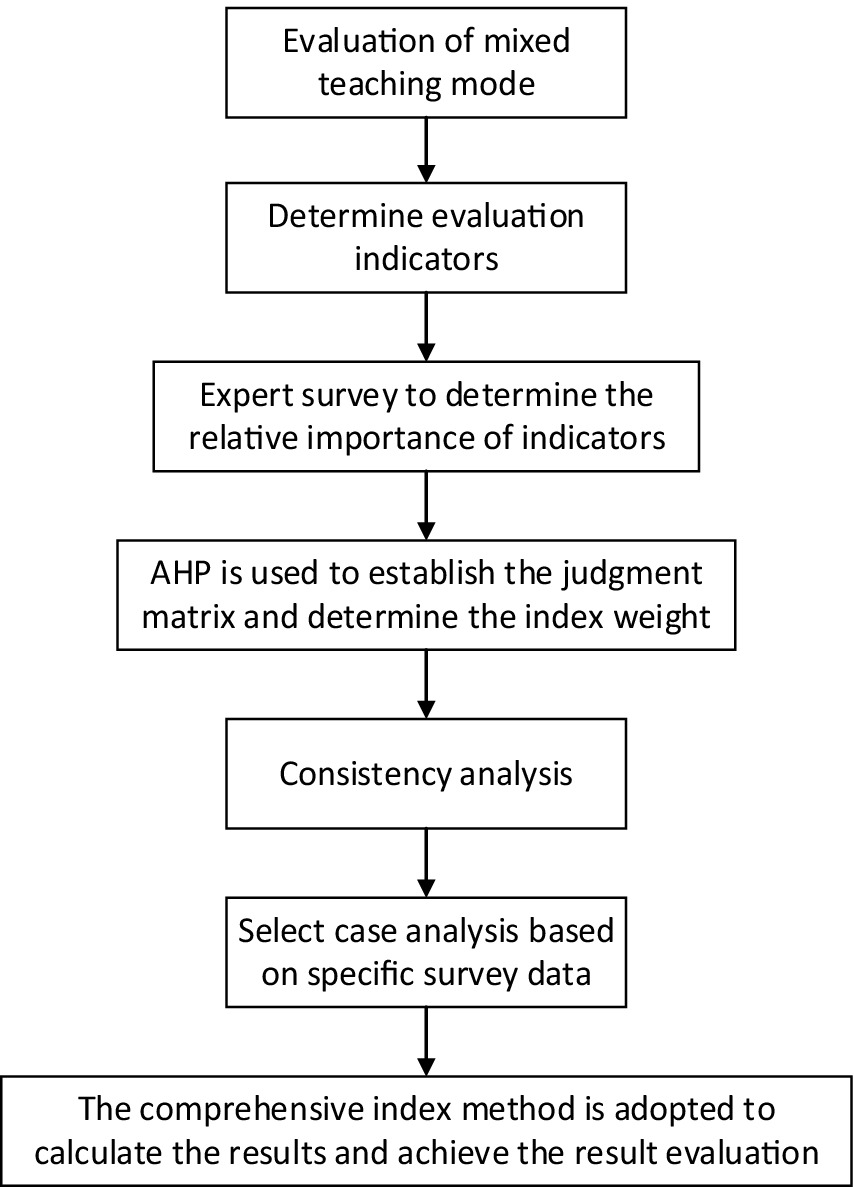

The study was divided into three stages. In the first stage, we distributed the questionnaire to the interviewees, mainly college students, by sampling in combination with literature review. Then, we analyzed the survey results, obtain the key factors, and use them to build the hierarchical evaluation structure of the second stage evaluation system; In the second stage, we collected expert opinions from different disciplines to improve the accuracy of the assessment content. Based on their expertise, we invited experts from diverse disciplines to participate. We applied the AHP method to determine the relative importance and weight of indicators (Chang et al., 2022). In the third stage, with the help of the evaluation system we have established, we choose the business school of a public university in Henan Province to conduct teaching quality evaluation to test the effectiveness of the evaluation system. The mixed teaching quality evaluation process is shown in Figure 3.

(1) In Equation 1, P represents the goal, and , (,=1, 2, ·······, n) represents the factor. Indicates the relative importance of a pair and . The judgment matrix P can be constructed by :

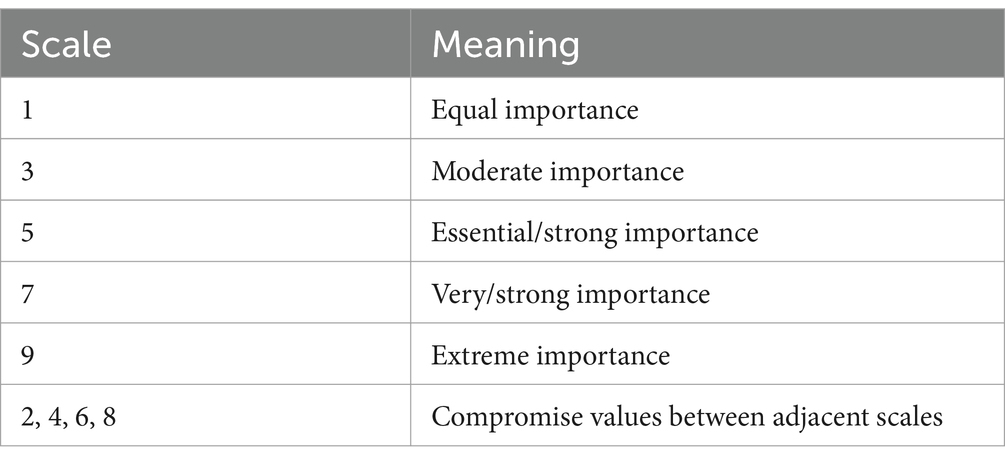

When constructing the judgment matrix, we conducted repeated pairwise comparisons between factors to confirm their relative importance under a specific criterion. The scale used ranged from 1 to 9 (Guillermo, 2022) (see Table 4). The determination of is based on multiple rounds of inquiry, which is obtained by consulting experts according to the scale of 1–9. The method of obtaining numerical value through multiple rounds of inquiry of experts can avoid the large deviation of the weight coefficient of teaching evaluation index caused by subjective factors such as the limitation of experts’ professional context.

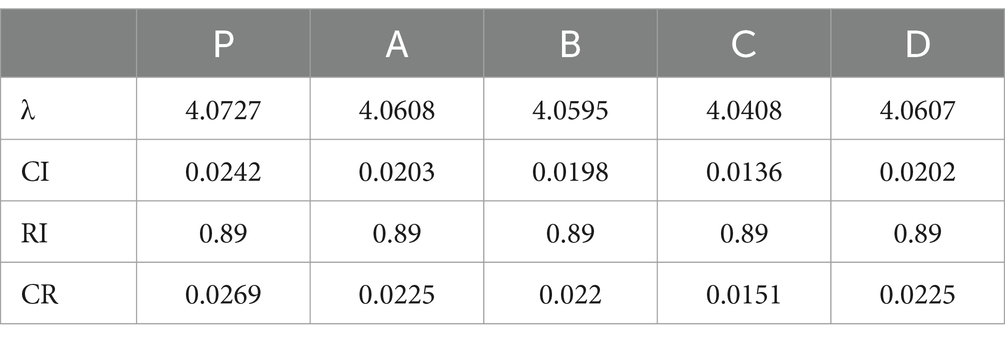

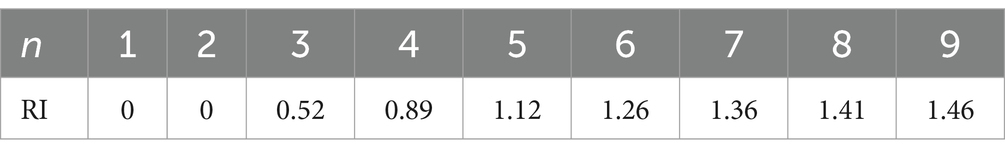

(2) Calculate the consistency of indicators. To determine the suitability of the questionnaire content, the consistency index (C.I.) (Equation 2) and consistency ratio (C.R.) (Equation 3) conduct conformance testing, both must be less than 0.1. Otherwise, there will be problems with the consistency of the hierarchical factors, and the consistency analysis of all factors must be conducted again.

Where, CR is the random consistency ratio of the judgment matrix; CI is the general consistency index of the judgment matrix, and RI is the average random consistency index of the judgment matrix. See Table 5 for the value of judgment matrix of order 1–9:

(3) Composite index method (Wu et al., 2022)

Table 5. Judgement matrix of order 1–9 (Li, 2022).

Use the comprehensive index method to multiply each index by its combined weight, and then add them to get the comprehensive index of the mixed teaching-quality evaluation system. It is calculated as follows:

In Equation 4, where Zi represents the comprehensive index of the evaluation index of unit i, the higher the numerical value, the higher the index, that is, the higher the reliability of the index; ωj is the combined weight of index j; yij is the standard value of indicator j.

3 Case study

(1) Judgment matrix analysis. In the hierarchical structure, the judgment matrix of the first level indicators is expressed as matrix P (Equation 5), and each second level indicator matrix is expressed as A, B, C, D (Equations 6–9). According to the previous survey, we can get the judgment matrix as follows:

(2) Normalization of judgment matrix. Find the corresponding eigenvector ω (Equations 10–14) and the maximum eigenvalue of the eigenvector λ. The normalized eigenvectors rank the importance of each evaluation factor, that is, weight distribution.

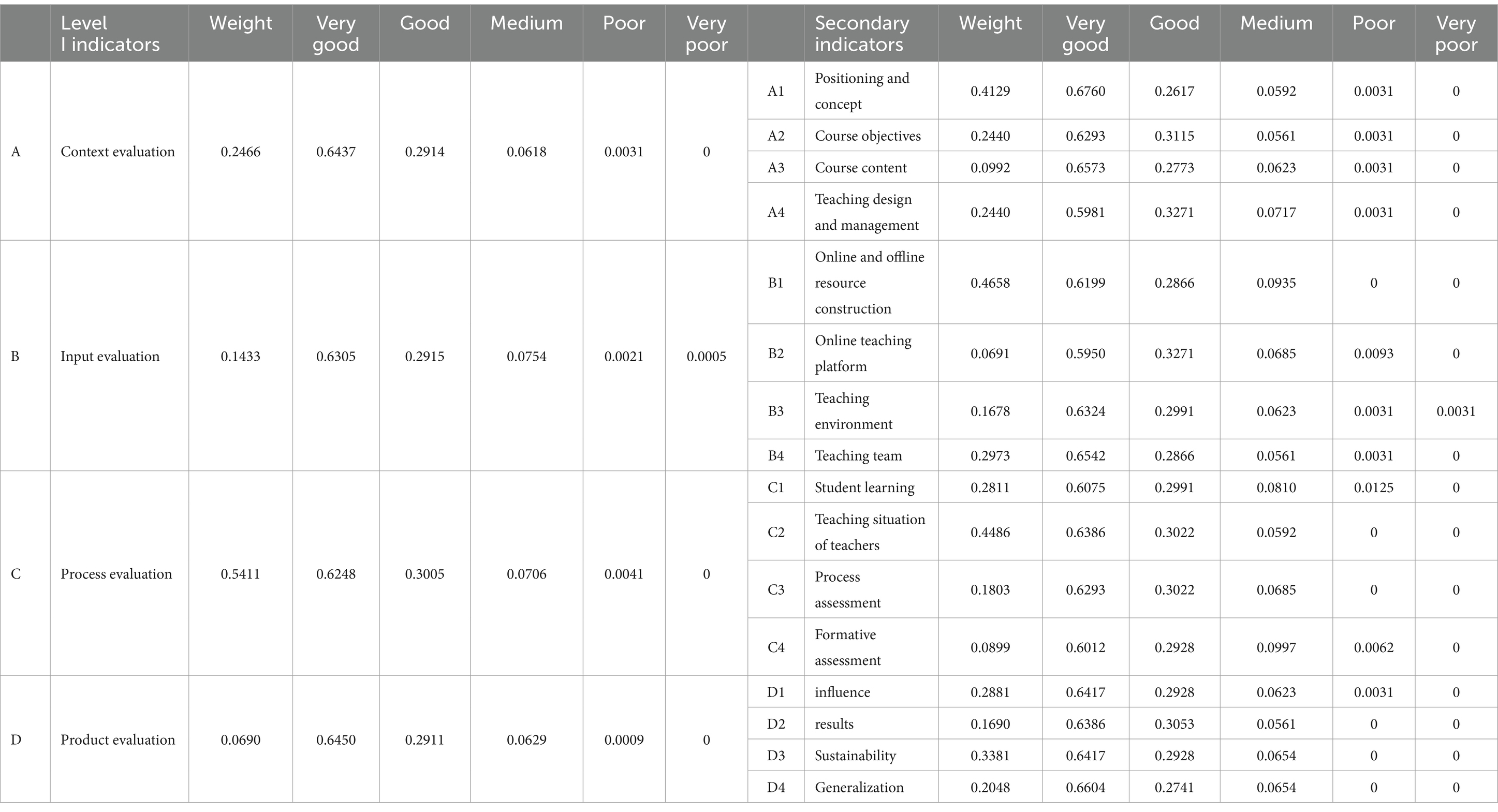

From the above data, we can get the weight distribution table of the primary and secondary indicators of the mixed curriculum quality evaluation system (see Table 6).

(3) Consistency inspection. Through calculation, we can get the consistency test data of judgment matrices P, A, B, C, D.

From Table 7, we can see that the CR values of all judgment matrices of the project are less than 0.1, which passes the consistency test.

(4) Data analysis. Our survey data are divided into five grades, as shown in Table 8. They are very good, good, medium, poor, and very poor. 326 students from the Business School of a public university in Henan Province were surveyed, and the data were collected through online questionnaires.

The evaluation system survey data in this study were collected online through an anonymous survey using the “Questionnaire Star” platform. The survey is anonymous, does not collect personally identifiable information, does not involve sensitive topics, and does not require ethical review, thus ensuring participant privacy and data security.

Questionnaire Star is a professional online survey, examination, evaluation, and voting platform that provides users with powerful yet user-friendly services for online questionnaire design, data collection, custom reports, and survey result analysis. Compared to traditional survey methods and other platforms, Questionnaire Star has distinct advantages in terms of speed, ease of use, and low cost. Typical applications include:

Enterprises: Customer satisfaction surveys, market research, employee satisfaction surveys, internal training, demand registration, talent evaluation, training management, and employee exams.

Universities: Academic research, social surveys, online registration, online voting, information collection, and online exams.

Individuals: Discussion polls, public welfare surveys, blog surveys, and fun tests.

This study employed a random sampling method to survey 326 students using Questionnaire Star. The results are considered highly reliable for the following reasons:

1. Sufficient sample size

The sample size of 326 students is generally considered sufficient in statistics, especially in educational research. For most university course or teaching evaluation studies, 326 students provide adequate statistical significance. According to the Central Limit Theorem, a sufficiently large sample size ensures that the sample distribution approximates the population distribution, reducing sampling bias.

2. Random sampling method

The sample was selected through random sampling, theoretically giving each student an equal chance of being selected, which effectively reduces bias introduced by human selection. Random sampling minimizes sampling bias by ensuring sample representativeness.

3. Matching of sample and population

If the sample’s gender ratio, grade distribution, and major background align with the overall student population, it can be considered representative. For example, if the gender ratio in the population is 1:1 and the sample’s gender ratio is close to 1:1, the sampling bias is minimal.

4. Research purpose and scope

The research aims to evaluate the effectiveness of a specific course or teaching model, and the sample is drawn from the entire student population of that course. Thus, the sample size of 326 students represents a significant proportion of the student population, minimizing bias.

5. Reasonableness of bias

In practical research, completely eliminating sampling bias is almost impossible. Researchers minimize bias and control its impact through statistical methods such as weighting adjustments and stratified analysis. If researchers follow scientific methods during the sampling process and analyze and discuss potential biases, the sampling bias can be considered within a reasonable range.

4 Conclusion

A random sample of 326 students is generally reasonable, provided the sample size is sufficient, the sampling method is scientific, and the sample aligns well with the population. Researchers enhance the reliability and generalizability of the results by analyzing data, optimizing methods, minimizing sampling bias, and clearly stating the study’s limitations.

Through the questionnaire survey, we obtained the basic data sets of 16 secondary indicators corresponding to 4 primary indicators. The corresponding data sets of 5 levels, namely, very good, good, medium, poor and very poor, are defined as Aj, Bj, Cj, Dj, Ej, j = 1,2,3,4. We set the evaluation set as S, then

We can get the final data by the comprehensive index method.

See Table 9 for details.

Through data analysis, we can see that the scores of the two grades of “very good” and “good” account for 92.7% of the evaluation scores for the blended teaching model, indicating that the system’s evaluation results are positive.

Research on the Evaluation of Blended Teaching Quality Based on the CIPP Model holds dual value for both educational practice and theoretical development.

(1) At the practical level:

The study reveals that the implementation of blended teaching requires optimization in three key areas:

i. Precision in resource allocation through context and input evaluation, coupled with the redesign of teaching processes to align with personalized learning needs.

ii. The constructed evaluation indicator system provides a scientific basis for educational departments to establish blended teaching standards, supporting the upgrade of quality monitoring systems.

iii. Evaluation data supports the creation of teacher competency profiles, facilitating the development of customized teacher training programs.

(2) At the theoretical level:

The study achieves three major breakthroughs:

i. It validates the applicability of the CIPP model in digital education scenarios.

ii. It creates a multi-level evaluation framework comprising 4 dimensions and 12 core indicators.

iii. The innovative introduction of teaching outcome sustainability indicators provides a research paradigm for tracking the long-term effectiveness of blended education.

This research has significant extended value:

1) Methodologically, it integrates the Analytic Hierarchy Process (AHP) with big data technology, opening new pathways for educational evaluation research.

2) Its application scope can extend to fields such as management and vocational education, promoting interdisciplinary research collaboration and innovation.

Recommendations for educational management departments.

Establish dynamic feedback mechanisms based on this research, guide teaching innovation through special funds, and build a quality assurance closed-loop system characterized by “standard formulation, process monitoring, and data-driven decision-making.”

5 Result

This study constructs a blended teaching quality evaluation system for organizational behavior courses based on the CIPP model and employs the Analytic Hierarchy Process (AHP) to determine the weights of the evaluation indicators. The results show that process evaluation holds the highest weight (0.5411) in the blended teaching quality evaluation, followed by background evaluation (0.2466), while achievement evaluation has the lowest weight (0.069). This finding is consistent with the characteristics of blended teaching, where the design and implementation of the teaching process are the core factors influencing teaching quality, while teaching outcomes may take longer to materialize.

The following section provides an in-depth analysis of the study’s results in the context of existing literature and highlights the innovative and unique aspects of this research.

5.1 Comparison with existing studies

Previous research on teaching quality evaluation has primarily focused on traditional teaching models. For example, Xia and Lu analyzed course evaluation data using machine learning models, but their study did not account for the unique aspects of blended teaching. In contrast, this study targets the characteristics of blended teaching models and develops an evaluation system covering four dimensions: context, input, process, and product. This approach addresses the gap in existing research on blended teaching quality evaluation. Additionally, while Chen and Lu proposed a teaching effectiveness evaluation system that includes indicators such as teaching attitude, methods, and ability, their evaluation dimensions were limited and did not fully reflect the complexity of blended teaching. The innovation of this study lies in its use of the systematic framework of the CIPP model to incorporate all aspects of blended teaching, offering a more comprehensive evaluation framework.

5.2 The central role of process evaluation

This study finds that process evaluation is the most significant factor in blended teaching quality evaluation (weight of 0.5411), which is consistent with the findings of Zhang et al., who highlighted the design and implementation of the teaching process as critical to student learning outcomes. However, unlike Zhang et al., who focused solely on classroom interactions and teaching techniques, this study expands the indicators of process evaluation to include student learning, teacher instruction, process assessment, and formative evaluation. This refinement not only enhances the scientific rigor of the evaluation system but also offers specific guidance for teachers to improve the teaching process.

5.3 The importance of context evaluation

Context evaluation holds the second-highest weight in this study (0.2466). This finding aligns with the results of Wang and Li, who emphasized the importance of clear course objectives and teaching design in ensuring teaching quality. However, the unique aspect of this study is its focus on positioning and concept as the core indicators of context evaluation (weight of 0.4129), emphasizing the importance of course positioning and teaching philosophy in blended teaching. This finding provides theoretical support for blended teaching course design, indicating that course design should prioritize clear positioning and concepts to achieve teaching objectives.

5.4 The potential value of achievement evaluation

Although achievement evaluation has the lowest weight in this study (0.069), its key indicators (sustainability, impact, and scalability) offer valuable insights for assessing the long-term effectiveness of blended teaching. This finding contrasts with the work of Liu et al., who focused mainly on short-term teaching outcomes while overlooking long-term impacts. The innovation of this study lies in its introduction of sustainability and scalability indicators, providing a theoretical foundation for the long-term development and promotion of blended teaching.

5.5 Innovation in research methodology

In terms of research methodology, this study employs the Analytic Hierarchy Process (AHP) to determine the weights of evaluation indicators. This method is more scientific and objective than traditional qualitative approaches. Unlike the single evaluation method used by Chen and Lu, the AHP method more accurately reflects the importance of each indicator in actual teaching, thus improving the reliability of the evaluation results. Additionally, by combining qualitative and quantitative indicators, this study addresses the lack of data diversity in existing research, offering a more comprehensive data basis for blended teaching evaluation.

5.6 Practical implications

The evaluation system developed in this study not only offers a scientific foundation for evaluating the quality of blended teaching in organizational behavior courses but can also be adapted for teaching evaluations in other disciplines. For example, by adjusting the specific indicators of context and process evaluation, the system can be applied to blended teaching evaluations in fields such as management and education. Furthermore, the study’s results provide specific directions for teachers to improve teaching quality, including optimizing teaching design and enhancing process assessment.

This study addresses a gap in existing research on blended teaching quality evaluation by constructing a blended teaching quality evaluation system based on the CIPP model. The results not only validate the applicability of the CIPP model in blended teaching evaluation but also determine scientific weights for each indicator through the AHP method, providing specific guidance for enhancing teaching quality. Future research could expand the application scope of the evaluation system and continuously improve the evaluation indicators based on actual teaching feedback to promote the high-quality development of blended teaching.

Other educational institutions can follow the following systematic approach to integrate practical recommendations with implementation steps, forming a closed-loop management system:

1) Top-Level Design and Preparation

Establish an interdisciplinary team (including education experts, teachers, and technical personnel) to define the goals of blended teaching evaluation. Conduct research on the strengths and weaknesses of existing teaching practices based on curriculum positioning. Establish a clear framework during the initial instructional design phase to ensure alignment between content, interaction methods, and teaching objectives. Simultaneously, plan teacher training programs to strengthen their ability to integrate online and offline teaching.

(2) Construction of a Scientific Evaluation System

Based on the CIPP model (Context, Input, Process, Product), design evaluation indicators across four dimensions. Use the Analytic Hierarchy Process (AHP) to calculate weights, ensuring the system’s scientific rigor. Develop tools such as questionnaires and classroom observation forms, and embed artificial intelligence and data analysis technologies to track teaching data in real time.

(3) Closed-Loop Implementation and Improvement

Collect diverse data (e.g., student feedback, classroom performance) to evaluate effectiveness and promptly provide feedback to teachers and management. Develop improvement plans based on evaluation results and establish long-term tracking mechanisms. Adjust teaching strategies and evaluation indicator weights each semester, focusing on the sustainability and impact of teaching practices.

(4) Technology Empowerment and Ecosystem Expansion

Deploy online learning platforms and intelligent tools (e.g., AI-based learning analytics) to encourage teachers to innovate and use big data to optimize teaching interactions. Promote validated course models and evaluation methods through inter-school collaboration and interdisciplinary seminars, driving the establishment of industry-level standards for blended teaching.

6 Limitations and future directions

While this study has advanced blended teaching quality evaluation, certain limitations warrant attention. Firstly, the sample scope (n = XXX, drawn from the Organizational Behavior major at X University) limits external validity. Future research should adopt stratified sampling across multiple disciplines and institutions to enhance generalizability. Secondly, the evaluation framework’s weight allocation needs to account for discipline-specific pedagogical requirements, as STEM courses may prioritize technical competencies while arts courses emphasize critical thinking development. Finally, a given the rapid evolution of blended pedagogy, the assessment framework must incorporate adaptive mechanisms such as machine learning–powered real-time feedback analytics to ensure ongoing validity.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants or participants legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

SZ: Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. LB: Data curation, Formal analysis, Software, Supervision, Writing – original draft. BY: Software, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work received financial support from the Research and Practice Project on Higher Education Teaching Reform in Henan Province under Grant No.2023SJGLX293Y, Henan Province Soft Science Research Project under Grant No.252400410299 and No.252400410264, and Pedagogical Research and Practice Project of Xuchang University under Grant No. XCU2023-YB-34.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ahmed, M., Qureshi, M., Mallick, J., and Ben Kahla, N. (2019). Selection of sustainable supplementary concrete materials using OSM-AHP-TOPSIS approach. Adv. Mater. Sci. Eng. 2019, 1–12. doi: 10.1155/2019/2850480

An, W. (2022). Based on the application of deep learning in college education evaluation. Mob. Inf. Syst. 2022:2959596. doi: 10.1155/2022/2959596

Cao, C. (2022). The synergy mechanism of online-offline mixed teaching based on teacher-student relationship. Int. J. Emerg. Technol. Learn. 17, 16–31. doi: 10.3991/ijet.v17i10.31539

Chang, F. K., Hung, W. H., Lin, C. P., and Chang, I. C. (2022). A self-assessment framework for global supply chain operations: case study of a machine tool manufacturer. J. Glob. Inf. Manag. 30, 1–25. doi: 10.4018/JGIM.298653

Chen, J., and Lu, H. (2022). Evaluation method of classroom teaching effect under intelligent teaching mode. Mob. Netw. Appl. 27, 1262–1270. doi: 10.1007/s11036-022-01946-2

Darko, A., Chan, A. P. C., Ameyaw, E. E., Owusu, E. K., Parn, E., and Edwards, D. J. (2019). Review of application of analytic hierarchy process (AHP) in construction. Int. J. Constr. Manag. 19, 436–452. doi: 10.1080/15623599.2018.1452098

Gu, J. H. (2022). Blended Oral English teaching based on Core competence training model. Mob. Inf. Syst. 2022:2226544. doi: 10.1155/2022/2226544

Guillermo, G. (2022). Using multi-criteria decision-making to optimise solid waste management. Curr. Opin. Green Sustain. Chem. 37:100650. doi: 10.1016/j.cogsc.2022.100650

Guo, W. B., and Niu, Y. J. (2021). Interactive teaching system of basketball action in college sports based on online to offline mixed teaching mode. Mob. Inf. Syst. 2021:9994050. doi: 10.1155/2021/9994050

Hu, P., and Chen, Y. (2024). Construction and practice of teaching quality evaluation system in higher vocational colleges and universities: based on big data technology. Occup. Prof. Educ. 9, 7–15. doi: 10.2478/amns-2024-0509

Hu, S. Y., and Wang, J. S. (2022). Evaluation algorithm of ideological and political assistant teaching effect in colleges and universities under network information dissemination. Sci. Program. 2022:3589456. doi: 10.1155/2022/3589456

Hui, Y. L. (2021). Evaluation of blended Oral English teaching based on the mixed model of SPOC and deep learning. Sci. Program. 2021:7044779. doi: 10.1155/2021/7044779

Jinxue, S., and Li, Y. (2023). Effectiveness and evaluation of online and offline blended learning for an electronic design practical training course. Int. J. Distance Educ. Technol. 21, 1–25. doi: 10.4018/IJDET.318652

Lei, Z. (2024). The application of CIPP model in the evaluation of teaching quality of college education in the context of new era. Appl. Math. Nonlinear Sci. 9, 1–17. doi: 10.2478/amns.2023.2.01568

Li, H. J. (2022). Application of fuzzy K-means clustering algorithm in the innovation of English teaching evaluation method. Wirel. Commun. Mob. Comput. 2022:7711386. doi: 10.1155/2022/7711386

Li, W. C. (2022). Research on evaluation method of physical education teaching quality in colleges and universities based on decision tree algorithm. Mob. Inf. Syst. 2022:9994983. doi: 10.1155/2022/9994983

Li, Y., and Hu, C. H. (2022). The evaluation index system of teaching quality in colleges and universities: based on the CIPP model. Math. Probl. Eng. 2022:3694744. doi: 10.1155/2022/3694744

Li, X. J., Xue, P., and Shao, Z. W. (2022) “The evaluation method of online and offline blended teaching quality of college mathematics based on Bayes theory,” Advanced Hybrid Information Processing, PT II., 417, pp. 359–370.

Li, X., and Yang, Q. (2024). Evaluation of teaching effectiveness in higher education based on social networks. Int. J. Netw. Virtual Organ. 30, 1–14. doi: 10.1504/IJNVO.2024.136771

Liu, J. L., and Wang, Y. (2022). Static and dynamic evaluations of college teaching quality. Int. J. Emerg. Technol. Learn. 17, 114–127. doi: 10.3991/ijet.v17i02.29005

Meng, X. T., Chen, W. J., Liao, L. B., Li, T., Qin, W., and Bai, S. B. (2019). Analysis of the teaching effect of the Normal human morphology with mixed teaching mode and formative evaluation in China. Int. J. Morphol. 37, 1085–1088. doi: 10.4067/S0717-95022019000301085

Miao, Y. F. (2021). Online and offline mixed intelligent teaching assistant mode of English based on Mobile information system. Mob. Inf. Syst. 2021:7074629. doi: 10.1155/2021/7074629

Millet, I., and Wedley, W. C. (2002). Modelling risk and uncertainty with the analytic hierarchy process. J. Multi-Criter. Decis. Anal. 11, 97–107. doi: 10.1002/mcda.319

Naveed, Q. N., Muhammed, A., Sanober, S., Qureshi, M. R. N., and Shah, A. (2017). Barriers effecting successful implementation of E-learning in Saudi Arabian universities. Int. J. Emerg. Technol. Learn. 12:94. doi: 10.3991/ijet.v12i06.7003

Naveed, Q. N., Qureshi, M. R. N., Alsayed, A. O., Muhammad, A., Sanober, S., and Shah, A. (2017) “Prioritizing barriers of E-learning for effective teaching–learning using fuzzy analytic hierarchy process (FAHP),” In Proceedings of the 2017 4th IEEE international conference on engineering technologies and applied sciences (ICETAS), Salmabad, Bahrain, 29 November–1 December 2017; IEEE: Piscataway, NJ, USA; pp. 1–8.

Ramaditya, M., Maarif, M. S., Affandi, J., and Sukmawati, A.. (2023). Improving private higher education strategies through fuzzy analytical hierarchy process: insight from Indonesia. Int. J. Manage. Educ. 17, 415–433. doi: 10.1504/IJMIE.2023.131221

Rui, Z., Chengcheng, G., Xicheng, C., Fang, L., Dong, Y., and Yazhou, W. (2023). Genetic algorithm optimised Hadamard product method for inconsistency judgement matrix adjustment in AHP and automatic analysis system development. Expert Syst. Appl. 211:118689. doi: 10.1016/j.eswa.2022.118689

Shao, L. (2021). Evaluation method of IT English blended teaching quality based on the data mining algorithm. J. Math. 2021:3206761. doi: 10.1155/2021/3206761

Tuna, H., and Basdal, M. (2021). Curriculum evaluation of tourism undergraduate programs in Turkey: a CIPP model-based framework. J. Hosp. Leis. Sport Tour. Educ. 29:100324. doi: 10.1016/j.jhlste.2021.100324

Wang, J., and Fu, J. (2022). Convolutional neural network based network distance English teaching effect evaluation method. Math. Probl. Eng. 2022:3352426. doi: 10.1155/2022/3352426

Wang, J. W., Liu, C. S., and Gao, W. (2022). Teaching quality evaluation and feedback analysis based on big data mining. Mob. Inf. Syst. 2022:7122846. doi: 10.1155/2022/7122846

Wang, Y., Sun, C. Y., and Guo, Y. A. (2021). Multi-attribute fuzzy evaluation model for the teaching quality of physical education in colleges and its implementation strategies. Int. J. Emerg. Technol. Learn. 16, 159–172. doi: 10.3991/ijet.v16i02.19725

Wei, X. (2022). An intelligent Grey correlation model for online English teaching quality analysis. Mob. Inf. Syst. 2022:4861684. doi: 10.1155/2022/4861684

Wu, S. H., Zhang, Y. L., and Yan, J. Z. (2022). Comprehensive assessment of geopolitical risk in the Himalayan region based on the grid scale. Sustain. For. 14, 1–20. doi: 10.3390/su14159743

Wu, G. F., Zheng, J., and Zhai, J. (2021). Individualized learning evaluation model based on blended teaching. Int. J. Electr. Eng. Educ. 60, 2047–2061. doi: 10.1177/0020720920983999

Xia, X. J., and Lv, D. M. (2022). The evaluation of music teaching in colleges and universities based on machine learning. J. Math. 2022:2678303. doi: 10.1155/2022/2678303

Xia, Y., and Xu, F. M. (2022). Design and application of machine learning-based evaluation for university music teaching. Math. Probl. Eng. 2022:4081478. doi: 10.1155/2022/4081478

Xing, Q., Tan, P. H., and Gan, W. S. (2024). Constructing a student development model for undergraduate vocational universities in China using the fuzzy Delphi method and analytic hierarchy process. PloS One 19:e0301017. doi: 10.1371/journal.pone.0301017

Xu, X., Yu, S. J., Pang, N., Dou, C. X., and Li, D. (2022). Review on a big data-based innovative knowledge teaching evaluation system in universities. J. Innov. Knowl. 7:100197. doi: 10.1016/j.jik.2022.100197

Yang, B. B., Xiaoming, Z., and Dongqi, T. (2022). Evaluation of blended learning effect of engineering geology based on online surveys. Geofluids 2022:9014895. doi: 10.1155/2022/9014895

Yuan, T. Q. (2021). Algorithm of classroom teaching quality evaluation based on Markov chain. Complexity 2021:9943865. doi: 10.1155/2021/9943865

Zahir, S. (1999). Clusters in group: decision making in the vector space formulation of the analytic hierarchy process. Eur. J. Oper. Res. 112, 620–634. doi: 10.1016/S0377-2217(98)00021-6

Keywords: CIPP, evaluation model, mixed teaching quality, quality evaluation system, AHP

Citation: Zhang S, Bai L and Yang B (2025) Mixed teaching quality evaluation of organizational behavior course based on CIPP model. Front. Educ. 10:1538539. doi: 10.3389/feduc.2025.1538539

Edited by:

Anne Marie Courtney, Institute of Technology, Tralee, IrelandReviewed by:

John Mark R. Asio, Gordon College, PhilippinesMohammad Najib Jaffar, Islamic Science University of Malaysia, Malaysia

I. Ketut Darma, Politeknik Negeri Bali, Indonesia

Copyright © 2025 Zhang, Bai and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Binbin Yang, eWFuZ2JpbmJpbkB4Y3UuZWR1LmNu

Sujun Zhang

Sujun Zhang Lifen Bai

Lifen Bai Binbin Yang

Binbin Yang