- 1Université Grenoble Alpes, Université Savoie Mont Blanc, CNRS, LPNC, Grenoble, France

- 2Université Grenoble Alpes, CNRS UMR 5525, VetAgro Sup, Grenoble INP, TIMC, Grenoble, France

- 3Université Grenoble Alpes, LaRAC, Grenoble, France

- 4Université Grenoble Alpes, SENS, Grenoble, France

- 5Institut Universitaire de France, Paris, France

- 6Université Grenoble Alpes, HP2, INSERM 1300, Grenoble, France

Measuring attention at school is essential given the relationship between attention, learning and school achievement. In this paper, we present a new battery called Computerized Attention Measure (CAM), which includes 8 web-based tasks we developed to measure several dimensions of attention that are crucial for school. Two studies were conducted to test the psychometric qualities of the CAM battery in preteens during school time. In the first study, we examined completion rate, reliability, validity, and overall behavioral performance in a sample of 646 preteens. A second study involving 202 preteens was conducted to replicate the main findings of study 1 and to test the psychometric qualities of a new task assessing divided attention. These evaluations of the CAM battery showed variability in completion, but the two studies yielded comparable and overall satisfactory psychometric properties, as indicated by behavioral performance and internal consistency. Validity was supported by inter-task correlations, supporting its applicability in school settings. Future work will need to be cautious in completion during group sessions to reduce loss of data and to evaluate the stability of results over time with test–retest reliability. Despite some limitations, the CAM battery appears to meet several important criteria for evaluating preteens’ attentional performance in a school setting.

Introduction

Measuring attention at school is essential given the relationship with learning and academic achievement (Franceschini et al., 2012; Keller et al., 2020; Stevens and Bavelier, 2012; Trautmann and Zepf, 2012). While studies on attention have focused on its neural underpinnings with influential models (e.g., Cieslik et al., 2015; Petersen and Posner, 2012) and its dysregulation through attentional disorders (Tremolada et al., 2019), less research has been conducted on assessing students’ attention during school time in ecological settings (Gallen et al., 2023). Since students often find attention assessment tasks to be tedious (Lumsden et al., 2016), we designed a series of less cumbersome web-based gamified tasks measuring several dimensions of attention in school settings.

Whereas attention may be one of the most crucial cognitive abilities in daily school life (Gallen et al., 2023; Keller et al., 2020; Trautmann and Zepf, 2012), there is no consensus on its definition in the scientific literature. Attention can be categorized into two main types: bottom-up attention, which is automatic and stimulus-driven, and top-down attention, which is voluntary and goal-oriented (Corbetta and Shulman, 2002). The multidimensional nature of attention is highlighted by most models, with three core components commonly included (Posner and Petersen, 1990): alerting (achieving and maintaining an alert state), orienting (selecting information via attentional shifts), and executive control (monitoring and resolving response conflict). In such a framework, the Attention Networks Test (ANT) is a well-known task in neuroscience and cognitive science that allows the assessment of the three attentional networks with only one task (de Souza Almeida et al., 2021; Fan et al., 2002). In addition, several studies have examined goal-directed components of attention that can be related to the three attention networks. Supported by prior research (e.g., McDowd, 2007; Talalay, 2024), these components include sustained attention, which reflects the ability to maintain focus over a prolonged period; selective attention, the ability to focus on relevant information while ignoring irrelevant information; and divided attention, the ability to manage multiple sources of information or perform dual tasks.

Research on children showed that the components of attention develop at varying rates (Best and Miller, 2010; Betts et al., 2006; Woods et al., 2013). Interestingly, attention fluctuates within and between individuals depending on various factors, including, for example, sleep habits (Eichenlaub et al., 2023), physical activity (de Greeff et al., 2018), life context (Spruijt et al., 2018), attentional disorders (Pievsky and McGrath, 2018), and gender (Sobeh and Spijkers, 2012). Developmental approaches have also revealed significant changes in attentional functioning from childhood to adolescence, with different components maturing at distinct rates during this period (Mullane et al., 2016; Rueda et al., 2004). These changes occur during the school period and may impact academic learning, as supported by several studies highlighting the role of attention in meeting daily academic demands and achieving academic success (e.g., Gallen et al., 2023; Keller et al., 2020; Razza et al., 2012; Rueda et al., 2010). Therefore, measuring the various components of students’ attention in school settings is a valuable challenge that our study aims to address.

Many measures of attention have been developed for different age groups, ranging from children to older adults (e.g., Adolphe et al., 2022; Billard et al., 2021; Manly et al., 2001; Zelazo et al., 2014; Zygouris and Tsolaki, 2015). Some of these measures are considered ecological, as they aim to simulate situations involved in daily life (Chevignard et al., 2012), which may include paper-and-pencil tasks or interviews. For instance, the TEA-Ch (Manly et al., 2001) detects attentional disorders in children up to the age of 16 through interviews and standardized tests that assess various components of attention. Other measures include questionnaires (e.g., Robaey et al., 2007), often administered to the children’s families or their teachers and supplemented by a clinical interview. Additionally, computerized measures were developed, offering the significant advantage of automatic data recording without the need for external intervention. The Test of Attentional Performance for Children (KiTAP, Zimmermann and Fimm, 2002) is an example of a computerized battery of tests assessing attention components in children (for a review of computerized cognitive test batteries, see Tuerk et al., 2023). One could conclude that there are plenty of tests to measure students’ attention, however, the existing measures have some limitations. First, time is not always well controlled, whereas we know that processing speed impacts cognitive performance (Su et al., 2015). Second, they generally require a fee-based license (e.g., CogState, KiTAP), are often tedious (Lumsden et al., 2016) and are not always compatible with large cohorts of participants. Finally, few are web-based, and their psychometric qualities are often not considered (Bauer et al., 2012; Tuerk et al., 2023).

The rise of the internet in the 2000s enabled the development of web-based experiments (Anwyl-Irvine et al., 2021; Germine et al., 2012; Kochari, 2019; Reips, 2021). This development involves the selection of a computer language (e.g., JavaScript or Python), hosting measures online via a dedicated platform (e.g., Pavlovia, pavlovia.org; Openlab, lab.js.org; JATOS, jatos.org), and, often, recruiting remote participants (e.g., Prolific or MTurk, see Sauter et al., 2020). Anwyl-Irvine et al. (2021) recently tested the data quality obtained online, given the diversity of hardware and software observed when conducting online studies. They tested how the variety of platforms such as Gorilla,1 jsPsych,2 Lab.js,3 or PsychoPy,4 could impact the presentation times of the stimuli and data recording. Based on a sample of 200,000 participants, their results showed that online testing platforms provide acceptable accuracy for both stimulus presentation times and response times recorded online, similar to those from laboratory conditions.

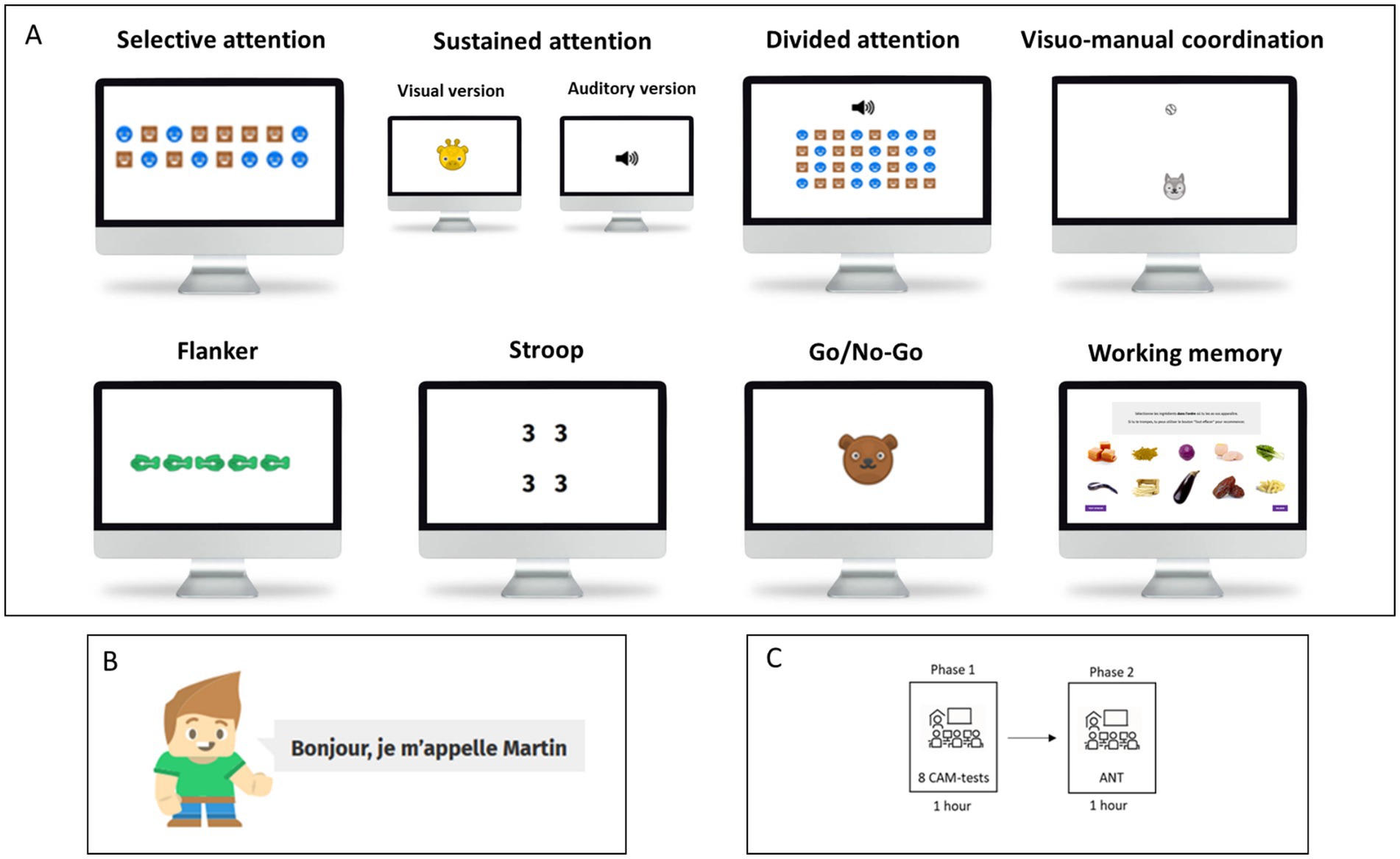

The present studies

Online studies have led to the development of numerous tasks and test batteries for assessing cognitive functions in general and attention in particular. One of the best-known and most widely used tasks in cognitive sciences is the ANT (Fan et al., 2002), available in web-based tasks and measuring three attention networks: alerting, orienting and executive control (de Souza Almeida et al., 2021). However, given the aim of testing students’ attentional capacities in situ, this influential test presents several limitations. First, it does not allow for fully independent assessment of attention components. Moreover, it does not take into account other cognitive processes such as divided attention (i.e., dual-tasking) and working memory, which is considered a subset of executive functions (Diamond, 2013). Furthermore, it was designed for laboratory protocols involving adult or child participants, and it overlooks the need for validated tools to study online cognitive functions in children and adolescents in ecological settings. The purpose of the current project was to develop accessible, easy-to-use, web-based tasks that can be administered by trained teachers in school computer rooms without requiring any specific technical skills or the presence of a researcher for assessing the various components of attention in preteens during school time. Our aim was to create a test battery encompassing various components of attention in preteens, going beyond the scope of existing tools designed in laboratory settings. To this end, we designed a series of 8 tasks that can be used independently of each other, enabling the isolation of specific effects related to attention, executive functions and visuo-manual coordination. Moreover, each task in the battery is engaging for preteens, with coherent visuals and a story that ties all tasks together when they are administered in combination. Finally, we conducted field tests of our battery in ecological settings directly within school environments. The developed battery is called CAM (Computerized Attention Measure, MIA in French for “Mesure Informatique de l’Attention”), composed of 8 well-known cognitive tasks and evaluating several components related to attention, executive functions and visuo-manual coordination (Figure 1). We assessed sustained, selective, and divided attention using the following tasks: a sustained attention task (Betts et al., 2006), a visual search task (Donnelly et al., 2007), and a divided attention task. Among the executive functions, we evaluated inhibitory control using three tasks: a Go/No-Go task (Casey et al., 1997), a counting Stroop task (Stroop, 1935; Windes, 1968) and a Flanker task (Eriksen and Eriksen, 1974). Working memory was assessed separately using a complex span task (Barrouillet et al., 2007; Barrouillet et al., 2011). One additional cognitive component, linked to attention and motor control, was evaluated using a visuo-manual coordination task. Two studies were conducted as part of a larger research project on student physical activity and attention in a school environment to develop and evaluate the psychometric properties of the 8 web-based tasks designed to assess components of attention in an ecological setting.

Figure 1. The different tasks developed in the CAM battery (A), the cartoon character named Martin (B) and the general procedure of study 1 (C).

Regarding the completion rate, we tested the feasibility of CAM tests in middle schools in collaboration with the teachers. Since the goal was to identify any technical issues or time constraints specific to this setting in which teachers were trained and then administered the tests, we did not make any assumptions about test completion outcomes. According to previous work, we anticipated observing differences in mean Reaction Time (RT) between incongruent and congruent trials for both the Flanker and Stroop tasks (Draheim et al., 2019). We also expected the Error Rate (ER) of No-Go trials to be higher than the Go trials in the Go/No-Go task (Cragg and Nation, 2008). In the complex span task, we hypothesized a better recall performance in low cognitive load condition compared to high load condition (Barrouillet et al., 2007). For selective attention and divided attention, we predicted differences in mean ER with greater disparities observed as the set size increased for selective attention (Donnelly et al., 2007; Woods et al., 2013). Additionally, we expected higher reliability for RT-based measures than for ER-based measures, and low reliability for cognitive interference measures assessed using Stroop and Flanker difference scores, as previous studies have indicated low reliability for these measures (Draheim et al., 2019). We also expected to find significant correlations between CAM battery tasks related to the three attentional networks measured by the ANT, especially for tasks related to sustained attention (Oken et al., 2006), inhibitory control (as assessed by the Flanker, Go/No-Go, and Stroop tasks, Constantinidis and Luna, 2019; Diamond, 2013), and selective attention (as assessed by a visual search task, Gil-Gómez de Liaño et al., 2020; Woods et al., 2013). To further investigate shared cognitive mechanisms, we conducted factor analyses to examine the latent structure of the CAM battery and assess whether common factors account for variability across tasks.

Study 1

Methods

Participants

Participants were 680 preteens (M = 11.52; SD = 0.51), all attending the first year of lower secondary education in France (referred to as 6e, the equivalent of Grade 6 in North American system), and were enrolled by their teachers from 13 French middle schools. Among these middle schools, 3 were private and 10 public, 8 urban and 5 rural, and none was located in educational priority networks. Schools were recruited through a call for expressions of interest coordinated by a regional education inspector and disseminated to middle school principals. Interested schools volunteered by contacting the research team directly. All parents gave written informed consent for their children to take part in the experiment. This study was conducted in compliance with the Declaration of Helsinki and was approved by the local ethics committee (CER Grenoble Alpes, approval no. 2020-09-01-4). Thirty-four participants with a history of psychiatric disorders or diagnoses indicating special educational needs were excluded from the analyses, resulting in a final sample of 646 preteens (336 boys and 310 girls). At the start of each test session, we ensured that children requiring visual or auditory aids were using their corrective devices.

Materials

The tasks were written with the jsPsych library (de Leeuw, 2015), on top of the Experiment Factory,5 a container-based platform for running online experiments (Sochat, 2018). All code related to the CAM battery are available online at: https://gitlab.com/mpinelli/CAM.

The graphical design of the tasks is suitable for middle school students (11 years on average), and features a cartoon character named Martin who visits a zoo (Figure 1B). For each task, the instructions are displayed on the screen. The tasks include training trials followed by testing trials and automatically begin one after the other in a random order. During the training trials, feedback is given by Martin, indicating whether the response is correct or not.

Testing procedure

Computerized attention measure (CAM): eight tasks of the CAM battery were used in this first study: Stroop, Flanker, Go/No-Go, selective attention, working memory, visual sustained attention, auditory sustained attention, and visuo-manual coordination (Figure 1A). The variables and measures of each task were selected to reflect the cognitive processes of interest, according to the literature on cognitive processes. For each task, we paired a gamified instructional approach with the cognitive task (Figure 1B). The parameters of the tasks described can be found in the Git repository. They were defined within the framework of the project but can be directly modified in the source files of each task.

Flanker task: participants were instructed to observe fish appearing on the screen. They had to respond as quickly as possible by pressing the directional arrows that correspond to the direction of the central fish flanked by two other fish on both sides (swimming in the same or in the inverse direction of the central fish). On each trial, a row of five fish appeared at the center of the screen for 1,500 ms, preceded by a 1,000 ms interstimulus interval (ISI). According to the condition (congruency of flankers), Flanking fish swam either in the same direction or the opposite direction. The task began with 4 practice trials, followed by 50 test trials. ER and RT scores were recorded.

Stroop task: in this task, participants had to count tickets to help the zoo’s head. The task entailed swiftly counting the items presented within canonical patterns, and participants were instructed to press the corresponding numbers on the keypad (ranging from 1 to 4) to indicate the count. Importantly, the identity of the items within the patterns (digits from 1 to 4) was inconsequential; the focus was solely on accurately quantifying the number of items present. Participants had to press the number pad as quickly as possible to indicate the correct amount of items (4 possible responses between 1 and 4). Each pattern was presented for 2,000 ms with an ISI of 1,000 ms. This task included trials for which the amount of items corresponded to the written digits and trials for which the amount of items did not correspond to the written numbers. Fifty trials were preceded by 4 training trials. ER and RT scores were recorded.

Go/No-Go task: participants were instructed to give a “candy” response (Go trial) when an animal appeared and to withhold the response when a caretaker was shown (No-Go trial). They had to press (or not) the space bar as quickly and accurately as possible. Items consisted of images representing either a caregiver or an animal. Items remained on screen until 500 ms. The ISI was between 1,250 and 1,750 ms. The task comprised 10 training trials and 80 test trials (No-Go frequency 25%). ER and RT scores were recorded.

Sustained attention tasks: the tasks were adapted into two versions: a visual and an auditory one. Participants either saw or heard animals and were instructed to count them. Depending on the version, they had to press the spacebar whenever an animal was seen or heard. At the end of the task, they reported the total number of animals they had counted. The ISI varied randomly between 1,500 and 4,000 ms. Two practice sequences were followed by 30 to 40 randomly presented test trials. The number of animals counted, ER and RT scores were recorded.

Selective attention task: the aim of this task was to find a target (a brown monkey) among distractors (other animals). They had to press the spacebar if the visual target was present among the set of distractors (filler animals). The target consisted of a round brown monkey and the distractors were brown square monkeys or round blue monkeys. Participants had to respond as quickly as possible by pressing the “P” key if the target was present, and the “A” key if the target was absent. There were 3 sets of 30 trials with either 8, 16, or 32 monkeys. Among the 90 test trials, there were “present” trials and “absent” trials, and 6 training trials preceded the test trials. The stimuli were displayed until the participant’s answer and with an ISI of 1,000 ms between trials. ER and RT scores were recorded.

Visuo-manual coordination task: the task required participants to play with an animal by sending a ball. Participants had to click on the animal that appeared at the bottom of the screen and move the mouse to the ball that appeared at the top of the screen (top left, top center or top right). The animal was displayed for 5,000 ms with a 1,000 ms delay between trials. The test involved 6 training and 30 trials. Movement Time (MT) and ER scores were recorded.

Working memory task: the gamified goal of the working memory (WM) task was to feed animals and recall food items. Participants had to memorize 5 ingredients and indicate, after each ingredient, the position of 3 animals (distractors) that appeared on the upper or in the lower part of the screen. For this distracting task, they had to press the “up” or “down” keys. At the end of each sequence, children had to recall the memorized ingredients via a recognition task in which the 5 target ingredients were mixed with 5 new food items. The task involved 2 training sequences with a low cognitive cost and 14 test sequences presented randomly (7 sequences with a low cognitive load and 7 with a high cognitive load).

Attention network test: the ANT, (Fan et al., 2002) was used to test the validity of the CAM tests. The ANT is a task that assesses three attentional networks (alerting, orienting, and executive). The task and instructions were adapted for use by preteens in the computer rooms of middle schools. Participants had to determine the direction of a central arrow (left or right) with cue arrows appearing above, below fixation, or not (depending on the condition). RT and ER scores were measured according to cue type (no cue, center cue, double cue, spatial cue) and flanker type (neutral, congruent, incongruent). The ANT involved 24 training trials with feedback and three experimental blocks without feedback for a total of 288 test trials. The three networks were computed as in Fan et al. (2002).

General procedure

The study comprised two sessions (Figure 1C). In the first session, participants performed the 8 CAM tests, dedicated to evaluating reliability and feasibility. Before this session, teachers received coaching on the testing protocol and were instructed to address any technical problems. Teachers received a total of 2 h of training. The first hour focused on presenting the CAM battery and the overall study protocol. The second hour involved testing the full set of tasks in each school’s computer room under the supervision of a psychology researcher. This session aimed to identify any technical issues, train the teachers in task administration, and verify that data were being properly recorded. The experiment took place in classrooms equipped with individual computers for each student, complete with a keyboard/keypad, mouse and headphones. Session 1 lasted about 1 h, during which the 8 web-based tasks were administered randomly. Although there was no formal break during the session, a short pause occurred between each task while the next one was loading. The second session took place in the classrooms of the middle schools that had completed session 1. During this session, participants carried out the ANT, which took about 1 h and was scheduled approximately 1 month after session 1. While the whole experiment was supervised by a psychology researcher, a volunteer teacher managed the organization of the two group sessions in each class.

Results

We examined the completion rates, performance metrics, validity, and reliability of the 8 web-based tasks designed to evaluate the attentional processes of preteens during school time. Completion rates were assessed for the entire session. Preteens’ performance was evaluated by analysing differences in results across conditions in each task (e.g., congruent vs. incongruent for the Stroop task). Reliability was measured using the split-half method. Finally, validity was assessed by comparing the CAM tests with the three dimensions of attention from the classical ANT for which psychometric properties have been considered in previous studies (e.g., Macleod et al., 2010).

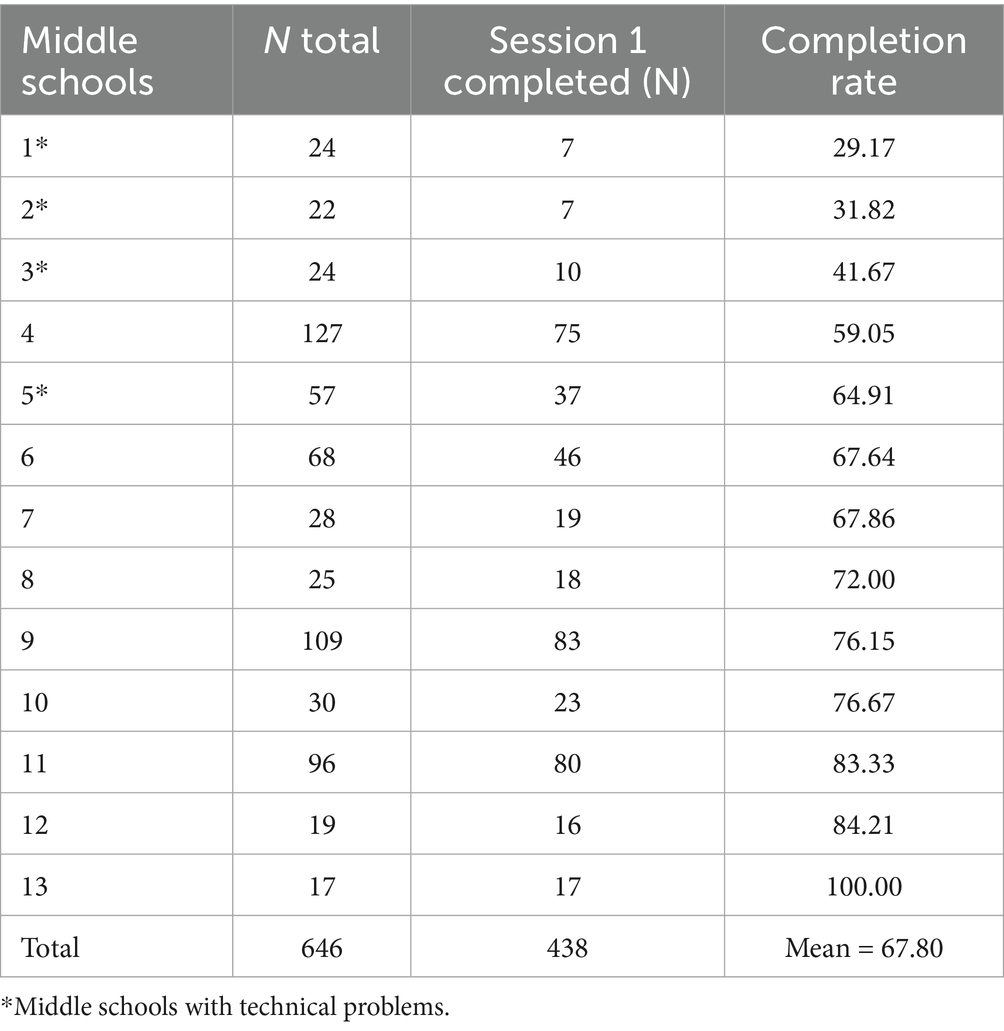

Completion rate

The completion rate corresponding to the percentage of participants who completed all the 8 tasks in session 1 varied across the 13 middle schools. The completion rate ranged from 29.17% to 100% with a total mean completion rate of 67.80% (see Table 1). The reasons for not completing the full battery were either technical issues that occurred during the testing session (poor network quality preventing tasks from loading and significantly extending the session time; see the middle schools concerned in Table 1), or insufficient time allocated by the teacher in the computer room. In session 2, a total of 100 preteens completed the session, which included the ANT.

Performance

Interference scores were computed using RT differences for the Flanker and Stroop tasks (Paap et al., 2020). For the Go/No-Go and selective attention tasks, condition differences were evaluated using ER scores (Meule, 2017; Woods et al., 2013), while the cognitive cost effect in the WM task was examined by considering the percentage of correctly recalled items (Barrouillet et al., 2007). RTs lower than 200 ms and higher than 3 SD from the mean were excluded, along with RTs from incorrect responses. Except for MT and accuracy-based tasks (i.e., selective attention, visuo-manual coordination, and WM tasks), participants with an ER exceeding 40% were excluded from all tasks. Furthermore, participants who consistently pressed the same button throughout all trials of a given task condition were removed. We present below the performance analysis of preteens for each condition of each task for the CAM battery. Due to data loss caused by technical problems, particularly affecting the selective attention and auditory sustained attention tasks, and the exclusion of outliers, some individual data were not recorded, leading to variations in sample size across tasks. The proportion of data loss was 49.07% for the auditory sustained attention task, 38.85% for the selective attention task, and ranged from 21.36% to 31.42% for the other tasks. Hence, we chose to present the performance results for each task individually. Performance data for each task are presented in Table 2.

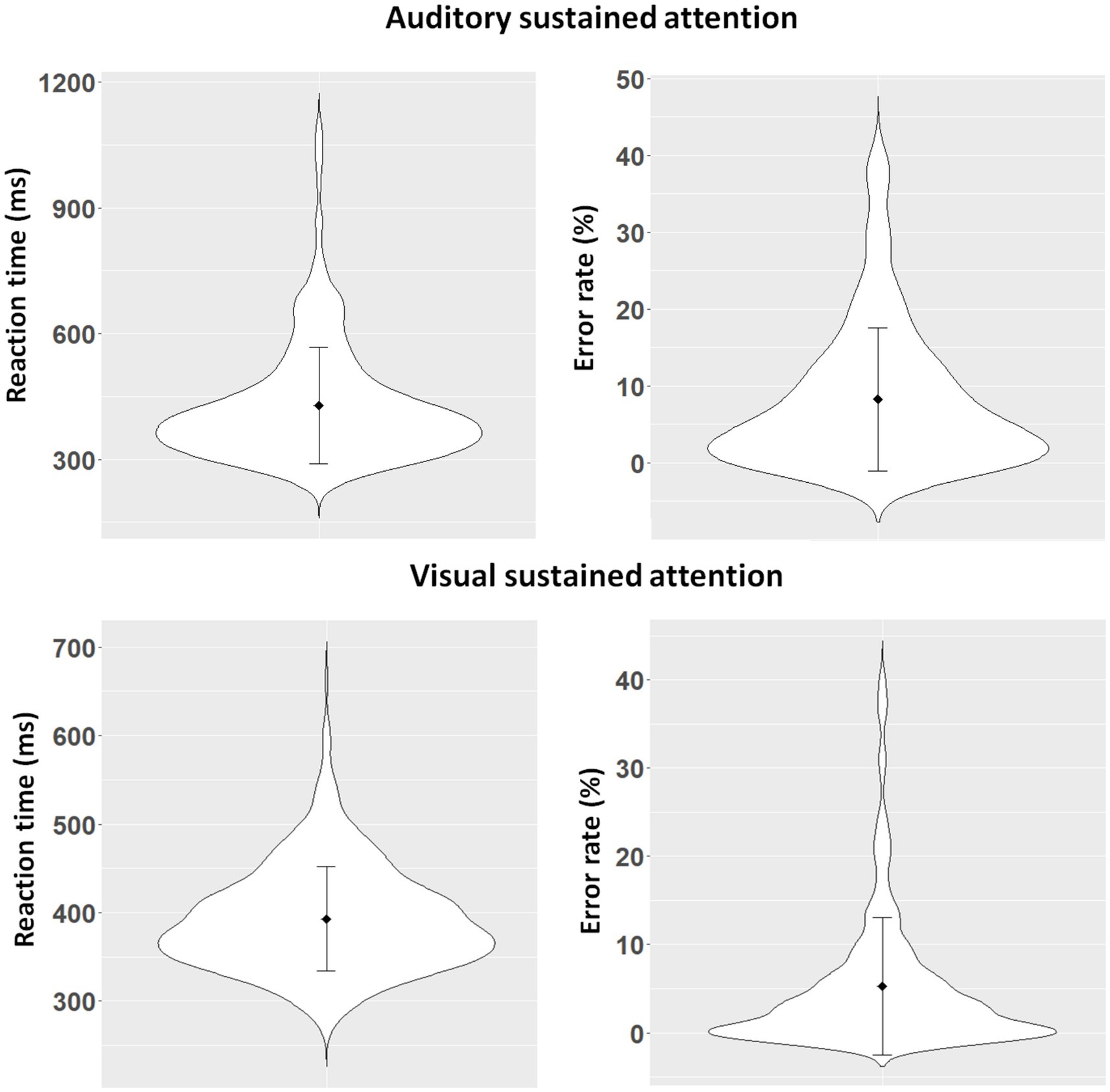

Table 2. Reaction times, error rates and condition effects for each task of the CAM battery in studies 1 and 2.

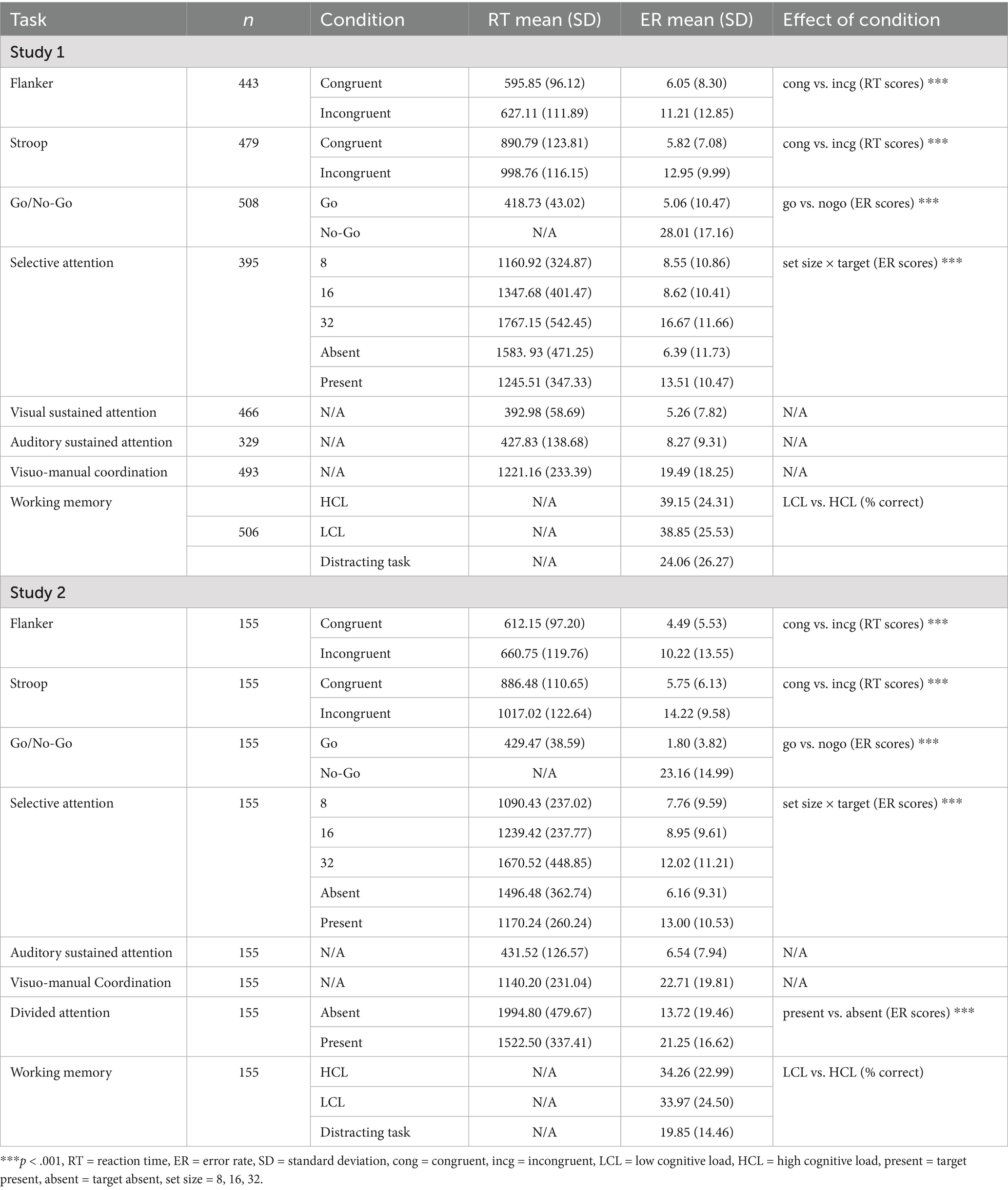

Inhibitory control was assessed via the Flanker task, the Stroop task and Go/No-Go task, by calculating ER and RT scores. As shown in Figure 2, the distribution of ER scores presented differences between preteens and between the Flanker, Stroop, and Go/No-Go tasks. The mean ER score was 10.10% for the Stroop task, 16.54% for the Go/No-Go task and 9.15% for the Flanker task. Moreover, Flanker task and Stroop task elicited a significant difference on mean RT score between incongruent and congruent trials, F(1, 442) = 154.76, p < 0.001, η2p = 0.25 (mean congruent = 595.85 ms, SD = 96.12; mean incongruent = 627.11 ms, SD = 111.89; delta = 31.27 ms) and F(1, 478) = 654.76, p < 0.001, η2p = 0.58 (mean congruent = 890.79 ms, SD = 123.81; mean incongruent = 998.76 ms, SD = 116.15; delta = 107.97 ms), for Flanker and Stroop, respectively. Results also showed a significant difference in ER score between Go (M = 5.06, SD = 10.47) and No-Go trials (M = 28.01, SD = 17.16) [F(1, 507) = 735.14, p < 0.001, η2p = 0.59] (Figure 2).

Figure 2. Performance distributions (error bars represent standard deviation) for the Flanker task (n = 443), the Stroop task (n = 479) and the Go/No-Go task (n = 508).

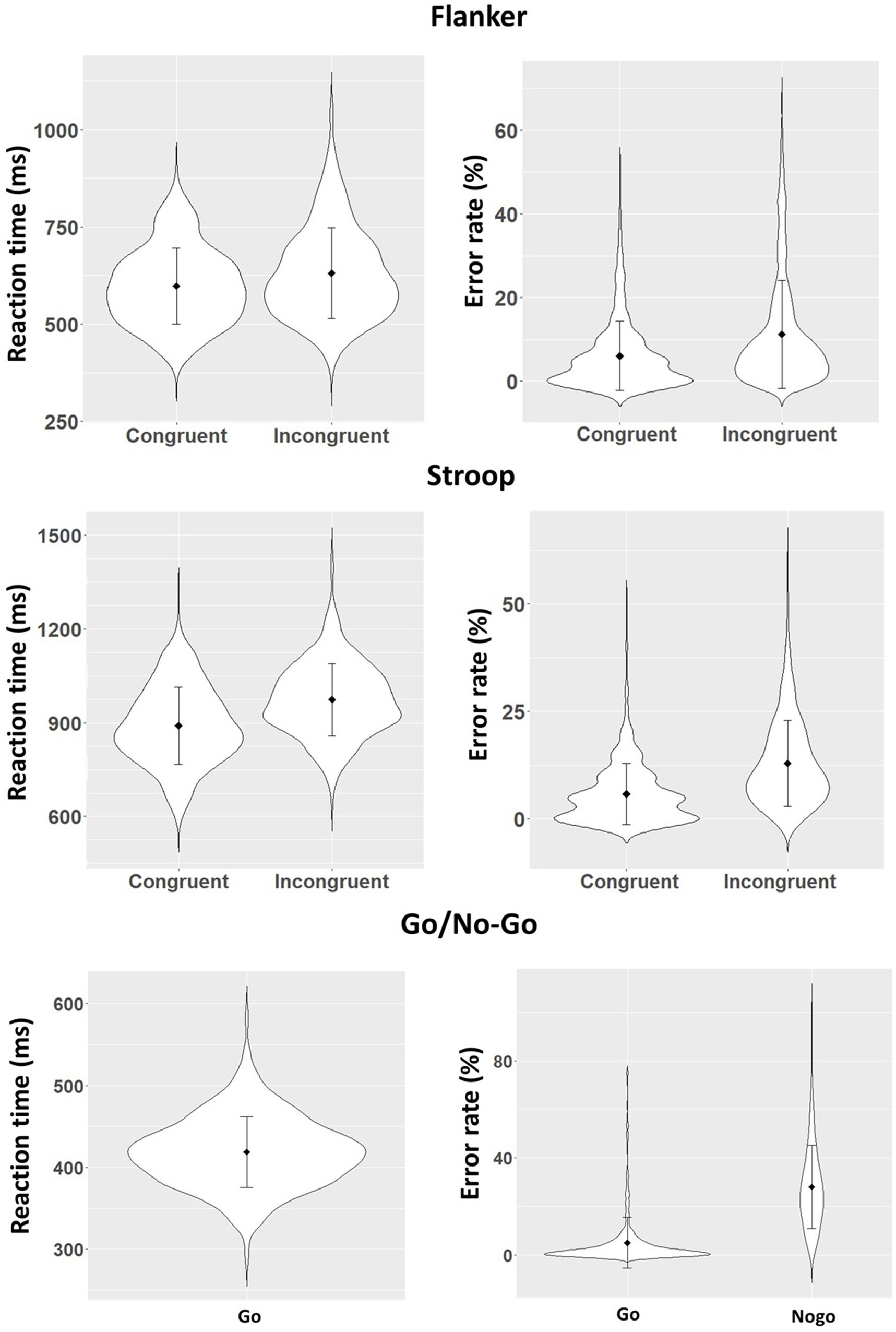

Sustained attention was evaluated with the visual and auditory sustained attention tasks, for which RT and ER were computed (Figure 3). The ER for the auditory and visual tasks corresponded to the proportion of trials in which preteens missed a target (i.e., failed to press the “space” key when required). The mean ER was 5.26% and 8.27% for the visual and auditory version, respectively. However, only 40.12% of preteens produced the correct number of heard stimuli and 47.42% for visual stimuli. We nonetheless observed a significant correlation between RT and ER for the visual sustained attention task (r = 0.43, p < 0.001) and for the auditory sustained attention task (r = 0.17, p < 0.01). The more preteens missed targets, and the longer they took to respond (Figure 3).

Figure 3. Performance distributions (error bars represent standard deviation) for the auditory sustained attention task (n = 329) and the visual sustained attention task (n = 466).

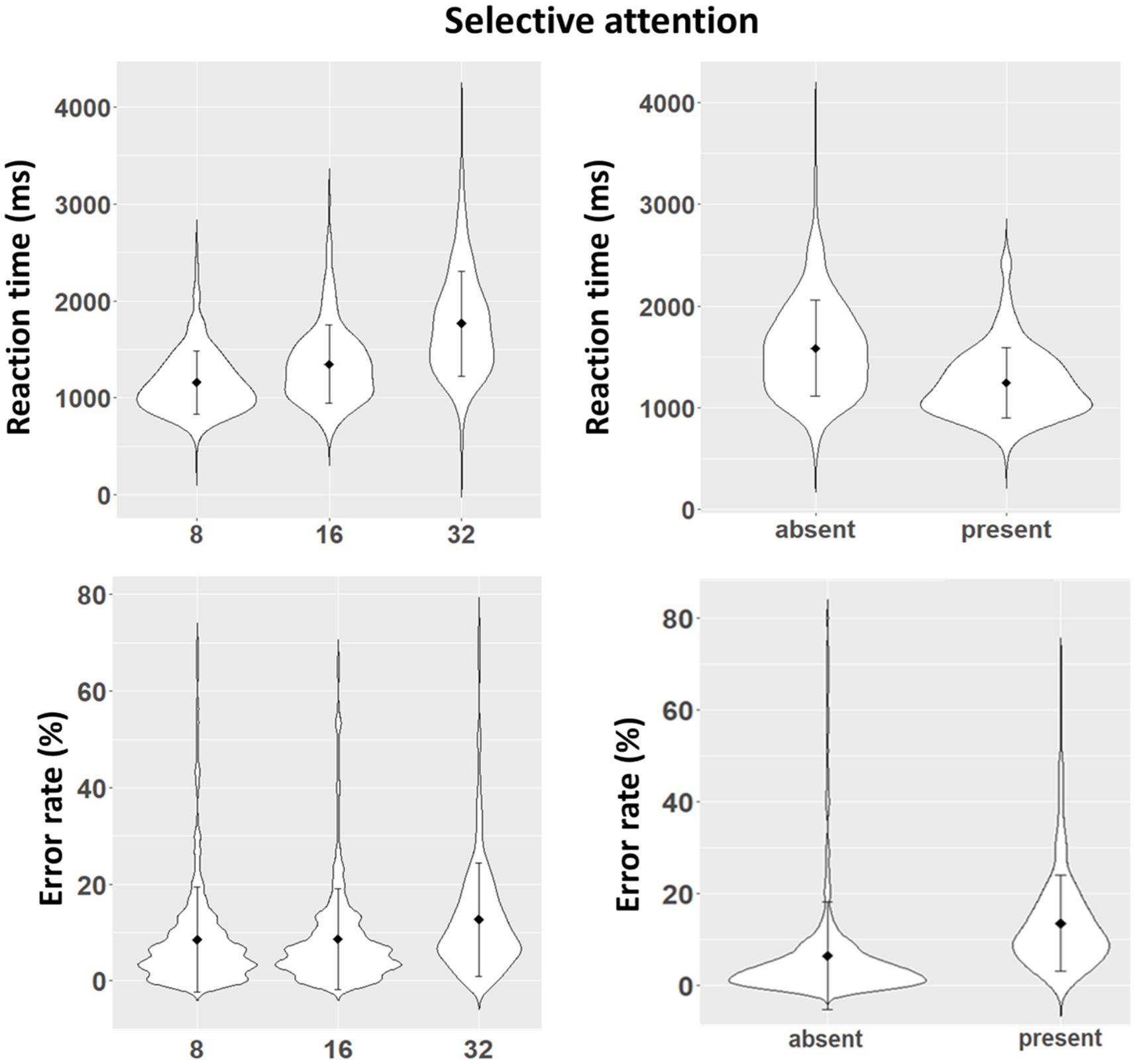

Results on the selective attention task showed a significant interaction between the set size and the presence of the target [F(2, 798) = 69.55, p < 0.001, = 0.15]. The interaction was decomposed into simple effects, with results reported using Bonferroni correction. Results showed a significant difference between present and absent condition with a set size of 32 items, t = 15.52, p < 0.001 (delta presence/absence = 12.66%), 16 items, t = 8.02, p < 0.001 (delta presence/absence = 4.83%) and 8 items, t = 5.47, p < 0.001 (delta presence/absence = 3.40%). In other words, the presence of a target had a greater impact on ER when the set size was larger (Figure 4).

Figure 4. Performance distributions (error bars represent standard deviation) for the selective attention task (n = 395).

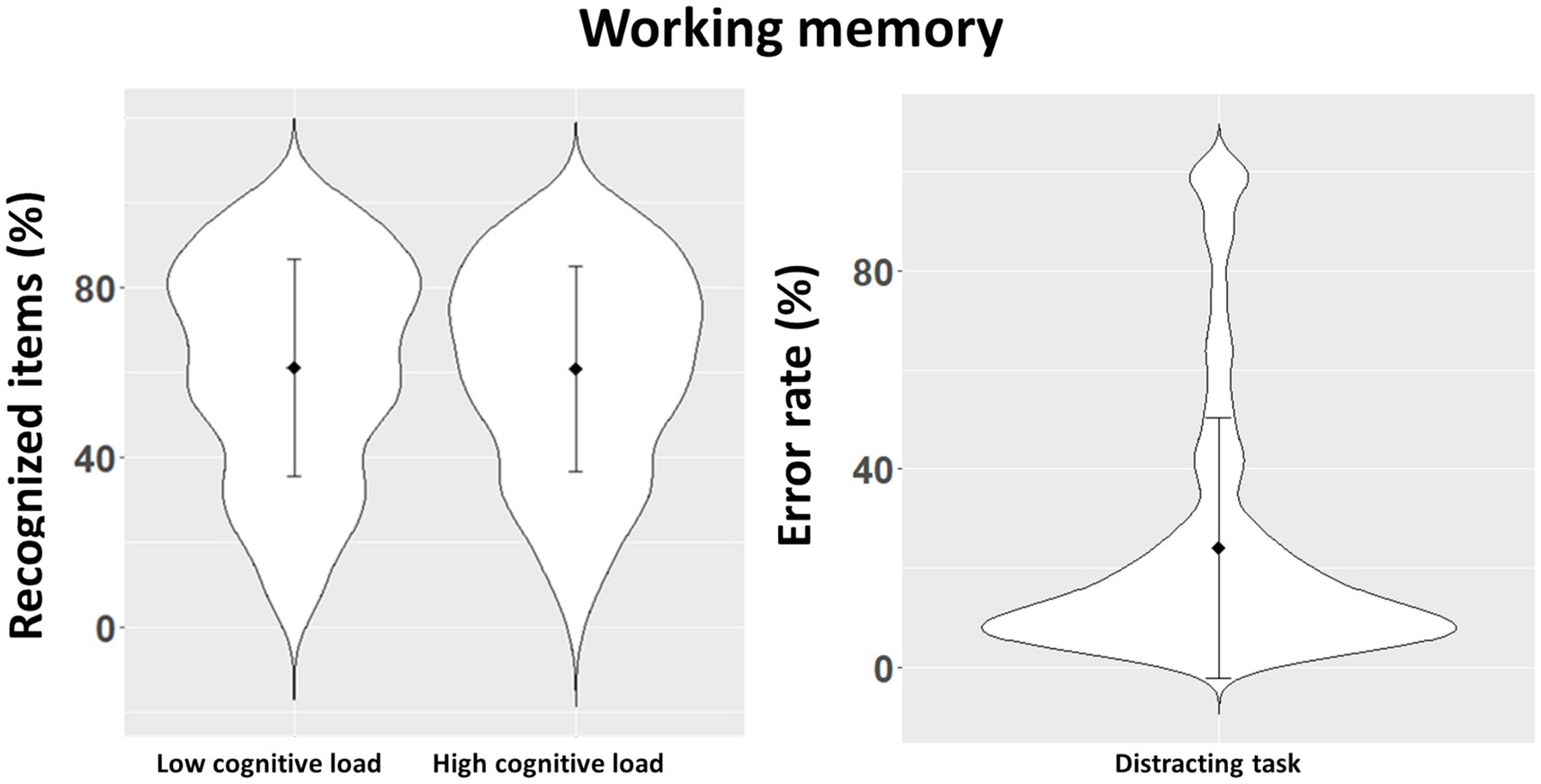

WM performance was evaluated through the mean rate of correctly recognized items in the complex span task. Moreover, the ER score evaluated performance on the distracting task. These two measures were computed as a function of the cognitive load imposed by the distracting task (low vs. high). The ER score of the distracting task was added as a covariate to control any impact on the relationship between the cognitive load and the percentage of correctly recognized items. Results showed that memory performance did not differ across the two cognitive load conditions with 61.15% and 60.85% of correctly recognized items in the low and high cognitive load condition respectively [F(1, 505) = 0.17, p = 0.67] (Figure 5). The ER score in the distracting task (24.06%) did not affect the cognitive load effect on correctly recognized items.

Figure 5. Performance distributions (error bars represent standard deviation) for the working memory task (n = 506).

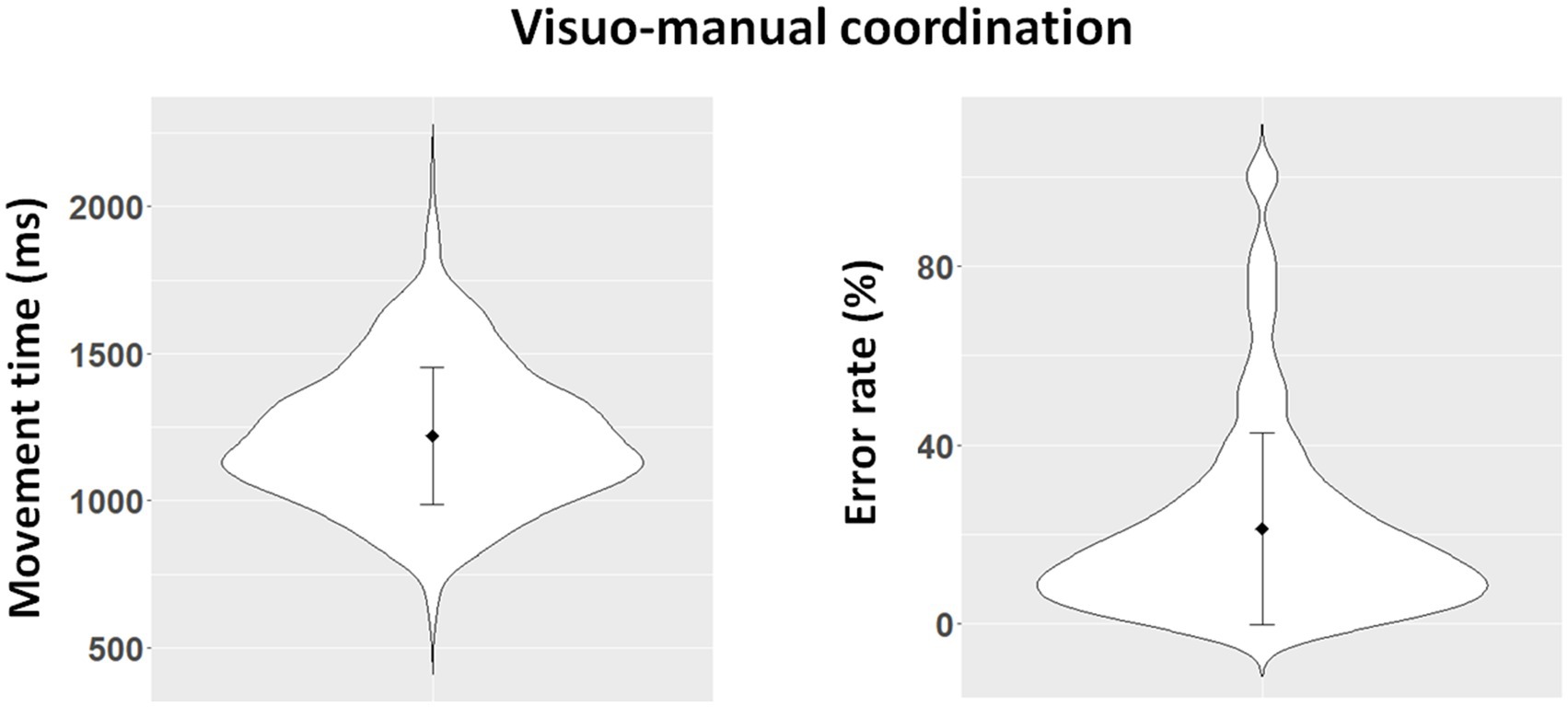

For the visuo-manual coordination task, ER score reflected the situations in which the target was missed. The MT corresponded to the time to move from the initial position to the target, according to the three target position conditions of the visuo-manual coordination task. Data distribution for the visuo-manual coordination task showed large individual differences in performance on both ER score and MT (Figure 6). The mean ER score was 19.49%.

Figure 6. Performance distributions (error bars represent standard deviation) for the visuo-manual coordination task (n = 493).

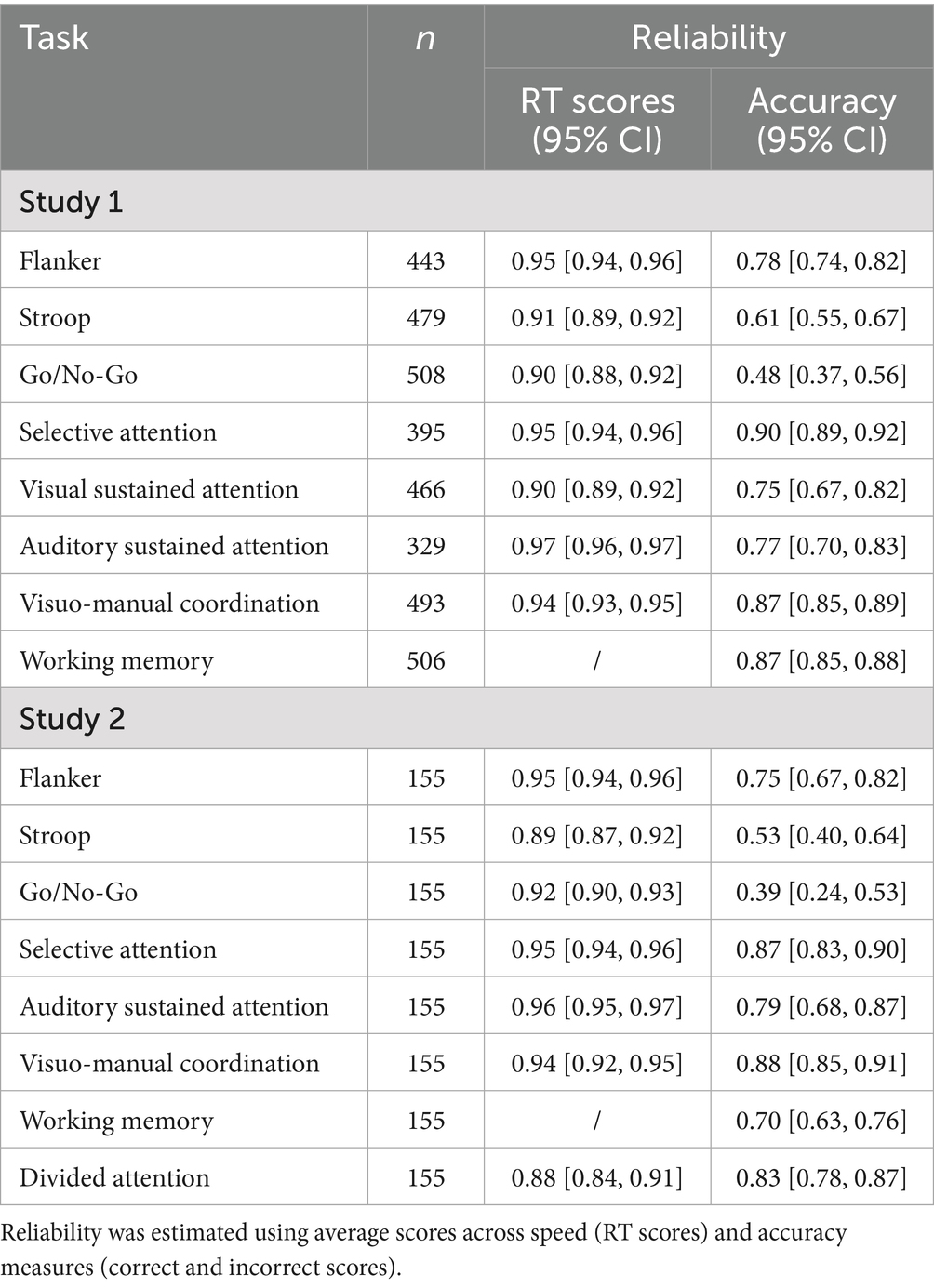

Reliability

Reliability was assessed for both response speed (mean RTs) and accuracy (correct and incorrect responses). The split-half method (R package “splithalf,” Parsons, 2021) consisting of randomly splitting 10,000 times trials into halves was applied. A correlation was computed for each split, and the reliability score was calculated as the mean of the 10,000 correlations and by applying the Spearman–Brown formula. Results of the split half for the RT and ER scores are presented in Table 3. With regard to RT scores, the results showed satisfactory reliability coefficients, ranging from 0.90 to 0.97. For ER scores, results showed acceptable coefficients (ranging from 0.61 to 0.90), but a low coefficient for the Go/No-Go task (0.48). Moreover, RTs difference reliability was low for Flanker and Stroop, respectively 0.29 and 0.03.

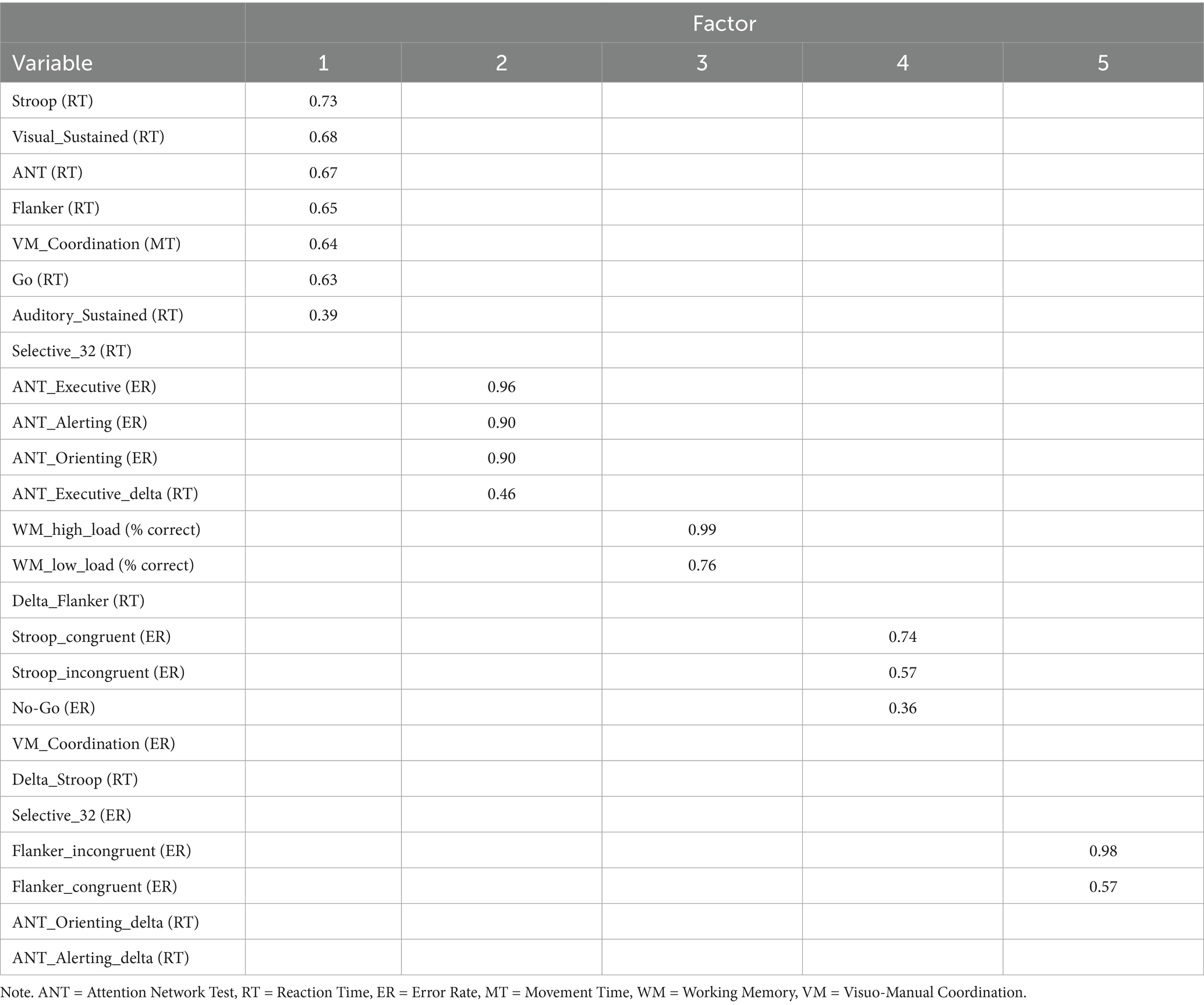

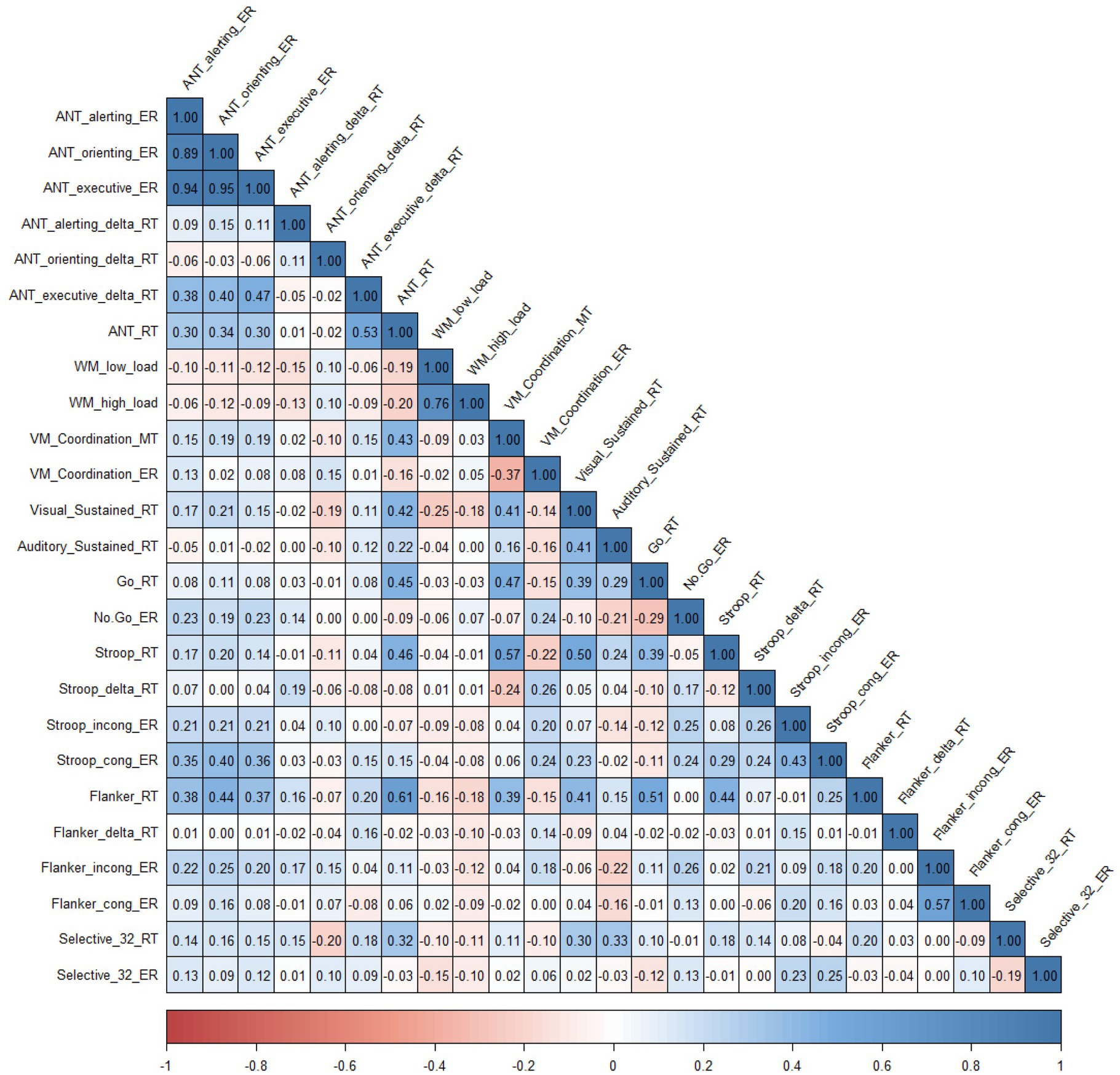

Validity

We first evaluated validity with a subsample consisting of preteens from middle schools who completed all CAM battery tests in session 1 and then participated in session 2 to complete the ANT (n = 100). Pearson correlation (significance threshold: α = 0.05) results are presented in Figure 7. Next, an exploratory factor analysis was carried out to study the latent structure of the CAM battery, with the ANT measures included in the analysis. A total of 25 variables were selected for convergent validity and exploratory factor analysis. These variables were drawn from the tasks based on measures used in the literature and their relevance for evaluating the cognitive processes under investigation. The selection aimed to balance speed-based and accuracy-based measures, while also limiting the number of variables included in the factor analysis in accordance with the sample size constraints (see Figure 7 and Table 4).

Figure 7. Correlation matrix between ANT and CAM tests. ANT, Attention Network Test; RT, Reaction Time; ER, Error Rate; MT, Movement Time; WM, Working Memory; VM, Visuo-Manual Coordination; cong, congruent; incong, incongruent.

Effect sizes were interpreted according to Gignac and Szodorai (2016), with r = 0.10 considered small, r = 0.20 typical, and r = 0.30 relatively large. The results indicated that all RT scores from the CAM battery were significantly correlated with the ANT RT score, ranging from typical to relatively large correlations (0.22 < r < 0.61). In addition, a typical, significant correlation was observed between the ANT RT score and working memory performance in the high-load condition (r = −0.20). Regarding sustained attention tasks, the RT score for visual sustained attention showed a typical significant correlation with the ER score from the ANT orienting component (r = 0.21). However, neither the visual nor the auditory sustained attention tasks showed significant correlations with the ER score from the ANT alerting component or the alerting delta score. A typical significant negative correlation was also observed between the RT score from the selective attention task and the ANT orienting delta score (r = −0.20). Additionally, ER scores from the No-Go and Stroop tasks, as well as both RT and ER scores from the Flanker task, were significantly correlated from typical to relatively large with the ER score from the ANT executive control component (0.21 < r < 0.37). Finally, the ANT executive delta score was typical significantly correlated with the RT score from the Flanker task (r = 0.20).

Between-task correlations revealed significant associations between ER and RT scores across the Stroop, Flanker, and Go/No-Go tasks, ranging from typical to relatively large (0.24 < r < 0.51). A typical correlation was also found between the Stroop delta RT score and the ER score from the incongruent condition of the Flanker task (r = 0.21). Additionally, typical correlations were observed between ER scores from the selective attention task and ER scores from both the incongruent (r = 0.23) and congruent (r = 0.25) conditions of the Stroop task. RT score from the selective attention task was also correlated with RT scores from the auditory and visual sustained attention tasks, with relatively large correlations (r = 0.33 and r = 0.30, respectively). A typical negative correlation was observed between the low cognitive load condition of the WM task and visual sustained attention (r = −0.25). The ER score from the visuo-manual coordination task showed typical correlations with the ER score from the No-Go condition (r = 0.24) and the RT score from the Stroop task (r = −0.24), as well as with the delta RT score from the Stroop task (r = 0.26). The MT score from the visuo-manual coordination task correlated with the RT scores from visual sustained attention, the Go condition, Stroop, and Flanker tasks (−0.24 < r < 0.57). Finally, sustained attention tasks showed correlations with the six tasks of the CAM battery, ranging from typical to relatively large (−0.25 < r < 0.50).

The exploratory factor analysis was conducted on ANT and CAM tasks to determine the latent structure (Table 4). The number of factors was selected according to a parallel analysis. The analysis showed a 5-factor solution (using maximum likelihood as the extraction method and a varimax rotation) explaining 45.01% of the variance (only factor loadings greater than 0.35 are reported). The adequacy of the exploratory factor analysis showed that Bartlett’s test was significant (p < 0.001) with an acceptable level of sampling adequacy (Kaiser-Meyer-Olkin = 0.60). Most RT-based variables loaded onto Factor 1. The ANT loaded onto Factor 2, the WM task loaded onto Factor 3, Stroop task loaded onto Factor 4 and Flanker task loaded onto Factor 5. The Go/No-Go task loaded onto the same factor as the Stroop task (Factor 4). Selective attention did not load onto any factor.

Discussion: study 1

The goal of this first study was to present and evaluate a new computerized battery including 8 cognitive tasks measuring attention, executive functions and visuo-manual coordination. While the completion rates indicated a large variability between middle schools (ranging between 29.17% and 100%), the tasks measuring several components of attention were efficient for evaluating cognitive performance. Moreover, except for accuracy in the Go/No-Go task as well as RT difference scores in Flanker and Stroop tasks, split-half reliability outcomes indicated that the tasks of the CAM battery had a good internal consistency. Relationships between the tasks of the CAM battery and the ANT were observed, suggesting that tasks from the CAM battery and the ANT involve convergent cognitive mechanisms. Finally, the factor structure showed that the Go/No-Go and Stroop tasks loaded onto one cluster, whereas the Flanker and ANT tasks loaded onto a separate cluster. Moreover, variables related to processing speed, as assessed by RT, converged onto a single cluster. In a second study, we aimed to replicate the results of study 1 with a new sample of students in order to guarantee that the CAM battery constitutes a usable and useful tool for future research. Given the variable completion rates caused by technical issues and a session length that did not allow a margin in test completion time, validity was tested on a restricted sample of participants in study 1. In addition, this variation in the completion rate could influence the tests’ performance results. Consequently, study 2 was carried out with a more limited number of participants per session (groups of 15). Moreover, performance, reliability, and validity analyses were conducted on participants who had completed all 8 tasks in study 2. In addition, a new test assessing divided attention was included in the CAM battery to target an additional aspect of attention. Finally, the WM task was adapted to address the absence of a cognitive load effect and to reduce task duration in order to minimize participant attrition.

Study 2

Methods

Participants

Two hundred two preteens (M = 11.06; SD = 0.37, 99 girls and 103 boys) from French middle schools completed the CAM battery in this second study. No middle school was located in educational priority networks. Moreover, all middle schools were public, with 7 located in urban areas and 2 in rural areas. The recruitment procedure was similar to that of study 1. Regarding parental occupation, 16 (mother = 11, father = 5) were retired or unemployed, 126 (mother = 66, father = 60) were employees/workers, 100 were intermediate professions (mother = 53, father = 47), 51 (mother = 20, father = 31) were executive professionals, 25 (mother = 7, father = 18) were craftsmen, merchants, company managers, and 4 (mother = 2, father = 2) were farmers and 82 did not provide information. Written informed consent was given by parents. The data presented here correspond to a sample of students who completed all tasks of the CAM battery and did not receive any intervention. As in study 1, visual and auditory corrections were verified. Participants had no history of psychiatric disorders or diagnoses indicating special educational needs.

Materials

The material and measures of the present study were the same as those described in study 1, with adjustments made to the testing procedure. Since we introduced a new test evaluating divided attention online, we were required to discard the visual sustained attention task due to time constraints. Moreover, the WM task was adapted to address the lack of a cognitive load effect identified in the first study. Participants were required to press the “M” key (for “up”) or the “Q” key (for “down”) to indicate the spatial position of the stimulus, which increased the difficulty of the distracting task and enhanced the cognitive load effect. In addition, the number of sequences was reduced to 8 (compared to 14 in the first study) to shorten the task duration. Finally, auditory instructions were added for each task of the CAM battery allowing preteens to read and/or listen to the instructions at their convenience. All task materials and procedures are available in the Git repository.

Divided attention task: participants had to follow two goals when performing this task: to find a visual target (an animal) among distractors and to count how many animals sounds they heard (Figure 1A). There were 30 trials with a single set of 32 monkeys. Among the 30 test trials there were 15 “present” trials and 15 “absent” trials. Six training trials preceded the 30 test trials. The stimuli were displayed until 5,000 ms with an ISI of 1,000 ms. Animal count, ER and RT scores were recorded.

General procedure

The general procedure for administering the CAM battery was similar to that of the first study, except that groups of 15 participants were preferred and provided with headphones to listen to the auditory instructions. Moreover, students completed the 8 CAM tests in the computer rooms during a single session.

Results

Performance, reliability, and validity were assessed in the sample of participants who completed all eight tasks in the battery. Data filtering and analysis followed the same procedure as in study 1. Following data filtering, the final sample included 155 preteens.

Performance

All performance results were significant and consistent with those from study 1 (see Table 2). It should be noted that technical issues with the Flanker task led to variability in the number of trials across congruence conditions (descriptive data are presented as indicative). However, results remained comparable in terms of performance, reliability, and validity (the inclusion or exclusion of the Flanker task did not alter the factor analysis pattern of the other tasks). Results for the WM task showed no significant effect of the cognitive load on memory performance [F(1, 154) = 0.03, p = 0.86], with 66.03% of items correctly recognized in the low cognitive load condition and 65.74% in the high cognitive load condition. Error rates in the distracting task did not influence the effect of cognitive load on item recognition.

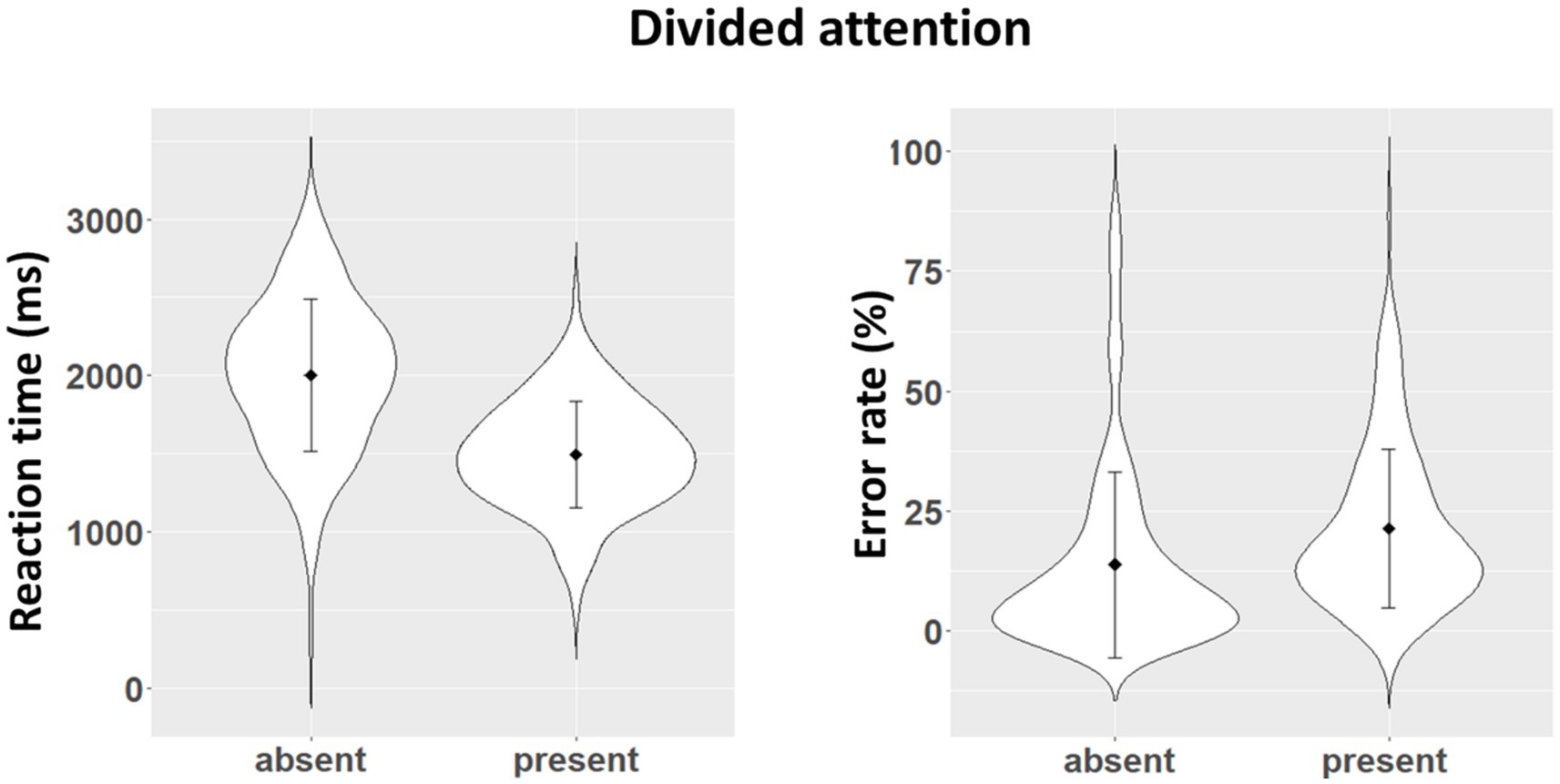

Results on the new divided attention task (Figure 8) showed a significant difference between the two conditions [F(1, 154) = 30.57, p < 0.001, η2p = 0.16]. Compared to the condition in which the target was absent (13.72%), participants made more errors when the target was present (21.25%). Participants heard an average of 17.80 sounds.

Figure 8. Performance distributions (error bars represent standard deviation) for the divided attention task (n = 155).

Reliability

Reliability results are presented in Table 3 and were consistent with those of Study 1 for both RT and ER scores, as well as for interference scores. Coefficients for RT scores ranged from 0.88 to 0.96, with similar values across tasks. In contrast, ER scores showed greater variability, with coefficients ranging from 0.39 to 0.88. The divided attention task showed a split-half reliability of 0.88 for RT score and 0.83 for ER score.

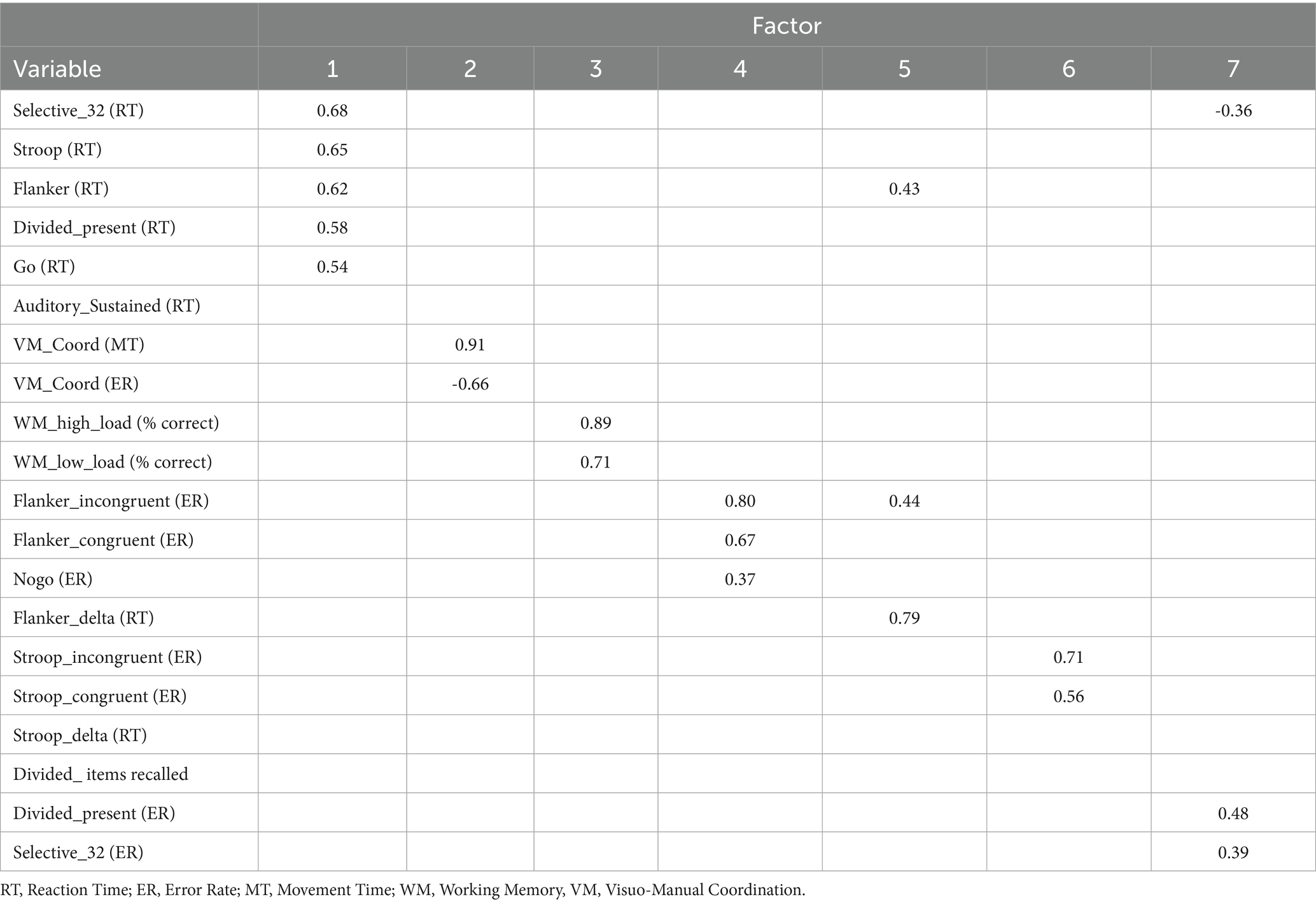

Validity

The variables from the CAM battery were similar to those in study 1, except that we included the presence condition of the divided attention target (RT and ER scores), as well as the recall variable (number of items recalled). Correlation analyses were conducted to examine the relationships between the divided attention task and other tasks of the CAM battery (see also the results in the Git repository). The ER from the divided attention task showed significant correlations with the ER from the Flanker task (r = 0.16), the RT score from the Stroop task (r = 0.16), and the ER from the selective attention task (r = 0.18). The RT from the divided attention task correlated significantly with all RT scores from other CAM battery tasks (0.17 < r < 0.45), except for visuo-manual coordination.

The same procedure as in study 1 was used to determine the factorial structure of the tests used in the present version of the CAM battery (parallel analysis with a factor loading cutoff of 0.35). Twenty variables were included in the exploratory factor analysis. Table 5 presents a 7-factor solution (using Maximum Likelihood as the extraction method and Varimax rotation), accounting for 47.36% of the variance. Bartlett’s test of sphericity was significant (p < 0.001), and the Kaiser-Meyer-Olkin value was 0.57. Except for the auditory sustained attention task, all speed-based variables loaded onto Factor 1. Visuo-manual coordination task loaded onto Factor 2, WM onto Factor 3, the Flanker task onto Factors 4 and 5, and the Stroop task onto Factor 6. Divided attention and selective attention tasks both loaded onto Factor 7. The No-Go condition of the Go/No-Go task loaded on the same factor as the Flanker task (Factor 4).

Discussion: study 2

In this study, we aimed to replicate results observed on the CAM battery in study 1, adding a new task measuring divided attention, and overcoming the absence of a cognitive load effect in the WM task. The results of the divided attention test showed satisfactory psychometric qualities on performance and reliability. The divided attention task loaded onto the same factor as the selective attention task, showing a shared underlying cognitive ability. Moreover, the results on performance and reliability were comparable to those of study 1 for the Flanker, Stroop, Go/No-Go tasks, as well as for selective attention, visuo-manual coordination, and auditory sustained attention. However, the cognitive load effect still did not emerge from this new configuration of the WM task. Finally, the factor analysis revealed a 7-factor solution in which most tasks loaded onto task-specific factors, with an additional factor related to RT scores across tasks.

General discussion

The goal of the present project was to validate a new tool designed to measure several dimensions of attention that are crucial for school: the CAM battery. In two studies involving more than 800 participants, the CAM battery was administered during school time in 2 different French preteens samples in order to test its psychometric qualities (i.e., completion rate, performance, reliability and validity).

The completion rate was considered the most important usability indicator. In study 1, the completion rate showed large variability across middle schools, with mixed feasibility ranging from 29.17% to 100%, and a mean completion rate of 67.80%. Reasons explaining completion rate variability between middle schools are (1) technical problems occurring in some cases and (2) insufficient time allocated by the teacher in the computer room due to unexpected events. Moreover, it is worth noting that group size during testing varied across middle school in study 1 and depended on teachers’ possibilities, sometimes with a large number of preteens in the same classroom. However, previous work indicated that data quality tends to be better when a maximum of 15 students performed collectively cognitive tasks (e.g., Moreau et al., 2017). Future applied studies should fix the group size using ideally semi-class groups (maximum of 15 students) to reduce missing data during the completion.

A second indicator of the quality of the new CAM battery is its ability to assess cognitive performance which reproduce well-known behaviors. Tasks evaluating attention and executive functions are common in the literature. Concerning the Flanker and Stroop tasks, the present results replicated the interference effect in preteens, with longer reaction times in the incongruent condition compared to the congruent condition (for a discussion, see Draheim et al., 2019). This pattern is consistent with previous web-based studies that have replicated similar effects (Crump et al., 2013). In the same way, we found a dependence of ER on No-Go trials and Go trials in the Go/No-Go task, as in previous work (Cragg and Nation, 2008). Selective attention showed influences of set size and of the target presence/absence on ER scores. This result aligns with previous research on conjunction search involving shifting spatial attention and integrating perceptual features (Donnelly et al., 2007; Treisman and Gelade, 1980; Woods et al., 2013). Performance on sustained attention reproduced the ability to maintain attention according to a given time (Betts et al., 2006). In the visuo-manual coordination task, the ability to reach the target relies on the integration of visual perception and motor coordination, which has been linked to academic achievement (Cameron et al., 2016). In the divided attention task, the dual-task demand resulted in reduced accuracy in the visual search task compared to the selective attention task, which involved only the visual search component. Finally, the outcomes of the WM task did not reveal the well-documented cognitive load effect (Barrouillet et al., 2011), possibly due to the processing task not being demanding enough or the type of recall used, which, for the purpose of the collective protocol, was recognition among fillers whereas it consists usually in a serial recall task.

The construct validity of the CAM battery was assessed through multiple correlations observed between its subtests and the three dimensions of the ANT. Performance on the visual and auditory sustained attention tasks, assessed via RT, was related to overall RT in the ANT, suggesting a common mechanism related to processing speed. However, no significant correlations were found between sustained attention (visual or auditory) and the alerting network of the ANT. In contrast, the results revealed a significant relationship between the orienting network of the ANT and selective attention, which relies on the ability to shift attention to specific spatial locations involved in the visual search component of the selective attention task. We also observed correlations between the ER scores from the Go/No-Go, Stroop, and Flanker tasks and those from the three ANT components. Finally, the difference scores (i.e., interference effects) from the Flanker and Stroop tasks did not correlate with the executive control difference score from the ANT but RT scores from Flanker task correlated with executive control difference score. Taken together, our results indicate moderate to relatively large correlations between components of the ANT and those of the CAM battery.

We also found multiple correlations between tasks of the CAM battery, indicating shared variance and suggesting that these tasks assess common cognitive abilities. For example, compared to sustained auditory attention, the sustained visual attention task showed stronger correlations with tasks involving visual stimuli, specifically with the Go/No-Go, visuo-manual coordination, selective attention, WM, Flanker, and Stroop tasks. Correlations were observed among Stroop, Flanker, and Go/No-Go tasks, which are related to executive control and inhibition mechanisms (Constantinidis and Luna, 2019; Diamond, 2013; Gratton et al., 2018; Wessel, 2018). Moreover, the correlations show that divided attention is related to the Stroop, Go/No-Go, and Flanker tasks, which can reflect shared demands regarding executive control. The relationship between the divided attention task and the sustained and selective attention tasks could reflect overlapping mechanisms involved in maintaining vigilance (Oken et al., 2006) and selecting relevant stimuli among distractors, as required in visual search tasks (Donnelly et al., 2007). WM correlated with sustained attention, selective attention, visuo-manual coordination and Stroop tasks into the two studies, as shown in previous work (De Fockert et al., 2001; Diamond, 2013; Rigoli et al., 2012; Unsworth and Robison, 2020).

This is usual in the literature to assess internal consistency using split-half procedures (Pronk et al., 2022). Our results indicate higher reliability based on RT scores than accuracy scores across the eight tasks of the CAM battery administered in middle schools. Whereas some research recommended evaluating attention based preferentially on the RT of correct trials by considering accuracy as secondary (Langner et al., 2023), others advice including accuracy in the reliability analysis (Draheim et al., 2019). In fact, computing reliability on ER scores can be problematic when there are floor effects (Zorowitz and Niv, 2023). Overall, the results observed in both studies align with previous findings, showing better internal consistency for RT-based measures and lower reliability for accuracy-based measures (see Draheim et al., 2019). Additionally, an interest in assessing executive functions is to measure the amount of conflict between incongruent and congruent trials (i.e., using differential score) for Flanker and Stroop tasks. Interestingly, previous research supports the present findings, indicating that differential score is unreliable for the Stroop and the Flanker in both adults and children (Draheim et al., 2019).

We developed 8 different sub-tests, based on the literature, to measure different dimensions of children’s cognitive abilities related to attention, executive functions and visuo-manual coordination. Exploratory factor analysis revealed a 5-factor solution in study 1 and 7-factor solution in study 2, with some variables loading onto shared factors and others onto distinct ones. In both studies, the factor structure indicated that the WM, Stroop, and Flanker tasks rely on specific cognitive processes, while conditions involving RT-based tasks tended to load onto a common factor. Moreover, we observed that the Go/No-Go task loaded with the Stroop task in study 1, whereas in study 2, the Go/No-Go and Flanker tasks loaded onto the same factor. This pattern suggests that inhibition, as measured by errors in the No-Go condition, shares common underlying mechanisms with both the Flanker and Stroop tasks. In study 2, the results also revealed that variables from the divided attention and selective attention tasks loaded on a common factor related to processing speed, as well as on a task-specific factor, reflecting their shared dependence on visual search components. While one might have expected a structure similar to that of the ANT in study 1 with 3 latent factors corresponding to the 3 attention networks (Fan et al., 2002), in which each CAM battery task would be loaded, the results did not support this pattern. This may be explained by the limited sample size in study 1 and by the fact that we employed the original version of the ANT, although with modified instructions and breaks during the task. Overall, the results suggest the emergence of a common factor related to processing speed and with most tasks belonging to a specific cognitive domain, particularly in study 2. This underscores the multidimensional nature of attention, which may involve components that can be assessed using task-specific norms. These findings highlight the importance of accounting for both shared and task-specific contributions when evaluating cognitive functioning.

Several advantages can be highlighted about such an online battery. The first is the reproducibility of the tasks and the possibility of sharing them freely. This allows us to overcome the current problem of replicability in psychological sciences (Klein et al., 2018). Moreover, our CAM battery combines child-friendly tasks (Lumsden et al., 2016), is web-based (Anwyl-Irvine et al., 2021; Tuerk et al., 2023), and offers the flexibility to evaluate multiple dimensions of attention through several tasks that can be used independently. The 8 tasks in the battery can be used within a single session, for example on top of the Experiment Factory, or selected individually, either online or locally. Finally, our web-based apps are free and require few human resources, participants can follow the instructions autonomously (reading or auditive) on their web browser.

Interestingly, the executive function is exclusively assessed using a Flanker task in the ANT, and sometimes variants of the Stroop or Simon tasks (de Souza Almeida et al., 2021). However, in real-life contexts, executive control is required in more complex activities as learning, planning, decision-making, and overcoming habitual responses (de Souza Almeida et al., 2021; Fan et al., 2009). These situations not only involve processes similar to those assessed by the Flanker task in the ANT, but also rely on WM, another core component of executive functioning (Diamond, 2013). With the CAM battery, we introduce a series of tasks that assess various components of attention and visuomotor integration, such as selective and sustained attention, visuo-manual coordination, divided attention, working memory, and inhibitory control, using established paradigms like the Stroop, Flanker, and Go/No-Go tasks. Drawing from several theoretical frameworks, the battery was designed for children and preteens within an ecological context.

Several points remain to be considered in the evaluation of the CAM battery. Firstly, the completion rate varied primarily due to technical problems and time constraints, which prevented us from allowing breaks during the administration of tests. To address these issues, researchers or teachers planning to use the test battery in school computer rooms should systematically allocate sufficient time (e.g., a time slot of 2 h for 8 tasks is recommended), including time for student set-up, instructions and the variable durations required by students to complete the tests. Secondly, preteens may experience fatigue after testing, which could affect cognitive performances. However, while there was no formal break in the middle of the session, each task was interrupted by a few seconds while the next test was loading. In addition, tasks were presented randomly in order to control for this potential bias, thereby minimizing the effect of fatigue on testing.

An important consideration regarding this study is the extent to which the results can be generalized. The CAM battery was tested exclusively on a sample of French preteens, which limits the generalizability of the findings to more diverse populations. Validation on broader and more representative samples is necessary, particularly by accounting for differences in age, educational background, and cultural context. In addition, although children in France are generally introduced to digital tools from primary school onwards, ensuring a basic level of digital literacy, variations in digital practices may still influence task performance. Previous findings have shown that participants’ experience with action video games modulated certain cognitive measures (e.g., Green and Bavelier, 2003). These results highlight that differences in digital engagement can introduce systematic variation, and should be considered when extending the use of the CAM battery to populations with different levels of access to, or familiarity with, digital environments.

Moreover, it is essential to assess the stability of CAM scores over time by establishing test–retest reliability (e.g., Aldridge et al., 2017). Test–retest reliability assesses the stability of performance across repeated administrations, indicating whether the tasks are robust to learning effects or habituation. Future research should investigate this reliability to support the use of the CAM battery in longitudinal and interventional studies, as well as in repeated assessments during training protocols. Although further studies are needed to fully evaluate the battery’s psychometric properties, the tests were designed based on well-established psychological measures in the literature, and we remain confident in the potential of the CAM battery to assess cognitive functions in preteens.

Finally, the CAM battery has direct potential for integration into educational assessment practices. In its current form, the tool provides a brief, gamified, and psychometrically sound evaluation of core attentional processes, which are foundational for learning and academic success. Its digital format allows for scalable administration by researchers as well as teachers or other educational workers, making it feasible for systematic screening of attentional profiles in school settings. Such screening could be used, for example, to tailor pedagogical interventions, particularly in the transition years (e.g., 6th grade) or complement existing educational evaluations such as those used in Réseaux d’Aides Spécialisées aux Élèves en Difficulté (RASED) in France (corresponding to specialized support networks for students with learning or behavioral difficulties).

Summary

In summary, we presented and developed the CAM battery comprising 8 tasks that are adapted for children and assess multiple dimensions related to attention, executive functions, and visuo-manual coordination within the school setting. While the completion indicated a large variability, the evaluation of the CAM battery demonstrated overall acceptable psychometric qualities in terms of performance, reliability, and validity for use in a school context. Future studies should pay particular attention to the conditions under which the battery is administered, assess its test–retest reliability in longitudinal studies, and evaluate its psychometric properties in other languages and cultures. At this point, we believe that the CAM battery meets important elements for evaluating the preteens’ attentional performance in the school context.

Data availability statement

The data for the CAM battery tasks can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://gitlab.com/mpinelli/CAM.

Ethics statement

The studies involving humans were approved by Comité d’éthique pour la recherche Grenoble Alpes. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

MP: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft. EP: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Validation, Visualization, Writing – original draft. PD: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Validation, Visualization, Writing – original draft. GJ: Conceptualization, Formal analysis, Validation, Visualization, Writing – review & editing. J-BE: Conceptualization, Formal analysis, Validation, Visualization, Writing – review & editing. JB: Conceptualization, Writing – review & editing. SP: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Validation, Visualization, Writing – original draft.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was funded by the ANR project ANR-15-IDEX-02, SFR Santé & Société, MGEN, Greco, Cercog and the pôle pilote PÉGASE, operation supported by the French state within the framework of the action “Territories of educational innovation” of the Program of investments of the future, operated by the Caisse des Dépôts.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Adolphe, M., Sawayama, M., Maurel, D., Delmas, A., Oudeyer, P.-Y., and Sauzéon, H. (2022). An open-source cognitive test battery to assess human attention and memory. Front. Psychol. 13:880375. doi: 10.3389/fpsyg.2022.880375

Aldridge, V. K., Dovey, T. M., and Wade, A. (2017). Assessing test-retest reliability of psychological measures: persistent methodological problems. Eur. Psychol. 22, 207–218. doi: 10.1027/1016-9040/a000298

Anwyl-Irvine, A., Dalmaijer, E. S., Hodges, N., and Evershed, J. K. (2021). Realistic precision and accuracy of online experiment platforms, web browsers, and devices. Behav. Res. Methods 53, 1407–1425. doi: 10.3758/s13428-020-01501-5

Barrouillet, P., Bernardin, S., Portrat, S., Vergauwe, E., and Camos, V. (2007). Time and cognitive load in working memory. J. Exp. Psychol. Learn. Mem. Cogn. 33, 570–585. doi: 10.1037/0278-7393.33.3.570

Barrouillet, P., Portrat, S., and Camos, V. (2011). On the law relating processing to storage in working memory. Psychol. Rev. 118, 175–192. doi: 10.1037/a0022324

Bauer, R. M., Iverson, G. L., Cernich, A. N., Binder, L. M., Ruff, R. M., and Naugle, R. I. (2012). Computerized neuropsychological assessment devices: joint position paper of the american academy of clinical neuropsychology and the national academy of neuropsychology. Clin. Neuropsychol. 26, 177–196. doi: 10.1080/13854046.2012.663001

Best, J. R., and Miller, P. H. (2010). A developmental perspective on executive function. Child Dev. 81, 1641–1660. doi: 10.1111/j.1467-8624.2010.01499.x

Betts, J., Mckay, J., Maruff, P., and Anderson, V. (2006). The development of sustained attention in children: the effect of age and task load. Child Neuropsychol. 12, 205–221. doi: 10.1080/09297040500488522

Billard, C., Thiébaut, E., Gassama, S., Touzin, M., Thalabard, J. C., Mirassou, A., et al. (2021). The computerized adaptable test battery (BMT-i) for rapid assessment of children's academic skills and cognitive functions: a validation study. Front. Pediatr. 9:656180. doi: 10.3389/fped.2021.656180

Cameron, C. E., Cottone, E. A., Murrah, W. M., and Grissmer, D. W. (2016). How are motor skills linked to children's school performance and academic achievement? Child Dev. Perspect. 10, 93–98. doi: 10.1111/cdep.12168

Casey, B. J., Trainor, R. J., Orendi, J. L., Schubert, A. B., Nystrom, L. E., Giedd, J. N., et al. (1997). A developmental functional MRI study of prefrontal activation during performance of a go-no-go task. J. Cogn. Neurosci. 9, 835–847. doi: 10.1162/jocn.1997.9.6.835

Chevignard, M. P., Soo, C., Galvin, J., Catroppa, C., and Eren, S. (2012). Ecological assessment of cognitive functions in children with acquired brain injury: a systematic review. Brain Inj. 26, 1033–1057. doi: 10.3109/02699052.2012.666366

Cieslik, E. C., Mueller, V. I., Eickhoff, C. R., Langner, R., and Eickhoff, S. B. (2015). Three key regions for supervisory attentional control: evidence from neuroimaging meta-analyses. Neurosci. Biobehav. Rev. 48, 22–34. doi: 10.1016/j.neubiorev.2014.11.003

Constantinidis, C., and Luna, B. (2019). Neural substrates of inhibitory control maturation in adolescence. Trends Neurosci. 42, 604–616. doi: 10.1016/j.tins.2019.07.004

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Cragg, L., and Nation, K. (2008). Go or no-go? Developmental improvements in the efficiency of response inhibition in mid-childhood. Dev. Sci. 11, 819–827. doi: 10.1111/j.1467-7687.2008.00730.x

Crump, M. J., McDonnell, J. V., and Gureckis, T. M. (2013). Evaluating Amazon's mechanical turk as a tool for experimental behavioral research. PLoS One 8:e57410. doi: 10.1371/journal.pone.0057410

De Fockert, J. W., Rees, G., Frith, C. D., and Lavie, N. (2001). The role of working memory in visual selective attention. Science 291, 1803–1806. doi: 10.1126/science.1056496

De Greeff, J. W., Bosker, R. J., Oosterlaan, J., Visscher, C., and Hartman, E. (2018). Effects of physical activity on executive functions, attention and academic performance in preadolescent children: a meta-analysis. Journal of science and medicine in sport, 21, 501–507. doi: 10.1016/j.jsams.2017.09.595

de Leeuw, J. R. (2015). jsPsych: a JavaScript library for creating behavioral experiments in a web browser. Behav. Res. Methods 47, 1–12. doi: 10.3758/s13428-014-0458-y

de Souza Almeida, R., Faria-Jr, A., and Klein, R. M. (2021). On the origins and evolution of the attention network tests. Neurosci. Biobehav. Rev. 126, 560–572. doi: 10.1016/j.neubiorev.2021.02.028

Diamond, A. (2013). Executive functions. Annu. Rev. Psychol. 64, 135–168. doi: 10.1146/annurev-psych-113011-143750

Donnelly, N., Cave, K., Greenway, R., Hadwin, J. A., Stevenson, J., and Sonuga-Barke, E. (2007). Visual search in children and adults: top-down and bottom-up mechanisms. Q. J. Exp. Psychol. 60, 120–136. doi: 10.1080/17470210600625362

Draheim, C., Mashburn, C. A., Martin, J. D., and Engle, R. W. (2019). Reaction time in differential and developmental research: a review and commentary on the problems and alternatives. Psychol. Bull. 145, 508–535. doi: 10.1037/bul0000192

Eichenlaub, J. B., Pinelli, M., and Portrat, S. (2023). Sleep habits and their relation to self-reported attention and class climate in preteens. Sleep Med. 101, 421–428. doi: 10.1016/j.sleep.2022.11.032

Eriksen, B. A., and Eriksen, C. W. (1974). Effects of noise letters upon the identification of a target letter in a nonsearch task. Percept. Psychophys. 16, 143–149. doi: 10.3758/BF03203267

Fan, J., Gu, X., Guise, K. G., Liu, X., Fossella, J., Wang, H., et al. (2009). Testing the behavioral interaction and integration of attentional networks. Brain Cogn. 70, 209–220. doi: 10.1016/j.bandc.2009.02.002

Fan, J., McCandliss, B. D., Sommer, T., Raz, A., and Posner, M. I. (2002). Testing the efficiency and independence of attentional networks. J. Cogn. Neurosci. 14, 340–347. doi: 10.1162/089892902317361886

Franceschini, S., Gori, S., Ruffino, M., Pedrolli, K., and Facoetti, A. (2012). A causal link between visual spatial attention and reading acquisition. Curr. Biol. 22, 814–819. doi: 10.1016/j.cub.2012.03.013

Gallen, C. L., Schaerlaeken, S., Younger, J. W., Anguera, J. A., and Gazzaley, A. (2023). Contribution of sustained attention abilities to real-world academic skills in children. Sci. Rep. 13:2673. doi: 10.1038/s41598-023-29427-w

Germine, L., Nakayama, K., Duchaine, B. C., Chabris, C. F., Chatterjee, G., and Wilmer, J. B. (2012). Is the web as good as the lab? Comparable performance from web and lab in cognitive/perceptual experiments. Psychon. Bull. Rev. 19, 847–857. doi: 10.3758/s13423-012-0296-9

Gignac, G. E., and Szodorai, E. T. (2016). Effect size guidelines for individual differences researchers. Pers. Individ. Differ. 102, 74–78. doi: 10.1016/j.paid.2016.06.069

Gil-Gómez de Liaño, B., Quirós-Godoy, M., Pérez-Hernández, E., and Wolfe, J. M. (2020). Efficiency and accuracy of visual search develop at different rates from early childhood through early adulthood. Psychonomic Bulletin \u0026amp; Review, 27, 504–511. doi: 10.3758/s13423-020-01712-z

Gratton, G., Cooper, P., Fabiani, M., Carter, C. S., and Karayanidis, F. (2018). Dynamics of cognitive control: theoretical bases, paradigms, and a view for the future. Psychophysiology 55:e13016. doi: 10.1111/psyp.13016

Green, C. S., and Bavelier, D. (2003). Action video game modifies visual selective attention. Nature 423, 534–537. doi: 10.1038/nature01647

Keller, A. S., Davidesco, I., and Tanner, K. D. (2020). Attention matters: how orchestrating attention may relate to classroom learning. CBE Life Sci. Educ. 19:fe5. doi: 10.1187/cbe.20-05-0106

Klein, R. A., Vianello, M., Hasselman, F., Adams, B. G., Adams, R. B. Jr., Alper, S., et al. (2018). Many labs 2: investigating variation in replicability across samples and settings. Adv. Methods Pract. Psychol. Sci. 1, 443–490. doi: 10.1177/2515245918810225

Kochari, A. R. (2019). Conducting web-based experiments for numerical cognition research. J. Cogn. 2, 1–21. doi: 10.5334/joc.85

Langner, R., Scharnowski, F., Ionta, S., Salmon, G., Piper, B. J., and Pamplona, G. S. P. (2023). Evaluation of the reliability and validity of computerized tests of attention. PLoS One 18:e0281196. doi: 10.1371/journal.pone.0281196

Lumsden, J., Edwards, E. A., Lawrence, N. S., Coyle, D., and Munafò, M. R. (2016). Gamification of cognitive assessment and cognitive training: a systematic review of applications and efficacy. JMIR Serious Games. 4:e5888. doi: 10.2196/games.5888

MacLeod, J. W., Lawrence, M. A., McConnell, M. M., Eskes, G. A., Klein, R. M., and Shore, D. I. (2010). Appraising the ANT: psychometric and theoretical considerations of the attention network test. Neuropsychology 24, 637–651. doi: 10.1037/a0019803

Manly, T., Anderson, V., Nimmo-Smith, I., Turner, A., Watson, P., and Robertson, I. H. (2001). The differential assessment of children’s attention: the test of everyday attention for children (TEA-Ch), normative sample and ADHD performance. J. Child Psychol. Psychiatry 42, 1065–1081. doi: 10.1111/1469-7610.00806

McDowd, J. M. (2007). An overview of attention: behavior and brain. J. Neurol. Phys. Ther. 31, 98–103. doi: 10.1097/NPT.0b013e31814d7874

Meule, A. (2017). Reporting and interpreting task performance in go/no-go affective shifting tasks. Front. Psychol. 8:701. doi: 10.3389/fpsyg.2017.00701

Moreau, D., Kirk, I. J., and Waldie, K. E. (2017). High-intensity training enhances executive function in children in a randomized, placebo-controlled trial. eLife 6:e25062. doi: 10.7554/eLife.25062

Mullane, J. C., Lawrence, M. A., Corkum, P. V., Klein, R. M., and McLaughlin, E. N. (2016). The development of and interaction among alerting, orienting, and executive attention in children. Child Neuropsychol. 22, 155–176. doi: 10.1080/09297049.2014.981252

Oken, B. S., Salinsky, M. C., and Elsas, S. (2006). Vigilance, alertness, or sustained attention: physiological basis and measurement. Clin. Neurophysiol. 117, 1885–1901. doi: 10.1016/j.clinph.2006.01.017

Paap, K. R., Anders-Jefferson, R., Zimiga, B., Mason, L., and Mikulinsky, R. (2020). Interference scores have inadequate concurrent and convergent validity: should we stop using the flanker, Simon, and spatial Stroop tasks? Cogn. Res. Princ. Implic. 5, 1–27. doi: 10.1186/s41235-020-0207-y

Parsons, S. (2021). Splithalf: robust estimates of split half reliability. J. Open Source Softw. 6:3041. doi: 10.21105/joss.03041

Petersen, S. E., and Posner, M. I. (2012). The attention system of the human brain: 20 years after. Annu. Rev. Neurosci. 35, 73–89. doi: 10.1146/annurev-neuro-062111-150525

Pievsky, M. A., and McGrath, R. E. (2018). The neurocognitive profile of attention-deficit/hyperactivity disorder: a review of meta-analyses. Arch. Clin. Neuropsychol. 33, 143–157. doi: 10.1093/arclin/acx055

Posner, M. I., and Petersen, S. E. (1990). The attention system of the human brain. Annu. Rev. Neurosci. 13, 25–42. doi: 10.1146/annurev.ne.13.030190.000325

Pronk, T., Molenaar, D., Wiers, R. W., and Murre, J. (2022). Methods to split cognitive task data for estimating split-half reliability: a comprehensive review and systematic assessment. Psychon. Bull. Rev. 29, 44–54. doi: 10.3758/s13423-021-01948-3

Razza, R. A., Martin, A., and Brooks-Gunn, J. (2012). The implications of early attentional regulation for school success among low-income children. J. Appl. Dev. Psychol. 33, 311–319. doi: 10.1016/j.appdev.2012.07.005

Reips, U.-D. (2021). Web-based research in psychology: a review. Z. Psychol. 229, 198–213. doi: 10.1027/2151-2604/a000475

Rigoli, D., Piek, J. P., Kane, R., and Oosterlaan, J. (2012). Motor coordination, working memory, and academic achievement in a normative adolescent sample: testing a mediation model. Arch. Clin. Neuropsychol. 27, 766–780. doi: 10.1093/arclin/acs061

Robaey, P., Amre, D., Schachar, R., and Simard, L. (2007). French version of the strengths and weaknesses of ADHD symptoms and normal behaviors (SWAN-F) questionnaire. J. Can. Acad. Child Adolesc. Psychiatry 16, 80–89.

Rueda, M. R., Checa, P., and Rothbart, M. K. (2010). Contributions of attentional control to socioemotional and academic development. Early Educ. Dev. 21, 744–764. doi: 10.1080/10409289.2010.510055

Rueda, M. R., Fan, J., McCandliss, B. D., Halparin, J. D., Gruber, D. B., Lercari, L. P., et al. (2004). Development of attentional networks in childhood. Neuropsychologia 42, 1029–1040. doi: 10.1016/j.neuropsychologia.2003.12.012

Sauter, M., Draschkow, D., and Mack, W. (2020). Building, hosting and recruiting: a brief introduction to running behavioral experiments online. Brain Sci. 10:251. doi: 10.3390/brainsci10040251

Sobeh, J., and Spijkers, W. (2012). Development of attention functions in 5- to 11-year-old Arab children as measured by the German test battery of attention performance (KITAP): a pilot study from Syria. Child Neuropsychol. 18, 144–167. doi: 10.1080/09297049.2011.594426

Sochat, V. (2018). The experiment factory: reproducible experiment containers. J. Open Source Softw. 3:521. doi: 10.21105/joss.00521

Spruijt, A. M., Dekker, M. C., Ziermans, T. B., and Swaab, H. (2018). Attentional control and executive functioning in school-aged children: linking self-regulation and parenting strategies. J. Exp. Child Psychol. 166, 340–359. doi: 10.1016/j.jecp.2017.09.004

Stevens, C., and Bavelier, D. (2012). The role of selective attention on academic foundations: a cognitive neuroscience perspective. Dev. Cogn. Neurosci. 2, S30–S48. doi: 10.1016/j.dcn.2011.11.001

Stroop, J. R. (1935). Studies of interference in serial verbal reactions. J. Exp. Psychol. 18, 643–662. doi: 10.1037/h0054651

Su, C. Y., Wuang, Y. P., Lin, Y. H., and Su, J. H. (2015). The role of processing speed in post-stroke cognitive dysfunction. Arch. Clin. Neuropsychol. 30, 148–160. doi: 10.1093/arclin/acu057

Talalay, I. V. (2024). The development of sustained, selective, and divided attention in school-age children. Psychol. Sch. 61, 2223–2239. doi: 10.1002/pits.23160

Trautmann, M., and Zepf, F. D. (2012). Attentional performance, age and scholastic achievement in healthy children. PLoS One 7:e32279. doi: 10.1371/journal.pone.0032279