- Department of Biology, Bioscience Education and Zoo Biology, Goethe University, Frankfurt, Germany

This study aims to explore students' associations with Artificial Intelligence (AI) and how these perceptions have evolved following the release of Chat GPT. A free word association test was conducted with 836 German high school students aged 10–20. Associations were collected before and after the release of Chat GPT, processed, cleaned, and inductively categorized into nine groups: technical association, assistance system, future, human, negative, positive, artificial, others, and no association. In total, 355 distinct terms were mentioned, with “robot” emerging as the most frequently cited, followed by “computer” and “Chat GPT,” indicating a strong connection between AI and technological applications. The release of Chat GPT had a significant impact on students' associations, with a marked increase in mentions of Chat GPT and related assistance systems, such as Siri and Snapchat AI. The results reveal a shift in students' perception of AI-from abstract, futuristic concepts to more immediate, application-based associations. Network analysis further demonstrated how terms were semantically clustered, emphasizing the prominence of assistance systems in students' conceptions. The findings underscore the importance of integrating AI education that fosters both critical reflection and practical understanding of AI, encouraging responsible engagement with the technology. These insights are crucial for shaping the future of AI literacy in schools and universities.

1 Introduction

The increased discussion of artificial intelligence (AI) since 2009 (Fast and Horvitz, 2016) reflects the growing presence of AI in modern life. As there is no universal definition that can comprehensively capture its essence (Brauner et al., 2023), AI acts as a kind of umbrella term for various technological references and shapes our society in different aspects (Kelley et al., 2021; Makridakis, 2017). One area concerns the economy, where AI has the potential to fundamentally reshape it (Lee et al., 2018; O'Shaughnessy et al., 2023; Pallathadka et al., 2023). Due to its cost-cutting impact, this influence is strongly supported by the industry (Demir and Güraksin, 2022). In addition, AI also has a significant impact on the medical world and is used in diagnostics, for example (Abouzeid et al., 2021; Castagno and Khalifa, 2020; Stai et al., 2020). Furthermore, the spread of AI is also clearly noticeable in people's everyday lives, as Sindermann et al. (2021) note. This applies to areas ranging from intelligent home systems to voice control systems and self-driving cars (Andries and Robertson, 2023). Even sociopolitical goals such as sustainable development are influenced by AI (Yeh et al., 2021). In this context, Brauner et al. (2023) emphasize that it is necessary to develop a basic understanding of AI as a society in order to be able to participate in a democratic debate about its potential and limitations.

1.1 AI in education

The growing significance of AI in our daily lives and the increasing demand for AI-literate professionals have garnered increased attention for the subject in the education sector (Lindner and Berges, 2020; Su and Zhong, 2022). The resulting use of AI in education (AIED) is being applied in a wide variety of settings (Kim and Kim, 2022), such as detecting learning gaps, assessing assignments and measuring learning outcomes (Chassignol et al., 2018). Furthermore, AI can be used to provide students with personalized scaffolding and recommendations (Chounta et al., 2022; Kim and Kim, 2022). These examples show that educational institutions, especially schools, can play a crucial initial role in teaching AI skills (Lindner and Berges, 2020). The integration of AIED can commence as early as primary education (Chai et al., 2021; Su and Zhong, 2022) and transcends the boundaries of computer science, thus becoming relevant to a wide array of subject areas. Consequently, it is unsurprising that the incorporation of AI into educational settings will continue to increase in the future (Zhang and Aslan, 2021). Developing AI competencies in the next generation and educating them on how to effectively engage with AI is crucial, given that today's younger generation will be significantly impacted by both the positive and negative potentials of this emerging technology (Jeffrey, 2020). Teaching students about AI has the potential to challenge their negative perceptions (Keles and Aydin, 2021), and as proposed by van Brummelen et al. (2021), Grassini (2023) and Kasinidou et al. (2024a) gaining a deeper understanding of students' perceptions of AI can enhance the effectiveness of teaching the subject. This involves addressing concerns and misconceptions about AI while highlighting its potential for positive impacts and providing informed insights into potential risks. Therefore, AI should be integrated into the general digital literacy that all individuals should acquire through their education (Große-Bölting and Mühling, 2020). It is essential that students grasp the fundamental principles of AI (Lee et al., 2018; Touretzky et al., 2019), as defined by Zhang et al. (2022), encompassing technical concepts and processes, ethical and societal implications, and career prospects in AI.

However, in the long term, AI can only be successfully integrated into the various areas of society if there is sufficient acceptance of the technology (Abouzeid et al., 2021). This implies that in education, learners will not benefit from available technologies and their advantages if they are not willing to use them (Estriegana et al., 2019). Accordingly, the successful implementation of specific technologies in education, like AI, is highly dependent on learners' technology acceptance and the factors that influence it (Granić and Marangunić, 2019; Ritter, 2017). For example, individuals with a high affinity for technology are more likely to develop technical acceptance toward new technologies than those with a low affinity for technology (Henrich et al., 2023). Additionally, several studies have shown that attitudes and perceptions toward AI serve as influential factors for technology acceptance (Cave et al., 2019; Demir and Güraksin, 2022; Kelley et al., 2021; Lee et al., 2018; O'Shaughnessy et al., 2023; Vasiljeva et al., 2021; Zhai et al., 2020). Therefore, to anticipate potential acceptance of a technology like AI, particular attention should be given to people's perceptions of AI.

1.2 Perception of AI

In recent years, several studies have surveyed perceptions of artificial intelligence (Kelley et al., 2021). According to a meta-analysis by Fast and Horvitz (2016) of 30 years of articles on AI topics, both positive and negative sentiments toward AI have increased significantly since 2009, whereby the majority of articles took an optimistic view of the technology. This finding was also confirmed by Vasiljeva et al. (2021). Furthermore, research shows that public opinion on AI is often contradictory, with neither an exclusively positive nor an exclusively negative interpretation (Haseski, 2019; Jeffrey, 2020; Keles and Aydin, 2021; Kelley et al., 2021; Kim and Kim, 2022). Researchers use different approaches to survey people's attitudes toward AI. Some use quantitative methods and develop scales for this purpose (Grassini, 2023; Schepman and Rodway, 2023; Sindermann et al., 2021), while others use a qualitative approach. For example, Bewersdorff et al. (2023) conducted a qualitative content analysis to identify and classify myths, mis- and preconceptions about AI among learners. Their study analyzed 25 empirical studies from various countries. Cave et al. (2019) conducted an open text response by asking the general population how a person would describe AI to a friend. Lindner and Berges (2020) used a different methodology by using a structure-laying technique to create concept images. Demir and Güraksin (2022) and Keles and Aydin (2021) conducted surveys in the education sector using metaphor analysis and independent word association tests respectively. A common feature of these qualitative studies is the aim of gaining access to learners' cognitive concepts. It is assumed that qualitative methods, compared to a closed questionnaire with e.g., Likert scales, impose fewer constraints on the response and thus allow more direct and unrestricted access to mental representations (Wagner et al., 1996). Furthermore, Szalay and Deese (2024) explain that human perception of a subject can usually be revealed through associations with it. Associations can take various forms, whether concrete or abstract, and they may be represented as verbs, nouns, adjectives, or even complete sentences (Kahneman, 2012).

Previous findings of a study by Vandenberg and Mott (2023) indicates that especially young students have mixed understanding of what AI is, what it can do, and how they feel about AI. Some studies have shown that students commonly associate artificial intelligence with robots, making this one of the most frequently mentioned terms in research on AI perception (Cave et al., 2019; Haseski, 2019; Kasinidou et al., 2024a; Nader et al., 2022). In addition to robots, assistance systems, including self-driving cars and digital assistants, are also frequently named, as observed in Lindner and Berges (2020). Further studies indicate that AI is often linked to future-related concepts, such as technological progress and modernization (Lindner and Berges, 2020; Kelley et al., 2021). In addition to these technical and future-oriented associations, AI is also frequently connected to aspects of human cognition and anthropomorphism (Bewersdorff et al., 2023; Cave et al., 2019; Demir and Güraksin, 2022; Lindner and Berges, 2020; Mertala and Fagerlund, 2022; van Brummelen et al., 2021).

The public perception of artificial intelligence can be influenced and shaped by user diversity, such as age or gender (Sindermann et al., 2021; Yigitcanlar et al., 2022), and the portrayal of AI in the media (Gregor and Gotwald, 2021; Nader et al., 2022). These media include movies and television (Di and Wu, 2021; Jeffrey, 2020), newspapers (Di and Wu, 2021; Fast and Horvitz, 2016) and social media (Gao et al., 2020). In addition, contextual influences play a crucial role in the perception of AI. For instance, due to the context, the use of AI in medicine for profitable purposes results in a more positive perception compared to AI in business, where human jobs are replaced (Brauner et al., 2023). Perceptions are dynamic and can evolve as they are influenced by fresh ideas, experiences, and social interactions over time (Moscovici, 2000).

1.3 Chat GPT

Regarding AI in general, and particularly in the context of AIED, Grassini (2023) highlights that the release of the Chat GPT software has the potential to heavily influence public discussions about AI. Chat GPT was launched in November 2022 and has since gained popularity and media attention (Dempere et al., 2023; Leiter et al., 2024). The findings of a systematic literature review and meta-analysis revealed that Chat GPT is an effective tool for engaging students in learning (Heung and Chiu, 2025). Thereby, students use Chat GPT for different tasks (Kasinidou et al., 2024b). However, reactions to the launch varied from enthusiasm to concern about artificial intelligence (García-Peñalvo, 2023; Leiter et al., 2024). The benefits of Chat GPT in education include research support, supported grading, and improved human-computer interaction. Furthermore, Valeri et al. (2025) found that Chat GPT is widely integrated into STEM subjects, with biology standing out as a key area where it serves primarily as a tool for improving conceptual comprehension. The concerns about Chat GPT are in terms of the security of online tests, plagiarism, and wider societal and economic impacts, such as job displacement and the digital education gap (Dempere et al., 2023). In education, the debate around the use of Chat GPT is mainly centered around its capability of creating texts that could pass as human creations. García-Peñalvo (2023) argues that ChatGPT should not be banned but rather consciously addressed and meaningfully integrated into the classroom. Similarly, Kasinidou et al. (2024b) highlight a consensus in favor of educational measures and against blanket prohibitions. An example of such meaningful integration is provided by Yilmaz and Yilmaz (2023), who demonstrated that students who used Chat GPT in programming classes exhibited significantly higher computational thinking skills, greater programming self-efficacy, and increased motivation compared to those in the control group.

1.4 Study aims and research questions

The aim of this study is to assess students' associations of artificial intelligence in order to gain a better understanding of their perception and assessment. This study deliberately focuses on the higher education sector and learners' associations. Knowing learners' perceptions of a particular subject can help to tailor lessons to their individual needs. Furthermore, by establishing a baseline understanding, the influence of potential factors, such as instructional interventions, which have already shown to potentially influence the perception of AI (Keles and Aydin, 2021), can be investigated. Beyond identifying students' associations, this study also maps out an association network, providing a structural view of mental representations. Mapping these associations enables us to reveal how participants' perceptions of AI are interconnected and identify which associations were frequently mentioned together. According to our research, this method, which offers an innovative approach to the visual representation of data, has not been used in the context of artificial intelligence in previous studies. In addition to the existing associations, this study also examines the potential impact of Chat GPT's release on students' associations. Therefore, additional students were surveyed after the release of Chat GPT and potential differences in word associations were analyzed categorically.

This aim of the study leads to the following research questions:

1) What associations do students have with artificial intelligence?

2) How have these associations changed, if at all, following the release of Chat GPT?

2 Materials and methods

Students' associations with artificial intelligence were collected using a free word association test. After data collection, these associations were processed, categorized, and analyzed in terms of frequency. To determine the influence of the release of Chat GPT 3 on the students' associations, data was collected at two test points before and after the release. This study employs a mixed-method approach that integrates frequency analysis, qualitative categorization, and network visualization, allowing for both quantitative and qualitative insights into students' perceptions of AI.

2.1 Participants

Data was collected from a total of 836 students (43.42% male; 54.07% female; 2.51% diverse and no answer). The participants were German high school students from various schools, ranging in age from 10 to 20 years (M = 14.97). Prior to the survey, the students—and parents in the case of minors—were informed about the intentions, the voluntary nature, and the anonymity of the study. The data set prior to the release of Chat GPT 3 (T1) was collected in the period from September 2021 to January 2022. It consists of 526 students (40.68% male; 57.41% female; 1.9% diverse and no answers) with an average age of 15.59 years. The data set after the release of Chat GPT 3 (T2) was collected in the period from May 2023 to June 2023 and consists of 310 students (48.06% male; 48.39% female; 3.55% diverse and no answers) with an average age of 13.86 years.

2.2 Instruments

In addition to collecting socio-demographic data on gender and age, a free word association test was conducted. This is a crucial method for gathering content-related associations, as described by Lo Monaco et al. (2017). The technique relies on the assumption that participants respond freely to stimulus words without limiting their thoughts (Bahar et al., 1999). All applications of free-word association tests aim to determine people's attitudes and perceptions toward certain objects as freely as possible (Szalay and Deese, 2024). In this study, students were asked about the stimulus term “artificial intelligence.” The association test was worded as follows: “What do you associate with the term ‘artificial intelligence'? Give three examples.” The students were given sufficient time to formulate their responses in a blank answer field. As a result, our dataset does not contain full-text responses but rather consists of isolated keywords provided by students in response to this free word association task. This distinction is explicitly highlighted to clarify that our analysis is based on individual terms rather than extended textual responses.

3 Analysis

The analysis first examines the entire data set to capture associations with AI based on the maximum number of participants. In order to assess the influence of Chat GPT, the data set is then differentiated into the respective survey time points (T1/T2). The data were transferred to Microsoft Excel for processing and categorization. The analysis of the frequency of individual terms and two-word combinations was conducted using MATLAB Version 2023. MATLAB was also used to calculate the changepoints, indicating significant changes in the frequency of mentions. These changepoints were used as thresholds for data utilization. Detailed rules for term consolidation and the resulting final list of terms are available in the Supplementary material. For this paper, the associations were translated from German to English using DeepL SE's online translation services (DeepL SE, 2017).

3.1 Cleaning

The process we defined as “cleaning” was carried out in Microsoft Excel to ensure better clarity of the data. Initially, the students' associations were checked for spelling and grammatical errors. To ensure consistency, all terms were adapted to the singular. Duplicate mentions by individual respondents were recorded only once. Additionally, words with the same meaning (e.g., “fast” and “speed”) and close synonyms (e.g., “mobile” and “smartphone”) were consolidated. Of the total responses, 67.7% of associations were then adopted without modification, maintaining their original student-provided form. However, 32.3% required minor adjustments to enable subsequent quantification of the data and facilitate quantitative analysis. These adjustments primarily involved breaking multi-word phrases into separate keywords while retaining their intended meaning. To transparently illustrate the adjustments made, the following examples present the modifications of student responses. “Helps with homework” was transformed into “help” and “homework,” “develops further” into “advancement,” and “questions that are answered” into “questions-answering.” “Computers that can learn” was split into “computer” and “learning ability.” “Has answers to everything” was reduced to “omniscient,” and “a robot that helps” was categorized as “robot” and “help.” “Electronic brain” was restructured into “electronics” and “brain,” while “technical device with its own opinion and mind” was broken down into “technology,” “device,” “mind,” and “opinion.” “Must not become too powerful” was assigned to “ethical concerns,” “Smarter than humans” to “superior to humans,” and “Machines with human personality” to “human-like.” The cleaned associations will henceforth be referred to simply as associations for ease of reference.

Subsequently, the MATLAB term-frequency counter “bagOfWords” was used to count the frequency of individual terms, identifying 355 different terms. The analysis of word combinations in the students' associations was limited to the co-occurrence of a maximum of two terms, regardless of their order or the mention of additional terms. Only the 1,000 most frequent combinations were taken into account. Plotting the term frequencies on a growth curve using MATLAB allowed us to identify changepoints at 5 and 12 mentions per term. For further analysis, only the terms that met the established thresholds of at least 5 (88 terms) or 12 mentions (41 terms) were considered, depending on the required level of clarity. Such reductions in the number of terms are common practice when working with associations to enhance clarity (Eylering et al., 2023; Kurt et al., 2013).

3.2 Categorization

To categorize the data, we used the 88 terms that were mentioned at least five times. Starting from the raw data, an inductive categorization system was developed by coding terms with similar content and grouping them into thematic subcategories, which were then consolidated into main categories. This inductive approach differs from the deductive method, where terms are classified into an already existing categorization system (Mayring, 2014). Based on the terms mentioned, seven specific main categories emerged (1. technical association, 2. assistance system, 3. future, 4. human 5. negative, 6. positive, 7. artificial). Associations that did not fit into any of the identified categories were declared as the eighth category titled other. Associations or statements such as “I don't know” were included as the ninth category under the title no association.

The inductive categorization system was subsequently assessed for its intercoder reliability (Mayring, 2014). For this purpose, the unsorted terms mentioned at least five times were categorized by an independent person based on provided definitions of subcategories and main categories. The definitions of the categories can be found in the Supplementary material. The Kappa value was calculated to evaluate the agreement between coders (Kuckartz and Rädiker, 2019). This value can range from −1 to 1, with a Kappa value of 1 indicating complete agreement. A Kappa value between 0.61 and 0.80 is considered “substantial” or “good,” while values from 0.81 to 1 are regarded as “almost perfect” (Landis and Koch, 1977).

To improve agreement, discrepancies were discussed, and the coders reached a consensus on each category. The results before the discussion showed 75% agreement with a Kappa value of κ = 0.7, and after the discussion, 92% agreement with a Kappa value of κ = 0.86 was achieved. The discussion protocol can be provided upon request.

3.3 Association network

Beyond frequency-based categorization, our study applies network analysis to provide additional semantic insights by illustrating which concepts students frequently associate together. To enhance clarity, only words mentioned at least 12 times and appearing as a two-word combination with another word at least three times were considered. Therefore, associations that were named together less than three times do not share a visible connection. The order of words in the mentions was irrelevant. The network was visualized using Gephi (Version 9.0.7) with the Fruchterman layout. Connections between associations and the associations themselves were weighted based on their frequency, with thicker or larger representations indicating higher frequency. Finally, the terms were color-coded in Inkscape (version 1.4) according to their respective categories.

3.4 Influence of Chat GPT

Besides the qualitative analysis, we also conducted quantitative statistical tests concerning the impact of the release of Chat GPT 3 on the associations that occurred. To compare the frequency of mentions of individual terms and categories before (T1) and after (T2) the release of Chat GPT 3, we employed a chi-square test (2 × 2 contingency table) with categories of “Mention/Non-Mention” and “T1/T2.” Based on the changepoints, we analyzed all terms that were mentioned at least 12 times in total across both time points, T1 and T2. Additionally, based on the chi-square values, Cramer's V was calculated as a measure of effect size. In interpreting the strength of associations, values from 0.10 to 0.30 are considered weak associations, values from 0.30 to 0.50 are considered moderate associations, and values above 0.50 are considered strong associations (Cohen, 1988). Therefore, a Cramer's V value close to 0 indicates no association, whereas a value close to 1 indicates perfect association (Field, 2013).

4 Results

4.1 Associations

The following results pertain to the entirety of the data set, comprising 836 students. They mentioned 355 distinct associations a total of 2543 times across the survey. This equates to an average of 3.04 mentions per person (SD = 1.01). A mere 3.95% of the surveyed students (n = 33) had no association with artificial intelligence. After cleaning the data and establishing a changepoint with a minimum of five mentions, 2149 mentions of 88 different associations were identified. With a minimum of 12 mentions, 1809 mentions from 41 different associations remain. Thus, 84.51% or 71.14% of all mentions were subjected to further analysis and incorporated into the statistical evaluation. Table 1 presents the five most frequently mentioned associations, along with their absolute frequency (f0). Additionally, the table shows the relative frequency in relation to the total number of mentions (f1) and in relation to the total number of students that mentioned the association (f2). Notably, the five most frequently mentioned associations account for 34.72% of the total number of mentions.

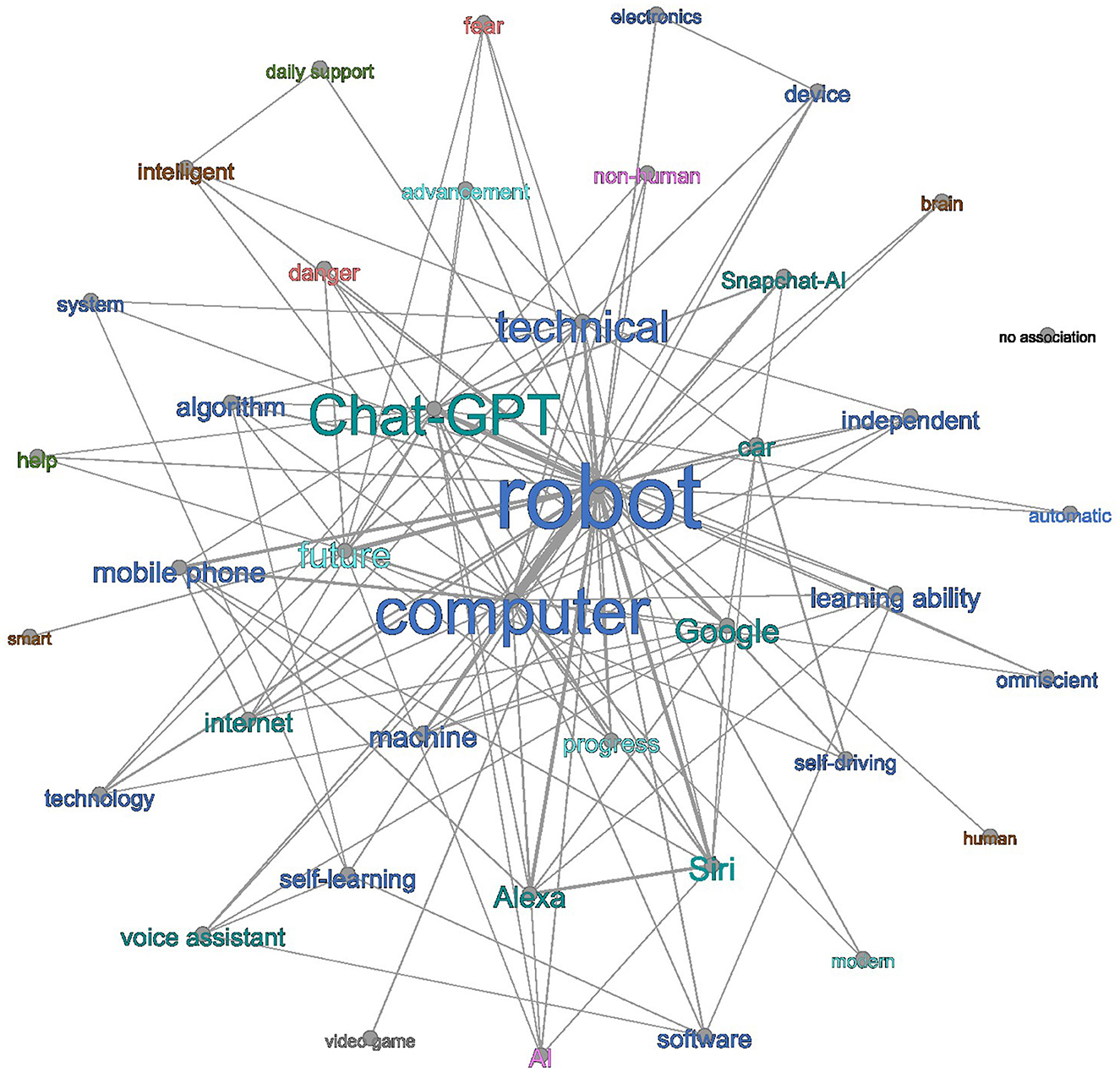

Table 1. The five most frequently mentioned associations, along with their absolute frequency (f0), the relative frequency in relation to the total number of mentions (f1) and in relation to the total number of students that mentioned the association (f2).

4.2 Categorization

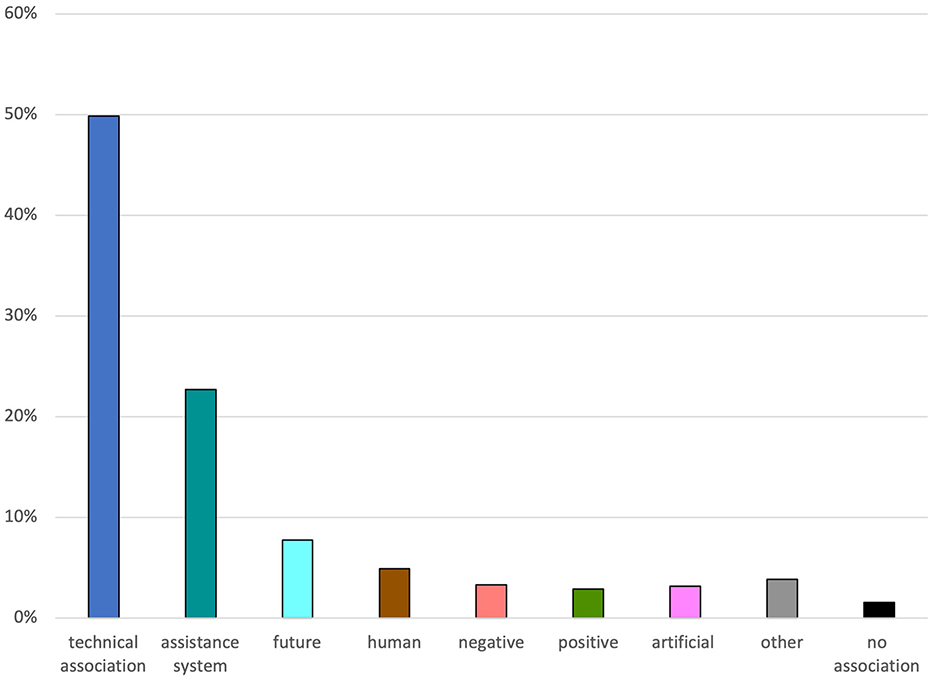

In order to form the inductive categories, the associations that were mentioned at least five times were taken into account. The 88 associations are classified into nine inductive main categories. Technical associations refer to terms that either directly mention technology or include references to technical devices (24 associations, e.g., robot, computer). Assistance systems encompass associations that specifically reference systems designed to aid or assist users (19 associations, e.g., Chat GPT, Siri). Future associations reflect the perception of artificial intelligence as a key component in future developments, often evaluating AI as a positive advancement that will shape the time yet to come (8 associations, e.g., future, progress). Human associations relate to terms that concern human beings, encompassing characteristics, qualities, or aspects intrinsic to humanity (6 associations, e.g., intelligent, smart). Negative associations capture terms that express concerns or highlight disadvantages associated with AI (5 associations, e.g., danger, fear). In contrast, positive associations represent affirmative or approving views, focusing on the benefits and potential advantages that AI can offer (6 associations, e.g., daily support, fast). Artificial associations describe artificial intelligence as an unnatural construct, recreated through technical means and modeled to emulate natural processes (8 associations, e.g., non-human, artificial). Other associations did not fit into any of the identified categories (11 associations, e.g., video game, science). Associations or statements such as “I don't know” were included as the ninth category under the title no association. The categories, including all associations that were mentioned at least five times, the number of different associations per category and the absolute frequency of mentions of a category can be seen in the Supplementary material. The distribution of the relative frequency in relation to the total number of mentions can be seen in Figure 1. With 49.88% of responses, the category technical association was the most frequently identified. This was followed by assistance system with 22.71% and future with 7.77%. The remaining categories each account for < 5% of mentions.

4.3 Association network

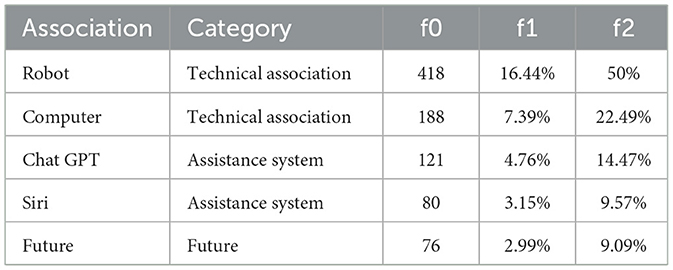

To form the association network, the 41 terms that were mentioned at least 12 times were taken into account. Moreover, out of those 41 terms only those word combinations that occurred a minimum of three times were considered. This resulted in a total of 131 distinct two-word combinations. The five most frequently occurring word combinations are “robot and computer” (referenced by 14.35% of students), “robot and Chat GPT” (referenced by 6.22% of students), “robot and Siri” (referenced by 5.26% of students), and “robot and Alexa” (referenced by 4.78% of students). Furthermore, the combinations “robot and mobile phone,” “robot and future” as well as “robot and technology” were each mentioned by 4.42% of students. This indicates that the term “robot” is present in each of the most frequently occurring word combinations. The complete association network is presented in Figure 2.

Figure 2. Association network showing the connections of the 41 terms that were mentioned at least 12 times and that occurred as two-word-combinations with other terms a minimum of three times. The size of the terms and the thickness of the connecting lines correlate positively with the frequency of mentions. The color of each term corresponds to the category it belongs to.

The network also reveals patterns in the associations between different categories. There was a frequent occurrence of terms being mentioned together that both fall under assistance system (26.72% of all two-word mentions), as well as terms being mentioned together that both fall under technical association (9.16% of all two-word mentions). Additionally, technical association and assistance system (27.48% of all two-word mentions), technical association and future (9.92% of all two-word mentions), as well as technical association and human (6.87% of all two-word mentions) were mentioned in combination with particular frequency.

4.4 Influence of Chat GPT 3

The preceding results pertain to the entirety of the data set, comprising 836 test subjects. However, if the data set is divided into T1 (before the release of Chat GPT) and T2 (after the release of Chat GPT), two cohorts are obtained which differ in terms of the associations mentioned, the frequency of the categories occurring and the frequency of the two-word combinations occurring.

4.4.1 Influence on associations

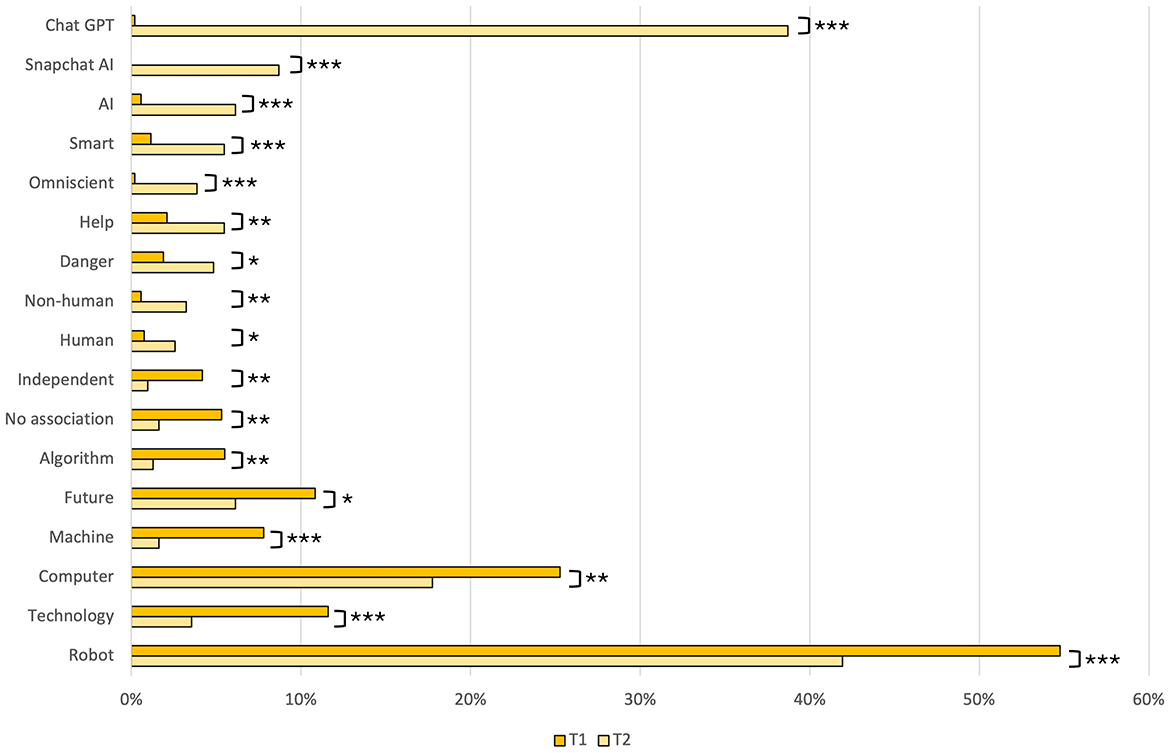

To evaluate how associations evolved, a chi-square test was conducted on associations that were mentioned at least 12 times. This test aimed to examine potential correlations between the release of Chat GPT and the frequency of commonly named associations. The results reveal significant effects in 17 out of 41 terms. The effect size, measured by Cramer's V, ranged from 0.07 to 0.53, indicating a spectrum of quite small to strong effects. Notably, 24 out of the 41 terms showed no significant correlation with the release of Chat GPT. The exact values of the significant terms can be found in the Supplementary material. The differences in the relative frequencies of all significant changes in relation to the total number of students who mentioned the association is visualized in Figure 3. Before the release of Chat GPT, the five most frequently mentioned terms were “robot” (18.2% of all terms; associated by 54.75% of students), “computer” (8.41% of all terms; associated by 25.29% of students), “technical” (3.86% of all terms; associated by 11.6% of students), “future” (3.6% of all terms; associated by 10.84% of students), and “Siri” (2.6% of all terms; associated by 10.46% of students). Following the release of Chat GPT, “robot” (13.5% of all terms; associated by 41.49% of students) and “computer” (5.71% of all terms; associated by 17.74% of students) remained among the five most frequently mentioned associations. New entries in the top five associations in the post-release period include “Chat GPT” (12.46% of all terms; associated by 38.71% of students) and “Snapchat AI” (2.8% of all terms; associated by 8.71% of students). Both are virtual assistants categorized under assistance systems and represent specific examples of practical applications. In contrast, the terms “future” and “technical,” which were among the top five associations in the pre-release period, no longer appear among the most frequently mentioned terms post-release. The changes in the associations “Chat GPT,” “Snapchat AI,” “AI,” “smart,” “omniscient,” “machine,” “technical,” and “robot” are particularly significant (Cramer's V > 0.12; p < 0.001).

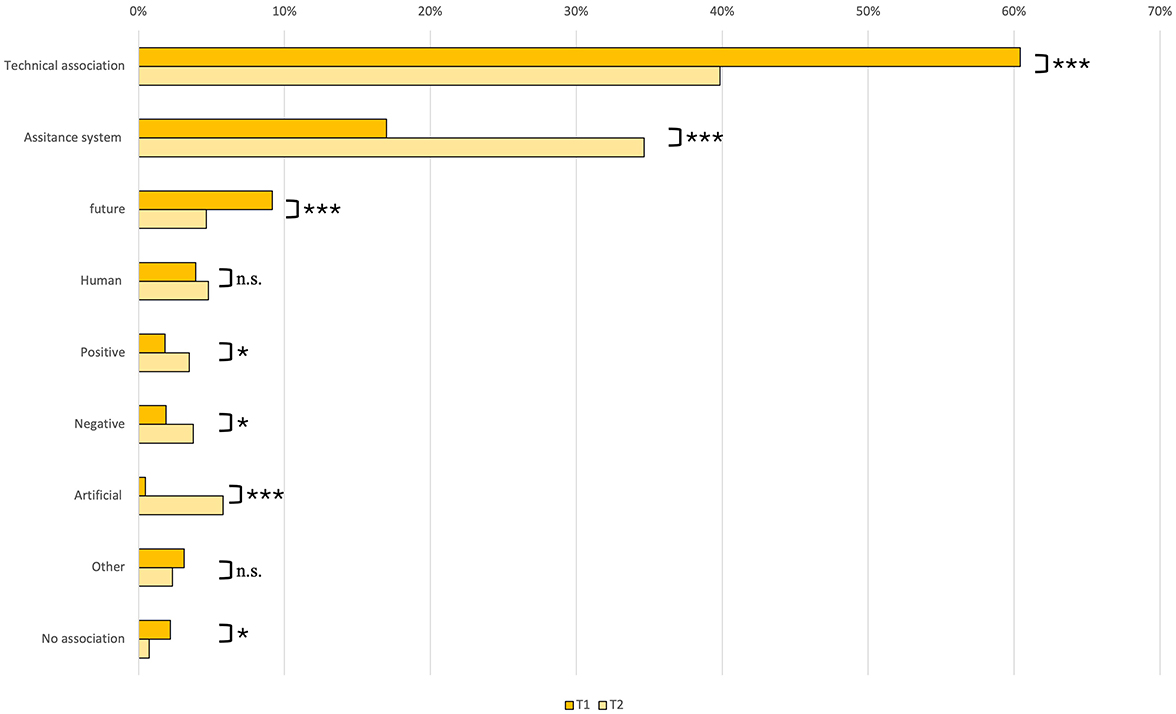

Figure 3. Changes in the frequency of terms mentioned by students before (T1) and after the release of Chat GPT (T2). Significance levels are indicated as follows: *** p < 0.001, * p < 0.05.

4.4.2 Influence on categories

To evaluate how categories evolved, a chi-square test was conducted. An analysis of the frequency of inductive categories reveals major changes between the two time points, T1 and T2. The exact values of the categories and their relative frequencies in relation to the total number of mentions at time points T1 and T2 can be found in the Supplementary material. The relative frequency is based on the total number of mentions for terms that occur at least five times.

Before the release of Chat GPT (T1), the top three categories were: technical association (60.42%), assistance system (17.01%) and future (9.17%). After the release of Chat GPT (T2), the top three categories were: technical association (39.86%), assistance system (34.64%) and artificial (5.8%). While the top two categories remained in their respective ranks, the relative frequency shifted significantly toward the category assistance system, which doubled in percentage. In addition, both positive and negative categories were mentioned twice as often at T2 compared to T1, but there remains no difference in frequency between them. Mentions of associations categorized under future halved, whereas mentions describing AI as something artificial significantly increased. The categories human and other showed no visible changes. There are also fewer instances of no associations, according to which the proportion of students who have no associations with artificial intelligence decreases from 2.19% to 1.61%. The differences in the relative frequencies of all significant changes of category mentions are visualized in Figure 4.

Figure 4. Changes in the frequency of category mentions before (T1) and after the release of Chat GPT (T2). Significance levels are indicated as follows: *** p < 0.001, ** p < 0.01, * p < 0.05, n.s., non-significant.

4.4.3 Influence on two-word combinations

The release of Chat GPT is also reflected in the two-word combinations. The five most frequently occurring word pairs before the release of Chat GPT were: “robot and computer” (referenced by 16.16% of students), “robot and Siri” (referenced by 6.27% of students), “robot and technical” (referenced by 6.08% of students), “robot and future” (referenced by 5.7% of students), as well as “robot and Alexa” (referenced by 5.13% of students). After the release of Chat GPT, the five most frequently occurring word pairs are: “robot and Chat GPT” (referenced by 16.77% of students), “robot and computer” (referenced by 11.29% of students), “robot and Snapchat AI” (referenced by 5.16% of students), “Chat GPT and Snapchat AI” (referenced by 4.84% of students), and “Chat GPT and computer” (referenced by 4.84% of students). While “robot” and “computer” remain frequently mentioned in the top word pairs, “Chat GPT” and “Snapchat AI” are newly prominent.

5 Discussion

This study pursued two main objectives to gain a deeper understanding of the perception of Artificial Intelligence (AI) among students. First, the focus was on capturing the associations that students have with the term “Artificial Intelligence.” This involved analyzing which terms and word combinations were mentioned most frequently to obtain a comprehensive picture. Second, the study aimed to investigate whether, and if so, how these associations have changed since the introduction of Chat GPT. This approach builds on the established method of free word association tests, which have already been applied in various educational fields, such as “Diffusion” (Kurt et al., 2013), “Electric Fields” (Türkkan, 2017), “Biodiversity” (Eylering et al., 2023), “Insects” (Vlasák-Drücker et al., 2022), and “Sustainability” (Barone et al., 2020).

5.1 Associations with artificial intelligence

The present study, which employed a total of 355 different terms, revealed a highly diverse spectrum of associations. This diversity can also be attributed to the absence of a universally accepted definition of AI, which would facilitate a clear delineation of its constituent elements (Brauner et al., 2023). Upon analysis of the entire data set, the most prevalent association with artificial intelligence is “robot,” a finding that is consistent with other studies (Haseski, 2019; Kasinidou et al., 2024a). In comparison to the findings of previous studies, our research demonstrates a more pronounced prevalence of the term, with a higher proportion of respondents citing it. In contrast to the findings of Nader et al. (2022) and Cave et al. (2019), who reported shares of 36.7% and 25%, respectively, for the term “robot,” our sample revealed that “robot” was mentioned by 50% of respondents. The high frequency of mentions of the term “robot”—together with the equally frequently mentioned term “computer” (22.49% of all respondents)—indicates that the inductively formed category of technical associations is the most frequently represented overall. Furthermore, the term “robot” is consistently among the five most frequent two-word combinations. The extraordinary dominance of the term “robot” in our study may be explained in part by its presence in the media and films (Di and Wu, 2021; Gregor and Gotwald, 2021; Jeffrey, 2020). Robots are often central characters in science fiction films, where they are often portrayed as concrete and tangible examples of artificial intelligence.

The third most common association, Chat GPT, and the fourth most common association, 'Siri', belong to the “assistance systems” category, which is the second most common overall. The fact that assistance systems play such a prominent role among students could be due to their already high level of integration into everyday life, as people are increasingly interacting with AI in their daily lives (Sindermann et al., 2021). The category of assistance systems also includes associations such as self-driving cars. Such everyday applications were also frequently mentioned in the study by Lindner and Berges (2020), for example.

The association “future” is the fifth most common term in our study. Together with terms such as “progress” and “modern”, this makes it the third most common future category in our study. Future-related categories can also be found in the studies by Lindner and Berges (2020) and Kelley et al. (2021).

Another common association with artificial intelligence is with aspects of human cognition and anthropomorphism (Cave et al., 2019; Demir and Güraksin, 2022; Lindner and Berges, 2020; van Brummelen et al., 2021; Bewersdorff et al., 2023). This is reflected in the category designated “human,” which includes terms such as “intelligent,” “smart,” and “human.” As Bewersdorff et al. (2023) and van Brummelen et al. (2021) note, even children tend to anthropomorphize AI, a phenomenon that is also evident in our sample, which comprises individuals between the ages of 10 and 20. It is particularly noteworthy that the Cambridge Dictionary defines artificial intelligence as “the study of how to produce computers that have some of the qualities of the human mind.” This definition helps to reinforce the misconception that artificial intelligence can function, think or feel like a human brain, without characterizing this assumption as a misconception. At the same time, however, the category “artificial” also emerged in our study, associating AI with something artificial. This aspect also appears in the study by Nader et al. (2022), for example. To counter these broad, sometimes contradictory associations and individual misconceptions, it is important to define the term AI more clearly, which could lead to greater selectivity.

In addition to these factual associations, there are also evaluative associations that can be categorized as either negative or positive. The public's attitude toward AI is frequently ambivalent, lacking a discernible positive or negative valence (Haseski, 2019; Jeffrey, 2020; Keles and Aydin, 2021; Kelley et al., 2021; Kim and Kim, 2022). Our study confirms these results. The occurrence of positive and negative terms was found to be equal, thus indicating that they possess an equivalent weighting in the students' associations. However, the association network demonstrates that these two evaluative categories were never mentioned together with regard to the 41 most frequent terms. Consequently, it can be inferred that individual students hold either a positive or a negative attitude toward AI. Negative associations may potentially arise from the fact that the decision regarding the use of AI is not always at the discretion of the user. This distinguishes the implementation of AI from the adaptation of laptops, for example (Schepman and Rodway, 2023). In a study conducted by Ghotbi et al. (2022) with college students, the term “unemployment” was identified as the most prevalent negative association. However, as the participants in our study were college students whose everyday lives still have little contact with the world of work, we did not identify any specific concerns related to employment. Instead, we observed the presence of more general negative terms, such as danger and fear. Furthermore, Castagno and Khalifa (2020) was able to refute the hypothesis that respondents were concerned about the prospect of losing their jobs. A total of 72% of respondents indicated that they were not afraid of this. The positive associations are largely confined to the domain of AI's supportive functions. For instance, there is a perception of assistance at work or in everyday life. This perception may be shaped by the fact that the students surveyed lack experience in the workforce and are therefore less able to envisage the potential consequences of job loss. Instead, they tend to focus on the labor-saving benefits they already enjoy through AI-powered assistants such as Siri or Alexa.

The low number of negative associations, coupled with the considerable number of technical associations and concrete application examples, suggests that the students surveyed exhibit a generally positive attitude toward artificial intelligence. This interpretation is supported by research indicating that the perception of AI is a crucial determinant of acceptance of this novel technology (Cave et al., 2019; Demir and Güraksin, 2022; Kelley et al., 2021; Lee et al., 2018; O'Shaughnessy et al., 2023; Vasiljeva et al., 2021; Zhai et al., 2020).

5.2 Influence of the Chat GPT release

In addition to examining the fundamental associations with AI, our study also addressed the question of whether these have undergone a transformation as a consequence of the advent of Chat GPT. The results of our study corroborate Grassini's (2023) hypothesis that the release of Chat GPT has the potential to exert a profound impact on the public discourse surrounding AI. This is exemplified by the considerable surge in mentions of “Chat GPT” and “Snapchat AI” following the release of Chat GPT. This underscores the substantial impact of this particular AI application on the conceptualization and perception of AI as a whole. This shift is statistically significant, indicating that Chat GPT is not merely a technical instrument but is also influencing the perception and discourse surrounding AI in the public domain. The fact that “Chat GPT” was mentioned by 38.71% of students 6 months after its release and was even one of the five most frequently mentioned terms in the entire survey period is indicative of the rapid spread and high relevance of this technology. The prominence of the term “Chat GPT” also directly influenced the distribution of the frequency of the categories mentioned. Consequently, the relative frequency of the category “assistance systems,” in which Chat GPT is categorized, has increased significantly. Conversely, the category “technical associations” has become less prominent, as terms such as “robot,” “computer,” “technical,” “machine,” “algorithm,” and “independent” were mentioned with considerably less frequency. It should be noted, however, that the reduction in the number of cases in our sample only corresponds to weak effects (Cramer's V ranged from 0.09 to 0.14). This demonstrates that the associations have evolved from a relatively abstract technical perception to tangible application examples. This suggests that students are increasingly identifying specific applications and interactions with AI technologies in their everyday experiences (Sindermann et al., 2021). Furthermore, the release of Chat GPT had a notable impact on the formation of two-word combinations. Following the introduction of Chat GPT, three of the five most frequent two-word combinations were formed with “Chat GPT.” This highlights the considerable influence that this particular application has had on linguistic patterns and the thematic focus within associations with AI.

The concept of AI as a phenomenon of the future has also undergone a significant transformation. This is evidenced by both the frequency of mentions of the term “future” itself and the inductively formed category “future.” Prior to the release of Chat GPT, the category “future” was referenced in 9.17% of instances. In the study conducted by Nader et al. (2022), the term was referenced on 11% of occasions. Following the introduction of Chat GPT, however, this figure declined significantly, reaching 4.64%. This suggests a shift in perception of AI, whereby it is no longer regarded as a future phenomenon, but rather as a technology that is already present. Chat GPT appears to exert a decisive influence in this regard, as the application is specifically perceived as representative of contemporary AI. Moreover, the growing incorporation of AI into the fabric of daily life may diminish interest in futuristic visions, as the focus shifts toward immediate benefits and interactions. The decline in the number of individuals who reported no associations also suggests that the technology has become more deeply embedded in everyday life, making it challenging to remain unconnected to it. AI has now become a pervasive presence in the lives of young people, playing a significant and expanding role in their daily lives. It seems plausible to suggest that the students were more informed as a result of the public discussion about Chat GPT, which may have influenced the frequency with which judgemental terms were mentioned. This effect was also observed in the study conducted by Jeffrey (2020). Following the release of Chat GPT, the frequency of both negative and positive terms increased to a similar extent. It is important to note, however, that both categories accounted for only 2.89% (positive) and 3.30% (negative) of the terms mentioned in the overall analysis, thus having a relatively low weighting overall. Furthermore, the observed change in categories is only weakly significant (Cramer's V = 0.05; p < 0.05). In conclusion, the release of Chat GPT has irrevocably altered the public perception of AI. Chat GPT has become a pivotal reference point in the discourse, particularly among students, leading to the association of more tangible applications of AI and a shift in perception toward a more tangible technology.

5.3 Implications for the education sector

It is encouraging that a significant proportion of students already possesses a basic understanding of artificial intelligence (AI), which can facilitate more effective teaching and learning. It would be beneficial to emphasize specific applications and their practical benefits in educational programmes in order to foster a deeper comprehension and greater acceptance of AI. Consequently, educational institutions, particularly schools, assume a pivotal role in the instruction of AI as Lindner and Berges (2020) state. Thereby, it is imperative to address and rectify any erroneous perceptions. For instance, the question of whether robotics and AI should be considered synonymous remains a subject of debate, despite the existence of numerous interfaces (Cave et al., 2019). In a study conducted by Abouzeid et al. (2021), only 7% of respondents demonstrated an awareness of the distinction between these two concepts. However, students' misconceptions about AI tend to be rather superficial rather than profound (Mertala and Fagerlund, 2022). It is thus imperative that the functioning of AI is presented in an intelligible manner within the context of specialized educational programmes, with a view to fostering a deeper comprehension of the subject matter. It is of particular importance to utilize concrete application examples, as these play a significant role in the formation of students' associations. The various associations and different categories also suggest the implementation of a multi-perspective approach, as this is the most effective method of aligning with the pupils' existing ideas. It is also crucial to initiate the education of students about artificial intelligence at an early age, as children already associate humanisations, for example (van Brummelen et al., 2021).

The differentiated understanding of positive and negative associations provides a basis for ethical discussions in the classroom. A critical stance toward AI, encompassing both positive and negative aspects, is regarded as a fundamental aspect of AI literacy, as evidenced by Su and Zhong (2022). Extremely high or low attitudes can have a deleterious effect on the adaptation of AI (Cave et al., 2019). It is therefore not the objective of education to foster exclusively positive associations among all students; rather, it is to cultivate a realistic and differentiated perception of AI, equipping learners with the capacity to engage in critical discourse surrounding AI applications (Shrivastava et al., 2024).

5.4 Implications for further research

It is erroneous to interpret social representations as static or fixed (Moscovici, 2000). The findings of our study, therefore, represent the currently valid associations of students with regard to AI. As these can change and be influenced by the further development of programmes and progressive implementation, it is necessary to conduct further surveys in the future in order to ensure that the data is up to date. This dynamic was already clearly evident during the period of our study, with the introduction of Chat GPT. It would be interesting to conduct a survey over the next few years in order to observe how associations with AI continue to develop, particularly in the context of further significant developments and events in the field of AI. Furthermore, the present study is constrained by its focus on a particular cohort of students, which may limit the extent to which the findings can be generalized. It would be beneficial for future studies to include a more diverse sample and employ quantitative methods to gain a deeper understanding of the underlying reasons for the observed associations. Additionally, our study is methodologically limited in terms of qualitative analysis. Due to the structure of student responses, which on average consist of three isolated terms, certain advanced text-based analytical methods, such as Latent Dirichlet Allocation (LDA) or WordNet-based semantic analysis, are not applicable. LDA requires more extensive text input per document to generate meaningful topic distributions, while WordNet struggles to classify many AI-related terms (e.g., “Chat GPT,” “Alexa”) and does not effectively capture semantic relationships within our dataset. These limitations should be taken into account in future research that aims to explore semantic structures and conceptual relationships in AI-related perceptions. In order to address the integration of AI in the classroom in a sustainable manner, it is also crucial that teachers possess the requisite skills to integrate the technology into their teaching practice (Forero-Corba and Negre Bennasar, 2024; Sanusi et al., 2024). However, many teachers find this process overwhelming and stressful (Fernández-Batanero et al., 2021). For effective teaching, it is essential to align with the existing knowledge and understanding of teachers regarding AI. This necessitates an understanding of their current concepts and perceptions (Lindner and Berges, 2020). Consequently, future research should also focus on the associations and perceptions of teachers.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethics Committee of the Science Didactic Institutes and Departments (FB 13, 14, 15) of the Goethe University Frankfurt am Main. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

Author contributions

MH: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. SF-Z: Conceptualization, Formal analysis, Investigation, Methodology, Validation, Visualization, Writing – review & editing. SS: Formal analysis, Investigation, Validation, Writing – review & editing. PD: Funding acquisition, Investigation, Supervision, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was financially supported by Klaus Tschira Stiftung gGmbH (project funding number 00.007.2020).

Acknowledgments

The authors thank the Klaus Tschira Stiftung gGmbH, which contributed to the success of the project through its financial support.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1543746/full#supplementary-material

References

Abouzeid, H. L., Chaturvedi, S., Abdelaziz, K. M., Alzahrani, F. A., AlQarni, A. A. S., and Alqahtani, N. M. (2021). Role of robotics and artificial intelligence in oral health and preventive dentistry – knowledge, perception and attitude of dentists. Oral Health Prev. Dent. 19, 353–363. doi: 10.3290/j.ohpd.b1693873

Andries, V., and Robertson, J. (2023). Alexa doesn't have that many feelings: Children's understanding of AI through interactions with smart speakers in their homes. Comput. Educ. Artif. Intell. 5:100176. doi: 10.1016/j.caeai.2023.100176

Bahar, M., Johnstone, A. H., and Sutcliffe, R. G. (1999). Investigation of students' cognitive structure in elementary genetics through word association tests. J. Biol. Educ. 33, 134–141. doi: 10.1080/00219266.1999.9655653

Barone, B., Rodrigues, H., Nogueira, R. M., Guimarães, K. R. L. S. L., de, Q, and Behrens, J. H. (2020). What about sustainability? Understanding consumers' conceptual representations through free word association. Int. J. Consum. Stud. 44, 44–52. doi: 10.1111/ijcs.12543

Bewersdorff, A., Zhai, X., Roberts, J., and Nerdel, C. (2023). Myths, mis- and preconceptions of artificial intelligence: a review of the literature. Comput. Educ. Artif. Intell. 4, 100143. doi: 10.1016/j.caeai.2023.100143

Brauner, P., Hick, A., Philipsen, R., and Ziefle, M. (2023). What does the public think about artificial intelligence?—a criticality map to understand bias in the public perception of AI. Front. Comput. Sci. 5:1113903. doi: 10.3389/fcomp.2023.1113903

Castagno, S., and Khalifa, M. (2020). Perceptions of artificial intelligence among healthcare staff: a qualitative survey study. Front. Artif. Intell. 3:578983. doi: 10.3389/frai.2020.578983

Cave, S., Coughlan, K., and Dihal, K. (2019). “Scary robots,” in Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society. AIES '19: AAAI/ACM Conference on AI, Ethics, and Society, eds. (Honolulu HI USA; New York, NY, USA: ACM), 331–337.

Chai, C. S., Lin, P.-Y., Jong, M. S.-Y., Dai, Y., Chiu, T. K. F., and Qin, J. (2021). Perceptions of and behavioral intentions towards learning artificial intelligence in primary school students. Educ. Technol. Soc. 24, 89–101.

Chassignol, M., Khoroshavin, A., Klimova, A., and Bilyatdinova, A. (2018). Artificial intelligence trends in education: a narrative overview. Procedia Comput. Sci. 136, 16–24. doi: 10.1016/j.procs.2018.08.233

Chounta, I.-A., Bardone, E., Raudsep, A., and Pedaste, M. (2022). Exploring teachers' perceptions of artificial intelligence as a tool to support their practice in Estonian K-12 education. Int. J. Artif. Intell. Educ. 32, 725–755. doi: 10.1007/s40593-021-00243-5

DeepL SE (2017). DeepL Translator. Retrieved from: https://www.deepl.com

Demir, K., and Güraksin, G. E. (2022). Determining middle school students' perceptions of the concept of artificial intelligence: a metaphor analysis. Particip. Educ. Res. 9, 297–312. doi: 10.17275/per.22.41.9.2

Dempere, J., Modugu, K., Hesham, A., and Ramasamy, L. K. (2023). The impact of ChatGPT on higher education. Front. Educ. 8:1206936. doi: 10.3389/feduc.2023.1206936

Di, C., and Wu, F. (2021). The influence of media use on public perceptions of artificial intelligence in China: evidence from an online survey. Inf. Dev. 37, 45–57. doi: 10.1177/0266666919893411

Estriegana, R., Medina-Merodio, J.-A., and Barchino, R. (2019). Student acceptance of virtual laboratory and practical work: an extension of the technology acceptance model. Comput. Educ. 135, 1–14. doi: 10.1016/j.compedu.2019.02.010

Eylering, A., Neufeld, K., Kottmann, F., Holt, S., and Fiebelkorn, F. (2023). Free word association analysis of German laypeople's perception of biodiversity and its loss. Front. Psychol. 14:1112182. doi: 10.3389/fpsyg.2023.1112182

Fast, E., and Horvitz, E. (2016). Long-term trends in the public perception of artificial intelligence. arXiv. doi: 10.1609/aaai.v31i1.10635

Fernández-Batanero, J.-M., Román-Graván, P., Reyes-Rebollo, M.-M., and Montenegro-Rueda, M. (2021). Impact of educational technology on teacher stress and anxiety: a literature review. Int. J. Environ. Res. Public Health 18:548. doi: 10.3390/ijerph18020548

Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics (4th ed.). London: SAGE Publications.

Forero-Corba, W., and Negre Bennasar, F. (2024). Técnicas y aplicaciones del Machine Learning e Inteligencia Artificial en educación: una revisión sistemática. RIED Rev. Iberoam. Educ. Distancia 27, 209–253. doi: 10.5944/ried.27.1.37491

Gao, S., He, L., Chen, Y., Li, D., and Lai, K. (2020). Public perception of artificial intelligence in medical care: Content analysis of social media. J. Med. Internet Res. 22:e16649. doi: 10.2196/16649

García-Peñalvo, F. J. (2023). La percepción de la inteligencia artificial en contextos educativos tras el lanzamiento de ChatGPT: disrupción o pánico. Educ. Knowl. Soc. 24:e31279. doi: 10.14201/eks.31279

Ghotbi, N., Ho, M. T., and Mantello, P. (2022). Attitude of college students towards ethical issues of artificial intelligence in an international university in Japan. AI Soc. 37, 283–290. doi: 10.1007/s00146-021-01168-2

Granić, A., and Marangunić, N. (2019). Technology acceptance model in educational context: a systematic literature review. Br. J. Educ. Technol. 50, 2572–2593. doi: 10.1111/bjet.12864

Grassini, S. (2023). Development and validation of the AI attitude scale (AIAS-4): a brief measure of general attitude toward artificial intelligence. Front. Psychol. 14:1191628. doi: 10.3389/fpsyg.2023.1191628

Gregor, B., and Gotwald, B. (2021). Perception of artificial intelligence by customers of science centers. Przegl. Zarzadz. 1, 29–38. doi: 10.7172/1644-9584.91.2

Große-Bölting, G., and Mühling, A. (2020). “Students' perception of the inner workings of learning machines,” in International Conference on Learning and Teaching in Computing and Engineering (LaTICE) Vol. 5 (Ho Chi Minh City: IEEE Computer Society).

Haseski, H. I. (2019). What do Turkish pre-service teachers think about artificial intelligence? Int. J. Comput. Sci. Educ. Sch. 3, 3–23. doi: 10.21585/ijcses.v3i2.55

Henrich, M., Formella-Zimmermann, S., Gübert, J., and Dierkes, P. W. (2023). Students' technology acceptance of computer-based applications for analyzing animal behavior in an out-of-school lab. Front. Educ. 8:1216318. doi: 10.3389/feduc.2023.1216318

Heung, Y. M. E., and Chiu, T. K. F. (2025). How ChatGPT impacts student engagement from a systematic review and meta-analysis study. Comput. Educ. Artif. Intell. 8:100361. doi: 10.1016/j.caeai.2025.100361

Jeffrey, T. (2020). Understanding college student perceptions of artificial intelligence. Syst. Cybern. Inform. 18, 8–13.

Kasinidou, M., Kleanthous, S., and Otterbacher, J. (2024a). “AI is a robot that knows many things: cypriot children's perception of AI,” in Interaction Design and Children (IDC'24), June 17–20, 2024, Delft, Netherlands, 897–901. doi: 10.1145/3628516.3659414

Kasinidou, M., Kleanthous, S., and Otterbacher, J. (2024b). “We have to learn to work with such systems: students' Perceptions of ChatGPT After a Short Educational Intervention on NLP,” in SIGCSE Virtual 2024, Dezember 5–8, 2024 (New York, NY: Association for Computing), 74–80. doi: 10.1145/3649165.3690113

Keles, P. U., and Aydin, S. (2021). University students' perceptions about artificial intelligence. Shanlax Int. J. Educ. 9, 212–220. doi: 10.34293/education.v9iS1-May.4014

Kelley, P. G., Yang, Y., Heldreth, C., Moessner, C., Sedley, A., Kramm, A., et al. (2021). “Exciting, useful, worrying, futuristic: public perception of artificial intelligence in 8 countries,” in Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society. AIES '21: AAAI/ACM Conference on AI, Ethics, and Society. Virtual Event USA, eds. M. Fourcade, B. Kuipers, S. Lazar and D. Mulligan (New York, NY, USA: ACM), 627–637.

Kim, N. J., and Kim, M. K. (2022). Teacher's perceptions of using an artificial intelligence-based educational tool for scientific writing. Front. Educ. 7:755914. doi: 10.3389/feduc.2022.755914

Kuckartz, U., and Rädiker, S. (2019). Analyzing qualitative data with MAXQDA. Springer International Publishing: New York. doi: 10.1007/978-3-030-15671-8

Kurt, H., Ekici, G., Aktas, M., and Aksu, O. (2013). Determining biology student teachers' cognitive structure on the concept of “diffusion” through the free word-association test and the drawing-writing technique. Int. Educ. Stud. 6:187. doi: 10.5539/ies.v6n9p187

Landis, J. R., and Koch, G. G. (1977). An application of hierarchical kappa-type statistics in the assessment of majority agreement among multiple observers. Biometrics 33, 363–374. doi: 10.2307/2529786

Lee, J., Davari, H., Singh, J., and Pandhare, V. (2018). Industrial artificial intelligence for Industry 4.0-based manufacturing systems. Manuf. Lett. 18, 20–23. doi: 10.1016/j.mfglet.2018.09.002

Leiter, C., Zhang, R., Chen, Y., Belouadi, J., Larionov, D., Fresen, V., et al. (2024). ChatGPT: a meta-analysis after 2.5 months. Mach. Learn. Appl. 16:100541. doi: 10.1016/j.mlwa.2024.100541

Lindner, A., and Berges, M. (2020). “Can you explain AI to me? Teachers' pre-concepts about artificial intelligence,” in IEEE Frontiers in Education Conference (FIE) (Uppsala: IEEE Frontiers in Education Conference (FIE)), 1–9. doi: 10.1109/FIE44824.2020.9274136

Lo Monaco, G., Piermattéo, A., Rateau, P., and Tavani, J. L. (2017). Methods for studying the structure of social representations: a critical review and agenda for future research. J. Theory Soc. Behav. 47, 306–331. doi: 10.1111/jtsb.12124

Makridakis, S. (2017). The forthcoming artificial intelligence (AI) revolution: its impact on society and firms. Futures 90, 46–60. doi: 10.1016/j.futures.2017.03.006

Mayring, P. (2014). Qualitative content analysis: theoretical foundation, basic procedures and software solution. Klagenfurt.

Mertala, P., and Fagerlund, J. (2022). Finnish 5th and 6th graders' misconceptions about artificial intelligence. Comput. Educ. Artif. Intell. 3:100095. doi: 10.1016/j.caeai.2022.100095

Moscovici, S. (2000). “Social representations,” in Explorations in Social Psychology. Cambridge: Polity Press.

Nader, K., Toprac, P., Scott, S., and Baker, S. (2022). Public understanding of artificial intelligence through entertainment media. AI Soc. 39, 1–14. doi: 10.1007/s00146-022-01427-w

O'Shaughnessy, M. R., Schiff, D. S., Varshney, L. R., Rozell, C. J., and Davenport, M. A. (2023). What governs attitudes toward artificial intelligence adoption and governance? Sci. Public Policy 50, 161–176. doi: 10.1093/scipol/scac056

Pallathadka, H., Ramirez-Asis, E. H., Loli-Poma, T. P., Kaliyaperumal, K., Ventayen, R. J. M., and Naved, M. (2023). Applications of artificial intelligence in business management, e-commerce and finance. Mater. Today Proc. 80, 2610–2613. doi: 10.1016/j.matpr.2021.06.419

Ritter, N. L. (2017). Technology acceptance model of online learning management systems in higher education: a meta-analytic structural equation model. Int. J. Learn. Manag. Syst. 5, 1–16. doi: 10.18576/ijlms/050101

Sanusi, I. T., Ayanwale, M. A., and Chiu, T. K. F. (2024). Investigating the moderating effects of social good and confidence on teachers' intention to prepare school students for artificial intelligence education. Educ. Inf. Technol. 29, 273–295. doi: 10.1007/s10639-023-12250-1

Schepman, A., and Rodway, P. (2023). The general attitudes towards artificial intelligence scale (GAAIS): confirmatory validation and associations with personality, corporate distrust, and general trust. Int. J. Hum. Comput. Interact. 39, 2724–2741. doi: 10.1080/10447318.2022.2085400

Shrivastava, V., Sharma, S., Chakraborty, D., and Kinnula, M. (2024). “Is a sunny day bright and cheerful or hot and uncomfortable? young children's exploration of chatGPT,” in Nordic Conference of Human-Computer Interaction (NordiCHI 2024), October 13–16, 2024, Uppsala, Sweden (New York, NY: Association for Computing Machinery), 1–15. doi: 10.1145/3679318.3685397

Sindermann, C., Sha, P., Zhou, M., Wernicke, J., Schmitt, H. S., Li, M., et al. (2021). Assessing the attitude towards artificial intelligence: Introduction of a short measure in German, Chinese, and English language. Künstl. Intell. 35, 109–118. doi: 10.1007/s13218-020-00689-0

Stai, B., Heller, N., McSweeney, S., Rickman, J., Blake, P., Vasdev, R., et al. (2020). Public perceptions of artificial intelligence and robotics in medicine. J. Endourol. 34, 1041–1048. doi: 10.1089/end.2020.0137

Su, J., and Zhong, Y. (2022). Artificial intelligence (AI) in early childhood education: curriculum design and future directions. Comput. Educ. Artif. Intell. 3:100072. doi: 10.1016/j.caeai.2022.100072

Touretzky, D., Gardner-McCune, C., Martin, F., and Seehorn, D. (2019). “Envisioning AI for K-12: what should every child know about AI?” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33, 9795–9799. doi: 10.1609/aaai.v33i01.33019795

Türkkan, E. (2017). Investigation of physics teacher candidates' cognitive structures about “electric field”: a free word association test study. J. Educ. Teach. Sci. 5:146. doi: 10.11114/jets.v5i11.2683

Valeri, F., Nilsson, P., and Cederqvist, A.-M. (2025). Exploring students' experience of ChatGPT in STEM education. Comput. Educ. Artif. Intell. 8:100360. doi: 10.1016/j.caeai.2024.100360

van Brummelen, J., Tabunshchyk, V., and Heng, T. (2021). “Alexa, can i program you?: student perceptions of conversational artificial intelligence before and after programming alexa,” in Interaction Design and Children. IDC '21: Interaction Design and Children. Athens Greece, eds. M. Roussou, S. Shahid, J. A. Fails and M. Landoni (New York, NY, USA: ACM), 305–313.

Vandenberg, J., and Mott, B. (2023). “AI teaches itself: exploring young learners' perspectives on artificial intelligence for instrument development,” in Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V.1 (ITiCSE 2023), July 8–12, 2023, Turku, Finland, 485–490.

Vasiljeva, T., Kreituss, I., and Lulle, I. (2021). Artificial intelligence: the attitude of the public and representatives of various industries. JRFM 14:339. doi: 10.3390/jrfm14080339

Vlasák-Drücker, J., Eylering, A., Drews, J., Hillmer, G., Carvalho Hilje, V., and Fiebelkorn, F. (2022). Free word association analysis of Germans' attitudes toward insects. Conserv. Sci. Pract. 4:e12766. doi: 10.1111/csp2.12766

Wagner, W., Valencia, J., and Elejabarrieta, F. (1996). Relevance, discourse and the ‘hot' stable core of social representations: a structural analysis of world associations. Br. J. Soc. Psychol. 35, 331–351. doi: 10.1111/j.2044-8309.1996.tb01101.x

Yeh, S.-C., Wu, A.-W., Yu, H.-C., Wu, H. C., Kuo, Y.-P., and Chen, P.-X. (2021). Public perception of artificial intelligence and its connections to the sustainable development goals. Sustainability 13:9165. doi: 10.3390/su13169165

Yigitcanlar, T., Degirmenci, K., and Inkinen, T. (2022). Drivers behind the public perception of artificial intelligence: Insights from major Australian cities. AI Soc. 39, 1–21. doi: 10.1007/s00146-022-01566-0

Yilmaz, R., and Yilmaz, F. G. K. (2023). The effect of generative artificial intelligence (AI)-based tool use on students' computational thinking skills, programming self-efficacy and motivation. Comput. Educ. Artif. Intell. 4:100147. doi: 10.1016/j.caeai.2023.100147

Zhai, Y., Yan, J., Zhang, H., and Lu, W. (2020). Tracing the evolution of AI: conceptualization of artificial intelligence in mass media discourse. Int. Distan. Learn. J. 48, 137–149. doi: 10.1108/IDD-01-2020-0007

Zhang, H., Lee, I., Ali, S., DiPaola, D., Cheng, Y., and Breazeal, C. (2022). Integrating ethics and career futures with technical learning to promote AI literacy for middle school students: an exploratory study. Int. J. Artif. Intell. Educ. 33, 1–35. doi: 10.1007/s40593-022-00293-3

Keywords: artificial intelligence (AI), student perceptions, Chat GPT, AI associations, free word association, AI in education

Citation: Henrich M, Formella-Zimmermann S, Schneider S and Dierkes PW (2025) Free word association analysis of students' perception of artificial intelligence. Front. Educ. 10:1543746. doi: 10.3389/feduc.2025.1543746

Received: 11 December 2024; Accepted: 30 April 2025;

Published: 21 May 2025.

Edited by:

Eugène Loos, Utrecht University, NetherlandsReviewed by:

Styliani Kleanthous, Open University of Cyprus, CyprusMuhammad Bello Nawaila, Federal College of Education, Nigeria

Copyright © 2025 Henrich, Formella-Zimmermann, Schneider and Dierkes. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marvin Henrich, SGVucmljaEBiaW8udW5pLWZyYW5rZnVydC5kZQ==

Marvin Henrich

Marvin Henrich Sandra Formella-Zimmermann

Sandra Formella-Zimmermann Sebastian Schneider

Sebastian Schneider Paul Wilhelm Dierkes

Paul Wilhelm Dierkes