- Department of Technology Education, Palestine Technical University-Kadoorie, Tulkarem, Palestine

Introduction: This study aims to investigate AI potentials, ethical use, and challenges at PTUK.

Methods: A mixed approach is utilized in this study to examine the perceptions of college instructors from different disciplines. A pre-existing scale of attitudes toward the ethics of artificial intelligence (AT-EAI) was used to assess the attitudes of 88 college instructor towards ethical use of AI. A phenomenological approach as well was conducted to gather information for the qualitative data. Semi-structured interviews with 17 participants were performed with college instructors who use AI in their teaching. One sample t-test was utilized to investigate the attitudes towards ethical use.

Results: Results revealed that justice, transparency, determination and no maleficence, and responsibility are statistically significant at a moderate level. Privacy was found at a moderate degree. Qualitative analysis yielded three themes for potential: academic productivity, accessibility, and multimodal teaching. Three themes for challenges: Ai quality services, lack of interaction, and need for teaching philosophy adaptation.

Discussion: The researcher recommendsthat further research and collaboration are necessary to maximize the benefits of AI in education and ensure its seamless integration into teaching practices.

1 Introduction

In the field of education, the integration of AI has sparked considerable interest among both researchers and educators. As a new information technology innovation, radical changes have occurred not only in everyday life but also this trend extends to higher educational institutions. As AI continues to advance, there is a growing exploration, development, and adoption of practical applications of this technology to enhance information exchange (Adov et al., 2020). Consequently, a substantial reduction is predicted in the workforce over the next 5 years, with automated establishments expected to replace current employees (Ferikoğlu and Akgün, 2022). It is therefore crucial to be aware of the requirements imposed by technological advancements on people’s future path. The degree to which individuals will embrace or resist this transformation is a subject of debate. In essence, during the process of change, people form perceptions by evaluating the impacts of these changes and weighing their advantages and disadvantages (Ferikoğlu and Akgün, 2022).

Several research studies have thoroughly explored the significant advantages associated with incorporating AI systems and chatbots into educational settings, as noted by Chien and Hwang (2023). Among the diverse benefits attributed to AI, one particularly stands out: a substantial enhancement of research precision and efficiency, as highlighted by Zhang and Aslan (2021). For example, ChatGPT demonstrates an impressive capability to rapidly analyze vast amounts of data, adeptly identifying intricate patterns and correlations that may escape human perception. Consequently, this invaluable tool empowers researchers to improve their overall productivity and effectiveness, enabling them to shift their attention towards addressing more challenging and innovative tasks, as discussed by Dignum (2018).

Even though artificial intelligence (AI) holds the potential to aid instructors and transform academic research across diverse facets of research development by facilitating the analysis and interpretation of extensive data sets, generating simulations and scenarios, it has some issues such as biased data and algorithms misuse, posing threats to social cohesion, democratic principles, and human rights (Yang et al., 2021). Moreover, since AI systems are trained on data, this data may contain implicit or explicit biases, potentially leading to discrimination against certain groups, such as minorities and women. This can perpetuate existing societal inequalities and compromise the principles of equal opportunity and fairness in education (Alshorman, 2024). Additionally, AI can generate content that appears credible but is entirely false, known as hallucinations or plausible facts. This makes AI generated content unreliable, and both faculty and students must develop the skills to critically assess these responses.

Noticeably, AI serves as a tool specifically designed to produce text resembling human conversation, capable of engaging in discussions on various subjects. Trained on human-human conversation data, generative AI is proficient at generating appropriate responses to queries and autonomously completing discussions, rendering it a valuable asset for natural language processing (NLP) research. As a language model developed by OpenAI, ChatGPT has found widespread use in diverse fields, including language translation, chatbots, and NLP. Beyond its utility in psychology, sociology, and education, generative AI tools contribute to the automation of some labor-intensive and time consuming research processes across multiple domains.

Despite the substantial body of research on chatbots and artificial intelligence (AI) in educational contexts, such as the works of Hiremath et al. (2018); Kim et al. (2021); Okonkwo and Ade-Ibijola (2021), there exists a noticeable gap in understanding potentials, challenges and ethical use of AI tools in teaching process.

This study seeks to explore the role of AI in education from college instructors’ perspectives which includes potential, challenges, and AI ethics attitudes. It is a public university located in Palestine, it is characterized by the emphasis on technical education. While PTUK has made substantial efforts towards implementing AI in education, the deployment of AI-based learning outcomes at PTUK is still in its early phases. As a result, there is a research gap in understanding the unique potential and challenges that exist at PTUK for incorporating AI in education.

1.1 Significance of the study

Hence, the outcomes of this study have the potential to benefit the advancement of AI in education for college instructors, researchers, and policymakers. These findings provide recommendations on creating enhancing teacher professional development programs for AI, and improving AI-focused teaching within higher education institutions.

Moreover, as artificial intelligence (AI) becomes increasingly widespread, the attention directed towards AI ethics also intensifies. To tackle concerns regarding AI ethics this study focuses on the ethical use of AI as well.

1.2 Problem statement

Artificial intelligence (AI) integration in the educational field holds a significant aspect, particularly in the context of higher education institutions, which has prompted a notable shift in scientific advancements (Maydi and Majed, 2023). The crucial value that AI will hold in the foreseeable future, draws a parallel to the past significance of oil, as highlighted by (El-Dahshan, 2019). The urgent necessity to develop the educational system in alignment with the technological revolution brought about by AI has become apparent (Khlaif, 2023).

Consequently, AI serves as a solution that should fit users’ characteristics. The incorporation of AI captures the attention of both teachers and learners, motivating them to engage more actively in the teaching-learning process (Karataş et al., 2024). Additionally, it assists in achieving educational objectives, better managing them, and analyzing data for each user, facilitating access to their strengths and weaknesses (Park and Kwon, 2023). Investigating its potential and challenges is a necessity in the higher education context.

Additionally, in the constantly changing realm of education, it is crucial to understand and tackle AI ethical use, particularly in light of technological progress influencing the field (Ray, 2023). To ensure the responsible and proper utilization of AI tools, the educational community should encourage open communication, offer thorough guidelines, and participate in meaningful ethical conversations (Pressman et al., 2024). Within these considerations, ethics emerges as a pivotal factor, encompassing concerns related to academic honesty, integrity, and the ethical implications of employing AI powered technology in educational assessments and interactions (Ali and Aysan, 2025). Specifically, the incorporation of AI into educational practices requires a thoughtful examination of fairness, transparency, and the protection of privacy, involving both students and educators (Mhlanga, 2023). There is a minor studies that discuss AI ethical issues in education, thus, it is imperative to bridge this research gap and contribute to literature with need to be emphasize on integrating AI into the educational domain (Chen et al., 2025). Continuous research on the potentials, challenges, and ethical use of AI will contribute to a deep comprehension of the adoption and use of AI in educational contexts. Moreover, investigating faculty members’ perceptions of AI would reveal their strengths and weaknesses in using AI in their profession. Also, it will promote insights for college instructors and policymakers on exploiting AI in education.

Objectives of the study are to:

1. Describe the opportunities AI usage offers by college instructors at PTUK.

2. Examine the attitudes towards ethical considerations of employing artificial intelligence (AI) systems within higher educational settings at PTUK.

3. Identify challenges of AI from college instructors’ perspectives.

The results of this study are anticipated to assist in the identification of potential biases and ethical considerations related to the utilization of AI in education. These insights are crucial for informing future developments and evaluating the implications of integrating AI into education across various topics and multidisciplinary fields. In the context of this investigation, AI could be a valuable tool that can expedite research productivity within specific areas of field research. Hence, the study aims to address the following research questions:

1.3 Research questions

1. What are the potentials AI offers for college instructors at PTUK in higher education settings?

2. What are the attitudes of college instructors at ATUK towards AI ethics?

3. What are the challenges of using AI by college instructors at PTUK?

1.4 Literature review potentials of artificial intelligence (AI) in education

Artificial intelligence (AI) is generally defined as technologies capable of performing tasks typically requiring human intelligence (Gignac and Szodorai, 2024). These technologies exhibit a degree of autonomy, the capacity to learn and adapt, and the ability to process large volumes of data (Cheng et al., 2023; Obidovna, 2024; Rahmatizadeh et al., 2020). It can be described as the capacity of machines to read and rapidly analyze vast amounts of datasets (Sira, 2022) to learn from and leverage them to achieve certain educational goals.

The integration of AI in education sparks debates and controversies among academics (Duran, 2024; Williamson and Eynon, 2020). Some researchers express optimism, emphasizing the potential benefits such as enhanced learning efficiency and quality. They believe AI can offer personalized learning experiences, allowing students to progress at their own pace, while teachers can assess outcomes in real time and create adaptive curricula. Also, some proponents of AI in education argue that it enhances learning effectiveness (Luckin and Cukurova, 2019) offering personalized and efficient learning experiences with interactive elements (Aguilar et al., 2021). This approach could streamline tasks like assessment, allowing teachers to focus on crucial aspects of guidance and direction. Additionally, AI may improve education accessibility for students in remote areas or with disabilities, providing equal access to learning content and resources (Sun et al., 2021). Another aspect of the teaching process is adapting AI to curriculum, by generating accustomed learning materials, providing adaptive learning experiences (Karataş and Yüce, 2024). AI capability gradually gives way to personalized and adaptive learning experiences facilitated by AI. With its capacity to analyze vast amounts of data and discern patterns, AI promises to revolutionize how knowledge is imparted and acquired (Dwivedi et al., 2021; Greenstein, 2022). Another field of using AI is using generative artificial intelligence in academic research. It can be used for any purpose, such as creating research ideas, allocating the research gap in specific topics, summarizing unlimited published articles, analyzing data and conclusions (Khlaif, 2023).

This paradigm shift is not merely about incorporating technology into classrooms; it entails fundamentally reimagining the educational ecosystem, where AI catalyzes innovation and inclusivity (Sriwijayanti, 2020).

1.5 Challenges of AI in education

AI boom has shown a great promise in education. On the other hand, several challenges are addressed while using AI. Some of these challenges were reported by Grzybowski et al. (2024) is AI algorithm bias in which can stem from skewed training data or decision-making processes, resulting in unequal learning outcomes. Tackling this bias involves thoroughly examining the data used to train AI models and applying strategies to reduce bias during algorithm development. Additionally, transparency poses a significant challenge, as AI systems often function as black boxes, making it hard to comprehend how decisions are made. Moreover, another study of Crompton et al. (2024) also investigated challenges in Ai in education and found that issues toward negative instructors’ perceptions like lack of technology skills, ethical concerns, and ease of use and design of the AI tools issues.

Similarly, concerns are raised by other academics who fear that AI might exacerbate educational inequalities (Vinuesa et al., 2020) and lead to overreliance on technology, diminishing interpersonal skills and creativity essential for the workforce. Even though, AI integration in curriculum adaptation have some benefits, it presents challenges as well. For example, as AI can enhance teaching efficiency and personalization (Nazaretsky et al., 2022), reliability of AI-generated content arose concerns (Karataş et al., 2024).

Moreover, academics with negative perspectives worry about AI’s potential drawbacks, such as reduced human interaction during the learning process (Ikedinachi et al., 2019). They express concerns about AI replacing teachers or diminishing student-teacher interaction, impacting the quality of education. Privacy issues related to student data collection also arise, with fears of excessive and detailed data jeopardizing student privacy (Holmes et al., 2021). Additionally, using AI generative tools like ChatGPT which generates text in scientific research that assist researchers, ethical concerns present like transparency issues, bias of AI, privacy concerns, and accountability of users. AI-generated text (Khlaif, 2023). Furthermore, AI is capable of democratization of education, it bridges the gap between accessibility and quality. For example, a study conducted by Asfahani et al. (2023) clarified the role of AI-powered platforms and tools in facilitating access to learners, with diverse geographical locations or socioeconomic backgrounds by tailoring their learning experiences that fit their individual needs and preferences. This democratization nurtures a more equitable educational settings, wherein learning barriers are dismantled, and opportunities for advancement are democratized.

Furthermore, AI fortifies educators’ role by empowering them with rich resources to carryout more effective teaching practices. For example, Sebsibe et al. (2023) explained how AI-driven analytics gives educators opportunities to gain a deeper understanding of students’ learning patterns, and needed improvements, this enable them to customize instruction accordingly.

However, amidst the optimism surrounding AI’s potential in education, ethical concerns regarding data privacy and algorithmic bias boom large. As AI applications become more ingrained in educational landscape, it becomes crucial to address these ethical considerations and concerns to ensure that of AI usage aligns with fair guidelines, transparency, and accountability (Abdurahman et al., 2023). Striking a balance between innovation and ethical stewardship is essential to harnessing the full potential of AI in educational progress.

1.6 Attitudes towards AI ethics

Attitude reflects an individual’s belief about a behavior, indicating whether they view it positively or negatively (Ellore et al., 2015). Research has been carried out to understand users’ attitudes towards AI ethics (Ikkatai et al., 2022; Chocarro et al., 2023; Choi et al., 2024). Even though, AI ethical use is another domain that was investigated previously (Ray, 2023; Ryan and Stahl, 2020), previous studies showed that it is imperative to explicitly address concerns related to fairness, accountability, transparency, bias, autonomy, agency, and inclusion (Jang et al., 2022). Jang et al. (2022) identified five dimensions of AI ethics by a final instrument he developed. Five dimensions were identified: fairness, transparency, privacy, responsibility, and non-maleficence dimension.

The fairness dimension encompassed elements like taking diversity into account during the collection of data for AI development and ensuring universal disclosure of the developed AI without any form of discrimination.

The transparency dimension comprised items gauging attitudes regarding the importance of AI explainability. In the privacy dimension, there were inquiries about attitudes toward safeguarding privacy during the collection and utilization of data for creating artificial intelligence. The responsibility dimension involved items that sought opinions on whether responsibilities should be allocated based on social consensus in situations involving AI-related issues or if specific groups, such as developers and users, should bear complete responsibility. Lastly, the non-maleficence dimension comprised items that gathered responses on the significance of preventing abuse by various agencies associated with AI (Ryan and Stahl, 2020).

On a broader scale, it is crucial to investigate the conflicting opinions among academics regarding AI in education which could impede progress in AI integration in the classroom. Therefore, this study is essential to find solutions and agreements that align with the goals of quality education, since the integration of AI in education highlights the need to transform pedagogical methods, accessibility, sustainability, and the fundamental principles of education to expedite progress toward achieving SDG 4.

2 Methodology

This study investigates the AI potential, the attitudes toward AI ethics, and the challenges college instructors face at PTUK. A mixed design of qualitative and quantitative research methods was conducted.

Research designs of phenomenology and descriptive research were considered and adopted as they align well with the study objectives. The rationale for adopting a mixed-method design lies in the recognition that relying solely on either quantitative or qualitative approaches fails to comprehensively address the research issue. Morse (2016) advocates for the integration of qualitative data to validate quantitative findings, justifying this methodological choice.

Moreover, the qualitative component of the study employs a phenomenological approach, chosen for its ability to delve into the lived experiences of a specific group or object, providing a more profound understanding of the phenomenon (Patten and Newhart, 2017) which is AI experience in this study.

While quantitative methods rely on self-reported scales from college instructors. Scales may lack depth in providing information. Thus, a qualitative approach complements quantitative data by offering additional insights, perspectives, and validation or expansion of the results derived from scales (Creswell and Clark, 2017). The likert-type scales, named after Rensis Likert, are among the most commonly employed and frequently applied methods for measuring attitudes. Likert introduced these summed or attitude scales in his pivotal 1932 article, “The Technique for the Measurement of Attitudes” (Likert, 1932). So, a scale was used in this study to assess attitudes towards ethical use. The use of different methods and data sources introduces methodological and data triangulation (Turner et al., 2017). The researcher triangulates results from both quantitative and qualitative analyses to elucidate college instructors’ attitudes toward AI ethics.

2.1 Participants and settings

The population of the study is the entire group of college instructors who teach at PTUK, is about 334 college instructors. A sample of 88 college instructors were chosen randomly to fill out the attitudes towards ethics of AI scale that were distributed by google forms. 17 participants were chosen from the 88 instructors purposively based upon their usage of AI in their teaching practices after filling out the attitudes scale. For the qualitative design, participants were selected based upon their extensive knowledge and usage of AI in their teaching practices. More experienced college instructors were selected for the interviews. Two tools were used. A pre-existing scale of attitudes towards AI and semi-structured interviews.

The interview participants were informed about the purpose and significance of the study, with an assurance that their input would be kept confidential and used solely for research purposes. Expressing gratitude for their willingness to participate, the interviews were conducted in a conducive environments with a respectful and comfortable interaction between the researcher and participants. Participants were notified that their responses would be accurately recorded for through documentation and direct quotations. Interview responses were transcribed objectively and without bias, with clarifications provided when participants faced difficulties understanding questions. After the interviews, participants were allowed to review and amend their responses. Qualitative data were reviewed multiple times, and reasons were categorized and prioritized based on their frequency in the study sample.

2.1.1 Data collection tools and instruments

2.1.1.1 Semi-structured interviews

Semi-structured interviews were conducted to collect data emerging from participants’ responses to the aforementioned research questions. Interviews were considered suitable for this phenomenological research design (Adhabi and Anozie, 2017). Unlike scales, interviews provide detailed information, address information conflicts, and allow the exclusion of contradictory information. Also this tool was selected aims to gain more depth and detail on the investigated topic (Lune and Berg, 2017). Individual interview data in this study made three major contributions: an initial model guided the exploration of individual opinions, while subsequent data from individual studies further enriched the conceptualization of the phenomenon; identification of the individual and contextual circumstances surrounding the phenomenon, which added richness to the interpretation of the structure of the phenomenon, which is AI experience in this context; the trustworthiness of the findings was enhanced by a convergence of the central characteristics of the phenomenon across individual interviews (Lambert and Loiselle, 2008). Semi structured interviews were conducted via Zoom and, in some cases, face-to-face based on interviewee preferences, these interviews involved 17 college instructors who volunteered after filling out the scale. Each interview lasted between 25–40 min. The interview questions were as follows:

1. What do you think about your artificial intelligence (AI) experience in your academic profession?

2. Describe the advantages of using artificial intelligence in your teaching process.

3. What did you like the most about this experience?

4. Tell me about disappointments you have had with Artificial intelligence in your academic profession.

2.1.1.2 Scale respondents

This study centered on PTUK college instructors who teach in different departments. A total of 88 college instructors were selected randomly selected from a population of instructors. Sample was selected from Palestine Technical University-PTUK.

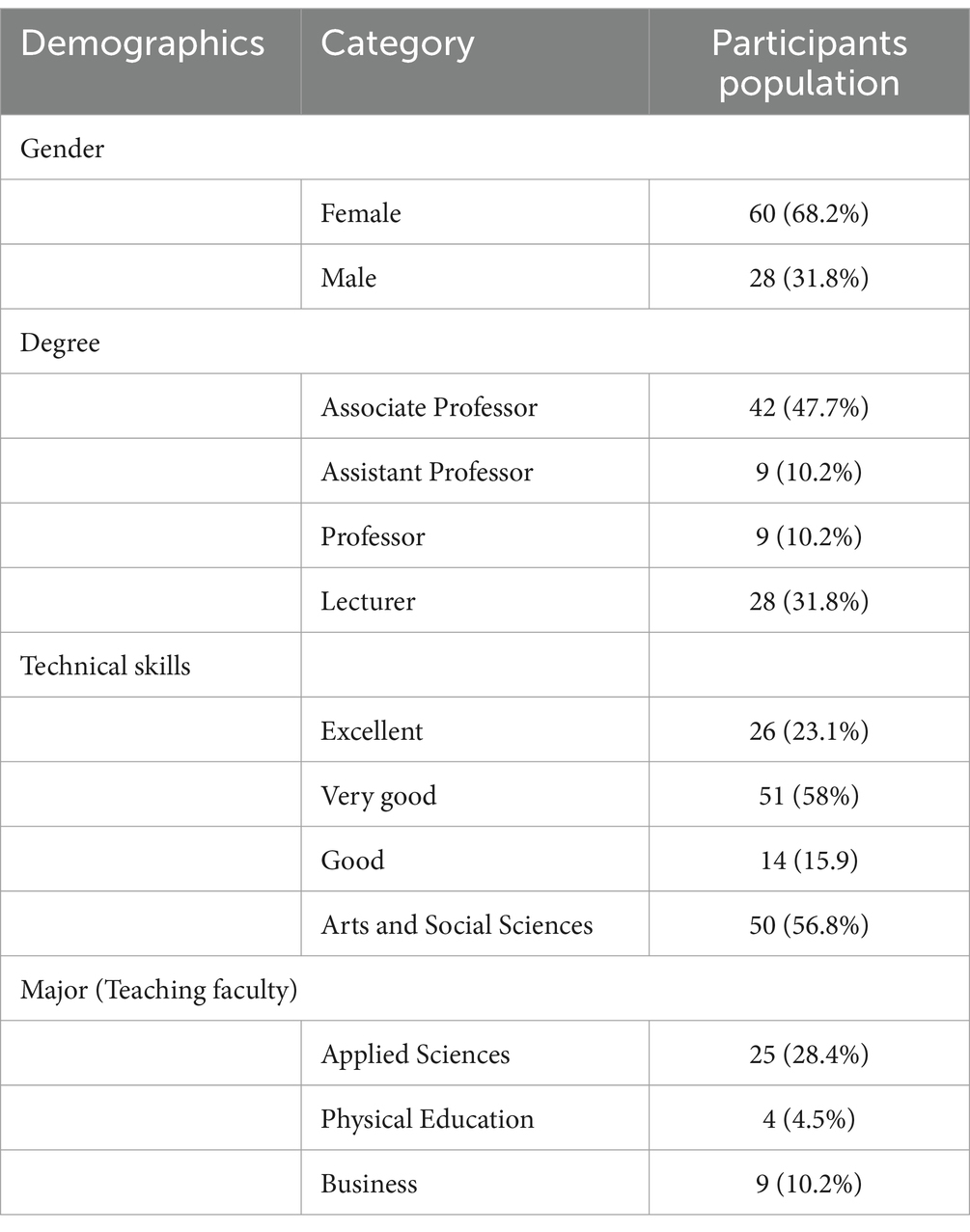

Respondents voluntarily participated in the research, and demographic details can be found in Table 1.

For (RQ2), the researcher adapted a pre-existing scale of the attitudes toward AI ethics to the study context (Jang et al., 2022). The first part of the scale consisted of 4 items related to demographic like: gender, degree, technical skills, and teaching faculty. The second part of the scale aimed to explore college instructor’s attitudes toward AI ethics consists of six dimensions. In particular, there are five domains of AI ethics on a 5-point Likert scale. The first dimension is fairness which means states fair access to technology should be provided fairly and without discrimination. Second, is transparency, the use of technology should be transparent, with clear explanations of how it works. Third, non-maleficence means technologies should be implemented in a way that is effective, safe, and does not cause harm to individuals. Fourth is responsibility which means individuals who use technology should take responsibility for any misuse, and there should be procedures in place for individuals to challenge incorrect results. Fifth, privacy which means personal data should be collected confidentially, and measures should be taken to ensure data security. The original scale consisted of 17 items. A sixth dimension is added to the scale which is human oversight and determination which means AI systems do not displace ultimate human responsibility and accountability with no harm caused to human beings. The adjusted scale became 19 items. Exploratory factor analysis is conducted for factor extraction. 14 items were extracted with six dimensions.

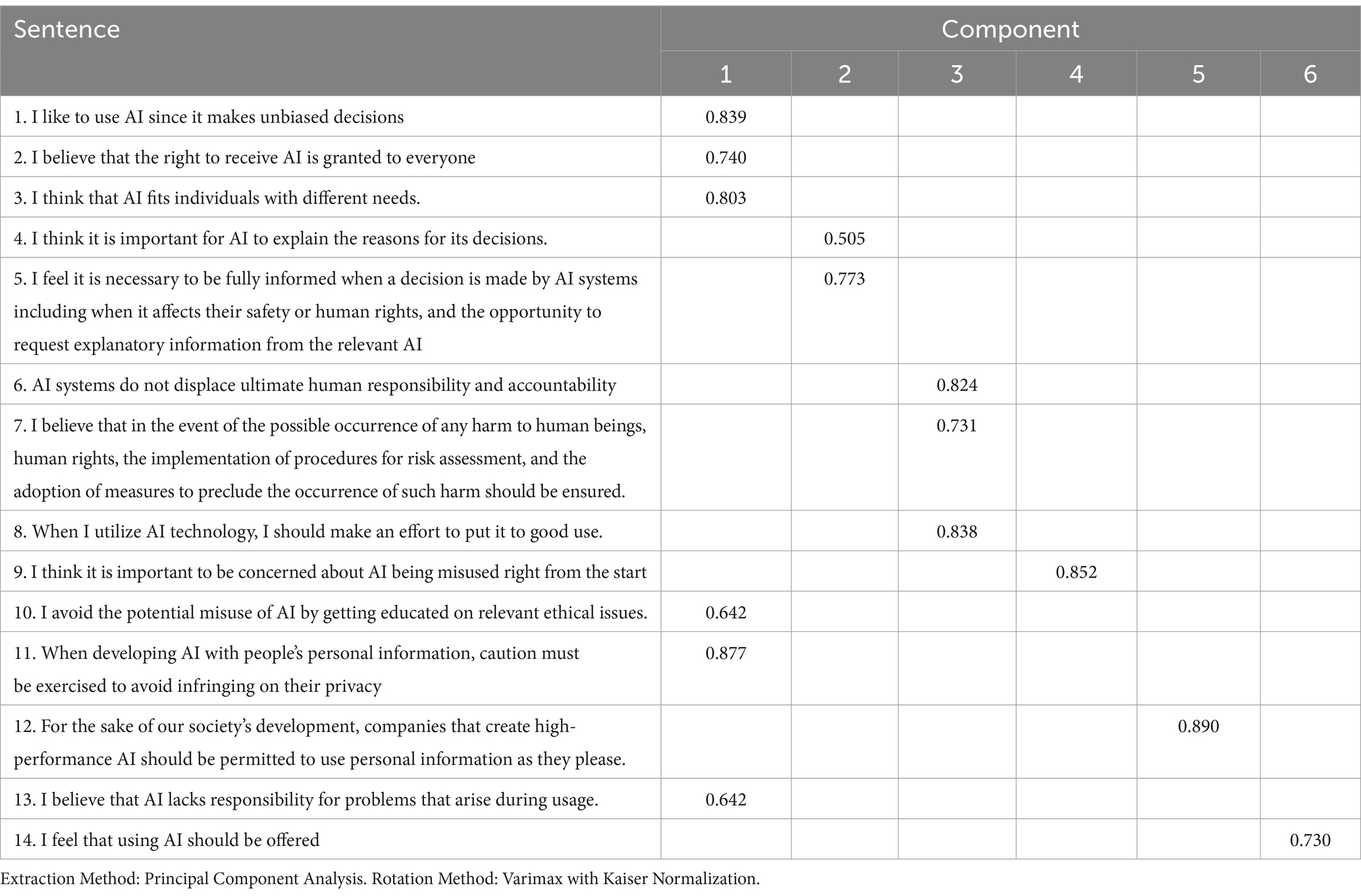

2.1.2 Validity and reliability of the scale

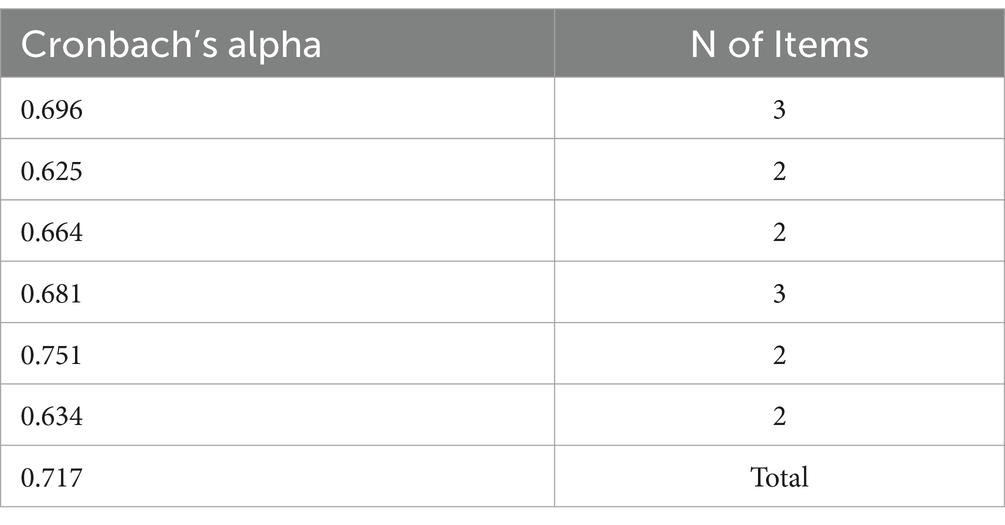

The validity of the scale was confirmed through several steps. Initially, face validity was assessed by administering the translated scale to professors specializing in education psychology, educational technology, curriculum, and instruction. Construct validity was then evaluated using principal component analyses with Varimax rotation, following the recommendation by Wang et al. (2022). Additionally, to further assess construct validity, exploratory factor analysis was conducted on the 19 attitudes towards AI ethics items of the scale, using data from a pilot study involving 60 students. Table 2 shows exploratory factor analysis. Each item on the scale needed to exhibit a correlation of at least 0.3 with another item. The Kaiser Meyer-Olkin measure of sample adequacy, at 0.742, surpassed the typically recommended value of 0.6, and Bartlett’s test of sphericity yielded a significant result (χ2 = 776.381, p < 0.001), indicating strong validity of the research data (Cheng and Shao, 2022). By using the Principal Component Analysis (PCA) technique as shown in Table 3, items were divided into six factors, and the table shows component loadings were used to name each factor correctly.

To ascertain the reliability of the scale, Cronbach’s alpha was computed for each of the six dimensions of the scale as shown in Table 3. The calculation of reliability for AI ethics attitudes scales involves assessing internal consistency. Specifically, Cronbach’s alpha values were 0.696 for fairness items, 0.625 for transparency items, 0.664 for human oversight and determination items, 0.681 for Non-maleficence items, and 0.751 for privacy 634 for responsibility. These findings indicate a high level of internal consistency for the overall scale, with a Cronbach’s alpha of 0.717, demonstrating strong reliability, as presented in Alnahdi (2020).

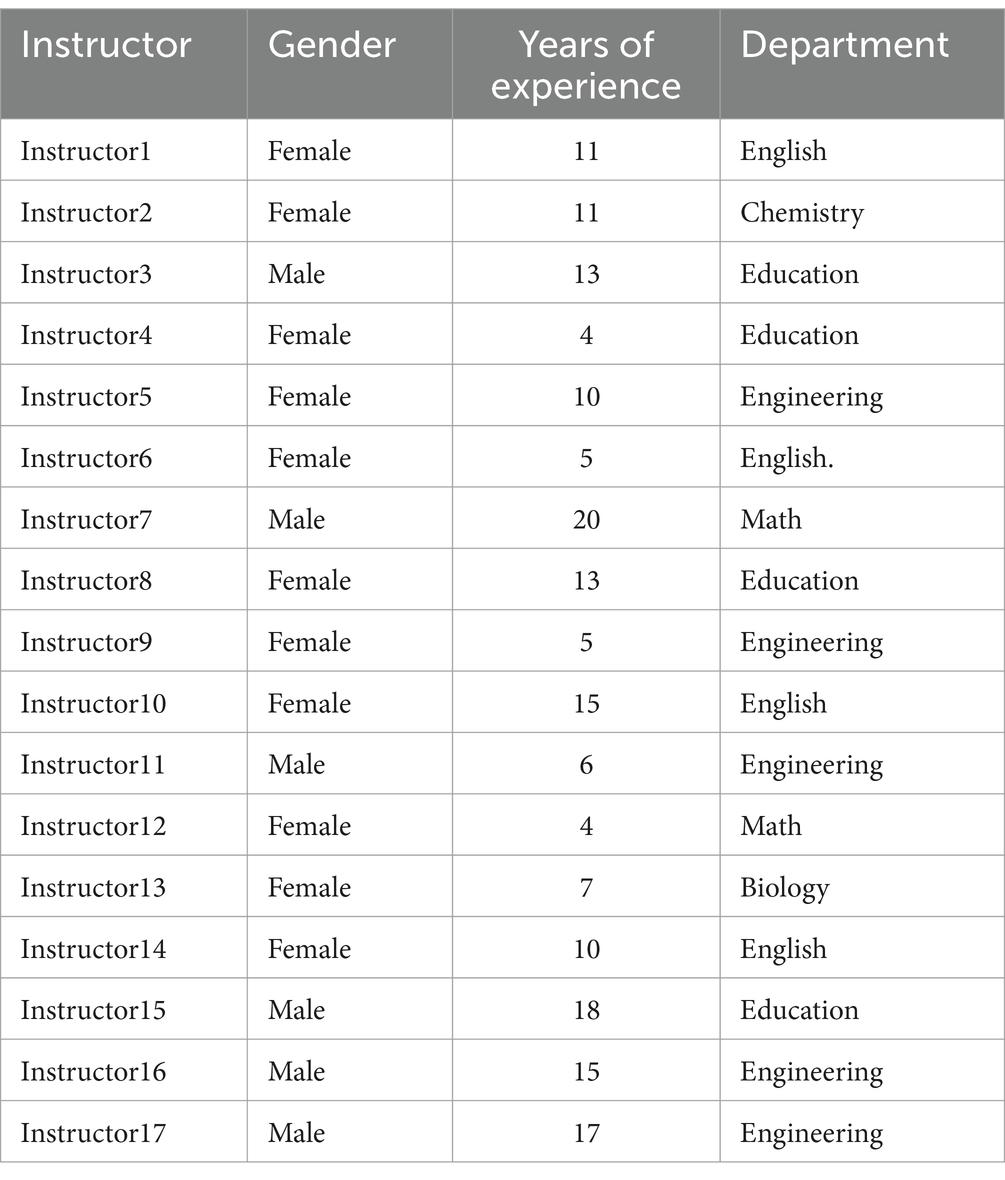

2.1.2.1 Semi structured interviewees

The primary aim of this study was to investigate the experiences of PTUK college instructors who AI in their teaching practices. Consequently, participants for the qualitative phase were selected purposefully from this group following their experience with AI. Creswell and Clark (2017) suggest a phenomenological study should involve 5–25 participants, while other sources propose a range of 6–20 participants for such research. Following Creswell’s recommendation, this study included 17 college instructors who actively participated in this phenomenological investigation. All participants possessed a minimum of 5 years of teaching experience; however, they had different prior exposure to educational tools based on AI technology. The background details of the participating educators can be found in Table 4.

The interview participants were briefed on the study’s objectives and the importance of their contributions, with assurances of confidentiality and exclusive use for research purposes. Gratitude was expressed for their participation, and a conducive interview environment was provided. Respectful and comfortable interaction prevailed between the researcher and participants. Participants were notified of the accurate recording of their responses for thorough documentation and direct quotations.

Objective transcription of participant responses without bias was ensured. Clarifications were offered when participants encountered question difficulties. Participants had the opportunity to review and amend their responses. Qualitative data underwent multiple reviews, with reasons categorized and prioritized based on frequency within the sample.

Four validation criteria were employed to ensure study reliability and validity. These included cross referencing responses in semi-structured interviews with additional field experts in the coding process, achieving an 85% agreement rate, surpassing the acceptable threshold of 80%. To ensure qualitative data credibility and dependability, four validation criteria were implemented. These included cross-referencing responses through semi-structured interviews with additional experts in the field, achieving an 85% agreement rate in coding, a percentage considered acceptable. The credibility of results was ensured through cross-checking codes and outcomes, verifying original data, and confirming the absence of discrepancies in the coding system. Triangulation, involving various research tools such as interviews and focus group discussions, was employed to enhance credibility. Dependability was addressed through a code-recode strategy, where the same dataset was independently coded twice, with a two-week interval between coding instances. A comparison between the outcomes of these two coding rounds assessed their similarity or disparity. Additionally, a stepwise replication approach involved independent analysis by both researchers, comparing their respective results. For transferability, purposive sampling based on specific criteria was used. Conformability was maintained by meticulously documenting the researchers’ procedures and deriving conclusions from participant narratives and language without researcher biases. A dualcheck process was consistently carried out throughout the study to verify data accuracy.

2.1.3 Ethical consideration

This study received ethical approval from the university, and informed consent was obtained from all participants. Approval was granted by PTUK. Participants’ identities were kept confidential, and their information was securely stored on a private computer accessible only to the researcher.

2.2 Data analysis

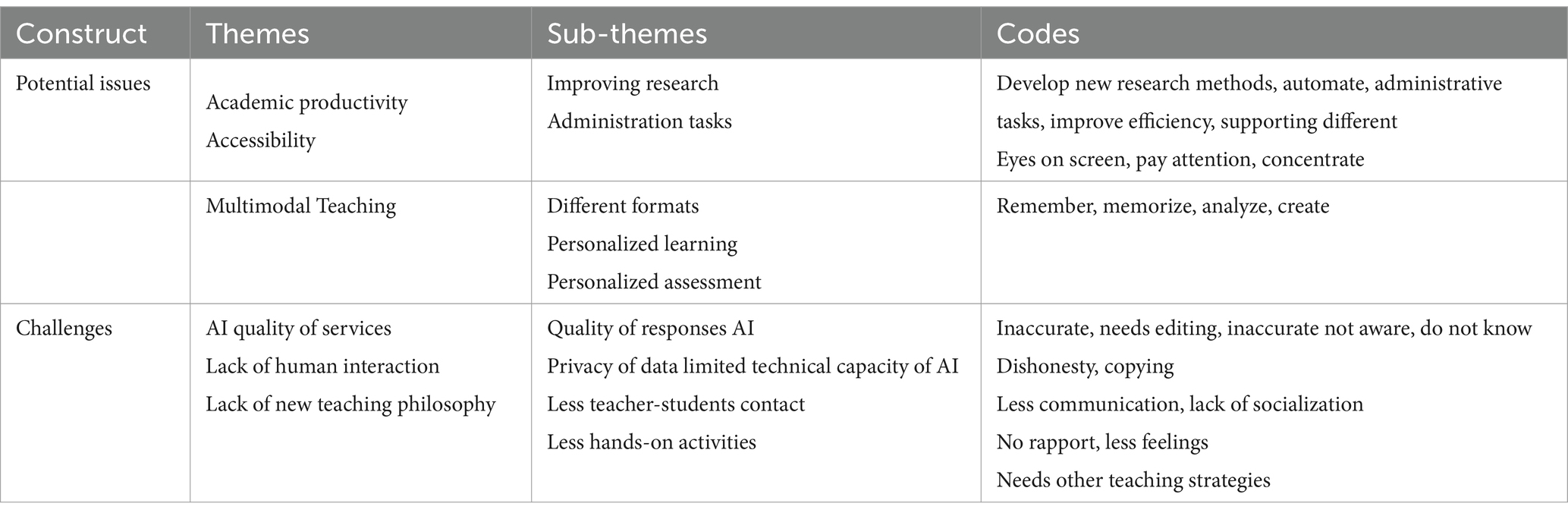

To analyze the results for the first question and third question, thematic analysis was conducted to extract the themes from the interview questions. A qualitative approach is employed for thematic data analysis, aiming to identify themes that reveal trends and connections within the qualitative data and address the research questions, following the methodology outlined by Braun and Clarke (2014). The analysis comprises multiple steps, starting with a thorough reading of transcribed interviews by the researcher to gain a deep understanding of the content. Thematic analysis is a method to identify, and report patterns of meaning in qualitative data. This analysis method is a process for interpreting textual data and converting scattered data into rich and detailed data (Braun and Clarke, 2014). The coding process in thematic analysis consists of several steps (Braun and Clarke, 2014) to identify and organize themes within qualitative data. First, repeatedly reading the data and noting initial ideas. Second, generating initial codes by methodically working through the data set, creating concise labels (codes) for significant features. These codes highlight interesting aspects of the data that may form the basis of recurring patterns (themes). This was done manually. Third, searching for themes by organizing different codes into potential themes. Fourth, reviewing themes by ensuring the themes work in relation to the coded extracts and the entire data set. This step involves splitting, combining, or discarding initial themes to ensure they accurately reflect the data. Fifth, defining and naming themes as shown in Table 5. Throughout the process, it was crucial to maintain a flexible approach, revisiting earlier steps as necessary to ensure the themes accurately represent the data. Documenting each step is essential for maintaining transparency and rigor. Results for the first question and the third questions, what are the potentials AI offers for college instructors in in higher education settings and the challenges? The identified themes and corresponding codes which are documented in Table 5, serving as a code book for reference.

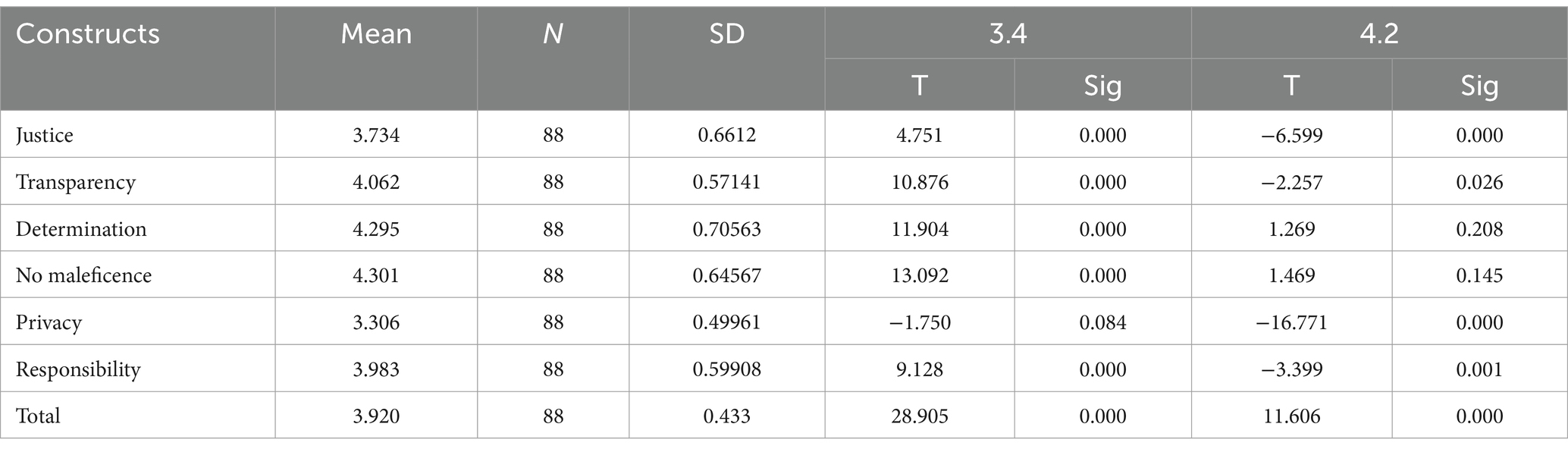

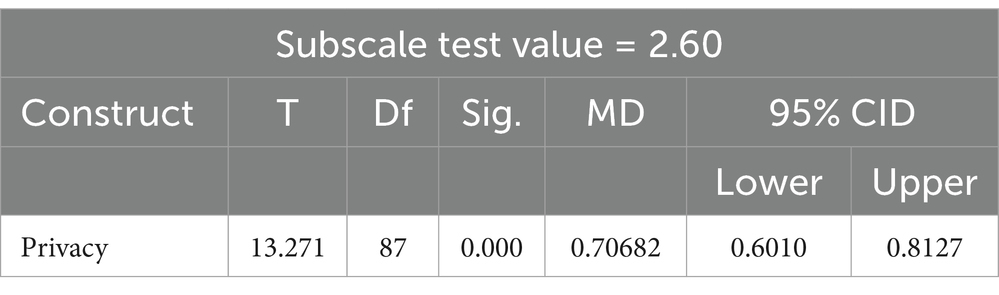

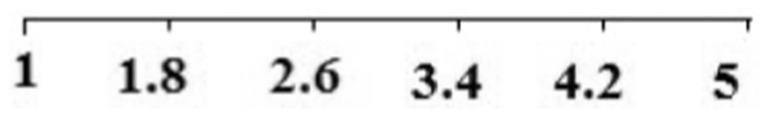

For the second question, regarding the attitudes towards ethical use a was used SPSS 23 was used. Mean and SD will be calculated. One sample t-test was used to assess the attitudes toward AI ethical use. Mean scores of attitudes were computed for each dimension, and subsequently, these resultant mean scores were compared to a scale formulated by Daher (2019). As per this scale, scores falling within the range of 0.8 to 1.8 were classified as very weak, scores ranging from 1.8 to 2.6 were categorized as “weak attitudes scores,” those falling between 2.6 to 3.4 were considered “moderate attitudes scores,” and scores between 3.4 to 4.2 were labeled as strong attitudes scores on this continuum. Finally, scores ranging from 4.2 to 5 were denoted as “very strong attitudes scores.” A statistical evaluation, specifically a One-sample t-test, was conducted to compare the mean scores against a predetermined benchmark, employing Daher’s referenced scale as depicted in Figure 1.

Figure 1. Interpretation scale of the mean scores (Daher, 2019, p. 5).

3 Results for the first question

3.1 Academic productivity

Many respondents emphasized that AI tools like ChatGPT have increased their academic productivity by improving their research and administration.

3.1.1 Improving research

Instructor 3 stated, “I started to focus on the methodology aspect of my research, concentrating on elements such as research design, tool development, and in-depth data analysis by using Chatgpt.”

It seems that this approach aims to derive robust theoretical and practical implications, thereby bringing about a transformative impact on scientific research in the age of AI.

3.1.2 Administration tasks

Many respondents reported that AI applications support their admiration tasks like planning, improving communication, grading, and assessment, and transcribing Video Meetings. Instructor 1 mentioned that “several existing AI applications like Turnitin helped me to check the authenticity of students’ essays submitted by students in language arts courses.”

3.2 Accessibility

AI systems, such as ChatGPT, hold the potential to improve digital inclusivity by enabling natural language interactions for individuals with disabilities. According to Instructor 6, ChatGPT could be instrumental in implementing features like converting text to speech, thereby enhancing information accessibility for individuals with visual impairments.

3.3 Multimodal teaching

AI technologies facilitate multimodal learning experiences by integrating various formats such as text, audio, video, and interactive components.

3.3.1 Enhancing personalized learning

Using Artificial intelligence in education is accessible to both educators and students, artificial intelligence aids teachers in customizing courses to meet individual needs. It deepens understanding of technological advancements and provides students with alternative paths tailored to their requirements. As mentioned by Instructor 15, AI can emulate human abilities, including language translation, speech-to-text conversion, text-to-speech synthesis, human-computer dialogue, and visual information processing through computer vision for image and text recognition. AI significantly contributes to Learning Analytics, involving the analysis of students’ knowledge and learning engagement strategies. This analysis allows instructors to create effective personalized learning pathways, while decision support systems utilize predictive analytics to forecast future student outcomes, visually presenting information through graphs and tables. Educators can leverage AI in education to assess specific students’ achievements.

Conversely, personalized learning systems focus on analyzing students’ mastery of skills, offering tailored educational activities, and promoting self-paced learning as students’ progress toward their learning objectives. Personalized learning establishes a robust foundation for continuous growth and development.

3.3.2 Instructional support

AI tools can assist and support teaching within the educational context by providing teachers with suggested lesson plans, games, instructional resources, and assignments. According to Instructor 5, AI chatbots, in particular, are valuable tools that save time and effort, offering a variety of educational games for the Chemistry classes they teach.

4 Results for the third question for the challenges college instructors face when using AI

4.1 AI service quality

4.1.1 Response quality

The successful integration of AI, such as Chatbots, in educational settings heavily relies on the quality of their responses. In this study, a majority of participants found ChatGPT’s conversation quality and information accuracy to be satisfactory. One instructor pointed out occasional inaccuracies and limited data availability, while another acknowledged the general dependability of ChatGPT’s responses but noted instances of misleading information, emphasizing the need for continuous refinement. An IT instructor provided an example of incorrect code generation, highlighting occasional errors. Despite this, participants recognized ChatGPT as an efficient virtual assistant for knowledge and product creation, though some mentioned the need to rely on personal experience in certain situations.

Some participants emphasized that the accuracy of ChatGPT’s responses is contingent upon the precision of user queries. Questions that are too recent or inadequately specific may result in less informative answers due to ChatGPT’s limited context.

4.1.2 AI data privacy

Ensuring the privacy of user data is crucial to prevent the misuse of AI systems for manipulation or biased treatment. AI systems using personal data for decision-making should prioritize transparency and responsibility in handling this information. An instructor expressed concern about big data and its privacy implications, underscoring the importance of data management in AI tool design. Misuse or inadequate design of AI systems could impact students’ ethical guidelines. Instructors emphasized the role of AI education in instilling ethical considerations in students. They advocated for including AI ethics in the curriculum to help students make informed decisions when using AI.

4.1.3 Limited technical capacity

Instructors incorporating AI tools in education noticed the limited technical capacity of AI. Participants have explained that AI sometimes is not able to process specific tasks like graphics or images and text. Instructor 4 mentioned, “AI assessment tools sometimes failed to assess the texts when the images are included.”

4.2 Lack of human interaction

While many participants acknowledged the potential benefits of AI in enhancing teaching and learning, some expressed concerns about reduced instructor-student interaction. They foresaw challenges in the psychological and social aspects of teaching, suggesting that the introduction of AI should be balanced to maintain a human touch in education.

4.3 Need teaching philosophy adaptation

AI is undeniably reshaping education, necessitating educators to enhance their skills and approaches to align with technological demands. The implementation of AI in the future for essay writing will not pose a significant challenge for students, even those lacking prior knowledge on a particular subject.

Consequently, teachers are urged to reconsider their teaching philosophies to effectively evaluate their students. For example, adopting methods like oral debates, to assess students’ logical and critical thinking.”

Another engineering instructor added AI minimizes experiential learning that electrical engineering needs to experience. In this sense, AI could be utilized to give students access to simulations and VR settings that are both realistic and interesting to them.

Moreover, as expressed by one of the interviewees, “Various applications utilizing generative AI can stimulate novel thought processes in knowledge processing. In learning environments with conversational AI, teachers may aim to facilitate students’ construction of mental frameworks from information fragments, fostering critical thinking that judiciously assesses the quality of information derived from AI. Given the recent emergence of teachers’ guides for AI, there will likely be a growing necessity to overhaul traditional lecture-based classroom setups.”

5 Results for the second question, what are college instructors attitudes towards ethical use of AI in their teaching process

To answer the second question, subscale means, overall attitudes towards AI ethics, one sample t-tests are calculated as shown in Tables 6, 7.

Tables 6, 7 show that the means of the six subscales of AI ethics range between 3.306 and 4.295 and the total score mean is 3.920 which is considered high. Subscales are mentioned, respectively, as follows: determination (M = 4.295), no maleficence (M = 4.301), transparency (M = 4.062), justice was 3.734, responsibility (M = 3.983), and privacy (M = 3.306). It seems that instructors’ attitudes towards determination subscale of ethical use was the highest and the lowest attitudes towards AI ethics in the privacy subscale.

To find the statistically significant difference between the sample-estimated population mean and the comparison population mean. One sample t-test was conducted and the results indicate that there are statistically significant differences at the level of significance (0.05) between the average score of (4.2), a very high value for justice, transparency, privacy, and responsibility.

The value of (t) was statistically significant and negative, and this indicates that the attitudes towards ethical use of AI from the point of college instructors at PTUK were less than (4.2) in four constructs: justice, transparency, privacy, and responsibility. While the value of (t) was statistically significant and positive for all constructs except privacy which indicates that the attitudes towards ethical use of AI from the point view of college instructors at PTUK is more than 3.4.

Also, when using the test value (3.4), it is revealed that there are statistically significant differences at the significance level (0.05) between the average score of the three constructs: justice, transparency, determination and no maleficence, and responsibility. This indicates a moderate attitude in these four subscales. Compared to privacy, all sub-scales are ranged between high and moderate.

For the privacy subscale, there was a statistically significant difference at p < 0.005 at the test value = 2.6 and the score mean which indicates that attitudes towards AI ethical use in privacy are weak. The overall score of attitudes towards AI ethical use is considered high.

6 Discussion

RQ1- What are the potentials of AI offers for college instructors in higher education settings? Several advantages of AI from the analysis of respondents’ answers on their AI use. The thematic coding revealed three categories of AI advantages. Most of the study participants were experienced college instructors who use contemporary technologies to support their teaching practice, such as Moodle learning management systems to plan their courses and to communicate with their students. On the other hand, they have basic knowledge of using AI in their academic tasks.

Results revealed that college instructors at PTUK utilize AI applications as a supportive tool in academic productivity. College instructors mentioned how AI tools help them to focus more on the methodology part and save time with AI-generated texts in other parts of their research. The results of this research align with recent studies advocating for the use of AI tools in research (Khlaif et al., 2023) indicating that generative AI tools, serving as an instance of AI-generated content, can serve as a tool for conducting data analysis for researchers. Also, participants of this study viewed AI as a tool to aid them in accessing educational resources, arranging their lessons based on content and timing, and assessing homework tasks. This finding is in line with Celik et al. (2022); Chounta et al. (2022) who reported that AI assists teachers with work duties like grading homework and handling routine administrative duties such as reporting. Additionally, AI could be beneficial in helping with lesson planning, both in terms of scheduling and content, as well as in monitoring students’ progress. This conquers with the results of Igbokwe (2023) who reported that AI can support instructors by assisting instructor in tasks such as assessment, grading, lesson planning, and feedback provision.

Moreover, college instructors mentioned one of the potentials of using AI in education as accessible to many students with different disciplines, like special education students, who use these tools to support them in their learning. This is in line with Jang et al. (2022) who recommended that identifiable and discriminatory biases should be eliminated while using AI. Additionally, they recommended that AI systems should be user-centric and designed such that anyone, regardless of age, gender, ability, or characteristic, can use AI products or services For example, fairness, justice and equality should be maintained to provide equitable benefits to learn.

Additionally, it was found that college instructors find AI applications useful in supporting multimodal teaching through personalized learning and augmenting instructors’ performance by offering instructional support due to their potential integration into educational settings. While variations in the extent of this perception shift existed among participants, the most notable perceptions were observed in college instructors with less teaching experience, who are more willing to use AI in their classrooms. This finding supports a previous study (Hwang et al., 2020) which showed how AI supports and provides assistance to students according to their learning progress, preferences, or individual characteristics. These results seem different than reported benefits of AI usage like AI enhance interest in technology, it aspires career in technology, increased social impact of AI and AI performance that showed the largest increase (Park and Kwon, 2023).

Upon analyzing the results of participants’ responses to AI implementation in education, it was found that many college instructors seem interested in using AI in their profession. It seems that implementing AI in education offers positive experiences that influence college instructors’ perception of AI tools.

For RQ2, results revealed that the overall attitudes of college instructors at PTUK are strong towards AI ethical use. This finding could be explained as the trend that newer-generation instructors, who possess greater exposure to educational technology exhibit a strong interest in utilizing novel technological tools and incorporating them into their teaching process and they are familiar with AI ethics guidelines. This finding aligns with similar research studies that highlight new teachers’ preference for implementing novel educational technology tools (Semerci and Aydin, 2018; Trujillo-Torres et al., 2020).

Fairness, transparency, and responsibility ethical guidelines are on average equally important to college teachers. It appears that since college instructors are using AI for academic purposes, their attitudes were high towards the responsibility dimension of AI that elicited on whether responsibilities should be divided according to social consensus when problems involving AI occur, or whether specific groups such as developers and users should bear all responsibility. For fairness and transparency as well, college instructors show moderate attitudes towards AI ethical use in these two dimensions. The fairness dimension of AI encompassed elements such as accounting for diversity during data collection for AI advancement and ensuring the accessibility of developed AI to all individuals without bias. The transparency dimension included factors gauging perspectives on the importance of AI explainability. College instructors seem to have moderate attitudes toward AI that are transparent since AI algorithms should be understandable and defensible for particular educational objectives. These results are consistent with the finding of Jang et al. (2022) who explained that the lack of transparency might hinder teachers from effectively utilizing AI in education and promptly identifying issues with students’ behavior and learning performance.

Moreover, college instructors’ attitudes towards the human oversight and determination dimension and non-maleficence were very strong including items that elicited responses regarding AI systems that do not have human responsibility and accountability and the importance of preventing abuse from various agencies associated with AI. This results differs from Huriye (2023) who found that that bias, privacy, accountability and transparency are the main ethical considerations that face users of AI technology.

Attitudes towards privacy were found weak among college instructors. For AI users’ privacy has to be protected, and ensured. This ethical principle is far more important than fairness, transparency, or responsibility. Since data are required while using AI, college teachers’ information is publicly accessible when they upload it online, and identifying who utilizes specific information which is under what purposes is quite challenging. This result is in line with Borenstein and Howard (2021) who mentioned that AI contributes to privacy erosion and is intensifying with tools that give information freely for anyone to scrub when posted online. Mean while a study conducted by Wang et al. (2024) explained that how generative artificial intelligence can foster users’ positive perceptions of privacy protection and security, which may enhance perceived AI ethics and increase its usage.

For RQ3, what challenges did teachers face when using AI for education?

AI quality of services, less human interaction, and the need for a new teaching philosophy.

One of the most observed subthemes for the AI quality of services challenges is the limited technical capacity of AI. For example, AI may not be effective for scoring graphics or figures and text. Ma et al. (2020) reported that an AI-based system failed to assess the complexity of texts when they included images.

Quality responses and data privacy are other subthemes of AI quality of services. The quality of responses was reported as AI responses lack trust and reliability. Furthermore, some college instructors noted instances where AI tools like ChatGPT provided random inaccuracies and lacked clarity on relevant subjects, leading them to occasionally question the reliability of the information. These participants felt that the generated output from AI tools like ChatGPT appeared akin to an opinion lacking proper references. This finding is consistent with a previous recent study conducted by Kung et al. (2023), who reported that the precision of ChatGPT hovered at approximately 60%.

This highlights the necessity for a thorough evaluation of its generated content before utilization. Consequently, there is a need for further investigation into guaranteeing impartiality, precision, and fairness for students employing chatbots in a broader sense, with specific attention directed towards ChatGPT. Moreover, issues of the assessment and tests; students could misuse AI-generated content to cheat on online exams and assessments by submitting AI-generated answers, which consequently reduces originality and creativity; college students could potentially misapply AI-generated text to fabricate content without exerting the effort to formulate their unique ideas.

Another subtheme that emerged is the lack of ethical consideration of AI usage by exposing users’ data and unethical usage of information like plagiarism. Moreover, college instructors are highly concerned about AI’s potential to minimize innovative and critical thinking skills. Moreover, several ethical concerns brought up by participants in the study include AI’s potential to promote academic disintegration and cheating, the possibility of fostering laziness among students, and the susceptibility to errors like presenting biased or false information.

The absence of interaction between the students themselves and the student-instructor interaction is another theme that is reported by the participants. It appears that AI can provide information and answers, but it lacks a nuanced understanding of human emotions and needs. For example, it lacks teacher-student interactions, which provide less personalized support that takes into account individual learning styles and difficulties. This result conquers with Aguilar et al. (2021) who believed that AI can offer personalized learning experiences, allowing students to progress at their own pace, while teachers can assess outcomes in real time and create adaptive curricula.

Additionally, IA is characterized by reduced human interaction. Previous studies go in line with these findings (Tlili et al., 2023) that confirmed excessive reliance on AI interactions could potentially reduce face-to-face interactions between teachers and students, which are valuable for building relationships, mentorship, and holistic development. This finding also conquers with Seo et al. (2021). This could be explained as students prefer anonymity from AI tools, which makes them less afraid to ask questions.

College instructors also mentioned that a lack of teaching philosophy is another challenge. The utilization of AI in the education sector holds promise for tailoring learning experiences and expanding access to a broader array of educational resources. This has the potential to steer away from traditional teaching approaches that aim for universal applicability across all students. However, it is crucial to acknowledge the potential downsides of AI in education, including the risk of reducing the sense of personal connection and interpersonal interaction in the learning process. These results seem different than the reported results of Lérias et al. (2024) and Salhab (2024) who found that AI literacy is a challenge that faces college instructors whether in implementing teaching practices or in AI integrated curriculum.

Additionally, concerns arise about potential biases in AI-driven educational resources and disparities in access to these resources (Lee, 2023). Also, findings related to AI ethics show that educators should integrate AI ethics into their teachings. Specifically, these findings can inform the design of AI ethics curricula and educational resources. Through this study, six essential dimensions of AI ethics were used to gauge college instructors’ attitudes toward these dimensions. Consequently, educators can tailor their AI ethics curriculum to align with these identified dimensions.

6.1 Conclusions, recommendations, and implications

The increasing interest in the utilization of AI has led to a rise in research focusing on instructors’ integration of AI into their practices in recent years. However, there remains a need for further exploration to gain a deeper understanding of how college instructors utilize AI and to explore their attitudes toward its ethical use and the challenges they face. As AI becomes more prevalent in educational settings, it is expected that more research will delve into its integration into teaching practices.

As highlighted in the discussion, college instructors are concerned about ethical issues like privacy mainly. A crucial element in addressing these challenges is helping developers realize that the technology they are creating is inherently linked to ethical considerations. Developers must understand their significant role and responsibility in addressing these ethical aspects.

Additionally, this study identified a gap concerning the limited diversity of methods and data sources utilized in AI-based systems within education. Current AI applications in education predominantly rely on self-reported and observational data, neglecting the potential benefits of incorporating multimodal data sources.

Integrating diverse data types could provide deeper insights into teaching and learning processes, enabling teachers to devise more effective interventions, offer timely feedback, and conduct more accurate assessments of students’ cognitive and emotional states.

Furthermore, this study revealed that teachers have minimal interaction with their students while using AI. The development of AI systems is crucial, beyond mere algorithm training, to ensure that these systems align with the needs and preferences of educators. Collaboration between AI developers, software companies, and teachers should be encouraged to enhance the relevance and effectiveness of AI technologies in educational settings.

Overall, this study indicates that AI holds significant potential for enhancing teachers’ instructional practices, aiding in various aspects such as planning, implementation, and assessment. However, further research and collaboration are necessary to maximize the benefits of AI in education and ensure its seamless integration into teaching practices.

6.2 Implications

AI is transforming our lives in ways that are hard to predict and comprehend. To guide this technology in a more socially responsible direction, it is crucial to focus on AI ethics education. The computing community must integrate ethics as a fundamental aspect of its identity. Practically, this is also important as job opportunities in AI ethics are beginning to arise.

The results of this study support utilizing AI in education in various ways and encouraging college instructors to enhance integrating AI in their classrooms and provide knowledge base for instructors to design interactive activities and with effective learning strategies to create a meaningful learning environment while using AI. The study also provides a launching point to AI ethics more seriously rather than an exercise. A pathway towards increasing that likelihood is making sure that ethics has a central place in AI educational efforts.

The findings of this study can be applied to educators and educational institutions that wish to teach students about AI ethics by guiding the development of AI ethics curricula and educational materials.

Finally, due to positive results of this experience, policymakers may move forward in decisionmaking regarding AI integrating process in the traditional teaching class.

6.3 Limitations and future work

While the study had a mixed design, the ability to generalize the results may be constrained due to the voluntary sampling technique employed (Tiit, 2021). Moreover, this investigation identified various limitations and obstacles associated with the use of AI by teachers, including its limited reliability, technical capabilities, and adaptability across different educational settings. Addressing these challenges necessitates further empirical inquiry. The study’s findings suggest that the development of AI systems capable of significantly enhancing education quality across diverse learning environments remains an ongoing endeavor. Achieving this goal requires collaborative efforts across multiple disciplines, involving stakeholders such as AI developers, pedagogical experts, teachers, and students.

Another limitation arises from the dataset being specific to a particular country. This is supported by the findings of the Moral Machine Experiment, which highlight the significant influence of national and cultural factors on AI ethics (Awad et al., 2018), thus potentially limiting the generalizability of the results. Future research could explore the factors impacting students’ attitudes toward AI ethics, and further investigations could delve into understanding the factors contributing to gender disparities in these attitudes.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

RS: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Palestine Technical University-Kadoorie has supported the publication of this article.

Acknowledgments

Thanks to Palestine Technical University-Kadoorie for supporting this study.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author declares that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdurahman, A., Marzuki, K., Yahya, M. D., Asfahani, A., Pratiwi, E. A., and Adam, K. A. (2023). The effect of smartphone use and parenting style on the honest character and responsibility of elementary school students. Jurnal Prima Edukasia 11, 247–257. doi: 10.21831/jpe.v11i2.60987

Adhabi, E., and Anozie, C. (2017). Literature review for the type of interview in qualitative research. Int. J. Educ. 9, 86–97. doi: 10.5296/ije.v9i3.11483

Adov, L., Pedaste, M., Leijen, Ä., and Rannikmäe, M. (2020). Does it have to be easy, and useful, or do we need something else? STEM teachers’ attitudes towards mobile device use in teaching. J. Technol. Pedag. 29, 511–526. doi: 10.1080/1475939X.2020.1785928

Aguilar, J., Garces-Jimenez, A., R-moreno, M., and García, R. (2021). A systematic literature review on the use of artificial intelligence in energy self-management in smart buildings. Renew. Sustain. Energy Rev. 151:111530. doi: 10.1016/j.rser.2021.111530

Ali, H., and Aysan, A. F. (2025). Ethical dimensions of generative AI: a cross-domain analysis using machine learning structural topic modeling. Int. J. Ethics Syst. 41, 3–34. doi: 10.1108/IJOES-04-2024-0112

Alnahdi, G. H. (2020). The construct validity of the Arabic version of the Chedoke-McMaster attitudes towards children with handicaps scale. Cogent Educ., 10,:1745540. doi: 10.3389/fpsyg.2019.02924, PMCID: PMC6992574

Alshorman, S. M. (2024). The readiness to use Ai in teaching science: science teachers’ perspective. J. Balt. Sci. Educ. 23, 432–448. doi: 10.33225/jbse/24.23.432

Asfahani, A., El-Farra, S. A., and Iqbal, K. (2023). International benchmarking of teacher training programs: lessons learned from diverse education systems. EDUJAVARE: Int. J. Educ. Res. 4, 65–75. doi: 10.37680/absorbent_mind.v4i1.4904

Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., et al. (2018). The moralmachine experiment. Nature 563, 59–64. doi: 10.1038/s41586-018-0637-6

Borenstein, J., and Howard, A. (2021). Emerging challenges in AI and the need for AI ethics education. AI Ethics 1, 61–65. doi: 10.1007/s43681-020-00002-7

Braun, V., and Clarke, V. (2014). What can “thematic analysis” offer health and well-being researchers? Int. J. Qual. Stud. Health Well Being 9:26152. doi: 10.3402/qhw.v9.26152

Celik, I., Dindar, M., Muukkonen, H., and Järvelä, S. (2022). The promises and challenges of artificial intelligence for teachers: A systematic review of research. TechTrends 66, 616–630. doi: 10.1007/s11528-022-00715-y

Chen, K., Tallant, A. C., and Selig, I. (2025). Exploring generative AI literacy in higher education: student adoption, interaction, evaluation and ethical perceptions. Inf. Learn. Sci. 126, 132–148. doi: 10.1108/ils-10-2023-0160

Cheng, G., and Shao, Y. (2022). Influencing factors of accounting practitioners’ acceptance of mobile learning. Int. J. Emerg. Technol. Learn. 17, 90–101. doi: 10.3991/ijet.v17i01.28465

Cheng, Y., Zhao, C., Neupane, P., Benjamin, B., Wang, J., and Zhang, T. (2023). Applicability and trend of the artificial Intelligence (AI) on bioenergy research between 1991–2021: A bibliometric. Analysis 16:1235. doi: 10.3390/en16031235

Chien, S.-Y., and Hwang, G.-J. (2023). A research proposal for an AI chatbot as a virtual patient agent to improve nursing students’ clinical inquiry skills. ICAIE 2023, 13.

Chocarro, R., Cortiñas, M., and Marcos-Matás, G. (2023). Teachers’ attitudes towards chatbots in education: a technology acceptance model approach considering the effect of social language, bot proactiveness, and users’ characteristics. Educ. Stud. 49, 295–313. doi: 10.1080/03055698.2020.1850426

Choi, J. I., Yang, E., and Goo, E. H. (2024). The effects of an ethics education program on artificial intelligence among middle school students: analysis of perception and attitude changes. Appl. Sci. 14:1588. doi: 10.3390/app14041588

Chounta, I. A., Bardone, E., Raudsep, A., and Pedaste, M. (2022). Exploring teachers’ perceptions of artificial Intelligence as a tool to support their practice in Estonian K-12 education. Int. J. Artif. Intell. Educ. 32, 725–755. doi: 10.1007/s40593-021-00243-5

Creswell, J. W., and Clark, V. L. P. (2017). Designing and conducting mixed methods research. Thousand Oaks, CA: Sage Publications.

Crompton, H., Jones, M. V., and Burke, D. (2024). Affordances and challenges of artificial intelligence in K-12 education: A systematic review. J. Res. Technol. Educ. 56, 248–268. doi: 10.1080/15391523.2022.2121344

Daher, W. (2019). Assessing students’ perceptions of democratic practices in the mathematics classroom. In Eleventh Congress of the European Society for Research in Mathematics Education (no. 6). Freudenthal Group; Freudenthal Institute; ERME. Ffhal-02421242f. Available online at: https://staff.najah.edu/media/conference/2020/06/18/Assessing_students_perceptions_of_democratic_practices.pdf

Dignum, V. (2018). Ethics in artificial intelligence: introduction to the special issue. Ethics Inf Technol. 20, 1–3. doi: 10.1007/s10676-018-9450-z

Duran, V. (2024). Analyzing teacher candidates’ arguments on AI integration in education via different chatbots. Digit. Educ. Rev. 45, 68–83. doi: 10.1344/der.2024.45.68-83

Dwivedi, Y. K., Hughes, L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., et al. (2021). Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 57, 101994.

El-Dahshan, G. A. J. E. J. (2019). Developing teacher preparation programs to cope with the requirements of the Fourth Industrial Revolution. Suhag Education. 68, 3153–3199. doi: 10.21608/EDUSOHAG.2019.90237

Ellore, V. P. K., Mohammed, M., Taranath, M., Ramagoni, N. K., Kumar, V., and Gunjalli, G. (2015). Children and parent’s attitude and preferences of dentist’s attire in pediatric dental practice. Int. J. Clin. Pediatr. Dent., 8, 102–107. doi: 10.5005/jp-journals-10005-1293, PMCID: PMC4562041

Ferikoğlu, D., and Akgün, E. (2022). An investigation of teachers’ artificial intelligence awareness: a scale development study. Malays. Online J. Educ. Technol. 10, 215–231. doi: 10.52380/mojet.2022.10.3.407

Gignac, G. E., and Szodorai, E. T. (2024). Defining intelligence: bridging the gap between human and artificial perspectives. Intelligence 104:101832. doi: 10.1016/j.intell.2024.101832

Greenstein, S. (2022). Preserving the rule of law in the era of artificial intelligence (AI). Artif. Intell. Law. 30, 291–323.

Grzybowski, A., Jin, K., and Wu, H. (2024). Challenges of artificial intelligence in medicine and dermatology. Clin. Dermatol. 42, 210–215. doi: 10.1016/j.clindermatol.2023.12.013

Hiremath, G., Hajare, A., Bhosale, P., Nanaware, R., and Wagh, K. S. (2018). Chatbot for education system. Int. J. Adv. Res. Ideas Innov. Technol. 4, 37–43. Available online at: https://docs.metea.org.uk/lib/QS6AWFUA

Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S. B., et al. (2021). Ethics of AI in education: Towards a community-wide framework. Int. J. Artif. Intell. Educ., 32, 504–526. doi: 10.1007/s40593-021-00239-1

Huriye, A. Z. (2023). The ethics of artificial intelligence: examining the ethical considerations surrounding the development and use of AI. Am. J. Technol. 2, 37–45. doi: 10.58425/ajt.v2i1.142

Hwang, G. J., Xie, H., Wah, B. W., and Gašević, D. (2020). Vision, challenges, roles, and research issues of artificial Intelligence in education. Comput. Educ. Artif. Intell. 1:100001. doi: 10.1016/j.caeai.2020.100001

Igbokwe, I. C. (2023). Application of artificial intelligence (AI) in educational management. Int. J. Sci. Res. Publ. 13, 300–307. doi: 10.29322/IJSRP.13.03.2023.p13536

Ikedinachi, A. P., Misra, S., Assibong, P. A., Olu-Owolabi, E. F., Maskeliūnas, R., and Damasevicius, R. (2019). Artificial intelligence, smart classrooms and online education in the 21st century: implications for human development. J. Cases Inf. Technol. 21, 66–79. doi: 10.4018/JCIT.2019070105

Ikkatai, Y., Hartwig, T., Takanashi, N., and Yokoyama, H. M. (2022). Octagon measurement: public attitudes toward AI ethics. Int. J. Hum.–Comput. Interact. 38, 1589–1606. doi: 10.1080/10447318.2021.2009669

Jang, Y., Choi, S., and Kim, H. (2022). Development and validation of an instrument to measure undergraduate students’ attitudes toward the ethics of artificial intelligence (AT-EAI) and analysis of its difference by gender and experience of AI education. Educ. Inf. Technol. 27, 11635–11667. doi: 10.1007/s10639-022-11086-5

Karataş, F., Eriçok, B., and Tanrikulu, L. (2024). Reshaping curriculum adaptation in the age of artificial intelligence: mapping teachers’ AI-driven curriculum adaptation patterns. Br. Educ. Res. J. 51, 154–180. doi: 10.1002/berj.4068

Karataş, F., and Yüce, E. (2024). AI and the future of teaching: preservice teachers’ reflections on the use of artificial intelligence in open and distributed learning. Int. Rev. Res. Open Distance Learn. 25, 304–325. doi: 10.19173/irrodl.v25i3.7785

Khlaif, Z. N. (2023). Ethical concerns about using AI-generated text in scientific research, doi: 10.2139/ssrn.4387984

Khlaif, Z. N., Mousa, A., Hattab, M. K. I., Tmazi, J., Hassan, A. A., Sanmugam, M., et al. (2023). The potential and concerns of using artificial Intelligence in scientific research: the case of ChatGPT. JMIR Med. Educ.. doi: 10.2196/47049, 9,:e47049, PMCID: PMC10636627

Kim, H.-S., Kim, N. Y., and Cha, Y. (2021). Is it beneficial to use AI chatbots to improve learners’ speaking performance? J. AsiaTEFL 18, 161–178. doi: 10.18823/asiatefl.2021.18.1.10.161

Kung, T. H., Cheatham, M., Medenilla, A., Sillos, C., De Leon, L., Elepaño, C., et al. (2023). Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit. Health 2:e0000198. doi: 10.1371/journal.pdig.0000198

Lambert, S. D., and Loiselle, C. (2008). Combining individual interviews and focus groups to enhance data richness. J. Adv. Nurs. 62, 228–237. doi: 10.1111/j.1365-2648.2007.04559.x

Lee, S. E. (2023). Rather than teaching by artificial intelligence. J. Philos. Educ. 57, 553–570. doi: 10.1093/jopedu/qhad019

Lérias, E., Guerra, C., and Ferreira, P. (2024). Literacy in artificial intelligence as a challenge for teaching in higher education: A case study at Portalegre Polytechnic University. Information 15:205. doi: 10.3390/info15040205

Luckin, R., and Cukurova, M. (2019). Designing educational technologies in the age of AI: A learning sciences-driven approach. Br. J. Educ. Technol. 50, 2824–2838. doi: 10.1111/bjet.12861

Lune, H., and Berg, B. L. (2017). Qualitative research methods for the social sciences. London: Pearson.

Ma, Z. H., Hwang, W. Y., and Shih, T. K. (2020). Effects of a peer tutor recommender system (PTRS) with machine learning and automated assessment on vocational high school students’ computer application operating skills. J. Comput. Educ. 7, 435–462. doi: 10.1007/s40692-020-00162-9

Maydi, T. A., and Majed, A. (2023). Attitudes of teachers of students with learning disabilities towards training programs based on artificial Intelligence. J. Posit. School Psychol. 4, 85–92. doi: 10.54536/ajet.v4i1.2455

Mhlanga (2023). Open AI in education, the responsible and ethical use of ChatGPT towards lifelong learning. doi: 10.2139/ssrn.4354422

Nazaretsky, T., Ariely, M., Cukurova, M., and Alexandron, G. (2022). Teachers’ trust in AI-powered educational technology and a professional development program to improve it. Br. J. Educ. Technol. 53, 914–931. doi: 10.1111/bjet.13232

Obidovna, D. Z. (2024). The pedagogical-psychological aspects of artificial Intelligence technologies in integrative education. Int. J. Lit. Lang. 4, 13–19. doi: 10.37547/ijll/Volume04Issue03-03

Okonkwo, C. W., and Ade-Ibijola, A. (2021). Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2:100033. doi: 10.1016/j.caeai.2021.100033

Park, W., and Kwon, H. (2023). Implementing artificial intelligence education for middle school technology education in Republic of Korea. Int. J. Technol. Des. Educ., 34, 109–135. doi: 10.1007/s10798-023-09812-2

Patten, M. L., and Newhart, M. (2017). Understanding research methods: An overview of the essentials. London: Routledge.

Pressman, S. M., Borna, S., Gomez-Cabello, C. A., Haider, S. A., Haider, C., and Forte, A. J. (2024). AI and ethics: a systematic review of the ethical considerations of large language model use in surgery research. In Healthcare,:825. doi: 10.3390/healthcare12080825, 12, PMCID: PMC11050155

Rahmatizadeh, S., Valizadeh-Haghi, S., and Dabbagh, A. (2020). The role of artificial intelligence in management of critical COVID-19 patients. J. Cell. Mol. Anesth. 5, 16–22. doi: 10.22037/jcma.v5i1.29752

Ray, P. P. (2023). ChatGPT: A comprehensive review of background, applications, key challenges, bias, ethics, limitations, and future scope. J. Internet Things Cyber-Phys. Syst. 3, 121–154. doi: 10.1016/j.iotcps.2023.04.003

Ryan, M., and Stahl, B. C. (2020). Artificial intelligence ethics guidelines for developers and users: clarifying their content and normative implications. J. Inf. Commun. Ethics Soc. 19, 61–86. doi: 10.1108/JICES-12-2019-0138

Salhab, R. (2024). AI literacy across curriculum design: investigating college Instructors’ perspectives. Online Learn. 28:n2. doi: 10.24059/olj.v28i2.4426

Sebsibe, A. S., Argaw, A. S., Bedada, T. B., and Mohammed, A. A. (2023). Swaying pedagogy: A new paradigm for mathematics teachers education in Ethiopia. Soc. Sci. Humanit. Open. 8, 100630–100610. doi: 10.1016/j.ssaho.2023.100630

Semerci, A., and Aydin, M. K. (2018, 105). Examining high school teachers’ attitudes towards ICT use in education. Int. J. Progress. Educ. 14:93. doi: 10.29329/ijpe.2018.139.7

Seo, K., Tang, J., Roll, I., Fels, S., and Yoon, D. (2021). The impact of artificial intelligence on learner–instructor interaction in online learning. Int. J. Educ. Technol. High. Educ. 18, 54–23. doi: 10.1186/s41239-021-00292-9

Sira, M. S. (2022). Artificial intelligence and its application in business management. Scientific Papers of Silesian University of Technology(165). doi: 10.29119/1641-3466.2022.165.23

Sriwijayanti, I. (2020). Christian Education in the Information of Era Openness with a Faith of Community Approach. ICCIRS 2019: Proceedings of the First International Conference on Christian and Inter Religious Studies, ICCIRS 2019, December 11-14 2019, Manado, Indonesia, 435.

Sun, Z., Anbarasan, M., and Praveen Kumar, D. J. C. I. (2021). Design of online intelligent English teaching platform based on artificial intelligence techniques. Comput. Intell. 37, 1166–1180. doi: 10.1111/coin.12351

Tiit, E. M. (2021). Impact of voluntary sampling on estimates. Pap. Anthropol. 30, 9–13. doi: 10.12697/poa.2021.30.2.01

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., et al. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 10:15. doi: 10.1186/s40561-023-00237-x