- 1Department of English Philology, Kuban State University, Krasnodar, Russia

- 2Department of Pedagogy of Higher Education, Kazan (Volga region) Federal University, Kazan, Russia

- 3Scientific and Educational Center “Person in Communication”, Pyatigorsk State University, Pyatigorsk, Russia

- 4Department of English for Professional Communication, Financial University under the Government of the Russian Federation, Moscow, Russia

- 5Department of Foreign Language, Peoples’ Friendship University of Russia named after Patrice Lumumba, Moscow, Russia

- 6Department of Chemistry, I.M. Sechenov First Moscow State Medical University (Sechenov University), Moscow, Russia

The rapid implementation of generative artificial intelligence (AI) in higher education has emphasized the necessity to comprehend the factors that impact its acceptance among students. This study examines the influence of digital media literacy on attitudes toward generative AI acceptance in higher education. Utilizing a cross-sectional survey design, a quantitative research methodology was implemented. The research sample included 451 undergraduate students from Kazan Federal University. The assessment of digital media literacy encompassed five sub-dimensions: Device Access, Content Access, Technical Understanding, Critical Understanding, and Create. The acceptance of generative AI was assessed using a scale modified from prior studies. The study employed structural equation modeling to analyze the connections between the sub-dimensions of digital media literacy and attitudes toward accepting generative AI. The results indicated that Content Access and Create had a noteworthy positive influence on attitudes toward accepting generative AI, whereas Technical Understanding had a considerable negative effect. The implementation of Device Access yielded a modest yet noteworthy positive effect, while the introduction of Critical Understanding did not result in a substantial impact. This study contributes to the expanding body of literature on digital media literacy and its influence on the acceptability of technology in higher education. The results underscore the significance of cultivating digital media literacy skills, particularly those related to content access and creation, to prepare students for the complexities and opportunities presented by generative AI in higher education. The study offers recommendations for educators, policymakers, and academics while acknowledging its limitations and suggesting directions for future research.

1 Introduction

According to Tala et al. (2024), generative artificial intelligence (Gen-AI) is increasingly being integrated into higher education contexts, offering numerous applications and potential benefits. Gen-AI is significantly impacting assessments, where its human-like ability to generate question responses is being used (Michel-Villarreal et al., 2023). The use of Gen-AI has the potential to enhance the evaluation process by offering tailored feedback to students, improving the effectiveness and accuracy of assessments, and enabling teachers to customize their teaching strategies in response to the specific needs of each student (Owan et al., 2023). Furthermore, ongoing research is exploring the educational applications of Gen-AI tools such as ChatGPT, Claude, and Gemini. These projects are evaluating the impact of these tools on academic integrity and the overall educational experience (Alasadi and Baiz, 2023; Damiano et al., 2024).

Digital media literacy is essential for influencing individuals’ perspectives on embracing Gen-AI technologies. Research has demonstrated that digital literacy significantly impacts attitudes and behaviors in various areas, such as healthcare, education, and business. Studies have shown that individuals with advanced digital literacy are more likely to exhibit greater confidence in utilizing technology (Khare et al., 2022; Kuek and Hakkennes, 2020). This self-assurance can lead to a more positive disposition toward adopting Gen-AI technology, as people become more comfortable using sophisticated digital tools. Studies by Long et al. (2022), Nyaaba and Zhai (2024), and Su and Yang (2024) have shown that incorporating AI literacy curriculum interventions in educational settings can foster favorable attitudes toward engineering and science among learners. Early integration of AI literacy instruction enables students to develop a foundational understanding of AI technology, thereby shaping their future perspectives and attitudes toward these tools.

Although Gen-AI has the potential to benefit higher education, several challenges must be addressed (Alier et al., 2024). Educators currently struggle to effectively harness the pedagogical advantages of Gen-AI on a larger scale, despite its potential to profoundly transform teaching and learning methods (Akinwalere and Ivanov, 2022). Institutions must address ethical and societal factors when using AI in education to ensure responsible and ethical use (Lainjo and Tmouche, 2023). Furthermore, it is necessary to bridge the gap between educators’ comprehension of AI and its potential to revolutionize education (Kusters et al., 2020). In the context of higher education, how students perceive Gen-AI is of significant importance. Students must acquire AI literacy, which involves comprehending the fundamental workings, benefits, drawbacks, and diverse applications of Gen-AI in academia (Chan and Hu, 2023). Despite these potential benefits, concerns exist regarding the ethical use of Gen-AI, particularly in assessment contexts where it might facilitate academic dishonesty through the misrepresentation of generated content as original work (Dehouche, 2021).

Although earlier studies have examined how digital literacy affects technology acceptance in other areas, there is a lack of studies particularly investigating the connection between digital media literacy and attitudes toward accepting generative AI in higher education. This study seeks to fill this research gap by exploring the impact of various sub-dimensions of digital media literacy on attitudes toward accepting generative AI in a higher education setting.

The significance of this study lies in its capacity to provide valuable insights into the determinants that influence attitudes toward the acceptability of Gen-AI in higher education. By recognizing the importance of digital media literacy in this context, educators and policymakers can develop targeted interventions and policies to enhance students’ digital competencies and foster positive attitudes toward Gen-AI technologies. Furthermore, the results of this study can contribute to the broader discussion on the conscientious and ethical incorporation of AI in education, providing guidance for optimal methods and directing future investigations in this field.

Aims of the study:

1) To investigate the relationship between the sub-dimensions of digital media literacy (Device Access, Content Access, Technical Understanding, Critical Understanding, and Create) and attitudes toward Gen-AI acceptance in higher education.

2) To determine the impact of each sub-dimension of digital media literacy on attitudes toward Gen-AI acceptance in higher education.

This study aims to investigate how various aspects of digital media literacy impact attitudes toward the acceptance of Gen-AI in higher education. The goal is to comprehensively understand this relationship to develop effective strategies for integrating AI technologies into educational settings.

2 Literature review

2.1 Digital media literacy

Digital media literacy refers to a set of basic skills necessary for efficiently navigating the digital environment. It encompasses abilities such as identifying digital resources, managing and evaluating content, creating new media formats, and interacting with others through digital platforms (Ozdamar-Keskin et al., 2015). This literacy is not an isolated concept; rather, it is a construct derived from media literacy and incorporates a dynamic set of skills essential in the digital era (Pangrazio et al., 2020). Digital literacy is necessary for individuals to effectively and safely collect, process, understand, integrate, and communicate information using digital technologies (Anggraeni et al., 2023).

Digital media literacy describes the ability to effectively use and create material using digital media. It includes skills such as accessing, understanding, and producing digital content. This concept emphasizes the importance of not only using digital platforms but also understanding the content presented and contributing valuable content within these environments. It is important to consider digital media literacy as a complex concept that includes understanding both the media content and the equipment used to access it (Heiss et al., 2023; Park, 2012). This approach highlights the evolving nature of literacy in the digital era, where traditional reading and writing practices intersect with digital tools and platforms. Furthermore, digital literacy emerges as a distinct process within the educational system, intersecting with media and information literacy (Miteva, 2022). This integration emphasizes the changing nature of literacy in the digital era, as people need to navigate a wide range of media sources and information channels. Hobbs defines media and digital literacy as a collection of vital skills necessary for active engagement in our media-saturated society. These abilities include critical media analysis, content creation, responsible decision-making, reflective practices, and engagement in socially meaningful activities (Botturi et al., 2018). This definition emphasizes the proactive role that individuals have in interacting with digital media and the corresponding obligations that accompany it.

Within the field of education, digital literacy encompasses the ability to securely access content, effectively utilize new technologies, critically assess knowledge, and generate digital content (Erol, 2021). This concept highlights the significance of not only passively absorbing digital information but also actively engaging with it through critical thinking and content creation. Digital literacies refer to the necessary abilities required to effectively read and write in digitally mediated settings, utilizing various digital technologies for communication (Zheng et al., 2013). This approach highlights the changing nature of literacy in the digital era, where conventional reading and writing methods are interconnected with digital tools and platforms.

Essential elements of digital literacy encompass critical thinking, creativity, information assessment, and proficient utilization of digital media (Perdana et al., 2019). Digital literacy equips individuals with the skills to effectively retrieve, evaluate, and generate information, thereby enhancing their comprehension of technology and its practical uses (Ussarn et al., 2022). Furthermore, digital literacy encompasses a range of literacies including information literacy, computer literacy, media literacy, communication literacy, visual literacy, and technological literacy, with this broad skill set enabling individuals to efficiently process knowledge and communicate effectively (Park et al., 2021; Wuyckens et al., 2022).

Digital literacy is essential for improving learning in educational settings. Students can analyze content thoughtfully, produce digital media, and express their thoughts effectively (Pertiwi, 2022). By incorporating digital literacy into instructional methods, educators can enable students to navigate the digital domain with assurance and proficiency (Afrilyasanti et al., 2022). Moreover, fostering digital literacy skills in students is crucial for their academic achievement, as it provides them with the requisite abilities to efficiently access and utilize information (Abbas et al., 2019; Jalil et al., 2021; Udeogalanya, 2022).

Digital literacy is essential not only in educational environments but also in broader social contexts. Developing this competency is increasingly crucial across various fields and industries, as it enables individuals to adapt to the demands of the digital era (Bejaković and Mrnjavac, 2020; Khan et al., 2022). Furthermore, enhancing digital literacy develops essential cognitive skills such as critical thinking and evaluation, which are crucial for employment in the digital age (Letigio and Balijon, 2022). By improving their digital literacy, individuals can skillfully navigate the complexities of the digital environment and make well-informed decisions (Balaban-Sali, 2012).

The development of digital media literacy has grown closely connected with media literacy, emphasizing the significance of comprehending and critically engaging with media messages in contemporary society (Miteva, 2022). The incorporation of digital media literacy highlights the importance of enabling individuals to responsibly understand and produce media content (Balaban-Sali, 2012). With the continuous advancement of technology, it is increasingly important to access, analyze, and convey information through digital platforms (Reddy et al., 2019). Hence, nurturing digital media literacy is imperative for individuals to actively participate in the digital age with depth and significance.

To summarize, digital media literacy includes a wide array of abilities, ranging from technical proficiency to analytical reasoning and innovative communication. It encompasses not only accessing and comprehending digital content but also actively participating in it, critically assessing information, and creating significant digital works. Individuals must continually adjust to new technologies, effectively navigate intricate media environments, and engage ethically in digital domains due to the ever-changing nature of digital literacy. Digital media literacy enables individuals to excel in the digital world by combining critical perspectives, practical abilities, and innovative methods.

2.2 Generative AI

Gen-AI is a specialized branch of AI dedicated to generating novel content such as images, text, or music, rather than solely analyzing existing data or making decisions based on predetermined rules. One of the main techniques utilized in Gen-AI is generative adversarial networks (GANs), which involve two neural networks—a generator and a discriminator—that work competitively to produce realistic outputs (Huang and Le, 2021). This method enables the generator to produce more convincing results by incorporating feedback from the discriminator, resulting in generated content that closely matches real data. Gen-AI has found applications in various fields, including medicine, where it has been applied to medical imaging tasks such as image acquisition, processing, and assisted reporting, demonstrating its potential to transform healthcare practices (Pesapane et al., 2018). Furthermore, within the design discipline, researchers have explored Generative AI and its potential effects on the future of design processes, providing a deeper understanding of its theoretical foundations and possible impacts on the field (Thoring et al., 2023). In the telecommunications industry, AI technologies such as natural language processing and machine learning have played an important role in improving data analysis capabilities, leading to enhanced management, planning, and operational efficiency (Chen et al., 2021).

Improvements in microprocessor architecture have been instrumental in implementing AI, with artificial neural networks (ANNs) emerging as promising algorithms for independent learning (Khan et al., 2021). These artificial neural networks, which draw inspiration from the human brain, serve as the foundation for numerous machine learning techniques that drive generative AI systems. The use of AI in creative fields like marketing has been increasing, with AI facilitating automation and fostering innovation in marketing strategies (Ameen et al., 2022).

Incorporating AI capabilities into the creative process has revolutionized marketing practices, enabling the generation of innovative ideas. In addition, AI has been utilized in the development of symmetric cryptographic primitives, showcasing its adaptability in guaranteeing secure communication and data protection (Mariot et al., 2022). When it comes to ethics and responsibility, concerns arise regarding the accountability and decision-making processes of AI systems (Dastani and Yazdanpanah, 2023). Recognizing the significance of AI decisions and promoting responsible AI development are crucial factors to consider as AI technologies progress and become more integrated into various aspects of society.

Gen-AI is increasingly integrated into higher education settings, offering a variety of applications and potential advantages. Gen-AI is transforming assessments with its ability to generate responses to questions that are remarkably humanlike. This use of Gen-AI can improve the assessment process through personalized student feedback, increased efficiency and accuracy, and enabling teachers to tailor their teaching strategies to individual student needs (Owan et al., 2023). In addition, researchers are currently examining the potential of Gen-AI tools like ChatGPT in education, evaluating their impact on academic integrity and the overall learning experience (Damiano et al., 2024). Studies have shown that using an AI-driven ODT app can significantly improve children’s interest in learning and help them retain information better, suggesting it could be a valuable tool for teachers in the classroom (Liu and Chen, 2023).

In higher education, how students perceive Gen-AI is significantly important. Students need to develop AI literacy, which involves understanding the basic principles of Gen-AI, its benefits, limitations, and various academic applications (Chan and Hu, 2023). However, concerns exist regarding the ethical use of Gen-AI, particularly in assessments where students might present AI-generated responses as their own work (Dehouche, 2021).

In addition, the implementation of Gen-AI in higher education is not without its challenges and concerns (Alier et al., 2024). Gen-AI has the ability to change the way we teach and learn, but teachers are still figuring out how to leverage its educational benefits on a larger scale (Akinwalere and Ivanov, 2022). According to Lainjo and Tmouche (2023), educational institutions must consider the societal and ethical implications of AI implementation to ensure responsible use. Furthermore, it is necessary to bridge the gap between educators’ comprehension of AI and its capacity to bring about significant changes in education (Kusters et al., 2020). Before using AI in educational technology, it is essential to weigh the pros and cons. The advantages include large data sets and benefits, while the disadvantages encompass risks and limitations. To justify the implementation of AI, it should provide substantial capabilities or efficiencies that would not be possible without it. The mere existence of AI does not necessarily validate its use (Gillani et al., 2023).

Despite these challenges, the benefits of integrating AI in higher education are substantial. AI technologies have the potential to enhance the student learning experience, offer customized training, facilitate adaptive learning, and improve evaluation methodologies (Mahligawati et al., 2023). Through utilizing Gen-AI technologies, such as text-to-image generators, students can produce innovative designs while maintaining academic integrity. Moreover, educational assessment technologies that utilize artificial intelligence can enhance the effectiveness and efficiency of assessments, providing advantages for both students and instructors (Owan et al., 2023).

In conclusion, integrating Gen-AI into higher education presents a cutting-edge opportunity to improve teaching and learning processes. Although challenges exist that must be addressed, the potential benefits of artificial intelligence in academia are substantial. It is essential for educators and institutions to carefully manage these challenges, ensuring that AI is used ethically and responsibly to fully leverage its positive influence on higher education.

2.3 Relation between digital media literacy and Gen-AI

Digital media literacy is a critical factor influencing attitudes toward accepting Gen-AI technologies. It has been shown that digital literacy affects how people think and act in various areas, such as business, education, and healthcare. According to studies, those who are more digitally literate also typically have greater confidence when utilizing technology (Khare et al., 2022; Kuek and Hakkennes, 2020). People who are more comfortable using advanced digital tools may have a more positive view of Gen-AI technologies. These perceptions regarding AI’s usefulness and ability to create new things affect how entrepreneurs feel about using AI in their businesses (Upadhyay et al., 2022).

Developing digital literacy programs can improve entrepreneurs’ comprehension of AI capabilities, positively influencing their attitudes toward utilizing AI tools in their enterprises. Similarly, the digital literacy levels of owner-managers in small and medium enterprises influence their attitudes toward using digital media for stakeholder engagement (Camilleri, 2019). This emphasizes the correlation between digital literacy and attitudes toward technology use in work environments. Studies by Long et al. (2022) and Su and Yang (2024) have demonstrated that incorporating AI literacy curriculum interventions in educational settings can foster positive attitudes toward engineering and science among young learners. By introducing AI literacy classes early on, children can gain a basic understanding of AI technologies, influencing their perceptions of these tools in the future.

Individuals with digital literacy in home settings may find it easier to establish online businesses, significantly increasing their household income. According to a prior study conducted by Alakrash and Razak (2021), digital literacy has the potential to improve overall welfare, particularly among the Indonesian population. Proficiency in digital media literacy is essential not just for influencing individuals’ attitudes toward technology acceptance but also for affecting broader societal outcomes. Studies suggest that possessing advanced media literacy skills positively impacts the cultivation of critical thinking and the promotion of cultural diversity (Tugtekin and Koc, 2020).

Moreover, advancements in digital technology, driven by the digital economy, are expected to significantly affect economic growth, job creation, and living standards in developing countries (Parianom and Arrafi, 2022). Misusing digital media, such as spreading misinformation and offensive content, can lead to societal division and trust issues. This underscores the importance of learning how to use technology responsibly (Gunawan and Ratmono, 2020; Raya et al., 2021). Additionally, research on higher education administrative staff shows that digital literacy directly affects effort expectations and indirectly influences technology acceptance through effort and performance expectations. This highlights the importance of continuous learning and support for developing digital literacy skills (Dyanggi et al., 2022). An increasing number of fields, including marketing (Gonçalves et al., 2023), are investigating the ethical issues raised by AI technologies, such as Gen-AI. Understanding attitudes toward AI acceptance requires consideration of social issues and strategies for building trust in AI systems (Du and Xie, 2021). This highlights the significance of not only improving digital literacy but also cultivating critical thinking abilities to assess the ethical implications of AI technology (Maznev et al., 2024).

To summarize, the literature emphasizes the complex and multifaceted nature of digital media literacy and its potential impact on attitudes toward Gen-AI acceptance in higher education. Digital media literacy encompasses a broad spectrum of skills, including the ability to access devices and content, understand technical aspects, critically analyze information, and create content. These skills are essential for individuals to effectively navigate and engage with digital technologies, critically analyze information, and produce meaningful digital content. Literature also discusses how Gen-AI is becoming increasingly important in higher education and how it could transform teaching and learning. However, for generative AI to be successfully integrated into education, it is crucial to understand the factors that influence acceptance, such as the importance of digital media literacy. This study aims to examine the relationship between various dimensions of digital media literacy and individuals’ attitudes toward Gen-AI acceptance. Its objective is to address the existing research gap and provide significant insights for educators, policymakers, and researchers. The results of this study can assist researchers in developing targeted interventions and strategies to enhance students’ digital media literacy skills and foster positive attitudes toward Gen-AI technologies in higher education. This will ultimately promote the responsible and ethical use of AI in higher education institutions.

3 Methodology

The aim of this research is to examine how digital media literacy affects individuals’ attitudes toward accepting Gen-AI in university education. To achieve this, we use a quantitative research method with a cross-sectional survey design (Creswell and Clark, 2017). This approach enables the collection of extensive data from a large sample at a single point in time, allowing for the examination of relationships between variables (Cohen et al., 1994).

3.1 Sample

The study group consisted of 476 undergraduate students at Kazan Federal University in Russia. After cleaning the data, we finalized our group with 451 participants. Of these students, 76.5% were female and 23.5% were male. We recruited these students through convenience sampling, meaning we approached individuals who were easily accessible and willing to participate in the study. While this sampling method has recognized limitations regarding representativeness and generalizability (Etikan et al., 2016; Jager et al., 2017), it is appropriate for this exploratory study examining relationships between variables rather than making population-level inferences (Bornstein et al., 2013). We chose this method because it is practical and efficient for gathering students from Kazan Federal University. According to Wolf et al. (2013), the minimum sample size in SEM analyses should be the number of items x 10. Accordingly, with 30 items x 10 = 300, a sample size of 451 is deemed sufficient. Although there are more females than males in our group, this is typical for Kazan Federal University. We acknowledge that convenience sampling has its drawbacks; it may not represent all students at Kazan Federal University or in Russia. However, as our objective is to explore how digital media literacy relates to attitudes toward Gen-AI, we believe this group is suitable. It can provide valuable insights regarding these issues at Kazan Federal University.

3.2 Data collection tools

The research employs two primary instruments for data collection: the digital media literacy scale developed by Okela (2023) and the attitude toward Gen-AI acceptance scale created by Chatterjee and Bhattacharjee (2020). The Digital Media Literacy Scale assesses users’ digital media literacy across five areas: Device Access (five questions), Content Access (five questions), Technical Understanding (five questions), Critical Understanding (five questions), and Create (five questions). The attitude toward generative AI acceptance scale consists of five items that evaluate participants’ attitudes toward the acceptance of generative AI in a higher education context. Both assessments employ a five-point Likert scale, ranging from 1 (indicating strong disagreement or never) to 5 (indicating strong agreement or very frequent occurrence).

An online survey questionnaire was created to combine the Digital Media Literacy Scale with the Attitude toward Gen-AI Acceptance Scale, along with a section for collecting demographic information. To ensure comprehension among Russian participants, the survey questionnaire was translated from English to Russian, following a simplified version of the cross-cultural research criteria provided by van der Linden and Hambleton (1997). The translation process involved a bilingual expert translating the original English scales into Russian, followed by another bilingual expert who, without seeing the original English, translated the Russian back into English. The research team conducted a comparison between the back-translated version and the original English scales, discussing the differences and seeking to refine the Russian scales. A pilot test of the Russian scales was conducted with a sample of 15 Russian individuals to assess clarity and comprehensibility, determining if further modifications were needed based on feedback. Finally, the Russian scales were finalized. This translation procedure ensured that the data collection instruments maintained equivalent meaning in both English and Russian, thereby enhancing the reliability and validity of the study results. The questionnaire was distributed to participants via an online form.

3.3 Data analysis

The data obtained were analyzed using Jamovi (version 2.5.4), an open-source statistical software that offers a user-friendly interface for executing a comprehensive array of statistical evaluations (The Jamovi Project, 2021). The data analysis methodology involved multiple stages, including data cleansing, descriptive statistics, correlation assessment, confirmatory factor analysis (CFA), and structural equation modeling (SEM).

3.3.1 Data cleaning

The dataset was carefully examined for missing values, outliers, and other errors before executing the main analysis. Appropriate techniques, such as listwise deletion or multiple imputation, were used to handle missing data based on the degree and type of missingness (Schafer and Graham, 2002).

3.3.2 Descriptive statistics

All study-relevant variables underwent descriptive statistics—means, standard deviations, skewness, and kurtosis. These statistical tools helped evaluate the normality assumption needed for subsequent analyses and provided a comprehensive overview of the data distribution (Field, 2009).

3.3.3 Correlation analysis

Pearson’s correlation coefficients were calculated to investigate the bivariate associations among the sub-dimensions of digital media literacy (Device Access, Content Access, Technical Understanding, Critical Understanding, and Create) and attitudes toward the acceptance of generative AI. The correlation matrix provided an initial insight into the interrelationships among the variables under study.

3.3.4 Confirmatory factor analysis

The CFA model defined the relationships between observable indicators (items) and their corresponding latent constructs (sub-dimensions of digital media literacy and attitudes toward generative AI acceptance). A range of indices, including the chi-square to degrees of freedom ratio (χ2/df), Comparative Fit Index (CFI), Tucker–Lewis Index (TLI), standardized root mean square residual (SRMR), and root mean square error of approximation (RMSEA), was used to evaluate model fit (Hu and Bentler, 1999). The internal consistency reliability of the scales was also assessed using Cronbach’s alpha and McDonald’s omega coefficients. The correlations between the sub-dimensions of digital media literacy and attitudes toward generative AI acceptance were investigated.

3.3.5 Structural equation modeling (SEM)

The SEM model articulated the direct influences of Device Access, Content Access, Technical Understanding, Critical Understanding, and Create on attitudes toward the acceptance of generative AI. Model fit was evaluated employing the same indices as those used in the CFA. Path coefficients, along with their statistical significance and standardized estimates, were scrutinized to ascertain the magnitude and directionality of the relationships. All statistical analyses were performed at a significance threshold of 0.05, and the findings were documented in accordance with APA style guidelines.

4 Findings

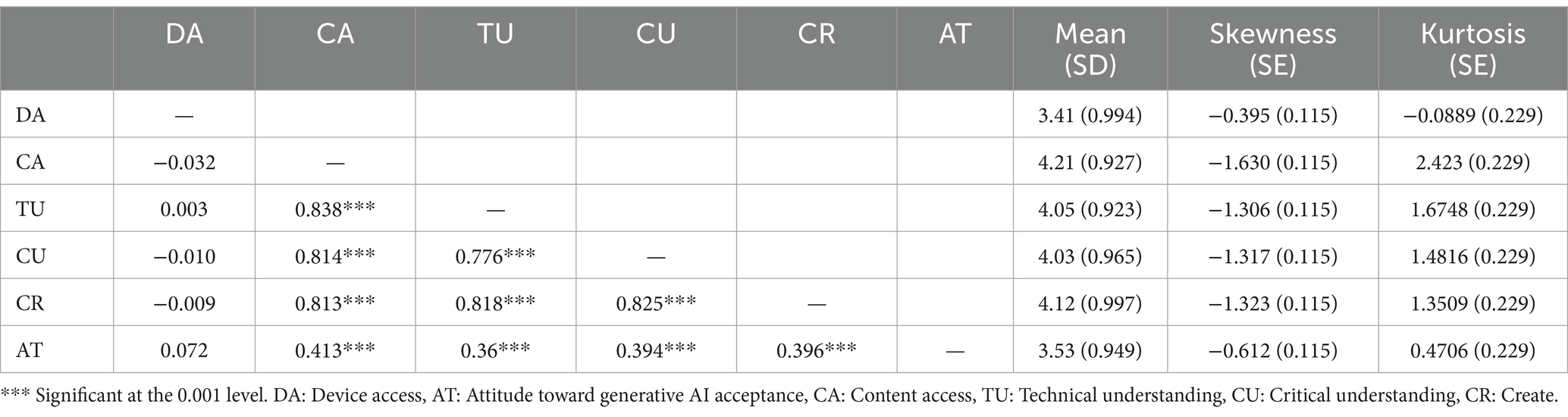

From the results in Table 1, Device Access (DA) has a very small positive relation with Attitude toward generative AI acceptance (AT) (r = 0.072), but it is not statistically significant. This means having digital devices does not really affect how a person feels about generative AI in university. However, Content Access (CA), Technical Understanding (TU), Critical Understanding (CU), and Create (CR) have medium positive relations with attitude toward generative AI acceptance (AT) (r = 0.413, 0.36, 0.394, and 0.396, all p < 0.001). This indicates that individuals with higher abilities in accessing content, understanding technology, thinking critically, and creating digital content tend to have more positive attitudes toward generative AI in higher education.

Additionally, the dimensions of Digital Media Literacy (CA, TU, CU, and CR) have strong positive relations with each other (r > 0.7, p < 0.001). This means these components are closely connected; if a person is good at one area, they are probably good at others as well. In summary, the results indicate that Content Access, Technical Understanding, Critical Understanding, and Creation dimensions of Digital Media Literacy have moderate positive relationships with attitude toward generative AI acceptance in higher education, while Device Access has a negligible effect.

Average scores for all components are quite high, ranging from 3.41 to 4.21. This indicates that participants generally demonstrate good levels of digital media literacy. The highest mean score is for Content Access (CA) at 4.21, suggesting participants are particularly proficient at accessing digital content. The lowest mean score is for Device Access (DA) at 3.41, which, while still above the midpoint, suggests participants may have relatively lower access to devices compared to other digital literacy skills. The average score for attitude toward generative AI acceptance in university (AT) is 3.53, above the midpoint, indicating that people generally have a positive view of the idea of generative AI in university.

Standard deviations (SD) for all variables are relatively small, ranging from 0.923 to 0.997. This indicates that most scores cluster around their respective means. All variables display negative skewness, indicating distributions skewed to the right with longer tails on the left. This suggests that more people have high scores in digital media literacy and a favorable view of generative AI.

Kurtosis values for DA and AT are closer to zero, suggesting their distributions more closely approximate normal curves. This means their data shapes have high peaks and long tails, unlike a normal bell shape. Kurtosis for DA and AT being closer to zero indicates their shapes resemble a normal bell curve.

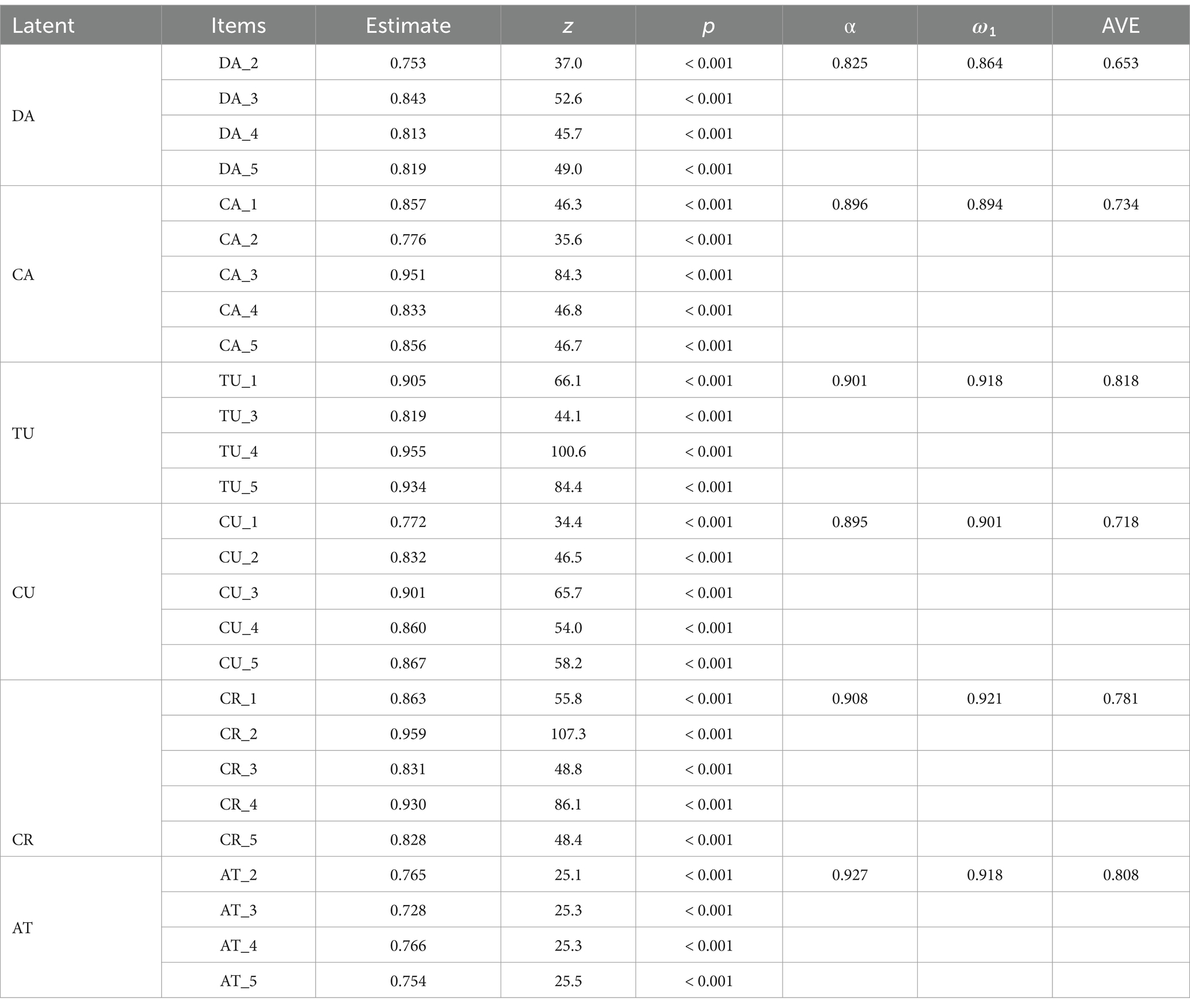

Table 2 presents strong factor loadings (>0.7) for items in all sub-dimensions and the AT scale, indicating that the items are strongly related to their respective constructs. Z-values and p-values (<0.001) confirm statistical significance. Cronbach’s alpha and McDonald’s omega values exceed 0.8 for all sub-dimensions and the AT scale, demonstrating high internal consistency and reliability, indicating that items within each scale measure the same construct.

AVE values are >0.5 for sub-dimensions and the AT scale. Items explain a good amount of variance in constructs, indicating good convergent validity. Three items (DA_1, TU_2, AT_1) were removed because their factor loadings were below 0.7, improving the model fit. The results demonstrate the reliability and validity of both the Digital Media Literacy sub-dimensions (DA, CA, TU, CU, CR) and the attitude toward generative AI acceptance in higher education (AT) scale. The high factor loadings, strong internal consistency, and good convergent validity indicate that these scales are appropriate for measuring their respective constructs in this study examining the impact of digital media literacy on attitude toward generative AI acceptance in higher education.

In addition, CFA was applied for each scale. For Media, CFI (0.947), TLI (0.939), SRMR (0.0335), and RMSEA (0.0675) were calculated. For AT, CFI (0.999), TLI (0.996), SRMR (0.00858), and RMSEA (0.0810) were calculated. All indices are acceptable for both scales.

To establish configural invariance, confirmatory factor analysis (CFA) was conducted separately for each gender group. This analysis evaluates whether the same factor structure holds across different groups without imposing equality constraints on any parameters.

For the attitude toward Gen-AI acceptance scale, the female group demonstrated excellent model fit with CFI = 0.996, TLI = 0.988, SRMR = 0.00880, and RMSEA = 0.0802. The male group showed an exceptional fit with CFI = 1.0, TLI = 1.0, SRMR = 0.00742, and RMSEA = 0.0001. These values indicate that the measurement model for attitudes toward generative AI acceptance fits well for both gender groups, with particularly strong fit indices for males.

For the Digital Media Literacy Scale, the female group demonstrated good model fit with CFI = 0.930, TLI = 0.920, SRMR = 0.0383, and RMSEA = 0.0756. Similarly, the male group showed comparable fit indices with CFI = 0.936, TLI = 0.920, SRMR = 0.0495, and RMSEA = 0.0881. These results suggest that the factor structure of the Digital Literacy Scale is appropriate for both gender groups, as all fit indices meet acceptable thresholds (CFI and TLI > 0.90, SRMR < 0.08, RMSEA < 0.10).

The consistent pattern of good model fit across both gender groups establishes configural invariance, confirming that the basic factor structure of both scales is equivalent for male and female participants. This is a fundamental prerequisite for subsequent invariance testing steps and indicates that the conceptual understanding of the measured constructs is similar across gender groups.

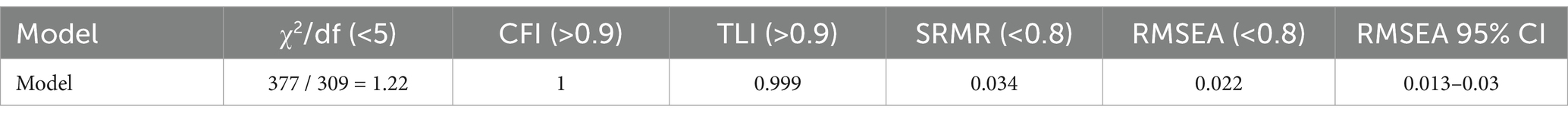

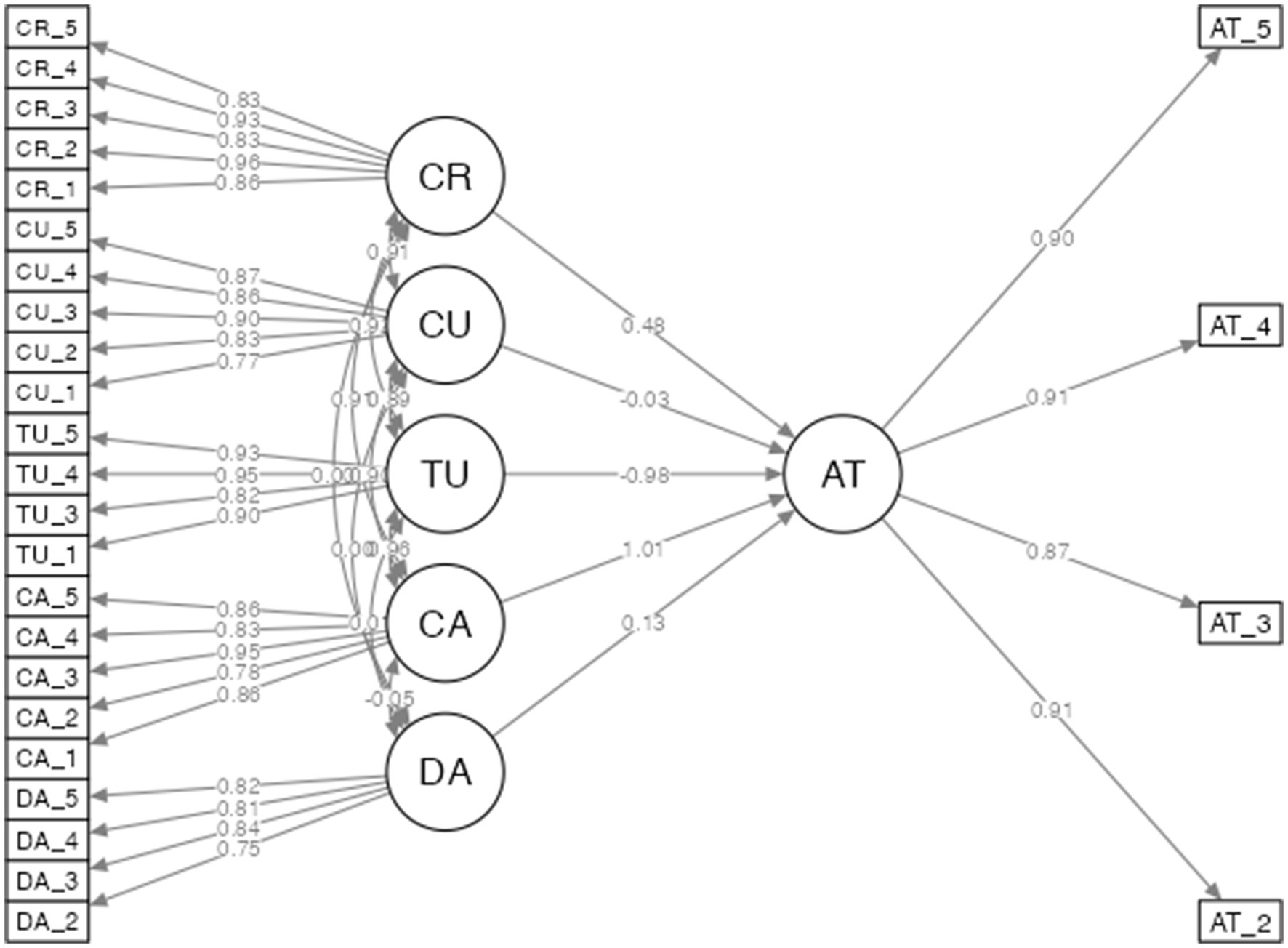

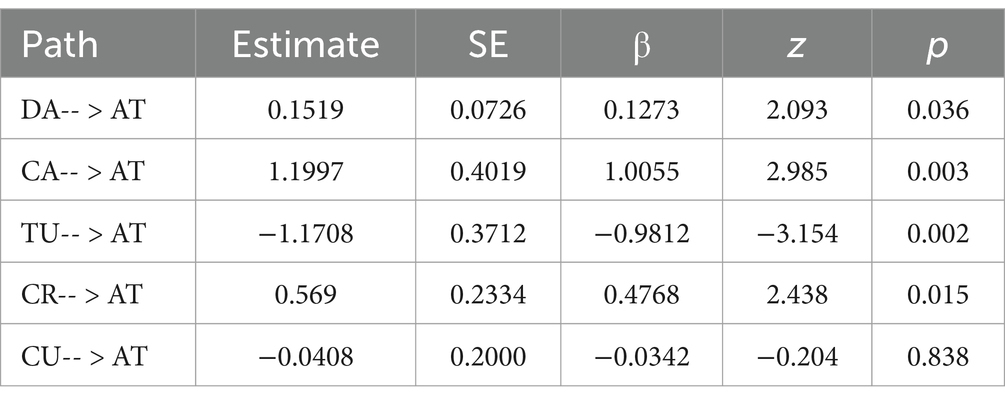

Model fit indices in Table 3 were used to evaluate how well the proposed model fits the observed data. The chi-square to degrees of freedom ratio (χ2/df) is 1.22, which is less than the recommended 5, showing that the model has a good fit to the data. The Comparative Fit Index (CFI) is 1 and the Tucker-Lewis Index (TLI) is 0.999, both greater than the recommended threshold of 0.9, indicating the model fits the data well compared to the baseline model. The standardized root mean square residual (SRMR) is 0.034, which is less than the recommended 0.8. The root mean square error of approximation (RMSEA) is 0.022, also less than the recommended 0.08, with a 95% confidence interval of 0.013 to 0.03, further supporting good model fit. As a result, all model fit indices (χ2/df, CFI, TLI, SRMR, RMSEA) suggest the proposed model has a good fit to the observed data. This means it represents relationships between variables well and supports the validity of the model and conclusions drawn from it (see Figure 1 and Table 4).

For the path from DA to AT, the estimate is 0.1519, SE is 0.0726, and β is 0.1273. The z-value is 2.093 with a p-value of 0.036, which is less than 0.05. This indicates that DA has a small but significant positive effect on AT. The path from CA to AT shows larger coefficients: estimate 1.1997, SE 0.4019, and β 1.0055. The z-value is 2.985 with a p-value of 0.003, which is very small (less than 0.01). This indicates that CA has a strong positive effect on AT.

The results for the path from TU to AT differ. The estimate is −1.1708, SE is 0.3712, and β is −0.9812. The z-value is −3.154 with a p-value of 0.002 (less than 0.01). The negative coefficient indicates that TU has a strong negative effect on AT, which is unexpected. For CR to AT, the numbers are: estimate 0.569, SE 0.2334, β 0.4768. The z-value is 2.438, and the p-value is 0.015 (less than 0.05). This shows that CR has a medium positive effect on AT.

Finally, the path from CU to AT shows non-significant results. The estimate is −0.0408, SE is 0.2, and β is −0.0342. The z-value is −0.204 with a p-value of 0.838, which is greater than 0.05. This indicates that CU has no significant effect on AT.

In conclusion, the analysis indicates that CA and CR have significant positive effects on AT. TU has a strong negative effect, which is surprising. DA has a small positive effect, while CU has no significant effect on AT in higher education. These results enhance our understanding of how different dimensions of digital media literacy influence attitudes toward generative AI acceptance.

5 Discussion

The objective of this study was to examine the influence of digital media literacy on attitudes toward accepting generative AI in higher education. The results offer valuable insights into the relationship among the different aspects of digital media literacy (Device Access, Content Access, Technical Understanding, Critical Understanding, and Create) and attitudes toward accepting generative AI among undergraduate students at Kazan Federal University in Russia.

The descriptive statistics revealed that participants generally had a high level of digital media literacy across all sub-dimensions, with the highest mean value observed for Content Access and the lowest for Device Access. This suggests that participants have a strong ability to access digital content but may have relatively lower access to digital devices compared to other sub-dimensions. The mean value for attitudes toward generative AI acceptance in higher education was above the neutral point, indicating that participants generally have a positive attitude toward the acceptance of generative AI in higher education. These findings are consistent with previous research highlighting the growing importance of digital media literacy in the context of higher education and the increasing acceptance of generative AI technologies (Damiano et al., 2024; Owan et al., 2023).

The correlation analysis revealed that Content Access, Technical Understanding, Critical Understanding, and Create had moderate positive correlations with attitudes toward generative AI acceptance, while Device Access had a very weak and non-significant correlation. These findings suggest that individuals with higher levels of digital media literacy in terms of accessing and creating digital content, understanding technical aspects, and critically evaluating information tend to have more positive attitudes toward generative AI acceptance in higher education. This is in line with previous studies indicating that higher levels of digital literacy are associated with greater confidence in using technology and a more positive attitude toward embracing advanced digital tools (Khare et al., 2022; Kuek and Hakkennes, 2020).

CFA provided evidence for the reliability and validity of the digital media literacy sub-dimensions and the attitude toward the generative AI acceptance scale. The high factor loadings, internal consistency, and convergent validity suggest that these scales are appropriate for measuring their respective constructs in the context of investigating the impact of digital media literacy on attitudes toward generative AI acceptance in higher education. The good model fit indices further supported the validity of the proposed model, adequately representing the relationships among the variables.

The structural equation modeling (SEM) results revealed that Content Access and Create had significant positive impacts on attitudes toward generative AI acceptance, while Technical Understanding had a significant negative impact. Device Access had a small but significant positive impact, and Critical Understanding did not have a significant impact. These findings provide a more nuanced understanding of the relationships between the sub-dimensions of digital media literacy and attitude toward generative AI acceptance in higher education.

The positive impact of Content Access and Create on attitudes toward generative AI acceptance highlights the importance of individuals’ ability to access and create digital content in shaping their attitudes toward advanced technologies like generative AI. This is consistent with previous research emphasizing the role of digital literacy in fostering positive attitudes toward technology adoption (Long et al., 2022; Su and Yang, 2024).

The negative impact of Technical Understanding on attitudes toward generative AI acceptance was unexpected and warrants further investigation. It is possible that individuals with a deeper technical understanding of generative AI may have concerns about its potential limitations or ethical implications, leading to a more cautious attitude toward its acceptance in higher education (Dehouche, 2021; Gonçalves et al., 2023). This may be due to students with more technical understanding being more aware of the negative aspects of Gen-AI. For example, one of the biggest criticisms of Gen-AI is that it hallucinates (Mahmoud et al., 2025; Sun et al., 2024). Students with high technical knowledge may not fully trust the information produced by Gen-AI. In a study conducted by Chan and Hu (2023), university students stated that the information produced by Gen-AI should be confirmed. AI also raises concerns about the trustworthiness of information (Acosta-Enriquez et al., 2024; Stone, 2024). In the study by Farhi et al. (2023), it is noted that the use of AI by university students affects their concerns about ethics.

The small but significant positive impact of Device Access on attitudes toward generative AI acceptance suggests that access to digital devices, while not as influential as other sub-dimensions of digital media literacy, still plays a role in shaping attitudes toward technology acceptance. This finding aligns with previous research highlighting the importance of access to digital resources in fostering positive attitudes toward technology (Alakrash and Razak, 2021).

The non-significant impact of Critical Understanding on the attitude toward generative AI acceptance was surprising, given the importance of critical thinking skills in evaluating the implications of AI technologies (Maznev et al., 2024). This finding may suggest that other factors, such as ethical considerations or trust in AI systems, play a more significant role in shaping attitudes toward generative AI acceptance in higher education (Du and Xie, 2021).

The results of the study can also be interpreted through Parasuraman’s (2000) Technology Readiness Index theory. This theory comprises four dimensions—optimism, innovativeness, discomfort, and insecurity—that influence people’s inclination to embrace new technology (Wu and Lim, 2024). Our results indicate that the positive impact of the Content Access and Content Creation aspects on AI adoption suggests that these students may be quite optimistic and innovative about technology (Flavián et al., 2022). Conversely, the unexpected negative impact of the Technical Understanding component on AI acceptance can be explained by the “discomfort” and “insecurity” dimensions of the Technology Readiness Index. Oc et al. (2024) found that individuals with high technical expertise may be more aware of the potential risks of technology, leading to a careful attitude toward new technologies. Similarly, Klimova et al. (2023) and Kamalov et al. (2023) found that students become more aware of the limitations and ethical concerns of AI technology as their technical expertise increases, which may influence their level of adoption. In this regard, the Technology Readiness Index offers a valuable theoretical framework for comprehending the diverse impacts of various aspects of digital media literacy on artificial intelligence acceptance.

The findings of our study can also be evaluated within the framework of Ajzen’s (1991) Theory of Planned Behavior. This theory suggests that behavioral intentions are determined by attitudes, subjective norms, and perceived behavioral control. In our research, the positive effect of the Content Access and Content Creation dimensions on AI acceptance can be associated with the concept of perceived behavioral control in the Theory of Planned Behavior. Ivanov et al. (2024) and Wang et al. (2024) stated that students with strong digital skills feel more competent in controlling and managing AI technologies, which increases their level of acceptance. In addition, studies by Galindo-Domínguez et al. (2024) and Vitezić and Perić (2024) showed that individuals with high digital competence developed more positive attitudes toward AI technologies. Conversely, the negative effect of the Technical Understanding dimension can be explained by the fact that students with high technical knowledge are more aware of the potential limitations of AI technologies, which affects their behavioral intentions, as suggested by Park (2024). In this context, the theory of planned behavior provides a valuable theoretical framework for understanding the effects of digital media literacy on AI acceptance.

The present study contributes to the growing body of literature on digital media literacy and its impact on technology acceptance in higher education. By investigating the relationships between the sub-dimensions of digital media literacy and attitudes toward generative AI acceptance, this study provides valuable insights for educators, policymakers, and researchers. The findings suggest that fostering digital media literacy, particularly in terms of content access and creation, can lead to more positive attitudes toward generative AI acceptance in higher education. This highlights the importance of integrating digital literacy education into higher education curricula to prepare students for the challenges and opportunities presented by advanced technologies like generative AI (Chan and Hu, 2023; Long et al., 2022).

This study aimed to examine the relationships between digital media literacy and Gen-AI acceptance from an exploratory perspective. However, experimental study designs are needed to fully reveal the causal relationships between these variables. İlhan et al. (2024) and Alnuaim (2024) demonstrated that experimental interventions are effective in developing digital literacy skills. Similarly, Förster (2024) and Sinha and Bag (2023) emphasized that experimental designs more strongly explain causal relationships in technology acceptance studies. To better understand the adoption process of AI technologies in education, intervention programs can be designed to provide students with specific digital literacy skills, and the effects of these interventions on AI acceptance can be measured (Markus et al., 2024; Sinha and Bag, 2023). This study has revealed important relationships that will form the basis for future empirical research. Future research can examine how training programs to improve content access and content creation skills affect students’ attitudes toward Gen-AI technologies (Dangprasert, 2023). Such experimental studies will contribute to a deeper understanding of the relationships between digital literacy and technology acceptance.

Our findings have important implications for educational policies and AI literacy frameworks in higher education. The positive relationship between Content Access, Content Creation, and AI acceptance aligns with AI literacy frameworks proposed by Long and Magerko (2020), which emphasize the importance of not only understanding AI but also actively engaging with it through content creation. Chan (2023) contends that educational policy should prioritize the development of students’ competencies in accessing, evaluating, and generating AI-enhanced content over mere technical skills. The unexpected negative relationship between technological comprehension and AI integration suggests that current educational methodologies may need to find a balance among technological literacy, ethical considerations, and practical relevance. Higher education institutions should develop comprehensive AI literacy systems that cover both the technical and social dimensions of artificial intelligence (Zawacki-Richter et al., 2019). Incorporating the five elements of digital media literacy from our study, these frameworks should prioritize material accessibility and creative skills. Our results support Crawford’s (2021) opinion that educational policy should support a thorough approach to artificial intelligence literacy that recognizes both the advantages and limitations of Gen-AI technologies. Higher education institutions can better equip students for critical engagement with Gen-AI by integrating these elements into instructional strategies and AI literacy frameworks.

However, the study also reveals the need for a more comprehensive understanding of the factors influencing attitudes toward generative AI acceptance in higher education. The negative impact of Technical Understanding and the non-significant impact of Critical Understanding on attitudes toward generative AI acceptance underscore the complexity of the relationships between digital media literacy and technology acceptance. Future research should explore these relationships further, considering additional factors such as ethical concerns, trust in AI systems, and the potential limitations of generative AI technologies.

In conclusion, the present study demonstrates the significant impact of digital media literacy on attitudes toward generative AI acceptance in higher education. The findings emphasize the importance of content access and creation in fostering positive attitudes toward generative AI acceptance, while also revealing the complex relationships between technical understanding, critical understanding, and technology acceptance. As higher education institutions continue to navigate the challenges and opportunities presented by generative AI, fostering digital media literacy among students becomes increasingly crucial. By empowering students with the skills to access, create, and critically evaluate digital content, educators can contribute to developing a more informed and proactive approach to the integration of generative AI in higher education.

6 Conclusion

The present study investigated the impact of digital media literacy on attitudes toward Gen-AI acceptance in higher education among undergraduate students at Kazan Federal University in Russia. The findings revealed that digital media literacy, particularly in terms of content access and creation, plays a significant role in shaping positive attitudes toward Gen-AI acceptance. The study highlights the importance of fostering digital media literacy skills among students to prepare them for the challenges and opportunities presented by advanced technologies like generative AI in higher education.

SEM results provided insights into the relationships between the sub-dimensions of digital media literacy and attitudes toward generative AI acceptance. Content Access and Create had significant positive impacts on attitudes toward generative AI acceptance, emphasizing the importance of individuals’ ability to access and create digital content in shaping their attitudes toward advanced technologies. Device Access had a small but significant positive impact, while Technical Understanding had a significant negative impact on attitudes toward generative AI acceptance. The non-significant impact of Critical Understanding on attitudes toward generative AI acceptance was unexpected and warrants further investigation.

The study contributes to the growing body of literature on digital media literacy and its impact on technology acceptance in higher education. The findings have important implications for educators, policymakers, and researchers, highlighting the need to integrate digital literacy education into higher education curricula to prepare students for the challenges and opportunities presented by generative AI. By fostering digital media literacy skills, particularly in terms of content access and creation, educators can help develop a more informed and proactive approach to integrating generative AI in higher education.

6.1 Recommendations

1) Higher education institutions should prioritize the integration of digital literacy education into their curricula to prepare students for the challenges and opportunities presented by generative AI and other advanced technologies.

2) Educators should focus on developing students’ skills in accessing and creating digital content, as these sub-dimensions of digital media literacy have been shown to have a significant positive impact on the attitudes toward generative AI acceptance.

3) Policymakers should support initiatives that promote digital media literacy education and the responsible integration of generative AI in higher education, taking into account the ethical and societal considerations associated with these technologies.

4) Researchers should conduct further studies to explore the complex relationships between digital media literacy and technology acceptance, considering additional factors such as ethical concerns, trust in AI systems, and the potential limitations of generative AI technologies.

5) Future research could include variables such as trust in AI, perceived usefulness, ethical awareness, and AI anxiety as mediators or moderators to enrich the model explaining the relationship between digital media literacy and AI acceptance. Incorporating such psychological mechanisms into the model will lead to a clearer understanding of the complex relationships between digital literacy and AI acceptance. In particular, it may be valuable to examine the mediating role of variables such as trust in AI technologies and ethical concerns to explain the unexpected negative effect of the Technical Understanding dimension on AI acceptance.

6.2 Limitations

1) We used convenience sampling, so the results may not be suitable for all undergraduate students in Russia or other countries. Future studies should employ better sampling methods to make findings more useful for everyone.

2) Our study is cross-sectional, meaning we only collected data at one point in time. This makes it difficult to assess whether digital media literacy genuinely affects changes in views toward the acceptance of generative AI or whether other factors are involved. We cannot understand how these relationships might change over time. Our data originates from self-reported surveys, which may not always precisely reflect actual abilities and attitudes. People sometimes overestimate their abilities or answer in a way they believe appears superior. Long-term studies or experiments could better demonstrate whether digital media literacy truly affects technology acceptance.

3) Since we only studied students from one university in Russia, our findings might not apply to students in other countries or educational settings. Different cultural backgrounds, teaching approaches, and access to technology could lead to varying results elsewhere. Future research should use long-term studies or experiments that track changes over time, include objective measures of digital skills, and involve students from various universities and countries. Future research should examine how digital media literacy influences attitudes toward generative AI acceptance in different cultures and educational settings.

4) We use self-reported data, which may not always be accurate because people want to present themselves favorably or may forget details. Future studies could use other methods, such as actual tests of digital media literacy or observing how individuals use technology, to validate our findings.

In conclusion, our study gives valuable insights into how digital media literacy affects attitudes toward generative AI acceptance in universities. We find that access to content and creation are important for fostering positive perceptions of generative AI. However, technical understanding and critical understanding have complex effects. With the increasing prevalence of generative AI in academia, it is essential to educate students on digital media literacy. Students can leverage generative AI more effectively in schools if they can access digital resources, create content, and engage in critical analysis. Future research should further investigate these topics, address the limitations of our study, and explore the impact of digital media literacy and technology adoption across various educational environments.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Institute of Psychology and Education at Kazan Federal University on September 17, 2024. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

OS: Conceptualization, Formal analysis, Investigation, Supervision, Visualization, Writing – review & editing. AM: Writing – original draft, Writing – review & editing. MZ: Conceptualization, Formal analysis, Investigation, Methodology, Supervision, Validation, Visualization, Writing – original draft. LC: Conceptualization, Investigation, Methodology, Software, Writing – original draft. LL: Conceptualization, Formal analysis, Investigation, Visualization, Writing – original draft. AL: Conceptualization, Methodology, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1563148/full#supplementary-material

References

Abbas, Q., Hussain, S., and Rasool, S. (2019). Digital literacy effect on the academic performance of students at higher education level in Pakistan. Glob. Soc. Sci. Rev. 4, 108–116. doi: 10.31703/gssr.2019(iv-i).14

Acosta-Enriquez, B. G., Arbulú Ballesteros, M. A., Huamaní Jordan, O., López Roca, C., and Saavedra Tirado, K. (2024). Analysis of college students’ attitudes toward the use of ChatGPT in their academic activities: effect of intent to use, verification of information and responsible use. BMC Psychol. 12:255. doi: 10.1186/s40359-024-01764-z

Afrilyasanti, R., Basthomi, Y., and Zen, E. L. (2022). The implications of instructors’ digital literacy skills for their attitudes to teach critical media literacy in EFL classrooms. Int. J. Media Inf. Lit. 7, 283–292. doi: 10.13187/ijmil.2022.2.283

Ajzen, I. (1991). The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50, 179–211. doi: 10.1016/0749-5978(91)90020-T

Akinwalere, S. N., and Ivanov, V. (2022). Artificial intelligence in higher education: challenges and opportunities. Border Crossing 12, 1–15. doi: 10.33182/bc.v12i1.2015

Alakrash, H. M., and Razak, N. A. (2021). Technology-based language learning: investigation of digital technology and digital literacy. Sustainability 13:12304. doi: 10.3390/su132112304

Alasadi, E. A., and Baiz, C. R. (2023). Generative AI in education and research: opportunities, concerns, and solutions. J. Chem. Educ. 100, 2965–2971. doi: 10.1021/acs.jchemed.3c00323

Alier, M., García-Peñalvo, F. J., and Camba, J. D. (2024). Generative artificial intelligence in education: from deceptive to disruptive. Int. J. Interact. Multimed. Artif. Intell. 8, 5–14. doi: 10.9781/ijimai.2024.02.011

Alnuaim, A. (2024). The impact and acceptance of gamification by learners in a digital literacy course at the undergraduate level: randomized controlled trial. JMIR Serious Games 12:e52017. doi: 10.2196/52017

Ameen, N., Sharma, G. D., Tarba, S., Rao, A., and Chopra, R. (2022). Toward advancing theory on creativity in marketing and artificial intelligence. Psychol. Mark. 39, 1802–1825. doi: 10.1002/mar.21699

Anggraeni, F. K. A., Nuraini, L., Harijanto, A., Prastowo, S. H. B., Subiki, S., Supriadi, B., et al. (2023). Student digital literacy analysis in physics learning through implementation digital-based learning media. J. Phys. Conf. Ser. 2623:012023. doi: 10.1088/1742-6596/2623/1/012023

Balaban-Sali, J. (2012). New media literacies of communication students. Contemp. Educ. Technol. 3, 265–277. doi: 10.30935/cedtech/6083

Bejaković, P., and Mrnjavac, Ž. (2020). The importance of digital literacy on the labour market. Empl. Relat. 42, 921–932. doi: 10.1108/ER-07-2019-0274

Bornstein, M. H., Jager, J., and Putnick, D. L. (2013). Sampling in developmental science: situations, shortcomings, solutions, and standards. In. Dev. Rev. 33, 357–370. doi: 10.1016/j.dr.2013.08.003

Botturi, L., Geronimi, M., and Kamanda, Y. (2018). One-frame movie. smartphones and movies for digital literacy development among teens and pre-teens, INTED2018 Proceedings. 4497–4503.

Camilleri, M. A. (2019). The SMEs’ technology acceptance of digital media for stakeholder engagement. J. Small Bus. Enterp. Dev. 26, 504–521. doi: 10.1108/JSBED-02-2018-0042

Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. High. Educ. 20:38. doi: 10.1186/s41239-023-00408-3

Chan, C. K. Y., and Hu, W. (2023). Students’ voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 20:43. doi: 10.1186/s41239-023-00411-8

Chatterjee, S., and Bhattacharjee, K. K. (2020). Adoption of artificial intelligence in higher education: a quantitative analysis using structural equation modelling. Educ. Inf. Technol. 25, 3443–3463. doi: 10.1007/s10639-020-10159-7

Chen, H., Li, L., and Chen, Y. (2021). Explore success factors that impact artificial intelligence adoption on telecom industry in China. J. Manag. Anal. 8, 36–68. doi: 10.1080/23270012.2020.1852895

Cohen, L., Manion, L., and Morrison, K. (1994). Educational research methodology. Athens: Metaixmio.

Crawford, K. (2021). The atlas of AI: Power, politics, and the planetary costs of artificial intelligence. United State of America: Yale University Press.

Creswell, J. W., and Clark, V. L. P. (2017). Designing and conducting mixed methods research approarch. 3rd Edn. Thousand Oaks, California: Sage.

Damiano, A. D., Lauría, E. J. M., Sarmiento, C., and Zhao, N. (2024). Early perceptions of teaching and learning using generative AI in higher education. J. Educ. Technol. Syst. 52, 346–375. doi: 10.1177/00472395241233290

Dangprasert, S. (2023). The development of a learning activity model for promoting digital technology and digital content development skills. Int. J. Inf. Educ. Technol. 13, 1242–1250. doi: 10.18178/ijiet.2023.13.8.1926

Dastani, M., and Yazdanpanah, V. (2023). Responsibility of AI systems. AI Soc. 38, 843–852. doi: 10.1007/s00146-022-01481-4

Dehouche, N. (2021). Plagiarism in the age of massive generative pre-trained transformers (GPT-3). Ethics Sci. Environ Polit. 21, 17–23. doi: 10.3354/esep00195

Du, S., and Xie, C. (2021). Paradoxes of artificial intelligence in consumer markets: ethical challenges and opportunities. J. Bus. Res. 129, 961–974. doi: 10.1016/j.jbusres.2020.08.024

Dyanggi, A. A. M. C., Islam, N., and Rian, R. (2022). Digital literacy as an effort to build National Resilience in Defending the country in the digital Field. J. Digit. Law Policy 1, 121–130. doi: 10.58982/jdlp.v1i3.202

Erol, H. (2021). A review of social studies course books regarding digital literacy and media literacy. Int. J. High. Educ. 10:101. doi: 10.5430/ijhe.v10n5p101

Etikan, I., Musa, S. A., and Alkassim, R. S. (2016). Comparison of convenience sampling and purposive sampling. Am. J. Theor. Appl. Stat. 5:1. doi: 10.11648/j.ajtas.20160501.11

Farhi, F., Jeljeli, R., Aburezeq, I., Dweikat, F. F., Al-shami, S. A., and Slamene, R. (2023). Analyzing the students’ views, concerns, and perceived ethics about chat GPT usage. Comput. Educ. Artif. Intell. 5:100180. doi: 10.1016/j.caeai.2023.100180

Flavián, C., Pérez-Rueda, A., Belanche, D., and Casaló, L. V. (2022). Intention to use analytical artificial intelligence (AI) in services – the effect of technology readiness and awareness. J. Serv. Manag. 33, 293–320. doi: 10.1108/JOSM-10-2020-0378

Förster, K. (2024). Extending the technology acceptance model and empirically testing the conceptualised consumer goods acceptance model. Heliyon 10:e27823. doi: 10.1016/j.heliyon.2024.e27823

Galindo-Domínguez, H., Delgado, N., Campo, L., and Losada, D. (2024). Relationship between teachers’ digital competence and attitudes towards artificial intelligence in education. Int. J. Educ. Res. 126:102381. doi: 10.1016/j.ijer.2024.102381

Gillani, N., Eynon, R., Chiabaut, C., and Finkel, K. (2023). Unpacking the “black box” of AI in education. Educ. Technol. Soc. 26, 99–111. doi: 10.30191/ETS.202301_26(1).0008

Gonçalves, A. R., Pinto, D. C., Rita, P., and Pires, T. (2023). Artificial intelligence and its ethical implications for marketing. Emerg. Sci. J. 7, 313–327. doi: 10.28991/ESJ-2023-07-02-01

Gunawan, B., and Ratmono, B. M. (2020). Social media, Cyberhoaxes and National Security: threats and protection in Indonesian cyberspace. Int. J. Netw. Secur. 22, 93–101. doi: 10.6633/IJNS.202001_22(1).09

Heiss, R., Nanz, A., and Matthes, J. (2023). Social media information literacy: conceptualization and associations with information overload, news avoidance and conspiracy mentality. Comput. Hum. Behav. 148:107908. doi: 10.1016/j.chb.2023.107908

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Huang, S.-C., and Le, T.-H. (2021). “Generative adversarial network” in Principles and labs for deep learning. eds. S.-C. Huang and T.-H. Le (London, UK: Elsevier), 255–281.

İlhan, A., Aslaner, R., and Yaşaroğlu, C. (2024). Development of digital literacy skills of 21st century mathematics teachers and prospective teachers through technology assisted education. Educ. Inf. Technol. doi: 10.1007/s10639-024-13283-w

Ivanov, S., Soliman, M., Tuomi, A., Alkathiri, N. A., and Al-Alawi, A. N. (2024). Drivers of generative AI adoption in higher education through the lens of the theory of planned behaviour. Technol. Soc. 77:102521. doi: 10.1016/j.techsoc.2024.102521

Jager, J., Putnick, D. L., and Bornstein, M. H. (2017). Ii. More THAN JUST convenient: the SCIENTIFIC merits of homogeneous convenience samples. Monogr. Soc. Res. Child Dev. 82, 13–30. doi: 10.1111/mono.12296

Jalil, A., Tohara, T., Shuhidan, S. M., Diana, F., Bahry, S., Nordin, N. B., et al. (2021). Exploring digital literacy strategies for students with special educational needs in the digital age. Turkish J. Comput. Math. Educ. 12, 3345–3358. doi: 10.17762/turcomat.v12i9.5741

Kamalov, F., Santandreu Calonge, D., and Gurrib, I. (2023). New era of artificial intelligence in education: towards a sustainable multifaceted revolution. Sustainability 15:12451. doi: 10.3390/su151612451

Khan, F. H., Pasha, M. A., and Masud, S. (2021). Advancements in microprocessor architecture for ubiquitous AI—an overview on history, evolution, and upcoming challenges in AI implementation. Micromachines 12:665. doi: 10.3390/mi12060665

Khan, N., Sarwar, A., Chen, T. B., and Khan, S. (2022). Connecting digital literacy in higher education to the 21st century workforce. Knowl. Manag. E-Learn. 14, 46–61. doi: 10.34105/j.kmel.2022.14.004

Khare, A., Jain, K., Laxmi, V., and Khanna, P. (2022). Impact of digital literacy levels of health care professionals on perceived quality of care. Iproceedings 8:e41561. doi: 10.2196/41561

Klimova, B., Pikhart, M., and Kacetl, J. (2023). Ethical issues of the use of AI-driven mobile apps for education. Front. Public Health 10:1118116. doi: 10.3389/fpubh.2022.1118116

Kuek, A., and Hakkennes, S. (2020). Healthcare staff digital literacy levels and their attitudes towards information systems. Health Informatics J. 26, 592–612. doi: 10.1177/1460458219839613

Kusters, R., Misevic, D., Berry, H., Cully, A., Le Cunff, Y., Dandoy, L., et al. (2020). Interdisciplinary research in artificial intelligence: challenges and opportunities. Front. Big Data 3:577974. doi: 10.3389/fdata.2020.577974

Lainjo, B., and Tmouche, H. (2023). The impact of artificial intelligence on higher learning institutions. Int. J. Educ. Teach. Soc. Sci. 3, 96–113. doi: 10.47747/ijets.v3i2.1028

Letigio, C. J. S., and Balijon, D. P. (2022). Digital literacy readiness of graduates of Philippine higher education institution. Int. J. Res. Publ. 115, 207–213. doi: 10.47119/ijrp10011511220224298

Liu, P. L., and Chen, C. J. (2023). Using an AI-based object detection translation application for English vocabulary learning. Educ. Technol. Soc. 26, 5–20. doi: 10.30191/ETS.202307_26(3).0002

Long, D., and Magerko, B. (2020). What is AI literacy? Competencies and design considerations Conference on Human Factors in Computing Systems-Proceedings.

Long, D., Teachey, A., and Magerko, B. (2022). Family learning talk in AI literacy learning activities. Conference on Human Factors in Computing Systems-Proceedings.

Mahligawati, F., Allanas, E., Butarbutar, M. H., and Nordin, N. A. N. (2023). Artificial intelligence in physics education: a comprehensive literature review. J. Phys. Conf. Ser. 2596:012080. doi: 10.1088/1742-6596/2596/1/012080

Mahmoud, A. B., Kumar, V., and Spyropoulou, S. (2025). Identifying the Public’s beliefs about generative artificial intelligence: a big data approach. IEEE Trans. Eng. Manag. 72, 827–841. doi: 10.1109/TEM.2025.3534088

Mariot, L., Jakobovic, D., Bäck, T., and Hernandez-Castro, J. (2022). “Artificial intelligence for the Design of Symmetric Cryptographic Primitives” in Security and artificial intelligence: A Crossdisciplinary approach. eds. L. Batina, T. Bäck, I. Buhan, and S. Picek (Cham, Switzerland: Springer International Publishing), 3–24.

Markus, A., Pfister, J., Carolus, A., Hotho, A., and Wienrich, C. (2024). Effects of AI understanding-training on AI literacy, usage, self-determined interactions, and anthropomorphization with voice assistants. Comput. Educ. Open 6:100176. doi: 10.1016/j.caeo.2024.100176

Maznev, P., Stützer, C., and Gaaw, S. (2024). AI in higher education: booster or stumbling block for developing digital competence? Zeitschrift Für Hochschulentwicklung 19, 109–126. doi: 10.21240/zfhe/19-01/06

Michel-Villarreal, R., Vilalta-Perdomo, E., Salinas-Navarro, D. E., Thierry-Aguilera, R., and Gerardou, F. S. (2023). Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Educ. Sci. 13:856. doi: 10.3390/educsci13090856

Miteva, K. (2022). Digital media literacy in the training of student midwives. Int. J. Sci. Res. Manag. 10, 652–656. doi: 10.18535/ijsrm/v10i07.mp01

Nyaaba, M., and Zhai, X. (2024). Generative AI professional development needs for teacher educators. J. AI 8, 1–13. doi: 10.61969/jai.1385915

Oc, Y., Gonsalves, C., and Quamina, L. T. (2024). Generative AI in higher education assessments: examining risk and tech-savviness on Student’s adoption. J. Mark. Educ. doi: 10.1177/02734753241302459

Okela, A. H. (2023). Egyptian university students’ smartphone addiction and their digital media literacy level. J. Media Lit. Educ. 15, 44–57. doi: 10.23860/JMLE-2023-15-1-4

Owan, V. J., Abang, K. B., Idika, D. O., Etta, E. O., and Bassey, B. A. (2023). Exploring the potential of artificial intelligence tools in educational measurement and assessment. Eurasia J. Math. Sci. Technol. Educ. 19:em2307. doi: 10.29333/ejmste/13428

Ozdamar-Keskin, N., Zeynep Ozata, F., Banar, K., and Royle, K. (2015). Examining digital literacy competences and learning habits of open and distance learners. Contemp. Educ. Technol. 6, 74–90. doi: 10.30935/cedtech/6140

Pangrazio, L., Godhe, A.-L., and Ledesma, A. G. L. (2020). What is digital literacy? A comparative review of publications across three language contexts. E-Learning Digital M, 17, 442–459. doi: 10.1177/2042753020946291

Parasuraman, A. (2000). Technology readiness index (TRI) a multiple-item scale to measure readiness to embrace new technologies. J. Serv. Res. 2, 307–320. doi: 10.1177/109467050024001

Parianom, R., and Arrafi, J. (2022). The impact of digital technology literacy and life skills on poverty reduction. Devot. J. Res. Comm. Serv. 3, 2002–2007. doi: 10.36418/dev.v3i12.247I., & Desmintari, D

Park, S. (2012). Dimensions of digital media literacy and the relationship with social exclusion. Media Int. Aust. 142, 87–100. doi: 10.1177/1329878X1214200111

Park, H. E. (2024). The double-edged sword of generative artificial intelligence in digitalization: an affordances and constraints perspective. Psychol. Mark. 41, 2924–2941. doi: 10.1002/mar.22094

Park, H., Kim, H. S., and Park, H. W. (2021). A Scientometric study of digital literacy, ICT literacy, information literacy, and media literacy. J. Data Inf. Sci. 6, 116–138. doi: 10.2478/jdis-2021-0001

Perdana, R., Yani, R., Jumadi, J., and Rosana, D. (2019). Assessing students’ digital literacy skill in senior high school Yogyakarta. JPI (Jurnal Pendidikan Indonesia) 8:169. doi: 10.23887/jpi-undiksha.v8i2.17168

Pertiwi, I. (2022). Original research article digital literacy in EFL learning: university students’ perspectives. JEES (Journal of English Educators Society) 7, 197–204. doi: 10.21070/jees.v7i2.1670