- 1Center for Excellence in Learning and Teaching, King Saud University, Riyadh, Saudi Arabia

- 2Kayyali Chair for Pharmaceutical Industries, Department of Pharmaceutics, College of Pharmacy, King Saud University, Riyadh, Saudi Arabia

- 3Department of Curriculum and Instruction, College of Education, King Saud University, Riyadh, Saudi Arabia

- 4Department of Plant Production, College of Food and Agriculture Sciences, King Saud University, Riyadh, Saudi Arabia

Background: The landscape of higher education is evolving with an emphasis on teaching excellence and student-centered learning, driven by technological advancements and societal changes. This study aimed to comprehensively evaluate the impact of the grant Program for Excellence in Learning and Teaching (PELT) at King Saud University, the first of its kind in Saudi Arabia.

Methods: Using a mixed-methods approach, the research design combined quantitative and qualitative data collection and analysis techniques. Faculty and student surveys were conducted to assess the program’s impact on teaching practices, student engagement, and skills development. Additionally, student academic performance data, including self-reported PELT course grades and university-reported final exam scores, were analyzed. The study examined the influence of various factors, such as academic discipline and grant theme on the program’s outcomes. Textual feedback from faculty and students was subjected to AI-assisted thematic and sentiment analysis to uncover key themes and insights.

Results: The PELT program showed a positive impact on faculty’s pedagogical skills, teaching methods, and perceptions of student learning. Faculty members showed a stronger preference for the “Student Professional Development” grant theme, while students valued the “Curriculum and Course Content Development” and “Excellence in Teaching and Assessment Strategies” themes more. The student textual feedback highlighted the need for more dynamic, student-focused, and practically oriented educational approaches. The innovative use of AI-powered sentiment analysis offered valuable complementary insights into student experiences, complementing the traditional survey-based methods. The analysis of student-reported academic performance revealed that PELT course grades were slightly higher than overall GPA, with the Humanities discipline demonstrating significant positive impacts. Importantly, the university-reported score analysis further highlighted that courses emphasizing student-faculty interactions and student-professional development (T2 and T3 themes) showed significantly higher scores in the PELT group compared to the control group, while the T1 (Curriculum and Course Content Development) theme showed the opposite.

Conclusion: The study’s findings underscore the importance of aligning educational interventions with approaches that prioritize active learning, collaborative experiences, and authentic student-faculty interactions. Furthermore, this study provides a robust foundation for assessing the impact of faculty development programs and guiding data-driven decisions to enhance the quality of higher education.

1 Introduction

The landscape of higher education is evolving with a strong emphasis on teaching excellence and student-centered learning, driven by technological advancements, societal changes, and the need for innovative educational practices (Sointu et al., 2019; Nygren and Sjöberg, 2023; Kayyali, 2024). In response, world-class universities have established a range of professional development programs to support teaching, research, and student learning. Grounded in constructivist learning theories, these programs aim to foster active, learner-centered approaches that engage learners in the co-construction of knowledge (Hyde and Nanis, 2006; Weimer, 2013; Ross et al., 2019). The scholarship of teaching and learning further emphasizes the importance of continual reflection and evidence-based refinement of pedagogical practices to improve student learning outcomes (Trigwell et al., 2000; Bernstein and Ginsberg, 2009; Hutchings et al., 2011; Cruz, 2013). These programs are vital for adopting innovative teaching methodologies, maintaining academic excellence, and applying best practices in program development and resource management (Steinert, 2010; James Jacob et al., 2015; Carney et al., 2016; Jacob et al., 2019).

The importance of faculty development programs in enhancing educational quality across institutions is well-established in the literature. Systematic reviews highlight the critical role of these programs in empowering educators, ensuring institutions maintain educational excellence, and significantly improving teaching methodologies and faculty job satisfaction (James Jacob et al., 2015; Kohan et al., 2023; Khadar and Kansra, 2024). Previous studies have consistently shown that teaching grant programs can significantly enhance faculty members’ pedagogical skills, encourage innovation in teaching methods, and foster a culture of continuous improvement within higher education institutions (Gruppen et al., 2003; Steinert et al., 2006; Bilal Guraya and Chen, 2019). Furthermore, research indicates high satisfaction rates among participating faculty, with reported improvements in teaching effectiveness and increased academic output (Steinert et al., 2016). While the evidence supports the effectiveness of faculty development programs, more rigorous evaluations are needed to understand their long-term impacts on institutional practices and student learning outcomes (Steinert, 2017).

In addition to faculty development initiatives, research projects, and grants are considered among the most important practices that lead to improving education, as they often have comprehensive goals and represent unique opportunities to create a scientific community focused on faculty development (Silver, 2013). These research-based programs may focus on developing the skills of faculty members, students, or both, and they may directly aim to improve the educational environment, resulting in enhanced educational outcomes.

The strategic use of small grant project schemes has been widely recognized as an effective means of promoting innovation, increasing motivation for teaching, and continuing professional development (Morris and Fry, 2006). These targeted grant programs offer a valuable complement to standard faculty training initiatives, empowering educators to pursue self-directed projects and experimentation that can drive meaningful improvements in teaching and learning.

For instance, several well-known universities such as the National University of Singapore, Yale University, Stanford University, and the University of Auckland have implemented teaching grant programs to promote professional development, encourage innovative educational practices, and explore new directions for course and curriculum development (Carney et al., 2016; Ossevoort et al., 2024). Similarly, King Fahd University of Petroleum and Minerals in Saudi Arabia has the Academic Professional Development Program to support faculty in developing their knowledge and learning skills.

These examples demonstrate the global recognition of the importance of investing in faculty professional development and pedagogical innovation to enhance the quality of higher education and student learning experiences. Teaching grant programs play a crucial role in enhancing faculty pedagogical skills, promoting innovative teaching practices, and establishing a culture of continuous improvement in higher education.

Digital transformation and technological advancements have fundamentally reshaped teaching and learning approaches on a global scale (Mhlanga, 2022). Recent studies acknowledged that Generative AI has a complex impact on student engagement and performance, with both positive and negative implications. While some studies highlight its potential to enhance engagement and improve academic outcomes (Bulawan Aieron et al., 2024; Lo et al., 2025), others indicate that reliance on these tools may hinder learning and performance (Bulawan Aieron et al., 2024; Wecks et al., 2024). This duality necessitates careful consideration by educators and policymakers regarding its integration into educational practices.

In response to these global trends, King Saud University initiated the grant Program for Excellence in Learning and Teaching (PELT), the first of its kind in Saudi universities, which has been coordinated by the Center for Excellence in Learning and Teaching (CELT-KSU). This pioneering program in Saudi Arabia aims to improve student learning experiences and skills, enhance academic practices, and promote a culture of quality in learning and teaching.

The current study focuses on the latest (7th cycle) of the PELT initiative (2023–2024), which has evolved to focus on three key intervention domains: Students as Partners, GPT Tools in Education, and Innovation in Educational Practices. These intervention domains reflect the program’s adaptability to emerging trends in higher education and its commitment to addressing contemporary challenges in teaching and learning.

While these programs aim to enhance teaching quality, it has been argued that the focus on funding and awards may inadvertently create competition rather than collaboration among educators, potentially undermining the collective goal of improving student learning outcomes (O’Leary and Wood, 2019). Therefore, these initiatives should be continuously assessed through comprehensive evaluations including teaching portfolios and student feedback to foster a culture of improvement in teaching practices (Malfroy and Willis, 2018).

This study aims to conduct a comprehensive evaluation of the impact of the PELT initiative on faculty teaching practices, student engagement, skills development, as well as student academic achievements. By analyzing data from faculty and student surveys, as well as student performance metrics, the study seeks to address the following specific research questions:

A- Faculty Surveys:

• What is the faculty’s perception of the PELT program and its impact on their teaching practices?

• How do faculty members’ views on the PELT program vary across different demographic factors, academic disciplines, and grant themes?

B- Student Surveys:

• How has the PELT program impacted student engagement and skills development?

• What are the students’ perceptions of the teaching methodologies used in PELT-supported courses?

C- Sentiment Analysis:

• Can AI-powered sentiment analysis of student feedback provide additional insights into their satisfaction and experiences with the PELT program?

• How do the AI-estimated sentiment scores align with the students’ self-reported satisfaction measures?

D- Academic Performance Analysis:

• How does the academic performance of students in PELT-implemented courses compare to their overall GPA and other control non-PELT student groups?

• What contextual factors, such as academic disciplines and grant themes, influence the PELT program’s impact on student academic outcomes?

By addressing these research questions, the study aims to provide a comprehensive assessment of the PELT program’s impact, encompassing both subjective experiences (faculty and student perceptions) and objective academic outcomes. The findings from this study are expected to contribute to the growing body of knowledge on learning and teaching excellence programs and their impact on higher education quality, particularly in the context of Saudi Arabian universities.

2 Materials and methods

2.1 Study design and methodology

This study utilized a mixed-methods approach to comprehensively evaluate the impact of the PELT initiative at King Saud University. The research design combined quantitative and qualitative data collection and analysis techniques to provide a holistic assessment of the program’s influence on faculty and students (Schoonenboom and Johnson, 2017; Shahba et al., 2022). To control for the potential influence of confounding variables, the study design and analysis incorporated several strategies. First, the surveys collected data on a range of faculty/student demographics and academic characteristics, including academic discipline, academic level, GPA, and sex. Additionally, the study examined the effect of PELT on educational outcomes at both the overall and subgroup levels. This subgroup analysis, considering factors like academic discipline, grant theme, and individual course types, helped to minimize the influence of course-level confounding factors such as content, assessments, and instructors. Finally, the use of both student-reported and university-reported performance data, as well as the integration of qualitative student feedback, provided a more comprehensive approach to understanding the PELT program’s impact while accounting for potential confounding influences.

2.2 Participant recruitment and study setting

During the 1st Semester-2023/2024, CELT-KSU issued a public call for voluntary participation in the 7th cycle of the PELT program, directed at all university faculty members. Out of the 38 faculty applicants, 11 members successfully passed a rigorous review process and subsequently implemented the program in their courses during the 2nd Semester-2024.

Faculty members who actively participated in the program and adopted the reviewer‘s comments received financial support to cover the required expenses and as compensation for administrative and intellectual efforts in the program.

2.3 Study demographics

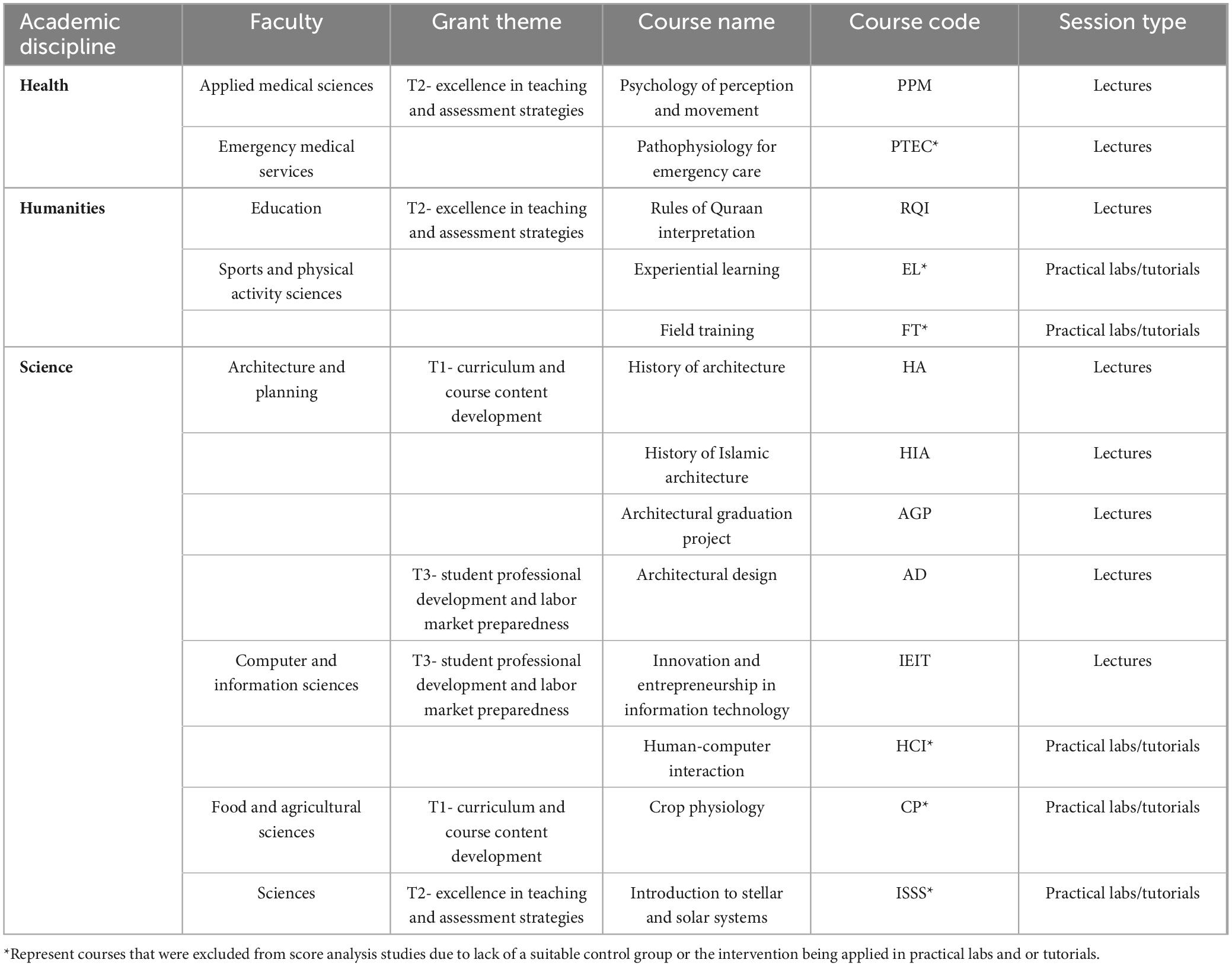

The current study covered the 7th cycle of The PELT initiative, which was implemented in the 2nd semester of 2024. It involved 13 undergraduate courses spanning three academic disciplines and eight different faculties, benefiting a total of 445 students (Table 1). Regarding session type, 8 courses implemented PELT in theoretical lecture classrooms, while 5 courses applied it in Practical Labs and Tutorials.

2.3.1 Emergent grant themes and thematic foci

The implemented PELT grants were retrospectively categorized into three themes that emerged from an AI-assisted post-hoc analysis and careful examination of the funded projects and their thematic foci; T1: Curriculum and Course Content Development, T2: Excellence in Teaching and Assessment Strategies, and T3: Student Professional Development and Labor Market Preparedness (Table 1). Although some grants may have encompassed multiple themes, the single most prominent theme was assigned to each funded course.

2.4 Data collection

2.4.1 Questionnaires to evaluate faculty and student’s perceptions of PELT

At the end of the semester, Faculty and students who participated in PELT were encouraged to voluntarily participate in a survey to solicit their feedback about their perception of the program and/or course quality (Altwijri et al., 2022; Shahba et al., 2022).

2.4.1.1 Faculty survey

The faculty survey was conducted through voluntary, in-depth phone interviews in the Arabic language and involved four main sections (The English-translated version of the faculty survey is provided in Supplemerntary Appendix 1). The first section collected general respondent information, the second section covered the impact of PELT on faculty skills, the third one involved the impact of PELT on students, and the last represented open-ended questions for textual recommendations and suggestions. The survey targeted all 11 participating faculty members and achieved a 100% response rate.

2.4.1.2 Student survey

The student survey was administered using Google Forms® in the Arabic language, and the survey link was distributed to students via email (Shahba and Sales, 2021). Additionally, two follow-up emails were sent to the students to encourage their participation in the survey. The student survey involved four main sections (The English-translated version of the student survey is provided in Supplemerntary Appendix 2). The first one collected general respondent information, including the courses they took, their scores in those courses, and their GPA. The second and third sections involved the impact of the teaching methodology on student achievements and skills, respectively. The fourth and fifth sections covered students’ perceptions of the course instruction and textual recommendations to improve PELT. A total of 445 students were surveyed, and the response rate was 13.7%. Responses with inappropriate and/or missing data were excluded from the study.

2.4.2 Student academic performance

2.4.2.1 Student-reported PELT course grades vs. GPA

These two metrics were collected through the student survey to analyze the program’s influence on students’ performance in courses where PELT was implemented compared to their overall GPA (Supplementary Table S1).

2.4.2.2 University-reported final course scores

Anonymous data on final exam scores was obtained from KSU’s Deanship of Anonymized data on final exam scores was obtained from KSU’s Deanship of Admission and Registration Affairs. This dataset included information on the total number of students enrolled in each section, as well as the number of students who withdrew, actively participated, or were prohibited from the course. Additionally, the data detailed the specific number of students who passed or failed the exams, along with their distribution across the various grade categories, from D to A+. Course sections that implemented PELT were assigned to the PELT group, whereas the corresponding non-PELT sections were assigned to the control group.

The data was then restructured using Python, focusing on three key metrics: Pass rate (%), Student scores, and Average section scores. The pass rate was calculated as the percentage of students who earned a grade of D or higher, while the average section and student scores were determined based on KSU’s grading scale, which assigns numerical values from 1 (DN or F) to 5 (A+) (Shahba et al., 2023).

Within the current score analysis study, one course (PTEC) was excluded from the analysis because it was delivered exclusively during the second semester with 100% program implementation across all sections, leaving no viable control group for comparison. In addition, five courses where PELT was implemented only in the labs/tutorials were excluded from the analysis, as the PELT program’s impact was limited to the practical sessions, which typically contribute a maximum of 30% of the overall course grade. However, these 6 courses were still included in the other analyses, such as student-reported PELT course grades, faculty and student surveys, and the textual feedback analysis.

The seven remaining courses provided suitable conditions for a comprehensive analysis of the university-reported final course scores, allowing for comparison between PELT- and control groups by assigning the non-PELT (control) group to the first semester and PELT group to the second semester for most of the courses based on whether PELT was implemented in each course.

2.5 Ethical considerations

The study protocol and surveys were revised and approved by the Standing Committee for Scientific Research Ethics (Ref No. KSU-HE-24-153). Retrospective analyses of student scores and feedback were collected as part of normal assessment during the course. Informed consent was obtained from surveyed students electronically.

2.6 Data analysis

The current study employed both Quantitative and Qualitative analysis of study parameters.

Quantitative analysis:

• Descriptive statistics to examine the distribution and trends in faculty and student survey responses.

• Comparative analysis of student performance metrics between PELT-implemented and control course sections.

• Subgroup analysis to examine the influence of confounding factors, such as academic discipline, grant theme, and sex.

Qualitative analysis:

• Thematic analysis of faculty and student textual feedback to uncover key themes and insights (Supplementary Table S1).

• AI-estimated sentiment Analysis to generate sentiment scores for each textual feedback.

• Integration of qualitative findings with quantitative results to provide a comprehensive understanding of the PELT program’s impact.

2.6.1 Methodological approach for AI-estimated sentiment analysis

The sentiment analysis was conducted using DATAIKU (version 11.1.3) and its sentiment analysis plugin (version 1.5.0). This plugin utilized the FastText library to provide a pre-trained model for classifying the sentiment polarity (positive/negative) of English text data. The process involved several critical pre-processing steps to ensure accurate sentiment estimation.

Initially, the raw student feedback texts underwent rigorous text preprocessing and cleaning, including:

• Translation: Converting all text from Arabic to the English language.

• Decapitalization: Converting all text to lowercase to standardize the input.

• Stemming: Reducing words to their root form to normalize linguistic variations.

• Tokenization: Breaking down text into individual meaningful units.

• Stopword removal: Eliminating common, non-informative words.

• Punctuation and special character cleaning.

After these preparatory steps, the preprocessed text was fed into DATAIKU’s sentiment analysis module, which utilizes advanced natural language processing (NLP) algorithms to generate sentiment scores. The resulting sentiment scores were quantified on a scale [1–5], where 1 represents highly negative sentiment and 5 represents highly positive sentiment.

The use of AI-driven sentiment analysis in educational contexts is supported by recent studies that highlight its effectiveness in analyzing student feedback (Kumar and Kumar, 2024; Prakash et al., 2024). Drawing from published methodological framework (Lo et al., 2025), the current AI-assisted sentiment analysis prioritized a collaborative model of intelligence augmentation, where AI complements rather than replaces human analytical capabilities. This tool was used to complement other quantitative/qualitative survey metrics offering administrators a more nuanced understanding of student experiences. To ensure the validity of the sentiment analysis, the generated sentiment scores were compared with the overall student satisfaction scores (Supplementary Table S1), and the correlation coefficient between the two was calculated. This comparison helps to validate the sentiment analysis results and ensure that the AI-generated sentiment scores align with the overall student satisfaction.

2.6.2 Sampling

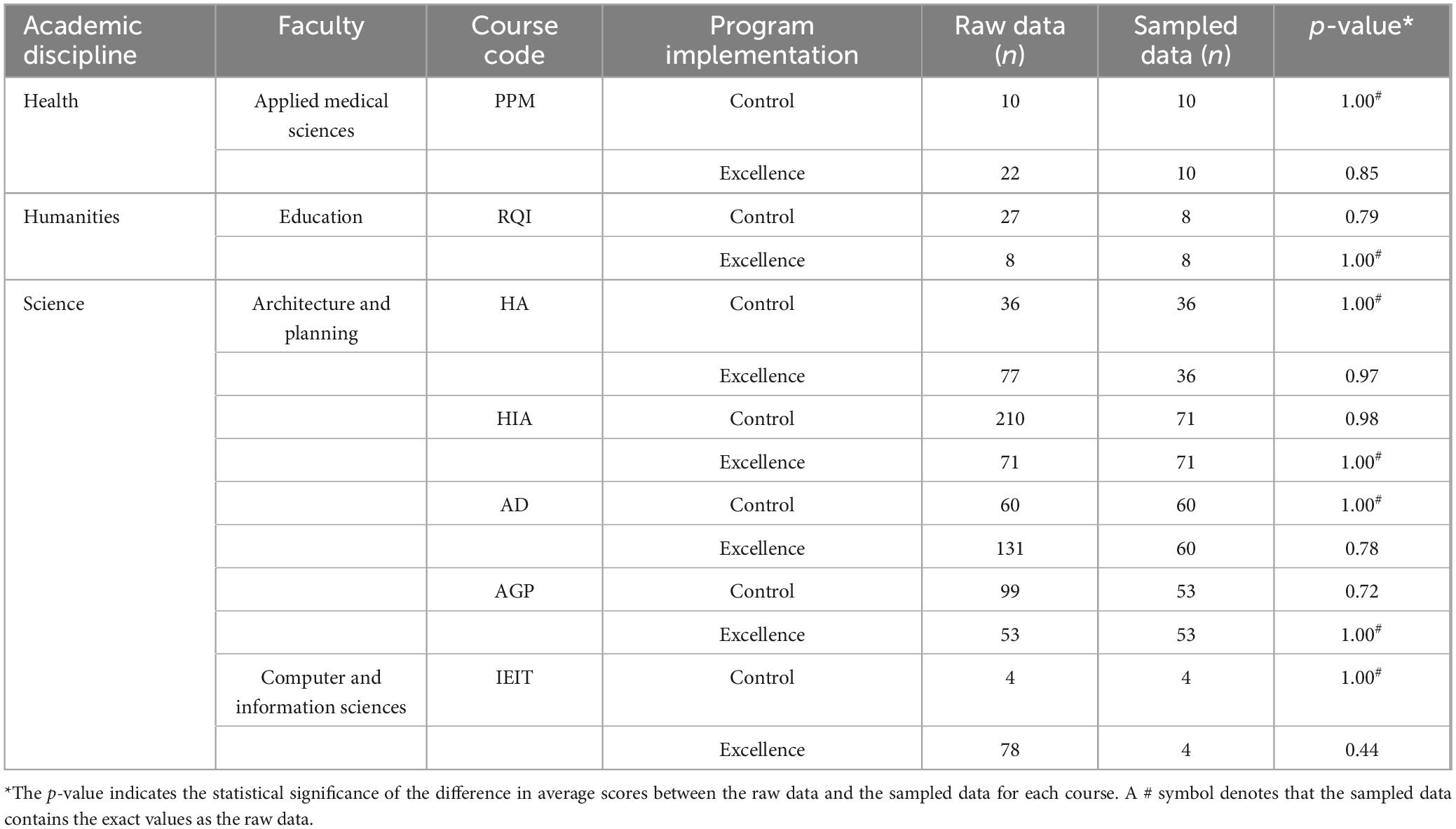

The preliminary analysis of student scores revealed a substantial discrepancy in target group sizes across the targeted courses, the smallest building block in the study, which then constitutes the larger groups (which could represent some confounding factors) such as college, study level, and academic discipline. Therefore, random sampling was performed to balance the number of students in the PELT and control groups within each course (Table 2; Shahba et al., 2023). This sampling was conducted using the sample function from the pandas python library, ensuring that the average score of the sampled data was not significantly different from the raw unsampled data (p > 0.05) (Table 2). As a result, the subsequent steps of statistical analysis for the pass rate (%) and student scores were performed using this balanced dataset.

2.6.3 Software

The data analysis for this study was primarily conducted using the Python programming language (version 3.9.20) within a Jupyter Notebook environment (jupyter_core: 5.7.2, notebook: 7.2.2). Several Python packages, including pandas, numpy, seaborn, matplotlib, itertools, and statannotations, were employed for tasks such as data presentation, grouping, validation, data frame manipulation, and visualization generation (Shahba et al., 2023).

Additionally, some parts of the manuscript and several Python scripts were drafted, and/or revised with the assistance of POE chatbots such as Anthropic’s Claude-3.5-Sonnet and Claude-3-Haiku. While these AI tools provided some guidance for the associated data analysis and interpretation, the authors maintained full accountability for the concepts explored, the content covered, and the final form of the manuscript.

2.6.4 Statistical analysis

The normality of the data distribution was systematically evaluated using the Shapiro-Wilk test implemented in the SciPy.stats Python package (Mishra et al., 2019). For normally distributed data such as the faculty survey findings showing the effect of academic discipline, Grant Theme, and Scientific rank on Faculty Overall satisfaction, one-way ANOVA followed by Tukey’s post-hoc test was used for statistical analysis. Additionally, independent t-tests were conducted to analyze the difference in satisfaction between male and female faculty (Hazra and Gogtay, 2016; Manikandan and Ramachandran, 2023). For non-normally distributed data, such as the student survey findings showing the effect of Academic discipline and Grant Theme on Overall Satisfaction, Kruskal-Wallis tests followed by Dunn’s post-hoc test with Bonferroni correction were applied. The Mann-Whitney U test was employed to analyze the difference in satisfaction between male and female students, as well as the effect of PELT implementation on student scores (Nahm, 2016; Hoag and Kuo, 2017). On the other hand, the effect of PELT Implementation on Student-Reported Academic Performance (PELT courses vs. GPA) was statistically analyzed using the Wilcoxon signed-rank test (paired samples).

Correlational analysis between Student Overall Satisfaction and academic level, Course grade, as well as AI-estimated sentiment scores, was performed using Spearman’s correlation test from the Scipy functions. For all statistical tests, a p-value of 0.05 or less was considered statistically significant (Schober et al., 2018).

To further assess the practical significance of the findings, several effect size measures were calculated including Cohen’s d (Cohen, 2013), the common language effect size (Vargha-Delaney’s A) (Vargha and Delaney, 2000), and the effect size Rosenthal correlation (r) (Rosenthal, 1984; Gullickson and Ramser, 1993).

3 Results

3.1 Faculty survey

3.1.1 General survey findings

The faculty survey was conducted to gather in-depth insights into the perspectives and experiences of the instructors who participated in the PELT program. The survey explored various aspects of the grant’s impact on faculty skills, teaching practices, and perceptions of student learning outcomes.

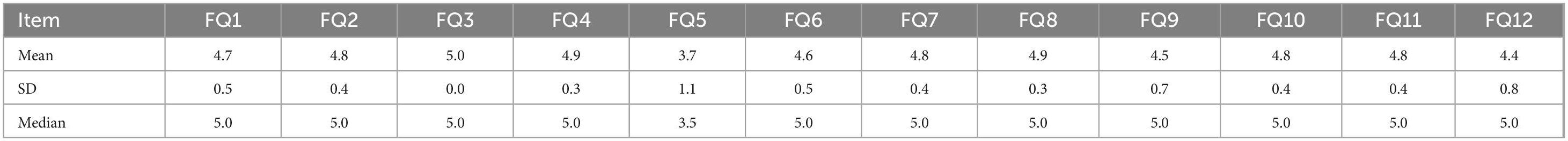

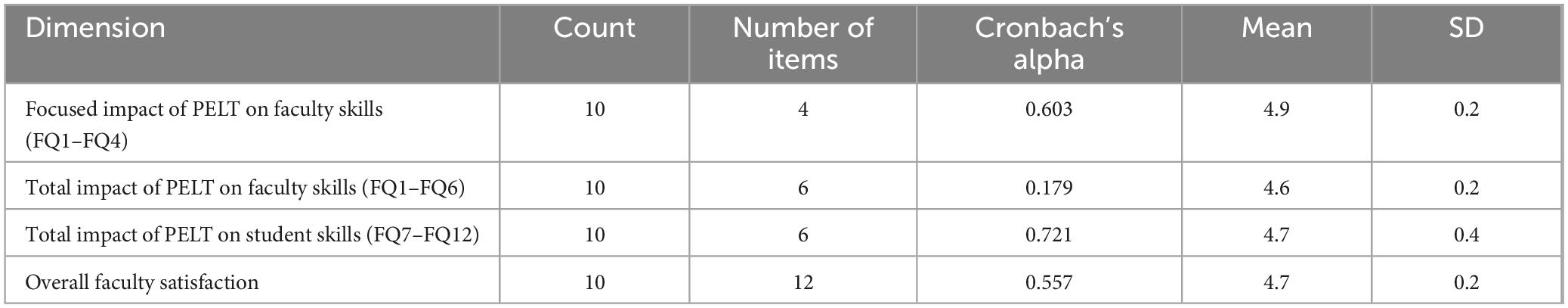

3.1.1.1 Focused impact of PELT on faculty skills (FQ1–FQ4)

The Faculty survey results indicate a strong positive impact of PELT on the faculty member’s skills and knowledge related to education and teaching research (FQ1), use of modern educational technologies (FQ2), development of teaching and active learning strategies (FQ3), and developing the course and student evaluation methods (FQ4)(Table 3). The high mean scores (4.9) and low standard deviation (0.2) suggest a consistent positive perception among the faculty (Table 4). The Cronbach’s Alpha of 0.603 for this dimension indicates a good level of internal consistency, further supporting the cohesiveness of these faculty-focused questions.

3.1.1.2 Impact of PELT on faculty publishing attempts and use beyond the grant scope (FQ5–FQ6)

The survey results show that FQ5 and FQ6 behave differently compared to FQ1–FQ4. While FQ1–FQ4 focused on the direct impact of the grant on faculty skills and teaching methods, FQ5 and FQ6 addressed more peripheral aspects of the grant’s influence. This was confirmed by the lower Cronbach’s Alpha score (0.179) in the case of the inclusion of FQ5 and FQ6 in one dimension with FQ1–FQ4 (Table 4).

3.1.1.2.1 FQ5-impact on faculty publishing attempts

This question has a relatively lower mean score of 3.7 compared to the other faculty-focused questions. This suggests that the grant program may not have been as effective in supporting faculty participation and publication in educational research. This could be an area for improvement, as disseminating research findings is an important aspect of professional development and knowledge sharing.

3.1.1.2.2 FQ6-use beyond the grant scope

The mean score for this question is 4.6, indicating that faculty members reported that they were able to apply or expect to apply the skills and techniques learned through the grant in contexts beyond the specific courses targeted by the grant. This suggests a positive transfer of knowledge and a broader impact of the grant program on faculty practices.

3.1.1.3 Impact of PELT on students skills (FQ7–FQ12)

The results also indicate a positive impact of the grant on various aspects of the student learning experience, including increased student interaction and participation (FQ7), improved academic performance (FQ8), enhanced classroom learning environment (FQ9), increased student satisfaction (FQ10), greater student involvement in the educational process (FQ11), and the acquisition of new professional skills (FQ12). The mean score of 4.7 and Cronbach’s Alpha of 0.721 suggest a strong and consistent positive perception of the grant’s impact on students.

3.1.1.4 Overall faculty satisfaction (FQ1–FQ12)

The overall faculty satisfaction with the grant program, as measured by the combined scores from all 12 questions, shows a positive result with a mean score of 4.66 and a standard deviation of 0.2. The Cronbach’s Alpha of 0.557 suggests a moderate level of internal consistency, indicating that the questions in this set could be collectively used in measuring overall satisfaction.

3.1.2 Factors influencing overall faculty satisfaction

3.1.2.1 Effect of scientific discipline on overall faculty satisfaction

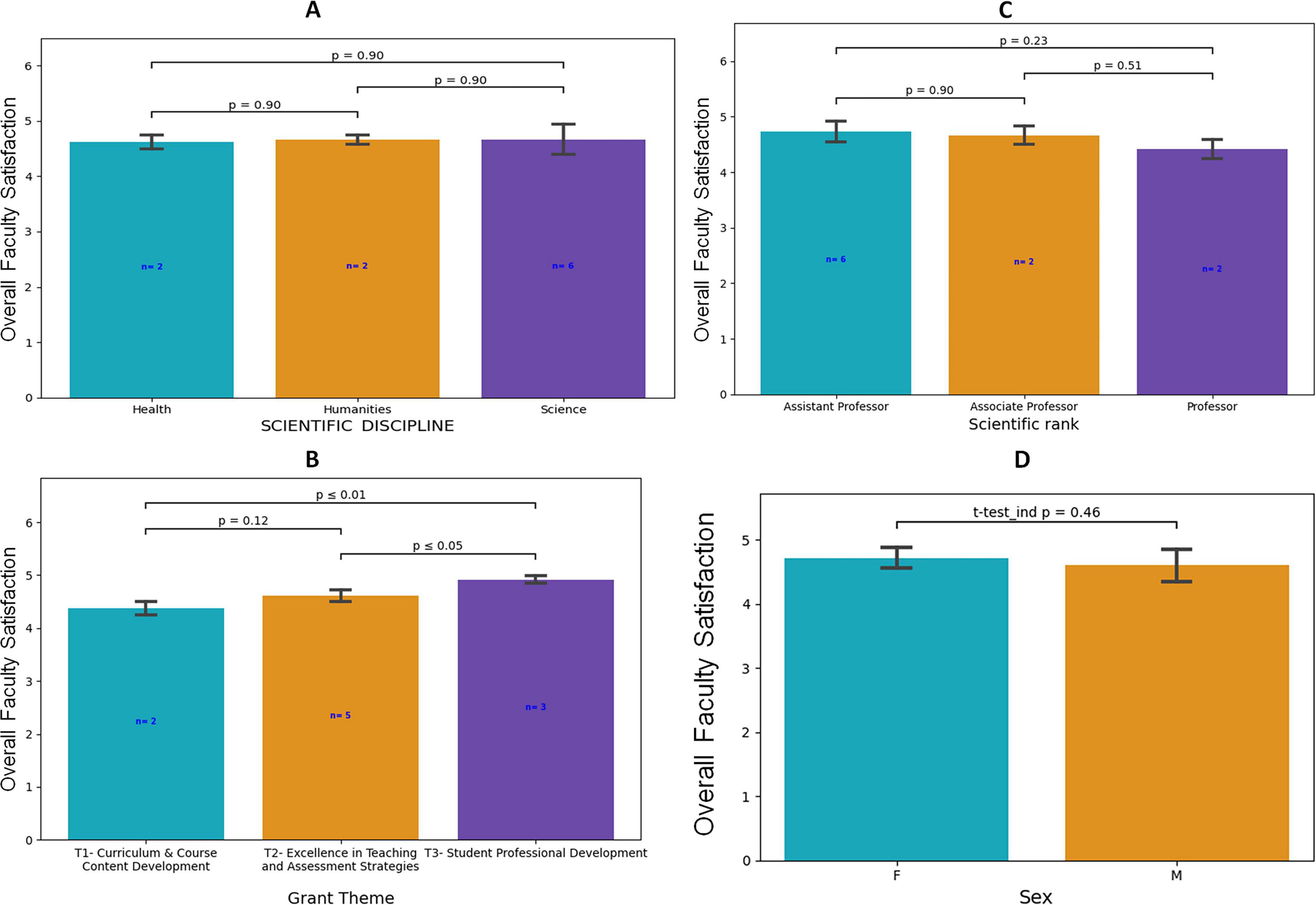

The study found no statistically significant differences in overall satisfaction among the faculty from the Health, Humanities, and Science disciplines (Figure 1A). This suggests that the faculty members’ overall satisfaction with the PELT program was not influenced by their disciplinary backgrounds.

Figure 1. Effect of (A) academic discipline, (B) grant theme, (C) scientific rank, and (D) sex on faculty overall satisfaction with PELT. Statistical analyses were performed using one-way ANOVA followed by Tukey’s post-hoc test for (A–C), while independent t-test was used for (D). A p-value of ≤ 0.05 was considered statistically significant.

3.1.2.2 Effect of grant theme on overall faculty satisfaction

In contrast, The analysis revealed a statistically significant effect (ANOVA, p = 0.006) on faculty members’ overall satisfaction across different grant themes. Notably, the “T3- Student Professional Development and Labor Market Preparedness” theme demonstrated significantly higher faculty satisfaction compared to “T2- Teaching and Evaluation Methods Development” (p < 0.05) and “T1- Curriculum and Course Content Development” (p < 0.01) (Figure 1B). This finding suggests that faculty members place greater value on student professional development and student career advancement opportunities.

3.1.2.3 Effect of scientific rank on overall faculty satisfaction

On the other hand, the faculty’s scientific rank (Assistant Professor, Associate Professor, and Professor) did not show a statistically significant effect on their overall satisfaction with the grant program (Figure 1C).

3.1.2.4 Effect of sex on overall faculty satisfaction

Similarly, the analysis revealed no statistically significant difference in overall satisfaction between female and male faculty members (Figure 1D).

3.1.3 Faculties’ textual feedback and recommendations to improve PELT

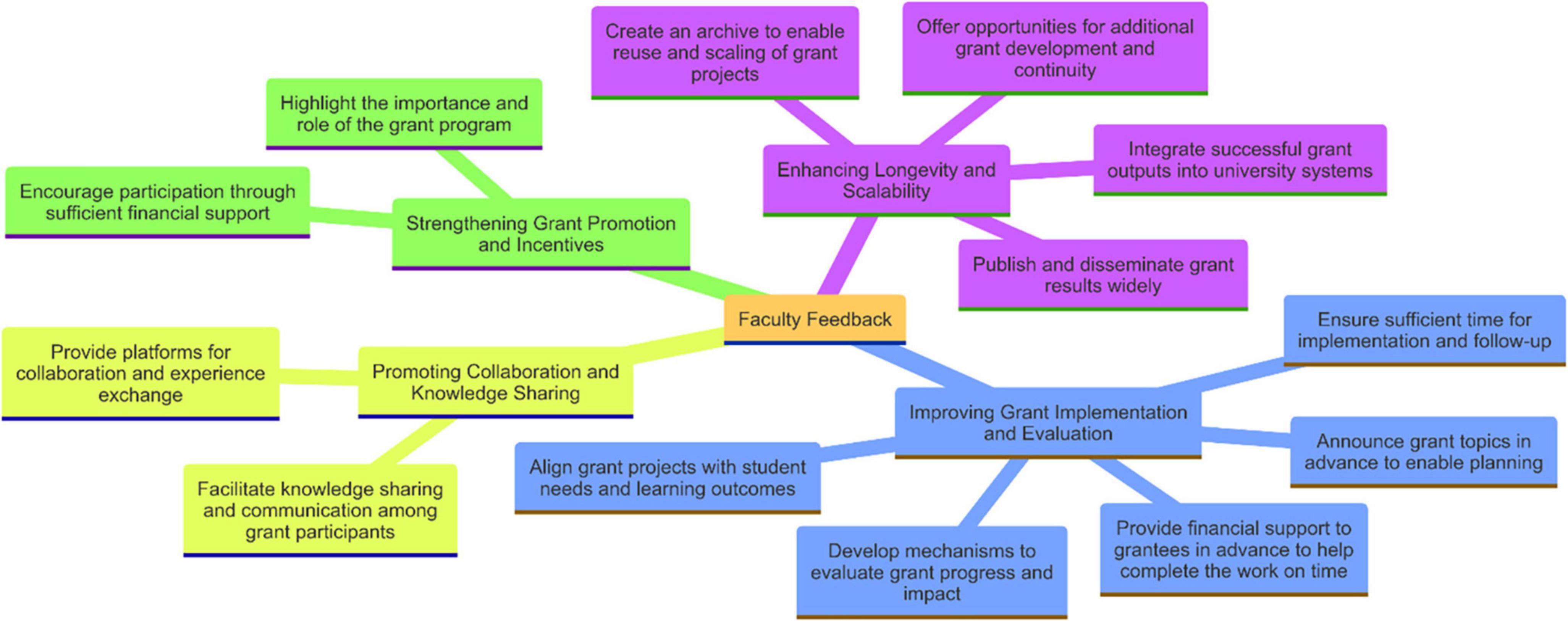

The faculty’s textual feedback was systematically analyzed using an AI-assisted qualitative thematic analysis approach. Through iterative analysis and refinement, four primary thematic areas emerged, capturing the nuanced perspectives and strategic recommendations for improving the PELT program (Figure 2).

3.1.3.1 Enhancing longevity and scalability

The faculty’s recommendations in this dimension demonstrate their desire for the PELT program to have a lasting and widespread impact. Suggestions to publish and disseminate the grant results widely, integrate successful outputs into the university’s systems, and create an archive for reuse and scaling indicate a focus on institutionalizing the program’s successes. Additionally, the recommendation to offer opportunities for continued grant development signals the faculty’s interest in building upon the program’s momentum and ensuring its long-term viability.

3.1.3.2 Improving grant implementation and evaluation

The faculty’s feedback in this area highlights their emphasis on enhancing the execution and assessment of the grant projects. Recommendations to announce grant topics in advance, provide timely financial support, ensure sufficient implementation and follow-up time, and develop robust evaluation mechanisms suggest a strong focus on improving the overall grant management and accountability processes. Aligning the grant projects with student needs and learning outcomes also reveals the faculty’s desire to ensure the practical relevance and impact of the funded initiatives.

3.1.3.3 Promoting collaboration and knowledge sharing

The faculty’s recommendations in this dimension address the importance of fostering a collaborative and knowledge-sharing environment among the grant participants. Facilitating communication and providing platforms for experience exchange can help create a sense of community, enable the cross-pollination of ideas, and amplify the program’s reach and impact. This emphasis on collaboration aligns with the broader goal of leveraging the collective expertise and resources of the faculty for the benefit of the program.

3.1.3.4 Strengthening grant promotion and incentives

The faculty’s feedback in this thematic area suggests the need for more targeted efforts to promote the PELT program and incentivize faculty participation. Highlighting the importance and role of the grant program, as well as providing sufficient financial support, can help raise awareness, build enthusiasm, and encourage broader engagement from the faculty. Addressing these aspects can contribute to the overall success and longevity of the PELT initiative.

Overall, the faculty’s feedback presents a well-rounded set of recommendations that address both the strategic and operational aspects of the PELT grant program. The faculty’s input reflects a good understanding of the program’s needs and a commitment to enhancing its long-term impact, implementation effectiveness, collaborative spirit, and ability to attract and retain high-quality faculty involvement.

3.2 Students survey results

3.2.1 General survey findings

The survey results include responses from 58 students, with information on their academic level, GPA, and course grades. The academic level of the respondents ranges from 2 to 10, with a mean of 7.0 ± 2.3 (Table 5). This diverse sample of students, from lower-level to higher-level undergraduates, provides a comprehensive representation of the student population.

The cumulative GPA of the respondents ranges from 3.2 to 5.0, with a mean of 4.4 ± 0.4. Similarly, the Student grade (in courses where PELT was implemented) ranged from 2.0 to 5.0, with a mean of 4.5 ± 0.6. These high academic performance indicators suggest that the sample consists of high-achieving students, with the majority of respondents having a GPA and course grade of 4.0 or above (Table 5).

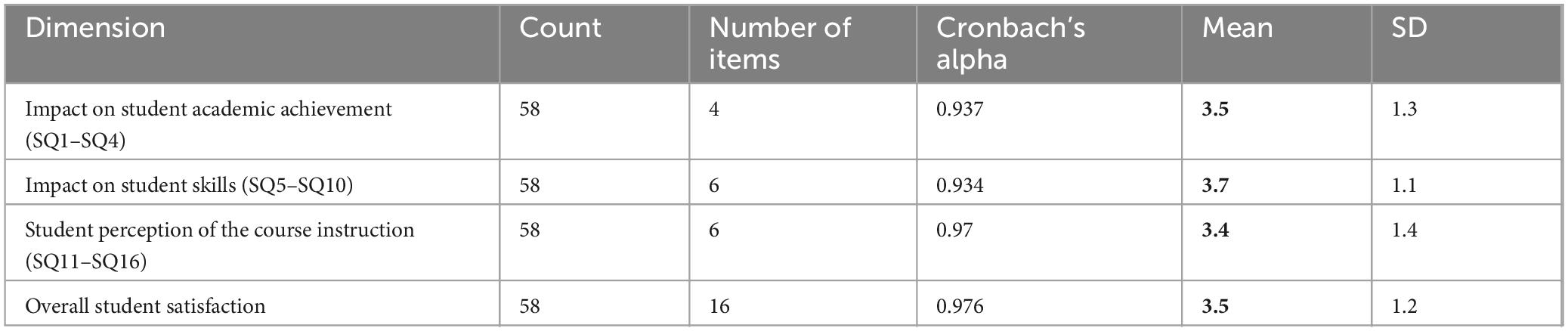

The survey items are grouped into four dimensions: Section 1- Impact on Student Academic Achievement (SQ1–SQ4), Section 2: Impact on Student Skills (SQ5–SQ10), Section 3: Student Perception of the Course Instruction (SQ11–SQ16), and Section 4: Recommendations (SQ17–SQ18). The minimum and maximum values for the Likert scale survey items span the full 1–5 scale, indicating that the sample included both very positive and very negative perceptions, providing a nuanced understanding of the student experience (Table 5).

3.2.1.1 Section 1: impact on student academic achievement (SQ1–SQ4)

The mean scores for the four survey items in this section range from 3.3 to 3.6, suggesting that students generally perceive the implemented teaching method to have a positive impact on their enthusiasm for studying (SQ1), preparation, and readiness for the lectures (SQ2), focus during the lectures (SQ3), and their understanding of the course content (SQ4) (Table 5). The mean score for this section was 3.5 ± 1.3, suggesting that the teaching approaches employed were successful in engaging the students and supporting their academic progress. The Cronbach’s alpha for this section is 0.937, demonstrating excellent internal consistency and reliability of the survey items (Table 6).

3.2.1.2 Section 2: impact on student skills (SQ5–SQ10)

The mean scores for the six survey items in this section range from 3.5 to 4.2, suggesting that students perceive the implemented teaching method to have a positive impact on the development of their skills, such as research and analysis (SQ5), discussion (SQ6), technology use (SQ7), teamwork (SQ8), creativity (SQ9), and professional competencies (SQ10) (Table 5). The mean score for this section was 3.7 ± 1.1, demonstrating that the teaching approaches were effective in enhancing these essential skills for the students’ future success and employability. The Cronbach’s alpha for this section is 0.934, also indicating excellent internal consistency and reliability (Table 6).

3.2.1.3 Section 3: student perception of the course instruction (SQ11–SQ16)

The students reported that the teaching methods used in the PELT-supported courses were quite superior to those employed in other courses they had taken (SQ11), and they experienced increased interaction and participation in the course activities (SQ12 and SQ14) and quite higher levels of satisfaction with the teaching methods (SQ13) (Table 5). This aligns with the findings from the first two sections, where the students reported positive impacts on their academic achievement and skill development.

However, they were more hesitant to endorse its generalization to other courses (SQ15) or were neutral about discussing it with their peers in other sections (SQ16) (Table 5).

The mean score of this section was 3.4 ± 1.4 suggesting quite positive perceptions of the impact of the teaching methodology on their academic achievement, skill development, and their overall learning experiences in the PELT-supported courses. The Cronbach’s alpha for this section is 0.97, indicating excellent internal consistency and reliability of the survey items (Table 6).

3.2.1.4 Overall student satisfaction

The overall mean score for student satisfaction, calculated as the mean of all 16 survey items, is 3.5 with a standard deviation of 1.2. This suggests that, on average, students have a quite positive perception of the teaching methodology and its impact on their academic achievement and skills, as well as their overall satisfaction with the course instruction. The Cronbach’s alpha for this section is 0.976, indicating excellent internal consistency and reliability of the survey items. Accordingly, the questions in this set could be collectively used in measuring overall satisfaction.

3.2.2 Factors influencing overall student satisfaction in a PELT program

3.2.2.1 Effect of scientific discipline on overall student satisfaction

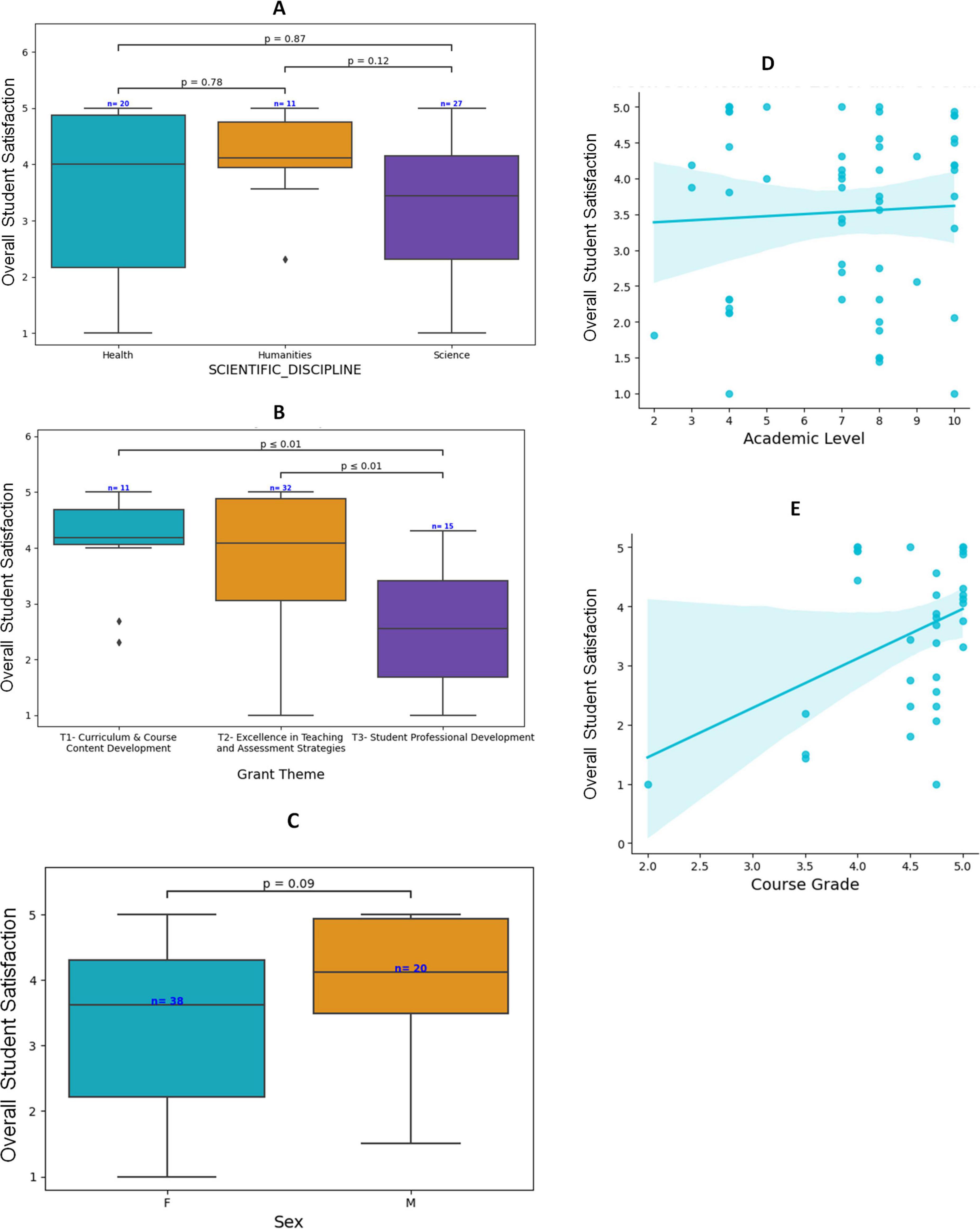

The data shows that the overall student satisfaction was higher for the Humanities and Health academic disciplines compared to Science. However, the p-values indicate no statistically significant differences between the groups (p > 0.05) (Figure 3A). This suggests that the academic discipline does not substantially influence overall student satisfaction.

Figure 3. Effect of (A) academic discipline, (B) grant theme, (C) sex, (D) academic level, and (E) course grade on student overall satisfaction on PELT. Statistical analyses were performed using Kruskal-Wallis tests followed by Dunn’s post-hoc test for (A,B), while the Mann-Whitney U test was used for (C), and Spearman’s rank correlation was used for (D,E). A p-value of ≤ 0.05 was considered statistically significant.

3.2.2.2 Effect of grant theme on overall student satisfaction

The data shows that overall satisfaction is higher for the “T1- Curriculum and Course Content Development” and “T2- Excellence in Teaching and Assessment Strategies” themes compared to the “T3- Student Professional Development and Labor Market Preparedness” theme with a statistically significant difference (p ≤ 0.01) (Figure 3B). This finding indicates that students value curriculum-focused and teaching-oriented grant themes more prominently than career development initiatives.

3.2.2.3 Effect of sex on overall student satisfaction

The data shows that overall satisfaction is marginally superior for males compared to females with a borderline p-value (p = 0.09) (Figure 3C). While student sex demonstrated a notable trend, it did not show a statistically significant effect on overall student satisfaction.

3.2.2.4 Effect of academic level on overall student satisfaction

The Spearman correlation coefficient of 0.02 and corresponding p-value of 0.88, which far exceeds the significance level of 0.05, definitively indicate a statistically negligible correlation, strongly suggesting that a student’s academic progression does not meaningfully impact their program satisfaction (Figure 3D).

3.2.2.5 Effect of PELT course grade on overall student satisfaction

The Spearman correlation coefficient of 0.29 and corresponding p-value of 0.09, which exceeds the standard significance level of 0.05, reveals a weak and statistically inconclusive correlation that suggests only a subtle, non-significant relationship between course performance and overall student satisfaction (Figure 3E).

3.2.3 Survey student textual feedback analysis

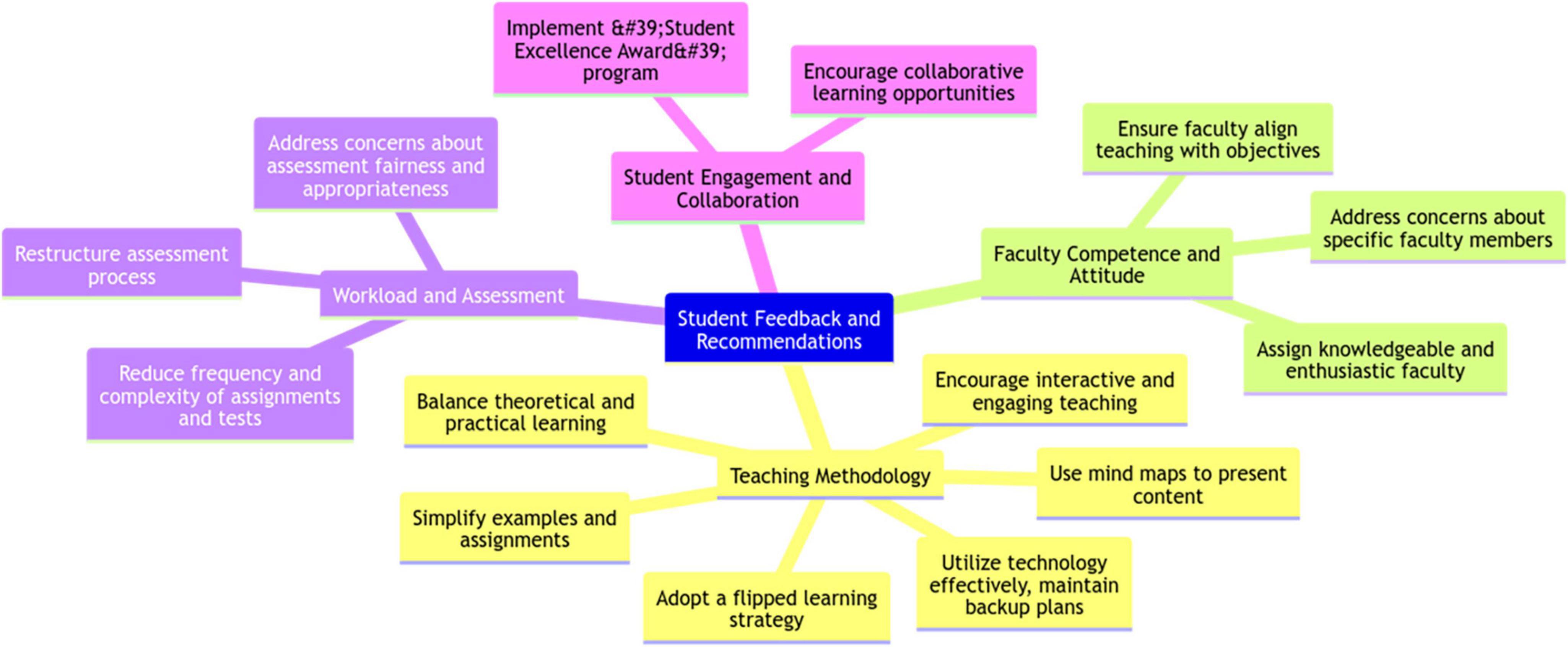

3.2.3.1 Students’ textual feedback and recommendations to improve PELT

The Student’s textual feedback and recommendations were carefully analyzed using an AI-assisted qualitative thematic analysis approach. Through iterative analysis and refinement, four key areas of improvement emerged: Teaching Methodology, Faculty Competence and Attitude, Workload and Assessment, and Student Engagement and Collaboration (Figure 4).

3.2.3.1.1 Teaching methodology

The students have provided several constructive suggestions to enhance the teaching methodology. They recommend adopting a flipped learning strategy, using mind maps to present content, and simplifying examples and assignments. This feedback eloquently suggests that students prefer a more dynamic and engaging learning experience, transitioning away from solely didactic lectures. Additionally, they critically emphasize the importance of effectively utilizing technology and implementing robust backup plans to mitigate technical issues. The emphasis on integrating theoretical and practical learning approaches indicates that students value a well-rounded educational experience.

3.2.3.1.2 Faculty competence and attitude

The students have highlighted the need for knowledgeable and enthusiastic faculty members who can effectively align their teaching methods with the course objectives and student understanding. Some students have requested addressing concerns about specific faculty members whose teaching style is perceived as distracting or misaligned with student needs. This feedback emphatically underscores the pivotal role of faculty members in shaping the overall student experience and the critical importance of ongoing faculty development and rigorous evaluation.

3.2.3.1.3 Workload and assessment

The students have suggested strategically reducing the frequency and complexity of assignments and tests while allowing for more flexibility in deadlines. They have also comprehensively requested a fundamental restructuring of the assessment process, such as implementing a single midterm exam instead of frequent short tests and establishing mechanisms for project corrections and grade redistribution. This feedback definitively indicates that students are seeking a more holistic and equitable assessment approach that harmonizes with the teaching and learning objectives.

3.2.3.1.4 Student engagement and collaboration

One of the students has strategically proposed the implementation of a “Student Excellence Award” program to recognize and incentivize outstanding student performance, cultivating a more interactive and synergistic educational environment. Additionally, students have expressed a desire for more interactive and collaborative learning opportunities, such as group projects and experiences sharing among students. This feedback powerfully highlights the students’ need for a more transformative and interconnected learning experience that promotes meaningful peer-to-peer interaction and a robust sense of community within the course.

Overall, the student feedback and recommendations provide valuable insights into the areas that require attention and improvement within the PELT program. The student feedback and recommendations reflect a high level of maturity and constructive criticism toward the PELT program. Incorporating this feedback can lead to meaningful improvements that enhance the overall educational experience for both students and faculty.

3.2.3.2 AI- estimated sentiment score of student textual feedback

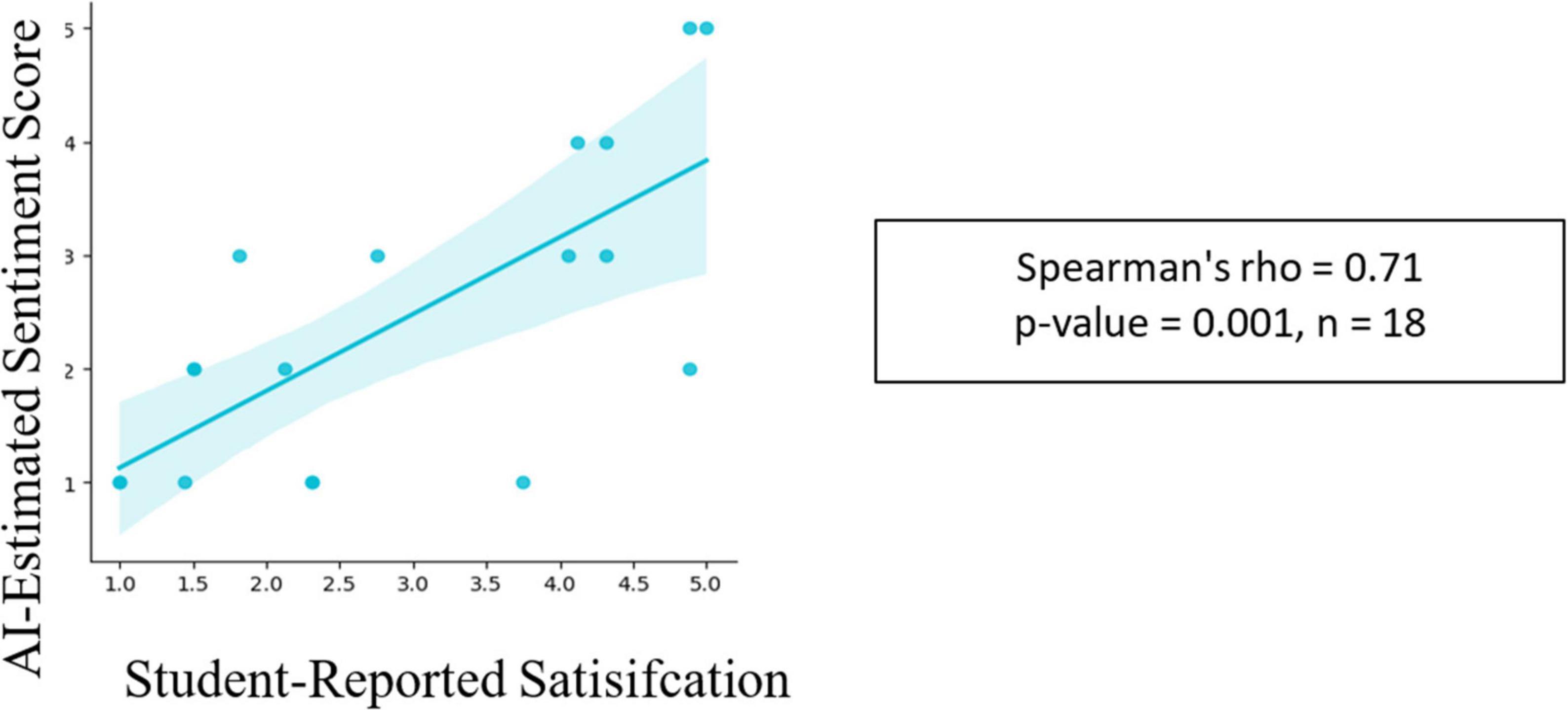

The current study explored the intricate relationship between AI-estimated sentiment scores extracted from student textual feedback and student-reported overall satisfaction. This innovative approach aimed to provide a quantitative lens into the qualitative aspects of student experience (Figure 5).

Figure 5. Correlation analysis of student overall satisfaction and AI-estimated sentiment scores derived from textual feedback. Statistical analysis was performed using Spearman’s rank correlation. A p-value of ≤ 0.05 was considered statistically significant. The blue translucent bands around the regression line represent the 95% confidence interval for the regression estimate.

The analysis revealed a strong and statistically significant correlation between overall satisfaction scores and AI-estimated sentiment scores (Spearman’s rho = 0.71, p < 0.01). This finding was particularly compelling, as it demonstrated a robust alignment between the AI-estimated sentiment from students’ textual feedback and their self-reported satisfaction levels. This strong correlation suggests that the AI-driven sentiment analysis method can effectively capture the nuanced emotional and experiential dimensions of student feedback.

Furthermore, representative quotes from students’ textual feedback are included to illustrate the findings of the sentiment analysis and to provide concrete examples of student opinions. When students were asked about potential improvements to the teaching and learning methods, and suggestions to enhance the overall educational experience, one student’s positive comment “None, it was beautiful” is reflected in the AI-estimated sentiment score of 5.0 (highly positive). Conversely, a negative sentiment, such as “It was possible to involve the student in many matters instead of teaching him boring scientific lessons.” is captured by an AI-estimated score of 1.0 (highly negative).

By providing a systematic, objective method of processing qualitative feedback, this approach offers a quantitative framework to capture nuanced student experiences. The analysis validates AI sentiment analysis as a reliable and scalable approach to understanding student perceptions, bridging the gap between quantitative measurements and qualitative insights.

3.2.4 Effect of PELT implementation on student-reported academic performance (PELT courses vs. GPA)

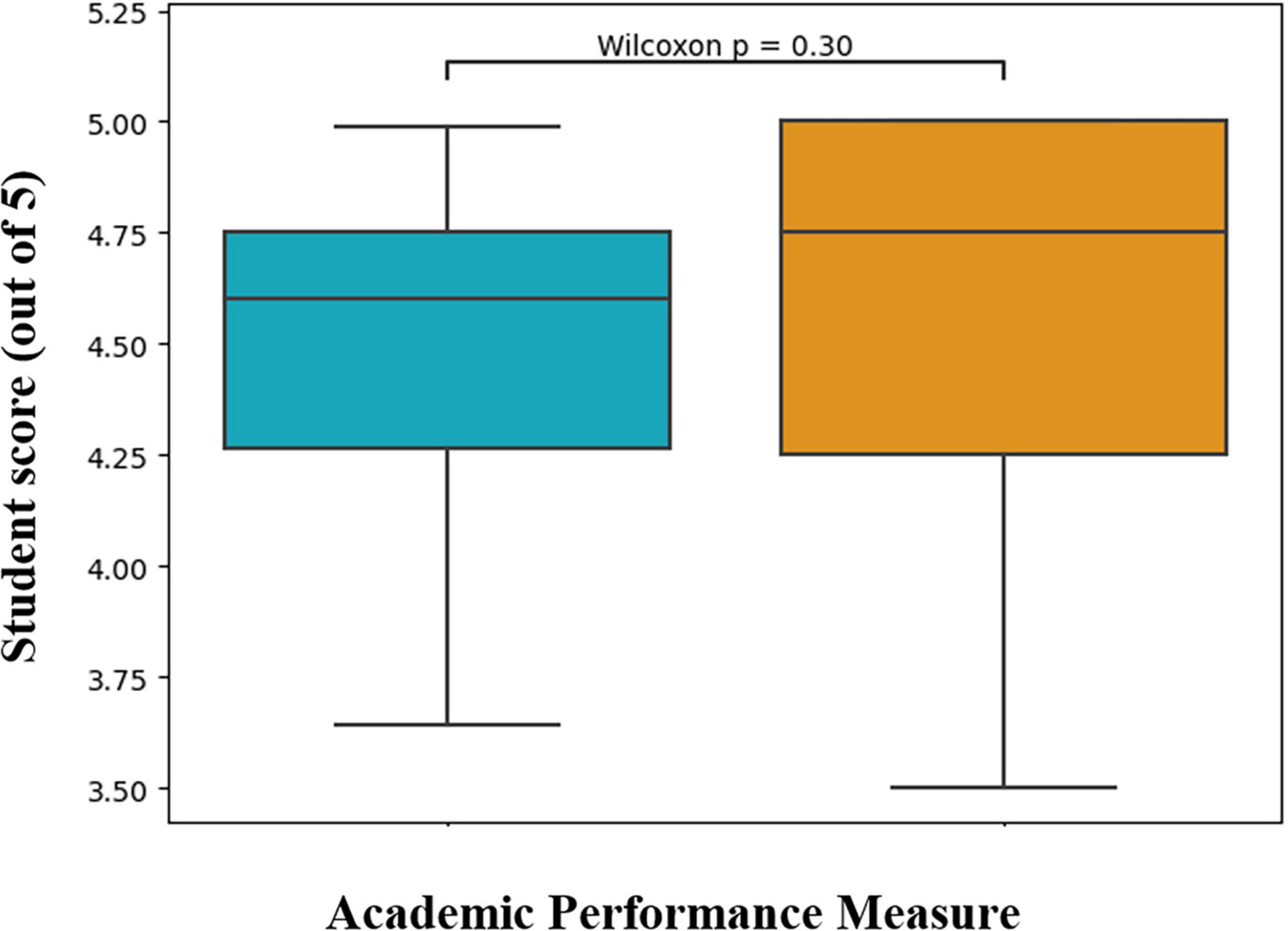

The analysis critically examines the impact of PELT implementation on students’ self-reported PELT Course Grades compared to their Overall GPAs across various factors. While Students’ grades in PELT-implemented courses were numerically higher than their corresponding GPA, the difference was statistically non-significant (Wilcoxon p = 0.30) (Figure 6). Furthermore, the Cohen’s d was 0.06, indicating a very small effect size (Cohen, 2013). The common language effect size (Vargha-Delaney’s A) was 0.61, suggesting a small effect size (Vargha and Delaney, 2000). The effect size Rosenthal correlation (r) was 0.174, indicating a very low effect size (Rosenthal, 1984; Gullickson and Ramser, 1993).

Figure 6. The overall impact of PELT implementation on student-reported academic performance. Statistical analysis was performed using Wilcoxon signed-rank test (paired samples). A p-value of ≤ 0.05 was considered statistically significant.

However, when examining the results across different factors, some interesting patterns emerged. Regarding the academic discipline, the Humanities group demonstrated a significantly higher PELT Course Grade compared to the overall GPA (p ≤ 0.05) (Figure 7A). Conversely, the Health and Science disciplines showed no statistically meaningful variations. Across all three themes (T1- Curriculum Development, T2- Teaching Strategies, T3- Professional Development), the median PELT Course Grades were higher than the Overall GPA, but the differences were not statistically significant (Figure 7B). Similarly, both female and male students showed higher median PELT Course Grades, however, the differences remained statistically inconsequential (Figure 7C).

Figure 7. The differentiated impact of PELT implementation on student-reported academic performance across (A) academic discipline, (B) grant theme, and (C) sex. Statistical analyses between each pair were performed using the Wilcoxon signed-rank test (paired samples). A p-value of ≤ 0.05 was considered statistically significant.

3.3 Comprehensive score analysis study (based on university academic records)

3.3.1 Performance metric findings

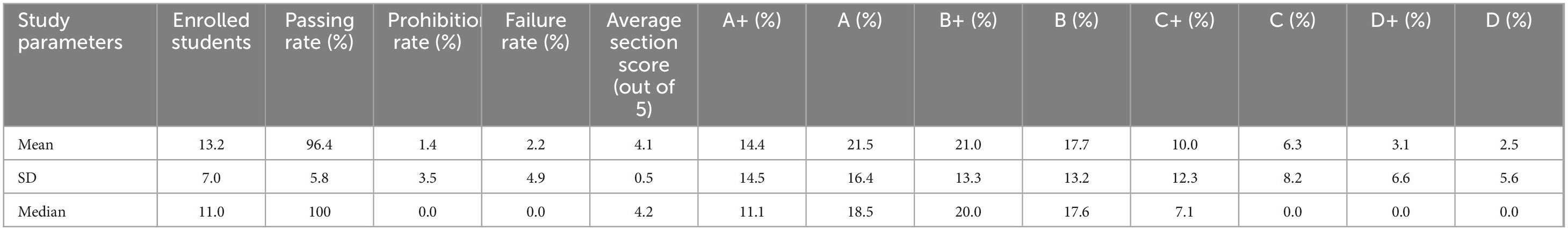

The analysis of academic performance metrics across course sections unveils multifaceted patterns in student achievement and grade distribution. The overall academic performance was remarkably strong, with a mean passing rate of 96.4% and relatively low prohibition and failure rates of 1.4 and 2.2% respectively. The average section score was 4.1 out of 5 (± 0.5), indicating consistently high academic achievement across sections (Table 7).

The grade distribution analysis shows a concentration in the higher grade brackets, with A+ and A grades accounting for 14.4 and 21.5% of grades respectively. B+ and B grades were also well-represented at 21.0 and 17.7% respectively. Lower grades showed progressively decreasing frequencies, with C+ at 10.0%, C at 6.3%, D+ at 3.1%, and D at 2.5%. Extreme values show that some sections had notably high proportions of top grades, with up to 59.1% achieving A+ and 76.0% achieving A grades in certain sections.

3.3.2 Demographics of score analysis study

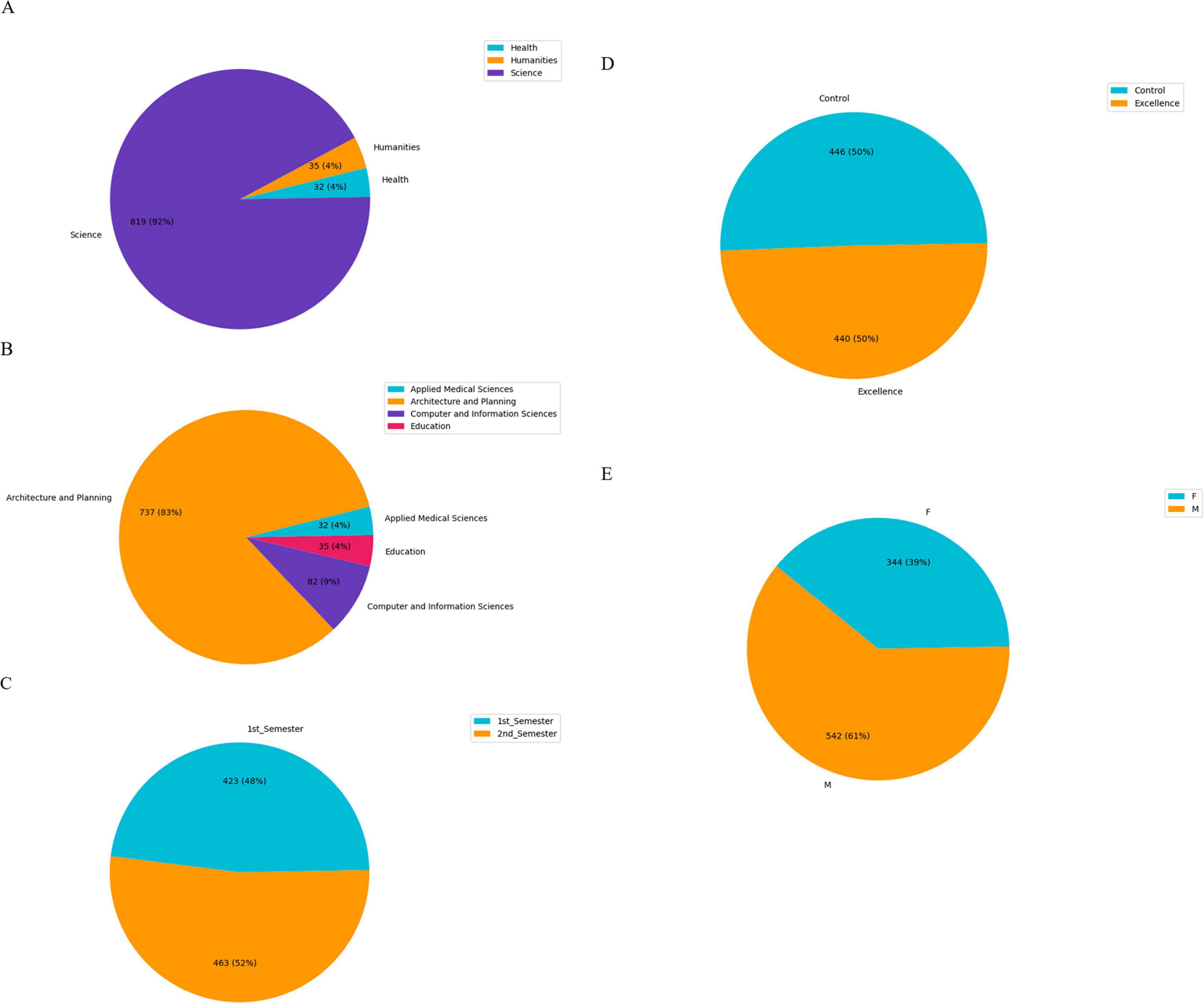

The demographic analysis of the university-collected student scores revealed intricate patterns in implementation and reach. The study demonstrates a strong STEM orientation, with science disciplines accounting for 92% of the participant population (n = 819), while humanities and health sciences each represent 4% (Figure 8A). At the faculty level, the College of Architecture and Planning represents the majority of participants (83%, n = 737), followed by Computer and Information Sciences (9%, n = 82), while Education and Applied Medical Sciences each comprise 4% of the population (Figure 8B). A distinctive feature of the study design is the temporal distribution of control and PELT groups: the first semester students (48%, n = 423) were entirely assigned to the control group, while the second-semester cohort (52%, n = 463) predominantly constituted the PELT group, with only a small portion serving as additional control subjects (Figure 8C). This approach was necessitated by PELT implementation across all sections of most courses in the second semester, rendering concurrent control sections impractical. The study adopted a temporal comparison approach, using first-semester sections as control groups, predicated on the assumption of no inherent performance differences between semesters. The program implementation maintained a balanced distribution between control and excellence groups (Figure 8D) as well as male and female students (Figure 8E).

Figure 8. The distribution of students according to (A) academic discipline, (B) faculty, (C) semester, (D) program implementation, and (E) sex.

3.3.3 Comprehensive PELT implementation score analysis

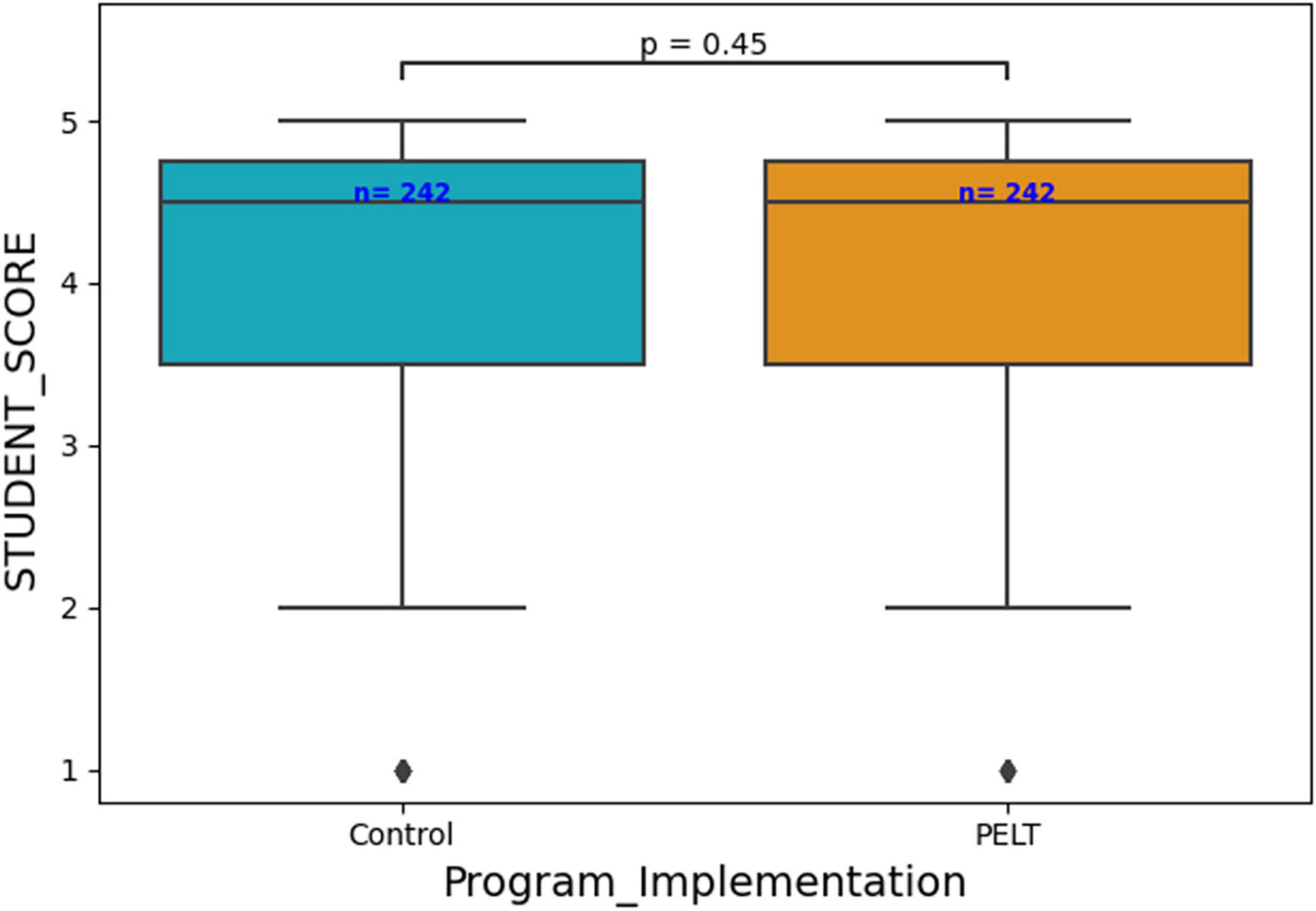

3.3.3.1 Overall effect of PELT implementation on student scores

The overall comparison between the PELT and control groups did not reveal a statistically significant difference in student scores (p = 0.45) (Figure 9). In addition, the Cohen’s d was 0.11, indicating a very small effect size (Cohen, 2013). The common language effect size (Vargha-Delaney’s A) was 0.52, suggesting a negligible effect size (Vargha and Delaney, 2000). The effect size Rosenthal correlation (r) was 0.064, indicating a very low effect size (Rosenthal, 1984; Gullickson and Ramser, 1993). This suggests a limited program impact on student performance when considering the entire student population.

Figure 9. The overall effect of PELT implementation on student exam scores. Statistical analyses between each pair were performed using Mann-Whitney U test. A p-value of ≤ 0.05 was considered statistically significant.

However, when considering the effect of other confounding factors, the results revealed uncovered notable variations.

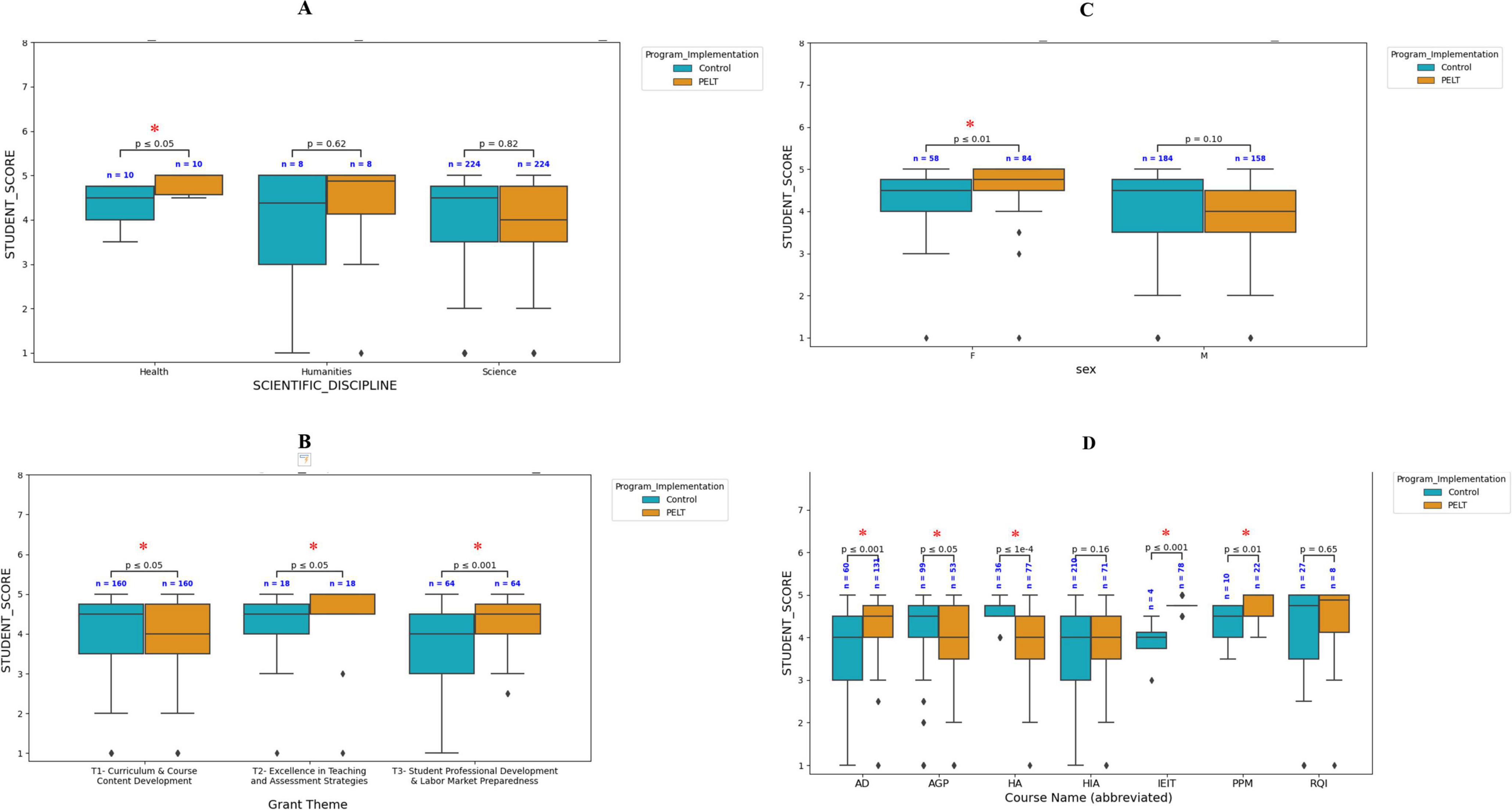

3.3.3.2 Subgroup effect of PELT implementation across academic discipline

Comparative analysis across disciplines revealed that Humanities and Science showed no significant score differences. Conversely, in the Health discipline, the PELT group demonstrated significantly higher scores compared to the control group (p ≤ 0.05) (Figure 10A). This suggests that the PELT program may have been particularly effective in enhancing student performance within the Health field.

Figure 10. Differentiated effect of PELT implementation on student exam scores across different confounding factors including (A) academic discipline, (B) grant theme, (C) sex, and (D) course type. Statistical analyses between each pair were performed using Mann-Whitney U test. A p-value of ≤ 0.05 was considered statistically significant and was denoted by a red asterisk (*). AD, Architectural Design; AGP, Architectural Graduation Project; HA, History of Architecture; HIA, History of Islamic Architecture; IEIT, Innovation and Entrepreneurship in Information Technology; PPM, Psychology of Perception and Movement; RQI, Rules of Quraan Interpretation.

3.3.3.3 Subgroup effect of PELT implementation across grant themes

The examination of grant themes provided further insights. For the T2 (Excellence in Teaching and Assessment Strategies) and T3 (Student Professional Development and Labor Market Preparedness) themes, the PELT group demonstrated significantly higher scores compared to the control group (p < 0.05 and p < 0.001, respectively). However, for the T1 (Curriculum and Course Content Development) theme, the PELT group had significantly lower scores than the control group (p < 0.05) (Figure 10B). These results suggest that the PELT program may have been particularly beneficial in enhancing student scores in the context of improving Teaching and Assessment Strategies as well as Student Professional Development and Labor Market Preparedness.

3.3.3.4 Subgroup effect of PELT implementation across sex

The analysis of student sex revealed a significant interaction with the PELT program implementation. Female students in the PELT group scored significantly higher than their counterparts in the control group (p < 0.01). In contrast, male students in the PELT group scored lower than the control group, but the difference was not statistically significant (Figure 10C). This suggests that the PELT program had a more pronounced positive impact on female students’ performance.

3.3.3.5 Subgroup effect of PELT implementation across different courses

The detailed analysis of different courses revealed interesting contrasts. For the courses (AD, IEIT, PPM) the PELT group showed significantly higher scores compared to the control group. Conversely, for the courses (AGP, HA), the PELT group showed significantly lower scores compared to the Control group. The remaining courses (HIA, RQI) showed no statistically significant difference in scores between PELT and control groups (Figure 10D).

4 Discussion

The current study offers a multifaceted and insightful assessment of the PELT initiative, leveraging faculty surveys, student surveys, and academic performance data to provide a holistic evaluation of the program’s effectiveness and areas for improvement. The results suggest that the PELT program has had a positive impact on both faculty’s and students’ experiences, though the effects vary across different factors.

The faculty survey results reveal a significantly positive perception of the grant program’s impact on faculty members’ skills and the student learning experience. The findings demonstrate that the grant program was successful in delivering an equitable beneficial experience for both male and female faculty across diverse disciplines and academic ranks. These findings are congruent with previous studies that position faculty development programs as pivotal mechanisms for enhancing faculty professional competencies, student learning outcomes, and the overall educational ecosystem (Morris and Fry, 2006; Bilal Guraya and Chen, 2019). Notably, among the three grant themes, the faculty exhibited a stronger preference for the “T3” theme, which focused on developing students’ professional skills and career readiness. This aligns with the program’s goal of preparing students for the workforce and addressing the gap between academic knowledge and real-world competencies. In addition, the faculty’s textual feedback reflects their desire for a more systemic and long-term approach to the grant program, where the impacts are amplified and embedded within the university’s processes and culture (Doherty, 2012).

On the other hand, the student survey results indicate that students generally have a fairly positive perception of the teaching methodology used in the course, with the impact on their skills being rated slightly higher than the impact on their academic achievement and their overall perception of the course instruction. The survey findings also suggest that while students valued the methodology in the context of the PELT-supported course, they were less enthusiastic about its broader applicability or sharing their experiences with others.

Similar to faculty perception, the grant theme appears to be the most significant factor influencing overall student satisfaction in the PELT program. However, students had a stronger preference for the T1 and T2 themes (which focused on curriculum development and teaching excellence, respectively) compared to the T3 theme (which focused on student professional development and labor market preparedness).

It is very interesting to note that faculty and students had the opposite effect of the grant theme on their PELT satisfaction. While the student satisfaction was highest for the “T1- Curriculum and Course Content Development” and “T2- Excellence in Teaching and Assessment Strategies” themes, the faculty showed significantly higher satisfaction with the “T3- Student Professional Development and Labor Market Preparedness” theme compared to the other two. One possible explanation for this divergence between student and faculty perspectives could be that faculty members have a more long-term and holistic view of student development. They recognize the importance of nurturing students’ professional skills and employability, which may not be immediately reflected in the students’ overall satisfaction. In contrast, students may prioritize the more immediate academic experiences, such as the quality of course content and teaching methods, as observed in the student satisfaction analysis. This difference highlights the need to consider the distinct perspectives and priorities of faculty and students when designing and implementing educational programs like PELT.

Student textual feedback indicates a strong desire for more dynamic, student-focused, and practically oriented educational approaches (Shahba and Sales, 2021). Students seek meaningful learning experiences that balance theoretical knowledge with practical skills and interactive engagement. The feedback also highlighted that faculty teaching effectiveness, assessment methods, and grading criteria are critical areas for improvement.

To comprehensively analyze these qualitative insights, the current study employed an iterative AI-human collaborative approach. This methodology allowed for harnessing the speed and scalability of AI-based techniques while ensuring the insights generated were grounded in a deep understanding of the context. This approach aligns with contemporary intelligence augmentation frameworks which fosters AI collaboration with human intellect to enhance, rather than replace, human capabilities (Kasepalu et al., 2022; Lo et al., 2025).

Building on this methodological foundation, AI-powered sentiment analysis tool was able to detect the overall satisfaction of students from the tone and language used in their textual feedback is particularly valuable. This suggests that AI-based sentiment analysis can effectively complement traditional survey methods, offering administrators a more nuanced understanding of student experiences.

However, these technological advantages must be weighed against important limitations. While sentiment analysis demonstrates high classification accuracy reflecting context validity (Kumar and Kumar, 2024), its oversight of cultural/gender influences on sentiment expression (Grimalt-Álvaro and Usart, 2024) and limitations in analytical depth (Knoth et al., 2024; Lo et al., 2025) necessitate human validation. These findings echo recent research on generative AI in education (Lo et al., 2025), where improved learning outcomes coexist with emotional variability. In the current study, the meaningful correlation between AI-derived sentiment scores and student satisfaction metrics provides empirical support for the model’s validity while still acknowledging its limitations.

This tension between AI’s benefits and challenges reflects larger debates about technology’s impact on pedagogical equity, feedback personalization, and emotional engagement. AI has the potential to level the academic playing field by providing personalized learning experiences tailored to diverse student needs (Donnell et al., 2024; Londoño, 2024). However, disparities in access to AI resources can exacerbate existing inequalities, particularly for underprivileged students (Donnell et al., 2024). Institutions must ensure equitable access to AI technologies to avoid widening the educational gap (Green et al., 2022). While AI enhanced student performance and engagement, emotional responses remained mixed, reflecting the complexity of technology adoption in education (Lo et al., 2025). Critics also caution that overreliance on AI may diminish essential human interactions, potentially hindering the development of socioemotional competencies (Donnell et al., 2024).

Within this complex landscape, The PELT implementation reported fairly positive effects on student-reported academic performance. The general trend shows PELT course grades being slightly higher than overall GPA across most categories, though these differences are mostly not statistically significant. However, the analysis of specific factors highlighted that the Humanities discipline demonstrated significant positive impacts on student performance.

Similarly, the university-reported score analysis findings highlight the nuanced nature of the PELT program’s effectiveness, with certain academic disciplines, and grant themes demonstrating more substantial positive impacts on student scores. These nuanced and nighly contrasting findings across different courses highlight the importance of evaluating the PELT program’s effectiveness at the individual course level, rather than just looking at the overall impact.

The nuanced findings across different courses highlight the importance of a contextual evaluation of the program’s implementation. The deeper investigation into the specific characteristics, pedagogical approaches, and grant themes associated with the courses revealed some interesting insights.

The courses that demonstrated significantly lower scores in the PELT group, namely AGP and HA, were both linked to the T1 (Curriculum and Course Content Development) grant theme. The analysis suggests that the focus of this theme was primarily on developing online resources, digitizing course content, and enhancing the technological aspects of course delivery, with less emphasis on fostering genuine student-faculty interactions within the learning environment.

In contrast, the courses that showed significantly higher scores in the PELT group, such as AD, IEIT, and PPM, were associated with the T2 (Excellence in Teaching and Assessment Strategies) and T3 (Student Professional Development and Labor Market Preparedness) grant themes. These themes placed a greater emphasis on enhancing student-student and student-faculty interactions, both within and outside the lecture setting, to improve course-related as well as student-professional skills.

The HA and HIA courses, which focused on developing 3D virtual reality models of historical buildings and architectural monuments, aligned with the T1 theme. The AGP course, on the other hand, aimed to create a digital repository for student graduation projects, also falling under the T1 theme. These specific grant focuses, which prioritized the development of online resources and digital tools, may not have been as effective in promoting meaningful student engagement and learning outcomes as the approaches emphasized in the T2 and T3 themes. These findings suggest that the PELT impact may have been more pronounced in courses that fostered stronger student-faculty and student-student interactions, as opposed to those that primarily emphasized content development and technological enhancements.

When compared to other faculty development programs, the PELT shares many common features with similar initiatives at leading universities globally. Similar to top worldwide programs, PELT has been established to foster active, learner-centered approaches and utilize small grant schemes to promote teaching innovation. Emphasizing the importance of investing in faculty development and pedagogical innovation is a key shared goal (James Jacob et al., 2015; Carney et al., 2016; Kohan et al., 2023; Ossevoort et al., 2024).

What sets the PELT program apart is its pioneering nature in the Saudi higher education context and its adaptability to emerging trends, with a focus on domains like Students as Partners and GPT Tools in Education. The integration of emerging technologies—particularly generative AI and AI-assisted text analysis—echoes the recent transformative shift in pedagogical innovation that fosters student-centered teaching and self-directed learning (Lo et al., 2025). The comprehensive multi-dimensional evaluation, combining faculty/student surveys, sentiment analysis, and academic performance data, provides a level of rigor beyond typical program assessments (Hum et al., 2015; Kohan et al., 2023). However, the moderate overall student satisfaction score and inconsistent performance impacts across courses suggest areas for further optimization to align with best practices observed at other successful faculty development programs (Reid et al., 2015). Moreover, the PELT program lacks the provision of workshops and learning communities which could further foster collaboration, knowledge sharing, and sustained professional growth (James Jacob et al., 2015).

The findings from this study have important practical implications for the future PELT development and implementation, as well as for similar active learning initiatives at other higher education institutions. The PELT varied outcomes underscore the importance of designing educational interventions that go beyond traditional instructional methods, emphasizing authentic active learning, meaningful student engagement, and alignment with both academic and professional development goals (Sivan et al., 2000). Based on the factors found to influence the effectiveness of the PELT approach, the program could be revised to incorporate new grant themes, modify faculty performance indicators, and prioritize student engagement and buy-in. Furthermore, key strategies for scaling up the program should be adopted including providing robust faculty training, ongoing support, and fostering strong institutional commitment and resource allocation. These principles could serve as a guide for other colleges and universities seeking to adopt the PELT model to their own unique contexts.

Moving forward, program administrators and educators should carefully consider the specific grant themes, pedagogical approaches, and implementation strategies employed in each course context. By aligning the PELT program’s focus with approaches that prioritize active learning, collaborative experiences, and authentic student-faculty interactions, the potential for a positive impact on student outcomes can be amplified. This nuanced, context-specific evaluation and optimization of the PELT program can lead to more consistent and impactful improvements in academic performance across diverse course offerings. Overall, the practical implications of this research extend well beyond the specific PELT program, offering valuable guidance and inspiration for the design and implementation of active learning initiatives that can drive positive change in higher education.

Furthermore, the findings from this evaluation of the PELT program offer insights that have broader theoretical implications for understanding faculty professional development and student-centered learning. The positive faculty perceptions of the program’s impact on their teaching practices and student engagement provide empirical support for educational theories such as learner-centered pedagogy and constructivist learning theories which emphasize the importance of coherent, applied professional development opportunities for enhancing instructional quality and student-centered approaches (Hyde and Nanis, 2006; Weimer, 2013; Moate and Cox, 2015; Ross et al., 2019). These frameworks emphasize knowledge construction through collaboration, personal growth, and critical reflection to enhance the overall learning experience (Msonde, 2023).

However, the moderate overall student satisfaction score of 3.5 suggests that more work may be needed to fully optimize the student-centered learning environment through the PELT program. These results could inform the scholarship of teaching and learning, highlighting the need to closely align faculty development and pedagogical practices with measurable improvements in the student experience (Trigwell et al., 2000; Bernstein and Ginsberg, 2009; Hutchings et al., 2011; Cruz, 2013). In addition, ensuring that student input is incorporated at the grant proposal stage is a key tenet of learner-centered pedagogy, as it helps align the program with learners’ needs and preferences (Moate and Cox, 2015; Msonde, 2023).

On the other hand, the study findings suggest potential nuanced effects of the PELT program on academic performance based on the different grant themes or disciplinary contexts. The study findings highlight that the PELT impact may have been more pronounced in courses that fostered stronger student-faculty and student-student interactions, as opposed to those that primarily emphasized content development and technological enhancements. This aligns with constructivist learning theories which position social interaction and collaborative knowledge construction as fundamental to meaningful learning (Hyde and Nanis, 2006; Weimer, 2013; Ross et al., 2019; Qambaday and Mwila, 2022). The results also suggest that when courses are designed around these principles, students can better construct knowledge through meaningful interactions with both their peers and instructors. This observation reinforces the core premise of learner-centered pedagogy - derived from constructivist theory - which elevates students’ active knowledge construction over rote content mastery (Moate and Cox, 2015).

In addition to the theoretical implications, the results of this study also have important considerations for educational policy and the long-term sustainability of faculty development programs like PELT. The observed disciplinary and thematic variations in PELT’s impact suggest that policymakers and institutional leaders should consider providing more targeted or differentiated support for faculty development initiatives. For example, the divergent preferences of faculty and students regarding the grant themes highlight the need to strike a balance between curriculum enhancement and professional skill development. Funding models and program designs should be adapted to address the unique needs and priorities across different academic disciplines.

As for long-term sustainability, it is one of the essential conditions for the acceptance of PELT grants, where the funded initiative or project must have a sustainable nature. This includes the possibility of continuing the implementation with the course students in each academic semester, presenting the experience to the academic department council. The program also encourages sharing its outputs on a wider scale within the university through scientific presentations or academic publications. Faculty members in different colleges and departments can also benefit from these experiences and adapt them to their respective contexts, which could enhance the institutional impact and long-term benefits.

In future programs, it is valuable to explore ways of integrating the pedagogical approaches and resources into ongoing faculty support and development initiatives at the university. This could include cultivating faculty learning communities, securing dedicated funding sources, and aligning the program’s objectives with institutional priorities around teaching excellence and student success.

Exploring these broader policy implications and sustainability factors is crucial for ensuring that impactful faculty development programs like PELT can have long-lasting effects on instructional quality and student learning experience.

4.1 Limitations of the study

While the current study offers valuable insights into the PELT program’s implementation and outcomes, it is important to acknowledge the limitations of the research design. Primarily, the single-institution focus, with the research being conducted at a single university, King Saud University, may limit the generalizability of the findings to other higher education contexts. As a non-experimental study, the current investigation does not allow for definitive causal claims between participation in the PELT program and the observed outcomes. The voluntary nature of the program precluded a rigorous controlled experimental design with random student assignments. The control group, comprised of course sections from previous semesters, introduces potential confounding variables such as variations in exam difficulty, instructional styles, and contextual changes unrelated to PELT implementation. Furthermore, the research confronted significant measurement challenges in comprehensively evaluating the program’s impact. While final course grades provided an unbiased performance metric, they incompletely captured the program’s holistic influence. Notably, courses with significant lab or practical components (up to 30% of final scores) may not fully reflect the theoretical PELT intervention’s nuanced educational contributions.

While acknowledging the potential of AI-assisted methodologies, the study recognizes critical considerations surrounding bias mitigation, cultural relevance, and the necessity of maintaining human oversight in technological interventions. These findings resonate with recent research advocating for maintaining human agency in AI-enhanced educational environments (Lo et al., 2025).

Despite these limitations, the study’s multifaceted approach distinguishes itself through an integrated methodology. By combining quantitative and qualitative data analysis, the research offered a comprehensive assessment of faculty and student perspectives. The innovative use of AI-powered sentiment analysis and nuanced comparison of course-specific and overall academic performance provided a rich, contextual understanding of the program effectiveness.

4.2 Future research

Future research should develop more sophisticated methodologies that address the current study’s constraints. It should replicate this study across diverse university settings to validate the insights and assess their broader applicability. By randomly assigning students to PELT and control groups, researchers can better isolate the specific effects of the program and account for potential confounding variables. Subsequent investigations should design interventions with more controlled experimental frameworks, explore comprehensive assessment techniques that capture multidimensional learning outcomes, and investigate the complex contextual factors influencing educational interventions.

In addition, future studies should consider adopting a longitudinal design to track the long-term impact of the PELT program. A multi-year study could provide valuable insights into the long-term effects of the PELT intervention on both faculty and students. Tracking changes in student academic performance, engagement, retention, and post-graduation outcomes over an extended period could shed light on the long-term and transformative potential of the program. Insights from such a longitudinal analysis could inform strategies for the successful scaling and implementation of the PELT program in higher education settings.

Building on current research in AI-enhanced education (Lo et al., 2025), future studies should examine the application of faculty development programs and AI-assisted interventions across diverse disciplines, age groups, and geographic regions to assess broader applicability.