- Department of Design, Media and Educational Science, University of Southern Denmark, Sønderborg, Denmark

Introduction: Despite their many advantages, group activities can result in participation imbalance, especially when a participant joins the activity remotely. In this experimental study, we address the issue of imbalance in small groups, which consist of two co-situated participants and one remote student on a telepresence robot. The aim is to investigate whether using a robot to moderate group activities can affect engagement and balance participation.

Methods: 84 participants were recruited and assigned to two conditions, namely baseline (without a robot moderator) and experimental (with a robot moderator). As participants engaged in completing a language learning task, the moderator implemented verbal and nonverbal interventions.

Results: Data analysis shows that while nonverbal interventions mostly failed, verbal interventions had a success rate of 88.24% in encouraging participants to start speaking. Regarding the talking time of group members, results show significant differences between the mean scores in both conditions, indicating a more balanced participation of group members in the experimental condition. Participants also formed positive attitudes toward the robot moderator.

Discussion: In conclusion, using the robot moderator positively affected group dynamics by encouraging quiet participants to be more active and rendering the interaction to be more balanced.

1 Introduction

In language learning contexts, group work activities have various benefits for students. They can increase engagement of learners by providing more opportunities for them to interact and socialize (Forslund Frykedal and Hammar Chiriac, 2018; Meyers, 1997). Furthermore, such activities have been shown to reduce affective barriers and enhance learner autonomy (Liu et al., 2018). A meta-analysis of about 170 research studies shows that group activities can improve learners’ achievements and reasoning skills and result in longer retention of information compared to individual tasks (Johnson et al., 2014).

Nevertheless, multiparty interactions can foster imbalanced participation of group members, especially in cases where one of the participants is more dominant or some group members do not find enough opportunities to contribute to the communication (Gillet et al., 2021). This issue might become more conspicuous in cases where one of the team members participates in the group activity remotely—for example via a telepresence robot. In such cases, the telepresence robot user tends to participate less than others and experience more task difficulty compared to co-situated participants (Stoll et al., 2018).

Nonetheless, improving participation and engagement of remote students in multiparty interactions is important for a number of reasons. First, when all students have an equal opportunity to participate and contribute to class discussions and activities, a more inclusive and equitable learning environment is created for everyone. This can be especially beneficial for students who are shy, have difficulty speaking up, or are joining the class virtually. Second, when students are included in learning and are actively engaged in class and/or group activities, they have a higher chance of learning and retaining information (see Turnbull et al., 2004). Third, improving the interaction quality of remote students can lead to better outcomes for the group as a whole. When all participants are engaged and contributing to the task, the group is more likely to generate creative ideas, solve problems effectively, and make informed decisions (Charteris et al., 2024). Finally, since social interaction is essential for human development (Filia et al., 2018), improving the participation and social inclusion of remote students can help to improve their social and emotional well-being. This can be especially beneficial for students who are homebound or hospitalized.

Previous research suggests that group participation can be balanced when it is moderated by a robot or robotic object (Skantze, 2017; Tennent et al., 2019; Weisswange et al., 2023). In such cases, the moderator can emulate a variety of verbal and nonverbal behaviors used in human-human interactions, such as turning its head and/or gaze toward the desired addressee, using gestures, asking directed questions, and calling the name of the next speaker. Such interventions can be implemented during different stages of interaction. For example, the robot moderator might wait for a speaker to finish their turn and then select the next speaker, or it may interrupt someone’s talk to allocate the turn to another person. There is a methodological advantage of studying group moderation using a robot moderator: human moderators generally make such decisions intuitively, possibly aided by—often loosely attended to—a set of rules, such as limited talking time. It is therefore quite difficult to see what strategies work and which ones do not actually facilitate group interaction. For robot moderators, the turn-allocation rules must be set in advance and the robot’s program should clearly state, for instance, whether it should intervene after a certain amount of time, during pauses, when turn-yielding signals are produced by the speaker, etc.

In this article, we therefore address the issue of participation imbalance in hybrid group interactions using a robot as moderator. The study investigates whether a robot moderator can balance group member participation by employing two types of intervention behaviors:

• Nonverbal Intervention: The robot turns its head and gaze toward the least vocal participant to encourage participation.

• Verbal Intervention: The robot poses opinion questions, inviting responses from quieter participants.

These interventions aim to encourage the least vocal participant in each group to be more active and engage in interactions. The success of these interventions is measured by assessing changes in participants’ total speaking time. Moderation is managed by a JD humanoid robot, which guides each group through a communicative language activity. Therefore, to examine the effectiveness of a robot moderator in facilitating balanced engagement, we address the following research questions:

• RQ 1: In robot-mediated interactions, do nonverbal interventions from a robot moderator increase participant engagement?

• RQ 2: In robot-mediated interactions, do verbal interventions from a robot moderator increase participant engagement?

• RQ 3: Do verbal and nonverbal interventions effectively balance speaking times of participants?

• RQ 4: Do participants form a positive attitude toward the robot moderator?

An experimental study was conducted to address the research questions. In the following sections, we will discuss relevant past research, present the experiment in detail, and discuss the results of the study.

2 Previous work

In this section, we will present core concepts and past research related to the dynamics of group work in learning contexts, telepresence robots in remote learning, and the role of robot moderation in interactions.

2.1 Group work

Group work in education has multifaceted benefits. In language learning, for example, group work provides more chances for students to practice linguistic skills, build social connections, and reduce affective barriers (Hansen, 2006; Jones, 2007; Moreland and Myaskovsky, 2000). Another advantage of group work is its potential to improve critical problem solving and experiential learning (Marder et al., 2021). In addition, group work tends to create a supportive social context, which can positively influence individual learning outcomes (Hammar Chiriac, 2014). Moreover, group discussions enable students to talk about their thoughts or negotiate complex ideas, with a higher chance of deeper learning through authentic interactions (Scott, 2017; Utha and Tshering, 2021).

The dynamics of group work can also promote cooperative learning and improve students’ engagement in collaborative tasks (Herrera-Pavo, 2021; Schwarz et al., 2021). Cooperative learning environments allow weaker students to learn from their more capable peers and improve their overall learning outcomes (Alghamdi and Gillies, 2013). Similarly, Kirschner et al. (2011) argue that collaborative learning can distribute cognitive load among group members and allow them to focus on problem-solving tasks.

Overall, past research shows that group work can be a powerful pedagogical tool to improve individual learning and create a supportive environment. However, despite the advantages of group work, it can be challenging to maintain balanced participation among members. In group dynamics, some individuals may naturally dominate the conversation, while others remain passive due to personality traits, confidence levels, or perceived expertise differences (Cohen and Lotan, 2014; Hodges, 2018). This uneven distribution of participation might hinder the overall effectiveness of group activities. For example, members who contribute less may feel undervalued or excluded from the task. This issue becomes particularly problematic when imbalances continue across multiple sessions or group tasks. Such an issue can create a pattern where quieter participants feel discouraged from sharing and become reluctant to participate in future collaborative tasks. Participation imbalance can also negatively affect group outcomes. For example, when certain members dominate discussions, the group may miss out on opinions of quieter members. Additionally, participation imbalance can discourage collaboration and make group members feel marginalized (See Strauß and Rummel, 2021).

The issue of participation imbalance can also create some challenges for students who participate remotely. Remote learners often struggle to integrate fully into group activities. Tang et al. (2004) point out that communication through technology can negatively affect engagement and social presence by reducing the sense of personal connection and emotional intimacy. For students, mediated communication can negatively affect participation and the feeling of connectedness to their classmates and instructor (Gray and DiLoreto, 2016). Furthermore, in cases where there is not a strong sense of actual and perceived presence of others as well as a shared social context, communication can be negatively impacted by the presence of mediating technology (Jourdan, 2006).

Overall, hybrid teamwork has several benefits. However, the brief review of the literature shows that remote students face the challenge of low participation rates and the probability of being passive participants in class activities. The present study aims to improve this struggle by adding a moderator to encourage their participation and increase their chances for engagement with peers.

2.2 Telepresence robots

While remote learning allows students to remain connected to their education, it comes with various challenges. In recent years, telepresence robots have emerged as a tool to improve mediated communication. A telepresence robot is a robot that is controlled by a person from a remote location. It is equipped with a camera, microphone, speakers, and usually a screen that displays the operator’s face. Through this device, the operator can remotely interact with others and navigate through the physical environment. Telepresence robots come in different forms with different capabilities, each of which offers some advantages over other means of mediated communication such as video conferencing programs. For example, a Double 3 telepresence robot enables its operator to tilt the camera, change height, and navigate automatically. A GoBe telepresence robot has a large screen with wide-angel and high-quality cameras and allows the users to adjust its speed. AV1 telepresence robots are desktop devices with a robotic face and different notification lights, indicating whether their users are idle or active. In a study by Johannessen et al. (2023), homebound children (N = 37) participated in their classes via AV1 telepresence robots. Interview data collected from the participants, their parents, and the teachers show that the robot facilitated the participants’ interaction with their classmates and teachers, the students felt less lonely and isolated, and had a lower level of uncertainty and anxiety during the experiment.

Integrating telepresence robots into education has been a major area of research over the past few years, especially since there are many students who are unable to attend school. Studies in this area suggest that this technology can help students maintain a virtual connection with their schools, classmates, and teachers (Liao and Lu, 2018; Newhart et al., 2016; Powell et al., 2021; Weibel et al., 2023a; Weibel et al., 2023b). As Cha et al. (2017) put it, attending school via a telepresence robot improves learning outcomes and reduces the issue of social isolation and emotional barriers that occur due to lack of contact between an individual and society. Telepresence robots can also increase learners’ access to native language teachers, authentic contexts, and even learners from a different region (Kwon et al., 2010). Kornfield et al. (2021), for example, compared video-mediated and robot-mediated interactions and found that local participants perceived telepresence robot users as more present compared to participants in video-mediated interactions. Similarly, Schouten et al. (2022) found that in hybrid activities, a telepresence robot can improve the feelings of social presence compared to videoconferencing.

Despite their benefits, telepresence robots have some limitations. Cha et al. (2017), for example, explore the use of a Beam Plus telepresence robot by middle school students. They found that since remote students cannot physically mingle with their peers, they are mostly bystanders during group activities and might feel isolated and excluded. In another study, Gleason and Greenhow (2017) examined the role of physical embodiment and social presence for doctoral students in a hybrid class. Although they reported positive results regarding facilitated interaction and physical presence, they found that collaborations between online and co-present participants were challenging due to the limited field of vision through the robot and incomprehensibility of communication in noisy contexts.

Stoll et al. (2018) examined whether the distribution of information among remote and co-located group members affects their participation. To do so, they designed teams that consisted of two co-located members and one person on a telepresence robot. They were instructed to solve a translation puzzle collaboratively. The study had three conditions: (a) all members had access to the translation key, (b) the collocated members had access to the translation key, and (c) only the remote user had access to the translation key. The findings of the study show that remote members were considered less trustworthy by collocated members in all three conditions. Online participants also participated less than other members and experienced more task difficulty compared to collocated participants. Stoll et al. (2018) suggest that one way to compensate for the social and communicative limitations of the robot-mediated interaction is through giving robot users more access to resources and materials needed to complete a task compared to collocated members.

In sum, telepresence robots facilitate remote participation in group settings. However, participation and group dynamics are likely to be negatively affected, since participants tend to participate less or be perceived as less trustworthy. It is therefore essential to address these challenges to help enhance meaningful connections and collaboration among participants and promote the overall experiences of telepresence robot operators (Thompson and Chaivisit, 2021). Past research has shown that these challenges can be mitigated through facilitators who actively encourage all members to share their thoughts and ideas (Skantze, 2017). Thus, in this paper, we investigate whether using a social robot moderator can reduce participation imbalance among group members. The reason why a robot moderator was chosen instead of a human moderator is that robots are consistent in making cues and interventions across groups. In the current work’s controlled experimental environment, consistency is a key factor that can ensure the reliability of the findings.

2.3 Robot moderation

Previous research shows that robots, robotic objects, and virtual agents can affect participation when they moderate group interactions. For example, Tennent et al. (2019) studied whether nonverbal behavior of a microphone-shaped robotic object, called Micbot, can positively affect engagement and performance of participants in groups of three participants. The researchers asked each group to perform two problem solving tasks. In the meantime, Micbot, which was placed on a table between the participants, performed one of the following behaviors: (a) it turned toward the speaker and stayed in that direction, while every 3 min, it turned toward the least vocal person for 15 s. Then it returned to its neutral position before starting its next move; (b) it moved randomly; and (c) it stood still on the table. The findings of the study show that Micbot’s behavior in the first condition made the conversational dynamics more balanced by encouraging passive participants to engage more in the group discussion. Moreover, Druckman et al. (2021) compared the effect of different types of mediators (a teleoperated robot vs. a human vs. a computer screen vs. no mediation) on negotiation outcomes. The mediators used an identical script to analyze the communication and give advice on how to resolve an impasse in a simulated negotiation scenario. The results show that mediation from the robot resulted in more agreements and a higher level if satisfaction compared to the other conditions.

In face-to-face communication, the direction of gaze can be used to signal conversational roles—such as speaker or addressee—and facilitate turn-taking and turn-yielding attempts of participants (Mutlu et al., 2012; Sacks et al., 1974). For example, a speakers’ gaze direction can imply the selection of the next speaker, while the lack of gaze toward a person can mean that the individual is not the desired recipient of the next speaking turn (Schegloff, 1996). Skantze et al. (2014) examined the role of human-like coordination cues such as gaze in a one-on-one interaction between a participant and a Furhat robot. They manipulated the robot’s gaze and turn-taking behavior and programmed it to guide participants to draw a route on a map. The researchers compared the systematic gaze behavior of Furhat with a condition in which the robot produced random gaze cues and a condition in which the robot spoke to the participant while it was hiding behind a paper board. They reported that participants benefited from the robot’s coordinated gaze behavior and that it affected the participants’ gaze and verbal behavior. Skantze et al. (2014) argue that participants were able to use the robot’s gaze to understand references to different spots on the map.

Skantze (2017) explored turn-taking and participation equality during a collaborative game between a Furhat robot and pairs of participants. While the participants and the robot discussed the task with each other, the robot used gaze, turned its head, and asked either a directed question (aimed at a specific participant) or an open question (not aimed at a specific participant). The findings suggest that by using gaze and changing the addressee, participation equality improved in favor of the less active participants. Gillet et al. (2021) examined whether a Furhat robot can balance the degree of participation by using adaptive gaze behaviors versus non-adaptive gaze behavior. In doing so, when the more active participant was talking, the robot gazed at both participants equally. However, during the less-vocal participant’s talking time, Furhat shifted its gaze depending on the relative amounts of speaking time. They paired up a native Swedish speaker with a learner of Swedish language and instructed them to play a language game with the robot. In this word-guessing game, the participants were expected to work together to provide verbal cues until the robot could guess the target word. It was found that the robot’s adaptive gaze behavior resulted in a more even participation compared to the non-adaptive gaze condition.

Bohus and Horvitz (2010) examined the role of verbal and non-verbal cues including gaze, gesture, and speech of a 2D conversational avatar in the flow of conversations in multiparty interaction. The interaction occurred in the form of a quiz game, where the virtual agent asked participants a series of questions. The questions were either addressed to one participant or all participants. To take or yield turns, the avatar used gaze and simple facial expressions and asked directed questions such as ‘is that correct’. The results suggest that the virtual agent’s synchronized speech, gaze, and gesture was successful in shaping addressee roles, allocating speaking turns, and affecting the flow of conversation in multiparty interaction. The researchers also found that the participants judged abilities of the moderator as favorable (Bohus and Horvitz, 2010).

These studies show that robots can be effective as group moderators. Robots also have the advantage that their behavior can be fully controlled, so that we can identify the effects of particular strategies without the fear of confounding factors. Overall, robot moderation can positively influence interaction among a pair or groups of participants. As discussed earlier in the previous section, when telepresence robot users join a group, they are more likely to speak less than their co-located groupmates. Thus, using a robot to moderate the interaction might be an effective way in balancing participation and increasing the engagement of remote users.

3 Materials and methods

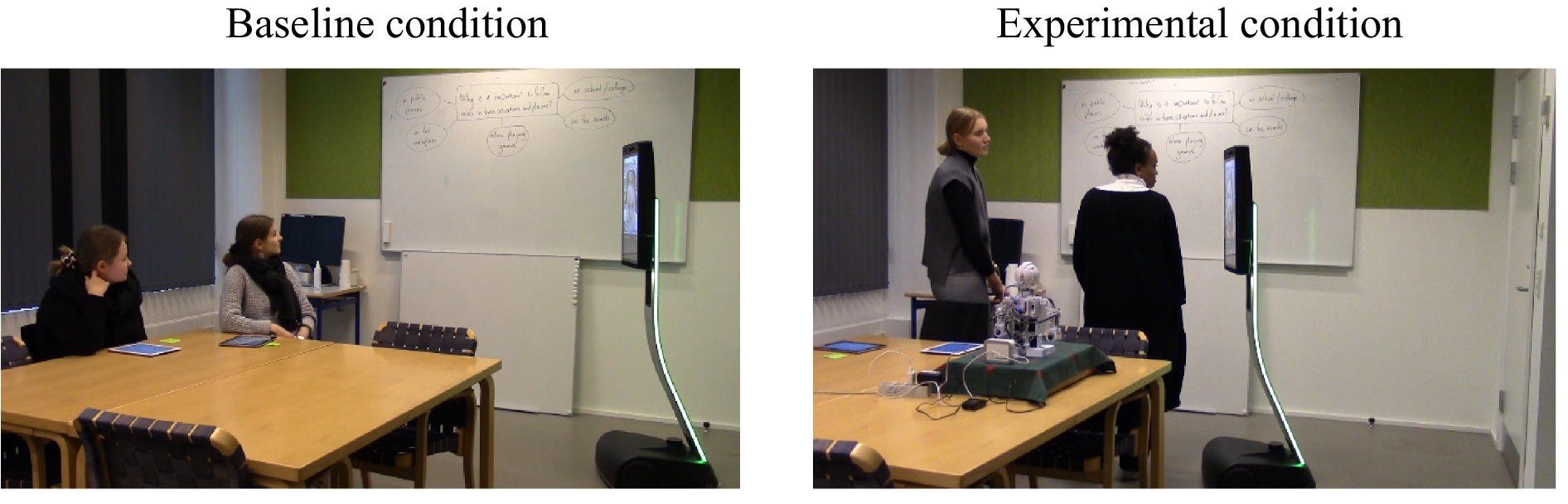

This study uses a between-participants experimental design with two conditions. Each condition involves three-person groups consisting of two co-located participants and one telepresence robot user. In the experimental condition, group members engage in a language learning activity facilitated by a robot moderator. In the baseline condition, there is no robot to moderate the experiment. Instead, participants follow text-based instructions delivered on iPads (for co-located participants) and computers (for telepresence robot users). Both conditions receive identical instructions to ensure that task content is consistent. The primary aim is to assess whether robot moderation influences participant engagement and balances participation.

There are two main reasons why a robot moderator was used instead of a human moderator. First, robots can maintain a high degree of consistency and control in their interventions (Fischer, 2016). For example, factors such as tone of voice, gaze direction, head movements, and other nonverbal cues can be replicated across all participant groups. This consistency can minimize the potential variability in a human moderator’s behavior (e.g., smiles, hesitations, fatigue, etc.). Second, robots have a consistent behavior even when not actively intervening. A human moderator might unintentionally show some nonverbal cues (e.g., nodding, shifting posture, etc.) while observing the discussion. These cues might be interpreted differently by participants and potentially affect their interaction patterns. Thus, the use of a robot moderator for targeted interventions can help us measure their effects more consistently and improve the replicability of the experiment.

3.1 Participants

84 individuals – recruited through convenience sampling – participated in this study. Once recruited, the participants were assigned into groups of three, resulting in 28 groups overall. Of these, 18 groups (N = 54) were assigned to the robot moderated condition, while the remaining 10 groups (N = 30) were assigned to the baseline condition. Within each group, participants selected a member to operate a telepresence robot for remote participation during the experiment session.

The sample included 56 males, aged 18 to 55 (M = 21.92, SD = 5.61), and 28 females, aged 18 to 30 (M = 20.71, SD = 2.85). The telepresence robot operators included four females and 14 males in the experimental and two females and eight males in the baseline condition. No one reported any past experience with telepresence robots. The study was conducted on Campus Sønderborg at the University of Southern Denmark, and all participants were students at the university. Participants were students from different fields of study, including Business Administration, European Studies, Technology Innovation, Electronics, and Mechatronics. They volunteered to participate in the experiment in their free time and received a bar of chocolate for their participation.

3.2 Human ethics statement

The experiment received ethical approval from The University of Southern Denmark’s Research & Innovation Organisation (SDU RIO) (notification number: 11.612). Participants reviewed and signed informed consent forms and thereby confirmed their voluntary participation in the experiment. They were reassured that their data would remain anonymous. They could also withdraw from the study at any time.

3.3 Experiment task

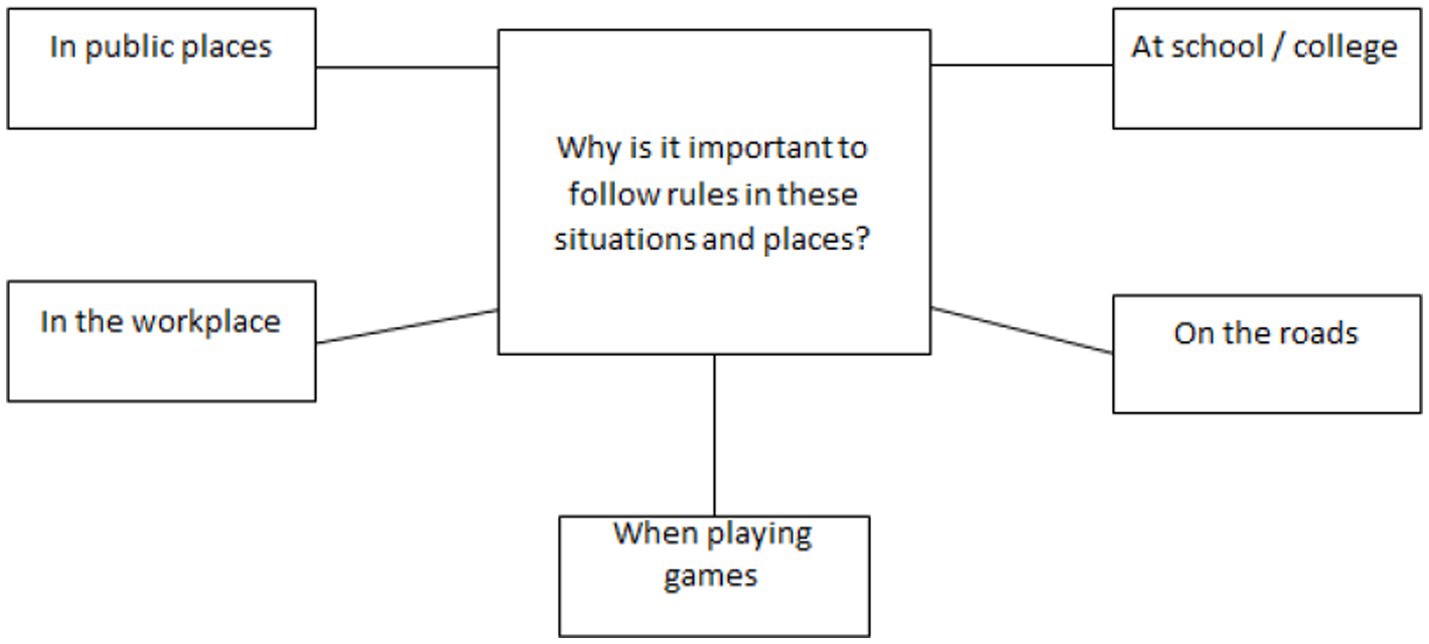

During the experiment, participants completed an interactive speaking activity adapted from the C1 Advanced Exam by Cambridge Assessment English. The activity consisted of a primary communicative task (Figure 1), followed by two discussion questions.

After question 1, participants received the following questions for further discussion:

• Are there occasions when we should question the rules and not simply obey? Why or why not?

• Do you think we have to follow too many rules in our lives? Why or why not?

Participants were instructed to discuss each part of the task for 3 min, though they were not interrupted if they exceeded the time limit and were allowed to finish at their own pace.

This task was selected due to its design and standardization by Cambridge Assessment English for interactive communication between two or three participants. The primary aim of this task is to assess collaborative interaction. Thus, it can encourage participants to engage in meaningful exchanges rather than focusing solely on linguistic form (see Brown and Lee, 2015).

3.4 Robots

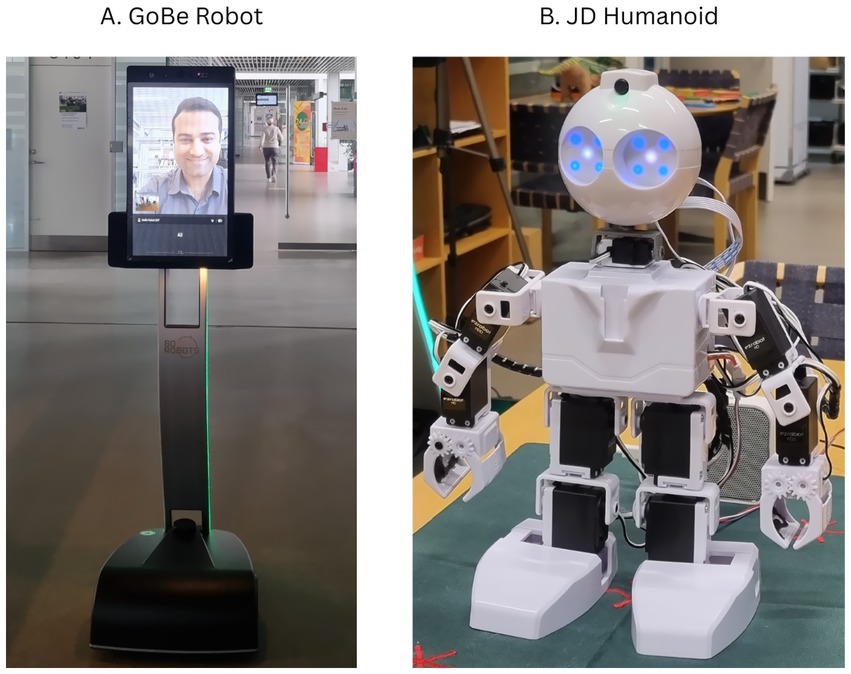

Two different types of robots were used in the study:

Telepresence robot: The GoBe robot (Figure 2A), manufactured by Blue Ocean Robotics in Denmark, served as the telepresence device for remote participants. GoBe is a mobile robot, which is 161 centimeters tall and weighs approximately 40 kilograms. It has a 21.5-inch touchscreen display, a high-definition webcam, and high-quality speakers. The robot takes approximately 4 h to fully charge and has a runtime of up to 8 h. It allows users to drive the around, adjust volume, increase or decrease speed, type messages on its screen for co-located participants, and communicate with others.

Social robot: JD Humanoid robot (Figure 2B) was used to moderate the experimental sessions. JD is 33 centimeters tall and weighs about 1.3 kilograms. Among its capabilities, JD Humanoid can engage in verbal communication, perform hand gestures and body movements, walk, and autonomously track motions, colors, and faces. It runs on a software program called Advanced Robotic Controller (ARC), which is compatible with Microsoft Windows, Android, and iOS platforms.

3.5 Robot dialogs

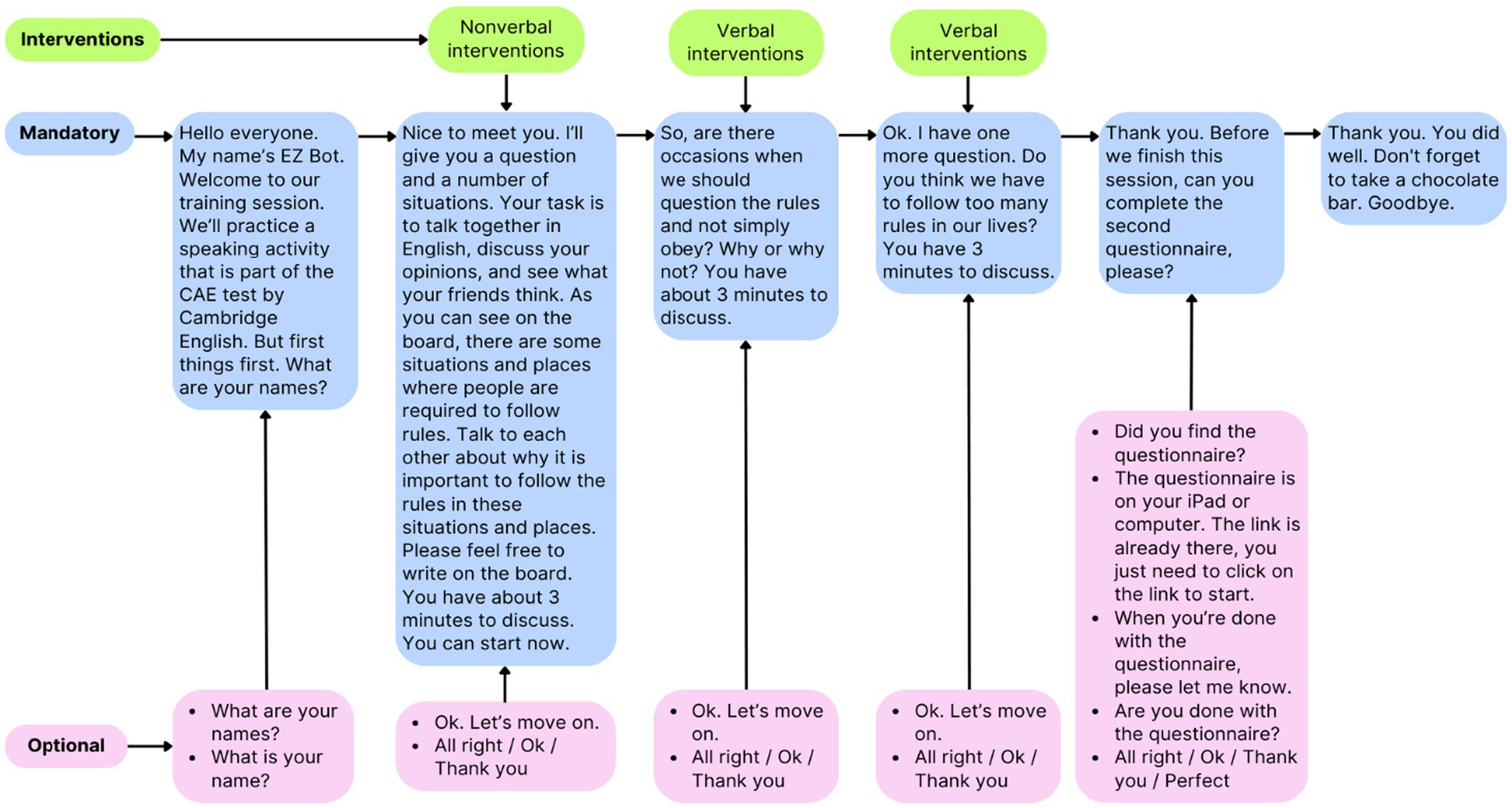

The robot moderator’s script (Figure 3) consists of three types of utterances:

a. Mandatory statements. These statements include essential utterances that the robot must produce during the session. Mandatory statements are pre-defined experiment instructions which cannot be skipped or altered to ensure the completeness of the experiment.

b. Optional dialogue lines. These statements include additional utterances that the robot may use depending on the conversational context and flow. Since the robot moderates the session without human intervention, these lines help maintain seamless interaction with the robot. For example, the robot has been programmed to ask participants if they can find the questionnaire questions to ensure smooth communication without disruptions.

c. Verbal interventions. These statements include both nonverbal and verbal cues (see the following section), the aims of which are to encourage engagement and balance participation. For nonverbal interventions, the robot shifts its gaze by turning its head toward a participant, while verbal interventions involve opinion questions.

Figure 3. Robot moderator’s script. In addition to these statements, there were a few more utterances on raising empathy and questionnaire completion. But since these utterances occurred after the speaking task and had no effect on the results of this study, they were left out. The data on empathy were partly published in 2023 IEEE International Conference on Advanced Robotics and Its Social Impacts (Asadi and Fisher, 2023a).

To ensure consistency across experimental sessions, all dialogue lines and gestures were strictly adhered to as written in the script, with no additions or deletions. To facilitate natural and seamless interactions, the Wizard-of-Oz (WoZ) technique was used to control the robot’s utterances and gestures. WoZ is an experimental method in which participants think they are engaging with an autonomous robot, although in reality a human operator (i.e., the wizard) remotely controls some or all aspects of the robot’s behavior (Riek, 2012). The wizard—i.e., the first author—adhered strictly to the scripted content without adding or removing any elements to the interaction.

There were three reasons behind choosing the WoZ technique. First, the robot moderator has limited speech recognition capabilities. The speech recognition can be unreliable, particularly in dynamic settings where participants may speak simultaneously or use colloquial language. Second, interaction between humans tends to be unpredictable and spontaneous. This spontaneity makes it challenging to pre-determine when the robot should respond or intervene. Third, the WoZ technique helps maintain a controlled and consistent interaction experience across participants. By pre-defining the robot’s dialogue lines and gestures, the experiment created consistent robot behaviors and prompts. Thus, it made it possible to conduct a systematic examination of the robot’s influence on participants’ communication and participation, even in a dynamic interaction situation.

3.6 Interventions

The robot moderator used two types of interventions: verbal and nonverbal. The aim was to encourage participation of less vocal participants and balance the speaking time among group members. The interventions are described below:

3.6.1 Nonverbal intervention

The nonverbal intervention was based on a gaze behavior adapted from Tennent et al. (2019). To implement this intervention, as soon as participants start to interact, the robot moderator turns its head to stare at the speaker. If a participant speaks for more than 30 s, the robot shifts its gaze away from the speaker to the quietest participant and looks at them for 15 s. If the silent participant does not begin speaking within this time, the robot moves its gaze back to the active speaker. However, if the targeted participant starts speaking, the robot maintains eye contact with them for 30 s before redirecting its gaze to another less talkative group member. If no participant exceeds the 30-s speaking threshold, the robot keeps looking at the speakers. Additionally, if a participant remains silent for over 90 s—even if other participants do not reach the 30-s limit during their turns—the robot directs its gaze to the quietest participant for 15 s.

The robot initiates gazing at speakers as soon as the task of the experiment starts. But the nonverbal intervention starts after two rounds of speaking turns to identify the quietest participant. The robot’s gaze behavior was manually controlled by the first author. It should be noted that JD Humanoid’s eyes cannot move independently of its head. Therefore, to make eye contact, it has to turn its head toward them.

3.6.2 Verbal intervention

The verbal interventions were selected based on the following recommendations:

• According to Skantze (2017), the use of directed questions and the addressee’s name can enhance the effectiveness of robot interventions in group interactions.

• Bohus and Horvitz (2010) suggest that including the deictic pronoun ‘you’ in the prompts (e.g., ‘Do you agree?’) makes the verbal intervention more effective compared to a general impersonalized prompt such as ‘is that correct?’ in multiparty interactions.

• Cambridge Language Assessment1 instructs its speaking examiners to ask questions such as “what do you think?” to elicit responses during certain speaking activities.

In developing the verbal interventions, we took all the said recommendations into account, except for the use of participants’ names. The main reason for excluding names was limitations in the robot’s text-to-speech system, which occasionally mispronounced local or uncommon names. Thus, to avoid inconsistencies across participants, names were excluded from the verbal interventions. Based on these guidelines, the robot moderator used one of the three directed prompts, namely “Do you agree?,” “What do you think?,” and “What’s your opinion?,” under the following conditions:

• when a participant remained silent for approximately 90 s without attempting to speak;

• when a participant did not engage in the discussion after six speaking turns; or

• when there was a pause of more than 5 s between speaking turns.

During this intervention phase, the robot used automatic motion, face, and color tracking to autonomously follow participants. The participants treated the robot moderator as if it was an autonomous partner. This was evident from their interactions with it, in which for example they asked the robot to repeat a question (e.g., “Hey, robot! I did not hear you. Can you repeat [the question], please?”) or clarify a point (e.g., “What do you mean by that?”).

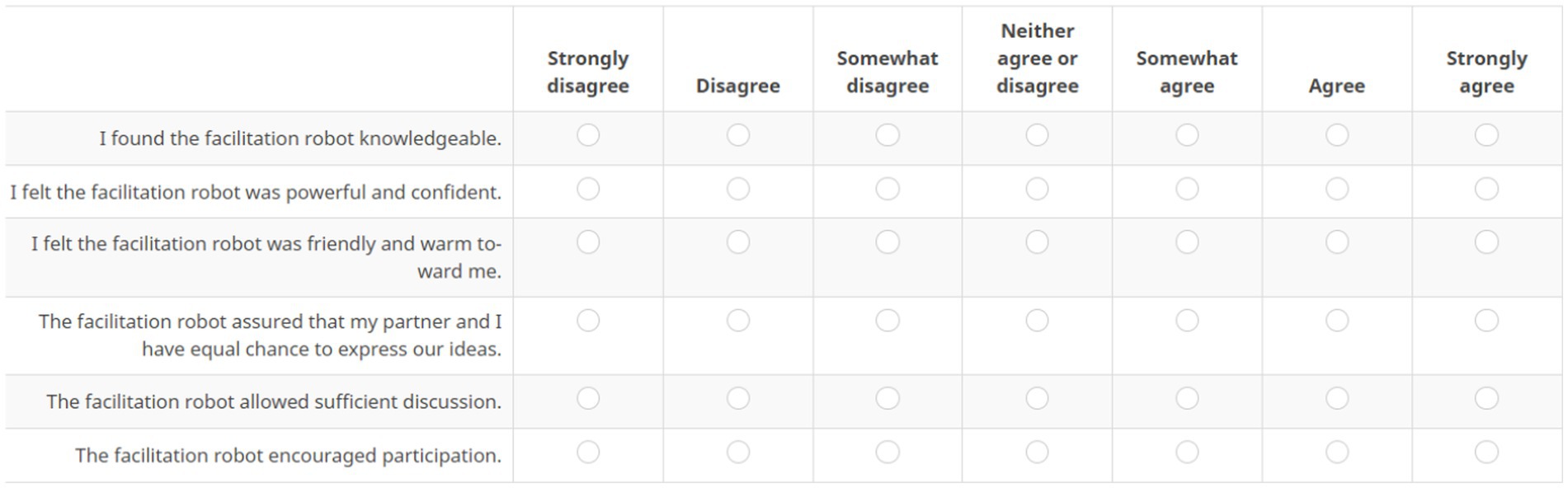

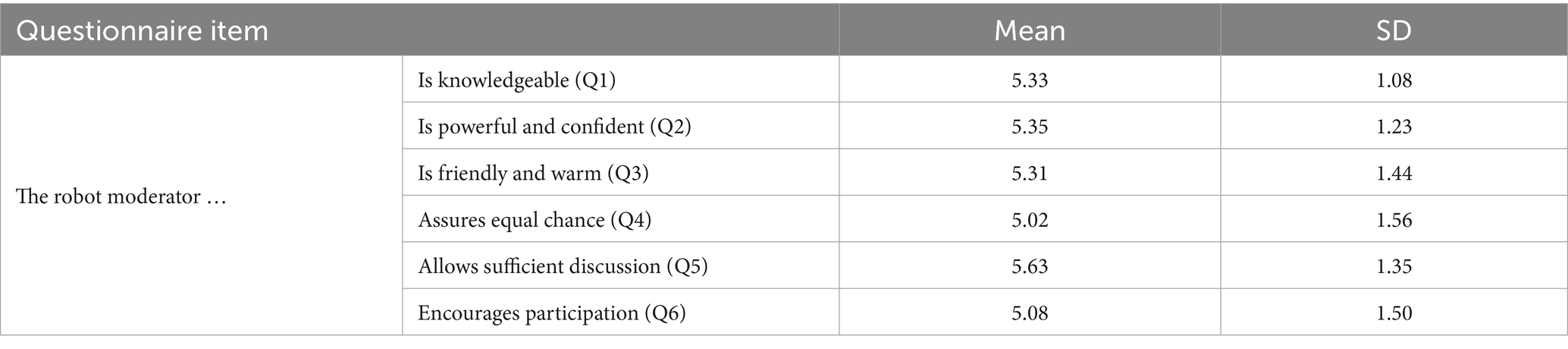

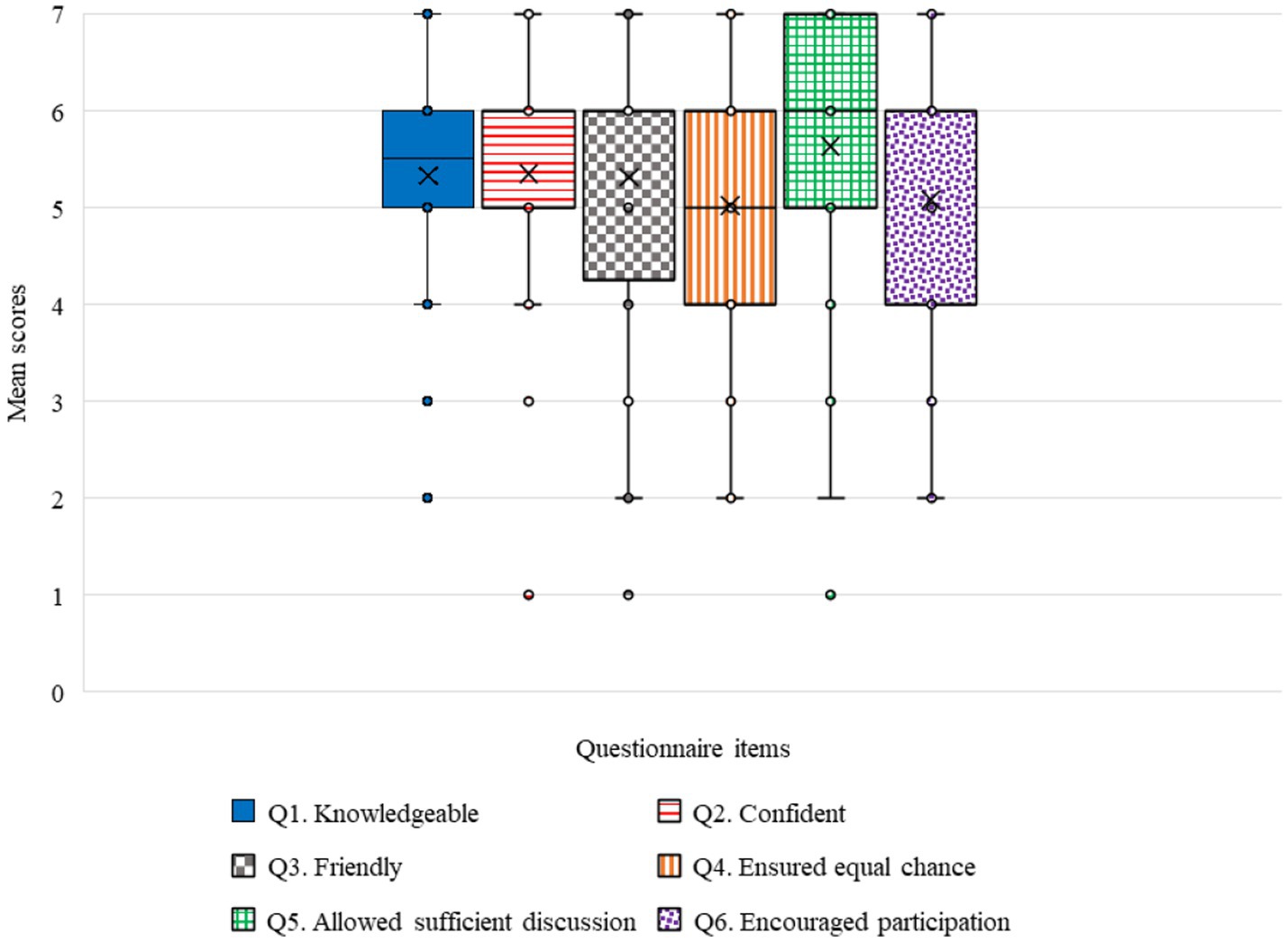

3.7 Questionnaire

To assess participants’ attitude and perception toward the effectiveness of the robot moderator in the experimental condition, a six-item questionnaire was administered at the end of the session (Figure 4). This questionnaire was adopted from Shamekhi and Bickmore (2019). Responses were recorded on a 7-point Likert scale, ranging from “strongly disagree” (1) to “strongly agree” (7).

3.8 Procedure

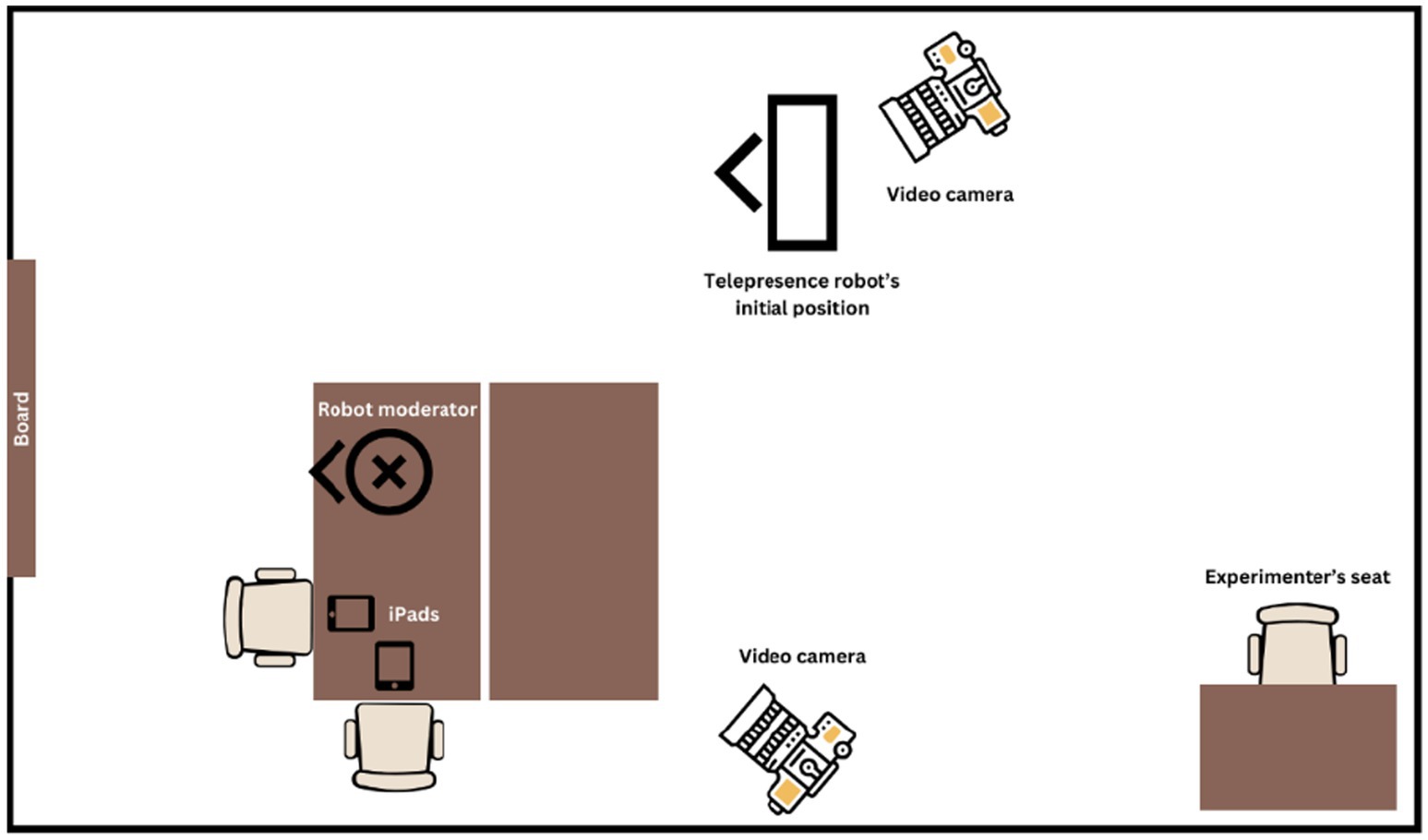

Upon recruiting three participants at a time, they were welcomed into the Human-Robot Interaction laboratory and were provided with a comprehensive briefing regarding the study’s objectives. Each group then selected a volunteer to operate the telepresence robot. The selected telepresence robot user was escorted to a nearby room, where they received brief training on using the robot via a desktop computer.

In the experimental condition, participants were informed that the robot moderator would take charge of the session and provide them with instructions once the experiment began. In the baseline condition, participants were instructed to follow the experiment steps as outlined on their iPads (for co-located participants) and computers (for the telepresence robot operator). Prior to starting the experiment, it was clearly communicated that participants were free to move around the lab.

Once participants were ready to begin, two video cameras were turned on to record the session. Then, the experimenter positioned himself at the opposite end of the laboratory, facing away from participants and orienting toward his laptop to minimize interference. Figure 5 shows the setup for the experimental condition. The setup in the baseline condition was identical except that the humanoid robot was absent.

In the experimental condition, the robot moderator initiated the session by greeting participants and asking for their names. It then introduced the language activity and instructed participants to discuss the first question. In the baseline condition, similar instructions were displayed as text on participants’ iPads or computers. In both conditions, the first question was written on a large whiteboard visible to the group. To make sure that the remote participant could clearly see the question, a printed copy was also placed on their desk, next to the computer by which they operated the telepresence robot. Participants were informed that they could use the whiteboard for notetaking if desired.

As participants engaged in discussing the first question, the robot moderator remained silent and only interacted with them through nonverbal interventions. In the baseline condition, no intervention was made. Figure 6 shows participants as they were engaged in doing the first part of activity in both conditions.

After completing the first question, the robot moderator provided the second and third questions in the experimental condition. In this phase, it made verbal interventions. In the baseline condition, participants read the questions on their devices and discussed it without any intervention. Each question was displayed on a separate screen page, so participants could only see one question at a time.

After completing the third question, participants in the experimental condition filled out a questionnaire about their attitudes toward the robot moderator. In the baseline condition, participants did not any get a questionnaire on moderation. Then, participants were thanked for their participation, and each received a chocolate bar for their participation.

3.9 Methods of data analysis

The dependent variables of the study include participants’ relative talking time, reactions to the robot moderator’s interventions, and their responses to the questionnaire. The independent variables are robot moderator or no moderator on the one hand and type of robot intervention, verbal versus non-verbal, on the other.

Talking time was calculated by counting the number of seconds that each participant spoke. Participants’ responses to the robot’s interventions were determined in a qualitative analysis of the video footage and by subsequent quantification of the results. Specifically, to study the effectiveness of the robot moderator’s verbal and non-verbal behaviors, we searched the videos for those instances in which the robot either used a head-turn or a verbal intervention and coded whether the respective addressee took the turn or not.

The data elicited from the video recordings were then normalized. Normalization helps mitigate this bias by adjusting for the differences in sample size and ensures that conclusions are based on the actual data patterns rather than artifacts of sample size differences (Mackey and Gass, 2015). According to Dornyei (2007), in studies with unequal sample sizes, the condition with more participants can have a greater influence on the overall statistics, which might lead to biased conclusions. To normalize the data, the following formula was used:

For example, in one baseline group, co-located participant 1 spoke for 171 s, co-located participant 2 for 20 s, and the telepresence user for 51 s, totaling 242 s. The normalized data are as follows:

These calculations show that collocated participant 1 took more than 70% of the speaking time of the whole group, while collocated participant 2 and the telepresence robot user spoke about 8 and 21% of the time, respectively.

All quantified data were analyzed statistically using t-tests. The aim was to find statistically significant differences between the mean scores.

4 Results

4.1 Verbal and nonverbal interventions

The first and second research questions inquired whether the robot moderator’s nonverbal and verbal interventions can improve participation. The results of the data analysis are discussed below.

4.1.1 Nonverbal interventions

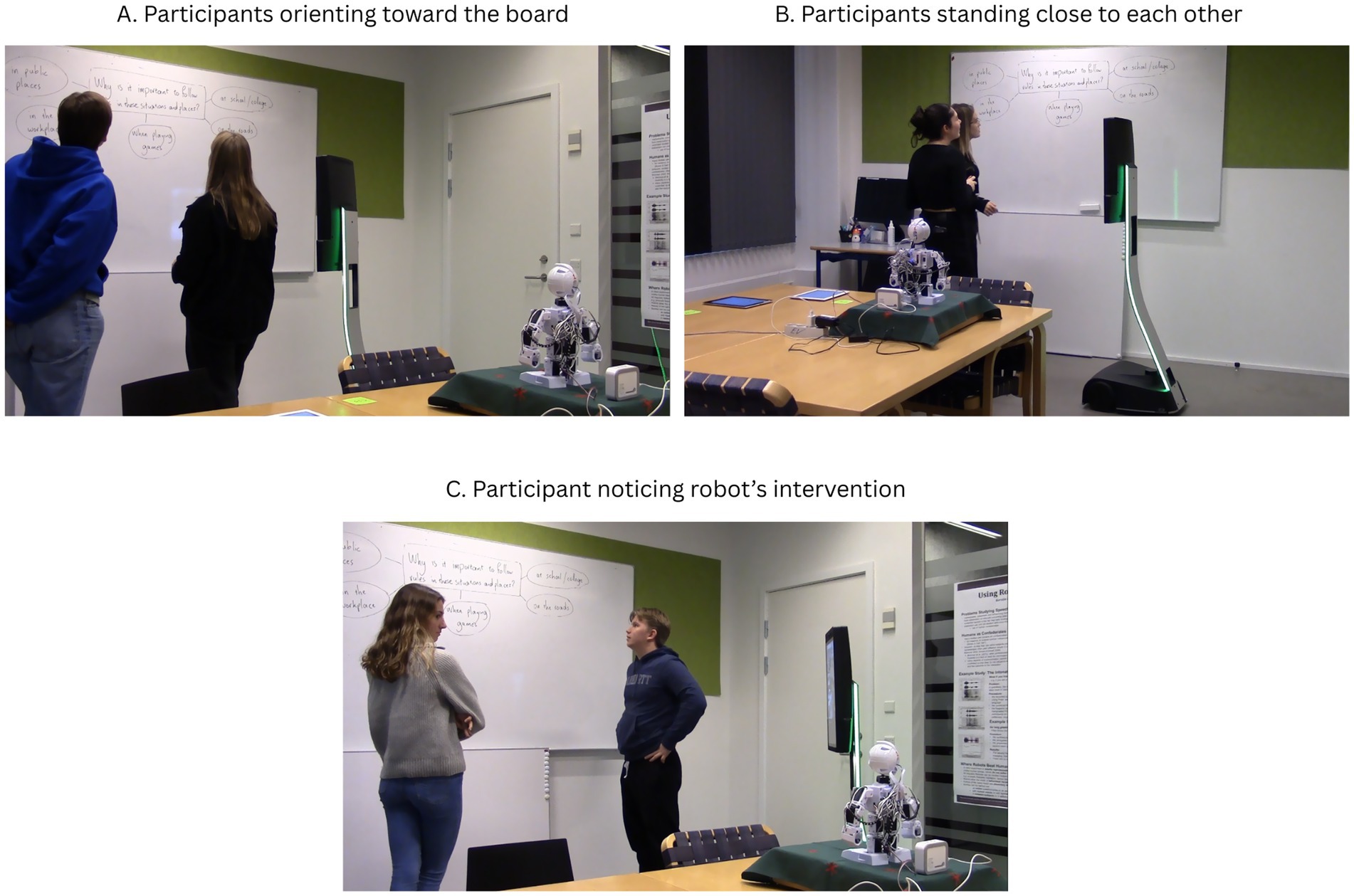

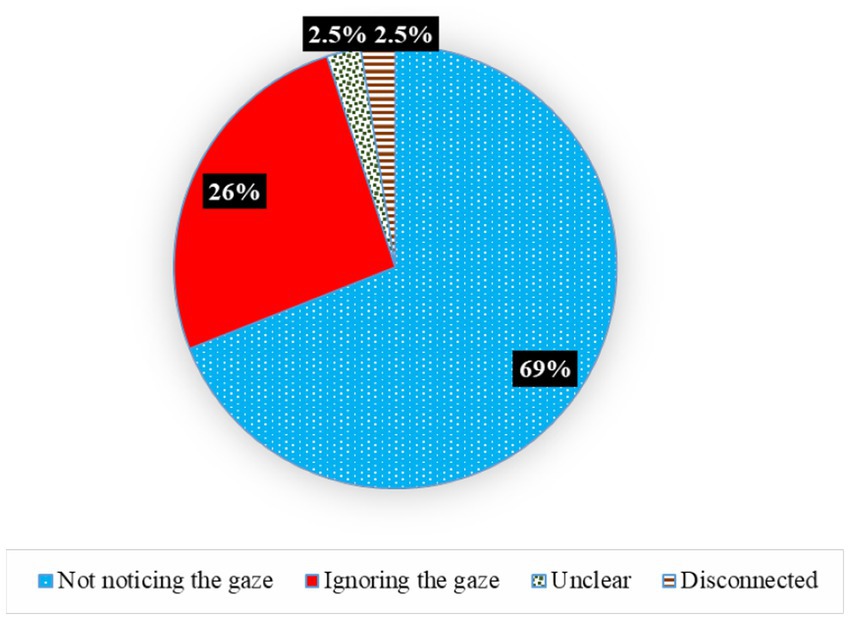

Across the 18 groups in the experimental condition, the robot moderator made 43 nonverbal intervention attempts. Of these, 97.6% failed to elicit responses from the target participants, while only 2.4% were successful.

As for the failed attempts of the robot moderator, in 69.05% of cases (29 attempts), target participants were facing away from the robot moderator, as they were engaged in discussing the question with each other. Therefore, they did not notice the robot’s gaze. In 26.19% of cases (11 attempts), target participants noticed the robot’s gaze but chose to remain silent. In one failed attempt, it was unclear whether the remote participant noticed the robot’s gaze. During another intervention, the telepresence robot disconnected (Figure 7).

Figure 7. Reasons why nonverbal interventions failed. (A) Participants orienting toward the board. (B) Participants standing close to each other. (C) Participant noticing robot’s intervention.

Observations from the video footage suggest several factors for the low success rate of nonverbal interventions. First, as participants were not assigned fixed seating positions, they often stood facing the whiteboard or orienting toward each other, with their backs to the robot moderator (Figure 8A). This positioning limited their ability to notice the robot’s gaze. In addition, sometimes co-located participants stood next to or very close to one another (Figure 8B). So, even if they did notice the intervention, there was a possibility that it would not have been easy to figure out at whom the robot moderator was looking. Finally, in cases where participants had little to contribute or there was a prolonged pause, they often ignored the robot’s gaze and remained silent, even when they noticed it (Figure 8C).

4.1.2 Verbal interventions

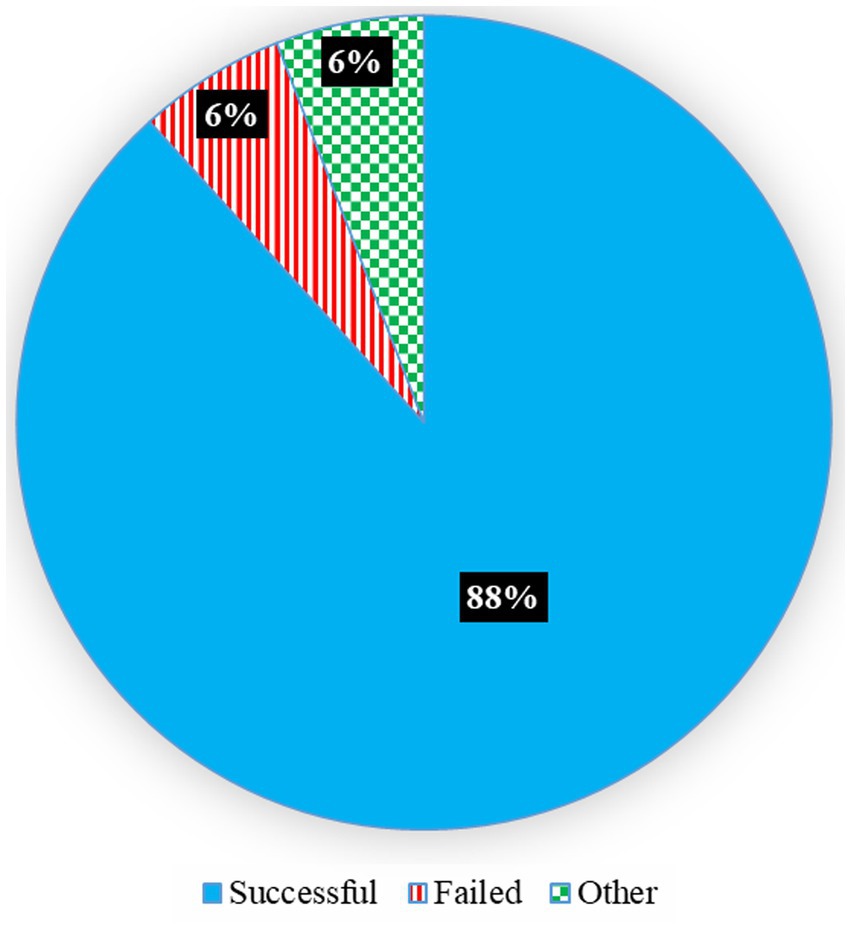

Video analysis shows that the robot moderator made a total of 34 verbal interventions. As mentioned in Section 3.6, the verbal interventions consisted of opinion questions (e.g., “What do you think?”) and did not involve any follow-up prompts or acknowledgements from the robot. The overall efficiency of these interventions was first assessed based on whether they elicited any verbal response. Of the 34 interventions, 88.24% (N = 30) successfully prompted the target participant to speak. In 5.88% (N = 2) of cases, the verbal intervention failed to elicit a response, while in another 5.88% (N = 2) of cases, the robot’s question coincided with a participant self-selecting a turn. Due to the slight delay in executing the robot’s command, these two cases were counted separately as technical limitations rather than successful or failed interventions. Figure 9 illustrates the success rate of verbal interventions.

Of the 30 interventions that elicited a response, 56.66% (N = 17) of participants provided long answers (≥ 3 s) and expanded or elaborated on their responses. In 43.33% of cases (N = 13), participants provided short answers (< 3 s), such as ‘Yes, I agree’ or ‘I do’, to the moderator’s questions. Further analysis of addressees also showed that 57% of the interventions prompted the intended addressee to speak, 33.33% of which were directed at co-located participants and 23.33% at the telepresence robot user. In 17% of successful interventions, a different group member spoke, sometimes interrupting the target participant. In two cases, a co-located participant noticed the robot addressing the remote participant and informed them, leading the telepresence user to respond.

The results show that verbal interventions proved more effective than nonverbal interventions in eliciting responses from participants. Thus, it can be concluded that in the context of this experiment, addressing participants verbally was more effective in increasing engagement than simply using gaze.

4.2 Balancing participation

Research question 3 asked whether the interventions reduced participation imbalance among group members. Given the limited success rate of nonverbal interventions, it was assumed that they had no major impact on the flow and dynamics of group interaction. Thus, this analysis focuses solely on the verbal intervention data from the second and third discussion questions, in which the verbal interventions were implemented.

Video analysis was used to calculate each participant’s total talking time. In the baseline condition (N = 30), participants spoke for a total of 3,975 s, while participants in the experimental condition (N = 54) spoke for 4,331 s. To carry out fair and meaningful comparisons between the conditions of the study, the total talking time of participants were normalized.

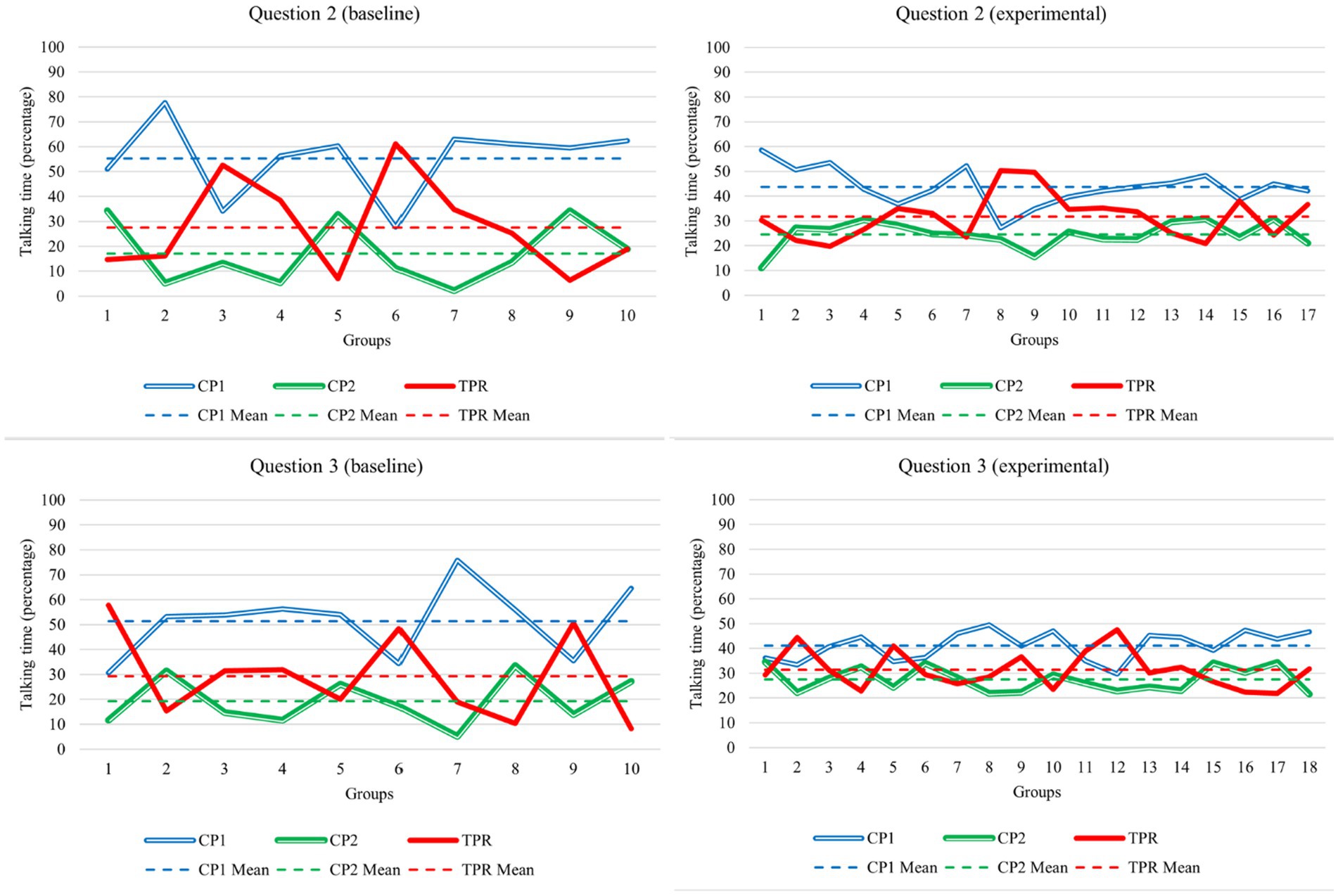

Figure 10 shows the normalized talking time for each group.

Figure 10. Normalized talking time per question (in seconds) by groups (1–10 in the baseline and 1–18 in the experimental condition). The first group in the experimental condition (question 2) experienced a technical issue while discussing question two. Therefore, it was excluded from the final calculations. CP represents co-located participants (CP1 = more talkative & CP2 = less talkative participant) and TPR the telepresence user. The Y axis shows the percentage of each participant’s talking time.

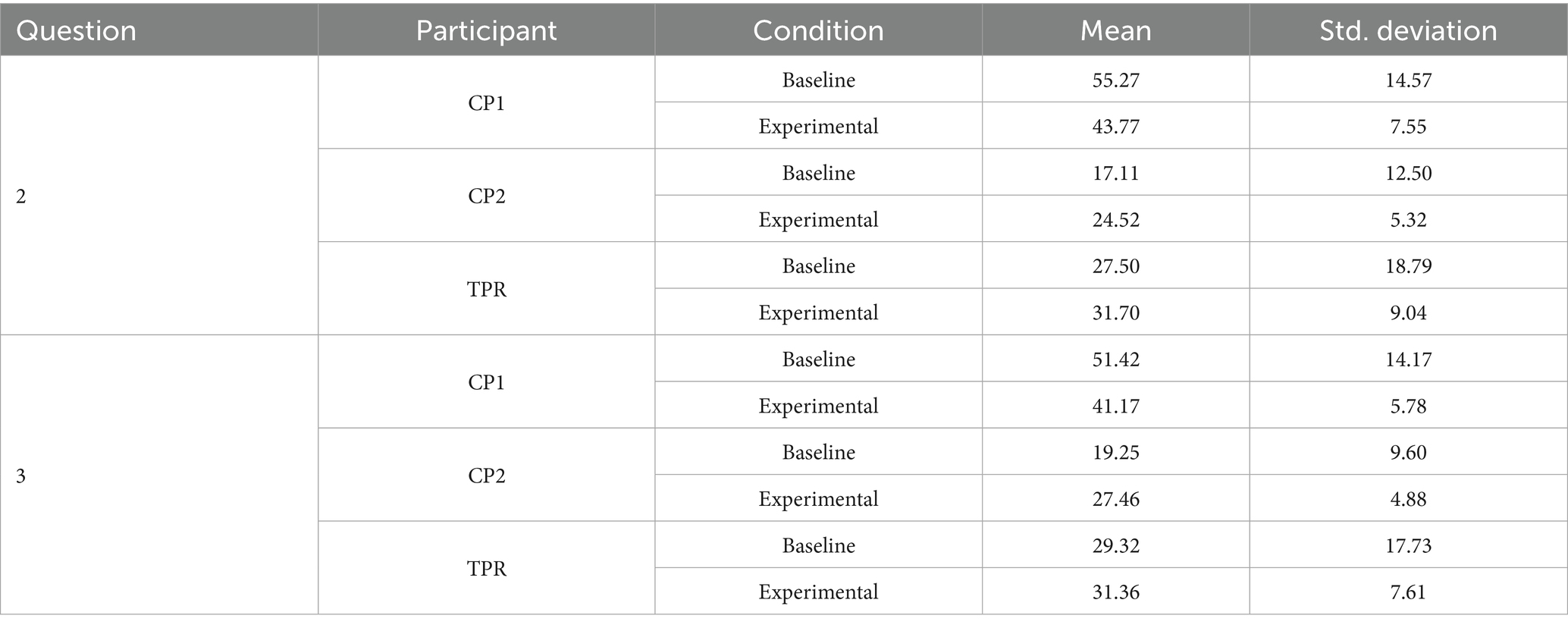

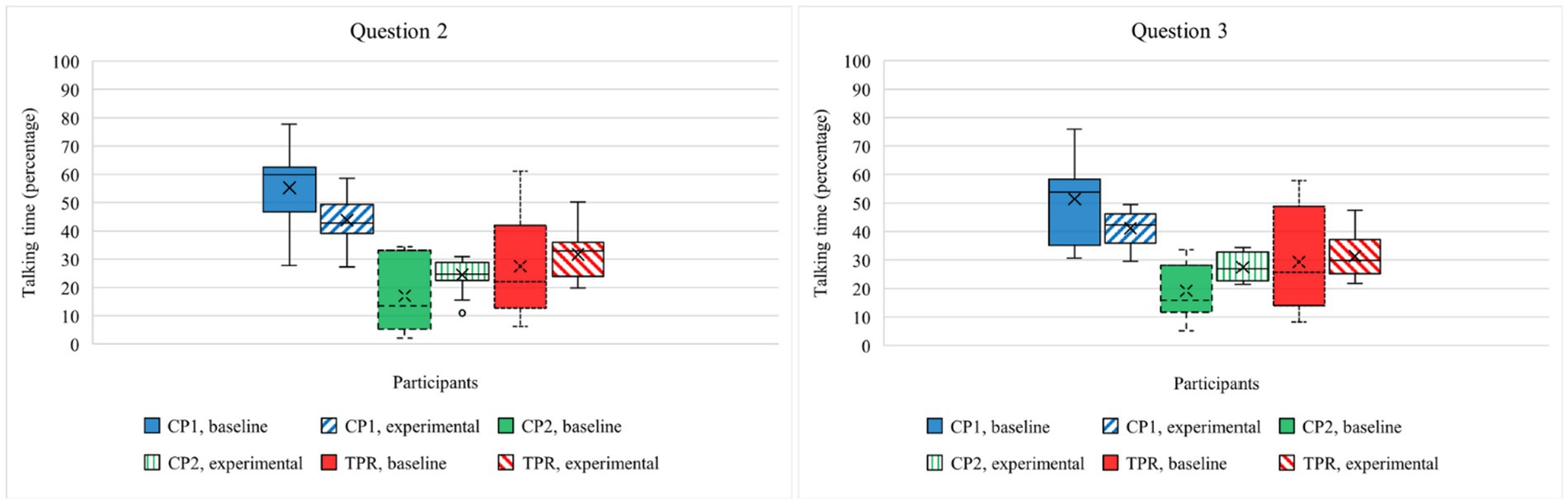

In the experimental condition, mean scores are closer to each other and are less scattered compared to the baseline, especially when discussing question 3. In addition, variance values decreased in this condition compared to the baseline condition (Figure 11). This can mean that robot moderation had a positive effect on making conversations more balanced.

Figure 11. Mean and variance of talking time data per question (in seconds). CP1, more talkative co-located participant; CP2, less talkative co-located participant; TPR, telepresence user.

The Shapiro–Wilk test was used to determine normality of the data in each condition—i.e., baseline and experimental. The results show that the data in both conditions were normally distributed (Baseline: W (30) = 0.92, p = 0.07; Experimental: W (54) = 0.96, p = 0.14). Thus, an independent samples t-test was conducted to determine if the mean differences in the normalized talking time data are statistically significant. Table 1 shows the descriptive statistics of talking times in both conditions.

The results of the t-test show that except for the differences between the mean scores of CP1s in question two, all the other differences between participants in both conditions were significantly different. That is, after comparing both conditions to each other, the differences between the mean scores of the quiet collocated participants [t (26) = −2.15, p = 0.002, Cohen’s d = 0.85] and telepresence robot users TPR [t (26) = −0.78, p = 0.007, Cohen’s d = 0.31] were significantly different for question two. In addition, the differences between the mean scores of the talkative collocated participants [t (26) = 2.72, p = 0.012, Cohen’s d = 1.07], less vocal collocated participants [t (26) = −3.02, p = 0.002, Cohen’s d = 1.19] and telepresence robot users [t (26) = −0.42, p = 0.001, Cohen’s d = 0.16] were significantly different in the baseline compared to the experimental condition. These significant differences suggest that robot moderation was effective in producing more balanced interactions.

4.3 Attitude toward robot moderator

Research question 4 investigated participants’ attitudes toward the robot moderator in the experimental condition. Descriptive statistics (Table 2) reveal that all items had mean scores above 5.00 on a 7-point Likert scale (min = 5.02, max = 5.63). This indicates that participants had an overall positive view toward the robot.

Figure 12 illustrates the mean scores of participants’ attitudes toward robot moderator.

A t-test was also conducted to compare the attitudes of co-located participants and telepresence users. Results showed no significant differences between the groups, suggesting that both remote and co-located participants had a generally positive perception of the robot moderator.

5 Discussion

This study examined the effectiveness of robot moderation in encouraging engagement and balancing participation among group members. The first and second research questions explored whether a robot’s verbal and nonverbal behaviors could encourage the least vocal participant to engage in the conversation. For the nonverbal interventions, where the robot turned its head and gazed at the quieter group member for 15 s, results indicate that most of the interventions did not elicit engagement from the participants. These results contrast with the findings of Tennent et al. (2019) that reported that turning the robotic object toward the least vocal participant would encourage them to participate in the conversation. A key reason for this discrepancy can be due to the current study’s setup, where participants were not confined to fixed seating positions and were free to move around the lab. The results show that in approximately 70% of cases, participants did not notice the robot moderator’s gaze because they were facing away from it, fully engaged in the task.

The robot moderator also used verbal interventions to prompt quiet participants to start speaking. These interventions were successful 88.24% of the times. This is in line with the findings of Skantze (2017), who found that a robot moderator’s verbal interventions can positively affect participation. In the present study, however, we allowed the participants to move around freely in order to simulate a group work activity and explore whether moderation would be effective in such situations. The results of the current study show that robot moderators may also be effective in hybrid scenarios, in which an initial imbalance is likely.

The third research question asked whether the interventions effectively balanced participation among all participants. Results indicate that in both conditions, one co-located participant dominated the discussion, while the telepresence robot user spoke significantly less, and the second co-located participant spoke the least. However, in the baseline condition, the mean differences between the talking time of all these participants were significantly larger than the experimental condition. This means that robot moderation successfully balanced out the talking time of group members in the experimental condition.

Finally, the questionnaire assessing participants’ attitudes toward the robot moderator suggested that participants in the experimental condition generally held positive views about the robot moderator. This is important because if the participants do not form a favorable perception of the robot moderator, the moderation might not have the desired impact (Huang et al., 2021).

While using a social robot to moderate group activities may not be feasible in every classroom or during regular school hours, there are two main advantages to doing so. First, a robot moderator can consistently apply interventions and gestures across all experimental sessions. This consistency is difficult to achieve with human moderators, who may unintentionally vary their facial expressions, body language, posture, tone of voice, and even backchanneling feedback depending on the group they are moderating. Second, the interventions used by the robot moderator are not confined to experimental context. They can be directly applied in real-world settings, where teachers can adopt them to balance participation and increase engagement in mediated interactions.

A possible limitation of this study is that the participant pool consisted solely of a specific subset of adults. In this sense, a more diverse sample that includes people from different age groups, social statuses, educational backgrounds, etc. could result in a richer dataset. Moreover, replicating this study in different settings and across various cultural contexts would enhance the generalizability of the findings. Another factor that may limit the applicability of these findings is that the telepresence robot users in our study were healthy volunteers. Future studies could benefit from recruiting participants who genuinely rely on telepresence robots for access to education – such as homebound learners, students recovering from illness, or people with physical disabilities who attend classes remotely. These participants are more representative of the contexts in which telepresence robots are currently deployed in educational settings. Thus, the data collected from such samples could help us understand more about the nuanced ways in which robot moderators might support or facilitate their participation and engagement. To do so, one should recognize the ethical and logistical challenges of recruiting participants with health or learning needs, such as fewer number of participants, the need for more support during participation, the emotional impact of the experiment on participants, etc.

The study’s findings may also vary with different types of telepresence robots. For example, previous research has found that factors like screen size (Reeves et al., 1992), robot height (Rae et al., 2013) and robot movement (Asadi & Fischer 2023b) can shape participants’ perceptions of the telepresence robot user. Thus, using robots with different screen dimensions or heights may affect interaction in different ways.

Finally, future research could investigate whether robot moderation remains effective in groups with multiple telepresence robot users or with different types of questions, materials, and activities. Such inquiries would deepen understanding of robot moderation’s applicability across varied contexts and interaction structures.

6 Conclusion

The results of this study show that robots can successfully regulate participation in hybrid student teams. Our findings show that verbal interventions by a robot moderator lead to more equal participation. There is little reason to assume that these findings do not carry over to interactions moderated by human facilitators. In contrast, our findings regarding nonverbal interventions may not necessarily replicate with human facilitators since human gaze, unlike the robot’s head pose, is likely to be a much stronger social cue (e.g., Meltzoff et al., 2010). Still, whether human moderators can regulate participation by means of gaze only is essentially an empirical question whose answer is unknown and methodologically difficult to assess. To sum up, a robot moderator is a suitable tool to regulate speaking time and participation in hybrid student teams.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

AA: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Visualization, Writing – original draft, Writing – review & editing. KF: Conceptualization, Funding acquisition, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by Honda Research Institute as part of the project ‘Facilitating Inclusive Interactions: Encouraging Mediators’.

Acknowledgments

This work is based on data collected for the AA’s PhD thesis, which is available online at https://findresearcher.sdu.dk/ws/portalfiles/portal/255910962/AliAsadi_Thesis.pdf.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Alghamdi, R., and Gillies, R. (2013). The impact of cooperative learning in comparison to traditional learning (small groups) on EFL learners' outcomes when learning English as a foreign language. Asian Soc. Sci. 9:19. doi: 10.5539/ass.v9n13p19

Asadi, A., and Fischer, K. (2023a). The effect of an empathy-eliciting intervention on the perception of telepresence robot users. In 2023 IEEE international conference on advanced robotics and its social impacts (ARSO). IEEE. (pp. 90–94).

Asadi, A., and Fischer, K. The effect of performance features of telepresence robots on the personality perception of their users. In 2023 IEEE international conference on metrology for eXtended reality, artificial intelligence and neural engineering (metro XRAINE) Milano, Italy (pp. 1161–1165). IEEE. (2023b).

Bohus, D., and Horvitz, E. (2010). Facilitating multiparty dialog with gaze, gesture, and speech. In International conference on multimodal interfaces and the workshop on machine learning for multimodal interaction (1–8).

Brown, H. D., and Lee, H. (2015). Teaching by principles: An interactive approach to language pedagogy. (3rd ed.). Pearson Longman: San Fracisco Public University.

Cha, E., Chen, S., and Mataric, M.J. (2017). Designing telepresence robots for K-12 education. In 2017 26th IEEE international symposium on robot and human interactive communication (RO-MAN) Lisbon, Portugal: (683–688). IEEE.

Charteris, J., Berman, J., and Page, A. (2024). Virtual inclusion through telepresence robots: an inclusivity model and heuristic. Int. J. Incl. Educ. 28, 2475–2489. doi: 10.1080/13603116.2022.2112769

Cohen, E. G., and Lotan, R. A. (2014). Designing groupwork: Strategies for the heterogeneous classroom. third Edn. Columbia University, New York, NY: Teachers College Press.

Dornyei, Z. (2007). Research methods in applied linguistics. New York: Oxford University Press. 336.

Druckman, D., Adrian, L., Damholdt, M. F., Filzmoser, M., Koszegi, S. T., Seibt, J., et al. (2021). Who is best at mediating a social conflict? Comparing robots, screens and humans. Group Decis. Negot. 30, 395–426. doi: 10.1007/s10726-020-09716-9

Filia, K. M., Jackson, H. J., Cotton, S. M., Gardner, A., and Killackey, E. J. (2018). What is social inclusion? A thematic analysis of professional opinion. Psychiatr. Rehabil. J. 41, 183–195. doi: 10.1037/PRJ0000304

Fischer, K. (2016). “Robots as confederates: how robots can and should support research in the humanities” in What social robots can and should do: proceedings of Robophilosophy 2016/TRANSOR 2016. eds. J. Seibt, M. Nørskov, and S. S. Andersen (IOS Press), 60–66.

Forslund Frykedal, K., and Hammar Chiriac, E. (2018). Student collaboration in group work: inclusion as participation. Int. J. Disabil. Dev. Educ. 65, 183–198. doi: 10.1080/1034912X.2017.1363381

Gillet, S., Cumbal, R., Pereira, A., Lopes, J., Engwall, O., and Leite, I. (2021). Robot gaze can mediate participation imbalance in groups with different skill levels. In Proceedings of the 2021 ACM/IEEE international conference on human-robot interaction (pp. 303–311).

Gleason, B., and Greenhow, C. (2017). Hybrid learning in higher education: The potential of teaching and learning with robot-mediated communication. Online Learn. J. 21, 159–176. doi: 10.24059/olj.v21i4.1276

Gray, J. A., and DiLoreto, M. (2016). The effects of student engagement, student satisfaction, and perceived learning in online learning environments. Int. J. Educ. Leadersh. Prep. 11:n1.

Hammar Chiriac, E. (2014). Group work as an incentive for learning–students’ experiences of group work. Front. Psychol. 5:558. doi: 10.3389/fpsyg.2014.00558

Hansen, R. S. (2006). Benefits and problems with student teams: suggestions for improving team projects. J. Educ. Bus. 82, 11–19. doi: 10.3200/JOEB.82.1.11-19

Herrera-Pavo, M. Á. (2021). Collaborative learning for virtual higher education. Learn. Cult. Soc. Interact. 28:100437. doi: 10.1016/j.lcsi.2020.100437

Hodges, L. C. (2018). Contemporary issues in group learning in undergraduate science classrooms: a perspective from student engagement. CBE Life Sci. Educ. 17:es3. doi: 10.1187/cbe.17-11-0239

Huang, H. L., Cheng, L. K., Sun, P. C., and Chou, S. J. (2021). The effects of perceived identity threat and realistic threat on the negative attitudes and usage intentions toward hotel service robots: the moderating effect of the robot’s anthropomorphism. Int. J. Social Robot. 13, 1599–1611. doi: 10.1007/s12369-021-00752-2

Johannessen, L. E., Rasmussen, E. B., and Haldar, M. (2023). Student at a distance: exploring the potential and prerequisites of using telepresence robots in schools. Oxf. Rev. Educ. 49, 153–170. doi: 10.1080/03054985.2022.2034610

Johnson, D.W., Johnson, R.T., and Smith, K.A., (2014). Cooperative learning: improving university instruction by basing practice on validated theory. J. Excell. Coll. Teach., 25. Available online at: https://celt.miamioh.edu/index.php/JECT/article/view/454

Jones, R. W. (2007). Learning and teaching in small groups: characteristics, benefits, problems and approaches. Anaesth. Intensive Care 35, 587–592. doi: 10.1177/0310057x0703500420

Jourdan, J. S. (2006). Perceived* presence in mediated communication: antecedents and effects, Austin, Texas: The University of Texas at Austin.

Kirschner, F., Paas, F., Kirschner, P. A., and Janssen, J. (2011). Differential effects of problem-solving demands on individual and collaborative learning outcomes. Learn. Instr. 21, 587–599. doi: 10.1016/j.learninstruc.2011.01.001

Kornfield, R., Rae, I., and Mutlu, B. (2021). So close and yet so far: how embodiment shapes the effects of distance in remote collaboration. Commun. Stud. 72, 967–993. doi: 10.1080/10510974.2021.2011362

Kwon, O.H., Koo, S.Y., Kim, Y.G., and Kwon, D.S. (2010). Telepresence robot system for English tutoring. In 2010 IEEE workshop on advanced robotics and its social impacts. Seoul, South Korea (152–155). IEEE.

Liao, J., and Lu, X. (2018). Exploring the affordances of telepresence robots in foreign language learning. Lang. Learn. Technol. 22, 20–32.

Liu, M. C., Huang, Y. M., and Xu, Y. H. (2018). Effects of individual versus group work on learner autonomy and emotion in digital storytelling. Educ. Technol. Res. Dev. 66, 1009–1028. doi: 10.1007/s11423-018-9601-2

Mackey, A., and Gass, S. M. (2015). Second language research: methodology and design. 2nd Edn. NY: Routledge.

Marder, B., Ferguson, P., Marchant, C., Brennan, M., Hedler, C., Rossi, M., et al. (2021). ‘Going agile’: exploring the use of project management tools in fostering psychological safety in group work within management discipline courses. Int. J. Manag. Educ. 19:100519. doi: 10.1016/j.ijme.2021.100519

Meltzoff, A. N., Brooks, R., Shon, A. P., and Rao, R. P. (2010). “Social” robots are psychological agents for infants: a test of gaze following. Neural Netw. 23, 966–972. doi: 10.1016/j.neunet.2010.09.005

Meyers, S. A. (1997). Increasing student participation and productivity in small-group activities for psychology classes. Teach. Psychol. 24, 105–115. doi: 10.1207/s15328023top2402_5

Moreland, R. L., and Myaskovsky, L. (2000). Exploring the performance benefits of group training: transactive memory or improved communication? Organ. Behav. Hum. Decis. Process. 82, 117–133. doi: 10.1006/obhd.2000.2891

Mutlu, B., Kanda, T., Forlizzi, J., Hodgins, J., and Ishiguro, H. (2012). Conversational gaze mechanisms for humanlike robots. ACM Trans. Interact. Intell. Syst. 1, 1–33. doi: 10.1145/2070719.2070725

Newhart, V. A., Warschauer, M., and Sender, L. (2016). Virtual inclusion via telepresence robots in the classroom: an exploratory case study. Int. J. Technol. Learn. 23, 9–25. doi: 10.18848/2327-0144/CGP/v23i04/9-25

Powell, T., Cohen, J., and Patterson, P. (2021). Keeping connected with school: implementing telepresence robots to improve the wellbeing of adolescent cancer patients. Front. Psychol. 12:749957. doi: 10.3389/fpsyg.2021.749957

Rae, I., Takayama, L., and Mutlu, B. (2013). The influence of height in robot-mediated communication. In 2013 8th ACM/IEEE international conference on human-robot interaction (HRI) (pp. 1–8). IEEE.

Reeves, B., Lombard, M., and Melwani, G. (1992). Faces on the screen: pictures or natural experience. Miami, FL: Mass Communication Division of the International Communication Association.

Riek, L. D. (2012). Wizard of oz studies in HRI: a systematic review and new reporting guidelines. J. Hum. Robot Interact. 1, 119–136. doi: 10.5898/JHRI.1.1.Riek

Sacks, H., Schegloff, E. A., and Jefferson, G. (1974). A simplest systematics for the organization of turn-taking for conversation. Language 50, 696–735. doi: 10.1353/lan.1974.0010

Schegloff, E. A. (1996). Turn organization: one intersection of grammar and interaction/Schegloff, Emanuel A : Interaction and grammar. New York, NY: Cambridge University Press, 52–133.

Schouten, A. P., Portegies, T. C., Withuis, I., Willemsen, L. M., and Mazerant-Dubois, K. (2022). Robomorphism: examining the effects of telepresence robots on between-student cooperation. Comput. Human Behav. 126:106980. doi: 10.1016/j.chb.2021.106980

Schwarz, B. B., Swidan, O., Prusak, N., and Palatnik, A. (2021). Collaborative learning in mathematics classrooms: can teachers understand progress of concurrent collaborating groups? Comput. Educ. 165:104151. doi: 10.1016/j.compedu.2021.104151

Scott, G. W. (2017). Active engagement with assessment and feedback can improve group-work outcomes and boost student confidence. High. Educ. Pedagog. 2, 1–13. doi: 10.1080/23752696.2017.1307692

Shamekhi, A., and Bickmore, T. (2019). A multimodal robot-driven meeting facilitation system for group decision-making sessions. In 2019 international conference on multimodal interaction (279–290).

Skantze, G. (2017). Predicting and regulating participation equality in human-robot conversations: effects of age and gender. In Proceedings of the 2017 acm/ieee international conference on human-robot interaction (pp. 196–204).

Skantze, G., Hjalmarsson, A., and Oertel, C. (2014). Turn-taking, feedback and joint attention in situated human–robot interaction. Speech Comm. 65, 50–66. doi: 10.1016/j.specom.2014.05.005

Stoll, B., Reig, S., He, L., Kaplan, I., Jung, M.F., and Fussell, S.R. (2018). Wait, can you move the robot? Examining telepresence robot use in collaborative teams. In Proceedings of the 2018 ACM/IEEE international conference on human-robot interaction (14–22).

Strauß, S., and Rummel, N. (2021). Promoting regulation of equal participation in online collaboration by combining a group awareness tool and adaptive prompts. But does it even matter? Int. J. Comput.-Support. Collab. Learn. 16, 67–104. doi: 10.1007/s11412-021-09340-y

Tang, A., Boyle, M., and Greenberg, S. (2004). Display and presence disparity in mixed presence groupware. Australasian User Interface Conference. Australian computer society, Inc., pp. 73–82.

Tennent, H., Shen, S., and Jung, M. (2019). Micbot: a peripheral robotic object to shape conversational dynamics and team performance. In 2019 14th ACM/IEEE international conference on human-robot interaction (HRI) Daegu, South Korea. (pp. 133–142). IEEE.

Thompson, P., and Chaivisit, S. (2021). Telepresence robots in the classroom. J. Educ. Technol. Syst. 50, 201–214. doi: 10.1177/00472395211034778

Turnbull, A. P., Turnbull, R., Shank, M., and Smith, S. J. (2004). Exceptional lives: Special education in today's schools. 4th Edn. Upper Saddle River, NJ: Pearson.

Utha, K., and Tshering, T. (2021). Effectiveness of group work in the colleges of Royal University of Bhutan. Bhutan J. Res. Dev. 10, 69–115. doi: 10.17102/bjrd.rub.10.2.007

Weibel, M., Hallström, I. K., Skoubo, S., Bertel, L. B., Schmiegelow, K., and Larsen, H. B. (2023a). Telepresence robotic technology support for social connectedness during treatment of children with cancer. Child. Soc. 37, 1392–1417. doi: 10.1111/chso.12776

Weibel, M., Skoubo, S., Handberg, C., Bertel, L. B., Steinrud, N. C., Schmiegelow, K., et al. (2023b). Telepresence robots to reduce school absenteeism among children with cancer, neuromuscular diseases, or anxiety—the expectations of children and teachers: a qualitative study in Denmark. Comput. Hum. Behav. Rep. 10:100280. doi: 10.1016/j.chbr.2023.100280

Weisswange, T. H., Javed, H., Dietrich, M., Pham, T. V., Parreira, M. T., Sack, M., et al. (2023). What could a social mediator robot do? Lessons from real-world mediation scenarios. In IEEE International Conference on Robotics and Automation (ICRA), 29 May – 2 June 2023, London, UK, pp. 1–8. arXiv.

Keywords: group work, balancing participation, students’ talking time, telepresence robot, robot moderation

Citation: Asadi A and Fischer K (2025) Not just another quiet student: reducing participation imbalance through robot moderation. Front. Educ. 10:1581175. doi: 10.3389/feduc.2025.1581175

Edited by:

Milad Nazarahari, University of Alberta, CanadaReviewed by:

Silvio Marcello Pagliara, University of Cagliari, ItalyJanika Leoste, Tallinn University, Estonia

Copyright © 2025 Asadi and Fischer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ali Asadi, YWFAc2R1LmRr

Ali Asadi

Ali Asadi Kerstin Fischer

Kerstin Fischer