- Instituto de Ciencias Naturales, Universidad de Las Américas, Santiago, Chile

The growing number of students in higher education has raised challenges in inclusion and equity due to the diversity of students’ backgrounds and entry-level skills. Educational institutions must adapt teaching-learning strategies to ensure the achievement of learning outcomes. In this context, Information and Communication Technologies (ICTs), combined with gamification, offer tools like Labster™, which enable interactive scientific simulations and promote active learning. This study used a quasi-experimental design with self-selected participation to evaluate the impact of Labster in a “Biological Foundations of Psychology” course across nine nationwide sections. The sample consisted of 315 first-year Psychology students, 237 in the experimental group and 78 in the control group. Given the non-normal distribution of academic data (Shapiro-Wilk test, p < 0.05), non-parametric tests were applied. A Kruskal-Wallis test on learnng gain (final minus initial grade) showed that students using Labster achieved significantly greater academic improvement than the control group (H = 12.347, p = 0.00004). A multiple linear regression, controlling for baseline performance (cat_1, ej_1, ta_1), confirmed that participation in the intervention predicted higher final grades (β = 0.3891, p = 0.0053; adjusted R2 = 0.216). No significant differences were found between the two types of simulations used (Kruskal-Wallis, p = 0.2814). A perception survey was administered to 237 Labster users, of whom 87 responded (response rate: 36.7%). The results revealed positive evaluations regarding usability, academic relevance, and motivational impact. In summary, Labster-based virtual simulations enhance academic performance and individual progress in scientific learning among psychology students, especially in diverse and non-science-oriented populations.

1 Introduction

The massification of access to higher education has experienced sustained global growth since the outset of the twenty-first century (United Nations Educational Scientific and Cultural Organization [UNESCO], 2020). This phenomenon has enabled individuals from increasingly diverse socioeconomic, cultural, and academic backgrounds to enter universities, leading to profound institutional transformations. One of the principal consequences of this expansion has been the rise in student heterogeneity, thereby introducing new challenges concerning educational quality, inclusion, and equity (Saiz Sánchez et al., 2020). The increasing diversity in academic abilities, prior preparation levels, and learning styles has compelled higher education institutions to flexibilize their teaching and learning methodologies, seeking adaptive strategies that promote academic success without compromising quality standards (Noui, 2020). In this context, the incorporation of ICTs has emerged as a fundamental pillar for addressing these challenges, facilitating the personalization of learning and broadening access to innovative educational experiences (Keshavarz and Mirmoghtadaie, 2023).

The continual evolution of ICTs has profoundly reshaped educational processes, fostering the development of new collaborative and individual learning environments that promote the acquisition of both disciplinary and transversal competencies (Mariaca Garron et al., 2022; García-Sánchez et al., 2018). This phenomenon has encouraged the proliferation of active learning approaches, wherein students assume a central and participatory role in constructing their own knowledge through interactive and reflective activities. Within this wave of pedagogical innovation, gamification has emerged as a particularly effective strategy for enhancing student motivation and engagement. Defined as the integration of game-design elements into non-gaming contexts (Irwanto et al., 2023), gamification seeks to transform educational experiences into more attractive and meaningful processes. Recent empirical evidence substantiates that its implementation, both in online and face-to-face modalities, augments intrinsic motivation, fosters academic satisfaction, and promotes the adoption of deep learning strategies (Aşıksoy, 2018; Asiksoy and Canbolat, 2021; Bencsik et al., 2021; Aguiar-Castillo et al., 2021).

Furthermore, the impact of gamification and virtual simulations can be theoretically supported by the cognitive load theory (Sweller, 1988), which proposes that instructional designs must optimize the cognitive resources of learners. By breaking down complex scientific processes into manageable segments, virtual laboratories such as Labster can reduce extraneous cognitive load and promote germane load, facilitating more efficient learning. More specifically, recent studies have demonstrated that gamification through immersive virtual simulations not only increases student motivation but also significantly enhances learning outcomes, particularly when virtual reality technologies are employed to foster sensory and cognitive immersion (Tsirulnikov et al., 2023). The application of dual coding theory (Clark and Paivio, 1991) further elucidates these outcomes, postulating that the simultaneous activation of verbal and visual coding systems strengthens memory retention. In the context of Labster, the integration of textual explanations, visual animations, and interactive tasks promotes dual coding, thereby enhancing the encoding and retrieval of complex biological knowledge.

In addition, the framework of student engagement theory (Fredricks et al., 2004) provides a lens to understand the effects observed. Engagement, conceptualized through behavioral, emotional, and cognitive dimensions, is critically enhanced through gamified simulations, which encourage active participation, emotional involvement, and cognitive investment in learning activities.

Virtual laboratories represent a tangible manifestation of these technological innovations, enabling students to engage with scientific concepts and procedures within controlled digital environments (Akinola and Oladejo, 2020). Such tools transcend the physical limitations of traditional laboratories by eliminating barriers associated with cost, specialized equipment, and the inherent risks of certain experimental practices (Byukusenge et al., 2022; Kennepohl, 2021; Potkonjak et al., 2016).

Empirical studies have demonstrated that virtual laboratories not only bolster students’ motivation and autonomy but also reinforce conceptual understanding and facilitate the transfer of knowledge to practical contexts, provided that they are grounded in robust pedagogical frameworks (de Vries and May, 2019). Nonetheless, there is widespread consensus that virtual laboratories should be conceived as complementary rather than as absolute substitutes for real experimental experiences, particularly in disciplines that necessitate the development of fine procedural skills (Navarro et al., 2024). Within this context, Labster has emerged as a leading platform in the domain of gamified scientific simulations. With a portfolio of over 250 simulations across fields such as biology, chemistry, physics, and biotechnology, Labster offers immersive scenarios wherein students can conduct experiments, solve scientific problems, and apply theoretical knowledge in simulations that faithfully emulate real-world conditions (Tripepi, 2022; Navarro et al., 2024).

Empirical evidence consistently confirms that the use of Labster enhances student motivation, self-efficacy, knowledge retention, and academic performance, in both desktop-based and highly immersive virtual reality environments (Tsirulnikov et al., 2023). Furthermore, students frequently report positive evaluations concerning the platform’s usability, the academic relevance of its simulations, and its contribution to fostering autonomous learning practices. However, the effectiveness of such tools may vary according to the disciplinary background of the student body. Traditionally, students enrolled in scientific programmes exhibit greater affinity for laboratory simulations. Conversely, in disciplines such as Psychology, where engagement with basic sciences tends to be less direct, it becomes imperative to explore how the implementation of these technologies might impact student motivation, academic performance, and perceptions of the learning process.

1.1 Purpose and contribution of the study

The principal contribution of this study lies in the innovative application of the Labster platform within the academic context of a Biological Foundations course for first-year Psychology students. Although Labster has proven effective in teaching natural and biological sciences, its implementation in a course aimed at first-year Psychology students—approximately 70% of whom are first-generation university attendees—represents a significant educational innovation.

This research provides new insights into how digital simulation tools, originally designed for scientific education, can enhance the understanding of biological concepts among diverse student populations. By exploring this application, the study contributes to pedagogical innovation, demonstrating how immersive virtual laboratories can foster active and autonomous learning. Moreover, the study highlights the potential of digital simulations to reduce educational inequalities by offering accessible, high-quality learning experiences, in alignment with international objectives for equitable and inclusive education (UNESCO, 2023; OECD, 2021). By situating the use of Labster within a mass higher education context, the study reinforces the role of emerging technologies in narrowing gaps related to technological access and learning opportunities. This work comprehensively evaluates the impact of Labster on teaching practices, focusing on academic performance and students’ perceptions regarding the platform’s usability and relevance. Ultimately, the study seeks to inform and enhance pedagogical strategies in higher education, particularly in contexts where digital transformation and educational innovation are essential to ensuring more equitable, accessible, and competency-oriented learning environments.

2 Materials and methods

2.1 Sample description

The sample consisted of 315 first-year Psychology students enrolled in the “Biological Foundations of Psychology” course at Universidad de Las Américas, distributed across three university campuses. The educational intervention, implemented during the first academic semester of 2023 (2023–10), involved a mixed pedagogical strategy combining laboratory simulations through the Labster platform and traditional face-to-face activities. All three groups — experimental (Labster users) and control — had equal access to the same face-to-face classes throughout the semester. The experimental group, consisting of 237 students, voluntarily participated in the use of the Labster platform after giving their informed consent. Specifically, 130 students used the simulation “Gross Function of the Nervous System: Let your brain learn about itself “ (Labster A), and 107 used “Sensory transduction: Learn why you feel pain when you get hit by a rock” (Labster B). The control group, consisting of 78 students, voluntarily chose not to use the virtual simulations but still attended the same face-to-face sessions. Thus, the key distinction between the groups was the additional opportunity to complete Labster simulations asynchronously, outside of class hours, while all students participated in the same scheduled face-to-face educational activities.

To evaluate baseline academic performance before the implementation of the intervention, students completed an initial in-class workshop (ta_1) and a test (ej_1) focused on foundational content during the first weeks of the course. Additionally, they took an initial written exam (cat_1) within the first month. These three assessments served as initial academic indicators to compare the control and experimental groups before the intervention and were later used to calculate net learning gains based on the final exam scores (cat_4).

2.2 Design and architecture of the educational intervention

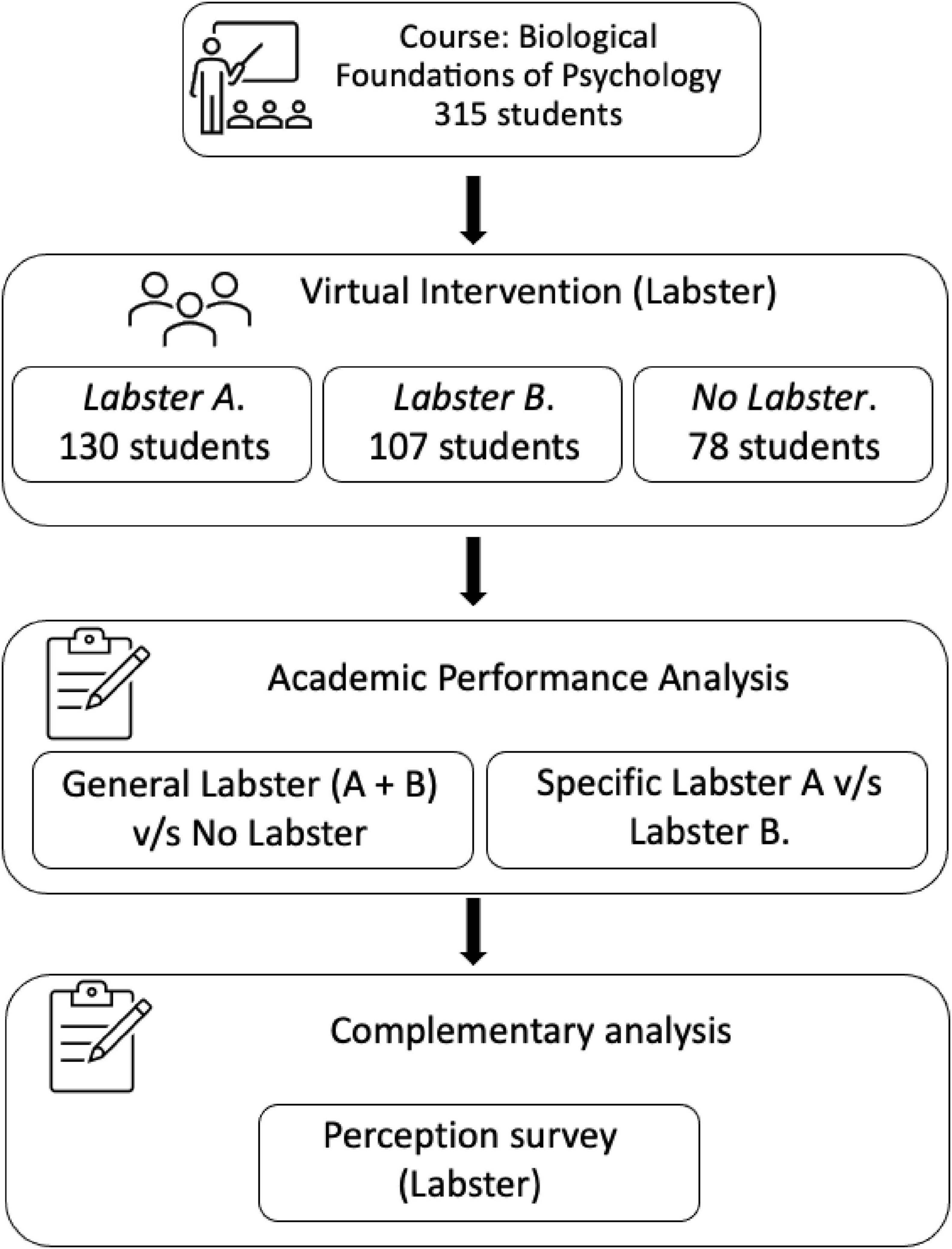

The design of this educational intervention was carefully structured to measure the impact of virtual laboratories in the face-to-face course “Biological Foundations of Psychology”, taught at the University of the Americas. As summarized in Figure 1, the intervention was proposed as a complementary strategy to the usual development of face-to-face classes, and focused on the implementation of two virtual activities through the scientific simulation platform Labster© (Somerville, MA, United States), which were aligned with the learning outcomes of the last unit of the course (unit IV). The course maintained its face-to-face structure, where all students regularly attended classes. However, as part of this complementary intervention, Labster virtual laboratories were introduced, which students had to complete asynchronously, outside of class hours. These laboratories were not a replacement, but an optional and additional resource, designed to offer interactive exposure to biological concepts addressed in the classroom. In this sense, two types of virtual laboratories called Labster A and Labster B were implemented, each with slightly different approaches in terms of practical content, but both aligned with the same learning outcomes of the subject. The educational intervention was deployed during the last part of the semester, after the completion of the first three units of the course, and coinciding with the development of Unit IV, which introduced new theoretical content not previously evaluated.

Figure 1. Flowchart summarizing the virtual intervention (Labster) and the analyses performed in the study.

The study design included two levels of comparison. First, a comparison was made to evaluate the overall impact of using the Labster platform with 237 students who completed virtual laboratories A or B (experimental group) and 78 students who did not complete the virtual activities (control group). This general comparison allowed us to assess whether access to the simulations was associated with differences in performance on the final exam (cat_4), which measured the learning outcomes of Unit IV. Additionally, the equivalence between the experimental and control groups at baseline was assessed through the analysis of three initial academic performance measures: the score on the first workshop (ta_1), the initial exam score (cat_1), and the first test (ej_1). Given the differences found in these initial measures, the analysis strategy incorporated the use of multivariate models to control for baseline performance and isolate the effect of the intervention.

In a second stage of analysis, it was more specifically evaluated whether there were differences between students who completed Labster A (130 students) and those who completed Labster B (107 students). This analysis allowed us to observe whether the different types of virtual laboratories differentially influenced student performance, or whether both approaches were equally effective in achieving the expected learning outcomes. Importantly, this final assessment (cat_4) focused on new content that was not included in the initial evaluations. These new topics were addressed during face-to-face sessions for all students, and additionally made available to the experimental group through Labster simulations, as part of the educational intervention. Furthermore, individual learning gain throughout the course was estimated by calculating the difference between the initial exam score (cat_1) and the final exam score (cat_4) for each student, providing an additional metric of academic progress related to the intervention. Finally, the students’ perception of the use of Labster was evaluated, where only the students who participated in the virtual activities had the option to answer a perception survey regarding their experience, using a previously validated instrument that measured the dimensions of usability, academic relevance, and motivation/satisfaction with the use of Labster.

2.3 Questionnaire design

The Assessment Instruments (AI) used in this study were developed from question banks carefully prepared and refined over several semesters of application. These question banks were developed following an approach based on specification tables, which clearly described the expected learning outcomes for students. For their development, Bloom’s taxonomy was used as a learning reference framework, which served as a guide to ensure that the questions were aligned with the cognitive levels of “remembering” and “understanding.” The process of developing and validating the questions was exhaustive and included the active participation of the entire teaching team. Under the supervision of an academic leader of the subject, all questions were reviewed to ensure their validity and reliability. This collaborative approach ensured consistency in the assessments and equity in measuring student performance. The AIs were composed of both single-choice and written-response questions, with a total of 30 alternative questions and one written question per assessment in the final evaluation (cat_4). The initial exam 1 (cat_1) shared a similar structure and response format, facilitating structural comparability between initial and final assessments. However, in accordance with Bloom’s taxonomy, the cognitive demand of the questions was expected to evolve throughout the course, progressively transitioning from lower-order thinking skills (remembering) to higher-order skills (understanding). In contrast, the initial assessments ta_1 and ej_1 corresponded to different types of academic activities, oriented toward formative tasks and other learning processes, and therefore differed in format and structure from the final examination. Finally, the data obtained were organized and stored in Excel databases, which facilitated the quantitative analysis of the results and the subsequent comparison of the study groups.

2.4 Perception survey

To assess students’ perceptions of the use of Labster simulations in their learning, a perception survey was designed. The survey was subjected to a content validation process using Aiken’s V coefficient. This method was used to assess the validity of the items according to the assessments of a panel of 14 expert judges. The judges evaluated each item on a scale of 1 to 4, where 1 represents “completely disagree” and 4 “completely agree.” Only items that reached an Aiken V value greater than 0.80 were selected, indicating a high degree of consensus among the judges regarding the relevance of the items (Maulita et al., 2019).

The final survey consisted of 11 items, organized into three key dimensions: (1) Usability, which assessed the ease of access and navigation through the platform; (2) Academic relevance, focused on the relationship of the simulations with the course content and their application in practical contexts; and (3) Motivation and satisfaction, which measured the interest and enjoyment generated by the use of Labster, as well as the willingness to recommend its use. In addition to the theoretical construction of these dimensions, an exploratory factor analysis (EFA) was conducted to evaluate the internal structure of the instrument. The analysis used the principal components method with Varimax rotation. The Kaiser-Meyer-Olkin (KMO) index was 0.90, and Bartlett’s sphericity test was significant (χ2 = 1161.62; p < 0.001), indicating the adequacy of the data for factor analysis. Although an initial one-factor solution showed a high explanation of variance, the three-factor model aligned more appropriately with the theoretical foundation and revealed interpretable groupings of items consistent with the predefined dimensions. To assess internal consistency, Cronbach’s alpha was calculated for each dimension, yielding excellent reliability: Usability (α = 0.90), Academic relevance (α = 0.93), and Motivation/Satisfaction (α = 0.95).

The survey was administered online through the Google Forms platform at the end of the students’ participation in the simulations. The students declared their voluntary participation, indicating their willingness to complete the questionnaire. For each item, the survey presented response options using a 5-category Likert scale, where the options were: (1) Completely disagree, (2) disagree, (3) neither agree nor disagree, (4) agree, and (5) Completely agree. At the end of the survey there was a comments section in case the student wanted to leave any.

2.5 Statistical analysis

To assess the impact of an educational intervention based on virtual simulations (Labster) on the academic performance of university students, a multivariate statistical approach was applied. Given the non-random nature of the quasi-experimental design, the Shapiro-Wilk normality test was applied to the baseline academic performance variables to verify the assumptions required for parametric tests. Since the variables did not follow a normal distribution, the non-parametric Kruskal-Wallis test was used to evaluate the general initial homogeneity between the control and experimental groups regarding initial workshop 1 (ta_1), initial test (ej_1), and initial exam (cat_1). Additionally, to compare the distributions of the evaluations between the two groups, the Mann-Whitney test was applied. The significance criterion used was a p-value < 0.05. In all cases, the H or U statistic, as appropriate, and the associated p-value were reported as indicators of significance.

For the analysis of the effect of the educational intervention on the final academic performance (cat_4), a multiple linear regression model was constructed. This model included baseline grades (ta_1, ej_1, cat_1) and group membership (control or experimental) as predictor variables. The inclusion of these covariates allowed for statistical control of pre-existing differences in academic performance, minimizing potential selection biases. A significance level of p < 0.05 was considered for all coefficients, and standardized beta coefficients (β), p-values, the model F-statistic, and the R2 coefficient were reported as indicators of the model’s fit and explanatory power. To evaluate the internal consistency of the final evaluation instrument (cat_4), the Cronbach’s alpha coefficient was calculated, yielding a value of 0.87. This result indicates a high reliability of the instrument for measuring student performance.

In the descriptive analysis of the student perception survey, absolute and relative frequencies of the responses for each of the categories of the 11 items of the instrument were calculated and presented, with the goal of quantitatively characterizing the participants’ perceptions. For the structural validation of the perception instrument, an exploratory factor analysis (EFA) was conducted using the factor analysis library in Python. This analysis was implemented to identify the latent structures underlying the responses and assess the construct validity of the instrument. The KMO (Kaiser-Meyer-Olkin) index was used as an indicator of sample adequacy, and the Bartlett’s sphericity test (χ2 and its p-value) was used to evaluate the feasibility of factor analysis. A KMO > 0.80 and p < 0.05 in the Bartlett test were adopted as criteria for acceptability. Additionally, Cronbach’s alpha coefficients were calculated for each identified dimension, with a value greater than 0.90 considered an indicator of excellent internal consistency, according to the psychometric standards proposed by (Nunnally and Bernstein, 1994). All statistical analyses were conducted using Python 3.11, with the scipy, statsmodels, and pandas packages.

2.6 Ethical approval and consent to participate

This research was approved by the scientific committee of the Universidad de Las Américas, guaranteeing high ethical and scientific standards. In relation to the perception survey, students were informed that participation was voluntary, that no personally identifiable information would be collected, and that all responses would remain anonymous. Data collection was conducted online through secure institutional platforms, ensuring the encryption and restricted access of the information. Confidentiality and anonymity were strictly maintained throughout the study, in compliance with current data protection regulations.

2.7 Theoretical framework

The selection of literature for this review followed a narrative approach, focusing on peer-reviewed studies and official international reports published between 2014 and 2024, identified through databases such as Scopus and Web of Science. The focus was on works addressing virtual laboratories, gamification, digital equity, and competency-based education.

3 Results

The results of the study are presented in two main sections. First, the academic performance of the students is analyzed, comparing those who participated in the educational intervention with virtual simulations (Labster) (experimental group) with those who did not use it (control group). This section includes both initial performance comparisons between the groups and multivariate analyses that isolate the specific effect of the intervention, as well as internal comparisons between the different types of Labster used (A and B). Second, the results of the student perception survey are presented, which evaluates the subjective experience of the participants with the Labster tool, addressing dimensions such as usability, academic relevance, motivation, and overall satisfaction with the intervention.

3.1 Analysis of academic performance based on the educational intervention with Labster

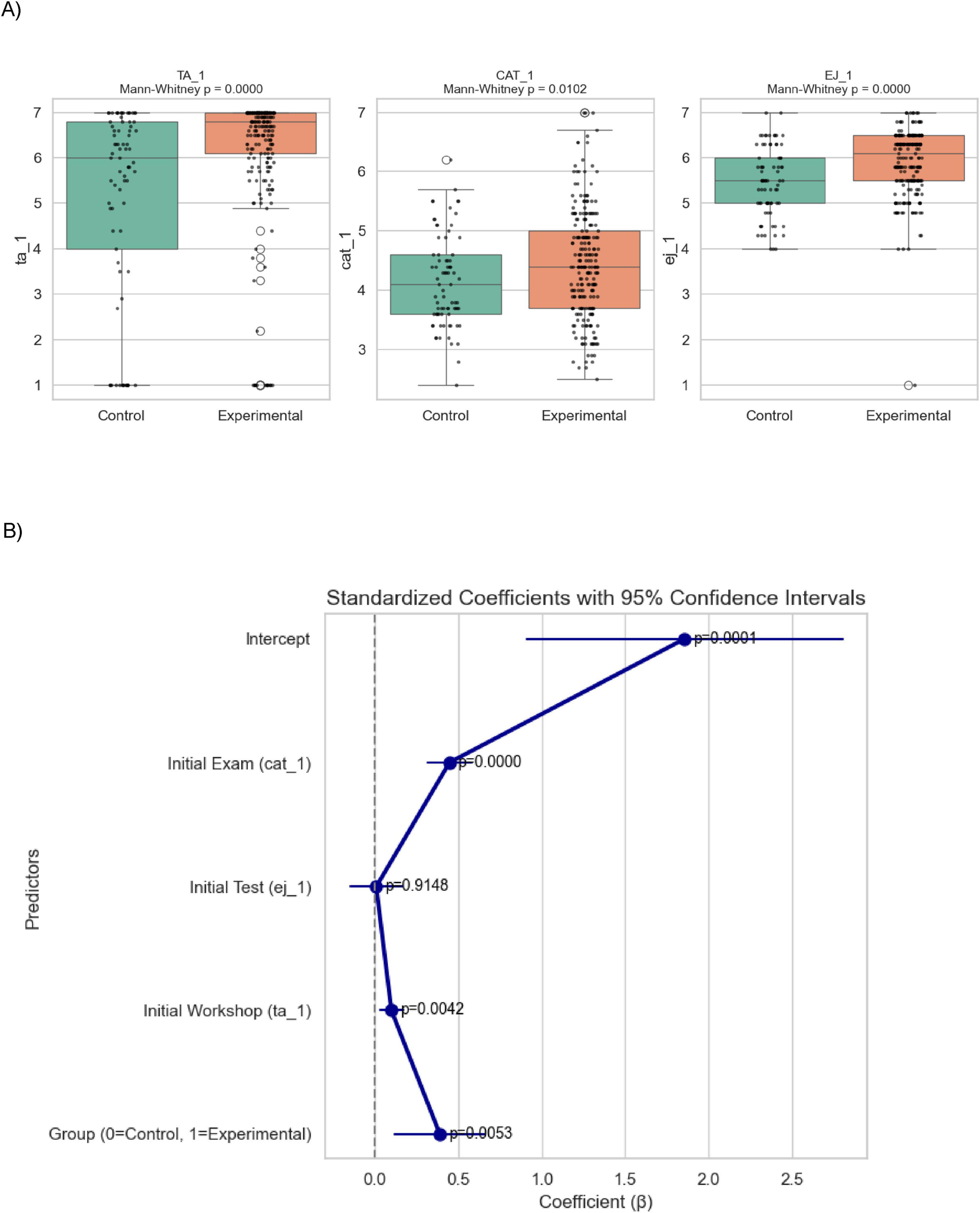

To verify the homogeneity between the control group (n = 78) and the experimental group (n = 237) prior to the implementation of the educational intervention, non-parametric tests were applied to three baseline academic performance variables: initial workshop 1 (ta_1), initial exam (cat_1) and initial test 1 (ej_1). First, the Kruskal-Wallis test was used due to the non-normal nature of the data (determined by the Shapiro-Wilk test) and the ordinal nature of the variables. The results indicated statistically significant differences between the control group and the experimental group in all three initial measures (ta_1: H = 24.34, p < 0.0001; cat_1: H = 6.61, p = 0.0102; ej_1: H = 19.35, p < 0.0001). Additionally, the Mann-Whitney test was applied to confirm these differences between the two groups, yielding the following U statistics: ta_1: U = 5573.000; cat_1: U = 7092.000; ej_1: U = 5883.000. These results show a lack of baseline equivalence between the study groups (Figure 2A), which justified the need to use multivariate analyses that consider these initial differences, in order to avoid bias in estimating the effect of the intervention.

Figure 2. (A) Comparison between the control group (n = 78) and the experimental group (n = 237) on three assessments conducted at the beginning of the course using the Mann-Whitney test. The results show the standard dispersion of the data for the variables ta_1, cat_1, and ej_1. (B) Multiple linear regression analysis to predict the final grade (cat_4) using the variables cat_1, ej_1, ta_1, and group as predictors. The model was evaluated using the F-statistic, and the p-value is reported to determine the significance of the included variables.

To estimate the specific effect of the Labster-based educational intervention on the students’ final exam (cat_4), a multiple linear regression model was fitted, which included baseline grades as covariates (cat_1, ej_1, and ta_1), along with group membership (control or experimental). This approach allowed for statistical control of the initial heterogeneity between the groups and isolated the specific effect of the intervention. The model showed a statistically significant relationship [F(4, 301) = 21.95, p < 0.0001], with an R2 coefficient of 0.226 and an adjusted R2 of 0.216, indicating that approximately 21.6% of the variance in the final grade can be explained by the variables included in the model (Figure 2B). Within the model, group membership (experimental group) acted as a significant predictor of final performance (β = 0.3891, p = 0.0053), indicating that participation in the educational intervention was associated with improved academic performance, even after adjusting for initial differences. Furthermore, the initial grade (cat_1, p < 0.001) was identified as a significant predictor of final performance, suggesting that students with better initial performance tended to achieve higher final grades. Workshop 1 (ta_1), which corresponds to the average of 2 Labster virtual activities on the platform (ta_1, p = 0.0042), was also a significant predictor, with a positive effect on final performance. In contrast, the first evaluation (ej_1) showed no statistically significant association (p = 0.9148).

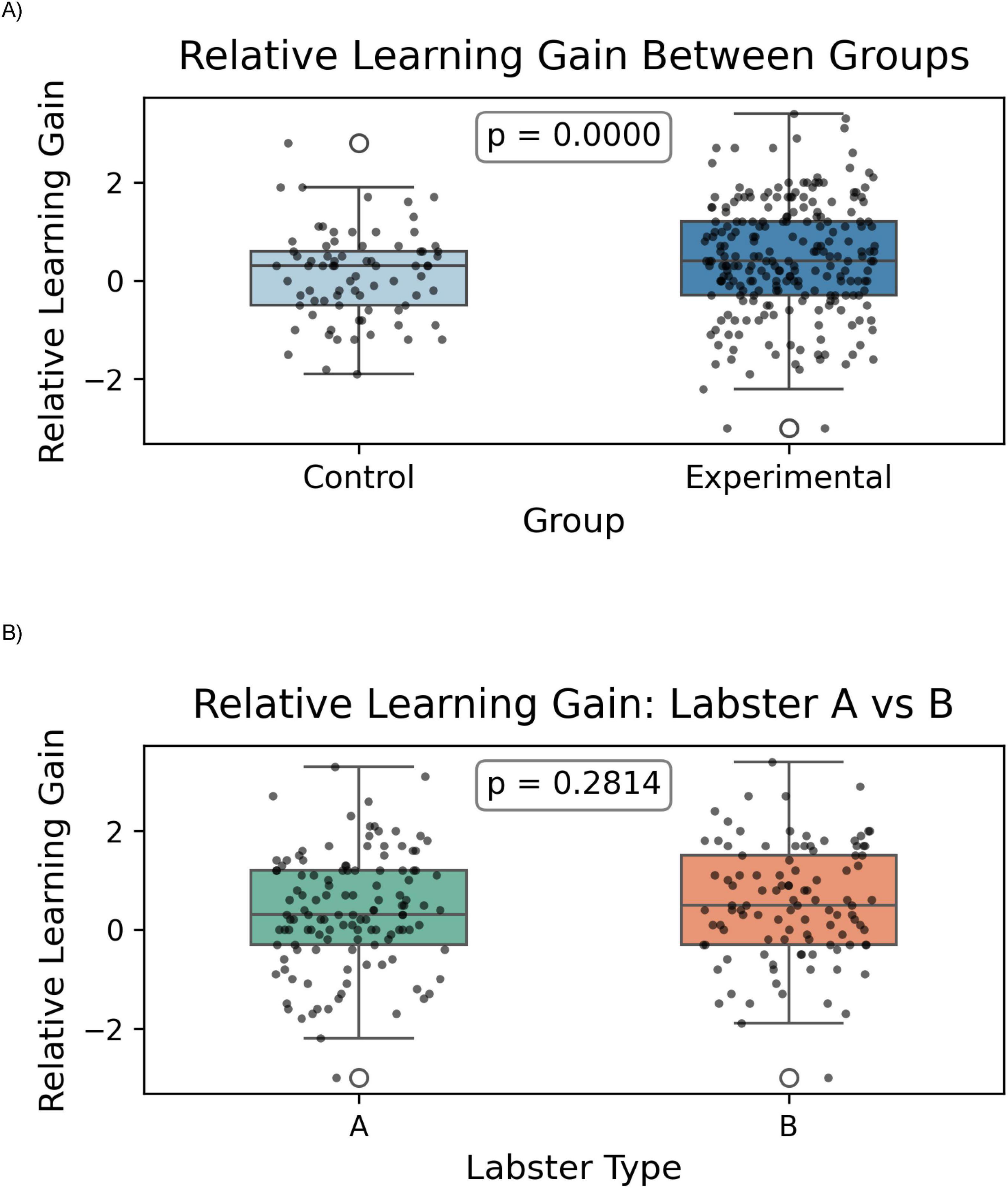

Finally, in order to evaluate individual progress throughout the course, the difference between the initial grade (cat_1) and the final grade (cat_4) of each student was analyzed as a measure of relative learning gain. This analysis was complemented by the Kruskal-Wallis test, due to the non-normality of the difference variable. The results revealed that students in the experimental group showed a significantly higher average improvement than those in the control group (H = 12.347, p = 0.00004), which indicates that the intervention not only impacted the final outcome but also induced a greater positive change in academic performance throughout the course (Figure 3A). This relative gain reinforces the argument that the use of virtual simulations not only improves final results but also effectively enhances individual learning, which is particularly relevant from a pedagogical and formative perspective. Finally, an analysis within the experimental group was conducted to assess the differences between students who used Labster A and Labster B, with no significant differences found in academic performance between the two groups, P = 0.2814 (Figure 3B). This finding suggests that the two versions of the simulations are equivalent in terms of the content assessed, reinforcing the idea that the specific type of simulation is not the determining factor, but rather the widespread use of the virtual tools themselves.

Figure 3. Comparison of academic gain between the control group and the experimental group to assess the impact of the Labster intervention. (A) Comparison between the control (n = 78) and experimental (n = 237) groups. (B) Comparison between the Labster A (n = 130) and Labster B (n = 107) subgroups within the experimental group. In both cases, the relative academic gain was evaluated by the difference between the final grade (cat_4) and the initial grade (cat_1). The Kruskal-Wallis test was used to compare the distributions of academic gains between the groups and subgroups, with a p-value < 0.05 as the significance criterion.

3.2 Results of the student perception survey on Labster use

Of a total of 237 students who participated in the use of Labster, 87 completed the perception survey (36.7%), of which 90% were between 18–21 years old, mainly female (80%) and had some experience in virtual games or simulations (87%) as initially indicated in the perception survey. Below are the results obtained broken down into the different dimensions. The internal consistency of the dimensions was high, with Cronbach’s alpha values of 0.903 for Dimension 1 (usability), 0.926 for Dimension 2 (academic relevance), and 0.950 for Dimension 3 (motivation and satisfaction).

Dimension 1 analysis: For the first usability analysis, which evaluated the ease of access and navigation through the platform, 3 items from the perception survey were applied, detailed in Table 1.

Table 1. Results obtained from the answers of psychology students in the perception survey on the usability of the Labster platform in the course of biological foundations of psychology.

Regarding the usability of the Labster platform, students were evaluated on three key items: ease of access to simulations, effectiveness of use and navigation, and appropriateness of simulation duration. First, regarding the item on the ease of access to simulations, 75.5% of students reported agreeing or completely agreeing that accessing simulations was easy, with 44.4% in complete agreement. However, 16.7% indicated some level of disagreement, suggesting that although the majority perceived access as intuitive, a small percentage experienced technical difficulty or possibly access barriers. In this context, some students expressed concerns related to issues such as lack of adequate access or platform instability negatively impacted the experience of a few users. Phrases such as “Suddenly you cannot access the Labster page properly…” or “it takes you out of the simulation several times and you have to start over” indicate a certain level of frustration on behalf of a few students who were unable to complete activities due to technical failures. This finding could be relevant when considering future improvements to the user interface or access systems to the platform, in order to ensure a more homogeneous experience among students.

Regarding navigation and effective use of the platform, 77.8% of respondents expressed agreement or complete agreement that they were able to navigate efficiently through the simulations. Only 13.3% expressed some type of disagreement, which could suggest that some students faced problems related to the interface or internet connectivity, as suggested by the comments above. On the other hand, in the item that evaluated the adequacy of the duration of the simulations, 77.8% of students considered that the duration of the activities was appropriate to cover the content adequately. However, 15.5% did not share this opinion, stating that the duration was not entirely adequate for their learning. Overall, these results reinforce the idea that Labster is, in general, an accessible and easy-to-use tool, although a more in-depth analysis of the factors that influence the negative experience of a small group of students could be considered.

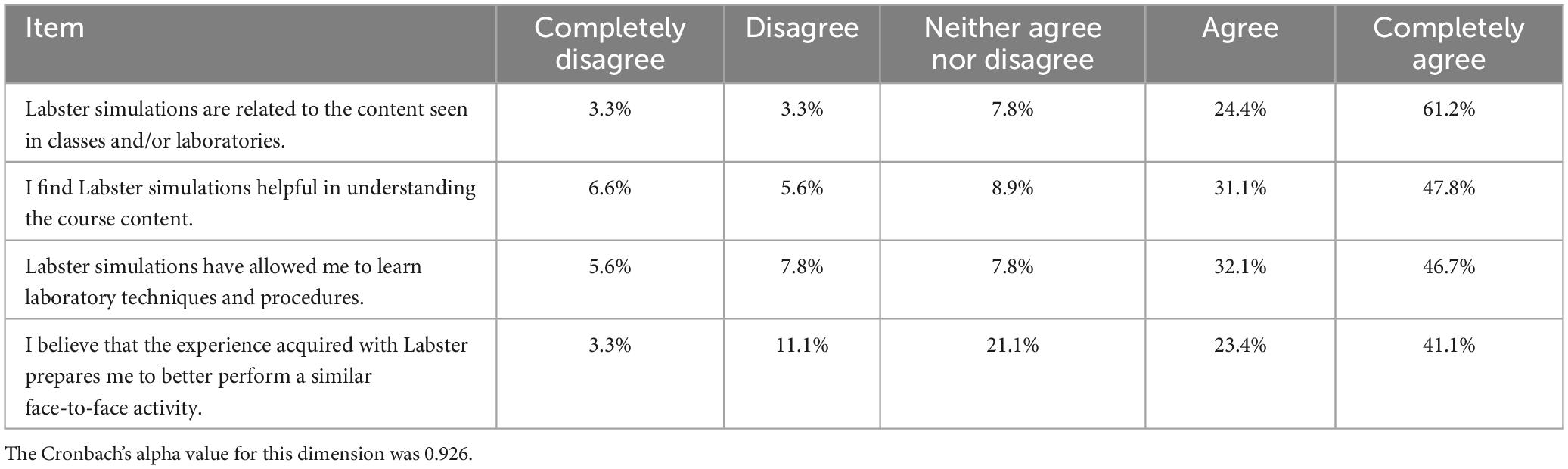

Analysis of dimension 2: For the analysis of academic relevance, focused on the relationship of the simulations with the course content and their application in practical contexts, 4 items from the perception survey were evaluated. As can be seen in Table 2.

Table 2. Results obtained from the answers of psychology students in the perception survey on the academic relevance of the Labster platform in the course on biological foundations of psychology.

First, the item on the relationship of simulations with the content covered in class yielded highly positive results. 85.6% of students agreed or completely agreed that Labster simulations are adequately related to the topics covered in classes and laboratories, with 61.2% of respondents in complete agreement, while only 6.6% expressed disagreement or complete disagreement. Regarding the second item, which assessed whether simulations helped them understand the course content, 78.9% of students indicated that Labster helped them better understand the course topics, with 47.8% of respondents indicating that they completely agreed with this statement. Regarding the item that explored whether simulations allowed them to learn laboratory techniques and procedures, 78.8% of students indicated that Labster contributed to their knowledge of experimental techniques, with 46.7% completely agreeing. Only 13.4% of students expressed disagreement. Along these lines, comments such as “excellent virtual reality or lab simulator to be able to learn quickly and in an entertaining way” and “a very good way to reinforce and review the material seen in class” underline the perception that Labster not only complements traditional teaching, but also adds a component of dynamism that can increase student interest.

Finally, in the item on preparation for similar face-to-face activities, a more moderate level of agreement was observed compared to the other items. 64.5% of students expressed agreement or complete agreement that the experience with Labster prepared them to better perform a similar face-to-face activity. In addition, several students mention that the simulations helped them better prepare for the evaluations, with comments such as: “it serves to review for the test and thus achieve a better understanding of the material.” This perception coincides with the quantitative results, where a significant majority of students stated that the simulations helped them better understand the course content. On the other hand, 21.1% of students were neutral and 14.4% expressed some degree of disagreement. Overall, these results indicate that Labster simulations are highly valued for their academic relevance, as they are aligned with the course content and help in understanding it, as well as offering a useful and complementary introduction to laboratory techniques.

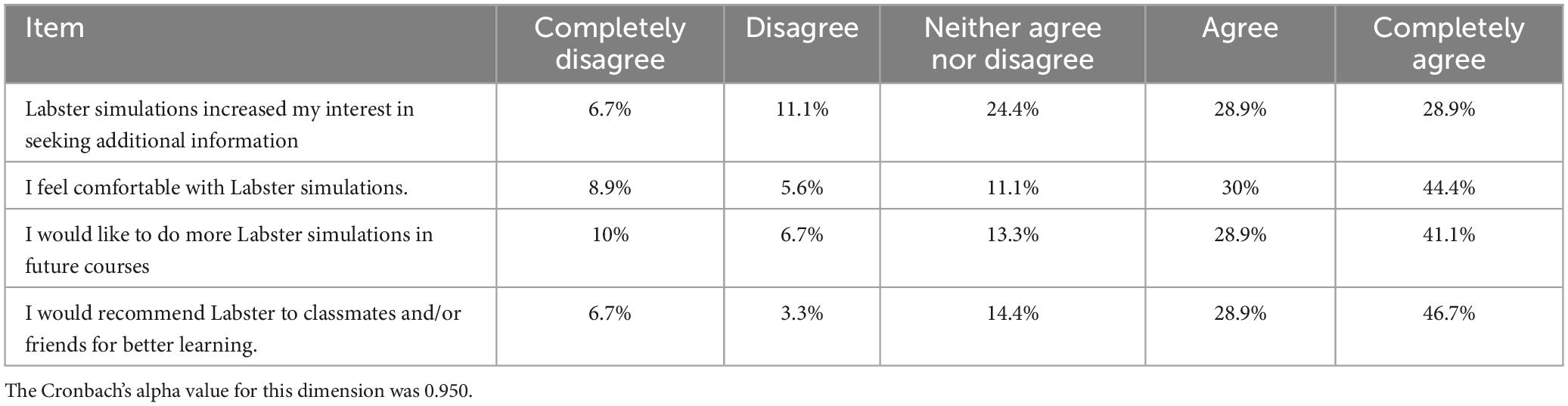

Dimension 3 analysis: For the first analysis of motivation and satisfaction, which measured the interest and enjoyment generated by the use of Labster, as well as the willingness to recommend its use, 4 items from the perception survey were applied, detailed in Table 3.

Table 3. Results obtained from the answers of psychology students in the perception survey on the motivation and satisfaction of the Labster platform in the course on biological foundations of psychology.

As for whether Labster simulations increased students’ interest in seeking additional information, the results show a balanced distribution of opinions. 57.8% of students indicated that they agreed or completely agreed with this statement, while 24.4% were neutral. However, it is noteworthy that 17.8% expressed some degree of disagreement. These results indicate that, although Labster has a positive effect on the motivation to delve deeper into knowledge, there is a significant proportion of students who do not experience an increase in their academic curiosity after using the simulations, which could suggest the need to incorporate more attractive or challenging elements in the simulations to encourage independent research related to the student’s area of interest.

Regarding general satisfaction, students say they are happy with Labster simulations with a level of 74.4% agreeing or completely agreeing. A low percentage expressed disagreement (14.5%), which can be attributed to the length of some simulations, as indicated by the comments “Labster’s length in some cases is very long…” and “… LABSTER is very effective in learning but some are extremely long and with too much information, which often causes the interest to drop…”

In relation to the willingness to use Labster in future courses, 70% of the students expressed interest in doing more simulations, with 41.1% completely agreeing with the idea, a result that is consistent with comments such as “Labster should be used in all subjects as it helps to reinforce and have a study tool for lectures and exercises.” and “I think that Labster worked adequately in Biological Fundamentals, if they could be integrated into courses that have a mandatory exam, to help better understand the subject…”. On the other hand, 16.7% of the students indicated that they disagreed or completely disagreed on this item. This data suggests that most students value simulations positively and would be willing to use them in the future, and there is also a group that, for various reasons, does not want to repeat the experience.

Finally, in the item on whether students would recommend Labster to their peers as a learning tool, 75.6% of students would recommend the platform to others, with 46.7% completely agreeing to do so. Only 10% expressed disagreement on this item, which reflects a high overall satisfaction with Labster, considering it a useful tool to improve learning.

3.3 Discussion

This study investigated the effects of gamification through Labster virtual laboratories on the academic performance and perception of first-year psychology students. The findings revealed that students who engaged with Labster simulations achieved significantly higher academic performance compared to those who did not. Additionally, students’ perceptions, assessed through a Likert-scale survey, indicated high levels of satisfaction regarding usability, relevance, motivation, and learning satisfaction.

The increasing integration of ICTs in education, accelerated by the COVID-19 pandemic, has fostered the development of diverse tools aimed at enhancing access to information, promoting knowledge construction, and improving student engagement. Among these, gamification has gained prominence for its potential to promote deeper learning outcomes (Koivisto and Hamari, 2019; Lampropoulos and Kinshuk., 2024). While positive effects have been reported, particularly when gamification complements traditional instruction (Bonde et al., 2014; Limniou and Mansfield, 2018), its effectiveness may vary depending on educational context, content alignment, and individual learner characteristics (Lo and Hew, 2018; Coleman and Smith, 2019; Dung, 2020).

In the present study, quantitative analyses revealed a significant improvement (p < 0.001) in academic performance among students who voluntarily participated in Labster virtual laboratories compared to those who did not. This finding aligns with previous research highlighting the benefits of interactive and dynamic environments in fostering deeper learning, increasing engagement, and promoting greater self-management of knowledge—factors that directly influence academic success (Aguiar-Castillo et al., 2021; Barber and Smutzer, 2017; Schechter et al., 2024; Tambiga et al., 2024). However, it is important to consider potential limitations related to the study design. As participation in Labster was voluntary, a self-selection bias may have influenced the results (Ryan and Deci, 2000). Students who opted to engage with the virtual simulations may have initially exhibited higher motivation, technological affinity, or academic self-efficacy, which could partially explain their superior performance. Moreover, external factors such as prior familiarity with the content or individual learning styles might also have contributed (Hassan et al., 2019).

To mitigate these potential biases, prior academic performance variables (cat_1, ej_1, ta_1) were included as covariates in the regression analyses. Notably, cat_1 was prioritized, given its equivalence with cat_4 (the final exam) in terms of structure and weighting, providing a meaningful baseline for adjustment. This statistical control strengthened the internal validity of the findings, allowing a more reliable attribution of the observed effects to the educational intervention rather than pre-existing group differences. Comparative analyses between the two different Labster simulations (A and B) revealed no significant differences in academic performance, suggesting pedagogical equivalence across different modules. This finding reinforces the consistency and versatility of the Labster platform when aligned with specific learning objectives. Nonetheless, certain limitations must be acknowledged. The absence of additional control variables, such as cumulative GPA, sociodemographic information, and prior technological experience, restricts the ability to fully characterize group differences and explore moderating effects. Future studies should address these aspects to refine the understanding of how virtual simulations impact diverse student profiles.

Despite these constraints, the present findings contribute to the growing body of evidence supporting the pedagogical potential of virtual laboratories in enhancing conceptual understanding, motivation, and engagement, particularly in disciplines or contexts where access to physical laboratories is limited or unequal (Freeman et al., 2014; Sung et al., 2016; Slater et al., 2010). The results also suggest that Labster can be an effective tool for improving academic performance among students traditionally less oriented towards the sciences, thus supporting the broader development of interdisciplinary skills in higher education.

Additionally, our results coincide with studies that have found similar benefits in the use of virtual simulations to promote active learning in disciplines such as microbiology (Tripepi, 2022), cell biology (Navarro et al., 2024), biomedicine (Soraya et al., 2022), among others. However, there is a stream of research that suggests that the effects of gamification may depend on the educational context and the individual characteristics of the students (DeLozier and Rhodes, 2017). In this sense, the results of some studies have been contradictory, showing that gamification does not always guarantee better academic performance, especially in situations where the design of the tool is not aligned with the specific learning objectives or when students have difficulties adapting to more autonomous learning environments (Aguiar-Castillo et al., 2021, Barber and Smutzer, 2017; Lamb et al., 2014). Along these lines, our results indicate that virtual labs were aligned with the subject’s learning outcomes according to data obtained from the student perception survey, which may partly explain the performance results. On the other hand, since psychology students do not always have an affinity for the study of science, it is essential to further explore the factors that modulate the effectiveness of Labster and other gamification tools, such as learning style, self-regulation capacity, and the impact of students’ prior expectations. Likewise, to evaluate how the long-term academic performance of students who use virtual labs compares with those who follow more traditional learning methods, considering not only their immediate grades, but also their retention of knowledge and skills over time. Regarding the results of the student perception survey on the use of Labster simulations in the Biological Foundations of Psychology course, they show a general tendency towards positive acceptance of the platform, in terms of usability, academic relevance, and motivation and satisfaction, although some aspects were identified that require discussion.

Several educational theories provide frameworks for interpreting the present findings. According to cognitive load theory (Sweller, 1988), Labster likely enhanced learning by reducing extraneous cognitive load through the structured segmentation of complex biological content, facilitating more efficient cognitive processing. Furthermore, the platform’s multimodal design—combining textual explanations, visual representations, and interactive experimentation—aligns with dual coding theory (Clark and Paivio, 1991), promoting stronger memory retention through the activation of both verbal and visual cognitive channels. Improvements observed in student motivation and engagement are consistent with the principles of student engagement theory (Fredricks et al., 2004), which highlights the critical role of behavioral, emotional, and cognitive engagement in academic success.

From a psychometric perspective, the three-dimensional structure of the survey instrument—addressing usability, academic relevance, and motivation and satisfaction—was theoretically grounded in these same educational frameworks. Usability was conceptualized under cognitive load theory, emphasizing that intuitive platforms minimize extraneous load and maximize learning efficiency. Academic relevance reflects the core aspects of student engagement theory, wherein alignment between learning activities and academic goals fosters deeper commitment.

Finally, motivation and satisfaction were framed within the self-determination theory (Ryan and Deci, 2000), which underscores the importance of intrinsic motivation, driven by experiences of competence and autonomy, for sustaining active and autonomous learning. Thus, both the interpretation of learning outcomes and the validation of the survey instrument are coherently anchored in well-established theoretical models that explain the effectiveness of educational interventions based on digital technologies and gamification.

The results of the exploratory factor analysis (EFA) provide strong support for the structural validity of the instrument. Sampling adequacy was excellent (KMO = 0.90), and Bartlett’s test of sphericity was significant (χ2 = 1161.62; p < 0.001), justifying the use of EFA (Nunnally and Bernstein, 1994). The unidimensional solution explained a high proportion of variance with strong factor loadings, suggesting a cohesive structure. However, given the theoretical basis of the instrument, a three-factor solution was also explored. This solution generally aligned with the proposed dimensions, though some cross-loadings were observed, indicating potential conceptual overlap among dimensions. While the Cronbach’s alpha values for each dimension were very high (ranging from 0.90 to 0.95), which indicates strong internal consistency, they may also reflect redundancy among items. Moreover, the use of principal component analysis might overestimate explained variance, so alternative approaches such as common factor analysis or confirmatory factor analysis are recommended for future studies.

Regarding usability, the majority of students (75.5%) considered Labster to be accessible and easy to use, which coincides with recent studies that highlight the importance of ease of access and simplicity of use of Labster virtual simulations (Navarro et al., 2024). However, 16.7% expressed some level of disagreement regarding accessibility, citing recurring technical difficulties, such as disconnection from the simulation or instability of the platform. These problems may be due to external factors such as internet connectivity or system incompatibilities, which are recognized in the literature as common challenges for virtual simulation platforms (Soraya et al., 2022). It is important to mention that 87% of the students who responded to the survey mentioned having participated in virtual games or done simulations on the Internet. This may partly account for the low percentage who experienced technical difficulties or probably access barriers.

Regarding relevance to academic content, 85.6% of students found Labster simulations to be aligned with course content, suggesting that simulations are effectively integrated within the pedagogical framework. This finding is in line with previous research highlighting that well-designed virtual simulations can enhance student understanding by connecting theory with practical applications (Mikropoulos and Natsis, 2011). Furthermore, 78.9% of respondents expressed that Labster helped them better understand course content, reinforcing the idea that these platforms can be valuable in active learning and information retention (Aguiar-Castillo et al., 2021, Barber and Smutzer, 2017). On the other hand, a smaller percentage of students (13.4%) did not consider that simulations improved their understanding of laboratory techniques and procedures. This group could be composed of students with learning preferences more oriented towards face-to-face practice or probably students who did not find simulations sufficiently interactive or realistic. In this sense, the perception of some students about the limitation of simulations in adequately preparing for face-to-face activities reflects an ongoing discussion in the literature, where it is argued that, although simulations can be valuable, they do not always completely replace the experience in the physical laboratory (Akinola and Oladejo, 2020; Byukusenge et al., 2022; Kennepohl, 2021; Potkonjak et al., 2016). Regarding motivation and satisfaction in using Labster, 74.4% of students stated that they were comfortable with the simulations, although some expressed that the length of some of the simulations was an issue. This suggests that, while the content is valuable, the duration could be adjusted to maintain interest and avoid cognitive overload, a phenomenon discussed in research on virtual simulations that affects student motivation (Parra-Medina and Álvarez-Cervera, 2021). Regarding the willingness to continue using Labster in future subjects, approximately 70% of students expressed interest in conducting more simulations in other courses, a result consistent with studies indicating that students value learning tools that combine interactive and visual elements with traditional teaching (Akinola and Oladejo, 2020; Byukusenge et al., 2022; Kennepohl, 2021; Potkonjak et al., 2016). However, 16.7% showed no interest in repeating the experience, suggesting that for some students, simulations failed to meet their expectations or learning style (Hassan et al., 2019). Finally, 75.6% of students would recommend Labster to their peers, reflecting high satisfaction with the tool. This finding is consistent with recent research suggesting that virtual simulations can improve student engagement and satisfaction, especially in areas where experiential learning is critical (Aguiar-Castillo et al., 2021; Barber and Smutzer, 2017). In light of these findings, future research could further explore the factors that modulate the effectiveness of Labster or other gamification tools, such as learning style, self-regulation capacity, and the impact of students’ prior expectations. It would also be valuable to assess how the long-term academic performance of students using virtual labs compared to those following more traditional learning methods, considering not only their immediate grades, but also their long-term retention of knowledge and skills.

4 Conclusion

This study demonstrates the positive impact of Labster virtual laboratories on the academic performance of first-year psychology students, revealing that those who participated in the simulations achieved significantly better results than those who did not. Additionally, students’ perceptions of usability, academic relevance, motivation, and satisfaction were predominantly favorable, reinforcing the viability of Labster as an effective pedagogical tool in higher education. These findings have important pedagogical implications. Educators and institutions should consider integrating virtual laboratories not merely as supplementary tools, but as core components of active and student-centered learning strategies. To maximize their effectiveness, it is essential to align virtual simulations with clear learning objectives, promote their relevance to curricular outcomes, and design complementary activities that foster reflective and collaborative learning.

Institutional strategies should also be developed to support students in enhanced technology-mediated environments. Specifically, offering training in digital literacy and self-regulated learning strategies could help reduce technological access barriers and improve students’ capacity to engage autonomously with virtual tools. Moreover, ensuring technical infrastructure reliability and providing responsive technical support are critical to guaranteeing equitable access for all students. Despite the promising results, certain limitations must be acknowledged. The self-selection of participants may have introduced bias, potentially overestimating the impact of the intervention. External factors such as differences in learning styles, technological familiarity, or prior content knowledge could also have influenced outcomes.

Future research should address these issues through randomized controlled trials that eliminate self-selection biases and enable stronger causal inferences. Additionally, longitudinal studies are recommended to examine the lasting effects of virtual laboratories on knowledge retention, skill development, and academic achievement over time, beyond immediate assessment performance. By advancing this research agenda and implementing supportive institutional practices, educational institutions can better leverage virtual simulations to promote deeper learning, inclusivity, and academic success across diverse student populations.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Comité ético científico, dirección de investigación, vicerrectora académica, Universidad de Las Américas. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

CC: Conceptualization, Data curation, Investigation, Methodology, Project administration, Supervision, Validation, Writing – review and editing. FC: Conceptualization, Formal Analysis, Validation, Writing – original draft, Writing – review and editing. FAC: Conceptualization, Investigation, Methodology, Validation, Writing – review and editing. CA: Conceptualization, Investigation, Methodology, Validation, Writing – review and editing. LL: Conceptualization, Investigation, Methodology, Validation, Writing – review and editing. PF: Conceptualization, Methodology, Resources, Supervision, Validation, Writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

We would like to express our gratitude to the academics who taught the Biological Foundations of Psychology course for their collaboration in data collection and their continuous feedback: Miguel Ávila, Cristian Morales, Elsa Fritz, Viviana Pavez, Paulina Muñoz, Valesca Cid, Ana Cortes, Andro Montoya, Jessica Sánchez, and Julieta Geisse.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1587639/full#supplementary-material

Supplementary Figure 1 | Timeline and flow of the educational intervention conducted during Unit 4 of the Biological Foundations for Psychology course. All students participated in face-to-face classes across Units 1 to 4. During Unit 4, students had voluntary access to Labster simulations. Group A completed the “Gross Function of the Nervous System” simulation; Group B completed the “Sensory Transduction” simulation; and Group C consisted of students who voluntarily chose not to engage with the virtual simulations despite having access. Participation was monitored through Blackboard Ultra. The final assessment and a perception survey were administered, followed by statistical analysis.

Abbreviations

ICTs, Information and Communication Technologies; AI, Assessment Instruments.

References

Aguiar-Castillo, L., Clavijo-Rodriguez, A., Hernández-López, L., De Saa-Pérez, P., and Pérez-Jiménez, R. (2021). Gamification and deep learning approaches in higher education. J. Hospital. Leisure Sport Tour. Educ. 29:100290. doi: 10.1016/j.jhlste.2020.100290

Akinola, V. O., and Oladejo, A. I. (2020). Virtual Laboratory: A viable and sustainable alternative to traditional physical laboratory. J. Educ. Res. Dev. 16, 1–7.

Aşıksoy, G. (2018). The effects of the gamified flipped classroom environment (GFCE) on students’ motivation, learning achievements and perception in a physics course. Qual. Quan. 52, 129–145. doi: 10.1007/s11135-017-0597-1

Asiksoy, G., and Canbolat, S. (2021). The effects of the gamified flipped classroom method on petroleum engineering students’ pre-class online behavioural engagement and achievement. Int. J. Eng. Pedagogy 11, 19–36. doi: 10.3991/ijep.v11i5.21957

Barber, C., and Smutzer, K. (2017). Leveling for success: Gamification in IS education. Atlanta, GA: Association for Information Systems (AIS).

Bencsik, A., Mezeiova, A., and Oszene, B. (2021). Gamification in higher education (case study on a management subject). Int. J. Learn. Teach. Educ. Res. 20, 211–231. doi: 10.26803/ijlter.20.5.12

Bonde, M. T., Makransky, G., Wandall, J., Larsen, M. V., Morsing, M., Jarmer, H., et al. (2014). Improving biotech education through gamified laboratory simulations. Nat. Biotechnol. 32, 694–697. doi: 10.1038/nbt.2955

Byukusenge, C., Nsanganwimana, F., and Tarmo, A. P. (2022). Effectiveness of virtual laboratories in teaching and learning biology: A review of literature. Int. J. Learn. Teach. Educ. Res. 21, 1–17. doi: 10.26803/ijlter.21.6.1

Clark, J. M., and Paivio, A. (1991). Dual coding theory and education. Educ. Psychol. Rev. 3, 149–210. doi: 10.1007/BF01320076

Coleman, S. K., and Smith, C. L. (2019). Evaluating the benefits of virtual training for bioscience students. High. Educ. Pedagogies 4, 287–299. doi: 10.1080/23752696.2019.1599689

de Vries, S., and May, M. (2019). Virtual laboratory simulation in the education of laboratory technicians—motivation and study intensity. Biochem. Mol. Biol. Educ. 47, 655–662. doi: 10.1002/bmb.21221

DeLozier, S. J., and Rhodes, M. G. (2017). Flipped classrooms: A review of key ideas and recommendations for practice. Educ. Psychol. Rev. 29, 141–151. doi: 10.1007/s10648-015-9356-9

Dung, D. T. H. (2020). The advantages and disadvantages of virtual learning. IOSR J. Res. Method Educ. 10, 45–48. doi: 10.9790/7388-1003054548

Fredricks, J. A., Blumenfeld, P. C., and Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Rev. Educ. Res. 74, 59–109. doi: 10.3102/003465430740010

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). “Active learning increases student performance in science, engineering, and mathematics,” in Proceedings of the National Academy of Sciences, 111, 8410–8415. doi: 10.1073/pnas.1319030111

García-Sánchez, M., Reyes, J., and Godínez, G. (2018). Las Tic en la educación superior, innovaciones y retos / The ICT in higher education, innovations and challenges. RICSH Rev. Iberoamericana Las Ciencias Soc. Human. 6, 299–316. doi: 10.23913/ricsh.v6i12.135

Hassan, M. A., Habiba, U., Majeed, F., and Shoaib, M. (2019). Adaptive gamification in e-learning based on students’ learning styles. Interact. Learn. Environ. 29, 545–565. doi: 10.1080/10494820.2019.1588745

Irwanto, I., Wahyudiati, D., Saputro, A. D., and Laksana, S. D. (2023). Research trends and applications of gamification in higher education: A bibliometric analysis spanning 2013-2022. Int. J. Emerg. Technol. Learn. (IJET) 18, 19–41. doi: 10.3991/ijet.v18i05.37021

Kennepohl, D. (2021). Laboratory activities to support online chemistry courses: A literature review. Can. J. Chem. 99, 851–859. doi: 10.1139/cjc-2020-0506

Keshavarz, M., and Mirmoghtadaie, Z. (2023). Book review: Teaching in a digital age: Guidelines for designing teaching and learning–third edition, authored by Anthony William (Tony) Bates (Tony Bates Associates Ltd., 2022). Int. Rev. Res. Open Distrib. Learn. 24, 192–195. doi: 10.19173/irrodl.v24i2.7063

Koivisto, J., and Hamari, J. (2019). The rise of motivational information systems: A review of gamification research. Int. J. Inform. Manag. 45, 191–210. doi: 10.2139/ssrn.3226221

Lamb, R. L., Annetta, L., Vallett, D. B., and Sadler, T. D. (2014). Cognitive diagnostic like approaches using neural-network analysis of serious educational videogames. Comp. Educ. 70, 92–104. doi: 10.1016/j.compedu.2013.08.008

Lampropoulos, G., and Kinshuk. (2024). Virtual reality and gamification in education: A systematic review. Educ. Technol. Res. Dev. 72, 1691–1785. doi: 10.1007/s11423-024-10351-3

Limniou, M., and Mansfield, R. (2018). “Traditional learning approach versus gamification: An example from psychology,” in Proceedings of the 4th International Conference on Higher Education Advances (HEAd’18). Liverpool. doi: 10.4995/head18.2018.7912

Lo, C. K., and Hew, K. F. (2018). A comparison of flipped learning with gamification, traditional learning, and online independent study: The effects on students’ mathematics achievement and cognitive engagement. Interact. Learn. Environ. 28, 464–481. doi: 10.1080/10494820.2018.1541910

Mariaca Garron, M. C., Zagalaz Sánchez, M. L., Campoy Aranda, T. J., and González González de Mesa, C. (2022). Revisión bibliográfica sobre el uso de las tic en la educación. Rev. Int. Invest. Ciencias Soc. 18, 23–40. doi: 10.18004/riics.2022.junio.23

Maulita, S. R., Sukarmin, and Marzuki, A. (2019). The content validity: Two-tier multiple choices instrument to measure higher-order thinking skills. J. Phys. Conf. Ser. 1155:012042. doi: 10.1088/1742-6596/1155/1/012042

Mikropoulos, T. A., and Natsis, A. (2011). Educational virtual environments: A ten-year review of empirical research (1999-2009). Comp. Educ. 56, 769–780. doi: 10.1016/j.compedu.2010.10.020

Navarro, C., Arias-Calderón, M., Henríquez, C. A., and Riquelme, P. (2024). Assessment of student and teacher perceptions on the use of virtual simulation in cell biology laboratory education. Educ. Sci. 14:243. doi: 10.3390/educsci14030243

Noui, R. (2020). Higher education between massification and quality. Higher Educ. Eval. Dev. 14, 93–103. doi: 10.1108/heed-04-2020-0008

Nunnally, J. C., and Bernstein, I. H. (1994). Psychometric theory, 3rd Edn. New York, NY: McGraw-Hill.

OECD (2021). The state of school education: One year into the COVID pandemic. Paris: OECD Publishing.

Parra-Medina, L. E., and Álvarez-Cervera, F. J. (2021). Síndrome de la sobrecarga informativa: Una revisión bibliográfica. Rev. Neurol. 73, 421–428. doi: 10.33588/rn.7312.2021113

Potkonjak, V., Gardner, M., Callaghan, V., Mattila, P., Guetl, C., Petrović, V. M., et al. (2016). Virtual laboratories for education in science, technology, and engineering: A review. Comp. Educ. 95, 309–327. doi: 10.1016/j.compedu.2016.02.002

Ryan, R., and Deci, E. L. (2000). La teoría de la autodeterminación y la facilitación de la motivación intrínseca, el desarrollo social, y el bienestar. Am. Psychol. 55, 68–78. doi: 10.1037/0003-066X.55.1.68

Saiz Sánchez, C., Fernández Rivas, S., and Almeida, L. S. (2020). Los cambios necesarios en la Enseñanza Superior que seguro mejorarían la calidad de la educación. E-PSI: Rev. Electrón. Psicol. Educ. Saúde 9, 9–26.

Schechter, R., Gross, R., and Cai, J. (2024). “Exploring nationwide student engagement and performance in virtual lab simulations with Labster,” in Proceedings of the EdMedia + Innovate Learning, ed. T. Bastiaens (Jacksonville, FL: Association for the Advancement of Computing in Education (AACE)), 871–878.

Slater, M., Spanlang, B., Sanchez-Vives, M. V., and Blanke, O. (2010). First person experience of body transfer in virtual reality. PLoS ONE 5:e10564. doi: 10.1371/journal.pone.0010564

Soraya, G. V., Astari, D. E., Natzir, R., Yustisia, I., Kadir, S., Hardjo, M., et al. (2022). Benefits and challenges in the implementation of virtual laboratory simulations (vLABs) for medical biochemistry in Indonesia. Biochem. Mol. Biol. Educ. 50, 261–272. doi: 10.1002/bmb.21613

Sung, Y. T., Chang, K. E., and Liu, T. C. (2016). The effects of integrating mobile devices with teaching and learning on students’ learning performance: A meta-analysis and research synthesis. Comput. Educ. 94, 252–275. doi: 10.1016/j.compedu.2015.11.008

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cogn. Sci. 12, 257–285. doi: 10.1207/s15516709cog1202_4

Tambiga, J. M., Revelo, J. M., Tulba, D. F. G., Bazar, J. S., and Baluyos, G. R. (2024). Virtual laboratory learning environment and the students’ performance. U. Int. J. Res. Technol. 5, 139–150.

Tripepi, M. (2022). Microbiology laboratory simulations: From a last-minute resource during the Covid-19 Pandemic to a valuable learning tool to retain—a semester microbiology laboratory curriculum that uses Labster as prelaboratory activity. J. Microbiol. Biol. Educ. 23:e00269-21. doi: 10.1128/jmbe.00269-21

Tsirulnikov, D., Suart, C., Abdullah, R., Vulcu, F., and Mullarkey, C. E. (2023). Game on: Immersive virtual laboratory simulation improves student learning outcomes and motivation. FEBS Open Bio 13, 396–407. doi: 10.1002/2211-5463.13567

Keywords: higher education, gamification, Information and Communication Technologies, academic performance, Labster

Citation: Carrasco CM, Cabezas F, Contreras FA, Aracena C, Laroze L and Figueroa P (2025) Enhancing learning: impact of virtual reality simulations on academic performance and perception in biological sciences in psychology students. Front. Educ. 10:1587639. doi: 10.3389/feduc.2025.1587639

Received: 04 March 2025; Accepted: 08 May 2025;

Published: 02 September 2025.

Edited by:

Alberto Ruiz-Ariza, University of Jaén, SpainReviewed by:

Guilhermina Lobato Miranda, Universidade de Lisboa, PortugalDaniar Setyo Rini, Jakarta State University, Indonesia

Ronald Reyes, Centro Escolar University, Philippines

Boonrat Plangsorn, Kasetsart University, Thailand

Copyright © 2025 Carrasco, Cabezas, Contreras, Aracena, Laroze and Figueroa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Carlos M. Carrasco, Y2NhcnJhc2NvcEB1ZGxhLmNs

†These authors have contributed equally to this work

‡ORCID: Carlos M. Carrasco, orcid.org/0009-0004-3531-2001; Felipe Cabezas, orcid.org/0009-0008-1116-9529

Carlos M. Carrasco

Carlos M. Carrasco Felipe Cabezas

Felipe Cabezas Felipe A. Contreras

Felipe A. Contreras Claudia Aracena

Claudia Aracena