- English Department, Universidad Católica de Valencia San Vicente Mártir, Valencia, Spain

Introduction: This study investigates the effectiveness of simulation combined with video-assisted feedback as a pedagogical approach to improve linguistic competence, metacognitive awareness, and professional communication skills in psychology students learning English.

Methods: A mixed-methods research design was employed involving 80 psychology students who participated in three iterative simulation cycles consisting of briefing sessions, recorded simulations, and structured video-assisted debriefings. Quantitative data were collected through a validated Likert-scale questionnaire and analyzed using Gaussian Graphical Models (GGM) to examine evolving relationships among professional experience, language proficiency, teamwork, motivation, and perceived utility of video-based feedback. Qualitative data were gathered from reflective reports, open-ended questions, and video observations and analyzed through thematic analysis.

Results: Initial findings indicated students primarily focused on overcoming linguistic barriers, perceiving language proficiency as a critical challenge. This indicates that language concerns were a primary impediment, potentially distracting from their ability to fully engage with the simulation’s learning objectives, such as mastering psychological terminology or practicing active listening skills. However, as the simulation cycles progressed, video-assisted feedback became central, with students actively seeking it to improve their interview techniques and diagnostic accuracy. This suggests that students increasingly viewed feedback as a valuable tool for self-assessment and skill development. For instance, qualitative data showed they began specifically requesting feedback on their ability to establish rapport with simulated clients and formulate accurate diagnoses, demonstrating increased metacognitive reflection. Thematic analysis of reflective reports corroborated these quantitative findings. Students’ comments shifted from initial anxieties about language to focused requests for feedback on specific professional skills. For example, students noted how watching their videos helped them identify nonverbal cues that conveyed empathy or areas where their questioning techniques could be improved, directly impacting their ability to regulate their learning and enhance professional performance.

Discussion: The findings underline the importance and effectiveness of integrating simulation with structured video-based feedback, demonstrating a clear shift from initial concerns about linguistic competence toward advanced metacognitive self-regulation and professional collaboration. These outcomes provide empirical support for simulation-based pedagogies and offer practical implications for educational practices in psychology curricula.

1 Introduction

Simulation, as a pedagogical strategy, offers a controlled yet realistic environment for students to apply theoretical knowledge and develop practical skills. In psychology education, simulation recreates complex clinical scenarios, enabling students to practice essential skills in a safe setting. The integration of video-assisted feedback further enhances this learning experience, providing opportunities for self-observation and reflection on performance. In line with this, recent research emphasizes the growing significance of video in educational settings, with educators using recorded lessons to enhance teaching practices and foster student reflection (Flavell, 1979; Lo and Wong, 2023).

The present study investigated the combined impact of simulation and video-assisted feedback on psychology students learning English for professional purposes.

This study employs a mixed-methods approach to explore the impact of this pedagogical strategy, seeking to address the following research questions:

1. How does the integration of simulation and video-assisted feedback impact the development of specific linguistic skills (e.g., fluency, accuracy, professional jargon) in psychology students learning English for professional purposes?

2. What are the key factors influencing students’ perceptions and utilization of video-assisted feedback within a simulation-based learning environment, and how do these factors (e.g., prior experience, language proficiency, self-efficacy) interact to affect learning outcomes and engagement?

To ensure methodological robustness, a validated Likert-scale questionnaire (Levin et al., 2023) was employed to assess students’ perceptions and experiences with simulation and video-assisted feedback. This allowed for a structured and empirical evaluation of the impact of these pedagogical strategies.

Building upon the recognized benefits of simulation in education, this study investigates the use of simulation combined with video-assisted feedback to enhance specific skills. As highlighted earlier, simulation creates realistic scenarios that allow students to bridge the gap between theory and practice, fostering experiential learning and knowledge transfer.

In this study, the simulation serves as a crucial platform for psychology students to develop both linguistic and professional skills, by providing a safe and relevant context for language practice in professional settings.

2 Theoretical background

Simulation, in the educational context, is defined as a methodology that recreates real situations or scenarios to allow students to apply theoretical knowledge, develop practical skills, and experience the consequences of their decisions in a safe and controlled environment (Angelini, 2021). In essence, it provides a bridge between the classroom and the real world, facilitating experiential learning and knowledge transfer.

Within the field of Psychology training, simulation is increasingly used to prepare students for the challenges of professional practice (Gibbs and Simpson, 2005; Issenberg and Scalese, 2008). It allows for the recreation of complex clinical situations, interactions with patients, scenarios of psychological assessment, and other situations relevant to the profession. By participating in simulations, students can practice interview skills, apply therapeutic techniques, make ethical decisions, and develop their clinical judgment, all without the risk of causing harm to real patients.

The effectiveness of simulation in Psychology training lies in its ability to provide an active and participatory learning environment, where students can experiment, reflect, and receive feedback on their performance (Levin, 2022). Simulation can also help students develop problem-solving skills, critical thinking, and teamwork, which are essential for success in professional practice.

Simulation, as a pedagogical tool, becomes a fundamental catalyst for the development of linguistic and professional communication skills in psychology students. Simulation offers a realistic and relevant context for language practice in professional situations. The need to interact with simulated “patients,” present case studies, participate in debates, and negotiate solutions requires students to use language effectively, both in terms of accuracy and clarity and in terms of adapting to the context and the interlocutor.

In this sense, simulation not only provides a space for linguistic practice but also encourages reflection on the use of language. By participating in simulations, students are forced to consider how their language affects others, how they can improve their communication to be more effective, and how they can adapt their communication style to different situations and audiences. This reflection, combined with the feedback received (especially through video), can lead to a greater awareness of one’s own linguistic strengths and weaknesses, as well as a greater commitment to the development of professional communication skills.

Feedback, defined in general terms as the information provided to an individual about their performance with the intention of improving their future learning and performance (Hattie and Timperley, 2007; Sadler, 2014), acquires a particularly powerful dimension when integrated with video recording technology. Video-based feedback refers to the process of recording a student’s performance (in this case, in a simulation), and then providing specific and detailed feedback based on the joint review of the video (Nicol and Macfarlane-Dick, 2006). This modality goes beyond traditional feedback (oral or written), offering a unique opportunity for self-observation, reflection, and continuous improvement (Boud, 2015).

Building on the established benefits of video-based feedback in enhancing self-awareness and metacognition (Kasperski et al., 2025; Ledger et al., 2022; Fischetti et al., 2022), we argue that it holds particular promise for accelerating language development within professional contexts. Video feedback uniquely contributes to language learning as video allows students to observe their verbal and nonverbal communication simultaneously from an external perspective. This is especially critical in professional settings, where subtle nuances in language and body language can significantly impact rapport, trust, and client outcomes (Ivey et al. 2011, 2018; Aafjes-van Doorn et al., 2022; Gossman and Miller, 2012). Students can identify specific instances where their language may have been unclear, insensitive, or culturally inappropriate, and then experiment with alternative phrasing in subsequent simulations.

Video provides instructors with a concrete record of student performance, enabling them to provide more specific and targeted feedback on linguistic features such as pronunciation, grammar, fluency, and professional jargon. This level of detail is often difficult to achieve with traditional feedback methods.

Video empowers students to take control of their own language development by providing them with a tool for self-assessment, goal-setting, and progress monitoring (Burke and Mancuso, 2012; Waldner and Olson, 2007). Students can revisit their video recordings to track their progress over time, identify areas where they continue to struggle, and develop personalized learning strategies.

This perspective aligns with Swain’s (1985) Output Hypothesis, which emphasizes the importance of language production for language development. Video feedback provides students with a structured opportunity to reflect on their output, identify areas for improvement, and then refine their production skills in subsequent simulations. It also builds on sociocultural theories (Vygotsky and Cole, 1978) of learning, as the instructor’s feedback can be a means of scaffolding the student to greater competence.

3 Materials and methods

This study employed a mixed-methods design to investigate the effectiveness of simulation combined with video-assisted feedback in enhancing linguistic and professional skills among psychology students. A pilot study was conducted addressing the research question: “Is simulation combined with video feedback an effective tool for improving linguistic and professional skills in psychology students?”

The demographic profile of the participants in this study—80 first-year psychology students aged 18–24—played a significant role in shaping their linguistic and metacognitive development during the simulation-based training. As first-year university students, many participants were likely at the beginning stages of their academic and professional journeys, with limited prior exposure to clinical or professional environments.

The students enrolled in a mandatory Scientific English course as part of their first-year university curriculum. All students enrolled in this course during the study period participated, and no specific selection criteria were applied beyond their enrollment in the course. Participation was compulsory and the simulations formed part of their overall course grade.

As part of the university’s admission requirements, all students are required to demonstrate English proficiency at a B1 or B2 level on the Common European Framework of Reference for Languages (CEFR) prior to enrolling in degree programs taught in English. Students typically provide evidence of this proficiency through standardized tests such as the IELTS or TOEFL. However, precise data on individual students’ baseline English proficiency scores were not collected as part of this study.

Participants engaged in simulation-based training that consisted of three phases: briefing/preparation, recorded simulation, and debriefing. Over the course of the semester, students completed three full simulation cycles. The debriefing phase involved reviewing video recordings of their simulated performance, allowing them to self-assess their skills. Qualitative data was collected from student responses [N = 80] to the open-ended question: “Comment on your experience in the simulations.”

A mixed-methods approach was employed to explore the development of metacognitive, linguistic, and professional communication skills in psychology students. To provide a comprehensive analysis, qualitative findings were complemented with quantitative network analysis, allowing for an in-depth examination of how relationships between key variables evolved over time. To enhance replicability, the full questionnaire items are provided in Supplementary A, and a detailed description of the qualitative data analysis coding procedures is provided in Supplementary B.

To assess students’ perceptions of simulation-based learning and video-assisted feedback, a validated Likert-scale questionnaire (Levin et al., 2023) was utilized. This instrument systematically captured data on professional experience, prior training, English language proficiency, teamwork, video-based feedback, and motivation. Its validity and reliability have been well established in prior research on simulation-enhanced learning environments, ensuring the robustness of the findings.

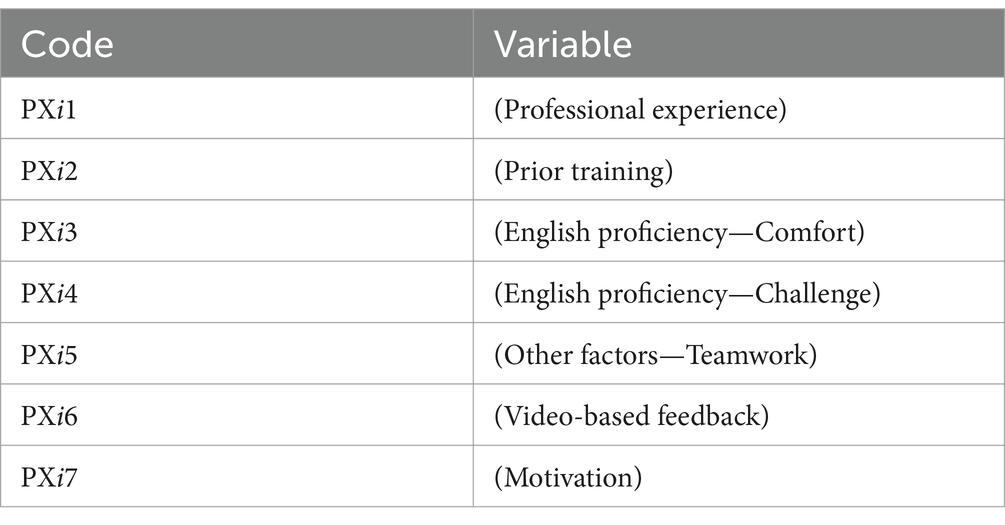

The quantitative research, utilizing network analysis, aimed to evaluate the evolution of relationships between key variables over time. Data was collected at three distinct time points during the training process. The sample consisted of the same N = 80 students who participated in structured simulation and evaluation activities. The following variables were analyzed at each phase of the study:

1. Professional experience: Perceived impact of prior professional experience on students’ confidence during simulations.

2. Prior training: Students’ perception of their need for additional training to fully benefit from the simulations.

3. English language proficiency—Comfort: Level of comfort communicating in English during the simulations.

4. English language proficiency—Challenge: Perception of using English as an additional challenge in the simulation.

5. Teamwork: Comfort level with teamwork during the simulation.

6. Video-based feedback: Perceived utility of video-based feedback for learning.

7. Motivation: Motivation to participate in simulations and video-based assessment activities.

These variables were assessed at three time points: P1 (beginning of the process), P2 (mid-point of the process), and P3 (end of the process) to capture the evolution of relationships between these factors throughout the intervention.

The three distinct moments of the training process:

• P1 (beginning of the process): first exposure to simulation with videotaped feedback.

• P2 (middle of the process): intermediate moment with more simulation experience.

• P3 (end of the process): further exposure to simulations and accumulated feedback.

In Table 1 we present variables included in the analysis, where i represents the point in time (i = 1, 2, 3).

3.1 Data analysis

Network analysis was performed using two complementary tools: the R programming software (R Core Team, 2023) and the JAMOVI statistical software (JAMOVI Project, 2023). R enabled the execution of advanced network analyses using the qgraph package (Epskamp et al., 2012), while JAMOVI facilitated the visualization and interpretation of the networks and centrality metrics. The Graphical Gaussian Model (GGM) method with glasso regularization and model selection based on the extended Bayesian information criterion (EBICglasso) was employed. In addition, centrality metrics such as closeness, and betweenness were analyzed to evaluate the structural role of each variable in the networks obtained at the three time points (P1, P2, P3).

Qualitative data were analyzed using Dedoose version 9.2.005 (SocioCultural Research Consultants, LLC, 2023). This software helped identify the most common and dominant themes within the data, which served as the basis for further analysis and interpretation. It is worth mentioning that qualitative research is of particular interest, especially in the social sciences, where the role of the participants and their perceptions are captured through their own discourse (Goetz and Le Compte, 1988; Vallés, 1997, 2002; Sandín, 2003; Harris, 2005; and Twining et al., 2017 among others).

The integration of these qualitative and quantitative approaches allowed for triangulation of the results, ensuring that both the emerging trends in the qualitative data and the structural patterns observed in the network were supported by a solid empirical foundation.

3.1.1 Network analysis

To model the interrelationships between the measured variables across the three time points, we employed Gaussian Graphical Models (GGM) with graphical lasso (glasso) regularization and Extended Bayesian Information Criterion (EBIC) for model selection. Unlike traditional correlation analysis, this approach estimates partial correlation networks, which highlight the most significant direct connections between variables while filtering out indirect or spurious associations. This provides a more accurate and interpretable visualization of the global structure of the learning system and how it evolves over the course of the simulation-based learning process.

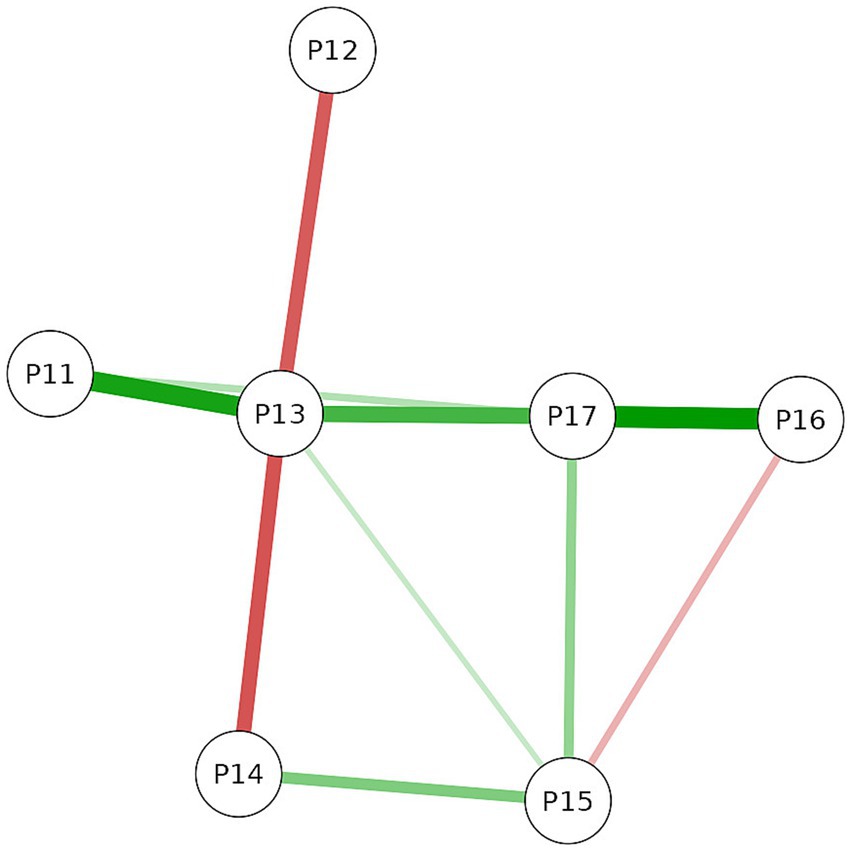

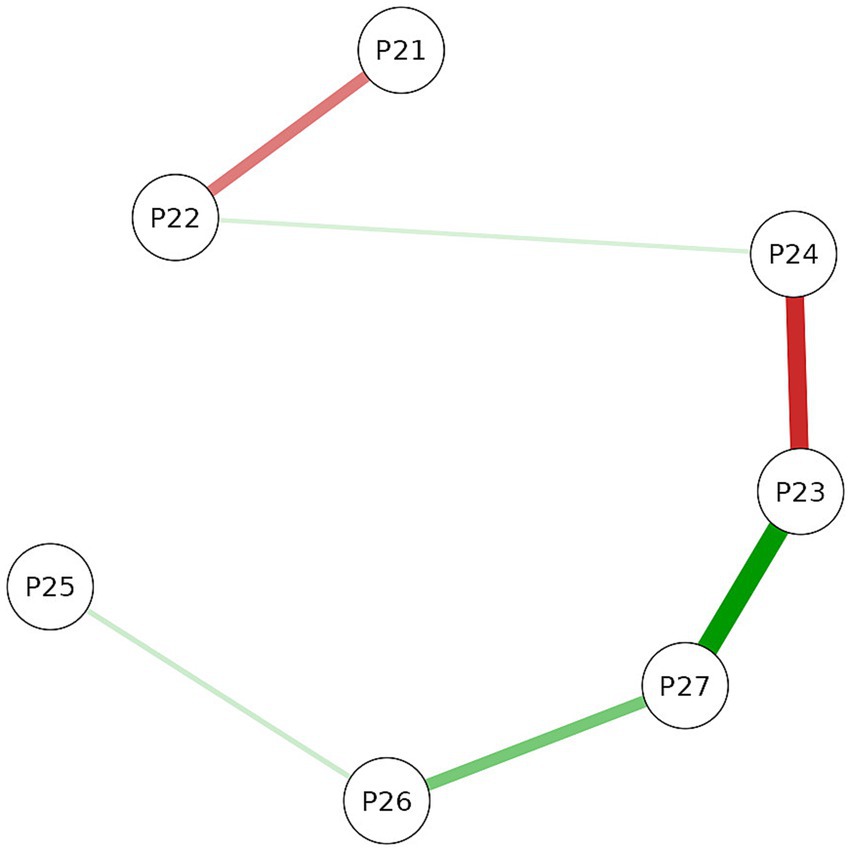

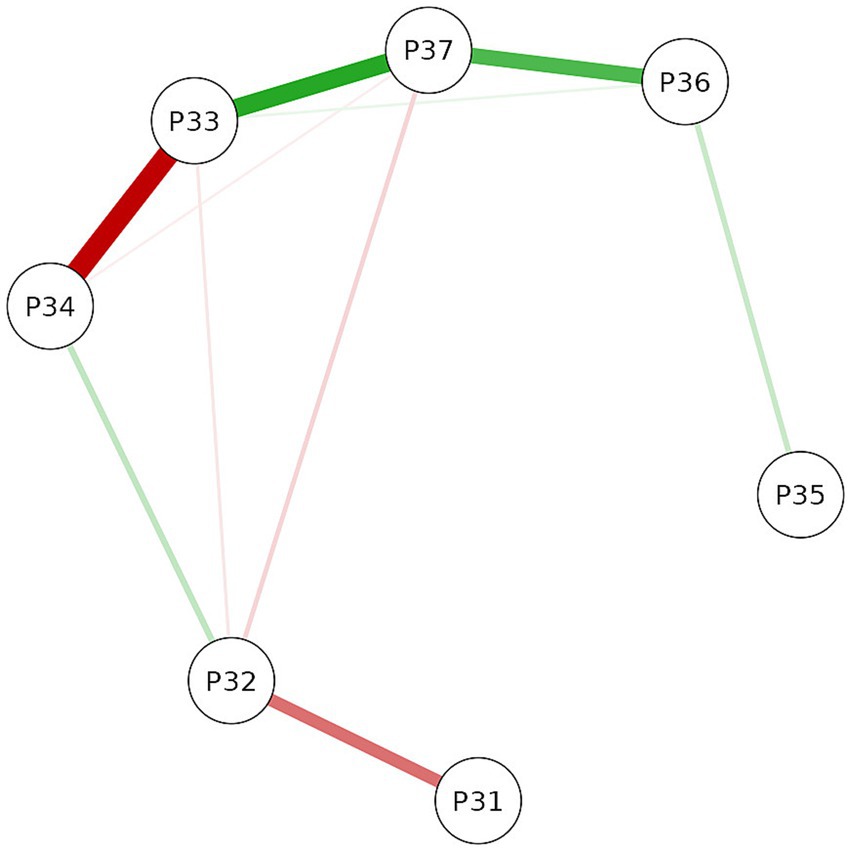

Each network is composed of nodes and edges, where each node represents a specific variable (PXiYj), with i ranging from 1 to 3 according to the time point, and j varying from 1 to 7 according to the variable. The edges represent statistically significant relationships, with the thickness reflecting the magnitude of the association. The color of the connections indicates their direction: green for positive relationships, where an increase in one variable is associated with an increase in another, and red for negative relationships, where an increase in one variable is related to a decrease in another.

Co-occurrence networks were generated for each time point using glasso, which allowed for obtaining partial networks by eliminating non-significant connections and retaining only the relevant interactions. In addition, the following centrality metrics were calculated to analyze the structural role of each variable in the network, which help identify the most influential variables in the learning process at each stage of the simulation:

• Closeness: is the inverse of the average distance from a node to all other nodes, reflecting its accessibility in the network.

• Betweenness: refers to the number of times a node acts as a bridge in the shortest path between other nodes, showing its role in information transmission.

To assist readers unfamiliar with network analysis, it is useful to clarify key centrality metrics used in this study. Betweenness indicates the extent to which a variable serves as a connector or bridge between other nodes in the network. A high betweenness score suggests the variable plays a pivotal role in linking otherwise unconnected constructs. Closeness measures how quickly a node can interact with all other nodes in the network, reflecting how central or accessible it is within the system. Together, these metrics help us understand how students’ perceptions shift across learning phases and which variables gain prominence in shaping learning behavior.

It should be noted that each network generated represents a “snapshot” of interactions at a specific point in time. While this analysis is descriptive in nature and does not allow us to infer causal relationships, it does allow us to identify changes in the structure of the network and the evolution of the importance of certain nodes over time, which provides key information on the progression of metacognitive and communicative processes in simulation-based learning.

3.1.2 Ethical considerations

This study was conducted entirely within the framework of a curricular activity included in the official syllabus of the university course “Inglés Científico” (Scientific English). As such, formal approval from an institutional ethics committee was not required. All participants were enrolled students who engaged in the learning activities as part of their standard coursework. Participation in reflections, simulations, and feedback sessions was mandatory, and all collected data were anonymized prior to analysis.

4 Results

4.1 Results of the network study

A. Linguistic Competence and Self-Confidence: in the early stages of the simulation experience (P1), students highlighted the language barrier as an initial challenge. They expressed difficulties in professional communication in English and noted that this affected their confidence in the simulation. Video-based feedback was seen as a useful tool for identifying errors but was not yet fully integrated as a learning tool.

B. Metacognitive Learning and Self-Regulation: as the process progressed (P2 and P3), students began to report a change in their perception of learning. The ability to visualize their performance through video allowed them to reflect on their mistakes, adjust their discourse, and improve their verbal and non-verbal expression. This evidence suggests that video-based feedback facilitated metacognitive regulation processes, reinforcing self-awareness about one’s own performance and promoting a progressive improvement in interaction in simulations.

C. Collaboration and Construction of Professional Identity: in P3, students emphasized the importance of teamwork and collaborative learning. They reported that the simulation not only favored their individual performance but also allowed them to learn from their peers by comparing communication strategies and sharing reflections on the feedback received. This finding suggests that simulation with video-based feedback not only reinforces autonomy in learning but also promotes the construction of a professional identity through interaction with others.

In the following sections, the networks obtained at each time point (P1, P2, P3) are presented, analyzing the connectivity between the nodes, the changes in the centrality of the variables, and their role in the regulation of learning and the development of metacognitive and communication skills.

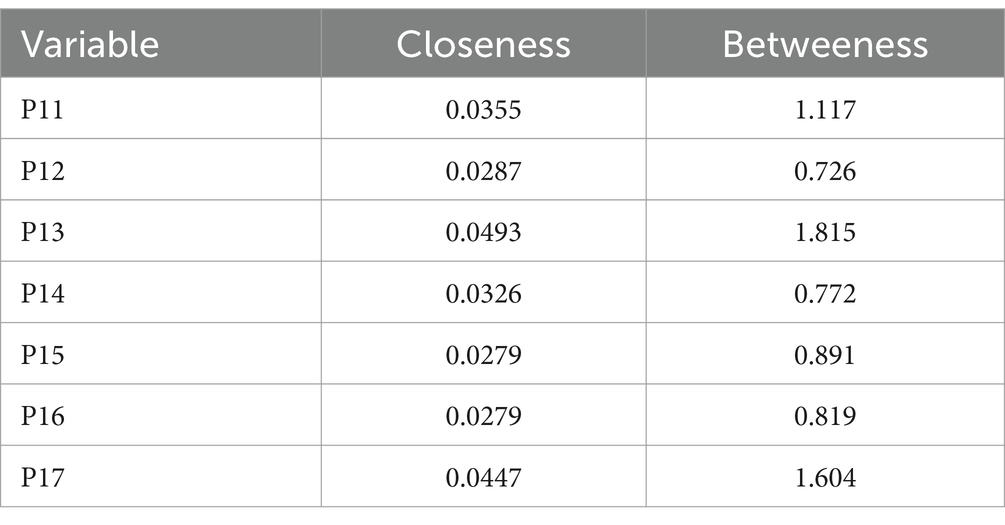

4.1.1 Moment P1: focus on linguistic competence

The network structure observed at the initial measurement point (Figure 1) exhibits moderate connectivity, characterized by distinct yet limited interactions among the variables under investigation. An analysis of the centrality metrics, as summarized in Table 2, reveals that the variable “English Proficiency-Comfort” (P13) demonstrates the highest values for closeness (0.0493) and betweenness (1.815), underscoring its pivotal role as a bridging node within the network structure. Specifically, P13 serves as a mediator in the relationships between variables such as professional experience (P11) and prior training (P12).

Figure 1. Network representation in P1. Gaussian graphical model showing the relationships between the seven measured variables at the initial stage of simulation-based learning. Node labels: P11 (Professional experience), P12 (Prior training), P13 (English proficiency—Comfort), P14 (English proficiency—Challenge), P15 (Teamwork), P16 (Video-based feedback), P17 (Motivation). Green edges indicate positive associations; red edges indicate negative associations. Thicker edges represent stronger relationships.

A notable finding is the negative association between prior training (P12) and comfort in English proficiency (P13), which suggests that students with less extensive prior training perceived greater initial linguistic challenges. This inverse relationship provides insight into the potential impact of preparatory experiences on students’ perceived linguistic competence within the simulation context.

Several potential explanations can be offered for this unexpected relationship. It may be inferred that students with more prior training may have developed higher expectations for their own performance, including their English language proficiency. As a result, they may have been more acutely aware of their linguistic limitations and therefore felt less comfortable. Also, more experienced students might possess a deeper understanding of the nuances of professional communication, including the importance of precise language and cultural sensitivity. This heightened awareness could lead them to be more self-critical of their English language skills.

It is also possible that the students’ prior training did not adequately prepare them for the specific linguistic demands of the simulations, such as using professional jargon or communicating with simulated clients from diverse backgrounds. This could have led them to feel less comfortable despite their prior training.

This negative association may have important implications for the design of future training programs. These programs should provide targeted language support that addresses the specific linguistic challenges faced by students with varying levels of prior training. This could involve offering specialized language workshops, providing opportunities for peer tutoring, or incorporating language-focused feedback into the simulation debriefings. Also, instructors should encourage students to adopt a self-compassionate approach to their language learning. This involves acknowledging their limitations, treating themselves with kindness, and recognizing that making mistakes is a natural part of the learning process. Training programs should ensure that the objectives align with the linguistic demands of the simulations and that students are adequately prepared for the specific communication tasks they will be asked to perform.

Furthermore, the network analysis reveals a particularly robust positive connection between video-based feedback (P16) and motivation (P17). However, it is important to note that despite this strong association, these variables appear to occupy relatively peripheral positions within the network at this initial stage of measurement. This observation suggests that while the relationship between video-based feedback and motivation is significant, their overall influence on the network structure may be limited at this early point in the study.

Figure 1 provides a visual representation of the network structure at the initial measurement point (P1), offering a comprehensive overview of the interconnections between variables. Table 2 complements this visual data by presenting the centrality metrics (closeness and betweenness) for each variable at this stage, allowing for a more nuanced understanding of their relative importance within the network.

This analysis provides valuable insights into the initial dynamics of the variables under study, setting the stage for further examination of how these relationships may evolve over subsequent measurement points.

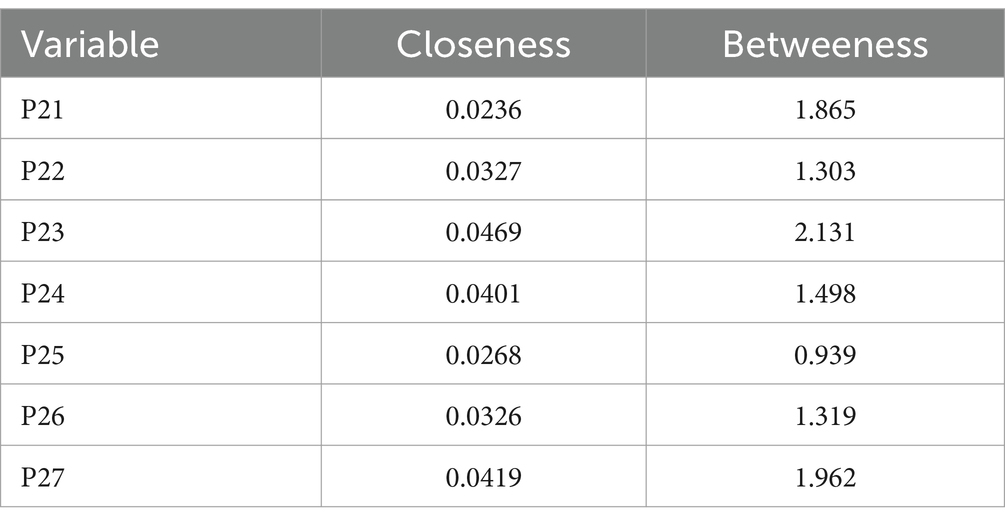

4.1.2 Moment P2: transition toward collaboration and feedback

At this intermediate stage, the network shows significant structural changes compared to the initial measurement (P1).

Compared to the previous network, connectivity has become reorganized, exhibiting new direct relationships between variables. Particularly notable is the strong positive connection between motivation (P27) and English proficiency-comfort (P23), suggesting that greater linguistic comfort is closely associated with increased student motivation. Conversely, a strong negative relationship emerges between English proficiency-comfort (P23) and English proficiency-challenge (P24), indicating that students experiencing comfort in using English perceive fewer linguistic challenges during simulations. Furthermore, video-based feedback (P26) connects positively, although moderately, with motivation (P27), indicating that students started recognizing video feedback as a valuable resource to maintain motivation and engage in reflective practice. The teamwork variable (P25), contrary to the initial interpretation, appears less central at this stage, showing only a weak connection with video-based feedback (P26).

Table 3 supports these observations, revealing English proficiency-comfort (P23) as the variable with the highest betweenness (2.131), reinforcing its central bridging role at this intermediate stage. Motivation (P27) also attains a high betweenness score (1.962), further highlighting its structural importance. In contrast, teamwork (P25) has the lowest betweenness centrality (0.939), indicating a peripheral position at this stage. Video-based feedback (P26), although moderately central, shows increased betweenness (1.319) compared to its initial role (P16 at P1: 0.819), confirming a growing perception among students of feedback’s role in self-regulated learning and professional skill development.

Collectively, these results reflect a clear shift from initial linguistic concerns toward an intermediate stage marked by a focus on motivation and reflection, facilitated by growing comfort with language and the perceived usefulness of video-based feedback.

Figure 2 illustrates the network structure at the second measurement point (P2).

Figure 2. Network representation in P2. Gaussian graphical model showing the evolving structure of variable relationships during the second simulation cycle. Node labels: P21–P27, same coding as in Figure 1. Note the emerging connections surrounding teamwork and motivation. Green edges denote positive connections; red edges indicate negative ones.

Table 3 presents the centrality metrics (closeness and betweenness) for each variable at this intermediate stage (P2).

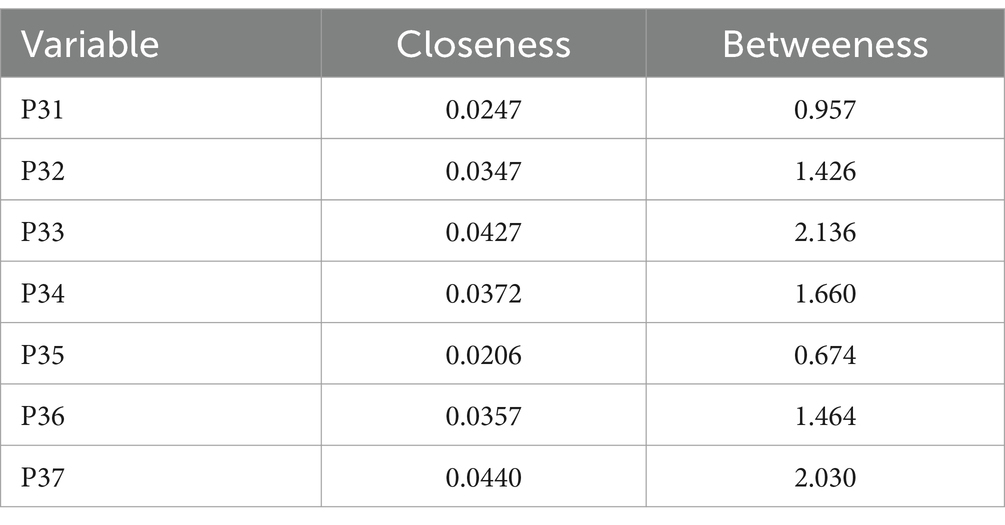

4.1.3 Moment P3: consolidation of metacognitive learning

At this advanced stage of the study, the network demonstrates enhanced cohesion, characterized by more robust and discernible connections among variables. Video-based feedback (P36) emerges as a pivotal node, exhibiting high centrality, particularly evident through its elevated betweenness (1.464). This underscores its critical role as a facilitator of interactions and a central mechanism for promoting metacognitive reflection and self-regulated learning. Moreover, motivation (P37) and teamwork (P35) display high closeness centrality values (0.0440 and 0.0427, respectively), indicating their integration as central and closely interconnected factors within students’ learning networks.

The strong positive association between motivation (P37) and video-based feedback (P36) highlights that students who perceive video feedback as beneficial also experience heightened motivation, actively incorporating feedback into their professional skill development. Conversely, prior training (P31) and English proficiency as a challenge (P34) exhibit lower centrality measures, suggesting their diminished significance at this advanced stage of training.

These findings corroborate that, by the final stage, students actively engage with video feedback as a resource for critical self-assessment, refining their professional and communicative competencies, and significantly advancing their metacognitive strategies. This engagement reflects a sophisticated level of self-regulated learning and metacognitive awareness among participants.

Figure 3 provides a visual representation of the network structure at the third analysis point (P3), offering insights into the complex interrelationships between variables at this stage. Table 4 presents the centrality metrics (closeness and betweenness) for each variable at the third stage, quantifying their relative importance within the network structure.

Figure 3. Network representation in P3. Gaussian graphical model showing the graph after the third simulation cycle. Node labels: P31–P37, same coding as in previous figures. Video-based feedback and teamwork appear more central. Edge color and thickness represent direction and strength of conditional associations.

The evolution of centrality across the three time points further supports this interpretation. In P1, English proficiency, comfort acted as a structural bridge, indicating that linguistic fluency was central to early student engagement. By P3, video-based feedback emerged as the most central node, indicating a shift in students’ learning behavior toward reflection and metacognitive regulation. These findings demonstrate that simulation with video feedback is effective for overcoming communication barriers and also for cultivating self-regulated learning strategies, thereby enhancing instructional effectiveness and long-term competency development.

4.2 Results of the qualitative analysis

The qualitative analysis, conducted with Dedoose software (SocioCultural Research Consultants, LLC, 2023), identified the main patterns in students’ perceptions of the simulation with videotaped feedback. Through a thematic coding process, several key themes emerged, which are organized into three main dimensions: linguistic competence, metacognitive learning and professional collaboration.

The qualitative study yielded five categories of analysis:

1. Awareness of Learning and Performance

2. Learning Regulation

3. Decision Making

4. Self-Efficacy and Confidence

5. Language Skills

4.2.1 Analysis category “awareness of learning and performance”

This category explores how students become aware of their own learning and performance processes through simulation and video feedback.

This finding confirms students’ ability to reflect on their own thinking and learning. The qualitative study identifies three subcategories of analysis: identification of strengths and weaknesses, content knowledge and specific skills, and understanding of own emotions and reactions during the simulation.

It is the students themselves who identify their strengths and weaknesses. Simulation, in this sense, promotes awareness of their current capabilities. Recognizing strengths provides confidence and reinforces effective strategies. Identifying weaknesses is crucial to focus improvement efforts and overcome problem areas. We can affirm that video feedback has enabled students to “identify discrepancies” between their intention and how the message is conveyed, promoting self-awareness.

“I have noticed that I need to practice speaking more because, even though I understand almost everything, I do not feel comfortable or confident enough to speak fluently. This means I cannot show my full potential.” (S5)

Regarding the subcategory of analysis “Content knowledge and specific skills,” some students recognize that they lack sufficient knowledge to be able to diagnose certain cases, although they are familiar with theoretical aspects of the issues addressed.

“I have studied all the addictions in the course material, but I could not figure out a treatment that would work for the simulation case. I’m not happy with the decision we made as a group.” (S22)

“I find that I’m better at identifying patient diagnoses, but I’m unsure about treatment plans. I need to research this more deeply.” (S31)

The subcategory “understanding one’s own emotions and reactions during the simulation” recognizes that emotions can significantly influence simulation performance. Students report being aware of their own emotional reactions (anxiety, nervousness, confidence) and how they affect their behavior. Video feedback has enabled students to “observe their own non-verbal cues,” revealing how emotions manifest themselves in body language and tone of voice.

“After the simulation, I listened to the audio and noticed that my English speaking skills are much better than I thought. I had never done a simulation before, and at first I was nervous because I did not know how to handle it, but my team helped me with my stress.” (S7)

“I realized that in the recording I look unsure of myself. I feel that my classmates are more confident when they speak, but I’m grateful to work with them because it’s easy to feel supported.” (S11)

4.2.2 Category of analysis: regulation of learning

This category focuses on how students use the feedback obtained by recording their performance to adjust their learning strategies, set goals, and monitor their progress. We can affirm that the study promotes taking control of one’s own learning process. Students have been able to plan, monitor, and evaluate progress toward learning goals, adjusting strategies as necessary. The following subcategories break down this self-regulation process into specific components: planning and preparation for future simulations; adjustment of communication and language strategies; searching for additional resources to improve knowledge; and time management and organization of information.

Regarding “planning and preparation for future simulations,” students recognize aspects that they should improve for the following simulations after watching the videos. Some state that it would be helpful to set clear goals for the next simulation and design a plan to achieve those goals: “I have prepared more for this one, based on what I saw that I was not good at” (testimony 12). This conscious planning process confirms that the simulation and the contribution of the recordings favor self-regulated learning.

In the context of simulation in a foreign language, the ability to adjust communication and language strategies is essential. This study demonstrates the great demand to which psychology students have been subjected. They have had to adapt vocabulary, grammar, communication style, and listening skills to interact in the simulation scenarios.

“I need to improve my speaking skills and practice more to have a more fluent conversation in English.” (S10)

Simulation-based learning is directly linked to “improved language skills” where “targeted linguistic feedback” is mentioned as a key element. We can affirm that the present methodological approach contributes to significant progress in language-related areas such as vocabulary, pronunciation, variety of expression and grammar.

“I prepared more for this one, based on what I saw I wasn’t good at before.” (S1)

“I think this time I felt more relaxed doing the simulation and better prepared to talk with my group. I also noticed that they were calmer than last time.” (S3)

The search for additional resources to improve knowledge reflects the formative demand of the proposal and the recognition of one’s own frustration (Valley of Despair, Van Laere et al., 2021). This proactive search for knowledge is a distinctive characteristic of self-regulated learners.

“I already have the knowledge of the therapies, but my biggest difficulty is translating it into English, which is a language I am not fluent in. I have had to look for articles in Spanish to be able to go deeper and help myself with translators to organise the information and thus contribute to my team” (S14).

Time management and organization of information in the context of simulations involves the ability to prioritize tasks, allocate sufficient time for preparation and organize information logically so that it is easy to remember and apply. A significant number of students state that after the first simulation, they have improved their time management in subsequent simulations. The strategy they identify as most important is the organization of information about different therapies that are repeated for various diagnoses.

“I am aware that I have made big mistakes in the simulation due to my anxiety. I am learning to work in a group and to organise a lot of information in a short time. For the next simulations, I will prepare the material more in advance and review it with my colleagues.” (S32)

“I was able to study in more depth for the last simulation. It was easier for me because the treatments of some disorders are similar and I was able to synthesise the information better.” (S43)

4.2.3 Category of analysis: decision-making

This category examines how students analyze their decisions and actions during the simulation, understanding the reasoning behind them and considering alternatives. It focuses on the development of critical thinking and the ability of clinical reasoning in students. It is not enough to apply a protocol or choose a treatment; it is essential to understand why that decision is made and how the situation could be affected. The following subcategories break down this process of reflection: analysis of treatment choices and their justification; consideration of alternative perspectives; evaluation of the impact of one’s own actions on interaction with peers.

Regarding the subcategory “analysis of treatment choices and their justification,” some students recognize that the simulation demanded reasoned justifications about the selection of a treatment. It aligns with the “Development of Professional Skills,” especially “critical thinking.” Justifying treatment choices requires evaluating the evidence, considering the alternatives, and reasoning logically.

“The simulations were very demanding for me, especially for two reasons, my lack of experience diagnosing cases with a wide variety of treatments; and because English made it difficult for me to express myself more naturally.” (S24)

In psychological practice, there is rarely a single “right answer.” The subcategory “Consideration of alternative perspectives” encourages mental flexibility and the ability to consider different points of view. It involves exploring alternative treatment approaches, recognizing the limitations of one’s own perspective, and being open to the possibility that other options may be more appropriate in certain circumstances.

“I am aware of the therapies but I found it difficult to justify them because I consider several as possible useful treatments.” (S34)

The practice of psychology is inherently interpersonal. The subcategory “evaluating the impact of one’s own actions in interaction with peers” confirms the impact of watching the post-simulation videos on one’s own performance. Some students note how ideas were communicated, how disagreements were handled and how they contributed to the overall learning environment. Video feedback has allowed for the assessment of interpersonal skills.

“I liked to see my peers discuss. Most of them did it in a polite way and with reasonable arguments on the cases of alcoholism and the social normalisation of alcoholism.” (S5)

“I have a lot to improve. I had to switch to Spanish to be able to argue. I think I have done quite well in terms of knowledge. English is another matter” (S16)

Professional skills development,” specifically relates to “assessing interpersonal skills” and “collaborative skills.”

“I think that both my colleagues and I have improved our performance and our fluency in speaking English and being able to attend to patients in the last simulation quite a lot. We have learned after the first simulation” (S56).

Together, these subcategories promote a more reflective, critical and patient-centered decision-making process. By analyzing their choices, considering alternatives and evaluating the impact of their actions, students show evidence of having developed essential skills for professional practice.

4.2.4 Category of analysis: “self-efficacy and confidence”

This category highlights how simulation and video feedback contribute to students’ self-efficacy, their confidence in their abilities, and their willingness to face future challenges. “Self-efficacy and confidence” directly influences students’ motivation, effort, and persistence. Subcategories of analysis are identified as perceptions of competence and capacity; reduction of anxiety and fear of making mistakes; and increased motivation to participate in learning activities.

Perceptions of competence and capacity focus on how students perceive their own level of skill and competence after participating in the simulations and after watching the videos. It relates to “Building Self-Efficacy and Reducing Anxiety,” where it is mentioned that feedback can “Highlight strengths and achievements,” increasing confidence.

“I think I’ve been getting better in the simulations. I’ve become more fluent, although I do not think I speak English as well as I would like to. (S20)

“I do not look very professional in the videos. I hesitate a lot and I prefer others to make the decisions. But I understand the topics and I have studied a lot.” (S23)

Regarding the reduction of anxiety and fear of making mistakes, one group of students initially acknowledges fear of failure and of failing the subject. As they progress through the simulations, this fear and anxiety seems to dissipate for some.

“I have noticed that I have improved in the simulations thanks to the previous preparation and the help of my colleagues. The first simulation was very complicated because I missed the previous classes and I did not prepare the content. The other simulations were better because I studied more and I knew that I was not a candidate to fail the subject if I made an effort.” (S40)

“I dared to speak English even though I know I am not very good at it. Attending to the patient was the important thing and I think I succeeded. I enjoyed the case.” (S61)

We can see that video feedback, by providing constructive criticism and fostering a growth mindset, can help students overcome their fears. It is related to “building self-efficacy and reducing anxiety,” where it is stated that feedback can “Foster a growth mindset,” which helps to reduce anxiety.

“I know I made some serious mistakes because of my anxiety in speaking English in front of my colleagues in the first two simulations. I tried to do better in the last simulation and I am more satisfied.” (S19)

Increased motivation to engage in learning activities is also observed as they become more confident in the simulation, as corroborated by previous studies on cyclic and iterative simulation (Angelini et al., 2024). In this case, simulation and video feedback, by providing positive and personalized learning experiences, have contributed to increasing students’ intrinsic motivation. Students are more likely to engage with feedback when they are motivated, which reinforces self-regulated learning strategies.

Evidence is gathered from the students’ own testimonies: “I started to see that I was not bad at it and that encouraged me.” This relates to “increased engagement and motivation,” where it is highlighted that video feedback can “provide personalised and practical ideas,” increasing engagement.

“I was motivated to see that my English improved somewhat in the simulations, I even used words I had read in the material I looked up.” (S8)

“I prepared a lot for this subject. I liked its practical approach and seeing myself playing the role of a professional clinic manager made me insecure at first but I solved it by studying the cases in depth. I would like to continue doing simulations.” (S13)

“In this simulation I felt more confident and secure, and I think I did better than in the previous one.” (S17)

“In this simulation I felt much better and more prepared. I think it went better because I did it more calmly and with more enthusiasm.” (S33)

“Now I am prepared to do all the simulations with more self-confidence and thinking that I have studied.” (S34)

“I was a bit more relaxed this time, which was a good thing. It was great to see that all my team members were contributing their part and their interest in the simulation.” (S58)

4.2.5 Analysis category “linguistic skills”

The “Linguistic Skills” category identifies the ability to communicate effectively in English in a controlled environment. Students have had to prepare content related to addictions and obsessive-compulsive disorders (theory) and interact with their peers in a simulation scenario to diagnose and plan treatments using various therapies. The video-based feedback has facilitated the analysis of the students’ own linguistic skills.

The following subcategories break down linguistic skills into key components: vocabulary and grammar; pronunciation; and fluency.

A group of students acknowledges that they lack a broad and precise vocabulary to understand and express complex psychological concepts. Students need to know the specific terminology of the discipline to participate in debates, read research, and communicate with colleagues. As the students’ comments aptly point out:

“I think I could have done better if I had studied more psychology vocabulary, I still lack vocabulary.” (S31)

“I made a lot of mistakes in speaking but my classmates understood me. I have to study English.” (S42)

“I have to improve my speaking and practice more to become more fluent” (S75).

Some learners admit to having clear, correct but improvable pronunciation. We can affirm that simulation-based learning contributes to significant progress in language-related areas such as pronunciation. The recorded component allows the learner to observe weaknesses.

“I do not like to hear myself speaking English. My level is below that of my classmates and that blocks me.” (S44)

However, videotaped feedback allows instructors to show students how to improve their pronunciation:

“The corrections of my classmates and the teacher by watching some videos helped me even though I repeated some mistakes again.” (S21)

“Comparatively, I spoke more in simulation 2 than in the last simulation, I think I was more comfortable with the profile of the novice psychologist than that of the patient in simulation 3.” (S39)

In relation to fluency, some indicate that they lack confidence in speaking English regardless of the preparation of the content:

“My problem is that I do not like to speak in front of others in English. I have no problem studying, reading or understanding what my classmates speak. Anyway, I felt quite comfortable in the simulations, especially in the last one. I think I was able to speak more.” (S18)

5 Discussion

The findings from this mixed-method study demonstrate meaningful shifts in students’ learning processes through simulation and video-assisted feedback, highlighting clear developmental patterns supported by both quantitative network analysis and qualitative thematic analysis.

At the initial stage (P1), the network revealed moderate connectivity, prominently focused on linguistic competence. Specifically, students’ perceived comfort with English proficiency emerged as the most central node, mediating interactions between their previous professional experience and prior training. The strong negative relationship observed between prior training and linguistic comfort indicates that students with limited previous experience faced significant linguistic challenges.

The demographic profile of the participants in this study—80 first-year psychology students aged 18–24—played a significant role in shaping their linguistic and metacognitive development during the simulation-based training. Due to their limited prior experience, many students initially struggled with using English in a professional context, which they identified as a significant obstacle. This challenge with “English language proficiency” was a primary concern at the beginning of the study.

The younger age range (18–24) also suggests variability in cognitive development, particularly in abstract reasoning and metacognitive capacities, which are crucial for self-regulated learning and reflection.

The age distribution within this cohort further influenced learning trajectories. Students in the 18–21 age group, who likely represented the majority of first-years, appeared to benefit more from repeated exposure to video feedback and structured debriefing sessions. These elements facilitated deeper self-observation and reflection, which are critical components of metacognitive growth. In contrast, older students (22–24) demonstrated more advanced self-regulation strategies earlier in the process, potentially due to greater maturity or prior educational experiences. These students were also quicker to integrate professional jargon into their communication, reflecting a more immediate alignment with the course’s objectives.

The structured simulation cycles provided an equalizing platform for all participants, regardless of age or initial proficiency levels. Younger students, who may have initially struggled with foreign language anxiety or unfamiliarity with professional contexts, showed significant improvement over time. The iterative nature of the simulations allowed them to build confidence and competence incrementally. For older students, the simulations served as a means to refine existing skills and adapt them to the specific demands of professional psychology communication.

Quantitative network analysis revealed that age and experience mediated the relationships between key variables over time. For instance, younger students exhibited a stronger correlation between motivation and perceived utility of video feedback as the training progressed, suggesting that these tools were particularly effective in fostering engagement and self-improvement within this subgroup. Older participants, on the other hand, demonstrated earlier connections between teamwork and professional experience, indicating a readiness to collaborate effectively within simulated clinical scenarios.

Qualitative data consistently mirrored these results, highlighting initial anxieties related to language proficiency. Although video-based feedback was positively linked to motivation, it initially occupied a peripheral position within the learning network, reflecting students’ early-stage unfamiliarity with reflective practices.

In the intermediate stage (P2), substantial network reorganization was evident. The quantitative results indicated that perceived comfort with English proficiency maintained its role as a crucial bridging node. Importantly, motivation emerged as a more centrally integrated variable, strongly associated with linguistic comfort. Simultaneously, video-based feedback gained moderate centrality, suggesting that students began recognizing its utility in facilitating self-assessment and reflective learning practices. Qualitative findings reinforced these observations, revealing that students increasingly employed video feedback to regulate their learning processes and refine professional communication skills. Contrary to initial expectations, teamwork remained relatively peripheral, suggesting collaborative dynamics were still developing during this intermediate stage.

By the third simulation cycle (P3), the network structure exhibited significantly greater cohesion, with video-assisted feedback clearly consolidating its role as a central node. The quantitative findings indicated video feedback’s prominent position in facilitating metacognitive reflection, motivation, and teamwork. Students reporting higher motivation and more effective teamwork frequently highlighted video feedback as essential for enhancing their professional competencies. The qualitative results supported this, with participants consistently reporting increased self-awareness, more effective regulation of learning strategies, improved emotional management, and stronger collaboration fostered through structured reflection on recorded simulations.

The observed transition aligns closely with previous research indicating that students progressively shift their focus from initial concerns about linguistic abilities toward self-efficacy and collaborative engagement (Fischetti et al., 2022; Harteveld et al., 2011; Ledger et al., 2022). Additionally, recent research has underscored the role of simulation in enhancing student satisfaction and learning perceptions by creating dynamic and engaging educational experiences, increasing self-awareness, and promoting deeper reflection and commitment to improvement (Kasperski et al., 2025).

Furthermore, several studies have emphasized the critical role simulation plays in developing metacognitive and self-regulation skills in educational contexts (Álvarez, 2023; Burke and Mancuso, 2012; Fischetti et al., 2022). The progressive structural changes identified through network analyses and the qualitative insights from this study are consistent with existing literature, which suggests that iterative simulation experiences facilitate a shift in students’ attention, from initial performance anxiety toward continuous improvement and enhanced metacognitive regulation (Angelini et al., 2024; Van Laere et al., 2021).

These findings have several important implications for the design and implementation of simulation-based courses in psychology and related fields.

Our study confirms that language proficiency can be a significant barrier for students, particularly at the beginning of their training. To mitigate this, instructors should offer resources such as glossaries of key psychological terms, sentence stems for common clinical interactions, and opportunities for low-stakes language practice before the simulations begin. This could involve role-playing exercises focused on specific communication skills (e.g., asking open-ended questions, summarizing client concerns) in a supportive environment. It should be highlighted that the initial focus is on developing skills and comfort with the simulation process, not on achieving perfect language or performance. Thus, instructors should create a safe learning environment where mistakes are viewed as opportunities for growth.

The centrality of video-assisted feedback in our network analysis underscores its potential as a powerful learning tool. Established feedback frameworks are useful to guide the debriefing process. These frameworks help students systematically analyze students’ performance, identify areas for improvement, and develop action plans. A relevant strategy is to encourage students to self-assess their performance before receiving the instructor’s feedback. By providing guiding questions that prompt them to analyze their communication skills, nonverbal behavior, and clinical reasoning, they develop metacognitive skills. Peer feedback sessions where students can provide constructive criticism to one another has proven to work well in debriefing practices. This not only enhances students’ analytical skills but also fosters a sense of community and collaborative learning.

Our findings also suggest that students’ metacognitive skills evolve over time with repeated exposure to simulation and feedback. Instructors should teach students about metacognitive concepts such as self-monitoring, self-regulation, and reflection.

Simulation, on the other hand, provides a valuable opportunity to bridge the gap between theory and practice. It is important that instructors ensure that the simulation scenarios directly relate to the concepts and theories being taught in the course. This helps students see the relevance of the material and apply it in a meaningful context.

By implementing these strategies, educators can harness the full potential of simulation-based learning to enhance students’ linguistic skills, metacognitive awareness, and professional competence.

Finally, integrating simulation with structured video-assisted feedback significantly promotes metacognitive reflection, enhances linguistic proficiency, and develops professional communication skills.

6 Limitations

While this study provides valuable insights into the effectiveness of simulation and video-assisted feedback, several limitations should be acknowledged. To begin with, the data collected through the Likert-scale questionnaire and reflective reports rely on students’ self-perceptions and subjective experiences. This raises the possibility of social desirability bias, where students may have been inclined to provide responses that they believed were more favorable or aligned with the expectations of the instructors. While efforts were made to ensure anonymity and encourage honest responses, this potential bias cannot be entirely ruled out.

The lack of a control group limits our ability to draw definitive causal conclusions about the intervention’s effectiveness. While the study demonstrates positive changes in students’ perceptions and skills over time, it is possible that these changes could have occurred independently of the simulation and video-assisted feedback. The study follows Morales Vallejo’s guidelines on quasi-experimental research (2008) in which a single-group design provides an in-depth examination of changes within participants as they progressed through the simulation and feedback intervention. Without a control group, we cannot definitively rule out the possibility that other factors, such as maturation or external learning experiences, contributed to the observed improvements (Morales Vallejo, 2008).

Moreover, the study was conducted with psychology students in Spain, which may limit the generalizability of the findings to other cultural contexts. Students’ learning styles, communication preferences, and attitudes toward feedback may be influenced by their cultural background.

Finally, the simulation served as part of the student assessment and anxiety was likely to be a prevalent condition, given their performance translated into grades.

Despite these limitations, the findings offer several practical implications for educators seeking to implement similar simulation-feedback models. For instance, implementing simulation-based learning requires careful allocation of resources. Educators need access to appropriate simulation equipment (e.g., video cameras, recording software, simulated client scenarios), as well as dedicated time for briefing sessions, simulations, debriefing, and video review. Institutions should invest in these resources to support effective simulation-based learning.

Educators need specific training on how to design and facilitate effective simulation scenarios, provide constructive video-assisted feedback, and manage the technical aspects of video recording and editing. Training programs should cover topics such as simulation design principles, feedback techniques, video editing software, and ethical considerations related to recording and sharing student performance data.

Simulation-based learning should be carefully integrated into the curriculum to ensure that it aligns with learning objectives and complements other instructional methods. Simulation activities should be designed to build upon theoretical knowledge and provide opportunities for students to apply their skills in authentic contexts.

Educators should be aware that simulation can be anxiety-provoking for some students, particularly those who are less confident in their language skills or who are uncomfortable being recorded on video. To mitigate anxiety, instructors should create a supportive and non-judgmental learning environment, provide clear instructions and expectations, and offer opportunities for students to practice in low-stakes simulations before participating in graded assessments.

Institutions should have ethical guidelines available and that are read to all students. Video creates a risk of social embarrassment if shared, whether intentional or not.

Future research should consider incorporating a comparative design to strengthen causal inferences. Potential designs include a wait-list control group, where a comparable group of students receives the intervention after a delay, or a comparison group receiving traditional instruction. These designs, in conjunction with the detailed network analysis employed in this study, would provide a more comprehensive understanding of the unique impact of simulation and video feedback on linguistic and metacognitive development.

7 Conclusion

The research identifies the benefits of simulation-based training in developing metacognitive aspects related to linguistic skills and professional communication in psychology. Among the advantages of video-based feedback, the following stand out:

a) Enhanced personal reflection and metacognitive awareness. Studies by Ledger et al. (2022) and Fischetti et al. (2022) argue that students who use simulations develop a greater awareness of what they have learned and how they can learn more. Video-based feedback amplifies this effect. Students can see themselves performing tasks (e.g., conducting a simulated therapy session, presenting a case study, or participating in a debate) and then receive instructor-led video feedback.

According to Harteveld et al. (2011) and Kasperski et al. (2025), simulation plays a key role in learning and increases student satisfaction by making it more dynamic and engaging. Video-based feedback allows for awareness and improvement of student engagement in their effort to pass the course, but mainly by clearly observing their limitations.

b) Building Self-Efficacy and Reducing Anxiety, as affirmed by Burke and Mancuso (2012) when corroborating that the debriefing or reflection phase in simulation environments builds self-efficacy and self-regulation.

This study collects some prejudices regarding the first simulation and its function in evaluating learning. We have been able to identify some perceptions of simulation as a threatening task because it is in English, and this may have generated greater anxiety, less motivation, and less commitment to the activity. This resulted in less fluent and accurate linguistic performance, as well as a reduced ability to demonstrate key professional skills (such as critical thinking, collaboration, and effective communication). The fact of having a cycle of simulations has shown that these conditioning factors have tended to be overcome in most students, according to the testimonies collected.

Therefore, we are in a position to affirm that simulation and video feedback are effective as a methodology for evaluating experiential learning in psychology training. Among the aspects to highlight is the impact of personal reflection and metacognition. Simulation as a teaching-learning methodology has allowed students to analyze their performance, identify areas for improvement, and develop self-assessment skills. Simulation has provided the opportunity to practice language use in a realistic context and receive specific feedback on grammar, vocabulary, and pronunciation. Likewise, simulation has allowed students to practice essential skills such as critical thinking, problem-solving, interpersonal communication, and team collaboration. This methodology has become, according to students, “an engaging and motivating learning experience,” especially when combined with personalized feedback and the opportunity to surpass oneself. Simulation provides a safe environment to practice and make mistakes, which has helped students gain confidence in their abilities and reduce fear of failure.

Finally, viewing recorded simulations during debriefings allowed students to identify strengths and areas for improvement in their professional performance. Specifically, students gained awareness of critical aspects of communication, such as body language, facial expressions, and tone of voice that often remain unnoticed in traditional feedback methods. This self-observation facilitated a deeper reflection on their decision-making, actions, and communication strategies during simulations, promoting the development of metacognitive and critical thinking skills.

This study provides compelling evidence for the effectiveness of integrating simulation with structured video-based feedback as a pedagogical approach to enhance linguistic skills, metacognitive awareness, and professional communication skills in psychology students learning English. Our findings demonstrate a clear shift from initial concerns about linguistic competence toward advanced metacognitive self-regulation and professional collaboration, highlighting the transformative potential of this approach.

More broadly, this research contributes to the growing body of literature supporting simulation-based learning as a valuable tool for bridging the gap between theory and practice in higher education. By providing students with opportunities to actively engage in realistic scenarios, reflect on their performance, and receive targeted feedback, simulation fosters deeper learning and skill development than traditional instructional methods alone. The findings also underscore the importance of video-assisted feedback as a means of enhancing self-awareness, promoting self-regulation, and accelerating language development in professional contexts. The student perceptions also correlate to network analysis which shows the weights attributed to language and professional skills, further solidifying the analysis.

The implications of this study extend beyond the field of psychology education. Simulation and video feedback can be adapted and applied to a wide range of disciplines, including medicine, nursing, business, and education. By creating immersive learning experiences that mimic real-world challenges, educators can better prepare students for the demands of their future professions.

Looking ahead, future research should focus on exploring the long-term impact of simulation-based learning on students’ professional success. Longitudinal studies could track students’ career trajectories, assess their on-the-job performance, and examine the extent to which the skills and knowledge they acquired during simulation training translate into real-world settings. Furthermore, research should also focus on best practices for instructors by providing explicit teaching guidelines that are known to work, such as rubrics. There should also be testing of cultural components that can affect the success of a teaching environment. By continuing to investigate the effectiveness of simulation-based learning and refining our pedagogical approaches, we can ensure that students are well-equipped to meet the challenges of an increasingly complex and interconnected world.

Data availability statement

The data supporting the findings of this study are available from the IGALA Research Group. However, restrictions apply to the availability of these data, which were used under license for the current study and are therefore not publicly available. Data are available from the authors upon reasonable request and with the explicit permission of the IGALA Research Group.

Ethics statement

Ethical approval was not required for the studies involving humans because this study was conducted entirely within the framework of a curricular activity included in the official syllabus of the university course “Inglés Científico” (Scientific English). As such, it did not require formal approval from an institutional ethics committee. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements because all participants were enrolled students who engaged in the learning activities as part of their standard coursework. Participation in reflections, simulations, and feedback sessions was voluntary, and data were anonymized prior to analysis.

Author contributions

RD: Conceptualization, Investigation, Methodology, Resources, Software, Visualization, Writing – original draft, Writing – review & editing. MA: Conceptualization, Funding acquisition, Investigation, Methodology, Resources, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the Ingenio Project UCV-2024-25 Grant awarded 20.000 euros.

Acknowledgments

The authors gratefully acknowledge Universidad Católica de Valencia San Vicente Mártir (UCV) for providing the financial support that made this study possible.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1597291/full#supplementary-material

References

Aafjes-van Doorn, K., Liu, A., and Kamsteeg, C. (2022). Videorecorded treatment sessions for professional development. Couns. Psychol. Rev. 37, 4–20. doi: 10.53841/bpscpr.2022.37.1.4

Álvarez, M. N. (2023). Aprendizaje visible y consciente a través de “lesson study” y “debriefing” en la formación inicial docente. (Doctoral dissertation): Universidad Católica de Valencia.

Angelini, M. L. (2021). Learning through simulations: Ideas for educational practitioners. Switzerland: Springer Nature.

Angelini, M. L., Diamanti, R., and Jiménez-Rodriguez, M. Á. (2024). La simulación telemática y su impacto en la formación inicial de profesorado. Revista Iberoamericana de Educación 94, 55–82. doi: 10.35362/rie9415942

Boud, D. (2015). Feedback: ensuring that it leads to enhanced learning. Clin. Teach. 12, 3–7. doi: 10.1111/tct.12345

Burke, H., and Mancuso, L. (2012). Social cognitive theory, metacognition, and simulation learning in nursing education. J. Nurs. Educ. 51, 543–548. doi: 10.3928/01484834-20120820-02

Epskamp, S., Cramer, A. O., Waldorp, L. J., Schmittmann, V. D., and Borsboom, D. (2012). Qgraph: network visualizations of relationships in psychometric data. J. Stat. Softw. 48, 1–18. doi: 10.18637/jss.v048.i04

Fischetti, J., Ledger, S., Lynch, D., and Donnelly, D. (2022). Practice before practicum: simulation in initial teacher education. Teach. Educ. 57, 155–174. doi: 10.1080/08878730.2021.1973167

Flavell, J. H. (1979). Metacognition and cognitive monitoring: a new area of cognitive–developmental inquiry. Am. Psychol. 34, 906–911. doi: 10.1037/0003-066X.34.10.906

Gibbs, G., and Simpson, C. (2005). Conditions under which assessment supports students’ learning. Learn. Teach. Higher Educ. 1, 3–31.

Goetz, J. P., and Le Compte, M. D. (1988). “Conceptualización del proceso de investigación: teoría y diseño”, in Etnografía y diseno cualitativo en investigación educativa. Madrid: Morata.

Gossman, M., and Miller, J. H. (2012). “The third person in the room”: recording the counselling interview for the purpose of counsellor training–barrier to relationship building or effective tool for professional development? Couns. Psychother. Res. 12, 25–34. doi: 10.1080/14733145.2011.582649

Harris, K. S. (2005). Teachers’ perceptions of modular technology education laboratories. J. Ind. Teach. Educ. 42, 52–71.

Harteveld, C., Thij, E. T., and Copier, M. (2011). Design for engaging experience and social interaction. Simul. Gaming 42, 590–595. doi: 10.1177/1046878111426960

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Issenberg, S. B., and Scalese, R. J. (2008). Simulation in health care education. Perspect. Biol. Med. 51, 31–46. doi: 10.1353/pbm.2008.0004

Ivey, A. E., D’Andrea, M. J., and Ivey, M. B. (2011). Theories of counseling and psychotherapy: a multicultural perspective: a multicultural perspective (No. 1). Sage.

Ivey, A. E., Ivey, M. B., and Zalaquett, C. P. (2018). Intentional interviewing and counseling: facilitating client development in a multicultural society. 9th Edn. Boston: Cengage Learning.

JAMOVI Project (2023). Jamovi (version 2.3) [computer software]. Available online at: https://www.jamovi.org (Accessed December 09, 2023).

Kasperski, R., Levin, O., and Hemi, M. E. (2025). Systematic literature review of simulation-based learning for developing teacher SEL. Educ. Sci. 15:129. doi: 10.3390/educsci15020129

Ledger, S., Burgess, M., Rappa, N., Power, B., Wong, K. W., Teo, T., et al. (2022). Simulation platforms in initial teacher education: past practice informing future potentiality. Comput. Educ. 178:104385. doi: 10.1016/j.compedu.2021.104385

Levin, O. (2022). Reflective processes in clinical simulations from the perspective of the simulation actors. Reflective Pract. 23, 635–650. doi: 10.1080/14623943.2022.2103106

Levin, O., Frei-Landau, R., and Goldberg, C. (2023). Development and validation of a scale to measure the simulation-based learning outcomes in teacher education. Front. Educ. 8:123456. doi: 10.3389/feduc.2023.1116626

Lo, N. P. K., and Wong, A. M. H. (2023). “Reimagining teaching and learning in higher education in the post-COVID-19 era: the use of recorded lessons from teachers’ perspectives” in Critical reflections on ICT and education. eds. A. W. B. Tso, W. W. L. Chan, S. K. K. Ng, T. S. Bai, and N. P. K. Lo (Singapore: Educational Communications and Technology Yearbook. Springer).

Morales Vallejo, P. (2008). Estadística aplicada a las Ciencias Sociales. Madrid: Universidad Pontificia Comillas, 1–363.

Nicol, D. J., and Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud. High. Educ. 31, 199–218. doi: 10.1080/03075070600572090

R Core Team (2023) R: a language and environment for statistical computing. R foundation for statistical computing. Available online at: https://www.R-project.org/ (Accessed March 14, 2025).