- 1Faculty of Informatics, Institute of Digital Technology, Eszterhazy Karoly Catholic University, Eger, Hungary

- 2Institute of Computer Science, University of Dunaújváros, Dunaújváros, Hungary

- 3Kandó Kálmán Faculty of Electrical Engineering, Institute of Electronics and Communication Systems, Óbuda University, Budapest, Hungary

- 4GAMF Faculty of Engineering and Computer Science, John von Neumann University, Kecskemét, Hungary

1 Introduction

Homework has always been one of the classic basic elements of teaching and learning. It is usually seen as a tool to consolidate learning and discipline and to foster independence of young minds. Historically, homework has served as a critical link between formal learning in the classroom and independent learning (Epstein and Van Voorhis, 2001). It has been incorporated into both behavioral theory and constructivist pedagogy (Cooper, 1989; Piaget and Inhelder, 1969; Vygotsky, 1978) and has played a key facilitating role. However, the recent explosion of generative AI tools such as ChatGPT has upset this balance. These new systems are now able to provide high-quality answers to difficult scientific problems in seconds, whether it is solving multi-step mathematical problems or compiling entire essays.

This transformation raises a fundamental dilemma: will students continue to use homework as a tool for learning at home, or will they outsource the cognitive effort to machines? As the distinction between aid and substitution becomes ambiguous, instructors must evaluate the suitability of present homework assignments for effective learning. This study contends that homework must transition from a model centered on repetition to one emphasizing logic, feedback, and reflection. Rather than simply banning AI tools, teachers should design intelligent, creative assignments that truly use and integrate AI effectively, and avoid simple, quick-solve tasks that AI can easily solve. It is crucial to design tasks for activities that both promote learning and use technology.

2 Homework: pros and cons in the AI era

Homework provides students with opportunities to consolidate classroom knowledge and foster independence. In mathematics in particular, repeated exposure and varied application are essential for mastering procedures and concepts.

At their best, homework builds autonomy and mental flexibility. It provides space for experimentation, errors, and reflection, especially when the tasks are well designed.

However, these benefits depend on meaningful design and context. Overburdening students with repetitive or overly difficult tasks can demotivate them (Deci and Ryan, 2008), exacerbate inequalities (especially where support at home is lacking), and drive them toward mechanical or AI-based simplification. If homework is not discussed in class, if it does not consider changing abilities, or if it is viewed as unnecessary work, then its benefits cannot be realized.

Technologies such as ChatGPT and Photomath present attractive expedients, particularly when tasks are easily automatable (Tulak, 2024). Students are likely to give their homework to AI if they see it as irrelevant or extremely difficult. On the other hand, AI, when appropriately included into assignments, can assist students by providing tips, comments, or simulations. Homework should be perceived as a dual-purpose educational instrument: it has the potential to enhance learning or devolve into meaningless work. The right balance in the era of AI relies on intentional design, explicit declaration of its worth, and ongoing feedback. Only through this approach can homework transform itself into an inclusive, reflective, and adaptable learning environment.

3 The core dilemma: learning vs. outsourcing

Teachers now must consider not only the content and amount of homework but also its susceptibility to the simplification provided by technology with the development of artificial intelligence tools. This advancement begs a basic pedagogical and ethical conundrum: are students still learning while they finish assignments using artificial intelligence tools? Alternatively, are they outsourcing the fundamental cognitive tasks required for meaningful learning?

Examining what homework is expected to achieve will help us to address this question. It should ideally provide a low-stakes environment for students to make mistakes, consider their knowledge, and apply it in novel settings. It is the fight with the problem, the so-called “desirable difficulty” that advances learning, not only the right answer itself (Bjork and Bjork, 2011). Still, the line separating help from replacement is not always obvious. Artificial intelligence can be a cognitive assistant for students helping them to visualize abstract ideas, get real-time explanations, and validate their work. Active learning is demonstrated, for instance, by a student who uses Wolfram Alpha to confirm the result of an integral after trying it hand-first. On the other hand, duplicating an essay produced by ChatGPT without reading or editing amounts to passive consumption, maybe more akin to academic dishonesty than instructional support.

The secret is intention, openness, and introspection. Sadly, most modern homework assignments lack distinction between these purposes. The conventional paradigm, which no longer holds, makes the clean separation between student work and outside help assumption. Teachers now have a fresh task: how to adapt homework to incorporate artificial intelligence as a resource without compromising the instructional benefits of autonomous effort?

4 From solving to prompting

Where traditional homework stressed repeated repetition and problem-solving, today's students increasingly engage in prompt engineering, creating questions to AI tools to acquire right or optimal responses. AI has restructured the cognitive economy of learning. When used carefully, artificial intelligence may scaffold learning as calculators redefining mathematical fluency in the 1980s. But there is a thin line separating scaffolding from replacement. Tools like ChatGPT fall short in analyzing, assessing, and generating but shine in tasks low on Bloom's taxonomy, remembering and implementing (Gonsalves, 2024). Should pupils rely more on artificial intelligence to finish assignments than to grasp them, automation without internalizing could follow. Teachers must thus create assignments demanding personal interpretation, meta-level thinking, or originality (Kovari, 2025), ones for which artificial intelligence cannot readily finish. The goal is to guide students toward safe artificial intelligence use while preserving the cognitive friction that drives learning.

Crucially, this is not a fringe phenomenon. According to a 2023 Pew Research Center study, 13% of American teenagers between the ages of 13 and 17 have used ChatGPT for homework; the proportion doubled to 26% by 2024 (Sidotti et al., 2025), however other studies show notable increase (Picton and Clark, 2024). Teachers find it more difficult than ever to separate real student voice in projects molded by generative AI tools (Luther, 2025).

The argument over what counts as “cheating” in homework has always changed alongside technology. Calculators, Wikipedia, and now generative artificial intelligence have frequently challenged teachers to rethink the line separating acceptable assistance from dishonest activity. Students could use ChatGPT today to create summaries, confirm responses, or paraphrase. Is this still cheating? Context, intention, and openness will all help to determine the response. Should a student apply artificial intelligence to clarify a confusing approach, this may be akin to using a textbook or peer support. But if artificial intelligence finishes the work totally, avoiding education, it crosses ethically questionable ground. Teachers now must teach not only subject matter but also AI literacy, including how and when to safely utilize digital tools (Picton and Clark, 2024). The difficulty is creating a society in which artificial intelligence is included as a tool rather than a replacement.

5 Rethinking homework design

Teachers have great difficulty as generative artificial intelligence systems get more advanced: how can we make sure that homework stays a meaningful learning opportunity instead of a mechanical chore assigned to robots? Neither practical nor pedagogically wise is banning artificial intelligence. Rather, good homework should discourage shortcutting and advance thought, logic, and appropriate use of technology.

The way classrooms are run now presents a striking model. Using Google Classroom, students must turn in scanned or photographed homework under clear deadlines and precise expectations. Crucially, even unfinished projects must be uploaded, and students are urged to record where they ran across challenges. This framework transforms homework from a product to be assessed into a process of learning and feedback, therefore discouraging shallow usage of artificial intelligence.

Task design determines whether homework is AI-resilient. Assignments aimed at rote computation or fact recall are most easily automated. Tasks involving metacognition, conceptual thinking, and student voice, on the other hand, provide settings where artificial intelligence can be a help rather than a replacement. For example:

• Ask students to compare two solution methods (one possibly AI-generated) and justify which is more effective.

• Assign error analysis tasks, such as identifying and correcting mistakes in AI-generated solutions.

• Encourage self-generated problems, where students design and solve a math question modeled on class examples.

• Use explain-your-reasoning prompts to reveal thought processes and discourage copying.

Metacognitive tasks, such as What did you find difficult and why? or How would you improve your solution now?, are particularly effective because these are personal, thoughtful, and hard to automate. As in your practice of going over homework at the start of classes, the stakes become social and intellectual rather than only procedural when students expect to participate in classroom discussions based on their contributions.

Including artificial intelligence overtly into the work is another exciting approach. Students might be instructed to search ChatGPT for a solution, criticize the output, and consider its reasoning. These projects help students to see the tool as an imperfect partner needing essential control rather than as a magic box, therefore promoting AI literacy.

Eventually, comments are quite essential. Un discussed homework becomes low-stakes and so a perfect target for delegation. Consistent feedback from your system, including into classroom activities, tells students that their effort and ideas count more than accuracy. This helps to emphasize that homework is a cognitive space rather than a compliance task.

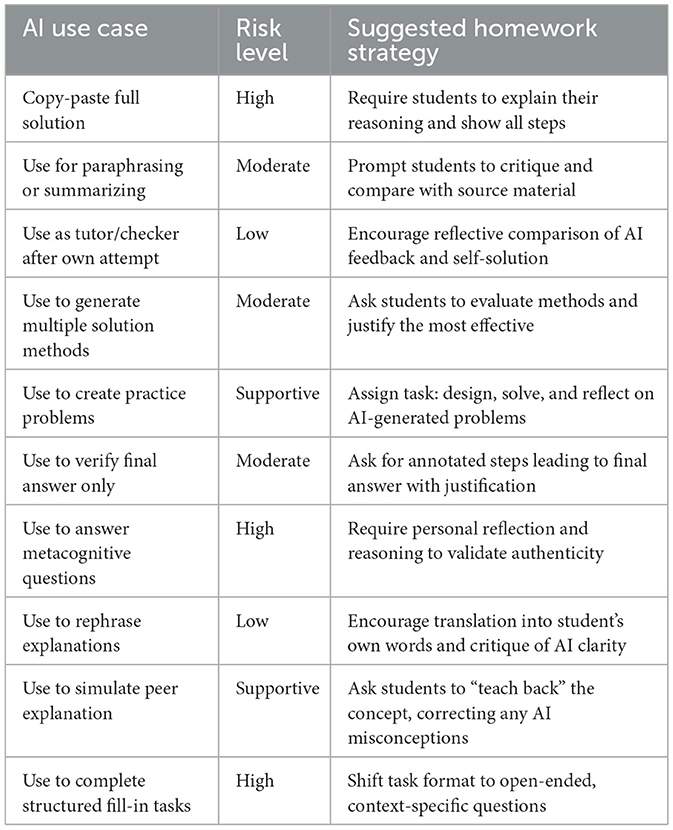

Table 1 summarizes typical AI-assisted student behaviors and suggests related instructional strategies to minimize shortcutting while preserving learning value based on the authors' practical experience and classroom-level implementation of AI-aware homework systems.

6 Discussion

The development of generative artificial intelligence tools questions conventional wisdom regarding homework as a consistent gauge of personal knowledge and effort. Instead of announcing the end of homework, we should acknowledge the end of a limited view of it, one oriented toward results over contemplation, and accuracy over process. The fundamental question is not whether pupils use artificial intelligence but rather how they do it. One instrument that replaces thinking reduces learning; one that enables explanation and introspection deepens it.

Stopping shortcutting calls far more than just detection or rules. It demands task designs that honor metacognition, creativity, and reason. Feedback systems such as the one detailed in this study, whereby students must try each assignment and review it in class, encourage real involvement and lessen the attraction of automation.

From a more general standpoint, academic integrity guidelines have to change. Like calculators in prior decades, schools should educate their students to utilize artificial intelligence properly. AI encourages us to rethink homework as a dialogic, reflexive, ethical component of learning rather than makes it obsolete.

Author contributions

EG: Writing – original draft, Writing – review & editing, Conceptualization, Methodology. AK: Writing – original draft, Writing – review & editing, Conceptualization, Methodology.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bjork, E. L., and Bjork, R. A. (2011). “Making things hard on yourself, but in a good way: creating desirable difficulties to enhance learning,” in Psychology and the Real World: Essays Illustrating Fundamental Contributions to Society, eds. M. A. Gernsbacher, R. W. Pew, L. M. Hough and J. R. Pomerantz (New York, NY: Worth Publishers), 56–64.

Deci, E. L., and Ryan, R. M. (2008). Facilitating optimal motivation and psychological well-being across life's domains. Can. Psychol. 49, 14–23. doi: 10.1037/0708-5591.49.1.14

Epstein, J. L., and Van Voorhis, F. L. (2001). More than minutes: teachers' roles in designing homework. Educ. Psychol. 36, 181–193. doi: 10.1207/S15326985EP3603_4

Gonsalves, C. (2024). Generative AI's impact on critical thinking: revisiting bloom's taxonomy. J. Mark. Educ. doi: 10.1177/02734753241305980 [Epub ahead of print].

Kovari, A. (2025). Ethical use of ChatGPT in education—best practices to combat AI-induced plagiarism. Front. Educ. 9:1465703. doi: 10.3389/feduc.2024.1465703

Luther, M. (2025). How this Teacher Feels About All This AI Stuff: The Path Forward Needs to be One of Imagination. The Important Work. Available online at: https://theimportantwork.substack.com/p/how-this-teacher-feels-about-all (accessed April 11, 2025).

Picton, I., and Clark, C. (2024). Children and Young People's Use of Generative AI to Support Literacy in 2024. National Literacy Trust. Available online at: https://nlt.cdn.ngo/media/documents/Children_and_young_peoples_use_of_AI_to_support_literacy_in_2024_vFSWEyC.pdf (accessed April 11, 2025).

Sidotti, O., Park, E., and Gottfried, J. (2025). About a Quarter of U.S. Teens Have Used ChatGPT for Schoolwork—Double the Share in 2023. Pew Research Center. Available online at: https://pewrsr.ch/4g3Jqt0 (accessed April 11, 2025).

Tulak, T. (2024). Optimizing mathematics learning outcomes using artificial intelligence technology. MaPan: J. Matem. Pembelajaran 12, 160–170. doi: 10.24252/mapan.2024v12n1a11

Keywords: ChatGPT, generative AI, homework design, plagiarism, ethical AI use

Citation: Gogh E and Kovari A (2025) Homework in the AI era: cheating, challenge, or change? Front. Educ. 10:1609518. doi: 10.3389/feduc.2025.1609518

Received: 14 April 2025; Accepted: 04 June 2025;

Published: 18 June 2025.

Edited by:

Maka Eradze, University of L'Aquila, ItalyReviewed by:

Guilherme Foscolo, Federal University of Southern Bahia, BrazilCopyright © 2025 Gogh and Kovari. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Attila Kovari, a292YXJpLmF0dGlsYUB1bmktZXN6dGVyaGF6eS5odQ==

Elod Gogh

Elod Gogh Attila Kovari

Attila Kovari