1 Introduction

The aim of this theoretical article is to explore frameworks contributing to reasonable and applicable definitions of artificial intelligence (AI), human intelligence, and generative artificial intelligence (GenAI), as well as propose a framework for an efficient, transparent, and scalable approach to assess and address inappropriate use of GenAI resources in an academic environment.

The integration of artificial intelligence technologies in academic environments has transformed how students engage with learning materials, complete assignments, and demonstrate formative knowledge. The opportunities AI-enhanced resources offer for personalized learning and robust academic experiences are co-mingled with significant challenges related to appropriate student use. Although automated content generators can enhance productivity and understanding, they also raise complex questions about academic integrity and the role educators must play in shaping their ethical use.

AI-powered tools provide students with grammar correction, citation management, language translation, research abstracts, and a wide range of enhanced learning support when used appropriately (Dwivedi et al., 2021). These tools also assist non-native speaking students with accessibility to learning modalities throughout the world (Karakas, 2023). It is when these tools are used to circumvent academic effort that concerns quickly emerge. Large language models (LLMs) capable of generating human-quality text and creative content have blurred the lines of authorship and academic integrity. Students have unprecedented access to resources capable of completing assignments, writing essays, and solving complex problems with minimal personal effort. This raises strong opinions about the assessment of student learning (Luo, 2024), determination of plagiarism (Bittle and El-Gayar, 2025), and efficacy of performance outcomes (Weng et al., 2024).

Luo's critical policy analysis, published in 2024, examined the institutional frameworks guiding the use of GenAI in assessment at 20 world-leading universities. Employing Bacchi's “What's the problem represented to be” (WPR) methodology, the research sought to critically analyze how these institutions articulate the challenges posed by GenAI within an evolving academic landscape.

The core critique identified a dominant, nearly universal policy paradigm that frames GenAI as a potential threat to academic integrity and the intellectual originality of student submissions. By designating these resources as a form of external assistance separate from the student's contribution, this prevailing policy structure suggests a critical silence regarding the increasingly distributed and collaborative nature of modern, technology-mediated knowledge production (Luo, 2024). This research suggests a redefinition of “originality” to effectively accommodate and integrate human-AI collaborative endeavors.

The potential effects of these prevailing integrity-focused policies are significant: they risk stigmatizing students utilizing GenAI resources for legitimate purposes and may transform faculty into punitive “gatekeepers” whose focus is policing misconduct rather than empowering robust student learning experiences (Luo, 2024). Notwithstanding its theoretical utility, Luo's study exhibits key research gaps. A primary limitation is the absence of empirical data concerning the lived effects of the policies, specifically lacking both student perspectives on their resultant impact and real-world faculty implementation data across varied disciplines. This reliance on a limited, purposeful sample of elite universities undermines the generalizability of findings, neglecting distinct policy challenges faced by diverse institutional types, such as community colleges, and proprietary and non-profit institutions. Finally, a key absence is the inclusion of alternative policy models, specifically in-depth case studies on institutions that have successfully moved beyond the punitive model to implement progressive, integrated GenAI policies.

A systematic literature review was conducted to effectively assess how GenAI technologies influence the demonstration of formative knowledge, balancing both educational benefits and associated risks to academic honesty (Bittle and El-Gayar, 2025). This comprehensive analysis encompassed 41 studies gathered from key databases, including the IEEE Xplore and JSTOR.

Core findings suggest a profound impact of GenAI resources in higher education environments. The opportunities AI-enhanced resources offer for customized learning and robust educational engagement are co-mingled with significant challenges related to appropriate student use. Large language models capable of generating human-quality assignments and creative content have blurred the lines of authorship, enabling the evasion of conventional plagiarism tools and raising complex questions about academic integrity.

This review suggests that immediate actions are required to effectively manage GenAI's influence. This primarily involves enhancing digital literacy among both students and faculty and developing more robust detection tools that can assess and address AI-generated content. The goal of this review was to guide future efforts in developing evidence-based practices for the responsible integration of these transformative tools.

The current body of study lacks diversity in study design and scope, often exhibiting a bias toward studies originating in Western contexts, and potentially missing perspectives from social science or humanities-focused databases (Bittle and El-Gayar, 2025). There is a limited comprehensive analysis that fully balances the beneficial pedagogical aspects of GenAI against its potential for academic dishonesty. Additionally, the field lacks empirical data regarding the long-term efficacy of countermeasures. Specifically, studies that examine the long-term impact of new policies on student knowledge retention and the continued reliability of detection tools as GenAI models continually evolve.

Another 2024 review conducted by Weng et al. (2024) explored how educators are currently assessing student learning in environments where GenAI is a factor, identified new learning outcomes that have emerged, and determined the predominant research methods deployed in this evolving academic environment. This analysis involved collecting and coding 34 relevant studies involving a rigorous five-stage framework.

The review's findings concerning assessment approaches identified three primary models. The first, traditional assessment, is often found to be ineffective in addressing the capabilities of GenAI resources. Subsequently, educators are adopting innovative and refocused assessment strategies, such as oral examinations and authentic project-based tasks. The final model involves GenAI-incorporated assessment, where AI-enhanced resources are a deliberate and integral part of the assignment (Weng et al., 2024).

The integration of artificial intelligence technologies has necessitated the emergence of new and refocused learning outcomes. Essential skills are increasingly centered on career-driven competencies and lifelong learning skills, including critical reasoning, AI literacy, and digital ethics necessary to navigate a technology-mediated professional landscape. However, current research trends reveal that the majority of studies exploring this intersection remain qualitatively oriented.

A significant gap in the research identified a critical methodological imbalance. There is a need for quantitative or mixed methods studies to move beyond exploratory, descriptive work and provide empirical evidence concerning the impact of GenAI on student assessment. Additionally, the literature lacks sufficient research on the efficacy of innovative assessment designs and how to effectively integrate different assessment approaches into a coherent, holistic strategy. Most importantly, while the new outcomes (AI literacy) are identified, a gap remains in rigorous exploration of the relationship between assessment and these new competencies, requiring further conceptual studies to precisely define and create measurable standards for these essential skills (Weng et al., 2024).

Assessing and addressing appropriate student use of AI technologies should follow a structured and systematic conceptual approach, hereby identified as the Six Domains of Academic Integrity Reporting (AIR): Limitations, Policy Parameters and Enforcement, Literacy Education, Intent, Issue-Based Coaching/Remediation, and Reporting/Monitoring.

Plagiarism extends beyond direct, uncited copying to include mosaic plagiarism, improper summary, and misuse of intellectual property, all violating core ethical scholarship principles (Drisko, 2023). Developing fair responses requires understanding root causes, such as academic anxiety, cultural differences regarding intellectual property, and poor instruction on source integration.

Drisko's study details practical identification strategies. While software-based similarity checks serve as initial screens, the emphasis is on faculty-level critical reading to spot anomalies. Educators look for inconsistent writing quality, abrupt shifts in voice, or complex, unattributed technical jargon. This ensures a holistic assessment distinguishing genuine citation misunderstanding from deliberate deception.

Additionally, advocating moving from a punitive disciplinary model to a developmental, preventative approach remains a solid approach (Drisko, 2023). Recommended interventions include structured, recurring lessons on paraphrasing, summarizing, and establishing academic voice. Explicit training in citation styles and ethics discussions fosters an integrity culture, preparing future social work professionals to maintain ethical standards in academic and clinical settings (Drisko, 2023).

A survey of academics found a high recognition of various forms of academic dishonesty, suggesting a strong awareness of plagiarism (Mostofa et al., 2021). The study also explored plagiarism detection software. Plagiarism detection tools like Turnitin are widely used and acknowledged for identifying textual similarities. However, the use of these tools alone doesn't guarantee a decrease in plagiaristic behavior within the research community (Mostofa et al., 2021).

A key challenge is the gap between knowing what plagiarism is and consistently avoiding it in their own and students' works (Mostofa et al., 2021). The authors propose that combating plagiarism effectively requires institutions to move beyond detection tools and assumed awareness. Instead, they recommend a focus on educational interventions. These interventions must emphasize the ethical imperative of originality and teach practical skills in proper citation, paraphrasing, and summarizing techniques (Mostofa et al., 2021). This shift is essential for transforming knowledge into responsible research practices.

To move from general concern to effective policy, understanding the root causes is essential. The Kampa et al. (2025) review synthesizes global literature to quantify primary “perceived reasons” for student plagiarism. This systematic review of 166 articles (from the Scopus database) focused on 19 studies, with a pooled quantitative synthesis from four, revealing a clear hierarchy of factors.

The most significant driver is the easy accessibility of electronic resources, exacerbated by tools like GenAI, which facilitate easy, unattributed content acquisition. The second factor is unawareness of instructions, highlighting a critical gap in communicating academic expectations and citation guidelines. Other factors include busy schedules, homework overload, and general laziness. Poor knowledge of research writing and the lack of a serious penalty also contribute. For systemic policy development, these factors are grouped into environmental, pedagogical, and motivational categories.

Unawareness often reflects an opportunity for improvement in curriculum design or instructional delivery, not explicit malice. This is common among freshmen and certain populations, like STEM students. Punitive action alone will fail if the underlying cause is inadequate instruction in research ethics, citation, and paraphrasing. Institutions must mandate foundational integrity instruction (Kampa et al., 2025).

The identification of a “lack of serious penalty” points to failures in institutional governance and enforcement. Minimal perceived risk or punishment neutralizes deterrence. This often stems from procedural failures: cumbersome or misunderstood reporting mechanisms, especially for part-time or online faculty who may lack training. This inconsistency creates loopholes, lowering the perceived risk.

The study recommended translating empirical findings into proactive integrity promotion rather than relying on reactive punishment. Suggestions include: a) introducing dedicated, credit-bearing introductory modules focusing explicitly on citation mechanics; b) paraphrasing and ethical source integration; and c) shifting from high-volume, low-stakes research to scaffolded assignments requiring complex and integrating analysis or unique application. These design recommendations reduce the appeal of easy electronic copying by demanding original, synthesized thought. Practical application and policy recommendations include developing simplified, standardized reporting protocols and mandating training for all faculty (full-time, part-time, online) as this removes systemic reporting barriers and ensures consistency, establishing a widely communicated tiered penalty structure. Consequences for academic dishonesty must be explicit, differentiating between unintentional mistakes and deliberate cheating to ensure fairness and provide a credible deterrent (Kampa et al., 2025).

Scholarly output faces global threats from easy digital access and intense professional competition. Plagiarism (wrongful appropriation of another person's ideas/language) is often seen as deliberate misconduct, but studies suggest it frequently stems from systemic pedagogical shortcomings. A functional research environment requires not just ethical awareness but also advanced skills to produce original, synthesized works.

Sharaf and Kadeeja (2021) surveyed 101 research scholars at Farook College, India, investigating awareness, perceptions of plagiarism acts, detection software use, citation knowledge, and punishment awareness. A key finding was that the vast majority of students have a strong basic awareness of plagiarism and its consequences.

This finding suggests a strategic pivot in prevention. Institutional efforts have raised basic awareness, but data show a persistent, critical deficit in operational research and writing skills (Sharaf and Kadeeja, 2021). The primary challenge is not ignorance, but functional incompetence. Effective intervention must transition from general lectures and punitive compliance to targeted, mandatory, discipline-specific training that remediates advanced scholarly communication skills. The study reveals that high awareness does not translate into effective avoidance. The dominant reason scholars cited for plagiarism was “lacking research skills.”

The primary risk factor is not dishonesty, but a functional inability to execute scholarly tasks like effective synthesis and accurate attribution (Sharaf and Kadeeja, 2021). This interpretation refutes the common administrative assumption that plagiarism is primarily a moral issue. Essentially, it is a skill deficiency. Although citing “lacking research skills” as the cause, it is suggested that institutional policy shift from a punitive policing model to a comprehensive, developmental pedagogy. Remediation must be integrated into the core curriculum, not an auxiliary event. The goal should be to equip scholars with the technical and cognitive skills to manage, synthesize, and ethically represent knowledge under real-world constraints.

There are some key actionable recommendations (Sharaf and Kadeeja, 2021). Actionable items include: (a) implementing mandatory workshops focused on advanced academic writing, synthesis, distillation, and avoiding patch-writing, and this training must be tailored by discipline; (b) mitigating risk from poor language skills by integrating specialized language support workshops to reduce dependence on source text phrasing and facilitate a distinct scholarly voice; (c) formalizing the library's role as the central hub for delivering consistent, practical training on citation tools, detection software, and information management; (d) developing internal review protocols involving close mentorship and formative feedback on early drafts. Faculty should be trained to identify poor synthesis and intervene early, treating it as a correctable methodological flaw rather than disciplinary grounds; and (e) conducting follow-up longitudinal studies to measure the efficacy of revised training. The key metric for success is a reduction in the percentage of students challenged by ethical paraphrasing. Policies that focus only on compliance or increased punishment will fail to address the root cause: a pedagogical failure to teach effective knowledge transformation.

2 Methodology

This theoretical article adopts a qualitative, practice-based research methodology, integrating scholarly literature, institutional policy analysis, extensive professional experience, and a conceptual framework to investigate and resolve areas of opportunity in the use of GenAI by students within the scope of academic integrity in higher education. The methodological approach is designed to examine not only what current research and institutional policies state but also how these inform academic environments undergoing rapid technological and academic change.

Grounded in a comprehensive review of 37 peer-reviewed, research-based articles addressing academic integrity, instructional ethics, policy enforcement, and the implications of emerging technologies in educational context, the aim is to explore frameworks contributing to reasonable and applicable definitions of artificial intelligence (AI), human intelligence, and generative artificial intelligence (GenAI), as well as propose a framework for an efficient, transparent, and scalable approach to assess and address inappropriate use of GenAI resources in an academic environment.

A detailed analysis was conducted of 24 academic integrity policies drawn from a random sampling of post-secondary institutions. This analysis seeks to identify common themes, gaps, and institutional responses related to issues such as plagiarism, misuse of GenAI, and the integration of artificial intelligence tools in academic settings.

Supporting this research is over two decades of direct professional engagement with academic integrity practices, and over 20 years of experience as a college instructor and academic administrator across multiple post-secondary institutions. This study includes the development and enforcement of academic integrity policies, the conduct of formal plagiarism investigations, and leadership in curriculum design that embeds ethical, responsible, and outcome-based learning.

To inform this article's conceptual framework, definitions that articulate and distinguish the constructs of human intelligence, artificial intelligence, and generative artificial intelligence were drawn upon. These definitions are used to critically assess how institutions are responding to the andragogical and ethical challenges presented by GenAI technologies. Through this triangulated methodological approach of literature review, policy analysis, and practitioner insight, this article seeks to produce a contextually rich, evidence-informed understanding of modern academic integrity challenges, with solid recommendations for policy implementation.

3 Defining artificial intelligence, human intelligence, and generative artificial intelligence

3.1 Artificial intelligence (AI)

One of the earliest studies to examine the processes of information transfer in the human brain occurred in 1943 by neurophysiologist Warren McCulloch and logician Walter Pitts, whereby a mathematical model was used to demonstrate how neural networks interact and perform logical functions (McCulloch and Pitts, 1943). This foundational understanding set the groundwork for replicating nervous system (brain) processes through computational algorithms. Published in 1948, Norbert Wiener's research explored similarities between machine and animal self-regulating mechanisms and feedback loops (Wiener, 1948), and within 2 years, the first measure of machine intelligence was introduced as the Turing Test (Turing, 1950).

The collective definition of “artificial intelligence” is rooted in the concept of machine learning. This evolution began with computers designed to perform complex calculations powered by high-speed processors, which allowed for expedited expression in mathematical terms. The computer was not learning, but merely following a set of instructions. These instructions became algorithms to allow for the extraction of large amounts of data and apply rules for classification and prediction. This was the beginning of machine learning (Cohen, 2025). Ultimately, machine learning became large language models (LLMs) that implemented algorithms loosely modeled after brain architecture to solve problems, capitalizing on interconnectivity and computational layering.

More recent scientific working definitions propose unique identifying traits, such as integration and allocation of internal and external sources (Gao et al., 2025), ability to reason and adapt based on sets of rules which mimic human intelligence (Ng et al., 2021), knowledge representation and reinforcement learning (Samoili et al., 2020), pragmatic abilities to perform actions that replace human cognitive processes (de Zúñiga et al., 2023), computer algorithms that enable perception, reasoning, and action (Marandi, 2025), and computational systems capable of performing cognitive tasks traditionally reserved for humans while functioning within socio-technical ecosystems (Fu et al., 2024).

For the purpose of this article, a working definition of artificial intelligence, informed by peer-reviewed research and models and aggregated identified traits, will be

“an engineered system, driven by a computational agent or algorithm, that perceives, learns, reasons, and performs in such a way that replicates human cognitive function. Its intelligence will be measured by task performance, decision-making autonomy, and demonstrated ability to adapt across contexts without explicit human instruction.” (Gao et al., 2025; de Zúñiga et al., 2023; Fu et al., 2024; Marandi, 2025; Ng et al., 2021; Samoili et al., 2020)

3.2 Human intelligence (HI)

Alfred Binet defined intelligence as

“the capacity to judge well, to reason well, and to comprehend well.” (Binet and Simon, 1905)

Various foundational theories of intelligence inform the modern framework definition. Spearman's Two-Factor Theory posits the existence of universal inborn ability and acquired knowledge from environmental influences (Spearman, 1961); Thurstone's (1946) Theory of Primary Mental Abilities states there are six primary factors contributing to measurement of human intelligence, which resulted in the “Test of Primary Abilities”; Guilford's (1988) Model of Structure of Intellect which frames intellect as a product of content, mental operation involved, and product resulting from the operation; Cattell's (1963) Fluid and Crystalized Theory which credits experiences, learning and environment for intellectual capacity; Gardner's (1987) Theory of Multiple Intelligence championed the recognition of multiple forms of intelligence which are subjective to the individual; and Goleman's (1995) Theory of Emotional Intelligence which characterizes emotional intelligence as knowledge of one's own emotions, managing emotions, self-motivation, recognition of emotions in others, and handling relationships. The evolution of the definition carries with it many different facets of subjectivity, such as the capability of abstract reasoning, mental representation, problem solving and decision making, the ability to learn, emotional knowledge, creativity, and adaptive qualities to meet environmental demands (Ruhl, 2024).

For the purpose of this article, a working definition of human intelligence, informed by historical context and expansion/evolution of a broadened academic definition, will be

“the mental capacity enabling individuals the ability to learn from experience, adapt to new situations, understand and handle abstract concepts, and use acquired knowledge to manipulate their environment and solve problems.” (Gardner, 1987; Goleman, 1995; Ruhl, 2024; Spearman, 1961)

3.3 Generative artificial intelligence (GenAI)

An important feature that distinguishes generative artificial intelligence from traditional forms of artificial intelligence is its highly intuitive interaction capabilities, which enable more natural, human-like engagement (Ronge et al., 2025). Unlike earlier AI systems that were narrowly focused on specific tasks such as image recognition, data analysis, or recommendation engines, GenAI demonstrates unprecedented versatility across a wide range of contexts, from creative writing, real-time conversation, and problem-solving. The functionality of this tool, coupled with the ability to generate original content and respond adaptively to nuanced prompts, marks a qualitative shift in the evolution of AI technologies (Ronge et al., 2025). GenAI has demonstrated an emerging capacity not merely to execute pre-defined operations, but to understand, reason, and create in ways that more closely mirror human cognition. Specifically, GenAI analyzes patterns and information from a data pool utilizing machine learning, neural networks, and other proprietary techniques to generate new content at the behest of a human prompt (Ooi et al., 2025).

GenAI, through the lens of general integration, has introduced unique advancements that separate it from traditional AI applications: utilization of a predictive approach to natural language processing based on deep neural networks and referencing an existing datasets, generating new words via large language model (LLM) connections (Zhang et al., 2023), generic user interface capabilities which prevent specific context functionality limitations, and complementary search engine interface functionality which draws equally from all available search engines due to its predictive approach (Dubin et al., 2023).

For the purpose of this article, a working definition of generative artificial intelligence, aggregated from the literature and narrowed in technical specificity for a broad application, will be

“an engineered, data-driven subset of artificial intelligence designed to autonomously produce content based on learned patterns from large datasets, operating through rule-based and statistical models and grounded in probabilistic associations.” (Dubin et al., 2023; Ronge et al., 2025; Ooi et al., 2025; Zhang et al., 2023)

3.4 Key differences between AI, HI, and GenAI

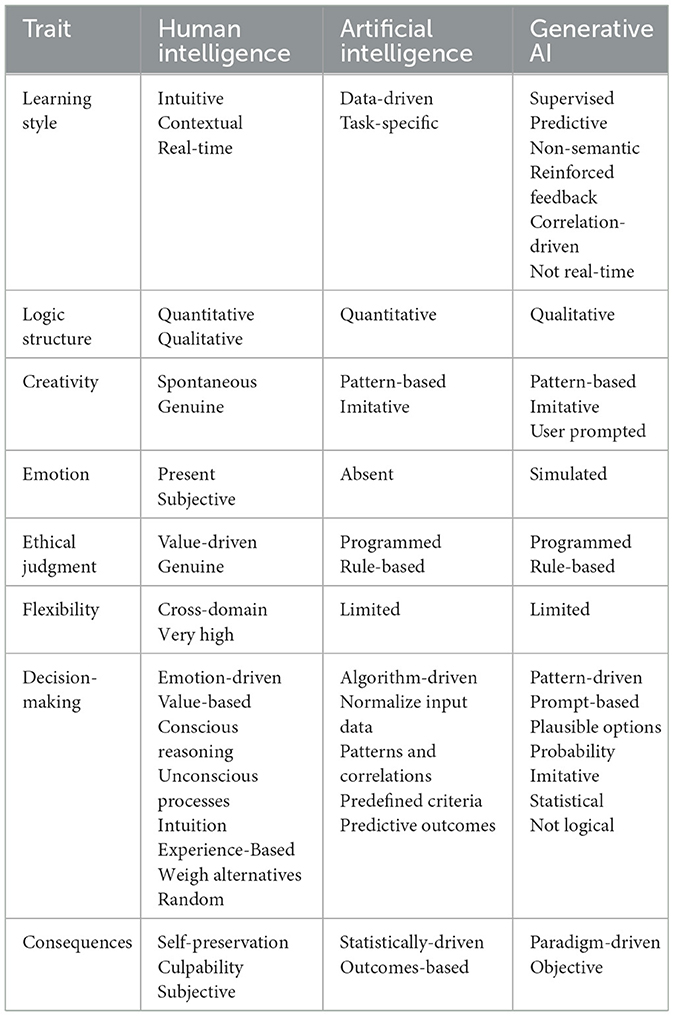

Understanding the substantive differences and functionality between these three categorizations of intelligence (HI, AI, and GenAI) is an important decision-making element when differentiating between intentional academic dishonesty and uninformed misuse of GenAI. Based on a synthesis of the literature and key characteristics identified therein, Table 1 provides a snapshot of distinguishing comparative traits of the three intelligences discussed.

4 A conceptual framework—Six domains of academic integrity reporting (AIR)

4.1 Domain I: limitations

Fundamental understanding of some of the limitations of artificially intelligent algorithms and related AI technologies is an important first step in implementing an efficacious institutional academic integrity plan. It is to be kept in mind that commercially available AI-driven tools are hosted. Meaning they are owned, operated, programmed, and maintained by an individual or a company. As such, these proprietary stakeholders are capable of using filters to parse, limit, or otherwise direct information and research findings to an alignment or protocol as they see fit. Specific concerns and key limitations articulated through both research and public opinion include legal, ethical and privacy concerns, accuracy, and reliability, limited critical thinking and problem-solving output, biased and multifaceted impact on learning and development, and technical constraints or data input and related disorganization of output (Cong-Lem et al., 2025). The potential for racial disparities in equitable learning can occur when there is not sufficient importance placed on unbiased coding. When programmers do not identify, examine, and control their internal biases, AI-generated bias can manifest and create both theoretical and applicable misinformation and misdirection (Wright, 2025). It is equally important to remember that the bulk of information gathered by commercial AI technologies comes from the internet. Not necessarily the repository of scholarly works and vetted data mines, but every corner of the World Wide Web where opinions, anecdotes, intentionally false and misleading information, and purely fictional creations exist.

As text-generative AI programs function on learned patterns from large datasets are grounded in probabilistic associations, are predictive in the nature of their outcomes, and rely on statistical models, they are unable to produce responses based on personal experience, subjective emotion, and verbal expression characteristically unique to that of the individual respondent. Human speech and expression patterns are more linear, whereas machine logic follows a categorical expression. Artificially generated responses often do not fall within subjective conventional grammatical expression, providing a seamless discourse aligning question with answer. These responses can present themselves as a broad overview and more of a conjecture instead of a succinct, personalized, or experiential response. As an example, if a question requires a response based on an individual's experience or opinion (What academic resources do you have available to you as a student of ABC University?), a machine-generated response may be, “Students at ABC University have access to….” A lack of human inflection and cognitive logic structure is a marker of a GenAI response (Saba, 2023). This is also evidenced by responses that lead with, “This article will.” Student responses are not articles. They are essays, compositions, theses, reviews of studies, etc.

As generative text tools create textual responses based on mathematical logic, imitative correlations, and algorithm-driven rules of organization, the outputs follow traditionally efficient data presentation formats. This formatting is evidenced by overuse of bullet points, bulleted lists that would normally be expressed as paragraphs, inappropriate use of headings and subheadings, and non-contextual introductory and conclusion paragraphs. Presentation of traditional essay-style writing as a response to what would normally be expressed as a well-constructed paragraph is another machine logic creation.

Generative text tools will also strive to emulate or replicate conventional human speech and dialogue patterns. As such, there can be a lack of cohesive readability, such as incorrect verb tense, tacit grammatical errors, and the use of slang verbiage or “texting” sentence construction. Replicated statements also occur, as well as an overuse of buzzwords or hook phrases and a verbose replication of generated thesis statements throughout the document.

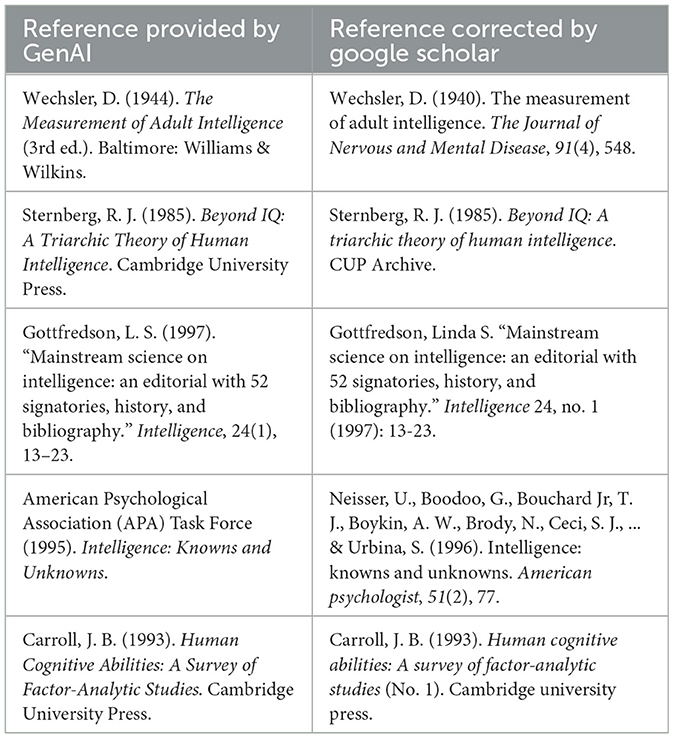

Generative large language models (LLMs) have a tendency to hallucinate and present inaccurate information (Hyyryläinen, 2024). This often presents itself in incongruent references and in-text citations. In-text citations either do not match the references accurately or the references are ghost creations, not in APA format, or are missing information. Research has cautioned against using generated references without verification of the source and correcting in-text usage (Clelland et al., 2024). A best practice is to verify the accuracy of the references through Google Scholar or a University Library. Ghost creations do not return a result when pasted into the authoritative search bar. The reference returns a result, but the year is different, or the reference returns a result that is different from the one provided by the student's response, and usually with proper formatting, capitalization, italicizing, and with different volume, edition, or page numbers.

To support this example of inaccurate references, a query prompt was entered into OpenAI: “Provide a definition of intelligence with five scholarly references.” Five references were provided, and each was cut-and-pasted into Google Scholar. The references provided by GenAI were not correctly formatted, they were not in APA format, and there were no in-text citations. Only one of the GenAI references was correct (Table 2).

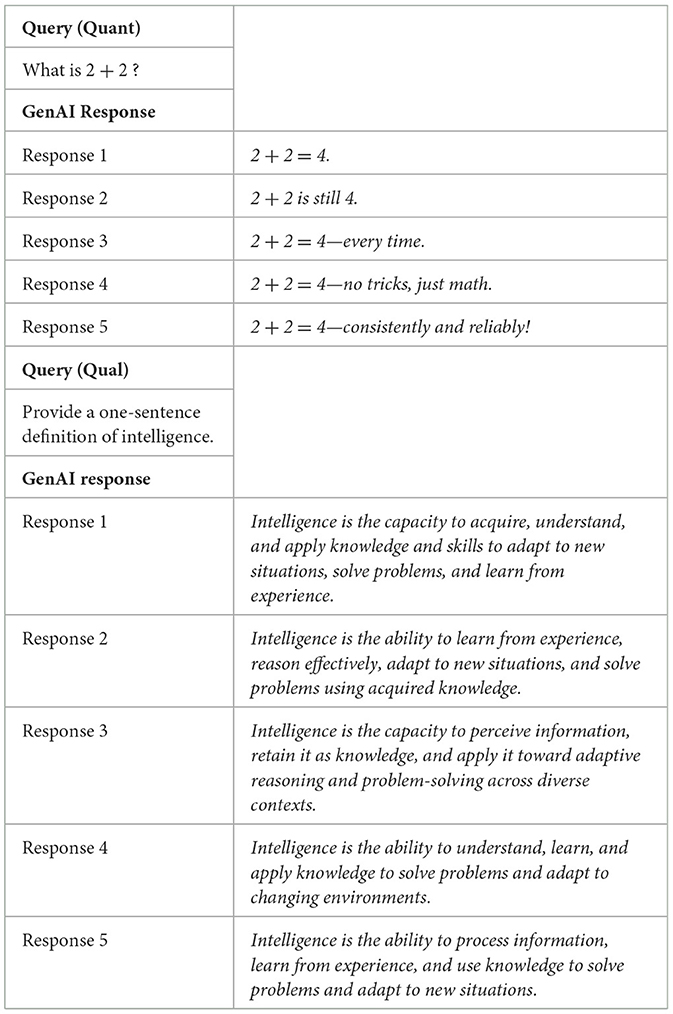

GenAI has a qualitative output response structure, meaning responses generated from a single inquiry prompt will return different results consecutively. This feature is what renders GenAI detection software ineffective because the predictive and pattern-based responses change as their referenced datasets update, and this update happens very quickly. To illustrate how the qualitative logic of GenAI is more of a dynamic output (imitative and fluid) structure vs. a quantitative static output (fixed machine logic) structure, two separate query prompts (one quantitative and one qualitative) were entered into OpenAI, each repeated five times consecutively (repeated immediately one after another), and the results are recorded (Table 3).

4.2 Domain II: policy parameters and enforcement

Institutions must establish clear, transparent, comprehensive, and dynamic policies and procedures that define acceptable and unacceptable uses of artificial intelligence, autonomous agents, and other related forms of machine intelligence in academic coursework, as well as protocols for the review and consequences of violations of academic integrity as defined by the institution's Academic Integrity Reporting (AIR) Policies. These policies should provide guidance for both students and educators, be communicated early and at regular intervals throughout a program or calendar year, and be updated regularly to keep pace with the rapid advancements of AI technology. Instead of a blanket ban on the use of this technology, AIR policies should differentiate between the use of machine intelligence as a learning aid vs. as a tool for academic dishonesty. These policies should neither rely on veiled threats of unenforceable punitive actions, nor should they contain verbiage that is accusatory in nature. It is important to remember that it is not what the educator knows to be plagiarism, but what the educator can prove to be plagiarism. Much like a court of law, the burden of proof requires evidence.

Documentation of a potential violation of the institution's academic integrity policy should be filed whenever there is an overt or explicit demonstration of intentional plagiarism or academic dishonesty. This is demonstrated through an institutionally designated plagiarism detector, an internet search that identifies the original or another creator, side-by-side comparison of two submitted deliverables from the same student with obvious differences in writing styles (assuming this has been brought to the student's attention and they have been afforded the opportunity to provide an explanation and the explanation is not deemed acceptable), explicitly evidenced within the course (i.e. one student's work is identical to another, the submission contains personal details other than that of the creator, etc.), or the student has been afforded the opportunity for issue-based coaching/remediation for a previous infraction and the same infraction has again occurred. This documentation should be sent to the institutional designee who will independently investigate the claim of academic dishonesty and make a final determination directly communicated to the student. If appropriate, the faculty will be instructed to provide a grade change, rectify a grading discrepancy, or take additional action as necessary.

A review of the academic integrity policies of randomly-selected (based on an internet query) 4-year public universities and private proprietary post-secondary schools of allied health/nursing was conducted to inspect the details of the institutional policies regarding the use of GenAI by their students. Due to proprietary internal policies and documentation, the subject institutions were de-identified. The public universities offered traditional degrees, and the allied health/nursing schools offered certificate, diploma, associate, and baccalaureate degree programs. Twenty-four institutions were selected from four regions of the United States (Northeast, Southeast, Southwest, and Northwest). Four public universities and two allied health/nursing schools were chosen from each region, and their academic integrity/plagiarism policies were reviewed to identify commonalities and specific requirements. All 16 public universities had a structured academic policy in place with specific details regarding student use of AI, and although all eight of the allied health/nursing schools had academic integrity policies documented in their academic catalogs, only four specifically mentioned guidelines related to inappropriate use of AI. The public university policies were specific in identifying GenAI, while the allied health/nursing schools used the generic reference of AI.

Policies of the reviewed public universities specifically mention that trademarked and copyrighted material of the institution may not be used, altered, repurposed, or otherwise manipulated by AI tools. This stipulation was not evident in the reviewed allied health/nursing schools. The most prevalent and common policy theme across all reviewed institutions was that missing or incorrect referencing/citations in documents created with AI or GenAI would be considered plagiarism. Ten university policies explicitly state that content that is completely AI-generated will not be accepted, four stated that prior to submission, students should review AI-derived content to ensure accuracy and synthesis of information (representative of the student's voice), and all represented the common theme that quoted material and paraphrasing from artificially generated content must be properly cited and attributed to avoid plagiarism. Fourteen university policies allow individual faculty members to establish parameters and restrictions for AI use in the classrooms. All institutional policies reviewed clarify that any questions regarding the proper use of AI or GenAI should first be directed to established policy or discussed with the appropriate instructor prior to submission.

4.3 Domain III: literacy education

It is essential to educate students about AI-powered tools, specifically, the capabilities, limitations, ethical implications, data bias, authorship requirements, and appropriate use in academic contexts. This includes teaching them how to critically evaluate AI-generated content, understand the principles of academic integrity in the digital age, and use AI tools responsibly and ethically as learning aids rather than substitutes for their own work. Such instruction empowers students to make informed choices, appreciate the importance of, and adhere to academic integrity standards (Kovari, 2025). A best practice would be to educate students on the very criteria faculty are trained in to identify and assess inappropriate integration of automated content generators.

Research suggests that specific training on basic artificial intelligence concepts and practices better prepares students to properly integrate GenAI tools into their course deliverables (Chen and Zhang, 2025). An unrelated study demonstrated this through a 7-h AI literacy course given to 120 university students, which resulted in a statistically significant outcome, suggesting that this approach not only fosters content literacy, bridges gender and program of study backgrounds but also eliminates skills gaps among those with different educational backgrounds (Kong et al., 2021).

4.4 Domain IV: intent

“Plagiarism is the act of presenting the words, ideas, or images of another as your own; it denies authors or creators of content the credit they are due” (American Psychological Association, 2022, Sections 8.2–8.3). Whether deliberate or unintentional, plagiarism violates standards of ethics in scholarship and scholarly writing. This posits the question as to whether the use of AI-powered tools is, in fact, plagiarism. Making that assessment requires the determination of intent. In a learning environment, submitting a plagiarized document must first be subject to the litmus test of intentional vs. careless. It is important to note that professional settings, however, elevate the scrutiny of an author's culpable intent as there are financial and career-driven gains to be made, and as such there is no pardon for factual misrepresentation or misappropriation of scholarly works. AI-induced plagiarism falls within the parameters of both educational and professional submissions and should be assessed accordingly. Domain IV specifically applies to the academic environment.

Plagiarism is regarded as an individual's intent to either take credit or represent work or ideas that belong to, were created by, or originated from someone else. Plagiarism is the failure to cite or provide appropriate credit to the originator of the work. Plagiarism is not incorrectly citing or failing to apply appropriate APA guidelines regarding in-text citations or reference pages, as consideration should be given that the novice learner simply made a careless error. This error can be a teachable event with the understanding that future discrepancies will not occur. This is not unlike learning a new math problem and using incorrect computational methods. The appropriate methods are instructed, and the assumption is that correct application moving forward. This would be an opportunity for issue-based coaching.

The required elements for demonstrating an act of plagiarism are evidence and intent. Without either of those elements, it is speculative. One must ask the question: Is it academic dishonesty? Or is it carelessness or being uninformed?

The key difference between academic dishonesty and uninformed writing lies in the writer's intent and the root cause of the error. Academic dishonesty is an ethical violation rooted in deception, while uninformed writing is a functional failure stemming from a lack of skill or knowledge.

Academic dishonesty, primarily encompassing plagiarism, is defined as a violation of core ethical scholarship principles (Drisko, 2023). It extends far beyond simple, direct, uncited copying. This categorization includes more subtle acts such as mosaic plagiarism (which involves weaving together phrases from a source without proper attribution), improper summary, and the general misuse of intellectual property (Drisko, 2023). The defining characteristic is the deliberate intent to present another's work or ideas as one's own.

To detect deliberate deception, educators should perform a holistic assessment. They should look for distinct red flags that suggest the student is attempting to mask the use of external sources. These signs include inconsistent writing quality, abrupt shifts in voice, or the sudden appearance of complex, unattributed technical jargon (Drisko, 2023). While acknowledging that root causes for such behavior can include factors like academic anxiety or cultural differences regarding intellectual property, the focus remains on the ethical imperative of originality (Drisko, 2023; Mostofa et al., 2021). Corrective measures for dishonesty often emphasize this ethical difference (Mostofa et al., 2021).

Although a functional skill gap, uninformed writing is characterized not by malice but by a functional inability to execute scholarly tasks like effective synthesis and accurate attribution (Sharaf and Kadeeja, 2021). The primary risk factor is thus a skill deficit, not dishonesty. This arises from factors that reflect a lack of training or proper guidance.

A major contributing factor is unawareness of instructions and citation guidelines, which highlights a misalignment in communication regarding academic expectations (Kampa et al., 2025). This unawareness often reflects an opportunity for improvement in curriculum design or instructional delivery rather than explicit ill will. It is particularly common among vulnerable populations, such as freshmen and certain STEM students, who may not have received adequate foundational instruction (Kampa et al., 2025). Other contributing elements to this functional failure include poor instruction on source integration, improper knowledge of research writing, busy schedules, homework overload, and general laziness (Drisko, 2023; Kampa et al., 2025).

The requisite action to address improper writing is fundamentally educational, not purely punitive. As punitive action alone will fail if the underlying cause is inadequate instruction in research ethics, citation, and paraphrasing, institutions must mandate foundational integrity instruction (Kampa et al., 2025). Interventions must focus on teaching practical skills in proper citation, paraphrasing, and summarizing techniques to transform knowledge into responsible research practices (Mostofa et al., 2021).

4.5 Domain V: issue-based coaching/remediation

An institution's designated academic compliance review entity reviews valid concerns of plagiarism, and the results of their investigations can ultimately lead to school- or University-level sanctions against the student. Assessing and addressing student use of machine intelligence and autonomous digital agents, therefore, must rise to the level of objectivity with clear and convincing evidence of unscrupulous and intentional intent to commit a violation of the institution's academic integrity standards. Implementing a process of issue-based coaching and remediation for students as an instructional tool is of paramount importance to ensure fairness and equity of the learning experience without hastily compromising a student's academic future. This step should occur in lieu of an AIR Review. An AIR Review would be reserved when issue-based coaching and remediation have not been successful and the student has demonstrated a repeated pattern of concern.

Guidelines to follow:

• Grade according to a rubric. Grammar, formatting, and reference criteria will capture the majority of Domain I cut-and-paste submission concerns.

• Do not be accusatory. Engage in a documented dialogue with the student about anomalies and concerns within the submitted assignment and ask for their perspective on what occurred. This is the first step in determining the presence or absence of intent. Ensure this is done prior to submitting the final grade of the deliverable.

• Issue-based coaching/remediation is appropriate under the following circumstances: An attempt to cite references was done improperly, absence of a reference page, grammatical errors, careless or poor writing. These are circumstances where faculty can guide the student and are not academic integrity concerns.

• Issue-based coaching/remediation is appropriate when elements of Domain I (limitations) are evident in the student's submission, and this is a first-time occurrence. Depending on the student response, the determination can be made to escalate to an AIR Review if warranted. Lack of a student response should be considered an incomplete initial faculty review, without a resolution of the concern, and reported as an AIR Review for further investigation.

• Faculty do not make the final determination of whether plagiarism has occurred. This is the role of the institution's designated academic compliance review entity. Ensure dialogue is kept neutral and inquiry-based at the classroom level.

• Refrain from making statements that the student has plagiarized, has violated standards of academic integrity, intentionally misused AI, is going to be investigated for plagiarism, etc. This verbiage is reserved for the final disposition that should be shared directly with the student from the appropriate institutional designee. Instead use verbiage such as, “Thank you for providing your input on xxx. Based on our dialogue/conversation, you have been provided guidance on how to properly address xxx, have been given an opportunity to resubmit the assignment (if applicable), and your grade will reflect xxx. Moving forward, the expectation is that these concerns will not resurface. Thank you for making the appropriate corrections.”

• It is vitally important that the concern has been brought to the attention of the student in a formal, documented manner with a deadline for their response, the student response itself, and articulation of any opportunity (if applicable) to correct and resubmit the assignment is clearly identified and agreed upon.

It is a best practice to report any issue-based coaching/remediation to the institution's designated academic compliance review entity as a non-punitive measure and for documentation purposes. The purpose is to demonstrate the faculty member's due diligence in addressing potential academic integrity concerns, the faculty member's discretion in identifying this as an isolated event and belief that it is successfully handled within the classroom, and that the student has been provided the opportunity to learn from this guidance, with the assumption that it will not occur again. In the event that future plagiarism concerns within the same course or subsequent courses occur, there is formal documentation of it being previously addressed, and as such, it provides supportive evidence for escalation to a formal AIR Review.

4.6 Domain VI: reporting/monitoring

The formal AIR review should be reserved for one of the following scenarios: (a) previous issue-based coaching/remediation efforts have demonstrated continued non-compliant behavior, (b) lack of a student response to an initial attempt at coaching/remediation, or (c) a valid concern of plagiarism is evidenced by an independent source. This decision should be clearly articulated to the student in a neutral and non-accusatory manner. A suggested example of verbiage is, “Due to xxx, this concern is being reported for institutional academic compliance review and any resulting action will be communicated directly with you.”

It would be a best practice to enter a grade of zero for the deliverable, with the understanding that any subsequent adjustment to the grade will be based on the result of the formal investigation and communicated to the faculty member. This action demonstrates that a concern has been identified and is under review before the assignment of a final grade. Continued monitoring of student academic performance should be intentional, and additional academic integrity concerns should result in additional AIR review submissions, as issue-based coaching/remediation has not proven successful in the past.

5 Discussion

As artificial intelligence becomes increasingly embedded in educational systems, its dual potential to enhance learning and complicate the landscape of academic integrity must be acknowledged and addressed with intentionality. The integration of AI in academia is not merely a technological advancement; it is an andragogical and ethical turning point that challenges long-held assumptions about authorship, originality, scholarship, and responsibility.

Schools and universities benefit from a proactive, transparent, and objective approach to assessing and addressing student use of machine intelligence and autonomous digital agents in their institutions. Artificial intelligence is a tremendous tool to enhance personalized learning, educational outcomes, and efficient delivery of content. The critical element to be addressed is ensuring its use is appropriate. Supporting academic integrity, promoting organic student learning, and upholding the standard of academic rigor are foundational elements that everyone wants to be evident in the field of academia.

The Six Domains of Academic Integrity Reporting (AIR) offer a comprehensive framework for institutions seeking to address this rapidly evolving environment by creating policies that are not only responsive and enforceable but also academically appropriate. By shifting from punitive models to proactive strategies, institutions can better uphold academic standards while also fostering student growth. Literacy education around AI tools empowers learners to make informed decisions and develop the skills necessary for ethical engagement with emerging technologies. Policy and practice-driven approaches that emphasize intent and remediation rather than assumption and punishment create space for fair and equitable learning.

The successful integration of AI in education lies not in avoidance or restriction, but in thoughtful engagement. Institutions must lead with clarity, compassion, and accountability while providing the tools, structures, and expectations, necessary to empower students to use AI-powered tools ethically and effectively.

6 Recommendations

In addition to providing clear and concise policies related to GenAI use expectations of students, creating a robust and scalable academic integrity reporting system, institutions would benefit from restructuring existing curriculum through an andragogically innovative lens, which requires students to provide more subjective, personal, and experiential responses.

Large language and cognitive computing models understand and interpret data through machine learning, simulated natural language processing, and pattern recognition. Deep-learning models use extensive datasets of existing information to construct recognizable output, often resulting in factual inaccuracies, the absence of critical analysis, and inconsistent logic flow (Yang, 2024). From an institutional perspective, there are curricular considerations that can be employed that are not easily outsourced to machine intelligence algorithms and lessen opportunities for student misuse of artificial intelligence tools, such as employing structured grading parameters, providing more student-centered learning experiences, promoting critical thinking, integrating real-world experiential application, and employing structured grading parameters.

6.1 Create a robust grading rubric that supplements content-focused criteria

Examples of integrating quantitative measurements include Authentic Voice/Tone, Task-Specific Execution, Evidence of Process, Contextual Relevance, Personal Connection/Original Thought, Reflective Component, Appropriate Integration of Sources, Grammatically-Correct Presentation, and Proper APA Formatting.

In an empirical study quantitatively assessing the efficacy of integrating rubrics to enhance student writing and stimulate the critical thinking required for successful achievement of student learning outcomes, research employed a—test methodology to compare student performance on two distinct writing assignments (Reaction Papers and Discussion Boards) under conditions of rubric vs. non-rubric implementation (Weatherspoon, 2022). The integration of the rubric on Reaction Papers successfully transformed how students engaged with the assignment, demonstrating a statistically significant difference in assignment scores. This key finding suggests that the structural clarity of the rubric successfully facilitated improved critical thinking, as evidenced by demonstrably higher performance metrics. The evidence suggests that when students are provided with clear instructional tools, they can effectively meet and exceed expectations for assignments that require higher-order cognitive skills.

This particular methodology, however, is commingled with significant research challenges and conceptual gaps. As an embedded, single-instructor study, the generalizability of the findings to broader academic environments is constrained. More critically, the research lacks the necessary qualitative data to establish a definitive causal mechanism, leaving open the question of whether score improvement reflects genuine critical thinking advancement or merely heightened compliance with assessment criteria. Furthermore, the study omits the details regarding the specific rubric design, hindering replicability. To address the missing link between feedback and student metacognition, a proposed framework for future investigation involves the integration of reflective writing exercises. It is when these exercises are used in conjunction with rubric-based feedback that a deeper assessment of the learning process (student self-regulation and the transferability of critical thinking) can be achieved, moving beyond a singular focus on the product (assignment score).

6.2 Integrate reflective writing exercises

This encourages students to analyze their own emotions, experiences and progress during learning or task completion.

Reed et al. (2024) conducted a quasi-experimental study comparing the impact of a progressive laboratory writing treatment vs. a traditional quiz-based assessment on the critical thinking performance of general education biology students. The integration of progressive writing assignments, such as lab reports and complex papers, in the academic environment has transformed how students engage with scientific concepts and demonstrate formative knowledge. The opportunities these writing-intensive resources offer for robust intellectual experiences are comingled with significant challenges related to measuring the direct mechanism of skill gain. The research utilized a pretest/post-test methodology, employing the California Critical Thinking Skills Test (CCTST) to isolate the effect of the writing treatment.

The key finding demonstrated that students engaging in the writing treatment significantly improved their overall critical thinking skills from pretest to post-test, while the quiz-based group did not. Specifically, the writing group showed statistically significant gains in the critical thinking sub-skills of analysis and inference. Although improved, gains in evaluation skills were not statistically significant, creating a specific gap for future design focus. Prior critical thinking ability and the instructing faculty member were found to significantly affect final performance, raising complex questions about instructional fidelity. It is when these assignments are used to facilitate communication about complex scientific ideas that concerns quickly emerge regarding the specific instructional process driving the gains. Future research must incorporate qualitative data (e.g., text analysis or interviews) to determine how the progressive writing sequence and feedback enhance cognitive abilities and whether these gains are transferable across academic disciplines.

6.3 Integrate community-based research projects

Students articulate a conceptual understanding through collaboration and real-world application of civic engagement.

The aim of a collected work by Beckman and Long (2023) was to explore frameworks contributing to reasonable and applicable models for Community-Based Research (CBR), as well as propose strategies for an efficient, transparent, and scalable approach to integrating CBR with the central goal of fostering community impact and social change. The integration of CBR into academic environments has transformed how students engage with learning materials, complete assignments, and demonstrate formative knowledge by conducting collaborative investigations with non-academic partners. The opportunities CBR offers for robust academic experiences and local social challenges are comingled with significant challenges related to measuring its long-term success.

Although the detailed case studies and frameworks, such as the “Power Model” and chapters on stakeholder perspectives, can enhance pedagogical practice and community understanding, they also raise complex questions about their structural sustainability and true causal impact. The provided literature offers faculty and students a wide range of enhanced support for integrating CBR into diverse curricula, from STEM to international projects. It is when these projects are used to simply fulfill student requirements that concerns quickly emerge about the true value for the community. The book implicitly addresses challenges, but a core gap lies in the lack of longitudinal studies capable of measuring community change metrics for several years post-project. Furthermore, there is a persistent research gap concerning the complex and power dynamics and equity inherent in university-community partnerships. It was suggested that future work focus on systematic reform of faculty reward structures to truly value this time-intensive work, ensuring that institutional support for community engagement is commensurate with its intended social aim.

The integration of Community-Based Participatory Research (CBPR) into academic and non-academic environments has transformed how research is conceptualized, legitimizing both scientific and experiential forms of knowledge (Amauchi et al., 2022). The opportunities CBPR offers for generating knowledge that is both scientifically rigorous and locally relevant are comingled with significant challenges related to equitable partnership and power dynamics. Although its methodology, advocating for a “slow” praxis prioritizing relationship-building, can enhance community relevance, it also raises complex questions about its operationalization and the role researchers must play in shaping a decolonizing practice.

The research conducted provided researchers with a synthesis of core CBPR principles and a wide range of enhanced understanding via three case studies illustrating practical implementation. It is when the ideal of equal sharing of control between academics and community members is used without critical reflection on power dynamics that concerns quickly emerge. The article suggests that CBPR is a powerful methodology for addressing complex social issues and leading to meaningful transitions. However, the field's effectiveness is hampered by structural limitations. Research is needed on how institutional policies can address the conflict between the “slow,” iterative nature of CBPR and the rapid constraints of academic funding cycles. Most critically, a significant gap remains in developing and validating standardized impact metrics capable of quantitatively assessing the degree of social transformation achieved across diverse CBPR projects (Amauchi et al., 2022).

6.4 Formulate student-created research questions and open-ended hypotheses

This promotes critical thinking, inquiry skills, engagement, and interaction through exploratory intellectual curiosity.

The integration of student-created assessment in academic environments has the potential to transform how students engage with learning materials, complete assignments, and demonstrate formative knowledge (Malkawi et al., 2023), as demonstrated by an empirical study is to explore a pedagogical framework contributing to an effective and applicable assessment method for English Grammar, as well as propose an approach where students are actively involved in designing their own test questions within a specific Jordanian context. The opportunities this active learning resource offers for enhanced critical thinking and self-regulated learning are co-mingled with significant challenges related to generalizability and implementation fidelity.

Although the core principle (the creation of high-quality questions requiring students to analyze and synthesize content) can enhance performance and metacognition, it also raises questions about the specific cognitive mechanisms at play. It is when the research focuses only on the improved final score that concerns emerge about the lack of qualitative depth. While the study suggests improved performance, a critical gap is the need for an efficient, transparent, and scalable approach to assess the quality of the student-created questions themselves. Research should systematically evaluate the Bloom's Taxonomy level of these questions to establish a correlation with final grammar scores (Malkawi et al., 2023). Furthermore, the single-context nature of the case study blurs the lines of generalizability, necessitating future studies across diverse educational settings to confirm the strategy's broad effectiveness.

The integration of Student-Created Case Studies (SCCS) in academic environments, particularly within an online, flipped-classroom setting, has transformed how students engage with primary literature, complete assignments, and demonstrate formative knowledge. The opportunities SCCS offer for student-led learning and robust academic experiences are co-mingled with significant challenges related to isolating the specific causal mechanisms of learning gain. Although the two-stage intervention involving iterative writing to create a case and subsequent peer teaching/assessment can enhance knowledge and self-reported confidence, it also raises concerns about the true source of improved performance.

The study provides data supporting the efficacy of the SCCS method, with student performance metrics and course evaluations indicating success (Bindelli et al., 2021). It is when these assessments are used to measure learning that concerns quickly emerge about the specific components driving the improvement. The research reports that iterative writing enhanced student performance but does not definitively prove the unique causal effect of the SCCS creation over the general benefits of iterative writing-to-learn. A significant gap, therefore, lies in the need for a transparent and scalable approach to isolate the effective components, distinguishing the learning benefit gained from creating the case vs. the benefit derived from completing a peer's case. The specialized nature of the neurovirology content blurs the lines of generalizability to other disciplines or introductory courses, requiring further empirical investigation across diverse academic environments.

6.5 Institute peer assessment of deliverables

This develops evaluative and feedback skillsets, encourages accountability, and appreciation of outside perspective.

Hadyaoui and Cheniti-Belcadhi (2025) authored an empirical study exploring frameworks contributing to an efficient and scalable approach to project-based collaborative learning (PBCL) to enhance both peer feedback quality and project performance. The integration of AI technologies in academic environments, specifically for Intelligent Team Formation and Strategic Peer Pairing, has transformed how students engage with collaborative materials and complete complex assignments (Hadyaoui and Cheniti-Belcadhi, 2025). The opportunities AI-enhanced resources offer for personalized learning and robust academic experiences contain significant challenges related to generalizability and algorithmic transparency.

The framework's core innovations, which rely on cognitive, behavioral, and interpersonal learner data for clustering, provide students with an enhanced learning support system. It is when these complex algorithms rely on “interpersonal data” that concerns emerge about ethical and accurate measurement. A significant gap lies in the need for an efficient, transparent, and scalable approach to define and justify exactly how this sensitive data is ethically captured and weighted by the algorithm. The single-domain validation blurs the lines of Contextual Validation, leaving the transferability to non-technical or humanities-based PBCL environments unproven (Hadyaoui and Cheniti-Belcadhi, 2025). Finally, the study omits complex questions about the Teacher/System Burden and the computational demands required for maintenance, failing to provide a critical cost-benefit analysis for institutions considering adopting this advanced system.

The integration of peer assessment and self-assessment in academic environments has transformed how students engage with learning materials and demonstrate formative knowledge. Although the peer assessment exhibited relatively high reliability, supporting its feasibility, both peer and self-assessment scores demonstrated a consistent overestimation bias when compared to the expert's score, as well as challenges related to score reliability and calibration.

An analysis conducted by Power and Tanner (2023) provided educators with data on the score alignment, suggesting that the reliability of self-assessment is notably low. When students utilize the provided rubrics for self-calibration and assessment, then questions emerge about the students' ability to accurately evaluate their own work. The research found that the quality of the feedback provided during peer assessment showed a strong positive relationship with student performance, indicating the value of the peer interaction itself. However, a significant gap lies in the need for an efficient, transparent, and scalable approach to developing instructional interventions that can reduce the self-overestimation bias, thereby improving self-assessment accuracy over the course of the engineering degree. The study omits complex questions about the causal mechanism behind the feedback quality link and the generalizability of these calibration issues beyond this specific engineering sub-discipline (Power and Tanner, 2023).

6.6 Creative output assignments based on personal experience

This promotes individual expression, self-awareness, and personal reflection.

A theoretical article by Saleem et al. (2021) explored frameworks contributing to reasonable and applicable definitions of social constructivism, as well as propose a model for an efficient, transparent, and scalable approach to developing deeper understanding in an academic environment. The integration of social constructivism in educational settings has transformed how students engage with learning materials, complete assignments, and demonstrate formative knowledge by positing that knowledge is actively constructed through social interaction, language, and culture (Saleem et al., 2021). There are opportunities that this learner-centered paradigm offers for robust academic experiences and knowledge co-creation. Although the philosophy advocates for a necessary shift where the teacher becomes a facilitator, employing collaborative approaches to discourage memorization, it also brings to light the extent and role educators must play in shaping its effective use.

The principles provide students with an enhanced learning support system, utilizing discussion and reflection for a wide-range of enhanced understanding. It is when these principles are proposed without supporting empirical data that concerns emerge. A significant research opportunity lies in the need for an efficient, transparent, and scalable approach to quantify the efficacy of social constructivist practices across diverse educational contexts and subject domains. The theoretical framework omits questions related to implementation barriers, failing to provide a detailed framework for the necessary teacher professional development required to successfully transition from a traditional lecturer to a proficient constructivist facilitator. The reliance on principles blurs the lines of accountability, suggesting the development of validated assessment methods to reliably measure the quality of the “knowledge constructed” in collaborative settings.

6.7 Utilize proctored assessments

Integration ensures academic integrity under controlled conditions.

The exploration of an approach contributing to an efficient and scalable method for mitigating academic dishonesty by utilizing proctored examinations to deter cheating in a distance learning environment has received much attention. The integration of proctoring technologies in academic environments has transformed how students engage with remote examinations, complete assignments, and demonstrate formative knowledge. The opportunities these surveillance-enhanced resources offer for upholding academic integrity are co-mingled with significant challenges related to privacy and student stress (Alvarez et al., 2022). The study found a high prevalence of self-reported cheating, with stress and worry cited as the primary causes, and brought into question the role educators must play in shaping ethical assessment. The finding that homework was the evaluation type that most enabled dishonesty suggests a design flaw.

The research provides educators with data indicating that students perceive proctored examinations, whether synchronous or asynchronous, as a wide range of enhanced support for monitoring remote learning. It is when the research relies on self-reported intentions (such as “less likely to cheat”) that concerns quickly emerge about the reliability of the deterrence claim. It is therefore important that objective approaches with structured proctoring methods in a neutral testing environment be employed (Alvarez et al., 2022).

Research by Alin et al. (2023) suggested a framework contributing to an efficient, transparent, and scalable approach to assessing and addressing academic dishonesty, utilizing a comprehensive synthesis of mitigation strategies for virtual proctored examinations. The integration of remote proctoring technologies in academic environments has transformed how students demonstrate formative knowledge, but cheating persists despite the use of anti-cheating software. There are positive opportunities this new framework offers for upholding academic integrity, with the assumption that ethical and unbiased implementation practices are employed (Alin et al., 2023).

The proposed framework synthesizes mitigation strategies into three core pillars: Assessment Design, Monitoring, and Resource Limitation. Although the authors advocate for combining these strategies, they also raise questions about the role educators must play in shaping a fundamental reform of assessment. The framework provides educators with a wide range of enhanced support for deterrence.

The integration of online technologies in academic environments is continually raising questions concerning academic integrity. The opportunities online platforms offer for flexible learning include the need to be proactive and address challenges related to misconduct, cheating, and collusion (Sabrina et al., 2022). This research provides educators with a wide range of enhanced support, categorized by assessment design, technical measures, and policy and pedagogy. There exists a need for an efficient, transparent, and scalable approach to assess and address the inappropriate use of GenAI resources, which bypass traditional similarity checking by creating non-plagiarized and user-prompted computer-generated responses. Opportunities exist for empirical validation, cost-benefit analysis for institutions, and the establishment of policies and protocols that address student-reported psychological and equity impacts associated with heavy reliance on surveillance (Sabrina et al., 2022).

6.8 Integrate frequent formative assessments

This improves learning outcomes by identifying learning gaps early in the academic journey by providing timely and contextual feedback.

The opportunities formative assessments offer for student achievement and social development are well-articulated, but also tethered by efficacious policies related to teacher comprehension, implementation, and associated training therein (Abd Halim et al., 2024). This research offered practices related to assessment diversity and assessment strategies, but also calls into question the scalable practice of shifting away from traditional, exam-oriented assessment practices. The research also provided educational leaders with a wide range of enhanced support, categorized into three main themes gleaned from an analysis of the 19 articles: Assessment Diversity, Assessment Strategies, and Student Learning Development. Areas of opportunity include the fidelity of implementation and the mechanism of impact, the need for an efficient, transparent, and scalable approach to systematically study the quality of feedback and its effect on both teacher workload and student learning.

A study by Na et al. (2021) investigated frameworks for developing an efficient and applicable instructional strategy utilizing Formative Assessment (FA) within an online educational modality. A secondary objective was to propose a systematic approach to question development, grounded in Bloom's Taxonomy. The incorporation of FA approaches has demonstrated an influence on student engagement with learning materials, completion of assignments, and demonstration of formative knowledge. This structure facilitates personalized learning and enhanced metacognitive experiences (Na et al., 2021).

While students reported that the FA strategy enhanced concentration and enabled the identification of learning gaps, the assessment items predominantly targeted the lower levels of Bloom's Taxonomy (Knowledge, Comprehension, and Application). The immediate explanation of answers by the instructor demonstrated an effective integration of instruction and assessment. Nevertheless, when assessments are restricted to lower-order skills, concerns emerge regarding student preparedness for the rigorous cognitive demands of clinical practice.

An opportunity exists for an efficient, transparent, and scalable methodology for designing, implementing, and assessing FA items that effectively target Higher-Order Cognitive Skills (HOCS), specifically Analysis, Evaluation, and Creation. These skills are non-negotiable for success in clinical settings. Of note is that the available literature lacks complex questions and empirical evidence linking this FA structure to improved summative course outcomes or the long-term retention of knowledge in subsequent medical courses (Na et al., 2021).