- 1School of Distance Education, Universiti Sains Malaysia, Gelugor, Penang, Malaysia

- 2School of General Education, Hunan University of Information Technology, Changsha, Hunan, China

- 3School of Graduate Studies, Lingnan University, Tuen Mun, Hong Kong SAR, China

Maintaining learning motivation and achieving academic success in English language learning remains a challenge for many university students, particularly those with lower proficiency. Conventional teacher-centered classrooms are often characterized by passive learners with limited personalized support. In contrast, blended and artificial intelligence (AI)-assisted learning have emerged as promising alternatives to address motivational and performance challenges in English as a foreign language (EFL) contexts. However, empirical comparisons of these instructional approaches remain limited. Grounded in Self-Determination Theory (SDT) and cognitive constructivism, this study examined the comparative effects of conventional, blended, and AI-blended instructional approaches on Chinese university students’ goal orientation, self-efficacy, instructional support, and English academic achievement. The AI-blended approach integrated tools such as automated writing evaluation (AWE), automated speech recognition (ASR), and the chatbot DouBao to support pre-class learning. A 1.5-year longitudinal within-subject design was employed with 43 first-year EFL students at a Chinese university. Participants experienced all three instructional approaches sequentially, with data collected via motivational questionnaires and achievement tests. Repeated measures analyses, including ANOVA and Friedman tests, were conducted. Results indicated that both blended and AI-blended instruction significantly improved students’ motivation and academic performance relative to conventional instruction. The AI-blended approach produced the most substantial gains in self-efficacy, instructional support, and key language skills such as listening comprehension, translation, and writing. These findings inform ongoing discussions on the integration of AI in EFL pedagogy and provide practical implications for instructional design, teacher preparation, and education policy innovation. The study’s limitations, including the small sample size, limited demographic diversity, and constraints of a within-subject design, should be addressed in future research.

1 Introduction

An effective instructional approach in English Language Teaching (ELT) is fundamental to fostering meaningful learning experiences. In recent years, ELT has witnessed a substantial shift from traditional teacher-centered instruction towards more student-centered instructional approaches that actively involve learners in the educational process and encourage meaningful engagement (Rezai et al., 2025). Traditional teacher-centered classrooms are typically characterized as a one-person show with uninvolved learners, relying heavily on direct instruction while offering minimal opportunities for student interaction (Umida et al., 2020). Although some studies have suggested that conventional offline instruction may yield better academic outcomes than online learning (Rachmah, 2020), and Chinese scholars have similarly underscored the effectiveness of face-to-face classroom activities and handwritten assessments which proved to be effective and essential for students in traditional learning environments (Yang et al., 2022; Li et al., 2025), this instructional approach has been widely criticized for its limitations (Khan and Khan, 2024).

With the advancement of information technology and the increasing prevalence of mobile devices, blended learning has emerged as a promising alternative, combining traditional face-to-face instruction with digital learning to provide learners with greater flexibility and access to educational resources anytime and anywhere (Yang et al., 2022). Empirical studies have demonstrated its effectiveness in enhancing academic achievement (Sankar et al., 2022), increasing learner engagement, and enabling more individualized learning experiences (Macaruso et al., 2020; McCarthy et al., 2020).

Despite its potential, blended learning is not without challenges. Learners accustomed to conventional face-to-face instruction often struggle with the transition to online learning (Dewaele et al., 2024). A systematic review by Boelens et al. (2017) identified four major threats in implementing blended instructional approach, including the need to incorporate flexibility and autonomy, to stimulate social interaction, to facilitate learning processes and to foster an affective learning climate. Other studies have further stated the practical difficulties, such as reduced interaction (Pham et al., 2023), increased distractions in digital environments (Yu et al., 2022), and a lack of self-regulation among learners (Sun et al., 2024). For example, when instructors shift instructional tasks to pre-class activities, students may experience confusion or frustration if adequate support and scaffolding are not provided.

In recent years, AI has become increasingly pervasive in educational contexts, including ELT. Park and Doo (2024) reviewed empirical studies on AI-supported blended learning in ELT, indicating its potential to address many of the limitations inherent in traditional blended instructional approach. AI tools have been shown to improve accessibility, engagement, and personalized instruction, and are being widely used to enhance course delivery and learning adaptation (Alshahrani, 2023). Kizilcec (2023) highlighted that AI holds great promise for optimizing the effectiveness of blended learning environments.

As instructional approaches become increasingly shaped by technology, growing attention has been directed toward their impact on learners’ motivation, a key factor influencing second or foreign language acquisition (Ellis, 1994). With the increasing use of AI in ELT, researchers have begun to examine how AI learning environments impact learners’ motivational constructs (Yuan and Liu, 2025). In the exam-oriented Chinese ELT context, the educational system significantly affects students’ goal orientations. Prior studies have typically categorized goal orientation into two broad dimensions: learning (or mastery) goals and performance goals (Ames, 1992; Button et al., 1996). Studies have shown that Chinese university students tend to prioritize performance goals (Yang, 2024; Zhong and Zhan, 2024). Li et al. (2022) further confirmed that performance goals were particularly salient among Chinese university EFL learners, as they were strongly linked to students’ perceptions of academic success and career advancement.

Another key construct influencing learner motivation is self-efficacy, an individual’s belief in their ability to perform a task successfully. Self-efficacy develops through experience, learning, and feedback (Gist and Mitchell, 1992) and plays a critical role in regulating motivation, cognitive effort, and persistence (Wood and Bandura, 1989). In the ELT context, students with higher self-efficacy are more willing to engage in English-speaking tasks, thereby improving both language proficiency and academic achievement (Zhang et al., 2020). Notably, recent studies suggest that self-efficacy also plays a mediating role in reducing AI-related anxiety and shaping positive attitudes toward AI in language learning (Chen D. et al., 2024).

Beyond learners’ internal factors, instructional support has a significant influence on students’ learning performance. Although not traditionally categorized as a motivational construct, instructional support is critical in promoting students’ academic motivation and achievement, both in blended or online learning contexts (Fowler, 2018). Supportive and responsive teachers play a pivotal role in shaping students’ learning experiences (Dewey, 1986; ter Vrugte and de Jong, 2012). Tools such as ChatGPT are increasingly being integrated into higher education to provide real-time support in language learning, helping students overcome barriers, adapt to diverse cultural and academic learning environments, and maintain learning motivation (Lo et al., 2024). However, emerging research also points to the potential negative emotional effects of AI-based interactions on learners’ motivation and engagement, especially in the Chinese ELT settings (Xin and Derakhshan, 2025).

As part of the national push for educational digitalization, the Ministry of Education of the People’s Republic of China has launched a strategic action plan to develop smart education platforms (China National Academy of Edu, 2023). This initiative has notably accelerated the integration of emerging technologies, particularly AI, into higher education. Therefore, it is crucial to examine not only the effects of instructional innovations on students’ key psychological constructs such as goal orientation, self-efficacy, and instructional support, but also their influence on academic achievement.

2 Literature review

2.1 Instructional approaches and learning motivation

Instructional approaches play a pivotal role in English Language Teaching (ELT), influencing learners’ motivation and attitudes (Rezai et al., 2025). In recent years, the implementation of blended and AI instructional approaches has drawn increasing attention for their potential to promote more confident, motivated and actively engaged learners.

Blended learning has been found to enhance students’ motivation and engagement (McCarthy et al., 2020). Sun et al. (2024) compared traditional and flipped classrooms and found that the flipped approach fostered goal-oriented learning and enhanced reflective thinking. Hao and Fang (2024), in a study involving 132 Chinese undergraduates, showed that students in the blended learning group reported significantly higher speaking self-efficacy than those in conventional classrooms. Similarly, Guo et al. (2023) incorporated WeChat-based assignments and scaffolding strategies into blended learning and found significant improvements in both language proficiency and self-efficacy among 232 university students.

Recent research has also explored the motivational effects of AI instruction. In a systematic review of 70 empirical studies, Lo et al. (2024) found that ChatGPT was frequently used to enhance learner motivation, overcome linguistic barriers, and improve academic engagement in ELT. Wang and Xue (2024), in a study of Chinese university students, found that AI-driven chatbots significantly increased academic engagement by promoting behavioral, cognitive, and emotional involvement. However, Xin and Derakhshan (2025) found that while AI instruction provided valuable learning support, it also triggered anxiety, frustration, and stress in some students. Similarly, Zhang et al. (2023), using questionnaires, eye-tracking, writing tasks, and interviews, examined the effects of AI writing tools on university students, indicating the limited improvements in writing self-efficacy, largely due to learners’ low confidence in adapting to diverse learning styles and strategies.

In blended and flipped learning contexts, AI has been applied to further enhance student motivation. Huang et al. (2023) used AI-driven personalized video recommendations in a flipped programming course, reporting that learners in the AI-enhanced blended condition demonstrated significantly higher levels of motivation and engagement.

2.2 Instructional approaches and English academic achievement

Learners’ academic performance and language proficiency are significantly shaped by the instructional approaches (Li and Chan, 2024). Given the increasing integration of technology in ELT, researchers have compared technology-enhanced instructional approaches with conventional face-to-face instruction. For example, Kashefian-Naeeini et al. (2023) found that students receiving web-based instruction showed significantly greater improvement in vocabulary acquisition compared to those taught through traditional approaches. Similarly, Khan and Khan (2024) reported that Saudi university students in the online learning group outperformed those in the conventional classroom. In contrast, Rachmah (2020) found that offline instruction led to better academic performance, suggesting that traditional approaches still hold value in ELT.

In addition, blended learning has received growing attention for its potential to enhance academic achievement in ELT. For example, Sankar et al. (2022) demonstrated that blended learning significantly improved academic achievement in higher education. Macaruso et al. (2020) conducted a large-scale study in kindergartens and found that those in the blended instruction group made greater reading gains than their peers receiving traditional instruction.

However, in the Chinese ELT context, findings regarding the effectiveness of blended learning have been more nuanced. Yang et al. (2022) compared conventional approaches (textbook instruction, stage presentations, handwritten exams) with blended approaches (video-based lessons, letter exchanges, online exams), and emphasized the continued importance of classroom-based activities and handwritten assessments for promoting academic achievement. Teng and Zeng (2022) reported that while blended learning improved oral fluency and accuracy among junior middle school students, it had limited effects on oral complexity. Similarly, Tao et al. (2024) noted that the effectiveness of blended instruction varied by proficiency level, with significant benefits observed for lower-proficiency learners, but limited gains for more advanced students.

More recently, the integration of AI into instructional approaches has opened new avenues for personalized feedback and adaptive learning. However, empirical findings regarding its impact on academic achievement remain inconclusive. In a six-week repeated-measures quasi-experimental study, Escalante et al. (2023) compared AI-generated feedback (via ChatGPT) with human tutor feedback in a university writing course, and found no consistent advantage associated with AI intervention. These findings illustrate the increasing convergence between instructional technology and its dual impact on learner motivation and academic achievement in ELT.

2.3 Theoretical framework

The theoretical framework underpinning this study draws on Self-Determination Theory (SDT) and cognitive constructivism to explain how instructional approaches affect learners’ motivation and academic achievement. SDT is a widely recognized theory of human motivation that emphasizes the importance of fulfilling three basic psychological needs (e.g., autonomy, competence, and relatedness), in order to foster sustained engagement, personal growth, and well-being (Ryan and Deci, 2022). Autonomy concerns a sense of initiative and ownership in one’s actions; Competence represents the need to feel capable of achieving success and mastering challenges; and Relatedness describes the need for meaningful social connections and a sense of belonging (Ryan and Deci, 2020). When these needs are supported, research shows that individuals tend to exhibit greater persistence, improved performance, enhanced social functioning, and overall psychological well-being (Ryan and Deci, 2022). In educational settings, SDT offers a practical and coherent framework for understanding learner motivation and engagement (Anderman, 2020). In the context of this study, these three psychological needs correspond, respectively, to the constructs of goal orientation, self-efficacy, and perceived instructional support, representing learners’ learning purposes, sense of competence, and perceptions of relatedness within instructional environments.

Piaget’s cognitive constructivism posits that knowledge is not passively received, but actively constructed by learners through direct interaction with their environment, including viewing, listening, reading, and hands-on experiences (Piaget, 2005). Learning is a process of meaning-making shaped by the mechanisms of assimilation, which involves integrating new information into existing cognitive structures, and accommodation, which refers to adjusting those structures to incorporate novel insights. When these processes operate in tandem, learners achieve cognitive equilibrium and develop more sophisticated ways of thinking (Mohammed and Kinyo, 2020). From this perspective, instructional approaches that prioritize learner-centered engagement, such as blended and AI-blended approaches, can be understood as constructivist in nature. These approaches are designed to activate prior knowledge and support the construction of meaning, all of which are essential for academic development.

Grounded in SDT and cognitive constructivism, this study aims to longitudinally examine the effectiveness of conventional, blended, and AI-blended instructional approaches in influencing students’ perceptions of goal orientation, self-efficacy, and instructional support, as well as their overall English academic achievement. Accordingly, two research questions have been formulated to guide the investigation.

Research question 1: To what extent do conventional, blended and AI-blended instructional approaches lead to significant changes in students’ perceptions of goal orientation, self-efficacy and instructional support, and are there any significant differences across these instructional approaches?

Research question 2: To what extent do the conventional, blended, and AI-blended instructional approaches impact students’ English academic achievement?

3 Methodology

A longitudinal within-subject experimental design study was adopted to investigate the effects of conventional, blended, and AI-blended instructional approaches on non-English major EFL learners at a university in Hunan, China. A repeated-measures design is appropriate for within-subjects studies where the same participants are exposed to multiple conditions (Field, 2013). Compared to between-subjects designs, this design offers greater precision by reducing variability associated with individual differences (Clifford et al., 2021). A total of 43 students in one intact experimental class sequentially experienced all three instructional approaches. The instructional sequence was pedagogically scaffolded over three consecutive semesters, each lasting 17 weeks. The conventional instructional approach, designed to establish baseline learning performance, was delivered from September 2023 to January 2024, comprising 24 face-to-face sessions. The blended instructional approach, implemented from March to July 2024, maintained the same duration and session count, transitioning students into a digitally supported learning environment that combined pre-class online learning with in-class activities. The AI-blended instructional approach, conducted from September 2024 to January 2025, introduced personalized learning features through AI tools and included 16 sessions due to minor scheduling constraints. The sequence of instructional approaches was determined to align with the structure of the academic calendar and to allow for balanced implementation across semesters. Additionally, it mirrors a natural learning curve in instructional technology adoption, which is from familiar (conventional) to transitional (blended) and then to innovative (AI-blended) instructional approaches. Each instruction was delivered as an independent phase and evaluated in relation to its own timeframe. Outcome variables were measured at the end of each phase to assess the effects of each instructional approach. To reduce the potential influence of cumulative exposure and temporal maturation, natural breaks of 1–2 months occurred between each instructional phase, functioning as washout intervals that helped mitigate carryover effects commonly associated with repeated-measures designs. Furthermore, the use of the Newstart College English Books 1–3 across the three phases provided a controlled and standardized curriculum framework. The structure, learning objectives, and instructional focus of the materials remained consistent across instructional phases, ensuring that only the instructional delivery approaches varied.

Three instructional approaches were developed based on the Dick and Carey instructional system (Dick, 1996), Constructive Alignment (Biggs, 1996), and Bloom’s Taxonomy of cognitive learning objectives (Momen et al., 2022). This foundation ensured alignment between learning objectives, instructional activities, and assessment methods, thereby fostering a coherent and structured learning environment across all instructional approaches.

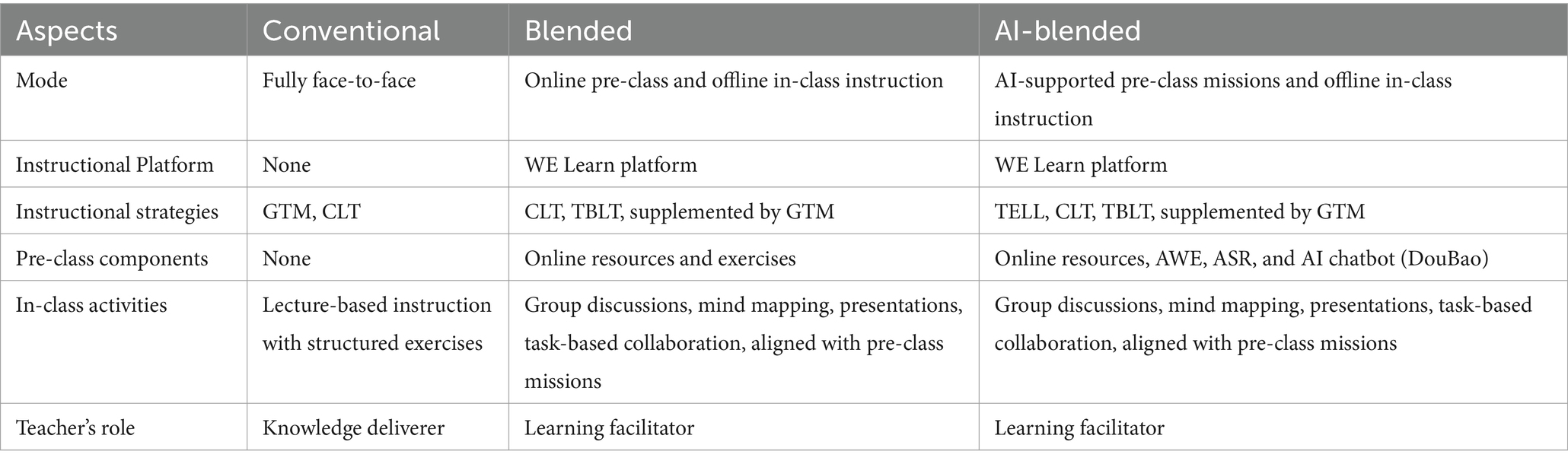

Table 1 provides a comparative overview of the three instructional approaches. The conventional instructional approach referred to a fully face-to-face, teacher-led classroom setting, where structured learning activities were delivered through direct instruction (Hartikainen et al., 2023). This approach excluded any pre-course online learning and relied primarily on lectures and textbook-based instruction. Instructional strategies centered around the Grammar-Translation Method (GTM) and Communicative Language Teaching (CLT), offering both explicit grammar instruction and opportunities for communicative practice.

The blended instructional approach combines online and offline instruction to create a flexible and interactive learning environment (Ashraf et al., 2022). Prior to in-class sessions, students engaged in pre-class online learning through the WE Learn platform, which provided materials such as videos, multimedia resources, and exercises. Clear instructions on accessing and navigating the platform were provided at the beginning of the first session to ensure all students could participate effectively. In-class session was then devoted to interactive activities including group discussions, mind mapping, and collaborative tasks. This instructional approach primarily employed Communicative Language Teaching and Task-Based Language Teaching (TBLT), while incorporating the Grammar-Translation Method when necessary to address specific language issues that learners needed, fostering active participation, critical thinking, and peer collaboration.

The AI-blended instructional approach was built upon blended learning by incorporating AI tools into pre-class learning. Students were assigned online learning resources with AI-driven learning missions, which served to enhance their preparation for subsequent in-class activities. AI tools such as AWE and ASR on the WE Learn platform automatically assessed learners’ writing and speaking performances. Meanwhile, the AI chatbot DouBao was introduced to provide personalized feedback, extend learning opportunities, and offer contextualized missions before face-to-face instruction. All learning activities were intentionally and synchronously aligned between pre-class and in-class phases to support instructional coherence. A dedicated course introduction at the beginning of the first session was conducted to guide students in the use of AI tools. Grounded in the principles of Technology-Enhanced Language Learning (TELL), this approach combined Communicative Language Teaching and Task-Based Language Teaching, creating a highly personalized and student-centered learning environment. It allowed for adaptive scaffolding, real-time feedback, and greater learner autonomy (Lechuga and Doroudi, 2023).

3.1 Participants

The study adopted a convenience sampling strategy and was conducted at a university in Hunan, China. A total of 43 first-year non-English major students (aged 18–19) who enrolled in the 2023 academic year voluntarily participated in the study. Although the sample was not randomly selected, convenience sampling is commonly used in educational research and can still yield sufficiently representative samples (Golzar et al., 2022). Furthermore, the decision to use 43 participants was guided by Chen Y. J. et al. (2024), who implemented a repeated-measures design with a similar sample size and noted that samples exceeding 30 are typically sufficient for such designs. All participants were freshmen at a similar stage in their English learning journey, as college English courses in China are typically completed during the first two academic years. Eligibility criteria included having no prior experience with college-level English instruction and no history of long-term study abroad.

All three instructional approaches were implemented sequentially with the same intact class over a period of 1.5 years. To control for instructor-related confounding variables, all instructional sessions were conducted by the same College English teacher, who had over 3 years of teaching experience in higher education. The teacher collaborated with the researcher in designing the instructional interventions.

Participation in the study was fully voluntary. Students were informed of their right to withdraw at any point without academic penalty. All procedures involving human participants were reviewed and approved by the Ethical Board of the researcher’s university.

3.2 Instruments

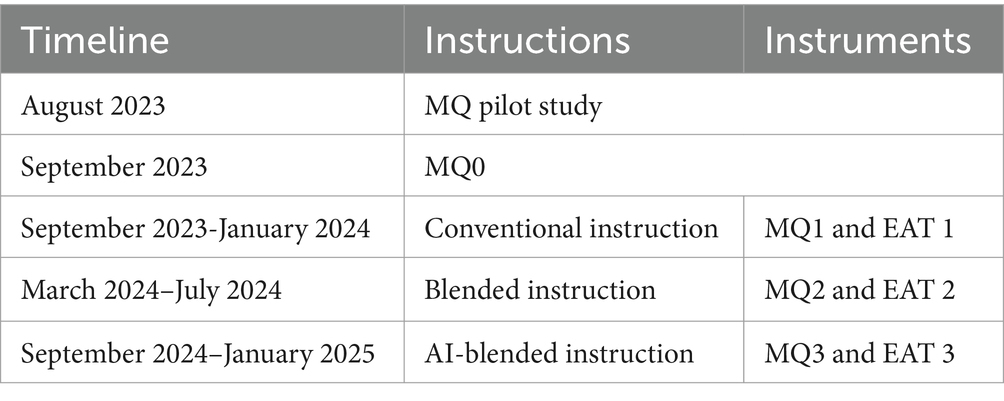

As outlined in Table 2, two research instruments were employed as provided in Supplementary material: the motivational questionnaires (MQ) and the English Achievement Tests (EAT). These instruments were administered across all three instructional approaches over an 18-month period, which included regular academic breaks. The MQ assessed students’ perceptions of goal orientation, self-efficacy, and instructional support, while the EAT evaluated their English academic achievement. A baseline pre-test of MQ (MO0) was administered prior to any instructional intervention. In total, students completed four rounds of MQ assessments and three EAT administrations, each conducted at the end of one instructional approach.

3.2.1 Motivational questionnaire

To assess students’ motivational constructs, goal orientation, self-efficacy, and instructional support, a motivational questionnaire was developed by adapting and integrating items from three well-established instruments. The first was the Motivated Strategies for Learning Questionnaire (MSLQ) developed by Pintrich et al. (1993), a self-report instrument designed to measure university students’ motivational orientations toward a course. The MSLQ is modular in nature, allowing its sections and subscales to be adapted to specific research contexts. The second instrument was the Motivation to Learn Online Questionnaire (MLOQ) by Fowler (2018), which focuses specifically on motivational factors such as goal orientation, self-efficacy, and instructional support within online learning environments. The third was the Academic Self-Efficacy Scale (ASES) developed by Byrne et al. (2014), which measures students’ confidence in their academic capabilities.

The integrated questionnaire used a 5-point Likert scale, ranging from 1 (“totally disagree”) to 5 (“totally agree”), enabling students to indicate the extent of their agreement with each item. The initial version consisted of 36 items, designed to capture students’ perceptions of the three focal motivational constructs. The draft questionnaire was reviewed by an expert to ensure content clarity and construct alignment. A pilot study was then conducted with a separate sample of students to assess the instrument’s reliability and validity. Based on item-total correlation analysis, three items were removed due to low corrected item-total correlations (0.054, 0.082, and 0.095). The final version contained 33 items and demonstrated high internal consistency, with a Cronbach’s alpha coefficient of 0.949.

3.2.2 English achievement tests

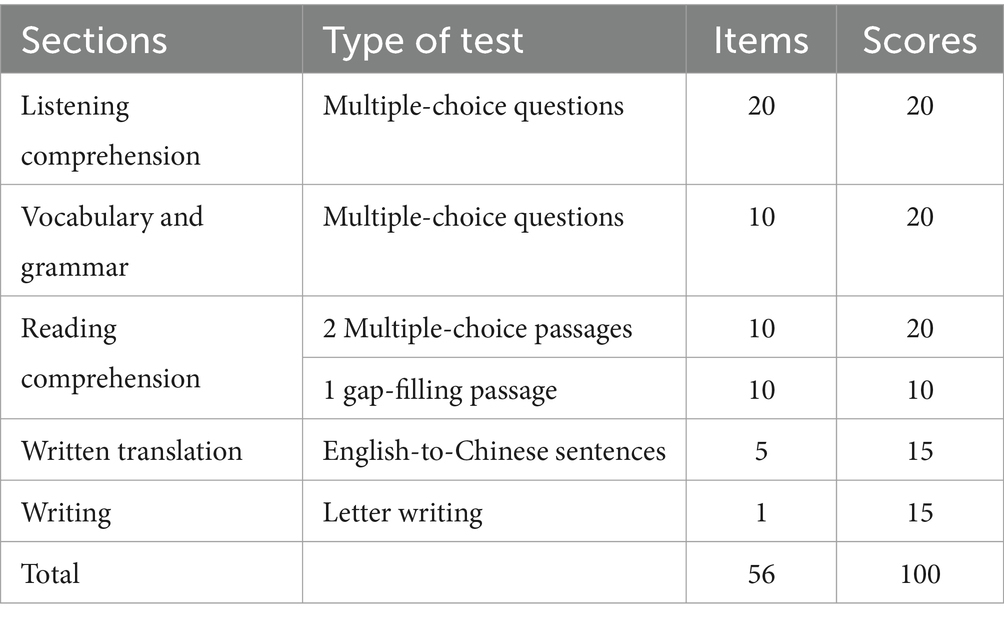

The English achievement tests were developed and marked by a team of professional English lecturers at the experimental university, who taught the same academic level and followed a standardized national College English curriculum, ensuring consistency in instructional content. The EAT functioned as an official end-of-course examination and was designed as criterion-referenced assessments, which evaluated students against predefined learning objectives rather than against the performance of their peers (Cohen et al., 2018). To enhance scoring reliability, a segmented marking approach was adopted, where each instructor was responsible for grading a specific section of the exam. As detailed in Table 3, each EAT was a 100-point assessment divided into five sections: 1. Listening Comprehension (20 multiple-choice questions, sourced from official College English examination materials); 2. Vocabulary and Grammar (10 multiple-choice questions, derived directly from the course content); 3. Reading Comprehension (Two multiple-choice reading passages and one gap-filling passage, with a total of 20 items drawn from the textbook); 4. Written Translation (Five English-to-Chinese translation sentences, all based on instructional content); 5. Writing (One letter-writing task, requiring students to produce a text of 120–180 words). In total, the test included 56 items, covering a range of language skills and ensuring alignment with the instructional objectives. The tests demonstrated content validity by aligning all items with the national College English curriculum and instructional objectives. Scoring reliability was enhanced through segmented marking and the use of consistent grading rubrics across all instructors.

3.3 Data analysis

The collected data included four administrations of the motivational questionnaire and three administrations of the English achievement test. These data were analyzed to examine differences in students’ motivation (goal orientation, self-efficacy, and instructional support) and English academic achievement across the conventional, blended, and AI-blended instructional approaches.

Prior to statistical testing, assumptions of normality and sphericity were assessed to determine the appropriateness of parametric testing (Verma, 2015). Repeated-measures ANOVA or its non-parametric equivalent, the Friedman test, was employed depending on whether the outcome variables met the assumptions of normality. The Shapiro–Wilk test was used to examine the normality of the distribution of data and Mauchly’s Test of Sphericity was applied to assess the assumption of sphericity for repeated measures ANOVA. Where sphericity was violated, Greenhouse–Geisser corrections were applied. All statistical analyses were designed to assess within-subject differences in the magnitude of change across instructional phases, thereby enabling a focused examination of the relative impact of each instructional approach and were conducted using SPSS Statistics (Version 29).

4 Results

4.1 Preliminary tests

The preliminary tests of normality and sphericity were first conducted in the analysis of both motivational questionnaires and English achievement tests.

4.1.1 Motivational questionnaire

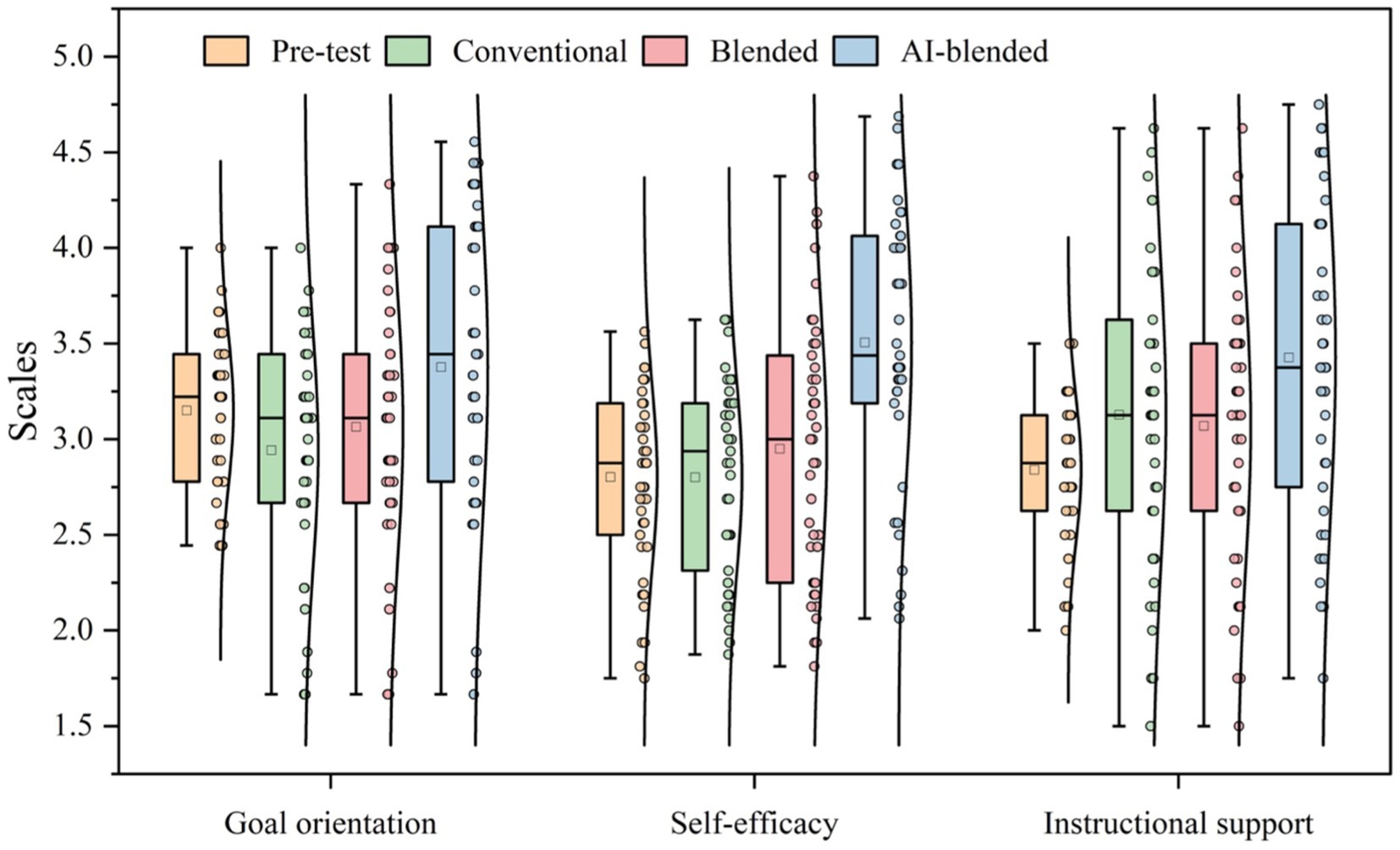

Descriptive analysis of the motivational questionnaire responses across the instructional approaches revealed distinct patterns across the three measured constructs. For goal orientation, the most notable improvements were observed under the AI-blended instructional approach, particularly in stimulating curiosity (Item 26), where 18.6% of participants selected “strongly agree,” compared to none in both the pre-test and conventional instruction. In contrast, self-efficacy levels were relatively low across the instructional approaches. Many items lacked responses in the “strongly agree” category, suggesting a general absence of strong learning confidence. Notably, this response category disappeared entirely after the conventional instruction, while the proportion of “strongly disagree” responses remained below 10%, indicating a reduction in extreme negative perceptions. Regarding instructional support, while a large proportion of responses clustered around “neutral” and “agree,” very few students selected “strongly agree,” especially before and after the conventional instructional approach. However, following the AI-blended instructional approach, an increased proportion of high-scale responses was observed, reflecting more positive perceptions of guidance and learning support.

The Shapiro–Wilk test indicated that goal orientation after the conventional (p = 0.019) and AI-blended (p = 0.037) instructional approaches violated the normality assumption. Similarly, self-efficacy after the blended instructional approach failed to meet the normality assumption (p = 0.034). Consequently, non-parametric Friedman tests were applied to analyze these two constructs. For the instructional support, all four measurement points passed the normality test (p > 0.05), allowing for parametric repeated measures ANOVA to be conducted. In addition, as the sphericity assumption was not met, the Greenhouse–Geisser correction was applied to adjust the degrees of freedom accordingly.

4.1.2 English achievement tests

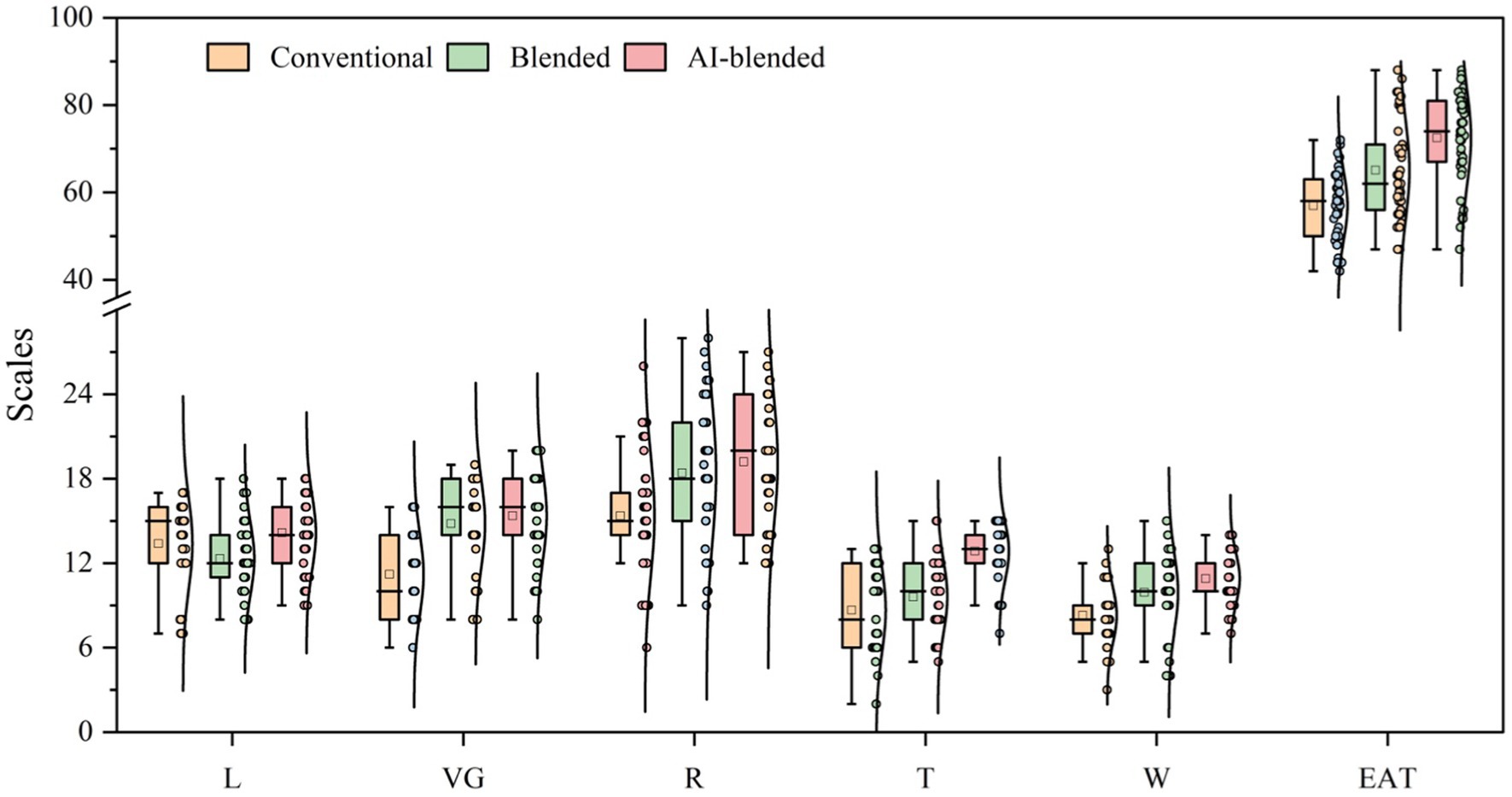

Descriptive analysis of the English achievement test results across the five sections showed overall improvement under the AI-blended instructional approach. In particular, both minimum and maximum scores increased in most sections, indicating gains among both lower- and higher-achieving students. Mean scores also showed upward trends across sections, with the AI-blended instructional approach yielding the highest performance overall. For instance, Vocabulary and Grammar showed notable gains, with the mean increasing from 11.209 under the conventional instructional approach to 15.372 under the AI-blended instructional approach, accompanied by improvements in both minimum and maximum scores (6–8, and 16–20, respectively). Reading demonstrated the largest absolute increase in mean score, from 15.372 to 19.209, although the standard deviation remained high, suggesting continued variability in reading proficiency. Translation scores became more concentrated around the mean under the AI-blended instructional approach, with the standard deviation decreasing from 3.022 to 2.042.

Due to violations of the normality assumption, as indicated by significant Shapiro–Wilk test results (p < 0.05) for at least one point in each section (including total score), non-parametric Friedman tests were conducted to examine differences across conventional, blended, and AI-blended instructional approaches.

4.2 Results for research question 1

To what extent do conventional, blended and AI-blended instructional approaches lead to significant changes in students’ perceptions of goal orientation, self-efficacy and instructional support, and are there any significant differences across these instructional approaches?

4.2.1 Goal orientation

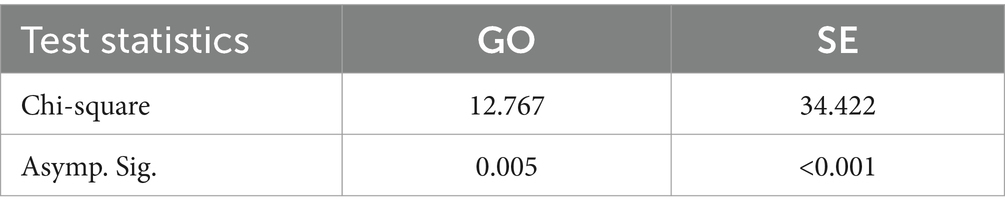

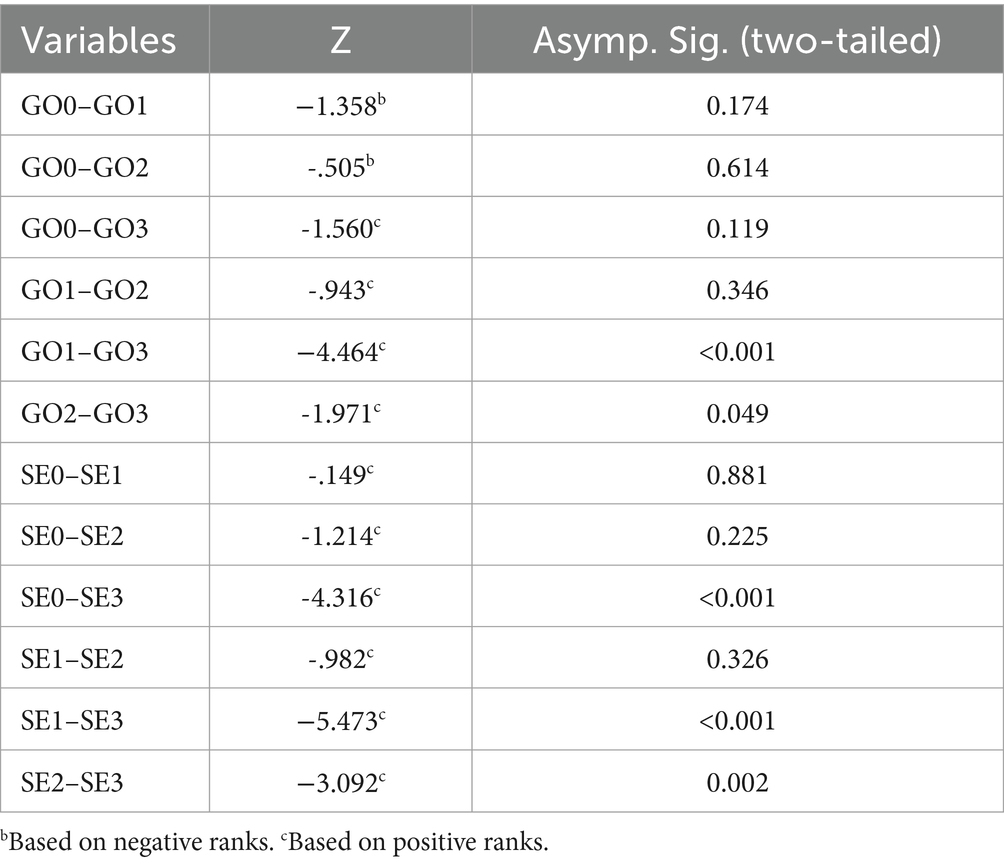

The Friedman test revealed a statistically significant difference in students’ goal orientation (GO) across the four time points, χ2(3) = 12.767, p = 0.005, as shown in Table 4. Post-hoc Wilcoxon Signed-Rank Tests were conducted to identify pairwise differences. As shown in Table 5, students reported significantly higher goal orientation scores under the AI-blended instructional approach (GO3) compared to both the conventional approach (GO1), Z = −4.464, p < 0.001, and the blended approach (GO2), Z = −1.971, p = 0.049. These results indicate that the AI-blended instructional approach substantially improved students’ goal orientation.

4.2.2 Self-efficacy

The Friedman test also indicated significant differences in self-efficacy (SE) across the four measurement points, χ2(3) = 34.422, p < 0.001. Follow-up Wilcoxon tests showed that self-efficacy under the AI-blended instructional approach (SE3) was significantly higher than the pre-test (SE0), Z = −4.316, p < 0.001, the conventional instructional approach (SE1), Z = −5.473, p < 0.001, and the blended instructional approach (SE2), Z = −3.092, p = 0.002. These findings demonstrate a consistent enhancement in students’ confidence in learning through AI-blended instructional approach.

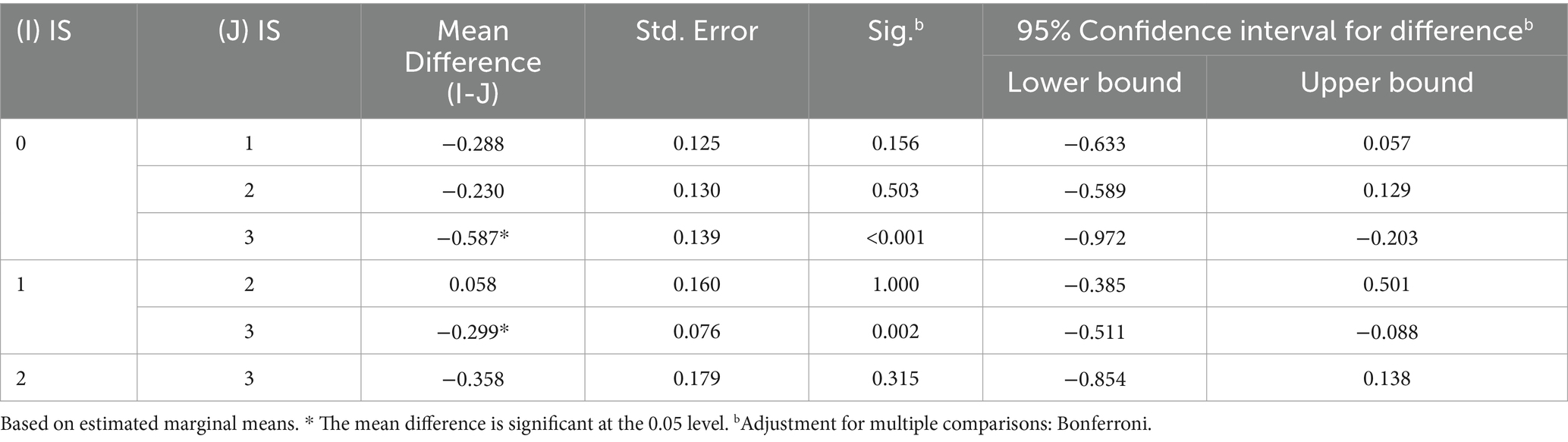

4.2.3 Instructional support

Since the instructional support (IS) data met parametric assumptions, a repeated measures ANOVA was performed. The analysis yielded a statistically significant effect, F-value of 8.349, p < 0.001, indicating differences across the four time points. Paired-sample t-tests were used for pairwise comparisons (see Table 6). Results showed that instructional support significantly improved under the AI-blended instructional approach (IS3) when compared to the pre-test (IS0) (p < 0.001) and the conventional instructional approach (IS1) (p = 0.002), highlighting students’ enhanced perceptions of guidance and support.

To address research question 1, repeated measures analyses were conducted across four time points of motivational questionnaires, examining goal orientation, self-efficacy and instructional support. The results demonstrated that students’ motivational perceptions significantly differed among instructional approaches, as illustrated in Figure 1. In particular, the AI-blended instructional approach led to statistically significant improvements in all three motivational constructs compared to conventional and blended instructional approaches. These findings suggest that the AI-blended instructional approach holds greater potential to foster students’ motivation in EFL learning contexts.

4.3 Results for research question 2

To what extent do the conventional, blended, and AI-blended instructional approaches impact students’ English academic achievement?

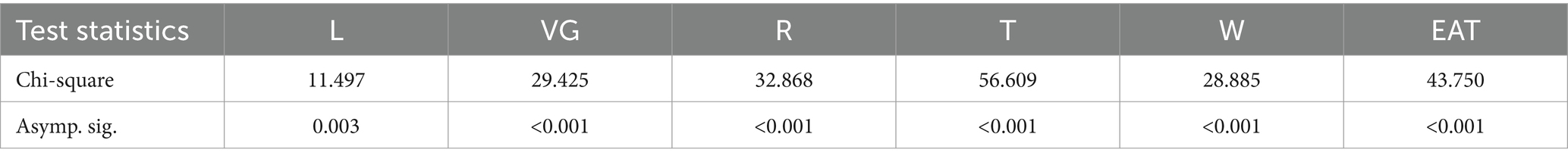

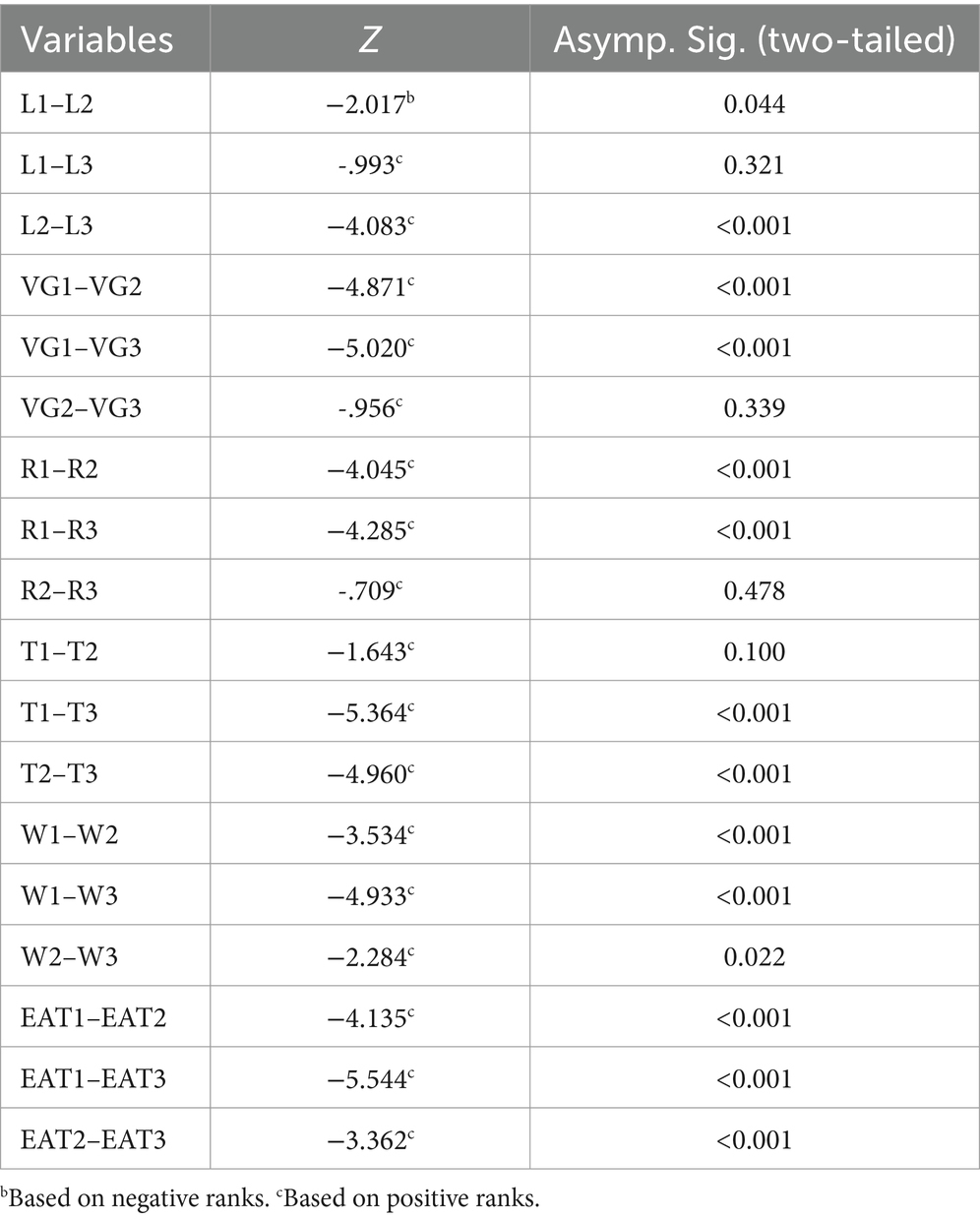

Given the violations of the normality assumption, the analysis proceeded with Friedman Tests to evaluate differences across the conventional, blended, and AI-blended instructional approaches for each section and the total score of the English Achievement Test (EAT). As shown in Table 7, the Friedman tests yielded statistically significant chi-square values (p < 0.05) for all five sections, Listening Comprehension (L), Vocabulary and Grammar (VG), Reading Comprehension (R), Written Translation (T), and Writing (W), as well as the total EAT scores, indicating overall differences across three instructional approaches.

To further examine the pairwise differences, Wilcoxon Signed-Rank Tests were conducted (see Table 8). A significant improvement in listening comprehension from blended (L2) to AI-blended instructional approaches (L3) (Z = −4.083, p < 0.001), indicating a strong positive effect of AI integration. In vocabulary and grammar, significant improvements were observed from conventional (VG1) to both blended (VG2) and AI-blended (VG3) instructional approaches (p < 0.001), although the difference between blended and AI-blended was not statistically significant. This may be due to the close alignment of the vocabulary and grammar section with the course content, limiting the added value of AI assistance. For reading comprehension, significant improvements were also observed between conventional (R1) and both blended (R2) and AI-blended (R3) instructional approaches. However, similar to the VG section, the AI-blended instructional approach did not produce a statistically significant advantage over the blended instructional approach. In the case of written translation, no significant difference was found between conventional (T1) and blended (T2) (Z = −1.643, p = 0.100), but the AI-blended instructional approach (T3) showed statistically significant improvements over both previous instructional approaches (p < 0.001). Writing scores also improved significantly across all approaches, with noticeable gains from conventional to blended, and further to AI-blended instructional approach (p < 0.05).

To answer research question 2, the Friedman Test on total EAT scores confirmed a consistent upward trend across instructional approaches, indicating that both blended and AI-blended instructional approaches significantly improved students’ overall English academic achievement compared to the conventional one, as illustrated in Figure 2. Overall, although the AI-blended instructional approach did not produce statistically significant improvements over the conventional instructional approach in listening comprehension, nor over the blended instructional approach in vocabulary and grammar, and reading comprehension, its integration nonetheless appears to facilitate a more comprehensive and balanced development of English language skills.

5 Discussion

University students, particularly those with lower English proficiency, often face substantial challenges in maintaining learning motivation and achieving satisfactory academic success. This study provides nuanced insights into how conventional, blended, and AI-blended instructional approaches support or hinder students’ goal orientation, self-efficacy, and instructional support, as well as their English academic achievement across key English language skills.

The motivational questionnaire results showed that the AI-blended instructional approach significantly enhanced students’ perceptions of self-efficacy and instructional support. These findings align with previous research highlighting the positive role of AI tools in language learning environments. For example, Zhang et al. (2024) found that ChatGPT-supported writing instruction improved students’ self-efficacy in English argumentative writing, while Xu et al. (2024) reported increased foreign language enjoyment and confidence among Chinese learners using an AI learning system. The adaptive, real-time, and personalized feedback enabled by AI likely contributed to learners’ stronger sense of support and competence (Alshahrani, 2023).

Significant improvements were also observed in goal orientation under the AI-blended instructional approach compared to both conventional and blended learning. This implies that AI integration supports both mastery goals by tracking personal progress and offering constructive feedback, and performance goals by creating visible opportunities for success. This is important since goal orientation fosters enthusiasm for learning and contributes to improved academic performance (Izadpanah, 2023), as well as engagement in writing (Yang, 2024; Zhong and Zhan, 2024). Compared to goal orientation, the even greater improvement in self-efficacy under the AI-blended instructional approach underscores the transformative potential of intelligent tools in boosting learner confidence and autonomy (Xu et al., 2024).

The analysis of the English achievement test indicated that both the blended and AI-blended instructional approaches significantly improved students’ overall English academic achievement compared to the conventional instructional approach. These findings support the growing body of research suggesting that technology-enhanced instruction improves grammar performance (Khodabandeh, 2024) and reading skills (Tao et al., 2024), by offering greater flexibility, increased exposure to learning materials, and diverse choices of engagement.

However, a more detailed examination revealed nuanced differences across specific skills. For instance, in listening comprehension, the AI-blended instructional approach led to significant improvement over the blended one, suggesting that AI-enhanced tools may offer better support for developing auditory processing through features like repeated input, real-time feedback, or ASR. In contrast, the blended instructional approach alone did not significantly outperform the conventional one, a finding that diverges from prior research (Pyo and Lee, 2024; Sujatha and Rajasekaran, 2024), which emphasized the benefits of mobile-assisted blended learning for listening in distinguishing relevant information, conceptualizing audio content, and predict meaning in listening tasks. One possible explanation is that students in this study lacked the learner autonomy needed to engage effectively in online listening tasks, a challenge previously noted by (Boelens et al., 2017).

In the vocabulary and grammar and reading comprehension sections, both blended and AI-blended instructional approaches significantly outperformed conventional instruction, consistent with earlier findings (Khodabandeh, 2024; Tao et al., 2024). However, the difference between AI-blended and blended instructional approaches was not statistically significant in this study, possibly due to test item alignment with course materials, which may have limited the added value of AI-enhanced personalization in these sections.

The translation section revealed a particularly strong effect of the AI-blended instructional approach, which significantly outperformed both conventional and blended approaches. While blended learning improved translation competence when students held positive attitudes (Peng et al., 2023) and achieved flexible peer collaboration (Chen, 2022), this study supports that AI-integrated instruction can scaffold complex productive tasks, such as sentence restructuring or meaning transfer, by offering targeted feedback and examples.

In terms of writing, both blended and AI-blended instructional approaches led to significant improvements. This aligns with studies by Chen (2023) and Wei et al. (2023), who found that AWE systems not only provided timely feedback but also enhanced students’ writing fluency. In the present study, the writing gains under the AI-blended instructional approach may reflect the dual advantage of immediate feedback and personalized revision guidance, creating a more structured yet adaptive writing environment.

While the blended instructional approach generally produced better achievement than the conventional instructional approach, it did not yield consistent advantages across all skills, particularly in listening comprehension and translation, nor did it demonstrate significantly greater improvements in student motivation constructs. One possible explanation lies in the limited autonomous learning capacity of university students, especially those with lower English proficiency. In traditional Chinese classrooms, learning has long been teacher-centered, with students accustomed to passive knowledge reception rather than self-directed learning (Li, 2022). Hence, students under the blended instructional approach may have struggled to manage online pre-class learning missions independently. This aligns with Boelens et al. (2017), who emphasized that blended learning must be carefully scaffolded to support learner autonomy and affective climate.

These results highlight that the blended instructional approach alone is not universally effective because its success depends on how well the online component is integrated, the degree of learner readiness, and the scaffolding provided by instructors. In contrast, the AI-blended instructional approach appeared to compensate for these limitations by embedding adaptive support into the online learning.

6 Conclusion, limitations, implications and recommendations

The present study investigated the effectiveness of conventional, blended and AI-blended instructional approaches on university students’ perceptions of goal orientation, self-efficacy and instructional support, and their English academic achievement. The findings demonstrated that both blended and AI-blended instructional approaches improved students’ motivation and academic performance compared to the conventional approach. Notably, the AI-blended instructional approach yielded the most significant enhancements across all dimensions, highlighting its potential to support more effective and engaging EFL learning in higher education contexts.

Several limitations should be acknowledged. First, the study was conducted at a single university with a single cohort of first-year non-English majors, which may limit the generalizability of the findings. Second, the longitudinal design spanning 1.5 years may have introduced confounding factors related to students’ natural academic and emotional development. The observed improvements in motivation and achievement may have partially resulted from maturation effects beyond instructional influences.

To implement the AI-blended instructional approach effectively, educators must carefully select AI tools that are both technically accessible and pedagogically aligned with instructional goals. Tools should support multiple language skills, offer clear usage guidance, and foster student output through collaborative activities such as group projects and in-class discussions. Instructional design should strategically integrate pre-class and in-class learning, going beyond merely introducing AI as a novelty and instead cultivating meaningful learning engagement.

The successful integration of AI also depends on teachers’ readiness to adopt technology-enhanced pedagogies. Thus, ongoing professional development is critical, not only to strengthen technical competence but also to foster teachers’ ability to align AI with learner needs, instructional objectives, and ethical standards. Additionally, clear institutional and national guidelines on the responsible use of AI in education are essential to ensure that implementation is instructionally sound and ethically compliant.

Future research should extend this investigation to diverse educational levels, including primary and secondary schools, to explore how an AI-blended instructional approach influences younger learners’ motivation and achievement in EFL contexts. Moreover, school leaders and administrators should take an active role in establishing structured frameworks for the selection and integration of digital tools, providing a foundation upon which teachers can choose appropriate, accessible, and pedagogically sound AI technologies.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Jawatankuasa Etika Penyelidikan Manusia Universiti Sains Malaysia (JEPeM-USM). Approval number: USM/JEPeM/PP/24080739. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

JL: Formal analysis, Writing – original draft, Methodology, Conceptualization, Investigation, Writing – review & editing. HA: Supervision, Writing – review & editing, Methodology. XB: Formal analysis, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors would like to thank the students who participated in the study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1614388/full#supplementary-material

References

Alshahrani, A. (2023). The impact of ChatGPT on blended learning: current trends and future research directions. Int. J. Data Netw. Sci. 7, 2029–2040. doi: 10.5267/j.ijdns.2023.6.010

Ames, C. (1992). Classrooms: goals, structures, and student motivation. J. Educ. Psychol. 84, 261–271. doi: 10.1037/0022-0663.84.3.261

Anderman, E. M. (2020). Achievement motivation theory: balancing precision and utility. Contemp. Educ. Psychol. 61:101864. doi: 10.1016/j.cedpsych.2020.101864

Ashraf, M. A., Mollah, S., Perveen, S., Shabnam, N., and Nahar, L. (2022). Pedagogical applications, prospects, and challenges of blended learning in Chinese higher education: a systematic review. Front. Psychol. 12:772322. doi: 10.3389/fpsyg.2021.772322

Biggs, J. (1996). Enhancing teaching through constructive alignment. High. Educ. 32, 347–364. doi: 10.1007/BF00138871

Boelens, R., De Wever, B., and Voet, M. (2017). Four key challenges to the design of blended learning: a systematic literature review. Educ. Res. Rev. 22, 1–18. doi: 10.1016/j.edurev.2017.06.001

Button, S. B., Mathieu, J. E., and Zajac, D. M. (1996). Goal orientation in organizational research: a conceptual and empirical foundation. Organ. Behav. Hum. Decis. Process. 67, 26–48. doi: 10.1006/obhd.1996.0063

Byrne, M., Flood, B., and Griffin, J. (2014). Measuring the academic self-efficacy of first-year accounting students. Account. Educ. 23, 407–423. doi: 10.1080/09639284.2014.931240

Chen, J. (2022). Effectiveness of blended learning to develop learner autonomy in a Chinese university translation course. Educ. Inf. Technol. 27, 12337–12361. doi: 10.1007/s10639-022-11125-1

Chen, P.-J. (2023). Looking for the right blend: a blended EFL university writing course. Comput. Assist. Lang. Learn. 36, 1147–1176. doi: 10.1080/09588221.2021.1974052

Chen, Y. J., Hsu, L., and Lu, S. W. (2024). How does emoji feedback affect the learning effectiveness of EFL learners? Neuroscientific insights for CALL research. Comput. Assist. Lang. Learn. 37, 1857–1880. doi: 10.1080/09588221.2022.2126498

Chen, D., Liu, W., and Liu, X. (2024). What drives college students to use AI for L2 learning? Modeling the roles of self-efficacy, anxiety, and attitude based on an extended technology acceptance model. Acta Psychol. 249:104442. doi: 10.1016/j.actpsy.2024.104442

China National Academy of Edu (2023). Report on China smart education 2022: Digital transformation of Chinese education towards smart education. Singapore: Springer.

Clifford, S., Sheagley, G., and Piston, S. (2021). Increasing precision without altering treatment effects: repeated measures designs in survey experiments. Am. Polit. Sci. Rev. 115, 1048–1065. doi: 10.1017/S0003055421000241

Cohen, L., Manion, L., and Morrison, K. (2018). Research methods in education : New York: Routledge.

Dewaele, J.-M., Albakistani, A., and Ahmed, I. K. (2024). Levels of foreign language enjoyment, anxiety and boredom in emergency remote teaching and in in-person classes. Lang. Learn. J. 52, 117–130. doi: 10.1080/09571736.2022.2110607

Dick, W. (1996). The dick and Carey model: will it survive the decade? Educ. Technol. Res. Dev. 44, 55–63. doi: 10.1007/BF02300425

Escalante, J., Pack, A., and Barrett, A. (2023). AI-generated feedback on writing: insights into efficacy and ENL student preference. Int. J. Educ. Technol. High. Educ. 20:57. doi: 10.1186/s41239-023-00425-2

Fowler, K. S.. (2018). The motivation to learn online questionnaire (Doctoral dissertation, University of Georgia). University of Georgia Electronic Theses and Dissertations. Available online at: https://getd.libs.uga.edu/pdfs/fowler_kevin_s_201805_phd.pdf (Accessed June 05, 2025).

Gist, M. E., and Mitchell, T. R. (1992). Self-efficacy: a theoretical analysis of its determinants and malleability. Acad. Manag. Rev. 17, 183–211. doi: 10.2307/258770

Golzar, J., Noor, S., and Tajik, O. (2022). Convenience sampling. Int. J. Educ. Lang. Stud. 1, 72–77. doi: 10.22034/ijels.2022.162981

Guo, Y., Wang, Y., and Ortega-Martín, J. L. (2023). The impact of blended learning-based scaffolding techniques on learners’ self-efficacy and willingness to communicate. Porta Linguar. Rev. Interuniv. Didáct. Leng. Extranj. 40, 253–273. doi: 10.30827/portalin.vi40.27061

Hao, X., and Fang, F. (2024). Learners’ speaking self-efficacy, self-efficacy sources and their relations in the traditional and flipped instructional modes. Asia Pacific J. Educ. 44, 1–16. doi: 10.1080/02188791.2024.2414051

Hartikainen, J., Haapala, E. A., Poikkeus, A.-M., Sääkslahti, A., Laukkanen, A., Gao, Y., et al. (2023). Classroom-based physical activity and teachers’ instructions on students’ movement in conventional classrooms and open learning spaces. Learn. Environ. Res. 26, 177–198. doi: 10.1007/s10984-022-09411-3

Huang, A. Y., Lu, O. H., and Yang, S. J. (2023). Effects of artificial intelligence–enabled personalized recommendations on learners’ learning engagement, motivation, and outcomes in a flipped classroom. Comput. Educ. 194:104684. doi: 10.1016/j.compedu.2022.104684

Izadpanah, S. (2023). The mediating role of academic passion in determining the relationship between academic self-regulation and goal orientation with academic burnout among English foreign language (EFL) learners. Front. Psychol. 13:933334. doi: 10.3389/fpsyg.2022.933334

Kashefian-Naeeini, S., Hosseini, S. A., Dabiri, A., Rezaei, S., and Kustati, M. (2023). A comparison of the effects of web-based vocabulary instruction vs. the conventional method on EFL learners’ level of L2 lexical knowledge. Forum Linguist. Stud. 6:1947. doi: 10.59400/fls.v6i1.1947

Khan, M. O., and Khan, S. (2024). Influence of online versus traditional learning on EFL listening skills: a blended mode classroom perspective. Heliyon 10:e28510. doi: 10.1016/j.heliyon.2024.e28510

Khodabandeh, F. (2024). Analyzing the influence of ambiguity tolerance on grammar acquisition in EFL learners across face-to-face, blended, and flipped learning environments. J. Psycholinguist. Res. 53:57. doi: 10.1007/s10936-024-10096-3

Kizilcec, R. F. (2023). To advance AI use in education, focus on understanding educators. Int. J. Artif. Intell. Educ. 34, 12–19. doi: 10.1007/s40593-023-00351-4

Lechuga, C. G., and Doroudi, S. (2023). Three algorithms for grouping students: a bridge between personalized tutoring system data and classroom pedagogy. Int. J. Artif. Intell. Educ. 33, 843–884. doi: 10.1007/s40593-022-00309-y

Li, B. (2022). Research on correlation between English writing self-efficacy and psychological anxiety of college students. Front. Psychol. 13:957664. doi: 10.3389/fpsyg.2022.957664

Li, Q., and Chan, K. K. (2024). The effect of teaching critical thinking on EFL speaking competence: a meta-analysis. Engl. Teach. Learn. 48, 1–18. doi: 10.1007/s42321-024-00191-y

Li, Z., Dai, Z., Li, J., and Guan, P. (2025). Does the instructional approach really matter? A comparative study of the impact of online and in-person instruction on learner engagement. Acta Psychol. 253:104772. doi: 10.1016/j.actpsy.2025.104772

Li, B., Turner, J., Xue, J., and Liu, J. (2022). When are performance-approach goals more adaptive for Chinese EFL learners? It depends on their underlying reasons. Int. Rev. Appl. Linguist. Lang. Teach. 61, 1607–1638. doi: 10.1515/iral-2021-0208

Lo, C. K., Yu, P. L. H., Xu, S., Ng, D. T. K., and Jong, M. S. Y. (2024). Exploring the application of ChatGPT in ESL/EFL education and related research issues: a systematic review of empirical studies. Smart Learn. Environ. 11:50. doi: 10.1186/s40561-024-00342-5

Macaruso, P., Wilkes, S., and Prescott, J. E. (2020). An investigation of blended learning to support reading instruction in elementary schools. Educ. Technol. Res. Dev. 68, 2839–2852. doi: 10.1007/s11423-020-09785-2

McCarthy, E. M., Liu, Y., and Schauer, K. L. (2020). Strengths-based blended personalized learning: an impact study using virtual comparison group. J. Res. Technol. Educ. 52, 353–370. doi: 10.1080/15391523.2020.1716202

Mohammed, S. H., and Kinyo, L. (2020). The role of constructivism in the enhancement of social studies education. J. Crit. Rev. 7, 249–256. doi: 10.31838/jcr.07.07.41

Momen, A., Ebrahimi, M., and Hassan, A. (2022). Importance and implications of theory of bloom’s taxonomy in different fields of education. Proceedings of the 2nd International Conference on Emerging Technologies and Intelligent Systems, 573, 515–525. doi: 10.1007/978-3-031-20429-6_47

Park, Y., and Doo, M. Y. (2024). Role of AI in blended learning: a systematic literature review. Int. Rev. Res. Open Distrib. Learn. 25, 164–196. doi: 10.19173/irrodl.v25i1.7566

Peng, W., Hu, M., and Bi, P. (2023). Investigating blended learning mode in translation competence development. SAGE Open 13:21582440231218628. doi: 10.1177/21582440231218628

Pham, A. T., Abdullah, M. R. T. L., and Ching, P. W.. (2023). Investigating the challenges of blended MOOCs for English language learning: a pilot study, Proceedings of the 2023 6th International Conference on Educational Technology Management

Pintrich, P. R., Smith, D. A., Garcia, T., and McKeachie, W. J. (1993). Reliability and predictive validity of the motivated strategies for learning questionnaire (MSLQ). Educ. Psychol. Meas. 53, 801–813. doi: 10.1177/0013164493053003024

Pyo, J., and Lee, C. H. (2024). Developing learner autonomy and EFL listening skills through mobile-assisted blended learning. Innov. Lang. Learn. Teach. 18, 1–16. doi: 10.1080/17501229.2024.2372068

Rachmah, N. (2020). Effectiveness of online vs offline classes for EFL classroom: a study case in a higher education. J. Engl. Teach. Appl. Linguist. Lit. 3, 19–26. doi: 10.20527/jetall.v3i1.7703

Rezai, A., Ahmadi, R., Ashkani, P., and Hosseini, G. H. (2025). Implementing active learning approach to promote motivation, reduce anxiety, and shape positive attitudes: a case study of EFL learners. Acta Psychol. 253:104704. doi: 10.1016/j.actpsy.2025.104704

Ryan, R. M., and Deci, E. L. (2020). Intrinsic and extrinsic motivation from a self-determination theory perspective: definitions, theory, practices, and future directions. Contemp. Educ. Psychol. 61:101860. doi: 10.1016/j.cedpsych.2020.101860

Ryan, R. M., and Deci, E. L. (2022). Self-Determination Theory. Encyclopedia of Quality of Life and Well-Being Research, 1–7. doi: 10.1007/978-3-319-69909-7_2630-2

Sankar, J. P., Kalaichelvi, R., Elumalai, K. V., and Alqahtani, M. S. M. (2022). Effective blended learning in higher education during COVID-19. Inf. Technol. Learn. Tools 88:214. doi: 10.33407/itlt.v88i2.4438

Sujatha, U., and Rajasekaran, V. (2024). Optimising listening skills: analysing the effectiveness of a blended model with a top-down approach through cognitive load theory. MethodsX 12:102630. doi: 10.1016/j.mex.2024.102630

Sun, X., Mollaee, F., and Izadpanah, S. (2024). Impact of flipped learning on reflective thinking and goal orientation among English foreign language learners. Comput. Assist. Lang. Learn. 37, 1–22. doi: 10.1080/09588221.2024.2430718

Tao, Y., Yu, L., Luo, L., and Zhang, H. (2024). Effect of blended teaching on college students’ EFL acquisition. Front. Educ. 9:1264573. doi: 10.3389/feduc.2024.1264573

Teng, X., and Zeng, Y. (2022). The effects of blended learning on foreign language learners’ oral English competence. Theory Pract. Lang. Stud. 12, 281–291. doi: 10.17507/tpls.1202.09

ter Vrugte, J., and de Jong, T. (2012). How to adapt games for learning: The potential role of instructional support. Joint Conference on Serious Games, 1–5

Umida, K., Dilora, A., and Umar, E. (2020). Constructivism in teaching and learning process. Eur. J. Res. Reflect. Educ. Sci. 8:134.

Verma, J. (2015). Repeated measures design for empirical researchers. Hoboken, New Jersey: John Wiley & Sons.

Wang, Y., and Xue, L. (2024). Using AI-driven chatbots to foster Chinese EFL students’ academic engagement: an intervention study. Comput. Human Behav. 159:108353. doi: 10.1016/j.chb.2024.108353

Wei, P., Wang, X., and Dong, H. (2023). The impact of automated writing evaluation on second language writing skills of Chinese EFL learners: a randomized controlled trial. Front. Psychol. 14:1249991. doi: 10.3389/fpsyg.2023.1249991

Wood, R., and Bandura, A. (1989). Impact of conceptions of ability on self-regulatory mechanisms and complex decision making. J. Pers. Soc. Psychol. 56, 407–415. doi: 10.1037//0022-3514.56.3.407

Xin, Z., and Derakhshan, A. (2025). From excitement to anxiety: exploring English as a foreign language learners’ emotional experiences in the artificial intelligence-powered classrooms. Eur. J. Educ. 60:e12845. doi: 10.1111/ejed.12845

Xu, S., Chen, P., and Zhang, G. (2024). Exploring the impact of the use of ChatGPT on foreign language self-efficacy among Chinese students studying abroad: the mediating role of foreign language enjoyment. Heliyon 10:e39845. doi: 10.1016/j.heliyon.2024.e39845

Yang, M. (2024). Fostering EFL university students’ motivation and self-regulated learning in writing: a socio-constructivist approach. System 124:103386. doi: 10.1016/j.system.2024.103386

Yang, C.-H., Huang, Y., and Huang, P. (2022). A comparison of the learning efficiency of business English between the blended teaching and conventional teaching for college students. Engl. Lang. Teach. 15:44. doi: 10.5539/elt.v15n9p44

Yu, Z., Xu, W., and Sukjairungwattana, P. (2022). Meta-analyses of differences in blended and traditional learning outcomes and students' attitudes. Front. Psychol. 13:926947. doi: 10.3389/fpsyg.2022.926947

Yuan, L., and Liu, X. (2025). The effect of artificial intelligence tools on EFL learners’ engagement, enjoyment, and motivation. Comput. Hum. Behav. 162:108474. doi: 10.1016/j.chb.2024.108474

Zhang, X., Ardasheva, Y., and Austin, B. W. (2020). Self-efficacy and English public speaking performance: a mixed method approach. Engl. Specif. Purp. 59, 1–16. doi: 10.1016/j.esp.2020.02.001

Zhang, R., Zou, D., and Cheng, G. (2023). Chatbot-based training on logical fallacy in EFL argumentative writing. Innov. Lang. Learn. Teach. 17, 932–945. doi: 10.1080/17501229.2023.2197417

Zhang, R., Zou, D., Cheng, G., and Xie, H. (2024). Flow in ChatGPT-based logic learning and its influences on logic and self-efficacy in English argumentative writing. Comput. Hum. Behav. 162:108457. doi: 10.1016/j.chb.2024.108457

Keywords: AI, instructional approach, motivation, achievement, blended, EFL

Citation: Liu J, Hamid HA and Bao X (2025) Motivation and achievement in EFL: the power of instructional approach. Front. Educ. 10:1614388. doi: 10.3389/feduc.2025.1614388

Edited by:

Md. Kamrul Hasan, United International University, BangladeshReviewed by:

Xia Hao, Nanjing University of Information Science and Technology, ChinaLatisha Asmaak Shafie, Universiti Teknologi Mara Perlis Branch, Malaysia

Copyright © 2025 Liu, Hamid and Bao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hazrul Abdul Hamid, aGF6cnVsQHVzbS5teQ==

Jingdan Liu

Jingdan Liu Hazrul Abdul Hamid

Hazrul Abdul Hamid Xujie Bao

Xujie Bao