- Rabdan Academy, Abu Dhabi, United Arab Emirates

Introduction: This study investigates faculty perceptions of academic integrity policies addressing traditional and Generative Artificial Intelligence (GenAI) plagiarism at an internationalized higher education institution in the UAE.

Methodology: Employing a mixed-methods approach, quantitative data were collected from 71 faculty members representing 37 nations via an online survey, supplemented by qualitative insights from 17 semi-structured interviews. The research assessed perceptions across five dimensions—availability, visibility, clarity, adequacy, and effectiveness—based on Bretag et al.’s (2011) framework.

Results: Results revealed significant disparities between traditional and GenAI policies, with both rated only moderately effective. Faculty demonstrated a preference for educative over punitive approaches while identifying workload constraints, insufficient institutional support, detection challenges, cultural leniency, and systemic limitations as barriers to policy enforcement. A hierarchical perception of GenAI plagiarism emerged, with direct content copying considered most serious and AI-assisted practices involving minimal student contribution viewed less severely. Lower response rates to Gen-AI scenarios reflected faculty uncertainty amid insufficient guidelines.

Discussion: The findings underscore the necessity for agile, comprehensive policies that address technological advancements while emphasizing faculty engagement and contextual support. This research contributes novel insights into underexplored scenarios including language assistance, translation, peer idea sharing, and citation errors, illuminating the evolving landscape of academic integrity in digital and collaborative environments. The study advocates for balanced frameworks integrating educative strategies, technological tools, and cultural sensitivities to maintain academic integrity standards in the AI era.

Introduction

Plagiarism is a well-documented challenge facing higher education institutions across the globe. The advent of Generative Artificial Intelligence (GenAI) has further compounded this challenge, prompting institutions to create additional layers of policy instruments to manage its inherent risks. However, such institutional regulatory frameworks have added to the increasingly intricate higher education professional landscape wherein faculty members are expected to balance their mission as educators with their obligation as policy enforcers (Chan, 2024; Kim et al., 2025; Watermeyer et al., 2024). In this vein, the literature points to a wide range of challenges facing faculty as they navigate academic policy instruments regulating the different forms of plagiarism, including the workload generated by integrity policies (e.g., Eaton et al., 2020; Eaton et al., 2019; Harper et al., 2019), lack of institutional support (e.g., Bretag, 2005; Malak, 2015), burden of proof (e.g., Coalter et al., 2007; Malak, 2015), fear of backlash and concerns about job security (e.g., Eaton et al., 2020; Malak, 2015), detection challenges (e.g., Alshurafat et al., 2024; Lodge et al., 2023), and faculty and students lack of training and awareness (e.g., Amigud and Pell, 2021; Brown and Howell, 2001; Walker and White, 2014). The scholarly discourse surrounding Gen-AI lacks adequate multicultural perspectives on its impact and complications in higher education settings (Yusuf et al., 2024). Along these lines, Fadlelmula and Qadhi (2024) observe that there is a dearth of research investigating the impact of AI in Arab universities, especially those in the Gulf Cooperation Council. As such, this empirical paper aims to examine the perceptions of a diverse group of faculty members about policies and practices regulating traditional and Gen-AI plagiarism at an internationalized institution in the UAE, where 90% of its faculty population is of diverse non-UAE background (MoE, 2023), shedding light on their experiences with navigating this recent yet evolving development. In doing so, this paper presents an opportunity adding to the emerging conversation on the impact of Gen-AI not only on academic integrity but also on faculty experiences. It also has the potential of providing insights to policy makers in the UAE on how to better position academic integrity policies in ways that serve their announced goals and to international institutions where faculty members are of diverse cultural background as in the UAE.

To achieve its objectives, the study reviews the literature on traditional and Gen-AI plagiarism, policy instruments regulating these infractions, and barriers to effective policy implementation. This is followed by the research questions and methodology. The findings and discussion are presented before outlining the contributions of the study and its conclusions.

Plagiarism: impact and prevalence

Plagiarism, a key form of academic misconduct, is one of today’s leading challenges facing higher education institutions across the globe (Awasthi, 2019; Miranda-Rodríguez et al., 2024; Stuhmcke et al., 2016; Yu et al., 2018). Pickard (2006) found that 72% of faculty at a UK institution reported to have encountered plagiarism cases in their work in the last academic year (p. 224). Harper et al. (2019) found, after surveying over 1,000 faculty members in Australia, that about 68% of faculty have “encountered a written task they suspected was written by someone other than the student who submitted it” (p. 1860). In the context of the UAE, very little research shed light on the impact of plagiarism on faculty experiences in the UAE. For example, Aljanahi et al. (2024) found that contract cheating results in faculty feeling that their and their students’ rights were being violated (pp. 8–9). They also reported that faculty sensed a lack of support from their institutions (p. 12). While the term plagiarism has been problematized by many scholars (Eaton, 2023; Howard, 2000), Fishman (2009) argues that it occurs when an individual “uses words, ideas, or word products attributable to another identifiable person or source without attributing the work to the source from which it was obtained in a situation where there is a legitimate expectation of original authorship in order to obtain some benefit, credit, or gain which need not be monetary” (p. 5). Pecorari and Petrić (2014) argues that plagiarism has been viewed traditionally as a form of “moral transgression and a reflection of decay” (p. 271) and as “literary theft that needs to be punished” (p. 272). However, the authors contend that academic integrity policies addressing plagiarism should consider intentionality as a critical factor, as instances of textual attribution may stem from insufficient knowledge of citation practices rather than deliberate misconduct. This consideration is particularly salient for non-native English speakers who may face additional challenges in navigating the conventions of academic writing in a secondary language. Following Chan’s (2024) work, this paper refers to plagiarism that does not involve Gen-AI as “traditional plagiarism.”

Gen-AI plagiarism: neo-plagiarism

The emergence and rapid proliferation of Gen-AI has taken academic institutions across the globe by surprise, intensifying the long-standing concerns about plagiarism and the integrity of the learning mission of these institutions (Alshurafat et al., 2024; Chan, 2024; Crompton and Burke, 2024; Karkoulian et al., 2024; Kim et al., 2025; Sánchez-Vera et al., 2024). Sánchez-Vera et al. (2024) surveyed faculty members across Spain and found that the top three risks associated with Gen-AI were deterioration of essential student skills, excessive dependence on technology, and plagiarism (p. 20). They also found that 75% of faculty members have encountered Gen-AI plagiarism in their institutions. These concerns reflect broader apprehensions about the impact of Gen-AI on educational outcomes and integrity. Hostetter et al. (2024) examined perceptions of Gen-AI in academic writing among 83 students and 82 faculty at a Midwestern liberal arts college. Results revealed significant detection challenges, with only 29% of faculty and 27% of students being able to correctly identify AI-generated content. A clear ethical hierarchy emerged: spell checkers and citation generators were deemed most ethical, followed by AI use for outlines or specific sections, with whole-paper generation considered least ethical. Notably, perceived ethicality increased significantly when scenarios included student verification, editing, and acknowledgment of AI use (average increase: 0.79 points on a 4-point scale). Rather than implementing blanket bans, the authors recommend open discussions about the appropriate use of Gen-AI and its impact on learning. These findings suggest educational institutions should develop adaptive and balanced policies acknowledging the varying impacts of different AI applications while emphasizing student critical engagement with AI-generated content.

Submission of AI-generated work and taking claim of it can be argued as a new form of cyber-pseudepigraphy which Page (2004) defines as “using the Internet to have another person write an academic essay or paper, without this authorship being acknowledged” (p. 429). This paper argues that the definition can still encompass Gen-AI by adding to the definition “or a Gen-AI engine” after “another person.” The author goes on to claim that cyber pseudepigraphy is more serious than traditional plagiarism as “[w]ith plagiarism, there can be some doubt that the writer has unconsciously borrowed ideas that he/she might have read before, while with pseudepigraphy, there is no doubt that the action is fraudulent” (p. 431). Another study by Kim et al. (2025) found that half of the student believed it was ethical and appropriate to use Gen-AI to develop responses to assignments. In the same vein, Longoni et al. (2023) demonstrate that individuals perceive plagiarizing AI-generated content as less unethical than plagiarizing human-generated content. They further contend that the real challenge with Large Language Models (such as ChatGPT) lies in their availability, user-friendly interfaces, and the difficulty in detecting their output (p. 3). Low detectability of Gen-AI plagiarism provides students with the opportunity to cheat as the risk of being caught is smaller than that in the case of traditional plagiarism, with the exception of contract cheating where detectability has proven to be challenging to educators (Walker and Townley, 2012, p. 31).

Chan (2024) coins the term “AI-giarism” to refer to Gen-AI plagiarism and defines it as “[t]he unethical practice of using artificial intelligence technologies, particularly generative language models, to generate content that is plagiarized either from original human-authored work or directly from AI-generated content, without appropriate acknowledgement of the original sources or AI’s contribution” (p. 3). Like many definitions of traditional plagiarism, this definition overlooks intent on the part of students or the nature of the contribution by AI. It also seems to disagree with the findings of her own study where faculty ranked Gen-AI practices from most to least serious.

Academic integrity policies

Academic integrity policies are important instruments that “strategically guide the [institutional] management of plagiarism” (Gullifer and Tyson, 2014, p. 1204) and are argued to help deter students from engaging in plagiarism (Bretag and Mahmud, 2016; Levine and Pazdernik, 2018). Bretag and his associates’ (2011) seminal work provided a comprehensive typology of the core components of an effective academic policy instruments including access, approach, responsibility, detail, and support. Cullen and Murphy (2025) point to a scholarly consensus supporting the robustness of this framework (pp. 5–6). Bretag et al. (2011) argue that for an academic integrity policy to be effective it should first be accessible to its target users and employs a clear language. In a later work, Bretag and Mahmud (2016) recommended the use of clear policies that guide faculty with managing plagiarism starting from suspension of misconduct and ending with imposition of sanctions. Similarly, a report commissioned by the Australian Universities Teaching Committee recommended “highly visible [institutional] procedures for monitoring and detecting cheating” (James et al., 2002, p. 37). However, availability and visibility of academic integrity policies alone do not necessarily correlate with reduced plagiarism on the part of students or increased enforcement on the part of faculty as found by Gullifer and Tyson (2014). For this reason, Bretag et al. (2011) posit that an effective policy should follow an educative approach. This corroborates the findings of Brown and Howell (2001) who concluded that educative policies are more likely to be effective. Third, they argue that an effective policy should clearly define all the stakeholders involved in academic integrity. This aligns with Gullifer and Tyson (2014) which called for active engagement with stakeholders to maximize the benefits of a policy instrument (p. 1214). Fourth, they contend that an effective policy instrument provides adequate details, allowing users to navigate the different subtleties of plagiarism. This comports with Niraula (2024) who calls for comprehensive policies that cover the different aspects of plagiarism, especially Gen-AI. Finally, they point to the importance of an institutional support apparatus enabling the implementation of the policy.

Bretag et al. (2011) framework has been used by many scholars (e.g., Cullen and Murphy, 2025; Stoesz et al., 2019) to evaluate written policy documents. However, it has not been used to examine faculty perceptions of policy documents. As such, this paper extends the scope of this framework to cover not only written policy but also faculty perceptions of these policies.

The next section of this paper outlines the research questions this study aims to answer.

Research questions

This paper aims to further our understanding of academic plagiarism in the era of Gen-AI by answering the following four questions:

1. How do faculty members perceive policies regulating traditional and Gen-AI plagiarism?

2. How do faculty members respond to incidents of plagiarism?

3. According to faculty, what are the barriers to effective policy implementation?

4. How do faculty perceive the seriousness of Gen-AI and traditional forms of plagiarism in higher education?

Context

The study was conducted at a higher education institution in the United Arab Emirates where academic integrity constitutes a core institutional value. The institution has implemented regulatory frameworks addressing both traditional and Gen-AI plagiarism. Traditional plagiarism governance is characterized by extensive, scenario-based policies with corresponding sanction gradations. The institution employs Turnitin as its primary plagiarism detection mechanism, with institutional guidelines providing explicit thresholds for similarity percentages and associated disciplinary measures. In contrast, generative AI governance remains predominantly abstract, lacking the granular scenario-based approach and detailed procedural guidance that characterizes traditional plagiarism policies. This regulatory asymmetry between traditional and emerging forms of academic misconduct provides the institutional context for examining faculty perceptions of academic integrity violations.

Methodology

This investigation employs a mixed-methods research design, integrating quantitative and qualitative methodological approaches to leverage their complementary strengths. As Creswell and Creswell (2017) assert, mixed-methods protocols offer robust frameworks for addressing the multidimensional complexities inherent in social science inquiry, yielding more comprehensive insights than mono-methodological approaches. The use of this method for this study can be further justified by the need for quantitative data, which serves here as the primary source, to be supplemented with crucial additional information providing contextual depth and interpretive nuance essential for comprehensive analysis of the phenomena under investigation.

An anonymous online survey was distributed to 95 faculty members and 10 university leaders with teaching responsibilities at Selim University (pseudonym) in the UAE during February 2025. The survey respondents originate from 37 nations, with predominant representation from the United Kingdom (15%), the United Arab Emirates (10%), the United States of America (8%), Australia (7%), Jordan (6%), Canada (5%), Malaysia (3%), Mexico (3%), Taiwan (3%), Pakistan (3%), and Italy (3%). Seventy-one individuals responded to the survey (a 68% response rate), with 17 volunteering for follow-up interviews which were conducted a month after the completion of the primary data collection phase. In the results and discussion sections of this paper, interviewees are referred to using the labels F1 to F17. Prior to data collection, ethical approvals were secured from both Lancaster University, where one of the authors is a postgraduate researcher, and Selim University, where the survey and interviews were administered.

The survey included four parts. In part one, respondents were asked to provide their attitudinal positioning in relation to five aspects of policies regulating traditional plagiarism and how they respond to traditional plagiarism cases. The second part included similar items covering Gen-AI plagiarism policies and practice. Many of the items appearing in these sections are synthesized from Bretag and her associates’ (2011) framework. The third part provided traditional plagiarism scenarios and respondents were asked to rank the seriousness of potential traditional plagiarism incidents on a Likert scale from 1 to 5 with 5 being the most serious. The scenarios are largely based on the survey items used in Chan (2024). The last section included scenarios covering possible Gen-AI plagiarism, and are also based on Chan’s (2024) work. The use of scenarios to investigate ethical matters is supported by Walker et al. (1987) who found that the use of real-life hypothetical dilemmas is “adequate for capturing individuals’ best level of moral reasoning competence” (p. 855). In the context of academic integrity, Moriarty and Wilson (2022) argues that using scenarios can support institutions in building academic integrity policies that promote justice and consistency.

Following Kvale’s (2012) work, semi-structure interviews were conducted with 17 individuals from the institution to arrive at “deeper and critical interpretation of meaning” (p. 121). Voice Memos was used to record the interviews and MS Word was utilized to auto-transcribe the audio files. The transcribed output was reviewed for accuracy against the audio files by the researchers. Transcripts were later analyzed using NVIVO. Following Braun and Clarke’s (2006) and Braun and her associates’ (2016) work, thematic analysis was used to gain an understanding of interviewees’ perceptions of traditional and Gen-AI plagiarism. This method allows participants to become collaborators in the research effort (Braun and Clarke, 2006, p. 97) and is robust in producing policy-focused research (Braun et al., 2016, p. 1). On the other hand, quantitative data obtained from the survey was analyzed using R.

Results and discussion

This section presents study findings and contextualizes them within existing literature. The section is organized into six subsections: (1) the five dimensions of policies, (2) additional dimensions of policies, (3) incidence and response, (4) barriers to policy effectiveness, (5) responses to Gen-AI plagiarism scenarios, (6) response to traditional plagiarism policies. The first and second subsections address question 1 of this paper, with the third answering question 2, and the fourth answering question 3. The last two subsections answer question 4.

Five dimensions of plagiarism policies

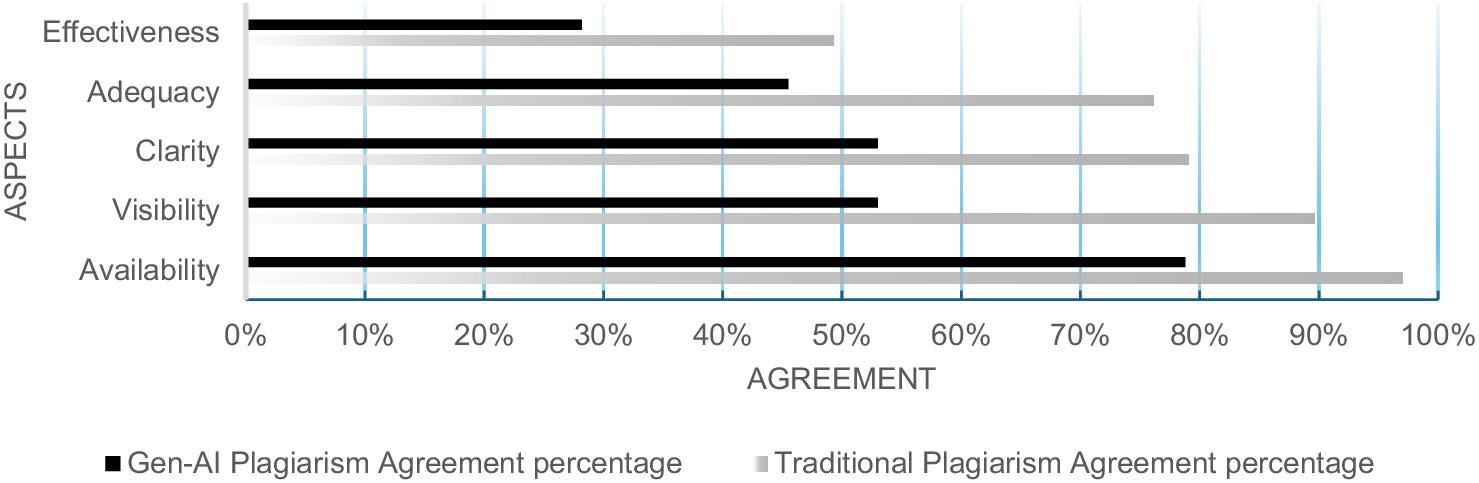

Faculty members evaluated five key dimensions of plagiarism policies: availability, visibility, clarity, adequacy, and effectiveness. Availability, visibility, and clarity correspond to the ‘access’ element in Bretag and her associates’ (2011) framework, while adequacy corresponds to ‘detail.’ ‘Approach,’ ‘support’ and ‘responsibility’ are elicited from interviews as shown in the next section.

Results indicate a significant disparity in perceived quality between traditional and Gen-AI plagiarism policies, with traditional policies receiving substantially more favorable evaluations across all dimensions. Traditional policies received favorable majority evaluations in availability, visibility, clarity, and adequacy, with effectiveness approaching a majority approval. In contrast, Gen-AI plagiarism policies achieved favorable majority ratings only in availability, with borderline majority assessments in visibility and clarity, and a significantly low scoring for effectiveness. Comparative analysis (Figure 1) reveals substantial disparities between the two guideline types, with particularly pronounced differences in visibility (a 35 percent point gap) and adequacy (a 25 percent point gap). These findings suggest that the investigated institution, similar to its peer institutions globally, appears to be navigating the complex process of developing and implementing comprehensive policies that address the unique challenges presented by Gen-AI plagiarism. These perceptions among faculty corroborate the conclusions of McDonald et al. (2025) who found that while a majority of Gen-AI policies adopted by US institutions (63%) encourage Gen-AI use, only 43% provided detailed guidance to faculty and students on how to manage Gen-AI plagiarism. On the other hand, the findings in the interviews are consistent with this quantitative outcome as faculty members generally agreed that the policies for traditional plagiarism are understood by both students and instructors. In contrast, they expressed a significant concern that current policies and detection tools fall short when addressing AI-generated content. The emergence of tools like ChatGPT has created uncertainties, as noted by F3 in his/her remark:

The LLMs [Large Language Models] are moving faster than the policies – we are now dealing with technology that our policies have not yet caught up with.

This observation underscores the technological-policy gap currently facing higher education institutions and suggests the need for more agile policy frameworks that can adapt to rapidly advancing AI capabilities. This finding is consistent with the conclusions drawn by Miron et al. (2021), who argued for a need to update academic integrity policies to account for the evolving higher education landscape.

Deeper statistical analysis of faculty perceptions revealed statistically significant differences across all five measured dimensions. For example, paired-samples t-tests demonstrated that traditional policies were rated significantly higher than Gen-AI policies on effectiveness [e.g., M = 3.44, SD = 1.09 vs. M = 2.89, SD = 1.11; t(65) = 4.76, p < 0.001, d = 0.59]. The magnitude of these differences, as indicated by Cohen’s d values, ranged from medium to large effect sizes. Notably, the standard deviations for Gen-AI policies were consistently higher across all dimensions, suggesting greater variability in faculty perceptions of these newer policies. This pattern of results indicates not only that traditional policies are perceived more favorably, but also that there is greater consensus, whereas perceptions of Gen-AI policies demonstrate less uniformity, potentially reflecting the emergent and evolving nature of institutional responses to Gen-AI technologies. Furthermore, the data reveals that a thin majority of faculty believed traditional plagiarism policies were effective, with an overwhelmingly larger proportion holding an opposing view for Gen-AI plagiarism policies. This empirical evidence reveals a notable paradox: despite the ostensible visibility, availability, and adequacy of institutional policies—particularly those governing traditional forms of plagiarism—faculty members consistently report significant concerns regarding the effectiveness of these policies in addressing plagiarism. This discrepancy underscores the need for a thorough reevaluation of the impact of these policies and subsequent improvement of the existing policies governing both forms of plagiarism.

The next section addresses the remaining elements of Bretag and her associates’ (2011) framework, namely approach, support and responsibility.

Additional dimensions of policies

The interviews revealed that faculty consistently advocate for more developmental rather than punitive approaches to academic integrity violations. This is exemplified by F2 who expressed concerns that by following policy s/he would “be causing [the student] to lose his career, just for something silly he made.” Along the same lines, F5 also remarked:

[I tell the student] I can report it, and we can go through the process … [alternatively,] you can rewrite. [If you] fix it, you get ten marks off.

Faculty responses suggest that the policies are written in punitive, legalistic terms that overlook integrating educative approaches. This policy approach is not unique to the investigated institution as found by Stoesz et al. (2019) and Cullen and Murphy (2025) who concluded that academic integrity policies in Canadian and the American institutions are largely punitive than educative.

On the other hand, faculty perceive while the policy points to stakeholders who are involved in the process (including committees, student sponsoring agencies, the students themselves, and faculty), the practice places excessive responsibility on faculty members in proving the incidence of plagiarism. This is exemplified by F10’s comment arguing that: “[t]he onus here is very high for the faculty to prove, and we often cannot get to that burden.” A similar concern was echoed by F11 who noted:

It’s very hard and time-consuming for faculty to actually, one, to prove. I mean, we are not a court of law, but it seems we actually have a higher standard of proof for our students.

Finally, interviewees repeatedly pointed to an urgent need for more institutional support to navigate the challenges associated with plagiarism. The section entitled Barriers to Policy Effectiveness addresses this matter in detail.

Incidence and faculty response

A majority of faculty (62%) indicated in the survey that they encounter traditional plagiarism instances, with 76% indicating that Gen-AI has increased plagiarism among students. This finding agrees with Harper et al. (2019) who found that about 67% of Australian faculty encounter academic misconduct and Eaton et al. (2019) who claimed that 90% of students in Canada self-reported engagement in dishonesty behaviors. When participants were asked whether they report these incidents, a majority indicated they do, with more faculty indicating that they report traditional plagiarism cases than Gen-AI cases (66% vs. 62%). This also agrees with the same Australian study which found that 56% of faculty members indicated that they report these incidents. Despite this expressed attitude among the faculty, a review of institutional records reveals that an incommensurate number of plagiarism cases was reported for investigation over the past two academic years. The gap between attitude and behavior, on the one hand, and policy and practice, on the other, was articulated in the response to questions asking whether faculty allow students to re-submit their work based on percentage of plagiarism or type of plagiarism. In the response to these questions, 82 and 79% of faculty indicated that they do so, even though institutional policy requires them to report any case based on suspicion and regardless of type or percentage of plagiarism to help maintain a systematic record-keeping of incidents. This finding is consistent with de Maio et al. (2020) who concluded that “most academic staff do not appear to report” incidents in Australia (p. 103), Nelson (2021) who found that only two faculty members (out of 12) ever reported academic integrity incidents, and Eaton et al. (2019) who highlighted the disconnect between the prevalence of self-reported academic dishonesty and percentage of reported cases by faculty (p. 7). The survey included items that shed some light on the reasons for this response to academic incidents. First, faculty reported that plagiarism results in increasing their workload (60% for traditional plagiarism and 63% for Gen-AI plagiarism). This paper argues that under-reporting is a coping mechanism adopted by faculty members to manage their increased workload. This agrees with the findings of Sattler et al. (2017) who concluded that the more-time consuming the effort is, the less likely faculty are to report academic misconduct (p. 1138). It also corroborates the findings of Harper et al. (2019) who concluded that a quarter of faculty who do not report academic integrity incidents thought that the process was time consuming. Second, the perceived effectiveness of the policies (49% for traditional plagiarism policies vs. 28% for Gen-AI plagiarism) could be argued to affect the reportability of academic misconduct. This corroborates the findings of Sattler et al. (2017) who concluded that the lower the efficacy of detection, the lower the faculty are to use and report detection methods (p. 1129). Third, faculty members reported to have different interpretations of the policies regulating plagiarism, with much more faculty interpreting Gen-AI plagiarism differently (46% vs. 70%). This also agrees with Eaton et al. (2019) who noted in a review of the literature of academic plagiarism in Canada that there is a widening gap between policy and practice among faculty in Canadian Higher Education settings.

The interviews provided additional data on how faculty respond to plagiarism. Many participants reported using early intervention approaches to avoid reporting students. For example, F2 remarked:

I give lots of feedback. So, I insist that all my students submit the first draft. I check [it]. [I tell them] I see the first draft is not your work. Do not submit this one. It’s like I’m trying to stop the behavior before it happens.

This preventive approach reflects a discretionary decision to prioritize education over punishment aligning with the findings of Walker and White (2014) who concluded that “most participants maintained that educative strategies … were an effective preventative strategy [to plagiarism]” (p. 679) and Gottardello and Karabag (2022) who found that a majority of faculty participating in their study maintained that they view themselves as educators rather than protectors of academic integrity (pp. 532–3). Other participants expressed concerns about the proportionality of consequences relative to the offense. For example, F8 argued:

Accusing a student of intentional plagiarism is serious. It sticks with them. So, we couch words carefully, take an educational approach, and avoid assumptions of malice.

This concern about fairness and proportionality aligns Tummers and Bekkers (2014) who noted that faculty consider the impact of their decisions on their student lives, allowing them to make a difference in their lives when implementing the policy (p. 528). This approach is also consistent with Kier and Ives’ (2022) recommendation for policy review to ensure proportionality between academic integrity sanctions and the severity of student violations (p. 14). Others take matters into their own hands and reduce the student grade without reporting the incident. For instance, F5 remarked:

[I tell the student] I can report it, and we can go through the process … [or] you can rewrite, you can fix it, you get ten marks off… If you do not believe you have done anything wrong, we can go through the formal process, or I’ll take ten points off the top … and most students have just accepted the ten points [off].

This finding aligns with the conclusions drawn by Coalter et al. (2007), who documented that faculty members frequently employ grade reduction as a punitive measure for plagiarism infractions, circumventing formal institutional reporting mechanisms. Concomitantly, this approach resonates with the ‘intimidator’ role faculty play, as documented by Gottardello and Karabag (2022), wherein faculty reported employing deterrence strategies and fear-inducing tactics to discourage academic dishonesty among students.

Barries to policy effectiveness

Following Amigud and Pell’s (2021, p. 929) call for institutions to endeavor to design plagiarism policies that are effective, this study examined faculty-identified impediments to academic integrity policy effectiveness through qualitative analysis of interview data. Seven distinct barrier categories were identified and quantified according to frequency of occurrence. Results indicate technological insufficiencies (n = 17, 100%) constitute the predominant concern, followed by and procedural limitations (n = 16, 94%), cultural impediments (n = 15, 88%), structural and systemic constraints (n = 14, 82%), faculty preparedness deficiencies (n = 13, 76%), accountability and enforcement gaps (n = 12, 70%), and student lack of awareness and preparedness (n = 8, 47%). These findings suggest effective policy implementation requires multidimensional interventions addressing both structural and cultural dimensions of the academic integrity ecosystem.

Technological insufficiencies emerged as the most frequently cited barrier (n = 17), with faculty reporting significant limitations in plagiarism detection, particularly regarding AI-facilitated misconduct. As F10 and F7 explicitly stated:

Our technology needs continuous upgrades to catch up [with advances in Gen-AI]. Students will find a way to circumvent our programs” (F10).

AI detectors give conflicting results. Turnitin shows percentages, but it’s not always accurate (F7).

This finding is not surprising and comports with those of Lodge et al. (2023) and Alshurafat et al. (2024).

Procedural limitations (n = 16) also appeared repeatedly in the interviews when faculty spoke about sanctioning parameters, reporting protocols, and escalation hierarchies. One faculty member described, “a [team] member handled [plagiarism] very poorly and because of how strict the policy and procedures appeared… [he] … had no choice” (F6). These procedural limitations engender inconsistent enforcement practices, with faculty frequently resorting to extra-institutional resolution strategies rather than engaging with formalized reporting frameworks. As one respondent explained, “If you get 3 or 4 people together, you’ll get 3 or 4 different opinions. Some take a harder stance on plagiarism; others are more flexible” (F8). This finding is not unique to the UAE as found by Cullen and Murphy (2025) who concluded that academic integrity policies in the US are not consistently implemented and the argument of Brown and Howell (2001) who found that clearly written and educative policies are more likely to be effective.

Faculty interviewees also revealed that cultural impediments (n = 15) operate through institutional paradigms that privilege leniency over accountability, coupled with student value systems that prioritize assessment outcomes over learning. One faculty member observed:

“Some students think consequences are insignificant […] believing punishments are rare or mild” (F14).

F16 attributed this to a lack of student motivation, while F17 critiqued a “bargaining culture” where some students negotiate grades rather than reflect on misconduct. Faculty also contribute to this barrier through emotional reluctance to report, such as F2 who admitted avoiding penalties to prevent student dismissal. This cultural leniency normalizes non-compliance, as students perceive consequences as rare or mild. This finding agree with those of Tummers and Bekkers (2014) who contends that faculty consider the impact of their decisions on students’ lives when implementing the policy (p. 528).

Faculty also reported structural and systemic constraints (n = 14) as a driving factor creating environmental conditions that inadvertently incentivize academic misconduct while simultaneously discouraging rigorous enforcement. F2 observed that high academic workloads and systemic pressure act as barriers. F5 noted that plagiarism checks are labor-intensive and unrewarded, leading faculty to prioritize teaching over enforcement. This perception aligns with the responses to the survey where 60 and 63% of faculty perceive traditional and Gen-AI plagiarism increase their workload. This finding is also consistent with Harper et al. (2019) who found that a quarter of faculty who do not report academic integrity incidents attributed this behavior to the load associated with reporting. In this vein, F3 noted:

Faculty opt for presentations over written work because they are harder to scrutinize […] Students exploit this, submitting AI-generated scripts or bullet points.

Additionally, some faculty claimed that policies often conflict with practical realities. For example, F14 noted that faculty bypass formal reporting due to perceived futility, while F17 highlighted inconsistent penalties (e.g., some students were expelled while others were allowed to resubmit). Structural misalignment between institutional rhetoric and practice further erodes trust, as “integrity” values remain aspirational without operational frameworks (F14 and F16). This systemic barrier can be argued to enable plagiarism and weaken policy enforcement.

Within the constrained environment, faculty and student training becomes increasingly important yet remains elusive. In this vein, faculty agreed that insufficient training and excessive workloads hinder consistent enforcement. Many faculty, like Interviewees 8 and 15, lacked familiarity with policies, relying on personal judgment. F2 specifically identified a lack of policy awareness and training as a critical barrier. Another, F1, noted that faculty may lack standardized tools or training to detect plagiarism reliably, creating loopholes in policy implementation. F9 stressed the need for interactive workshops to calibrate understanding of policy over passive training, while F14 criticized the absence of AI ethics programs. This finding agrees with those of Amigud and Pell (2021) who contend that policy communication, through training and awareness, is key to policy effectiveness.

Faculty also reported accountability and enforcement gaps as another reason for policy ineffectiveness. The burden of proof and fear of repercussions deter reporting. F2 emphasized the difficulty of proving Gen-AI plagiarism, while F4 noted faculty fear administrative backlash. Informal resolutions seemed to dominate, as F15 pointed out that his/her approach is typified by consulting with his/her program chair rather than filing reports. Inconsistent penalties—such as allowing resubmissions instead of reporting (F17)—undermine deterrence as argued by F4, F15, and F9. F11 highlighted ghostwriting’s evidentiary challenges, leaving faculty powerless without “smoking gun” proof. The finding here is consistent with many studies that tackled accountability gaps as the driving reason for the under-reportability of plagiarism. For example, Malak (2015) found establishing legally valid evidence of student engagement in plagiarism was one of the main reasons that reduced the likelihood of faculty reporting academic integrity incidents. Coalter et al. (2007) also found that 90% of faculty who did not report integrity incidents did so because of lack of proof (p. 7). Similarly, LeBrun’s (2023) findings revealed that a significant majority (87%) of participants reported difficulties in establishing sufficient evidence to meet the required burden of proof, particularly in cases involving Gen-AI plagiarism.

Student disengagement, while less frequently cited, also represents a significant barrier characterized by policies that inadequately address fundamental motivational deficits and misalignment between institutional expectations and student capacity. F1 identified a lack of student engagement with policies as a primary concern. Language barriers and inadequate institutional scaffolding exacerbate this. F7 and F6 noted that those whose pre-tertiary schooling is from rote-learning systems struggle with citation practices, leading to unintentional plagiarism. F6 and F8 described non-native speakers misusing AI to compensate for poor English. While less frequent, this theme underscores the need for proactive education to bridge gaps between secondary and tertiary expectations. LeBrun’s (2023) research corroborates this finding, demonstrating that a predominant proportion of faculty members identified cultural disparities and linguistic challenges as significant impediments to international students’ comprehension of and adherence to academic integrity protocols.

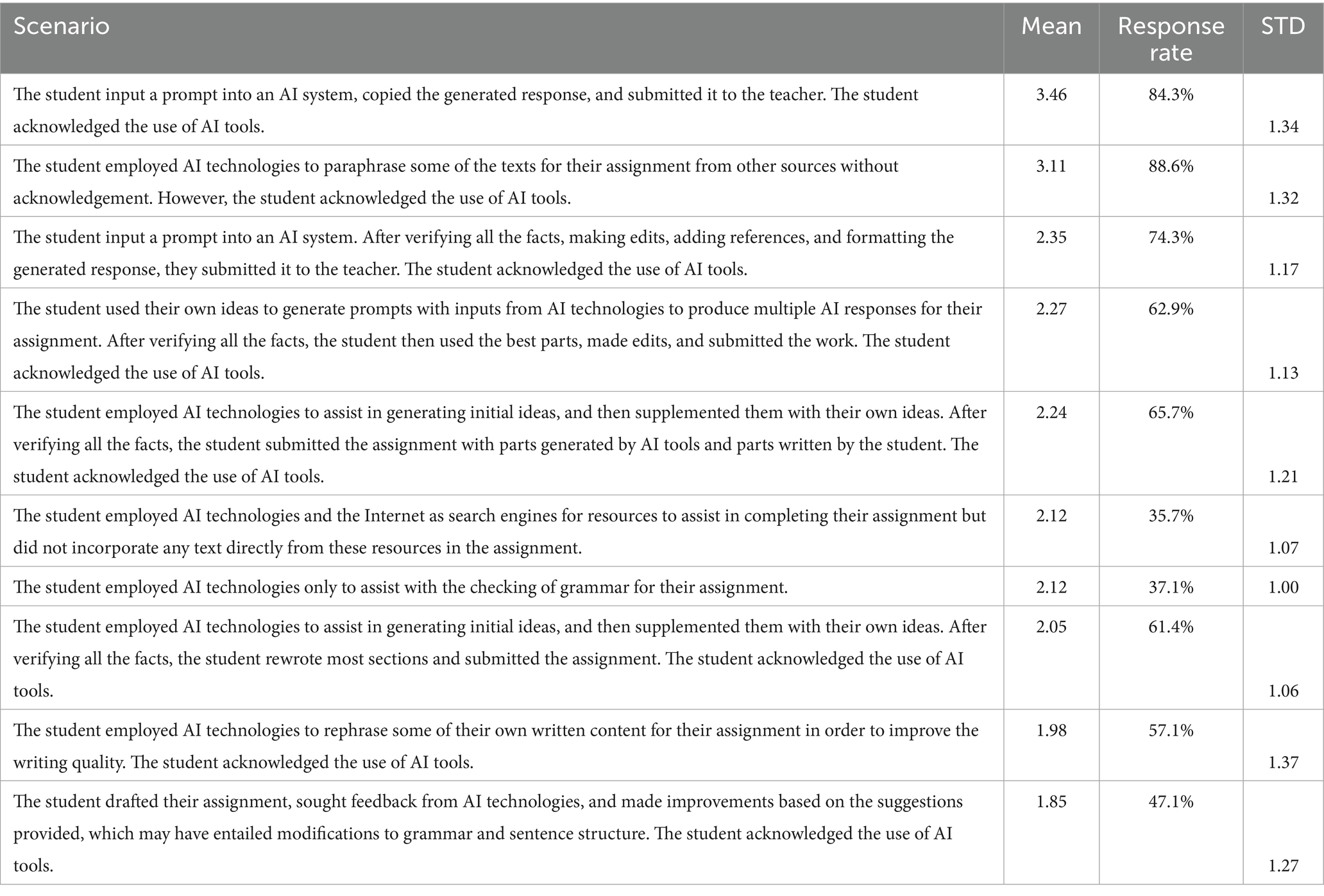

Response to Gen-AI plagiarism scenarios

Section four of the survey included possible Gen-AI plagiarism scenarios and respondents were asked to rank their seriousness on a scale of 1 to 5. As shown in Table 1, the data shows that direct copying of AI-generated content (scoring 3.46/5) and using AI for plagiarism (3.11/5) are considered the most serious or unethical infractions, while using AI for grammar checking (1.85/5) and rephrasing one’s own content (1.98/5) are viewed as relatively acceptable practices. A strong correlation (0.78) between scenario seriousness and response rate suggests that more concerning scenarios elicit greater faculty engagement. The narrow spread of scores (SD = 0.52) indicates moderate consensus among faculty, with clear distinctions between problematic and acceptable AI uses. Faculty appear to differentiate based on the degree of student contribution: scenarios where AI replaces student work are viewed negatively, while those where AI augments student-generated content is more acceptable. Notably, simply acknowledging AI use does not significantly mitigate concerns when the underlying practice involves minimal student input. Concomitantly, the data shows that Gen-AI generated scenarios much less response rates, if compared with traditional plagiarism scenarios (next section). This paper argues that there are two main possible reasons for this observation. First, some faculty members are struggling to navigate the subtleties of Gen-AI plagiarism amid insufficient institutional guidelines and professional development opportunities, resulting in an inability to formulate definitive positions on these emerging scenarios. This is not surprising given the nascency of this development in higher education and corresponds with empirical evidence from multiple investigations examining faculty engagement with plagiarism detection, particularly concerning AI-generated content. Petricini et al. (2023) found that faculty were uncertain about the ethicality of using Gen-AI in higher education settings. Hostetter et al. (2024) and Kofinas et al. (2025) demonstrated that faculty were unable to reliably distinguish between student-authored and AI-generated submissions. Similarly, Freeman (2024) found that lack of policy specificity undermines the ability to clearly identify the appropriate use of Gen-AI. Second, the positioning of this inquiry within the terminal section of the survey instrument may have contributed to diminished response rates, potentially attributable to respondent fatigue—a methodological limitation warranting consideration in the interpretation of these findings.

The findings of this study offer a compelling, complementary faculty perspective that substantively corroborates the student-centered patterns identified by Chan (2024). The hierarchical evaluation of AI-assisted behaviors mirrors Chan’s findings with remarkable consistency; both datasets reveal that direct AI utilization (prompt input and submission) received higher ratings than more elaborate engagement strategies involving verification, editing, and synthesis. This parallel suggests that faculty and students share similar evaluative frameworks regarding AI tool usage in academic contexts, despite their different roles in the educational ecosystem. The close numerical alignment between our faculty-reported scores and Chan’s student-reported means (with differences ranging from just 0.01 to 0.41 across scenarios) indicates a surprising consensus between these two stakeholder groups. In this sense, the alignment between student and faculty attitudes corroborates the findings of Kim et al. (2025) who concluded that “students and faculty did not differ significantly in their attitudes toward Gen-AI in higher education” (p. 1).

However, a deeper statistical analysis reveals that faculty and student perspectives on AI-assisted work vary significantly in both severity assessments and consensus levels. Comparative analysis of faculty data versus student responses demonstrates that faculty consistently assign higher severity ratings to AI-assisted academic practices while exhibiting substantially greater response variability. For instance, regarding direct AI-generated content submission, faculty ratings (M = 4.37, SD = 7.23) contrast markedly with student evaluations (M = 3.44, SD = 1.18). This pattern persists across all measured scenarios, from simple grammar checking (faculty: M = 2.97, SD = 4.70; students: M = 2.09, SD = 1.29) to more complex AI integration practices.

The pronounced standard deviations in faculty responses (ranging from SD = 4.57 to SD = 7.47) compared to the relatively uniform student assessments (consistently SD = 1.13–1.29) constitute a critical finding with substantial implications for academic policy development. While students demonstrate cohesive understanding of AI-related academic integrity boundaries, faculty judgments reflect a heterogeneous evaluative framework, likely stemming from diverse disciplinary backgrounds, pedagogical philosophies, and varying degrees of technological familiarity.

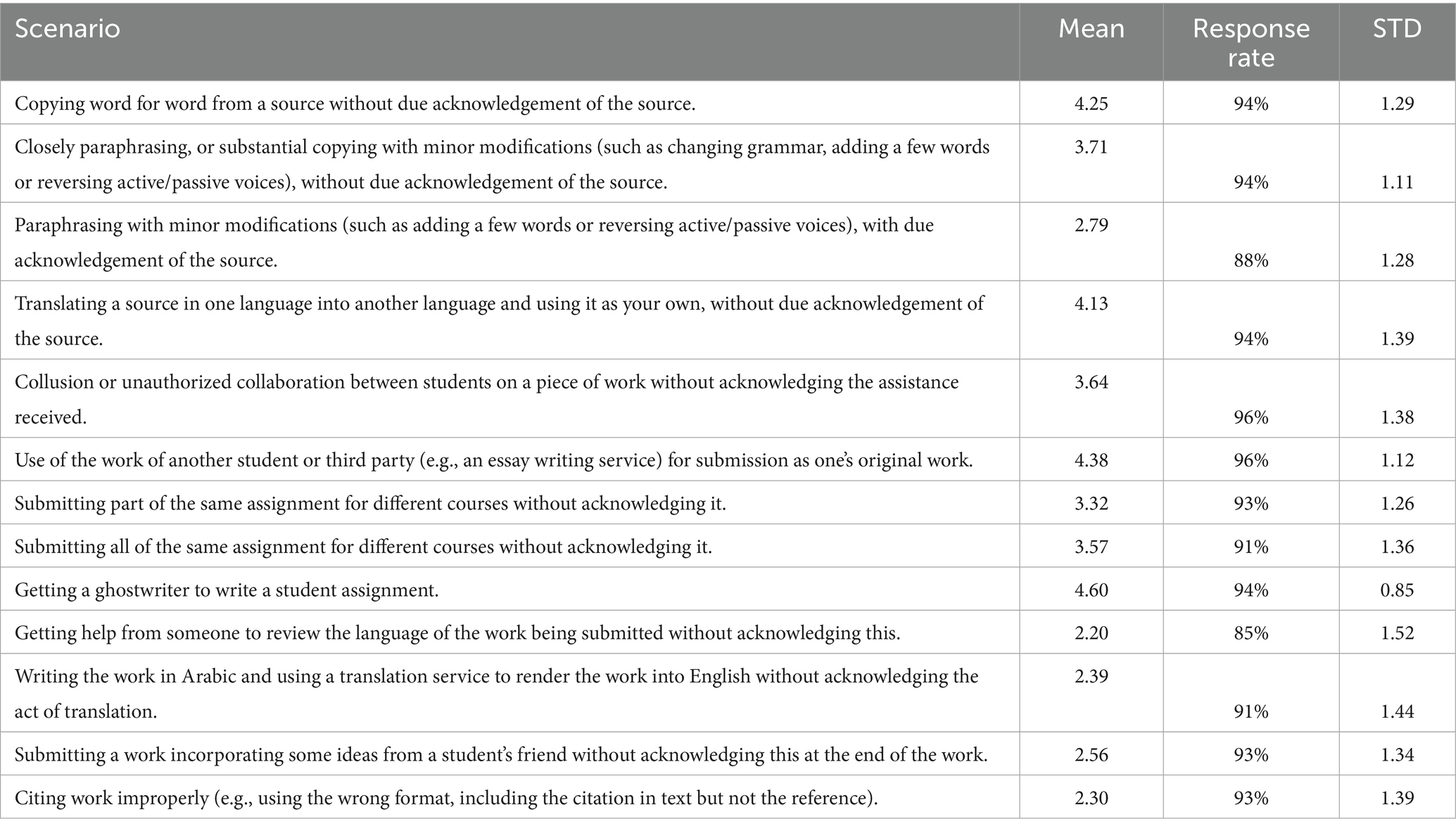

Response to traditional plagiarism scenarios

Analysis of traditional plagiarism scenarios, Table 2, reveals a clear hierarchy of perceived violations, with intentional deception through ghostwriting (4.60) and submission of others’ work (4.38) viewed most severely, while technical citation errors (2.50) and unacknowledged language assistance (2.45) are considered less serious infractions. The data demonstrates that proper acknowledgment significantly reduces perceived severity across similar actions, as evidenced by the substantial difference between paraphrasing without acknowledgment (3.71) versus with acknowledgment (2.79). Notably, context matters significantly in translation scenarios, with direct translation of sources without attribution (4.13) viewed much more severely than using translation services to render one’s own work (2.43). Self-plagiarism through submitting identical work across courses (3.57) occupies a middle ground of concern, while unauthorized collaboration (3.64) is perceived as moderately serious. These findings suggest academic institutions should implement gradual sanctions aligned with violation severity, while focusing educational efforts on clarifying gray areas where student confusion may exist, particularly regarding translation services, collaboration boundaries, and proper acknowledgment practices.

A comparative analysis with Chan (2024) reveals significant discrepancies between faculty and student evaluations of academic integrity infractions. Faculty assessments demonstrate elevated mean severity ratings for direct plagiarism and contract cheating behaviors, with a particularly pronounced disparity in the evaluation of ghostwriting (faculty M = 4.60, SD = 0.85; students M = 3.99, SD = 1.27). This substantial difference suggests a fundamental difference in how these groups conceptualize the severity of outsourced academic work. Conversely, for textual appropriation involving paraphrasing without attribution, student perceptions (M = 3.95, SD = 1.12) indicate marginally higher severity ratings than faculty evaluations (M = 3.71, SD = 1.11).

The heterogeneity in standard deviations between these populations merits particular attention as it illuminates the degree of consensus within each group. Faculty responses to ghostwriting demonstrate notably lower variance (SD = 0.85), indicating substantial agreement among faculty. In contrast, student responses exhibit greater dispersion across multiple categories, suggesting more diverse interpretative frameworks regarding plagiarism.

The current faculty-focused study makes a significant contribution to the academic integrity literature by addressing critical scenarios not covered in Chan’s student-centered investigation. Specifically, it introduces and quantifies faculty perspectives on language assistance without acknowledgment (M = 2.45, SD = 1.39), translation services usage (M = 2.43, SD = 1.42), informal peer idea incorporation (M = 2.65, SD = 1.27), and citation formatting errors (M = 2.50, SD = 1.29)—all representing increasingly common academic practices in multilingual and collaborative learning environments that have been underexplored in previous research.

Faculty concerns encompass a granular spectrum of academic integrity issues, particularly partial or technical violations often overlooked in student conceptualizations. This measurement of “gray area” scenarios provides crucial insights into academic integrity’s evolving landscape amid digital tools and collaborative practices transforming traditional authorship concepts.

Conclusion, implications, and limitations

This study provides a wide range of insights into the impact of the advent of Gen-AI on faculty experiences and policy making. The study shows a significant disparity in perceived quality between traditional and Gen-AI plagiarism policies, underscoring the need for more agile policy frameworks that can adapt to rapidly advancing AI capabilities. Not only did faculty perceive traditional policies more favorably, but also with more uniformity, reflecting the emergent and evolving nature of institutional responses to Gen-AI technologies. This empirical evidence also reveals a notable paradox: despite the ostensible visibility, availability, and adequacy of institutional policies they still fall short in perceived effectiveness. The data shows that having policies alone are not sufficient for promoting academic integrity as policies continue to overlook a wide range of challenges associated with their implementation including detection challenges, workload, faculty discretion, and nuanced ethical considerations that Gen-AI technologies necessitate. The tension between effective implementation and effective policy instrumentation –or ideals and reality– necessitates a balanced approach where governance is reconciled with impact and faculty engagement. As such, while Bretag et al.’s (2011) framework is foundational in accounting for faculty perceptions in relation to policies regulating plagiarism, it could benefit from an evaluation component articulating how the policy will be evaluated for effectiveness. It could also use an expansion of the approach to not only focus on educative versus punitive strategies but also to account for discretion which has been found to greatly impact policy implementation (but not necessarily effectiveness).

The study also demonstrated that faculty perceptions of Gen-AI plagiarism reveal a clear ethical hierarchy, with direct copying of AI-generated content and using AI for plagiarism deemed most serious, while grammar checking and rephrasing one’s own content are considered acceptable. The determining factor appears to be the degree of student contribution, with AI replacement of student work viewed negatively compared to AI augmentation of student-generated content. Response rates for Gen-AI scenarios were markedly lower than for traditional plagiarism scenarios, attributable to faculty uncertainty amid insufficient institutional guidelines and the survey’s structure. This finding aligns with recent research demonstrating faculty difficulties in navigating Gen-AI ethicality and identification.

This study contributes to academic integrity literature by addressing critical scenarios not covered in Chan’s student-centered investigation. Specifically, it introduces and quantifies faculty perspectives on language assistance without acknowledgment, translation services usage, informal peer idea incorporation, and citation formatting errors—all representing increasingly common academic practices in multilingual and collaborative learning environments that have been underexplored in previous research. Faculty concerns encompass a granular spectrum of academic integrity issues, particularly partial or technical violations often overlooked in student conceptualizations. This measurement of “gray area” scenarios provides crucial insights into academic integrity’s evolving landscape amid digital tools and collaborative practices transforming traditional authorship concepts.

Several limitations of this study warrant consideration. First, the research was conducted at a single internationalized institution in the UAE, potentially limiting the generalizability of findings to other institutional and cultural contexts. Second, the study’s cross-sectional design captures faculty perceptions at a specific moment in the rapidly evolving landscape of AI technologies, necessitating longitudinal follow-up studies. Third, while faculty perspectives provide valuable insights, the absence of administrator and policymaker viewpoints represents a notable gap in understanding the full spectrum of institutional responses to Gen-AI plagiarism. Finally, the study’s reliance on self-reported data may not fully capture actual faculty behaviors in addressing academic integrity violations.

Future research should focus on developing expanded theoretical frameworks that build upon Bretag et al.’s foundation while incorporating the technological, pedagogical, and ethical dimensions unique to the Gen-AI era. Such frameworks should accommodate the dynamic nature of AI technologies and provide adaptable guidelines for policy development across diverse institutional contexts. This study contributes to the scholarly discourse by highlighting both the enduring value and inherent limitations of established academic integrity frameworks when confronting rapidly evolving technological challenges in higher education.

Data availability statement

The raw data supporting the conclusions of this article can be made available by the authors, without undue reservation.

Ethics statement

This study involves human participants and was approved by Selim University’s Ethics Committee. The study was conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

RA: Conceptualization, Formal analysis, Methodology, Visualization, Writing – original draft, Writing – review & editing. NA: Data curation, Investigation, Project administration, Software, Validation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generated AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Data analysis was minimally supported by Julius AI (https://julius.ai), powered by GPT-4 (April 2025 version), which provided high-level insights into the research data. Additionally, Claude 3.7 Sonnet (April 2025 version) was utilized to provide a review of some parts of the language used in the manuscript. All interpretations and linguistic revisions were independently and religiously verified by the researchers.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aljanahi, M. H., Aljanahi, M. H., and Mahmoud, E. Y. (2024). “I’m not guarding the dungeon”: faculty members’ perspectives on contract cheating in the UAE. Int. J. Educ. Integr. 20, 9–19. doi: 10.1007/s40979-024-00156-5

Alshurafat, H., Al Shbail, M. O., Hamdan, A., Al-Dmour, A., and Ensour, W. (2024). Factors affecting accounting students’ misuse of chatgpt: an application of the fraud triangle theory. J. Financ. Reporting Account. 22, 274–288. doi: 10.1108/JFRA-04-2023-0182

Amigud, A., and Pell, D. J. (2021). When academic integrity rules should not apply: a survey of academic staff. Assess. Eval. High. Educ. 46, 928–942. doi: 10.1080/02602938.2020.1826900

Awasthi, S. (2019). Plagiarism and academic misconduct a systematic review. DESIDOC J. Libr. Inf. Technol. 39, 94–100. doi: 10.14429/djlit.39.2.13622

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Braun, V., Clarke, V., and Weate, P. (2016). “Using thematic analysis in sport and exercise research” in Handbook of qualitative research in sport and exercise (London: Routledge), Smith, B. M., and Sparkes, A. C. 213–227.

Bretag, T. (2005). Implementing plagiarism policy in the internationalised university. Newcastle, Australia: University of Newcastle.

Bretag, T., and Mahmud, S. (2016). “A conceptual framework for implementing exemplary academic integrity policy in Australian higher education” in Handbook of academic integrity, T. Bretag. Singapore: Springer. 463–480.

Bretag, T., Mahmud, S., Wallace, M., Walker, R., James, C., Green, M., et al. (2011). Core elements of exemplary academic integrity policy in Australian higher education. Int. J. Educ. Integr. 7, 3–12. doi: 10.21913/IJEI.v7i2.759

Brown, V. J., and Howell, M. E. (2001). The efficacy of policy statements on plagiarism: do they change students’ views? Res. High. Educ. 42, 103–118. doi: 10.1023/A:1018720728840

Chan, C. K. Y. (2024). Students’ perceptions of ‘AI-giarism’: investigating changes in understandings of academic misconduct. Educ. Inf. Technol. 30, 1–22. doi: 10.1007/s10639-024-13151-7

Coalter, T., Lim, C. L., and Wanorie, T. (2007). Factors that influence faculty actions: a study on faculty responses to academic dishonesty. Int. J. Scholarsh. Teach. Learn. 1:n1. doi: 10.20429/ijsotl.2007.010112

Creswell, J. W., and Creswell, J. D. (2017). Research design: qualitative, quantitative, and mixed methods approaches. California: Sage publications.

Crompton, H., and Burke, D. (2024). The educational affordances and challenges of ChatGPT: state of the field. TechTrends 68, 380–392. doi: 10.1007/s11528-024-00939-0

Cullen, C. S., and Murphy, G. (2025). Inconsistent access, uneven approach: ethical implications and practical concerns of prioritizing legal interests over cultures of academic integrity. J. Acad. Ethics, 1–20. doi: 10.1007/s10805-025-09606-2

de Maio, C., Dixon, K., and Yeo, S. (2020). Responding to student plagiarism in Western Australian universities: the disconnect between policy and academic staff. J. High. Educ. Policy Manag. 42, 102–116. doi: 10.1080/1360080X.2019.1662927

Eaton, S. E. (2023). Postplagiarism: transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology. Int. J. Educ. Integr. 19, 23–10. doi: 10.1007/s40979-023-00144-1

Eaton, S. E., Conde, C. F., Rothschuh, S., Guglielmin, M., and Otoo, B. K. (2020). Plagiarism: a Canadian higher education case study of policy and practice gaps. Alberta J. Educ. Res. 66, 471–488. doi: 10.55016/ojs/ajer.v66i4.69204

Eaton, S. E., Crossman, K., and Edino, R. (2019). Academic integrity in Canada: an annotated bibliography.

Fadlelmula, F., and Qadhi, S. (2024). A systematic review of research on artificial intelligence in higher education: practice, gaps, and future directions in the GCC. J. Univ. Teach. Learn. Pract. 21, 146–173. doi: 10.53761/pswgbw82

Fishman, T. (2009) “We know it when we see it” is not good enough: Toward a standard definition of plagiarism that transcends theft, fraud, and copyright

Freeman, J. (2024). Provide or punish? Students’ views on generative AI in higher education. Oxford: Higher Education Policy Institute.

Gottardello, D., and Karabag, S. F. (2022). Ideal and actual roles of university professors in academic integrity management: a comparative study. Stud. High. Educ. 47, 526–544. doi: 10.1080/03075079.2020.1767051

Gullifer, J. M., and Tyson, G. A. (2014). Who has read the policy on plagiarism? Unpacking students’ understanding of plagiarism. Stud. High. Educ. Dorchester-on-Thames 39, 1202–1218. doi: 10.1080/03075079.2013.777412

Harper, R., Bretag, T., Ellis, C., Newton, P., Rozenberg, P., Saddiqui, S., et al. (2019). Contract cheating: a survey of Australian university staff. Stud. High. Educ. 44, 1857–1873. doi: 10.1080/03075079.2018.1462789

Hostetter, A. B., Call, N., Frazier, G., James, T., Linnertz, C., Nestle, E., et al. (2024). Student and faculty perceptions of generative artificial intelligence in student writing. Teach. Psychol. 986283241279401. doi: 10.1177/00986283241279401

Howard, R. M. (2000). Sexuality, textuality: the cultural work of plagiarism. Coll. Engl. Melbourne: The University of Melbourne Centre for the Study of Higher Education. 62, 473–491. doi: 10.2307/378866

Karkoulian, S., Sayegh, N., and Sayegh, N. (2024). ChatGPT unveiled: understanding perceptions of academic integrity in higher education-a qualitative approach. J. Acad. Ethics, 1–18. doi: 10.1007/s10805-024-09543-6

Kier, C. A., and Ives, C. (2022). Recommendations for a balanced approach to supporting academic integrity: perspectives from a survey of students, faculty, and tutors. Int. J. Educ. Integr. 18, 22–19. doi: 10.1007/s40979-022-00116-x

Kim, J., Klopfer, M., Grohs, J. R., Eldardiry, H., Weichert, J., Cox, L. A., et al. (2025). Examining faculty and student perceptions of generative AI in university courses. Innov. High. Educ. 1–33. doi: 10.1007/s10755-024-09774-w

Kofinas, A. K., Tsay, C. H. H., and Pike, D. (2025). The impact of generative AI on academic integrity of authentic assessments within a higher education context. Br. J. Educ. Technol.

LeBrun, P. (2023) Faculty barriers to academic integrity violations reporting: A qualitative exploratory case study ProQuest Dissertations and Theses Global. University of Phoenix

Levine, J., and Pazdernik, V. (2018). Evaluation of a four-prong anti-plagiarism program and the incidence of plagiarism: a five-year retrospective study. Assess. Eval. High. Educ. 43, 1094–1105. doi: 10.1080/02602938.2018.1434127

Lodge, J. M., Thompson, K., and Corrin, L. (2023). Mapping out a research agenda for generative artificial intelligence in tertiary education. Australas. J. Educ. Technol. 39, 1–8. doi: 10.14742/ajet.8695

Longoni, C., Tully, S., and Shariff, A. (2023). Plagiarizing AI-generated content is seen as less unethical and more permissible.

Malak, J. M. (2015). Academic integrity in the community college setting: full-time faculty as street level bureaucrats. Ph.D., Illinois State University. ProQuest Dissertations & Theses Global. United States -- Illinois.

McDonald, N., Johri, A., Ali, A., and Collier, A. H. (2025). Generative artificial intelligence in higher education: evidence from an analysis of institutional policies and guidelines. Comput. Human Behav. Artif. Humans 3:100121. doi: 10.1016/j.chbah.2025.100121

Miranda-Rodríguez, R. A., Sánchez-Nieto, J. M., and Ruiz-Rodríguez, A. K. (2024). Effectiveness of intervention programs in reducing plagiarism by university students: a systematic review. Front. Educ. 9:1357853. doi: 10.3389/feduc.2024.1357853

Miron, J., McKenzie, A., Eaton, S. E., Stoesz, B., Thacker, E., Devereaux, L., et al. (2021). Academic integrity policy analysis of publicly-funded universities in Ontario, Canada: a focus on contract cheating. Can. J. Educ. Adm. Policy, 62–75. doi: 10.7202/1083333ar

MoE,. (2023). Higher Education Institutions in the UAE. Unpublished Report. Ministry of Education, UAE.

Moriarty, C., and Wilson, B. (2022). Justice and consistency in academic integrity: philosophical and practical considerations in policy making. J. Coll. Char. 23, 21–31. doi: 10.1080/2194587X.2021.2017971

Nelson, D. (2021). How online business school instructors address academic integrity violations. J. Educ. Online 18:n3. doi: 10.9743/JEO.2021.18.3.1

Niraula, S. (2024). The impact of ChatGPT on academia: a comprehensive analysis of AI policies across UT system academic institutions. Advanc. Mobile Learn. Educ. Res. 4, 973–982. doi: 10.25082/AMLER.2024.01.009

Page, J. S. (2004). Cyber-pseudepigraphy: a new challenge for higher education policy and management. J. High. Educ. Policy Manag. 26, 429–433. doi: 10.1080/1360080042000290267

Pecorari, D., and Petrić, B. (2014). Plagiarism in second-language writing. Lang. Teach. 47, 269–302. doi: 10.1017/S0261444814000056

Petricini, T., Wu, C., and Zipf, S. T. (2023) Perceptions about generative AI and ChatGPT use by faculty and college students. doi: 10.35542/osf.io/jyma4

Pickard, J. (2006). Staff and student attitudes to plagiarism at university college Northampton. Assess. Eval. High. Educ. 31, 215–232. doi: 10.1080/02602930500262528

Sánchez-Vera, F., Reyes, I. P., and Cedeño, B. E. (2024). Impact of artificial intelligence on academic integrity: Perspectives of faculty members in Spain. Artificial intelligence and education: Enhancing human capabilities, protecting rights, and fostering effective collaboration between humans and Machines in Life, learning, and work. 13–29 Octaedro.

Sattler, S., Wiegel, C., and Veen, F. v. (2017). The use frequency of 10 different methods for preventing and detecting academic dishonesty and the factors influencing their use. Stud. High. Educ. 42, 1126–1144. doi: 10.1080/03075079.2015.1085007

Stoesz, B. M., Eaton, S. E., Miron, J., and Thacker, E. J. (2019). Academic integrity and contract cheating policy analysis of colleges in Ontario, Canada. Int. J. Educ. Integr. 15, 1–18. doi: 10.1007/s40979-019-0042-4

Stuhmcke, A., Booth, T., and Wangmann, J. (2016). The illusory dichotomy of plagiarism. Assess. Eval. High. Educ. 41, 982–995. doi: 10.1080/02602938.2015.1053428

Tummers, L., and Bekkers, V. (2014). Policy implementation, street-level bureaucracy, and the importance of discretion. Public Manag. Rev. 16, 527–547. doi: 10.1080/14719037.2013.841978

Walker, L. J., de Vries, B., and Trevethan, S. D. (1987). Moral stages and moral orientations in real-life and hypothetical dilemmas. Child Dev. 58, 842–858. doi: 10.2307/1130221

Walker, M., and Townley, C. (2012). Contract cheating: a new challenge for academic honesty? J. Acad. Ethics 10, 27–44. doi: 10.1007/s10805-012-9150-y

Walker, C., and White, M. (2014). Police, design, plan and manage: developing a framework for integrating staff roles and institutional policies into a plagiarism prevention strategy. J. High. Educ. Policy Manag. 36, 674–687. doi: 10.1080/1360080X.2014.957895

Watermeyer, R., Phipps, L., Lanclos, D., and Knight, C. (2024). Generative AI and the automating of academia. Postdigit. Sci. Educ. 6, 446–466. doi: 10.1007/s42438-023-00440-6

Yu, H., Glanzer, P. L., Johnson, B. R., Sriram, R., and Moore, B. (2018). Why college students cheat: a conceptual model of five factors. Review of higher education 41, 549–576. doi: 10.1353/rhe.2018.0025

Keywords: Gen-AI plagiarism, faculty experience, UAE, policy and practice, higher education, academic integrity

Citation: Alsharefeen R and Al Sayari N (2025) Examining academic integrity policy and practice in the era of AI: a case study of faculty perspectives. Front. Educ. 10:1621743. doi: 10.3389/feduc.2025.1621743

Edited by:

Josiline Phiri Chigwada, University of South Africa, South AfricaReviewed by:

Mohammad Mohi Uddin, University of Alabama, United StatesRobert Buwule, Mbarara University of Science and Technology, Uganda

Elisha Mupaikwa, National University of Science and Technology, Zimbabwe

Copyright © 2025 Alsharefeen and Al Sayari. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rami Alsharefeen, cmFtaS5hbHNoYXJlZmVlbkB5YWhvby5jb20=

Rami Alsharefeen

Rami Alsharefeen Naji Al Sayari

Naji Al Sayari