- 1Department of Chemistry, Louisiana State University, Baton Rouge, LA, United States

- 2College of Science, Louisiana State University, Baton Rouge, LA, United States

This original research article focuses on the investigation of the use of generative artificial intelligence (GAI) use among students in communication-intensive STEM courses and how this engagement shapes their scientific communication practices, competencies, confidence, and science identity. Using a mixed-methods approach, patterns were identified in how students perceived their current science identity and use of incorporating artificial intelligence (AI) into writing, oral, and technical tasks. Thematic analysis reveals that students use AI for a range of STEM communication endeavors such as structuring lab reports, brainstorming presentation ideas, and verifying code. While many minoritized students explicitly describe AI as a confidence-boosting, timesaving, and competence-enhancing tool, others—particularly those from privileged backgrounds—downplay its influence, despite evidence of its significant role in their science identity. These results suggest the reframing of science identity as being shaped by technological usage and social contingency. This research illuminates both the potential and pitfalls of AI-use in shaping the next generation of scientists.

1 Introduction

In today's society, there is a need for individuals to have sufficient scientific knowledge to engage in science-related issues (Zhai et al., 2022). Science communication can play an integral role in science identity development as it overlays with the three frames of science identity: competence, performance, and recognition (Carlone and Johnson, 2007). Within scientific writing and science communication as a whole, appropriate skills, media, activities, and dialogue are used to produce Awareness (familiarity with new aspects of science), Enjoyment (appreciation of science), Interest (voluntary involvement with science or its communication), Opinion-forming (the forming, reforming, or confirming of science-related attitudes), and Understanding (understanding of science, its content, processes, and social factors)—defining the purpose of science communication and its effectiveness (Burns et al., 2003). Students enter higher education with varying levels of communication proficiency, yet are often assessed based on their ability to express ideas effectively in writing (Grammarly, 2022a,b). To promote equity, institutions must provide all students—regardless of background—with the support needed to develop strong communication skills. This begins with identifying systemic gaps in communication support and assessing the resources currently available to students.

Generative artificial intelligence (AI), specifically ChatGPT, is a current issue of pressing importance and concern in higher education (Mohebi, 2024). ChatGPT and its forecasted use challenges traditional notions of academic and professional ethics. During this emerging period when new technology is being learned and adopted, critical populations may be vulnerable. Beasley cites that students of color (non-white) were more likely to be accused of academic dishonesty than their white counterparts (Beasley, 2016). However, AI tools are currently used in academia through many platforms as a plagiarism detector for assignments (Surahman and Wang, 2022). There is a need to understand what constitutes positive institutional settings and learning experiences that influences students' career paths and how systemic bias and other forms of discrimination impact a students' journey—especially as students and society as a whole progress their AI-use (Winfield et al., 2020; Garcia Ramos and Wilson-Kennedy, 2024). Students from historically marginalized backgrounds continue to experience inequitable learning experiences through curriculum placement, access to certified instructors, and access to educational resources (Murphy et al., 1986; Lawson and Murray, 2014; Lawson et al., 2015). Inequi outcomes in students' beliefs and performance in STEM may be a result of inequitable access to resources, inadequate support, and inequitable learning environments which in turn affects students' science identity (Cwik and Singh, 2022). However, issues of fairness and equity in educational AI (AIEd) systems have received comparatively little attention (Holstein and Doroudi, 2022). The development of AIEd systems has often been motivated by their potential to promote educational equity and reduce achievement gaps across different groups of learners—for example, by scaling up the benefits of one-on-one human tutoring to a broader audience or by filling gaps in existing educational services (Holstein and Doroudi, 2022). However, research has shown that when schools and individual learners have equal access to new technology, the technology tends to be used and accessed in unequal ways, exacerbating inequity (Holstein and Doroudi, 2022). (Puckett and Rafalow 2020) found that instructors at institutions with different demographic compositions adopted different attitudes toward students' digital literacy skills and expressions based on racial stereotypes about the student body, while schools that are better-resourced, serving students from more privileged backgrounds, tend to use technology in more innovative ways (Puckett and Rafalow, 2020; Rafalow and Puckett, 2022).

While studies have shown that AI techniques can bring opportunities to develop STEM education and heighten students' interests and motivation in STEM learning, little research has been conducted that investigates the application of AI in a STEM education context, including the use of ChatGPT, with limited research being done on the use of ChatGPT and other AI in its influence on STEM identity and equitable student learning (Hwang et al., 2020; Xu and Ouyang, 2022). In addition, although there have been studies done in education using AI tools, such as its use in automated scoring (including writing assessments), recommending personalized student resources, and diagnosing learning gaps, little research has been done analyzing the use of AI technology within the broad spectrum of scientific writing and its dissemination—encompassing writing, oral, and technical (Barstow et al., 2017; Ledesma and García, 2017; Liu et al., 2017; Perin and Lauterbach, 2018; Zhang et al., 2022; Alvarez et al., 2024; Blau et al., 2024; Nicholas et al., 2024; Nixon et al., 2024).

2 Methodology

A Quantitative–Qualitative (Quant–Qual) mixed-methods research design was employed in this study. Based on the gaps in research intersecting AI in education and science identity and the need for better understanding of AI use's influence on science identity among students, this study aimed to investigate the following research questions:

1. How are students using ChatGPT and similar artificial intelligence tools in science communication (writing, oral, technical)?

2. How did the use of artificial intelligence impact students' competencies, confidence, and identity development in communication-intensive STEM courses?

2.1 Ethical considerations

The study received ethical approval from the Institutional Review Board (IRB): IRB # IRBAM-23-0748. Virtually signed consent forms were obtained from participants. Student names were redacted and replaced with numerical coding during data collection and analysis to ensure data integrity and maintain participant privacy. Any potentially identifying details were removed from all documentation prior to analysis.

2.2 Participants and study environment

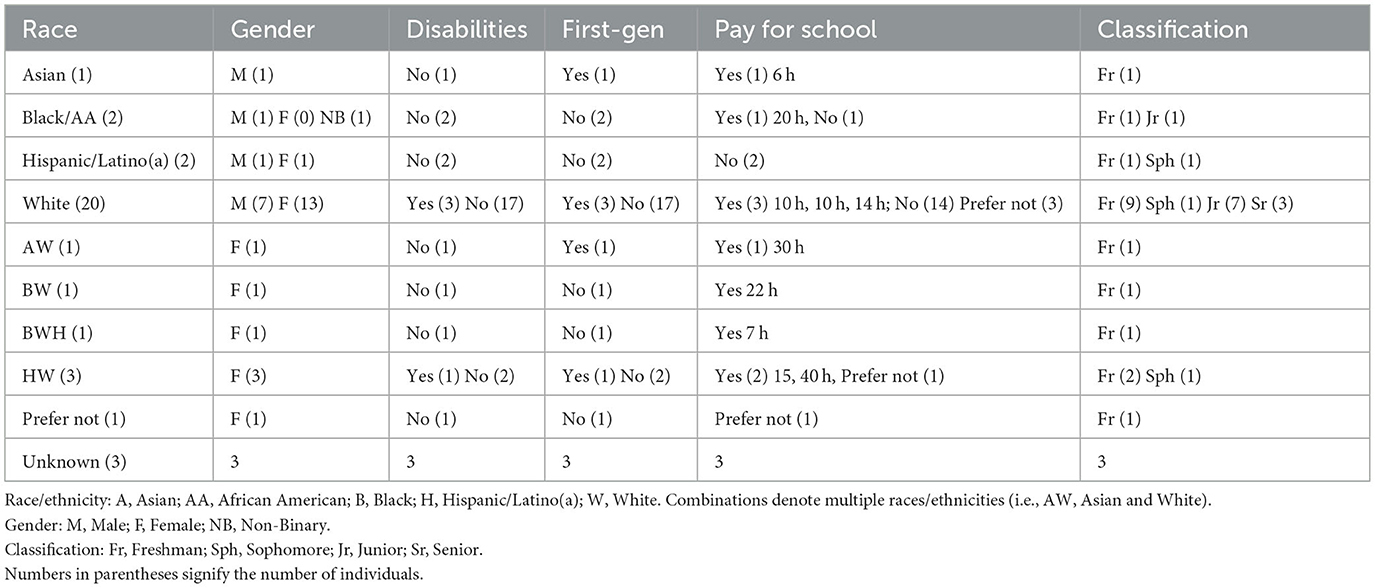

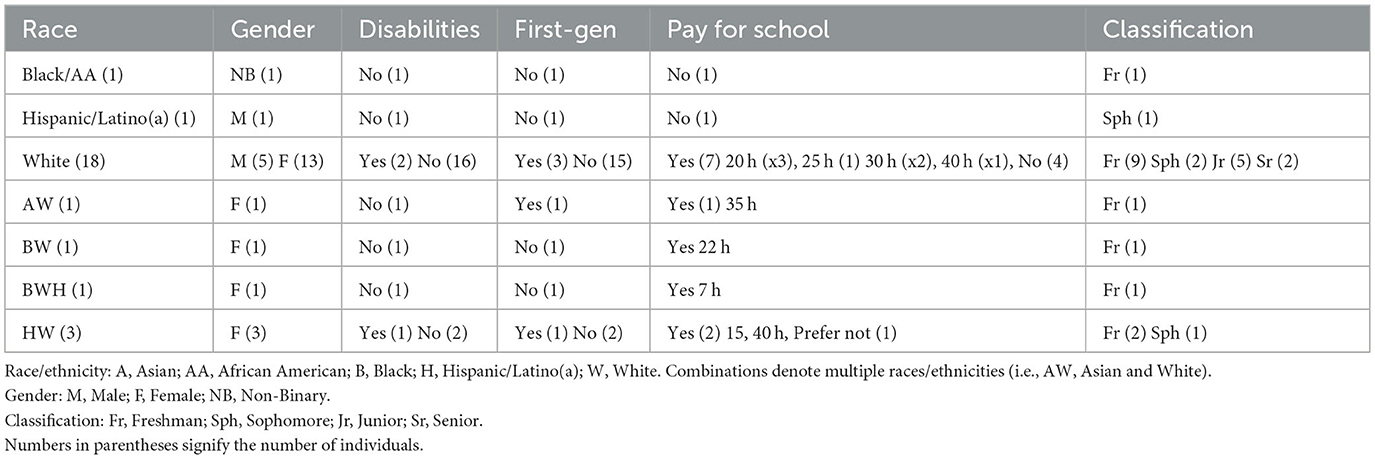

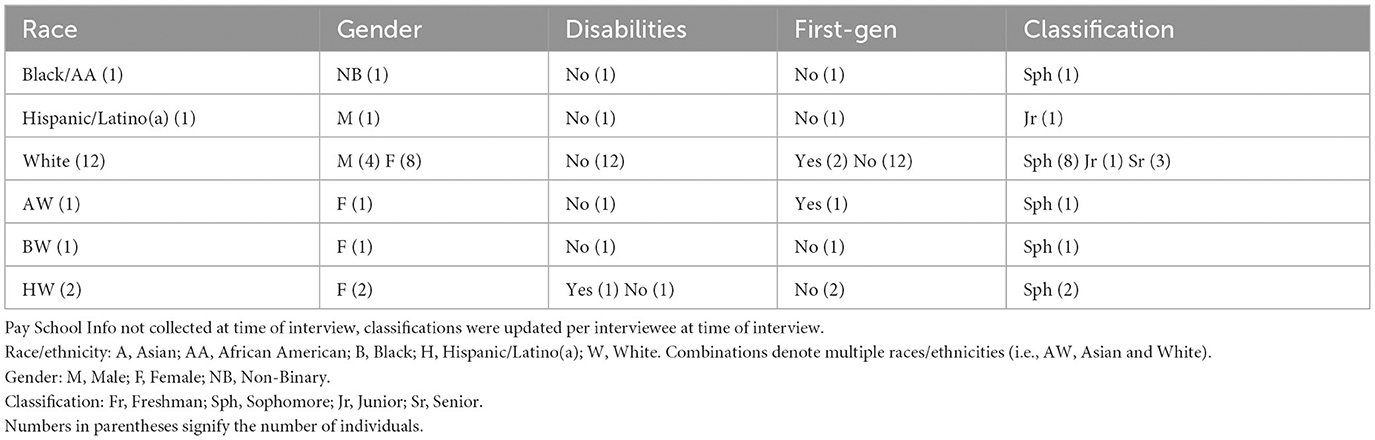

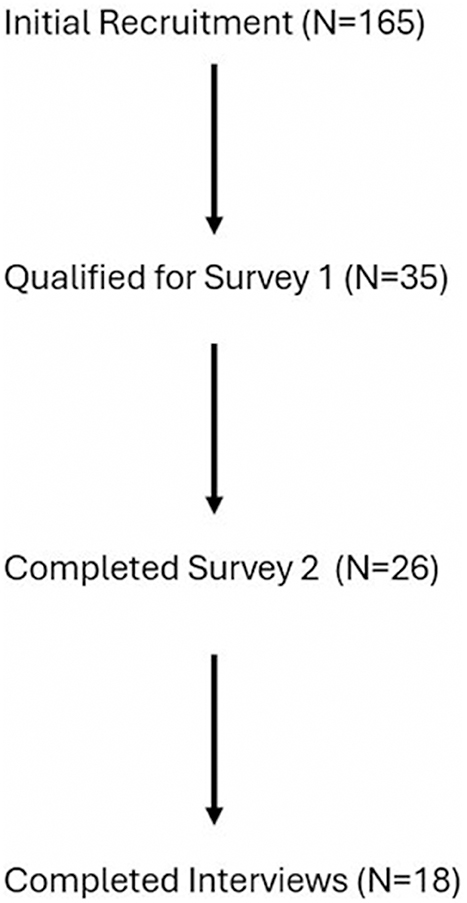

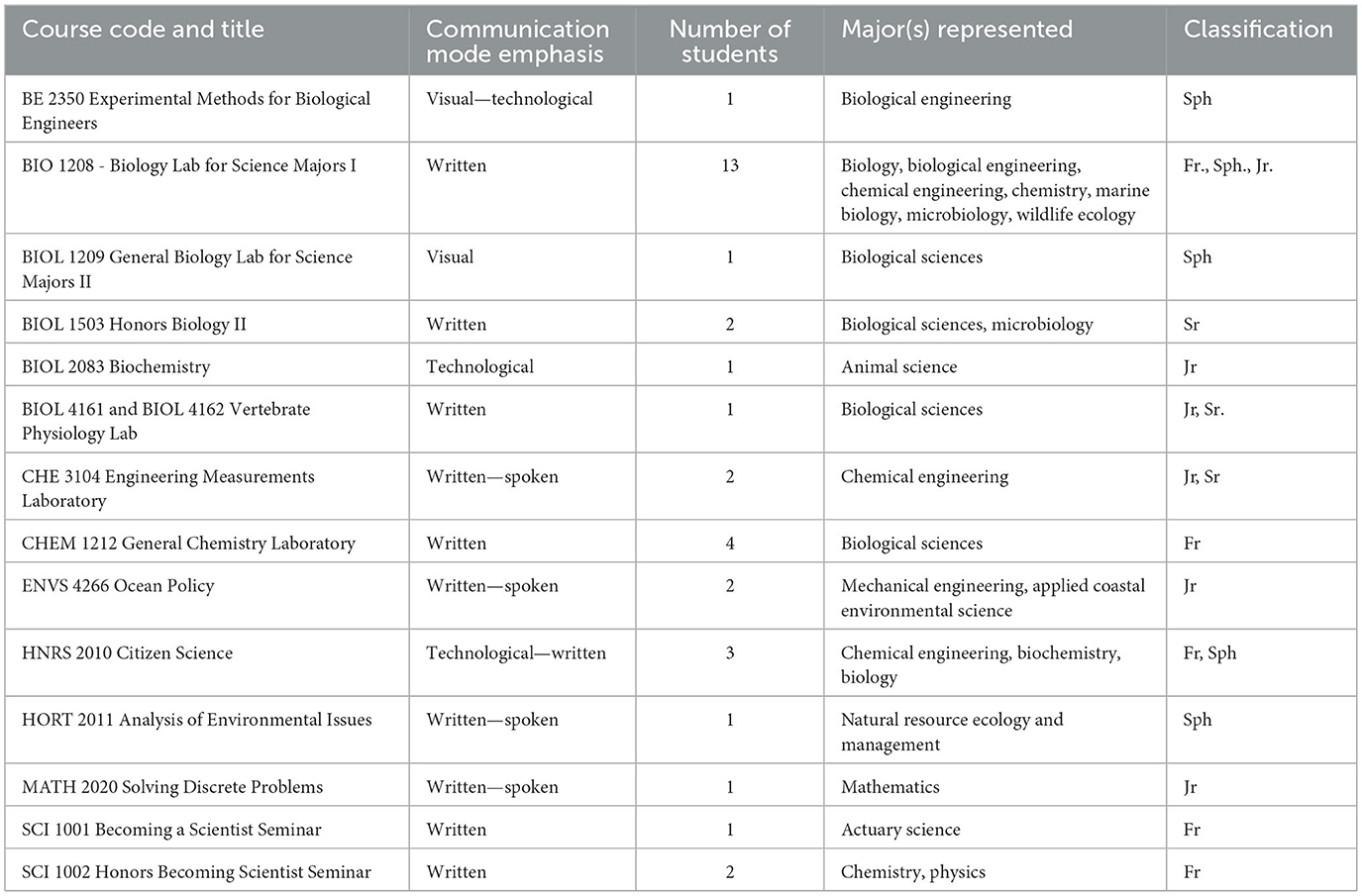

This study was conducted at a public flagship research university located in the southern United States. Although a predominantly white and historically privileged institution, there is a diverse student body present characterized by intersecting identities in relation to race, ethnicity, socioeconomic status, first-generation college status, and gender. The university has a campus-wide initiative to integrate discipline-specific communication skills across all curricula, including written, spoken, visual, and technological communication. Courses designated as communication-intensive must be approved by the initiative, outlining what components are emphasized within the course (lab reports, speeches, presentations, etc.). Two surveys (progressive, where their completion and results of survey one would progress them to survey two) and an interview was conducted for this study. The first of two surveys was deployed through the university's Qualtrics system in the middle of Fall 2023 (and was open recruitment until the end of the Spring 2024 semester) and was advertised campus-wide—to recruit students who were STEM majors, were taking STEM communication-intensive courses that were certified under the campus program and have used AI in their STEM communication-intensive courses. Out of 165 students that initially conducted the survey, 35 students qualified for the study; although 35 students completed the first survey, only 31 students left contact information to progress on to the second survey. The second survey was administered to the qualifying 31 students during the Spring 2024 semester, only 26 students completed survey 2. The 26 students were then invited to participate in the interview. Due to delayed responses from the students, the interviews were conducted during the Fall 2024 semester, where only 18 students participated. The interviews were conducted in a university conference room. Sixty (60) $25 Tango gift cards were given at random via Random Number Generator (RNG) for Survey 1. Each participant for Survey 2 received a $25 Tango gift card. Each participant in the interview was given a $50 Tango gift card. Details regarding the surveys and interviews are found in the subsequent sections. The demographic breakdown of the participants for Survey 1, Survey 2, and the interviews are found below on Tables 1–3 respectfully. A basic workflow of the participant recruitment is shown in Figure 1 below. Table 4 below displays the communication courses and methods of communication used.

Figure 1 displays the basic workflow of participants throughout the study.

2.3 Theoretical frameworks

The syntheses of the following framework theories were used.

2.3.1 STEM identity framework

Carlone and Johnson (2007) define STEM Identity as one's recognition as a STEM person and proposed a science identity framework comprising three dimensions: (1) competence, or one's knowledge and understanding of STEM; (2) performance, or one's ability to engage in various STEM practices; and (3) recognition, or being seen by others and seeing one's self as a STEM person. Competence refers to having scientific knowledge as well as motivation to understand the world scientifically. Performance refers to being able to demonstrate scientific knowledge to others. Recognition refers to both self-recognizing as well as meaningful others recognizing one as a “science person.” According to this model, a science identity is composed of combinations of these dimensions. For instance, a student may be able to perform activities that scientists do, show competence in that performance, but may not be recognized as a “science person” by others. Whereas, another student may be recognized as a “science person” who may in fact have lower competence and lower performance than the former individual. However, this framework has gaps within the realm of recognition and its influence on confidence. Carlone and Johnson (2007) emphasize the importance of recognition from those they define as meaningful, scientific others. However, due to the lack of explicitly recognizing science identity as a social identity, the potential for impact of others (such as peers or instructors) is not fully explored. Another limitation of the framework is that it does not place sufficient emphasis on the role of the environment when investigating science identity development. Recognition from others is important when a student is explicitly aware of the recognition or the lack thereof. However, environmental influences can be more subtle. Master et al.'s study suggests that making the environment more welcoming extends beyond explicit recognition of individual students (Master et al., 2016). It also suggests that there are opportunities to support the development of a science identity in a wider range of students through environmental cues. When students feel included, they are more likely to continue engagement in science (Master et al., 2016).

2.3.2 The Communication Theory of Identity (CTI)

The Communication Theory of Identity (CTI) frames science identity as a communicative and interactional process, rather than a set of individual cognitions or beliefs (Stewart, 2022). The use of this framework has been demonstrated to suggest designed support in the development of science identity, focusing not only on formal interventions (e.g., mentoring, experiential learning) but also on creating opportunities for students to form peer relationships and communities (Stewart, 2022). The CTI framework was used as it best encompasses science identity development within science communication and AI contexts.

2.3.3 Intersectionality

Intersectionality encompasses psychosocial, cultural discourses as well as relations and practices of inclusion, exclusion, marginalization and centering (Phoenix and Pattynama, 2006). An intersectional approach is one that considers the simultaneous and mutually constitutive effects of the multiple social categories of identity, difference, and disadvantage that individuals inhabit (Crenshaw, 1991; Cole, 2009). Intersectionality theory has its roots in the writings of U.S. Black feminists who challenged the notion of a universal gendered experience and argued that Black women's experiences were also shaped by race and class (Collins, 2002; Davis, 2011). Contrary to articulating gender, race, and class as distinct social categories, intersectionality postulates that these systems of oppression are mutually constituted and work together to produce inequality (Crenshaw, 1991; Collins, 2002; Cole, 2009). As such, analyses that focus on gender, race, or class independently are insufficient because these social positions are experienced simultaneously. Whereas, intersectionality has had an impact on both Feminist Theory and Critical Race Theory, its integration into the STEM education, science writing, artificial intelligence literature has been limited to non-existent. The synthesis of both Science Identity and Intersectionality best reflects the need for and the importance of the educational contexts to best integrate AI use into the scientific writing curriculum and its influence in promoting STEM identity and equitable learning outcomes.

2.4 Quantitative

The quantitative component of this study was designed to provide a structured overview of participants' self-perception of their science identity broadly and with the use of AI. In this study, two previously validated instruments were adapted and used: the STEM Professional Identity Overlap measure (STEM-PIO-1) was utilized, originally developed and validated by McDonald et al. (2019) and the Science Communication Training Effectiveness (SCTE) scale originally developed and validated by Rodgers et al. (2020) (McDonald et al., 2019; Rodgers et al., 2020). Each instrument was selected based on its validity and relevance to the study. The quantitative data was analyzed using IBM SPSS Statistics 29.0.0.0.

2.4.1 STEM-PIO-1

The original STEM-PIO-1 instrument is a single-item pictorial scale designed to measure STEM identity through participants' perceived overlap between their self-image and their image of a typical STEM professional. McDonald et al. provided evidence of the measure's validity and reliability, demonstrating convergent validity through moderate to strong correlations with established STEM identity, attitudes, and self-efficacy scales. Furthermore, criterion validity was established by differentiating effectively between STEM and non-STEM majors and by showing associations with persistence and academic engagement in STEM fields. The STEM-PIO-1 also displayed distinct discriminant validity, capturing unique variance separate from STEM identity measures that emphasize identity centrality. Its test-retest reliability over 6 months was moderate, with a multi-item variant demonstrating good internal consistency (Cronbach's alpha = 0.87).

2.4.2 SCTE

The Science Communication Training Effectiveness (SCTE) scale was designed specifically to evaluate perceived improvements in motivation, self-efficacy, cognition, affect, and behavior among students following science communication training. Rodgers et al. provided evidence for the scale's validity and reliability, where face validity was confirmed through expert evaluations involving interdisciplinary faculty members who assessed the scale's relevance and appropriateness. Content validity was strengthened through detailed item critiques from experts in education, plant sciences, and strategic communication, resulting in a refined and conceptually accurate measurement tool. The SCTE scale demonstrated strong construct validity, with internal consistency reliability (Cronbach's alpha) ranging from moderate (α = 0.555) to high (α = 0.962), and averaging α = 0.856, thereby confirming reliable measurement across its constructs. Criterion and convergent validity were established through significant positive correlations between increases in science communication knowledge and oral presentation self-confidence, as well as negative correlations between measures of self-efficacy, confidence, and stress or anxiety levels. These relationships validate the theoretical expectations that improved knowledge and confidence following training would decrease trainees' anxiety and stress.

2.4.3 STEM-PIO-1 and SCTE

For our research, the surveys were adapted specifically to align with our participant population and research context. For Survey 1, the instrument was kept in its original format for the STEM-PIO-1 instrument whereas the SCTE added the term “AI” after each statement. For Survey 2, the word “Professional” was replaced with “Communicator” for the STEM-PIO-1, whereas the SCTE was kept in its original format. However, the study was limited by a small sample size (N = 35 for Survey 1, N = 26 for Survey 2), restricting the feasibility of performing statistical validation methods, such as confirmatory factor analysis or internal consistency reliability tests. Consequently, our quantitative results did not reach statistical significance. Despite this limitation, the adapted instrument's face and content validity were carefully ensured through expert reviews and pilot testing (N = 6). The second author (ZWK) is familiar with STEM identity research and evaluated the adapted surveys to confirm its appropriateness and clarity for the study participants. During the pilot study, participant feedback indicated the clarity, relevance, and appropriateness of the adapted survey items, further supporting its validity for both versions found in both surveys.

2.5 Qualitative

The qualitative component of this study was conducted in person in a university conference room, except for one session via Zoom due to accommodating some participant's needs during the Fall of 2024. Group interviews were chosen to encourage conversation amongst the participants regarding their AI-use. Due to participant availability, there was a solo interview and 5 group interviews with 6 (Zoom), 2, 2, 4, and 3 participants per group interview conducted, respectively. The interviews were audio recorded using an audio recorder. Discussion of informed consent occurred before each interview. Participants were instructed to answer each question to the best of their ability and encouraged to reply to others' responses if they felt the need to comment. The interviews were transcribed verbatim using Rev, refined by the primary researcher (JGR). A pilot study was done with 6 students prior to the full execution of the study. The interviews on average lasted between 40 and 75 min. Delve, an online qualitative data analysis software, was used to create, sort, store, and retrieve data during analysis (Delve, 2025). Inter-rater reliability (IRR) was performed to ensure reliability of the coding scheme and analyzed data between JGR and ZWK with an initial percent agreement of 90% which was then increased to 100% after discussions. Although the interview sample size (N = 18) was below the commonly recommended threshold of 20 for theme saturation, the recurrence of themes across participants and the coherence of shared reflections suggest that thematic saturation was achieved (Hennink and Kaiser, 2022). Inductive coding was used for data analysis. The coding process was guided by the frameworks mentioned under Section 2.4. Although the coding was guided through these frameworks, responses across the different demographic categories suggest that institutional and curricular structures may have had a more prominent influence on students' experiences than individual identity characteristics alone. Nonetheless, distinctions across lived experiences were documented and preserved where present. Additional quotes are found in Supplemental material document.

2.6 Study limitations

The data gathered in this study is derived from a large R1 institution in the southern United States that has a campus-wide communication-intensive program. Thus, the data may not be representative of smaller colleges or universities in different states or that may or may not have a communication-intensive initiative which may or may not be campus-wide. There are emerging reports and data on AI-use within all disciplines; however, this study uniquely emphasizes student AI-use in STEM communication-intensive courses.

The quantitative data collected, although exploratory in nature due to the small sample size, remains valuable as it complements and contextualizes our qualitative findings. The quantitative descriptive insights offer additional depth and help reinforce the themes emerging from our qualitative data analysis, thus enriching our overall understanding of participants' STEM identity perceptions and experiences within science communication (Creswell and Clark, 2017). Given these considerations, future studies are recommended to utilize larger sample sizes to conduct comprehensive psychometric evaluations, further validating the adapted STEM-PIO and SCTE instrument's reliability and applicability across diverse educational contexts.

3 Results

3.1 Quantitative results

The following data quantitatively demonstrates how AI-supported and general communication training and explicit curricular experiences affect students' science identity and their competencies in scientific communication. Descriptive statistics are given in the B tables in Supplementary material document.

These quantitative findings offer empirical evidence on the influence of combining innovative technological tools and identity-focused educational practices.

3.1.1 Survey 1 and 2 data comparison

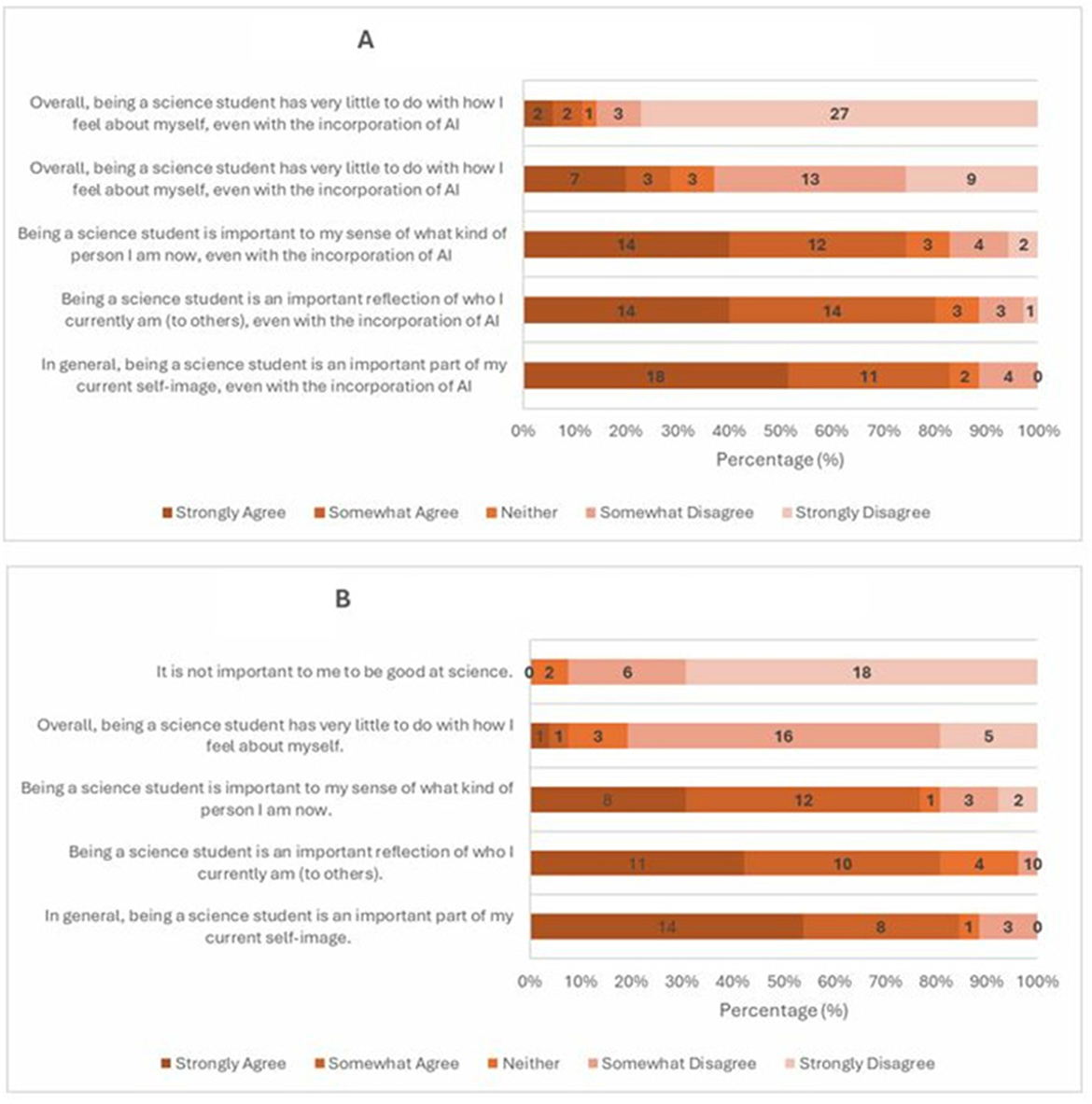

Figures 2A, B (Supplementary Tables 1, 6) below shows that the students largely affirmed the importance of their identity as science students despite incorporating AI into their learning process, but also that without explicit AI integration, being a science student significantly impacts their self-image. A notable majority, with 18 strongly agreeing and 11 somewhat agreeing, indicated that being a science student remains a crucial element of their current self-image. Similarly, when asked about their science identity relative to others' perception, respondents showed overall strong agreement, with 14 strongly agreeing and 14 somewhat agreeing, indicating internal and external alignment of their identities as STEM Professionals despite AI usage. The few students who disagree suggest nuanced differences in how AI-use may be internalized differently by certain students. This highlights a consistent sense of personal and social identification with science independent of explicit technological influences. The comparative analysis (found in Supplementary Table 11) results show minor differences in students; perceptions of their science identity when integrating AI, suggesting that the incorporation of AI does not dramatically alter fundamental self-perceptions as science students, although slight variations suggest some students may feel AI impacts external perceptions more than internal perceptions.

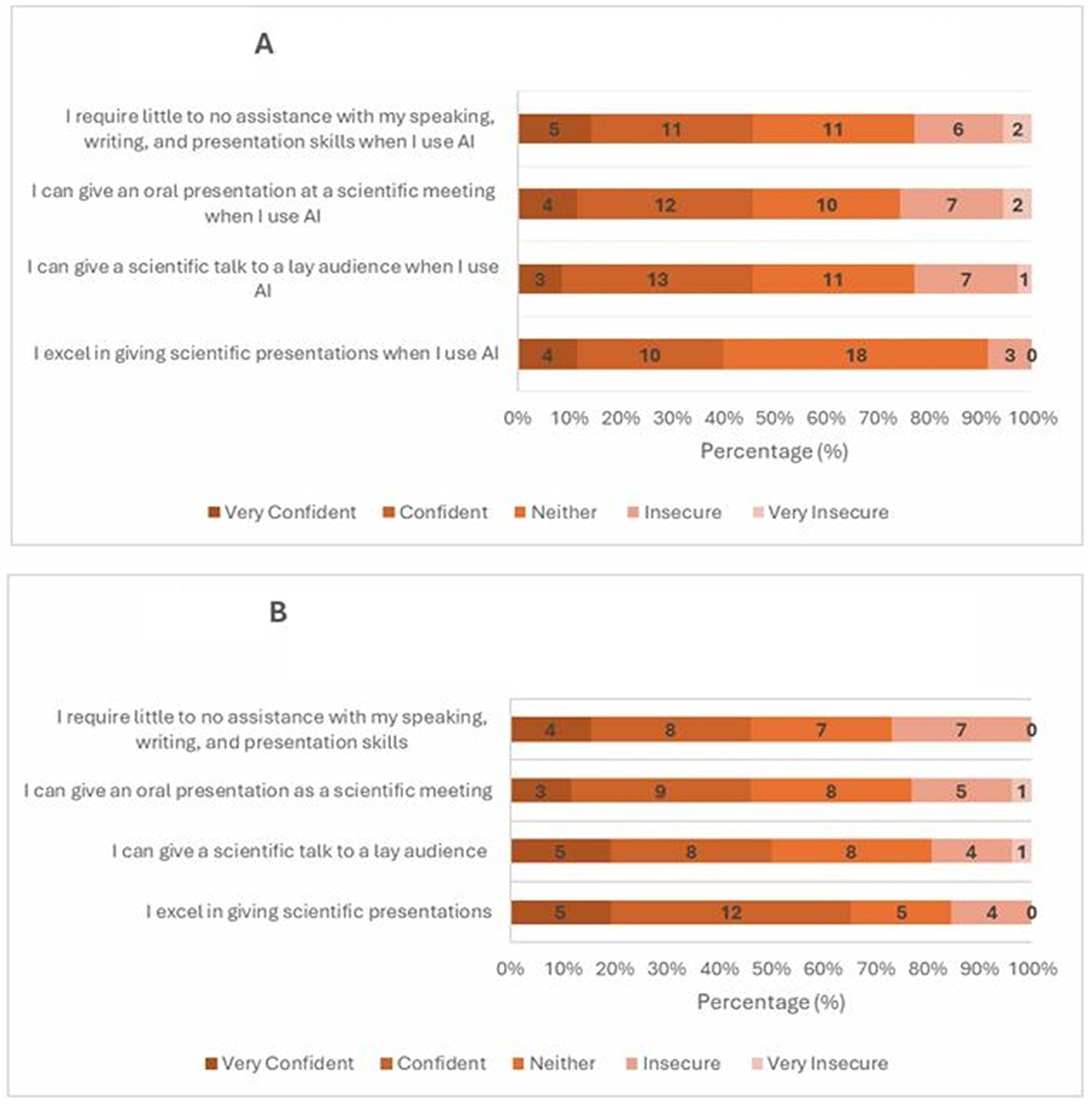

Figures 3A, B (Supplementary Tables 2, 7) below shows that the students' responses were mixed regarding self-confidence in oral communication tasks utilizing AI. A moderate proportion of students expressed confidence in using AI for excelling in scientific presentations and presenting to audiences. Neutral responses remained significant across tasks, indicating a degree of uncertainty about AI's role in enhancing oral presentation abilities. The variation in this data highlights an area where AI integration, while perceived as beneficial, has yet to unanimously boost confidence among students. The data also displays that a majority of students expressed confidence in their ability to conduct effective scientific presentations. However, considerable neutral and insecure responses suggest that while many students feel well-equipped, a notable portion still experiences self-doubt, underscoring the need for continued pedagogical interventions in enhancing communication skills. The comparative analysis (found in Supplementary Table 12) results show AI integration generally resulted in slightly lower reported confidence across most presentation metrics, potentially indicating initial uncertainty and skepticism about AI's effectiveness or reflecting students; reservations about depending on technology rather than personal skill.

Figure 3. (A) Confidence in science presentations using AI. (B) General scientific presentations without AI.

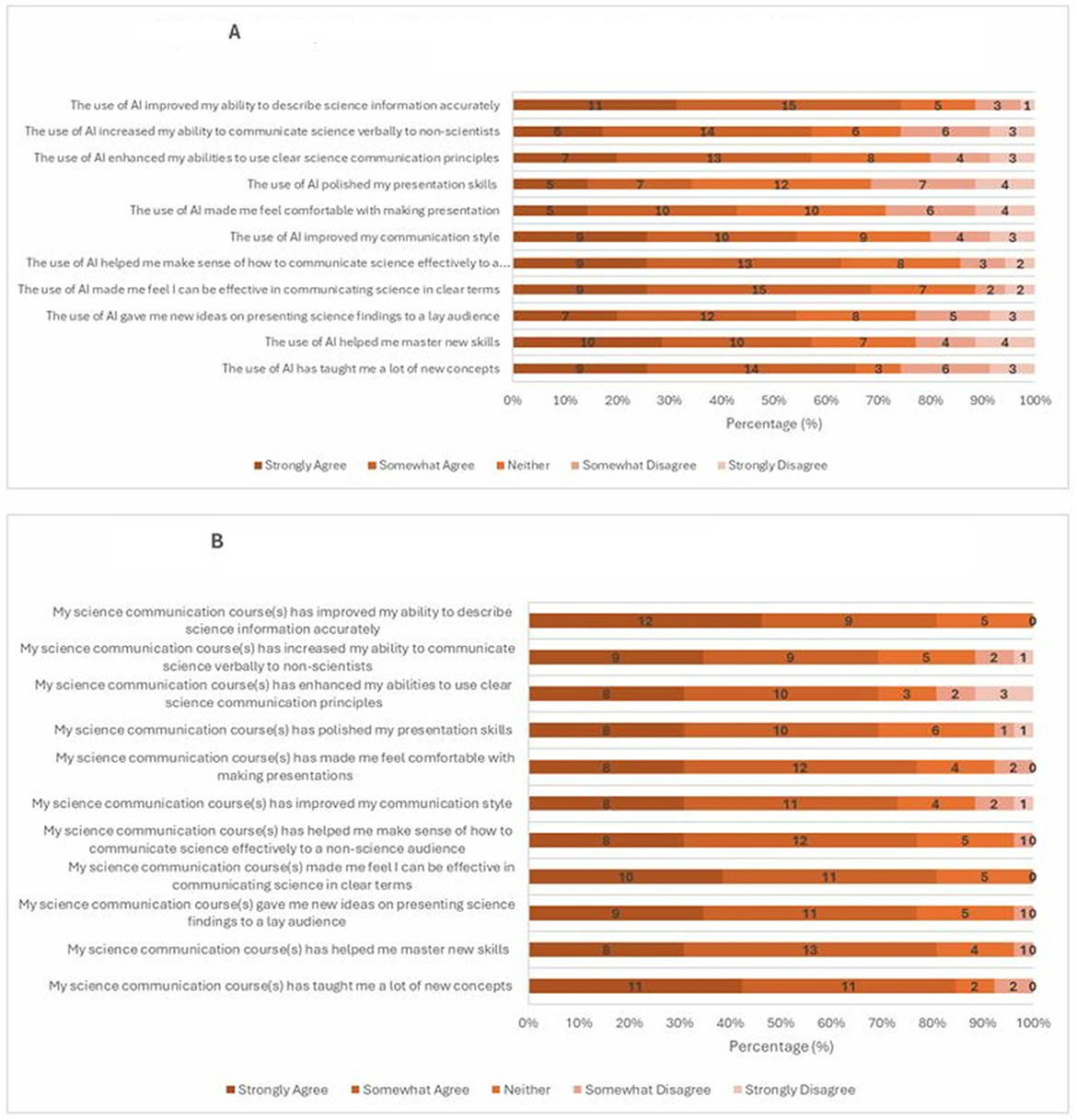

Figures 4A, B (Supplementary Tables 3, 8) displays students overwhelmingly endorsing the role of AI in helping them acquire new concepts and master new skills. The students also strongly credited AI for improving their communication, suggesting that students recognize the substantial improvements in their communication skills directly associated with AI-support. However, the students disagreeing across the board reflects their skepticism of AI's influence on their communication abilities. In addition, the data displays students overwhelmingly reporting that their science communication-intensive courses significantly bolstered their capabilities. Strong agreement was evident in terms of mastering new communication concepts, improving communication style, and enhancing the clarity of verbal science communication. The comparative analysis (found in Supplementary Table 13) results interestingly show students rated their skill development and conceptual understanding more positively without explicit AI integration. This may indicate students; greater reliance or perceived value in traditional communication training methods, or it may reflect hesitancy about attributing personal growth directly to technological tools.

Figure 4. (A) AI's impact on science communication skills development and conceptual understanding. (B) Science communication skills development and competencies without AI.

3.1.2 Survey 1

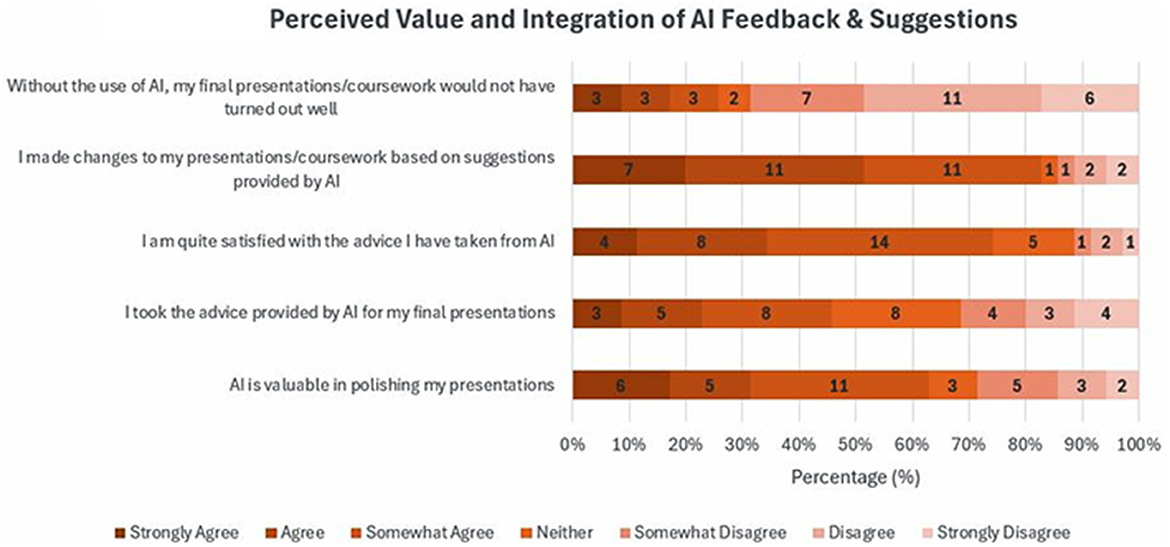

Figure 5 (Supplementary Table 4) displays students acknowledging the instrumental influence AI feedback had on their coursework. A significant group explicitly agreed that AI suggestions led to substantiative changes in their presentations, with those disagreeing highlighting skepticism regarding AI's essentiality in delivering successful assignments. While AI is largely viewed as helpful, students maintained a strong sense of ownership of their academic products.

Figure 5. Perceived value and integration of AI feedback and suggestions. Student's mixed acknowledgment of the instrumental influence AI feedback had on their coursework.

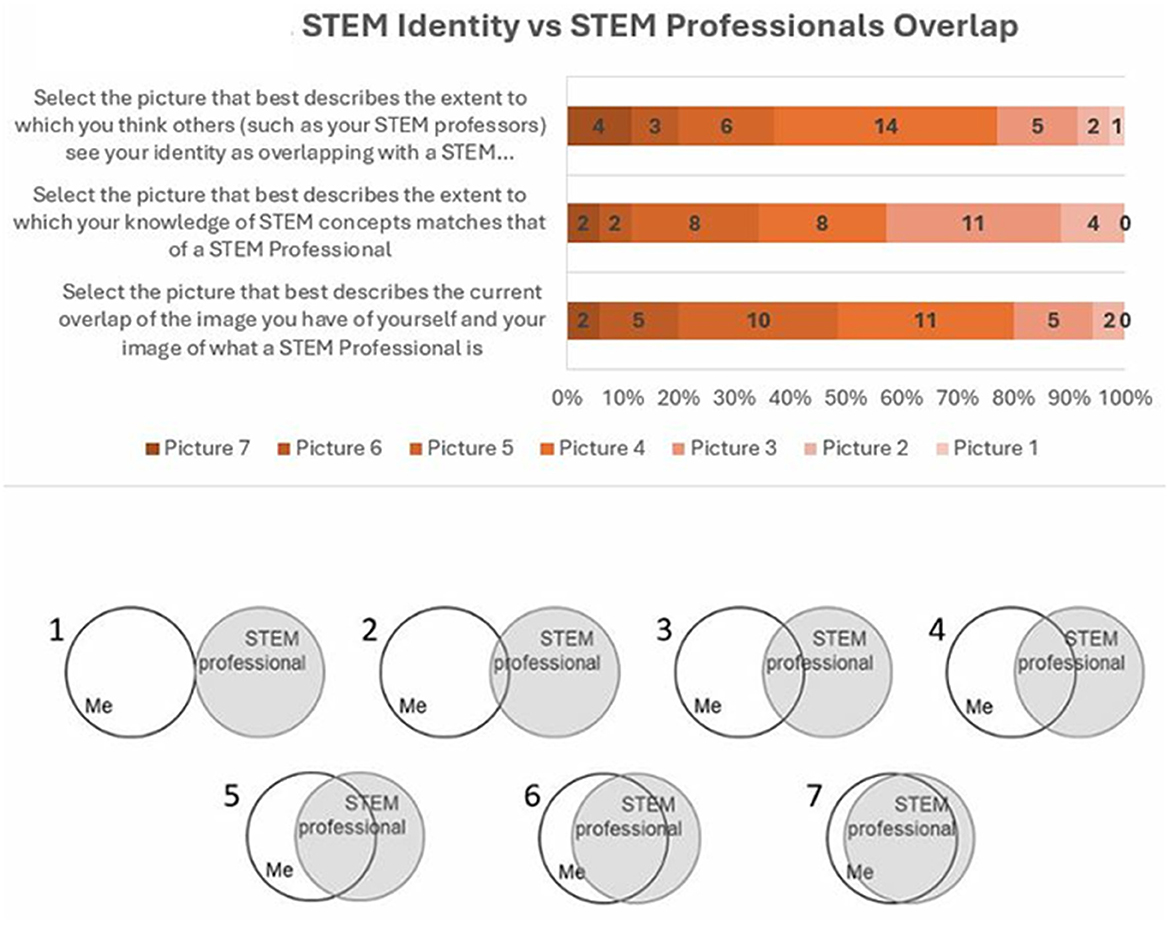

Figure 6 (Supplementary Table 5) displays the moderate alignment between students' personal STEM identities and their conceptualizations of STEM Professionals. Most students selected mid-level overlap representations, suggesting active development of their STEM identities. Although some students perceived substantial overlap, a significant number indicates partial alignment, suggesting room for continued growth in their professional self-concept influenced by the presence, assistance, and use of AI.

Figure 6. STEM identity vs. STEM professionals overlap. STEM professionals are individuals whose professional activities relate to the STEM fields (science, technology, engineering, or, mathematics).

3.1.3 Survey 2

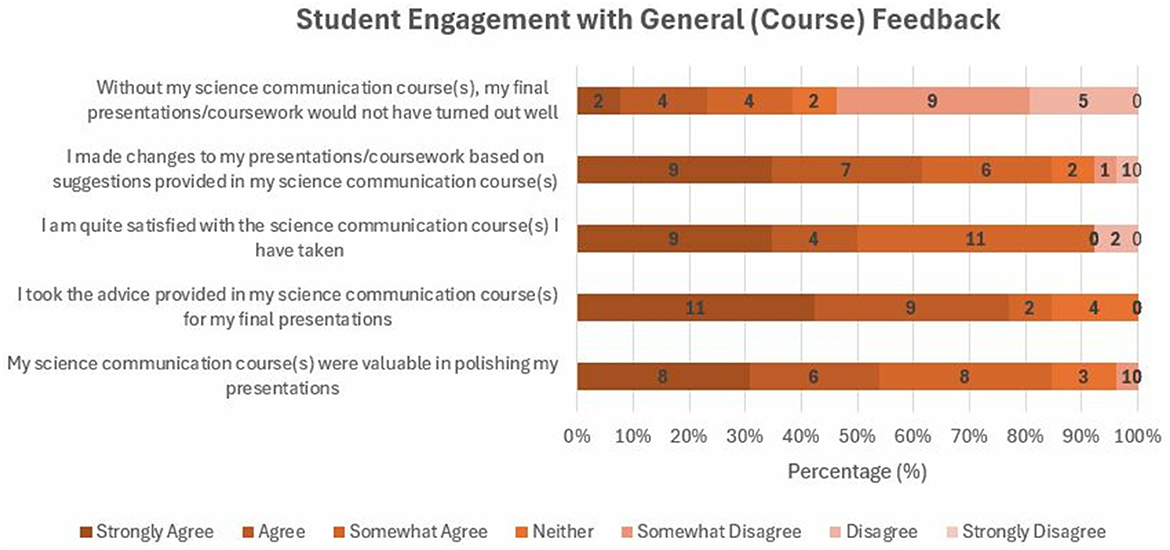

Figure 7 (Supplementary Table 9) displays students actively integrated course feedback into their work, with many strongly agreeing or agreeing that the courses significantly contributed to polishing assignments and led to tangible improvements in their final products. Students generally rated non-AI feedback more positively, particularly in terms of value and adherence to feedback, highlighting a preference or perceived greater value in traditional instructor feedback vs. AI-generated suggestions. Yet, notable disagreement reflects student perceptions of instructional necessity, highlighting variability in individual reliance on structured feedback.

Figure 7. Student engagement with general (course) feedback. Students actively integrated course feedback into their work without AI.

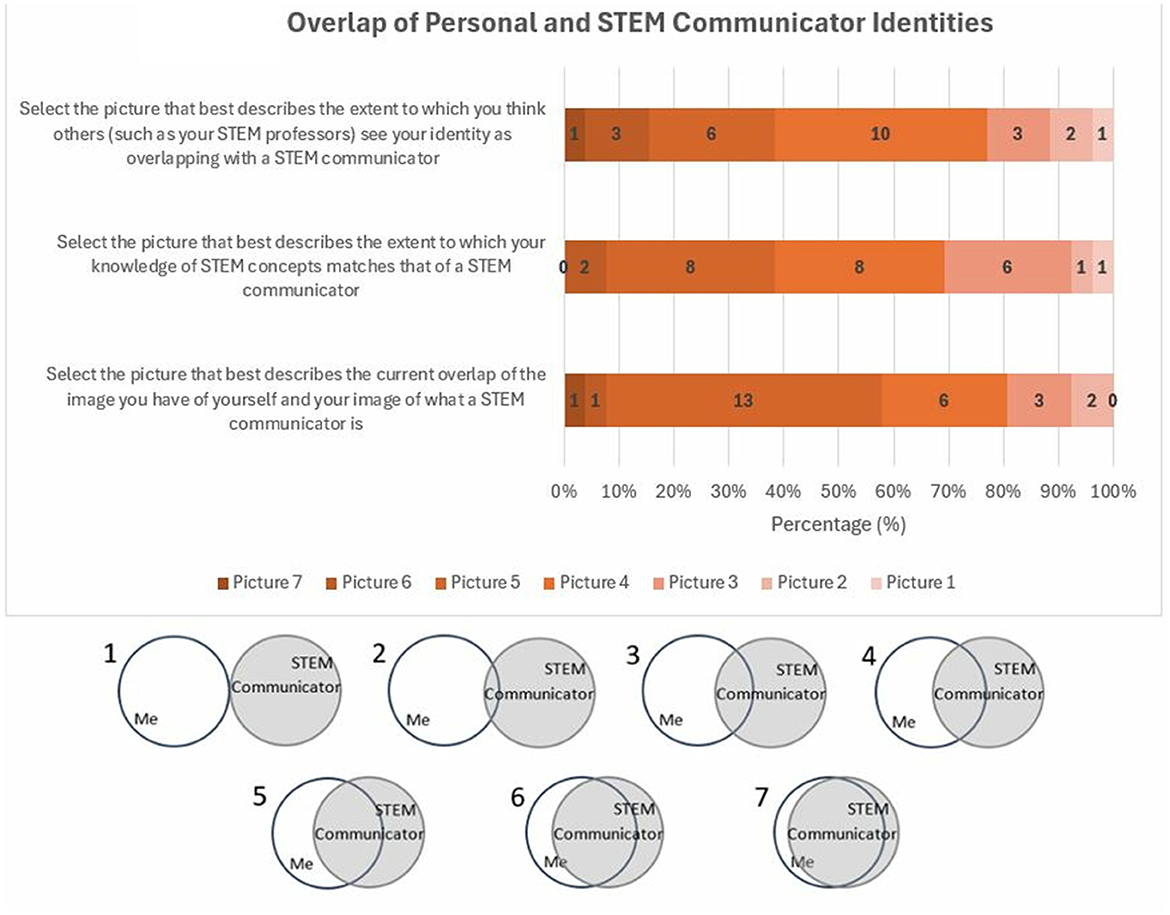

Figure 8 (Supplementary Table 10) displays students' moderate congruence between personal identity and perceptions of STEM communicators, predominantly selecting intermediate identity overlap levels. This finding suggests a well-established, though not fully matured, professional identity as STEM communicators, emphasizing the impact of curricular efforts in shaping students' professional self-concept. The data also suggests that external validation and recognition of personal identity represent crucial growth areas for enhancing persistence and identification with STEM fields.

Figure 8. Overlap of personal and STEM communication identities. STEM communicators are individuals whose professional communication activities relate to the STEM fields (science, technology, engineering, or, mathematics).

3.2 Qualitative results

Analysis of the interviews revealed an intricate yet complicated relationship between undergraduate students and their use of AI tools within their courses, especially when compared to their self-reports from Surveys 1 and 2. Eight broad thematic categories encompassing the many themes present emerged from the data, addressing how students use AI in their communication-intensive STEM courses and how the use has influenced their competencies, confidence, and science identity.

3.2.1 AI enhances learning, but requires critical evaluation

All of the students have used AI to support science communication-based tasks for their courses. Students have used ChatGPT to draft, summarize and edit their scientific writing and technical skills, including structuring oral presentations and revising technical outputs for code and data descriptions.

“For me, I usually just, it comes in the form of the AI going in a different direction than what I necessarily envisioned for my paper or my presentation. And so, usually, in those instances I'm just like, ‘Oh, how can I reword this prompt in a way that actually fits the mold of what I'm actually trying to talk about.' And I think in those instances, it actually helps me because it allows me to rephrase things in my own brain and be like, oh look, this would be a more beneficial way of looking at it, or this is a topic I really want to touch on more. And so, I'd say in those instances, I see those slip-ups as partially an inconvenience, but also it gives me more confidence in knowing that I know what I'm talking about essentially. And then also, that I can, rephrasing it is always a good thing just in a broader scientific scope because you are going to have tons of different audiences.”

“So they have this, it's called like LaTeX is the website that we use to do our presentations. He suggested we use that, not saying we would have got a better score if we didn't use that, but the only way to write the slides is using coding, which it was the first time I've ever done that. So it was a new learning curve to our presentations. So it would take double the amount of time to once learn the code and then write my presentations. So I used it to help me write the code and learn the code really fast so I can go ahead and write the presentation, take a day or two on actually doing the math, working through it and whatnot”

“I wouldn't really say grammar or content. However, on the content end, it would at times… I would put it in say grade my lab report based on this rubric, and I kind of rewrote the rubric because AI was like, ‘What is this?' I would tell it to grade it based on this rubric and it would tell me, ‘Oh, you're missing an equation here, or you need to include the program that you analyze your data with and the methods.' It would just be small, small details like that. But typically, it was a really good feedback. I didn't really use it to write my stuff and I didn't find it to be particularly great at coming up with its own stuff.”

“For me, I use it to do the work that I essentially almost can't be bothered to do. So essentially, in the sense that I mostly use it for my communicative intense engineering courses and essentially I'm in a position where it's like I'm in engineering, I have other work to do, so I essentially use it as almost a starter prompt to get the ball rolling. Because once I have a starter prompt in front of me, it tricks my brain into thinking, oh, I already have work done. And even if I don't keep any of the original prompts that I actually use, I still end up using that as a basis to work off of writing the rest of my paper. And then, I usually use that, or if I'm doing a presentation, usually use that as inspiration of what I want to put in this PowerPoint and how I want to talk about things. And so that's mostly how I use AI.”

Although the use of GAI improved their workflow and access to explanations, students demonstrated their trust and limits of AI outputs—following the need to double-check results. This critical engagement suggests developing competencies, even as AI initially masks misunderstandings.

“…it just encourages me to fact check the AI and just be like, I know I'm not crazy, I know I definitely have the right answer in my brain. And so, it encourages me to do deeper research in a sense to prove that the AI is faulty in that sense.”

“It would only answer a chemistry problem right a couple of the times because I would take the answer and put it into the homework and present it, but it's just I didn't trust it so much in that field anymore. I continued to trust it because I kept getting good scores and good feedback from my TAs and professors on the written assignments for lab and stuff. But I feel like answering questions, I learned really quickly that in chemistry answering questions, you can't really trust AI to do that for you. So I just learned what use, because AI I learned as a tool and I just learned where it's best used and best not used.”

“It makes me feel really confident when I realize that it's a wrong answer, I'll be like, ‘Okay, I know what I'm doing.' I know what I'm doing well enough to know that this is completely wrong.”

3.2.2 AI supports time management, but risks over-reliance

Students have consistently expressed AI as a time-saving tool to complete what they deem as repetitive assignments. Students have also used AI to organize content, breakdown content into manageable chunks, and generate summaries when under pressure with deadlines. In many cases, this use stemmed from procrastination or low motivation, with AI positioned as a coping tool to “just get it done.”

“It's easier something as simple as enjoying my free time. Just as college students, I feel like, and especially in science or science adjacent classes, I find myself almost constantly doing work for school. So it's one of those instances where it's like, okay, do I really want to spend three hours writing this paper or can I get it done in an hour, hour and a half with the help of AI and then using that rest of that time to do anything else that I would just enjoy doing more. And then, beyond that, it also goes hand in hand with what I said in terms of having other work to do. If I have a lab report and a presentation and an engineering assignment all due in the same night, whatever I can use to help get all of those things done and as quick and efficient as a manner that I can, I'm going to use it.”

“So in my other courses, sometimes if they give us a really long paper to read and I just don't have the time to read it, for example, in my ecology class, there was one by this guy named Lima. I don't know why, there were 40 pages to it and it wasn't saying anything. So I slammed it into ChatGPT and I was like, ‘Can you just give me a really brief summary?' And it did and I ended up using it, and that's all I actually needed for the exam. So other than that, I really just use it to condense down data, write code, and probably condense papers as well.”

“…it makes me push things off longer. I knew it was there for me. I knew I could learn it super-fast just because I had it. So I waited until the last two days to go ahead and work on my project when I knew I had it for the entire month. So my enthusiasm lacks a lot just because I have something I can rely on, look back on and do it really fast because it gives me the solution in one second.”

Students have also reported short-boosts in confidence but expressed concern that their understanding and scientific writing competencies were shallow or surface-level due to AI reliance—in turn having them question their science identity.

“I feel like AI in general kind of makes me feel bad because I feel like I'm just relying on something without actually putting in the work. And I do put in the work. I'm not saying I do it all the time, but when I do use it, I tell myself that people probably that are in medical school right now do not use this at all. People that are doctors probably do not use this. And it creates doubts whenever it doesn't give me the answers that I want, or it gives me this really weird explanation. And so I start to doubt myself like, should I really be in this course if I don't even know how to learn something, even with the use of AI? Should I really be here as a scientist?”

“It's kind of humbling in the sense because in high school I pretty much got straight As and then I come here and it's like I'm happy with getting a C. and that's not normally how I am. And so my enthusiasm when I'm working with AI and it just doesn't give me what I want, I don't want to say it completely diminishes, but it does create self-doubt. Like, what am I supposed to do now?”

3.2.3 AI fosters STEM identity and confidence—but raises skill concerns

Students described how AI tools empowered them to participate more confidently in class discussions, presentations, and writing-intensive tasks. Students then identified themselves more closely with STEM roles due to the increased competence via AI support. However, many voiced concerns about whether their success was due to their own efforts or AI. This tension of authenticity has shaped their reflections on science identity and perceived preparedness/skill development for future STEM careers.

“If anything, it's probably solidified my science identity just because I feel like I'm able to keep up with, the [science] community.”

“I think in my case, it's definitely made me a lot more confident in this practical situation rather than all my homework because I can, in real-time, see the effects of it I guess in class. And then, the other part of the question, my science identity, I guess it makes me feel more knowledgeable so it improves my identity as a teacher of some sort, I guess. If I had not used it, I feel like I would be a little bit more hesitant to participate in the lecture because I wouldn't really know what else to say about it. So having AI give me more ideas to expand on has really helped significantly.”

“It just gives me doubts. I just think, am I not going to be able to do this? Am I not going to be able to finish it? Because at the end of the day, even in my field of work, people can use it at their own work jobs and everything, but if I can't make it through college even using it then might really fit out to be one? So it kind of puts my esteem down a little bit and just makes me worried, makes me anxious about if I can't even pass the lower level of this, when I get to the higher one, is it only going to get harder and whatnot?”

3.2.4 Institutional and generational gaps shape AI use

Students have highlighted inconsistencies in how AI was discussed, permitted, or integrated into their courses. Some professors and Teaching assistants (TAs) encouraged AI use for brainstorming or formatting, while others prohibited the use altogether and equated it with academic dishonesty.

“From a professor standpoint, I think it just depends on the professor. Like my microbiology professor, of course, she highly encourages us to use AI, but other professors think of it as laziness or a way to avoid actually putting an effort to the assignment.”

“My genetics professor was just like, ‘You can use AI because I use AI.' And I was like, ‘All right.”'

“I even have one class, it's like a statistics or whatnot, that a TA will literally tell us to use it, but then the professor himself, which he doesn't actually go to the class, it's taught by the TA, he's not for it all. But even the TA will tell us in class like, ‘Hey look, this can help you and we'll use it,' or whatnot, ‘During the lab and stuff.”'

In courses with open AI policies, students were more willing to use AI for scientific communication. In restrictive environments, the students turned to AI privately or avoided it entirely. Inconsistent messaging impacted students' confidence and willingness to fully integrate AI into their science communication practices—creating inequity of use, particularly when students receive inconsistent messaging from different—or even the same—professors across various courses.

“…most of my professors get really angry at that. I've never been caught. I've never known anybody personally to be caught, but I have had people caught out of class, kicked out of class and whatnot …”

“I usually use Gemini by Google because that's what my chemical engineering professor recommended to us, and I know how to use it more than ChatGPT.”

Students reported a stronger science identity when instructors modeled or encouraged ethical use.

“It makes me feel like I'm a part of this thing and if everybody else started using it, and my 50-year-old teacher who had to learn AI on the fly as it was coming out is using it for phylogenetic work and coding, yeah, that's really, really helpful, then me using it is not really any different. It makes me feel like a scientist. It's cool!”

“I think because AI is so widespread and everybody's using it now, that professors are accommodating for that. So even in the syllabus they'll be like, ‘Oh, appropriate uses of AI' and they'll let you know what you can and cannot do. They're not just like, ‘Oh, you cannot use AI whatsoever.' They're like, ‘Oh, you can, but you can't use it for this. You can only use it to do this.”'

3.2.5 AI access and literacy affect equity and outcomes

Discussing the use of AI tools has revealed disparities in access to advanced AI tools and in students' digital literacy. Students with access to premium paid versions of ChatGPT and other AI tools used them more frequently. Other students were limited by access or knowledge gaps. This unequal access affected confidence and performance, with students noting they felt “left behind” when their peers could use the more powerful AI models to their full capacity for assignments, especially those that were high-stakes.

“My family pays for the premium ChatGPT. It's like ChatGPT 4.0 right now. As far as I'm aware, that's actually a big improvement. So I trust that more than, for example, when I use a search engine.”

“Yeah, it definitely has its ups and downs. There's obviously going to be some students that abuse the technology and use it to completely do their assignments, but that's inevitable. But most of the time, I feel like it's used beneficially to help study and aid with assignments. And I feel like, like he said, that stigma should definitely be erased and AI is becoming the new norm and it's time to accept that and move forward with regulations that might help students that use it beneficially to use it in the classroom.”

“I mean AI is so new, if I understand the question correctly, AI is so new and people will have different levels of technological prowess and savviness. So some people will be using AI more or less and when it is allowed or even if it's not allowed, people who use AI to… People who are good using AI when it's allowed or people who use it to cheat will probably do better in these classes because AI is a good tool already. So I could definitely see that and probably have seen, I never really consciously acknowledged this, but certainly I would think that I have seen in practice where some students will get an edge because they are good or using AI or use it when they shouldn't have as well possibly.”

3.2.6 Help-seeking behavior and comfort impact AI dependency

Students' personal comfort and experiences with faculty significantly influenced whether they sought human support or turned to AI. Some students described professors and TAs as inaccessible or intimidating, using them to use AI as a safer and non-judgemental alternative. Students whom expressed having supportive (demeanor and/or with AI-use) instructors used AI as a starting point but still engaged with human feedback and expressed valuing personal guidance.

“Most of the time for me, AI will be at my last resort. Usually, I'll check the textbook before I even ask AI if the professor is not available. But in terms of if I would rather use AI or go to the professor, I would always rather go to the professor because I know they would have the right answer over AI, considering AI can be wrong at least 30% of the time.”

“It's great if your professors or TAs don't have office hours available.”

“I think it also depends on the professor. Some professors are scary and I do not want to talk to.”

“ … a lot of professors in those classes don't know everyone's name, don't ever see people basically. And the only people they do remember are the people who sit in the front or the people who annoy them or the people they don't like, and I don't want to be seen as a person they don't like basically, if that makes any sense.”

“I do think if I wasn't supposed to be using AI and then I just said that I was, maybe that would inhibit me from going and really reaching out to professors.”

“The reason why I went to AI again is because it's easier. I don't have to talk face to face probably because it would hurt my ego to have another person directly saying it. Also, I might trust an AI more to give me real feedback because I feel like people face-to-face might not be harsh enough with their criticism. But yeah, it's right there, it's accessible, it's easy, and I don't have to worry about any human face-to-face barriers…”

“I think a lot of the labs here, some of the TAs aren't the best, so you're left with a lot of questions and obviously, you're a freshman taking a lot of… like, I am a bio major, so I took two labs my freshman year and both of them, my TAs were just not too helpful. So I was left with a lot of questions on how to do things and how things should be formatted and I think AI was really helpful with being very clear and professional-sounding and helping me to understand how I needed to format things.”

3.2.7 Emotional and motivational factors and responses due to AI use

As seen from previous quotes, students often used AI to reduce anxiety, face mental barriers, and motivate themselves to begin difficult assignments, especially when lacking focus and/or enthusiasm. Students described AI as a non-judgemental tool that helped them “get something on the page” even when unmotivated or when they are unsure where to start. They expressed using AI for generating starter text, especially in writing and oral tasks. Students were aware that AI lowered the barrier to initiate and complete tasks, but it did not always lead to lasting skill gains. However, the outcomes of AI itself can generate negative emotions and responses from students due to incorrect information, inability to solve problems or perform tasks, etc.

“It makes me frustrated and I multitask a lot, so most of the time I just quit what I'm doing and I'll go do something else. But yeah, it just makes me frustrated a lot, especially when the time is getting lower and it gives me the wrong answer. It gives me the wrong wording or whatnot for the presentation. Sometimes it just makes me frustrated. Then I'll have to go do it on my own, and most of the time it's not my best work because I am frustrated from what it did.”

“It just, not for enthusiasm, but it lowers my self-esteem, I guess. I kind of look at things differently. So this year I'm in a really hard probabilities course and I can't even figure it out using ChatGPT. I can't figure it out talking to a teacher. So I'm kind of just looking at different ways now and I don't want to drop it. I don't want to get out of it, but I'm trying to figure certain ways out right now. I'm looking back at tutoring, stuff like that. It's making me take routes that I've never taken in my life before because I had good grades all my life and everything, but if I can't figure it out using this, and it has me going a bunch of different ways. So my enthusiasm and everything's kind of low right now. I know it's high to a sense because I'm trying to figure different stuff out, but it's low because I've never done this before. I've never done this bad. I've never struggled this hard with the class. So it's really making me frustrated and whatnot and trying to figure it out. I have no clarity on things.”

“Yeah, I 100% agree. Sometimes the explanation that it gives you, you rely on that explanation and then they give you some really weird one and you're like, well, this doesn't reflect what's going on in the course. So like you said, it really does slow you down. It's frustrating because you want to get it done, but you also want to learn in a timely fashion. So it's just very annoying and sometimes it creates doubts like, oh my gosh, if I can't learn this or figure it out, how is this going to look if I have to present something? But normally I'm able to learn it in time to make sure that my presentation looks well enough and has correct information on it.”

“ I feel like that goes the other way because the more that I understand tech and everything and I use it more, the less I feel like I'm actually learning, and I feel like other kids are getting ahead in that aspect. So I don't know. I feel like it goes both ways.”

3.2.8 Disconnection between perceived and actual impact of AI on science identity

As mentioned in the prior section discussing the survey results, a compelling disconnect emerged when analyzing the quantitative data with the students' statements. Several students—particularly those who identified as White and coming from privileged academic and/or socioeconomic backgrounds—explicitly stated that AI use had no influence on their science identity. However, their subsequent statements and behaviors revealed implicit links between AI use and their confidence, enthusiasm, communication competencies, and profession self-concept as scientists and science communicators. Their statements reflect an increase in confidence, competency, and a greater sense of belonging in science—core elements of science identity—despite their denial.

“The most common instance in which I would use AI for a course like Science 1002 would be to get feedback on my writing. So on the biggest part of that class, which was the final presentation, you had to make up a large presentation in a group setting to present to an audience which had no idea what it was about. And so AI helped me to (a) just get feedback on whether the writing was good, whether the writing needs some improvement, and then also how understandable it would be to an audience who had no idea. … Yeah, I mean the ability to write more compelling presentations and do well in my science courses with the help of some AI definitely helps with my science identity. I would definitely not tie my identity to how well I did on any specific assignment in these courses. But having produced these good works with the help of AI does increase my confidence as a scientist. But in general, I think that for my science identity, I actually do want to do it myself. And so the end goal is to wean off AI. It's helping me write right now, but with the end goal of being able to write with myself without the help of AI because I have all my English courses already done, that's all behind me and science writing is not a larger component in the courses I'll be taking in the future. And so AI helps me write better and with the end goal of weaning off of it and being confident in my writing in a vacuum, how people used to evaluate their own writing.

“I mean I think identity is really something that comes from within and what you've done and not necessarily with your school work. AI can give good feedback on general writing stuff and my structure and just how it generally flows. I don't use it for grammar so much, but I might ramble on them about a topic and then it needs to be shortened a lot and AI can help with that. … It does improve my confidence a lot and because it improves my confidence, I feel like I'm not weighed down by an element of fear like, I hope I get an A. Now I feel like, okay, it's going to tell me where I did it wrong. I'm going to rewrite it and it's at least going to be a 94. But I feel like I'm not weighed down by the fear of not getting a good grade. And so I feel like that gives me more freedom to engage and actually enjoy the subject that I'm learning about. So I guess it does improve my excitement.”

“I don't think it affected me at all really. I feel like it pointed out certain things that I would tend to forget every time. And I feel like in that circumstance I did learn to put those elements that I would continuously forget back into my paper. I feel like I got a lot better at writing lab reports, but I feel like it didn't really impact me all too much. … I'd say it definitely has improved my confidence because I feel like whenever I read my lab reports, I'm looking at say how to critique my own lab report now. I feel like I have been criticized enough by AI and other resources as well, but we're talking about AI right now. I feel like I'm going through it and I'm now looking to see like, oh, I have this, I typically forget this or I have that just double-checking, learning how to double-check and how to criticize my work.”

“The reason why I call myself an amateur crystallographer is because I can use the machine myself like be the crystallographer at least for the day, and I go in and run a sample. … I will be using AI because it does a good job at giving those stuff where especially crystallography, those resources for a undergraduate student are limited, very limited.”

4 Discussion

This study explored how undergraduate students in STEM communication-intensive courses use artificial intelligence tools such as ChatGPT and how the use influences their competencies, confidence, and science identity. The findings reveal that AI is not simply a technological aid but a complex social and academic tool that shapes learning, motivation, and self-perception—often in ways students do not consciously or admittedly acknowledge. Students described using AI to support scientific writing, oral presentations, technical tasks (i.e., coding and data interpretation), and workflow organization. These uses were deeply connected to how students built confidence, navigated academic challenges, and identified as capable participants in STEM. However, this development was shaped by differences in access, cultural perceptions of academic merit, and instructor attitudes toward AI—resulting in uneven outcomes and hidden tensions.

A critical finding emerged when examining both the quantitative data and the qualitative data: students from more privileged backgrounds (particularly white students) often claimed AI had no effect on their science identity—yet, their statements revealed clear evidence of increased confidence, perceived competence, and a greater sense of alignment with scientific practices. This disconnect suggests that those most embedded in privileged academic cultures may not fully recognize how digital tools are shaping their educational experiences and identities. These contradictions illuminate the nuanced and invisible methods AI shapes students' science identity. While some students (particularly minoritized students) explicitly credited AI with helping them feel like a scientist, others (both white people and some minoritized students) downplayed or denied its impact—despite behaviorally demonstrating reliance on it. This divergence has implications for how science identity is studied and who feels permitted to attribute or deny their growth to external support.

The data also suggests the risks of AI-use replacing deep engagement with the material (via summaries) and variation in confidence to engage in academic settings. Students who avoided faculty interactions used AI to build confidence and competency independently, though this may limit opportunities for deeper mentorship and science identity formation. AI-use improved confidence temporarily and thus masked gaps in content/competency development. Despite students' denial of impact, the gap between stated and demonstrated impact of AI and science identity suggests an underacknowledged and/or subconscious relationship with AI—one that likely reflects a deeper cultural or identity-based dynamic(s) around self-perception, merit, and technological legitimacy in STEM. These findings echoes the findings of other studies—improvement of conceptual understanding, overreliance/misuse of AI tools hindering student learning outcomes and skill acquisition, behavioral intentions influence of AI-use, and technology impacting learning and science identity development (Huang and Pei, 2024; Hutson et al., 2024; Azoulay et al., 2025; El Fathi et al., 2025; Ji et al., 2025; Wang et al., 2025).

The data highlights the need of awareness of meritocratic narratives from privileged ethnic/racial majority individuals. Students from privileged backgrounds are more likely to internalize merit-based frameworks in STEM and resist acknowledging AI's (or any source of assistance) role in shaping their success or identity as it would be perceived as undermining their self-image as independently capable scientists. Coupled with performative detachment, student were presenting themselves as distant from AI while they were integrating it into their academic practices to maintain a perception of intellectual autonomy—driven by social norms in competitive academic environments. The normalization of technology in today's society welcomes the modern students' view as AI tools being an expected extension of their academic toolkit. Although the students may or may not intentionally or consciously recognize this as transformative, their behaviors and reflections demonstrate shifts in engagement and self-efficacy.

5 Implications of research and practice

These findings emphasize the importance of viewing science identity through the lens of privilege and social positionality. Students with institutional and/or structural advantages may be less attuned to the ways support tools scaffold their growth in contrast to first-generation and/or minoritized students who more readily attribute AI to their progress and success. Educational interventions for curricular design should include reflective opportunities that explicitly ask students to analyze how tools like AI shape their learning, even when those influences are subtle or unacknowledged. Normalizing and demystifying use in academic spaces should be considered through open conversations fostered by faculty about AI as a legitimate support tool—rather than stigmatizing or ignoring it. Doing so will help dismantle performative detachment and support honest engagement with emerging technologies. Institutions should design equity-focused AI access and training initiatives to address disparities in AI access through free access to essential tools and training. Training should also be implemented for faculty to support ethical and inclusive AI integration to support student growth. Faculty discomfort and generational gaps in AI understanding contribute to inconsistent and exclusionary classroom practices; training is needed to inform equitable and responsible AI integration—echoed by Ren and Wu (2025). Future quantitative instrument development for measuring science identity should include indirect or behavioral indicators of tool reliance to capture latent effects not revealed through self-reporting.

6 Conclusion

AI tools are a part of the evolving landscape of STEM education and practice. The results of this study show that science identity is technologically mediated and socially contingent—challenging current dominant models of science identity. While students have shown gaining confidence and competencies through AI, inconsistent institutional messaging, digital privilege, and cultural narratives of merit influences identity development. This work contributes to the emerging conversations of how students form and (self) negotiate their science identities with AI/technology use, along with responsible AI integration and its role in inclusive STEM education. Aligning technological advancement with educational practices that center on identity and equity paves for higher education to better nurture the next generation of scientists to not only act like scientists but truly see themselves as scientists.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by LSU Institutional Review Board Dr. Alex Cohen, IRB Chair Douglas Villien, Assistant Director of Research Compliance & Integrity and IRB Manager. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

JGR: Visualization, Conceptualization, Formal analysis, Resources, Data curation, Validation, Project administration, Funding acquisition, Methodology, Writing – review & editing, Writing – original draft, Supervision, Investigation. ZW-K: Validation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was funded by the National Science Foundation Award Number 2327418.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1644873/full#supplementary-material

References

Alvarez, A., Caliskan, A., Crockett, M. J., Ho, S. S., Messeri, L., and West, J. (2024). Science communication with generative AI. Nat. Hum. Behav. 8, 625–627. doi: 10.1038/s41562-024-01846-3

Azoulay, R., Hirst, T., and Reches, S. (2025). Large language models in computer science classrooms: ethical challenges and strategic solutions. Appl. Sci. 15:1793. doi: 10.3390/app15041793

Barstow, B., Fazio, L., Lippman, J., Falakmasir, M., Schunn, C. D., and Ashley, K. D. (2017). The impacts of domain-general vs. domain-specific diagramming tools on writing. Int. J. Artif. Intell. Educ. 27, 671–693. doi: 10.1007/s40593-016-0130-z

Beasley, E. M. (2016). Comparing the demographics of students reported for academic dishonesty to those of the overall student population. Ethics Behav. 26, 45–62. doi: 10.1080/10508422.2014.978977

Blau, W., Cerf, V. G., Enriquez, J., Francisco, J. S., Gasser, U., Gray, M. L., et al. (2024). Protecting scientific integrity in an age of generative AI. Proc. Natl. Acad. Sci. U. S. A. 121:e2407886121. doi: 10.1073/pnas.2407886121

Burns, T. W., O'Connor, D. J., and Stocklmayer, S. M. (2003). Science communication: a contemporary definition. Public Underst. Sci. 12, 123–221. doi: 10.1177/09636625030122004

Carlone, H. B., and Johnson, A. (2007). Understanding the science experiences of successful women of color: science identity as an analytic lens. J. Res. Sci. Teach. 44, 1187–1218. doi: 10.1002/tea.20237

Cole, E. R. (2009). Intersectionality and research in psychology. Am. Psychol. 64, 170–180. doi: 10.1037/a0014564

Collins, P. H. (2002). Black Feminist Thought: Knowledge, Consciousness, and the Politics of Empowerment, 2nd Edn. New York, NY: Routledge. doi: 10.4324/9780203900055

Crenshaw, K. (1991). Mapping the margins: intersectionality, identity politics, and violence against women of color. Stanford Law Rev. 43:1241. doi: 10.2307/1229039

Creswell, J. W., and Clark, V. L. P. (2017). Designing and Conducting Mixed Methods Research. Los Angeles, CA: SAGE Publications.

Cwik, S., and Singh, C. (2022). Developing an innovative sustainable science education ecosystem: lessons from negative impacts of inequitable and non-inclusive learning environments. Sustainability 14:11345. doi: 10.3390/su141811345

Delve (2025). Software Tool to Analyze Qualitative Data. Delve. Available online at: https://delvetool.com (Accessed December 17, 2022).

El Fathi, T., Saad, A., Larhzil, H., Lamri, D., and Al Ibrahmi, E. M. (2025). Integrating generative AI into STEM education: enhancing conceptual understanding, addressing misconceptions, and assessing student acceptance. Discip. Interdiscip. Sci. Educ. Res. 7:6. doi: 10.1186/s43031-025-00125-z

Garcia Ramos, J., and Wilson-Kennedy, Z. (2024). Promoting equity and addressing concerns in teaching and learning with artificial intelligence. Front. Educ. 9:1487882. doi: 10.3389/feduc.2024.1487882

Grammarly (2022a). Demand for Strong Written Communication Skills Is Soaring—Why Isn't the Support? Demand Writ. Ski. Soars Support Lags Grammarly. Available online at: https://www.grammarly.com/blog/demand-for-strong-written-communication-skills-is-soaring-why-isnt-the-support/ (Accessed March 21, 2024).

Grammarly (2022b). There's a Hidden Equity Issue in Education. Here's How to Address It. Hidden Equity Issue Educ. Address It Grammarly. Available online at: https://www.grammarly.com/blog/equity-in-communications/ (Accessed March 25, 2024).

Hennink, M., and Kaiser, B. N. (2022). Sample sizes for saturation in qualitative research: a systematic review of empirical tests. Soc. Sci. Med. 292:114523. doi: 10.1016/j.socscimed.2021.114523

Holstein, K., and Doroudi, S. (2022). “Equity and artificial intelligence in educationl will ‘AIEd' amplify or alleviate inequities,” in The Ethics of Artificial Intelligence in Education (New York, NY: Routledge). doi: 10.4324/9780429329067-9

Huang, L., and Pei, X. (2024). Exploring the impact of web-based inquiry on elementary school students' science identity development in a STEM learning unit. Humanit. Soc. Sci. Commun. 11, 1–11. doi: 10.1057/s41599-024-03299-5

Hutson, J., Plate, D., and Berry, K. (2024). Embracing AI in English composition: insights and innovations in hybrid pedagogical practices. Int. J. Chang. Educ. 1, 19–31. doi: 10.47852/bonviewIJCE42022290

Hwang, G.-J., Xie, H., Wah, B. W., and Gašević, D. (2020). Vision, challenges, roles and research issues of artificial intelligence in education. Comput. Educ. Artif. Intell. 1:100001. doi: 10.1016/j.caeai.2020.100001

Ji, Y., Zhan, Z., Li, T., Zou, X., and Lyu, S. (2025). Human–machine cocreation: the effects of ChatGPT on students' learning performance, AI awareness, critical thinking, and cognitive load in a STEM course toward entrepreneurship. IEEE Trans. Learn. Technol. 18, 402–415. doi: 10.1109/TLT.2025.3554584

Lawson, A., Ferrer, L., Wang, W., and Murray, J. (2015). “Detection of demographics and identity in spontaneous speech and writing,” in Multimedia Data Mining and Analytics, eds. A. K. Baughman, J. Gao, J.-Y. Pan, and V. A. Petrushin (Cham: Springer International Publishing), 205–225. doi: 10.1007/978-3-319-14998-1_9

Lawson, A., and Murray, J. (2014). “Identifying User demographic traits through virtual-world language use,” in Predicting Real World Behaviors from Virtual World Data, eds. M. A. Ahmad, C. Shen, J. Srivastava, and N. Contractor (Cham: Springer International Publishing), 57–67. doi: 10.1007/978-3-319-07142-8_4

Ledesma, E. F. R., and García, J. J. G. (2017). Selection of mathematical problems in accordance with student's learning style. Int. J. Adv. Comput. Sci. Appl. Ijacsa 8. doi: 10.14569/IJACSA.2017.080316

Liu, M., Li, Y., Xu, W., and Liu, L. (2017). Automated essay feedback generation and its impact on revision. IEEE Trans. Learn. Technol. 10, 502–513. doi: 10.1109/TLT.2016.2612659

Master, A., Cheryan, S., and Meltzoff, A. N. (2016). Computing whether she belongs: stereotypes undermine girls' interest and sense of belonging in computer science. J. Educ. Psychol. 108, 424–437. doi: 10.1037/edu0000061

McDonald, M. M., Zeigler-Hill, V., Vrabel, J. K., and Escobar, M. (2019). A single-item measure for assessing STEM identity. Front. Educ. 4:78. doi: 10.3389/feduc.2019.00078

Mohebi, L. (2024). Empowering learners with ChatGPT: insights from a systematic literature exploration. Discov. Educ. 3:36. doi: 10.1007/s44217-024-00120-y

Murphy, J., Hallinger, P., and Lotto, L. S. (1986). Inequitable allocations of alterable learning variables. J. Teach. Educ. 37, 21–26. doi: 10.1177/002248718603700604

Nicholas, D., Swigon, M., Clark, D., Abrizah, A., Revez, J., Herman, E., et al. (2024). The impact of generative AI on the scholarly communications of early career researchers: an international, multi-disciplinary study. Learn. Publ. 37:e1628. doi: 10.1002/leap.1628

Nixon, N., Lin, Y., and Snow, L. (2024). Catalyzing equity in STEM teams: harnessing generative AI for inclusion and diversity. Policy Insights Behav. Brain Sci. 11, 85–92. doi: 10.1177/23727322231220356

Perin, D., and Lauterbach, M. (2018). Assessing text-based writing of low-skilled college students. Int. J. Artif. Intell. Educ. 28, 56–78. doi: 10.1007/s40593-016-0122-z

Phoenix, A., and Pattynama, P. (2006). Intersectionality. Eur. J. Womens Stud. 13, 187–192. doi: 10.1177/1350506806065751

Puckett, C., and Rafalow, M. H. (2020). “From ‘impact' to ‘negotiation': educational technologies and inequality,” in The Oxford Handbook of Digital Media Sociology, eds. D. A. Rohlinger and S. Sobieraj (Oxford University Press), 274–278. doi: 10.1093/oxfordhb/9780197510636.013.8

Rafalow, M. H., and Puckett, C. (2022). Sorting machines: digital technology and categorical inequality in education. Educ. Res. 51, 274–278. doi: 10.3102/0013189X211070812

Ren, X., and Wu, M. L. (2025). Examining teaching competencies and challenges while integrating artificial intelligence in higher education. TechTrends 69, 519–538. doi: 10.1007/s11528-025-01055-3

Rodgers, S., Wang, Z., and Schultz, J. C. (2020). A scale to measure science communication training effectiveness. Sci. Commun. 42, 90–111. doi: 10.1177/1075547020903057

Stewart, C. O. (2022). STEM identities: a communication theory of identity approach. J. Lang. Soc. Psychol. 41, 148–170. doi: 10.1177/0261927X211030674

Surahman, E., and Wang, T.-H. (2022). Academic dishonesty and trustworthy assessment in online learning: a systematic literature review. J. Comput. Assist. Learn. 38, 1535–1553. doi: 10.1111/jcal.12708

Wang, F., Cheung, A. C. K., Chai, C. S., and Liu, J. (2025). Development and validation of the perceived interactivity of learner-AI interaction scale. Educ. Inf. Technol. 30, 4607–4638. doi: 10.1007/s10639-024-12963-x

Winfield, L. L., Wilson-Kennedy, Z. S., Payton-Stewart, F., Nielson, J., Kimble-Hill, A. C., and Arriaga, E. A. (2020). Journal of chemical education call for papers: special issue on diversity, equity, inclusion, and respect in chemistry education research and practice. J. Chem. Educ. 97, 3915–3918. doi: 10.1021/acs.jchemed.0c01300

Xu, W., and Ouyang, F. (2022). The application of AI technologies in STEM education: a systematic review from 2011 to 2021. Int. J. STEM Educ. 9:59. doi: 10.1186/s40594-022-00377-5

Zhai, X., He, P., and Krajcik, J. (2022). Applying machine learning to automatically assess scientific models. J. Res. Sci. Teach. 59, 1765–1794. doi: 10.1002/tea.21773

Keywords: generative AI, education, AI integration, broadening participation, equity, inclusive teaching, technology, ethics

Citation: Garcia Ramos J and Wilson-Kennedy Z (2025) The use of artificial intelligence in STEM communication-intensive courses and its impact on science identity. Front. Educ. 10:1644873. doi: 10.3389/feduc.2025.1644873

Received: 11 June 2025; Accepted: 06 August 2025;

Published: 26 August 2025.

Edited by:

Sumali Pandey, Minnesota State University Moorhead, United StatesReviewed by:

Jamie Lynn Sturgill, University of Kentucky, United StatesNicole Kelp, Colorado State University, United States

Copyright © 2025 Garcia Ramos and Wilson-Kennedy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jennifer Garcia Ramos, amVubmlmZXJnYXJjaWEyMEBnbWFpbC5jb20=

†ORCID: Jennifer Garcia Ramos orcid.org/0000-0001-5807-8829

Jennifer Garcia Ramos

Jennifer Garcia Ramos Zakiya Wilson-Kennedy

Zakiya Wilson-Kennedy