- 1School of Foreign Languages, Izmir University of Economics, Ízmir, Türkiye

- 2Directorate of Computer Technologies & Robotics Application and Research Center, Faculty of Engineering and Architecture, Istanbul Gelisim University, Istanbul, Türkiye

The study explores the acceptance and use of ChatGPT in higher education, focusing on university students and faculty members. The research aims to identify the factors that influence the behavioral intention to use ChatGPT, utilizing the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2) as the theoretical framework. Key constructs such as performance expectancy, effort expectancy, social influence, facilitating conditions, hedonic motivation, price value, and habit were examined for their impact on the acceptance of ChatGPT. Data were collected through a survey of 378 participants, including 346 students and 32 faculty members, from various faculties at a university in Eastern Europe. The findings reveal that effort expectancy and performance expectancy were the most significant predictors of behavioral intention to use ChatGPT. Faculty members demonstrated a higher intention to use ChatGPT compared to students, likely due to their greater experience with technology. Hedonic motivation also played an important role in both groups, indicating that enjoyment contributed to the acceptance of the tool. The study concludes that ChatGPT holds great potential for enhancing education, but its habitual use is not yet widespread. The results suggest that universities should focus on improving institutional support and training to facilitate broader acceptance of ChatGPT among students and faculty.

Introduction

AI-powered chatbots have rapidly emerged as transformative tools across various sectors, with ChatGPT becoming a particularly prominent example in education (Adigüzel et al., 2023). Released in late 2022, ChatGPT quickly garnered unprecedented global attention for its ability to produce coherent, human-like responses to user prompts in real time (OpenAI., 2023). In higher education, this technology has sparked both excitement and concern, as students and instructors explore its potential uses for learning and teaching (Zhai et al., 2024). On one hand, ChatGPT offers immediate answers, personalized explanations, and creative content generation, which has led to optimism about its capacity to enhance educational practices (Zhao et al., 2023). On the other hand, its introduction into academia has raised critical questions about academic integrity, reliability of AI-generated content, and the appropriate role of generative AI in pedagogy (Bin-Nashwan et al., 2023).

As with any disruptive innovation, the extent to which end-users are willing to embrace ChatGPT remains uncertain. University stakeholders are only beginning to confront whether and how such AI tools can be integrated into coursework and instruction. In this context, a clear need has emerged for empirical research on the acceptance of ChatGPT in higher education. While the public discourse on ChatGPT's capabilities and risks has been vigorous, systematic evidence on how higher education communities perceive and adopt this tool is still limited. To address this gap, the present study provides a focused investigation into the acceptance and use of ChatGPT among university students and instructors.

The research is centered on identifying the key factors that influence the behavioral intention to use ChatGPT, drawing on an established technology acceptance model for a guiding framework. This comparative analysis contributes to understanding whether different pedagogical roles lead to different drivers of adoption. Unlike previous studies focusing solely on students (e.g., Strzelecki, 2024), our approach also includes faculty members as a distinct user group. The present study aims to explore the key factors influencing university students' and instructors' intentions to use ChatGPT in higher education. In particular, the research applies the UTAUT2 model to identify which constructs (e.g., performance expectancy, effort expectancy, etc.) drive acceptance of ChatGPT, and whether these drivers differ between the two user groups. By examining both student and instructor perspectives, the study seeks to provide a comprehensive understanding of ChatGPT's adoption in the academic context. Specifically, the objective of this research is to empirically investigate how UTAUT2 constructs shape behavioral intentions to adopt ChatGPT in higher education, with the aim of clarifying the similarities and differences between students and faculty. By doing so, the study intends to contribute both theoretically—by extending technology acceptance research to generative AI in academia—and practically—by offering actionable insights for institutions seeking to support effective and responsible integration of ChatGPT into teaching and learning.

Literature review

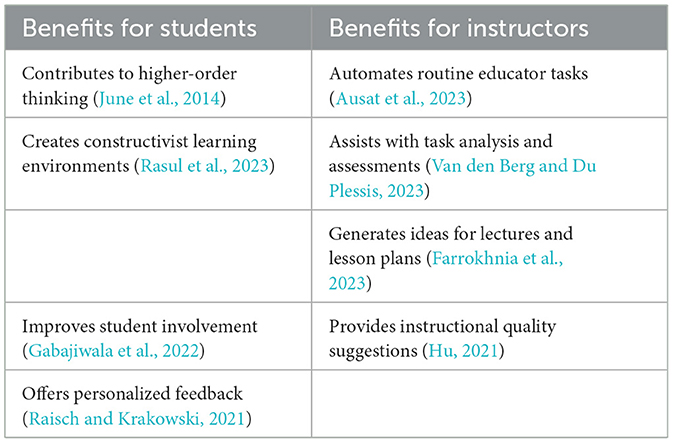

ChatGPT and generative AI in higher education

In higher education, ChatGPT has shown considerable promise. Recent studies suggest it contributes to higher-order thinking and personalized learning experiences through tailored feedback and adaptive learning preferences (Raisch and Krakowski, 2021). Increased student engagement and motivation have also been reported when using ChatGPT as a supplemental instructional tool (Gabajiwala et al., 2022). ChatGPT has quickly emerged as an invaluable resource for both students and instructors. It has been put forward that the “T” dimension of ChatGPT holds significant potential to transform education, particularly in higher education settings (Rawas, 2023). Table 1 illustrates that many studies have underscored different positive contributions of ChatGPT for university students and instructors.

While the acceptance and use of ChatGPT seem to hold great promise at the university level, Some educators have shared mixed feelings about the complex tasks it performs. Concerns have been raised with regard to its limitations and disadvantages: ethical concerns (Willems, 2023), potential deception (McCallum, 2023), enhanced cheating risks (Tlili et al., 2023), issues of discrimination, intellectual property, bias, and the privacy of data (Li et al., 2022), as well as over-reliance and dependency on technology (Adigüzel et al., 2023). Conversely, Garcia-Penalvo (2023) argues that these negative attitudes toward ChatGPT, in fact, arise from a resistance to embracing its innovative and transformative power, rather than the disruptive nature of this technology as it necessitates a paradigmatic shift in instructional practices (Bozkurt, 2023).

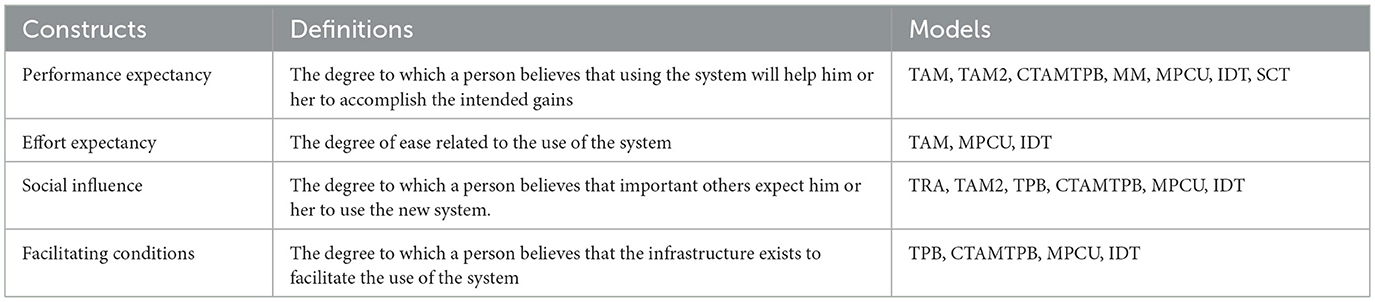

UTAUT and technology acceptance frameworks

The examination of factors influencing technology acceptance has been widely explored in academic research. To this purpose, numerous models and theories have been proposed, one of which is the Unified Theory of Acceptance and Use of Technology (UTAUT) developed by Venkatesh et al. (2003). UTAUT outlines two direct determinants of technology use: facilitating conditions and behavioral intention. Furthermore, it identifies three direct determinants affecting the behavioral intention to utilize a technology: performance expectancy, effort expectancy, and social influence. The model also presents four contingencies: gender, age, experience, and voluntariness, which further enhance the predictive capacity of the model. As seen in Table 2, Venkatesh et al. (2003) built this model on the key components of the previous models: Technology Acceptance Model (TAM), Technology Acceptance Model-2 (TAM2), Theory of Reasoned Action (TRA), Theory of Planned Behavior (TPB), Model of PC Utilization (MPCU), Innovation Diffusion Theory (IDT), Social Cognitive Theory (SCT), Motivational Model (MM), Combined TAM and the Theory of Planned Behavior (CTAMTPB).

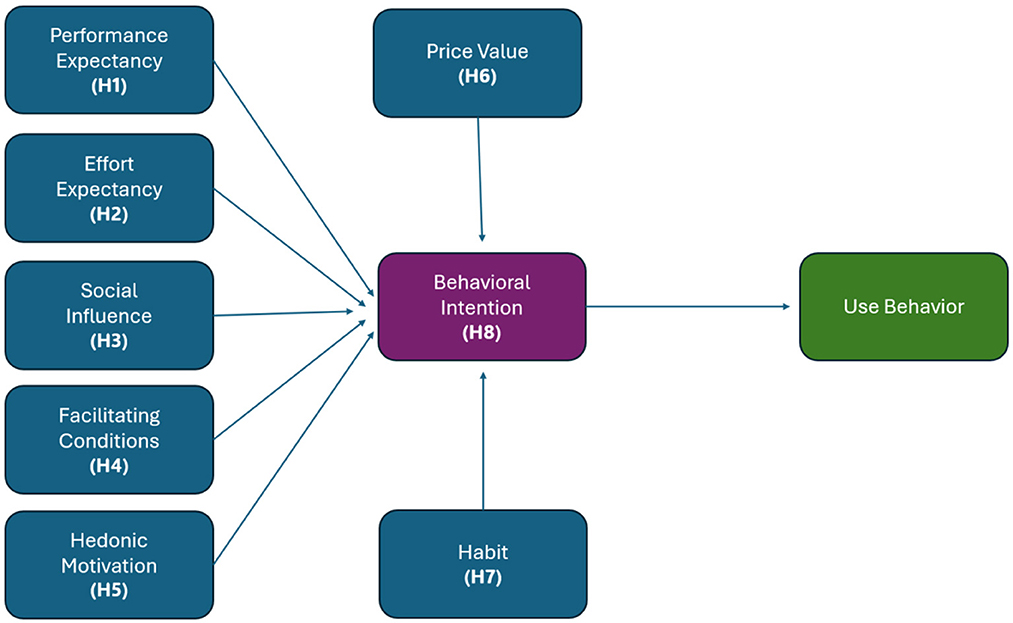

In due course, UTAUT has evolved into UTAUT2, including three additional constructs: hedonic motivation, price value, and habit (Venkatesh et al., 2012). Hedonic motivation refers to the pleasure derived from using a technology; price value refers to the decision that the benefits of using a technology are more than the perceived financial cost of the technology; and habit is defined as the degree to which people tend to perform a behavior automatically because of learning. UTAUT2 presents a stronger predictive power than UTAUT in explaining about 74% of the variability in users' behavioral intention and 52% in their technology usage (Venkatesh et al., 2016).

Empirical studies on ChatGPT adoption using UTAUT2

In addition to the growing body of research on ChatGPT, several related studies provide useful comparative insights into AI adoption in education. For example, Farhi et al. (2023) emphasized students' ethical concerns and perceived usefulness in shaping ChatGPT use, while Xie et al. (2024) analyzed policy-to-practice challenges in implementing generative AI in diverse educational institutions. Similarly, studies by Wang et al. (2025) and Cachola and Vu (2024) highlight broader digital education trends, such as ease of use, and long-term engagement through chatbots at higher education. These studies collectively reinforce the value of applying structured models like UTAUT2 to understand the key constructs driving AI adoption across diverse educational contexts.

Empirical studies using UTAUT2 highlight factors like performance expectancy, effort expectancy, social influence, and facilitating conditions as influential in students' acceptance of ChatGPT (Habibi et al., 2023). Hedonic motivation and habit are also significant predictors of sustained engagement (Strzelecki, 2024). Cross-cultural studies further emphasize the universal importance of ease of use and social influence in different contexts (Budhathoki et al., 2024).

Emerging studies explore educator perspectives. Dietrich and Grassini (2025) reveal ethical perceptions as primary drivers for instructors' adoption intentions, contrasting students' performance-oriented and habitual motivations. Research with specific learner populations provides further insights. Moradi (2025) found that habit significantly influences adoption among English-language learners, while performance expectancy and social influence were also crucial. Interestingly, ease of use and hedonic motivation were less significant, suggesting context-dependent acceptance drivers.

In empirical comparisons, the UTAUT2 model outperforms older frameworks like TAM in predicting usage intentions (Rondan-Cataluna et al., 2015). While TAM focuses narrowly on perceived usefulness and ease of use, it often overlooks social, organizational, and affective factors that influence adoption. By integrating social and facilitative influences alongside task-related beliefs, UTAUT2 provides a more holistic view of why users embrace new systems, which is especially valuable for novel tools like AI chatbots in academia (Lee et al., 2025).

UTAUT2 as the theoretical framework

UTAUT2 has emerged as a widely accepted model for explaining technology acceptance in educational contexts, making it a strong choice for the present study. It was originally developed by consolidating eight prominent adoption theories (including TAM, TPB, and Diffusion of Innovations) into a unified framework (Wedlock and Trahan, 2019). Compared to earlier models, UTAUT2 incorporates a broader range of determinants such as performance expectancy, effort expectancy, social influence, facilitating conditions, hedonic motivation, price value, and habit (Acosta-Enriquez et al., 2024). This comprehensive scope has translated into high explanatory power: UTAUT/UTAUT2 can account for roughly 70–75% of the variance in users' behavioral intentions to adopt technology (Wedlock and Trahan, 2019).

The relevance of UTAUT2 for this study lies in its ability to capture the multifaceted nature of technology adoption in higher education. Unlike earlier models such as TAM, UTAUT2 integrates both extrinsic factors (e.g., performance expectancy, facilitating conditions) and intrinsic drivers (e.g., hedonic motivation, habit), which are particularly important when examining emerging technologies like ChatGPT. The higher education environment involves diverse stakeholders with varying expectations and technology experiences. UTAUT2′s constructs are well-suited to reflect this complexity, as they account for not only perceived usefulness and ease of use but also the social and institutional context influencing adoption. In the case of ChatGPT, constructs such as performance expectancy and hedonic motivation are highly relevant—students and faculty adopt the tool not only for its utility but also for its engaging and interactive features. Habit and facilitating conditions further help to explain sustained use in a learning environment where digital tools must integrate seamlessly into academic routines. By employing UTAUT2, this study ensures that both the cognitive and affective aspects of ChatGPT acceptance are systematically analyzed, offering a robust framework to explain why and how users in academia choose to engage with this innovative AI technology.

Importantly, UTAUT2 has been successfully applied in studies of AI and chatbot adoption in higher education, underscoring its relevance to our research. For example, Yildiz Durak and Onan (2024) used UTAUT2 to examine university students' intentions to use educational chatbots, and Strzelecki (2024) employed an extended UTAUT2 model to investigate acceptance of ChatGPT among college students. A recent systematic review of AI in universities further confirms the versatility of UTAUT2 in elucidating technology adoption processes in higher education (Acosta-Enriquez et al., 2024). Across these studies, the core UTAUT2 constructs (e.g., performance expectancy and effort expectancy) consistently emerge as significant predictors of students' intentions to adopt AI-driven tools (Marlina et al., 2021). This track record in educational AI contexts justifies using UTAUT2 as our base model: it is a validated, robust framework capable of capturing the key drivers of chatbot acceptance among faculty and students.

Methodology

This survey-based quantitative research aims to study the impact of UTAUT2 constructs on the behavioral intention of university students and instructors to use ChatGPT, as shown in Figure 1. It classifies each construct and explores how instructors and students perceive “ChatGPT use”. In addition to analyzing the effects of gender, school year, and faculty on each factor, distinct hypotheses have been developed based on each UTAUT2 construct from the perspectives of both students and instructors. This study further places great emphasis on the use of ChatGPT within instructional contexts, highlighting its pedagogical implications, student involvement, and academic performance, turning it more context-specific compared to the broader UTAUT2 applications found in Venkatesh et al. (2012). Furthermore, by examining both students and instructors, the research provides new insights into how roles and experiences shape technology acceptance, revealing how the differences of these groups in their perceptions of ease of use and behavioral intention.

Performance expectancy (PE) is defined as the extent to which an individual perceives that using the technology will enhance the attainment of meaningful gains. It has been identified as an important determinant of behavioral intention, with its varying impact on gender and age (Venkatesh et al., 2003). Recent research confirms that PE is a significant predictor of students' intention to adopt ChatGPT in higher education (Dietrich and Grassini, 2025).

H1s: PE significantly influences students' behavioral intention to use ChatGPT in higher education.

H1f: PE significantly influences faculty's behavioral intention to use ChatGPT in higher education.

Effort expectancy (EE) is described as the level of ease linked to the utilization of new technology (Venkatesh et al., 2003). It has been observed that the impact of effort expectancy on behavioral intention differs across gender and age, with the strongest effect on older women in the early stages of experience (Venkatesh and Zhang, 2010). Thus, it needs to be investigated if the acceptance and use of ChatGPT enhances the efficiency of university instructors' and students' performance across different genders and ages. According to Foroughi et al. (2024), effort expectancy significantly shapes learners' intention to adopt ChatGPT in educational contexts, emphasizing ease of use as a key determinant.

H2s: EE significantly influences students' behavioral intention to use ChatGPT in higher education.

H2f: EE significantly influences faculty's behavioral intention to use ChatGPT in higher education.

Social influence (SI) is defined as the extent to which an individual perceives that important others think she or he should use the new technology (Venkatesh et al., 2003). Women tend to be more responsive to others' opinions (Venkatesh et al., 2000). As shown in a recent study by Parveen et al. (2024), social influence plays a substantial role in individuals' willingness to adopt AI-based educational tools like ChatGPT. In this study, the impact of social influence on university students and faculty is investigated through the following hypotheses:

H3s: SI significantly influences students' behavioral intention to use ChatGPT in higher education.

H3f: SI significantly influences faculty's behavioral intention to use ChatGPT in higher education.

Facilitating conditions (FC) are characterized as the degree to which an individual believes the presence of an organizational and technical infrastructure supports the use of the technology (Venkatesh et al., 2003). It has been determined that the impact of facilitating conditions on technology use depends on age and experience, with the most significant impact on older workers in later stages of experience (Venkatesh and Zhang, 2010). In this research, how significant such conditions are for instructors' and students' behavioral intention to use ChatGPT at university is further supported by findings from Parveen et al. (2024), who emphasized the importance of access to support resources as a significant factor in promoting ChatGPT usage among students.

H4s: FC significantly influences students' behavioral intention to use ChatGPT in higher education.

H4f: FC significantly influences faculty's behavioral intention to use ChatGPT in higher education.

PE is linked to extrinsic motivation; thus, in order to complement this and involve an intrinsic dimension, hedonic motivation (HM) is added to UTAUT2, which refers to the fun and joy derived from using the new technology (Venkatesh et al., 2012). There is research that indicates its significant influence on determining technology acceptance (Shaw and Sergueeva, 2019). Thus, in the use of ChatGPT by faculty and university students, the extent to which hedonic motivation holds a key role needs to be explored thoroughly. Faraon et al. (2025) found that enjoyment derived from ChatGPT significantly predicted students' intentions to use it across different cultural settings, underscoring the motivational appeal of the tool.

H5s: HM significantly influences students' behavioral intention to use ChatGPT in higher education.

H5f: HM significantly influences faculty's behavioral intention to use ChatGPT in higher education.

Price value (PV) refers to a person's decision based on the perceived advantages of using the system and its financial cost (Venkatesh et al., 2012). This construct is viewed as a key predictor in determining a person's behavioral intention to use a new technology (Tamilmani et al., 2018). Although ChatGPT is currently free for many users, Arbulú Ballesteros et al. (2024) demonstrated that students still consider its cost–benefit perception relevant in forming their intention to adopt AI tools in university settings. Whether it holds the same key role in the use of ChatGPT by faculty and university students is investigated through the following hypotheses:

H6s: PV significantly influences students' behavioral intention to use ChatGPT in higher education.

H6f: PV significantly influences faculty's behavioral intention to use ChatGPT in higher education.

Habit (H) is defined as the degree to which individuals tend to perform a behavior automatically because of learning (Venkatesh et al., 2012). Tamilmani et al. (2019) regard “habit” as the most important theoretical addition to the original UTAUT. Whether university students and faculty have such a habit to enhance their use of ChatGPT needs to be investigated closely. Recent research by Strzelecki (2024) found that habit was among the strongest predictors of sustained ChatGPT use in academic settings, particularly among students.

H7s: H significantly influences students' behavioral intention to use ChatGPT in higher education.

H7f: H significantly influences faculty's behavioral intention to use ChatGPT in higher education.

Behavioral intention (BI) is seen as the key component in the acceptance and use of new technologies (Ajzen, 1991). It refers to the willingness and intention of people to utilize an innovation for a specific purpose (Venkatesh et al., 2003). In this study, BI is explored to display faculty's and university students' willingness to use ChatGPT. As highlighted by Strzelecki (2024), behavioral intention to use ChatGPT strongly predicts actual usage behavior among higher education students, consistent with core assumptions of UTAUT2.

H8s: BI significantly influences students' use behavior of ChatGPT in higher education.

H8f: BI significantly influences faculty's use behavior of ChatGPT in higher education.

Setting and participants

The research was done in a small university in Eastern Europe. It is an English medium institution with around 500 instructors and 10,000 students in six faculties. This is considered a world university, being composed of a large selection of students and instructors from several countries. In this context, the vision and mission of this higher education institution correspond closely with modern instructional technologies.

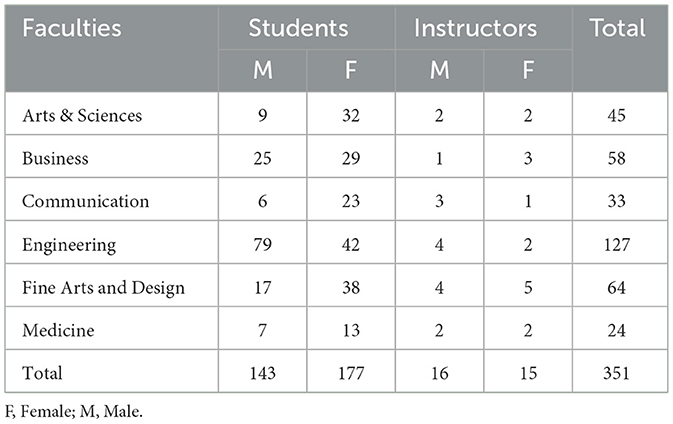

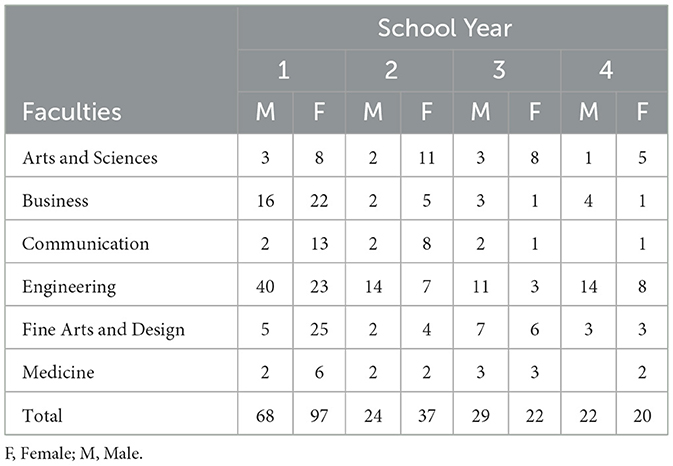

Since participants were selected based on whether they were accessible and willing to take part in this study, convenience sampling was performed to gather responses from those who were readily available and willing. After conducting the survey in the first data collection phase, 378 participants responded to the survey. The dataset went through a rigorous data screening and cleaning process to identify any potential data quality issues. This screening incorporated identifying and removing incomplete survey responses, conducting outlier analysis, and removing cases with response patterns that lack meaningful variation. After these steps, a final sample of 351 participants was retained for analysis, which enabled a dataset which is reliable and representative to identify predictors and determinants influencing the acceptance of ChatGPT within a higher education setting. As seen in Table 3, most of them are students, making up 91.2% of the total participants, and 8.8% represent instructors. The study has a diverse demographic, as evident from its male and female students and instructors in all six faculties. Engineering students constitute the largest portion, occupying 34.4% of the total. Fine Arts and Design follows with 15.7%, and Business students make up 15.4%. Arts and Sciences consist of 10.3% of the total, while students from Communication compose 8.3%. Finally, Medicine students comprise 4.1%, the smallest group. This distribution represents the ratio between technical and creative disciplines in the sample.

As Table 4 illustrates, an overview of student distribution by school year shows that first-year students constitute the largest percentage, at 51%. Sophomores comprise 19.7%, and Juniors consist of 15.9%. The fourth-year students who are at 13.3% show a little more experienced perspective. This spread offers a wide spectrum of perspectives at varying points in the students' academic careers.

The sample was drawn using a convenience sampling method, targeting both students and faculty who had access to the institution's learning management system and responded voluntarily to the invitation to participate. Given the size and diversity of the institution, which includes six faculties, around 10,000 students, and 500 instructors, convenience sampling was considered the most feasible and efficient strategy to reach a large pool of participants within the study's time and resource constraints. Although convenience sampling has well-documented limitations—particularly regarding representativeness and generalizability—several factors support its appropriateness for this research. First, participants were drawn from all six academic faculties and included both male and female students and instructors, creating demographic and disciplinary diversity. Secondly, the institution itself actively promotes the integration of digital and AI-driven tools into its instructional practices, making it a particularly relevant setting to examine ChatGPT adoption. This context enhances the value of convenience sampling by ensuring that participants were already situated within a technologically engaged academic environment. Moreover, the student body at the university represents diverse nationalities, which enhances the generalizability of the findings beyond a single cultural context. The demographic heterogeneity observed in our sample mirrors the diversity targeted in these prior studies, supporting the reliability of results despite the limitations of convenience sampling. Taken together, these justifications strengthen the rationale for adopting this method in the present study and provide confidence that the insights generated are both credible and transferable to similar higher education contexts.

The choice of convenience sampling also aligns with established practices in educational technology acceptance research. Prior studies in higher education have frequently employed this method to investigate AI adoption and user acceptance. For example, Strzelecki (2024) conducted a large-scale survey on ChatGPT acceptance using convenience sampling across multiple faculties in a European university, while Yildiz Durak and Onan (2024) examined educational chatbot use in a Turkish university through a similar approach. Beyond these, Awal and Haque (2025) applied convenience sampling in a South Asian context to assess students' intention to adopt AI-powered chatbots, Liu et al. (2025) investigated barriers to chatbot use among teacher trainees in Malaysia using the same strategy, and Tian et al. (2024) employed it in a study of graduate students' adoption of chatbots in Chinese higher education. Collectively, these studies demonstrate that convenience sampling, though non-probabilistic, is widely recognized as an acceptable and effective method in technology acceptance research, particularly when the focus is on capturing perceptions of large and heterogeneous academic populations.

Data collection and analysis

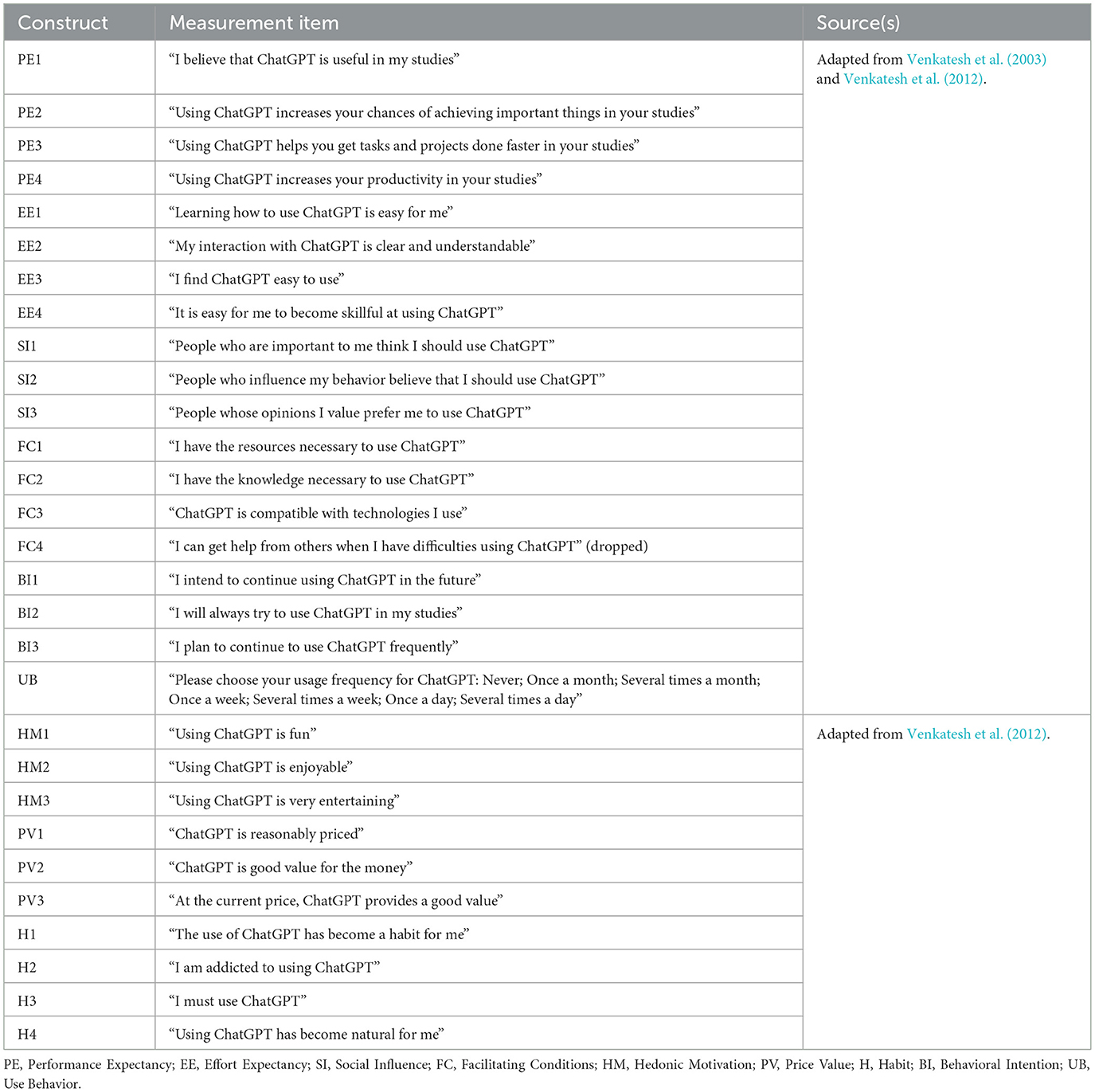

The data were gathered via a seven-point Likert scale measuring the participants' driving constructs based on UTAUT2 to use ChatGPT at university (Appendix A) (Strzelecki, 2024). The range given to the participants for their responses varied from “strongly disagree” to “strongly agree.” The scale item intended to measure “use behavior” involved seven options, ranging from “never” to “several times a day.” The data collection was conducted by one of the researchers using multiple techniques, which involved sending the questionnaire to the instructors via email every other Wednesday for 2 months, distributing leaflets carrying a QR code of the questionnaire on campus for 2 months, and making the scale available on the learning management system for students and instructors for 2 months.

In the study, multiple descriptive statistics were performed: mean and standard deviation calculations for the demographics of participants (e.g., students vs. instructors) and each UTAUT2 construct (i.e., Performance Expectancy, Effort Expectancy) across faculties, school years, and gender. The comparative analysis aimed to highlight differences, while the frequency analysis of Likert-scale responses aimed to provide insights.

In this study, robust measures were performed to ensure the security and accuracy of the data. The responses of the participants were anonymized to maintain confidentiality. No personally identifiable information was collected, stored, or associated with the dataset. Participation in the survey was entirely voluntary, and informed consent was obtained from all participants. Furthermore, the data were kept on encrypted platforms with restricted access, accessible only to the researchers of this study.

Consistent with prior studies, this research utilized PLS-SEM to analyze complex relationships between latent variables. This method enabled the simultaneous testing of multiple hypotheses, which was particularly valuable for exploring mediators, moderators, and diverse constructs such as performance expectancy, hedonic motivation, and habit. A survey-based data collection approach was employed, with relationships analyzed using bootstrapping procedures to assess the statistical significance of path coefficients. Separate analyses were conducted for students and instructors, which ensured robustness in hypothesis validation.

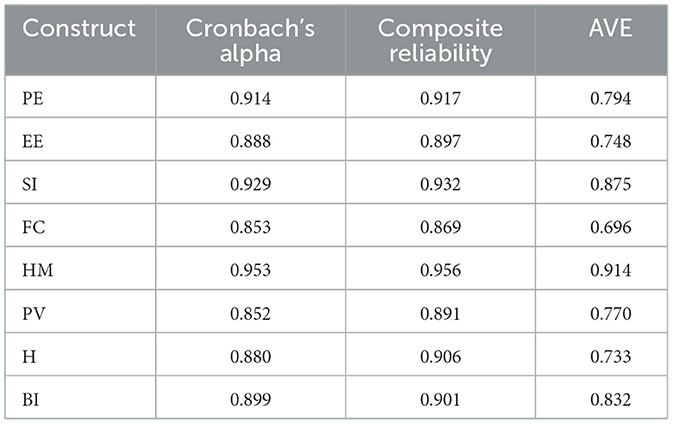

To evaluate the reliability and validity of the constructs in this study, key metrics like Cronbach's alpha, composite reliability (CR), and average variance extracted (AVE) were assessed. As illustrated in Table 5, all constructs met the criteria for acceptable reliability and validity. Cronbach's alpha and composite reliability values exceeded the 0.70 threshold, ensuring acceptable internal consistency reliability. Moreover, AVE values, which measure the proportion of variance explained by each construct, are above the 0.50 threshold, demonstrating robust convergent validity.

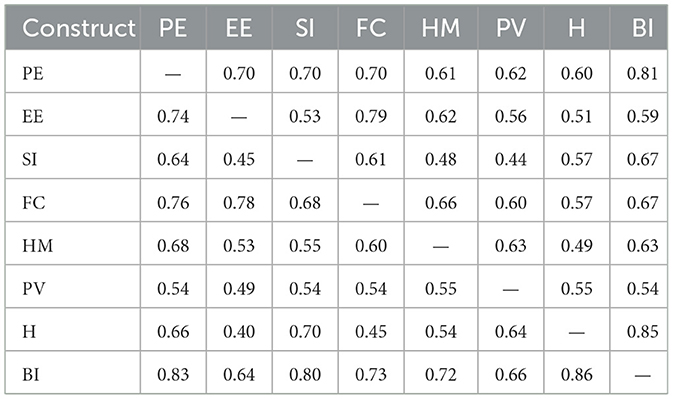

All indicator loadings exceeded the recommended 0.70 threshold and were statistically significant (p < 0.001), supporting indicator reliability for all constructs. One item from the Facilitating Conditions construct (FC4) was removed due to a low loading, which improved the overall reliability. Discriminant validity was confirmed through the Fornell–Larcker criterion, as each construct's square-root AVE (ranging from 0.83 to 0.96) was greater than its correlations with any other construct. Additionally, the Heterotrait-Monotrait (HTMT) ratios between all construct pairs were below the suggested 0.90 cutoff (Hair and Alamer, 2022), further demonstrating discriminant validity. Table 6 presents the HTMT values for the constructs in the model.

To ensure no multicollinearity among the predictors, variance inflation factor (VIF) values were examined for the structural model. All VIFs were well below the recommended threshold of 5 (PE = 2.99, EE = 2.53, SI = 2.21, FC = 2.87, HM = 2.07, PV = 1.82, H = 1.99), indicating that collinearity was not a concern. Kock and Lynn (2012) proposed the full collinearity test, and the occurrence of a VIF greater than 3.3 is an indicator of pathological collinearity, and also an indicator that a model may be contaminated by common method bias. Most of the VIFs resulting from a full collinearity test are lower than 3.3; the model can be considered free of common method bias.

Results

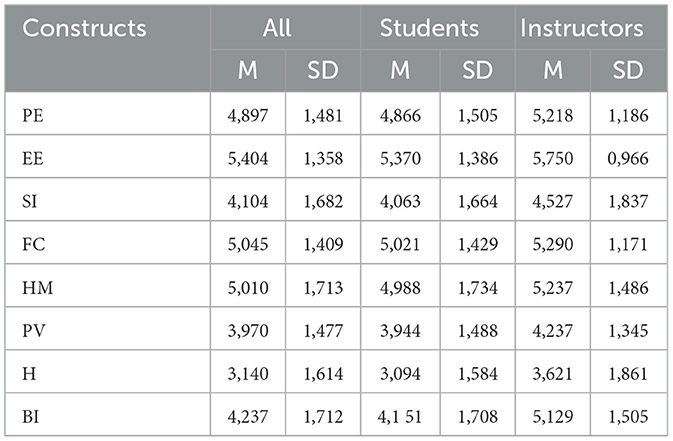

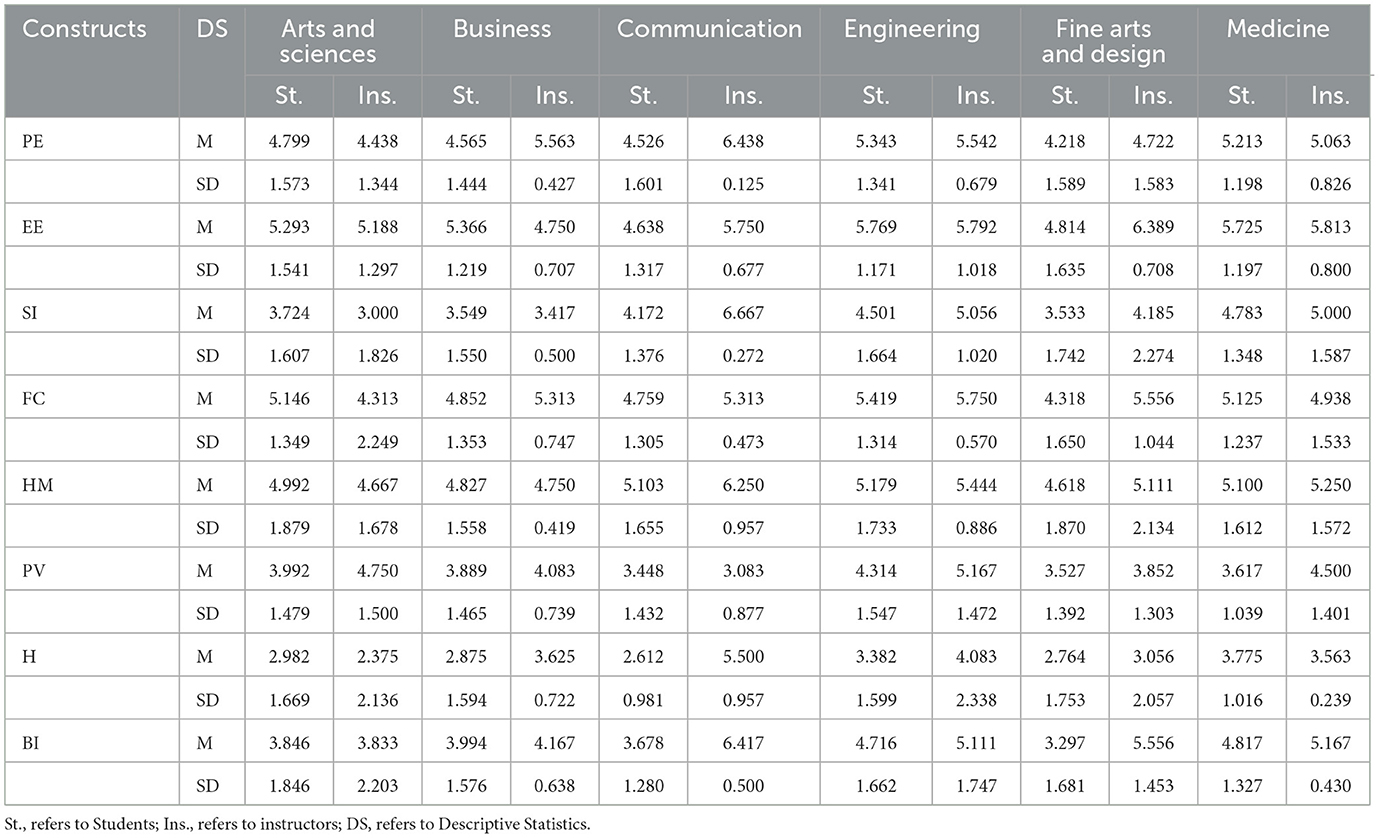

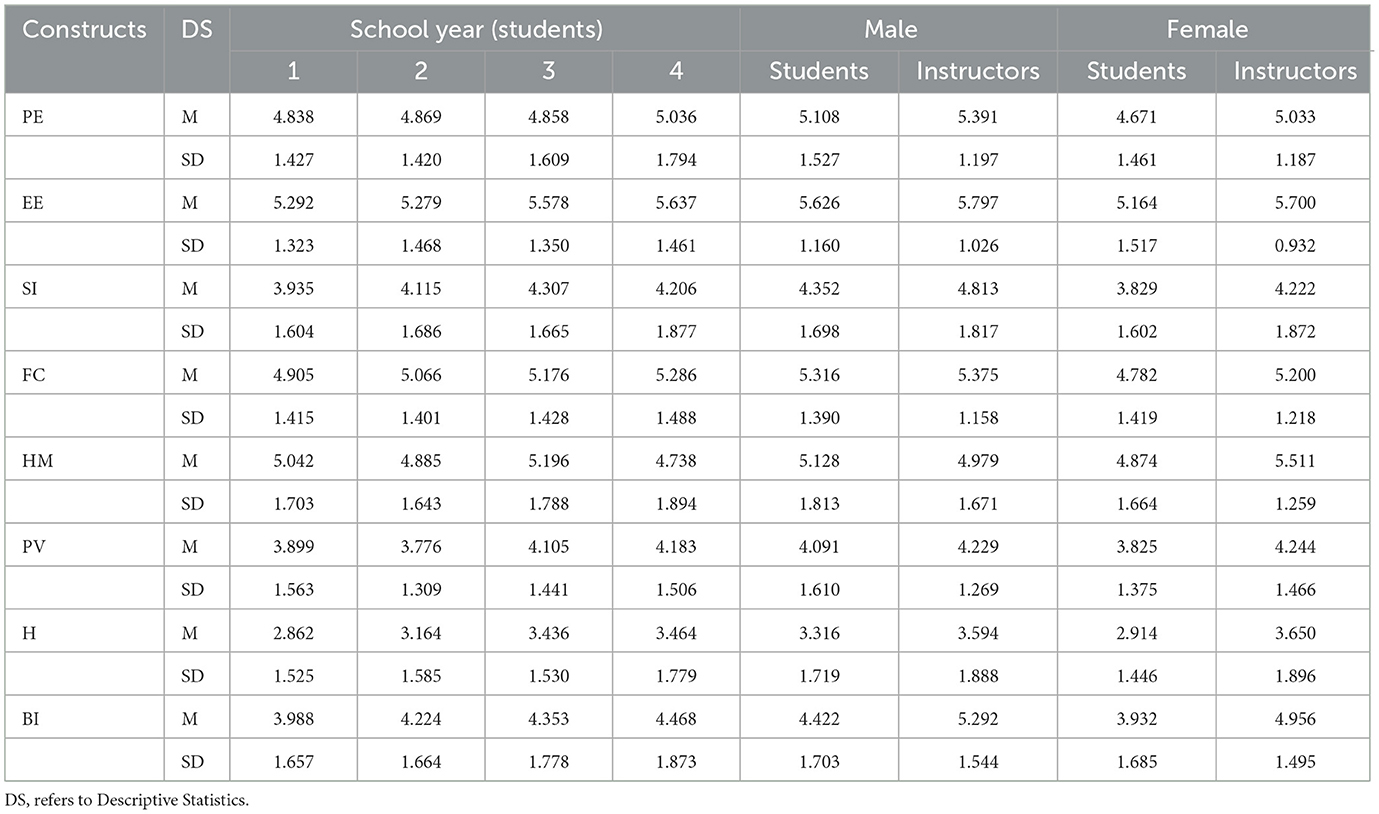

In this part, the findings of the study are presented based on the hypotheses developed using the constructs of the UTAUT2 theory for students and instructors. Tables 7–9 show the mean scores and standard deviations across faculty, gender, and school year for the UTAUT2 constructs, which underscore the key differences between the two groups. Among both students and instructors, Effort Expectancy received the highest scores, highlighting the significance of ease of use for behavioral intention. In contrast, Habit was spotted as the construct with the lowest scores across all groups.

Table 8. Descriptive results (M - Means and SD - Standard Deviations) of constructs based on the faculties.

Table 9. Descriptive results (M-Means and SD - Standard Deviations) of UTAUT2 constructs based on gender and school year.

The instructors from the Communication Faculty achieved higher scores in Performance Expectancy (6.43) and Social Influence (6.66) when compared to the students. Among students, those in Engineering scored the highest across departments. Significant gaps were observed in Fine Arts and Design and Engineering, where the instructors consistently outperformed the students across all constructs. Additionally, in the Medicine faculty, the students perceived ChatGPT as less cost-effective compared to the instructors.

Effort Expectancy showed an increasing trend across all school years, indicating that ease of use becomes increasingly important as students advance in their studies. Similarly, Performance Expectancy showed an upward trajectory, reaching its highest average of 5.39 among senior students. In terms of gender differences, males and females showed similar results across most constructs; however, males scored slightly higher in Effort Expectancy, while females scored lower in Social Influence. Habit consistently had the lowest averages for both groups, pointing out that it is not a well-established construct among the participants.

Hypothesis testing

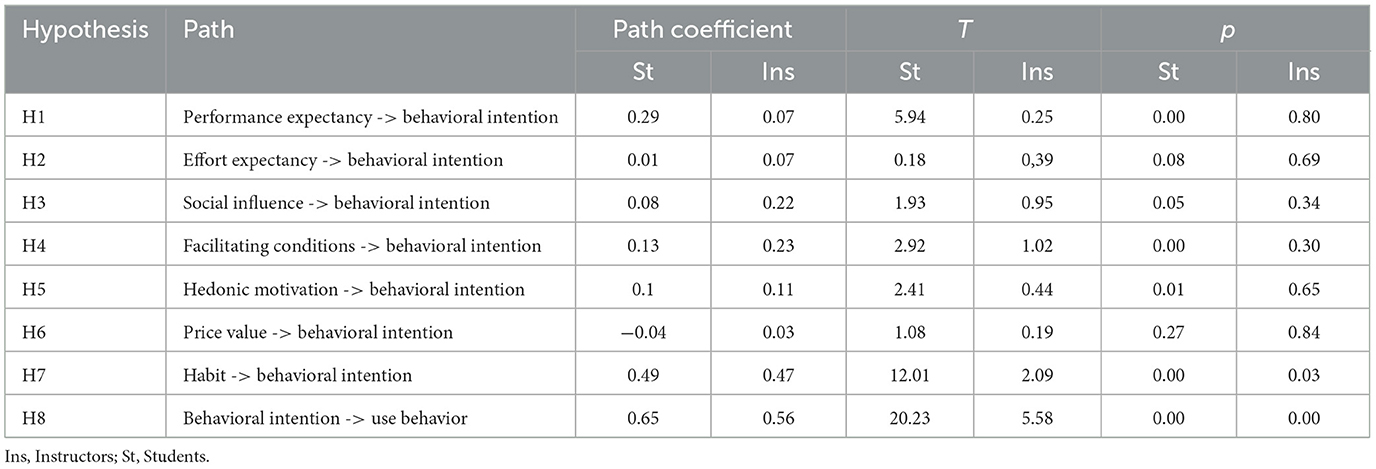

The structural model was evaluated by analyzing the path coefficients, R2 values, and t-statistics for the relationships between latent variables. Significance testing of the path coefficients was conducted using bootstrapping with 5,000 subsamples.

The model accounts for 79% of the variance in Behavioral Intention (R2 = 0.79) and 53% of the variance in Use Behavior (R2 = 0.53), showing strong explanatory power for Behavioral Intention and moderate explanatory power for Use Behavior. Model fit was evaluated using the SRMR (Standardized Root Mean Square Residual) and Q2 (Predictive Relevance) indices, which assess the model's alignment with the observed data. The SRMR values for students (0.06) and instructors (0.07) fall below the 0.08 threshold, indicating a good model fit. The Q2 values for students (0.25) and instructors (0.22) are positive, confirming moderate predictive relevance. These fit indices validate that the model is well-specified and can accurately predict new data points.

Table 10 summarizes the path coefficients and hypothesis testing results for students and instructors. A significant relationship is observed between Performance Expectancy and Behavioral Intention for students (β = 0.29, p < 0.01), however, this relationship is not significant for instructors (β = 0.07, p = 0.80). Similarly, the effect of Effort Expectancy on Behavioral Intention is minimal and insignificant in both groups, suggesting that ease of use does not have a strong influence on Behavioral Intention (β = 0.01 for students, β = 0.07 for instructors). In contrast, Habit proves to be a key predictor, showing strong coefficients in both groups, particularly for students (β = 0.49, p < 0.01). Furthermore, Behavioral Intention significantly predicts Use Behavior for both groups, with a stronger association for students (β = 0.65) compared to instructors (β = 0.56). These findings highlight the key role of Behavioral Intention in shaping ChatGPT usage in educational settings.

Furthermore, the effect size (f2) for each path was calculated to assess the relative impact of each construct. Habit exhibited a large effect on Behavioral Intention (f2 = 0.58 for students, =0.28 for instructors), indicating that removing Habit would substantially reduce the model's R2. Performance Expectancy showed a moderate effect on students' Behavioral Intention (f2 = 0.15), while Effort Expectancy had a small effect (f2 ≈ 0.00). In contrast, Facilitating Conditions, Hedonic Motivation, Social Influence, and Price Value had negligible contributions (f2 < 0.03), aligning with their non-significant paths. For the Use Behavior outcome, Behavioral Intention had large effects (f2 = 0.75 for students, =0.48 for instructors), underscoring its pivotal role in explaining actual ChatGPT usage.

Discussion

This part provides a critical evaluation of the results, examining the research hypotheses in the context of relevant literature and the conclusions derived. A comprehensive integration of the findings and supporting arguments is presented as follows:

• The evaluation of the hypotheses of the study.

• The significance of gender and school year on the acceptance of ChatGPT.

• The role of UTAUT2 constructs by faculties.

The evaluation of the hypotheses of the study

The evaluation of the hypotheses reveals a complex interplay between UTAUT2 constructs and their impact on the acceptance of ChatGPT by both students and instructors at the tertiary level. The findings show that students' Behavioral Intention (BI) to use ChatGPT is more significantly influenced by Performance Expectancy (PE) than instructors', which indicates that students place greater emphasis on the tool's potential to improve academic outcomes. This finding is consistent with Venkatesh et al. (2012), who argued that perceived usefulness is a critical determinant of technology acceptance, particularly for less experienced users. For students who may have limited experience with similar AI technologies, the expectation of improved academic performance serves as a primary motivator, aligning with the findings of Habibi et al. (2023).

It is shown that Effort Expectancy (EE) does not significantly influence BI for either students or instructors, suggesting that perceived ease of use is not a chief driver for the acceptance of ChatGPT in instructional contexts. This finding is divergent from previous research and may be explained by ChatGPT's user-friendly interface, which, as Venkatesh and Zhang (2010) argued, can reduce the significance of EE in technology acceptance. ChatGPT's design likely contributes to making EE a less prominent construct for both groups. This finding aligns with studies indicating that when a technology is free and easy to use, these factors have diminished predictive value (e.g., Tiwari et al., 2024).

Social Influence (SI) had minor effects, slightly significant for students but not instructors, reflecting that adoption is driven more by personal utility than peer pressure. Instructors were influenced mainly by practical applications, while students valued performance benefits and enjoyment. Together, these findings confirm that usefulness, institutional support, and habit outweigh ease and peer effects in driving adoption.

Facilitating Conditions (FC), which represent the availability of resources and support, demonstrated a moderate impact on BI, with a significantly stronger effect for instructors than students. This finding underscores the key role of organizational support in facilitating technology acceptance among instructors because they often require institutional assurance regarding compatibility and resources to effectively integrate ChatGPT into their teaching (Iqbal et al., 2022). For students, while support is advantageous, their relatively lower reliance on FC may indicate a more self-directed and independent approach to accepting new learning tools.

Hedonic Motivation (HM) showed a positive influence on BI for both students and instructors, with a slightly stronger effect observed on students. This finding is consistent with existing literature that emphasizes the importance of enjoyment as a key driver of technology acceptance, particularly in instructional contexts where intrinsic motivation is a significant variable (Strzelecki, 2024). Higher HM scores among students highlight the role of intrinsic factors. Enjoyment likely fosters sustained engagement with ChatGPT and makes it an appealing tool for educational use.

Finally, Habit (H) emerged as the strongest predictor of BI for both students and instructors, with a more significant effect observed among students. This finding suggests that as the use of ChatGPT becomes ordinary in daily routines, users are more likely to continue using it. Tamilmani et al. (2019) highlight that habitual behavior highlights sustained technology acceptance, even when other motivators diminish. This underscores the significance of early and consistent exposure to ChatGPT, especially for students, since it helps establish technology-driven learning routines that contribute to long-term engagement.

These findings collectively show that although performance benefits and enjoyment serve as primary drivers for initial acceptance, ongoing institutional support and the development of habitual use are essential for sustained acceptance and use. Highlighting and addressing these constructs could greatly foster the successful integration of ChatGPT into higher education, establishing its role as a valuable resource for both students and instructors.

The significance of gender and school year on the acceptance of ChatGPT

No significant gender differences were observed in construct means or behavioral intention. However, university students' acceptance of ChatGPT appears to vary by school year. Freshman students showed lower levels of PE and EE compared to the students in the upper years, suggesting they regard ChatGPT as moderately useful and not particularly easy to use. These lower scores may be attributed to limited technological self-efficacy, as freshmen are still adapting themselves to their new life at university and its associated digital tools (Bouteraa et al., 2024). SI is moderate, which indicates limited peer interactions involving ChatGPT. This is also consistent with the findings that freshmen typically have less collaborative experience with technology (Sobaih et al., 2024). Despite this, HM is relatively high, indicating sufficient curiosity-driven engagement with ChatGPT among first-year students.

Sophomore students show increased scores in all constructs except for slight decreases in EE, PV, and HM. PE rises to 4.86 and BI to 4.22, which indicates growing acknowledgment of ChatGPT's advantages and use (Strzelecki and ElArabawy, 2024). The increased scores of FC (5.06) and SI (4.11) imply improved access to supportive resources and a bit stronger peer influence, possibly owing to coursework that requires more collaboration (Elkefi et al., 2024). HM (4.5) remains strong, reflecting sustained interest, while H (3.0) indicates the early stages of routine use.

Junior students exhibit better growth across key constructs. BI increases to 4.35, and EE rises to 5.57, reflecting a notable appreciation for ChatGPT's ease of use, possibly caused by the increased academic requirements of junior-year coursework (Venkatesh et al., 2003). There is also an improvement in FC (5.17) and SI (4.30), indicating stronger integration of ChatGPT into the academic environment and enhanced peer influence (Shah et al., 2024). A higher HM score implies heightened enjoyment and engagement with ChatGPT.

Senior students achieve the highest scores across most constructs, with a slight drop in SI and HM. PE rises to 5.03, and EE reaches 5.63, which implies strong confidence in ChatGPT's effectiveness and ease of use, possibly owing to their increased experience and interaction with it (Sobaih et al., 2024). There is an increasing trend in FC (5.28), and BI (4.46), reflecting institutional support and a strong tendency to use ChatGPT. The modest decrease in HM (4.73) may be due to the fact that it loses its impact of novelty as familiarity increases (Xu and Thien, 2024).

The increasing scores in almost all constructs from freshman to senior year demonstrate growing acceptance and integration of ChatGPT as students progress in their academic life at the university. Early challenges, such as unfamiliarity and perceived difficulty, gradually disappear as they continue experiencing and using it. The notable increase in EE and PE underscores the importance of initial training and continuous assistance to help students handle related challenges and appreciate ChatGPT's advantages. Furthermore, the rise in SI and HM shows the key function of peer influence and enjoyment in facilitating acceptance. Promoting a collaborative learning environment can further enhance the acceptance and effective use of ChatGPT, which makes it an integral part of the educational experience.

The role of UTAUT constructs by faculties

The variations across UTAUT constructs in different faculties significantly impact the acceptance and use of ChatGPT at the university. Regarding PE, Romero-Rodríguez et al. (2023) identify it as a key variable influencing BI to accept ChatGPT in instructional contexts, noting that PE differs across faculties. In this study, the instructors in Communication and Business showed higher PE scores than the students, with scores of 5.56 for Business and 6.43 for Communication. This indicates that the instructors regard ChatGPT as more beneficial for academic tasks. Conversely, in Engineering, both the students and instructors showed similarly high PE scores, which reflects a mutual recognition of ChatGPT in technical disciplines. However, lower PE ratings in Fine Arts and Design indicate the need for improved integration strategies to make the most of ChatGPT's potential in creative fields. Recent research (e.g., Cambra-Fierro et al., 2025) has shown that instructors also perceive ChatGPT as a tool for improving instructional quality and reducing workload, which further strengthens Performance Expectancy in academic settings.

EE scores show that ChatGPT is usually regarded as easy to use across all faculties, despite some variations. For example, both the students (5.77) and instructors (5.79) in Engineering and Medicine rated EE highly. This reflects their technical expertise and regular interaction with AI tools. In contrast, a significant disparity in EE scores was noted in Fine Arts and Design, where students rated it at 4.81 compared to instructors (6.39). This gap suggests that students in creative disciplines may need more tutorials to use ChatGPT more effectively. These findings are consistent with Bervell and Umar (2017), who validated the UTAUT model and emphasized the key function of effort expectancy, especially in settings where individuals display variations in their technical proficiency, paralleling the EE differences seen across faculties. This reflects a broader pattern observed across countries, where Effort Expectancy is sometimes less predictive of intention when users already perceive the tool as easy to use (Moradi, 2025).

Regarding SI, there were notable variations across faculties. Communication instructors reported much higher peer pressure to accept ChatGPT (6.66) than students (4.17). This disparity denotes the strong collaborative culture in Communication, where peer influence has a great influence on technology acceptance. Almahri et al. (2020) similarly identify social influence as a crucial determinant for technology acceptance, particularly in fields requiring collaborative work. In Fine Arts and Design, the instructors may experience greater peer pressure than the students, while in Arts and Sciences, the students appear to face higher levels of peer influence. These differences emphasize the key role of social factors in the acceptance of ChatGPT across various disciplines. However, as recent faculty studies indicate (Bhat et al., 2024), this peer influence can also manifest as discouragement due to unclear institutional policies or fear of misuse, which must be addressed to promote faculty adoption.

Gunasinghe et al. (2019) emphasized the influence of FC on technology acceptance, pinpointing the changing degrees of institutional support for ChatGPT across faculties. In this study, both Engineering students and instructors rated FC highly. This reflects a robust institutional infrastructure for ChatGPT use in this field. In Arts and Sciences and Medicine, the students pointed out better support compared to their instructors, whereas in other departments, the pattern was just the opposite. These findings suggest that enhancing targeted resources and support for instructors could significantly improve the acceptance and use of ChatGPT across faculties. This variation mirrors findings across countries where enjoyment varies depending on academic goals, with students in more pragmatic fields focusing less on the entertaining aspects of AI (Moradi, 2025).

HM scores showed a consistent pattern with high scores across all the faculties. Supporting this, Arain et al. (2019) highlighted HM's influence on faculty-specific variations in ChatGPT use. Notably, Communication instructors gave a higher HM score (5.10), reflecting their strong interaction with ChatGPT. In contrast, Fine Arts and Design showed slightly lower HM scores, implying moderate enjoyment. Meanwhile, both the students and instructors in Medicine emphasized the entertainment value of using ChatGPT.

Although BI scores were usually high in all the faculties, H scores were consistently low, which suggests that ChatGPT has not yet become a regular component that they use in their daily routine. The notably low H scores in Arts and Sciences highlight an infant pattern, while Medicine demonstrated moderate habit development. This indicates a step-by-step integration of ChatGPT into their academic practices. Given that multiple studies (e.g., Abdi et al., 2025; Sergeeva et al., 2025; Wu et al., 2025) found Habit to be a primary driver of behavioral intention, faculty-specific strategies for regular engagement—such as embedding ChatGPT into common teaching workflows—could foster sustainable use.

While PE and EE have a key role in the acceptance of ChatGPT, it is of utmost importance to address the low H scores, as it has the potential to improve long-term adoption rates. Higher education institutions can facilitate better integration of ChatGPT if they strengthen their institutional support and offer a tailored training program so as to unlock its full potential across various academic disciplines.

Conclusion

This study provides a comprehensive examination of university students' and faculty members' acceptance of ChatGPT in a higher education context using the UTAUT2 framework. The findings reveal that multiple factors significantly shape the intention to use ChatGPT. In particular, performance expectancy emerged as a strong positive predictor of adoption, alongside facilitating conditions such as institutional support, hedonic motivation, and habit. By contrast, social influence and price value were found to be comparatively weak or non-significant factors, suggesting that peer opinions and cost considerations are less central in this context. Notably, effort expectancy did not show a significant effect on usage intentions in our analysis. This pattern indicates that once users become acquainted with ChatGPT's capabilities, ease of use may not be a decisive hurdle, possibly because the tool is sufficiently user-friendly or because other factors like usefulness and enjoyment dominate the decision to embrace it.

Theoretically, this study extends UTAUT2 into generative AI, reaffirming core constructs while highlighting new dynamics. Hedonic motivation emerged as a key driver, underscoring the role of intrinsic enjoyment. This aligns with evidence that enjoyment fosters sustained engagement with AI tools. Social influence was weaker than expected in traditional models, suggesting adoption is driven more by personal utility than peer pressure. The inclusion of both students and faculty in the analysis further extends theoretical understanding: it indicates that pedagogical role may moderate technology acceptance, as faculty exhibited generally higher intentions to use ChatGPT than students. This observation, likely reflecting faculty's greater technological experience or clearer use cases for teaching, provides a richer theoretical insight into how user context influences the weight of UTAUT2 factors.

Practically, the study offers valuable insights into how higher education stakeholders can facilitate the effective integration of ChatGPT. The implications of their study emphasize how crucial generative AI technologies are in educational frameworks to optimize their benefits and enhance learning outcomes. By identifying the key factors influencing acceptance, our findings help educators and administrators focus their efforts more effectively. For instance, the strong impact of performance expectancy suggests that clearly communicating and demonstrating ChatGPT's educational benefits could bolster user intention to adopt the tool.

Specifically for faculty, institutions should consider offering targeted professional development programs, communities of practice, and incentives for early adopters to model innovative use cases. Providing practical teaching resources, discipline-specific integration strategies, and administrative support can further encourage uptake and experimentation. Likewise, the significance of facilitating conditions underscores the importance of providing a supportive environment such as reliable technical infrastructure, training opportunities, and guidance on AI use to empower both students and faculty to utilize ChatGPT confidently. The prominence of hedonic motivation implies that making interactions with ChatGPT engaging and enjoyable may further encourage voluntary use, thereby enriching the learning experience. The comparative approach of this study also suggests that different user groups might benefit from tailored strategies: faculty members, who have higher initial acceptance, can act as champions and integrate ChatGPT into curricula, while students may need orientation on how ChatGPT's capabilities align with their learning needs. Together, these practical insights contribute to a better-informed approach for universities looking to responsibly harness ChatGPT's potential in education, without mandating any specific institutional policy or action.

Although supported by a large sample, the study has limitations. Reliance on convenience sampling may introduce bias, restricting generalizability. Future work should employ stratified samples, longitudinal analysis, and triangulated data to examine how initial intentions develop into sustained use. In addition, future-focused strategies should address potential barriers to ChatGPT adoption, such as privacy concerns and ethical considerations. Institutions should consider clear policies and training on responsible AI use to ensure safe and effective implementation. Addressing privacy concerns through secure data handling practices, anonymization protocols, and digital literacy education can help overcome adoption barriers and build user trust.

In conclusion, while ChatGPT holds great potential for enhancing educational outcomes, its acceptance and use in higher education are influenced by a range of factors identified in the UTAUT2 framework, including performance expectancy, effort expectancy, hedonic motivation, and institutional support. By acknowledging these key determinants and addressing current limitations through further research, educational institutions can more effectively integrate AI tools like ChatGPT into their curricula, ultimately enhancing the learning experience for both students and faculty.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Izmir University of Economics, Ethical Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

MK: Writing – original draft, Writing – review & editing. TA: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. This manuscript utilized ChatGPT to enhance language clarity.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdi, A. N. M., Omar, A. M., Ahmed, M. H., and Ahmed, A. A. (2025). The predictors of behavioral intention to use ChatGPT for academic purposes: evidence from higher education in Somalia. Cogent Educ. 12:2460250. doi: 10.1080/2331186X.2025.2460250

Acosta-Enriquez, B. G., Farroñan, E. V. R., Zapata, L. I. V., Garcia, F. S. M., Rabanal-León, H. C., Angaspilco, J. E. M., et al. (2024). Acceptance of artificial intelligence in university contexts: a conceptual analysis based on UTAUT2 theory. Heliyon 10:e38315. doi: 10.1016/j.heliyon.2024.e38315

Adigüzel, T., Kaya, M. H., and Cansu, F. K. (2023). Revolutionizing education with AI: exploring the transformative potential of ChatGPT. Contemp. Educ. Technol. 15:ep429. doi: 10.30935/cedtech/13152

Ajzen, I. (1991). The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50, 179–211. doi: 10.1016/0749-5978(91)90020-T

Almahri, F. A. J., Bell, D., and Merhi, M. (2020). “Understanding student acceptance and use of chatbots in the United Kingdom universities: a structural equation modelling approach,” in 2020 6th International Conference on Information Management (ICIM) (Piscataway, NJ: IEEE), 284–288.

Arain, A., Hussain, Z., Rizvi, W. H., and Vighio, M. S. (2019). Extending UTAUT2 toward acceptance of mobile learning in the context of higher education. Univ. Access Inf. Soc. 18, 659–673. doi: 10.1007/s10209-019-00685-8

Arbulú Ballesteros, M. A., Acosta Enríquez, B. G., Ramos Farroñán, E. V., García Juárez, H. D., Cruz Salinas, L. E., Blas Sánchez, J., et al. (2024). The sustainable integration of AI in higher education: analyzing ChatGPT acceptance factors through an extended UTAUT2 framework in Peruvian universities. Sustainability 16:10707. doi: 10.3390/su162310707

Ausat, A. M. A., Massang, B., Efendi, M., Nofirman, N., and Riady, Y. (2023). Can chat GPT replace the role of the teacher in the classroom: a fundamental analysis. J. Educ. 5, 16100–16106. doi: 10.31004/joe.v5i4.2745

Awal, M. R., and Haque, M. E. (2025). Revisiting university students' intention to accept AI-powered chatbot with an integration between TAM and SCT: a South Asian perspective. J. Appl. Res. High. Educ. 17, 594–608. doi: 10.1108/JARHE-11-2023-0514

Bervell, B., and Umar, I. (2017). Validation of the UTAUT model: Re-considering non-linear relationships of exogeneous variables in higher education technology acceptance research. Eurasia J. Math. Sci. Technol. Educ. 13, 6471–6490. doi: 10.12973/ejmste/78076

Bhat, M. A., Tiwari, C. K., Bhaskar, P., and Khan, S. T. (2024). Examining ChatGPT adoption among educators in higher educational institutions using extended UTAUT model. J. Inform. Commun. Ethics Soc. 22, 331–353. doi: 10.1108/JICES-03-2024-0033

Bin-Nashwan, S. A., Sadallah, M., and Bouteraa, M. (2023). Use of ChatGPT in academia: academic integrity hangs in the balance. Technol. Soc. 75:102370. doi: 10.1016/j.techsoc.2023.102370

Bouteraa, M., Bin-Nashwan, S. A., Al-Daihani, M., Dirie, K. A., Benlahcene, A., and Sadallah, M. (2024). Understanding the diffusion of AI-generative (ChatGPT) in higher education: does students' integrity matter?. Comput. Human Behav. Rep. 14:100402. doi: 10.1016/j.chbr.2024.100402

Bozkurt, A. (2023). Generative artificial intelligence (AI) powered conversational educational agents: the inevitable paradigm shift. Asian J. Dist. Educ. 18, 198–204. doi: 10.5281/zenodo.7716416

Budhathoki, T., Zirar, A., Njoya, E. T., and Timsina, A. (2024). ChatGPT adoption and anxiety: a cross-country analysis utilising the unified theory of acceptance and use of technology (UTAUT). Stud. High. Educ. 49, 831–846. doi: 10.1080/03075079.2024.2333937

Cachola, K., and Vu, K. (2024). “Investigating the use of chatbots as an educational tool,” in Human Factors in Design, Engineering, and Computing. AHFE (2024) International Conference. AHFE Open Access, vol 159, eds. T. Ahram and W. Karwowski (Orlando, FL, AHFE International, USA).

Cambra-Fierro, J. J., Blasco, M. F., López-Pérez, M. E. E., and Trifu, A. (2025). ChatGPT adoption and its influence on faculty well-being: an empirical research in higher education. Educ. Inform. Technol. 30, 1517–1538. doi: 10.1007/s10639-024-12871-0

Dietrich, L. K., and Grassini, S. (2025). Assessing ChatGPT acceptance and use in education: a comparative study among german-speaking students and teachers. Educ. Inform. Technol. 30, 1–26. doi: 10.1007/s10639-025-13658-7

Elkefi, S., Tounsi, A., and Kefi, M. A. (2024). Use of ChatGPT for education by engineering students in developing countries: a mixed-methods study. Behav. Inform. Technol. 43, 1–17. doi: 10.1080/0144929X.2024.2354428

Faraon, M., Rönkkö, K., Milrad, M., and Tsui, E. (2025). International perspectives on artificial intelligence in higher education: an explorative study of students' intention to use ChatGPT across the nordic countries and the USA. Educ. Inform. Technol. 30, 17835–17880. doi: 10.1007/s10639-025-13492-x

Farhi, F., Jeljeli, R., Aburezeq, I., Dweikat, F. F., Al-shami, S. A., and Slamene, R. (2023). Analyzing the students' views, concerns, and perceived ethics about chat GPT usage. Comput. Educ. Artif. Intell. 5:100180. doi: 10.1016/j.caeai.2023.100180

Farrokhnia, M., Banihashem, S. K., Noroozi, O., and Wals, A. (2023). A SWOT analysis of ChatGPT: implications for educational practice and research. Innov. Educ. Teach. Int. 61, 460–474. doi: 10.1080/14703297.2023.2195846

Foroughi, B., Senali, M. G., Iranmanesh, M., Khanfar, A., Ghobakhloo, M., Annamalai, N., et al. (2024). Determinants of intention to use ChatGPT for educational purposes: findings from PLS-SEM and fsQCA. Int. J. Human–Comput. Interact. 40, 4501–4520. doi: 10.1080/10447318.2023.2226495

Gabajiwala, E., Mehta, P., Singh, R., and Koshy, R. (2022). “Quiz maker: automatic quiz generation from text using NLP,” in Futuristic Trends in Networks and Computing Technologies: Proceedings of Fourth International Conference on FTNCT 2021, eds. P. K. Singh, S. T. Wierzchon, K. K. Chhabra, and S. Tanwar (New York: Springer Nature), 523–533.

Garcia-Penalvo, F. J. (2023). The perception of Artificial Intelligence in educational contexts after the launch of ChatGPT: disruption or Panic? Educ. Knowl. Soc. 24:e31279. doi: 10.14201/eks.31279

Gunasinghe, A., Hamid, J., Khatibi, A., and Azam, S. (2019). The adequacy of UTAUT-3 in interpreting academicians' adoption to e-learning in higher education environments. Interact. Technol. Smart Educ. 17, 86–106. doi: 10.1108/ITSE-05-2019-0020

Habibi, A., Muhaimin, M., Danibao, B. K., Wibowo, Y. G., Wahyuni, S., Octavia, A., et al. (2023). ChatGPT in higher education learning: acceptance and use. Comput. Educ. Artif. Intell. 5:100190. doi: 10.1016/j.caeai.2023.100190

Hair, J., and Alamer, A. (2022). Partial Least Squares Structural Equation Modeling (PLS-SEM) in second language and education research: guidelines using an applied example. Res. Methods Appl. Linguist. 1:100027. doi: 10.1016/j.rmal.2022.100027

Hu, J. J. (2021). Teaching evaluation system by use of machine learning and artificial intelligence Methods. Int. J. Emerg. Technol. Learn. 16, 87–101. doi: 10.3991/ijet.v16i05.20299

Iqbal, N., Ahmed, H., and Azhar, K. A. (2022). Exploring teachers' attitudes towards using ChatGPT. Global J. Manage. Administ. Sci. 3, 97–111. doi: 10.46568/gjmas.v3i4.163

June, S., Yaacob, A., and Kheng, Y. K. (2014). Assessing the use of YouTube videos and interactive activities as a critical thinking stimulator for tertiary students: an action research. Int. Educ. Stud. 7, 56–67. doi: 10.5539/ies.v7n8p56

Kock, N., and Lynn, G. S. (2012). Lateral collinearity and misleading results in variance-based SEM: an illustration and recommendations. J. Assoc. Inform. Syst. 13:2. doi: 10.17705/1jais.00302

Lee, A. T., Ramasamy, R. K., and Subbarao, A. (2025). “Understanding psychosocial barriers to healthcare technology adoption: a review of TAM technology acceptance model and unified theory of acceptance and use of technology and UTAUT frameworks,” in Healthcare (Vol. 13). Basel: MDPI, 250.

Li, C., Xing, W., and Leite, W. (2022). Building socially responsible conversational agents using big data to support online learning: a case with Algebra Nation. Br. J. Educ. Technol. 53, 776–803. doi: 10.1111/bjet.13227

Liu, Y., Awang, H., and Mansor, N. S. (2025). Exploring the potential barrier factors of AI Chatbot usage among teacher trainees: from the perspective of innovation resistance theory. Sustainability 17:4081. doi: 10.3390/su17094081

Marlina, E., Tjahjadi, B., and Ningsih, S. (2021). Factors affecting student performance in e-learning: a case study of higher educational institutions in Indonesia. J. Asian Fin. Econ. Bus. 8:993–1001. doi: 10.13106/jaf

Moradi, H. (2025). Integrating AI in higher education: factors influencing ChatGPT acceptance among Chinese university EFL students. Int. J. Educ. Technol. High. Educ. 22:30. doi: 10.1186/s41239-025-00530-4

OpenAI. (2023). Models and data use at OpenAI. Retrieved from https://openai.com (retrieved September 5, 2025).

Parveen, K., Phuc, T. Q. B., Alghamdi, A. A., Hajjej, F., Obidallah, W. J., Alduraywish, Y. A., et al. (2024). Unraveling the dynamics of ChatGPT adoption and utilization through Structural Equation Modeling. Sci. Rep. 14:23469. doi: 10.1038/s41598-024-74406-4

Raisch, S., and Krakowski, S. (2021). Artificial intelligence and management: the automation–augmentation paradox. Acad. Manag. Rev. 46, 192–210. doi: 10.5465/amr.2018.0072

Rasul, T., Nair, S., Kalendra, D., Robin, M., de Oliveira Santini, F., Ladeira, W. J., et al. (2023). The role of ChatGPT in higher education: benefits, challenges, and future research directions. J. Appl. Learn. Teach. 6, 41–56. doi: 10.37074/jalt.2023.6.1.29

Rawas, S. (2023). ChatGPT: empowering lifelong learning in the digital age of higher education. Educ. Inform. Technol. 29, 6895–6908. doi: 10.1007/s10639-023-12114-8

Romero-Rodríguez, J. M., Ramírez-Montoya, M. S., Buenestado-Fernández, M., and Lara-Lara, F. (2023). Use of ChatGPT at university as a tool for complex thinking: students' perceived usefulness. J. New Approach. Educ. Res. 12, 323–339. doi: 10.7821/naer.2023.7.1458

Rondan-Cataluna, F. J., Arenas-Gaitán, J., and Ramírez-Correa, P. E. (2015). A comparison of the different versions of popular technology acceptance models: a non-linear perspective. Kybernetes 44, 788–805. doi: 10.1108/K-09-2014-0184

Sergeeva, O. V., Zheltukhina, M. R., Shoustikova, T., Tukhvatullina, L. R., Dobrokhotov, D. A., Kondrashev, S. V., et al. (2025). Understanding higher education students' adoption of generative AI technologies: an empirical investigation using UTAUT2. Contemp. Educ. Technol. 17:ep571. doi: 10.30935/cedtech/16039

Shah, M. H. A., Dharejo, N., Shah, S. A. A., Dayo, F., and Murtaza, G. (2024). “Designing a conceptual model: predictors influencing the acceptance of ChatGPT usage in the academia of underdeveloped countries,” in 2024 IEEE 1st Karachi Section Humanitarian Technology Conference (KHI-HTC), 1–5.

Shaw, N., and Sergueeva, K. (2019). The non-monetary benefits of mobile commerce: extending UTAUT2 with perceived value. Int. J. Inf. Manage. 45, 44–55. doi: 10.1016/j.ijinfomgt.2018.10.024

Sobaih, A. E. E., Elshaer, I. A., and Hasanein, A. M. (2024). Examining Students' Acceptance and Use of ChatGPT in Saudi Arabian Higher Education. Eur. J. Invest. Health Psychol. Educ. 14, 709–721. doi: 10.3390/ejihpe14030047

Strzelecki, A. (2024). Students' acceptance of ChatGPT in higher education: an extended unified theory of acceptance and use of technology. Innov. High. Educ. 49, 223–245. doi: 10.1007/s10755-023-09686-1

Strzelecki, A., and ElArabawy, S. (2024). Investigation of the moderation effect of gender and study level on the acceptance and use of generative AI by higher education students: comparative evidence from Poland and Egypt. Br. J. Educ. Technol. 55, 1209–1230. doi: 10.1111/bjet.13425

Tamilmani, K., Rana, N., Dwivedi, Y., Sahu, G. P., and Roderick, S. (2018). “Exploring the role of ‘price value' for understanding consumer adoption of technology: a Review and Meta-analysis of UTAUT2 based Empirical Studies,” in PACIS 2018 Proceedings, 64.

Tamilmani, K., Rana, N. P., and Dwivedi, Y. K. (2019). “Use of ‘habit'is not a habit in understanding individual technology adoption: a review of UTAUT2 based empirical studies,” in Smart Working, Living and Organising: IFIP WG 8.6 International Conference on Transfer and Diffusion of IT, TDIT 2018, Portsmouth, UK, June 25, 2018, Proceedings (Berlin: Springer International Publishing), 277–294.

Tian, W., Ge, J., Zhao, Y., and Zheng, X. (2024). AI Chatbots in Chinese higher education: adoption, perception, and influence among graduate students—an integrated analysis utilizing UTAUT and ECM models. Front. Psychol. 15:1268549. doi: 10.3389/fpsyg.2024.1268549

Tiwari, C. K., Bhat, M. A., Khan, S. T., Subramaniam, R., and Khan, M. A. I. (2024). What drives students toward ChatGPT? An investigation of the factors influencing adoption and usage of ChatGPT. Interact. Technol. Smart Educ. 21, 333–355. doi: 10.1108/ITSE-04-2023-0061

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., et al. (2023). What is the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 10, 1–24. doi: 10.1186/s40561-023-00237-x

Van den Berg, G., and Du Plessis, E. (2023). ChatGPT and generative AI: possibilities for its contribution to lesson planning, critical thinking and openness in teacher education. Educ. Sci. 13:998. doi: 10.3390/educsci13100998

Venkatesh, V., Morris, M. G., and Ackerman, P. L. (2000). A longitudinal field investigation of gender differences in individual technology adoption decision-making processes. Organ. Behav. Hum. Decis. Process. 83, 33–60. doi: 10.1006/obhd.2000.2896

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D. (2003). User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478. doi: 10.2307/30036540

Venkatesh, V., Thong, J. Y., and Xu, X. (2016). Unified theory of acceptance and use of technology: a synthesis and the road ahead. J. Assoc. Inform. Syst. 17, 328–376. doi: 10.17705/1jais.00428

Venkatesh, V., Thong, J. Y. L., and Xu, X. (2012). Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q. 36, 157–178. doi: 10.2307/41410412

Venkatesh, V., and Zhang, X. (2010). Unified theory of acceptance and use of technology: US vs. China. J. Global Inform. Technol. Manage. 13, 5–27. doi: 10.1080/1097198X.2010.10856507

Wang, C., Chen, X., Hu, Z., Jin, S., and Gu, X. (2025). Deconstructing University learners' adoption intention towards AIGC technology: a mixed-methods study using ChatGPT as an example. J. Comput. Assist. Learn. 41:e13117. doi: 10.1111/jcal.13117

Wedlock, B. C., and Trahan, M. P. (2019). Revisiting the unified theory of acceptance and the use of technology (UTAUT) model and scale: an empirical evolution of educational technology. Res. Issues Contemp. Educ. 4, 6–20. Available online at: https://eric.ed.gov/?id=EJ1244613

Willems, J. (2023). ChatGPT at universities–the least of our concerns. SSRN Electron. J. doi: 10.2139/ssrn.4334162

Wu, Q., Tian, J., and Liu, Z. (2025). Exploring the usage behavior of generative artificial intelligence: a case study of ChatGPT with insights into the moderating effects of habit and personal innovativeness. Curr. Psychol. 44, 8190–8203. doi: 10.1007/s12144-024-07193-w

Xie, Q., Li, M., and Enkhtur, A. (2024). Exploring generative AI policies in higher education: a comparative perspective from China, Japan, Mongolia, and the USA. arXiv [preprint]. arXiv:2407.08986. Available online at: https://doi.org/10.48550/arXiv.2407.08986

Xu, X., and Thien, L. M. (2024). Unleashing the power of perceived enjoyment: exploring Chinese undergraduate EFL learn- ers' intention to use ChatGPT for English learning. J. Appl. Res. High. Educ. 17, 578–593. doi: 10.1108/JARHE-12-2023-0555

Yildiz Durak, H., and Onan, A. (2024). Predicting the use of chatbot systems in education: a comparative approach using PLS-SEM and machine learning algorithms. Curr. Psychol. 43, 23656–23674. doi: 10.1007/s12144-024-06072-8

Zhai, X., Chu, X., Wang, M., Tsai, C. C., Liang, J. C., Spector, J. M., et al. (2024). A systematic review of Stimulated Recall (SR) in educational research from 2012 to 2022. Humanit. Soc. Sci. Commun. 11, 1–14. doi: 10.1057/s41599-024-02987-6

Zhao, S., Shen, Y., and Qi, Z. (2023). Research on chatgpt-driven advanced mathematics course. Acad. J. Math. Sci. 4, 42–47. doi: 10.25236/AJMS.2023.040506

Appendix

Keywords: ChatGPT acceptance, higher education, UTAUT2 framework, student and faculty perceptions, AI

Citation: Kaya MH and Adıgüzel T (2025) Exploring the acceptance of ChatGPT in higher education: a comprehensive quantitative study of university students and faculty. Front. Educ. 10:1652292. doi: 10.3389/feduc.2025.1652292

Received: 25 June 2025; Accepted: 01 September 2025;

Published: 23 September 2025.

Edited by:

Muhammad Farrukh Shahzad, Beijing University of Technology, ChinaReviewed by:

Ahmed-Nor Mohamed Abdi, SIMAD University, SomaliaHira Zahid, University of Education Lahore, Pakistan

Copyright © 2025 Kaya and Adıgüzel. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mehmet Haldun Kaya, bWVobWV0aGFsZHVua2F5YUBnbWFpbC5jb20=

Mehmet Haldun Kaya

Mehmet Haldun Kaya Tufan Adıgüzel

Tufan Adıgüzel