- Chair of Primary School Education, Institute of Pedagogy, Faculty of Human Sciences, Julius-Maximilians-University of Würzburg, Würzburg, Germany

Introduction: Children increasingly engage with online content from an early age, but often lack the competencies to critically evaluate it. To foster these skills, suitable assessment instruments are required, yet none currently exist for the elementary school level. Drawing on a conceptual framework comprising four evaluation criteria applied across five content areas, this study addresses the need for a target group-specific operationalization. The aim was to derive concrete indicators to guide the development of an assessment instrument designed to measure elementary school children’s ability to evaluate online content.

Methods: To specify evaluation criteria within the content areas, a qualitative preliminary study was conducted. All German curricula were systematically examined with respect to evaluation competencies in digital contexts. In addition, interviews with media experts were carried out. Both the curricula and the interviews were analyzed using qualitative content analysis to refine and extend existing research with indicators tailored to elementary school children.

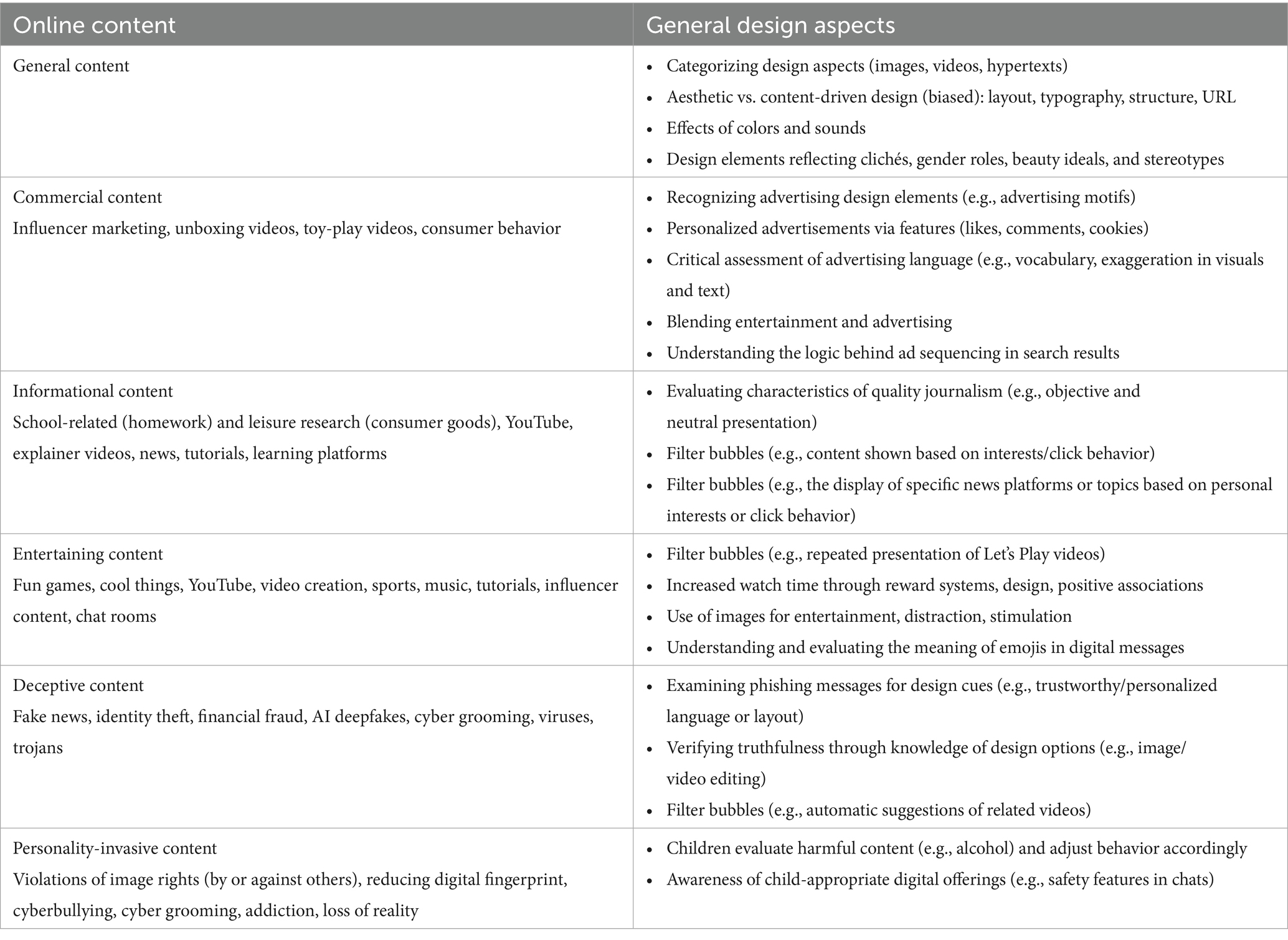

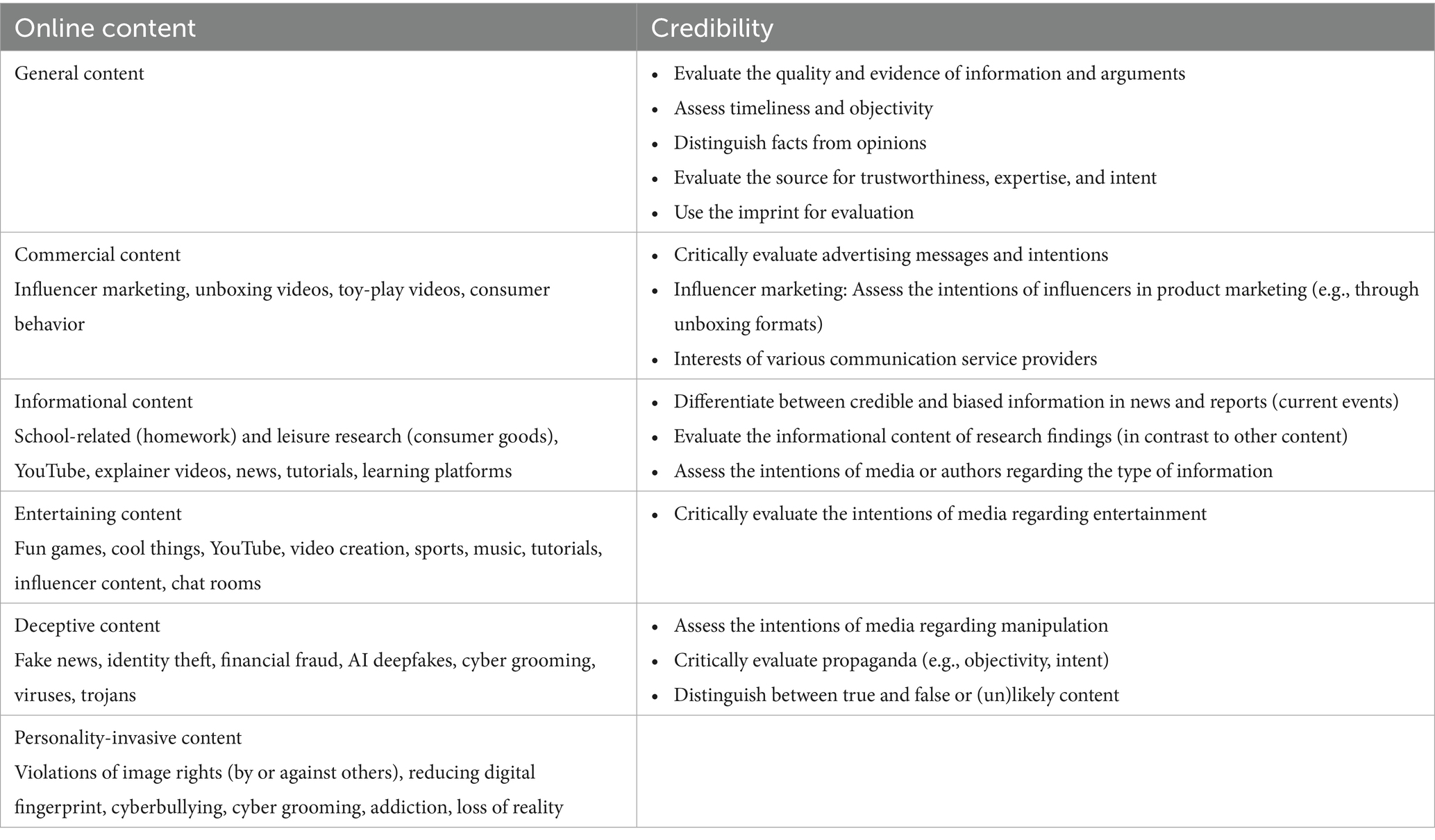

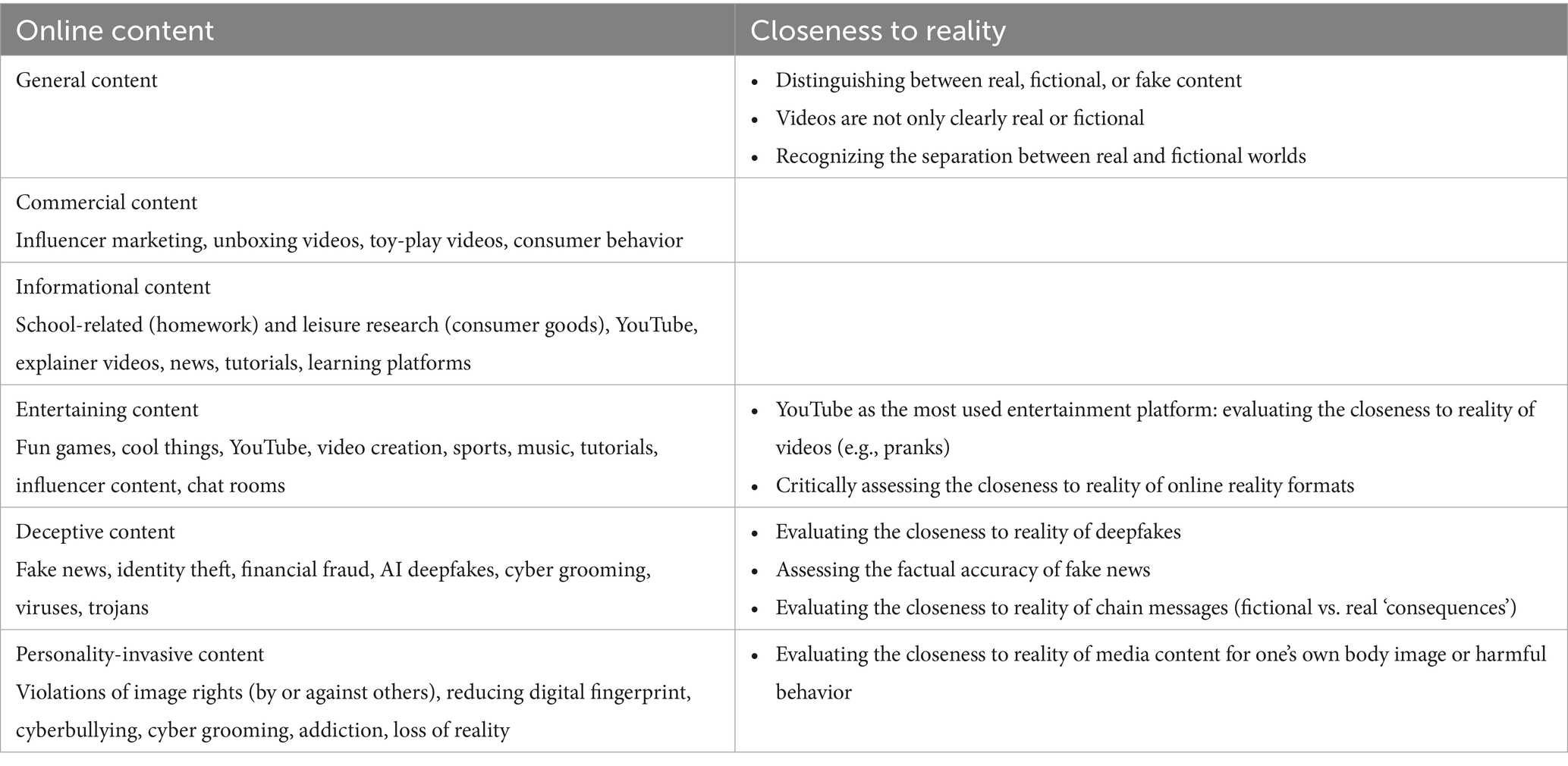

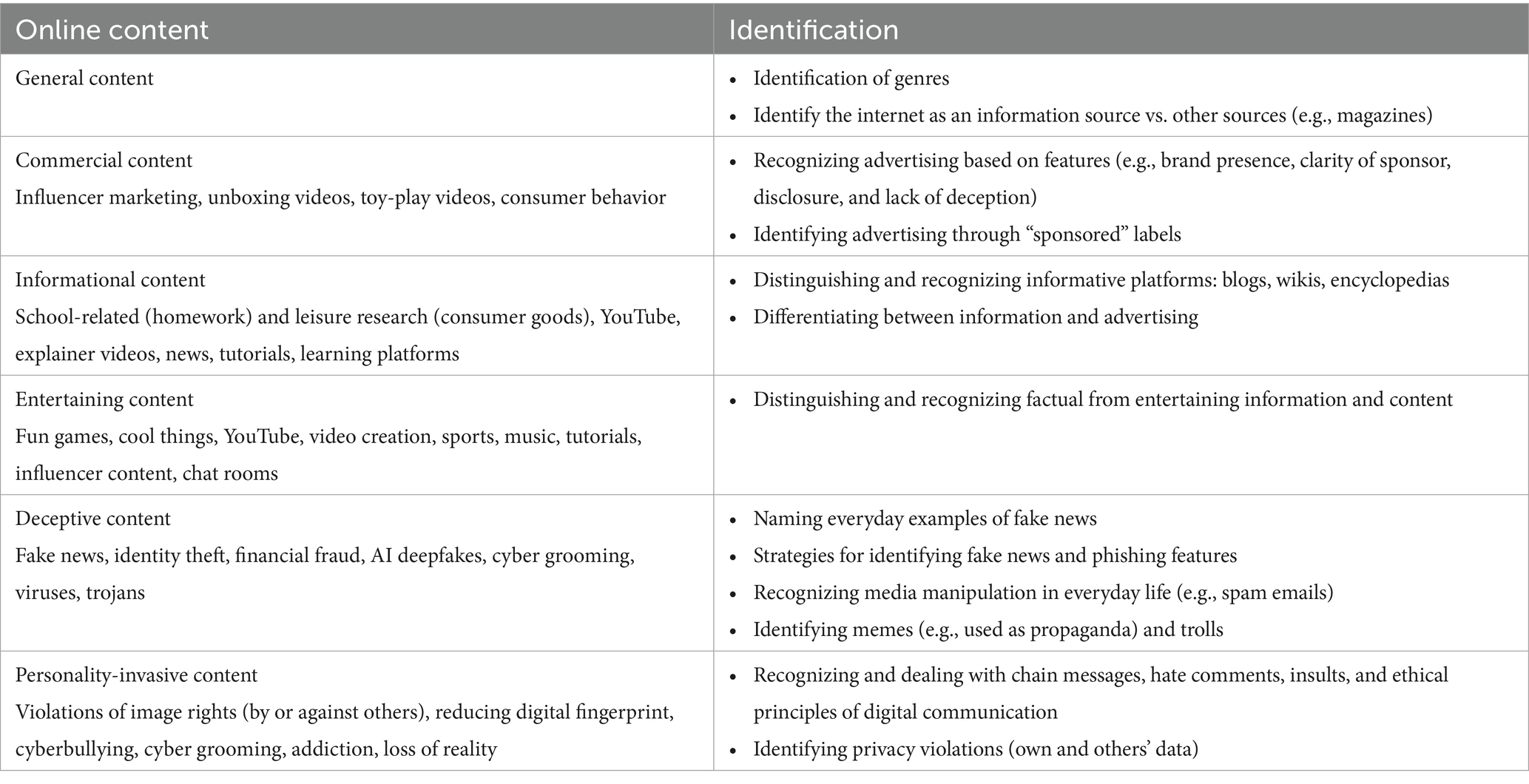

Results: Indicators were derived for four evaluation criteria across various forms of online content relevant to elementary school children. For design aspects, indicators include, for example, understanding the role of likes and comments in personalized content or interpreting emojis in chat messages. Indicators for credibility involve distinguishing facts from opinions and evaluating the intent behind influencer content or chain messages. Regarding closeness to reality, children are expected to differentiate real from fictional content, such as assessing YouTube pranks. Finally, identification relates to recognizing digital phenomena, for instance, distinguishing between educational videos and hidden advertising.

Discussion: This study highlights the development of indicators for assessing elementary school children’s evaluation of online content. These indicators enable the construction of standardized items that capture both knowledge and procedural skills using age-appropriate, multimodal online materials.

1 Introduction

Elementary school children engage with various online offerings on a regular basis. Among the most popular social media platforms for this age group are YouTube, TikTok, and WhatsApp, which are primarily accessed for entertainment purposes (Feierabend et al., 2023; Ofcom, 2022). In recent years, empirical research has increasingly examined the use of social media platforms and their associated risks for children and adolescents from educational, psychological, and pediatric perspectives. Depending on individual factors, social media use may negatively affect body image (Modrzejewska et al., 2022) or mental health, potentially contributing to conditions such as depression and anxiety disorders (Mojtabai, 2024). Moreover, 21.1% of children and adolescents aged 10–17 exhibit risky patterns of social media use, with 4.0% of 10- to 13-year-olds meeting the criteria for pathological use (according to ICD-11 criteria; Wiedemann et al., 2025). Additional online risks include the rise of cyberbullying (Beitzinger and Leest, 2024), targeted advertising based on children’s personal data (Trevino and Mortin, 2019), and exposure to deceptive content such as fake news, deepfakes, or phishing messages (Dale, 2019).

Overall, the findings presented above should be interpreted considering individual factors such as gender, self-esteem, and social support, and should not be understood as promoting fear-based or overly protective pedagogical approaches. Rather, children and adolescents need to develop digital competencies and strategies to navigate potential risks and to leverage the potential of digital environments through structured learning opportunities and interventions. Among these digital competencies, one aspect is the ability of children to critically evaluate online content from an early age (Weisberg et al., 2023; Livingstone, 2014). Strategies for evaluating online content aim, among other things, to help children distinguish between true and false information (Artmann et al., 2023), verify sources or claims across different websites (Paul et al., 2019), and identify manipulated images and videos. To assess and foster such abilities, the underlying construct must first be clearly defined for the elementary school context. The conceptualization of online content evaluation for this target group serves as a theoretical foundation (Jocham and Pohlmann-Rother, 2025, in review; see section 2).

In addition, validated and standardized instruments are required to assess interventions and learning opportunities that aim to develop children’s ability to critically assess online content. Such tools are currently lacking for this age group and are crucial both for empirical research and for educators aiming to foster and assess children’s competencies. To measure children’s abilities, the operationalization must include elementary school-specific requirements and indicators. Purington Drake et al. (2023) emphasize the importance of such specifications, arguing that elementary education requires concrete contexts and situations in which children’s competencies can be assessed. Without this contextualization, items designed to measure complex competencies remain too abstract and difficult for the target group to comprehend. This study aims to address a notable gap in existing literature by refining the criteria used to evaluate online content in elementary education. While previous academic discussions have offered valuable insights, the criteria have often been described in broad and abstract terms and remain only partially developed with respect to the specific cognitive and developmental needs of this age group. Accordingly, the study derives indicators for the construct of online content evaluation that can serve as a basis for item development in future empirical research.

2 Elementary school children evaluate online content

Given that children engage with online content with varying intentions, the ability to evaluate content in a goal- and task-oriented manner is essential. Children must be able to determine which evaluation criteria are appropriate for their specific task, such as school-related research, or for their particular purpose, such as watching videos for entertainment (Weisberg et al., 2023). In addition, multimodal online content, including visual and auditory elements, plays a significant role. This is particularly relevant, as elementary school children primarily engage with video- and image-based content, with YouTube as the most popular platform. So far, existing studies on online content evaluation have focused on the application of evaluation criteria to (multiple) online texts, such as comparing different websites to answer a question. This emphasis has primarily highlighted reading comprehension skills (e.g., Paul et al., 2018), while overlooking (multimodal) online content, which children predominantly engage with.

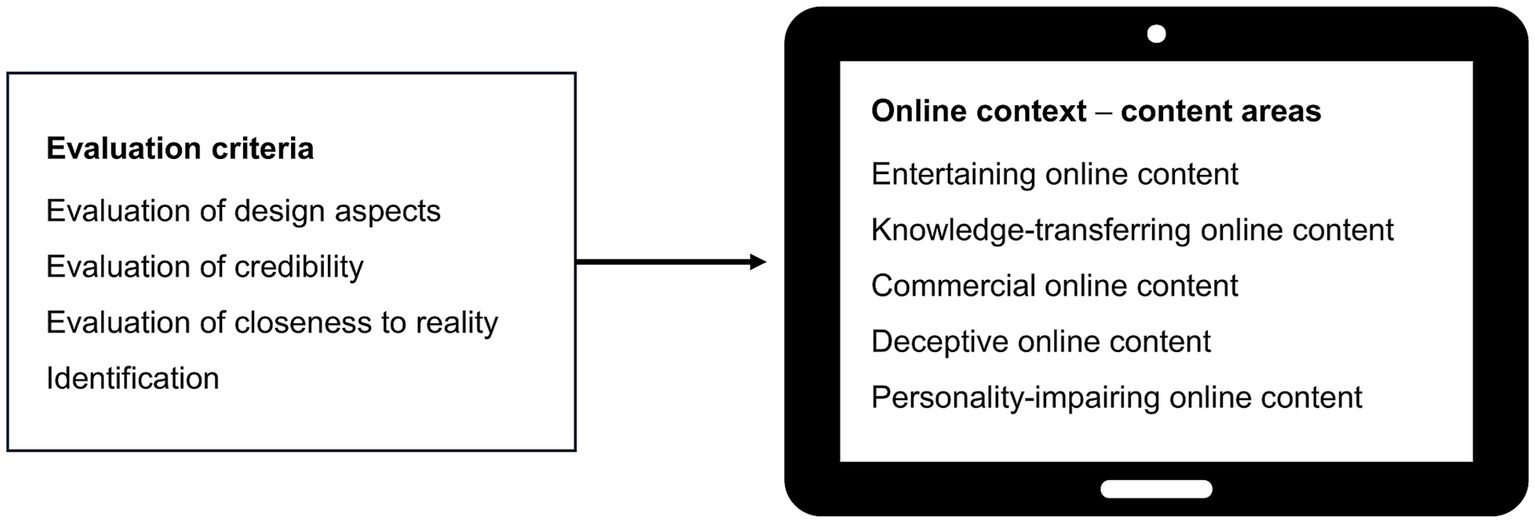

Based on established theoretical and normative models as well as empirical research findings, a conceptual framework for evaluating online content for elementary school children has been developed (Jocham and Pohlmann-Rother, 2025, in review). At its core, the framework comprises four evaluation criteria, which are applied across five distinct content areas (see Figure 1). These criteria include the evaluation of design aspects, credibility, closeness to reality, and the identification of characteristics relevant to evaluation. The criteria can be applied to entertaining, knowledge-transferring, commercial, deceptive, and personality-impairing online content (content areas). The following section provides definitions of the four evaluation criteria and five content areas, which are not exclusively intended for elementary school children (ages 6–10 in Germany). The presented research findings serve to further specify these criteria, highlighting that the context of application may vary (e.g., fake news) or that criteria may be emphasized (e.g., the evaluation of expertise).

Figure 1. Conceptualization of online content evaluation (Jocham and Pohlmann-Rother, 2025, in review).

2.1 Evaluation criteria

2.1.1 General design aspects

The way information is presented online can be complex, increasing the challenge for elementary school children to evaluate content effectively (Macedo-Rouet et al., 2013). Critical evaluation of online content requires knowledge of and awareness about digital environments, which are characterized by a change that occurs in the “language, interaction, and behavior [as part of] different social and cultural contexts” (Yeh and Swinehart, 2020, p. 1736) formed online. To account for the specific nature of digital spaces, this criterion focuses on the evaluation of various design aspects, including their potentially manipulative intent. This includes general design aspects of the internet, such as connectivity and search engine optimization (Kammerer and Gerjets, 2012), as well as features of digital platforms, including multimodality and algorithmic structuring (Cotter and Reisdorf, 2020). Despite theoretical overlaps with credibility assessments of context (Forzani, 2020) and with platform- and content-related prior knowledge (Brand-Gruwel et al., 2017; Vanwynsberghe et al., 2012), the unique characteristics of online content and platforms are better conceptualized within the criterion of design aspects rather than being subsumed under credibility (Sundar, 2008). This is particularly relevant for deriving concrete criteria and indicators that can be used to assess and foster competencies in the elementary school age group.

At the core of this criterion is the awareness children need to develop regarding the fundamental characteristics of digital content—and how these characteristics can add complexity to the application of other evaluation criteria. Technical features of digital media serve as cues that trigger various heuristics, which in turn influence the users’ evaluations (Sundar, 2008). For example, the closeness to reality heuristic predicts greater trust in audiovisual modalities, as they are perceived to more closely resemble the “real” world (see also Bezemer and Jewitt, 2010). Multimodal forms of presentation—such as videos, images, and hypertext—are defining features of digital content. Studies involving children point to contradictory mechanisms: some findings suggest that videos exert a stronger influence on attitude change, while others indicate that children assign greater authority to textual content (Salmerón et al., 2020). This highlights the need for items that assess evaluative competencies based on multimodal online content. Relevant indicators can be partially derived from the following findings. However, gaps become apparent in goal- and task-specific evaluation contexts that also consider children’s patterns of interaction with online content.

Initially, knowledge about how online information is presented can play a significant role in the evaluation process. Unlike print media, digital environments require awareness of elements such as hyperlinks (e.g., PageRank), layout (e.g., graphics or navigation functionality), typography (e.g., vocabulary use) (Keshavarz, 2021), structure (e.g., the arrangement and formatting of visual or textual elements), and URLs (Forzani, 2020). Empirical findings highlight the strong influence of information presentation. For instance, students tend to focus more on the aesthetic design of social media content than on its actual message or source (Shabani and Keshavarz, 2022). Furthermore, third- to fifth-grade students rate websites as more trustworthy when they include more dynamic graphics (Eastin et al., 2006). A study involving fifth- to eighth-grade students also shows that learners primarily rely on superficial cues—such as typographic emphasis or keywords—when assessing the relevance of online content (Rouet et al., 2011).

Further indicators relate to knowledge about the design of specific platforms, which also becomes relevant in the evaluation process (e.g., the peer-editing structure of Wikipedia; Miller and Bartlett, 2012). Many digital platforms are intentionally designed to be more appealing and engaging to children than other leisure activities, often through reward systems and persuasive design elements (Radesky, 2021). However, these individually positive experiences are frequently leveraged to serve other—often commercial—purposes. As a result, it is essential that users learn to critically evaluate how (social media) platforms influence their own perceptions. This can be supported by introducing children to overarching persuasive strategies that are common across many (social media) platforms (Tandoc et al., 2021). This includes, for instance, developing an understanding of their digital footprint, which enables them to critically evaluate personalized content and phenomena such as filter bubbles (Cotter and Reisdorf, 2020; Weisberg et al., 2023). Moreover, it is essential to understand specific design aspects, such as characteristics of quality journalism (Association of College and Research Libraries, 2015) and persuasive techniques like image and video editing (Syam and Nurrahmi, 2020). For example, elementary school children need specific knowledge to identify and evaluate online advertising and influencer marketing. This includes understanding advertising language, the blending of entertainment and promotional content, and strategies for building relationships with consumers (Rozendaal et al., 2011; Evans et al., 2019). Despite their frequent use of social media, research involving elementary school children remains underrepresented. One possible reason is that social media platforms are not designed for this age group.

2.1.2 Credibility

The concept of credibility is defined and operationalized in various ways across theoretical and empirical studies. Credibility judgments may be made at different levels or in combination. First, credibility can be assessed with regard to specific content, such as search results or social media posts, which is interpreted in light of one’s prior knowledge and beliefs (Anttonen et al., 2023). In the context of a (research) goal framed as a problem-solving process (Kim et al., 2019), judgments about the relevance and usefulness of content are also essential (Keshavarz, 2021; Eickelmann et al., 2019). Furthermore, online content can be evaluated based on its argumentation (Forzani, 2020), using criteria such as truthfulness (Hilligoss and Rieh, 2008), accuracy (Tate, 2018), objectivity (Keshavarz, 2021), and clarity (Eickelmann et al., 2019). To assess the quality and evidential basis of online content (Forzani, 2020), the strategy of corroboration—comparing information across multiple sources—is particularly effective (Eickelmann et al., 2019). These and similar cognitively demanding strategies are more complex than evaluating the source (e.g., author, expert, or publisher) and are used less frequently by adolescents (Kiili et al., 2008). Closely related to content evaluation is the assessment of sources, which serves to verify the reliability and objectivity of information (Kim, 2019). Evaluation strategies used by skilled readers (Flanagin and Metzger, 2010) include assessing trustworthiness, for example the objectivity or impartiality of content, and expertise, including author qualifications or document type (Braasch et al., 2009; Wineburg, 1991; Fogg and Tseng, 1999; McGrew and Byrne, 2021). The concept of expertise refers to the author’s or user profile’s professional experience or qualifications in a specific domain (Forzani, 2020). This assessment is particularly challenging on digital platforms, where anyone can potentially assume the role of an expert (Chinn et al., 2020). Additionally, analyzing the source’s intent is crucial (Polanco-Levicán and Salvo-Garrido, 2022), raising questions about personal interests and political or commercial motives. Strategies for verifying source information are often grouped under the term sourcing (Wineburg, 1991), which involves consulting multiple sources to validate information (Duncan et al., 2018).

Empirical studies consistently suggest that students, university learners, and adults struggle to evaluate online content effectively. This poses a risk of being influenced by misinformation or inaccurate representations (Stanford History Education Group, 2016). As a result, the importance of targeted educational interventions is increasingly emphasized. On the one hand, elementary and high school teachers report that students tend to overestimate their ability to handle online information and often reuse inaccurate content (Miller and Bartlett, 2012). On the other hand, only a portion of students report that they learn how to handle internet-related tasks in school, as shown by recent ICILS findings (Eickelmann et al., 2024). When examining students’ source evaluation skills, it becomes evident that seventh graders often cannot determine whether an author is an expert (Coiro et al., 2015). Similarly, Kiili et al. (2018) found that half of sixth graders did not question the credibility of a commercial source. Overall, secondary school students rarely attend to source characteristics, even though they are generally capable of doing so (Paul et al., 2017). At the same time, Kuiper et al. (2008) showed that students, regardless of academic performance, possess knowledge about search and evaluation strategies, but do not apply it consistently. The reasons for this are multifaceted and influenced by various factors, including social aspects.

Given findings from studies with adults and adolescents, it is unsurprising that elementary school children struggle to distinguish between factual reporting and opinion (Kerslake and Hannam, 2022). However, this distinction is crucial for differentiating between largely neutral (journalistic) and biased information. To do so, children must be familiar with characteristics of credible and ethical journalism (Weisberg et al., 2023). Research also shows that elementary students can identify source information and assess an author’s intent and expertise in age-appropriate reading tasks. However, they struggle to transfer these skills to multiple documents (Paul et al., 2018), which is particularly relevant in digital contexts due to the interconnected nature of information. Macedo-Rouet et al. (2013) similarly found that fourth and fifth graders could identify information sources, but had difficulty evaluating the knowledge of those sources using textual cues. Following an intervention, students were better able to evaluate source credibility (Macedo-Rouet et al., 2013). Other studies also suggest that sourcing skills can be improved through targeted prompts, such as citation guidance (Paul et al., 2019), and through training, which in turn supports the development of critical thinking (e.g., Pérez et al., 2018).

A substantial portion of the literature on the evaluation of online content focuses on criteria for determining credibility. Although recent findings increasingly address the digital context, much of the research still centers on information-seeking scenarios, which represent only a fraction of elementary school children’s actual media use. Contexts in which children pursue personal goals have received significantly less attention. Moreover, the emphasis has largely been on the evaluation of (multiple) online documents, rather than multimodal online content that includes not only text but also audio, images, and video. Existing insights can be used to derive indicators for new contexts, such as multimodal online content.

2.1.3 Closeness to reality

This criterion has gained importance as a growing number of individuals receive and disseminate content through interconnected platforms and new forms of communication. On social media platforms in particular, the boundaries between consumers and producers are increasingly blurred (Vanwynsberghe, 2014), in contrast to traditional mass media, which are typically produced by trained professionals within a limited number of media organizations. In digital environments, content is shaped by individual motivations, experiences, and values, resulting in diverse representations of reality (Pangrazio and Selwyn, 2018). This diversity, along with rapid technological advancements, makes it increasingly difficult to distinguish between real, fictional, or manipulated content (Cho et al., 2022). For example, technologies such as deepfakes enable highly realistic editing of audio, images, and video. Since elementary school children primarily utilize videos and images on their preferred platforms, these editing capabilities make it harder for them to detect manipulation or fakes and to assess the authenticity of content (Chesney and Citron, 2019). The complex knowledge required for this is particularly challenging for elementary school children.

Empirical findings in early childhood and elementary education primarily focus on children’s evaluations of the closeness to reality of fictional or real characters, events, people, or other aspects in television, stories, or fairy tales (e.g., Li et al., 2015). Children as young as 3 years old are capable of distinguishing between reality and fantasy at a basic level (Woolley and Ghossainy, 2013). Numerous studies show that children develop and refine these abilities with age, depending on the context. For example, their ability to reliably identify real people or events increases, while they are less likely to classify unrealistic or fictional elements as real (e.g., Bunce and Harris, 2013). Nevertheless, elementary school children may still believe in magical figures or events and may mistakenly judge unfamiliar content as impossible (Mares and Bonus, 2019). Preschool children (ages 4–5) tend to justify their distinctions between real and fictional characters based on authenticity, while children aged 5–7 also consider whether natural laws are violated (e.g., Snow White being revived by a kiss) (Bunce and Harris, 2013). As they grow older, elementary students increasingly draw on their own experiences and other sources of information to evaluate closeness to reality (Woolley and Ghossainy, 2013). This development is rooted in cognitive growth: although elementary students can sometimes make similar judgments about the closeness to reality as adults, doing so requires significantly more cognitive effort (Li et al., 2015). The significance of evaluating the perceived closeness to reality of online content becomes evident when considering its impact on the effectiveness of media literacy trainings programs (Cho et al., 2019; Cho et al., 2020).

Given the popularity and frequency of YouTube (Pew Research Center, 2020), recent studies have also examined how children assess the closeness to reality of YouTube content (e.g., Hassinger-Das and Dore, 2023; Hassinger-Das et al., 2020; Martínez and Olsson, 2019). These studies highlight the challenge of multiplicity: videos cannot always be clearly categorized as real or fictional, and various aspects must be evaluated and weighed (Cho et al., 2022). One illustrative example is a video featuring a real YouTuber caring for fictional (digital) pets in an app. Initial findings with children aged 3–8 shows that individuals in smartphone videos are perceived as more real than those on television. The perceived closeness to reality of individuals on YouTube is lower than in smartphone videos but higher than on television, suggesting that children may find it more difficult to evaluate the closeness to reality of YouTube content—possibly due to the platform’s diversity and complexity. Children of all ages were able to distinguish between formats. They justified their evaluations of YouTube personalities using “medium-objective” reasoning, whereas their judgments of the other two formats were primarily based on characteristics of the person (Hassinger-Das et al., 2020). These tendencies increase with age, suggesting that older children possess more differentiated knowledge about the YouTube platform or increasingly use this knowledge to evaluate the closeness to reality (Hassinger-Das and Dore, 2023). Finally, children’s preferences for certain videos could be predicted by how real they perceived the videos to be (Hassinger-Das et al., 2020). Potential indicators may therefore include findings from previous research on YouTube formats, while expanding the scope to other relevant platforms could be beneficial in covering a broader range of topics and content areas.

2.1.4 Identification

The previously described criteria focus on online-specific aspects that can support children in evaluating online content. However, a fundamental prerequisite is that users can identify key features relevant to evaluation (e.g., content genre) in the first place. Only then can they assess whether these features are relevant to their specific goals and whether they support or undermine the quality of the content (Lucassen and Schraagen, 2011). The ability to identify such features is also addressed in the revised version of Bloom’s taxonomy, where identifying is part of the learning objective recognizing and serves as a necessary condition for more complex problem-solving processes such as evaluation itself (Mayer, 2002). The taxonomy should not be interpreted as a rigid hierarchy of lower- and higher-order cognitive tasks. For example, identifying hidden features or phenomena (e.g., phishing) may be more challenging for children than evaluating familiar and obvious content (Dubs, 2009). Equally important is the consideration of how knowledge across different content areas can support the critical evaluation of online content. This assumption is supported by empirical studies showing that different evaluation competencies are required depending on the type of online content, for instance when comparing neutral and commercial content (Kiili et al., 2018). To better understand how the identification of features contributes to the evaluation itself, three research contexts are considered, from which initial indicators can be derived.

The first area concerns the identification of genre, for example blogs, wikis, or video formats, which can significantly improve online research (Leeder, 2016). This is explained by the activation of implicit knowledge, which enables predictions about complexity and other genre-specific features. As a result, cognitive resources are freed up for further evaluation processes (Santini et al., 2011). However, studies show that students often lack comprehensive knowledge of online genres that would allow for such cognitive relief (e.g., Leeder, 2016; Sidler, 2002). Moreover, research suggests that the genre of online texts can imply specific intentions and trigger expectations related to credibility evaluation (Kiili et al., 2023).

The second area involves the identification of specific phenomena in digital environments. When children suspect or identify a deepfake in a video, this influences their evaluation of other aspects, such as the perceived closeness to reality or the credibility of the content. This highlights that recognizing complex online phenomena, for instance phishing, cyber grooming, or clickbait, requires abstraction skills that are cognitively demanding. On social media, the boundaries between entertainment, information, and marketing strategies are increasingly blurred—something that is particularly difficult for children to detect (Lupiánez-Villaneuva et al., 2016; Scholl et al., 2007). However, studies on elementary school children’s developmental stages show that they are potentially capable of recognizing advertising and reflecting on aspects of commercial privacy. Between the ages of 7 and 11, information processing improves significantly (analytical stage), enabling more complex knowledge about advertising and more detached reflection (John, 1999). Kerslake and Hannam (2022), for example, found that younger children struggle to recognize covert advertising. Similar assumptions can be made for deceptive content. Qualitative studies indicate that elementary school children already possess knowledge about fake news: they are aware of its existence, can define the term, cite everyday examples, and describe identification strategies (Tamboer et al., 2024; Vartiainen et al., 2023). However, it must be noted that the children surveyed rarely named all key features of fake news (Tamboer et al., 2024) and were less able to articulate deeper evaluation strategies (e.g., quality and consistency of evidence, Vartiainen et al., 2023). Although initial intervention studies on dealing with disinformation in elementary education exist (e.g., Artmann et al., 2023), research is still in its early stages. The challenge of sustainably promoting the identification of complex phenomena in elementary school is illustrated by an intervention study by Lastdrager et al. (2017), which found no lasting improvement in the identification of phishing messages among children aged 9–12.

The third area relates to credibility evaluation, which can involve many criteria, for instance expertise, recency, or trustworthiness (see 2.1.2). Identifying credible content or sources can serve as an initial anchor for evaluation (Lucassen and Schraagen, 2011). When clear indicators are available—such as identifying the most credible source in a search result—working memory capacity is freed up for deeper information processing (Keßel, 2017).

2.2 Online context

To assess and promote the presented evaluation criteria beyond an abstract context, specific areas of application are required. The importance of online context is also emphasized by Kim (2019), who advocates for the development of context-based (credibility) models. As a result, the conceptualization integrates five content areas reflecting the online contexts of elementary school children. Prior to outlining the content areas, attention is directed to the social media platforms where such online content is commonly encountered and engaged with by elementary school children.

2.2.1 Ethical considerations for social media in elementary schools

Social media platforms are not designed for elementary school children and explicitly exclude them through their terms of use. Nevertheless, evidence shows that children increasingly engage with these platforms. This engagement is closely linked to greater access to personal devices, which enable independent use. By the end of elementary school, both the frequency of use and the proportion of children owning a mobile phone rise significantly, from 44% at age 9 to 91% at age 11 (Ofcom, 2022). This creates a dilemma: children are accessing online content that is not appropriate for their age. For instance, 11% of children aged 6–11 report having encountered content that made them feel uncomfortable, most of which was pornographic or erotic (Feierabend et al., 2023; von Soest, 2023). Research increasingly points to the negative impact of social media on children’s mental health. A large-scale longitudinal study (n = 17,409) identifies sensitive developmental windows (girls: 11–13 and 19 years; boys: 14–15 and 19 years), during which increased social media use predicts lower life satisfaction, and vice versa (Orben et al., 2022). This may explain survey findings among German adolescents aged 16–17, in which 45% supported a minimum age of 16 years for creating a personal social media account, as applied in Australia (Wedel et al., 2025). Against this backdrop, unrestricted access to social media platforms across Europe appears problematic. Educators and institutions must consider how to respond to this reality. If children in a classroom use different platforms, this should be acknowledged as part of their lived experience and integrated into educational efforts. To do so, teachers need to be aware of the learning prerequisites within their class and of the relevant platforms to foster critical evaluation competencies.

2.2.2 Online content areas in children’s media use

On social media platforms, children encounter a wide range of online content, each potentially requiring different evaluation criteria. The application of these criteria depends on the intent and purpose behind the content’s use. To derive indicators for the use of evaluation criteria that are specifically relevant for elementary school settings, the following section briefly introduces selected content areas. These areas offer a foundation for identifying concrete situations in which the promotion and assessment of evaluative competencies can be meaningfully differentiated.

2.2.2.1 Entertaining online content

Numerous qualitative and quantitative studies confirm that elementary school children primarily use the internet for school-related and entertainment purposes (e.g., Trevino and Mortin, 2019; Feierabend et al., 2023). Children describe their online activities with phrases such as „access [to] fun games and cool things” (female, age 8 years), “watch YouTube” (male, age 7 years), or “make videos” (female, age 7 years) (Donelle et al., 2021, p. 4). These activities are reflected in the popularity of platforms such as YouTube, WhatsApp, and TikTok, with short-form video content gaining increasing traction. Content preferences vary by age and gender—for example, boys tend to utilize more gaming- or sports-related content, while girls more often watch music videos, tutorials, or influencer content (Ofcom, 2022).

2.2.2.2 Knowledge-transferring online content

Beyond entertainment, the internet is the primary medium for searching information. In Germany, 71% of elementary school children actively search online for information related to schoolwork, 51% research consumer products, and 45% use online searches to solve everyday problems (Feierabend et al., 2023; von Soest, 2023). In addition to search engines used for student projects, there are other digital offerings aimed at informing or educating users—such as educational influencers (see Carpenter et al., 2023). These include news articles, tutorials, explainer videos, learning apps, or online courses. Of particular importance is the fact that children use popular platforms like YouTube to acquire knowledge through multisensory content (Donelle et al., 2021), which they perceive as having high educational value (Hassinger-Das et al., 2020). This helps explain YouTube’s longstanding dominance as both an entertainment and search platform.

2.2.2.3 Commercial and deceptive online content

During both academic and recreational online activities, children inevitably encounter commercial and deceptive content. Advertising includes influencer marketing, which many brands use—sometimes exclusively—to promote their products (De Veirman et al., 2017). In the toy industry, for instance, entertaining unboxing and toy-play videos are produced specifically to appeal to children (Radesky et al., 2020). Deceptive practices may include „lies, omission, evasion, equivocation and generating false conclusions with objectively true information” (Levine, 2014, p. 381). In digital environments, deception often manifests in the form of fake news, identity theft, phishing, financial fraud (Dale, 2019), such as Ponzi and pyramid schemes or scam cryptocurrencies (Chiluwa, 2019), AI-generated deepfakes (Weisberg et al., 2023), or cyber grooming (Singh and Gitte, 2014). However, elementary school children may not encounter all these phenomena.

2.2.2.4 Personality-impairing online content

In addition to commercial and deceptive content, the digital space presents numerous ways in which individuals’ personal development can be negatively affected. Both governmental and private actors, including companies and individuals, may infringe on informational self-determination, for example, by collecting and disclosing personal data, violate image rights, or otherwise compromise personal privacy (Bumke and Voßkuhle, 2020). Other threats to the “development and preservation of personality” (translation by the authors; Bumke and Voßkuhle, 2020, p. 88) include issues related to cyber safety, cyberbullying, and hate speech. Cyber safety refers to children’s ability to navigate the internet safely and minimize risks by managing their digital footprint and responding to challenging content (Roddel, 2006). This includes knowledge about handling personal data, such as phishing, cyber grooming, and targeted advertising, as well as knowledge about others’ data, including copyright and data protection, with overlaps to the construct of data literacy. “The ability to collect, manage, evaluate, and apply data, in a critical manner” (Ridsdale et al., 2015, p. 2) is closely linked to children’s capacity to mitigate data-driven threats in cyber safety contexts (e.g., dataveillance; Lupton and Williamson, 2017) while also respecting the rights of others. Cyberbullying and hate speech represent a second major concern, where elementary school children may be both victims and offenders. Prevalence rates vary depending on the study and methodology. A recent WHO/Europe study (Cosma et al., 2024) involving 279,000 participants found that one in six children aged 11–15 had experienced cyberbullying. For elementary school children, prevalence rates range between 13 and 31% (Muller et al., 2017; Tao et al., 2022).

In summary, elementary school children encounter a wide variety of online content that presents both challenges and opportunities. To navigate these effectively, children must be familiar with and able to apply evaluation criteria across different online contexts. However, existing research often focuses on older age groups, is formulated too broadly, or lacks specificity when it comes to the needs of younger children. Therefore, the aim of this study is to specify evaluation criteria for online content that are appropriate for elementary school children. This includes linking concrete types of online content with corresponding, age-appropriate evaluation criteria.

3 Methodology

To address the research gap outlined above, this study aims to specify evaluation criteria suitable for elementary school children based on prior research. As part of this process, all German curricula were analyzed, and interviews with media experts were conducted to identify additional indicators. Accordingly, the present study represents a qualitative preliminary study (Mayring, 2001) within the context of an item development process.

3.1 Data collection

German Curricula: Each of Germany’s 16 federal states has its own curriculum and guidelines outlining subject-specific and cross-disciplinary competency goals. The integration of digital competencies in elementary education is guided by overarching national directives (KMK, 2016), which are based on the European DigComp framework (Ferrari, 2013). Since the DigComp framework is not specifically tailored to elementary education and lacks age-specific requirements, the federal states in Germany define digital competencies within subject profiles or in supplementary media and digital competency plans. These curricula serve as binding reference frameworks and form the foundation for educational practice in elementary schools. Through clearly defined goals and content across subjects and learning domains, they aim to ensure quality and comparability across schools. Competency goals are also assessed in national comparative studies such as PIRLS (German; McElvany et al., 2023) and TIMSS (Mathematics; Schwippert et al., 2024). However, there is currently no standardized assessment of digital competencies at the elementary level. Teachers retain autonomy in implementing the curriculum. They determine the pedagogical methods, sequencing of content, and areas of emphasis.

The data basis for this study comprises the subject-specific and cross-disciplinary curricula of each federal state (curricula per state: M = 12.5; SD = 1.7). While implementation varies, the curricula share a common structure. All are competency-based and aim to foster methodological, social, and self-competence. They include defined thematic areas and learning objectives, often supplemented by practical implementation suggestions and sample tasks.

Media Experts: To identify additional indicators, media experts in the education sector were recruited for interviews. Selection criteria included: (1) active engagement in digital media, (2) development of online content and offerings for elementary school children, and (3) design and implementation of programs in digital media for this age group. Based on these criteria, major German child-focused search engines, public broadcasters, and well-known online formats promoting digital literacy (e.g., identifying fake news) were contacted. This resulted in a random sample of seven media experts (n = 6 female). Three works in editorial teams of child search engines, two develop support programs for public broadcasting channels, and two serve as media advisors to schools. The experts have an average age of 45 years (SD = 8) and predominantly hold degrees or additional qualifications in media studies, media education, information science, or special education.

Prior to the interviews, participants were informed about the study’s objectives and the voluntary nature of participation. They signed data protection and consent forms. Interviews were conducted via Zoom and recorded. On average, interviews lasted 63.7 min (SD = 13.3).

3.2 Expert interviews

The interviews were conducted using a structured guide divided into three thematic sections. As the primary aim was to generate indicators for item development (Reinders, 2022), a semi-structured interview format was chosen. This ensured that all participants received the same core questions while allowing flexibility for follow-up inquiries tailored to individual professional contexts and responses (Merriam and Tisdell, 2015). The first section focused on initial questions (Savin-Baden and Major, 2023) designed to gather background information about the interviewees, such as their educational qualifications and professional roles. The second section aimed to collect in-depth data relevant to the research question. The initial focus was on general media and digital competencies that children need to navigate the internet effectively. This was followed by questions about what experts consider when designing and producing multimodal online content for elementary school children. Subsequently, the discussion was narrowed to evaluation competencies in digital environments. Specific and often challenging types of online content and phenomena commonly encountered by elementary school children were addressed, particularly those requiring critical assessment. The final section of the interview guide was intended to conclude the interview (Reinders, 2022). Throughout the interview, follow-up questions were used to elicit concrete examples from the experts’ professional practice, which could inform the development of relevant use cases for elementary education. This approach ensured that the interviewer could fully understand the content by posing clarifying questions where necessary (Merriam and Tisdell, 2015).

All interviews were recorded, anonymized, and transcribed. Verbal responses were transcribed verbatim, without corrections for grammar, dialect, or colloquial language (literal transcription). Paralinguistic and nonverbal elements were excluded, as they were not relevant to the research focus (Misoch, 2015). The analysis primarily focused on responses related to evaluation competencies and the online contexts in which these competencies are required.

3.3 Qualitative content analysis

The interviews and curricula were analyzed using qualitative content analysis (Mayring, 2022). Since the aim of the study was to derive indicators for item development, the four evaluation criteria of the proposed framework served as the main categories. These categories also guided the development of subcategories, which represent the specific contexts in which the evaluation criteria are applied. These were defined a priori based on five content areas: advertising, knowledge transfer, entertainment, deception, and intrusions into personal integrity. This contextualization is particularly relevant for elementary school children, as indicators should not be abstract but embedded in specific online contexts (Purington Drake et al., 2023). The rationale for the deductive category development was to concretize the existing conceptual framework through empirical data and to support the operationalization of the construct for the target group. At the same time, the data were applied to the categories, as the interview guide was designed accordingly and the curricula describe evaluation competencies as part of digital literacy.

Following the deductive development of the category system, the first interviews and curricula were coded (Cohen et al., 2018). All units that relate to the evaluation criteria or address specific internet phenomena that children might potentially need to evaluate were coded. This and all subsequent coding steps were conducted independently by the authors and two additional raters. A consensus coding process was then used to compare all coding’s across raters and to discuss discrepancies in detail (Kuckartz and Rädiker, 2022). The aim was to validate the deductively developed category system through empirical data and to refine definitions and coding rules to ensure conceptual coherence. Once discrepancies in the consensus coding process decreased, the entire dataset was coded (Mayring, 2022).

This approach was intended to empirically specify the framework for elementary school children using empirical data. Therefore, both interview and curriculum data were incorporated into the analysis. Moran-Ellis et al. (2006) refer to this as data integration, where both data sources are weighted equally and address the same research question. Triangulating the data would not have been appropriate considering the research question, as the aim was not to weigh or compare the findings from the two approaches against each other, but rather to compile a set of indicators. Triangulation would have been the case, for example, if teachers had been asked to what extent they perceive and concretely implement curricular requirements in a specific area. However, this would have required a different interview protocol.

4 Results

The results derived from the deductive category system are presented below in accordance with the four evaluation criteria. For each criterion, insights from both the interview data and curriculum documents are synthesized. This process includes assigning findings to specific content domains or refining those domains where necessary. The empirical data are interpreted considering the existing theoretical and research literature. Based on this analysis, a series of competency matrices is developed, each grounded in previously established indicators and expanded through the empirical contributions of this study. Since the aim of the study is to identify additional indicators for item development, no analysis is conducted regarding whether divergences between the media experts exist. Any distinct content-related emphases identified in the curricula and interviews are reported.

4.1 Evaluation of general design aspects

Overall, the experts and all curricula emphasized the importance of competencies related to multimedia content, which is particularly engaging for children. Students should be able to interpret various design elements (e.g., images, videos, hypertext) and understand their interrelations (e.g., not just technical skills). One possible element in both self-produced and pre-existing media content is the effect of colors or sounds (“Structure and impact of advertising: identifying, describing, and comparing advertising strategies and design elements, such as color or shape”; MBK, 2011, p. 21). The goal is for children to understand and apply visual and textual elements as tools of advertising and communication in both analog and digital formats, and to evaluate design elements based on specific criteria—such as advertising language or the motives behind advertising in areas like health or mobility (“Design elements of a commercial versus objective information”; Der Senator für Bildung und Wissenschaft, 2007, p. 34). In the interviews, experts emphasized the importance of child-friendly design in online environments. This includes the use of different colors for distinct sections, simplified language, age-appropriate content labels, and a clear, structured layout of websites using frames, headings, subheadings, and advertising elements (“So it really needs to be right next to that ad element—advertisement or ad—although from our perspective, advertisement would actually be the better wording, something kids would understand more easily”; I3; pos. 230–232).

Criteria concerning the influence of design elements appeared almost exclusively in curriculum documents (“Recognize and evaluate design techniques used in digital media offerings”; SMK, 2017, p. 40) and were largely absent from expert interviews. In general, students are expected to develop the ability to critically describe both their own and others’ media productions and to assess them based on design aspects and intended effects using defined criteria. This also includes the evaluation of techniques and strategies used in media manipulation. Furthermore, students should be able to assess the truthfulness of media products by drawing on familiar design aspects (“Students can create and present different types of media products and, based on their understanding of design possibilities in media products, verify the truthfulness of information”; Der Senator für Bildung und Wissenschaft, 2007, p. 15). Additional examples relate to the evaluation of design elements and persuasive techniques in the context of stereotypes, gender roles, beauty ideals, and clichés. In the context of online searches, children need to understand how to interpret search results (“The other thing is how to deal with the search results. What determines a ranking like this? What comes up at the top, what appears further down”; I1, pos. 330–331).

Further considerations regarding the evaluation of design aspects focused on child-appropriate design, a topic primarily emphasized by the experts. Given that elementary school children often use platforms and search engines designed for adults, knowledge about the design of digital environments is essential. According to the experts, this includes an awareness of how websites are interconnected, and a basic understanding of how the internet is structured and functions (“A lot of kids just think, you know, the first hits on the list—that’s the answer they were looking for. Just figuring out what this whole networking thing on the internet is. Like, how do other sites link to other sites, and then those link to something else again. I mean, even a lot of adults do not really get that.”; I1, pos. 326–330). Navigation design and its level of complexity were also identified as critical factors. Regarding content, experts highlighted the need for age-appropriate topic presentation, factual accuracy, and child protection measures (e.g., exclusion of alcohol advertising). Safety features such as moderated chats and comment sections were seen as helpful in reducing risks like cyberbullying and grooming. Moreover, knowledge about algorithms, data usage, and the role of likes in content personalization is already relevant at the elementary school level (“Knowledge about algorithms. For elementary school kids—I actually think they can understand that. You can definitely break it down, and do it in a meaningful way. (.) Like, show them how an algorithm reacts faster and faster.”; I4, pos. 240–244).

Table 1 presents a synthesis of the study’s findings considering existing theoretical and empirical research. This synthesis results in a set of indicators that serve to operationalize the evaluation of design aspects. The indicators listed under general content in the table refer to the evaluation of design aspects that apply regardless of the application context. These indicators, along with those specific to the content areas, were added to the table where applicable. Importantly, the evaluation of these design aspects is not confined to a single content area; rather, it can be meaningfully transferred across various content areas. For instance, design aspects used in advertising may be applied to other online content by incorporating specific elements from entertaining formats.

4.2 Evaluation of credibility

Educational standards emphasize the (guided) evaluation of credibility of information and sources based on selected criteria (“Critically evaluate sources of information: distinguish between informational and commercial content”; MBWFK, 2019, p. 34). Specific criteria for evaluating internet sources include the identity of the author(s), references, objectivity, and recency. Experts addressed specific criteria for the evaluation of credibility less frequently. Instead, they primarily emphasized that elementary students should be able to critically assess the presence of an imprint and the quality of sources (“So you also have to raise awareness, like, look (.) Who’s behind this site? Why might they actually be right, or credible enough? I mean, are they even, could they be experts?,” I3, pos. 496–499). This is particularly relevant given that children increasingly use social media platforms (e.g., TikTok, YouTube) to inform themselves about current events.

This evaluation applies not only to online texts but also to the credibility of images. In addition, elementary students are expected to evaluate the informational content of their research findings (“Can extract information from age-appropriate texts/media, understand it, and evaluate it within its respective context“; HMKB, 2011, p. 9) and distinguish between factual and interest-driven information. Several curricula emphasize that elementary students should be able to evaluate whether content is true or false, or (un) likely. Further criteria mentioned include balance, informational value, and supporting evidence. Experts also highlighted the importance of assessing the accuracy of content (“But what they obviously cannot do is judge whether what the person is saying is actually true or not. But yeah, you can at least ask: What do you see? What do you hear? And what do you feel?”; I6, pos. 300–302).

Another frequently mentioned criterion emerging from both interviews and curricula is the evaluation of intent in visual as well as verbal communication. Students should be able to judge the different intentions of media in relation to information, entertainment, and manipulation (“Different intentions of media regarding entertainment, advertising, and information. Understand advertising messages and intentions, become familiar with different perspectives in reporting, manipulation”; TMBJS, 2017, p. 8). This includes recognizing advertising messages and intentions, different perspectives in news reporting, and propaganda (“What really comes into play here is getting them to put themselves in the author’s perspective—whether it’s an ad message, a company doing advertising, a blogger, or an editor (.). What is this message, this text supposed to achieve? And I think that’s definitely a goal: to notice these kinds of questions and, through that, to recognize the author’s intention“; I4, pos. 161–165). It also involves analyzing and evaluating the interests of various communication service providers.

Table 2 synthesizes the study’s results with existing research to offer a comprehensive overview of indicators for evaluating credibility. The criteria described can be applied across different content areas. This applies particularly to the empty fields and those with only limited entries in Table 2, for which no or only minimal results could be assigned. Nevertheless, it remains possible to examine personality-impairing online content in terms of intent or trustworthiness, for example in the context of cyber grooming.

4.3 Evaluation of closeness to reality

Many educational frameworks address the critical evaluation of the closeness to reality of media representations, referring to both actual reality and reality as mediated through images and media (“Recognize that media and virtual constructs and environments cannot be directly transferred into reality”; MBWFK, 2019, p. 40). In line with this, students are expected to distinguish between fiction and reality and to apply criteria for differentiating fictional and non-fictional media formats and content. This objective is further reinforced by the goal of separating the real world from the media world (“Distinguish between reality and fiction when engaging with media representations of history, including computer games and cinematic portrayals”; MBS, 2021, p. 194), which includes distinguishing between realistic and fictional images and understanding the relationship between mediated and actual reality. However, the degree of closeness to reality in online content is not always transparent to children, even though this is crucial, as media images shape perceptions of reality, establish aesthetic standards, and influence individual conceptions. Experts also highlighted the growing challenge of evaluating fakes, deceptions, and tricks in multimedia contexts, particularly regarding their degree of closeness to reality (“Especially photos, pictures, tricks—that’s really hard. What’s a trick, and what’s the difference between an act in a photo and a photo trick? Like, a staged photo, a little scene, or a photo trick where someone is actually deceiving you. (.) And the thing is, the whole idea of appearance versus reality is something that only develops over time (.), and even adults still cannot really tell the difference between appearance and reality.,” I5, pos. 78–83). In this context, elementary school children are increasingly confronted with chain messages, which they are expected to evaluate for their closeness to reality.

To address these challenges, curricula recommend introducing students to manipulation techniques as a means of understanding criteria for evaluating closeness to reality. Suggested activities include analyzing film scenes, creating and evaluating image montages, and altering images through cropping or editing (“Question information, alter images by selecting specific sections or through image editing”; Der Senator für Bildung und Wissenschaft, 2007, p. 32). At the same time, experts pointed to the rapid evolution of manipulative formats such as deepfakes and memes, which further complicate the evaluation of authenticity in digital media (“And then there’s this really thin line between, you know, it’s just a funny meme and when it’s actually propaganda. That’s a really fine line”; I4, pos. 350–351).

As shown in Table 3, not all content areas could be specified to the same extent based on the combined insights from curricula, interview data, and previous research. Nevertheless, the findings can be transferred to incomplete or vague online content. For example, criteria used to evaluate the closeness to reality of deceptive online content (e.g., real vs. fictional deepfakes) can also be applied to commercial online content (e.g., real vs. fictional advertising videos, such as exaggerated claims in promotional messages). The same applies to the evaluation of closeness to reality in news content, which can be assessed for its degree of factual accuracy.

4.4 Identification of relevant characteristics

German educational curricula emphasize the ability to identify relevant features of media as part of evaluative competence. Elementary students are expected to recognize statements in relation to the medium (“Compare information from newspapers, television, and the internet as well as their content“; TMBJS, 2017, p. 5). Furthermore, curricula highlight the importance of identifying advertising and understanding how it works, particularly in contrast to informational content. Students should also develop an awareness of the extent to which media influences their perception of the world (“Students recognize that their worldview is shaped by media”; MBWK M-V, 2020, p. 35). In addition, they are encouraged to uncover media manipulation in their everyday lives. Experts reinforced this perspective by emphasizing that elementary school students need knowledge about internet phenomena to identify and evaluate them. This includes recognizing advertising, memes, deepfakes, and trolls as part of understanding manipulative media practices (“When is something an advertising?”; I5, pos. 128–129 and “Fake news—how do you recognize that and so on. That’s really another content-related point that’s important to us”; I2, pos. 163–164).

Curricula also address behavioral norms for digital interaction and cooperation, including the understanding of ethical principles of digital communication (“Follow basic rules of communication when using digital media under guidance, e.g., SMS, email, chat”; MBWFK, 2019, p. 36). This involves recognizing and responding to chain messages, spam emails, hate comments, and insults as part of responsible online behavior (“Respect personal rights and behave respectfully in social networks”; MBWK M-V, 2020, p. 35). Furthermore, children should be sensitized to the handling of their own data and be able to recognize prompts that violate their own or others’ privacy, such as data entry in messengers, forums, comments, or chats (“Comment sections are still pretty common on websites, but how much data do I have to give up just to leave a comment? And also, is it moderated in any way?”; I3, pos. 204–206).

Finally, the findings from interviews and curriculum analysis are synthesized with prior research, as illustrated in Table 4. For all content areas, concrete specifications were identified, which can in turn be used to supplement other content areas. For example, the identification of genres aimed at knowledge transfer can also be applied to genres intended for entertainment (e.g., reality formats).

5 Discussion

To assess knowledge about evaluation criteria and their application in elementary education, it is essential to select and continuously adapt evaluation criteria and online content that is relevant to children’s everyday lives. The development of appropriate assessment instruments requires the conceptualization and operationalization of sub-competencies of digital competence that are tailored to the target group (Siddiq et al., 2016) and go beyond purely normative considerations. Currently, only a few studies assess the status quo of specific sub-competencies in elementary school children using methods other than self-report (e.g., Godaert et al., 2022; Pedaste et al., 2023; Kong et al., 2019; Lazonder et al., 2020; Aesaert and van Braak, 2014).

Based on a conceptual framework for the online content evaluation, this study aims to derive indicators for assessing online content among elementary school children to develop items in the future. To this end, interviews with media experts and German curricula were analyzed based on the framework. These findings were then synthesized with existing research to provide an overview of indicators (Tables 1–4). A qualitative design was chosen because methods such as interviews are suitable for operationalization when there is limited prior knowledge for item construction in each domain (Reinders, 2022). The results are discussed below, followed by educational implications and limitations.

5.1 Indicators for the evaluation of online content

As part of the operationalization process, it was necessary to define the online contexts in which the evaluation criteria should be applied at the elementary school level. Particular attention was given to specifying evaluation criteria for multimodal online content (Hassinger-Das and Dore, 2023), as this is the type of content children most frequently encounter. Wherever possible, the criteria were concretized across five overarching content areas: advertising, entertainment, knowledge transfer, deception, and intrusions into personal integrity. These areas served as umbrella categories from which a wide range of online content types and phenomena were derived. In accordance with youth protection standards, violent or sexualized online content was excluded from the operationalization, even though students may encounter such potentially harmful content during internet use (Livingstone, 2014). These topics are more appropriately addressed through pedagogical and trust-based engagement.

In integrating the findings from interviews, curricula, and the research literature, an uneven distribution of indicators across the evaluation criteria and/or content areas became apparent. One possible reason for this is that the methodological approaches emphasize different focal points. For instance, numerous German curricula address the critical evaluation of advertising, which also includes specific design aspects. Other content areas, however, are either not addressed or receive significantly less attention. The same applies to specific evaluation criteria, which often refer broadly to the assessment of online content and sources or mention criteria such as “age appropriateness, timeliness, scope, credibility” (TMBJS, 2017, p. 5). However, such references are unlikely to provide teachers with detailed guidance on what these criteria entail and how they can be applied in a task- and goal-oriented manner in elementary education. Moreover, curricula are normative documents that define educational goals and competencies in a formal and rather abstract manner. In contrast, the interview transcripts reflect subjective interpretations and expert knowledge provided by media professionals, which emerged in context- and topic-specific ways during the interviews. For example, the perspectives of media experts varied according to their professional domain. Experts working with child-oriented search engines focused more on evaluation skills in the context of online content searches, whereas those involved in developing support programs and online services for children tended to emphasize risk-related aspects such as misinformation, cyber grooming, or cyberbullying. Indicators derived from previous research primarily relate to the evaluation of multiple online texts and the assessment of sources (e.g., author expertise) by elementary school students (Paul et al., 2018). Multimodal content on social platforms, which adolescents predominantly use, has received considerably less attention in this regard (Veum et al., 2024).

When synthesizing the collected indicators, empty fields in Tables 1–4 also became apparent. These were intentionally left blank because no results emerged from the individual approaches in these areas. However, these fields can be expanded by transferring indicators from other fields. Indicators that specify an evaluation criterion within one content area (e.g., assessing the intention of online advertising) can be applied to other content areas (e.g., assessing the intention of personality-influencing online content). Nevertheless, the findings provide a more concrete basis for previous theoretical and normative assumptions. National and international frameworks such as DigComp (Vuorikari et al., 2022) do not specifically address the requirements for elementary school children and remain rather general with regard to the individual subdimensions of digital competence. Even media literacy plans tailored to elementary education (e.g., Medienberatung, 2020) largely remain at a general level, for example stating that children should “recognize and evaluate information and its sources as well as underlying strategies and intentions, e.g., in news and advertising” (p. 15). To measure and foster digital competences in a target-group-specific way, further specification of the individual subdimensions is therefore necessary (Siddiq et al., 2016). Previous studies, however, have operationalized and validated digital competencies using a wide range of subdimensions (e.g., Godaert et al., 2022). It is therefore unsurprising that the evaluation of online content is represented in most existing measurement instruments by only a few items. In such cases, it can be assumed that a comprehensive assessment of a subdimension of digital competence is not possible. This study therefore aims to specify various evaluation criteria for the application context of elementary school children to develop multimodal items and validate them empirically.

5.2 Educational implications

In addition to the importance of specifying the construct for operationalization, the findings also offer points of reference for curricular considerations. It is important to note that the actual internet usage of elementary school children does not necessarily align with the competency goals outlined in (e.g., German) curricula, or is only partially represented (e.g., social media). Against the backdrop of existing national and international frameworks (e.g., DigiComp, Vuorikari et al., 2022; ISTE standards, International Society for Technology in Education, 2016), curriculum guidelines should further specify evaluation criteria, as current frameworks often only refer broadly to evaluating content in terms of ‘credibility and reliability’. This also requires that evaluation criteria be explained through specific application contexts that are relevant for acquiring knowledge and skills at this age. This includes, for example, not only content areas that are frequently integrated into curricula, such as advertising, but also the cross-cutting integration of deceptive or entertaining content that should be critically examined across different platforms (e.g., clickbait on YouTube or cyber grooming in platform chats). Particular attention should be paid to the interrelation of content areas and their underlying intentions in the context of social media use (e.g., Fernández-Gómez et al., 2024). For instance, commercial or deceptive content is often embedded within entertaining formats (e.g., unboxing videos), making it particularly difficult for children to identify. To address this, children need to be familiar with the characteristics of different content areas and specific phenomena (e.g., emotionalization in fake news).

Specifying evaluation criteria and their areas of application in the curriculum could potentially support teachers in identifying subject-specific connections and practical applications for their lessons. Evaluation criteria become particularly relevant in the context of (school-based) research tasks (Feierabend et al., 2023), where ideally the specific task determines the selection of appropriate criteria. Furthermore, raising awareness of the application of suitable evaluation criteria in leisure contexts appears to be important. It is therefore important that children learn to apply evaluation criteria in a task- and goal-dependent manner (Weisberg et al., 2023). To implement this in classroom practice, teachers need to be able to assess students’ current level of competence and have access to appropriate instructional materials. These materials should, for example, cover different types of media (such as blogs or news articles), characteristics of content areas (e.g., what defines deceptive content), and platform-specific features (e.g., differences between social media platforms and online newsrooms). Such efforts require that elementary school teachers can respond to their students’ usage behavior by being familiar with the potential risks and opportunities associated with the platforms and online content they engage with (Berger and Wolling, 2019). While a few primarily digital teaching resources are now available for elementary education in Germany (e.g., Fake Finder by SWR1) and internationally (e.g., Be Internet Awesome by Google2), these resources have rarely been empirically validated. Furthermore, it is essential that elementary school teachers have opportunities to develop their digital competencies in this and related sub-dimensions, for instance through MOOCs (e.g., Europen Schoolnet Academy3). To ensure a consistent knowledge base, mandatory integration of digital competencies into teacher education programs could be one possible approach (OECD, 2023).

5.3 Limitations and outlook

The present study also has methodological limitations. The results of the qualitative content analysis of the German curricula and interviews were synthesized with the existing body of research. However, it is possible that not all previous findings were incorporated, as this was not a systematic literature review. Moreover, numerous normative requirements exist, suggesting that additional relevant literature may not have been included in the review. A research bias in certain evaluation criteria may also be assumed, as not all criteria have been equally investigated with elementary school children across different contexts. A systematic review would therefore be necessary in the future—one that centers on a specific research question and evaluates sources based on their quality. Additionally, the normative educational frameworks referenced stem exclusively from Germany, as the underlying objective is to develop a measurement instrument for German elementary school children. Nevertheless, additional international curricula for the elementary education sector could be analyzed to identify further specific indicators. The selection of media experts can also be critically discussed (selection bias, von Soest, 2023). The individuals interviewed volunteered to participate, which constitutes self-selection. Consequently, the composition of expertise and professional domains is random. In additional interviews, the existing indicators could be validated and expanded, either with the same experts or with new participants.

The data presented in Tables 1–4 aim to identify indicators for elementary school children. Therefore, in this study, the data were integrated with the objective of collecting as many indicators as possible that can be used for future item development. Gaps in Tables 1–4 point to further research needs, for example focusing on indicators for evaluating the closeness to reality of informational content. Moreover, the indicators represent only a snapshot of current technological developments. These must be continuously updated to keep pace with the digital experiences of elementary school children. The findings of this study are also not based on the perspectives of elementary school children, which could have contributed additional indicators. In the next phase, the indicators serve as the basis for constructing an initial item pool. To this end, multimodal online content is selected that is relevant to the everyday lives of elementary school children (e.g., familiar formats, influencers) and originates from platforms popular among the target group (YouTube, TikTok, WhatsApp; Ofcom, 2022; Feierabend et al., 2023). The items and indicators were first refined using the think-aloud method with elementary school children and subsequently tested in a pilot study (Theurer et al., 2024). The larger empirical study aimed at validating the items has been completed, with analyses currently underway.

Data availability statement

The datasets presented in this article will not be made available in their original form, as some interviewees disclosed internal information about the platforms they work for. Requests to access the datasets should be directed to Tina Jocham, dGluYS5qb2NoYW1AdW5pLXd1ZXJ6YnVyZy5kZQ==.

Ethics statement

Ethical approval was not required for the studies involving humans because at the time of data collection, no ethics committee was established at the Faculty of Human Sciences. However, the informed consent process was coordinated with the university’s data protection officer. The study involved interviews with media experts, who were informed about data anonymization and deletion procedures, among other aspects. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

TJ: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. SP-R: Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the Faculty of Human Sciences of the Julius-Maximilians-University of Würzburg, Germany.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Aesaert, K., and van Braak, J. (2014). Exploring factors related to primary school pupils’ ICT self-efficacy: a multilevel approach. Comput. Hum. Behav. 41, 327–341. doi: 10.1016/j.chb.2014.10.006

Anttonen, R., Räikkönen, E., Kiili, K., and Kiili, C. (2023). Sixth graders evaluating online texts: self-efficacy beliefs predict confirming but not questioning the credibility. Scand. J. Educ. Res. 68, 1214–1230. doi: 10.1080/00313831.2023.2228834

Artmann, B., Scheibenzuber, C., and Nistor, N. (2023). Elementary school students’ information literacy: instructional design and evaluation of a pilot training focused on misinformation. JMLE 15, 31–43. doi: 10.23860/JMLE-2023-15-2-3

Association of College and Research Libraries. (2015). “Framework for information literacy for higher education.” Available online at: https://www.ala.org/acrl/sites/ala.org.acrl/files/content/issues/infolit/framework1.pdf.