- 1Department of Pedagogy and Psychology, The Faculty of Social and Humanities Sciences, Khoja Akhmet Yassawi International Kazakh-Turkish University, Turkistan, Kazakhstan

- 2Department of Preschool and Primary Education, The Faculty of Social and Humanities Sciences, Khoja Akhmet Yassawi International Kazakh-Turkish University, Turkistan, Kazakhstan

Introduction: This study introduces a training model developed to support practitioners in effectively using the Denver II Developmental Screening Test. The model was shaped through a five-step process and is grounded in metacognitive principles. It aims to help practitioners not only follow steps but also pause, anticipate challenges, and consider their actions—practices often neglected in typical training.

Methods: The model development process involved qualitative data collection with fifteen early childhood professionals who engaged with the model during its implementation. Their feedback and observed behaviors were qualitatively examined. Additionally, insights were obtained from several field experts who reviewed the model.

Results: Findings indicated that the model was perceived as flexible and relevant across diverse practice settings. Experts highlighted its applicability in improving practitioners’ ability to reflect, self-monitor, and adapt their professional judgment during the use of developmental screening tools.

Discussion: The model’s contribution lies in shifting the focus from simply following procedures to fostering practitioners’ cognitive and metacognitive engagement in decision-making. While developed around the Denver II framework, the approach shows potential to inform practices in related assessment contexts.

Introduction

Practitioners use developmental screening tools to detect early risks in children’s growth areas, allowing support to begin before delays become more pronounced. Accurate assessments support individual development and benefit societal and educational systems (Glascoe, 2005; Squires and Bricker, 2009). Closely tracking children’s development—particularly between the ages of 0 and 6—has been linked to later academic success and social adjustment.

Among early childhood screening tools, the Denver II test stands out for its simplicity and adaptability. Practitioners in pediatric healthcare, early education, and child welfare use this test to evaluate children aged 0–6. It assesses development across four domains: personal-social skills, fine/gross motor skills, and early language. Owing to its brief administration time and suitability across diverse cultural contexts, the test has gained widespread acceptance not only in clinical practice but also in educational policy and research (Frankenburg et al., 1992).

The Denver II test was first introduced in the United States, but it has found its way into various countries and educational systems. As it spread, it became clear that the test could not always be used the same way everywhere. Differences in parenting, daily routines, and even how much space children have to move around (such as outdoor access or playtime norms) shape how children develop. For example, kids growing up in rural areas may learn to climb, run, or balance earlier than those in city apartments (Wang et al., 1993). These kinds of differences explain why the test sometimes needs to be adjusted to better reflect local realities.

In its traditional form, Denver II is conducted face-to-face via manual procedures. Despite its design, it remains adaptable enough to be applied across diverse developmental settings. Screening tests, which aim to detect developmental delays or at-risk indicators and facilitate appropriate intervention, largely depend on the knowledge, skills and procedural awareness of practitioners. Denver II also exemplifies a tool that is designed to assess the developmental status of children and most strongly highlights the critical importance of practitioner training. In Denver II and similar screening tests, practitioners are not passive implementers who simply follow instructions, but rather active participants who facilitate and monitor the process. The roles of practitioners in the testing process can be categorized as follows:

Correct application of test instructions: on standardized tests such as Denver II, standardized administration of instructions is vital to the validity and reliability of results. As Anastasi and Urbina (1997) noted, when the standard procedures of a test are not fully followed, its reliability tends to decrease noticeably. For this reason, how the practitioner gives instructions, is important; they should be clear, easy to follow, and consistent with the test’s aim. The observation of natural behaviors during testing is essential to the assessment process (Zimmerman, 2002). In Denver II, the practitioner must observe how the child interprets task demands, makes decisions, and responds to stimuli. These observations help assess the child’s developmental status.

Contextual sensitivity: screening tests are influenced by context. Tests such as the Denver II should be administered with regard to physical, social, and cultural contexts (Hofstede, 2001). For example, ensuring a distraction-free environment helps a child exhibit natural responses (Bayley, 2006).

Process management: practitioners must manage unexpected situations during test administration. For example, if the child is distracted or struggles with instructions, the practitioner must maintain professional neutrality. While proper interventions improve test accuracy, incorrect interventions can affect evaluation (Schneider and McGrew, 2012). Training Denver II practitioners is essential to ensuring the test’s validity. There is empirical evidence supporting the training process.

Ensuring standardization: to ensure proper test use and reliability, practitioners must receive adequate training (Anastasi and Urbina, 1997). In standardized tests such as the Denver II, practitioners must follow instructions to maintain validity.

Development of objective observation skills: observing individuals’ natural behaviors in screening tests can be improved through training of practitioners. Zimmerman (2002) emphasized that observation skills support result accuracy.

Context awareness: Hofstede (2001) demonstrated how contextual factors influence test results and emphasized that practitioners should consider these factors.

This applies not only to Denver II but also to other developmental screening tools. For example, in Wechsler Intelligence Tests (WISCs), practitioners must present instructions accurately and interpret behaviors (Wechsler, 1949). Bayley Scales: In this tool for early motor and cognitive assessment, practitioner reporting impacts result reliability (Bayley, 2006). Stanford-Binet Test: In problem-solving assessments, practitioner training improves accuracy (Terman and Merrill, 1960).

Although each test has unique demands, practitioner training remains a shared success factor. Studies show that practitioners must have technical knowledge, process management skills, contextual awareness and objective skills. This requirement highlights the importance of training practitioners on scientific validity and reliability. Practitioner training is key to test accuracy and directly affects overall screening success.

Purpose of the study

This study aims to develop a training model for Denver II Developmental Screening Test practitioners based on the Kazakh adaptation of the test. The program aims to help practitioners follow instructions, build scoring and interpretation skills, and apply the test properly. The training also builds practitioners’ judgment and decision-making in challenging test situations.

Methods

Research model

This study utilized a design-based research model (DBR), primarily based on a qualitative approach, to develop a metacognition-based practitioner training program for the Denver II Developmental Screening Test. Design-based research was deemed appropriate for this study because it facilitates iterative model development processes through the interaction of theoretical frameworks, field applications, and expert opinions.

The DBR approach was developed to bridge the gap between practice and theory in educational research (McKenney and Reeves, 2012). In this context, our study developed a literature-based theoretical framework and continuously revised the model based on practitioners’ experiences and expert opinions. The study’s five-phase process model (analysis, design, development, implementation, and evaluation) directly aligns with the cyclical structure of this approach. The process of developing the practitioner training model was carried out through the Kazakh adaptation of the Denver II Developmental Screening Test. Specifically, the multiple data sources used in this study (117 application videos, 161 communication recordings, expert opinions, and practitioner experiences) were analyzed in accordance with DBR’s principle of “multi-faceted data collection and continuous improvement.” Thus, the developed model is not merely a theoretical proposal but also an educational model that has been tested with field data and its applicability has been verified.

Participants

Research data were obtained from three groups of participants: children, families, and practitioners, and model construction was carried out using these data. Implementation videos of the children and families from these groups were analyzed, and these analyses were used directly in the model construction. Some demographic characteristics of these participants are presented below (Tables 1, 2).

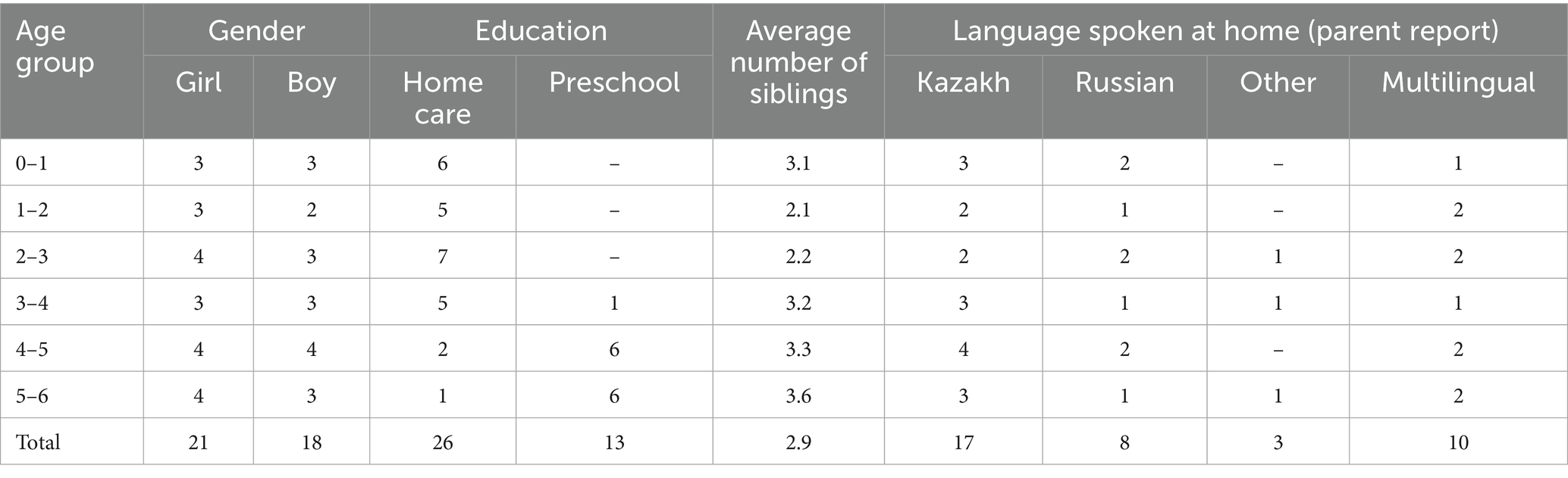

Table 1 shows the demographic characteristics of the 39 children who participated in the study. The sample was gender-balanced (21 girls, 18 boys) and encompassed all groups aged 0–6. The majority of the children (n = 26) received home care, while 13 attended preschool. The average number of siblings among the participants was 2.9. An examination of the data revealed that the average was lowest in the 1–2 age group (2.1) and highest in the 5–6 age group (3.6). It would also be helpful to note that the children in the study group had a minimum of 1 sibling and a maximum of 6 siblings. In general, it can be said that the sample reflected a large family structure with multiple siblings. Findings regarding the language spoken at home indicate that the majority of participants reported speaking Kazakh (n = 17). In addition, cases of multilingual use at home (n = 10), Russian (n = 8), and other languages (n = 3) were also identified. This distribution demonstrates the multilingual nature of the region where the study was conducted. Furthermore, children with medical or special needs were not included in the sample (Table 2).

According to Table 2, mothers were on average 32.4 years old (SD = 5.13), whereas fathers were slightly older, with an average of 35.2 years (SD = 7.27). This finding suggests that the parents are generally in the young adult age group. In terms of education level, it is noteworthy that the majority of parents are university graduates (n = 57), while a smaller group is high school graduate (n = 17) and holds a master’s or doctoral degree (n = 4). This suggests that the participating parents have a relatively high level of education.

When employment status was examined, it was found that the majority were employed (n = 69), while the number of unemployed parents remained quite low (n = 9). This distribution suggests that the participant group has a socioeconomically stable profile. In terms of residence, the overwhelming majority of participants live in urban areas (n = 36), with a very limited number of rural residents (n = 3). This suggests that the study primarily represents families living in urban centers. Furthermore, the fact that the study was conducted in Almaty, Astana, Shymkent, and Turkistan, which are among the major cities in Kazakhstan, suggests that the data largely reflects the metropolitan context.

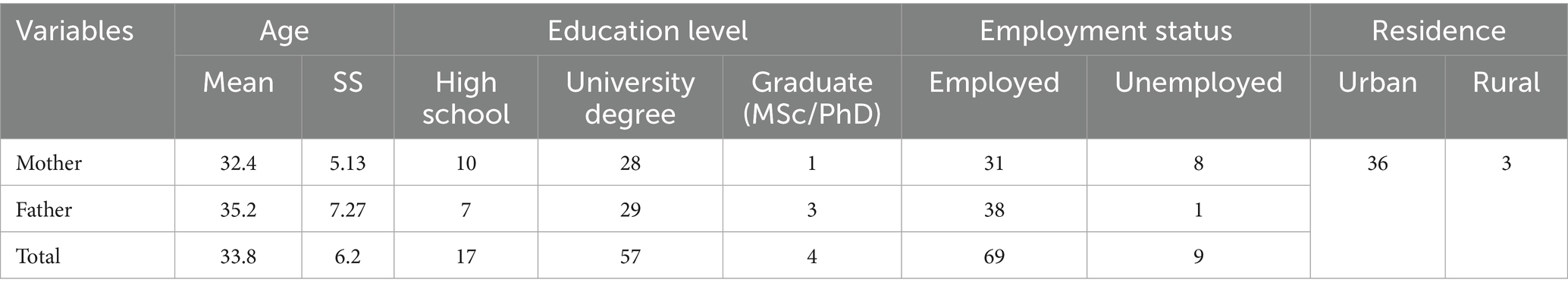

Practitioners are another participant group who actively participate in the training process, thus facilitating the creation of a practitioner training model. Some variables related to this group are presented in Table 3.

Table 3 shows the demographic characteristics of the 15 practitioners who participated in the training program. All participants were female. The majority were between 26 and 30 years of age (46.7%), and the majority held a bachelor’s degree (80%). Sixty percent of the participants had 6 to 10 years of professional experience, and 80% were preschool teachers. None of the practitioners participating in the study had received prior Denver II training. Overall, the participant group consisted of experienced, highly educated, and actively working female educators.

Procedural steps in developing the training model

The theoretical foundation of the study is grounded in Halloun’s (2007) model development approach. In this approach, models emerge through interactions between empirical data and theoretical reasoning. This is a dialectical process shaped by the interpretation of experimental data observations and the analysis of theoretical frameworks. The approach emphasizes strengthening models through critical analysis of theoretical constructions instead of relying only on data. In this context, Halloun’s work serves as both a theoretical basis and a guide for our practitioner training model.

The empirical foundations of the training process are field studies, practical activities, and feedback data. During this process, practitioners took part in realistic practices and developed their skills in applying professional judgment to solve encountered problems. The inclusion of participants as codesigners enhances contextual sensitivity and sustainability-a strength highlighted by Stockless and Brière (2024) in design-based research. This participatory aspect helped shape our model in real-world settings.

The theoretical framework shaped the training content through literature and expert input. The content of the program was structured to help practitioners adapt to different situations while following the Denver II guidelines. The findings and the feedback from the participants shaped the final model by establishing a balance between theory and practice. This structuring process allowed the model to be shaped through the interaction of the empirical and rational components emphasized by Halloun in his model development approach.

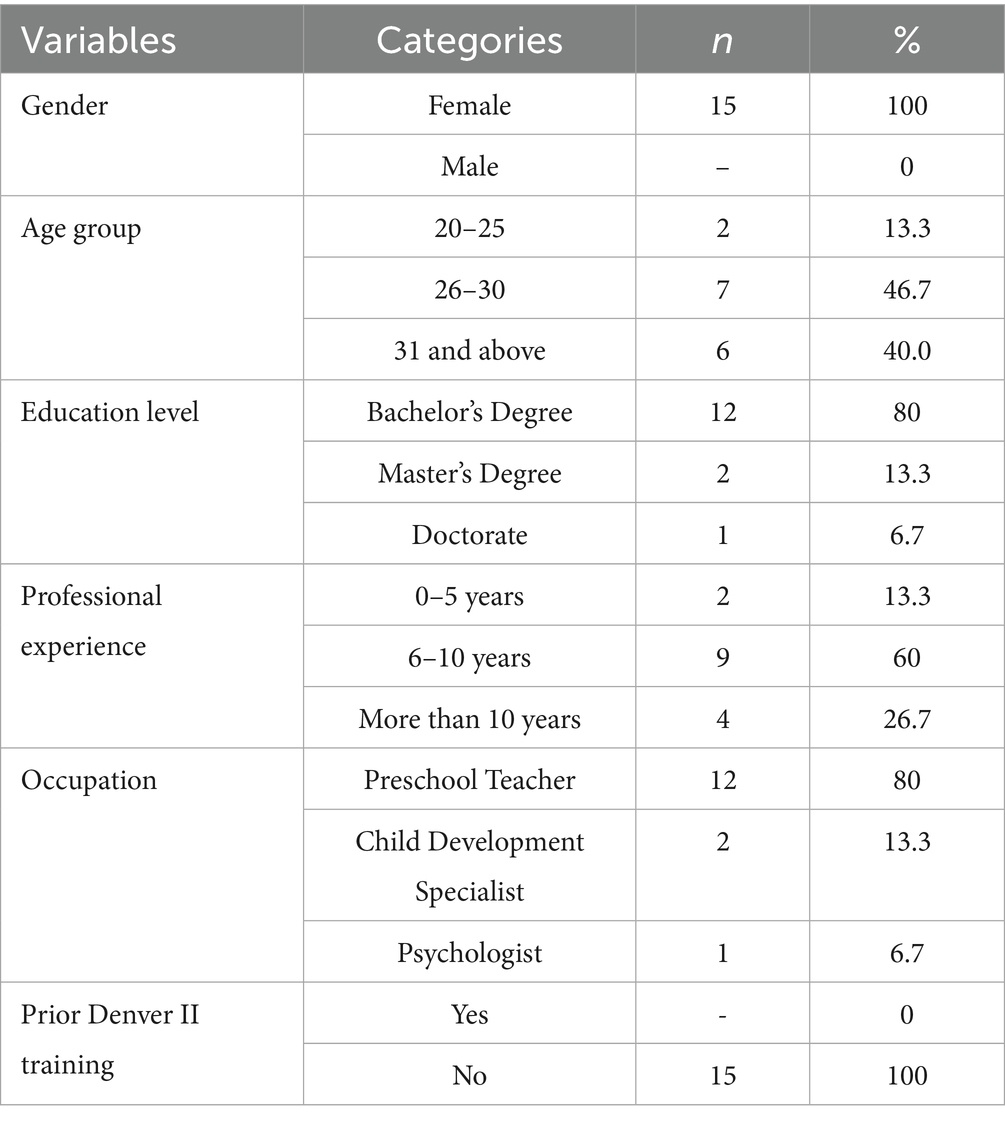

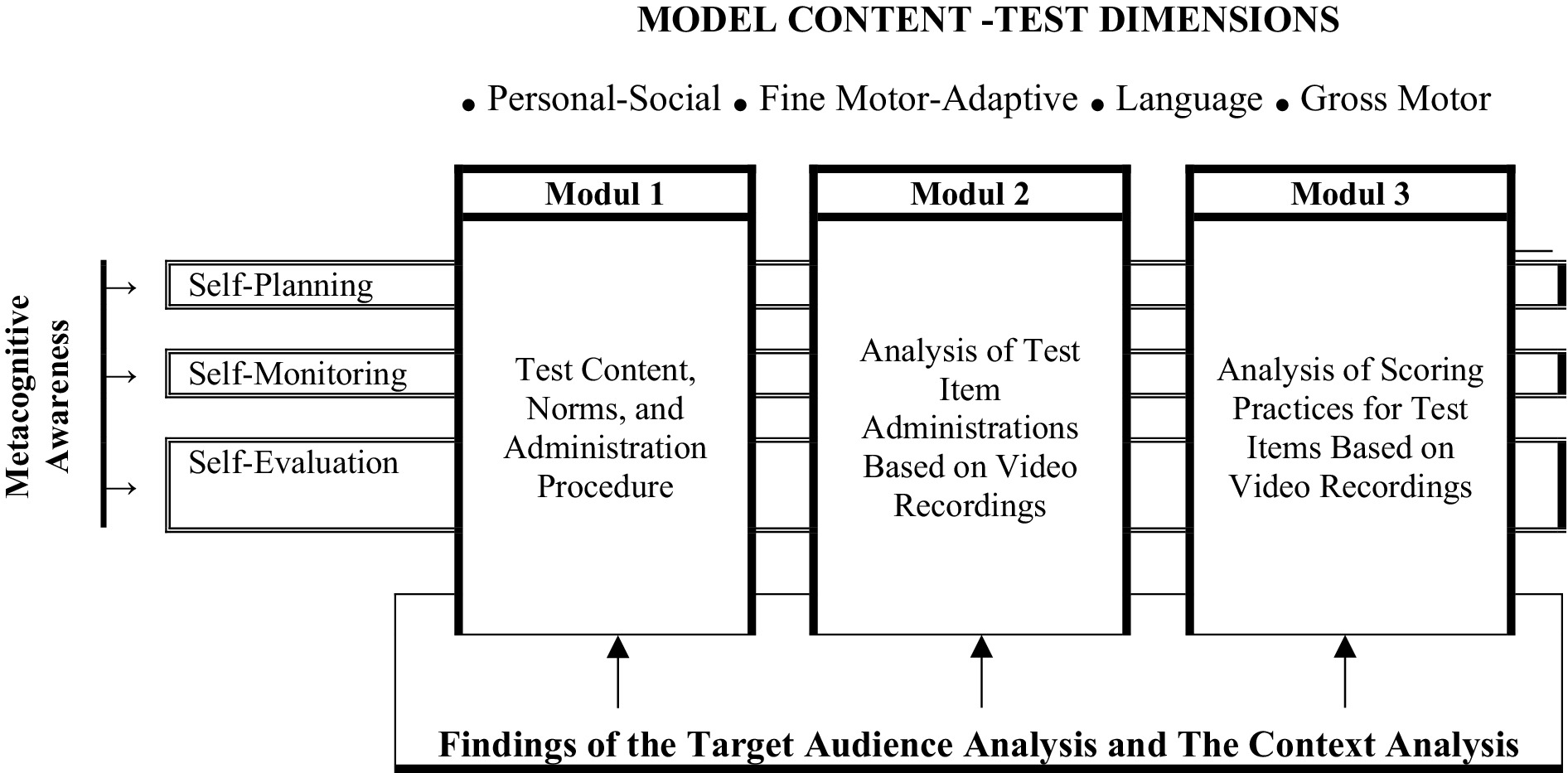

A five-stage model was developed on the basis of the literature and participant-expert feedback, as shown in Figure 1.

The model development process was carried out according to the procedural sequence presented in Figure 1, and the five process steps described below were shaped based on the opinions and practices of practitioners and experts. The process was completed in a total of 13 weeks.

First phase (determining the focus area – inspiration phase): In this phase, the model idea was formulated and the researcher’s perspective on practitioner training was defined. The initial phase of the study lasted approximately 2 weeks.

Second phase (analysis of the target audience, context, and model plan): Participant profiles, implementation context, and model plan were examined in detail. This phase lasted approximately 3 weeks.

Third phase (presentation of the proposed model and its core components): The model was drafted, and the training modules and core structures were explained. The process lasted approximately 2 weeks. In the third phase, the proposed model and its core components were introduced in detail. Practitioner training in this phase was structured into three sequential modules: (1) Module 1 focused on test content, norms, and implementation procedures and fostered the development of planning skills in the self-regulation process; (2) Module 2 included analysis of test item administration using video recordings, contributing to the development of self-monitoring skills; (3) Module 3 focused on analysis of test item scoring using video recordings and strengthened self-assessment processes. Based on the results of the target audience and context analyses, the modules followed a clear sequence that extended from planning through monitoring to evaluation.

Fourth phase (discussion of reflections on program development): The contributions of the model developed in the third phase to program development were evaluated through the pilot implementation of the implementer training model. Inferences were drawn on the implementation processes, and adjustments were made based on these inferences. This phase lasted approximately 4 weeks.

Fifth phase (obtaining feedback from implementers and experts and making necessary adjustments): Participant and expert opinions were collected, and necessary changes were made to the model. The process was completed in approximately 2 weeks.

Data collection

The data collection process for constructing the model consisted of five main stages. The first stage was to define the theoretical framework of the research context through a comprehensive literature review. The second stage focused on needs and context analysis: (a) practitioners were given a brief introduction to the test application, (b) they were asked to conduct and record sample applications, (c) these administaration videos were analyzed by the researchers, (d) semi-structured interviews were conducted with practitioners, and (e) expert opinions were obtained for the context analysis. The third stage was to structure the practitioner training program based on the findings. The fourth stage was a pilot implementation of the program with 15 practitioners. During this process, data on practitioners’ experiences were systematically collected, evaluated primarily using descriptive analysis, and necessary changes were made to the model.

Additionally, 117 video recordings of the practitioners’ test applications and online question-and-answer exchanges were analyzed, and the findings were discussed in the classroom. The fifth stage consisted of interviews with field experts to assess the validity and applicability of the model. This gradual process allowed different data sources to corroborate each other, increasing the reliability of the results. Furthermore, member checking was systematically ensured throughout the process.

Data analysis

As stated during the data collection process, the data collection, analysis, and feedback processes were carried out continuously throughout the study. Furthermore, it can be said that the planned data collection process was carried out in three stages: (1) Needs analysis (interview and observation) and context analysis (interview) studies conducted in the Exploration-Analysis Phase; (2) Participant opinions in the Implementation Phase; and (3) Expert opinions in the Feedback (Evaluation) Phase. Qualitative research methods such as descriptive analysis and content analysis were used in the aforementioned interview and observation studies.

The purpose of the semi-structured interviews (n = 15) conducted with the participants was to reveal their experiences and perceptions regarding the Denver II implementation processes. The interviews were audio-recorded, transcribed, and analyzed within the framework of pre-determined themes (e.g., implementation fidelity, language use, cultural context). Furthermore, new concepts expressed by the participants were incorporated into the analysis using open coding. Coding was conducted independently by two researchers, and consistency was achieved through comparisons, and the findings were reported using direct quotes from the participants.

Video recordings (n = 117) of Denver II administrations conducted by practitioners were analyzed to assess the testing processes in terms of fidelity to the instructions, language-communication styles, and children’s responses. Each recording was independently viewed by at least three researchers, and observations were recorded on standardized forms. The researchers’ findings were compared, discussions were held until consensus was reached, and the results were converted into thematic codes. The coded data were integrated within the content analysis to identify strengths and weaknesses in the administration processes. During the video-recorded test administrations, the primary language was designated as Standard Kazakh (the central dialect), as the research was structured around the Kazakh adaptation of the Denver II. However, considering the bilingual context, the following procedure was followed: If the child could not recognize or respond to a concept in Standard Kazakh, they were presented with regional dialect variants. If the child responded in Russian and was found to have understood the concept correctly, this response was also considered correct. This approach was systematically applied across all tasks to minimize potential disadvantages stemming from bilingualism and to maintain the validity of the assessments. The purpose of the expert opinions (n = 6) obtained in the final stage of the model development was to assess its validity, applicability, and cultural adaptability. These opinions were analyzed using descriptive analysis, and the recommendations were categorized under three main themes (validity of implementation processes, adequacy of training modules, and cultural adaptability). The experts’ assessments were supported by open coding and then integrated and interpreted comparatively, directly contributing to the final construction of the model.

The differences of opinion that emerged during the analysis process were addressed at two levels. Coding differences between researchers regarding the same data source were resolved through comparison of independent codes and discussion sessions, and recoding was performed until consensus was reached. Discrepancies between different data sources (interviews, observations/videos, and expert opinions) were resolved using triangulation. Within this framework, the context in which the findings were obtained was reassessed, the data were cross-compared, and the data were synthesized, taking into account their complementarities. This ensured both reliability within individual data sources and the holistic contribution of the different methods.

Phases of the research process

Identification of the area to be focused—inspiration phase

The starting point of the study was the official support for the researchers’ project on the standardization of the Denver II developmental screening test in Kazakhstan by the Ministry of Higher Education of the Republic of Kazakhstan. The researchers first received Denver II practitioner and trainer certificates. During this process, they worked on the original English and adapted Turkish versions. One researcher is a native Turkish speaker, and the other is Kazakh; both have upper-intermediate English proficiency.

During certification, researchers focused on standardization in Kazakhstan and identified language and culture as key challenges. They agreed that Kazakhstan’s cultural and linguistic diversity, especially the roles of teachers, students, and families, differs from those of other standardization models. The incomplete adaptation of technical and academic languages into Kazakh was also seen as a major difficulty.

These findings became evident after the certification program and trial training began. “Initially, difficult-to-interpret situations emerged. “Although the Kazakh translations of the test items and instructions were meticulously conducted, the practitioners made obvious mistakes and failed to complete the assessments properly. The most repeated of these mistakes was the practitioner’s support to prompt the child to complete the task, although it was not included in the instructions. These were either verbal or physical prompts. In fact, it was noted that most practitioners directly provided answers. Although continuous feedback was given and corrections were made on this issue, no behavioral changes were observed. Interestingly, the practitioners, who were from different parts of the country and had no contact with each other, showed the same tendency. Moreover, mothers’ intervention with similar attitudes made the testing process progressively harder to administer. Researchers first analyzed this situation. It was concluded that the reason for the behavior of the participants who preferred to act not as implementers, but rather as protectors, shielding the child from failure, and protecting social reputation was shaped by culturally embedded social norms. In this sense, this effect must be considered in practitioner training programs.

The researchers’ personal experiences were also effective in the analysis of the above situation. One of the researchers is a Turkish citizen and has been working as a faculty member in Kazakhstan for 8 years. This researcher’s two children also study in Kazakhstan. She has extensive experience in with deep differences that cultural interpretations can create. Another researcher, a Kazakhstani researcher, has also spent a long time in Turkey for her postdoctoral research. She also has experience in intercultural perception and semantic variation. In this respect, the researchers agreed that cultural and linguistic variables should be carefully considered in the training of practitioners during the adaptation process.

Additionally, Saban (2021) study titled “Curriculum Development through Action Research: A Model Proposal for Practitioners” inspired the practitioner education model developed in this research both theoretically and methodologically. On the basis of this study, the researchers were able to more clearly determine the path they would follow when structuring their own model.

On the basis of both their own experiences and those of the foundational studies, the researchers considered other factors that might be unobserved. They also emphasized conducting a well-grounded analysis to support model development before starting the application training.

The literature states that the analysis process is a critical starting point in the model development process. Halloun (2007) stated that the model construction and application processes in the modeling cycle are generally addressed in balance with empirical and rational elements and that the modeling process begins with problem analysis. Accordingly, the first step in the modeling process is to analyze existing physical systems or phenomena and to identify appropriate theories or models.

Inspiration phase—the analysis phase

The first phase of our Denver II Practitioners’ Education Program Model (DUYEPM) development process, analysis, was conducted to comprehensively evaluate the current situation and determine the core components of the model. This phase includes a detailed examination of both the needs of the target audience and the characteristics of the context.

Our analysis process was informed by established models in the literature. For example, in the ADDIE model, analysis is the first step toward determining the target audience and the context and serves as the basis for subsequent phases (Molenda, 2015). Similarly, Tyler (1949) goal-based program development model defines needs analysis as the primary step in determining educational goals. According to Tyler, analyzing students’ current knowledge skills and social needs is essential in determining goals. In line with this, the step-by-step procedure for thematic analysis outlined by Naeem et al. (2023) offers a clear roadmap for researchers aiming to derive models directly from qualitative data. This approach further supported the structuring of our practitioner training model on the basis of qualitative findings.

To this end, the following steps were followed:

Target audience analysis: The first study in our analysis focused on the assessment of current knowledge, skills, and needs of the target audience. In this context, the competence of Denver II implementers in applying instructions and scoring items was taken into consideration. A systematic approach was adopted to obtain initial data about the target audience and determine their knowledge and skill levels. Accordingly, the following steps were followed.

Sample application study: Participants were presented with Denver II basic application scenarios, and their knowledge levels, skills and decision-making competencies were observed. This study aimed to understand how the process was perceived by the participants and how the instructions were followed. For this purpose, the Denver II 5-item guideline was given to the participants. The guidelines define the physical setup, materials, and implementer limits. In addition, three tasks (Drawing a Square, Recognizing Colors, Drawing Lines) for 3–4-year-olds were included. The participants were asked to perform these tasks with a 3–4-year-old child nearby, adhering to the instructions, and to record the process on video. The instructions also included task-based evaluation criteria.

Unstructured interviews were conducted as follows: After the sample applications, the video recordings were watched with the participants, their process-related thoughts encountered difficulties, and awareness was examined through unstructured interviews.

Applications and interview data were evaluated: Participant applications were observed by independent evaluators/trainers and analyzed with a standard evaluation form.

Some striking, frequent, and meaningful sample situations in model structuring in the application videos, and some dialogs based on interviews after video analyses and considered foundational for the model, are as follows:

Square drawing task: In one application, the practitioner guided the child’s hand during the drawing. Although the child asked, “Can I do it myself?,” the practitioner replied, “It would be better if I helped you.” This limited the chance to assess the child’s independent performance.

In the follow-up interview, she stated: “I helped him draw because I thought he would not do it on his own.” Reflecting later, she acknowledged, “Maybe I should’ve been more patient. I wasn’t supposed to intervene.”

Another participant gave an overly complex instruction: “Now I want the shape in the picture, but it should be regular, pay attention to the corners.” When the child asked, “What is this like?,” the practitioner hesitated, making the task harder to grasp. In the interview, she reflected: “I thought I needed to explain more, but that might have confused him.” She later admitted, “He would’ve understood more easily if I’d kept it simple.”

Color Identification Task. One participant gave indirect hints such as “Is this red?,” prompting the correct answer. After a pause, the child replied, “Yes, it is red.” These prompts seemed to limit his ability to think independently. In the follow-up, the practitioner said: “I gave him a little clue because he confused the colors.” Then he admitted: “Maybe I should’ve let him find the truth on his own.”

Another video revealed a distracted child. Instead of observing naturally, the practitioner insisted, “Now look at this color.” She later reflected: “I constantly warned him. Maybe I should’ve just taken notes. It might have changed his natural response.”

Line Drawing Task. In one case, the practitioner focused on neatness over the process. When the child asked, “Is this line good?,” the response was, “You did not do it properly, try again.”—repeated nearly ten times. This insistence exceeded the instructions and weakened the purpose of the task. Reflecting later, the practitioner said: “I actually focused on teaching him to draw, not the line.”

Similarly, another participant emphasized uniformity in the child’s thinking. Comments such as “This one is longer” and directives such as “Try again, they should be equal” overshadowed the natural problem-solving process. When asked, the practitioner admitted: “My focus was on the lines being equal. I aimed for perfection. But the child could have done it his way.”

Context analysis: Following the target audience analysis, the second stage was the context analysis. In this process, the physical, social and cultural conditions for Denver II implementation were examined. A comprehensive literature review of the Kazakhstan context was conducted, particularly to determine potential challenges and necessary arrangements; then, expert opinions were obtained. These opinions contributed to a deeper analysis by providing important findings on implementation. Expert opinions are grouped under the following headings.

Physical Conditions: The Denver II test requires a comfortable environment for children’s natural reactions. In applications in Kazakhstan, it is essential for test environments to be distraction-free and child-friendly. The categories from the expert statements are as follows:

• The test environment should be quiet, plain and arranged to avoid distraction.

• The materials (cubes, bottles, balls, dolls, pencils, etc.) should be clean, complete and standard compliant.

• The application room should have a warm atmosphere that feels safe and comfortable for children.

Some expert opinions are as follows:

“Test environments should be arranged in a way that prevents children from feeling anxious. Families usually want their children to be very successful; this situation creates pressure on the child without being noticed. The environment should feel safe so that children are not afraid of making mistakes.” — Child Psychology Expert

“The physical space should be colorful but not distracting so that children can display their natural behaviors. A more relaxed environment should be preferred, unlike traditional school classrooms in Kazakhstan.” — Education Sciences Expert

Cultural Conditions: Traditional values, family structures, and child-rearing styles in Kazakhstan can directly affect test implementation and results. In particular, the attitudes and expectations of families influence the testing process. The categories of the experts are as follows:

• The family is central to child development. Parents and extended family (e.g., grandparents) can indirectly affect the testing process.

• The traditional structure can lead to children being overly compliant with adult authority.

• Families’ expectations regarding the results can put pressure on practitioners.

Expert opinions on this are as follows:

“Families see the child’s performance as a reflection of their parenting skills. Therefore, the results should not be evaluated directly, but with understanding.” — Sociology Expert

“In Kazakhstan, children are encouraged to comply with parental authority. This can lead to unnatural reactions during the test. Practitioners should provide a comfortable environment for children.” — Education Science Expert

Social Conditions: Social dynamics in Kazakhstan can affect children’s test behavior and how examiners evaluate it. Adherence to social norms and interactions with adults are important in this context. The categories of the experts are as follows:

• Traditional child–adult relationships can limit child independence.

• The examiner should maintain an appropriate distance and attitude during the test.

• Social expectations can mask natural child behavior.

Here are expert opinions on this:

“Social norms can limit children’s ability to make independent decisions. This is especially evident in tasks that require individual performance. Examiners should be patient and avoid intervention.” — Educational Sociology Expert

“In society, adults control children rather than encouraging them to express their thoughts. This can lead to passive or reserved behavior during the test.” — Developmental Psychology Expert

Another process conducted within the context analysis is the analysis of sample application videos. The sample application videos of the practitioners contain important data reflecting the Kazakh context. Among the findings that reveal the context in the analysis of the application videos, the language factor emerges as a significant dimension.

Linguistic factor: The most striking linguistic aspect encountered in the analyzed videos is the children’s multilingualism or the limited mutual intelligibility between dialects. The phrase “limited interaction between dialects” refers to the fact that children speaking one dialect of Kazakh lack sufficient knowledge, particularly in terms of vocabulary, of the other dialect. This is a frequently observed finding. Some findings regarding the language factor can be listed as follows.

a. Colors: almost all children were found to had knowledge of colors. In other words, children know colors. The most important observation regarding the Kazakh context is that the children used either the Kazakh or Russian versions of all the colors asked and were able to recognize meanings. In other words, some children were observed to know colors only in Kazakh, while others only in Russian. This was attributed to the language of education the child received and the language spoken in the family. An evaluation of Kazakh educational programs revealed that children aged 2–3 were taught the primary colors (red, blue, green, and yellow). Children aged 3–4 were taught color naming, comparing, and distinguishing between colors. Children aged 4–5 were taught the shades of primary colors. Children aged 5–6 were also taught naming and comparing, as well as classifying objects by color.

b. Numbers: All children were found to be able to understand numerical expressions used in the test. The fact that numbers in Kazakh are in a decimal system and have a consistent structure is believed to facilitate their use by children regardless of language barriers. Furthermore, an examination of Kazakhstan’s preschool programs reveals that children aged 2–3 can distinguish objects and learn the concept of more and less. Children aged 3–4 learn to count to 3, while children aged 4–5 develop the ability to count forward and backward to 5 and compare numbers with neighboring numbers. Children aged 5–6 can count to 10 and are introduced to adding and subtracting objects. By age 6–7, children can count to 20, know how to combine numbers, and perform simple arithmetic operations.

c. The Prominence of the Near-to-Far Principle: During the test preparation process, it was anticipated that a significant portion of children would be unable to identify an object, concept, or phenomenon that does not exist in their immediate environment, in naming/defining tasks like “What is…?” For example, in the Kazakh adaptation study, the word “lake” was used instead, given the possibility that they might not know the word “sea” due to living in landlocked country. Despite this adjustment, it was determined that some children in the Kazakh version, whose geographical area does not have a lake, did not understand the word “lake.” This and similar situations were clearly interpreted as children being language users based on what they see and hear in their environment.

d. Multilingualism: This is a frequently encountered situation in sample application videos. In naming/defining tasks related to building structures, it was frequently observed that some children could understand certain concepts only in Russian (e.g., roof). A similar situation also applies to household items. Examples of this were frequently encountered in a different task of the test, “What do we do with…?” For example, the words “ıstakan,” “bakal,” “kese,” and “piyala,” which are used in different regions to mean “glass,” were generally not recognized in the Standard Kazakh language test instructions. This was attributed to regional and dialect differences.

e. Language proficiency: Some of the linguistic areas in the Kazakh language test instructions where children did not experience difficulties are as follows: (1) concepts expressing contrast (long-short, etc.), (2) concepts indicating location and direction (on top of, under, next to, etc.), (3) action commands (take, give, jump, etc).

The findings from the analysis revealed that although the implementers had technical skills, they were inadequate in integrating these skills with context and evaluating their own behaviors. In the target audience analysis, limitations were identified in the ability to apply instructions correctly and objectively while observing children’s natural reactions. In the context analysis, it was observed that physical, social and cultural conditions strongly influenced the test and that implementers needed to develop skills to apply the test sensitively to these conditions.

These findings show that technically based education methods are inadequate. In a test such as Denver II, which requires high sensitivity, it is not enough for implementers to only follow the instructions; they also need to recognize the impact of their own behaviors on the test results and make conscious decisions accordingly. Accordingly, the metacognition approach stands out as an effective method to address deficiencies.

Metacognition was defined by Flavell (1979) as an individual’s ability to recognize, monitor and organize their own thought processes. This concept allows them to not only complete a task but also understand how and why they completed it. In this context, it is important for practitioners to evaluate not only what they do during the test, but also why they do it and how these behaviors affect children’s performance. Brown et al. (1987) and Schraw and Dennison (1994) emphasized that metacognitive awareness increases success in problem solving, decision making, and learning.

Many deficiencies observed in the Denver II process stem from practitioners’ lack of process awareness. For example, unnecessary interventions are not only due to lack of command of the instructions, but also to a failure to assess the impact of the intervention on the outcomes. Metacognitive awareness enables questioning behaviors in such situations, evaluating their effects, and adjusting them when necessary. In addition, the metacognitive approach is critical in contexts where social and cultural factors are influential. In an environment such as Kazakhstan, where traditional family structures are strong, practitioners need to consider the impact of family expectations and societal norms on the child.

Design–development phase

Design

In the design process, it is important to clearly define the objectives of the model. For this purpose, the objectives of the implementer training program were determined on the basis of the findings of the analysis process. These objectives include developing the skills of taking initiative, making correct assessments and acting in accordance with the purpose of the test.

Video analyses and interviews revealed that implementers had deficiencies in their ability to follow instructions, communicate effectively with children and guide the process. These findings guided both the determination of needs and the structuring of the training model.

In the analysis process, both the theoretical approaches in the literature and the field data exemplified above were evaluated collectively. The findings obtained showed that the training process should not be limited to the transfer of theoretical knowledge but should also focus on the real needs encountered in practice. Thus, the model aimed to provide a structure that is not only theoretical but also practice.

Within this framework, it was determined that the participants needed support in the following areas during training:

Independence support: the practitioners made unnecessary interventions during the children’s independent tasks. For example, when a participant directed the child’s hand when he hesitated while drawing a square, it was considered an intervention that was not suitable for the purpose of the test. This situation shows that practitioners should observe the results of their interventions more carefully.

Lack of awareness: situations in which the practitioners did not sufficiently realize how their own behaviors affected the children’s performance throughout the process were indentified. For example, in the color recognition task, practitioners’ excessive prompt of the child changed the natural thinking process. This situation requires practitioners to evaluate their effects on the test more consciously.

Feedback approach: feedback was given to teach the content of the task rather than to complete the task. This situation emerges as providing opportunities for repetition and feedback focused on teaching.

Clarity of instruction: there was difficulty in presenting the test instructions in a clear and understandable manner. For example, in the square drawing task, the participant’s excessive explanation confused the child. This situation shows that the instructions should be presented in a way that only includes the necessary information.

Reporting natural responses: instead of observing and reporting children’s natural reactions during the test, practitioners focused on situations outside the instructions. For example, a participant’s comments such as “This is longer” during the line drawing task distracted the child’s attention; the child’s behavior was shaped according to the practitioner’s priorities.

Attention management: practitioners generally give warnings or directions when the child’s attention was distracted; however, they do not objectively report these reactions. However, natural reactions such as distraction should be recorded objectively.

On the basis of expert opinions and the context analysis results, the following goals were determined for Denver II practitioners to both develop their technical skills and evaluate their effects on the process. These goals focus on practitioners managing test processes in a way that is sensitive to physical, cultural, and social conditions and observing and evaluating children’s natural behaviors objectively.

Management of physical conditions: organizing the test environment such that the child can exhibit natural behaviors, selecting appropriate materials, and recognizing the impact of the environment on performance.

Management of cultural and family dynamics: observing families’ attitudes toward test results, considering factors that may affect the child’s natural behaviors in communication with parents, and managing without reflecting extended family influences on the process.

Adaptation to social dynamics and evaluation of child–adult relationships: this process involves observing the effects of social norms on children’s independent behaviors and evaluating natural reactions without providing guidance in the child–practitioner relationship.

Evaluation of natural reactions: objectively observing the child’s natural reactions during the test and evaluating the effects of practicing behaviors on performance.

Attention and process management: properly directing the process in situations such as distraction; monitoring and evaluating conditions affecting the child’s performance.

Initial applications and analyses revealed that participants needed not only the skills of correctly applying instructions, but also the skills of evaluating their thinking processes, making decisions, and managing the process. Therefore, the above objectives were determined. The literature shows that metacognition-based approaches increase success in learning and practice (Flavell, 1979; Schraw and Dennison, 1994). In this context, the continuation of the study is structured within the framework of a metacognition-supported training model. Metacognitive awareness allows practitioners to evaluate their own biases, reactions, and decisions. This approach strengthens not only individual performance but also the scientific validity of the test. The training model to be developed in this study is based on metacognition and the following foundations:

Process awareness of practitioners: in tests such as Denver II, it is not enough for practitioners to follow the instructions; their failure to recognize the impact of their own behavior on test results is a significant deficiency. Metacognitive awareness enables individuals to recognize such issues and eliminate deficiencies (Flavell, 1979; Schraw and Dennison, 1994). Paris and Winograd (1990) reported that metacognitive strategies increase awareness and improve performance and process management.

Theoretical basis: metacognition allows individuals to not only complete a task but also understand why and how they are carrying out this process (Brown et al., 1987). According to Flavell (1979) model, knowledge, experience, and strategy play a central role in structuring learning. Schraw and Moshman (1995) emphasize that metacognition deepens learning processes by improving problem solving and decision making.

Contextual requirements: physical, social, and cultural contexts require not only technical skills but also awareness of factors affecting processes and outcomes. Especially in traditional family structures such as Kazakhstan, the influence of family expectations and social norms should be considered (Hofstede, 2001). Metacognition supports the conscious decision-making process by increasing this awareness.

Practical observations: in the video analysis, unnecessary interventions by practitioners negatively affected children’s natural performance. These interventions limit children’s independent thinking and problem-solving skills, demonstrating the importance of metacognitive awareness (Zimmerman, 2002). Schraw et al. (2006) emphasize that metacognitive skills are an effective guide for both learners and practitioners.

The aim was not only for Denver II practitioners to apply the instructions but also to evaluate the impact of their own behaviors on children’s reactions, and a metacognitive awareness approach was adopted.

Metacognitive awareness provides practitioners with the following:

• Questioning their role in the test and understanding its impact on the results.

• Analyze their interventions and evaluate their impact on children’s performance (Schraw and Dennison, 1994).

• Making conscious decisions while considering contextual and cultural differences (Hofstede, 2001).

• Ability to objectively observe and evaluate natural reactions (Zimmerman, 2002).

The metacognitive awareness approach integrated into the Denver II practitioner training model was embodied in each module with a different metacognitive component. In the first module, self-planning skills were supported; participants questioned how they delivered instructions and at what language level and were encouraged to conduct preliminary assessments of children’s perceptions of the task. In the second module, a self-monitoring process was developed; practitioners analyzed their own practice videos to observe how gestures, facial expressions, tone of voice, and directions influenced children’s natural responses and engaged in reflective discussions based on these observations. In the third module, self-assessment became prominent; participants questioned the extent to which contextual factors, social expectations, or personal tendencies influenced their scoring decisions and conducted comparative analyses with objective criteria. Thus, abstract metacognitive components were made applicable by relating them to concrete behavioral patterns in each module.

Consequently, the sensitivity required by Denver II is possible not only through technical knowledge and skills, but also through the ability to assess and regulate the impact of behaviors within the process. Consequently, the sensitivity required by Denver II is possible not only through technical knowledge and skills, but also through the ability to assess and regulate the impact of behaviors within the process. A metacognitive-based training model addresses these shortcomings and enables practitioners to make more informed and effective decisions. Literature demonstrates that this approach not only enhances performance but also enhances the depth of learning and evaluation processes (Zimmerman, 2002; Schraw et al., 2006). Therefore, metacognitive awareness provides a strong and valid framework for practitioner training.

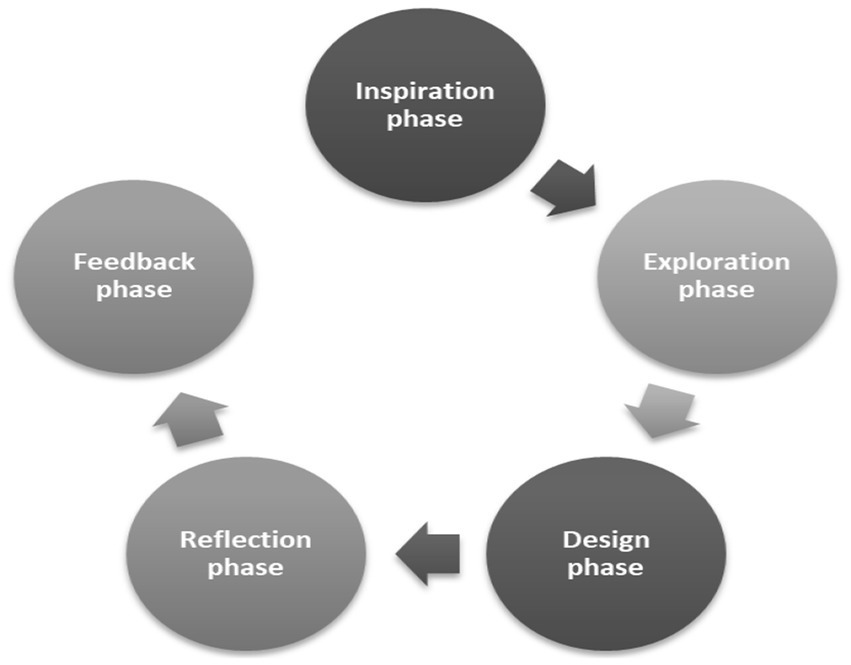

Development

The Denver II training program model for practitioners was designed as 3 metacognitive-supported structured modules. The general framework of the Program Model is presented in Figure 2.

As shown in Figure 2, the program is based on three basic metacognitive processes that aim to improve the cognitive processes of practitioners: self-planning, self-monitoring, and self-evaluation. These processes aim to increase the participants’ test application performance and their ability to analyze test results correctly.

“Self-planning is the ability to anticipate and organize the necessary steps before starting a task” (Zimmerman, 2002). “Self-monitoring is the constant awareness of cognitive and emotional states during the learning process” (Schraw and Dennison, 1994). “Self-evaluation is the process of evaluating learning outcomes and strategies used after they are completed” (Paris and Winograd, 1990). Each module includes special activities for the gradual implementation of these metacognitive processes.

The training program consists of three modules. The first module aims to teach practitioners the content, norms, and application of the Denver II test. The second module focuses on the analysis of test items through video footage, providing process awareness through visual materials. The third module teaches the evaluation and scoring of test items through sample videos. The second and third modules were conducted simultaneously. The program aims to gradually develop metacognitive skills by encouraging the active participation of practitioners in each module. The model is based on integrating targets derived from the target audience and context analyses into each module.

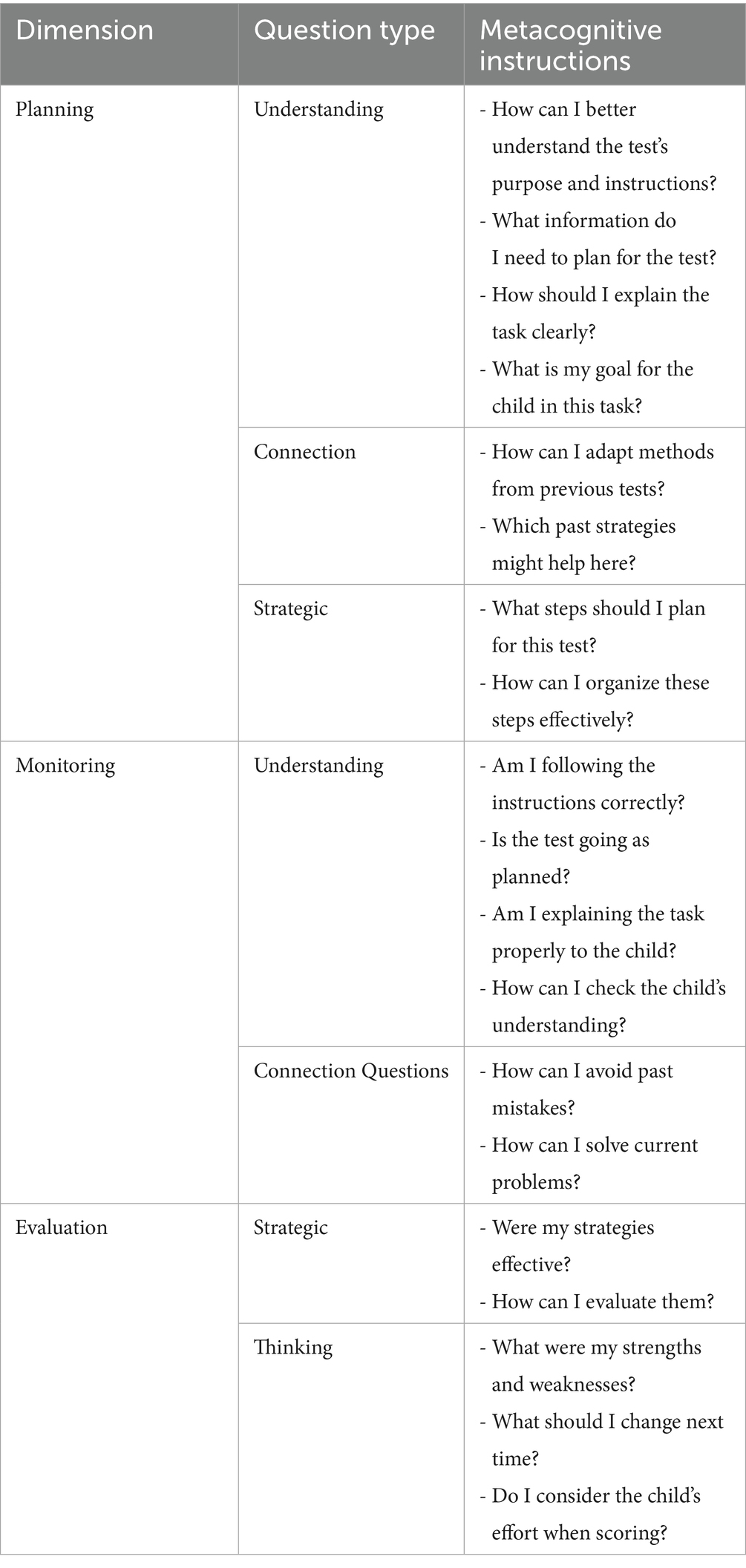

The guidance process in the model was carried out with prompt cards specially designed for each module. The questions on these cards, which encourage individual metacognitive questioning, are classified as “preparatory level understanding,” “connection,” “strategic” and “thinking” questions. The questions were developed in line with the theoretical framework of Mevarech and Kramarski (1997): (1) Comprehension questions are asked to prepare the student to solve the problem; the student reads the task aloud, defines it with his/her own words and explains its meaning. (2) Connection questions are aimed at making the students think about the similarities and differences between the current task and the previous tasks. (3) Strategic questions aim to determine the appropriate solution strategy and explain why. (4) Thinking questions direct them to evaluate their understanding and impressions of the solution process.

The prompt card prepared for use in Module 1, where the structure, content, norms and application procedures of the test are planned to be transferred, is presented in Table 4.

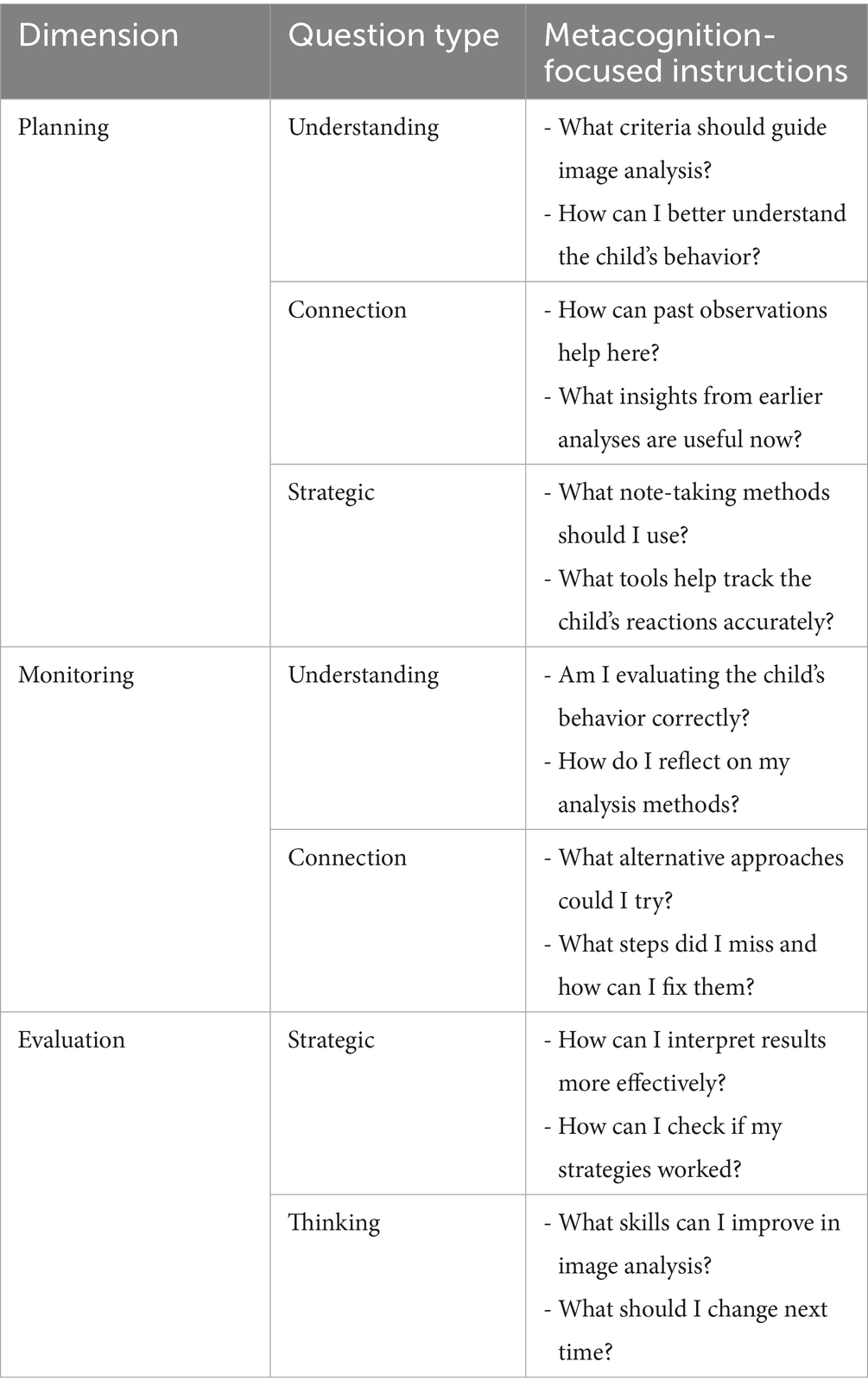

The next stage of the practitioner training model, the analysis of the applications of test items through images and the prompt card prepared for use in Module 2, which is planned for the purpose of guiding practitioners, is presented in Table 5.

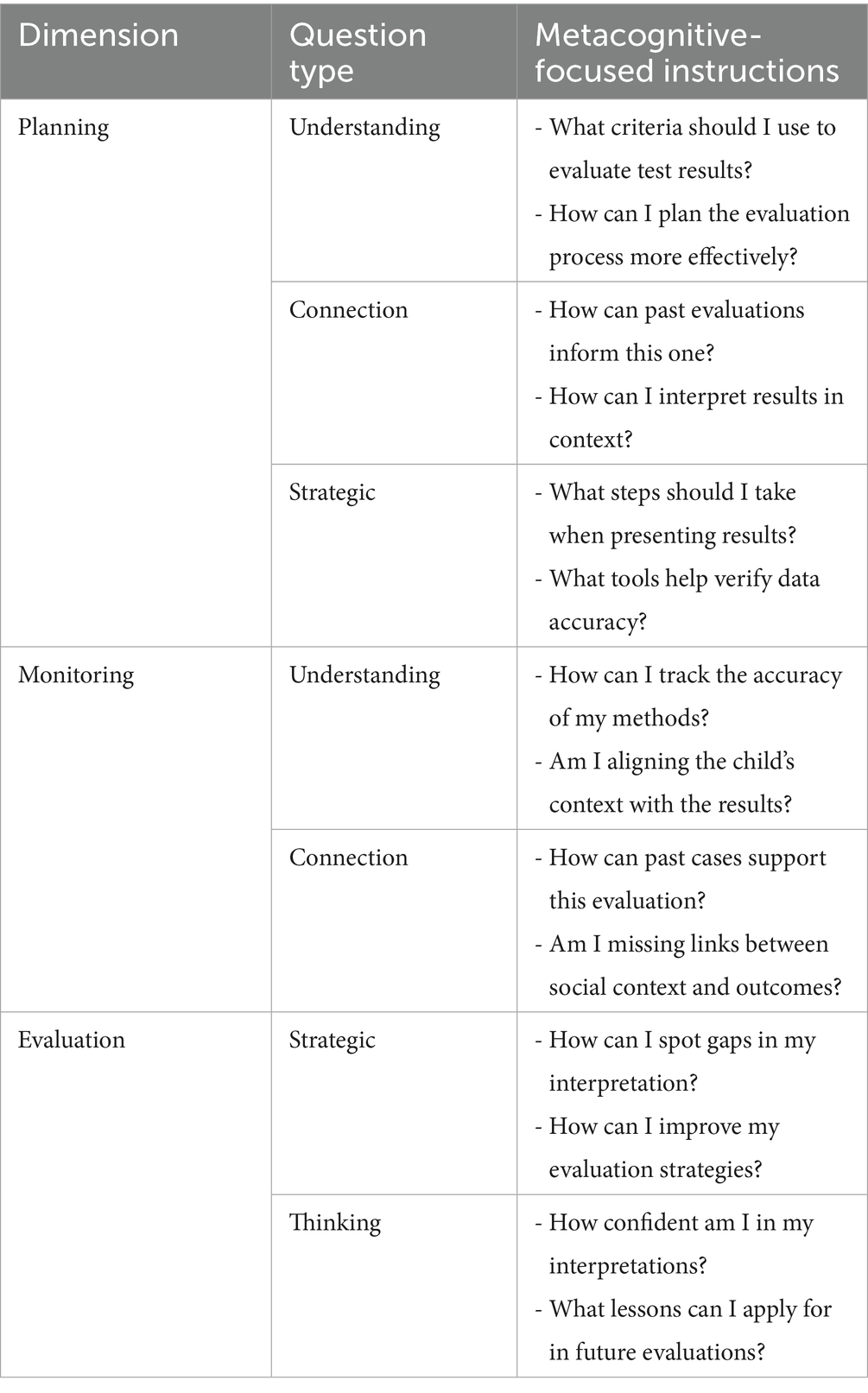

The prompt card prepared for use in Module 3, which is the final stage of the implementer training model, where the evaluation of test results and the transfer of adaptation studies are planned, is presented in Table 6.

In addition to the prompt cards, metacognitive-based teaching strategies were applied in all the modules.

Question generation: this allows practitioners to generate metacognitive questions to guide participants’ thinking processes. These questions help individuals realize and explain how they think, organize their thoughts and increase their metacognitive awareness (King, 1991). “What questions should I ask to solve this task? How do these questions guide the thinking process?”

Identifying difficulty: this aims for participants to identify the challenging aspects of a problem and develop solutions. This supports individuals in producing strategies by focusing on problems in the learning process (Schraw and Moshman, 1995). “What is the most difficult part of this test item? What strategies can I use to overcome this difficulty?”

Explaining ideas in detail: participants are encouraged to express their thoughts in detail. This contributes to the analysis of their thinking processes and the development of effective strategies (Chi, 2009). “How can I guide the participant to explain their thoughts in more detail?”

Naming behaviors: participants are encouraged to name and give meaning to the behaviors they exhibit. This helps individuals understand their own process and relate it to the context (Zimmerman, 2002). “How would you name and explain this behavior?”

Questioning thought processes: the aim is for participants to question their thoughts and develop different perspectives. This develops problem-solving and metacognitive strategies (Flavell, 1979). “How can I get the participant to question their thinking?”

Thinking aloud: by having participants express their thoughts aloud, their thought processes are made visible. This makes it easier to monitor the process and helps individuals to notice their mistakes (Ericsson and Simon, 1993). “How can I provide an environment for the participant to express their thoughts aloud?”

Collaborative learning / student teaching student: encourages metacognitive interaction and collaboration among participants. This increases awareness and deepens learning (Vygotsky, 1978). “How can I support participants in teaching each other?”

Reflection—implementation phase

The implementation phase of the Denver II Metacognition-Supported Training Program for Practitioners Model, whose analysis, design and development phases have been completed, was carried out to receive real-world feedback and make final arrangements before the evaluation. This phase aims to reflect the studies carried out on the model and to test the functionality of the model.

The implementations were carried out by two researchers with a Denver II practitioner and trainer certificates. Fifteen candidate practitioners participated in the training program. The candidates were identified through preliminary interviews. In these interviews, the candidates’ experience working with young age groups, their interest in scientific research, their access to this age group in different regions of Kazakhstan and their advanced Kazakh language skills were taken into consideration.

In the implementation phase, a metacognition-supported practitioner training process was carried out to transfer the technical and psychometric properties of the scale and to define the 134 tasks in the scale, which are collected in four sections. The planned training program, which lasted a total of 25 h, lasted 10 weeks. The video recordings of the trial applications of the candidate practitioners were subjected to preliminary analysis by the researchers and made ready for presentation before the lessons. The workload of this study included preparing 117 trial study videos for presentation and required approximately 65 h of work. In addition, a communication channel that served as a counseling line for the candidate practitioner was actively used during the specified process. At the end of the process, a total of 161 applications were made through this communication channel, and solutions were developed for all of them. It was observed that 94 of the applications were about explaining the instructions for any task, 45 about scoring the child’s behavior and the decision-making process, and 22 about technical issues such as age calculation, month calculation, etc.

In this section, application examples are presented in accordance with the order of the modules and supported by qualitative data during the application. The statements regarding the relevant examples were included in the text after being confirmed by the participants. Application examples for the three modules were structured by exemplifying a metacognitive dimension on the prompt cards for each module, presenting metacognitive activities related to the process, and adapting the findings regarding the target audience-context analysis. Sample reflection and application studies for the modules are as follows:

Module 1: test content, norms and application method

This module has two main objectives: (1) to ensure that participants understand the instructions and standards for the word definition task and (2) to enable practitioners to give appropriate instructions to children by clarifying the purpose of the test. In light of this, participants were first asked to read the instructions individually, and then some participants were asked to convey the task to others in their own sentences. Examples of the implementation of the module are presented below.

During the implementation process, participants were asked to focus on the questions on the prompt cards in their hands that corresponded to the relevant point of the process. Metacognitive activities were used in accordance with the design while the participants were answering the questions. After the task was read, participants were asked to look at the planning questions and were given time to think about the following question on the cards. “What kind of language should I use to explain the task clearly?”

This question is a metacognitive question aimed at creating a practitioner language scheme (Module 1 / Prompt card – Planning – Preparation Level: Understanding). A dialog about this process is given below:

K5: I should use language that the child can understand.

A1: What kind of language do you mean exactly? Can you explain?

K5: I can say it is a child’s language. In other words, children do not speak like adults do.

A1: Can you give an example?

K5: For apple, “What is this? Can you tell me what this is? It is the pear’s friend.”

A1: Now, I want you to evaluate K5’s directive sentence. If there is an error, determine its type, then name this error, approach and behavior (Metacognitive Activity: Generalization and Naming of Behavioral Patterns).

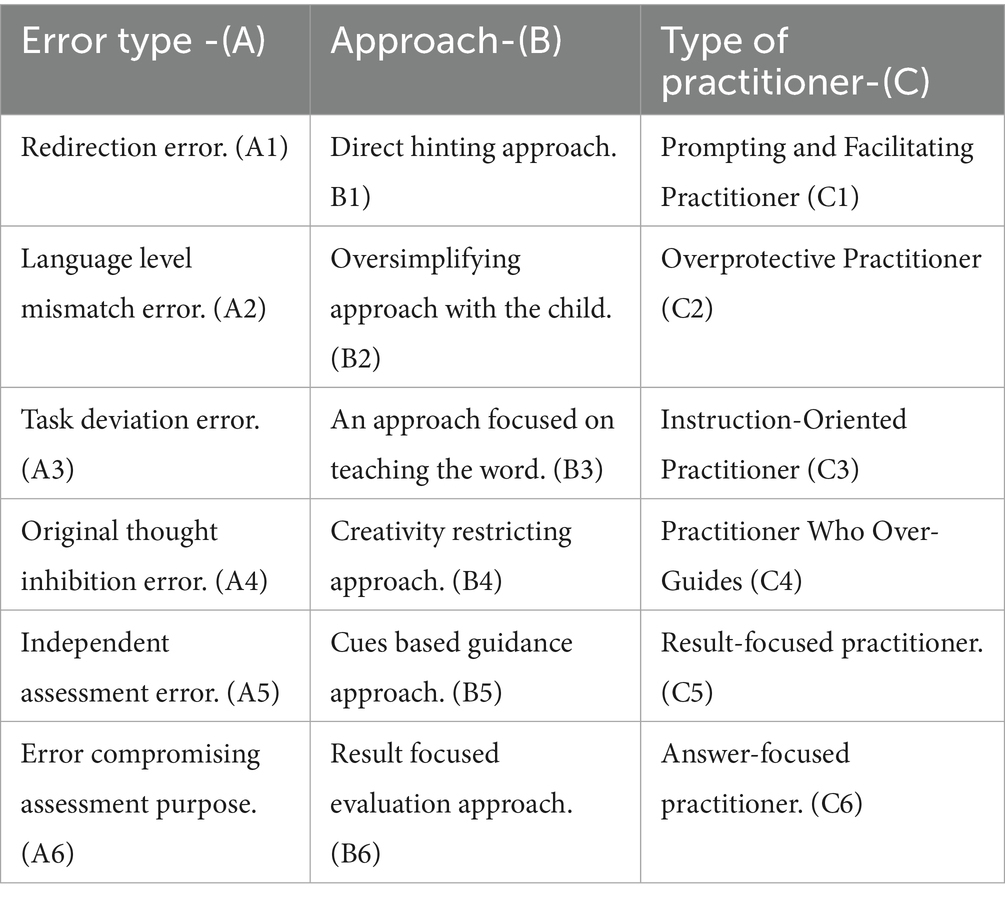

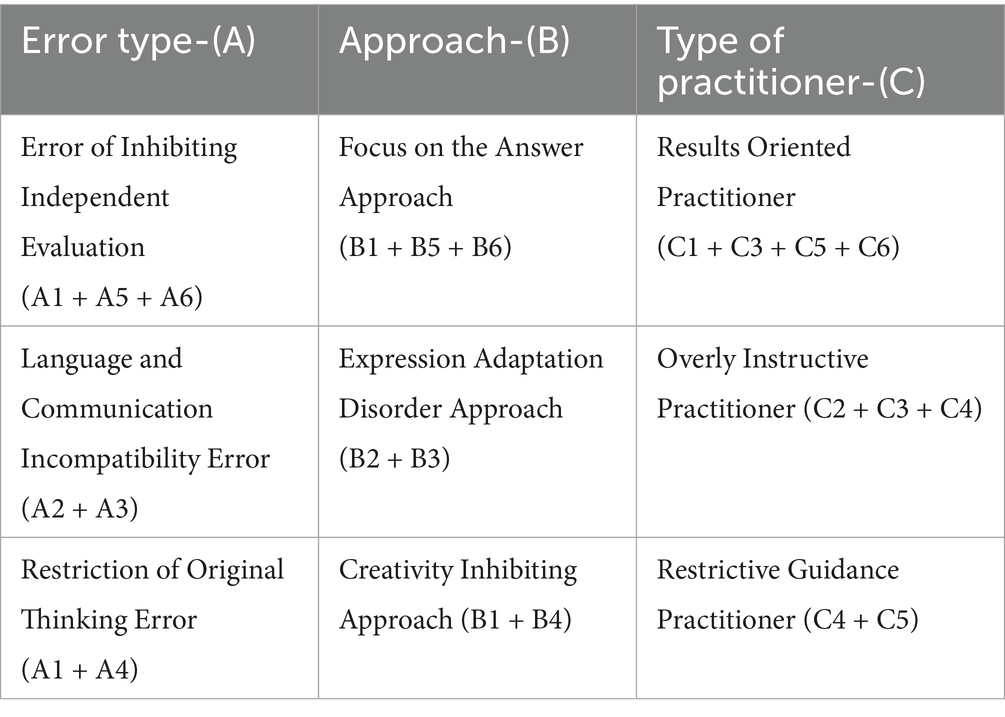

All the participants stated that there was an error, and the definitions created with brainstorming and the consensus of the whole group, including K5, are presented in Table 7.

A1: Thank you. You see the definitions on the screen. Now, I want you to organize the data in the table. This is actually a classification exercise: we create categories by combining those that indicate similar meanings and purposes. First, we work individually; then, we determine the final version through group discussion.

The Error, Approach and Practitioner Type categories created by the participants were restructured again via the brainstorming technique. The restructured categories and the variables that form the categories are presented in Table 8.

The following study was guided by Table 8 (Metacognitive Activity: Creating Questions).

A1: Dear friends, now I want you to prepare questions that will help determine the type of practitioner. These questions should directly point to the type of practitioner when they are answered. What do you think, what kind of questions could they be?

K12: What behaviors does the practitioner exhibit during the task and how can I relate these behaviors to the purpose of the task?

A1: Thank you. Do you think this question is related to the type of practitioner; does it reveal the characteristic features?

K5: I think it is good but not enough. The behavior of the practitioner and being task-oriented are okay, but how they guide the child and to what extent they enable them to demonstrate their skills are also important. I think these three indicators should be evaluated together. They can put so much pressure that the child may become frustrated even if the task is done correctly.

K9: I agree. Which of the behaviors do the practitioner exhibit to ensure that the task achieves its purpose is dominant?

A1: What do you think?

K8: I prepared a question for a direct type: What is the name given to the type of practitioner who restricts the child’s original thinking and prevents creativity?

K9: This is a bit like a direct definition. If our aim is to name the situation in the examples, more comprehensive questions may be more appropriate.

A1: I think K9’s question is more appropriate in this respect. If it is appropriate, let us adopt it as our guiding question.

In this study, as approved by the participants, an attempt was made to clarify the implementer behavior models in response to the instructions. In the first module, studies were also conducted on the basis of the target audience and context analysis. The first of these was the clarity of the instructions.

A1: Friends, I want you to read the instructions in the Word Definition Task Group. I am curious about your opinions.

K1: The instructions are clear, but in practice I noticed that the question “What is a table?” is not understandable for children. It is more appropriate to say “Can you tell me what a table is?” The question “What is it?” usually leads to one-word answers.

K6: I agree. Although it seems simple, children do not fully understand it.

A1: Thank you for your valuable contribution. Is there anything that is not clear to you?

K7: You can write more clearly that a passing score will be given if a definition is made from one perspective.

A2: Do you think there is a need to add anything about the physical conditions?

K4: No need. This was already stated in the general rules.

K6: Yes, we now clearly know that the test environment should be simple and distraction-free. No need to add.

The positive impact of the abovementioned studies was observed by researchers within a limited timeframe. At the end of the module, the participants effectively applied the processes on the basis of metacognitive awareness and gained awareness in the planning, monitoring and evaluation stages. In addition, through the transfer of theoretical knowledge, the participants were provided with an understanding of the structure and instructions of the test, and they were supported in developing solution strategies against application difficulties.

In the next two modules, the aim is to internalize the test process and scoring criteria on the basis of the participants’ practices, analyze experiences and integrate theoretical knowledge with practice. Thus, it aims to increase the practical effectiveness of tests in the field.

Modules 2 and 3: analysis of test item administrations and scoring based on video recordings

These modules, which are conducted simultaneously, have three main objectives: (1) increasing process awareness through diverse implementation practices, (2) developing awareness of whether tasks are performed independently or with the support of an implementer, and (3) providing decision-making and grading skills in scoring. In Modules 2 and 3, participants were guided by questions on prompt cards corresponding to the relevant stage; a video and scoring form containing positive/negative examples were studied. The participants completed some tasks before watching the video materials and were directed to the following metacognitive question: “What alternative methods can I think of to better understand the child’s behavior? Which steps am I missing and how can I correct them?” (Module 2 / Prompt Card – Monitoring – Connection Questions).

A sample of dialogs related to this process is presented below:

K5: The child seems not to understand the task but is actually capable of doing it. When the implementer explains too much, he deviates from the instructions.

A1: What would you recommend in such a case?

K2: First, you need to make him listen. It may be better not to start right away.

K5: Being in the child’s natural environment beforehand helps him adapt.

K2: Preparing with similar games also helps.

A1: Good suggestions. What else did you notice?

K8: The child’s behavior can be predicted. Solutions and strategies can be developed for each task.

A1: I’m taking notes, thank you.

K2: In addition to learning the instructions, let us clarify the underlying rationale of the test. Our goal is not to support development, but to evaluate the current situation.

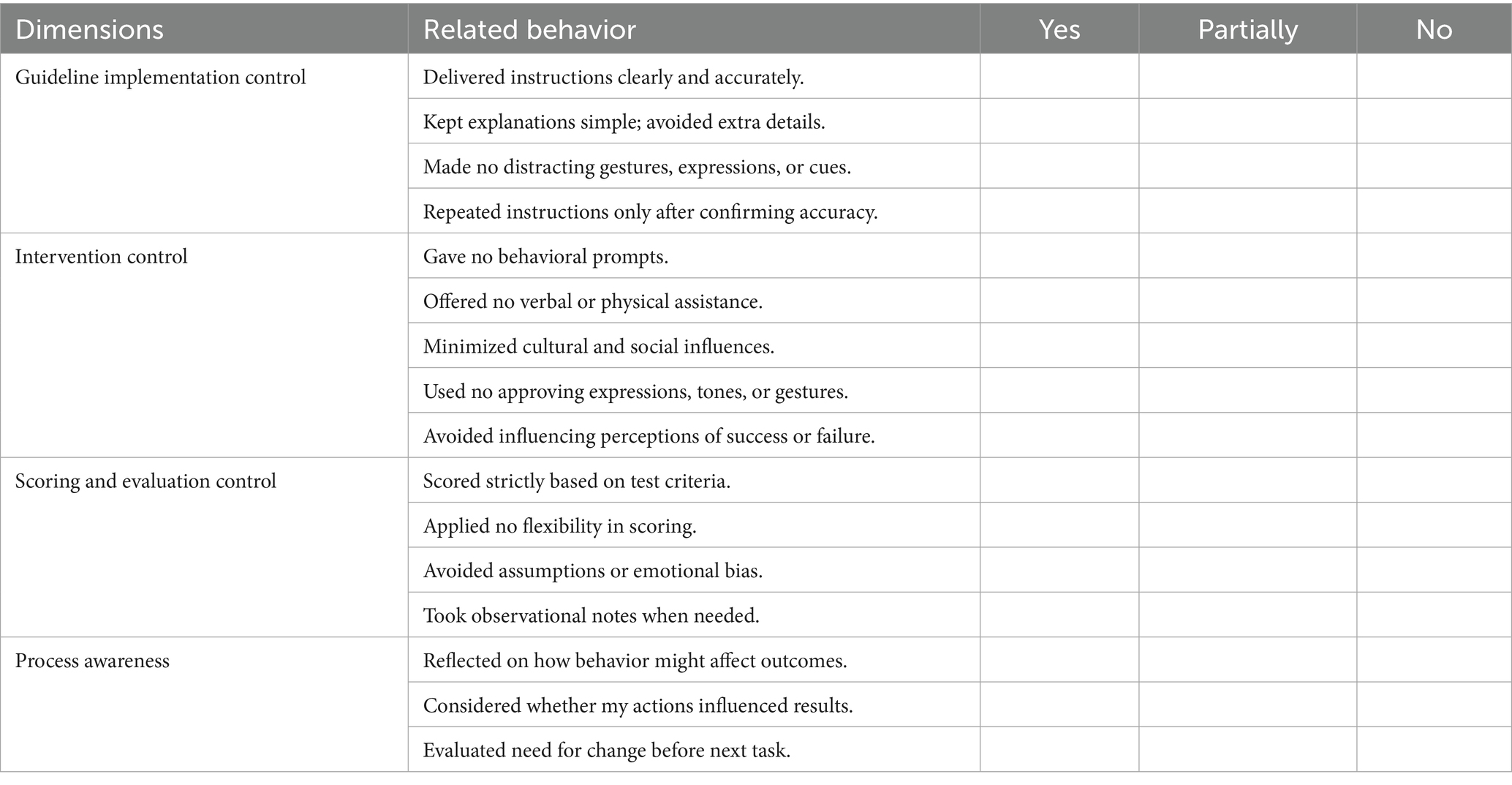

A1: Therefore, let us do an activity. Let us prepare the implementer evaluation criteria and a self-assessment checklist. The video was reanalyzed with the following question “How can I develop my strategies for a more effective evaluation?”

K2: It should be clarified whether the instructions were applied correctly.

K13: The level of intervention and the scoring process should also be examined.

A1: Now, let everyone write down the dimensions and behaviors that should be written on the self-assessment form. Let us discuss and determine the final version together.

After the process, the written proposals of the candidates were collected and evaluated by three researchers, and the self-assessment list in Table 9 was created.

After Table 9 was presented, the participants were asked to discuss, refine, and approve the dimensions and behaviors on the list. With this approximately 30-min study, the template agreed upon by all participants was deemed valid. The activity aimed not only to answer the metacognitive question on the prompt card but also to express their thinking processes in detail and raise mutual awareness among practitioners. In addition, the target audience and context analyses made at the beginning were integrated and added to the attention-process management and social dynamics checklist.

Feedback (evaluation) phase

In this phase, the “Metacognition-Based Practitioner Training Model” was evaluated through the opinions of six experts with doctoral degrees, all working at various universities in Kazakhstan and specializing in program development and/or educational assessment.

The model was presented to the experts as follows: (a) the introductory content and the study report were shared via e-mail after the confirmation of volunteering; (b) an approximately 60-min online presentation was made at the appropriate time; (c) expert questions were answered; and (d) opinions, criticisms and suggestions were collected via e-mail.

Expert opinions were evaluated via the content analysis method. All statements were read several times, and similar comments were grouped under themes and supported with direct quotes. Quotes were coded from “Expert 1” to “Expert 6” according to the order of e-mail return. The findings were classified into three main themes:

Content of the practitioner training model

The experts evaluated the practitioner training model under two headings: positive aspects and areas of development. Most of them find the content and theoretical basis of the model both original and practically applicable. In particular, the metacognitive structure, the logical flow between modules, and the fact that the guidance cards support thinking were welcomed positively. Some experts have drawn attention to the model’s adaptability across diverse practitioner profiles; they stated that certain metacognitive concepts may be too abstract for practical implementation and that process monitoring strategies should be explained more concretely. Positive evaluations and suggestions based on these views are presented below.

Positive Themes. Awareness-Oriented Instructional Model: Focusing on the practitioner’s thinking processes instead of transferring information was evaluated by experts as an innovative approach. “The metacognitive-based structure brings a new breath to practitioner training. It provides the participant with not only knowledge but also awareness.” — Expert 2.

Structured Progression Across Modules: The structure consisting of three modules is balanced in terms of content and process; the consistent and gradual progression of the modules increases applicability. “The three-module structure is balanced and gradually deepens. It facilitates participant development.” — Expert 5.

Prompt-Driven Reflective Thinking: The variety of questions on the cards stimulates metacognitive thinking. “Prompt cards support multi-dimensional thinking. Practitioners go beyond learning.” — Expert 1.

Aspects to be improved. Practitioner-Centered Flexibility: Some experts emphasized that the model may be too academic for the field and that implementation guidelines may be needed for practitioners at varying levels. “The model is strong, but practitioners have different levels of education, experience and cultural sensitivities. Flexible guidelines may be useful.” — Expert 3.

Perceived Abstractness of Concepts: Practitioners working in the field may have difficulty understanding these concepts, and simple explanations and examples are necessary. “Self-monitoring and self-evaluation are valuable concepts, but they should be supported with explanations and examples for some practitioners.” — Expert 6.

Structured Process Monitoring: It was suggested that the evaluation dimension of the model be made clearer so that trainers can effectively monitor the process. “The model is impressive; however, the criteria for practitioner evaluation and process monitoring should be more clearly defined.” — Expert 4.

Methodological structure of the practitioner training model

The experts evaluated the methodological structure of the model under two headings: positive aspects and areas for development. Most experts found its foundation in both theoretical and field data, its modular structure and its practitioner-centered design to be strong. Some experts stated that practitioner participation could be more systematically organized, that the method should be supported with quantitative data and that long-term monitoring strategies should be strengthened.

Positive Themes. Theory and field compatibility: Integrating the theoretical basis with field observations increased the practical validity of the model. “One of the rare studies where the theoretical framework and field observations are balanced.” — Expert 2.

Design on the basis of practitioner experience: Including the dilemmas and observations experienced by practitioners, this design transformed the model into a living structure. “The model is not desk-based; it offers a structure that is sensitive to the field and realistic.” — Expert 4.

Modular and systematic structure: Content integrity and gradual progress between modules are clearly planned. “Transitions between modules are very clear, making it easier to follow the process.” — Expert 1.

Aspects to be improved. Increasing practitioner participation: Several reviewers emphasized that although the model effectively reflects field practices, its impact and applicability might be enhanced if practitioners play a more active role. Rather than serving only as sources of data, involving them in key stages—such as design, interpretation, or even decision-making—could foster greater engagement and shared ownership.

Quantitative data support: Although the model stands on a strong qualitative basis, some experts have wondered whether the limited use of quantitative tools might improve its overall reliability. This was not meant to replace qualitative insight—but rather to serve as a complementary layer. Even basic numeric indicators could provide a more nuanced sense of how the model functions in various settings.

Long-term monitoring: A recurring thought among reviewers was about the lasting impact of the training. The model appears promising at first, but does this progress hold? A few suggested that even informal check-ins, a few months after implementation, could offer valuable information on sustainability—and perhaps highlight areas needing fine-tuning.

Suggestions from experts

The model was considered effective in practice, but suggestions for increasing its impact are presented under the following three headings:

Extending the training period: Extending the duration of the modules over longer periods and supporting them with practice repetitions can increase the depth of learning. “Modules are valuable, but short periods of time limit learning. Practice repetitions would be beneficial.” — Expert 2.

Preparation of guide materials: Module summaries, examples and checklists can help implementers reinforce the process. “What is learned in training can be forgotten, support materials are important in this regard.” — Expert 5.

Institutional dissemination: Institution-specific presentations, plans and pilot application reports can facilitate the use of the model in wider environments. “The model can also be effective at the institutional level; it contributes to the training of in-house trainers.” — Expert 1.

Discussion, conclusion, and recommendations

Reflection on the implementation process

The most striking aspect of this study was the evolution of the theoretical model through field applications. Initially, considered both theoretical and functional, the model provided the opportunity to test the extent to which the content and methods were suitable for the field during the application process. Discussions with practitioners allowed researchers to gain an insider’s perspective on the model.

The most critical awareness was that practitioner training would not respond in the same way in every social context. Practices in Kazakhstan demonstrated the importance of contextual sensitivity before conceptual content. Pretraining analyses facilitated the understanding of practitioners’ established routines and intervention patterns in the Denver II administration.

The main finding was that practitioners make decisions on the basis of the child’s emotional state or their own experiences rather than on the basis of instructions. This raised the questions of not only ‘what should we teach?’ but also ‘which practitioner behavior patterns require transformation?’ Thus, the content became contextually integrated and adapted to the participants.