- 1Faculty of Education, Primary School Pedagogy, Institute of Pre-Primary and Primary Education, Leipzig University, Leipzig, Germany

- 2Department of Inclusion and Pedagogical Development Support, Institute for Special Education, University of Flensburg, Flensburg, Germany

Reading competence is a prerequisite for academic success, yet studies reveal persistent deficits among primary school students, especially in inclusive classrooms. Digital formative diagnostics and aligned support tools offer promising opportunities; however, their sustainable implementation depends on both technical and pedagogical usability. This study explores the design of digital inclusive reading support to ensure usability and foster acceptance in practice. In the context of the collaborative project DaF-L, an adaptive digital reading screening and corresponding reading packages were developed and integrated into the competence-oriented learning platform Levumi as Open Educational Resources. A qualitative exploratory design was employed, integrating expert interviews with teachers (n = 13) and participatory classroom observations (n = 33) to capture perspectives from both teachers and students. Educators underscored the necessity for simplified navigation, individualized class management, and clear feedback mechanisms. The students’ positive response to motivational features, such as visual design, repetition opportunities, and immediate feedback, was noted. However, technical issues (e.g., unstable Wi-Fi connections, browser incompatibilities) and pedagogical challenges (e.g., skip function) were identified as factors that reduced usability. The findings indicate that the efficacy of digital formative reading support is contingent upon the synergy of intuitive technical design and pedagogical functionality, thereby facilitating differentiated learning. The study demonstrates how practice-to-research transfer can systematically enhance usability and provides implications for the future development of inclusive digital learning environments.

Introduction

The ability to read and comprehend text is widely regarded as a pivotal component of academic and societal success. However, international comparative studies such as the Progress in International Reading Literacy Study (PIRLS; Mullis et al., 2023) and the German national education report (Stanat et al., 2022) indicate that a high percentage of primary school students in Germany, as in many other countries, display substantial deficiencies in reading (Betthäuser et al., 2023; McElvany et al., 2023; Mullis et al., 2023; Organisation for Economic Co-operation and Development [OECD], 2023). Furthermore, heterogeneity in inclusive primary schools (grades 1–4, approximate ages 6–10) has increased, and teachers are tasked with the education of children from a variety of linguistic, cultural, and cognitive backgrounds (Kultusministerkonferenz [KMK], 2022). This discourse has intensified in Germany since the COVID-19 pandemic, which has led to a significant number of primary school children displaying disconcerting learning deficits. As a result, the German Kultusministerkonferenz (KMK) [The Standing Conference of the Ministers of Education and Cultural Affairs] recommends the early intensification of nationwide diagnostics and the provision of scientifically based, quality-assured diagnostic instruments and aligned support in form of formative diagnostics to ensure basic competencies (Köller et al., 2022). The PIRLS, which is conducted through a computer-based assessment since 2016 and also focuses on digital forms of reading, came to a similar conclusion (Mullis and Martin, 2019). In this context, formative diagnosis is becoming increasingly important, as it identifies learning ability at an early stage and enables customized support (Connor, 2019; Förster and Souvignier, 2014). Digital formative diagnostics offer particular potential in this regard. These tools facilitate continuous monitoring of learning progresses, provide immediate feedback, and assist teachers in providing individualized support (Leshchenko et al., 2021).

For digital formative diagnostics to be effective, instruments must be both reliable and accurate in measurement and usable for students and teachers. These goals can sometimes contradict each other; since, for example, the more items a test contains, the more reliable it is. In practice, the implementation necessitates an increase in the time required for the test, the child’s need to concentrate for an extended period, and the allocation of additional time to plan for its integration within the classroom setting (Schurig et al., 2021). Consequently, it is imperative to consider the requirements for both reliable and objective measurement as well as the requirements for teachers and students. The usability of diagnostic instruments has received minimal consideration and analysis throughout the development process. Furthermore, research indicates that a number of these digital tools are rarely utilized in practice, often due to a lack of technical and/or pedagogical usability (May and Berger, 2014; Blumenthal et al., 2022). This indicates that the design of digital diagnostic and support tools has rarely been systematically examined and optimized from the perspective of the main users, namely teachers and students. This is especially relevant, as usability is a key factor in the actual use of digital tools in inclusive education (Jahnke et al., 2020).

This article investigates how digital reading support can be designed to be effectively implemented in inclusive primary school classrooms. The primary focus of this study is to assess the technical and pedagogical usability of the learning platform Levumi and the developed digital reading packages, which are available as Open Educational Resources.1 The research was conducted within the framework of the federally funded collaborative project DaF-L2 [Digitale alltagsintegrierte Förderdiagnostik–Lesen in der inklusiven Bildung (digital support diagnostics integrated into everyday life–reading in inclusive education, own translation)] to develop an adaptive reading screening and aligned support materials. This study explores how teachers’ and students’ feedback can be systematically integrated in order to enhance the technical and pedagogical usabilty of digital tools. The objective is to foster their acceptance and sustainable use in inclusive educational practice.

Literature review

Reading competencies in elementary school

By the end of the fourth school year, students are expected to have achieved specific reading competencies and a certain level of reading comprehension. The PIRLS assesses not only an individual’s literary experience, but also their capacity to acquire and utilize information. The assessment comprises two main parts, covering the following reading comprehension processes: (1) focus on and retrieve explicitly stated information, (2) make straightforward inferences, (3) interpret and integrate ideas and information, (4) evaluate and critique content and textual elements (Mullis et al., 2023, p. 57). The reading comprehension processes retrieving, straightforward inferencing, interpreting and integrating as well as evaluating and critiquing are categorized differently further on such as an emphasis on relative average achievement in literary and informational purposes (Mullis et al., 2023). Additionally, they can be defined into two distinct reading comprehension processes: (1) retrieving and making straightforward inferences, and (2) interpreting, integrating, and evaluating (Mullis et al., 2023, pp. 61–62).

Formative assessment through digitalization to support all readers

Formative assessment entails the continuous monitoring of student learning, enabling instructors to offer immediate and continuous feedback for the purpose of refining their teaching methods, and students to enhance their own learning progress (Liebers et al., 2019). Continuous feedback provided through formative assessment empowers students to reflect on their learning and to make targeted improvements. Simultaneously, formative assessments enable teachers to gain deeper insights into students’ understanding and performance, enabling them to adapt their teaching approaches and create personalized learning opportunities that cater to the needs of the individual student. In essence, the implementation of formative assessment methods fosters a supportive learning environment, enabling students to maximize their potential (Connor, 2019; Förster and Souvignier, 2014; Mullis and Martin, 2019; Organisation for Economic Co-operation and Development [OECD], 2005).

Digital learning applications serve as valuable tools for formative assessment, enabling real-time progress monitoring and targeted support. Additionally, digitization in education allows for personalized learning experiences, accommodating individual preferences, and learning styles. With the appropriate guidance and usage of digital learning applications, children effectively develop the skills needed for reading and navigating the digital world (Kultusministerkonferenz [KMK], 2022).

Furthermore, the integration of digital technologies into education has been shown to contribute to a reduction in teachers’ workloads while enhancing their pedagogical effectiveness. The data is saved, sorted, and (partly) analyzed digitally. Through that, teachers save time and receive information on their students’ learning with which they can adjust their lessons and focus on supporting individual students.

The digital reading packages offer teachers the necessary tools to support their students effectively. By receiving results digitally, teachers can easily track students’ progress, make essential adjustments to their teaching methods, and provide personalized support. Students experience a more engaging and effective learning process with the reading packages because they receive immediate feedback. This instant validation for correct answers can boost their confidence, while timely corrections prevent frustration, helping them stay motivated to improve their reading skills.

However, the current landscape presents a challenge, as there is a lack of digital tools that systematically integrate formative assessment with targeted reading support. Although digital reading programs exist, only a limited number of them are designed with a formative approach that permits continuous monitoring of learning progress and adaptive support. Recent national work, such as Junger and Liebers (2024), underscores the nascent stage of this research. However, at the international level, there is a paucity of evidence to suggest the existence of comparable digital formative programs that specifically address reading in inclusive classroom contexts. This dearth of research underscores the significance of the present study and its contribution to the nascent field of digital formative diagnostics in reading education.

Technical-pedagogical usability

The concept of usability originates from the field of software development, and it is defined as the discrepancy between the potential usefulness of a system and the extent to which users are able and willing to utilize it (e.g., Eason, 1984; Sarodnick and Brau, 2016). In recent decades, a number of guidelines have been developed on how to assess the technical usability of such systems (e.g., Chalmers, 2003; Nielsen, 1994; Tognazzini, 2003). In the context of the ongoing digitization of teaching materials, diagnostic tools, and support materials as well as the increasing use of digital devices in schools, the usability of digital learning environments has become more important (Estrada-Molina et al., 2022; Lu et al., 2022). To ensure comprehensive evaluations of learning materials, the focus has shifted to not only examining their technical usability but also considering pedagogical aspects (e.g., Jahnke et al., 2020; Moore et al., 2014; Nokelainen, 2006). Technical usability focuses primarily on the efficiency, effectiveness, and satisfaction of using software or devices. While pedagogical usability specifically considers the requirements of the educational sector, in particular the effectiveness and user-friendliness of educational materials, platforms, and technologies. Pedagogical usability is defined as the design of teaching and learning materials that are easy to understand, accessible, and motivating. This encompasses elements such as clear instructions, engaging presentations of learning content, and the integration of interactive elements (Jahnke et al., 2020). Pedagogical usability is imperative to ensure that educational technologies contribute effectively to knowledge transfer and actively engage learners.

An examination of digital formative diagnostic tools reveals the existence of a substantial collection of well-designed tools that adhere to scientific standards. Nonetheless, the majority of formative digital diagnostic tools developed to assess and enhance sustainable engagement have been evaluated under scientific conditions with user involvement in their development being limited (Girdzijauskienė et al., 2022; D’Mello, 2021). However, there is a lack of research in real-life educational settings on how and under what conditions these diagnostic tools are used. In school practice, this is particularly evident in the rather rare use in the classroom (Dancsa et al., 2023; Wammes et al., 2022; Souvignier, 2021; May and Berger, 2014). The reasons cited for this reluctance include incompatibility with regular school life and the unused potential of digital diagnostic tools by teachers (Abdykerimova et al., 2025; Blumenthal et al., 2022; Liebers et al., 2019).

Although there’s a growing research interest in the overall user experience and how people perceive technology (Law and Abrahão, 2014; Schmidt and Huang, 2022; Schmidt et al., 2020), the primary focus has predominantly been on technological usability. Pedagogical usability, defined as the extent to which digital systems are designed to be didactically meaningful and conducive to learning, has been the subject of only a limited number of empirical studies (Lu et al., 2022). In order to cultivate acceptance and sustainable implementation in the classroom, it is essential to engage prospective users in the (further) development of digital formative diagnostic tools and the associated support materials. This engagement must systematically take into account both, technical and pedagogical usability.

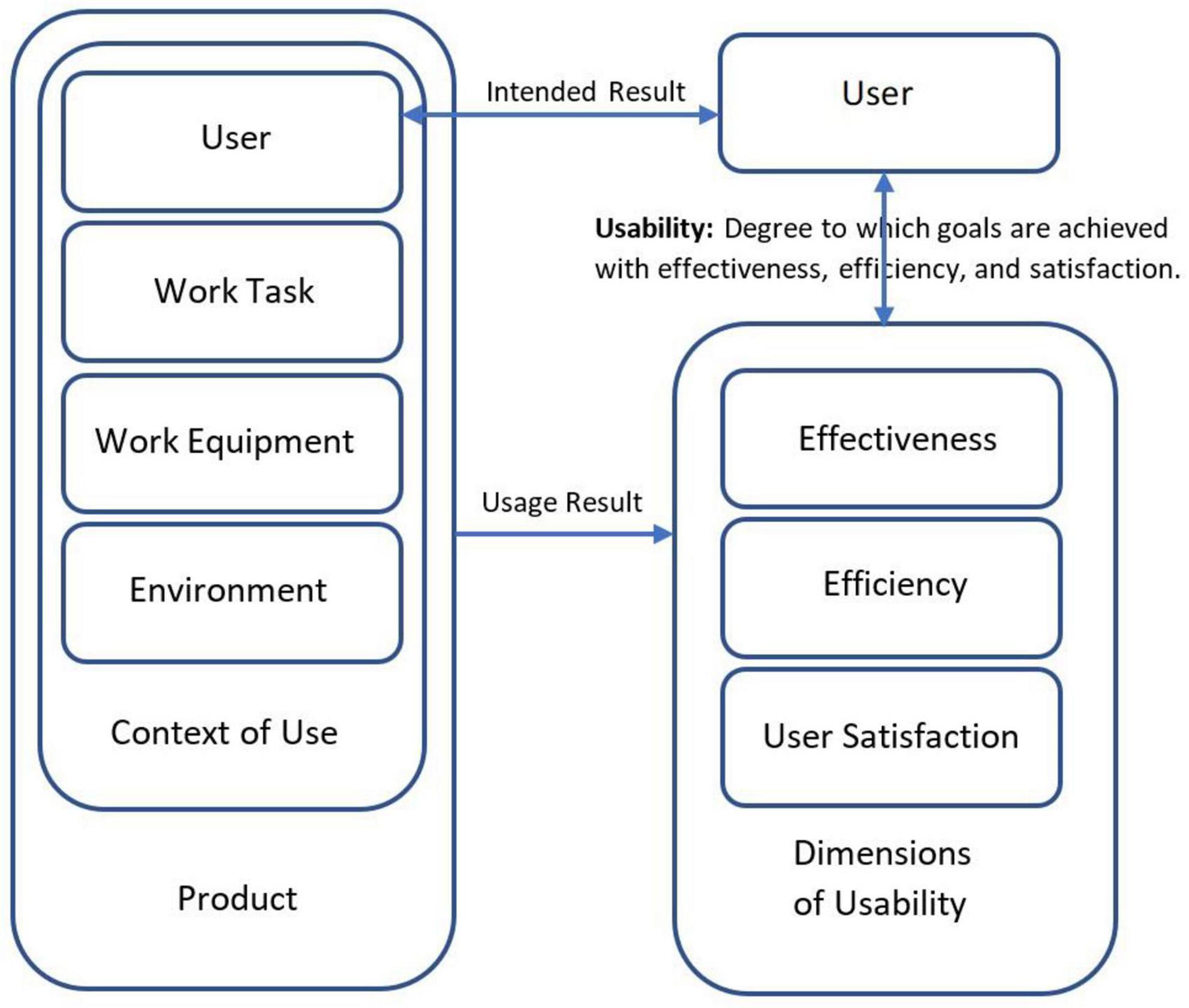

Considering the technical usability as part of the series of standards DIN ISO 9241 Ergonomics of human-system interaction, the ergonomic design of software products is described in more detail in the standards of DIN EN ISO 9241 Part 11: Usability: Definitions and concepts and DIN EN ISO 9241 Part 110: Dialog principles. Figure 1 schematically illustrates the framework of usability.

Figure 1. Framework of usability consisting of the three dimensions of usability: effectiveness, efficiency, user satisfaction, and considerations regarding the product: context of use. These form the basis for technical usability (cf. Din En Iso 9241-11:2018-11, 2018).

According to DIN EN ISO 9241-11, usability consists of effectiveness, efficiency, and user satisfaction with the system. Also referred to as the dimensions of usability. In this context, technical usability should be considered in connection with the specific context of use, rather than as an absolute measure. Factors such as the type of task, the environment as well as the characteristics and abilities of the users also have an influence on the technical usability (Din En Iso 9241-11:2018-11, 2018; Tullis and Albert, 2013).

In the context of technical usability, the development of a diagnostic instrument entails not only the construction of the instrument in accordance with its diagnostic function, but also focuses on the instrument’s users. This way, acceptance of the procedure can be increased and frustration during usage reduced in order to improve the productivity of the procedure (Kähler et al., 2019). However, the dimensions of technical usability need to be further specified to comprehensively illuminate usability and its factors.

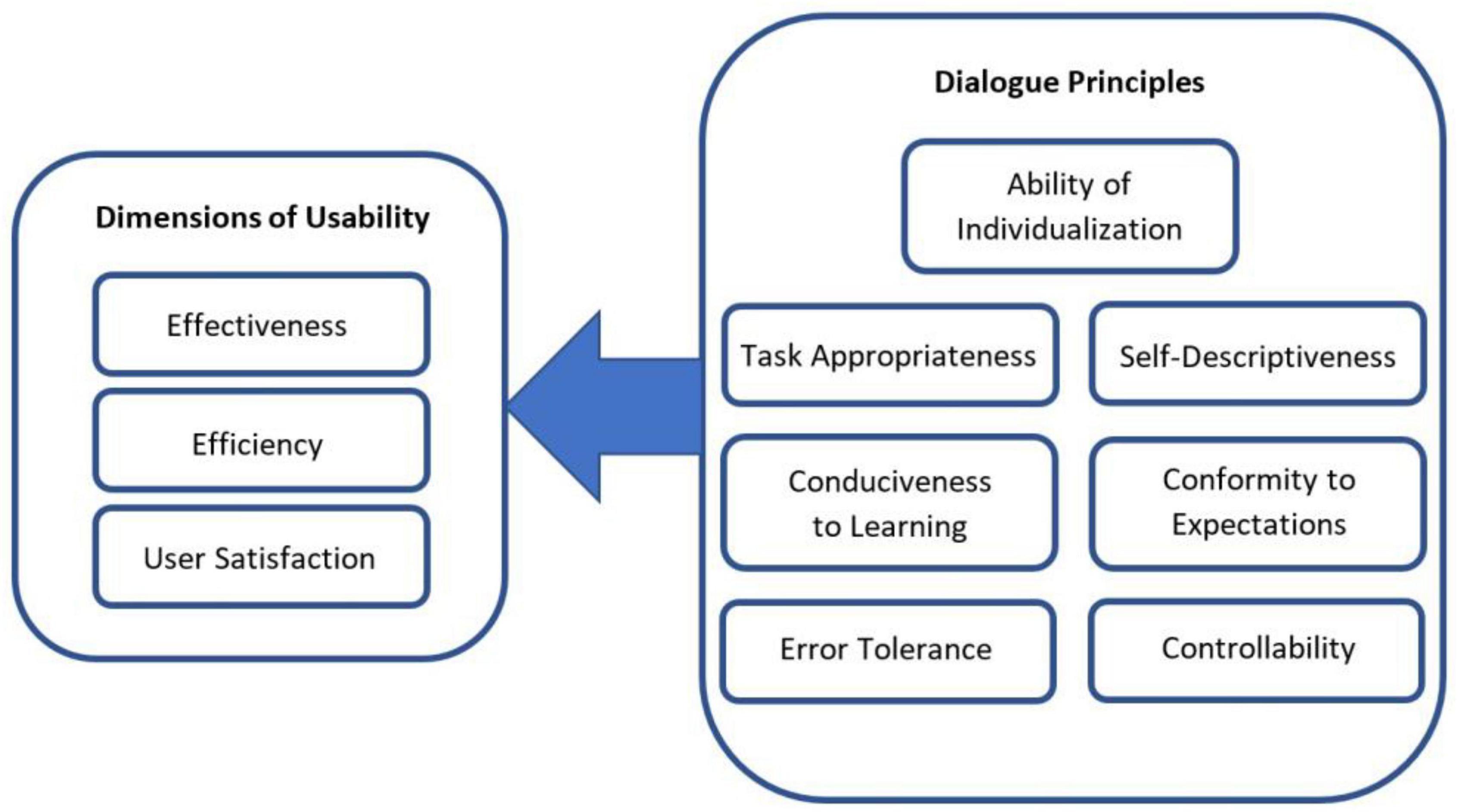

A dialog between the user and the tool is created, when a digital diagnostic tool is operated. This dialog is defined in DIN EN ISO 9241 Part 110 as Dialog principles (see Figure 2). It describes the interaction between a user and an interactive system in the form of a sequence of actions by the user (inputs) and responses by the interactive system (outputs) to achieve a goal (Din En Iso 9241-110:2008-09, 2006). To minimize misleading or insufficient information from the diagnostic tool, the design of the interaction should be based on the seven principles of dialog design: ability of individualization, task appropriateness, self-descriptiveness, conduciveness to learning, conformity to expectations, error tolerance, and controllability. The principles of dialog design act as general goals for how user interactions should be built. Therefore, it is essential to adapt or to operationalize these for practical use, according to the system being designed, and the characteristics of its user group (Kähler et al., 2019).

Figure 2. Seven dialog principles (e.g., task appropriateness, self-descriptiveness, error tolerance) that structure user–system interaction (cf. Kähler et al., 2019).

As described, pedagogical usability ensures that teaching and learning materials are easy to understand, accessible, and motivating. This approach is designed to facilitate students’ navigation of the learning environment, thereby ensuring their ability to achieve optimal learning outcomes. Therefore, a crucial factor in constructing a digital formative diagnostic tool is the students’ perspective. According to Nokelainen, pedagogical usability consists of ten dimensions: Learner control, Learner activity, Cooperative/collaborative learning, Goal orientation, Applicability, Added value, Motivation, Valuation of previous knowledge, Flexibility, and Feedback, as well as 51 sub-dimensions (2006).

Developing a digital learning environment where students can easily access, understand, and engage with the platform’s content contributes significantly to their overall learning experience. Furthermore, this can promote a positive attitude toward learning, boost their confidence, and ultimately lead to more effective learning outcomes (Wilson and Myers, 2000).

In summary, usability can be defined as the effectiveness, efficiency, and satisfaction with which users operate a system (EN ISO 9241-11:2018-11, 2018). Pedagogical usability is a concept that extends this perspective to encompass didactic criteria such as comprehensibility, motivation, and suitability for learning objectives (Nokelainen, 2006). Research has demonstrated that it is solely the synergy of technical and pedagogical usability that facilitates the comprehensive utilization of digital formative diagnostic tools (Jahnke et al., 2020; Moore et al., 2014). However, there are still significant gaps in the existing research. A prevailing tendency in the field is to examine technical and pedagogical aspects in isolation, rather than to systematically analyze their interactions. In addition, feedback from teachers and students is seldom used to further develop digital tools, even though it could be of great importance for acceptance and effectiveness in inclusive elementary schools.

Collaborative project DaF-L

The BMBF [Bundesministerium für Bildung und Forschung (Federal Ministry of Education and Research)] within the Rahmenprogramm empirische Bildungsforschung [Framework program empirical educational research, own translation] funded the collaborative project DaF-L. A digital and competence-oriented screening for reading competencies with aligned reading packages, consisting of literary texts and reading exercises (reading comprehension questions), was developed and tested. The study centered on the practice-to-research transfer to enhance the technical-pedagogical usability of the learning platform Levumi, in conjunction with the co-development of adaptive reading screening and packages in collaboration with school practitioners (Junger et al., 2024). Since then, the materials are available for teachers as an Open Educational Resource (OER) (Junger et al., 2023). The learning platform Levumi, which was developed in 2015, provides competence-oriented learning progression diagnostic tools as well as support materials that aid regular school teachers and special education teachers in the diagnostics and support of students (Gebhardt et al., 2016; Jungjohann et al., 2018; Mühling et al., 2017). The collaborative project DaF-L comprised of four universities in Germany. The digital screening for reading competencies was developed by the University of Munich in 2022 (LES-IN-CAT; Ebenbeck et al., 2023; Jungjohann et al., 2023). The reading support was constructed by the University of Flensburg in 2023 (Reading path; Hanke and Diehl, 2024a,b; Diehl and Hanke, 2024). Qualitative expert interviews were conducted by the University of Leipzig throughout the entire project period to ensure the practice-to-research transfer and to improve the technical-pedagogical usability (Junger et al., 2023). The University of Kiel was responsible for the digital integration of the newly developed tools into the learning platform Levumi. The objective was threefold: to provide simple, data-driven, and effective reading support; to determine what makes daily diagnostic tools successful; and to create a better environment for inclusive education in primary schools.

Reading path–digital reading packages with formative assessment

The reading packages promoting reading comprehension in inclusive classrooms contain literary texts on three ability levels and reading exercises, which were reading comprehension questions, tailored to them. The reading packages are intended to promote the reading skill reading fluently and the reading abilities reading comprehension and strategies for reading comprehension. The ability to read fluently implies that the students can read quietly, aloud, automatically, accurately, meaningfully, and quickly (Kultusministerkonferenz [KMK], 2022). The students have to read texts and solve reading exercises; therefore, they read both repeatedly (Mayer, 2018) and a lot (Kruse et al., 2015), thus promoting reading fluency. In case of reading comprehension, and the main focus of the reading packages, the students read texts that correspond to their ability level and understand their meaning. Skills encompass students’ ability to identify textual information at the local level, which may be either explicitly stated or deduced through straightforward inferencing. In doing so, students also pay close attention to linguistic means in order to ensure that the context of the text is understood, and to link text information, draw conclusions, and construct an overall understanding using their previous knowledge (Kultusministerkonferenz [KMK], 2022). In case of possessing strategies for reading comprehension or reading strategies, the students know how to use basic cognitive and metacognitive reading strategies after reading. They work with after-reading strategies such as central text statements (Kultusministerkonferenz [KMK], 2022).

As previously stated, the implementation of digital formative assessment and digital reading tasks, such as engaging with literary texts and completing reading assignments, has been demonstrated to offer significant advantages for both students and teachers. The effectiveness of these tools; however, is contingent upon their design, which must be both thoughtful and user-friendly in order to ensure good technical-pedagogical usability.

For this article, the present study reexamines expert interviews on the reading packages as well as participatory classroom observations during their implementation, guided by the following research question: How should digital inclusive reading support be designed to increase its utilization in the classroom?

Materials and methods

As part of the practice-to-research transfer, expert interviews and participatory observation protocols were implemented to test the usability of the learning platform Levumi and the intervention for further development. In the interviews, the following sub-research questions were addressed:

(1) How do educators rate the usability of Levumi before the redevelopment?

(2) What changes would be beneficial from the educators’ perspective to increase the usability of learning platform Levumi?

To answer the questions, the DaF-L project conducted two surveys, one in spring 2022 and the other one in spring 2023.

Additionally, participatory observations were conducted during the intervention to examine the reading support sessions and the digital reading packages. However, due to the importance of the pedagogical usability of learning support materials, they were reevaluated with the focus on the students’ usability of the learning platform and the digital reading packages.

Research design

The present study employed a qualitative exploratory design to examine the technical and pedagogical usability of the learning platform Levumi and its developed digital reading packages. The study drew on two complementary data sources: expert interviews with teachers and participatory classroom observations. This dual perspective enabled a systematic analysis of both teacher and student experiences, providing a richer and more nuanced interpretation of the findings and supporting informed recommendations for the further development of digital reading interventions and improvements in inclusive education.

To ensure a direct practice-to-research transfer, expert interviews in a qualitative longitudinal design were planned. The processual character of a qualitative longitudinal design is intended to improve usability by focusing on the stakeholders’ perspective (Bortz and Döring, 2016; Witzel, 2020). The qualitative interviews were conducted at two different points in time with expert teachers to accompany the further development of the learning platform Levumi in coordination with school practitioners.

The first survey of expert interviews, a needs analysis, was conducted in spring of 2022 and captured initial requests for improvements to the learning platform Levumi from educators. The second expert interviews, regarding the study of the digital reading packages, took place in spring 2023. Teachers assessed and reported on the technical-pedagogical usability of the reading intervention for its further development, including the literary texts and the reading exercises.

Additionally, in order to include feedback on students’ usability, observational data was collected in the qualitative cross-sectional design. For this purpose, participatory observation protocols were employed to record students’ challenges during the intervention in spring 2023. The protocols were indebted to evaluate different parts of each intervention session. To secure the instruments’ usability for the students, the collection and analysis of qualitative data through participatory observation protocols represent an essential aspect of this study’s research methodology. Through detailed records carefully completed by research staff, the participatory observation protocols captured the unfolding course of tests and intervention sessions, providing a wealth of valuable information about how individual tests and measures were administered and carried out.

Sample and data collection

Sample

The recruitment of teachers for the first expert interviews regarding the needs analysis was carried out in three acquisition rounds. The term expert is applied to describe interview participants possessing specialized knowledge that relates to a specific area and is not part of general knowledge (Misoch, 2019). Accordingly, teachers with practical experience using the learning platform Levumi are regarded as specialists in its technical usability. Their specialized knowledge concerning the platform is supposed to offer indications of how the usability of the platform can be improved.

In the first attempt, the last two school years (2020/2021, 2021/2022) were defined as the period of active use. A total of 217 active users were recorded in the database of the learning platform Levumi. Due to data protection constraints preventing direct contact with the expert teachers, they were approached via the newsletter function of the learning platform Levumi. In total, only six teachers accepted the invitation to participate in the interviews.

Due to the low response, the search period for teachers, who actively use the learning platform Levumi, was extended to the 2019/2020 school year in the second attempt. In that school year, Levumi was updated to a new version with a revised platform as well as new functions. This extended the sample period to the three school years 2019/2020, 2020/2021, and 2021/2022. The total number of active users thus increased from 217 to 276. Unfortunately, the expansion of the period did not add any additional educators.

In a third attempt, former teacher candidates who wrote their master thesis with a collaborative project partner via the platform were contacted directly by a project partner with a request to participate in the interviews. Unfortunately, there was no response to these requisitions either. However, through an independent interview, it was possible to establish contact with a person who studied social work but was included in the sample as an expert on the technical usability of the learning platform Levumi based on two years of experience in using the platform. From the acquisition conducted, as described, a total of seven interview partners were obtained for individual interviews.

In the second survey for the study of the digital reading packages, educators were required to evaluate the beta version of the reading packages with students before the interviews in order to provide feedback. It was not necessary for teachers to have used the learning platform Levumi before, as it was the case of the initial needs analysis survey. First, the experts from the interviews of the needs analysis were asked to participate in the new survey. Three of the original seven educators responded positively to the request. To maximize outreach for canvassing, various Levumi social media channels were used, such as a Levumi X (former Twitter) account, the Levumi newsletter, and the Levumi blog. Incentives for school supplies were included in the ads, to further motivate teachers to participate in the interviews. Therefore, three additional teachers were acquired for the interviews, so that a total of six teachers participated in the second survey.

In addition to conducting interviews, a series of participatory classroom observations were undertaken in three primary school classes during the implementation of the digital reading packages, resulting in 33 structured observation protocols. Student interactions with the platform and its associated materials were analyzed, with a particular emphasis on usability, engagement, and any pedagogical hurdles encountered. In order to ensure the participation of teachers from previous studies, the project team re-contacted the relevant teachers. Furthermore, the project was presented at a principals’ conference, and information letters were distributed.

Data

The data of the surveys was collected by semi-structured, guided expert interviews as this allows researchers access to the special knowledge of the people involved in the situation and the processes (Gläser and Laudel, 2010). The semi-structured guide was designed according to the research questions and adapted to the domain-specific content of technical usability.

The interview guide consisted of a total of four parts and 22 questions. It started with an introductory question about the practical use of the learning platform Levumi in school. Second, the two main categories comprised of the dimensions of usability, with six questions on effectiveness, efficiency, and satisfaction, and the dialog principles, with 14 questions on task appropriateness, self-descriptiveness, conformity to expectations, conduciveness to learning, controllability, error tolerance, and ability of individualization. As a final question, educators were asked about the urgency of processing, as not all feedback could be incorporated immediately and the possibility of implementation needs to be evaluated first. The semi-structured guide was used equally in the two interviews (needs analysis and study of the digital reading packages).

All interviews were conducted exclusively in a digital form, due to the physical distance to the interview partners (different federal states) and the pandemic situation with restrictions on business travel ongoing. The open source virtual classroom software BigBlueButton provided by the University of Leipzig was used for the online communication. The interviews were recorded using a voice recorder. The second survey of the expert interviews was initiated before the commencement of the intervention study in April 2023.

The participatory observation protocols comprised inquiries spanning both overall observations and the documentation of specific segments of the intervention. The distinct sections under scrutiny encompassed Warm-Up, Text Work 1 (digital reading packages), Text Work 2 (reading with a partner), and Closer. Questions pertaining to the pedagogical usability of the reading materials and the learning platform’s interface encompassed considerations such as the extent to which students encountered challenges while interacting with the tablet. Additionally, factors such as the level of student engagement (active, interested, etc.) were assessed. Further inquiries encompassed the students’ ability to maintain focus on the digital reading materials and to successfully complete them. Notably, any difficulties or issues (related to the reading packages and platform’s interface) were also examined. Moreover, the protocols delved into whether students required assistance with the digital exercises (content).

During the sessions, researchers provided active support to students, checking their progress and clarifying the functions of the digital reading packages. Prior to the intervention, students were introduced to the structure and navigation of the reading packages, which included texts, comprehension questions, and exercise formats.

The protocols for the participatory observation provided a detailed account of the intervention process and offered valuable insights into students’ behaviors, interactions, and engagement, shedding light on their understanding, learning styles, and responses to the reading packages (Katz-Buonincontro and Anderson, 2018; Postholm, 2019). Simultaneously, the qualitative analysis pinpointed challenges and contextual variables that may have affected the quantitative findings. These nuanced insights enriched the interpretation of the results and complemented other data, ultimately leading to a more comprehensive understanding of the intervention’s outcomes and its significance for the field of reading education.

Analyzing of data

The length of the collected interviews was between 51 and 90 min. The average duration of an interview is 76 min. In total, data material with a temporal scope of 9 h was recorded (including interviewer questions).

In order to focus on the content of the interviews at an early stage, a semantic-content transcription system was used. The transcription was carried out by trained personnel and transcription software Amberscript, using the transcription rules according to Dresing and Pehl as basis for the transcription of the audio files (2018). In the interviews, the language is transcribed more fluently and readably, with the focus on the (semantic) content of the speech (Kuckartz, 2018). This includes, for example, no word breaks, word slurring, word repetitions, slips of the tongue, filler sounds, and comprehension signals (if these are not meaningful) as well as dialect, which is approximated to written language (Dresing and Pehl, 2018).

The interview material was prepared for the information analysis and then analyzed and extracted by using MAXQDA 2020, a program for qualitative data and text analysis. The categories were created deductively based on the theoretical operationalization of technical usability in the interview guide. The two main categories of technical usability, dimensions of usability and principles of dialog design, were coded as upper categories, and the ten associated variables were coded as subcategories. The subcategories were also subdivided into the current state (coding of the first sub-question to evaluate current usability) and the target state (coding of the second sub-question to profitably change usability).

For each intervention session, the researchers utilized participatory observation protocols. The protocols encompassed general inquiries related to the overall observation, such as the students’ level of engagement (active, interested, unmotivated, independent, etc.). Additionally, it included specific questions concerning various parts of the intervention. The intervention was structured into several segments, namely the warm-up, individual digital work with the reading packages (Text Work 1), partner reading (Text Work 2), and the closing activity. Consequently, the participatory observation protocols included queries like, “To what extent do the students encounter difficulties handling the tablet?” and “How does the partner reading function? (smoothly, hesitantly, with frequent breaks, etc.).”

Results

The interviews conducted with teachers along with the participatory observation protocols employed by the researchers, played a crucial role in improving technical-pedagogical usability through the implementation of suggested enhancements.

Teachers’ perspectives on technical usability

Regarding the first sub-research question, how teachers assess the usability of the learning platform Levumi, they generally evaluate its technical usability and the pedagogical benefit positively. “All it takes is time to register and then for the students to log in, but after that you only benefit from it” (AP_A_1, teacher 5, pos. 28, own translation).

For the second sub-question, which changes would be beneficial from the educators’ perspective in order to increase the usability of Levumi, the teachers identified primarily changes regarding the controllability of the platform’s navigation levels, the ability of individualization of classes’ administration, and the feedback for the students during the test to increase the platform’s technical usability.

According to the teachers, the controllability of the navigation levels lacked clarity; “So the process is all clear, but it’ not really clearly structured […]” (AP_A_1, teacher 1, pos. 29, own translation). In addition, many steps (clicks) have to be taken to get to the “desired” result. It was assumed that certain tests were not sufficiently intuitive, which could result in confusion and an increase in workload during classroom implementation. Teachers suggested clearer organization of the exercises and improved user guidance to reduce barriers to use.

To increase the ability of individualization the management of both classes and students was identified as a significant area for enhancement, particularly with regard to the transfer of individual students between groups. Teachers underscored the necessity for features that would enable them to transfer students without losing previously collected results, thereby ensuring the continuity and comparability of learning data. Presently, the capacity to share entire classes with other teachers is available; however, the option to transfer individual students is not yet available.

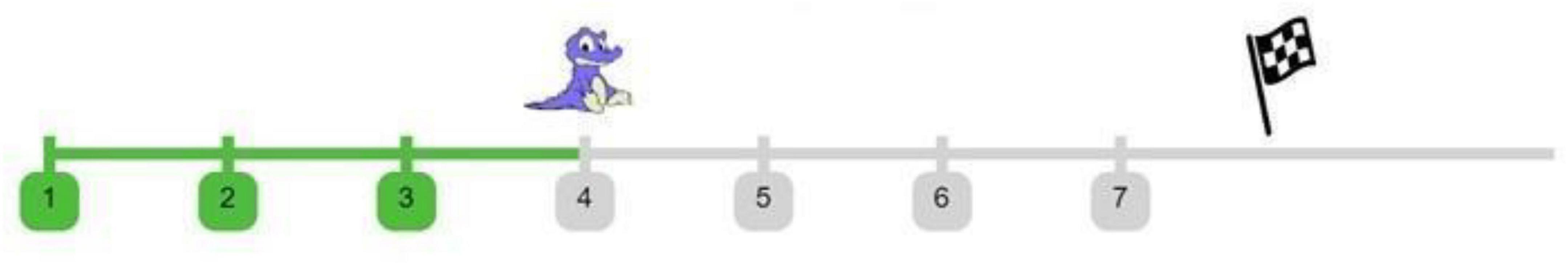

Teachers identified the implementation of a time or progression indicator as a high priority with regard to feedback. This indicator would provide clearer feedback about students’ engagement and performance, thereby supporting formative assessment practices. Thus, teacher 2 explains, “students sometimes just don’t have an understanding of time or [..] of 5 min tests, then yes, they don’t know what’s coming up. How much longer?” (AP_A_1, Lehrkraft 2, 2/2, pos. 47, own translation).

Students’ perspectives from classroom observations

After the first survey of the needs analysis interviews some changes (such as the Levumi timeline, see Figure 3) were made on the learning platform Levumi as well as in the reading packages, which then were tested during the study of the digital reading packages for further improvements. In order to include the students’ perspective in the development process of the reading packages, the participatory observations were re-evaluated using Nokelainen’s dimensions of pedagogical usability (2006).

Figure 3. Levumi timeline view of student progress in Levumi’s reading packages (Gebhardt and Mühling, n.d.).

The participatory classroom observations provided detailed insights into how students engaged with the platform in practice. A recurring issue that was identified pertained to the clarity of the Levumi timeline. Many students encountered challenges in differentiating between main and sub-exercises, which occasionally resulted in disruptions to the learning process. This prompted educators to frequently intervene to clarify task structures.

From the students’ perspective, the participatory observation protocols revealed that self-descriptiveness, particularly regarding the timeline, still required improvement. Despite implementing changes based on the initial survey, the timeline’s self-descriptiveness remained unclear. While it does demonstrate students’ progress, it exclusively reflects the completion of primary exercises (see Figure 3) and does not account for the number of subsidiary exercises. For instance, Exercise 1 contains two sub-exercises, while Exercise 2 contains eight, resulting in perceived inconsistencies. Specifically, the mascot advances quickly in Exercise 1 but appears to stall in Exercise 2, causing uncertainty. These findings underscore the importance of intuitive design and clear, self-explanatory progress indicators.

The design of the reading packages provided substantial support for the notion that motivational aspects played a significant role in the learning process. Visual elements such as the mascot, illustrations, animations, and a trophy page positively influenced students, supported navigation, and stimulated engagement. Observations revealed that students frequently exhibited signs of enthusiasm in response to these elements, thereby facilitating the maintenance of concentration during extended activities.

Furthermore, the incorporation of the mascot, such as the reading dragon within the literary text and the dragon at the end holding a trophy and signaling “finished” (see Figure 4), further engaged and motivated them to complete the reading packages. However, the skip button occasionally undermined these efforts by allowing students to bypass exercises without completing them. Despite this challenge, the majority of students actively engaged with the digital reading materials and successfully completed the exercises, underscoring the motivational and learning potential of the reading packages.

Figure 4. Dragon mascot with trophy signaling task completion in Levumi (Gebhardt and Mühling, n.d.).

Despite challenges during the intervention, students’ overall experience with the platform and reading packages was positive. The enjoyment expressed by the students was evident in their enthusiasm for using the platform, engaging with the reading texts, and completing the reading exercises. Their positive response to the digital tool reflects a genuine interest and appreciation for the interactive and dynamic learning environment that the digital platform provides.

Pedagogical usability of the reading packages

Pedagogical usability emerged as a central theme, with both teacher interviews and classroom observations highlighting its significant influence on how teachers and students engaged with the digital reading materials. Teachers noted that the reading packages offered materials that were differentiated and tailored to various reading levels in three different reading ability groups. The materials included literary texts and eight suitable exercises, allowing students to work at their own pace and receive individualized support. The digital materials were assessed for their potential to reduce preparation time, thereby allowing teachers to prioritize providing targeted feedback in the classroom. Furthermore, the interviews revealed areas that necessitated enhancement. Teachers suggested implementing a visual separation between the exercises and the literary texts. This approach is intended to enhance the applicability of the exercises to the students, thereby facilitating comprehension. In, addition it was recommended to provide targeted support to learners who have not yet attained the full text reading level but are engaged in the acquisition of vowels, syllables, or words.

Students particularly valued features that allowed them to repeat exercises and receive immediate, individualized feedback, such as grading their answers or second-try options. These elements not only facilitated reflection on their reading and learning but also enhanced motivation, persistence, and a sense of achievement. The pedagogical usability of the reading packages was; therefore, closely linked to both learning outcomes and students’ emotional engagement with reading.

Challenges and limitations

Despite the overall positive evaluation, several challenges and limitations became evident. Technical issues, including but not limited to unstable Wi-Fi connections and browser incompatibilities, have been known to result in data loss. This, in turn, has often compelled students to redo exercises, thereby causing frustration for both teachers and learners. These issues not only hindered effective classroom implementation but also diminished the time allotted for genuine learning activities. Furthermore, the improper usage of particular features, such as the skip button, compromised the pedagogical efficacy of specific exercises and emphasized the necessity for more stringent control mechanisms. When considered as a whole, these limitations underscore the imperative for continuous technological advancement and the judicious incorporation of pedagogical principles into the development of digital reading instruments. Addressing these challenges is imperative to ensure the reliable implementation of the platform in diverse classroom settings, particularly in inclusive education contexts.

Overall synthesis

The study demonstrated that a combination of digital reading support and formative assessment yielded substantial added value in the classroom setting. It enables educators to assess individual learning levels and adapt instruction accordingly, while empowering students to strengthen their reading skills. A comprehensive evaluation of the Levumi digital reading packages revealed that they were perceived as engaging, motivational, and conducive to differentiated learning. Teachers highlighted their potential for formative assessment and individual support, and students reported enjoyment, motivation, and a sense of accomplishment. It is imperative to note that the alignment of technical and pedagogical usability, through intuitive navigation, clear feedback mechanisms, and differentiated, motivating tasks, proved essential for successful implementation and meaningful contributions to inclusive reading instruction.

Discussion

Key findings

The objective of the study was to explore how digital inclusive reading support should be designed to increase its utilization in the classroom. The findings underscore the pivotal role of technical and pedagogical usability in determining acceptance and effective implementation. It is evident that teachers emphasized the importance of simplified navigation, efficient class management, and meaningful feedback mechanisms. Conversely, students responded positively to motivational elements such as visual design, repetition opportunities, and immediate feedback. The findings indicate that the efficacy of digital formative reading programs is contingent upon a harmonious balance between intuitive technical design and pedagogical functionality that fosters inclusive and differentiated learning.

The findings are consistent with existing research on usability and user experience. According to EN ISO 9241-11:2018-11 (2018), usability encompasses effectiveness, efficiency, and satisfaction in the use of a system. Nokelainen’s (2006) work builds upon this foundation by emphasizing pedagogical usability, a concept that encompasses didactic criteria such as comprehensibility, motivation, and alignment with learning objectives. The findings of this study demonstrate that a synthesis of both perspectives is imperative to optimize the efficacy of digital diagnostic tools. Although previous studies predominantly concentrated on technological usability (Lu et al., 2022), this study underscores the imperative of equally prioritizing pedagogical aspects.

The integration of teacher and student feedback into the design process aligns with the findings of user experience research (Law and Abrahão, 2014; Schmidt and Huang, 2022), which underscores the significance of end-users’ perceptions in technology acceptance. The participatory approach employed in this study offers empirical evidence for the role of user feedback in guiding iterative development, particularly within inclusive educational contexts. In accordance with the findings of international research on digital education tools (Estrada-Molina et al., 2022; Girdzijauskienė et al., 2022; Wammes et al., 2022), the learning platform Levumi demonstrates how technical-pedagogical usability can contribute to more accessible and motivating learning environments.

Practical implications

The results of this study carry important implications for future practice and development. Digital formative reading packages, such as those developed in Levumi, provide teachers with valuable support in assessing individual learning levels and adapting instruction accordingly. This finding aligns with national results reported by Junger and Liebers (2024), who also emphasize the diagnostic potential of digital formative tools. Concurrently, the platform provides students with engaging materials designed to cultivate motivation, persistence, and confidence in their reading abilities. This component is of particular relevance in inclusive classrooms, where the provision of differentiated support is paramount. However, the investigation also revealed several challenges. Technical issues, including unstable Wi-Fi and browser incompatibilities, impeded smooth classroom implementation and caused frustration for both teachers and students. Furthermore, the presence of features such as the skip button has been demonstrated to compromise the pedagogical effectiveness of specific tasks. From a methodological perspective, the qualitative exploratory design yielded rich and detailed insights; however, it is limited in terms of the generalizability of the findings. In future studies, the integration of quantitative measures would help substantiate the intervention’s broader impact. In summary, while the intervention demonstrated considerable potential for enhancing student engagement and enjoyment, it also revealed areas for improvement in the technical-pedagogical usability of the platform. Addressing these issues can further increase the effectiveness and user experience of such tools, ultimately supporting students’ reading development and teachers’ motivation to adopt them. The findings also reflect the adaptability and digital literacy of students and underscore the potential of digital platforms to create inclusive, engaging, and effective learning environments. The present study demonstrates the importance of close collaboration between educators and researchers in the ongoing development of digital educational tools.

Future research

Future research endeavors should delve deeper into the integration of technical and pedagogical usability in digital formative diagnostic tools. The execution of comparative studies across a variety of educational systems has the potential to yield valuable insights regarding the transferability of the Levumi approach. Furthermore, the implementation of longitudinal and mixed-method designs would facilitate a more profound comprehension of the long-term implications on student achievement and pedagogical practices. Addressing technical limitations while continuously incorporating user feedback will be essential for advancing digital tools that are both inclusive and effective in supporting reading development.

Limitations

Throughout the course of the study, both before and during the intervention, a number of limitations occurred, which inevitably impacted the research process and the depth of insights gathered. The feedback obtained from the study was primarily derived from interviews with teachers and participatory observation protocols. While these methods offered valuable perspectives, they also posed certain challenges that influenced the overall findings.

One of the primary limitations arose from the difficulty in recruiting a sufficient number of teachers, schools, and classes for interviews as well as the reading intervention study. Despite deploying various outreach strategies, such as utilizing blogs, emails, and letters, the process of persuading educators to participate proved to be challenging and yielded limited results. The ongoing impact of the COVID-19 pandemic further complicated matters, as schools and teachers were grappling with the aftermath of the disruptions caused by the health crisis, leading them to prioritize other essential obligations, including making up for missed academic assessments.

Moreover, the reluctance of some parents/guardians and students to take part in the study due to personal concerns added an additional layer of challenge to the research process. Privacy concerns, skepticism about the study’s purpose, and the potential impact on their daily routines were some of the factors that deterred certain individuals from participating fully.

Due to the limited number of interviews, further research is needed on the technical and pedagogical usability of digital learning platforms.

As schools continue to advance in their use of technology, more research is needed to promote student and teacher motivation to use digital learning platforms. Therefore, cooperation between educators and researchers must be strengthened to improve the transfer of educational practices and research.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/ga6bm/.

Ethics statement

The studies involving humans were approved by the Ethics Advisory Board and the Data Protection Officer of the University of Leipzig. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

RJ: Writing – review & editing, Investigation, Data curation, Conceptualization, Methodology, Writing – original draft. JH: Investigation, Methodology, Data curation, Writing – review & editing, Writing – original draft.

Funding

The authors declare financial support was received for the research and/or publication of this article. The collaborative project DaF-L was funded by the Federal Ministry of Education and Research under the funding code 01NV2116. The publication was supported by the Open Access Publishing Fund of Leipzig University.

Conflict of interest

The authors were part of the collaborative project DaF-L and were involved in the further development of the learning platform Levumi.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

2. ^The collaborative project DaF-L is funded by the Federal Ministry of Education and Research under the funding code 01NV2116. The responsibility for the content of this publication lies with the authors.

References

Abdykerimova, E., Turkmenbayev, A., Sagindykova, E., Nigmetova, G., and Mukhtarkyzy, K. (2025). Systematic review of digital tools’ impact on primary and secondary education outcomes. Int. J. Eng. Pedagogy (IJEP) 15, 92–114. doi: 10.3991/ijep.v15i3.50511

Betthäuser, B. A., Bach-Mortensen, A. M., and Engzell, P. (2023). A systematic review and meta-analysis of the evidence on learning during the COVID-19 pandemic. Nat. Hum. Behav. 7, 375–385. doi: 10.1038/s41562-022-01506-4

Blumenthal, S., Gebhardt, M., Förster, N. G., and Souvignier, E. (2022). Internetplattformen zur diagnostik von lernverläufen von schülerinnen und schülern in deutschland: Ein vergleich der plattformen lernlinie, levumi und quop. [Internet platforms for diagnosing the learning progress of pupils in Germany: A comparison of the platforms Lernlinie, Levumi and Quop]. Zeitschrift Heilpädagogik 73, 153–167. doi: 10.18453/rosdok_id00003556 German

Bortz, J., and Döring, N. (2016). Forschungsmethoden und evaluation in den sozial- und humanwissenschaften. [Research methods and evaluation in the social sciences and humanities]. Heidelberg: Springer Verlag. German

Chalmers, P. (2003). The role of cognitive theory in human-computer interface. Comp. Hum. Behav. 19, 593–607. doi: 10.1016/S0747-5632(02)00086-9

Connor, C. M. (2019). Using technology and assessment to personalize instruction: Preventing reading problems. Prevent. Sci. 20, 89–99. doi: 10.1007/s11121-017-0842-9

Dancsa, D., Štempeľová, I., Takáč, O., and Annuš, N. (2023). Digital tools in education. Int. J. Adv. Nat. Sci. Eng. Res. 7, 289–294. doi: 10.59287/IJANSER.717

Diehl, K., and Hanke, J. (2024). LesePfad (Lesepakte mit formativem Assessment digital). [Reading Path (reading packages with formative assessment, digital)] QfI - Qualifizierung für Inklusion 6. German. doi: 10.25656/01:33041

Din En Iso 9241-110:2008-09 (2006). Ergonomics of human-system interaction - Part 110: Dialogue principles (ISO 9241-110:2006); German version EN ISO 9241-110:2006. Berlin: Beuth Verlag GmbH.

Din En Iso 9241-11:2018-11 (2018). Ergonomics of human-system interaction - Part 11: Usability: Definitions and concepts (ISO 9241-11:2018); German version EN ISO 9241-11:2018. Berlin: Beuth Verlag GmbH.

D’Mello, S. K. (2021). “Improving student engagement in and with digital learning technologies,” in OECD digital education outlook 2021, ed. R. S. Baker (Paris: OECD), 79–104.

Dresing, T., and Pehl, T. (2018). Praxisbuch interview, transkription & analyse: Anleitungen und regelsysteme für qualitativ forschende. Marburg: Eigenverlag.

Eason, K. D. (1984). Towards the experimental study of usability. Behav Inform. Technol. 3, 133–143. doi: 10.1080/01449298408901744

Ebenbeck, N., Jungjohann, J., and Gebhardt, M. (2023). Testbeschreibung des digitalen levumi-lesescreenings les-in-dig. beschreibung der testkonstruktion sowie der items der digitalen screeningtests, phonologische bewusstheit“,,,lexikalischer abruf“,,,blitzlesen“ und,,sinnkonstruierendes satzlesen“ in deutscher sprache. Version 1. [Test description of the digital levumi reading screening les-in-dig. Description of the test construction and the items of the digital screening tests “phonological awareness,” “lexical retrieval,” “speed reading,” and “meaning-constructing sentence reading” in German. Version 1]. Available online at: https://epub.uni-regensburg.de/53993/1/LES-IN-DIG.pdf (accessed May 3, 2024).

Estrada-Molina, O., Fuentes-Cancell, D. R., and Morales, A. A. (2022). The assessment of the usability of digital educational resources: An interdisciplinary analysis from two systematic reviews. Educ. Inform. Technol. 27, 4037–4063. doi: 10.1007/s10639-021-10727-5

Förster, N., and Souvignier, E. (2014). Learning progress assessment and goal setting: Effects on reading achievement, reading motivation and reading self-concept. Learn. Instruct. 32, 91–100. doi: 10.1016/j.learninstruc.2014.02.002

Gebhardt, M., Diehl, K., and Mühling, A. (2016). Online Lernverlaufsmessung für alle SchülerInnen in inklusiven Klassen. Zeitschrift Heilpädagogik 67, 444–454.

Gebhardt, M., and Mühling, A. (n.d.). Levumi. Available online at: http://www.levumi.de/

Girdzijauskienė, R., Rupšienė, L., and Pranckūnienė, E. (2022). “Usage of digital learning tools to engage primary school students in learning,” in Human, technologies and quality of education, (Latvia: University of Latvia Press). doi: 10.22364/htqe.2022.20

Gläser, J., and Laudel, G. (2010). Experteninterviews und qualitative inhaltsanalyse als instrumente rekonstruierender untersuchungen. Wiesbaden: VS Verlag.

Hanke, J., and Diehl, K. (2024a). Handbuch - beschreibung der lesepakete lesepfad für dritte inklusive klassen: Beschreibung der konstruktion der lesepakete sowie beispiele der lesetexte und leseaufgaben in deutscher sprache. [Handbook - description of the reading packages, reading path for third-grade inclusive classes: Description of the construction of the reading packages as well as examples of reading texts and reading tasks in German.]. Available online at: https://nbn-resolving.org/urn:nbn:de:gbv:8:3-2024-00240-2 (accessed March 1, 2024). German

Hanke, J., and Diehl, K. (2024b). “LesePfad – digitale lesepakete zur förderung der lesekompetenz im inklusiven unterricht. [Reading Path – digital reading packages to promote reading skills in inclusive education],” in Grundschulforschung meets kindheitsforschung reloaded, eds A. Flügel, A. Gruhn, I. Landrock, J. Lange, B. M. üller-Naendrup, J. Wiesemann, et al. (Bad Heilbrunn: Verlag Julius Klinkhardt). German

Jahnke, I., Schmidt, M., Pham, M., and Singh, K. (2020). “Sociotechnical-pedagogical usability for designing and evaluating learner experience in technology-enhanced environments,” in Learner and user experience research: An introduction for the field of learning design & technology, eds M. Schmidt, A. A. Tawfik, I. Jahnke, and Y. Earnshaw (Utah: EdTech Books), 127–144.

Junger, R., Hanke, J., Ebenbeck, N., Zellner, J., Bastian, M., Liebers, K., et al. (2025). “Ein maßgeschneidertes digitales Gesamtpaket für die Leseförderung in inklusiven 3. Klassen — das adaptive Screening LES-IN-CAT und das Förderpaket LesePfad: Ergebnisse aus dem Verbundprojekt DaF-L. [A tailor-made digital package for promoting reading in inclusive 3rd grade classes — the adaptive screening tool les-in-cat and the reading path support package — results from the DaF-L collaborative project],” in Förderbezogene Diagnostik in der inklusiven Bildung: Kompetenzbereiche — Fachdidaktik, Vol. 1, eds K. Beck, R. A. Ferdigg, D. Katzenbach, J. Kett-Hauser, S. Laux, and M. Urban (Münster: Waxmann Verlag GmbH), 111–131. German.

Junger, R., and Liebers, K. (2024). Digitale formative diagnoseverfahren in der grundschule – begründungen und überblick über vorliegende entwicklungen am beispiel deutsch. ZFG 17, 3–19. doi: 10.1007/s42278-024-00190-9

Junger, R., Hanke, J., Ebenbeck, N., Zellner, J., Bastian, M., Liebers, K., et al. (2024). Ein maßgeschneidertes digitales gesamtpaket für die leseförderung in inklusiven 3. klassen – das adaptive screening les-in-cat und das förderpaket lesepfad – ergebnisse aus dem verbundprojekt DaF-L. [A tailor-made digital package for promoting reading in inclusive 3rd grade classes – the adaptive screening tool les-in-cat and the reading path support package – results from the DaF-L collaborative project]. German

Jungjohann, J., Diehl, K., Mühling, A., and Gebhardt, M. (2018). Graphen der lernverlaufsdiagnostik interpretieren und anwenden – leseförderung mit der onlineverlaufsmessung levumi. [Interpreting and applying graphs of learning progress diagnostics – promoting reading with the online progress measurement tool levumi]. Forschung Sprache 6, 84–91. doi: 10.17877/DE290R-19806 German

Jungjohann, J., Ebenbeck, N., Liebers, K., Diehl, K., and Gebhardt, M. (2023). Das lesescreening LES-IN für inklusive grundschulklassen: Entwicklung und psychometrische prüfung einer paper-pencil-version als basis für Computerbasiertes Adaptives Testen (CAT). [The LES-IN reading screening tool for inclusive primary school classes: Development and psychometric testing of a paper-and-pencil version as a basis for computer-based adaptive testing (CAT)]. Empirische Sonderpädagogik 15, 141–156. doi: 10.2440/003-0003 German

Kähler, B., Zettl, A., and Prinz, F. (2019). Nutzerfreundlichehler et al., 2019]. softwaregestaltung in der pflegedokumentation: Handreichung für softwareentwickler. [User-friendly software design in nursing documentation: A guide for software developers]. Available online at: https://www.bgw-online.de/resource/blob/8484/7b3f62956b9f4e76d22fb0bf0aa70e42/bgw-09-14-114-bgw-test-pflegedokumentation-handreichung-softwareentwickler-data.pdf (accessed May 30, 2025). German

Katz-Buonincontro, J., and Anderson, R. C. (2018). A review of articles using observation methods to study creativity in education (1980-2018). J. Creat. Behav. 54, 508–524. doi: 10.1002/jocb.385

Köller, O., Thiel, F., van Ackeren, I., Anders, Y., Becker-Mrotzek, M., Cress, U., et al. (2022). Basale kompetenzen vermitteln – bildungschancen sichern. perspektiven für die grundschule. gutachten der ständigen wissenschaftlichen kommission der kultusministerkonferenz (SWK). Dallas, TX: SWK. German

Kruse, G., Rickli, U., Riss, M., and Sommer, T. (2015). Lesen. das training klasse 2./3. [Reading. The training for grades 2/3.]. Stuttgart: Klett. German

Kuckartz, U. (2018). Qualitative inhaltsanalyse: Methoden, praxis, computerunterstützung. [Qualitative content analysis: methods, practice, computer support]. Weinheim: Beltz Juventa.

Kultusministerkonferenz [KMK] (2022). Bildungsstandards für das fach deutsch primarbereich. [Educational standards for the subject of German in primary education]. Kolkata: KMK.

Law, E. L.-C., and Abrahão, S. (2014). Interplay between user experience (UX) evaluation and system development. Int. J. Human-Comp. Stud. 72, 523–525. doi: 10.1016/j.ijhcs.2014.03.003

Leshchenko, M., Lavrysh, Y., and Kononets, N. (2021). Framework for assessment the quality of digital learning resources for personalized learning intensification. N. Educ. Rev. 64, 148–159. doi: 10.15804/tner.21.64.2.12

Liebers, K., Schmidt, C., Junger, R., and Prengel, A. (2019). “Formatives assessment in der inklusiven grundschule im spannungsfeld von wissenschaft und transfer,” in Grundschulpädagogik zwischen wissenschaft und transfer, eds C. Donie, F. Foerster, M. Obermayer, A. Deckwerth, G. Kammermeyer, G. Lenske, et al. (Wiesbaden: Springer), 303–313.

Lu, J., Schmidt, M., Lee, M., and Huang, R. (2022). Usability research in educational technology: A state-of-the-art systematic review. Educ. Technol. Res. Dev. 70, 1951–1992. doi: 10.1007/s11423-022-10152-6

May, P., and Berger, C. (2014). “Monitoring des hamburger sprachförderkonzepts,” in Lesekompetenz nachhaltig stärken. evidenzbasierte Maßnahmen und programme, eds R. Valtin and I. Tarelli (Berlin: dgls), 225–247.

Mayer, A. (2018). Blitzschnelle worterkennung (BliWo): Grundlagen und praxis. [Lightning-fast word recognition (BliWo): Fundamentals and practice]. Dortmund: Borgmann. German

McElvany, N., Lorenz, R., Frey, A., Goldhammer, F., Schilcher, A., and Stubbe, T. C. (2023). “Kapitel 1 IGLU 2021: Zentrale befunde im überblick. [Chapter 1 IGLU 2021: Key findings at a glance],” in IGLU 2021. lesekompetenz von grundschulkindern im internationalen vergleich und im trend über 20 jahre, eds N. McElvany, R. Lorenz, A. Frey, F. Goldhammer, A. Schilcher, and T. C. Stubbe (Münster: Waxmann Verlag GmbH), 13–25. German

Moore, J. L., Dickson-Deane, C., and Liu, M. Z. (2014). “Designing CMS courses from a pedagogical usability perspective. perspectives in instructional technology and distance education,” in Research on course management systems in higher education, eds A. D. Benson and A. Whitworth (Charlotte NC: Information Age Publishing, Inc), 143–169.

Mühling, A., Gebhardt, M., and Diehl, K. (2017). Formative diagnostik durch die onlineplattform levumi. Informatik Spektrum 40, 556–561. doi: 10.1007/s00287-017-1069-7

Mullis, I. V. S., and Martin, M. O. (eds) (2019). PIRLS 2021 assessment frameworks. retrieved from Boston College. Chestnut Hill, MA: TIMSS & PIRLS International Study Center website.

Mullis, I. V. S., von Davier, M., Foy, P., Fishbein, B., Reynolds, K. A., and Wry, E. (2023). PIRLS 2021 international results in reading. Chestnut Hill, MA: Boston College, TIMSS & PIRLS International Study Center.

Nielsen, J. (1994). “Heuristic evaluation,” in Usability inspection methods, eds J. Nielsen and R. Mack (Hoboken, NJ: John Wiley & Sons), 25–62.

Nokelainen, P. (2006). An empirical assessment of pedagogical usability criteria for digital learning material with elementary school students. J. Educ. Technol. Soc. 9, 178–197.

Organisation for Economic Co-operation and Development [OECD] (2005). Formative assessment: Improving learning in secondary classrooms. policy brief. Paris: OECD.

Organisation for Economic Co-operation and Development [OECD] (2023). PISA 2022 results (Volume I): The state of learning and equity in education, PISA. Paris: OECD Publishing.

Postholm, M. B. (2019). Research and development in school. grounded in cultural-historical activity theory. Netherlands: Brill Academic Publishers.

Sarodnick, F., and Brau, H. (2016). Methoden der usability evaluation: Wissenschaftliche grundlagen und praktische anwendung. [Methods of usability evaluation: Scientific foundations and practical application]. Göttingen: Hogrefe. German

Schmidt, M., and Huang, R. (2022). Defining learning experience design: Voices from the field of learning design & technology. TechTrends 66, 141–158. doi: 10.1007/s11528-021-00656-y

Schmidt, M., Tawfik, A. A., Jahnke, I., and Earnshaw, Y. (2020). Learner and user experience research. Utah: EdTech Books.

Schurig, M., Jungjohann, J., and Gebhardt, M. (2021). Minimization of a short computer-based test in reading. Front. Educ. 6:684595. doi: 10.3389/feduc.2021.684595

Souvignier, E. (2021). “Interventionsforschung im kontext schule. [Intervention research in the context of schools],” in Handbuch schulforschung, eds T. Hascher, T. S. Idel, and W. Helsper (Wiesbaden: Springer VS), doi: 10.1007/978-3-658-24734-8_9-1 German

Stanat, P., Schipolowski, S., Schneider, R., Sachse, K. A., Weirich, S., and Henschel, S. (2022). IQB-Bildungstrend 2021: Kompetenzen in den fächern deutsch und mathematik am ende der 4. jahrgangsstufe im dritten ländervergleich. [IQB Education Trend 2021: Competencies in German and mathematics at the end of the 4th grade in the third international comparison]. Münster: Waxmann Verlag. German

Tognazzini, B. (2003). First principles. Available online at: http://www.asktog.com/basics/firstPrinciples.html (accessed November 24, 2005).

Tullis, T., and Albert, B. (2013). Measuring the user experience: Collecting, analyzing and presenting usability metrics. Burlington: MK Morgan Kaufmann.

Wammes, D., Slof, B., Schot, W., and Kester, L. (2022). Pupils’ prior knowledge about technological systems: Design and validation of a diagnostic tool for primary school teachers. Int. J. Technol. Design Educ. 32, 2577–2609. doi: 10.1007/s10798-021-09697-z

Wilson, B., and Myers, K. (2000). “Situated cognition in theoretical and practical context,” in Theoretical foundations of learning environments, eds D. H. Jonassen and S. Land (Mahwah, NJ: Lawrence Erlbaum Associates), 57–88.

Keywords: digital diagnostic, formative assessment, practice-to-research transfer, reading support, technical-pedagogical usability

Citation: Junger R and Hanke J (2025) Technical-pedagogical usability of digital inclusive reading support evolving through practice-to-research transfer. Front. Educ. 10:1657822. doi: 10.3389/feduc.2025.1657822

Received: 01 July 2025; Accepted: 28 October 2025;

Published: 01 December 2025.

Edited by:

Gavin T. L. Brown, The University of Auckland, New ZealandReviewed by:

Fahri Haswani, State University of Medan, IndonesiaMeriem Elboukhrissi, Ibn Tofail University, Morocco

Copyright © 2025 Junger and Hanke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ralf Junger, cmFsZi5qdW5nZXJAdW5pLWxlaXB6aWcuZGU=

†ORCID: Ralf Junger, orcid.org/0000-0003-3331-5909; Judith Hanke, orcid.org/0000-0002-2010-1808

Ralf Junger

Ralf Junger Judith Hanke2†

Judith Hanke2†