- Teaching & Learning Centre, Amsterdam University Medical Centre, University of Amsterdam, Amsterdam, Netherlands

Background: Generative AI use (GenAI) by students is transforming education and professional practice, necessitating literacy training. Comprehensive data on usage, competencies, needs and ethics, especially in the Netherlands, is scarce. To inform future literacy education, this study examines usage frequency and type, self-assessed competencies, ethical attitudes, and perceived career impact among Medical Informatics students at the University of Amsterdam.

Methods: From September 2024 to February 2025, we conducted a cross-sectional survey among Bachelor and Master students. Eighty-six percent of the 155 students completed a questionnaire based on the EU's DigComp 2.2 framework. Items (Likert scale and multiple-choice) assessed GenAI usage frequency and purposes, tool types, paid subscription use, critical evaluation capabilities, prompting proficiency, ethical concerns (privacy, bias, environmental impact, copyright), and perceived effects on academic behavior and career outlook.

Results: Daily use grew from 5% among first-years to 33% of Msc students. Paid subscriptions rose from 2.4% in first-year to 38% in second-year Master's students. ChatGPT was used by >90%. Educational use included brainstorming (56%−75%), summarizing (50%−76%), and text rewriting (44%−70%), coding assistance increased after the first year (16% vs. 44%−67% in later cohorts). Reliance on GenAI over traditional resources peaked in third-year undergraduates (45.8% agreement) vs. first year (9.1%). Competency ratings varied: Critical evaluation was high (60%−85% agreement). Prompting proficiency was moderate (35%−68%). Ethical appraisal was low (15%−56%). Competence in GenAI-assisted tasks (code writing, essay composition, practice question creation) was lowest among first-year (16%−38%) and highest in advanced cohorts (33%−83%). Privacy was the top ethical concern (46%−76%), followed by effects on skill development (38%−63%) and bias (33%−100%). Copyright (12%−46%) and environmental impact (13%−28%) received less concern. Interest in learning centered on prompting (58%−84%), privacy/security (60%−84%), and bias mitigation (38%−88%). Sixteen percent to thirty percent of students feared a negative impact of GenAI on their career perspectives.

Conclusion: Students increasingly use GenAI as a complementary academic resource but exhibit skill gaps in ethical safety practices and content creation. Institutions should ensure equitable, secure GenAI infrastructure and integrate ethics-centered AI literacy in the curriculum.

Introduction

Generative artificial intelligence (GenAI), exemplified by tools such as ChatGPT, is fundamentally how students learn, write, and prepare for professional life... Unlike traditional AI systems that focus on pattern recognition and decision-making based on predefined rules, GenAI actively produces new content (text, images, audio, or code) that resembles human-generated outputs (European Parliament, 2024). This shift from consumption to co-creation with AI represents a paradigm change that demands new forms of literacy beyond conventional digital skills. The widespread adoption of GenAI among students worldwide, coupled with regulatory developments like the EU AI Act, underscores the urgent need for comprehensive GenAI literacy in higher education (Chan and Hu, 2023; Hirabayashi et al., 2024; Vuorikari et al., 2022; Zulfikasari et al., 2024). The AI Act explicitly mandates training in (Gen)AI use for both students and educators, recognizing GenAI as a systemic technology that all learners, not just technical experts, must engage with critically and responsibly (Laupichler et al., 2022; European Parliament, 2024). However, this legislation raises fundamental questions: What does GenAI literacy actually encompass? How do current student competencies align with these emerging requirements? And what specific educational interventions are needed to bridge existing gaps?

GenAI literacy represents a specialized evolution of digital literacy that requires distinct competencies. While traditional digital literacy focuses on consuming, evaluating, and sharing existing digital content, GenAI literacy centers on collaborative content creation with AI systems. This involves not only technical skills like effective prompting and output evaluation, but also new forms of critical thinking about authorship, attribution, and the ethical implications of AI-generated content.

Crucially, GenAI literacy encompasses competencies that extend beyond existing digital frameworks: the ability to recognize AI hallucinations and biases, understand the environmental impact of AI model training and use, navigate complex questions of intellectual property in AI-assisted work, and maintain human agency in AI-mediated learning processes (Almatrafi et al., 2024; Shiri, 2024; Tzirides et al., 2024; Vuorikari et al., 2022). These skills represent a qualitative shift from traditional digital literacy, requiring specialized training approaches that current educational systems are only beginning to address.

Recent empirical studies reveal widespread, but uneven GenAI adoption among students globally. Usage rates vary significantly across contexts: 63.4% of students in Germany (von Garrel and Mayer, 2023), 67% in Hong Kong (Chan and Hu, 2023), 92% in Indonesia (Zulfikasari et al., 2024), and 87.5% of Harvard STEM undergraduates (Hirabayashi et al., 2024) report having used GenAI tools at least once, with nearly half of the Harvard cohort using them daily.

These studies reveal consistent patterns in how students integrate GenAI into their learning processes. Common applications include clarifying concepts, idea generation, literature review assistance, and writing support—encompassing summarization, paraphrasing, and proofreading. ChatGPT emerges as the predominant tool, followed at distance by specialized applications like DeepL and DALL-E (von Garrel and Mayer, 2023).

However, this widespread adoption occurs largely without systematic literacy training. Students' use of GenAI tools appears driven primarily by perceived utility, institutional norms, and faculty attitudes (Chan and Hu, 2023; Singh et al., 2023; Strzelecki, 2023) rather than structured educational frameworks. Notably, usage patterns appear to evolve with academic progression (Crček and Patekar, 2023; Hirabayashi et al., 2024) suggesting that GenAI literacy develops through practice rather than formal instruction. This is raising concerns about the development of critical evaluation skills and ethical awareness (Chan and Hu, 2023; Zawacki-Richter et al., 2019).

While international studies provide valuable insights into GenAI adoption patterns, comprehensive data on usage, competencies, and ethical attitudes in the Netherlands remains notably scarce. The limited available evidence comes primarily from linguistic analyses of TU Delft theses, which suggest substantial student uptake of GenAI tools following ChatGPT's introduction (De Winter et al., 2023). However, these indirect measures cannot capture the nuanced picture of how Dutch students actually use GenAI, what competencies they possess, or what ethical concerns they harbor.

This lack of ethical considerations of GenAI suggests a potential gap between current educational offerings and both, professional demands and the EU AI Act's training requirements. Understanding Dutch students' GenAI literacy baseline is essential for developing appropriate training programs and ensuring compliance with EU AI Act requirements in a Dutch educational context.

To address GenAI literacy systematically, educators need structured frameworks that can guide both assessment and instruction. The European Union's Digital Competence Framework 2.2 (Vuorikari et al., 2022) provides such a foundation, organizing digital competence into five mutually-reinforcing areas: information & data literacy, communication & collaboration, digital content creation, safety, and problem solving. While alternative frameworks exist, such as UNESCO's AI competency framework (Miao et al., 2024), DigComp 2.2 offers particular advantages: it is specifically designed for European educational contexts, aligns with EU regulatory requirements, and provides detailed competency descriptors that can be adapted for GenAI-specific applications.

When projected onto GenAI use, these areas translate into:

• Using critical data-literacy skills to craft effective prompts and verify AI-generated outputs;

• Sharing data, information, and digital content through a variety of appropriate digital tools and apply sound referencing and attribution practices to credit original sources;

• Co-creating text, images or audio with GenAI tools and negotiating new questions of authorship, accessibility, and licensing;

• Extending digital safety to encompass privacy-preserving use of models, transparency of GenAI use, and the ethical use of GenAI content;

• Employing generative AI as a problem-solving partner that can simulate scenarios, prototype ideas, and debug solutions—skills that also demand reflective oversight to detect hallucinations or misuse.

However, existing research reveals significant gaps in students' ethical awareness regarding GenAI use. While students demonstrate some awareness of concerns like plagiarism and data privacy, they show limited understanding of more complex ethical dimensions: algorithmic bias in AI outputs, environmental impacts of AI model training and use, and broader implications for skill development and academic integrity (Chan and Hu, 2023; Zawacki-Richter et al., 2019). Without these skills, risks such as misinformation, plagiarism, superficial learning, and ethical missteps increase (Stolpe and Hallström, 2024; Tlili et al., 2023). Furthermore, AI undermines traditional notions of privacy through the covert collection, integration, and analysis of revealing personal information, which can lead to data de-anonymization and detailed profiling (Chan and Lo, 2025). This ethical knowledge gap represents a critical challenge for educational institutions seeking to develop responsible AI users.

Despite widespread student adoption of GenAI tools, educational institutions have been slow to develop comprehensive literacy programs. While universities are beginning to implement GenAI literacy programs to teach students, little is known about how students, particularly in the Netherlands, currently use GenAI, how competent they are or feel, or what ethical concerns they have. This reactive approach leaves students to develop GenAI competencies through trial and error, potentially reinforcing problematic usage patterns and ethical blind spots.

Furthermore, students increasingly report feeling unprepared for GenAI-integrated workplaces, expressing anxiety about their career prospects in an AI-transformed job market (Abdelwahab et al., 2023; Hirabayashi et al., 2024). Several studies indicate that GenAI literacy skills are becoming highly valued across various labor market sectors (Ahmadi et al., 2024; Brynjolfsson et al., 2025; Gazquez-Garcia et al., 2025; Gulati et al., 2025). Future professionals will not only work with GenAI tools but also with the ethical dilemmas and societal structures shaped by AI systems (Chan and Lo, 2025). Yet formal educational preparation remains limited. This disconnects between workplace demands and educational provision creates an urgent need for evidence-based curriculum development.

To inform responsible and effective educational integration of GenAI, comprehensive baseline data on student usage patterns, competencies, and attitudes is essential. While studies like Hirabayashi et al. (2024) among Harvard undergraduates provide valuable insights, they focus primarily on usage patterns and general attitudes rather than systematic competency assessment across established frameworks. Moreover, no comprehensive study has examined GenAI literacy in the Dutch educational context or provided detailed mapping to European competency standards.

To address these gaps and inform responsible and well-founded educational integration of GenAI, this study investigates:

1. How do students use GenAI, with regards to frequency, types of tools and purposes?

2. How do students assess their own GenAI competency?

3. How do students perceive the impact of GenAI on their career?

4. What do students consider important regarding ethical aspects such as privacy, data security, factual accuracy, bias, environmental impact, and what would they like to learn more about?

By focusing on Medical Informatics students—a population with high baseline digital literacy but varied exposure to AI technologies—this study provides insights into how GenAI literacy develops in technically oriented educational contexts. The systematic mapping to DigComp 2.2 competencies enables direct translation of findings into curriculum development, while the comprehensive coverage of usage, competency, ethical, and career dimensions provides a holistic foundation for educational planning.

This baseline study aims to inform evidence-based curriculum development that aligns with student needs, regulatory requirements, and professional preparation goals, contributing to the broader effort to integrate GenAI literacy effectively and responsibly into higher education.

Methods

Study design, study setting & participants

This study employed a cross-sectional design using questionnaires to assess AI use, competency, ethical attitudes, and perceived career impact among university students. The study was conducted at the Medical Informatics (MI) programme of the University of Amsterdam in the period of September 2024 (Bsc year 1 and Msc year 1 to February 2025 (Bsc year 2 and 3, Msc year 2). The study focused on first, second- and third-year bachelor's and first- and second-year master's students in Medical Informatics (MI). All enrolled students were eligible to participate. In Table 1, the number of students that were present in the class where the questionnaire was taken and that participated in the study is listed. The data was collected anonymously. University of Amsterdam students were at that point in time officially not allowed to use GenAI for studying and did not have any formal GenAI literacy training yet.

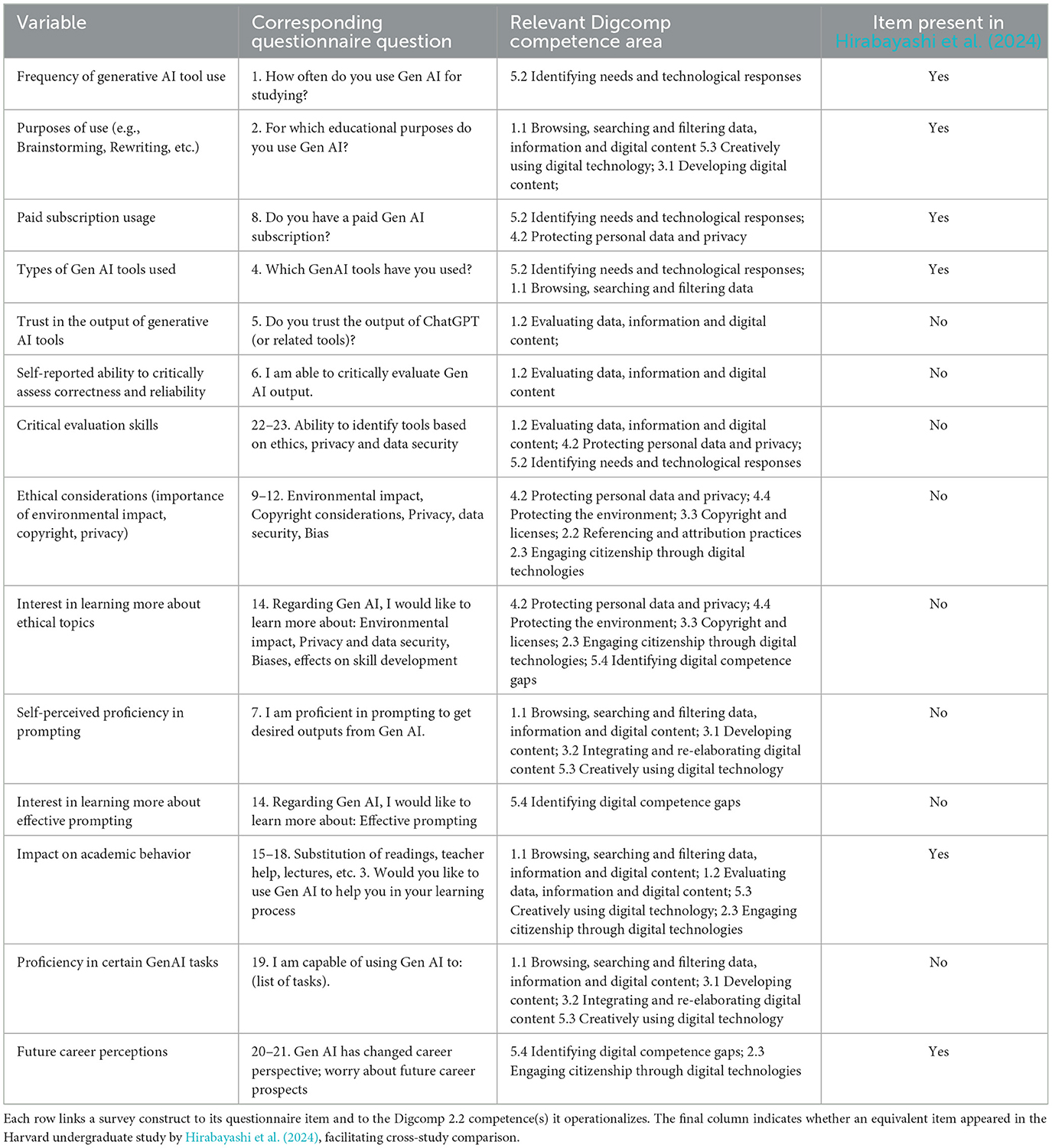

Table 1. “Students present” denotes the number of Medical Informatics students physically in class when the questionnaire QR-code was shown.

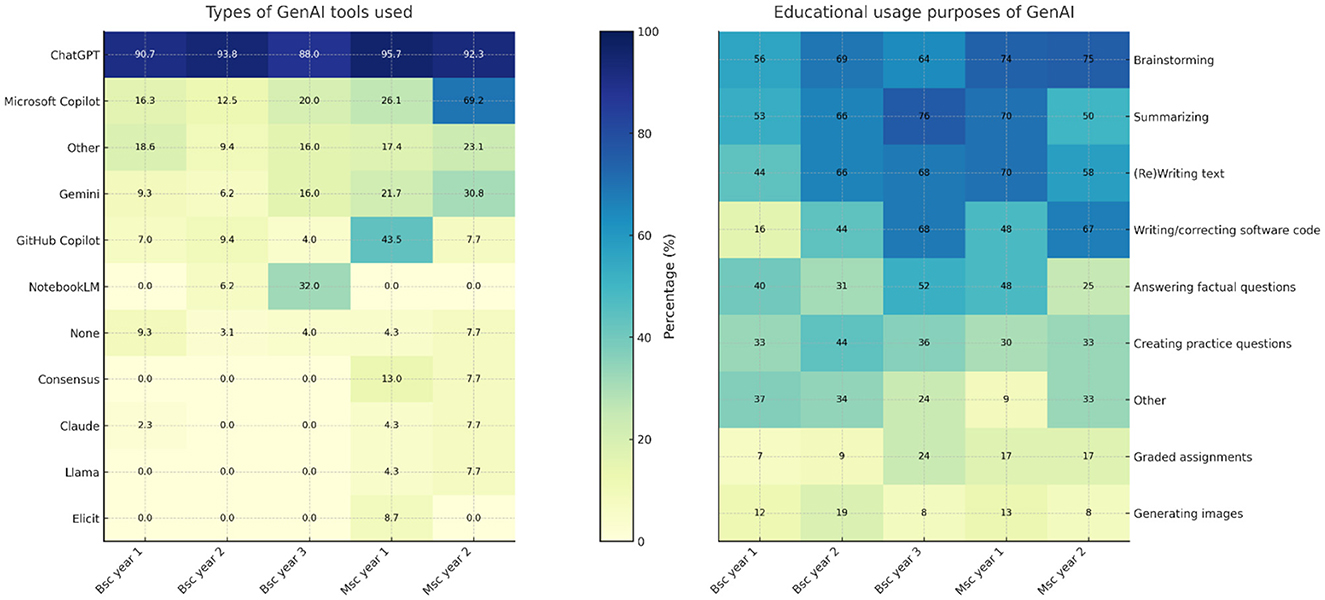

Data sources and questionnaires

The questionnaire in annex 1, consists of questions that were composed based on the five competency areas from the European Union's Digital Competence Framework 2.2 and the Harvard Undergraduate Survey (Hirabayashi et al., 2024; Vuorikari et al., 2022). The link between our questionnaire items, the Digcomp framework and the study from Hirabayashi et al. (2024) is detailed in Table 2. The Digcomp framework is developed by the European Union to provide an overview of the digital competency skills required by the general workforce. To improve the face and content validity of the questions the questionnaire was sent out for review to a group of five other educational (GenAI) researchers and adapted accordingly. By utilizing a number of questions that were pre-piloted in a previous study (Hirabayashi et al., 2024), we further ensured the methodological validity of our assessment. The questionnaire consists of Likert scales (1–5) and multiple-choice questions. The survey, consisting of 23 items, was administered using an online survey distributed via QR code before a lecture. For the full questionnaire see Appendix 1.

Statistical analysis

Descriptive statistics were used to summarize the results. Variables are aggregated as frequencies and percentages. When comparative statements are made concerning Likert scales, a Kruskal Wallis test is conducted followed by pairwise Mann–Whitney U-tests with Holm adjustment for multiple testing to assess statistical significance. For multiple response counts a Chi squared goodness of fit test was done followed by a 2 × 2 proportion test to assess pairwise differences. A p-value of < 0.05 is considered statistically significant. To assess whether Gen-AI usage frequency increases with academic progression, the original seven response categories (Daily, Every other day, Weekly, Bi-weekly, Monthly, Rarely, Not) were collapsed into four ordered levels: frequent (Daily + Every other day), Weekly, Bi-weekly, and Infrequent (Monthly + Rarely + Not), coded 4–1 respectively. A Jonckheere–Terpstra test for ordered alternatives was applied to the five independent cohorts (BSc Y1 → BSc Y2 → BSc Y3 → MSc Y1 → MSc Y2). Because ties and unequal group sizes were present, exact p-values were approximated via 20,000 Monte-Carlo label permutations. The same procedure was repeated for the Bachelor cohorts only (Y1–Y3). For the Master cohorts (two levels), the trend test collapses to a Mann-Whitney U. To determine whether the paid GenAI subscription increased over the years a Cochran–Armitage linear-by-linear χ2 trend test was performed on a 2 × 5 contingency table (Paid = Yes/No × Cohort order: BSc Y1 → MSc Y2). All analyses were performed in Python 3.11 the SciPy 1.11 library.

Ethics

The data was collected according to the principles of the Ethical Review Board of the Dutch Association of Medical Education (approved dossier 2025.3.5). Participation in the research was voluntary. The data were collected anonymously, with no identifying information being collected, stored or published. Participants were provided with a clear explanation of the study's purpose, and written informed consent was obtained prior to data collection. The researchers had no involvement in the assessment of the students, ensuring that their participation could not influence academic progress or standing. All data are securely stored on a password-protected server, accessible only to authorized personnel. Additionally, the study was designed to minimize any potential risks or discomfort to participants.

Results

RQ1 - How do students use GenAI, with regards to frequency, types of tools, and purposes? Frequency of GenAI use

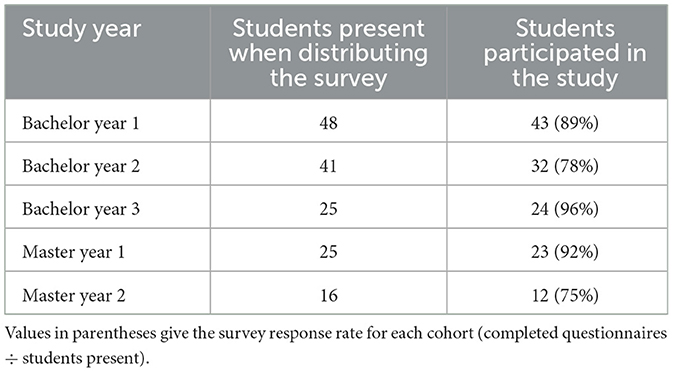

Overall, students' use of GenAI rose over the course of their combined Bsc and Msc degrees (Figure 1 - left, p < 0.0001). In the bachelor the GenAI use rose from 5% among first year undergraduates to 33% in their third undergraduate year, (p < 0.0001). Within the Master cohort no trend can be observed regarding increase in GenAI use (p = 0.26). Paid subscriptions also increased over the course of the study programme (Figure 1 - right, p = 0.0022): roughly 2.4% of first-years, 12.5% of second-years, and 8.7% of third-years reported having one, rising further in postgraduate years (16% in MSc 1 and 38% in MSc 2).

Figure 1. Frequency of GenAI use and paid subscription by academic year. The stacked area chart (left) displays the percentage of students in each cohort (“BSc Year 1” to “MSc Year 2”) reporting their frequency of GenAI use, categorized as: Daily, Every other day, Weekly, Biweekly, Monthly, Rarely, or Not at all. Colors correspond to the seven use-frequency categories. The bar graph (right) shows the proportion of students with a paid GenAI subscription in each academic year. Bar heights reflect subscription rates on a consistent 0%−100% scale.

Types of tools and usage purposes

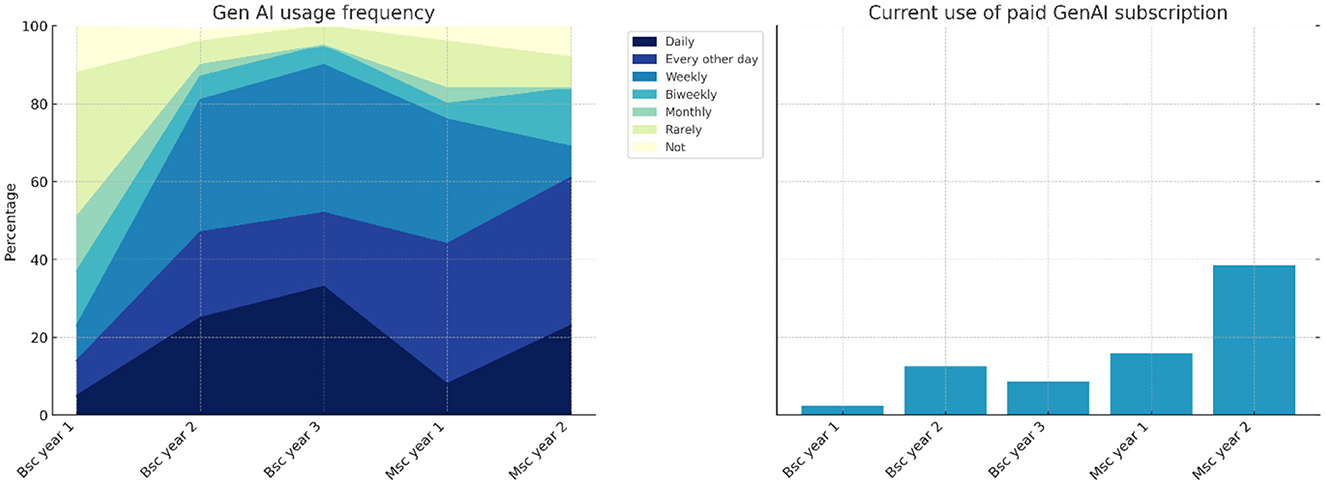

ChatGPT was the predominantly used GenAI tool (Figure 2 - left, p = 0.004), with >90% of students reporting having used it (from 90.7 % in BSc 1 up to 95.7% in MSc 1, and 92.3% in MSc 2).

Figure 2. Types of GenAI tools and educational usage purposes. Side-by-side heatmaps showing (left) the percentage of students in each cohort (BSc Year 1 → MSc Year 2) who have used specific GenAI tools (ChatGPT, Claude, Microsoft Copilot, etc.) and (right) the percentage who have employed GenAI for various educational tasks (brainstorming, rewriting text, summarizing, etc.). Darker cells denote higher usage rates; both panels share a common colour scale.

In terms of educational purposes, students most frequently (p < 0.001) employed GenAI for brainstorming (Figure 2 - right, 56% BSc year 1 to 75% in MSc year 2), summarizing (50%−76%) and rewriting text (44%−70%). The middle group of usage frequency (p = 0.001) was write or correct software code (16% use in Bsc year 1 vs. 44–67% in later years), answering factual questions (25%−52%) and creating practice questions (30%−33%). Least frequent applications (p < 0.001) included use for graded-assignments (7%−24%), generating images (8%−19%), and “other” tasks (9%−37%).

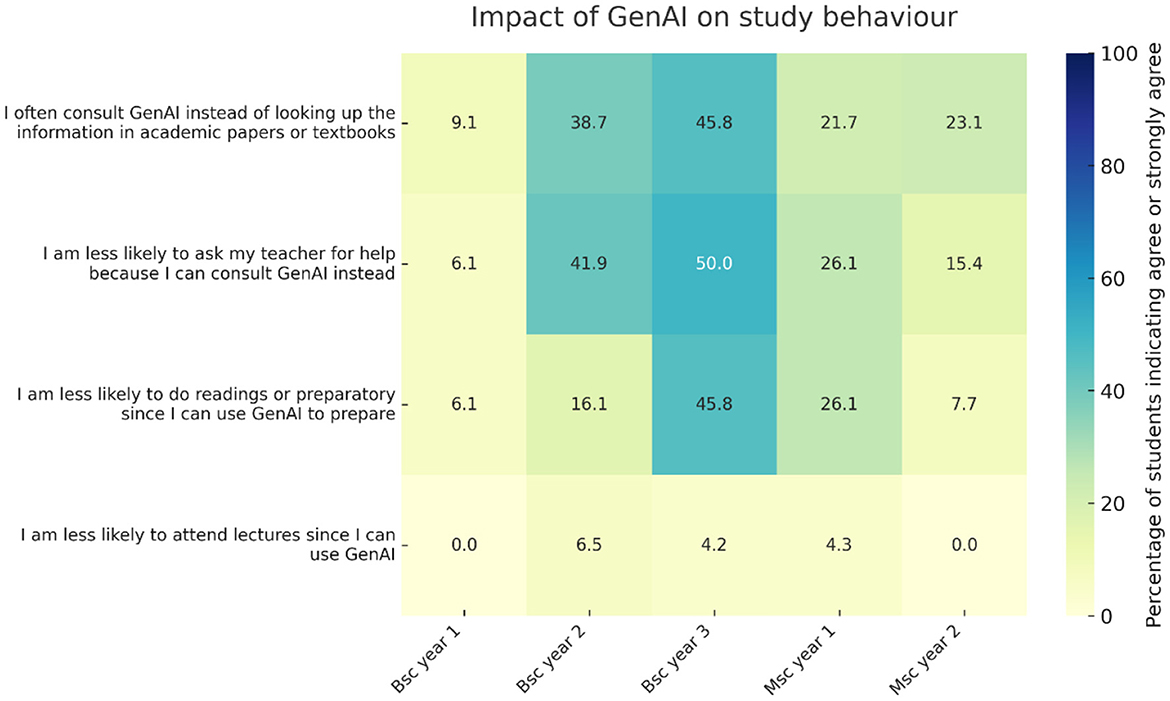

Use of GenAI instead of traditional study resources

Overall, third-year bachelor students report the greatest shift. exception of lecture replacement (Figure 3, p = 0.001–0.04). 9.1% of first-year undergraduates agreed or strongly agreed that they “often consult GenAI instead of looking up the information in academic papers or textbooks,” compared to 38.7 % (BSc year 2), 45.8 % (Bsc year 3), 21.7 % (MSc year 1), and 23.1 % (MSc year 2). Similar patterns can be seen for asking GenAI instead of a teacher (6%−50%) and less likely to do readings and using GenAI instead (6%−48%). Across all years students are not likely to attend lectures less because of the option GenAI use (0%−7%) (Figure 3).

Figure 3. Impact of GenAI on study behaviour. Heat-map of the percentage of students by programme year (BSc Year 1 → MSc Year 2) who “agree” or “strongly agree” with statements about substituting GenAI for traditional study activities. Darker cells indicate a higher proportion of students. The y-axis lists four behaviours (consulting GenAI instead of academic sources; refraining from teacher help; skipping preparatory readings; skipping lectures), and the x-axis shows academic year.

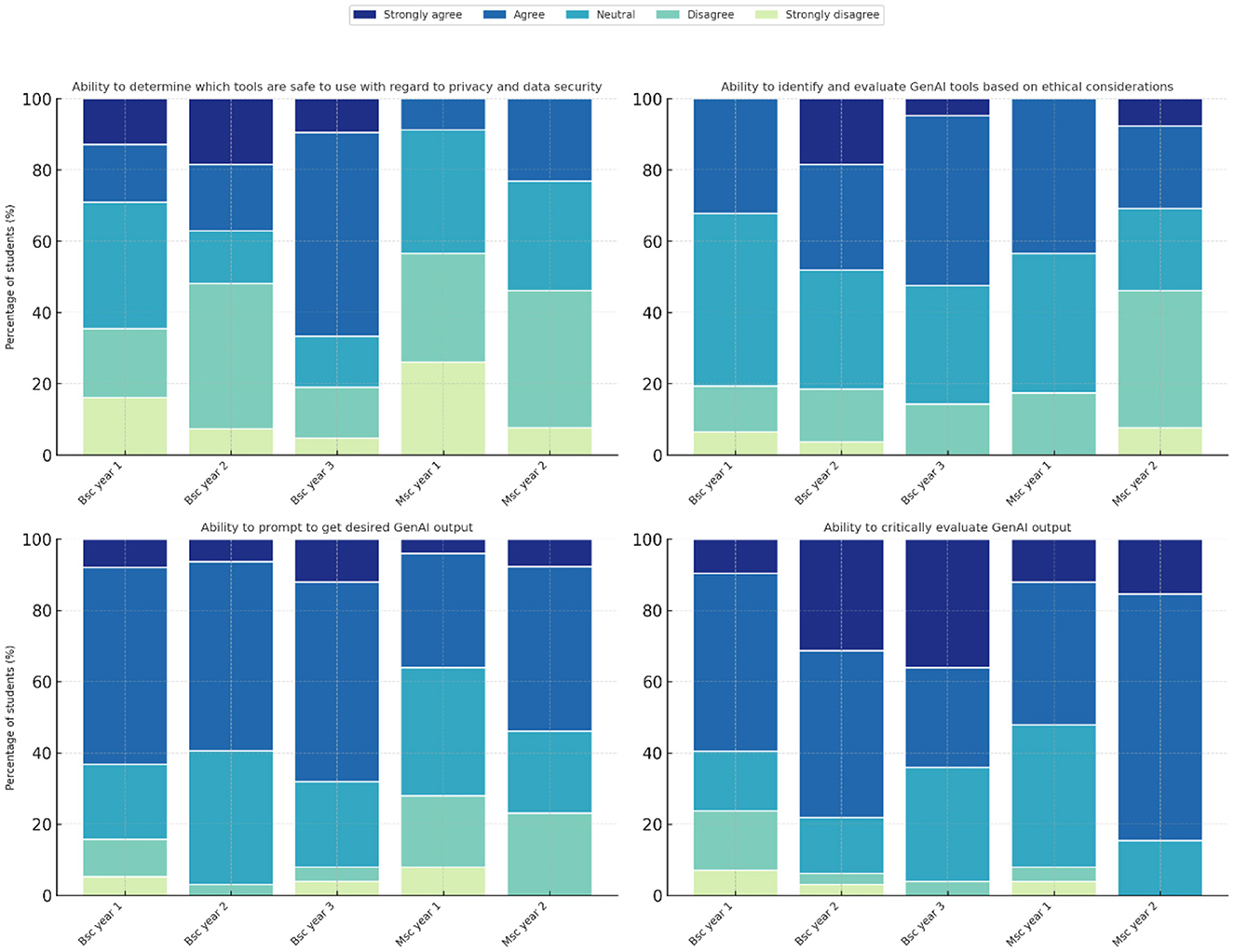

RQ2 - How do students assess their own GenAI competency?

The highest self-ratings were reported (Figure 4) for critically evaluating GenAI output (60%−85% agree or strongly agree, p = 0.006), followed by ability to prompt (35%−68.0%, p = 0.003–0.028), ethical appraisal (32%−52 %, p = 0.028–0.038), and ability to select a tool based on privacy and data security (25%−43%, p = 0.038).

Figure 4. Self-assessed GenAI competencies by academic year. Four stacked-bar panels display, for each cohort (BSc Year 1 → MSc Year 2), the self-assessed ability for four GenAI competencies.

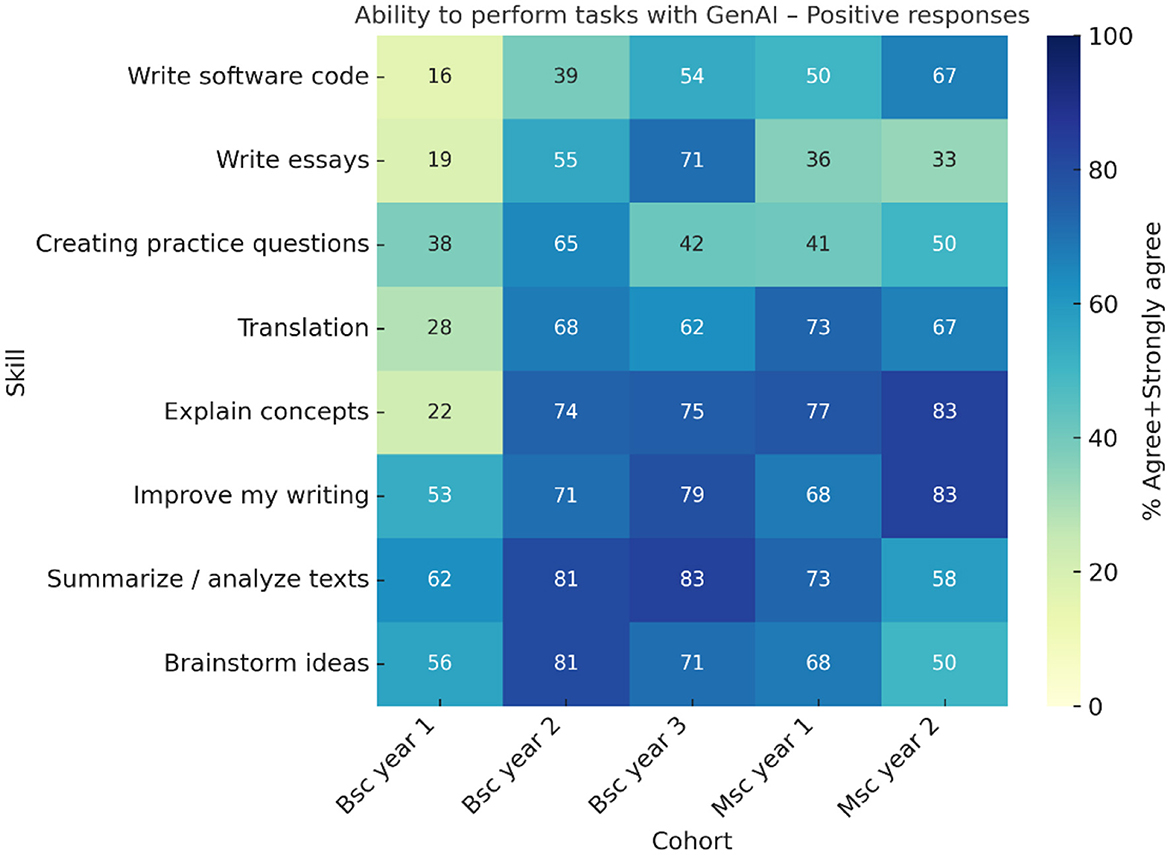

Ability to perform tasks with GenAI

Students' self-reported competence with GenAI-assistance for tasks (Figure 5) is lowest in the first year (16%−62%) compared to the other years (33%−85%) for the tasks improve writing, write essays, write software code, translation, and explaining concepts (p < 0.001–0.27). Master year two scores the lowest on summarizing/analyzing texts, creating practice questions and brainstorming ideas (p = 0.002–0.015).

Figure 5. Ability to perform tasks with GenAI. Heat-map showing the percentage of students in each cohort (BSc Year 1 → MSc Year 2) who “agree” or “strongly agree” that they feel able to perform eight academic tasks using generative AI. Darker shading indicates higher self-reported competence; annotations give the exact percentages.

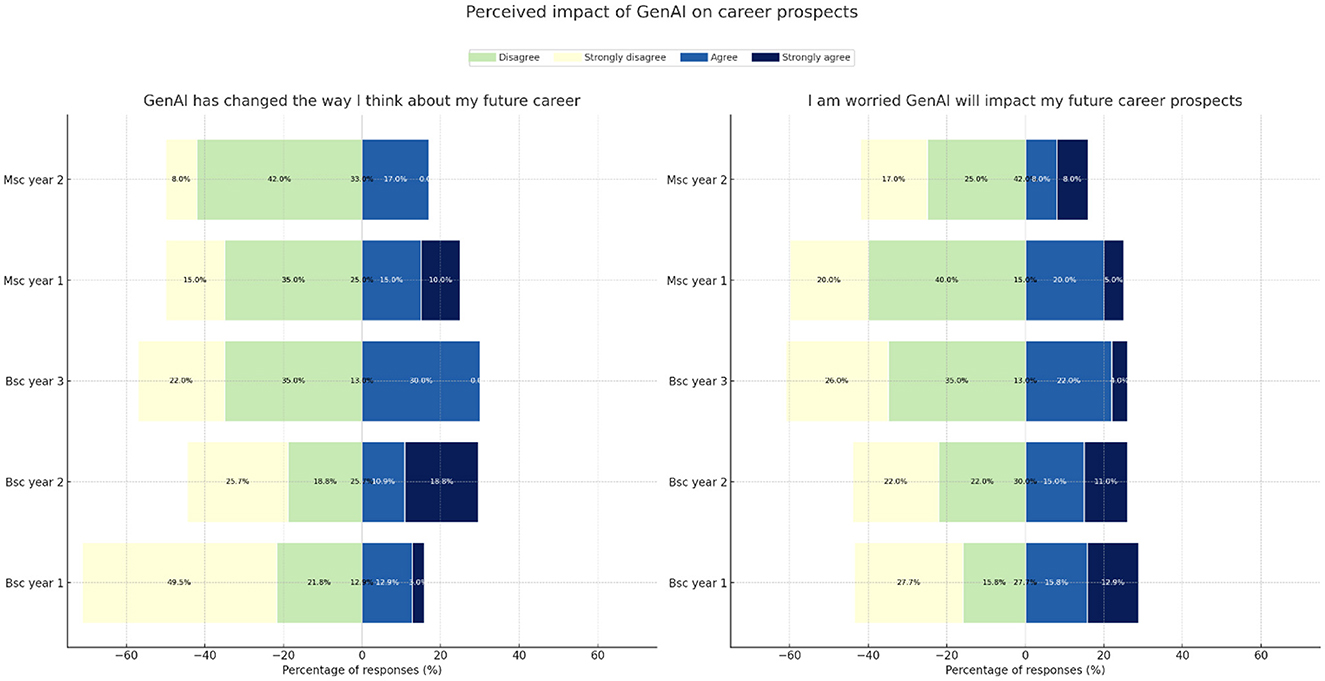

RQ3 - How do students perceive the impact of GenAI on their career?

Sixteen percent to thirty percent of students indicate (strong) agreement that GenAI has changed the way they think about their future careers and 45%−71% (strongly) disagree that GenAI changed the way they think about their future careers. Sixteen percent to thirty percent of students are (strongly) worried that GenAI will impact their future career prospects and 42%−62% of students are not worried (Figure 6).

Figure 6. Perceived impact of GenAI on career prospects. Diverging bar charts showing the distribution of respondents' perceptions of Generative AI (GenAI) on their future career prospects across five student cohorts (BSc Yr 1–3, MSc Yr 1–2). Negative bars (left) represent the combined percentages of “Disagree” and “Strongly disagree,” positive bars (right) the percentages of “Agree” and “Strongly agree,” and the neutral category percentage is indicated at the vertical zero line. (left Illustrates responses to “GenAI has changed the way I think about my future career,” and (right) to “I am worried GenAI will impact my future career prospects.

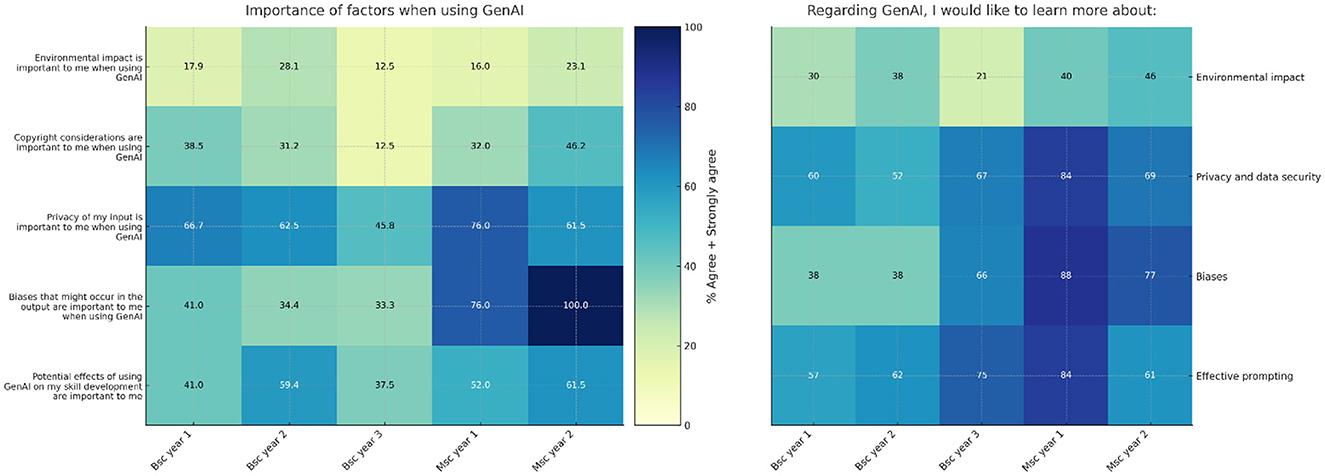

RQ4 - What do students consider important regarding ethical aspects such as privacy, data security, bias, environmental impact, and what would they like to learn more about?

Students rate privacy of their input, skill development and bias as the most important consideration (p = 0.0004–0.05) when using GenAI (Figure 7). Regardings privacy 46%−76% of students (strongly) agree with this factor being important. Potential biases are considered especially important by Master students (76%−100%), compared to Bachelor students (33%−41%). Copyright (12%−46%) and environment (13%−28%) are considered least important (p = 0.001–0.006).

Figure 7. Perceived importance of factors and learning interests regarding GenAI. Side-by-side heatmaps showing, for each cohort (BSc Year 1 → MSc Year 2), the percentage of students who “agree” or “strongly agree” that each factor is important when using GenAI (left) and who “agree” or “strongly agree” that they would like to learn more about each topic (right). Darker cells indicate higher percentages; both panels share a common legend for % Agree + Strongly Agree.

When asked what they would like to learn more about, interest are highest (p < 0.0001) for effective prompting [58%−84% (strongly) agree], privacy and data security (60%−84%), and bias (38%−88%). Interest in learning more about biases is especially high among Master students (38%−66% in Bachelor vs. 77%−88% in the Master). Students are least interested in learning more about the environmental impact of GenAI (21%−46%, p < 0.0001).

Conclusion and discussion

This cross-sectional survey of Medical Informatics students at the University of Amsterdam demonstrates that engagement with generative AI tools increases substantially among students after their first Bachelor year. Paid GenAI subscription gaps, especially in the later study years, could threaten digital equity for the students who are not able to afford paid subscriptions (3 % of BSc year 1 students vs. 40 % MSc year 2). To prevent adverse effects, universities should consider providing a universal safe and powerful GenAI infrastructure.

Overall, students typically continue to rely on traditional study materials—such as textbooks and lectures—rather than GenAI, although the tendency to substitute conventional resources grows among upper-level bachelor's students. Considering the high use but not as replacement, students appear to integrate GenAI as a complement. The types of use reported align with those in previous studies, such as brainstorming, text (re)writing, and answering factual questions (Hirabayashi et al., 2024; von Garrel and Mayer, 2023). Notably, the usage of GenAI increases with academic progression, which is consistent with findings in other educational contexts that report higher tool adoption among advanced students (Chan and Hu, 2023; Zulfikasari et al., 2024).

Students' self-assessments reveal a clear, uneven competence profile that signals where additional instruction could be most needed according to the Digcomp 2.2 competence profile.

• Area 1 – Information & Data Literacy. Abilities tied to prompting, summarizing, and critically vetting AI output shows the highest levels of competence: 60%−85 % of respondents agree or strongly agree that they perform well in these tasks.

• Area 2 – Communication & Collaboration. This area is less applicable on GenAI use considering the human-to-human sharing and teamwork behaviors listed. Therefore this study did not include area 2 skills.

• Area 3 – Digital Content Creation. Competences such as using GenAI for writing and text-refinement are also considered relatively strong, with 53%−83 % reporting (high) proficiency, although not all skills are rated that proficient such as creating practice questions (38%−64%).

• Area 4 – Safety. Many students indicate that they are not able to select a GenAI tool based on privacy, data security or ethical preferences (15%−56%).

• Area 5 – Problem solving. Students report being competent in using GenAI to solve study related problems such as creating an essay, coding, explaining concepts or brainstorming with 19%−81% reporting (high) proficiency.

These findings indicate that the main self-reported skill gaps in GenAI literacy are safety and certain aspects of digital content creation.

The majority of students in this study do not fear adverse career impacts from AI (70%−85% agree or strongly agree) and seem less worried than other STEM students at Harvard about the impact of GenAI on their career prospects. Perhaps this is because they expect that with their background in informatics GenAI will provide more career opportunities rather than less.

The study highlights a (strong) desire for additional training in prompting (area 1), privacy-preserving practices (area 4), and bias mitigation (area 1). Students rate privacy of their input (area 4), potential effects on skill development (area 2 – digital citizenship) and bias (area 1) as the most important considerations when using GenAI, copyright (area 3) and environment (area 4) are considered least important. The skill gap in area 4, mainly considering environmental impact, combined with the lack of interest in learning more the environmental impact signals the need for additional sensibilization.

There are some aspects to consider regarding the interpretation and implications of this study. Firstly, the study's cross-sectional design provides a snapshot at a specific time, which may not capture the rapid evolution of both GenAI technologies and student competencies and attitudes over time. The two different timepoints of measurement (September 2024 and February 2025) might also impact the results, thus reducing the comparability between the Bsc year 1 + Msc year 1 and the other years of the cohort. Future research should involve longitudinal studies to examine changes in usage patterns, competencies, and ethical perspectives as students' progress in their education, receive (additional) AI literacy training, and get access to secure and potent GenAI infrastructure from universities. It would also be interesting to see whether students who more often use GenAI during their studies have different ethical attitudes than those who use it less. Furthermore, the reliance on self-reported data regarding students' perceived competencies introduces potential bias. Students might not be able to accurate assess their own competency, especially when their competency is low, the so-called Dunning-Kruger effect (Kruger and Dunning, 1999). Although the questionnaire is fully anonymous, students may still display some degree of social desirability bias in their responses. However, since they also report socially undesirable view, such as indicating that environmental impact is not particularly important, we consider the influence of this bias to be relatively limited. Objective measures of Gen AI literacy, such as assessments, would provide more accurate insights into students' abilities. Such objective measures would then also more reliably allow for ascertaining a potential relationship between amount of use, perceived GenAI literacy and objective GenAI literacy. Lastly, Medical Informatics is a technical field of study, with an expected higher digital literacy than other studies. This might impact the generalizability of the results to other types of degrees outside of the sciences.

The relatively high response rate among participants enhances the reliability of the findings, and the comprehensive approach—covering usage patterns, competencies, ethical attitudes, and perceived career impact—provides a holistic understanding of students' relationship with GenAI.

Given the rapid development of GenAI tools, continued research in this field is crucial. Future studies could focus on measuring digital literacy more objectively, either through standardized tests or AI-skill assessments, to better understand students' true capabilities, particularly when compared to other disciplines with varying levels of digital literacy. Moreover, qualitative research exploring the reasons behind students' preferences and their ethical concerns could shed light on their evolving needs and attitudes toward GenAI tools. Longitudinal cohort studies would also provide valuable insights into how GenAI usage patterns and competencies develop over time with increased availability to GenAI infrastructure and hopefully also increased AI literacy education.

In terms of educational practice, it is clear that our students, particularly those in the early stages of their studies, require more structured training in area's 1, 3, and 5 of the Digcomp framework (Information & Data Literacy, Digital Content Creation, Problem solving). Students in all academic years require more training in area 4 (Safety). Other universities could use a similar approach to measure the baseline GenAI competence level of their students to determine which aspects require more training.

By linking our findings to the EU Digital Competence Framework, we establish a valuable connection between academic reality and digital literacy standards, which can guide future educational practices. Future GenAI literacy training should not only address technical skills but also cover ethical considerations, ensuring that students understand the implications of using AI in academic contexts.

Considering the limited ability of students to assess whether tools are (ethically) safe and the observation that many students have paid GenAI subscriptions we consider it important that institutions offer access to (ethically) safe and capable GenAI infrastructure to prevent issues with ethical considers or digital inequity. Providing access to GenAI tools and integrating them into educational strategies could prepare students better for the work place.

Considering the prevalent GenAI use by students worldwise, and with ~50% students in this study using it (every other) day for studying and 7%−24% using it for graded assignments, it is important for educational institutes to consider the validity of their assessments, the relevance of their learning goals and adapt their curriculum accordingly (Jongkind et al., 2025; Corbin et al., 2025) GenAI use by students is now routine, but the full scale of skills that are needed for responsible and effective use is not—creating the need for universities to pair equitable access with curriculum-wide, safety-centered AI literacy.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/qdh43/, Open Science Framework.

Ethics statement

The studies involving humans were approved by Ethical review board of the Dutch Society of Medical Education (Nederlandse Vereniging van Medisch Onderwijs, NVMO). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

RJ: Investigation, Writing – original draft, Visualization, Formal analysis, Writing – review & editing, Methodology, Data curation, Project administration, Conceptualization. YB: Conceptualization, Writing – review & editing, Methodology, Writing – original draft. JM: Investigation, Conceptualization, Writing – review & editing, Data curation, Methodology. TB: Conceptualization, Methodology, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors would like to thank Suzanne Geerlings, Stéphanie van der Burgt, Anne van Hoogmoed Emma Vermeulen, Gerard Spaai, Erik Joukes, and Floris Wiesman for providing valuable feedback or input on either the questionnaire, design of the study or manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. Generative AI was used to generate the Python code to make the figures. The figures were generated with the Python library matplotlib. The python code was double-checked and edited where necessary.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdelwahab, H. R., Rauf, A., and Chen, D. (2023). Business students' perceptions of Dutch higher educational institutions in preparing them for artificial intelligence work environments. Ind. High. Educ. 37, 22–34. doi: 10.1177/09504222221087614

Ahmadi, M., Kheslat, N. K., and Akintomide, A. (2024). Generative AI impact on labor market: analyzing ChatGPT's demand in job advertisements. arXiv [Preprint]. arXiv:2412.07042 [cs.CY]. doi: 10.48550/arXiv.2412.07042

Almatrafi, O., Johri, A., and Lee, H. (2024). A systematic review of AI literacy conceptualization, constructs, and implementation and assessment efforts (2019–2023). Comput. Educ. Open 6:100173. doi: 10.1016/j.caeo.2024.100173

Brynjolfsson, E., Li, D., and Raymond, L. (2025). Generative AI at work*. Q. J. Econ. 140, 889–942. doi: 10.1093/qje/qjae044

Chan, C. K. Y., and Hu, W. (2023). Students' voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 20:43. doi: 10.1186/s41239-023-00411-8

Chan, H. W. H., and Lo, N. P. K. (2025). A study on human rights impact with the advancement of artificial intelligence. J. Posthumanism 5, 1114–1153. doi: 10.63332/joph.v5i2.490

Corbin, T., Dawson, P., and Liu, D. (2025). Talk is cheap: why structural assessment changes are needed for a time of GenAI. Assess. Eval. High. Educ. 1–11. doi: 10.1080/02602938.2025.2503964 Available online at: https://www.tandfonline.com/doi/full/10.1080/02602938.2025.2503964?scroll=top&needAccess=true

Crček, N., and Patekar, J. (2023). Writing with AI: university students' use of ChatGPT. J. Lang. Educ. 9, 128–138. doi: 10.17323/jle.2023.17379

De Winter, J. C. F., Dodou, D., and Stienen, A. H. A. (2023). ChatGPT in education: empowering educators through methods for recognition and assessment. Informatics 10:87. doi: 10.3390/informatics10040087

European Parliament, C. (2024). Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence and amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act) (Text with EEA relevance). Available online at: http://data.europa.eu/eli/reg/2024/1689/oj (Accessed June, 2025).

Gazquez-Garcia, J., Sánchez-Bocanegra, C. L., and Sevillano, J. L. (2025). AI in the health sector: systematic review of key skills for future health professionals. JMIR Med. Educ. 11:e58161. doi: 10.2196/58161

Gulati, P., Marchetti, A., Puranam, P., and Sevcenko, V. (2025). Generative AI adoption and higher order skills. SSRN Electon. J. doi: 10.2139/ssrn.5175809

Hirabayashi, S., Jain, R., Jurković, N., and Wu, G. (2024). Harvard undergraduate survey on generative AI. arXiv [Preprint] arXiv:2406.00833 [cs.CY]. doi: 10.48550/arXiv.2406.00833

Jongkind, R., Elings, E., Joukes, E., Broens, T., Leopold, H., Wiesman, F., et al. (2025). Is your curriculum GenAI-proof? A method for GenAI impact assessment and a case study. MedEdPublish 15:11. doi: 10.12688/mep.20815.1

Kruger, J., and Dunning, D. (1999). Unskilled and unaware of it: how difficulties in recognizing one's own incompetence lead to inflated self-assessments. J. Pers. Soc. Psychol. 77, 1121–1134. doi: 10.1037/0022-3514.77.6.1121

Laupichler, M. C., Aster, A., Schirch, J., and Raupach, T. (2022). Artificial intelligence literacy in higher and adult education: a scoping literature review. Comput. Educ. Artif. Intell. 3:100101. doi: 10.1016/j.caeai.2022.100101

Miao, F., Shiohira, K., and Lao, N. (2024). AI Competency Framework for Students. Paris: UNESCO Digital Library.

Shiri, A. (2024). Artificial intelligence literacy: a proposed faceted taxonomy. Digit. Libr. Perspect. 40, 681–699. doi: 10.1108/DLP-04-2024-0067

Singh, H., Tayarani-Najaran, M.-H., and Yaqoob, M. (2023). Exploring computer science students' perception of ChatGPT in higher education: a descriptive and correlation study. Educ. Sci. 13:924. doi: 10.3390/educsci13090924

Stolpe, K., and Hallström, J. (2024). Artificial intelligence literacy for technology education. Comput. Educ. Open 6:100159. doi: 10.1016/j.caeo.2024.100159

Strzelecki, A. (2023). To use or not to use ChatGPT in higher education? A study of students' acceptance and use of technology. Interact. Learn. Environ. 32, 5142–5155. doi: 10.1080/10494820.2023.2209881

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., et al. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 10:15. doi: 10.1186/s40561-023-00237-x

Tzirides, A. O. (Olnancy), Zapata, G., Kastania, N. P., Saini, A. K., Castro, V., Ismael, S. A., et al. (2024). Combining human and artificial intelligence for enhanced AI literacy in higher education. Comput. Educ. Open 6:100184. doi: 10.1016/j.caeo.2024.100184

von Garrel, J., and Mayer, J. (2023). Artificial intelligence in studies—use of ChatGPT and AI-based tools among students in Germany. Humanit. Soc. Sci. Commun. 10, 1–9. doi: 10.1057/s41599-023-02304-7

Vuorikari, R., Kluzer, S., and Punie, Y. (2022). DigComp 2.2: The Digital Competence Framework for Citizens - With new examples of knowledge, skills and attitudes [WWW Document]. JRC Publ. Repos. doi: 10.2760/115376

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int. J. Educ. Technol. High. Educ. 16:39. doi: 10.1186/s41239-019-0171-0

Zulfikasari, S., Sulistio, B., and Aprilianasari, W. (2024). Utilization of Chat GPT Artificial Intelligence (AI) in student's learning experience Gen-Z class. Lect. J. Pendidik. 15, 259–272. doi: 10.31849/lectura.v15i1.18840

Appendix

Questionnaire

1. How often do you use Gen AI for studying?

° Daily, every other day, weekly, biweekly, monthly, rarely ever, not

2. For which educational purposes do you use Gen AI?

° Brainstorming, (Re)writing text, summarizing, answering factual questions, writing software code, generating images, making graded assignments, creating practice questions, Other

3. Would you like to use Gen AI to help you in your learning process?

° Scale 1–5: Strongly Disagree to Strongly Agree

4. Which GenAI tools have you used?

° ChatGPT, Claude, Microsoft Copilot, Llama, GitHub Copilot, Gemini, Grammarly, NotebookLM, Elicit, Consensus, Other, n.v.t.

5. Do you trust the output of ChatGPT (or related tools)?

° Scale 1–5: Strongly Disagree to Strongly Agree

6. I am able to critically evaluate Gen AI output?

° Scale 1–5: Strongly Disagree to Strongly Agree

7. I am proficient in prompting to get desired outputs from Gen AI.

° Scale 1–5: Strongly Disagree to Strongly Agree

8. Do you have a paid Gen AI subscription? (e.g., ChatGPT Plus)

° Yes/No

9. Environmental impact is important to me when using GenAI

° Scale 1–5: Strongly Disagree to Strongly Agree

10. Copyright considerations are important to me when using GenAI

° Scale 1–5: Strongly Disagree to Strongly Agree

11. Privacy of my input is important to me when using GenAI

° Scale 1–5: Strongly Disagree to Strongly Agree

12. Bias that might occur in the output are important to me when using GenAI

° Scale 1–5: Strongly Disagree to Strongly Agree

13. Potential effects of using Gen AI on my skill development are important to me.

° Scale 1–5: Strongly Disagree to Strongly Agree

14. Regarding Gen AI, I would like to learn more about:

° Environmental impact (Scale 1–5)

° Privacy and data security (Scale 1–5)

° Biases (Scale 1–5)

° Effective prompting (Scale 1–5)

15. I often consult Gen AI instead of looking up the information in academic papers or textbooks.

° Scale 1–5: Strongly Disagree to Strongly Agree

16. I am less likely to ask my teacher for help because I can consult Gen AI instead.

° Scale 1–5: Strongly Disagree to Strongly Agree

17. I am less likely to do readings or preparatory work since I can use Gen AI to prepare.

° Scale 1–5: Strongly Disagree to Strongly Agree

18. I am less likely to attend lectures because of Gen AI.

° Scale 1–5: Strongly Disagree to Strongly Agree

19. I am capable of using Gen AI to:

° Improve my writing, Write essays, Summarize/analyze texts, Write software code, Brainstorm ideas, Create practice questions, Translation, Explain concepts

° Scale 1–5: Strongly Disagree to Strongly Agree

20. Gen AI has changed the way I think about my future career.

° Scale 1–5: Strongly Disagree to Strongly Agree

21. I am worried Gen AI will impact my future career prospects.

° Scale 1–5: Strongly Disagree to Strongly Agree

22. I am able to identify and evaluate Gen AI tools based on my ethical considerations

° Scale 1–5: Strongly Disagree to Strongly Agree

23. I am able to determine which tools are safe to use with regard to privacy and data security.

° Scale 1–5: Strongly Disagree to Strongly Agree

Keywords: generative AI, GenAI literacy, GenAI usage patterns, higher education, ethical attitudes, GenAI competences and skills, DigComp 2.2, GenAI skill development

Citation: Jongkind R, Bikker Y, Meinema J, and Broens T (2025) Studying with GenAI: cross-sectional study on usage patterns, needs, competencies, and ethical perspectives of medical informatics students. Front. Educ. 10:1658415. doi: 10.3389/feduc.2025.1658415

Received: 02 July 2025; Accepted: 26 August 2025;

Published: 19 September 2025.

Edited by:

Leman Figen Gul, Istanbul Technical University, TürkiyeReviewed by:

Noble Lo, Lancaster University, United KingdomPongsakorn Limna, Pathumthani University, Thailand

Copyright © 2025 Jongkind, Bikker, Meinema, and Broens. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Remco Jongkind, ci5jLmpvbmdraW5kQGFtc3RlcmRhbXVtYy5ubA==

Remco Jongkind

Remco Jongkind Yurrian Bikker

Yurrian Bikker