- Centre for Educational Technology, Indian Institute of Technology Bombay, Mumbai, India

Introduction: Active reading is essential for students' comprehension and engagement when working with complex academic texts. Although digital textbooks and multimedia resources are increasingly combined with print materials, little is known about how students engage in cross-media active reading using smartphone-based companion applications in authentic learning contexts.

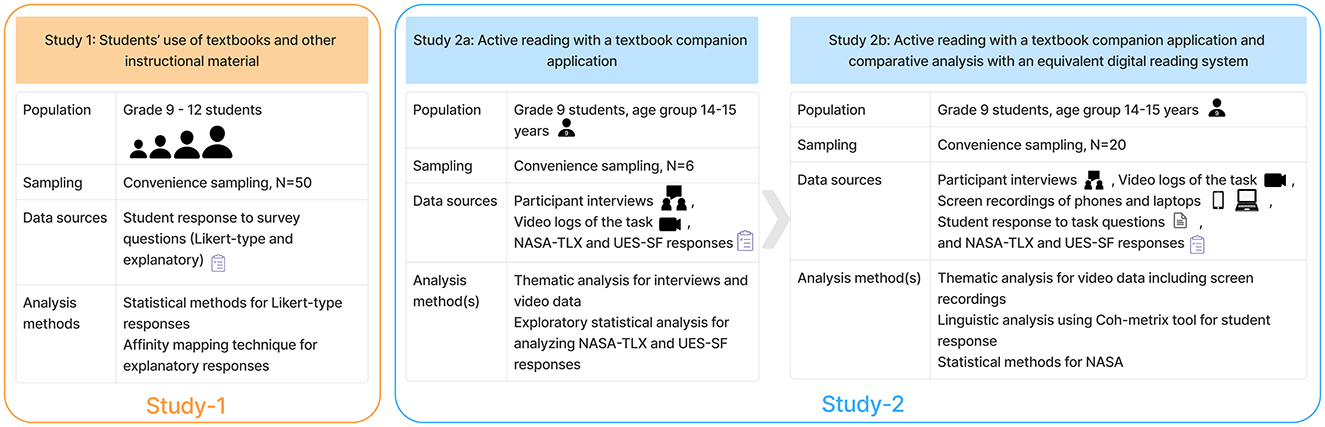

Methods: We conducted a two-part investigation with high school students in India. First, an exploratory survey of 45 students examined their reading preferences and use of supplemental resources. Second, a mixed-methods study with 26 students compared active reading behaviors across two systems: a printed textbook with a smartphone companion app, and an interactive digital textbook. Data sources included video recordings of the reading process, validated self-report instruments for cognitive load and engagement, and students' textual responses to task questions.

Result: The result showed that the phygital system (printed textbook + companion app) was associated with lower cognitive load, higher engagement, and linguistically richer written responses compared to the digital textbook system.

Discussion: These findings suggest that phygital learning environments can enhance academic reading experiences and inform the design of technology-integrated instructional materials.

1 Introduction

Academic reading is a foundational cognitive process that shapes students capacity to learn, reflect, and apply disciplinary knowledge. However, students, particularly in secondary education, often report difficulties maintaining attention, understanding abstract concepts, and meaningfully engaging with curricular texts (Moreno Rocha et al., 2021). These challenges can be attributed in part to cognitive overload stemming from dense instructional material, especially when students must process complex information across multiple sources (Mayes et al., 2001). To mitigate these difficulties, educators have emphasized active reading strategies, such as annotating, questioning, and summarizing that support deeper comprehension through active engagement with content (Mehta et al., 2017; Mahler, 2021; Shibata et al., 2015). Active reading is a process of meaning making by engaging with the content (Mehta et al., 2017), and understanding the text (Shibata and Omura, 2020b). It is characterized by fluid transitioning between immersive reading and sub-tasks by users while interacting with the text. Secondary tasks, such as annotation, content browsing, cross-referencing, etc., are often performed during active reading (Hong et al., 2012).

Meanwhile, the presence of digital technologies in students' lives has changed how they approach academic reading. Increasingly, students blend printed materials with digital learning tools, such as educational videos, interactive websites, and mobile applications. This cross-media behavior reflects an evolution toward multimodal learning, where learners flexibly engage with multiple representations of knowledge. According to Dual Coding Theory (Paivio, 1991), the use of both verbal and visual channels, such as combining text with animation or AR overlays, can enhance memory and comprehension by activating parallel cognitive systems. In this context, phygital learning environments (Saindane et al., 2023b), which integrate physical textbooks with digital scaffolds via smartphones or tablets, offer novel affordances for learning (Saindane et al., 2023a). Although promising, little empirical work has examined how such systems influence students' engagement, cognitive load, and reading comprehension in real-world academic contexts. As reading in an academic context is dependent on multiple factors, including the use of technological interventions (Moreno Rocha et al., 2021), understanding the reading process in such contexts becomes crucial.

Hence, this paper focuses on understanding the active reading behavior of students in the context of academic reading. This work presents two studies with students studying in grades 9–12 in urban India, specifically. We investigate how this blended format affects students' reading behaviors, perceived workload, cognitive engagement, and comprehension.

2 Background and related work

2.1 Academic reading contexts

Academic reading is defined as the reading of textbooks, or other educational texts for an associated educational goal (like research or learning) (Moreno Rocha et al., 2021). It is often accompanied by other tasks (or activities) such as note-taking and annotations (Lopatovska and Sessions, 2016), which are impacted by media and technology (Moreno Rocha et al., 2021). This effect is studied empirically for various aspects of learning (Hare et al., 2024), for example, experimental studies that compare the effect of media (usually digital and print) on comprehension (Singer and Alexander, 2017), proof-reading (Schmid et al., 2023), and reading strategies (Jian, 2022). Similar investigations are presented for the multi-device and cross-media reading contexts, which primarily includes augmented reality (AR) systems that enable learner interactions with a print and a digital learning material through their mobile devices (Rajaram and Nebeling, 2022; Prajapati and Das, 2025). Results indicate improvements in reading comprehension in children when reading picture books (Liu et al., 2024) or storybooks (Şimşek and Direkçi, 2023), and reduced cognitive load in reading tasks for adults (Miah and Kong, 2024).

2.2 Understanding reading process and learning experience

Reading is a multifaceted cognitive process of constructing meaning from a text (Lopatovska and Sessions, 2016), and due to its multi-faceted nature, learners interaction strategies are varied (Jian, 2022). Hence, there are multiple methods used by the researchers for studying reading process. A convenient way of analyzing learner interactions with the print artifacts is through video logs that capture learner actions during reading. These logs can be annotated (or coded) with relevant labels that can collectively be used to form themes related to the reading process (like skimming, annotating, etc.) (Hong et al., 2012), or can be studied as patterns of actions or behaviors (page turning, dog earing, etc.) to support different reading types (Takano et al., 2014). Similar temporal analysis of the reading process and activities can also be used for understanding the difference in behaviors in different media (Mahler, 2021). Comparatively, in digital or computer-based learning environments click-stream data from the respective reading platform (e.g., BookRoll1) is used for analyzing reading behavior. In addition to behavioral analysis using process log data, multiple studies have also used self-report instruments that are administered to students. Cognitive load and engagement are such constructs that are of importance in the reading process (O'Brien et al., 2016) and often measured in this manner. The NASA-TLX scale is one such validated instrument used for measuring cognitive load that is used in the education setting (Zumbach and Mohraz, 2008). It consists of six dimensions (mental demand, physical demand, temporal demand, performance, effort, and frustration) and it can be administered digitally or pen and paper format (Hart, 2006). Similarly, for engagement, the User Engagement Scale (UES) is used (O'Brien et al., 2018), which also consists of Likert-type questions given to the user after the experience session.

2.3 Analysis of learning outcomes in reading

Evaluation studies on the learning interventions analyze student outputs as an indicator of the enhancement in learning, which can be conceptualized in different ways including quantitative measures like improvement in test or assessment scores (Kirkwood and Price, 2014). Assessments related to reading comprehension can be performed using validated tests, which are often applicable for broad contexts (Joshi and Vogel, 2024) or tests designed by researchers or experts (specifically for the respective study). Comprehension assessments can be performed using multiple choice type test questions (Liu et al., 2024), constructed response type questions (Singer and Alexander, 2017), open ended questions that require inference generation (Kreijkes et al., 2025), or a combination of both (Şimşek and Direkçi, 2023). Furthermore, computational linguistic tools such as Coh-Metrix can quantitatively analyze student-written responses by evaluating linguistic dimensions including readability, coherence, connective use, lexical diversity, and text complexity (McNamara et al., 2014). It has been used as an independent evaluation tool for student-generated texts (in assessments such as essays) (Petchprasert, 2021), which can also be helpful in between group comparison studies (Simion Malmcrona, 2020). Such tools can also be combined with existing rubrics (Mahadini et al., 2021), moreover, the computed linguistic indices are shown to be related to the measure of reading comprehension (Allen et al., 2015; Zagata et al., 2023).

2.4 Research gap

Existing literature provides significant insights into active reading behaviors across separate digital (e.g., AkÇapinar et al., 2020) and print (e.g., Hong et al., 2012) contexts; however, integrated multi-device cross-media environments remain understudied. Specifically, little is known about how high school students engage with a printed textbook when augmented with a smartphone-based companion application. Additionally, with multiple interventions based on extended reality for textbooks (Chulpongsatorn et al., 2023; Gunturu et al., 2024b,a; Rajaram and Nebeling, 2022; Saindane et al., 2023b; Karnam et al., 2021), it becomes crucial to analyze the reading behavior of learners to understand how such solutions affect learning in the context of academic reading. Therefore, this paper reports the study on academic reading behaviors of students (studying in 9th grade) with textbooks through two major studies: Study 1: Exploratory analysis of students academic reading practices with textbooks and additional instructional materials such as video, reference books, website, etc., and Study 2: A mixed-method study investigating active reading behaviors with a textbook companion application in a controlled setting. The overall methodology is described in a schematic diagram in Figure 1, and the specific research questions to guide the investigation are:

RQ1: How do students integrate textbooks with other instructional materials for academic reading?

RQ2: How do active reading behaviors differ when reading with a printed textbook and a smartphone-based companion application vs. an interactive digital textbook?

RQ3: How do the cognitive load, perceived engagement, and textual characteristics vary across the two textbook systems?

The remainder of this paper is organized as follows. The next section presents Study 1, a survey-based investigation, along with its key findings. This is followed by the next section on Study 2, which is subdivided into subsections, elucidating Study 2a and Study 2b respectively. For each of these sub-sections the employed data analysis procedures and the corresponding results are reported. Subsequently, the Discussion section interprets these findings in relation to the three research questions. Finally, the paper concludes with a summary of contributions and implications, while also mentioning the limitations of this work and future work to address these limitations.

3 Study 1: students' preferences for textbook and other instructional materials for their academic goals

The objective of this exploratory study was to gain insights into the student preferences regarding their use of textbooks (mandated in the curriculum) and other instructional materials for learning purposes. Data from grade 9–12 students studying in Indian schools in metro cities were collected through internet surveys (Cohen et al., 2017a), and the participants were procured through convenience sampling method (Cohen et al., 2017b) using social media platforms. Participants provided informed consent before answering the survey questions. The survey consists of Likert-type questions (five-point scale) (De Winter and Dodou, 2010) and descriptive questions related to (i) usage of textbooks, (ii) usage of other instructional materials, and (iii) experience of learning from textbooks. Link to the survey questions are provided in the Appendix. Likert-type survey questions were analyzed using statistical methods and the responses to the subjective questions were analyzed using affinity mapping method (Hanington and Martin, 2019). The results were interpreted to obtain a broad understanding of learner preference and associated issues while studying with textbooks and other instructional materials.

3.1 Student's textbook usage contexts

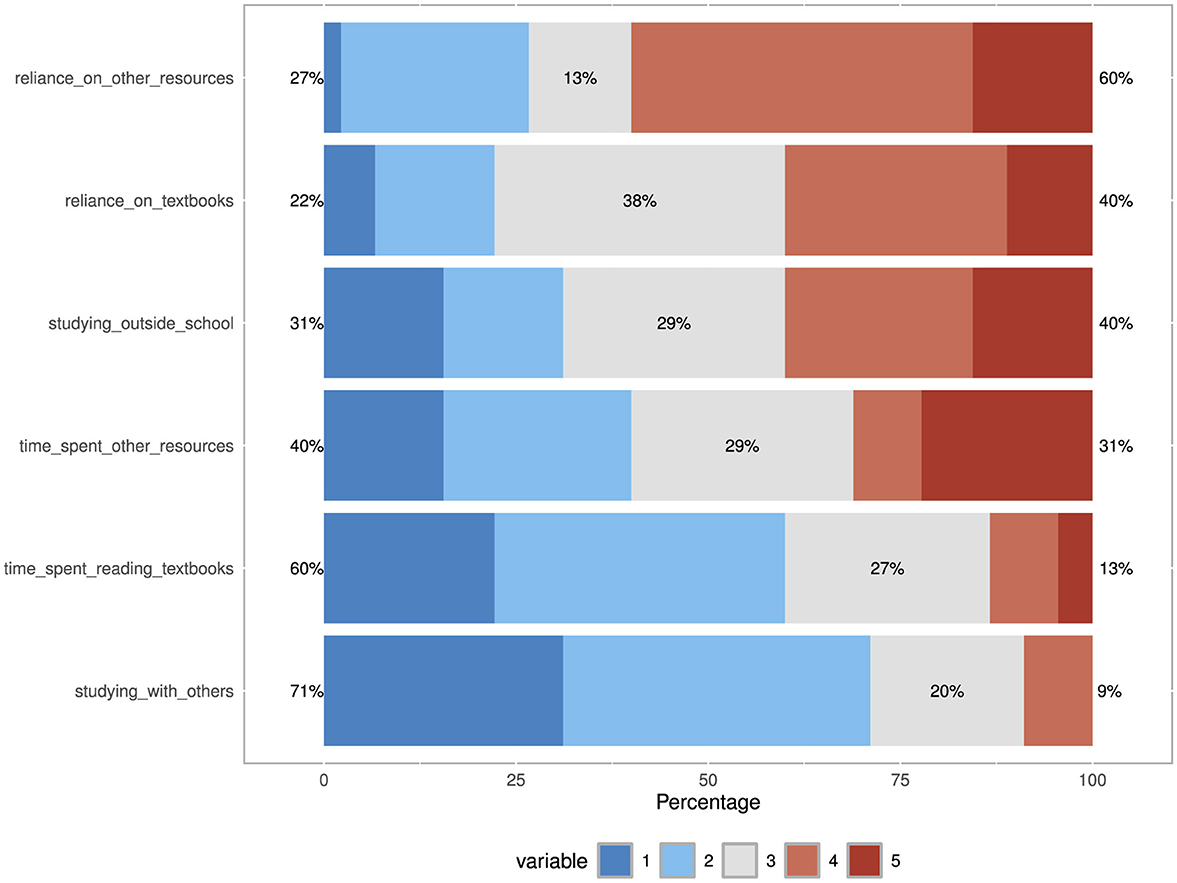

Forty-five survey responses (N = 45) from 50 students were finally selected (of the respondents who completed the survey) for analysis. The respondents were in the age range of 14–18 years, and studying in grades 9–12 where the medium of classroom instruction was English. A large proportion of respondents (40%) have reported studying significant time outside school hours (respondents who selected 4 or 5 on the Likart scale), while for the preference of studying alone or in a group, 71% of the students reported that they preferred studying alone mostly, while no one preferred always studying with others (see Figure 2). Looking at the time spent by students while reading textbooks when compared to learning from other resources, we do notice that learners seemingly spend more time on other resources than textbooks [greater preference of using other resources (Median = 3) than textbooks (Median = 2), W = 542 , p = 0.032, Rank biserial correlation = 0.390].

Figure 2. Visualization of user responses to Likert-type questions in the survey. The questionnaire items are: (i) Reliance on other resources, (ii) Reliance on textbooks, (iii) Studying outside school, (iv) Time spent on other resources, (v) Time spent on reading textbooks, (vi) Studying with others.

On questions about the reliance on these instructional materials on understanding of the concepts, we could again see that learners rely slightly greater on other resources (like videos, websites, etc.) for their conceptual learning. However, these opinions do not differ much statistically as no significant difference between learner's reliance on other resources (Median = 4), and school textbooks (Median = 3), (W = 332, p = 0.355, Rank biserial correlation = 0.182), (see Figure 2) was seen. Further, the responses to the open-ended questions were analyzed using the affinity mapping techniques (Hanington and Martin, 2019). There were three such survey questions that looked into how students use textbooks, how they use other resources in comparison to textbooks, and their experiences of learning from textbooks.

It was observed that they used textbooks as a (i) reference for definitions and important points from the text such as - (“mainly for definitions,” “to revise key points”), (ii) primarily for the goal of preparing for examination (“Yes, to know the particular topic in-depth, and for competitive exams”), (iii) for supplementing their learning from textbooks, post reading (“For details about some interesting content encountered in textbook.”), and (iv) when they seek clarity in concepts (“Asking different questions and clearing doubt also,” “To the topic that I could not understand,” “I use this YouTube resource to understand easily. Sometimes some topics in the textbook are directly given, which we aren't able to understand properly and we get confused to avoid this i use online videos,” “Textbook skips a lot of steps in derivation and all but vids and tutors they make it more easy than textbook”). A significant portion of the respondents reported using online videos and websites as other instructional resources for their academic goals.

3.2 Findings

The survey indicated that students use various instructional materials for their academic needs. One of the emergent themes from this analysis was that they have integrated technological solutions well into their ecosystem. Regarding their learning workflow, one practice that was mentioned by a significant number of respondents, was their use of additional instructional materials to supplement their learning. This was due to multiple reasons: (a) persisting doubt in a concept they have read from the textbook, (b) seeking detailed or alternative explanations for a concept, and (c) looking for additional practice problems. Also, some responses mentioned that supplemental digital content helped them with focus and engagement (“for some reason I find myself more focused while watching video than during reading textbook”), which is a common issue in the context of academic reading (Moreno Rocha et al., 2021). We wanted to further investigate the practice of supplementing one's learning using additional instructional materials during academic reading. Specifically, we wanted to get behavioral insights of students while they are supplementing their academic reading. For this exploration we chose the context of using printed textbooks with a smartphone, primarily because of the general preference of smartphones over laptops (or desktops) for learning (Diao and Hedberg, 2020). Additionally, we scoped the context further to the reading of science texts.

4 Study 2: active reading behavior with a textbook companion application

To investigate how learners integrate dynamic content while reading and their reading experience, we conducted two controlled studies (Cohen et al., 2017b), referred to as study 2a and study 2b in the text. These studies follow mixed-method design, where data from quantitative (such as self-report instruments) and qualitative sources (participant interviews, video recordings, etc.) were integrated for the investigation of the research questions. Furthermore, such research design affords the ability to draw insights beyond a singular data analysis method (Creswell, 2021).

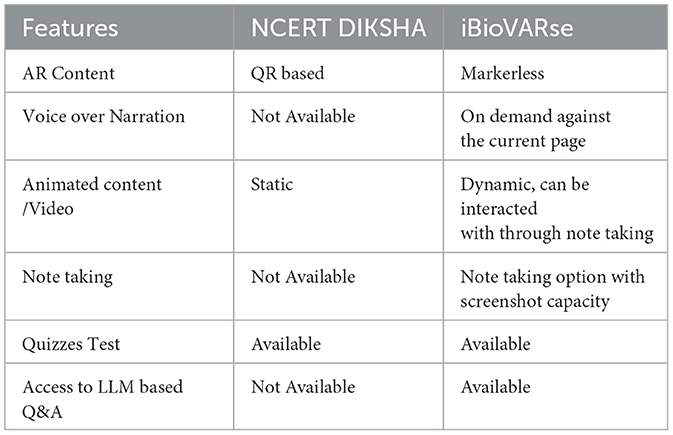

The reading behavior of the students was studied (in studies 2a and 2b) by adopting the strategies and protocols suggested in previous works on the study of reading behaviors (Hong et al., 2012; Takano et al., 2014; Mahler, 2021). The smartphone-based companion application selected for this study was iBioVARse (Saindane et al., 2023b), which was developed as per the learning needs of the participants (Saindane et al., 2023a). A comparison between iBioVARse and DIKSHA2 (another companion application provided by the government) is shown in Table 1. In study 2b, an additional group of participants was also introduced that performed the task using a digital textbook on a laptop. In addition, participant data from interviews and self-reported instruments (task workload and engagement) were also collected.

Table 1. Comparison of the features between NCERT DIKSHA and iBioVARse applications (table adapted from Saindane et al., 2023b).

This study was conducted in accordance with the ethical standards of the institutional research committee and prior to the commencement of the study, ethical approval was obtained from the Institutional Review Board (IRB). Informed consent was obtained from the parents or guardians of all participating students, and informed assent was obtained from the students themselves. Participants were clearly informed that their participation was entirely voluntary and that they could withdraw from the study at any time without any penalty or consequence. They were also informed that they could take breaks whenever needed and were encouraged to ask questions if any part of the procedure or instructions was unclear. All data were anonymized to protect the privacy and confidentiality of the participants.

4.1 Study 2a: active reading with a textbook companion application

To study the reading behavior with a textbook companion application, six participants (n = 5 male, n = 1 female) studying in grade 9 in an urban school in India were recruited through the convenience sampling method (Cohen et al., 2017b), and student ascent along with parent consent was obtained from the participants. The study began with a semi-structured interview of the participant, which was followed by the reading task. After completing the reading task, the students were given self-report instruments for workload and engagement. These phases are further elucidated as follows:

• Interview: Participants were asked about their use of textbooks and additional instructional materials for their study goals, and how they integrate digital devices in their workflows through semi-structured interviews (Cohen et al., 2017b) (see Appendix for representative interview questions). The interview duration was around 15 min for the participants, and it was recorded in audio format by the researchers.

• Reading session: As mentioned in the previous sections that the context for this study involved reading a printed textbook with a mobile companion application. Hence, the researcher team chose iBioVARse application (Saindane et al., 2023b) for this study as it was designed to be used as a companion application for the NCERT textbooks and has significantly higher usability than DIKSHA (see text footnote 2), which is widely used in the school ecosystem. Students were given a topic from the NCERT Biology textbook (can be accessed through this URL: https://ncert.nic.in/textbook.php?kebo1=15-19) to read and answer five related questions on a worksheet (the evaluation questions are provided in the Appendix). Additionally, researchers ensured that the students are able to use the application by demonstrating various use cases and allowing them to interact with it before the task, and the task data was collected using a video-camera by the researchers. The task duration for the participants was approximately 40 minutes, which was consistent with the study durations mentioned in the previous literature on reading behavior analysis (Hong et al., 2012), and quasi-experimental studies with school students (Liu et al., 2024; Casteleiro-Pitrez, 2021).

• Self-repot and feedback: Post the reading session, participants were administered NASA-TLX (Hart, 2006) and UES (O'Brien et al., 2018) self-report instruments for task workload and perceived engagement during the task respectively. The Participant responses to these scales and their feedback were collected digitally3. Participants spent 10 minutes on the task.

4.1.1 Data analysis

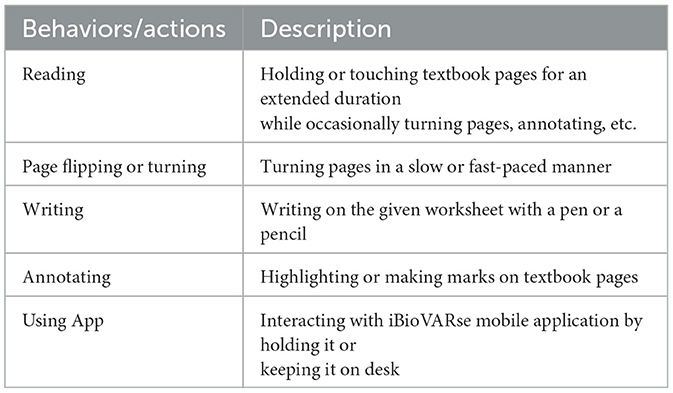

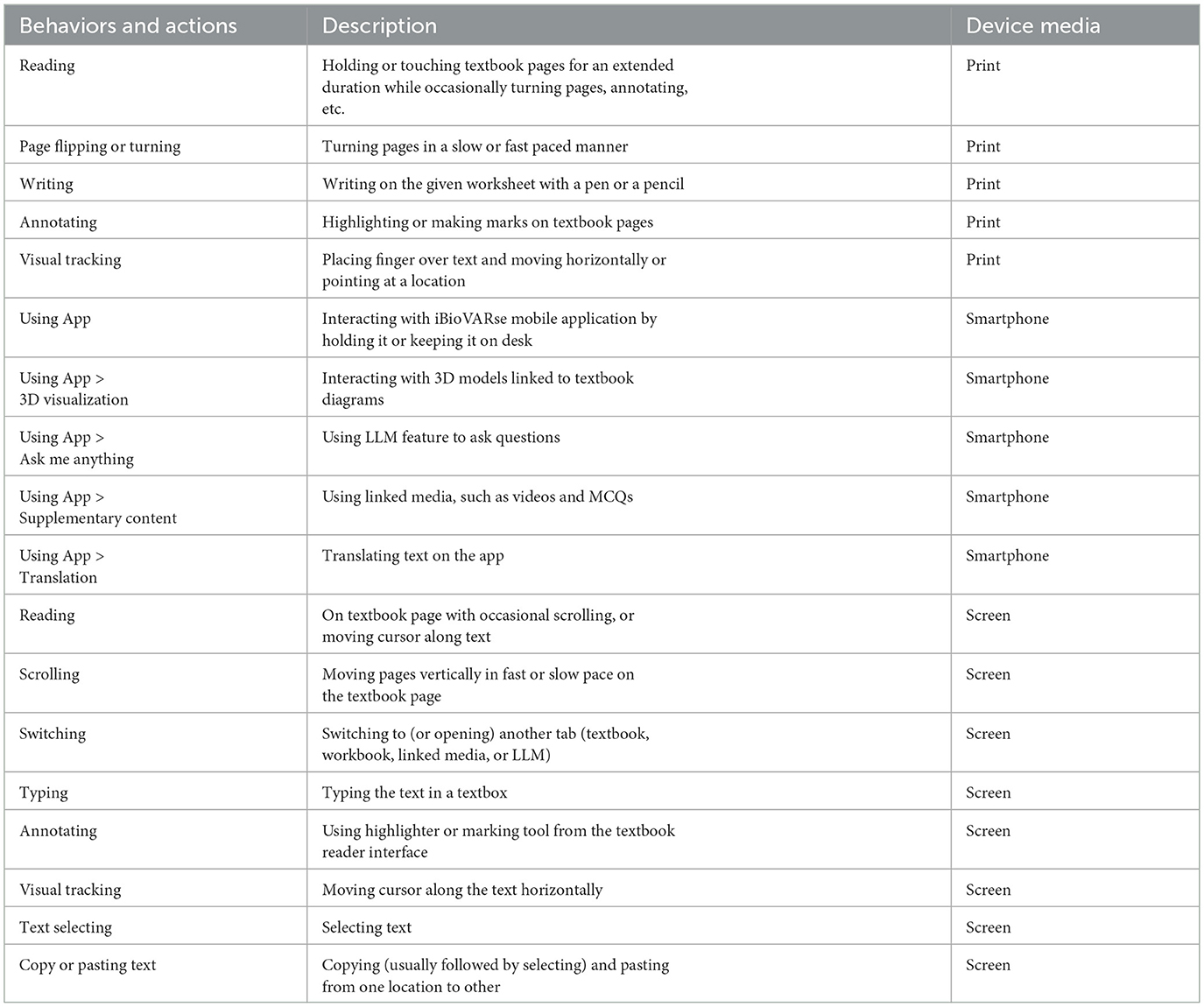

The data sources for this study were transcripts from the interviews, video logs of the reading sessions, and survey responses of NASA-TLX (on seven point scale) and UES (on five point scale). The transcripts were analyzed using thematic analysis approach using inductive coding of the interview texts (Mo et al., 2024). Video logs of the reading session (total duration of 152 min) were first segmented using an open coding process (Saldaña, 2021), where the researchers coded for user actions, artifact usage, and reading processes. The coding was further refined based on prior studies on reading behavior (Hong et al., 2012; Takano et al., 2014). To ensure coding reliability, two of the participant interviews and video recordings (33.3% of the data) were reviewed by two researchers and any disagreements were discussed until a consensus was reached to finalize the codebook (see Table 2). Additionally, the scores for NASA-TLX and UES instruments were calculated from the Likert-scale responses as per the suggested guidelines (Hart, 2006; O'Brien et al., 2018).

4.1.2 Findings

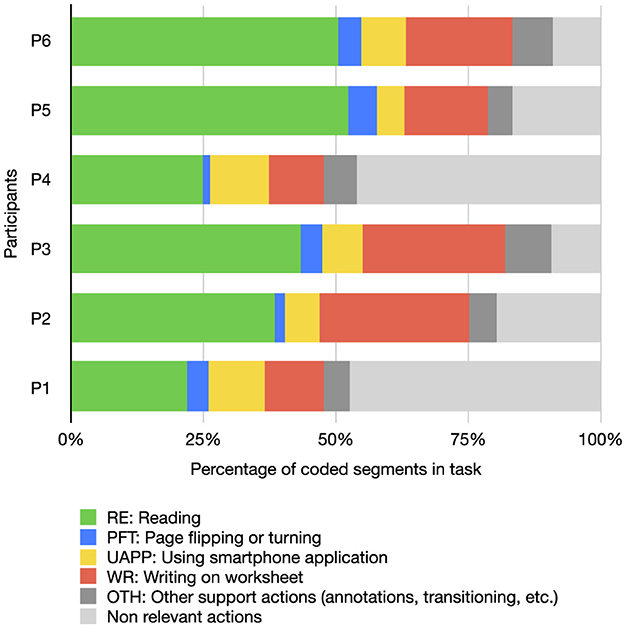

4.1.2.1 In-task reading behavior and interaction patterns

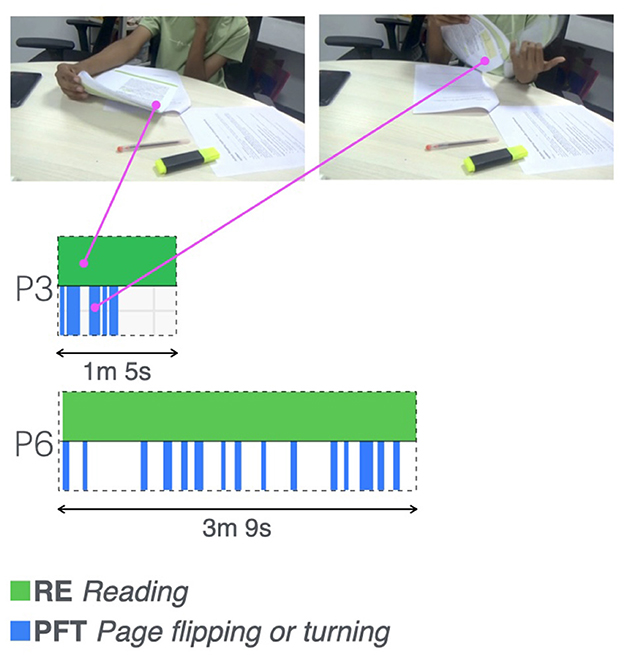

Examining the coded segments for the participants (see Figure 3) we see that the participants were involved in reading from the textbook for a significant portion of time while doing the task. Additionally, the duration for each of these interactions was in line with the results of Hong et al.'s findings (Hong et al., 2012) where a similar method was used for the analysis of reading behavior from the sequential analysis of video data. In the data, the reading process is accompanied by the actions of page flipping and turning, which is indicated by overlapping segments with codes RE and PFT. Other behaviors of information extraction from the text can be interpreted from the data, such as skimming and scanning by the learner, which in the data can be seen when short reading duration overlaps with multiple frequent page flipping segments as shown in Figure 4.

Figure 4. Timelines showing a continuous reading activity segment during the task for participants P3 and P6, with cropped snapshots from the video data (of participant P3).

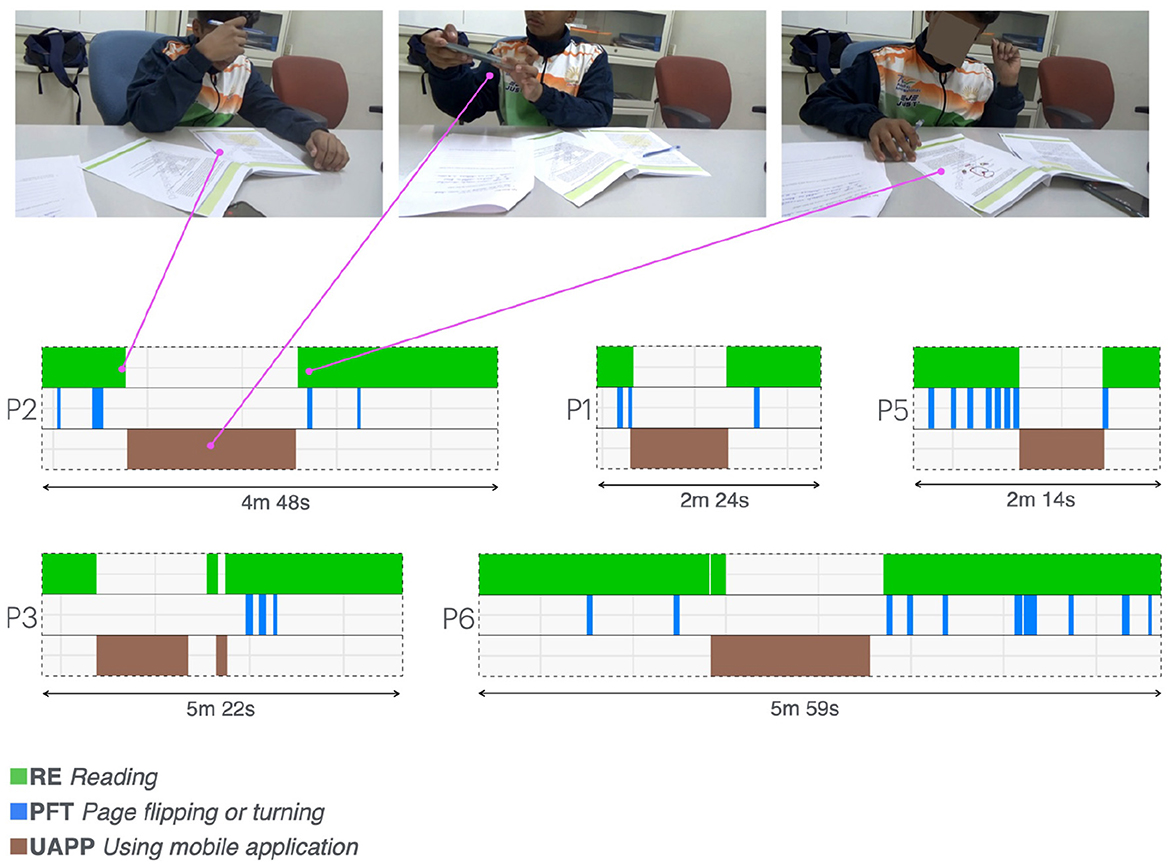

4.1.2.2 Students' usage pattern of an integrated smartphone companion application

The companion application was used by all participants, commonly in between the reading sessions. This was characterized by the segment indicating the usage of the app in between the reading segments, i.e. reading followed by using the app followed by reading again (Figure 5). While giving feedback after the reading session, participants mentioned that they mostly used augmented reality exploration of the textbook diagrams and the generative AI-based question-answering feature of iBioVARse app. While reflecting they mentioned that they searched for the information related to the given question by asking their own versions of it followed by re-verification from the textbook. Surprisingly, no participant except for P6 used integrated videos in the app during the task. Upon probing the participants, P5 mentioned that they learn from videos after finishing reading their textbooks, and searching for answers to the given questions is much easier while reading.

Figure 5. Timelines showing the app usage activity segment in between continuous reading activity segments during the task for participants P2, P1, P5, P3, and P6, with cropped snapshots from the video data (of participant P2).

4.1.2.3 Cognitive load and engagement in a reading task

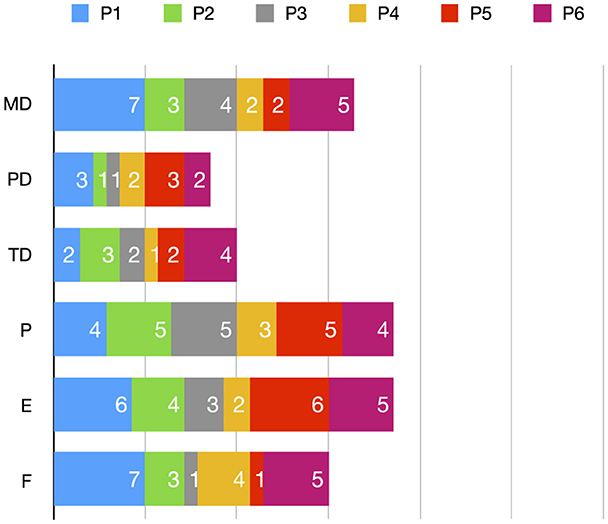

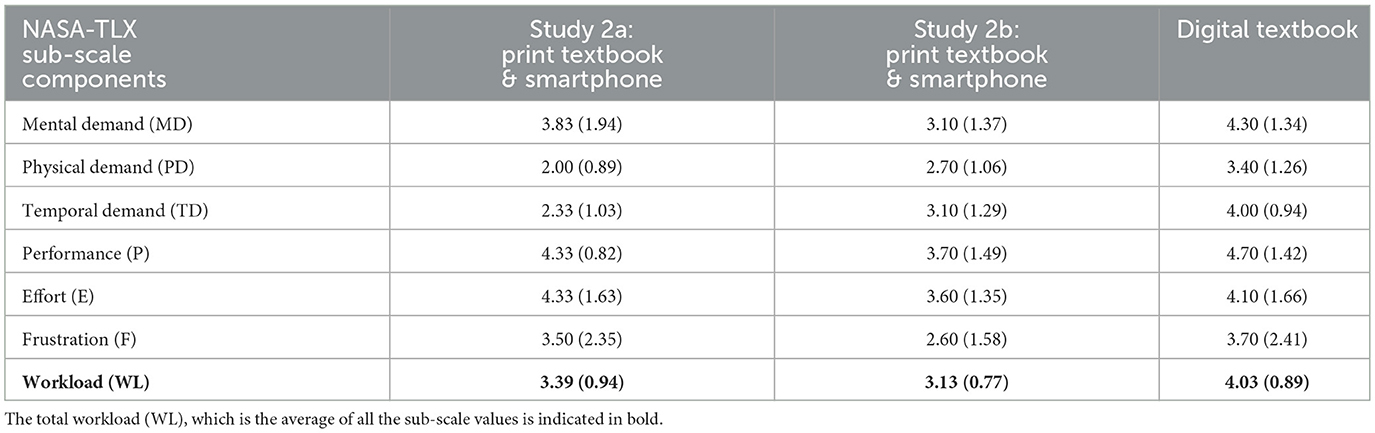

NASA-TLX was administered on a 7 point Likert scale and the analysis of the responses were done as per the methodology mentioned in literature (Hart, 2006), for similar studies (Gu et al., 2024). Responses from NASA-TLX were moderate values for mental demand, effort, and frustration, which are indicative of the cognitive load of the task. The means were lower than the values reported for the standard digital reading environment for MD and E, which were 5.13 for MD, 4.78 for effort. However, the frustration values were higher, 2.29 (see Figure 6).

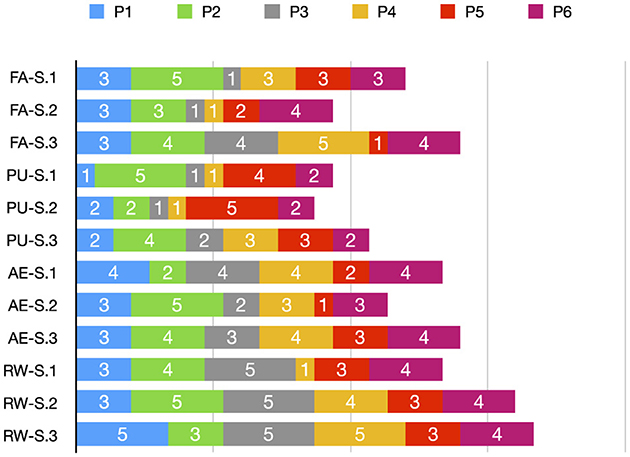

Figure 7 shows participants' responses to the Likert-type questions (which were on a scale of 5). Examining the individual dimensions, it can be seen that the use of iBioVARse in reading is a rewarding experience for the participants (items RW-S.1, RW-S.2, and RW-S.3), and the perceived usability was above average (lower values for items PU-S.1, PU-S.2, and PU-S.3), which was consistent with previous results in usability evaluation of iBioVARse (Saindane et al., 2023b).

Now, we wanted to understand the differences in the behavior of the students between digital and phygital (physical textbook and a digital system) reading experience. Hence, we introduced an additional group between the participants where they performed the task on a laptop.

4.2 Study 2b: active reading with a textbook companion application and comparison with a digital textbook system

Study 2b was an extension of study 2a, where the investigation of understanding reading behavior of the students was extended to digital textbooks. For this study a separate set of grade 9 students from similar demographics was recruited (N = 20, 8 Male, 12 Female). They were randomly assigned either of the two textbook systems for the reading task by the researchers. The data collection methods were similar to study 2a for the textbook system with iBioVARse companion application whereas for the digital textbook system the reading behavior was analyzed from screen recordings (Glassman and Russell, 2016). The three phases of the study remain the same as described in study 2a, which are: (a) Interviews (10–15 min), (b) Reading session (40–60 min), and (c) Self-report and feedback (10 min).

4.2.1 Data analysis

For study 2b, the data analysis approach involved qualitative and quantitative methods for the two participant groups, i.e., printed textbook with a smartphone-based companion application (iBioVARse) vs. an interactive digital textbook. For the qualitative analysis of the reading behavior from the video data, procedure similar to study 2a was adopted. The corresponding codebook for labeling video data was expanded to accommodate screen recordings for smartphones and laptops (see Table 3). Similarly, for the quantitative data obtained from NASA-TLX and UES-SF scales, a statistical test was performed (in Jamovi4: an open statistical tool) to compare the two groups (as used in prior studies for quantitative measures Jian, 2022; Schmid et al., 2023; Liu et al., 2024).

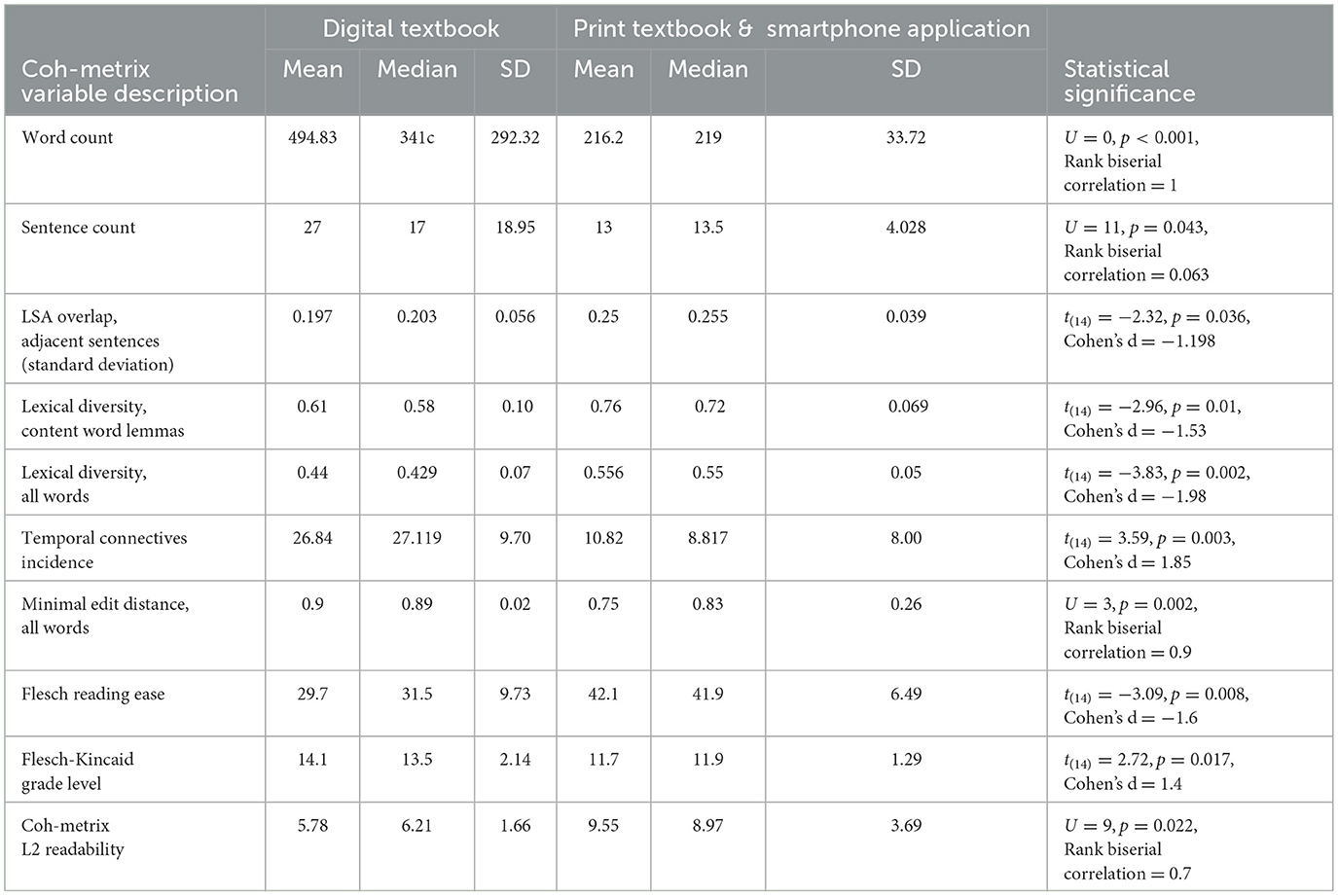

The student textual responses to questions were analyzed using the Coh-metrix indices that computes indices ranging from basic text properties to lexical, syntactic, and cohesive measures (McNamara et al., 2014). For the current analysis Coh-metrix 3.0 was selected as it provides us with a set of 108 indices for assessing lower level (word count, paragraph count, etc.) and higher level (e.g. cohesive measures) aspects of the text. Prior studies have used these indices in multiple ways for the analysis, such as, using a subset of the indices for looking into a singular aspect of the text (like readability) (Feller et al., 2024), alongside a conventional rubric for evaluating (Mahadini et al., 2021), and in predictive modeling (Zagata et al., 2023). Hence, after computing the indices for all the valid student responses, statistical tests for comparison between two groups were performed on the indices that are reported (in prior literature) to be related to the reading comprehension skill (Allen et al., 2015). In this analysis we wanted to have a broad-based comparison across all aspects of the text, rather than focusing on an individual aspect [for example, only analyzing cohesion using "Tool for the Automatic Analysis of Cohesion” (TAACO) (Crossley et al., 2016)].

4.2.2 Findings

4.2.2.1 Reading in a digital textbook system

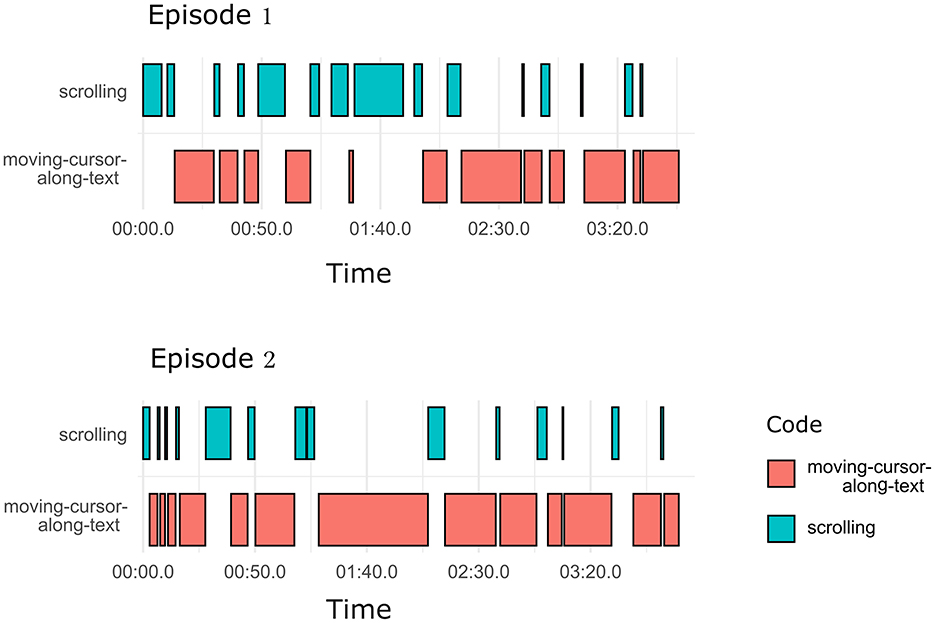

A reading process in the digital textbook system was interpreted with a combination of codes. For example, when a learner is on the textbook page and that time segment intersects with actions such as scrolling and moving the cursor along the text, then the learner is reading (Figure 8). Two types of reading textbook page were observed, when the learner is moving the cursor along the text and on the textbook page with minimal scroll. And the other one where cursor movement along the text is sparse with frequent scrolls. These two patterns indicate deep reading and skimming (or scanning) reading activities respectively. Figure 9 provides an example of these reading processes by a participant using a temporal sequence visualization of coded actions (often used while analyzing the video data Isenberg et al., 2008), which in this case were scrolling and visual tracking. The visualization shows learner actions in the time-span of around 3 minutes and 30 seconds when they are reading the digital textbook. There are two reading episodes compared (for the same participant), where in episode 1 (see Figure 9) scrolling and visual tracking (moving cursor along text) actions are significant, and in episode 2, the scrolling action is sparse and larger segments of visual tracking actions indicating deep reading by the participant.

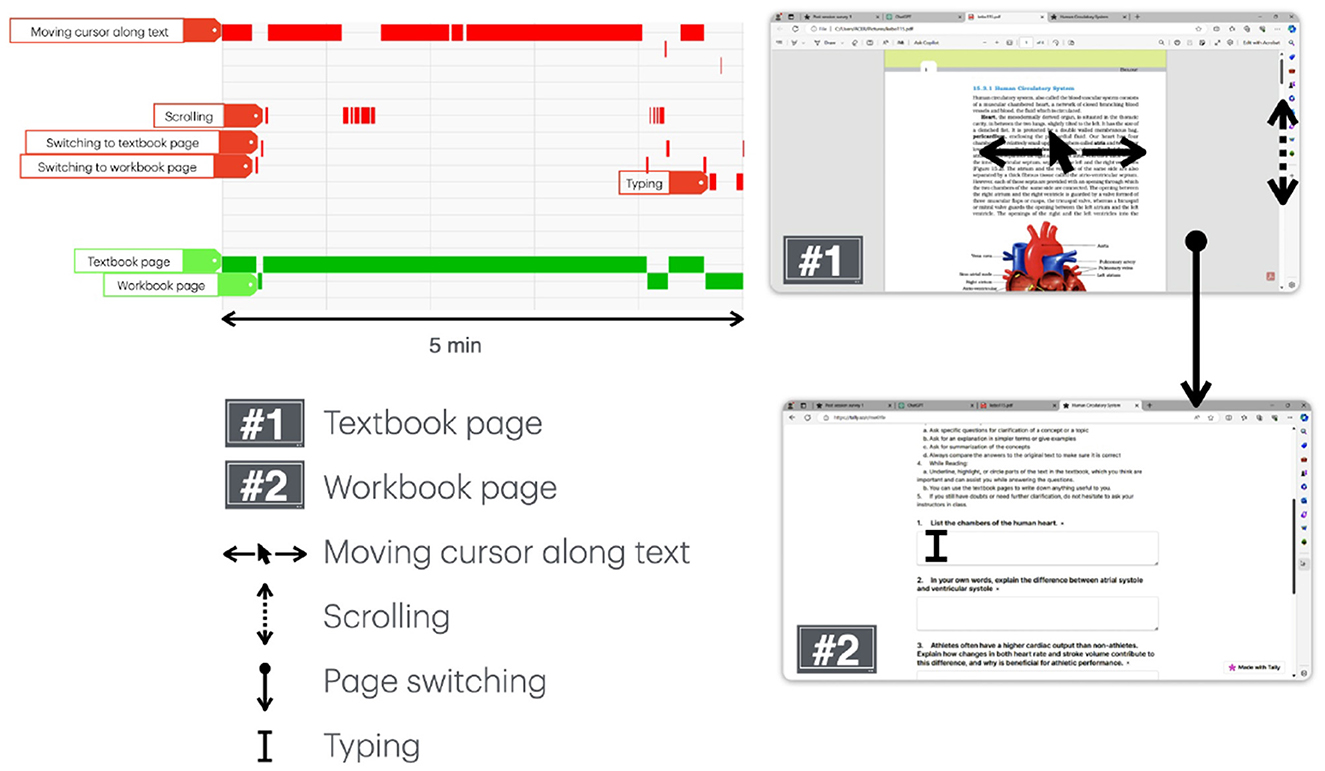

Figure 8. Timeline showing various actions performed by users while reading in a digital environment. Textbook and workbook screens are also shown along with action schematics.

Figure 9. Temporal sequences (two episodes) of digital reading processes with the actions scrolling and visual tracking (moving cursor along the text, as mentioned in code-book given in Table 3).

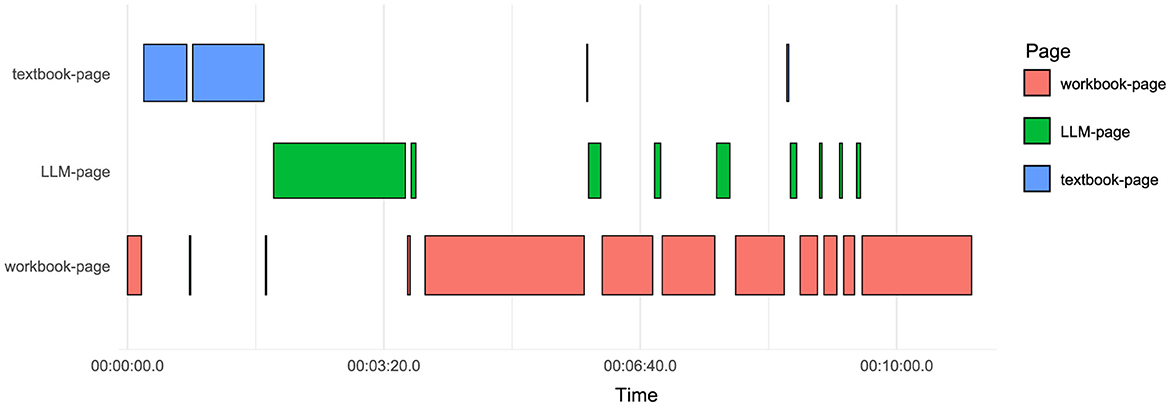

Writing answers involved multiple switching instances between workbook, textbook, and LLM pages. In most cases when the learner was not able to locate the answers easily upon reading textbook they switched to LLMs, moreover, there were instances where they formulated the answers with the help of LLMs. The visualization for the temporal sequence of the time spent on different pages by a participant is shown in Figure 10. Here, instead of the learner actions, we took the digital artifact with which the learner is interacting while answering a question. In this sequence, we see that the learner starts their interaction with the workbook page to read and understand the question followed by searching into the textbook. Thereafter the learner has switched to the LLM page to search for the answer to the workbook question. They later exclusively refers to LLMs for the remaining duration of their answering task for a question.

Figure 10. Temporal sequences showing the time spent on different pages, i.e., textbook, workbook, and LLM by a participant.

4.2.2.2 Students academic reading practices

Similar academic reading behaviors were observed for this participant cohort, which was using textbooks as the primary instructional material and using digital content as a additional reference material. The challenges reported by the students are also similar, which were related to understanding the text, also one of the participants mentioned that it is challenging for them to find the information that is needed. The most common digital device for active reading was a smartphone, only 5 students reported reading on a laptop (or a PC), and one reported tablet. In terms of the use of digital content, the trend was similar (“I use websites for searching easy and short answers and also for understanding maths problems”). Additionally, a few students also reported the use of ChatGPT (“Searching on Google, YouTube and chat GPT”). Similarly, one student commented on the ease of searching for information in a digital system highlighting the affordances of digital media for learning (“websites contains more information and it is easier to find what we are looking for”).

4.2.2.3 Cognitive load and perceived engagement in academic reading tasks

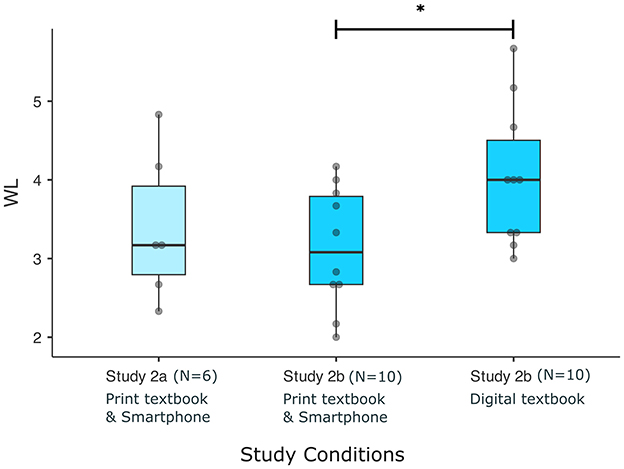

Table 4 below shows the descriptive statistics of the learner responses to different components of the NASA-TLX instrument. For all the six components of the NASA-TLX scale, the mean values were more for the digital textbook case for the reading task. This indicates that the overall workload experienced by the learners while using the digital interactive textbook system was greater on an average than when they were using iBioVARse system. Additionally, a statistical significant difference was found in the mean workload between the two groups using Mann-Whitney non-parametric test (U = 23.5, p = 0.048, Rank biserial correlation = 0.530) (Figure 11). The non-parametric version of the test was chosen because of the ordinal nature of the Likert scale responses.

Figure 11. Perceived task load (mean NASA-TLX score) for the reading task in studies 2a and 2b. *p < 0.05

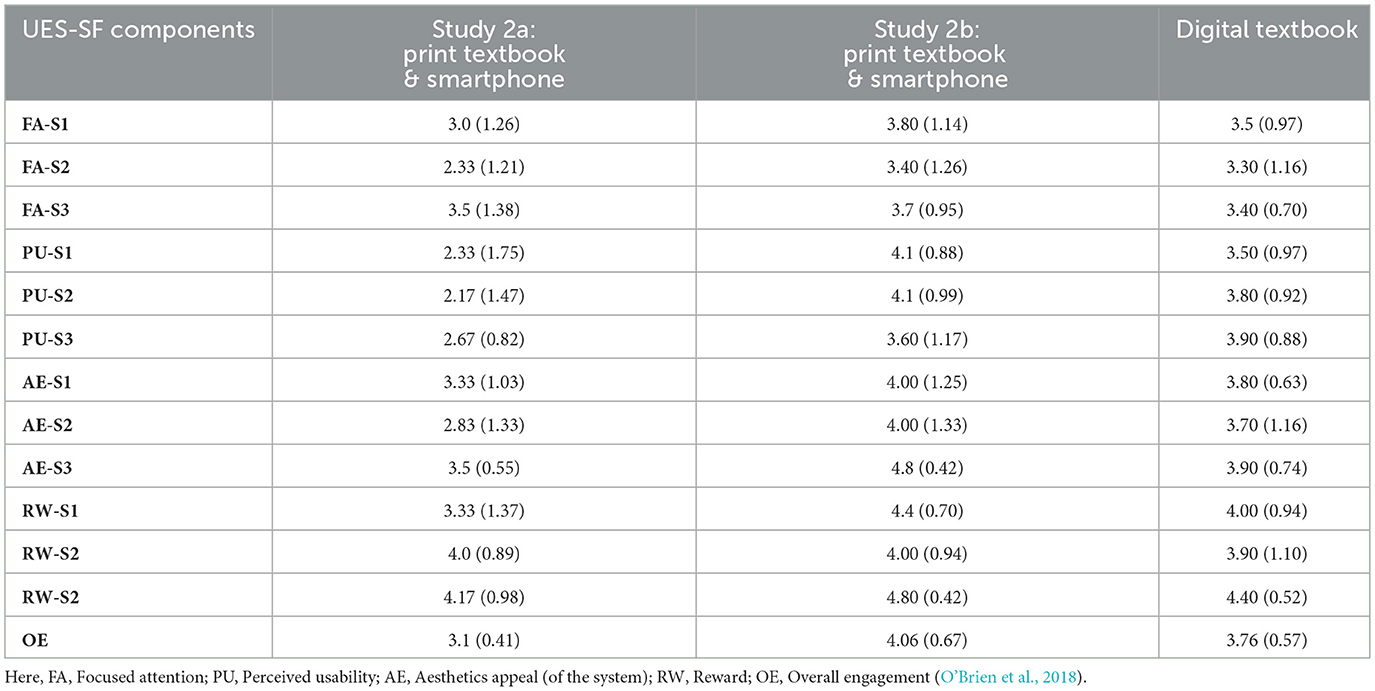

For the evaluation of perceived engagement, a short form UES scale was used, and was adapted for the context of the present study according to the guidelines mentioned in the literature (O'Brien et al., 2018). The assessment questions were answered by participants after the NASA-TLX questions. The questions were in a five-point Likert scale format and for the calculation of the engagement score the mean value of all twelve questions was calculated for both groups. The mean value for the overall engagement was higher for the group that read printed textbook with iBioVARse (see Table 5), however, a statistically significant difference was not observed between the two groups for means using Mann-Whitney U test (U = 37.0, p = 0.343, Rank bisearial correlation = 0.260).

Table 5. UES-SF questionnaire items scores for study 2a and 2b, mentioned as: mean (standard deviation).

4.2.2.4 Variation in textual characteristics of students' response

Coh-metrix variables were computed for the students' answers for both the groups in Study 2b using the Coh-metrix tool (McNamara et al., 2014). The text from the student response was first pre-processed and valid entries were filtered out as per the guidelines mentioned in the literature (Zagata et al., 2023). Independent sample t-tests revealed statistically significant differences in several indices correlated with reading comprehension skill and text readability. Table 6 summarizes the statistical tests results (Table S1 in Appendix provides the descriptive statistical values for all relevant indices).

5 Discussion

This study sought to explore students active reading behaviors and learning experiences when engaging with academic content across two distinct textbook systems: a traditional printed textbook augmented with a smartphone-based companion application (phygital system), and a fully digital interactive textbook. Through a combination of behavioral observation, self-report scales, and linguistic analysis, we examined differences in student engagement, cognitive load, and textual comprehension. The results of this mixed-method investigation contribute to ongoing discussions around the design of cross-media educational environments, particularly in secondary school contexts where printed textbooks remain dominant but are increasingly supplemented by mobile technology.

5.1 Integration of instructional materials for academic reading (RQ1)

The results of Study 1 reveal that secondary school students frequently rely on a variety of instructional materials beyond their prescribed textbooks. Learners often turn to digital resources such as online videos, websites, and generative tools like ChatGPT to supplement their understanding of textbook content. This integration of resources reflects a pragmatic and self-directed learning strategy, where students shift between modes to suit their comprehension needs. Furthermore, students' responses to the interviews in study 2 suggests a strategic use of such dynamic content, such as revisiting online explanations after textbook reading or re-verifying answers with generative tools. And, they prefer interacting with dynamic content through smartphones, which is in accordance with the trend of using digital technologies for academic reading purposes (Moreno Rocha et al., 2021; Diao and Hedberg, 2020). These findings suggest that textbook design and curricular planning should consider students' multi-resource learning strategies and provide more integrated access points for supplemental content, while also considering for the ubiquitous nature of mobile devices in academic reading workflows.

5.2 Active reading behaviors in phygital vs. digital systems (RQ2)

The behavioral analysis from Study 2 offers a detailed view into how learners engage in active reading tasks when supported by different media. In both the phygital and digital textbook conditions, students exhibited familiar active reading behaviors such as annotating, scanning, and sustained reading. However, notable differences emerged in the frequency and quality of these behaviors between conditions. Behaviors indicative of deep reading, which is crucial for comprehension and is an intentional process (Chen et al., 2023) was inferred from the segments of video data for both learning environments. In the phygital system, this was inferred from the segments where the participant is reading and their page flipping actions are spread throughout that interval (Figure 4), and in the digital system when the learner is on the textbook page and short scrolling activity occurs, and the movement of the mouse pointer across text occurs (Figure 8). These sequences indicates a productive oscillation between immersive reading and supporting behaviors, characteristic of active reading (Hong et al., 2012; Mahler, 2021), and indicate deep reading (Chen et al., 2023; Jian, 2022) by learners. On the other hand, reading behaviors indicative of surface reading were also observed. In the data, when the learners were reading with a print textbook, skimming was characterized with the shorter duration of reading segments with a dense overlap of page-turning actions indicating a rapid movement through pages (Figure 4). And, in the digital textbook system, it was seen when complimentary actions such as scrolling, page-switching were closely spaced in the timeline (Figure 8). Such behaviors of deep and shallow reading are also discussed in the context of reading in print (Shibata and Omura, 2020a; Mahler, 2021), and on screens (Chen et al., 2023; Mahler, 2021).

Active reading behaviors are characterized by reading and support activities (such as annotation, navigation, etc.) (Hailpern et al., 2015; Tashman and Edwards, 2011; Yoon et al., 2015). Annotation behaviors also varied significantly for both the learning environments. In the phygital concondition, students used pens or highlighters to mark their textbooks, a practice well-supported in educational literature as contributing to metacognitive awareness and learning (Schmid et al., 2023). In contrast, annotation was less frequent in the digital system, possibly due to interface friction or a lack of familiarity with on-screen tools. A similar findining was also reported in a comparative study of active reading behaviors between digital and print medium (Mahler, 2021). This affirms earlier findings that paper-based materials afford a more natural and operable reading experience, particularly for tasks requiring sustained attention and interaction (Shibata and Omura, 2020b; Sellen and Harper, 2003). Additionally, when writing answers to the comprehension questions, the annotated data points to a greater tendency of copying-pasting the answers from LLMs with minimal editing, which is also reported in other learning contexts with LLMs (Kumar et al., 2024; Kreijkes et al., 2025). These differences highlight that the medium of delivery not only influences how students access information but also shapes their cognitive strategies and learning dispositions during the task.

5.3 Cognitive load, perceived engagement, and textual characteristics of students' response (RQ3)

The results from the NASA-TLX and UES-SF instruments provide quantitative support for the observed behavioral differences. Learners in the phygital group reported significantly lower task workload, particularly in the dimensions of mental demand and effort. This suggests that the division of labor between paper and mobile app helped offload certain cognitive tasks, such as content retrieval or concept explanation, thereby reducing extraneous cognitive load. Additionally, while the digital textbook group had access to similar tools, their higher reported workload, especially in frustration and temporal demand, may stem from the cognitive overhead of navigating multiple nested interfaces. Prior studies (e.g., Qian et al., 2022; Cheng, 2017) have documented similar results, where interacting with a paper-based document along with a mobile device has lower perceived cognitive load. Furthermore, In terms of perceived engagement, UES-SF scores were higher for the phygital group, though not statistically significant. The results contribute to the results of the investigations of comparing learner's engagement in different reading conditions (Hare et al., 2024), and also reaffirms the association between engagement (as measured using UES scales) and comprehension (O'Brien et al., 2016).

Perhaps the most compelling evidence comes from the linguistic analysis of students' written responses using Coh-Metrix tool (McNamara et al., 2014). The textual outputs of the digital textbook group were seen to be longer (higher mean values for sentence and word count), but demonstrated lower lexical diversity and fewer temporal connectives (see Table 6 for statistical results). In contrast, the group that read with print textbook and the smartphone application, produced shorter but linguistically richer responses characterized by greater lexical diversity (indicated by the mean values of the variables ‘sentence count', ‘lexical diversity' in Table 6). These linguistic differences provides an important result related to the reading comprehension in different textbook systems, as results from the prior studies shows a statistically significant correlation between Coh-Metrix variables (computed on the written response of students) and reading comprehension (Allen et al., 2015; Zagata et al., 2023).

6 Conclusion

The studies presented in this work have informed us that students integrate digital devices, mainly smartphones, to better understand the text by seeking more information, accessing visualizations, watching videos, etc. This access to additional content through the interactions with learning systems supports academic reading and comprehension (Lopatovska and Sessions, 2016). For this investigation we utilized mixed-methods approach (Cohen et al., 2017b) combining behavioral observations (through video logs), validated self-report instruments for workload (NASA-TLX) and perceived engagement (UES-SF), and linguistic analysis of learners' written response (Coh-metrix tool). Qualitative analysis indicated the differences in active reading behaviors in the two textbook systems with multiple episodes of deep and shallow reading. In addition to the differences in the learner interactions, one of the key difference was observed in the annotation behavior of learners. On the other hand, quantitative analysis indicated a statistically significant difference in the workload (lower in the group that read a printed textbook with a companion app), and higher perceived engagement when compared to the group that read using a digital textbook system. Moreover, the written responses obtained during the task also showed significant differences in relevant Coh-metrix variables (see Table 6) that correlates to the reading comprehension skill.

6.1 Implications for educational design and practice

The findings of this study have several implications for the design of learning environments and educational technologies:

• Phygital systems that integrate print with digital scaffolds can offer the best of both worlds, supporting student autonomy during academic reading activities. This multi-device interactions can be explored in novel ways to improve learning outcomes in students.

• Mobile-first design strategies are crucial, given students strong preference for smartphones over laptops. However, design must go beyond content delivery and consider usability, learning flow, and scaffoldingmechanisms.

• Teachers and facilitators should be aware that not all digital resources reduce workload, poorly designed systems or over-reliance on generative tools can fragment attention and impair deep engagement. Instructional guidance on how to meaningfully integrate such tools is essential.

• Assessment practices should move beyond word counts to include linguistic richness and cohesion as proxies for understanding. Tools like Coh-Metrix or rubrics grounded in discourse analysis can inform both formative and summative assessment.

6.2 Limitations and future work

Despite the rich insights offered by this study, some limitations must be acknowledged. The sampling methodology for participant recruitment was convenience sampling, which threatens the generalization of the findings. Although the results are discussed in the context of existing findings, for future investigation, random sampling would be adopted. Furthermore, limited statistical power in quantitative analysis is another limitation for which the study has used mixed-method study design as a mitigation measure. For future quantitative investigations, a prior power analysis will be performed.

Future studies should examine the effects of a phygital textbook system (textbook with a companion application) on comprehension and retention over sustained use. Additionally, the impact of adaptive scaffolds provided using LLMs in such settings on comprehension can also be explored. Another line of investigation could be the studies with cross-cultural cohorts expanding the demographics to obtain different design requirements of such learning systems.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by the Indian Institute of Technology Bombay - Institutional Review Board (IRB). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

Author contributions

SP: Writing – review & editing, Formal analysis, Methodology, Software, Data curation, Conceptualization, Visualization, Writing – original draft. SD: Project administration, Conceptualization, Validation, Writing – review & editing, Supervision, Resources.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors would like to acknowledge Mr. Ashish Patil (TGT Science, Secondary section, IITB Campus School & Jr. College) for his assistance with the study logistics.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. The Generative AI was used in the preparation of this manuscript, specifically, for rephrasing.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1660133/full#supplementary-material

Footnotes

References

Akçapinar, G., Hasnine, N. M., Majumdar, R., Chen, A. M.-R., Flaganan, B., and Ogata, H. (2020). “Exploring temporal study patterns in eBook-based learning,” in 28th International Conference on Computers in Education Conference Proceedings (Asia-Pacific Society for Computers in Education (APSCE)), 342–347.

Allen, L. K., Snow, E. L., and McNamara, D. S. (2015). “Are you reading my mind? Modeling students' reading comprehension skills with natural language processing techniques,” in Proceedings of the Fifth International Conference on Learning Analytics And Knowledge (Poughkeepsie, New York: ACM), 246–254. doi: 10.1145/2723576.2723617

Casteleiro-Pitrez, J. (2021). Augmented reality textbook: a classroom quasi-experimental study. IEEE Rev. Iberoamer. Tecnol. Aprendizaje 16, 258–266. doi: 10.1109/RITA.2021.3122887

Chen, X., Srivastava, N., Jain, R., Healey, J., and Dingler, T. (2023). “Characteristics of deep and skim reading on smartphones vs. desktop: a comparative study,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (Hamburg, Germany: ACM), 1–14. doi: 10.1145/3544548.3581174

Cheng, K.-H. (2017). Reading an augmented reality book: an exploration of learners” cognitive load, motivation, and attitudes. Australasian J. Educ. Technol. 33:2820. doi: 10.14742/ajet.2820

Chulpongsatorn, N., Lunding, M. S., Soni, N., and Suzuki, R. (2023). “Augmented math: authoring AR-based explorable explanations by augmenting static math textbooks,” in Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (San Francisco, CA, USA: ACM), 1–16. doi: 10.1145/3586183.3606827

Cohen, L., Manion, L., and Morrison, K. (2017a). “Internet surveys,” in Research Methods in Education (Routledge), 361–374. doi: 10.4324/9781315456539-18

Cohen, L., Manion, L., and Morrison, K. (2017b). Research Methods in Education. London: Routledge. doi: 10.4324/9781315456539

Creswell, J. W. (2021). A Concise Introduction to Mixed Methods Research. New York: SAGE Publications.

Crossley, S. A., Kyle, K., and McNamara, D. S. (2016). The tool for the automatic analysis of text cohesion (TAACO): automatic assessment of local, global, and text cohesion. Behav. Res. Methods 48, 1227–1237. doi: 10.3758/s13428-015-0651-7

De Winter, J. C., and Dodou, D. (2010). Five-point Likert items: t test versus Mann-Whitney-Wilcoxon. Pract. Assessm. Res. Eval. 15, 1–12. doi: 10.7275/bj1p-ts64

Diao, M., and Hedberg, J. G. (2020). Mobile and emerging learning technologies: are we ready? EMI. Educ. Media Int. 57, 233–252. doi: 10.1080/09523987.2020.1824422

Feller, D. P., Sabatini, J., and Magliano, J. P. (2024). Differentiating less-prepared from more-prepared college readers. Discour. Proc. 61, 180–202. doi: 10.1080/0163853X.2024.2319515

Glassman, E. L., and Russell, D. M. (2016). DocMatrix: self” teaching from multiple sources. Proc. Assoc. Inf. Sci. Technol. 53, 1–10. doi: 10.1002/pra2.2016.14505301064

Gu, Z., Arawjo, I., Li, K., Kummerfeld, J. K., and Glassman, E. L. (2024). “An AI-resilient text rendering technique for reading and skimming documents,” in Proceedings of the CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA: ACM), 1–22. doi: 10.1145/3613904.3642699

Gunturu, A., Jadon, S., Zhang, N., Thundathil, J., Willett, W., and Suzuki, R. (2024a). Reality summary: on-demand mixed reality document enhancement using large language models. arXiv:2405.18620.

Gunturu, A., Wen, Y., Zhang, N., Thundathil, J., Kazi, R. H., and Suzuki, R. (2024b). “Augmented physics: creating interactive and embedded physics simulations from static textbook diagrams,” in Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology, 1–12. doi: 10.1145/3654777.3676392

Hailpern, J., Vernica, R., Bullock, M., Chatow, U., Fan, J., Koutrika, G., et al. (2015). “To print or not to print: hybrid learning with METIS learning platform,” in Proceedings of the 7th ACM SIGCHI Symposium on Engineering Interactive Computing Systems (Duisburg, Germany: ACM), 206–215. doi: 10.1145/2774225.2774837

Hanington, B., and Martin, B. (2019). Universal Methods of Design Expanded and Revised: 125 Ways to Research Complex Problems, Develop Innovative Ideas, and Design Effective Solutions. Beverly, MA: Rockport publishers.

Hare, C., Johnson, B., Vlahiotis, M., Panda, E. J., Tekok Kilic, A., and Curtin, S. (2024). Children's reading outcomes in digital and print mediums: a systematic review. J. Res. Read. 47, 309–329. doi: 10.1111/1467-9817.12461

Hart, S. G. (2006). “Nasa-task load index (NASA-TLX); 20 years later,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 904–908. doi: 10.1177/154193120605000909

Hong, M., Piper, A. M., Weibel, N., Olberding, S., and Hollan, J. (2012). “Microanalysis of active reading behavior to inform design of interactive desktop workspaces,” in Proceedings of the 2012 ACM International Conference on Interactive Tabletops and Surfaces (Cambridge, MA, USA: ACM), 215–224. doi: 10.1145/2396636.2396670

Isenberg, P., Tang, A., and Carpendale, S. (2008). “An exploratory study of visual information analysis,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Florence, Italy: ACM), 1217–1226. doi: 10.1145/1357054.1357245

Jian, Y.-C. (2022). Reading in print versus digital media uses different cognitive strategies: evidence from eye movements during science-text reading. Read. Writ. 35, 1549–1568. doi: 10.1007/s11145-021-10246-2

Joshi, N., and Vogel, D. (2024). “Constrained highlighting in a document reader can improve reading comprehension,” in Proceedings of the CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA: ACM), 1–10. doi: 10.1145/3613904.3642314

Karnam, D., Agrawal, H., Parte, P., Ranjan, S., Borar, P., Kurup, P. P., et al. (2021). Touchy feely vectors: a compensatory design approach to support model”based reasoning in developing country classrooms. J. Comput. Assist. Learn. 37, 446–474. doi: 10.1111/jcal.12500

Kirkwood, A., and Price, L. (2014). Technology-enhanced learning and teaching in higher education: what is enhanced and how do we know? A critical literature review. Learn. Media Technol. 39, 6–36. doi: 10.1080/17439884.2013.770404

Kreijkes, P., Kewenig, V., Kuvalja, M., Lee, M., Vitello, S., Hofman, J., et al. (2025). Effects of LLM Use and Note-Taking on Reading Comprehension and Memory: A Randomised Experiment in Secondary Schools. Available online at: https://ssrn.com/abstract=5095149

Kumar, H., Musabirov, I., Reza, M., Shi, J., Wang, X., Williams, J. J., et al. (2024). “Guiding students in using LLMs in supported learning environments: effects on interaction dynamics, learner performance, confidence, and trust,” in Proceedings of the ACM on Human-Computer Interaction, 1–30. doi: 10.1145/3687038

Liu, S., Sui, Y., You, Z., Shi, J., Wang, Z., and Zhong, C. (2024). Reading better with AR or print picture books? A quasi-experiment on primary school students” reading comprehension, story retelling and reading motivation. Educ. Inf. Technol. 29, 11625–11644. doi: 10.1007/s10639-023-12231-4

Lopatovska, I., and Sessions, D. (2016). Understanding academic reading in the context of information-seeking. Library Rev. 65, 502–518. doi: 10.1108/LR-03-2016-0026

Mahadini, M. K., Setyaningsih, E., and Sarosa, T. (2021). Using conventional rubric and coh-metrix to assess EFL students' essays. Int. J. Lang. Educ. 5, 260–270. doi: 10.26858/ijole.v5i4.19105

Mahler, J. (2021). A Study of multi-document active reading in analog and digital environments. PhD Thesis, Wien.

Mayes, D. K., Sims, V. K., and Koonce, J. M. (2001). Comprehension and workload differences for VDT and paper-based reading. Int. J. Ind. Ergon. 28, 367–378. doi: 10.1016/S0169-8141(01)00043-9

McNamara, D. S., Graesser, A. C., McCarthy, P. M., and Cai, Z. (2014). Automated Evaluation of Text and Discourse with Coh-Metrix. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511894664

Mehta, H., Bradley, A., Hancock, M., and Collins, C. (2017). Metatation: annotation as implicit interaction to bridge close and distant reading. ACM Trans. Comput. Hum. Interact. 24, 1–41. doi: 10.1145/3131609

Miah, M. O., and Kong, J. (2024). Augmented reality and cross-device interaction for seamless integration of physical and digital scientific papers. Int. J. Hum. Comput. Inter. 41, 7040–7057. doi: 10.1101/2024.02.05.578116

Mo, Z. P., Prajapati, S. P., Vasudevan, S., and Murthy, S. (2024). “From textbooks to classroom implementation: experience report of middle school science teachers' pedagogy for activity-based learning,” in International Conference on Computers in Education. doi: 10.58459/icce.2024.4994

Moreno Rocha, M. A., Nacenta, M. A., and Ye, J. (2021). “An exploratory study on academic reading contexts, technology, and strategies,” in 8th Mexican Conference on Human-Computer Interaction (Mexico: ACM), 1–10. doi: 10.1145/3492724.3492727

O'Brien, H. L., Cairns, P., and Hall, M. (2018). A practical approach to measuring user engagement with the refined user engagement scale (UES) and new UES short form. Int. J. Hum. Comput. Stud. 112, 28–39. doi: 10.1016/j.ijhcs.2018.01.004

O'Brien, H. L., Freund, L., and Kopak, R. (2016). “Investigating the role of user engagement in digital reading environments,” in Proceedings of the 2016 ACM on Conference on Human Information Interaction and Retrieval (Carrboro, NC, USA: ACM), 71–80. doi: 10.1145/2854946.2854973

Paivio, A. (1991). Dual coding theory: retrospect and current status. Canadian J. Psychol. 45:255. doi: 10.1037/h0084295

Petchprasert, A. (2021). Utilizing an automated tool analysis to evaluate EFL students” writing performances. Asian-Pacific J. Second Fore. Lang. Educ. 6:1. doi: 10.1186/s40862-020-00107-w

Prajapati, S. P., and Das, S. (2025). “Is' quick and dirty' good enough? An analysis of the usability evaluation practices for learning environment design,” in Proceedings of the 2025 ACM International Conference on Interactive Media Experiences, 313–321. doi: 10.1145/3706370.3727857

Qian, J., Sun, Q., Wigington, C., Han, H. L., Sun, T., Healey, J., et al. (2022). “Dually noted: layout-aware annotations with smartphone augmented reality,” in CHI Conference on Human Factors in Computing Systems (New Orleans, LA, USA: ACM), 1–15. doi: 10.1145/3491102.3502026

Rajaram, S., and Nebeling, M. (2022). “Paper trail: an immersive authoring system for augmented reality instructional experiences,” in CHI Conference on Human Factors in Computing Systems (New Orleans, LA, USA: ACM), 1–16. doi: 10.1145/3491102.3517486

Saindane, D., Prajapati, S., and Das, S. (2023a). “Supporting learning through affordance- based design: a comparative analysis of "bioVARse" and a standard textbook companion application in biology education,” in International Conference on Computers in Education, 484–486. doi: 10.58459/icce.2023.1020

Saindane, D., Prajapati, S. P., and Das, S. (2023b). “Converting physical textbooks into interactive and immersive 'phygital' textbooks: a proposed system architecture design for textbook companion apps,” in iTextbooks@ AIED, 88–93.

Schmid, A., Sautmann, M., Wittmann, V., Kaindl, F., Schauhuber, P., Gottschalk, P., et al. (2023). “Influence of annotation media on proof-reading tasks,” in Mensch und Computer 2023 (Rapperswil, Switzerland: ACM), 277–288. doi: 10.1145/3603555.3603572

Shibata, H., and Omura, K. (2020a). “The ease of reading from paper and the difficulty of reading from displays,” in Why Digital Displays Cannot Replace Paper (Singapore: Springer), 27–33. doi: 10.1007/978-981-15-9476-2_3

Shibata, H., and Omura, K. (2020b). “Effects of operability on reading,” in Why Digital Displays Cannot Replace Paper (Singapore: Springer), 43–110. doi: 10.1007/978-981-15-9476-2_5

Shibata, H., Takano, K., and Tano, S. (2015). “Text touching effects in active reading: the impact of the use of a touch-based tablet device,” in Human-Computer Interaction INTERACT 2015, eds. J. Abascal, S. Barbosa, M. Fetter, T. Gross, P. Palanque, and M. Winckler (Cham: Springer International Publishing), 559–576. doi: 10.1007/978-3-319-22701-6_41

Simion Malmcrona, H. (2020). Variation in assessment: A coh-metrix analysis of evaluation of written English in the Swedish upper secondary school (Dissertation). Available online at: https://urn.kb.se/resolve?urn=urn:nbn:se:su:diva-178837

Şimşek, B., and Direkçi, B. (2023). The effects of augmented reality storybooks on student's reading comprehension. Br. J. Educ. Technol. 54, 754–772. doi: 10.1111/bjet.13293

Singer, L. M., and Alexander, P. A. (2017). Reading across mediums: effects of reading digital and print texts on comprehension and calibration. J. Exper. Educ. 85, 155–172. doi: 10.1080/00220973.2016.1143794

Takano, K., Shibata, H., Ichino, J., Tomonori, H., and Tano, S. (2014). “Microscopic analysis of document handling while reading paper documents to improve digital reading device,” in Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design (Sydney, NSW, Australia: ACM), 559–567. doi: 10.1145/2686612.2686701

Tashman, C. S., and Edwards, W. K. (2011). “Active reading and its discontents: the situations, problems and ideas of readers,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Vancouver, BC, Canada: ACM), 2927–2936. doi: 10.1145/1978942.1979376

Yoon, D., Hinckley, K., Benko, H., Guimbretire, F., Irani, P., Pahud, M., et al. (2015). “Sensing tablet grasp + micro-mobility for active reading,” in Proceedings of the 28th Annual ACM Symposium on User Interface Software and Technology (Charlotte, NC, USA: ACM), 477–487. doi: 10.1145/2807442.2807510

Zagata, E., Kearns, D., Truckenmiller, A. J., and Zhao, Z. (2023). Using the features of written compositions to understand reading comprehension. Read. Res. Quart. 58, 624–654. doi: 10.1002/rrq.503

Keywords: active reading, phygital learning, textbook companion app, digital education, cognitive load, user engagement, secondary education, multimodal learning

Citation: Prajapati SP and Das S (2025) Understanding students' active reading in phygital learning environments: a study of smartphone-based textbook companions in Indian classrooms. Front. Educ. 10:1660133. doi: 10.3389/feduc.2025.1660133

Received: 05 July 2025; Accepted: 26 August 2025;

Published: 30 September 2025.

Edited by:

Shashidhar Venkatesh Murthy, James Cook University, AustraliaReviewed by:

Federico Batini, University of Perugia, ItalyMeriem Elboukhrissi, Ibn Tofail University, Morocco

Copyright © 2025 Prajapati and Das. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sunny Prakash Prajapati, bWFpbHMuc3VubnlwcmFqYXBhdGlAZ21haWwuY29t

Sunny Prakash Prajapati

Sunny Prakash Prajapati Syaamantak Das

Syaamantak Das