- College of Education, Dalian University, Dalian, China

Introduction: In recent years, virtual venue technology has been increasingly adopted in higher education. While existing studies indicate that feedback strategies can promote deep learning among college students in virtual environments, the specific mechanisms underlying this effect remain poorly understood. This study investigates how peer feedback strategies influence deep learning processes within virtual learning environments.

Methods: The study employed the ErgoLAB Environment Synchronous Cloud Platform V3.0 alongside questionnaire scales to collect multimodal data, including behavioral patterns, physiological responses (eye movements, EEG, and electrodermal activity), learning experience metrics, and deep learning outcomes.

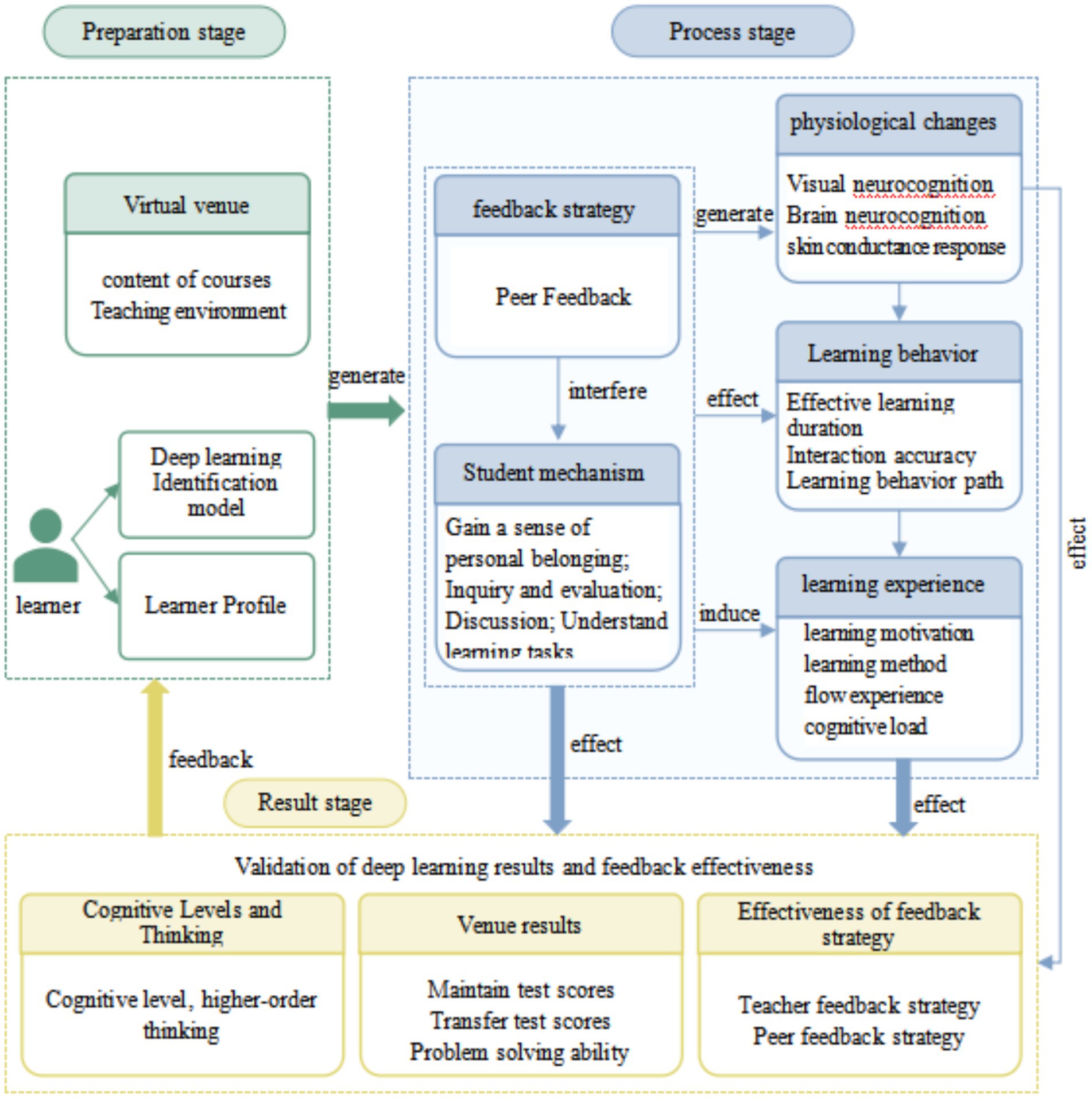

Results: Analysis revealed significant differences in deep learning outcomes across peer feedback strategies. The peer dialogue feedback group operating in a basic virtual interaction environment outperformed the other three experimental groups, suggesting that structured peer dialogue combined with foundational virtual interactions may most effectively support deep learning.

Discussion: The findings underscore the importance of deliberately designed peer feedback strategies to enhance deep learning in virtual educational contexts. This study addresses the need for targeted feedback interventions in virtual instruction and offers empirical evidence for the integration of virtual venues into academic curricula. It also provides practical insights for fostering innovative talent development in the context of digital transformation in higher education.

1 Introduction

In the context of global educational digital transformation, education systems are encountering both novel challenges and opportunities (Schmidt and Tang, 2020). The rapid advancement of online learning technologies has established digital and intelligent virtual learning environments as a crucial focus in higher education research and practice (Wu et al., 2024). Recent years have witnessed the continuous expansion of higher education enrollment alongside rising demands for improved talent cultivation quality, driving widespread adoption of course platforms that integrate virtual venue technology with multidisciplinary curricula. However, learners in virtual environments increasingly exhibit surface-level learning behaviors, leading to suboptimal deep learning outcomes that ultimately compromise the quality of talent development in virtual teaching modalities (Tevet et al., 2024). Existing research demonstrates that deep learning, serving as a critical pathway to enhance learning efficiency while fostering critical thinking and innovation capabilities, holds particular significance in technology-enhanced higher education contexts.

Despite growing interdisciplinary integration and extensive application of virtual venue feedback strategies, several practical challenges have emerged. The accelerated development of virtual venue technologies and ongoing innovations in university teaching methodologies have heightened focus on achieving meaningful deep learning in virtual environments (Nwakanma and Aijaz, 2024). Nevertheless, research remains limited regarding both the mechanisms and efficacy of feedback strategies for promoting deep learning in virtual settings, with neither comprehensive theoretical frameworks nor effective practical guidelines yet established (Attiogbe et al., 2025). Current research primarily focuses on technological improvements and environmental optimization of virtual venues, with few researchers conducting innovative design and quasi-experimental validation of feedback strategies in virtual venue environments. To address the aforementioned issues, this study will center on the core question: “The mechanisms and effects of peer feedback strategies in promoting university students’ deep learning in virtual venue environments,” while simultaneously investigating the following three sub-questions:

• Question 1: How can multimodal data be utilized to characterize learners’ deep learning in virtual venues?

• Question 2: How can a quasi-experiment be designed to verify the enhancing effect of peer feedback strategies on university students’ deep learning outcomes in virtual venue environments?

• Question 3: How do peer feedback strategies influence the mechanisms underlying the occurrence of deep learning among university students in virtual venue environments?

2 Literature review

2.1 Research on deep learning in virtual venue environments

Deep Learning is a concept shared by both the field of education and computer science. This study, situated within the context of a university virtual venue course platform, primarily explores issues related to the process and outcomes of university students’ deep learning in virtual venue environments. As such, this research pertains to deep learning in the educational domain, characterized by its focus on pedagogical inquiry, particularly the impact on university students’ deep learning. In related literature, deep learning is also referred to as “in-depth learning” or “profound learning,” contrasting with surface-level or superficial learning. Given the scope of this study, the terms “deep learning” and “surface learning” will be consistently adopted.

Deep learning encompasses in-depth exploration across three dimensions: learning engagement, methodology, and outcomes. This approach aims to describe the quality of learning, from internalization to explicit expression. Specifically, internalized deep learning is reflected in learners’ behaviors, experiences, and motivations, particularly in terms of high engagement, enjoyment, and satisfaction, often associated with flow experiences. Externally, deep learning manifests in learning outcomes, such as enhanced cognitive levels, development of higher-order thinking skills, improved academic performance, and strengthened problem-solving abilities.

Building on these perspectives, this study adopts a comprehensive framework that integrates both the learning process and outcomes. In terms of the learning process, depth is demonstrated through learners’ behaviors, physiological responses, and experiential engagement within virtual venue environments. Regarding learning outcomes, beyond academic achievement, deep learners exhibit elevated cognitive abilities, advanced higher-order thinking, and improved problem-solving skills.

Virtual Venues (Virtual Museums) are online learning spaces based on virtual reality (VR) technology, allowing students to access and immerse themselves in these environments via computers, smartphones, or other VR devices. Learning in virtual environments provides students with an immersive educational experience, while also offering interactive learning activities that require active participation and engagement in operational interactions to acquire knowledge and skills (Hite et al., 2024). Virtual venues are increasingly gaining attention in higher education, particularly in bridging theoretical coursework with practical learning. Course platforms integrating specialized curricula with virtual venue learning spaces are being adopted by a growing number of higher education institutions. Compared to traditional methods, students in virtual environments demonstrate higher levels of engagement, deeper understanding of complex concepts, and improved knowledge retention.

The teaching of specialized courses in universities within virtual exhibition environments aims to maximize students’ deep learning outcomes. Based on the profiling and state recognition of college students’ deep learning, it will promptly identify learning groups exhibiting deep learning characteristics or shallow learning tendencies and provide effective feedback strategy interventions. Students’ deep learning outcomes, as a key metric of learning effectiveness, can reflect the impact of instructional interventions. Conducting feedback strategy interventions based on multimodal learning analytics in virtual exhibition environments fundamentally seeks to enhance students’ deep learning outcomes.

Supported by multimodal learning analytics, this approach thoroughly considers factors such as the learning environment, learners’ individual states, task completion, and the application of feedback strategies. Simultaneously, it cross-validates students’ cognitive and behavioral performance, learning experience data, and physiological response data during the instructional process. Based on this, deep learning outcome assessments are conducted to provide timely feedback on the effectiveness of intervention strategies. This serves as a robust guarantee for achieving dynamic and precise instructional interventions.

2.2 The role of peer feedback strategies in promoting deep learning among college students in virtual environments

Feedback mechanisms serve as a vital strategy for enhancing university students’ learning outcomes, fundamentally relying on bidirectional information exchange. These strategies are commonly categorized into teacher feedback and peer feedback, with the latter emerging as a key approach strongly associated with deep learning. In virtual environments, the implementation of peer feedback depends on collaborative interaction among students, which not only increases learner engagement but also facilitates profound knowledge construction and conceptual understanding. Research indicates that in virtual learning settings, providing learning cues—such as holographic demonstrations of operational errors—along with opportunities for discussion among peers, can effectively shift students’ learning approaches from surface learning to strategic and deep learning. This is particularly beneficial for those who tend to learn at a superficial level.

With the ongoing digital transformation in education, peer feedback in virtual learning environments has emerged as a collaborative learning strategy that demonstrates significant potential in stimulating students’ higher-order cognitive abilities and promoting deep learning. Deep learning emphasizes critical thinking, knowledge integration, and transferable application rather than superficial memorization. Recent studies indicate that well-structured peer feedback in virtual environments can effectively enhance university students’ metacognitive skills and learning engagement. In such settings, peer feedback strategies provide strong support for deep learning through their interactive nature and diverse forms of presentation, showing considerable promise in facilitating knowledge construction, skill development, and cognitive growth. In innovative virtual learning contexts, real-time peer feedback can simulate authentic communicative scenarios and offer learners multi-perspective and multi-dimensional learning resources (Feng et al., 2023).

Peer feedback facilitates deep learning through the lens of social constructivism. By evaluating the work of others, learners reflect on their own cognitive blind spots, while receiving multi-perspective feedback that promotes knowledge reconstruction (Nicol, 2021). Virtual environments provide synchronous and asynchronous interaction, anonymous evaluation, and multimodal feedback support, thereby reducing the social pressures typical of traditional classrooms and fostering more candid critical dialogue (Chen et al., 2022). For instance, a meta-analysis by Al-Samarraie et al. (2023) indicated that embedding reflective prompt frameworks in peer feedback within virtual environments significantly enhances students’ analytical depth. When instructional feedback strategies are incorporated into these environments, university students exhibit improved epistemic cognition and feedback literacy through interactive learning operations (Carless and Winstone, 2024). Although existing studies have validated the influence of peer feedback strategies on deep learning in virtual settings, current research lacks a comprehensive investigation incorporating physiological data.

In conclusion, within virtual learning environments, peer feedback strategies offer robust support for college students’ deep learning through their distinctive interactive nature and diversified presentation formats. These strategies demonstrate significant potential in advancing knowledge construction, skill development, and cognitive growth.

2.3 Multimodal learning analytics for evaluating deep learning in virtual learning environments

Evaluating deep learning outcomes serves as one of the methods for assessing the performance of digital transformation in higher education. Traditional evaluation approaches, such as self-report scales and behavioral coding, often rely on unimodal data, making it difficult to comprehensively capture learners’ states and outcomes (Jabeen et al., 2023). Only through the scientific assessment of diverse teaching models can higher education reforms under digital transformation be continuously enhanced (Slack et al., 2003). Moreover, the process of deep learning is inherently complex, requiring the integration of multiple factors, including the learning environment and learners’ cognitive states, thus necessitating multimodal data for a holistic evaluation.

Multimodal cognition includes cerebral neural cognition, visual neural cognition, electrodermal activity, and electrocardiography, among other modalities. From an educational perspective, exploring learning processes through the lens of neuroscience represents a crucial research approach for understanding human learning mechanisms (Lövdén et al., 2013). The plasticity of the human brain and its learning functions constitute one of the most distinctive features differentiating humans from other species. The integration of neuroscience and educational theory through multimodal cognition theory provides clear direction for investigating the brain’s role in learning activities, offering new pathways to explore the intrinsic mechanisms of deep learning.

Multimodal learning analytics technology enables the integration of data from diverse modalities, such as EEG signals, eye-tracking data, and behavioral logs, facilitating a comprehensive and in-depth understanding of learners’ cognitive states and learning outcomes. For example, analyzing learners’ EEG activity in virtual venues can reveal their cognitive load and attentional allocation. Eye-tracking data provides insights into learners’ focus patterns and information processing regarding key elements in virtual environments, while behavioral logs reflect operational habits and problem-solving strategies.

In virtual venue environments, university students’ deep learning processes exhibit increased complexity and multidimensional characteristics. Virtual venues create immersive learning experiences by simulating real or fictional scenarios, requiring learners not only to process information through visual and auditory channels but also to engage deeply through hands-on operations and interactive exchanges (Oviatt et al., 2018). Therefore, assessing deep learning in virtual venues cannot rely solely on traditional unimodal evaluation methods. Instead, multimodal learning analytics technology should be adopted to more comprehensively and accurately capture learners’ multidimensional performance. In contrast to previous studies, which have predominantly focused on learning behavioral data in virtual environments with limited attention to the synchronous collection of learners’ physiological data, this research emphasizes the synchronization and alignment of multimodal data in a virtual environment setting. This approach significantly enhances the accuracy and scientific rigor of data analysis.

In summary, multimodal learning analytics provides novel approaches for evaluating university students’ deep learning in virtual venues. To better assess deep learning outcomes, it is essential to investigate the multimodal data representations of deep learning. Based on the characteristics of virtual venues and relevant literature on deep learning assessment, such evaluation should encompass both learning processes and outcomes. In virtual environments, multimodal data primarily originates from learners’ subjective self-reports, physiological experiments, and system-logged interaction data. Processing these multimodal datasets enables a comprehensive and accurate assessment of students’ deep learning outcomes. It is important to note that conducting multimodal learning analytics research in virtual environments requires precise experimental instruments and software tools capable of modality alignment, which imposes environmental constraints on the implementation of such evaluations. Furthermore, while applying multimodal learning analytics techniques, attention must be paid to both the correlations and distinctions between data related to the learning process and learning outcomes. The influence of temporal and other contextual factors on quasi-experimental studies should not be overlooked.

3 Theoretical framework for promoting university students’ deep learning through virtual venue feedback strategies

3.1 Preparatory phase analysis

In virtual venue educational environments, the primary participants are university students who possess certain fundamental characteristics. Prior to engaging in virtual venue learning, students undergo a pre-assessment phase involving prior knowledge surveys and quantitative evaluations of self-regulation capabilities. Based on students’ preparatory performance, instructors provide tailored pre-learning feedback strategies to support subsequent learning activities. To achieve highly personalized and targeted feedback interventions, this study employs a university student deep learning state identification model. This model accurately distinguishes between deep learners and surface learners, establishing a technical foundation for implementing precise feedback strategies and instructional interventions to ensure effective teaching implementation (Figure 1).

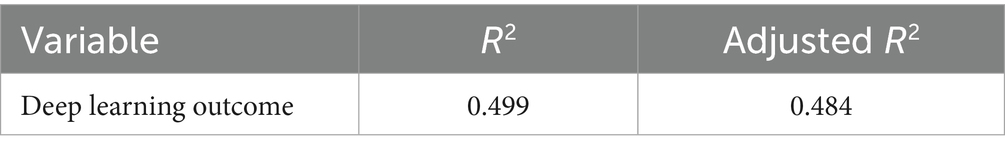

Figure 1. Theoretical framework for promoting university students’ deep learning through virtual venue feedback strategies.

3.2 Preparatory phase analysis

(1) Feedback strategy analysis

The theoretical framework provides a solid basis for selecting various venue-specific feedback strategies. Through systematic organization and implementation of different feedback interventions, optimal approaches for promoting university students’ deep learning can be identified. Based on the source of feedback, virtual venue feedback strategies are primarily categorized into instructor feedback and peer feedback strategies, with selection determined by specific instructional needs and applications. These strategies intervene at appropriate moments to influence learners’ task processing, affecting their interactive behaviors, knowledge construction, and self-regulation, thereby inducing physiological changes and altering learning experiences.

(2) Student mechanism analysis

As the “feedback strategy” variable influences students’ “mechanistic performance” during virtual venue learning processes, which subsequently affects “deep learning outcomes,” each sub-study investigates specific questions regarding: (a) the direct impact of virtual venue feedback strategies on deep learning (both process and outcomes), and (b) the relationship between students’ procedural behaviors and their deep learning enhancement within specific strategy contexts. The student mechanism in virtual venues emphasizes interactive activities including multimedia browsing, challenge-based quizzes, peer interactions, and 3D model manipulations. This mechanism manifests through students developing personal belongingness while engaging in evaluative, discursive, and self-reflective processes during these learning tasks, thereby promoting cognitive and thinking development that enhances deep learning outcomes.

(3) Physiological change analysis

Multimodal learning theory and cognitive mechanisms suggest that learners’ task processing in virtual venues affects visual neural, cerebral neural, and autonomic nervous systems, resulting in measurable physiological changes. Feedback strategy implementation alters students’ cognition and behaviors, subsequently causing variations in physiological indicators, with different strategies producing distinct physiological impact patterns.

(4) Learning behavior analysis

In virtual venue environments, learners’ behaviors are influenced by multiple factors. Within feedback-strategy-enhanced environments, learning behaviors are primarily shaped by feedback interventions, evidenced by improved interaction accuracy, increased effective learning duration, and optimized learning pathways. These positive behavioral modifications subsequently influence deep learning outcomes in virtual venues.

(5) Learning experience analysis

In virtual learning environments, learners’ cognitive behaviors and experiential formation are shaped by multifaceted factors. Comprehensive analysis requires cross-validation across behavioral, visual neural, cerebral neural, and physiological dimensions. The learning experience encompasses factors like learning methods, flow experiences, and cognitive load, which significantly mediate cognitive behaviors. Research indicates that both cognitive behaviors and acquired learning experiences jointly influence deep learning outcomes, making understanding and optimizing these factors crucial for enhancing virtual venues’ educational effectiveness.

3.3 Outcome phase analysis

The outcome phase in virtual venues comprises two components: deep learning outcomes and feedback strategy validation. Deep learning outcomes are measured through cognitive level progression, higher-order thinking development, venue-specific retention/transfer test performance, and problem-solving capabilities. Feedback strategy effectiveness is evaluated through comparative analysis of instructor and peer feedback approaches. Outcome measures are triangulated with process-phase physiological, behavioral, and experiential data, providing comprehensive evidence of learners’ deep learning achievements throughout the instructional continuum.

3.4 Theoretical support and limitations

The construction of the theoretical framework draws upon the 3P model and theories regarding how feedback strategies facilitate deep learning mechanisms, incorporating characteristics of virtual learning environments and the learning attributes of university students. Based on the above theoretical construction and analysis, the proposed framework on how virtual environment feedback strategies promote deep learning is specifically applicable to higher education settings. Its applicability to virtual learning environments in basic education remains to be validated.

4 Research methodology

4.1 Methodology and participant selection

The experimental design employed a randomized assignment of participants across conditions in a 2 × 2 factorial design, with two independent variables: (1) virtual venue feedback type (peer evaluation feedback vs. peer dialogue feedback) and (2) virtual venue interaction type (basic interaction vs. three-dimensional interaction). The complete experimental design is presented in Figure 2. Among these, basic interactions in virtual environments refer primarily to gesture-based operations, spatial navigation, quiz-based challenges, and other fundamental interactive activities. In contrast, three-dimensional (3D) interactions specifically involve stereoscopic engagements between students and 3D models, including tasks such as model assembly and three-dimensional spatial navigation.

The study examined two independent variables: virtual venue feedback type and virtual venue interaction type. Based on the analysis of virtual venue characteristics and interaction classifications presented in the literature review, the virtual venue interaction types were categorized into two levels: basic interaction and three-dimensional interaction. Similarly, peer feedback was classified into peer dialogue feedback and peer evaluation feedback. The dependent variable was deep learning effectiveness, comprising multiple dimensions: deep learning outcomes, advanced and innovative thinking, problem-solving ability, learning experience, and the application effectiveness of peer feedback strategies.

Participants in this study were recruited from Experimental University S, located in City S, Province L, Country C. All participants provided written informed consent prior to the experiment. The study protocol was approved by the Institutional Review Board of Experimental University S (Ethics Approval No.: SYU20230201). This study focuses on a foundational computer course delivered through a virtual learning environment at the experimental university. As a general education course widely offered in higher education institutions, it aims to provide first-year undergraduates with fundamental computer knowledge and skills training. Participants were randomly selected from various academic disciplines across the freshman cohort, including engineering, natural sciences, humanities, and medical sciences, and were subsequently assigned into four experimental groups, with 45 students in each group. Participants were right-handed individuals with normal visual acuity, with balanced gender distribution across all experimental groups. Through random assignment, participants were divided into four experimental conditions: (1) basic interaction with peer dialogue feedback, (2) three-dimensional interaction with peer dialogue feedback, (3) basic interaction with peer evaluation feedback, and (4) three-dimensional interaction with peer evaluation feedback.

4.2 Experimental materials and apparatus

The study utilized the “Computer Foundation” virtual learning platform from experimental universities as the experimental material. This platform incorporates built-in preview feedback and peer feedback strategies, with a backend system that monitors learners’ behaviors and states in real-time to implement timely peer feedback interventions. The pre-learning feedback strategy, systematically administered before formal instruction, aims to help learners establish fundamental understanding of the virtual learning environment and enhance their preliminary perception of the digital learning space.

All four experimental groups used identical course materials in terms of knowledge content and difficulty level, differing only in interaction types and peer feedback implementations: (1) basic interaction with peer evaluation feedback, (2) three-dimensional interaction with peer evaluation feedback, (3) basic interaction with peer dialogue feedback, and (4) three-dimensional interaction with peer dialogue feedback. In the peer evaluation feedback conditions, students could rate each other but were restricted from generating dialogue. Conversely, the peer dialogue feedback conditions allowed students to engage in interactive discussion tasks to facilitate learning communication.

As illustrated in Figure 3, the platform interface includes distinct feedback features: the left side displays the peer dialogue feedback function with a discussion button and area for open dialogue, while the right side shows the peer evaluation feedback function limited to “like” ratings without opinion expression capabilities.

4.3 Research hypotheses

Hypothesis 1 (H1): The preview-based peer feedback strategy (hereinafter referred to as “the peer feedback strategy”) in virtual learning environments significantly influences learners' behavioral patterns, producing observable differences in behavioral pathway visualizations.

Hypothesis 2 (H2): The peer feedback strategy in virtual environments significantly modulates learners’ neural cognitive activity, particularly manifesting as characteristic alpha-band (8–13 Hz) power spectral density attenuation patterns in frontal, central, parietal, and occipital regions. These neurophysiological changes correlate with enhanced attentional engagement, providing mechanistic evidence for cognitive facilitation in deep learning. Distinct peer feedback strategies will yield significantly different alpha power distributions and topographical brain mapping patterns.

Hypothesis 3 (H3): The peer feedback strategy significantly affects visual cognitive behaviors, evidenced by between-group differences in three eye-tracking metrics: (1) fixation duration, (2) fixation count, and (3) saccade frequency. These metrics demonstrate strategy-dependent variation in visual information acquisition rates across experimental conditions.

Hypothesis 4 (H4): The strategy exerts significant main effects on electrodermal activity (EDA) and cognitive load measures. Specifically, EDA parameters (peak amplitude, SCR magnitude, SCR frequency, and mean SCR amplitude) show positive covariation with cognitive load levels, increasing with cognitive demand elevation and decreasing with demand reduction.

Hypothesis 5 (H5): Significant main effects emerge on learning experience dimensions, including: (1) engagement, (2) motivation, and (3) flow state, with measurable between-strategy differences following intervention.

Hypothesis 6 (H6): The strategy significantly enhances deep learning outcomes, operationalized through: (1) retention test scores, (2) transfer test performance, (3) problem-solving ability, and (4) higher-order thinking skills. Cognitive performance demonstrates time-dependent improvement with strategy-specific growth trajectories.

Hypothesis 7 (H7): In virtual learning environments, significant correlations exist among behavioral, physiological, and experiential measures with both deep learning outcomes and peer feedback strategy efficacy metrics, suggesting an underlying interaction mechanism.

4.4 Research instruments and evaluation metrics

4.4.1 Research apparatus and experimental platform

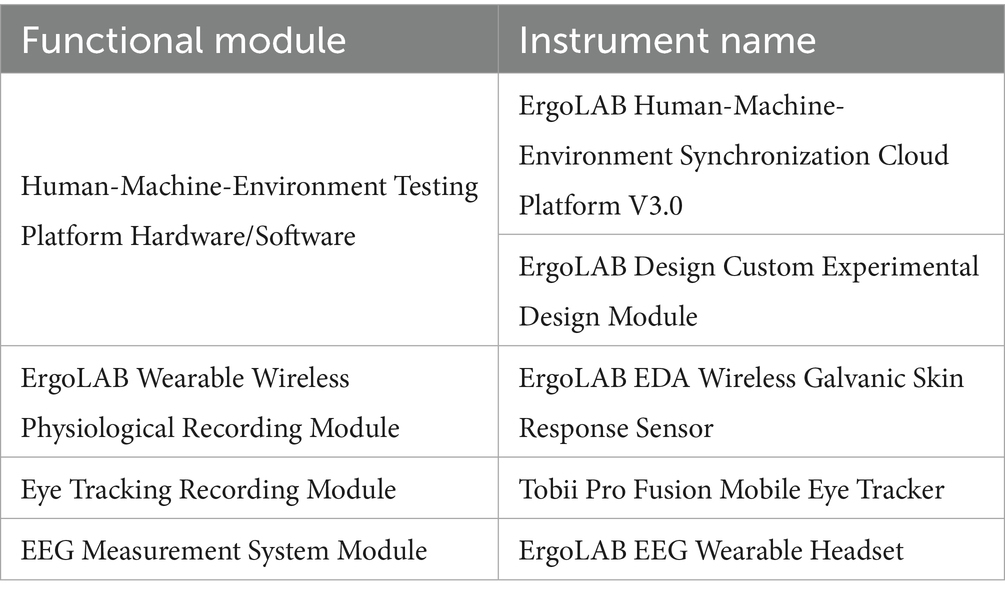

The university’s multimodal laboratory is equipped with 45 sets of ErgoLAB experimental systems, enabling concurrent experimentation for 45 students with each session lasting 30 min. Experimental cohorts consisted of 45 students per round, with multiple rounds conducted weekly. This study employed multi-channel, multimodal data acquisition instruments capable of synchronously capturing: (1) eye movement patterns, (2) electroencephalographic (EEG) signals, and (3) electrodermal activity (EDA) through screen-based terminals.

The ErgoLAB Human-Machine Environment Synchronization Cloud Platform V3.0, a cloud-architecture-based system, served as the primary multimodal data collection and analysis platform. This ISO 9241-210 certified professional apparatus features:

(1) ±1 ms timestamp precision for synchronous physiological, behavioral, and environmental data acquisition

(2) Python/Matlab compatibility for secondary development

(3) Integrated machine learning algorithms for multidimensional educational data fusion analysis

Specifically designed for education research, this platform optimally supports quasi-experimental studies utilizing multimodal learning analytics. Detailed instrument specifications are presented in Table 1.

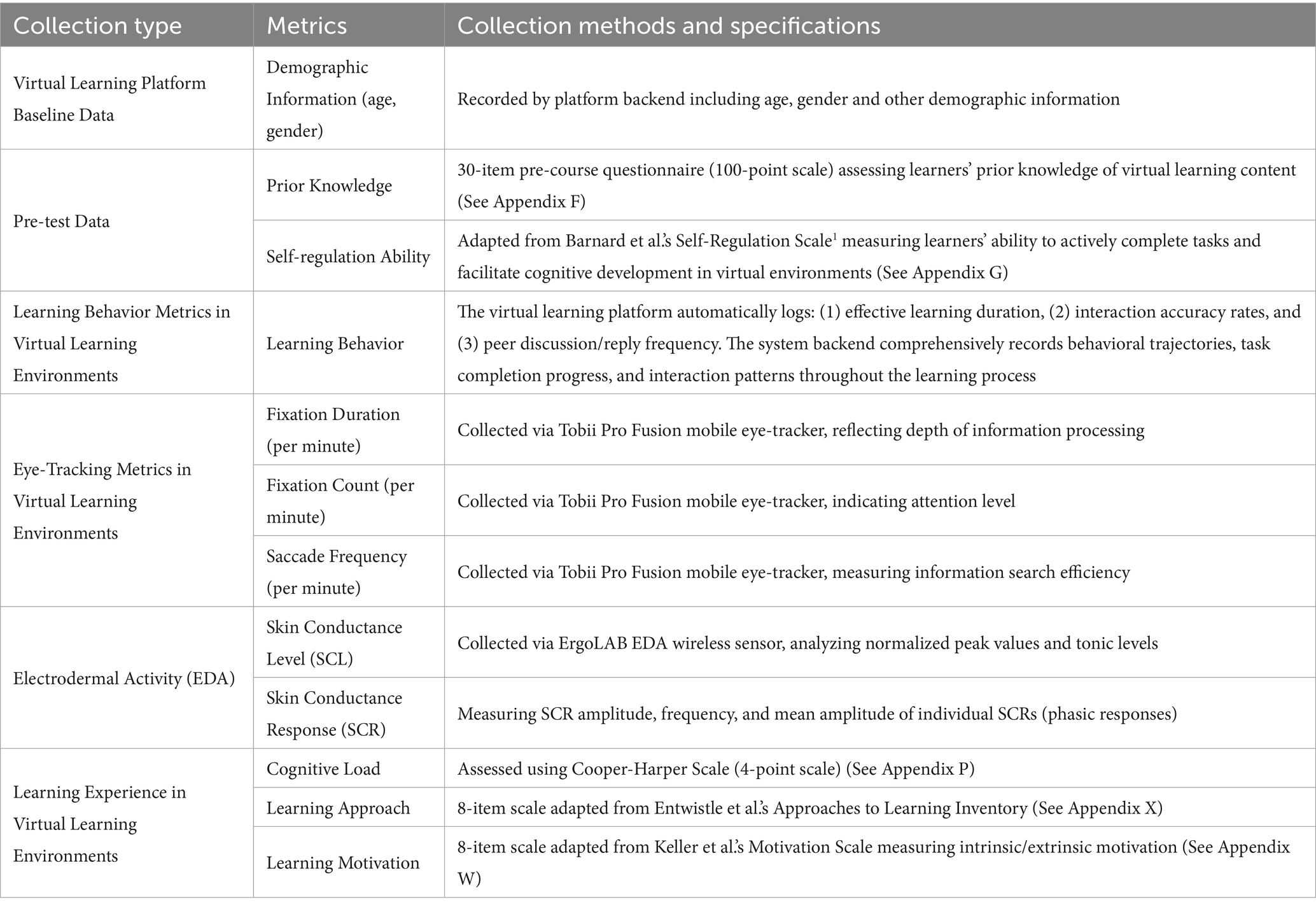

4.4.2 Experimental evaluation metrics and data collection methods

A quasi-experimental study was conducted utilizing multimodal learning analytics technology to systematically collect and analyze: (1) learning behaviors, (2) eye-tracking metrics, (3) electrodermal activity (EDA) data, and (4) virtual learning environment experience measures. The comprehensive experimental evaluation metrics are detailed in Table 2.

5 Experimental data analysis and mechanistic exploration

5.1 Learning behavior differential analysis

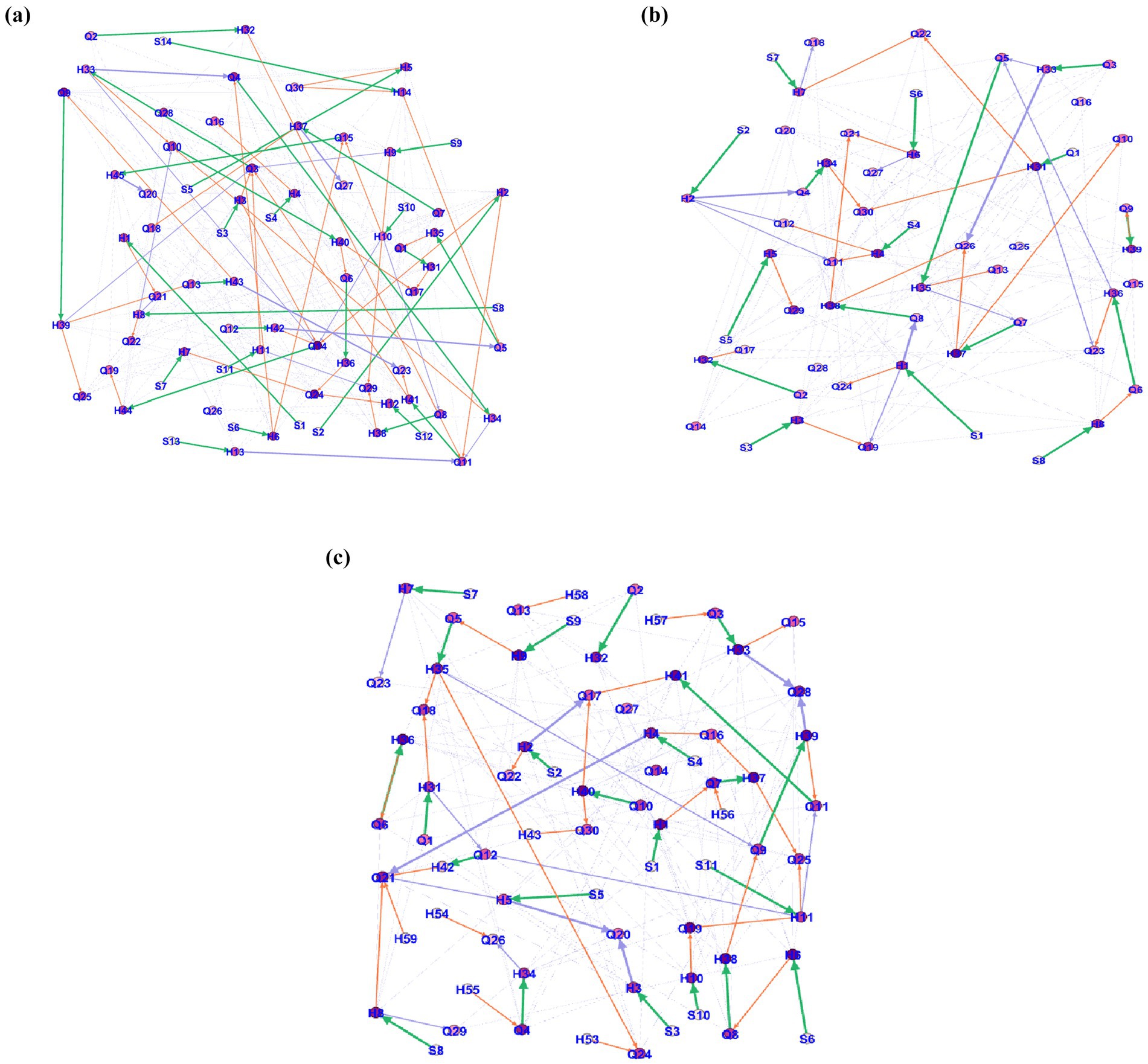

Figures 4a–d present the entry and exit centrality metrics of surface learners in virtual learning environment discussion areas following distinct peer feedback interventions. Centrality analysis serves as an effective indicator of network positioning—when a learner demonstrates direct interactions with multiple peers, they are considered to occupy a central community position with elevated discourse authority.

Figure 4. (a) Basic interaction with peer dialogic feedback. (b) Three-dimensional interaction with peer dialogic feedback. (c) Basic interaction with peer evaluative feedback. (d) Three-dimensional interaction with peer evaluative feedback.

Prior to intervention, behavioral analysis revealed that surface learners exhibited minimal posting activity (constituting only a small fraction of replies), with liking behavior representing their most frequent interaction. Post-intervention observations demonstrated significant behavioral shifts:

(1) Increased posting frequency accompanied by deeper learning engagement

(2) Enhanced reply quality and like counts for their posts

(3) Particularly in basic interaction conditions, surface learners showed:

a. Maximum posting frequency increase

b. Highest reply and like reception.

The social network analysis further revealed a hierarchical pattern in posting frequency across conditions:

(1) Peer dialogue-based basic interaction (highest)

(2) Peer evaluative 3D interaction

(3) Peer dialogue 3D interaction

(4) Peer evaluative basic interaction (lowest)

5.2 Physiological data differential analysis

5.2.1 Eye-tracking experiment analysis

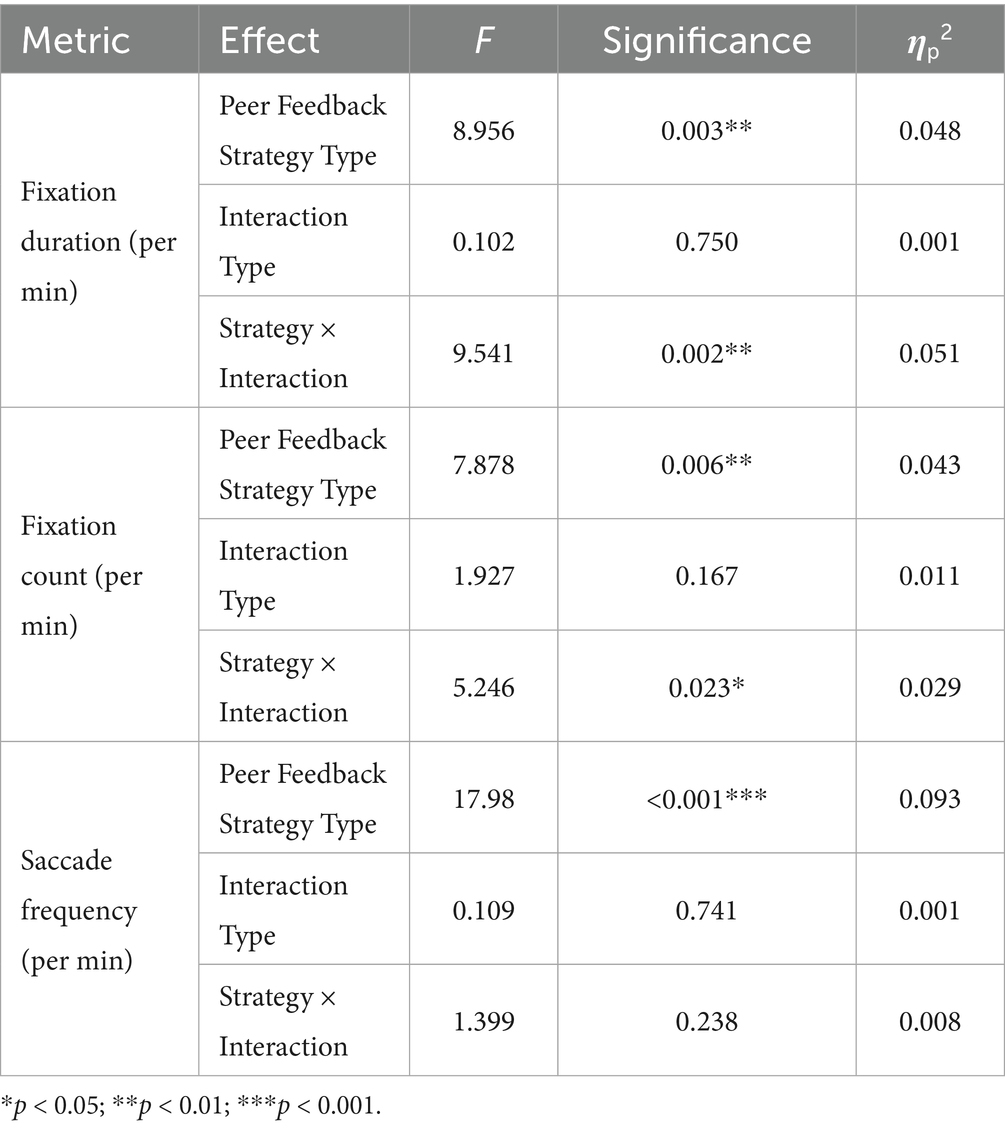

Table 3 presents the ANOVA results for eye movement metrics. Regarding fixation duration per minute:

(1) A significant main effect emerged for preview-based peer feedback strategy (F = 8.956, p = 0.003, ηp2 = 0.048), with post-hoc tests revealing longer fixation durations in the dialogic feedback condition compared to evaluative feedback.

(2) The feedback strategy × interaction type interaction was significant (F = 9.541, p = 0.002, ηp2 = 0.051). Simple effects analysis indicated:

a. Under evaluative feedback, 3D interaction yielded significantly longer fixations than basic interaction (p = 0.017)

b. Under dialogic feedback, basic interaction showed marginally longer fixations than 3D interaction (p = 0.052)

For fixation frequency per minute:

(1) Significant main effect of feedback strategy (F = 8.78, p = 0.006, ηp2 = 0.043), with dialogic feedback producing higher fixation counts

(2) Significant interaction effect (F = 5.246, p = 0.023, ηp2 = 0.029):

a. Basic interaction elicited significantly more fixations than 3D interaction (p = 0.01)

b. No significant difference between 3D and basic interaction conditions (p > 0.05)

Regarding saccadic frequency per minute:

(1) Significant main effect of feedback strategy (F = 17.98, p < 0.001, ηp2 = 0.093)

(2) Dialogic feedback generated significantly more saccades than evaluative feedback.

5.2.2 Analysis of neural cognitive level differences

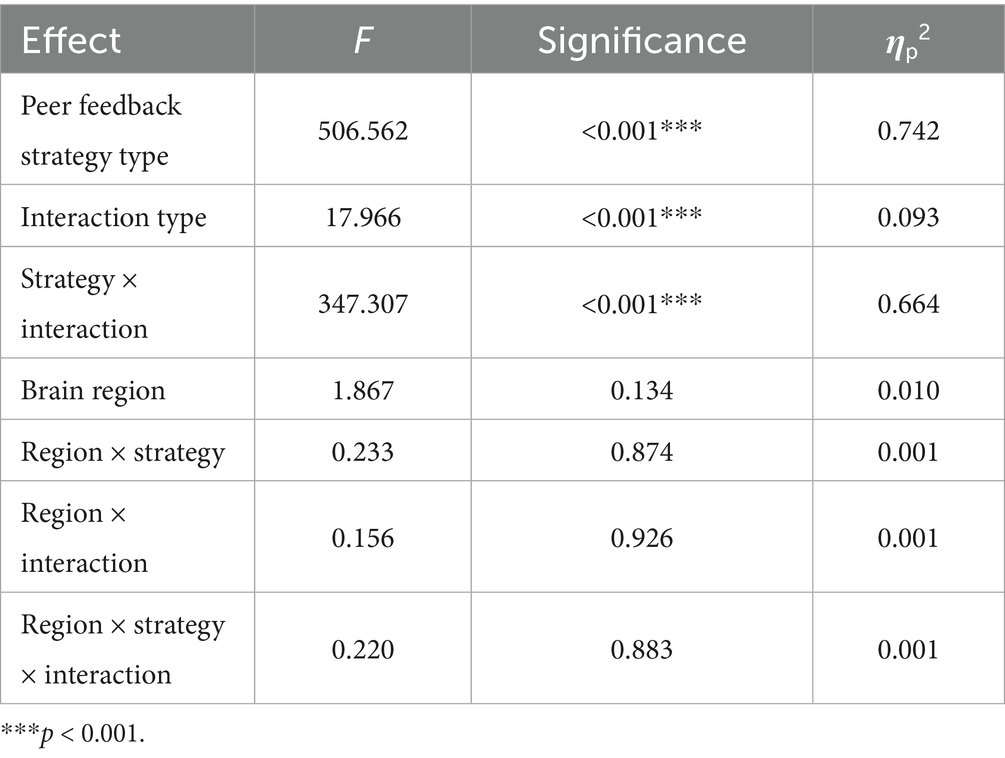

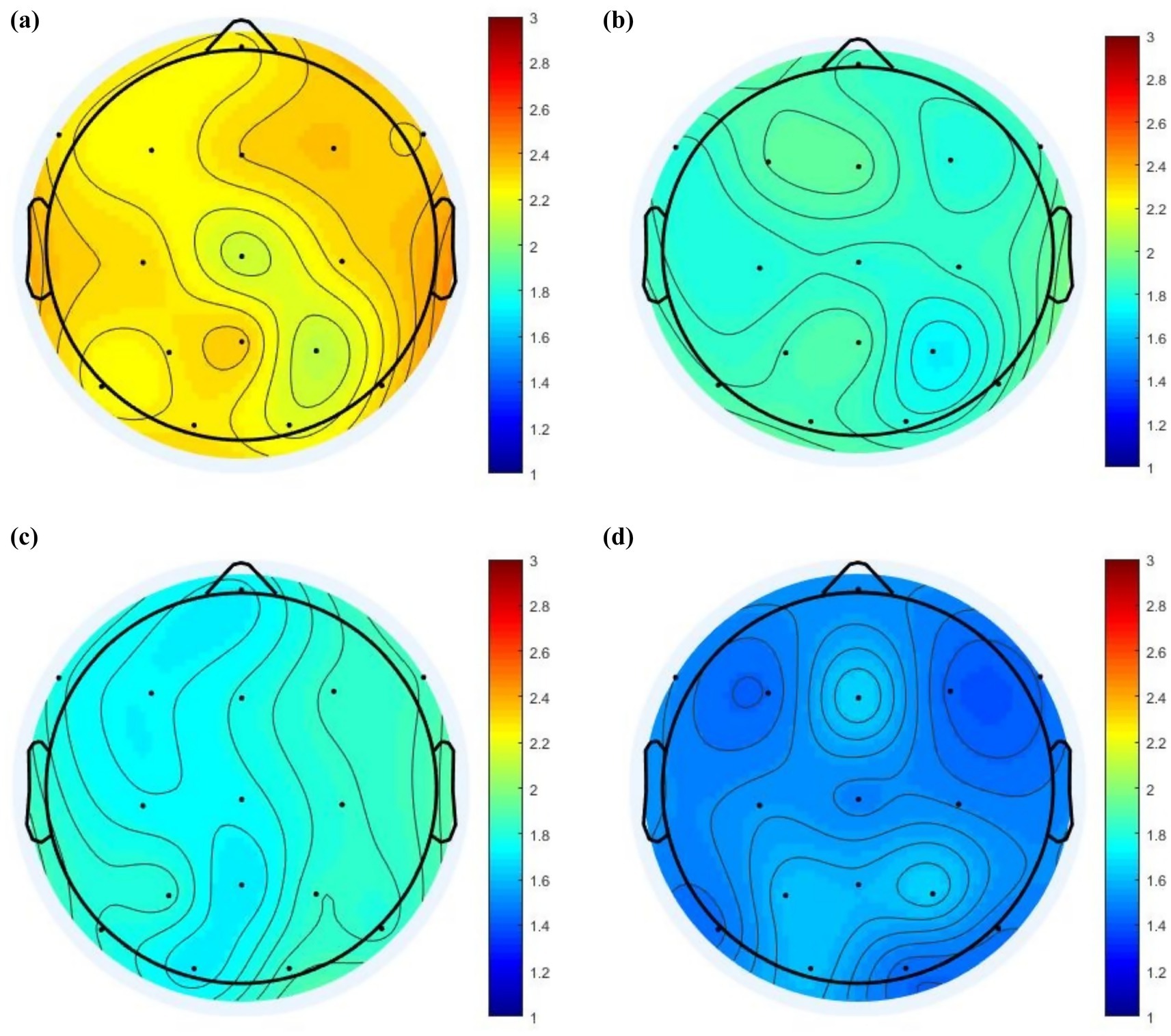

Alpha power spectral density (8–13 Hz) was computed across 16 electrode sites using the ErgoLAB Environment Synchronization Cloud Platform V3.0 for EEG preprocessing. The derived values were subsequently analyzed in MATLAB 2017a (MathWorks), with topographic mappings generated through EEGLAB 2021’s mapping algorithms. Significant between-group differences in cortical activation patterns were observed across the four experimental conditions, as visually demonstrated in Figures 5a–d. In the brain topography, the unit of power spectral intensity is dB. The numerical values displayed in the graph allow for clear visualization of the corresponding colors in the brain map. For example, in Figure 5a, the power spectral intensity falls within the range of 2.2–2.4, resulting in a color gradient in the brain map that transitions from yellow to orange (Table 4).

Figure 5. (a) Brain topography based on power spectral intensity: Peer Evaluative Feedback (Basic Interaction). (b) Brain topography based on power spectral intensity: Peer Evaluative Feedback (3D Interaction). (c) Brain topography based on power spectral intensity: Peer Dialogic Feedback (3D Interaction). (d) Brain topography based on power spectral intensity: Peer Dialogic Feedback (Basic Interaction).

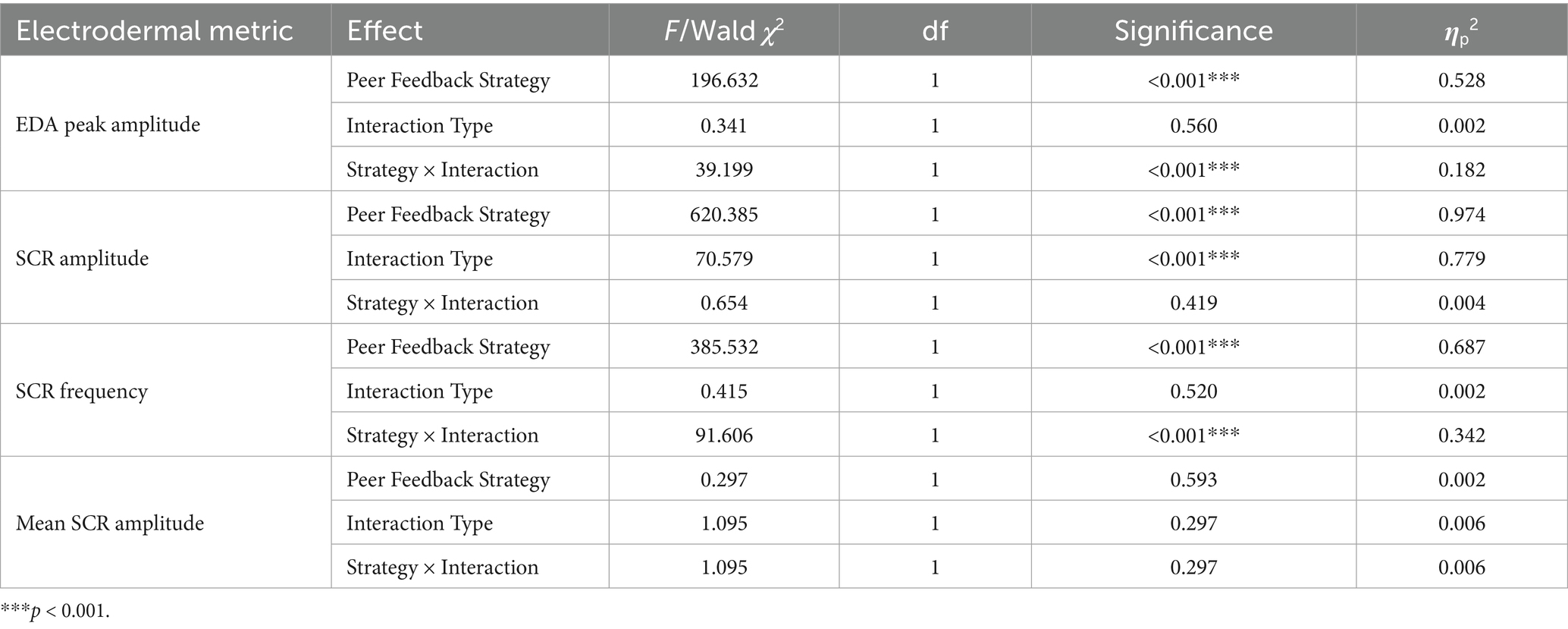

5.2.3 Electrodermal activity and cognitive load analysis

Given the non-normal distribution characteristics of SCR amplitude and mean single SCR amplitude in the electrodermal data, a generalized linear model (GLM) with linear regression was employed for analysis (see Table 5 for complete results). Key findings revealed:

(1) Significant main effect of peer feedback strategy on EDA peak amplitude (p < 0.05), indicating substantial between-group differences in electrodermal responses among learner types.

(2) Non-significant effect of interaction type on EDA peak amplitude (p > 0.05), suggesting minimal variation across different interaction modalities.

(3) Significant interaction effect between feedback strategy and interaction type (p < 0.05), demonstrating that the influence of feedback strategy on electrodermal responses is modulated by interaction conditions.

Note: Complete statistical details including effect sizes and confidence intervals are presented in Table 5.

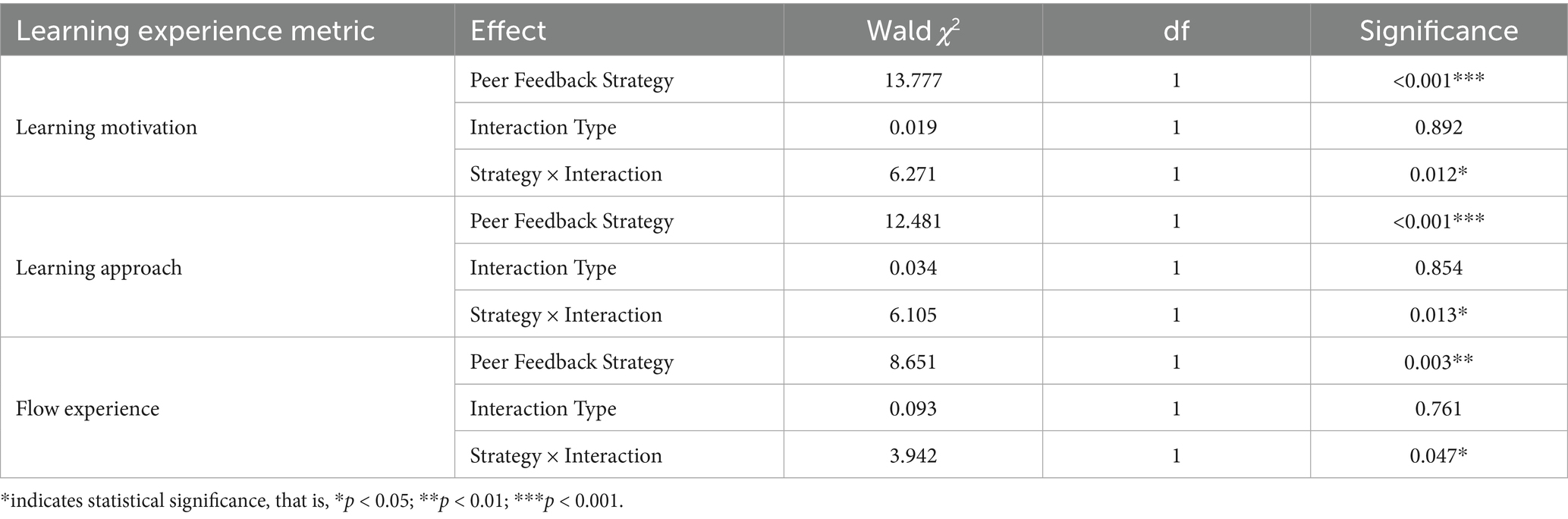

5.3 Learning experience differential analysis in virtual environments

Given that the learning experience metrics (motivation, learning approach, and flow experience) were all scale-based and exhibited non-normal distribution characteristics, we employed a generalized linear model (GLM) with linear regression for multivariate analysis (see Table 6 for complete results). The key findings revealed:

(1) Significant main effect of peer feedback strategy on learning motivation (p < 0.05), demonstrating substantial between-group differences in motivational levels among learner types.

(2) Non-significant effect of interaction type on learning motivation (p > 0.05), suggesting that motivation levels remained consistent across different interaction modalities.

(3) Significant interaction effect between feedback strategy and interaction type (p < 0.05), indicating that the motivational impact of feedback strategies was moderated by interaction conditions.

Note: Complete statistical details including effect sizes and confidence intervals are presented in Table 6.

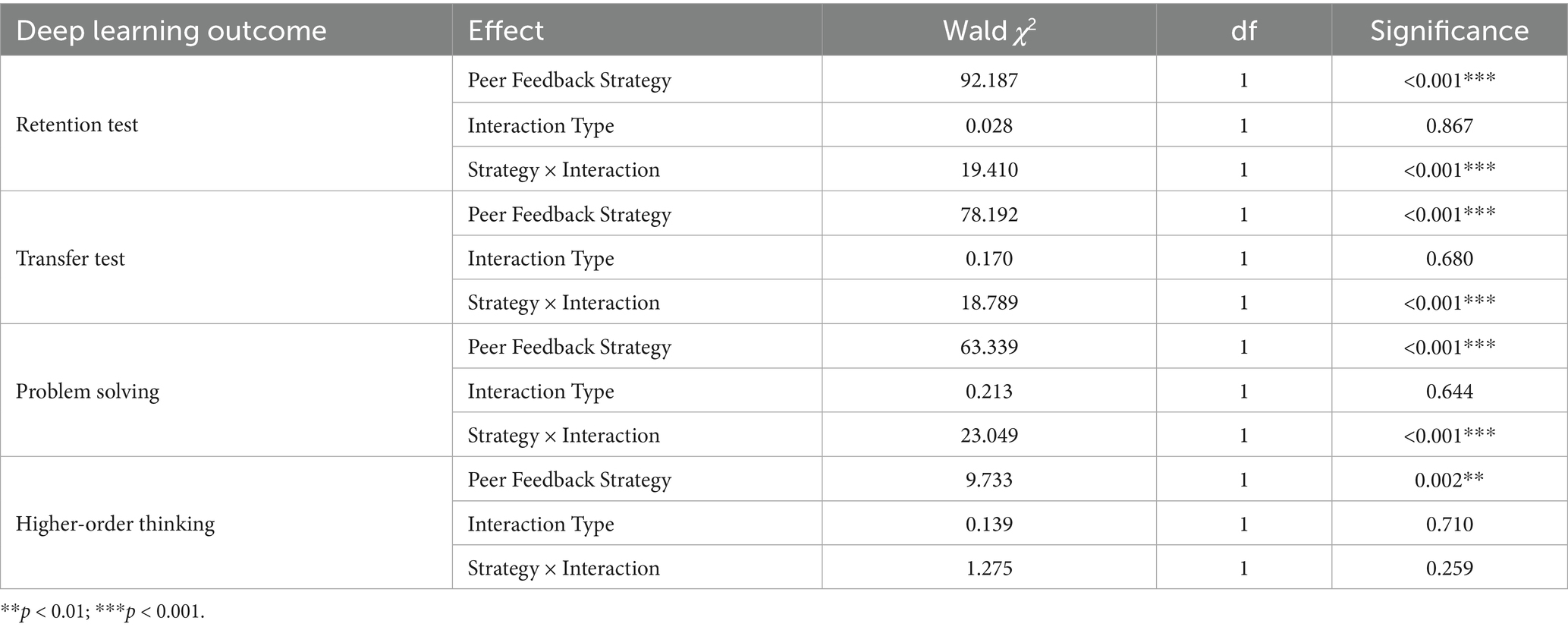

5.4 Virtual learning environment performance and higher-order thinking analysis

Given that higher-order thinking scores derived from deep learning outcomes exhibited non-normal distribution (Shapiro–Wilk test, p < 0.05), we employed a generalized linear model (GLM) with linear regression for multifactorial analysis (see Table 7 for complete results). The analysis revealed three key findings:

(1) Significant main effect of peer feedback strategies on knowledge retention (Wald χ2 = [value], p < 0.01), demonstrating significant differences between groups. (ηp2 = [value]).

(2) Non-significant effect of interaction type on retention (Wald χ2 = [value], p = 0.[value]), suggesting consistent retention levels across different interaction modalities.

(3) Significant strategy × interaction effect (Wald χ2 = [value], p < 0.05), indicating that the efficacy of feedback strategies was moderated by interaction conditions.

Note: Complete statistical parameters including effect sizes and 95% confidence intervals are presented in Table 7.

6 Mechanism analysis of peer feedback strategies in promoting college students’ deep learning

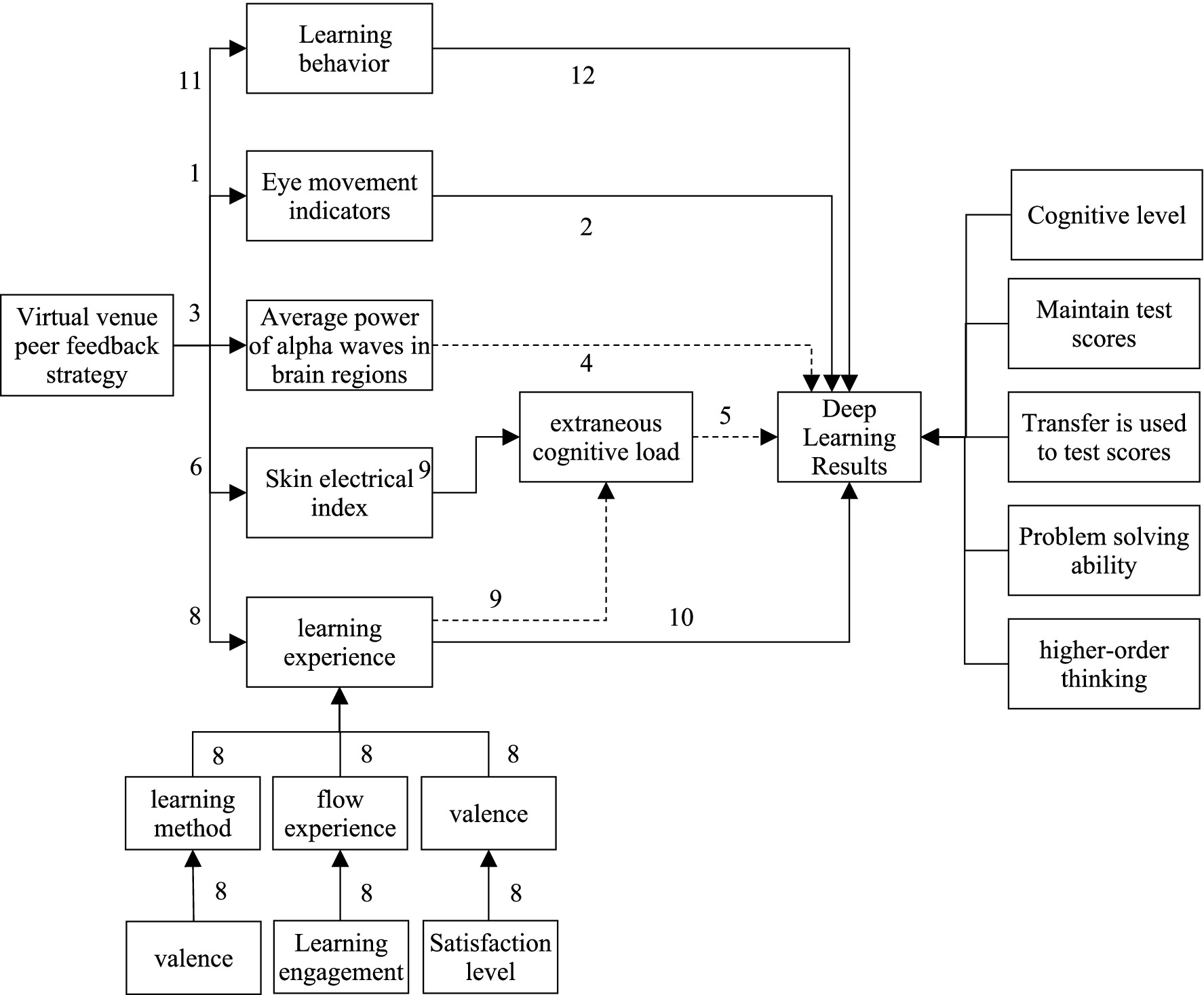

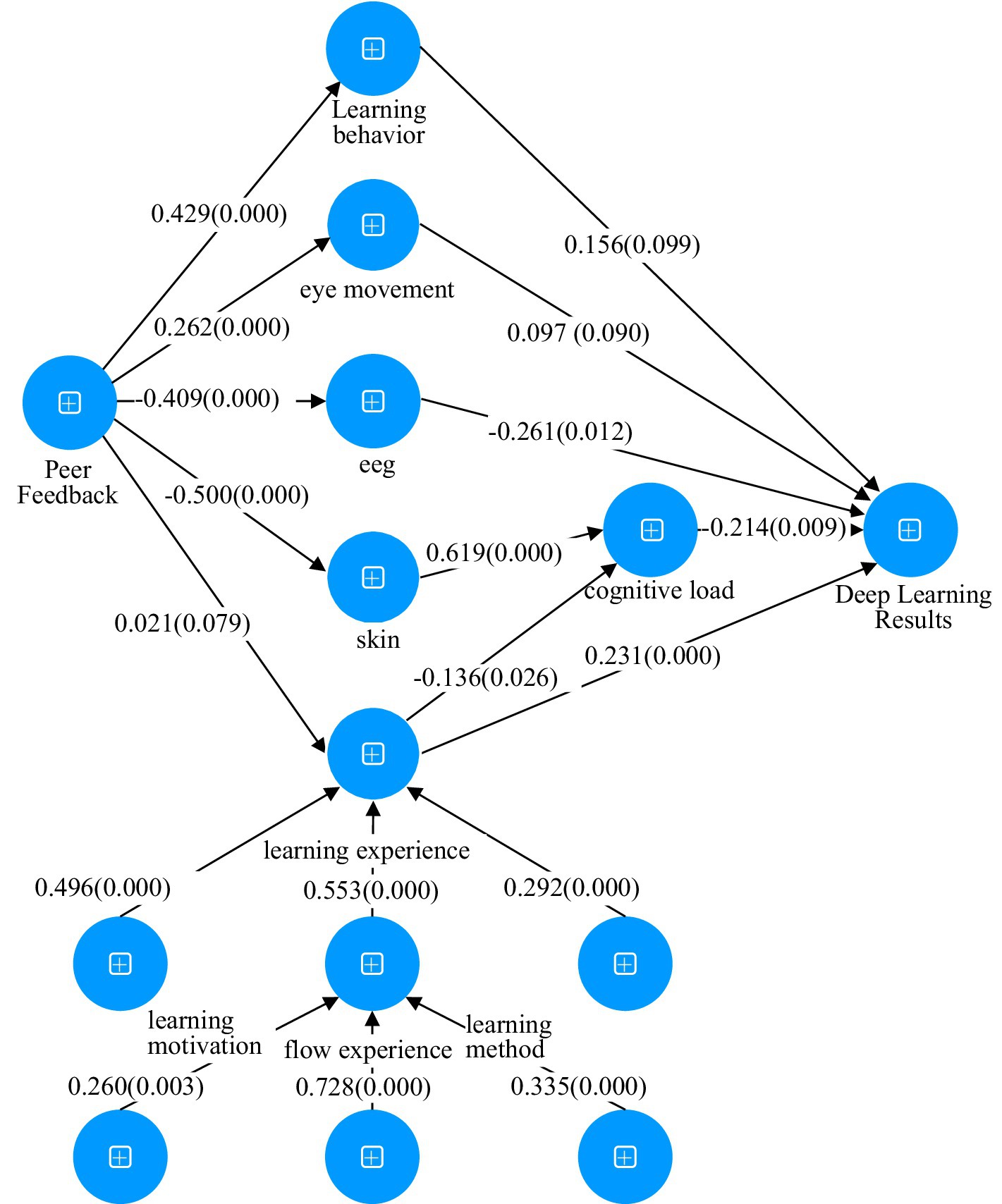

To further elucidate the influence pathways through which virtual peer feedback strategies affect learners’ deep learning outcomes, this study investigates a mechanism model based on correlation analysis results and deep learning design principles, as illustrated in Figure 6. Using preview-based peer feedback as the input and deep learning outcomes as the output, we construct a hypothesized mechanism model with the following proposed pathways: (1) peer feedback strategy → learning behavior → deep learning outcomes; (2) peer feedback strategy → eye movement indicators → deep learning outcomes; (3) peer feedback strategy → average Alpha wave power → deep learning outcomes; (4) external cognitive load → deep learning outcomes; (5) peer feedback strategy → electrodermal activity indicators → external cognitive load; and (6) peer feedback strategy → learning experience → external cognitive load → deep learning outcomes.

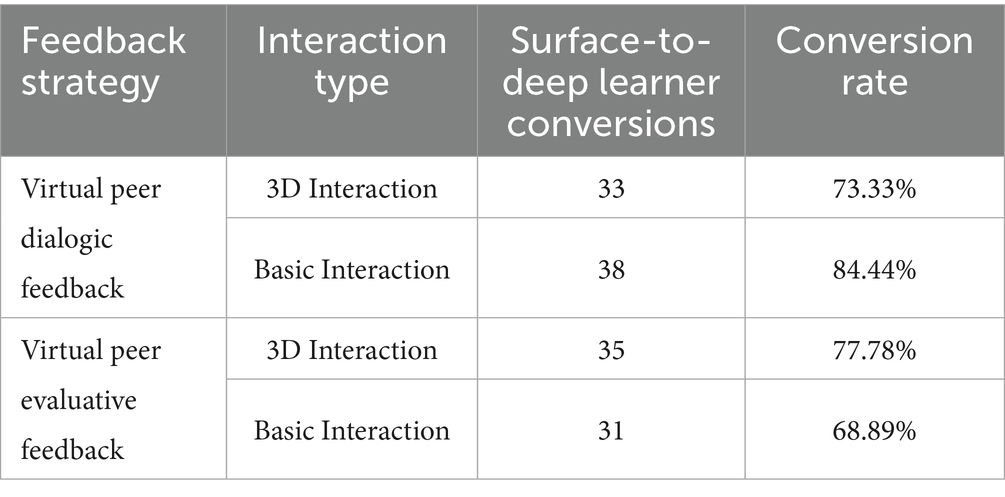

In Partial Least Squares Structural Equation Modeling (PLS-SEM) analysis, R2 (the coefficient of determination) serves as the most fundamental and widely adopted model evaluation metric. As a crucial indicator of predictive validity, R2 quantifies the proportion of variance in endogenous latent variables explained by the model, with values ranging from 0 to 1. Higher R2 values indicate greater predictive power of the model.

The literature presents varying perspectives regarding the interpretation thresholds for R2. Cohen (1988) established 0.26, 0.13, and 0.02 as benchmark values representing large, medium, and small effect sizes, respectively. Hair et al. (2019) proposed that in behavioral science research, R2 values of 0.75, 0.50, and 0.25 could be interpreted as indicating substantial, moderate, and weak explanatory power, respectively. Notably, Falk and Miller (1992) argued that R2 values should exceed 0.10 to demonstrate practical significance, while Chin (1998) suggested 0.67, 0.33, and 0.19 as alternative cutoff points for model evaluation. The model fit analysis is presented in Table 8.

As shown in Table 8, the R2 value for deep learning outcomes reaches 0.499, exceeding the threshold of 0.33 and thus satisfying the criterion for moderate explanatory power. The verified structural equation model, including its path diagram and standardized coefficients, is presented in Figure 7.

Through dual validation employing both correlation analysis and structural equation modeling, this study identified six significant mechanism pathways through which pre-learning peer feedback promotes deep learning:

• Path 1: Preview-based peer feedback significantly enhances learners’ behavioral engagement (β = 0.429, p < 0.001), which in turn improves deep learning outcomes (β = 0.156, p < 0.10).

• Path 2: Preview-based peer feedback significantly improves learners’ eye movement indices (β = 0.262, p < 0.001), thus improving deep learning results (β = 0.097, p < 0.10).

• Path 3: Preview-based peer feedback significantly reduces average Alpha wave power in relevant brain regions (β = −0.409, p < 0.001), thereby facilitating improvement in deep learning outcomes (β = −0.261, p < 0.05).

• Path 4: Preview-based peer feedback significantly decreases electrodermal activity (β = −0.500, p < 0.001), which correspondingly reduces students’ cognitive load (β = 0.619, p < 0.001), ultimately promoting deep learning outcomes (β = −0.214, p < 0.01).

• Path 5: Preview-based peer feedback significantly enhances learning experience quality (β = 0.021, p < 0.10), which mitigates cognitive load (β = −0.136, p < 0.05) and subsequently improves deep learning outcomes (β = −0.214, p < 0.01).

• Path 6: Preview-based peer feedback significantly elevates learning experience levels (β = 0.021, p < 0.10), directly contributing to improved deep learning outcomes (β = 0.231, p < 0.001).

These pathways collectively demonstrate that virtual peer feedback strategies promote deep learning by influencing multiple dimensions: behavioral engagement, oculomotor metrics, neural activity (alpha wave power), physiological arousal (electrodermal activity), cognitive load, and subjective learning experience. Correlation analyses revealed significant interrelationships among most indicators. The established association mechanisms confirm that feedback strategies affect deep learning through an integrated pathway network, thereby validating experimental hypothesis H7.

7 Discussion

7.1 Design of peer feedback strategies in virtual learning environments

Existing research demonstrates that structured peer evaluation activities—including work review, problem identification, questioning, discussion facilitation, and feedback provision—can significantly enhance learners’ critical thinking and metacognitive abilities (Gielen et al., 2010). Prior to implementation, systematic evaluator training is essential to establish clear assessment criteria and protocols, thereby reducing subjectivity and bias in the evaluation process.

Compared to traditional classroom or conventional online course peer assessments, virtual learning environment-based peer feedback strategies offer distinct advantages. Most notably, learners demonstrate greater willingness to provide constructive criticism and improvement suggestions in virtual modalities than in face-to-face settings. This model enables instructors to dynamically monitor the entire evaluation process while continuously tracking learners’ developmental progress.

This study examines two primary peer feedback strategies in higher education virtual learning contexts:

(1) Peer evaluative feedback

(2) Peer dialogic feedback

Quasi-experimental results indicate that virtual peer feedback strategies significantly improve participants’:

(1) Cognitive and behavioral outcomes

(2) Physiological responses

(3) Learning experiences

(4) Deep learning performance

Furthermore, these strategies effectively facilitated the transition from surface to deep learning. Notably, the basic interaction group employing preview-based peer dialogic feedback demonstrated superior deep learning outcomes compared to other experimental conditions.

Table 8 shows the model fitting metrics. As demonstrated in Table 9, systematic analysis of empirical data reveals differential effects of various peer feedback strategies coupled with preview-based interaction modalities on facilitating the transition from surface to deep learning in virtual learning environments. Specifically, the basic interaction framework demonstrated superior efficacy in enhancing peer dialogue feedback strategies, whereas the three-dimensional interaction system exhibited optimal performance when implemented alongside peer evaluation feedback mechanisms. These findings offer empirical evidence to inform the optimization of instructional design in virtual learning platforms.

7.2 Quantitative analysis of intervention efficacy on deep learning outcomes

Quantitative analysis revealed a progressive attenuation in deep learning outcomes across the following intervention groups in descending order: (1) basic interaction with peer dialogue feedback, (2) three-dimensional interaction with peer evaluation feedback, (3) three-dimensional interaction with peer conversation feedback, and (4) basic interaction with peer evaluation feedback. Overall, dialogic peer feedback demonstrates more pronounced effectiveness compared to evaluative peer feedback. This study argues that student-to-student dialogue is more likely to stimulate learners’ interest and foster a discussion-oriented mindset, thereby enhancing mutual understanding of the subject matter. In contrast, three-dimensional interactive tasks—such as those involving manipulation of 3D models—present relatively higher operational challenges. Consequently, in basic interactive activities like clicking or drag-and-drop connections, students tend to achieve successful operational experiences more readily.

A significant finding regarding brain topography is that the four intergroup brain maps exhibit distinct differences in color presentation, characterized by a clear contrast between cool and warm tones. The experimental group with the most effective deep learning outcomes—which engaged in basic interactive dialogic peer feedback—displayed a dark blue brain topography with values approaching 1. In contrast, the group with the weakest deep learning performance showed a light yellow brain topography with values close to X. The observed correlation between color patterns in brain topographies and deep learning effects provides a visual assessment reference for future research and represents an innovative discovery in the context of virtual environment-based instruction.

7.3 Discussion of experimental effects supported by multimodal learning analysis

The statistical analysis of learning behaviors in virtual environments reveals that peer feedback strategies (including dialogic and evaluative feedback) implemented for surface learners demonstrate significant positive effects in both basic and three-dimensional interactive environments. These strategies effectively curtail ineffective learning behaviors, particularly reducing instances of superficial engagement such as abrupt transitions between irrelevant materials and direct answer-seeking, thereby guiding learners away from passive learning states. Concurrently, the strategies foster the development of effective learning behaviors, evidenced by increased repeated reading of instructional materials and active video viewing, indicating learners’ propensity for deeper material exploration through peer interaction and evaluation. The social interaction dimension also exhibited enhancement, with learners demonstrating more frequent posting and liking behaviors in comment sections, which not only stimulated learning community engagement but also reflected strengthened peer interaction and mutual recognition.

Notably, the pre-learning peer feedback strategy significantly improves learners’ ability to sustain learning states following 3D model interaction and video learning. This strategy substantially reduces the likelihood of regression to surface learning after deep cognitive engagement, confirming its efficacy in maintaining post-deep learning cognitive retention. However, the strategy’s impact on certain specific behaviors remains limited, particularly in promoting learning progress monitoring and subsequent behavioral transformation, suggesting incomplete coverage of all learning behavior enhancement aspects. The preview-based peer feedback strategy, through enhanced peer evaluation and dialogue activities, effectively facilitates knowledge exchange among peers, promotes constructive and interactive learning approaches, and consequently enhances deep learning outcomes. While these strategies have demonstrated broad positive influences on surface learners’ behaviors in virtual environments, the study’s limitations indicate the need for future educational practices to refine feedback strategy precision and adaptability, enabling targeted interventions across diverse learning behaviors to holistically improve learning efficiency and quality. Experimental hypothesis H1 is thereby confirmed.

Physiological data analysis across three eye-tracking metrics (gaze duration, gaze frequency, and saccade frequency per minute) revealed improved visual cognition levels with intergroup differences, with the preview-based peer dialogic feedback in basic interaction group showing optimal performance. EEG analysis of Alpha power reduction indicated enhanced concentration levels, with brain topographic map visualization demonstrating the most pronounced improvement (evidenced by warm-to-cool color transitions) in the same group, confirming experimental hypothesis H2. Significant intergroup differences in gaze duration, frequency, and saccade frequency corresponded with varied increases in visual cognitive input, with improved visual cognition consistently promoting deep learning outcomes, thereby validating experimental hypothesis H3.

Under the preview-based peer feedback intervention in virtual environments, simultaneous analysis of electrodermal activity (EDA) and cognitive load data demonstrated strong correlations between cognitive load and EDA peak height, SCR amplitude, and SCR frequency. However, the correlation between average single SCR amplitude and cognitive load did not reach significance, partially confirming experimental hypothesis H4. The preview-based peer feedback strategy significantly enhanced learners’ experience across multiple dimensions: engagement, motivation, methodology, and flow state, thereby validating experimental hypothesis H5.

Deep learning outcome assessments further verified the performance ranking of experimental groups across retention test scores, transfer test scores, problem-solving ability, cognitive level, and higher-order thinking development. Latent variable mixed modeling of cognitive level progression (pre-, during, and post-learning) confirmed cognitive improvement across all groups, with the preview-based peer dialogic feedback in basic interaction group showing most significant enhancement, thus validating experimental hypothesis H6.

7.4 Universality of the theoretical framework for promoting college students’ deep learning through virtual venue feedback strategies

This study proposes a three-stage theoretical framework (preparation, process, outcome) to guide the design and application of virtual venue feedback strategies for deep learning. In the preparation stage, learners are classified via a deep learning identification model, while instructional content and the virtual environment are prepared. Preliminary feedback (e.g., operational guidance) is also provided. During learning, intermittent feedback strategies influence learners’ physiological and behavioral states, shaping their experiences. Post-learning, students access personalized reports (performance and reflection), creating a dynamic feedback loop that reinforces iterative learning.

To address virtual learning environments, this study integrates multimodal learning theory, emphasizing cognitive and physiological responses. An optimized 3P model of deep learning factors underpins the feedback strategy design. Quasi-experimental validation confirms the framework’s feasibility, offering theoretical support for assessing deep learning in virtual venues.

8 Limitations and future study directions

This study was conducted solely among first-year students at the participating university, considering the importance of foundational computer courses for freshmen. This approach limited the sample size and precluded the inclusion of undergraduates across all grade levels. Future research will explore the integration of virtual environment technology with various academic disciplines in higher education and continue to employ quasi-experimental designs to inform the application of virtual environments in university curriculum development. Due to time constraints, this study did not incorporate interviews to collect qualitative data—an aspect that will be addressed in ongoing research starting in the fall semester of 2025. Subsequent qualitative investigations will be introduced to complement the existing findings.

The implementation of virtual environments is constrained by institutional policies and the availability of experimental equipment, in addition to requiring instructors and researchers to receive training in delivering virtual environment-based instruction. These factors present challenges for the widespread adoption of virtual environment courses across universities. However, these difficulties are temporary. With advances in artificial intelligence in educational technology, a growing number of higher education institutions and research centers are recognizing the benefits that virtual environment-based teaching offers to both educators and students—particularly its notable contributions to safety education, which will be a key focus of future research.

9 Conclusion

The prevalence of shallow learning behaviors in virtual learning environments has significantly compromised instructional effectiveness and the cultivation of digital competencies, underscoring the critical need for well-designed feedback strategies and timely interventions. To systematically examine the differential impacts of various feedback strategies on college students’ deep learning outcomes in virtual environments, the current study implemented a quasi-experimental design incorporating multimodal learning analytics. Comprehensive multimodal data encompassing learning processes and outcomes were collected to establish multi-source verification.

Through integrated analysis of cognitive-behavioral data, physiological indicators, learning experience measures, feedback strategy efficacy, and deep learning outcomes—coupled with correlation analyses and mechanistic pathway examinations—the findings validate both the effectiveness of feedback strategies in virtual environments and their differential impacts on promoting deep learning.

Key findings demonstrate the superior efficacy of peer dialogic feedback strategies over peer evaluative feedback in virtual environments, with dialogic approaches proving most effective in basic interactive settings. These results suggest that virtual environment designers and instructors should strategically tailor feedback approaches according to specific disciplinary requirements to optimize students’ deep learning outcomes.

The study contributes to the field by: (1) enriching the design framework for feedback strategies in virtual learning environments; (2) advancing the deep learning assessment; (3) providing empirically-validated strategy prototypes and evaluation metrics for future research; and (4) offering practical references for implementing blended virtual learning approaches across academic disciplines.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/supplementary material.

Ethics statement

The studies involving humans were approved by Ethics Committee of Shenyang University (SYU20230201). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

XW: Project administration, Visualization, Validation, Formal analysis, Supervision, Methodology, Data curation, Software, Writing – original draft, Funding acquisition, Resources, Investigation, Conceptualization. MZ: Writing – original draft, Methodology, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Research on Optimization Strategies for Social Presence in Online Learning Based on Cognitive Style Differences among Rural Students, Youth Project of Shandong Provincial Social Science Planning Research Project. Approval Number: 23DJYJ05.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Al-Samarraie, H., Shamsuddin, A., and Alzahrani, A. I. (2023). The impact of structured peer assessment in virtual learning environments: a meta-analysis. Comput. Educ. 194:104745. doi: 10.1016/j.compedu.2022.104745

Attiogbe, E. J. K., Oheneba-Sakyi, Y., Kwapong, O. A. T. F., and Boateng, J. (2025). Assessing the relationship between feedback strategies and learning improvement from a distance learning perspective. J. Res. Innov. Teach. Learn. 18, 165–186. doi: 10.1108/JRIT-10-2022-0061

Carless, D., and Winstone, N. (2024). Ai-mediated feedback processes: expanding student feedback literacy horizons. Comput. Educ. 216:105042. doi: 10.1016/j.compedu.2024.105042

Chen, B., Håklev, S., and Rosé, C. P. (2022). Designing anonymity in peer feedback systems: a socio-cognitive perspective. Br. J. Educ. Technol. 53, 571–589. doi: 10.1111/bjet.13188

Chin, W. W. (1998). “The partial least squares approach to structural equation modeling” in Modern methods for business research. ed. G. A. Marcoulides. (America: Lawrence Erlbaum Associates), 295–336.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. 2nd Edn. America: Lawrence Erlbaum Associates.

Falk, R. F., and Miller, N. B. (1992). A primer for soft modeling. America: University of Akron Press.

Feng, Z., Wang, T., and Liu, H. (2023). Ai-guided peer feedback in online collaborative learning: effects on metacognitive awareness and knowledge convergence. Comput. Educ. 198:104762. doi: 10.1016/j.compedu.2023.104762

Gielen, S., Peeters, E., Dochy, F., Onghena, P., and Struyven, K. (2010). Improving the effectiveness of peer feedback for learning. Learn. Instr. 20, 304–315. doi: 10.1016/j.learninstruc.2009.08.007

Hair, J. F., Hult, G. T. M., Ringle, C. M., and Sarstedt, M. (2019). A primer on partial least squares structural equation modeling (PLS-SEM). 2nd Edn. America: Sage.

Hite, R. L., Jones, M. G., and Childers, G. M. (2024). Classifying and modeling secondary students’ active learning in a virtual learning environment through generated questions. Comput. Educ. 208:104940. doi: 10.1016/j.compedu.2023.104940

Jabeen, S., Li, X., Amin, M. S., Bourahla, O., Li, S., and Jabbar, A. (2023). A review on methods and applications in multimodal deep learning. ACM Trans. Multimedia Comput. Commun. Appl. 19, 1–41. doi: 10.1145/3545572

Lövdén, M., Wenger, E., Mårtensson, J., Lindenberger, U., and Bäckman, L. (2013). Structural brain plasticity in adult learning and development. Neurosci. Biobehav. Rev. 37, 2296–2310. doi: 10.1016/j.neubiorev.2013.02.014

Nicol, D. (2021). The power of internal feedback: exploiting natural comparison processes. Assess. Eval. High. Educ. 46, 756–778. doi: 10.1080/02602938.2020.1823314

Nwakanma, C., and Aijaz, R. (2024). A critical analysis of the integration of artificial intelligence in 6G: Vision, hype, and reality. ICT Express. Advance online publication. doi: 10.1016/j.icte.2024.04.008

Oviatt, S., Grafsgaard, J., Chen, L., and Ochoa, X. (2018). “Multimodal learning analytics: assessing learners' mental state during the process of learning” in The handbook of multimodal-multisensor interfaces: signal processing, architectures, and detection of emotion and cognition, New York: The Association for Computing Machinery and Morgan & Clipper. vol. 2, 331–374.

Schmidt, J. T., and Tang, M. (2020). “Digitalization in education: challenges, trends and transformative potential” in Führen und managen in der digitalen transformation: trends, best practices und herausforderungen (Wiesbaden: Springer Fachmedien Wiesbaden), 287–312.

Slack, F., Beer, M., Armitt, G., et al. (2003). Assessment and learning outcomes: the evaluation of deep learning in an online course. J. Inf. Technol. Educ. Res. 2, 305–317. doi: 10.28945/330

Tevet, O., Gross, R. D., Hodassman, S., Rogachevsky, T., Tzach, Y., Meir, Y., et al. (2024). Efficient shallow learning mechanism as an alternative to deep learning. Phys. A Stat. Mech. Appl. 635:129513. doi: 10.1016/j.physa.2024.129513

Keywords: peer feedback strategy, virtual venues, deep learning, multimodal learning analysis, EEG experiment

Citation: Wu X and Zhao M (2025) The mechanisms of peer feedback strategies in facilitating deep learning for university students in virtual exhibition environments. Front. Educ. 10:1662330. doi: 10.3389/feduc.2025.1662330

Edited by:

Rawan Nimri, Griffith University, AustraliaReviewed by:

Maila Pentucci, University of Studies G. d'Annunzio Chieti and Pescara, ItalyThiti Jantakun, Roi et Rajabhat University, Thailand

Copyright © 2025 Wu and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Miaomiao Zhao, emhhb21pYW9taWFvMjAyNUAxNjMuY29t

Xinyi Wu

Xinyi Wu Miaomiao Zhao

Miaomiao Zhao