- 1Rabdan Academy, Abu Dhabi, United Arab Emirates

- 2Lancaster University, Lancaster, United Kingdom

Introduction: This study examines how university faculty members at an internationalized higher education institution in the UAE navigate the challenges of generative artificial intelligence (Gen-AI) plagiarism through the theoretical lens of Michael Lipsky’s Street-Level Bureaucracy (SLB) framework.

Methods: Drawing on qualitative data from semi-structured interviews with 17 faculty members at an internationalized university in the UAE, this paper analyzes how faculty members exercise discretion when confronted with suspected AI-generated content in student work.

Results: The findings of the study reveal that faculty, as street-level bureaucrats, develop various coping strategies to manage the additional workload associated with Gen-AI detection, including preventive education, discretionary intervention, and modified assignment designs. Faculty decisions are influenced by tensions between empathy and policy enforcement, skepticism about detection tools, and concerns about institutional processes. The study also highlights a significant gap between institutional expectations and faculty practices, with program chairs critiquing discretionary approaches while faculty defend them as essential for addressing nuanced student contexts.

Discussion: This paper argues that institutional policies should acknowledge and accommodate faculty discretion rather than attempt to eliminate it, emphasizing prevention and education over detection and punishment. This research contributes to understanding how front-line academic integrity enforcers shape policy implementation in practice, with significant implications for institutional governance, faculty development, and academic integrity in higher education.

1 Introduction

The rapid proliferation of Generative AI (Gen-AI) tools has fundamentally disrupted traditional conceptions of academic integrity and plagiarism detection in higher education (Alsharefeen and AlSayari, 2025; Chan, 2024; Crompton and Burke, 2024; Kim et al., 2025; Sánchez-Vera et al., 2024). While rushing to ‘play catchup’, academic institutions across the globe adopted varying stances in response to GenAI. In a review of Gen-AI policies in 116 universities across the US, McDonald et al. (2025) found that while a majority of them (or 63%) encourage its use, only 43% provided detailed guidance to faculty and students on how to manage Gen-AI content. While institutional policies typically outline formal procedures for handling plagiarism cases, the implementation of these policies falls to individual faculty members who must make real-time judgments about suspected violations (Malak, 2015). This discretionary decision-making process is complicated by several factors including the technical challenges of definitively identifying AI-generated content, concerns about false accusations, empathy for students’ circumstances, workload constraints, and personal beliefs about the educational purpose of addressing academic misconduct (cf. Alsharefeen and AlSayari, 2025; Hostetter et al., 2024; Malak, 2015). Lipsky’s (2010) Street-Level Bureaucracy framework offers a valuable theoretical lens for understanding how faculty navigate these challenges. Lipsky defines street-level bureaucrats as public service workers who interact directly with citizens and have substantial discretion in executing their jobs. In the context of education, faculty members serve as street-level bureaucrats (SLBs) who interpret and implement institutional academic integrity policies through their interactions with students (Lipsky, 2010; Maynard-Moody and Musheno, 2000). Through a qualitative analysis of faculty interviews, this paper explores how faculty members at an internationalized higher education in the UAE setting develop coping mechanisms, decision-making frameworks, and discretionary practices that may diverge from institutional guidelines. By applying Lipsky’s SLB framework to this contemporary challenge, this paper contributes to the understanding of how front-line academic integrity enforcers shape policy implementation in practice, especially in international institutions where faculty members are of diverse cultural background as in the UAE. The findings of this study have significant implications for institutional governance, faculty development, and academic integrity policies in higher education. By highlighting the complex reality of policy implementation at the classroom level, this research can inform more effective approaches to addressing Gen-AI plagiarism that acknowledge the crucial role of faculty discretion while promoting consistency and fairness in academic integrity enforcement.

To achieve its objectives, the study reviews the literature on Lipsky’s Street Level Bureaucracy, Academic Integrity, and Gen-AI plagiarism. After outlining the methodology of the study, the findings and discussion are presented in the following sections. Before concluding, the contributions of the study are outlined.

2 Literature review

2.1 Street-level bureaucracy and discretionary decision-making

First published in 1980, Lipsky’s (2010) seminal work on street-level bureaucracy provides a theoretical framework for understanding how public service workers —exemplified by teachers, police officers, and welfare workers—implement policy through their direct interactions with citizens. Evans and Harris (2004, p. 872) argue that Lipsky’s ideas are both novel and rare in shedding light on aspects of real life. Maynard-Moody and Portillo (2010) posit that Lipsky’s work is a classic example of a book that fundamentally reshaped its field of study. According to Lipsky (2010, p. 13), SLBs often operate in environments characterized by limited resources, high workloads, ambiguous policy directives, and conflicting goals. These conditions necessitate the exercise of varying degrees discretion, as workers must interpret policies and make judgment calls about how to apply rules to specific cases. Tummers and Bekkers (2014, p. 529) define SLB’s discretion as “the perceived freedom of street-level bureaucrats in making choices concerning the sort, quantity, and quality of sanctions, and rewards on offer when implementing a policy.”

Taylor and Kelly (2006, pp. 630–1) argue that the literature tends to overlook the fact that Lipsky did not advocate for a higher degree of discretion among SLBs when implementing policy. Instead, Lipsky believes that SLBs are more likely to be effective if a degree of control and regulation is exercised over their work. However, Taylor and Kelly (2006) note that Lipsky’s analysis suggests that the absence of explicit policy guidance inherently demands increased discretionary judgment from SLBs. Along these lines, Evans and Harris (2004, p. 889) argues that having a discretion is a healthy necessity for SLBs as curtailing it would result in the formation of practices that would lead eventually to subverting policy. This manifests itself in situations where SLBs either creatively interpret the policy to build room for maneuverability or claim to service receivers that the policy does not allow any room for flexibility thereby discrediting the policy and its enforcing agency (p. 888). To manage challenges encountered by them, SLBs develop various coping mechanisms in order to simplify their work in ways that maximize the outcome of their work (Lipsky, 2010, p. xi). These coping mechanisms often lead to operational practices that diverge from official policy intentions, creating a gap between policy as written and policy as implemented. Consequently, SLBs assume de facto policymaking roles through the exercise of discretionary judgment, thereby reconfiguring policy outcomes at the point of delivery (p. 13).

Many studies have applied this theoretical framework to higher education (e.g., Karaevli et al., 2024; Khelifi and Triki, 2020; Lovell, 2024; Malak, 2015). However, very few studies have applied this framework in the context of academic integrity and technological change. As such, this study extends the application of street-level bureaucracy theory to examine how university faculty navigate academic integrity challenges posed by generative AI.

2.2 Faculty as SLBs

Faculty in higher education settings share key characteristics with Lipsky’s SLBs. They make immediate and direct contact with students; they exercise considerable discretion in their daily work; they deliver benefits and sanctions to students; they are held accountable through performance evaluation reviews; and they often operate under conditions of limited resources and institutional guidance (Malak, 2015, pp. 27–30). In his theoretical paper, Lovell (2024) makes an argument to extend the scope of SLBs to higher education faculty as many aspects of their work fit the traditional definition of SLBs. He posits that the prevailing literature often excludes higher education faculty members from discussions pertaining to public administration, framing this omission as a consequence of divergent epistemological orientations and contextual demands inherent to academic work (p. 28). As an example, he asserts that faculty can change the curriculum and teaching approach to fit the needs of their students and enjoy various degrees of academic freedom (pp. 25, 35), a form of discretionary power argued to be exclusivity associated with higher education (Khelifi and Triki, 2020). Additionally, they are routinely faced with the requirement to make ethical decisions with significant implications on their student lives, a responsibility intensified during disruptive developments such as COVID (p. 26).

In the context of academic integrity and generative AI, faculty make daily decisions about what constitutes acceptable use of these tools, how to identify potential violations, and how to respond to suspected cases. Through these situated judgements, faculty shape the actual academic integrity policy experienced by students. Of the very few studies that employed the SLB framework in academic integrity contexts, Malak (2015) reported that a majority of faculty members, despite cognizance of institutional academic integrity policies, exercised discretion in their application, resulting in materially divergent enforcement practices. Reasons faculty provided included the burden of proof, workload, processing time, disagreement with premise of policy, and fear of backlash (p. 57). By applying the lens of street-level bureaucracy to faculty experiences with generative AI and academic integrity, this study provides insights into how technological change affects policy implementation in higher education. It examines how faculty exercise discretion, navigate resource constraints, develop coping mechanisms, and effectively become policy makers in this rapidly evolving technological context.

2.3 Academic integrity policies in higher education

Academic integrity policies are key instruments that “strategically guide the management of plagiarism” (Gullifer and Tyson, 2014, p. 1204) and are argued to help mitigate the risk of students engaging in plagiarism (Bretag and Mahmud, 2016; Levine and Pazdernik, 2018). Effective plagiarism management requires a holistic institutional approach where clear policies are implemented consistently (Duggan, 2006; Morris and Carroll, 2016). However, studies revealed inconsistencies in how academic integrity policies are implemented across institutions and even within departments (e.g., Amigud and Pell, 2021; Cullen and Murphy, 2025). Faculty members often vary in their understanding of what constitutes plagiarism, in their approaches to detection, and in their responses to suspected violations (Eaton et al., 2019; Glendinning, 2013; Morris and Carroll, 2016). In a study of academic integrity policies across the European Union, Glendinning (2013, p. 38) found that lack of a shared understanding of plagiarism was a fundamental barrier impeding common educational standards in Europe. Walker and White (2014) contend that inconsistency in handling plagiarism undermines the principles of equity and transparency. These inconsistencies can be attributed to various factors, including disciplinary differences, personal beliefs about the educational purpose of addressing misconduct, workload constraints, and concerns about the procedural fairness of formal reporting systems (Eaton et al., 2019). Along these lines, many studies concluded that academic integrity policies tend to be punitive rather than education. Stoesz and Eaton (2022) found that policies in western Canadian universities continue to be of punitive nature, leaving faculty and students with little support. Similarly, Pecorari and Petrić (2014) drew attention to the need for academic integrity policies to be more educative than punitive.

2.4 Generative AI and academic integrity

The rapid proliferation of Generative AI (Gen-AI) tools, such as ChatGPT, Claude, DeepSeek, and Bard, has fundamentally disrupted traditional conceptions of academic integrity and plagiarism detection in higher education (Chan, 2024; Crompton and Burke, 2024; Hostetter et al., 2024; Kim et al., 2025; Sánchez-Vera et al., 2024). These sophisticated language models can produce coherent, contextually appropriate text that is increasingly difficult to distinguish from human-authored content (Eaton, 2023; Hostetter et al., 2024; Lodge et al., 2023). Sánchez-Vera et al. (2024, p. 20) surveyed faculty members across Spain and found that the top three risks associated with Gen-AI were deterioration of essential student skills, excessive dependence on technology, and plagiarism. As Gen-AI technologies continue to evolve, university faculty find themselves at the forefront of detecting, addressing, and preventing this new form of academic misconduct. Alsharefeen and AlSayari (2025) refers to this form as ‘neo-plagiarism’ in order to reflect its nascent nature, while Chan (2024, p. 3) calls it “AI-giarism” and defines it as “[t]he unethical practice of using artificial intelligence technologies, particularly generative language models, to generate content that is plagiarised either from original human-authored work or directly from AI-generated content, without appropriate acknowledgement of the original sources or AI’s contribution.” In a systematic review of the literature on Gen-AI in higher education, Xia et al. (2024) found that a majority of the literature is focused on its impact on academic integrity and strategies to integrate it into instruction and assessment. However, much less attention has been paid to how faculty experience academic integrity policies in response to suspected Gen-AI plagiarism (Kim et al., 2025). This gap is significant because, as Lipsky’s SLB framework suggests, the reality of policy implementation often diverges from formal guidelines due to the discretionary decisions of “front-line workers.”

3 Context

Building on the investigation initiated by Alsharefeen and AlSayari (2025), this study was situated within Rayyan University (a pseudonym), a higher education institution in the UAE, where academic integrity represents a fundamental institutional principle. The investigated institution maintains distinct regulatory frameworks for traditional and generative AI-mediated plagiarism, characterized by marked asymmetry in their development and implementation. Traditional plagiarism governance comprises comprehensive, scenario-specific policies delineating graduated sanctions corresponding to violation severity. The institution utilizes Turnitin as its primary detection mechanism, with established protocols specifying similarity thresholds and concomitant disciplinary actions. Conversely, policies addressing generative AI misconduct remain largely conceptual, lacking the operational specificity and procedural granularity that define traditional plagiarism regulations. This regulatory disparity between established and emergent forms of academic misconduct creates a unique institutional environment for investigating faculty perceptions and responses to integrity violations. The juxtaposition of mature traditional plagiarism frameworks against nascent AI-related policies provides a critical context for understanding how educators navigate evolving technological challenges to academic integrity.

4 Methodology

This paper employs primarily a qualitative research design to examine how university faculty navigate academic integrity challenges posed by generative AI. An anonymous online survey was distributed to 95 faculty members and 10 university leaders with teaching responsibilities at Rayyan University during February 2025, yielding a 78% response rate. The survey recipients originate from 37 nations, with predominant representation from the United Kingdom (15%), the United Arab Emirates (10%), the United States of America (8%), Australia (7%), Jordan (6%), Canada (5%), Malaysia (3%), Mexico (3%), Taiwan (3%), Pakistan (3%), and Italy (3%). Participants were predominantly social scientists, reflecting the disciplinary focus of the host university’s academic programs. Participants demonstrated a mean tenure of 3.7 years (SD = 2.4) at Rayyan University, with the majority (72.7%) classified as early-career institutional members (0–4 years of service). Nearly one-quarter (24.2%) represented mid-career faculty (5–9 years), while only 3.0% had served the institution for 10 or more years. In contrast to their institutional tenure, participants possessed extensive higher education experience, averaging 14.6 years (SD = 7.3) in academic settings. The distribution revealed that over half (53.7%) were senior academics with 10–19 years of experience, while 20.9% were veterans with two or more decades in higher education. Mid-career academics (5–9 years) comprised another 20.9%, with only 4.5% representing early-career academics. Participants’ experience within the UAE averaged 7.1 years (SD = 6.7), demonstrating considerable variation in regional familiarity. Half of the participants (51.5%) had fewer than 5 years of UAE work experience, while 21.2% had 5–9 years, and 22.7% possessed 10 or more years of regional experience. When examining specifically higher education experience within the UAE, the mean decreased to 6.1 years (SD = 5.4), with 53.0% having fewer than 5 years of UAE-specific academic experience. Participants were predominantly social scientists, reflecting the disciplinary focus of the host university’s academic programs.

The survey instrument solicited participants’ willingness to engage in subsequent semi-structured interviews by providing their email addresses at the end of the survey. In response to this item, 17 faculty members expressed their interest in follow-up interviews which were conducted a month after the completion of the primary data collection phase. Three of the interviewees were females while the remainder were males. Five interviewees serve as program chairs with reduced teaching responsibilities, while the rest of interviewees were faculty members with no administrative responsibilities. In the results and discussion sections of this paper, interviewees are referred to using the labels Interviewee 1 to Interviewee 17. Program Chairs are Interviewees 3, 6, 9, 11, and 17. Prior to data collection, ethical approval was secured from Lancaster University, where the author is a postgraduate researcher, and Rayyan University, where the survey and interviews were administered.

Following Kvale’s (2012, p. 121) work, semi-structured interviews were conducted with the faculty members in order to arrive at “deeper and critical interpretation of meaning.” Voice Memos was employed to record the interviews while MS Word was utilized to auto-transcribe the audio files. The transcribed output was reviewed for accuracy against the audio files by the researcher. Transcripts collected from this study were later analyzed using NVIVO. Following Braun and Clarke’s (2006) and Braun et al. (2016) work, thematic analysis was used to gain a deep understanding of interviewees’ perceptions of traditional and Gen-AI plagiarism. This method allows participants to become collaborators in the research effort (Braun and Clarke, 2006, p. 97) and is robust in producing policy-focused research (Braun et al., 2016, p. 1). While this study is centrally premised on qualitative findings gathered from in-depth interviews, relevant quantitative findings from the survey are systematically integrated to reinforce the core arguments presented in this paper. Many of these findings were reported in Alsharefeen and AlSayari (2025), upon which this research builds. This approach is supported by Creswell and Creswell (2017) who assert the combination of qualitative and quantitative data offers robust frameworks for addressing the multi-dimensional complexities inherent in social science inquiry, yielding more comprehensive insights than single method approaches.

5 Findings

The analysis of interviews revealed several key themes related to how faculty members exercise discretion when confronted with suspected Gen-AI plagiarism. These themes illuminate the complex reality of policy implementation at the classroom level and highlight the role of faculty as SLBs who shape academic integrity enforcement through their discretionary decisions.

5.1 Discretionary intervention for educative purposes

A majority of faculty participants (n = 10/17) emphasized the importance of educative and early intervention strategies for addressing Gen-AI plagiarism. Rather than focusing solely on detection and reporting in accordance with existing policies regulating plagiarism, most interviewees opted for discretionary approaches aimed at preventing misconduct before and sometimes after it occurs. These strategies included chances for resubmission and confrontational warnings without reporting. Of the seventeen interviewees, fifteen reported not following official channels for investigation, as typified by Excerpt 1. Such approach contravenes institutional policy, mandating immediate reporting of suspected violations, while explicitly preventing the implementation of punitive measures—a responsibility that falls exclusively under the purview of the Investigation Committee. Notably, this qualitative insight contradicts the quantitative survey data reported in Alsharefeen and AlSayari (2025), wherein 62% of faculty indicated compliance with formal reporting procedures despite 60% perceiving Gen-AI plagiarism as more serious than traditional plagiarism. In excerpt 2, Interviewee 1 exercises discretion by instituting a mandatory first-draft requirement to which s/he provides extensive feedback to students. By insisting on checking early drafts, the faculty creates a structured opportunity to identify potential dishonesty before formal submission. The explicit approach aims to educate students and deter the problematic behavior entirely. This discretionary creation of an early checkpoint transforms the faculty’s role into a preventative gatekeeper, seeking to intercept plagiarism proactively and avoid the significant time and procedural burden of investigating a final submitted paper. A similar behavior is echoed in excerpt 3 where the faculty member interviewee 5 prioritizes addressing plagiarism during draft stages thereby avoiding formal reporting by resolving issues before final submission. Other faculty, such interviewee 4 in excerpt 3, described following a more confrontational yet educational approach with students by issuing warnings or requiring revisions without formal reporting when they suspected AI-generated content.

I deal with them, you know, in my own way. I do not report them (Interviewee 2).

I give lots of feedback. So, I insist that all my students submit the first draft. I check [it]. [I tell them] ‘I see the first draft is not your work. Do not submit this one’. It’s like I’m trying to stop the behavior before it happens (Interviewee 2).

I catch issues in drafts.give the benefit of doubt as a mistake. If it does not get submitted, no problem (Interviewee 5).

I would normally have a very direct conversation.[and would tell him/her directly], ‘I do not think you have written that essay.you are on my radar now’ (Interviewee 4).

The data also show that faculty also reported allowing students to resubmit even after the detection of Gen-AI plagiarism (n = 5/17). Quote 5 exemplifies an SLB exercising significant discretion within Lipsky’s framework. Faced with a policy violation, the faculty does not mechanically apply sanctions. Instead, s/he constructs the student’s situation (attributing the issue to unawareness, not defiance), allowing him/her to adapt the rule’s application. S/he offers a second chance focused on revision, fundamentally redefining the policy interaction as a “teaching tool.” This adaptation aligns with their professional ethic as an educator who prioritizes the broader goal of student learning over strict, immediate policy enforcement, demonstrating how frontline workers reshape policy implementation based on their judgement and context.

I’m going to assume that [the student is] not aware [of the policy] so I’ll give … another go. [I would tell the student] please revise it and look at it. Then you have another chance. Because sometimes, you know, maybe a student misunderstood. This approach is as a kind of a teaching tool (Interviewee 7).

This preventive approach reflects a discretionary decision to prioritize education over punishment, aligning with what Lipsky (2010) describes as SLBs’ tendency to develop alternative strategies in response to challenges in the workplace, especially when formal procedures are perceived as ineffective or counterproductive as found in the quantitative survey, where only 28% believed that Gen-AI plagiarism policy was effective. The finding also resonates with Lipsky’s (2010, pp. 11–24) argument that SLBs seem themselves as mediators of the student’s relationship to the institution, thereby exercising considerable impact on the lives of these students. In the context of literature on plagiarism, Bretag et al. (2011) posit that an effective policy should follow an educative approach that prioritize learning over punishment. The pedagogical orientation of is substantiated by empirical evidence from multiple investigations, notably those conducted by Brown and Howell (2001), Walker and White (2014), and Pecorari and Petrić (2014). For example, Walker and White (2014, p. 679) who concluded that “most participants maintained that educative strategies … [in managing plagiarism] were an effective preventative strategy.”

5.2 Burden of proof and discretionary avoidance

Analysis of the results revealed that 59% of faculty participants (n = 10/17) systematically diverted suspected generative AI cases from institutional reporting channels, citing inability to meet evidentiary thresholds for substantiating academic misconduct as the principal factor influencing discretionary non-compliance. Unlike traditional plagiarism, which produces tangible evidence through text-matching software such as Turnitin, Gen-AI use presents unique evidentiary challenges that fundamentally alter enforcement dynamics. Excerpt 6 articulates this challenge and encapsulates the evidentiary dilemma faculty face—the necessity of proof coupled with the difficulty of obtaining it in AI-mediated cases.

I will try to confront the students and I will gather evidence to prove that this is not his or her work because accusing a student without having evidence is not right (Interviewee 1).

Interviewee 10 echoed the concern over the burden of proof concern by noting:

It’s very hard and time consuming for faculty to actually … to prove. I mean, we are not a court of law, but it seems to be we actually have a higher standard of proof for our students and therefore faculty it’s too cumbersome of a process for them.

This comparison to legal standards highlights faculty perception that institutional expectations for evidence exceed reasonable capabilities given available detection tools. Interviewee 3 provided insight into the resulting discretionary behavior:

So basically, do I think there are reach of integrity that do not get full money investigated? Yes, absolutely. [It is because] there’s not enough to reach the burden of proof. Yes, that’s the way I see it. The main reason for it is the proof.

Consistent with SLB theory’s emphasis on resource limitations, faculty members identify the temporal and administrative burden of formal reporting as a significant deterrent. The investigation process demands substantial time investment that competes with other professional obligations. Interviewee 10 explicitly connected policy non-enforcement to these burdens:

Because again, we can write down, yes, we have got all these fancy policies and procedures, but at the end of the day, if we do not enforce them, they are not worth any. Because the onus here is very high for the faculty to prove, and we often cannot get to that burden.

This sentiment reflects Lipsky’s (2010, p. 27) observation that street-level bureaucrats face “chronically inadequate resources” relative to their tasks. Faculty members must balance integrity enforcement against teaching, research, and service obligations, leading to rational calculations about resource allocation. Concurrently, these discretionary practices align with Lipsky’s (2010) theoretical framework positing that SLBs exercise heightened discretion under conditions of regulatory ambiguity and operational uncertainty. Such behavioral adaptations emerge as rational responses to institutional policy inadequacies, as evidenced by the survey data revealing that merely 28% of faculty respondents perceived existing GenAI plagiarism guidelines as effective, while only 45% deemed them adequate. In the context of plagiarism, this finding is consistent with Alsharefeen and AlSayari (2025) who identified burden of proof as one of the main barriers to effective implementation of Gen-AI academic integrity policies. Coalter et al. (2007, p. 7) also found that 90% of faculty who did not report integrity incidents did so because of lack of proof. Similarly, LeBrun’s (2023) findings revealed that a significant majority (87%) of participants reported difficulties in establishing sufficient evidence to meet the required burden of proof, particularly in cases involving Gen-AI plagiarism.

5.3 Discretionary reduction of grades

Analysis of faculty discretionary sanctioning practices reveals a systematic deviation from institutional reporting protocols, exemplifying Lipsky’s (2010, p. 27) theoretical proposition that SLBs exercise “substantial discretion” in translating policy into practice. Faculty members operationalize an informal graduated sanctioning framework that circumvents official channels. The data demonstrates that at least four interviewees constructed parallel justice systems wherein grade reductions substitute for formal disciplinary proceedings. One participant exemplifies this discretionary calculus:

[If I am not satisfied with outcome of the meeting with the student] I will reduce the grade. If [however,] I found a lot of evidence that this is an essay that is a GenAI plagiarism, then I would of course [give] a mark of 0 (Interviewee 1).

This approach illustrates how evidentiary thresholds determine sanction severity outside institutional frameworks. Such practices constitute what Lipsky identifies as “coping mechanisms” whereby street-level bureaucrats reconcile professional values with organizational constraints. Faculty transform binary report/non-report mandates into nuanced pedagogical interventions, effectively rewriting plagiarism policy through accumulated discretionary decisions. This informal sanctioning regime reflects rational adaptations to structural impediments—particularly time constraints and evidentiary burdens—while preserving faculty autonomy to prioritize educational outcomes over punitive compliance, thereby instantiating policy through implementation rather than formal channels. This empirical finding corroborates Aljanahi et al.’s (2024, p. 14) observations regarding faculty discretionary practices in the United Arab Emirates, wherein instructors systematically deploy grade reduction as an informal sanctioning mechanism for suspected academic misconduct while circumventing formal investigative protocols.

5.4 Empathy vs. policy enforcement

A recurring theme in the interviews was the tension between empathy for students and the obligation to enforce academic integrity policies. Faculty described feeling torn between their role as policy enforcers and their desire to protect students from potentially severe consequences. This conflict is characteristic of what Lipsky (2010) describes as the “dilemmas of the individual in public service,” where SLBs must reconcile their personal values with institutional mandates. Personal connections with students and concerns about their futures often influenced faculty decisions about whether to formally report suspected Gen-AI plagiarism. As one participant explained,

I feel like they are my sons, kids. If I’m going to report him, hundred percent, he’s going to be dismissed. I worry that I will be causing him to lose his career (Interviewee 2).

Another added,

Accusing a student of intentional plagiarism is serious. It sticks with them. So, we couch words carefully, take an educational approach, and avoid assumptions of malice” (Interviewee 8).

This finding is consistent with what Maynard-Moody and Musheno (2000, p. 352) describe as “citizen-agent narrative” where SLBs make decisions based on relationships rather than rules as these relationships are the “source of their greatest sense of accomplishment.” It is also consistent with Lipsky’s (2010, p. 15) argument that SLBs discretionary behavior promotes their self-regard when students believe that SLBs “hold the keys to their well-being.”

Faculty also expressed concerns about the proportionality of consequences relative to the offense, particularly in cases where students might not fully understand the implications of using AI tools. One participant noted,

Accusing a student without having evidence is not fair. You should not assume guilt just because they prepared a very good essay. What if the student worked hard? (Interviewee 1).

This concern about fairness and proportionality aligns with what Tummers and Bekkers (2014, p. 528) describe as “client meaningfulness,” where street-level bureaucrats consider the impact of their decisions on clients’ lives, allowing them to make a difference in their lives when implementing the policy. It is also consistent with Aljanahi et al. (2024, p. 15), which reported that faculty evaluate the long-term implications for students arising from reporting plagiarism decisions.

5.5 Technological limitations

Faculty participants described a lack of faith in technological tools for addressing Gen-AI plagiarism. Many expressed skepticism about the reliability of AI detection software and the procedural fairness of formal reporting systems. One participant articulated fundamental epistemological challenges with detecting Gen-AI plagiarism by stating:

It’s very hard to detect and establish, very hard. The AI detectors do not work (Interviewee 2).

In describing his/her approach to suspected cases, another interviewee noted:

If Turnitin flags a high percentage of AI. I sit down with the student, ask them to walk me through how they produced it. They usually come clean. I give them a second chance but warn that if the resubmission is not their work, I’ll report it to the [relevant] office (Interviewee 14).

This approach reflects what Lipsky (2010, p. 14) describes as SLBs’ tendency to develop their own procedures when formal systems are perceived as ineffective, voluminous, fluid, or contradictory.

The technological limitations of AI detection tools further complicated faculty discretion. Participants described the challenges of definitively identifying AI-generated content, particularly given the rapid evolution of AI technologies. As one faculty member explained,

With tight marking timelines, [faculty] skim assignments and miss AI misuse. We need more time and training to detect these issues properly (Interviewee 9).

These technological and resource constraints align with what Lipsky (2010, p. xv) describes as the “corrupted worlds of service,” where street-level bureaucrats must make decisions with incomplete information and inadequate resources. In the context of the literature on plagiarism, many studies —including Lodge et al. (2023) and Alshurafat et al. (2024)— have underscored the difficulty of identifying text produced by Gen-AI. Applying the Fraud Triangle Theory, Alshurafat et al. (2024) argue that students often view the use of generative AI as a victimless act (rationalization). Under the strain of achieving higher grades (pressure) and confident that detection is unlikely (opportunity), they are consequently inclined to employ these tools.

5.6 Workload management and coping strategies

Survey results indicated that a majority of faculty (76%) believed that Gen-AI tools increase plagiarism frequency among students, with a substantial proportion (63%) reporting concomitant workload increases. In response to this, faculty participants described various coping strategies for managing the additional workload associated with detecting and addressing Gen-AI plagiarism. These strategies include modifying assignment designs, implementing in-class assessments, developing efficient screening processes for identifying suspicious submissions, and avoiding reporting altogether. One participant explained faculty approach to workload management:

With tight marking timelines, they skim assignments and miss AI misuse. We need more time and training to detect these issues properly (Interviewee 9).

This sentiment reflects what Lipsky (2010, p. 82) describes as the “resource inadequacy” that characterizes street-level bureaucracies, where workers must develop coping mechanisms to manage excessive demands with limited resources.

This detection uncertainty catalyzes systematic assessment restructuring, exemplified by Interviewee 14’s declaration:

I’ve moved all these electronic submissions to in in-class assignments last semester.

Other faculty avoid scrutiny and burden by changing their assessment instruments to modes that do not undergo Gen-AI checks as exemplified by the comment,

Faculty avoid accountability by opting for presentation assessments. They know PowerPoints are harder to check for AI than written work (Interviewee 4).

Other faculty opted for multistage summative assessment where students are required to produce evidence of the development process (e.g., Interviewee 1). These approaches reveal how institutional pressures (e.g., workload, student pushback) indirectly encourage discretionary non-enforcement, creating loopholes for AI misuse. This avoidance resonates with what Lipsky (2010) calls “routines of practice” that minimize the administrative burden. Complementing these structural adaptations, faculty deploy interpersonal verification mechanisms, with Interviewee 1 describing the need to:

Confront the student to make sure that he’s the author of the ideas right when they are presenting in class.

The individualized coping strategies illustrate how technological disruption intensifies discretionary burdens on academic street-level bureaucrats. The prevalence of such ad hoc adaptations, rather than coordinated institutional responses, underscores faculty’s role as de facto policy architects navigating what Lipsky terms “the gap between expectations and accomplishment,” constructing pragmatic workarounds that preserve evaluative integrity despite technological uncertainties and institutional policy vacuums.

Faculty also described prioritizing certain cases over others based on their assessment of the severity of the violation and the student’s intent. As one participant noted,

If you do not have enough proof, make sure you do an authenticity interview. Record your authenticity interview. … And then she would only want the ones … [that were] on the bar (Interviewee 4).

This prioritization reflects what Lipsky (2010) describes as “creaming,” where street-level bureaucrats focus their attention on cases that are deemed more important or more likely to yield positive outcomes.

The time constraints associated with detecting and addressing Gen-AI plagiarism influenced faculty decisions about how thoroughly to investigate suspicious submissions. As one participant explained, “With tight marking timelines, they skim assignments and miss AI misuse” (Interviewee 9). This time pressure aligns with what Lipsky (2010) describes as the “chronically inadequate resources” that characterize street-level bureaucracies, forcing workers to make trade-offs in how they allocate their time and attention. This finding is also consistent with Harper et al. (2019) who found that a quarter of faculty who do not report academic integrity incidents attributed this behavior to the load associated with reporting. Similar conclusions were drawn by Malak (2015) and Alsharefeen and AlSayari (2025) who reported that workload was among the top rated challenges encountered by faculty members as they manage plagiarism incidents.

5.7 Program chairs (managers) vs. faculty (SLBs)

Program chairs generally critique discretionary practices that undermine institutional policies. Interviewee 9 rejects discretion entirely, arguing that “plagiarism is plagiarism—if it’s above 20%, it should be flagged,” emphasizing fixed thresholds to avoid subjectivity. Interviewee 3 similarly advocated for minimal discretion in “clear-cut cases,” critiquing disciplinary committee overreach influenced by non-academic factors like historical student performance. This aligns with SLB’s emphasis on systemic boundaries, where managers prioritize policy uniformity to maintain institutional credibility. Program chairs, constrained by systemic boundaries, viewed discretion as a risk to institutional consistency. Interviewee 3 critiqued committees for “interpret[ing] events differently,” leading to “wild disagreements.” This critique aligns with a qualitative finding of the survey wherein 70% of faculty believed that they have different interpretations of guidelines regulating Gen-AI plagiarism. In contrast, faculty, operating within pedagogical boundaries, defend discretion as essential for addressing nuanced student contexts. Interviewee 1’s empathy-driven approach—“I feel they are my sons”—exemplifies this divide.

Program Chairs identified gaps between policy intent and implementation. Interviewee 17 lamented that “AI essays are rampant” due to unreliable detection tools, while Interviewee 11 cited “burdensome” processes that discourage reporting. SLB reveals how role-based responsibilities—chairs’ focus on policy design vs. faculty’s focus on enforcement—create disjointed accountability.

Both groups acknowledged AI’s disruptive impact, but responses differed. Program Chairs demanded “clearer policies and training” to standardize enforcement (Interviewee 9), whereas faculty “preemptive discretion” to avoid bureaucratic hurdles (Interviewee 13). The data shows that program chairs, anchored in systemic boundaries, advocated for policy rigor, detection tools, and institutional accountability. On the other hand, faculty prioritized restorative justice, cultural sensitivity, and individualized learning. These divergences reveal a critical tension: academic integrity requires both a systemic consistency to deter misconduct and pedagogical flexibility to nurture ethical growth.

6 Discussion

The findings of this study illuminate how faculty operate as SLBs who shape academic integrity policy implementation through their discretionary decisions. By applying Lipsky’s SLB framework to the context of Gen-AI plagiarism detection and response, we gain valuable insights into the complex reality of policy implementation at the classroom level. This section provides a discussion of the implications of faculty discretion through the theoretical lens of street-level bureaucracy, examining how faculty navigate the challenges of Gen-AI plagiarism amid resource constraints, technological limitations, and moral dilemmas.

6.1 Faculty discretion and policy implementation

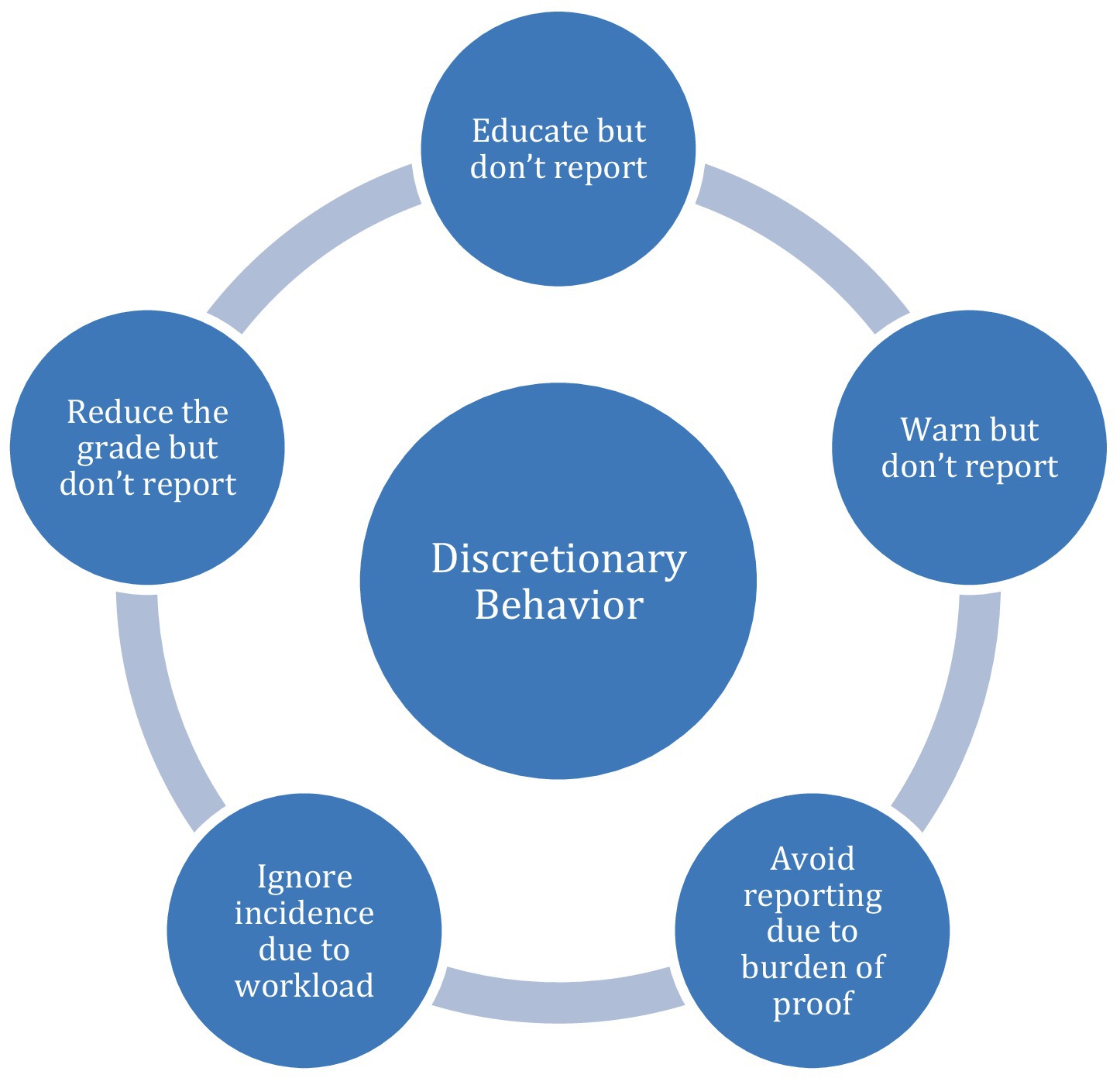

The findings on this study show that a vast majority of participants engage in discretionary behavior bypassing institutional policies. Figure 1 provides a visual summary outlining how faulty members use their discretion in their response to Gen-AI plagiarism. This exercise of discretion by faculty in addressing Gen-AI plagiarism aligns with Lipsky’s (2010, p. 10) characterization of street-level bureaucrats as policymakers in practice. While institutional policy outlines formal procedures for addressing academic integrity violations, this paper revealed that faculty frequently develop their own interpretations and applications of these policies based on their individual judgments and contextual factors. As a result, their practice becomes the policy in the eyes of students and colleagues they mentor. The preventive, educative approach adopted by many faculty participants exemplifies what Tummers and Bekkers (2014) describe as “meaningful discretion,” where SLBs adapt policies to better serve client needs. This approach represents a significant departure from the punitive emphasis of institutional policies, focusing instead on education and prevention.

The also data revealed that a majority of faculty participants preferred to handle suspected Gen-AI plagiarism cases through informal interventions rather than formal reporting channels. This pattern of discretionary decision-making effectively transforms institutional policy from its written form to its implemented reality, creating what Lipsky (2010, p. xvii) terms a “gap” between policy as written and policy as practiced. This discretionary implementation is particularly evident in how faculty interpret what constitutes “evidence” of Gen-AI plagiarism. While faculty argue that institutional policy requires definitive proof, faculty participants described relying on their professional judgment and intuition when formal detection tools proved inadequate. This approach represents what Maynard-Moody and Musheno (2022) describe as “pragmatic improvisation,” where street-level bureaucrats develop practical solutions to policy implementation challenges.

The findings also revealed a significant variation in how faculty exercise discretion across different contexts and cases. Several participants described employing different approaches based on the severity of the suspected violation and the specific assignment context. This contextual adaptation of policy aligns with Lipsky’s (2010, p. 221) observation that SLBs’ discretionary practices are shaped by both individual agency and institutional structures. As one faculty member explained: “over 20%, it’s got to be reported. Over 30%? faculty members confirm the plagiarism, mark the grade right there. It does not need to go to a committee” (Interviewee 6).

This variation in discretionary approaches raises important questions about equity and consistency in academic integrity enforcement. While discretion allows faculty to respond to the unique circumstances of each case, it may also lead to inconsistent outcomes for students, potentially undermining the perceived fairness of academic integrity processes. This tension between flexibility and consistency represents a central challenge in street-level bureaucracy (Lipsky, 2010) that institutions must address in developing effective approaches to Gen-AI plagiarism.

6.2 Coping with resource constraints and technological limitations

Faculty participants described various coping strategies for managing the additional workload and technological challenges associated with detecting and addressing Gen-AI plagiarism. Our findings indicate that the frequency of these workload concerns is substantial, with a majority of faculty participants mentioning time constraints as a factor in their discretionary decisions. This pattern aligns with Tummers and Bekkers’ (2014) observation that workload pressures significantly influence street-level bureaucrats’ policy implementation choices.

The technological limitations of AI detection tools represent another significant constraint on faculty discretion. Participants consistently expressed skepticism about the reliability of these tools, describing them as inadequate for definitively identifying Gen-AI content. This technological uncertainty forces faculty to rely more heavily on their professional judgment, potentially increasing the subjectivity of plagiarism detection. This finding comports what conclusions drawn by Alsharefeen and AlSayari (2025) who found technological limitations as one of the leading barriers to effective Gen-AI management.

Faculty participants described developing various workarounds to address these technological limitations. Several mentioned adapting their assessment strategies to make AI-assisted plagiarism more detectable or less feasible. Some faculty described using in-class writing assignments or oral examinations to establish baseline writing samples for comparison. These adaptive strategies represent what Evans and Harris (2004) describe as one of the two approaches adopted by SLBs when faced with uncertainty and confusion where SLBs creatively interpret rules allowing them room for maneuverability while working within resource constraints.

The combination of workload pressures and technological limitations creates what Lipsky (2010) terms a “dilemma of service provision,” where SLBs must balance quality and quantity in their work. Faculty participants described making strategic decisions about which suspected cases to pursue based on the perceived severity of the violation and the available evidence.

These findings highlight the need for institutional approaches that acknowledge and address the resource constraints and technological limitations faculty face in detecting and responding to Gen-AI plagiarism. Without adequate support and realistic expectations, faculty will likely continue to develop discretionary coping strategies that may diverge from institutional policy intentions.

6.3 Moral dimensions of faculty discretion

The moral conflicts described by faculty participants highlight what Maynard-Moody and Musheno (2022) call the “moral work” of SLBs, who must make value judgments about how to apply policies to individual cases. These moral dimensions of discretion were particularly evident in how faculty balanced concerns about student welfare with institutional expectations regarding academic integrity enforcement. Participants described experiencing significant moral tension when deciding how to respond to suspected Gen-AI plagiarism. This tension often centered on the potential consequences of formal reporting for students’ academic and professional futures. This concern for student welfare frequently led faculty to choose educational interventions over punitive measures, particularly for first-time offenses or cases perceived as stemming from ignorance rather than intentional misconduct.

This moral dimension of discretion was particularly evident in how faculty responded to speakers of English as a foreign language or those from educational backgrounds where academic integrity expectations might differ. Several participants described adapting their approach to account for cultural differences and language barriers. As one faculty member explained: “For students using AI to ‘improve’ English, the final text is often unrecognizable from their usual writing.” LeBrun’s (2023) work substantiates this observation, revealing that most faculty identified cultural disparities and linguistic barriers as primary obstacles to international students’ understanding and compliance with academic integrity protocols.

The moral complexity of discretionary decision-making was further complicated by faculty participants’ varying perspectives on the educational value of different responses to Gen-AI plagiarism. Some viewed strict enforcement as necessary for maintaining academic standards and teaching professional ethics, while others saw educational interventions as more aligned with their teaching mission. As one participant articulated: “Everything you do in life has a consequence. I repeat this to my students. It’s about educating them” (Interviewee 2). This diversity of moral perspectives contributes to the variation in how faculty exercise discretion, creating what Lipsky (2010) terms “unequal treatment” in policy implementation.

Faculty participants also described moral conflicts related to the fairness of academic integrity processes. Several expressed concern about the proportionality of consequences relative to the offense. This concern for procedural fairness influenced how faculty exercised discretion, often leading them to require a higher standard of evidence than institutional policies might specify.

These findings highlight the complex moral calculations that shape faculty discretion in addressing Gen-AI plagiarism. While institutional policies focus on procedural consistency and deterrence, faculty discretionary decisions are often guided by more nuanced moral considerations related to student welfare, educational values, and procedural fairness. This moral dimension of discretion represents a significant factor in the “implementation gap” between policy as written and policy as practiced (Lipsky, 2010).

6.4 Implications for academic integrity policy and practice

The findings of this study have several implications for academic integrity policy and practice in the era of generative AI in the UAE and beyond, especially in contexts where English is a foreign language and where faculty are of diverse cultural backgrounds. First, they highlight the need for institutional policies that acknowledge and accommodate faculty discretion rather than attempting to eliminate it. As Lipsky (2010) argues, discretion is an inevitable and necessary aspect of street-level bureaucracy, particularly in complex professional contexts like education. Rather than viewing faculty discretion as a problem to be solved, institutions should recognize it as a valuable resource for adapting policies to diverse contexts and student needs. According to Hudson et al. (2019, p. 12), this could be achieved through extending the collaborative consensus established with key stakeholders during the policy design phase to encompass implementation actors occupying managerial and professional positions. This approach necessitates a comprehensive understanding of bottom-up discretionary practices and the inherent dilemmas faced by SLBs in their operational contexts. Another approach to achieving this involves the creation of what Rutz and de Bont (2019, p. 284) termed as the collective discretionary room, a form of freedom that is subject to constraint via managerial authority and the senior policymakers, who formally prescribe the limits of worker maneuverability.

Second, our findings suggest that effective academic integrity governance requires what Hupe and Hill (2007) describe as “multiple accountability” systems that balance professional autonomy with institutional oversight. Faculty participants expressed frustration with policies that they perceived as overly rigid or disconnected from classroom realities. This suggests a need for more collaborative approaches to policy development that incorporate faculty perspectives and experiences.

Third, the frequency with which faculty mentioned preventive and educational approaches suggests that institutional policies should place greater emphasis on prevention and education rather than focusing primarily on detection and punishment. Many participants described developing their own preventive strategies, such as requiring draft submissions or providing explicit guidance on AI use. Institutions could support these efforts by developing comprehensive educational resources, training arrangements, and integrating academic integrity education more systematically into the curriculum.

Fourth, the technological limitations identified by faculty participants highlight the need for more sophisticated and reliable approaches to detecting Gen-AI content. As current AI detection tools have significant limitations, leading international institutions could collaborate on evaluating these technologies and invest in developing research-based solutions that are made available to other institutions, especially those in less privileged environments. At the same time, our findings suggest that technological solutions should complement rather than replace faculty judgment.

Fifth, the workload concerns expressed by faculty participants indicate a need for institutional processes that recognize and account for the time required to address Gen-AI plagiarism effectively. Several participants described avoiding formal reporting due to the administrative burden involved. Institutions should consider how to streamline and automate reporting processes and provide adequate resources and recognition for faculty engaged in academic integrity work. Additionally, the design of workload models, a new reality of today’s academe (c.f. Fumasoli and Marini, 2022), should duly take into account the impact the proliferation of Gen-AI has on faculty workload.

Sixth, the moral dimensions of faculty discretion highlighted in our findings suggest a need for institutional approaches that acknowledge the ethical complexity of academic integrity decisions. Rather than prescribing uniform responses to all cases of suspected plagiarism, institutions should provide ethical frameworks and support systems that help faculty navigate these complex decisions. This might include creating opportunities for faculty to discuss and reflect on their discretionary approaches, developing case-based resources that illustrate different ethical perspectives, cognitive debiasing techniques and providing access to ethics consultation when needed (e.g., Daniel et al., 2017). In doing so, universities provide safe spaces for faculty to address inequities and inconsistencies that may arise as a result of the exercise of discretion.

Finally, our findings highlight the importance of faculty development in preparing faculty members to address Gen-AI plagiarism effectively. Many participants described learning through trial and error, developing their approaches in isolation from colleagues or institutional support. Institutions should provide comprehensive faculty development opportunities focused on understanding Gen-AI technologies, detecting AI-generated content, designing AI-resistant assessments, and navigating the ethical challenges of academic integrity enforcement. These development opportunities should recognize faculty as SLBs who play a crucial role in shaping policy implementation, rather than treating them merely as policy executors.

By acknowledging and addressing these implications, institutions can develop more effective approaches to academic integrity in the era of generative AI—approaches that harness the valuable resource of faculty discretion while providing the support and guidance needed to ensure fair and consistent outcomes for students.

6.5 Contribution of the study

This study makes several significant contributions to the literature on academic integrity, policy implementation, and the emerging challenges of generative artificial intelligence in higher education in the UAE and beyond. First, we extend Lipsky’s Street-Level Bureaucracy framework to a novel context by applying it to faculty responses to generative AI plagiarism. While previous research has examined faculty as street-level bureaucrats in other contexts, our study is among the first to utilize this theoretical lens to understand how faculty exercise discretion when confronting AI-generated content in student work. This theoretical application provides a robust framework for understanding the gap between institutional academic integrity policies and their implementation at the classroom level.

Second, our findings reveal the complex interplay between policy enforcement and faculty discretion, highlighting how faculty develop coping mechanisms to navigate the tensions between institutional expectations and classroom realities. By documenting these strategies—including preventive education, assignment redesign, and selective enforcement—this paper contributes to a more nuanced understanding of policy implementation in practice. This extends beyond simplistic compliance/non-compliance binaries to illuminate how SLBs reshape policies through their daily decisions.

Third, this study illuminates the divergent perspectives between program chairs (managers) and faculty (SLBs) regarding discretion in academic integrity enforcement. This contribution enhances our understanding of the vertical tensions within policy implementation hierarchies and challenges assumptions about policy alignment across organizational levels. Finally, this paper research offers practical insights for higher education institutions grappling with generative AI technologies. By documenting faculty experiences and decision-making processes, this paper provides an empirical foundation for developing more effective institutional approaches that acknowledge faculty discretion while supporting consistent and fair academic integrity enforcement.

7 Conclusion

This study has demonstrated the value of applying Lipsky’s street-level bureaucracy framework to understand how faculty navigate the challenges of Gen-AI plagiarism in higher education. Our findings reveal that faculty exercise considerable discretion in interpreting and implementing academic integrity policies, often prioritizing educational interventions over punitive measures when confronted with suspected AI-generated content. This discretion is shaped by multiple factors, including workload constraints, technological limitations, empathetic concerns for students, and skepticism about institutional processes.

The tension between program chairs’ expectations for policy adherence and faculty’s contextual decision-making highlights a fundamental governance challenge in higher education: how to balance institutional consistency with the need for professional judgment in complex academic integrity cases. Rather than viewing faculty discretion as a problem to be eliminated through stricter oversight or automated detection tools, institutions should recognize it as a valuable resource for adapting policies to diverse contexts and student needs.

Moving forward, effective institutional responses to Gen-AI plagiarism should focus on: (1) developing policies that explicitly acknowledge the role of faculty discretion; (2) investing in reliable detection tools that complement rather than replace faculty judgment; (3) streamlining reporting processes to reduce administrative burden; (4) providing adequate resources and recognition for faculty engaged in academic integrity work; and (5) creating ethical frameworks and faculty development opportunities to navigate the complexities of academic integrity decisions.

By reconceptualizing faculty as SLBs who actively shape policy implementation through their discretionary practices, this research contributes to a more nuanced understanding of academic integrity governance in the age of generative AI. Future research should explore how these discretionary practices vary across different institutional and cultural contexts, and how they might evolve as AI technologies and institutional responses continue to develop.

This research has two main limitations that suggest directions for future investigation. First, the single-institution sampling frame constrains the generalizability of results, as institutional characteristics, pedagogical approaches, and student demographics may not be representative of broader educational contexts. Additionally, the rapidly evolving nature of Gen-AI technologies means that findings may require regular updating as new tools and detection methods emerge. Therefore, future research should explore longitudinal impacts of Gen-AI on academic integrity practices and investigate student perspectives to complement faculty insights.

Data availability statement

The raw data supporting the conclusions of this article can be made available by the authors upon obtaining ethical approval from Rayyan University.

Ethics statement

The studies involving humans were approved by Ethics Committee, Rayyan University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

RA: Software, Resources, Writing – review & editing, Formal analysis, Writing – original draft, Validation, Investigation, Visualization, Data curation, Conceptualization, Methodology.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author declares that Gen AI was used minimally in the creation of this manuscript. Claude 3.7 Sonnet (July 2025 version) was utilized to provide a review of minor parts of the language used in the manuscript. All linguistic revisions were rigorously verified by the researcher. This was carried out after the feedback was received from reviewers and after the declaration of AI use.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aljanahi, M. H., Aljanahi, M. H., and Mahmoud, E. Y. (2024). “I’m not guarding the dungeon”: faculty members’ perspectives on contract cheating in the UAE. Int. J. Educ. Integr. 20, 9–19. doi: 10.1007/s40979-024-00156-5

Alsharefeen, R., and AlSayari, N. (2025). Examining academic integrity policy and practice in the era of AI: a case study of faculty perspectives. Front. Educ. 10:1621743. doi: 10.3389/feduc.2025.1621743

Alshurafat, H., Al Shbail, M. O., Hamdan, A., Al-Dmour, A., and Ensour, W. (2024). Factors affecting accounting students’ misuse of chatgpt: an application of the fraud triangle theory. J. Financ. Report. Account. 22, 274–288. doi: 10.1108/JFRA-04-2023-0182

Amigud, A., and Pell, D. J. (2021). When academic integrity rules should not apply: a survey of academic staff. Assess. Eval. High. Educ. 46, 928–942. doi: 10.1080/02602938.2020.1826900

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Braun, V., Clarke, V., and Weate, P. (2016). “Using thematic analysis in sport and exercise research” in Routledge handbook of qualitative research in sport and exercise. (London: Routledge), 213–227.

Bretag, T., and Mahmud, S. (2016). A conceptual framework for implementing exemplary academic integrity policy in Australian higher education. Handbook Acad. Integr., T. Bretag. Singapore: Springer. 463–480. doi: 10.1007/978-981-287-098-8_24

Bretag, T., Mahmud, S., Wallace, M., Walker, R., James, C., Green, M., et al. (2011). Core elements of exemplary academic integrity policy in Australian higher education. Int. J. Educ. Integr. 7:3–12. doi: 10.21913/IJEI.v7i2.759

Brown, V. J., and Howell, M. E. (2001). The efficacy of policy statements on plagiarism: do they change students' views? Res. High. Educ. 42, 103–118. doi: 10.1023/A:1018720728840

Chan, C. K. Y. (2024). Students’ perceptions of ‘AI-giarism’: investigating changes in understandings of academic misconduct. Educ. Inf. Technol. 30, 8087–8108. doi: 10.1007/s10639-024-13151-7

Coalter, T., Lim, C. L., and Wanorie, T. (2007). Factors that influence faculty actions: a study on faculty responses to academic dishonesty. Int. J Scholarsh. Teach. Learn. 1:n1. doi: 10.20429/ijsotl.2007.010112

Crompton, H., and Burke, D. (2024). The educational affordances and challenges of ChatGPT: state of the field. TechTrends 68, 380–392. doi: 10.1007/s11528-024-00939-0

Creswell, J. W., and Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches. Thousand Oaks, CA: Sage.

Cullen, C. S., and Murphy, G. (2025). Inconsistent access, uneven approach: ethical implications and practical concerns of prioritizing legal interests over cultures of academic integrity. J. Acad. Ethics, 1–20. doi: 10.1007/s10805-025-09606-2

Daniel, M., Carney, M., Khandelwal, S., Merritt, C., Cole, M., Malone, M., et al. (2017). Cognitive debiasing strategies: a faculty development workshop for clinical teachers in emergency medicine. MedEdPORTAL 13:10646. doi: 10.15766/mep_2374-8265.10646

Duggan, F. (2006). Plagiarism: prevention, practice and policy. Assessment & Evaluation in Higher Education, 31:151–154. doi: 10.1080/02602930500262452

Eaton, S. E. (2023). Postplagiarism: transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology. Int. J. Educ. Integr. 19, 23–10. doi: 10.1007/s40979-023-00144-1

Eaton, S. E., Crossman, K., and Edino, R.. (2019). Academic integrity in Canada: an annotated bibliography. Calgary: University of Calgary Online Submission.

Evans, T., and Harris, J. (2004). Street-level bureaucracy, social work and the (exaggerated) death of discretion. Br. J. Soc. Work. 34, 871–895. doi: 10.1093/bjsw/bch106

Fumasoli, T., and Marini, G. (2022). “The irresistible rise of managerial control? The case of workload allocation models in British universities” in Research Handbook on academic careers and managing academics. (Cheltenham, UK: Edward Elgar Publishing), 298–309.

Glendinning, I. (2013) Comparison of policies for academic integrity in higher education across the European Union.

Gullifer, J. M., and Tyson, G. A. (2014). Who has read the policy on plagiarism? Unpacking students' understanding of plagiarism. Stud. High. Educ. 39, 1202–1218. doi: 10.1080/03075079.2013.777412

Harper, R., Bretag, T., Ellis, C., Newton, P., Rozenberg, P., Saddiqui, S., et al. (2019). Contract cheating: a survey of Australian university staff. Stud. High. Educ. 44, 1857–1873. doi: 10.1080/03075079.2018.1462789

Hostetter, A. B., Call, N., Frazier, G., James, T., Linnertz, C., Nestle, E., et al. (2024). Student and faculty perceptions of generative artificial intelligence in student writing. Teach. Psychol. 52, 319–329. doi: 10.1177/00986283241279401

Hudson, B., Hunter, D., and Peckham, S. (2019). Policy failure and the policy-implementation gap: can policy support programs help? Policy Design Pract. 2, 1–14. doi: 10.1080/25741292.2018.1540378

Hupe, P., and Hill, M. (2007). Street-level bureaucracy and public accountability. Public Adm. 85, 279–299. doi: 10.1111/j.1467-9299.2007.00650.x

Karaevli, Ö., Çeven, G., and Korumaz, M. (2024). Implementing education policy: reflections of street-level bureaucrats. Asia Pac. J. Educ. 44, 488–502. doi: 10.1080/02188791.2022.2118670

Khelifi, S., and Triki, M. (2020). Use of discretion on the front line of higher education policy reform: the case of quality assurance reforms in Tunisia. High. Educ. 80, 531–548. doi: 10.1007/s10734-019-00497-y

Kim, J., Klopfer, M., Grohs, J. R., Eldardiry, H., Weichert, J., Cox, L. A., et al. (2025). Examining faculty and student perceptions of generative AI in university courses. Innov. High. Educ. 1–33. doi: 10.1007/s10755-024-09774-w

LeBrun, P. (2023). Faculty barriers to academic integrity violations reporting: A qualitative exploratory case study ProQuest Dissertations and Theses Global. University of Phoenix.

Levine, J., and Pazdernik, V. (2018). Evaluation of a four-prong anti-plagiarism program and the incidence of plagiarism: a five-year retrospective study. Assess. Eval. High. Educ. 43, 1094–1105. doi: 10.1080/02602938.2018.1434127

Lipsky, M. (2010). Street-level bureaucracy: dilemmas of the individual in public service. New York, USA: Russell sage foundation.

Lodge, J. M., Thompson, K., and Corrin, L. (2023). Mapping out a research agenda for generative artificial intelligence in tertiary education. Australas. J. Educ. Technol. 39, 1–8. doi: 10.14742/ajet.8695

Lovell, D. (2024). Rethinking faculty as street-level bureaucrats: exploring the role of ethics and administrative discretion in contemporary higher education. Public Integr. 26, 23–39. doi: 10.1080/10999922.2022.2148985

Malak, J. M. (2015). Academic integrity in the community college setting: full-time faculty as street level bureaucrats (Publication number 3723281, Ph.D. Illinois State University, ProQuest Dissertations & Theses). Illinois, USA: Global.

Maynard-Moody, S., and Musheno, M. (2000). State agent or citizen agent: two narratives of discretion. J. Public Adm. Res. Theory 10, 329–358. doi: 10.1093/oxfordjournals.jpart.a024272

Maynard-Moody, S. W., and Musheno, M. C. (2022). Cops, teachers, counselors: stories from the front lines of public service. Chicago, USA: University of Michigan Press.

Maynard-Moody, S., and Portillo, S. (2010). “Street-level bureaucracy theory” in The Oxford handbook of American bureaucracy. ed. R. F. Durant (Oxford, UK Oxford University Press).

McDonald, N., Johri, A., Ali, A., and Collier, A. H. (2025). Generative artificial intelligence in higher education: evidence from an analysis of institutional policies and guidelines. Comput. Hum. Behav. Artif. Hum. 3:100121. doi: 10.1016/j.chbah.2025.100121

Morris, E. J., and Carroll, J. (2016). Developing a sustainable holistic institutional approach: dealing with realities “on the ground” when implementing an academic integrity policy. Handbook Acad. Integr., 449–462. doi: 10.1007/978-981-287-098-8_23

Pecorari, D., and Petrić, B. (2014). Plagiarism in second-language writing. Lang. Teach. 47, 269–302. doi: 10.1017/S0261444814000056

Rutz, S., and de Bont, A. (2019). “Organized discretion” in Discretion and the quest for controlled freedom. (Cham, Switzerland: Springer), 279–294.

Sánchez-Vera, F., Reyes, I. P., and Cedeño, B. E. (2024). “Impact of artificial intelligence on academic integrity: perspectives of faculty members in Spain” in Artificial intelligence and education: enhancing human capabilities, protecting rights, and fostering effective collaboration between humans and Machines in Life, learning, and work.

Stoesz, B. M., and Eaton, S. E. (2022). Academic integrity policies of publicly funded universities in western Canada. Educ. Policy 36, 1529–1548. doi: 10.1177/0895904820983032

Taylor, I., and Kelly, J. (2006). Professionals, discretion and public sector reform in the UK: re-visiting Lipsky. Int. J. Public Sect. Manag. 19, 629–642. doi: 10.1108/09513550610704662

Tummers, L., and Bekkers, V. (2014). Policy implementation, street-level bureaucracy, and the importance of discretion. Public Manag. Rev. 16, 527–547. doi: 10.1080/14719037.2013.841978

Walker, C., and White, M. (2014). Police, design, plan and manage: developing a framework for integrating staff roles and institutional policies into a plagiarism prevention strategy. J. High. Educ. Policy Manag. 36, 674–687. doi: 10.1080/1360080X.2014.957895

Keywords: street-level bureaucracy, academic integrity, generative AI, plagiarism, faculty discretion, higher education policy

Citation: Alsharefeen R (2025) Faculty as street-level bureaucrats: discretionary decision-making in the era of generative AI. Front. Educ. 10:1662657. doi: 10.3389/feduc.2025.1662657

Edited by:

Paitoon Pimdee, King Mongkut’s Institute of Technology Ladkrabang, ThailandReviewed by:

Thiyaporn Kantathanawat, King Mongkut’s Institute of Technology Ladkrabang, ThailandMohammad Mohi Uddin, University of Alabama, United States

Puris Sornsaruht, King Mongkut’s Institute of Technology Ladkrabang, Thailand

Copyright © 2025 Alsharefeen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rami Alsharefeen, cmFtaS5hbHNoYXJlZmVlbkB5YWhvby5jb20=

Rami Alsharefeen

Rami Alsharefeen