- 1FuDan University, Shanghai, China

- 2Gansu Qilian Mountain Water Conservation Forest Research Institute, Zhangye, China

The learning environment has been transformed from a teaching background to the core educational technology in contemporary engineering education. The learning environment that meets the engineering education certification standards is conducive to the standardization of teaching and the realization of educational goals. However, the correlation between the existing learning environment scale and the professional certification standard is insufficient. Especially in China, there are few studies on the learning environment scale based on engineering education professional certification. The research adopts the mainstream learning environment evaluation perspective of students’ perception evaluation, and combines curriculum learning, teachers and student relationship, classmate relationship, learning atmosphere, institutional environment, project and practice, physical resources, and so on seven factors. The graduation requirements of engineering education certification in China are deeply coupled with the content of the benchmark, and the quality of the systematic questionnaire is tested. The results show that the engineering education learning environment scale has a robust seven-factor structure, reasonable 36 items, good reliability and validity, no common method deviation, and the constructed model is appropriate. It can be used as a learning environment measurement tool to support graduation requirements. The innovation of the future learning environment should not only be based on the standard of engineering education certification graduation requirements, but also support graduates to become a new generation of engineers with a global vision and sustainable development leadership.

1 Introduction

Education is an important catalyst for achieving the United Nations 2030 Sustainable Development Goals (SDGs). With the global spread of COVID-19, economic experts have predicted that the uneven economic recovery is the main feature of economic development after 2021 (Clouston et al., 2021; Wolff and Ehrström, 2020); this inequality also exists in the field of education (Bacher-Hicks et al., 2021). Education is the core driving force for inclusive economic growth and needs to be transformed actively. At present, the field of engineering education is undergoing a transformation from “technology-based” to “sustainable development-based, “but its performance is not optimistic.

The Higher Education Council of Australia has proposed an important measure to control the quality of higher education through the assessment of the learning environment in universities. UN Secretary-General Antonio Guterres stressed: Without a healthy planet, there will be no prosperous future. The environment is not only an independent goal of SDGs, but also a basic constraint for the realization of all goals. The engineering education learning environment is the core element supporting the sustainable development standard, and has a profound interaction with engineering decision-making ability and academic sustainable development. For example, in the Living Lab model established by the Technical University of Aachen in Germany, student teams complete the full project cycle from hydrogen storage system design to carbon footprint assessment in real industrial scenarios. This kind of immersive learning improves students’ engineering decision-making ability by 37% (p < 0.01) and shows a stronger interdisciplinary collaboration tendency. Especially for students who have been exposed to life cycle analysis tools, the probability of adopting sustainable design criteria will increase by 2.1 times in the selection of subsequent engineering schemes (Tien et al., 2019). In addition, AI is changing the engineering practice environment, and the cultivation of graduates needs to highlight the sustainable development of skills (Cañavate et al., 2025). Therefore, the study of the engineering education learning environment is of great significance.

The engineering education certification system is the core to ensure the quality of talent training, and is deeply reshaping the global engineering talent training paradigm. The 2023 revision of the Washington Accord explicitly requires graduates to have the “ability to solve complex engineering problems.” The ABET EC 2000 standard (2023–2024) emphasizes the need to achieve a ‘design-implementation-verification’ engineering closed-loop through environmental design in Criterion 3 (student ability index) and Criterion 5 (curriculum design). The China Engineering Education Accreditation Association (CEEAA) also uses the ‘continuous improvement mechanism’ as a certification veto, requiring institutions to provide a chain of evidence for the optimization of the learning environment (Standard Clause 4.2). This reflects that the learning environment scale is not only a data collection tool, but also a starting point for continuous improvement of the evidence chain. In general, the abovementioned policies point to a core proposition: learning environment, as the physical carrier and social field of engineering ability landing, has become a key observation point for certification.

China’s engineering education certification system has covered 67.2% of the country’s engineering majors; its core is to drive curriculum reform with outcome-based education (OBE). The research shows that through the certification of professionals, graduates’ employment competitiveness increased by 19.3%. However, some colleges and universities have the phenomenon of ‘formal compliance’ (Li and Zhao, 2021). The scale of engineering education in China is huge, and there are few studies on the localized learning environment scale based on certification. The shaping of the engineering learning environment is one of the necessary conditions for improving the engineering quality of engineering students in local colleges and universities (Shen, 2007). For the application-oriented undergraduate colleges after the transformation of newly built undergraduate colleges upgraded or merged in China after 2000, the engineering education professional certification is in an important operational period. It is urgent to develop a localized evaluation scale and give full play to the learning environment as the core carrier of certification landing.

At present, there is a structural disconnection between the existing learning environment assessment tools and the certification requirements. The learning environment assessment tools have insufficient cross-cultural adaptability in the context of engineering education. The traditional scales (such as NSSE) do not cover the special elements of engineering (such as laboratory resources and school-enterprise cooperation). The expansion of learning environment theory in the field of engineering education needs to be further studied. In order to fill the existing research gaps, this study proposes the following questions:

(1) How to construct the theoretical structure of the engineering education learning environment scale? This question includes two sub-questions: What are the dimensions of the engineering education learning environment assessment scale? What content?

(2) How to test the reliability and validity of the Engineering Education Learning Environment Scale? This question mainly includes two sub-questions: First, how to test the reliability and validity of the prediction scale? Second, how to test the reliability and validity of the formal scale?

Students’ perception and evaluation of the learning environment is the mainstream perspective of research in the field of learning environments. The research will evaluate the scale target construct ‘learning environment’ (that is, the characteristics and quality of the engineering education environment itself) through students’ ‘perception’ and ‘experience’. The core of the research is to construct a multi-dimensional construct of engineering education learning environment, and ultimately aims to develop and verify a tool with good reliability and validity that can describe and evaluate the engineering education learning environment itself. The expected results will provide a reliability evaluation tool for monitoring the learning environment quality of engineering education. The 12 competency benchmark indicators of China Engineering Education Accreditation Graduation Requirements are transformed into operable observation variables of the learning environment to provide data support for accreditation evaluation. A quantitative evidence chain is formed for the continuous improvement concept of “formative evaluation-feedback-improvement” required by China’s engineering education professional certification.

2 Theoretical framework

2.1 Definition of evaluation content

2.1.1 Comparison of international engineering education accreditation organizations

The International Engineering Education Alliance (IEA) is composed of three engineering education degree mutual recognition organizations, namely ‘Washington Agreement’, ‘Sydney Agreement’, and ‘Dublin Agreement’, and engineer professional qualification mutual recognition organizations, namely ‘Engineer Mobility Forum Agreement’, ‘Asia Pacific Engineer Program’, and ‘Engineering Technician Mobility Forum Agreement’. Among them, the ‘Washington Agreement (WA)’ is the most authoritative and systematic agreement. This agreement is recognized by the international engineering education community as an authoritative agreement on the requirements of engineering graduates and engineers’ professional ability. Member states promote the transnational flow of engineering talents through the international mutual recognition of certification standards (Patil and Codner, 2007). In addition, the European Federation of National Engineering Associations (FEANI) professional competence standards for engineers, the American Council for Accreditation of Engineering Technology (ABET) standards, and China’s general standards for engineering education certification. In contrast, the ‘Washington Accord’ is a global authoritative standard required by engineering graduates. ABET follows the requirements of the Washington Accord, and the FEANI certification standard is relatively lagging behind, which does not reflect the new development requirements of international engineering talent training.

As the cornerstone document of the global engineering education mutual recognition system, the core content of the ‘Washington Accord’ is 12 competency benchmark indicators for engineering education graduates. Through these 12 standards of substantial equivalence, the signatory countries have achieved the improvement of engineering talents’ ability and cross-border flow. The research shows that certification standards effectively narrow the gap between engineering education in developing countries and developed countries by establishing threshold quality benchmarks (Lennon, 2021). After the implementation of the certification system in developing countries, the core competence compliance rate of engineering graduates increased by 27% (Marin et al., 2021), and the international mobility rate of graduates has also increased significantly (β = 0.33, p < 0.01) (Kim and Ozturk, 2020).

2.1.2 General standards for engineering education accreditation in China

China joined the “Washington Accord” on 2 June 2016 (Li and Zhao, 2023), which means that China’s engineering professional certification standards have been recognized by international certification organizations, which has laid an institutional foundation for the transnational flow of engineering talents. Through the three core concepts of student-centered, result-oriented, and continuous improvement, the general standard of China’s engineering education certification has constructed seven first-level indicators and 24 s-level indicators, including students, training objectives, graduation requirements, continuous improvement, curriculum system, teaching staff, and supporting conditions. ‘Graduation requirements’ is the core of the certification system, covering 12 ability indicators. These 12 graduation requirements are benchmarked against the “Graduate Attributes” of the “Washington Accord, reflecting the dual logic of international substantive equivalence and local practical innovation (Zhang et al., 2023).

2.1.3 Interpretation of the graduation requirements’ indicator of China engineering education accreditation

The “Graduation Requirements” indicators of the General Standards for Engineering Education Accreditation in China are deeply aligned with the 12 core competencies that “Washington Accord” graduates need to possess, and integrated into the concept of engineering education with Chinese characteristics. For example, in the requirements of ‘engineering and society’ and ‘environment and sustainable development’, not only the technical compliance is emphasized but also the engineering ethical responsibility and social governance participation are highlighted. The index of ‘professional norms’ strengthens the guidance of socialist core values to engineering practice.

The three-dimensional ability model of ‘knowledge, ability, and quality’ is constructed in the graduation requirements of the general standard of China’s engineering education certification (Ping, 2014). The knowledge dimension requires the mastery of mathematics, natural science, and professional engineering knowledge to support the identification and modeling of complex engineering problems. The ability dimension covers engineering practice abilities such as design and development, modern tool application, teamwork, and cross-cultural communication. The definition of ‘complex engineering problems’ clearly requires the characteristics of ‘technical uncertainty’ and ‘multi-stakeholder conflict’. The quality dimension emphasizes the social responsibility and lifelong learning ability of engineers, which can significantly affect the quality of engineering ethical decision-making.

From the perspective of the implementation mechanism of the degree of achievement of graduation requirements, the graduation requirements of the general standard of China’s engineering education certification reflect the evaluation concept of the ‘quantitative + qualitative’ dual-track system. At the quantitative level, the quantitative tracking of ability is realized through the course assessment data and graduation design score; the qualitative level relies on the school-enterprise joint evaluation mechanism, focusing on the industry adaptability of non-technical capabilities. The research shows that this multi-dimensional evaluation system can improve the matching degree of graduates post-competency by 23–31% (Sheng-Tao et al., 2023).

Based on the abovementioned analysis, this study focuses on the 12 benchmark contents of the core index ‘graduation requirements’ of China’s engineering education certification system, and constructs the content framework of the learning environment measurement of China’s engineering education. In terms of evaluation objects, in view of the fact that the “student-centered” concept of China’s engineering education is the premise and motivation for result-oriented and continuous improvement, focusing on the “student-centered” certification concept, the evaluation objects will choose to reflect on the learning environment of China’s engineering education from the perspective of students.

2.2 Construction of the evaluation dimension

A learning environment usually has the meaning of a place of learning, a collection of external conditions and places, and a collection of conditions that support learning activities. The specific performance is as follows: (1) the place where learning activities take place (Kaicheng, 2000). For example, Wilson, an American scholar, proposed that the learning environment is a tool and information resource and a supportive place for learners to use in achieving learning goals and solving learning problems. (2) Wang Binhua of East China Normal University believes that the learning environment refers to the external conditions and places of learning, including in-class and after-class learning environment (Thy, 2017), including schools, families, society, and so on. (3) The constructivist view of learning holds that learning is the result of the interaction between learners and the environment. From the perspective of constructive learning, a learning environment is a collection of various learning resources for learners to construct learning, including physical resources of information and cognitive tools and contextualized resources of learning (Feng and Weimin, 2001).

In addition, some scholars have found that after the 1990s, the definition of learning environment by Chinese and Western scholars mainly includes three dimensions: “Space to support learners’ development, mainly physical space, activity space, and psychological space. The collective power of supporting learning activities comes from resources, tools, teachers’ support, and psychological environment; learning style of constructive support for learning activities” (Zhixian, 2005). Yu Haiqin of Huazhong University of Science and Technology divides the learning environment into six factors: curriculum learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, and project and practice in the study of measuring the influence of the learning environment of top-notch innovative talents on learning style and learning achievement (Haiqin et al., 2013).

Based on the abovementioned scholars’ definition of learning environment, this study believes that the learning environment of engineering education is a collection of supportive conditions that support students to participate in the conception, design, implementation, and operation of products, and then acquire engineering knowledge, practical skills, and engineering literacy. According to the concept of CDIO engineering education, conception refers to the understanding of product requirements and technology, design refers to the description of product implementation, implementation refers to the generation of products from designed plans, and operation refers to the realization of the use value of products (Crawley et al., 2007). “Supportive conditions” include courses, practical activities inside and outside the school, systems, physical resources, and other factors that support learning and learning atmosphere, interpersonal relationships, and other regulatory factors.

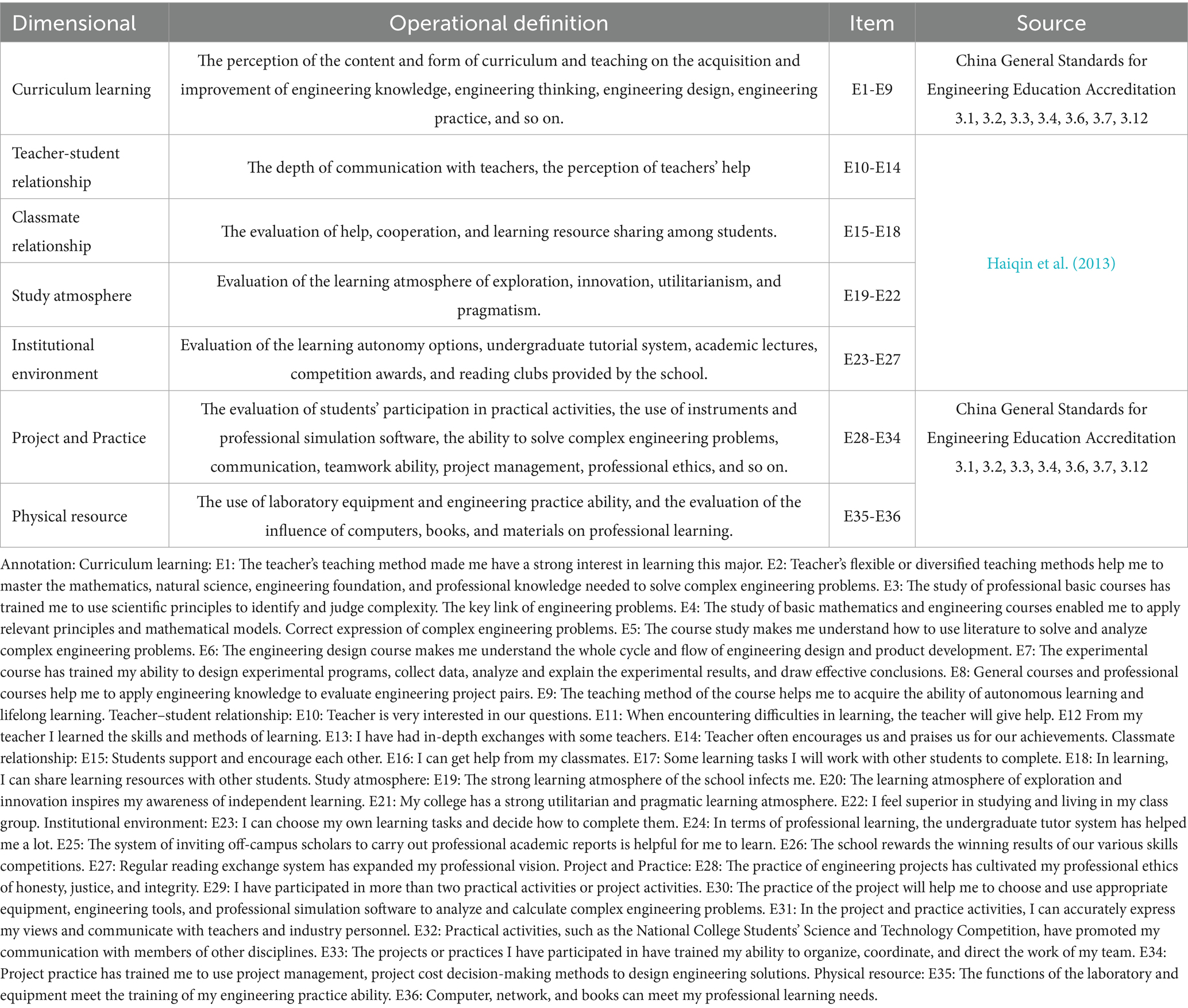

The study further developed a learning environment assessment scale for engineering education, which includes seven dimensions: ‘course learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, project and practice, and physical resources’. The research hypothesis is proposed: (H1) The evaluation structure of the learning environment of engineering education consists of seven dimensions; the (H2) scale has good CITC, internal consistency reliability, content validity, and composite reliability, and there is no common method bias.

3 Method

3.1 Scale preparation program

Integration Clark & Watson Scale preparation program (Clark and Watson, 2019). The scale preparation of this study mainly includes four steps. First, review the literature and establish the theoretical concept definition and dimension of the engineering education learning environment; second, the initial scale is formed through expert review and interviews; third, through the small sample pretest, item identification, total correlation, exploratory factor analysis and other tests; fourth, revise the items and contents, and test the reliability and validity of the formal scale and confirmatory factor analysis again.

3.2 Research object

3.2.1 Case college introduction

Application-oriented universities originated in European and American countries and have different forms of practice in the development of higher education in different countries. Among them, the University of Applied Sciences in Germany is more typical. The German University of Applied Sciences, which was founded in 1960, is an institution for the training of practical and specialized advanced applied talents. Most of the applied undergraduate colleges in China are more than 600 local undergraduate colleges built after 1999. According to the statistics of the 2018 Education Bulletin, 1,131 local undergraduate colleges in China account for 90.84% of the total number of ordinary undergraduate colleges in China (including 265 independent colleges). A total of 85% are located in non-capital cities and assume the sinking function of higher education resources. In October 2015, with the implementation of the policy document issued by the Ministry of Education of the People’s Republic of China on the transformation of some local undergraduate colleges and universities to applied undergraduate colleges and universities, the transformation and development of local undergraduate colleges and universities were officially launched.

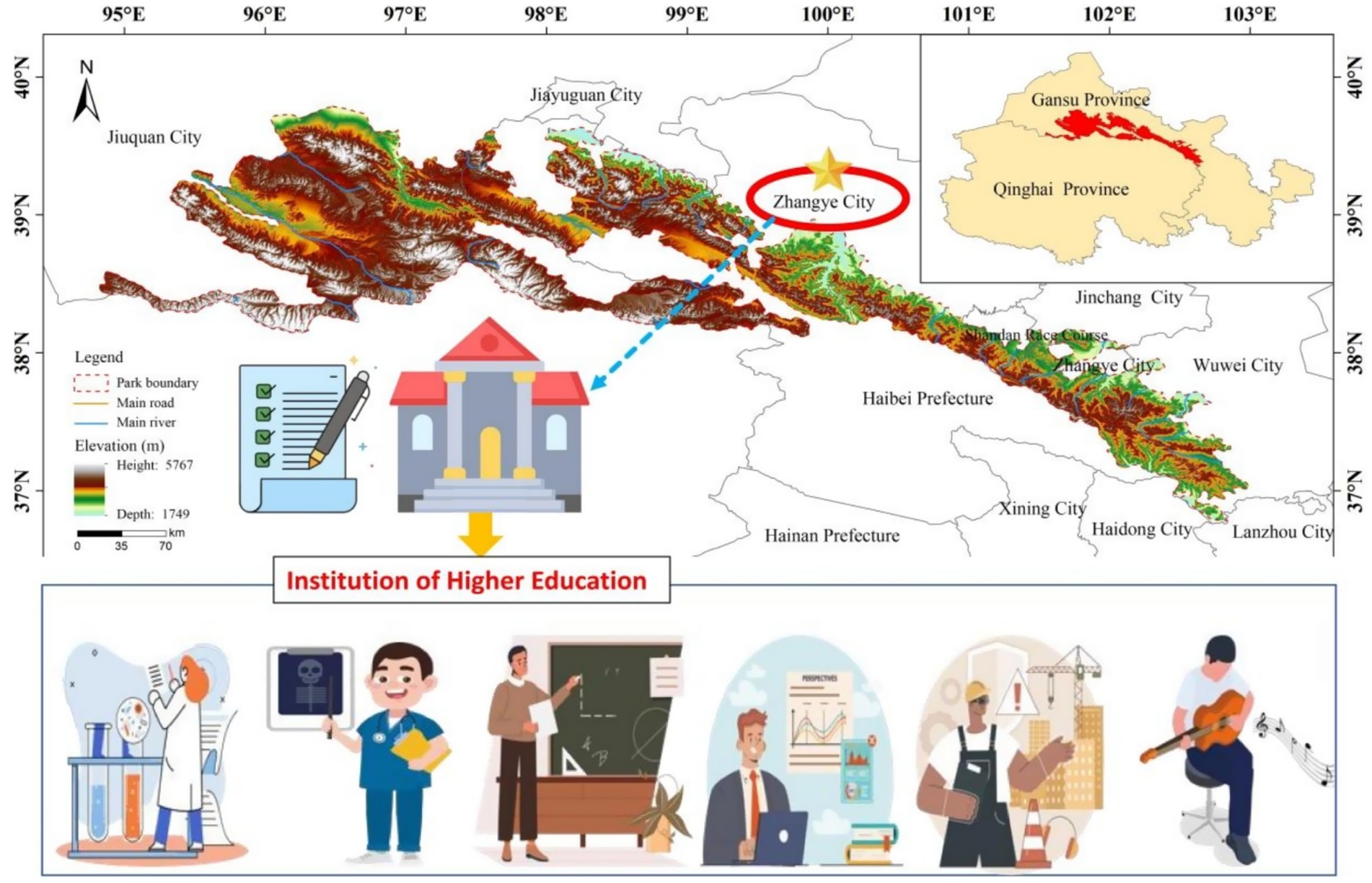

The case College X (Hexi University) belongs to the applied undergraduate college in charge of the provincial education department in China. It is located in Gansu Province, an economically underdeveloped province in western China (as shown in Figure 1). The province belongs to 23 provinces, 5 autonomous regions, and 4 municipalities directly under the central government. The provinces with GDP rankings of the last 5%. The X College is the only undergraduate college in Zhangye City. Zhangye, where the school is located, is accelerating innovation and transformation development. The X College is responsible for serving the city’s innovation and transformation development, and delivering innovative application technology talents to enterprises. X College was founded in 1941. At the beginning of its operation, it was a normal college. In 2000, it merged regional agricultural schools and vocational secondary schools. In 2001, it was upgraded to a local undergraduate college. In 2014, it merged regional medical colleges. In 2015, it was identified as one of the first colleges and universities in the document of the transformation pilot of local colleges and universities at the provincial level. X College had generally achieved transformation and development in 2020. The transformation of X College was carried out to meet the local demand for high-quality applied technical talents and to promote the innovative development of local industries. Due to the role of the school in the construction of regional economy and industry, from the newly established undergraduate colleges to the applied technology colleges, and then to the characteristics of the establishment of the applied technology demonstration colleges and the development status of the engineering education professional certification, it is representative to select the engineering and technical students of X College as the research object.

3.2.2 Professional introduction of the case college

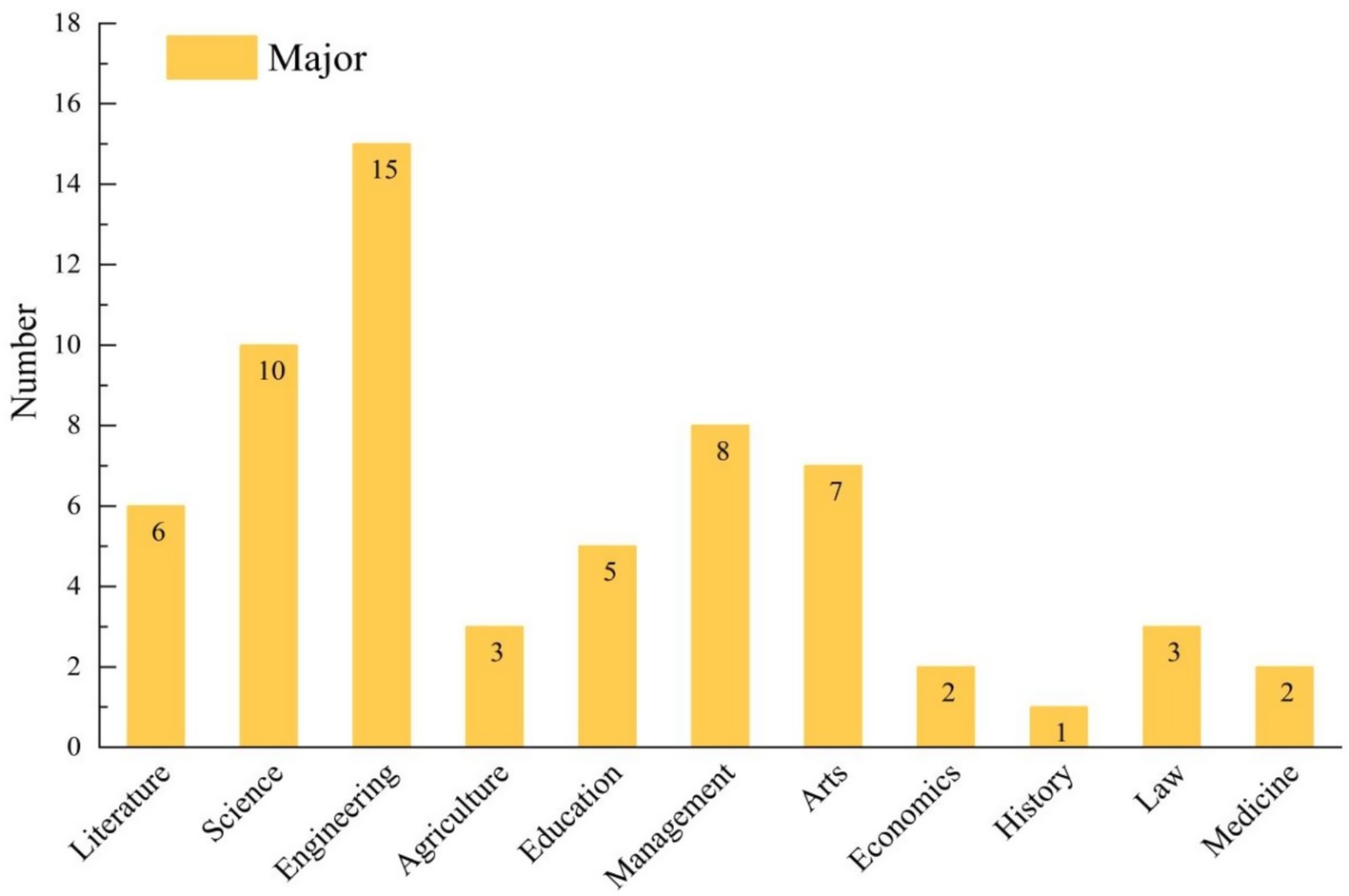

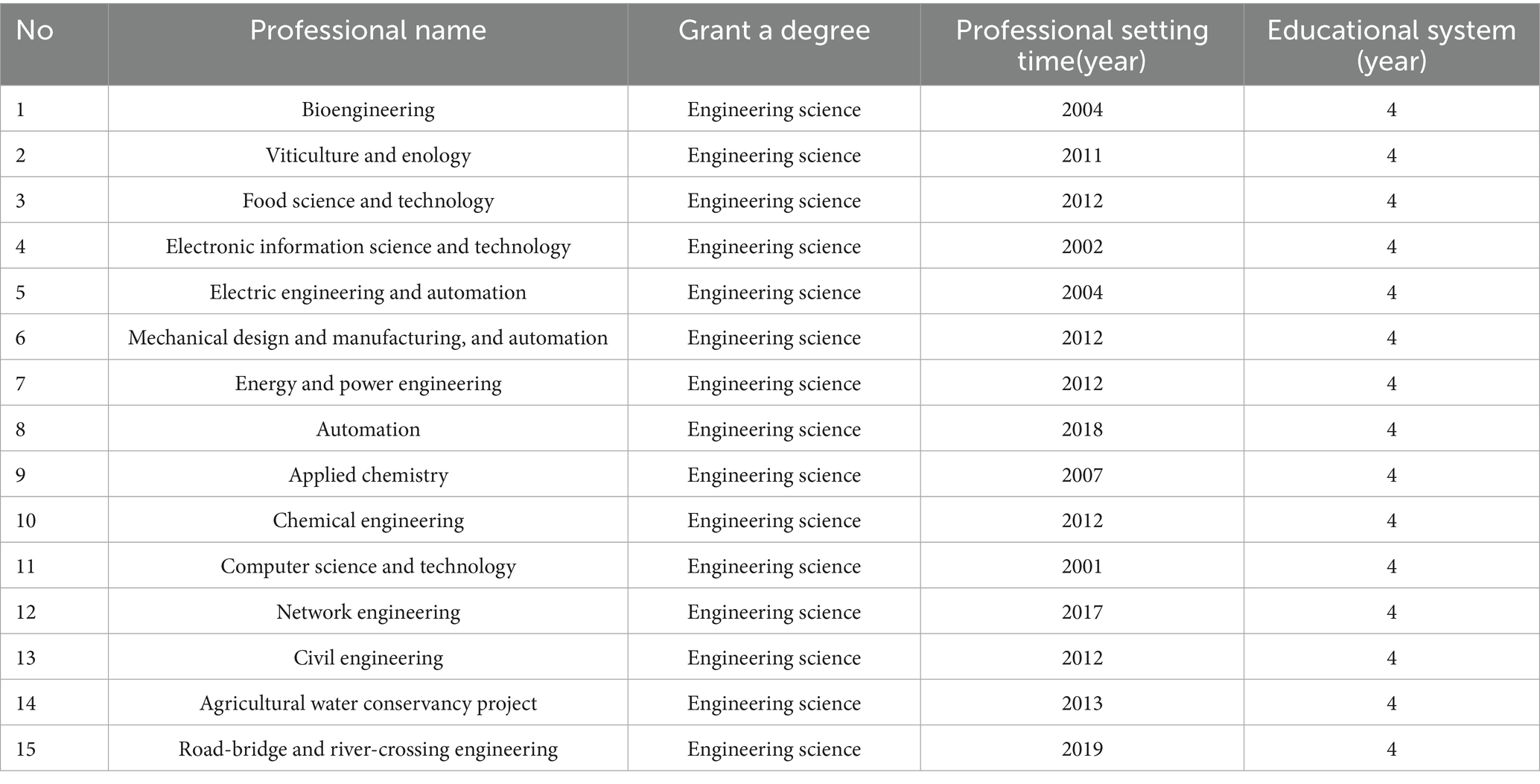

As shown in Figure 2, College X covers five major subject groups of engineering technology, agriculture, medical treatment, education, arts, and science. There are 11 disciplines, including engineering, science, literature, and other disciplines. In undergraduate studies, there are 15 engineering majors among 62 majors, accounting for the largest proportion. The 15 engineering majors are classified into five categories (Table 1). X College is a university that focuses on the training of engineering education professionals. It is representative to choose X College as a case study.

3.2.3 Sample and data sources

According to Hinkin’s basic sample size standard, the sample size of the pre-test is at least 5 times that of the scale item (Hinkin, 1998), and Comrey and Lee’s absolute sample size benchmark is 200 samples better standard (Comrey and Lee, 1992). In this prediction survey, 200 students majoring in water conservancy engineering and electrical engineering in X College were selected by simple random sampling. Before the survey, the purpose of the survey was explained to the counselor. The counselor issued a questionnaire link and a two-dimensional code, and asked the students to use the questionnaire star online to answer according to the actual situation. The opening time of the questionnaire system is from 13 March 2025 to 28 March 2025. The average completion time of students is 11 min, and 183 valid questionnaires were collected. The census collected data from all individuals within a specific range (Safitri et al., 2024). The formal scale used the data collection method of the census to conduct an online survey of 2,796 students from freshmen to seniors of all engineering majors in the case colleges. The opening time of the reporting system was from late June to mid-July 2021, and a total of 2,276 questionnaires were collected, with a recovery rate of 81.40%. In fact, there will still be no response bias in the census, and the response rate of 81.40% in this study is acceptable (Terek et al., 2021). The study also adopts a perfect sampling frame to clarify all individual information, explain the purpose of the survey, ensure anonymity, provide incentives, etc., to reduce the non-response bias. In general, not all students participated in the formal survey, and the 2,276 questionnaires actually collected constituted a subset. It can be observed that the actual collected data is closer to a convenience sampling.

3.3 Instruments

Based on the 12 indicators of the graduation requirements of the general standard of China’s engineering education professional certification (2020 edition), according to the definition of the learning environment of engineering education in this study, and using Chinese scholar Yu Haiqin to measure the impact of the learning environment of top-notch innovative talents on learning methods and learning achievements, the learning environment is divided into six factors: curriculum learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, and project and practice (Haiqin et al., 2013). An engineering education learning environment assessment scale was developed, which includes seven dimensions: curriculum learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, project and practice, and physical resources. In total, 36 items were compiled as the first draft of the prediction. The specific prediction scale structure is shown in Table 2. The measurement tool belongs to the self-made scale, and the items are answered in a self-reported manner, and scored according to the seven levels of Likert’s seven levels (very inconsistent to very consistent).

3.4 Statistical analysis—measurement tool quality inspection method

3.4.1 Prediction scale item test

3.4.1.1 CITC and internal consistency reliability test

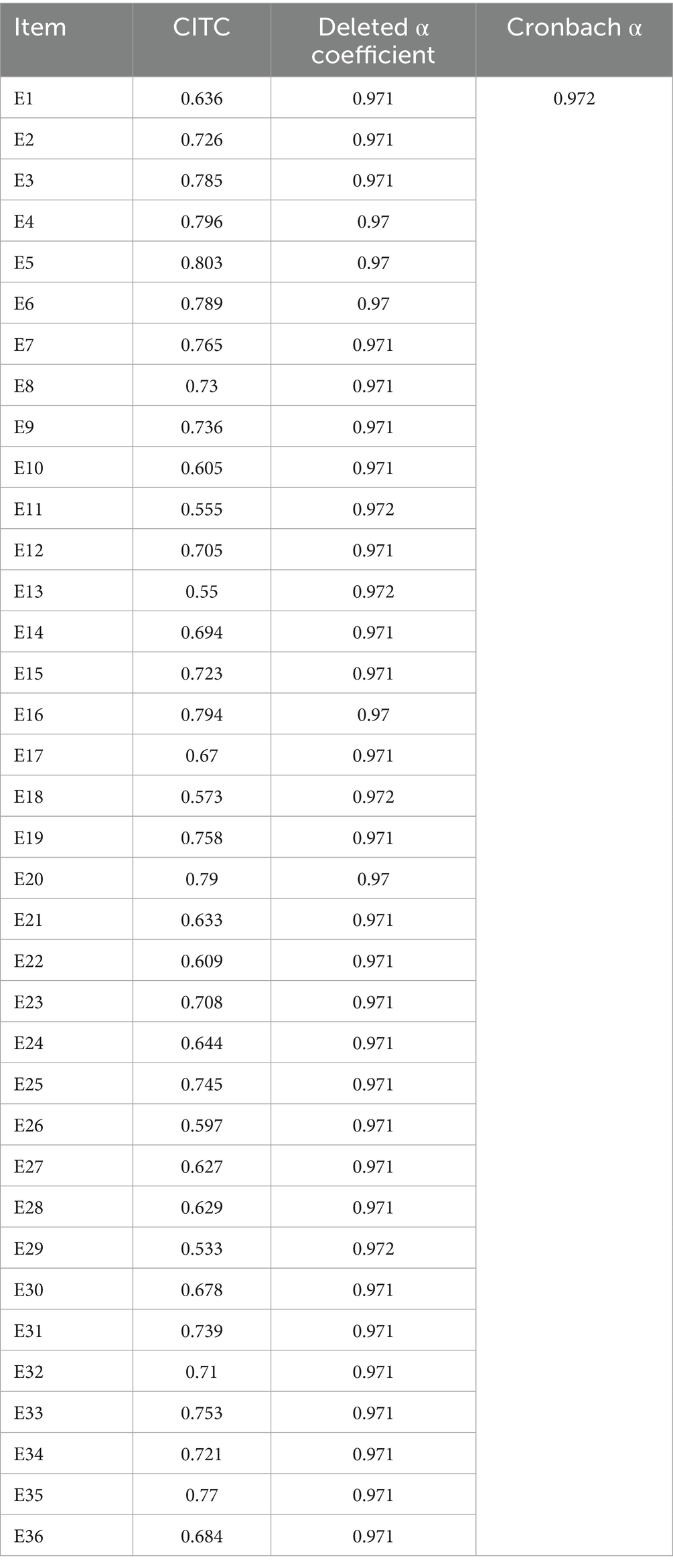

The reliability coefficient is an index to measure the consistency and stability of measurement tools or test results (Tang et al., 2024). Scholars mostly use the total correlation coefficient (CITC) and internal consistency reliability coefficient of the revised items to screen the effective items of the questionnaire. CITC refers to the correlation coefficient between the measurement item and other items in the same measurement dimension. The CITC value of the item is less than 0.3, which needs to be deleted to improve the reliability of the questionnaire (Daiwen, 2002).

The internal consistency reliability coefficient includes the Cronbach α coefficient, split-half reliability, K-R reliability coefficient proposed by G. F. Kuder et al., combined reliability coefficient, and Hoyt’s coefficient of variation. Among them, the α coefficient is commonly used in the reliability estimation of the Likert scale, and it is also widely used in social sciences. The α coefficient is a function of the degree of correlation between the items of the questionnaire. The closer the value is to 1, the better the internal consistency of the scale is. The α coefficient of a construct of the scale should be greater than 0.50, and the α coefficient of a scale should be at least greater than 0.70 (Minglong, 2009).

The reliability of this scale is estimated by the α coefficient (non-standardized value). In this study, CITC is less than 0.3, and the α coefficient values of sub-tests and the whole scale are less than 0.50 and 0.70 as the criteria for item selection. Comparing whether the α coefficient increases after deletion, the larger the value shows that deleting the smaller CITC questions can improve the reliability of the scale.

3.4.1.2 Content validity

Content validity is an important part of scale development and evaluation. The purpose of the content validity test is to ensure that the scale can accurately and comprehensively reflect the target content and avoid a too-narrow measurement range or missing important dimensions (Mokkink et al., 2025). The content validity evaluation process mainly includes defining the measurement objectives and theoretical framework (Marinho et al., 2024); based on the theoretical framework, constructing the items of measurement tools (Matolić et al., 2023); inviting experts to conduct assessments (Du, 2025); and revising according to expert feedback (Anggara and Abdillah, 2023).

This study has a clear measurement goal, constructs the evaluation dimension and content, and determines the measurement items according to the theoretical framework. Through interviews with experts in this field and teachers of engineering majors, the concept and structure of variables, and the prediction of whether the text of the questionnaire items is easy to understand and whether the content is meaningful are considered. According to the feedback, the rare words of the items were deleted, and the words were embellished by using the easy-to-understand terms of engineering students, so as to ensure the good content validity of the questionnaire.

3.4.1.3 Exploratory factor analysis

After passing the CITC and α coefficient test, it is necessary to carry out exploratory factor analysis on the questionnaire. Items with the same attributes are classified into one dimension to test the discriminant validity of each dimension. KMO and Bartlett spheres. The Bartlett Test of Sphericity is used to test whether there is a correlation between variables, which is the basic condition for exploratory factor analysis. The larger the KMO value, the better the correlation of each variable is measured, and factor analysis can be performed (Qingguo, 2002). The null hypothesis of Bartlett’s spherical test is that the correlation matrix has a single dimension. When the test results show a statistically significant level, the null hypothesis is rejected, indicating that the questionnaire has multiple dimensions and is suitable for factor analysis.

EFA is an analysis method of factor dimension reduction, which can realize the fusion of observation variables into several common factors, and construct all item information with several common factors. Principal component analysis (PCA) is used to calculate the explanatory variation of the item, and then explain the variation of the data. The factors with initial characteristic roots greater than 1 are obtained by the maximum variance orthogonal rotation method. Exploratory factor analysis test results. If the factor load is less than 0.5, the project needs to be deleted according to the existing literature.

3.4.2 Quality analysis index of formal scale

3.4.2.1 Combined reliability (CR)

Compared with Cronbach’s Alpha reliability coefficient, combined reliability is considered to be more suitable for assessing the reliability of a scale composed of multiple dimensions. Composite reliability refers to the proportion of the construct variance explained by all the measurement items in the scale. It can be understood as the degree to which all the items in the scale jointly measure the latent variables. The higher the combined reliability, the higher the internal consistency of the scale, and the more reliable the measurement results (Vakili and Jahangiri, 2018).

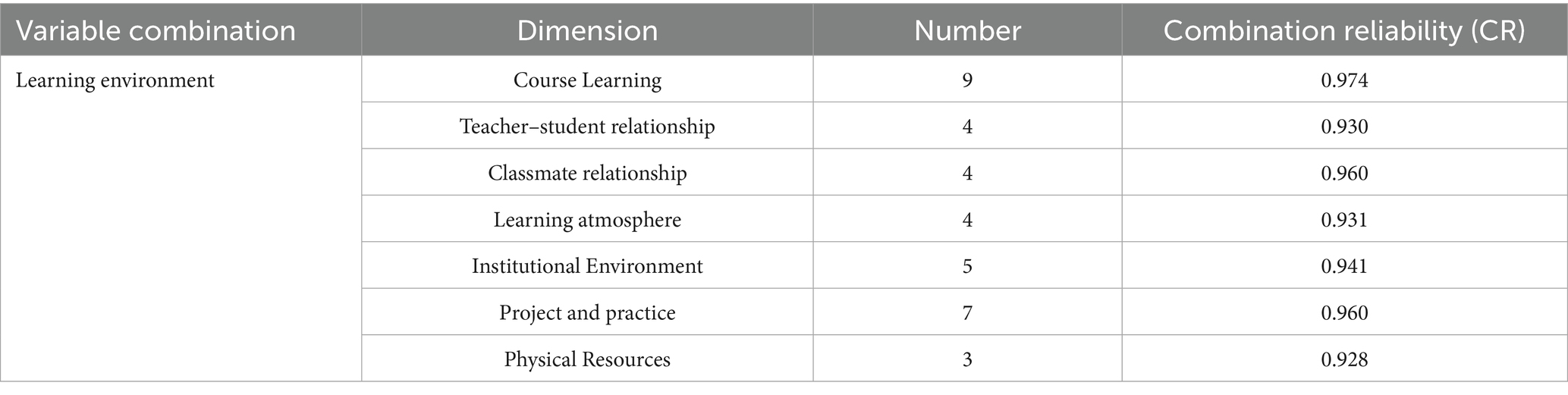

The combined reliability coefficient is also a method to test whether each item of the scale measures the same factor. The combined reliability of the scale generally requires greater than 0.7 (Fornell and Larcker, 1981). Combined with the scholar Hou Jietai’s view that the combination reliability coefficient is more effective for the internal consistency reliability estimation, the reliability analysis of the formal questionnaire used the combined reliability coefficient test (Jietai et al., 2004).

3.4.2.2 Common method bias (CMB)

The scale data comes from the self-report of college students, which may lead to the common method bias of false correlation between item variables. Usually, in order to reduce this systematic error, anonymity is guaranteed in the questionnaire design, and guiding words that affect students’ judgment are not used. In terms of statistical control, the common practice is to apply exploratory factor analysis. It is concluded that the eigenvalue of the single factor is greater than 1, and the variance of the single factor of the variable is less than 40%. It is considered that there is no serious common method bias in the study (Hao and Lirong, 2004; Baojuan et al., 2017). However, some scholars have pointed out that the equivalent table has four dimensions, and CMV is at 80%; or measuring 7 dimensions, CMV more than 90% to test the serious deviation of the common method (Fuller et al., 2016; Dandan and Zhonglin, 2020). In addition, using exploratory factor analysis (CFA) to judge CMB, some scholars have proposed that if several indicators of the single factor model do not meet the fitting criteria, CMB can be considered not serious (Iverson and Maguire, 2000). In this study, all items of the scale are regarded as a single factor. If the model fitting is not established, the common method deviation is not serious.

3.4.2.3 Confirmatory factor analysis (CFA)

Confirmatory factor analysis is the fitting degree of the theoretical structure model and the sample measurement model based on the literature. Analyze, screen out effective items, get the appropriate variable structure, and obtain a high-quality questionnaire. Further advancing the purpose of confirmatory factor analysis is to test whether the measurement index of the questionnaire has the measurement of latent variables. Effectiveness, indicating whether the construction model is appropriate, its essence is a test of the validity of the questionnaire structure. Generally, absolute fitness index (DF, CMIN, GFI, AGFI, RMSEA), value-added fitness index (NFI, RFI, IFI, TLI, CFI), and simple fitting value (χ2/d f , PGFI, PNFI) were used to judge (Minglong, 2009).

The fitting test of this study mainly uses GFI, RMSEA, NFI, IFI, TLI, CFI, and other indexes. Among them, GFI (goodness of fit index) represents the fitting index, and the closer its value is to 1; it represents the fitting degree of the theoretical model and the measurement model. The smaller the value of RMSEA (root mean square error of approximation), the better. If the value is less than 0.10, the model is acceptable. NFI, IFI, TLI, and CFI are judged based on values greater than 0.9. The closer the value is to 1, the better the fitting degree is. The χ2/d f value generally increases with the increase of the sample, and it is not used as the basis for judging whether the model fitting degree is better in the study.

4 Results

4.1 Prediction item purification and exploratory factor analysis results

4.1.1 CITC and internal consistency reliability analysis

The prediction scale contains 36 items. As shown in Table 3, the minimum correlation coefficient between each item and other items is 0.5, which is greater than 0.3; the α coefficient of the questionnaire is 0.972, and the overall reliability is very high.

4.1.2 Exploratory factor analysis

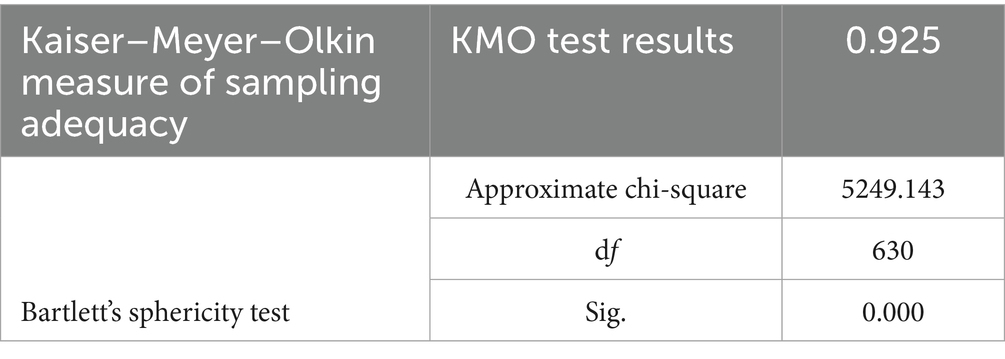

As shown in Table 4, the KMO value of the scale is 0.925, which is very suitable for factor analysis. The Bartlett result is significant (p < 0.001), indicating that the engineering education learning environment has multiple dimensions and conforms to factor analysis.

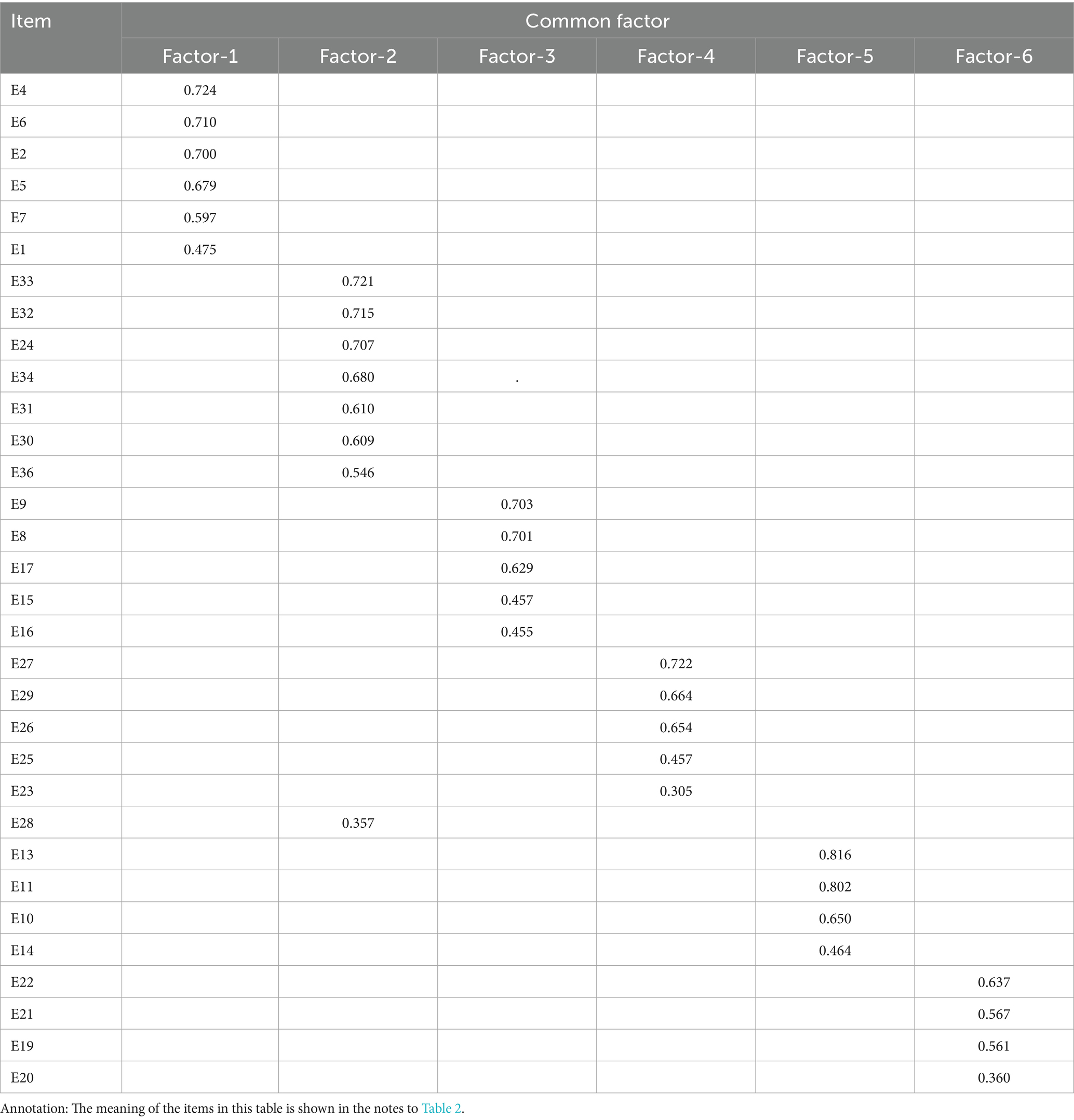

Principal component analysis was used to set the eigenvalue greater than 1. After orthogonal rotation of the maximum variance, six common factors are obtained. The eigenvalues were 18.537, 1.918, 1.611, 1.381, 1.187, and 1.095, respectively. The total variance explained was 71.469% (greater than 50%). As shown in Table 5, according to the factor loading matrix of the common factor and the item, factor 1 contains 1, 2, 3, 4, 5, 6, 7, and there are no 8 and 9 items in the original preset course learning. Factor 2 consists of 24, 30, 31, 32, 33, 34, 36, excluding 24 items. Factor 2 is generally consistent with the theoretical construct of the project and practice dimension. Factor 3 contains 8, 9 (course learning), 15, 16, 17 (classmate relationship), two dimensions of items. Factor 4 contains 25, 26, 27, 29, excluding 29 items, and the other items conform to the established structure of the institutional environment. Factor 5 is composed of 10, 11, 13, 14, and 15, which is consistent with the structure of teacher–student relationship.

Factor 6 includes 19, 21, 22, which is basically in line with the theoretical construct of learning atmosphere. The exploratory factor analysis has not yet extracted the physical resource dimension of the engineering education learning environment. Considering that the exploratory factor analysis generally requires more than 2 items, the revision of the dimension adds the item ‘the practice base of school-enterprise cooperation helps to strengthen my engineering practice ability’.

According to the results of the rotation component matrix of the engineering education learning environment, combined with the division of the existing dimensions, the revised questionnaire deleted 12 questions, and revised the 18, 24 questions with ambiguous meaning, and 23, 28, 20 questions with low validity. The newly revised scale course learning items include the original 1, 2, 3, 4, 5, 6, 7, 8, 9 items; the project and practice items remain unchanged; the dimension of classmate relationship modifies the content of 18 items, including the original 15,16, 17, 18; the institutional environment has revised 23 and 24 topics, including the original topics 23, 24, 25, 26, 27; the teacher–student relationship consists of the original items 10, 11, 13, 14; the learning atmosphere includes the original item 19, 20, 21, 22; the physical resource dimension adds an item.

4.2 Quality analysis of formal scale

4.2.1 Combined reliability

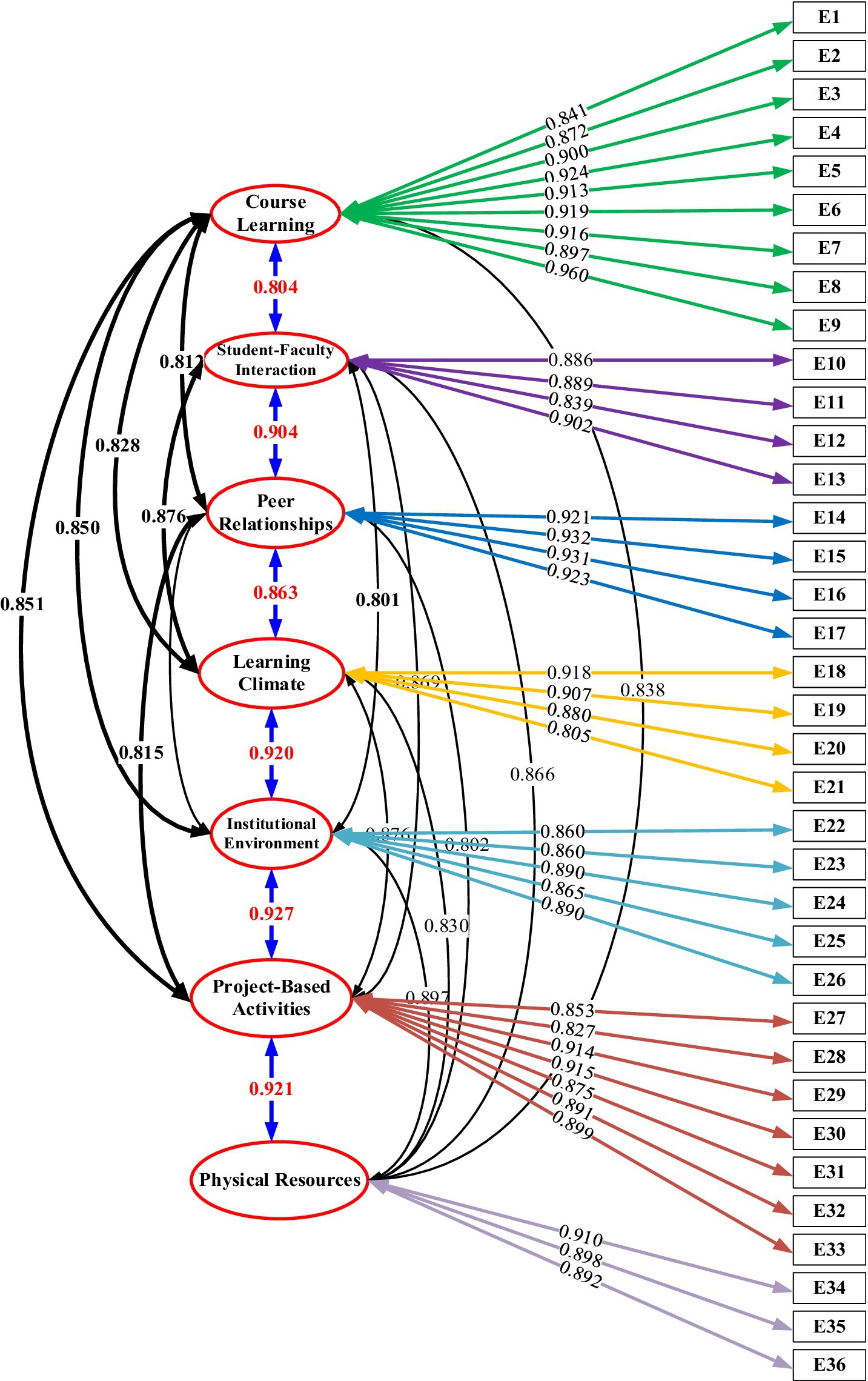

The composite reliability coefficient is generally required to be greater than 0.7. The combined reliability values of course learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, project and practice, and physical resources do not need to be the same. As shown in Table 6, the minimum values of these seven dimensions are greater than 0.7 and close to 1, indicating that the internal consistency of the engineering education learning environment scale is high. Among them, E1–E9 items measure the course learning factor, E10–E13 items measure the teacher–student relationship factor, E14–E17 items measure the classmate relationship factor, E18–E21 items measure the learning atmosphere factor, and E22–E26 items measure the institutional environment factor. E27–E33 items measure project and practice, and E34–E36 items measure physical resource factors.

4.2.2 Common method bias

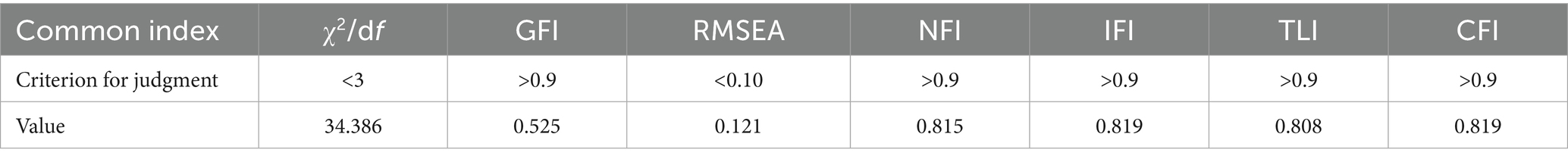

As shown in Table 7, according to the common method deviation test method, it is assumed that the 36 items of the scale can extract a factor, and the test results. The RMSEA value of 0.121 is greater than the standard value of 0.10, and the GFI, NFI, IFI, TLI, and CFI are all less than 0.9. The single-factor model of the learning environment scale is poorly fitted and cannot be focused into a single dimension, indicating that there is no serious common method bias in the questionnaire.

4.2.3 Confirmatory factor analysis

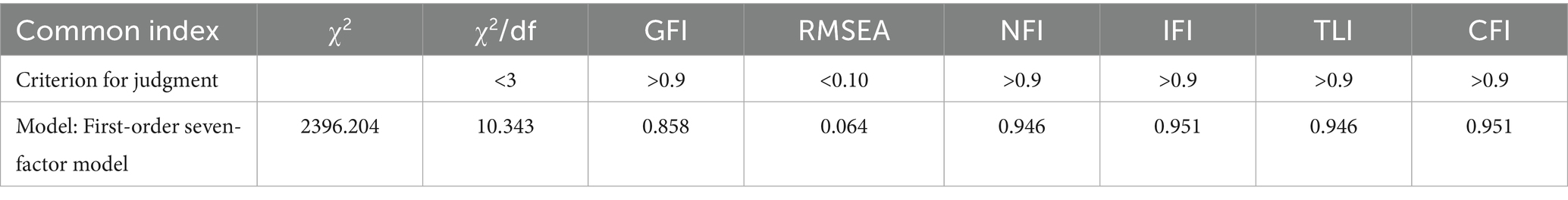

After predictive exploratory factor analysis, some items of the predictive scale are deleted and added. It is assumed that the learning environment theory of engineering education is a first-order seven-factor model, and the fitting index of the model is shown in Table 8.

In addition to the GFI close to the critical value, the other fitting indexes of the model all meet the standard, indicating that the theoretical model and measurement model of the engineering education learning environment have a good fitting degree, which is in line with the theoretical concept of the engineering education learning environment measurement. The path diagram of the model is shown in Figure 3. The factor loading coefficients of the items and the corresponding common factors of the engineering education learning environment scale are all above 0.9 (rounded), indicating that the measurement items have a strong correlation with each potential construct, and the dimensions of the items are well isomorphic. The seven-dimensional structure division of engineering education is reasonable. In addition, as shown in Figure 3, the path coefficients between the seven variables of course learning, student–faculty interaction, peer relationship, learning climate, institutional environment, project-based activities, and physical resources are compared. The study found that among the seven variables of the engineering education learning environment, the path coefficients of the three pairs of variables, teacher–student relationship and classmate relationship, learning atmosphere and institutional environment, institutional environment and project and practice, project and practice and physical environment, are all greater than 0.9. The path coefficient of the institutional environment and project and practice is the largest, which is 0.927, indicating that the institutional environment has the strongest correlation with the project and practice, and has the greatest impact on the seven-dimensional model structure of the engineering education learning environment.

5 Discussion

This study focuses on the development of the learning environment assessment scale from the perspective of China’s engineering education accreditation. It emphasizes the constructivist learning concept that learners are active in knowledge construction in the learning environment and need experience and social interaction (Nurhuda et al., 2023). The test of the prediction scale and the formal scale uses students’ ‘perception’ and ‘experience’ to verify the characteristics and quality of the engineering education learning environment. The study found that “from 2011 to 2020, the quantitative literature related to the learning environment scale included in the Web of Sciences database is mostly based on constructivism and empiricism epistemology, focusing on students’ autonomy and independence in education” (Brito and Silva, 2023). The research perspective of this paper is consistent with this conclusion. The difference is that this study focuses on the learning environment assessment scale in the field of engineering education, and deeply studies the learning environment assessment based on the graduation requirements of China’s engineering education professional certification and excellent talent training.

5.1 Evaluation of the theoretical structure of the scale

Although the 12 benchmark contents of the graduation requirements of China’s engineering education professional certification are the content scope of the preparation of the learning environment scale items, they cannot be directly used as the measurement dimension of the engineering education learning environment scale. The theoretical structure of engineering education learning environment evaluation includes seven factors: curriculum learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, project and practice, physical resources, and a system of 12 benchmark contents for engineering education professional certification graduation requirements.

From the perspective of the theoretical structure of the scale: on the one hand, the scale covers 12 benchmark contents of China’s engineering education professional certification graduation requirements: ‘engineering knowledge, problem analysis, design/development solutions, research, use of modern tools, engineering and society, environment and sustainable development, professional norms, individuals and teams, communication, project management, and lifelong learning’. Zhu Lu, Hu Dexin, and other studies have shown that there are two types of reference points for the graduation requirements of China’s engineering education: (Zhu et al., 2024) First, the content investigation of graduation requirements should have the characteristics of feasibility and evaluability. Second, the graduation requirements include the evaluation mechanism and implementation. The scale developed in this study implements the content information of China’s engineering education graduation requirements into evaluable items. The development of the scale realizes the requirements that the content of the 12 indicators can be implemented and evaluated in the investigation of the graduation requirements of engineering education professional certification. The scoring results obtained by this scale can also be used as a reference for the quantitative conclusions of undergraduate graduation requirements. Therefore, the development of the scale makes up for the lack of systematic connection between the existing learning environment assessment tools and the graduation requirements of engineering education professional certification, which is of great significance to promote the prosperity of engineering education and support the sustainable development of engineers in the future. On the other hand, the evaluation dimension of this scale covers the hard learning environment conditions, such as courses, practical activities inside and outside the school, systems, and material resources that support the cultivation of undergraduate talents in excellent engineering education, as well as soft learning environment conditions, such as learning atmosphere, teacher-student relationship, and student relationship. Compared with Yu Haiqin’s (Haiqin et al., 2013) evaluation dimension of the learning environment of Chinese undergraduate top-notch innovative talents, the study increased the physical resource evaluation factor of ‘the use of laboratory and equipment and engineering practice ability, the evaluation of the impact of computers and books on professional learning’. The theoretical model shows that the engineering education learning environment evaluation scale is a college students’ learning environment system that integrates classroom and extracurricular, school and society, materials, nature, society, and culture. (Lijun and Bushi, 2010)

5.2 Reflection on the results of the scale test

From the results of the scale test, the exploratory factor analysis of the prediction scale is carried out, and the items are corrected, which means that the 7 common factors contained in the revised scale construct 36 items of information. The formal scale has been tested by CITC, including internal consistency reliability, content validity, and composite reliability, which proves that the reliability and validity of the scale are good and there is no common method deviation. The confirmatory factor test shows that the seven-dimensional structure division of engineering education learning environment conforms to the theoretical construct. Therefore, the model constructed by the engineering education learning environment scale is suitable and can be used as a reliable tool for the investigation and management of the engineering education learning environment. The discussion of each dimension of the scale is as follows.

The course learning factor reflects the students’ evaluation of the improvement of engineering knowledge, engineering thinking, engineering design, and engineering practice ability through the course, different teaching contents and forms, including E1–E9 items. This indicator shows that the engineering education professional certification emphasizes student-centered, and requires colleges and universities to fully consider the students’ learning needs and characteristics of engineering knowledge, engineering thinking, engineering design, and engineering practice in the design of courses and teaching in the construction of a learning environment. It means that colleges and universities need to change the traditional teacher-centered teaching mode and adopt teaching methods and courses that pay more attention to students’ active participation and independent inquiry (Zheng et al., 2023). For example, using flexible or diversified teaching methods to help students master the mathematics, natural science, engineering foundation and professional knowledge needed to solve complex engineering problems; to cultivate the key links of identifying and judging complex engineering problems by using scientific principles; general courses and professional courses should help students apply engineering knowledge to evaluate the impact of engineering projects on social sustainable development and environment, and understand the social responsibilities they should bear.

The teacher–student relationship is the evaluation of the depth of communication between students and teachers and the degree of teacher help, including E10–E13 items. The research shows that the teacher-student relationship is the cornerstone of the construction of the engineering education learning environment. It provides support for professional certification through the following aspects: ensuring the achievement of students’ learning outcomes, supporting the student-centered teaching paradigm, promoting the continuous improvement of teaching, and helping educational projects adapt to the development trend of digitization and globalization (Qian, 2023; Agrawa et al., 2024; Dai, 2024). In general, effective teacher–student interaction is the key to achieving high-quality development of engineering education and successfully passing professional certification. With the increasing popularity of digital technology, maintaining and strengthening the positive relationship between teachers and students is still an important issue that needs continuous attention and research in the field of engineering education (Jaafar et al., 2021; Makda, 2024).

Classmate relationship refers to the evaluation of mutual encouragement, help, cooperation, and learning resource sharing among students, including E14–E17 items. The research shows that the classmate relationship plays an irreplaceable role in the professional certification of engineering education (Craps et al., 2021). It directly supports the requirements of certification standards for graduates’ comprehensive quality by promoting the development of teamwork (Inegbedion, 2024), communication skills (Estriegana et al., 2024), knowledge sharing (Jia et al., 2024), learning motivation (Hsiao et al., 2019), professional quality, and cross-cultural ability (Kokroko et al., 2024). Therefore, in the construction of an engineering education learning environment, we should attach great importance to cultivating positive and healthy classmate relationships, so as to lay a solid foundation for students’ future career development and lifelong learning.

The learning atmosphere is an evaluation of the school’s exploration, innovation, and pragmatic learning atmosphere. Items include E18–E21. Learning atmosphere is of great significance to the construction of the engineering education learning environment. The research shows that a positive learning atmosphere is more likely to promote the formation of students’ learning motivation (Qian, 2023); Better absorption and understanding of knowledge (Zheng et al., 2023). In addition, students are more likely to have new ideas and innovative thinking in an open, inclusive, questioning, and exploring learning atmosphere. (Chen et al., 2023)

The institutional environment is the evaluation of the school’s independent choice of learning, undergraduate tutorial system, academic lectures, competition awards, and reading clubs. The items include E22–E26. This indicator emphasizes that engineering education accreditation encourages colleges and universities to focus on cultivating students’ innovative spirit and critical thinking in the construction of the learning environment (Zhang et al., 2022).

Project and practice is the evaluation of students’ participation in practical activities, the use of instruments and professional simulation software, the ability to solve complex engineering problems, communication, teamwork ability, engineering management, honest and fair professional ethics, etc. Items include E27–E33. This indicator shows the importance of practical training in the engineering education certification project.

Physical resources are the use of laboratory and equipment, the influence of training based on engineering practice ability, and the evaluation of computer, network, and book materials to meet professional learning. The items include E34–E36. This index shows that engineering education certification attaches great importance to students’ evaluation of the use of physical resources, which means that colleges and universities should strengthen the construction of laboratories, instruments and equipment, training bases, information networks, and books and materials in the construction of the learning environment (Chen et al., 2023).

5.3 Evaluation of scale design concept

According to the transformation of international engineering education to the standard of sustainable development, the elements of China’s engineering education learning environment evaluation scale are in line with the concept of sustainable development of engineering education. For example, the curriculum learning factor is a powerful carrier to promote sustainable development. Taking the mechanical engineering major of the Massachusetts Institute of Technology as an example, this major has embedded modules such as sustainable design and circular economy in the core curriculum since 2018. This kind of curriculum reconstruction enables students to establish a systematic and sustainable development thinking framework while mastering professional technology. Research shows that 5 years after graduation, the proportion of such students engaged in green technology research and development reaches 42%, which is significantly higher than 23% of the traditional course group (MIT Office of Institutional Research, 2023). The quality of collaboration between teacher support and classmate relationships in teacher–student relationship is a key factor affecting the sustainable development of academics. Teacher support can induce students’ autonomous motivation and maintain the continuity of learning motivation (Corradini and Nardelli, 2020); the collaborative quality of classmate relationships enhances cognitive flexibility and a sense of belonging (Rosé and Järvelä, 2021).

6 Limitations and future directions

Based on the benchmark indicators of graduation requirements of China Engineering Education Accreditation, this study developed a learning environment assessment scale. The development of the scale can assist the continuous improvement of the results of engineering education professional certification from the dimension of the learning environment. But what is the cross-cultural adaptability of the scale? Further comparative verification is needed in the future. The research data come from the underdeveloped areas in western China, and all the students majoring in engineering technology in application-oriented universities. The research objects are typical, but the research results only represent the situation of such universities in China, and the application and promotion of the scale need to be cautious. In the future, heterogeneous samples should be selected to evaluate the reliability and validity of the scale.

In addition, in the confirmatory factor analysis (CFA) model, the correlation coefficient of the two potential factors is greater than 0.9, indicating that there may be multicollinearity, and it is difficult to determine the independent effect of the variables (Kyriazos and Poga, 2023). This means that the independent influence of learning atmosphere, institutional environment, project activities, and material resources on the learning environment of engineering education is difficult to determine, and there are some deficiencies in the research. The concepts of these four latent factors are different, and the items measuring these four variable factors do not overlap (the results of exploratory factor analysis have been verified). Factor merging can reduce multicollinearity problems, but it may also lead to a decline in model explanatory power (Upendra et al., 2023). Therefore, the study did not delete and merge these four factors. Existing studies have also shown that there are two potential factors in the confirmatory factor analysis with a correlation coefficient greater than 0.9, but it is not unreasonable (Zhai et al., 2024). In order to make up for the limitations of this study, we will consider increasing the sample size in the future to improve the tolerance of the model to multicollinearity and make the estimation of model parameters more reliable (Ismaeel et al., 2021).

Engineering education is the cradle of cultivating future technology leaders, and engineering education professional certification plays a role in ensuring the quality of personnel training. The innovation of the university learning environment should not only stay at the certification benchmark, stop at the improvement of knowledge transfer efficiency, but also focus on shaping a new generation of engineers with a global vision and sustainable development leadership. This study mainly solves the problem of designing and verifying the engineering education learning environment scale. In the future, it is necessary to further explore the problem of learning environment construction in the collaborative mechanism of knowledge construction, ability training, and value shaping in educational practice. The specific implementation path, for example, (1) to build a “three-layer nested “curriculum system: in addition to the professional and technical level, the addition of sustainable development theory layer and interdisciplinary practice layer; (2) Developing a dynamic capability assessment matrix: integrating ABET certification standards and SDGs indicators to establish a new evaluation paradigm for engineering education quality; (3) Create an industry-academic community: Learn from Stanford University’s ‘Change Lab’ model to enable students to understand the practical logic of sustainable development in real technology iterations.

7 Conclusion

This study aims to develop and test the learning environment assessment scale in engineering education. The research takes the 12 benchmark contents of the graduation requirements of the general standard of China’s engineering education certification as the content scope of the item preparation, and complies with the principle of scale design and verification. From the seven dimensions of curriculum learning, teacher–student relationship, classmate relationship, learning atmosphere, institutional environment, project and practice, physical resources, the evaluation scale of learning environment in China’s engineering education is developed and verified, and the theoretical structure model of learning environment evaluation in the context of China’s engineering education and the reliability and validity of the scale are answered. The research results confirm that the seven-factor structure scale has good robustness, indicating that it has potential usefulness in continuously improving China’s engineering education and making the engineering talent training of certified institutions consistent with the global engineering education professional certification standards. Through the test, this evaluation scale is the physical carrier and social field of the engineering ability of Chinese engineering education graduates, and the key observation point of the graduation requirements. It will play a supporting role in the cultivation of outstanding talents in engineering education and the growth of students into a new generation of sustainable engineers.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

BJ: Data curation, Formal analysis, Writing – original draft, Writing – review & editing. RX: Funding acquisition, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research has been supported by the National Natural Science Foundation of China (U22A20592), Gansu Province Science and Technology Plan Project (24RCKG001, 25RCKG002, and 24JRRG034), Gansu Province International Science and Technology Cooperation Project (25YFWG001), Gansu Sea Talent Project (GSHZJH 12-2025-01), and Special Project of the Postdoctoral Research Station at Gansu Qilian Mountain Water Resource Conservation Forest Research Institute (20221231).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agrawa, S., Sagi, S., Srinivasaiah, B., and Ray, N. K. (2024). The impact of teacher-student relationships on students’ perceptions of teachers’ educational effectiveness. J. Res. Vocat. Educ. 6, 45–53. doi: 10.53469/jrve.2024.6(11).10

Anggara, D. S., and Abdillah, C. (2023). Content validity analysis of literacy assessment instruments. Jurnal Cakrawala Pendidik 42, 447–459. doi: 10.21831/cp.v42i2.55900

Bacher-Hicks, A., Goodman, J., and Mulhern, C. (2021). Inequality in household adaptation to schooling shocks: COVID-induced online learning engagement in real time. J. Public Econ. 193:104345. doi: 10.1016/j.jpubeco.2020.104345

Baojuan, Y., Qing, Z., Shenghong, D., Xiaoting, F., and Linlin, L. (2017). The effect of career mission on college students' employability: the mediating role of job search clarity and job search self-efficacy. Psychol. Dev. Educ. 33, 37–44.

Brito, S., and Silva, A. (2023). Mapping measurement scales for the assessment of learning environments. Int. Educ. Stud. 16:164. doi: 10.5539/ies.v16n2p164

Cañavate, J., Martínez-Marroquín, E., and Colom, X. (2025). Engineering a sustainable future through the integration of generative AI in engineering education. Sustainability 17:3201. doi: 10.3390/su17073201

Chen, Q., Wang, X., Yin, H., and Feng, J. (2023). Design of an exploratory experiment in teaching for engineering education accreditation: fluoride geochemical simulation during water–rock interactions under the effect of seawater intrusion. Sustainability 15:12910. doi: 10.3390/su151712910

Clark, L. A., and Watson, D. (2019). Constructing validity: new developments in creating objective measuring instruments. J. Abnorm. Psychol. 128, 500–508.

Clouston, S. A. P., Natale, G., and Link, B. G. (2021). Socioeconomic inequalities in the spread of coronavirus-19 in the United States: a examination of the emergence of social inequalities. Soc. Sci. Med. 268:113554. doi: 10.1016/j.socscimed.2020.113554

Comrey, A. L., and Lee, H. B. (1992). A first course in factor analysis. 2nd Edn. New York: Psychology Press.

Corradini, I., and Nardelli, E. (2020). “Developing digital awareness at school: a fundamental step for cybersecurity education” in Advances in human factors in cybersecurity. eds. I. Corradini, E. Nardelli, and T. Ahram (New York, NY, USA: Springer International Publishing), 102–110.

Craps, S., Pinxten, M., Knipprath, H., and Langie, G. (2021). Different roles, different demands. A competency-based professional roles model for early career engineers, validated in industry and higher education. Eur. J. Eng. Educ. 47, 144–163.

Crawley, E. F., Malmqvist, J., Östlund, S., and Brodeur, D. R. (2007). Rethinking engineering education: The CDIO approach. 2nd Edn. Cham, Switzerland: Springer.

Dai, P. (2024). The influence of teacher-student relationship on students’ learning. Lect. Notes Educ. Psychol. Public Media 40, 241–247. doi: 10.54254/2753-7048/40/20240764

Dandan, T., and Zhonglin, W. (2020). Common method Bias test: problems and suggestions. Psychol. Sci. 43, 215–223.

Du, W. (2025). Content validation and content validity index calculation for teacher innovativeness instrument among Henan private universities in China. Commun. Appl. Nonlinear Anal. 32, 175–187. doi: 10.52783/cana.v32.4705

Estriegana, R., Teixeira, A. M., Robina-Ramirez, R., Medina-Merodio, J. A., and Otón, S. (2024). Impact of communication and relationships on student satisfaction and acceptance of self- and peer-assessment. Educ. Inf. Technol. doi: 10.1007/s10639-023-12276-5

Feng, L., and Weimin, W. (2001). Characteristics and Design of Network Learning Environment. China Dist. Educ. 7, 21–23.

Fornell, C., and Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement error: algebra and statistics. J. Mark. Res. 18, 382–388. doi: 10.1177/002224378101800313

Fuller, C. M., Simmering, M. J., Atinc, G., Atinc, Y., and Babin, B. J. (2016). Common methods variance detection in business research. J. Bus. Res. 69, 3192–3198. doi: 10.1016/j.jbusres.2015.12.008

Haiqin, Y., Chen, L., and Haimei, S. (2013). Effects of learning environment on college students ' learning style and academic achievement-an empirical study based on the cultivation of top innovative undergraduate talents. High. Educ. Res. 34, 62–70.

Hao, Z., and Lirong, L. (2004). Statistical test and control of common method bias. Psychol. Sci. Progress 6, 942–950.

Hinkin, T. R. (1998). A brief tutorial on the development of measures for use in survey questionnaires. Organ. Res. Methods 1, 104–121. doi: 10.1177/109442819800100106

Hsiao, A, McSorley, G, and Taylor, D. (2019). “Peer learning and leadership in engineering design and professional practice.” in Proceedings of the Canadian Engineering Education Association (CEEA).

Inegbedion, H. E. (2024). Influence of educational technology on peer learning outcomes among university students: the mediation of learner motivation. Educ. Inf. Technol. 29, 21241–21261. doi: 10.1007/s10639-024-12670-7

Ismaeel, S. S., Midi, H., and Sani, M. (2021). Robust multicollinearity diagnostic measure for fixed effect panel data model. Malays. J. Fundam. Appl. Sci. 17, 636–646. doi: 10.11113/mjfas.v17n5.2391

Iverson, R. D., and Maguire, C. (2000). The relationship between job and life satisfaction: evidence from a remote mining community. Hum. Relat. 53, 807–839. doi: 10.1177/0018726700536003

Jaafar, I., Statti, A., Torres, K. M., and Pedersen, J. M. (2021). Technology integration and the teacher-student relationship. Advances in educational technologies and instructional design. IGI Global: 196–213.

Jia, M., Zhao, Y., Song, S., Zhang, X., Wu, D., and Li, J. (2024). How vicarious learning increases users’ knowledge adoption in live streaming: the roles of parasocial interaction, social media affordances, and knowledge consensus. Inform. Proc. Manag. 61:103599.

Jietai, H., Zhonglin, W., and Zijuan, C. (2004). Structural equation model and its application. Beijing: Education Science Press.

Kaicheng, Y. (2000). Design principles of constructivist learning environment. Elec. Educ. China 4, 14–18.

Kim, S., and Ozturk, I. (2020). Global mobility patterns of Washington accord graduates. High. Educ. Q. 74, 210–228.

Kokroko, K. J., Leipold, W., and Hovis, M. (2024). Applying a pedagogy of interdisciplinary and cross-cultural collaboration as socio-ecological practice in landscape architecture education. Soc. Ecol. Pract. Res. 6, 21–40. doi: 10.1007/s42532-023-00175-5

Kyriazos, T., and Poga, M. (2023). Dealing with multicollinearity in factor analysis: the problem, detections, and solutions. Open J. Stat. 13, 404–424. doi: 10.4236/ojs.2023.133020

Lennon, M. C. (2021). Global engineering education and the Washington accord: threshold standards for quality assurance. High. Educ. Policy 34, 589–607.

Li, Z. Y., and Zhao, W. B. (2021). Latest developments in engineering education accreditation in China. High. Eng. Educ. Res. 5, 39–43.

Li, M, and Zhao, M. (2023). “Research on governance of higher engineering education quality in China after accessing the Washington accord.” in 2023 ASEE Annual Conference & Exposition Proceedings.

Lijun, C., and Bushi, L. (2010). On the construction of learning support system for higher engineering education in knowledge society. China High. Educ. Res. 9, 19–23.

Makda, F. (2024). Digital education: mapping the landscape of virtual teaching in higher education – a bibliometric review. Educ. Inf. Technol. 30, 2547–2575.

Marin, P., Gómez, H., and Lee, J. (2021). Assessing the impact of international accreditation in emerging economies: evidence from engineering graduates. Stud. High. Educ. 46, 1789–1805.

Marinho, A. C. F., De Medeiros, A. M., Lima, E. d. P., and Teixeira, L. C. (2024). Speaking in public coping scale (ECOFAP): content and response process validity evidence. CoDAS 36, 125–137. doi: 10.1590/2317-1782/20242023200en

Matolić, T., Jurakić, D., Greblo Jurakić, Z., Maršić, T., and Pedišić, Z. (2023). Development and validation of the EDUcational course assessment TOOLkit (EDUCATOOL) – a 12-item questionnaire for evaluation of training and learning programmes. Front. Education :8.

Minglong, W. (2009). Operation and application of structural equation model-AMOS : Chongqing University Press.

MIT Office of Institutional Research (2023). Longitudinal study on engineering curriculum reform outcomes. Cambridge: MIT Press.

Mokkink, L. B., Herbelet, S., Tuinman, P. R., and Terwee, C. B. (2025). Content validity: judging the relevance, comprehensiveness, and comprehensibility of an outcome measurement instrument – a COSMIN perspective. J. Clin. Epidemiol. 185:111879. doi: 10.1016/j.jclinepi.2025.111879

Nurhuda, A., Al Khoiron, M. F., Syafi’i Azami, Y., Syafi'i Azami, Y., and Ni'mah, S. J. (2023). Constructivism learning theory in education: characteristics, steps and learning models. Res. Educ. Rehabil. 6, 234–242. doi: 10.51558/2744-1555.2023.6.2.234

Patil, A., and Codner, G. (2007). Accreditation of engineering education: review, observations, and proposals for global benchmarking. Eur. J. Eng. Educ. 32, 639–651. doi: 10.1080/03043790701520594

Ping, C. (2014). The concept of professional certification promotes the connotative development of engineering specialty construction. Chin. Univ. Teach. 1, 42–47.

Qian, Y. (2023). Improvement of teaching management mechanism for engineering education accreditation. J. Contemp. Educ. Res. 7, 46–51. doi: 10.26689/jcer.v7i9.5320

Qingguo, M. (2002). Management statistics: Data acquisition, statistical principles and SPSS tools and applied research. Beijing: Science Publishing, 27–42.

Rosé, C., and Järvelä, S. (2021). A theory-driven reflection on context-aware support for collaborative discussions in light of analytics, affordances, and platforms. Intern. J. Comput-Support. Collab. Learn 16, 435–440. doi: 10.1007/s11412-021-09360-8

Safitri, S. M., Masnawati, E., and Darmawan, D. (2024). Pengaruh Gaya Mengajar Guru, Dukungan Orang Tua dan Kepercayaan Diri terhadap Minat Belajar Siswa. EL-BANAT Jurnal Pemikiran Dan Pendidikan Islam 14, 77–90. doi: 10.54180/elbanat.2024.14.1.77-90

Shen, Z. D. (2007). "five Strengthenings" in engineering quality cultivation for students at local Normal universities. China Adult Educ. 24, 121–122.

Sheng-Tao, L., Xu, L., Qing-Qing, L., and Yu-Ling, Z. (2023). Empirical study on the effectiveness of engineering education certification from the perspective of students: based on SERU data of university. Univ. Educ. Sci. 3, 73–83.

Tang, D., Boker, S. M., and Tong, X. (2024). Are the signs of factor loadings arbitrary in confirmatory factor analysis? Prob. Solut. United States. Pub Med.

Terek, M., Muchova, E., and Lesko, P. (2021). How to make estimates with compensation for nonresponse in statistical analysis of census data. J. Eastern Europ. Central Asian Res. 8, 149–159. doi: 10.15549/jeecar.v8i2.619

Thy, S. (2017). A survey on cyber security awareness among college students in Tamil Nadu. IOP Conf. Ser.Mater. Sci. Eng. 263:4.

Tien, D. T. K., Namasivayam, S. N., and Ponniah, L. S. (2019). A review of transformative learning theory with regards to its potential application in engineering education. AIP Conf. Proc. 2137:050001.

Upendra, S., Abbaiah, R., and Balasiddamuni, P. (2023). Multicollinearity in multiple linear regression: detection, consequences, and remedies. Int. J. Res. Appl. Sci. Eng. Technol. 11, 1047–1061. doi: 10.22214/ijraset.2023.55786

Vakili, M. M., and Jahangiri, N. (2018). Content validity and reliability of the measurement tools in educational, behavioral, and health sciences research. J. Med. Educ. Dev. 10, 106–118.

Wolff, L. A., and Ehrström, P. (2020). Social sustainability and transformation in higher educational settings: a utopia or possibility? Sustainability 12:4176. doi: 10.3390/su12104176

Zhai, R. N., Liu, Y., and Wen, J. X. (2024). Competency scale of quality and safety for greenhand nurses: instrument development and psychometric test. BMC Nurs. 23:219. doi: 10.1186/s12912-024-01873-5

Zhang, Q., Ding, X., Wang, Q., and Zhang, Y. (2022). Competency of guiding teachers of technology competitions for Chinese college students under the global engineering education accreditation. Int. J. Technol. Des. Educ. 33, 1819–1833.

Zhang, J., Yuan, H., Zhang, D., Li, Y., and Mei, N. (2023). International engineering education accreditation for sustainable career development: a comparative study of ship engineering curricula between China and UK. Sustainability 15:11954. doi: 10.3390/su151511954

Zheng, W., Wen, S., Lian, B., and Nie, Y. (2023). Research on a sustainable teaching model based on the OBE concept and the TSEM framework. Sustainability 15:5656. doi: 10.3390/su15075656

Keywords: learning environment, engineering education accreditation, scale preparation, graduation requirements, stability

Citation: Jing B and Xiaofeng R (2025) The development and test of the learning environment scale from the perspective of China’s engineering education certification. Front. Educ. 10:1665226. doi: 10.3389/feduc.2025.1665226

Edited by:

Dillip Das, University of KwaZulu-Natal, South AfricaReviewed by:

Jose Roberto Cardoso, University of São Paulo, BrazilVaishali Nirgude, Thakur College of Engineering and Technology, India

Copyright © 2025 Jing and Xiaofeng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bian Jing, MTgxMTA0NjAwMDFAZnVkYW4uZWR1LmNu

Bian Jing

Bian Jing Ren Xiaofeng2

Ren Xiaofeng2