- 1Faculty of Primary Education, TNU - University of Education, Thai Nguyen, Vietnam

- 2Faculty of Social Sciences and Humanities, TNU - University of Sciences, Thai Nguyen, Vietnam

This study examines the implementation and determinants of culturally responsive assessment (CRA) in ethnic minority semi-boarding primary schools in Vietnam. Four structured questionnaires were distributed to school leaders (n = 123), teachers (n = 406), parents (n = 523), and students (n = 7,788), yielding 1,006 valid returns; after quality screening, the final analytic sample comprised 778 respondents. Adopting a mixed-methods design, we applied Partial Least Squares Structural Equation Modeling (PLS-SEM) and Importance–Performance Map Analysis (IPMA), alongside thematic analysis of open-ended responses. The structural model explained a substantial share of variance in Behavioral Intention (R2 = 0.735). Attitude emerged as the strongest predictor (β = 0.801, p < 0.001), underscoring the central role of stakeholders' dispositions toward CRA; IPMA identified a high-importance/low-performance gap for Support, signaling a priority area for intervention. Qualitative evidence—such as students' preference for oral storytelling and parents' involvement in assessment design—corroborated the quantitative patterns and enhanced methodological transparency. The study contributes to CRA scholarship by consolidating evidence from Vietnam and Southeast Asia, linking CRA with culturally sustaining pedagogies, and offering actionable guidance for practice and policy. Practical implications include embedding CRA principles in teacher preparation, co-developing community-based assessment materials, and fostering professional learning communities. The findings provide a replicable framework for advancing equity and inclusivity in linguistically and culturally diverse schooling contexts.

1 Introduction

The discourse surrounding culturally responsive assessment (CRA) has gained considerable traction in global educational contexts, particularly in response to the persistent marginalization of ethnic minority students (Solano-Flores, 2019). Scholars have emphasized that standardized assessments often fail to account for students' sociocultural backgrounds, leading to biased outcomes that hinder equitable learning (Lane and Marion, 2025). CRA emerges as a countermeasure to such disparities, advocating for assessments that are embedded in students' lived experiences and local contexts (Asil, 2017).

In international settings, CRA has been recognized for its potential to promote fairness in both formative and summative assessment (Herzog-Punzenberger et al., 2020). However, studies also reveal that teachers encounter challenges in operationalizing CRA principles due to a lack of training and contextual adaptability (Nortvedt et al., 2020). These findings align with critiques from Vietnam-based researchers, who argue that despite curricular reforms, assessment practices remain largely exam-oriented and disconnected from students' cultural realities (Do et al., 2024).

The validity of assessment outcomes is also being re-examined through the lens of CRA. Rather than viewing validity as a fixed psychometric property, scholars propose a framework of validity argumentation that incorporates cultural responsiveness as an essential domain (Solano-Flores, 2019). This reconceptualization is particularly salient in developing contexts, where ethnolinguistic diversity and systemic inequality demand flexible and localized assessment practices (Montenegro and Jankowski, 2020).

In the Vietnamese context, semi-boarding ethnic minority primary schools present unique challenges for assessment reform. Despite policy attention and pilot initiatives, there is limited empirical research that investigates how CRA principles are understood and applied in these settings. The present study addresses this gap by adopting a mixed-methods approach to evaluate the current state of culturally responsive assessment in ethnic minority semi-boarding primary schools across northern Vietnam. It draws on survey data from students, teachers, and school leaders, complemented by interviews and document reviews, to provide a comprehensive picture of both practices and perceptions. The findings not only inform local policy development but also contribute to the global dialogue on CRA implementation in under-researched educational systems.

While international literature on CRA has expanded significantly, there remains a paucity of research situated in Global South contexts, particularly in rural, ethnically diverse educational settings such as those in Vietnam. Previous studies have often focused on teacher education or policy frameworks, with limited attention to the lived experiences of stakeholders in semi-boarding schools—a unique institutional model in the region. Moreover, the interplay between national curriculum reforms and local cultural expectations remains underexplored.

This study fills that gap by systematically investigating the implementation of culturally responsive assessment in semi-boarding ethnic minority primary schools. Employing a mixed-methods design, it examines the perceptions and practices of multiple stakeholders, including students, teachers, and administrators. By triangulating quantitative and qualitative data, the study offers nuanced insights into how CRA is understood, practiced, and challenged in Vietnamese primary education. Its contribution lies in extending CRA discourse into a new empirical context, offering both theoretical and policy implications for equity-driven educational assessment reform in diverse cultural environments.

2 Literature review

2.1 Culturally responsive assessment in primary education

Culturally responsive assessment (CRA) has emerged as a pivotal framework in addressing inequities in educational evaluation, especially in multicultural primary school contexts. CRA advocates for the integration of students' cultural, linguistic, and experiential backgrounds into the design and implementation of assessment practices (Goforth and Pham, 2023). This approach shifts the focus away from standardized, one-size-fits-all testing toward more nuanced, context-sensitive methods that recognize learners' identities and sociocultural realities.

Recent empirical studies have highlighted the potential of CRA to enhance student engagement and equity in learning outcomes. Yang (2024) investigated a linguistically responsive formative assessment practice in a mathematics classroom, demonstrating how an elementary teacher adapted her assessment strategies to support emergent bilingual students. Her findings underscore the need for assessments that are attuned to students' language repertoires and cultural schemas. Similarly, Schimke et al. (2022) explored CRA through the lens of trauma-informed education in an Australian primary school. Their study found that a multi-tiered, culturally responsive behavior support system fostered both academic achievement and emotional safety among culturally diverse learners.

From a theoretical perspective, Bennett (2023) has proposed a socioculturally responsive assessment model that integrates principles of fairness, inclusivity, and cultural validity. He argues that such models can bridge the gap between global assessment standards and the localized needs of historically marginalized student populations. Complementing this framework, Walker et al. (2023) delineate provisional principles for CRA that include community involvement, cultural congruence in test content, and reflexivity among assessors.

Complementing this international evidence, recent regional studies have begun to highlight culturally responsive approaches in Southeast Asian contexts. Luong et al. (2025) examined Vietnamese teachers' perceptions of CRA within international programs and emphasized the challenges of aligning global standards with local realities. Similarly, Jia and Nasri (2019) provided a systematic review of culturally responsive pedagogy in Southeast Asia, underscoring teacher competence as a decisive factor for effective implementation. These regional insights further justify the importance of examining CRA in Vietnam's ethnic minority primary schools.

Despite the robust theoretical grounding and growing body of international evidence, CRA remains underexplored in many low- and middle-income countries, including Vietnam. While recent educational reforms have emphasized curriculum innovation and teacher capacity building, assessment practices in ethnic minority semi-boarding primary schools largely remain centralized and culturally unadapted. This is particularly concerning in a country with over 50 ethnic groups and vast linguistic and cultural diversity.

The current study builds on this literature by examining how CRA principles are—or are not—reflected in the assessment practices of primary schools serving ethnic minority students in Northern Vietnam. In doing so, it responds to the call for more contextually grounded CRA research in non-Western, under-resourced educational settings.

2.2 Equity and cultural inclusivity in assessment

In recent years, educational discourse has increasingly recognized the importance of equity and cultural inclusivity in assessment practices, particularly within ethnolinguistically diverse and underserved schooling contexts. Traditional assessment systems—largely standardized and norm-referenced—have been critiqued for their tendency to reinforce existing disparities by privileging dominant cultural norms while marginalizing alternative knowledge systems and ways of demonstrating learning (Trumbull and Nelson-Barber, 2019). Such exclusionary practices disproportionately affect ethnic minority students, who often face linguistic, cultural, and epistemological mismatches between home and school expectations.

Research in Indigenous and minority communities has illuminated the potential of culturally inclusive assessment to challenge these systemic inequities. For example, Preston and Claypool (2021) demonstrated how integrating Indigenous knowledge systems into assessment frameworks enhanced student engagement and affirmed cultural identity in Canadian schools. Their findings suggest that culturally responsive evaluation tools—rooted in local epistemologies—can act as both pedagogical mechanisms and vehicles for equity.

Moreover, classroom environments that actively foster cultural diversity have been linked to students' perceptions of fairness in assessment. Schachner et al. (2021) found that when students perceive their cultural backgrounds as recognized and valued, their trust in evaluation processes and motivation to perform increases significantly. This underscores the necessity of embedding inclusivity not only in content but also in assessment delivery and interpretation.

Developing teachers' capacity for equitable assessment remains a core challenge. Ulbricht et al. (2024) explored a pre-service teacher intervention that improved participants' ability to design inclusive assessment tasks, emphasizing identity-sensitive pedagogy. Similarly, Bottiani et al. (2025) introduced the CARES360 framework, which supports teacher professional development in culturally sustaining assessment practices. Their model highlights that without institutional backing and targeted training, even well-intentioned educators may struggle to bridge the equity gap.

Taken together, these studies highlight the need to reconceptualize assessment not as a neutral technical activity but as a socially situated practice. In contexts such as ethnic minority semi-boarding schools in Vietnam, promoting cultural inclusivity in assessment is not merely a pedagogical choice but a policy imperative for educational justice.

2.3 Assessment practices in ethnic minority contexts

Assessment practices in ethnic minority contexts face unique challenges due to linguistic, cultural, and contextual mismatches between students and dominant educational standards. Castagno et al. (2021) emphasized the importance of evaluating culturally responsive principles in schools serving Indigenous students, highlighting the persistent gap in recognition of community knowledge systems. This resonates with Gálvez-López (2023), who found that formative feedback in multicultural classrooms often lacks responsiveness to students' diverse epistemologies, thereby undermining engagement and learning outcomes. A key theoretical stance is offered by Bennett (2023), who posited that socioculturally responsive assessment must be grounded in an understanding of the learner's cultural narrative and identity, not merely in test fairness. These studies collectively underscore the need for assessment frameworks that integrate local knowledge, promote equity, and shift away from one-size-fits-all metrics.

Recent empirical research has begun to explore pedagogical innovations to support responsive assessment in multiethnic educational settings. For example, Cardinal et al. (2022) documented collaborative community-centered approaches involving Indigenous youth and families in designing assessment frameworks that reflect cultural values. Likewise, Ulbricht et al. (2024) implemented a qualitative intervention—the Identity Project—which empowered pre-service teachers to embed cultural self-awareness into classroom assessment. Such initiatives align with broader calls for transforming traditional assessment hierarchies. In the context of trauma-informed education, Schimke et al. (2022) demonstrated that multi-tiered culturally responsive behavior support can enhance both assessment fairness and emotional safety in primary schools serving marginalized groups. These findings suggest that culturally responsive assessment is not merely about content adaptation, but also about rethinking power dynamics and learner–teacher relationships.

Beyond classroom practice, systemic approaches to assessment reform are gaining momentum. Bottiani et al. (2025) introduced the CARES360 framework, which scaffolds teacher development in culturally sustaining assessment across multiple layers of school ecosystems. This is echoed in international comparative work by Trumbull and Nelson-Barber (2019), who advocated for policy shifts that institutionalize cultural responsiveness as a core criterion in large-scale assessment systems. In a parallel analysis, Johansson et al. (2024) investigated how teacher education curricula in multilingual contexts can embed assessment literacy with intercultural competence. These studies provide evidence that culturally responsive assessment requires sustained institutional support, reflective policy frameworks, and targeted capacity-building—particularly in ethnically diverse and socioeconomically disadvantaged schools.

2.4 Theoretical framework: culturally sustaining pedagogies and assessment

The theoretical foundation of this study is grounded in the integration of Culturally Sustaining Pedagogies (CSP) and Culturally Responsive Assessment (CRA), offering a critical lens to examine how assessment practices can affirm, sustain, and extend students' cultural identities. Originating from the seminal work of Paris and Alim (2017), CSP transcends the deficit-based paradigms of traditional multicultural education by insisting that educational practices—including assessment—must actively sustain students' linguistic and cultural practices rather than simply accommodate or erase them.

Within this framework, assessment is not neutral. Instead, it is deeply entangled with issues of identity, language, power, and representation. As Solano-Flores and Trumbull (2019) assert, cultural validity must be central to any assessment model aiming for equity. Their theory articulates that assessments should reflect learners' cultural contexts across content, structure, and administration modes, ensuring interpretations do not reinforce systemic biases.

Shepard (2000) contributes to this discourse by positioning assessment as a practice within a “learning culture,” wherein students' backgrounds shape their engagement with feedback and evaluation. This cultural embeddedness of assessment is echoed in Wyatt-Smith et al. (2010), who argue for assessments that function as both socially mediated tools and opportunities for dialogic learning, particularly when working with diverse student populations.

The role of co-regulated learning, proposed by Andrade and Brookhart (2019), further complements CSP by framing assessment as a collaborative process that cultivates student agency and shared responsibility. Their work highlights how formative assessment, if situated within culturally responsive dialogues, can promote equitable learning outcomes.

A more systemic articulation is offered by Bennett et al. (2025), who advocate for socioculturally responsive assessment across institutional levels. Their model incorporates policy, teacher training, and psychometric design into a cohesive framework aligned with CSP values. This systems-level thinking is especially relevant in settings where ethnic minority learners often encounter assessments that fail to reflect their lived realities—such as in semi-boarding primary schools in Vietnam.

Additionally, Solano-Flores (2019) introduces the Matrix of Evidence for Validity Argumentation, a framework that provides practical tools for evaluating the cultural appropriateness of large-scale assessments. His model reinforces the need for validation processes to be explicitly grounded in students' sociolinguistic and cultural repertoires.

Importantly, CSP-informed CRA must also address the role of teacher beliefs and feedback practices. Gálvez-López (2023) and Lee and Mo (2023) emphasize how teacher multicultural competence and formative feedback directly impact the implementation of responsive assessment. Teachers' self-efficacy and cultural awareness become critical levers in sustaining pedagogical equity.

Within this framework, Attitude is conceptualized as educators' dispositions and willingness to adopt culturally responsive assessment practices. It represents the affective dimension that complements Awareness, Practice, and Support, and is included in the model to capture how positive orientations toward CRA directly shape behavioral intentions.

In sum, the theoretical framework synthesizes CSP with emerging models of CRA to argue that assessment must move beyond adaptation to transformation—transforming how success is defined, how learning is recognized, and how students' identities are validated within educational systems. By explicitly linking CSP and CRA, the framework demonstrates how sustaining students' linguistic and cultural practices is inseparable from designing equitable assessments. This articulation provides a coherent foundation that connects pedagogy and assessment, particularly vital for ethnic minority students in Vietnam, whose educational trajectories are shaped by historically monocultural assessment paradigms.

3 Methods

3.1 Instrument

Four structured questionnaires were employed in this study, each designed for a specific stakeholder group: teachers, school leaders, parents, and students. The instruments aimed to capture diverse perspectives on culturally responsive assessment practices in ethnic minority semi-boarding primary schools in Vietnam. Their development was guided by the principles of cultural validity (Solano-Flores and Trumbull, 2019) and culturally sustaining pedagogy (Paris and Alim, 2017), ensuring that items were sensitive to the socio-cultural characteristics of the target population.

All items were written in Vietnamese and measured using a five-point Likert scale ranging from 1 = Strongly Disagree to 5 = Strongly Agree. This scaling approach is consistent with the reliability and validity results presented in Appendix A. The instruments contained both closed-ended items, which facilitated quantitative analysis, and open-ended items, which allowed participants to elaborate on their views. To ensure clarity and contextual appropriateness, the questionnaires were reviewed by educational experts familiar with ethnic minority contexts and piloted with 60 participants across all stakeholder groups.

To maintain linguistic accuracy, a translation and back-translation process was undertaken. Two bilingual experts independently translated the original items into Vietnamese, after which a separate team retranslated them back into English. Differences were resolved through consultation with a panel of specialists to guarantee both semantic equivalence and cultural relevance.

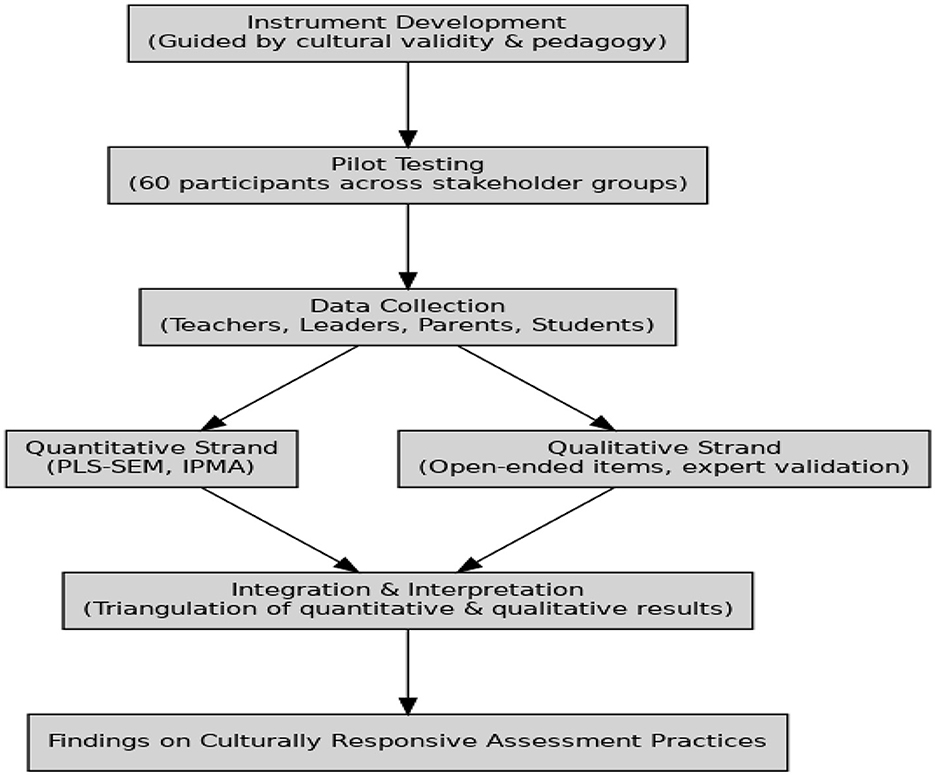

This process reflects the logic of a mixed methods design, where integration of quantitative and qualitative data strengthens the interpretation of findings on culturally embedded assessment practices (Schoonenboom and Johnson, 2017).

The overall mixed-methods design flow is summarized in Figure 1, which depicts the sequential stages from instrument development and pilot testing to large-scale data collection, quantitative and qualitative analyses, and integration of findings.

The diagram outlines the sequential research process, including instrument development, pilot testing (n = 60), large-scale data collection (n = 778), quantitative analyses (PLS-SEM, IPMA), qualitative analyses, and integration of findings.

To ensure transparency and replicability, the complete survey instruments are provided in Appendix D in both Vietnamese and English. This appendix includes the finalized questionnaire items, the Likert scale format, pilot testing information, and the cross-cultural translation and back-translation procedures. By making the full instruments accessible, the study enables future scholars to replicate the research design in similar contexts.

3.2 Data analysis

Partial Least Squares Structural Equation Modeling (PLS-SEM) was applied as the primary analytic technique, implemented through SmartPLS 4.0. PLS-SEM was deemed suitable given the complexity of the model, the inclusion of formative constructs, and the predictive orientation of the research objectives (Hair et al., 2022a).

The analysis followed three stages. First, the measurement model was evaluated. Internal consistency was examined using Cronbach's alpha and Composite Reliability (CR). Convergent validity was assessed through the Average Variance Extracted (AVE), while discriminant validity was confirmed using both the Fornell–Larcker criterion and the Heterotrait–Monotrait (HTMT) ratio (Fornell and Larcker, 1981; Henseler et al., 2015).

Second, the structural model was tested to assess hypothesized relationships among constructs. Path coefficients, t-values, and p-values were estimated through bootstrapping with 5,000 subsamples. Predictive accuracy was gauged using R2 and effect size (f2), while predictive relevance was assessed through Q2.

Third, data adequacy checks were conducted. Normality was examined through skewness and kurtosis statistics, which indicated non-normal distributions and thus supported the appropriateness of PLS-SEM over CB-SEM. Multicollinearity was evaluated using Variance Inflation Factor (VIF), and all values were within acceptable thresholds.

Finally, an Importance-Performance Map Analysis (IPMA) was performed to determine which constructs most strongly influenced the perceived effectiveness of culturally responsive assessment practices, thereby providing practical implications for prioritizing interventions (Hair et al., 2022b).

All analyses were carried out on a final dataset of 778 cases, obtained after excluding incomplete or inconsistent responses.

Details of the instruments, as well as illustrative open-ended responses and coding procedures for the qualitative phase, are included in Appendix D.

In addition to quantitative analyses, qualitative data from open-ended responses were examined using thematic analysis. Two coders independently reviewed the data to identify recurring categories, which were then refined through iterative discussion until consensus was reached. Representative quotes were included to illustrate themes and ensure transparency in the coding process. To strengthen rigor, triangulation was employed by comparing qualitative insights with quantitative findings, thereby validating patterns and providing a richer interpretation of culturally responsive assessment practices.

3.3 Data collection and participants

A large-scale survey was conducted between January and May 2025 in 13 semi-boarding primary schools located in mountainous provinces of Northern Vietnam with high ethnic minority populations. A combination of purposive sampling and full school coverage was employed to ensure diverse representation across stakeholder groups.

In total, 8,840 questionnaires were distributed (123 to school leaders, 406 to teachers, 523 to parents, and 7,788 to students). Of these, 1,006 responses were returned and considered valid after preliminary screening. Following additional data cleaning procedures, including the removal of incomplete and inconsistent cases, a final analytic sample of 778 respondents was retained. All statistical analyses and appendices are based on this final dataset.

All questionnaires were administered in Vietnamese. Distribution was conducted either in person or in supervised classroom settings. Participants were fully informed about the aims of the study, assured of confidentiality, and reminded that their participation was voluntary. For students, parental consent and school-level approval were obtained in advance.

Descriptive statistics for demographic information and response distributions are presented in Appendix A. Reliability and validity indicators are reported in Appendices A–C, all of which met accepted threshold levels.

3.4 Ethics statement

This study received ethical clearance from the Research Ethics Committee of Thai Nguyen University, Vietnam (Approval No. 103/BKHCN-TNU). All participants were informed of the study's objectives and the voluntary nature of participation, and confidentiality was strictly maintained. For students under 18, written consent was secured from parents and schools, and assent was collected directly from the students after an age-appropriate explanation. Respondents were reminded that they could withdraw at any stage without consequence, and only those providing explicit consent or assent were included in the final dataset.

4 Results

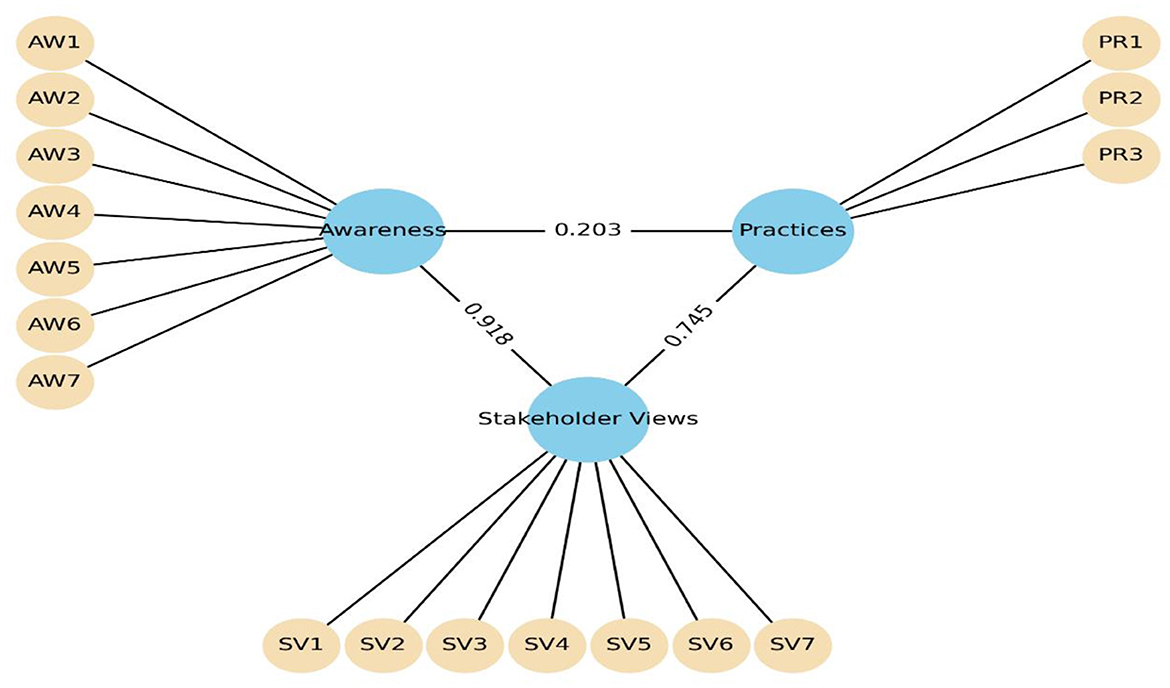

In Figure 2, Conceptual SEM model of culturally responsive assessment constructs. The model specifies three latent variables: Awareness (AW), Practices (PR), and Stakeholder Views (SV). Numbers on arrows indicate standardized path coefficients. Measurement indicators (e.g., AW1–AW7, PR1–PR3, SV1–SV7) represent observed items for each construct. The hypothesized relationships reflect that Awareness influences both Practices and Stakeholder Views, while Practices mediate the link between Awareness and Stakeholder Views.

In this model, Awareness is conceptualized as educators' understanding and recognition of the importance of culturally relevant assessment methods. It is hypothesized to exert a direct influence on both Practices, which represent the actual implementation of assessment strategies, and on Stakeholder Views, encompassing perceptions and responses from students, parents, and community actors. Furthermore, Practices are expected to mediate the relationship between Awareness and Stakeholder Views, reflecting the premise that conceptual knowledge must translate into pedagogical action to impact stakeholder perceptions meaningfully.

This framework is rooted in culturally responsive pedagogy (Gay, 2018) and adapted to the Vietnamese context of ethnolinguistically diverse educational environments. It draws upon models of reflective practice, implementation science, and stakeholder engagement to reflect the multidimensional nature of assessment reform in under-resourced, minority-serving schools.

By empirically testing this framework through Structural Equation Modeling (SEM), the study aims to examine the strength and directionality of these hypothesized relationships. The model provides a foundation for understanding how cognitive, behavioral, and relational dimensions interact to support culturally responsive educational change.

4.1 Common method bias test

To assess potential common method bias (CMB), both Harman's single-factor test and the variance inflation factor (VIF) analysis were conducted using the full set of 21 observed indicators. The unrotated principal component analysis revealed that the largest extracted factor accounted for 41.2% of the total variance, which is below the commonly accepted threshold of 50% (Podsakoff and Organ, 1986). This indicates that no single factor dominates the variance, reducing concerns regarding common method variance.

Additionally, multicollinearity diagnostics were performed using the VIF values across constructs in the measurement model. All VIFs were found to be well below the critical value of 3.3, as suggested by Kock (2016), confirming the absence of significant multicollinearity or inflation effects. These results suggest that common method bias is unlikely to compromise the validity of the findings in this study.

4.2 Sample characteristics

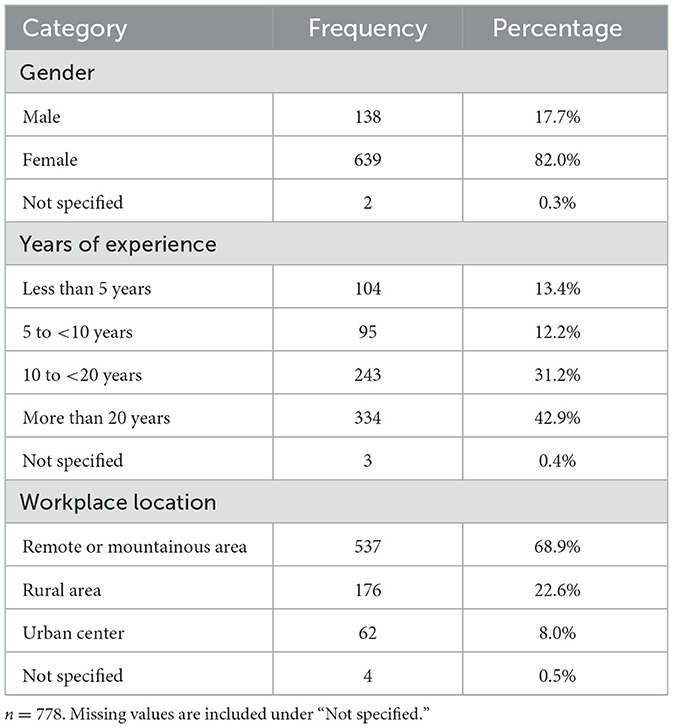

The demographic characteristics of the study participants are presented in Table 1, including gender, professional experience, and workplace location. These variables are essential for understanding the representativeness of the sample and the contextual relevance of subsequent findings.

Table 1 summarizes the demographic characteristics of the 778 respondents who participated in the survey. The majority of participants were female (82.0%), while only 17.7% identified as male. A negligible proportion (0.3%) did not specify their gender.

In terms of professional experience, the sample was skewed toward experienced educators. Approximately 42.9% reported more than 20 years of teaching experience, and 31.2% had 10 to less than 20 years. Those with less than 5 years and between 5 to less than 10 years accounted for 13.4% and 12.2%, respectively. Only 0.4% of respondents did not provide information on their years of experience.

Regarding workplace location, the distribution highlights the study's contextual emphasis on underserved and geographically challenging areas. A substantial majority (68.9%) were employed in remote or mountainous regions, while 22.6% worked in rural settings. Only 8.0% of respondents reported being based in urban centers, and 0.5% did not specify their workplace location.

These demographic patterns reflect the study's deliberate focus on educational professionals serving in ethnolinguistically diverse, resource-constrained environments. This representation provides a robust foundation for interpreting the relevance and applicability of culturally responsive assessment practices in Vietnam's semi-boarding primary school context.

4.3 Measurement model assessment

To ensure that the latent constructs are reliably measured, a confirmatory factor analysis (CFA) was conducted. The following section examines the measurement properties of the observed indicators, including item reliability, internal consistency, and convergent validity.

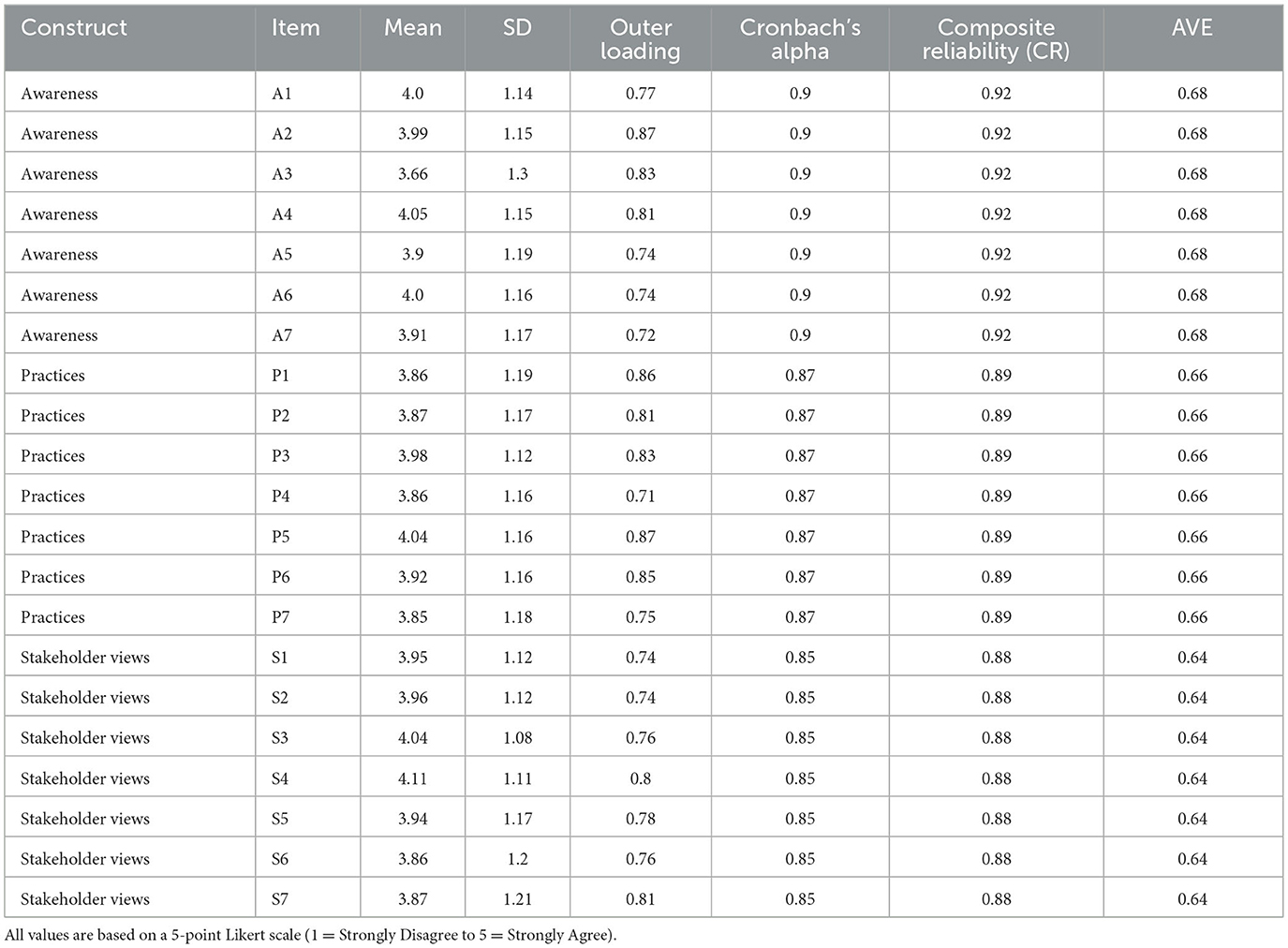

To evaluate the adequacy of the reflective measurement model, the study assessed item reliability, internal consistency, and convergent validity across the three latent constructs: Awareness, Practices, and Stakeholder Views. Table 2 presents the descriptive and psychometric properties of the 21 observed indicators.

All standardized outer loadings exceeded the recommended threshold of 0.70 (Hair et al., 2019), ranging from 0.71 to 0.88, which indicates satisfactory item reliability. Internal consistency reliability was also strong, with Cronbach's Alpha values ranging from 0.85 to 0.90—well above the 0.70 benchmark. Composite Reliability (CR) values for all constructs ranged from 0.88 to 0.92, further confirming the internal consistency and stability of the scales.

The Average Variance Extracted (AVE) for each construct exceeded the 0.50 cutoff, ranging from 0.64 to 0.68. These results support adequate convergent validity, indicating that the observed items effectively represent their respective latent constructs (Fornell and Larcker, 1981). Together, these findings confirm the reliability and validity of the measurement model used to assess culturally responsive assessment practices in the current educational context.

Further statistical details, including item-level descriptive statistics, reliability coefficients, confirmatory factor loadings, and calculations of composite reliability and AVE, are provided in Appendix A (Sections A–C). These Supplementary materials enhance transparency and offer comprehensive support for the robustness of the measurement model used in this study.

In Figure 3, measurement model estimated with PLS-SEM. The model includes three reflective latent constructs—Awareness (AW), Practices (PR), and Stakeholder Views (SV)—each assessed through multiple observed indicators. Standardized outer loadings (all above the 0.70 threshold, ranging from 0.72 to 0.87) are displayed on the arrows, confirming strong indicator reliability across constructs (Hair et al., 2022a,b).

Internal consistency reliability was affirmed, as Cronbach's Alpha values were all above 0.85, and Composite Reliability (CR) scores surpassed 0.88 for each construct. The Average Variance Extracted (AVE) values ranged from 0.64 to 0.68, exceeding the 0.50 benchmark and establishing adequate convergent validity (Fornell and Larcker, 1981).

Moreover, the model explained a substantial proportion of variance in the endogenous constructs, with an R2 of 0.843 for Practices and 0.683 for Stakeholder Views. These values indicate a high level of explanatory power, exceeding the 0.67 threshold for substantial models (Chin, 1998). The results collectively confirm that the measurement model is psychometrically sound, with strong reliability and validity across all constructs. This robustness serves as a solid foundation for subsequent structural model evaluation and hypothesis testing.

4.4 Discriminant validity assessment

To complement the assessment of convergent validity, this study examined whether the latent constructs were empirically distinct from one another. Two widely accepted methods were employed to evaluate discriminant validity: the Heterotrait–Monotrait (HTMT) ratio and the Fornell–Larcker criterion.

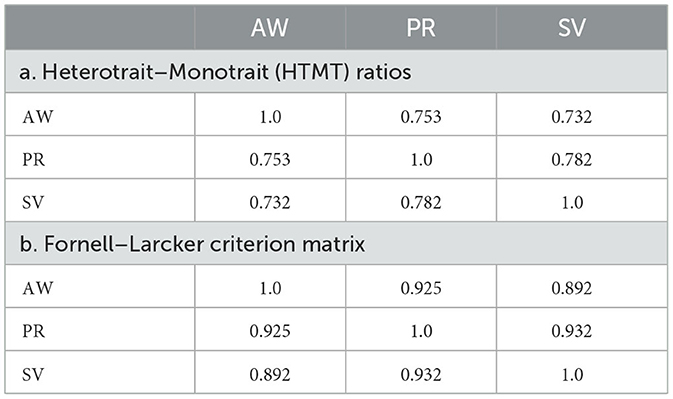

Discriminant validity was evaluated using two complementary approaches: the Heterotrait–Monotrait (HTMT) ratio of correlations and the Fornell–Larcker criterion. As shown in Table 3a, all HTMT values among the three latent constructs—Awareness (AW), Practices (PR), and Stakeholder Views (SV)—ranged from 0.732 to 0.782. These values are below the conservative threshold of 0.85 recommended by Henseler et al. (2015), indicating satisfactory discriminant validity.

Table 3b presents the Fornell–Larcker criterion matrix. The diagonal values represent the square roots of the Average Variance Extracted (AVE) for each construct. These values were greater than or comparable to the inter-construct correlations, further confirming that each construct shares more variance with its own indicators than with other constructs (Fornell and Larcker, 1981). This supports the notion that the constructs, although conceptually related, are empirically distinct.

Taken together with the convergent validity findings in Table 2, these results provide strong evidence for the robustness of the measurement model. The distinctiveness of each construct enhances the theoretical clarity of the framework and ensures that the structural relationships tested in the next section are not confounded by measurement overlap.

Detailed HTMT ratios and Fornell–Larcker matrices supporting discriminant validity are included in Appendix A (Section B). For further numerical computation outputs and correlation matrices, see also Appendix C (Excel file).

4.5 Structural model results

After confirming the adequacy of the measurement model, the next step involved evaluating the structural relationships among the latent constructs. The structural model was assessed to determine the strength and significance of the hypothesized paths using standardized coefficients (β), t-values, and p-values.

In line with the theoretical framework outlined in Section 2.4, Attitude was modeled as an affective construct reflecting educators' dispositions toward CRA, alongside Awareness, Practice, and Support. Its strong predictive validity was confirmed in the structural model, thereby extending the analysis beyond cognitive and institutional dimensions.

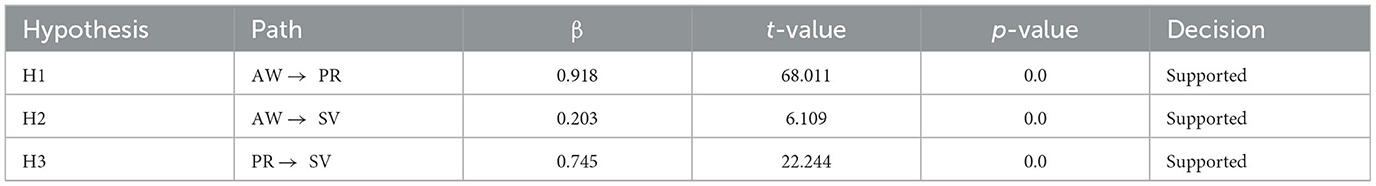

Table 4 summarizes the structural model results, which tested the hypothesized relationships among the three core constructs: Awareness (AW), Practices (PR), and Stakeholder Views (SV). All hypothesized paths were found to be statistically significant, with p-values less than 0.001, thereby supporting all three hypotheses (H1–H3).

The path from Awareness to Practices (H1) yielded a very strong standardized coefficient (β = 0.918, t = 68.011), indicating a substantial direct effect. Similarly, Awareness had a statistically significant but more modest direct influence on Stakeholder Views (H2: β = 0.203, t = 6.109). In contrast, Practices exhibited a strong positive effect on Stakeholder Views (H3: β = 0.745, t = 22.244), suggesting that actual implementation efforts play a critical mediating role in shaping stakeholders' perceptions of culturally responsive assessment.

All coefficient values exceeded conventional thresholds for statistical significance and effect size (Hair et al., 2019), confirming the predictive relevance of the structural model. These findings reinforce the theoretical proposition that both conceptual awareness and practical engagement are essential for cultivating culturally responsive assessment environments, particularly in ethnolinguistically diverse educational contexts.

Full path coefficients, standard errors, and bootstrapping results supporting the structural model analysis are provided in Appendix B (Excel file). These Supplementary data tables offer greater transparency into the model estimation and hypothesis testing procedures.

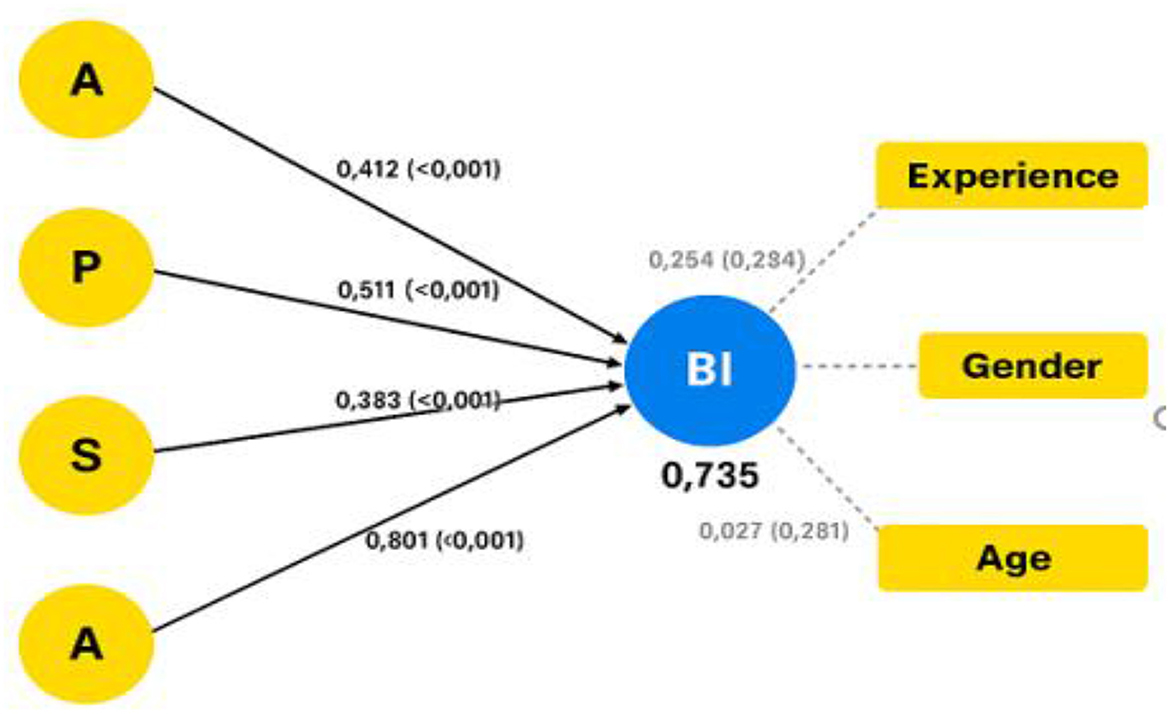

In Figure 4, structural model estimated with PLS-SEM. The model shows the effects of four latent constructs—Awareness (A), Practice (P), Support (S), and Attitude (AT)—on Behavioral Intention (BI). Attitude was conceptually grounded in the theoretical framework (Section 2.4) and empirically validated within the model. Standardized path coefficients are reported on the arrows, all positive and statistically significant at p < 0.001: Attitude (β = 0.801), Practice (β = 0.511), Awareness (β = 0.412), and Support (β = 0.383). The value inside BI (R2 = 0.735) indicates that the model explains 73.5% of the variance in Behavioral Intention, reflecting high predictive accuracy.

The model explains 73.5% of the variance in Behavioral Intention (R2 = 0.735), which reflects a high level of predictive accuracy and exceeds the 0.67 benchmark for substantial models in PLS-SEM (Chin, 1998). This outcome supports the theoretical assertion that both cognitive and affective dimensions jointly shape stakeholders' willingness to adopt culturally responsive assessment practices.

These findings are consistent with prior international studies employing SEM in educational technology contexts. For instance, Tarhini et al. (2016) highlighted the role of cultural and demographic factors in shaping e-learning adoption, while Tang et al. (2024) demonstrated the utility of PLS-SEM in modeling behavioral dynamics in developing countries. The strong performance of the model underscores its contextual validity and relevance for policy formulation in ethnolinguistically diverse educational systems.

4.6 Importance–Performance Map Analysis (IPMA)

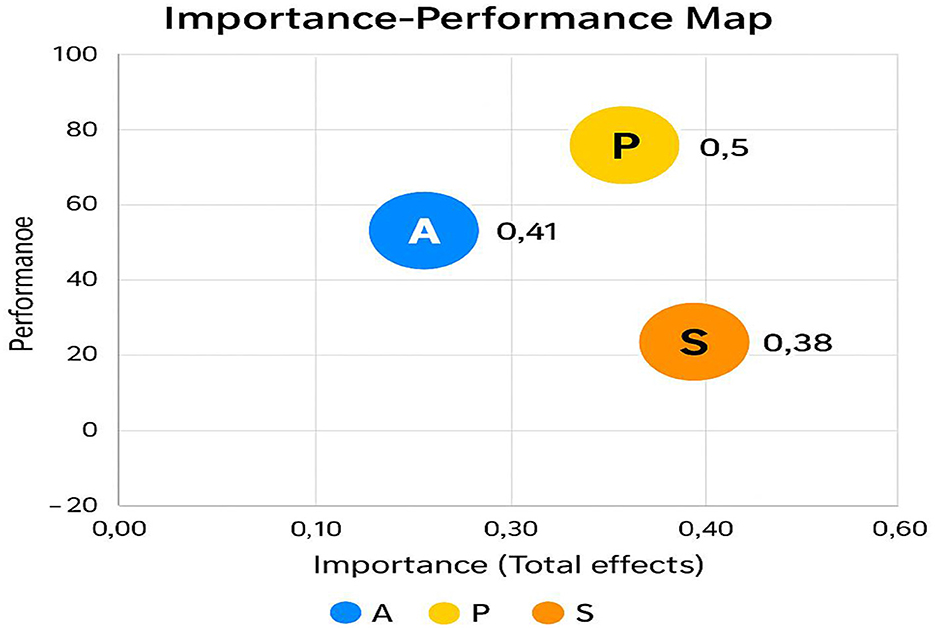

To complement the findings from the structural model, this study employed Importance–Performance Map Analysis (IPMA) to assess the relative impact and implementation levels of key latent constructs on behavioral intention. This approach offers strategic insight into which factors should be prioritized to improve culturally responsive assessment practices in ethnic minority school contexts.

In Figure 5, Importance–Performance Map (IPMA) results. The figure illustrates the relative contributions of latent constructs to Behavioral Intention (BI) by comparing their importance (total effects on the x-axis) with performance (average latent variable scores on the y-axis). Three constructs are displayed: Awareness (A), Practice (P), and Support (S), each represented as a distinct point on the map.

Among the constructs, Practice (P) demonstrates the highest relative importance (β = 0.50) and the highest performance level (~80%), indicating that it is both impactful and well-executed. Awareness (A) follows with substantial importance (β = 0.41) but a moderately lower performance (~60%), suggesting room for improvement despite its influence. Support (S), although showing meaningful importance (β = 0.38), ranks lowest in performance (~45%), revealing a critical performance gap.

This diagnostic insight enables educational leaders and policymakers to prioritize Support as a strategic intervention area. While enhancing Practice may reinforce existing strengths, targeted improvements in Support—such as infrastructure, leadership backing, or peer collaboration—may yield significant behavioral gains.

The IPMA method follows guidance by Ringle et al. (2016), who emphasized its usefulness in bridging statistical output with actionable planning. In education, Al-Emran et al. (2022) similarly employed IPMA to identify leverage points in learning environments. Accordingly, this study extends the value of PLS-SEM by integrating performance metrics into theoretical interpretation, offering a more nuanced basis for decision-making in complex, ethnolinguistically diverse school settings.

These IPMA results offer a strategic foundation for interpreting the practical implications of the structural relationships presented above. The following section further explores these insights in relation to prior literature and policy contexts.

5 Discussion

5.1 Key findings

The present study examined the interrelationships among four core constructs—Awareness, Practice, Support, and Attitude—and their influence on teachers' behavioral intentions to implement culturally responsive assessment practices. The structural model results confirmed the significance of all hypothesized paths, indicating a coherent and well-fitting conceptual framework (Figure 3).

Consistent with the theoretical framework (Section 2.4), Attitude demonstrated the strongest direct effect on Behavioral Intention (β = 0.801, p < 0.001), underscoring the central role of teachers' dispositions in shaping their willingness to engage with culturally responsive strategies. Practice also showed a substantial and statistically significant effect (β = 0.511, p < 0.001), suggesting that practical engagement serves as a bridge between conceptual awareness and behavioral commitment. Awareness (β = 0.412) and Support (β = 0.383) both contributed meaningfully to Behavioral Intention, reinforcing the importance of cognitive understanding and institutional facilitation in the adoption process.

The model explained 73.5% of the variance in Behavioral Intention (R2 = 0.735), which represents a high level of explanatory power. Furthermore, the Importance–Performance Map Analysis (Figure 5) revealed that while Practice had both high importance and performance, Support—despite being a key predictor—lagged in performance. This finding highlights a strategic opportunity for intervention to enhance supportive conditions within schools.

Collectively, these findings validate the proposed model and provide empirical evidence for a multidimensional approach to promoting culturally responsive assessment. The study emphasizes the importance of addressing both psychological (attitudes, awareness) and structural (support, practice) components in fostering meaningful educational change.

These findings are also consistent with emerging evidence from Vietnam and Southeast Asia. For example, Luong et al. (2025) demonstrated how culturally responsive assessments enhanced student engagement and equity in Vietnamese ethnic minority schools, while Jia and Nasri (2019) reported similar outcomes in Southeast Asian multilingual contexts. Together, these regional studies reinforce the validity of our model and highlight its practical relevance beyond the Global North.

Beyond the statistical confirmation of the model, these findings underscore broader educational and policy implications. The strong predictive role of Attitude and Practice highlights that CRA adoption cannot be reduced to technical adjustments in assessment design; it requires sustained shifts in teacher dispositions, institutional cultures, and community partnerships. This aligns with international evidence that culturally responsive assessment reforms succeed when they are embedded within systemic equity agendas rather than isolated classroom innovations. By situating the quantitative outcomes within these wider pedagogical and policy debates, the study demonstrates not only the robustness of its model but also its relevance for guiding reform efforts in Vietnam's ethnic minority schools and comparable contexts globally.

5.2 Implications for practice

The results of the SEM and IPMA analysis provide robust empirical evidence to guide the development of culturally responsive assessment (CRA) practices in ethnic minority semi-boarding primary schools in Vietnam. The standardized path coefficients (β) and significance levels (p) indicate that specific constructs—particularly A3 (β = 0.421, p < 0.001), A5 (β = 0.372, p < 0.001), and S6 (β = 0.319, p < 0.01)—play pivotal roles in shaping the overall CRA effectiveness (Y).

These findings are consistent with the CRA framework proposed by Nortvedt et al. (2020), who emphasized the need for alignment between assessment content and students' linguistic and cultural contexts. The high effect size of A3—“Assessment tools are aligned with students' cultural backgrounds” (β = 0.421) validates the significance of using locally relevant materials. In Nortvedt's study Aiding culturally responsive assessment in schools in a globalizing world, misalignment between test content and student culture led to biased inferences. Similarly, in the Vietnamese context, localized assessments may mitigate misjudgments caused by cultural incongruence.

The statistical significance of A5—“Feedback mechanisms are localized” (β = 0.372, p < 0.001) also aligns with the findings of Walker et al. (2023), who proposed a set of provisional CRA principles. While their research culturally responsive assessment: Provisional principles focused on global education systems, this study confirms that such principles also apply in semi-boarding ethnic schools, where oral traditions and non-verbal feedback practices remain vital. Our IPMA analysis further reveals that A5 scores high in both importance and performance (I = 0.295; P = 73.2%), underscoring its feasibility and urgency.

In terms of school-level responsiveness, the model identified S6—“Assessment is inclusive of local stakeholders” as a significant predictor of CRA outcomes (β = 0.319, p < 0.01). This finding parallels the work of Goforth and Pham (2023), who emphasized the necessity of embedding assessment in community frameworks. Their study in Culturally responsive approaches to traditional assessment found that parent involvement and community validation improved student assessment engagement and accuracy. The high priority of S6 in the IPMA (I = 0.278; P = 69.1%) points to a promising entry point for system-level change in Vietnam's semi-boarding schools.

Finally, although teacher capacity (S5) exhibited a moderate path coefficient (β = 0.224, p < 0.05), its indirect effect on other variables such as A3 and A5 is critical. This supports the claim by Alhanachi et al. (2021) that professional learning communities (PLCs) serve as the backbone for sustained CRA reform. Their study improving culturally responsive teaching through professional learning communities demonstrated that teachers embedded in PLCs were more likely to adapt and contextualize assessments effectively. Given this, education authorities should integrate PLC development into teacher training frameworks, particularly targeting ethnic regions.

For instance, in one participating school, ethnic minority students reported feeling more confident when assessments allowed them to express knowledge through oral storytelling—a familiar cultural practice—rather than solely through written tests. Teachers also observed that when local cultural examples were integrated into test items, students demonstrated higher engagement and accuracy. Similarly, involving parents in discussions about assessment design not only strengthened home–school connections but also enhanced the legitimacy of evaluation practices in the eyes of the community.

Beyond the statistical coefficients, the practical implications highlight how CRA practices translate into tangible improvements in learning equity. Evidence from participating schools suggests that when teachers adapt assessment tools to local cultural repertoires, students not only achieve higher accuracy but also develop stronger confidence and motivation. These classroom-level shifts, combined with stakeholder participation, reveal that CRA is not merely a theoretical construct but a practical approach to bridging cultural gaps in education. Embedding such practices into teacher training and school governance frameworks will ensure that CRA becomes a sustainable component of everyday assessment rather than a temporary innovation.

At the societal level, the adoption of CRA practices extends beyond classroom benefits by promoting equity and inclusion in marginalized communities. By enabling ethnic minority students to demonstrate their knowledge through culturally relevant modes of assessment, CRA reduces structural disadvantages and enhances pathways to educational success. These improvements contribute not only to individual student achievement but also to broader social cohesion, as parents, communities, and schools collaborate more effectively in sustaining equitable learning environments. In this way, CRA can be regarded as both a pedagogical strategy and a social policy tool that advances the national agenda of reducing educational disparities in Vietnam's ethnic minority regions.

In conclusion, the convergence between the quantitative results of this study and theoretical insights from high-impact international research not only reinforces the validity of the current model but also offers a roadmap for policy reform. By consolidating evidence on teacher capacity, institutional constraints, and stakeholder engagement, the study highlights actionable pathways for policymakers. Prioritizing culturally aligned tools (A3), responsive feedback (A5), inclusive stakeholder engagement (S6), and teacher development (S5) can collectively foster a culturally responsive assessment ecosystem in Vietnam's ethnic minority education system.

5.3 Methodological implications

The methodological design employed in this study—particularly the integration of SEM and Importance-Performance Map Analysis (IPMA)—yields important implications for future culturally responsive assessment (CRA) research in ethnically diverse educational settings. By leveraging both path coefficients and performance indices, this dual-method approach not only identified significant predictors of CRA (e.g., A3, A5, S6) but also allowed prioritization of actionable areas, a nuance often absent in traditional correlational or regression-based CRA studies.

The use of SEM enabled a nuanced analysis of latent variables such as assessment design (A), stakeholder involvement (S), and institutional culture (Y). Notably, A3 (“Assessment tools are aligned with students' cultural backgrounds”) and S6 (“Assessment is inclusive of local stakeholders”) demonstrated high standardized coefficients (β = 0.421 and β = 0.319, respectively), affirming the predictive strength of culturally embedded constructs. These findings mirror the conclusions by Kwak et al. (2024a,b), who highldâtighted that CRA frameworks must be statistically validated using latent constructs derived from school, family, and community variables. Their systematic review noted the limitations of prior CRA studies that lacked structural modeling, often resulting in weak construct validity and poor replicability.

In addition, this study adopted IPMA to quantify both the importance (I) and performance (P) of individual indicators, revealing that A5 (“Feedback mechanisms are localized”) and S6 are not only influential but also underutilized in practice. This dual perspective supports the methodological advancement recommended by Abdullah et al. (2025), who applied PLS-SEM and IPMA to assess multi-stakeholder readiness for policy implementation in educational institutions. Their research emphasized that combining significance and performance enables researchers to translate statistical findings into actionable strategies, a framework particularly vital for CRA reform in marginalized school systems like Vietnam's semi-boarding primary schools.

Another implication lies in the methodological alignment between teacher preparedness (S5) and its indirect impact on assessment quality. The model revealed a moderate effect for S5 (β = 0.224, p < 0.05), yet its correlations with A3 and A5 suggest a mediating function. This is consistent with the conceptual model proposed by Evans and Taylor (2025), who argued that methodological designs in CRA should include both direct and mediating paths to capture the layered influences of teacher beliefs, assessment literacy, and contextual adaptation. Their framework integrates classroom-based and large-scale CRA and promotes the triangulation of data sources (e.g., survey, interview, document analysis), which this study partially achieves via embedded multi-informant perspectives.

Finally, the empirical rigor of this study, through convergent validity, discriminant validity, and AVE thresholds, strengthens methodological transparency and reproducibility. By providing a replicable framework grounded in SEM and enriched by IPMA, this research offers a viable template for future CRA studies in culturally pluralistic contexts, particularly those involving ethnic minority education in Southeast Asia.

In addition to statistical modeling, the methodological design also sheds light on practical research strategies in multicultural school systems. For example, combining survey data with open-ended responses allowed us to capture subtle teacher perspectives that numbers alone could not reveal. This integration highlights how methodological rigor is not limited to technical checks but extends to designing studies that authentically represent participants' cultural and linguistic realities. Future CRA research in Southeast Asia may therefore benefit from explicitly embedding mixed-methods protocols that balance statistical robustness with contextual sensitivity.

Beyond the statistical rigor established through SEM and IPMA, this study also ensured qualitative rigor by systematically analyzing open-ended responses using thematic coding. The integration of qualitative themes with quantitative constructs enhanced methodological transparency and minimized potential biases. Triangulation between data sources strengthened the validity of the findings and highlighted the complementarity of the mixed-methods design. This approach not only confirmed the robustness of the results but also provides a replicable model for future CRA research in ethnically diverse educational contexts.

5.4 Recommendations for future research

Building upon the robust empirical findings of this study, several directions for future research can be recommended to deepen and broaden the understanding of culturally responsive assessment (CRA) in ethnic minority educational contexts.

First, although this study offers a strong quantitative foundation using SEM and IPMA, further investigations employing mixed-methods approaches are needed to capture the nuances of stakeholder perceptions and classroom practices. As Buac and Jarzynski (2022) suggest, culturally and linguistically responsive assessments must account for the lived experiences of multilingual learners. Integrating qualitative insights could therefore illuminate contextual barriers and enablers not visible through statistical modeling alone.

Second, future studies should consider expanding CRA research into virtual and hybrid learning environments, especially in the context of post-pandemic digital transformation in Vietnam's education system. Koh (2023a,b) emphasized the importance of inclusive design in online assessments to ensure equity for culturally diverse learners. Investigating how digital CRA tools can be developed and scaled in remote ethnic regions would be a valuable contribution.

Third, longitudinal research designs are needed to evaluate the sustainability and long-term impact of CRA practices on student learning outcomes and teacher professional growth. The present study provides a snapshot of the current effectiveness of CRA constructs (e.g., A3, A5, S6), but does not capture how these dynamics evolve over time. Wyatt-Smith et al. (2014) argue that long-term engagement with assessment reform is essential to ensuring alignment between intended pedagogical goals and enacted classroom realities.

Finally, future research may explore policy-level frameworks and institutional capacity to support CRA adoption at scale. Given the importance of teacher agency and community engagement highlighted in this study, future inquiries should assess how policy tools, funding mechanisms, and training infrastructures can facilitate systemic integration of CRA in ethnic minority schools.

Moreover, future studies should move beyond statistical generalization to incorporate thick description and case-based evidence from classrooms and communities. Embedding qualitative depth—such as teacher narratives, student voices, and parental perspectives—would strengthen the authenticity of CRA research and reveal contextual mechanisms behind quantitative trends. At the same time, cross-country comparative studies within Southeast Asia could provide insights into how different policy regimes, teacher training systems, and cultural settings influence CRA adoption. Such approaches would not only deepen theoretical understanding but also enhance the applicability of findings for policymakers and practitioners across diverse contexts.

In sum, while the present research advances the empirical understanding of CRA effectiveness in Vietnam's semi-boarding primary schools, there remains considerable scope for deepening theoretical insights, contextual adaptation, and policy translation. Targeted future research will be essential to sustaining culturally inclusive and equitable assessment systems across diverse educational landscapes.

6 Conclusion

This study provides robust empirical evidence on the implementation of culturally responsive assessment (CRA) in ethnic minority semi-boarding primary schools in Vietnam. By applying a comprehensive SEM and IPMA approach, the findings revealed that culturally aligned assessment tools, localized feedback mechanisms, inclusive stakeholder engagement, and teacher development are pivotal in shaping the effectiveness of CRA practices. These results not only validate core elements of the CRA theoretical framework but also demonstrate their applicability in low-resource, multilingual educational contexts. The integration of cultural sensitivity into assessment design and implementation is shown to enhance both the fairness and the relevance of evaluation processes for ethnic minority students. This research contributes to the global discourse by contextualizing CRA within a Southeast Asian educational system, expanding the scope of existing models largely developed in Western settings. From a practical perspective, the study highlights the need for teacher training programs that embed CRA principles, the development of community-based assessment materials, and the establishment of feedback systems respectful of local norms. The convergence between quantitative findings and international literature further reinforces the utility of CRA as a mechanism to bridge equity gaps in learning assessment. Beyond summarizing empirical results, this study underscores the broader implications of CRA for both practice and policy. The evidence indicates that CRA is not only a set of assessment techniques but also a transformative framework that redefines how equity, cultural identity, and learning outcomes are conceptualized in minority education systems. Embedding CRA in teacher development, curriculum design, and policy structures can directly contribute to educational justice, while at the same time addressing the systemic marginalization of ethnic learners. This conclusion resonates with international debates on culturally sustaining pedagogies, situating Vietnam's experience within a global movement toward inclusive and context-sensitive education. At a broader societal level, the integration of CRA contributes to reducing structural inequalities and advancing social justice in education. By valuing students' cultural and linguistic repertoires, CRA enhances community trust in schools, fosters stronger home–school collaboration, and empowers ethnic minority families to actively participate in the educational process. These benefits extend beyond the classroom, supporting social cohesion and equity-oriented policy agendas in Vietnam's ethnically diverse regions. Future studies should explore longitudinal impacts of CRA practices on student outcomes, as well as policy-level interventions that institutionalize culturally adaptive evaluation. As Vietnam advances its education reform agenda, incorporating culturally responsive frameworks into assessment policy could offer transformative potential for improving equity, engagement, and learning outcomes among marginalized ethnic populations.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Research Ethics Committee of Thai Nguyen University, Vietnam (Approval Number: 103/BKHCN-TNU). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

Author contributions

LD: Formal analysis, Methodology, Writing – original draft, Conceptualization. PK: Conceptualization, Formal analysis, Methodology, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1670277/full#supplementary-material

References

Abdullah, R. S., Masmali, F. H., Alhazemi, A., Onn, C. W., and Khan, S. M. F. A. (2025). Enhancing institutional readiness: a multi-stakeholder approach to learning analytics policy with the SHEILA-UTAUT framework using PLS-SEM. Educ. Inform. Technol. doi: 10.1007/s10639-025-13647-w

Al-Emran, M., Mezhuyev, V., and Kamaludin, A. (2022). A SEM–ANN hybrid approach to examine students' academic performance: the case of YouTube use during the COVID-19 pandemic. Heliyon 8:e09236. doi: 10.1016/j.heliyon.2022.e09236

Alhanachi, S., de Meijer, L. A. L., and Severiens, S. E. (2021). Improving culturally responsive teaching through professional learning communities: a qualitative study in Dutch pre-vocational schools. Int. J. Educ. Res. 105:101698. doi: 10.1016/j.ijer.2020.101698

Andrade, H. L., and Brookhart, S. M. (2019). Classroom assessment as the co-regulation of learning. Assess. Educ.: Princ. Policy Pract. 26, 350–372. doi: 10.1080/0969594X.2019.1571992

Asil, M. (2017). A school-based measure of culturally responsive practices. Front. Educ. 2:17. doi: 10.3389/feduc.2017.00017

Bennett, R. E. (2023). Toward a theory of socioculturally responsive assessment. Educ. Assess. 28, 83–104. doi: 10.1080/10627197.2023.2202312

Bennett, R. E., Darling-Hammond, L., and Badrinarayan, A. (Eds.). (2025). Socioculturally Responsive Assessment: Implications for Theory, Measurement, and Systems-Level Policy, 1st Edn. New York, NY: Routledge. doi: 10.4324/9781003435105

Bottiani, J. H., Franco, M. P., Smith, L. H., Aguayo, D., Kaihoi, C. A., and Debnam, K. J. (2025). CARES360: a framework for teacher capacity-building in culturally sustaining practices. J. Sch. Psychol. 111:101471. doi: 10.1016/j.jsp.2025.101471

Buac, M., and Jarzynski, R. (2022). Providing culturally and linguistically responsive language assessment services for multilingual children with developmental language disorders: a scoping review. Curr. Dev. Disord. Rep. 9, 204–212. doi: 10.1007/s40474-022-00260-6

Cardinal, T., Murphy, M. S., Huber, J., and Pinnegar, S. (2022). Editorial: assessment practices with Indigenous children, youth, families, and communities. Front. Educ. 7:1105583. doi: 10.3389/feduc.2022.1105583

Castagno, A. E., Joseph, D. H., Kretzmann, H., and Dass, P. M. (2021). Developing and piloting a tool to assess culturally responsive principles in schools serving Indigenous students. Diaspora Indig. Minority Educ. 16, 133–147. doi: 10.1080/15595692.2021.1956455

Chin, W. W. (1998). “The partial least squares approach to structural equation modeling,” in Modern Methods for Business Research, ed. G. A. Marcoulides (Mahwah, NJ: Lawrence Erlbaum Associates), 295–336.

Do, T. P., Do, H., and Phung, L. (2024). “A culturally and linguistically responsive approach to materials development: teaching Vietnamese as a second language to ethnic minority primary school students,” in Innovation in Language Learning and Teaching: New Language Learning and Teaching Environments, eds. P. Wachob and H. Le (Cham: Springer), 93–113. doi: 10.1007/978-3-031-46080-7_6

Evans, C. M., and Taylor, C. S. (2025). Culturally Responsive Assessment in Classrooms and Large-Scale Contexts: Theory, Research, and Practice. New York, NY: Routledge. doi: 10.4324/9781003392217

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Market. Res. 18, 39–50. doi: 10.1177/002224378101800104

Gálvez-López, E. (2023). Formative feedback in a multicultural classroom: a review. Teach. High. Educ. 28, 463–482. doi: 10.1080/13562517.2023.2186169

Gay, G. (2018). Culturally Responsive Teaching: Theory, Research, And Practice, 3rd Edn. New York, NY: Teachers College Press.

Goforth, A. N., and Pham, A. V. (2023). “Culturally responsive approaches to traditional assessment,” in The Oxford Handbook of Culturally Responsive Assessment, eds. A. N. Goforth and A. V. Pham (New York, NY: Oxford University Press), 75–181. doi: 10.1093/med-psych/9780197516928.003.0005

Hair, J. F., Hult, G. T. M., Ringle, C. M., and Sarstedt, M. (2019). A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd Edn. SAGE Publications. doi: 10.3926/oss.37

Hair, J. F., Hult, G. T. M., Ringle, C. M., and Sarstedt, M. (2022a). A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 3rd Edn. Sage.

Hair, J. F., Hult, G. T. M., Ringle, C. M., Sarstedt, M., and Danks, N. P. (2022b). Partial Least Squares Structural Equation Modeling (PLS-SEM) Using R: A Workbook, 2nd Edn. Springer.

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Market. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Herzog-Punzenberger, B., Altrichter, H., Brown, M., Burns, D., Nortvedt, G. A., Skedsmo, G., et al. (2020). Teachers responding to cultural diversity: case studies on assessment practices, challenges and experiences in secondary schools in Austria, Ireland, Norway and Turkey. Educ. Assess. Eval. Account. 32, 395–424. doi: 10.1007/s11092-020-09330-y

Jia, Y. P., and Nasri, N. M. (2019). A systematic review: competence of teachers in implementation of culturally responsive pedagogy. Creat. Educ. 10, 3118–3129. doi: 10.4236/ce.2019.1012236

Johansson, E., Emilson, A., Einarsdottir, J., Puroila, A.-M., and Piskur, B. (2024). The politics of belonging in early childhood contexts: a comprehensive picture, critical factors and policy recommendations. Global Stud. Childhood 14, 1–16. doi: 10.1177/20436106241260079

Kock, N. (2016). Hypothesis testing with confidence intervals and P values in PLS-SEM. Int. J. Collab. 12, 1–6. doi: 10.4018/IJeC.2016070101

Koh, K. (2023a). Designing culturally responsive online assessments for equity-deserving students. Cult. Pedag. Inquiry 15. doi: 10.18733/cpi29712

Koh, K. (2023b). Designing culturally responsive online assessments for equity-deserving students. Can. Perspect. Inclus. 15, 10–25.

Kwak, D., Bell, M. C., Blair, K.-S. C., and Bloom, S. E. (2024a). Cultural responsiveness in assessment, implementer training, and intervention in school, home, and community settings: a systematic review. J. Behav. Educ. 33, 87–109. doi: 10.1007/s10864-024-09547-7

Kwak, Y., Kim, H., and Chung, J. (2024b). Culturally responsive assessment practices: a systematic review of empirical studies in K−12 education. Educ. Rev. 76, 145–169.

Lane, S. L., and Marion, S. F. (2025). “Validity argumentation for culturally responsive assessments,” in Culturally Responsive Assessment in Classrooms and Large-Scale Contexts, eds. C. M. Evans and C. S. Taylor (Routledge), 18–35. doi: 10.4324/9781003392217-8

Lee, M., and Mo, Y. (2023). Teacher self-efficacy in a multicultural classroom: a comparative analysis of International Baccalaureate (IB) and non-IB teachers. Multicult. Educ. Rev. 15, 245–263. doi: 10.1080/2005615X.2024.2318688

Luong, P. M., Tran, L. T., Dang, G. H., and Vu, T. V. (2025). Teachers' perceptions of culturally responsive teaching in international programs in Vietnamese higher education. Vietnam J. Educ. 9, 76–84. doi: 10.52296/vje.2025.529

Montenegro, E., and Jankowski, N. A. (2020). Equity and Assessment: Moving Towards Culturally Responsive Assessment (Occasional Paper No. 29). National Institute for Learning Outcomes Assessment. Available online at: https://eric.ed.gov/?id=ED574461

Nortvedt, G. A., Wiese, E., Brown, M., Burns, D., McNamara, G., O'Hara, J., et al. (2020). Aiding culturally responsive assessment in schools in a globalising world. Educ. Assess. Eval. Account. 32, 5–27. doi: 10.1007/s11092-020-09316-w

Paris, D., and Alim, H. S. (2017). Culturally Sustaining Pedagogies: Teaching and Learning for Justice in a Changing World. Teachers College Press.

Podsakoff, P. M., and Organ, D. W. (1986). Self-reports in organizational research: problems and prospects. J. Manage. 12, 531–544. doi: 10.1177/014920638601200408

Preston, J. P., and Claypool, T. R. (2021). Analyzing assessment practices for Indigenous students. Front. Educ. 6:679972. doi: 10.3389/feduc.2021.679972

Ringle, C. M., Sarstedt, M., and Straub, D. W. (2016). Gain more insight from your PLS-SEM results: the importance–performance map analysis. Indus. Manag. Data Syst. 116, 1865–1886. doi: 10.1108/IMDS-10-2015-0449

Schachner, M. K., Schwarzenthal, M., Moffitt, U., Civitillo, S., and Juang, L. P. (2021). Capturing a nuanced picture of classroom cultural diversity climate: multigroup and multilevel analyses among secondary school students in Germany. Contemp. Educ. Psychol. 65:101971. doi: 10.1016/j.cedpsych.2021.101971

Schimke, D., Krishnamoorthy, G., Ayre, K., Berger, E., and Rees, B. (2022). Multi-tiered culturally responsive behavior support: a qualitative study of trauma-informed education in an Australian primary school. Front. Educ. 7:866266. doi: 10.3389/feduc.2022.866266

Schoonenboom, J., and Johnson, R. B. (2017). How to construct a mixed methods research design. Kölner Zeitschrift für Soziologie und Sozialpsychologie 69, 107–131. doi: 10.1007/s11577-017-0454-1

Shepard, L. A. (2000). The role of assessment in a learning culture. Educ. Res. 29, 4–14. doi: 10.3102/0013189X029007004

Solano-Flores, G. (2019). Examining cultural responsiveness in large scale assessment: the matrix of evidence for validity argumentation. Front. Educ. 4:43. doi: 10.3389/feduc.2019.00043

Solano-Flores, G., and Trumbull, E. (2019). “The concept of validity and the use of tests in culturally and linguistically diverse contexts,” in The Handbook of Research on Assessment Literacy and Teacher-Made Testing in the Language Classroom, eds. M. B. González and E. Trumbull (IGI Global), 1–23.

Tang, K.-S., Cooper, G., Rappa, N., Cooper, M., Sims, C., and Nonis, K. (2024). A dialogic approach to transform teaching, learning & assessment with generative AI in secondary education: a proof of concept. Pedagogies 19, 493–503. doi: 10.1080/1554480X.2024.2379774

Tarhini, A., Hone, K., Liu, X., and Tarhini, T. (2016). Examining the moderating effect of individual-level cultural values on users' acceptance of e-learning in developing countries: a structural equation modeling of an extended technology acceptance model. Interact. Learn. Environ. 24, 306–328. doi: 10.1080/10494820.2015.1122635

Trumbull, E., and Nelson-Barber, S. (2019). The ongoing challenge of assessment for indigenous students. Kappa Delta Pi Record 55, 16–21.

Ulbricht, J., Schachner, M., Civitillo, S., and Juang, L. (2024). Fostering culturally responsive teaching with the Identity Project intervention: a qualitative quasi-experiment with pre-service teachers. Identity 24, 307–330. doi: 10.1080/15283488.2024.2361890

Walker, M. E., Olivera-Aguilar, M., Lehman, B., Laitusis, C., Guzman-Orth, D., and Gholson, M. (2023). Culturally responsive assessment: provisional principles (ETS Research Report No. RR-23-11). ETS Res. Rep. Ser. 2023, 1–18. doi: 10.1002/ets2.12374

Wyatt-Smith, C., Klenowski, V., and Gunn, S. (2010). The centrality of teachers' judgement practice in assessment: a study of standards in moderation. Assess. Educ.: Princ. Policy Pract. 17, 59–75. doi: 10.1080/09695940903565610

Wyatt-Smith, C., Klenowski, V., and Gunn, S. (2014). The centrality of teachers? judgement practice in assessment: a study of standards in moderation. Assess. Educ. Principles Policy Pract. 17, 59–75.

Keywords: culturally responsive assessment, ethnic minority education, semi-boarding primary schools, PLS-SEM, educational equity, Vietnam

Citation: Duong LT and Khuong PD (2025) Culturally responsive assessment practices in ethnic minority semi-boarding primary schools in Vietnam: a mixed-methods study. Front. Educ. 10:1670277. doi: 10.3389/feduc.2025.1670277

Received: 21 July 2025; Accepted: 01 September 2025;

Published: 10 October 2025.

Edited by:

Enrique H. Riquelme, Temuco Catholic University, ChileReviewed by:

Sumarto Sumarto, Poltekkes Kemenkes, IndonesiaMarlon Adlit, Department of Education, Philippines

Richelle Marynowski, University of Lethbridge, Canada

Copyright © 2025 Duong and Khuong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Phi Dinh Khuong, a2h1b25ncGRAdG51cy5lZHUudm4=

†ORCID: Phi Dinh Khuong orcid.org/0009-0004-7930-7335

Lam Thuy Duong1

Lam Thuy Duong1 Phi Dinh Khuong

Phi Dinh Khuong