- 1Department of Business Science and Management, Albstadt-Sigmaringen University, Sigmaringen, Germany

- 2Department of Media Education, Weingarten University of Education, Weingarten, Germany

- 3Department of Life Sciences, Albstadt-Sigmaringen University, Sigmaringen, Germany

Background: Visualized data are central to economic decision-making. A key educational challenge is fostering related competencies from school to higher education. Research on cognitive competencies and their assessment in data literacy remains scarce. This study examines the effects of an eight-week intervention based on a cognitive model, aiming to enhance learners’ critical engagement with and evaluation of visualized data.

Methods: Using a design-based research approach, a cognitive model was implemented as an instructional intervention and iteratively refined through multiple design cycles. A pre-post comparison was conducted within the experimental group (N = 40). The model emphasizes a backward-oriented interpretation of data visualizations, guiding learners from analysis to mental reconstruction of the underlying data structure and the data collection process. To enhance acceptance, learners were involved in model development from the outset.

Results: There were changes in perceived ability to critically evaluate visualized data over time (p = 0.011, d = 1.72). In addition, the usefulness of the intervention is evaluated positively (M = 3.76, SD = 1.09). The model underwent several rounds of revision before being implemented in the main study during the winter 2025/26 as part of a statistics course for undergraduate students. These are therefore preliminary results in the context of this study.

Discussion: To our knowledge, this is the first instructional intervention based on a cognitive model to foster critical evaluation of visualized data. The backward-oriented approach guided learners’ step by step – from analyzing visualizations to mentally reconstructing the underlying data structure and drawing conclusions about the data collection process. Extending the intervention duration may further enhance its effectiveness. Overall, the cognitive-model-based intervention shows potential to improve data literacy competencies in higher education.

1 Introduction

In today’s data-driven society, the ability to understand, critically interpret and communicate data has become a key competence. The concept of data literacy has been established internationally to describe a broad set of skills ranging from analyzing and managing data to critical reflection and decision-making. Carlson et al. (2011) was among the first to define data literacy as an independent competence domain in higher education. Ridsdale et al. (2015) provided a comprehensive synthesis of international strategies and best practices, emphasizing the lack of systematically embedded and empirically tested teaching approaches. Empirical research further indicates that students often read data visualizations only superficially. Oguguo (2020) demonstrated significant deficits in practical data skills among university students in Nigeria, while Kedra and Zakeviciute (2019) showed that visual literacy practices are rarely taught explicitly in higher education. Zhu and Lim (2024) add that students tend to value visual literacy highly but underestimate their ability to interpret and critically evaluate visual materials. Together, these studies highlight the centrality of visual literacy within the broader concept of data literacy but also reveal that the cognitive processes involved remain underexplored. Theoretical work on how learners process multimodal information exists – for example, Mayer’s (2001) multimedia learning theory and Schnotz and Bannert’s (2003) model of text and picture comprehension – but these frameworks have rarely been applied systematically to the interpretation of data visualizations (Figure 1).

Figure 1. Integrated model of text and picture comprehension (Schnotz and Bannert, 2003; adapted by Hettmannsperger et al., 2016).

The German context reflects a similar pattern. The Future Skills framework developed by Schüller et al. (2019) identifies data literacy as a central skill set for graduates. Schüller and Sievers (2022) provide practice-based evidence showing that while data literacy education is emerging in German higher education, initiatives remain fragmented and rarely evaluated systematically. Demiröz and Lämmlein (2024) argue that data literacy should be considered a transversal competence to be embedded across all disciplines, while Artelt et al. (2024) provide large-scale empirical evidence of persistent gaps in digital and data-related skills in Germany. Taken together, these studies demonstrate that, despite growing attention, data literacy in the German context is still addressed mainly through frameworks and initiatives but lacks empirically validated didactic models that specifically foster critical engagement with data visualizations.

Mental models are essential for the reception and comprehension of data products. As cognitive structures, they facilitate the decoding of visual information and its integration into existing knowledge frameworks. These frameworks are often organized in the form of cognitive schemas-stable patterns of information processing that structure the perception, organization, and classification of environmental stimuli (Moser, 2014; Nerdinger, 2012). Cognitive schemas significantly influence how new information is perceived, interpreted, and evaluated. When existing mental models prove insufficient for problem-solving, they can be modified and further developed through learning processes, as described by Moser (2014) and Nerdinger (2012). Thus, mental models are dynamic constructs, continuously interacting with individual patterns of perception, reasoning, and behavior (Ford and Sterman, 1998). Moreover, studies have shown that domain-specific expertise relies on specialized mental models, which evolve and differentiate based on an individual’s level of experience and knowledge (Ford and Sterman, 1998; Johnson-Laird, 1983). Therefore, the integration of mental models into instructional designs is essential for the effective development of data literacy competencies.

Based on this foundation, a cognitive model was developed, drawing on the existing framework by Schnotz and Bannert (2003), which serves as a basis for a didactic intervention implemented by the instructor (Figure 2). It is assumed that the decoding process from text to data follows a similar mental pathway as the already studied transition from image to text, relying on comparable cognitive mechanisms.

Figure 2. Extended representation of the integrative model of text and picture comprehension (own illustration, adapted from Schnotz, 2005).

This study addresses that gap by introducing and testing a cognitive model designed to support learners in the critical evaluation of data visualizations. The model builds on a backward-oriented reasoning process, guiding students from surface-level analysis through mental reconstruction of the underlying data structure to reflection on the data collection process and its context. Despite increasing recognition of the importance of data and visual literacy, no empirically grounded instructional intervention has yet been developed that explicitly operationalizes a cognitive model for the critical evaluation of data visualizations. Existing studies have either focused on descriptive skills, on design principles for creating visualizations, or on general literacy frameworks without addressing the specific cognitive processes needed for critical interpretation (Schüller et al., 2019; Zelazny, 2015; Few, 2009; Shneiderman, 2004). To our knowledge, this is the first study that systematically develops, implements, and tests a cognitive model that guides learners through a backward-oriented reasoning process to critically assess visualized data. By doing so, it makes a unique contribution to both theory and practice: it bridges established cognitive models of information processing (Schnotz and Bannert, 2003; Mayer, 2001) with the field of data literacy education and offers an empirically tested instructional approach that can be integrated into higher education curricula. In doing so, it responds to calls for more systematic data literacy interventions while extending existing theoretical approaches. Unlike prior work that mainly provides guidelines for visualization design (Zelazny, 2015; Few, 2009; Shneiderman, 2004), the present model combines cognitive theory (Schnotz and Bannert, 2003; Mayer, 2001) with an instructional intervention that actively trains critical and reflective thinking. The goal is not only to improve formal reading skills but also to strengthen learners’ capacity for critical engagement with data as a foundation for informed communication and decision-making.

The first draft of the cognitive model was developed based on a comprehensive literature review of the current research and supplemented by expert interviews. The aim of the present study was to investigate the effects of a didactic intervention on the development of data literacy competencies in students.

We hypothesized that (1) the primary outcome, which is the perceived ability to evaluate visualized data, will increased over time as assessed at pre-intervention (T-pre) and post-intervention (T-post); and (2) the secondary outcome, which is the perceived usefulness of the intervention, will be assessed at T-post to potentially improve the intervention for future research.

2 Materials and methods

2.1 Study design

The cognitive model illustrated in Figure 2 was incorporated into the didactic intervention to enhance learners’ cognitive processes underpinning the critical assessment of data visualizations. The intervention group receives an eight-week instructional intervention based on this cognitive model, integrated into a course. A design-based research (DBR) approach was adopted to guide the development and implementation of the proposed cognitive model and its corresponding didactic intervention, enabling iterative refinement of both theoretical constructs and practical applications in authentic educational contexts. Participants were assessed before the intervention (pre-assessment) and will be assessed after the intervention (post-assessment) with respect to their level of competence. For this purpose, a paired-samples t-test was conducted. This statistical procedure examines mean differences within a group under conditions of dependent measurements (Bortz and Schuster, 2010).

2.2 Participants and setting

Participants were recruited from the Business Statistics course, where the author introduced the study. Prior to the end of the survey period, students were informed about the aim of the study as well as the predefined framework for participation (duration, procedure, and assurance of confidentiality). They were further reminded of the voluntary nature of participation and the principles of data protection (Blach, 2016). Participants are eligible for inclusion if they (a) are enrolled in an undergraduate Bachelor’s degree program at Albstadt-Sigmaringen University that includes the module Business Statistics and (b) have sufficient proficiency in the German language. Recruitment began in March 2025 and took place during the course Business Statistics at Albstadt-Sigmaringen University. The study specifically targets German-speaking students. A total of N = 40 students agreed to participate.

2.3 Procedure

Eligible participants (Figure 3) were invited to access the pre-assessment questionnaire via a provided link or QR code. The T-pre-questionnaire began with a statement informing participants of the voluntary nature of their involvement. Both the pre-assessment and post-assessment are administered using the online survey platform LimeSurvey.1 No personalized login credentials are required, ensuring participant anonymity. Figure 3 presents the planned study procedure and timeline for the experimental group. All data analyses were conducted using pseudonymized data, ensuring that the analyst could not identify individual participants.

2.4 Instruments

The primary outcome is measured using a self-developed questionnaire designed to assess participants’ perceived ability to critically evaluate visualized data. The questionnaire consisted of 11 items capturing students’ perceived ability to critically evaluate data visualizations. Item were phrased as self-assessment statements (e.g., “I have a clear sense of where the data in visualizations come from”). Responses are measured using a 5-point Likert scale, with values ranging from 1 (“strongly disagree”) to 5 (“strongly agree”). Scale responses are aggregated into sum scores (range 1–5), with higher scores indicating greater perceived critical evaluation ability. The reported aspects are then compared to the theoretically relevant dimensions outlined in the cognitive model, allowing for an assessment of learning effects based on conceptual alignment (Figure 4).

To assess the internal consistency of the instrument, Cronbach’s alpha was calculated. The coefficient was α = 0.87, which can be classified as high. According to common guidelines, values above 0.70 are considered acceptable, values above 0.80 satisfactory, and values above 0.90 very good (Bühner, 2011; George and Mallery, 2003). Thus, the instrument used demonstrates excellent reliability and can be applied for research purposes within the present sample. The following table presents the results of the detailed item analysis (Table 1).

In total, four items (1, 5, 6, 8) demonstrated corrected item-total correlations of ≥0.80. Six items (2, 3, 4, 7, 10, 11) showed values between 0.70–0.79, and item 9 fell within the range of 0.50–0.69.

To evaluate the construct validity of the developed instrument, an exploratory factor analysis was conducted. The suitability of the data for factor analysis was first examined. The Kaiser-Meyer-Olkin coefficient (KMO) was 0.80 (Kaiser, 1974), and Bartlett’s test of sphericity was significant, with χ2 (df = 55) = 450.56 and p < 0.0001, indicating sufficient correlations among the items. These results confirmed the adequacy of the data for factor analysis.

Principal axis factoring was used as the extraction method. The initial communalities suggest meaningful associations among the variables as shown in Table 2.

Factor retention was based on the eigenvalue criterion (λ > 1) and Cattell’s (1966) scree plot test. According to the Kaiser criterion, five factors were extracted (eigenvalues > 1) (Table 3).

As shown above, the five extracted factors together accounted for 88.33% of the variance. We can also observe a steady decrease in the proportion of explained variance as the number of factors increases. The number of factors to be extracted can be determined using the scree plot. In addition to a five-factor solution, a three-factor solution would also be conceivable (Kenemore et al., 2023) (Table 4).

The rotated factor matrix indicated that items loaded consistently based on three factors. High loadings (λ > 0.50) are shown in bold (see Table 5). Factor 1 showed strong loadings for items related to structuring and reporting data (e.g., “creating diagrams,” “structuring data logically,” “reporting information content”). Factor 2 was defined by items emphasizing critical evaluation and data-based decision-making (e.g., “critically evaluating data,” “understanding data provenance,” “making data-based decisions”). Factor 3 was characterized by items focusing on deriving and communicating insights (e.g., “deriving recommendations for action,” “presenting visualizations,” “evaluating information content”). Overall, the loading pattern supports the assumed construct structure of the instrument (Table 5).

The Varimax rotation has resulted in a theoretically meaningful and empirically robust factor structure, which is suitable for both research and practical application. Most items rely clearly on one factor. The three factors are logical and theoretically grounded and together explain 85.48% of the variance (see Table 6), which is substantially higher than the typical variance explained in psychological scales (40–60%). This result is likely attributed to the small number of items and their content homogeneity, which allows the variance to be more strongly captured by the three factors. Only 3.6% of large residuals were observed, indicating a very satisfactory model fit.

The secondary outcome, perceived usefulness of the intervention, is also measured using the same self-developed questionnaire, applying the five-point Likert scale from 1 (“strongly disagree”) to 5 (“strongly agree”). The questionnaire consisted of one item capturing students’ perceived usefulness of the didactic intervention. Item were phrased as self-assessment statements (“Using the cognition model improves my ability to critically understand data”). Aggregate scores are computed, with higher values indicating a greater perceived usefulness of the intervention. Perceived usefulness of the intervention was assessed exclusively in the post-test (T-post), as this construct could not be meaningfully measured prior to participation. For this purpose, descriptive statistics (M, SD and mode) were calculated to provide an overview of participants’ evaluations.

2.5 Intervention (prototype)

2.5.1 Defining learning objectives

Design-based research is a particularly suitable research approach for addressing complex and practice-oriented educational problems (Ford et al., 2017). Due to its high degree of flexibility and strong orientation toward practice, design-based research is applied across a wide range of educational contexts. Wherever concepts are developed with and for practice, this approach offers an appropriate and adaptable methodological framework. The definition of clear learning objectives is essential before developing any instructional intervention. In the design-based research approach, learning objectives serve as a foundation to guide, document, and evaluate the development process.

A prerequisite for defining appropriate learning objectives is to clarify the concept of data literacy. Data literacy comprises both the capacity to comprehend and interpret data - such as analyzing visualizations, drawing evidence-based conclusions, and detecting misleading representations (Oguguo, 2020; Carlson et al., 2011) - and the competencies needed to collect, manage, critically assess, and apply data in practical contexts (Oguguo, 2020; Ridsdale et al., 2015).

Within this competency spectrum, visualization literacy and visual literacy are of particular importance. Visualization literacy refers to the ability to create data visualizations, while visual literacy relates to the interpretation and critical evaluation of these visual representations (Schüller et al., 2019). Both competencies are particularly relevant in higher education to prepare students for data-informed professional environments (Zhu and Lim, 2024).

Visual literacy is especially critical as it supports the interpretation of complex visual content and fosters critical thinking - key prerequisites for effective and reflective communication (Kedra and Zakeviciute, 2019).

2.5.2 Specific learning objectives

The instructional intervention was designed to achieve the following learning objectives:

1. Learners understand the process of critically evaluating data products.

2. Learners are able to engage in backward reasoning from a given data product to the underlying data collection process, including data structures such as tables, columns, or matrices.

3. Learners recognize that critical engagement with data products extends beyond content evaluation to include the examination of underlying data collection processes as the basis for data-driven communication and decision-making.

These learning objectives formed the starting point for the iterative design phase.

To achieve the defined learning objectives, the intervention was designed based on a behavior-oriented approach. This approach draws on learning theory, which holds that most human behavior is learned and therefore subject to change (Skinner, 1953). Accordingly, the instructional goal is to build desired behaviors through targeted learning support.

The methodological implementation was carried out in a structured planning phase, in which specific strategies were defined. These strategies are based on the principles of observational learning (Bandura, 1977). The intervention thus follows a systematic, controlled approach to supporting the learning process.

3 Findings from the design-based research cycles

3.1 Iterative design phase: development of the cognitive model

Within an iterative design phase, the cognitive model presented in Figure 2 was systematically enhanced.

The present study is embedded in an empirically grounded, qualitatively oriented design-based research framework. The process began with a preliminary literature review and the development of the theoretical foundation, which were subsequently complemented by a pilot study involving expert interviews. The insights gained were analyzed using qualitative content analysis and informed the creation of an initial prototype of a cognitive model (C. Lieb, manuscript submitted for publication).

In the course of the iterative cycles, short interviews with participants were conducted as part of the formative evaluation of the intervention. These interviews primarily aimed to collect student feedback that could directly contribute to the design’s further development. Accordingly, the analysis was carried out in the pragmatic manner (e.g., summarizing key contents, identifying areas for improvement) rather than through a comprehensive qualitative content analysis. This approach is consistent with the logic of design-based research, in which data in the early cycles primarily serve to inform reflection and guide design optimization (McKenney and Reeves, 2019). A more in-depth qualitative analysis (e.g., Kuckartz, 2018; Mayring, 2010) would not have aligned with the main research objective at this stage and would have been disproportionate in methodological terms.

The current publication focuses on the design-based research cycles, which serve to iteratively refine and implement the cognitive model. These cycles aim to evaluate and optimize the model’s didactic utility through practice-based feedback and ongoing theoretical reflection. During each iteration, adjustments are made to the prototype based on whether the intervention is accepted in its current form, ultimately leading to a final version of the cognitive model once stability and acceptance have been achieved. A forthcoming publication will address the evaluation of the final cognitive model, including its implementation and impact measurement.

The following section presents the individual development cycles in a systematic manner to clarify the practical application of the design process. We first describe observations and resulting conclusions at the model level, then synthesize them across disciplinary perspectives.

3.1.1 Pilot phase 1: initial draft of the cognitive model

Focus and procedure: the first draft of the cognitive model focused on identifying the aspects students consider when critically evaluating visualized data. For this purpose, students were presented with a pie chart. The task followed a three-step process:

• Planning - Students were asked to define their evaluation strategy.

• Evaluation - Students performed the actual evaluation based on their chosen approach.

• Reflection - Students reflected on their process and assessed whether they had considered all relevant aspects.

Observations: the observations revealed that most students focused primarily on descriptive rather than interpretive aspects. Their evaluations often concentrated on formal criteria, such as clarity, structural layout, correct summation to 100%, and appropriate use of color. While many students acknowledged the importance of questioning the origin of the data, they were generally unable to specify concrete methods for doing so. Some students mentioned that they would seek additional information through independent research to assess the data source and the underlying data collection process.

Conclusions from pilot phase 1: the findings from this first pilot phase demonstrated the importance for a more structured process that explicitly outlines the steps involved in critical evaluation. This structure would help students more effectively identify and apply relevant evaluation criteria.

The design-based-research cycle now begins, consisting of iterative phases of development, testing, evaluation, and revision (Haagen-Schützenhöfer et al., 2024).

3.1.2 Revision 1: development of a structured approach for the critical evaluation of visualized data: adjusted draft of the cognitive model

In the first revision phase, the goal was to identify suitable methods to systematically promote the critical evaluation of visualized data. Analogous to the process of creating visualizations from raw data, the reverse process can also be described: starting with a visualization and reasoning backward to infer the underlying data (Zelazny, 2015; Few, 2009).

The model of text and picture comprehension by Schnotz (2005) describes a cognitive process relevant to this backward reasoning. When viewing a visualization, learners first generate a mental representation of its surface structure. A visual filter subsequently extracts relevant information and transfers it to working memory, where it supports the formation of a mental model. Mental models rely on the activation of prior knowledge stored in long-term memory (Dutke, 1994) and support the processing of complex information by simulating or internally visualizing processes. A similar cognitive process can be assumed when critically evaluating visualizations and analyzing their underlying data. To appropriately assess visualized data, students must first systematically analyze the visualization, including

• Identifying the type of chart or diagram,

• Interpreting axis labels and scales,

• Recognizing which elements are emphasized,

• Determining the key message conveyed.

This analysis enables students to draw reasoned conclusions about data collection methods and contextual factors. Based on these insights, backward analysis emerged as a promising methodological approach for supporting the critical evaluation of visualized data.

3.1.3 Pilot phase 2: application and reflection of backward analysis

Implementation and observations: in the second pilot phase, students were asked to apply backward analysis to critically evaluate a data visualization following the intervention. The results indicated that many students struggled to familiarize themselves with this unfamiliar approach within the limited time available.

Even after identifying the chart type and axis labels, several students were unable to derive the key message of the visualization based on the presented variables.

Reflection and identified need for cognitive scaffolding: follow-up discussions with the students revealed that this novel reasoning process - working backward from the visualization to the underlying data - could be enhanced by integrating an additional cognitive step. This step involves the conscious construction of an interpretive framework, enabling learners to:

• Move beyond formal analysis.

• Connect the visualization to relevant background knowledge.

• Systematically structure their analysis,

• More effectively derive meaningful conclusions from the data.

Conclusion of pilot phase 2: the findings suggest that cognitive scaffolding through the construction of an interpretive framework is essential to support students in applying backward analysis effectively and in making informed evaluations of visualized data.

3.1.4 Revision 2: model refinement – mental reconstruction as a cognitive bridge: adjusted draft of the cognitive model

In this revision phase, the cognitive model was further refined to include mental reconstruction as a bridging step between formal analysis and conclusions based on data collection and context.

For example, students are presented with a bar chart showing the increase in student dropout rates across various academic programs. Initially, they correctly analyze the chart type and axis labels. The newly introduced cognitive bridging step requires them to anticipate possible causes and to raise questions about additional factors or economic conditions. This enables them to identify key aspects and derive the main message of the visualization. Only after this step do students contextualize the data, consider the data collection objectives, and critically reflect on the visualization. The process of mentally reconstructing the underlying data structure serves as a cognitive bridge between surface-level analysis and deeper reflection on data collection and contextual factors. Existing literature emphasizes the importance of understanding the data structure and the ability to reason backward from a visualization to the original dataset (Few, 2009; Shneiderman, 2004). This cognitive step supports the critical assessment within the decoding process of visualized data, particularly with regard to recognizing emphasized aspects and deriving the intended key messages.

3.1.5 Pilot phase 3: testing the cognitive model as an instructional intervention

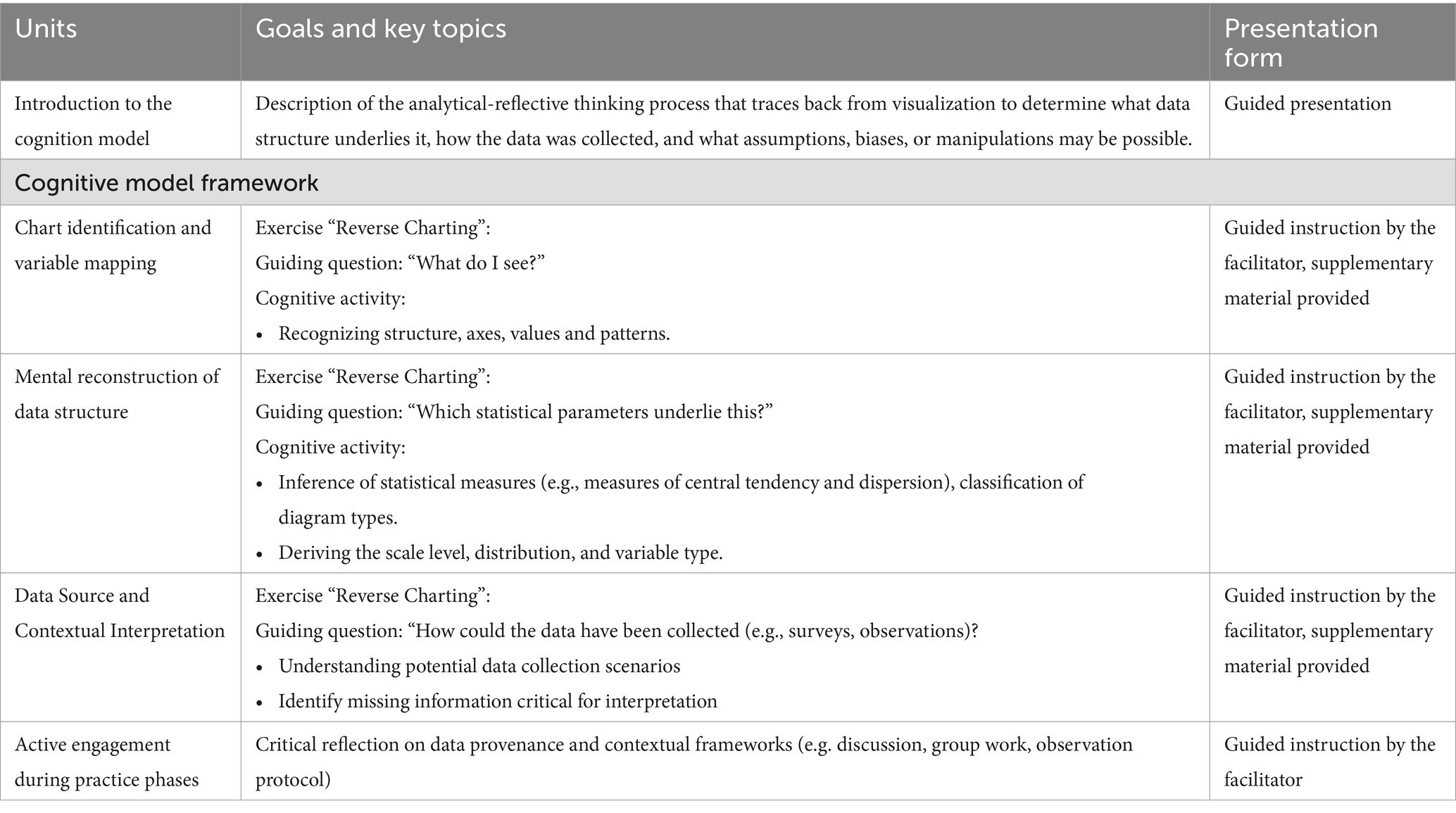

Integration into teaching: an overview of the intervention content, structured around the model’s three steps - (1) analysis of the visualization, (2) mental reconstruction of the data structure, and (3) reasoning back to data collection and context - is provided in Table 1. The cognitive model was integrated into the Business Statistics course. Additional visualized data products were presented for demonstration purposes but could not be used as active learning materials due to time constraints.

Observations and outcomes: despite these limitations, students worked with the cognitive model and developed a structured, step-by-step approach to the critical evaluation of visualized data. The results from this third pilot phase demonstrate the need for active engagement with the model to foster students’ understanding of critical data evaluation processes. However, due to time limitations, students were not yet able to fully apply the model independently, representing the next cognitive level in the learning process that remains to be achieved in future iterations. An overview of the alternating intervention contents is shown in Table 7.

3.2 Data analysis

To examine changes over time, various inferential statistical methods will be applied. The significance level for all analyses is set at 0.05. Only complete datasets from participants who provided data at both time points (T-pre and T-post) will be included in the analysis. Incomplete questionnaires will be excluded.

4 Results

4.1 Principal outcome measure: perceived critical evaluation ability

A total of 40 students participated in the experimental group (N = 40). To examine average competence development across the two measurement points, a paired-samples t-test was conducted. This test is appropriate for evaluating mean differences between pre-test (T-pre) and post-test (T-post). Effect sizes for the analyzed differences are reported using Cohen’s d. Table 7 summarizes descriptive statistics for each measurement point.

The paired-samples t-test revealed a significant increase from pre-test (M = 3.13, SD = 0.24) to post-test (M = 3.54; SD = 0.24), t(39) = 2.35, p = 0.011, Cohen’s d = 1.72. According to Cohen’s (1988) guidelines, this increase represents a very large effect, suggesting that the intervention had a substantial impact on participants’ competence development (Table 8).

Table 8. Descriptive statistics and paired-samples t-test results for pre- and post-intervention measures.

4.2 Secondary outcome measure: perceived usefulness

Perceived usefulness of the intervention was rated positively (M = 3.76, SD = 1.09), with 4 being the most frequently selected value.

5 Discussion

This study centers on the application and further development of a cognitive model designed to foster learners’ critical evaluation and communication of visualized data as a basis for informed decision-making. As far as we are aware, this is the first instructional intervention specifically targeting cognitive competencies in the context of data literacy, particularly focusing on communication and decision-making as outcome variables.

The intervention was developed following a design-based research (DBR) approach, an innovative method for addressing educational challenges that integrates iterative redesign processes within prototype phases and actively involves learners from the beginning. Participant feedback was integrated into the revised cognitive model and will be further examined to enhance the intervention. This study may provide a valuable contribution to the advancement of instructional interventions in data literacy, particularly in higher education settings. In the present intervention study, the inclusion of a control group was intentionally omitted. This decision is based on a combination of ethical considerations, practical constraints, and the exploratory nature of the investigation. From an ethical standpoint, it is not justifiable to deliberately withhold access to an intervention that holds the potential to be effective and contribute to competence development. This is particularly relevant in educational contexts, where there is a responsibility to support the development of all learners. Intentionally assigning participants to a non-intervention control group would therefore raise significant ethical concerns.

Moreover, the study adopts an exploratory approach. The primary objective is to examine the general feasibility and potential effectiveness of the intervention under naturalistic conditions. The aim is to gather initial evidence on changes in the target variable, which can serve as the basis for designing more rigorous, controlled studies in the future. The results are additionally contextualized through comparisons with existing normative data and findings from similar studies. This approach enables initial inferences regarding the effectiveness of the intervention, even in the absence of a classical control group. This methodological decision aligns with current ethical guidelines for educational interventions and represents a well-established approach in empirical educational research, especially during the early development phases of new interventions.

While existing cognitive models such as Mayer’s (2001) multimedia learning theory or the model of text and picture comprehension by Schnotz & Bannert (2005) describe how learners select, organize, and integrate external representations into mental models (see Figure 1), they focus primarily on forward-oriented decoding processes. The “mental reconstruction step” proposed in this study extends these frameworks by introducing a backward-oriented reasoning dimension. Learners are not only guided to decode the surface structure of a visualization but also to reconstruct the underlying data structure and reflect on the conditions of data collection. This additional cognitive step functions as a bridge between surface-level analysis and critical reflection, thereby integrating evaluative and reflexive processes into the act of comprehension itself. In this sense, the model does not replace but expands existing cognitive theories, making them applicable to the context of data literacy education.

The following section discusses the extent to which the overall student sample achieved performance gains through participation in the intervention. To address the hypothesis, the results of the paired-samples t-test and relevant descriptive statistics were examined. The findings revealed a significant increase in the competence dimension “perceived ability to critically evaluate visualized data” from the first (T-pre) to the second measurement point (T-post) (p = 0.011). The effect size on this difference was large (d = 1.72). These results are in line with previous research suggesting that visual literacy competencies (within the context of data literacy) can be acquired (e.g., Pohl, 2023). Participants also evaluated the perceived usefulness of the intervention. The mean rating was relatively high (M = 3.76, SD = 1.09, Mode = 4), indicating that participants considered the intervention useful.

5.1 Practical implications and curricular applications

The purpose of the didactic intervention is to aid students in developing data literacy competencies for data-based communication and decision-making. The findings of this study suggest that the developed cognitive model can be integrated not only into introductory statistics courses in economics but also more broadly across curricula. In the future, various teaching scenarios could be developed: First, the model can serve as a scaffolding tool that guides learners step by step in the critical analysis of visualized data and gradually supports their transition toward independent application. Second, the integration of real-world data problems – e.g. visualizations from the media, corporate reports, or scientific publications – ensures high relevance and strengthens the applicability of acquired competencies. Third, the inclusion of mental reconstruction as a cognitive bridging step deliberately fosters reflective competencies. Learners are trained not only to evaluate the surface features of a visualization but also to critically examine the underlying data structures, collection methods, and potential biases. Finally, the universal applicability of the model allows for interdisciplinary embedding across different domains of study. Concrete curricular applications can be identified in multiple disciplines. For example in marketing or consumer research, the model can support the critical assessment of survey data; in finance or corporate communication, it can be applied to the analysis of charts in annual or sustainability reports. In life sciences contexts, students may decode visualizations of clinical trials. In sociology or political science, the model supports the critical interpretation of visualizations from opinion polls or election forecasts. In (business) psychology, it can foster reflection on empirical studies and evaluation reports. Also in preparatory courses, the model can be applied to everyday examples such as charts from newspapers or social media to build foundational visual literacy skills. The perspective of collaborative learning could also be taken into account here. And in teacher education, it can be employed to prepare future educators to guide students in the critical reflection of data visualization. For instructional design, several approaches can be recommended. We recommend case-based learning, which incorporates authentic examples such as media reports, and project-based learning, which involves students creating and critically evaluating their own visualizations using the model. In addition, digital self-study units (e.g., “reverse-charting” exercises) can be integrated into blended-learning settings. Assessment formats may also benefit from the model, for instance through open-ended exam tasks that require students to decode and critically reflect upon a given visualization.

Overall, the cognition model demonstrates high transfer potential and provides a valuable foundation for fostering data literacy across diverse disciplinary and educational contexts.

6 Limitations

Limitations of the study should be mentioned and considered for the design of future research. To address the question of the effects of a didactic intervention may have in supporting students’ development of data literacy competencies, a cognition model was designed, implemented and evaluated using a paired-samples t-test. The results are positive despite several limitations. The present investigation constitutes a quantitative study. The methodological discussion primarily focuses on the concept of validity as a key quality criterion in empirical research (Döring and Bortz, 2016). Methodological rigor is assessed particularly with reference to the concept of validity, which refers to the degree to which scientific inferences drawn from the present quantitative empirical study are accurate (Shadish et al., 2001). However, in the context of competency assessment, situations may arise in which students’ competencies cannot be validly captured by the collected data, thereby limiting construct validity (ibid.). Such instances may occur, for example, when participants alter their behavior due to the testing situation (Bauhoff, 2011). Subsequently, the measurement values may not adequately reflect the actual level of data literacy competence, which must be regarded as a limitation of the study. The present study cannot entirely rule out external temporal factors that often restrict the internal validity of investigations (Döring and Bortz, 2016), and such influences cannot be entirely ruled out in the present study. Another limitation to internal validity lies in the repeated use of the same survey instrument. Due to the test-practice effect, students may adjust their responses over time. Such chances could be mistakenly interpreted as causal effects of the intervention on competence development (ibid.). This study cannot rule out this potential bias, as the same questionnaire was used at both measurement points to assess the intervention’s impact. Moreover, altered response patterns may also result from decreasing student engagement over the course of the study, which constitutes an additional limitation. It should also be noted that, due to the small number of participants in the study, the results are limited. Another aspect concerns the transferability of conclusions to other populations. Thereby, effects observed in a specific sample – here, students of business-related degree programs in a statistic course – cannot be readily generalized to other contexts (ibid.). Investigating other semesters and academic disciplines would increase external validity and allow for more robust conclusions across different student populations. However, such an extension was not feasible within the scope of the present study for practical research reasons. Moreover, in other courses and semesters, the development of a different intervention for fostering data literacy competencies would have been necessary. As a result, competence development across disciplines and semesters could not be attributed exclusively to the intervention, given the lack of identical conditions. In addition, no standardized measurement instrument was applied to assess competencies. Although the scale demonstrated very satisfactory reliability (α = 0.87), allowing for the assumption of consistent measurement, it should be noted that statements regarding students’ perceived competence gains rely exclusively on self-assessment. Due to potential differences in objective data, future studies should include both subjective and objective measures, particularly regarding acceptance and learning effects. Another issue to be considered is that statistically significant treatment effects may be misinterpreted in cases of incomplete and incorrect implementations (ibid.).

7 Outlook

Instead of employing a parallel-group design, the forthcoming publication will present a pre-post comparison with follow-up within the experimental group, based on assessments conducted before and after the intervention to evaluate changes over time. Data will be collected at baseline and upon completion of the 8-week intervention. In addition, 3 weeks after the post-test, follow-up interviews will be conducted with participants who previously completed both the pre-test and post-test to examine whether the intervention has a sustainable impact and is applied beyond the teaching context. The primary endpoint will be the perceived ability to critically evaluate data, which constitutes the foundation for effective data-driven communication and subsequent decision-making. The perceived usefulness of the intervention will also be assessed as a secondary endpoint. To test the significance of mean differences, one-way repeated measures ANOVAs will be employed. Moreover, gender will be analyzed as a potential factor influencing the average development of competence within the group.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

All participants were provided with written information outlining the study procedures, data protection measures, the voluntary nature of participation, their right to withdraw at any time, and the intended publication of anonymized results. The study exclusively collects self-reported data, with no psychophysiological measurements conducted. The online questionnaires administered via LimeSurvey are pseudonymized and stored in a secured cloud environment accessible only to authorized study team members who are bound by confidentiality agreements. In line with German data protection law, any data shared beyond the project team will be fully anonymized. The study findings are intended for publication in a peer-reviewed scientific journal.

Author contributions

CL: Conceptualization, Data curation, Formal analysis, Methodology, Visualization, Writing – original draft, Writing – review & editing. CP: Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

We would like to thank all students for their contribution to the study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. The authors affirm that the manuscript was originally written in German and then translated with ChatGPT-4o into English by the first author. The translated text was subsequently reviewed and manually revised for linguistic clarity and accuracy.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Artelt, C., Wolter, I., Maurice, J.von, and Schoor, C. (2024). Digitale und datenbezogene Kompetenzen in Deutschland. Available online at: https://www.lifbi.de/de-de/Start/Forschung/Großprojekte/DataLiteracy (accessed May 15, 2025).

Bauhoff, S. (2011). Systematic self-report bias in health data: impact on estimating cross-sectional and treatment effects. Health Serv. Outcome Res. Methodol. 11, 44–53. doi: 10.1007/s10742-011-0069-3

Blach, C. (2016). Ein empirischer Zugang zum komplexen Phänomen der Hochsensibilität. Hamburg: Disserta.

Bortz, J., and Schuster, C. (2010). Statistik für Human- und Sozialwissenschaftler. Heidelberg: Springer.

Carlson, J. R., Fosmire, M., Miller, C., and Sapp Nelson, M. R. (2011). Determining data information literacy needs: a study of students and research faculty. Libraries Faculty Staff Scholarship Res. 11, 629–657. doi: 10.1353/pla.2011.0022

Cattell, R. B. (1966). The scree test for the number of factors. Multivar. Behav. Res. 1, 245–276. doi: 10.1207/s15327906mbr0102_10

Demiröz, V., and Lämmlein, B. (2024). Data Literacy im Hochschulkontext. Hochschulforum Digitalisierung 33, 6–15. doi: 10.48718/ys2m-ww35

Döring, N., and Bortz, J. (2016). Forschungsmethoden und Evaluation in den Sozial- und Humanwissenschaften. Heidelberg: Springer.

Dutke, S. (1994). Mentale Modelle: Konstrukte des Wissens und Verstehens: kognitionspsychologische Grundlagen für die Software-Ergonomie [Menal Models. Constructs of knowledge and understanding]. Göttingen: Verlag für Angewandte Psychologie.

Few, S. (2009). Now you see it: Simple visualization techniques for quantitative analysis. Oakland, CA: Analytics Press.

Ford, D., McNally, D., and Ford, K. (2017). Using design-based research in higher education innovation. Learning 21:1232. doi: 10.24059/olj.v21i3.1232

Ford, D. N., and Sterman, J. D. (1998). Expert knowledge elicitation to improve formal and mental models. Syst. Dyn. Rev. 14, 309–340. doi: 10.1002/(SICI)1099-1727(199824)14:4%3C309::AID-SDR154%3E3.0.CO;2-5

George, D., and Mallery, P. (2003). SPSS for windows step by step: A simple guide and reference. Boston, MA: Allyn & Bacon.

Haagen-Schützenhöfer, C., Obczovsky, M., and Kislinger, P. (2024). Design-based research - tension between practical relevance and knowledge generation – what can we learn from projects? Eurasia J. Mathematics Sci. Technol. Educ. 20:3. doi: 10.29333/ejmste/13928

Hettmannsperger, R., Mueller, A., Scheid, J., and Schnotz, W. (2016). Developing conceptual understanding in ray optics via learning with multiple representations. Z. Erziehungswiss. 19, 235–255. doi: 10.1007/s11618-015-0655-1

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika 39, 31–36. doi: 10.1007/BF02291575

Kedra, J., and Zakeviciute, R. (2019). Visual literacy practices in higher education: what, why and how? J. Vis. Literacy 28, 1–7. doi: 10.1080/1051144X.2019.1580438

Kenemore, J., Chavez, J., and Benham, G. (2023). The pathway from sensory processing sensitivity to physical health: stress as a mediator. Stress. Health 39, 1148–1156. doi: 10.1002/smi.3250

Kuckartz, U. (2018). Qualitative Inhaltsanalyse. Methoden, praxis, Computerunterstützung. Weinheim: Beltz Juventa.

McKenney, S., and Reeves, T. C. (2019). Conducting educational design research. New York, NY: Routledge.

Oguguo, B. (2020). Assessment of students' data literacy skills in southern Nigerian universities. Univ. J. Educ. Res. 8, 2717–2726. doi: 10.13189/ujer.2020.080657

Pohl, M. (2023). Visualization Literacy. Über das Entziffern visueller Botschaften. MedienPädagogik 55, 109–125. doi: 10.21240/mpaed/55/2023.10.05.X

Ridsdale, C., Rothwell, J., Smit, M., Ali-Hassan, H., Bliemel, M., Irvine, D., et al. (2015). Strategies and best practices for data literacy education knowledge synthesis report. Halifax, NS: Dalhousie University.

Schnotz, W. (2005). “An integrated model of text and picture comprehension” in Cambridge handbook of multimedia learning. ed. R. E. Mayer (Cambridge: Cambridge University Press), 49–69.

Schnotz, W., and Bannert, M. (2003). Construction and interference in learning from multiple representations. Learn. Instr. 13, 141–156. doi: 10.1016/S0959-4752(02)00017-8

Schüller, K., Busch, P., and Hindinger, C. (2019). Future Skills: Ein Framework für Data Literacy-Kompetenzrahmen und Forschungsbericht. Hochschulforum Digitalisierung 47, 14–31. doi: 10.5281/zenodo.3349865

Schüller, K., and Sievers, S. (2022). Data Literacy Education an deutschen Hochschulen – Erkenntnisse aus der Praxis. Available online at: https://hochschulforumdigitalisierung.de/data-literacy-education-an-deutschen-hochschulen-erkenntnisse-aus-der-praxis/ (accessed May 15, 2025).

Shadish, W. R., Cook, T. D., and Campbell, D. T. (2001). Experimental and Quai-experimental designs for generalized clausal inference. Bosten: Cengage Learning.

Shneiderman, B. (2004). Designing the user Interface: Strategies for effective human-computer interaction. Boston, MA: Addison-Wesley Longman.

Zelazny, G. (2015). Wie aus Zahlen Bilder werden. Der Weg zur visuellen Kommunikation – Daten überzeugend präsentieren. Wiesbaden: Springer Gabler.

Keywords: cognitive model, critical thinking, data literacy, design-based research, didactic intervention, visualized data

Citation: Lieb C and Pickhardt C (2025) A didactic intervention to strengthen critical thinking in the interpretation of visualized data. Front. Educ. 10:1680396. doi: 10.3389/feduc.2025.1680396

Edited by:

Chunxia Qi, Beijing Normal University, ChinaReviewed by:

Eleni Kolokouri, University of Ioannina, GreeceNestor L. Osorio, Northern Illinois University Founders Memorial Library, United States

Copyright © 2025 Lieb and Pickhardt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christiane Lieb, Y2hyaXN0aWFuZS5saWViQGhzLWFsYnNpZy5kZQ==

Christiane Lieb

Christiane Lieb Carola Pickhardt3

Carola Pickhardt3