- 1Carrera de Sistemas Inteligentes, Universidad Bolivariana del Ecuador, Durán, Ecuador

- 2Facultad de Ciencias Matemáticas y Físicas, Universidad de Guayaquil, Cdla. Universitaria Salvador Allende, Guayaquil, Ecuador

- 3Instituto para el Futuro de la Educación, Tecnológico de Monterrey, Monterrey, México

- 4Universidad Politécnica Metropolitana de Hidalgo, Hidalgo, México

This study examined the user experience that influences the adoption of artificial intelligence (AI) applications in higher education, focusing on three dimensions: habit, perceived complexity, and perception of adoption. A quantitative, non-experimental, cross-sectional design was applied, using a Likert scale questionnaire administered to 621 university students in Spain, Mexico, and Serbia, selected through convenience sampling. The results revealed that the frequency of regular use of AI tools is positively associated with a lower perception of complexity and a higher assessment of their educational impact. In addition, significant differences were identified between countries and between public and private universities, with the perception of adoption being more favorable in private contexts and in countries with greater technological familiarity. Correlations between dimensions and network and centrality analyses highlighted the structural role of habit as a facilitator of use and positive perception of AI. This evidence showed the need for differentiated strategies that promote the regular integration of AI, reduce perceived barriers, and enhance its educational impact, considering the contextual factors that condition its acceptance and effective use in university settings.

1 Introduction

The digital transformation of higher education has generated growing interest in the adoption of emerging technologies, among which artificial intelligence (AI) occupies a prominent place. This technology, defined as the ability of computer systems to perform tasks that require reasoning, learning, adaptation, and decision-making, has demonstrated the potential to reshape the way knowledge is taught, learned, and managed in university settings. The incorporation of AI applications in this field ranges from academic support chatbots and personalized recommendation engines to automatic assessment systems and adaptive learning platforms. These applications promise to improve the efficiency of the educational process, personalize the learning experience, optimize institutional management, and alleviate the administrative burden resulting from student overcrowding.

Various studies have documented the expected benefits of using AI in education, noting that this technology could contribute to improved academic performance, more dynamic interaction between teachers and students, and more accurate, data-driven institutional decision-making. Indeed, recent literature suggests that, in contexts where the technological infrastructure is adequate and educational actors are prepared, AI has the capacity to generate more inclusive, adaptive, and efficient learning environments (Chatterjee and Bhattacharjee, 2020). However, the mere development or availability of these tools does not guarantee their effective adoption. Factors such as perceived usefulness, ease of use, institutional support, prior experience, perceived value, and associated risks, especially in terms of privacy, technological dependence, or academic integrity, can influence users' willingness to integrate them into their daily practices.

To understand this dynamic, various theoretical models have been used to study the acceptance of technologies in higher education. Among them, the Unified Theory of Acceptance and Use of Technology (UTAUT) and its extended version UTAUT2 have established themselves as robust analytical frameworks for identifying the factors that influence the behavioral intention to use emerging technologies, including AI-based systems (Rudhumbu, 2022; Abu Gharrah and Aljaafreh, 2021). These models consider both cognitive aspects, such as performance and effort expectations, and affective and social dimensions, such as hedonic motivation, environmental influence, and acquired habits. In addition, recent research has proposed the incorporation of additional variables such as perceived risk or the emotional value associated with the use of technology to more accurately capture the determinants of technological behavior in educational contexts.

In this context, the study of the adoption of AI applications in higher education requires not only exploring the functional potential of this technology, but also understanding the personal, institutional, and contextual factors that condition its effective integration. Considering the breadth of possible variables, this study focuses exclusively on three dimensions directly related to the research objective: habit, understood as the frequency and naturalness with which students integrate AI into their academic activities; perceived complexity, which examines the perception of difficulty or simplicity when interacting with these technologies; and the adoption of AI in higher education, focused on assessing the positive impact of these tools on teaching, learning, and student engagement. The selection of these dimensions allows for a clear and focused empirical approach that captures both behavioral aspects and cognitive and attitudinal perceptions, which is key to understanding the user experience from a comprehensive, user-centered perspective. This delimitation responds to the interest in identifying operational and attitudinal factors that explain, in a concrete way, the willingness of university students to incorporate AI tools into their educational experience.

2 Literature review

The study of technology adoption in higher education has been extensively addressed through theoretical models that seek to explain user behavior in response to technological innovations (Khechine and Lakhal, 2018; Paublini-Hernández and Morales-La Paz, 2025). Among these models, UTAUT has established itself as a robust and widely validated reference framework (Popova and Zagulova, 2022; Paublini-Hernández and Morales-La Paz, 2025; Alhasan et al., 2025). Proposed by Venkatesh et al. (2003), the UTAUT model synthesizes eight previous theories on technology acceptance, including TAM, TPB, and the Diffusion of Innovation Theory (Martín García et al., 2014), giving it a comprehensive structure for understanding the intention and effective use of technologies in various contexts (Wrycza et al., 2017; Lawson-Body et al., 2020). In the context of higher education, UTAUT has been adapted to analyze the adoption of specific technologies such as e-learning, mobile learning, and virtual platforms, demonstrating its analytical versatility in different technological scenarios (López Hernández et al., 2016; McKeown and Anderson, 2016).

UTAUT posits that behavioral intention and actual use of a technology are primarily determined by four constructs: outcome expectancy, effort expectancy, social influence, and facilitating conditions (Lin et al., 2023; Kim and Lee, 2022; Raza et al., 2021). These constructs allow for a multidimensional assessment of users' predisposition to adopt a technology, integrating both individual and contextual factors (Martín García et al., 2014). Expectancy of results refers to the degree to which the use of technology is perceived to improve performance; expectancy of effort measures the perceived ease of use; social influence refers to pressure or support from the environment; and facilitating conditions consider the availability of technical and organizational resources (Altalhi, 2021; Tolba and Youssef, 2022; Chauhan and Jaiswal, 2016). Furthermore, it has been shown that these constructs can be affected by moderating variables such as age, gender, and previous user experience, which has been considered in extensions of the model such as UTAUT2 (I Kittinger and Law, 2024; Andrews et al., 2021; Chroustová et al., 2022; Alshehri et al., 2020).

Several studies have empirically validated these constructs in educational settings, finding that expectation of results is one of the most consistent predictors of intention to use (Ab Jalil et al., 2022; Alshehri et al., 2019; Sharma et al., 2021). Meanwhile, the influence of effort expectation varies depending on prior familiarity with the technology (Radovan and Kristl, 2017). In contexts where users have high digital literacy, as is often the case in higher education, this construct may carry less weight (Khechine et al., 2020; Hu et al., 2020). On the other hand, facilitating conditions and social influence are particularly relevant in institutional environments, where technological infrastructure and academic support directly influence the adoption of digital tools (Yang et al., 2019; Mafa and Govender, 2025). This pattern suggests that the design of institutional policies should consider not only technological availability but also cultural and social factors that favor the collective use of technology (Tolba and Youssef, 2022; de Souza Domingues et al., 2020).

The emergence of generative artificial intelligence (GAI) in education has reshaped the landscape of research on technology adoption, requiring frameworks that integrate both traditional dimensions of acceptance and new perspectives focused on the user experience (García Peñalvo et al., 2023). In this context, it is pertinent to extend the UTAUT model with constructs that reflect how users interact with AI on a daily basis, their perception of technological complexity, and the perceived educational impact (Honig et al., 2025; Yakubu et al., 2025). The user experience, understood as a subjective construct that encompasses habits, perceived difficulties, and general assessments, offers an appropriate way to explore the adoption of these emerging technologies (Chao-Rebolledo and Rivera-Navarro, 2024). This adaptation allows us to examine not only the initial disposition toward AI, but also the dynamics of sustained use that shape a deeper appropriation of technology in learning processes (Arif et al., 2018; Chen and Hwang, 2019).

Three dimensions allow us to operationalize the experience of using AI in higher education: habit, perceived complexity, and perceived adoption. These dimensions map directly to core UTAUT constructs: perceived complexity corresponds to effort expectancy, habit aligns with the UTAUT habit construct, and perceived adoption reflects performance expectancy; social influence and facilitating conditions have been deliberately left out to maintain the simplicity of our model. The habit dimension considers the frequency and naturalness with which students incorporate AI tools into their academic practices (Kim and Lee, 2022). Perceived complexity assesses ease or difficulty of use, closely related to the effort expectation of the UTAUT model (Popova and Zagulova, 2022). Finally, the perceived adoption dimension integrates judgments about the positive impact of AI on teaching and learning processes, aligning conceptually with the expectation of results and broadening its scope to pedagogical assessments (Honig et al., 2025). It should be noted that these dimensions also allow us to capture possible resistance to the use of AI, especially when incompatibilities with traditional teaching methods or concerns about its academic legitimacy are perceived (Alonso-Rodríguez, 2024). Recent evidence from the classroom reinforces the relevance of these concepts. A study by Lo et al. (2025b) on generative AI in essay writing shows that AI support improves the quality of revision and student engagement, but also elicits mixed emotions that are best addressed through human support. Similarly, Lo et al. (2025a) compare teacher-only, AI-only, and hybrid feedback in English language learning and find that hybrid feedback consistently outperforms either mode alone in terms of motivation, perceived quality, and performance. Taken together, these findings give concrete educational substance to our concept of perceived adoption as an indicator of performance expectation and illustrate how hybrid feedback workflows can reduce perceived complexity while maintaining engagement.

Recent literature on educational AI highlights the need to consider aspects such as prior training in AI, understanding how it works, and the ethical challenges associated with its use (Tramallino and Zeni, 2024). Emerging studies reveal that, although there is a generally positive perception of the potential of AI in the university environment, there is still low technological literacy and little critical reflection on ethical risks, such as misinformation, algorithmic bias, or academic dishonesty (Nikolic et al., 2024; Marc Fuertes Alpiste, 2024). These findings underscore the need to complement traditional theoretical frameworks with approaches that incorporate user perceptions of the ethical and social implications of technology (Chao-Rebolledo and Rivera-Navarro, 2024). The inclusion of these ethical dimensions as conditioning factors for adoption would enrich explanatory models and anticipate possible tensions in the use of AI in educational contexts (Nawafleh and Fares, 2024).

Likewise, it has been observed that training and institutional support have a decisive influence on the adoption of AI-based technologies (Tramallino and Zeni, 2024). The existence of clear institutional strategies, technical support, and ethical use policies strengthen the enabling conditions of the UTAUT model, which in turn enhances adoption (Guggemos et al., 2020). Consequently, the study of the user experience cannot be separated from the organizational context in which these tools are implemented, especially in higher education institutions where autonomy and critical thinking are fundamental pillars of the educational process (Chao-Rebolledo and Rivera-Navarro, 2024). In this sense, it is proposed that the organizational environment not only acts as a logistical facilitator, but also as an agent that shapes attitudes and competencies around the responsible use of AI (Magsamen-Conrad et al., 2020).

In summary, this framework combines the simplicity of focusing on three dimensions with the broader structure of UTAUT/UTAUT2. Perceived complexity corresponds to the expectation of effort, habit reflects the concept of habit in UTAUT, and perceived adoption aligns with the expectation of performance. Social influence and facilitating conditions have been left out to maintain the analytical focus of the model; however, classroom studies on AI-assisted writing demonstrate that well-designed hybrid feedback workflows can serve as facilitating conditions that determine both perceived ease of use and perceived educational impact.

Based on this background, the UTAUT model, enriched with constructs derived from user experience, constitutes a relevant theoretical framework for analyzing the adoption of artificial intelligence applications in higher education (Vinh Nguyen et al., 2024; Bayaga and Du Plessis, 2024). This approach allows us not only to identify the factors that predispose students to the use of these technologies, but also to understand how their everyday experience, perception of ease, and educational assessment influence the process of technological integration (Chao-Rebolledo and Rivera-Navarro, 2024). The proposed model thus offers a solid conceptual basis for guiding both empirical analysis and the design of institutional strategies that promote the thoughtful and effective adoption of artificial intelligence in educational contexts (Alonso-Rodríguez, 2024). Its implementation could guide the development of academic policies that integrate AI literacy, critical thinking, and ethical use as pillars for a responsible digital culture (Guggemos et al., 2020).

3 Materials and methods

3.1 Participants

The target population for this study consisted of undergraduate university students enrolled in higher education institutions, belonging to various professional careers. Inclusion criteria were established that required enrollment in the current academic semester and direct experience in the use of at least one AI-based tool within the academic context. In order to ensure the validity of the results, participants who did not give their informed consent to take part in the study were excluded. The final sample consisted of 626 students who voluntarily participated after receiving institutional invitations distributed via official digital channels. Participation was therefore based on a convenience sampling strategy, which provided broad access. The sample included participants from both public and private institutions across different countries. A total of 53.8% were enrolled in public institutions and 46.2% in private institutions. The demographic profile consisted of 41.4% men, 58.1% women, and 0.5% non-binary students. Ages ranged from 17 to 57 years, with a mean age of 22 years. All participants were duly informed about the objectives of the research, its anonymous and voluntary nature, and their right to withdraw at any time without consequences. The process complied with the ethical principles for studies involving human participants, in accordance with institutional standards and current international recommendations.

3.2 Methodological design

The main objective of this study is to expand theoretical knowledge about the factors that influence the adoption of AI applications in educational settings, without immediate application in professional practice. A quantitative approach was adopted, which allowed for the objective measurement of the variables involved, enabling statistical analysis of the data collected. The research design was non-experimental, as the variables were observed as they manifested themselves in the students' natural environment, without any external manipulation. Likewise, a cross-sectional design was applied, given that the data were collected at a single point in time. From the point of view of scope, the research is correlational in nature, as it was aimed at identifying the relationship between the experience of using AI technologies—structured in three analytical dimensions—and the level of adoption of these technologies by students.

3.3 Instrument

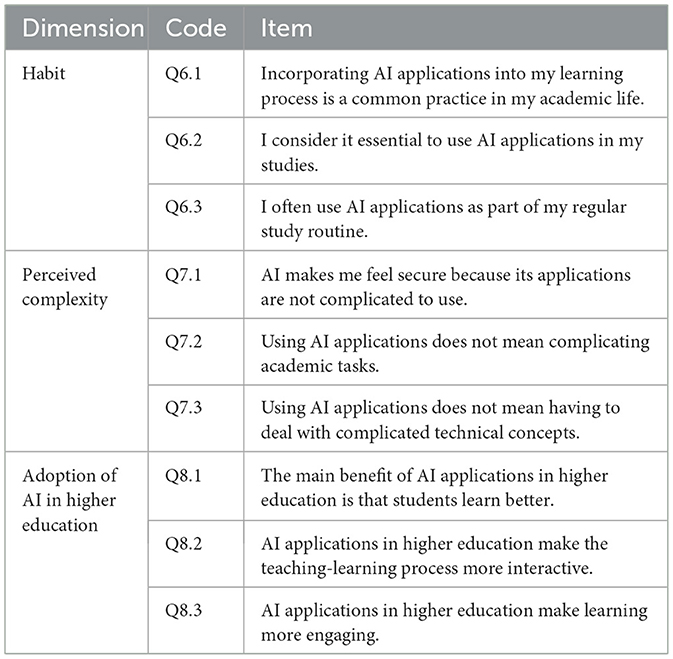

A structured questionnaire was used to collect data, consisting of a total of nine items distributed across three specific dimensions that are directly relevant to the objective: (1) habit, (2) perceived complexity, and (3) adoption of AI in higher education. Table 1 provides a detailed description of the items. The “habit” dimension assesses how often students use AI tools and the extent to which these tools are naturally integrated into their academic routines. The “perceived complexity” dimension analyzes the subjective perception of the ease or difficulty of interacting with these technologies, considering factors such as the interface, the learning curve, and the need for prior knowledge. Finally, the “AI adoption” dimension captures general perceptions regarding the positive impact of these applications on key processes in the educational environment, such as teaching, autonomous learning, and student engagement. The questionnaire used a five-point Likert scale to record responses, with options ranging from “strongly disagree” to “strongly agree”, thus allowing for nuances in the attitudes and assessments of respondents to be captured. These dimensions align with the constructs of UTAUT/UTAUT2: habit corresponds to the construct “habit” in UTAUT2, perceived complexity approximates the concept of “effort expectancy,” and adoption is linked to “performance expectancy” and the “behavioral intention” of technology adoption (Venkatesh et al., 2003, 2012).

Students interact with AI in a variety of ways (i.e., searching, writing, commenting). While previous classroom studies show that comment-oriented uses can increase perceived impact and reduce complexity, this survey did not stratify responses by use case, a scope decision that will inform future improvements.

3.4 Procedure

The questionnaire was administered virtually through institutional platforms. Students were invited to participate through official digital channels of their institutions. These invitations constituted the sampling frame, and participation was voluntary, resulting in a convenience-based recruitment rather than a fully probabilistic procedure. From the eligible population a total of 626 responses were collected, of which 621 were valid after data screening, corresponding to a 99.2% response rate. Each item was numerically coded according to the Likert scale described above, which allowed for the systematization of information and facilitated subsequent statistical processing. The content validity of the instrument was reviewed by specialists in educational research and emerging technologies, who ensured the relevance, clarity, and consistency of the items with respect to the study objectives. The data obtained were stored in protected databases and used exclusively for scientific purposes, respecting the confidentiality of the participants at all times.

3.5 Preliminary analysis

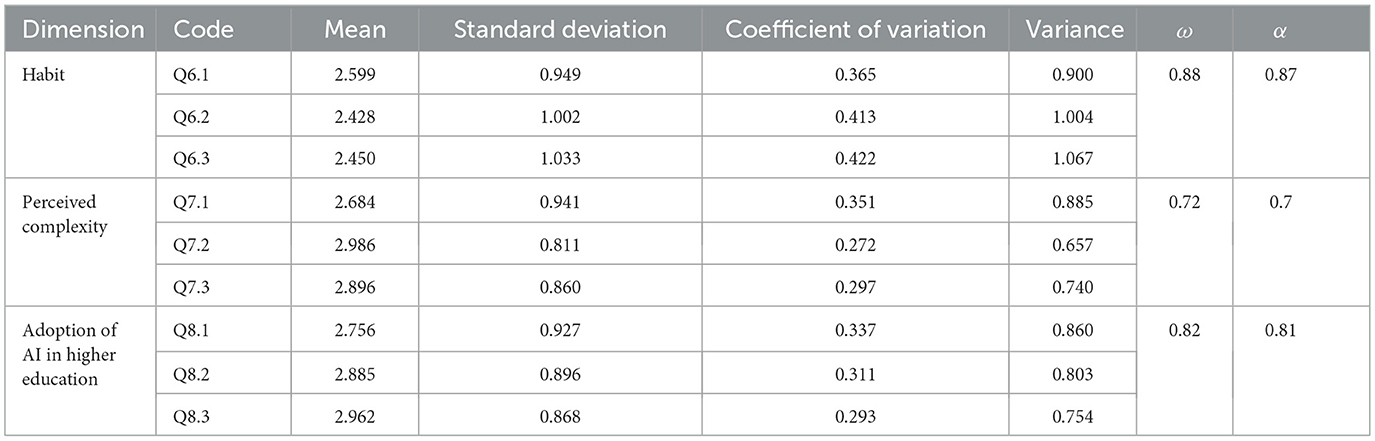

The dimensions analyzed reflect perceptions and practices related to the integration of AI applications in academia. According to the descriptive analysis in Table 2, the items with the highest averages were “Q7.2. Using AI applications does not complicate academic tasks” (M = 2.986; CV = 0.272) and “Q8.3. AI applications make learning more engaging” (M = 2.962; CV = 0.293), indicating a positive assessment of the accessibility and motivational impact of these technologies.

Table 2. Descriptive statistics: mean (M), standard deviation (SE), coefficient of variation (CV), variance (V), McDonald's coefficient (ω) and Cronbach's alpha (α).

In contrast, the items with the lowest mean correspond to the frequent use of these tools in academic life, such as “Q6.2. I consider it essential to use AI applications in my studies” (M = 2.428; CV = 0.413), which shows that their incorporation is still limited or in development. The low relative variations observed in items Q7.2 and Q8.3 indicate greater consensus among participants regarding the practical and pedagogical benefits of AI, while the highest coefficients of variation are concentrated in attitudinal and habit aspects, such as the habitual use of these applications (items Q6.2 and Q6.3), suggesting divergences in the frequency of use and appropriation of these tools.

4 Results

This study analyzes the experience of using AI applications in higher education, focusing on three key dimensions: habit, perceived complexity, and AI adoption. In all analyses, Q6, Q7, and Q8 refer to the composite scores of the three items corresponding to each dimension. These composites were calculated as the mean of the three items, with higher values reflecting stronger presence of the construct.

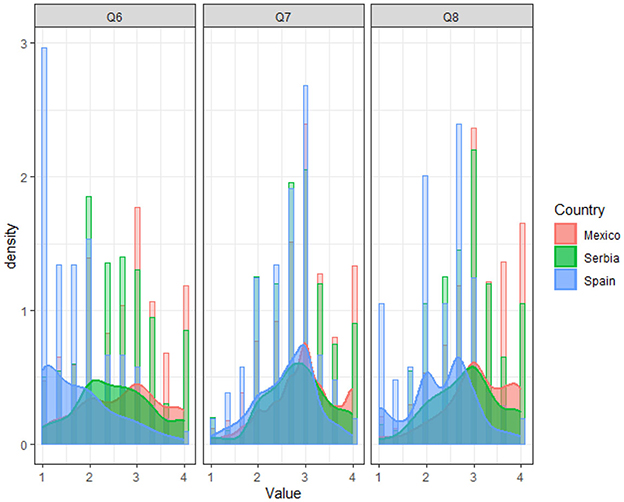

In Figure 1, the results are presented using histograms that reflect the responses of students from Spain, Mexico, and Serbia, evaluating the perception of different aspects of AI integration into their academic routines.

In terms of habit, which assesses the frequency and naturalness with which students integrate AI into their academic routines, the data show notable differences between countries. Spain has more concentrated distributions in lower values (1 and 2), suggesting less habitual integration of these technologies compared to Mexico and Serbia, where values tend to be more dispersed. This could be related to the level of technological familiarity and the availability of AI tools in the academic environment.

Perceived complexity, which measures the perception of difficulty or simplicity when interacting with AI applications, reflects greater variability in Serbia, with scores widely distributed between levels 1 and 4. This suggests that, although some students perceive these tools as easy to use, others encounter significant barriers, possibly related to a lack of training or technical support. In contrast, Spain shows an intermediate level of interactivity with a greater presence of low values, which are concentrated around values 2 and 3, although there is room for improvement in usability. In Mexico, scores are concentrated in intermediate values with a greater tendency toward high values.

Finally, the dimension of AI adoption in higher education, which assesses the overall perception of the positive impact of these technologies, shows positive trends in Mexico and Serbia, with a higher density in high values (3 and 4). This finding suggests that students recognize the potential of AI to improve learning, increase student engagement, and foster pedagogical innovation. However, Spain shows a slight tendency toward lower values (1 and 2), which could reflect a less favorable perception in certain contexts.

Taken together, these results highlight the influence of contextual factors, such as institutional policies and access to technological infrastructure, on the experience of using AI applications. While students generally recognize the value of these tools, it is necessary to address differences in the perception of complexity and promote strategies to encourage their regular integration, especially in contexts with less technological familiarity.

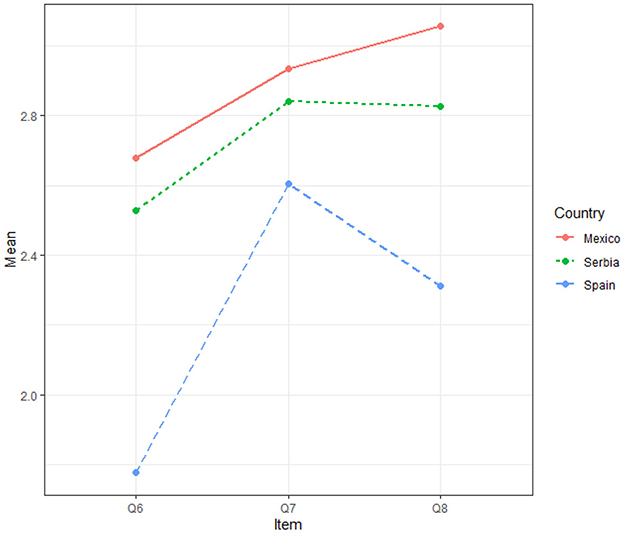

The line graph presented in Figure 2 shows the variations in the mean responses for the dimensions evaluated in Spain, Mexico, and Serbia, providing a comparative view of students' perceptions of the use of AI applications in higher education.

One of the most notable variations is observed in Spain, where the average in Q6 is significantly lower compared to Q7, but drops again in Q8. This suggests that, although students perceive improvements in the impact of AI (Q7), they still face challenges in its routine integration and overall perception (Q6 and Q8).

In contrast, Mexico shows a steady upward trend across all three dimensions, with averages close to 3.0 in Q8. This indicates a progressively more positive perception in terms of AI use and adoption, which could reflect a more favorable environment for learning and implementing these technologies.

Serbia, on the other hand, shows a more stable curve, with averages around 2.8 in all dimensions. This suggests a moderate and consistent perception, where AI tools are seen as useful but perhaps not transformative. This behavior could be due to an intermediate level of technological familiarity or a less prominent implementation of AI in the educational field.

Overall, the results reflect differences between countries in the perception of AI in higher education, highlighting the need for policies and strategies tailored to local contexts to maximize the positive impact on students.

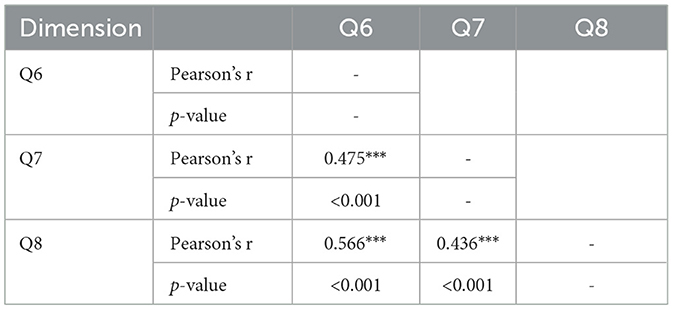

The correlational analysis in Table 3 reveals significant relationships between the dimensions evaluated (Q6, Q7, and Q8). The strongest correlation is observed between Q6 and Q8, with a value of r = 0.566 (p < 0.001). This indicates that students who integrate AI into their academic routines tend to perceive it as a tool with an overall positive impact on their educational experience.

Another important correlation is that between Q6 and Q7, with r = 0.475 (p < 0.001), indicating that students who use AI more regularly also perceive less difficulty in using it. This could be due to a continuous learning effect, where familiarity with these tools reduces perceived barriers.

Finally, the correlation between Q7 and Q8 (r = 0.436, p < 0.001) shows that the perceived simplicity of interacting with AI is associated with a higher assessment of its overall impact. This finding reinforces the idea that improving the usability of these tools can significantly contribute to their adoption in higher education.

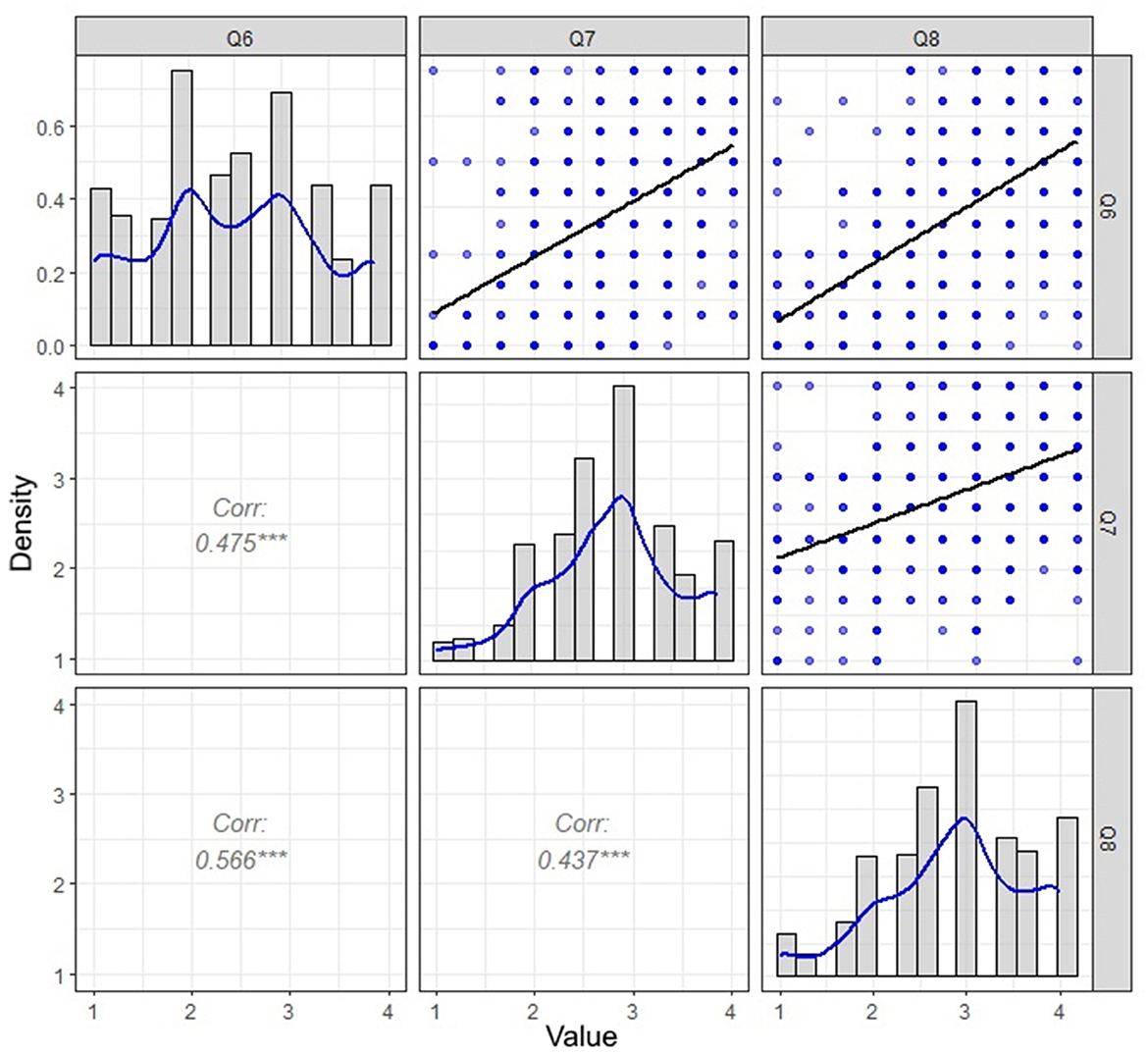

Figure 3 presents a more detailed analysis of the correlations between the dimensions evaluated, highlighting key relationships that reinforce the understanding of the factors that influence the perception and adoption of AI in academia.

The density graphs reflect the distribution of responses for questions Q6, Q7, and Q8, revealing insights into students' perceptions of their user experience. In particular, it can be seen that the frequency of AI use (Q6) has a bimodal distribution, suggesting that there is a significant group of students who regularly integrate AI into their academic routines, while another group uses these tools sporadically. This variability could be related to the habit dimension, indicating that the natural integration of AI into academic life is key to its effective adoption.

The scatter plots between questions Q6, Q7, and Q8 show significant trends that deserve attention. The correlation between Q6 and Q7 is positive (r = 0.475), suggesting that students who use AI more frequently tend to perceive it as less complex. This implies that familiarity with AI could reduce the perception of difficulty, facilitating its adoption. This finding reinforces the idea that regular practice with these tools can improve confidence and competence in their use.

Similarly, the relationship between Q7 and Q8 shows a correlation of r = 0.566. This indicates that a lower perception of complexity is associated with a higher assessment of the positive impact of AI on learning and student engagement. This result suggests that simplifying interaction with technology can not only facilitate its use but also improve overall educational experiences.

Finally, the correlation between Q6 and Q8 (r = 0.437) indicates that students who use AI more regularly also tend to report a more positive perception of its impact on teaching. This finding underscores the importance of encouraging regular use of AI applications to maximize their benefit in the educational setting.

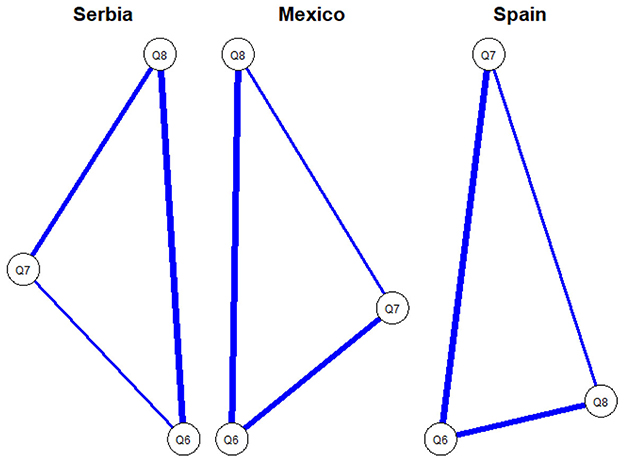

As a complementary part of the study, a network analysis was performed to explore the strength and structure of the relationships between the dimensions in the participating countries. Figure 4 shows the networks generated for Serbia, Mexico, and Spain, where the thickness of the edges represents the magnitude of the correlation between dimensions, and the color of the edges reflects the type of relationship: blue for positive relationships and red for negative ones. In all cases, a fully connected network with three nodes and three edges is observed, implying maximum density within the reduced model, with no negative links present.

In the case of Serbia, the connections between Q6–Q8 and Q7–Q8 are more pronounced, indicating a particularly strong association between the perception of adoption and perceived complexity, as well as between habit and complexity. This configuration suggests that in this context, the perception of difficulty or ease of use of AI is closely linked to both frequency of use and overall assessment of its usefulness, generating a network structure with high dependency around Q8.

Mexico, on the other hand, presents a network with more balanced edges, where the connections between Q6-Q7 and Q6-Q8 show a notable thickness. This arrangement suggests that the Adoption dimension acts as a central and mediating node, generating significant relationships with both habitual use and perceived difficulty. This structural integration indicates that, in this country, perceptions about AI adoption are built on a coherent interaction between practical experiences and perceived barriers.

In the case of Spain, the most prominent edge is between Q6–Q7, reflecting a strong direct association between frequent use and the perceived educational value of AI. Connections to the Q8 dimension are weaker in comparison, suggesting that the perception of complexity has less weight in the construction of the overall judgment about these technologies. This network reflects a more functional integration between habit and adoption, which may be related to a more established technological culture in the academic environment.

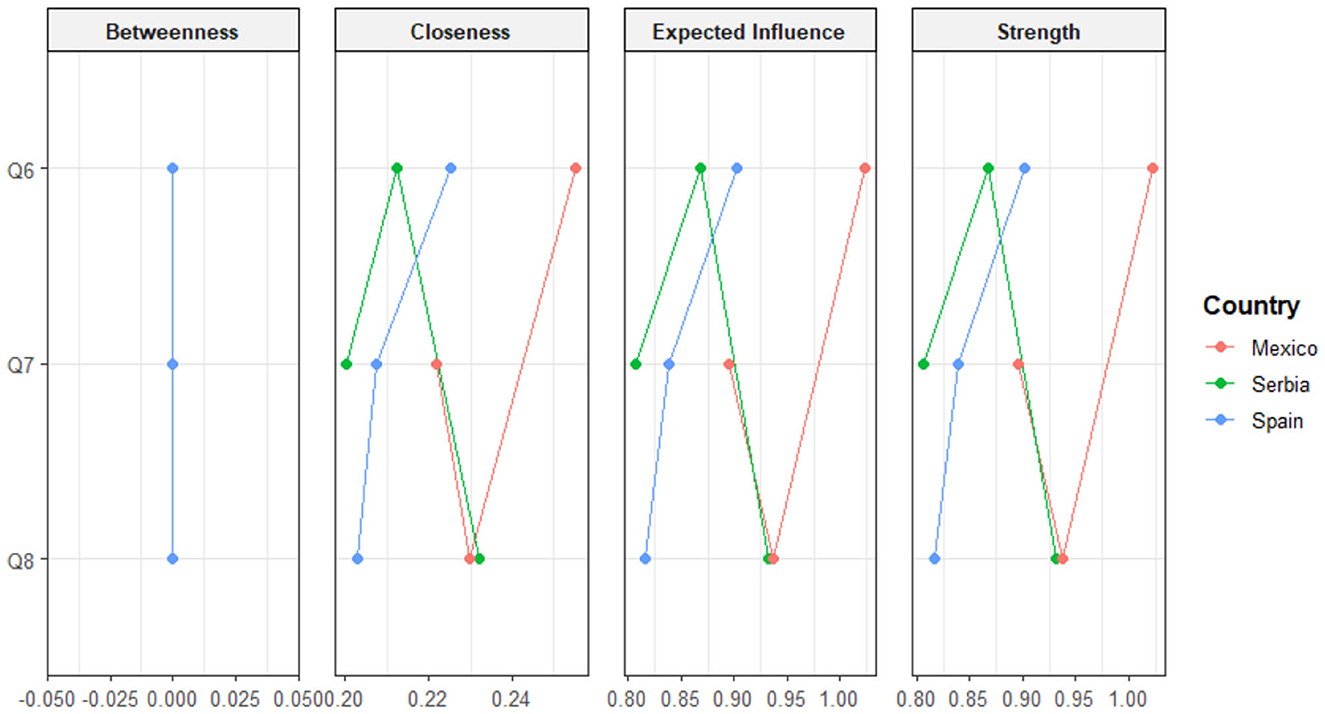

The centrality analysis allowed us to examine how student perceptions are structured around the three key dimensions. To this end, the metrics of betweenness, closeness, expected influence, and strength were used, differentiated by country (Spain, Mexico, and Serbia), as shown in Figure 5.

In the betweenness metric, all dimensions have a value of zero in the three countries, indicating that none of the variables acts as a key intermediary in the connection routes within the perceptual network. This absence of a bridging function suggests that students' perceptions of each dimension are structurally separate, i.e., there is no dimension that serves as a link to articulate the others in the mental model of AI use.

In terms of closeness, there are notable variations between countries. In Mexico, the Adoption dimension (Q6) has the highest value (0.24), which implies that this variable maintains considerable structural proximity to the other nodes, i.e., it can be quickly reached from other perceptions. In Spain, the value of the Habit dimension (Q7) stands out (0.23), suggesting that frequent and natural use of AI occupies a central place in the perceptual network. In Serbia, on the other hand, distributed centrality is observed: both Habit and Adoption have similar and high closeness values (0.23 each), while Perceived Complexity is consistently low in all three countries (0.21 in Spain and Serbia, 0.22 in Mexico), indicating a more peripheral location in the network.

In terms of expected influence, Mexico has the highest value for the Adoption dimension (1.00), followed by Spain (0.95), while Serbia has a slightly lower value (0.94). This suggests that the perception of the positive impact of AI on education has a high capacity to indirectly influence the other variables, especially in Mexico. The Habit dimension ranks as the second most influential in Spain and Serbia, with values of 0.90 and 0.94 respectively, supporting its important role in consolidating favorable attitudes toward AI. In contrast, Perceived Complexity has the lowest value in all countries (0.90 in Mexico, 0.94 in Spain and Serbia), reflecting its limited influence in the network, which could indicate a weak association between the perception of difficulty and other forms of positive experience.

Finally, in the strength metric, the Adoption dimension again stands out with the highest values: 1.00 in Mexico and Serbia, and 0.95 in Spain. This confirms that this variable maintains strong and direct connections with other dimensions of the experience. It is followed by the Habit dimension, with values of 0.90 in Spain and Serbia, and 0.85 in Mexico, indicating that habitual use also has strong links in the network, although with less relative weight. Once again, the Perceived Complexity dimension has the weakest connection in all cases, with values of 0.85 in Mexico, 0.90 in Spain, and 0.80 in Serbia, reinforcing its marginal nature within the overall perceptual structure.

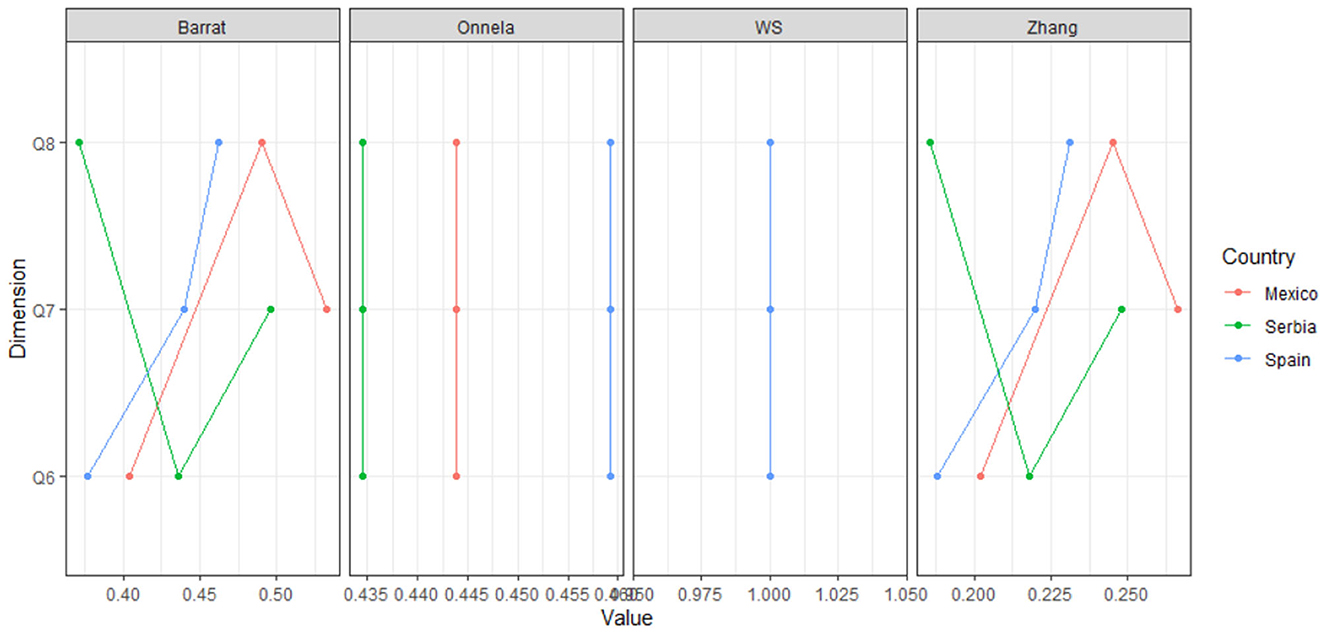

The results are complemented by an analysis using the Barrat, Onnela, WS, and Zhang clustering metrics, which allow for the evaluation of the cohesion of the variables in specific national contexts, represented in Figure 6 of clustering by question and country.

The habit dimension explores the frequency with which students integrate AI into their daily academic routines, reflecting a natural and fluid relationship with these technologies. The clustering values in this dimension show some variability among the countries analyzed. For example, the WS metric shows high and consistent values in Mexico and Serbia (exceeding 1.000), suggesting greater cohesion in usage habits in these contexts. In contrast, Spain shows more dispersed values, which could reflect less systematic integration of AI into academic activities.

This dimension assesses how students perceive the difficulty or simplicity of interacting with AI applications. The results obtained in the Barrat and Zhang metrics reveal that the perception of complexity is higher in Spain, according to the higher values in the associated questions. However, Mexico shows a more marked perception of simplicity, with Zhang values remaining below 0.250, indicating greater perceived ease in using these tools.

Finally, the general adoption dimension focuses on the perception of the positive impact of AI on learning, teaching, and student engagement. Here, cohesion metrics, such as Onnela and Barrat, highlight the case of Serbia, where clustering values are higher compared to the other countries. This suggests that students in Serbia perceive a greater positive impact of AI, which could be due to specific educational policies or training initiatives in this context.

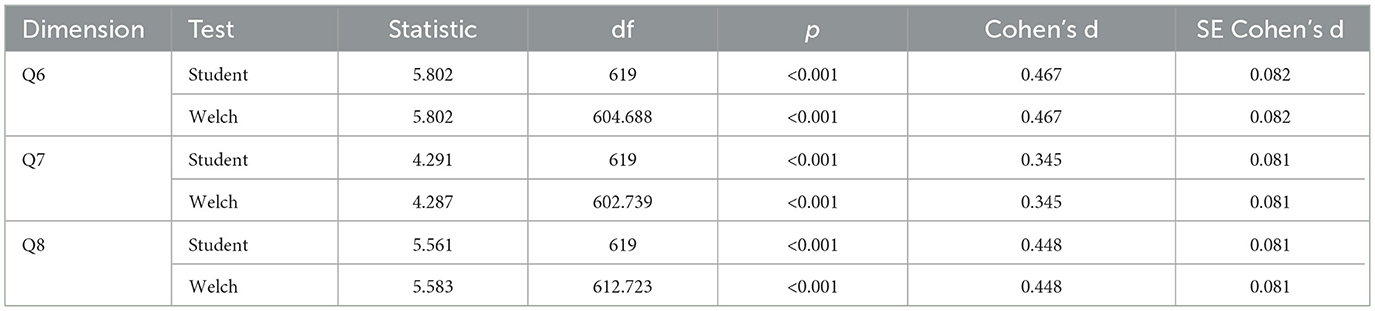

In a third phase of the study, a comparative analysis was carried out between public and private universities with the aim of identifying significant differences in the perception of the dimensions of Habit, Perceived Complexity, and AI Adoption. To this end, Student's t-tests and Welch's t-tests were applied, evaluating both statistical significance (p-value) and effect size using Cohen's d coefficient, the results of which are summarized in Table 4.

First, the Habit dimension (Q6) showed the most marked differences between the two types of institutions. Both tests reported highly significant values (p < 0.001), with a moderate effect size (Cohen's d = 0.467 in both tests), indicating that students at private universities reported significantly higher levels of AI application adoption compared to their peers at public universities. This difference is not only statistically robust but also relevant in practical terms, as it suggests greater acceptance and appreciation of AI in private settings, possibly due to greater availability of technological resources or more proactive institutional strategies.

The Adoption dimension (Q8) also showed highly significant differences (p < 0.001), with an effect size of Cohen's d = 0.448. This finding indicates that students at private universities perceive the use of AI as less complex compared to students at public universities. The perception of lower difficulty could reflect a greater degree of technological familiarity or a more fluid integration of these tools into the academic dynamics of the private sector.

Regarding the Perceived Complexity dimension (Q7), significant differences were also observed (p < 0.001), although with a slightly smaller effect size (Cohen's d = 0.345). This result suggests that students at private institutions incorporate the use of AI into their academic routines more frequently and naturally. Although the effect is smaller than in the other dimensions, it is still relevant and reflects a consistent trend toward a more consolidated user experience in this context.

Overall, the results show a consistent trend across all dimensions, with variations in the magnitude of the differences observed. The greatest discrepancies are concentrated in regular use and perception of the academic impact of AI, while differences in ease of use are more subtle. The consistency between the Student and Welch tests supports the robustness of the findings.

5 Discussions

The results shown in Figure 1 highlight that the integration of AI in higher education is influenced by contextual factors such as the availability of technological resources, institutional policies, and student training. The variability in the perceived complexity dimension, especially in Serbia, underscores the need to reduce barriers to use through training and technical support. Likewise, the positive perception of AI adoption reinforces its potential to improve learning, although differences between countries highlight challenges in its equitable implementation. It is essential that institutions establish clear strategies to encourage the regular use of these technologies, ensuring that the benefits reach all students.

In line with these findings, a study by Honig et al. (2025) that used the UTAUT framework for the adoption of generative AI in higher engineering education identified that performance expectation (PE) is a significant factor, and that concerns about the accuracy and scope of AI tools act as potential barriers to wider adoption. The study also found that expectation of effort (EE) and facilitating conditions (FC) had a lesser influence. Interestingly, a subset of students preferred AI tools to face-to-face interactions, suggesting a potential for addressing social anxiety as a barrier to learning. This reinforces the idea that perceived usefulness and barriers, including complexity and anxiety, are crucial for AI integration.

The patterns observed in Figure 2 reflect significant differences in the perception and adoption of AI between Spain, Mexico, and Serbia. In Spain, the variation in the means highlights a possible challenge in the consistent integration of AI, which could be related to contextual barriers or the lack of sustained strategies to promote its use. In Mexico, the steady increase suggests that students are developing a more positive perception, likely driven by growing familiarity with these tools or favorable institutional policies. In Serbia, the stability of the means indicates a homogeneous but moderate perception, which could signal limited use or less emphasis on AI implementation in the educational environment.

These findings underscore the importance of addressing initial perceptions and experiences of use to encourage broader and more sustained adoption of AI in higher education. In particular, it is crucial to design interventions that reduce perceived barriers in countries such as Spain, while in contexts such as Mexico it would be beneficial to consolidate positive trends through greater incentives and technical support.

A study by Nikolic et al. (2024), a systematic review exploring the attitudes of academic teachers toward AI, supports this variability by observing that attitudes toward generative AI may depend on the degree of awareness and familiarity of academics with these tools. This supports the notion of differences in perception and adoption based on contextual factors and the level of familiarity with the technology in different countries.

The correlation results in Table 3 highlight the interdependence between the dimensions of habit, perceived complexity, and perceived impact of AI. The strong correlation between Q6 and Q8 underscores the importance of encouraging regular use of these technologies to maximize their positive perception in the educational environment. Likewise, the relationship between Q6 and Q7 suggests that familiarity with AI not only increases its adoption but also reduces barriers associated with perceived complexity. This positive link aligns with repeated AI-supported review cycles that produce visible improvements and maintain student engagement, reinforcing adoption through habit formation.

However, the moderate correlation between Q7 and Q8 indicates that, although simplicity is an important factor, it is not the only determinant of the overall perception of AI's impact. This highlights the need to address other contextual factors, such as institutional policies and training, to promote wider and more effective adoption.

Overall, the findings suggest that user experience plays a key role in the acceptance and valuation of AI in higher education, highlighting the importance of designing comprehensive strategies to improve both frequency of use and perceptions of ease and usefulness.

In this regard, the study by Hu et al. (2020) found that Habit (HT) was the strongest predictor of behavioral intention and actual use of mobile technologies by academics, even surpassing Performance Expectancy (PE). Furthermore, it was observed that Effort Expectancy (EE) was not a significant factor for academics with high digital literacy or experience, implying that the perception of complexity decreases with regular use and familiarity. This directly supports the interdependence between habit, perceived complexity, and usage experience, as well as the need to consider factors other than simplicity for successful adoption.

The results in Figure 3 show that the frequency of use of AI tools (Q6) is positively related to both the perception of simplicity (Q7) and the overall impact (Q8). This emphasizes the importance of encouraging early and frequent use habits to consolidate a more positive and effective experience with these technologies. Furthermore, the relationship between Q7 and Q8 suggests that improving usability and reducing perceived complexity can have a direct effect on the overall assessment of AI, which is crucial for driving its acceptance in higher education. This inverse relationship is consistent with evidence that combining AI with teacher feedback and light training makes interactions easier, while improving both technical and higher-order learning outcomes.

The findings highlight the need for strategic interventions that reduce initial barriers to AI use, such as training programs and intuitive designs. At the same time, encouraging continued use could maximize perceived benefits, consolidating the positive impact of these tools on teaching and learning.

Complementarily, Muneer and Abbad's (2021) study on the use of learning management systems (LMS) in Jordan found that both Performance Expectancy (PE) and Effort Expectancy (EE) significantly influenced students' behavioral intentions to use the system. This finding supports the connection between simplicity (EE) and perceived impact (PE) with usage intention, which, in turn, predicts actual usage. The study also highlights the importance of PE as the main determinant of usage intention. This reinforces the notion that ease of use and perceived benefits are interdependent and crucial for the continued adoption of AI.

The patterns observed in the networks in Figure 4 reveal significant differences in how students cognitively structure their experience of using AI depending on the country. While in Serbia the strongest connections revolve around perceived complexity, suggesting a critical or evaluative approach to the use of technology, in Mexico a balanced integration predominates, with adoption positioned as a central variable. This configuration could reflect greater maturity in the appropriation of AI tools, where students value both their usefulness and the conditions of use.

In the case of Spain, the strong link between habit and adoption suggests a more automatic or natural internalization of AI use, in which perceived complexity becomes a secondary factor. This pattern may indicate an academic environment where the use of these technologies has become more common and less conditioned by technical or cognitive barriers.

In relation to these differentiated patterns, the study by Yakubu et al. (2025) investigated students' behavioral intentions to use content-generating AI (CG-AI) tools and found that Performance Expectancy (PE), Social Influence (SI), and Effort Expectancy (EE) were significant determinants of students' behavioral intention (BI) to use CG-AI tools. However, facilitating conditions (FC), perceived risk, and attitude were not significant. This evidence points to a specific cognitive structuring of acceptance factors, where some contextual and attitudinal elements may not be central, which is directly compatible with the idea of differentiated patterns of AI use depending on the country.

The findings shown in Figure 5 reveal different patterns by country in terms of the structural centrality of the dimensions that explain the user experience, which has important implications for the formulation of AI integration strategies in educational contexts. The fact that the Adoption dimension has a high influence and strength in Mexico suggests a consolidated positive perception of the pedagogical value of AI, which could be derived from greater exposure or effectiveness in institutional implementation practices. In contrast, the low centrality of Perceived Complexity in all contexts indicates that this dimension is not strongly connected to the other experience variables, which could reflect a tendency to dissociate perceived difficulty from concrete effects on adoption. Likewise, the outstanding performance of Habit in Spain and Serbia reinforces its role as a mechanism for cognitive access to technology, highlighting the importance of consolidating regular usage practices as a facilitating factor. These results invite consideration of country-specific interventions that strengthen the dimensions with the greatest structural capacity for influence, in order to promote a more effective and sustainable adoption of AI-based technologies in the university setting.

However, a study by Mafa and Govender (2025) that examined technology adoption by teachers showed that Effort Expectancy (EE) had a positive, albeit weak, influence on behavioral intention, and that Habit had a negative correlation with TPACK knowledge components. This offers a contrasting perspective on the role of habit and perceived complexity, suggesting that their influence is not uniform across all contexts and that simplicity alone does not guarantee a positive perception of impact. This finding underscores the need for country-differentiated interventions that strengthen the dimensions with the greatest structural capacity for influence, recognizing that the impact of habit may vary.

The analysis of the clustering metrics presented in Figure 6 reveals interesting patterns about how the different dimensions of the user experience are articulated in each country. In particular, habit and cohesion: the high WS values in Mexico and Serbia reflect that AI is more integrated into academic routines, which could be related to technological availability or more robust digital literacy programs. Perceived complexity and barriers: the high values of Barrat and Zhang in Spain indicate that the perception of complexity could be a significant barrier to the adoption of AI in this context. This suggests the need to design more intuitive interfaces and more accessible training programs. Overall adoption and positive impact: Onnela's high values in Serbia underscore the positive impact of AI on higher education, which could be related to national strategies that prioritize the use of these technologies in the classroom.

In summary, the results show that the adoption of AI in higher education is not uniform and is influenced by cultural, technological, and educational factors specific to each country. Cohesion metrics, such as Barrat and Onnela, allow us to identify areas of opportunity to improve the user experience and, thereby, encourage wider and more effective adoption of these technologies in higher education.

The study by Alshehri et al. (2019) reinforces this perspective by highlighting the importance of Enabling Conditions (EC), which include organizational support, training, available resources, and ICT infrastructure. This study emphasizes that the successful implementation of e-learning technology is based on improving infrastructure and support. This is directly compatible with the discussion on technological availability and training programs that influence adoption and overcoming barriers of perceived complexity in different countries.

The results of the analysis in Table 4 by type of university show a clear pattern: students at private universities report higher levels in all dimensions evaluated, with statistically significant differences and moderate effects. This finding has important implications for the design of educational policies aimed at reducing gaps in AI adoption between different types of institutions.

The higher adoption and lower perception of complexity in the private sector could be associated with greater investment in digital infrastructure, teacher training, or curricular flexibility, factors that facilitate the effective integration of these technologies. One plausible mechanism is that private institutions may more frequently provide structured and hybrid feedback and micro-support, contributing to a greater perception of adoption and a lower perception of complexity, although these conditions were not directly measured in this study. In contrast, the context of public universities could be limited by budget constraints, less access to specialized tools, or more rigid institutional processes.

Finally, although all dimensions show differences, the greater distance observed in Adoption and Perceived Complexity suggests that improvement strategies in public universities should focus not only on promoting use, but also on reducing perceived barriers and fostering a positive interaction experience with AI. These findings reinforce the need to develop differentiated policies that respond to the particularities of the institutional environment.

A study by Gunasinghe et al. (2019), focusing on the adoption of e-learning by academics in Sri Lanka, suggests that higher education institution (HEI) administrations should consider staff training, ongoing awareness-raising, periodic system review, and the introduction of policies and guidelines to encourage adoption. This aligns with the discussion on the educational policies and institutional strategies needed to reduce gaps in technology adoption between different types of universities.

From a design perspective, the three dimensions identified in this study can be translated into practical guidelines. Institutions should integrate regular, low-risk AI-assisted review activities to build habit; incorporate AI into hybrid feedback orchestrated by teachers to reduce perceived complexity and anxiety while improving both mechanics and higher-order writing; and make learning progress visible through review examples and reflective questions to reinforce performance expectations. These enabling conditions are particularly relevant for public institutions, where data suggest that additional support could help close adoption gaps.

Furthermore, recent studies on the use of generative AI in academic feedback show that technical reliability alone does not guarantee pedagogical acceptance. For example, a mixed study in Hong Kong on AI-assisted writing assessment reported high agreement between human and AI-generated grades, but also skepticism regarding the depth and contextual appropriateness of the feedback. This suggests that adoption is more sustainable when AI is integrated into hybrid feedback flows mediated by teachers, where routine, low-risk use can reduce perceived complexity and maintain positive perceptions of impact (Lo and Chan, 2025).

6 Conclusions

This study explored the experience of using AI applications in higher education, focusing on the dimensions of habit, perceived complexity, and adoption. The findings highlight that the integration of these technologies is conditioned both by contextual factors, such as technological infrastructure and institutional policies, and by individual variables related to the perception of ease of use, frequency of interaction, and assessment of the positive impact on learning.

First, the results show that the frequency of AI use (habit) is closely linked to a more favorable perception of its impact on higher education. Students who integrate these tools into their academic routines tend to consider them less complex and more useful, suggesting that encouraging regular usage habits may be a key factor in consolidating technology adoption.

Second, the perception of complexity shows significant variations between national contexts, being lower in Mexico and higher in Spain. This underscores the importance of designing more intuitive interfaces, offering technical training, and providing ongoing support to reduce initial barriers to the use of these technologies. Likewise, the correlation between perceived simplicity and adoption reinforces the need to prioritize usability as a central component in the implementation of AI-based applications.

Third, the dimension of overall adoption reflects substantial differences between countries and types of universities. Students at private institutions report higher levels of adoption, which could be related to greater availability of technological resources and more proactive institutional strategies. This disparity indicates the need to design differentiated policies that close the gaps between public and private universities, ensuring equitable implementation of AI in education.

Finally, the patterns observed in the perception and centrality analysis networks highlight that the adoption dimension acts as a central axis in the user experience, especially in contexts where AI is perceived as a pedagogical tool with high transformative potential. However, the low centrality of perceived complexity suggests that, although it is a relevant factor, its influence is secondary compared to frequency of use and overall impact assessment.

In conclusion, the effective adoption of AI applications in higher education requires strategic interventions that address both technical barriers and student perceptions. Encouraging usage habits, improving usability, and ensuring equitable access to these technologies are fundamental steps to maximizing their potential in teaching, learning, and student engagement. In addition, it is crucial to adapt strategies to local contexts and address the specific needs of each type of institution, thus promoting an inclusive and efficient environment for the use of artificial intelligence in academia.

Ultimately, although this study focuses on the user experience from the dimensions of the UTAUT model, it is important to recognize that perceived risks and ethical considerations are potential barriers to the adoption of AI in higher education. Recent research on the impacts of artificial intelligence on human rights warns of challenges related to privacy, equity, and accountability, reinforcing the need to incorporate principles of transparency, human oversight, and privacy by design in future educational applications (Chan and Lo, 2025).

This study opens up several lines of research to deepen the understanding of the adoption of AI applications in higher education. Future research could focus on evaluating how technical and pedagogical training programs for teachers and students influence the perception of complexity and the adoption of AI tools. It would also be relevant to conduct longitudinal studies to analyze how usage habits and perceptions of the impact of these technologies evolve in different academic and cultural contexts over time. Another important direction is to incorporate additional variables, such as perceived risk, trust in technology, and emotional value, to more fully understand the barriers and motivators that affect the acceptance of these tools. Furthermore, future surveys could include a small set of items to capture specific AI use cases and the presence of hybrid feedback workflows. A simple longitudinal or quasi-experimental design would allow us to test whether hybrid feedback mediates the path from habit, through perceived complexity, to perceived adoption, an extension motivated by existing classroom evidence. In addition, specific interventions, such as institutional policies and usability improvements, could be designed and evaluated to promote the equitable integration of AI in public and private universities. Finally, it would be valuable to expand the analysis to the international level, including other countries, to identify global trends and cultural differences in the adoption of AI in higher education. These lines of work will contribute to building a more comprehensive framework that favors the effective implementation of AI in education, maximizing its positive impact on teaching and learning processes.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/gary-reyes-zambrano/AI-Adoption-in-Higher-Education.

Ethics statement

Ethical approval was not required for the studies involving humans. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

GR: Conceptualization, Investigation, Methodology, Writing – original draft. RT-B: Supervision, Validation, Writing – review & editing, Conceptualization. CG-R: Data curation, Formal analysis, Writing – review & editing. DR: Formal analysis, Writing – review & editing. JB-M: Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ab Jalil, H., Rajakumar, M., and Zaremohzzabieh, Z. (2022). Teachers' acceptance of technologies for 4ir adoption: implementation of the UTAUT model. Int. J. Learn. Teach. Educ. Res. 21, 18–32. doi: 10.26803/ijlter.21.1.2

Abu Gharrah, A., and Aljaafreh, A. (2021). Why students use social networks for education: extension of UTAUT2. J. Technol. Sci. Educ. 11:53. doi: 10.3926/jotse.1081

Alhasan, K., Alhasan, K., and Alhashimi, S. (2025). Optimizing gamification adoption in higher education: an in-depth qualitative case study applying the UTAUT framework. Int. J. Game-Based Learn. 15, 1–24. doi: 10.4018/IJGBL.372068

Alonso-Rodríguez, A. (2024). Hacia un marco ético de la inteligencia artificial en la educación. Teoría de la Educación: Revista Interuniversitaria 36, 79–98. doi: 10.14201/teri.31821

Alshehri, A., Rutter, M. J., and Smith, S. (2019). An implementation of the UTAUT model for understanding students' perceptions of learning management systems: a study within tertiary institutions in Saudi Arabia. Int, J. Dist. Educ. Technol. 17, 1–24. doi: 10.4018/IJDET.2019070101

Alshehri, A. J., Rutter, M., and Smith, S. (2020). The effects of UTAUT and usability qualities on students' use of learning management systems in saudi tertiary education. J. Inform. Technol. Educ.: Res. 19, 891–930. doi: 10.28945/4659

Altalhi, M. (2021). Toward a model for acceptance of MOOCs in higher education: The modified UTAUT model for Saudi Arabia. Educ. Inform. Technol. 26, 1589–1605. doi: 10.1007/s10639-020-10317-x

Andrews, J. E., Ward, H., and Yoon, J. (2021). UTAUT as a model for understanding intention to adopt ai and related technologies among librarians. J. Acad. Librarians. 47:102437. doi: 10.1016/j.acalib.2021.102437

Arif, M., Ameen, K., and Rafiq, M. (2018). Factors affecting student use of Web-based services: application of UTAUT in the Pakistani context. Elect. Library 36, 518–534. doi: 10.1108/EL-06-2016-0129

Bayaga, A., and Du Plessis, A. (2024). Ramifications of the Unified Theory of Acceptance and Use of Technology (UTAUT) among developing countries' higher education staffs. Educ. Inform. Technol. 29, 9689–9714. doi: 10.1007/s10639-023-12194-6

Chan, H. W. H., and Lo, N. P. K. (2025). A Study on Human Rights Impact with the Advancement of Artificial Intelligence. J. Posthumanism 5:490. doi: 10.63332/joph.v5i2.490

Chao-Rebolledo, C., and Rivera-Navarro, M. A. (2024). Usos y percepciones de herramientas de inteligencia artificial en la educación superior en México. Revista Iberoamericana de Educación. doi: 10.35362/rie9516259

Chatterjee, S., and Bhattacharjee, K. K. (2020). Adoption of artificial intelligence in higher education: a quantitative analysis using structural equation modelling. Educ. Inform. Technol. 25, 3443–3463. doi: 10.1007/s10639-020-10159-7

Chauhan, S., and Jaiswal, M. (2016). Determinants of acceptance of ERP software training in business schools: Empirical investigation using UTAUT model. Int. J. Managem. Educ. 14, 248–262. doi: 10.1016/j.ijme.2016.05.005

Chen, P.-Y., and Hwang, G.-J. (2019). An empirical examination of the effect of self-regulation and the Unified Theory of Acceptance and Use of Technology (UTAUT) factors on the online learning behavioural intention of college students. Asia Pacific J. Educ. 39, 79–95. doi: 10.1080/02188791.2019.1575184

Chroustová, K., Šorgo, A., Bílek, M., and Rusek, M. (2022). Differences in chemistry teachers' acceptance of educational software according to their user type: an application of extended utaut model. J. Baltic Sci. Educ. 21, 762–787. doi: 10.33225/jbse/22.21.762

de Souza Domingues, M. J. C., Ewaldo Jader Pereira, P., and Deleon Fávero, J. (2020). Factores que influyen en el uso de teléfonos móviles en el contexto de aprendizaje por parte del profesorado de educación superior en la provincia de Santa Catarina (Brasil). Hallazgos 18:35. doi: 10.15332/2422409X.5773

García Pe nalvo, F. J., Llorens-Largo, F., and Vidal, J. (2023). La nueva realidad de la educación ante los avances de la inteligencia artificial generativa. RIED: Revista Iberoamericana de Educación a Distancia. 27, 9–39. doi: 10.5944/ried.27.1.37716

Guggemos, J., Seufert, S., and Sonderegger, S. (2020). Humanoid robots in higher education: Evaluating the acceptance of Pepper in the context of an academic writing course using the UTAUT. Br. J. Educ. Technol. 51, 1864–1883. doi: 10.1111/bjet.13006

Gunasinghe, A., Hamid, J. A., Khatibi, A., and Azam, S. F. (2019). The adequacy of UTAUT-3 in interpreting academician's adoption to e-Learning in higher education environments. Interact. Technol. Smart Educ. 17, 86–106. doi: 10.1108/ITSE-05-2019-0020

Honig, C., Rios, S., and Desu, A. (2025). Generative AI in engineering education: Understanding acceptance and use of new GPT teaching tools within a UTAUT framework. Austral. J. Eng. Educ. 2025, 1–13. doi: 10.1080/22054952.2025.2467500

Hu, S., Laxman, K., and Lee, K. (2020). Exploring factors affecting academics' adoption of emerging mobile technologies-an extended UTAUT perspective. Educ. Inform. Technol. 25, 4615–4635. doi: 10.1007/s10639-020-10171-x

Khechine, H., and Lakhal, S. (2018). Technology as a double-edged sword: from behavior prediction with UTAUT to students' outcomes considering personal characteristics. J. Inform. Technol. Educ.: Res. 17, 063–102. doi: 10.28945/4022

Khechine, H., Raymond, B., and Augier, M. (2020). The adoption of a social learning system: Intrinsic value in the UTAUT model. Br. J. Educ. Technol. 51, 2306–2325. doi: 10.1111/bjet.12905

Kim, J., and Lee, K. S.-S. (2022). Conceptual model to predict Filipino teachers' adoption of ICT-based instruction in class: Using the UTAUT model. Asia Pacific J. Educ. 42, 699–713. doi: 10.1080/02188791.2020.1776213

Kittinger, I. L., and Law, V. (2024). A systematic review of the UTAUT and UTAUT2 among K-12 educators. J. Inform. Technol. Educ.: Res. 23:017. doi: 10.28945/5246

Lawson-Body, A., Willoughby, L., Lawson-Body, L., and Tamandja, E. M. (2020). Students' acceptance of E-books: an application of UTAUT. J. Comp. Inform. Syst. 60, 256–267. doi: 10.1080/08874417.2018.1463577

Lin, S.-C., Chuang, M.-C., Huang, C.-Y., and Liu, C.-E. (2023). Nursing staff's behavior intention to use mobile technology: an exploratory study employing the UTAUT 2 model. Sage Open 13:21582440231208483. doi: 10.1177/21582440231208483

Lo, N., and Chan, S. (2025). Examining the role of generative AI in academic writing assessment: a mixed-methods study in higher education. ESP Rev. 7, 7–45. Available online at: https://www.kci.go.kr/kciportal/landing/article.kci?arti_id=ART003233766

Lo, N., Chan, S., and Wong, A. (2025a). Evaluating teacher, AI, and hybrid feedback in english language learning: impact on student motivation, quality, and performance in Hong Kong. SAGE Open 15:21582440251352907. doi: 10.1177/21582440251352907

Lo, N., Wong, A., and Chan, S. (2025b). The impact of generative AI on essay revisions and student engagement. Comp. Educ. Open 9:100249. doi: 10.1016/j.caeo.2025.100249

López Hernández, F. A., and Silva Pérez, M. M. (2016). Factores que inciden en la aceptación de los dispositivos móviles para el aprendizaje en educación superior. Ese-estudios Sobre Educacion 30, 175–195. doi: 10.15581/004.30.175-195

Mafa, R. K., and Govender, D. W. (2025). Exploring teachers' technology adoption: linking TPACK knowledge and UTAUT-3 constructs. Discover Educ. 4:88. doi: 10.1007/s44217-025-00480-z

Magsamen-Conrad, K., Wang, F., Tetteh, D., and Lee, Y.-I. (2020). Using technology adoption theory and a lifespan approach to develop a theoretical framework for eHealth literacy: extending UTAUT. Health Commun. 35, 1435–1446. doi: 10.1080/10410236.2019.1641395

Marc Fuertes, A. (2024). Enmarcando las aplicaciones de IA generativa como herramientas para la cognición en educación. Pixel-Bit, Revista de Medios y Educación. 42–57. doi: 10.12795/pixelbit.107697

Martín-García, A. V., García, A., and Mu noz-Rodríguez, J. M. (2014). Factores determinantes de adopción de blended learning en educación superior. Adaptación del modelo UTAUT*. Educación XX1 17, 217–240. doi: 10.5944/educxx1.17.2.11489

McKeown, T., and Anderson, M. (2016). UTAUT: capturing differences in undergraduate versus postgraduate learning? Educ. + Train. 58, 945–965. doi: 10.1108/ET-07-2015-0058

Muneer, A., and Abbad, M. (2021). Using the UTAUT model to understand students' usage of e-learning systems in developing countries. Educ. Inform. Technol. 2021, 1–20. Available online at: https://link.springer.com/article/10.1007/s10639-021-10573-5

Nawafleh, S., and Fares, A. M. S. (2024). UTAUT and determinant factors for adopting e-government in Jordan using a structural equation modelling approach. Elect. Governm. Int. J. 20, 20–46. doi: 10.1504/EG.2024.135323

Nguyen, V., Nguyen, C., Do, C., and Ha, Q. (2024). Understanding the factors that influence digital readiness in education: a UTAUT study among digital training learners. IPTEK. 34, 141–150. doi: 10.12962/j20882033.v34i2.17619

Nikolic, S., Wentworth, I., Sheridan, L., Moss, S., Duursma, E., Jones, R. A., et al. (2024). A systematic literature review of attitudes, intentions and behaviours of teaching academics pertaining to AI and generative AI (GenAI) in higher education: an analysis of GenAI adoption using the UTAUT framework. Austral. J. Educ. Technol. 40, 56–75. doi: 10.14742/ajet.9643

Paublini-Hernández, M. A., and Morales-La Paz, L. R. (2025). Preferencias Por La EducaciÓn A Distancia: Un AnÁlisis Desde El Comportamiento Del Consumidor. Kairós, Revista de Ciencias Económicas, Jurídicas y Administrativas. 8, 88–107. doi: 10.37135/kai.03.14.05

Popova, Y., and Zagulova, D. (2022). UTAUT model for smart city concept implementation: use of web applications by residents for everyday operations. Informatics 9:27. doi: 10.3390/informatics9010027

Radovan, M., and Kristl, N. (2017). Acceptance of technology and its impact on teachers' activities in virtual classroom: integrating UTAUT and COI into a combined model. Turkish Online J. Educ. Technol. - TOJET 16, 11–22. Available online at: https://tojet.net/articles/v16i3/1632.pdf

Raza, S. A., Qazi, W., Khan, K. A., and Salam, J. (2021). Social isolation and acceptance of the learning management system (LMS) in the time of COVID-19 pandemic: an expansion of the UTAUT model. J. Educ. Comp. Res. 59, 183–208. doi: 10.1177/0735633120960421

Rudhumbu, N. (2022). Applying the UTAUT2 to predict the acceptance of blended learning by university students. Asian Assoc. Open Univers. J. 17, 15–36. doi: 10.1108/AAOUJ-08-2021-0084

Sharma, S., Singh, G., Pratt, S., and Narayan, J. (2021). Exploring consumer behavior to purchase travel online in Fiji and Solomon Islands? An extension of the UTAUT framework. Int. J. Culture Tour. Hospital. Res. 15, 227–247. doi: 10.1108/IJCTHR-03-2020-0064

Tolba, E. G., and Youssef, N. H. (2022). High school science teachers' acceptance of using distance education in the light of UTAUT. Eurasia J. Mathem. Sci. Technol. Educ. 18:em2152. doi: 10.29333/ejmste/12365

Tramallino, C., and Zeni, A. M. (2024). Avances y discusiones sobre el uso de inteligencia artificial (IA) en educación. Educación. 33, 29–54. doi: 10.18800/educacion.202401.M002

Venkatesh, V., Morris, M. G., Davis, G., and Davis, F. D. (2003). User acceptance of information technology: toward a unified view. MIS Quart. 27:425. doi: 10.2307/30036540

Venkatesh, V., Thong, J., and Xu, X. (2012). Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Quart. 36:157. doi: 10.2307/41410412

Wrycza, S., Marcinkowski, B., and Gajda, D. (2017). The enriched UTAUT model for the acceptance of software engineering tools in academic education. Inform. Syst. Managem. 34, 38–49. doi: 10.1080/10580530.2017.1254446

Yakubu, M. N., David, N., and Abubakar, N. H. (2025). Students' behavioural intention to use content generative AI for learning and research: a UTAUT theoretical perspective. Educ. Inform. Technol. 33, 17969–17994. doi: 10.1007/s10639-025-13441-8

Keywords: artificial intelligence, higher education, technology adoption, UTAUT, habit

Citation: Reyes G, Tolozano-Benites R, George-Reyes C, Rumbaut D and Barzola-Monteses J (2025) Adoption of artificial intelligence applications in higher education. Front. Educ. 10:1680401. doi: 10.3389/feduc.2025.1680401

Received: 05 August 2025; Accepted: 10 October 2025;

Published: 29 October 2025.

Edited by:

Benito Yáñez-Araque, University of Castilla-La Mancha, SpainReviewed by:

Noble Lo, Lancaster University, United KingdomKhalid Mirza, Quaid-i-Azam University, Pakistan

Copyright © 2025 Reyes, Tolozano-Benites, George-Reyes, Rumbaut and Barzola-Monteses. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gary Reyes, Z3hyZXllc3pAdWJlLmVkdS5lYw==

Gary Reyes

Gary Reyes Roberto Tolozano-Benites1

Roberto Tolozano-Benites1 Carlos George-Reyes

Carlos George-Reyes