- 1English Language Department, Al-Ahliyya Amman University, Amman, Jordan

- 2Accounting Department, Faculty of Business, Applied Science Private University, Amman, Jordan

- 3Balsillie School of International Affairs, Technology Governance Initiative, University of Waterloo, Waterloo, ON, Canada

Introduction: In today's rapidly evolving technological landscape, the integration of artificial intelligence (AI) into education is increasingly inevitable. AI has the potential to improve educational quality and, in turn, contribute to sustainable development. This study investigates the effects of selected AI tools; Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF, on the quality of education and examines students' perceptions of their influence on self-directed learning, problem-solving, critical thinking, and digital literacy skills.

Methods: A quantitative research design was adopted. Data were collected through a structured questionnaire and analyzed statistically using SPSS. Purposive sampling was employed, targeting 78 students enrolled in computer-aided translation courses over two semesters.

Results: The findings indicated that students perceived multiple AI tools as beneficial in enhancing their learning skills, although the degree of impact varied across tools. Overall, the selected AI tools positively influenced skills associated with quality education, including self-directed learning, problem-solving, critical thinking, and digital literacy.

Discussion: The study demonstrates that AI tools can significantly contribute to the enhancement of educational quality. These findings underscore the importance of integrating AI technologies into teaching and learning processes to foster sustainable education. Future research should explore strategies for optimizing the use of AI tools in various educational contexts to maximize their potential benefits.

1 Introduction

The United Nations General Assembly adopted the 2030 Agenda for Sustainable Development in 2015. This agenda incorporates 17 sustainable development goals (SDGs) (United Nations, 2024) that aim at creating a sustainable, peaceful, prosperous, and equitable world for all (UNESCO, 2017). Education plays a pivotal role within this Agenda especially concerning quality education (SDG 4), specifically Target 4.7. SDG4 emphasizes the importance of education for sustainable development, as articulated in Target 4.7: “by 2030 ensure all learners acquire knowledge and skills needed to promote sustainable development” (United Nations, 2024, p. 57).

The path toward sustainable development necessitates a thoughtful transformation in our mindsets and actions. To achieve this, individuals must be equipped with the knowledge, skills, values, and attitudes that empower them to contribute meaningfully to a sustainable future (UNESCO, 2017). Education, therefore, plays a pivotal role in realizing this objective. Education for Sustainable Development (ESD) goes beyond mere knowledge acquisition. It is a transformative process that empowers learners to make informed decisions and take responsible actions that ensure environmental integrity, economic viability, and a just society—for both present and future generations (UNESCO, 2017).

ESD, as defined by Gough and Scott (2003), is a learning process that enables individuals to become agents for positive action by providing them with the necessary knowledge and skills to understand the intricacies of sustainability and lead desired changes. Once equipped with this kind of knowledge and skills, people will be able to contribute toward achieving SDGs in an informed manner.

According to UN General Assembly Resolution 72/222 (2020, p. 57), ESD is “an integral element of SDG 4 on Education and a key enabler of all the other SDGs”. In a survey conducted on Sustainable Development Goals, SDG 4 is identified as the second most important SDG for society to prioritize. SDG 4 encompasses the characteristics of a study program that meets the expected standards by its beneficiaries (United Nations, 2024). It aims to ensure universal access to affordable education and reduce gender disparities in the educational sector (Shim and Choi, 2023). Quality education, as Kumar believes (2023), is attainable through sharpening students' abilities and skills.

Quality education is further exemplified when learners utilize technology, including Artificial Intelligence (AI) tools, to identify relevant solutions to challenges they encounter as students and in their future careers or lives (Kumar, 2023). The significance of AI in education is evident (Wang et al., 2022; Habib et al., 2024; Alkhawaja, 2024, 2023), as it has been shown to enhance educational quality, pedagogical approaches, and foster practical-oriented learning in higher education (Singh et al., 2023). Educational AI encompasses technologies with learning principles that can enhance education quality, including learning-related analytics and game-oriented learning (Nahar, 2024).

The promise of AI in education is significant, particularly when the right tools are adopted responsibly and thoughtfully. However, the challenge remains in ensuring the widespread acceptance and effective use of these technologies and AI tools by both educators and students. Previous literature demonstrated the vital role of technology and AI in achieving quality education and sustainable development (Shim and Choi, 2023). However, some studies highlighted (Shim and Choi, 2023; Kumar, 2023) the potential risks of using technology and AI in education due to the associated ethical and security risks involved when creating machine learning for learners. These contradictory findings regarding the role of AI in sustainable development in the education sector form the basis of this research.

The objective of this research is to evaluate the effects of AI tools on students' skills development and making education more sustainable using Technology Acceptance Model (TAM) as a theoretical framework (Davis, 1989). It adopts TAM as a framework to examine educators‘ and students' perceptions of the usefulness and ease of use of AI tools in education. This study seeks to determine how these AI tools could promote better learning outcomes and accessibility thereby improving the quality of education. TAM helps to identify the key challenges and benefits of using technology in education, leading to insights that enhance students‘ digital literacy, critical thinking, and problem-solving skills. Therefore, adopting TAM as a theoretical framework is expected to provide a comprehensive and practical approach to evaluating the effectiveness of using AI tools in education.

By understanding these perceptions, educational institutions can gain valuable insights into how to integrate effective and user-friendly AI tools. This, in turn, can enhance students' digital literacy, critical thinking, and problem-solving skills, ultimately leading to students perceived the tool as useful for supporting their competencies and progress toward achieving Sustainable Development Goal 4 (SDG 4).

In response to this context, this research answers the following research questions:

1. What is the impact of AI tools on quality education?

1.1. How do learners perceive the influence of AI tools on the development of their learning skills, including self-led learning, critical thinking, digital literacy, and problem-solving?

1.2. How do learners assess their experiences with the use of Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF in their educational processes?

The term “learning skills” refers to the ability to develop and acquire skills that enable us to learn effectively. These skills encompass a broad range, including creativity, problem-solving, leadership, critical thinking, collaboration, and more (Higgins et al., 2007). In this research, this term refers to four learning skills: self-led learning, critical thinking, digital literacy, and problem-solving fostered through using technology and AI tools. Artificial Intelligence refers to machines with technologies that emulate human intelligence features, including their ability to learn, reason and solve problems (Su, 2022). AI in this research refers to educational technologies, such as Grammarly, QuillBot, and chatbots that enhance learning experiences. Quality Education refers to a study program's characteristics that meets the quality standards expected by its beneficiaries (United Nations, 2024). In this research quality education was conceptualized as students' perceptions of learning support, engagement, and ease of use, consistent with previous research (Bayrakci and Narmanlioglu, 2021; Ayala-Pazmiño, 2023).

Exploring the relation between AI tools and sustainable education is significant to various stakeholders in the sector. The research's results will contribute to the dearth of literature on the potential benefits of AI tools. If positive impacts are identified, the research will benefit policy makers in identifying the essential aspects of AI tools that they can use to ensure the education sector benefits from them. Educators might also benefit from the findings of this research as they gain insight into whether such AI tools could enhance their learners' digital literacy, critical analysis, problem-solving, and self-directed learning skills. Additionally, the findings will guide educators and stakeholders in the education sector as they develop relevant guidelines and policies governing the use of AI tools to foster sustainable education. Eventually, these findings could be utilized to advance the achievement of SDG 4 goals.

The results of this research are expected to benefit policy makers in developing policies governing the use of AI tools to foster sustainable education and identifying various AI tools to be used in the educational sector. Educators might also benefit from the findings of this research as they gain insights into whether such AI tools could enhance learners' digital literacy, critical analysis, problem-solving, and self-directed learning skills.

2 Literature review

Artificial intelligence has a positive impact on learning outcomes, time and cost efficiency, worldwide access to quality education among other benefits (Jafari and Keykha, 2024). As quality education is measured by learners' characteristics and skills UNESCO (2005), it is vital to identify the effect of AI tools and technologies on learners' educational outcomes and their development of essential skills. According to Darwin et al. (2024), AI tools can foster critical thinking abilities of learners. Critical thinking depicts the reflective process of making decisions that entails critically analyzing available data to make informed decisions (Parsakia, 2023). However, it is also noted that the use of AI may present challenges and could potentially hamper critical thinking skill development. Keyes et al. (2021) suggested that AI may restrict the range of search results available to individuals, thereby potentially impeding the critical analysis process. Hence, it is essential to investigate whether the selected AI tools have impacted critical thinking skills of the targeted learners considering the nuanced results regarding their effects in existing literature.

2.1 The impact of AI tools on learners' skill development

AI tools have been found to positively impact learners' problem-solving skills as they learn how to think more critically and independently (Slimi and Carballido, 2023; Habib et al., 2024). However, other studies suggested that learners might rely on AI tools, such as Chatbots, to resolve their problems, which negatively affect their problem-solving skills (Parsakia, 2023; Darwin et al., 2024). Therefore, there is a risk that the use of AI might lead a learner to engage more in technology-influenced problem-solving activities. This can hamper their problem-solving skills further, highlighting the need to explore whether such tools might influence such abilities and skills among learners.

In the same vein, studies indicate that learner's usage of AI technologies are expected to demonstrate higher digital literacy (Wang et al., 2022; Kit et al., 2022; AlIssa, 2024). Learners using AI technologies develop skills and competencies required to use AI tools and applications effectively (Bayrakci and Narmanlioglu, 2021; Ayala-Pazmiño, 2023; Almustafa, 2024). Hence, it is expected that the use of AI tools in education can lead to improvements in the digital literacy of learners.

Moreover, studies found that AI tools foster self-directed learning, particularly in terms of their motivation, experience, and efficiency (Yildirim et al., 2023; Kohnke et al., 2023). In this regard, Lashari and Umrani (2023) found that AI might facilitate self-led learning of second languages but there is a risk that these tools reveal incorrect findings. They added (2023), this might be the case considering the majority of phonetics in some global languages have not been documented yet. Hence, the algorithms generated from searching for uncommon language using AI chatbots might yield incorrect or uncomprehensive findings.

Generative AI, particularly ChatGPT, was highly prevalent in 2023 (Dempere et al., 2023). However, its use in education systems has sparked mixed reactions due to concerns about plagiarism among learners and the potential for yielding incorrect findings due to inconsistency (UNESCO, 2023). The creation of student AI systems also involves learners in data collection during machine learning, raising ethical concerns about the potential misuse of data and infringement of privacy (Chan and Hu, 2023). Additionally, the use of AI in the education sector faces additional challenges, including the risk of bias and the high implementation and maintenance costs, which may exacerbate global diversity issues and contradict the aims of sustainable development goals (Wang et al., 2022).

The nuanced benefits of AI use in education prompt the need for further research to determine whether its advantages outweigh the costs in the sector. Quality education, as outlined by UNESCO (2019), is measured through various factors including learner characteristics, pedagogical and learning processes, required inputs, context, and outcomes. One major output and learner characteristic that can be considered in assessing quality are the learning skills gained through educational AI. AI has the potential to influence learners‘ skills, including digital literacy and critical thinking abilities (Habib et al., 2024; Darwin et al., 2024; Mansour et al., 2025). According to Sarker and Ullah (2023), quality education can be assessed through learners' ICT adaptability and competencies, which refers to their capability to effectively engage in technology-related activities.

AI significantly impacts the quality of education (Liao et al., 2021; Nguyen, 2023). For example, self-directed English learning has been demonstrated to be achieved through AI Chatbots (Lashari and Umrani, 2023). Parsakia (2023) revealed that AI used to develop Chatbots might enhance learners' critical thinking and problem-solving abilities; however, these skills might be limited to specific contexts. Additionally, the provision of AI tools in preschools significantly influences learners' digital literacy skills by fostering their emotional and collaborative inquisitive abilities (Kewalramani et al., 2021). Nonetheless, there is limited literature regarding the impact of AI tools on digital literacy, critical thinking, problem solving, and self-directed studying abilities or skills of learners.

Existing literature conceptualizes AI's vital role in achieving sustainable development (Chan and Hu, 2023; Nguyen, 2023; Khoury, 2024). There is a positive relationship between AI and SDG 4, which focuses on quality education (Liao et al., 2021; Nguyen, 2023). However, Singh et al. (2023) and Kumar (2023) highlighted that the benefits of using AI in education might hamper sustainability due to the associated ethical and security risks involved when creating machine learning for learners. These contradictory findings regarding the role of AI in sustainable development in the education sector form the basis for this research. The importance of this research is further highlighted by the fact that research on the effects of AI tools on education is still in its early stages (Kohnke et al., 2023).

2.2 Learners' experiences with AI tools in education

Learners provided varying insights regarding their encounters, perceptions, and interactions with AI tools specifically in relation to their usability and impact on learning-related outcomes (Liao et al., 2021; Parsakia, 2023; Nguyen, 2023). An exploration of higher education learners' perceptions revealed their positive attitudes toward generative AI use in their learning. They perceived that such AI tools can support their learning and analysis abilities through facilitating brainstorming-related assistance (Chan and Hu, 2023). Similarly, Grájeda et al. (2023) found that AI tools have a positively significant impact on the academic experiences of higher education learners. Some of the impacted learners' experiences include improvements in their productive, creative, and comprehension abilities (Darwin et al., 2024).

Numerous studies asserted the positive impact of AI on quality education (e.g. Jafari and Keykha, 2024; Darwin et al., 2024). Slimi and Carballido (2023) provided evidence that AI use enhanced the quality of education in higher educational institutions. Additionally, educational AI technologies have been identified as having the potential to enhance equality within the sector (Fussell and Truong, 2021). Moreover, AI was proven also to impact sustainable development (Keyes et al., 2021; Lashari and Umrani, 2023). Therefore, this research aims to investigate AI influences on sustainable development through its impact on students' learning skills and quality education. In this regard, Kohnke et al. (2023, p. 5) asserted that “research on the effect of generative AI tools on education is still in its early stages” emphasizing the need to conduct this research.

The reviewed literature demonstrated a positive impact of AI on sustainability and quality education, particularly in enhancing students' learning skills, such as digital literacy, critical thought development, self-led learning, and problem-solving.

This study seeks to investigate whether AI tools, including Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF can enhance quality education through improving quality education-related skills. Grammarly is an AI writing assistant designed to offer real-time feedback on grammar, spelling, punctuation, and sentence structure. Grammarly corrects mistakes in the text when it needs correcting, and it suggests ways to improve the writing style. ChatGPT is a large language model chatbot that provides information and responds with relevant, natural-sounding answers. It provides access to relevant sources and helps learners to investigate solutions to complicated challenges. ChatPDF helps users to extract information from various PDF documents. It solves issues by reading, summarizing, and answering questions about any PDF document. QuillBot helps users enhance the clarity and consistency of their writing. Textero AI assists users with the writing process by creating and organizing ideas. Learners using the above-mentioned AI tools could analyze their strengths and weaknesses, identify areas where they need improvement, and, thus, improve their learning outcomes. Moreover, such AI tools could improve decision-making and problem-solving abilities. For that reason, it is worth investigating the degree of this improvement as suggested by the previous literature.

Accordingly, hypotheses were developed as follows:

H1: The integration of AI tools in education positively impacts quality education.

H1.1: Learners perceive that AI tools positively influence the development of their learning skills, including self-led learning, critical thinking, digital literacy, and problem-solving.

H1.2: Learners assess their experiences with the use of Grammarly, ChatGPT, QuillBot, Textero AI and ChatPDF in their educational processes as predominantly positive, characterized by perceived improvements in learning outcomes and user-friendly interactions.

To address the hypotheses and provide a clear framework for analysis, the study pursued the following research objectives:

1. To examine the impact of integrating AI tools on quality education in higher education settings.

2. To investigate learners' perceptions of AI tools in enhancing their learning skills, including self-directed learning, critical thinking, digital literacy, problem-solving.

3. To evaluate learners' experiences with specific AI tools (Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF) in their educational processes, focusing on:

4. Perceived improvements in learning outcomes, ease of use and user-friendly interactions

5. To provide evidence-based insights on the perceived usefulness of AI tools in supporting educational quality and enhancing learning experiences.

3 Theoretical framework

TAM aims to highlight the processes underpinning the acceptance of technology and to provide a theoretical explanation for the successful implementation of technology (Abdullah and Ward, 2016). The model measures a user's overall attitude toward using the technology through two specific aspects: perceived usefulness and perceived ease of use. Perceived usefulness refers to the degree to which an individual believes that using a particular technology can maximize the overall performance whereas perceived ease of use is defined as the degree to which an individual believes that using a particular technology would be free from effort to enhance achievement at work (Granić and Marangunić, 2019).

This model is the most widely used and valid model to predict and explain users' behavior toward the acceptance and adoption of technology in education and learners' skills (Yeou, 2016; Almaiah, 2018). The model predicts individuals' intentions to use technology; however, intentions do not always guarantee behavior. For example, someone might intend to use ChatPDF but not follow through.

There are several factors that influence the strength of the relationship between intentions and behavior. These can be seen in various empirical studies conducted in educational contexts, which reveal many factors that might influence users' perceived usefulness and perceived ease of use Al-Azawei et al., (2017). These studies identified factors such as computer self-efficacy (Liu et al., 2010; Ros et al., 2015), learners' experience (Chang et al., 2012; Al-Rahmi et al., 2021), learning styles (Keyes et al., 2021), and perceived convenience (Yu, 2020). Other studies have investigated TAM to facilitate technology use in education, such as social media platforms (Yu, 2020), teaching assistant robots (Park and Kwon, 2016), virtual reality (Fussell and Truong, 2021), personal learning environments (Rejón-Guardia et al., 2020) and augmented reality technologies (Jang et al., 2021). For instance, (Al-Azawei et al. 2017) examined learners' perceptions of a blended e-learning system and found that the TAM model revealed learners' intention to use the system, which positively impacted learners' satisfaction. In a similar manner, Al-Adwan et al. (2023) adopted TAM and its components to investigate the factors that influence higher education students' use of the metaverse for educational purposes. They found that students who perceive the metaverse as useful are more likely to use it for learning purposes. However, ease of use was not a significant factor. Based on these findings, we can conclude that applying TAM in the context of education is valid and useful for both educators and learners, as it can encourage the integration of technology in the educational process for a potentially beneficial learning experience. Based on previous literature, it is evident that perceived ease of use and perceived usefulness positively affect the acceptance of learning with technology (Granić and Marangunić, 2019).

4 Methodology

4.1 Research design

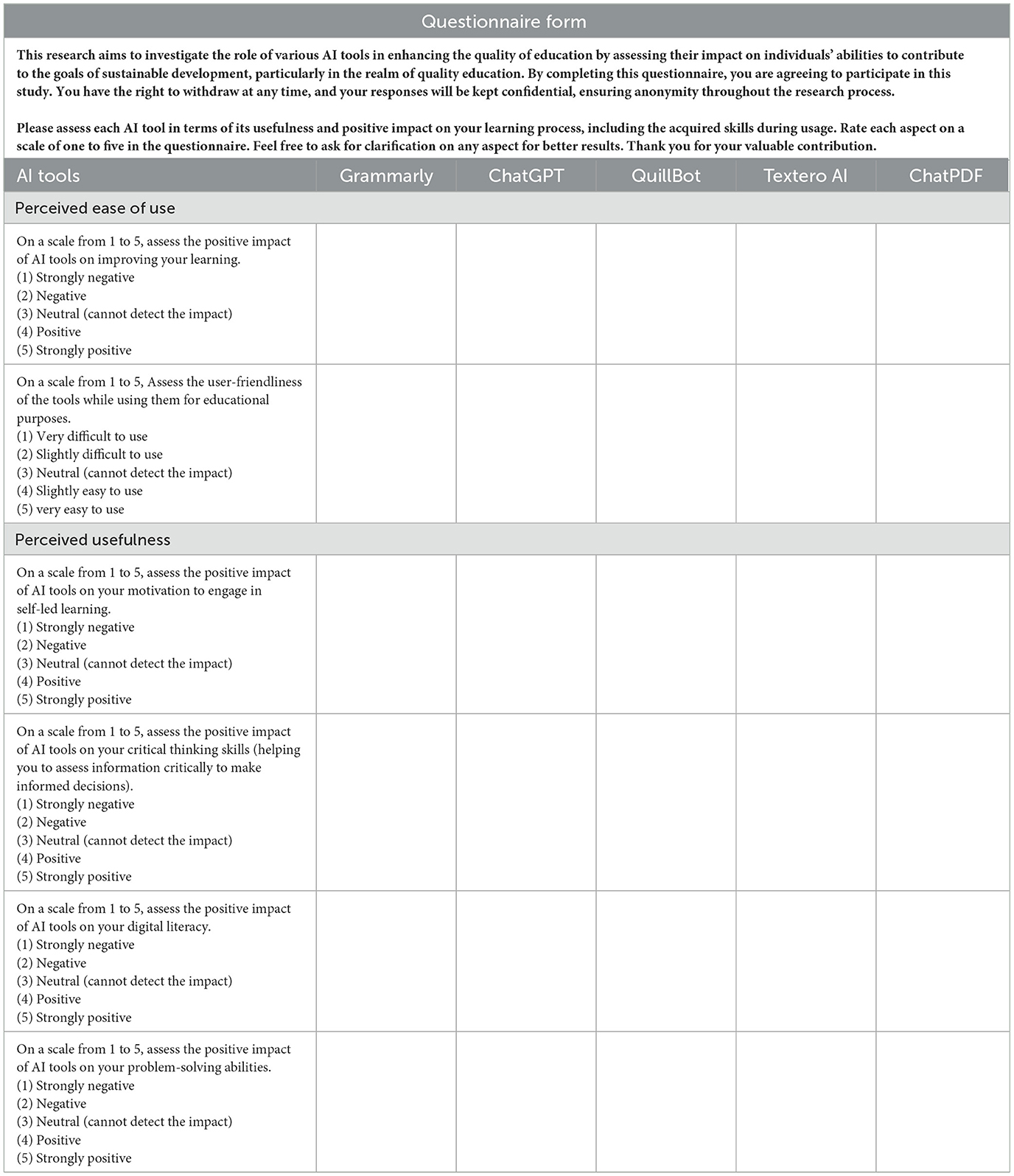

This research adopted quantitative research design. A questionnaire using two primary factors in the Technology Acceptance Model (TAM): perceived usefulness and perceived ease of use was used for data collection (Appendix). According to Charness and Boot (2016), TAM is one of the most influential models of technology acceptance. The questionnaire utilized predefined answers and five-point Likert scale [(1) Strongly Negative (2) Negative; (3) Neutral; (4) Positive; (5) Strongly Positive] questions. The quantitative responses from the questionnaire were subjected to statistical analysis to identify relationships between variables. Ten Mann-Whitney tests, matching the 10 groups, were run in each and every skill totaling 60 tests in total.

Prior to administering the survey, each participant was presented with an informed consent form. This form outlines the nature of the research, including its objectives and procedures. Participants were informed that their participation is voluntary and that they could withdraw from the study at any time. To ensure anonymity, participants' identities were kept confidential throughout the research process.

The selection of Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF was based on their widespread adoption in educational settings, their diverse functionalities, and their relevance to language learning and academic tasks. Specifically:

1. Grammarly is widely used for enhancing writing accuracy, grammar, and style.

2. ChatGPT is an advanced AI language model supporting idea generation, comprehension, and academic writing.

3. QuillBot is used for paraphrasing, summarization, and improving clarity in written assignments.

4. Textero AI and ChatPDF support document analysis, information extraction, and interactive learning from texts and PDFs.

These tools collectively represent a range of AI functionalities that are directly relevant to students' learning skills, problem-solving, and digital literacy. The selection was guided by accessibility, popularity among students, and applicability to higher education tasks, ensuring that the study captures meaningful and practical insights into AI integration in educational processes.

4.2 Sample and sampling techniques

A total number of 78 students who studied the computer-assisted translation course were sampled in this research. They were chosen from this course as it is essential for a participant to have knowledge regarding a phenomenon of focus in research (Hossan et al., 2023). These learners have adequate experience considering their course is aimed at guiding them on how to use technology to support their learning process.

The study recruited participants using a convenience sample from a single undergraduate class in Computer Aided Translation, at Al-Ahliyya Amman University. A total of 82 students were invited, of whom 78 completed the survey, yielding a response rate of 95%. Participants ranged in age from 21 to 22, with 78% female and 22 male. All participants were third-year students majoring in English Language and Translation. Because the sample was drawn from a single class, the findings may not generalize to other courses, academic years, or institutions. Future studies should employ larger and more diverse samples to enhance external validity.

Data for this study was collected during the middle of the semester, after students had sufficient exposure to the AI tools integrated into the course activities. This timing ensured that participants had meaningful experience with Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF, allowing them to provide informed and reflective responses regarding their learning experiences and perceived skill development.

4.3 Data analysis

The survey responses were analyzed using SPSS. Kruskal-Wallis, the non-parametric counterpart to one-way ANOVA, was used to document whether students' benefits vary from one program to another. It is worth mentioning that one-way ANOVA was not appropriate in this situation due to a statistically significant Levene's statistic indicating heterogeneity of variances.

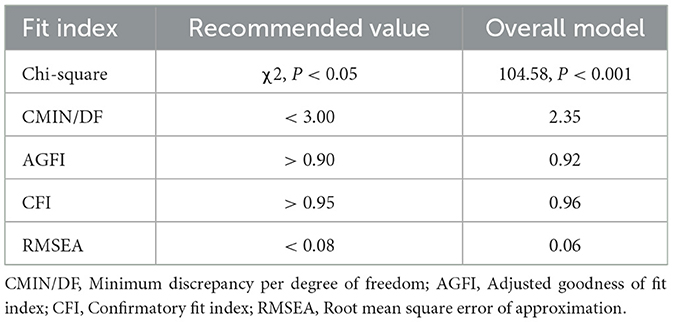

4.4 Reliability and validity of the data

The results of reliability and validity testing indicated a successful adaptation of the hypothesized model. The questionnaire was measured by the Confirmatory Factor Analysis (CFA). The results of CFA analysis are as follows: χ2 = 104.58, p < 0.001, CMIN/DF = 2.35, AGFI = 0.92, CFI = 0.96, and RMSEA = 0.06. This means that the hypothesized model of the 6-item structure is a valid model to examine the data understudy. As indicated in Table 1, all fit indices are in the ranges of the cutoff values for an acceptable model fit. The results of the CFA confirmed that the model fit is acceptable between the proposed model and the observed data (Table 1).

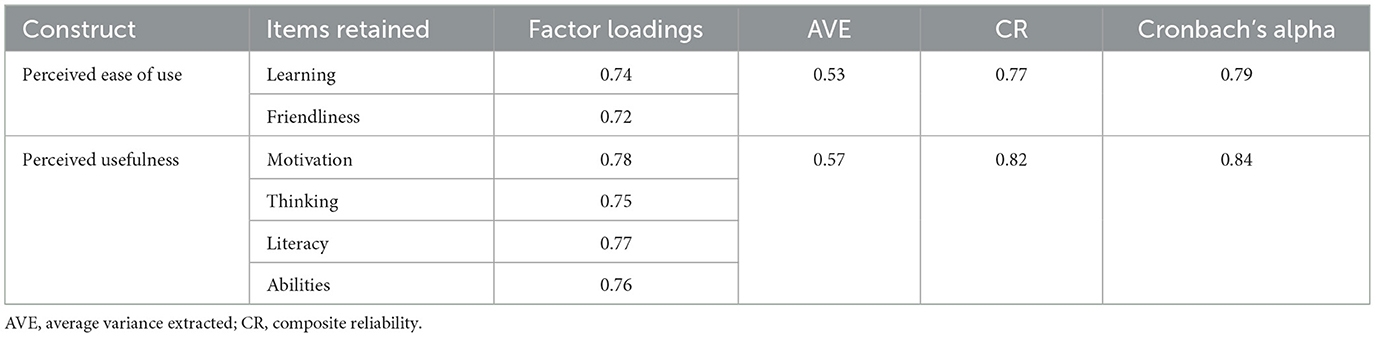

The construct validity was explained using the convergent and discriminant validity measures and all measurement items on the variables were examined through factor loadings. The results indicated that the measurement items had sufficient factor loadings on their respective variables (>0.50; Table 2), as suggested by Hair et al. (2006). Also, the values of average variance extracted (AVE) for each variable were above the recommended threshold (>0.50). Measurement items across the aforementioned variables loaded sufficiently on their respective constructs; the constructs reported very good scores for both Cronbach's alpha and composite reliability, retaining the adequacy of the construct's convergent validity (Fornell and Larcker, 1981).

It is worth noting that while objective measures would further strengthen validity, previous TAM-based studies in higher education have similarly relied on self-reported perceptions to evaluate system usefulness and educational benefits (e.g., Davis, 1989; Venkatesh, 2000; Marian et al., 2025).

5 Results

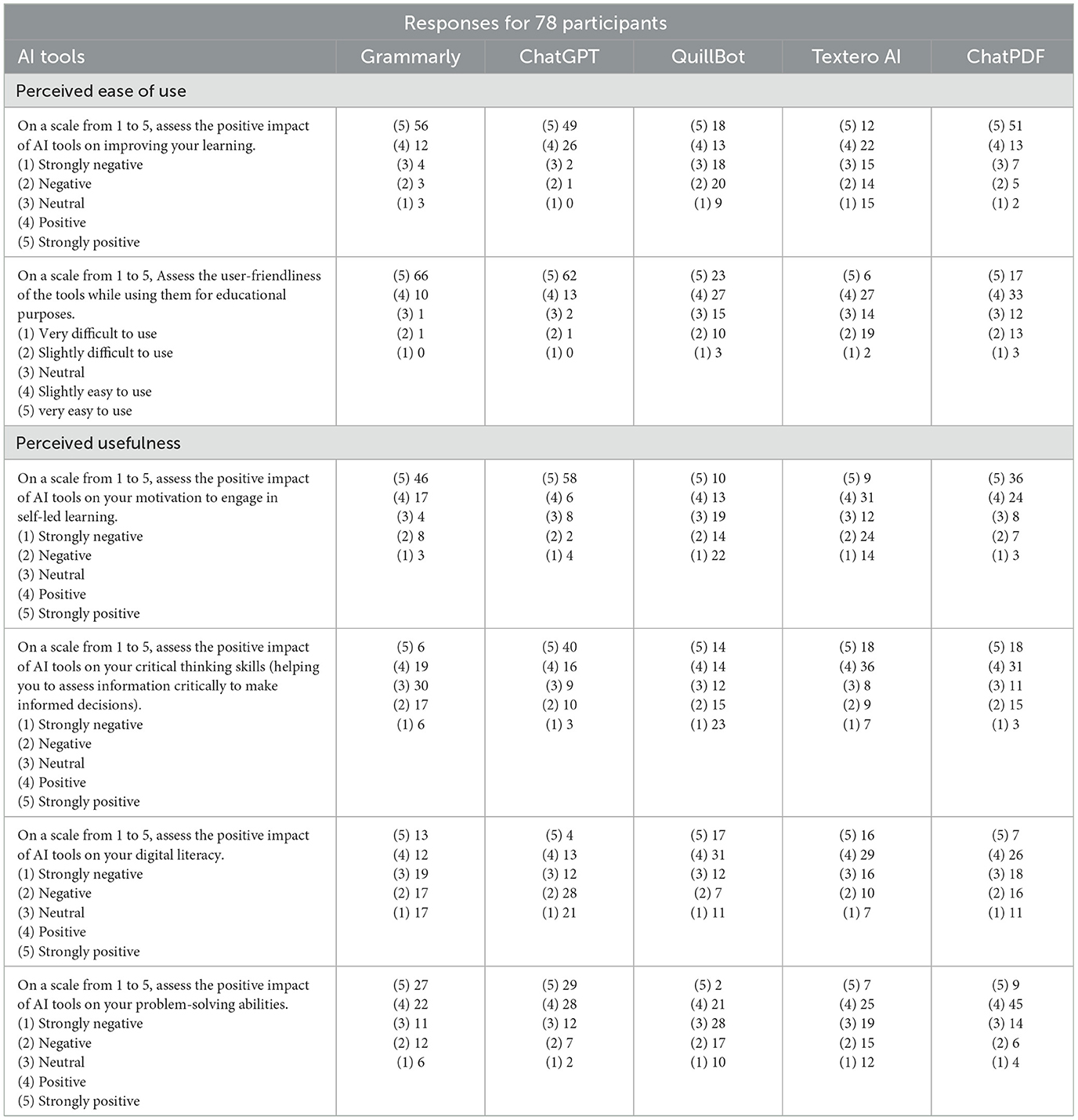

Table 3 presents the responses of 78 participants regarding the perceived ease of use, user-friendliness, perceived usefulness, impact on critical thinking, impact on digital literacy, and impact on problem-solving abilities of five AI tools: Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF. Responses are categorized based on a scale from 1 to 5, ranging from strongly negative to strongly positive.

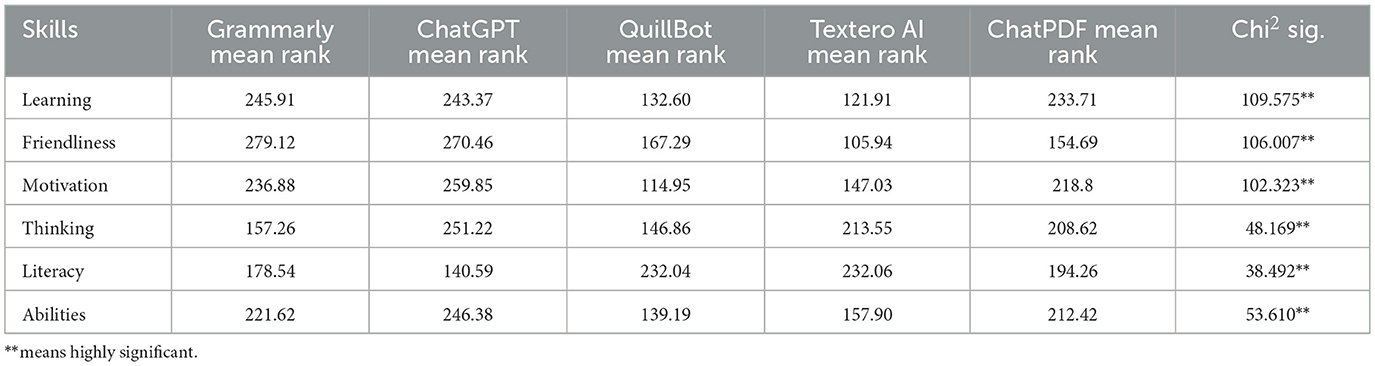

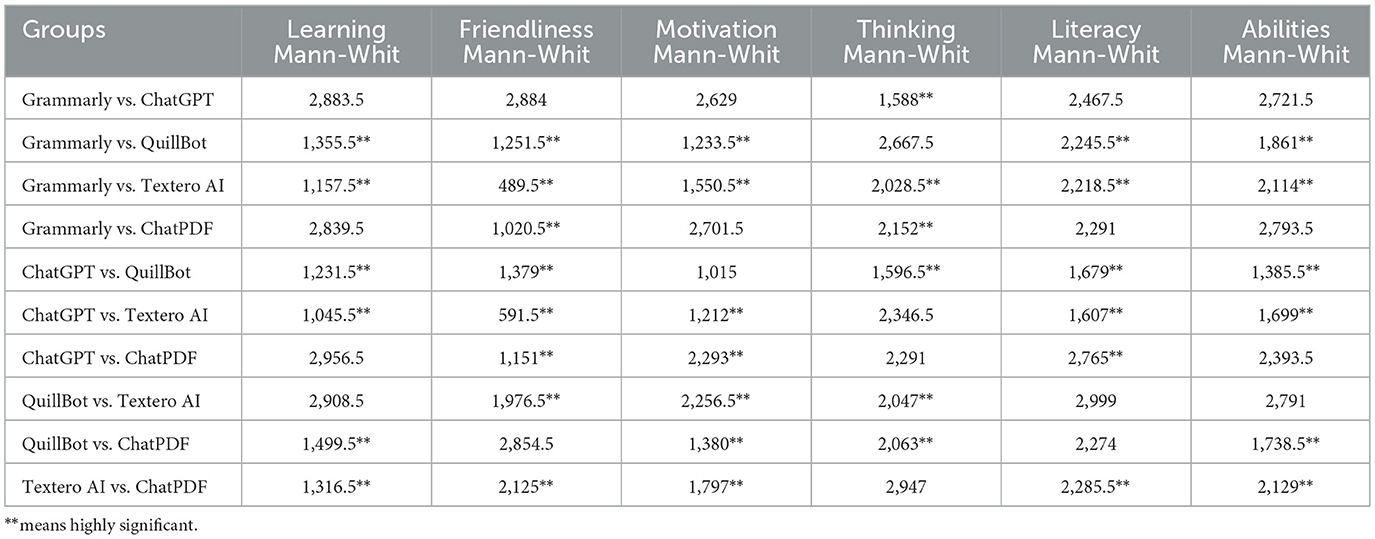

In Table 4, the Chi-square statistics proved that there are in fact differences in all skills benefits among programs. Post-hoc tests are then conducted to measure pair-wise differences in order to rank programs in each and every skill. That is, 10 Mann-Whitney tests, matching the 10 groups, are run in each and every skill totaling 60 tests in total. Table 5 shows Mann-Whitney differences between each group for every skill.

Based on the statistical significance of a test of differences, students ranked Grammarly, ChatPDF, and ChatGPT higher than QuillBot and Textero AI in terms of their positive impact on improving learning outcomes. This indicates that Grammarly, ChatPDF, and ChatGPT are more effective in facilitating learning. Moreover, these tools are equally effective in enhancing students' learning experiences, making them preferable choices for educational purposes.

Students ranked Grammarly and ChatGPT the highest in terms of user-friendliness, signifying their ease of use in educational contexts. They ranked QuillBot and ChatPDF next, indicating a moderate level of user-friendliness. Textero AI was ranked the lowest, suggesting that students found it the most challenging to use compared to the other tools.

Similarly, students rated ChatGPT, Grammarly, and ChatPDF equally high in relation to fostering their motivation for self-directed learning. This means that these tools significantly enhanced students' enthusiasm for engaging in independent study and encouraged their active participation in learning. However, QuillBot was ranked the lowest, indicating lower efficacy in motivating students toward self-led learning.

Regarding critical thinking skills, ChatGPT, Textero AI, and ChatPDF were rated equally high, suggesting they effectively promoted students‘ analytical and evaluative abilities. Grammarly and QuillBot were ranked lower, indicating that they were less useful and impactful when it comes to enhancing students' critical thinking skills. The higher-ranked tools are more likely to offer better opportunities for critical engagement and problem-solving activities, which are crucial for developing critical thinking skills.

Concerning digital literacy, QuillBot, and Textero AI are perceived to have the most substantial positive impact. These tools were rated higher than ChatPDF, which in turn was rated higher than Grammarly and ChatGPT. This ranking suggests that QuillBot and Textero AI are more effective in helping students develop skills necessary for navigating and utilizing digital technologies effectively.

When evaluating the impact on problem-solving abilities, all the tools, including ChatGPT, Grammarly, ChatPDF, Textero AI, and QuillBot, were equally rated. This indicates that students perceived no significant difference among these tools in terms of their ability to enhance problem-solving skills. Each tool appeared to provide similar benefits for developing students' ability to tackle and resolve various challenges.

6 Discussion

Based on the findings of this research, Grammarly excels in four categories: positive impact on learning, user-friendliness for educational purposes, motivation for self-led learning, and problem-solving abilities. Additionally, it effectively enhances critical thinking through writing tasks. Similarly, ChatGPT excels in five categories: positive impact on learning, user-friendliness for educational purposes, motivation for self-led learning, critical thinking skills, and problem-solving abilities. QuillBot stands out in two categories: critical thinking skills and digital literacy, and it is highly rated for motivation for self-led learning. Textero AI demonstrates high performance in five categories: positive impact on learning, motivation for self-led learning, critical thinking skills, digital literacy, and problem-solving abilities, and it is also highly rated for user-friendliness. Finally, ChatPDF is ranked at the top in digital literacy and rated highly for user-friendliness.

The attributes of these systems and applications influence perceived ease of use and perceived usefulness in TAM. They foster a positive learning environment that encourages technology acceptance and integration into students' learning process. In other words, the findings align with TAM, where perceived usefulness and ease of use are expected to positively influence students‘ acceptance and long-term use of technology. Moreover, the findings support the results of previous research indicating that AI tools positively impact students' learning outcomes and overall learning experience. Studies by various researchers concluded that AI tools improve educational quality and acceptability by providing students with interactive learning opportunities and enhancing their motivation and engagement. For example, the results of this study concur with other studies (Kewalramani et al., 2021; Grájeda et al., 2023), which found that AI tools can foster learners‘ critical thinking and problem-solving skills. This highlights TAM's emphasis on the overall perceived usefulness of technology in education.

Regarding digital literacy, the study's findings also align with existing literature findings that AI tools can develop essential digital skills (Granić and Marangunić, 2019; Almaiah, 2018; Wang et al., 2022). Students perceived the tool as useful for supporting their competencies. (Kewalramani et al., 2021; Su, 2022; Shim and Choi, 2023). Similarly, in terms of students' motivation for self-directed learning, our findings revealed that AI tools can facilitate independent learning for students. These findings are consistent with previous studies (Liu et al., 2010; Al-Azawei et al., 2017), except for those (e.g., Ros et al., 2015) that raised concerns about the reliability of the information obtained from AI tools. To address such concern, we suggest ongoing evaluation and refinement of AI technologies to ensure their reliability and effectiveness.

The findings of this study support existing literature on AI's potential to advance SDG 4 (Su, 2022; Jafari and Keykha, 2024; Kamalov et al., 2023). Therefore, integrating these tools into students' educational processes could enhance the overall quality of education for students. Understanding these nuances can guide educators and students in selecting the most appropriate tools for their specific educational needs.

7 Study limitations

This research is limited to third- or fourth-year students undertaking the computer-assisted translation program sampled from one learning institution. Hence, it does not reveal whether learners in other years or those who have completed their studies have an alternative perspective. This research is also limited to five educational AI tools despite the presence of more technologies that learners can use. Hence, it reveals a gap that future researchers can fill by studying a more comprehensive number of AI tools.

Moreover, a limitation of this study is that the CFA model included only six items, which limited the robustness of the measurement structure. Future studies should consider using extended and validated item sets (minimum of three items per construct) to enhance measurement validity and allow for full structural model testing. Finally, we acknowledge that the cross-sectional, self-report design limits the ability to infer causal effects or objectively measure actual skill acquisition and educational quality.

8 Conclusion

This research proved that AI tools, including Grammarly, ChatGPT, QuillBot, Textero AI, and ChatPDF have a significant relationship with quality education. The tools were perceived by participants as enhancing aspects of educational quality. Considering their benefits to the various learning skills for assessing quality education, it can be concluded that the AI tools have a positive impact on sustainable education. This research's results prove the efficacy of AI tools and reveal the need to identify ways of ensuring that the relevant limitations are mitigated. Integration of AI tools still has a long way to go, hence the need for the modification of relevant education policies and regulations.

The study suggests several implications for learners, teachers and technology developers. Teachers might consider integrating technology, particularly AI tools, when designing and structuring courses. They could design courses that incorporate appropriate AI tools to facilitate students leaning, as suggested by Yang et al. (2017). Teachers should also demonstrate how technology and AI tools can benefit learners and facilitate course learning (Kit et al., 2022). Moreover, technology developers should consider developing technologies with practical functionalities, in order to ensure a satisfactory service for users (Koranteng et al., 2020). TAM assessment of AI tools in education can help policy makers to identify the most effective tools for adoption. It can further inform them with the training and support needed for both learners and educators for effective use of technology and AI tools. For students, a better understanding of AI tool adoption can lead to a more positive learning experience, fostering essential skills for their future careers. Future studies could incorporate teacher assessments or validated performance measures to triangulate students' self-reported learning outcomes. Additionally, longitudinal designs and objective assessments of skills and learning outcomes should be employed to complement self-report data and validate the perceived benefits reported in this study.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Al-Ahliyya Amman University Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

LA: Writing – review & editing, Conceptualization, Writing – original draft. MI: Formal analysis, Writing – review & editing. SS: Data curation, Methodology, Writing – review & editing. AA: Project administration, Supervision, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdullah, F., and Ward, R. (2016). Developing a General Extended Technology Acceptance Model for E-Learning (GETAMEL) by analysing commonly used external factors. Comput. Hum. Behav. 56, 238–256. doi: 10.1016/j.chb.2015.11.036

Al-Adwan, A. S., Li, N., Al-Adwan, A., Al-belushi, M., Al-abdallat, B., Al-madadha, A., et al. (2023). Extending the Technology Acceptance Model (TAM) to predict university students' intentions to use metaverse-based learning platforms. Educ. Inf. Technol. 28, 15381–15413. doi: 10.1007/s10639-023-11816-3

Al-Azawei, A., Parslow, P., and Lundqvist, K. (2017). Investigating the effect of learning styles in a blended e-learning system: an extension of the technology acceptance model (TAM). Austr. J. Educ. Technol. 33, 1–23. doi: 10.14742/ajet.2741

AlIssa, A. (2024). The effectiveness of employing Jordanian school teachers the artificial intelligence applications in blended learning. Al-Balqa J. Res. Stud. 27. doi: 10.35875/827jzc89

Alkhawaja, L. (2023). Artificial intelligence in education: harnessing its power as a valuable tool, not an adversary. Int. J. Comput. Assist. Lang. Learn. Teach. 13, 1–15. doi: 10.4018/IJCALLT.329607

Alkhawaja, L. (2024). Unveiling the new frontier: ChatGPT-3 powered translation for Arabic-English language pairs. Theory Pract. Lang. Stud. 14, 85–92. doi: 10.17507/tpls.1402.05

Almaiah, M. A. (2018). Acceptance and usage of a mobile information system services in University of Jordan. Educ. Inf. Technol. 23, 1873–1895. doi: 10.1007/s10639-018-9694-6

Almustafa, M. F. (2024). The reality of employing blended learning in achieving sustainable development in governmental secondary schools from the point of view of teachers of the Irbid district. Al-Balqa J. Res. Stud. 27. doi: 10.35875/g0g78s51

Al-Rahmi, A. M., Shamsuddin, A., Alturki, U., Aldraiweesh, A., Yusof, F. M., Al-Rahmi, W. M., et al. (2021). The influence of information system success and technology acceptance model on social media factors in education. Sustainability 13:7770. doi: 10.3390/su13147770

Ayala-Pazmiño, M. (2023). Artificial intelligence in education: exploring the potential benefits and risks. 593 Digit. Publ. CEIT 8, 892–899. doi: 10.33386/593dp.2023.3.1827

Bayrakci, S., and Narmanlioglu, H. (2021). Digital literacy as whole of digital competences: scale development study. D?s?nce Toplum Sosyal Bilimler Dergisi 4, 1–30. Available online at: https://dergipark.org.tr/en/download/article-file/1797036

Chan, C., and Hu, W. (2023). Students' voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 20, 1–18. doi: 10.1186/s41239-023-00411-8

Chang, C.-C., Yan, C.-F., and Tseng, J.-S. (2012). Perceived convenience in an extended technology acceptance model: mobile technology and English learning for college students. Australas. J. Educ. Technol. 28, 850–863. doi: 10.14742/ajet.818

Charness, N., and Boot, W. R. (2016). “Technology, gaming, and social networking,” in Handbook of the Psychology of Aging, 8th Edn., Eds. J. E. Birren, and K. W. Schaie (Academic Press), 389–407.

Darwin, D., Rusdin, D., Mukminatien, N., Suryati, N., Laksmi, E. D., and Marzuki (2024). Critical thinking in the AI era: an exploration of EFL students' perceptions, benefits, and limitations. Cogent Educ. 11, 1–18. doi: 10.1080/2331186X.2023.2290342

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

Dempere, J., Modugu, K., Hesham, A., and Ramasamy, L. K. (2023). The impact of ChatGPT on higher education. Front. Educ. 8:1206936. doi: 10.3389/feduc.2023.1206936

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.1177/002224378101800104

Fussell, S., and Truong, D. (2021). Accepting virtual reality for dynamic learning: an extension of the technology acceptance model. Interact. Learn. Environ. 31, 5442–5459. doi: 10.1080/10494820.2021.2009880

Gough, S., and Scott, W. (2003). Sustainable Development and Learning: Framing the Issues, 1st Edn. London: Routledge.

Grájeda, A., Burgos, J., Olivera, P. C., and Sanjinés, A. (2023). Assessing student-perceived impact of using artificial intelligence tools: construction of a synthetic index of application in higher education. Cogent Educ. 11, 1–24. doi: 10.1080/2331186X.2023.2287917

Granić, A., and Marangunić, N. (2019). Technology acceptance model in educational context: a systematic literature review. Br. J. Educ. Technol. 50, 2572–2593. doi: 10.1111/bjet.12864

Habib, S., Vogel, T., Anli, X., and Thorne, E. (2024). How does generative artificial intelligence impact student creativity? J. Creat. 34:100072. doi: 10.1016/j.yjoc.2023.100072

Hair, J. F., Black, W. C., Babin, B. J., Anderson, R. E., and Tatham, R. L. (2006). Multivariate Data Analysis. Upper Saddle River, NJ: Pearson Prentice Hall.

Higgins, S., Baumfield, V., and Hall, E. (2007). Learning Skills and the Development of Learning Capabilities. London: EPPI-Centre, Social Science Research Unit, Institute of Education, University of London. Available online at: https://eppi.ioe.ac.uk/cms/Portals/0/PDF%20reviews%20and%20summaries/Learning%20Skills%20-%20Report%20-%20online2.pdf (Accessed June 1, 2024).

Hossan, D., Mansor, Z., and Jaharuddin, N. (2023). Research population and sampling in quantitative study. Int. J. Bus. Technopreneursh. 13, 209–222. doi: 10.58915/ijbt.v13i3.263

Jafari, F., and Keykha, A. (2024). Identifying the opportunities and challenges of artificial intelligence in higher education: a qualitative study. J. Appl. Res. High. Educ. 16, 1228–1245. doi: 10.1108/JARHE-09-2023-0426

Jang, J., Ko, Y., Shin, W. S., and Han, I. (2021). Augmented reality and virtual reality for learning: an examination using an extended Technology Acceptance Model. IEEE Access 9, 6798–6809. doi: 10.1109/ACCESS.2020.3048708

Kamalov, F., Santandreu Calonge, D., and Gurrib, I. (2023). New era of artificial intelligence in education: towards a sustainable multifaceted revolution. Sustainability 15:2451. doi: 10.3390/su151612451

Kewalramani, S., Kidman, G., and Palaiologou, I. (2021). Using Artificial Intelligence (AI)-interfaced robotic toys in early childhood settings: a case for children's inquiry literacy. Eur. Early Child. Educ. Res. J. 29, 652–668. doi: 10.1080/1350293X.2021.1968458

Keyes, O., Hitzig, Z., and Blell, M. (2021). Truth from the machine: artificial intelligence and the materialization of identity. Interdiscip. Sci. Rev. 46, 158–175. doi: 10.1080/03080188.2020.1840224

Khoury, O. Y. (2024). Reflection of explicitation in scientific translation: neural machine translation vs. human post-editing. J. Lang. Teach. Res. 15, 1510–1517. doi: 10.17507/jltr.1505.12

Kit, N., Luo, W., Chan, H., and Chu, S. K. W. (2022). An examination on primary students' development in AI literacy through digital story writing. Comput. Educ. Artif. Intell. 3:100054. doi: 10.1016/j.caeai.2022.100054

Kohnke, L., Moorhouse, B. L., and Zou, D. (2023). Exploring generative artificial intelligence preparedness among university language instructors: a case study. Comput. Educ. Artif. Intell. 5:100156. doi: 10.1016/j.caeai.2023.100156

Koranteng, F. N., Sarsah, F. K., Kuada, E., and Gyamfi, S. (2020). An empirical investigation into the perceived effectiveness of collaborative software for students' projects. Educ. Inf. Technol. 25, 3431–3449. doi: 10.1007/s10639-019-10011-7

Kumar, M. J. (2023). Artificial intelligence in education: are we ready? IETE Tech. Rev. 40, 153–154. doi: 10.1080/02564602.2023.2207916

Lashari, A. A., and Umrani, S. (2023). Reimagining self-directed learning language in the age of artificial intelligence: a systematic review. Grassroots Biannu. Res. J. 57, 92–114. Available online at: https://dergipark.org.tr/en/pub/grassroots/issue/12345/67890

Liao, Y., Huang, R., Sun, C., and Li, X. (2021). Artificial intelligence in education: opportunities and challenges from a learning science perspective. Front. Educ. 6:773608. doi: 10.3389/feduc.2021.773608

Liu, Y., Li, H., and Carlsson, C. (2010). Factors driving the adoption of m-learning: an empirical study. Comput. Educ. 55, 1211–1219. doi: 10.1016/j.compedu.2010.05.018

Mansour, A., Alshaketheep, K., Al-Ahmed, H., Shajrawi, A., and Deeb, A. (2025). Public perceptions of ethical challenges and opportunities for enterprises in the digital age. Ianna J. Interdiscip. Stud. 7, 197–210. doi: 10.5281/zenodo.15458907

Marian, A.-L., Apostolache, R., and Ceobanu, C. M. (2025). Toward sustainable technology use in education: psychological pathways and professional status effects in the TAM framework. Sustainability 17:7025. doi: 10.3390/su17157025

Nahar, S. (2024). Modeling the effects of artificial intelligence (AI)-based innovation on sustainable development goals (SDGs): applying a system dynamics perspective in a cross-country setting. Technol. Forecast. Soc. Change 201, 1–27. doi: 10.1016/j.techfore.2023.123203

Nguyen, N. D. (2023). Exploring the role of AI in education. Lond. J. Soc. Sci. 6, 84–95. doi: 10.31039/ljss.2023.6.108

Park, E., and Kwon, S. J. (2016). The adoption of teaching assistant robots: a technology acceptance model approach. Program Electron. Libr. Inf. Syst. 50, 354–366. doi: 10.1108/PROG-02-2016-0017

Parsakia, K. (2023). The effect of chatbots and AI on the self-efficacy, self-esteem, problem-solving and critical thinking of students. Health Nexus 1, 71–76. doi: 10.61838/hn.1.1.14

Rejón-Guardia, F., Polo-Peña, A. I., and Maraver-Tarifa, G. (2020). The acceptance of a personal learning environment based on Google Apps: the role of subjective norms and social image. J. Comput. High. Educ. 32, 203–233. doi: 10.1007/s12528-019-09206-1

Ros, S., Hernández, R., Caminero, A., Robles, A., Barbero, I., Maciá, A., et al. (2015). On the use of extended TAM to assess students' acceptance and intent to use third-generation learning management systems. Br. J. Educ. Technol. 46, 1250–1271. doi: 10.1111/bjet.12199

Sarker, M. F., and Ullah, M. S. (2023). A review of quality assessment criteria in secondary education with the impact of the COVID-19 pandemic. Soc. Sci. Humanit. Open 8:100740. doi: 10.1016/j.ssaho.2023.100740

Shim, J. Y., and Choi, S. (2023). A case study of the use of artificial intelligence in a problem-based learning program for the prevention of school violence. Hum. Ecol. Res. 61, 15–28. doi: 10.6115/her.2023.002

Singh, A., Kanaujia, A., Singh, V. K., and Vinuesa, R. (2023). Artificial intelligence for Sustainable Development Goals: bibliometric patterns and concept evolution trajectories. Sustain. Dev. 32, 724–754. doi: 10.1002/sd.2706

Slimi, Z., and Carballido, B. V. (2023). Systematic review: AI's impact on higher education - learning, teaching, and career opportunities. TEM J. 12, 1627–1637. doi: 10.18421/TEM123-44

Su, K.-D. (2022). Implementation of innovative artificial intelligence cognitions with problem-based learning guided tasks to enhance students' performance in science. J. Balt. Sci. Educ. 21, 245–257. doi: 10.33225/jbse/22.21.245

UNESCO (2019). SDG 4 - Education 2030: Part II, Education for Sustainable Development Beyond 2019 (Document code: 206 EX/6.II). Paris: United Nations Educational, Scientific and Cultural Organization. Available online at: https://unesdoc.unesco.org/ark:/48223/pf0000371095?posInSet=1&queryId=6ac26cb7-4383-4c7d-ad2b-333faef7e48c

UNESCO, Z. Z. (2005). EFA Global Monitoring Report 2005: The Qualitative Imperative. Available online at: https://www.right-to-education.org/sites/right-to-education.org/files/resource-attachments/EFA_GMR_Quality_Imperative_2005_en.pdf (Accessed October 17, 2025).

UNESCO, Z. Z. (2017). Unpacking Sustainable Development Goal 4 Education 2030. Available online at: http://unesdoc.unesco.org/images/0024/002463/246300E.pdf (accessed June 1, 2024).

UNESCO, Z. Z. (2023). Artificial Intelligence in Education. Uses and Impacts Introduced to Teachers in Bosnia and Herzegovina. Available online at: https://www.unesco.org/en/articles/artificial-intelligence-education-uses-and-impacts-introduced-teachers-bosnia-and-herzegovina (Accessed October 17, 2025).

United Nations, Z. Z. (2024). About the 2030 Agenda on Sustainable Development OHCHR and the 2030 Agenda for Sustainable Development. Available online at: https://www.ohchr.org/en/sdgs/about-2030-agenda-sustainable-development

Venkatesh, V. (2000). Determinants of perceived ease of use: integrating control, intrinsic motivation, and emotion into the technology acceptance model. Inf. Syst. Res. 11, 342–365. doi: 10.1287/isre.11.4.342.11872

Wang, B., Rau, P.-L. P., and Yuan, T. (2022). Measuring user competence in using artificial intelligence: validity and reliability of artificial intelligence literacy scale. Behav. Inf. Technol. 42, 1–14. doi: 10.1080/0144929X.2022.2072768

Yang, C. Y. D., Ozbay, K., and Xuegang, J. B. (2017). Developments in connected and automated vehicles. J. Intell. Transp. Syst. 21, 251–254. doi: 10.1080/15472450.2017.1337974

Yeou, M. (2016). An investigation of students' acceptance of Moodle in a blended learning setting using Technology Acceptance Model. J. Educ. Technol. Syst. 44, 300–318. doi: 10.1177/0047239515618464

Yildirim, Y., Camci, F., and Aygar, E. (2023). “Advancing self-directed learning through artificial intelligence,” in Advancing Self-Directed Learning in Higher Education (Hershey, PA: IGI Global), 143–162.

Yu, Z. G. (2020). Extending the learning technology acceptance model of WeChat by adding new psychological constructs. J. Educ. Comput. Res. 58, 1121–1143. doi: 10.1177/0735633120923772

Appendix

Keywords: sustainability, learning skills, learning opportunities, Technology Acceptance Model, quality education, Artificial Intelligence

Citation: Alkhawaja L, Idris M, Al-Sayyed S and Al Jaber AM (2025) Exploring the impact of Artificial Intelligence on students' skills for sustainable development in education. Front. Educ. 10:1691148. doi: 10.3389/feduc.2025.1691148

Received: 22 August 2025; Accepted: 30 September 2025;

Published: 28 October 2025.

Edited by:

Oihane Korres, University of Deusto, SpainReviewed by:

Jessica Paños-Castro, University of Deusto, SpainIker Villanueva-Ruiz, Universidad de Deusto - Campus Donostia San Sebastian, Spain

Copyright © 2025 Alkhawaja, Idris, Al-Sayyed and Al Jaber. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Linda Alkhawaja, bC5hbGtoYXdhamFAYW1tYW51LmVkdS5qbw==

Linda Alkhawaja

Linda Alkhawaja Mohammed Idris2

Mohammed Idris2 Sa'ida Al-Sayyed

Sa'ida Al-Sayyed