- 1Malmo University, Malmö, Sweden

- 2Amity Law School, Amity University, Uttar Pradesh, India

Artificial Intelligence (AI) is fundamentally reshaping contemporary education, not merely as a technical tool but as a transformative sociotechnical force. While often promoted for its potential to personalize learning and improve efficiency, this paper argues that AI's deeper impact lies in its capacity to reorganize relations of power, authority, and inequality within educational systems. This study offers a sociological analysis, drawing on Actor-Network Theory and Critical Digital Sociology to examine how intelligent systems mediate teacher-student relationships, redistribute agency, and contribute to new forms of digital stratification. Through a thematic synthesis of recent literature (2017–2024) and critical analysis of global case examples, the findings demonstrate that AI can intensify existing disparities—through algorithmic bias, surveillance, and uneven access—while generating new dependencies that challenge teacher autonomy and human-centered pedagogy. The analysis further reveals that the dominant techno-optimistic narrative often obscures these power dynamics. In response, this paper concludes by proposing a novel Sociotechnical-Ethical-Pedagogical (STEP) framework, designed to guide the equitable and accountable adoption of AI in education. The STEP model emphasizes transparency, educator agency, and social equity as non-negotiable conditions for responsible innovation, positioning sociological critique as essential for a just educational future.

1 Introduction

Artificial Intelligence (AI) has rapidly evolved from a technical innovation into a structuring force within contemporary education systems. Its applications—ranging from adaptive learning platforms and predictive analytics to automated assessment and robotic learning assistants—now influence how learners engage with content, how teachers perform their roles, and how institutions organize decision-making (Costa et al., 2017; García et al., 2007; Bicknell et al., 2023). Examples such as Khan Academy's Khanmigo, Duolingo's personalized language pathways, iFlyTek's large-scale assessment systems, and social robots like Pepper and Nao illustrate the speed and scale of AI diffusion in classrooms and learning platforms (iFlyTek, 2024; Leh, 2024). While these developments demonstrate AI's capacity to personalize instruction and streamline administrative tasks, they also reveal a deeper sociotechnical shift: educational processes are increasingly mediated by opaque computational systems whose logics are not pedagogical, but algorithmic.

A growing body of scholarship acknowledges AI's potential to improve learning outcomes and expand access (Wilton and Vargas-Alejo, 2023; Li and Zhao, 2024). Yet, sociologists caution that such benefits often overshadow critical questions about power, governance, and inequality (Selwyn, 2020; Davies et al., 2020). AI systems are not neutral tools; they are embedded in social structures that shape who benefits, who is marginalized, and whose knowledge is legitimized. The risk is that AI solutionism—viewing technology as the remedy for systemic educational problems—can obscure entrenched inequalities, including digital access gaps, algorithmic bias, and the deskilling or disempowerment of teachers (Apple, 2019; Williamson, 2020). From this perspective, the integration of AI is not simply a technical project but a sociocultural and political one.

As AI becomes woven into assessment systems, pedagogical routines, institutional governance, and even emotional-relational aspects of schooling, its adoption reconfigures authority, agency, and educational meaning. Decisions once made through human judgement—such as evaluation, feedback, or behavioral interpretation—are increasingly delegated to automated systems that normalize surveillance, data extraction, and behavioral nudging. This raises urgent sociological questions: Who designs and controls these systems? Whose values and assumptions are embedded in them? Who becomes visible—or invisible—through their logics?

Therefore, this paper approaches AI in education not as a neutral enhancement, but as a sociotechnical actor that co-produces educational realities. By examining how AI reshapes relationships, reorganizes power, and reproduces or challenges inequalities, the study seeks to extend the debate beyond efficiency and personalization toward issues of justice, governance, and democratic accountability.

Following this introduction, the paper proceeds by first establishing the theoretical framework of Actor-Network Theory and Critical Digital Sociology. The methodology section then details the qualitative approach of thematic synthesis applied to literature and case studies. The subsequent findings chapter presents four key thematic analyses, followed by a discussion that directly addresses the research questions. The paper concludes by proposing implications for policy and practice.

2 The sociological imperative: gap and purpose

Artificial Intelligence (AI) has rapidly evolved from an experimental novelty to a constitutive force in education, shaping content engagement, pedagogical mediation, and institutional resource allocation (Holmes et al., 2023). However, the dominant discourse remains captured by technological and psychological paradigms, predominantly concerned with performance metrics, cognitive efficiency, and personalized learning pathways (Luckin et al., 2016). While this research provides valuable insights, it largely overlooks a more fundamental issue: how AI redistributes power, agency, and legitimacy within educational ecosystems (Selwyn, 2020).

This constitutes a critical theoretical and empirical gap. Education is not merely a technical process but a social and cultural practice (Apple, 2019), and AI is not a neutral tool but a sociomaterial actor that actively co-produces educational realities (Fenwick and Edwards, 2011; Latour, 2005). When algorithms determine knowledge visibility, pathway selection, and behavioral interpretation, they reshape core educational relations—defining who teaches, who learns, and what counts as knowledge (Williamson, 2020; Ragnedda and Muschert, 2018). The current fragmentation between studies of AI's technical effectiveness and critical analyses of digital inequality prevents a holistic understanding of how AI is transforming the social fabric and authority structures of education itself.

This paper directly addresses this gap by developing a sociologically grounded analysis that bridges empirical research on AI-driven pedagogy with critical social theory. The study is purposefully designed to move beyond instrumental accounts and instead examine what AI does to education as a social system. To achieve this, it is guided by the following research questions:

• How does AI reshape educational relationships among teachers, learners, and institutions?

• In what ways does AI reinforce or challenge existing inequalities in access, participation, and achievement?

• How can sociological theories—particularly Actor-Network Theory and critical digital sociology—help explain AI's role in educational transformation?

• What ethical tensions emerge from the integration of AI into educational governance and assessment?

• What conceptual model can guide equitable and reflective use of AI in diverse educational contexts?

By synthesizing literature and analyzing global cases through this dual theoretical lens, the study aims to illuminate how AI mediates participation, recognition, and authority, ultimately proposing a framework for just and accountable adoption.

3 Significance

By situating AI within the sociology of education, this study contributes to both conceptual and practical debates about the future of learning.

Conceptually, it challenges instrumental and solutionist narratives by framing AI as a sociotechnical force that co-produces educational realities, reshapes authority, and mediates participation. This perspective foregrounds the political, cultural, and ethical dimensions of AI, emphasizing that intelligent systems are not neutral tools but actors embedded in relations of power, policy, and ideology.

Practically, the study offers a critical framework for educators, policymakers, and technology developers. For educators, it provides a language to critically assess AI tools and advocate for pedagogical integrity. For policymakers, it underscores the necessity of equity-focused governance and ethical oversight in AI procurement and implementation. For developers, it highlights the social consequences of design choices, arguing for participatory and justice-oriented approaches.

Ultimately, this research aims to equip stakeholders to move beyond uncritical adoption, fostering a more democratic and reflective path for the integration of AI in education.

4 Theoretical framework

The integration of Artificial Intelligence (AI) into education can be conceptualized as a sociotechnical transformation rather than a purely technical innovation. To analyze how AI reshapes relationships, authority, and inequality within educational environments, this study adopts Actor-Network Theory (ANT) as its core theoretical framework, supported by insights from Critical Digital Sociology.

4.1 Actor-Network Theory: AI as an educational actor

Actor-Network Theory (Latour, 2005) views social reality as a network of relationships formed by both human and non-human actors. In educational contexts, AI systems—such as adaptive learning platforms, predictive analytics, chatbots, and automated assessment tools—participate in shaping pedagogical decisions, institutional practices, and learner experiences (Fenwick and Edwards, 2011). ANT rejects the notion of technology as a neutral tool and instead positions AI as an actor that co-produces educational outcomes alongside teachers, students, policy frameworks, and institutions.

This perspective is suited to the study's focus because it illuminates how AI reconfigures:

• teacher agency (e.g., when algorithms guide instruction or assessment),

• student identity and autonomy (e.g., when behavior is datafied or nudged),

• institutional governance (e.g., when decision-making shifts to automated systems).

By tracing how networks of humans and machines interact, ANT enables investigation of who gains authority, who loses influence, and how educational roles are renegotiated through AI adoption. This speaks directly to the study's concern with shifting power relations and learner-teacher-technology dynamics.

4.2 Critical digital sociology: power, inequality, and datafication

While ANT explains relational dynamics, Critical Digital Sociology provides a lens to interrogate how AI reproduces or disrupts social inequality within education. Scholars such as Selwyn (2019), Williamson (2020), Apple (2019), and Ball (2012) argue that digital technologies in education are embedded in systems of power, policy, and economic interest. AI can therefore reinforce existing hierarchies—through algorithmic bias, data surveillance, or unequal access—or contribute to new forms of control and stratification.

This lens foregrounds key sociological concerns relevant to this study's research questions:

• digital inequality (who has access, literacy, and cultural capital),

• governance and algorithmic authority (who controls data and decisions),

• ethical risk (bias, transparency, accountability),

• the commodification of education through EdTech markets and data economies.

By combining ANT with critical sociology, the framework captures both micro-level relational change and macro-level structural effects.

5 Methodology

The study adopts a qualitative sociological research design, using a thematic synthesis approach to analyze how AI is represented, implemented, and contested within educational systems. Thematic synthesis is well-suited for integrating diverse empirical and conceptual sources, allowing for interpretation beyond aggregation (Thomas and Harden, 2008).

The analysis followed the systematic procedures for thematic analysis outlined by Braun and Clarke (2006), progressing through key stages: (1) Familiarization: Immersive reading and re-reading of the selected literature; (2) Initial Coding: Generating systematic codes across the dataset to identify sociologically significant features; (3) Theme Development: Collating codes into potential themes and gathering all relevant data within them; (4) Reviewing and Refining Themes: Checking if the themes work in relation to the coded extracts and the entire dataset to ensure coherence and distinctiveness; and (5) Defining and Naming Themes: Refining the essence of each theme for the final analysis, which resulted in the four key domains presented in the Findings chapter.

The review covers literature published between 2017 and 2024, a period selected to capture the rapid maturation and large-scale implementation of AI in education, marked by the shift from adaptive tutoring systems to the pervasive influence of generative AI and large-language models. Peer-reviewed journal articles, policy papers, and institutional case studies were sourced from Scopus, Web of Science, ERIC, and Google Scholar databases.

Inclusion criteria comprised studies addressing the intersection of AI and education with explicit attention to pedagogy, digital equity, governance, or sociocultural implications. Exclusion criteria eliminated purely technical or computer science publications that did not engage with social, ethical, or cultural dimensions of AI use in educational contexts. This ensured a sociologically grounded evidence base, consistent with the aim of examining AI as a sociotechnical and relational phenomenon.

To provide contextual diversity, five global exemplars were purposively selected for comparative illustration: Carnegie Learning (USA), UNSW (Australia), Squirrel AI (China), Smart Kindergarten (Finland), and Stanford University (USA). Purposive sampling is appropriate when cases are selected for their conceptual relevance rather than representativeness (Patton, 2015). These cases were chosen specifically as “critical cases” (Flyvbjerg, 2006) that vividly exemplify different facets of the sociotechnical dynamics under study—such as algorithmic governance (UNSW), commercialization (Squirrel AI), and the mediation of human relationships (Smart Kindergarten)—enabling rich, comparative insight into how AI interacts with institutional norms and educational inequalities.

Data analysis proceeded in two stages. First, sources were inductively coded to identify recurring sociological themes, such as algorithmic bias, shifting teacher-student relations, institutional governance, and equity of access. Thematic coding followed Braun and Clarke (2006) procedures, as described above. Second, the emerging themes were interpreted through Actor-Network Theory (Latour, 2005), which highlights how AI systems act as mediating actors within educational networks, and critical digital sociology (Selwyn, 2019; Williamson, 2020), which examines how power, inequality, and governance are reproduced through digital infrastructures. This dual theoretical lens supported a sociological interpretation of how AI reconfigures educational relationships and reflects broader structures of authority and control.

6 Findings: a sociological analysis of AI in education

This chapter presents the findings from the thematic synthesis of literature and case studies, organized around the core sociological concerns of this investigation. In line with recent calls for more critical syntheses of AI in education (Holmes et al., 2023; Selwyn, 2020), it offers a critical analysis of how AI operates as a sociotechnical actor within educational systems, rather than providing a comprehensive but neutral review. The analysis is structured into four key domains that emerged from the data, which collectively reveal the complex interplay between technology, power, and inequality. This synthesis directly sets the stage for addressing the study's research questions in the subsequent Discussion by first establishing the empirical and conceptual landscape shaped by AI's integration into learning environments.

6.1 The dominance of the techno-optimist narrative in AI emergence

Artificial Intelligence is increasingly recognized as a disruptive force in education, reshaping instructional models, assessment practices, and learner engagement (Young, 2024). AI technologies such as machine learning, natural language processing, and predictive analytics are now embedded in classrooms through adaptive platforms, intelligent tutoring systems, and automated feedback tools (Roshanaei and Omidi, 2023; Zawacki-Richter et al., 2019). Their adoption is often justified by their capacity to personalize learning, respond to student needs, and improve equity through differentiated support (Roshanaei and Omidi, 2023; Li and Zhao, 2024). Some scholars argue that AI can democratize access to quality education by lowering cost barriers and expanding reach (Fahimirad and Kotamjani, 2018).

However, the emergence of AI also raises critical challenges. Researchers highlight digital inequities, the risk of data-driven surveillance, and the need for institutional adaptation (Selwyn, 2020; Williamson, 2020). In particular, unequal access to bandwidth, devices, and AI literacy threatens to deepen existing educational disparities rather than close them (Ragnedda and Muschert, 2018). Thus, while AI is anticipated to continue expanding and shaping “technology-enhanced futures” (Young, 2024; Li and Zhao, 2024), scholars emphasize that questions of who benefits, who is marginalized, and under what conditions must remain central to AI adoption.

Although this body of research demonstrates the rapid expansion of AI tools and their technical capabilities, it tends to prioritize innovation, efficiency, and personalization while sidelining deeper sociological implications. The literature overwhelmingly treats AI as a solution to educational limitations rather than as a technology embedded in systems of power, policy, and inequality (Selwyn, 2019; Knox, 2020). As a result, questions of who benefits, who is marginalized, and whose knowledge is legitimized remain largely unexamined (Williamson, 2020). The dominant narrative assumes that access to AI will naturally lead to democratization, yet this perspective ignores structural disparities in infrastructure, teacher preparation, and institutional capacity (Ragnedda and Muschert, 2018). Moreover, AI is often positioned as a neutral tool, masking the political and cultural values embedded in its design and implementation Wang et al. (2024). This techno-optimism leaves little room to interrogate how AI reshapes teacher authority or student identity, or how it reproduces existing hierarchies through algorithmic logics. Therefore, despite documenting technological progress, this research field lacks a critical sociological perspective—creating a gap that directly motivates the present study and its first research question on how AI reshapes educational relationships.

6.2 The contested terrain of AI's pedagogical transformation

AI has been widely promoted for its ability to tailor learning pathways, enhance motivation, and support teachers through automation (Alashwal, 2024; Rangavittal, 2024). Intelligent tutoring systems, virtual reality applications, and learning analytics can diagnose student strengths and weaknesses, adjust pacing, and provide immediate feedback (Baker and Inventado, 2018; Zawacki-Richter et al., 2019). Studies report positive outcomes such as improved retention, deeper engagement, and administrative efficiency (Williamson, 2017; Holmes et al., 2019; Mossavar-Rahmani and Zohuri, 2024). AI-enabled analytics can also support faculty decision-making and streamline instructional planning (Rangavittal, 2024).

Yet, scholars caution against an overly deterministic or solutionist view of AI in pedagogy (Selwyn, 2019; Knox, 2020). Critics argue that data-driven instruction risks narrowing pedagogy to what is measurable, overlooking relational, cultural, and interpretive dimensions of learning (Williamson, 2020). Ethical concerns also arise, including privacy violations, algorithmic bias, and opacity in automated decision-making (Vasile, 2023; Floridi et al., 2018). Therefore, while AI can support personalization and efficiency, meaningful integration requires reflective, human-centered pedagogical approaches and robust ethical safeguards (Williamson, 2017; Alashwal, 2024).

While researchers highlight AI's ability to enhance instructional personalization and learner engagement, this discourse remains heavily rooted in psychological and technical paradigms, with limited attention to broader social consequences. In emphasizing cognitive gains and performance metrics, the literature risks narrowing the purpose of education to measurable outputs, sidelining questions of relationality, ethics, and democratic participation (Andrejevic, 2020). Few studies interrogate how AI-mediated pedagogy redistributes agency among teachers, students, and algorithms, or how it may erode professional autonomy by privileging data-driven decision-making (Komljenovic, 2021). This framing also obscures the cultural and ideological assumptions embedded in adaptive learning systems, which can normalize surveillance and compliance in the name of personalization (Zuboff, 2019). As pedagogical control shifts toward automated systems, the meaning of teaching itself is altered, potentially reducing educators to facilitators of platform logic rather than shapers of human learning. This reveals a conceptual blind spot in existing scholarship: pedagogy is treated as an instructional procedure rather than a social relationship (Apple, 2019). Consequently, the literature fails to adequately address how AI reconfigures classroom power dynamics, directly linking to the present study's concern with inequality and governance.

6.3 Structural ethical deficits and sociological implications

AI is not merely a technical innovation but a sociotechnical phenomenon that redistributes authority, mediates relationships, and shapes educational governance (Fenwick and Edwards, 2011; Latour, 2005). Sociologists warn that AI systems may reproduce cultural, racial, and linguistic biases embedded in their training data (Noble, 2018; O'Neil, 2016) and contribute to “datafication,” where student behavior becomes continuously monitored and quantified (Williamson, 2020). This risks reducing learners to data points, thereby undermining agency and reinforcing existing hierarchies (Roberts-McMahon, 2023; Selwyn, 2020).

Digital inequality also remains a central concern. Even when AI tools are available, differences in digital literacy, institutional resources, and cultural capital can amplify educational stratification (DiMaggio and Hargittai, 2001; Ragnedda and Muschert, 2018). Ethical issues—including consent, transparency, and surveillance—further complicate AI adoption (Floridi et al., 2018). Scholars therefore call for frameworks that foreground justice, accountability, and human rights in educational AI governance (Holmes et al., 2023).

Research on AI ethics and equity identifies urgent risks such as algorithmic bias, surveillance, and the digital divide, but these concerns are often treated as technical problems requiring technical fixes rather than structural injustices rooted in policy and power (Benjamin, 2019; Noble, 2018). As a result, proposed solutions such as improving datasets or refining privacy protocols rarely challenge the socio-economic systems that produce inequity (Livingstone and Sefton-Green, 2021). This moral minimalism reduces ethics to risk management instead of questioning whether automated systems should govern learning at all (Andrejevic, 2020). Moreover, ethical scholarship seldom engages with theories of stratification or cultural reproduction, leading to fragmented analyses that fail to connect AI to broader educational inequality (Apple, 2019). By neglecting these connections, the literature overlooks how AI can normalize surveillance, intensify institutional control, and legitimize inequitable decision-making under the guise of objectivity (Zuboff, 2019). This gap directly motivates RQ4 and underscores the need for a sociological model—later developed in this study—to confront equity and accountability at the structural level.

6.4 An emerging geography of privilege and stratification: lessons from global cases

Several cases demonstrate AI's potential but also reveal its social and ethical complexities. Carnegie Learning's Cognitive Tutor (USA) has improved mathematics outcomes, yet its effectiveness varies widely depending on teacher mediation, demonstrating that AI amplifies pedagogy rather than replaces it (Aleven et al., 2023; Buchenn et al., 2021). UNSW (Australia) employs AI-driven assessment to deliver consistent feedback through NLP, although questions remain about transparency and student trust (Perkins et al., 2023). Squirrel AI (China) illustrates how adaptive learning can scale rapidly, but its commercial model raises concerns about privatization of education (Ciesinski and Chen, 2019). Smart Kindergartens (Finland) use AI robots to personalize early learning, yet researchers warn that social and emotional dimensions of learning require human presence (Belpaeme et al., 2018). Stanford University (USA) uses predictive analytics to reduce dropout rates, while the University of Toronto (Canada) uses AI chatbots to support student wellbeing, both showing promise but also highlighting privacy and ethics challenges. Collectively, these cases confirm that AI's success is contingent on context, teacher agency, governance, and ethical oversight—not simply on technological capability.

The global cases illustrate AI's capacity to produce both innovation and stratification, yet the literature frequently reports these cases as success stories without interrogating their societal implications. Studies often measure effectiveness in terms of performance gains or system efficiency, rarely examining how these implementations reshape local educational cultures, reproduce social hierarchies, or expand institutional control through datafication (Williamson and Piattoeva, 2022). Elite institutions such as Stanford or UNSW benefit from advanced AI infrastructure, while marginalized communities struggle with access or rely on commercialized platforms like Squirrel AI, which can deepen economic divides (Zhang and Ye, 2023). Meanwhile, AI-driven classrooms in early childhood contexts raise questions about emotional labor and relational development, yet these concerns are often overlooked in favor of technological enthusiasm (Chassignol et al., 2018). These cases reveal an emerging geography of AI privilege, where resources, data capacity, and institutional power determine who experiences personalization and who experiences automation. However, the literature rarely frames these outcomes as sociotechnical inequalities (Selwyn, 2020). A more critical comparative approach is needed—one that aligns with the present study's aim to theorize how AI operates within sociomaterial networks and systems of power.

Taken together, the literature highlights AI's rapid expansion but reveals a clear analytical gap: existing research remains fragmented, techno-centric, and insufficiently sociological. It rarely interrogates how AI reshapes relationships, reproduces inequality, or redistributes authority within educational systems. This gap creates the foundation for the present study, which applies a sociological theoretical lens to examine AI as a mediating actor in education and to address the research questions outlined earlier.

7 Discussion

7.1 AI and the reconfiguration of educational relationships (RQ1)

The findings indicate that AI is reshaping interaction patterns and pedagogical relationships by redistributing agency across students, teachers, institutions, and technological systems. AI-driven platforms increasingly mediate instructional decisions, feedback, and pacing, altering the relational balance in classrooms. For example, Carnegie Learning's Cognitive Tutor personalizes mathematics instruction by continuously analyzing learner performance and recommending next steps, thereby influencing how and when teachers intervene (Aleven et al., 2023). Similarly, the UNSW automated assessment system allows algorithms to evaluate student writing, reducing teacher control over evaluative judgment. In early childhood settings, Finland's Smart Kindergarten shows how AI-driven robots can mediate teacher-child communication, affecting emotional and instructional dynamics.

Across these cases, teachers are repositioned from authoritative knowledge-holders to supervisory figures who monitor, validate, or override algorithmic recommendations. Students, in turn, learn through interaction with both human and machine actors. This relational shift reflects what Actor-Network Theory describes as the redistribution of agency among heterogeneous actors, where technologies shape and condition social action rather than merely supporting it (Latour, 2005; Fenwick and Edwards, 2011). From this perspective, AI functions as a mediating actor that co-produces pedagogical decisions and relationships, illuminating how classroom authority is not lost but reconfigured through sociotechnical networks.

7.2 AI, inequality, and the reproduction of educational divides (RQ2)

Although AI is often promoted as a democratizing force, the findings demonstrate that it can reinforce or intensify existing inequalities. Sociological studies have shown that digital technologies are frequently adopted first—and most effectively—in high-resource institutions, creating new forms of advantage (Selwyn, 2019; Williamson, 2020). This is reflected in the contrast between elite implementations such as Stanford's adaptive platforms, which improve retention and performance, and resource-poor contexts where AI tools are either inaccessible or poorly supported.

The case of Squirrel AI in China further illustrates how commercialized AI systems can widen socioeconomic gaps, as access is tied to financial capacity and urban privilege. Scholarship on the digital divide confirms that inequitable access to infrastructure, teacher expertise, and data literacy disproportionately harms marginalized learners (Ragnedda and Muschert, 2018; Zhang and Ye, 2023). Even when access is achieved, biased algorithms—trained on narrow datasets—can reproduce stereotypes or penalize students whose linguistic, cultural, or behavioral norms deviate from the assumed “standard.”

From a Critical Digital Sociology perspective, these dynamics reveal how AI can reproduce structural inequalities by embedding existing hierarchies into data systems, thereby transforming digital disparities into forms of educational stratification (Selwyn, 2019; Apple, 2019). Thus, AI introduces a new form of digital capital that benefits those already positioned to leverage technology. Without equity-driven governance, AI risks amplifying rather than reducing educational inequality.

7.3 Power, governance, and algorithmic authority in education (RQ3)

The findings also show that AI reshapes institutional power relations by embedding decision-making within opaque algorithmic systems. In the UNSW assessment case, teachers and students were required to adapt to automated evaluative criteria, illustrating how algorithmic judgments can supersede professional pedagogical expertise. At Stanford, AI tools used for predictive analytics grant administrators greater surveillance capacity over learners, extending institutional oversight beyond the classroom. The University of Toronto chatbot demonstrates how AI can expand institutional governance into students' emotional and psychological spheres, blurring boundaries between care, control, and monitoring.

These developments signal a shift toward algorithmic governance in education, where data-driven systems classify, sort, and guide learners in ways that may not be fully transparent or accountable. This mirrors critical scholarship on power, which argues that digital systems extend institutional authority by normalizing surveillance and managerial control through sociotechnical infrastructures (Ball, 2012; Williamson, 2020). From an ANT perspective, algorithms act as powerful nodes within educational networks, exerting influence over human actors while obscuring the sources of authority (Latour, 2005). This raises urgent questions about accountability and democratic oversight in AI-mediated decision-making.

7.4 Ethical and sociotechnical tensions in AI adoption (RQ4)

Ethical concerns emerged consistently, particularly regarding privacy, surveillance, and bias. AI systems rely on continuous data extraction to function, making students and teachers subject to heightened monitoring. In the NLP-based assessment systems described by Perkins et al. (2023), student writing is not only evaluated but also stored and analyzed, raising concerns about data ownership and future use. Similarly, the Toronto chatbot shows how emotional support functions can unintentionally normalize surveillance and reduce confidentiality.

Bias is an additional ethical tension. Research warns that algorithmic evaluation risks embedding racial, linguistic, or cultural bias unless datasets and model training are explicitly diversified (Zhang and Ye, 2023). These ethical risks are not merely technical flaws but sociotechnical outcomes of design choices that privilege efficiency and control over relational pedagogy. As critical scholars argue, ethical debates must therefore move beyond technical fixes to interrogate the political, cultural, and economic interests shaping AI in education (Selwyn, 2019; Zuboff, 2019). Responsible integration requires transparent governance, participatory design, and stronger accountability structures.

7.5 Toward a Sociotechnical-Ethical-Pedagogical (STEP) model (RQ5)

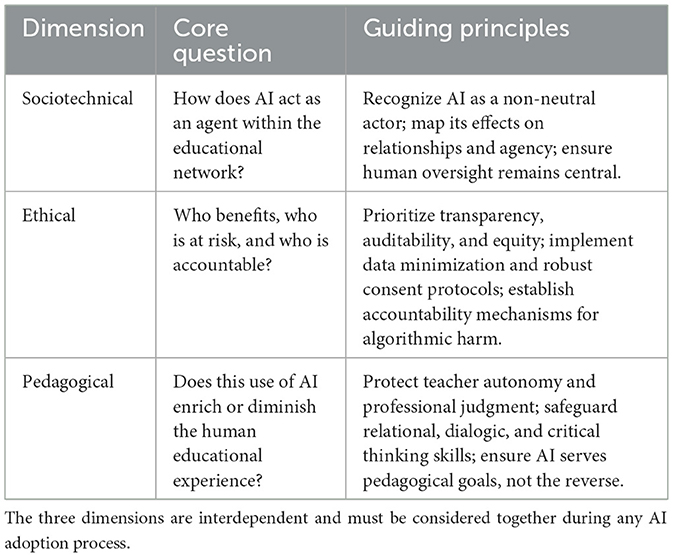

In response to these findings, this study proposes the Sociotechnical-Ethical-Pedagogical (STEP) Framework, a conceptual model designed to guide the equitable and reflective use of AI in diverse educational contexts. The STEP model offers a sociological alternative to deterministic or solutionist narratives by insisting that AI integration must be evaluated across three interdependent dimensions, as detailed in Table 1.

The STEP model is designed explicitly to counter the gaps and risks identified in this analysis. It provides a structured response to the techno-optimistic narrative by centering sociological and pedagogical concerns, offers a concrete tool to tackle structural ethical deficits beyond technical fixes, and creates a checklist to mitigate the geographic stratification of AI privilege by prioritizing equity and context.

The utility of the STEP framework can be illustrated with a common example: the adoption of an automated essay grading system. A STEP-informed evaluation would not merely assess its technical accuracy (Sociotechnical), but would rigorously audit it for cultural and linguistic bias (Ethical), and critically examine whether its feedback mechanisms support the development of student voice and argumentation skills or simply train students to write for an algorithm (Pedagogical).

By linking ANT's focus on distributed agency with critical sociology's concern for inequality, the STEP framework centers human judgment, relationality, and equity as non-negotiable conditions for responsible innovation. It provides educators, policymakers, and designers with a practical heuristic to ensure that AI serves educational values, rather than re-engineering education to serve AI's logic.

8 Limitations of the study

While this study offers a critical sociological analysis of AI in education and proposes a novel conceptual framework, it is important to acknowledge its inherent limitations. These limitations primarily stem from the methodological choices necessary for a broad, synthesizing review of this nature.

First, the study relies on a thematic synthesis of existing literature and published case studies. Consequently, the findings are interpretive and contingent upon the availability, scope, and quality of the secondary sources analyzed. Although we employed systematic search procedures across major databases, it is possible that some relevant studies, particularly those in non-English languages or in gray literature, were inadvertently excluded, which may influence the comprehensiveness of the synthesis.

Second, the selection of global case studies (e.g., Carnegie Learning, Squirrel AI, Smart Kindergarten) was purposive, designed to provide rich, illustrative examples of different sociotechnical dynamics. While this approach yields valuable in-depth insights, these cases are not statistically representative of all AI implementations globally. The findings, therefore, illustrate key sociological themes rather than claim universal applicability across all educational contexts.

Finally, the proposed Sociotechnical-Ethical-Pedagogical (STEP) framework is a conceptual model derived from our theoretical and analytical work. Its utility and practical effectiveness for guiding policy, design, and implementation have not yet been empirically validated through application in real-world educational settings. Future research is needed to test, refine, and operationalize the STEP framework, assessing its impact on actual AI adoption processes and outcomes.

These limitations, however, also delineate a clear trajectory for future research, as outlined in the conclusion.

9 Conclusion

Artificial Intelligence in education represents not merely a technical shift but a profound sociotechnical transformation that reorganizes power, reconfigures relationships, and redistributes opportunities. This study has demonstrated, through a sociological lens of Actor-Network Theory and Critical Digital Sociology, that AI systems are non-neutral actors which often intensify existing inequalities through algorithmic governance, datafication, and unequal access. The analysis of diverse global cases reveals that AI's impact—whether personalizing or surveilling, democratizing or dividing—is contingent not on its technical prowess, but on the sociotechnical context of its use.

In response, this paper's central contribution is the Sociotechnical-Ethical-Pedagogical (STEP) framework, a conceptual model designed to guide equitable AI integration by evaluating it across three interdependent dimensions: its role as a mediating actor (Sociotechnical), its adherence to transparency and equity (Ethical), and its support for teacher agency and human-centric learning (Pedagogical).

For policymakers and practitioners, this translates to actionable priorities: instituting equity-focused governance, strengthening teacher agency in AI design and oversight, enforcing robust ethical standards, and fostering critical algorithmic literacy. As a conceptual study, this work calls for future empirical research to test the STEP framework in practice.

Ultimately, the future of AI in education is a question of human choice, not technological determinism. It will not automate its way to justice. A truly equitable future requires that these intelligent systems remain subject to democratic scrutiny, ethical courage, and an unwavering commitment to education as a human, dialogic, and emancipatory project.

Author contributions

LB: Conceptualization, Formal analysis, Writing – review & editing. SK: Methodology, Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alashwal, M. (2024). Empowering education through AI: potential benefits and future implications for instructional pedagogy. PUPIL Int. J. Teach. Educ. Learn. 8, 201–212. doi: 10.20319/ictel.2024.201212

Aleven, V., McLaughlin, E. A., Glenn, R. A., and Koedinger, K. R. (2023). “Instruction based on adaptive learning technologies,” in Handbook of Research on Learning and Instruction, 2nd Edn., Eds. R. E. Mayer and P. A. Alexander (New York, NY: Routledge) 234–256.

Baker, R. S., and Inventado, P. S. (2018). “Educational data mining and learning analytics: applications to constructionist research,” in Constructionism, (New York, NY: Routledge) 127–140.

Ball, S. J. (2012). Global Education Inc.: New Policy Networks and the Neo-Liberal Imaginary. New York, NY: Routledge.

Belpaeme, T., Kennedy, J., Ramachandran, A., Scassellati, B., and Tanaka, F. (2018). Social robots for education: a review. Sci. Robot. 3:eaat5954. doi: 10.1126/scirobotics.aat5954

Benjamin, R. (2019). Race After Technology: Abolitionist Tools for the New Jim Code. Medford, MA: Polity Press.

Bicknell, K., Bansal, A., and Lee, M. (2023). How Duolingo's AI learns what you need to learn. J. Educ. Technol. 45, 234–245.

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Buchenn, R., Carvalho, L., and Goodyear, P. (2021). The role of teachers in the age of automated tutoring: a systematic review of learning analytics and teacher assessment. Comput. Educ. 168:104212.

Chassignol, M., Khoroshavin, A., Klimova, A., and Bilyatdinova, A. (2018). Artificial intelligence trends in education: a narrative overview. Procedia Comput. Sci. 136, 16–24. doi: 10.1016/j.procs.2018.08.233

Ciesinski, S., and Chen, J. (2019). Squirrel AI: Learning by Scaling. Stanford, CA: Stanford University.

Costa, E. B., Fonseca, B., Santana, M. A., de Araújo, F. F., and Rego, J. (2017). Evaluating the effectiveness of educational data mining techniques for early prediction of students' academic failure in introductory programming courses. Comput. Hum. Behav. 73, 247–256. doi: 10.1016/j.chb.2017.01.047

Davies, H. E., Miller, R., and Smith, P. (2020). The mobilisation of AI in education: a Bourdieusean field analysis. Sociology 55, 539–560. doi: 10.1177/0038038520967888

DiMaggio, P., and Hargittai, E. (2001). From the “Digital Divide” to “Digital Inequality”: Studying Internet Use as Penetration Increases. Princeton, NJ: Princeton University Center for Arts and Cultural Policy Studies.

Fahimirad, M., and Kotamjani, S. S. (2018). A review on application of artificial intelligence in teaching and learning in educational contexts. Int. J. Learn. Dev. 8, 106–118. doi: 10.5296/ijld.v8i4.14057

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., et al. (2018). AI4People—an ethical framework for a good AI society. Minds Mach. 28, 689–707. doi: 10.1007/s11023-018-9482-5

Flyvbjerg, B. (2006). Five misunderstandings about case-study research. Qual. Inq. 12, 219–245. doi: 10.1177/1077800405284363

García, P., Amandi, A., Schiaffino, S., and Campo, M. (2007). Evaluating Bayesian networks' precision for detecting students' learning styles. Comput. Educ. 49, 794–808. doi: 10.1016/j.compedu.2005.11.017

Holmes, W., Bialik, M., and Fadel, C. (2019). Artificial Intelligence in Education: Promises and Implications for Teaching and Learning. Boston, MA: Center for Curriculum Redesign.

Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S. B., et al. (2023). Ethics of AI in education: towards a community-wide framework. Int. J. Artif. Intell. Educ. 33, 1–23.

iFlyTek (2024). From Holding the “Red Pen” to Holding the “Mouse”: The Technological Revolution Behind the College Entrance Examination Marking. Available online at: https://edu.iflytek.com/solution/examination (Accessed November 11, 2025).

Komljenovic, J. (2021). The rise of education rentiers: digital platforms, digital data and rents. Learn. Media Technol. 46, 320–332. doi: 10.1080/17439884.2021.1891422

Latour, B. (2005). Reassembling the Social: An Introduction to Actor-Network-Theory. Oxford: Oxford University Press.

Leh, J. (2024). AI in LMS: 10 Must-See Innovations for Learning Professionals. Talented Learning. Available online at: https://talentedlearning.com/ai-in-lms-innovations-learning-professionals-must-see/ (Accessed November 11, 2025).

Li, Y., and Zhao, Z. (2024). The role of education in the era of AI: challenges along with opportunities. J. Humanit. Arts Soc. Sci. 8, 45–52. doi: 10.26855/jhass.2024.04.039

Livingstone, S., and Sefton-Green, J. (2021). The Class: Living and Learning in the Digital Age. New York, NY: NYU Press.

Luckin, R., Holmes, W., Griffiths, M., and Forcier, L. B. (2016). Intelligence Unleashed: An Argument for AI in Education. London: Pearson.

Mossavar-Rahmani F. Zohuri B. (2024) Artificial intelligence at work: transforming industries redefining the workforce landscape. J. Econ. Manage. Res.

Patton, M. Q. (2015). Qualitative Research and Evaluation Methods, 4th Edn. Thousand Oaks, CA: Sage.

Perkins, M., Roe, J., Postma, M., McGaughran, J., and Hickerson, D. (2023). Trust in AI: an integrated model of trust in AI-based automated writing evaluation systems. Comput. Educ. Artif. Intell. 5:100183.

Rangavittal, P. B. (2024). Transforming higher education with artificial intelligence. Int. J. Sci. Res. 13, 1234–1241.

Roshanaei, M., and Omidi, H. (2023). Harnessing AI to foster equity in education. J. Intell. Learn. Syst. Appl. 15, 123–143. doi: 10.4236/jilsa.2023.154009

Selwyn, N. (2020). Education and Technology: Key Issues and Debates, 3rd Edn. London: Bloomsbury Academic.

Thomas, J., and Harden, A. (2008). Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med. Res. Methodol. 8:45. doi: 10.1186/1471-2288-8-45

Vasile, C. (2023). Artificial intelligence in education: transformative potentials and ethical considerations. J. Educ. Sci. Psychol. 15, 45–56. doi: 10.51865/JESP.2023.2.01

Wang, S., Wang, F., Zhu, Z., Wang, J., Tran, T., and Du, Z. (2024). Artificial intelligence in education: a systematic literature review. Expert Syst. Appl. 252:124167. doi: 10.1016/j.eswa.2024.124167

Williamson, B. (2020). New Pandemic Edtech? AI and Data Futures in the Making. Code Acts in Education. Available online at: https://codeactsineducation.wordpress.com/2020/04/01/new-pandemic-edtech/ (Accessed November 11, 2025).

Williamson, B., and Piattoeva, N. (2022). Objectivity as standardization in data-scientific education policy. Learn. Media Technol. 47, 1–15.

Wilton, M. S., and Vargas-Alejo, C. (2023). The impact of artificial intelligence on higher education. J. Namibian Stud. 34, 3284–3290.

Young, J. (2024). The rise of artificial intelligence in education. Int. J. Innov. Res. Dev. 13, 45–56.

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education. Int. J. Educ. Technol. High. Educ. 16:39. doi: 10.1186/s41239-019-0171-0

Keywords: Artificial Intelligence, sociology of education, Actor-Network Theory, algorithmic governance, digital inequality, power relations, STEP framework

Citation: Bouakaz L and Khalid S (2025) AI in education: a sociological exploration of technology in learning environments. Front. Educ. 10:1700876. doi: 10.3389/feduc.2025.1700876

Received: 07 September 2025; Accepted: 28 October 2025;

Published: 26 November 2025.

Edited by:

Mohammed Saqr, University of Eastern Finland, FinlandReviewed by:

Pitshou Moleka, Eudoxia Education Private Limited, IndiaCristina-Georgiana Voicu, Alexandru Ioan Cuza University of Iasi, Romania

Copyright © 2025 Bouakaz and Khalid. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Laid Bouakaz, TGJlZHVjYXRpb24xMkBnbWFpbC5jb20=

Laid Bouakaz

Laid Bouakaz Sheeba Khalid

Sheeba Khalid