- 1Adaptive Systems Group, Department of Computer Science, Humboldt-Universität zu Berlin, Berlin, Germany

- 2Cognitive Robotics Group, Center for Science Research, Universidad Autonoma del Estado de Morelos, Cuernavaca, Mexico

Sensorimotor control and learning are fundamental prerequisites for cognitive development in humans and animals. Evidence from behavioral sciences and neuroscience suggests that motor and brain development are strongly intertwined with the experiential process of exploration, where internal body representations are formed and maintained over time. In order to guide our movements, our brain must hold an internal model of our body and constantly monitor its configuration state. How can sensorimotor control enable the development of more complex cognitive and motor capabilities? Although a clear answer has still not been found for this question, several studies suggest that processes of mental simulation of action–perception loops are likely to be executed in our brain and are dependent on internal body representations. Therefore, the capability to re-enact sensorimotor experience might represent a key mechanism behind the implementation of higher cognitive capabilities, such as behavior recognition, arbitration and imitation, sense of agency, and self–other distinction. This work is mainly addressed to researchers in autonomous motor and mental development for artificial agents. In particular, it aims at gathering the latest developments in the studies on exploration behaviors, internal body representations, and processes of sensorimotor simulations. Relevant studies in human and animal sciences are discussed and a parallel to similar investigations in robotics is presented.

1. Introduction

The capability to perform sensory-guided motor behaviors, or sensorimotor control, is generally not fully developed at birth in mammals. Rather, it emerges through a learning process where the individual is actively involved in the interaction with the external environment. In humans, similarly, sensorimotor control is developed along the ontogenetic process of the individual. Developmental psychologists consider this skill as a fundamental prerequisite for the acquisition of more complex cognitive and social capabilities.

In robotics, a large number of studies investigated mechanisms for sensorimotor control and learning in artificial agents. Inspired by human development and aiming at producing adaptive systems (Asada et al., 2009; Law et al., 2011), researchers proposed robot learning mechanisms based on exploration behaviors. Evidence from human behavioral and brain sciences suggests that motor and brain development are strongly intertwined with this experiential process, where internal body representations would be formed and maintained over time. However, it is still not clear how sensorimotor development is linked to the development of cognitive skills. Indeed, one of the most challenging questions in developmental sciences, including developmental robotics, is how low-level motor skills scale up to more complex motor and cognitive capabilities throughout the lifespan of an individual.

A recent line of thought identifies the capability to internally simulate sensorimotor cycles based on previous experience, or to re-enact past sensorimotor experience, as one of the fundamental processes implicated in the implementation of cognitive skills (Barsalou, 2008). Several behavioral and brain studies can be found in the literature that support this idea. In this work, we argue that sensorimotor simulation mechanisms may serve as a bridge between sensorimotor representation and the implementation of basic cognitive skills, such as behavior recognition, arbitration and imitation, sense of agency, and self–other distinction. During the last years, an increasing number of robotics studies addressed similar processes for the implementation of cognitive skills in artificial agents. However, empirical investigation on exploration behaviors for the learning of sensorimotor control, on the functioning and modeling of simulation processes in the brain, and on their implementation in artificial agents is still fragmented.

This paper aims at gathering the latest developments in the study on exploration behaviors, or internal body representations, and on re-using sensorimotor experience for cognition. For each of these topics, relevant studies in human and animal sciences will be introduced and similar studies in robotics will be discussed. We strongly believe that this can be beneficial for those researchers who investigate autonomous motor and mental development for artificial agents. This manuscript provides a comprehensive overview of the state of the art in the mentioned topics from different perspectives. Moreover, we want to encourage robotic researchers in sensorimotor learning and in body representations to make a step further by investigating how the acquired sensorimotor experience can be used for cognition. Nonetheless, we would like to encourage researchers to not overlook the process of acquisition of sensorimotor experience by assuming the existence of a repertoire of sensorimotor schemes, when investigating computational models for internal simulations. We strongly believe that tackling both issues at the same time not only would provide a more comprehensive view of the developmental process in artificial agents but it would also give insights into the generalization and specialization of the proposed models. In addition, we believe that addressing both sensorimotor and cognitive development by simulation processes would bridge different specialties and provide new research directions for developmental robotics.

2. Exploration as a Drive for Motor and Cognitive Development

In the late 1980s, a new era known as post-cognitivism started to flourish in the cognitive sciences, bringing new philosophical interest on embodiment and on the importance of the role of the body for cognition (Wilson and Foglia, 2011). According to the embodied cognition framework, sensorimotor interaction is essential for the development of cognition. A common characteristic of humans, animals, and artificial agents is their embodiment and their being situated in an environment they can interact with. They possess the means for shaping these interactions: a body that can be actuated by controlling its muscles (in humans and animals) or actuators (in artificial agents) and the capability to perceive internal or external phenomena through their senses (in humans and animals) or sensors (in artificial agents) (Pfeifer and Bongard, 2006). In animals and humans, brain development is modulated by the multimodal sensorimotor information experienced by the individual while interacting with the external environment. In the literature, this process is often referred as sensorimotor learning. Theorists on grounded cognition propose that cognitive capabilities are grounded on sensorimotor experiences (Barsalou, 2008). Although the validity of this theory is still under debate, it is commonly accepted that sensorimotor control and learning are fundamental prerequisites for cognitive development in humans. Therefore, developmental roboticists are particularly interested in implementing exploration behaviors in artificial agents, which would allow them to gather the necessary sensorimotor experience to further develop complex motor and cognitive skills.

Humans are not innately skillful at governing their body. Motor control is a capability that is acquired and refined over time, as demonstrated by several studies. For example, Zoia and colleagues have shown that learning of motor control is an ongoing process already during pre-natal stages (Zoia et al., 2007). In fact, they observed an improvement of coordinated kinematic patterns in fetuses between the age of 18 and 22 weeks. At initial stages, fetuses’ hand movements directed at their eyes and mouth were inaccurate and characterized by jerky and zigzag movements. However, already around the 22nd week of gestation, fetuses showed more precise hand trajectories, characterized by acceleration and deceleration phases that were apparently planned according to the size and to the delicacy of the target (facial parts, such as mouth or eyes). It is plausible to think that such an improvement in sensorimotor control would be the result of an experiential process, driven by exploration behaviors. Many developmental studies agree with this hypothesis (Piaget, 1954; Kuhl and Meltzoff, 1996; Thelen and Smith, 1996; Meltzoff and Moore, 1997). Others show systematic exploration behaviors already at early stages of post-natal development [for example, in the visual and proprioceptive domains (Rochat, 1998)].

In developmental psychology, exploration behaviors are seen as the common characteristic of initial stages of motor and cognitive development. In an early study, Jean Piaget defined exploration behaviors as circular reactions – or repetitions of movements that the child finds pleasurable – through which infants gather experience and acquire governance of those motor capabilities (such as reaching an object) that will enable them, subsequently, to explore the interactions with objects and with people (Piaget, 1954). Therefore, exploration would pave the way to the development of more complex motor and social capabilities. In a study on language acquisition, for example, Kuhl and Meltzoff (1996) reported that in infants younger than 6 months the vocal tract and the neuromusculature are still immature for the production of recognizable sounds. It is through exploratory behaviors, which Meltzoff and Moore (1997) named as body or vocal babbling, that infants would learn articulatory–auditory relations, a prerequisite for language acquisition.

However, it is not clear what the drive of exploration behaviors is. Behavioral studies agree with the fact that animals and humans seem to have a common desire to experience and to acquire new information (Berlyne, 1960; Reio et al., 2006; Reio, 2011). Such a characteristic, commonly referred to as curiosity, is usually associated with the experience of rewards, similar to appetitive desires for food and sex (Litman, 2005). However, several theories have been developed on the mechanisms that explain curiosity. For example, the curiosity-driven theory assumes that organisms are motivated to acquire new information through exploratory behaviors by the need of restoring cognitive and perceptual coherence (Berlyne, 1960). Such a coherence can be disrupted by an unpleasant experience of uncertainty, an unpleasant feeling of deprivation, the reduction of which is rewarding (Litman, 2005).

Curiosity and exploration behaviors are considered as fundamental aspects of learning and development. However, studying them in humans and animals often means to observe and to analyze only their behavioral effects, which is of course limiting the understanding of the underlying processes. Robots recently came into play as they provide a valuable test bed for the investigation of such mechanisms. Investigating curiosity and exploration behaviors in artificial agents is indeed also advantageous for developmental roboticists, whose aim is to produce autonomous, adaptive, and social robots, which learn from and adapt to the dynamic environment using mechanisms inspired by human development (Lungarella et al., 2003). The developmental approach in robotics is not only motivated by a mere interest in mimicking human development in artificial agents. Rather, studying human development can give insights in finding those basic behavioral components that may allow for the autonomous mental and motor development in artificial agents. In fact, researchers in developmental robotics try to avoid defining models of robot embodiment and of their surrounding world a priori, in order to not stumble across problems, such as robot behaviors lacking adaptability and the capability to react to unexpected events (Schillaci, 2014).

In developmental robotics, the general approach consists of providing artificial agents with learning mechanisms based on exploration behaviors. In addition to humans, robots can generate useful information about their bodily capabilities while interacting with the external environment. This information is shaped by the characteristics of the agent’s body and of the environment. In addition, dynamic environments and temporary or permanent changes in the bodily characteristics of the individual, for example, the ones caused by the usage of tools, can strongly affect the information that is perceived through the senses and the way the individual can interact with its surroundings. Therefore, pre-defining models of the robot’s body and of the environment can be very challenging, or even impossible, as an enormous number of variables have to be taken into account, for covering all the aspects of such dynamic systems. This is one of the main motivations behind the developmental approach in robotics, where researchers try to implement computational models that self-organize along the sensorimotor information that is generated from the bodily interaction of the agent with the external environment, such as the one produced through exploration behaviors, while assuming as little prior information to construct the model as possible.

Several studies on the development of motor and cognitive skills based on exploration behaviors can be found in the literature on developmental robotics. For example, in a survey on cognitive developmental robotics, Asada and colleagues presented a developmental model of human cognitive functions starting from the fetal simulation of sensorimotor learning of body representation in the womb up to the social development through interaction between individuals, namely imitation (Asada et al., 2009). The authors put a central role to exploration behaviors for the emergence of cognition in infants and artificial agents. These behaviors are the drive to the construction of body representations (see Section 3), or mappings of multimodal sensorimotor information, which are necessary for interacting with the external environment, for example, with objects. Learning of coordinated movements, such as reaching and grasping, is considered to develop along the infant’s acquisition of predictive capabilities, which may play an important role in the development of non-verbal communication, such as pointing or imitation (Asada et al., 2009; Hafner and Schillaci, 2011).

Dearden and Demiris (2005) also adopted exploration behaviors for learning internal forward models in an artificial agent. As it will be discussed in more details in the following sections of this paper, forward models enable a robot to predict the consequence of its motor actions. In Dearden and Demiris (2005), a robot performed random movements of its gripper and visually observed the outcome of these actions. The internal forward model was encoded as a Bayesian network, whose structure and parameters were learned using the sensorimotor data gathered during the exploration behavior performed in the motor space. This exploration strategy, known also as random body babbling, chooses motor commands from the range of possible movements in a random fashion. Takahashi and colleagues implemented a similar exploration mechanism in a simulated robotic setup for learning motion primitives under tool-use conditions (Takahashi et al., 2014). A simulated robotic arm was programed to execute an exploration behavior – random body babbling – in order to gather sensorimotor information to be used for building up a body representation. The authors adopted a recurrent neural network for training the body representation and a deep neural network for encoding the tool dynamic features and evaluated the approach in an object manipulation task.

Stoytchev (2005) presented an experiment with a simulated robot on learning the binding affordances of objects using pre-defined exploration motion primitives that were selected in a random fashion. The action opportunities that an object provides to the agent, or affordances, were learned during the exploration session where the robot randomly chose sequences of pre-defined behaviors, applied them to explore the objects, and detected invariants in the resulting set of observations. However, the proposed approach is limited by the usage of pre-defined movements and by the lack of variability in the exploration behaviors. In fact, there could be object affordances that are unlikely to be discovered due to the unavailability of specific exploratory behaviors.

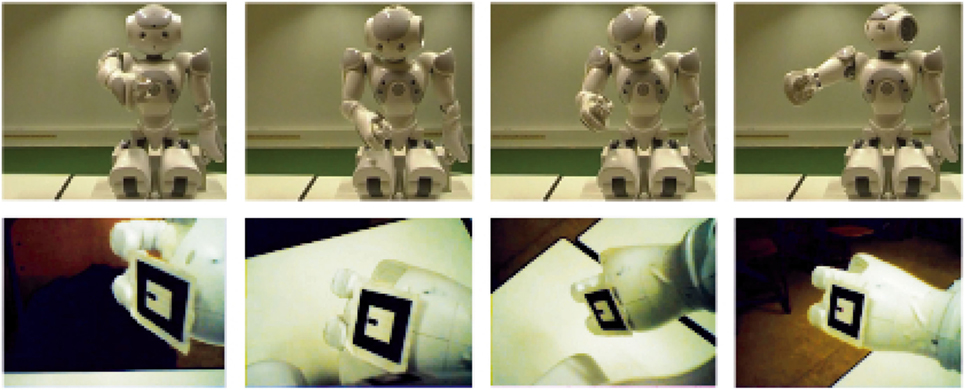

Many other developmental robotics studies adopting random exploration behaviors, and comparing different random movement strategies (Schillaci and Hafner, 2011), can be found in the literature. However, random exploration strategies – such as random body babbling, or motor babbling (see Figure 1) – have been found to be not optimal, especially when applied to robotic systems characterized by a high number of degrees of freedom. More efficient sensorimotor exploration behaviors have been proposed. For example, Baranes and Oudeyer (2013) presented an intrinsically motivated goal exploration mechanism that allows active learning of inverse models, or controllers, in redundant robots. In the proposed methodology, exploration is performed in the task space, making it more efficient than exploring the motor space, especially when using high-dimensional robots. Rolf and Steil showed a similar approach based on goal-directed exploration in the task space, which enabled successful learning of the controller on a challenging robot platform, the Bionic Handling Assistant (Rolf and Steil, 2014).

Figure 1. A sequence from an exploration behavior – in this case random motor babbling – performed by the humanoid robot Aldebaran Nao. In the bottom, the corresponding frames grabbed by the robot camera are shown. Picture taken from Schillaci and Hafner (2011).

Investigating goal-directed exploration behaviors provided new insights and research directions toward the understanding of the mechanisms behind curiosity. In fact, one of the main questions posed by researchers on goal-directed exploration in artificial agents is how to generate goals in the task space. The typical approach proposes to simulate curiosity in an artificial agent, by adding interest factors in the exploration phase, usually based on measuring the confidence that the system has toward possible goals in the space to be explored. Information seeking through exploration behaviors, according to Gottlieb et al. (2013), is “a process that obeys the imperative to reduce uncertainty and can be extrinsically or intrinsically motivated.” This is in line with what has been proposed by Litman (2005), as mentioned before in this section, that the drive of curiosity might rely on the reduction of the unpleasant experience of uncertainty, which is rewarding. Oudeyer et al. (2007), Baranes and Oudeyer (2013), Moulin-Frier et al. (2013), and Schmerling et al. (2015) adopted an intrinsically motivated goal exploration mechanism, named Intelligent Adaptive Curiosity (IAC), which relies on the uncertainty reduction idea and on exploration based on learning progress. In other words, IAC selects goals maximizing a competence progress, thus creating developmental trajectories driving the robot to progressively focus on tasks of increasing complexity and is statistically significantly more efficient than selecting tasks in a random fashion. IAC has been applied to different contexts in artificial agents, such as in learning sensorimotor affordances (Oudeyer et al., 2007), in learning inverse kinematics of a simulated robotic arm and in learning motor primitives in mobile robots (Baranes and Oudeyer, 2013), in vocal learning (Moulin-Frier et al., 2013), in the context of oculomotor coordination (Gottlieb et al., 2013), and in learning visuo-motor coordination in a humanoid robot (Schmerling et al., 2015). In this latter study, in particular, the authors showed not only the superiority of goal-directed exploration strategies, compared to random ones, but also their effectiveness in the case where two separate motor sub-systems, head and arm in the presented experiment, need to be coordinated.

Other experiments on curiosity-driven exploration behaviors can be found in the literature. However, most of them adopt an approach similar to IAC that implements exploration mechanisms based on the learning progress. Ngo et al. (2013), for example, proposed a system that generates goals based on the confidence in its predictions about how the environment reacts to its actions; when the confidence on a prediction is low, the environmental configuration that generated such an event becomes a goal. Pape et al. (2012) presented a similar curiosity-driven exploration behavior in the context of tactile skills learning, which allowed the robotic system to autonomously develop a small set of basic motor skills that lead to different kinds of tactile input, and to learn how to exploit the learned motor skills to solve texture classification tasks. Jauffret et al. (2013) presented a neural architecture based on an online novelty detection algorithm that is able to self-evaluate sensory-motor strategies. Similar to the abovementioned mechanism, in the proposed system, the prediction error coming from unexpected events provides a measure of the quality of the underlying sensory-motor schemes and it is used to modulate the system’s behavior in a navigation task. A greedy goal-directed exploration strategy has been adopted, instead, by Berthold and Hafner (2015), who presented an approach for online learning of a controller for a low-dimensional spherical robot based on reservoir computing. The exploration strategy adopted by the authors generated motor commands aimed at regulating the sensory input to externally generated target values.

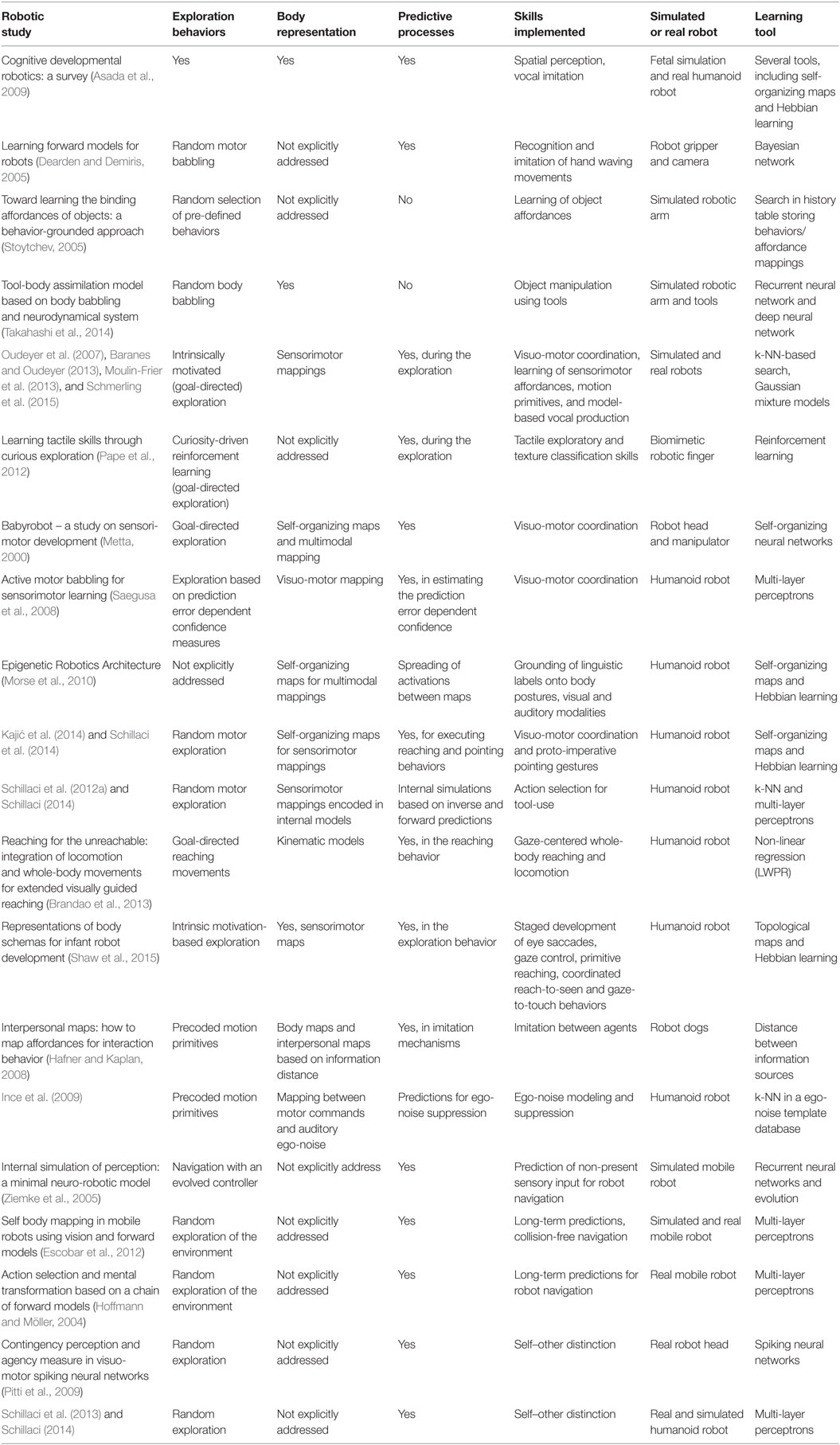

The number of robotics studies investigating sensorimotor exploration behaviors for robot learning has been considerably growing in the last couple of decades. This section mentioned the most prominent studies, with a particular focus on the competences that such exploration behaviors allowed the robots to acquire. Table 1 summarizes the studies that are cited in this work, and for each of them it points out whether and what exploration strategies have been used for learning particular skills. As evident in these descriptions, most of the studies addressing intelligent exploration behaviors, or exploratory strategies that try to mimic human curiosity, are prevalently adopted only for learning sensorimotor skills. Unfortunately, links from sensorimotor development to cognitive development in these studies are often missing. Moreover, most of the abovementioned studies on intelligent exploration strategies usually address a unique sensory modality. How can exploration be performed in multimodal domains? What is the role of attention and priming behaviors in curiosity-driven exploration? Few studies tackle these issues, such as Forestier and Oudeyer (2015) and di Nocera et al. (2014). However, these and similar questions must be better addressed, in order to allow these strategies to be adopted in the implementation of more complex learning mechanisms.

In the following sections, we focus the review on studies on internal body representations and internal models, and on the predictive capabilities that they could provide to artificial agents. As it will be described in the rest of this paper, predictive processes and, in general, simulation processes of sensorimotor activity could represent the bridging mechanisms between sensorimotor learning, implemented through exploration behaviors, and the development of basic cognitive skills.

3. Internal Body Representations

The rich multimodal information flowing through the sensory and motor streams during the interaction of an individual with the environment contains information about the body of the individual that has been proposed to be integrated in our brain in a sort of body schema (Hoffmann et al., 2010). This schema would keep an up-to-date representation of the positions of the different body parts in space and of the space of each individual modality and their combination (Hoffmann et al., 2010). Such a representation would be fundamental, for example, for constantly monitoring the position and configuration of our body, and thus for guiding our movements with respect with an environment.

In neuroscience, it is known that neural pathways and synapses in the brain change with the behavior and the interaction of the individual with the environment. Plastic changes are produced by sensory and motor experiences, which are strongly dependent on the characteristics of the body of the subject. Studies on body representations (Udin and Fawcett, 1988; Cang and Feldheim, 2013) suggested the existence of topographic maps in the brain, or projections of sensory receptors and of effector systems into structured areas of the brain. These maps self-organize throughout the brain development in a way that adjacent regions process spatially close sensory parts of the body. Kaas (1997) reported a number of studies showing the existence of such maps in the visual, auditory, olfactory, and somatosensory systems, as well as in parts of the motor brain areas. Moreover, evidence suggests that different areas belonging to different sensory and motor systems are integrated into a unique representation. The findings from Iriki et al. (1996), Maravita et al. (2003), Holmes and Spence (2004), and Maravita and Iriki (2004), for example, support the existence of an integrated representation of visual, somatosensory, and auditory peripersonal space in human and non-human primates, which operates in body-part-centered reference frames. In developmental psychology, Butterworth and Hopkins (1988) reported evidence demonstrating that various sensorimotor systems are potentially organized and coordinated in their functioning from birth, such as primitive forms of visually guided reaching (Von Hofsten, 1982). Similarly, Rochat and Morgan (1998) suggested that infants, already around the age of 12 months, possess a sense of a calibrated body schema, which is a perceptually organized entity which they can monitor and control. The existence of a body representation in the brain is also suggested by studies on sensory and motor disorders. For example, Haggard and Wolpert (2005) have shown that several sensory and motor disorders can be explained as caused by damage to some of the properties of a body representation in the human brain that are required for multimodal integration and coordinated sensorimotor control.

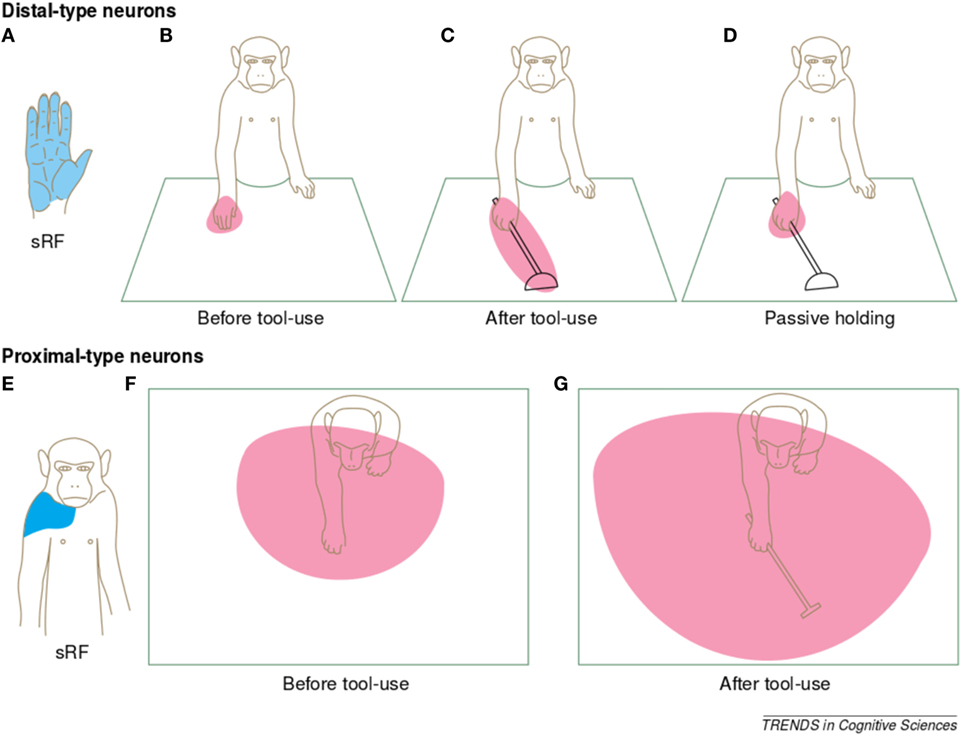

Body representations very likely undergo a continuous process of adaptation, as humans and animals follow an ontogenetic process, where corporal dimensions and morphology change over time. Nonetheless, even temporary alterations of the body of the individual can happen, such as those produced by the usage of tools. The way the brain deals with these changes has attracted the interest of many researchers. For example, Cardinali et al. (2009) studied the alterations in the kinematics of grasping movements from free-hand conditions to tool-use ones. Other studies (Iriki et al., 1996; Maravita and Iriki, 2004; Sposito et al., 2012; Ganesh et al., 2014) reported effects in the dynamics of movements with the usage of tools (see Figure 2), as well as plastic changes in the primary somatosensory cortex in the human brain (Schaefer et al., 2004).

Figure 2. Illustration of the changes in bimodal receptive field properties, following tool-use. Picture taken from Maravita and Iriki (2004). The authors of this work recorded the neuronal activity from the intraparietal cortex of Japanese macaques. In this brain region, neurons respond to both somatosensory and visual stimulation. The authors observed that some of these “bimodal neurons” (distal-type neurons) responded to somatosensory stimuli at the hand (A) and to visual stimuli near the hand (B), also when this moved in space. After the monkey had performed 5 min of food retrieval with an extension tool, the visual receptive fields (vRFs) of some of these bimodal neurons expanded to include the length of the tool (C). The vRFs of these neurons did not expand when the monkey was merely grasping the tool with its hand (D). Similarly, other bimodal neurons (proximal-type neurons) responded to somatosensory stimuli at the shoulder/neck of the monkey (E) and had visual receptive fields covering the reachable space of the arm (F). After tool-use, the visual receptive fields of these neurons expanded to cover the reachable space accessible with the tool (G).

It is still very challenging to reproduce and to deploy in a computational model the partially unexplained but fascinating capabilities of our brain to acquire and to maintain internal body representations, and to re-adapt them to temporary or permanent bodily changes. A typical challenge is related to finding a proper balance between stability and plasticity of the internal model of the body, which can ensure both long-term memory maintenance and propensity to sudden and temporary alteration of the body schema. During the last couple of decades, interest in the possibility to develop models inspired by the mechanisms of human body representations has been growing also in the robotics community. Equipping robots with multimodal body representations, capable of adapting to dynamic circumstances, would indeed improve their level of autonomy and interactivity. Morever, body representations can be seen as the set of sensorimotor schemes that an agent acquires through the interaction with the environment.

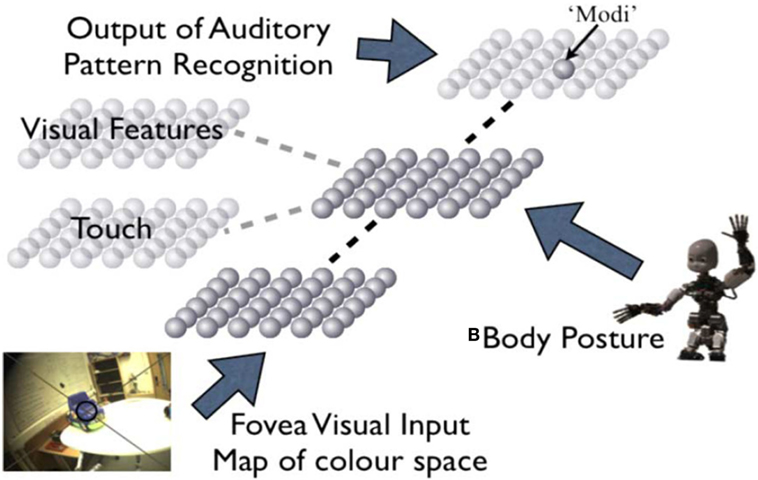

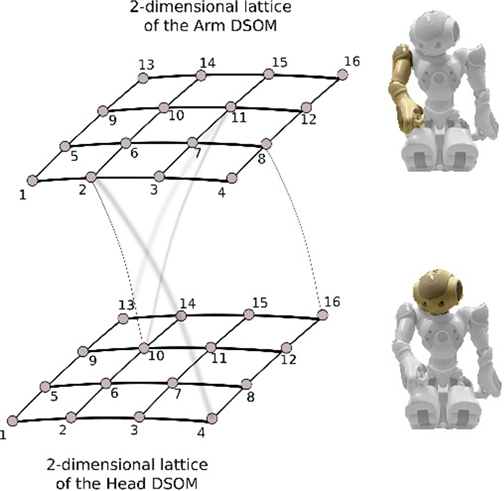

In the robotics literature, several terms can be found referring to the same concept of the abovementioned internal body representations, such as body schemes, body maps, internal models of the body, multimodal maps, intermodal maps, and multimodal representations. The investigation in body representations in robotics has probably started within the context of the development of visuo-motor coordination. Visuo-motor coordination is often referred to as the capability to reach a particular position in the space with a robotic arm, but could also be referred to oculomotor control and eye (camera) head coordination. In both cases, this skill requires knowledge and coordination of the sensory and motor systems, thus a knowledge of an internal model or representation of the embodiment of the artificial system. For example, Metta (2000) implemented an adaptive control system inspired by human development of visuo-motor coordination for the acquisition of orienting and reaching behaviors on a humanoid robot. The robotic agent started with learning how to move its eyes only and proceeded with acquiring closed-loop gains, reflex-like modules controlling the arm sub-system, and finally eye–head and head–arm coordination. Goal-directed exploration behaviors have been compared to random exploration ones in the study. Similarly, Saegusa et al. (2008) studied the acquisition of visuo-motor coordination skills in a humanoid robot using an intelligent exploration behavior based on a prediction error-dependent interest function. Kajić et al. (2014) adopted a random exploration strategy for acquiring visuo-motor coordination skills, but proposed a biologically inspired model consisting of Self-Organizing Maps (Kohonen, 1982) for encoding the sensory and motor mapping. Such a framework led to the development of pointing gestures in the robot. The model architecture proposed by Kajić et al. (2014) was inspired by the Epigenetic Robotics Architecture [ERA, Morse et al. (2010)], where a structured association of multiple SOMs has been adopted for mapping different sensorimotor modalities in a humanoid robot. The ERA architecture resembles the formation and maintenance of topographic maps in the primate and human brain (see Figure 3). Shaw et al. (2015) proposed a similar architecture for body representation based on sensorimotor maps and intrinsic motivation-based exploration behaviors. In their experiment, the robot progressed through a staged development whereby eye saccades emerged first, followed by gaze control, then primitive reaching, and followed by eventual coordinated gaze-to-touch behaviors. An extension of the approach proposed by Kajić et al. (2014) was presented by Schillaci et al. (2014), where Dynamic Self-Organizing Maps [DSOMs (Rougier and Boniface, 2011)] and a Hebbian paradigm were adopted for online and continuous learning on both static and dynamic data distributions. The authors addressed the learning of visuo-motor coordination in robots, but focused on the capability of the proposed internal model for body representations to adapt to sudden changes in the dynamics of the system. Brandao et al. (2013) presented an architecture for integrating visually guided walking and whole-body reaching in a humanoid robot, thus increasing the reachable space that can be acquired with the visuo-motor coordination learning mechanisms proposed above. Goal-directed exploration mechanisms have been used by the authors.

Figure 3. Epigenetic Robotics Architecture, proposed by Morse and colleagues. Self-organizing maps are used to encode different sensory and motor modalities, such as color, body posture, and words. These maps are then linked using Hebbian learning with the body posture map that acts as a central hub. Picture taken from Morse et al. (2010).

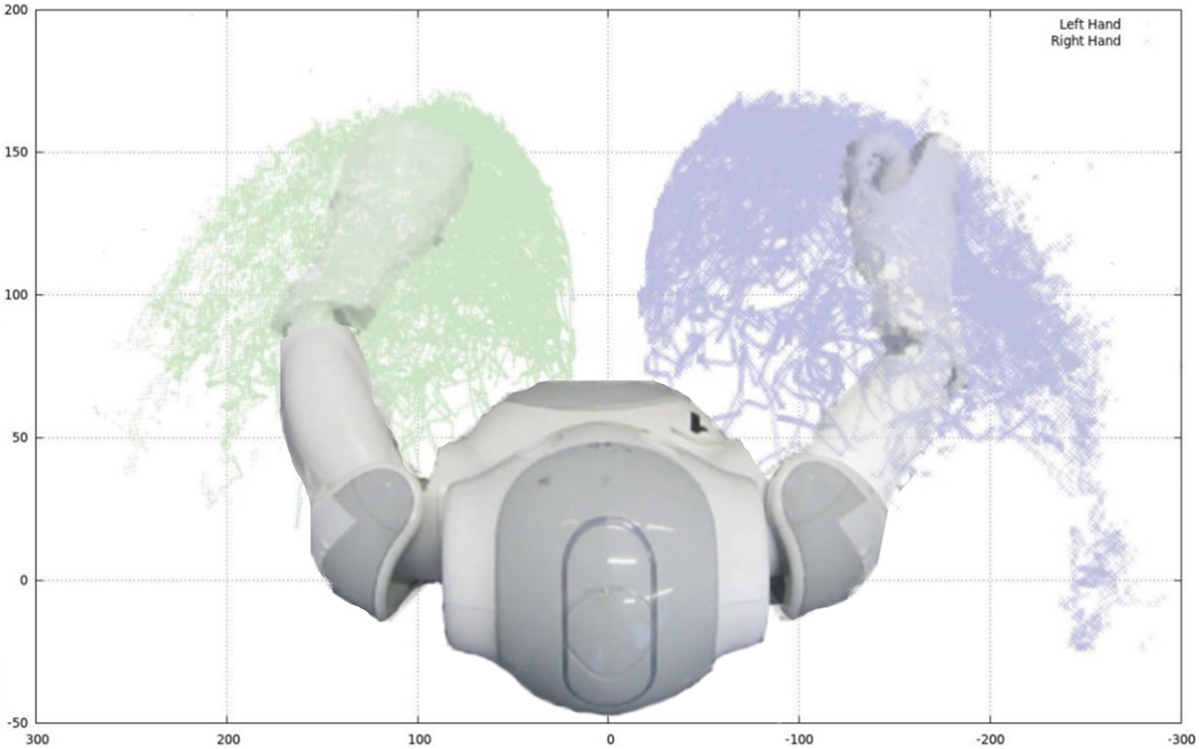

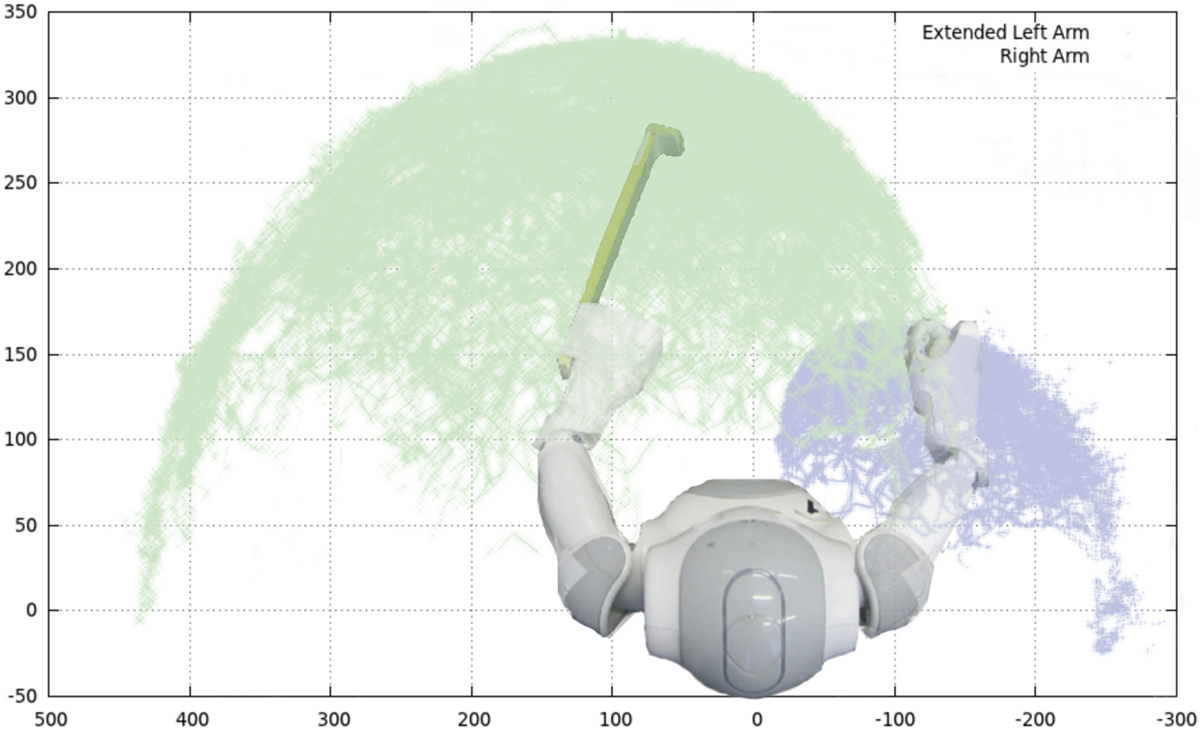

Roncone et al. (2014) investigated the calibration of the parameters of a kinematic chain by exploiting the correspondences between tactile input and proprioceptive modality (joint angles), or the tactile-proprioceptive contingencies, in the humanoid robot iCub. The study is in line with the finding from Rochat and Morgan (1998), who suggested that the multimodal events continuously experienced by infants, such as the visual-proprioceptive event of looking at their own movements, or the perceptual event of the double touch resulting from the contact of two tactile surfaces, would drive the establishment of an intermodal calibration of the body. Yoshikawa et al. (2004) addressed visuo-motor and tactile coordination in a simulated robot. In particular, they proposed a method for learning multimodal representations of the body surface through double-touching, as this co-occurred with self-occlusions. Similarly, Fuke et al. (2007) addressed the learning of a body representation consisting of motor, proprioceptive, tactile, and visual modalities in a simulated humanoid robot. The authors encoded sensory and motor modalities as self-organizing maps. Hikita et al. (2008) extended this multimodal representation to the context of tool-use in a humanoid robot. Similarly, Schillaci et al. (2012a) implemented a learning mechanisms based on random exploration strategies for the acquisition of visuo-motor coordinationon a humanoid robot (see Figure 4) and analyzed how the action space of both arms can vary when the robot is provided with an extension tool (see Figures 5 and 6). The extended arm experiment can be seen as the body of the robot being temporarily extended by a suitable tool for a specific task (Schillaci, 2014).

Figure 4. Illustration of body representation proposed by Schillaci et al. (2014) and Kajić et al. (2014). The body representation is formed by two self-organizing maps [standard Kohonen SOMs in Kajić et al. (2014) and Dynamic SOMs in Schillaci et al. (2014)], connected through Hebbian links. On the left side, the 2-dimensional lattices of the two self-organizing maps (arm and head) are shown. Picture taken from Schillaci et al. (2014).

Figure 5. Reachable spaces for both hands of the Aldebaran Nao robot. Each point in the clouds has been experienced together with the motor command that resulted in that end-effector position. Picture taken from Schillaci (2014).

Figure 6. Reachable space for the arms of the Nao, when one of the two arms is equipped with an extension tool. The extension tool considerably modifies the action space of the left arm. Picture taken from Schillaci et al. (2012a) and Schillaci (2014).

Nonetheless, several other studies can be found in the literature, which address body representations for artificial agents outside the context of visuo-motor coordination. For example, Hafner and Kaplan (2008) extended the notion of body representations, or body maps, to that of interpersonal maps, a geometrical representation of the relationships between a set of proprioceptive and heteroceptive information sources. The study proposed a common representation space for comparing an agent’s behavior and the behavior of other agents, which was used to detect specific types of interactions between agents, such as imitation, and to implement a prerequisite for affordance learning. The abovementioned Epigenetic Robotics Architecture Morse et al. (2010) addressed body representations for grounding linguistic labels onto body postures, visual, and auditory modalities. A similar framework has been proposed by Lallee and Dominey (2013), which encodes sensory and motor modalities as self-organizing maps into a body representation. Through the use of a goal-directed exploration behavior, the system learns a body model composed of specific modalities (arm proprioception, gaze proprioception, vision) and their multimodal mappings, or contingencies. Once multimodal mappings have been learned, the system is capable of generating and exploiting internal representations or mental images based on inputs in one of these multiple dimension (Lallee and Dominey, 2013). Kuniyoshi and Sangawa (2006) presented a model of neuro-musculo-skeletal system of a human infant, composed of self-organizing cortical areas for primary somatosensory and motor areas that participate in the explorative learning by simultaneously learning and controlling the movement patterns. In the simulated experiment, motor behaviors emerged, including rolling over and crawling-like motion. Body representations that include the auditory modality have been also addressed, although not explicitly, by Ince et al. (2009), who investigated methods for the prediction and suppression of ego-motion noise. The authors built up an internal body representation of a humanoid robot consisting of motor sequences mapped to the recorded motor noise and their spectra. This resulted in a large noise template database that was then used for ego-noise prediction and subtraction.

Exploration behaviors for the acquisition and maintenance of internal body representations is a very elegant and promising developmental approach for providing artificial agents with robustness and adaptivity to dynamic body and environments. However, how can these low-level behaviors and representations enable the development of more complex cognitive and motor capabilities? Although this question has still not been clearly answered, several behavioral and brain studies suggest that processes of mental simulations of action–perception loops are likely to be executed in our brain and are dependent on internal motor representations. The capability to simulate sensorimotor experience might represent a key mechanism behind the implementation of higher cognitive skills, as discussed in the following section.

4. Sensorimotor Simulations

In one of the most influential post-cognitivist studies, Lakoff and Johnson (1980) argued that cognitive processes are expressed and influenced by metaphors, which are based on personal experiences and shape our perceptions and actions. Correlations and co-occurrence of embodied experiences would lead to primitive conceptual metaphors. As argued by Lakoff, physical concepts, such as running and jumping, can be understood through the sensorimotor system, as they can be performed, seen, and felt. Abstract concepts would get their meaning via conceptual metaphors, a combination of basic primitive metaphors that get their meaning via embodied experience. Therefore, Lakoff (2014) concludes that the meaning of concepts comes through embodied cognition. Moreover, in Lakoff and Johnson (1980), the authors argued that metaphorical inferences would arise from neural simulation of experienced situations.

Similarly, Varela, Thompson, and Rosch argued that the interactions between the body, its sensorimotor circuit, and the environment determine the way the world is experienced. Cognitive agents are living bodies situated in the environment and knowledge would emerge through the embodied interaction with the world (Varela et al., 1992). According to the enaction paradigm proposed by Varela and colleagues, the embodied actions of an individual in the world constitute the way how the environment is experienced and thereby ground the agent’s cognition. This is at least accepted in the Narrow Conception of Enactivism (de Bruin and Kästner, 2012).

A related concept is known in the philosophical and scientific literature as mental imagery [for a literature review on embodied cognition and mental imagery, see Schillaci (2014)]. This phenomenon has been defined as a quasi-perceptual experience (in any sensory modality, such as auditory, olfactory, and so on) which resembles perceptual experience but occurs in absence of external stimuli (Nigel, 2014). What is the nature of this mental phenomenon has always been a very debated topic [Nigel (2014) provides a more comprehensive review of the literature on mental imagery]. Not surprisingly, studies on mental imagery can be found already in Greek philosophy. In De Anima, Aristotle saw mental images, residues of actual impressions or phantasmata as playing a central role in human cognition, for example, in memory. Behaviorists believed that psychology must have handled only observable behaviors of people and animals, not unobservable introspective events. Therefore, mental imagery was reputed as not being sufficiently scientific (Watson, 1913), since no rigorous experimental method was proposed to demonstrate it. Only after the 1960s, mental imagery gained new attention in psychology and in the neuroscience (Nigel, 2014).

During the last 20 years, many behavioral and cognitive studies on attitudes, emotion, and social perception investigated and supported the hypothesis that the body is closely tied with cognition. We argue that sensorimotor simulations are behind all of these processes. Strack et al. (1988) demonstrated that people’s facial activity influences their affective responses. Participants were holding a pen in their mouth in a way that either inhibited or facilitated the muscles typically associated with smiling without requiring subjects to pose in a smiling face. The authors found that subjects reported more intense humor responses when cartoons were presented under facilitating conditions than under inhibiting conditions (Strack et al., 1988). These results highlight the important overlapping between motor activity and the affective response an agent has.

Wexler and Klam (2001) presented a study where participants predicted the position of moving objects, in cases of actively produced and passively observed movement. The authors found that in the absence of eye tracking, when occluding the object, the estimates are more anticipatory in the active conditions than in the passive ones. The anticipatory effect of an action depended on the congruence between the motor action and the visual feedback: the less congruent were the motor action and the visual feedback, the more diminished the anticipatory effect, but it was never eliminated. However, when the target was only visually tracked, the effect of manual action disappeared, indicating distinct contributions of hand and eye movement signals to the prediction of trajectories of moving objects (Wexler and Klam, 2001).

Animal research also suggests that rat brains implement simulation processes. O’Keefe and Recce (1993) found that particular cells in the hippocampus of the rat’s brain seem to be involved in the representation of the animal’s position. Their observations of the firing characteristics of these cells suggested that the position of the animal is periodically anticipated along the path. In a study on visual guidance of movements in primates (Eskandar and Assad, 1999), monkeys were trained to use a joystick to move a spot to a specific target. During the movements, the authors modified the relationship between the direction of joystick and movements of the spot, and eventually occluded the spot, thus dissociating the visual and motor correlations. The authors observed cells in the lateral intraparietal area of the monkey’s brain, which were not selectively modulated by either visual input or motor output, but rather seemed to encode the predicted visual trajectory of the occluded target (Eskandar and Assad, 1999).

Wolpert et al. (1995) suggested that sensorimotor prediction processes exist in motor planning and execution also in humans. In testing whether the central nervous system is able to maintain an estimate of the position of the limbs, the authors asked participants to move their arm in the absence of visual feedback. Each participant gripped a tool that was used to measure the position of the thumb and to apply forces to the hand using torque motors. The experimenters were disturbing the hand movements of the participants, which were then asked to indicate the visual estimate of the unseen thumb position using a trackball held, in the other hand. The distance between the actual and visual estimate of thumb location, used as a measure of the state estimation error, showed a consistent overestimation of the distance moved (Wolpert et al., 1995). The authors observed a systematic increase of the error during the first second of movement and then a decay. Therefore, they proposed that the initial phase is the result of a predictive process that estimates the hand position, followed by a correction of the estimate when the proprioceptive feedback is available (Wolpert et al., 1995). In another study, Wolpert et al. (1998) suggested that an internal body representation consisting of a combination of sensory input and motor output signals is stored in the posterior parietal cortex of the brain. The authors also reported that a patient with a lesion of the superior parietal lobe showed both sensory and motor deficits consistent with an inability to maintain such an internal representation between updates.

Blakemore et al. (2000b) supported the existence of self-monitoring mechanisms in the human brain for explaining why tickling sensations cannot be self-produced. The proposal is that sensory consequences of self-generated actions are perceived differently from an identical sensory input that is externally generated. This would explain the cancelation or attenuated tickle sensation when this is the consequence of self-produced motor commands (Blakemore et al., 2000b). The data reported in the study suggest that brain activity differs in response to externally and internally produced stimuli. Moreover, it has been proposed that illnesses, such as schizophrenia, would disable the patient’s capability to detect self-produced actions, therefore producing an altered perception of the world (Frith et al., 2000).

The internal models proposed by Wolpert et al. (1998) could explain the computational processes behind the attenuation of sensory sensation reported above. In particular, Wolpert and colleagues suggest that these internal models are constructed through the sensorimotor experience of the agent in the environment and used in simulation for processes, such as the attenuation of sensory sensations in Blakemore et al. (2000a) and conditions as in Frith et al. (2000). A similar effect has been reported by Weiss and colleagues in a study on selective attenuation of self-generated sounds (Weiss et al., 2011). The experience of generating actions, or self-agency, has been suggested to be linked to the internal motor signals associated with the ongoing actions. It has been proposed that the experience of perceiving actions as self-generated would be caused by the anticipation and, thus, the attenuation of the sensory consequences of such motor commands (Weiss et al., 2011). The results reported by the authors confirmed this hypothesis, as they found that participants perceived the loudness of sounds less intensive when they were self-generated than when they were generated by another person or by a software.

Further evidence suggesting that an internal model of our motor system is involved in the capability to distinguish between self and others can be found in Casile and Giese (2006). In this study, the authors showed that participants were better at recognizing themselves than others when watching movies of only point-light walkers. Knoblich and Flach (2001) performed a study on the capability of participants to predict the landing position of a thrown dart, observed from a video screen. The authors reported that predictions were more accurate when participants observed their own throwing actions than when they observed another person’s throwing actions, even if the stimulus displays were exactly the same for all participants. The results are consistent with the assumption that perceptual input can be linked with the action system to predict future outcomes of actions (Knoblich and Flach, 2001).

4.1. Computational Models for Sensorimotor Simulations

Hesslow (2002) supported with a set of evidence the simulation theory of conscious thought, by assuming that simulation processes are implemented in our brain and that the simulation approach can explain the relations between motor, sensory, and cognitive functions and the appearance of an inner world. In the investigation on internal simulation processes in the human brain, internal forward and inverse models have been proposed (Wolpert et al., 2001). A forward model is an internal model which incorporates knowledge about sensory changes produced by self-generated actions of an individual. In other words, a forward model predicts a sensory outcome St+1 of a motor command Mt applied from an initial sensory situation St. This internal model was first proposed in the control literature as a means to overcome problems, such as the delay of feedback on standard control strategies and the presence of noise, both also characteristic of natural systems (Jordan and Rumelhart, 1992). More recently, Webb (2004) presented a discussion on the possibilities offered by the studies in invertebrate neuroscience to unveil the existence of these types of models. The research concludes that although there is no conclusive evidence, forward models might answer some of the open questions on the mapping between motor and sensory information.

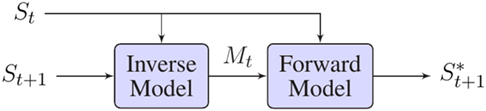

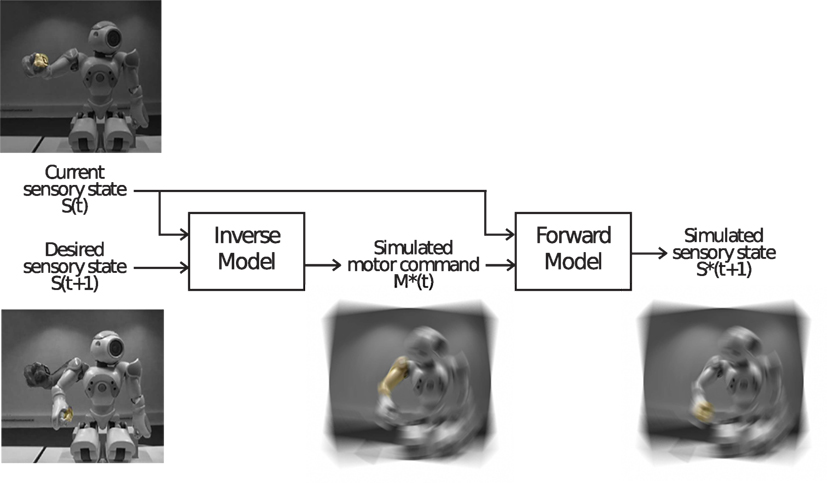

While forward models present the causal relation between actions and their consequences, inverse models perform the opposite transformation providing a system with the necessary motor command Mt to go from a current sensory situation St to a desired one (St+1) (see Figure 7). Inverse models are also very well known in control theory and in robotics, as they have been used for the implementation of inverse kinematics in robotic manipulators. Kinematics describe the geometry of motion of points and objects. In classic control theory, kinematics equations are used to determine the joint configuration of a robot to reach a desired position of its end-effector.

Figure 7. Inverse and forward model pairs. The joint actions of these two models can produce internal simulations of sensorimotor cycles.

Recently, forward and inverse models became central players in the coding of sensorimotor simulations, as they naturally fuse together different sensory modalities as well as motor information, not only providing individuals with multimodal representations but also encoding the dynamics of their motor systems (Wilson and Knoblich, 2005). Studies such as the ones reported in the previous section shed light on the importance that the prediction of the sensory consequences of our own actions has for basic motor tasks (Blakemore et al., 1998). Forward models, by functioning with self-generated motor commands are an important base for the feeling of agency, as suggested by Weiss et al. (2011). A faulty functioning of forward models, in their role as self-monitoring mechanisms, is thought to be responsible for some of the symptoms present in schizophrenia (Frith, 1992). In general, the capability to anticipate sensorimotor activity is thought to be crucially involved in several cognitive functions, including attention, motor control, planning, and goal-oriented behavior (Pezzulo, 2007; Pezzulo et al., 2011).

Research has been done on computational internal models for action preparation and movement, in the context of reaching objects and of handling objects with different weights (Wolpert and Ghahramani, 2000). The main proposal became a standard reference known as the MOdular Selection And Identification for Control (MOSAIC) model (Haruno et al., 2001). In MOSAIC, different pairs of inverse and forward models encode specific sensorimotor schemes. The contribution of each pair to choose a motor command is weighted by a responsibility estimator according to the context and the behavior the system is currently modeling (Haruno et al., 2001). The authors extended the model to encode more complex behaviors and actions in Hierarchical MOSAIC (Wolpert et al., 2003). Conceptually, HMOSAIC is capable of accounting and model social interaction, action observation, and action recognition.

Tani et al. (2005) proposed an architecture in which multiple sensorimotor schemes can be learned in a distributed manner based on using a recurrent neural network with parametric biases. The model was demonstrated to implement behavior generation and recognition processes in an imitative interaction experiment, thus acting as a mirror system. Moreover, the model has been shown to support associative learning between behaviors and language, supporting the hypothesis posed by Arbib (2002) that the capabilities of the mirror neurons for conceptualizing objects manipulation behaviors might lead to the origins of language (Tani et al., 2005). In the framework of cognitive robotics, interesting work has been done in incorporating internal simulations for navigation on autonomous robots. Ziemke et al. (2005) incorporated several aspects of the sensorimotor theories and performed internal simulations to achieve a navigation task. A trained robot equipped with the proposed framework was able, in some cases, to move blindly in a simple environment, using as input only own sensory predictions rather than actual sensory input.

Lara and Rendon-Mancha (2006) equipped a simulated agent with a forward model implemented as an artificial neural network. The system learned to successfully predict multimodal sensory representations formed by visual and tactile stimuli for an obstacle avoidance task. Following the same strategy, Escobar et al. (2012) made an experiment on robot navigation through self body-mapping and the association between motor commands and their respective sensory consequences. A mobile robot was made to interact with its environment in order to know the free space around it from re-enaction of sensory–motor cycles predicting collisions from visual data. The robot formed multimodal associations, consisting of motor commands, disparity maps from vision and tactile feedback, into a forward model, which was trained with data coming from random trajectories. The resulting forward model allowed the robot to navigate avoiding undesired situations by performing long-term predictions of the sensory consequences of its actions (Escobar et al., 2012).

Following navigation studies, Möller and Schenck (2008) made an experiment on anticipatory dead-end recognition, where a simulated agent learned to distinguish between dead ends and corridors without the necessity to represent these concepts in the sensory domain. With interacting with the environment, the agent acquired a visuo-tactile forward model that allowed it to predict how the visual input was changing under its movements and whether movements were leading to a collision. In addition, the agent learned an inverse model for suggesting which actions should be simulated for long-term predictions. Finally, Hoffmann and Möller (2004) and Hoffmann (2007) presented a chain of forward models that provides a mobile agent with the capability to select different actions to achieve a goal situation and perform mental transformations during navigation. It is worth highlighting that in the last five examples, the agents make use of long-term predictions (LTP) to achive the desired behaviors. These LTPs are achieved by executing sensorimotor simulations aquired throught the interaction of the agents with the environment.

Akgün et al. (2010) presented an internal simulation mechanism for action recognition, inspired by the behavior recognition hypothesis of mirror neurons. The proposed computational model, similar to HAMMER and MOSAIC, is capable of recognizing actions online using a modified Dynamical Movement Primitives framework, a non-linear dynamic system that has been proposed for imitation learning, action generation, and recognition by Ijspeert et al. (2001). Schrodt et al. (2015) presented a generative neural network model for encoding biological motion, for recognizing observed movements and for adopting the point of view of an observer. The proposed model learns map and segment multimodal sensory streams of self-motion to anticipate motion progression, to complete missing sensory information, and to self-generate motion sequences that have been previously learned. In addition, the model was equipped with the capability to adopt the point of view of an observed person, establishing full consistency with the embodied self-motion encodings by means of active inference (Schrodt et al., 2015).

A MOSAIC-like architecture for action recognition was also presented by Schillaci et al. (2012b), where the authors also compared different learning strategies for inverse and forward model pairs (see Figure 8). In an experiment on action selection, Schillaci et al. (2012b, 2014) showed how a robot can deal with tool-use when equipped with self-exploration behaviors and with the capability to execute internal simulations of sensorimotor cycles. Schillaci et al. implemented learning of internal models through self-exploration on a humanoid robot, which were consequently used for predicting simple arm trajectories and for distinguishing between self-generated movements and arm trajectories executed by a different robot (Schillaci et al., 2013) or by a human (Schillaci, 2014).

Figure 8. An example of an internal simulation [image taken from Schillaci (2014)]. The inverse model simulates the motor command (in the example, a displacement of the joints of one arm of the humanoid robot Aldebaran Nao) needed for reaching a desired sensory state, from the current state of the system. Before being sent to the actuators, such a simulated motor command can be fed into the forward model that anticipates its outcome, in terms of sensory perception. A prediction error of the internal simulation can be calculated by comparing the simulated sensory outcome with the desired sensory state.

Interesting research on sensorimotor simulations can be found in the context of action execution and recognition. For example, Dearden and Demiris (2005) presented a study where a robot learned a forward model that successfully imitated actions presented to its visual system. In a later study, Dearden (2008) presented a more complex system where a robot learns from a social context by means of forward and inverse models using memory-based approaches. Nishide et al. (2007) presented a study on predicting object dynamics through active sensing experiences with a humanoid robot. For predicting the movements of an unknown object, a static image of the object and robot motor command are fed into a neural network that was trained in a previous stage through a learning mechanism based on active sensing. In the HAMMER architecture (Hierarchical Attentive Multiple Models for Execution and Recognition) proposed by Demiris and Khadhouri (2006), inverse and forward model pairs encoded sensorimotor schemes and were used for action execution and action understanding. The HAMMER architecture was implemented using Bayesian Belief Networks and was also extended to include cognitive processes, such as attention (Demiris and Khadhouri, 2006).

Kaiser (2014) investigated a computational model for perceiving the functional role of objects, or their affordances, based on internally simulated object interactions. The approach was based on an implementation of visuo-motor forward models based on feed-forward neural networks and geometric approximations. The models were trained with sensorimotor data gathered from self-exploration, although in a structured systematic fashion, i.e., by defining grids in sensorimotor space or in motor space (Kaiser, 2014).

A promising line of investigation addresses the implementation of simulation processes for the development of the sense of agency, the sense of being the cause or author of a movement, and for distinguishing between self and other. Pitti et al. (2009) proposed a mechanism of spike timing-dependent synaptic plasticity as a biologically plausible model for detecting contingency between multimodal events and for allowing a robotic agent to experience its own agency during motion.

Finally, we would like to highlight the work presented in Hoffmann (2014), where the paradigm of cognitive developmental robotics is addressed through a case study. In this, information flow is analyzed with an agent interacting with the world. A very critical view of the paradigm is addressed in the light of embodied cognition and the enactive paradigm. Extraction of low-level features in the sensorimotor space is analyzed and use in higher level behaviors of the agent where sensorimotor associations are formed. Interestingly, an important conclusion is the importance and usefullness of forward models in the control structure of agents.

5. Conclusion

The goal of developmental roboticists is to implement mechanisms for autonomous motor and mental development in artificial agents. We argued that mechanisms for sensorimotor simulation may be the bridge between low-level sensorimotor representations learned through experience and the implementation of basic cognitive skills in artificial agents. Several robotics studies showed that internal simulations and imagery can provide robots with capabilities, such as long-term prediction for navigation, behavior selection and recognition, and perception of the functional role of objects, and can even serve as a possible basis for the acquisition of the sense of agency and for the capability to distinguish between self and other.

A prerequisite for the implementation of sensorimotor simulation processes in artificial agents is the knowledge about the characteristics of their motor systems and their embodiment. In fact, to be able to internally simulate the outcome of their own actions, robots need to know their action possibilities and to have an antecedent perceptual experience about the consequences of their activities. An elegant and promising way for allotting artificial agents with such a knowledge is provided by exploration, a learning mechanism inspired by human development. By exploring their bodily capabilities and by interacting with the environment, possibly using mechanisms resembling human curiosity, robots can generate a rich amount of sensory and motor experience. Maintaining this multimodal information into internal representations of the robot’s body could be not only helpful for monitoring the correct functioning of the system but also exploited for detecting unexpected events, such as temporary or permanent changes in the agents morphology, and for adapting to them. Such a possibility would be impossible to implement with a priori defined models of the robot body and its surrounding environment, as this would require not only the exact knowledge of the dynamics of the artificial system and its surroundings, as well as the definition of all the variables that could affect the normal functioning of the system. It is important to note that different implementations have made use of different computational strategies for the coding of these body representations. However, in all cases, these representations encompass the bulk of the possibilities an agent has of sensing and acting in the world. Following this line of thought, simulations are the off-line rehearsal of these schemes.

We argue that sensorimotor learning, internal body representation, and internal sensorimotor simulations are paramount in the development of artificial agents. Also, we strongly believe that the three processes have to be considered interdependent and necessary when investigating autonomous mental development. It is for these reasons, we tried to give an interdisciplinary overview of what we believe to be the most prominent studies on these topics, from the disciplines of robotics, cognitive sciences, and neuroscience.

Author Contributions

Each of the authors has contributed equally and significantly to the study.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer (MH) and handling editor declared their shared affiliation, and the handling editor states that the process nevertheless met the standards of a fair and objective review.

Acknowledgments

The present work has been conducted as part of the EARS (Embodied Audition for RobotS) Project. The research leading to these results has partially received funding from the European Unions Seventh Framework Programme (FP7/2007–2013) under grant agreement number 609465. The authors would like to thank the members of the Adaptive Systems Group of the Humboldt-Universität zu Berlin and of the Cognitive Robotics lab of the Universidad Autonoma del Estado de Morelos for discussion and inspiration.

References

Akgün, B., Tunaoglu, D., and Sahin, E. (2010). “Action recognition through an action generation mechanism,” in International Conference on Epigenetic Robotics (EPIROB). Örenäs Slott.

Arbib, M. A. (2002). “The mirror system, imitation, and the evolution of language,” in Imitation in Animals and Artifacts, eds K. Dautenhahn and C. L. Nehaniv (Cambridge, MA: MIT Press), 229–280.

Asada, M., Hosoda, K., Kuniyoshi, Y., Ishiguro, H., Inui, T., Yoshikawa, Y., et al. (2009). Cognitive developmental robotics: a survey. IEEE Trans. Auton. Ment. Dev. 1, 12–34. doi:10.1109/TAMD.2009.2021702

Baranes, A., and Oudeyer, P.-Y. (2013). Active learning of inverse models with intrinsically motivated goal exploration in robots. Rob. Auton. Syst. 61, 49–73. doi:10.1016/j.robot.2012.05.008

Barsalou, L. W. (2008). Grounded cognition. Annu. Rev. Psychol. 59, 617–645. doi:10.1146/annurev.psych.59.103006.093639

Berthold, O., and Hafner, V. V. (2015). “Closed-loop acquisition of behaviour on the sphero robot,” in Proceedings of the European Conference on Artificial Life (York, UK).

Blakemore, S. J., Goodbody, S. J., and Wolpert, D. M. (1998). Predicting the consequences of our own actions: the role of sensorimotor context estimation. J. Neurosci. 18, 7511–7518.

Blakemore, S.-J., Smith, J., Steek, R., Johnstone, E. C., and Frith, C. D. (2000a). The perception of self-produced sensory stimuli in patients with auditory hallucinations and passivity experiences: evidence for a breakdown in self-monitoring. Psychol. Med. 30, 1131–1139. doi:10.1017/S0033291799002676

Blakemore, S. J., Wolpert, D., and Frith, C. (2000b). Why can’t you tickle yourself? Neuroreport 11, 11–16. doi:10.1097/00001756-200008030-00002

Brandao, M., Jamone, L., Kryczka, P., Endo, N., Hashimoto, K., and Takanishi, A. (2013). “Reaching for the unreachable: integration of locomotion and whole-body movements for extended visually guided reaching,” in 2013 13th IEEE-RAS International Conference on Humanoid Robots (Humanoids) (Atlanta: IEEE), 28–33.

Butterworth, G., and Hopkins, B. (1988). Hand-mouth coordination in the new-born baby. Br. J. Dev. Psychol. 6, 303–314. doi:10.1111/j.2044-835X.1988.tb01103.x

Cang, J., and Feldheim, D. A. (2013). Developmental mechanisms of topographic map formation and alignment. Annu. Rev. Neurosci. 36, 51–77. doi:10.1146/annurev-neuro-062012-170341

Cardinali, L., Frassinetti, F., Brozzoli, C., Urquizar, C., Roy, A. C., and Farn, A. (2009). Correspondence. Curr. Biol. 19, R478–R479. doi:10.1016/j.cub.2009.05.009

Casile, A., and Giese, M. A. (2006). Nonvisual motor training influences biological motion perception. Curr. Biol. 16, 69–74. doi:10.1016/j.cub.2005.10.071

de Bruin, L. C., and Kästner, L. (2012). Dynamic embodied cognition. Phenomenol. Cogn. Sci. 11, 541–563. doi:10.1007/s11097-011-9223-1

Dearden, A. (2008). Developmental Learning of Internal Models for Robotics. Ph.D. thesis, Imperial College London, London.

Dearden, A., and Demiris, Y. (2005). “Learning forward models for robots,” in Int. Joint Conferences on Artificial Intelligence (Edinburgh: IEEE), 1440.

Demiris, Y., and Khadhouri, B. (2006). Hierarchical attentive multiple models for execution and recognition of actions. Rob. Auton. Syst. 54, 361–369. doi:10.1016/j.robot.2006.02.003

di Nocera, D., Finzi, A., Rossi, S., and Staffa, M. (2014). The role of intrinsic motivations in attention allocation and shifting. Front. Psychol. 5:273. doi:10.3389/fpsyg.2014.00273

Escobar, E., Hermosillo, J., and Lara, B. (2012). “Self body mapping in mobile robots using vision and forward models,” in 2012 IEEE Ninth Electronics, Robotics and Automotive Mechanics Conference (CERMA) (Cuernavaca: IEEE), 72–77.

Eskandar, E. N., and Assad, J. A. (1999). Dissociation of visual, motor and predictive signals in parietal cortex during visual guidance. Nat. Neurosci. 2, 88–93. doi:10.1038/4594

Forestier, S., and Oudeyer, P.-Y. (2015). “Towards hierarchical curiosity-driven exploration of sensorimotor models,” in 2015 Joint IEEE International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob) (Providence: IEEE), 234–235.

Frith, C. D. (1992). The Cognitive Neuropsychology of Schizophrenia. Hove, UK; Hillsdale, USA: L. Erlbaum Associates.

Frith, C. D., Blakemore, S.-J., and Wolpert, D. M. (2000). Explaining the symptoms of schizophrenia: abnormalities in the awareness of action. Brain Res. Rev. 31, 357–363. doi:10.1016/S0165-0173(99)00052-1

Fuke, S., Ogino, M., and Asada, M. (2007). Body image constructed from motor and tactile images with visual information. Int. J. HR 4, 347–364. doi:10.1142/S0219843607001096

Ganesh, G., Yoshioka, T., Osu, R., and Ikegami, T. (2014). Immediate tool incorporation processes determine human motor planning with tools. Nat. Commun. 5, 4524. doi:10.1038/ncomms5524

Gottlieb, J., Oudeyer, P.-Y., Lopes, M., and Baranes, A. (2013). Information-seeking, curiosity, and attention: computational and neural mechanisms. Trends Cogn. Sci. 17, 585–593. doi:10.1016/j.tics.2013.09.001

Hafner, V. V., and Kaplan, F. (2008). “Interpersonal maps: how to map affordances for interaction behaviour,” in Towards Affordance-Based Robot Control, Volume 4760 of Lecture Notes in Computer Science, eds E. Rome, J. Hertzberg, and G. Dorffner (Berlin, Heidelberg: Springer), 1–15.

Hafner, V. V., and Schillaci, G. (2011). From field of view to field of reach – could pointing emerge from the development of grasping? Front. Comput. Neurosci. 5, 17 doi:10.3389/conf.fncom.2011.52.00017

Haggard, P., and Wolpert, D. M. (2005). “Disorders of body scheme,” in Higher-Order Motor Disorders, eds H. J. Freund, M. Jeannerod, M. Hallett, and R. Leiguarda (Oxford: University Press).

Haruno, M., Wolpert, D. M., and Kawato, M. (2001). Mosaic model for sensorimotor learning and control. Neural Comput. 13, 2201–2220. doi:10.1162/089976601750541778

Hesslow, G. (2002). Conscious thought as simulation of behaviour and perception. Trends Cogn. Sci. 6, 242–247. doi:10.1016/S1364-6613(02)01913-7

Hikita, M., Fuke, S., Ogino, M., Minato, T., and Asada, M. (2008). “Visual attention by saliency leads cross-modal body representation,” in 7th IEEE International Conference on Development and Learning, 2008. ICDL 2008 (Monterey, CA: IEEE), 157–162.

Hoffmann, H. (2007). Perception through visuomotor anticipation in a mobile robot. Neural Networks 20, 22–33. doi:10.1016/j.neunet.2006.07.003

Hoffmann, H., and Möller, R. (2004). “Action selection and mental transformation based on a chain of forward models,” in Proc. of the 8th Int. Conference on the Simulation of Adaptive Behavior (Cambridge, MA: MIT Press), 213–222.

Hoffmann, M. (2014). “Minimally cognitive robotics: body schema, forward models, and sensorimotor contingencies in a quadruped machine,” in Contemporary Sensorimotor Theory (Berlin: Springer), 209–233.

Hoffmann, M., Marques, H., Hernandez Arieta, A., Sumioka, H., Lungarella, M., and Pfeifer, R. (2010). Body schema in robotics: a review. IEEE Trans. Auton. Ment. Dev. 2, 304–324. doi:10.1109/TAMD.2010.2086454

Holmes, N., and Spence, C. (2004). The body schema and multisensory representation(s) of peripersonal space. Cogn. Process. 5, 94–105. doi:10.1007/s10339-004-0013-3

Ijspeert, A., Nakanishi, J., and Schaal, S. (2001). “Trajectory formation for imitation with nonlinear dynamical systems,” in Proceedings. 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2001, Vol. 2 (Outrigger Wailea Resort: IEEE), 752–757.

Ince, G., Nakadai, K., Rodemann, T., Hasegawa, Y., Tsujino, H., and Imura, J. (2009). “Ego noise suppression of a robot using template subtraction,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2009. IROS 2009 (St. Louis, MO: IEEE), 199–204.

Iriki, A., Tanaka, M., and Iwamura, Y. (1996). Coding of modified body schema during tool use by macaque postcentral neurones. Neuroreport 7, 2325–2330. doi:10.1097/00001756-199610020-00010

Jauffret, A., Cuperlier, N., Gaussier, P., and Tarroux, P. (2013). From self-assessment to frustration, a small step towards autonomy in robotic navigation. Front. Neurorobot. 7, 16. doi:10.3389/fnbot.2013.00016

Jordan, M. I., and Rumelhart, D. E. (1992). Forward models: supervised learning with a distal teacher. Cogn. Sci. 16, 307–354. doi:10.1207/s15516709cog1603_1

Kaas, J. H. (1997). Topographic maps are fundamental to sensory processing. Brain Res. Bull. 44, 107–112. doi:10.1016/S0361-9230(97)00094-4

Kaiser, A. (2014). Internal Visuomotor Models for Cognitive Simulation Processes. Ph.D. thesis, Bielefeld University, Bielefeld.

Kajić, I., Schillaci, G., Bodiroža, S., and Hafner, V. V. (2014). “Learning hand-eye coordination for a humanoid robot using soms,” in Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, HRI ’14 (New York, NY: ACM), 192–193.

Knoblich, G., and Flach, R. (2001). Predicting the effects of actions: interactions of perception and action. Psychol. Sci. 12, 467–472. doi:10.1111/1467-9280.00387

Kohonen, T. (1982). Self-organized formation of topologically correct feature maps. Biol. Cybern. 43, 59–69. doi:10.1007/BF00337288

Kuhl, P. K., and Meltzoff, A. N. (1996). Infant vocalizations in response to speech: vocal imitation and developmental change. J. Acoust. Soc. Am. 100(4 Pt 1), 2425–2438. doi:10.1121/1.417951

Kuniyoshi, Y., and Sangawa, S. (2006). Early motor development from partially ordered neural-body dynamics: experiments with a cortico-spinal-musculo-skeletal model. Biol. Cybern. 95, 589–605. doi:10.1007/s00422-006-0127-z

Lakoff, G. (2014). Mapping the brain’s metaphor circuitry: is abstract thought metaphorical thought? Front. Hum. Neurosci. 8, 958. doi:10.3389/fnhum.2014.00958

Lallee, S., and Dominey, P. F. (2013). Multi-modal convergence maps: from body schema and self-representation to mental imagery. Adap. Behav. 1, 1–12.

Lara, B., and Rendon-Mancha, J. M. (2006). “Prediction of undesired situations based on multi-modal representations,” in Electronics, Robotics and Automotive Mechanics Conference, 2006, Vol. 1 (Cuernavaca: IEEE), 131–136.

Law, J., Lee, M., Hülse, M., and Tomassetti, A. (2011). The infant development timeline and its application to robot shaping. Adap. Behav. 19, 335–358. doi:10.1177/1059712311419380

Litman, J. (2005). Curiosity and the pleasures of learning: wanting and liking new information. Cogn. Emot. 19, 793–814. doi:10.1080/02699930541000101

Lungarella, M., Metta, G., Pfeifer, R., and Sandini, G. (2003). Developmental robotics: a survey. Connect. Sci. 15, 151–190. doi:10.1080/09540090310001655110

Maravita, A., and Iriki, A. (2004). Tools for the body (schema). Trends Cogn. Sci. 8, 79–86. doi:10.1016/j.tics.2003.12.008

Maravita, A., Spence, C., and Driver, J. (2003). Multisensory integration and the body schema: close to hand and within reach. Curr. Biol. 13, 531–539. doi:10.1016/S0960-9822(03)00449-4

Meltzoff, A. N., and Moore, A. K. (1997). Explaining facial imitation: a theoretical model. Early Dev. Parent. 6, 179–192. doi:10.1002/(SICI)1099-0917(199709/12)6:3/4<179::AID-EDP157>3.3.CO;2-I

Möller, R., and Schenck, W. (2008). Bootstrapping cognition from behavior – a computerized thought experiment. Cogn. Sci. 32, 504–542. doi:10.1080/03640210802035241

Morse, A. F., Greef, J. D., Belpaeme, T., and Cangelosi, A. (2010). Epigenetic robotics architecture (ERA). IEEE Trans. Auton. Ment. Dev. 2, 325–339. doi:10.1109/TAMD.2010.2087020

Moulin-Frier, C., Nguyen, S. M., and Oudeyer, P.-Y. (2013). Self-organization of early vocal development in infants and machines: the role of intrinsic motivation. Front. Psychol. 4, 1006. doi:10.3389/fpsyg.2013.01006

Ngo, H., Luciw, M., Förster, A., and Schmidhuber, J. (2013). Confidence-based progress-driven self-generated goals for skill acquisition in developmental robots. Front. Psychol. 4:833. doi:10.3389/fpsyg.2013.00833

Nigel, J. T. (2014). “Mental imagery,” in The Stanford Encyclopedia of Philosophy, Fall 2014 Edn, ed. E. N. Zalta (Stanford: Center for the Study of Language and Information), 1.

Nishide, S., Ogata, T., Tani, J., Komatani, K., and Okuno, H. (2007). “Predicting object dynamics from visual images through active sensing experiences,” in 2007 IEEE International Conference on Robotics and Automation (Roma: IEEE), 2501–2506.

O’Keefe, J., and Recce, M. L. (1993). Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 3, 317–330. doi:10.1002/hipo.450030307

Oudeyer, P.-Y., Kaplan, F., and Hafner, V. V. (2007). Intrinsic motivation systems for autonomous mental development. IEEE Trans. Evol. Comput. 11, 265–286. doi:10.1109/TEVC.2006.890271