- 1Mobility and AI Laboratory, Nissan Motor Co., Ltd., Atsugi, Kanagawa, Japan

- 2Department of Robotics Brain and Cognitive Sciences, Italian Institute of Technology, Genoa, Liguria, Italy

- 3Department of Informatics, Bioengineering, Robotics and Systems Engineering, The University of Genoa, Genoa, Liguria, Italy

- 4Department of Medicine and Surgery, The University of Parma, Parma, Emilia–Romagna, Italy

- 5Research Planning Department, Nissan Motor Co., Ltd., Atsugi, Kanagawa, Japan

Designing a social humanoid robot to enhance human cognitive multitasking in a mixed human-robot team is anything but straightforward. In fact, the robot’s presence and behavior can either improve or impair human performance. In this study, we examined how different vitality forms—expressed through the robot’s actions, speech and facial expressions—affect cognitive multitasking. Analysis of human facial expressions and skin conductance data revealed that a robot exhibiting a gentle vitality form fostered a more positive and relaxed state than one displaying a rude vitality form. Moreover, the gentle vitality form improved human performance on tasks involving short-term memory and continuous target tracking. To our knowledge, this is the first study to explore the long-term impact of vitality forms. Overall, our findings suggest that properly designing a social humanoid robot’s vitality forms can significantly enhance cognitive multitasking performance in human-robot teams.

1 Introduction

Humans communicate richly using explicit social signals such as verbal cues and with implicit social signals such as eye gaze (Argyle et al., 1973), tone of voice (Zuckerman et al., 1982), facial expressions (Zuckerman et al., 1982; Ekman, 1970), and gestures (Goldin-Meadow, 1999; Iverson and Goldin-Meadow, 1998). These social signals are integral to everyday interactions. Enabling robots to recognize and generate such social signals allows human-robot communication more effective and influencing human task performances. For example, Bartneck et al. showed that the mere presence of social robots can motivate people to put in more effort on a task (Bartneck, 2003) and Spatola et al. found that social robots improve performance on both the Stroop test and the Eliksen Flanker task (Spatola et al., 2019a; b). Moreover, Agrigoroaie and Tapus compared a robot that promoted impatience with one that remained motionless while encouraging a relaxed demeanor (Agrigoroaie and Tapus, 2020), finding that the former imposed a higher mental workload on participants, whereas the latter prompted them to relax, think carefully, and avoid undue worry. Rea et al. further reported that participants exercised harder and felt competitive with an impolite robot, while a polite robot was perceived as friendly, but sometimes uncompelling and disingenuous (Rea et al., 2021).

Although these studies have focused on the impact of social robots on single tasks, everyday life rarely involves only one task at a time. Instead people are often engaged in various form of collaborative multitasking—driving with a passenger, operating an aircraft with a co-pilot, or walking with a friend who offers advice and cautions each other while facing in the same direction. Therefore, in our previous study, we compared a robot that provided task advice through social signals during cognitive multitasking (Aoki et al., 2022) to evaluate social facilitation effects (Zajonc, 1965; Allport, 1924). The results indicated that while social robots worsen performance on a simple reactive task, it enhanced performance on a cognitively demanding task during multitasking. Additionally, our analysis of participants’ facial expressions revealed more positive expressions when they were with a socially behaving robot compared to a mechanically behaving one (Jirak et al., 2022).

Given the diversity of social behaviors, the different behavioral styles in social robots may affect human behaviors in various ways. For instance, Torrey et al. found that the use of hedges and discourse markers by robots can create a positive impression (Torrey et al., 2013) and Ghazali et al. showed that incorporating social cues reduces psychological reactance to highly controlling language (e.g., “you have to…”) (Ghazali et al., 2017). Building on our previous work in cognitive multitasking with social robots, this study focuses on how different behavioral styles—manifested through varying vitality forms—impact human performance.

2 Related work

2.1 Vitality forms in human-human interaction

Effective cooperation in social groups requires the ability to accurately predict others’ actions, interpret their behaviors, and adapt their activities. When interacting socially with others, we typically understand their behavioral goals and intentions (Brown and Brüne, 2012). This ability to attribute mental states—such as beliefs, intentions, knowledge—of others to ourselves and to predict their behavior based on these inferences is known as “theory of mind” (Premack and Woodruff, 1978). Evidence suggests that the fundamental mechanisms underlying theory of mind involve a group of neurons—neurons that fire both during action observation and execution (Gallese and Goldman, 1998; Rizzolatti and Craighero, 2004; Rizzolatti, 2005; Kilner et al., 2007).

During social interactions, people express their positive or negative attitudes through the way they perform actions or speak, using different vitality forms (e.g., gentle, rude). For instance, an agent may grasp and pass an object either rudely or gently, depending on their attitude. Variations in the force, direction, and velocity of their actions enable the receiver to infer their affective state and attitude. Daniel Stern defined these fundamental aspects of social communication as “vitality forms” (Stern, 2010). Vitality forms play a dyadic role in interpersonal relationships by enabling the agent to convey their attitudes and allowing the receiver to understand those attitudes (Di Cesare et al., 2017; 2020a; Rizzolatti et al., 2021).

Vitality forms are distinct from emotions. Scherer defines emotions as responses elicited by stimulus events—occurring when an event triggers a response in the organism after being appraised for its significance (Scherer, 2005). Furthermore, Pace-Schott et al. describe basic emotions as brief events characterized by visceromotor responses and behavioral preparation (Pace-Schott et al., 2019). In contrast, vitality forms represent human behaviors that reflect an agent’s internal emotional state (Stern, 2010). Specifically, social actions performed with a rude vitality form tend to exhibit larger trajectories and higher velocity profiles than those executed with a gentle vitality form (Di Cesare et al., 2016b). Additionally, speech delivered with a rude vitality form is characterized by a lower pitch (pitch of sound) and weaker intensity (volume) compared to speech delivered with a gentle vitality form (Di Cesare et al., 2017).

The ability to perceive and express vitality forms is evident even in infants during mother-infant interactions, underscoring their critical role in relating to and understanding others (Condon and Sander, 1974; Ammaniti and Ferrari, 2013; Rochat, 2009). Moreover, the perception of vitality forms is often impaired in individuals with social and communication deficits, such as children on the autism spectrum (Rochat et al., 2013). Most notably, Di Cesare et al. have studied the neural correlates of vitality form processing, showing that both the expression and perception of vitality forms activate the dorso-central insula and the middle cingulate cortex (Di Cesare et al., 2016a; Di Cesare et al., 2017; Di Cesare et al., 2020a; Di Cesare et al., 2021).

2.2 Vitality forms from social robots

While humans and monkeys both translate observed motor behaviors into their own internal motor representations, it has been shown that groups of neurons with mirror properties fire even when observing goal-directed actions performed by a different species (Ferrari et al., 2003). This finding indicates that mirror neurons in monkeys respond to human movement, suggesting they may play a role in understanding actions and intentions of others across species boundaries.

Additionally, Gazzola et al. identified the motor cortex involved in executing hand actions in humans and found that this area is strongly activated when observing actions performed by either humans or robots (Gazzola et al., 2007). Similarly, Oberman et al. demonstrated that mirror neurons are activated when observing hand movements by a humanoid robotic hand (Oberman et al., 2007). These studies indicated that mirror neurons encode the goal of an action, and that humans can project the apparent mental state of robots onto themselves. In a related experiment, Hegel et al. showed that people exert their theory of mind abilities on humanoid robots in a classical prisoner’s dilemma game (Hegel et al., 2008).

Furthermore, Di Cesare et al. argued that the same motor cortex is activated when observing a humanoid robot (iCub) (Metta et al., 2008; 2010) performing different vitality forms (Di Cesare et al., 2020b; Di Cesare, 2020; Vannucci et al., 2018). They proposed that differences in perceived vitality forms affect motor responses, with the same motor cortex firing during the observation of both human and robot actions. In their study, they implemented the kinematic features of human actions into the iCub robot, allowing it to exhibit vitality forms like a human. Most importantly, they demonstrated that observing these actions activated the brain network involved in processing vitality forms, just as it does when observing human actions (Di Cesare et al., 2020b). Notably, their results showed that the velocity profile and peak velocity are more crucial for representing vitality forms than adherence to the 2/3 power law.

Based on these studies, we aim to study how differences in the vitality forms of social robots impact human cognitive multitasking performance. The next section presents the study design.

3 Study design

This section outlines the hypothesis and experimental design, and tasks assigned to participants.

3.1 Hypotheses

Drawing on research into vitality forms and our previous studies on human cognitive tasks with social robots, we propose the following hypotheses:

Participants complete a short-term memory task in less time in the presence of a gently behaving robot than in the presence of a rudely behaving robot.

Participants respond faster to a simple reactive task in the presence of a rudely behaving robot than in the presence of a gently behaving robot.

Participants control a target more accurately on a tracking task in the presence of a gently behaving robot than in the presence of a rudely behaving robot.

Participants show more positive facial expressions and are more relaxed in the presence of a gently behaving robot than in the presence of a rudely behaving robot.

3.2 Experimental setup

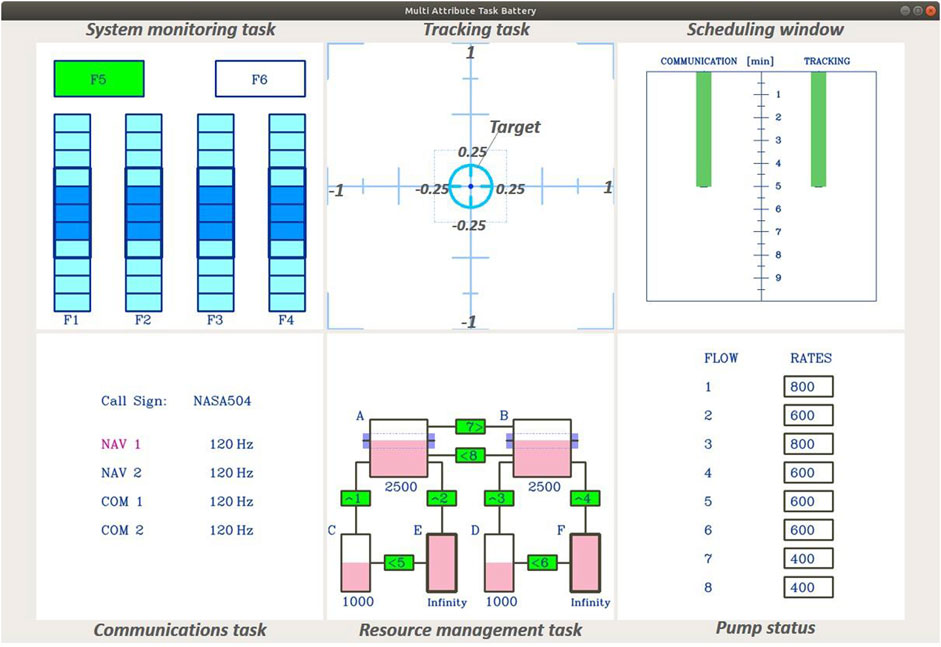

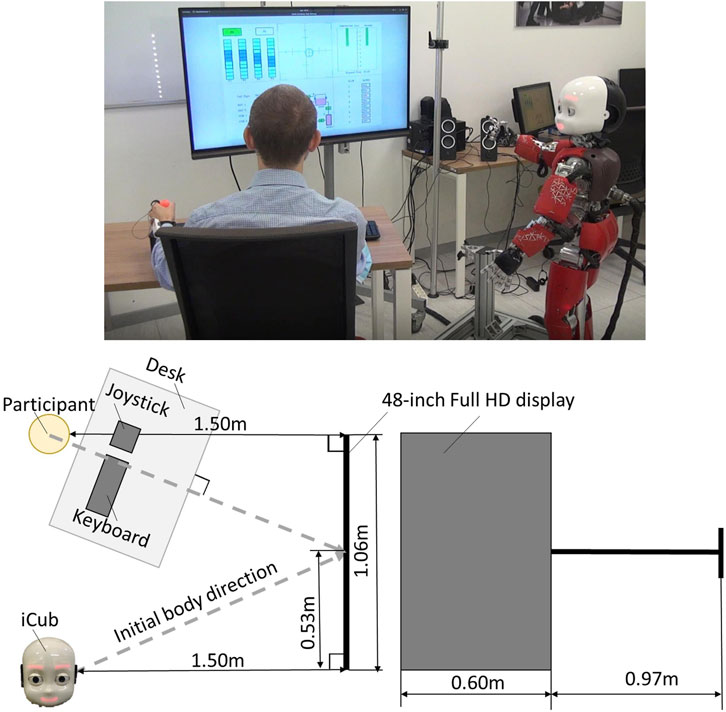

All experiments are conducted with the humanoid robot iCub (Metta et al., 2008; Metta et al., 2010). The iCub design and control infrastructure enable it to reproduce human-like behaviors based on specific cognitive models of human-human interaction. Participants perform the MATB-YARP (see Section 3.3), a task battery that simulates various cognitive challenges encountered by aircraft pilots. Each participant engages with the MATB-YARP for 5 min. During this period, the iCub (see Section 3.4) marks the start and end of the task while continuously displaying vitality forms through coordinated movements of its arms, torso, and head. Figure 1 illustrates the experimental setup, and Figure 2 shows the MATB-YARP display.

Figure 1. On the left is a plan view of the experimental layout, showing the positions of the participant, the iCub robot, the devices, and the display. On the right, is the dimensions of the display are shown.

3.3 The cognitive tasks (The MATB-YARP)

This study aims to explore human task performance and emotional states—as measured through facial expressions, gestures (including arm, torso, and head movements), gaze, and tone of voice—while participants engage in demanding cognitive multitasking in the presence of social robots exhibiting different behavioral styles based on vitality forms.

To this end, we selected MATB-YARP (Aoki et al., 2022), an implementation of NASA’s Multi-Attribute Task Battery (MATB) (Comstock Jr and Arnegard, 1992; Santiago-Espada et al., 2011) on YARP, which is suitable for experiments with the iCub. Among the task battery, we asked participants to perform the following three tasks for 5 min as in our previous study (Aoki et al., 2022).

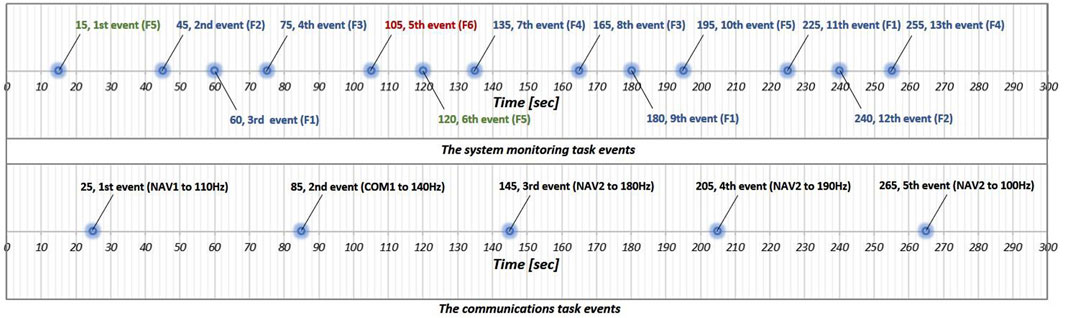

3.3.1 The communications task

The communications task is a task that requires participants to perform a series of key operations based on auditory information. In this task, participants adjust the radio frequencies of predetermined channels in response to voice instructions from a simulated air traffic control. Specifically, when instructed to adjust a radio frequency, they must select the appropriate channel (NAV1, NAV2, COM1, or COM2) using the up or down keys on a keyboard, navigate to the frequency change box with the right key, and then adjust the frequency using the up or down keys. The instructions is delivered in Italian, stating “Change NAV1 (NAV2, COM1, COM2) to

Figure 3. Overview of the MATB events for both the system monitoring task (first row) and the communications task (second row). Blue dots denote the timing of events that require input from participants.

3.3.2 The system monitoring task

The system monitoring task is a simple reactive task designed to measure reaction time based on visual cues. It features four indicator lights and two warning lights. Participants must press the F5 key when the top-left box labeled “F5” changes from green to white and press the F6 key when the top-right box labeled “F6” changes from white to red. Pressing a key resets the corresponding box to its original color. Additionally, the four lower indicator lights—labeled “F1” through “F4”—move randomly within each default area indicated by each blue box while no event is occurring. If an indicator goes outside of its blue box, pressing the corresponding key returns it to the designated area indicated by the blue box. As shown in Figure 3, events in the system monitoring task are designed to occur 13 times in the 5-min session.

3.3.3 The tracking task

The tracking task is a continuous, visually based compensatory task. In this task, participants use a joystick to keep a target centered. The target moves randomly in the

3.4 Stimuli (robot behavior)

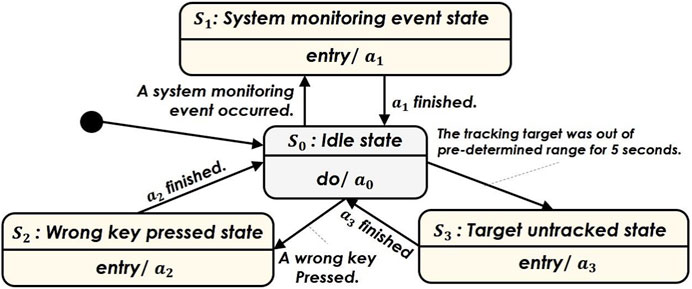

In both the gentle and rude conditions, the iCub interacts with participants during the MATB-YARP exercise using physical movements and speech—all delivered in Italian. The two conditions differ in their vitality forms—that is, in the manner in which the robot behaves—which is detailed later in this section. The robot’s behavior in both conditions is governed by the finite state machine shown in Figure 4. Its control strategy transitions among four states:

When a system monitoring event is activated, the robot notifies the participant using pointing gestures toward the display, gazing into the participant’s face, and speech. The spoken phrase in Italian is: “Press F1, please.”

If the participant presses an incorrect key, the robot notifies the participant using pointing gestures toward the display, gazing into the participant’s face, and speech. The spoken phrase in Italian is: “If I can help you, you have pressed the wrong key.”

When the participant fails to follow the tracking task, the robot alerts them using pointing gestures toward the display, gazing into the participant’s face, and speech. The spoken phrase in Italian is: “Sorry, you should correct your trajectory. Please pay attention.”

Figure 4. Finite state machine representing the flow of the interaction states with the iCub robot cf. Section 3.4.

The speech content was recorded by an adult male actor, and its pitch was slightly raised to match the iCub’s appearance. These recordings were identical in both the gentle and rude conditions. In the following section, we outline the differences in between the two conditions.

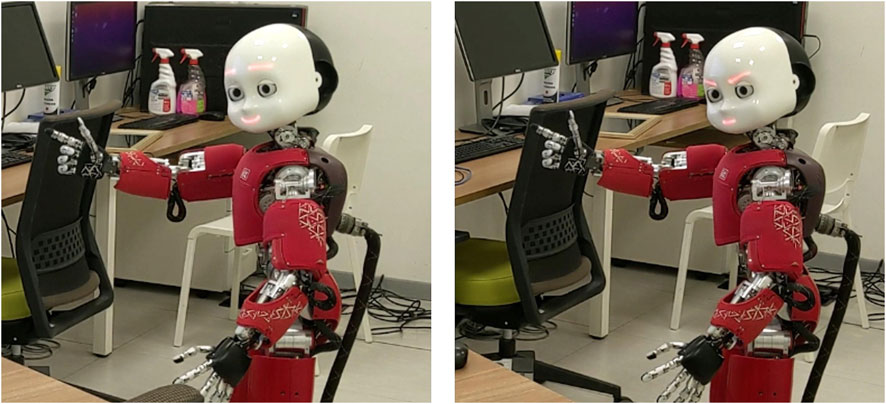

3.4.1 The gentle condition

In this condition, the robot exhibits a gentle vitality form characterized by calm, slow behaviors. Its movements are deliberately unhurried—each action, whether it is a pointing gesture, turning toward the participant, or reorienting its gaze to the monitor, lasts 3 s, which is slower than in the rude condition. When providing advice, the robot’s facial expression features level inner and outer eyebrows with slightly raised mouth corners (see Figure 5). Regarding voice, the robot uses weak plosives and accents, and it speaks slowly.

Figure 5. Robot behaviors and facial expressions in the gentle (the left image) and the rude (the right image) conditions.

3.4.2 The rude condition

In this condition, the robot exhibits the rude vitality form, characterized by aggressive and fast behaviors. Its movements are rapid—each action, whether it is a pointing gesture, turning toward the participant, or reorienting its gaze to the monitor, lasts 1.25 s, which is faster than in the gentle condition. When providing advice, the robot’s facial expression features raised outer eyebrows with mouth in a straight line (see Figure 5). Regarding voice, the robot uses a strong plosives and accents, and it speaks fast.

3.5 Integrated skin conductance response

To assess the participants’ mental workload during the multi tasks—i.e., to determine whether they are relaxed or not—we measure the electrodermal activity (EDA), also known as galvanic skin response (GSR), which is considered an indicator of sympathetic nervous system (Tao et al., 2019). EDA refers to changes in the skin electrical properties in response to sweat secretion. By applying a low constant voltage to the skin, we can noninvasively measure changes in skin conductance (SC) (Fowles et al., 1981).

The time series of SC can be divided into slow-changing tonic activity (skin conductance level, SCL) and rapid-changing phasic activity (skin conductance response; SCR).

In standard experimental settings, SCRs are often analyzed individually using peak detection methods. However, this approach assumes that responses are temporally isolated. In continuous tasks—such as those involving ongoing robot interactions—SCRs frequently occur in quick succession. As a result, subsequent responses may overlap with the decay phase of earlier ones, making it difficult to identify discrete peaks accurately. In such cases, peak detection methods tend to underestimate SCR amplitudes (Boucsein, 1992).

To address this issue, we adopt the integrated skin conductance response (ISCR) as our metric, following the approach proposed by Benedek and Kaernbach (Benedek and Kaernbach, 2010a; Benedek and Kaernbach, 2010b). ISCR is derived from the integral of the phasic driver and assumes that subsequent SCRs are additively superposed. This makes it particularly suitable for evaluating responses to continual stimuli, where traditional peak-based methods fall short.

Therefore, ISCR provides a more robust and accurate measure of mental workload under such conditions and is particularly well-suited to our study, which involves continuous task events and robot interactions that evoke overlapping SCRs. ISCR is defined by the following Equation 1:

where

where IRF, impulse response function, is given by the Bateman function in Equation 3:

with the optimal values for the time constants

For the calculation of

3.6 Arousal and valence from facial expressions

In this study, participants’ facial expressions are recorded, and arousal and valence (Russell, 1980) are estimated to determine whether the robot’s vitality forms during the task propagate to the participants. According to one approach, all emotional states arise from two basic neurophysiological systems—arousal and valence—a concept known as the “circumplex model of affect” (Posner et al., 2005). Higher arousal indicates that the participant is less drowsy and more energetic, while higher valence signifies a more positive emotional state. Facial expressions are continuously recorded throughout the 5-minute tasks using a USB camera mounted on the monitor displaying MATB-YARP, capturing images at approximately 12–15 FPS. For each frame, arousal and valence are estimated using a deep learning model. Specially, we adopted the FaceChannel deep neural network architecture (Barros et al., 2020), which was initially trained on the FER + dataset (Barsoum et al., 2016) and fine-tuned on the AffectNet dataset (Mollahosseini et al., 2017). In the FaceChannel network, both arousal and valence are represented on a scale ranging from −1 to 1. By calculating the mean values over 20-second intervals, we obtain the arousal and valence values for each time period.

3.7 Procedure

Participants are welcomed in a room arranged to be comfortable and distinctly different from a typical laboratory setting. To minimize distractions that might affect performance, the experimenter’s control area is placed behind the participants. The robot begins in its home position (expressionless, standing, and facing forward), and as soon as the participant enters the room, the following protocol is implemented:

1. Participants are asked to sit in the designated chair in the experimental setup.

2. Before the experiment begins, the experimenters explain how to operate the MATB-YARP task and instruct participants to wear the EDA sensor on their left hand. They are also directed to use the joystick with their left hand and the keyboard with their right hand.

3. The robot signals the start of the MATB-YARP task by speaking in Italian: “Are you ready to start? I have to inform you that the experiment is starting, let’s start the experiment, I am here for you.” The task then commences, with events occurring as scheduled in the time series. The robot’s behavior speed and its tone of voice vary depending on the condition.

4. After 5 min, the tasks end. The robot indicates the conclusion of the MATB-YARP task by speaking in Italian: “Thank you, the experiment is terminated.” Again, the robot’s behavior speed and its tone of voice vary depending on the condition.

5. Finally, the experimenter conducts a post-experiment interview to verify that the participant has observed the iCub’s behaviors.

The experimental protocol is designed to minimize interaction between participants and the experimenters, ensuring that the entire experience focuses on the interaction between the humanoid robot and the participant.

3.8 Participants

We recruited 29 native Italian speakers (18–54 years old,

3.9 Analysis

We recorded data from 15 participants (9 female, 6 male) of the gentle condition and 15 participants (9 female, 6 male) of the rude condition. Data from one participant in the rude condition was excluded because the recording was stopped mid-session, so the analysis for that condition included data from the remaining 14 participants (9 female, 5 male).

For data analysis, we assessed the following behavioral measures in the tasks:

In the communications task, events that take longer than 15 s to complete—or that receive no response—were assigned a completion time of 15 s. In our experiment, 17 responses (5 in the gentle condition and 12 in the rude condition) were treated this manner, resulting in a total of 145 responses (75 in the gentle condition and 70 in the rude condition) included in the analysis.

In the system monitoring task, events that take longer than 5 s to react to—or that receive no response—were assigned a reaction time of 5 s. In our experiment, 40 responses (22 in the gentle condition and 18 in the rude condition) were treated in this manner, resulting in a total of 377 responses (195 in the gentle condition and 182 in the rude condition) included in the analysis.

In the tracking task, the target’s distance from the center was averaged every 20 s, yielding 15 data points per participant. In our experiment, 435 data points were obtained from 29 participants (225 from 15 participants in the gentle condition and 210 from 14 participants in the rude condition) and included in the analysis.

For the facial expression data, we averaged the arousal and valence values every 20 s, yielding 15 data points per participant. Due to missing data, we excluded 1 participant from the gentle condition and 3 participants from the rude condition, leaving us with 375 data points from 25 participants (210 from 14 participants in the gentle condition and 165 from 11 participants in the rude condition).

In addition, the ISCR was calculated every 20 s during the 5-minute task, yielding 15 data points per participant. If a participant’s mean ISCR exceeded 10 μSs (i.e., the mean SCR was more than 0.5 μS), we considered that they experience excessive mental workload due to unfamiliarity with the experiment and excluded their data. Similarly, if a participant’s mean ISCR fell below 0.2 µSs (i.e., the mean SCR was less than 0.01 µS), we considered it unlikely that they exhibited normal skin conductance responses, and we excluded their data. In our experiment, we excluded one participant from the gentle condition and three from the rude condition on this basis, leaving 375 data points from 25 participants (210 from 14 participants in the gentle condition and 165 from 11 participants in the rude condition).

For statistical analysis, we applied the Mann-Whitney

We also compared the values for each condition to baseline values, which were obtained from another group of 14 participants (5 female, 9 male) who performed the MATB-YARP task without any robot present. The baseline values were calculated as median values of each task performance, arousal, valence, and ISCR. We then examined the differences between the median values in the gentle and rude conditions, as well as their respective baseline values.

4 Results

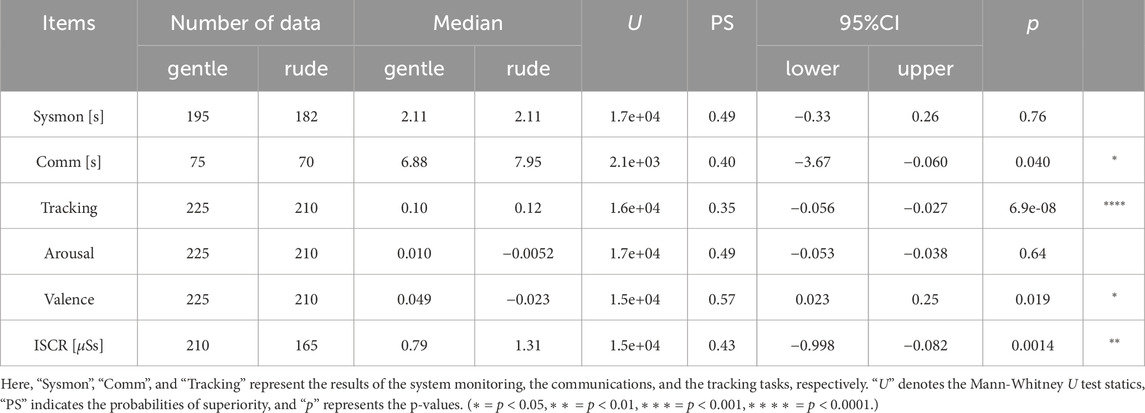

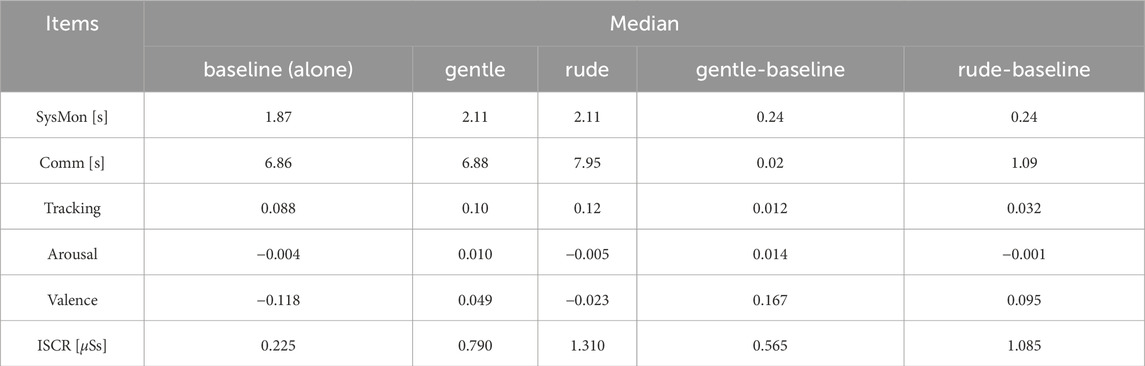

In this section, we summarize the experimental results in relation to the four hypotheses (see Section 3.1), our results support H1, H3, and H4 but not H2. A summary of the data is provided in Table 1.

4.1 Hypothesis 1: supported

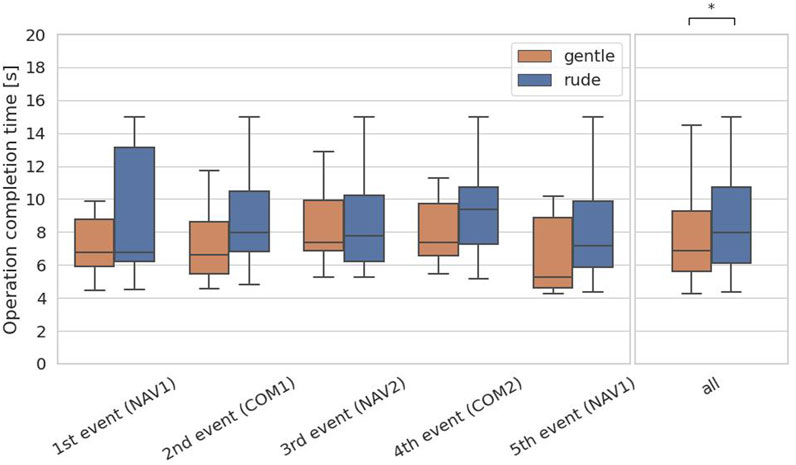

The first hypothesis examined whether the presence of a gently behaving robot significantly enhances on human performance in a cognitive task requiring short-term memory, compared to a rudely behaving robot. To test the hypothesis, we evaluated the operation completion time of the communication task, which is associated with short-term memory. For each participant, we obtained 5 data samples, and we applied the Mann-Whitney

Figure 6. Evaluation of the operation completion time on each event and at all events in the communications task. (∗ = p < 0.05, ∗ ∗ = p < 0.01, ∗ ∗ ∗ = p < 0.001.)

4.2 Hypothesis 2: not supported

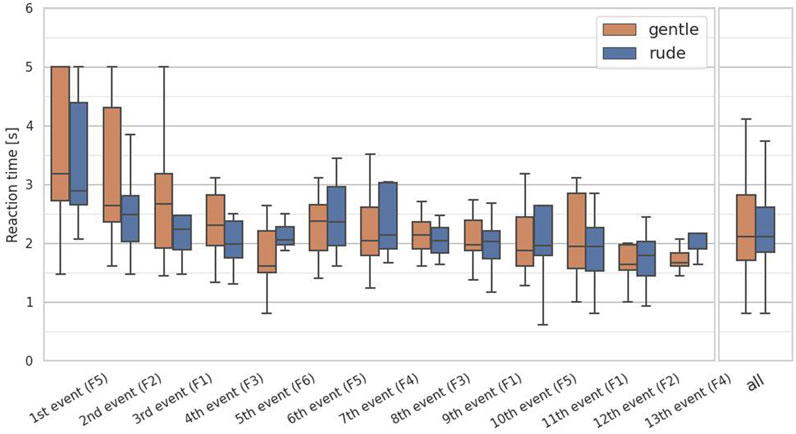

The second hypothesis examined whether the presence of a rudely behaving robot significantly affect human reactive performance on a cognitive task, compared to a gently behaving robot. To test the hypothesis, we analyzed the reaction time in the system monitoring task. For this task, we collected 13 data samples per participant and applied the Mann-Whitney

Figure 7. Evaluation of the reaction time on each event and at all events in the system monitoring task. (∗ = p < 0.05, ∗ ∗ = p < 0.01, ∗ ∗ ∗ = p < 0.001.)

4.3 Hypothesis 3: supported

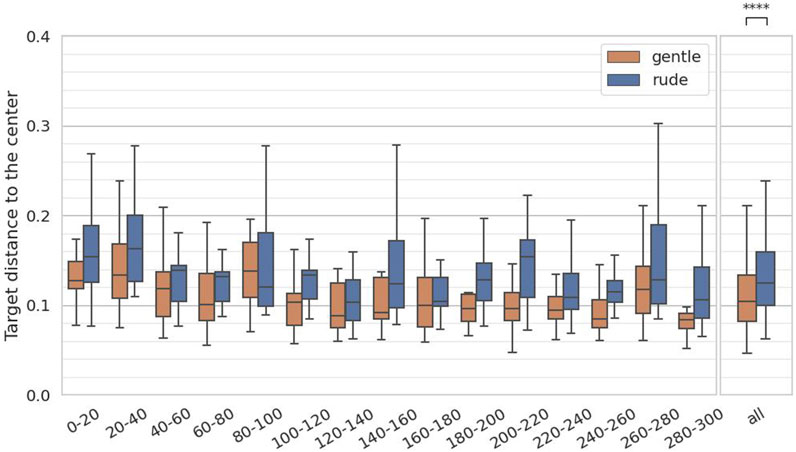

The third hypothesis examined whether the presence of a gently behaving robot significantly improve on human tracking performance in a cognitive task requiring continuous focus, compared to a rudely behaving robot. To test the hypothesis, we measured the distance from the center in the target in the tracking task. We calculated the average distance to the center at 20-second intervals, yielding 15 data samples per participant, and we applied the Mann-Whitney

Figure 8. Evaluation of the target distance to the center on every 20 s and at all periods in the tracking task. (∗ = p < 0.05, ∗ ∗ = p < 0.01, ∗ ∗ ∗ = p < 0.001.)

4.4 Hypothesis 4: supported

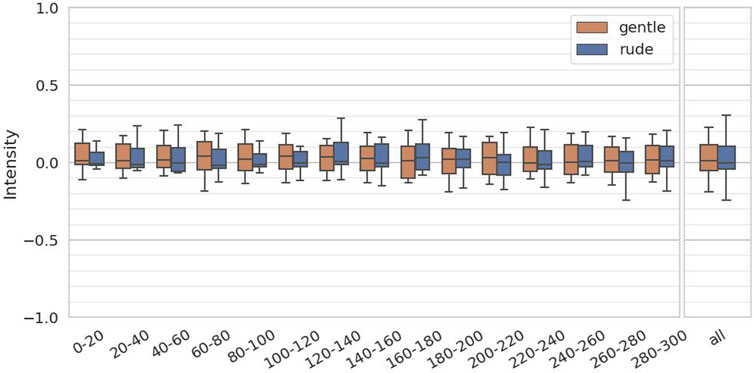

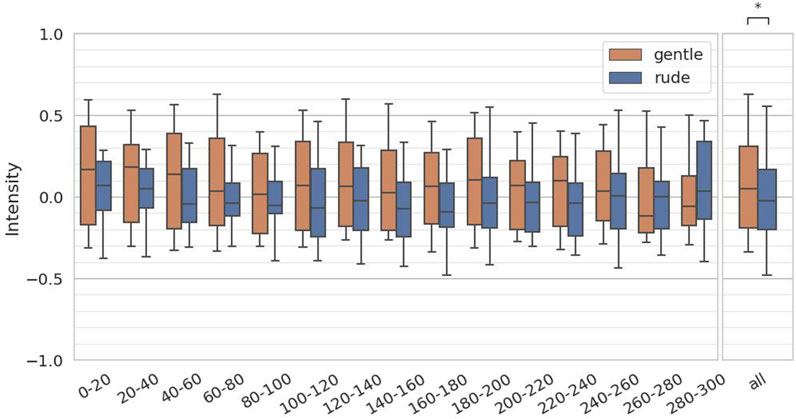

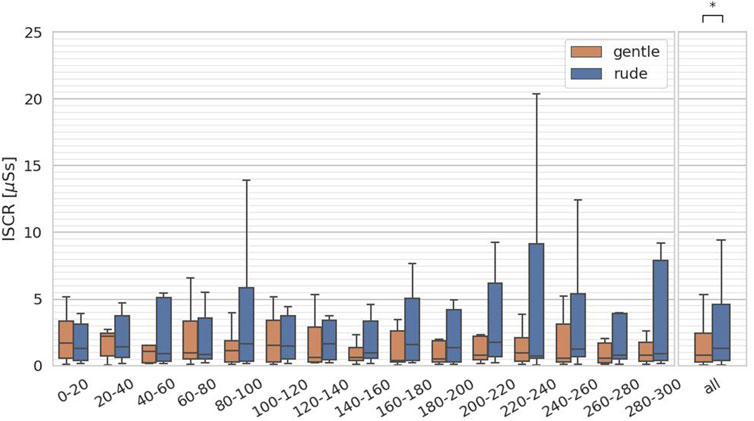

The fourth hypothesis examined whether the presence of a gently behaving robot significantly affects human facial expressions and mental workload, compared to a rudely behaving robot. To test this, we evaluated arousal and valence from facial expressions, as estimated by the FaceChannel deep neural network, and the ISCR from the skin conductance measurements. For both arousal and valence, we computed the mean values over 20-second intervals, yielding 15 data samples per participant, and we applied the Mann-Whitney

Figure 9. Evaluation of the arousal values on every 20 s and at all periods. (∗ = p < 0.05, ∗ ∗ = p < 0.01, ∗ ∗ ∗ = p < 0.001.)

Figure 10. Evaluation of the valence values on every 20 s and at all periods. (∗ = p < 0.05, ∗ ∗ = p < 0.01, ∗ ∗ ∗ = p < 0.001.)

Figure 11. Evaluation of the integrated skin conductance response (ISCR) on every 20 s and at all periods. (∗ = p < 0.05, ∗ ∗ = p < 0.01, ∗ ∗ ∗ = p < 0.001.)

4.5 Comparison with the baseline

We compared the median values in each condition against the baseline, as summarized in Table 2. For the system monitoring task, reaction times were 0.24 s slower than baseline in both the gentle and rude conditions. In the communications task, the operation completion time was 0.02 s slower than baseline in the gentle condition and 1.09 s slower in the rude condition. In the tracking task, performance was 0.012 units worse than baseline in the gentle condition and 0.032 units worse in the rude condition. For arousal, values were 0.014 higher than baseline in the gentle condition and 0.001 lower in the rude condition. For valence, values were 0.167 higher than baseline in the gentle condition and 0.095 higher in the rude condition. Finally, for ISCR, values were 0.565

Table 2. The differences between the values obtained in this study and the baseline values are summarized. The baseline was measured when the MATB-YARP task was performed without any robot present.

5 Discussion

We examined the effects of two interaction styles of humanoid robots on multitasking performance, facial expressions, and mental workload. Data were collected from 29 participants during a multitasking experiment in which the robot provided advice for the system monitoring and tracking tasks—but not for the communications task. Our results showed that participants in the gentle condition performed better in the communications task—which required short-term memory of voice instructions and multiple key presses—and in the tracking task, which demanded continuous attention.

Moreover, analysis of valence and ISCR derived from facial expressions revealed that participants exhibited more positive emotion and lower unconscious mental workload when being with the gently moving robot. These findings suggest that the gentle behavior with the cooperative expressions of the robot unconsciously fostered a positive, relaxed state in the participants, thereby improving their performance on the communications task and demonstrating the influence of vitality forms.

Conversely, in the system monitoring task—a simple reactive task—there was no statistically significant difference between the two conditions, and the influence of vitality forms was not evident. In a previous study by Di Cesare et al. (2020b), participants received an object after observing an iCub offering it either gently or rudely. In our experiment, however, participants merely pressed keys on a keyboard, so any differences in reactive movement speed were likely less pronounced.

We also observed that the likelihood of failing to complete the communications task within 15 s was lower in the gentle condition (5 out of 75 responses) compared to the rude condition (12 out of 70 responses). This suggests that rude robot behavior imposed a higher mental workload, causing participants to take longer in their decision-making process.

We further compared the median values in each condition to baseline values obtained when the MATB-YARP task was performed without any robot present. As noted in Section 4.5, reaction times in the system monitoring task were equally slower in both the gentle and rude conditions compared to the baseline. This suggests that for simple reactive tasks, it may be preferable to work alone to minimize distractions. In contrast, for the communications task—a cognitively demanding task—performance in the gentle condition was nearly identical to baseline, while performance in the rude condition deteriorated. Similarly, in the tracking task, which requires sustained concentration, the rude condition negatively impacted performance. Additionally, ISCR values were higher than baseline in both conditions, with the rude condition showing an even greater increase.

These results suggest that merely the social and physical presence of a robot can elevate mental workload, and this effect is amplified when the robot behaves rudely. Notably, valence values were even higher in the gentle condition—exceeding those in both the rude condition and the baseline—indicating that participants experienced more positive emotions when interacting with a gentle robot. The fact that the rude condition also yielded slightly higher valence values than the baseline implies that simply interacting with a social robot for the first time may have a modestly positive emotional effect.

Taken together, a gentle social robot does not increase mental workload as much as a rude robot; rather, it elicits more positive emotions and reduces the likelihood of errors. These findings suggest that the presence of a gentle robot has the potential to enhance team performance in cognitive multitasking. Future research should explore these effects over longer periods to better understand the impact of interactions with robotic agents.

6 Limitation

This study has several limitations.

While further research is needed to reinforce our conclusions, some findings are clearly evident. A review of camera images shows that all participants observed the iCub’s behavior at the beginning and end of the 5-minute session. In contrast, only two participants in the gentle condition and none in the rude condition looked at the iCub during the tasks—with those participants glancing only two or three times, respectively. Nevertheless, post-experiment interviews confirmed that all participants were aware of the iCub’s gesturing during the tasks, as the robot was positioned within their peripheral view. Thus, it is likely that while participants recognized some of the robot’s behaviors, they did not observe all of them, and some of the participants were not influenced by these behaviors. Given these facts, additional study is needed to further divide the experimental conditions into voice-only and behavior-only to clarify the effects of each component.

Another limitation is that our between-subjects design prevented us from asking participants whether they could distinguish between the “gentle” and “rude” vitality forms. Subsequent physiological experiments could then measure the activity of the brain networks involved in processing vitality forms, providing direct evidence that they were indeed propagated to the participants.

Also due to the fact that the sample size was not so large, it was not sufficient to develop a predictive model combining each of the parameters. If more data had been collected, a more informative statistical analysis could have been performed.

Lastly, this study involved participants performing the three MATB tasks simultaneously. Future studies might enhance our understanding by having participants perform each individual task separately.

7 Conclusion

This paper contributed to the burgeoning field of vitality forms research, which is attracting increasing attention in neuroscience. Unlike previous experiments on vitality forms that measured a single human reaction to one isolated robot action, our research evaluated participants’ performance over a 5-minute period during a series of robot behaviors. This approach provided a more comprehensive view of the impact of vitality forms over an extended timeframe. Furthermore, these results may serve as a stepping stone for exploring the long-term effects of vitality forms in real-world settings beyond robotics. Overall, our findings suggest that properly designing a social humanoid robot’s vitality forms can significantly enhance cognitive multitasking performance in human-robot teams.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by The ethical committee in the Italian Institute of technology. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

MA: Writing – original draft. FR: Writing – original draft. GD: Writing – original draft. GS: Writing – original draft. TaY: Writing – original draft. AT: Writing – original draft. ToY: Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

Authors MA, TaY, AT, and, ToY were employed by Nissan Motor Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agrigoroaie, R., and Tapus, A. (2020). Cognitive performance and physiological response analysis. Int. J. Soc. Robotics 12, 47–64. doi:10.1007/s12369-019-00532-z

Ammaniti, M., and Ferrari, P. (2013). Vitality affects in daniel stern’s thinking—a psychological and neurobiological perspective. Infant Ment. Health J. 34, 367–375. doi:10.1002/imhj.21405

Aoki, M., Rea, F., Jirak, D., Sandini, G., Yanagi, T., Takamatsu, A., et al. (2022). On the influence of social robots in cognitive multitasking. Int. J. Humanoid Robotics 19, 2250022. doi:10.1142/s0219843622500220

Argyle, M., Ingham, R., Alkema, F., and McCallin, M. (1973). The different functions of gaze. Semiotica 7, 19–32. doi:10.1515/semi.1973.7.1.19

Barros, P., Churamani, N., and Sciutti, A. (2020). The facechannel: a fast and furious deep neural network for facial expression recognition. SN Comput. Sci. 1, 321. doi:10.1007/s42979-020-00325-6

Barsoum, E., Zhang, C., Canton Ferrer, C., and Zhang, Z. (2016). “Training deep networks for facial expression recognition with crowd-sourced label distribution,” in ACM international conference on multimodal interaction (ICMI).

Bartneck, C. (2003). “Interacting with an embodied emotional character,” in Proceedings of the 2003 international conference on Designing pleasurable products and interfaces, 55–60.

Benedek, M., and Kaernbach, C. (2007). Ledalab. Available online at: http://ledalab.de.

Benedek, M., and Kaernbach, C. (2010a). A continuous measure of phasic electrodermal activity. J. Neurosci. methods 190, 80–91. doi:10.1016/j.jneumeth.2010.04.028

Benedek, M., and Kaernbach, C. (2010b). Decomposition of skin conductance data by means of nonnegative deconvolution. psychophysiology 47, 647–658. doi:10.1111/j.1469-8986.2009.00972.x

Boucsein, W. (1992). Electrodermal indices of emotion and stress, chapter 3. Electrodermal Activity, 369–391.

Brown, E. C., and Brüne, M. (2012). The role of prediction in social neuroscience. Front. Hum. Neurosci. 6, 147. doi:10.3389/fnhum.2012.00147

Burns, A., Doheny, E. P., Greene, B. R., Foran, T., Leahy, D., O’Donovan, K., et al. (2010a). “Shimmer™: an extensible platform for physiological signal capture,” in 2010 annual international conference of the IEEE engineering in medicine and biology (IEEE), 3759–3762.

Burns, A., Greene, B. R., McGrath, M. J., O’Shea, T. J., Kuris, B., Ayer, S. M., et al. (2010b). Shimmer™–a wireless sensor platform for noninvasive biomedical research. IEEE Sensors J. 10, 1527–1534. doi:10.1109/jsen.2010.2045498

Comstock, J. R., and Arnegard, R. J. (1992). The multi-attribute task battery for human operator workload and strategic behavior research.

Condon, W. S., and Sander, L. W. (1974). Neonate movement is synchronized with adult speech: interactional participation and language acquisition. Science 183, 99–101. doi:10.1126/science.183.4120.99

Di Cesare, G. (2020). “The importance of the affective component of movement in action understanding,” in Modelling human motion: from human perception to robot design (Cham: Springer), 103–116.

Di Cesare, G., De Stefani, E., Gentilucci, M., and De Marco, D. (2017). Vitality forms expressed by others modulate our own motor response: a kinematic study. Front. Hum. Neurosci. 11, 565. doi:10.3389/fnhum.2017.00565

Di Cesare, G., Fasano, F., Errante, A., Marchi, M., and Rizzolatti, G. (2016a). Understanding the internal states of others by listening to action verbs. Neuropsychologia 89, 172–179. doi:10.1016/j.neuropsychologia.2016.06.017

Di Cesare, G., Gerbella, M., and Rizzolatti, G. (2020a). The neural bases of vitality forms. Natl. Sci. Rev. 7, 202–213. doi:10.1093/nsr/nwz187

Di Cesare, G., Marchi, M., Lombardi, G., Gerbella, M., Sciutti, A., and Rizzolatti, G. (2021). The middle cingulate cortex and dorso-central insula: a mirror circuit encoding observation and execution of vitality forms. Proc. Natl. Acad. Sci. 118, e2111358118. doi:10.1073/pnas.2111358118

Di Cesare, G., Valente, G., Di Dio, C., Ruffaldi, E., Bergamasco, M., Goebel, R., et al. (2016b). Vitality forms processing in the insula during action observation: a multivoxel pattern analysis. Front. Hum. Neurosci. 10, 267. doi:10.3389/fnhum.2016.00267

Di Cesare, G., Vannucci, F., Rea, F., Sciutti, A., and Sandini, G. (2020b). How attitudes generated by humanoid robots shape human brain activity. Sci. Rep. 10, 16928. doi:10.1038/s41598-020-73728-3

Ferrari, P. F., Gallese, V., Rizzolatti, G., and Fogassi, L. (2003). Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. Eur. J. Neurosci. 17, 1703–1714. doi:10.1046/j.1460-9568.2003.02601.x

Filetti, M. (2020). Ledapy 1.2.1. Available online at: https://pypi.org/project/ledapy.

Fowles, D. C., Christie, M. J., Edelberg, R., Grings, W. W., Lykken, D. T., and Venables, P. H. (1981). Publication recommendations for electrodermal measurements. Psychophysiology 18, 232–239. doi:10.1111/j.1469-8986.1981.tb03024.x

Gallese, V., and Goldman, A. (1998). Mirror neurons and the simulation theory of mind-reading. Trends cognitive Sci. 2, 493–501. doi:10.1016/s1364-6613(98)01262-5

Gazzola, V., Rizzolatti, G., Wicker, B., and Keysers, C. (2007). The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage 35, 1674–1684. doi:10.1016/j.neuroimage.2007.02.003

Ghazali, A. S., Ham, J., Barakova, E. I., and Markopoulos, P. (2017). “Pardon the rude robot: social cues diminish reactance to high controlling language,” in 2017 26th IEEE international symposium on robot and human interactive communication (RO-MAN), Lisbon, Portugal, 28 August 2017 - 01 September 2017 (IEEE), 411–417.

Goldin-Meadow, S. (1999). The role of gesture in communication and thinking. Trends Cognitive Sci. 3, 419–429. doi:10.1016/S1364-6613(99)01397-2

Hegel, F., Krach, S., Kircher, T., Wrede, B., and Sagerer, G. (2008). “Theory of mind (tom) on robots: a functional neuroimaging study,” in Proceedings of the 3rd ACM/IEEE international conference on Human robot interaction, Amsterdam, Netherlands, 12-15 March 2008, 335–342. doi:10.1145/1349822.1349866

Iverson, J. M., and Goldin-Meadow, S. (1998). Why people gesture when they speak. Nature 396, 228. doi:10.1038/24300

Jirak, D., Aoki, M., Yanagi, T., Takamatsu, A., Bouet, S., Yamamura, T., et al. (2022). Is it me or the robot? a critical evaluation of human affective state recognition in a cognitive task. Front. Neurorobotics 16, 882483. doi:10.3389/fnbot.2022.882483

Kilner, J. M., Friston, K. J., and Frith, C. D. (2007). Predictive coding: an account of the mirror neuron system. Cogn. Process. 8, 159–166. doi:10.1007/s10339-007-0170-2

Mann, H. B., and Whitney, D. R. (1947). On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. statistics 18, 50–60. doi:10.1214/aoms/1177730491

Metta, G., Natale, L., Nori, F., Sandini, G., Vernon, D., Fadiga, L., et al. (2010). The iCub humanoid robot: an open-systems platform for research in cognitive development. Neural Netw. 23, 1125–1134. doi:10.1016/j.neunet.2010.08.010

Metta, G., Sandini, G., Vernon, D., Natale, L., and Nori, F. (2008). “The iCub humanoid robot: an open platform for research in embodied cognition,” in Performance metrics for intelligent systems (PerMIS) workshop (ACM), 50–56. doi:10.1145/1774674.1774683

Mollahosseini, A., Hasani, B., and Mahoor, M. H. (2017). Affectnet: a database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 10, 18–31. doi:10.1109/taffc.2017.2740923

Oberman, L. M., McCleery, J. P., Ramachandran, V. S., and Pineda, J. A. (2007). Eeg evidence for mirror neuron activity during the observation of human and robot actions: toward an analysis of the human qualities of interactive robots. Neurocomputing 70, 2194–2203. doi:10.1016/j.neucom.2006.02.024

Pace-Schott, E. F., Amole, M. C., Aue, T., Balconi, M., Bylsma, L. M., Critchley, H., et al. (2019). Physiological feelings. Neurosci. and Biobehav. Rev. 103, 267–304. doi:10.1016/j.neubiorev.2019.05.002

Posner, J., Russell, J. A., and Peterson, B. S. (2005). The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 17, 715–734. doi:10.1017/s0954579405050340

Premack, D., and Woodruff, G. (1978). Does the chimpanzee have a theory of mind? Behav. brain Sci. 1, 515–526. doi:10.1017/s0140525x00076512

Rea, D. J., Schneider, S., and Kanda, T. (2021). ““is this all you can do? harder!” the effects of (im) polite robot encouragement on exercise effort,” in Proceedings of the 2021 ACM/IEEE international conference on human-robot interaction, 225–233.

Rizzolatti, G. (2005). The mirror neuron system and its function in humans. Anat. embryology 210, 419–421. doi:10.1007/s00429-005-0039-z

Rizzolatti, G., and Craighero, L. (2004). The mirror-neuron system. Annu. Rev. Neurosci. 27, 169–192. doi:10.1146/annurev.neuro.27.070203.144230

Rizzolatti, G., D’Alessio, A., Marchi, M., and Di Cesare, G. (2021). The neural bases of tactile vitality forms and their modulation by social context. Sci. Rep. 11, 9095. doi:10.1038/s41598-021-87919-z

Rochat, M. J., Veroni, V., Bruschweiler-Stern, N., Pieraccini, C., Bonnet-Brilhault, F., Barthélémy, C., et al. (2013). Impaired vitality form recognition in autism. Neuropsychologia 51, 1918–1924. doi:10.1016/j.neuropsychologia.2013.06.002

Rochat, P. (2009). Others in mind: social origins of self-consciousness. Cambridge University Press.

Russell, J. A. (1980). A circumplex model of affect. J. personality Soc. Psychol. 39, 1161–1178. doi:10.1037/h0077714

Santiago-Espada, Y., Myer, R. R., Latorella, K. A., and Comstock, J. R. (2011). The multi-attribute task battery II (MATB-II) software for human performance and workload research: a user’s guide. Tech. Rep. 60203411.

Scherer, K. R. (2005). What are emotions? and how can they be measured? Soc. Sci. Inf. 44, 695–729. doi:10.1177/0539018405058216

Spatola, N., Belletier, C., Chausse, P., Augustinova, M., Normand, A., Barra, V., et al. (2019a). Improved cognitive control in presence of anthropomorphized robots. Int. J. Soc. Robotics 11, 463–476. doi:10.1007/s12369-018-00511-w

Spatola, N., Monceau, S., and Ferrand, L. (2019b). Cognitive impact of social robots: how anthropomorphism boosts performances. IEEE Robotics and Automation Mag. 27, 73–83. doi:10.1109/mra.2019.2928823

Stern, D. N. (2010). Forms of vitality: exploring dynamic experience in psychology, the arts, psychotherapy, and development. Oxford University Press.

Tao, D., Tan, H., Wang, H., Zhang, X., Qu, X., and Zhang, T. (2019). A systematic review of physiological measures of mental workload. Int. J. Environ. Res. public health 16, 2716. doi:10.3390/ijerph16152716

Torrey, C., Fussell, S. R., and Kiesler, S. (2013). “How a robot should give advice,” in 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 03-06 March 2013 (IEEE), 275–282.

Vannucci, F., Di Cesare, G., Rea, F., Sandini, G., and Sciutti, A. (2018). “A robot with style: can robotic attitudes influence human actions?,” in 2018 IEEE-RAS 18th international conference on humanoid robots (humanoids) (IEEE), 1–6.

Keywords: human-robot interaction, social robots, vitality forms, cognitive multitasking, facial expressions, mental workload

Citation: Aoki M, Rea F, Di Cesare G, Sandini G, Yanagi T, Takamatsu A and Yamamura T (2025) Effects of gentle and rude vitality forms in social robots on humans during cognitive multitasking. Front. Robot. AI 12:1305685. doi: 10.3389/frobt.2025.1305685

Received: 02 October 2023; Accepted: 29 January 2025;

Published: 22 April 2025.

Edited by:

Silvia Rossi, University of Naples Federico II, ItalyReviewed by:

Sofia Thunberg, Chalmers University of Technology, SwedenHatice Kose, Istanbul Technical University, Türkiye

Eleonora Zedda, National Research Council (CNR), Italy

Copyright © 2025 Aoki, Rea, Di Cesare, Sandini, Yanagi, Takamatsu and Yamamura. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Motonobu Aoki, bW90LWFva2lAbWFpbC5uaXNzYW4uY28uanA=

Motonobu Aoki

Motonobu Aoki Francesco Rea

Francesco Rea Giuseppe Di Cesare

Giuseppe Di Cesare Giulio Sandini

Giulio Sandini Takura Yanagi

Takura Yanagi Atsushi Takamatsu

Atsushi Takamatsu Tomohiro Yamamura5

Tomohiro Yamamura5