- 1Department of Information Technology, Uppsala University, Uppsala, Sweden

- 2Department of Information and Computing Sciences, Utrecht University, Utrecht, Netherlands

- 3Department of Information Engineering and Computer Science, University of Trento, Trento, Italy

Friendship and rapport play an important role in the formation of constructive social interactions, and have been widely studied in education due to their impact on learning outcomes. Given the growing interest in automating the analysis of such phenomena through Machine Learning, access to annotated interaction datasets is highly valuable. However, no dataset on child-child interactions explicitly capturing rapport currently exists. Moreover, despite advances in the automatic analysis of human behavior, no previous work has addressed the prediction of rapport in child-child interactions in educational settings. We present UpStory — the Uppsala Storytelling dataset: a novel dataset of naturalistic dyadic interactions between primary school aged children, with an experimental manipulation of rapport. Pairs of children aged 8–10 participate in a task-oriented activity: designing a story together, while being allowed free movement within the play area. We promote balanced collection of different levels of rapport by using a within-subjects design: self-reported friendships are used to pair each child twice, either minimizing or maximizing pair separation in the friendship network. The dataset contains data for 35 pairs, totaling 3 h 40 m of audiovisual recordings. It includes two video sources, and separate voice recordings per child. An anonymized version of the dataset is made publicly available, containing per-frame head pose, body pose, and face features. Finally, we confirm the informative power of the UpStory dataset by establishing baselines for the prediction of rapport. A simple approach achieves 68% test accuracy using data from one child, and 70% test accuracy aggregating data from a pair.

1 Introduction

Rapport, the establishment of a close relationship based on mutual understanding, has long been considered to be an important facet of social interactions (Tickle-Degnen and Rosenthal, 1990), and particularly in dyadic (one-on-one) interactions (Travelbee, 1963). In the educational context, teacher-student rapport and student-student rapport have been shown to have a positive impact on the learning experience (Frisby and Martin, 2010). Friendship between classmates is naturally a high-rapport relationship and has been shown to improve measurable outcomes such as task performance, effective problem-solving, and learning outcomes (Azmitia and Montgomery, 1993; Newcomb and Bagwell, 1995; Wentzel et al., 2018).

Given the growing corpus of research in child-robot interaction seeking to use social robots as learning companions, helping students learn through simulated peer interaction (Belpaeme et al., 2018), the automatic analysis of rapport can be very beneficial, allowing social robots to better adapt to their role as peers. As such, observing data from child-child interactions provides valuable insights into modeling interaction dynamics and human behavior, which informs the creation of socially intelligent robots capable of interacting effectively with children in educational settings.

While the Machine Learning (ML)-based analysis of affect and related interpersonal constructs has been well studied (see, e.g., (Wu et al., 2020; Alsofyani and Vinciarelli, 2021; Nojavanasghari et al., 2016)), no previous work has investigated the prediction of rapport in child-child dyadic interactions in educational settings. Moreover, no dataset capturing this specific interaction context is available. The primary goal of this study is to address this gap by introducing a publicly available child-child interaction dataset annotated for rapport. We further verify the informative power of the dataset by providing ML baselines for the automatic prediction of rapport, showing that even a simple approach clearly attains better-than-random performance.

In order to learn useful models of social interaction through ML techniques, practitioners require access to high-quality multimodal datasets. Due to the sensitive nature of the recorded data (including the likeness and voice of the participants, as well as the information they disclose through speech), the number of such datasets is relatively small, and access is constrained. Some well-known datasets are available for adult-adult interactions (Bilakhia et al., 2015) and child-child interactions (Singh et al., 2018; Lemaignan et al., 2018), but in these, available annotations for social constructs are obtained from external raters. This has implications on the reliability of the annotations, and an objective measure of rapport would be preferable when studying this phenomenon. We address this issue by relying on the friendship network: a graph representing friendships in the classroom through self-reported friendship nominations. Friendship networks have long been used to study the social relationships in educational institutions (Hallinan, 1979), and have been analyzed using graph theory to evaluate the social fabric of the student population (Hansell, 1985). In this study, we propose a partition scheme based on friendship network analysis to obtain high-rapport and low-rapport pairings of the student population. We validate the partition scheme using graph analysis and questionnaire responses.

The data collection effort is centered on an educational collaborative storytelling game designed to elicit pair interactions. Pairs of children, determined with the proposed partition scheme, are invited to play a card-based storytelling game together. Storytelling has been widely used in the classroom as an activity to enhance children’s creativity, language development, cognitive, and social skills (Wright, 1995; Cassell and Ryokai, 2001). As such, previous research has shown that social robots could be used as facilitators and active co-creators of storytelling in child-robot interaction (Elgarf et al., 2022). In this study, the storytelling activity begins with children planing a story in a private space, while being recorded by two overhead cameras and head-mounted microphones. Each pair plays several rounds of the game; the obtained recordings form a private multimodal dataset. The UpStory dataset is subsequently obtained by extracting anonymized face and pose features from the video recordings.

Finally, we set ML baselines for the prediction of rapport, framed as a binary classification task: did this sample belong to a high-rapport or a low-rapport pair? We analyze non-verbal behaviors, including body posture and facial cues, to capture natural signals that indicate rapport and interpersonal attitudes during social interactions (Goldman and Buysse, 2007). We provide two variants, either using features from a single child (single-child baseline), or considering the data from both children in a game round (joint-pair baseline). We use a simple approach based on previous literature (Paetzel et al., 2017): focus on facial expression data, summarize the time-series into a small selection of statistics, and use shallow learning models to classify the sample. We obtain 68% test accuracy in the single-child baseline and 70% test accuracy in the joint-pair baseline, well above the 54% random chance baseline for the dataset. Our models are intended to demonstrate that the public dataset captures the dynamics of rapport in the shared time-series, and to provide a baseline for benchmarking the performance of further solutions, such as the usage of more advanced models or the addition of alternative data modalities.

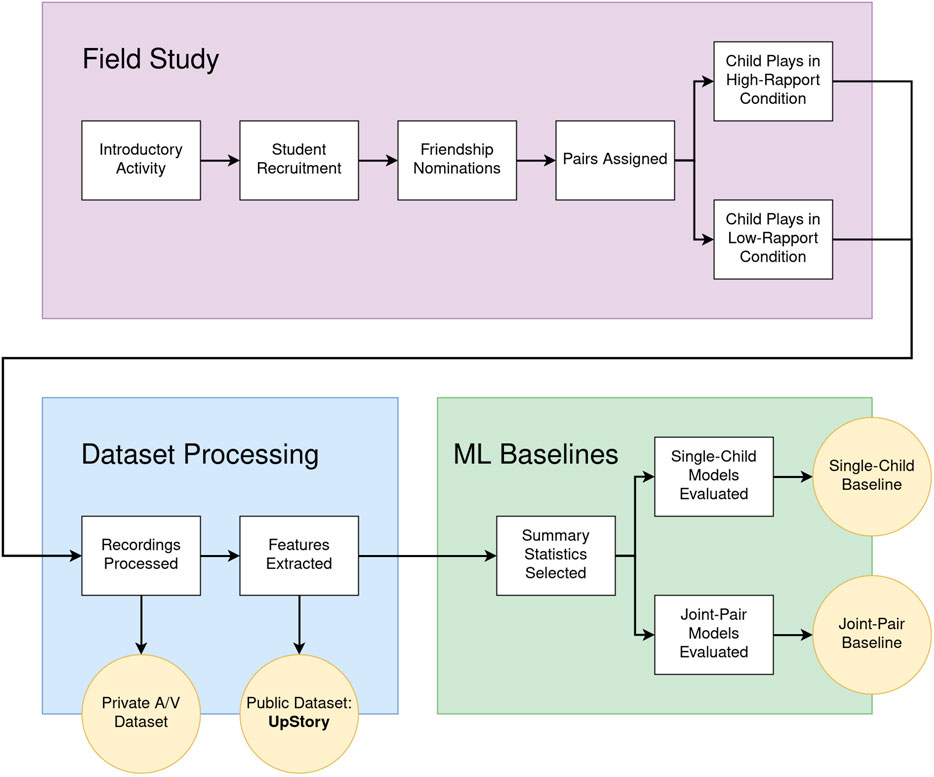

Figure 1 shows an overview of the steps in this study. Our main contributions can be summarized as follows:

1. We propose a novel experimental manipulation based on friendship network analysis to obtain high-rapport and low-rapport pairings of the student population in educational settings;

2. We collect a private dataset on dyadic child-child interaction, with an experimental manipulation for the level of rapport, including recordings of 35 pairs of children participating in a task-oriented activity, captured from two audio sources (head-mounted microphones) and two video sources (overhead cameras);

3. We publish UpStory, an open access dataset containing head pose, body pose and face features extracted from the private audiovisual dataset; and

4. We establish ML baselines for the prediction of rapport using the public UpStory feature dataset, using summary statistics of the provided AU time-series, and a selection of shallow learning techniques, achieving up to 70% test accuracy.

Figure 1. Research diagram summarizing the phases of this research (colored rectangles), the individual steps therein (white rectangles), and the produced artifacts (yellow circles). In the field study, children are first introduced to the storytelling game as a whole class. Consent forms are collected from the families of interested children. Afterwards, participating children fill in a friendship nomination form, and the resulting friendship network is used to form two pairings of the participant population: a high-rapport pairing and a low-rapport pairing. Each child participates once in each condition. In the dataset processing phase, first all recordings are cut and synchronized, producing a private audiovisual dataset, and then body pose, face pose, and facial expression features are extracted per-frame, producing the UpStory dataset. Finally, ML baselines are produced for rapport prediction using the feature representations, either corresponding to a single child in the pair (single-child baseline), or combining the data from both children in a pair (joint-pair baseline).

The UpStory dataset is publicly available at https://zenodo.org/doi/10.5281/zenodo.15391848.

The source code for this project is available at https://github.com/MarcFraile/dyadic-storytelling.

2 Related work

2.1 Children’s rapport

The Cambridge dictionary defines rapport as “a good understanding of someone and an ability to communicate well with them.”1. However, the true meaning of the word is considered difficult to define, sometimes relating to bonds built over time as implied by the dictionary definition (Tickle-Degnen and Rosenthal, 1990), but more often indicating a momentary state of constructive and relaxed communication (Travelbee, 1963). Naturally, the subtle nature of its definition implies that “rapport” needs to be operationalized with a tangible specific meaning in any empirical study of its effects.

Rapport requires two individuals willing to make and establish a meaningful connection. As rapport occurs when people’s communication styles synchronize, it facilitates communication during task-oriented activities, fostering a higher degree of cooperation, and leading to a more profound level of engagement (Newcomb and Bagwell, 1995; Azmitia and Montgomery, 1993; Wentzel et al., 2018). Children’s relationships with others evolve as they progress through various developmental stages impacting their cognitive development. Engaging in discussions and collaborative tasks with friends and peers prompts children to explore and critically evaluate varying perspectives, contributing to their cognitive growth (Hartup, 1996; Bukowski et al., 1998). For these positive effects to materialize, establishing relationships characterized by quality is crucial. In this context, rapport-building becomes essential in assessing children’s positive connections with others, involving mutual understanding and caring (Tickle-Degnen and Rosenthal, 1990).

Behaviors such as cooperation, social contact, positive affect, and verbal communication are significantly more prevalent among individuals who share rapport (Tickle-Degnen and Rosenthal, 1990). Numerous studies have aimed to develop tools for predicting and identifying peer relationships, employing diverse methodologies such as direct observation (Berndt et al., 1988), manual video annotation (Ladd, 1990), sensor-based assessment (Kanda and Ishiguro, 2006), and automatic prediction techniques (Messinger et al., 2022). These approaches are based on analyzing group behaviors, in which social relationships are evaluated through measurements of member distances, facial expressions, conversational patterns, synchrony, and time spent together (Messinger et al., 2022; Lin et al., 2023; Rabinowitch and Meltzoff, 2017; Maman et al., 2020).

While those studies have utilized diverse approaches to analyze group behaviors and evaluate social relationships, there is a need to understand the broader context of peer interactions. As such, research in Child Development has emphasized the exploration of social networks to understand children’s status within a social group (Kasari et al., 2011). More specifically, friendship networks provide insights into the dynamics of peer relationships and the formation of social bonds and rapport. Approaches such as self-reported friendship nominations contribute to the identification of social networks by identifying key players and patterns of connections (George and Hartmann, 1996; Strauss and Pollack, 2003). The research presented in this paper aims to provide a dataset for the automatic prediction of rapport in child-child dyadic interactions. Therefore, we relied on social networks to identify dyads with high and low rapport and, consequently, to comprehend the dynamics in these dyads.

2.2 Datasets on dyadic interactions

Dyadic social interaction datasets are crucial for understanding how individuals engage in various activities and gaining insights into interaction dynamics. For instance, the IEMOCAP dataset (Busso et al., 2008) consists of audiovisual recordings of English-speaking actors, either 1) acting out a scene or 2) improvising on a predefined topic. The dataset aims to elicit emotional expressions and provides annotated data for different categories of emotions such as happiness, anger, and sadness, and labels for valence and dominance dimensions. Another dataset is ALICO (Malisz et al., 2016), which consists of audiovisual recordings of German-speaking adults taking either the role of a storyteller (sharing a vacation story) or an active listener. The ALICO dataset provides manual annotations for head movements and the function of the listener’s responses, among others, characterizing mechanisms in interpersonal communication.

While these datasets provide great value to the study of social interactions between dyads, they suffer from low agreement between raters when annotating the data, which could affect the reliability and quality of it (Alhazmi et al., 2021). Cohen’s Kappa is a statistical criterion used to measure the reliability between raters, for the ALICO dataset, this value is around

Datasets designed to capture children’s social interactions often emphasize the adult-child context. These datasets have primarily concentrated on evaluating children’s reactive emotions to objects (Nojavanasghari et al., 2016), exploring play in the context of autism diagnoses (Rehg et al., 2013), or annotating and estimating synchrony between a child and a therapist (Li et al., 2021). Recent efforts have introduced a comprehensive multimodal dataset capturing the dynamics of parent-child interaction within a storytelling context (Chen et al., 2023). However, the tasks selected to capture social interactions in these datasets do not encompass the natural interactions that arise when children engage with their peers.

Only a few datasets focus on child-child interactions. The P2PSTORY dataset (Singh et al., 2018) focuses on child-child interactions during a collaborative storytelling task, with one child as the listener and the other as the storyteller. The dataset includes video and audio data, along with annotations of non-verbal behaviors such as gaze, smiles, forward leans, nods, and verbal cues like short utterances and backchanneling. Multiple coders manually annotated children’s behaviors following a defined coding scheme. While adhering to standard annotation practices, coder agreement varied across behaviors, with more observable actions achieving higher agreement levels than subjective evaluations, highlighting the complexity of annotating behaviors with multiple label options.

The PInSoRo dataset (Lemaignan et al., 2018) is also dedicated to understanding the social dynamics among children in free-play interactions. This multimodal dataset provides video and audio recordings, employing automated computational methods for feature extraction, such as prosody, voice quality, facial landmarks, Action Units (AUs), gaze, and skeleton data. It also includes manual annotations of social interaction, focusing on task and social engagement, and social attitudes in child-child dyads and child-robot dyads. Similar to the P2PSTORY Dataset, PInSoRo’s manual annotations involved multiple experts in human behavior; however, the challenge of coding social interaction is once again echoed, as it resulted in a low agreement between coders.

While both datasets contribute to understanding the dynamics in children’s interactions with others, they do not focus on the differences in interactions with friends versus acquaintances. This distinction is crucial, as interaction patterns can significantly vary based on the relationship between the individuals. Therefore, there is a need for datasets that explicitly categorize interactions based on the nature of the relationship, enabling a more nuanced analysis of children’s social dynamics.

2.3 Automated prediction of rapport

Computational models in Affective Computing are emerging to better understand the complex dynamics of social interactions, such as analyzing and predicting human behavior, affect, and social constructs (Wang et al., 2022). Therefore, different verbal and non-verbal behaviors could provide information on rapport in an interaction. For instance, the estimation of children’s closeness to others using computational approaches has primarily been explored through location-based techniques, specifically by computing the distance separating two children to predict their friendship status. Kanda and Ishiguro implemented a friendship estimation algorithm by using wearable sensors’ data to calculate potential friendships between children based on their interaction times, with specific attention to simultaneous interactions (Kanda and Ishiguro, 2006). In another study, depth sensors’ data was employed to design a tracking algorithm aimed at estimating the child’s position. Additionally, RGB cameras were utilized for face identification. These data sources were subsequently integrated to provide an estimation of children’s relationships with their peers within the classroom (Komatsubara et al., 2019).

In addition, synchrony and mimicry have been studied due to their impact on children’s attitudes toward each other, enhancing social bonds with friends (Denham et al., 2015; Rabinowitch and Meltzoff, 2017). For instance, in a peer-play context, a study involving children aged 4–6 years revealed that when interacting synchronously with their peers, children exhibited higher frequencies of helping behaviors, mutual smiles, and eye contact compared to asynchronous interactions (Tunçgenç and Cohen, 2018). Notably, mutual smiling and sustained eye contact were prominent features of interactions specifically among friends (Goldman and Buysse, 2007), suggesting that non-verbal behaviors play a crucial role when assessing children’s attitudes and relationships in different social interactions.

Other studies employ benchmark tools such as OpenFace (Baltrušaitis et al., 2016) for the extraction of facial features, OpenPose (Cao et al., 2019) for the extraction of body features, and OpenSMILE (Eyben et al., 2010) for the extraction of voice features. Wu and colleagues conducted a user study in which university students participated in video call consultations with simulated patients (Wu et al., 2020). Motor mimicry episodes were automatically detected using features extracted with OpenFace. Statistical analysis revealed a correlation between mimicry and communication skills in dyadic interactions. Alsofyani and Vinciarelli designed a multimodal approach for attachment recognition in children aged five to 9 (Alsofyani and Vinciarelli, 2021). Participants were asked to participate in a storytelling-based psychiatric test typically scored by an expert rater. In this case, an automated pipeline using OpenFace and OpenSMILE was used to predict the expert annotations, using a combination of logistic regression models for unimodal and multimodal data. Similar methodologies have been applied to analyze behaviors such as gaze duration and direction, and engagement, utilizing video data and exploring interactions between infants and children with adults (Erel et al., 2023; Fraile et al., 2021). However, the existing literature remains scarce in its examination of the dynamics of social interactions when assessing children’s relationships with others. Consequently, tools that automatically predict the level of rapport based on children’s behaviors are still limited. This limitation arises from the lack of appropriate datasets that distinguish between different types of rapport in children’s dyads and the relevant features that enable accurate rapport prediction.

To address these gaps, we present the UpStory dataset, which focuses on capturing the dynamics of social interactions in children’s dyads. We designed a study based on friendship network analysis to obtain low-rapport and high-rapport dyads. This method allowed us to study dynamic interactions between children and their peers in an educational context. Contrary to (subjective) manual annotation of the existing datasets, we used a friendship network methodology to obtain more objective annotations. In addition, we extracted behavioral features using benchmark tools such as OpenFace and OpenPose, and provided ML baselines for predicting the level of rapport in the dataset. By implementing the friendship network methodology, we ensured that the behaviors captured within the dataset were representative of social dynamics inherent to children’s interactions, where rapport levels can vary significantly depending on their social status (i.e., the position that one holds in a group (Coie et al., 1990)). As a result, the UpStory dataset facilitates a more robust analysis of children’s social interactions and the automatic prediction of rapport.

3 Materials and methods

In order to collect the UpStory dataset, we designed a study with the explicit goal to collect samples of high-rapport and low-rapport pairs of children participating in a collaborative storytelling activity. This section details the design of the study, including the participant population, the pair-making strategy, and the primary data collection effort.

3.1 Participants

The study was performed at a public primary school in Sweden that provides bilingual English-Swedish education, and was embedded in the school’s free play hours. Prior to execution, the research plan was reviewed by a local ethics committee2. We collaborated with the school’s teaching team to inform the students and their families about the study, and to collect consent forms signed by the children’s legal guardians. Only children who presented a completed consent form and showed interest in participating were retained for the study. The children were informed that they could refuse to participate at any point, without consequences.

The recruited children were students in Year 2 (ages 8-9) and Year 3 (ages 9-10) who could speak English fluently, to allow fluid communication with the (non-Swedish speaking) researchers conducting the experiment. Due to the school’s bilingual program, the children came from a diverse set of cultural and socioeconomic backgrounds. A total of 39 children signed up for the study and were assigned a two-digit random ID. One student was subsequently excluded due to lack of availability, resulting in a population of 38 participants: 28 students in Year 2 (14 boys and 14 girls), and 10 students in Year 3 (4 boys and six girls).

3.2 Experimental conditions

3.2.1 Pair making with a social distance heuristic

Since manual annotation of social constructs is prone to produce disagreement between coders (Singh et al., 2018; Lemaignan et al., 2018; Fraile et al., 2022), it is preferable to capture high-rapport and low-rapport pairs through an explicit experimental manipulation. Given a large cohort size, a between-subjects design could be achieved by choosing pairs of participants that are either known to be close friends (high-rapport condition), or known to have separate social circles (low-rapport condition), at the cost of introducing selection bias. However, this is not a valid approach if we have access to a smaller cohort (such as classmates in a primary school), or if we wish to avoid the selection bias. To address this, we propose a pair-assigning strategy that is suitable for small cohorts, using a within-subjects design which ensures that each child participates once in each condition. Our method is based on relaxing the criteria for pair selection: we seek to optimize a social distance heuristic, instead of choosing guaranteed close or distant pairs.

An established strategy to quantify social relations in the classroom is to form a friendship network by asking students to list their friends, and using graph theory to analyze the collected data (George and Hartmann, 1996; Strauss and Pollack, 2003). The particular form used in this study is a directed graph

Algorithm 1. Social distance heuristic: mathematical distance measuring separation between individuals in a directed friendship network

Our solution to obtain balanced experimental conditions is to ask each child to participate twice; once in each condition. In the high-rapport condition, we split the cohort into low-distance pairs by minimizing the sum of distances; in the low-rapport condition, we split the cohort into high-distance pairs by maximizing the sum of distances.

The described partitions can be obtained automatically through graph-manipulating software by reframing them as maximal matching problems. A maximal matching is a subgraph that is as big as possible (maximal), and in which each node has at most one edge (matching). If we consider the fully connected weighted graph

In other words: we operationalize rapport as measurable closeness between children that is naturally higher when children are near in the friendship network (close friends and good acquaintances), and naturally lower when children are far in the friendship network (disparate social circles), and can be detected with adequate questionnaires (described in Section 3.4).

3.2.2 Validation of the pair-making procedure

In the weeks before performing the main study, we asked each participant to privately fill in a form listing their “closest friends”. We refer to this document as the friendship nomination form. The form contained the prompt and 10 empty lines, with no specific instruction on how many names to include. Respondents were instructed to identify at least one close friend, and encouraged to list a good amount of friends. While respondents were encouraged to list classmates from school, they were also allowed to add any other names.

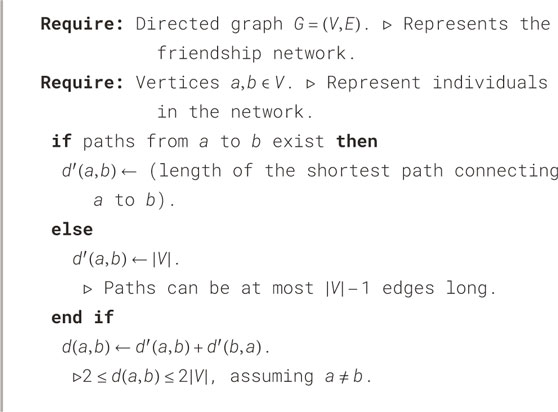

All 38 included participants filled in the friendship nomination. After matching the nominations to IDs with help from the teaching team, we obtained

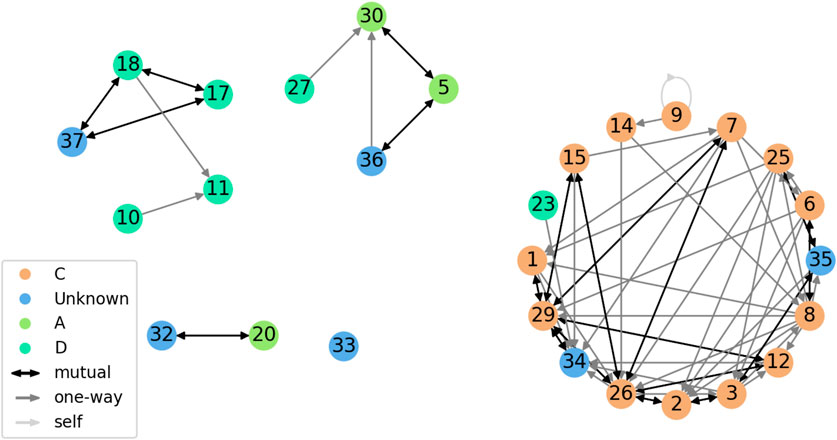

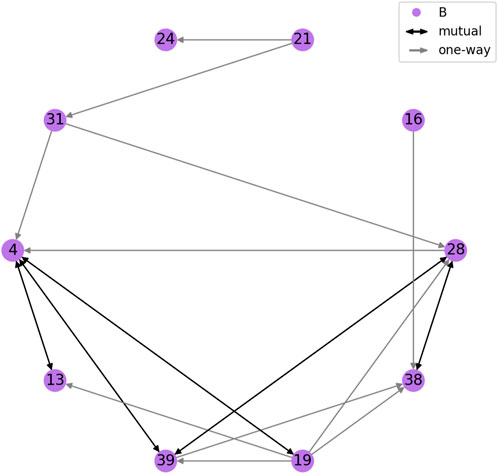

Figure 2 shows the friendship network for Year 2. We can see the subgraphs corresponding to each class tend to be quite connected, while very few connections exist across class boundaries. We hypothesize this helped the quality of the pair-making process. Figure 3 shows the friendship network for Year 3. Since only one class participated, the graph is more evenly connected, although some students are still completely separated from each other.

Figure 2. Friendship network of the Year two cohort. Vertex color indicates class (labeled randomly as A, C, D for anonymity). Edge color and shape indicate type of connection (one-way or mutual). The light gray loop corresponds to a child who nominated themselves.

Figure 3. Friendship network of the Year three cohort. All children belonged to the same class (labeled randomly as B; shown in purple). Edge color and shape indicate type of connection (one-way or mutual).

We used our pair-making procedure to obtain two partitions of the student population (a high-rapport pairing and a low-rapport pairing), calculated separately per year. This resulted in 14 pairs per condition in Year 2, as well as five pairs per condition in Year 3, totalling 38 pairs (19 per condition). The pairings were validated by the teaching team, who confirmed no problematic pairs were suggested by the algorithm (e.g., children likely to get in a fight), and that the high-rapport pairings generally corresponded to closer acquaintances than the low-rapport pairings.

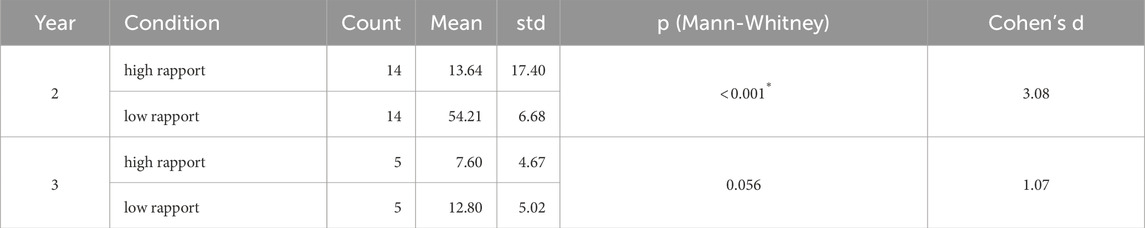

Table 1 shows statistics for the social distance heuristic in each year and condition. In both cohorts, we obtain a desired lower social distance for the high-rapport condition, with large effect sizes (as measured by Cohen’s d (Cohen, 1988)). Due to the small sample sizes, we tested the differences with non-parametric Mann-Whitney U tests, which found significance in Year 2

Table 1. Statistics for the social distance heuristic. The p-value corresponds to a Mann-Whitney U test with

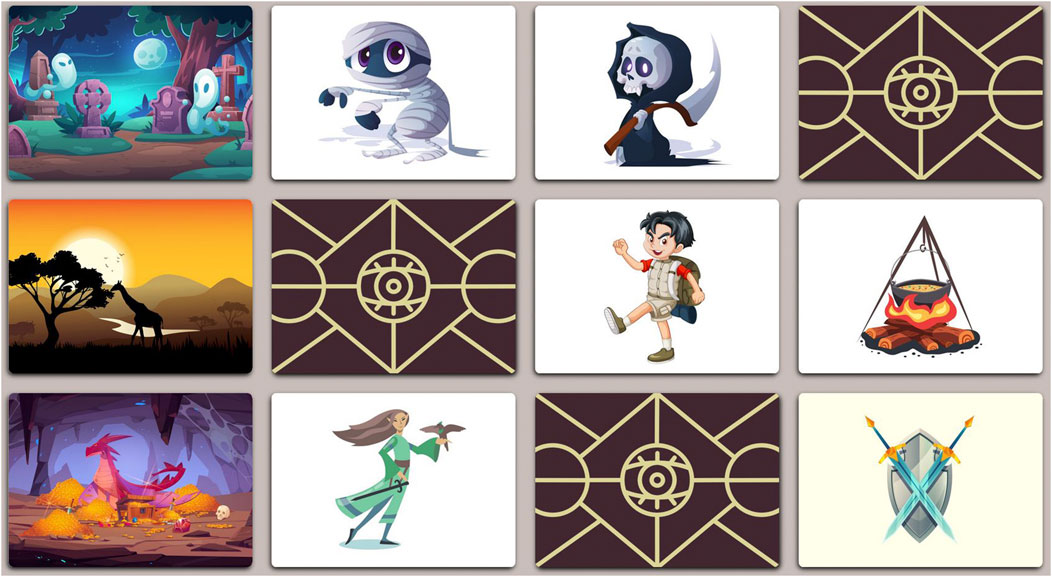

3.3 Task design

The primary activity in this study is a collaborative storytelling game in which a pair of children develop and present a story together, with the help of a virtual deck of picture cards. In the planning phase, the children are led into a private space (the planning area), and a random selection of 12 virtual cards is presented face-down in a standing touchscreen (Figure 4). The children can reveal as many cards as they see fit by pressing on them, and are instructed to select a subset of 6 cards to be incorporated into the story. They are allowed as much time as needed to plan the story and to move freely within the planning area. Later, in the presentation phase, the children jointly present the story they designed to the experimenters in a designated presentation area. The planning and presentation phases are then repeated for each additional game round.

Figure 4. Example playing board used in the planning phase, showing face-up and face-down cards. Each row corresponds to one setting. Top to bottom: Halloween monsters, hiking, fantasy. Each column corresponds to a card type. Left to right: location, character, character, object.

The virtual card deck used in the planning phase was implemented as a static webpage, using basic web technologies (HTML, JavaScript, CSS). The cards displayed to the children are chosen at random from a pool of settings and types. There are nine settings available, chosen to be a mix of realistic scenarios (e.g., hiking in nature, going to the hospital) and fantastic scenarios (e.g., knights and wizards, Halloween monsters). All cards are displayed in a similar cartoon style, in order to promote free mixing of options. Each setting contains a number of location cards, character cards, and object cards. In each round of the game, three settings are chosen at random (ensuring the same option is not chosen in two consecutive rounds). One location, two characters, and one object are chosen from each scenario, forming a grid of 12 cards. We collected the original card images from the free-use image website Freepik3, and processed them to a uniform size. Figure 4 shows an example board with most cards revealed.

3.4 Hypotheses and measures

In Section 3.2.2, we provided statistical evidence that the pair-making procedure attained larger pair distances in the low-rapport condition when compared to the high-rapport condition, but we did not address whether rapport was successfully manipulated. In order to measure social and emotional effects, we employed questionnaires to validate the following experimental hypotheses:

H1: Children feel closer to their partner when playing in the high-rapport condition than when playing in the low-rapport condition.

H2: Children report higher scores in the Valence-Arousal-Dominance model of emotion when playing in the high-rapport condition than when playing in the low-rapport condition.

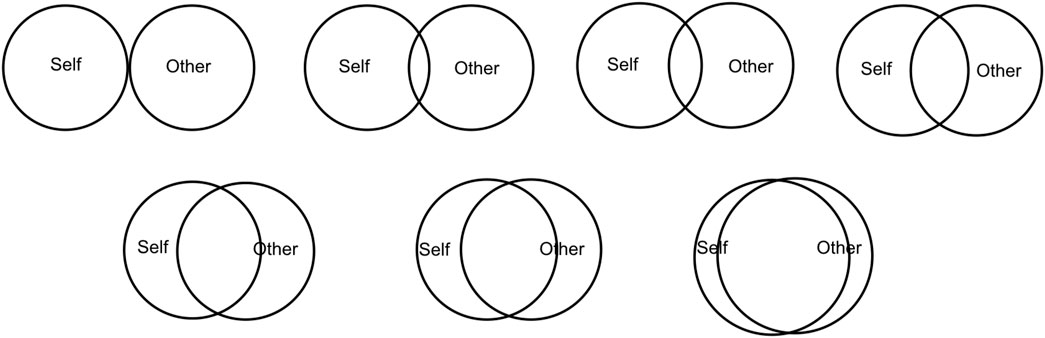

We tested H1 through a post-interaction administration of Inclusion of Other in the Self (IOS) (Aron et al., 1992) (shown in Figure 5), a single-item seven-point pictographic scale used to measure a person’s perceived closeness to another individual. Following a pre-existing protocol designed for administering IOS to children (Westlund et al., 2018), we add two calibration items to verify that the participants understood the questionnaire. We asked each child to rate their closeness with three individuals: (1) their best friend, (2) a “bad guy” from fictional media, and (3) their partner during the storytelling activity.

Figure 5. IOS questionnaire (Aron et al., 1992), used to measure the closeness of each participant with their best friend, a “bad guy” from fictional media, and their partner during the game. Measured after the interaction.

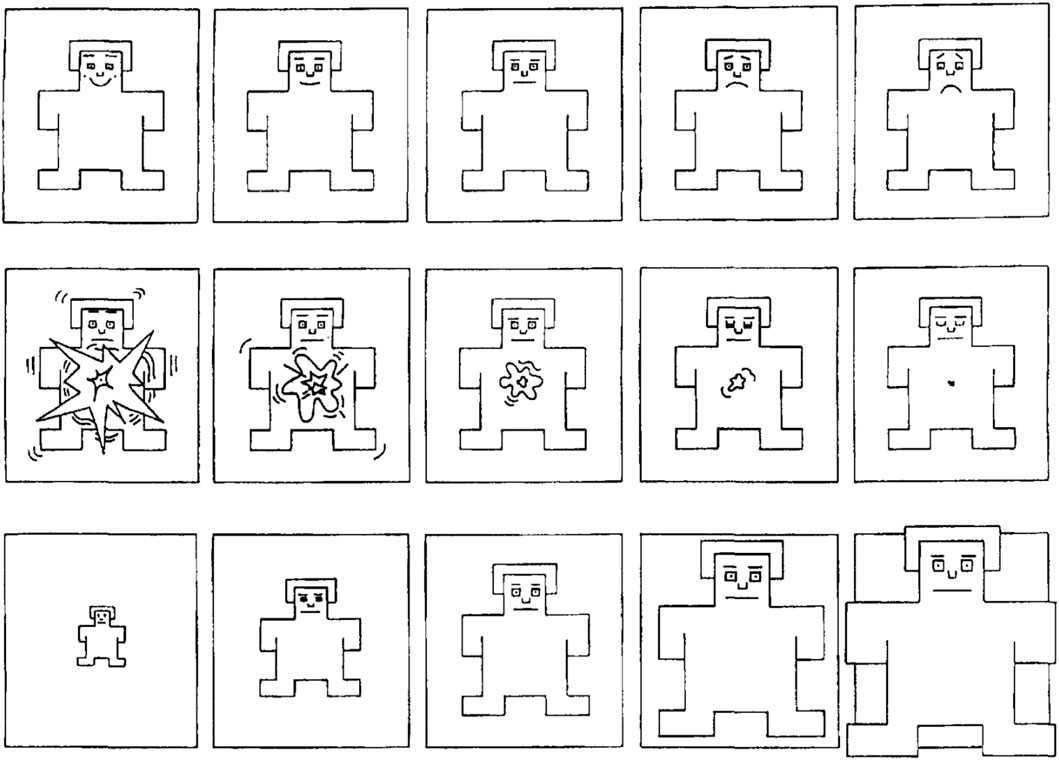

Regarding H2, the Valence-Arousal-Dominance model is a continuous-variable representation of emotion (Russell, 1980). Valence represents pleasantness of emotion, Arousal measures intensity of emotion, and Dominance refers to the degree of control experienced. We tested H2 through pre-interaction and post-interaction administration of the Self-Assessment Manikin (SAM) (Bradley and Lang, 1994) (shown in Figure 6), a pictographic questionnaire used to measure a person’s emotional state. It consists of three single-item scales directly measuring Valence, Arousal, and Dominance. Each scale contains five pictures and is graded 1-5, but the original procedure allows adding crosses between pictures, making it a nine-point scale. Given our young audience, we chose to simplify it as a five-point scale. SAM has a long history of use with primary school-aged children (Beidel and Turner, 1988), and is typically delivered without additional callibration

Figure 6. SAM questionnaire (Bradley and Lang, 1994), used to measure the Valence (top row, left to right), Arousal (middle row, right to left), and Dominance (bottom row, left to right) dimensions of emotion. Measured before and after the interaction.

Both questionnaires were chosen because they have a history of successful deployment with children, and do not require language skills to understand. Participants filled all questionnaires in a private space with the help of one researcher, who provided age-appropriate explanations for each item.

3.5 Experimental apparatus

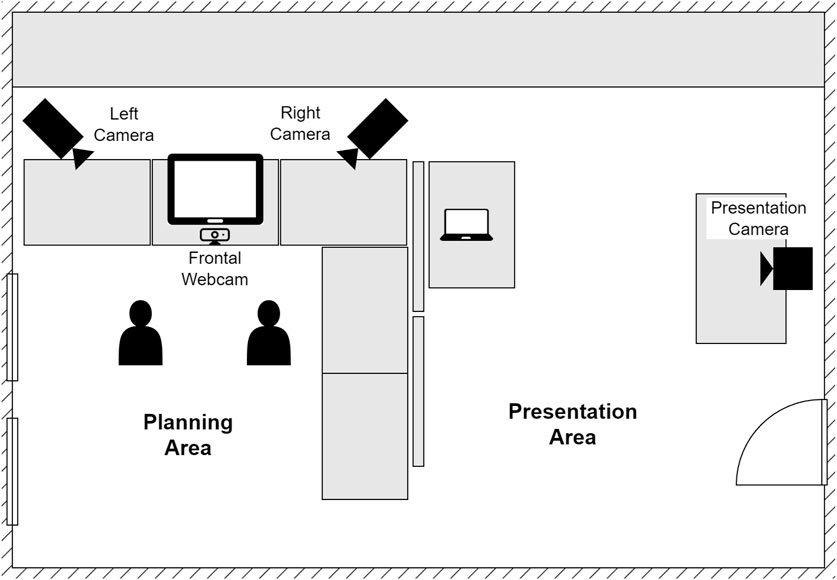

The main activity was performed inside the collaborating school, within the English library room. Figure 7 illustrates the experimental setup.

Figure 7. Experimental area diagram. Children first designed a story in the planning area, and then emerged into the presentation area to retell the story to the experimenters.

The room’s desks (wide rectangles in the diagram) were arranged to delineate the planning area, a rectangular enclosure of approximately 160 cm

One of the sides delimited by desks was designated as the front of the planning area. A touchscreen was placed at its center in standing mode, and used to display the storytelling card game. Two Panasonic HC-V380 cameras (

Per-child audio of the whole interaction was recorded using head-mounted microphones. Each child was fitted with a SubZero SZW-20 wireless microphone set. The audio was captured with a Tascam US-2X2HR audio interface.

In contrast to the walled-off planning area, the presentation area was the working space used by the experimenters. The presentation area contained a Windows laptop used to (1) run the game application shown on the touchscreen, using the Firefox web browser; (2) record the frontal camera video stream, using Logitech recording software; and (3) record the headset audio streams, using Audacity (a popular open-source audio editing and recording application).

Once a participating pair had finished planning their story, they were instructed to enter the presentation area, stand in front of the presentation camera, and retell their story to the experimenters. A Canon EOS Rebel T3i (

3.6 Procedure and protocol

The study started with an introductory meeting with each participating class. During the meeting, the whole classroom was introduced to the storytelling game, and allowed to try it in groups of 2-5 students. This was done to familiarize the children with the activity, and to register further interest from the students. During the following days, registered participants filled out the friendship nomination form in private. Each child was subsequently assigned to a high-rapport pair and a low-rapport pair, as indicated in Section 3.2.1.

For the remainder of the study, we invited one pair at a time to participate in the storytelling game. Pairs were chosen based on availability, prioritizing balance between experimental conditions to avoid order effects. Participating pairs were asked to communicate in English during the activity to allow for future word content analysis, and to allow the researchers to monitor the conversation.

The pair were first called into the experimental space, where they played a short warm-up round of the game with help from two researchers, in order to remind them of the rules and familiarize them with the planning area and presentation area. Following this, the pair were separated, and each child filled the pre-activity questionnaire (SAM) with help from a researcher.

Next, the children donned the microphones with help from the researchers and proceeded to play several rounds of the game. They were allowed to play up to three rounds, or until the total planning time exceeded 10 min. Some exceptions were made for fast pairs, allowing a fourth round if the children requested it. The participants could ask to stop early at any time.

Finally, the children removed the microphones with help from the researchers, and were separated again to fill the post-activity questionnaire (SAM and IOS) with help from a researcher.

4 Data analysis

4.1 Data collection

Of the 38 scheduled sessions, 33 were performed as planned. Two low-rapport sessions were canceled due to a lack of participant availability. One high-rapport session was discarded because it was a false positive: the name-based nomination system produced a mismatch due to two children sharing the same name. Finally, an error in communication caused a non-scheduled pair to play together, forcing us to substitute two distance-2 pairs (children had nominated each other directly) for two distance-4 pairs (children had nominated common friends). All children from the re-scheduled pairs were friends and could be found in the same playgroup.

In total, 35 sessions were included in the recordings: 18 high-rapport sessions, totalling 57 rounds (

For each of the three cameras recording the planning area (left, right, frontal), as well as the two head-mounted microphones, we obtained one continuous recording covering all rounds of the game (except the warm-up round). Upon review, the frontal camera recordings were deemed low-quality and were discarded from further analysis. The main factors for this decision were a disproportionately high number of frames in which the participants’ faces were out of view, and the fact that some children played with the camera, moving it around or even flipping it.

4.2 Data processing

The unprocessed audio sources (per-child headphone recordings) and video sources (left and right cameras) consisted of one continuous recording for each participating pair, containing all the game rounds that the pair played. Video sources were manually cut and processed using FFmpeg4; audio sources were similarly cut and processed within Audacity. A Python script was used to further synchronize the files based on audio content analysis. The package used for synchronization5 claims the “accuracy is typically to within about 0.01s”; manual observation indicates no perceivable time differences.

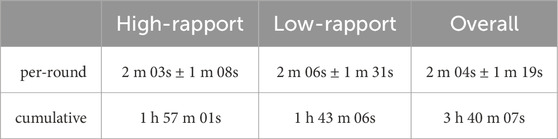

After processing the data from all 35 included pairs, we obtained 106 sets of synchronized multimodal recordings, corresponding to the planning phase of each game round played, for a total of 3 h 40 m of recorded interaction time. The presentation phase recordings were not processed, and are left for future analysis. Table 2 shows duration statistics, including per-round duration in each experimental condition. A cursory t-test analysis did not indicate that either group played longer than the other.

Table 2. Duration of the processed recordings. Times per round are given as mean and standard deviation.

4.3 Questionnaire analysis

From the collected questionnaire data, two entries were discarded due to unclear responses (the child selected multiple options, and the accompanying researcher could not clarify the child’s intent). Further entries were discarded if they could not be paired within-subjects (because the child only participated in one accepted pair, or because the child had missing data in one of their questionnaire responses).

After curation, the questionnaire data contained full sets of responses for 30 children, across 34 pairs. 17 children participated in the high-rapport condition first, and 13 children participated in the low-rapport condition first (56.67% high-rapport first).

We used the Python package Pingouin (Vallat, 2018) to perform statistical analysis of the questionnaire data. Shapiro-Wilks tests suggest none of the questionnaire item responses are normally distributed, either overall or per condition

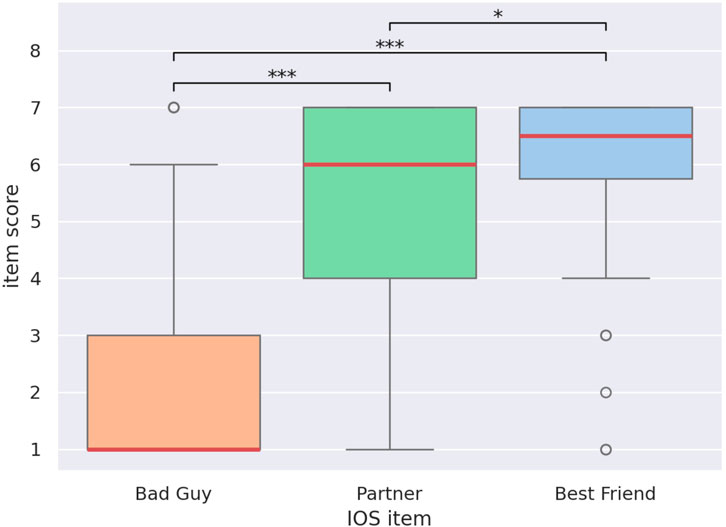

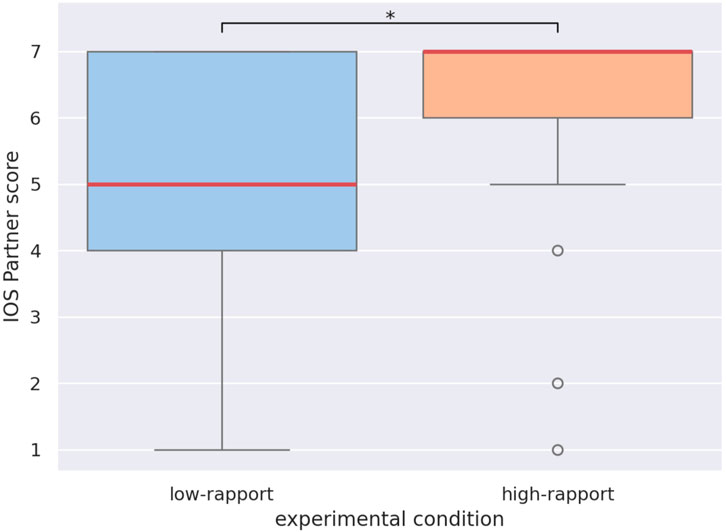

Overall response distributions for the IOS post-test are displayed in Figure 8 as a boxplot, with significant differences highlighted. The control items Bad Guy and Best Friend followed the expected ordering with respect to the target item Partner: A two-sided Wilcoxon signed-rank test showed that Bad Guy scores are significantly lower than Partner scores

Figure 8. Response distribution for the IOS questionnaire items Bad Guy, Partner, and Best Friend. Boxes show the 25% (bottom), 50% (red, middle), and 75% (top) quartiles. Whiskers extend to the lowest and highest samples within 1.5 interquartile ranges from the box. Samples outside the whiskers are shown as circles. Significant differences are shown

Figure 9. Response distribution for the IOS questionnaire item Partner, under the high-rapport (orange) and low-rapport (blue) conditions. The difference is significant

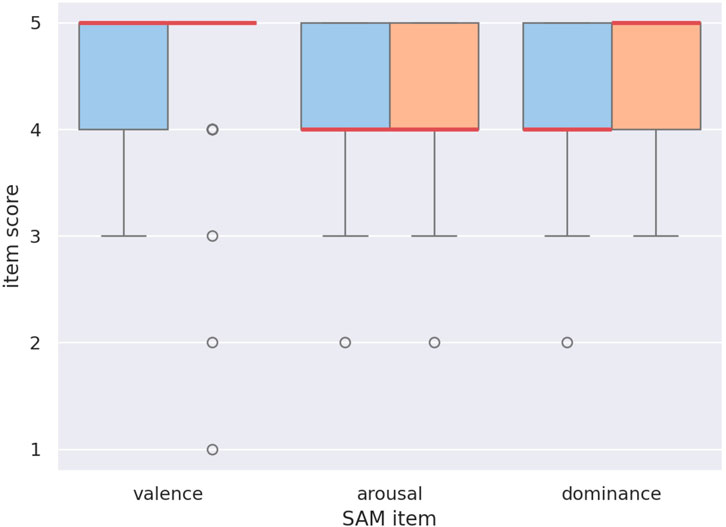

Figure 10 shows the response distribution for each SAM item, comparing pre-test to post-test responses. We observed a strong ceiling effect, causing the data to be inconclusive. Most participants immediately chose the options they perceived as most positive, resulting in a response distribution heavily biased towards the high end of each scale. In concordance with this observation, a cursory repeated-measures ANOVA analysis shows no significant effects of condition nor moment (pre-interaction vs. post-interaction) on the SAM responses. We are therefore forced to reject H2 due to a lack of information.

Figure 10. Response distribution for the SAM questionnaire items Valence, Arousal, and Dominance, in the pre-test (blue) and post-test (orange). No significant relations were found.

The observed results (strong support for H1 despite the small sample size; rejection of H2 due to a ceiling effect) suggest that our pair-making strategy successfully promoted the formation of high-rapport vs. low-rapport pairs at the population level.

5 The UpStory dataset

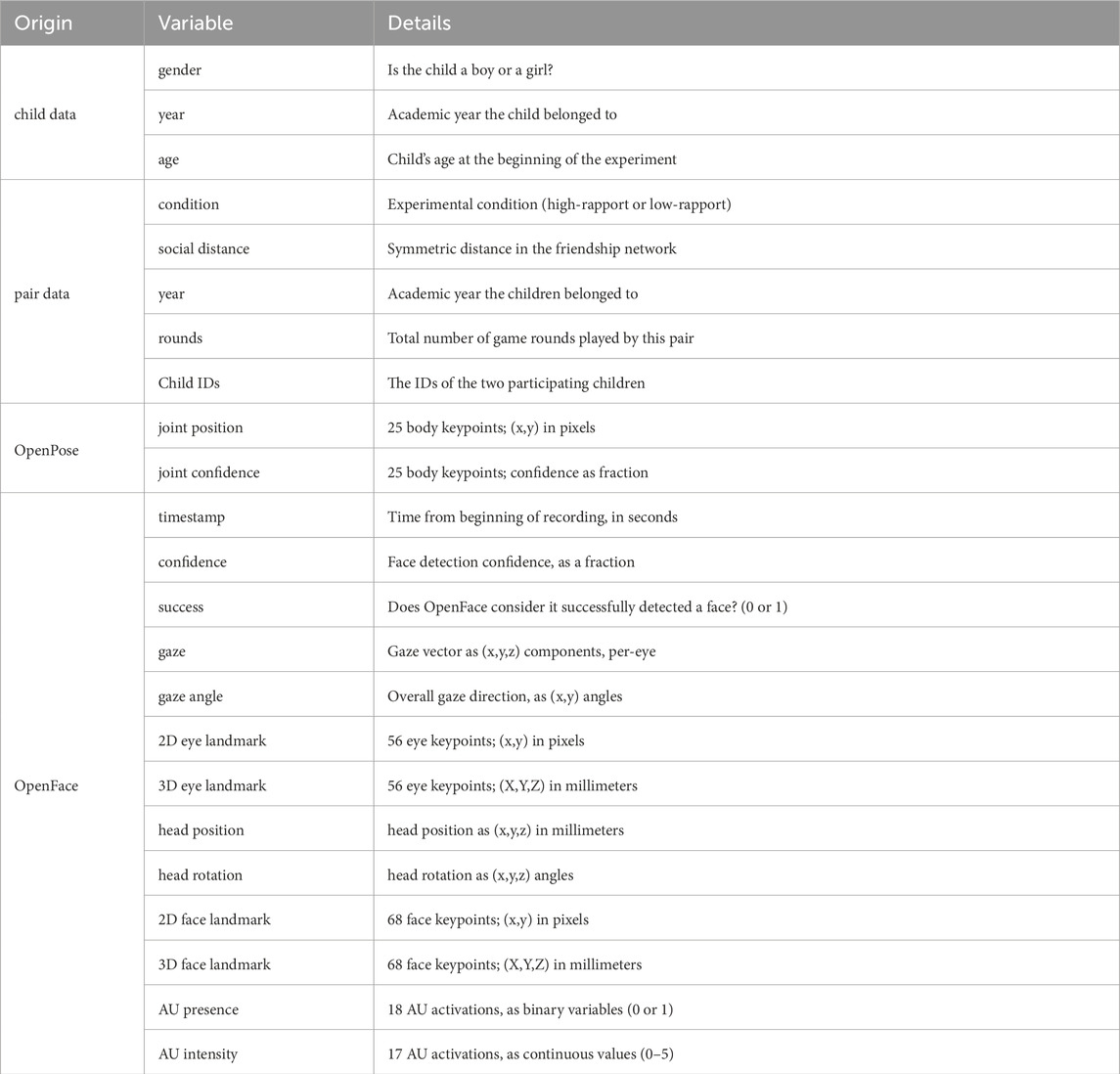

The UpStory dataset is publicly available at https://zenodo.org/doi/10.5281/zenodo.15391848. It is an anonymized feature-based dataset, containing OpenPose features (pose estimation) and OpenFace features (head pose, facial expression, gaze), extracted per-frame at 25 Hz. Data extracted from both the left camera and right camera sources is available. It provides data for all 35 pairs included in the recordings, totalling 3 h 40 m 07s of interaction data. The following per-child metadata is shared: gender, age, school year. The following per-pair metadata is included: experimental condition (high-rapport or low-rapport), social distance heuristic, school year, number of rounds played, and each child’s ID. Table 3 shows all extracted metadata and features, and gives a brief description of each item. As observed in Section 4.1, the recordings correspond to a total of 106 game rounds (57 high-rapport rounds and 49 low-rapport rounds). The remainder of this section gives detailed information on the choice of feature extractors, the extracted features, and custom post-processing required to track individuals over time.

Table 3. Metadata and feature sets contained in the UpStory feature dataset. Metadata is given per-pair; features are provided as time series, captured at 25 Hz.

In recent years, OpenPose and OpenFace have become the reference feature extractors for pose estimation and facial feature estimation in the literature (Wu et al., 2020; Srivastava et al., 2020; Alsofyani and Vinciarelli, 2021). For this reason, we chose these two feature extractors to obtain an anonymized version of the dataset that is amenable to ML research. When applied to our synchronized video sources (left and right cameras), they produce time series of features: an entry is produced for each tracked feature, for each frame in the recording, for each detected individual. In our case, we can obtain a 25 Hz time series per child for each tracked feature. OpenPose provides the position and confidence of 25 body keypoints. OpenFace provides 2D and 3D gaze direction estimates; 2D and 3D keypoint locations for the eyes; 2D and 3D keypoint locations for the whole face; position and angle estimates for the face as a whole; intensity estimates for 17 AUs; and presence estimates for 18 AUs. AUs represent specific muscle activations that produce facial appearance changes (Ekman and Friesen, 1978). We once again direct the reader to Table 3 for more details.

A processing challenge in this study is the fact that both participants could move freely in the experiment space. This means the feature extraction pipeline needs to deal with movement over time, occlusions, and temporary loss of detection. Neither OpenFace not OpenPose offer identification functionality, meaning their output needs to be processed to maintain stable child identity over time. This was achieved with a custom post-processing pipeline based on three principles: minimizing the distance each body keypoint moves between consecutive frames (accounting for reported detection confidence), caching the last known location when tracking is lost, and ensuring face and body identities are consistent with respect to each other.

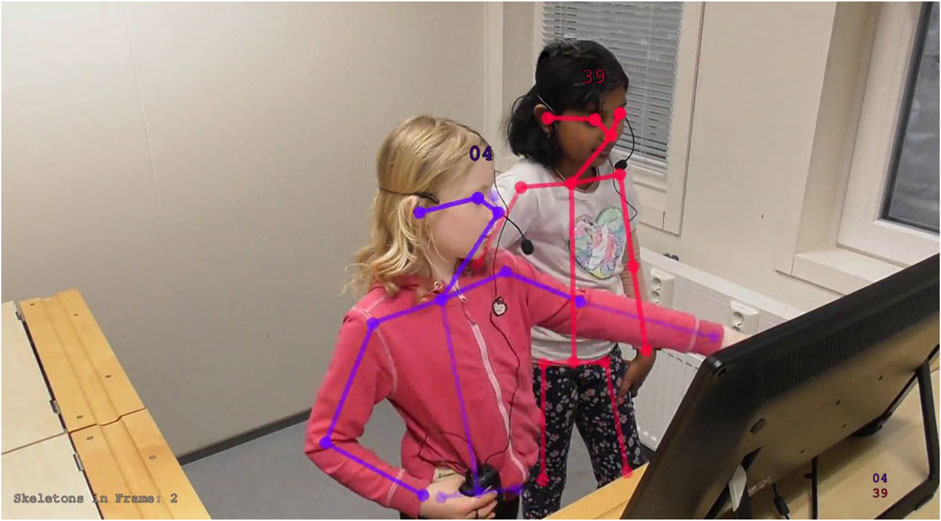

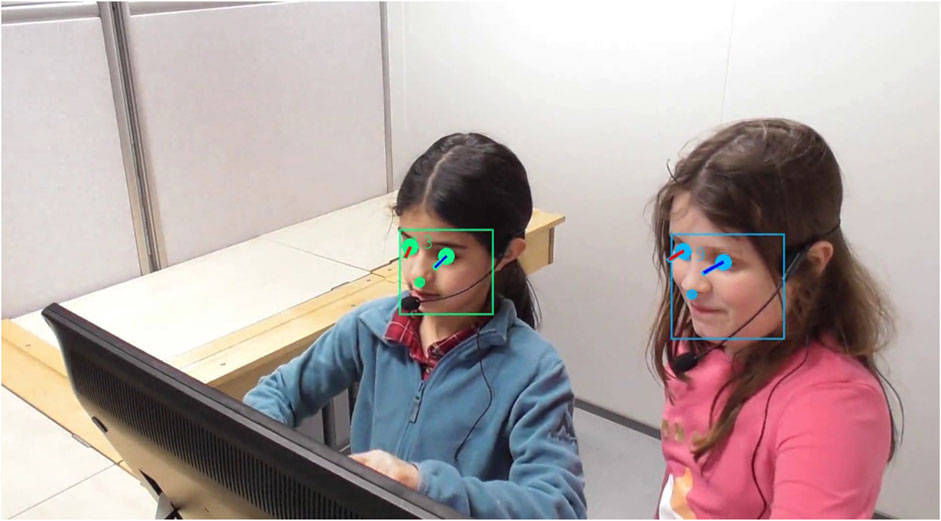

Figure 11 shows post-processed OpenPose output with attached identities (represented by the skeleton color and the ID tag). Each detected body keypoint is shown as a circle, with opacity indicating detection confidence. Similarly, Figure 12 shows post-processed OpenFace output with attached identities. For simplicity, we only display the eye positions, gaze vectors, and bounding boxes containing all face keypoints.

Figure 11. OpenPose output visualization displaying joint positions and confidences, overlayed on the original right camera frame. Displayed numbers are child IDs. Joint transparency indicates detection confidence, as reported by OpenPose (e.g., Child 04s face keypoints show high confidence, while their left wrist keypoint shows low confidence). In this frame, OpenPose successfully disambiguated a complex crossing of limbs. Child identity over time is not provided by either feature extraction tool, and is calculated as a post-processing step.

Figure 12. OpenFace output visualization, displaying the eye locations, gaze vectors, and face keypoint bounding box, overlayed on the original left camera frame. Hue indicates child identity; gaze vectors are color-coded Blue for Left and Red for Right. Child identity over time is deduced from the corresponding body pose estimate.

6 ML baselines

In order to validate the predictive power of the UpStory dataset, we established baselines for the prediction of the level of rapport based on the publicly available features. Two different variants are provided: Section 6.4 considers data from a single child as a sample, while Section 6.5 considers joint data from both children in a pair as a sample. In both cases, the task is binary classification: using AU time-series from a single round as input, the algorithm predicts the experimental condition that the pair belonged to (high-rapport vs. low-rapport). Since the UpStory dataset provides AU estimates from two video sources, we provide baselines either training and evaluating only on the left camera data, or training and evaluating only on the right camera data.

We first discuss feature selection in Section 6.1, data stratification in Section 6.2, and model selection in Section 6.3, since they apply to all provided baselines.

6.1 Feature selection

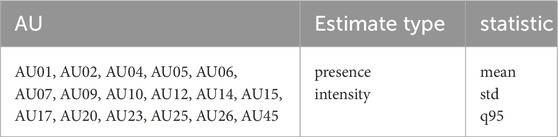

Following an established procedure (Paetzel et al., 2017; Srivastava et al., 2020), we focused on the AU estimates provided by OpenFace, and reduced each AU time series to a selection of summary statistics, calculated per-child. As listed in Table 3, OpenFace produces independently calculated estimates of presence (binary variable) and intensity (continuous variable); 17 AUs are covered in both estimate types. For each AU estimate, we calculated the following summary statistics: mean, standard deviation, and 95% percentile. The mean and standard deviation have been used by Paetzel (Paetzel et al., 2017) and by Srivastava (Srivastava et al., 2020), among others. Usage of the 95% percentile is adapted from a similar idea in Alsofyani and Vinciarelli’s work (Alsofyani and Vinciarelli, 2021). If we consider all possible combinations of AU, estimate type, and summary statistic, we obtain 102 features (e.g., AU10-intensity-q95, or AU17-presence-mean). Table 4 shows the possible combinations.

Table 4. A total of 102 features are considered for the ML baselines. Each feature is a combination of (1) a target AU, (2) an estimate type, and (3) a summary statistic. E.g., AU06-presence-mean (we track the AU06 presence estimate, and aggregate by taking the mean), or AU12-intensity-q95 (we track the AU12 intensity estimate, and aggregate by taking the 95% quantile). This table lists all considered values for (1), (2), and (3). In particular, only AUs with both estimates available are considered. q95 indicates 95% quantile; std indicates standard deviation.

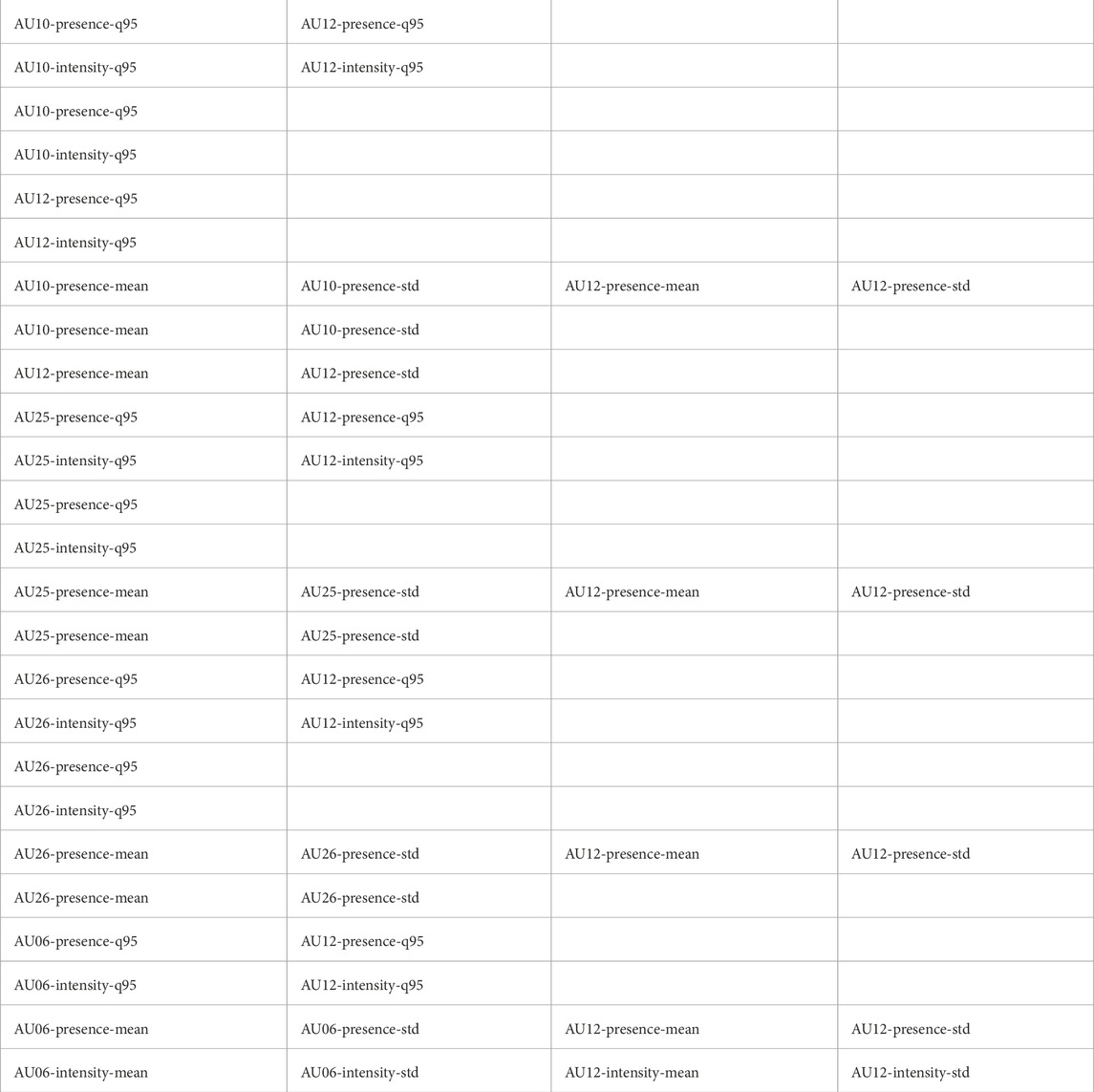

While other authors working with larger datasets have chosen to use all 17 AUs as input features (Alsofyani and Vinciarelli, 2021), due to our comparatively small number of samples, we decided to train on small feature sets consisting of 1-4 features to avoid overfitting. Two different feature selection approaches were combined: theory-based and data-driven.

The theory-based feature sets are based on expressed happiness: the joint activation of AU06 (cheek raiser) and AU12 (lip corner puller) is identified by Ekman as the indicator of a “genuine smile”, and associated with the basic emotion of happiness (Ekman and Friesen, 1978). We considered both presence and intensity estimates (without mixing estimate types). As summary statistics, we either took the more established combination of mean and standard deviation, or alternatively the 95% quantile for extreme event quantification. In total, four theory-based feature sets were considered. They are listed as the last block in Table 7.

For the data-driven approach, we used a battery of t-tests to find variables having significantly different values between experimental conditions. To account for the use of repeated tests, Bonferroni correction was applied to guarantee the correctness of our statistical analysis. We chose combinations of significantly different variables based on the following soundness rules: do not use more than 2 AUs at once, only use one type of statistic at a time (with mean and standard deviation being allowed as either two separate statistics, or a double combination), do not mix presence and intensity variables.

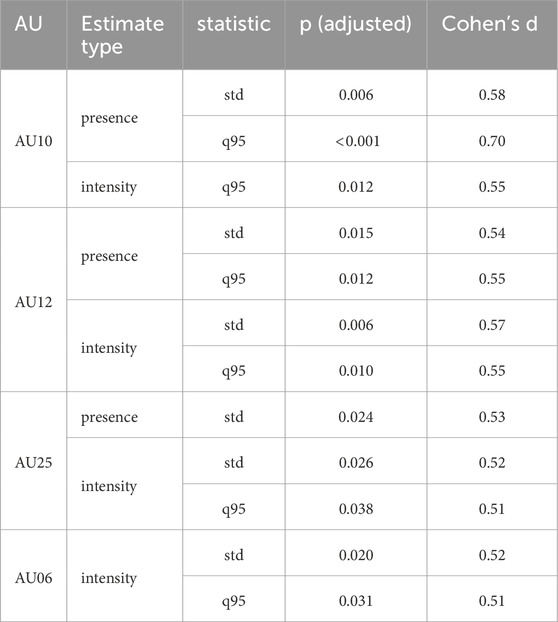

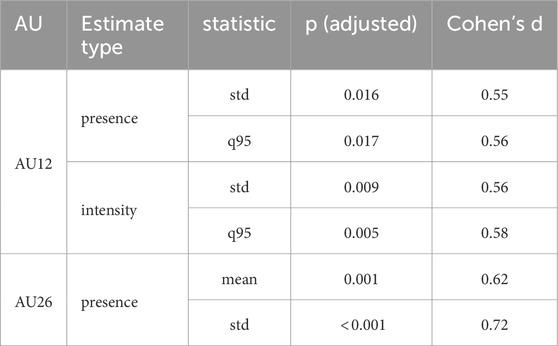

Table 5 lists the features that were significantly different across experimental conditions, as extracted from the left camera, and provides the t-test results (with Bonferroni correction). Table 6 lists the corresponding features and statistics for the right camera. In both sources, AU12 (lip corner puller) showed significant differences in standard deviation and 95% quantile for both estimate types, though the difference in means was not significantly different under Bonferroni correction. AU06 (cheek raiser), AU10 (upper lip raiser) and AU25 (lips part) displayed significant differences in the left camera, while AU26 (jaw drop) displayed significant differences in the right camera. Note that AU25 and AU26 can indicate speech, suggesting that the amount of speech might act as a predictor of the experimental condition. Notably, only one of the significant statistics is a mean. This suggests children in the high-rapport condition expressed a wider range of emotions, with more extreme values being detected.

Table 5. Significantly different summary AU statistics across experimental conditions for the left camera, as measured by a t-test with Bonferroni correction. q95 indicates 95% quantile; std indicates standard deviation.

Table 6. Significantly different summary AU statistics across experimental conditions for the right camera, as measured by a t-test with Bonferroni correction. q95 indicates 95% quantile; std indicates standard deviation.

Each AU selected by the data-driven approach was considered on its own, and combined with other AUs that were significant in the same source (e.g., AU10 and AU12 — left camera —, but not AU25 and AU26 — mix of cameras). For each of the selected AU combinations, 95% quantiles of the presence and intensity estimates were considered. Finally, the mean and standard deviation of the presence estimates was also considered. In total, 21 data-driven feature sets were considered. Together with the theory-driven feature sets, a total of 25 feature sets were considered. All combinations are listed in Table 7. The first three blocks correspond to features identified from the left camera; the third block corresponds to features identified from the right camera.

Table 7. Feature sets considered for ML training. Each row shows a feature set consisting of 1-4 individual features. Each feature represents a choice of tracked AU, estimate type, and summary statistic, as shown in Table 4. AU06 and AU12 combinations (last block) are chosen based on prior literature; other combinations are surfaced through data analysis.

6.2 Data stratification

In order to provide high-quality training, validation, and test sets, we partitioned the dataset into five folds (disjoint subsets of samples), separated at the pair level, and using stratification to ensure good sample balancing. Sampling was designed to always distribute pairs from the same year and condition evenly over the folds; further rejection sampling was performed to enforce good statistical qualities of each fold. In particular, the following quantities were optimized by random sampling, in order of importance:

Each listed quantity was evaluated using the relative square error compared to a uniform distribution over the folds, and weighted according to its importance. This resulted in five folds, each containing seven pairs (3–four pairs per condition). Distributions of game rounds per fold range from 48% high-rapport to 57% high-rapport (in total, 21 to 22 rounds per fold). The resulting random chance accuracy baseline is

6.3 Model selection

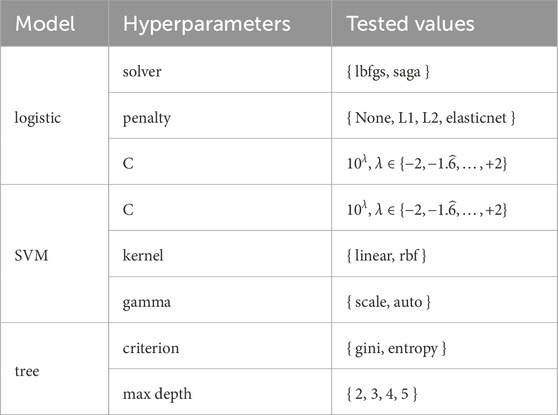

Three shallow ML models were chosen, due to their interpretability, and to set a statistically sound baseline for further study: logistic regression, Support Vector Machines (SVM), and decision trees. We performed the subsequent analysis in Python, using scikit-learn (Pedregosa et al., 2011). A set of hyperparameter values was identified for each model; the hyper-parameters and their tested values are shown in Table 8.

Table 8. Hyperparameters optimized for each model type. All valid combinations were tested in a grid search (e.g., SVM’s gamma parameter only applies to the radial basis function kernel).

For each possible combination of video source, feature set, and classification model, we performed nested

1. For each of the five pre-determined folds:

a. The fold is marked as the test set.

b. For each remaining fold:

(1) The fold is marked as the validation set. The remaining three folds are marked as the train set.

(2) Each combination of hyper-parameters (from the valid combinations shown in Table 8) is used to train on the train set, and evaluated on the validation set.

c. The best performing hyper-parameters are chosen (based on average accuracy on the validation sets), and evaluated on the test set.

2. The expected accuracy of the classification model is estimated as the average test accuracy.

Nested validation strategies are important in small dataset analysis to obtain reliable performance estimates, and especially to avoid over-optimistic results. See (Larracy e al., 2021) for an in-depth discussion of this subject.

6.4 Single-child baseline

In the single-child baseline, summary statistics for one child (calculated over the AU data from one game round) are used as features to predict the pair-level rapport label (high-rapport vs. low-rapport). This means we obtain two separate samples from one game round, for a total of 212 samples. Feature sets range from one to four features per sample. Combining the 25 feature sets described in Section 6.1 and the three models described in Section 6.3, we obtain 75 separate experiments for the single-child baseline.

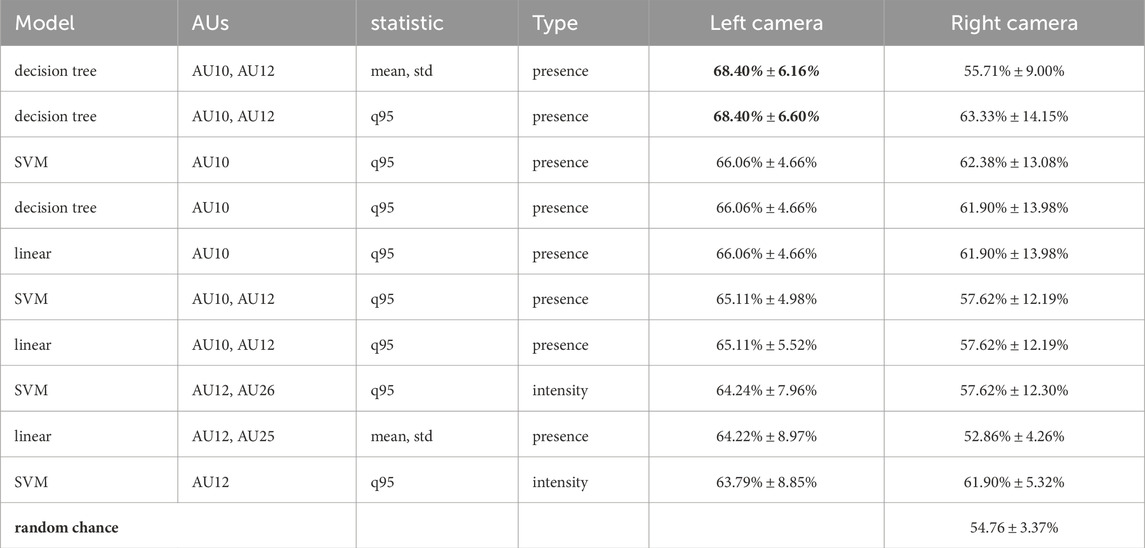

Compared to the baseline random classifier accuracy of 53.8%, all 75 feature-model combinations trained on the left camera obtained better-than-random accuracy on the train set, and 64/75 obtained better-than-random accuracy on the test set. Respectively for the right camera, 74/75 models performed better-than-random on the train set, and 58/75 performed better-than-random on the test set. These high success rates suggest that the AU features generally contain rapport information, and some amount can be extracted with any simple ML strategy.

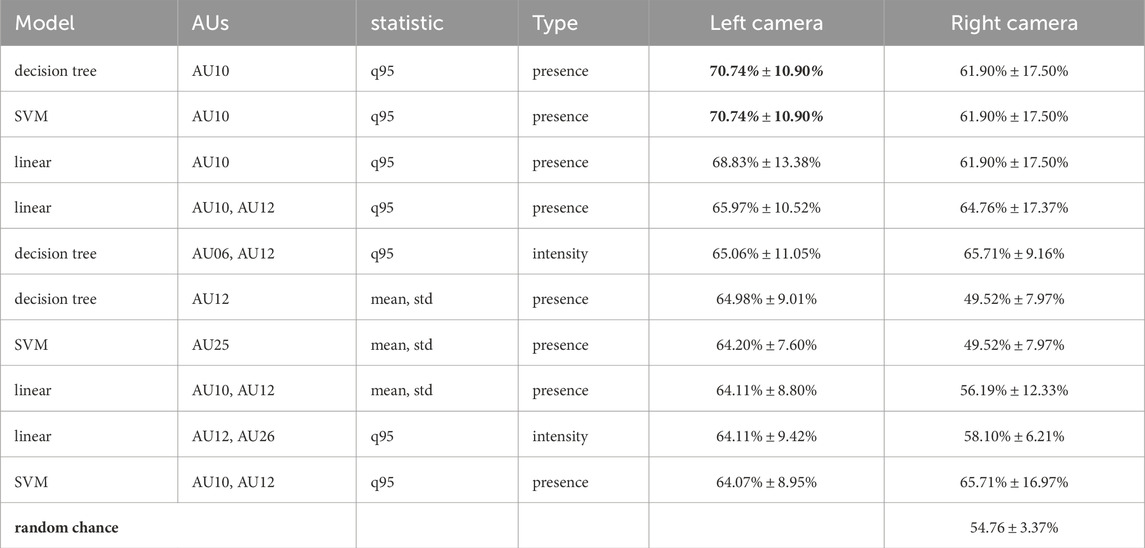

Table 9 lists the top 10 models, ranked by test accuracy on the left camera. Two entries tie for best performance: training a decision tree using AU10 and AU12 presence with either the mean - standard deviation combination or the 95% quantile yields a test accuracy of 68.40%. Most listed models rely on AU10, which was selected using the data-driven approach. Comparing model performance between the two camera streams (rightmost columns on the table), there seems to be a substantial generalization gap, with few models performing comparatively well on both sources.

Table 9. Top 10 single-child models ranked by left camera test accuracy. Listed values include the left camera’s test accuracy, and the right camera’s test accuracy. q95 indicates 95% quantile; std indicates standard deviation.

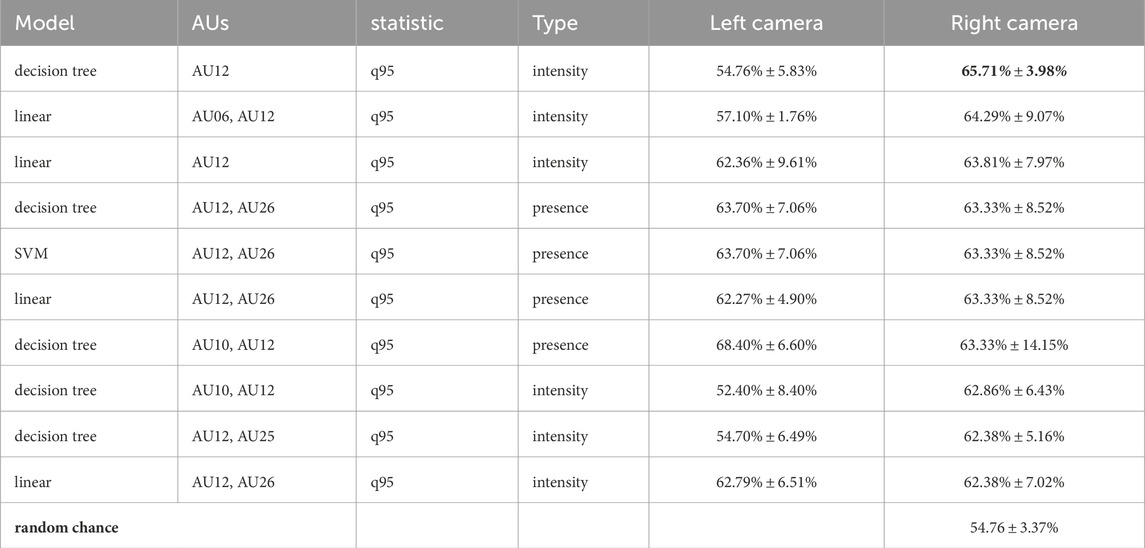

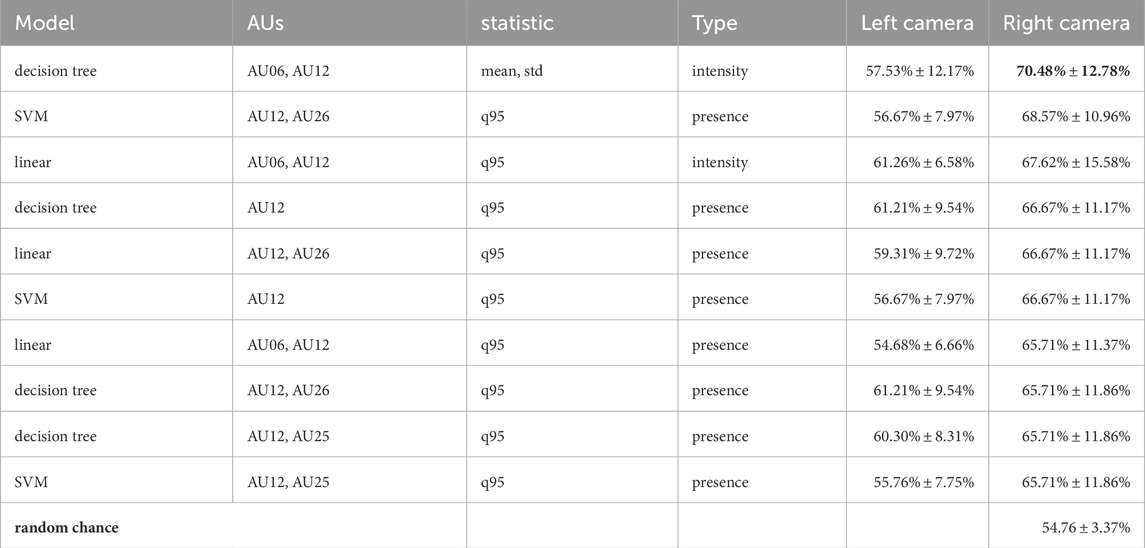

Table 10 lists the top 10 models, ranked by test accuracy on the right camera. The best performing model is a decision tree trained using the AU12 intensity 95% quantile, with a train accuracy of 69.77%, and a test accuracy of 65.71%. A theory-based combination ranks second; all listed solutions rely on AU12. While overall performance is slightly lower than in the left camera leaderboard, the generalization gap is also smaller between camera sources.

Table 10. Top 10 single-child models ranked by right camera test accuracy. Listed values include the left camera’s test accuracy, and the right camera’s test accuracy. q95 indicates 95% quantile; std indicates standard deviation.

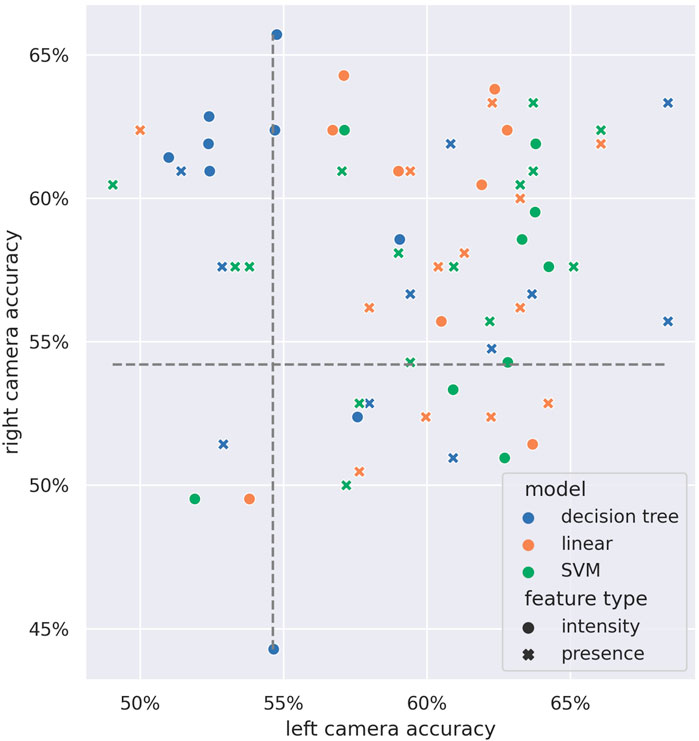

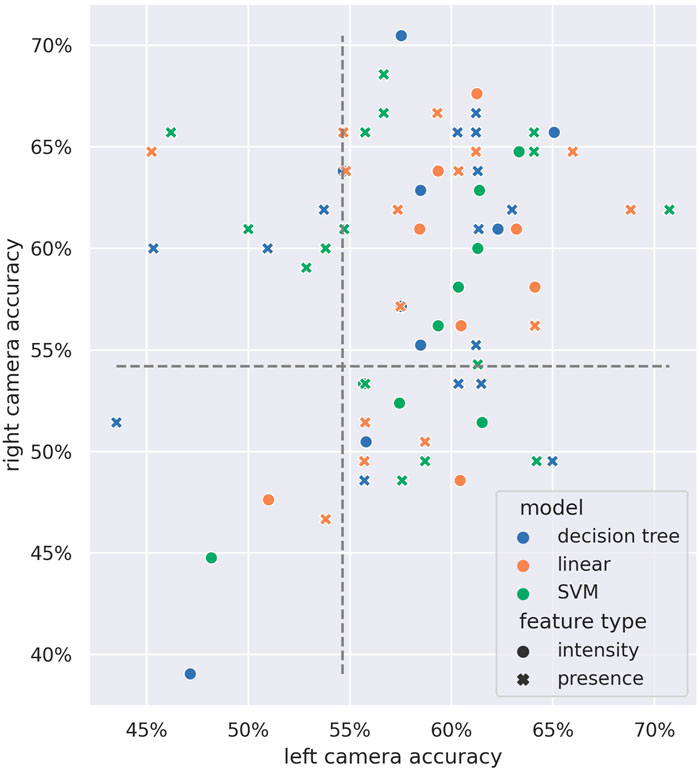

Figure 13 shows a comparison of the single-child test accuracies across both camera streams. We can see there is no strong preference for either of the three models, and the best-performing AU estimate type depends on the evaluated source.

Figure 13. Performance comparison of each single-child model across video sources. Axes indicate the test accuracies in the left camera (horizontal axis) and right camera (vertical axis). Color indicates model type; shape indicates feature type. Gray dashed lines indicate the random chance baseline.

6.5 Joint pair baseline

In the joint pair baseline, the AU summary statistics from both children in a game round are concatenated together, and once more used to predict the pair-level rapport label (high-rapport vs. low-rapport). Compared to Section 6.4, this means the feature count is doubled to 2-8 features per sample, and the sample count is halved to 106 samples. We use the same methodology, including the same choice of feature sets and ML models, again adding up to 75 feature-model combinations per video source.

All 75 left camera feature-model combinations obtained better-than-random train accuracy, and 64/75 obtained better-than-random test accuracy. Respectively for the right camera, 73/75 model-feature combinations obtain better-than-random train accuracy, and 54/75 obtain better-than-random test accuracy. These numbers are similar to the ones obtained in single-child experiments.

Table 11 lists the top 10 joint pair models, ranked by test accuracy on the left camera. Again, we get a tie: training either a decision tree or an SVM on the AU10 presence 95% quantile yields a test accuracy of 70.74%. While the model choice does not seem to matter much, most entries rely on AU10 presence estimates, with the notable exception of a theory-based result. Test accuracies are generally higher than in the single-child baseline, but standard deviations are larger—suggesting that the sample size reduction causes some instability.

Table 11. Top 10 joint pair models ranked by left camera test accuracy. Listed values include the left camera’s test accuracy, and the right camera’s test accuracy. q95 indicates 95% quantile; std indicates standard deviation.

Table 12 lists the top 10 joint pair models, ranked by test accuracy on the right camera. The best-performing model is a decision tree trained using a pure theory-based approach: AU06 and AU12 mean - standard deviation combination. It attains a train accuracy of 81.43%, and a test accuracy of 70.48%. Again, we observe higher accuracies but larger standard deviations when compared to single-child models.

Table 12. Top 10 joint pair models ranked by right camera test accuracy. Listed values include the left camera’s test accuracy, and the right camera’s test accuracy. q95 indicates 95% quantile; std indicates standard deviation.

Figure 14 shows a comparison of the joint pair test accuracies across both camera streams. We can see a similar overall distribution as in Figure 13, with higher overall accuracies reported.

Figure 14. Performance comparison of each joint-pair model across video sources. Axes indicate the test accuracies in the left camera (horizontal axis) and right camera (vertical axis). Color indicates model type; shape indicates feature type. Gray dashed lines indicate the random chance baseline.

Overall, using joint data from both children resulted in slightly better accuracies, but noticeably higher standard deviations. In this scenario, taking the interpersonal aspect of rapport into account did not outweigh the benefits from having a bigger population size.

7 Discussion and conclusion

To better understand the social dynamics and behaviors that emerge in the classroom when children interact with their friends and peers, we collected UpStory: a new dataset of dyadic interactions with different levels of rapport. In order to provide an objective measure of rapport, we leveraged friendship network analysis to propose and validate a novel pair-making technique. Our approach allowed us to control for different levels of rapport, resulting in a multimodal dataset on child-child interactions with high-rapport and low-rapport levels.

We recorded pairs of children participating in collaborative storytelling play for a total of 35 sessions, adding up to 106 game rounds, with a duration of 3 h 40 m. Each session is annotated with the pair’s condition (high-rapport or low-rapport) and a social distance heuristic, allowing practitioners to train ML models based on the children’s self-reported friendships. The resulting private dataset contains three video feeds with associated audio, and two additional audio feeds from head-mounted microphones, providing clean recordings for each child’s voice. UpStory is the anonymized dataset obtained by extracting frame-by-frame body and face features from two video sources; it is made publicly available at https://zenodo.org/doi/10.5281/zenodo.15391848. The provided features were extracted using OpenPose and OpenFace, with a custom solution for identity tracking over time.

We followed two methodologies to validate our pair-making technique. Firstly, analysis of the social distance heuristic’s distribution suggested that the pairing strategy worked at the population level, but not at the case-by-case level. This was expected, since we optimized for the sum of distances in order to guarantee that all children are assigned a pair, and the dynamics of popularity come into play: not all children were equally liked by their peers, so it was not always possible to provide a perfect match. This form of label noise was chosen to minimize selection bias, but it can be a limiting factor if strong labels are needed. A possibility for further ML experiments would be to base the labels on the pair distances instead of the assigned condition, although this can introduce confounding variables (e.g., sociable children being over-represented in the low-distance case). We observed better quality pairings in the Year two cohort, both due to a bigger cohort size and due to the participation of several classes. A possible extension to this method is to ensure even participation across several classes; in our study, we were limited by the requirement that the students speak English fluently.

Secondly, our questionnaire analysis showed that children felt significantly closer to their pair in the high-rapport condition, supporting H1. However, our survey data also revealed that children did not necessarily have more positive emotions when playing in the high-rapport condition than in the low-rapport condition, rejecting H2. This result is not surprising, as the novelty effect of the activity could lead to the observed ceiling effects when questionnaires are used to capture children’s subjective perceptions (Ligthart and Peters, 2018).

Overall, we demonstrated that our proposed pair-making technique is reliable in controlling rapport levels. We believe that our experimental manipulation more clearly captures the extremes of naturalistic behavior inherent to the social dynamics of child dyads when compared to post hoc annotation. Furthermore, we base the provided labels on the child’s own opinions, rather than the perceptions of external observers.

Any study involving social interaction at a single site is deeply affected by the local culture where the experiment took place, and this work is no exception. We collaborated with a school that specifically caters to international families, and obtained a very diverse student population, both in terms of home culture and in terms of socioeconomic status; but the fact that all the students were growing up in one Swedish town could in theory have a measurable effect on their behavior. However, note that the literature we based our protocol on involves research in other countries, and the facial expression cues that were selected for the ML baselines are generally understood to be universal: primarily, expressions of happiness, or amount of speech. We therefore expect results derived from the UpStory dataset to be applicable to other populations.

The naturalistic social dynamics captured by our dataset hold promise for the training of ML-based automatic prediction of rapport. To build on this, we have provided ML baselines for the prediction of the experimental condition. Both baselines using the data from a single child and baselines using joint pair data are provided. In all cases, the best-performing models attained test accuracies in the 60%–70% range, well above random chance. While our exhaustive parameter searches provide different optimal models for each experiment (single-child or joint pair data, left camera or right camera), the findings are quite consistent: all selected features are indicators of happiness and speech, with extreme event summarization (as captured by the 95% quantile) and decision trees generally performing well in the rankings. Having different views into the same interaction can provide complementary information (for example, having occlusions at different times), so one possible direction for future research would involve combining the data from both sources into one prediction.

While most of the available datasets for affective computing and child-child interaction are small, containing only a few instances of data (Mathur et al., 2021; Singh et al., 2018), previous research has demonstrated the potential of small datasets for modeling human behavior (Cheong et al., 2024). Collecting datasets for child-child interactions is an exhaustive and expensive process that requires a rigorous methodology and careful planning (Chen et al., 2023; Lemaignan et al., 2018), which makes these datasets highly valuable when understanding social dynamics. Moreover, small datasets are easier to align with individual needs and enable more personalized training and decision-making (Zehfroosh et al., 2017; Fraile et al., 2021).

We identified three areas in which the UpStory dataset could be used to design data-driven methodologies to further our understanding of children’s relationships with their friends and peers. First, the spontaneous nature of the interactions in our dataset provides significant information for ML models to learn from these social dynamics, as it captures real and unstructured peer collaborations. This could enhance the ability of ML to model interpersonal dynamics in educational settings. Data-driven approaches to understanding children’s social dynamics are still a developing field, due to the complexity involved in interpreting these complex interactions (Lemaignan et al., 2018; Horn et al., 2024).

Second, by incorporating both facial cues and body gestures, our dataset provides a more comprehensive approach to recognizing children’s affective states as they naturally emerge during peer interactions. Its high ecological validity—data collected in a school setting—enables researchers to study children’s affect and engagement in authentic learning contexts. This is crucial for child-robot interaction, as evidence shows that the flow of these interactions constantly fluctuates (Ros et al., 2011). We hope the UpStory dataset will contribute to the design of more socially aware robots and help advance of the field beyond the lab. While “real-world” studies may trade experimental control for ecological validity, requiring more logistical coordination or managing unpredictable peer interactions that might introduce new confounds, they also capture genuine social dynamics crucial for designing child–robot applications (Baxter et al., 2016). Therefore, field studies must therefore balance methodological rigour with transparent reporting of contextual influences to harness both its challenges and its insights.

Third, by operationalizing rapport, we believe that our dataset could contribute to the design of more effective collaborative learning systems, especially in areas where personalized education and assistance are required to enhance children’s wellbeing (Williams et al., 2024). For instance, there is evidence that assistive technologies, such as social robots, could help increase communication and emotional regulation through motor and gestural imitation, particularly in children with autism spectrum disorder (So et al., 2019).

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://zenodo.org/records/15391848 and https://github.com/MarcFraile/dyadic-storytelling.

Ethics statement

The requirement of ethical approval was waived by Etikprövningsmyndigheten, diarienummer 2022-04863-01 for the studies involving humans because the ethical board considered that the conducted research did not pose considerable risks to users and did not fall under the categories that require ethical approval. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin. Written informed consent was obtained from the minor(s)’ legal guardian/next of kin for the publication of any potentially identifiable images or data included in this article.

Author contributions

MF: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review and editing. NC-B: Writing – original draft, Writing – review and editing, Conceptualization, Methodology. AA: Investigation, Writing – original draft. GV: Conceptualization, Data curation, Supervision, Writing – review and editing. JL: Conceptualization, Supervision, Writing – review and editing. NS: Conceptualization, Supervision, Writing – review and editing. GC: Conceptualization, Supervision, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was partly funded by the Centre for Interdisciplinary Mathematics, Uppsala University; the Marianne and Marcus Wallenberg Foundation and the Marcus and Amalia Wallenberg Foundation, partly via the Wallenberg AI, Autonomous Systems and Software Program-Humanities and Society (WASP-HS); the Horizon Europe SymAware project (project number: 101070802); and the Swedish Research Council (grant number 2020-03167).

Acknowledgments

The authors wish to thank all the colleagues who donated time and effort to help complete this project. In no particular order: Alessio Galatolo, Gustaf Gredebäck, Johan Öfverstedt, and Rebecca Stower. Attribution for the card images displayed in Figure 4, in reading order: graveyard by upklyak; mummy by upklyak; grim reaper by upklyak; savannah by macrovector; boy scout by brgfx; hearth by macrovector; dragon hoard by upklyak; elf by pch. vector; shield by vectorpocket. All images collected from Freepik. Manuscripts of this article have been previously made available in MF’s doctoral thesis (Fraile, 2024) and in Arxiv https://arxiv.org/abs/2407.04352.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2025.1547578/full#supplementary-material

Footnotes

1https://dictionary.cambridge.org/us/dictionary/english/rapport

2Etikprövningsmyndigheten, diarienummer 2022-04863-01.

5https://github.com/bbc/audio-offset-finder

References

Alhazmi, K., Alsumari, W., Seppo, I., Podkuiko, L., and Simon, M. (2021). “Effects of annotation quality on model performance,” in 2021 international conference on artificial intelligence in information and communication (ICAIIC) (IEEE), 063–067.

Alsofyani, H., and Vinciarelli, A. (2021). “Attachment recognition in school age children based on automatic analysis of facial expressions and nonverbal vocal behaviour,” in Proceedings of the 2021 international conference on multimodal interaction, 221–228.

Aron, A., Aron, E. N., and Smollan, D. (1992). Inclusion of other in the self scale and the structure of interpersonal closeness. J. personality Soc. Psychol. 63, 596–612. doi:10.1037/0022-3514.63.4.596

Azmitia, M., and Montgomery, R. (1993). Friendship, transactive dialogues, and the development of scientific reasoning. Soc. Dev. 2, 202–221. doi:10.1111/j.1467-9507.1993.tb00014.x

Baltrušaitis, T., Robinson, P., and Morency, L.-P. (2016). “Openface: an open source facial behavior analysis toolkit,” in 2016 IEEE winter conference on applications of computer vision (WACV) (IEEE), 1–10.

Baxter, P., Kennedy, J., Senft, E., Lemaignan, S., and Belpaeme, T. (2016). “From characterising three years of hri to methodology and reporting recommendations,” in 2016 11th acm/ieee international conference on human-robot interaction (hri) (IEEE), 391–398.

Beidel, D. C., and Turner, S. M. (1988). Comorbidity of test anxiety and other anxiety disorders in children. J. Abnorm. child Psychol. 16, 275–287. doi:10.1007/bf00913800

Belpaeme, T., Kennedy, J., Ramachandran, A., Scassellati, B., and Tanaka, F. (2018). Social robots for education: a review. Sci. robotics 3, eaat5954. doi:10.1126/scirobotics.aat5954

Berndt, T. J., Perry, T. B., and Miller, K. E. (1988). Friends’ and classmates’ interactions on academic tasks. J. Educ. Psychol. 80, 506–513. doi:10.1037//0022-0663.80.4.506

Bilakhia, S., Petridis, S., Nijholt, A., and Pantic, M. (2015). The mahnob mimicry database: a database of naturalistic human interactions. Pattern Recognit. Lett. 66, 52–61. doi:10.1016/j.patrec.2015.03.005

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: the self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. psychiatry 25, 49–59. doi:10.1016/0005-7916(94)90063-9