- 1CCPS Laboratory, ENSAM-C, Hassan II University, Casablanca, Morocco

- 2Pluridisciplinary Laboratory of Research and Innovation (LPRI), EMSI Casablanca, Casablanca, Morocco

- 3LISPEN, Arts et Métiers Institute of Technology, Lille, France

In today’s era of digital transformation, industries have made a decisive leap by adopting data-driven, robot-assisted disassembly solutions that cut cycle time and cost relative to labor-intensive manual tear-down. Thus, including robots not only improved production activities but also strengthened the safety measures that once the human operator was handling. Minimizing the impact of the human factor in the process means minimizing incidents related to it. The disassembly of Waste Electrical and Electronic Equipment (WEEE) poses complex technical, economic, and safety challenges that traditional manual methods struggle to meet. Thus, there is a need for a decision-making tool harmonized with human cooperation, in which Artificial Intelligence (AI) plays a pivotal role by providing financially viable solutions while ensuring a secure collaborative environment for both humans and robots. This review synthesizes recent advances in AI-enabled robotic disassembly by focusing on four main research areas: i optimization and strategic planning, ii human–robot collaboration (HRC), iii computer vision (CV) integration, and (iv) Safety for Collaborative Applications. A supplementary subsection is also included to briefly acknowledge emerging topics such as reinforcement learning that lie outside the main scope but represent promising future directions. By analyzing 62 peer-reviewed studies published between 2000 and 2024, the results identify how these themes converge, highlight open challenges, and map out future research directions.

1 Introduction

Natural resources used in electronics cannot be regenerated, or at least not at the same rate at which they are consumed. The United States itself generated 500 million volumes of electronic waste between 1997 and 2007 (Kiddee et al., 2013). During that period, printed-circuit boards (PCBs) relied on costlier raw materials underscoring the imperative for resource stewardship and long-term sustainability (Perossa et al., 2023). Remanufacturing is defined as the process of bringing back a used product up to the level of its original equipment manufacturer (OEM), with the same warranty as an equivalent new product (Matsumoto and Ijomah, 2013). It has a major impact on preserving the environment, thanks to the use of recovered components which are then reassembled into remanufactured products, saving on raw materials as well as reducing production costs while at the same time reducing the impact on the environment. A visualization of the life cycle of resources according to the circular economic business is presented in Figure 1. The early adoption of such processes was driven by the need to repair, maintain or understand complex machinery. However, as products became more complex and the need to recover individual components expanded, the term ‘disassembly’ was introduced allowing valuable components to be extracted in a targeted manner, thereby facilitating efficient recycling and reducing the environmental footprint. The disassembly process represents the first phase in the remanufacturing cycle (Priyono et al., 2016). It is the reverse process in which a product is separated into its components and/or sub-assemblies by non-destructive or semi-destructive operations that damage only the connectors or fixings. If the process of separating the product is not reversible, this process is called disassembly (Vanegas et al., 2019). Within the resource life cycle, the disassembly process itself focuses on the extraction of sub-assemblies and individual components from end-of-life products (EOLPs) so that they can be reused/manufactured. Non-destructive disassembly refers to separating components without damaging them, enabling their reuse, remanufacturing, or recycling. While this preserves the integrity of individual parts, it does not necessarily allow for full reassembly of the original product and is therefore not always fully reversible. However, when it involves waste electrical and electronic equipment, the main obstacles to successful recycling (both technical and economic) include the difficulties associated with classifying and disassembling components. Manual operations are considered prohibitively expensive, and full automation is also rejected due to the lack of uniformity of discarded appliances and the exorbitant costs associated with traditional automation techniques (Alvarez-de-los-Mozos and Renteria, 2017a). Manual disassembly also causes safety problems which increase labor costs, representing the second most expensive item for a recycling plant (D’Adamo et al., 2016). Following the industrial revolutions, starting with the first industrial robot up to the advanced technologies of industry 4.0 and 5.0. Robots in industrial processes make industrial plants even more efficient, reducing errors and safety issues while improving both product stability and consistency. For this reason, one interesting solution consists of integrating robots into the disassembly process. The use of robots is increasing in remanufacturing systems which particularly improves the performance of disassembly lines. In remanufacturing systems, robots can be deployed in various roles ranging from fully autonomous execution of specific tasks to collaborative operations alongside human workers or other robots, with the flexibility to adapt to different task requirements. The main advantage of robotics is in the accurate and consistent performance of repeated tasks, such as on assembly lines. On the other hand, in the context of robotic disassembly which involved several uncertainties, a standard robot without any cognitive capacity for reasoning and logic will have serious limitations compared with the ability of a human being to disassemble an EOLP intuitively. To fully realize the potential of automated disassembly, it is essential to implement artificial intelligence approaches such as reinforcement learning (RL) alongside with computer vision systems capable of automatically identifying and locating such items or finding the most optimized path (Wegener et al., 2015).

Figure 1. An abstract visualization of the life cycle of resources according to the circular economic business model. 01: Dismantling of the EOLP; 02: Recycling of materials; 03: New raw materials enter production during the design and manufacture of sub-components; 04: Production of the final product; 05: Distribution of the product to customers; 06: Consumption of the product.

This paper begins by presenting the background of robotic disassembly (Section 1), followed by the paper selection methodology (Section 2) used to identify relevant studies across four main areas, and then proceeds to a detailed analysis of selected studies (Section 3), which will be divided according to domain.

2 Background: robotic disassembly

Robots enable faster, more consistent extraction of reusable components from end-of-life (EOL) products. Robotic systems now replace manual disassembly techniques to make recovery of reusable materials from electrical and electronic products faster. Companies demonstrate this breakthrough in their recycling processes. In 2016, Apple introduced Liam and Daisy which demonstrated a treatment process of e-waste by disassembling an iPhone within minutes (Apple, 2024). Another example presented by CRG Automation deployed robotic systems to safely disassemble the M55 rocket, a chemical weapon containing nerve agents such as VX and sarin (James, 2023). Without forgetting many use cases such as e-waste or battery disassembly. Or their automated solution enabled precise handling and neutralization within high-risk demilitarization facilities (Allison, 2023; Fraunhofer IFF, 2025). Robotic disassembly gives manufacturers promising performance benefits that combine flexibility with profitability and safety protection along with positive environmental outcomes. Robots can also handle many different products as human operators. In addition, robots improve both labor savings, making remanufacturing more affordable. Using robotic disassembly helps with material reuse which lowers environmental effects (Zeng et al., 2022). Finally, robots can perform in unsafe areas and manage dangerous materials making human workers safe (Xu et al., 2021).

2.1 Collaborative approaches for disassembly

The existing e-waste management is confronted with a couple of issues: Manual disassembly process is expensive, and automated disassembly is complicated for virtually all types of legacy devices. The current approach is tackling the issues using a hybrid approach where robots and human operators collaborate (Alvarez-de-los-Mozos and Renteria, 2017b). This approach incorporates robotic and human operators to facilitate e-waste recycling with the use of the best system designs under ecosystems. The basics of this field include interactions between people and robots as well as other advanced constructs of human-robot collaboration, wherein the robot is endowed with the skills needed to work with people (Feil-Seifer et al., 2009; HRI, 2024). Human-centered collaborative robotics creates shared workspaces in which robots handle repetitive or hazardous operations such as manipulating irregularly shaped, toxin-laden components while humans provide real-time judgement. Analyzing e-waste disassembly therefore requires a concurrent examination of collaborative strategies and the enabling tool-chain.

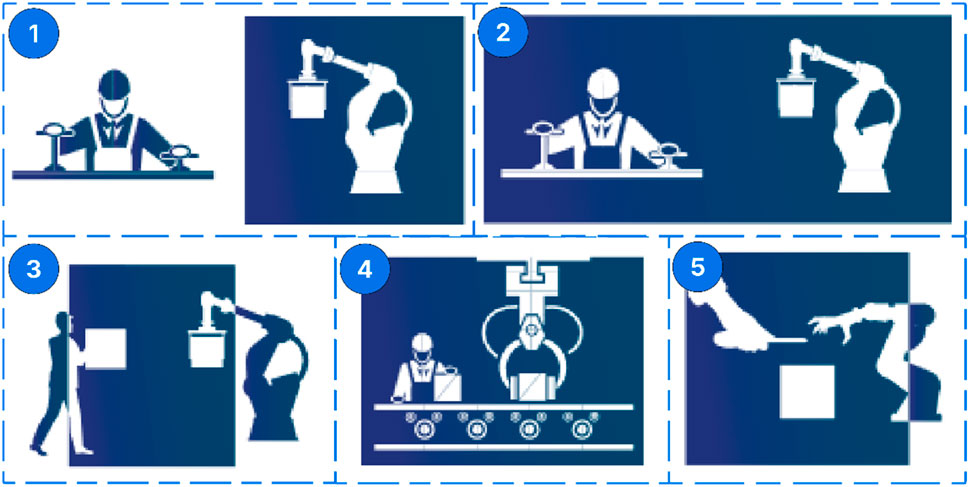

A specialized robotic cell for disassembly functions as a system that uses PLC-controlled robots to execute disassembly tasks under remote monitoring conditions (Dawande et al., 2005). Product separation and hazardous element removal procedures through recycling operations occur frequently with this technology to achieve layout disassembly goals. Robotic cells integrate built-in security and environmental awareness with custom disassembly techniques but require human agency to complete tasks which need direct assistance. Human Robot Coexistence operations at disassembly sites yield challenging situations together with fresh prospects for site management. People working alongside robots achieve versatile disassembly task integration by having robots complete excessive or dangerous procedures while humans handle decision-focused activities (Magrini et al., 2020). Today’s industries depend heavily on robotic technology for safety purposes because these machines safely handle toxic materials alongside sharp objects. Technical detection systems working with flexible robotic elements alongside cautious safety protocols help reduce exposure risks to create optimized work environments for concurrent human-robot disassembling operations (Magrini et al., 2020). Humans and robots perform their work simultaneously in the same space through a coordinated disassembly method (Zhuang et al., 2019). During synchronization the human workers share the space with robotic systems through parallel task execution that maintains individual work domain separation. Human operators first remove screws from the workpiece, creating space for robotic extraction operations that achieve highly precise results. This integrated approach combines robotic processing elements alongside human capabilities to increase complex disassembly performances through enhanced accuracy rates and operational speed increases. When humans work with robots in identical workspace areas independent roles integrate as part of collaborative tasks. When companies adopt collaborative systems, they achieve effective workspace division between parallel assignments without sacrificing production objectives (Váncza et al., 2011). After robot systems break down single components human operators check these pieces to verify component condition before permitting additional disassembly operations. The current advanced technology enables several operations to execute simultaneously by forcing robotic employees to stay regardless of preceding work completion so tasks function without interruption. The methodology enables flexible operations within complex disassembly systems by implementing its collaborative process. The core technique behind robot-human operator collaboration allows teams to work together in both safe and optimized conditions (Ameur et al., 2024). Figure 2 illustrates the collaborative approaches in robotic disassembly.

Figure 2. Collaborative approaches in robotic disassembly (1. Robot Cell, 2. Coexistence, 3. Synchronized, 4. Cooperation, 5. Collaboration).

2.2 Challenges and difficulties

However, robotic disassembly of WEEE introduces a wide range of associated challenges that make automation particularly difficult when compared to assembly processes. Among the most critical obstacles is the need to operate in dynamic environments in which variability in product orientation, product condition and component integrity can interfere with fixed robotic routines. Contrary to structured industrial tasks the disassembly often takes place in unpredictable spatial and material conditions which require real-time detection and adaptation. And there are no uniform end-of-life conditions as products reaching the end of their life may be damaged at some stage, incomplete or severely worn (Hohm et al., 2000). Different versions of products, user modifications may result in different internal configurations which limit the effectiveness of predefined CAD-based trajectories or fixed motion sequences (Bogue, 2019). As a result of this unpredictability, the cognitive and mechanical requirements of robotic systems increase. It also involves a variety of tooling requirements (Kernbaum et al., 2009). As opposed to repetitive assembly, the disassembly process often requires several operations on a single product. This may involve unscrewing, cutting, breaking, heating or lifting components. Each of these operations may require a different tool head and actuation force and level of precision. This requires reconfigurable end-effectors and tool change mechanisms that offer multiple functions while maintaining cycle time and safety (Poschmann et al., 2021; Karlsson and Järrhed, 2000). The coordination of these systems is even more complex when it comes to seamless automation. Perception, as well as decision-making and motion planning, must be tightly synchronized in an integrated way, especially in cluttered or constrained environments. Robots must be able to make decisions regarding the disassembly sequence and execute high-precision movements, all in real time. In collaborative scenarios, the coordination between human and robotic agents becomes even more critical so robust interaction protocols and safety mechanisms are required. Other systemic barriers can include designs that are not intended for disassembly, where products are manufactured to be compact and tamper-proof with strong adhesives and welded joints or concealed fixings. Such features make automated disassembly technically unfeasible or economically inefficient (Huang et al., 2021). The environmental and regulatory constraints associated with WEEE add to the complexity since robots need to securely extract toxic components such as lithium batteries or mercury lamps while maximizing the recovery of valuable materials such as rare earth elements. In addition, the availability of datasets and standardization remain major obstacles. While assembly is documented and often standardized at every stage, the disassembly process has no guidelines or detailed labelling. This limits the ability to form intelligent systems or to generalize robot behavior across product types.

3 Paper selection methodology

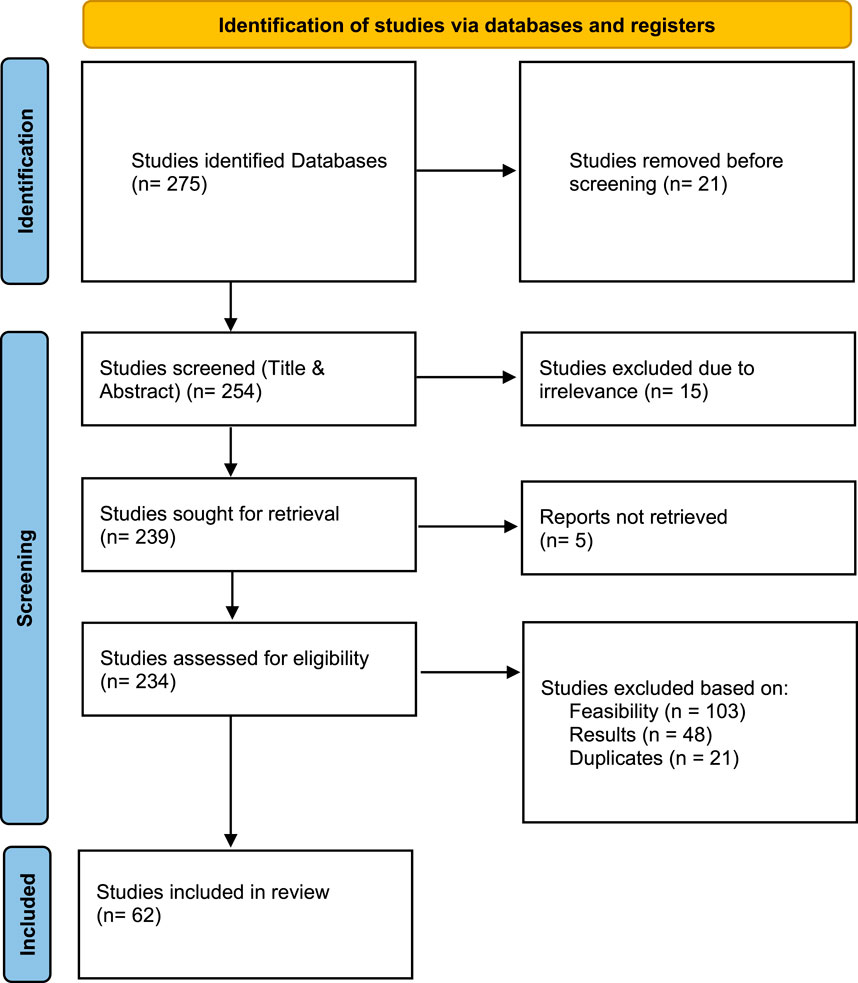

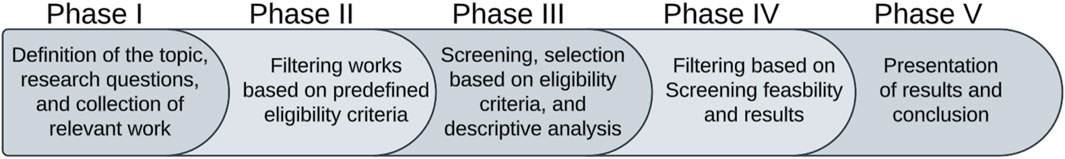

A systematic review selection framework was developed specifically to conduct thorough research on robotic disassembly processes and the integration of AI methods. This study follows preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines as defined by Moher et al. (2009) through a five-stage framework (Figure 3). Phase I begin by developing an explanation of the topic then selecting research questions before retrieving publications through multiple information platforms. The second phase of methodology implements predefined eligibility criteria to refine the initial study pool which helps researchers locate appropriate and researched-based documents. During Phase III an extensive screening protocol integrates eligibility verification with descriptive investigations of approved reviews. With Phase IV researchers examine the chosen studies to discover feasibility levels and confirm study objectives match. Results from this research investigation present critical findings in Phase V. The structured systematic process safeguards the scientific validity of the review through detailed outcomes which researchers can easily understand.

Figure 3. The five Phases of the Paper Selection Methodology as adapted from Moher et al. (2009).

3.1 Search strategy

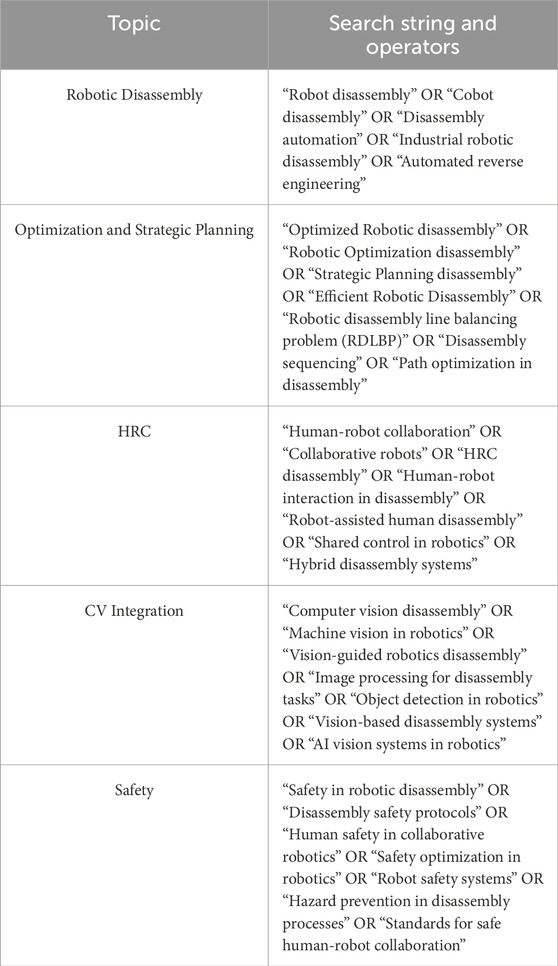

The research query covered the four major robotic disassembly domains through precise search terms which included optimization approaches alongside strategic planning methods denoting high-level, offline decisions that structure the entire disassembly system and human-robot collaboration systems. The research included search strings that combined “Robotic disassembly” with “Disassembly automation” to find general robotic disassembly studies and “Human-robot collaboration” with “Collaborative robots” to identify specific Human-Robot Collaboration research. The analysis included advanced technology searches with combinations of “Computer vision disassembly” OR “AI vision systems in robotics” to examine robotic applications that merged vision systems with artificial intelligence. Research examining safety practices in robotic disassembly is covered through search terms that include “Safety in robotic disassembly” OR “Human safety in collaborative robotics” (Table 1). A methodical searching system enables the review to identify an extensive collection of research documents which accurately represents the current body of knowledge.

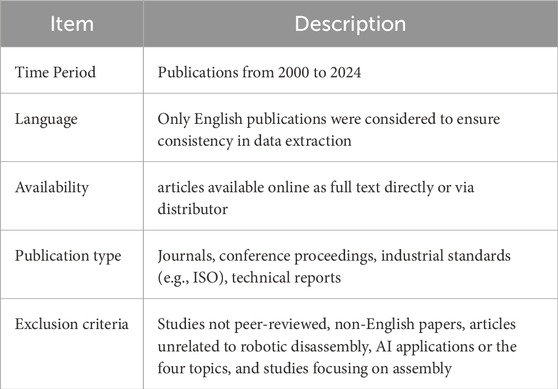

The research period encompassed publications from 2000 to 2024 (Table 2) to capture advancements in robotics and artificial intelligence and industrial disassembly methods during the last 24 years. The analysis included only publications written in English to ensure uniformity throughout data extraction and interpretation. The research included only peer-reviewed articles and conference proceedings alongside industrial standards (such as ISO) and technical reports that provided full text access through online distributors or direct access. The research excluded materials which did not meet peer-review standards or used non-English text or focused on unrelated robotic disassembly or AI applications. The analysis excluded research papers that analyzed assembly operations alone without discussing reverse engineering or disassembly work. The established criteria allowed researchers to select reviews which directly focused on review objectives while preserving scientific standards.

3.2 Paper selection

To strengthen the examination process, the PRISMA flow diagram was used (Figure 4). In this way, a systematic and transparent evaluation of the literature is guaranteed, reinforcing the reliability of the findings and comparison. The inclusion and exclusion criteria were applied in distinct stages as follows:

3.2.1 Title and abstract screening

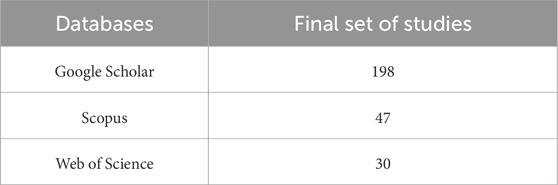

The database search revealed 275 publications, which included 198 from Google Scholar, 47 from Scopus and 30 from Web of Science. Our analysis has eliminated 21 duplicates and evaluated 248 separate research articles (Table 3). Those publications were selected by the research team through a title and abstract evaluation process in order to find their relevance to the research topic. Evaluation included elimination of research that did not focus on robotic disassembly techniques or artificial intelligence applications.

3.2.2 Full-text screening

A thorough review of full texts applied to 254 remaining articles. The research excluded 192 articles throughout this stage for either being unfeasible to implement or showing insufficient data or lacking alignment with the research context which included studies unrelated to disassembly systems or human-robot collaboration or safety. A thorough examination of 62 articles during this phase resulted in a final selection of articles for comprehensive research.

3.2.3 Sorting based on content type

The final 62 articles received content-based categorization that focused on the review’s four main themes including optimization strategies and human-robot collaboration and computer vision integration alongside safety for collaborative applications. These articles served as the base for descriptive research and content analysis that followed in the review process.

3.3 Content analysis and classification

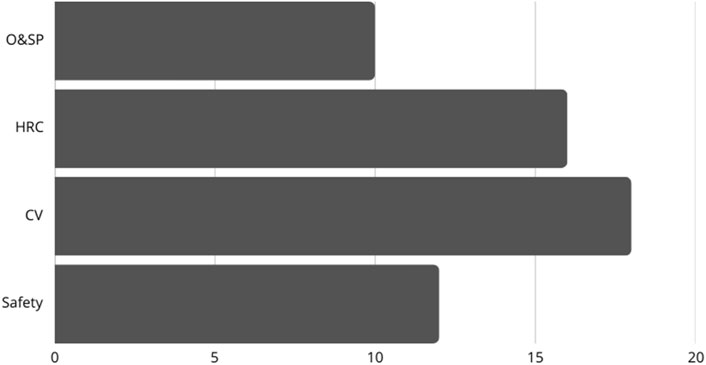

This classification system defines the research spaces within robotic disassembly studies (Figure 5). Through optimization and strategic planning (O&SP) techniques developers create essential algorithms that optimize both sequencing planning processes and resource allocation effectiveness. Ergonomic system designs which enable HRC produce spaces that are safer and more productive for shared operations. Advanced visual systems integrated through computer vision technology enable robots to work with greater precision when detecting objects and sequencing disassembly operations. Safety represents an ongoing necessity because risk-minimizing systems need appropriate protocols to operate with autonomous systems and collaborative systems alike. Research within robotic disassembly studies shows how artificial intelligence systems can solve various problems by linking different domains.

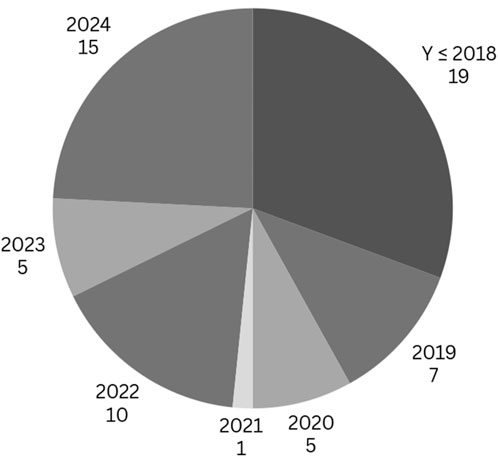

Figure 6 demonstrates strategic selection toward current discoveries while including essential studies in robotic disassembly and Artificial Intelligence research. Post-2019 scholarly work dominates the selection since it demonstrates advanced robotic disassembly techniques and Artificial Intelligence applications. Recent studies reveal new understanding about the operation of reinforcement learning systems together with collaborative robots and computer vision software. A selection of 19 groundbreaking papers originating from before 2018 serves as foundational material for subsequent investigation.

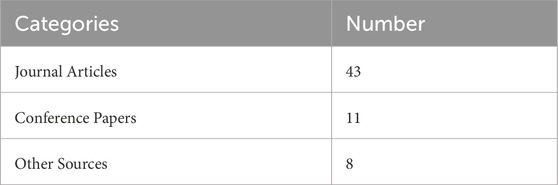

This research organizes its sources into three main categories including journal articles and conference papers and others for clear understanding of resource analysis. Journal articles present extensive examinations of field knowledge that deliver basic and advanced understanding. Conference papers highlight contemporary innovations and developmental progress which take place at prominent industrial events. The “Other Sources” category in Table 4 contains industry reports and white papers and industrial standards which strengthen the practical value of the study. The table provides an overview of source categories together with their assigned reference counts.

4 Detailed analysis of selected studies

Artificial intelligence has emerged as an essential tool for tackling the complexity inherent in robotic disassembly systems (Poschmann et al., 2020). Within WEEE recycling, combining different types of robots such as industrial high-precision arms with flexible collaborative robots can broaden task coverage but also increases integration challenges due to different control interfaces, communication protocols and tooling requirements. AI overcomes these challenges by providing adaptive perception, decision-making and control capabilities that enable robots to navigate complex product geometries and identify components and also perform disassembly steps with greater accuracy and efficiency. Conceptually, AI refers to the integration of human-like reasoning and learning into machines. Machine learning forms the core to enable systems to derive patterns from data and automatically build analytical models, while deep learning takes advantage of multi-layer neural networks to model complex relationships in visual, spatial or sequential data (Saadat et al., 2022). Generative AI extends these capabilities to content creation, which, in the disassembly domain, can support tasks such as synthetic data generation for training vision systems (Sætra, 2023). The following sub-sections provides a detailed analysis of selected studies grouped into four key research domains that structure the current landscape of AI-enabled robotic disassembly: optimization and strategic planning, human–robot collaboration, computer vision integration, and safety standards. These areas were identified as the most recurrent and impactful across the reviewed literature. A final subsection highlights supplementary trends, including emerging approaches such as reinforcement learning, which while outside the main scope, indicate promising future directions.

4.1 Optimization and strategic planning

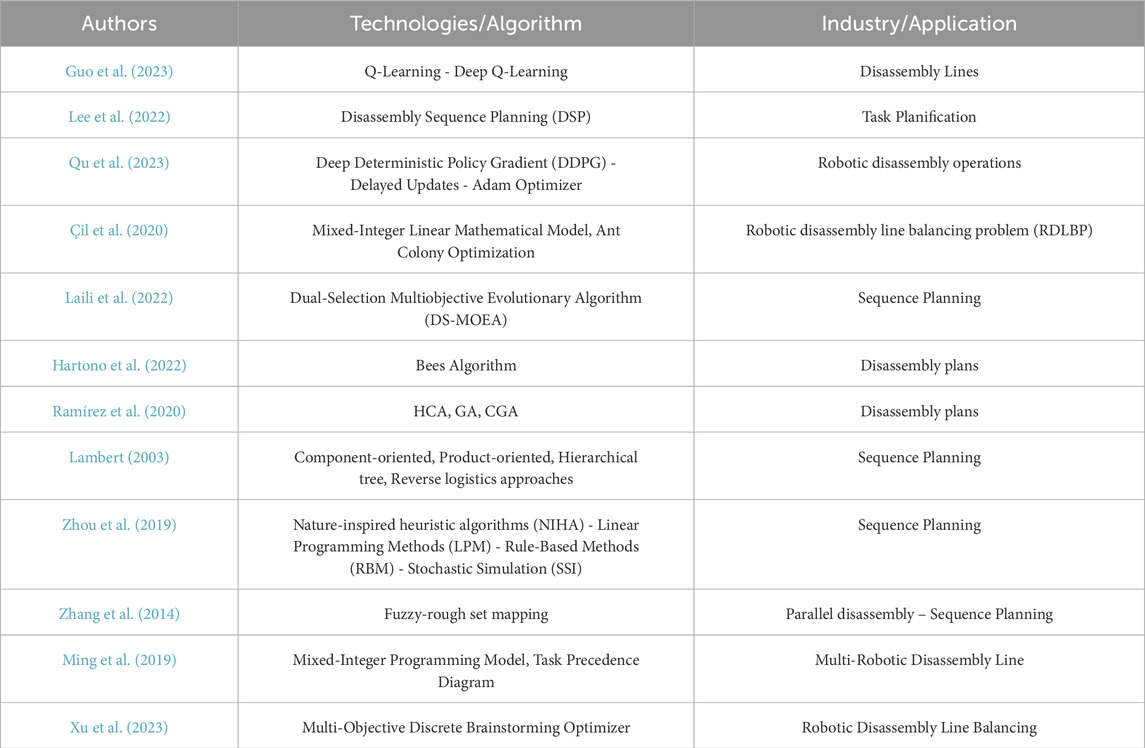

The strategic planning along with navigation optimization of robotic systems relies on AI algorithm execution (Chen et al., 2021). This capability allows the robot to effectively determine the most straightforward path toward dismantling without harming significant components and while reducing operational mistakes. Guo et al. (2023) implemented a dual-agent approach using Deep Q-learning (DQL) and RL to create decision frameworks which enhanced state exploration efficiency and calculation speed. Lee et al. (2022) established a computational framework for HRC that combines safe human conditions with resource limitations to optimize complete disassembly durations within defined security boundaries. Qu et al. (2023) employed RL together with neural networks and actor-critic modeling to teach robots how to extract bolts from door chain grooves resulting in less than 1 mm of clearance between components. The objective of this work was to improve robotic disassembly capabilities through skill transfer and training capabilities. Çil et al. (2020) utilized Ant Colony Optimisation (ACO) along with Genetic Algorithm (GA) and Random Search (RS) algorithms for comparative analysis. ISIACO represents a proposed solution which uses multiple algorithms to optimize disassembly line balancing while incorporating the best elements of existing solution approaches to achieve improved performance. The study by Laili et al. (2022) shows how backup actions boost disassembly sequence planning reliability during automation system failures. The research presents three backup action types and introduces a new approach to disassembly planning along with the proposed DS-MOEA solution method. A two-pointer detection system combines with interference matrix technology to determine extractable components from subassemblies. The algorithm surpasses conventional methods by constructing optimal sequence plans while attaining superior completion results through performance enhancement. The research by Hartono et al. (2022). developed a robot planning model which determines disassembly product sequences to maximize profits alongside saving energy and reducing greenhouse gas emissions. A computational model implementing the bee algorithm takes inspiration from bees’ natural food search behavior. The algorithm functions to enhance the efficiency of disassembly plans. The Bees algorithm functions to determine both optimal recovery options and associated disassembly information. Ramírez et al. (2020), Covers how to use optimisation technologies and methodologies, including hybrid cellular automata (HCA) and GA, to solve the disassembly sequencing problem. The paper also provides a detailed description of the customisation of various operations to solve the disassembly problem, including the size of the population, initialisation, crossover, mutation operators also as stopping criteria. The paper shows the results of the ideal solution for different algorithms, HCA, GA and CGA, with respect to fitness value and execution time. Lambert (2003), outlines the latest research into the modelling, scheduling, and applications of the disassembly process. Zhou et al. (2019), gave a paper where disassembly sequence planning (DSP) methods are introduced from the point of view of disassembly modelling and disassembly planning methods. The paper describes the characteristics associated with different DSP methods as well as identifying future directions for DSP. Zhang et al. (2014) showed a parallel disassembly fuzzy-rough set mapping model that has been implemented to obtain the ideal parallel disassembly sequence. Some recent advances proposed by Ming et al. (2019) address the balancing of multirobotic disassembly lines with uncertain processing times using multirobotic systems and stochastic task processing. On the same theme, Xu et al. (2023) have explored a discrete brainstorming multi-objective optimizer, this was done for balancing robotic disassembly lines in the event of disassembly failure and product variability. The comparative results of these works are synthesized in Table 5.

4.2 Human-robot collaboration

Thanks to human-robot collaboration, disassembly tasks have become more efficient and flexible. Such collaborative action optimises the use of resources by giving repetitive or physically demanding tasks to robots, while leveraging human problem-solving and adaptability skills. Thanks to advanced sensors and safety features, robots can work next to human operators. HRC’s adaptability offers major advantages for disassembly, as it enables rapid adaptation to different types of products as well as materials. In addition, working in this collaborative mode makes it easier to improve human skills, as robots help to stabilise components or carry out complex tasks. Hjorth and Chrysostomou (2022), Matheson et al. (2019) explores in a literature review, different technologies and standards related to human-robot collaboration in disassembly processes. Also, they focus on the technology and approaches used in human-robot collaborative disassembly systems. Kay et al. (2022), concluded that the optimum disassembly solution for an EV battery pack/module should be a human-robot collaboration, where the robot can efficiently make cuts on the battery pack, allowing the technician to quickly sort out the battery parts and remove any plugs or connectors that the robot is having trouble with. Li et al. (2018), have carried out research to overcome the challenges presented by the flexibility and reconfigurability of processing variable-sized components from electric vehicles, offering a robotized disassembly approach to boost value recovery and reduce environmental impact. Chen et al. (2014), describe a comprehensive state diagram for training a robot for a new bit position. With this approach, the robot can return several times to its initial position using joint control, thus improving the accuracy of the bit approach. The paper also offers some valuable insights into the development of robotic systems for unscrewing in disassembly processes, responding to the need for adaptability and flexibility within industrial automation. Chu and Chen (2023), present the results of a mathematical model which calculates the completion time under different conditions, and compare the performance of various optimization algorithms. Results reveal that the suggested approach achieves a reduced completion time while guaranteeing the sustainability of all disassembly sequences. One case study by Huang et al. (2020) shows a two-finger gripper KUKA LBR iiwa robot being employed to separate press-fit components, making use of active compliance monitoring along with impedance monitoring for safe and flexible interaction with human operators. Prioli and Rickli (2020), developed a cyber-physical architecture which uses human-robot interaction with collaborative robots (Cobots) to form a flexible automated disassembly system. The project aimed to solve the problem of executing large-scale disassembly operations which addresses uncertain end-of-life product conditions to support recycling and remanufacturing. Li et al. (2020) developed a control method which combines torque and position monitoring features with active compliance to unlock hex screws by using collaborative robots to improve end effector and screw head engagement success rates. Ding et al. (2019) proposes a knowledge graph-based system which enables human-robot collaboration during disassembly operations. In order to lower the downtime and disassembly cost, Wu et al. (2022) have proposed a study on a multi-objective optimization model to be implemented in human-robot collaborative disassembly extracting electric vehicle battery modules. In addition, a disassembly cell was designed by Huang et al. (2021) using active compliance and tactile sensing, making accurate human-robot interaction of complex elements such as automotive turbochargers. In Table 6, an overview of the referenced approaches.

4.3 Computer vision integration

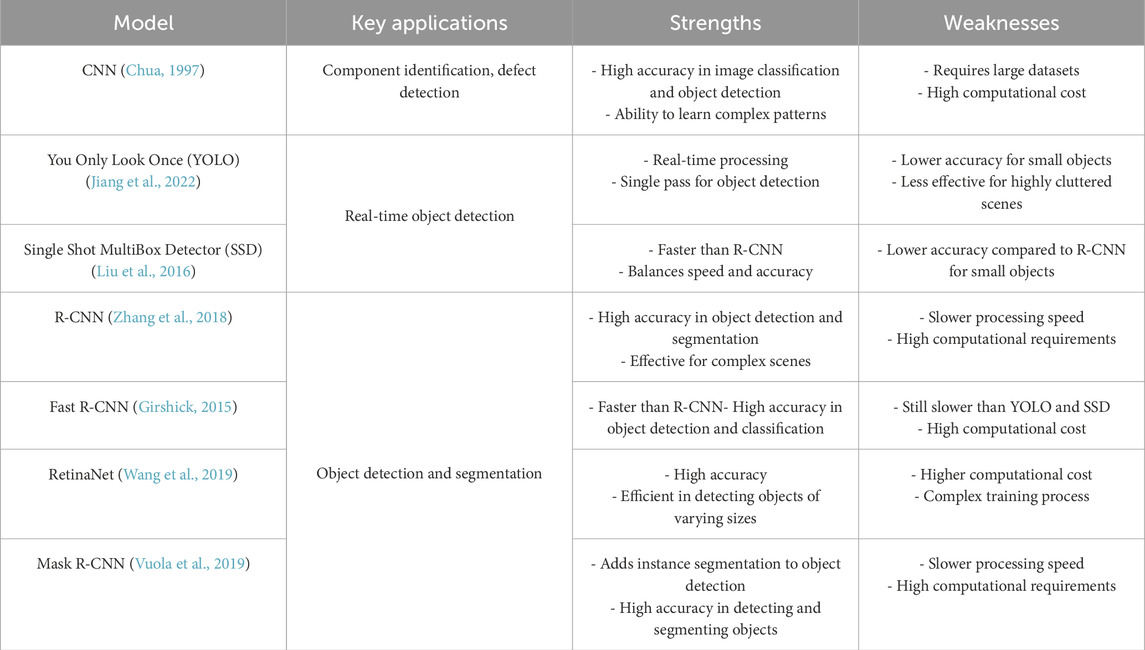

The combination of robotic devices with computer vision technology has transformed multiple industrial processes with robotic disassembly standing out as an exceptional application. Robotics-based disassembly operations require product or equipment extraction through computer vision methods which deliver unique advantages to this challenging process. By giving robots observation and environmental perception capabilities the accuracy levels together with operational speed and safety conditions of disassembly operations increase significantly. The accuracy and speed of robotic component identification improves when robots employ multiple product-specific detection models to update their environmental feature understanding. Research shows convolutional neural networks (CNN) (Chua, 1997) produce high image classification accuracy because they learn complex image data patterns, but their implementation demands big data sets and extensive computational resources. The YOLO (You Only Look Once) network stands apart through its real-time processing features and object detection speed of one pass through detection which benefits time-sensitive applications but demonstrates below-average efficiency in small-object scenes and cluttered scenes (Jiang et al., 2022). Region-based CNNs (R-CNNs) demonstrate top accuracy for detection and segmentation in challenging environments yet their high processing needs require extended computation times (Liu et al., 2016). The Single Shot MultiBox Detector operates at a higher speed than R-CNN while maintaining moderate accuracy levels between the two approaches. The Real-time applicability domain matches this approach. Fast R-CNN took up an objective like that mentioned above: The researchers aimed to make the initial R-CNN faster without compromising its precision (Zhang et al., 2018). SSD operates more rapidly than YOLO but slower than both detection models. Faster R-CNN delivers improved speed and accuracy in detecting small objects although its computational requirements surpass those of YOLO and SSD. By offering high precision capabilities RetinaNet detects objects of all sizes through a learning process that remains straightforward and efficient in terms of computational resources (Girshick, 2015). The Mask R-CNN enhances Faster R-CNN through the addition of instance segmentation to object detection while maintaining exceptional precision yet requiring a significant increase in processing time and computational power. These models work together to boost robotic disassembly operations which results in advanced automation efficiency (Vuola et al., 2019). To complement this discussion, Table 7 outlines the synthesized characteristics of prior works.

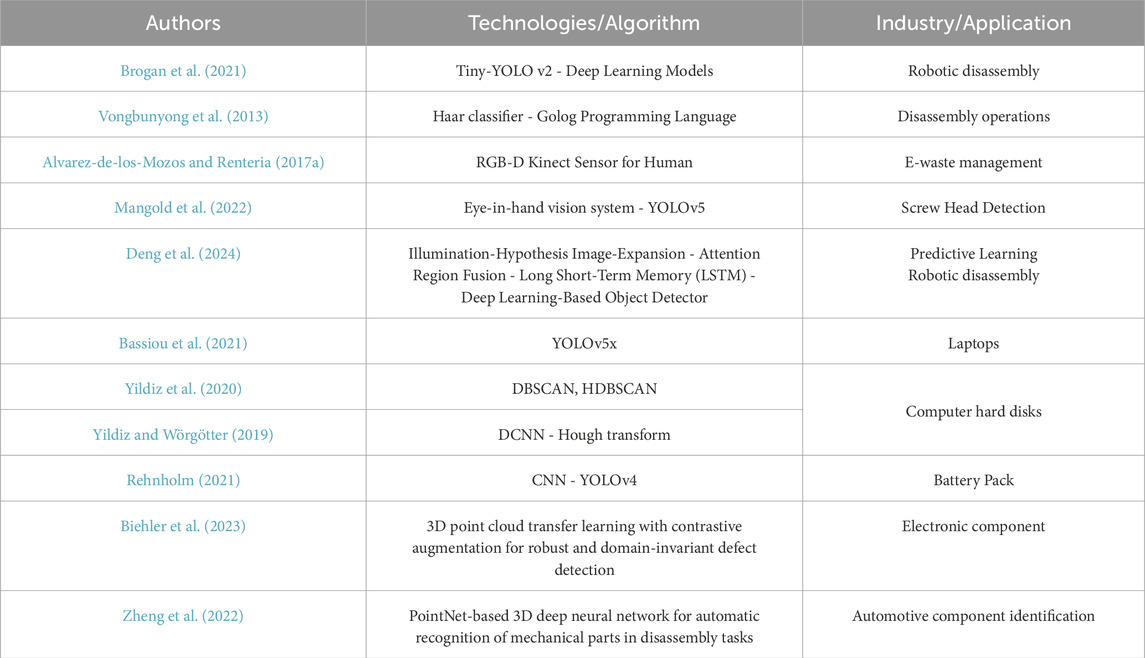

Brogan et al. (2021), presents deep learning methods and computer vision technology for automatic screw detection specifically designed for maintenance and disassembly operations. Researchers present different models along with their detection results for screws and objects utilizing both Average Accuracy (AA) and Frames Per Second (FPS) metrics. A training pool of 900 original images with 12.3 MPx resolution supported development and testing occurred across three distinct image sets containing 90 images each. The Tiny-YOLO v2 DL object detection system functioned as the testing model of choice. The paper by Vongbunyong et al. (2013) provided an extensive study of process monitoring for disassembly tasks through vision-based cognitive robotics systems along with oversight structures and decision pathways for achieving target objectives. Through IndiGolog’s programming platform a rule-based reasoning system operates an algorithm to minimize the evaluation space needed to execute operations at specified points during disassembly. The system maintains an execution loop until the desired goal state becomes reality. The system developed by Alvarez-de-los-Mozos E and team implements body tracking together with facial recognition with color segmentation to compute hand positioning for humans (Alvarez-de-los-Mozos and Renteria, 2017a). The development of human-robot interaction depends significantly on these processes. Through hand gestures combined with vocal instructions people can teach robots to understand specific operational settings. Mangold et al. (2022). have developed an adaptable system that performs quick screw head detection and classification in automated disassembly processes for reconditioning with robotic adaptability to diverse workpiece types. Using a 1280 × 1024 pixel monochromatic camera equipped with a 6 mm lens mounted on the robot arm to provide a hand-eye camera system, object detection architecture YOLOv5 is employed to locate and classify the screws within the perceived images. The dataset consists of 550 images, among which six categories of screw heads at different sizes. Deng et al. (2024), proposed an approach that aims to provide efficient and highly accurate real-time exposure control of vision-based robotic disassembly processes in difficult lighting conditions. It consists of three major modules: the region-of-interest (ROI) extraction module, along with the ROI quality assessment module in addition to the exposure time prediction module. Based on a deep learning-based object detection model, YOLOv5, the ROI extraction module can extract ROIs out of images captured using a variety of hypothetical lighting conditions. Deep learning with YOLOv5x performed better than conventional image processing techniques when identifying e-waste laptop parts as described by Bassiou et al. (2021). The experiment utilized Hasty. AI to label images of laptops with open or closed lids from a curated dataset. Yildiz et al. (2020) devised a visual perception system through deep learning and point cloud processing for automated hard disk (HDD) computer disassembly to achieve accurate gap detection. The researcher proposed a system for screw detection and localization in waste electronic products. The circular shapes that define screws as fundamental elements will be identified through Hough transform applications leading to a classifier which uses positive and negative training data examples. Using a dataset of over 10,000 samples, the performance of the screw classifiers is measured, and the two best-performing classifiers are combined into an integrated model (Yildiz and Wörgötter, 2019), Rehnholm (2021), concentrates specifically on two object detection approaches, pattern matching and CNNs, to benchmark how they perform in the task of dismantling electric vehicle batteries in order to find the optimal solution in terms of accuracy, recall performance as well as time consumption. A contrastive transfer learning framework (PLURAL) has been proposed by Biehler et al. (2023), which improves defect detection in 3D point clouds by using domain-invariant features. Zheng et al. (2022) have applied PointNet with the aim of identifying mechanical parts from 3D scans and facilitating robotic disassembly of complex automotive systems. The detailed characteristics of the cited contributions are organized in Table 8.

4.4 Safety standards for collaborative applications

The use of robotic disassembly systems is rapidly becoming an established feature across a range of industries, providing efficiency and accuracy in the disassembly of electronic devices and machinery. However, safety of human operators and the environment has become a major issue as the use of robotic systems for disassembly tasks continues to grow. The integration of safety measures plays a key role in minimising the risks involved in these tasks. ISO 10218 is a major standard governing the safety aspects of industrial robots, particularly those used in disassembly applications.

4.4.1 ISO 10218-1:2011 - safety requirements for industrial robots—part 1: robots

This section deals with the essential safety aspects of industrial robots, including robot design, system integration and installation. Guidelines are given for risk assessment, safeguards, and implementation of safety features to avoid potential accidents in normal working conditions as well as under exceptional conditions (ISO, 2024a).

4.4.2 ISO 10218-2:2011 - safety requirements for industrial robots—Part 2: robot systems and integration

In the second part, the standard deals with the interaction between a robot and its environment, which includes human workers. This part of the standard specifies collaborative operation and describes the safety measures that need to be taken when humans are operating in the proximity of robots, focusing on the need to reduce risks (ISO, 2024b).

4.4.3 ISO/TS 15066:2016 - robots and robotic devices—collaborative robots

The technical specification delivers safety guidelines for robots that function together with human workers. Human-robot interfaces must establish the maximum force and pressure levels which humans can tolerate when accidently interacting with robotic systems. Risk assessment procedures and safety measures with guidelines for human-robot interactions are specified in the document while different collaborative operation modes such as speed and separation monitoring and hand guiding and power/force limiting are detailed. The standard functions as an essential addition to ISO 10218 by reducing risks in collaborative environments that combine human operators with robotic systems (ISO, 2024c).

Looking beyond ISO standards, a bunch of organisations have come up with safety guidelines that shape how robots are designed and used. In the EU the Machinery Directive (2006/42/EC) (Eur-Lex, 2006) sets out a legal framework for machine safety including industrial robots which is often linked to EN ISO 10218 and ISO/TS 15066. In other sectors such as defence, safety rules cover autonomous and unmanned systems. NATO’s STANAG 4586 (STANAG 4586, 2025) standard helps to standardise drone operations while the US Department of Defence applies MIL-STD-882E (Department of Defense DoD, 2023) to manage risks associated with complex, high-risk robotic systems. Together, these policies highlight the general need for safety from factories to battlefields in order to ensure both the protection of people and the reliability of systems.

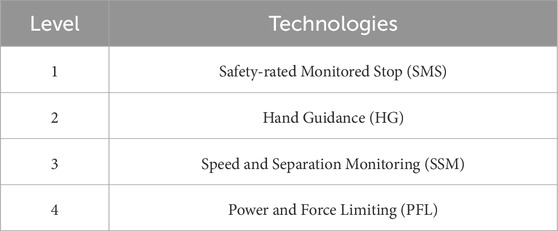

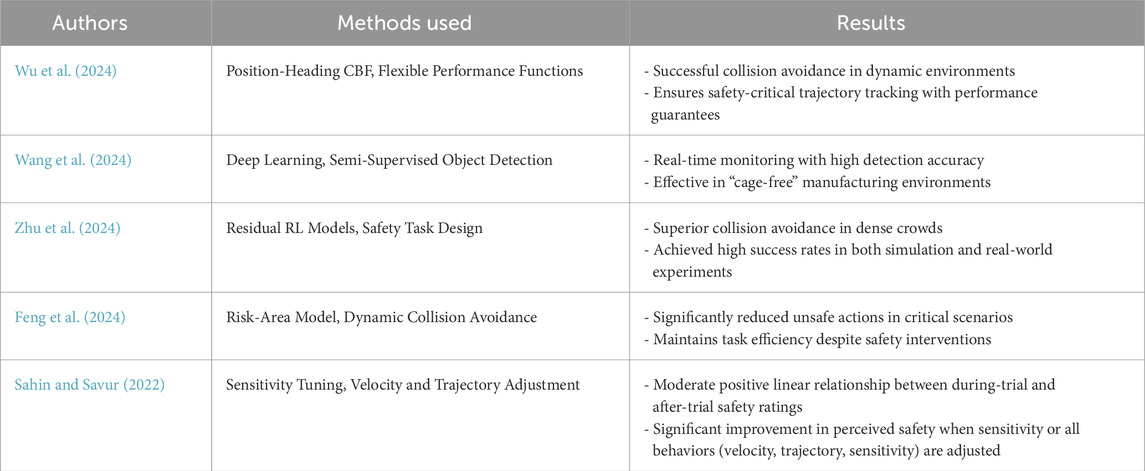

There are four collaborative operative modes identified by robot safety standards as mentioned in Table 9. Safety-rated Monitored Stop is the most basic form of collaboration. In this case, the worker executes manual tasks within the operational space shared by man and robot. Inside this collaboration zone, the human and the robot can work, however not at the same time since the robot is not allowed to move when the operator is occupying this shared space. Such cooperation is ideally suited to the manual placement of objects on the robot end-effector, whether for visual checking, finishing or complex tasks (Vysocky and Novak, 2016). Secondly, Hand Guidance. Also referred to as “direct teach”, it allows the operator in this collaborative mode to teach the robot positions by simply moving the robot, with no need for an additional interface, for example, a robot teach pendant. The robot arm’s weight is balanced to maintain its position. Using a guiding device, which drives the robot’s movement, allows the operator to be in direct contact with the machine (Ogura et al., 2012). Third mode refers to Speed and Separation Monitoring. This mode, also referred to as Speed and Position Monitoring (SPM), provides human access to the robot space using safety monitoring sensors. When a human is in the 1st zone, the robot operates at full speed, in the 2nd zone at slower speed, and in the 3rd zone it stops when the human enters (Marvel, 2013). A fourth mode is Power and Force limitation. It involves limiting the motor’s power and strength, to enable a human worker to work side-by-side with the robot. It requires specific equipment and control models to handle collisions between robot and human without negative consequences for the human (Haddadin et al., 2015). Table 10 demonstrates the application of safety technologies for human-robot collaboration in dynamic environments through key studies. The research presents both techniques and verified outcomes to display progress made in robot collision prevention alongside human perception of safety and robotic-human interface systems. Safety technology approaches highlight how vital it is to combine adaptive and predictive systems for maintaining safety and efficiency in shared work environments.

4.5 Supplementary trends

Self-supervised learning refers to an approach based on a machine learning concept in which a model can learn representations directly from the data itself, with no explicit supervision. In conventional supervised learning, the model learns from labeled data, where every input is associated with a corresponding target output. Yet in self-supervised learning, a model is trained to predict certain aspects of the data without depending on external labels.

4.5.1 Grasp2Vec

It combines the analysis of robotic grasping with the integration of words, presenting grasping actions as vectors like words in NLP. This exploits the properties of vector space for tasks such as grasp recommendation and similarity analysis, providing a new approach to improving robotic manipulation capabilities (Jang et al., 2018). As presented by Jang et al. (2018), there’s a representation learning from input, using a robotic arm to remove an object from the scene and examine the resulting scene and the object in the gripper. Making sure that the difference between the representations of the scene corresponds to the representation of the object. Also, as supervision of grasping using learned representations, A similarity metric between object representations has been used as a reward for grasping an object, which eliminates the need to manually label the results of the grasp.

The grasping system detects motion while operating objects yet remains unaware of which specific objects it handles. The system includes cameras that record imagery of both the complete scene and the target object during gripping operations. During the initial training, the grasping robot is run to grasp any object at random, producing a triplicate of images (Spre, Spost, O): The camera shows O as an image representation of what the camera detected. The scene before the capture shows the object at position O. The image Spost shows the captured scene after capture while O is absent from the image.

4.5.2 RIG (reinforcement learning with imagined goals)

RIG combines reinforcement learning with self-supervised learning methods through an integrated system. The addition of imagined goals improves sample efficiency and policy robustness through exploration and learning of generalized policies. The method has demonstrated utility across different domains to improve RL agent performance within complicated scenarios.

Like grasp2vec, RIG also applies data augmentation through latent relabeling of targets: specifically, half of the targets are randomly generated from the a priori and the other half are selected using HER. As with grasp2vec, the rewards do not depend on ground truth states, but only on the learned state encoding, so it can be used for training on real robots as outlined in the work of Nair et al. (2018).

4.5.3 TCN (time-contrastive networks)

TCN (Time-Contrastive Networks) is based on the intuition that different viewpoints of the same scene at the same time should share the same integration (as in FaceNet), whereas the integration should vary over time, even for the same camera viewpoint. Therefore, the integration captures the semantic meaning of the underlying state rather than visual similarity. TCN integration is trained with triplet loss. Within the work of Sermanet et al. (2018), training data are collected by simultaneously taking videos of the same scene, but from different angles. All videos are unlabeled.

The blue frames selected from two camera views at the same timestep are anchor and positive samples, while the red frame at a different timestep is the negative sample. TCNs are also used in various sequential data tasks such as speech recognition, natural language processing and time series prediction. They have demonstrated competitive performance against other recurrent and convolutional architectures, particularly for tasks requiring long-term dependencies and the capture of complex temporal patterns.

4.5.4 SOAR cognitive architecture

A powerful framework designed to emulate human cognitive processes and decision-making capabilities in complex and dynamic environments. As outlined in recent studies, Soar integrates state-operator-action-result (SOAR) reasoning to systematically analyze and respond to environmental stimulus. It operates by constructing state spaces that combine long-term memory elements (domain-specific knowledge) with short-term memory elements (real time environmental data). This architecture supports the generation of interpretable decisions through rule-based mechanisms, allowing agents to adaptively transition between states to achieve predefined goals The decision-making process in Soar can be expressed as:

where

4.5.5 Adaptive control of thought-rational (ACT-R)

The robust cognitive architecture shows exceptional ability to model human thinking through its integration of perception modules with motor execution and memory retrieval systems. ACT-R contains two memory modules with procedural and declarative functions which operate together through buffers and pattern-matching to execute behavior-controlling production rules. The robotics systems utilizing ACT-R have shown successful deployment for adaptive tasks that support human-robot interaction along with collaborative functions. The system achieves performance through the combination of real-world sensory data with established cognitive models that produce context-specific responses. The modular design of ACT-R enables advanced perception along with motor functions that benefit humanoid robot implementations such as Pepper. Robotics systems that use ACT-R processing generate human emotion comprehension abilities which enable them to modify verbal and non-verbal outputs for improved human robot interaction. Practical implementations of ACT-R prove its ability to merge robotic operational competencies with human cognitive operations thereby developing more empathetic robotic platforms.

4.5.6 Robot operating system (ROS)

As middleware frameworks the Robot Operating System and its successor ROS2 are the key to how distributed robotics components talk and work together. It makes hardware complexity easier to deal with through a publish-subscribe model using topics, services and messages making it simpler to build robotics features in a modular way. While ROS1 is relying on a centralized master node, ROS2 adopts a decentralized approach based on the Data Distribution Service (DDS) offering better scalability and real-time communication also as fault tolerance. Both systems serve as the foundation for robotics software integration which support not only task orchestration but also system-level extensions such as safety monitoring as demonstrated by recent efforts (Rivera et al., 2020).

5 Conclusion and perspectives

In this paper, an in-depth systematic review is summarized on artificial intelligence approaches for robotic disassembly. Focusing on the optimization and strategic planning methodologies of HRC, CV, and safety measures. The benefits in these technologies are enormous, showing their potential to improve overall efficiency, precision, and flexibility in disassembly processes. Integration of machine learning, robotic handling, and advanced sensor systems finally seems to produce promising results toward disassembly tasks automation. Such technologies adequately treat issues related to the e-waste material diversity and intricate product designs, while being dependent on systems that will be totally safe for human operators, user-friendly, and easy to deploy. Despite all these advances, there still exist a few barriers to preventing the widespread robotic disassembly. Key issues are cost-effective implementation, scalability, and legal issues, these all must be addressed for proper application of these technologies in an industrial setup. More research and development need to be done further to give solutions related to these challenges, as this will move the field forward. There would need to be further advancement as well, in synthesis with robotics engineering, AI research, and policymaking. This form of interdisciplinary collaboration will be a defining feature in the future landscape for robotic disassembly. Such synchronization between technologic advancements, on one side, and practical, economic, and regulatory frameworks, on the other, could place the preconditions for effective and widespread introduction of robotic disassembly systems, which in turn will be an eventual step in the direction of more sustainable and efficient practices in e-waste management.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

SA: Writing – original draft, Writing – review and editing. MT: Methodology, Supervision, Writing – review and editing. ZH: Writing – review and editing. MH: Writing – review and editing. KK: Methodology, Writing – review and editing. RB: Supervision, Writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allison, P. (2023). Oak ridge demos AI-Driven robotic battery disassembly. Needham, MA: Battery Power Online. Available online at: https://www.batterypoweronline.com/news/oak-ridge-demos-ai-driven-robotic-battery-disassembly/.

Alvarez-de-los-Mozos, E., and Renteria, A. (2017a). Collaborative robots in E-Waste management. Procedia Manuf. 11, 55–62. doi:10.1016/j.promfg.2017.07.133

Alvarez-de-los-Mozos, E., and Renteria, A. (2017b). “Collaborative robots in E-Waste management,” in Proceedings of the 27th International Conference on Flexible Automation and Intelligent Manufacturing (FAIM), Modena, Italy, 27–30.

Ameur, S., Tabaa, M., Hamlich, M., Hidila, Z., and Bearee, R. (2024). “Human-robot collaboration in remanufacturing: an application for computer disassembly,” in Smart applications and data analysis. SADASC 2024. Editors M. Hamlich, F. Dornaika, C. Ordonez, L. Bellatreche, and H. Moutachaouik (Cham: Springer), 2168, 70–84. doi:10.1007/978-3-031-77043-2_6

Apple (2024). Apple adds Earth day donations to Trade-In and recycling program. Available online at: https://www.apple.com/uk/newsroom/2018/04/apple-adds-earth-day-donations-to-trade-in-and-recycling-program/ (Accessed November 29, 2024).

Bassiouny, A. M., Farhan, A. S., Maged, S. A., and Awaad, M. I. (2021). “Comparison of different computer vision approaches for E-Waste components detection to automate E-Waste disassembly,” in Proceedings of the 2021 international Mobile, intelligent, and ubiquitous computing conference (MIUCC) (Cairo, Egypt), 17–23. doi:10.1109/MIUCC52538.2021.9447637

Biehler, M., Sun, Y., Kode, S., Li, J., and Shi, J. (2023). PLURAL: 3D point cloud transfer learning via contrastive learning with augmentations. IEEE Trans. Automation Sci. Eng. 21, 7550–7561. doi:10.1109/tase.2023.3345807

Bogue, R. (2019). Robots in recycling and disassembly. Ind. Robot. 46, 461–466. doi:10.1108/IR-03-2019-0053

Brogan, D., DiFilippo, N., and Jouaneh, M. (2021). Deep learning computer vision for robotic disassembly and servicing applications. Array 12, 100094. doi:10.1016/j.array.2021.100094

Chen, W. H., Wegener, K., and Dietrich, F. (2014). “A robot assistant for unscrewing in hybrid human-robot disassembly,” in Proceedings of the 2014 IEEE international conference on robotics and Biomimetics (ROBIO 2014) (Bali, Indonesia), 536–541. doi:10.1109/ROBIO.2014.7090386

Chen, Z., Alonso-Mora, J., Bai, X., Harabor, D. D., and Stuckey, P. J. (2021). Integrated task assignment and path planning for capacitated multi-agent pickup and delivery. IEEE Robotics Automation Lett. 6 (3), 5816–5823. doi:10.1109/LRA.2021.3074883

Chu, M., and Chen, W. (2023). Human-robot collaboration disassembly planning for end-of-life power batteries. J. Manuf. Syst. 69, 271–291. doi:10.1016/j.jmsy.2023.06.014

Chua, L. O. (1997). CNN: a vision of complexity. Int. J. Bifurc. Chaos 7, 2219–2425. doi:10.1142/s0218127497001618

Çil, Z. A., Mete, S., and Serin, F. (2020). Robotic disassembly line balancing problem: a mathematical model and ant colony optimization approach. Appl. Math. Model. 86, 335–348. doi:10.1016/j.apm.2020.05.006

Dawande, M., Geismar, H. N., Sethi, S. P., and Sriskandarajah, C. (2005). Sequencing and scheduling in robotic cells: recent developments. Manuf. Syst. 8, 387–426. doi:10.1007/s10951-005-2861-9

Deng, W., Liu, Q., Pham, D., Hu, J., Lam, K., Wang, Y., et al. (2024). Predictive exposure control for vision-based robotic disassembly using deep learning and predictive learning. Robot. Comput.-Integr. Manuf. 85, 102619. doi:10.1016/j.rcim.2023.102619

Department of Defense (DoD) (2023). “MIL-STD-882E: Standard practice for system Safety, including change 1. Publication date: 27 September 2023,” in Status: current revision superseding MIL-STD-882D, 106.

Ding, Y., Xu, W., Liu, Z., Zhou, Z., and Pham, D. T. (2019). Robotic task-oriented knowledge graph for human-robot collaboration in disassembly. Procedia CIRP 83, 105–110. doi:10.1016/j.procir.2019.03.121

D’Adamo, I., Rosa, P., and Terzi, S. (2016). Challenges in waste electrical and electronic equipment management: a profitability assessment in three European countries. Sustainability 8, 633. doi:10.3390/su8070633

Eur-Lex (2006). Directive 2006/42/EC of the european parliament and of the council of 17 may 2006 on machinery, and amending directive 95/16/EC (recast). OJ L 157 (9.6), 24–86.

Feil-Seifer, D., and Matarić, M. J. (2009). “Human-robot interaction,” in Encyclopedia of complexity and systems science (Springer), 4643–4659. doi:10.1007/978-0-387-30440-3_274

Feng, Z., Xue, B., Wang, C., and Zhou, F. (2024). Safe and socially compliant robot navigation in crowds with fast-moving pedestrians via deep reinforcement learning. Robotica 42 (9), 1212–1230. doi:10.1017/S0263574724000183

Fraunhofer IFF (2025). Cognitive robotics and new safety technologies for human-robot collaboration. Fraunhofer Institute for Factory Operation and Automation IFF. Available online at: https://www.iff.fraunhofer.de/en/press/2025/cognitive-robotics-and-new-safety-technologies-for-human-robot-collaboration.html.

Girshick, R. (2015). “Fast R-CNN,” in Proceedings of the IEEE international conference on computer vision (ICCV).

Guo, X., Bi, Z., Wang, J., Qin, S., Liu, S., and Qi, L. (2023). Reinforcement learning for disassembly system optimization problems: a survey. Int. J. Netw. Dyn. Intell. 2, 1–14. doi:10.53941/ijndi0201001

Haddadin, S. (2015). “Physical safety in robotics,” in Formal modeling and verification of cyber-physical systems. Editors R. Drechsler, and U. Kühne (Wiesbaden: Springer Vieweg). doi:10.1007/978-3-658-09994-7_9

Hartono, N., Ramírez, F. J., and Pham, D. T. (2022). Optimisation of robotic disassembly plans using the bees algorithm. Robot. Comput.-Integr. Manuf. 78, 102411. doi:10.1016/j.rcim.2022.102411

Hjorth, S., and Chrysostomou, D. (2022). Human–robot collaboration in industrial environments: a literature review on non-destructive disassembly. Robot. Comput.-Integr. Manuf. 73, 102208. doi:10.1016/j.rcim.2021.102208

Hohm, K., Müller Hofstede, H., and Tolle, H. (2000). “Robot assisted disassembly of electronic devices,” in Proceedings of the international conference on intelligent robots and Systems (IROS), 1273–1278. doi:10.1109/IROS.2000.893194

HRI (2024). Human-Robot interaction and Collaboration. Available online at: https://hri.iit.it/human-robot-interaction-and-collaboration (accessed on November 29, 2024).

Huang, J., Pham, D. T., Wang, Y., Qu, M., Ji, C., Su, S., et al. (2020). A case study in human–robot collaboration in the disassembly of press-fitted components. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 234, 654–664. doi:10.1177/0954405419883060

Huang, J., Pham, D. T., Li, R., Qu, M., Wang, Y., Kerin, M., et al. (2021). An experimental human–robot collaborative disassembly cell. Comput. Ind. Eng. 155, 107189. doi:10.1016/j.cie.2021.107189

ISO (2024a). Robots and robotic devices—safety requirements for industrial robots—Part 1: robots; ISO 10218-1:2011. Available online at: https://www.iso.org/obp/ui/#iso:std:iso:10218:-1:ed-2:v1:en (accessed on November 29, 2024).

ISO (2024b). Robots and robotic devices—safety requirements for industrial robots—part 2: robot systems and integration; ISO 10218-2:2011. Available online at: https://www.iso.org/standard/41571.html (accessed on November 29, 2024).

ISO (2024c). ISO/TS 15066:2016. Available online at: https://www.iso.org/standard/62996.html (accessed on November 29, 2024).

James, D. (2023). Robots automate disassembly of chemical weapons. Assem. Mag. Available online at: https://www.assemblymag.com/articles/96763-robots-automate-disassembly-of-chemical-weapons.

Jang, E., Devin, C., Vanhoucke, V., and Levine, S. (2018). Grasp2Vec: learning object representations from self-supervised grasping. arXiv. Available online at: https://arxiv.org/abs/1811.06964 (Accessed on November 29, 2024).

Jiang, P., Ergu, D., Liu, F., Cai, Y., and Ma, B. (2022). A review of YOLO algorithm developments. Procedia Comput. Sci. 199, 1066–1073. doi:10.1016/j.procs.2022.01.135

Karlsson, B., and Järrhed, J. O. (2000). Recycling of electrical motors by automatic disassembly. Meas. Sci. Technol. 11, 350–357. doi:10.1088/0957-0233/11/4/303

Kay, I., Farhad, S., Mahajan, A., Esmaeeli, R., and Hashemi, S. R. (2022). Robotic disassembly of electric vehicles’ battery modules for recycling. Energies 15, 4856. doi:10.3390/en15134856

Kernbaum, S., Franke, C., and Seliger, G. (2009). Flat screen monitor disassembly and testing for remanufacturing. Int. J. Sustain. Manuf. 1, 347. doi:10.1504/IJSM.2009.023979

Kiddee, P., Naidu, R., and Wong, M. H. (2013). Electronic waste management approaches: an overview. Waste Manag. 33, 1237–1250. doi:10.1016/j.wasman.2013.01.006

Laili, Y., Li, X., Wang, Y., Ren, L., and Wang, X. (2022). Robotic disassembly sequence planning with backup actions. IEEE Trans. Autom. Sci. Eng. 19, 2095–2107. doi:10.1109/TASE.2021.3072663

Lambert, A. J. D. (2003). Disassembly sequencing: a survey. Int. J. Prod. Res. 41, 3721–3759. doi:10.1080/0020754031000120078

Lee, M.-L., Behdad, S., Liang, X., and Zheng, M. (2022). Task allocation and planning for product disassembly with human-robot collaboration. Robot. Comput.-Integr. Manuf. 76, 102306. doi:10.1016/j.rcim.2021.102306

Li, J., Barwood, M., and Rahimifard, S. (2018). Robotic disassembly for increased recovery of strategically important materials from electrical vehicles. Robot. Comput.-Integr. Manuf. 50, 203–212. doi:10.1016/j.rcim.2017.09.013

Li, R., Ji, C., Liu, Q., Zhou, Z., Pham, D. T., Huang, J., et al. (2020). Unfastening of hexagonal headed screws by a collaborative robot. IEEE Trans. Autom. Sci. Eng. 17, 1–14. doi:10.1109/TASE.2019.2958712

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C. Y., et al. (2016). “SSD: single shot multibox detector,” in Proceedings European conference on computer vision, (21-37).

Magrini, E., Ferraguti, F., Ronga, A. J., Pini, F., De Luca, A., and Leali, F. (2020). Human-robot coexistence and interaction in open industrial cells. Robot. Comput.-Integr. Manuf. 61, 101846. doi:10.1016/j.rcim.2019.101846

Mangold, S., Steiner, C., Friedmann, M., and Fleischer, J. (2022). Vision-based screw head detection for automated disassembly for remanufacturing. Proc. 29th CIRP Life Cycle Eng. Conf. 105, 1–6. doi:10.1016/j.procir.2022.02.001

Marvel, J. (2013). Performance metrics of speed and separation monitoring in shared workspaces. IEEE Trans. Autom. Sci. Eng. 10, 405–414. doi:10.1109/TASE.2013.2237904

Matheson, E., Minto, R., Zampieri, E., Faccio, M., and Rosati, G. (2019). Human-robot collaboration in manufacturing applications: a review. Robotics 8, 100. doi:10.3390/robotics8040100

Matsumoto, M., and Ijomah, W. (2013). “Remanufacturing,” in Handbook of sustainable engineering (Springer), 389–408. doi:10.1007/978-1-4020-8939-8_93

Ming, H., Liu, Q., and Pham, D. T. (2019). Multi-robotic disassembly line balancing with uncertain processing time. Procedia CIRP 83, 71–76. doi:10.1016/j.procir.2019.02.140

Moher, D., Liberati, A., Tetzlaff, J., and Altman, D. G.The PRISMA Group (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLOS Med. 6 (7), e1000097. doi:10.1371/journal.pmed.1000097

Nair, A., Pong, V. H., Dalal, M., Bahl, S., Lin, S., and Levine, S. (2018). Visual reinforcement learning with imagined goals. NeurIPS.

Ogura, Y., Fujii, M., Nishijima, K., Murakami, H., and Sonehara, M. (2012). Applicability of hand-guided robot for assembly-line work. J. Robot. Mechatron. 24, 547–552. doi:10.20965/jrm.2012.p0547

Perossa, D., El Warraqi, L., Rosa, P., and Terzi, S. (2023). Environmental sustainability of printed circuit boards recycling practices: an academic literature review. Cham: Springer International Publishing.

Poschmann, H., Brüggemann, H., and Goldmann, D. (2020). Disassembly 4.0: a review on using robotics in disassembly tasks as a way of automation. Chem. Ing. Tech. 92, 341–359. doi:10.1002/cite.201900107

Poschmann, H., Brüggemann, H., and Goldmann, D. (2021). Fostering end-of-life utilization by information-driven robotic disassembly. Procedia CIRP 98, 282–287. doi:10.1016/j.procir.2021.01.104

Prioli, J. P. J., and Rickli, J. L. (2020). Collaborative robot-based architecture to train flexible automated disassembly systems for critical materials. Procedia Manuf. 51, 46–53. doi:10.1016/j.promfg.2020.10.008

Priyono, A., Ijomah, W., and Bititci, U. S. (2016). Disassembly for remanufacturing: a systematic literature review, new model development and future research needs. J. Ind. Eng. Manage. 9, 899–932. doi:10.3926/jiem.2053

Qu, M., Wang, Y., and Pham, D. (2023). Robotic disassembly task training and skill transfer using reinforcement learning. IEEE Trans. Ind. Inf. 19, 10934–10943. doi:10.1109/TII.2023.3242831

Ramírez, F. J., Aledo, J. A., Gamez, J. A., and Pham, D. T. (2020). Economic modelling of robotic disassembly in end-of-life product recovery for remanufacturing. Comput. Ind. Eng. 142, 106339. doi:10.1016/j.cie.2020.106339

Rehnholm, J. (2021). Battery pack part detection and disassembly verification using computer vision. Västerås, Sweden: School of Innovation Design and Engineering.

Rivera, S., Iannillo, A. K., Lagraa, S., Joly, C., and State, R. (2020). “ROS-FM: fast monitoring for the robotic operating System(ROS),” in 2020 25th international conference on engineering of complex computer systems (ICECCS) (Singapore), 187–196. doi:10.1109/ICECCS51672.2020.00029

Saadat, M., Goli, F., and Wang, Y. (2022). “Perspective of self-learning robotics for disassembly automation,” in Proceedings of the 27th IEEE international conference on automation and computing (ICAC2022) (Bristol, UK). doi:10.1109/ICAC55051.2022.9911085

Sahin, M., and Savur, C. (2022). “Evaluation of human perceived safety during HRC task using multiple data collection methods,” in 2022 17th annual system of systems engineering conference (SOSE) (IEEE Press), 465–470. doi:10.1109/SOSE55472.2022.9812693

Sætra, H. S. (2023). Generative AI: here to stay, but for good? Technol. Soc. 75, 102372. doi:10.1016/j.techsoc.2023.102372

Sermanet, P., Lynch, C., Chebotar, Y., Hsu, J., Jang, E., Schaal, S., et al. (2018). “Time-contrastive networks: self-supervised learning from video,” in Proceedings of the 2018 IEEE international conference on robotics and automation (ICRA), Brisbane, QLD, Australia (IEEE), 1134–1141.

STANAG 4586 (2025). Formally titled standard interfaces of UAV control System (UCS) for NATO UAV Interoperability.

Váncza, J., Monostori, L., Lutters, D., Kumara, S. R., Tseng, M., Valckenaers, P., et al. (2011). Cooperative and responsive manufacturing enterprises. CIRP Ann. Manuf. Technol. 60, 797–820. doi:10.1016/j.cirp.2011.05.009

Vanegas, P., Peeters, J., and Duflou, J. (2019). “Disassembly,” in CIRP encyclopedia of production engineering (Springer), 395–399. doi:10.1007/978-3-642-35950-7

Vongbunyong, S., Kara, S., and Pagnucco, M. (2013). Basic behaviour control of the vision-based cognitive robotic disassembly automation. Assem. Autom. 33, 38–56. doi:10.1108/01445151311294694

Vuola, A. O., Akram, S. U., and Kannala, J. (2019). “Mask-RCNN and U-Net ensembled for nuclei segmentation,” in Proceedings of the 2019 IEEE 16th international symposium on Biomedical imaging (ISBI 2019) (Venice, Italy), 208–212.

Vysocky, A., and Novak, P. (2016). Human–robot collaboration in industry. MM Sci. J. 2016, 903–906. doi:10.17973/MMSJ.2016_06_201611

Wang, Y., Wang, C., Zhang, H., Dong, Y., and Wei, S. (2019). Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 11, 531. doi:10.3390/rs11050531

Wang, S., Zhang, J., Wang, P., Law, J., Calinescu, R., and Mihaylova, L. (2024). A deep learning-enhanced digital twin framework for improving safety and reliability in human–robot collaborative manufacturing. Robotics Computer-Integrated Manuf. 85, 102608. doi:10.1016/j.rcim.2023.102608

Wegener, K., Chen, W. H., Dietrich, F., Dröder, K., and Kara, S. (2015). Robot-assisted disassembly for the recycling of electric vehicle batteries. Procedia CIRP 29, 716–721. doi:10.1016/j.procir.2015.02.051

Wu, T., Zhang, Z., Yin, T., and Zhang, Y. (2022). Multi-objective optimisation for cell-level disassembly of waste power battery modules in human-machine hybrid mode. Waste Manag. 144, 513–526. doi:10.1016/j.wasman.2022.04.015

Wu, W., Wu, D., Zhang, Y., Chen, S., and Zhang, W. (2024). Safety-critical trajectory tracking for Mobile robots with guaranteed performance. IEEE/CAA J. Automatica Sinica 11 (9), 2033–2035. doi:10.1109/JAS.2023.123864

Xu, W., Cui, J., Liu, B., Liu, J., Yao, B., and Zhou, Z. (2021). Human-Robot collaborative disassembly line balancing considering the safe strategy in remanufacturing. J. Clean. Prod. 324, 129158. doi:10.1016/j.jclepro.2021.129158

Xu, G., Zhang, Z., Li, Z., Guo, X., Qi, L., and Liu, X. (2023). Multi-objective discrete brainstorming optimizer to solve the stochastic multiple-product robotic disassembly line balancing problem subject to disassembly failures. Mathematics 11 (6), 1557. doi:10.3390/math11061557

Yildiz, E., and Wörgötter, F. (2019). “DCNN-based screw detection for automated disassembly processes,” in Proceedings of the 2019 15th international conference on signal-image technology and Internet-based systems (SITIS); sorrento, Italy, 187–192. doi:10.1109/SITIS.2019.00040

Yildiz, E., Brinker, T., Renaudo, E., Hollenstein, J. J., Haller-Seeber, S., Piater, J., et al. (2020). A visual intelligence scheme for hard drive disassembly in automated recycling routines. Robovis 2020, Int. Conf. Robotics, Vis. Intelligent Syst., 17–27. doi:10.5220/0010016000170027

Zeng, Y., Zhang, Z., Yin, T., and Zheng, H. (2022). Robotic disassembly line balancing and sequencing problem considering energy-saving and high-profit for waste household appliances. J. Clean. Prod. 381, 135209. doi:10.1016/j.jclepro.2022.135209

Zhang, X. F., Yu, G., Hu, Z. Y., Pei, C. H., and Ma, G. Q. (2014). Parallel disassembly sequence planning for complex products based on fuzzy-rough sets. Int. J. Adv. Manuf. Technol. 72, 231–239. doi:10.1007/s00170-014-5655-4

Zhang, M., Li, W., and Du, Q. (2018). Diverse region-based CNN for hyperspectral image classification. IEEE Trans. Image Process. 27, 2623–2634. doi:10.1109/tip.2018.2809606

Zheng, S., Lan, F., Baronti, L., Pham, D. T., and Castellani, M. (2022). Automatic identification of mechanical parts for robotic disassembly using the PointNet deep neural network. Int. J. Manuf. Res. 17 (1), 1–21. doi:10.1504/ijmr.2022.121591

Zhou, Z., Liu, J., Pham, D. T., Xu, W., Ramírez, F. J., Ji, C., et al. (2019). Disassembly sequence planning: recent developments and future trends. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 233, 1450–1471. doi:10.1177/0954405418789975

Zhu, C., Yu, T., and Chang, Q. (2024). Task-oriented safety field for robot control in human-robot collaborative assembly based on residual learning. Expert Syst. Appl. 238 (Part C), 121946. doi:10.1016/j.eswa.2023.121946

Keywords: robotic disassembly, AI approaches, human robot collaboration, computer vision, systematic review

Citation: Ameur S, Tabaa M, Hidila Z, Hamlich M, Karboub K and Bearee R (2025) The future of robotic disassembly: a systematic review of techniques and applications in the age of AI. Front. Robot. AI 12:1584657. doi: 10.3389/frobt.2025.1584657

Received: 04 March 2025; Accepted: 01 September 2025;

Published: 09 October 2025.

Edited by:

Erfu Yang, University of Strathclyde, United KingdomReviewed by:

Barış Can Yalçın, University of Luxembourg, LuxembourgVicent Girbés-Juan, University of Valencia, Spain

Jose Saenz, Fraunhofer Institute for Factory Operation and Automation (IFF), Germany

Copyright © 2025 Ameur, Tabaa, Hidila, Hamlich, Karboub and Bearee. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohamed Tabaa, bS50YWJhYUBlbXNpLm1h

Soufiane Ameur

Soufiane Ameur Mohamed Tabaa

Mohamed Tabaa Zineb Hidila2

Zineb Hidila2