- 1School of Automation, Southeast University, Nanjing, Jiangsu, China

- 2Ministry of Education Key Laboratory of Measurement and Control of Complex Systems of Engineering, Southeast University, Nanjing, Jiangsu, China

Robotic surfaces consisting of many actuators can change shape to perform tasks, such as object transportation and sorting. Increasing the number of actuators can enhance the robot’s capacity, but controlling a large number of actuators is a challenging problem that includes issues such as the increased system-wide refresh time. We propose a novel control method that has constant refresh times, no matter how many actuators are in the robot. Having a distributed nature, the method first approximates target shapes, then broadcasts the approximation coefficients to the actuators and relies on itself to compute the inputs. To confirm the system size-independent scaling, we build a robot surface and measure the refresh time as a function of the number of actuators. We also perform experiments to approximate target shapes, and a good agreement between the experiments and theoretical predictions is achieved. Our method is more efficient because it requires fewer control messages to coordinate robot surfaces with the same accuracy. We also present a modeling strategy for the complex robot–object interaction force based on our control method and derive a feedback controller for object transportation tasks. This feedback controller is further tested by object transportation experiments, and the results demonstrate the validity of the model and the controller.

1 Introduction

Robotic surfaces (Liu et al., 2021; Walker, 2017) typically consist of many actuation modules arranged in an array and can serve as intelligent conveyors (Uriarte et al., 2019; Chen et al., 2024), adaptive structures (Wang et al., 2019; Salerno et al., 2020), molding tools (Tian et al., 2022), treadmills (Smoot et al., 2019), shape displays, or haptic interfaces (Leithinger et al., 2014; Nakagaki et al., 2019). The capability of a robotic surface is related to the number of actuators it has as the robot can perform multiple tasks in parallel with more actuators. Developments in soft robotics also bring new solutions to meet the demand of actuators (Liu et al., 2021; Johnson et al., 2023; Robertson et al., 2019). However, coordinating many actuators is challenging. Generating control commands for them requires a large amount of resources, such as physical space, equipment, and communication bandwidth (Winck and Book, 2013). A noticeable quantity is the time delay between the first and the last actuator when updating the system to a new shape, which we note as the refresh time

A non-sequential and more scalable approach is to drive each row and column of the actuator array, similar to the matrix drive technique used in LED displays (Chen et al., 2011). This method simultaneously controls all actuators on the same row or column. Early works from Zhu, Winck, and Book et al. show that the scaling of

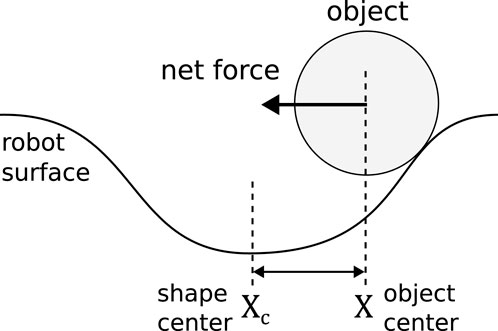

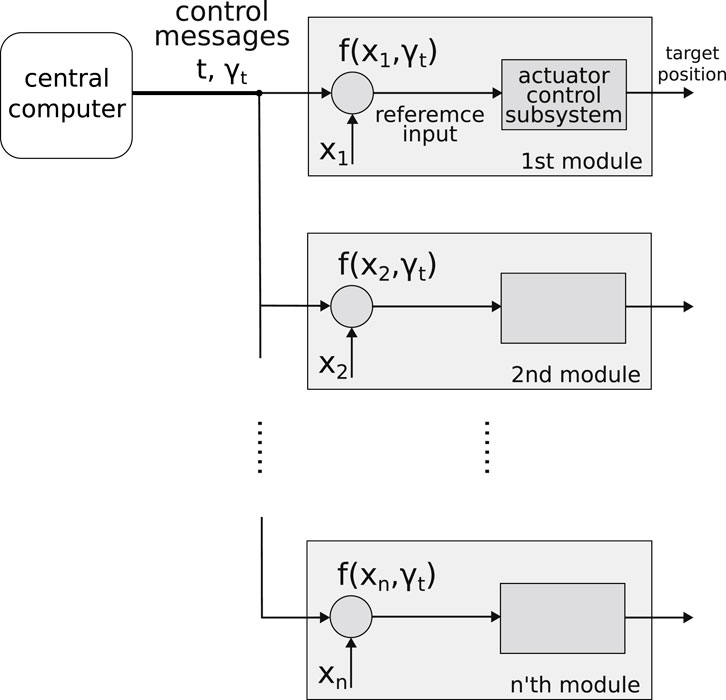

We propose a novel control method for robotic surfaces that has a system size-independent refresh time. In general, a continuous surface profile is first discretized, and the relevant actuation commands are passed to each actuator. Our method focuses on the second part of shape control, so we simply discretize the surface profile on a square lattice representing the pin array. When approximating the discretized surface profile, it is worth noting that (1) neighboring actuators usually have similar inputs, so there is no need to send the inputs to every one of them; (2) the discretized surface profile may be simply parameterized, such as Gaussian function-like patterns used in object manipulation tasks (Johnson et al., 2023), in which only two center coordinates are important. Therefore, in our method, a central computer broadcasts features of the target shape to individual actuation modules and allows them to calculate their inputs. Our method is illustrated in Figure 1. This approach results in a size-independent scaling

Figure 1. An illustration of our control method. At time

The rest of the article is organized as follows. In Section 2, we present the control method and algorithms to compute the shape features, derive equations for the object manipulation problem, and describe the robot setup. In Section 3, we present our experimental results on the time-delay scaling, quantification of shape-changing capacity, and object manipulation tasks. Section 4 contains a discussion and concluding remarks.

2 Materials and methods

2.1 Control message calculation

Our control method is illustrated in Figure 1. One highlight is that the control messages

The universal approximation ability guarantees that any target shape can be exactly represented by a set of

where

Considering that the cosine function may not be efficient in representing localized patterns, we use time–frequency functions to capture both extended and localized patterns:

where

For object manipulation tasks, we use the Gaussian radial basis function (GRBF):

where the coefficients in

2.2 Force model and controller design

Generally speaking, an object is governed by the equation of motion of its center of mass

In this work, we manipulate an object with a shape defined by one concave

where

Equations 4, 5 together provide a force model when using a single GRBF to generate the shape.

The force model is obtained via order-of-magnitude analysis and a symmetry consideration; hence, many realistic details are not captured, such as the discreteness of the robot shape due to finite actuator size, object rotation, friction, and visco-elastic force at contact. We also focus on the planar movement of the object and ignore its vertical motion.

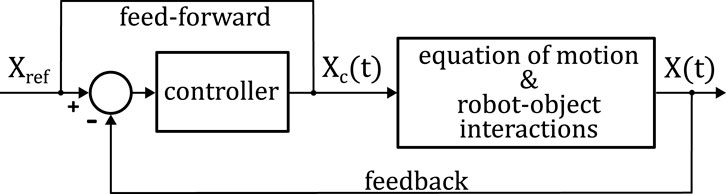

The feedback controller needs to compute the shape center

where

so that

The first and second terms (Equation 9) are the proportional feedback and feed-forward control, respectively. The control loop is shown in Figure 3.

To ensure control process stability when

The controller only has one parameter

2.3 Experimental setup

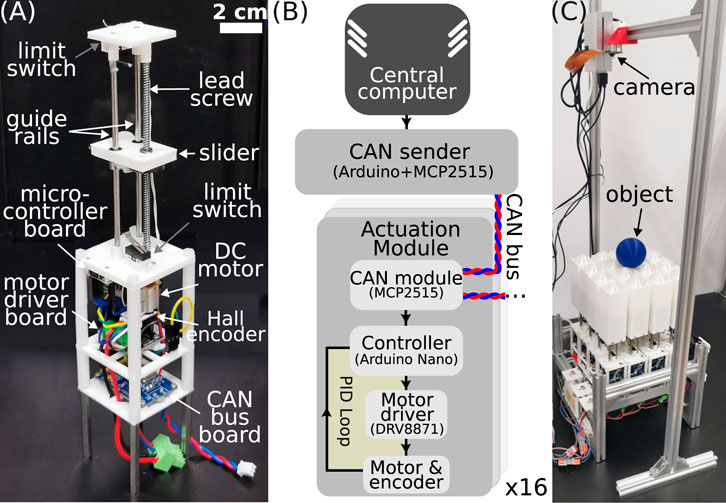

The robot has a modular design and consists of 16 identically built linear actuation modules arranged in an 85-mm-long square area. As shown in Figure 4A, the module is approximately 47 mm wide and 200 mm long. A lead screw of 100 mm in length and 2.5 mm in pitch converts the rotary motion of a DC motor to linear motion. The screw is attached to a slider with two additional guide rails parallel to the lead screw to reduce friction. Two limit switches are installed at the two ends to prevent overshoot that may damage the motor, and the overall arrangement of mechanical parts results in a linear stroke of 70 mm. A complete module also has a rectangular cover attached to the slider (see Figure 4C). The DC motor (Tianqu Motor, N20VA, 1:10) has a rated maximum speed of 50 revolutions per second, leading to a nominal speed of 125 mm/s of the linear motion. The motor’s tail has a Hall rotary encoder to measure the angular position of the shaft, defined as

Figure 4. (A) A picture of a single linear actuation module. The rectangular cover is removed to expose mechanical components. (B) Block diagram of the electronic system. The arrows indicate information flows. (C) The robot and vision servo system for object manipulation.

The electronics block diagram is shown in Figure 4B. The controller of the actuator is an Arduino Nano board, which is programmed as a closed-loop control system for shaft position

For the object manipulation tasks, a simple vision servo system is built to track the object and compute the control output

3 Results

3.1 Experiments on refresh time

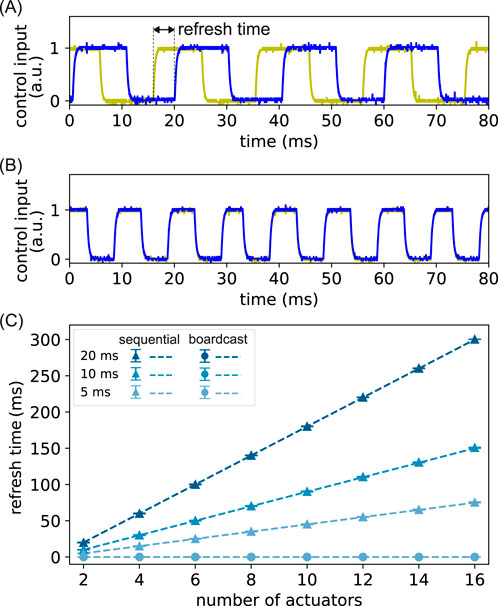

We first perform an experiment to measure the refresh time where there is no actuator dynamics and only communication delay between the central computer and the actuator modules. The robot is refreshed between two uniform patterns

Figure 5. Experimental refresh time scaling without actuator dynamics. (A) The reference inputs of two actuators when using a sequential control method. The yellow (blue) line is the first (last) actuator in a two-actuator system; (B) the reference inputs of the same actuators when using our control method; (C) the averaged refresh time is plotted as a function of the number of actuators for the two control methods and at different communication rates (expressed in

The average refresh time is plotted as a function of

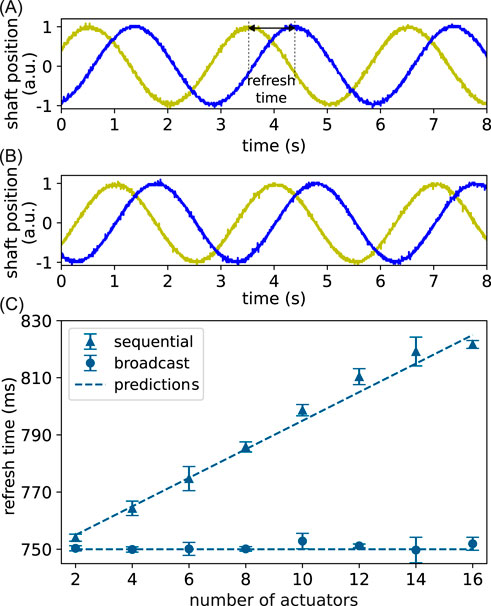

In the second experiment, we take the actuator dynamics into account by measuring the refresh time between the shaft positions

where

Figure 6. Experimental refresh time scaling with actuator dynamics. (A) The motor shaft angular position when using the sequential control method. The yellow (blue) trace is the first (last) actuator in a 16-actuator system, with its refresh time indicated in the dashed line; (B) the shaft position of the same actuators when using our control method. (C) Refresh time is plotted as a function of the number of actuators for the two control methods. The triangles and circles are experimentally measured refresh times using the sequential and our control methods, respectively. Each point is an average of at least six refresh times, and the error bar is one standard deviation. The dashed lines are theoretical predictions.

3.2 Characterization of shape change

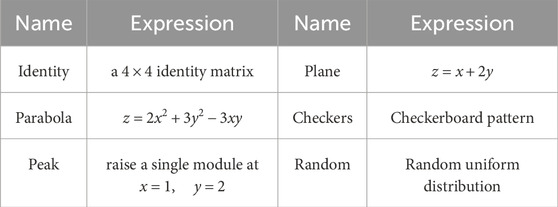

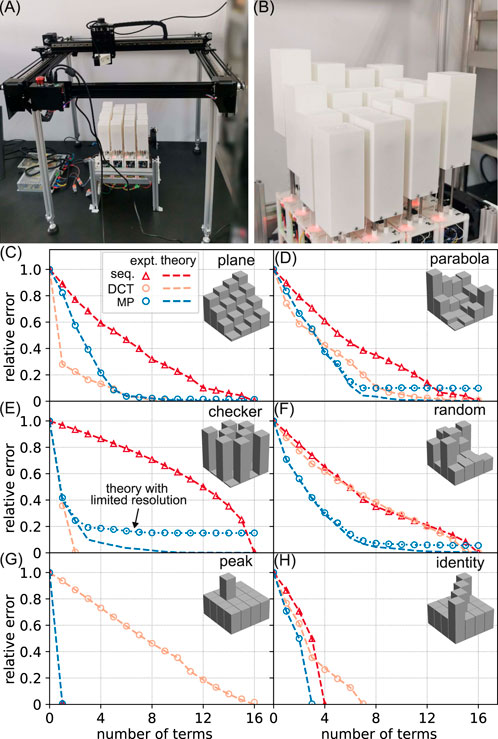

To quantify the approximation accuracy of our control method, we drive the robot to six distinct shapes and quantify the errors between the target and the measured shapes. The shape measurement apparatus is shown in Figure 7A. A laser distance meter (Shanghai Kedi, KG01) is used to measure the height change of each actuator, and the scan process is automated via a homemade Cartesian robot. The system has an accuracy of 0.2 mm. The six shapes are listed in Table 1. All shapes are represented as a

Figure 7. Characterization of shape-changing ability. (A) The experimental setup for shape measurement; (B) the parabola shape displayed by the robot; (C–H) the relative error of the shape is plotted as a function of the number of terms used to approximate the shape. The triangles (circles) are from the sequential (our) control method. The orange and blue colors of the circles correspond to the DCT and MP algorithms, respectively. Each data point is an average of three independent runs. The error bar is smaller than the marker size, so it is not shown. The dashed lines are theoretical predictions calculated with 64-bit floating point numbers, and the dotted blue lines are calculated with 16- and 8-bit resolution-limited numbers. The inset displays the target shape.

The relative error as a function of the number of terms (or equivalently, the number of control messages) is shown in Figures 7C–H. Our control method with the MP algorithm can outperform the sequential control method in the sense that it requires fewer terms to approximate the target shape for the same error. For example, it takes 11 terms for the sequential method to approximate the parabola shape to a

3.3 Closed-loop object manipulation

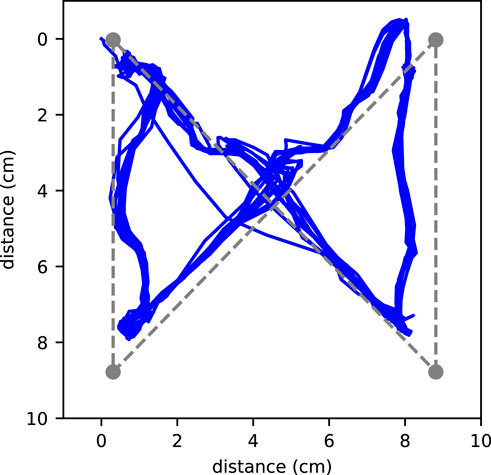

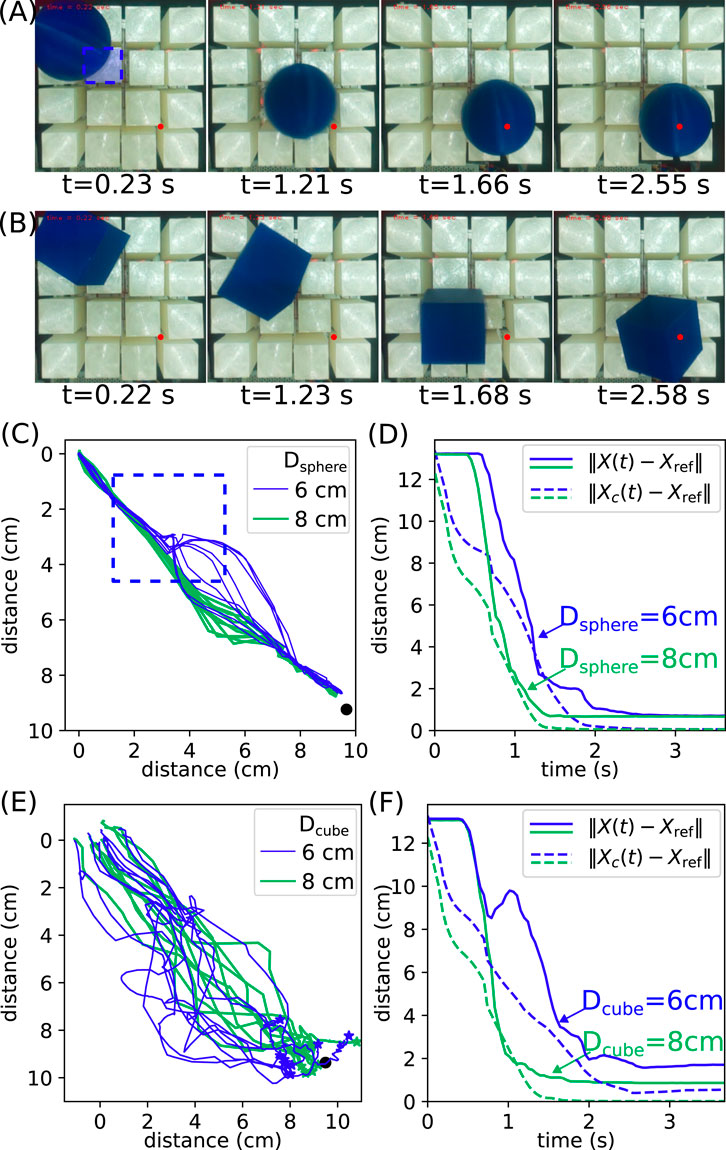

We demonstrate the object manipulation capability of the robot based on our closed-loop control method derived in Section 2.2. Motion videos are provided in the Supplementary Material. The objects are 3D printed light-weight spheres of diameter

The initial and target position of the object are at opposite corners of the robot, and the snapshots of typical transportation processes are shown in Figures 8A,B. The objects can be repeatedly transported to their target positions, showing our closed-loop control method is robust. We collect 10 independent runs for each object, and the trajectories are visualized in Figure 8C and (E) for spheres and cubes, respectively. For the

Figure 8. Object transportation experiments. (A) Snapshots of transportation processes of a sphere with

Similar analysis can be performed for the cubic objects, and the results are shown in Figures 8E,F. The trajectories of cubes are much more scattered than those of the spheres. This can be understood as cubes are more irregular than spheres and experience more random forces during transportation. In addition, the trajectories of the larger cube diverge less than those of the smaller cube. The convergence of the distance to the target position is also less smooth, and sometimes cubes can go away from the target, as shown by the increasing trend in Figure 8F. The

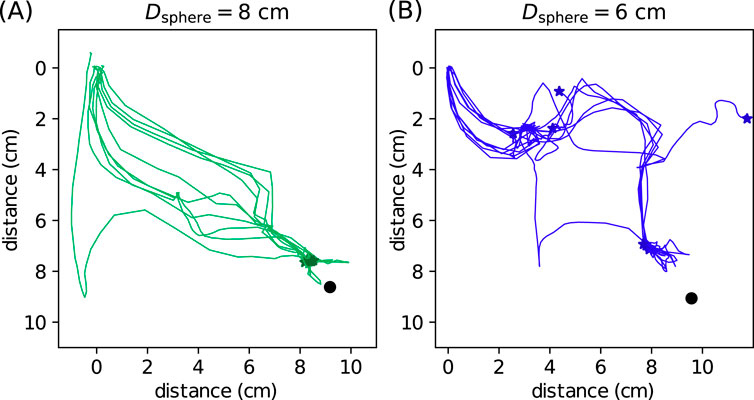

To further test the robustness of the design controller, we change the surface property by removing all spherical caps attached to the pins, so the surface becomes flat. This modification increases the randomness during object manipulation, as the objects can sometimes stabilize themselves on the pins or roll away quickly on the flat surface. In addition, the pins are sharper and stiffer, giving greater force disturbance than the soft spherical cap. Without modifying any control parameter, we perform the same object transportation experiments for two spheres and collect 10 independent runs for each object. The resulting trajectories are shown in Figure 9. As expected, the trajectories are more scattered than those in Figure 8. For the

Figure 9. Object transportation experiments without the spherical caps. The target position is marked by a circle, and the end position of each trajectory is marked by a star. (A) and (B) are trajectories of 10 independent runs for the

It is worth noting that our proposed controller does not explicitly require any constraint on the object shape. The ideal object–surface interaction model in Equation 6 considers the object as a point mass, and the robotic surface being smooth. In this regard, a smooth spherical object would be close to the ideal model. In other words, the controller is more applicable to spherical objects. The effects of shape or surface irregularities are shown in Figure 8 or Figure 9, respectively. Irregularities in object shapes and the robotic surface are both considered disturbances and can lead to unstable trajectories, as the interaction force can have greater fluctuations via unstable contacts, rolling, or slipping, etc.

Finally, to showcase the object manipulation capability of the robot, we have the robot continuously controlling the

4 Discussion

We present a novel control method for robotic surfaces that can substantially reduce the number of independent inputs. The control method has size-independent refresh time and can lead to an effective object manipulation controller when a suitable approximation function is used. We implement the control method in a robotic surface and experimentally confirm the system size-independent refresh time. In addition, the presence of actuator dynamics does not affect this refresh time scaling behavior. Based on the discrete cosine transform and the matching pursuit algorithm, different shapes are efficiently approximated because fewer control messages are required when compared to the standard sequential method. Hence, our control method is more scalable and has the potential to control robotic surfaces with more actuators. Note that the practical upper limit depends on whether the communication technology can reliably broadcast signals to every module in time. Based on our control method, we also provide a modeling method for object manipulation tasks. Using the GRBF as the shape generator, we simplify the complex interaction force with reasonable assumptions based on order-of-magnitude analysis and symmetry considerations and derive a compact feedback controller for object transportation tasks. The validity of the force model and controller is confirmed by the successful transportation of objects of different sizes and shapes.

Because all modules receive the same control message every time step, our control method can update the state of all modules simultaneously. Alternatively, one may design a schedule procedure that updates only relevant modules at a time and hence saves communication bandwidth even if serial communication is used. In this regard, the advantage of our method is that the schedule step is implicitly performed in computing the control message. For example, if only one module is determined to be actuated, a sufficiently small width parameter (such as

As a multi-actuator system, robotic surfaces benefit from a large number of actuators working together to accomplish various tasks, while suffering from the cost and complexity of coordinating many actuators. In essence, our method sends compressed coordination commands to all actuators. A trade-off may exist between the complication due to system size and the complexity of the commands. Although the refresh time scaling is only validated on a small set of actuation modules, and the closed-loop controller is quite simple, we demonstrate its scalable performance. It can be interesting to achieve shape control and object manipulation with distributed control methods, such as designing a sparse state-feedback gain matrix

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

YZ: Conceptualization, Data curation, Formal Analysis, Funding acquisition, Project administration, Software, Writing – original draft, Writing – review and editing. YC: Data curation, Software, Writing – review and editing, Formal Analysis.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the start-up research fund of Southeast University.

Acknowledgments

YZ thanks Cheng Zhao, Yifan Wang, Shihua Li, and Xin Xin for helpful discussions.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2025.1633131/full#supplementary-material

References

Babazadeh, M., and Nobakhti, A. (2017). Sparsity promotion in state feedback controller design. IEEE Trans. Automatic Control 62, 4066–4072. doi:10.1109/TAC.2016.2626371

Chanfreut, P., Maestre, J. M., and Camacho, E. F. (2021). A survey on clustering methods for distributed and networked control systems. Annu. Rev. Control 52, 75–90. doi:10.1016/j.arcontrol.2021.08.002

Chen, J., Cranton, W., and Fihn, M. (2011). Handbook of visual display technology. Incorporated: Springer Publishing Company.

Chen, Z., Deng, Z., Dhupia, J. S., Stommel, M., and Xu, W. (2021). Motion modeling and trajectory tracking control for a soft robotic table. IEEE/ASME Trans. Mechatronics, 1–11. doi:10.1109/TMECH.2021.3120436

Chen, Z., Deng, Z., Dhupia, J. S., Stommel, M., and Xu, W. (2024). Trajectory planning and tracking of multiple objects on a soft robotic table using a hierarchical search on time-varying potential fields. IEEE Trans. Robotics 40, 351–363. doi:10.1109/tro.2023.3337291

Ferguson, K. M., Tong, D., and Winck, R. C. (2020). “Multiplicative valve to control many cylinders,” in 2020 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM) (Boston, MA, USA: IEEE), 673–678.

Follmer, S., Leithinger, D., Olwal, A., Hogge, A., and Ishii, H. (2013). “inFORM: dynamic physical affordances and constraints through shape and object actuation,” in Proceedings of the 26th annual ACM symposium on user interface software and technology (New York, NY, USA: Association for Computing Machinery), 417–426.

Jadhav, S., Glick, P. E., Ishida, M., Chan, C., Adibnazari, I., Schulze, J. P., et al. (2023). Scalable fluidic matrix circuits for controlling large arrays of individually addressable actuators. Adv. Intell. Syst. 5, 2300011. doi:10.1002/aisy.202300011

Johnson, B. K., Naris, M., Sundaram, V., Volchko, A., Ly, K., Mitchell, S. K., et al. (2023). A multifunctional soft robotic shape display with high-speed actuation, sensing, and control. Nat. Commun. 14, 4516. doi:10.1038/s41467-023-39842-2

Leithinger, D., and Ishii, H. (2010). “Relief: a scalable actuated shape display,” in Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction (New York, NY, USA: Association for Computing Machinery), 221–222.

Leithinger, D., Follmer, S., Olwal, A., and Ishii, H. (2014). “Physical telepresence: shape capture and display for embodied, computer-mediated remote collaboration,” in Proceedings of the 27th annual ACM symposium on user interface software and technology (New York, NY, USA: Association for Computing Machinery), 461–470.

Liu, K., Hacker, F., and Daraio, C. (2021). Robotic surfaces with reversible, spatiotemporal control for shape morphing and object manipulation. Sci. Robotics 6, eabf5116. doi:10.1126/scirobotics.abf5116

Mallat, S., and Zhang, Z. (1993). Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 41, 3397–3415. doi:10.1109/78.258082

Nakagaki, K., Fitzgerald, D., Ma, Z. J., Vink, L., Levine, D., and Ishii, H. (2019). “inFORCE: bi-directional ‘force’ shape display for haptic interaction,” in Proceedings of the Thirteenth International Conference on Tangible, Embedded, and Embodied Interaction (New York, NY, USA: Association for Computing Machinery), 615–623.

Park, J., and Sandberg, I. W. (1991). Universal approximation using radial-basis-function networks. Neural Comput. 3, 246–257. doi:10.1162/neco.1991.3.2.246

Robertson, M. A., Murakami, M., Felt, W., and Paik, J. (2019). A compact modular soft surface with reconfigurable shape and stiffness. IEEE/ASME Trans. Mechatronics 24, 16–24. doi:10.1109/tmech.2018.2878621

Salerno, M., Paik, J., and Mintchev, S. (2020). Ori-pixel, a Multi-DoFs origami pixel for modular reconfigurable surfaces. IEEE Robotics Automation Lett. 5, 6988–6995. doi:10.1109/lra.2020.3028054

Siu, A. F., Gonzalez, E. J., Yuan, S., Ginsberg, J. B., and Follmer, S. (2018). “shapeShift: 2D spatial manipulation and self-actuation of tabletop shape displays for tangible and haptic interaction,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–13. doi:10.1145/3173574.3173865

Smoot, L. S., Niemeyer, G. D., Christensen, D. L., and Bristow, R. (2019). Floor system providing omnidirectional movement of a person walking in a virtual reality environment

Stanley, A. A., Hata, K., and Okamura, A. M. (2016). “Closed-loop shape control of a haptic jamming deformable surface,” in 2016 IEEE International Conference on Robotics and Automation (ICRA), 2718–2724. doi:10.1109/icra.2016.7487433

Tian, Y., Fang, G., Petrulis, J. S., Weightman, A., and Wang, C. C. L. (2022). Soft robotic mannequin: design and algorithm for deformation control. IEEE/ASME Trans. Mechatronics 27, 1820–1828. doi:10.1109/tmech.2022.3175759

Uriarte, C., Asphandiar, A., Thamer, H., Benggolo, A., and Freitag, M. (2019). Control strategies for small-scaled conveyor modules enabling highly flexible material flow systems. Procedia CIRP 79, 433–438. doi:10.1016/j.procir.2019.02.117

Walker, I. D. (2017). “Continuum robot surfaces: smart saddles and seats,” in Mechatronics and robotics engineering for advanced and intelligent manufacturing (Cham: Springer), 97–105.

Wang, Y., Frazelle, C., Sirohi, R., Li, L., Walker, I. D., and Green, K. E. (2019). “Design and characterization of a novel robotic surface for application to compressed physical environments,” in 2019 International Conference on Robotics and Automation (ICRA), 102–108. doi:10.1109/icra.2019.8794043

Wang, J., Sotzing, M., Lee, M., and Chortos, A. (2023). Passively addressed robotic morphing surface (parms) based on machine learning. Sci. Adv. 9, eadg8019. doi:10.1126/sciadv.adg8019

Winck, R. C., and Book, W. J. (2012). A control loop structure based on singular value decomposition for input-coupled systems. American Society of Mechanical Engineers Digital Collection, 329–336.

Winck, R. C., and Book, W. J. (2013). Dimension reduction in a feedback loop using the SVD: results on controllability and stability. Automatica 49, 3084–3089. doi:10.1016/j.automatica.2013.07.017

Winck, R. C., and Book, W. J. (2017). Stability and performance of the SVD system. IEEE Trans. Automatic Control 62, 6619–6624. doi:10.1109/TAC.2017.2717808

Winck, R. C., Kim, J., Book, W. J., and Park, H. (2012). Command generation techniques for a pin array using the SVD and the SNMF. IFAC Proc. Vol. 45, 411–416. doi:10.3182/20120905-3-hr-2030.00072

Xue, Z., Zhang, H., Cheng, J., He, Z., Ju, Y., Lin, C., et al. (2024). “ArrayBot: reinforcement learning for generalizable distributed manipulation through touch,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), 16744–16751. doi:10.1109/ICRA57147.2024.10610350

Zhu, H., and Book, W. J. (2004). Practical structure design and control for digital clay. American Society of Mechanical Engineers Digital Collection, 1051–1058.

Zhu, H., and Book, W. J. (2006). “Construction and control of massive hydraulic miniature-actuator-sensor array,” in 2006 IEEE Conference on Computer Aided Control System Design, 2006 IEEE International Conference on Control Applications, 2006 IEEE International Symposium on Intelligent Control, 820–825. doi:10.1109/cacsd-cca-isic.2006.4776751

Keywords: robotic surfaces, object manipulation, cellular robots, distributed robot systems, pin array, refresh time scaling

Citation: Zhao Y and Chen Y (2025) A shape control and object manipulation technique based on function approximation for robotic surfaces. Front. Robot. AI 12:1633131. doi: 10.3389/frobt.2025.1633131

Received: 22 May 2025; Accepted: 02 October 2025;

Published: 05 November 2025.

Edited by:

Anthony Tzes, New York University Abu Dhabi, United Arab EmiratesReviewed by:

Nikolaos Evangeliou, New York University Abu Dhabi, United Arab EmiratesYadan Zeng, Nanyang Technological University, Singapore

Copyright © 2025 Zhao and Chen . This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuchen Zhao, eXVjaGVuLnpoYW8wNzhAc2V1LmVkdS5jbg==

†ORCID: Yuchen Zhao, orcid.org/0000-0002-9779-4577

Yuchen Zhao

Yuchen Zhao Yuxin Chen 1,2

Yuxin Chen 1,2