- 1IRIDIA, Université libre de Bruxelles, Brussels, Belgium

- 2R&D, Toyota Motor Europe, Brussels, Belgium

Swarm perception enables a robot swarm to collectively sense and interpret the environment by integrating sensory inputs from individual robots. In this study, we explore its application to people re-identification, a critical task in multi-camera tracking scenarios. We propose a decentralized, feature-based perception method that allows robots to re-identify people across different viewpoints. Our approach combines detection, tracking, re-identification, and clustering algorithms, enhanced by a model trained to refine extracted features. Robots dynamically share and fuse data in a decentralized manner, ensuring that collected information remains up to date. Simulation results, measured by the cumulative matching characteristics (CMC) curve, mean average precision (mAP), and average cluster purity, show that decentralized communication significantly improves performance, enabling robots to outperform static cameras without communication and, in some cases, even centralized communication. Furthermore, the findings suggest a trade-off between the amount of data shared and the consistency of the Re-ID.

1 Introduction

Swarm perception refers to the ability of a robot swarm (Beni, 2005; Şahin, 2005; Dorigo et al., 2014) to leverage the sensory input of individual robots and achieve a collective understanding of the environment. Due to their distributed nature, robot swarms can collectively gather, share, and update information about their surroundings in a scalable, flexible, and fault-tolerant manner (Brambilla et al., 2013). This can be particularly advantageous in people (re)identification and tracking scenarios, especially in environments with unknown structure where static methods, which rely on strategic sensor placement or predefined path planning, become ineffective (Robin and Lacroix, 2016).

In the general case, tracking people across multiple cameras falls within the domain of multi-target, multi-camera tracking (MTMCT) (Amosa et al., 2023). Person re-identification (Re-ID) specifically addresses MTMCT problems in which people must be matched across multiple non-overlapping cameras (Tang et al., 2017; Ristani and Tomasi, 2018; Gaikwad and Karmakar, 2021). Traditional person re-identification (Re-ID) methods are designed for static CCTV cameras capturing low-resolution video feeds. These systems typically rely on whole-body feature extraction, making them sensitive to occlusions, lighting changes, pose variations, and clothing alterations (Ye et al., 2022). In contrast, robot swarms offer dynamic and adaptive perception. Their mobility and compact size allow them to reposition in response to crowd movement, capture scenes from closer and varied viewpoints, and scale easily by adding more units without requiring fixed infrastructure. Unlike static CCTV cameras, robots can also interact directly with people—providing guidance, assistance, or real-time information. However, swarm perception presents challenges, particularly in integrating and fusing observations collected along diverse and unsynchronized trajectories.

In this paper, we investigate how communication between robots impacts the performance of decentralized person re-identification (Re-ID) in robot swarms. We propose a feature-based decentralized perception framework that integrates detection, tracking, Re-ID, and clustering algorithms, enhanced by a model trained for robust visual feature extraction. Although our framework does not employ state-of-the-art models for detection, tracking, or re-identification, it is intentionally built upon well-established and reproducible components. This choice is motivated by the need to isolate and rigorously study the impact of inter-robot communication on collective perception in decentralized swarm systems. By controlling for the complexity and variability of individual modules, we ensure that observed effects can be attributed to communication mechanisms rather than fluctuations in module performance. This approach naturally limits absolute perception performance, yet provides a robust and interpretable basis for analyzing system-level coordination, adaptability, and resilience. Moreover, the modular structure of our framework allows any component to be replaced with a more advanced alternative, facilitating future studies that combine communication mechanisms with high-performance perception models.

To assess the role of communication, we systematically vary the communication range and analyze its impact on the ability to accurately re-identify people and on the consistency of clustering observations across the swarm. We conduct our evaluation in a simulated conference-like environment, which provides a dynamic and complex setting where Re-ID is particularly valuable. Furthermore, we compare the swarm’s performance to that of CCTV-based systems, analyzing both communication-enabled and isolated camera setups to underscore the advantages and limitations of decentralized robot swarms.

2 Related work

In swarm robotics, extensive research has been devoted to understanding collective behaviors (Trianni and Campo, 2015; Brambilla et al., 2013; Garattoni and Birattari, 2016) and collective decision making (Strobel et al., 2018; Valentini et al., 2016b), often highlighting the crucial role of perception. For example, studies by Valentini et al. (2016a) and Zakir et al. (2022) explore swarm perception in the context of collective decision making. Collective perception has received increasing attention, particularly in the context of (semi-)autonomous vehicles (Günther et al., 2015; Günther et al., 2016; Thandavarayan et al., 2019) and monitoring systems (Fiore et al., 2008; Choi and Savarese, 2012; Montero et al., 2023). In monitoring, person re-identification (Zheng et al., 2016) plays a crucial role, as collective perception requires agents to reach a consensus on the identity of detected and tracked people, enabling data fusion. Recent advances primarily leverage deep-learning techniques (Ye et al., 2022), especially feature-embedding methods such as those based on triplet loss and its variations (Hermans et al., 2017). Triplet loss has been a key framework for learning discriminative feature representations, ensuring that people remain distinguishable under challenging conditions such as occlusions, pose variations, and lighting changes (Cheng et al., 2016; Zeng et al., 2020). Although most re-identification research has focused on stationary cameras in surveillance systems (Ukita et al., 2016; Koide et al., 2017), mobile robots equipped with cameras offer the advantage of dynamic repositioning, enabling multi-view observations and closer interactions (Murata and Atsumi, 2018). Research has primarily explored single-robot applications, particularly in human-robot interaction and service robotics (Pinto et al., 2023; Carlsen et al., 2024). Face Re-ID improves the ability of a robot to interact personally with users (Wang et al., 2019), while recent work has integrated voice Re-ID to further improve recognition (Lu et al., 2024). Beyond recognition, robots can also follow users to provide continuous assistance (Ye et al., 2023). For instance, the CARPE-ID framework enables person re-identification despite occlusions and clothing changes, allowing a single robot to reliably track a person (Rollo et al., 2024). Preliminary studies have also explored multi-robot (Popovici et al., 2022) and swarm-based approaches (Kegeleirs et al., 2024a; b), highlighting promising directions for future advancements. However, most prior efforts emphasize the performance of individual models rather than their integration into robust and scalable systems. In contrast, our work focuses on the system-level effectiveness of decentralized swarm perception using readily available algorithms.

3 Methods

In the proposed method, robots acquire data and share it with their peers whenever they are within communication range.

3.1 Individual data acquisition

Each robot independently performs re-identification and tracking using the video stream from its onboard camera. This process follows a three-step pipeline, as illustrated in Figure 1.

First, people are detected using YOLOv8 (Varghese and Sambath, 2024) trained on COCO (Lin et al., 2014). Second, the BoT-SORT tracking algorithm (Aharon et al., 2022) assigns a unique ID to each detected person and continues to track them as long as they remain within the field of view of the camera. Third, embeddings for each person are computed using the OSNet model (Zhou et al., 2021). As the pre-trained weights lack discriminative power, we fine-tuned the model on three datasets: Market1501 (Zheng et al., 2015), DukeMTMC-reID (Ristani et al., 2016; Gou et al., 2017), and CUHK03 (Li et al., 2014). This training enhances the discriminative power of the embeddings by ensuring that those corresponding to the same person are positioned closer together in the feature space, while those of different ones are farther apart. Embeddings are then clustered based on the IDs assigned by BoT-SORT. Clusters sharing the same ID are merged, so each resulting cluster ideally contains all detections and associated embeddings corresponding to a single person. When a new ID is to be assigned to a person, their embeddings are compared to all existing clusters using a distance-based merging algorithm: (i) the distance between the new embeddings and all existing clusters is computed—as the distance between their means. (ii) If the distance between the new embeddings and an existing cluster is below a predefined threshold (0.2), the new data are merged into this cluster, with the new ID being appended to the cluster’s known IDs. (iii) If no sufficiently similar cluster is found, a new cluster is created and initialized with the new ID. At any given time, the resulting set of clusters constitutes the robot’s people database.

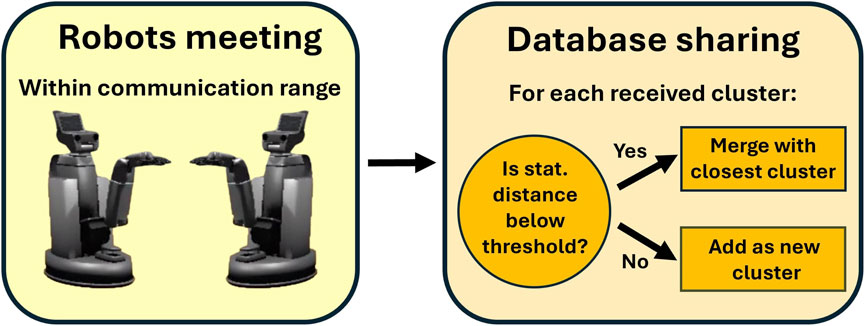

3.2 Inter-robot data sharing

When robots meet, they exchange their respective databases of people. The received clusters are integrated into the robot’s database using a distance-based merging algorithm similar to the one described above: (i) For each new cluster, the distance to all existing clusters is computed—as the distance between their means. (ii) If the distance between a new cluster and an existing one is below a predefined threshold, the two clusters are merged. This aims at grouping in a single cluster the embeddings that represent a same person, regardless of the robots that collected them. (iii) If the distance between a new cluster and each of the existing clusters exceeds the threshold, the new cluster is added as a distinct new person. Figure 2 summarizes this process.

3.3 Exploration behavior

Exploration behavior plays a crucial role in swarm-based perception, particularly in how data is collected and shared across the swarm. Robots can actively influence coverage and observation overlap by adjusting their movement patterns, potentially intensifying search in under-observed areas. However, in this specific study, to isolate and evaluate the direct impact of communication rates on decentralized person re-identification, we adopt a random walk exploration strategy. In particular, we selected ballistic motion, a straightforward implementation commonly used in previous studies (Francesca et al., 2014; 2015; Kegeleirs et al., 2019; Spaey et al., 2020). Random walk is a widely used baseline in swarm robotics due to its simplicity, decentralization, and unbiased spatial coverage (Dimidov et al., 2016; Schroeder et al., 2017). It provides a clean and neutral framework for analysis, avoiding confounding effects introduced by more complex or adaptive behaviors, and allowing us to attribute performance differences primarily to communication dynamics.

4 Experimental Setup

To evaluate the swarm’s ability to perform re-ID using the method presented in Section 3, we designed a series of simulated experiments in which Toyota HSR robots navigate within a closed environment resembling a conference venue, where multiple people move freely. In particular, we assess how communication enables the robot swarm to maintain a coherent and robust shared database of the tracked people.

4.1 Material

The environment and people are simulated in Unity, while robot movements are simulated in ARGoS3 (Pinciroli et al., 2012) and mirrored in Unity via ROS communication. The environment is a 625 m2 empty square room with bright lighting, enclosed by 3 m high walls. Additionally, we consider a second environment identical to the first but featuring five obstacles: 5 m × 3 m x 0.2 m panels that obstruct both movement and visibility. People move randomly at speeds ranging from 0.5 to 1.5 m/s, while robots execute a random walk with a linear speed of 0.3 m/s and an angular speed of about 0.2 rad/s, ensuring stable tracking performance during turns.

4.2 Protocol

For each environment, we consider two group sizes: 6, and 50 people. For each combination of environment and group size, we evaluate two swarm sizes: 4 and 8 robots, except in the 6-person scenario, where only 4 robots are used. This results in a total of 6 unique experiments. Additionally, four CCTV cameras are positioned at the room’s corners, facing the center, to capture video footage for comparison with the robots’ performance. For experiments with 50 people and 8 robots, four extra CCTV cameras are placed at the center of the room, each facing a different corner. Each experiment is a 5-min simulation during which robots and people move freely while avoiding obstacles. The robots continuously record video data from their onboard cameras, while CCTV cameras record from fixed positions. Robot positions are also logged solely to verify the communication range during video replay. After the simulation, videos produced by each robot are processed independently and sequentially in a synchronous way. Synchronization ensures that when robots exchange information, they refer to the same time window. By doing so, robots generate clusters of features as described in Section 3. To prevent excessive data exchange when robots remain in proximity for extended periods, communication occurs at most every 5 s. We assume error-free communication. In practice, limited packet loss should have minimal impact because robots transmit their full data payload at every exchange, allowing missing items to be recovered in subsequent exchanges. Exchanges are modeled as instantaneous relative to a simulation step (all data are synchronized before the next step), but they are limited to occurring no more frequently than once every 5 s, preventing unrealistically high data rates. We consider four communication settings: no communication and three communication ranges of 1.5 m, 5 m, and 10 m, with wider ranges associated with a higher number of data exchanges. CCTV cameras process their video feeds synchronously using the same algorithm as the robots, but only two communication settings are considered: no communication and centralized communication, where all cameras exchange data every 5 s.

For each experiment and communication setting, we compute the cumulative matching characteristics (CMC) curve, the mean average precision (mAP) and the average cluster purity for both robots and CCTV cameras, then aggregate the results accordingly. CMC@k evaluates top-k identification accuracy—how often the first correct identity appears within the top-k ranks. The mAP evaluates retrieval quality over the full ranking—how completely and how early all correct matches are returned. The CMC curve and mAP are computed using 10% of all images observed by the robots during the experiment as queries, with a minimum of 1,000 and a maximum of 5,000 queries. Cluster purity quantifies the extent to which each cluster contains only a single class. It is calculated as the proportion of the most represented ground-truth ID within each cluster. If duplicate IDs occur, only the largest cluster is considered. IDs that are not detected are penalized by assigning them a 0% proportion.

5 Experimental results

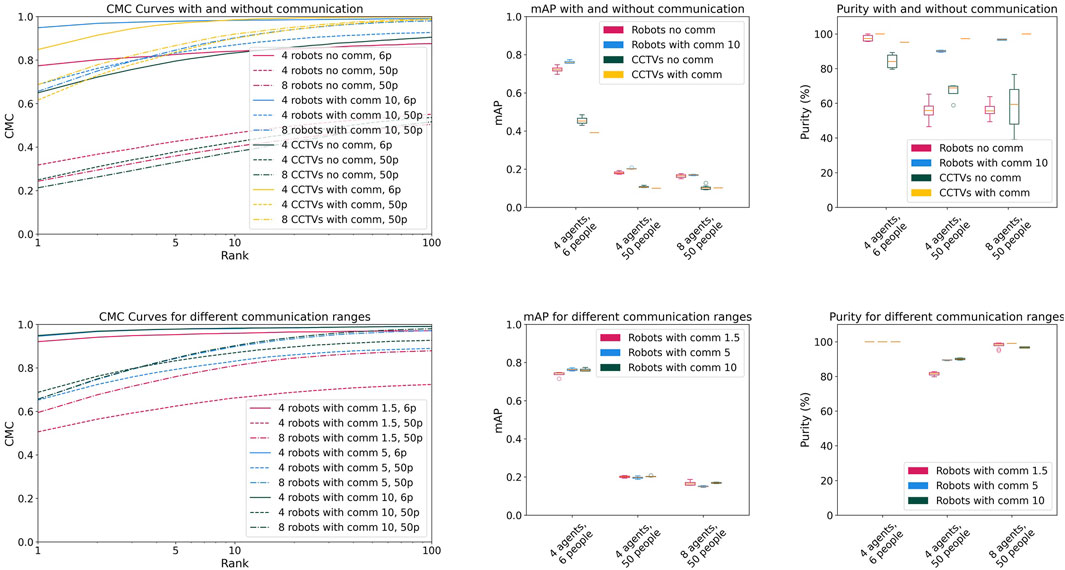

We first examine the impact of communication on the performance (see Figure 3).

Figure 3. CMC, mAP, and purity (up) with and without communication and (down) for different communication ranges.

Without communication, robots outperform CCTV cameras on both the CMC curve and in terms of purity/mAP. This difference is particularly pronounced in experiments with 6 people. This is particularly noteworthy given that both systems rely on the same lightweight and standard algorithmic components—highlighting the practical benefits of mobility and varied viewpoints available to decentralized robotic agents. Although CCTV cameras have a wider field of view, which allows them to observe more people simultaneously, frequent occlusions reduce the consistency of their clusters. Overall, results without communication remain relatively low in scenarios with 50 people. However, introducing communication greatly improves the robots’ performance, particularly in the 50-person experiments. This demonstrates that by exchanging information, robots enhance cluster quality—both by detecting new people and by refining previously detected persons through correct clustering of the data received from their peers. Centralized communication enables CCTV cameras to achieve slightly better results in the 50-people experiments, but they are outperformed by the robots in the 6-people experiment. These results suggest that, although centralized communication lets CCTV cameras collect more information, the extra data does not drastically improve clustering quality. In particular, their broader field of view helps when 50 people are present—enabling them to monitor many individuals at once—but this benefit largely vanishes in the 6-person experiments.

Also, cameras yield lower mAP scores than the robots: even though they detect more people overall, the clusters they form are less consistent. Two additional factors influence performance. First, the number of people to be detected has a direct impact: as the number increases, the likelihood of errors rises, leading to lower overall performance. Second, the number of robots or cameras also plays a role, albeit more subtly. A higher number of robots leads to more frequent interaction between them. Without communication, this is only detrimental, as robots waste time avoiding one another and may occlude each other’s field of vision. For CCTV cameras, lower performance with more cameras may also result from the presence of robots: since cameras sometimes misidentify robots as people, and a higher robot count increases misclassification errors. However, when communication is enabled, a greater number of robots or cameras results in more acquired data. As a consequence, the CMC curve and purity improve, while mAP stagnates or slightly declines due to the accumulation of errors. This suggests a trade-off between the amount of shared data and the consistency of the clusters formed by the agents. Overall, the results indicate that communication substantially enhances the Re-ID capability of both robots and cameras.

We then examine the impact of communication frequency on the robots’ performance (see Figure 3). Increasing the communication range—and consequently the communication frequency—generally improves performance, as robots can share more data. However, the results confirm the previously identified trade-off: in experiments with 8 robots, while the CMC curve improves, mAP initially decreases before increasing, and purity eventually decreases. Since a larger number of robots leads to more frequent encounters, there is a threshold where additional meetings enhance the detection of new people but simultaneously reduce cluster consistency as more misidentifications may happen. Depending on the application and the number of available robots, the communication range should be carefully adjusted to optimize performance.

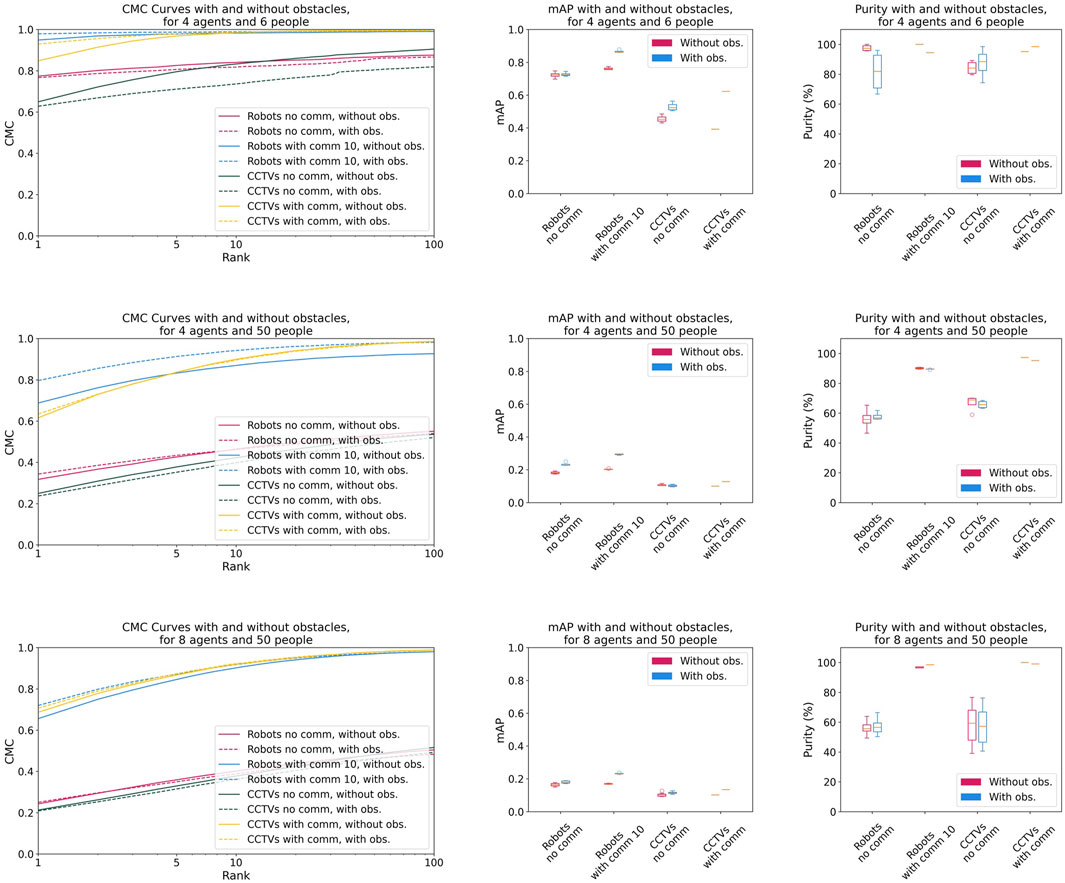

Finally, we examine the impact on performance of obstacles obstructing vision (see Figure 4).

Figure 4. CMC, mAP, and purity with and without obstacles for 4 agents (robots or CCTV cameras) and 6 people, 4 agents and 50 people, and 8 agents and 50 people.

We initially expected obstacles to degrade performance across most configurations, with robots being less affected. However, the results show minimal impact, and in some cases, obstacles even yield positive effects. Specifically, obstacles tend to slightly improve CMC and mAP performance while reducing purity. We hypothesize that while obstacles introduce occlusions, they also increase the separation between people in the environment. As a result, agents observe fewer people at a same time, simplifying detection. This finding may also indicate that the Re-ID method is robust to occlusions, enabling agents to correctly re-identify people even after they disappear behind an obstacle. A thorough investigation would be needed to explore this further.

6 Conclusions

The results show that a decentralized approach leveraging swarm perception holds significant potential for person re-identification. By exchanging data in a decentralized manner, robots detect more people and refine their knowledge of those they have already seen. Moreover, mobile agents offer inherent advantages for Re-ID, as even robots without communication outperform fixed CCTV cameras. In a controlled, enclosed environment, a centralized system with optimally placed cameras and continuous communication still achieves slightly better results in most cases. However, its database may be less consistent than that created by the robots, and such a system is not always feasible, particularly in open environments where uncontrollable factors, such as changing lighting conditions or moving obstacles, can disrupt its functionality.

Despite these advantages, challenges remain, particularly when multiple people exhibit high visual similarity. Additionally, the limited field of view caused by the robots’ low height may hinder their performance in densely crowded environments.

Future work will focus on three key directions: enhancing the re-identification pipeline, improving communication and data sharing strategies, and extending the system to more realistic deployment scenarios. First, we plan to augment the re-identification process by incorporating additional modalities—such as spatial and temporal cues within embeddings—and exploring complementary techniques like face recognition. While our current pipeline uses standard components, integrating more discriminative models and advanced merging strategies (e.g., those from the CARPE-ID framework (Rollo et al., 2024)) could reduce clustering errors and improve performance in more challenging conditions. Second, we will investigate selective and adaptive data-sharing mechanisms. Rather than sharing all available data, robots could exchange compressed representations, such as statistical summaries or representative embeddings, to reduce communication load and increase scalability. We also aim to explore federated learning-inspired approaches, where robots share model updates instead of raw data, enabling collaborative adaptation without centralized coordination. Finally, we intend to expand our simulator and testbed to include dynamic and unstructured environments, such as outdoor public spaces or emergency scenarios, where decentralized swarm perception is especially relevant. This includes exploring more sophisticated exploration behaviors that allow robots to actively adapt coverage and reallocate resources based on perceptual uncertainty or mission goals. Moreover, experiments with physical robots would provide critical insight into the system’s real-world transfer, where uncontrolled factors (e.g., lighting variation, dynamic obstacles) and physical limitations (e.g., sensor noise, communication loss, and latency) may degrade performance.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

MiK: Formal Analysis, Writing – original draft, Visualization, Data curation, Writing – review and editing, Conceptualization, Software, Methodology, Investigation. IG: Writing – review and editing, Software. MaK: Writing – review and editing, Software, Methodology. LG: Supervision, Validation, Methodology, Writing – review and editing, Conceptualization, Resources. GF: Writing – review and editing, Funding acquisition, Resources, Methodology, Conceptualization, Validation, Supervision. MB: Supervision, Project administration, Validation, Writing – review and editing, Methodology, Resources, Funding acquisition.

Funding

The authors declare that financial support was received for the research and/or publication of this article. The research received was supported by the Wallonia-Brussels Federation of Belgium through the ARC Advanced Project GbO. It was also partially supported by the Belgian Fonds de la Recherche Scientifique–FNRS under Grant T001625F. MB further acknowledges support from the Belgian Fonds de la Recherche Scientifique–FNRS of which he is a Research Director.

Conflict of interest

Authors MaK, LG and GF were employed by Toyota Motor Europe.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Generative AI was used in the creation of this manuscript. Generative AI was used to improve the quality of the language during the editing process. It was not used to generate a first draft or to conduct research.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aharon, N., Orfaig, R., and Bobrovsky, B.-Z. (2022). BoT-SORT: robust associations multi-pedestrian tracking. Available online at: https://arxiv.org/abs/2206.14651.

Amosa, T. I., Sebastian, P., Izhar, L. I., Ibrahim, O., Ayinla, L. S., Bahashwan, A. A., et al. (2023). Multi-camera multi-object tracking: a review of current trends and future advances. Neurocomputing 552, 126558. doi:10.1016/j.neucom.2023.126558

Beni, G. (2005). “From swarm intelligence to swarm robotics,”. Sab 2004. Editors E. Şahin, and W. M. Spears (Berlin, Germany: Springer), 3342, 1–9. of LNCS. doi:10.1007/978-3-540-30552-1_1

Brambilla, M., Ferrante, E., Birattari, M., and Dorigo, M. (2013). Swarm robotics: a review from the swarm engineering perspective. Swarm Intell. 7, 1–41. doi:10.1007/s11721-012-0075-2

Carlsen, P. A., Taylor, A. M., Chan, D. M., Uddin, M. Z., Riek, L., and Torresen, J. (2024). “Real-time person re-identification to improve human-robot interaction,” in 2024 IEEE international conference on Real-time computing and robotics (RCAR) (IEEE), 425–430. doi:10.1109/RCAR61438.2024.10671043

Cheng, D., Gong, Y., Zhou, S., Wang, J., and Zheng, N. (2016). “Person re-identification by multi-channel parts-based cnn with improved triplet loss function,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 1335–1344.

Choi, W., and Savarese, S. (2012). “A unified framework for multi-target tracking and collective activity recognition,”. Computer Vision–ECCV 2012. Editors A. Fitzgibbon, S. Lazebnik, P. Perona, Y. Sato, and C. Schmid (Springer), 7575, 215–230. doi:10.1007/978-3-642-33765-9_16

Dimidov, C., Oriolo, G., and Trianni, V. (2016). “Random walks in swarm robotics: an experiment with Kilobots,”. Ants 2016. Editors M. Dorigo, M. Birattari, X. Li, M. López-Ibáñez, K. Ohkura, and T. Stützle (Cham, Switzerland: Springer), 9882, 185–196. of LNCS. doi:10.1007/978-3-319-44427-7_16

Dorigo, M., Birattari, M., and Brambilla, M. (2014). Swarm robotics. Scholarpedia 9, 1463. doi:10.4249/scholarpedia.1463

Fiore, L., Fehr, D., Bodor, R., Drenner, A., Somasundaram, G., and Papanikolopoulos, N. (2008). Multi-camera human activity monitoring. J. Intelligent Robotic Syst. 52, 5–43. doi:10.1007/s10846-007-9201-6

Francesca, G., Brambilla, M., Brutschy, A., Trianni, V., and Birattari, M. (2014). AutoMoDe: a novel approach to the automatic design of control software for robot swarms. Swarm Intell. 8, 89–112. doi:10.1007/s11721-014-0092-4

Francesca, G., Brambilla, M., Brutschy, A., Garattoni, L., Miletitch, R., Podevijn, G., et al. (2015). AutoMoDe-Chocolate: automatic design of control software for robot swarms. Swarm Intell. 9, 125–152. doi:10.1007/s11721-015-0107-9

Gaikwad, B., and Karmakar, A. (2021). Smart surveillance system for real-time multi-person multi-camera tracking at the edge. J. Real-Time Image Process. 18, 1993–2007. doi:10.1007/s11554-020-01066-8

Garattoni, L., and Birattari, M. (2016). “Swarm robotics,” in Wiley encyclopedia of electrical and electronics engineering. Editor J. G. Webster (Hoboken, NJ, USA: John Wiley & Sons), 1–19. doi:10.1002/047134608X.W8312

Gou, M., Karanam, S., Liu, W., Camps, O., and Radke, R. J. (2017). “DukeMTMC4ReID: a large-scale multi-camera person re-identification dataset,” in 2017 IEEE conference on computer vision and pattern recognition (IEEE), 10–19. doi:10.1109/CVPRW.2017.185

Günther, H.-J., Trauer, O., and Wolf, L. (2015). “The potential of collective perception in vehicular ad-hoc networks,” in 2015 14th international conference on ITS telecommunications, 1–5. doi:10.1109/ITST.2015.7377190

Günther, H.-J., Mennenga, B., Trauer, O., Riebl, R., and Wolf, L. (2016). “Realizing collective perception in a vehicle,” in 2016 IEEE vehicular networking conference, 1–8. doi:10.1109/VNC.2016.7835930

Hermans, A., Beyer, L., and Leibe, B. (2017). In defense of the triplet loss for person re-identification. Available online at: https://arxiv.org/abs/1703.07737.

Kegeleirs, M., Garzón Ramos, D., and Birattari, M. (2019). “Random walk exploration for swarm mapping,”. TAROS 2019. Editors K. Althoefer, J. Konstantinova, and K. Zhang (Cham, Switzerland: Springer), 11650, 211–222. doi:10.1007/978-3-030-25332-5_19

Kegeleirs, M., Garzón Ramos, D., Legarda Herranz, G., Gharbi, I., Szpirer, J., Debeir, O., et al. (2024a). “Collective perception for tracking people with a robot swarm,” in ICRA@40 (IEEE).

Kegeleirs, M., Garzón Ramos, D., Legarda Herranz, G., Gharbi, I., Szpirer, J., Hasselmann, K., et al. (2024b). Leveraging swarm capabilities to assist other systems. Available online at: https://arxiv.org/abs/2405.04079.

Koide, K., Menegatti, E., Carraro, M., Munaro, M., and Miura, J. (2017). “People tracking and re-identification by face recognition for rgb-d camera networks,” in European conference on Mobile robots (ECMR), 1–7. doi:10.1109/ECMR.2017.8098689

Li, W., Zhao, R., Xiao, T., and Wang, X. (2014). “DeepReID: deep filter pairing neural network for person re-identification,” in 2014 IEEE conference on computer vision and pattern recognition (IEEE), 152–159. doi:10.1109/CVPR.2014.27

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., et al. (2014). “Microsoft COCO: common objects in context,” in Computer vision–ECCV 2014 (Springer), 740–755. doi:10.1007/978-3-319-10602-1_48

Lu, Z., Ashok, A., and Berns, K. (2024). “Roboreid: Audio-visual person re-identification by social robot,” in 2024 10th IEEE RAS/EMBS international conference for biomedical robotics and biomechatronics (BioRob) (IEEE), 1758–1763. doi:10.1109/BioRob60516.2024.10719738

Montero, D., Aranjuelo, N., Leskovsky, P., Loyo, E., Nieto, M., and Aginako, N. (2023). Multi-camera BEV video-surveillance system for efficient monitoring of social distancing. Multimedia Tools Appl. 82, 34995–35019. doi:10.1007/s11042-023-14416-y

Murata, Y., and Atsumi, M. (2018). “Person re-identification for mobile robot using online transfer learning,” in 2018 joint 10th international conference on soft computing and intelligent systems (SCIS) and 19th international symposium on advanced intelligent systems (ISIS) (IEEE), 977–981. doi:10.1109/SCIS-ISIS.2018.00162

Pinciroli, C., Trianni, V., O’Grady, R., Pini, G., Brutschy, A., Brambilla, M., et al. (2012). ARGoS: a modular, parallel, multi-engine simulator for multi-robot systems. Swarm Intell. 6, 271–295. doi:10.1007/s11721-012-0072-5

Pinto, V., Bettencourt, R., and Ventura, R. (2023). “People re-identification in service robots,” in 2023 IEEE international conference on autonomous robot systems and competitions (ICARSC) (IEEE), 44–49. doi:10.1109/ICARSC58346.2023.10129612

Popovici, M.-A., Gil, I. E., Montijano, E., and Tardioli, D. (2022). “Distributed dynamic assignment of multiple mobile targets based on person re-identification,” in Iberian robotics conference (Springer), 211–222.

Ristani, E., and Tomasi, C. (2018). “Features for multi-target multi-camera tracking and re-identification,” in 2018 IEEE conference on computer vision and pattern recognition (Piscataway, NJ, USA: IEEE), 6036–6046. doi:10.1109/CVPR.2018.00632

Ristani, E., Solera, F., Zou, R., Cucchiara, R., and Tomasi, C. (2016). “Performance measures and a data set for multi-target, multi-camera tracking,” in European conference on computer vision (Springer), 17–35. doi:10.1007/978-3-319-48881-3_2

Robin, C., and Lacroix, S. (2016). Multi-robot target detection and tracking: taxonomy and survey. Auton. Robots 40, 729–760. doi:10.1007/s10514-015-9491-7

Rollo, F., Zunino, A., Tsagarakis, N., Hoffman, E. M., and Ajoudani, A. (2024). “Continuous adaptation in person re-identification for robotic assistance,” in Icra 2024 (IEEE), 425–431. doi:10.1109/ICRA57147.2024.10611226

Şahin, E. (2005). “Swarm robotics: from sources of inspiration to domains of application,”. Sab 2004. Editors E. Şahin, and W. M. Spears (Berlin, Germany: Springer), 3342, 10–20. of LNCS. doi:10.1007/978-3-540-30552-1_2

Schroeder, A., Ramakrishnan, S., Kumar, M., and Trease, B. (2017). Efficient spatial coverage by a robot swarm based on an ant foraging model and the Lévy distribution. Swarm Intell. 11, 39–69. doi:10.1007/s11721-017-0132-y

Spaey, G., Kegeleirs, M., Garzón Ramos, D., and Birattari, M. (2020). Evaluation of alternative exploration schemes in the automatic modular design of robot swarms. In Artificial Intelligence and Machine Learning: BNAIC 2019, BENELEARN 2019, eds. B. Bogaerts, G. Bontempi, P. Geurts, N. Harley, B. Lebichot, and T. Lenaerts (Cham, Switzerland: Springer) 1196. 18–33. doi:10.1007/978-3-030-65154-1_2

Strobel, V., Castello Ferrer, E., and Dorigo, M. (2018). “Managing byzantine robots via blockchain technology in a swarm robotics collective decision making scenario,” in AAMAS 2018 (Richland, SC, USA: International Foundation for autonomous agents and multiagent systems (IFAAMAS)), 541–549.

Tang, S., Andriluka, M., Andres, B., and Schiele, B. (2017). “Multiple people tracking by lifted multicut and person re-identification,” in 2017 IEEE conference on computer vision and pattern recognition (Piscataway, NJ, USA: IEEE), 3539–3548. doi:10.1109/CVPR.2017.394

Thandavarayan, G., Sepulcre, M., and Gozalvez, J. (2019). “Analysis of message generation rules for collective perception in connected and automated driving,” in 2019 IEEE intelligent vehicles symposium, 134–139. doi:10.1109/IVS.2019.8813806

Trianni, V., and Campo, A. (2015). “Fundamental collective behaviors in swarm robotics,” in Handbook of computational intelligence. Editors J. Kacprzyk, and W. Pedrycz (Heidelberg, Germany: Springer: Springer Handbooks), 1377–1394. doi:10.1007/978-3-662-43505-2

Ukita, N., Moriguchi, Y., and Hagita, N. (2016). People re-identification across non-overlapping cameras using group features. Comput. Vis. Image Underst. 144, 228–236. doi:10.1016/j.cviu.2015.06.011

Valentini, G., Brambilla, D., Hamann, H., and Dorigo, M. (2016a). “Collective perception of environmental features in a robot swarm,”. Ants 2016. Editors M. Dorigo, M. Birattari, X. Li, M. López-Ibáñez, K. Ohkura, and C. Pinciroli (Cham, Switzerland: Springer), 9882, 65–76. doi:10.1007/978-3-319-44427-7_6

Valentini, G., Ferrante, E., Hamann, H., and Dorigo, M. (2016b). Collective decision with 100 kilobots: speed versus accuracy in binary discrimination problems. Aut. Agents Multi-Agent Systems 30, 553–580. doi:10.1007/s10458-015-9323-3

Varghese, R., and Sambath, M. (2024). “YOLOv8: a novel object detection algorithm with enhanced performance and robustness,” in 2024 international conference on advances in data engineering and intelligent computing systems (ADICS), 1–6. doi:10.1109/ADICS58448.2024.10533619

Wang, Y., Shen, J., Petridis, S., and Pantic, M. (2019). A real-time and unsupervised face re-identification system for human-robot interaction. Pattern Recognit. Lett. 128, 559–568. doi:10.1016/j.patrec.2018.04.009

Ye, M., Shen, J., Lin, G., Xiang, T., Shao, L., and Hoi, S. C. H. (2022). Deep learning for person re-identification: a survey and outlook. IEEE Trans. Pattern Analysis Mach. Intell. 44, 2872–2893. doi:10.1109/TPAMI.2021.3054775

Ye, H., Zhao, J., Pan, Y., Cherr, W., He, L., and Zhang, H. (2023). “Robot person following under partial occlusion,” in Icra 2023, 7591–7597. doi:10.1109/ICRA48891.2023.10160738

Zakir, R., Dorigo, M., and Reina, A. (2022). “Robot swarms break decision deadlocks in collective perception through cross-inhibition,”. ANTS 2022. Editors M. Dorigo, H. Hamann, M. López-Ibáñez, J. García-Nieto, A. Engelbrecht, and C. Pinciroli (Cham, Switzerland: Springer), 13491, 209–221. of LNCS. doi:10.1007/978-3-031-20176-9_17

Zeng, K., Ning, M., Wang, Y., and Guo, Y. (2020). “Hierarchical clustering with hard-batch triplet loss for person re-identification,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 13657–13665.

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., and Tian, Q. (2015). “Scalable person re-identification: a benchmark,” in Proceedings of the IEEE international conference on computer vision (IEEE), 1116–1124. doi:10.1109/ICCV.2015.133

Zheng, L., Yang, Y., and Hauptmann, A. G. (2016). Person re-identification: past, present and future. Corr. Abs/1610, 02984. doi:10.48550/arXiv.1610.02984

Keywords: swarm robotics, swarm perception, distributed systems, robot communication, people re-id

Citation: Kegeleirs M, Gharbi I, Kaplanis M, Garattoni L, Francesca G and Birattari M (2025) Assessing the impact of feature communication in swarm perception for people re-identification. Front. Robot. AI 12:1671952. doi: 10.3389/frobt.2025.1671952

Received: 23 July 2025; Accepted: 20 November 2025;

Published: 02 December 2025.

Edited by:

Edgar A. Martinez-Garcia, Universidad Autónoma de Ciudad Juárez, MexicoReviewed by:

Hian Lee Kwa, Thales, SingaporeRazanne Abu-Aisheh, University of Bristol, United Kingdom

Copyright © 2025 Kegeleirs, Gharbi, Kaplanis, Garattoni, Francesca and Birattari. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mauro Birattari, bWF1cm8uYmlyYXR0YXJpQHVsYi5iZQ==

Miquel Kegeleirs

Miquel Kegeleirs Ilyes Gharbi

Ilyes Gharbi Marios Kaplanis

Marios Kaplanis Lorenzo Garattoni

Lorenzo Garattoni Gianpiero Francesca

Gianpiero Francesca Mauro Birattari

Mauro Birattari