- 1Communication and Computing Systems Lab, Computer, Electrical and Mathematical Sciences and Engineering Division, King Abdullah University of Science and Technology, Thuwal, Saudi Arabia

- 2Mathematics and Engineering Physics Department, Faculty of Engineering, Cairo University, Giza, Egypt

- 3Compumacy for Artificial Intelligence Solutions, Cairo, Egypt

Autonomous driving has the potential to enhance driving comfort and accessibility, reduce accidents, and improve road safety, with vision sensors playing a key role in enabling vehicle autonomy. Among existing sensors, event-based cameras offer advantages such as a high dynamic range, low power consumption, and enhanced motion detection capabilities compared to traditional frame-based cameras. However, their sparse and asynchronous data present unique processing challenges that require specialized algorithms and hardware. While some models originally developed for frame-based inputs have been adapted to handle event data, they often fail to fully exploit the distinct properties of this novel data format, primarily due to its fundamental structural differences. As a result, new algorithms, including neuromorphic, have been developed specifically for event data. Many of these models are still in the early stages and often lack the maturity and accuracy of traditional approaches. This survey paper focuses on end-to-end event-based object detection for autonomous driving, covering key aspects such as sensing and processing hardware designs, datasets, and algorithms, including dense, spiking, and graph-based neural networks, along with relevant encoding and pre-processing techniques. In addition, this work highlights the shortcomings in the evaluation practices to ensure fair and meaningful comparisons across different event data processing approaches and hardware platforms. Within the scope of this survey, system-level throughput was evaluated from raw event data to model output on an RTX 4090 24GB GPU for several state-of-the-art models using the GEN1 and 1MP datasets. The study also includes a discussion and outlines potential directions for future research.

1 Introduction

Autonomous vehicles, powered by Autonomous Driving (AD) technologies, are rapidly expanding their presence in the market. Autonomy in the context of AD systems refers to a vehicle’s capability to independently execute critical driving tasks, including object detection, path planning, motion prediction, and vehicle control functions such as steering, braking, and acceleration. This progress is largely enabled by breakthroughs in artificial intelligence (AI), machine learning, computer vision, robotics, and sensor technology. The effective operation of Autonomous Driving Systems (ADS) relies on key functions such as perception, decision-making, and control. The perception system allows the vehicle to sense and interpret its environment in real time, enabling timely and appropriate responses (Messikommer et al., 2022). It collects data from a variety of sensors, including cameras, LiDARs, and radars, to acquire and understand the surrounding environment. The raw sensor data are then processed to perform critical tasks such as object detection, segmentation, and classification, providing essential information for high-level decision making in various applications, including self-driving cars, drones, robotics, wireless communication, and augmented reality (El Madawi et al., 2019; Petrunin and Tang, 2023; Fabiani et al., 2024; Wang Y. et al., 2025). The major players in the field of ADS are Waymo, Tesla, Uber, BMW, Audi, Apple, Lyft Baidu and others (Johari and Swami, 2020; Kosuru and Venkitaraman, 2023; Zade et al., 2024). In particular, Waymo offers “robotaxi” services in major US cities, including Phoenix, Arizona, San Francisco, California. It relies on the fusion of cameras, radar, and LiDAR to navigate in urban surroundings. Tesla implemented its Autopilot system, which functions similarly to an airplane’s autopilot, assisting with driving tasks while the driver remains responsible for full control of the vehicle. Its system eliminates LiDAR and functions based on advanced camera and AI technologies. BMW, in its BMWi Vision Dee system, is working toward integrating augmented reality and human-machine interaction (Suarez, 2025).

Among sensors used in the AD perception system, LiDAR offers high accuracy but suffers from high latency. Radar, on the other hand, provides low latency but lacks precision (Wang H. et al., 2025). Traditional frame-based cameras, which are currently the dominant type (Liu et al., 2024), face challenges in dynamic environments where lighting conditions change rapidly or where extremely high-speed motion is involved. The typical dynamic range of frame-based cameras is around 60 dB (Gallego et al., 2020), and in the high-quality frame cameras, it does not exceed 95 dB (Chakravarthi et al., 2025). The power consumption of these cameras is 1–2 W with a data rate around 30–300 MB/s and a latency of 10–100 ms (Xu et al., 2025). Therefore, recently introduced event-based cameras have gained attention for their distinct operating principles, which are inspired by biological vision systems. This approach emulates the way the brain and nervous system process sensory input, inherently exhibiting neuromorphic properties (Lakshmi et al., 2019). Unlike traditional frame-based cameras that capture the entire scene at fixed intervals, event-based cameras detect changes in brightness at each pixel asynchronously and record events only when a change occurs (Kryjak, 2024; Reda et al., 2024). As a result, they offer faster update rates in the range of 1–10

Object detection is a fundamental component of the perception system and plays a vital role in ensuring safe navigation in autonomous driving (Balasubramaniam and Pasricha, 2022). The ability to accurately and promptly identify nearby vehicles, pedestrians, cyclists, and static obstacles is crucial for informed decision-making. Event-based sensors are particularly well-suited for high-speed motion and challenging lighting conditions, offering robustness to motion blur, low latency, and high temporal resolution. This responsiveness enables more precise and timely object recognition, making them a strong candidate for enhancing perception in autonomous vehicles (Zhou and Jiang, 2024). Notably, some of the earliest datasets collected with event-based cameras were captured in driving scenarios, highlighting their relevance for real-world autonomous navigation. These include N-Cars (Sironi et al., 2018), DDD17 (Binas et al., 2017), DDD20 (Hu et al., 2020) datasets. Furthermore, the first large-scale real-world datasets focused on object detection, GEN1 (De Tournemire et al., 2020) and 1MP (Fei-Fei et al., 2004), were specifically designed for this task and are widely accepted as benchmarks for evaluating models.

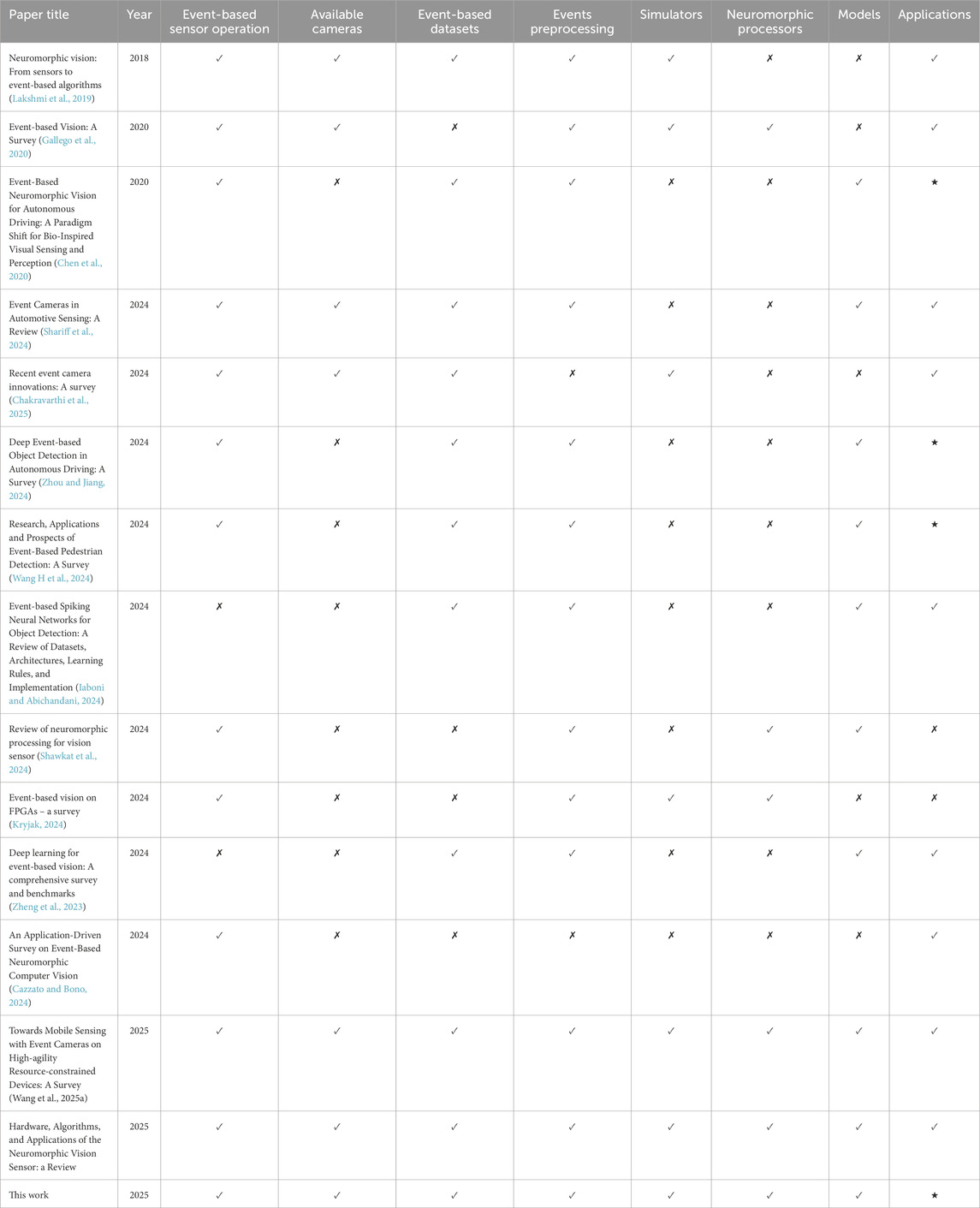

Despite promising features of event-based cameras, modern processing systems and algorithms are not fully suitable or ready to process sparse spatiotemporal data produced by such sensors. Most traditional computer vision pipelines and Deep Neural Network (DNN) models are designed for frame-based data, where information is structured as sequential images (Perot et al., 2020; Messikommer et al., 2020). In addition, there are significantly fewer event-based datasets available compared to traditional frame-based datasets. Nevertheless, there has been a significant surge in research activity and specialized workshops focused on event-based processing and applications (Chakravarthi et al., 2025; Cazzato and Bono, 2024). This growing interest has also resulted in numerous surveys that review and analyze various aspects of event-based processing and its applications. One of the pioneering surveys in this area was presented in (Lakshmi et al., 2019). It describes the architecture and operating principles of neuromorphic sensors, followed by a brief summary of commercially available event-based cameras, their applications, and relevant algorithms. Due to the limited availability of commercial event-based cameras at the time, the survey includes only early event-based datasets and, for the same reason, explores methods for generating more event data from conventional frame-based sources. A later survey (Gallego et al., 2020) expands the coverage to include both commercially available and prototype event cameras and extends the discussion to include neuromorphic data processors. However, it does not provide information on datasets.

One of the first reviews on event-based neuromorphic vision with a specific focus on autonomous driving is presented in (Chen et al., 2020). The survey discusses the operating principles of event-based cameras, highlighting their advantages and suitability for autonomous driving. It also presents early driving scenario datasets that can be adapted through post-processing for object detection tasks, along with signal processing techniques and algorithms tailored for event-based applications. However, it does not discuss hardware components such as commercially available event-based cameras or neuromorphic processors. The fundamentals of event-based cameras, along with their capabilities, challenges, and the common state-of-the-art cameras, are listed in (Shariff et al., 2024). Most importantly, this survey discusses the appropriate settings for acquiring high-quality data and applications. A more recent survey (Chakravarthi et al., 2025) provided a general overview of research and publication trends in the field, highlighting significant milestones in event-based vision and presenting real-world datasets for various applications and existing cameras. But it lacks information about state-of-the-art preprocessing and processing algorithms and neuromorphic hardware.

Another recent survey on event-based autonomous driving reviewed both early and state-of-the-art publicly available object detection datasets, along with the processing methodologies, classifying them into four main categories, such as traditional Deep Neural Networks (DNNs), bio-inspired Spiking Neural Networks (SNNs), spatio-temporal Graph Neural Networks (GNNs), and multi-modal fusion models (Zhou and Jiang, 2024). There is also a recent survey on event-based pedestrian detection (EB-PD) that evaluates various algorithms using the 1MP and self-collected datasets for the pedestrian detection task, which can be seen as a specific use case of object detection in autonomous driving (Wang H. et al., 2024). A comprehensive and well-structured study on event-based object detection using SNNs, including applications in autonomous driving, can be found in (Iaboni and Abichandani, 2024). It provides an overview of state-of-the-art event-based datasets, as well as SNN architectures and their algorithmic and hardware implementations for object detection. The work also highlights the evaluation metrics that can be used to assess the practicality of SNNs.

Biologically inspired approaches to processing the output of event-based cameras show great promise for their potential to enable energy-efficient and high-speed computing, though they have yet to surpass traditional methods (Shawkat et al., 2024; Iaboni and Abichandani, 2024; Chakravarthi et al., 2025). The study (Shawkat et al., 2024) reviewed approaches involving neuromorphic sensors and processors and pointed out that a major challenge in building fully neuromorphic systems, especially on a single chip, is the lack of solutions for integrating event vision sensors with processors. Similarly, challenges exist in interfacing event-based cameras with systems accelerated using Field Programmable Gate Arrays (FPGAs) or System-on-Chip FPGAs (SoC FPGAs). Additionally, there is limited availability of publicly accessible code, particularly in Hardware Description Languages (HDLs) (Kryjak, 2024).

While effective algorithms and efficient hardware acceleration are crucial for processing event-based data, there are also techniques specifically aimed at enhancing the quality of the event data itself. These methods improve data representation and reduce noise to enhance performance (Shariff et al., 2024). A recent comprehensive survey on deep learning approaches for event-based vision and benchmarking provides a detailed taxonomy of the latest studies, including event quality enhancement and encoding techniques (Zheng et al., 2023). Another survey provides an overview of hardware and software acceleration strategies, with a focus on mobile sensing and a range of application domains (Wang H. et al., 2025). A recent work also surveyed algorithms, hardware, and applications in the event-based domain, highlighting the research gap (Cimarelli et al., 2025).

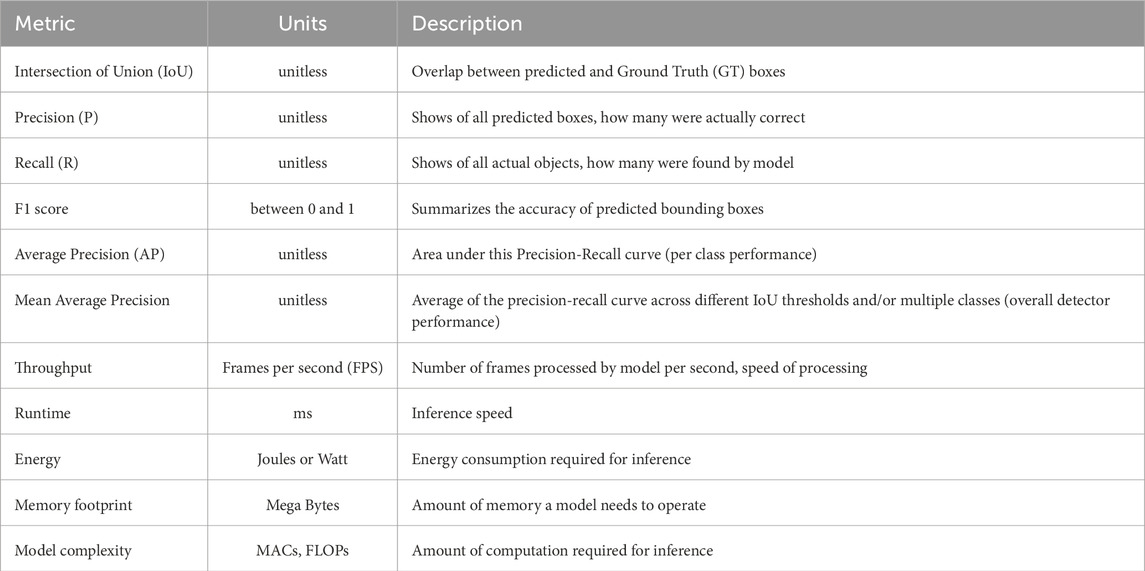

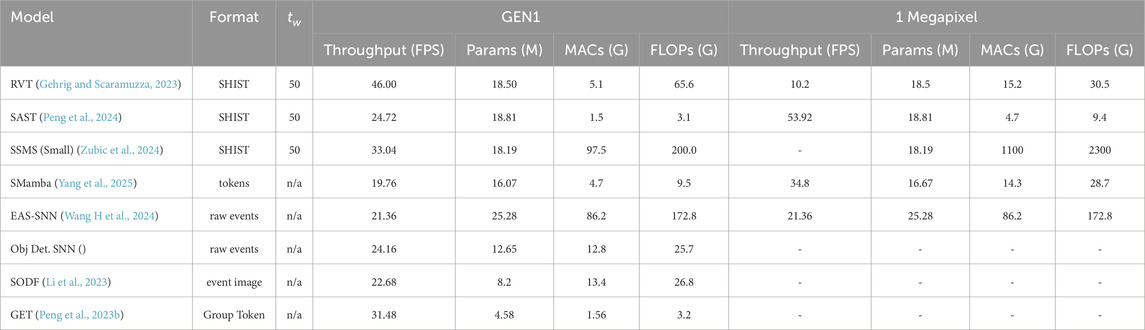

All aforementioned surveys provide important insights into event-based vision and are summarized in Table 1. Building on these contributions, our survey provides an end-to-end review of event-based vision, covering event-based sensor architectures, key datasets with a focus on object detection in autonomous driving, and the full pipeline from data preprocessing and processing to postprocessing. In addition, we discuss benchmarking metrics designed to support fair and consistent evaluation across different processing approaches and hardware accelerators, aiming to ensure a balanced comparison. This work provides a summary of popular evaluation metrics for object detection models and evaluation of system-level throughput that includes conversion events to the required data format.

Table 1. Summary of existing surveys on event-based vision: from sensors and algorithms to processors (

The structure of the paper is outlined as follows: Section 2 introduces the fundamental concepts of autonomous driving systems and explains the distinctions between different levels of driving automation. It also highlights the role of object detection in supporting autonomous driving functionality. Section 3 provides a brief overview of the available event-based datasets and their acquisition methods. In particular, Section 3.1 introduces the fundamentals of event-based sensors and highlights notable commercially available models. Section 3.3 explores the characteristics of event-based datasets, covering both early-stage research datasets and real-world as well as synthetic datasets, with an emphasis on autonomous driving scenarios. Section 4 introduces the evaluation metrics and focuses on the neuromorphic processing pipeline, detailing state-of-the-art event-based object detection architectures, their classification, relevant event encoding techniques, and data augmentation methods. Sections 2–4 cover the fundamentals of object detection and event-data acquisition, making the survey accessible to a broader audience, including researchers who are new to event-based object detection. Section 5 presents a system-level evaluation of event-based object detectors and summarizes the performance of models discussed in Section 4.2. Additionally, it addresses missing aspects in end-to-end evaluation. Finally, Section 6 offers a discussion.

2 Autonomous driving systems

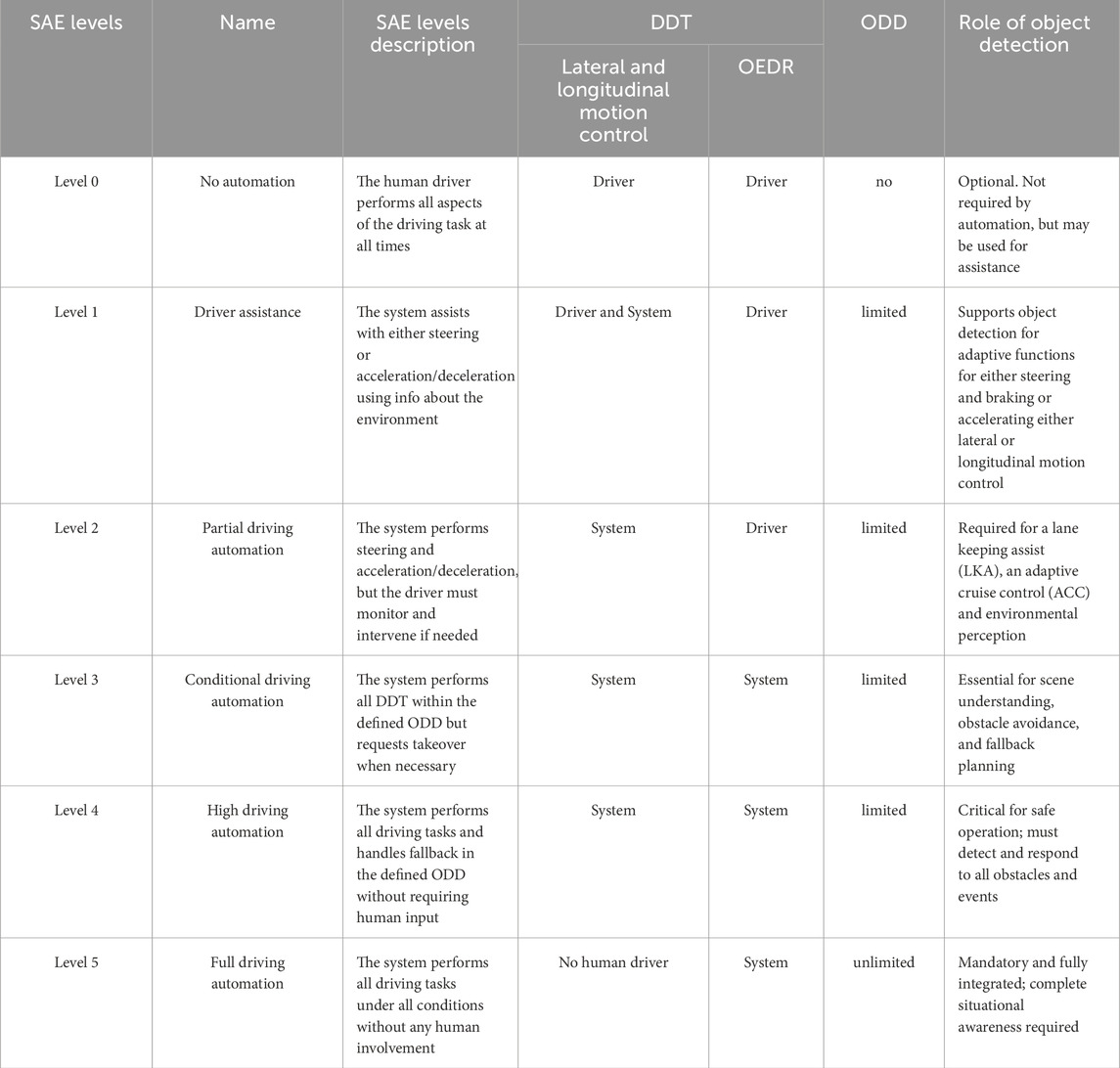

The Society of Automotive Engineers (SAE) defines six levels of autonomy in autonomous driving systems (Zhao et al., 2025). These levels are based on who performs the Dynamic Driving Task (DDT), either the driver or the system. A key part of DDT is Object and Event Detection and Response (OEDR), which refers to the system’s ability to detect objects in the environment, such as vehicles, pedestrians, and traffic signs, and respond appropriately. Level 0 of the SAE indicates no autonomy and full manual driving, while Levels 1 through 5 represent increasing degrees of automation, with each level incorporating more advanced autonomous features. As the level of autonomy increases, the vehicle’s reliance on intelligent systems becomes more critical for ensuring safe and efficient navigation in complex environments (Zhao et al., 2025; Balasubramaniam and Pasricha, 2022). The SAE also introduced the concept of the Operational Design Domain (ODD), a key characteristic of a driving automation system. Defined by the system’s manufacturer, the ODD outlines the specific conditions, such as geographic area, road type, weather, and traffic scenarios under which the autonomous system is intended to operate ERTRAC (2019). Overall, the SAE levels describe the degree of driver involvement and the extent of autonomy, while the ODD defines the specific conditions where and when that autonomy can be applied (Warg et al., 2023). Table 2 summarizes SAE Levels of automation for on-road vehicles and the role of object detection. Clearly, as the level of autonomy increases, the importance of object detection becomes increasingly critical.

Most commercial vehicles today operate at Level 2, where the system can control steering and speed. This includes Tesla Autopilot, Ford BlueCruise, Mercedes Drive Pilot (Leisenring, 2022). Waymo has advanced into Level 4, offering fully autonomous services within geofenced urban areas like Phoenix and San Francisco, without a safety driver onboard Ahn (2020). Uber, while investing heavily in autonomy, currently operates at Level 2–3 through partnerships and focuses on integrating automation with human-supervised fleets Vedaraj et al. (2023). Level 5, representing universal, human-free autonomy in all environments, remains a long-term goal for the industry and has not yet been achieved by any company.

The SAE proposes an engineering-centric classification, while there is also a user-centric perspective for vehicle automation classification. According to Koopman, there are four operational modes, which include driver assistance, supervised automation, autonomous operation, and vehicle testing. The latter distinct category is for testing purposes, where the human operator is expected to respond more effectively to automation failures than a typical driver. Mobileye also suggests four dimensions, such as hands-on/hands-off (for steering wheel), eyes-on/eyes-off (the road), driver/no driver, and Minimum Risk Maneuver (MRM) requirement Warg et al. (2023). All of the above-mentioned automation level definitions are focused on driving tasks on-road traffic. There are other dimensions for autonomy classification focused on interaction in various environments, which are not covered in this work.

3 Neuromorphic data acquisition and datasets

3.1 Event-based sensors

Traditional image- and video-acquiring technology primarily revolves around frame-based cameras capable of capturing a continuous stream of still pictures at a specific rate. Each still frame consists of a grid of 2D pixels with global synchronization, generated using sensor technologies like Charge-Coupled Devices (CCDs) or Complementary Metal Oxide-Semiconductor (CMOS) sensors. Due to their superior imaging quality, CCDs are favored in specialized fields such as astronomy (Polatoğlu and Özkesen, 2022), microscopy (Faruqi and Subramaniam, 2000), and others. These sensors feature arrays of photodiodes, capacitors, and charge readout circuits that convert incoming light into electrical signals. In contrast, CMOS sensors dominate consumer electronics due to their lower cost and sufficient image quality. CMOS sensors can be designed as either Active Pixel Sensors (APS) or, less commonly, Passive Pixel Sensors (PPS) (Udoy et al., 2024). A basic APS pixel sensor is comprised of a 3-transistor (3-T) cell, which includes a reset transistor

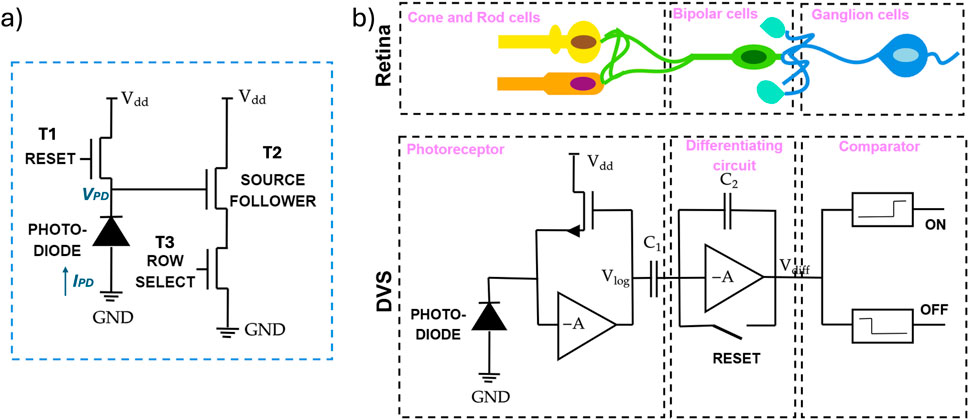

However, these technologies generate large amounts of spatiotemporal data, requiring hardware with high processing capabilities and increased power consumption. This has also led to the development of sensors inspired by biological vision (Shawkat et al., 2024). Particularly, a new imaging paradigm inspired by the function of the human retina, located at the back of the eye, has started gaining attention. The sensing in the retina is done by cones and rods of a photoreceptor, which convert light to electrical signals and pass them to ON/OFF bipolar cells and eventually to ganglion cells. The latter two respond to various visual stimuli, such as intensity increments or decrements, colour, or motion. Similar to the retina, pixels in novel event-based cameras generate output independently from each other and only when some changes in the captured scene occur.

There are several approaches to implementing event-based sensors. The first one is the Dynamic Vision Sensor (DVS). Its pixel architecture shown in Figure 1b mimics a biological retina and is comprised of three blocks, such as a photoreceptor, switched capacitor differentiator, and comparator blocks, which act as photoreceptor, bipolar, and ganglion cells. To produce ON and OFF events, DVS measures light intensity change and slope. In particular, at the initial stage, the DVS pixel starts with a reference voltage that corresponds to the logarithmic intensity of previously observed light. When light hits a photodiode, the generated current

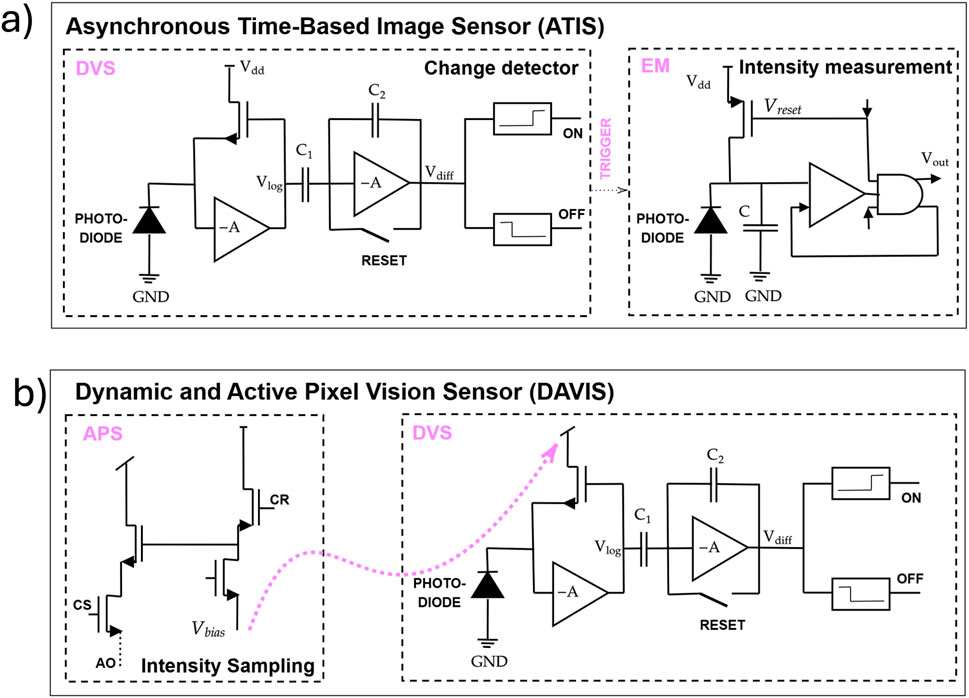

In addition, there are hybrid types of event-based sensors, which include Asynchronous Time Based Image Sensor (ATIS) and DAVIS, shown in Figures 2a,b, respectively. ATIS is a combination of DVS and Time to First Spike (TFS) technologies (Posch et al., 2010). Here, the DVS detects changes in the event stream, while Pulse Width Modulation (PWM) in the Exposure Measurement (EM) component enables the capture of absolute brightness levels. The second photodiode in the ATIS architecture allows it to measure both event intensity and temporal contrast. As a result, ATIS has a larger pixel area compared to DVS and produces enriched tripled data output. The output event of ATIS is

Figure 2. (a) Asynchronous Time-Based Image Sensor (ATIS); (b) Dynamic and Active Pixel Vision Sensor (DAVIS).

DAVIS is an image sensor comprised of synchronous APS and asynchronous DVS that share a common photodiode, as shown in Figure 2b. It provides multimodal output, which requires data fusion and more complex processing. In particular, a frame-based sampling of the intensities by APS allows for receiving static scene information at regular intervals but leads to higher latency (Shawkat et al., 2024), while DVS produces events in real-time based on changes.

Event-based cameras are typically equipped with control interfaces known as “biases”. These biases configure key components such as amplifiers, comparators, and photodiode circuits, directly impacting latency and event rate. The event bias settings can be adjusted to adapt to specific environmental conditions and to filter out noise (Shariff et al., 2024).

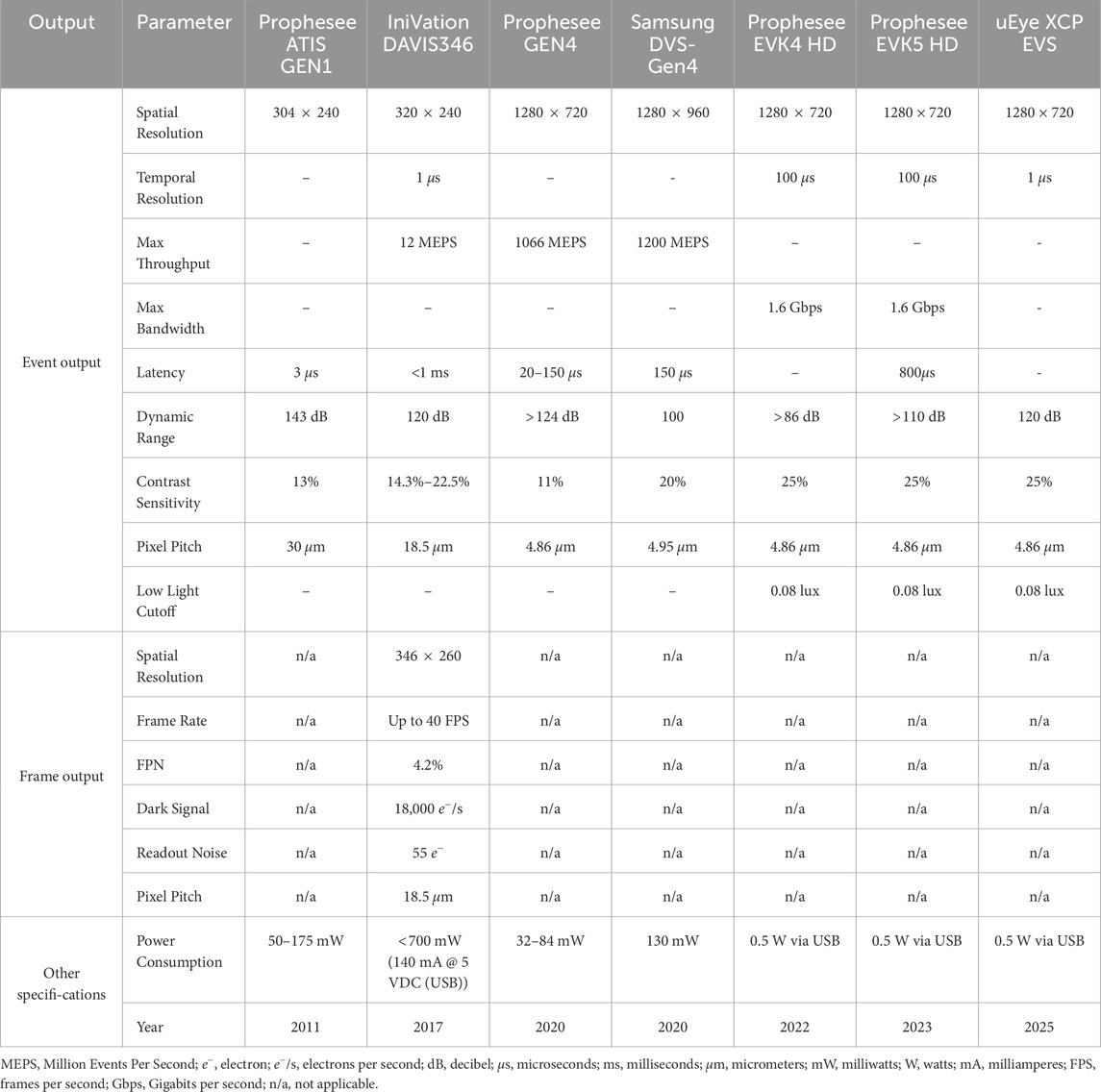

The most recent summary on the commercially available event-based cameras and their specifications can be found in (Gallego et al., 2020; Chakravarthi et al., 2025). The main vendors include iniVation (e.g., DVS128, DVS240, DVS346), Prophesee (e.g., ATIS, Gen3 CD, Gen 3 ATIS, Gen 4 CD, EVK4 HD), CelePixel (e.g., Cele-IV, Cele-V), Samsung (e.g., DVS Gen 2, DVS Gen 3, DVS Gen 4), and Insightness. In addition, (Chakravarthi et al., 2025), provides a list of open-source event-based camera simulators. The notable ones include DAVIS (Mueggler et al., 2017) and Prophesee Video to Event Simulator (Prophesee, 2025). The key event cameras used for the collection of the real-world large-scale event datasets include Prophesee’s GEN1, GEN4, EVK4, and IniVation DAVIS346, whose specifications can be found in Table 3. An important milestone in the field of event-based sensing is the collaboration of Prophesee and Sony, resulting in a hybrid architecture IMX636. This sensor was integrated into industrial camera IDS Imaging uEye XCP EVS (IDS Imaging Development Systems GmbH, 2025), Prophesee EVK4 and EVK5 Evaluation Kits (Chakravarthi et al., 2025), and others.

Table 3. Key commercial event cameras [adapted from (Gallego et al., 2020; Chakravarthi et al., 2024; Wang H. et al., 2025)].

3.2 Synthetic event-based data generation

Slow progress in the event-based domain was caused by the fact that event sensors are both rare and expensive. Furthermore, producing and labeling real-world data is a resource-intensive and time-consuming process. As an alternative, datasets can be generated synthetically (Aliminati et al., 2024). One of the prominent tools for this purpose is the Car Learning to Act (CARLA) simulator (Dosovitskiy et al., 2017), which provides highly realistic virtual environments for autonomous driving. CARLA supports a variety of sensor outputs, including event cameras, RGB cameras, depth sensors, optical flow, and others, enabling the creation of diverse and realistic synthetic event-based datasets.

The Event Camera Simulator (ESIM) is one of the pioneering works in event simulation Rebecq et al. (2018). Its architecture is tightly integrated with the rendering engine and generates events through adaptive sampling, either from brightness changes or pixel displacements. Vid2E Gehrig et al. (2020) follows the same principle and is considered an extension of ESIM. Unlike ESIM, which relies on image input, Vid2E uses video as input. The data generated by Vid2E was evaluated on object recognition and semantic segmentation tasks.

EventGAN generates synthetic events using a Generative Adversarial Network (GAN) (Zhu et al., 2021). The GAN is trained on a pair of frame data and events from the DAVIS sensor. During training, the network is constrained to mimic information present in the real data. To generate events, EventGAN takes input from a pair of grayscale images from existing image datasets.

V2E toolbox creates events from intensity frames Hu et al. (2021). This enabled the generation of event data under bad lighting and motion blur. This contributed to the development of more robust models. V2E produces a sequence of discrete timestamps, whereas real DVS sensors generate a continuous event stream Zhang et al. (2024). Video to Continuous Events Simulator (V2CE) tried to overcome this issue of V2E. V2CE includes two stages: (1) motion-aware event voxels prediction, and (2) voxels to continuous events sampling. Besides, it takes into account the nonlinear characteristics of the DVS camera. Additionally, this work introduced quantifiable metrics to validate synthetic data Zhang et al. (2024).

DVS-Voltmeter allows the generation of synthetic events from high frame-rate videos. It is the first event simulator that took into account physics-based characteristics of real DVS, which include circuit variability and noise Lin et al. (2022). The generated data was evaluated on semantic segmentation and intensity-image reconstruction tasks, demonstrating strong resemblance to real event data.

The ADV2E framework proposed a fundamentally different approach in event generation Jiang et al. (2024). It focuses on analogue properties of pixel circuitry rather than logical behavior. Synthetic events are generated from APS frames. Particularly, emulating an analog low-pass filter allows generating events based on varying cutoff frequencies.

The Raw2Event framework enables the generation of event data from raw frame cameras, producing outputs that closely resemble those of real event-based sensors Ning et al. (2025). It currently generates events from grayscale images, but could be extended to support color event streams. A low-cost solution deployed on Raspberry Pi could also be built on edge AI hardware, enabling lower latency and practical use at the edge.

A recently proposed PyTorch-based library, Synthetic Events for Neural Processing and Integration (SENPI), converts input frames into realistic event-based tensor data Greene et al. (2025). SENPI also includes dedicated modules for event-driven input/output, data manipulation, filtering, and scalable processing pipelines for both synthetic and real event data.

To sum up, most of these tools are rule-based, designed to convert APS-acquired images into synthetic event streams. The only exception is EventGAN, which is learning-based, but it tends to be less reliable and heavily dependent on the quality and diversity of the training data. Among these simulators, ESIM and DVS-Voltmeter stand out for offering the highest realism. Tools like v2e, v2ce, and ADV2E are the most scalable for large dataset generation, while recently introduced Raw2Event is the simplest, lightest, and fastest option. A novel framework, SENPI, offers controlled simulation of event cameras and extended processing features, including data augmentation and manipulation, and algorithmic development.

3.3 Event-based datasets

3.3.1 Early event-based datasets

There is a growing variety of neuromorphic datasets that were generated synthetically or recorded in real-world scenarios and cover a wide spectrum of event-based vision tasks, from small-scale classification to real-world autonomous navigation. Depending on the method of capture, they are primarily divided into two categories: ego-motion and static, also known as fixed. Event-based datasets collected from a static/fixed perspective typically focus on the movement of objects or features in the environment, whereas ego-motion datasets emphasize the movement of the observer or camera relative to the scene (Verma et al., 2024).

Early event-based datasets include DVS-converted datasets N-MNIST (Orchard et al., 2015), MNIST-DVS (Serrano-Gotarredona and Linares-Barranco, 2015), CIFAR 10-DVS (Li et al., 2017), N-Caltech101 (Orchard et al., 2015), and N-ImageNet (Kim et al., 2021) are publicly available datasets converted to event-based representation from frame-based static image datasets MNIST (LeCun et al., 1998), CIFAR 10 (Krizhevsky and Hinton, 2009), Caltech101 (Fei-Fei et al., 2004), and ImageNet (Deng et al., 2009). The conversion of frame-based images to an event stream was achieved either by moving the camera, as in case of N-MNIST and N-Caltech101, or by a repeated closed-loop smooth (RCLS) movement of frame-based images, as in MNIST-DVS, CIFAR 10-DVS(Iaboni and Abichandani, 2024; Li et al., 2017). The latter method produces rich local intensity changes in continuous time (Li et al., 2017). The pioneering DVS-captured dataset is DVS128 Gesture. It was generated by natural motion under three lighting conditions, including natural light, fluorescent light, and LED light (He et al., 2020). All of them serve as important benchmark datasets for developing and testing models in the context of event-based vision. However, only N-Caltech includes bounding box annotations, making it the most suitable dataset for the object detection task, which is the primary focus of this survey.

3.3.2 Event-based datasets with autonomous driving context

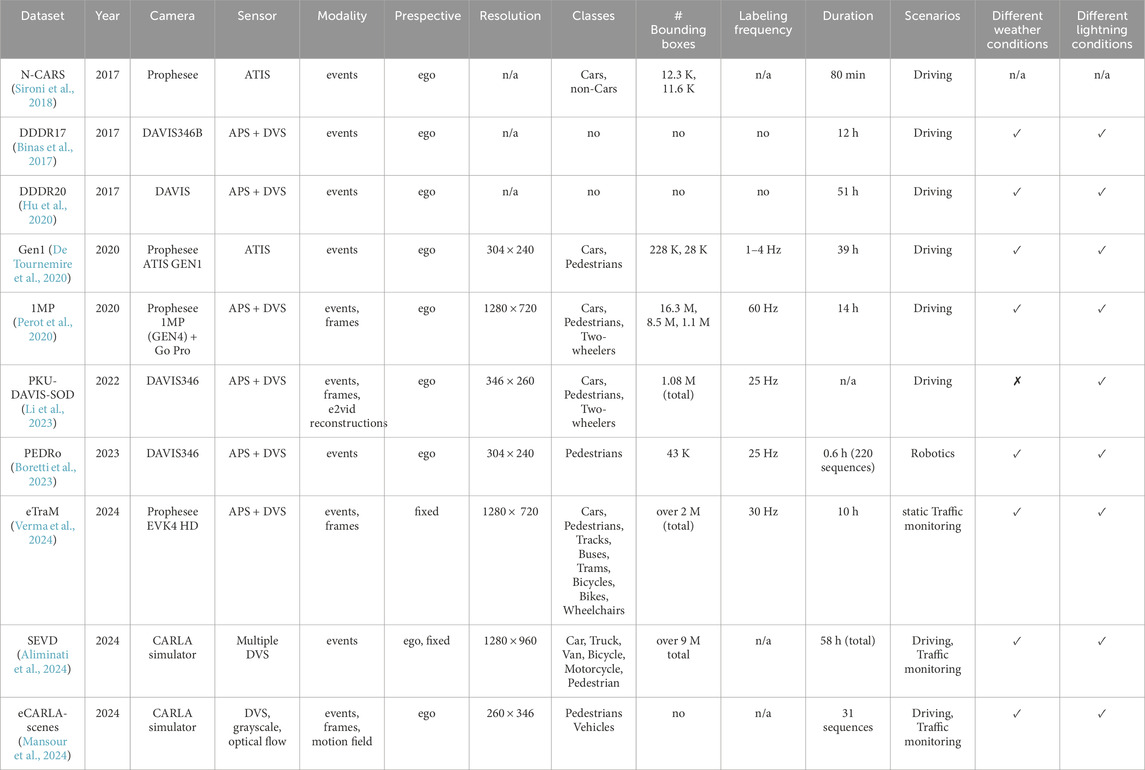

There is a variety of DVS-captured datasets, each focusing on different aspects of event-based vision and application domains. Table 4 summarizes commonly used event-based datasets related to autonomous driving. These datasets differ in spatial and temporal resolution, collection sensor types, and environmental conditions such as lighting and weather. In addition to the dataset collection process, dataset labeling also plays an essential role in effective object detection. However, annotating event-based data at every timestamp is highly resource-intensive (Wu et al., 2024). Moreover, event data with low spatial or temporal resolution often results in poor quality and limited utility, while higher-resolution data significantly increases memory requirements. Although high temporal resolution improves the tracking of fast-moving objects, it also introduces greater sensitivity to noise. To balance these trade-offs, different datasets adopted different labeling frequencies.

The DDD17 (Davis Driving Dataset, 2017; Binas et al., 2017) was among the first datasets specifically created for this purpose and includes 12 h of recording. It was collected from German and Swiss roads at speeds ranging from 0 to 160 km/h using a DAVIS346B prototype camera with a resolution of

Another complex dataset recorded in changing environments is N-Cars (Sironi et al., 2018). It was collected using Prophesee’s ATIS camera mounted behind the windshield of a car and consists of 80 min of video. Then, gray-scale measurements from the ATIS sensor were converted into conventional gray-scale images. ATIS’s luminous intensity measures were used to generate ground-truth annotations. The resulting dataset has two classes, comprised of 12,336 car samples and 11,693 non-car samples.

Three additional event-based datasets focusing on human motion were later introduced: the pedestrian detection dataset, the action recognition dataset, and the fall detection dataset. The event streams, recorded both indoors and outdoors, were converted into frames and annotated using the labelImg tool. The resulting DVS-Pedestrian dataset contains 4,670 annotated frames (Miao et al., 2019).

Prophesee’s GEN1 Automotive Detection Dataset (also called GAD (Crafton et al., 2021)) is the first large-scale real-world event-based labeled dataset that includes both cars and pedestrians (De Tournemire et al., 2020) and is recognized as the first major detection benchmark. The dataset was collected by the Prophesee ATIS GEN 1 sensor with a resolution of

More detailed environmental mapping is achieved in a 1 Megapixel (1MP) automotive detection dataset (Perot et al., 2020) recorded by an event-based vision sensor with high resolution

PKU-DAVIS-SOD is a multimodal object detection dataset with the focus on challenging conditions. It has 1.08 M bounding boxes for 3 classes, such as cars, pedestrians, and two-wheelers (Li et al., 2023). Compared to GEN1 and 1MP datasets, the PKU-DAVIS-SOD dataset offers moderate resolution (346

Person Detection in Robotics (PEDRo) is another event-based dataset primarily designed for robotics, but can also be used in autonomous driving contexts for pedestrian detection. DAVIS346 camera with a resolution of

eTraM is one of the recent event-based datasets (Verma et al., 2024). It is a static traffic monitoring dataset recorded by a

3.3.3 Synthetic event-based datasets

CARLA simulator was used to generate the Synthetic Event-based Vision Dataset (SEVD) (Aliminati et al., 2024) for both multi-view (360°) ego-motion and fixed-camera traffic perception scenarios, providing comprehensive information for a range of event-based vision tasks. The synthetic data sequences were recorded using multiple dynamic vision sensors under different weather and lightning conditions and include several object classes such as car, truck, van, bicycle, motorcycle, and pedestrian.

Additionally, the CARLA simulator, along with the recently developed eWiz a Python-based library for event-based data processing and manipulation, was used to generate the eCARLA-scenes synthetic dataset, which includes four preset environments and various weather conditions (Mansour et al., 2024).

3.3.4 Event-based dataset labeling

Event-based datasets remain underrepresented. Additionally, the accuracy of object detection is influenced by dataset labeling and its temporal frequency. If labels are sparse in time, the model may miss critical information, especially in high-speed scenarios. On the other hand, higher labeling frequency can become redundant in low-motion scenes and is often expensive to implement manually. To address the scarcity of well-labeled event-based datasets, the overlap between event-based and frame-based data can be exploited to generate additional labeled event datasets (Messikommer et al., 2022). In (Perot et al., 2020), event-based and frame-based cameras were paired as in the 1MP dataset. Since frame-based and event-based sensors were placed side by side, a distance approximation was applied afterwards, and labels extracted from the frame-based camera were transferred to event-based data. Another option suggests the generation of event-based data from existing video using video-to-event conversion (Gehrig et al., 2020).

Unlike frame-based cameras, event-based sensors inherently capture motion information. Adoption of Unsupervised Domain Adaptation (UDA) to enable the transfer of knowledge from a labeled source (e.g., image

Labeling event data directly from sensor output, without relying on corresponding frame-based information, faces its own challenges. In particular, labeling event-based data at each timestep is expensive due to its high temporal resolution. To address this challenge, Label-Efficient Event-based Object Detection (LEOD) was proposed (Wu et al., 2024). LEOD involves pre-training a detector on a small set of labeled data, which is then used to generate pseudo-labels for unlabeled samples. This approach supports both weakly supervised and semi-supervised object detection settings. To improve the accuracy of the pseudo-labels, temporal information was used. Specifically, time-flip augmentation was applied, which enabled model predictions on both the original and temporally reversed event streams. LEOD was evaluated on the GEN1 and 1MP datasets, and it can outperform fully supervised models or be utilized together to enhance their performance.

4 Event-based object detection

To a great extent, traditional object detectors can be divided into single-stage detectors and two-stage detectors (Bouraya and Belangour, 2021; Carranza-García et al., 2020). The single-stage detector is comprised of several parts, which typically include an input, a backbone for feature extraction, a detection head, and, optionally, neck layers. Its neck layers are located between the backbone and head layers and consist of several top-down and bottom-up paths to extract multi-scale features for detecting objects of various sizes (Bouraya and Belangour, 2021). A detection head takes the outputs of the backbone and neck and transforms extracted features into a final prediction. You Only Look Once (YOLO) (Hussain, 2024) and Single Shot MultiBox Detector (SSD) (Liu et al., 2016) are examples of Single-stage detectors. YOLO divides the image into a grid and predicts bounding boxes for each cell, while SSD uses multiple feature maps at different scales to detect objects of varying sizes. Two-stage detectors include an additional step before the classification stage, known as the regions of interest (RoI) proposal stage (Carranza-García et al., 2020). This extra stage helps to identify potential object locations for better performance. As a result, single-stage detectors predict object classes and bounding boxes in one pass and provide higher speed, whereas two-stage detectors try to ensure accurate prediction and involve more computational cost.

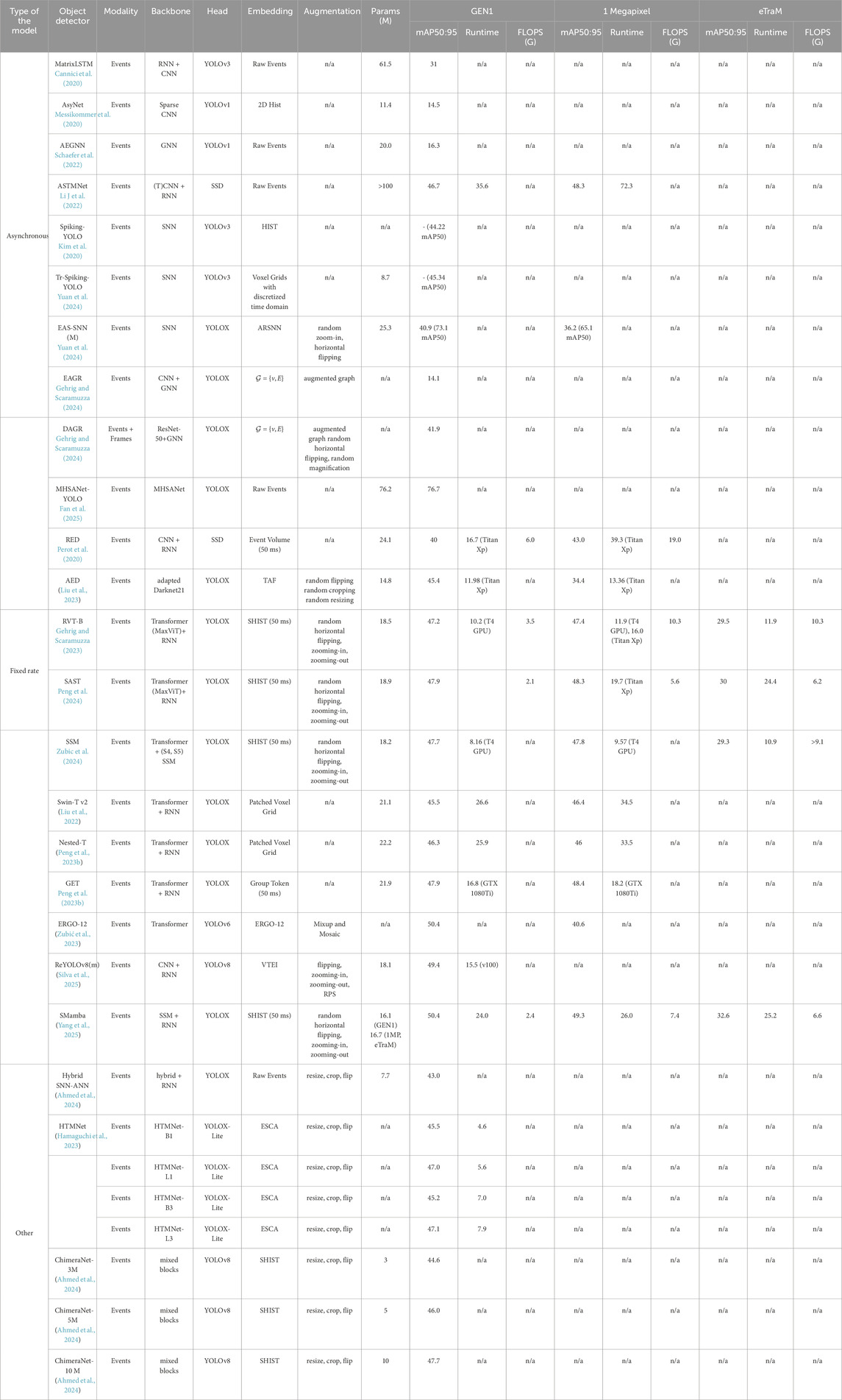

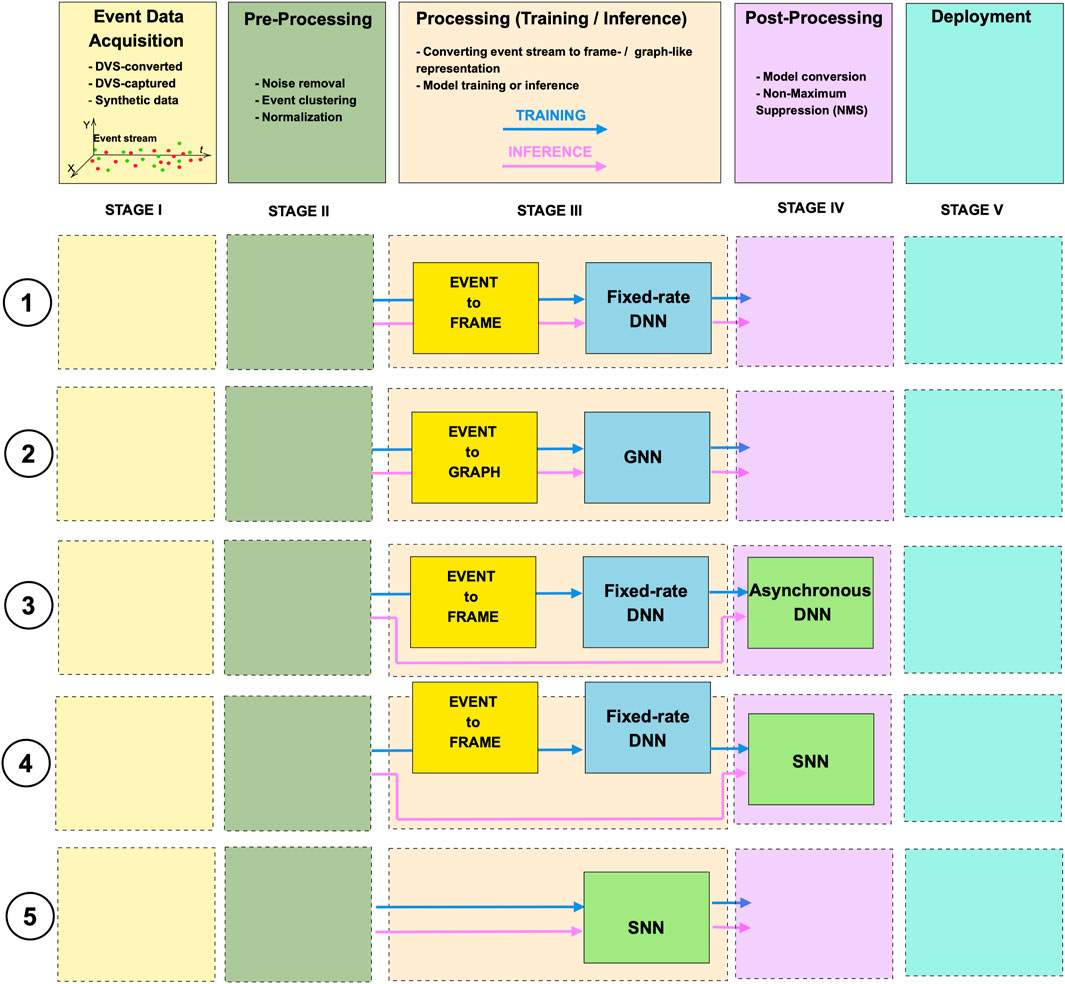

Unlike frame-based data, the binary event stream is characterized by spatial and temporal sparsity. Handling such data requires high-performing algorithms. The structure of existing event-based object detection models is comprised of a backbone architecture followed by an SSD- or YOLO-based head. Detection model backbone architectures can be classified as dense, spiking, or graph-based, and can often be converted between formats to enhance efficiency during training and inference. Depending on the model architecture, event data may be processed in its raw form or require conversion. Once formatted appropriately, models can operate either asynchronously on raw event streams or at a fixed rate using dense frame or graph-based representations.

Figure 3 summarizes the basic pipeline of event-based object detectors, categorized by the type of model used. While the pipeline can be extended with additional pre- and post-processing stages, in the diagram we focus on the minimal encoding and processing components. The processing stage typically involves converting event data into a specific format, if required, to match the input requirements of the target model and training or inference processes. Based on the type of data processing, these models can be categorized as either event-driven asynchronous (green boxes in Figure 3) or fixed-rate synchronous (blue boxes in Figure 3). Furthermore, based on the backbone model architecture, the networks can be categorized as dense, spiking, or graph-based, resulting in five possible processing pathways within the pipeline. More details on models are provided below in Section 4.2. Although detection models differ in their architectures and processing strategies, it should be noted that they share several common evaluation metrics, with some variations depending on the specific processing approach. In the following sections, we begin by outlining these key evaluation metrics, then introduce state-of-the-art models. We also review existing data augmentation techniques and highlight relevant neuromorphic accelerators.

Figure 3. Event-based object detection pipeline: event-data acquisition, pre-processing, processing, post-processing, and deployment. Five types of pipelines based on processing rate and backbone model architecture: fixed-rate dense, fixed-rate graph-based, asynchronous sense, asynchronous spike-based processing dense data, and asynchronous spike-based processing raw events.

4.1 Evaluation metrics

Evaluation methods applied to event-based object detectors are inherited from frame-based frameworks. The widely adopted one is the COCO (Common Object in Context) metric protocol, which utilizes various performance metrics such as Average Precision (AP),

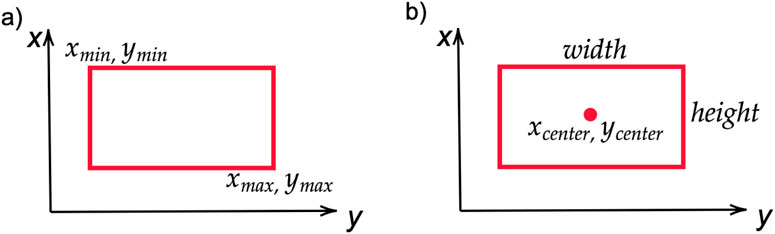

These performance metrics evolved based on prediction boxes produced by detection models. The output of object detectors is bounding boxes encoded as (

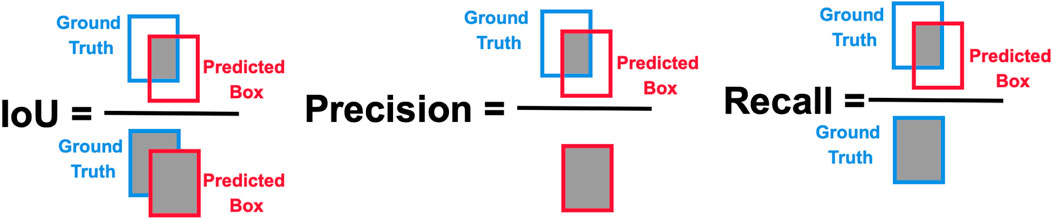

The Intersection of Union (IoU) is a measure of the overlap between predicted and Ground Truth (GT) bounding boxes. Based on the given specific threshold

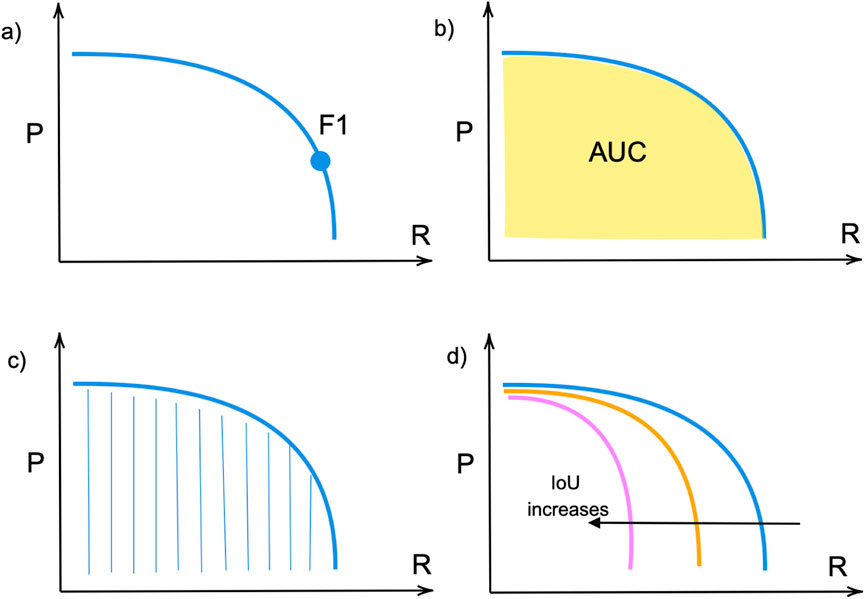

The precision-recall curve illustrates a trade-off at various confidence values. The model is considered good if the precision remains high as its recall increases (Padilla et al., 2020). The F1 score is the metric that shows the trade-off between precision P and recall R as illustrated in Figure 6a and can be found from Equation 3. It ranges between 0 and 1, where 1 shows the highest accuracy. Average Precision (AP) is identified individually for each class and represents the area under the curve (AUC) of the precision-recall corresponding to Figure 6b for that specific class as shown in Figure 6c. It measures how well the model balances precision (accuracy of positive predictions) and recall (coverage of actual positives) at different confidence thresholds. Eventually, mAP (Figure 6d) is the average of the Average Precision (AP) of each class.

Figure 6. (a) F1 score; (b) Precision-Recall Area Under Curve (PR-AUC); (c) Average precision (AP); (d) mAP over various IoU.

In addition to mAP, which represents the prediction quality, the number of floating point operations (FLOPs) is commonly used to measure the computational efficiency and complexity of a model (Messikommer et al., 2020). For asynchronous models, where data is event-driven rather than frame-based, the adopted metric is FLOPs per event (FLOPs/ev) (Santambrogio et al., 2024), which more accurately reflects the computational cost relative to the number of events processed.

Another important performance indicator is the runtime of the object detection model, referring to the time required to process the input data and evaluate all bounding box annotations across the images. Lower runtime is crucial, especially in real-time or resource-constrained applications such as robotics and autonomous systems.

Besides, there are evaluations such as latency

4.2 Models

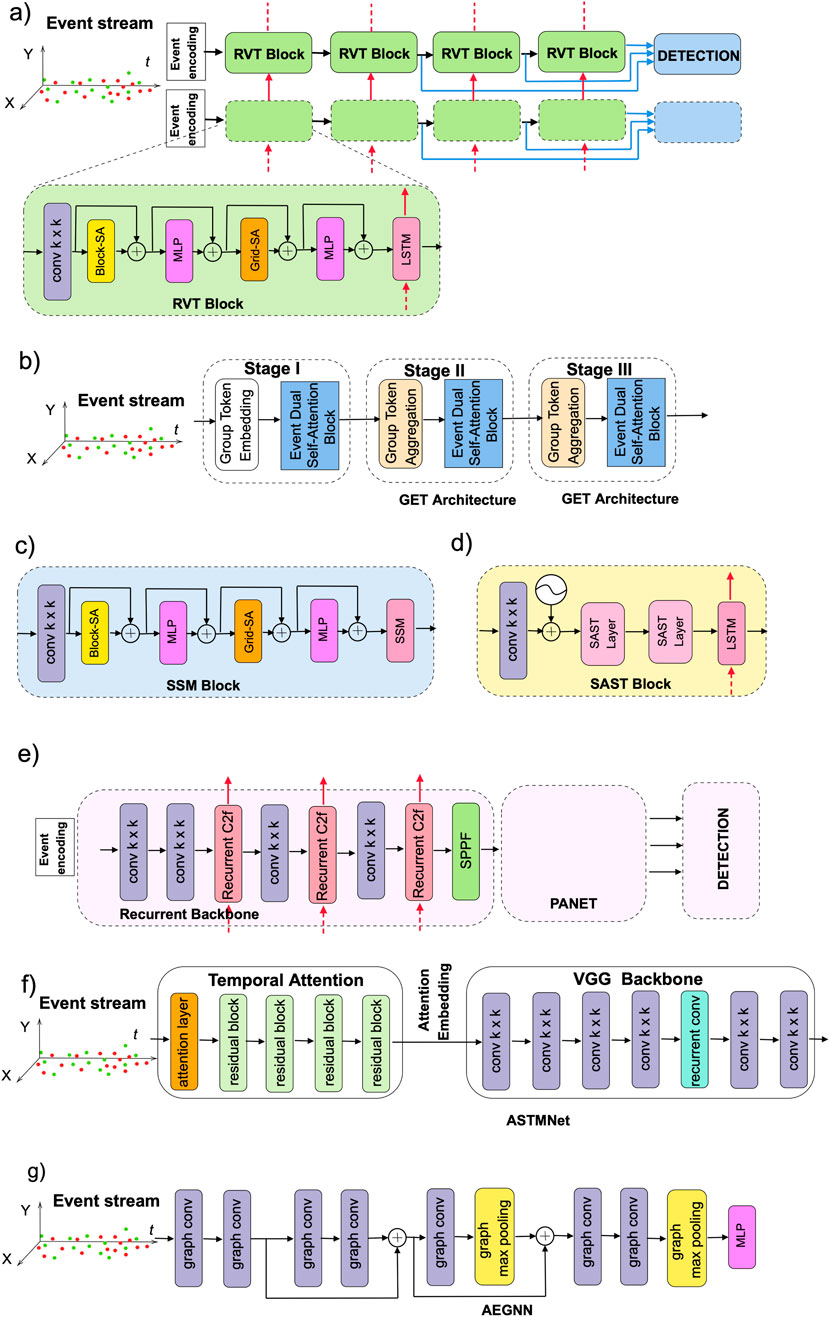

As mentioned earlier, event data is a new and fundamentally different type of information compared to traditional data. Nevertheless, existing neural models have been adapted to effectively process event streams. These approaches can be broadly categorized into dense, asynchronous dense, SNNs, GNNs, and other model types. Below, we present these categories with a focus on state-of-the-art models for autonomous event-based object detection, particularly those evaluated on the GEN1, 1MP, and eTraM datasets. Figure 7 illustrates some of them.

Figure 7. Schematic of fixed-rate (a) RVT and RVT Block; (b) GET architecture; (c) SSM Block; (d) SAST Block; (e) Recurrent YOLOv8; and asynchronous (f) ASTMNet; (g) AEGNN models.

4.2.1 Dense models

Currently, DNNs remain a practical choice for event-based data processing due to their well-established training methodologies and scalability. In particular, in (Perot et al., 2020; Silva et al., 2024c; Peng et al., 2023a), authors evaluated the performance of popular CNN-based RetinaNet and YOLOv5 models on GEN1 and 1MP datasets, which lately served as a baseline for their frameworks. However, it should be noted that conventional models require event streams to be converted into a grid-like format before they can be processed. Earlier methods often relied on reconstructing grayscale images from events (Liu et al., 2023; Perot et al., 2020), while recent works use more advanced encoding techniques (Peng et al., 2023a; Liu et al., 2023; Peng et al., 2023b), which are discussed later in Section 4.3.

Generally, DNN-based backbones can be categorized into either CNN-based or Transformer-based architectures. Additionally, they can be improved by incorporating specialized architectural layers to better capture the temporal dynamics of event data. In particular, networks that integrate recurrent layers form a distinct subgroup of models. One of the first models with recurrency is Recurrent Event-camera Detector (RED) (Perot et al., 2020). The architecture of RED includes convolutional layers extracting low-level features followed by convolutional long short-term memory (ConvLSTM) layers to extract high-level spatio-temporal patterns from the input. RED showed that memory mechanism created by recurrent layers allows detection of objects directly from events, achieving results comparable to those obtained using reconstructed grayscale images. However, utilization of ConvLSTM layers also led to increased computational complexity and latency and resulted in slow inference.

The Agile Event Detector (AED) is a YOLO-based architecture, which demonstrated faster and more accurate performance than the baseline YOLOX model on the GEN1 and 1MP datasets (Liu et al., 2023). Prior to AED, many event-based detection models were computationally intensive and suffered from low inference speeds. In addition, conventional approaches for converting events into dense representations often rely on fixed global time windows

The next architecture is Recurrent Vision Transformer (RVT) (Gehrig and Scaramuzza, 2023) and has a transformer-based backbone with recurrent layers. RVT is designed to overcome a trade-off between accuracy and computational complexity of previous event-based object detectors (Perot et al., 2020; Messikommer et al., 2020). It has a hierarchical multi-stage design of several blocks, which include an attention mechanism to process spatio-temporal data. Moreover, to reduce computation, RVT blocks gave preference to Vanilla LSTM cells over ConvLSTM layers, which allowed for a decrease in inference time compared to the RED. Following the introduction of RVT, numerous event-based object detection models were proposed within a relatively short period, and RVT served as a baseline for the majority of them, as can be noticed below.

In most cases, converting events to an image-like dense format can result in the loss of some properties. A group-based vision Transformer backbone called Group Event Transformer (GET) tried to overcome this problem by incorporating Group Token representation of asynchronous events that consider their time and polarity (Peng et al., 2023b). The architecture of GET has three stages comprised of Group Token Embedding (GTE), Event Dual Self-Attention (EDSA), and Group Token Aggregation (GTA) blocks. The visualization study demonstrated that by incorporating the EDSA block, GET could effectively capture counterclockwise motion. The enhanced version of GET with ConvLSTM layers was able to outperform most state-of-the-art models like RED, RVT-B, and others. Overall, GET is reported to be the fastest end-to-end method since other frameworks require longer data pre-processing time, which is typically not omitted in runtime results.

Traditional Vision Transformers benefit from the self-attention mechanism, which improves performance by capturing long-range dependencies. However, its quadratic computational complexity also introduces a great overhead in terms of A-FLOPs (Attention-related FLOPs) and limits scalability during processing high-resolution tasks (Gehrig and Scaramuzza, 2023; Peng et al., 2024). One of the ways to reduce computational burden was using sparse and sparse window-based transformers that rely on token-level sparsification or adaptive sparsification. In the event-based domain, these ideas were implemented in the Scene adaptive sparse transformer (SAST) (Peng et al., 2024). Its architecture is composed of multiple SAST blocks, each of which concludes with an LSTM layer. Through the combined use of window-token co-sparsification and Masked Sparse Window Self-Attention (MS-WSA), SAST effectively discards uninformative windows and tokens. This enables scene-aware adaptability, which allows focusing only on relevant objects. As a result, it could achieve better performance than variants of RVT at lower computational expense.

Recurrent YOLOv8 (ReYOLOv8) is an object detection framework that leverages the state-of-the-art CNN-based YOLOv8 model for efficient and fast object detection, and enhances its spatiotemporal processing capabilities to process events by integrating ConvLSTM layers (Silva et al., 2025). ReYOLOv8 achieved better accuracy with a relatively smaller number of parameters compared to other state-of-the-art event-based object detectors, including RED (Perot et al., 2020), GET (Peng et al., 2023b), SAST (Peng et al., 2024), variants of RVT (Gehrig and Scaramuzza, 2023), HMNet (Hamaguchi et al., 2023), and others.

As mentioned earlier, prior to being processed by dense models, the event stream must be converted into a frame-like format. The time window

SSM with 2D selective scan (S6) was adopted in the architecture of Sparse Mamba (SMamba) (Yang et al., 2025). It was evaluated on widely adopted GEN1, 1MP datasets and the recent eTRaM dataset, and outperformed the state-of-the-art models, including its sparse transformer-based counterpart SAST. While SAST proposed a window attention-based sparsification strategy, SMamba utilizes information-guided spatial selective scanning and global spatial-based channel selective scanning that can measure the information content of tokens and discard non-event noisy tokens.

4.2.2 Asynchronous dense models

Conversion of a stream of asynchronous and spatially sparse events into a synchronous tensor-like format and processing them by dense models at fixed rates leads to high latency and computational costs. Therefore, some works focus on dense models that process asynchronous event-by-event data during inference, leveraging both the temporal and spatial features of the event information. Nevertheless, training asynchronous dense models still requires converting raw event data into frame-like representations, which remains computationally intensive.

AsyNet is a framework designed to convert traditional models, trained on synchronous dense images, into asynchronous models that produce identical outputs (Messikommer et al., 2020). To preserve sparsity in event-based input data, AsyNet employs a sparse convolutional (SparseConv) technique such as the Submanifold Sparse Convolutional (SSC) Network, which effectively ignores zero-valued inputs within the convolutional receptive field. To maintain temporal sparsity, Sparse Recursive Representations (SRRs) are used. Unlike traditional methods that reprocess the entire image-like representation from scratch for every incoming event, SRRs enable recursive and sparse updates as new events arrive, which eliminates the need to rebuild the full representation each time. Examples of SRRs include event histograms (Maqueda et al., 2018), event queues (Tulyakov et al., 2019), and time images (Mitrokhin et al., 2018), where only single pixels need updating for each new event.

The next approach for asynchronous processing is known as MatrixLSTM and uses a grid of Long Short-Term Memory (LSTM) cells to convert asynchronous streams of events into 2D event representations (Cannici et al., 2020). All outputs of LSTM layers are collected into a dense tensor of shape

Asynchronous spatio-temporal memory network for continuous event-based object detection (ASTMNet) also processes raw event sequence directly without converting to image-like format (Li J. et al., 2022). This became possible due to the utilization of an adaptive temporal sampling strategy and temporal attention convolutional module.

Fully Asynchronous, Recurrent and Sparse Event-based CNN (FARSE-CNN) uses hierarchical recurrent units in a convolutional way to process sparse and asynchronous input (Santambrogio et al., 2024). Unlike MatrixLSTM, which also uses ConvLSTM but uses a single recurrent layer, FARSE-CNN is a multi-layered hierarchical network. FARSE-CNN also introduced Temporal Dropout, a temporal compression mechanism, which allows building deep networks.

The transformer-based framework for streaming object detection (SODformer) also operates asynchronously without being tied to a fixed frame rate (Li et al., 2023). SODformer was designed for object detection based on heterogeneous data, and, to improve detection accuracy from event- and frame-based streams, it introduced transformer and asynchronous attention-based fusion modules. The performance of SODformer was evaluated on the multimodal PKU-DAVIS-SOD dataset.

4.2.3 Spiking Neural Networks

As observed in dense models, adding recurrent connections can enhance the performance of dense backbones due to the ability to capture the temporal dependencies of events (Perot et al., 2020; Gehrig and Scaramuzza, 2023). One study further showed that Spiking Neural Networks (SNNs) outperform standard RNNs in processing sparse, event-driven data and achieve performance comparable to LSTMs (He et al., 2020). SNNs are widely known as biologically inspired, energy-efficient architectures that are inherently well-suited for processing asynchronous input (Cordone et al., 2022) and are considered as neuromorphic or/and event-driven neural networks. However, as the resolution of the vision data increases, the performance of SNNs begins to decline (He et al., 2020). Moreover, SNNs face significant challenges when it comes to training and scalability, primarily due to their inherent complexity and the need for algorithms to handle the discrete and event-driven nature of their neurons (Kim et al., 2020). Besides, there is a lack of specialized hardware. Traditional gradient-based training methods and Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) are well-optimized for DNNs, but not directly suitable for SNNs (Cordone, 2022). Different topologies of SNNs and training methods are continuously evolving. Additionally, pre-trained DNNs can be converted into SNNs for inference, often achieving results comparable to those obtained with DNNs (Silva D. et al., 2024).

One of the first spike-based object detection models is a Spiking-YOLO, which was obtained via DNN-to-SNN conversion (Kim et al., 2020). Initially, the converted model was unable to detect any objects due to a low firing rate and a lack of an efficient implementation method of leaky-ReLU. After introducing channel-wise normalization and signed neurons with an imbalanced threshold, the modified model achieved up to 98% on non-trivial PASCAL VOC and MS COCO datasets, comparable to the original DNN-based TinyYOLO model. However, applied normalization methods also led to an increase in the required number of timesteps, which is unfeasible for real-world implementation on neuromorphic hardware due to high latency (Cordone, 2022). In particular, the conversion-based Spiking-YOLO model (Kim et al., 2020) required 500 timesteps to achieve results comparable to those of the Trainable Spiking-YOLO (Tr-Spiking-YOLO) (Yuan et al., 2024), which uses direct training with the surrogate gradient algorithm and only 5 timesteps on the GEN1 dataset.

EMS-YOLO is the first deep spiking object detector trained directly with surrogate gradients, without relying on ANN-to-SNN conversion Su et al. (2023). EMS-YOLO uses the standard Leaky Integrate-and-Fire (LIF) neuron model and surrogate gradient backpropagation through time (BPTT) across all spiking layers. On the GEN1 dataset, EMS-ResNet10 achieves performance comparable to dense ResNet10 while consuming 5.83

End-to-End Adaptive Sampling and Representation for Event-based Detection with Recurrent Spiking Neural Networks (EAS-SNN) is another SNN-based model that introduced Residual Potential Dropout (RPD) and Spike-Aware Training (SAT) (Wang Z. et al., 2024). It also uses backpropagation through time (BPTT) with surrogate gradient functions to overcome the non-differentiability of spikes. Surrogate gradient applied in Spike-Aware Training (SAT) improves the precision of spike timing updates. With only 3 timesteps required for detection, EAS-SNN demonstrated competitive detection speeds of 54.35 FPS and reduced energy consumption up to a

A recently introduced Multi-Synaptic Firing (MSF) neuron inspired by multisynaptic connections represents a practical breakthrough for event-based object detection Fan et al. (2025). Unlike vanilla spiking neuron, MSF-based SNN is capable of simultaneously encoding spatial intensity through firing rates and temporal dynamics through spike timing. By combining multi-threshold and multi-synaptic firing with surrogate gradients, MSF networks can be trained at scale for deep model architectures. Particularly, the MHSANet-YOLO model with MSF neurons achieved up to 73.7 mAP on the GEN1 dataset, which is better than both ReLU and LIF versions. Moreover, MSF-based MHSANet-YOLO required 16.6

4.2.4 Graph-based models

The architecture of GNNs can also process event-based data by preserving their sparsity and asynchronous nature. One of the GNN-based object detection frameworks, called Asynchronous Event-based Graph Neural Network (AEGNN) processes events as “static” spatio-temporal graphs in a sequential manner (Schaefer et al., 2022). AEGNN uses an efficient training method where only the affected nodes are updated when a single event occurs. In other words, they were able to process events sparsely and asynchronously. In addition, it can also process batches of events and use the standard backpropagation method. This enables AEGNN to be trained on synchronized event data and support asynchronous inference. For object detection tasks, AEGNN demonstrated up to 200

The asynchronous nature of the event stream is also considered in Efficient Asynchronous Graph Neural Networks (EAGR) (Gehrig and Scaramuzza, 2022). EAGR offers per-event processing and can be configured using several architecture design choices. To reduce computational cost, it used max pooling in early layers and a pruning method, which resulted in skipping up to 73% of node updates. Therefore, a reduced number of FLOPS was observed during the first three layers while processing GEN1 dataset. A small size variant of EAGR achieved a 14.1 mAP higher performance and around 13% times fewer MFLOPS/ev than the AEGNN. Nevertheless, GNN-based models’ performance is still behind dense counterparts, especially involving recurrent connections.

Deep Asynchronous GNN (DAGr) attempted to improve GNN’s performance by combining event- and frame-based sensors in a hybrid object detector (Gehrig and Scaramuzza, 2024). The study showed that combining a 20-FPS RGB camera with high-rate event cameras can match the latency of a 5000-FPS camera and the bandwidth of a 45-FPS camera. Similarly to EAGR, it comes with different variants of configurations, conditionally divided into nano, small, and large size models. By effectively leveraging each modality, the large variant of DAGr achieved improved performance, reaching 41.9 mAP by the large size variant.

4.2.5 Other models

Some architectures cannot be categorized into the aforementioned groups and include frameworks that are employed to enhance the performance of the object detectors.

The first one is Hierarchical Neural Memory Network (HMNet) (Hamaguchi et al., 2023). It is a multi-rate network architecture inspired by Hierarchical Temporal Memory (HTM). An ordinary HTM is a brain-inspired algorithm that uses an unsupervised Hebbian-learning rule and is characterized by sparsity, hierarchy, and modularity. It operates at a single rate and incorporates Spatial Pooling and Temporal Pooling acting as convolutional and recurrent layers (Smagulova et al., 2019). On the other hand, HMNet features a temporal hierarchy of multi-level latent memories that operate at different rates, allowing it to capture scenes with varying motion speeds (Hamaguchi et al., 2023). In HMNet, low-level memories encode local and dynamic information, while high-level memories focus on static information. For embedding the sparse event stream into dense memory cells, HMNet introduced an Event Sparse Cross Attention (ESCA). There are four variants of HMNet, including HMNet-B1/L1/B3/L3, which differ in the number of memory levels and dimensions. In addition, the architecture of HMNet can be extended to the multisensory inputs. Overall, HMNet outperforms other methods in speed, particularly the recurrent baselines, which require a long accumulation time to construct an event frame.

The dense-to-sparse event-based object detection framework, DTSDNet, provides enhanced speed robustness and enables a reduction in event stream accumulation time by a factor of five, such as decreasing it from the typical 50 ms to just 10 ms (Fan et al., 2024). In particular, in conventional recurrent models, event streams are partitioned evenly, whereas DTSDNet uses an attention-based dual-pathway aggregation module to integrate rich spatial information from dense pathway with asynchronous sparse pathway.

While manually designed architectures like HMNet and others demonstrate strong performance, they often rely on expert knowledge and trial-and-error. To overcome this limitation and explore more efficient configurations, Neural Architecture Search (NAS) can automate the design of novel neural networks by exploring various combinations of architectural components using strategies like gradient-based search, evolutionary algorithms, and reinforcement learning (Ren et al., 2021). Chimera is the first block-based Neural Architecture Search (NAS) for event-based object detection using dense models (Silva et al., 2024b). The choice of encoding format, along with models designed using the Chimera NAS framework, achieved performance comparable to state-of-the-art models on the GEN1 and PEDRo datasets, while reducing the number of parameters up to

There are also hybrid models that include both SNN and dense Artificial neural network (ANN) architectures. One of such examples is an attention-based hybrid SNN-ANN. Its SNN part captures spatio-temporal events and converts them into dense feature maps to be further processed by the ANN part (Ahmed et al., 2025). SNN component of Hybrid SNN-ANN model used the surrogate gradient approach during training. Hybrid SNN-ANN achieves dense-like performance at a reduced number of parameters, latency, and power.

4.3 Event encoding techniques

Each event in a event stream

SNNs are inherently suited for processing event-based data. Models that utilize asynchronous sparse architectures are also capable of handling raw events. However, in the case of DNNs and GNNs, events cannot be processed directly by models and need to be encoded into a specific format. To be utilized by GNNs, events must first be transformed into a graph format (Gehrig and Scaramuzza, 2022; 2024), whereas DNNs process events that have been adapted into the image- or tensor-like structure.

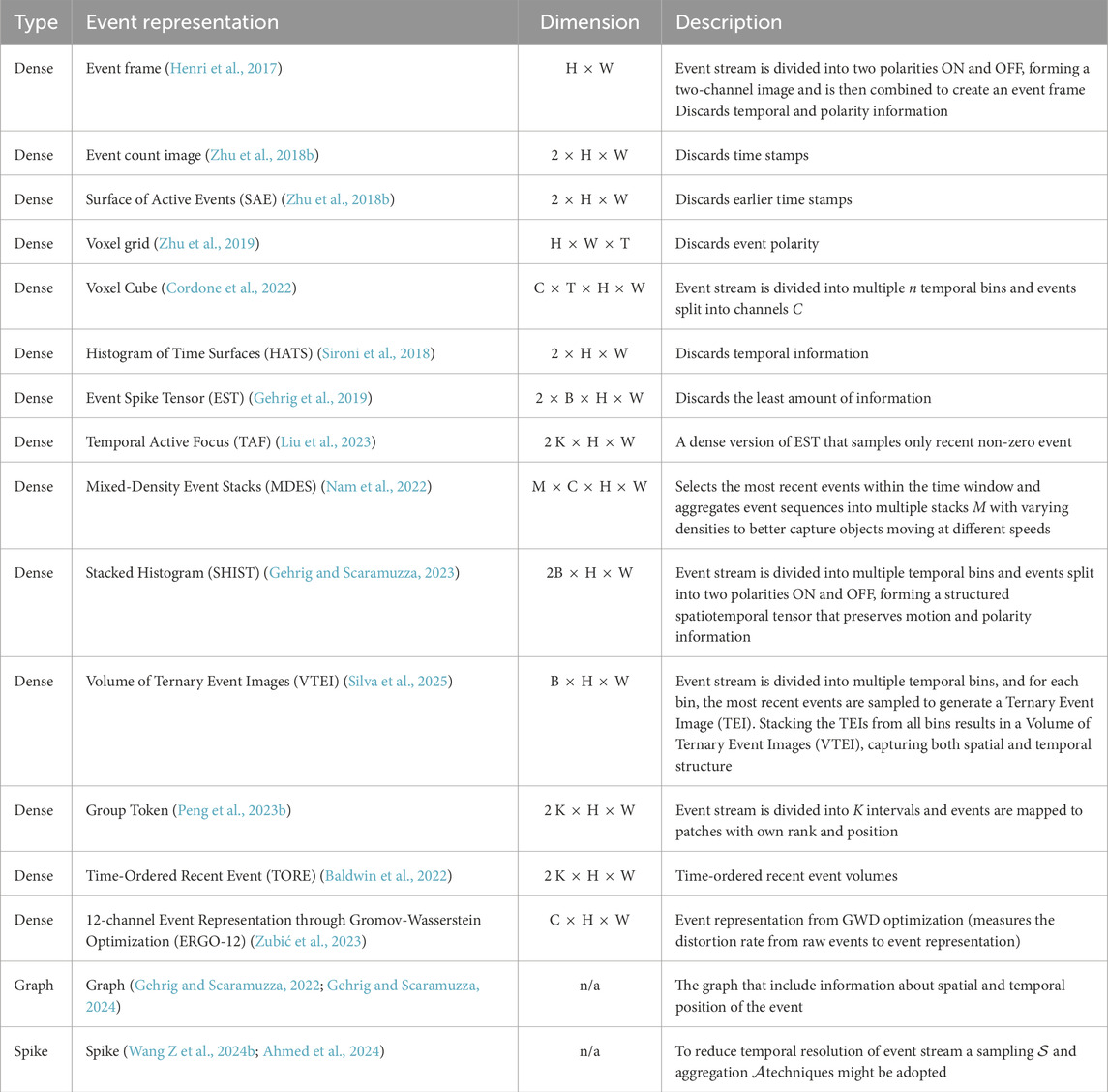

During event encoding into a specific format, the choice of representation can significantly impact performance. For example, the temporal component of the event stream can be used to identify patterns and provide valuable insights in certain applications, a concept known as temporal sensitivity (Shariff et al., 2024). Additionally, focusing on the most informative changes in a scene, which is called selectivity, further improves processing. These representations can also be used to satisfy computational and memory requirements (Shariff et al., 2024). Table 6 presents a summary of common event encoding formats, with detailed descriptions provided in the sections below.

Table 6. Common event encoding techniques [adapted from (Gehrig et al., 2019; Zheng et al., 2023].

4.3.1 Dense aggregation

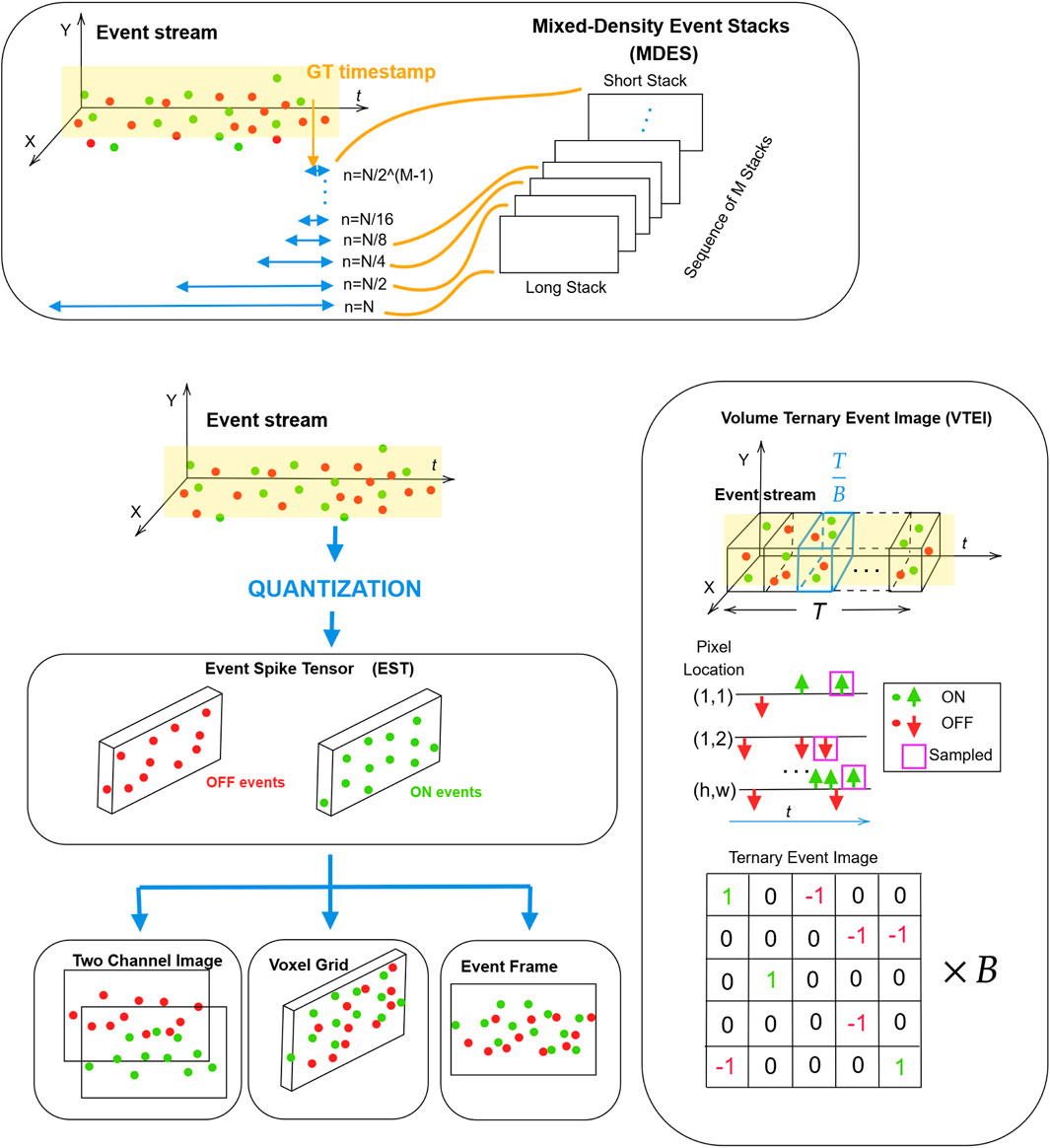

A common approach for converting an event stream into a dense, grid-like format involves stacking the events in various configurations. Based on image formation strategies, existing stacking methods are categorized into four types: stacking by polarity, timestamps, event count, and a combination of timestamps and polarity (Zheng et al., 2023). This section highlights several noteworthy techniques for encoding events and illustrates some of them in Figure 8.

Figure 8. Some of the dense representations of events: Event Spike Tensor (EST), Two-Channel Image, Voxel Grid, Event Frame, Mixed-Density Event Stacks (MDES), Volume Ternary Event Image (VTEI) (adapted from (Gehrig et al., 2019; Nam et al., 2022; Silva et al., 2025).

• Event Frame is formed by merging two-channel images, each corresponding to stacked ON and OFF polarity events (Henri et al., 2017).

• Event Volume or Voxel Grid is a volumetric representation of the events expressed as

Each element in the event volume consists of events represented by a linearly weighted accumulation, analogous to bilinear interpolation as in Equation 6:

where

• Voxel Cube are obtained from a voxel grid which is formed via accumulation of events over a specified time window

• Event Spike Tensor (EST) allows to process continuous-time event data as a grid-like 4-dimensional data structure

In a given time interval

Examples of

The convolved signal is also known as membrane potential. Prior works employed various task-specific kernel functions, including the exponential kernel, which was used in the hierarchy of time-surfaces (HOTS) (Lagorce et al., 2016) and histogram of average time surfaces (HATS) (Sironi et al., 2018) encodings. After a convolutional step, the signal is further sampled at regular intervals to produce a grid-like generalized Event Spike Tensor (EST) representation as in Equation 9:

with the spatiotemporal coordinates

The generalized EST can be further modified via different operations such as summation

• Temporal Active Focus (TAF) is seen as a dense version of the Event Spike Tensor (EST), which involves spatiotemporal data processing with efficient queue-based storage (Liu et al., 2023). While traditional EST is a sparse tensor covering the entire event stream

According to Equation 10, at each detection step

Then its non-zero values are pushed into the FIFO queues. At the next step

• Mixed-Density Event Stacks (MDES) was proposed to alleviate the event missing or overriding issues due to different speeds of the captured objects (Nam et al., 2022).

Due to the different speeds of the moving objects, stacking events with the pre-defined number of events or time period may lead to the loss of information. For example, short stacks can not track slow objects, whereas long stacks with excessive events may overwrite earlier scenes. To overcome the problem, Mixed-Density Event Stacks (MDES) format is proposed, where the length of each event sequence

• Stacked Histogram (SHIST) A Stacked Histogram (SHIST) is designed to save memory and bandwidth (Gehrig and Scaramuzza, 2023). The algorithm creating SHIST includes several steps. It starts by creating a 4-dimensional byte tensor. The first two dimensions are polarity and

where

• Volume of Ternary Event Images (VTEI) Volume of Ternary Event Images (VTEI) method ensures high sparsity, low memory usage, low bandwidth, and low latency (Silva et al., 2025). Similar to MDES, VTEI focuses on the encoding of the last event data, but with uniform temporal bin sizes and considering events’ polarity, +1 and −1. The VTEI tensor is created in several steps. The first step involves the initialization of a tensor

where

• Group Token representation groups asynchronous events considering their timestamps and polarities (Peng et al., 2023b). Conversion of the event stream into GT format is done using Group Token Embedding (GTE) module. First, asynchronous time events are discretized into

where:

Then, two 1D arrays with length

• Time-Ordered Recent Event (TORE) volumes avoid fixed and predefined frame rates, which helps to minimize information loss (Baldwin et al., 2022). Similar to TAF, TORE prioritizes the most recent events since they have the most impact and employs FIFO buffer. TORE volumes are implemented based on a per pixel polarity specific FIFO queues

where

• 12-channel Event Representation through Gromov-Wasserstein Optimization (ERGO-12) It was discovered that several measures can improve model convergence and speed up optimization, and include (i) normalization of the event coordinates and timestamps, (ii) concatenation of the normalized pixels, and (iii) sparsification (Zubić et al., 2023).

The choice of encoding format depends on the specific task, dataset, and network backbone used. Traditionally, identifying the optimal representation relies on validation scores obtained through neural networks, which is often a resource-intensive process. A recently introduced method for ranking event representations across various formats leverages the Gromov-Wasserstein Discrepancy (GWD), achieving a 200

where

The tests of the two-channel 2D Event Histogram and 12-channel Voxel Grid, MDES, TORE, and ERGO-12 using YOLOv6 architecture preserved the same ranking across multiple backbones, SwinV2 (Liu et al., 2022), ResNet-50 (He et al., 2016), and EfficientRep (Weng et al., 2023). Moreover, ERGO-12 outperformed other methods by up to 2.9% mAP on the GEN1 dataset using YOLOv6 with SwinV2 backbone (Zubić et al., 2023).

4.3.2 Spike-based representation

Although SNNs can naturally perform event-driven computations, their performance lags behind DNNs. One of the possible reasons is that the temporal resolution of sensors exceeds the processing capability of object detectors. Inspired by a sampling

4.3.3 Graph representation

In AEGNN, the event stream is converted into a spatio-temporal graph format using uniform subsampling (Schaefer et al., 2022). In particular, events are embedded into a spatio-temporal space

Both DAGr (Gehrig and Scaramuzza, 2024) and EAGR process the spatio-temporal graphs

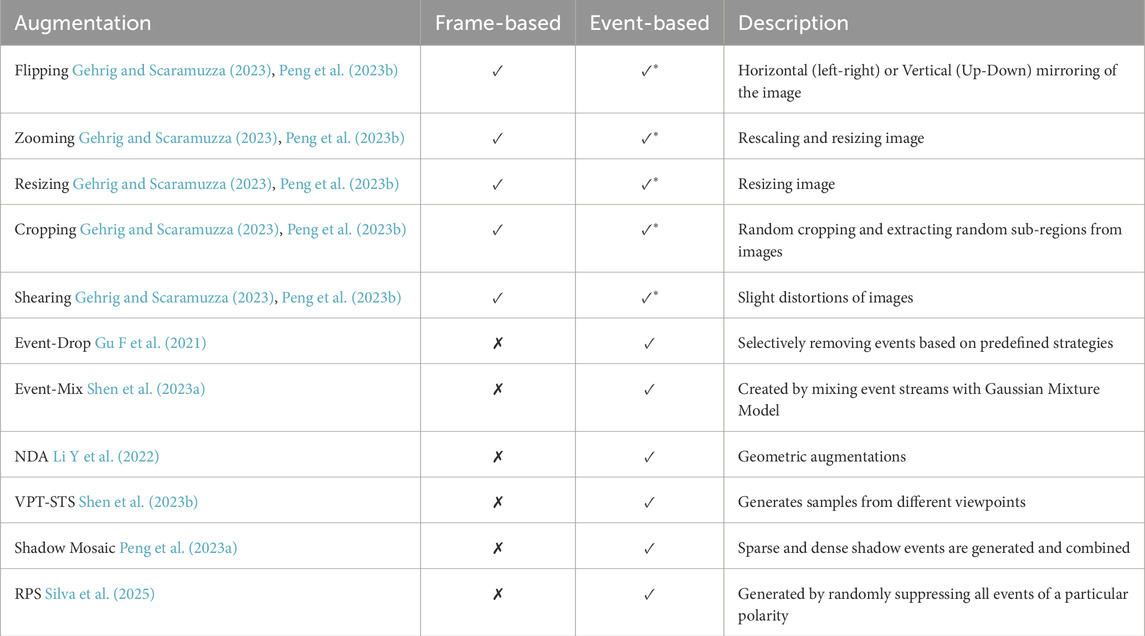

4.4 Augmentation

Data augmentation can increase the generalization ability of neural networks and greatly affect their performance (Zoph et al., 2020). The most common augmentation techniques for event-based data are similar to those used for traditional frame-based images and include horizontal flipping, zoom-in, zoom-out, resizing, adding noise, shearing, and cropping (Gehrig and Scaramuzza, 2023; Peng et al., 2023b).

On the other hand, other augmentation methods exploit the nature of event-based data for augmentation. EventDrop (Gu F. et al., 2021) is applied to raw events. It augments asynchronous event data by selectively removing events based on predefined strategies such as random drop, drop by time, and drop by area. The method was evaluated using DNN models with four event encoding representations, such as Event Frame, Event Count, Voxel Grid, and Event Spike Tensor (EST), on N-Caltech101 and N-Cars datasets. In addition, EventDrop can enhance the model’s generalization in object recognition and tracking by generating partially occluded cases, improving performance in scenarios with occlusion. Besides, EventDrop is reported to be compatible with SNNs too.

Similar to EventDrop, the EventMix method can be applied to both DNNs and SNNs. It creates augmentation by mixing event streams with a Gaussian Mixture Model (Shen G. et al., 2023). Performance of EventMix was tested on DVS-CIFAR10, N-Caltech101, N-CARS, and DVS-Gesture datasets. SNN with Event-Mix achieved state-of-the-art results (Shen G. et al., 2023).

Neuromorphic Data Augmentation (NDA), a family of geometric augmentations, was specifically designed to enhance the robustness of SNNs (Li Y. et al., 2022). SNN model with NDA improved accuracy by 10.1% and 13.7% on DVS-CIFAR10 and N-Caltech 101, respectively. The next ViewPoint Transform and Spatio-Temporal Stretching (VPT-STS) augmentation method is also designed for SNNs (Shen H. et al., 2023). In particular, the SNN model with VPT-STS achieved 84.4% on the DVS-CIFAR10 dataset. The VPT-STS generates samples from different viewpoints by transforming the rotation centers and angles in the spatiotemporal domain.

Another proposed method for enhancing event data diversity is Shadow Mosaic (Peng et al., 2023a). It consists of several stages, including Shadow Mosaic, Scaling, and Cropping, which aim to reduce the imbalance in spatio-temporal density of event streams due to different speeds of objects and the brightness change. Sparse shadow events are generated through random sampling, while dense shadow events are created by replicating events in the three-dimensional domain. At the mosaic stage, resulting shadow event samples are merged and scaled up or down, leading to a distortion. To restore realistic event structures, the shadow method is re-applied, and cropping is performed. The Shadow Mosaic augmentation method was used with Hyper Histograms encoding for the DNN model and improved mAP by up to 9.0% and 8.8% compared to the baseline without augmentation on the 1MP and GEN1 real-world datasets, respectively. A recent work introduced Random Polarity Suppression (RPS) augmentation method, which was applied on the VTEI tensor (Silva et al., 2025). Table 7 provides summary on augmentation techniques mentioned above.

4.5 Hardware accelerators

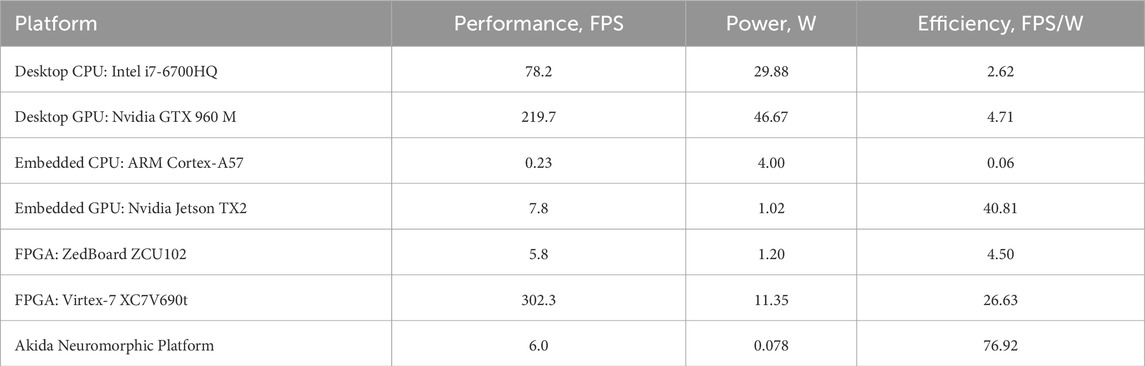

4.5.1 Graphical Processing Units

Majority of the event-based data object detection architectures with the state-of-the-art performance were trained and evaluated on Graphical Processing Units (GPUs), which represent conventional Von-Neumann architectures. Some of the works omit the hardware specification, making their direct comparisons challenging, but the most commonly used evaluation platforms for both dense and sparse algorithms include NVIDIA Tesla T4, NVIDIA Titan Xp, NVIDIA Quadro RTX 4000, and others (Gehrig and Scaramuzza, 2023; Peng et al., 2024). Generally, GPUs, along with specialized libraries such as PyTorch and TensorFlow, are well-suited for executing traditional DNNs due to their optimized support for parallel matrix operations and high computational throughput. However, they are less efficient when it comes to processing sparse models, as they typically do not skip computations involving zero-value elements (Smagulova et al., 2023).

Generally, sparse neuromorphic models like SNN are better aligned with the nature of event-based data, offering greater potential for efficient processing due to their ability to exploit data sparsity and reduce unnecessary computations. The same characteristic also poses a major obstacle to training efficiency. To address the issue, a range of specialized frameworks for SNNs have been developed, which include snnTorch and SpikingJelly, each targeting different aspects of model design and simulation. More recently, temporal fusion has been proposed as a strategy for scalable, GPU-accelerated SNN training Li et al. (2024).

4.5.2 FPGA-based accelerators

AI-based object detection systems on FPGAs lag behind GPU-based developments due to a time-consuming implementation process(Kryjak, 2024). Additional challenges include the lack of standardized benchmarks and the limited availability of Hardware Description Language (HDL) codes. However, the introduction of Prophesee’s industry-first event-based vision sensors, combined with the FPGA-based AMD Kria Vision AI Starter Kit, marks a significant milestone for future advancements in the field (Kalapothas et al., 2022). The recent work introduces SPiking Low-power Event-based ArchiTecture (SPLEAT) neuromorphic accelerator, a full-stack neuromorphic solution that utilizes the Qualia framework for deploying state-of-the-art SNNs on an FPGA (Courtois et al., 2024). In particular, it was used to implement a small 32-ST-VGG model, which achieved 14.4 mAP on the GEN1 dataset. The model’s backbone was accelerated on SPLEAT, operating with a power consumption of just 0.7 W and a latency of 700 ms, while the SSD detection head was executed on a CPU.

4.5.3 Neuromorphic platforms

Neuromorphic processing platforms for SNNs remain in their early stages of development, but represent a significant area of ongoing research (Bouvier et al., 2019; Smagulova et al., 2023). The notable SNN accelerators include Loihi (Davies et al., 2018), Loihi-2 (Orchard et al., 2021), TrueNorth (Akopyan et al., 2015), BrainScaleS (Schemmel et al., 2010), BrainScaleS-2 (Pehle et al., 2022), Spiking Neural Network Architecture (SpiNNaker) (Furber and Bogdan, 2020), SpiNNaker 2 (Huang et al., 2023), and one of the first commercially available neuromorphic processors, Akida by BrainChip (Posey, 2022).

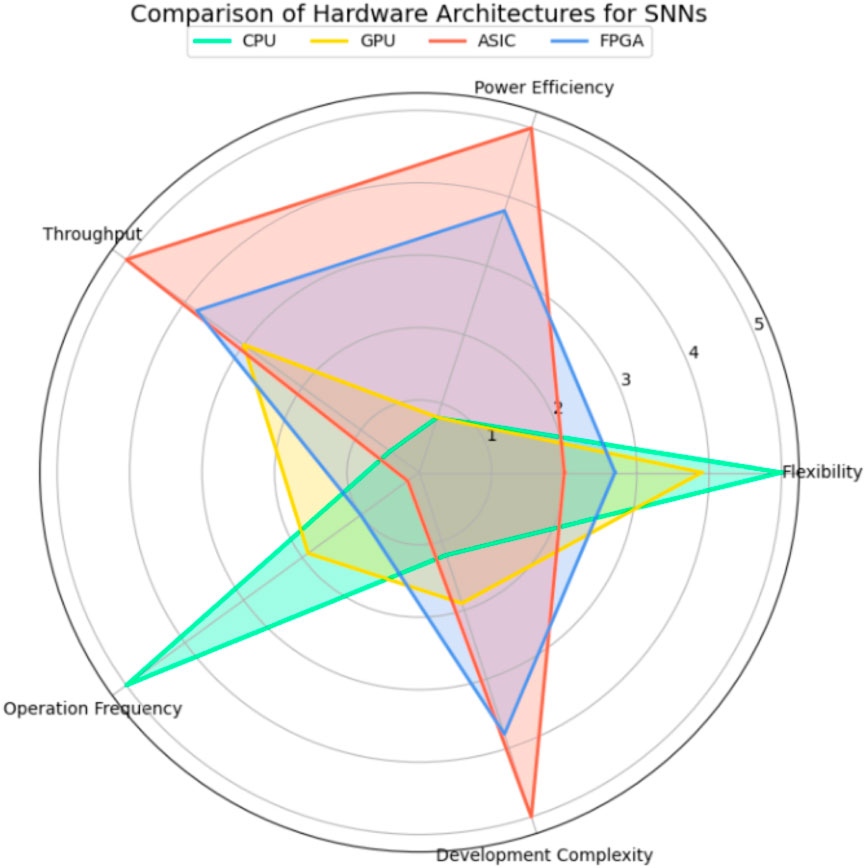

TrueNorth is an early large-scale neuromorphic ASIC designed for SNNs. While it was a significant milestone in brain-inspired computing, it lacks the flexibility required for modern AI applications and has been superseded by newer designs. BrainScaleS and BrainScaleS-2 are mixed-signal brain-inspired platforms suitable for large-scale SNN simulations. However, their large physical footprint and complex infrastructure requirements make them less suitable for deployment in embedded or real-world applications such as autonomous driving Iaboni and Abichandani (2024).