- 1Institute for Life Sciences/Computer Science, University of Southampton, Southampton, United Kingdom

- 2Allen Discovery Center, Tufts University, Medford, MA, United States

- 3Wyss Institute for Biologically Inspired Engineering, Harvard University, Boston, MA, United States

- 4School of Engineering and Informatics, University of Sussex, Brighton, United Kingdom

The truly surprising thing about evolution is not how it makes individuals better adapted to their environment, but how it makes individuals. All individuals are made of parts that used to be individuals themselves, e.g., multicellular organisms from unicellular organisms. In such evolutionary transitions in individuality, the organised structure of relationships between component parts causes them to work together, creating a new organismic entity and a new evolutionary unit on which selection can act. However, the principles of these transitions remain poorly understood. In particular, the process of transition must be explained by “bottom-up” selection, i.e., on the existing lower-level evolutionary units, without presupposing the higher-level evolutionary unit we are trying to explain. In this hypothesis and theory manuscript we address the conditions for evolutionary transitions in individuality by exploiting adaptive principles already known in learning systems. Connectionist learning models, well-studied in neural networks, demonstrate how networks of organised functional relationships between components, sufficient to exhibit information integration and collective action, can be produced via fully-distributed and unsupervised learning principles, i.e., without centralised control or an external teacher. Evolutionary connectionism translates these distributed learning principles into the domain of natural selection, and suggests how relationships among evolutionary units could become adaptively organised by selection from below without presupposing genetic relatedness or selection on collectives. In this manuscript, we address how connectionist models with a particular interaction structure might explain transitions in individuality. We explore the relationship between the interaction structures necessary for (a) evolutionary individuality (where the evolution of the whole is a non-decomposable function of the evolution of the parts), (b) organismic individuality (where the development and behaviour of the whole is a non-decomposable function of the behaviour of component parts) and (c) non-linearly separable functions, familiar in connectionist models (where the output of the network is a non-decomposable function of the inputs). Specifically, we hypothesise that the conditions necessary to evolve a new level of individuality are described by the conditions necessary to learn non-decomposable functions of this type (or deep model induction) familiar in connectionist models of cognition and learning.

Introduction: Evolutionary Transitions in Individuality

All complex individuals are made of parts that used to be individuals themselves (e.g., the transition from single-celled life to multicellular organisms). Such evolutionary transitions in individuality have occurred at many levels of biological organisation, and have been fundamental to the origin of biological complexity, but how they occurred is not well understood (Maynard Smith and Szathmary, 1997; Michod, 2000; Okasha, 2006; Godfrey-Smith, 2009; Szathmary, 2015; West et al., 2015). Before a transition, adaptations under natural selection support component entities in acting to maintain their individual survival and reproduction. But after a transition, natural selection supports components in acting to serve the development, survival and reproduction of an individual at higher level of organisation (e.g., the multicellular organism), even when it conflicts with or suppresses the survival and reproduction of these component parts (e.g., somatic cells) (Maynard Smith and Szathmary, 1997; Godfrey-Smith, 2009).

How do they come to work together in this way? The form and function of the many different parts within an individual, and their working together as a coordinated whole, is consistent with natural selection acting at the higher level. When the higher-level individual is established as an evolutionary unit, i.e., after a transition, this can even explain self-sacrifice at the level of component parts – as they are no longer effective evolutionary units as individuals. But this presupposes the higher-level individual as an evolutionary unit and does not explain the process of the transition. The evolutionary changes involved in the creation and maintenance of a new level of individuality are complex and can involve many evolutionary steps in multiple dimensions including population structure, functional interdependence and reproductive specialisation (Godfrey-Smith, 2009). For example, these may include: a new kind of compartmentalisation (e.g., cell membrane) that limits the distribution of public goods or provides physical protection that binds selective fates together; new social relationships that create irreversible fitness dependencies between ecological partners (e.g., from ecological “trade” to division of labour); the synchronisation and centralisation of reproductive machinery (e.g., as in the origin of chromosomes and the eukaryote cell); changes to physical population structure that implement genetic assortment (e.g., a reproductive bottleneck in the origin of multicellular animals) and/or reproductive specialisation (with early-determination and sequestration of a germ line) (Margulis and Fester, 1991; Maynard Smith and Szathmary, 1997; Michod, 2000; Okasha, 2006; Godfrey-Smith, 2009; Buss, 2014; Szathmary, 2015; West et al., 2015). Such changes cannot be explained as adaptations of the higher-level unit because the higher-level unit does not exist until after (some sufficient subset of) these adaptations have taken place. Rather they must be a result of selection on the extant lower-level units changing their functional relationships with one another. That is, evolutionary transitions in individuality must be understood as evolved or coevolved changes to relationships between existing evolutionary units – not as some kind of instantaneous jump in the unit of selection (followed by the evolutionary complexification of internal relationships and mechanisms) (Black et al., 2020; Veit, 2021).

This presents an evolutionary puzzle because, whilst the collective benefit of adaptations at the higher level may be significant in the long term, natural selection is famously short-sighted and self-interested. That is, characteristics that decrease immediate benefit, or differentially benefit others, do not increase in frequency. Selection at the higher level of organisation necessary to overcome this is not effective until after the new level of individuality is constructed, and selection at the lower level will not favour any changes that decrease short-term individual fitness (Veit, 2021). Assuming the new evolutionary unit did not spring into existence “all at once,” with the necessary organised relationships already in place, the multiple changes involved in its creation must have been driven “bottom-up” by the selective interests of the extant, lower-level units – even though these same units are consequently caused to give-up their self-interest in the process. A key question in the transitions is thus:

How do multiple short-sighted, self-interested entities organise their relationships with one another to create a new level of individuality, meaning that they are caused by these relationships to act in a manner that is consistent with long-term collective interest?

To answer this, a theory of ETIs needs to describe (a) what kind of functional relationships between components are needed to make a new individual, and how they need to be organised; and (b) how the organisation of these relationships arises “bottom-up,” i.e., without presupposing the higher-level individual we are trying to explain.

Existing evolutionary theory struggles with these questions. Specifically, a conventional evolutionary framework cannot explain adaptations in systems that are not evolutionary units. After the transition, when the higher-level individual is established as an evolutionary unit, selection at this higher level can explain complex relationships and even altruistic behaviours among the component parts. But before the transition, we cannot invoke natural selection to explain the adaptation of such system-level relationships or behaviours. Thus, if these are adaptations required to create the new level of individuality, how can selection explain them? Questions about how new units are created, or transition from one level of organisation to another, cannot be addressed within a framework that presupposes the unit it is trying to explain. As Veit puts it, the problem is one of circular reasoning: “how to explain the origins of Darwinian properties without already invoking their presence at the level they emerge?” (Veit, 2021). So the process of ETIs under bottom-up selection creates a chicken-and-egg problem for conventional thinking; Which came first, the higher-level unit of selection required for complex adaptations, or the complex adaptations required to create the higher-level unit of selection? (Griesemer, 2005; Clarke, 2016).

In this manuscript, we outline the existing theoretical frameworks and hypotheses regarding the ETIs, and discuss their limitations – in particular, the problem of creating fitness differences at the collective level that are not just a by-product of fitness differences among particles, and how to explain the selective mechanisms by which the structures necessary to produce this transition can evolve bottom-up.

We then introduce some new experimental findings in developmental biology – namely, “basal cognition” and the separation of organismic individuality from genetics (Manicka and Levin, 2019a; Lyon et al., 2021a) and new perspectives on evolutionary processes, namely “evolutionary connectionism” (Watson et al., 2016), which deepens and expands the formal links between evolution and learning. The link between evolution and simple types of learning has often been noted (Skinner, 1981; Watson and Szathmary, 2016) but is sometimes interpreted in an uninteresting way; as if to say Some types of learning are no more clever than random variation and selection. But the formal equivalence between evolution and learning (Frank, 2009; Harper, 2009; Shalizi, 2009; Valiant, 2013; Chastain et al., 2014) also has a much more interesting implication, namely: Evolution is more intelligent than we realised (Watson and Szathmary, 2016). Evolutionary connectionism addresses the two questions above by utilising (a) the principles of distributed cognition, familiar in neural network models, to explain how the relationships between evolutionary units can produce something that is “more than the sum of the parts” in a formal sense, and b) the principles of distributed learning to address how evolving relationships can be organised bottom-up, without presupposing system-level feedback. This provides new ways of thinking about these questions, leading to a new hypothesis for what ETIs are and how the ETIs occur.

The core of the idea is that ETIs are the evolutionary equivalent of deep learning (LeCun et al., 2015) (i.e., multi-level model induction), familiar in connectionist models of cognition and learning (Watson et al., 2016; Watson and Szathmary, 2016; Czégel et al., 2018, 2019; Vanchurin et al., 2021). We hypothesise that this is not merely a descriptive analogy, but a functional equivalence (Watson and Szathmary, 2016) that describes the types of relationships required to support a new level of individuality and the selective conditions required for these relationships to arise bottom-up. Specifically, we hypothesise that (i) the type and organisation of functional relationships between components required for a new level of individuality are those which encode a specific but basic type of non-decomposable computational function (i.e., non-linearly separable functions), (ii) these relationships are enacted by the mechanisms of information integration and collective action (“basal cognition”) observed in the developmental processes of organismic individuality, and (iii) the conditions necessary for natural selection to produce these organisations are described by the conditions for deep model induction.

Existing Approaches to the Problem of the Evolutionary Transitions in Individuality

The evolutionary transitions in individuality, ETIs, have been some of the most important innovations in the history of biological complexity (Maynard Smith and Szathmary, 1997; Michod, 2000; Godfrey-Smith, 2009; West et al., 2015). These include the transition from individual autocatalytic molecules to the first protocells, individual self-replicating genes to chromosomes, from simple bacterial cells to eukaryote cells containing multiple organelles, and from unicellular life to multicellular organisms (Maynard Smith and Szathmary, 1997; Michod, 2000). Each transition is characterised by the “de-Darwinisation” of units at the existing level of organisation and the “Darwinisation” of collectives at a higher-level of organisation (Godfrey-Smith, 2009). That is, at the lower level, each component part loses its ability to replicate independently – the most fundamental property of a Darwinian unit – and after the transition, can replicate only as part of a larger whole (Maynard Smith and Szathmary, 1997). Conversely, before the transition, reproduction does not occur at the collective level; and after the transition the collective exhibits heritable variation in fitness that belongs properly to this new level of organisation (Maynard Smith and Szathmary, 1997; Okasha, 2006).

Whereas conventional evolutionary theory takes individuality for granted, and assumes the unit of selection is fixed, it is now recognised that Darwinian individuality is a matter of degree in many dimensions (e.g., degree of genetic homogeneity, degree of functional integration, degree of reproductive specialisation) (Godfrey-Smith, 2009). The research programme of the ETIs seeks to understand the processes, mechanisms and drivers that cause evolutionary processes to move through this space of possibilities (Okasha, 2006; Godfrey-Smith, 2009).

Social Evolution Theory and Kin Selection

Social evolution theory, a general approach to explain social behaviour, notes that it is evolutionarily rational to cooperate with someone that makes more copies of you (or your genes). Thus, in the case that interactors are genetically related or homogeneous, as they can be in the case of the cells within a multicellular organism, for example, this can explain the altruism of the somatic cells (West et al., 2015; Birch, 2017). The inclusive fitness perspective on ETIs, derived from this kind of social evolution theory, also offers a viewpoint that side-steps the whole problem. The question, as we posed it, asked why short-sighted self-interested individuals would act in a manner that opposes their individual interest to serve the interests of the whole. But an inclusive fitness perspective suggests this is wrong-headed because they were never different individuals in the first place – they were always of one genotype, and the multicellular organism is just a phenotype of this singular evolutionary unit. Problem solved?

For some purposes, it might be appropriate to view ETIs as an extreme point on the same continuum as other social behaviours. But genetic relatedness, kin selection or inclusive fitness do not explain all ETIs or even key examples such as multicellularity with homogeneous genetics.

First, acting with unity of purpose in multicellular organisms does not require genetic homogeneity (Grossberg, 1978; Levin, 2019, 2021b; Levin et al., 2019; Bechtel and Bich, 2021). Second, other transitions in individuality involve components that are genetically unrelated, for example, the transition from self-replicating molecules to chromosomes, and the transition from bacterial cells to eukaryote cells with multiple organelles (Maynard Smith and Szathmary, 1997). Third, and perhaps most important, social evolution theory only explains the cooperation that is expected given a certain interaction structure (i.e., determining whether those that interact are related). It does not explain changes in interaction structures that are necessary to increase or decrease genetic assortment, let alone to reach such extremes. Moreover, the genetic definition of individuality fails to address all the questions that are really interesting about individuality – not least how individuality changes from one level of organisation to another. By asserting that, both before and after the transition, the only relevant individual was the gene, this approach fails to address the meaning of the individual at all. Of course, it is common that the cells of multicellular organisms, especially animals, are for the most part genetically homogeneous. And given that they are, this can explain the apparently altruistic behaviours of soma. But this does not explain how this situation evolved, nor other instances of individuality that are not genetically homogeneous.

Evolved Change in Interaction Structure: Ecological Scaffolding and Social Niche Construction

One recent approach to explain how new interaction structures might evolve is ecological scaffolding (Black et al., 2020; Veit, 2021). That is, extrinsic ecological conditions, that are not in themselves adaptations and do not require selective explanation, create conditions where individuals live in a grouped or meta-population structure, e.g., microbial mats aggregated around water reed stems (Veit, 2021). The differential survival and reproduction of such sub-populations, e.g., in recolonising vacant locations, affords the possibility of higher-level selection (Wilson, 1975; Wade, 2016). Thus far in this account, nothing has evolved to support or maintain these structures; It is simply an assumption of fortuitous extrinsic conditions that alter population structure to create these different selective pressures. But from there it becomes more interesting. Given these conditions, individual selection at the lower level supports the evolution of characters that access synergistic fitness interactions, changing the relationships among the particles, and given that synergistic fitness interactions among particles have evolved, it is subsequently advantageous for particles to evolve traits that actively support this grouped population structure. Now the original extrinsic ecological conditions might change or cease, but the population structure necessary to support higher-level selection is nonetheless maintained, supported by the adaptations of the particles. That is, the ecological scaffolding becomes redundant, and is replaced by endogenous effects of characters produced by selection at the particle level. This ecological scaffolding thus provides a way to overcome the chicken-and-egg problem of the ETIs (by temporarily assuming the presence of a “chicken”). It does, however, depend on the initial assumption of extrinsic ecological conditions that happen to support higher-level selection in the first place. Moreover, if population structure changes evolutionary outcomes for individuals, and individuals have the ability to alter population structure, we must consider the possibility that rather than adapting to support the new level of selection they act to oppose or disrupt it, e.g., by evolving dispersal behaviours rather than aggregation behaviours.

These works and others in this area point to the need to explain how evolution modifies the parameters of its own operation when these parameters exhibit heritable variation (Powers and Watson, 2011; Ryan et al., 2016; Watson and Szathmary, 2016; Watson and Thies, 2019), i.e., to endogenise the explanation of its own parameter values (Bourrat, 2021b; Okasha, 2021). For example, with or without scaffolding, suppose that organisms have heritable variation in traits that modify their interaction structure with others, such as compartmentalisation or group size, reproductive synchronisation, or reproductive specialisation. These traits can modify relatedness – they change how related interactors are [not by changing anyone’s genetics but by changing who interacts with whom (Taylor and Nowak, 2007; Jackson and Watson, 2013)]. How does natural selection act on these traits? For example, initial group size is known to be an important factor in modifying the efficacy of (type 1) group selection (Wilson, 1975; Powers et al., 2009, 2011), and individuals may have traits that modify initial group size (e.g., propagule size) (Powers et al., 2011). The term “social niche construction” refers to the evolution of traits that alter interaction structure, i.e., who you interact with and how much (Powers et al., 2011; Ryan et al., 2016). In some circumstances, natural selection will act to modify such traits toward structures that increase cooperation (Santos et al., 2006; Powers et al., 2011; Jackson and Watson, 2013). This social niche construction has potential advantages over ecological scaffolding because it does not presuppose exogeneous reasons for favourable population structure (that is later canalised by endogenous traits), but shows conditions where such population structure can evolve de novo.

Multi-Level Selection and Individuation Mechanisms

In contrast to the kin selection approach (i.e., focussing on the lower-level units and whether they interact with other units that are related), the multi-level selection approach conceives higher-level organisations (collectives) as units of a higher-level evolutionary process (Wilson, 1997; Okasha, 2006; O’Gorman et al., 2008). The multi-level Price approach, for example, attempts to divide the covariance of character and fitness into “between collective selection” (acting at the higher level) and “within collective selection” (acting at the particle level) (e.g., Bourrat, 2021b). Clarke (2016) proposes that we might assess the degree of individuality as “the proportion of the total change that is driven by selection at the higher level,” and like Okasha (2006), suggests that an ETI involves a decrease in the proportion of selection driven by the lower level and an increase in the proportion driven by selection at the higher level. In the limit of complete Darwinisation of the collective, and complete de-Darwinisiation of the particles, this becomes maximal.

One problem with this analysis is that, as Wimsatt (1980) points out, the presence of heritable variation in reproductive success at the collective level is not in itself “sufficient for the entity to be a unit of selection, however, for they guarantee only that the entity in question is either a unit of selection or is composed of units of selection.” Moreover, Bourrat argues that “there is no fact of the matter as to whether natural selection occurs at one level or another” because “when evolution by natural selection occurs at one level, it does so concomitantly at many other levels, even in cases where, intuitively, these levels do not count as genuine levels of selection” (Bourrat, 2021a). Collectives can be defined at any level and with any boundary, and their character-fitness covariance can be measured, and yet we could have equally well drawn boundaries in any other way. We would have got different quantities (if the interactions among particles are non-linear), but nothing about these quantities tells us how to identify which units are playing a factually causal role in the evolutionary process. Thus, even when there are salient functional interactions among the particles within a collective, it can be hard to disentangle what is happening at one level and what is happening at another, or more exactly, what caused things to happen at one level or another (see also cross-level by-products (Okasha, 2006).

Bourrat (2021a) goes on to provide an extension to the multi-level Price approach which divides the response to selection (the product of selection and heritability) into a component that is functionally additive (aggregative) and a non-additive component. The latter non-aggregative component is associated with the collective response to selection that is not explained by the particle response to selection Thies and Watson (2021). This is useful in drawing attention to the nature of the interactions among particles and its significance in identifying the salient level of causal processes. It also emphasises how a change in heritability at the collective level could alter the ability to respond to selection at the collective level. We will develop related ideas below (but argue that in order for higher-level selection to alter evolutionary outcomes, the type of non-aggregative interaction needs to be more specific).

Beyond matters of quantifying individuality, we also aim to better understand the mechanisms that cause these changes (e.g., changes in the ability to produce heritable fitness differences at the collective level) and how selection acts on these mechanisms. In other words, in addition to knowing whether the evolutionary change in a character is explained by lower-level or higher-level evolutionary units, and quantifying how this balance might alter in the course of a transition, we also want to explain how and why this balance changes. We want to explain the mechanisms by which natural selection changes the identity of the evolutionary unit. Here theory is less well developed.

Clarke offers the concept of “individuation mechanisms” that influence “the extent to which objects are able to exhibit heritable variance in fitness” (see also Godfrey-Smith, 2009). These might include developmental bottlenecks, sexual reproduction, egg-eating behaviours, germ separation, immune regulation and physical boundaries (Clarke, 2014, 2016). In general such mechanisms may affect genetic variance (by affecting the extent to which genetic variation is heritable at the collective level), the fitness effects of that variation, or other (non-genetic) sources of heritable variance in fitness. But still, we want to know how selection, more specifically, bottom-up selection, acts on such traits. For example, we need to be able to explain why lower-level selection would act on such traits in a manner that increases non-aggregative components of the collective heritability and response to selection, and not in a manner that decreases it. Intuitively, one might imagine that the reason the traits evolve, the source of their selective advantage, derives specifically from the change in the collective-level response to selection – e.g., the non-aggregative component identified by Bourrat. The models of social niche construction demonstrate that this is possible in some circumstances. However, we cannot assume that it is in the interest of particles to reduce their ability to respond to selection independently, and make themselves dependent on the collective to respond to selection. Given that such traits must be evolved through a particle-level response to selection (since a collective-level response to selection does not exist until after the transition), and that a collective-level response to selection may ultimately create a situation that opposes their direct fitness (e.g., that of somatic cells), this direction of travel is not at all for granted. As yet, these approaches do not tie together the effects that such traits have on the level of individuality with the selection that causes such traits to evolve.

Types of Fitness Interactions: Emergence, Non-aggregative Interactions and Collectives That Change Evolutionary Outcomes

In order for a new level of biological organization to have a meaningful causal role as an evolutionary unit, evolutionary outcomes of the collective must not be simply summary statistics over the lower level units they contain (Okasha, 2006; Bourrat, 2021a). Being a bone fide evolutionary unit requires heritable variation in fitness (Lewontin, 1970; Okasha, 2006), and being a new evolutionary unit (that is “more than the sum of the parts”) requires heritable fitness differences at the new level that are not just the average of heritable fitness differences at the lower level (Okasha, 2006). Otherwise, how can it be that collective characters, and not particle characters, determine particle fitness? If particle characters determine collective characters, and collective characters determine the fitness of the particles they contain, then particle characters determine particle fitness. We can write this as follows. If the sum (or other aggregative property) of particle characters (Σz) in a collective determines (linearly) the reproductive output of the collective (Ω), and the reproductive output of the collective determines (linearly) the fitness of a particular particle therein (ω1), then the value of that particle determines its fitness (z1 → ω1), and hence the collective is explanatorily redundant in describing the selection on particles (Eq. 1).

The point is perhaps better made by focussing on changes in characters and fitnesses. Thus if the change in a character (Δz1) determines a change in collective fitness (ΔΩ), and a change in collective fitness determines a change in particle fitness, then changes in particle fitness are determined by changes in particle characters (Δz1 → Δω1), and the collective is redundant.

So, given that collective characters and hence collective fitness are entailed by the characters of the particles they contain, how can collectives and not particles be the reason that one particle character was selected and another was not? The means by which collectives can somehow break the association between particle character and particle fitness will be a key focus of what follows.

To create a meaningful causal role for the collective, there is often an appeal to the notion of creating something qualitatively new at a higher level of organisation, a.k.a. emergence. This can be difficult to define (Corning and Szathmary, 2015; Bourrat, 2021a), especially since we generally want to retain the assumption that salient differences at the higher level require salient differences at the lower level (supervenience). It is agreed, at least, that in order for the collective to be a meaningful evolutionary unit, fitness interactions between components cannot be linearly additive (Corning and Szathmary, 2015; Bourrat, 2021b). If the fitness-affecting character of the collective is simply the sum or average of the particles, or more generally, an aggregative property of the parts (Bourrat, 2021b), then the distinction between higher and lower levels of selection is merely conventional, not substantial (Bourrat, 2021a).

Bourrat examines cases where the relationship between z and collective character, Z (and hence Ω), is non-linear (Bourrat, 2021b). For example, suppose a change in the character of a particular particle (Δz1) given a particular context where the sum of other particle characters has a particular value (Σzx = p), results in a change to collective fitness and hence a change to particle fitness (Δω1). Now consider the same change, Δz1, in a different context where the sum of other particle characters has a different value (Σzx ≠ p), i.e., we are at a different point on the non-linear curve relating the particle characters to collective character. If this has a different effect on collective fitness (ΔΩ′ ≠ ΔΩ) and hence a different effect on the fitness of this particular particle (Δω1′ ≠ Δω1) then it does not follow that this change to particle character results in a change in its fitness that is independent of context (Eq. 3). In this sense, the collective is not explanatorily redundant.

Corning and Szathmary (2015) and Bourrat (2021b) describe some examples of possible scaling relationships, such as step functions or thresholds, and super linear curves, that effect a non-linear relationship between the characters of parts and the characters of wholes. The salient criterion of such functions is “whether or not there are combined effects that are interdependent and cannot be achieved by the “parts” acting alone.” or “produce an interdependent, qualitatively different functional result” (Corning and Szathmary, 2015).

However, although Δω1′ and Δω may be different in any such non-linear function, they could nonetheless have the same sign. This is the case whenever the function relating Σz to Z, and Ω, is monotonic (such as a diminishing returns or economy of scale relationship). In this case it will nonetheless be the case that an increase (a particular directional change) in particle character (↑z1) will systematically produce an increase in particle fitness (↑ω1) regardless of context. That is, for monotonic relationships, the collective is explanatorily redundant in determining the direction of selection on particle characters (Eq. 4) (even though the collective character may be non-aggregative).

This means that, although the effect of selection at the collective level may be different from selection at the particle level, it is always affected by particle characters in the same direction. This does not describe cases where higher-level selection changes evolutionary outcomes, i.e, changes in which of two variants are favoured, only how quickly the preferred variant will fix. Such monotonic non-linearities alter only the magnitude of selection, and thus might alter how quickly selection modifies the frequency of a type, but not which type is favoured. Heritable variation in the fitness at the collective level thus remains explanatorily redundant in determining which particle character is favoured by selection.

We think this is not a minor point because altering evolutionary outcomes in this sense – where individual and collective levels of selection “want different things” - is central to ETIs. Restricting attention to monotonic relationships excludes scenarios where the creation of a higher level evolutionary unit causes the lower level units to “do something they didn’t want to do” such as evolve characters that decrease their individual fitness (e.g., somatic cells, or other reproductive division of labour), or decrease fitness differences between particles (e.g., fair meiosis, mitochondrial reproductive regulation, or other policing strategies). Although other types of non-linearity where the interaction is not monotonic are sometimes mentioned (in particular a division of labour, as developed below) there is perhaps a reason why the worked examples in previous work have not addressed this. Specifically, if the direction of selection on particle character is different under particle selection and collective selection, such that higher-level selection opposes the phenotypes favoured by lower-level selection, why would bottom-up selection create a new evolutionary unit that opposed its interests in this way?

It is relatively easy to explain why selective conditions can be different (even reversed) after a transition compared to what they were before a transition; as per scenarios of strong altruism, for example. What is not easy to explain is how traits (or the parameters of individuating mechanisms) that change evolutionary outcomes in this way themselves evolve. Before a transition the only entities that can be evolving are particles not collectives, so it must be some character of particles that explains these changes in individuality. How can individual selection favour characters that serve collective interest at the expense of the short-term self-interest of particles?

New Data and Insights

A number of current inter-related topics provide new perspectives and new data that contribute to a different way of looking at the evolutionary transitions and individuality.

When the Direction of Selection on Components Is Context Sensitive - Division of Labour Games, Nonlinearly Separable Functions, Non-decomposable Phenotypes, and Comparison With Other Non-aggregative Functions

Intuitively, collectives could alter evolutionary outcomes if the way in which the character of a particle affects the fitness of the particle depends on the other particles present. More specifically, the direction of selection produced by a change in the character of a particle must depend on the other particles present.

Interactions of this form can be written as follows. Suppose that in one context (say when a neighbouring particle, z2, has a positive character value or a value above a given threshold, θ) increasing particle fitness requires an increase in a particular particle character, and yet in another context (e.g., z2 < θ) increasing particle fitness requires a decrease in the same particle character. In this case, neither an increase nor a decrease in particle character reliably determines an increase in particle fitness (Eq. 5). Accordingly, although collective character determines the direction of selection on particles, particle character does not (Watson and Thies, 2019).

In such cases, the sign of the relationship between particle character and particle fitness depends on what other particles are present. When interacting components are within one evolutionary unit (e.g., genes), this kind of sign change in fitness effects is known as reciprocal sign epistasis (Weinreich et al., 2005). But before a transition, the components are different evolutionary units and can instead be construed as players interacting in a game (Hofbauer and Sigmund, 1988). In this case, this kind of sign change in fitness effects is described by a division of labour game (Ispolatov et al., 2012; Tudge et al., 2013, 2016), requiring individuals to adopt complimentary heterogeneous roles (Hayek, 1980; Tudge et al., 2013; Watson and Thies, 2019) [e.g., reproductive specialisations such as germ/soma (Godfrey-Smith, 2009)]. The significance of role specialisation and division of labour (or combination of labour) in ETIs has been noted by many writers (e.g., Bonner, 2003; Kirk, 2005; Ratcliff et al., 2012; Simpson, 2012; Wilson, 2013; Corning and Szathmary, 2015), but not formally developed in the manner that follows.

In this case, and only in this case, there is no particle character that maximises particle fitness but there is nonetheless a collective character (e.g., complementarity or coordination of particles) that cannot be reduced to the character of individual particles, and this collective character confers (collective fitness and hence) particle fitness. This is a basic but fundamental way of describing a non-decomposable collective character; i.e., a collective character, entailed by particle characters, that confers particle fitness, and yet there is no particle character that systematically confers increases in particle fitness over all contexts.

For what comes later, it will be useful to note that a division of labour scenario is the game theory equivalent of a non-linearly separable function in learning theory (Box 1). This provides a formal way to characterise what is important about these functions in evolutionary terms because the distinction between linearly separable and non-linearly separable functions is fundamental in machine learning for the same reasons. That is, the effect of one input changes sign depending on the other input (Box 1). We refer to collective characters underpinned by such a function as a non-decomposable collective character (Figure 1). That is, the collective character cannot be decomposed into a sum of contributions from individual characters (non-linearity) – and more specifically, the sign of the effect of changing one particle character is not independent of its context (non-decomposable).

BOX 1. Non-linearly separable functions.

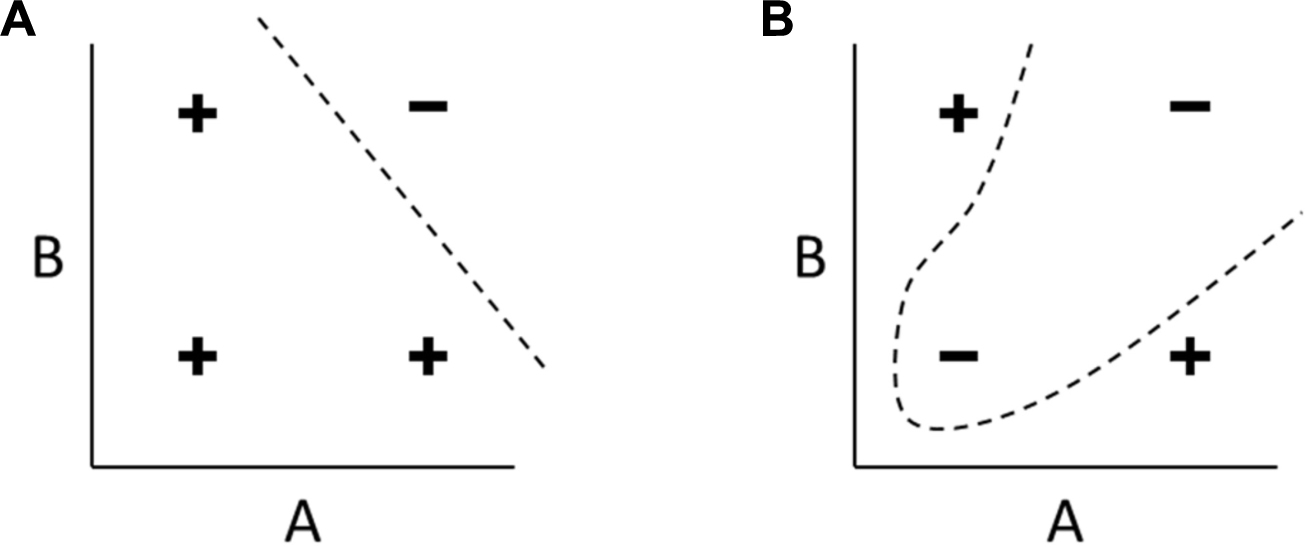

In machine learning, examples of non-linearly separable functions for two binary inputs are logical exclusive-or (XOR) and if-and-only-if (IFF), meaning that the inputs are different or the same, respectively. In such a function, the contribution of each component input to the output value changes sign depending on the value of another input. For example, if A = true then the output [A XOR B] is made true by B = false. But if A = false then the output [A XOR B] is made true by B = true (for example, if this cell is soma that cell should be germ, and vice versa) (Figure B1). In functions that are linearly separable (i.e., unitary functions IDENTITY, NOT, and other two-argument functions OR, AND, NAND, and NOR) the effect of an input “shows-through” to the output (or cannot be “decoupled” from the output). That is, if there is a context (a set of values for the other inputs) where increasing a given input increases the output, its effect cannot be the reverse in another context. Put simply, in non-linearly separable functions the sign of the effect of an input on the output depends on an interaction with other inputs. This is a simple way of defining what it means for an output to be non-decomposable or “more than the sum of the parts” in a formal sense, i.e., not decomposable into a sum of sub-functions over individual inputs. Technically, the term linearly separable refers to the idea that dividing the multidimensional input space into points where the output is true and those where the output is false, only requires one straight line (or, for more than two inputs, one hyperplane). In a non-linearly separable function, in contrast, this is not possible (Figure B1). A corollary of this is that linear directional movements through input space can traverse through regions where the output is true, then false, then true again. Put differently, getting from one point where the output is true, to another region where the output is true, without going through a region where the output is false, can require either a nonlinear trajectory or a “jump” in input space where several input variables change simultaneously in a specific manner. It is not guaranteed that there is one variable that, on its own, can be changed incrementally to reach the other region (nor any linear combination of input variables) (see also Figure B3.B). This is a simple way to formalise what is meant by a scenario that requires “coordinated action,” i.e., variability that maintains a particular output requires specific coordinated simultaneous change in multiple variables.

Figure B1. Linearly separable and non-linearly separable functions. (A) A linearly separable function of two inputs A and B. The four combinations of high and low values are classified as either positive or negative. In any linearly separable function, like this example representing NAND(A,B), the positive and negative examples can be separated with a linear decision boundary (another example is shown in Figure B3.B). (B) In any non-linearly separable function, like this example representing XOR(A,B), no such linear decision boundary can be drawn, and separating the two classes requires a non-linear boundary.

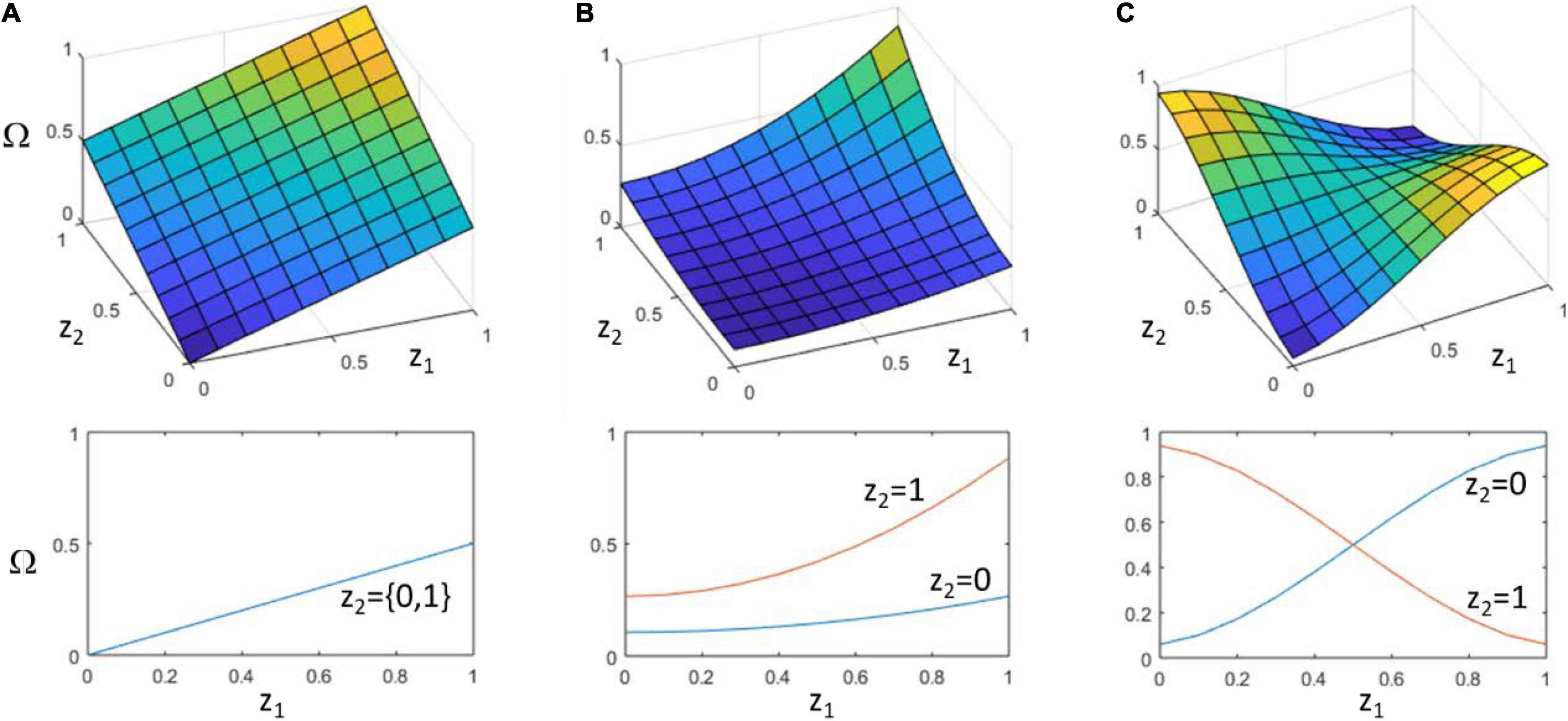

Figure 1. Different possibilities for the relationship of particle characters to collective character. (A) Additive (no interaction). Two particles, with characters z1 and z2, each contribute to collective character, Ω, independently. That is, changes in z1 always have the same effect on Ω regardless of the value of z2 and vice versa. (B) Synergistic interaction. The effect of z1 on Ω increases with the value of z2. The two together thus have a synergistic, i.e., super-additive, effect on collective character. (C) Non-decomposable interaction. The sign of the effect of z1 on Ω can be reversed by the context of z2. The class of particle character configurations that confer high values of Ω (yellow) is defined by a non-linearly separable function over z1 and z2, such as z1 XOR z2 (Box 1) (this is not the case in (B) which remains linearly separable, i.e., z1 AND z2). The lower panels show the value of Ω as a function of z1. (A) insensitive to z2, (B) slope of response depends on value of z2 but does not change sign, (C) sign of response depends on value of z2. Note that in both (B,C), Ω is a non-aggregative function of the particle characters, but only (C) is non-decomposable in the sense of being non-linearly separable.

Note that the statistical average of curves in Figure 1C can be flat (i.e., the context-free contribution of a single particle character to collective character is zero, averaged over all contexts). This does not mean that Ω is insensitive to particle characters; the functional interactions of particles matter significantly in determining the collective character. In this sense, non-decomposability is intimately related to the issue of separating particle character from particle fitness, and the possibility that collective character (and not particle character) determines the fitness of the particles it contains (even though collective character supervenes on particle characters). Note that, confirming the intuition of Okasha (2006), non-decomposability must be defined in terms of traits or characters not particle fitnesses. It is not logically possible for particle fitness to control collective fitness and not control its own fitness. But it is possible for particle traits to determine collective fitness and not control its own fitness. The particle character matters to its fitness, but the way it matters (the direction of selection conferred by a change in particle character) is not determined by itself independently, i.e., free from context.

Note that non-decomposability is a stronger condition than (a refinement of) non-aggregative interactions (Bourrat, 2021b). Non-aggregative interactions include both monotonic non-linear interactions and these non-linearly separable scenarios, but previous examples of non-aggregative interactions have largely been monotonic and thus linearly separable. It is easy to see why: Looked at from the particle level, if a particular change in particle character can increase its fitness in one context and the same change can decrease it in another, how can particles evolve to control and take advantage of collective benefits? Looked at from the collective level, collectives containing an appropriate complement of particle types can solve a division of labour game, and will thus be fitter than a collective that does not. But this creates a problem for the heritability of the collective – the heterogeneous functions with homogeneous fitness (HFHF) problem (Box 2). To solve this problem, and understand how selection at the lower level can find solutions to non-decomposable problems we need to look at higher-level individuals not as containers of heterogeneous but inert particles, but as dynamical systems that “calculate” collective phenotypes through the interactive behaviours of particles. The domain of such dynamics are the processes of development. How can development perform such computations?

BOX 2. The “heterogeneous functions with homogeneous fitness” (HFHF) problem.

For particle fitness to be determined by collective character and not particle character, a division of labour game is required (“When the Direction of Selection on Components Is Context Sensitive - Division of Labour Games, Nonlinearly Separable Functions, Non-decomposable Phenotypes, and Comparison With Other Non-aggregative Functions”). Solving a division of labour game requires individuals to be different to each other. But if a collective contains multiple types of individuals, how does it reproduce? If reproduction occurs through a single-celled bottleneck or unitary propagule this creates homogeneous descendant groups (and homogeneous groups cannot be solutions to a division of labour game). If reproduction occurs through fissioning the group, or any propagule greater than size one, and individuals are intrinsically different, then selection at the individual level will act on these differences, driving changes in the composition of the group. The latter appears as transmission bias opposing the ability to respond to selection at the higher level (Okasha, 2006). To remove this problem and stop selection at the lower level from interfering with selection at the collective level, the fitnesses of the components must be equalised (de-Darwinised). Individuality thus requires collectives to solve the “heterogeneous functions with homogeneous fitness” (HFHF) problem (Watson and Thies, 2019). Heterogeneous functions are necessary to create fitness differences at the collective level (a.k.a. Darwinisation of the whole); and homogeneous fitness is required to remove fitness differences at the individual level (a.k.a. de-Darwinisation of the parts) (Godfrey-Smith, 2009). But how can particles be functionally different and have the same fitness? Solving the heterogeneous functions with homogeneous fitness problem requires individuals to be plastic (Watson and Thies, 2019). This is logical; untying function from fitness requires either plasticity of function or plasticity of fitness. Functional (or phenotypic) plasticity allows individuals to be intrinsically the same (e.g., same genotype and hence same fitness) but act differently (e.g., different phenotype and function). Alternatively, reproductive plasticity (e.g., where reproduction is cued by or enacted by the context of the collective, rather than by autonomous reproductive mechanisms of the particles) allows individuals to be intrinsically different (providing functional complementarity) but reproduce the same (e.g., synchronised reproduction of chromosomes equalises fitnesses) (Watson and Thies, 2019). In evolutionary transitions, these two different ways of solving the HFHF problem are manifest in two different kinds of transitions (Queller, 1997; Watson and Thies, 2019). Fraternal transitions solve the HFHF problem with phenotypic plasticity (and homogeneous genetics) whereas egalitarian transitions utilise reproductive plasticity (and heterogeneous genetics).

New Perspectives on Organismic Individuality – Development and Basal Cognition

Organismic concepts of individuality, like evolutionary concepts of individuality, can also be hard to pin down (Clarke, 2010; Levin, 2019). Properties such as functional integration, spatial continuity or physical cohesion, coordinated action and developmental dependency, for example, may or may not be aligned with notions of evolutionary or Darwinian individuality (Godfrey-Smith, 2009). Tying individuality to an evolutionary unit identified by its genetics quickly unravels (Godfrey-Smith, 2009; Clarke, 2010). Clonal growth of a bacterial colony may be genetically homogeneous, for example, but does not constitute an organismic individual by most accounts. And even normal looking natural multicellular organisms can be profoundly genetically heterogeneous. For example, planaria are multicellular organisms that can reproduce by fissioning (without a cellular population bottleneck) and thus can accumulate somatic diversity over many generations (Lobo et al., 2012). Nonetheless, planaria exhibit development, morphology and behaviour just like genetically homogeneous multicellular organisms. At a smaller scale, the mechanisms of chromosomal reproduction and (fair) meiosis are tightly coordinated within cells but the chromosomes are genetically heterogeneous. And the behaviour of individual unicellular organisms is, of course, far from a linear combination of gene-products. At a higher level of organisation, holobionts, for example, are sometimes offered as a candidate for a higher-level individual – not because of shared genetics but because of coordinated functional integration and dependencies. Some argue for a view of the biosphere as a whole that is organismic in kind, despite the lack of conditions necessary to be an evolutionary unit. How do we distinguish a collection of multiple organisms that is merely complicated from a new level of individuality?

In multicellular organisms, morphogenesis and its disorder, the breakdown of individuality known as cancer, is intrinsic to individuality (Deisboeck and Couzin, 2009; Doursat et al., 2013; Rubenstein et al., 2014; Friston et al., 2015; Pezzulo and Levin, 2015, 2016; Slavkov et al., 2018; Pezzulo et al., 2021). In most organisms cancerous growths originate from genetically homogeneous tissue and, conversely, in planaria, despite their heterogeneity, cancers are rare. New work shows that cancerous growth can be induced by a disruption of electrical coordination signals between cells and in some cases can be reversed by re-establishing them, without genetic changes (Levin, 2021a). Meanwhile, new experiments demonstrate that artificial multicellular genetic chimera can also exhibit holistic behaviours and functions (Blackiston et al., 2021). These recent experiments and considerations add to the growing evidence that genetic homogeneity is neither necessary nor sufficient for organismic individuality. Is functional integration more important? And what kind of functional integration is necessary and sufficient?

Recent work has begun to apply the tools of collective intelligence and cognitive neuroscience to describe “the signals that turn societies into individuals?” (Lyon et al., 2021a,b). In particular, this includes consideration of behaviours and their reward structures or incentives. Like the considerations of evolutionary individuality above, if the incentives of the whole (its macro-scale reward structures and sensory-action feedbacks) are just summary statistics over the incentives of the parts (micro-scale reward structures and sensory-action feedbacks), then the individuality of the whole is conceptually degenerate. Levin recently makes the case that organismic individuality is appropriately ascribed to systems that are capable of information integration and collective action at some spatiotemporal scale (regardless of whether they are genetically related or not) (Levin, 2019, 2021b). This is a cognitive notion of “self” (“cogito, ergo sum” perhaps?). But it does not require neurons or brains; Basal cognition refers to processes of information integration and collective action that occur in non-neural substrates – such as in the development of morphological form (Pezzulo and Levin, 2015; Manicka and Levin, 2019a,b; Lyon et al., 2021a,b). It refers to cognition in an algorithmic sense that is substrate independent (Levin and Dennett, 2020). “[F]unctional data on aneural systems show that the cognitive operations we usually ascribe to brains—sensing, information processing, memory, valence, decision making, learning, anticipation, problem solving, generalization and goal directedness—are all observed in living forms that don’t have brains or even neurons” (Levin et al., 2021). What is important is the presence of functional and informational interactions (signals and responses of any nature) that facilitate information integration and the ability to orchestrate cued responses that coordinate action. In this manuscript we develop this cognitive notion of self by making explicit equivalences with computational models of individuality based on connectionist notions of cognition and learning. This provides the dynamical substrate in which interacting particles can collectively compute solutions that solve the HFHF problem.

Particle Plasticity and Collective Development

Solving the heterogeneous functions with homogeneous fitness problem requires individuals to be plastic (Watson and Thies, 2019; Box 2). Plasticity allows function and fitness to be separated such that the phenotype of the particle (e.g., whether it is type A or type B) does not determine its reproductive output (Eq. 5). Nonetheless, when this plasticity is used to coordinate phenotypes with other particles, it can access the non-decomposable component of collective fitness. Thus the ability to adopt a phenotype that is complementary to its neighbour (such as “becoming an A when with a B” or “becoming a B when with an A”) confers a consistent selective signal (toward being different for XOR, or toward being the same for IFF, Box 1). Plasticity thus pushes a collective trait like “diversity” down to a particle trait like “an ability to plastically differentiate.” This introduces the notion of a second order particle trait – a trait about relationships between things rather than the things themselves – in contrast to a first-order or context free trait. That is, a second-order trait, such as a differentiating or coordinating behaviour, controls the combinations of first-order characters. It is thus an individual character which increases the heritability of a non-decomposable collective character (e.g., phenotypic diversity necessary to solve a division of labour game).

Note that although the direction of selection on a first-order individual character will reverse depending on context in a division of labour game, the direction of selection on the second-order character (e.g., favouring being different rather than being the same) is consistent for a given game. It is then possible to attribute particle fitness to this (second-order) particle character. This appears to put us back at square one with a collective that is explanatorily redundant (Eq. 4). But note that second-order characters such as plasticity really are different from first order characters because they are about relational attributes. For example, a particle cannot be “the same” or “different” on its own, and a phenotype that is sensitive to the context of others cannot be assigned a fitness until the others are present and the plasticity is enacted (i.e., development happens). Intuitively, although the property of being able to plastically differentiate from your partner is a property that a single particle can have, the ability to solve a division of labour game is not a property that a single particle can have. This collective property is the result of a basal “calculation” performed by multiple particles within the collective in interaction with each other. When this functional outcome (a solution to the division of labour game) is a non-linearly separable function of the individual particle characters, the fitness of the particles (and more specifically, the direction of selection on particle characters) that results cannot be attributed to those individual particle characters, and accordingly, the collective is not explanatorily redundant. This view thus resolves the tension between the two desiderata of (i) collectives that are not explanatorily redundant and (ii) collective properties that are nonetheless determined by particle properties.

We thus identify particle plasticity (enabling coordinated phenotypes or coordinated reproductive behaviour between particles) as a concrete type of individuation mechanism. This is a particularly significant type because it enables access to components of selection that cannot be otherwise be accessed precisely when functional interactions between particles have a non-decomposable relationship. Because the ability to coordinate with others is a characteristic that can be heritable at the particle level, and the result of this ability is a coordinated collective phenotype that would not otherwise be heritable, this facilitates a response to selection at the collective level that was not previously present. This particular kind of particle-level trait therefore connects directly with the particular kind of non-aggregative component of selection, and the collective level heritability, required to facilitate a response to selection at the collective level (Bourrat, 2021b).

How does the necessary plasticity evolve? Given the consistent direction of selection on plastic traits, Tudge et al. (2016) showed that natural selection can evolve phenotypic plasticity that solves division of labour games in two-player collectives with homogeneous genotypes, by evolving phenotypic sensitivity to one-another to facilitate complementary differentiation (Brun-Usan et al., 2020). Plasticity of any kind requires a timescale on which it can take effect – time to go from undifferentiated types (genotypes) to differentiated types (phenotypes), with communication between one particle and another to determine the coordinated outcome. In a fraternal transition, this temporally extended process effects a minimal separation between an “embryonic group” (undifferentiated components with the same genotype) and the “group phenotype” (differentiated components with coordinated complementary functions) – and the process that separates them is a minimal model for development.2 To Darwinise the collective at the same time as de-Darwinising the components thus requires the components to be plastic and a developmental process that coordinates their behaviour. The Tudge model, involves just two particles and the one connection between them. It also assumes genetic relatedness which presupposes the higher-level unit of selection and its heritability. The evolution of relationships that solve the HFHF problem in more general networks of interactions (more than two players, thus more general games), and under bottom-up selection, has not yet been shown.

Note that development is not merely a process that modifies particle phenotypes and particle fitnesses, but more specifically, to produce fitness differences that properly belong to the collective level, it must solve a division of labour game. These considerations argue that developmental interactions required for evolutionary individuality must be able to coordinate solutions to non-decomposable functions of this type. This complexity exists in the substrate of basal cognition (implicated in organismic individuality) and at the timescale of organismic development. It suggests that organismic individuality (i.e., the plasticity of particles, and the developmental interactions that coordinate their differentiation) is intrinsic to Darwinian individuality (i.e., creating non-decomposable fitness differences that properly belong to the collective level). Recent expansions on the equivalence between evolution and learning provide a new theoretical framework to make sense of and unify these observations. In particular, these develop connectionist models of cognition and learning that focus on interactions (or second-order characters) in systems of many components and many interactions.

Connectionist Models of Cognition and Learning

Connectionism explores the idea that the intelligence of a system lies not in the intelligence of its parts but in the organisation of the connections between them. Each neuron might be computationally trivial (e.g., a unit that produces an output if the sum of its inputs is strong enough), but connected together in the right way, networks of such units have computational capabilities at the system level that are qualitatively different. For example, the output of a network can be a non-linearly separable function of its inputs (Box 3), and built-up in multiple layers (the outputs of one layer being the input to the next), such networks can represent any arbitrary function of its inputs. In networks with recurrent connections (creating activation loops), the system as a whole can have multiple dynamical attractors that produce particular activation patterns. The information that produces these patterns is not held in any one neuron (or any one connection) but in the organisation of the connections between them. Patterns stored in this way can be recalled through presentation of a partial or corrupted stimulus pattern, known as an “associative memory” (Watson et al., 2014; Power et al., 2015).

BOX 3. Depth is required to represent non-linearly separable functions.

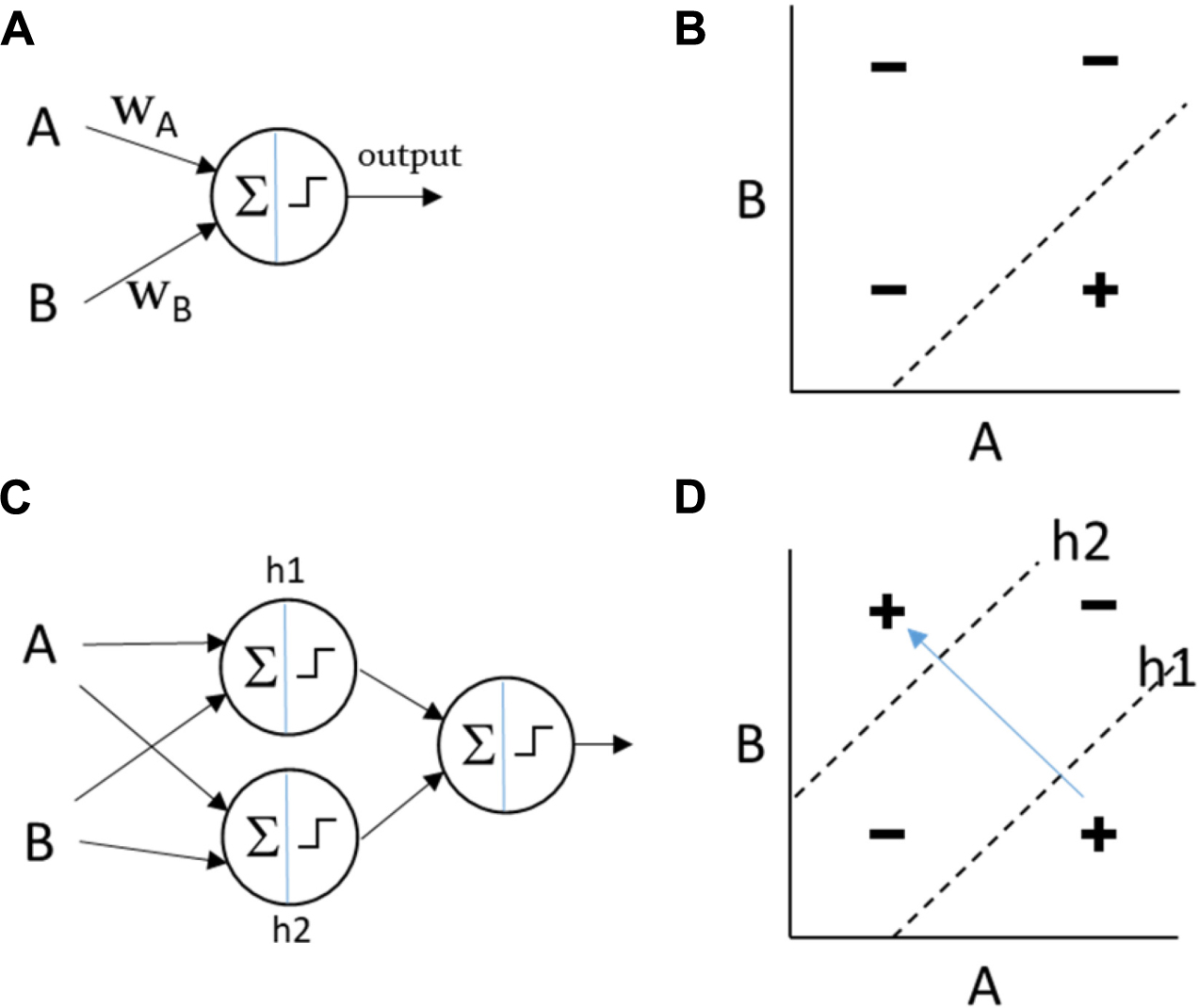

In simple artificial neural networks (e.g., the Perceptron), the output of each neuron is a function of the sum of its weighted inputs (Minsky and Papert, 1988). The shape of this function is non-linear but monotonic (e.g., sigmoidal or threshold). For a single neuron, a particular input might influence the output more or less strongly than other inputs (depending on the magnitude of the weight), it might have a positive or negative influence (depending on the sign of its weight), and because of the non-linearity of the output function, the slope of this influence can be affected by its magnitude and the magnitude of other inputs. But the influence of a particular input on the output cannot change sign. Whether it increases or decreases the output is not sensitive to other inputs [by analogy, see the difference between magnitude epistasis and sign epistasis (Weinreich et al., 2005)]. This means that when directional changes in the input “show-through” to directional changes on the output they do so in a consistent manner, i.e., there cannot be two contexts where a given change on the input has the opposite effect on the output. This property makes it easy to incrementally adjust the weights toward a desired output function because the correct direction to change a weight does not depend on the state of other inputs. However, this means that a single neuron of this type, or a network with a single layer of such neurons, cannot compute non-linearly separable functions of the inputs, where the influence of one of the inputs must be reversed depending on the value of the other input (Box 1). To represent a non-linearly separable function an intermediate level of representation (or “hidden layer”) between inputs and outputs can be employed. A multi-layer Perceptron can compute A XOR B, for example, by computing OR(AND(A, NOT(B)), AND(NOT(A),B)), i.e., (A XOR B) = “A without B or B without A.” The sub-functions used in this construction (AND, OR, and NOT) are all linearly separable functions (computable with a single Perceptron). One node in the hidden layer, let’s call it h1, can thus compute h1 = AND(A,NOT(B)) and another node can compute h2 = AND(NOT(A),B), and then an output node can be stacked on top to compute OR(h1,h2). More generally, to represent a non-linearly separable function, a network must be able to compute higher-order or multiplicative terms – not just a weighted sum of inputs.1

Figure B3. Shallow and deep computations. (A) A Perceptron of two inputs calculates an output that is a non-linear weighted sum of its inputs. (B) The Perceptron can represent any linearly separable function, such as this example, AND(A,NOT(B)). (C) The multi-layer Perceptron utilises “hidden” nodes to calculate intermediate functions which are fed forward to the output node. This can calculate any linearly or non-linearly separable function of its inputs. (D) In this example, h1 calculates AND(A,NOT(B)) and h2 calculates AND(NOT(A),B). The output node can calculate OR(h1,h2) such that the network as a whole represents the non-linearly separable function XOR(A,B). In a non-linearly separable function, moving between different positive regions (variation within the class without visiting regions that are not in the class) cannot be achieved by linear movements in the input space and instead requires “jumps” or coordinated “collective action” (simultaneous discontinuous changes in multiple variables).

System-Level Organisation Without System-Level Reinforcement

The organisation necessary for such distributed intelligence can arise through simple learning mechanisms – without design or selection. In most learning systems, the learning mechanism (used to adjust connections) is simply incremental adjustment that follows local improvements in an objective function. The objective function can be based on the accuracy of the output (supervised learning), the fit of the model to data (unsupervised learning), or the reward from behaviours that are generated from the model (reinforcement learning).3 Supervised learning requires an “external teacher” to define a desired output or target but reinforcement learning only requires a “warmer/colder” feedback signal and nothing more specific (reinforcement learning is commonly identified as the analogue of evolution by natural selection, but for bottom-up evolutionary processes we are particularly interested in unsupervised learning (Watson and Szathmary, 2016). Unsupervised learning does not depend on a reinforcement signal at all. It demonstrates conditions where the organisations necessary to produce system-level cognitive capabilities can arise through very simple distributed mechanisms operating without system-level feedback. A simple example is the application of Hebbian learning often paraphrased as “neurons that fire together wire together” (Watson and Szathmary, 2016). This mechanism changes relationships (under local information, i.e., using only the state of the two nodes involved in that connection) in a manner that makes the connection more compatible with the current state of the nodes it connects. Despite this simplicity, this type of learning is sufficient to produce an associative memory capable of storing and recalling multiple patterns, generalisation, data-compression and clustering, and optimisation abilities (“System-Level Optimisation Without System-Level Reinforcement”).

Learning is not the same as simply remembering something. Learning (apart from rote learning) requires generalisation – the ability to use past experience to respond appropriately to novel situations. That is, the ability to model (recognise, generate or respond to) not just the situations encountered in past experience but also novel situations that have not been encountered before. Connectionist models of cognition and learning exhibit generalisation naturally. When representing the pattern “11,” for example, the network could represent that the first neuron value is “1,” and independently, the second neuron value is “1.” But because networks can represent patterns with connections, it can also represent an association between the value of neuron 1 and the value of neuron 2 – in this case, that the values are the same. This “associative model” represents not just this particular pattern but the class of patterns where the values have the same relationship. In this example, it will also include “00.” In some situations, this might be a mistake – after all, “00” has no individual values in common with “11.” But the relationships between values (such as “sameness” or “differentness”) in a pattern are higher-order features that might represent useful underlying structures within a broader set, or “class,” of patterns. If consistent with past experience, learning such relationships enables generalisation that cannot be provided by treating individual components of the pattern as though they were unrelated. This enables neural networks, over an extraordinarily broad range of domains, to learn generalised models that capture deep underlying structural regularities from past experience and exploit this in novel situations.

System-Level Optimisation Without System-Level Reinforcement

Because of their ability to generalise, neural networks can also discover novel solutions to optimisation problems. Specifically, simple fully-distributed mechanisms of unsupervised learning, using only local information, can produce system-level optimisation abilities (Watson et al., 2011a,c). The initial weights of the network define the constraints of a problem and running the network from random initial states finds state patterns that correspond to locally optimal solutions to these constraints (Hopfield and Tank, 1986; Tank and Hopfield, 1987a,b). If the network is repeatedly shocked or perturbed, e.g., by occasionally randomising the states, with repeated relaxations in between, this causes it to visit a distribution of locally optimal solutions over time. Without learning, however, it cannot learn from past experience and may never find really good solutions. In contrast, if Hebbian learning slowly adjusts the weights of the network whilst it visits this distribution of locally optimal solutions, the dynamics of the system slowly changes. Specifically, these systems learn to solve complex combinatorial problems better with experience (Watson et al., 2009, 2011a,c). This is because the network learns an associative model of its own behaviour [known as a self-modelling dynamical system (Watson et al., 2011c)]. That is, it forms memories of the locally optimal solutions it visits, causing it to visit these patterns more often in future. This is because Hebbian changes to connections have the effect of creating a memory of the current state, making it more likely that the system dynamics visits this state in future by increasing its basin of attraction (i.e., the region of configuration space that is attracted to that state configuration by the state dynamics). Moreover, because it is an associative model, it is not simply memorising these past solutions but learning regularities that generalise. That is, any state configuration that shares that combination of states (consistent with that connection) is more likely to be visited. This means it also enlarges the dynamical attractors for other states it has not visited in the past but have similarly coordinated states. Over time, as relationships change slowly, the attractor that is enlarged the most tends to be a higher quality solution, sometimes even better than all of the locally optimal attractors visited without such learning (Mills, 2010; Watson et al., 2011a,b, c; Mills et al., 2014). The ability to improve performance at a task with experience is perhaps not unexpected in learning systems. But important for our purposes here, there is no reinforcement learning signal used in these models – system-level optimisation is produced without system-level feedback, using only unsupervised and fully-distributed Hebbian learning acting on local information, and this repeated perturbation and relaxation.

Furthermore, the principle of Hebbian learning is entirely natural; it does not require a mechanism designed or selected for the purpose of performing such learning. Specifically, Hebbian changes to connections result from incremental “relaxation” of connections, i.e., changes that reduce conflicting constraints, reduce the forces that variables exert on one another, or equivalently, decrease system energy (Watson et al., 2011a,c). This means that any network of interactions, where connections differentially deform under the stress they experience, can exhibit this type of associative learning and optimisation. The action of natural selection provides one such case in point when there is heritable variation in connections – even without system-level selection. This enables the computational framework of cognition and learning familiar in connectionist models to be unified with the evolutionary domain – hence evolutionary connectionism.

Evolutionary Connectionism

Evolutionary connectionism is a new theoretical framework which formalises the functional equivalence between the evolution of networks and connectionist models of cognition and learning (Watson et al., 2016; Watson and Szathmary, 2016). This work shows that the action of random variation and selection, when acting on heritable variation in relationships, is equivalent to simple types of associative learning. Accordingly, these models can be translated into the domain of evolutionary systems to explain the evolution of biological networks with system-level computational abilities (Watson et al., 2010; Kounios et al., 2016; Kouvaris et al., 2017; Brun-Usan et al., 2020). This work demonstrates mechanisms of information integration in biological interaction networks, equivalent to simple (but powerful) types of neural network cognition.

In some cases, these models characterise the evolution of developmental organisation (evo-devo) where the interactions are inside a single evolutionary unit (among the multiple components it contains), such as gene-regulatory interactions (Watson et al., 2010). The kind of information integration that gene-networks can evolve is the same as that which neural networks can learn and, for example, is capable of demonstrating associative memory (one genotype can store and recall multiple phenotypes, recalled from partial or corrupted selective conditions) and generalisation (networks can produce novel adaptive phenotypes that have not been produced or selected in past generations) (Watson et al., 2010; Kouvaris et al., 2017). These models demonstrate that the conditions for effective learning can be transferred into the evolutionary domain and help explain biological phenomena such as the evolution of evolvability (Kounios et al., 2016; Watson, 2021). However, these models assume that selection is applied at the system level (equivalent to reinforcement learning at the system level).

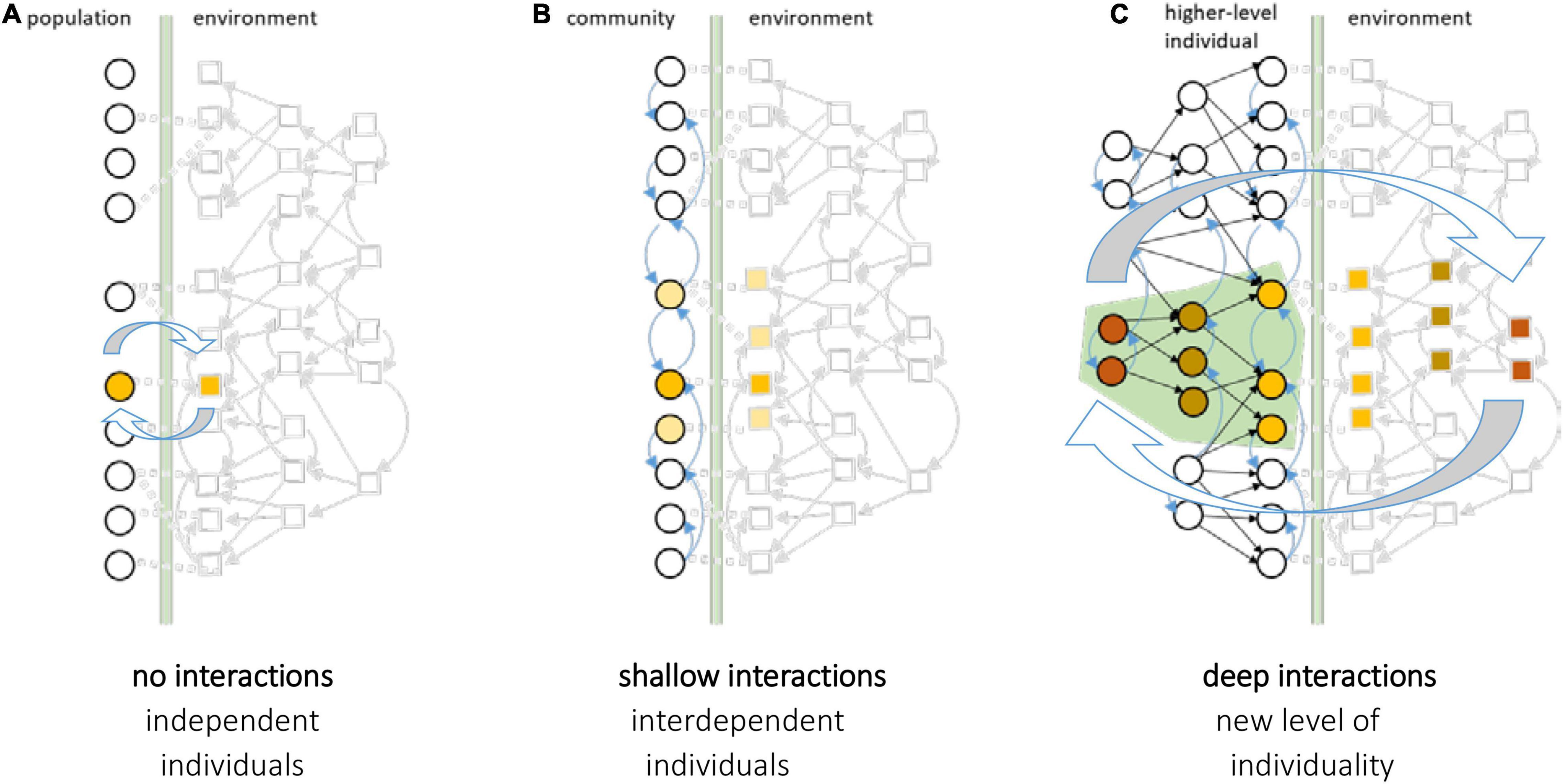

System-Level Organisation Without System-Level Selection: Evolving Organised Relationships Bottom-Up

For the ETIs, driven by bottom-up selection, we cannot assume a reward function that operates over the system as a whole; rather it must be analogous to a reward function for each individual particle (Power et al., 2015). How does reinforcement learning at the level of individual particles, in interaction with each other and acting on their relationships, change system-level behaviours?

Previous work shows that the action of fitness-based incremental change at the individual level (or individual reinforcement learning in a network of pairwise games (Davies et al., 2011; Watson et al., 2011a), when applied to relationships between agents, is equivalent to unsupervised associative learning at the system scale. That is, individual-level reinforcement learning, when given control over the strength of connections, is equivalent to unsupervised learning at the system level (Davies et al., 2011; Watson et al., 2011a; Power et al., 2015). This means that the same learning principles can be translated into evolutionary scenarios where the system is not a single evolutionary unit but a network of relationships among many evolutionary units – such as an ecological community with a network of fitness dependencies between species. These models characterise the evolution of ecological organisation (evo-eco) under individual-level natural selection (Power et al., 2015; Watson and Szathmary, 2016). Even though, in this case, selection acts at the level of the components not at the system level, the kind of information integration that community networks can evolve is also the same as that which neural networks can learn (with unsupervised learning) (Power et al., 2015). These learning principles do not depend on any centralised mechanisms, or an external teacher/system-level feedback (Watson and Szathmary, 2016). This can be used to demonstrate the evolution of ecological assembly rules that implement an associative memory that can store and recall multiple ecological attractors that have been visited in the past and recall them from partial or corrupted ecological conditions (Power et al., 2015). This is crucial in demonstrating how natural selection organises interaction networks bottom-up - before a transition.

System-Level Adaptation Without System-Level Selection: Bottom-Up Adaptation