- 1National Meteorological Center, China Meteorological Administration, Beijing, China

- 2Key Laboratory of South China Sea Meteorological Disaster Prevention and Mitigation of Hainan Province, Haikou, China

- 3School of Artificial Intelligence, Beijing University of Posts and Telecommunications, Beijing, China

- 4Hainan Meteorological Observatory, Hainan Meteorological Service, Haikou, China

Meteorological satellites have become an indispensable meteorological tool for earth observation, as aiding in areas such as cloud detection, which has important guiding significance for maritime activities. However, it is time-consuming and labor-intensive to obtain fine-grained annotations provided by artificial experience or mature satellite cloud products for multi-spectral maritime cloud imageries, especially when new satellites are launched. Moreover, due to the data discrepancy caused by different detection bands, existing models have inadequate generalization performance compared to new satellites, and some cannot be directly migrated. In this paper, to reduce the data distribution’s discrepancy, an approach is presented based on unsupervised domain adaption method for marine cloud detection task based on Himawari-8 satellite data as a source domain and Fengyun-4 satellite data as a target domain. The goal of the proposed method is to leverage the representation power of adversarial learning to extract domain-invariant features, consisting of a segmentation model, a feature extract model for target domain, and a domain discriminator. In addition, aiming to remedy the discrepancy of detection bands, a band mapping module is designed to implement consistency between different bands. The result of the experiments demonstrated the effectiveness of the proposed method with a 7% improvement compared with the comparative experiment. We also designed a series of statistical experiments on different satellite data to further study cloudy perception representation, including data visualization experiment and cloud type statistics.

Introduction

Multiple cloud recognition is an indispensable piece of technology in marine meteorological science. The precise classification of marine cloud types has guiding significance in meteorological disaster monitoring and prevention, weather forecasting, development of the marine industry, and ecological environment monitoring (Astafurov and Skorokhodov., 2022). For example, sea fog, which is a severe marine phenomenon that causes atmospheric visibility to be less than 1 km, is composed of strato-cumulus and stratus clouds. There are more than 80 days of sea fog in China’s offshore waters every year, and 80% of the collision accidents between ships at sea each year are caused by sea fog. Therefore, accurate cloud classification at sea is crucial.

In recent years, there have been many research methods for cloud recognition based on meteorological satellites, such as the Split-Window Algorithm (SWA) (Purbantoro et al., 2018), which uses the 13-channel and 15-channel of the Himawari-8(H-8) satellite to construct a two-dimensional scattergram of brightness temperature and brightness temperature difference to segment the visual image into regions representing different cloud types. There is also a cloud classification method based on multispectral bin classification (Anthis and Cracknelll., 1999), which uses the remote sensing data Advanced Very High-Resolution Radiometer (AVHRR) of the third-generation practical meteorological observation satellite National Oceanic and Atmospheric Administration (NOAA) in the United States and the data of the European Geostationary Meteorological Satellite to predict cloud types. However, just as with the traditional cloud classification methods, they require professional knowledge and complexity, which significantly consumes the manpower of professional meteorologists. In addition, despite the support of experts, it still faces various problems such as inaccurate manual annotation and poor performance in complex situations.

With the development of artificial intelligence, deep learning, transfer learning, and other methods, these new techniques are being widely used in a variety of image processing fields, such as through convolutional networks extracting deep features from data images, realizing cloud type identification after calculation, and replacing manual classification. CloudFCN Net (Francis et al., 2019), proposed in 2019, uses high-resolution satellite data input into the segmentation network to extract multi-scale features and detect whether clouds cover a two-class segmentation method, but according to the cloud international ten-category system, the UATnet (Wang et al., 2021a) proposed in 2021 is more reasonable. This is based on the U-Net segmentation network, wherein the meteorological satellite cloud image pixel-level classification is realized, and channel cross-entropy is introduced to guide multi-scale fusion, and it has achieved good results. A cloud detection method (Liu et al., 2021) based on deep learning proposed in 2021 has also achieved good results. It uses the deep learning network architecture to distinguish cloudy and non-cloudy areas with an accuracy rate of 95%, which is of great significance in applying deep learning in cloud classification. However, when a new type of satellite is first launched, the detailed pixel-level labels collected by the satellite are tiny, and do not allow us to perform supervised segmentation model training. In addition, different satellite types have great differences in wavelength and observation bands (Zhang et al., 2016), which makes it difficult for us to use existing models to perform cloud classification prediction on the data monitored by new satellites.

Unsupervised domain adaptation (UDA) acquires knowledge from the annotated source domain and transfers it to the unannotated target domain (Huang, 2021). In this way, it is hoped that the classifier derived from the source can be adapted to the target domain data (Long et al., 2019). This method is widely used in medical images, road extraction, and other fields. For marine cloud classification and identification, we can also use UDA for transfer learning in the face of new satellite data. In 2021, the UDA method was proposed to use in sea fog detection (Xu et al., 2022) by migrating land fog to sea fog data, and achieved good results. However, when faced with different satellites, there are often problems, such as different channels, and there are differences in time and space between satellite data, so it is hard to share the data knowledge, and so we cannot directly use UDA for transfer learning between different satellites (Tzeng et al., 2017).

We propose an unsupervised domain adaptation network architecture for pixel-level maritime cloud species labeling on satellites without human-annotated cloud classification labels (Goodfellow et al., 2014). In this paper, the Himawari-8 satellite data are used with annotations defined as the source domain and the Fengyun-4 satellite data without annotations defined as the target domain. Through the same semantic space shared by the two satellites, an adversarial learning network consisting of a segmentation model, a target domain feature extraction model, and a domain discriminator is built. It solves the problems of the difference in the number of channels between the two satellites and the difference in the data distribution. On the basis of learning the data distributions of the two domains, domain-invariant features are extracted and then passed through the discriminator to reduce the domain gap, applying the semantic segmentation model of the source domain to the target domain data. The final accuracy of our paper experiment reaches 55.8%, realizing the identification of marine cloud species on the target domain.

Data

Himawari-8 is a geostationary meteorological satellite operated by the Japan Meteorological Agency (JM) (Kotaro et al., 2016), which was launched in 2014 and contains 16 detection bands: 3 visible channels, 3 near-infrared channels, 10 thermal-infrared channels. The different detection bands have different resolutions, and detection is performed every 10 min (Yi et al., 2019). Due to its high-quality data and sophisticated cloud classification (Suzue et al., 2016), the product is often used to study the identification task of cloud classification at sea. Their cloud classification is high resolution, has mature visual interface, and cloud category classification in line with international cloud classification methods. And the Fengyun-4 satellite is a quantitative remote sensing meteorological satellite in geostationary (Dong, 2016) orbit launched by China in recent years. It contains 14 detection bands: 2 visible channels, 4 near-infrared channels, 6 thermal-infrared channels, and 2 water vapor channels. Detection is performed every 15 min (Lu et al., 2017), and their cloud classification is low resolution, has relatively simple products, and special classification of cloud categories, shown in Table 1. We can classify clouds into ten types through satellite data: clear, cirrus, cirro-stratus and etc. In our study, since the Himawari-8 satellite contains pixel-level labels are manually labelled, it is used as the source domain, and the Fengyun satellite does not have pixel-level labels, so it is used as the target domain data.

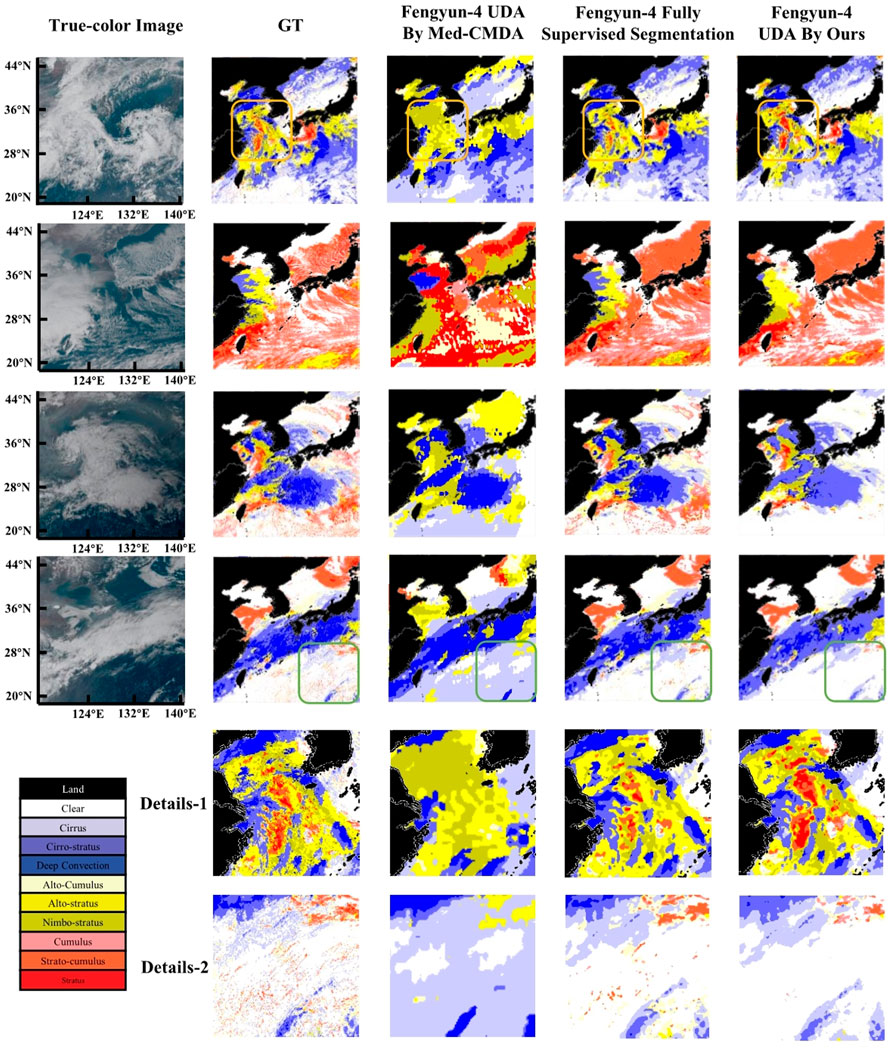

We mainly focus on parts of the Yellow Sea and the Bohai Sea (Cao et al., 2012), which are rich in cloud types and have various types of maritime weather, which are quite representative. The longitude is in the range of 116°E to 141.6°E, and the latitude is in the range of 19.4°N-45°N. The time is focused on 23:00–9:30 UTC (local time 7:00–17:30) on selected dates from January to June in 2020. Because the visible channel data of the satellite is not available at night, we only consider the marine cloud classification in the daytime in this experiment. For example, Figure 1A shows a satellite image collected on 6 February 2020 at 05:00 UTC (08:00 Beijing Standard Time), and a color image is synthesized using the visible light channels. Since the discrimination of cloud types is closely related to the visible light channel, near-infrared channel, thermal-infrared channel, and other data, our experimental data use range is the total channels, that is, the 16 channels of the Himawari-8 satellite and the 14 channels of the Fengyun satellite. For example, Figure 1B shows grayscale images of 16 channels of Himawari-8, and Figure 1C shows grayscale images of 14 channels of Fengyun-4.

FIGURE 1. (A)Red-Green-Blue image generated from Himawari-8 satellite data, GT images, and the types of cloud on 6 February 2020 at 05:00 UTC (08:00 Beijing Standard Time) (B) Gray-scale images of Himawari-8 formed by one channel of remote sensing data, in range of H01 to H16 (C) Gray-scale images of Fengyun-4 formed by one channel of remote sensing data, in range of F01 to F14.

At the beginning of the experiment, we first obtained the collected satellite images’ data. Because the collection area of satellite images is not uniform, we first cropped the satellite data in NumPy format, aligned it to some areas of the Yellow Sea and the Bo Sea, and uniformly scaled it to 512 × 512 resolution. In addition to this, we temporally aligned the satellite data, collecting data half an hour apart. The training set and the validation set of the data are randomly classified according to the ratio of 4:1 to ensure the randomness and reliability of the data samples. For the specific data, please refer to Table 2.

Materials and Methods

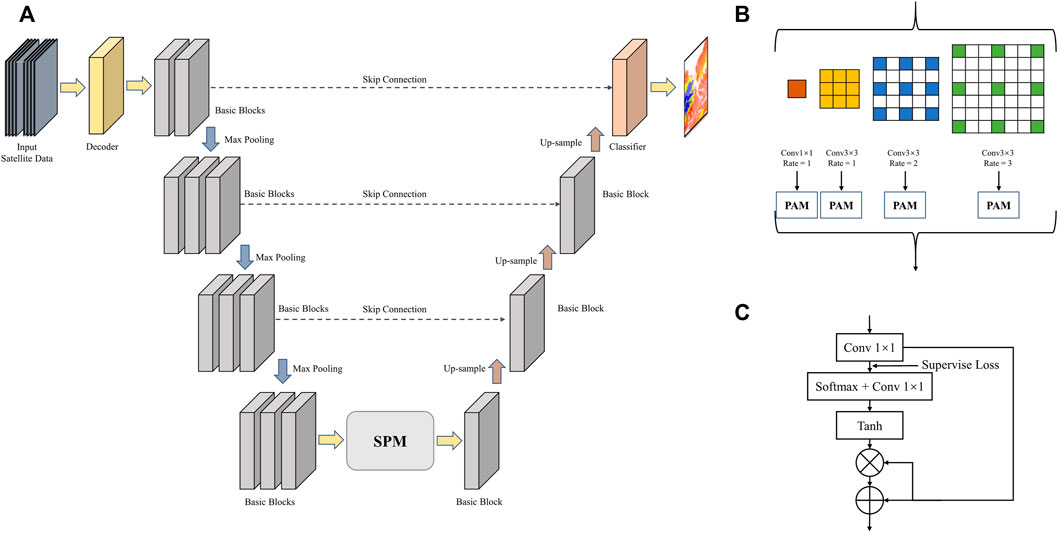

In order to complete the unsupervised domain adaptation between the target domain and the source domain, we design the following network to consist of three modules: the source domain segmentation model, the target domain feature extractor, and the domain discriminator. The feature extractor aims to extract domain-invariant features, and the discriminator aims to correctly distinguish the source of the input segmentation mask. We utilize the adversarial relationship between the feature extractor and the domain discriminator to optimize the two modules until the extractor can output an eligible segmentation mask. In addition, we design an encoder when the model is input to solve the problem of satellite data channel difference. The entire network is constructed of multiple convolutional layers, aiming to extract the deep features of satellite data, and obtain the optimal weight of each layer of the network through loss gradient descent. The adversarial update of the segmentation model/feature extraction model between the discriminator will continuously reduce the domain gap between the target domain and the source domain, and realize that multiple marine cloud type can be autonomously identified through our network. The architecture of network model is shown in Figure 2.

In the early stage of training, we input the source domain data above the model and the target domain data below the model. After entering their respective encoders, they are calculated in the shared weight segmentation/feature extraction network and constrained by the segmentation loss. Then, the output predicted images are fed into the discriminator one by one, and the adversarial loss is passed back. Finally, by the constraint on the loss, we will get the predicted images.

Source Domain Segmentation Model/Target Domain Feature Extractor

The segmentation model of the source domain and the feature extraction model of the target domain are based on the idea of VecNet (Wang et al., 2021b): multiple 1 × 1 convolutional layers are used to extract deep spectral features cross-bands. In addition, the pooling layer is used to continuously down-sample, and among them, each Basic Block contains a convolutional layer, a Batch Normalization layer (BN), and an activation layer. Also, we used the Squeeze-and-Excitation (SE) layer to assign appropriate weights to different channels. After the down-sampling, data will pass to the semantic pyramid module (SPM) and be up-sampled to revert to the original resolution size, as shown in Figure 3. Among them, we use skip connections (Ronneberger et al., 2015) to facilitate the fusion of features of different depths. The loss

FIGURE 3. (A)Architecture of Segmentation Model/Feature Extractor (B)Architecture of semantic pyramid module (SPM) (C)Architecture of Point-wise Guided Attention Module (PAM).

The SPM module adopts four different branches, aiming to complete the integration of multi-scale features and achieve the fusion of semantics and details. The down-sampled in-depth features are taken as input and composed of dilated convolutions (Wang et al., 2018) with different dilation rates. Finally, the local features are re-weighted (He et al., 2015) by cloud type prediction to minimize the loss for each cloud type.

The source domain segmentation model shares the weights obtained by training with the feature extraction model, and inputs the segmentation masks to the domain discriminator for judgment. In addition, due to the difference in the number of channels between the source and target domains, we designed an encoder to unify the dimensions.

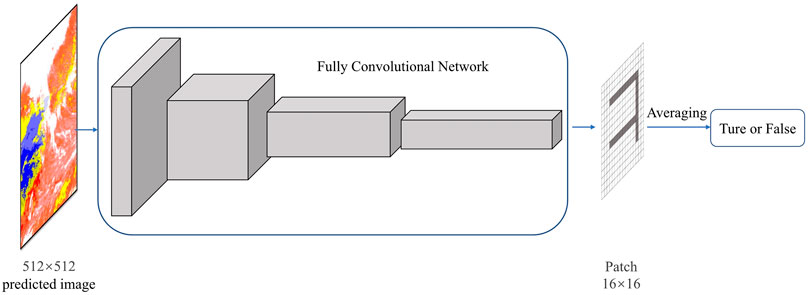

Discriminator

In order to reduce the domain gap between the source domain and the target domain, the discriminator we designed is continuously trained with the segmentation masks output by the segmentation model/feature extraction model, in order to distinguish the correct source of the segmentation mask. In the traditional discriminator, the discriminator maps the input to an actual number, that is, the probability that the sample is true, and the only output is the evaluation value of the whole image. Due to the high resolution of our samples, the evaluation value of the whole image cannot be used as the loss indicator, so in the design of our model, we use PatchGAN (Isola et al., 2016) to complete the design of the discriminator.

Our discriminator network consists of multiple convolutional layers and activation functions, and outputs a 16 × 16 matrix; each point in the matrix is the evaluation value of a small area in the input image, that is, part of the receptive field in the image. Finally, the average of the entire Patch is the final output of the discriminator. This is shown in Figure 4. In this way, we can take into account the influence of different parts in the input image, making the output more convincing. Final discriminator network is continuously updated iteratively and is constrained by the adversarial loss

Design of Loss

During the training process, we use the segmentation loss

Among them,

Among them,

In addition, to mitigate the effect of domain migration and reduce the domain gap, it should be necessary to maximize domain confusion. We also use the adversarial loss

Experiment Results

In our experiment, we used mean intersection-over-union ratio (miou) and all accuracy (allAcc) as metrics. For our multi-classification problem, it is not objective to use mean accuracy, because in a certain area, the distribution of various cloud categories is not balanced, and the overall accuracy design is as follows, where

In addition, we introduced mean intersection-over-union ratio (miou) as a secondary evaluation metric, where i is true value, j is predicted value, and

Also, we use stochastic gradient descent (SGD) as the optimizer; it is used to optimize a differentiable objective function. The method iteratively updates the weights and bias terms by computing the gradient of the loss function over mini-batches of data. Our optimizer’s weight decay is 1e-4, momentum and power set as 0.9, and

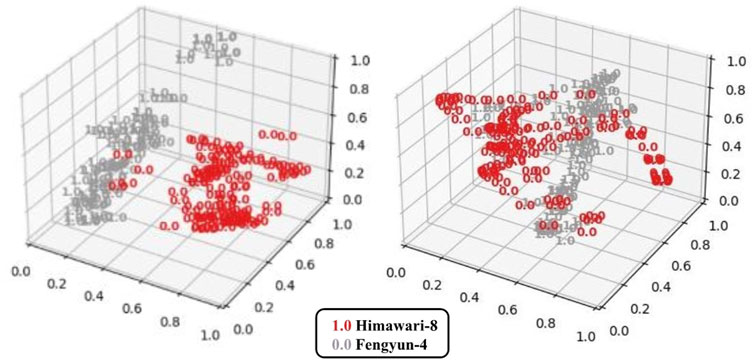

In our experiments, we use t-SNE (t-distributed Stochastic Neighbor Embedding) (Laurens and Hinton, 2016) to complete a set of visualization experiments. This model can realize the mapping of data from high-dimensional space to low-dimensional space without changing the local characteristics of the data. Our Himawari-8 satellite data has 16 channels and Fengyun satellite data has 14 channels; by comparing the original satellite data and the output of the adversarial domain adaptation model, we can observe that under the action of the UDA model, the domain gap between the two satellites has been significantly reduced, as shown in Figure 5. This experiment demonstrates the necessity of our UDA model to address domain adaptation.

FIGURE 5. Satellite data distribution maps-3D (Left: Source domain and target domain original data; Right: Source and target domain data after passing through the unsupervised model).

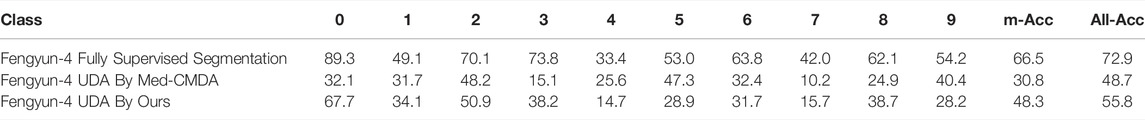

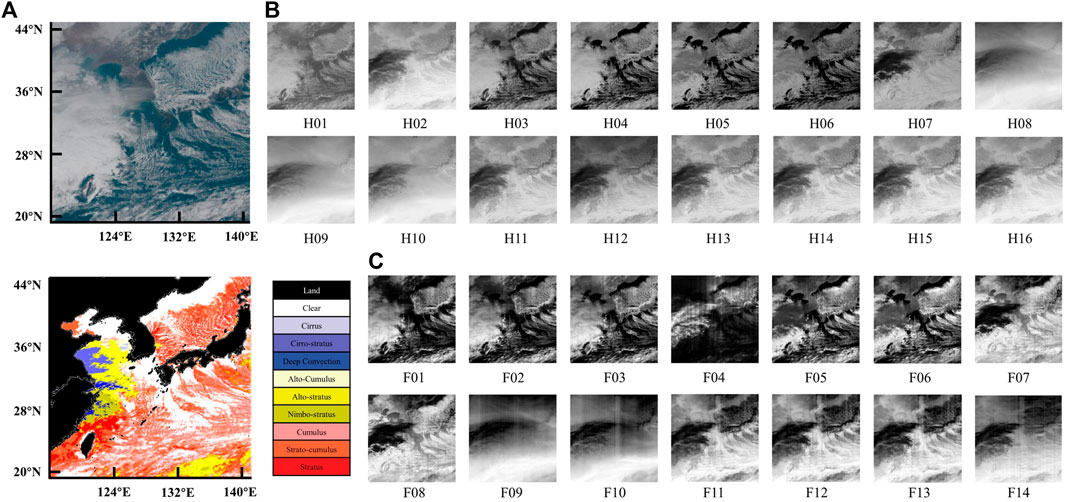

After 80 epochs of training, we can obtain the accuracy of each cloud type through adversarial training and full supervision training by Fengyun-4 Satellite. It can be observed that, through domain adaptation methods, the accuracy is close to the Fengyun-4 labelled segmentation training, showing promising results. And the experimental results are significantly improved compared to the Med-CMDA method (Dou et al., 2018). The All-Acc of the UDA training of Fengyun Satellite can reach 55.8%. Among them, the prediction accuracy rate is higher in the cloud-free environment, and for cloud types such as alto-cumulus and cumulus, due to the lack of training data, the training results are poor. But, overall, it met expectations for unsupervised training. Refer to Table 3.

We showed the comparison results in the figure, where the cloud classification is shown in the graph on the right. We can see that marine cloud recognition can achieve good results under the supervised segmentation networks. Under unsupervised training without mask, although the complex edge and independent pixel-point distinction cannot be as accurate as supervised training, it can basically complete the cloud classification task on the target domain. For example, with deep convection at 2020–0502 08:00, the training results are not as good as fully supervised training due to the complex details and features, solving the problem of no mask in the target domain.

In addition, we carried out comparative experiments with reference to the Med-CMDA model architecture, which is mainly an unsupervised domain adaptation model for medical images. Referring to the visualization results in the third column of the figure, we can clearly find that, due to the large receptive field and strong aggregation of this model, the satellite cloud classification prediction cannot show better results in pixel-level classification.

The figure below shows that the first column on the left is a pseudo-color image synthesized by channels 1, 2, and 3 of the Satellite. Because of the complexity of the image, it is difficult to judge the classification of cloud types directly. The second column is an image manually marked by meteorologists. The black part is the coastline and land. The fourth column is the supervised training result using Fengyun satellite data and label, and the fifth column is the training result using Himawari-8 satellite and Fengyun satellite data when there is no label. For details, please refer to Figure 6.

We can see that in the first row of test results, the cloud types marked in the yellow box have obvious stratus cloud types in the GT image, which do not perform well in the training of fully supervised segmentation models, but their cloud category can be accurately predicted in our UDA method, for specific details, see Details-1. But in the test results in the fourth row, the cloud species marked in the green box, the scattered stratus, and alto-stratus in the GT image, our UDA method is not as accurate as the fully supervised segmentation model, for specific details, see details-2.

Conclusion

In our paper, we proposed an algorithm for classification of marine cloud types based on unsupervised domain adaptation methods. At present, multi-cloud recognition based on satellites has been a common technical means, has been used frequently in various applications, and has had an important guiding significance for various human activities on the sea. Our research aimed to discover how to transfer and apply the experience and knowledge acquired from a more mature satellite to another satellite through unsupervised learning, to solve the problem of data differences due to different detection bands and the number of detection channels when facing new satellites, new fields, and new data. Our network model consists of a segmentation model, a target domain feature extraction model, and a domain discriminator. Based on learning the data distribution of the two domains and extracting domain-invariant features, the domain differences are reduced in the high-level semantic feature space, thereby realizing unsupervised multi-cloud recognition on unlabeled satellites. In addition, we first propose to use two encoders to solve the problem of channel difference between satellites. After iterative training, All-Acc can reach 55.8% without cloud classification labels. The results show that our network model reduces the time and manpower required by professional meteorologists to complete the labeling and is very effective for marine cloud classification prediction without masks. In the future, we will work on optimizing the model architecture, tuning the parameters to optimize the accuracy, and hope to perform diverse tests with other satellite data.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

Drafting of article: BH; Data pre-processing: WF; Planning and supervision of the research: MW and XF; Model construction: BH, LX, and MX; Experiment: LX and MX.

Funding

This work was supported by the National Key Research and Development Program of China (2019YFC1510102) and Key Laboratory of South China Sea Meteorological Disaster Prevention and Mitigation of Hainan Province (Grant No SCSF202203).

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors fratefully acknowledge the funding provided by the National Key Research and Development Program of China (2019YFC1510102) and Key Laboratory of South China Sea Meteorological Disaster Prevention and Mitigation of Hainan Province (Grant No SCSF202203). They also thank the reviewers for their efforts.

References

Anthis, A. I., and Cracknell, A. P. (1999). Use of satellite images for fog detection (AVHRR) and forecast of fog dissipation (METEOSAT) over lowland Thessalia, Hellas. Int. J. Remote Sens. 20 (6), 1107–1124. doi:10.1080/014311699212876

Astafurov, V. G., and Skorokhodov, A. V. (2022). Using the results of cloud classification based on satellite data for solving climatological and meteorological problems. Russ. Meteorology Hydrology, 12. doi:10.3103/S1068373921120050

Cao, X., Shao, L., and Li, X. (2012). Analysis on the characteristics and causes of a persistent heavy fog in the Yellow Sea and Bohai Sea. Meteorological Sci. Technol. (01), 92–99. doi:10.19517/j.1671-6345.2012.01.018

Dong, Y. (2016). Fengyun-4 meteorological satellite and its application prospect. Shanghai Aerosp. (02), 1–8. doi:10.19328/j.cnki.1006-1630.2016.02.001

Dou, Q., Ouyang, C., Chen, C., Chen, H., and Heng, P. (2018). Unsupervised cross-modality domain adaptation of convnets for biomedical image segmentations with adversarial loss. doi:10.48550/arXiv.1804.10916

Francis, A., Sidiropoulos, P., and Muller, J. (2019). CloudFCN: Accurate and robust cloud detection for satellite imagery with deep learning. Remote Sens. 19, 2312. doi:10.3390/rs11192312

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., et al. (2014). Generative adversarial nets. MIT Press.

He, K., Zhang, X., Ren, S., and Sun, J. (2015). Deep residual learning for image recognition. CoRR. doi:10.1109/CVPR.2016.90

Huang, Z. (2021). Unsupervised domain adaptive method based on the deep learning research (master's degree thesis. Hangzhou: university of electronic science and technology.

Isola, P., Zhu, J., Zhou, T., and Efros, A. (2016). Image-to-Image translation with conditional adversarial networks. CoRR. doi:10.1109/CVPR.2017.632

Kotaro, B., Kenji, D., Masahiro, H., Ikeda, A., Imai, T., Inoue, H., et al. (2016). An introduction to himawari-8/9— Japan’s new-generation geostationary meteorological satellites. J. Meteorological Soc. Jpn. 94 (2), 151–183. doi:10.2151/jmsj.2016-009

Liu, C., Yang, S., Di, D., Yang, Y., Zhou, C., Hu, X., et al. (2021). A machine learning-based cloud detection algorithm for the himawari-8 spectral image. Adv. Atmos. Sci. doi:10.1007/S00376-021-0366-X

Long, M., Cao, Y., Cao, Z., Wang, J., and Michael, J. (2019). Transferable representation learning with deep adaptation networks. IEEE Trans. Pattern Anal. Mach. Intell. 41 (12), 3071–3085. doi:10.1109/TPAMI.2018.2868685

Lu, F., Zhang, X., Chen, B., Liu, H., Wu, R., Han, Q., et al. (2017). Imaging characteristics and application prospects of Fengyun-4 meteorological satellite. J. Mar. Meteorology (02), 1–12. doi:10.19513/j.cnki.issn2096-3599.2017.02.001

Purbantoro, B., Aminuddin, J., Manago, N., Toyoshima, K., Lagrosas, N., Sumantyo, J., et al. (2018). Comparison of cloud type classification with Split window algorithm based on different infrared band combinations of himawari-8 satellite. Adv. Remote Sens. 07 (3), 218–234. doi:10.4236/ars.2018.73015

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. CoRR, 234–241. doi:10.1007/978-3-319-24574-4_28

Shrivastava, A., Gupta, A., and Girshick, R. (2016). Training region-based object detectors with online hard example mining. IEEE Computer Society, 761–769. doi:10.1109/CVPR.2016.89

Suzue, H., Imai, T., and Mouri, K. (2016). High-resolution cloud analysis information derived from himawari-8 data” in. Meteorol. Satell. Cent. Tech. Note 61, 43–51.

Tzeng, E., Hoffman, J., Saenko, K., and Darrell, T. (2017). “Adversarial discriminative domain adaptation,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Los Alamitos, CA, USA, Jul, 2962–2971. doi:10.1109/CVPR.2017.316

Wang, P., Chen, P., Yuan, Y., Liu, D., Huang, Z., Hou, X., et al. (2018). Understanding convolution for semantic segmentation. IEEE. doi:10.1109/WACV.2018.00163

Wang, Z., Kong, X., Cui, Z., Wu, M., Liu, T., Gong, M., et al. (2021b). “Vecnet: A spectral and multi-scale spatial fusion deep network for pixel-level cloud type classification in himawari-8 imagery,” in Igarss 2021 - 2021 IEEE international Geoscience and remote sensing symposium (IEEE). doi:10.1109/IGARSS47720.2021.9554737

Wang, Z., Zhao, J., Zhang, R., Li, Z., Lin, Q., Wang, X., et al. (2021a). UATNet: U-shape attention-based transformer Net for meteorological satellite cloud recognition. Remote Sens. 14 (1), 104. doi:10.3390/RS14010104

Xu, M., Wu, M., Guo, J., Zhang, C., Wang, Y., Ma, Z., et al. (2022). Sea fog detection based on unsupervised domain adaptation. Chin. J. Aeronautics 35 (4), 415–425. doi:10.1016/J.CJA.2021.06.019

Yi, H., Siems, S., Manton, M., Protat, A., Majewski, L., Nguyen, H., et al. (2019). Evaluating himawari-8 cloud products using shipborne and CALIPSO observations: Cloud-top height and cloud-top temperature. J. Atmos. Ocean. Technol. 36 (12), 2327–2347. doi:10.1175/jtech-d-18-0231.1

Yi, Z., Zhang, H., Tan, P., and Gong, M. (2017). DualGAN: Unsupervised dual learning for image-to-image translation. IEEE Comput. Soc. doi:10.48550/arXiv.1704.02510

Keywords: marine cloud classification, unsupervised domain adaptation, deep learning, transfer learning, semantic segmentation

Citation: Huang B, Xiao L, Feng W, Xu M, Wu M and Fang X (2022) Domain Adaptation on Multiple Cloud Recognition From Different Types of Meteorological Satellite. Front. Earth Sci. 10:947032. doi: 10.3389/feart.2022.947032

Received: 19 May 2022; Accepted: 07 June 2022;

Published: 12 August 2022.

Edited by:

Dmitry Efremenko, German Aerospace Center (DLR), GermanyReviewed by:

Chao Liu, Nanjing University of Information Science and Technology, ChinaXing Yan, Beijing Normal University, China

Copyright © 2022 Huang, Xiao, Feng, Xu, Wu and Fang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ming Wu, d3VtaW5nQGJ1cHQuZWR1LmNu; Xiang Fang, ZmFuZ3hpYW5nQGNtYS5nb3YuY24=

†ORCID ID: Luming Xiao, orcid.org/0000-0002-9359-2097; Mengqiu Xu, orcid.org/0000-0002-3029-7664; Ming Wu, orcid.org/0000-0001-8390-5398

Bin Huang1,2

Bin Huang1,2 Luming Xiao

Luming Xiao Mengqiu Xu

Mengqiu Xu Ming Wu

Ming Wu