Abstract

A commonly cited use of Learning Styles theory is to use information from self-report questionnaires to assign learners into one or more of a handful of supposed styles (e.g., Visual, Auditory, Converger) and then design teaching materials that match the supposed styles of individual students. A number of reviews, going back to 2004, have concluded that there is currently no empirical evidence that this “matching instruction” improves learning, and it could potentially cause harm. Despite this lack of evidence, survey research and media coverage suggest that belief in this use of Learning Styles theory is high amongst educators. However, it is not clear whether this is a global pattern, or whether belief in Learning Styles is declining as a result of the publicity surrounding the lack of evidence to support it. It is also not clear whether this belief translates into action. Here we undertake a systematic review of research into belief in, and use of, Learning Styles amongst educators. We identified 37 studies representing 15,405 educators from 18 countries around the world, spanning 2009 to early 2020. Self-reported belief in matching instruction to Learning Styles was high, with a weighted percentage of 89.1%, ranging from 58 to 97.6%. There was no evidence that this belief has declined in recent years, for example 95.4% of trainee (pre-service) teachers agreed that matching instruction to Learning Styles is effective. Self-reported use, or planned use, of matching instruction to Learning Styles was similarly high. There was evidence of effectiveness for educational interventions aimed at helping educators understand the lack of evidence for matching in learning styles, with self-reported belief dropping by an average of 37% following such interventions. From a pragmatic perspective, the concerning implications of these results are moderated by a number of methodological aspects of the reported studies. Most used convenience sampling with small samples and did not report critical measures of study quality. It was unclear whether participants fully understood that they were specifically being asked about the matching of instruction to Learning Styles, or whether the questions asked could be interpreted as referring to a broader interpretation of the theory. These findings suggest that the concern expressed about belief in Learning Styles may not be fully supported by current evidence, and highlight the need to undertake further research on the objective use of matching instruction to specific Learning Styles.

Introduction

For decades, educators have been advised to match their teaching to the supposed Learning styles of students (Hyman and Rosoff, 1984). There are now over 70 different Learning Styles classification systems (Coffield et al., 2004). They are largely questionnaire-based; students are asked to self-report their preferences for different approaches to learning and other activities and are then assigned one or more Learning Styles. The VARK classification is perhaps the most well-known (Newton, 2015; Papadatou-Pastou et al., 2020), which categorizes individuals as one or more of Visual, Auditory, Read-Write and Kinesthetic learners. Other common Learning Styles classifications in the literature include those by Kolb, Honey and Mumford, Felder, and Dunn and Dunn (Coffield et al., 2004; Newton, 2015).

In the mid-2000s two substantial reviews of the literature concluded that there was currently no evidence to support the idea that the matching of instructional methods to the supposed Learning Styles of individual students improved their learning (Coffield et al., 2004; Pashler et al., 2008). Subsequent reviews have reached the same conclusion (Cuevas, 2015; Aslaksen and Lorås, 2018) and there have been numerous, carefully controlled attempts to test this “matching” hypothesis (e.g., (Krätzig and Arbuthnott, 2006; Massa and Mayer, 2006; Rogowsky et al., 2015, 2020; Aslaksen and Lorås, 2019). The identification of supposed student Learning Style does not appear to influence the way in which students choose to study (Husmann and O'Loughlin, 2018), and does not correlate with their stated preferences for different teaching methods (Lopa et al., 2015).

Despite this lack of evidence, a number of studies suggest that many educators believe that matching instruction to Learning Style(s) is effective. One of the first studies to test this belief was undertaken in 2009 and looked at various statements about the brain and nervous system which are widespread but which are not supported by research evidence, for example the idea what we only use 10% of our brain, or that we are born with all the brain cells that we will ever have. The study described such statements as “neuromyths” and showed that belief in them was high, including belief in matching of instruction to Learning Styles which was reported by 82% of a sample of trainee teachers in the United Kingdom (Howard-Jones et al., 2009). A number of similar studies have been conducted since, and have reached the same conclusion, with belief in Learning Styles reaching as high as 97.6% in a study of preservice teachers in Turkey (Dündar and Gündüz, 2016).

This apparent widespread belief in an ineffective teaching method has caused concern amongst the education community. Part of the concern arises from a perception that the use of Learning Styles is actually harmful (Pashler et al., 2008; Riener and Willingham, 2010; Dekker et al., 2012; Rohrer and Pashler, 2012; Dandy and Bendersky, 2014; Willingham et al., 2015). The proposed harms include concerns that learners will be pigeonholed or demotivated by being allocated into a Learning Style. For example, a student who is categorized as an “auditory learner” may conclude that there is no point in pursing studies, or a career, in visual subjects such as art, or written subjects such as journalism and so be demotivated during those classes. They might also conclude that they will be more successful in auditory subjects such as music, and thus inappropriately motivated by unrealistic expectations of success and become demotivated if that success does not materalise. It is worth noting however that many advocates of Learning Styles propose that it may be motivating for individual learners to know their supposed style (Coffield et al., 2004). Another concern is that to try and match instruction to Learning Styles risks wasting resources and effort on an ineffective method. Educators are motivated to try and do the best for their learners, and a logical extension of the matching hypothesis is that educators would need to try and generate 4 or more versions of their teaching materials and activities, to match the different styles identified in whatever classification they have used. Additional concerns are that the continued belief in Learning Styles undermines the credibility of educators and education research, and creates unwarranted and unrealistic expectations of educators (Newton and Miah, 2017). These unrealistic expectations could also manifest when students do not achieve the academic grades that they expect, or do not enjoy, or engage with, their learning; if students are not taught in a way that matches their supposed Learning Style, then they may attribute these negative experiences to a lack of matching and be further demotivated for future study. These concerns, and controversy, have also generated publicity in the media, both the mainstream media and in publications focused on educators (Pullmann, 2017; Strauss, 2017; Brueck, 2018).

The apparent widespread acceptance of a technique that is not supported by evidence is made more striking by the fact that there are many teaching methods which demonstrably promote learning. Many of these methods are simple and easy to learn, for example the use of practice tests, or the spacing of instruction (Weinstein et al., 2018). These methods are based upon an abundance of research which demonstrates how we learn (and how we don't), in particular the limitations of human working memory for the processing of new information in real time, and the use of strategies to account for those limitation (e.g., Young et al., 2014). Unfortunately these evidence-based techniques do not appear to be reflected in teacher-training textbooks (National Council on Teacher Quality, 2016).

The lack of evidence to support the matching hypothesis is now acknowledged by some proponents of Learning Styles theory. For example Richard Felder states in a 2020 opinion piece

“As the critics of learning styles correctly claim, the meshing hypothesis (matching instruction to students' learning styles maximizes learning) has no rigorous research support, but the existence and utility of learning styles does not rest on that hypothesis and most proponents of learning styles reject it.” (Felder, 2020)

and

“I now think of learning styles simply as common patterns of student preferences for different approaches to instruction, with certain attributes - behaviors, attitudes, strengths, and weaknesses - being associated with each preference”. (Felder, 2020)

This specific distinction between the matching/meshing hypothesis, and the existence of individual preferences, is at the heart of many studies which have examined belief in the matching hypothesis. Many studies ask about both preferences and matching. These are very different concepts, but the wording of the questions asked about them is very similar. Here for example is the original wording of the questions used in Howard-Jones et al. (2009), which has been used in many studies since. Participants are asked to rate their agreement with the statements that;

“Individuals learn better when they receive information in their preferred learning style (e.g., auditory, visual, kinesthetic)” (Matching question).

and, separately,

“Individual learners show preferences for the mode in which they receive information (e.g., visual, auditory, kinesthetic)” (Preferences question).

The similarities between these statements creates a risk that participants may not fully distinguish between them. This risk is heightened by the existence of similar-sounding but distinct concepts. For example there is evidence that individuals show fairly stable differences in certain cognitive tests, e.g., of visual or verbal ability, sometimes called a “cognitive style” (e.g., Mayer and Massa, 2003). There is also evidence that individuals express reasonably stable preferences for the way in which they receive information, although these preferences do not appear to be correlated with abilities (Massa and Mayer, 2006). This literature, and the underlying science, is complex and multi-faceted, but the nomenclature bears a resemblance to the literature on Learning Styles and the science itself may be the genesis of many Learning Styles theories (Pashler et al., 2008).

This potential overlap in concepts is reflected in studies which have examined what educators understand by the term Learning Styles. A 2020 qualitative study investigated this in detail and found a range of different interpretations of the term Learning Styles. Although the VAK/VARK classification system was the most commonly recognized classification, many educators incorrectly conflated it with other theories, such as Howard Gardeners theory of Multiple Intelligences, and learning theories such as cognitivism. There was also a large diversity in the ways in which educators attempted to account for the use of Learning Styles in their teaching practice. Many educators responded by including a diversity of approaches within their teaching, but not necessarily mapped onto specific Learning Styles instrument or with instruction specific to individuals. For example using a wide variety of audiovisual modalities, or a diversity of active approaches to learning (Papadatou-Pastou et al., 2020). An earlier study reported that participants incorrectly used the term “Learning Styles” interchangeably with “Universal Design for Learning,” and other strategies that take into account individual differences (differentiation) (Ruhaak and Cook, 2018). This complexity is reflected in teacher-training textbooks, which commonly refer to Learning Styles but in a variety of ways, including student motivation and preferences for learning (Wininger et al., 2019). There is also a related misunderstanding about Learning Styles theory; the absence of evidence for a matching hypothesis does not mean that students should all be taught the same way, or that they do not have preferences for how they learn. Attempts to refute the matching hypothesis have been incorrectly interpreted in this way (Newton and Miah, 2017).

Thus, one interpretation of the current literature and surrounding media is that, concern has arisen due to widespread belief in the efficacy of an ineffective and potentially harmful teaching technique, but the participants in studies which report on this widespread belief do not clearly understand what they are being asked, or what the intended consequences are if they disagree with what they are asked.

One set of questions to be addressed in this review then is whether the aforementioned concern is fully justified, and whether this potential confusion is reflected in the data. We examine this by using a systematic review approach to take a broader look at trends and patterns in a larger dataset. The evidence showing a lack of evidence for matching instruction to Learning Styles has been available since 2004. It would be reasonable then to expect that belief in this method would have declined since then, particularly if it is harmful. A related question is whether educators actually use Learning Styles; to generate multiple versions of teaching materials and activities would require considerable additional effort for no apparent benefit, which should also hasten the decline of Learning Styles.

With this is mind, we have conducted a Pragmatic Systematic Review. Pragmatism is an approach to research that attempts to identify results that are useful, relevant to practical issues in the real-world, rather than focusing solely on academic questions (Duram, 2010; Feilzer, 2010). Pragmatic Evidence-based Education is an approach which combines the most useful education research evidence and relies on judgement to apply it in specific context (Newton et al., accepted). Thus, here we have designed research questions to help us develop and discuss findings which are, we hope, useful to the sector rather than solely of academic interest. In addition, we have included many of the usual measures of study quality associated with a systematic review. However, these are included as results as in themselves, rather than as reasons to include/exclude studies from the review. A detailed picture of the quality of studies should be useful for the sector to determine whether the findings justify the aforementioned concern, and whether it needs to be addressed.

Research Questions

-

What percentage of educators believe in the matching of instruction to Learning Styles?

-

What percentage of educators enact, or plan to enact the matching of instruction to Learning Styles?

-

Has belief in matching instruction to Learning Styles decreased over time?

-

Do evidence-based interventions reduce belief in matching instruction to Learning Styles?

-

Do studies present clear evidence that participants understand the difference between (a) matching instruction to Learning Styles and (b) preferences exhibited by learners for the ways in which they receive information?

Methods

The review followed the PRISMA guidelines for conducting and reporting a Systematic Review (Moher et al., 2009), with a consideration of measures of quality and reporting for survey-based research, taken from (Kelley et al., 2003; Bennett et al., 2011).

Eligibility Criteria, Information Sources, and Search Strategy

Education research is often published in journals that are outside the immediate field of education, but instead are linked to the subject being learned. Therefore, we used EBSCO to search the following databases: CINAHL Plus with Full Text; eBook Collection (EBSCOhost); Library, Information Science & Technology Abstracts; MEDLINE; APA PsycArticles; APA PsycINFO; Regional Business News; SPORTDiscus with Full Text; Teacher Reference Center; MathSciNet via EBSCOhost; MLA Directory of Periodicals; MLA International Bibliography. We also searched PubMed and the Education Research database ERIC.

The following search terms were used: “belief in learning styles”; “believe in learning styles”; “believed in learning styles”; “Individuals learn better when they receive information in their preferred learning style” (this is the survey question used in the original Howard-Jones paper (Howard-Jones et al., 2009). Neuromyth*; “learning styles” AND myth AND survey or questionnaire. We used advanced search settings for all sources to apply related words and to ensure that the searches looked for the terms within the full text of the articles. No date restriction was applied to the searches and so the results included items up to and including April 2020.

This returned 1,153 items. Exclusion of duplicates left 838 items. These were then screened according to the inclusion criteria (below). Screening articles on the basis of their titles identified 85 eligible items. The abstracts of these were then evaluated which resulted in 46 items for full-text screening. We also used Google Scholar to search for the same terms. Google Scholar provides better inclusion of non-journal research including of gray literature (Haddaway et al., 2015) and unpublished theses that are hosted on servers outside the normal databases (Jamali and Nabavi, 2015). For example, when searching for the specific survey item used in the original Howard-Jones paper (Howard-Jones et al., 2009) and in many studies subsequently; “Individuals learn better when they receive information in their preferred learning style.” This search returned zero results on ERIC and four result on PsychINFO, but returned 107 results on Google Scholar, most of which were relevant. However, all Google Scholar results had to hand screened in real-time since Google Scholar does not have the same functionality as the databases described above; it includes multiple versions of the same papers, and the search interface is limited, making it difficult to accurately quantify and report search results (Boeker et al., 2013).

Study Selection

To be included in the review a study had to meet the following criteria;

-

Survey educators about their belief in the matching of instruction to one or more of the Learning Styles classifications identified in aforementioned reviews (Coffield et al., 2004; Pashler et al., 2008) and/or educators use of that matching in their teaching. This included pre-service or trainee teachers (individuals studying toward a teaching qualification).

-

Report sufficient data to allow calculation of the number and percentage of respondents stating a belief that individuals learn better when they receive information in their preferred learning style (or use/plan to use Learning Styles theory in this way).

Exclusion criteria included the following

-

Surveys of participant groups that were not educators or trainee educators.

-

Only survey belief in individual learning preferences (i.e., rather than matching instruction).

-

Survey other opinions about Learning Styles, for example whether they explain differences in academic abilities (e.g., Bellert and Graham, 2013).

-

Survey belief in personalizing learning to suit preferences or other characteristics not included in the Learning Styles literature (e.g., prior educational achievement, “deep, surface or strategic learners.”

Some studies were not explicitly clear that they surveyed belief in matching instruction, but used related non-specific concepts such as the “existence of Learning Styles.” These were excluded unless additional information was available to confirm that the studies specifically surveyed belief in matching instruction to Learning Styles. For example (Grospietsch and Mayer, 2018) reported surveying belief in the existence of Learning Styles. However, the content of this paper discussed knowledge acquisition in the context of matching, and stated that the research instruments was derived from Dekker et al. (2012), and had been used in an additional paper by the same authors (Grospietsch and Mayer, 2019), while a follow-up paper from the same authors described both these earlier papers as surveying belief in matching instruction to Learning Styles (Grospietsch and Mayer, 2020). These two survey studies were therefore included. Another study (Canbulat and Kiriktas, 2017) was not clear and no additional information was available. Two emails were sent to the corresponding author with a request for clarity, but no response was received.

Application of the inclusion criteria resulted in 33 studies being included, containing a total of 37 samples. We then went back to Google Scholar to search within those articles which cited the 33 included studies. No further studies were identified which met the inclusion criteria.

Data Collection Process

Data were independently extracted from every paper by two authors working separately (PN + AS). Extracted data were then compared and any discrepancies resolved through discussion.

Data Items

The following metrics were collected where available (all data are shown in Appendix 1):

-

The year the study was published

-

Year that data were collected (where stated, and if different from publication date. If a range was stated, then the year which occupied the majority of the range was taken (e.g., Aug 2014–April 2015 was recorded as 2014).

-

Country where the research was undertaken

-

Publication type (peer reviewed journal, thesis, gray literature)

-

Population type (e.g., academics in HE, teachers, etc.)

-

Whether or not funding was received and if so where from

-

Whether or not a Conflict of Interest was reported/detected

-

Target population size

-

Sample size

-

“N” (completed returns)

-

Average teaching experience of participant group

-

Percentage and number of participants who stated agreement with a question regarding belief in the matching of instruction to Learning Styles, and the text of the specific question asked

-

Percentage and number of participants who stated agreement with a question regarding belief that learners express preferences for how they receive information, and the text of the specific question asked

-

The percentage and number of participants who stated that they did, or would, use matching to instruction in their teaching, and the text of the specific question asked

-

The percentage and number of participants who stated agreement with a question regarding belief in the matching of instruction to Learning Styles after any intervention aimed at helping participants understand the lack of evidence for matching instruction to Learning Styles

Summary Measures and Synthesis of Results

Most measures are simple percentages of participants who agreed, or not, with questionnaire statements. Summary measures are then the average of these. In order to account for unequal sample size, simple weighted percentages were calculated; percentages were converted to raw numbers using the stated “N” for an individual sample. The sum of these raw numbers from each study was then divided by the sum of “N” from each study and converted to a percentage. Percentages from individual studies were used as individual data points in groups for subsequent statistical analysis, for example to compare the percentage of participants who believed in matching instruction to the percentage who actually used Learning Styles in this way.

Risk of Bias Within and Across Studies

Bias is defined as anything which leads a review to “over-estimate or under-estimate the true intervention effect” (Boutron et al., 2019). In this case an “intervention effect” would be belief in, or use of, Learning Styles either before or after any intervention, or belief in a preference for receiving information in different ways.

Many concerns regarding bias are unlikely to apply here. For example, publication bias, wherein results are less likely to be reported if they are not statistically significant. Most of the data reported in the studies under consideration here are not subject to tests of significance, so this is less of a concern.

However, a number of other factors affect can generate bias within a questionnaire-type study of the type analyzed here. These factors also affect the external validity of study findings, i.e., how likely is it that study findings can be generalized to other populations. We collected the following information from each study in order to assess the external validity of the studies. These metrics were derived from multiple sources (Kelley et al., 2003; Bennett et al., 2011; Boutron et al., 2019). Some were calculated from the objective data described above, whereas others were subject to judgement by the authors. In the latter case, each author made an independent judgement and then any queries were resolved through discussion.

-

Sampling Method

. Each study was classified into one of the following categories. Categories are drawn from the literature (Kelley et al.,

2003) and the studies themselves.

◦ Convenience sampling. The survey was distributed to all individuals within a specified population, and data were analyzed from those individuals who voluntarily completed the survey.

◦ Snowball sampling. Participants from a convenience sample were asked to then invite further participants to complete the survey.

◦ Unclassifiable. Insufficient information was provided to allow determination of the sampling method

◦ (no other sampling approaches were used by the included studies)

-

Validity Measures

◦ Neutral Invitation. Were participants invited to the study using neutral language. Neutrality in this case was defined as not demonstrating support for, or criticism of, Learning Styles in a way that could influence the response of a participant. An example of a neutral invitation is Dekker et al. (2012) “The research was presented as a study of how teachers think about the brain and its influence on learning. The term neuromyth was not mentioned in the information for teachers.”

◦ Learning Styles vs. styles of learning. Was sufficient information made available to participants for them to be clear that they were being asked about Learning Styles rather than styles of learning, or preferences (Papadatou-Pastou et al., 2020). For example, was it explained that, in order to identify a Learning Style, a questionnaire needs to be administered which then results in learners being allocated to one or more styles, with named examples (e.g., Newton and Miah, 2017).

◦ Matching Instruction. If yes to above, was it also made clear that, according to the matching hypothesis, educators are supposed to tailor instruction to individual learning styles.

Additional Analyses

The following additional analyses were pre-specified in line with our initial research questions.

Has Belief in Matching Instruction to Learning Styles Decreased Over Time?

The lack of evidence to support matching instruction to Learning Styles has been established since the mid-2000s and has been the subject of substantial publicity. We might therefore hypothesize that belief in matching instruction has decreased over time, for example due to the effects of the publicity, and/or from a revision of teacher-training programmes to reflect this evidence. Three different analyses were conducted to test for evidence of a decrease.

-

A Spearman Rank Correlation test was conducted to test for a correlation between the year that the study was undertaken and the percentage of participants who reported a belief in matching instruction to learning styles. A significant negative correlation would indicate a decrease over time.

-

Belief in matching instruction to Learning Styles was compared in trainee teachers vs. practicing teachers. If belief in Learning Styles was declining then we would expect to see lower rates of belief in trainee teachers. Two samples (Tardif et al., 2015; van Dijk and Lane, 2018) contained a mix of trainee and qualified teachers and were excluded from this analysis. The samples of teachers in Dekker et al. (2012) and Macdonald et al. (2017) both contained 94% practicing teachers and 6% trainee teachers, and so the samples were counted as practicing teachers for the purpose of this analysis.

-

A Spearman Rank Correlation test was conducted to test for a correlation between the average teaching experience of study participants and the percentage of participants who reported a belief in matching instruction to Learning Styles. If belief in matching instruction to learning styles is decreasing then we might expect to see a negative correlation.

Is There a Difference Between Belief in Learning Styles and Use of Learning Styles

The weighted percentage for each of these was calculated, and the two groups of responses were also compared.

Question Validity Analysis

In many of the studies here, participants were asked about both “preferences for learning” and “matching instruction to Learning Styles.” As described in the introduction, the wording for both questions was similar. If there was confusion about the difference between these two statements, then we would expect the pattern of response to them to be broadly similar. To test for this, we calculated a difference score for each study by subtracting the percentage of participants who believed in matching instruction to Learning Styles from the percentage who agreed that individuals have preferences for how they learn. We then conducted a one-tailed t-test to determine whether the distribution of these scores was significantly different from zero. We also compared both groups of responses.

Analysis

All datasets were checked for normal distribution before analysis using a Kolmogorov-Smirnov test. Non-parametric tests were used where datasets failed this test. Individual tests are described in the results section.

Results

89.1% of Participants Believe in Matching Instruction to Learning Styles

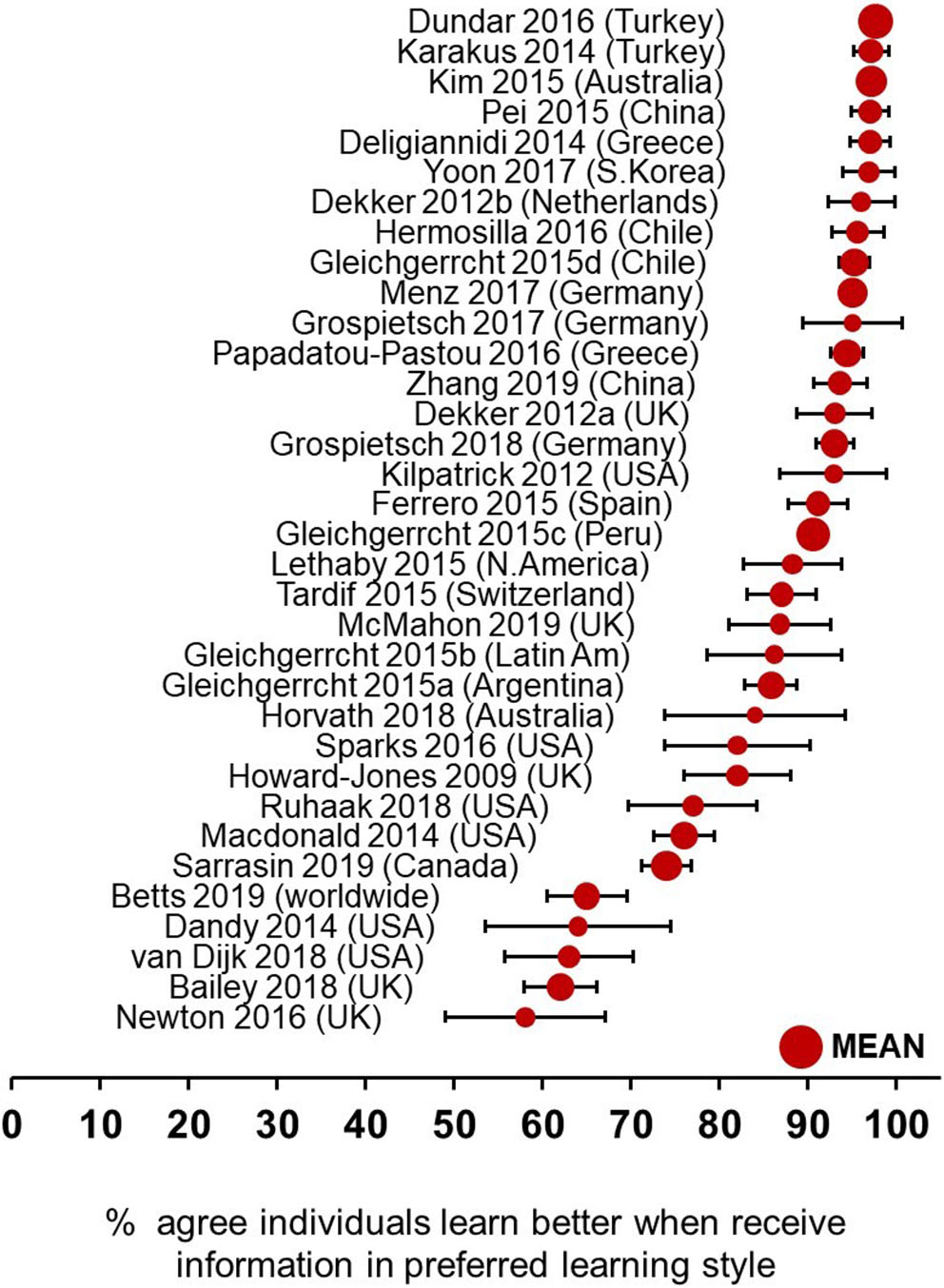

34/37 samples reported the percentage of participants who stated agreement with an incorrect statement that individuals learn better when they receive information in their preferred learning style. The simple average of these 34 data points is 86.2%. To calculate a weighted percentage, these percentages were converted to raw numbers using the stated “N.” The sum of these raw numbers was then divided by the sum of “N” from the 34 samples to create a percentage. This calculation returned a figure of 89.1%. A distribution of the individual studies is shown in Figure 1.

Figure 1

The percentage of participants who stated agreement that individuals learn better when they receive information in their preferred Learning Style. Individual studies are shown with the name of the first author and the year the study was undertaken. Data are plotted as ±95% CI. Bubble size is proportional to the Log10 of the sample size.

No Evidence of a Decrease in Belief Over Time

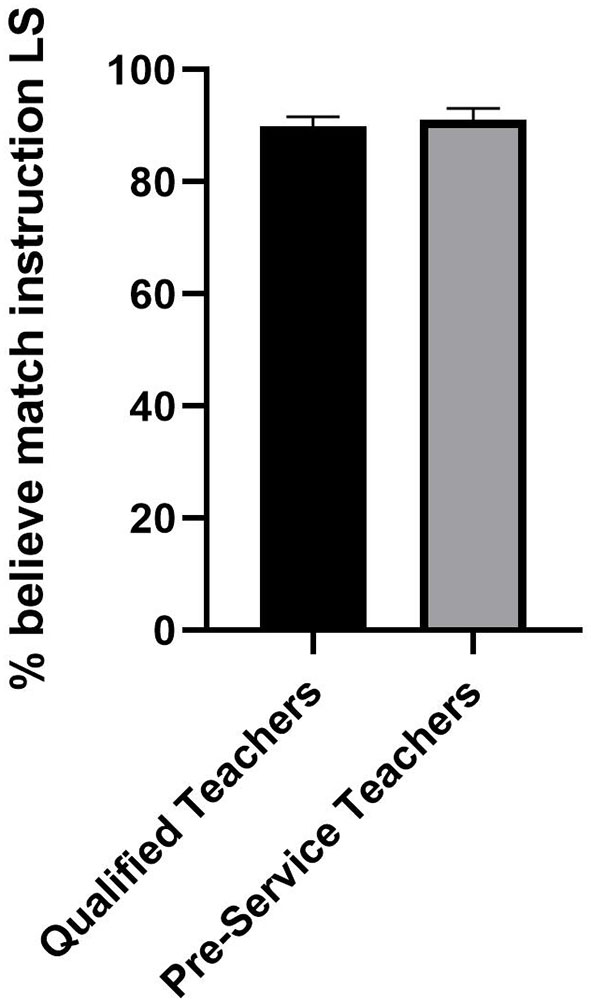

As described in the methods we undertook three separate analyses to test for evidence that belief in Learning Styles has decreased over time. (1) A Spearman Rank correlation analysis was conducted to test for a relationship between the year a study was conducted and the percentage who reported that they believed in matching instruction to Learning Styles. No significant relationship was found (r = −0.290, P = 0.102). (2) Belief in matching instruction to Learning Styles was compared in samples of qualified teachers (N = 16) vs. pre-service teachers (N = 12) using a Mann-Whitney U test. No significant difference was found (Figure 2). A Mann Whitney U test returned a P value of 0.529 (U = 82). When calculating the weighted percentage from each group, belief in matching was 95.4% for pre-service teachers and 87.8% for qualified teachers. The weighted percentage for participants from Higher Education was 63.6%, although this was not analyzed statistically since these data were calculated from only three studies and these were different to the others in additional ways (see Discussion). (3) A Spearman Rank correlation analysis was conducted to test for a relationship between the mean years of experience reported by a participant group (qualified teachers) and the percentage who reported that the believed in matching instruction to Learning Styles. No significant relationship was found (r = −0.158, P = 0.642).

Figure 2

No difference between the percentage of Qualified Teachers vs. Pre-Service Teachers who believe in the efficacy of matching instruction to Learning Styles. The percentage of educators who agreed with each statement was compared by Mann-Whitney U test. P = 0.529.

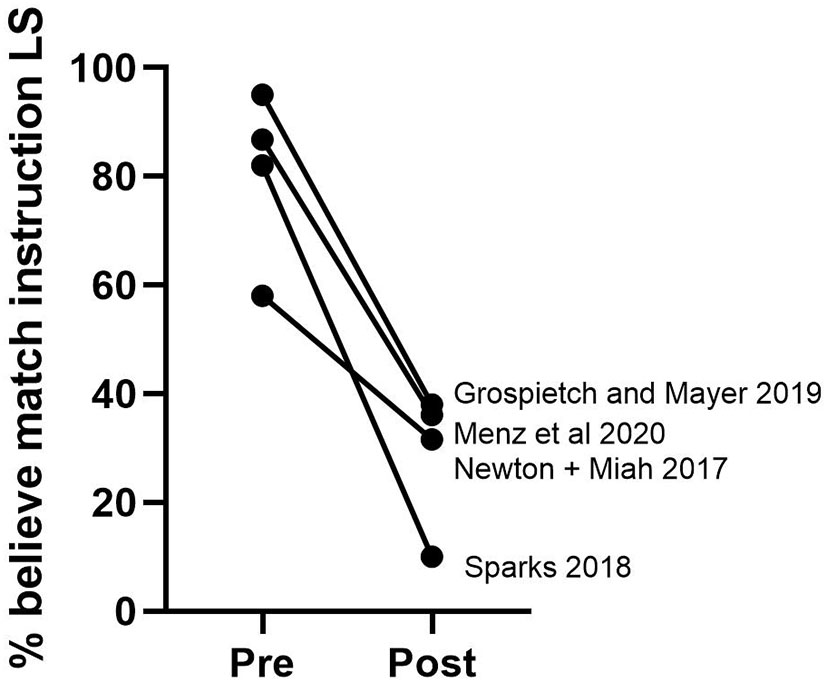

Effect of Interventions

Four studies utilized some form of training for participants, to explain the lack of current evidence for matching instruction to Learning Styles. A pre-post test analysis was used in these studies to evaluate participants belief in the efficacy of matching instruction to Learning Styles both before and after the training. Calculating a weighted percentage revealed that, in these four studies, belief went from 78.4 to 37.1%. The effect size for this intervention effect was large (Cohens d = 3.6). Comparing these four studies using a paired t-test revealed that the difference between pre and post was significant (P = 0.012). Results from the individual studies are shown in Figure 3.

Figure 3

Interventions which explain the lack of evidence to support the efficacy of matching instruction to Learning Styles are associated with a drop in the percentage of participants who report agreeing that matching is effective. Each of the four studies used a pre-post design to measure self-reported belief. The weighted percentage dropped from 78.4 to 37.1%.

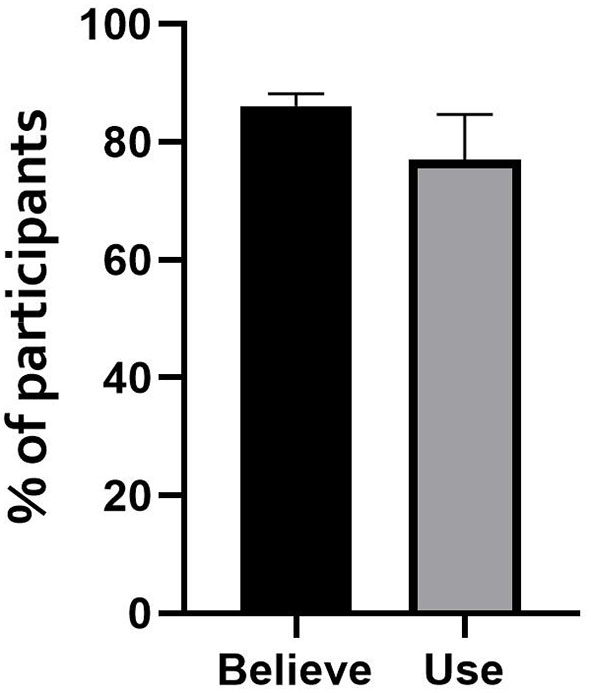

Use of Learning Styles vs. Belief

Seven studies measured self-report of use, or planned use, of matching instruction to Learning Styles. Calculating the weighted average revealed that 79.7% of participants said they used, or intended to use, the matching of instruction to Learning Styles. This was compared to the percentage who reported that they believed in the efficacy of matching instruction. A Mann-Whitney U test was used since four of the seven studies did not measure belief in matching to instruction and so a paired test was not possible. No significant difference was found between the percentage of participants who reported believing that matching instruction to Learning Styles is effective (89.1%), and the percentage who used, or planned to use, it as a teaching method (79.7%) (P = 0.146, U = 76.5). Data are shown in Figure 4.

Figure 4

No difference between the percentage of participants who report believing in the efficacy of matching instruction to Learning Styles, and the percentage who used, or intended to use, Learning Styles in this way. The pooled weighted percentage was 89.1 vs. 79.7%. P = 0.146 by Mann-Whitney U test.

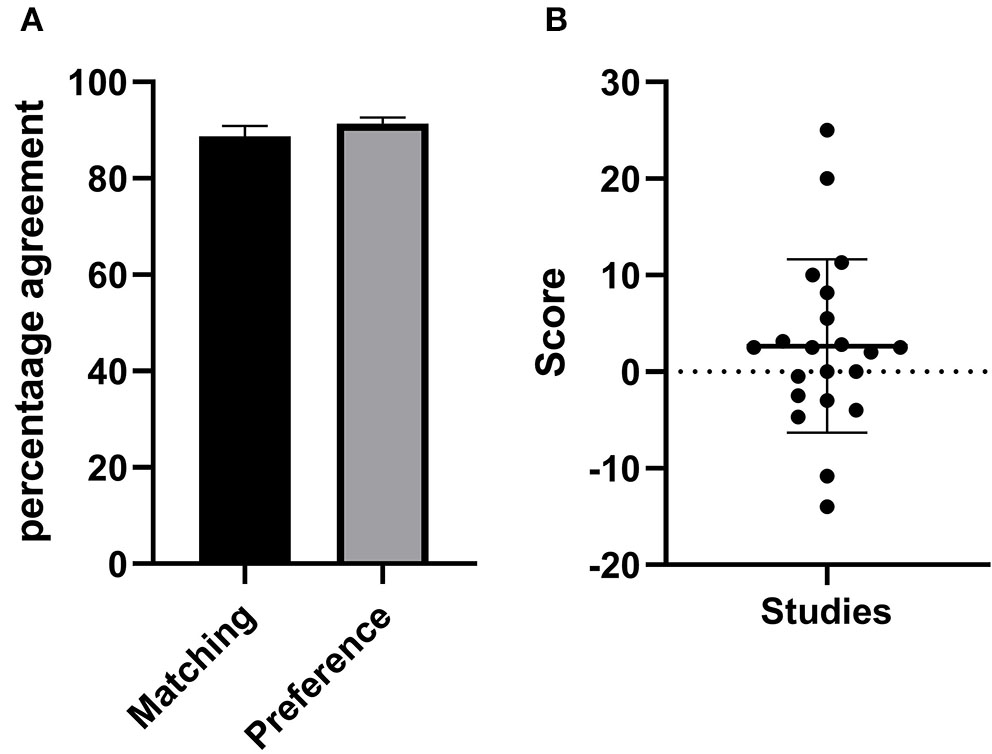

No Difference in Belief in Preferences vs. Belief in Matching Instruction to Learning Styles

As described in the introduction, many studies compared belief in matching instruction to Learning Styles (a “neuromyth”) with a correct statement that individuals show preferences for the mode in which they receive information. Twenty-one studies questioned participants on both their belief in matching instruction to Learning Styles, and their belief that individual learners have preferences for the ways in which they receive information. A Wilcoxon matched-pairs test showed no significant difference between these two datasets (P = 0.262, W = 57). A difference score was calculated by subtracting the percentage who believe in matching instruction from the percentage who believe that learners show preferences. The mean of these scores was 2.66, with a Standard Deviation of 8.97. A one sample t-test showed that the distribution of these scores was not significantly different from zero (P = 0.189). The distribution of these scores is shown in Figure 5 and reveals many negative scores, i.e., where belief in matching instruction to Learning Styles is higher than a belief that individuals have preferences for how they receive information.

Figure 5

No difference between belief in Learning Styles and Learning Preferences. (A) The percentage of participants who report believing that individuals have preferences for how the receive information, and the percentage who report believing that individuals learn better when receiving information in their preferred Learning Style. (B) The difference between these two measures, calculated for individual samples. A negative score means that fewer participants believed that students have preferences for how they received information compared to the percentage who believed that matching instruction to Learning Styles is effective.

Risk of Bias and Validity Measures

A summary table of the individual studies is shown in Table 1. (The full dataset is available in Appendix 1).

Table 1

| First Auth | Yr published | Country | Yr of study | Population type | Neutral invitation? | Make clear LS? | Make clear matching? | Sampling Method | N | Ave teaching exp | HJQ? | % Believe matching | % post training | % Use LS | % preference |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Betts | 2019 | Worldwide | 2019 | Higher Education | – | N | N | Sno | 427 | – | Y | 65 | – | – | 90 |

| Bailey | 2018 | UK + Ireland | 2017 | Sports Coaches | – | N | N | Con | 545 | – | Y | 62 | – | – | – |

| Carter | 2015 | Australia | 2015 | Pre-service teachers | – | Y | Y | Con | 235 | 0 | – | – | – | 95.3 | – |

| Dandy | 2014 | USA | 2014 | Higher Education | – | Y | Y | Con | 81 | – | N | 64 | – | – | – |

| Dekker | 2012 | UK | 2012 | Teachers (mixed) | Y | N | N | Con | 137 | – | Y | 93 | – | – | 95 |

| Dekker | 2012 | Netherlands | 2012 | Teachers (mixed) | Y | N | N | Con | 105 | – | Y | 96 | – | – | 82 |

| Deligiannidi | 2015 | Greece | 2014 | Teachers (mixed) | – | N | N | Con | 217 | 15.1 | Y | 97 | – | – | 97 |

| Dundar | 2016 | Turkey | 2016 | Pre-service teachers | – | N | N | Con | 2932 | 0 | Y | 97.6 | – | – | 86.8 |

| Ferrero | 2016 | Spain | 2015 | Teachers (mixed) | Y | N | N | Con | 284 | 16.9 | Y | 91.1 | – | – | 93.6 |

| Gleichgerrcht | 2015 | Argentina | 2015 | Teachers (mixed) | – | N | N | Unc | 551 | 17.8 | Y | 85.8 | – | – | 94 |

| Gleichgerrcht | 2015 | Latin America | 2015 | Teachers (mixed) | – | N | N | Unc | 80 | 17.8 | Y | 86.2 | – | – | 97.5 |

| Gleichgerrcht | 2015 | Peru | 2015 | Teachers (mixed) | – | N | N | Unc | 2222 | 17.8 | Y | 90.6 | – | – | 96.1 |

| Gleichgerrcht | 2015 | Chile | 2015 | Teachers (mixed) | – | N | N | Unc | 598 | 17.8 | Y | 95.2 | – | – | 97.7 |

| Grospietsch | 2019 | Germany | 2018 | Pre-service teachers | Y | N | N | Con | 550 | 0 | Y | 93 | – | – | 93 |

| Grospietsch | 2017 | Germany | 2017 | Pre-service teachers | – | N | N | Con | 57 | 0 | Y | 95 | 38 | – | – |

| Hermosilla | 2016 | Chile | 2016 | Pre-service teachers | Y | N | N | Con | 184 | 0 | Y | 95.6 | – | – | 98.4 |

| Horvath | 2018 | UK, USA, Australia | 2018 | Teachers | – | N | N | Con | 50 | 18.6 | Y | 84 | – | – | 94 |

| Howard-Jones | 2009 | UK | 2009 | Pre-service teachers | – | N | N | Con | 158 | 0 | Y | 82 | – | – | 79 |

| Karakus | 2014 | Turkey | 2014 | Teachers (mixed) | – | N | N | Con | 278 | – | Y | 97.1 | – | – | 94.6 |

| Kilpatrick | 2012 | USA | 2012 | Teachers (Elem School) | – | N | N | Con | 70 | 13.4 | N | 92.9 | – | 84.3 | |

| Kim | 2017 | Australia | 2015 | Pre-service teachers | – | N | N | Con | 1144 | 0 | Y | 97.1 | – | – | – |

| Lethaby | 2015 | North America | 2015 | Teachers (TESOL) | – | N | N | Con | 128 | – | Y | 88.3 | – | – | 91.41 |

| Macdonald | 2017 | USA | 2014 | Teachers (mixed) | – | N | N | Con | 598 | – | Y | 76 | – | – | – |

| McMahon | 2019 | UK | 2015 | Pre-service teachers | – | N | N | Con | 130 | – | Y | 86.8 | 63.1 | – | – |

| Menz | 2020 | Germany | 2017 | Pre-service teachers | Y | N | N | Sno | 936 | 0 | N | 95.0 | – | – | – |

| Morehead | 2015 | USA | 2015 | Higher Education | Y | N | N | Con | 146 | 0 | – | – | – | 77 | 91 |

| Newton | 2016 | UK | 2016 | Higher Education | Y | Y | Y | Con | 114 | 11 | Y | 58 | 31.6 | 33 | – |

| Papadatou-Pastou | 2017 | Greece | 2016 | Pre-service teachers | – | N | N | Con | 571 | 0 | Y | 94.4 | – | – | 93.9 |

| Pei | 2015 | East China | 2015 | Teachers (mixed) | – | N | N | Con | 238 | – | Y | 97 | – | – | 93 |

| Piza | 2019 | USA | 2017 | Higher Education | – | N | N | Con | 156 | – | – | – | – | 79.4 | 91 |

| Ruhaak | 2018 | USA | 2018 | Pre-service teachers | – | N | N | Con | 129 | 0 | Y | 77 | – | 90 | 79.5 |

| Sarrasin | 2019 | Quebec | 2019 | Teachers (mixed) | – | N | N | Con | 972 | 4 | Y | 74 | – | – | – |

| Sparks | 2018 | USA | 2016 | Pre-service teachers | – | N | N | Con | 84 | 0 | Y | 82 | 10 | – | – |

| Tardif | 2015 | Switzerland | 2015 | Teachers + Pre-service | – | N | N | Con | 274 | – | N | 87 | – | 80 | – |

| van Dijk | 2018 | USA | 2018 | Teachers + Pre-service | – | N | N | Sno | 169 | – | Y | 63 | – | – | 83 |

| Yoon | 2018 | South Korea | 2017 | Pre-service teachers | Y | N | N | Con | 132 | 0 | Y | 96.9 | – | – | – |

| Zhang | 2019 | China | 2019 | Teachers (Headmasters) | – | N | N | Con | 251 | 18.8 | Y | 93.6 | – | – | 88.9 |

Characteristics of included studies.

For sampling, Con = Convenience, Sno = Snowball, Unc = unclassifiable. HJQ = Did the study measure use the question from Howard-Jones et al. (2009) to measure belief in matching instruction to Learning. Make clear LS did the study provide additional information to explain to participants about Learning Styles before surveying their belief in matching instruction to Learning Styles. Make clear matching. Did the study did the study provide additional information to explain to participants about the specific issue of matching instruction to Learning Styles, before surveying participants on their belief in that topic.

Of the 34 samples which measured belief in matching instruction to Learning Styles, 30 of them used the same question as used in Howard-Jones et al. (2009) (see Introduction). The four which used different questions were “Does Teaching to a Student's Learning Style Enhance Learning?” (Dandy and Bendersky, 2014), “Students learn best when taught in a manner consistent with their learning styles” (Kilpatrick, 2012), “How much do you agree with the thesis that there are different learning styles (e.g., auditory, visual or kinesthetic) that enable more effective learning?” (Menz et al., 2020) and “A pedagogical approach based on such a distinction favors learning” (participants had been previously been asked to rate their agreement with the statement “Some individuals are visual, others are auditory”) (Tardif et al., 2015).

Sampling

Thirty of the 37 samples included used convenience sampling. Three of the studies used snowballing from convenience sampling, while the remaining 4 were unclassifiable; these were all from one study whose participants were recruited “at various events related to education (e.g., book fair, pedagogy training sessions, etc.), by word of mouth, and via email invitations to databases of people who had previously enquired about information/courses on neuroscience and education” (Gleichgerrcht et al., 2015). Thus, no studies used a rigorous, representative, random sample and so no further analysis was undertaken on the basis of sampling method. Some studies considered representativeness in their methodology, for example Dekker et al. (2012) reported that the local schools they approached “could be considered a random selection of schools in the UK and NL” but the participants were then “Teachers who were interested in this topic and chose to participate.” No information is given about the size of the population or the number of individuals to whom the survey was sent, and no demographic characteristics are given regarding the population.

Response Rate

Only five samples reported the size of the population from which the sample was drawn, and so no meaningful analysis of response rate can be drawn across the 37 samples. In one case (Betts et al., 2019) the inability to calculate a response rate was due to our design rather than the study from which the data were extracted; Betts et al. (2019) reported distributing their survey to a Listserv of 65,780, but the respondents included many non-educators whose data were not relevant for our research question. It is perhaps worth noting however that their total final participant number was 929 and so their total response rate across all participant groups was 1.4%

Neutral Invitation

Nine of the 37 studies presented evidence of using a neutral invitation. None of the remaining studies provided evidence of a biased invitation; the information was simply not provided.

Briefing on Learning Styles and Matching

Two of the 37 studies reported giving participants additional information regarding Learning Styles, sufficient (in our view) for participants to be clear that they were being asked specifically about Learning Styles as defined by Coffield et al., and the matching on instruction to Learning Styles.

Discussion

We find that 89.1% of 15,045 educators, surveyed from 2009 through to early 2020, self-reported a belief that individuals learn better when they receive information in their preferred Learning Style. In every study analyzed, the majority of educators reported believing in the efficacy of this matching, reaching as high as 97.6% in one study by Dundar and colleagues, which was also the largest study in our analysis, accounting for 19% of the total sample (Dündar and Gündüz, 2016).

Perhaps the most concerning finding from our analysis is that there is no evidence that this belief is decreasing, despite research going back to 2004 which demonstrates that such an approach is ineffective and potentially harmful. We conducted three separate analyses to test for evidence of a decline but found none, in fact the total percentage of pre-service teachers who believe in Learning Styles (95.4%) was higher than the percentage of qualified teachers (87.8%). This finding suggests that belief in matching instruction to Learning Styles is acquired before, or during, teacher training. Tentative evidence in support for this is a preliminary indication that belief in Learning Styles may be lower in educators from Higher Education, where teacher training is less formal and not always compulsory. In addition, Van Dijk and Lane report that overall belief in neuromyths is lower in HE although they do not report this breakdown for their data on Learning Styles (van Dijk and Lane, 2018). However, the studies from Higher Education are small, and two of them are also studies where more information is provided to participants about Learning Styles (see below).

From our pragmatic perspective, there are a number of issues to consider when determining whether these findings should be a cause for alarm, and what to do about them.

The data analyzed here are mostly extracted from studies which assess teacher belief in a range of so-called neuromyths. These all use some version of the questionnaire developed by Howard-Jones and co-workers (Howard-Jones et al., 2009). The value of surveying belief in neuromyths has been questioned, on the basis that, in a small sample of award-winning teachers, there did not appear to be any correlation between belief in neuromyths and receiving a teaching award (Horvath et al., 2018). The Horvath study ultimately proposed that awareness of neuromyths is “irrelevant” to determining teacher effectiveness and played down concerns, expressed elsewhere in the field, that belief in neuromyths might be harmful to learners, or undermine the effectiveness of educators. We have only analyzed one element of the neuromyths questionnaire (Learning Styles), but we share some of the concerns expressed by Horvath and co-workers. The majority (30/34) of the samples analyzed here measured belief in Learning Styles using the original Howard-Jones/Dekker questionnaire. A benefit of having the same questions asked across multiple studies is that there is consistency in what is being measured. However, a problem is that any limitations with that instrument are amplified within the synthesis here. One potential limitation with the Howard-Jones question set is that the “matching” question is asked in many of the same surveys as a “belief” question, as shown in the introduction, potentially leading participants to conflate or confuse the two. Any issues may then be exacerbated by a lack of consistency in what participants understand by “matching instruction to Learning Styles”; this could affect all studies. The potential for multiple interpretations of these questions regarding Learning Styles is acknowledged by some authors (e.g., Morehead et al., 2016), and some studies report a lack of clarity regarding the specific meaning of Learning Styles and the matching hypothesis (Ruhaak and Cook, 2018; Papadatou-Pastou et al., 2020). This lack of clarity is reflected also in the psychometric properties of Learning Styles instruments themselves, with many failing to meet basic standards of reliability and validity required for psychometric validation (Coffield et al., 2004). In addition, we have previously founds that participants, when advised against matching instruction to Learning Styles, may conclude that this means educators should eliminate any consideration of individual preferences or variety in teaching methods (Newton and Miah, 2017).

Here we found no significant differences between participant responses to the question regarding belief in matching instruction vs. the question about individual preferences, with almost half the studies analyzed actually reporting a higher percentage of participants who believed in matching instruction when compared to belief that individuals have preferences for how they receive information. This is concerning from a basic methodological perspective. The question is normally thus; “Individual learners show preferences for the mode in which they receive information (e.g., visual, auditory, kinesthetic).” In any sample of learners, some individuals are going to express preferences. It may not be all learners, and those preferences may not be stable for all learners, and the question does not encompass all preferences, but the question, as asked, cannot be anything other than true.

More relevant for our research questions is the apparent evidence of a lack of clarity within the research instrument; it may not be clear to study participants what the matching hypothesis is and so it is difficult to conclude that the results truly represent belief in matching instruction to Learning Styles. This finding is tentatively supported by our analysis which shows that, in the two studies which give participants additional instructions and guidance to help them understand the matching hypothesis, belief in matching instruction to Learning Styles is much lower, a weighted average of 63.5% (Dandy and Bendersky, 2014; Newton and Miah, 2017). However, these are both small studies, and both are conducted in Higher Education rather than school teaching, so the difference may be explained by other factors, for example the amount and nature of teacher-training given to educators in Higher Education when compared to school-teaching. It would be informative to conduct further studies in which more detail was provided to participants about Learning Styles, before they are asked whether or not they believed matching instruction to Learning Styles is effective.

However, even if we conclude that the findings represent, in part, a lack of clarity over the specific meaning of “matching instruction to Learning Styles,” this might itself still be a cause for concern. The theory is very common in teacher training and academic literature (Newton, 2015; National Council on Teacher Quality, 2016; Wininger et al., 2019) and so we might hope that the meaning and use of it is clear to a majority of educators. An additional potential limitation is that the Howard-Jones question cites VARK as an example of Learning Styles, when there are over 70 different classifications. Thus we have almost no information about belief in other common classifications, such as those devised by Kolb, Honey and Mumford, Dunn and Dunn etc. (Coffield et al., 2004).

79.7% of participants reported that they used, or planned to use, the approach of matching instruction to Learning Styles. This high percentage was surprising since our earlier work (Newton and Miah, 2017) showed that only 33% of participants had used Learning Styles in the previous year. If Learning Styles are ineffective, wasteful of resources and even harmful, then we might predict that far fewer educators would actually use them. There are a number of caveats to the current results. There are only seven studies which report on this and all are small, accounting for <10% of the total sample. Most are not paired, i.e., they do not explicitly ask about belief in the efficacy of Learning Styles and then compare it to use of Learning Styles. The questions are often vague, broad and do not specifically represent an example of matching instruction to individual student Learning Styles as organized into one of the recognized classifications. For example “do you teach to accommodate those differences” (Learning Styles). Agreement with statements like these might reflect a belief that educators feel like they have to say they use them in order to respect any/all individual differences, rather than Learning Styles specifically. In addition this is still a self-report of a behavior, or planned behavior. It would be useful, in further work, to measure actual behavior; how many educators have actually designed distinct versions of educational resources, aligned to multiple specific individual student Learning Styles? This would appear to be a critical question when determining the impact of the Learning Styles neuromyth.

The studies give us little insight into why belief in Learning Styles persists. The theory is consistently promoted in teacher-training textbooks (National Council on Teacher Quality, 2016) although there is some evidence that this is in decline (Wininger et al., 2019). If educators are themselves screened using Learning Styles instruments as students at school, then it seems reasonable that they would then enter teacher-training with a view that the use of Learning Styles is a good thing, and so the cycle of belief would be self-perpetuating.

We have previously shown that the research literature generally paints a positive picture of the use of Learning Styles; a majority of papers which are “about” Learning Styles have been undertaken on the basis that matching instruction to Learning Styles is a good thing to do, regardless of the evidence (Newton, 2015). Thus an educator who was unaware of, or skeptical of, the evidence might be influenced by this. Other areas of the literature reflect this idea. A 2005 meta-analysis published in the Journal of Educational Research attempted to test the effect of matching instruction to the Dunn and Dunn Learning Styles Model. The results were supposedly clear;

“results overwhelmingly supported the position that matching students' learning-style preferences with complementary instruction improved academic achievement” (Lovelace, 2005).

A subsequent publication in the same journal in 2007 (Kavale and LeFever, 2007) discredited the 2005 meta-analysis. A number of technical and conceptual problems were identified with the 2005 meta-analysis, including a concern that the vast majority of the included studies were dissertations supervised by Dunn and Dunn themselves, undertaken at the St. John's University Center for the Study of Learning and Teaching Styles, run by Dunn and Dunn. At the time of writing (August 2020), the 2005 meta-analysis has been cited 292 times according to Google Scholar, whereas the rebuttal has been cited 38 times. A similar pattern played out a decade earlier, when an earlier meta-analysis by R Dunn, claiming to validate the Dunn and Dunn Learning Styles model, was published in 1995 (Dunn et al., 1995). This meta-analysis has been cited 610 times, whereas a rebuttal in 1998 (Kavale et al., 1998), has been cited 60 times.

An early attempt by Dunn and Dunn to promote the use of their Learning Styles classification was made on the basis that teachers would be less likely to be the subject of malpractice lawsuits if they could demonstrate that they had made every effort to identify the learning styles of their students (Dunn et al., 1977). This is perhaps an extreme example, but reflective of a general sense that, by identifying a supposed learning style, educators may feel they are doing something useful to help their students.

A particular issue to consider from a pragmatic perspective is that of study quality. Many of the studies did not include key indicators of the quality of survey responses (Kelley et al., 2003; Bennett et al., 2011). For example, none of the studies use a defined, representative sample, and very few include sufficient information to allow the calculation of a response rate. From a traditional research perspective, the absence of these indicators undermines confidence in the generalizability of the findings reported here. Pragmatic research defines itself as identifying useful answers to research questions (Newton et al., accepted). From this perspective then, we considered it useful to still proceed with an analysis of these studies, and consider the findings holistically. It is useful for the research community to be aware of the limitations of these studies, and we report on these measures of study quality in Appendix 1. We also think it is useful to report on the evidence, within our findings, of a lack of clarity regarding what is actually meant by the term “Learning Styles.” Taken all together these analyses could prompt further research, using a large representative sample with a high response rate, using a neutral invitation, with a clear explanation of the difference between Learning Styles and styles of Learning. Perhaps most importantly this research should focus on whether educators act on their belief, as described above.

Some of these limitations, in particular those regarding representative sampling, are tempered by the number of studies and a consistency in the findings between studies, and the overall very high rates of self-reported belief in Learning Styles. Thirty-four samples report on this question, and in all studies, the majority of participants agree with the key question. In 25 of the 34 samples, the rate of agreement is over 80%. Even if some samples were not representative, it would seem unlikely to affect the qualitative account of the main finding (although this may be undermined by the other limitations described above).

A summary conclusion from our findings then is that belief in matching instruction to Learning Styles is high and has not declined, even though there is currently no evidence to support such an approach. There are a number of methodological issues which might affect that conclusion, but when taken all together these are insufficient to completely alleviate the concerns which arise from the conclusion; a substantial majority of educators state belief in a technique for which the lack of evidence was established in 2004. In the final section of the discussion here we then consider, from a pragmatic perspective, what are the useful things that we might do with these findings, and consider what could be done to address the concerns which arise from them.

Our findings present some limited evidence that training has some effect on belief in matching instruction to Learning Styles. Only four studies looked at training, but in those studies the percentage who reported that belief in the efficacy of matching instruction to individual Learning Styles dropped from 78.4 to 37.1%. It seems reasonable to conclude that there is a risk of social desirability bias in these studies; if participants have been given training which explains the lack of evidence to support Learning Styles, then they might be reasonably expected to disagree with a statement which supports matching. Even then, for 37.1% of participants to still report that they believe this approach is effective is potentially concerning; it still represents a substantial number of educators. Perhaps more importantly these findings are, like many others discussed here, a self-report of a belief, rather than a measure of actual behavior.

There is already a substantial body of literature which identifies Learning Styles as a neuromyth, or an “urban legend.” A 2018 study analyzed the discourse used in a sample of this literature and concluded that the language used reflected a power imbalance wherein “experts” told practitioners what was true or not. A conclusion was that this language may not be helpful if we truly want to address this widespread belief in a method that is ineffective (Smets and Struyven, 2018). We have previously proposed that a “debunking” approach is unlikely to be effective (Newton and Miah, 2017). It takes time and effort to identify student learning styles, and much more effort to then try and design instruction to match those styles. The sorts of instructors who go to that sort of effort are likely to be motivated by a desire to help their students, and so to be told that they have been propagating a “myth” seems unlikely to be news that it is well received.

Considering these limitations from a pragmatic perspective, it does not seem that training, or debunking, is a useful approach to addressing widespread belief in Learning Styles. It is also difficult to determine whether training has been effective when we have limited data regarding the actual use of Learning Styles theory. It may be better to focus on the promotion of techniques that are demonstrably effective, such as retrieval practice and other simple techniques as described in the introduction. There is evidence that these are currently lacking from teacher training (National Council on Teacher Quality, 2016). Many evidence-based techniques are simple to implement, for example the use of practice tests, the spacing of instruction, and the use of worked examples (Young et al., 2014; Weinstein et al., 2018). Concerns exist about the generalizability of education research findings to specific contexts, but these concerns might be addressed by the use of a pragmatic approach (Newton et al., accepted).

In summary then, we find a substantial majority of educators, almost 90%, from samples all over the world in all types of education, report that they believe in the efficacy of a teaching technique that is demonstrably not effective and potentially harmful. There is no sign that this is declining, despite many years of work, in the academic literature and popular press, highlighting this lack of evidence. To understand this fully, future work should focus on the objective behavior of educators. How many of us actually match instruction to the individual Learning Styles of students, and what are the consequences when we do? Does it matter? Should we instead focus on promoting effective approaches rather than debunking myths?

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

Author contributions

PN conceived and designed the study, undertook searches, extracted data, undertook analysis, drafted manuscript, and finalized manuscript. AS re-extracted data and provided critical comments on the manuscript. AS and PN undertook PRIMSA quality analyses.

Acknowledgments

The authors would like to acknowledge the assistance of Gabriella Santiago and Michael Chau who undertook partial preliminary data extraction on a subset of papers identified in an initial search. We would also like to thank Prof Greg Fegan and Dr. Owen Bodger for advice and reassurance with the analysis.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2020.602451/full#supplementary-material

References

1

Aslaksen K. Lorås H. (2018). The modality-specific learning style hypothesis: a mini-review. Front. Psychol. 9:1538. 10.3389/fpsyg.2018.01538

2

Aslaksen K. Lorås H. (2019). Matching instruction with modality-specific learning style: effects on immediate recall and working memory performance. Educ. Sci. 9:32. 10.3390/educsci9010032

3

Bellert A. Graham L. (2013). Neuromyths and neurofacts: information from cognitive neuroscience for classroom and learning support teachers. Spec. Educ. Perspect. 22, 7–20.

4

Bennett C. Khangura S. Brehaut J. C. Graham I. D. Moher D. Potter B. K. et al . (2011). Reporting guidelines for survey research: an analysis of published guidance and reporting practices. PLoS Med. 8:e1001069. 10.1371/journal.pmed.1001069

5

Betts K. Miller M. Tokuhama-Espinosa T. Shewokis P. Anderson A. Borja C. et al . (2019). International Report: Neuromyths and Evidence-Based Practices in Higher Education. Available online at: https://onlinelearningconsortium.org/read/international-report-neuromyths-and-evidence-based-practices-in-higher-education/ (accessed September 01, 2020).

6

Boeker M. Vach W. Motschall E. (2013). Google Scholar as replacement for systematic literature searches: good relative recall and precision are not enough. BMC Medical Research Methodology, 13:131.

7

Boutron I. Page M. J. Higgins J. P. T. Altman D. G. Lundh A. Hróbjartsson A. (2019). Chapter 7: considering bias and conflicts of interest among the included studies, in Cochrane Handbook for Systematic Reviews of Interventions version 6.0 (Cochrane). Available online at: /handbook/current/chapter-07 (accessed July 2019).

8

Brueck H. (2018). There's No Such Thing as “Auditory” or “Visual” Learners. Business Insider. Available online at: https://www.businessinsider.com/auditory-visual-kinesthetic-learning-styles-arent-real-2018–l-2012 (accessed September 01, 2020).

9

Canbulat T. Kiriktas H. (2017). Assessment of educational neuromyths among teachers and teacher candidates. J. Educ. Learn.6:326. 10.5539/jel.v6n2p326

10

Coffield F. Moseley D. Hall E. Ecclestone K. (2004). Learning Styles and Pedagogy in Post 16 Learning: A Systematic and Critical Review. The Learning and Skills Research Centre. Available online at: https://www.voced.edu.au/content/ngv%3A13692 (accessed September 01, 2020).

11

Cuevas J. (2015). Is learning styles-based instruction effective? A comprehensive analysis of recent research on learning styles. Theory Res. Educ. 13:308–333. 10.1177/1477878515606621

12

Dandy K. Bendersky K. (2014). Student and faculty beliefs about learning in higher education: implications for teaching. Int. J. Teach. Learn. High. Educ. 26, 358−380.

13

Dekker S. Lee N. C. Howard-Jones P. Jolles J. (2012). Neuromyths in education: prevalence and predictors of misconceptions among teachers. Front. Psychol.3:429. 10.3389/fpsyg.2012.00429

14

Dündar S. Gündüz N. (2016). Misconceptions regarding the brain: the neuromyths of preservice teachers. Mind Brain Educ. 10, 212–232. 10.1111/mbe.12119

15

Dunn R. Dunn K. Price G. E. (1977). Diagnosing learning styles: a prescription for avoiding malpractice suits. Phi Delta Kappan. 58, 418–420.

16

Dunn R. Griggs S. A. Olson J. Beasley M. Gorman B. S. (1995). A meta-analytic validation of the Dunn and Dunn model of learning-style preferences. J. Educ. Res. 88, 353–362. 10.1080/00220671.1995.9941181

17

Duram (2010). In L. A. Pragmatic Study, Encyclopedia of Research Design. Thousand Oaks, CA: SAGE Publications, Inc.

18

Feilzer M. (2010). Doing mixed methods research pragmatically: implications for the rediscovery of pragmatism as a research paradigm. J. Mix. Methods Res. 4, 6–16. 10.1177/1558689809349691

19

Felder R. (2020). OPINION: uses, misuses, and validity of learning styles, in Advances in Engineering Education. Available online at: https://advances.asee.org/opinion-uses-misuses-and-validity-of-learning-styles/ (accessed September 01, 2020).

20

Gleichgerrcht E. Luttges B. L. Salvarezza F. Campos A. L. (2015). Educational neuromyths among teachers in Latin America. Mind Brain Educ. 9, 170–178. 10.1111/mbe.12086

21

Grospietsch F. Mayer J. (2018). Professionalizing pre-service biology teachers' misconceptions about learning and the brain through conceptual change. Educ Sci. 8:120. 10.3390/educsci8030120

22

Grospietsch F. Mayer J. (2019). Pre-service science teachers' neuroscience literacy: neuromyths and a professional understanding of learning and memory. Front. Hum. Neurosci. 13:20. 10.3389/fnhum.2019.00020

23

Grospietsch F. Mayer J. (2020). Misconceptions about neuroscience – prevalence and persistence of neuromyths in education. Neuroforum26, 63–71. 10.1515/nf-2020-0006

24

Haddaway N. R. Collins A. M. Coughlin D. Kirk S. (2015). The role of Google Scholar in evidence reviews and its applicability to grey literature searching. PLoS ONE10:e0138237. 10.1371/journal.pone.0138237

25

Horvath J. C. Donoghue G. M. Horton A. J. Lodge J. M. Hattie J. A. C. (2018). On the irrelevance of neuromyths to teacher effectiveness: comparing neuro-literacy levels amongst award-winning and non-award winning teachers. Front. Psychol. 9:1666. 10.3389/fpsyg.2018.01666

26

Howard-Jones P. A. Franey L. Mashmoushi R. Liao Y.-C. (2009). The neuroscience literacy of trainee teachers, in British Educational Research Association Annual Conference, Manchester.

27

Husmann P. R. O'Loughlin V. D. (2018). Another nail in the coffin for learning styles? Disparities among undergraduate anatomy students' study strategies, class performance, and reported VARK learning styles. Anatom. Sci. Educ. 12:6–19. 10.1002/ase.1777

28

Hyman R. Rosoff B. (1984). Matching learning and teaching styles: the jug and what's in it. Theory Pract. 23:35. 10.1080/00405848409543087

29

Jamali H. R. Nabavi M. (2015). Open access and sources of full-text articles in Google Scholar in different subject fields. Scientometrics105, 1635–1651. 10.1007/s11192-015-1642-2

30

Kavale K. A. Hirshoren A. Forness S. R. (1998). Meta-analytic validation of the Dunn and Dunn model of learning-style preferences: a critique of what was Dunn. Learn. Disabil. Res. Pract. 13, 75–80.

31

Kavale K. A. LeFever G. B. (2007). Dunn and Dunn model of learning-style preferences: critique of lovelace meta-analysis. J. Educ. Res. 101, 94–97. 10.3200/JOER.101.2.94-98

32

Kelley K. Clark B. Brown V. Sitzia J. (2003). Good practice in the conduct and reporting of survey research. Int J Qual. Health Care15, 261–266. 10.1093/intqhc/mzg031

33

Kilpatrick J. T. (2012). Elementary School Teachers' Perspectives on Learning Styles, Sense of Efficacy, and Self-Theories of Intelligence [Text, Western Carolina University]. Available online at: http://libres.uncg.edu/ir/wcu/listing.aspx?id=9058 (accessed September 01, 2020).

34

Krätzig G. Arbuthnott K. (2006). Perceptual learning style and learning proficiency: a test of the hypothesis. J. Educ. Psychol. 98, 238–246. 10.1037/0022-0663.98.1.238

35

Lopa J. “Mick,” L WrayM. L. (2015). Debunking the matching hypothesis of learning style theorists in hospitality education. J. Hospital. Tour. Educ. 27, 120–128. 10.1080/10963758.2015.1064317

36

Lovelace M. K. (2005). Meta-analysis of experimental research based on the Dunn and Dunn model. J. Educ. Res. 98, 176–183. 10.3200/JOER.98.3.176-183

37

Macdonald K. Germine L. Anderson A. Christodoulou J. McGrath L. M. (2017). Dispelling the myth: training in education or neuroscience decreases but does not eliminate beliefs in neuromyths. Front.Psycho.8:1314.

38

Massa L. J. Mayer R. E. (2006). Testing the ATI hypothesis: should multimedia instruction accommodate verbalizer-visualizer cognitive style?Learn. Indiv. Diff. 16, 321–335. 10.1016/j.lindif.2006.10.001

39

Mayer R. E. Massa L. J. (2003). Three facets of visual and verbal learners: cognitive ability, cognitive style, and learning preference. J. Educ. Psychol. 95, 833–846. 10.1037/0022-0663.95.4.833

40

Menz C. Spinath B. Seifried E. (2020). Misconceptions die hard: prevalence and reduction of wrong beliefs in topics from educational psychology among preservice teachers. Eur. J. Psychol. Educ. 10.1007/s10212-020-00474-5. [Epub ahead of print].

41

Moher D. Liberati A. Tetzlaff J. Altman D. G. Group T. P. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6:e1000097. 10.1371/journal.pmed.1000097

42

Morehead K. Rhodes M. G. DeLozier S. (2016). Instructor and student knowledge of study strategies. Memory24, 257–271. 10.1080/09658211.2014.1001992

43

National Council on Teacher Quality (2016). Learning About Learning: What Every New Teacher Needs to Know. National Council on Teacher Quality. Available online at: http://www.nctq.org/dmsStage.do?fn=Learning_About_Learning_Report (accessed September 01, 2020).

44

Newton P. (2015). The learning styles myth is thriving in higher education. Educ. Psychol. 6:1908. 10.3389/fpsyg.2015.01908

45

Newton P. Da Silva A. Berry S. (accepted). The case for pragmatic evidence-based higher education; a useful way forward?Front. Educ. 10.3389/feduc.2020.583157

46

Newton P. Miah M. (2017). Evidence-based higher education – is the learning styles ‘myth' important?Front. Psychol. 8:444. 10.3389/fpsyg.2017.00444

47

Papadatou-Pastou M. Touloumakos A. K. Koutouveli C. Barrable A. (2020). The learning styles neuromyth: when the same term means different things to different teachers. Eur. J. Psychol. Educ. 10.1007/s10212-020-00485-2. [Epub ahead of print].

48

Pashler H. McDaniel M. Rohrer D. Bjork R. (2008). Learning styles: concepts and evidence. Psychol. Sci. Public Interest9, 105–119. 10.1111/j.1539-6053.2009.01038.x

49

Pullmann J. (2017). Scientists: “Learning Styles” Like Auditory, Visual, And Kinesthetic Are Bunk. The Federalist. Available online at: https://thefederalist.com/2017/03/22/brain-scientists-learning-styles-like-auditory-visual-and-kinesthetic-are-bunk/ (accessed September 01, 2020).

50

Riener C. Willingham D. (2010). The myth of learning styles. Change Magaz. High. Learn. 42, 32–35. 10.1080/00091383.2010.503139

51

Rogowsky B. A. Calhoun B. M. Tallal P. (2015). Matching learning style to instructional method: Effects on comprehension. J. Educ. Psychol. 107, 64–78. 10.1037/a0037478

52

Rogowsky B. A. Calhoun B. M. Tallal P. (2020). Providing instruction based on students' learning style preferences does not improve learning. Front. Psychol. 11:164. 10.3389/fpsyg.2020.00164

53

Rohrer D. Pashler H. (2012). Learning styles: where's the evidence?Med. Educ.46, 634–635. 10.1111/j.1365-2923.2012.04273.x

54

Ruhaak A. E. Cook B. G. (2018). The prevalence of educational neuromyths among pre-service special education teachers. Mind Brain Educ. 12, 155–161. 10.1111/mbe.12181

55

Smets W. Struyven K. (2018). Power relations in educational scientific communication—A critical analysis of discourse on learning styles. Cogent Educ. 5:1429722. 10.1080/2331186X.2018.1429722

56

Strauss V. (2017). Analysis | Most Teachers Believe That Kids Have Different ‘Learning Styles.' Here's Why They Are Wrong. Washington Post. Available online at: https://www.washingtonpost.com/news/answer-sheet/wp/2017/09/05/most-teachers-believe-that-kids-have-different-learning-styles-heres-why-they-are-wrong/ (accessed September 01, 2020).

57

Tardif E. Doudin P. Meylan N. (2015). Neuromyths among teachers and student teachers. Mind Brain Educ. 9, 50–59. 10.1111/mbe.12070

58

van Dijk W. Lane H. B. (2018). The brain and the us education system: perpetuation of neuromyths. Exceptionality28, 1–14. 10.1080/09362835.2018.1480954

59

Weinstein Y. Madan C. R. Sumeracki M. A. (2018). Teaching the science of learning. Cognit. Res. Princ. Implic. 3:2. 10.1186/s41235-017-0087-y

60

Willingham D. T. Hughes E. M. Dobolyi D. G. (2015). The scientific status of learning styles theories. Teach. Psychol. 42, 266–271. 10.1177/0098628315589505

61

Wininger S. R. Redifer J. L. Norman A. D. Ryle M. K. (2019). Prevalence of learning styles in educational psychology and introduction to education textbooks: a content analysis. Psychol. Learn. Teach. 18, 221–243. 10.1177/1475725719830301

62

Young J. Q. Merrienboer J. V. Durning S. Cate O. T. (2014). Cognitive load theory: implications for medical education: AMEE guide no. 86. Med. Teach. 36, 371–384. 10.3109/0142159X.2014.889290

Summary

Keywords

evidence-based education, pragmatism, neuromyth, differentiation, VARK, Kolb, Honey and Mumford

Citation

Newton PM and Salvi A (2020) How Common Is Belief in the Learning Styles Neuromyth, and Does It Matter? A Pragmatic Systematic Review. Front. Educ. 5:602451. doi: 10.3389/feduc.2020.602451

Received

12 September 2020

Accepted

25 November 2020

Published

14 December 2020

Volume

5 - 2020

Edited by

Robbert Smit, University of Teacher Education St. Gallen, Switzerland

Reviewed by

Håvard Lorås, Norwegian University of Science and Technology, Norway; Steve Wininger, Western Kentucky University, United States; Kristen Betts, Drexel University, United States