Abstract

Introduction:

Digital technologies are widely integrated with teaching and learning, and examining these technological interventions in the classrooms has become an active research area. Existing reviews are often constrained, relying on qualitative methods like meta-analysis, scoping review, or systematic review, which tend to cover a limited number of studies. We conducted a bibliometric analysis of 1,128 articles published between 2014 and 2023 from the Web of Science database to provide a comprehensive overview of this field.

Methods:

This study used Biblioshiny and VOSviewer tools to perform performance analysis and scientific mapping. In this study, performance analysis was performed, including descriptive statistics, publication trends, and identification of key actors. Furthermore, scientific mapping has also been done to visualize the prevalent structural aspects and hot research topics to observe the evolving dynamics over the past 10 years.

Results:

The findings indicate a significant rise in publications over the past decade, with an annual growth of 21.5 per cent. We identified prolific authors, institutions, journals, countries, articles, and keywords that encapsulate the domain of digital technologies in the classrooms. The prospective challenges were also identified, including a need for a more technology-driven culture, limited teacher assistance, user interface design issues, proper training, and a technological divide.

Discussion:

The findings could encourage the use of digital technologies in the classrooms and offer insights for policymakers to (re)allocate resources. Furthermore, this work is valuable for informing scholars and practitioners about the current state of research, helping them to identify and focus on trending topics when deciding which areas to explore.

1 Introduction

According to the United Nations Educational, Scientific and Cultural Organization (UNESCO), education’s primary objective is to focus on holistic development, sustainability, and inclusive education and prepare upcoming generations for the digital era (UNESCO, 2021). Digital technologies encompass computers, internet-based applications, and devices like video cameras, smartphones, and personal digital assistants (Ertmer, 1999). It significantly enhanced various aspects of education, i.e., reshaped traditional teaching methods (Okoye et al., 2023), increased accessibility (Moon and Hofferth, 2018), and enhanced the learning experience for students worldwide (Cohen et al., 2022). To address the requirements of diverse learners and maintain their competitiveness in the global education market, many academic institutions and universities use cutting-edge technology-based teaching strategies inside and outside the classroom (Haleem et al., 2022; Torres-Ruiz and Moreno-Ibarra, 2019). The utilization of digital technologies and their interactive features have gained scholarly attention for their potential to enhance pedagogical and learning functions (Bourbour, 2023). These digital technologies facilitated remote communication between teachers and students in diverse classroom settings, extending beyond traditional learning (Kumi-Yeboah et al., 2020). Conversely, conventional classroom teaching methods need more immediacy, rapid assessment, and high engagement (Ertmer, 2005), but digital technologies have addressed these shortcomings effectively (Okoye et al., 2023). Despite several advantages of digital technologies in education, various challenges such as the digital divide (Moon and Hofferth, 2018), distraction (Forsler and Guyard, 2023), overdependence during lectures (Torres-Ruiz and Moreno-Ibarra, 2019), decreased social interaction, excessive screen time, reduced attention span (Forsler and Guyard, 2023), teacher training (Okoye et al., 2023), and content quality (Torres-Ruiz and Moreno-Ibarra, 2019) exists. The adoption of digital technologies in classroom settings has significantly increased over the last two decades (Harju et al., 2019). Several governments, i.e., the United Kingdom (UK), the United States of America (USA), and China, have considerably financed digital technologies within classrooms to improve learning outcomes (Luo et al., 2021; Banks and Williams, 2022). A survey conducted in 368 European higher education institutions comprising 48 countries pointed out that technology-enhanced learning has significantly impacted student engagement, enhanced teaching effectiveness, and increased flexibility in course delivery as significant benefits (Zhu, 2019). Each year in the UK, schools spend £470 million, and colleges spend £140 million to support the integration of digital tools in classrooms. The Flexible Learning Fund, with £11 million, was also established for innovative approaches to adult education through blended or online learning (Britain, 2019). Similarly, China’s “Education Modernization 2035” policy established significant investments in digital education, such as establishing a modern education governance system by deepening comprehensive educational reform, fully utilizing digital technologies and mechanisms, expanding internet access in rural schools, and promoting digital textbooks and online learning platforms (Zhu, 2019). This increased governmental attraction and capital spending in digital technologies, paired with education, have led to rapid advancements, shown by a growing research community and a rise in scholarly publications (Major et al., 2018). Despite these advancements, the challenges in traditional educational practices persist.

1.1 Rationale for bibliometric analysis

It is crucial to understand how digital technologies shape the educational landscape and how they address or exacerbate existing issues. A significant demand exists for synthesizing the current research within digital technologies in the classroom (Major et al., 2018), offering a concise overview of existing literature. Existing comprehensive reviews in the field are often constrained, relying on qualitative methods like meta-analysis (Forsler and Guyard, 2023), scoping review (Major et al., 2018), or systematic review (Bathla et al., 2023; Aytekin et al., 2022), which tend to cover a limited number of studies and concentrate on specific research outcomes and themes. However, these methods prioritized analyzing individual research evidence and thematic patterns over delivering a quantitative evaluation of the entire research landscape (Haleem et al., 2022). An effective way for quantitative assessment of the overall research landscape is to use bibliometric analysis to track academic trends (Tsay and Yang, 2005). Due to the large number of publications in this interdisciplinary domain, it is crucial to analyze trends, evaluate research impact, and guide policy decisions. According to Zupic and Čater (2015), bibliometrics helps to examine how disciplines have changed over time based on their conceptual, social, and intellectual structures. Bibliometrics makes research retrospectives easier and may also be used to explore research hotspots and discipline-specific development trends objectively and scientifically (Tsay and Yang, 2005; Van Eck and Waltman, 2014). On the one hand, it makes it possible to evaluate the progress that has been made, identifies the most reliable and well-liked sources of scientific publications, honors prominent actors in the field, including authors and institutions, establishes the intellectual framework for evaluating recent developments (Aria and Cuccurullo, 2017), recognizes new areas of research interest, and forecasts the success of future studies (Ellegaard and Wallin, 2015). However, it also assists researchers in identifying suitable research institutions of collaboration, and possible co-authors (Zupic and Čater, 2015; Aria and Cuccurullo, 2017).

1.2 Research gap

Currently, many studies employ bibliometric analysis focused on specific avenues rather than broader topics, i.e., 25 years of research in the Journal of Special Education Technology (Sinha et al., 2023), or three decades of research in Interactive Learning Environments Journal (Mostafa, 2023) etc. Some critical areas of focus for bibliometric studies were global research on emerging digital technology (Herlina et al., 2025), digital technology for sustainable development goals (Bathla et al., 2023), the intersection of Islam and digital technology (Wahid, 2024), and unified theory of acceptance and use of technology for mobile learning adoption (Aytekin et al., 2022). However, few bibliometric studies have specifically highlighted research in education. These studies generally focus on bibliometric analysis in specific areas, such as topic evolution in education research (Huang et al., 2020), smart education (Li and Wong, 2022), physical education (Gazali and Saad, 2023), technology for classroom dialogue (Hao et al., 2020), e-learning (Djeki et al., 2022), and construction education (Aliu and Aigbavboa, 2023). Our research aims to fill this gap by conducting a bibliometric analysis to provide a comprehensive overview of the scattered literature on digital technologies in education while offering insights for future research.

1.3 Research questions

To accomplish the goal of this study, a bibliometric analysis is performed to encounter the following research questions:

RQ1: What are the global trends, distribution of the major actors, and research landscape in the interdisciplinary research area of digital technologies and classrooms in the last 10 years?

RQ2: What are the prevalent structural aspects and hot research topics in digital technologies in classrooms over the last 10 years?

1.4 Structure of the paper

The rest of this study is organized as follows. Section 2 outlines the materials and methods, emphasizing article selection through a search query, tools for data analysis, and methodology used to perform the bibliometric analysis. Section 3 presents the findings of the analysis. Section 4 highlights key contributions and suggests future research directions in the field. Lastly, section 5 concludes the study with possible future work.

2 Materials and methods

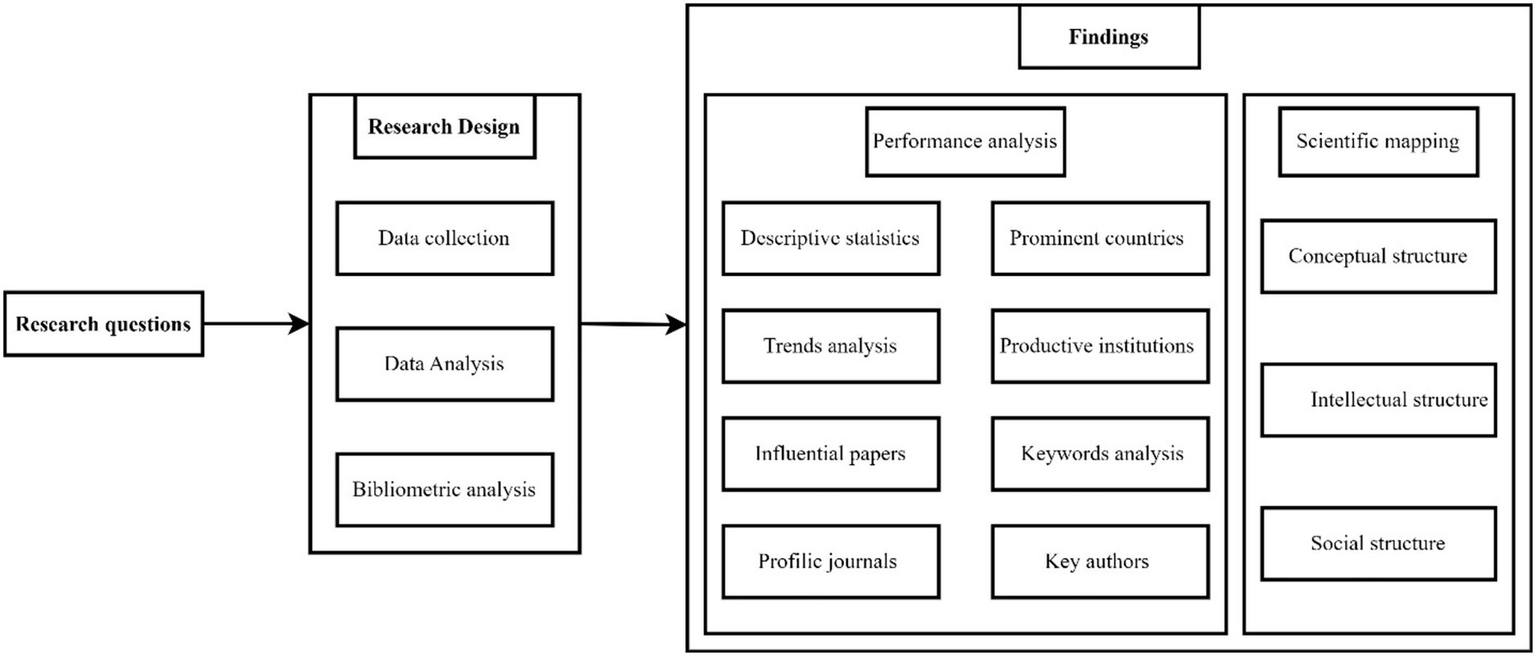

This study utilized bibliometric analysis to measure the influence of scientific publications and the extent of knowledge dissemination using statistical methods (Van Eck and Waltman, 2014; Zupic and Čater, 2015). We have collected a relatively large number of articles to extract the related information for data analysis. Subsequently, a quantitative study was conducted using performance analysis and scientific mapping. The research design of our study is presented in Figure 1.

Figure 1

The research design of our study.

2.1 Data collection

The primary step in conducting a bibliometric analysis involves gathering raw data to obtain essential article metadata, such as authors, affiliations, abstracts, keywords, references, citation counts (CC), etc. (Noyons et al., 1999). The two vital bibliometric databases, Web of Science (WoS) and Scopus have vast academic literature collections. Google Scholar has also been popular but unsuitable for bibliometric analysis due to inconsistent data, lack of controlled indexing, inflated citation counts, limited advanced metrics, and difficulty exporting structured data (Roldan-Valadez et al., 2019). WoS contains over 15,000 journals and 90 million documents, and Scopus indexes more than 20,000 sources, totalling about 69 million records. This research selected WoS Core Collection for its superior quality standards compared to Scopus. This choice aims to minimize the occurrence of false positive results in author and keyword disambiguation, a process further facilitated by using keywords plus (Merigó et al., 2015). Keywords plus are automatically generated from WoS based on terms recurring in an article’s reference list, eliminating comparison issues (e.g., single or plural forms or acronyms). Consequently, WoS is considered the most suitable database and has emerged as a primary choice for scholars conducting bibliometric analysis (Tsay and Yang, 2005). It is challenging to find search queries in the data retrieval process. While digital technologies are widely used, opinions regarding their nomenclature and conceptualization are still divided. Terms like “digital tools” also appear in the literature (Hao et al., 2020). Publications about digital technologies in the classrooms from 2014 to 2023 were found using a search query that included keywords. The choice of timespan was not arbitrary because previous researchers explored bibliometric analysis for education concerning digital technologies done in different timespans, for example, E-learning (2015–2020) (Djeki et al., 2022), technology-mediated classroom dialogue (1997–2016) (Hao et al., 2020), and the evolution of topics in education research (2000–2017) (Huang et al., 2020). Furthermore, we focused on the last decade due to rapid technological advancements (Harju et al., 2019), significant shifts in educational paradigms (Mostafa, 2023), and the faster adoption of digital technologies during the COVID-19 pandemic (Alabdulaziz, 2021), making it important to identify current trends. The following advanced query was used to retrieve raw bibliographic data related to the digital technologies in the classroom research area from WoS, the most significant bibliometric database (Merigó et al., 2015).

TS = ((“digital technolog*” OR “digital tool*”) AND (“education*” OR “classroom” OR “learn*”)).

“TS” (Topics) refers to a publication’s title, abstract, or keywords. The last date of the article retrieval search was December 31, 2023. The articles were included based on (1) in English; (2) published between 2014 and 2023; (3) included in a WOS category titled “Education & Educational research”; and (4) articles indexed in the Social Sciences Citation Index (SSCI) and Science Citation Index-Expanded (SCIE). The restriction to only English language publications provides access to internationally recognized research, and the selected timeframe ensures that studies address contemporary educational challenges under current educational trends (Aytekin et al., 2022; Sinha et al., 2023).

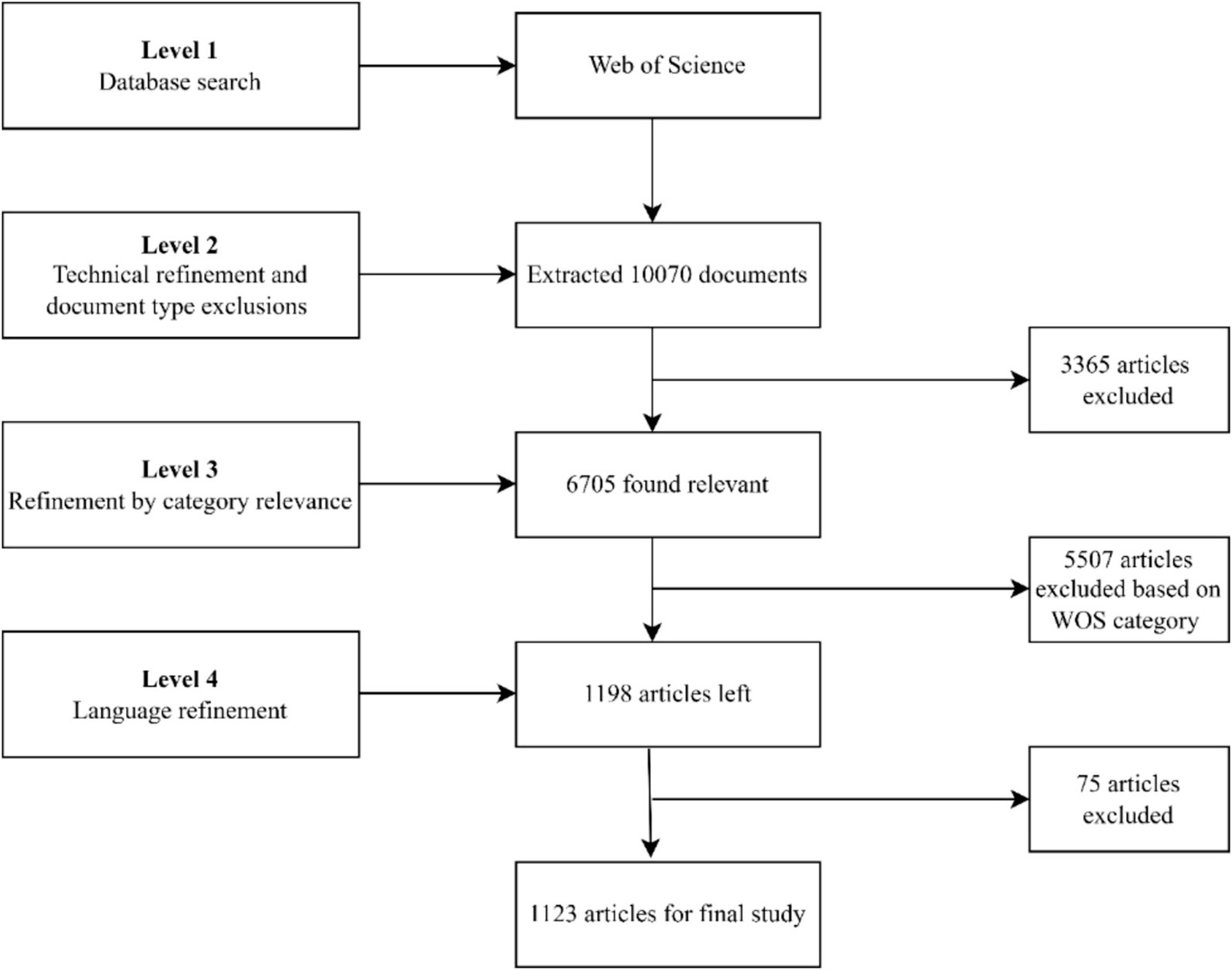

Ten thousand seventy publications with complete bibliographic data and citations published between 2014 and 2023 were extracted. The downloaded raw data was put into Excel for additional processing to extract essential components from each publication, such as the title, authors, affiliation, abstract, journal, year published, etc. Preprocessing and data filtering were done to ensure the analysis was reliable and efficient. A total of 1,123 papers were chosen based on the criteria to be examined in the final data collection, as shown in Figure 2. This has fulfilled the requirements for analyzing bibliometrics, because Rogers et al. (2020) stated that to analyze bibliometrics a minimum of 200 documents is needed. Meanwhile, Donthu et al. (2021) stated that at least 300 documents were needed. Previous research used a few (Aliu and Aigbavboa, 2023; Mostafa, 2023; Sinha et al., 2023; Aytekin et al., 2022; Bathla et al., 2023) to thousands of papers (Djeki et al., 2022; Hao et al., 2020; Li and Wong, 2022; Huang et al., 2020; Wahid, 2024; Herlina et al., 2025) for bibliometric analysis. Ellegaard and Wallin (2015) stated that a high volume of papers gives breadth, and a limited volume of papers enables more targeted insights. Still, the volume of papers for bibliometric analysis depends on whether the domain is developing or developed.

Figure 2

A visual representation of a systematic process for selecting relevant articles based on predetermined criteria.

2.2 Data analysis

Bibliometric analysis was performed employing Biblioshiny (RStudio), VOSviewer software, and Microsoft Excel to explore various characteristics. Microsoft Excel was employed to filter and visualize publication trends through graphs. Biblioshiny, a Java software, integrates the capabilities of the bibliometrix package with the user-friendly interface of web applications within the Shiny package environment (Aria and Cuccurullo, 2017). After applying inclusion and exclusion criteria, we exported the results in two file formats: Microsoft Excel and Bibtex. Biblioshiny was utilized to perform descriptive analyses, i.e., annual scientific productivity, and identify top contributing authors, institutions, journals, countries, articles, and keywords. Furthermore, the co-occurrence network, thematic map, author’s collaboration network, and cross-country collaboration network were created through Biblioshiny. VOSviewer was used to create an author-based co-citation network, article-based co-citation network, and source-based co-citation network.

2.3 Bibliometric analysis

The final data analysis involved bibliometric techniques, particularly performance analysis and scientific mapping (Noyons et al., 1999). It aids in exploring bibliographic characteristics and the visualization of research landscapes to identify trends and patterns within literature.

2.3.1 Performance analysis

Performance analysis in bibliometric analysis involves descriptive statistics, publications projection, and evaluating the impact, productivity, and influence of journals, institutions, countries, authors, and keywords within a specific field (Li and Wong, 2022). It can employ a variety of indicators, primarily focused on analyzing the overall trend of the topic and the publication or CC of articles within the dataset, categorized by authors, journals, countries, and affiliations (Huang et al., 2020). However, other indicators are commonly utilized when assessing the scientific impact of researchers or journals. One of the most popular, known for its straightforward interpretation, is the h-index introduced by Hirsch (2005). It represents the minimum number of papers garnered at least citations, offering a holistic evaluation of productivity and impact within academia. Nevertheless, while it provides objectivity, the h-index is particularly advantageous for comparing authors across diverse research domains or varying career stages (Kelly and Jennions, 2006). However, the g-index is considered more robust than the h-index as it accounts for the citation performance of all articles, giving more weight to highly cited papers has also been reported. The m-index has also been reported as an alternative unit of analysis to overcome the problems associated with comparing researchers at different stages of their careers. The m-index is the h-index divided by the years between a scientist’s first and last publication (Hirsch, 2007). This study only compares scientists in the field of digital technologies in classroom environments.

2.3.2 Scientific mapping

Scientific mapping aims to uncover latent relationships and phenomena within the structure of science through visual representations that would otherwise be difficult to detect. It typically employs symbols of varying sizes and colors to represent concepts and their significance (Aria and Cuccurullo, 2017). It permits scholars to identify conceptual, social, and intellectual structures to recognize their progressing dynamics over time (Aria and Cuccurullo, 2017; Noyons et al., 1999). Furthermore, it is an efficient method to define research topics from literature and provide a comprehensive overview of the current state of research, but mainly identifying research gaps to guide future research directions (Aria and Cuccurullo, 2017).

3 Results

3.1 Performance analysis

This section presents a bibliometric analysis utilizing diverse performance metrics to address the first research question of this study.

RQ1: What are the global trends, distribution of the major actors, and research landscape in the interdisciplinary research area of digital technologies and classrooms in the last 10 years?

3.1.1 Descriptive statistics

Table 1 illustrates the descriptive characteristics of digital technologies in the classroom of 1,123 articles published from 2014 to 2023. The data of the selected timespan demonstrates a notable annual growth rate of 21.5%, with an average article age of 4.04 years and an average of 14.3 citations per article. Furthermore, the articles within the dataset contain a rich array of content, with 1,202 automatically generated keywords (keywords Plus) and 3,104 keywords provided by the authors. Among the 2,680 contributing authors, 237 authors have singular contributions. Collaboration among authors is evident, with an average of 2.81 co-authors per article and approximately 21.19% of articles demonstrate international co-authorships.

Table 1

| Description | Results |

|---|---|

| Primary information about the data | |

| Timespan | 2014–2023 |

| Sources (Journals) | 194 |

| Articles | 1,123 |

| Annual growth rate (%) | 21.5 |

| Article average age | 4.04 |

| Average citations per article | 14.3 |

| References | 45,463 |

| Article contents | |

| Keywords plus | 1,202 |

| Author’s Keywords | 3,104 |

| Author’s information | |

| Authors | 2,680 |

| Authors of single-authored article | 237 |

| Authors collaboration | |

| Single-authored articles | 259 |

| Co-Authors per article | 2.81 |

| International co-authorships (%) | 21.19 |

Descriptive characteristics of digital technologies in the classroom literature.

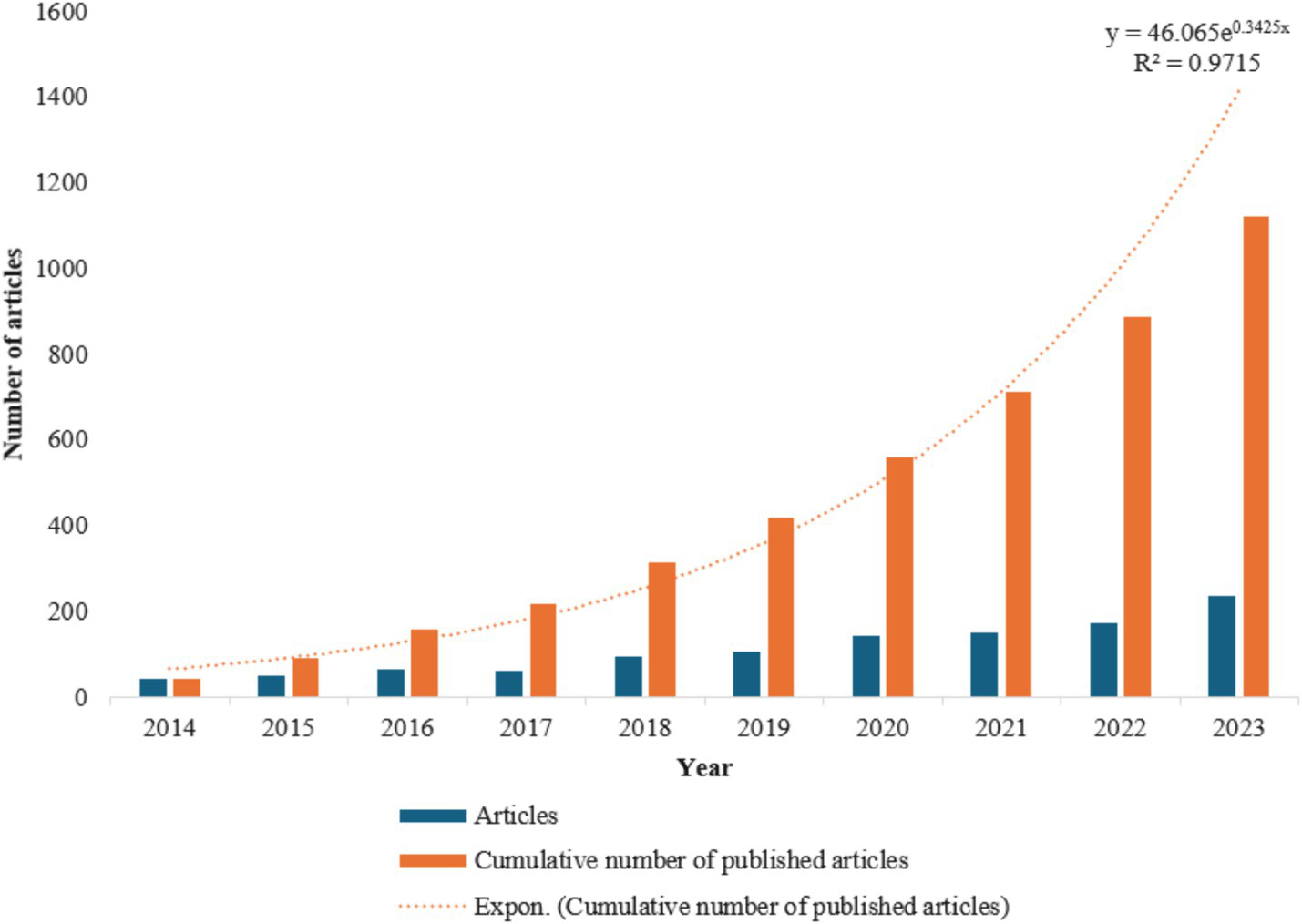

3.1.2 Articles trends analysis

The number of articles published across diverse scientific domains has grown remarkably in recent years. This surge aligns with notable shifts observed in the behavior of scholars engaged in scientific research (Roldan-Valadez et al., 2019; Kelly and Jennions, 2006). Figure 3 displays the number of articles by year based on data from 1,123 articles. Data analysis showed 41 publications in 2014 (3.65%) and 49 in 2015 (4.36%). The trend continued with 66 publications in 2016 (5.88%), 62 in 2017 (5.52%), 95 in 2018 (8.46%), 106 in 2019 (9.44%), 142 in 2020 (12.65%), 151 in 2021 (13.45%), 172 in 2022 (15.32%), and 237 in 2023 (21.12%). Of these, 72.2% of the articles were published within the last 5 years, compared to 27.8% from 2013 to 2017. The fitting curve with an R2 value of 0.9715 indicates that the cumulative number of published articles on digital technologies in classrooms has experienced significant growth. The trend indicates that digital technologies in classroom research have matured post-2019.

Figure 3

Trend analysis of digital technologies in the classroom in the last decade.

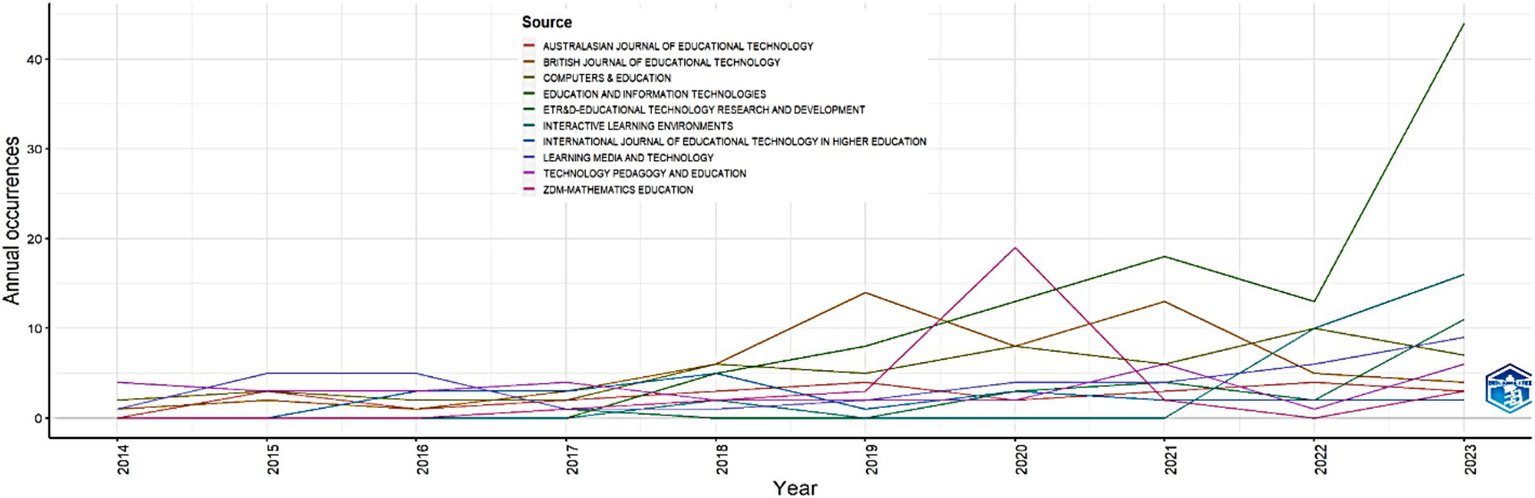

3.1.3 Journal profiles analysis

Journal profile analysis entails examining the characteristics of academic journals based on various criteria (Roldan-Valadez et al., 2019). We employed source impact analysis alongside Bradford law to identify primary journals contributing to disseminating digital technologies in classroom-related literature within the education domain. Table 2 outlines the ranking of articles based on publication count (PC), citation count (CC), cumulative frequency (CF), h, m, g-index, journal’s zone (Bradford’s law), and publication year (PY). Furthermore, the significance of a journal has also been determined by impact factor (IF), SJR (SCImago Journal Rank), Citescore, SNIP (Source Normalized Impact per Paper), and overall h-index (Roldan-Valadez et al., 2019). According to Bradford’s law, journals are categorized into zones based on productivity. Zone 1 comprises core sources with the most publications, while zones 2 and 3 contain progressively fewer publications (Tsay and Yang, 2005). The findings reveal that among 194 journals analyzed, nine journals fall within core zone 1, while 39 and 147 journals were classified under zones 2 and 3, respectively. Figure 4 illustrates the yearly proportions of relevant papers for the 20 most prolific journals, enabling readers to analyze the trends within each top-ranked journal over the past decade. The top 20 journals emerge as key avenues for disseminating digital technologies in classroom literature. The top five journals are “Education and Information Technologies,” “British Journal of Educational Technology,” “Computers & education,” “Learning Media and Technology,” and “Technology Pedagogy and Education.” Of all the articles, the top 20 journals contain 47.55%, with “Education and Information Technologies” making up 8.99%. Out of 194 sources, only 23 journals published at least 10 papers between 2014 and 2023. The “British Journal of Educational Technology” and “Technology Pedagogy and Education” are ranked second and fifth, respectively, based on PC. Yet, the primary journal’s PC of “Education and Information Technologies” articles surpasses that of the second-ranked journal, the “British Journal of Educational Technology,” nearly double. The m-index of “Education and Information Technologies” is also higher among all journals. Considering all parameters, “Education and Information Technologies” came in first, indicating their excellent caliber of papers within the discipline.

Table 2

| Journals = 194 | Publication count | Citation count | Cumulative frequency | Zone | H-index | G-index | M-index | Publication year | Impact factor (2022) | SCImago journal rank (2022) | Citescore (2022) | Source normalized impact per paper (2022) | Overall H-index |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Education and Information Technologies | 101 | 1,133 | 101 | Zone 1 | 18 | 29 | 2.571 | 2018 | 5.5 | 1.25 | 8.2 | 2.26 | 61 |

| British Journal of Educational Technology | 57 | 1,205 | 158 | Zone 1 | 22 | 32 | 2 | 2014 | 6.6 | 2.1 | 13.8 | 2.75 | 110 |

| Computers & Education | 51 | 2006 | 209 | Zone 1 | 20 | 44 | 1.818 | 2014 | 12 | 3.68 | 23.8 | 5 | 215 |

| Learning Media and Technology | 38 | 794 | 247 | Zone 1 | 14 | 27 | 1.273 | 2014 | 6.9 | 1.88 | 10.4 | 2.81 | 56 |

| Technology Pedagogy and Education | 33 | 432 | 280 | Zone 1 | 13 | 20 | 1.182 | 2014 | 4.9 | 1.26 | 7.1 | 1.97 | 45 |

| ZDM-Mathematics Education | 30 | 305 | 310 | Zone 1 | 9 | 16 | 1.125 | 2017 | 3 | 1.4 | 5.2 | 2.23 | 56 |

| Interactive Learning Environments | 28 | 169 | 338 | Zone 1 | 7 | 12 | 1 | 2018 | 5.4 | 1.17 | 11 | 1.69 | 57 |

| Australian Journal of Educational Technology | 25 | 274 | 363 | Zone 1 | 10 | 16 | 1 | 2015 | 1.1 | 1.1 | 6.9 | 1.72 | 61 |

| Educational Technology Research and Development | 21 | 94 | 384 | Zone 1 | 6 | 8 | 0.75 | 2017 | 5 | 1.52 | 8.1 | 2 | 101 |

| International Journal of Educational Technology in Higher Education | 21 | 713 | 405 | Zone 2 | 12 | 21 | 1.333 | 2016 | 7.6 | 2.05 | 15.3 | 3.85 | 49 |

| Journal of Computer-Assisted Learning | 15 | 67 | 420 | Zone 2 | 4 | 7 | 0.5 | 2017 | 5 | 1.63 | 8 | 2.18 | 105 |

| Sport Education and Society | 14 | 488 | 434 | Zone 2 | 8 | 14 | 0.727 | 2014 | 2.9 | 1.13 | 6.9 | 2.11 | 74 |

| Journal of Early Childhood Literacy | 14 | 175 | 448 | Zone 2 | 7 | 13 | 0.875 | 2017 | 1.6 | 0.65 | 4.4 | 1.51 | 45 |

| International Journal of Technology and Design Education | 14 | 104 | 462 | Zone 2 | 6 | 10 | 0.545 | 2014 | 2.1 | 0.84 | 4.7 | 2.26 | 50 |

| Educational Technology & Society | 13 | 250 | 475 | Zone 2 | 9 | 13 | 0.818 | 2014 | 4 | 1.05 | 5.8 | 1.53 | 103 |

| Educational Studies in Mathematics | 13 | 145 | 488 | Zone 2 | 8 | 12 | 0.727 | 2014 | 3.2 | 1.64 | 4.7 | 2.35 | 76 |

| BMC Medical Education | 13 | 78 | 501 | Zone 2 | 5 | 8 | 1 | 2020 | 3.6 | 0.91 | 4.5 | 1.79 | 87 |

| Journal of Science Education and Technology | 11 | 221 | 512 | Zone 2 | 9 | 11 | 0.9 | 2015 | 4.4 | 1.28 | 7 | 1.9 | 74 |

| Learning Culture and Social Interaction | 11 | 48 | 523 | Zone 2 | 5 | 6 | 0.556 | 2016 | 1.9 | 0.77 | 4.4 | 1.58 | 30 |

| Computer Assisted Language Learning | 11 | 128 | 534 | Zone 2 | 4 | 11 | 0.444 | 2016 | 7 | 1.75 | 12.6 | 3.1 | 63 |

Top 20 influential and prestigious journals concerning the frequency of their publications.

Figure 4

The growth of journals over time in the last 10 year.

3.1.4 Influential countries analysis

Identifying the most influential countries is important as it provides insights into the global research landscape (Ellegaard and Wallin, 2015). It was discovered that authors from 72 countries had used digital technologies in classroom research. Table 3 displays the 20 most prominent nations based on PC, CC, average citations per article (ACA), gross domestic product (GDP) ranking, single-country publications (SCPs), multi-country publications (MCPs), and MCP ratio. Zaman et al. (2018) pointed out a positive correlation between a country’s research growth and GDP ranking, indicating that higher GDP rankings are associated with greater research productivity. Furthermore, when considering multi-authored publications, it becomes apparent that countries with the highest publication output may exhibit a lower propensity for international collaboration. The MCP ratio indicates each country’s propensity for international collaboration (Ellegaard and Wallin, 2015). Based on Table 3, the United States is the most frequently mentioned nation, trailed by Australia, the UK, China, and Sweden. It was found with the analysis of the dataset that the publications related to digital technologies in classrooms in 72 countries, mainly the USA (14.87%), Australia (14.33%), UK (10.50%), China (6.76%), Sweden (6.41%), Spain (5.34%), etc.

Table 3

| Countries = 72 | Publication count | Citation count | Average citations per article | Publication count (%) | Gross domestic product ranking (2024) | Single-country publications | Multi-country publications | Multi-country publications ratio |

|---|---|---|---|---|---|---|---|---|

| USA | 167 | 1784 | 10.7 | 14.87 | 1 | 145 | 22 | 0.132 |

| Australia | 161 | 3,022 | 18.8 | 14.33 | 12 | 129 | 32 | 0.199 |

| United Kingdom | 118 | 2,185 | 18.5 | 10.50 | 6 | 91 | 27 | 0.229 |

| China | 76 | 954 | 12.6 | 6.76 | 2 | 52 | 24 | 0.316 |

| Sweden | 72 | 913 | 12.7 | 6.41 | 24 | 66 | 6 | 0.083 |

| Spain | 60 | 791 | 13.2 | 5.34 | 15 | 45 | 15 | 0.25 |

| New Zealand | 32 | 421 | 13.2 | 2.84 | 50 | 24 | 8 | 0.25 |

| Finland | 31 | 477 | 15.4 | 2.76 | 47 | 21 | 10 | 0.323 |

| Norway | 31 | 869 | 28 | 2.76 | 23 | 25 | 6 | 0.194 |

| Germany | 26 | 550 | 21.2 | 2.31 | 4 | 24 | 2 | 0.077 |

| Ireland | 26 | 313 | 12 | 2.31 | 26 | 23 | 3 | 0.115 |

| Canada | 24 | 403 | 16.8 | 2.13 | 9 | 21 | 3 | 0.125 |

| Turkey | 21 | 244 | 11.6 | 1.86 | 19 | 19 | 2 | 0.095 |

| Switzerland | 19 | 224 | 11.8 | 1.69 | 20 | 18 | 1 | 0.053 |

| Brazil | 16 | 146 | 9.1 | 1.42 | 11 | 11 | 5 | 0.313 |

| Israel | 16 | 353 | 22.1 | 1.42 | 27 | 13 | 3 | 0.188 |

| France | 15 | 185 | 12.3 | 1.33 | 7 | 7 | 8 | 0.533 |

| Italy | 15 | 173 | 11.5 | 1.33 | 10 | 9 | 6 | 0.4 |

| Greece | 13 | 171 | 13.2 | 1.15 | 54 | 11 | 2 | 0.154 |

| Denmark | 12 | 77 | 6.4 | 1.06 | 40 | 9 | 3 | 0.25 |

Top-ranking countries based on total publication count.

3.1.5 Productive institutions analysis

The analysis of productive institutions in the bibliometric analysis is crucial for identifying research hubs (Hao et al., 2020). It is vital to consider a university’s research competence, academic reputation, and place in the international and local educational world based on Quacquarelli Symonds (QS) ranking, Academic Ranking of World Universities (ARWU) or Shanghai ranking, and National rankings (Moskovkin et al., 2022). The top 20 universities are listed in Table 4 based on PC. The top five universities are “Monash University” (48), “University of Oslo” (21), “Deakin University” (18), “University of Gothenburg” (15), and “Griffith University” (15). Of the top 20, 9 originate from Australia, indicating Australia’s central position in the research field. Several renowned universities (Top 50 QS universities), the “University of Cambridge,” “University College London,” “the University of Sydney,” “the University of Edinburgh,” “The University of Hong Kong,” “The University of Melbourne,” and “Monash University,” also published many articles in a similar domain. Similarly, the leading/top universities of Norway, Finland, the UK, New Zealand, Australia, and Hong Kong are active and influential in their research on digital technologies in classrooms at the national level. These 20 institutes hold 26.17% of the total publications.

Table 4

| Affiliation | Country | Publication count | Citation count | Quacquarelli symonds ranking (2024) | Academic ranking of world universities (2023) | National ranking (Academic ranking of world universities (2023)) |

|---|---|---|---|---|---|---|

| Monash University | Australia | 48 | 1,343 | 42 | 77 | 5 |

| University of Oslo | Norway | 21 | 934 | 117 | 73 | 1 |

| Deakin University | Australia | 18 | 134 | 233 | 201–300 | 9–15 |

| University of Gothenburg | Sweden | 15 | 182 | 185 | 101–150 | 4 |

| Griffith University | Australia | 15 | 357 | 243 | 301–400 | 16–22 |

| University College London | UK | 14 | 247 | 8 | 17 | 3 |

| University of Helsinki | Finland | 14 | 190 | 106 | 101–150 | 1 |

| University of Limerick | Ireland | 14 | 280 | 426 | 801–900 | 5 |

| Australian Catholic University | Australia | 14 | 271 | 801–850 | 501–600 | 25–26 |

| University of Cambridge | UK | 13 | 299 | 2 | 4 | 1 |

| University of Auckland | New Zealand | 13 | 145 | 87 | 201–300 | 1 |

| The University of Melbourne | Australia | 13 | 293 | 14 | 35 | 1 |

| The University of Hong Kong | Hong Kong | 12 | 173 | 26 | 88 | 1 |

| Queensland University of Technology | Australia | 12 | 150 | 189 | 301–400 | 16–22 |

| University of Edinburgh | UK | 11 | 408 | 22 | 38 | 5 |

| Macquarie University | Australia | 10 | 119 | 195 | 201–300 | 9–15 |

| University of Sydney | Australia | 10 | 124 | 19 | 73 | 4 |

| Orebro University | Sweden | 9 | 270 | 501–550 | 701–800 | 12 |

| Stockholm University | Sweden | 9 | 185 | 118 | 98 | 3 |

| University of Wollongong | Australia | 9 | 179 | 162 | 201–300 | 9–15 |

Top institutions sorted by total publication count.

3.1.6 Productive authors analysis

Table 5 lists the influential researchers in digital technologies in classrooms, which includes the author’s name, affiliation, country, PC, CC, h, g, m-index, and PY. With 15 articles produced between 2014 and 2023, Neil Selwyn was ranked highest by the analysis, followed by Dominik Petko with nine publications. Michael Henderson and Paul Drijvers were placed fourth and fifth in the analysis; while having the same number of articles, they received different citations. Australia is home to three of the top five active researchers. Among the top 20 researchers, seven researchers belong to Australia, and five authors belong to the UK.

Table 5

| Author’s name | Affiliation | Country | Publication count | Citation count | H-index | G-index | M -index | Publication year |

|---|---|---|---|---|---|---|---|---|

| Neil Selwyn | Monash University | Australia | 15 | 944 | 13 | 15 | 1.182 | 2014 |

| Dominik Petko | University of Zurich | Switzerland | 9 | 88 | 5 | 9 | 0.833 | 2019 |

| Susan Edwards | Australian Catholic University | Australia | 8 | 213 | 6 | 8 | 0.545 | 2014 |

| Michael Henderson | Monash University | Australia | 7 | 413 | 6 | 7 | 0.545 | 2014 |

| Paul Drijvers | Utrecht University | Netherlands | 7 | 65 | 4 | 7 | 0.667 | 2019 |

| Antero Garcia | Stanford University | USA | 6 | 61 | 4 | 6 | 0.4 | 2015 |

| Paul Warwick | University of Cambridge | UK | 6 | 155 | 4 | 6 | 0.571 | 2018 |

| Oliver McGarr | University of Limerick | Ireland | 6 | 44 | 3 | 6 | 0.5 | 2019 |

| Sara Hennessy | University of Cambridge | UK | 5 | 172 | 5 | 5 | 0.5 | 2015 |

| Ekaterina Tour | Monash University | Australia | 5 | 102 | 5 | 5 | 0.5 | 2015 |

| Ina Blau | The Open University of Israel | Israel | 5 | 247 | 4 | 5 | 0.5 | 2017 |

| Marcelo Borba | São Paulo State University | Brazil | 5 | 101 | 3 | 5 | 0.6 | 2020 |

| Michelle Margaret Neumann | Southern Cross University | Australia | 5 | 216 | 3 | 5 | 0.333 | 2016 |

| Chiara Antonietti | University of Zurich | Switzerland | 4 | 65 | 4 | 4 | 1.333 | 2022 |

| Ashley Casey | Loughborough University | UK | 4 | 175 | 4 | 4 | 0.5 | 2017 |

| Sarah K. Howard | University of Wollongong | Australia | 4 | 109 | 4 | 4 | 0.4 | 2015 |

| Deborah Lupton | University of New South Wales | Australia | 4 | 122 | 4 | 4 | 0.4 | 2015 |

| Louis Major | University of Cambridge | UK | 4 | 87 | 4 | 4 | 0.571 | 2018 |

| Benjamin Luke Moorhouse | Hong Kong Baptist University | China | 4 | 52 | 4 | 4 | 1 | 2021 |

| Selena Nemorin | University of Oxford | UK | 4 | 83 | 4 | 4 | 0.444 | 2016 |

Authors with the highest number of publications.

3.1.7 Influential papers analysis

Among 1,123 articles, 16 papers received citations exceeding 100 times, suggesting significant impact and influence within their respective fields. Additionally, the top 20 most influential publications, presumably based on CC, are listed in Table 6. Table 6 contains information on journals, publishers, IF, CC, and citation count per year (CCY) related to the publication. The analysis shows that the research on digital technologies in classrooms focuses much more on the students and teachers, their experience and acceptance, the impact of digital technologies on learning, and how to improve education. The article by Ronny Scherer et al. entitled “The Technology Acceptance Model (TAM): A Meta-analytic Structural Equation Modeling Approach to Explaining Teachers’ Adoption of Digital Technology in Education” published in the journal “Computers & Education” in 2019 is the most cited (580 times in WoS and 1,548 times in Google Scholar). The paper titled “What Works and Why? Student Perceptions of ‘Useful’ Digital Technology in University Teaching and Learning,” authored by Michael Henderson et al., holds the second position in terms of citations. Published in 2015 in the journal “Studies in Higher Education,” it has been cited 272 times in WoS and 1,093 times in Google Scholar. Following closely is the paper authored by Melissa Bond et al., titled “Digital Transformation in German Higher Education: Student and Teacher Perceptions and Usage of Digital Media.” Published in 2018 in the “International Journal of Educational Technology in Higher Education,” it ranks third in citation count, with 178 citations in WoS and 464 citations in Google Scholar. The examination further indicated that the papers cited the most were the earliest, with the most influential papers published from 2014 to 2019. Notably, several highly cited papers encompass literature reviews, systematic literature reviews, or meta-analyses, highlighting the significance of social and experimental research within digital technologies in educational settings, thus emphasizing the critical role of review studies in this domain.

Table 6

| Title | Journal | Publisher | Impact factor | Citation count (2022) | Citation count per year |

|---|---|---|---|---|---|

| “The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education” | Computers and Education | Elsevier | 12 | 580 | 96.67 |

| “What works and why? Student perceptions of ‘useful’ digital technology in university teaching and learning” | Studies in Higher Education | Taylor & Francis | 4.2 | 272 | 34 |

| “Digital transformation in German higher Education: student and teacher perceptions and usage of digital media” | International Journal of Educational Technology in Higher Education | Springer | 7.6 | 178 | 25.43 |

| “Factors influencing digital technology use in early childhood education” | Computers & Education | Elsevier | 12 | 168 | 15.27 |

| “Data entry: toward the critical study of digital data and education” | Learning, Media, and Technology | Taylor & Francis | 6.9 | 159 | 15.9 |

| “What’s the matter with ‘technology-enhanced learning’?” | Learning, Media, and Technology | Taylor & Francis | 6.9 | 146 | 14.6 |

| “Embedding Digital Literacies in English Language Teaching: Students’ Digital Video Projects as Multimodal Ensembles” | TESOL Quarterly | Wiley | 3.2 | 138 | 12.55 |

| “Student preparedness for university e-learning environments” | The Internet and Higher Education | Elsevier | 8.6 | 135 | 13.5 |

| “Blended learning in higher education: Trends and capabilities” | Education and Information Technologies | Springer | 5.5 | 122 | 20.33 |

| “Young people’s uses of wearable healthy lifestyle technologies; surveillance, self-surveillance and resistance” | Sport, Education, and Society | Taylor & Francis | 2.9 | 115 | 19.17 |

| “Re-designed flipped learning model in an academic course: The role of co-creation and co-regulation” | Computers & Education | Elsevier | 12 | 113 | 14.13 |

| “Technology use and learning characteristics of students in higher education: Do generational differences exist?” | British Journal of Educational Technology | Wiley | 6.6 | 107 | 10.7 |

| “The promise and the promises of Making in science education” | Studies in Science Education | Taylor & Francis | 4.9 | 105 | 13.13 |

| “The potential of digital tools to enhance mathematics and science learning in secondary schools: A context-specific meta-analysis” | Computers & Education | Elsevier | 12 | 104 | 20.8 |

| “Rethinking the relationship between pedagogy, technology and learning in health and physical education” | Sport, Education, and Society | Taylor & Francis | 2.9 | 104 | 13 |

| “Children under five and digital technologies: implications for early years pedagogy” | European Early Childhood Education Research Journal | Taylor & Francis | 2.3 | 102 | 11.33 |

| “Dialogue, thinking together and digital technology in the classroom: Some educational implications of a continuing line of inquiry” | International Journal of Educational Research | Elsevier | 3.2 | 97 | 16.17 |

| “Using tablets and apps to enhance emergent literacy skills in young children” | Early Childhood Research Quarterly | Elsevier | 3.7 | 96 | 13.71 |

| “Digital downsides: exploring university students’ negative engagements with digital technology” | Teaching in Higher Education | Taylor & Francis | 2.6 | 92 | 10.22 |

| “Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence” | Journal of Science Education and Technology | Springer | 4.4 | 91 | 45.5 |

Most cited papers for digital technologies in classrooms.

3.1.8 Keywords analysis

Keywords are pivotal in bibliometric analysis as identifiers for research topics, themes, and trends. Additionally, keyword analysis facilitates the exploration of research trajectories and mapping intellectual landscapes. Table 7 Presents the most commonly occurring words as keywords plus author’s, abstract, and title keywords. Notably, “digital technologies” emerge as the most frequently utilized term by authors, with a count of 103. Interestingly, the utilization of author keywords is relatively minimal across the literature. Furthermore, only a few researchers employ author keywords. “Digital” is the most prevalent word in the abstract and title sections, with a frequency of 500 and 3,045, respectively. However, it has been observed that terms employed in the abstract and title tend to be more generic, with lesser potential to delineate specific themes or research streams.

Table 7

| Keywords plus | Count | Author’s keywords | Count | Title keywords | Count | Abstract keywords | Count |

|---|---|---|---|---|---|---|---|

| Education | 141 | digital technologies | 103 | Digital | 500 | digital | 3,045 |

| Technology | 137 | digital technology | 96 | Learning | 313 | learning | 2,271 |

| Students | 95 | higher education | 70 | Education | 271 | students | 1783 |

| Knowledge | 64 | Technology | 64 | Technology | 196 | teachers | 1,442 |

| ICT | 62 | Covid-19 | 38 | Teachers | 168 | technology | 1,303 |

| Framework | 53 | digital literacy | 32 | Students | 125 | education | 1,248 |

| Teachers | 51 | Education | 29 | Teaching | 108 | Study | 1,175 |

| Design | 49 | educational technology | 28 | Technologies | 107 | technologies | 1,110 |

| Impact | 48 | digital tools | 27 | School | 82 | teaching | 830 |

| Perceptions | 42 | Pedagogy | 27 | Study | 82 | research | 826 |

| Literacy | 41 | online learning | 26 | Teacher | 75 | Tools | 582 |

| Science | 41 | blended learning | 23 | Online | 66 | Data | 579 |

| Beliefs | 40 | professional development | 23 | Practices | 63 | online | 538 |

| Skills | 37 | teacher education | 23 | Literacy | 62 | school | 511 |

| Performance | 34 | ICT | 21 | Development | 59 | Paper | 494 |

| Information | 32 | Digital | 20 | Exploring | 52 | teacher | 490 |

| higher education | 31 | early childhood education | 20 | Classroom | 49 | development | 487 |

| Media | 31 | secondary education | 19 | Design | 49 | educational | 482 |

| Pedagogy | 31 | technology integration | 19 | covid-19 | 48 | practices | 470 |

| Model | 30 | Learning | 18 | Analysis | 43 | results | 451 |

Top 20 keywords ranked based on count.

3.2 Scientific mapping

In this section, we presented the findings of scientific mapping to conclude the analysis of digital technologies in the classroom. This involves identifying the conceptual, intellectual, and social structures surrounding the topic. Consequently, we aimed to address the second research question of this study:

RQ2: What are the prevalent structural aspects and hot research topics in digital technologies in classrooms over the last 10 years?

3.2.1 Conceptual structure

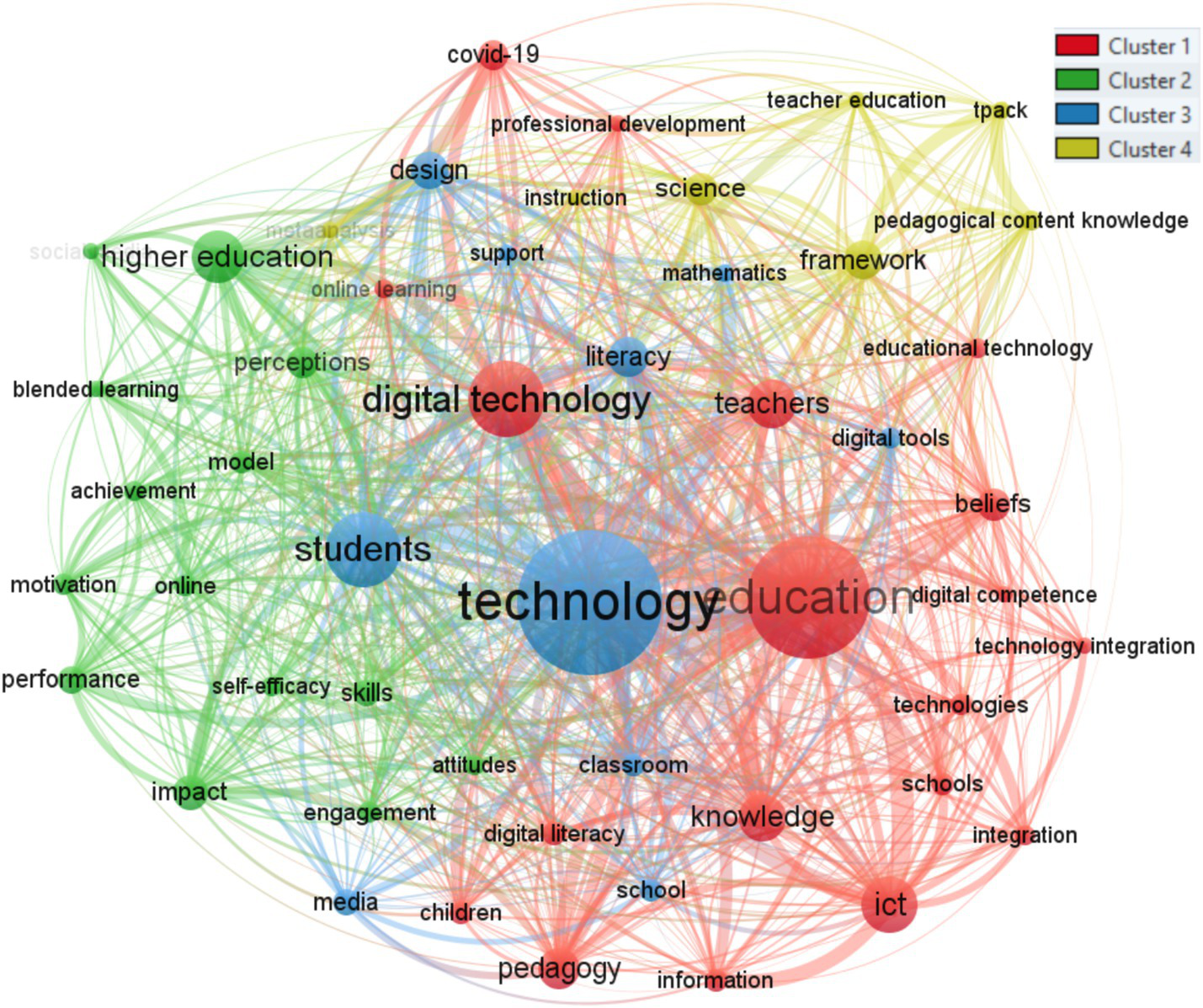

Conceptual structure enables researchers to highlight the relationships among identical terms occurring within a particular collection, referred to as co-occurrences (Ellegaard and Wallin, 2015). It identifies words that frequently appear together in a cluster, revealing conceptual or semantic groupings that research constituents regard as various topics or sub-topics (Aria and Cuccurullo, 2017). A keyword’s bubble became larger the more academics used it. Three standard weight attributes were utilized: links, occurrences, and total link strength. Links and total link strength indicate the number of connections an item has with other items and the cumulative strength of these connections, respectively. The co-occurrence of keywords in digital technologies in classroom research is shown in Figure 5.

Figure 5

Co-occurrence network.

The primary emphasis of this study is technology or digital technology, which are more prominent nodes. Because those terms frequently occur together in certain instances, cluster 1 indicates that these subtopics or variables are closely related to digital education and pedagogy in COVID-19. Similarly, cluster 2 represents a concept of factors influencing online and blended learning in higher education. Furthermore, cluster 3 represents digital technology integration in classroom design and literacy support. Cluster 4 depicts the evaluation of digital technologies through conceptual frameworks, i.e., tpack. Table 8 presents the distribution of the clusters by frequency of occurrences (top 50 keywords).

Table 8

| Cluster # | Keywords |

|---|---|

| Cluster 1 | digital technology, education, teachers, educational technology, beliefs, digital competence, technology integration, technologies, knowledge, digital literacy, children, integration, ict, information, pedagogy, covid-19, professional development, online learning |

| Cluster 2 | higher education, perceptions, blended learning, model, achievement, online, motivation, performance, self-efficacy, skills, impact, attitudes, engagement, social media |

| Cluster 3 | technology, students, digital tools, literacy, support, design, classroom, school, media |

| Cluster 4 | meta-analysis, instruction, science, tpack, framework, pedagogical content knowledge, teacher education |

Distribution of clusters based on number of occurrences (top 50 keywords).

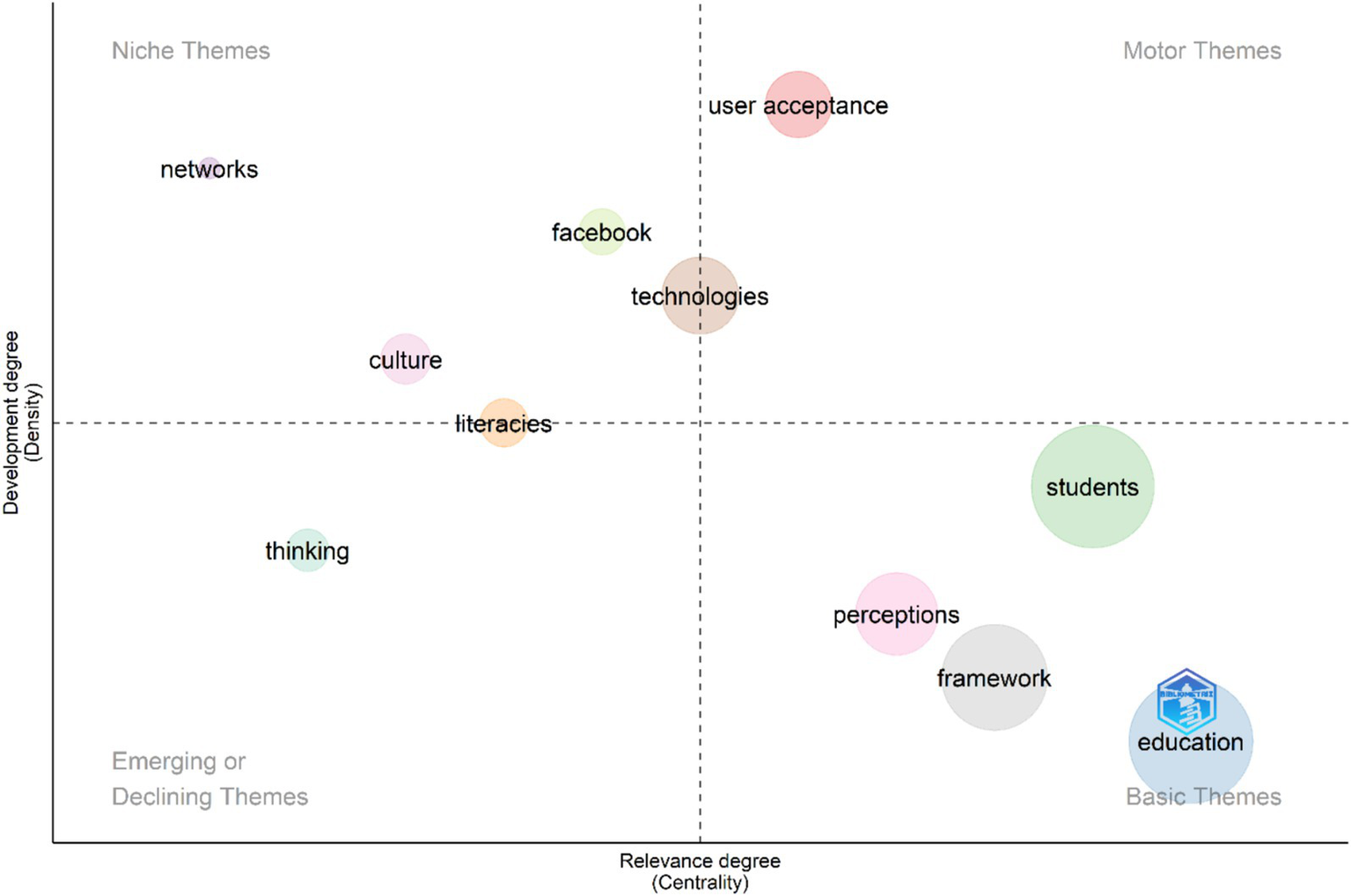

This study also included a thematic analysis of digital technologies in the classroom. The thematic analysis uncovers themes from keyword clusters and their interconnections. Two crucial aspects defining these themes are density and centrality (Mostafa, 2023). Centrality is depicted along the horizontal axis, which signifies the association level between different themes, while density is represented along the vertical axis, which measures the coherence among nodes (Sinha et al., 2023). Additionally, the cohesion among nodes, representing the density of the field of study, determines its ability to expand and sustain itself over time. The thematic map was created using the walktrap clustering algorithm, acknowledged for its efficiency and effectiveness (Bathla et al., 2023). The thematic map is separated into four quadrants (Q1–Q4), as shown in Figure 6. Basic themes in the lower right quadrant (Q1) highlighted the well-established research themes that are highly pertinent to the field. Themes such as education, students, framework, and perceptions seen in Q1 are crucial for the field’s development. Motor themes are shown in the upper right quadrant (Q2), referring to the dominant or driving topics or areas that attract significant attention and research activity. Themes such as technologies and user acceptance, seen in Q2, suggested that the technology acceptance model or unified theory of acceptance and use of technology framework are essential for the evaluation to integrate digital technologies in classrooms.

Figure 6

Thematic map.

Niche themes in the upper left quadrant (Q3) represented less explored topics that might not receive as much attention as motor themes but are of significant interest to certain researchers or subfields. These themes were social networks such as Facebook, culture (youth), thinking (users), and networks that can provide insights into research or opportunities for interdisciplinary collaboration. A theme, such as technologies sandwiched between Q2 and Q3, is well-developed and capable of structuring the multidisciplinary research field. The emerging/declining theme of “thinking” in the lower left quadrant (Q4) was determined by prior literature that suggested potential areas for further exploration.

3.2.2 Intellectual structure

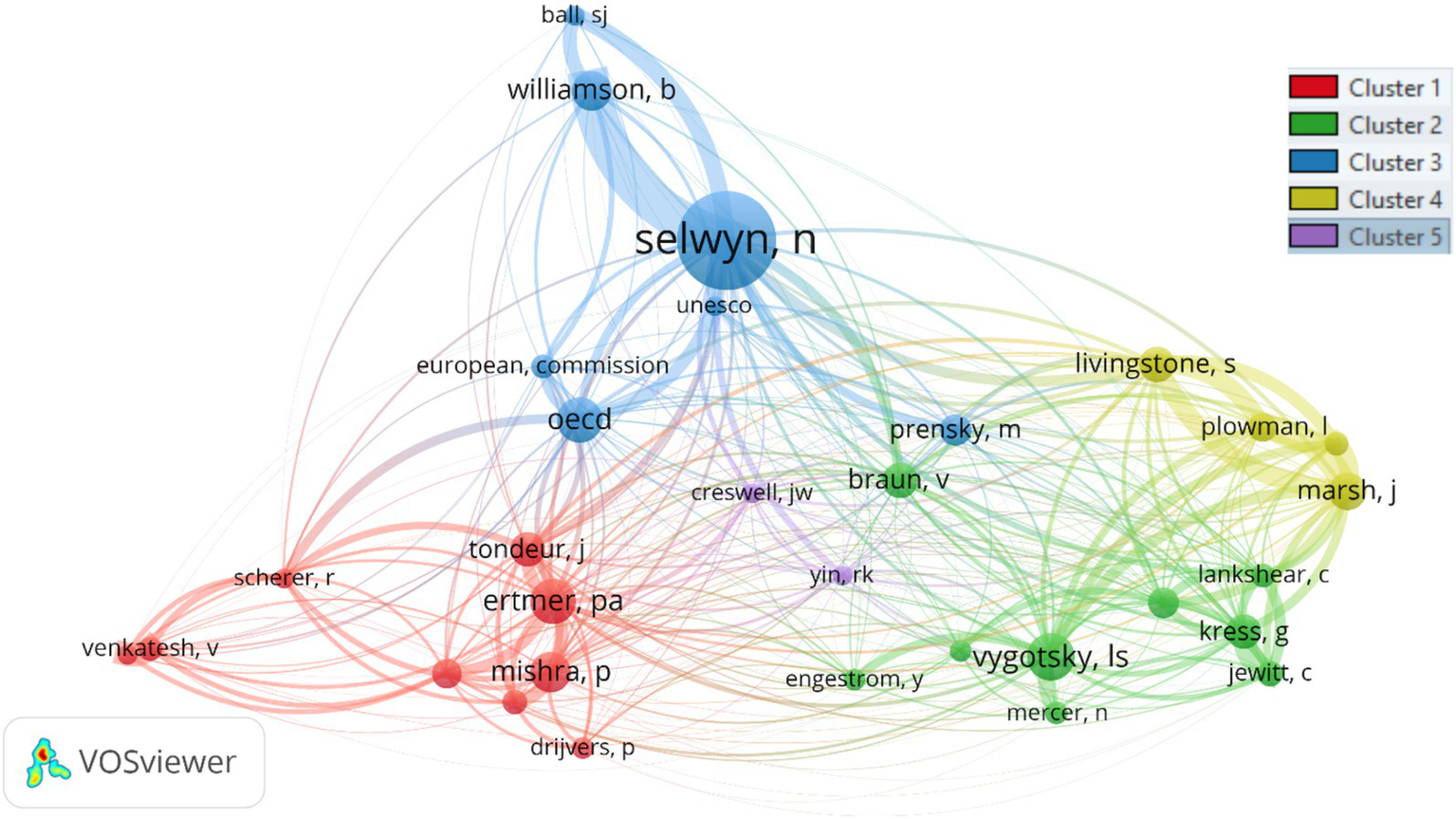

Intellectual structure refers to knowledge organization within a field, determined by co-citation patterns regarding authors, articles, and sources. Co-citation analysis assesses the frequency with which two articles are cited in a third article (Aria and Cuccurullo, 2017; Zupic and Čater, 2015). This connection has been established not by the cited articles but by the third article referencing them (Noyons et al., 1999). Co-citation analysis operates under the assumption that authors cite other works based on their similarity, relevance, and interconnectedness (Callon et al., 1983). Figure 7 illustrates the co-citation network among authors. The size of each node corresponds to how often it is cited alongside others; larger nodes indicate more frequent co-citations. Meanwhile, distinct colors and node placements signify various clusters. Table 9 illustrates the cluster categorization for the author’s co-citation network based on citation count (top 25 authors). Our study utilized article-based co-citation analysis (Figure 8) to discern influential articles shaping the field. Additionally, we employed source-based (journal-based) co-citation analysis (refer to Figure 9).

Figure 7

Author-based co-citation analysis.

Table 9

| Cluster # | Authors |

|---|---|

| Cluster 1 | tondeur j, ertmer pa, Mishra p, drijvers p. scherer r, Venkatesh v |

| Cluster 2 | Vygotsky Is, braun n, lankshear c, kress g, engestrom y, jewitt c, mercer n |

| Cluster 3 | Selwyn n, ball sj, Williamson b, unesco, european commission, oecd, prensky m |

| Cluster 4 | livingstone s, plowman l, marsh j |

| Cluster 5 | creswell jw, yin rk |

Breakdown of clusters for author’s co-citation network based on citation count (top 25 authors).

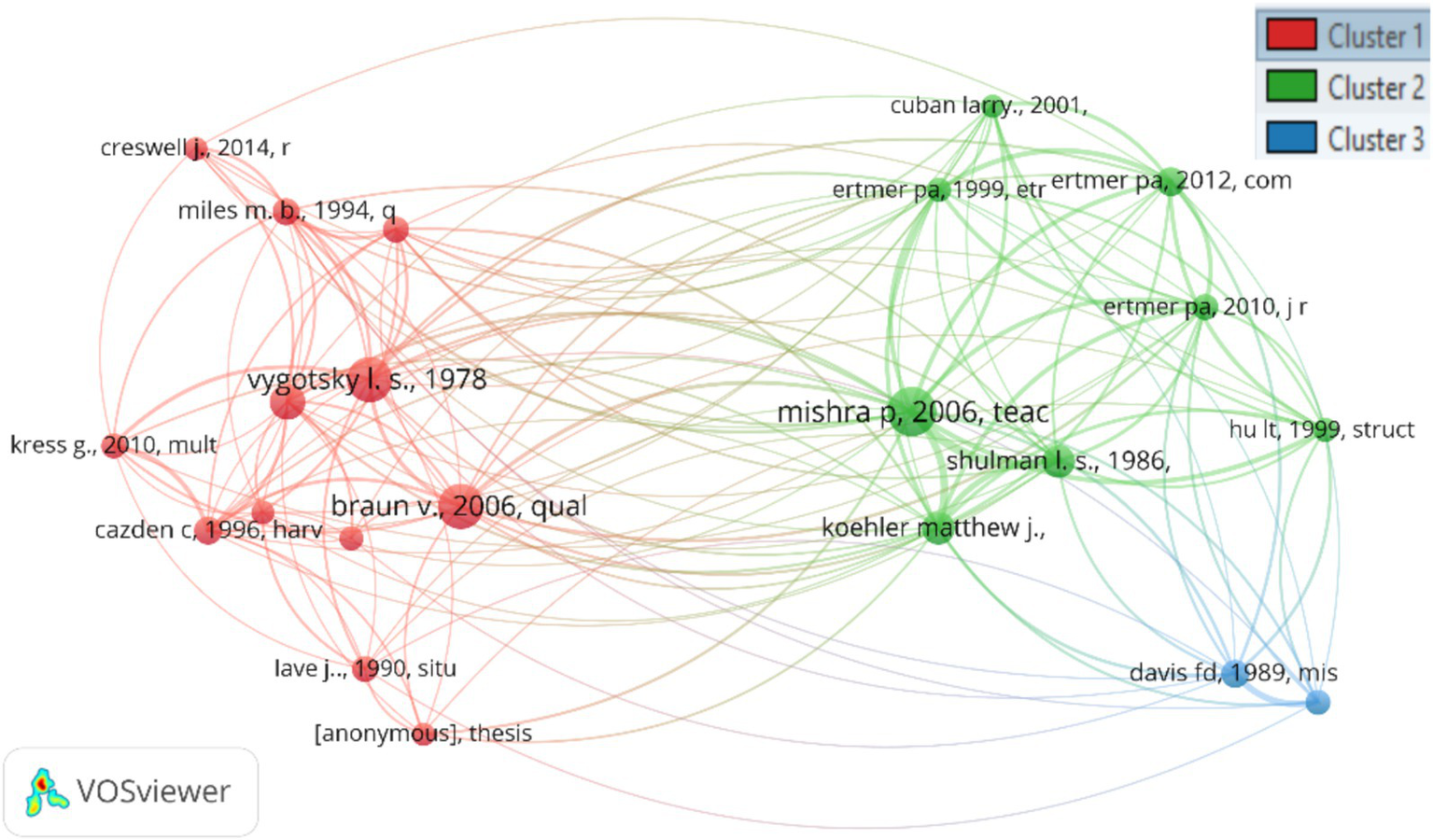

Figure 8

Article-based co-citation analysis.

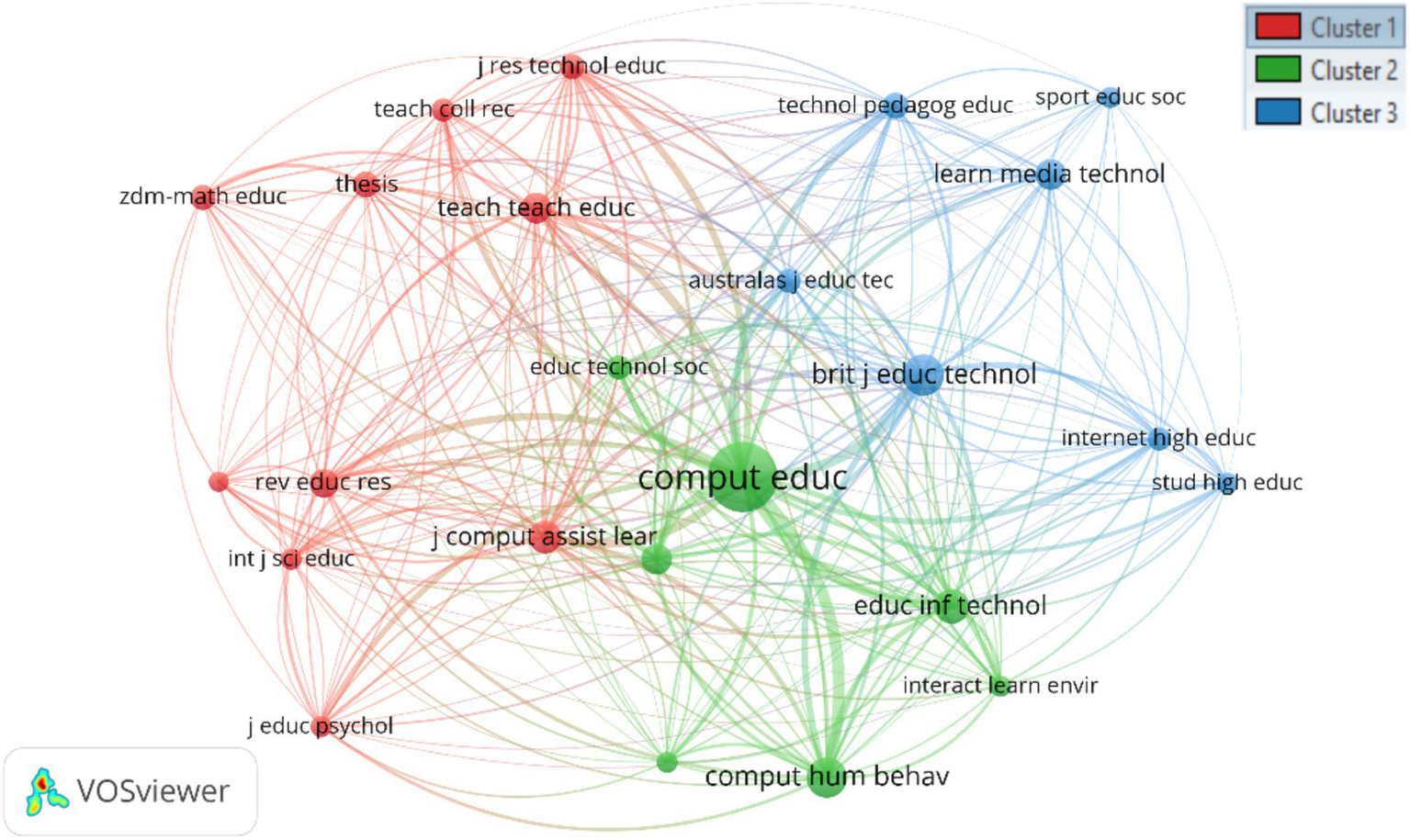

Figure 9

Source-based co-citation analysis.

Author-based co-citation analysis revealed five clusters in which cluster 1 discussed technology acceptance regarding teachers and students in classrooms. Ertmer (1999) worked on technological change, especially teacher beliefs, knowledge, and confidence about digital technologies in classrooms (Ertmer, 2005; Ertmer and Ottenbreit-Leftwich, 2010). Mishra and Koehler (2006) designed a framework for the complex dynamics of teachers incorporating technology into their pedagogical practices. Similarly, Tondeur et al. (2012) and Tondeur et al. (2016) explored strategies for training pre-service teachers in technology integration and developed a tool to assess their perceived support and training adequacy.

Venkatesh proposed TAM1 (Venkatesh, 2000), TAM2 (Venkatesh and Davis, 2000), TAM3 (Venkatesh and Bala, 2008), and the unified theory of acceptance and use of technology (Venkatesh et al., 2003) framework to evaluate the integration of digital tools in diverse settings. Cluster 2 is formed around the works of Vygotsky, Braun, Kress, and others. Key foundational ideas on emphasizing the sociocultural context are used to shape cognitive growth (e.g., Vygotskij and John-Steiner, 1979), thematic analysis to understand student’s perceptions (e.g., Braun and Clarke, 2006; Braun and Clarke, 2021), learning design in the digital age (e.g., Kress, 2005; Kress, 2003), and new literacies in the classroom (e.g., Lankshear and Knobel, 2006; Lankshear and Knobel, 2003) are central to this cluster. The authors in cluster 3 contributed to the field by providing digital technologies governance in education, broadly categorized under advantages and disadvantages of educational technology in the classroom (e.g., Selwyn, 2011; Selwyn, 2007), digital governance in education (e.g., Williamson, 2016b; Williamson, 2016a), and students as digital immigrants (Prensky, 2001). Selwyn, Williamson, and Prensky are the key authors of this cluster. Scholars in cluster 4 laid the foundations for the theme of digital technology for young children in school (e.g., Livingstone et al., 2014; Plowman and Stephen, 2007; Marsh et al., 2017). Cluster 5 provided the foundation for the theme of qualitative and quantitative evaluation of technological integration in classrooms (e.g., Creswell and Poth, 2016; Yin, 2009).

Article-based co-citation analysis revealed three clusters in which Vygotskij and John-Steiner (1979) and Braun and Clarke (2006) from cluster 1 are the most foundational articles. In cluster 1, the co-cited articles laid the foundation for a basic understanding of technology integration in classrooms (e.g., Vygotskij and John-Steiner, 1979; Braun and Clarke, 2006; Miles and Huberman, 1994).

They also provided foundational research on the sociocultural contexts in shaping cognitive growth (e.g., Vygotskij and John-Steiner, 1979) and thematic analysis to understand student’s perceptions (e.g., Braun and Clarke, 2006; Miles and Huberman, 1994). Mishra and Koehler (2006) article positioned the foundation of the research on a framework for the complex dynamics of teachers incorporating technology into their pedagogical practices. Mishra and Koehler (2006) was co-cited with other articles co-authored by Koehler and Mishra (2009) in cluster 2. Articles in Cluster 3 formed the basis for the themes of user acceptance of digital technologies (Davis, 1989; Venkatesh et al., 2003). Table 10 presents the cluster classification for the article’s co-citation network, organized by citation count (top 15 articles). Source-based co-citation analysis revealed three clusters of foundational journals. The “Journal of Computer-Assisted Learning,” “Teaching and Teacher Education,” “Journal of Research on Technology in Education,” and “ZDM-Mathematics Education” are the key journals that form cluster 1. The Journal of “Computers & Education” is the highest co-cited journal. It was co-cited with “Education and Information Technologies,” “Computer and Human Behavior,” and “Educational Technology & Society” in cluster 2. Cluster 2 also had the highest number of citations among all clusters. The “British Journal of Educational Technology,” “Learning Media and Technology,” “Technology Pedagogy and Education,” and “Internet and Higher Education” form cluster 3. Table 11 shows the source-based co-citation analysis clustered by citation count (top 20 sources).

Table 10

| Cluster # | Article’s |

|---|---|

| Cluster 1 | Creswell j., 2014; miles m., 1994; Vygotsky i. s., 1978; kress g., 2010; braun v., 2006; cazden c, 1996; lave j., 1990; (anonymous), thesis |

| Cluster 2 | Cuban larry., 2001; ertmer pa, 1999; ertmer pa, 2012; mishra, 2006; hu lt, 1999; Shulman s., 1986; Koehler matthew j., 2003 |

| Cluster 3 | Davis fs,1989 |

Cluster grouping of article-based co-citation analysis grounded on citation count (top 15 articles).

Table 11

| Cluster # | Sources (journals) |

|---|---|

| Cluster 1 | Journal of Computer-Assisted Learning, Journal of Educational Psychology, International Journal of Science Education, Review of Educational Research, Teaching and Teacher Education, thesis, ZDM-Mathematics Education, Journal of Research on Technology in Education |

| Cluster 2 | Computers & Education, Education and Information Technologies, Interactive Learning Environments, Computer and Human Behavior, Educational Technology & Society |

| Cluster 3 | Studies in Higher Education, Internet and Higher Education, British Journal of Educational Technology, Australasian Journal of Educational Technology, Learning Media and Technology, Technology Pedagogy and Education, Sport Education and Society |

Co-citation analysis of sources clustered according to citation count (top 20 sources).

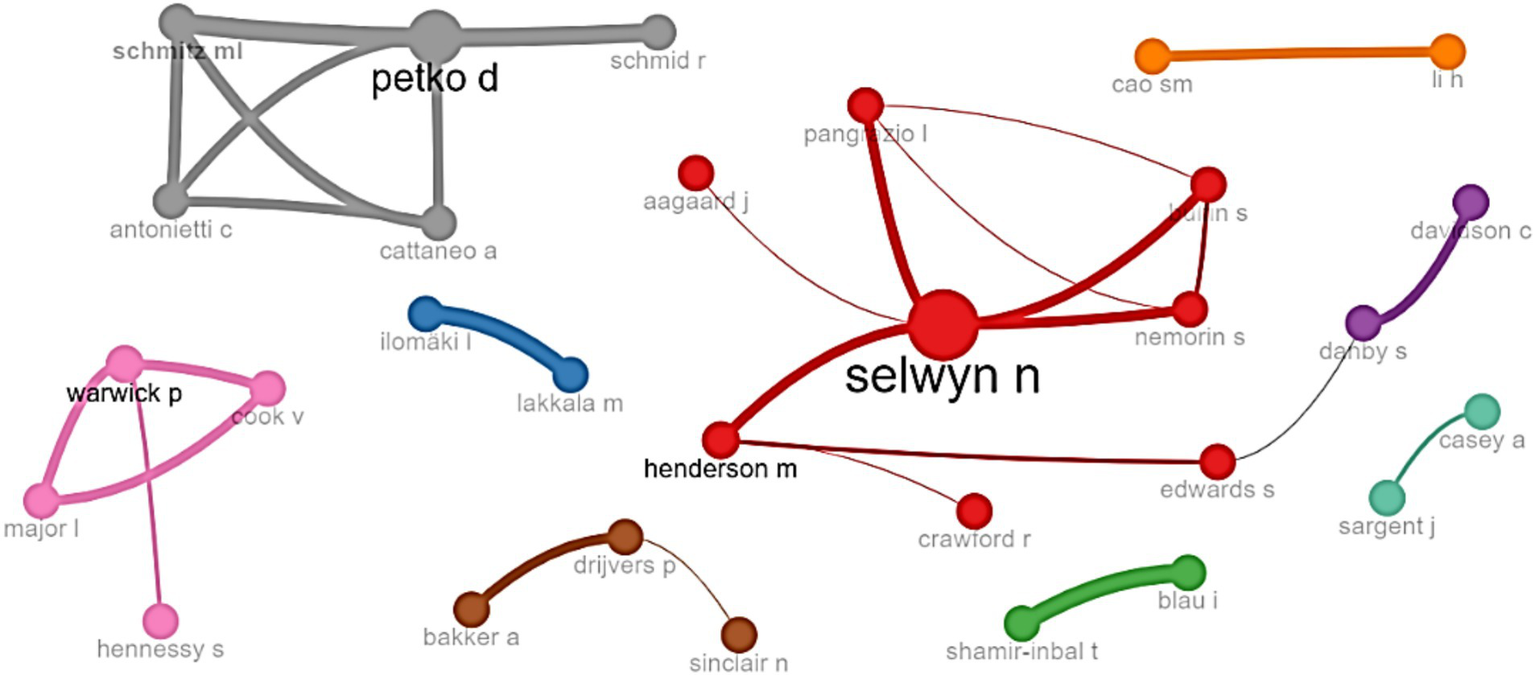

3.2.3 Social structure

Social structure in bibliometric analysis refers to the network of collaborations and interactions among researchers or countries within the scholarly community, typically examined through co-authorship networks or collaboration analysis (Aria and Cuccurullo, 2017). This section discussed the collaboration between authors and countries in classrooms regarding digital technologies. Author collaboration was assessed using betweenness and closeness centrality, commonly employed metrics for analyzing collaboration networks (Noyons et al., 1999). The nodes’ sizes correspond to the number of articles, while the connections between nodes represent the intensity of collaboration (Aria and Cuccurullo, 2017). Scholars who collaborate closely are clustered together and distinguished by various colors. The biggest group (red) includes 10 scholars, and the three Australian authors (Selwyn, Henderson, Pangrazio, Nemorin, and Bulfin) are at the center of this cluster, as shown in Figure 10. Other groups (grey) include five scholars from Switzerland (Petko, Schmid, Cattaneo, Antonietti, and Schmitz), the pink group is formed around the UK scholars (Warwick, Cook, Major, and Hennessy), and (the brown group) belongs to Netherlands (Bakker, Drijvers, and Sinclair). There are only four groups with more than three scholars, while the number of authors in the remaining groups is minimal. Author collaboration grouping through Walktrap algorithm (including betweenness, closeness, and PageRank metrics) is presented in Table 12.

Figure 10

Author’s collaboration network.

Table 12

| Node | Cluster | Betweenness | Closeness | PageRank | Node | Cluster | Betweenness | Closeness | PageRank |

|---|---|---|---|---|---|---|---|---|---|

| Selwyn n | Red | 23 | 0.06250 | 0.07799 | Cao sm | Orange | 0 | 1.00000 | 0.03333 |

| Edwards s | Red | 14 | 0.05000 | 0.02507 | Drijvers p | Brown | 1 | 0.50000 | 0.04865 |

| Henderson m | Red | 23 | 0.06250 | 0.04406 | Sinclair n | Brown | 0 | 0.33333 | 0.01534 |

| Nemorin s | Red | 0 | 0.04545 | 0.03553 | Bakker a | Brown | 0 | 0.33333 | 0.03601 |

| Pangrazio l | Red | 0 | 0.04545 | 0.03036 | Warwick p | Pink | 2 | 0.33333 | 0.04780 |

| Aagaard j | Red | 0 | 0.04167 | 0.01010 | Hennessy s | Pink | 0 | 0.20000 | 0.01516 |

| Bulfin s | Red | 0 | 0.04545 | 0.03553 | Major l | Pink | 0 | 0.25000 | 0.03519 |

| Crawford r | Red | 0 | 0.04167 | 0.01124 | Cook v | Pink | 0 | 0.25000 | 0.03519 |

| Ilomaki I | Blue | 0 | 1.00000 | 0.03333 | Petko d | Grey | 3 | 0.25000 | 0.04980 |

| Lakkala m | Blue | 0 | 1.00000 | 0.03333 | Antonietti c | Grey | 0 | 0.20000 | 0.03221 |

| Blau i | Green | 0 | 1.00000 | 0.03333 | Cattaneo a | Grey | 0 | 0.20000 | 0.03221 |

| Shamir-inbal t | Green | 0 | 1.00000 | 0.03333 | Schmid r | Grey | 0 | 0.14285 | 0.01709 |

| Danby s | Purple | 8 | 0.03846 | 0.03570 | Schmitz ml | Grey | 0 | 0.20000 | 0.03535 |

| Davidson c | Purple | 0 | 0.02941 | 0.02776 | Casey a | Sea green | 0 | 1.00000 | 0.03333 |

| Li h | Orange | 0 | 1.00000 | 0.03333 | Sargent j | Sea green | 0 | 1.00000 | 0.03333 |

Grouping of author’s collaboration through Walktrap algorithm (betweenness, closeness, pageRank).

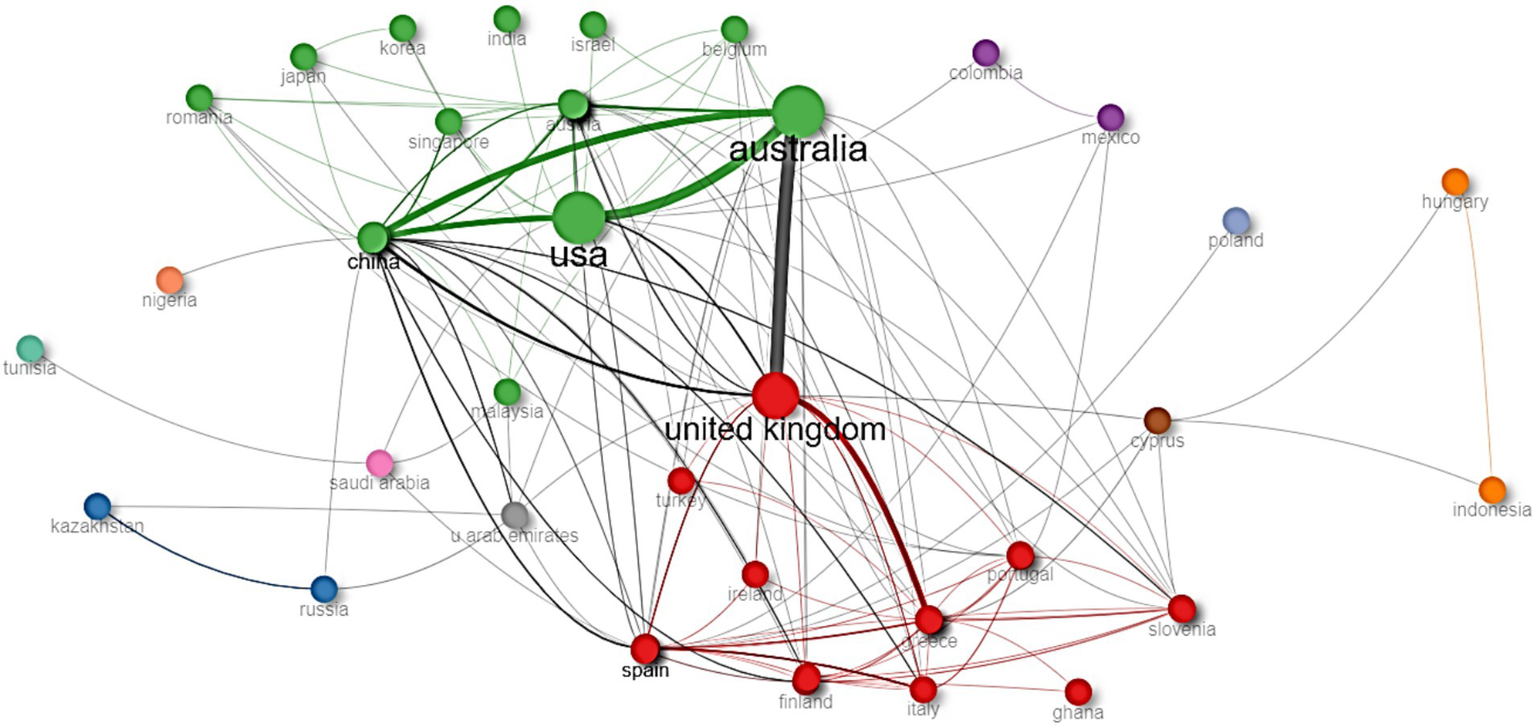

The cross-country collaboration network facilitates collaborative efforts among partner countries. Every node symbolizes a country, with its size reflecting the volume of publications. The thickness of the lines connecting nodes correlates with the level of collaboration between countries (Aria and Cuccurullo, 2017). The countries collaborating with others include Australia, the UK, the USA, China, Australia, and Spain, as indicated in Figure 11.

Figure 11

Cross-country collaboration network.

Australia and the UK have the highest collaborations in digital technologies in classrooms, followed by Australia and the USA, the USA and China, and Australia and China. Two more prominent clusters can be identified. The biggest (green) includes Australia, the USA, China, and other countries (Singapore, Belgium, Israel, India, Korea, Japan, Romania, and Austria). The second cluster (red) includes nine countries, and the UK is in the center, including Spain, Finland, Italy, Ghana, Slovenia, Portugal, Turkey, Ireland, and Greece. However, other clusters have a limited number of collaborations among countries. Cross-country collaboration through the Walktrap algorithm (betweenness, closeness, and PageRank) is presented in Table 13.

Table 13

| Node | Cluster | Betweenness | Closeness | PageRank | Node | Cluster | Betweenness | Closeness | PageRank |

|---|---|---|---|---|---|---|---|---|---|

| United Kingdom | Red | 247.26010 | 0.01470 | 0.09890 | Germany | Green | 14.86466 | 0.01219 | 0.02760 |

| Spain | Red | 100.70729 | 0.01250 | 0.04674 | Canada | Green | 16.92967 | 0.01234 | 0.03408 |

| Finland | Red | 82.70229 | 0.01190 | 0.02957 | Israel | Green | 0 | 0.00943 | 0.00632 |

| Norway | Red | 17.79930 | 0.01190 | 0.03072 | Chile | Green | 9.31875 | 0.01149 | 0.02229 |

| Switzerland | Red | 8.76740 | 0.01136 | 0.01613 | Korea | Green | 1.60712 | 0.00990 | 0.00813 |

| Ireland | Red | 0.21372 | 0.01030 | 0.01174 | Belgium | Green | 0.58087 | 0.01063 | 0.01204 |

| Italy | Red | 10.49105 | 0.01123 | 0.02848 | India | Green | 0 | 0.00854 | 0.00534 |

| France | Red | 2.66752 | 0.01020 | 0.01967 | Singapore | Green | 0 | 0.01030 | 0.01378 |

| Turkey | Red | 0.04889 | 0.00970 | 0.00860 | Malaysia | Green | 7.01941 | 0.00990 | 0.01136 |

| Brazil | Red | 17.70970 | 0.01087 | 0.01815 | Austria | Green | 0.02380 | 0.00970 | 0.00976 |

| Greece | Red | 14.35419 | 0.01052 | 0.01963 | Estonia | Green | 0.01190 | 0.01010 | 0.00738 |

| Netherlands | Red | 18.11776 | 0.01190 | 0.02522 | Romania | Green | 2.98402 | 0.01075 | 0.00967 |

| Denmark | Red | 0.10201 | 0.01010 | 0.01190 | Japan | Green | 0.77572 | 0.00892 | 0.00836 |

| South Africa | Red | 19.14897 | 0.01087 | 0.01970 | Mexico | Maroon | 2.49299 | 0.00980 | 0.00934 |

| Portugal | Red | 2.45144 | 0.00934 | 0.01382 | Colombia | Maroon | 0 | 0.00869 | 0.00625 |

| Ghana | Red | 0 | 0.00826 | 0.00573 | Hungary | Orange | 0 | 0.00694 | 0.00833 |

| Slovenia | Red | 0 | 0.00800 | 0.00573 | Indonesia | Orange | 0 | 0.00694 | 0.00833 |

| Russia | Blue | 12.53810 | 0.00819 | 0.01861 | Cyprus | Brown | 88.08000 | 0.0100 | 0.01884 |

| Kazakhstan | Blue | 0 | 0.00724 | 0.01257 | Saudi Arabia | Pink | 46.44145 | 0.00961 | 0.01201 |

| USA | Green | 222.11491 | 0.01388 | 0.08231 | United Arab Emirates | Grey | 38.19197 | 0.01030 | 0.01560 |

| Australia | Green | 135.66004 | 0.01449 | 0.09141 | Tunisia | Sea green | 0 | 0.00671 | 0.00574 |

| China | Green | 126.80114 | 0.01234 | 0.05786 | Nigeria | Sea green | 0 | 0.00793 | 0.00423 |

| Sweden | Green | 8.10119 | 0.01204 | 0.02754 | Poland | Sky blue | 0 | 0.00800 | 0.00432 |

| New Zealand | Green | 9.92051 | 0.01162 | 0.02990 |

Cross-country collaboration through Walktrap algorithm (including betweenness, closeness, and PageRank metrics).

4 Discussion

In this study, we conducted a bibliometric analysis to address the research questions outlined earlier, focusing on digital technologies in the classroom. Our research enriches the field of digital technologies in the classroom by exploring performance analysis and scientific mapping over the past decade of digital technologies in the classroom. Previous research using bibliometric analyses has demonstrated their effectiveness in identifying trends and providing information for decision-making in educational contexts (Huang et al., 2020; Li and Wong, 2022). For example, such analysis has shed light on the adoption rates of digital tools in classrooms, guiding the development of educational policies and frameworks to support technology integration (Hao et al., 2020). The majority (72.2%) of the articles were published in the most recent 5 years, contrasting with only 27.8% published from 2014 to 2018. This trajectory, coupled with supporting data from longitudinal studies (e.g., Harju et al., 2019) suggests that this growth is likely to persist. The influential journal analysis reveals a notable recognition and popularity of articles contributing to advancing digital technologies in classroom settings, particularly since 2014. The top 20 most popular journals (Table 3) contain 47.55% of articles, and 23 published at least 10 papers between 2014 and 2023. This research will help scholars identify the top-tier journals in this interdisciplinary field based on PC, CC, H-index, IF, SJR, Citescore, and SNIP (Table 2), given the rapid spread of predatory journals in recent years, which pose a global threat to the integrity of scientific research (Strong, 2019). Most productive authors are from research institutions in developed countries, such as the “Monash University” in Australia, “the University of Gothenburg” in Sweden, “the University of London” in the UK, and “the University of Limerick” in Ireland. The absence of scholars from developing countries in Tables 4, 5 is noticeable because developed countries (for example, the United States, the United Kingdom, Netherlands, Switzerland, China, and Australia) have contributed significantly to the global literature. This disparity may attributed to factors such as limited funding, research infrastructure, and lack of international networks (Zaman et al., 2018). These disparities can be incorporated by increased funding, international collaborations, and capacity-building initiatives to ensure more inclusive and globally relevant research.

We went beyond highlighting the performance analysis and revealed the scientific mapping of digital technologies in the classroom, particularly its conceptual, intellectual, and social structure. The conceptual structure enabled the identification of four different research directions related to digital technologies in the classroom. These research topics focused on digital education and pedagogy in COVID-19, factors influencing online and blended learning in higher education, digital technology integration in classroom design, and literacy support and evaluation of digital technologies through technology acceptance frameworks (Alabdulaziz, 2021). Moreover, the outcomes of remote learning were mixed, with some studies indicating that students faced difficulties in maintaining engagement, motivation, and academic performance (Kumi-Yeboah et al., 2020; Alabdulaziz, 2021; Bergdahl and Bond, 2022). The analysis revealed four quadrants to understand the conceptual structure: basic themes like education, students, and frameworks; motor themes like technologies and user acceptance; niche themes like social networks and culture; and emerging/declining themes like “thinking.” Co-citation patterns regarding authors, articles, and sources determined the intellectual structure. Author-based co-citation analysis revealed various clusters: technology acceptance in classrooms (Alabdulaziz, 2021), thematic analysis to understand student perceptions (Braun and Clarke, 2021; Miles and Huberman, 1994), digital technologies governance in education (Williamson, 2016b; Williamson, 2016a), and qualitative and quantitative evaluation of technological integration in classrooms (Creswell and Poth, 2016; Yin, 2009). Article-based co-citation analysis revealed three clusters of foundational articles: the sociocultural context in shaping cognitive growth (Vygotskij and John-Steiner, 1979) and thematic analysis to understand students’ perceptions (Braun and Clarke, 2006), complex dynamics of teachers incorporating technology into their pedagogical practices (Mishra and Koehler, 2006), and user acceptance of digital technologies (Davis, 1989; Venkatesh et al., 2003). Source-based co-citation analysis revealed three clusters: The “Journal of Computer-Assisted Learning,” “Teaching and Teacher Education,” “Journal of Research on Technology in Education,” and “ZDM-Mathematics Education”; The “British Journal of Educational Technology,” “Learning Media and Technology,” “Technology Pedagogy and Education,” and “Internet and Higher Education”; “Education and Information Technologies,” “Computer and Human Behavior,” and “Educational Technology & Society.” Social structure analyzed the collaboration of authors and countries in which the most significant group includes (Selwyn, Henderson, Pangrazio, Nemorin, and Bulfin). There are only four groups with more than three scholars, while the number of authors in the remaining groups is minimal. The cross-country collaboration network facilitates collaborative efforts among partner countries. The countries collaborating with others include Australia, the UK, the USA, China, Australia, and Spain, as indicated in the collaboration map. Australia and the UK have the highest collaborations in digital technologies in classrooms, followed by Australia and the USA, the USA and China, and Australia and China.

Digital technologies have several educational benefits, such as increased accessibility, better engagement, personalized learning, and flexible learning environments (Cohen et al., 2022). Some researchers also pointed out significant institutional challenges, such as a lack of technology-driven culture, limited assistance for teachers, and a lack of proper training (Okoye et al., 2023), Institutional settings lacking ethical conduct, transparency, and accountability, which discourage teachers from adopting substantial reforms (McGarr and McDonagh, 2021); and an institutional skepticism about the potential risks of implementing new technologies (Cohen et al., 2022). Technical concerns must be resolved to make these tools practical (Ferrante et al., 2024). Additionally, the availability of adequate resources to meet demand is a concern; teachers can only plan courses featuring new technologies if classrooms have the necessary equipment (Okoye et al., 2023; Yildiz Durak, 2021). Furthermore, geographical differences show notable adoption gaps, especially in developing countries or regions with inadequate resources and infrastructure. While current challenges in integrating digital technologies in classrooms present obstacles, they also pave the way for future opportunities to innovate and enhance the educational experience. Digital technology integration in classroom instruction has become pervasive and continuously expanding to facilitate classroom dynamics (Harju et al., 2019). Hence, the focus should extend beyond whether digital technologies should be employed in classrooms or how they can be utilized in the classroom context (Kumi-Yeboah et al., 2020; Tondeur et al., 2012). Instead, attention should be directed toward optimizing the incorporation of diverse technologies to enhance the effectiveness and productivity of learning (Howard et al., 2015). The focus should be on recent technological advancements, such as artificial intelligence and virtual reality, and their applications in the classroom (Bathla et al., 2023). The blended or online mode needs to be harmonized with learning using digital technologies and digital learning materials. This also leads to project-based learning, flipped learning, adaptive learning, and using student data analysis to support teaching through personalizing learning (Britain, 2019). Teacher’s digital competence is not just about digitizing lectures or using software to prepare lessons but also about integrating tools for teaching methods, classroom management, and student interaction into the digital space (Luo et al., 2021). Teachers should be equipped with approaches to integrate digital tools into pedagogy, aligning them with curriculum goals and distinct student demands. Adopting digital tools requires professional development for teachers to improve their digital literacy efficiently. Policymakers should focus on teachers’ digital literacy and the digital gap and provide equal access to digital resources. Furthermore, digital technologies could also make evidence-based decisions that optimize their use in education. Artificial intelligence-based tools should be provided to the institutions to support instructional content creation and help personalize learning. The resources should be allocated to virtual and augmented reality technologies to create more immersive learning experiences and increase student engagement with instructional content (Bathla et al., 2023). Our contribution through this research offers practical implications for improving teaching and learning practices by examining the landscape of digital technology in classrooms over the past decade with current challenges and opportunities.

This research possesses a few limitations, such as a restriction to a few keywords, which may influence the search results, but future research should incorporate additional related keywords. Secondly, this study focused on a broad range of digital technologies rather than specific platforms. New digital technologies such as large language models or extended reality may lack extensive publication, resulting in lower bibliometric visibility despite their growing relevance in classrooms. Thirdly, only the WOS database was used for bibliometric analysis; other databases, such as Scopus, ProQuest, or IEEE Xplore, could strengthen the findings. Lastly, excluding non-English publications may limit the valuable insights from research conducted in other languages.

5 Conclusion

This study offers a comprehensive review of publications on digital technologies in the classroom from 2014 to 2023 through bibliometric analyses. It demonstrates how bibliometric analysis can be applied to other fields, including scientific mapping and performance analysis. Publication trends suggest a promising future for digital technologies in classroom research and highlight their significant value in applied settings. Insights into leading authors, institutions, journals, countries, articles, and keywords will assist researchers in identifying key contributors and suitable platforms for disseminating their work. International collaboration should be pursued to explore opportunities and address challenges. The compelling visualizations of keyword co-occurrence, thematic map, co-citation, and collaboration networks were created, allowing for a more thorough interpretation of the data. Our study also contributes by highlighting the research trends and developments in the field over the past 10 years, aiding scholars in becoming more aware of current research hotspots when choosing topics to pursue. Teachers should focus on professional development to enhance their digital competencies. At the same time, policymakers should invest in equitable access to infrastructure and support digital literacy through training programs that may impact technology integration in education. The present study’s potentially informative and valuable implications aid researchers, policymakers, and practitioners in understanding the past, present, and future scientific structure of the interdisciplinary field of education and digital technologies. Future research should focus on particular technologies, such as immersive, adaptive, or virtual learning.

Statements

Author contributions

TA: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Visualization, Writing – original draft, Writing – review & editing. GS: Conceptualization, Investigation, Methodology, Project administration, Resources, Supervision, Writing – original draft, Writing – review & editing. KS: Conceptualization, Formal analysis, Project administration, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. ÖÖ: Funding acquisition, Methodology, Resources, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted without any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note