- 1Research Center for Climate Change Education and Education for Sustainable Development (ReCCE), Pädagogische Hochschule Freiburg University of Education, Freiburg, Germany

- 2Department of Physics Education, Pädagogische Hochschule Freiburg University of Education, Freiburg, Germany

Climate change is one of the most pressing issues of our times. Consequently, climate change education (CCE) should prepare students to mitigate the effects of climate change and adapt to its consequences through promoting climate literacy. The present meta-analysis’ aim is to investigate the effectiveness of CCE interventions on climate literacy, including climate related knowledge, attitudes, and behavior. Additionally, we investigated moderator variables that may influence the effectiveness of CCE. We conducted a systematic literature search and selection process following PRISMA guidelines. A total of 6,159 records were identified, of which 53 were eligible for inclusion in the meta-analysis. The data were analyzed using multi-level random effects modelling to pool the effect sizes across all studies. Results revealed a significant medium to large mean effect size for knowledge related outcomes (g = 0.77, 95% CI = 0.58, 0.96), a significant small to medium effect for attitude related outcomes (g = 0.39, 95% CI = 0.17, 0.62), and a small to medium effect for behavior related outcomes (g = 0.36, 95% CI = 0.12, 0.61). Regarding moderator analysis, there were significant effects for the content of the intervention for knowledge related outcomes. For attitudinal outcomes, we found a significant effect for treatment duration and for the teacher who delivered the CCE intervention. Overall, the heterogeneity of the included studies was large, calling for caution when interpreting these results. Our findings highlight that more qualitatively high research about CCE is needed.

1 Introduction

There is substantial evidence that human activities and actions contribute significantly to climate change and are associated with impacts on many natural and social systems (Cook et al., 2013; IPCC, 2022). Immediate and extensive actions are necessary to mitigate climate change by reducing greenhouse gas emissions and reaching net zero emissions (IPCC, 2022). At the same time, it is important to adapt to current and predictable effects of climate change, such as sea level rise, extreme weather events, deterioration in ecosystem structures, species extinctions, adverse effects on food production, and water scarcity in certain areas of the world, (Berrang-Ford et al., 2011; IPCC, 2022). Climate change mitigation and adaptation require a societal transformation to archive extensive changes in politics, technology, economy, and individual behavior. To facilitate such a transformation, education has been described as one of the most important tipping points because climate change education (CCE) can influence the values and behaviors of an entire cohort and can lead to initiating and supporting changes in politics and economy as soon as the educated and climate literate cohort reaches relevant positions in society (IPCC, 2014, 2022, 2023; Otto et al., 2020; UNFCCC, 1998, 2015; UNFCCC and UNESCO, 2016).

In the last decades, numerous intervention studies have been conducted investigating the effect of CCE and developing evidence based educational programs. These studies investigated the effects of CCE on a range of outcomes and have reported varying results regarding the magnitude of the effects (e.g., Barata et al., 2017; Eggert et al., 2017; Reinfried et al., 2012). The broad heterogeneity in content, methods, and dependent variables makes it hard to comprehend the existing evidence and draw conclusions for educational programs. Four recent reviews addressed this issue by summarizing the findings of intervention studies by qualitative methods. Monroe et al. (2019) summarized the findings of 49 studies to identify effective instructional methods. They concluded that focusing on personally meaningful information and using active and engaging teaching methods might contribute to effective climate education. In another review, Jorgenson et al. (2019) summarized 70 studies on energy and CCE and found that these studies have a focus on energy conservation, whereas there is a need for more studies focusing on energy transition. Furthermore, Bhattacharya et al. (2021) summarized 178 studies concluding that (a) there is a great diversity in the nature of studies on climate education, (b) climate education is taught in a rather fragmented way, (c) most interventions focus on basic concepts such as the greenhouse effect, the carbon cycle, or the earths energy budget; and (d) many teachers feel underprepared in their knowledge about climate change. A review by Kranz et al. (2022) included 75 studies focused on how intervention studies on climate education address a political perspective. Their findings showed that intervention studies rarely addressed the political dimension of climate change.

In sum, these reviews provide a good overview of the contents and teaching methods on climate education interventions. However, they demonstrate that the research field is fragmented and that it is hard to compare the diverse evidence. To this end, meta-analyses are an important means for summarizing data from multiple studies in a comprehensive and systematic way, which can increase the precision and accuracy of the effect estimates of the individual studies (Higgins et al., 2023). Compared to individual studies, meta-analyses provide a higher degree of evidence by reducing the impact of confounding factors and random findings. Currently, there is no meta-analysis summarizing studies on CCE but two meta-analyses on the related field of environmental education. The meta-analyses by Van De Wetering et al. (2022) and Świątkowski et al. (2024) integrate findings focusing broadly on environmental education, thus also including studies focusing on other aspects than CCE, such as outdoor education, sustainability education, or conservation education. While the meta-analysis of Świątkowski et al. (2024) is limited to intervention effects on behavior changes, the analysis of Van De Wetering et al. (2022) also investigates effects of environmental education on knowledge, attitudes, and behavioral intentions. Both analyses include intervention studies with children and adolescents conducted in formal and non-formal educational settings such as summer or nature camps. This broad focus on environmental education and the inclusion of studies from formal and informal education might be one reason why the existing meta-analysis found no (van de Wetering et al., 2022), or just two significant moderator effects (Świątkowsk et al., 2024) that explain variance in study outcomes. The study sample might be to diverse to detect systematic differences in the effectiveness of intervention studies. A meta-analysis with a clear focus on CCE in formal educational settings is missing. We argue that such a meta-analysis is needed because climate change differs from other topics of environmental education. In contrast to many other topics of environmental education climate change is a global issue that can only be understood and solved from a global perspective as the climate system is a global system. Moreover, compared to other environmental problems, the consequences of human action (emission of greenhouse gases) are delayed to its consequences on natural (e.g., global warming) and social systems (e.g., food-prices). At least the complexity and relevance of climate change is bigger compared to other environmental issues because climate change impacts all social systems such as the political, economic and health system and thus many aspects of the personal and social life of all people. Moreover, we argue that a meta-analysis is needed that is restricted to studies in formal primary and secondary education as studies conducted in in-formal settings often relay on selective samples and as they can utilize resources and methods that cannot be transferred to spread into regular classrooms. If climate education is to be a social tipping point, it must reach as many students as possible in the most effective way, i.e., be effective in formal educational settings.

The present meta-analysis investigates the effectiveness of intervention studies focusing specifically on CCE in formal primary and secondary education. Besides estimating the average effectiveness of CCE, we also investigate moderator variables that explain differences in the effectiveness of the individual studies to determine characteristics of CCE that are particularly effective. To cover the broad variety of objectives related to CCE, this meta-analysis summarizes multiple outcome variables such as knowledge, attitudes behavior changes.

1.1 Promoting climate literacy

Numerous political treaties and documents describe education as a key strategy in facilitating mitigation and adaptation to climate change through promoting climate literacy (IPCC, 2014, 2022, 2023; UNFCCC, 1998, 2015; UNFCCC and UNESCO, 2016). No universally accepted definition of climate literacy exists (Pfeiffer, 2022), although many definitions encompass a component of knowledge (Azevedo and Marques, 2017; Kuthe et al., 2020; Stadler et al., 2025; USGCRP, 2009), attitude (Azevedo and Marques, 2017; Kolenatý et al., 2022; Kuthe et al., 2020; Stadler et al., 2025), and behavior or competencies crucial in the context of climate change (Azevedo and Marques, 2017; Kranz et al., 2022; Kuthe et al., 2020; Stadler et al., 2025; USGCRP, 2009). Within many conceptualizations of climate literacy, the component of knowledge is particularly prominent and differentiated (Stadler et al., 2025) and has been described to comprise various forms of knowledge, including content, procedural, epistemic knowledge, and effectiveness knowledge (e.g., Azevedo and Marques, 2017; Frick et al., 2004). The attitude component can be described as a set of sub-facets that promote taking action against climate change and may include concern about climate change (e.g., Bedford, 2016), belief in climate change (e.g., Flora et al., 2014), perceived importance of climate change (e.g., Schauss and Sprenger, 2019), feeling of responsibility (e.g., Deisenrieder et al., 2020), feeling prepared (e.g., Keller et al., 2019), perception of self-efficacy, and locus of control (e.g., Kuthe et al., 2020). The behavioral component can be described as the ability to change one’s behavior in a more climate-friendly way (e.g., Kuthe et al., 2020; Miléř and Sládek, 2011), the ability to make informed decisions related to climate (Stadler et al., 2025; USGCRP, 2009), as well as competencies in engaging in multiplicative actions (e.g., Azevedo and Marques, 2017; Stadler et al., 2025). In consequence of the diverse aims related to climate literacy, existing intervention studies vary broadly in their content and teaching approaches. These differences might cause differences in the effectiveness of CCE interventions and thus are potential moderator variables.

1.2 Effects of the content of CCE on intervention effectiveness

While some interventions focus only on knowledge about the climate system (Ben-Zvi Assaraf and Orpaz, 2010; Chang et al., 2018), others also include action knowledge about potential actions to mitigate climate change and to adapt to its consequences (Lester et al., 2006). In our analysis we utilize the framework of Probst et al. (2018) that has been created to analyze curricula and teaching materials about climate change education to categorize the intervention content. The framework also includes action knowledge but divides system knowledge further into (1) basic knowledge about climate and (2) knowledge about causes and effects of climate change on various natural and societal systems. We decided to utilize this framework because it has been shown that knowledge about causes and effects of climate change has a big impact on mitigation actions than basic knowledge about the climate system (Tasquier and Pongiglione, 2017). One aim of the current meta-analysis was to investigate how the contents covered in CCE influence the effectiveness of CCE interventions.

1.3 Effects of intervention, student, and study characteristics

The effects of an intervention study not only depend on the contents of the intervention but also on other aspects such as the duration of the intervention, the teacher who delivers the intervention, student’s age, or the study design (Schwichow and Zoupidis, 2023). It has been shown that intervention duration can be a moderating factor in meta-analyses investigating intervention studies, though the evidence is still inconclusive with some studies reporting an effect of the intervention duration (e.g., Schwichow and Zoupidis, 2023; Sun and Zhou, 2023) whilst other meta-analyses did not find such an effect (e.g., Doğan et al., 2023; Van De Wetering et al., 2022). Besides the duration of the intervention, the teacher can have a potential moderating effect on students’ learning gains. An often cited meta-study by Hattie (2003) has shown that the teacher seems to play an important role, accounting for approximately 30 per cent of the variance in students’ learning gains. Regarding CCE, it has been shown that teachers vary greatly in their knowledge about climate change and that many teachers show substantial knowledge gaps in their understanding of climate change (Boon, 2010; Wise, 2010). It has been demonstrated in sustainability education that teachers’ attitudes and professional knowledge predicts students gains in knowledge and attitudes (Scharenberg et al., 2021).

The complexity of climate change might also make it less accessible for younger students than for older ones. On the other hand, it has been suggested that environmental consciousness drops during middle adolescence (i.e., lower secondary school) and is lower compared to both younger and older students (Olsson and Gericke, 2016; Van De Wetering et al., 2022). This might lead to CCE interventions being less effective during lower secondary school compared to primary and upper secondary school. In their meta-analysis of effects of environmental education on students’ behavior, Świątkowski et al. (2024) indeed found a negative effect of students’ age on intervention effects. In the current meta-analysis, we were interested in whether the duration of an intervention, the teacher’s background, and students’ age have an influence on the multiple outcomes such as knowledge, attitudes and behavior changes.

Furthermore, the design of an intervention study can have an impact on the outcome of the study. Studies with a pre-post design that compare pre- and post-intervention measures of the same students usually report larger effect sizes than studies that compare students from an intervention and students from a control group. This is because pre-post designs do not control for retest effects occurring when students become more familiar with the test instrument and, thus, achieve higher scores in a post-test than in a pre-test, even without getting any intervention (Scharfen et al., 2018). In addition to the study design, the instruments used to measure learning gains may influence the findings of intervention studies. For instance, a meta-analysis by Schwichow et al. (2016), which synthesizes the effects of intervention studies aiming at facilitating students’ understanding of controlled experiments, showed that the item format had an impact on the measured intervention effect. Therefore, in this meta-analysis, we were interested in the effects of the study design and the test instrument on the outcome of a study.

1.4 Research questions

Numerous studies have investigated education interventions aimed at promoting climate literacy. It is currently not known how effective teaching CCE at school is in general. Thus, the first research question of this meta-analysis was:

RQ1: What is the average effectiveness of CCE studies on climate related knowledge, attitude, and behavior across all intervention studies?

The content covered in climate education varies greatly between studies. We were interested whether the content covered in the climate education interventions has an influence on how effective an intervention is. Therefore, our second research question was:

RQ2: Does the content of the intervention influence the effect size of intervention studies?

Climate change is a complex topic. Hence, teaching about climate change might require longer lasting interventions and teachers that have a certain background knowledge about climate change. Moreover, students’ age might also be a moderating factor. These variables, however, have rarely been investigated within individual intervention studies on climate education. Yet, there are huge differences between studies regarding the treatment duration, the teachers’ background, and students’ age. Based on these differences between studies, we addressed the following research question:

RQ3: Do treatment duration, teacher characteristics, and grade level of the students influence the effect size of intervention studies?

Furthermore, it is known that the study design and test instruments used to assess the effects of an intervention can influence the effect sizes. Accordingly, our fourth research question was:

RQ4: How do the study design and the test instruments used to measure intervention effects influence the effect sizes?

2 Methods

This meta-analysis follows the typical steps in conducting meta-analyses described by the PRISMA guidelines, consisting of: (1) systematic literature search and selection, (2) data extraction and coding, (3) effect size calculation, (4) synthesis of effect sizes, (5) moderation analysis, and (6) discussion of findings (Page et al., 2021).

2.1 Literature search

We conducted a systematic literature search using three electronic databases, including ERIC, PsycInfo, and Web of Science. The database search was conducted on May 16th, 2022, and considered publications since database inception. The keyword selection was oriented on previous reviews about climate education and also considered the databases’ thesaurus terms. The keywords for our search included synonyms of the terms “climate change education” (climate change education, climate change science education, climate literacy, climate change education research, education for sustainability, education for sustainable development, sustainability education), “environmental education” (conservation education, ecology education, energy education) and “climate change” (global warming, environmental effects). The keywords were combined with Boolean operators in the following way: climate education OR (environmental education AND climate change). In addition to the database search, we included the articles that had been included in a comprehensive recent review article on climate education (Kranz et al., 2022).

To be included in the meta-analysis, studies had to meet the following inclusion criteria:

Intervention: Eligible studies had to include an education intervention explicitly focusing on climate change. Studies that included interventions that only briefly touched on the subject of climate change or studies that focused on education for sustainability in general were excluded. For example, a study by Riess and Mischo (2010) had a focus on forest sustainability in general but not explicitly on climate change and was therefore excluded; or a study by Richter et al. (2015) focused on environmental education, conservation, and biodiversity in rural Madagascar and only touched on the subject of climate change and was therefore excluded.

Population: To be included, studies must investigate a population of elementary or secondary school students or, if information about school grade was not available, students aged between 6 and 20 years. Populations outside of a formal education setting, as well as populations in higher education and adult education, were not included.

Study design: Studies were included that applied an empirical design with either a pre-post design, quasi-experimental design, or randomized controlled design. All other designs were excluded, including, for example, theoretical articles, qualitative studies, curriculum analyses, or literature reviews.

Publication type: Eligible studies had to be written in English (i.e., internationally published articles) and had to be published in a peer reviewed journal.

Outcome and data for effect size calculation: To be included, studies had to report outcomes on knowledge, attitude, or behavior related to climate change. Regarding behavior related outcomes, we included self-reported measures of performed or intended climate friendly behavior, laboratory tasks, as well as objective measures such as household electricity consumption (Lange and Dewitte, 2019). Additionally, studies had to provide sufficient information for effect size calculation.

We did not apply any exclusion criteria based on publication year because our study is the first meta-analysis on climate education studies and because we aimed to include all available information about the effects of CCE.

The study screening and selection process was done in two steps using the web application Rayyan (Ouzzani et al., 2016). First, the titles and abstracts of all the articles that had been obtained were screened according to the above-described inclusion criteria. Second, the full texts were reviewed of those studies considered relevant in the first screening step or whose abstracts did not provide enough information regarding the inclusion criteria. Studies where judgement about eligibility for inclusion was ambiguous were reviewed and discussed in a team of the three authors.

2.2 Coding of study characteristics

We created a coding guide for coding study characteristics relevant for investigating our research questions. Studies were coded for (1) study characteristics including the authors, publication year, and country the study was conducted in; (2) study population including grade level (primary: grade 1–4, lower secondary: grade 5–9, and upper secondary: grade 10–13), age of students and number of participants; (3) content of the intervention (i.e., whether basic knowledge about climate and weather, natural and anthropogenic causes of climate change, impacts of climate change on natural and social environment, and potential actions against climate change were provided); (4) duration of the intervention; (5) the teacher who taught the intervention (i.e., whether the intervention was taught by the students’ regular teacher, an expert or researcher in climate change or in a co-teaching format between the regular teacher and an expert); (6) study design including pre-post designs, quasi experimental designs and randomized controlled designs and characteristics of the evaluation instrument including the kind of instrument that was used (i.e., multiple choice questionnaire, open answers, mixed tests of multiple choice questions and open answers). The climate intervention content’s coding guide was adapted from a recent study by Probst et al. (2018) that analyses curricula and teaching materials in climate change education. To ensure intersubjectivity, three researchers independently extracted and coded the study characteristics before comparing the results. Interrater reliability before comparison was substantial with Fleiss κ = 0.60. Any discrepancies were resolved by discussion.

2.3 Effect size calculation

For effect size calculation, we used the pre-test (before the climate intervention), post-test (immediately after the intervention), and, where available, follow-up data. For within-group designs, we calculated standardized mean differences (SMD) by dividing the difference between pre-test and post-test (or follow-up, respectively) by the pooled standard deviation SMD according to Cohen’s d: SMD = d = . We used the pooled standard deviation instead of the pre-test standard deviation to consider changes in the variance in consequence of the treatment (Borenstein et al., 2010). To adjust for biases due to small sample sizes, the SMDs were transformed to Hedge’s g: g = SMD x J with J = 1–3/(4(n1 + n 2)-1. Hedge’s g and Cohen’s d are identical for large sample sizes. Hence, the meta-analyses results reported as Hedge’s g can be interpreted in accordance to Cohen’s d (Durlak, 2009). For between-group designs, we calculated the difference between the SMDs for the intervention groups and the control groups: ; with IG referring to the intervention group and CG referring to the control group.

When means and standard deviations were not reported, we estimated effect sizes from alternative values, including t-values by , pre – post difference scores, and the standard deviance of the difference by d = ; effect size r: d = (Lipsey and Wilson, 2001). If only rates of success were reported, we calculated the effect size using the arcsine transformation (d = arcsin(pgroup1) – arcsin(pgroup2)) (Lipsey and Wilson, 2001). If sufficient data for effect size calculation were not reported but figures illustrate means and standard deviations, we extracted these values from figures using the tool WebPlotDigitizer (Rohatgi, 2022) to calculate effect sizes. In cases where articles did not report sufficient data for effect size calculation, we contacted the authors of the article to ask whether they could provide the missing data.

Effect sizes were coded in a way that positive values indicate an advantage of the intervention over the control group or higher post- than pre-test scores, indicating learning gains. In addition to effect sizes, we calculate the study variance for every effect size by . If no value for r was provided, we implemented a conservative value of 0.5.

For studies reporting both within and between group differences, we calculated effect sizes for both options. Moreover, we calculated multiple effect sizes for studies reporting students’ outcomes for more than one intervention group (i.e., any group that received a climate education intervention) or reporting results based on multiple test instruments or subscales of test instruments (Borenstein et al., 2010) to allow for inclusion in moderation analysis.

2.4 Estimation of the mean effect size and moderation analysis

We calculated separate meta-analyses for the three outcome variables: knowledge, attitudes, and behavior, in the context of climate change. This was because simulation studies demonstrated that the statistical parameters of meta-analyses are only reliable if separate meta-analytical models are calculated for different outcomes (Fernández-Castilla et al., 2021a).

We excluded outliers defined as effect sizes whose z-values were larger than 3.29 or smaller than −3.29 (Assink and Wibbelink, 2016). Due to this outlier analysis, one value was excluded for the meta-analysis of the outcome knowledge (Karpudewan and Mohd Ali Khan, 2017) and one value for the meta-analysis of the outcome attitude (Hu and Chen, 2016). For many studies, more than one effect size was calculated resulting in dependencies between the effect sizes and the standard errors, since these originate from test results of the same test persons or the same study context. These dependencies and nested structures within the data require the use of meta-analytic multilevel models for data analysis (Assink and Wibbelink, 2016; Scammacca et al., 2014). To identify the best fitting number of levels for our data, we compared different model configurations: (a) a two level model which takes into account the sampling variance of the extracted effect sizes (level 1) and the variance between the studies (level 2); (b) a two level model which takes into account the sampling variance of the individual effect sizes (level 1) and the variance within the studies (level 2); (c) a three level model which takes into account the sampling variance (level 1), the variance within the individual studies (level 2), and the variance between the studies (level 3), and (d) a four level model which takes into account the sampling variance (level 1), the variance within the individual subgroups of a study (level 2), the variance within the individual studies (level 3), and the variance between the studies (level 4). We identified the model with the best fit to the data by comparing the four models by a likelihood-ratio test (LRT) and looking at the model fit indices (AIC, BIC). This model was then used in all subsequent calculations.

To determine the mean effect size, models were calculated with an intercept and without moderator variables. Additionally, we estimated the sampling variance (variance on level 1), within group variance (variance on level 2), within study variance (variance on level 3), and between study variance (variance on level 4) (Assink and Wibbelink, 2016). All analyses were conducted in R using the package metafor (Viechtbauer, 2010) and applying a restricted maximum likelihood (REML) estimator. We implemented the small sample correction described by Tipton (2015) to adapt the residuals and degrees of freedom of all estimators because meat-analysis with a low number of studies tend to have an increased error I rate.

Moderator analyses were conducted to identify variables that could explain systematic differences between effect sizes and identify characteristics of effective interventions. To this end, we added moderator variables as predictors to the meta-analytic models. To be included in the moderator analysis, categorical variables such as whether basic knowledge about climate and weather was taught or not were dummy-coded into 0 (not teaching basic knowledge) and 1 (teaching basic knowledge). To limit the risk of over-fitting and multicollinearity, all moderator analyses were first conducted individually, that is, with models containing only one moderator variable each. Next, we calculated a model with multiple predictors that included all moderator variables identified as significant moderators in the previous step.

2.5 Investigating publication bias

To identify a potential bias in the mean effect sizes due to publication bias, we visually analyzed the funnel plots for asymmetries and performed an Egger’s test with corrected standard error (SEn = √(n1 + n2)/(n1 x n2)) (Pustejovsky and Rodgers, 2019). In case of a positive Egger’s test, we performed a trim and fill analysis to impute effect sizes that might be missing in due to publication bias. Based on a reanalysis of the imputed dataset, we estimated the sensitivity of our findings to a potential publication bias. Considering the hierarchical structure of our dataset, this reanalysis was done for two conditions: all lacking effect sizes originate from one study, and all lacking effect sizes originate from different studies (Fernández-Castilla et al., 2021b).

3 Results

3.1 Selected studies

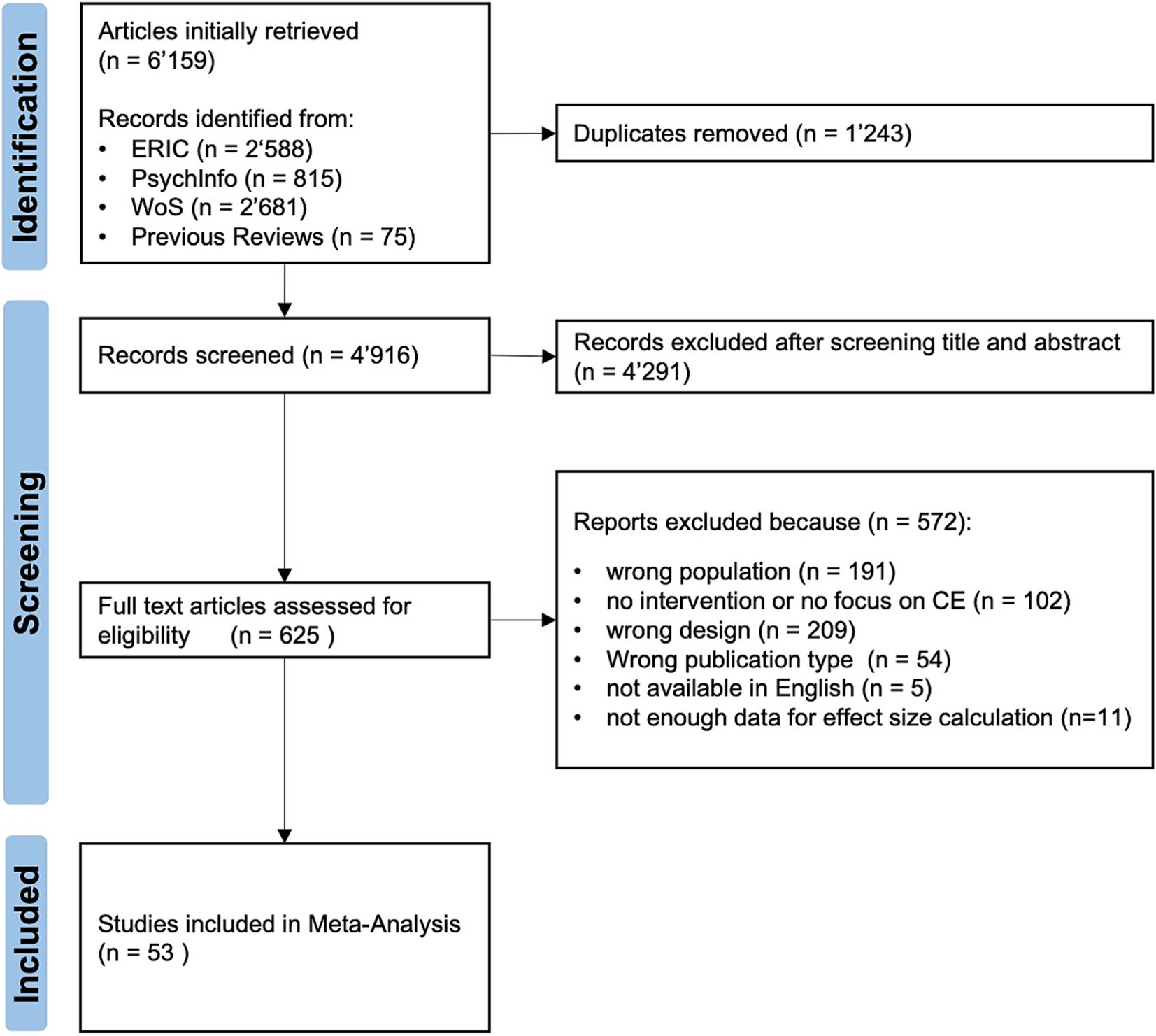

We initially retrieved a total of 6,159 studies. Interestingly, we found only a small overlap between literature found in the ERIC and Web of Science databases. The reason is that the databases search in different citation indexes: literature from some journals on environmental education (e.g., Australian Journal of Environmental Education) are not listed in the social scientific citation index, while other journals are not listed in ERIC (e.g., Environmental Conservation). This might be the reason why previous literature reviews have very different overlap between included studies and further research syntheses should consider this issue.

After removing duplicates, we screened the titles and abstracts of 4,916 articles, of which 625 met our inclusion criteria. After screening the full texts of these articles, 53 articles were included in the final meta-analysis. Figure 1 illustrates the process of study selection. Of the included studies, 43 reported outcomes on knowledge about climate change, 17 outcomes on attitude, and 11 studies outcomes on behavior. Several studies investigated not only one outcome but a combination of the three outcome variables (see Appendix Table A1). The studies included in the meta-analysis were published between 1995 and 2022, with the majority of studies published between 2008 and 2022. Twenty-two studies were conducted in the USA, six in Asia, five in Germany, three in Australia/New Zealand, two in Canada, two in Great Britain; one study was each conducted in Austria, Denmark, Greece, India, Israel, Italy, Portugal, and Switzerland; and 4 studies were conducted in multiple countries (e.g., one study was conducted both in Austria and Australia). Furthermore, the majority of the studies were conducted with secondary school students (twenty-three studies in lower secondary and 18 studies in upper secondary). The remaining studies included mixed populations from lower and upper secondary schools, of which only two studies additionally included primary school students. Regarding the content of the climate education interventions, 36 studies addressed the basics about climate and weather, whereas 17 studies did not explicitly address these. Furthermore, 45 studies addressed anthropogenic causes of climate change, five studies addressed both anthropogenic and natural causes, and three studies did not provide any information in this regard. In terms of consequences of climate change, 49 studies addressed consequences on the climate system (physical systems such as the hydrological cycle), 26 studies addressed consequences on the utility systems (human systems such as food production, water supply or the economy), 18 studies addressed consequences on the biosystems (e.g., biodiversity, marine ecosystem), while nine studies addressed extreme events (e.g., drought, hurricanes). With regard to potential actions against climate change, 33 studies addressed actions against climate change, whereas 20 did not explicitly mention actions. Of the studies mentioning actions against climate change, 31 focused on mitigation, and 11 focused on adaptation.

The duration of the interventions varied greatly between studies, ranging from a single 25-min lesson to a year-long curriculum. Numerous studies only provided vague information about the duration of the intervention. Therefore, we roughly categorized studies into short interventions (i.e., 90 min or shorter) and long interventions (i.e., longer than 90 min). Of the included studies, seven studies used short interventions, 44 studies included interventions that were longer, and two studies did not provide any information about the duration of the intervention. In 28 studies, the intervention was taught by the regular teacher, in 11 studies in a co-teaching format between the regular teacher and an expert in the field of climate change, and in seven studies the intervention was taught by experts.

Regarding the study design, the majority of the studies used a pre-post design (35 studies), 16 studies used a controlled quasi-experimental design, and two studies used an experimental design. It should be noted, however, that a large proportion of the controlled studies compared different variations of climate education interventions, which differed, for instance, in the methodological implementation of the climate education content, but not in the content, meaning that both the intervention and the control group received a CCE intervention. Only nine studies used control groups that received no CCE intervention. Appendix Table A2 provides an overview on the characteristics of the included studies.

3.2 Mean effect sizes

In a first step we compared the four multi-level models described in the methods section to identify the model with the best fit to the data using a likelihood-ratio test (LRT) and looking at the model fit indices (AIC, BIC). The random effects model with a four-level structure showed the best fit indices and was therefore used in all subsequent calculations. A comparison of the different models with respective fit indices is provided in Appendix Table A3.

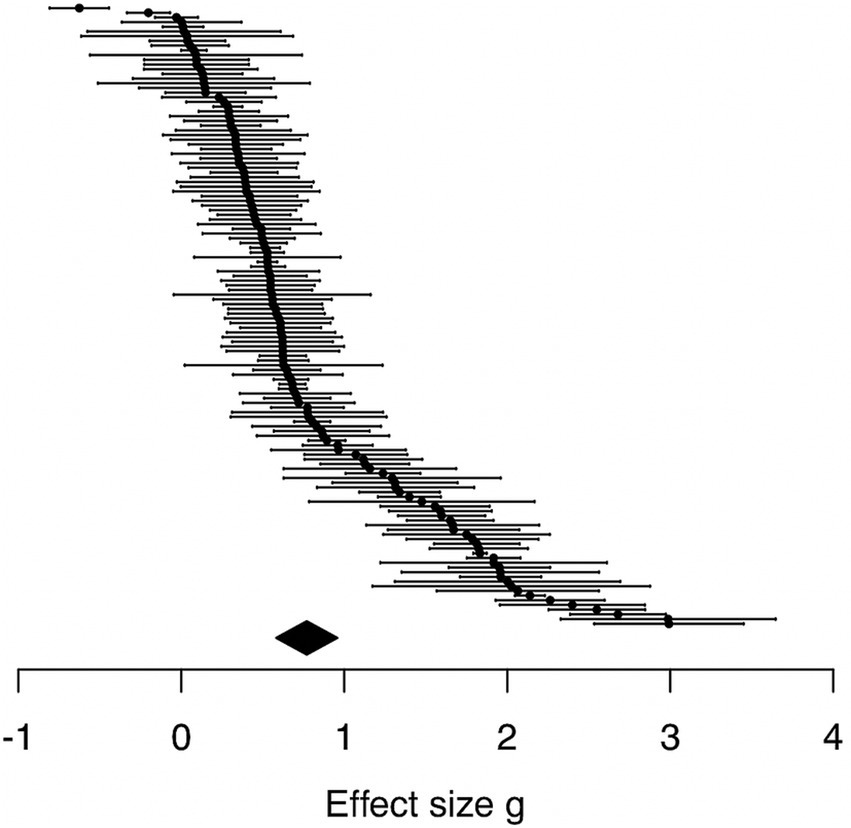

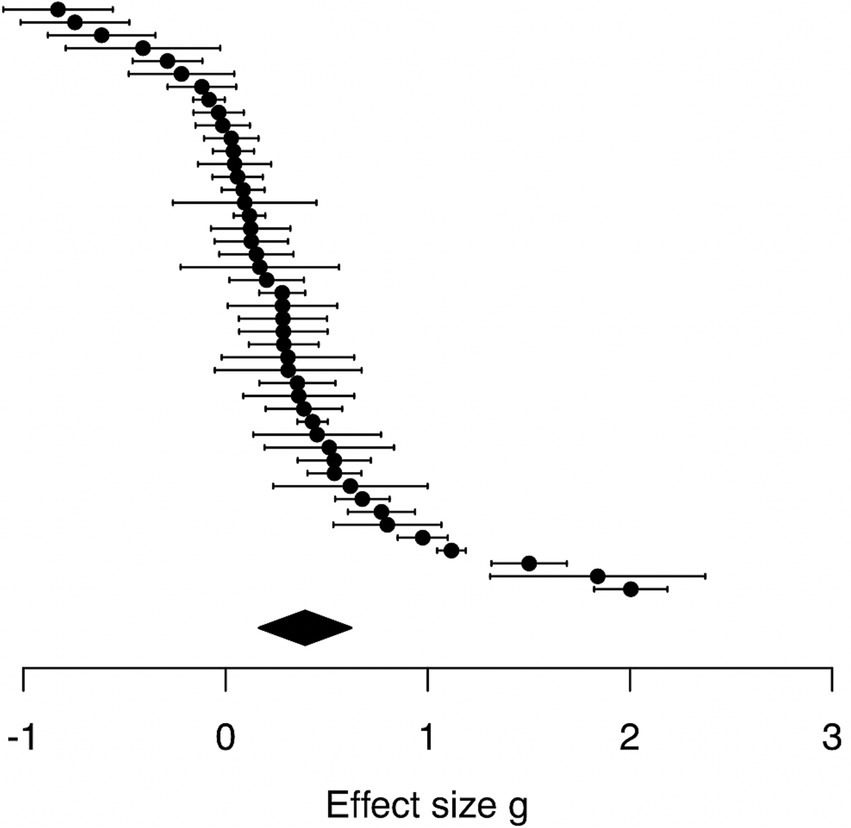

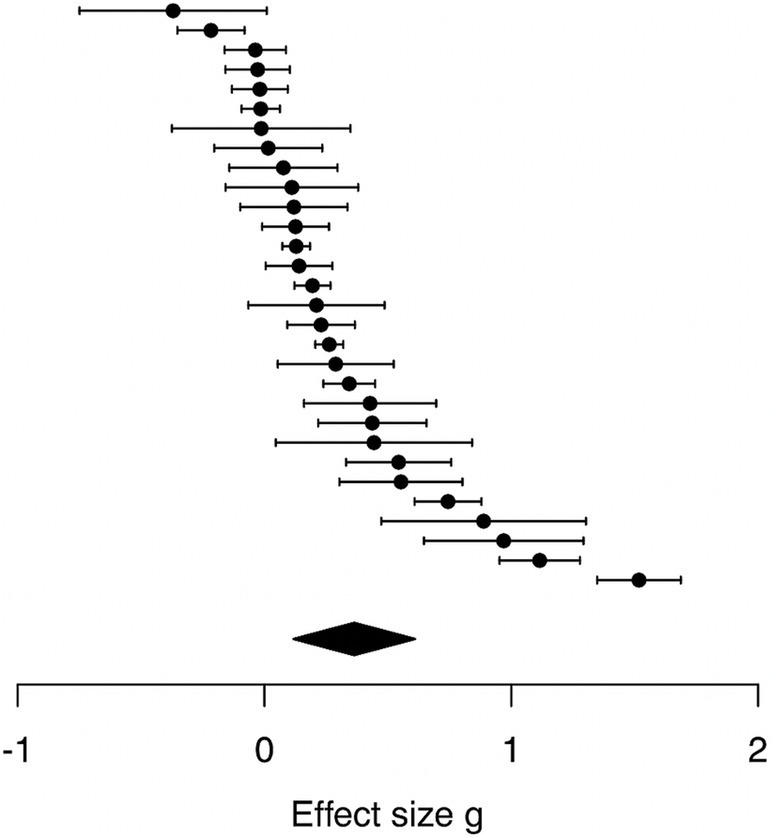

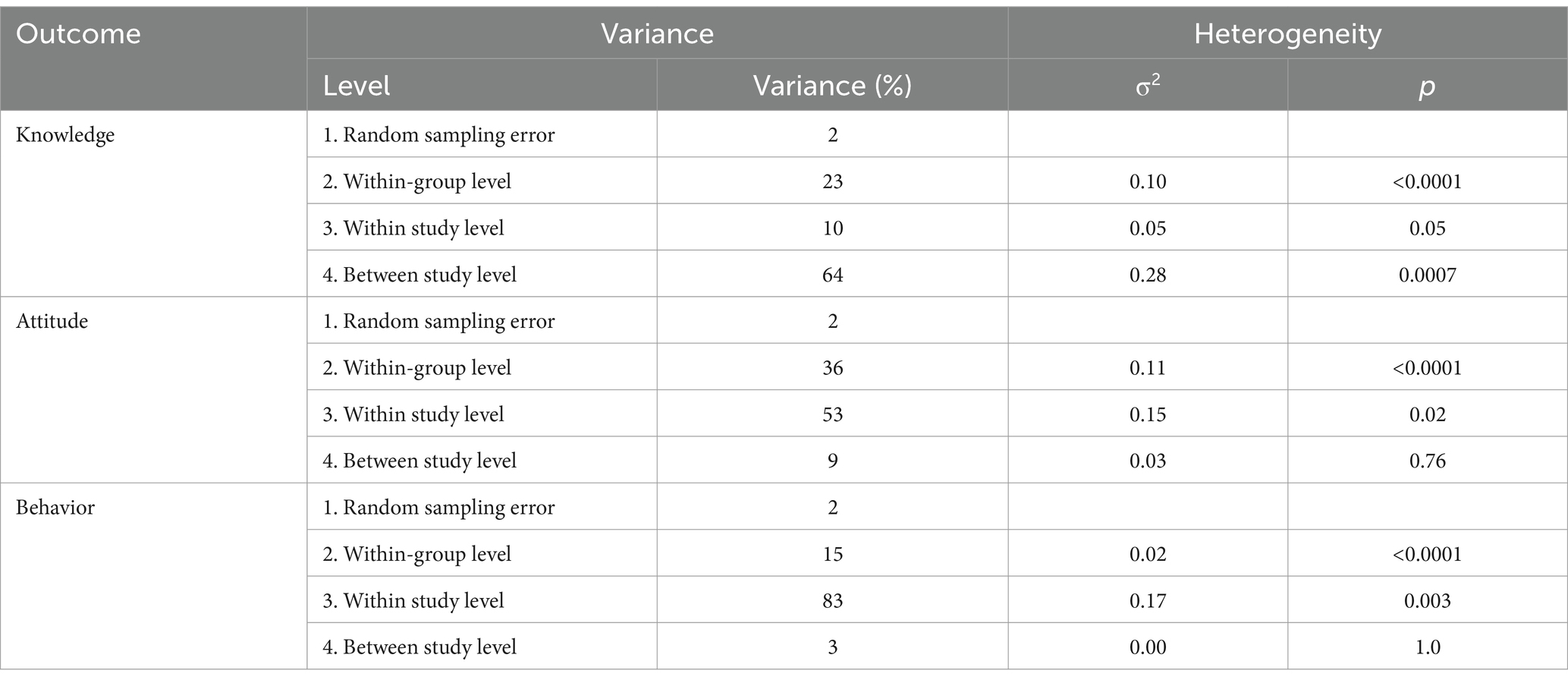

In a second step, we calculated the mean effect sizes. Figures 2–4 provide an overview on the individual effect sizes and their corresponding 95% confidence intervals for knowledge, attitude, and behavior. Overall, there was a significant medium to large mean effect for the outcome climate related knowledge based on 43 studies with 132 effect sizes (g = 0.77, 95% CI = 0.58, 0.96; Figure 2). Sensitivity analyses that included the outlier value showed a similar result (g = 0.79, 95% CI = 0.59, 0.99, based on 133 effect sizes from 43 studies). The heterogeneity between the effect sizes was substantial [Q(df = 131) = 6′541.43; p < 0.0001] as was the within group variance and the between study variance (Table 1). Regarding the mean effect size for attitude related outcomes, there was a significant small to medium effect based on 17 studies with 46 effect sizes (g = 0.39, 95% CI = 0.17, 0.62; Figure 3). Sensitivity analyses that included outlier values showed a slightly higher effect size (g = 0.45, 95% CI = 0.14, 0.75, based on 48 effect sizes from 17 studies). The heterogeneity between the effect sizes was also substantial [Q(df = 45) = 1′777.26; p < 0.0001], as was the within group variance and the within study variance (Table 1). In terms of behavior related outcomes, there was a small to medium mean effect based on 11 studies with 30 effect sizes (g = 0.36, 95% CI = 0.12, 0.61; Figure 4). The heterogeneity between the effect sizes was substantial [Q(df = 29) = 613.65; p < 0.0001], as well as the within group variance and the within study variance (Table 1).

3.3 Moderation analysis

Table 2 provides an overview of the results of the moderator analysis for the three outcome variables: climate related knowledge, attitude, and behavior. For knowledge related outcomes, we found a significant effects for the content of the intervention (i.e., interventions that covered the basics of climate science seem to be more effective in promoting knowledge than those who do not),. For attitude related outcomes, there was a significant effect for the duration of the intervention (i.e., 90-min or shorter interventions seem to be more effective than longer ones) and the teacher, (i.e., studies in which experts delivered the intervention have larger effect sizes than studies that implemented a co-teaching between the regular teacher and an expert). We found no significant moderator variables for behavior related outcomes. Note however, that the moderator analyses for the outcome variables attitude and behavior are based on a small number of studies, which means that these results must be interpreted with caution.

3.4 Publication bias

An Egger’s test with corrected standard error was conducted separately for the three outcome variables and indicated a significant publication bias for knowledge related outcomes, b = 1.20 (CI: 0.92–1.49), p = 0.003. Visual analysis of the funnel plots also showed an asymmetry for knowledge related outcomes (see Figure 5). The trim and fill analysis yields that our sample of effect sizes might lack six negative effect sizes. If these effect sizes would originate from one study, then the overall effect size would decrease to g = 0.62 (95% CI = 0.38, 0.89). If they originate from six different studies, then the overall effect size would decrease to g = 0.71 (95% CI = 0.48, 0.93). For the outcomes, attitude, and behavior, we found no evidence for a publication bias.

Figure 5. Funnel plot for knowledge, attitude, and behavior related outcomes with the effect sizes (Hedge’s g) displayed on the x-axis and standard errors (SE) on the y-axis.

4 Discussion

The aim of this meta-analysis was to investigate the effects of climate education interventions on climate literacy, including knowledge, attitude, and behavior by determining the average effectiveness across all intervention studies and to investigate moderator variables that explain differences in the effect sizes of the individual studies to determine characteristics of CCE that are particularly effective.

4.1 Mean effect sizes (RQ 1)

We found a significant medium to large mean effect size for knowledge related outcomes (g = 0.77), and small to medium effects for attitude related outcomes (g = 0.39) and behavior related outcomes (g = 0.36). However, we found evidence that the mean effect size for knowledge related outcomes is affected by publication bias and thus might be substantially smaller. The mean effect sizes for attitudes and behavior are nearly identical to the mean effect sizes for the outcomes reported by van de Wetering et al. (2022) in their meta-analysis on environmental education, and the associated confidence intervals of these two meta-analyses overlap substantially. Świątkowsk et al. (2024) report a bigger effect size of g = 0.53 for intervention effects on children’s pro-environmental behavior but again the confidence intervals this effect overlaps substantially with the confidence intervals for behavioral outcomes found in the meta-analysis of van de Wetering et al. (2022) and in our meta-analysis. We found a smaller mean effect size for knowledge (g = 0.77) compared to van de Wetering et al. (g = 0.95) but the confidence intervals of both analyses overlap, too (even with the ones from our trim and fill analysis). The similarity of the mean effects between the three meta-analyses is striking, as these three meta-analyses differ in their inclusion criteria and as the body of primary studies between our analysis overlaps by only five studies with the analysis by van de Wetering et al. (2022) and by three studies with the work of Świątkowsk et al. (2024). The similar findings of those three meta-analyses support the validity of the identified mean effect sizes because CCE can be seen as a branch of the much broader environmental education (Blum et al., 2013).

Regarding the finding that the effect sizes for knowledge were higher compared to attitude and behavior, multiple explanations are possible. First, from a methodological perspective it is easier to reliably assess students’ knowledge than to assess attitudes or behavior (Lange and Dewitte, 2019). More reliable measures are less effected by error variance and thus better capture intervention effects which means that they produce bigger effect sizes. Second, most interventions focus on teaching knowledge about climate change. This focus on knowledge was also found in the meta-analysis of van de Wetering et al. (2022) and is also reflected in the fact that most studies assessed climate change related knowledge, whereas comparatively few assessed attitude and behavior. If the focus of an intervention is not to change attitudes and behaviors, it is not surprising that the intervention is not as effective on these side-measures than on measures of the focus construct. Third, attitudes can be strong, meaning that they are stable over time and difficult to change (Ajzen, 2001; Verplanken and Orbell, 2022). Fourth, a growing body of literature suggests that knowledge about climate issues is not sufficient to stimulate climate related behavior change and that the relationship between knowledge and behavior is complex (Boyes and Stanisstreet, 2012; Norgaard, 2009; Tasquier and Pongiglione, 2017). Some empirical studies report correlations between climate and environmental knowledge and behavior (Masud et al., 2015; Pothitou et al., 2016) or knowledge and attitudes (Tasquier and Pongiglione, 2017), while others failed to find such effects (Ajzen et al., 2011; Mei et al., 2016). These conflicting findings can be explained by the theory of planned behavior, according to which knowledge is insufficient to explain behavior. Instead, individual attitudes, norms, perceived behavioral control, and beliefs that may or may not be based on correct knowledge are crucial to explain individual behavior (Ajzen et al., 2011). In consequence, the same gain in knowledge does not result in the same changes in behavior so that the effect sizes of behavior measures are smaller than those of knowledge measures. Fifth, not all types of knowledge might be equally relevant for supporting behavior changes (Tasquier and Pongiglione, 2017; Truelove and Parks, 2012). Declarative knowledge about the basics of climate change might be less relevant for changing behavior than procedural or action knowledge regarding what can be done against climate change. Thus, current studies on climate change education might effectively teach knowledge but the wrong type of knowledge for behavior changes. Sixth, even though the CCE interventions assessed within the studies of this meta-analysis covered a wide range of topics including basic knowledge about climate change, its effects and potential mitigation and adaptation actions, the majority of CCE interventions was conducted within subjects of natural sciences whereas CCE interventions conducted within subjects of social sciences and humanities were scarce. However, it has been argued, that a stronger focus on social sciences would be important for promoting climate literacy in a more wholistic way; particularly in addressing the social drivers of climate change which in turn can inform and promote climate change actions (Shwom et al., 2017; Vuong and Nguyen, 2024). Although our argumentation focuses on behavior changes, it can be transferred to attitudes since attitudes and behavior are related (Kaiser et al., 2010). Overall, there are some potential explanations for the finding of smaller effects for attitude and behavior compared to knowledge. Future research should have a stronger focus on how attitude and behavior can be promoted in the context of climate education and how knowledge relates to attitudes and behavior (see, e.g., suggestions for research on how to effectively promote attitudes and behavior in Riess et al., 2022).

In addition to differences in the mean effect sizes, we also found interesting differences between knowledge, attitude, and behavior variables in the distribution of variance over the between, within-group, and within-study level (Table 1). For knowledge related outcomes, the variance attributed to the between study level is much larger than the within-group and within-study variance. For attitudes and behavior related outcomes, however, the within-group and within-study variance exceed the between-study variance. The variance estimations for attitudes and behavior variables should be treated with caution, as they are estimated from a small number of studies which can result in an underestimation of the total variance (Moeyaert et al., 2017). However, as the meta-analysis by van de Wetering et al. (2022), which is based on a much larger sample of studies, also found that the within-study variance increases for attitudes and behavior outcomes, we will discuss the meaning of this finding, too. A possible reason for the increasing within-study variance for attitudes and behavior related outcomes is that the same persons respond differently to items addressing different attitudes or behaviors. This can lead to an increased within-group and within-study variance if multiple attitudes and behaviors are measured within the same study. From environmental education, it is known that persons’ responses to behavior measures depend on the concrete behavior, as some behaviors are associated with higher costs than others, while some changes may be accompanied with additional benefits such as saving money (Lange and Dewitte, 2019). Similarly, it can be assumed that attitudes differ for different facets of attitude such as concern about climate change, self-efficacy, or belief in climate change. In consequence, it is not meaningful to treat various measures of attitudes and behavior as uniform constructs. To overcome this challenge, further research needs a common theoretical framework to describe which costs and benefits are related to a particular behavior or attitude.

4.2 Findings of moderator analyses (RQ 2–4)

Regarding research question two, we found a significant effect of the content of the intervention on knowledge related outcomes. Interventions that teach basic knowledge about climate change like the difference between climate and weather have larger effect sizes than interventions that do not teach such knowledge. A potential reason for this effect might be that basic knowledge about the climate system is crucial for understanding concepts such as global warming as the basic knowledge is a foundation for understanding more complex concepts. For attitudinal and behavioral outcomes, we did not find an effect of basic knowledge.

Moreover, we found a significant moderator effect for the duration and the teacher (research question three) for attitude related outcomes. Shorter interventions of up to 90 min were more effective than interventions that were longer than 90 min. At a first glance, this finding may sound counterintuitive. However, a possible explanation could be that early on in an intervention in which students are confronted with the alarming topic of climate change, students’ emotions may be raised, making the urgency of the topic particularly present. This initial urgency and accompanying emotions may diminish over time as students go about their everyday lives. Hence, attitude changes might be most pronounced after short interventions in which the assessment takes place immediately before and after the intervention. Similar effects are known from other psychological areas, such as mindfulness interventions where effects of short interventions of one or two weeks showed larger effects compared to longer interventions of three or four weeks (Sedlmeier et al., 2018). However, more research is needed to investigate the temporal development of attitude during CCE interventions. Moreover, we found that studies in which researchers teach have significantly larger effects on attitudinal outcomes than studies in which teachers and experts teach together. We can only speculate about the reasons for this finding. It might demonstrate the crucial role of the teacher for students learning (Hattie, 2003). The multi-level analysis by Scharenberg et al. (2021) provides a first indication that this assumption might be correct. Teachers’ attitudes towards ESD and their professional knowledge emerged to be important predictors of student’s development of sustainability competencies over the course of a school year. Further research should investigate which teacher variables are related to higher student learning to understand the role of teachers in CCE. Regarding research question four (impact of the study design and test instruments), we found no significant effect.

However, contrary to our expectations, we found only very few moderator effects in this meta-analysis. One reason might be the relatively small number of primary studies, especially for attitude and behavior outcomes, which limits the power of our analysis. However, the meta-analysis by van de Wetering et al. (2022) also did not find any significant effects, even though they had a larger sample of studies. It might be that the absence of moderator effects is a property of environmental and climate education, as these educational concepts are very broad and aim at changing multiple outcome variables by utilizing a broad variety of teaching methods. Thus, it might be that the variance in the field is too broad to detect variables explaining the variance in study outcomes. This problem could be addressed only if more detailed theoretical frameworks exist based on which existing studies can be categorized into meaningful categories that represent specific learning processes for particular outcome variables.

4.3 Limitations

The present meta-analysis has several limitations. First, there was substantial heterogeneity among the included studies calling for caution when interpreting the mean effect sizes of this meta-analysis. Second, a large proportion of studies used ad hoc measures that had previously been validated. This likely contributed to the large heterogeneity among measures, making aggregation within a meta-analysis challenging. Future research should rely on validated measures more often. Third, most studies assessing behavior relied on self-report measures for performed or intended climate friendly behavior. However, self-report measures of behavioral outcomes are susceptible to retrospective biases, personal expectations, and social desirability (Lange and Dewitte, 2019). Future studies should use more objective and behavioral measures. Fourth, only few studies included students in primary education within mixed samples of primary and secondary students. Accordingly, the results of this meta-analysis can only be generalized to secondary students. This is a crucial limitation as a recent meta-analysis has shown that intervention effects on children’s pro-environmental behaviors are larger for younger than for older children (Świątkowsk et al., 2024). Fifth, our meta-analysis has a bias towards content from the natural sciences as the majority of included interventions were conducted within subjects of natural sciences whereas CCE interventions conducted within subjects of social sciences and humanities were scarce (Westphal et al., 2025). Thus, further intervention studies should investigate how interventions within social sciences influence students’ knowledge, attitudes and behavior in the context of climate change.

Sixth, since we only included studies published in English, there was a country bias, with the majority of studies having been published in the United States. Lastly, the methodological quality of many studies was low. Therefore, more qualitatively high research is needed in the area of climate change education.

5 Conclusion

In sum, this meta-analysis shows a significant mean effect of climate education on climate literacy. The medium to large effects for climate related knowledge demonstrate that CCE education is effective for promoting the knowledge component of climate literacy. However, the medium to small effects for attitude and behavior show that CCE is less effective for substantial changes in attitudes and behavior. This interpretation is supported by findings of the meta-analysis of van de Wetering et al. (2022) on environmental education and by a meta-analysis of Delmas et al. (2013) on interventions aiming at saving electric energy, who also found only small effects of education on behavior changes. To expect that CCE can contribute to mitigating climate change, thus, seems to be unrealistic. Furthermore, we found significant moderator effects for the duration of the intervention and the teacher on attitudinal outcomes, as well as a significant effect of teaching basic knowledge about the climate system on knowledge related outcomes. It must be noted, however, that the heterogeneity of the included studies was large, calling for caution when interpreting these results. Overall, the results of this meta-analysis are promising, but based on the current evidence, no specific recommendations for climate change education practice are possible, with great need for more qualitatively high research about CCE. Furthermore, additional research syntheses are needed because the body of primary studies of the existing meta-analyses (nearly) not overlap, which demonstrates that research on environmental education and climate education is fragmented. Further research synthesis should address this fragmentation for example by combining the datasets of existing meta-analyses in meta- meta-analysis.

Author contributions

VM-JA: Data curation, Formal analysis, Methodology, Project administration, Visualization, Writing – original draft, Writing – review & editing. MS: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Writing – original draft, Writing – review & editing. WR: Conceptualization, Funding acquisition, Methodology, Resources, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the German Federal Ministry of Education and Research (Bundesministerium für Bildung und Forschung) [grant number 16MF1014].

Acknowledgments

The authors thank the research assistants Adrian Schad, Laura Wevelsiep, Karla Hummel, Jonas Nick, and Nico Worzeck who helped in the screening process and coding of the studies. Furthermore, the authors thank the researchers Anushree Bopardikar, Alexandar Ramadoss, and Gannet Hallar who provided additional data for inclusion in the meta-analysis.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. During the preparation of this work the authors used DeepL and Bing/Microsoft in order to edit English language of individual sentences and words. After using this, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1563816/full#supplementary-material

References

Ajzen, I. (2001). Nature and operation of attitudes. Annu. Rev. Psychol. 52, 27–58. doi: 10.1146/annurev.psych.52.1.27

Ajzen, I., Joyce, N., Sheikh, S., and Cote, N. G. (2011). Knowledge and the prediction of behavior: the role of information accuracy in the theory of planned behavior. Basic Appl. Soc. Psychol. 33, 101–117. doi: 10.1080/01973533.2011.568834

Assink, M., and Wibbelink, C. J. M. (2016). Fitting three-level meta-analytic models in R: a step-by-step tutorial. Quant. Methods Psychol. 12, 154–174. doi: 10.20982/tqmp.12.3.p154

Azevedo, J., and Marques, M. (2017). Climate literacy: a systematic review and model integration. Int. J. Global Warm. 12, 414–430. doi: 10.1504/IJGW.2017.084789

Barata, R., Castro, P., and Martins-Loução, M. A. (2017). How to promote conservation behaviors: the combined role of environmental education and commitment. Environ. Educ. Res. 23, 1322–1334. doi: 10.1080/13504622.2016.1219317

Bedford, D. (2016). Does climate literacy matter? A case study of U.S. students’ level of concern about anthropogenic global warming. J. Geogr. 115, 187–197. doi: 10.1080/00221341.2015.1105851

Ben-Zvi Assaraf, O., and Orpaz, I. (2010). The Life at the Poles study unit: Developing junior high school students’ ability to recognize the relations between earth systems. Res. Sci. Educ, 40, 525–549.

Berrang-Ford, L., Ford, J. D., and Paterson, J. (2011). Are we adapting to climate change? Glob. Environ. Chang. 21, 25–33. doi: 10.1016/j.gloenvcha.2010.09.012

Bhattacharya, D., Carroll Steward, K., and Forbes, C. T. (2021). Empirical research on K-16 climate education: a systematic review of the literature. J. Geosci. Educ. 69, 223–247. doi: 10.1080/10899995.2020.1838848

Blum, N., Nazir, J., Breiting, S., Goh, K. C., and Pedretti, E. (2013). Balancing the tensions and meeting the conceptual challenges of education for sustainable development and climate change. Environ. Educ. Res. 19, 206–217. doi: 10.1080/13504622.2013.780588

Boon, H. J. (2010). Climate change? Who knows? A comparison of secondary students and pre-service teachers. Australian J. Teacher Educ. 35, 43–64. doi: 10.14221/ajte.2010v35n1.9

Borenstein, M., Hedges, L., and Rothstein, H. (2010). Introduction to meta-analysis. Hoboken, NJ: John Wiley and Sons.

Boyes, E., and Stanisstreet, M. (2012). Environmental education for behavior change: which actions should be targeted? Int. J. Sci. Educ. 34, 1591–1614. doi: 10.1080/09500693.2011.584079

Chang, C. -H., Pascua, L., and Ess, F. (2018). Closing the “Hole in the Sky”: The use of refutation-oriented instruction to correct students’ climate change misconceptions. J. Geogr. 117, 3–16. doi: 10.1080/00221341.2017.1287768

Cook, J., Nuccitelli, D., Green, S. A., Richardson, M., Winkler, B., Painting, R., et al. (2013). Quantifying the consensus on anthropogenic global warming in the scientific literature. Environ. Res. Lett. 8:24024. doi: 10.1088/1748-9326/8/2/024024

Deisenrieder, V., Kubisch, S., Keller, L., and Stötter, J. (2020). Bridging the action gap by democratizing climate change education—the case of k.i.d.Z.21 in the context of fridays for future. Sustain. For. 12:1748. doi: 10.3390/su12051748

Delmas, M. A., Fischlein, M., and Asensio, O. I. (2013). Information strategies and energy conservation behavior: a meta-analysis of experimental studies from 1975 to 2012. Energy Policy 61, 729–739. doi: 10.1016/j.enpol.2013.05.109

Doğan, Y., Batdı, V., and Yaşar, M. D. (2023). Effectiveness of flipped classroom practices in teaching of science: a mixed research synthesis. Res. Sci. Technol. Educ. 41, 393–421. doi: 10.1080/02635143.2021.1909553

Durlak, J. A. (2009). How to select, calculate, and interpret effect sizes. J. Pediatr. Psychol. 34, 917–928. doi: 10.1093/jpepsy/jsp004

Eggert, S., Nitsch, A., Boone, W. J., Nückles, M., and Bögeholz, S. (2017). Supporting students’ learning and socioscientific reasoning about climate change—the effect of computer-based concept mapping scaffolds. Res. Sci. Educ. 47, 137–159. doi: 10.1007/s11165-015-9493-7

Fernández-Castilla, B., Aloe, A. M., Declercq, L., Jamshidi, L., Beretvas, S. N., Onghena, P., et al. (2021a). Estimating outcome-specific effects in meta-analyses of multiple outcomes: a simulation study. Behav. Res. Methods 53, 702–717. doi: 10.3758/s13428-020-01459-4

Fernández-Castilla, B., Declercq, L., Jamshidi, L., Beretvas, S. N., Onghena, P., and Van Den Noortgate, W. (2021b). Detecting selection bias in meta-analyses with multiple outcomes: a simulation study. J. Exp. Educ. 89, 125–144. doi: 10.1080/00220973.2019.1582470

Flora, J. A., Saphir, M., Lappé, M., Roser-Renouf, C., Maibach, E. W., and Leiserowitz, A. A. (2014). Evaluation of a national high school entertainment education program: the Alliance for climate education. Clim. Chang. 127, 419–434. doi: 10.1007/s10584-014-1274-1

Frick, J., Kaiser, F. G., and Wilson, M. (2004). Environmental knowledge and conservation behavior: exploring prevalence and structure in a representative sample. Personal. Individ. Differ. 37, 1597–1613. doi: 10.1016/j.paid.2004.02.015

Hattie, J. (2003). Teachers make a difference, what is the research evidence? Paper presented at the building teacher quality: What does the research tell us? ACER research conference, Melbourne, Australia.

Higgins, J. P. T., Thomas, J., Chandler, M., Li, T., Page, M. J., and Welch, V. A. (2023). Cochrane handbook for systematic reviews of interventions version 6.4 (updated august 2023). Cochrane. Available online at: www.training.cochrane.org/handbook

Hu, S., and Chen, J. (2016). Place-based inter-generational communication on local climate improves adolescents’ perceptions and willingness to mitigate climate change. Clim. Chang. 138, 425–438. doi: 10.1007/s10584-016-1746-6

IPCC (2014). “Climate change 2014: synthesis report” in Contribution of working groups I, II and III to the fifth 1701 assessment report of the intergovernmental panel on climate change [core writing team]. eds. R. K. Pachauri and L. A. Meyer (Geneva: IPCC).

IPCC (2022). Climate change 2022 – Impacts, adaptation and vulnerability: Working group II contribution to the sixth assessment report of the intergovernmental panel on climate change. Cambridge: Cambridge University Press.

IPCC (2023). “Climate change 2023: synthesis report” in Contribution of working groups I, II and III to the sixth assessment report of the intergovernmental panel on climate change [core writing team]. eds. H. Lee and J. Romero (Geneva: IPCC).

Jorgenson, S. N., Stephens, J. C., and White, B. (2019). Environmental education in transition: a critical review of recent research on climate change and energy education. J. Environ. Educ. 50, 160–171. doi: 10.1080/00958964.2019.1604478

Kaiser, F. G., Byrka, K., and Hartig, T. (2010). Reviving Campbell’s paradigm for attitude research. Personal. Soc. Psychol. Rev. 14, 351–367. doi: 10.1177/1088868310366452

Karpudewan, M., and Mohd Ali Khan, N. S. (2017). Experiential-based climate change education: fostering students’ knowledge and motivation towards the environment. Int. Res. Geogr. Environ. Educ. 26, 207–222. doi: 10.1080/10382046.2017.1330037

Keller, L., Stötter, J., Oberrauch, A., Kuthe, A., Körfgen, A., and Hüfner, K. (2019). Changing climate change education: exploring moderate constructivist and transdisciplinary approaches through the research-education co-operation k.i.d.Z.21. GAIA Ecol. Pers. Sci. Soc. 28, 35–43. doi: 10.14512/gaia.28.1.10

Kolenatý, M., Kroufek, R., and Činčera, J. (2022). What triggers climate action: the impact of a climate change education program on students’ climate literacy and their willingness to act. Sustain. For. 14:10365. doi: 10.3390/su141610365

Kranz, J., Schwichow, M., Breitenmoser, P., and Niebert, K. (2022). The (un)political perspective on climate change in education—a systematic review. Sustain. For. 14:4194. doi: 10.3390/su14074194

Kuthe, A., Körfgen, A., Stötter, J., and Keller, L. (2020). Strengthening their climate change literacy: a case study addressing the weaknesses in young people’s climate change awareness. Appl. Environ. Educ. Commun. 19, 375–388. doi: 10.1080/1533015X.2019.1597661

Lange, F., and Dewitte, S. (2019). Measuring pro-environmental behavior: review and recommendations. J. Environ. Psychol. 63, 92–100. doi: 10.1016/j.jenvp.2019.04.009

Lester, B. T., Ma, L., Lee, O., and Lambert, J. (2006). Social activism in elementary science education: A science, technology, and society approach to teach global warming. Int. J. Sci. Educ, 28, 315–339. doi: 10.1080/09500690500240100

Lipsey, M. W., and Wilson, D. B. (2001). Practical meta-analysis. Thousand Oaks, CA: Sage Publications.

Masud, M. M., Akhtar, R., Afroz, R., Al-Amin, A. Q., and Kari, F. B. (2015). Pro-environmental behavior and public understanding of climate change. Mitig. Adapt. Strateg. Glob. Chang. 20, 591–600. doi: 10.1007/s11027-013-9509-4

Mei, N. S., Wai, C. W., and Ahamad, R. (2016). Environmental awareness and behavior index for Malaysia. Procedia. Soc. Behav. Sci. 222, 668–675. doi: 10.1016/j.sbspro.2016.05.223

Miléř, T., and Sládek, P. (2011). The climate literacy challenge. Procedia. Soc. Behav. Sci. 12, 150–156. doi: 10.1016/j.sbspro.2011.02.021

Moeyaert, M., Ugille, M., Natasha Beretvas, S., Ferron, J., Bunuan, R., and Van Den Noortgate, W. (2017). Methods for dealing with multiple outcomes in meta-analysis a comparison between averaging effect sizes, robust variance estimation and multilevel meta-analysis. Int. J. Soc. Res. Methodol. 20, 559–572. doi: 10.1080/13645579.2016.1252189

Monroe, M. C., Plate, R. R., Oxarart, A., Bowers, A., and Chaves, W. A. (2019). Identifying effective climate change education strategies: a systematic review of the research. Environ. Educ. Res. 25, 791–812. doi: 10.1080/13504622.2017.1360842

Norgaard, K. M. (2009). Cognitive and behavioral challenges in responding to climate change. Background paper to the 2010 world development report. World Bank Dev. doi: 10.1596/1813-9450-4940

Olsson, D., and Gericke, N. (2016). The adolescent dip in students’ sustainability consciousness—implications for education for sustainable development. J. Environ. Educ. 47, 35–51. doi: 10.1080/00958964.2015.1075464

Otto, I. M., Donges, J. F., Cremades, R., Bhowmik, A., Hewitt, R. J., Lucht, W., et al. (2020). Social tipping dynamics for stabilizing Earth’s climate by 2050. Proc. Natl. Acad. Sci. 117, 2354–2365. doi: 10.1073/pnas.1900577117

Ouzzani, M., Hammady, H., Fedorowicz, Z., and Elmagarmid, A. (2016). Rayyan—a web and mobile app for systematic reviews. Syst. Rev. Article 210. 5. doi: 10.1186/s13643-016-0384-4

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., et al. (2021). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Int. J. Surg. 88:105906. doi: 10.1016/j.ijsu.2021.105906

Pfeiffer, C. (2022). “Paving the way through the ‘literacy jungle’: science education in the context of social media and climate change” in Esera summer school 2022—Ebook of synopses. ed. K. Kortland (Utrecht: University of Utrecht), 231–234.

Pothitou, M., Hanna, R. F., and Chalvatzis, K. J. (2016). Environmental knowledge, pro-environmental behavior and energy savings in households: an empirical study. Appl. Energy 184, 1217–1229. doi: 10.1016/j.apenergy.2016.06.017

Probst, M., Reinfried, S., Adamina, M., Hertig, P., and Stucki, P. (2018). Klimabildung in allen Zyklen der Volksschule und in der Sekundarstufe II. Bern: Climate Change Education and Science Outreach [CCESO].

Pustejovsky, J. E., and Rodgers, M. A. (2019). Testing for funnel plot asymmetry of standardized mean differences. Res. Synth. Methods 10, 57–71. doi: 10.1002/jrsm.1332

Reinfried, S., Aeschbacher, U., and Rottermann, B. (2012). Improving students’ conceptual understanding of the greenhouse effect using theory-based learning materials that promote deep learning. Int. Res. Geogr. Environ. Educ. 21, 155–178. doi: 10.1080/10382046.2012.672685

Richter, T., Rendigs, A., and Maminirina, C. (2015). Conservation messages in speech bubbles–evaluation of an environmental education comic distributed in elementary schools in Madagascar. Sustain. For. 7, 8855–8880. doi: 10.3390/su7078855

Riess, W., Martin, M., Mischo, C., Kotthoff, H.-G., and Waltner, E.-M. (2022). How can education for sustainable development (ESD) be effectively implemented in teaching and learning? An analysis of educational science recommendations of methods and procedures to promote ESD goals. Sustain. For. 14:3708. doi: 10.3390/su14073708

Riess, W., and Mischo, C. (2010). Promoting systems thinking through biology lessons. Int. J. Sci. Educ. 32, 705–725. doi: 10.1080/09500690902769946

Rohatgi, A. (2022). WebPlotDigitizer (4.6) [computer software]. Available online at: https://automeris.io/WebPlotDigitizer

Scammacca, N., Roberts, G., and Stuebing, K. K. (2014). Meta-analysis with complex research designs: dealing with dependence from multiple measures and multiple group comparisons. Rev. Educ. Res. 84, 328–364. doi: 10.3102/0034654313500826

Scharenberg, K., Waltner, E.-M., Mischo, C., and Rieß, W. (2021). Development of students’ sustainability competencies: do teachers make a difference? Sustain. For. 13:13. doi: 10.3390/su132212594

Scharfen, J., Peters, J. M., and Holling, H. (2018). Retest effects in cognitive ability tests: a meta-analysis. Intelligence 67, 44–66. doi: 10.1016/j.intell.2018.01.003

Schauss, M., and Sprenger, S. (2019). Conceptualization and evaluation of a school project on climate science in the context of education for sustainable development (esd). Educ. Sci. 9:217. doi: 10.3390/educsci9030217

Schwichow, M., Croker, S., Zimmerman, C., Höffler, T., and Härtig, H. (2016). Teaching the control-of-variables strategy: a meta-analysis. Dev. Rev. 39, 37–63. doi: 10.1016/j.dr.2015.12.001

Schwichow, M., and Zoupidis, A. (2023). Teaching and learning floating and sinking: a meta-analysis. J. Res. Sci. Teach. 61, 487–516. doi: 10.1002/tea.21909

Świątkowski, W., Surret, F. L., Henry, J., Buchs, C., Visintin, E. P., Butera, F., et al. (2024). Interventions promoting pro-environmental behaviors in children: A meta-analysis and a research agenda. J. Environ. Psychol., 96, 102295. doi: 10.1016/j.jenvp.2024.102295

Sedlmeier, P., Loße, C., and Quasten, L. C. (2018). Psychological effects of meditation for healthy practitioners: an update. Mindfulness 9, 371–387. doi: 10.1007/s12671-017-0780-4

Shwom, R., Isenhour, C., Jordan, R. C., McCright, A. M., and Robinson, J. M. (2017). Integrating the social sciences to enhance climate literacy. Front. Ecol. Environ. 15, 377–384. doi: 10.1002/fee.1519

Stadler, M., Martin, M., Schuler, S., Stemmann, J., Rieß, W., and Künsting, J. (2025). “Entwicklung eines Kompetenzstrukturmodells für Climate Literacy” in Bildung für nachhaltige Entwicklung: Einblicke in aktuelle Forschungsprojekte. eds. H. Kminek, V. Holz, M. Singer-Brodowski, H. Ertl, T. S. Idel, and C. Wulf, vol. 17 (Berlin: Springer-Verlag).

Sun, L., and Zhou, D. (2023). Effective instruction conditions for educational robotics to develop programming ability of K-12 students: a meta-analysis. J. Comput. Assist. Learn. 39, 380–398. doi: 10.1111/jcal.12750

Tasquier, G., and Pongiglione, F. (2017). The influence of causal knowledge on the willingness to change attitude towards climate change: results from an empirical study. Int. J. Sci. Educ. 39, 1846–1868. doi: 10.1080/09500693.2017.1355078

Tipton, E. (2015). Small sample adjustments for robust variance estimation with meta-regression. Psychol. Methods 20, 375–393. doi: 10.1037/met0000011

Truelove, H. B., and Parks, C. (2012). Perceptions of behaviors that cause and mitigate global warming and intentions to perform these behaviors. J. Environ. Psychol. 32, 246–259. doi: 10.1016/j.jenvp.2012.04.002

UNFCCC. (1998). Kyoto Protocol of the United Nations Framework convention on climate change. Available online at: https://unfccc.int/resource/docs/convkp/kpeng.pdf

UNFCCC and UNESCO. (2016). Action for climate empowerment: Guidelines for accelerating solutions through education, training and public awareness. UNESCO. Available at: https://unfccc.int/sites/default/files/action_for_climate_empowerment_guidelines.pdf

USGCRP (2009). Climate literacy: The essential principles of climate science. Washington, DC: Global Change Research Program.

Van De Wetering, J., Leijten, P., Spitzer, J., and Thomaes, S. (2022). Does environmental education benefit environmental outcomes in children and adolescents? A meta-analysis. J. Environ. Psychol. 81:101782. doi: 10.1016/j.jenvp.2022.101782

Verplanken, B., and Orbell, S. (2022). Attitudes, habits, and behavior change. Annu. Rev. Psychol. 73, 327–352. doi: 10.1146/annurev-psych-020821-011744

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. J. Stat. Softw. 36, 1–48. doi: 10.18637/jss.v036.i03

Vuong, Q. H., and Nguyen, M. H. (2024). Better economics for the earth: A lesson from quantum and information theories. Hanoi, Vietnam: AISDL.

Westphal, A., Kranz, J., Schulze, A., Schulz, H., Becker, P., Wulff, P., et al. (2025). Climate change education: Bibliometric analysis of the Status-Quo and future research paths. J. Environ. Educ., 1–20. doi: 10.1080/00958964.2025.2475299

Keywords: climate change education, climate, environmental education, climate literacy, meta-analysis, synthesis

Citation: Aeschbach VM-J, Schwichow M and Rieß W (2025) Effectiveness of climate change education—a meta-analysis. Front. Educ. 10:1563816. doi: 10.3389/feduc.2025.1563816

Edited by:

Xiang Hu, Renmin University of China, ChinaReviewed by:

Maria João Guimarães Fonseca, Universidade do Porto, PortugalMinh-Hoang Nguyen, Phenikaa University, Vietnam

Copyright © 2025 Aeschbach, Schwichow and Rieß. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Vanessa Marie-Jane Aeschbach, dmFuZXNzYS5hZXNjaGJhY2hAcGgtZnJlaWJ1cmcuZGU=

Vanessa Marie-Jane Aeschbach

Vanessa Marie-Jane Aeschbach Martin Schwichow

Martin Schwichow Werner Rieß

Werner Rieß