Abstract

Generative Artificial Intelligence (GAI), such as OpenAI’s ChatGPT, has rapidly emerged as a transformative tool in higher education, offering opportunities to enhance teaching and learning. This paper describes the design and implementation of ChatGPT-integrated curriculum activities, featuring coding learning in psychology and conceptual discussions in physics, and presents the findings of a year-long experimental study in both types of classrooms. Our findings suggest that students generally found ChatGPT easy to use and beneficial to their learning, reporting improved confidence, motivation, and engagement. However, its ability to address individual needs or replace instructors was viewed less favorably. Comparative analyses showed that coding activities in psychology led to higher levels of activity satisfaction and perceived usefulness of ChatGPT compared to the more abstract discussion activities in physics. While graduate students were more enthusiastic about using ChatGPT for skill acquisition than undergraduates, demographic factors such as gender, race, and first-generation college status showed no significant influence on such perceptions. Meanwhile, instructors’ reflections emphasize the importance of thoughtful integration, technical support, and pedagogical balance to maximize GAI’s potential while mitigating its limitations. Recommendations for integrating GAI into teaching practices and future research directions are discussed, contributing to the evolving discourse on GAI’s role in transforming modern classrooms.

1 Introduction

Generative Artificial Intelligence (GAI) such as ChatGPT has emerged as a transformative tool in higher education, offering great potential to enhance both teaching and learning experiences. Software tools that leverage current GAI functionality can facilitate personalized learning, provide instant feedback, and assist in content creation. The integration of these tools into educational contexts and processes is rapidly occurring (e.g., Athanassopoulos et al., 2023; Su et al., 2023). At the same time, the use of GAI also raises many concerns, particularly in terms of ethical considerations related to plagiarism and the challenge of determining how to effectively incorporate this technology into teaching and learning contexts (e.g., Hutson, 2024).

Although there is growing interest in the use of GAI in education, there is a great need for more empirical studies that discuss its adoption and impact (Farrokhnia et al., 2024). In particular, systematic evaluations of the effectiveness of GAI-based classroom activities remain scarce. Such evaluations should carefully consider factors like pedagogical formats, audience demographics, and disciplinary differences. To help address this gap, we present the findings from a year-long experiment conducted in 2023, where the authors incorporated ChatGPT into college classroom teaching across various subjects and formats, involving both undergraduate and graduate students. This teaching experiment spans two semesters and provides a unique perspective on the dynamics of using ChatGPT in real-world educational settings. Participating students and teachers provided their feedback and reflections upon completion of the teaching experiment, offering valuable insights and practical suggestions for educators interested in using GAI tools in their future teaching practices.

In summary, our goal with this manuscript is to contribute to the growing body of knowledge on implementing GAI in higher education and offer data-driven insights and actionable recommendations for educators and institutions. The structure of this paper is as follows: a review of the relevant literature, a detailed description of the experimental design and methodology, a summary of the experiment findings, and a discussion of the implications and future recommendations.

1.1 Background and literature review

Driven by significant advancements in machine learning and deep learning, natural language processing and GAI have evolved rapidly over the past decade. One of the most notable recent innovations is OpenAI’s ChatGPT (OpenAI, 2022), which was built based on the powerful Generative Pre-trained Transformer (GPT) model capable of producing human-like text and engaging in sophisticated “conversations” with human users. In education contexts, ChatGPT is a novel yet promising tool that offers numerous benefits, including (and not limited to) personalized learning experiences, enhanced engagement, immediate feedback on assignments, easy access to rich information and resources, the ability to facilitate discussions and debates, the promotion of independent learning, and the potential to reduce workload by automating routine tasks. These advantages could contribute to a more dynamic, interactive, and efficient educational environment, ultimately enhancing the overall learning experience for students and teaching experience for instructors.

Despite its potential benefits, the use of GAI in educational contexts also raises concerns, including (among others) the potential to: engender an over-reliance on GAI, reduce development of critical thinking skills, and present ethical issues related to data transparency and accessibility. A developing GAI-related debate among educators reflects a broader discussion on balancing the innovative potential of GAI with the need to maintain rigorous educational standards (e.g., Yu, 2023). While some view GAI as a transformative tool that can enrich the learning experience, others fear this type of technology may diminish the quality of education by fostering dependence and undermining academic integrity.

Numerous studies have explored this topic since the advent of ChatGPT. To provide a general overview of the current state of GAI research within the educational sector, particularly in the traditional schooling systems, we conducted an extensive literature search covering studies published up to April 2024. Given the rapid pace of AI advancements, newer studies may have emerged since our search; however, we believe our analysis captures key trends and patterns that remain relevant and consistent over the early stage of GAI adoption in education. Our focus was on peer-reviewed academic journal articles that specifically examined the use of ChatGPT as a tool in education. Using Google Scholar and Web of Science, we filtered articles to include only those with “education” and “ChatGPT” in the title or abstract. We then coded each article as either empirical (E) or review (R) in form, and whether it focused on education within schools (1) or not (2). This search resulted in 376 articles, 271 of which directly addressed the use of ChatGPT in school settings. The remaining studies primarily focused on ChatGPT’s application in various professional fields, such as healthcare, research, electronics, white-collar work, language, and marketing, with healthcare being the predominant area. Notably, a large number of review articles (n = 151) were identified during the search and generally explored the broader implications of ChatGPT in the context of education and training. These articles frequently discussed both the potential benefits and risks of using ChatGPT, with most adopting a neutral or cautiously optimistic stance. This cautious approach is understandable, as ChatGPT is still in its early stages of development, and its long-term impacts have yet to be established through more robust empirical research.

For the present study, we focus on empirical studies exclusively within the education system. Through the literature search process described above, we identified 158 such studies for further analysis. A summary table of these studies is presented in Table 1, which shows that most of the existing research in this domain has been done in a higher, post-secondary education context. The number of studies that focus on graduate-level (n = 64) and undergraduate-level (n = 70) topics are similar, and collectively account for 85% of the total studies identified. In contrast, only 16 studies focus on K-12 grade levels, while eight studies do not clearly specify the educational level context information.

TABLE 1

Empirical studies of use of ChatGPT in education.

Among the studies identified, the most common category (n = 40) focuses on testing ChatGPT’s proficiency and utility for generating and responding to exam and assignment questions. These studies primarily evaluate ChatGPT’s performance on exam and assignment questions relevant to higher education classroom content (e.g., Currie and Barry, 2023). In particular, a large portion of studies within the category use medical licensing exam questions to assess ChatGPT’s capabilities (e.g., Wang et al., 2023).

The second most prevalent category evident in these identified studies (n = 36) explores how ChatGPT can support both teachers and students as an innovative tool. While some studies focus on using ChatGPT to develop educational content and design learning tools (e.g., Jeon and Lee, 2023), others investigate its potential as a teaching assistant to aid student learning (e.g., Lee et al., 2024). The third category (n = 30) examines student and teacher perceptions of ChatGPT, often assessing its perceived usefulness and potential risks (e.g., Zou and Huang, 2023). Although fewer in number, several studies investigate the current prevalence of ChatGPT use by students and its impact on student performance. These studies are mostly at the undergraduate level, with some examining K-12 educational contexts. Surprisingly, there are not many studies directly comparing ChatGPT and student responses to various homework assignments (with few attempts such as in Currie and Barry, 2023), which is a significant concern for educators regarding plagiarism. Finally, in the “Others” category shown in Table 1, an emerging trend in the literature is the use of ChatGPT to support research processes, particularly in manuscript generation at the graduate education level.

From our review, we noticed that while existing research offers some, mostly positive signs and insights regarding the use of ChatGPT, additional empirical studies are needed to promote the responsible and effective use of GAI in classrooms and other learning settings. Unlike many existing studies that capture perceptions at a single point in time, the present year-long experiment follows a consistent framework across two consecutive university semesters. This approach allows us to track changes in learning effectiveness and provide a more comprehensive view of student perceptions. By assessing how student perspectives have evolved with the rising popularity and understanding of GAI’s benefits and limitations, our study offers deeper insights into current trends in student perceptions within higher education, as well as students’ adaptation to this new technology.

Moreover, the present study connects student perceptions directly to various class activities in different academic disciplines (e.g., a GAI-facilitated coding activity in statistics courses in psychology and a GAI-enhanced discussion activity in physics), rather than gathering general opinions about non-contextualized GAI applications. This method yields more refined and meaningful data on students’ perceived usefulness of the activities themselves, providing valuable information for researchers and educators interested in adopting similar classroom instruction methods. Further, direct comparisons across distinct activity designs and over time offer invaluable opportunities for teachers to reflect on the effective integration of GAI into their own teaching practices.

In the following section, we explain the details of our class activities incorporating ChatGPT into classroom settings during the spring and fall semesters of 2023.

2 Experimenting with the integration of ChatGPT in classroom activities

2.1 Experiment 1: learning python coding in a psychology statistics course

The primary task of this classroom activity was to facilitate students’ familiarity with Python programming, with a specific goal of building and improving models for handwritten digit recognition. Throughout this activity, students were guided in constructing a neural network model to recognize handwritten digits. The learning process for this activity involved students executing instructor-provided Python code examples, responding to various related queries, and iteratively modifying their code to enhance model performance. Students were introduced to ChatGPT as a potentially helpful resource and were permitted to access and utilize ChatGPT to find answers to their questions. This exercise was designed to foster an interactive, hands-on approach to learning Python and understanding the process of building a neural network model. The length of this class activity was one hour. The implementation details of this class activity are outlined below (Also see Figure 1 for workflow illustration for all class activities):

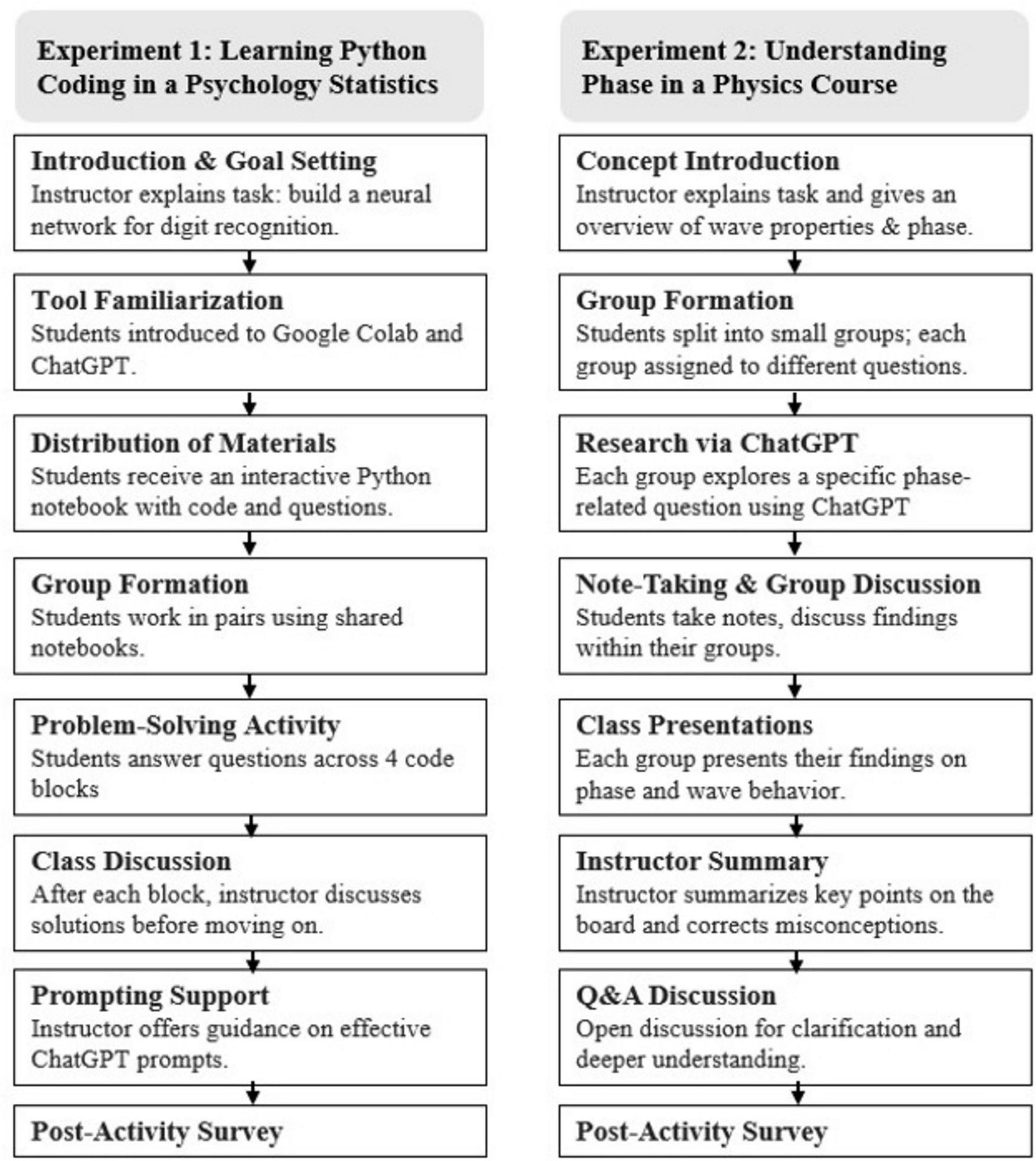

FIGURE 1

Workflow illustration of each class activity.

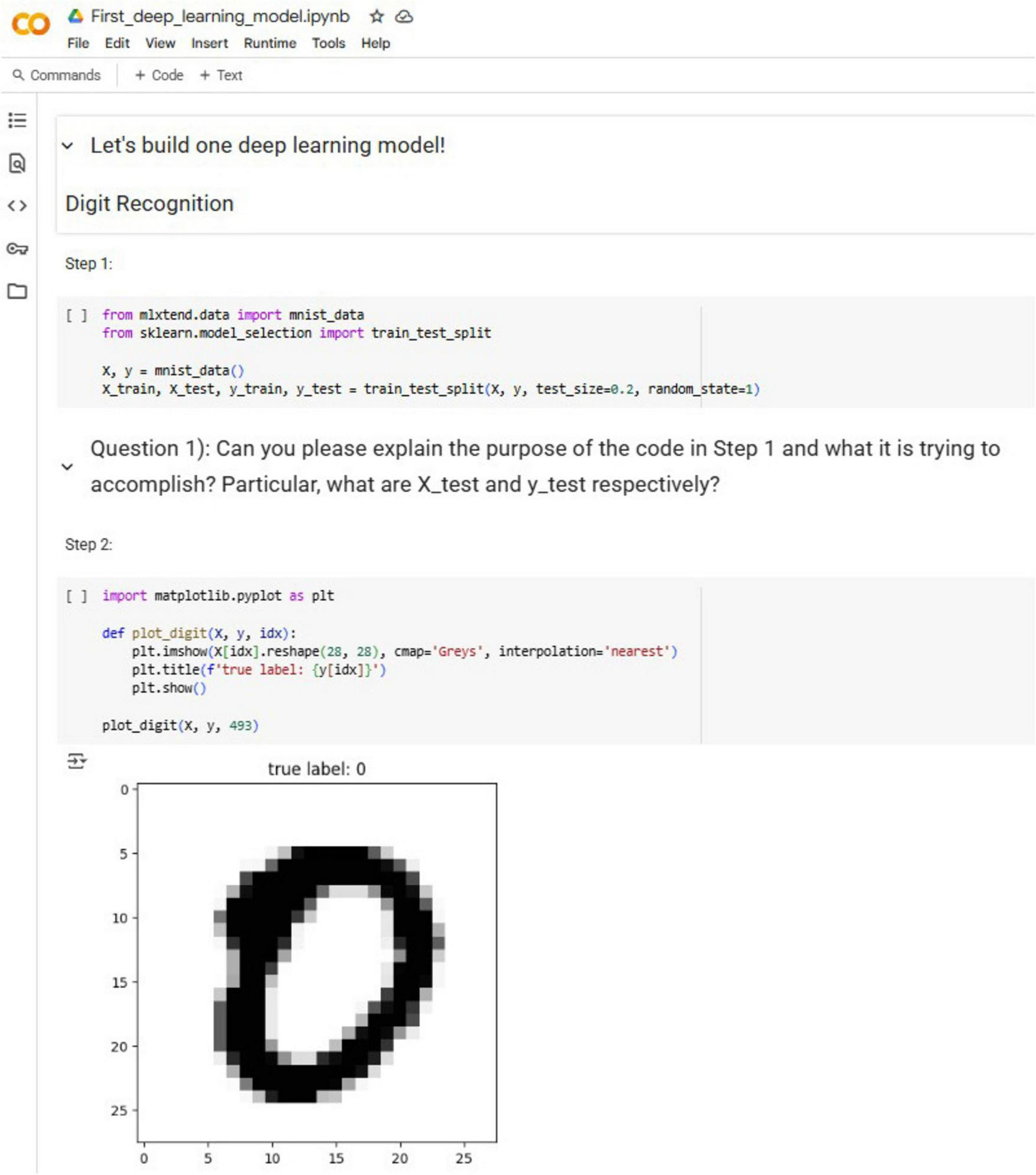

First, the activity task and goal were explained to students. The students were then introduced to Google Colab and ChatGPT, and guided to a level of familiarity with these tools. Next, after ensuring that all students had access to both tools and were comfortable with their basic operations, each student was provided with a copy of an interactive Python notebook. This notebook contained pre-written Python code snippets and accompanying questions. An excerpt from the notebook is provided in Figure 2, while the complete notebook can be found in the Supplementary materials.

FIGURE 2

Excerpt of python code from the psychology in-class coding activity.

Next, students were grouped into pairs and collaboratively tackled a series of problems within a shared notebook. The exercise was structured around four sets of questions that corresponded to four distinct code blocks provided with the activity materials. To maintain a cohesive learning pace and ensure no one was left behind, the students were asked to address the questions sequentially and as a class. Students were also encouraged to ask the instructor any questions during the problem-solving process, fostering an open dialogue within the classroom. Before progressing to the next problem, the instructor reviewed and explained each previous question.

It is important to note that the teacher actively monitored the class to ensure effective communication between the students and ChatGPT. While the teacher did not prescribe a one-size-fits-all “prompt,” guidance and suggestions regarding the selection of prompts were provided, especially when students encountered difficulties.

After the class activity, students were given a brief survey (summarized in Table 2) regarding their general reactions or perceptions toward the use of ChatGPT in the activity, along with some basic demographic information (e.g., gender, race, and first-generation college status).

TABLE 2

| Survey item (Likert scale 1–5) | All data (n = 169) | PHYS | PSYC | ||||

| M (SD) | T1 M (n = 42) | T2 M (n = 36) | Combined M | T1 M (n = 30) | T2 M (n = 61) | Combined M | |

| 1. ChatGPT is easy to use | 4.08 (0.90) | 3.67 | 4.11 | 3.87 | 4.37 | 4.20 | 4.25 |

| 2. ChatGPT seems to be useful for my learning | 3.78 (0.98) | 3.26 | 3.58 | 3.41 | 4.10 | 4.10 | 4.10 |

| 3. Use of ChatGPT could improve my learning | 3.82 (0.95) | 3.33 | 3.72 | 3.51 | 3.83 | 4.20 | 4.08 |

| 4. I have fun interacting with ChatGPT | 3.78 (0.98) | 3.38 | 3.72 | 3.54 | 4.23 | 3.85 | 3.98 |

| 5. The use of ChatGPT helps me connect ideas in new ways | 3.67 (0.96) | 3.31 | 3.53 | 3.41 | 3.80 | 3.95 | 3.90 |

| 6. The use of ChatGPT can help me develop confidence in the subject area | 3.70 (1.00) | 3.29 | 3.69 | 3.47 | 3.90 | 3.90 | 3.90 |

| 7. The use of ChatGPT motivated me to learn certain new skills | 3.35 (1.00) | 2.93 | 3.22 | 3.06 | 3.63 | 3.57 | 3.59 |

| 8. Use of ChatGPT can be important supplement to the classroom | 3.65 (1.04) | 3.19 | 3.67 | 3.41 | 3.70 | 3.93 | 3.86 |

| 9. I think the use of ChatGPT in classroom is a good idea. | 3.45 (1.03) | 3.00 | 3.31 | 3.14 | 3.47 | 3.84 | 3.71 |

| 10. I think ChatGPT provides a fair education opportunity to all the students | 3.43 (1.13) | 2.95 | 3.53 | 3.22 | 3.20 | 3.80 | 3.60 |

| 11. I plan to or will continue to use ChatGPT in the future | 3.60 (1.19) | 2.93 | 3.50 | 3.19 | 3.90 | 3.97 | 3.95 |

| 12. I am satisfied with the ChatGPT activity. | 3.62 (0.99) | 3.19 | 3.72 | 3.44 | 3.73 | 3.80 | 3.78 |

| 13. I think ChatGPT can better address my individual questions than teachers | 2.64 (1.12) | 2.76 | 2.44 | 2.62 | 2.57 | 2.72 | 2.67 |

| 14. I have some concerns over the use of ChatGPT in classrooms | 3.36 (1.15) | 3.69 | 3.28 | 3.50 | 3.40 | 3.16 | 3.24 |

| 15. Using ChatGPT takes too much of my time | 2.11 (0.82) | 2.43 | 2.11 | 2.28 | 1.87 | 2.00 | 1.96 |

| 16. I am not comfortable with the idea of use of ChatGPT | 2.47 (1.23) | 2.95 | 2.33 | 2.67 | 2.37 | 2.26 | 2.30 |

Basic descriptive statistics for students’ survey data.

T1 refers to the Spring semester of 2023, and T2 refers to the Fall semester of 2023.

2.2 Experiment 2: learning the physical meaning of phase in a physics course

The primary goal of this activity was for students to grasp the physical meaning of “phase” in wave mechanics and its role in generating interference and diffraction patterns in acoustics and optics. In physics, phase is a key parameter that influences the behavior and interaction of waves, particles, and various physical systems. Understanding phase is crucial for describing a wide range of physical processes, including wave interference, signal processing, synchronization, and the dynamics of electrical circuits and mechanical vibrations. In thermodynamics and condensed matter physics, phase also refers to different states of matter and their transitions, underscoring its broad relevance across multiple domains in physics. This one-hour class activity was specifically designed for non-physics STEM undergraduates. The implementation details of this class activity are outlined below:

The activity started with the instructor’s brief introduction to wave properties, including wavelength, frequency, amplitude, and phase. Phase was explained as a wave’s position within its cycle, a concept that can be observed in everyday life, where its critical role in understanding phenomena such as interference and diffraction was emphasized.

Next, students were divided into small groups and tasked with exploring different aspects of phase using ChatGPT.1 Each group was assigned a specific question, such as “How do phase shifts affect interference patterns?” or “What is the difference between constructive and destructive interference?” By interacting with ChatGPT, students asked a series of individual questions and received tailored explanations. They also took notes on the information provided by ChatGPT and discussed their findings within their groups.

Following their interactions with ChatGPT, each group presented their findings to the class, where students explained the concept of phase and its effects on wave behavior based on their AI-assisted research. The instructor summarized the key points on the whiteboard and clarified any misconceptions. To deepen students’ understanding, the instructor facilitated a brief question-and-answer discussion where students could ask additional questions and seek further clarification. This discussion helped resolve any remaining uncertainties and reinforced the main concepts. The instructor concluded the activity by having students reflect on how using ChatGPT had enhanced their understanding of phase. The students were instructed to respond to the same brief survey described in the in-class activity above.

3 Findings

3.1 General survey findings from students

Over the course of this year-long experiment (i.e., spring and fall semesters of 2023), a total of 169 students participated in one of the class experiments just described, providing their feedback in response to the student survey. The survey items and basic descriptive statistics of students’ responses are presented in Table 2. In the psychology discipline, both graduate and undergraduate students from multiple courses (i.e., undergraduate and graduate statistics courses) participated in the coding activity experiment. A total of 91 students provided feedback, with 47% being graduate students, 79% female, 78% White, and 34% first-generation college students. For physics, 78 undergraduate students participated (all enrolled in a 1,000-level general education course focused on fundamentals of electromagnetism and optics). Among these students, 64% were female, 88% White, and 23% first-generation college students.

Overall, students found ChatGPT easy to use and beneficial as a resource for learning. They enjoyed interacting with the tool, felt it boosted their confidence and motivation, and saw it as a valuable classroom supplement. They also believed it provided fair educational opportunities and expressed a desire to continue using ChatGPT in the future. At the same time, the students did not believe that ChatGPT could replace the instructors in classrooms to address their individual needs. Additionally, they expressed varying degrees of concern over the use of ChatGPT.

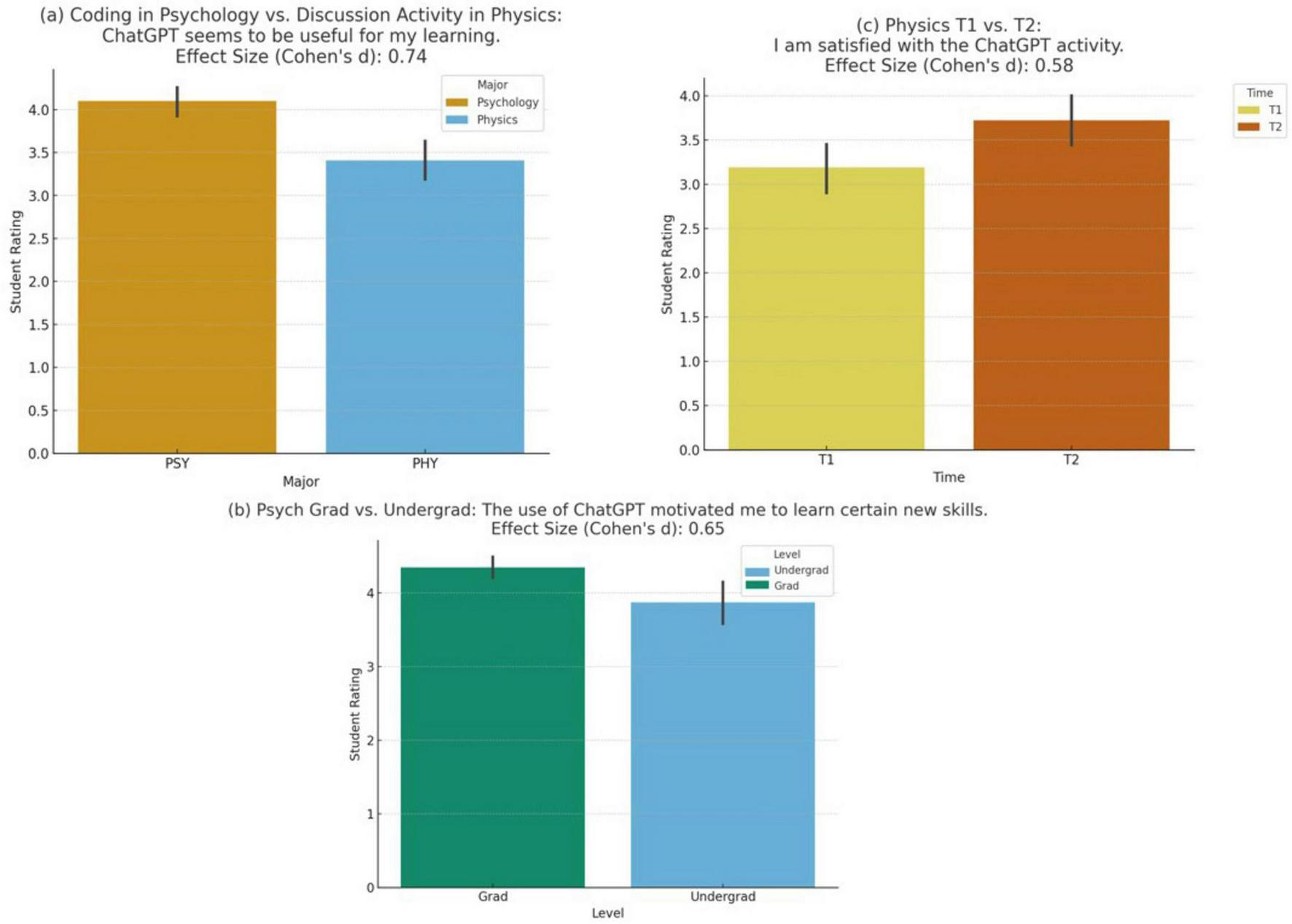

3.2 Coding activity versus conceptual learning

Next, we present findings that compare students’ perceptions across different subgroups of interest. All the analysis details can be found in Table 3. The comparative analysis first reveals that the type of activity significantly influences student reactions to ChatGPT as an instructional aid tool. It is found that the coding activity in psychology classes was better received by students compared to the conceptual discussion activity in physics. Almost all survey items indicated significant differences, except for the items “better addresses my individual questions than teachers” and “have some concerns over the use of ChatGPT in classrooms.” Among all the items, perceptions of usefulness and intention for future use showed the largest differences, with Cohen’s d effect sizes of 0.74 and 0.66, respectively. These findings suggest that the type of activity plays a crucial role in shaping students’ perceptions. Coding activities may have been perceived as more valuable because they offer more tangible and structured learning opportunities compared to the abstract nature of conceptual discussions.

TABLE 3

| Survey item | PHYS vs. PSYC (PHYS-PSYC) | PHYS T1 vs. T2 (PHYS_ T1-PHYS_T2) |

Grad vs. Undergrad (PSYC_grad – PSYC_ undergrad) |

|||

| Mean difference | Effect size D | Mean difference | Effect size D | Mean difference | Effect size D | |

| 1. ChatGPT is easy to use | −0.38* | −0.43 | −0.44* | −0.54 | 0.36* | 0.41 |

| 2. ChatGPT seems to be useful for my learning | −0.69* | −0.74 | −0.32 | −0.32 | 0.47* | 0.60 |

| 3. Use of ChatGPT could improve my learning | −0.56* | −0.62 | −0.39 | −0.41 | 0.34 | 0.41 |

| 4. I have fun interacting with ChatGPT | −0.44* | −0.46 | −0.34 | −0.40 | 0.61* | 0.63 |

| 5. The use of ChatGPT helps me connect ideas in new ways. | −0.49* | −0.53 | −0.22 | −0.25 | 0.58* | 0.64 |

| 6. The use of ChatGPT can help me develop confidence in the subject area | −0.43* | −0.43 | −0.41 | −0.43 | 0.28 | 0.28 |

| 7. The use of ChatGPT motivated me to learn certain new skills | −0.53* | −0.55 | −0.29 | −0.33 | 0.64* | 0.65 |

| 8. Use of ChatGPT can be important supplement to the classroom | −0.45* | −0.44 | −0.48* | −0.49 | 0.49* | 0.49 |

| 9. I think the use of ChatGPT in classroom is a good idea. | −0.57* | −0.57 | −0.31 | −0.28 | 0.41* | 0.46 |

| 10. I think ChatGPT provides a fair education opportunity to all the students | −0.39* | −0.34 | −0.58* | −0.51 | 0.13 | 0.12 |

| 11. I plan to or will continue to use ChatGPT in the future | −0.75* | −0.66 | −0.57* | −0.49 | 0.55* | 0.53 |

| 12. I am satisfied with the ChatGPT activity | −0.34* | −0.35 | −0.53* | −0.58 | 0.33 | 0.33 |

| 13. I think ChatGPT can better address my individual questions than teachers | −0.05 | −0.05 | 0.32 | 0.27 | −0.21 | −0.20 |

| 14. I have some concerns over the use of ChatGPT in classrooms | 0.26 | 0.22 | 0.41 | 0.36 | 0.16 | 0.14 |

| 15. Using ChatGPT takes too much of my time | 0.33* | 0.40 | 0.32 | 0.39 | −0.31 | −0.40 |

| 16. I am not comfortable with the idea of use of ChatGPT | 0.37* | 0.30 | 0.62* | 0.50 | −0.52* | −0.46 |

Students’ perception comparison among various subgroups.

Total n = 169.

*For significant p-values less than.05. T1 refers to the Spring semester of 2023, and T2 refers to the Fall semester of 2023. The comparisons between graduate and undergraduate students are among psychology classroom. Among all the items, no significant differences were found between male vs. female, Whites vs. others, and first generation vs non-first generation college students.

3.3 Spring semester versus fall semester in 2023

For the psychology coding activity, the perceptions of participating students were similar across two semesters, with no significant differences in responses except for one item: “I think ChatGPT provides a fair education opportunity to all the students.” For this item, student ratings increased from the first to the second semester by a rating difference of 0.60 out of a five-point Likert scale of agreement (Cohen’s d of 0.56). This change may indicate that as students became more familiar with ChatGPT over time, they developed a greater appreciation for its ability to provide equitable learning opportunities and effectively address individual questions. Additionally, although no other items differed significantly over time, there was a general trend toward improved student perceptions of ChatGPT. This improvement may reflect the important influence of sustained exposure to GAI tools in fostering positive attitudes and maximizing their potential in educational settings.

For the discussion activity in physics courses, students’ perceptions improved significantly over time. Notably, significant changes with modest effect sizes were observed in several areas, including ease of use, usefulness as a supplemental tool to classroom instruction, provision of fair educational opportunities, activity satisfaction, intent for future use, and comfort level with using ChatGPT. These findings suggest the importance of self-exploration and the dynamic interactions within student group activities, which appear to play a crucial role in enhancing both engagement and learning outcomes when integrating ChatGPT into classroom activities.

3.4 Comparisons among various student subgroups

Comparisons between graduate and undergraduate students in the participating psychology courses reveal significant differences in the perceived effectiveness and usefulness of the GAI. Graduate students showed greater motivation to use ChatGPT for learning new skills (Cohen’s d = 0.65) and reported a higher level of intention to use the tool in the future (Cohen’s d = 0.53) compared to undergraduates.

Additionally, we examined the impact of various demographic factors on students’ reactions to ChatGPT among all students as well as within each discipline. These analyses suggest that there are no significant differences between male and female students, white students and students of other races, or first-generation and non-first-generation college students across the items of our evaluation.

4 Discussion

Before presenting a general discussion, it is invaluable to consider individual instructors’ reflections based on their year-long observations and experiences with the activities described here. These reflections provide a unique and nuanced perspective that complements the students’ feedback.

4.1 Reflections from the psychology instructor

For the coding activity, clear instructions and a well-thought-out design were crucial in fostering students’ high levels of engagement and perceived learning effectiveness. Several key observations are worth noting. First, regarding prior interaction experiences, most graduate students had some level of familiarity with ChatGPT. However, we were surprised to learn that many undergraduate students, even in the fall of 2023, were still unfamiliar with or had no prior experience using ChatGPT (i.e., 13 out of 25 psychology undergraduates, or 52%, had never used ChatGPT in September 2023). Despite this lower-than-expected familiarity with ChatGPT, its ease of use ensured that participants’ lack of experience did not affect their engagement in the experimental activity. On the other side, while ChatGPT proved accessible, other tools used in the activity, such as Google Colab, required more technical support from the instructor. Providing a thorough introduction and a step-by-step walkthrough of related operational tasks (e.g., creating, modifying, or running code) was essential to ensure students could effectively use these tools.

Second, despite their overall comfort and satisfaction with the ChatGPT integrated activity, students expressed some reservations about its use in a classroom setting (e.g., concerns over privacy and inaccurate information). These concerns underscore the need for careful consideration of how GAI is integrated into learning environments. Finally, although the instructor anticipated that this new GAI tool could serve as a customized resource to meet students’ individual needs and promote a fairer educational environment, it may take time to fully realize the potential. While GAI tools become more versatile and mature, students need to become more adept at effectively leveraging their capabilities.

To maximize engagement and effectiveness, it is crucial for instructors to design activities thoughtfully, ensuring they are engaging and provide opportunities for students to share their solutions and feedback. For instance, group activities are essential in this activity, as some students may struggle with new topics and could benefit from peer support. The support and feedback from the instructor are equally important. Additionally, the difficulty level of the tasks should be appropriately challenging, allowing students to effectively utilize the GAI tool to solve problems while fostering deeper learning. There can also not be any priori expectation or assumption of uniform familiarity or comfort with GAI tools among diverse groups of students.

4.2 Reflections from the physics instructor

Using ChatGPT to teach the concept of phase, or physics concepts in general, offers several advantages. Its interactive nature allows students to engage dynamically with the material, asking questions and receiving tailored explanations that make complex ideas more accessible. The GAI tool’s availability at any time supports continuous learning, enabling students to explore topics outside of class hours and revisit concepts as needed. Additionally, ChatGPT provides immediate feedback, which helps students correct misunderstandings quickly and tailor their learning experience to their specific needs.

One of the most significant lessons learned and, we believe, a key driver of the improved outcomes observed in the second semester, was the critical role of increased student engagement. Strategies such as group discussions and opportunities for self-exploration were crucial in enhancing the effectiveness of the activity. These elements not only encouraged collaboration and deeper understanding but also fostered a more active and participatory learning environment. Group discussions and opportunities for self-exploration were thus important in the successful integration of GAI tools into classroom settings.

While ChatGPT offers valuable interactive learning, it has limitations that can impact the depth of understanding for complex concepts. For instance, when explaining the intricacies of phase shifts in wave interference, ChatGPT might provide a basic overview without delving into the mathematical derivations and experimental evidence that underpin these phenomena. This could result in students having a superficial grasp of the material rather than a comprehensive understanding. Moreover, ChatGPT may struggle to fully understand the context of a student’s question. For example, if a student asks, “How does phase affect the sound quality in a concert hall?” ChatGPT might provide a general explanation of phase and sound waves without addressing the specific acoustical design considerations and real-world examples relevant to concert halls. This misalignment can lead to responses that are less relevant to the student’s actual query.

The text-based nature of ChatGPT’s interactions also may not adequately support more visual learners. Consider a student who learns best through visual aids asking ChatGPT to explain how phase differences lead to constructive and destructive interference. While GAI can describe these concepts textually, it cannot provide diagrams or interactive simulations that visually depict overlapping waves and their resultant interference patterns. This can limit the effectiveness of the explanation for visual learners.

Additionally, there is a risk of students misinterpreting the responses of GAI tools. For instance, consider a student asking ChatGPT, “What happens when two waves are out of phase by 180 degrees?” ChatGPT might explain that the waves will cancel each other out due to destructive interference. While this is correct, the GAI might not elaborate on the conditions necessary for perfect cancelation, such as the requirement for the waves to have the same amplitude and frequency. If the student misunderstands this, they might incorrectly assume that any two out-of-phase waves will always completely cancel each other out, regardless of their other properties. This misinterpretation could lead to confusion when the student encounters real-world scenarios or more complex problems where these additional factors play a crucial role.

In summary, while ChatGPT can be a valuable supplementary tool for teaching the concept of physics, it is best used in conjunction with other educational methods to address its limitations and enhance the overall learning experience.

4.3 General discussion and implications

In this manuscript, we describe the implementation details and findings from two in-class activity experiments conducted over two semesters in a university environment. These experiments were designed to evaluate undergraduate and graduate student (and instructor) reactions to and experiences with ChatGPT in classroom settings across two different academic disciplines. Overall, the integration of ChatGPT into classroom teaching was well-received by students, though outcomes such as learning effectiveness varied depending on the type and purpose of the activity. When the goal was to learn a new coding language in a statistics course, ChatGPT proved to be a highly effective tool, demonstrating its usefulness to students. In contrast, while students’ perceptions of the physics-based conceptual discussion activity were generally positive, they were less favorable compared to those of the coding-focused learning activity. It is also important to note that perceptions of the discussion activity improved significantly over time, primarily due to the incorporation of collaborative group activities that enhanced engagement and learning outcomes.

Graduate students appeared more ready and motivated to embrace ChatGPT as a tool for acquiring new skills compared to their undergraduate counterparts. At the same time, no significant differences in perceptions or reactions were found across other demographic categories, such as gender, race, or first-generation college status. Some of the key findings among all comparative analyses are visualized in Figure 3. Notably, there was an improvement in satisfaction with the physics discussion activity over time, a higher level of motivation among graduate students compared to undergraduates in the psychology coding activity, and a greater perceived usefulness of ChatGPT in coding-based activities compared to discussion-based activities.

FIGURE 3

Key differences in students’ perceptions across subgroups.

The findings from this preliminary study highlight key areas for improving the use of GAI tools like ChatGPT in the classroom. First, although students generally expressed positive satisfaction with the experimental class-based learning activities, their satisfaction levels were modest, with mean ratings falling below four on a five-point Likert scale. This indicates considerable room for improvement, particularly in fostering greater student engagement. Specifically, ChatGPT did not motivate students to learn new skills to the level we had anticipated in the physics course. This may be due to the nature of the discussion activity, where ChatGPT’s performance did not exceed students’ expectations as an effective facilitator of a discussion in that setting. Meanwhile, the overall improvement in students’ perceptions in the physics course likely reflected the impact of pedagogical adjustments. Likewise, contrary to our initial prediction that ChatGPT could and would be perceived to function effectively as a personalized tutor, students did not perceive it as significantly better in facilitating fair educational opportunities. It is noteworthy that, on average, students did not believe ChatGPT could address individual questions more effectively than their instructors. These findings suggest that while ChatGPT may be a valuable educational tool, its effectiveness depends on the context in which it is used, the nature of the learning activity, and students’ perceptions of its role in their education. For instance, its impact may be limited when students lack adequate support, or when they do not perceive it as meaningfully enhancing their engagement.

Interestingly, while the present analyses did not identify significant subgroup differences among participants (i.e., male vs. female, White vs. minority, first-generation students vs. non-first-generation students), it is important to note that first-generation and minority students perceived use of ChatGPT as a fairer learning opportunity when compared to their counterparts. Additionally, minority students reported a higher level of confidence and motivation to learn new skills. Although these differences were not statistically significant, they suggest that GAI can be a helpful tool to address the special needs of disadvantaged groups, offering benefits not typically provided by traditional instructional resources. However, these promising trends come with a cautionary note: the easy accessibility of GAI tools should not overshadow the technical challenges that some students face. It is essential to provide adequate support to all students, particularly those who are less familiar with such technologies.

Instructors should thoughtfully plan how to integrate ChatGPT and similar AI tools into their teaching practices to maximize their potential benefits while addressing potential challenges. Based on our findings, we offer the following suggestions for designing effective classroom activities using GAI tools like ChatGPT:

Address technical challenges: Ensure students have adequate support to effectively use GAI tools. This may include providing clear instructions, step-by-step walkthroughs, and troubleshooting guidance to build student confidence in navigating these technologies.

Tackle pedagogical challenges: Integrate GAI in ways that enhance, rather than replace, traditional teaching methods. Activities should leverage AI to complement human instruction by encouraging critical thinking, collaboration, and engagement.

Stay updated on technological advancements: With the rapid pace of GAI development, it is crucial for instructors to stay informed about emerging tools and features. For example, Google Colab now includes the embedded Gemini chatbot for direct interactions.

Consider ethical concerns: Carefully examine and address issues such as privacy and potential bias in AI-generated content. Instructors should promote discussions around the responsible use of AI tools among students.

Overcome practical limitations: Be mindful of accessibility and cost-related barriers that may prevent widespread adoption of AI tools. Additionally, investing in ongoing teacher training is essential to equip instructors with the skills needed to effectively implement AI technologies.

Evaluate long-term impact: While GAI tools like ChatGPT show promise in enhancing student learning, their long-term impact on educational outcomes remains unclear. Further research is necessary to better understand how these tools influence learning processes and how to optimize their integration into diverse classroom settings.

By considering these factors, instructors can design GAI-enhanced activities that are engaging, equitable, and effective, ensuring that generative AI tools like ChatGPT become valuable assets in modern education.

This study has several limitations. First, while it is exploratory in nature and focuses on how students engage with GAI in a classroom setting, particularly during a period when such tools were still relatively new and unfamiliar, it was not designed to rigorously evaluate the effectiveness of GAI on learning outcomes (e.g., including a control or comparison group, where the traditional teaching approach was used for the same learning objectives). In addition, certain potential confounding variables such as cohort differences were not fully considered into the study design. Therefore, while the findings are informative, they should be interpreted with caution. Second, the study would benefit from being conducted on a larger scale, ideally including students from more diverse backgrounds to enhance the generalizability of the findings. At the same time, it is increasingly important to teach students to use GAI tools critically, effectively, and responsibly. Third, the reliance on self-report measures may introduce bias in evaluating learning outcomes. Future studies could incorporate more objective measures to provide a more robust assessment of students’ learning and engagement. At the same time, the measures could be further refined, and the psychometric property could be validated to more robustly support the study’s conclusions. Furthermore, the literature review could be expanded to include more databases, with a broader range of GAI tools beyond ChatGPT.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by University of Tennessee at Chattanooga IRB#23-107 (exempt). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

FG: Writing – original draft, Writing – review and editing. TL: Writing – original draft, Writing – review and editing. CC: Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The publication of this article is funded by Tian Li’s research grant from the University of Tennessee at Chattanooga. Tian Li also acknowledges support from the National Science Foundation through the ExpandQISE program under Award No. ExpandQISE-2426699.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) verify and take full responsibility for the use of generative AI in the preparation of this manuscript. Generative AI was used we acknowledge the use of ChatGPT solely for grammar checks and proofreading assistance in the preparation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1574477/full#supplementary-material

Footnotes

1.^In the spring semester of 2023, no group-based exploration tasks were included. Instead, the activities were instructor-led and demonstrated to the students. The activity outcome differences are discussed later in the manuscript.

References

1

Agarwal M. Sharma P. Goswami A. (2023). Analysing the applicability of chatgpt, bard, and bing to generate reasoning-based multiple-choice questions in medical physiology.Cureus15:e40977. 10.7759/cureus.40977

2

Alali R. M. Al-Barakat A. A. (2023). Leveraging the revolutionary potential of Chatgpt to enhance kindergarten teachers’ educational performance: A proposed perception.Eur. J. Educ. Res.10650–69. 10.14689/ejer.2023.106.004

3

Alenizi M. A. K. Mohamed A. M. Shaaban T. S. Revolutionizing E. (2023). Special education: How ChatGPT is transforming the way teachers approach language learning.Innoeduca95–23. 10.24310/innoeduca.2023.v9i2.16774

4

Ali J. K. M. Shamsan M. A. A. Hezam T. A. Mohammed A. A. (2023). Impact of ChatGPT on learning motivation: Teachers and students’ voices.J. Engl. Stud. Arabia Felix241–49. 10.56540/jesaf.v2i1.51

5

Aljindan F. Al Qurashi A. Albalawi I. Alanazi A. Aljuhani H. Falah Almutairi F. et al (2023). ChatGPT conquers the saudi medical licensing exam: Exploring the accuracy of artificial intelligence in medical knowledge assessment and implications for modern medical education.Cureus15:e45043. 10.7759/cureus.45043

6

Alkhaaldi S. Kassab C. Dimassi Z. Oyoun Alsoud L. Al Fahim M. Al Hageh C. et al (2023). Medical student experiences and perceptions of ChatGPT and artificial intelligence: Cross-sectional study.JMIR Med. Educ.9:e51302. 10.2196/51302

7

Alneyadi S. Wardat Y. (2023). ChatGPT: Revolutionizing student achievement in the electronic magnetism unit for eleventh-grade students in Emirates schools.Contemp. Educ. Technol.15:e448. 10.30935/cedtech/13417

8

Amoozadeh M. Daniels D. Nam D. Kumar A. Chen S. Hilton M. et al (2024). “Trust in generative AI among students: An exploratory study,” in Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1, (ACM), 67–73.

9

Ariyaratne S. Iyengar K. Nischal N. Chitti Babu N. Botchu R. A. (2023). comparison of ChatGPT-generated articles with human-written articles.Skeletal. Radiol.521755–1758. 10.1007/s00256-023-04340-5

10

Athanassopoulos S. Manoli P. Gouvi M. Lavidas K. Komis V. (2023). The use of ChatGPT as a learning tool to improve foreign language writing in a multilingual and multicultural classroom.Adv. Mobile Learn. Educ. Res.3818–824. 10.25082/amler.2023.02.009

11

Ayub I. Hamann D. Hamann C. Davis M. (2023). Exploring the potential and limitations of chat generative pre-trained transformer (ChatGPT) in generating board-style dermatology questions: A qualitative analysis.Cureus15:e43717. 10.7759/cureus.43717

12

Banerjee A. Ahmad A. Bhalla P. Goyal K. (2023). Assessing the efficacy of ChatGPT in solving questions based on the core concepts in physiology.Cureus15:e43314. 10.7759/cureus.43314

13

Bartoli A. May A. Al-Awadhi A. Schaller K. (2024). Probing artificial intelligence in neurosurgical training: Chatgpt takes a neurosurgical residents written exam.Brain Spine4:102715. 10.1016/j.bas.2023.102715

14

Bašic Ž Banovac A. Kružiæ I. Jerkoviæ I. (2023). ChatGPT-3.5 as writing assistance in students’ essays.Human. Soc. Sci. Commun.101–5. 10.1057/s41599-023-02269-7

15

Bhayana R. Krishna S. Bleakney R. (2023). Performance of ChatGPT on a Radiology board-style examination: Insights into current strengths and limitations.Radiology307:e230582. 10.1148/radiol.230582

16

Bin-Nashwan S. A. Sadallah M. Bouteraa M. (2023). Use of ChatGPT in academia: Academic integrity hangs in the balance.Technol. Soc.75:102370. 10.1016/j.techsoc.2023.102370

17

Bitzenbauer P. (2023). ChatGPT in physics education: A pilot study on easy-to-implement activities.Contemp. Educ. Technol.15:e430. 10.30935/cedtech/13176

18

Bonsu E. M. Bafour-Koduah D. (2023). From the consumers’ side: Determining students’ perception and intention to use ChatGPT in Ghanaian higher education. J. Educ. Soc. Multicult.4, 1–29. 10.2478/jesm-2023-0001

19

Borchert R. Hickman C. Pepys J. Sadler T. (2023). Performance of ChatGPT on the situational judgement test-a professional dilemmas-based examination for doctors in the United Kingdom.JMIR Med Educ.9:e48978. 10.2196/48978

20

Chan C. K. Y. Hu W. (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education.Int. J. Educ. Technol. High. Educ.20: 43. 10.1186/s41239-023-00411-8

21

Chaudhry I. S. Sarwary S. A. M. El Refae G. A. Chabchoub H. (2023). Time to revisit existing student’s performance evaluation approach in higher education sector in a new era of ChatGPT—A case study.Cogent Educ.10:2210461. 10.1080/2331186X.2023.2210461

22

Chen J. Zhuo Z. Lin J. (2023). Does ChatGPT play a double-edged sword role in the field of higher education? An in-depth exploration of the factors affecting student performance.Sustainability15:16928. 10.3390/su152416928

23

Chen T. Multala E. Kearns P. Delashaw J. Dumont A. Maraganore D. et al (2023). Assessment of ChatGPT’s performance on neurology written board examination questions.BMJ Neurol. Open5:e000530. 10.1136/bmjno-2023-000530

24

Chiu T. K. (2024). The impact of Generative AI (GenAI) on practices, policies and research direction in education: A case of ChatGPT and Midjourney.Interactive Learn. Environ.326187–6203. 10.1080/10494820.2023.2253861

25

Cingillioglu I. (2023). Detecting AI-generated essays: The ChatGPT challenge.Int. J. Information Learn. Technol.40259–268. 10.1108/IJILT-03-2023-0043

26

Clark T. M. Anderson E. Dickson-Karn N. M. Soltanirad C. Tafini N. (2023). Comparing the performance of college chemistry students with ChatGPT for calculations involving acids and bases.J. Chem. Educ.1003934–3944. 10.1021/acs.jchemed.3c00500

27

Cowling M. Crawford J. Allen K. A. Wehmeyer M. (2023). Using leadership to leverage ChatGPT and artificial intelligence for undergraduate and postgraduate research supervision.Australasian J. Educ. Technol.3989–103. 10.14742/ajet.8598

28

Crcek N. Patekar J. (2023). Writing with AI: University students’ use of ChatGPT.J. Lang. Educ.9128–138. 10.17323/jle.2023.17379

29

Cross J. Robinson R. Devaraju S. Vaughans A. Hood R. Kayalackakom T. et al (2023). Transforming medical education: Assessing the integration of ChatGPT into faculty workflows at a caribbean medical school.Cureus15:e41399. 10.7759/cureus.41399

30

Currie G. Barry K. (2023). ChatGPT in nuclear medicine education.J. Nucl. Med. Technol.51247–254. 10.2967/jnmt.123.265844

31

Currie G. Singh C. Nelson T. Nabasenja C. Al-Hayek Y. Spuur K. (2023). ChatGPT in medical imaging higher education.Radiography (Lond)29792–799. 10.1016/j.radi.2023.05.011

32

Cuthbert R. Simpson A. (2023). Artificial intelligence in orthopaedics: Can chat generative pre-trained transformer (ChatGPT) pass section 1 of the fellowship of the royal college of surgeons (trauma & Orthopaedics) examination?Postgrad. Med. J.991110–1114. 10.1093/postmj/qgad053

33

Dahlkemper M. N. Lahme S. Z. Klein P. (2023). How do physics students evaluate artificial intelligence responses on comprehension questions? A study on the perceived scientific accuracy and linguistic quality of ChatGPT.Phys. Rev. Phys. Educ. Res.19:010142. 10.1103/PhysRevPhysEducRes.19.010142

34

Dai Y. Lai S. Lim C. P. Liu A. (2023). ChatGPT and its impact on research supervision: Insights from Australian postgraduate research students.Australasian J. Educ. Technol.3974–88. 10.14742/ajet.8843

35

Danesh A. Pazouki H. Danesh K. Danesh F. Danesh A. (2023). The performance of artificial intelligence language models in board-style dental knowledge assessment: A preliminary study on ChatGPT.J. Am. Dent. Assoc.154970–974. 10.1016/j.adaj.2023.07.016

36

Das D. Kumar N. Longjam L. Sinha R. Deb Roy A. Mondal H. et al (2023). Assessing the capability of ChatGPT in answering first- and second-order knowledge questions on microbiology as per competency-based medical education curriculum.Cureus15:e36034. 10.7759/cureus.36034

37

Dasari D. Hendriyanto A. Sahara S. Suryadi D. Muhaimin L. H. Chao T. et al (2024). ChatGPT in didactical tetrahedron, does it make an exception? A case study in mathematics teaching and learning.Front. Educ.8:1295413. 10.3389/feduc.2023.1295413

38

Davies N. Wilson R. Winder M. Tunster S. McVicar K. Thakrar S. et al (2024). ChatGPT sits the DFPH exam: Large language model performance and potential to support public health learning.BMC Med. Educ.24:57. 10.1186/s12909-024-05042-9

39

Day T. A. (2023). preliminary investigation of fake peer-reviewed citations and references generated by ChatGPT.Professional Geogr.751024–1027. 10.1080/00330124.2023.2190373

40

de Vicente-Yagüe-Jara M. I. López-Martínez O. Navarro-Navarro V. Cuéllar-Santiago F. (2023). Writing, creativity, and artificial intelligence: ChatGPT in the university context.Comunicar Med. Educ. Res. J.3145–54. 10.3916/C77-2023-04

41

de Winter J. C. Dodou D. Stienen A. H. (2023). ChatGPT in education: Empowering educators through methods for recognition and assessment.Informatics10:87. 10.3390/informatics10040087

42

Dengel A. Gehrlein R. Fernes D. Görlich S. Maurer J. Pham H. H. et al (2023). Qualitative research methods for large language models: Conducting semi-structured interviews with ChatGPT and BARD on computer science education.Informatics10:78. 10.3390/informatics10040078

43

Dergaa I. Chamari K. Zmijewski P. Ben Saad H. (2023). From human writing to artificial intelligence generated text: Examining the prospects and potential threats of ChatGPT in academic writing.Biol. Sport.40615–622. 10.5114/biolsport.2023.125623

44

Desaire H. Chua A. Isom M. Jarosova R. Hua D. (2023). Distinguishing academic science writing from humans or ChatGPT with over 99% accuracy using off-the-shelf machine learning tools.Cell. Rep. Phys. Sci.4:101426. 10.1016/j.xcrp.2023.101426

45

Dhanvijay A. Pinjar M. Dhokane N. Sorte S. Kumari A. Mondal H. (2023). Performance of large language models (ChatGPT, bing search, and google bard) in solving case vignettes in physiology.Cureus15:e42972. 10.7759/cureus.42972

46

Ding L. Li T. Jiang S. Gapud A. (2023). Students’ perceptions of using ChatGPT in a physics class as a virtual tutor.Int. J. Educ. Technol. High. Educ.20:63. 10.1186/s41239-023-00434-1

47

Duong C. D. Vu T. N. Ngo T. V. N. (2023). Applying a modified technology acceptance model to explain higher education students’ usage of ChatGPT: A serial multiple mediation model with knowledge sharing as a moderator.Int. J. Manag. Educ.21:100883. 10.1016/j.ijme.2023.100883

48

Farrokhnia M. Banihashem S. K. Noroozi O. Wals A. (2024). A SWOT analysis of ChatGPT: Implications for educational practice and research.Innov. Educ. Teach. Int.61460–474. 10.1080/14703297.2023.2195846

49

Fergus S. Botha M. Ostovar M. (2023). Evaluating academic answers generated using ChatGPT.J. Chem. Educ.1001672–1675. 10.1021/acs.jchemed.3c00087

50

Flores-Cohaila J. García-Vicente A. Vizcarra-Jiménez S. De la Cruz-Galán J. P. Gutiérrez-Arratia J. D. Quiroga Torres B. G. et al (2023). Performance of ChatGPT on the peruvian national licensing medical examination: Cross-sectional study.JMIR Med. Educ.9:e48039. 10.2196/48039

51

Friederichs H. Friederichs W. März M. (2023). ChatGPT in medical school: How successful is AI in progress testing?Med. Educ. Online28:2220920. 10.1080/10872981.2023.2220920

52

Fütterer T. Fischer C. Alekseeva A. Chen X. Tate T. Warschauer M. et al (2023). ChatGPT in education: Global reactions to AI innovations.Sci. Rep.13:15310. 10.1038/s41598-023-42227-6

53

Gencer A. Aydin S. (2023). Can ChatGPT pass the thoracic surgery exam?Am. J. Med. Sci.366291–295. 10.1016/j.amjms.2023.08.001

54

Ghafouri M. (2024). ChatGPT: The catalyst for teacher-student rapport and grit development in L2 class.System120:103209. 10.1016/j.system.2023.103209

55

Ghosh A. Maini Jindal N. Gupta V. Bansal E. Kaur Bajwa N. Sett A. (2023). Is ChatGPT’s knowledge and interpretative ability comparable to first professional MBBS (Bachelor of Medicine, Bachelor of Surgery) students of india in taking a medical biochemistry examination?Cureus15:e47329. 10.7759/cureus.47329

56

Giannos P. (2023). Evaluating the limits of AI in medical specialisation: ChatGPT’s performance on the UK neurology specialty certificate examination.BMJ Neurol. Open5:e000451. 10.1136/bmjno-2023-000451

57

Gill S. S. Xu M. Patros P. Wu H. Kaur R. Kaur K. et al (2024). Transformative effects of ChatGPT on modern education: Emerging Era of AI Chatbots.Int. Things nd Cyber Phys. Syst.419–23. 10.1016/j.iotcps.2023.06.002

58

Guleria A. Krishan K. Sharma V. Kanchan T. (2023). ChatGPT: Ethical concerns and challenges in academics and research.J. Infect. Dev. Ctries171292–1299. 10.3855/jidc.18738

59

Guo Y. Lee D. (2023). Leveraging chatgpt for enhancing critical thinking skills.J. Chem. Educ.1004876–4883. 10.13140/RG.2.2.20970.32968

60

Hasanein A. Sobaih A. (2023). Drivers and consequences of ChatGPT use in higher education: Key stakeholder perspectives.Eur. J. Investig. Health Psychol. Educ.132599–2614. 10.3390/ejihpe13110181

61

Herbold S. Hautli-Janisz A. Heuer U. Kikteva Z. Trautsch A. (2023). A large-scale comparison of human-written versus ChatGPT-generated essays.Sci. Rep.13:18617. 10.1038/s41598-023-45644-9

62

Hmoud M. Swaity H. Hamad N. Karram O. Daher W. (2024). Higher education students’ task motivation in the generative artificial intelligence context: The case of ChatGPT.Information15:33. 10.3390/info15010033

63

Hosseini M. Gao C. Liebovitz D. Carvalho A. Ahmad F. Luo Y. et al (2023). An exploratory survey about using ChatGPT in education, healthcare, and research.PLoS One18:e0292216. 10.1371/journal.pone.0292216

64

Hu J. Liu F. Chu C. Chang Y. (2023). Health care trainees’ and professionals’ perceptions of ChatGPT in improving medical knowledge training: Rapid survey study.J. Med. Internet Res.25:e49385. 10.2196/49385

65

Huang H. (2023). Performance of ChatGPT on registered nurse license exam in taiwan: A descriptive study.Healthcare (Basel)11:2855. 10.3390/healthcare11212855

66

Huang Y. Gomaa A. Semrau S. Haderlein M. Lettmaier S. Weissmann T. et al (2023). Benchmarking ChatGPT-4 on a radiation oncology in-training exam and Red Journal Gray Zone cases: Potentials and challenges for ai-assisted medical education and decision making in radiation oncology.Front. Oncol.13:1265024. 10.3389/fonc.2023.1265024

67

Hutson J. (2024). Rethinking plagiarism in the era of generative AI.J. Intell. Commun.420–31. 10.54963/jic.v4i1.220

68

Ignjatovic A. Stevanovic L. (2023). Efficacy and limitations of ChatGPT as a biostatistical problem-solving tool in medical education in Serbia: A descriptive study.J. Educ. Eval. Health Prof.20:28. 10.3352/jeehp.2023.20.28

69

Ilgaz H. Çelik Z. (2023). The significance of artificial intelligence platforms in anatomy education: An experience with ChatGPT and google bard.Cureus15:e45301. 10.7759/cureus.45301

70

Imran M. Almusharraf N. (2023). Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature.Contemp. Educ. Technol.15:e464. 10.30935/cedtech/13605

71

Jeon J. Lee S. (2023). Large language models in education: A focus on the complementary relationship between human teachers and ChatGPT.Educ. Information Technol.2815873–15892. 10.1007/s10639-023-11834-1

72

Khlaif Z. Mousa A. Hattab M. Itmazi J. Hassan A. Sanmugam M. et al (2023). The potential and concerns of using AI in scientific research: ChatGPT performance evaluation.JMIR Med. Educ.9:e47049. 10.2196/47049

73

Kieser F. Wulff P. Kuhn J. Küchemann S. (2023). Educational data augmentation in physics education research using ChatGPT.Phys. Rev. Phys. Educ. Res.19:020150. 10.1103/PhysRevPhysEducRes.19.020150

74

Kiryakova G. Angelova N. (2023). ChatGPT—A challenging tool for the university professors in their teaching practice.Educ. Sci.13:1056. 10.3390/educsci13101056

75

Knoedler L. Alfertshofer M. Knoedler S. Hoch C. Funk P. Cotofana S. et al (2024). Pure wisdom or potemkin villages? A comparison of ChatGPT 3.5 and ChatGPT 4 on USMLE step 3 style questions: Quantitative analysis.JMIR Med. Educ.10:e51148. 10.2196/51148

76

Küchemann S. Steinert S. Revenga N. Schweinberger M. Dinc Y. Avila K. E. et al (2023). Can ChatGPT support prospective teachers in physics task development?Phys. Rev. Phys. Educ. Res.19:020128. 10.1103/PhysRevPhysEducRes.19.020128

77

Kufel J. Paszkiewicz I. Bielówka M. Bartnikowska W. Janik M. Stencel M. et al (2023). Will ChatGPT pass the Polish specialty exam in radiology and diagnostic imaging? Insights into strengths and limitations.Pol. J. Radiol.88e430–e434. 10.5114/pjr.2023.131215

78

Kumah-Crystal Y. Mankowitz S. Embi P. Lehmann C. (2023). ChatGPT and the clinical informatics board examination: The end of unproctored maintenance of certification?J. Am. Med. Inform. Assoc.301558–1560. 10.1093/jamia/ocad104

79

Kung J. Marshall C. Gauthier C. Gonzalez T. Jackson J. (2023). Evaluating ChatGPT performance on the orthopaedic in-training examination.JB JS Open Access.8:e23.00056. 10.2106/JBJS.OA.23.00056

80

Lai U. Wu K. Hsu T. Kan J. (2023). Evaluating the performance of ChatGPT-4 on the United Kingdom medical licensing assessment.Front. Med.10:1240915. 10.3389/fmed.2023.1240915

81

Lappalainen Y. Narayanan N. (2023). Aisha: A custom AI library chatbot using the ChatGPT API.J. Web Librariansh.1737–58. 10.1080/19322909.2023.2221477

82

Lee U. Han A. Lee J. Lee E. Kim J. Kim H. et al (2024). Prompt aloud!: Incorporating image-generative AI into STEAM class with learning analytics using prompt data.Educ. Inf. Technol.299575–9605. 10.1007/s10639-023-12150-4

83

Leite B. S. (2023). Artificial intelligence and chemistry teaching: A propaedeutic analysis of ChatGPT in chemical concepts defining.Quimica Nova46915–923. 10.21577/0100-4042.20230059

84

Li B. Kou X. Bonk C. J. (2023). Embracing the disrupted language teaching and learning field: Analyzing YouTube content creation related to ChatGPT.Languages8:197. 10.3390/languages8030197

85

Lian Y. Tang H. Xiang M. Dong X. (2024). Public attitudes and sentiments toward ChatGPT in China: A text mining analysis based on social media.Technol. Soc.76:102442. 10.1016/j.techsoc.2023.102442

86

Limna P. Kraiwanit T. Jangjarat K. Klayklung P. Chocksathaporn P. (2023). The use of ChatGPT in the digital era: Perspectives on chatbot implementation.J. Appl. Learn. Teach.664–74. 10.37074/jalt.2023.6.1.32

87

Lin C. Akuhata-Huntington Z. Hsu C. (2023). Comparing ChatGPT’s ability to rate the degree of stereotypes and the consistency of stereotype attribution with those of medical students in New Zealand in developing a similarity rating test: A methodological study.J. Educ. Eval. Health Prof.20:17. 10.3352/jeehp.2023.20.17

88

Livberber T. (2023). Toward non-human-centered design: Designing an academic article with ChatGPT.Profesional Inf.321–19. 10.3145/epi.2023.sep.12

89

Livberber T. Ayvaz S. (2023). The impact of artificial intelligence in academia: Views of Turkish academics on ChatGPT.Heliyon9:e19688. 10.1016/j.heliyon.2023.e19688

90

Lower K. Seth I. Lim B. Seth N. (2023). ChatGPT-4: Transforming medical education and addressing clinical exposure challenges in the post-pandemic era.Indian J. Orthop.571527–1544. 10.1007/s43465-023-00967-7

91

Luo W. He H. Liu J. Berson I. R. Berson M. J. Zhou Y. et al (2024). Aladdin’s Genie or Pandora’s box for early childhood education? Experts chat on the roles, challenges, and developments of ChatGPT.Early Educ. Dev.3596–113. 10.1080/10409289.2023.2214181

92

Luo Y. Weng H. Yang L. Ding Z. Wang Q. (2023). College students’ employability, cognition, and demands for ChatGPT in the AI Era among Chinese nursing students: Web-based survey.JMIR Form. Res.7:e50413. 10.2196/50413

93

Mannam S. Subtirelu R. Chauhan D. Ahmad H. Matache I. Bryan K. et al (2023). Large language model-based neurosurgical evaluation matrix: A novel scoring criteria to assess the efficacy of ChatGPT as an educational tool for neurosurgery board preparation.World Neurosurg.180e765–e773. 10.1016/j.wneu.2023.10.043

94

Meo S. Al-Masri A. Alotaibi M. Meo M. Meo M. (2023). ChatGPT knowledge evaluation in basic and clinical medical sciences: Multiple choice question examination-based performance.Healthcare (Basel)11:2046. 10.3390/healthcare11142046

95

Meron Y. Araci Y. T. (2023). Artificial intelligence in design education: Evaluating ChatGPT as a virtual colleague for post-graduate course development.Design Sci.9:e30. 10.1017/dsj.2023.28

96

Michalon B. Camacho-Zuñiga C. (2023). ChatGPT, a brand-new tool to strengthen timeless competencies.Front. Educ.8:1251163. 10.3389/feduc.2023.1251163

97

Morjaria L. Burns L. Bracken K. Ngo Q. Lee M. Levinson A. et al (2023). Examining the threat of ChatGPT to the validity of short answer assessments in an undergraduate medical program.J. Med. Educ. Curric. Dev.10:23821205231204178. 10.1177/23821205231204178

98

Muñoz S. A. S. Gayoso G. G. Huambo A. C. Tapia R. D. C. Incaluque J. L. Aguila O. E. P. et al (2023). Examining the impacts of ChatGPT on student motivation and engagement.Soc. Space231–27.

99

Nam B. H. Bai Q. (2023). ChatGPT and its ethical implications for STEM research and higher education: A media discourse analysis.Int. J. STEM Educ.10:66. 10.1186/s40594-023-00452-5

100

Ngo A. Gupta S. Perrine O. Reddy R. Ershadi S. Remick D. (2024). ChatGPT 3.5 fails to write appropriate multiple choice practice exam questions.Acad. Pathol.11:100099. 10.1016/j.acpath.2023.100099

101

Niu Y. Xue H. (2023). Exercise generation and student cognitive ability research based on ChatGPT and Rasch Model.IEEE Access.11116695–116705. 10.1109/ACCESS.2023.3325741

102

Oh N. Choi G. Lee W. (2023). ChatGPT goes to the operating room: Evaluating GPT-4 performance and its potential in surgical education and training in the era of large language models.Ann. Surg. Treat. Res.104269–273. 10.4174/astr.2023.104.5.269

103

OpenAI. (2022). ChatGPT: Optimizing Language Models for Dialogue. Available online at: https://openai.com/blog/chatgpt/(accessed May 8, 2025).

104

Panthier C. Gatinel D. (2023). Success of ChatGPT, an AI language model, in taking the French language version of the European Board of Ophthalmology examination: A novel approach to medical knowledge assessment.J. Fr. Ophtalmol.46706–711. 10.1016/j.jfo.2023.05.006

105

Parker J. Becker K. Carroca C. (2023). ChatGPT for automated writing evaluation in scholarly writing instruction.J. Nurs. Educ.62721–727. 10.3928/01484834-20231006-02

106

Peres F. (2024). Health literacy in ChatGPT: Exploring the potential of the use of artificial intelligence to produce academic text.Cien Saude Colet29:e02412023. 10.1590/1413-81232024291.02412023

107

Podlasov S. O. Matviichuk O. V. (2023). Application of ChatGPT in the teaching of physics. Inf. Technol. Learn. Tools97, 149–166. 10.33407/itlt.v97i5.5374

108

Polyportis A. A. (2024). longitudinal study on artificial intelligence adoption: Understanding the drivers of ChatGPT usage behavior change in higher education.Front. Artif. Intell.6:1324398. 10.3389/frai.2023.1324398

109

Rahman M. S. Sabbir M. M. Zhang J. Moral I. H. Hossain G. M. S. (2023). Examining students’ intention to use ChatGPT: Does trust matter?Aust. J. Educ. Technol.3951–71. 10.14742/ajet.8956

110

Raman R. Mandal S. Das P. Kaur T. Sanjanasri J. P. Nedungadi P. (2023). Exploring university students’ adoption of ChatGPT using the diffusion of innovation theory and sentiment analysis with gender dimension. Hum. Behav. Emerg. Technol.2024:3085910. 10.1155/2024/3085910

111

Relmasira S. C. Lai Y. C. Donaldson J. P. (2023). Fostering AI literacy in elementary science, technology, engineering, art, and mathematics (STEAM) education in the age of generative AI.Sustainability15:13595. 10.3390/su151813595

112

Riedel M. Kaefinger K. Stuehrenberg A. Ritter V. Amann N. Graf A. et al (2023). ChatGPT’s performance in German OB/GYN exams - paving the way for AI-enhanced medical education and clinical practice.Front. Med.10:1296615. 10.3389/fmed.2023.1296615

113

Romero-Rodríguez J. M. Ramírez-Montoya M. S. Buenestado-Fernández M. Lara-Lara F. (2023). Use of ChatGPT at university as a tool for complex thinking: Students’ perceived usefulness.J. New Approaches Educ. Res.12323–339. 10.7821/naer.2023.7.1458

114

Ruiz-Rojas L. I. Acosta-Vargas P. De-Moreta-Llovet J. Gonzalez-Rodriguez M. (2023). Empowering education with generative artificial intelligence tools: Approach with an instructional design matrix.Sustainability15:11524. 10.3390/su151511524

115

Saad A. Iyengar K. Kurisunkal V. Botchu R. (2023). Assessing ChatGPT’s ability to pass the FRCS orthopaedic part A exam: A critical analysis.Surgeon21263–266. 10.1016/j.surge.2023.07.001

116

Salifu I. Arthur F. Arkorful V. Abam Nortey S. Solomon Osei-Yaw R. (2024). Economics students’ behavioural intention and usage of ChatGPT in higher education: A hybrid structural equation modelling-artificial neural network approach.Cogent Soc. Sci.10:2300177. 10.1080/23311886.2023.2300177

117

Sallam M. Al-Salahat K. (2023). Below average ChatGPT performance in medical microbiology exam compared to university students.Front. Educ.8:1333415. 10.3389/feduc.2023.1333415

118

Sallam M. Salim N. Barakat M. Al-Mahzoum K. Al-Tammemi A. Malaeb D. et al (2023). Assessing health students’ attitudes and usage of ChatGPT in Jordan: Validation study.JMIR Med. Educ.9:e48254. 10.2196/48254

119

Sánchez-Ruiz L. M. Moll-López S. Nuñez-Pérez A. Moraño-Fernández J. A. Vega-Fleitas E. (2023). ChatGPT challenges blended learning methodologies in engineering education: A case study in mathematics.Appl. Sci.13:6039. 10.3390/app13106039

120

Scherr R. Halaseh F. Spina A. Andalib S. Rivera R. (2023). ChatGPT interactive medical simulations for early clinical education: Case study.JMIR Med. Educ.9:e49877. 10.2196/49877

121

Shin D. Lee J. H. (2023). Can ChatGPT make reading comprehension testing items on par with human experts?Lang. Learn. Technol.2727–40. doi: 10125/73530

122

Shin H. Kang J. (2023). Bridging the gap of bibliometric analysis: The evolution, current state, and future directions of tourism research using ChatGPT.J. Hosp. Tour. Manag.5740–47. 10.1016/j.jhtm.2023.09.001

123

Shoufan A. (2023). Exploring students’ perceptions of ChatGPT: Thematic analysis and follow-up survey.IEEE Access1138805–38818. 10.1109/ACCESS.2023.3268224

124

Shue E. Liu L. Li B. Feng Z. Li X. Hu G. (2023). Empowering beginners in bioinformatics with ChatGPT.Quant. Biol.11105–108. 10.15302/j-qb-023-0327

125

Silva A. M. D. Rottava L. (2024). Lexical density in texts generated by ChatGPT: implications of artificial intelligence for writing in additional languages.Texto Livre17:e47836. 10.1590/1983-3652.2024.47836

126

Singh H. Tayarani-Najaran M. H. Yaqoob M. (2023). Exploring computer science students’ perception of ChatGPT in higher education: A descriptive and correlation study.Educ. Sci.13:924. 10.3390/educsci13090924

127

Smolansky A. Cram A. Raduescu C. Zeivots S. Huber E. Kizilcec R. F. (2023). “Educator and student perspectives on the impact of generative AI on assessments in higher education,” in Proceedings of the tenth ACM conference on Learning@ Scale, (ACM), 378–382.

128

Su Y. Lin Y. Lai C. (2023). Collaborating with ChatGPT in argumentative writing classrooms.Assess. Writing57:100752. 10.1016/j.asw.2023.100752

129

Sudheesh R. Mujahid M. Rustam F. Shafique R. Chunduri V. Villar M. G. et al (2023). Analyzing sentiments regarding ChatGPT using novel BERT: A machine learning approach.Information14:474. 10.3390/info14090474

130

Surapaneni K. M. (2023). Assessing the performance of ChatGPT in medical biochemistry using clinical case vignettes: Observational study.JMIR Med. Educ.9:e47191. 10.2196/47191

131

Tarisayi K. S. (2024). ChatGPT use in universities in South Africa through a socio-technical lens.Cogent Educ.11:2295654. 10.1080/2331186X.2023.2295654

132

Theophilou E. Koyutürk C. Yavari M. Bursic S. Donabauer G. Telari A. et al (2023). “Learning to prompt in the classroom to understand AI limits: A pilot study,” in Proceedings of the International Conference of the Italian Association for Artificial Intelligence, (Cham: Springer Nature Switzerland), 481–496.

133

Tiwari C. K. Bhat M. A. Khan S. T. Subramaniam R. Khan M. A. I. (2024). What drives students toward ChatGPT? An investigation of the factors influencing adoption and usage of ChatGPT.Interactive Technol. Smart Educ.21333–355. 10.1108/ITSE-04-2023-0061

134

Tlili A. Shehata B. Adarkwah M. A. Bozkurt A. Hickey D. T. Huang R. et al (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education.Smart Learn. Environ.10:15. 10.1186/s40561-023-00237-x

135

Totlis T. Natsis K. Filos D. Ediaroglou V. Mantzou N. Duparc F. et al (2023). The potential role of ChatGPT and artificial intelligence in anatomy education: A conversation with ChatGPT.Surg. Radiol. Anat.451321–1329. 10.1007/s00276-023-03229-1

136

Uddin S. J. Albert A. Ovid A. Alsharef A. (2023). Leveraging ChatGPT to aid construction hazard recognition and support safety education and training.Sustainability15:7121. 10.3390/su15097121

137

van den Berg G. du Plessis E. (2023). ChatGPT and generative AI: Possibilities for its contribution to lesson planning, critical thinking and openness in teacher education.Educ. Sci.13:998. 10.3390/educsci13100998

138

Vartiainen H. Tedre M. (2023). Using artificial intelligence in craft education: Crafting with text-to-image generative models.Digital Creativity341–21. 10.1080/14626268.2023.2174557

139

Vaughn J. Ford S. H. Scott M. Jones C. Lewinski A. (2024). Enhancing healthcare education: Leveraging ChatGPT for innovative simulation scenarios.Clin. Simul. Nurs.87:101487. 10.1016/j.ecns.2023.101487

140

Vazquez-Cano E. Ramirez-Hurtado J. M. Saez-Lopez J. M. Lopez-Meneses E. (2023). ChatGPT: The brightest student in the class.Thinking Skills Creativity49:101380. 10.1016/j.tsc.2023.101380

141

Veras M. Dyer J. O. Rooney M. Barros Silva P. G. Rutherford D. Kairy D. (2023). Usability and efficacy of artificial intelligence chatbots (ChatGPT) for health sciences students: Protocol for a crossover randomized controlled trial.JMIR Res Protoc.12:e51873. 10.2196/51873

142

Von Garrel J. Mayer J. (2023). Artificial Intelligence in studies—Use of ChatGPT and AI-based tools among students in Germany.Human. Soc. Sci. Commun.10:799. 10.1057/s41599-023-02304-7

143

Waltzer T. Cox R. L. Heyman G. D. (2023). Testing the ability of teachers and students to differentiate between essays generated by ChatGPT and high school students.Hum. Behav. Emerg. Technol.2023:1923981. 10.1155/2023/1923981

144

Wandelt S. Sun X. Zhang A. (2023). AI-driven assistants for education and research? A case study on ChatGPT for air transport management.J. Air Transport Manag.113:102483. 10.1016/j.jairtraman.2023.102483

145

Wang H. Wu W. Dou Z. He L. Yang L. (2023). Performance and exploration of ChatGPT in medical examination, records and education in Chinese: Pave the way for medical AI.Int. J. Med. Informatics177:105173. 10.1016/j.ijmedinf.2023.105173

146

Wang L. Chen X. Wang C. Xu L. Shadiev R. Li Y. (2024). ChatGPT’s capabilities in providing feedback on undergraduate students’ argumentation: A case study.Thinking Skills Creativity51:101440. 10.56297/vaca6841//BFFO7057/MYEH4562

147

Wardat Y. Tashtoush M. A. AlAli R. Jarrah A. M. (2023). ChatGPT: A revolutionary tool for teaching and learning mathematics.Eurasia J. Math. Sci. Technol. Educ.19:em2286. 10.29333/ejmste/13272

148

West J. K. Franz J. L. Hein S. M. Leverentz-Culp H. R. Mauser J. F. Ruff E. F. et al (2023). An analysis of AI-generated laboratory reports across the chemistry curriculum and student perceptions of ChatGPT.J. Chem. Educ.1004351–4359. 10.1021/acs.jchemed.3c00581

149

Wood D. A. Achhpilia M. P. Adams M. T. Aghazadeh S. Akinyele K. Akpan M. et al (2023). The ChatGPT artificial intelligence chatbot: How well does it answer accounting assessment questions?Issues Account. Educ.3881–108. 10.2308/ISSUES-2023-013

150

Wu T. T. Lee H. Y. Li H. Huang C. N. Huang Y. M. (2024). Promoting self-regulation progress and knowledge construction in blended learning via ChatGPT-based learning aid.J. Educ. Comput. Res.613–31. 10.1177/07356331231191125

151

Xiao C. Xu S. X. Zhang K. Wang Y. Xia L. (2023). “Evaluating reading comprehension exercises generated by LLMs: A showcase of ChatGPT in education applications,” in Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023), (Association for Computational Linguistics), 610–625.

152