Abstract

Introduction:

Competency-Based Education (CBE) has become increasingly important in Chilean higher education since the 2006 reform, which aimed to improve educational quality. However, implementing CBE effectively poses challenges, particularly in developing assessments that adhere to CBE principles and are consistent across all university courses. To address this issue, the Competency Assessment and Monitoring (C-A&M) model was created. This study evaluates the effectiveness of C-A&M in standardizing course assessments within the CBE paradigm at the Pontifical Catholic University of Valparaíso (PUCV) in Chile.

Methods:

Two observational studies were conducted. The first study utilized a within-group design and included 20 Engineering courses. The second study employed a between-groups design, examining the perceptions of 109 instructors from various faculties, including Law, Economic and Administrative Sciences, Philosophy, and Education, among others.

Results:

The within-group study emphasized the need for better course assessment and alignment of learning objectives with competencies. It found a positive correlation between C-A&M and the effective implementation of CBE in university courses. The between-groups study showed a minor effect size but suggested a similar relationship.

Discussion:

Assessment based on CBE principles is not being effectively implemented at PUCV. Key areas for improvement include the evaluation plan, aligning learning objectives with competencies, and coordinating training with these objectives. The application of C-A&M has led to significant improvements, indicating a positive correlation between its use and these enhancements.

1 Introduction

Competency-Based Education (CBE) has emerged as a crucial approach in higher education in Chile since the 2006 educational reform (Zancajo and Valiente, 2019). This paradigm emphasizes the development of specific competencies in students, aiming to enhance academic outcomes and better prepare them for professional environments. The recognition of CBE as a fundamental approach reflects a broader commitment to improving educational quality and effectiveness in the country.

CBE's gradual consolidation and expansion can be attributed to its proven ability to enhance academic results. Studies conducted in Chile, such as those by Letelier et al. (2003); Gomez et al. (2017); Sanchez-Garcia et al. (2019), highlight the positive results associated with CBE implementation. CBE has become a transformative force in the global educational landscape, with successful implementations in many other countries, such as Canada (Karpinski et al., 2024; Faiella and Styles, 2024), Dubai (Shadan et al., 2025), Germany (Kruppa et al., 2024), India (Sahadevan et al., 2021; Singh and Shah, 2023), Italy (Barbina et al., 2025), Kenya (Ruparelia et al., 2021), Malasya (Shariff and Razak, 2022), Mexico (Martínez-Ávila et al., 2024), Spain (Vázquez-Espinosa et al., 2024), South Africa (Nel et al., 2023), and the United States (Banse et al., 2024; Chen et al., 2024).

However, despite CBE's growing recognition and implementation, several challenges impede its effective integration into the educational framework (Struyven and De Meyst, 2010; Deng et al., 2024). As pointed out by Morales and Zambrano (2016), aligning competency-based assessment with student learning outcomes remains a significant hurdle within Chile. This lack can lead to inconsistencies in the quality and competencies of engineering graduates across different institutions, affecting the expected outcomes (Cruz et al., 2019). This has become even more critical since the Chilean National Accreditation Commission (CNA-Chile) published the New Quality Criteria and Standards for Education1 on September 30th, 2021. These criteria and standards adopt CBE and have been mandatory for all Chilean universities since October 1st, 2023. They are classified into six main scopes: (1) Institutional Accreditation, (2) Focus on Learning Outcomes, (3) Research Development, (4) Community Engagement, (5) Efficient Institutional Management, and (6) Internationalization. Among them, the focus on learning outcomes entails a central concern for the quality of education delivered and the assessment of student progress. Nevertheless, CBE assessment at the level of learning outcomes is still not being fully addressed by academic institutions, which risks compliance with the current regulatory framework for education quality assurance outlined by CNA-Chile. Furthermore, operational obstacles such as limited faculty time, large class sizes, and other organizational factors (fundamentally, the contracts and incentives of the teaching staff) also complicate widespread adoption, as highlighted by Koenen et al. (2015).

On the other hand, implementing innovative practices at the micro-curricular level is primarily restricted to a small group of educators, further complicating efforts to advance CBE in the country (Pey et al., 2013). This limited engagement suggests that a broader approach is necessary to foster widespread acceptance and integration of competency-based methods among educators in Chile. In this sense, and as pointed out by ZlatkinTroitschanskaia and Pant (2016), there is a significant need for valid models and instruments that adequately support students' assessment under the CBE paradigm. Traditional assessment practices in Chilean higher education predominantly focus on individual learning and rely heavily on memorization and reproduction of content. The Organisation for Economic Co-operation and Development (OECD) has reported that these practices limit the potential of CBE, emphasizing the need for a shift toward more dynamic and competency-focused assessments (OECD, 2009, 2017). To face these issues, we created the Competency Assessment and Monitoring (C-A&M) model (Vargas et al., 2019; Marin et al., 2020; Vargas et al., 2023, 2024).

This paper discusses the experience of using C-A&M to standardize course assessments at the Pontifical Catholic University of Valpara–so (PUCV) in Chile. The report includes two observational studies: one with a within-groups design involving 20 Engineering courses, and another with a between-groups design that examines the perceptions of 109 instructors from various faculties, including Law, Economic and Administrative Sciences, Philosophy, and Education, among others. Based on our experience, CBE is not currently being implemented effectively at PUCV. Several aspects need improvement, especially the evaluation plan, the alignment of learning objectives with competencies, and the coordination of training activities with the learning objectives. Nevertheless, following the application of C-A&M, we observed significant improvements in these areas, which indicates a positive correlation between the use of C-A&M and these enhancements.

The remainder of this paper is organized as follows. Section 2 provides a brief introduction to C-A&M. Section 3 outlines the methodology we used to evaluate the current CBE implementations in PUCV's courses and assess the impact of C-A&M on these implementations. Section 4 summarizes the findings from our studies. Section 5 discusses the implications and limitations of our studies, and also potential threats to the validity of our conclusions.

2 A brief introduction to C-A&M

The goal of C-A&M is to provide instructors with a systematic approach to evaluating students. To do so, C-A&M requires identifying 5 types of elements and their interrelationships in a decompositional manner: Competencies (Cs), Learning Outcomes (LOs), Global Indicators (GIs), Specific Indicators (SIs), and Assessment Tools (ATs). Let us introduce C-A&M with a course on Automatic Control that is part of the Electronic Engineering master's program at PUCV's Faculty of Engineering. The course lasts a total of 16 weeks and covers the fundamental concepts of linear control systems. The lectures span 4 h per week and, after them, students participate in weekly simulation sessions, each lasting 2 h, where they work in pairs to apply the control theory they have learned using specialized software tools.

The current graduate profile of the study program encompasses a total of 17 competencies. Specifically, the automatic control course contributes to the development of two of these competencies, which are described below.

The student...s

combines basic science and engineering knowledge to identify, analyze, and solve problems in the field (Competency C1).

models and simulates processes to optimize their parameters and enhance their operating conditions (Competency C2).

The first step in applying C-A&M is to define the learning outcomes, which aim to connect the course content with the required competencies. In this case, the following LOs have been established:

The student will be able to ...

use control system analysis methodologies to address discipline-related problems (Learning Outcome LO1.1).

use control system design methodologies to address discipline-related problems (Learning Outcome LO1.2).

model and simulate control systems to address discipline-related problems (Learning Outcome LO2.1).

In C-A&M, the decompositions are represented as weighted means, with the weights indicating each element's contribution (weights are scaled between 0 and 1, and they must sum to 1). Weighted means are convenient because they are easy to understand and use. For instance, Equation 1 summarizes the decomposition of competencies into specific learning outcomes. Competency C1 is developed through two learning outcomes: LO1.1, which focuses on control system analysis, and LO1.2, which accounts for control system design. Both learning outcomes contribute equally to this competency, so each LO has a weight of 0.5.

Similarly, competency C2 is developed through LO2.1, which is related to modeling and simulating control systems. In this case, this single LO contributes fully to the corresponding competency, so its weight is 1 (i.e., C2 = LO2.1).

LOs are further broken down into GIs, which are then refined into SIs, which are finally evaluated with ATs. For each AT, a rubric is defined (i) to ensure that each student is assessed consistently and objectively and (ii) to provide constructive feedback to the students. The rubric outlines specific criteria and performance standards that guide the evaluation process. It clarifies expectations, making it easier for evaluators to assess the quality of the work based on predetermined metrics. This structured approach not only enhances the transparency of the assessment but also provides valuable feedback to students, helping them identify areas for growth and development.

In the automatic control course, a one-to-one assessment strategy is used, i.e, LO1.1 = AT1, LO1.2 = AT2 and LO2.1 = AT3. AT1 and AT2 refer to traditional individual written tests, while AT3 involves a simulation-based homework assignment that is completed in pairs. Tables 1, 2, 3 present the GIs and SIs used in AT1, AT2, and AT3.

Table 1

| LO | GI description | Weight | SI description (The student ...) | Weight |

|---|---|---|---|---|

| LO1.1 | GI1: Analysis of the time response and performance specifications | 0.2 | SI1: Determines the transient response specifications | 0.5 |

| SI2: Determines the steady-state error | 0.5 | |||

| GI2: Control system analysis by using control diagrams (rlocus/bode/nyquist) | 0.5 | SI3: Determines and plots the root-locus | 0.6 | |

| SI4: Determines phase and gain margins | 0.4 | |||

| GI3: Interpretation and validation of results obtained from control system analysis. | 0.3 | SI5: Interprets the transient response | 0.2 | |

| SI6: Interprets the steady-state response | 0.2 | |||

| SI7: Interprets stability from rlocus diagrams | 0.3 | |||

| SI8: Interprets stability from phase and gain margins | 0.3 |

Indicators and weights for AT1.

Table 2

| LO | GI description | Weight | SI description (The student ...) | Weight |

|---|---|---|---|---|

| LO1.2 | GI1: Analytical design of controllers | 0.6 | SI1: Designs phase lead/lag s | 1/3 |

| SI2: Designs PID controllers | 1/3 | |||

| SI3: Designs state-space controllers | 1/3 | |||

| GI2: Analytical validation of controllers | 0.4 | SI4: Validates the design of phase lead/lag controllers | 0.4 | |

| SI5: Validates the design of PID controllers | 0.4 | |||

| SI6: Validates the design of state-space controllers | 0.2 |

Indicators and weights for AT2.

Table 3

| LO | GI description | Weight | SI description (The student ...) | Weight |

|---|---|---|---|---|

| LO2.1 | GI1: Deployment of simulations of control systems | 0.2 | SI1: Configures the simulation parameters | 0.25 |

| SI2: Deploys and runs control system simulations | 0.75 | |||

| GI2: Analysis of control systems by simulation tools | 0.4 | SI3: Time-domain control system analysis by simulations | 0.4 | |

| SI4: Frequency-domain control system analysis by simulations | 0.4 | |||

| SI5: Interprets simulation plots | 0.2 | |||

| GI3: Design and validation of controllers by simulation tools | 0.4 | SI6: Uses simulations to support the design of controllers | 0.5 | |

| SI7: Validates the design of controllers by simulations | 0.5 |

Indicators and weights for AT3.

In 2016, C-A&M was first implemented in the course. Since then, C-A&M has proven to help keep students informed about their grades and evaluate their performance based on competencies and learning outcomes. Additionally, it has assisted teachers in monitoring the progress of the course over time by measuring, recording, and tracking students' academic performance. This information is then used to implement corrective actions to improve competency attainment in future courses.

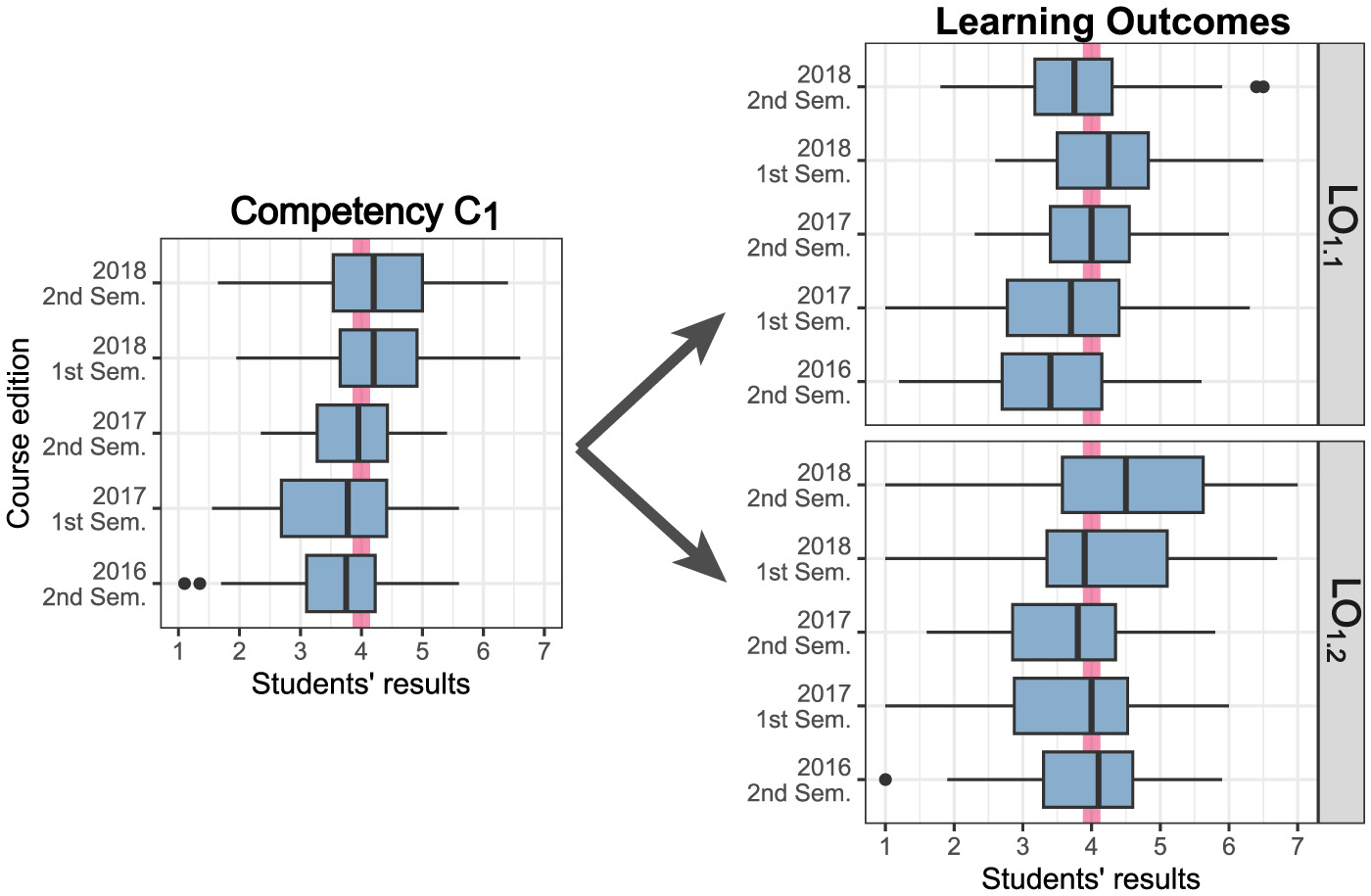

For example, the left side of Figure 1 presents a boxplot illustrating students' progress in fulfilling C1 over five consecutive course editions from 2016 to 2018. On average, there were 60.4 students enrolled in each course. It is important to note that in the Chilean university system, grades range from 1 to 7, with 4 being the minimum passing score. Figure 1 displays a red line highlighting the boundary between the successful and unsuccessful achievement of competencies and learning outcomes. The instructors expressed significant concern over the low competency achievement in the initial versions of the course. For example, in the 2nd semester of 2016, most students did not achieve competency C1.

Figure 1

Example of the type of analysis C-A&M supports: students' results for C1 and its associated LO1.1 and LO1.2.

C-A&M facilitates the transition between abstraction levels to identify the root cause of educational problems. For instance, the right side of Figure 1 shows students' results at the LO's level. It appears that LO1.1 was the main factor contributing to the low achievement of C1 in the second semester of 2016, as its median score was only 3.4. In contrast, LO1.2 had a median score of 4.1. Consequently, teachers decided to focus on improving the instruction for LO1.1. To address this, they revised the teaching materials for control system analysis and reorganized the course schedule to allocate more class time to LO1.1. This change led to a slight improvement in student grades for LO1.1 during the first semester of 2017. However, this improvement came at the expense of lower performance in LO1.2, as the reduced class time negatively impacted students' understanding of that learning outcome. As a result, the teachers then redirected their efforts toward enhancing student performance in LO1.2. It is noticeable that instructors have struggled to find the right balance between learning objectives LO1.1 and LO1.2 over various editions of the course. As a result, the actions taken by the instructors have had a cumulative impact on C1, which became palpable in the first semester of 2018.

C-A&M assists instructors in many ways:

It avoids missing any of the key components of competency-based education.

It helps to think in a top-down manner, moving from abstract concepts to concrete components, which aids in designing the course assessment.

It helps to think in a bottom-up manner, which is useful to derive the rates of the abstract concepts (i.e., competencies and learning outcomes) from the most concrete ones (i.e., the rubric). This is done automatically using the weighted sums. Also, by modeling each component's contribution with weighted sums, C-A&M encourages instructors to reflect on these relationships and their importance.

It prevents instructors from getting overwhelmed by the complexity of the whole model by allowing them to focus on one element at a time when defining each component. For example, when defining LO1.1, the instructor concentrated solely on the breakdown of this learning objective into the global indicator GI1, without being distracted by other LOs or competencies.

It offers a thorough overview of the assessment components for a course.

To learn more about C-A&M, please refer to the references summarized in Table 4. The last column indicates the open repositories that store the anonymized experimental data and the scripts to analyze them.

Table 4

| References | Summary | Data repository |

|---|---|---|

| Vargas et al. (2019) | This paper provides a comprehensive and detailed overview of C-A&M. | https://github.com/rheradio/C-AM/ |

| Marin et al. (2020) | This paper reports the use of C-A&M to assess the interactive simulation tool LCSD, finding it effective for enhancing analysis skills in an automatic control course while also noting areas for future improvement. | https://github.com/rheradio/LCSDAssessment |

| Vargas et al. (2023) | This paper uses C-A&M to evaluate Factory I/O, a 3D simulation tool, to enhance practical learning. It demonstrates that Factory I/O complements Matlab/Simulink by helping students develop essential real-world skills and supports continuous improvement. | https://github.com/rheradio/FactoryIO |

| Vargas et al. (2024) | This paper presents a comprehensive discussion of the example summarized in this section and another case in which C-A&M is used in a laboratory course. The second example is notably more complex, involving 3 competencies, 6 LOs, and 4 ATs. Additionally, it includes data regarding the use of C-A&M in 15 other courses across 8 university degrees, such as computer programming, computer networks, digital technologies for learning, and professional teacher development. | https://github.com/rheradio/CAM |

References to learn more about C-A&M.

Since most of us teach at engineering university schools, it was easier to begin testing C-A&M in engineering courses. As C-A&M proved its benefits, we started promoting its use in other faculties. For example, in Vargas et al. (2024), we reported C-A&M's use in the PUCV's School of Pedagogy. In this current paper, we continue this trend by examining C-A&M's impact not only on engineering courses but also on other disciplines such as Law, Marine Sciences, Geography, Theology, and more.

3 Materials and methods

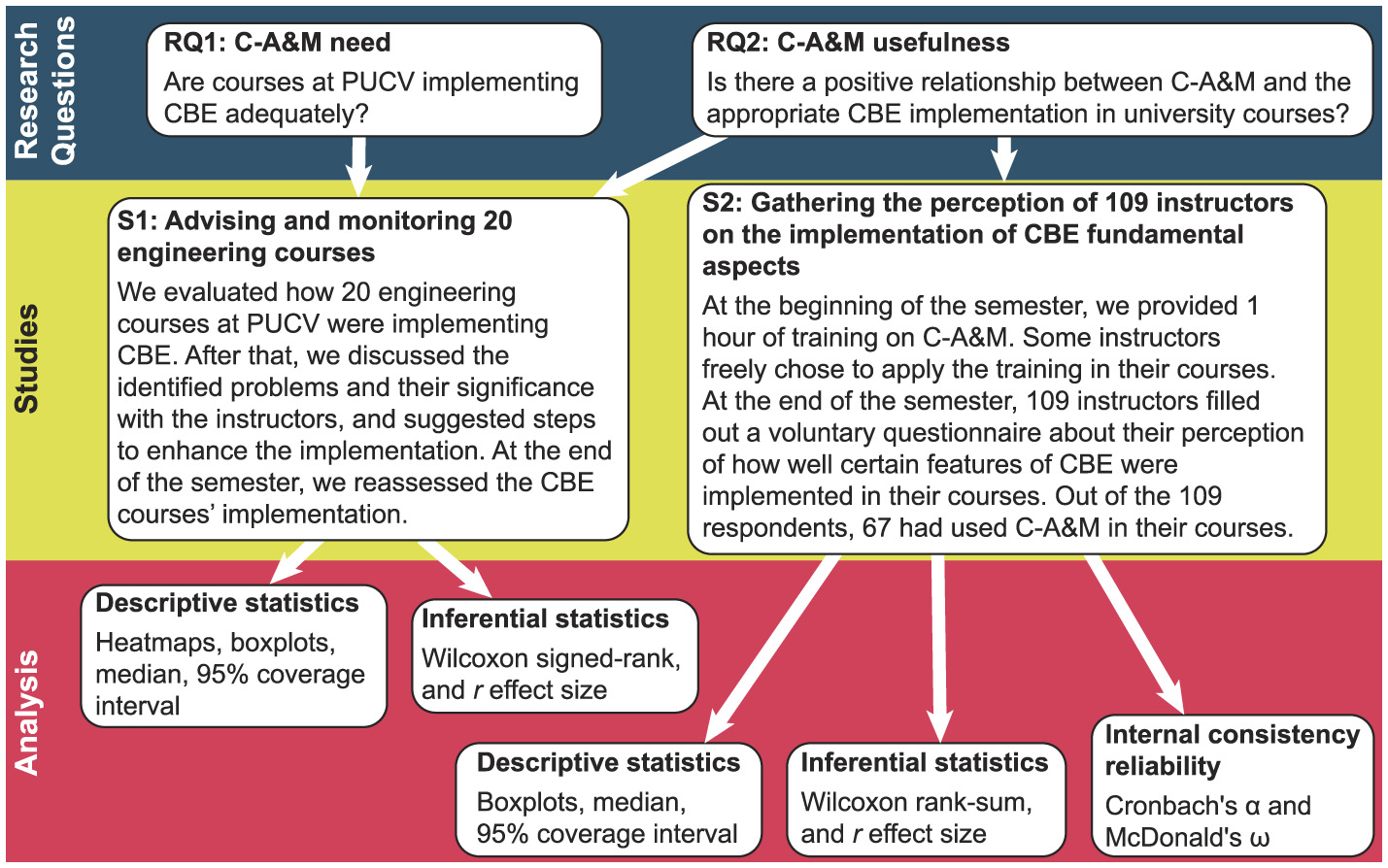

Figure 2 outlines the method we used to evaluate (i) the current CBE implementations in PUCV's courses and (ii) the impact of C-A&M's in these implementations. The following subsections provide a detailed description of the method.

Figure 2

Schematic summary of the evaluation method we followed.

3.1 Design

Our investigation targeted two Research Questions (RQ1 and RQ2):

RQ1:C-A&M's need. Are courses at PUCV implementing CBE adequately? Specifically, are they identifying, aligning and assessing the competencies?

RQ2:C-A&M's usefulness. Is there a positive relationship betweenC-A&Mand the appropriate CBE implementation in university courses?

To answer the RQs, we performed two complementary Studies:

S1: Advising and monitoring 20 engineering courses. S1 followed a within-groups design. At the beginning of the semester, we issued an open call for participation in all courses offered at the Engineering School of PUCV. The coordinators of 20 different courses chose to take part. First, we assessed the CBE implementation in all these courses. Afterward, we discussed the issues we identified and their significance with the instructors, suggesting steps for improvement. At the end of the semester, we conducted a follow-up assessment to evaluate how CBE had been reimplemented in these courses.

S2: Gathering the perception of 109 instructors on the implementation of CBE fundamental aspects. S2 used a between-groups design. At the beginning of the semester, we offered a one-hour training session on C-A&M. Some instructors freely chose to incorporate the training content into their courses. At the end of the semester, 109 instructors completed a voluntary questionnaire assessing their perceptions of how well certain CBE features had been implemented in their courses. Of the 109 respondents, 67 had used C-A&M in their courses.

As shown in Figure 2, both S1 and S2 contribute to answering question RQ2, thereby improving the reliability of the investigation results. Also, both studies employ an observational design rather than an experimental one. We chose this approach due to several ethical considerations:

Randomly assigning instructors to use C-A&M might have disrupted their ability to teach effectively or led to resistance. In contrast, the observational approach ensured that participation was voluntary and did not force instructors to alter their everyday activities.

An experimental design would have required a control group, which means some instructors and their students had not been able to receive the potential benefits of C-A&M, thus leading to inequality in learning opportunities.

Universities are complex environments with tight schedules and pre-established curricula. Introducing an experimental design would have required significant changes to teaching methods, potentially disrupting the educational flow.

3.2 Participants

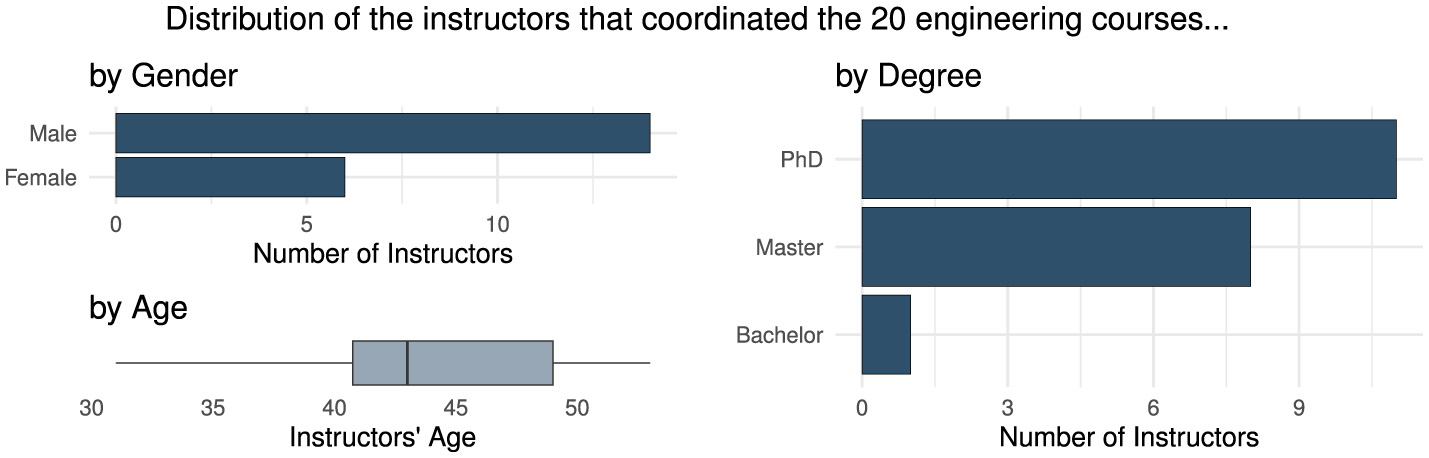

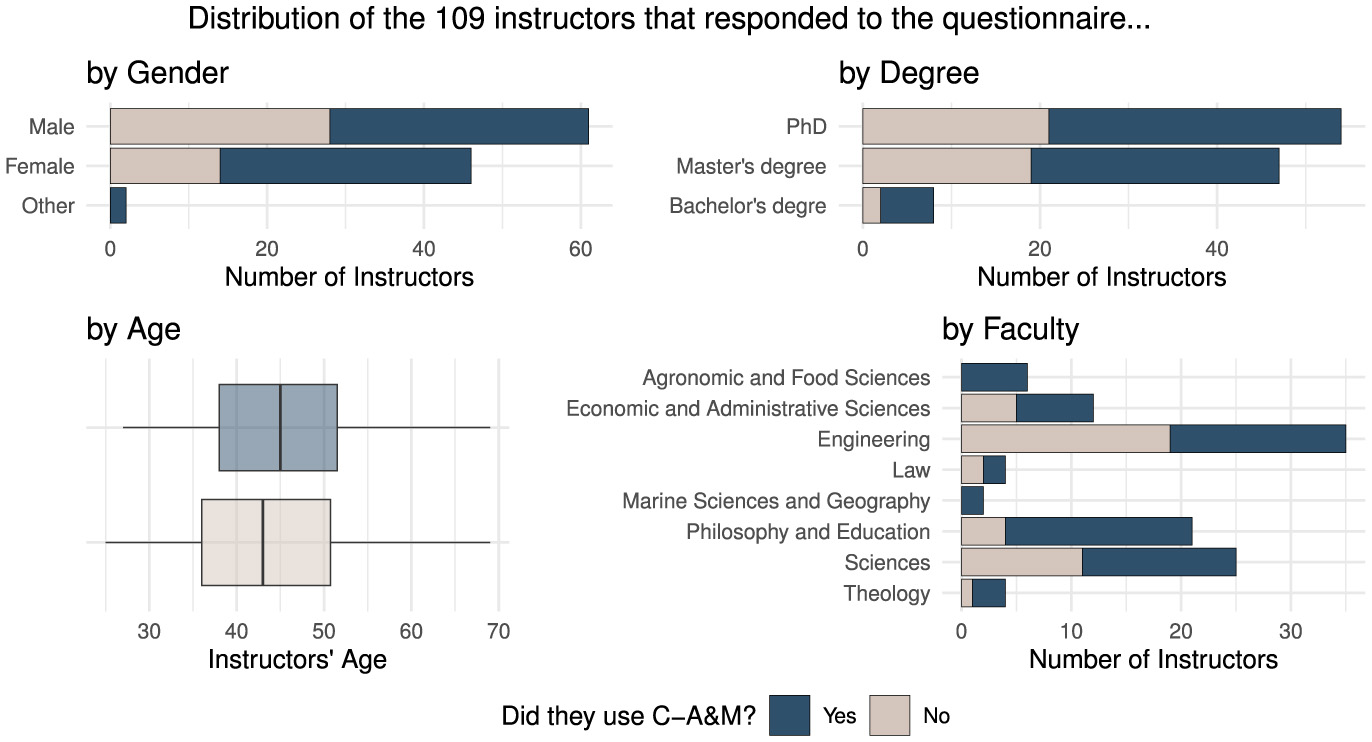

Figures 3, 4 summarize the distribution of participants by gender, age, academic degree, and faculty (all participants in S1 are from the Engineering School, while participants in S2 are from eight different faculties).

Figure 3

Characterization of the participants in S1.

Figure 4

Characterization of the participants in S2.

3.3 Procedure

3.3.1 Procedure for S1

We evaluated the CBE implementation of the participating courses twice: at the beginning of the semester and again at the end, after we had provided recommendations to the course coordinators. To facilitate this process, we created the rubric detailed in Appendix 1, which encompassed four key dimensions, each one with its own sub-dimensions:

Competencies: The quality of the course competencies' specification (c_quality2), structure (c_structure), and components (c_components).

LOs: The quality of the specification and structure of the LOs (lo_quality and lo_structure), and their alignment with the competencies, course content, formative activities, and evaluation tools (lo_alignment).

Content & training activities: The relevance of the content and training activities (cta_relevance), to what extent they covered all LOs (cta_coverage), their sequencing throughout the course (cta_sequence), and their alignment with the LOs (cta_alignment).

Evaluation plan: The quality of the evaluation plan, including whether the ATs covered all LOs (ep_coverage), and if the ATs were relevant to the competency-based assessment context (ep_ats). Also, if the course included a detailed evaluation scheme based on achievement indicators that allowed the precise assessment of each LO(ep_achiev_ind), and finally, the quality of the assessment rubrics used in the course (ep_rubrics).

3.3.2 Procedure for S2

At the beginning of the semester, we organized a one-hour training session on C-A&M, which was available to all interested instructors at PUCV. After the training, some instructors applied what they had learned in their courses. By the end of the semester, 109 instructors completed the questionnaire included in Appendix 2 on a voluntary basis. The questionnaire assessed five dimensions:

Coherence: Whether (i) the relationship between competencies and LOs was coherent, (ii) the ATs effectively evaluated these outcomes, and (iii) the assigned weights for the assessments were appropriate for measuring competencies.

Exercises: Whether the assessment exercises and study cases engaged students in work-related scenarios relevant to their fields, challenging them while maintaining a clear link between competencies and LOs and reflecting common situations they might encounter in their careers.

Feedback: Whether the instructor's review was provided within two weeks and helped students understand their mistakes and areas of improvement.

Alignment with CBE: Whether the course (i) was designed to accommodate diverse students and followed a competency-based approach, and (ii) it employed teaching methodologies that promoted the development and practical application of skills while engaging students as active participants.

Assessment: Whether (i) the course aligned its rubrics with LOs and clearly defined levels of achievement in a structured manner that guided students, (ii) these levels were attainable and demonstrated competency progression, and (iii) the rubrics were accessible to the students in the virtual classroom before their evaluations, along with detailed explanations of their content and goals.

3.4 Materials

3.4.1 Data collection

In S1, we held in-person meetings with the coordinators of the participating courses. We used the rubric provided in Appendix 1 to guide our discussions and conduct the course evaluations, which were recorded in CSV3 files. After evaluating all the courses, we prepared reports outlining the necessary actions for each course. We then sent these reports to the coordinators and scheduled another meeting to discuss the proposed improvements in detail. At the end of the semester, we met with the coordinators for a final evaluation of the courses, and recorded the results in CSV files.

In S2, we made the questionnaire in Appendix 2 available at the LimeSurvey4 platform for 2 weeks. The link to the questionnaire was distributed through the PUCV's institutional email, with an initial message sent on the first day and a reminder sent after one week. The responses were stored in a CSV file.

3.4.2 Data analysis

The statistical analysis of the collected data was conducted using the R language5:

The ggplot6 package was utilized to create the heatmaps and boxplots shown in Figures 3–8.

The wilcox.test function from R's standard library was used to check the statistical significance and effect size of S1 and S2 results by means of Wilcoxon signed-rank and rank-sum tests, respectively.

The ci.reliability function from MBESS7 package was used to compute McDonald's ωh for testing the internal consistency reliability of the questionnaire in Appendix 2 (MBESS is particularly recommended by Hayes and Coutts, 2020).

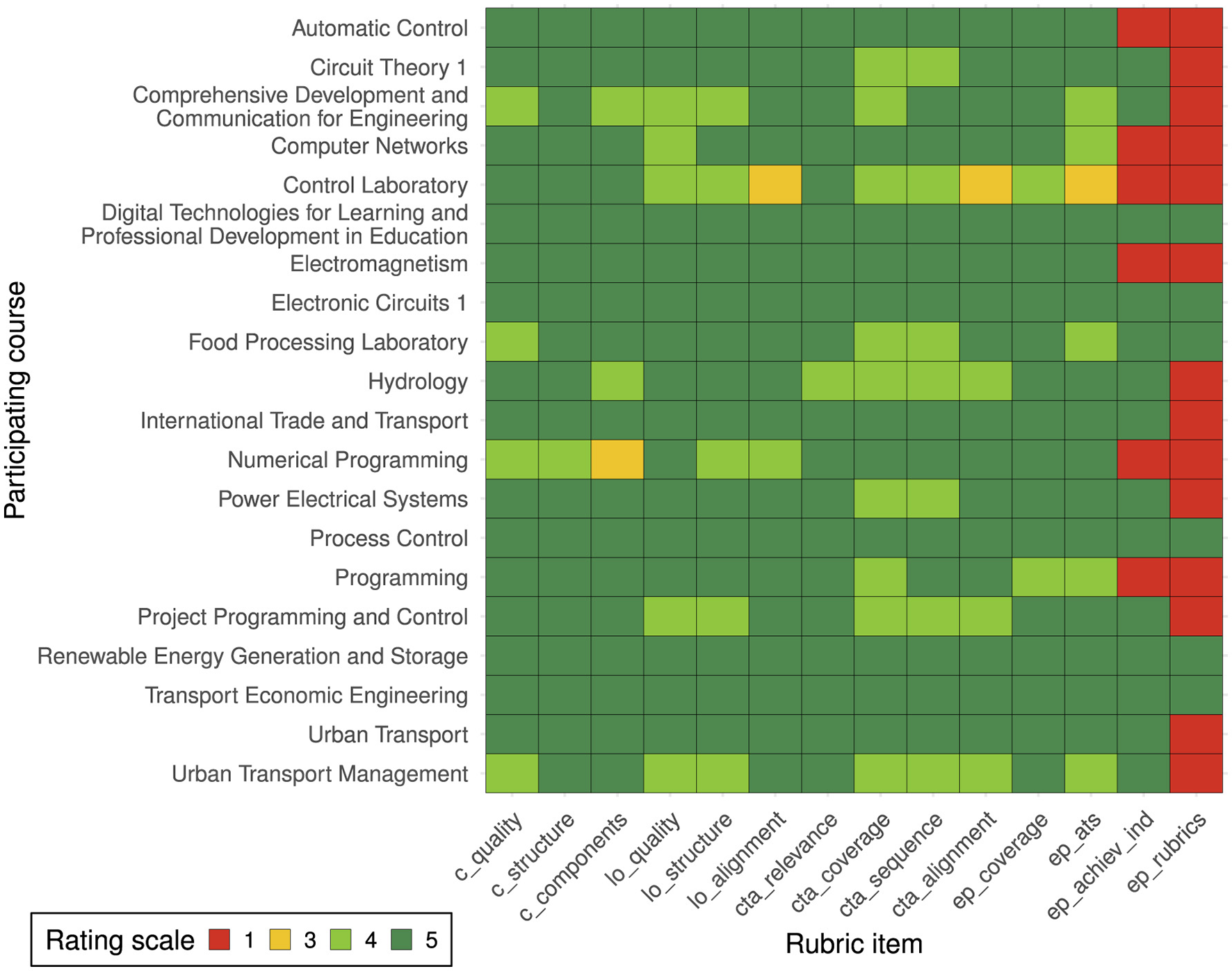

Figure 5

Rating of the courses that participated in S1 before they used C-A&M.

Figure 6

Rating of the courses that participated in S1 after they used C-A&M.

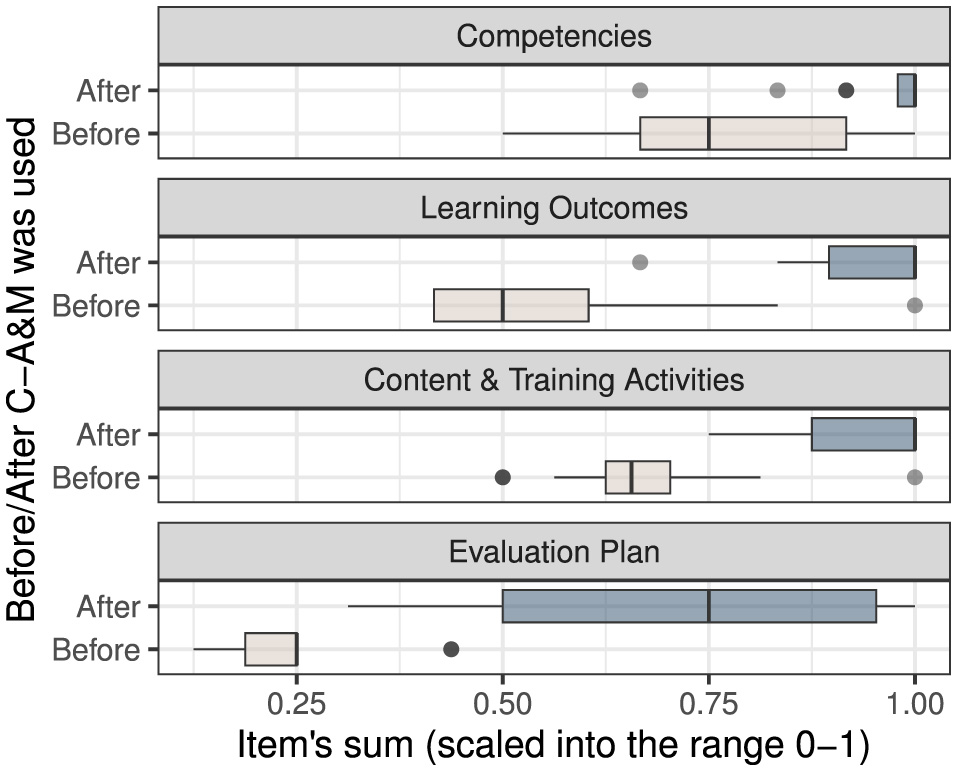

Figure 7

Course rating distribution before and after C-A&M was used.

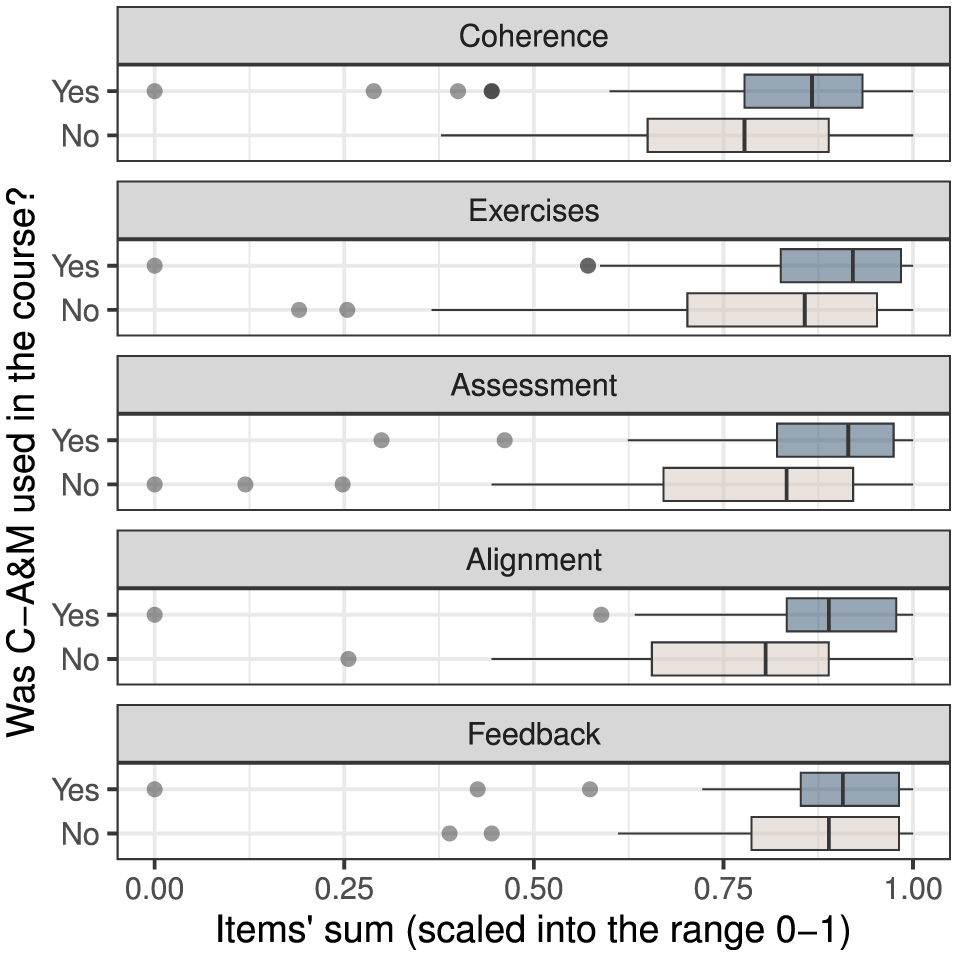

Figure 8

Questionnaire rating distribution between the participants who used C-A&M and those we did not.

4 Results

This section summarizes the results of S1 and S2, organized by the research questions RQ1 and RQ2.

4.1 RQ1: C-A&M's need

The heatmap in Figure 5 depicts the results of the initial evaluation in S1, which was carried out with the rubric specified in Appendix 1. The x-axis represents the rubric items, while the y-axis shows the names of the participating courses. The red, orange, and yellow squares indicate that CBE is not currently being implemented appropriately in most courses. In particular:

The evaluation plan got the worst results among the four rubric dimensions, especially in ep_achievement_indicators and ep_rubrics.

The alignment among elements was inadequate. In particular, (i) in 60% of the courses, LOs had a low alignment to the competencies they contributed, and (ii) in 80% of the courses, content and training activities were poorly aligned to the LOs (i.e.,

lo_alignment = cta_alignment = 2).

4.2 RQ2: C-A&M's usefulness

4.2.1 Study S1

Figure 6 shows the final evaluation results in S1, after participants incorporated C-A&M into their courses. Most problems were fixed, leading to a considerable improvement in all the courses. While some of the major issues identified in the initial evaluation were completely resolved (lo_alignment and cta_alignment), others were only partially addressed (ep_achievement_indicators and ep_rubrics).

To check the conclusion validity of S1 results, the Wilcoxon signed-rank tests summarized in Table 5 were conducted for every rubric dimension. First, we calculated the global rating of each dimension by adding up its sub-dimensions and scaling the results to the range [0, 1].8Figure 7 shows the distribution of these scaled sums before and after C-A&M was used, pointing out a substantial difference. Then, we applied Bonferroni's correction to minimize the probability of making a type 1 error due to performing multiple comparisons, an issue known as the fishing and error rate problem (Trochim and Donnelly, 2016). Bonferroni's method adjusts the significance level α by dividing it by the number of comparisons made. In our case, four comparisons were made (one per rubric dimension), and so the 95%-significance level decreased to α = 0.005/4 = 0.0125. The effect size of Wilcoxon signed-rank tests is estimated using the r statistic. The standard convention (Cohen, 1988) is that when |r| = 0, there is no effect, when 0 < |r| < 0.3, the effect size is considered small, when 0.3 ≤ |r| < 0.5, the effect is medium, and when |r|≥0.5, the effect is large. Accordingly, S1 results show pre/post-differences of medium size and statistically significant in all four rubric dimensions.

Table 5

| Dimension | When C-A&M? | Descriptive stats. | Wilcoxon signed-rank | |||

|---|---|---|---|---|---|---|

| Median | 95%-CI | 95%-CI | p-value | r | ||

| range | ||||||

| Competencies | After | 1 | [0.7458, 1] | 0.2542 | 8e-04* | –0.3767‡ |

| Before | 0.7500 | [0.5000, 1] | 0.5000 | |||

| Learning | After | 1 | [0.7458, 1] | 0.2542 | 1e-04* | –0.4454‡ |

| Outcomes | Before | 0.5000 | [0.4167, 0.9208] | 0.5042 | ||

| Content & | After | 1 | [0.7500, 1] | 0.2500 | 1e-04* | –0.4353‡ |

| Training activities | Before | 0.6562 | [0.5000, 0.9109] | 0.4109 | ||

| Evaluation | After | 0.7500 | [0.3422, 1] | 0.6578 | ~0* | –0.4555‡ |

| Plan | Before | 0.2500 | [0.1250, 0.4375] | 0.3125 | ||

Wilcoxon signed-rank tests of the differences between the course ratings before and after C-A&M was used; statistically significant p-values are highlighted with *, and medium effect sizes are emphasized with ‡.

In summary, the results from S1 illustrate how identifying the course structure using C-A&M significantly improved instructors' understanding of the pedagogical components of competencies. This alignment between the elements of the educational model, the specific course content, and the assessment plan facilitated the development of a more solid and coherent structure for course implementation.

4.2.2 Study S2

The questionnaire in Appendix 2 encompasses five constructs, each one operationalized with several items: Coherence (Items 6–10), Exercises (items 11–17), Feedback (Items 18–23), Alignment (Items 24–33), and Assessment (Items 34–46). Following the recommendations of Hayes and Coutts (2020), Table 6 summarizes the evaluation of the internal consistency of this operationalization with McDonald's ωh. All values of ωh are above 0.8, which is the threshold generally considered indicative of good internal consistency (Hermsen et al., 2013; Cheung et al., 2023). The 3rd and 4th columns present the standard errors and their 95% confidence intervals. Despite the sample size being only 109 respondents, the standard errors and interval widths are narrow, thus supporting the accuracy of the estimated ωh's.

Table 6

| Construct | McDonald's ωh | Std. Error | 95%-CI |

|---|---|---|---|

| Coherence | 0.890 | 0.0292 | [0.818, 0.933] |

| Exercises | 0.948 | 0.0172 | [0.898, 0.970] |

| Assessment | 0.932 | 0.023 | [0.881, 0.967] |

| Feedback | 0.908 | 0.0386 | [0.825, 0.962] |

| Alignment | 0.945 | 0.0199 | [0.904, 0.971] |

Reliabiltiy of the constructs' operationalization in the questionnaire.

Figure 8 presents a comparison of the questionnaire results between participants who had used C-A&M and those who had not. Likewise we made for the rubric results analysis, the ratings of the items for each construct were added up, and the total was scaled to the range of [0, 1]. Figure 8 highlights a difference between the two groups of participants, which is statistically supported by the Wilcoxon rank-sum tests summarized in Table 7 for the assessment and alignment dimensions.

Table 7

| Construct | C-A&M | Descriptive stats. | Wilcoxon rank-sum | |||

|---|---|---|---|---|---|---|

| Median | 95%-CI | 95%-CI range | p-value | r | ||

| Coherence | Yes | 0.8667 | [0.3611, 1] | 0.6389 | 0.0193 | –0.2242† |

| No | 0.7778 | [0.4444, 1] | 0.5556 | |||

| Exercises | Yes | 0.9206 | [0.5714, 1] | 0.4286 | 0.0215 | –0.2203† |

| No | 0.8571 | [0.2567, 1] | 0.7433 | |||

| Assessment | Yes | 0.9145 | [0.5671, 1] | 0.4329 | 0.002* | –0.2953† |

| No | 0.8333 | [0.1229, 1] | 0.8771 | |||

| Alignment | Yes | 0.8889 | [0.6178, 1] | 0.3822 | 3e-04* | –0.3454‡ |

| No | 0.8056 | [0.4444, 0.9889] | 0.5444 | |||

| Feedback | Yes | 0.9074 | [0.5222, 1] | 0.4778 | 0.232 | –0.1145† |

| No | 0.8889 | [0.4486, 1] | 0.5514 | |||

Wilcoxon rank-sum tests of the differences in the questionnaire ratings between the participants who used C-A&M and those who did not; statistically significant p-values are highlighted with * (note that α = 0.05/5 = 0.01 due to Bonferroni's correction); small and medium effect sizes are emphasized with † and ‡, respectively.

The results from S2 indicate that participants who have utilized the C-A&M model demonstrate a deeper understanding of its components. Specifically, participants show improvements in evaluation and alignment, two aspects often overlooked in course development. By employing the C-A&M model, instructors must focus on these elements more thoroughly. This approach results in better alignment among key course components—namely, competencies, learning outcomes, content, and assessments—which ultimately enhances the overall structure of the course. This improvement is recognized as a distinguishing characteristic of instructors who implement C-A&M.

5 Discussion

5.1 Findings

The article addressed the implementation of Competency-Based Education (CBE) at the Pontifical Catholic University of Valpara–o (PUCV) in Chile, focusing on the challenges associated with assessment methods for accurately measuring students' competencies. To this end, two studies were conducted to answer two research questions.

According to S1 results, the courses that used C-A&M experienced improvements in identifying competencies, learning outcomes, learning activities, and assessment plans within their program structure. This is consistent with the findings from several other smaller studies conducted in (Vargas et al., 2019; Marin et al., 2020; Vargas et al., 2023, 2024). In particular, C-A&M helped instructors gain a deeper structural understanding of the CBE pedagogical elements in their courses (competencies, learning outcomes, contents, and the assessment plan), especially in identifying and aligning these elements. Nevertheless, one area that did not show improvement was the rubrics instructors used. A possible explanation for this may be the numerous tasks teachers must handle as part of their academic responsibilities. As reported by Pey et al. (2013), and more recently by Koenen et al. (2015), heavy workloads and additional duties reduce the time to develop effective course rubrics. In addition, S2 results indicate a positive difference between instructors who utilized C-A&M and those who did not regarding the course evaluations and CBE components' alignment.

Although CBE presents significant challenges both internationally and nationally (OECD, 2009; Struyven and De Meyst, 2010; Morales and Zambrano, 2016; OECD, 2017; Deng et al., 2024), the implementation of C-A&M could serve as a means to improve key aspects of CBE and close the gap between assessment and learning outcomes (Morales and Zambrano, 2016).

The findings from both studies indicate improvements in certain areas, while others remain unchanged. In the case of S1, the rubrics utilized in the courses did not improve (see column ep_rubrics in Figure 6). This may be attributed to the fact that developing rubrics requires specific knowledge that university professors often lack and would require targeted training. Furthermore, constructing rubrics demands additional preparation time for each course assessment activity, which conflicts with the numerous responsibilities that university faculty members must manage.

In S2, certain aspects showed more significant improvement than others. Notably, instructors who received training in C-A&M had different perceptions regarding alignment and assessment. These elements are crucial components of C-A&M since assessments must be explicitly aligned with course content–a concept referred to as constructive alignment by Biggs et al. (2022).

Additionally, the evaluated work in the studies demonstrated differences in implementation. In S1, the evaluated work involved direct efforts by each instructor to revise their course syllabi. In contrast, S2's training was conducted through a workshop, typically lasting ten hours, during which C-A&M was introduced and application exercises were performed. However, there was no direct follow-up with each participating instructor. This lack of follow-up may be an influential factor in the effective implementation of the CAM.

5.2 Implications

CBE has become the predominant educational paradigm in Chilean higher education, particularly following the reforms initiated in 2006. However, despite the recognized benefits of CBE, challenges remain in its implementation, particularly concerning students' assessment. C-A&M has been proposed to systematize course assessment under CBE. The potential benefits of C-A&M include ensuring that all essential elements of CBE are considered, facilitating a top-down approach that transitions from abstract concepts to specific components for effective course assessment design, and enabling a bottom-up perspective that connects competencies and learning outcomes back to concrete elements through weighted sums. C-A&M simplifies the complexity for instructors, allowing them to focus on individual components, such as LOs, without distraction. Additionally, C-A&M provides a comprehensive overview of all course assessment elements, aiding in tracking and improving the course over time. The two complementary observational studies reported in this paper indicate a positive correlation between C-A&M adoption and improvements in CBE assessment practices at PUCV. As we standardized course assessments using C-A&M, we observed notable advancements in critical areas such as the evaluation plan, alignment of LOs with competencies, and coordination of training activities.

PUCV is currently promoting the use of C-A&M in their courses. In collaboration with PUCV, we are currently developing:

A self-instructional online course for teachers focused on strengthening foundational concepts of CBE by applying C-A&M.

A standardized course design template to facilitate the creation of syllabi and course programs structured around CBE principles. This format will guide instructors to ensure precise alignment between competencies, LOs, instructional activities, and assessments.

A C-A&M-oriented rubric template, easily adaptable to different disciplines, which includes explicit descriptors for performance levels aligned with competency-based elements.

C-A&M presents a transformative opportunity for higher education institutions seeking to enhance CBE. By embracing this model, universities can make significant strides toward overcoming existing barriers, ultimately achieving a more effective and meaningful educational experience for students. The ongoing collaboration between PUCV and our research team is a promising example of how innovative practices can substantially improve educational quality and adaptability in an increasingly complex academic landscape.

5.3 Limitations

As mentioned in Section 3.1, we chose an observational design instead of an experimental one for ethical reasons. Therefore, when interpreting our results, it is important to acknowledge the limitations that are inherent to observational designs. We cannot definitively state that the use of C-A&M causes improvements in the implementation of CBE, since other factors may be influencing the results; for example, instructors who decided to use C-A&M might be more engaged with CBE than their peers, which could lead to more effective CBE implementation in their courses. Nevertheless, both S1 and S2 suggest a positive relationship between C-A&M and the implementation of CBE. While our results cannot demonstrate internal validity, they do support conclusion validity.

In summary, we faced two threats to conclusion validity:9

The fishing and error rate problem, which happens when multiple analyses are conducted on the same data, was mitigated by adjusting the level of statistical significance α with Bonferroni's correction.

The threat of violated assumptions in parametric statistical tests was addressed by employing non-parametric tests, specifically the Wilcoxon signed-rank and rank-sum tests.

Statements

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://doi.org/10.5281/zenodo.14559216.

Ethics statement

The studies involving humans were approved by the Pontificia Universidad Católica de Valparaíso. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants' legal guardians/next of kin because written informed consent was not required due to full data anonymization, ensuring no identifiable information was collected or analyzed. Written informed consent was not obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article because written informed consent was not required due to full data anonymization, ensuring no identifiable information was collected or analyzed.

Author contributions

HV: Conceptualization, Formal analysis, Funding acquisition, Investigation, Project administration, Resources, Supervision, Writing – original draft, Writing – review & editing. EA: Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. RH: Data curation, Formal analysis, Funding acquisition, Methodology, Project administration, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. LT: Data curation, Formal analysis, Project administration, Software, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The Pontificia Universidad Católica de Valparaíso (PUCV) funded this work for open-access publishing.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1579124/full#supplementary-material

Footnotes

1.^The repository with all documents related to the criteria and quality standards of CNA-Chile is available at https://www.cnachile.cl/noticias/paginas/nuevos_cye.aspx.

2.^Rubric items are denoted as dimension_subdimension. The dimensions are abbreviated with their initials: c stands for Competencies, lo for Learning Outcomes, cta for Content & Training Activities, and ep for Evaluation Plan. For example, c_quality represents the quality of the course competencies. In Section 4, Figures 5, 6 will use this fine-grain notation to compare the pre and post CBE implementation in the courses that participated in S1.

3.^A CSV (Comma-Separated Values) file is a text format used to represent tabular data. Each row in a CSV file is defined by a new line, with commas separating the values within that row. This format is human-readable and easy to import into software such as R or Excel.

4.^https://www.limesurvey.org/

6.^https://ggplot2.tidyverse.org/

7.^https://cran.r-project.org/web/packages/MBESS/index.html

8.^Some rubric dimensions have varying numbers of sub-dimensions; for example, ep has four sub-dimensions, while lo has three. Thus, it is necessary to scale the sums of the sub-dimensions to a range of 0 to 1 to facilitate comparison across the dimensions.

9.^Refer to Section 11.2a of (Trochim and Donnelly, 2016) for a detailed discussion of threats to conclusion validity.

References

1

BanseH. E.KedrowiczA.MichelK. E.BurtonE. N.JeanK. Y.-S.AndersonJ.et al. (2024). Implementing competency-based veterinary education: a survey of AAVMC member institutions on opportunities, challenges, and strategies for success. J. Vet. Med. Educ. 51, 155–163. 10.3138/jvme-2023-0012

2

BarbinaD.BredaJ.MazzaccaraA.Di PucchioA.ArzilliG.FasanoC.et al. (2025). Competency-based and problem-based learning methodologies: the WHO and ISS European Public Health Leadership Course. Eur. J. Public Health35, ii21–ii28. 10.1093/eurpub/ckae178

3

BiggsJ.TangC.KennedyG. (2022). Teaching for Quality Learning at University 5e. London: McGraw-hill education (UK).

4

ChenA. M.KleppingerE. L.ChurchwellM. D.RhoneyD. H. (2024). Examining competency-based education through the lens of implementation science: a scoping review. Am. J. Pharm. Educ. 88:100633. 10.1016/j.ajpe.2023.100633

5

CheungG. W.Cooper-ThomasH. D.LauR. S.WangL. C. (2023). Reporting reliability, convergent and discriminant validity with structural equation modeling: a review and best practice recommendations. Psychol. Assess. 41, 745–783. 10.1007/s10490-023-09871-y

6

CohenJ. (1988). Statistical Power Analysis for the Behavioral Sciences. London: Routledge, 2nd Edition.

7

CruzM.Saunders-SmitsG.GroenP. (2019). Evaluation of competency methods in engineering education: a systematic review. Eur. J. Eng. Educ. 45, 729–757. 10.1080/03043797.2019.1671810

8

DengL.WuY.ChenL.PengZ. (2024). ‘Pursuing competencies' or ‘pursuing scores'? High school teachers' perceptions and practices of competency-based education reform in China. Teach. Teach. Educ. 141, 1–11. 10.1016/j.tate.2024.104510

9

FaiellaW.StylesK. (2024). Navigating competency-based cardiology training: challenges and successes. Canad. J. Cardiol. 40, 1347–1349. 10.1016/j.cjca.2024.01.004

10

GomezM.ArandaE.SantosJ. F. (2017). A competency model for higher education: an assessment based on placements. Stud. High. Educ. 42, 2195–2215. 10.1080/03075079.2016.1138937

11

HayesA. F.CouttsJ. J. (2020). Use omega rather than cronbach's alpha for estimating reliability. But... Commun. Methods Measur. 14, 1–24. 10.1080/19312458.2020.1718629

12

HermsenL. A.LeoneS. S.SmalbruggeM.KnolD. L.van der HorstH. E.DekkerJ. (2013). Exploring the aggregation of four functional measures in a population of older adults with joint pain and comorbidity. BMC Geriatr. 13, 1–8. 10.1186/1471-2318-13-119

13

KarpinskiJ.StewartJ.OswaldA.DalsegT. r.AtkinsonA.FrankJ. r. (2024). Competency-based medical education at scale: a road map for transforming national systems of postgraduate medical education. Perp. Med. Educ. 13, 24–32. 10.5334/pme.957

14

KoenenA.-K.DochyF.BerghmansI. (2015). A phenomenographic analysis of the implementation of competence-based education in higher education. Teach. Teach. Educ. 50, 1–12. 10.1016/j.tate.2015.04.001

15

KruppaC.RudzkiM.BaronD. J.DuddaM.SchildhauerT. A.HerbstreitS. (2024). Success factors and obstacles in the implementation of competence-oriented teaching in surgery. Chirurgie95, 833–840. 10.1007/s00104-024-02107-9

16

LetelierM.HerreraJ. A.CanalesA.CarrascoR.LópezL. L. (2003). Competencies evaluation in engineering programmes. Eur. J. Eng. Educ. 28, 275–286. 10.1080/0304379031000098247

17

MarinL.VargasH.HeradioR.de La TorreL.DiazJ. M.DormidoS. (2020). Evidence-based control engineering education: evaluating the LCSD simulation tool. IEEE Access8, 170183–170194. 10.1109/ACCESS.2020.3023910

18

Martínez-ÁvilaM.García-GarcíaR. M.Guajardo-FloresS.Bonifaz DelgadoL. V.Guajardo-FloresD. (2024). “Fostering post-university success: exploring emotional intelligence in college graduates through the traditional model vs. competency-based model,” in IEEE Global Engineering Education Conference (EDUCON) (Kos, Greece), 01–06. 10.1109/EDUCON60312.2024.10578699

19

MoralesB.ZambranoH. (2016). Coherencia evaluativa en formación universitaria por competencias: estudio en futuros educadores en Chile. Infancias Imágenes15, 9–26. 10.14483/udistrital.jour.infimg.2016.1.a01

20

NelD.McNameeL.WrightM.AlseidiA.CairncrossL.JonasE.et al. (2023). Competency assessment of general surgery trainees: a perspective from the global south, in a CBME-naive context. J. Surg. Educ. 80, 1462–1471. 10.1016/j.jsurg.2023.06.027

21

OECD (2009). Reviews of National Policies for Education: Tertiary Education in Chile 2009. Washington, DC: OECD and World Bank. 10.1787/9789264051386-en

22

OECD (2017). Reviews of National Policies for Education: Education in Chile. Washington, DC:OECD and World Bank.

23

PeyR.DuranF.JorqueraP. (2013). Análisis y recomendaciones del proceso de innovación curricular en las Universidades del Consejo de Rectores de las Universidades Chilenas (CRUCH). Santiago de Chile: Consejo de Rectores de Las Universidades Chilenas, CRUCH.

24

RupareliaJ.McMullenJ.AndersonC.MuneneD.ArakawaN. (2021). Enhancing employability opportunities for Pharmacy students; a case study of processes to implement competency-based education in Pharmacy in Kenya. High. Educ. Quart. 75, 608–617. 10.1111/hequ.12319

25

SahadevanS.KurianN.ManiA. M.KishorM. R.MenonV. (2021). Implementing competency-based medical education curriculum in undergraduate psychiatric training in India: Opportunities and challenges. Asia-Pacific Psychiatry13:e12491. 10.1111/appy.12491

26

Sanchez-GarciaI. N.Ureña-MolinaM. P.López-MedinaI. M.Pancorbo-HidalgoP. (2019). Knowledge, skills and attitudes related to evidence-based practice among undergraduate nursing students: a survey at three universities in Colombia, Chile and Spain. Nurse Educ. Pract. 39, 117–123. 10.1016/j.nepr.2019.08.009

27

ShadanM.ShalabyR. H.ZiganshinaA.AhmedS. (2025). Integrating portfolio and mentorship in competency-based medical education: a Middle East experience. BMC Med. Educ. 25. 10.1186/s12909-024-06553-1

28

ShariffN. M.RazakR. A. (2022). Exploring hospitality graduates' competencies in Malaysia for future employability using Delphi method: a study of Competency-Based Education. J. Teach. Travel Tour. 22, 144–162. 10.1080/15313220.2021.1950103

29

SinghT.ShahN. (2023). Competency-based medical education and the McNamara fallacy: assessing the important or making the assessed important?J. Postgrad. Med. 69, 35–40. 10.4103/jpgm.jpgm_337_22

30

StruyvenK.De MeystM. (2010). Competence-based teacher education: Illusion or reality? An assessment of the implementation status in Flanders from teachers' and students' points of view. Teach. Teach. Educ. 26, 1495–1510. 10.1016/j.tate.2010.05.006

31

TrochimW. M.DonnellyJ. P. (2016). Research Methods: The Essential Knowledge Base. Boston: Cengage Learning.

32

VargasH.HeradioR.ChaconJ.De La TorreL.FariasG.GalanD.et al. (2019). Automated assessment and monitoring support for competency-based courses. IEEE Access7, 41043–41051. 10.1109/ACCESS.2019.2908160

33

VargasH.HeradioR.DonosoM.FariasG. (2023). Teaching automation with Factory I/O under a competency-based curriculum. Multimed. Tools Appl. 82, 19221–19246. 10.1007/s11042-022-14047-9

34

VargasH.HeradioR.FariasG.LeiZ.de la TorreL. (2024). A pragmatic framework for assessing learning outcomes in competency-based courses. IEEE Trans. Educ. 67, 224–233. 10.1109/TE.2023.3347273

35

Vázquez-EspinosaM.Sancho-GalánP.González-de PeredoA. V.CalleJ. L. P.Ruiz-RodríguezA.Fernández BarberoG.et al. (2024). Enhancing competency-based education in instrumental analysis: a novel approach using high-performance liquid chromatography for real-world problem solving. Educ. Sci. 14. 10.3390/educsci14050461

36

ZancajoA.ValienteO. (2019). Tvet policy reforms in chile 2006–2018: between human capital and the right to education. J. Vocat. Educ. Train. 71, 579–599. 10.1080/13636820.2018.1548500

37

ZlatkinTroitschanskaiaO.PantH. (2016). Measurement advances and challenges in competency assessment in higher education. J. Educ. Measur. 53, 253–264. 10.1111/jedm.12118

Summary

Keywords

competency-based course assessment standardization competency-based education, assessment model, course structuring, systematic evaluation, higher education

Citation

Vargas H, Arredondo E, Heradio R and Torre Ldl (2025) Standardizing course assessment in competency-based higher education: an experience report. Front. Educ. 10:1579124. doi: 10.3389/feduc.2025.1579124

Received

18 February 2025

Accepted

26 May 2025

Published

18 June 2025

Volume

10 - 2025

Edited by

Tom Prickett, Northumbria University, United Kingdom

Reviewed by

Hsiao-Fang Lin, Chaoyang University of Technology, Taiwan

Alexandros Chrysikos, London Metropolitan University, United Kingdom

Harold Connamacher, Case Western Reserve University, United States

Updates

Copyright

© 2025 Vargas, Arredondo, Heradio and Torre.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ruben Heradio rheradio@issi.uned.es

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.