Abstract

Introduction:

Recent work has shown that student trust in their instructor is a key moderator of STEM student buy-in to evidence-based teaching practices (EBTs), enhancing positive student outcomes such as performance, engagement, and persistence. Although trust in instructor has been previously operationalized in related settings, a systematic classification of how undergraduate STEM students perceive trustworthiness in their instructors remains to be developed. Moreover, previous operationalizations impose a structure that often includes distinct domains, such as cognitive and affective trust, that have yet to be empirically tested in the undergraduate STEM context.

Methods:

To address this gap, we engage in a multi-step qualitative approach to unify existing definitions of trust from the literature and analyze structured interviews with 57 students enrolled in undergraduate STEM classes who were asked to describe a trusted instructor. Through thematic analysis, we propose that characteristics of a trustworthy instructor can be classified into three domains. We then assess the validity of the three-domain model both qualitatively and quantitatively. First, we examine student responses to determine how traits from different domains are mentioned together. Second, we use a process-model approach to instrument design that leverages our qualitative interview codebook to develop a survey that measures student trust. We performed an exploratory factor analysis on survey responses to quantitatively test the construct validity of our proposed three-domain trust model.

Results and discussion:

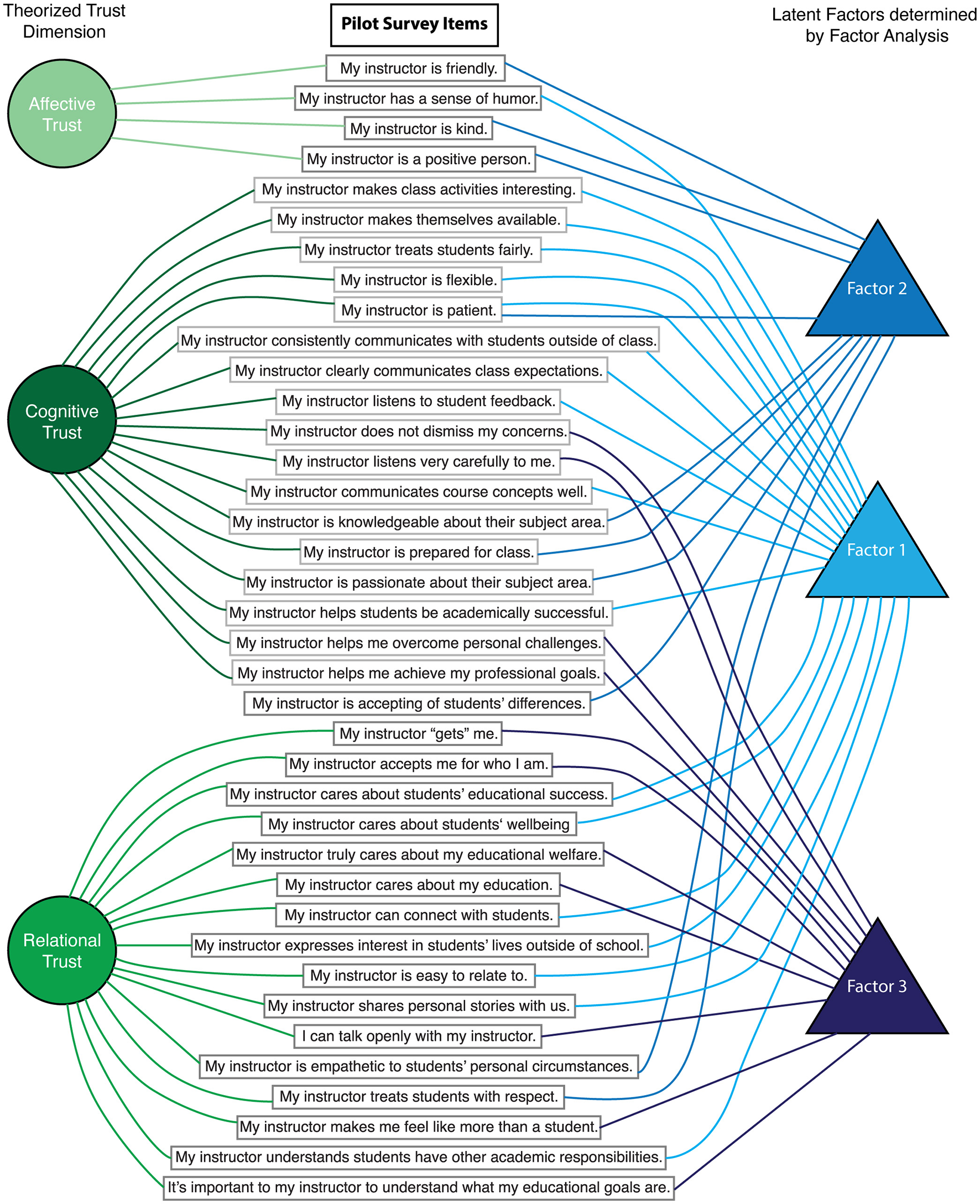

We identified 28 instructor traits that students perceived as trustworthy, categorized into cognitive, affective, and relational domains. Within student responses, we found that there was a high degree of interconnectedness between traits in the cognitive and relational domains. When we assessed the construct validity of the three-factor model using survey responses, we found that a three-factor model did not adequately capture the underlying latent structure. Our findings align with recent calls to both closely examine long-held assumptions of trust dimensionality and to develop context-specific trust measurements. The work presented here can inform the development of a reliable measure of student trust within undergraduate STEM student environments and ultimately improve our understanding of how instructors can best leverage the effectiveness of EBTs for positive student learning outcomes.

1 Introduction

Two reports published 8 years apart, one in 2012 by the President's Council of Advisors on Science and Technology (PCAST) and one in 2020 by the National Science Board, both call for a modernization of STEM education to better retain students and strengthen the domestic science and technology workforce. The 2012 PCAST report found that only 40% of students who matriculate into higher education with the intent of pursuing a STEM degree persist to the end of their degree. The learning environment of introductory courses in the first 2 years of the STEM major is a critical factor in retaining these students (President's Council of Advisors on Science and Technology, 2012). Since then, national assessments have shown stagnant or declining STEM competencies among students and the general public (National Science Board, National Science Foundation, 2020; US Department of Education, 2018, 2024).

Evidence-based teaching practices (EBTs) such as student-centered active learning or discovery-based learning improve student achievement and persistence in STEM fields (Chasteen and Pollock, 2008; Gross et al., 2015; Freeman et al., 2014; Handelsman et al., 2007; Hanauer et al., 2017; Henderson and Dancy, 2009; Jensen et al., 2015; Reeves et al., 2023; Wieman, 2014). Yet, widespread adoption remains limited due to institutional barriers and student resistance (Brazeal et al., 2016; Brownell and Tanner, 2012; Finelli et al., 2018; Minhas et al., 2012; Nguyen et al., 2016; Patrick, 2020; Seidel and Tanner, 2013; Stains et al., 2018; Walker et al., 2008). Critically, instructors' experience of student resistance, which can manifest as lack of engagement or disruptive behavior, may contribute to high rates of instructors who revert to traditional lecturing after trying EBTs (Lake, 2001; Henderson et al., 2012; Seidel and Tanner, 2013; Nguyen et al., 2021). Thus, a better understanding of the social and cognitive factors underlying students' buy-in, or commitment, to the use of EBTs may improve adoption rates (Cavanagh et al., 2016; Corwin et al., 2015; Dolan, 2015; Wang et al., 2021). One factor that has emerged as an empirically significant moderator of student buy-in is trust in their instructor (Cavanagh et al., 2018; Wang et al., 2021).

Indeed, empirical studies have shown that strong personal connections between faculty and students can positively affect a variety of student outcomes (Mayhew et al., 2016), such as persistence in college (Guzzardo et al., 2021; Milem and Berger, 1997; Nora et al., 1996; Pascarella and Terenzini, 1979; Robinson et al., 2019; Schudde, 2019; Pike et al., 1997; Wilcox et al., 2005), attitudes toward learning (Christophel, 1990; McLure et al., 2022), motivation (Komarraju et al., 2010; Wentzel, 2016; Zhou et al., 2023), academic self-concept (Kim and Sax, 2014; Trinidad et al., 2024), self-efficacy (Ballen et al., 2017; Ferguson, 2021), engagement (Umbach and Wawrzynski, 2005; Snijders et al., 2020), performance (Roorda et al., 2011; Zhao and You, 2023), self-worth (Alt et al., 2022; Kuh, 1995; Trinidad et al., 2024), and interest and effort put toward a course (Fedesco et al., 2019). Students themselves report that closer relationships with faculty based on trust are critical for success in college STEM classrooms (Pedersen et al., 2022). Among these relational elements, trust has emerged as a key construct that not only underpins the quality of student–instructor relationships but also directly moderates student buy-in to evidence-based teaching practices (Cavanagh et al., 2018; Wang et al., 2021). Because buy-in has been identified as a critical mechanism for improving student engagement and persistence, especially in STEM, we focus our investigation on trust as a theoretically grounded and empirically supported factor within the broader construct of student–instructor connection. Despite the importance of positive student-teacher relationships for student success, how students develop a sense of trust in their instructor remains empirically understudied and may be undervalued by college STEM instructors (Beltrano et al., 2021; Christe, 2013; Felten et al., 2023; Hagenauer and Volet, 2014; Niedlich et al., 2021; Payne et al., 2022; Tierney, 2006).

The construct of trust has been widely studied across disciplines both from theoretical and empirical perspectives. For example, in an empirical study of romantic partnerships, Rempel et al. (1985) consider the development of trust as beginning with demonstrations of consistency and evolving based on shared values and goodwill. Revisiting this work, Camanto and Campbell (2025) found three key dimensions of trust in romantic relationships that reiterate Rempel et al.'s (1985) framework: predictability, dependability, and faith. Lewicki and Bunker (1996) offer an expanded theoretical framework to describe the development of trust in professional relationships. Initial calculus-based trust informed by self-interest grows into knowledge-based trust through familiarity. When two individuals identify with each other's shared values and goals, they progress to the deepest form of identification-based trust. While Lewicki and Bunker's (1996) framework describes the development of trust through different domains over time, McAllister's (1995) empirical study of workplace relationships suggests that different domains of trust, specifically cognitive and affective, develop simultaneously and independently from each other. The cognitive domain depends on a rational assessment of professional competence while the affective domain is rooted in an emotional bond. Indeed, Massey et al. (2019) argue that interpersonal trust is bidimensional in nature and consists of both affective and cognitive components, highlighting in their empirical study that affective and cognitive trust domains explain significant variance in one's perception of the quality of an interpersonal relationship. Lewis and Weigert (1985) present a theoretical description of trust as a collective social force that also considers the distinction between cognitive and emotional processes but treats trust as a generalized attitude toward an institution rather than in the context of a specific relationship. In another framing of organizational trust, Mayer et al. (1995) consider trust as unidimensional and provide a theoretical model that distinguishes between trust as an internal state of willingness of a trustor to be vulnerable to a trustee in the face of uncertainty. The decision to trust is based on the trustor's sense of the other party's trustworthiness, determined by the trustee's demonstration of ability, benevolence, and integrity. Conducting an empirical study on how trust is built in both hybrid and in-person work settings, Fischer et al. (2023) interestingly highlight the value of behavioral or relational trust, deeming authenticity and communication as trustworthy professional behaviors.

There is no one unified definition of trust, though there is some consensus in the literature that trust has at least two distinct dimensions: cognitive and affective. Despite this consensus and exploration of these two dimensions in research on trust, there is nonetheless a shortfall in the literature in terms of a consistent and empirical distinction between cognitive and affective domains (Legood et al., 2023). In the context of higher education, there is even less consensus on the definition of trust between students and instructors (Beltrano et al., 2021; Christe, 2013; Felten et al., 2023; Hagenauer and Volet, 2014; Niedlich et al., 2021; Payne et al., 2022; Tierney, 2006). First defined in the K-12 context, Bryk and Schneider (2002) put forth a relational trust framework based on empirical research in Chicago public schools to describe the role of trust as a collective property of the school environment in improving student outcomes and organizational effectiveness. In this framework, trust is built through the quality of social exchanges (measured by benevolence, competence, integrity, and respect) between teachers, students, administrators, and parents. Building upon this framework, Tschannen-Moran and Hoy (2000) broaden the scope of Bryk and Schneider's work, adding more focus on school leadership, policies, and climate. Additionally, Tschannen-Moran and Hoy take an empirical approach to their synthesis of literature by focusing on measurable characteristics that could be used to develop a quantitative tool. Their resulting Omnibus Trust Scale measures five dimensions of trust: benevolence, reliability, competence, honesty, and openness. Using Tschannen-Moran and Hoy's framework to theoretically ground their study, Holzer and Daumiller (2025) use analyses of qualitative interviews with students and teachers in ninth-grade classes to suggest that teachers' willingness to be vulnerable and confide personal information in their students are also critical components of trust. Although developed in the context of K-12 education, Tschannen-Moran and Hoy's framework of trust has been used as a reference point for investigating trust in higher education.

Models of trust in higher education marketing have examined the relationship between students' trust and their loyalty toward their institution. Surveys of students and alumni revealed that trust in the institution included five dimensions parallel to those identified in Tschannen-Moran and Hoy's framework: expertise, integrity, congeniality, sincerity, and openness (Ghosh et al., 2001). Sampaio et al. (2012) take Ghosh et al.'s model a step further through a quantitative survey with business students, distinguishing student trust in faculty as a critical component of trust in their institution. Indeed, conceptual models of retention suggest that trust depends on the success of relational exchanges between students and faculty (Dzimińska et al., 2018; Schertzer and Schertzer, 2004). These are primarily conceptual or theoretical papers, offering models rather than new empirical data. The pedagogical impact of student-faculty trust as an important form of social capital is illustrated by Ream et al. (2014), who conducted an empirical mixed-methods study of STEM students in a research program. Using survey data and qualitative interviews, they found that STEM students who had greater trust in their mentor during a summer research program reported greater motivation and had higher career expectation. Building upon Mayer et al.'s (1995) and Tschannen-Moran and Hoy's (2000) frameworks of trust, Ream et al. (2014) estimated students' perceptions of trustworthiness through surveys measuring competence, benevolence, and integrity. Importantly, research students in this study interacted with their faculty mentor outside of a formal classroom setting. Similarly, past empirical studies of student-faculty relationships demonstrate the importance of informal interactions with faculty outside of class for student satisfaction, engagement, and retention (Mattanah et al., 2024; Wong and Chapman, 2023; Pascarella and Terenzini, 2005; Tinto, 2015; Wilcox et al., 2005).

Whether students choose to interact with faculty outside of class is based on perceptions of approachability and support, informed by behavioral cues during class (Lamport, 1993; Wilson et al., 1974). Based on surveys and classroom observation, Lamport (1993) found that rather than age, gender, academic rank, or research accolades, students are more likely to engage in informal interactions with faculty based on their instructors' interpersonal sociopsychological characteristics, such as friendliness, understanding, and authenticity. Similarly, student surveys collected by Schussler et al. (2021) found that student ratings of instructor support were influenced by student perceptions of care and approachability as well as the instructor's personality. Moreover, an empirical survey study (Denzine and Pulos, 2000) found that in-class behaviors demonstrating care and concern for the student (such as asking personal questions) explained significantly more variance in measures of approachability compared to behaviors that demonstrated conscientiousness (such as starting class on time). Empirical evidence gathered from surveys shows that students who report greater trust in the instructor are more likely to engage in out-of-classroom contact with their instructors (Faranda, 2015; Jaasma and Koper, 1999), thus faculty approachability based on demonstrations of care may be a significant factor contributing to student trust. Relatedly, other empirical studies have found that when instructors bring personal elements into their instruction, such as showing vulnerability through acts of self-disclosure (Johnson and LaBelle, 2017; LaBelle et al., 2023) or teacher immediacy (Andersen, 1979; Liu, 2021), students report higher relational satisfaction (Johnson and LaBelle, 2017; LaBelle et al., 2023), increased motivation, and more positive attitudes toward learning (Christophel, 1990; Frymier, 1994; Frymier et al., 2019). While these studies do not explicitly reference the development of trust, the broader literature suggests that trust develops over the course of repeated interactions between individuals. Thus, the factors that lead students to have a positive view of their interactions with faculty both inside and out-of-class likely play a significant role in the development of trust. In a reflective, qualitative study that did explicitly explore the development of trust, Meinking and Hall (2024) describe how students emphasized the importance of relational trust and the willingness of both students and instructors to be vulnerable with one another as key factors for building a trusting learning environment.

The treatment of the teacher-student relationship as an interpersonal one with significant relational and emotional components has been widely adopted in the instructional communication literature (Hess and Mazer, 2017). In 2018, Cavanagh et al. adapted and validated the use of Clark and Lemay's (2010) close interpersonal relationship framework to define student trust in their STEM instructor. Clark and Lemay's work highlights the positive impact of mutual responsiveness and communal norms, where individuals act for each other's benefit without contingency, for long-term, intimate relationships. Cavanagh et al. (2018) adapt this theory to model students' responsiveness to their instructor's use of EBTs, arising from trust that their instructor is acting for their benefit. The decision to trust is based on the extent to which students believe their instructor understands, accepts, and cares about them. This operationalization of trust was further validated in Wang et al.'s (2021) study of the relationship between student trust and buy-in in 14 large-enrollment STEM courses. However, a critique of the instructional communication literature has been that too much focus has been placed on the interpersonal aspects of the student-teacher relationship without consideration for cognitive factors (Hess and Mazer, 2017). Indeed, when students in an online learning environment were surveyed about instructor trustworthiness, high-trusting students cited the instructor's professional credibility and expertise in addition to interpersonal traits related to care, acceptance, and understanding (Hai-Jew, 2007). Similarly, a conceptual model for student trust developed through interviews with college faculty included a domain related to cognitive factors, such as instructors' knowledge, skill, and competence, in addition to affective, identity, and value-based domains (Felten et al., 2023). These studies suggest that both students and faculty believe that trust in instructor encompasses both affective and cognitive domains and that the conceptualization of student-instructor trust solely through the lens of a close personal relationship is insufficient.

However, the distinction between different dimensions of trustworthiness has also been debated. McEvily and Tortoriello's (2011) and Whipple et al.'s (2013) reviews of the measurement of trustworthiness argue there is weak evidence to support the construct validity of separate dimensions. A literature review conducted by Niedlich et al. (2021) similarly highlights the lack of conceptual clarity and inconsistent application of existing theoretical frameworks to define trust and its dimensionality across studies specifically within education contexts. Moreover, Niedlich et al. (2021) note that while existing research often depends on the use of multidimensional trust scales, the relationships between dimensions is rarely examined. Concerns about the construct validity of trust dimensions have also been raised in other domains. For instance, Bradford et al. (2022), in a mixed-methods study of trust in police among immigrant communities in Australia, emphasize the contextual and interpretive variability in how trustworthiness is perceived and measured—raising similar questions about the transferability of pre-defined trust constructs. Likewise, Nielsen and Nielsen (2023), working from an ethnomethodological and micro-sociological perspective, argue that trustworthiness emerges in the details of social interaction, challenging the assumption that it can be cleanly isolated and captured through conventional self-report measures. Together, these studies align with our argument that trust, as perceived by undergraduate STEM students, may not be fully captured by dimensions derived from other top-down theoretical models.

To empirically test the construct validity of trust in higher education, Di Battista et al. (2020, 2021) sought to determine if students could themselves consistently differentiate between instructor characteristics related to two dimensions of trust often used in education contexts: competence and benevolence. In a quantitative study, Di Battista et al. (2021) found that manipulating students' perceptions of an instructor's competence significantly affected their subsequent judgment of benevolence, and vice versa. In a qualitative study, they further found that when students were asked to list characteristics associated with a benevolent or competent instructor, students frequently used the same words to describe both dimensions and used words that were not aligned with theoretical definitions (Di Battista et al., 2020). These findings affirm the argument that theorized sub-constructs of trust and the relationships between them may be highly dependent on institutional context or overlap entirely when empirically tested (PytlikZillig and Kimbrough, 2016). The lack of empirical studies of trust-dimensionality in higher STEM education calls for a more thorough examination of how well theorized trust dimensions drawn from organizational, social, and educational psychology frameworks or from K-12 contexts represent student perceptions in this specific context.

In the current study, we therefore seek to address the following research question: are college STEM students' perceptions of instructor trustworthiness accurately captured by previously theorized sub-constructs of trust? Based on research evidence discussed above, we hypothesize that a simple two- or three-domain model may not capture the rich dimensionality of student descriptions of trustworthiness. To test this hypothesis, we first employ a multi-step qualitative approach that gives students the opportunity to describe trusted instructors in their own words. To the best of our knowledge, such a “bottom-up” approach has yet to be applied in empirical studies of American college STEM students' trust in their instructors (Di Battista et al., 2020). Di Battista et al.'s qualitative study (2020) was conducted with a group of 125 psychology students in a single course at an Italian institution. Previous studies of American STEM undergraduate student trust have been limited to research faculty mentorship (Ream et al., 2014), faculty perceptions (Felten et al., 2023; Bayraktar et al., 2025), small classroom settings (Meinking and Hall, 2024) or to a close personal relationship framing of the student-instructor relationship (Cavanagh et al., 2018; Wang et al., 2021). Therefore, deepening our understanding of trust from the perspective of students themselves is a key step toward advancing student experiences in STEM classrooms.

To prioritize empirical model testing, we chose to follow a defined “process model” approach that leverages qualitative data for instrument design (Chatterji, 2003). First, we reviewed literature across education, psychology, and management to identify existing trust constructs. We then conducted structured interviews with 57 STEM undergraduates, asking them to describe a trusted instructor. Using a priori codes from the literature and inductively generating new ones, we developed a codebook that categorized traits into conceptual groupings. These categories were then used to draft survey items and test dimensionality. The purpose of the qualitative work was therefore twofold: (1) to propose a preliminary model of instructor trustworthiness grounded in student descriptions and (2) to draft an instrument for empirical testing.

In this manuscript, we distinguish between trust and trustworthiness. Drawing on Mayer et al. (1995), trust refers to the psychological state of the trustor based on a decision to be vulnerable to the actions of the trustee. Trustworthiness, by contrast, refers to the characteristics or behaviors of the trustee, such as competence, care, or fairness, that lead the trustor to view them as deserving of trust. Our study centers on students' descriptions of instructor trustworthiness and uses these perceptions as a window into how trust develops. Although we use the term “trust” at times for brevity, our analyses focus on the observable antecedents to trust as experienced and articulated by students.

Our qualitative analysis revealed that trustworthy instructor traits clustered into cognitive, affective, and relational domains, with notable overlap between cognitive and relational elements. We piloted a survey based on the codebook in a large-enrollment STEM course. A forced three-factor exploratory factor analysis (EFA) yielded poor-to-acceptable model fit while higher-order models performed better. Moreover, items did not load cleanly into the predefined domains, indicating that student conceptions of trustworthiness may not align neatly with previously theorized models. These findings suggest a more nuanced understanding of trust is needed to improve student buy-in to evidence-based practices and, ultimately, support retention in STEM fields (Cavanagh et al., 2018; Graham et al., 2013; Wang et al., 2021).

2 Materials and methods

2.1 Process model approach to instrument design

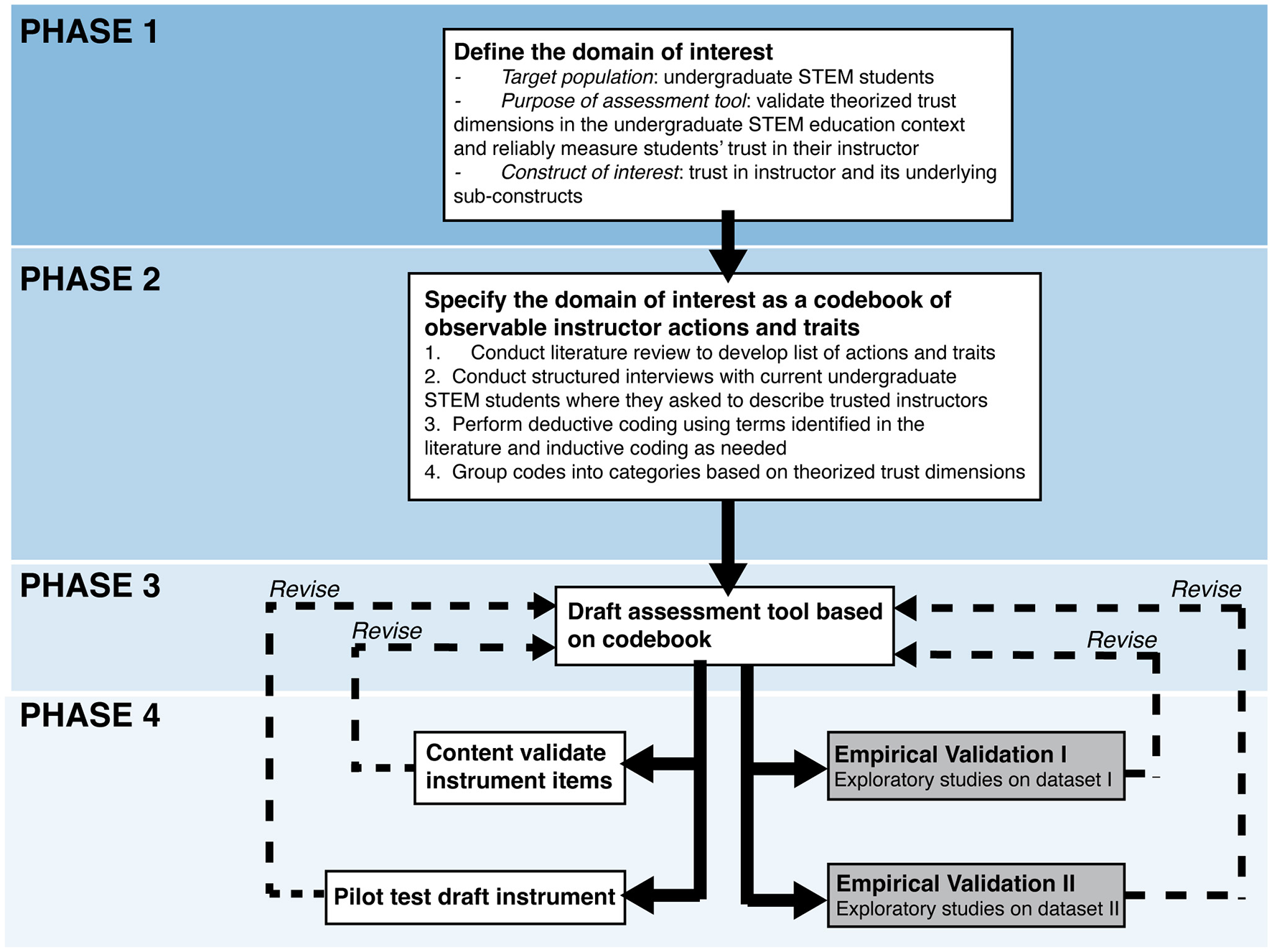

In this study, we apply an iterative process model for instrument design to develop a codebook of trustworthy instructor characteristics (Chatterji, 2003; Chatterji et al., 2002; Graham et al., 2009). The process has four phases, depicted in Figure 1. In phase 1, we began by defining the assessment context, including the constructs and population that will be targeted for measurement. In this case, the domain of interest was defined as the constructs underlying trust for undergraduate STEM students. In phase 2, we specified the domain in terms of action-oriented and observable indicators to facilitate instrument construction in phase 3. To do so, we conducted a literature review and held structured interviews with current undergraduate STEM students. The work of phase 3 then focused on converting the specified behaviors and characteristics into rating items for a survey. Finally, in phase 4, we conducted iterative rounds of validation and revision, including content validation of the items and a pilot test of the instrument. The process model approach used here is based on recommendations for test development grounded in psychometric modeling described in the Standards for Educational and Psychological Testing (American Educational Research Association, 1999). Instruments developed using this model typically achieve desired reliability and a concise factor structure within fewer rounds of empirical testing (Chatterji et al., 2002; Graham et al., 2009). We apply all four phases of the process model in this work, focusing primarily on domain specification to clarify and validate the constructs underlying student trust in their instructor. Importantly, while we present findings from a pilot study, this paper does not address phase 4 in full. In the process model, phase 4 typically includes exploratory and confirmatory studies with large independent data sets. Overall, this study followed a sequential exploratory mixed methods design, in which qualitative data collection and analysis preceded and directly informed the quantitative phase. The qualitative phase involved structured interviews to identify traits students associate with instructor trustworthiness. These traits were used to develop a survey instrument, which was then pilot tested using exploratory factor analysis to examine the dimensional structure of trust.

Figure 1

Phases in the development of a codebook of instructor behaviors and characteristics that contribute to the development of student trust and validation of trust measurement instrument derived from the codebook. Dashed lines depict revisions made to the instrument following rounds of validation. Boxes in gray (“Empirical Validation I” and “Empirical Validation II”) represent steps of the process model approach not addressed in this paper.

2.2 Process model phase 1

We defined the domain of interest as the constructs underlying the latent variable “trust” and the assessment context was defined as undergraduate STEM classrooms in the United States.

2.3 Process model phase 2

2.3.1 Literature review

To identify actions, characteristics, and other related variables underlying descriptions of trusted individuals, the research team conducted an exploratory literature search to identify key dimensions previously used to operationalize the latent variable, “trust,” across multiple disciplinary contexts. We began our search by first expanding upon theoretical frameworks used to measure trust in schools. These included the close personal relationship framework adapted by Cavanagh et al. (2018) and the five dimensions of trust highlighted by Tschannen-Moran and Hoy (2000). The research team identified potential new sources via keyword searches of the following online databases: JSTOR, ProQuest, EBSCOHost, and Google Scholar. Example keywords used in the search included: “trust in schools,” “trust in organizations,” “trust between superior and subordinates,” “trust among colleagues,” “trust in leaders,” and “trust in teachers.” Searches were conducted between Spring 2021 and 2022 and yielded about 100 research articles published between 1967 and 2022, spanning psychology, education, and organizational studies. Articles were included based on whether the authors provided a clear operational definition or framework of trust with specific domains, or clear descriptions of behaviors and characteristics associated with trustworthy individuals. Articles that did not involve the study of human behavior or that did not provide a working definition of trust were excluded.

In reviewing each article, research team members recorded the dimensions and individual characteristics used to operationalize and describe trustworthiness. Each dimension or characteristic was recorded within a preliminary codebook and accompanied by any available definitions, behaviors, or sample items included in the original article. Definitions and examples of dimensions or characteristics not explicitly tied to education settings were contextualized to the student-instructor relationship based on definitions provided in the original source material. Our focus was to clarify existing trust dimensions with descriptive language that placed actions and characteristics into the specified domain context. The literature review concluded once the research team agreed that saturation had been achieved, or that few new terms appeared in each successive article. The full literature review codebook is presented in Supplementary Table 1.

2.3.2 Interview participants and procedures

Next, we sought to obtain the perspectives of students as key stakeholders in the study of instructor trust. To do so, we recruited undergraduate students enrolled in STEM classes (N = 57) from one large public research university, one mid-size private research university, and one mid-size public teaching college to participate in a study about their relationships with college instructors. Students were first recruited via convenience sampling by undergraduate assistants on the research team. Recruitment was then formalized through posters, web posts, and emails to students with the incentive of $10 compensation.

Thirty participants identified as students of color (52.6%). Of those 30, 12 identified as Black (21%), 10 identified as Hispanic or Latino (17.5%), three as more than one group (5.3%), and five did not identify their race/ethnicity (8.8%). Thirty-eight participants identified as women (67.7%) and 19 identified as men (33.3%). Most students were in their first and second years (56.2%) while the remainder were in their third and fourth year (43.8%) of college. Most students majored in STEM fields (68.4%) with some from the social sciences (21.1%), humanities (7%), and other fields (3.5%). Seventeen students (29.8%) were first-generation college students. See Table 1 for all interview participant demographic characteristics.

Table 1

| Number of participants | Percent | |

|---|---|---|

| Self-identified gender | ||

| Male | 19 | 33.3% |

| Female | 38 | 67.7% |

| School year | ||

| First-year | 5 | 8.8% |

| Second-year | 27 | 47.4% |

| Third-year | 17 | 29.8% |

| Fourth-year | 8 | 14.0% |

| Race/ethnicity | ||

| White or Asian or Pacific Islander | 27 | 47.4% |

| Black or African American | 12 | 21.0% |

| Hispanic or Latino | 10 | 17.5% |

| Multiple Ethnicities | 3 | 5.3% |

| Prefer not to answer | 5 | 8.8% |

| First-generation student status | ||

| Yes | 17 | 29.8% |

| No | 8 | 14.0% |

| Unsure/prefer not to answer | 32 | 56.2% |

| Major | ||

| STEM | 39 | 68.4% |

| Social Sciences | 12 | 21.1% |

| Humanities | 4 | 7.0% |

| Other | 2 | 3.5% |

Demographic characteristics of student interview participants (N = 57).

Percent is rounded to nearest decimal place.

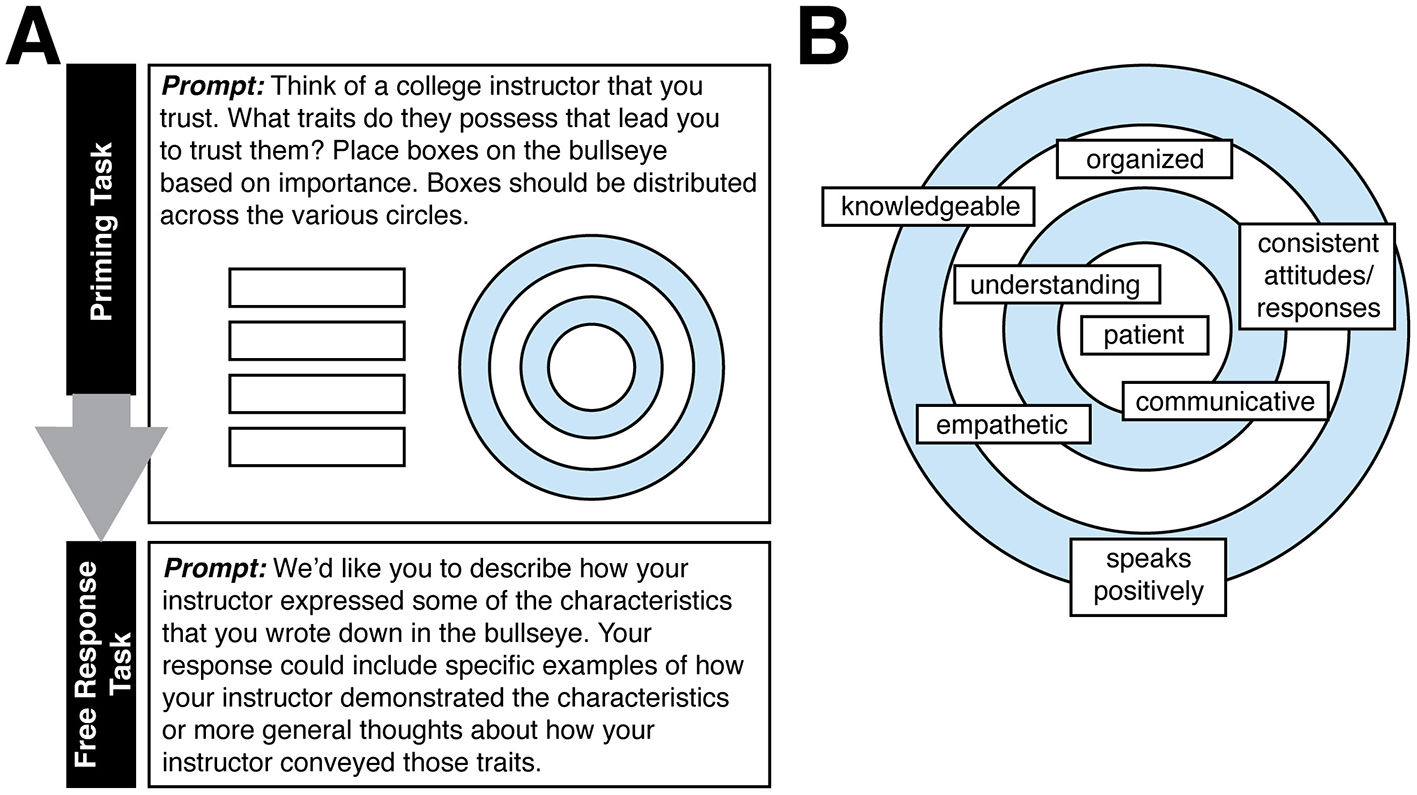

Upon volunteering to participate in the study, students were invited to a 15-min Zoom interview with a member of the research team. The interview followed a two-part structure. In the first, priming task, participants were asked to reflect on a past college instructor they trusted and to generate words describing the instructor's traits. Using Google Slides, participants were asked to place each trait on a bullseye graphic based on how important they believed each trait was to their perception of trustworthiness (e.g. if a student believed that an instructor's “punctuality” was the foremost reason for trust, the student would place “punctuality” in the center of the bullseye; see Figure 2 for an example). In the second part of the task, participants were asked to explain why the characteristics they chose were perceived as being trustworthy and how those characteristics were demonstrated by the instructor. Participants were encouraged to provide anecdotal examples of how their instructor had displayed the traits they had chosen. The purpose of the priming task was to prime the participant to reflect on a past trusted instructor in preparation for discussing their experiences in depth during the “free response task” (see Figure 2 for a schematic overview of the interview procedure). In the current study, we focus our analysis on responses during the free response task. A second manuscript in progress (Chen et al., in review) provides an analysis of the responses to the priming task. Interviewers (consisting of two full-time researchers and three undergraduate research assistants) only asked follow-up questions if participants' responses were unclear. Each interview was securely recorded on Zoom. This project was granted exempt status from each institution's Institutional Review Board Human Subjects Committee, as it examined standard educational practices.

Figure 2

(A) Schematic overview of the two-part student interview procedure. In the first priming task, students were prompted to recall a past trusted instructor, identify characteristics of that instructor they perceived as being trustworthy and put them in boxes. Students then placed traits in order of perceived importance by moving boxes onto a “bullseye” image. In the second free response task, students were asked to expand upon the characteristics they listed in the priming task. (B) Example of a bullseye completed by a student participant in the priming task.

2.3.3 Thematic coding of interview free responses

We adhered to the established qualitative methodology of thematic analysis to identify emergent themes in students' free-response interview data (Braun and Clarke, 2006). First, three members of the research team (one full-time researcher and two undergraduate research assistants) familiarized themselves with the data by reading through all student responses and generating an initial list of themes. Next, we engaged in a directed content analysis approach (Hsieh and Shannon, 2005), integrating both deductive and inductive coding, to systematically code individual student responses. All interview responses were uploaded to NVivo, which enabled us to electronically code and manage data and ideas (Hilal and Alabri, 2013). Research team members systematically identified all traits associated with the trusted instructor by considering student responses sentence by sentence, contextualizing each sentence within the greater paragraph. Using deductive coding, constructs from the literature review and initial list of themes were used as an a priori list of codes to label students' responses. When student responses did not readily align with existing codes, we used inductive coding to generate new codes that more accurately captured emerging constructs. This iterative process ensured that our coding scheme remained grounded in prior research while allowing flexibility to accommodate novel insights from the data.

While our methodological approach incorporated both deductive and inductive elements, we did not adopt a fully inductive Grounded Theory method (Glaser and Strauss, 2017). Instead, we followed an abductive approach informed by Chatterji's (2003) process model, using existing frameworks to guide initial coding while allowing new codes to emerge from student responses. We recognize that this hybrid strategy limits the possibility of generating an entirely novel theory of instructor trust. However, it was intentionally chosen to balance theoretical grounding with openness to context-specific constructs, as our goal was to develop a preliminary instrument aligned with both empirical data and existing conceptualizations of trust.

Once an initial codebook was generated, two members of the research used the codebook to code all student responses independently. Once the two raters coded all responses, they met to discuss their independent analyses. To ensure consistency and validity, three rounds of intercoder reliability checks were conducted. When coding disagreements arose between the two coders, the coders and a senior investigator would discuss them and assign a final code after consensus was reached. The kappa value of the first intercoder reliability check was 0.64. The kappa value increased to 0.80 and finally to 0.85 after disagreements were resolved during the second and third rounds of intercoder reliability checks. The final kappa value indicates strong agreement between coders based on the codebook. Once agreement was reached on the codebook structure, two coders continued to complete the coding of all student interview free responses. At the completion of coding, NVivo's Coding Query functionality was used to calculate code frequencies. Even if a construct was mentioned more than once by a participant, we coded it a maximum of one time per response.

Finally, we searched for themes in the data by grouping codes into categories based on existing theoretical frameworks of trust identified during the literature review. Specifically, we had identified a consensus in the literature that trust broadly encompasses at least two domains: affective and cognitive. Additionally, previous research of undergraduate STEM students defined trust using a close personal relationship framework. Thus, we included a third, relational trust domain. Members of the research team first grouped codes into these broad domains. These themes were then iteratively refined through discussion among the research team. Where necessary, major themes were divided into sub-categories to ensure that the richness of our interview data was accurately represented. The final codebook contains 28 individual codes grouped into three major themes: affective, cognitive, and relational trust. The cognitive domain comprises six sub-categories, the relational domain comprises five sub-categories, and it was not necessary to split the affective domain into smaller sub-categories.

2.3.4 Network analysis of code frequencies

To examine patterns in how students associated different instructor traits with trust, we constructed a co-occurrence network diagram based on qualitative interview responses. Each node in the network represents a unique interview code (trait) mentioned by students, and edges represent instances where two traits were co-mentioned in the same interview. The weight of each edge reflects the frequency of co-occurrence across all participants. To further analyze network structure, for each node, we calculated degree centrality (the number of connections a trait had) and betweenness centrality (how often a trait acted as a bridge between others). At the domain level, we computed the average node degree and average betweenness centrality, as well as the intra-domain edge density, defined as the ratio of actual to possible connections among traits within the same domain. Finally, we quantified inter-domain edge frequencies to assess the extent to which traits from different trust domains co-occurred. These analyses allowed us to identify not only which traits were most central to students' conceptualizations of trust, but also how traits within and across trust domains were structurally interconnected.

2.4 Process model phase 3

2.4.1 Survey item writing and content validation

According to the process model approach to instrument design, the interview codebook was used to draft items for an assessment tool measuring students' trust in their instructor. Each survey item was derived from each unique code. To draft survey items, three senior members of the research team independently wrote items for all codes. In some cases, multiple items were written for the same code to ensure an accurate representation of the behaviors or traits encompassed within the code. Once completed, the researchers convened to discuss the drafted items. When items written for the same code differed from each other, the researchers reviewed the contextual definitions of the code, consulted with senior investigators, and edited the items until consensus was reached. To content validate survey items, three currently enrolled undergraduate STEM students were asked to provide feedback on each item. Each student provided an interpretation of what each item was asking for, highlighted items that were unclear or ambiguous, and provided feedback on how well the items aligned with their experiences as STEM students. Items were revised according to their feedback. The final survey contained 38 items and is provided on the first page of the Supplementary material.

2.5 Process model phase 4

2.5.1 Survey participants and procedures

As a pilot study, the survey was distributed in one STEM classroom at a large public research university. Students received an e-mail from their instructor inviting them to participate in an online survey administered with Qualtrics survey software. Of the 252 students who received the survey, 210, or 83%, completed the survey in its entirety. Of the participants, 58.6% identified as female, 14.8% identified as male, 0.5% identified as non-binary and 26.2% declined to provide their gender identity. Most students were in their second year (54.3%), with 41.4% of students in their third and fourth years and only 0.9% of students in their first year. Most participants self-identified as: White (58.6%), followed by Asian or Pacific Islander (15.7%), Hispanic or Latino (8.1%), multiple ethnicities (6.7%), and Black or African American (6.2%). 4.3% of participants declined to provide information regarding their race or ethnicity. 28.1% of participants were first-generation college students. Almost all students (96.2%) majored in STEM fields. 2.4% of students majored in Social Sciences and 1.4% were undeclared. See Table 2 for all survey participant demographic characteristics. This project was granted exempt status from each institution's Institutional Review Board Human Subjects Committee, as it examined standard educational practices.

Table 2

| Number of participants | Percent | |

|---|---|---|

| Self-identified gender | ||

| Male | 31 | 14.8% |

| Female | 123 | 58.6% |

| Non-binary | 1 | 0.5% |

| Prefer not to answer | 55 | 26.2% |

| School year | ||

| First-year | 2 | 1% |

| Second-year | 114 | 54.3% |

| Third-year | 66 | 31.4% |

| Fourth-year | 21 | 10.0% |

| Other | 7 | 3.3% |

| Race/Ethnicity | ||

| White | 125 | 58.6% |

| Asian or Pacific Islander | 34 | 15.7% |

| Black or African American | 13 | 6.2% |

| Hispanic or Latino | 19 | 8.1% |

| Multiple Ethnicities | 15 | 6.7% |

| Prefer not to answer | 9 | 4.3% |

| Other | 1 | 0.5% |

| First-generation student status | ||

| Yes | 59 | 28.1% |

| No | 148 | 70.5% |

| Unsure/prefer not to answer | 3 | 1.4% |

| Major | ||

| STEM | 202 | 96.2% |

| Social Sciences | 5 | 2.4% |

| Undecided | 3 | 1.4% |

Demographic characteristics of survey participants (N = 210).

Percent is rounded to nearest decimal place.

2.5.2 Psychometric analysis of survey

Statistical analyses for the pilot study were conducted to investigate the psychometric properties of the survey derived from the interview codebook. Based on thematic analysis of the codebook, we hypothesized that a three-factor solution would define the dimensions of the survey (affective, cognitive, and relational trust). To evaluate this hypothesis, we conducted a maximum-likelihood factor analysis with promax rotation. Sampling adequacy was evaluated using a Kaiser-Meyer-Olkin analysis and suitability for factor analysis was evaluated using Bartlett's test. We evaluated model fit with the chi-square test of model fit, comparative fit index (CFI; Bentler, 1990), Tucker-Lewis Index (TLI; Tucker and Lewis, 1973), normed-fit index (NFI; Bentler and Bonett, 1980) and root mean square error of approximation (RMSEA). Finally, we computed a factor correlation matrix and examined internal consistency using Cronbach's α for all survey items and for each component factor.

3 Results

To understand how students conceptualize trust in instructors in their own words, we conducted structured interviews with 57 currently enrolled undergraduate STEM students at 3 institutions. They were asked to describe characteristics of a past trusted instructor, including examples of how the instructor had demonstrated these characteristics (see Section 2 for details). We first performed qualitative analyses to determine the dimensional organization of traits students used to describe trusted instructors, resulting in an interview codebook that proposes a three-dimensional structure to the development of trust. Next, we used qualitative and quantitative methods to understand the relationships between proposed dimensions of trust. We first sought to determine whether the proposed dimensions of trust were highly interrelated or if they remained distinct by examining co-occurrences of trait mentions in student responses. Finally, we developed a survey based on the qualitative interview codebook and used it to empirically test the construct validity of the proposed dimensions of trust.

3.1 Emergent dimensions of trust based on student interviews

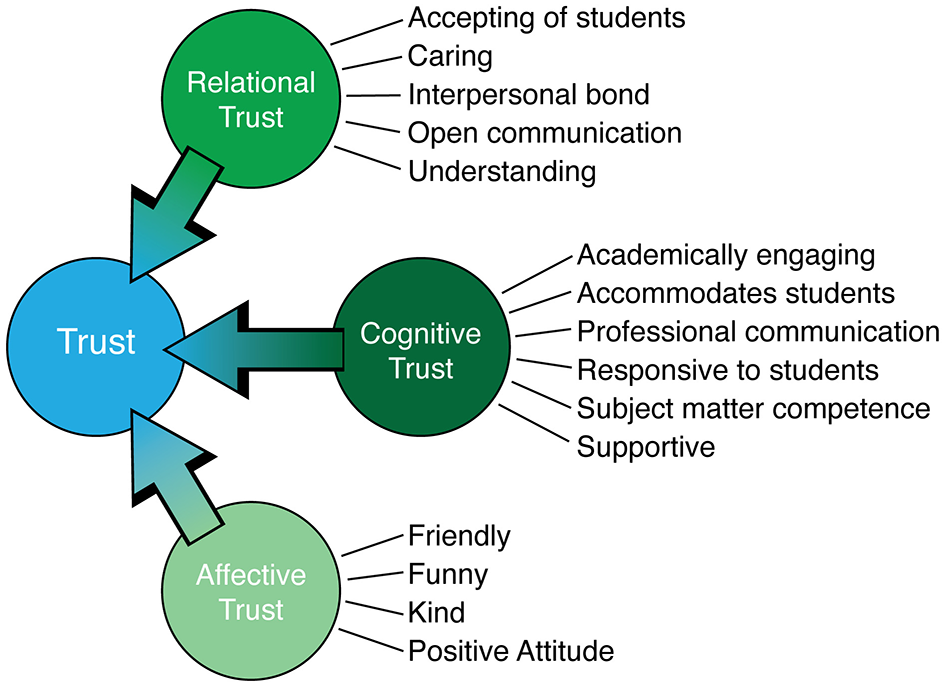

Through content analysis of open-ended interview responses, our findings reveal 28 individual codes representing instructor characteristics perceived by students as demonstrating trustworthiness (see Section 2 for details). Because students were asked to provide examples of how instructors demonstrated these characteristics, we extracted observable contextual definitions for each code and included them in our codebook. Through thematic analysis, we organized individual codes into three major dimensions: affective, cognitive, and relational trust (Figure 3; see Section 2 for details). The major coding categories were then further divided into subcategories that grouped together similar individual codes as needed. In our qualitative codebook of trust, readers can find the three major coding categories, subcategories, individual code definitions, and accompanying student examples for each code (Table 3).

Figure 3

Schematic of major code categories and their subcategories, representing the three dimensions of trust captured by qualitative analysis of student interview responses.

Table 3

| Trust dimension | Code category | Code | Definition | Student example |

|---|---|---|---|---|

| Affective trust | Friendly/personable | The instructor demonstrates personal kindness to students. Statements indicate that the instructor is pleasant, likable, and/or agreeable | “He was always friendly and greeted everyone when he got into class” | |

| Funny | The instructor displays a sense of humor | “I was considerably more likely to attend and enjoy class taught by professors that…could make jokes to keep us interested” | ||

| Kind | The instructor demonstrates benevolence. [Sentence must mention the word “kind”] | “One of the biggest things a professor can do that can increase my likelihood of trusting them is to simply be kind…” | ||

| Positive attitude | The instructor displays a positive demeanor | “They did their best to lighten the mood and keep everyone smiling” | ||

| Cognitive trust | Academically engaging | The instructor cultivates a positive academic/classroom environment through involvement with students and course material | “They were also very engaging and did not read off of their slides but rather made sure that they engaged with us” | |

| Accommodates students | Available | The instructor is accessible to students and makes time to meet | “He took a genuine interest in individual students and would be willing to spend a long time in office hours with every student who was struggling” | |

| Fair | The instructor demonstrates reasonable judgments, such as when grading assignments | “She was always fair in her grading and willing to work with her students to understand and achieve” | ||

| Flexible | The instructor breaks existing norms or patterns of behavior to accommodate students. | “When classes switched to ‘online-mode' she was super flexible when it came to the new difficulties and challenges we were facing” | ||

| Patient | The instructor tolerates delays, confusion, or other unanticipated confusion | “The instructor showed patience by taking the time to listen to others who had questions” | ||

| Professional communication | Ongoing communication | The instructor communicates with students over time and, perhaps, after the course ends | “He has consistently checked in with me throughout the semester since that exam and even reached out to me before the next exam offering some last-minute help” | |

| Transparent communication | The instructor sets out goals for the class that are understandable | “Our class only had ten people, so she was able to connect with us and clearly communicate everything that was going on with the course” | ||

| Responsive to students | Good listener | The instructor demonstrates their capacity to hear students' questions/concerns and the instructor thoughtfully considers students words and values their opinions/feedback | “He always listened to all of his students' concerns- and made it obvious that he was really listening and really cared” | |

| Provides feedback | The instructor actively and constructively reacts to students' assignments including providing feedback | “I built trust with this professor because of his ability to provide active feedback to all of my work for his class” | ||

| Subject matter competence | Communicates concepts clearly | The instructor is adept at relaying academic concepts in class. The instructor answers student questions well | “The teacher was dependable in the sense that you as the student could count on the professor to teach difficult topics really well and efficiently” | |

| Knowledgeable | The instructor is well-versed in their subject area | “Having a breadth of knowledge also played a role because this professor would include small details about why this topic was important and how it could be used in the bigger picture made me actually want to learn it” | ||

| Organized, prepared, and responsible in class | The instructor is adept at effectively structuring and planning their course | “Finally, he was very organized and responsible, as all of the class material was organized very neatly and in an easy-to-access manner” | ||

| Passionate about subject and work | The instructor has a strong interest, commitment and desire for their work. | “This professor has a huge depth of knowledge in this class and just wants to share their passion with us every time we meet” | ||

| Supportive | The instructor indicates they are a resource for academic assignments, projects, materials, etc. The instructor also supports and motivates students to interact with course material | “He was very supportive and would not belittle students for their failures or difficulties…” | ||

| Relational trust | Accepting of students | The instructor is cognizant of and supportive of a diversity of learners (This requires patience and flexibility). The instructor ensures all students are given access to opportunities and made to feel welcomed and involved (e.g. no matter ability, background, etc.). The instructor comprehends and shows consideration for other points of view/ideas, creating a safe environment | “He was always sure to engage all of the students no matter their age or willingness to raise their hand” | |

| Caring | The instructor shows interest and investment in student achievement within the scope of their class/in the academic sphere. Instructor may motivate/inspire students to care about course materials. When a sentence mentions a teaching approach/strategy (e.g., patience, teaching competence: communicating concepts), do not use this code. Only code as “caring for students' success” if no strategy is mentioned and only the instructor's intention(s) are mentioned | “My instructor demonstrated that they cared about students' by making it a point to learn each and every student's name” | ||

| Interpersonal bond | Connects with students | The instructor seeks to cultivate a personal relationship with students | “While we were in class, she would make it a point to laugh with her students and truly connect” | |

| Expresses Interest in students' lives | The instructor actively displays interest in students beyond the classroom (e.g., asking questions about their past) | “The instructor that I trusted really wanted to get to know me and my background” | ||

| Relatable | The student perceives that the instructor is more like them than not | “In our one-to-one meeting, my professor made sure to take my worries/doubts about declaring the major seriously and made me feel as though she had once experienced them herself and that she could relate to my experience” | ||

| Vulnerable | The instructor takes risks by disclosing personal information or emotions | “They established this relationship by getting personal and sharing information about themself so I could then open up” | ||

| Open communication | The instructor communicates in a way that is perceived as open, or a mode wherein multiple subjects (e.g. academic subjects, personal subjects, etc.) can be broached The sentence should indicate that there was a “two-way communication” which fosters an engaging, communicative relationship | “My instructor would always have time to meet with me either to talk about course material or just what was going on in my life” | ||

| Understanding | Empathetic | The instructor is able to share students' feelings and experiences | “My professor is a very empathetic person who would always make sure his students were doing well in and outside of class” | |

| Humanizes students | The instructor comprehends and shows consideration for the fact that students are human beings, not just students. Therefore, the instructor may show that they really know the student (e.g., knowing students' names). They may also respect the student and display politeness | “He understood that we are human beings and not just robots that should always complete our work right on time and always know the answer” | ||

| Understanding | The instructor comprehends and shows consideration for students' situational non-academic responsibilities, personal circumstance, the difficulties of the pandemic, or being sick during school, and other things more generally. | “A professor who understands that life happens and that their students have other responsibilities besides school and reaches out to their students when they notice something might be wrong is greatly appreciated” |

Qualitative codebook emerging from student interviews.

As described in “Section 2,” our coding and thematic analysis were informed by a broad literature review, which yielded 50 distinct characteristics that have been previously used to operationalize the latent variable “trust” or have been found to be statistically strongly associated with trust. Our search included more than 100 review articles, experimental studies, and qualitative analyses across a wide array of fields (see Supplementary Table 1 for the full literature review codebook). While our review was comparatively limited in scope relative to the entire body of literature on “trust,” we found that many operational definitions of trust included two sub-constructs: cognitive and affective trust. Thus, we sought to categorize interview codes into these two domains, using the existing literature to guide our categorization of traits related either to an instructor's professional capabilities or an instructor's ability to elicit positive emotions from their students, respectively. Additionally, in previous studies of undergraduate STEM student trust, trust was operationally defined using a close personal relationship framework encompassing care, acceptance, and understanding as sub-constructs (Clark and Lemay, 2010; Cavanagh et al., 2018; Wang et al., 2021). We therefore opted to include a third, relational trust domain to capture instructor traits related to these and other constructs that could be associated with developing and maintaining a close personal relationship with students. In the following, we describe each of the three domains—cognitive, relational, and affective trust—in more detail, providing contextual information about each domain and a rationale for the inclusion of individual codes within specific domains.

3.1.1 Cognitive trust

In our literature review, we found that cognitive trust is causally driven and based on a knowledge-based evaluation of a trustee's ability to fulfill an obligation (Dowell et al., 2015; Johnson and Grayson, 2005; Lewis and Weigert, 1985; Rempel et al., 1985). In this framing of trust, the trustor holds certain expectations of the trustee, based on a promise the trustee made to the trustor. Traits that were often found to be associated with the cognitive domain in a review of the literature included “competence,” “reliability,” “consistency,” “fairness,” “professionalism,” “responsiveness,” “flexibility,” and “timeliness” (Butler and Cantrell, 1984; Cook and Wall, 1980; Friedland, 1990; Ghosh et al., 2001; Lindskold and Bennett, 1973; McAllister, 1995; Moorman et al., 1993; Rousseau et al., 1998; Tschannen-Moran and Hoy, 2000; others, see Supplementary Table 1), among others.

In the higher education context, the trustor is the student making a cognitive decision about whether the trustee, their instructor, can meet their expectations of what an instructor should do in the classroom. This decision may be driven by evaluations of the instructor's competence and reliability, or other behaviors that seek to facilitate an effective working relationship between the student and instructor. Thus, interview codes related to the professional responsibilities typical of an undergraduate STEM instructor, such as demonstrating subject matter competence and providing adequate support for students' academic success, were subsequently grouped into the cognitive dimension (Table 3). In previous work, student trust in the higher education context was assessed using Tschannen-Moran and Hoy's framework of trust developed in the K-12 setting, which included “reliability” and “competence” as key domains of trust (McClain and Cokley, 2017). These elements were also captured in our analysis, represented in the cognitive domain of trust.

Overall, we found that cognitive trust was an important dimension for student perceptions of trustworthiness. Cognitive trust was the second most coded theme with 54 out of 57 students (94.7%) referencing at least one instructor characteristic associated with building cognitive trust (Table 4). Containing 14 codes, the project team divided cognitive trust into six subcategories: academically engaging, accommodating students, professional communication, responsive to students, competent in subject matter, and supportive. The most cited singular codes within the cognitive domain were “supportive,” referenced by 33 out of 57 of students (57.9%) and “flexible,” referenced by 19 out of 57 students (33.4%; Table 4).

Table 4

| Trust dimension | Code category | Code | Number of mentions (out of 57 students) | Most frequently co-occurring codes (number of co-occurrences across all interviews) |

|---|---|---|---|---|

| Affective trust | 19 | |||

| Friendly/personable | 5 | Humanizes Students (5) Supportive (4) | ||

| Funny | 6 | Caring (4) Supportive (3) Relatable (3) Empathetic (3) Understanding (3) | ||

| Kind | 6 | Supportive (4) Available (3) Good Listener (3) Humanizes Students (3) | ||

| Positive attitude | 7 | Caring (5) Supportive (4) Understanding (3) | ||

| Cognitive trust | 54 | |||

| Academically engaging | 10 | Communicates Concepts Clearly (5) Passionate (5) Caring (5) Supportive (4) Accepting (4) | ||

| Accommodates students | Available | 13 | Caring (10) Supportive (9) Flexible (5) Communicates Concepts Clearly (5) | |

| Fair | 9 | Caring (6) Communicates Concepts Clearly (5) Understanding (5) | ||

| Flexible | 19 | Caring (13) Supportive (10) Understanding (9) | ||

| Patient | 8 | Accepting (6) Communicates Concepts Clearly (5) Supportive (5) | ||

| Professional communication | Ongoing communication | 6 | Supportive (6) Caring (5) Understanding (4) | |

| Transparent communication | 3 | Caring (3) Communicates Concepts Clearly (2) | ||

| Responsive to students | Good listener | 12 | Supportive (7) Caring (6) Humanizes Students (5) Understanding (5) | |

| Provides feedback | 4 | Caring (4) Supportive (3) | ||

| Subject matter competence | Communicates concepts clearly | 14 | Caring (9) Passionate (8) Supportive (7) | |

| Knowledgeable | 11 | Supportive (7) Passionate (6) Caring (6) | ||

| Organized, prepared, and responsible in class | 5 | Caring (4) Supportive (3) | ||

| Passionate about subject and work | 13 | Caring (9) Supportive (6) Accepting (5) Understanding (5) | ||

| Supportive | 33 | Caring (26) Open Communication (11) Understanding (11) | ||

| Relational trust | 55 | |||

| Accepting of students | 16 | Caring (8) Open Communication (6) Humanizes Students (4) | ||

| Caring | 40 | Understanding (19) Open Communcation (12) Humanizes Students (9) | ||

| Interpersonal bond | Connects with students | 5 | Understanding (3) | |

| Expresses interest in students' lives | 8 | Vulnerable (4) Humanizes Students (4) Understanding (4) | ||

| Relatable | 8 | Humanizes Students (5) Understanding (5) Empathetic (4) | ||

| Vulnerable | 9 | Understanding (5) Open Communication (4) Humanizes Students (4) | ||

| Open communication | 18 | Understanding (7) Humanizes Students (4) | ||

| Understanding | Empathetic | 11 | Understanding (7) Humanizes Students (4) | |

| Humanizes students | 15 | Understanding (7) | ||

| Understanding | 23 | Caring (19) Supportive (11) Flexible (9) |

Frequency of unique code mentions by students (out of 57 students) and codes most frequently co-occurring with it.

In interviews, students described instructor behaviors and traits that demonstrated the instructor's ability to fulfill their professional obligations in creating an effective learning environment. For example, instructor flexibility was described as an instructor's willingness to accommodate extenuating circumstances, such as illness or family emergencies, that prevented students from turning in assignments on time:

“[My instructor] was willing to work with me when it came to catching up on class notes. He set up informal office hours with me so I could catch up on materials and this was an action that not many of my professors were willing to do when I was sick. His ability to be flexible, understanding, and attentive to my needs as a student truly meant a lot to me.”

While this action could be interpreted as kindness, the context in which students described this trait had strong implications for the student's course performance. Similarly, instructor support was not described as being emotionally supportive but rather, operationalized as the instructor's role as a resource for academic success. For example, behaviors described as supportive included providing the necessary resources for students to complete assignments and motivating students to engage deeply with the course material. One student explained the importance of instructor support as: “I considered this period in my academic career my most productive because I knew there was always someone who supported my learning and would answer any of my questions, no matter what.”

3.1.2 Relational trust

In contrast to cognitive trust, which captures instructor characteristics aimed at building an effective working relationship, relational trust captures characteristics that reflect the cultivation of a strong personal relationship. This is not meant to connote an inappropriate relationship but rather refers to the ways in which an instructor may get to know a student and treat a student as a whole person. These actions may not have direct implications for students' classroom performance or academic achievement, but may have indirect effects through impact on students' self-efficacy, engagement, academic self-concept, motivation, and persistence (Ballen et al., 2017; Eimers, 2001; Komarraju et al., 2010; Kuh and Hu, 2001; Micari and Pazos, 2012; Umbach and Wawrzynski, 2005; Vogt et al., 2007).

Previous studies of trust in the higher education STEM context defined trust through elements of care, understanding, and acceptance (Cavanagh et al., 2018; Wang et al., 2021; Supplementary Table 1). Thus, we included emergent interview codes that reiterated these three elements in the relational trust domain (Table 3). Further, we considered some of the key elements that have been associated with positive personal student-teacher relationship in other studies, including openness, benevolence, care, connectedness, vulnerability, and respect (Anderson and Carta-Falsa, 2002; Jacklin and Le Riche, 2009; Komarraju et al., 2010; Meinking and Hall, 2024; McClain and Cokley, 2017; Micari and Pazos, 2012; Tschannen-Moran and Hoy, 2000; Umbach and Wawrzynski, 2005). Willing vulnerability and the disclosure of personal information by teachers have also been emphasized as key components of a trust student-teacher relationship in the K-12 context (Holzer and Daumiller, 2025). Interview codes showing instructor behaviors intended to build and maintain strong interpersonal relationships were therefore also grouped into the relational trust dimension (Table 3).

The relational trust category contained 10 unique codes, divided into five subcategories: accepting of students, caring, interpersonal bond, open communication, and understanding. After “caring,” traits associated with an instructor's understanding were the most mentioned during student interviews and were referenced by 34 out of 57 students (59.6%; Table 4). Our analysis found that relational trust was the most coded theme with 55 out of 57 students (96.5%) referencing at least one instructor characteristic associated with building relational trust (Table 4). Across all student interviews, instructor characteristics from the relational domain were among the most cited traits informing students' overall perception of their instructor's trustworthiness. Indeed, the single most often cited instructor characteristic perceived by students as indicating trustworthiness was “caring,” mentioned by 40 out of 57 students (70.2%; Table 4).

In student interviews, traits associated with building relational trust were often described within the context of the instructor's efforts to recognize students' identities beyond their role as students and to share aspects of their own identity beyond that of an instructor. For example, one student explained that the trust they had in their instructor came from how:

“[t]he instructor [] really wanted to get to know me and my background. Usually, instructors just see you as just another person in a class, but this instructor really wanted to understand what [was] going on in my other classes and would check in to make sure that I wasn't being too hard on myself. They established this relationship by getting personal and sharing information about themself so I could then open up. This instructor's willingness and desire to get to know me past the identity of a student is why I trust them.”

Across disciplines, trust is often described as a “willingness to be vulnerable” to the actions of a trustee (Mayer et al., 1995). In the context of the student experiences described here, instructors who were also willing to be vulnerable through acts of self-disclosure or who made strides to accept and understand their students' vulnerability appeared to succeed in building not only relational trust, but overall trust with their students.

3.1.3 Affective trust

Affective trust is the emotional component of trust based upon an initial interpersonal connection between two individuals that can lead to feelings of closeness, care, concern, or friendship. In turn, these positive emotions can deepen the development of trust, even in the absence of other causal attributes (Dowell et al., 2015; Johnson and Grayson, 2005; Lewis and Weigert, 1985; Rempel et al., 1985). It is important to distinguish between the relational and affective domains of trust. For example, “benevolence,” or acting out of kindness, is often cited as a component of trust in the broader literature as part of an affective dimension (Erdem and Ozen, 2003; Hoy and Tschannen-Moran, 1999; Jarvenpaa and Leidner, 1998; Kramer and Cook, 2004; Lindskold and Bennett, 1973; Mayer et al., 1995; McAllister, 1995; Morgan and Hunt, 1994; Renn and Levine, 1991; Rousseau et al., 1998; others, see Supplementary Table 1). However, taking students' contextualized meaning into account in our qualitative analysis, we deemed that certain interview codes that could be related to “benevolence” went beyond simple acts of professional courtesy or kindness and instead represented truly individualized acts of care. Such codes were subsequently categorized within the relational trust domain.

Interview codes included in the affective domain were instead related to students developing positive feelings toward their instructor that initially built trust or encouraged students' openness to the possibility of pursuing a personal relationship with their instructor (Table 3). In other words, codes included within the affective domain are related to students' first impressions of their instructor's affect and approachability, which then informed their decision to interact further with their instructor. Indeed, our review of the literature found that instructor approachability and frequency of positive interactions with instructors were important affective components of trustworthiness (Boyas and Sharpe, 2010; Denzine and Pulos, 2000; Edmondson et al., 2004; Jaasma and Koper, 1999; Kramer and Cook, 2004; Lamport, 1993; Robinson, 1996; Tschannen-Moran and Hoy, 1998; others, see Supplementary Table 1). Of the three dimensions of trust that emerged from student interviews, affective trust was the least commonly coded theme, with only 19 out of 57 students (33.3%) referencing at least one of the associated characteristics in their interviews (Table 4). The affective trust code category was encompassed by four singular codes (friendly/personable, funny, kind, and positive attitude), with no additional categorization of codes needed (Table 3).

When students referenced the affective domain of trust during interviews, they referred to instructor characteristics that made them feel more positive about the classroom environment and attending class or office hours. For example, one student discussed the importance of instructor kindness in building trust and motivating attendance: “One of the biggest things a professor can do that can increase my likelihood of trusting them is to simply be kind and empathetic. I am much less likely to go to a professor's office hours if they are cold and callous during class but am much more likely to approach a professor when they are kind.”

3.2 Relationships between dimensions of trust in students' words

Once we qualitatively categorized interview codes into three dimensions, we next sought to understand how different dimensions of trust interacted within students' open-ended responses. In doing so, we aimed to assess whether students tended to systematically distinguish between different dimensions of trust in their descriptions or if there was a pattern in how traits were mentioned in relation to each other. First, we tabulated the number of codes mentioned by each student in their free responses (Table 4). Of the 57 students we interviewed, 37 students mentioned traits from at least two dimensions, 17 students mentioned traits from all three dimensions, and three students mentioned traits from only one dimension. The three students who mentioned traits from only one dimension all used traits belonging to the relational domain. On average, students used between five and six traits to describe an instructor and 2–3 of those traits tended to fall within the cognitive and relational domains, respectively.

Next, we determined the frequency of codes co-occurring together within a student's response. Across all interviews, we found that “supportive” from the cognitive domain and “caring” from the relational domain were most frequently mentioned together, co-occurring 26 times. Following behind, “caring” and “understanding” from the relational domain co-occurred 19 times while “flexible” from the cognitive domain and “caring” from the relational domain were mentioned together 13 times. “Supportive” from the cognitive domain also frequently co-occurred with “open communication” from the cognitive domain (12 co-occurrences) and “understanding” from the relational domain (11 co-occurrences). The rightmost column of Table 4 lists the traits that were most frequently mentioned in conjunction with each individual code.

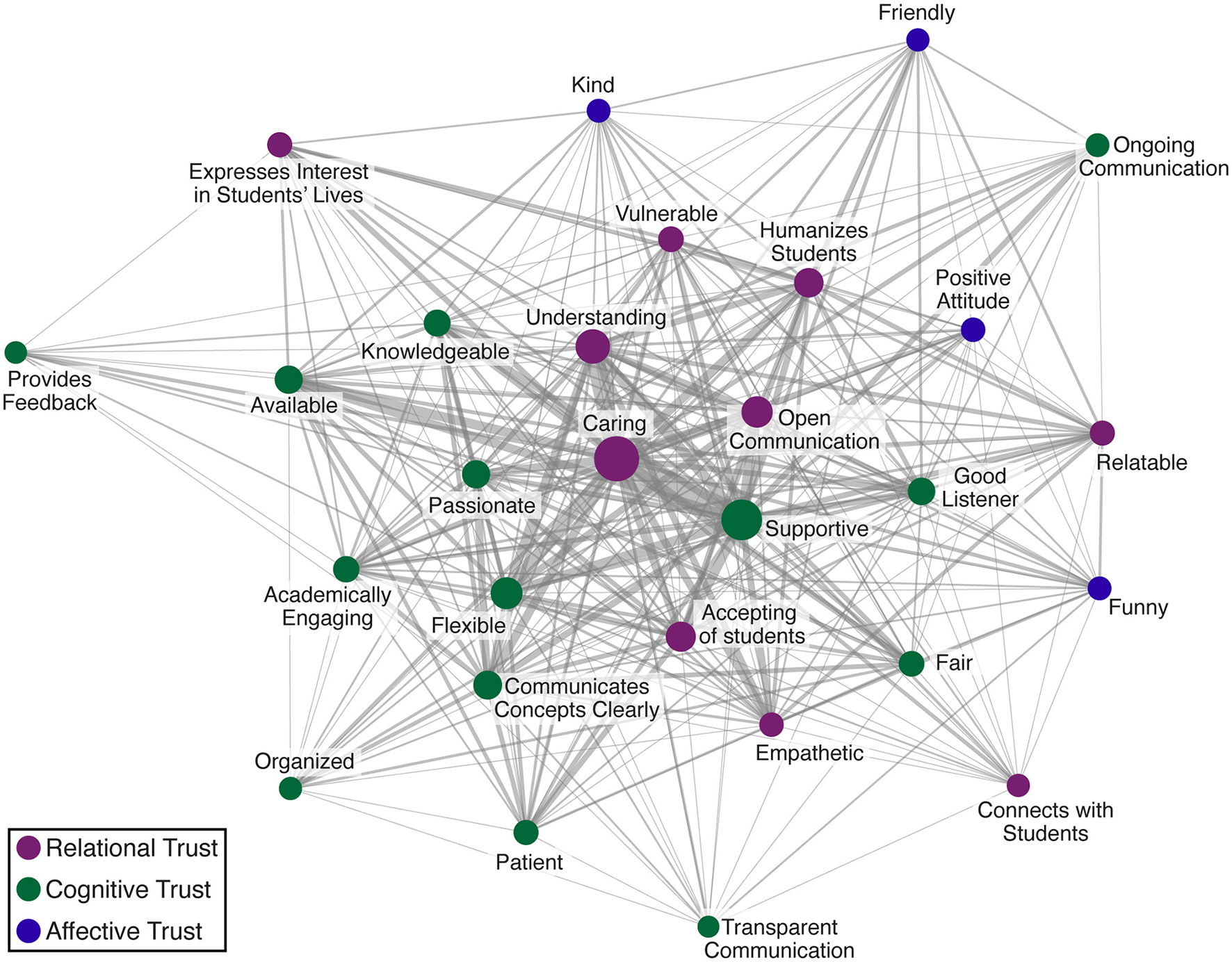

The complete network diagram is shown in Figure 4, where each individual code is represented by a node and edges connect nodes that co-occurred in student responses. The size of the node reflects the number of times a code was mentioned across all students, and thickness of the edges represents how frequently two codes were mentioned together. Nodes are additionally color-coded by trust domain. As seen in the co-occurrence network, traits associated with the relational and cognitive domains occupy central positions in the network and exhibit a high degree of co-occurrence. These nodes are not only frequently mentioned, based on their size, but also highly interconnected. The high degree of interdependence suggests that students may perceive traits falling within these domains as closely linked and reinforcing when evaluating trust in their instructors. On the other hand, traits falling within the affective domain are not as consistently interconnected with other traits in the network, suggesting they may play a more peripheral role in student evaluations of trust.

Figure 4

Co-occurrence network graph of interview codes. Each code is represented by a node, whose size reflects the number of times it was mentioned across all student interviews and whose color denotes the thematic domain, or trust dimension, to which it belongs. Edges connect codes that were mentioned by the same student and thickness of the edges represents how frequently pairs co-occurred.

To further characterize the network structure, we calculated node-level and domain-level descriptive statistics. Traits in the relational and cognitive domains exhibited higher average degrees (22.8 and 21.21, respectively) than those in the affective domain (18.25), indicating that they co-occurred more frequently with other traits. Similarly, betweenness centrality scores were higher for relational (3.4) and cognitive (2.77) traits compared to affective traits (1.56), suggesting that nodes within these domains function as more central bridges within the network. Intra-domain edge density was also higher among relational (0.93) and cognitive traits (0.82), compared to affective traits (0.67), reinforcing that these domains are more densely interconnected. Lastly, we found that the number of inter-domain edges was highest between relational and cognitive traits (113 edges between relational and cognitive domains compared to 31 edges between relational and affective domains and 34 edges between cognitive and affective domains), further supporting their overlapping nature in students' descriptions of trustworthy instructors.

Finally, we examined students' open-ended responses to qualitatively understand how students, in their own words, used traits from different domains in relation to each other when describing trusted instructors. In a notable example of the relationship between relational and cognitive dimensions, one student describes their instructor:

“He viewed the task of building students' understanding as his responsibility as a professor rather than the student's responsibility. When students went to see him for office hours, he always asked about them even though it was technically extraneous information—what a student's major was, what their interests were, etc. And he would not only remember this information, but he would also use it to help explain ideas better. He would introduce students to each other if they were in office hours at the same time. Essentially, he humanized and dignified the students who would come to see him, which was particularly helpful during times of struggling with the material.”