- Faculty of Humanities and Arts, Macau University of Science and Technology, Taipa, Macao SAR, China

The usefulness and ease of use of generative artificial intelligence (GAI) technology serve as the necessary technical foundation for its rapid proliferation. However, within the current educational landscape, students have raised growing concerns and apprehensions regarding the ethical governance of GAI technologies and their potential to disrupt employment opportunities through job displacement effects. This study investigated the relationship between perceptions of threats and hesitancy concerning the use of GAI technology among college students. A survey of 805 participants revealed a positive correlation between perceived technological threat and use hesitancy. Importantly, perceived avoidability and fear of GAI were found to serve as sequential mediators in this relationship. These findings elucidate the psychological mechanisms that underlie students’ reluctance to adopt emerging GAI technologies and suggest that interventions aimed at addressing threat perceptions, increasing avoidability, and reducing fear may promote greater acceptance of such techniques among students.

1 Introduction

Since mid-2010, machine learning and artificial intelligence systems based on neural networks have gradually been implemented in various creative fields, such as generative art based on machine learning (Caramiaux and Fdili Alaoui, 2022). This shift has led to the emergence of new vitality in all fields of human life; in particular, the advent of ChatGPT in 2022 made AI available in the form of a more universal tool. Simpler and less specialized language coding and the rapid speed of output generation have caused the use of AI in creation and production processes to become mainstream trends (Pradana et al., 2023). In addition, generative AI (GAI) technology has been increasingly integrated into educational settings, demonstrating transformative potential through diverse applications. These applications include facilitating immersive learning experiences to enhance student engagement and knowledge retention, optimizing instructional efficiency through adaptive learning systems, and empowering educators with intelligent tools that streamline administrative tasks, thereby enabling greater focus on personalized student development (Mittal et al., 2024). Such advancements position GAI technology as a catalytic force in redefining pedagogical paradigms, with scholars identifying it as a pivotal driver of future educational innovation (Lim et al., 2023).

College education plays a very important role in connecting students’ professional education to their social output. Therefore, college students are considered early receivers of advanced technology, such as GAI technology, in society. However, students exhibit different attitudes toward the use of GAI for content creation (Avcı, 2024). Empirical evidence has demonstrated a paradoxical relationship between students’ adoption of and attitudes toward GAI in higher education. According to a HEPI (Freeman, 2025) survey, while GAI usage among university students has shown steady growth, significant concerns persist: 53% of respondents fear being accused of academic misconduct when utilizing GAI tools, whereas 51% express apprehension about the technology’s propensity for generating erroneous outputs. This reflects a complex behavioral pattern in which students simultaneously embrace and distrust GAI, a phenomenon further corroborated by Chegg’s 2025 Global Student Survey (Bandarupalli, 2025), which found that despite widespread adoption for learning assistance, 53% of students maintain cautious skepticism regarding GAI’s tendency to produce inaccurate information. The ambivalence appears particularly pronounced among students in disciplines vulnerable to professional displacement by GAI capabilities. For example, those in arts and design programs demonstrate heightened hesitation due to unresolved copyright concerns surrounding AI-generated content. Similarly, computer science majors perceive GAI as a potential threat to programming employment opportunities (Wach et al., 2023). The overuse of GAI tools is weakening students’ dialectical thinking and foundational skills, which is a growing concern (Basha, 2024).

However, few studies have explored students’ hesitant attitudes toward the use of the GAI or the reasons underlying this phenomenon. This study hypothesizes that college students’ attitudes toward adopting GAI might be affected by their subjective thinking. The study examines this group’s perception of threats posed by the rapid development of GAI and the resultant hesitancy in its use. Moreover, different individuals may have distinct subjective responses to threats (Yin et al., 2024). For example, there are factors such as the perceived avoidability of threats, fear, and AI literacy of individuals. In contrast to traditional technology acceptance and diffusion studies that place a high emphasis on the technology itself, this research supplements the analysis with personal subjective influencing factors during the process of technology development.

2 Literature review

2.1 Technology adoption frameworks: from traditional models to GAI-specific paradigms

The Technology Acceptance Model (TAM) (Davis et al., 1989) examines user adoption behaviors toward computational systems through dual cognitive mechanisms: perceived ease of use and perceived usefulness. Similarly, Rogers’ (1962) diffusion of innovations (DOI) theory explicates the societal adoption process of novel technologies, ideas, products, and services. Rogers (2003) identified five critical factors influencing innovation adoption—relative advantage, complexity, compatibility, trialability, and observability—while acknowledging that certain factors may impede acceptance and lead to adoption failure.

While generative AI has demonstrated ease of access, simplicity of use, and efficient outputs, the paradoxes of use and dependency, multi-information and accuracy, and limited access that accompany its development in education have challenged traditional models of acceptance (Lim et al., 2023). However, extended models such as the unified theory of acceptance and use of technology (UTAUT) and TAM3 incorporate more factors explored in addition to the characteristics of the technology itself, such as gender, age, and previous experience of use (Jin et al., 2025). However, factors affecting acceptance, such as use anxiety and personal fear of technology, addressed in TAM3 have yet to be specifically discussed for GAI technology (Avcı, 2024). Therefore, the present study was conducted to explore the process of individuals’ acceptance of GAI technology.

2.2 GAI technology use hesitancy

Hesitancy in decision-making manifests as a form of behavioral postponement and delay of individual action (Bussink-Voorend et al., 2022). In the context of GAI technology, hesitancy involves not only delayed acceptance or skepticism about the foundational use of GAI techniques but also selective adoption of the content and form of GAI outputs, as well as delayed use or avoidance of potentially risky GAI techniques.

The phenomenon of vaccine adoption hesitancy, operationalized as delayed adoption behaviors or nonacceptance decisions that persist despite healthcare service accessibility, has been systematically investigated in immunization behavioral studies. Building upon this conceptual foundation, contemporary health behavior models frame resistance as a continuum of behavioral uncertainty, a sociocognitive process demonstrating temporal–spatial contingency and situational variability across demographic groups (Bedford et al., 2018).

Research on technology adoption and diffusion indicates that rapid advancements in AI technology do not necessarily cultivate enthusiastic adoption; instead, users often exhibit cautious or resistant attitudes (Hinks, 2020). Different categories of GAI tools, particularly those designed for entertainment versus professional applications, have generated varying market responses (Wach et al., 2023). Within the student population, while the utility of GAI technology prevents wholesale rejection, individual subjective factors significantly influence adoption patterns. Therefore, characterizing higher education students’ complex relationship with GAI technology as hesitancy more accurately reflects the nuanced reality of their engagement with these emerging tools.

2.3 Perceived technological threat and GAI technology use hesitancy

In the technological domain, stress-inducing elements frequently manifest as technological threats, which are particularly evident in the functionality and autonomy of GAI systems (Clauson, 2022). Perceived technological threat encompasses both the perceived severity of potential risks and their anticipated negative impacts.

A substantial corpus of literature exists on the development of AI technology and the potential risks associated with the advancement of GAI technology. First, the iterative process of technological updating facilitated by GAI technology, improvements in productivity, adjustments in the current position of humans regarding labor, and the corresponding employment crisis have led to significant changes in the labor market (Kandoth and Kushe Shekhar, 2022). Therefore, in the AI era, eliminating technological threats to human jobs has become more important than ever. Second, the technology gap created by the fact that few people control such technology has widened the wealth gap between individuals who have access to this technology and everyone else (Kitsara, 2022). As an emerging and rapidly evolving technological innovation, GAI technology has intensified public discourse that critically examines both its transformative potential and associated societal risks. While theoretical frameworks persistently highlight systemic vulnerabilities inherent in its developmental trajectory, empirical investigations are progressively quantifying user perceptions through measurable impact assessments of algorithmic systems. This dual analytical approach combines philosophical contemplation of technological evolution with data-driven evaluations of human–algorithm interactions, fostering a comprehensive understanding of GAI’s tangible implications across operational contexts.

Clauson (2022) divided perceived technological threat into numerous dimensions that are more convenient to measure, including four main categories: substitution (which refers to situations in which GAI technology replaces humans’ jobs, tasks, lifestyles, and core experiences), difficulty (which pertains to the difficulty of learning to use GAI technology), burden (which refers to the time and energy consumed through the use of GAI technology), and control (which includes issues such as the violation of personal privacy through monitoring).

The influence of GAI technology on the university student population is becoming evident, particularly with respect to learning styles and outcome outputs, as well as the prospect of highly intelligent future career paths, which are closely intertwined with two significant challenges (Holmes and Tuomi, 2022). From the perspective of human instincts, humans perceive clues related to threats and then react to them through avoidance or rejection; this process represents a basic behavioral mechanism aimed at maximizing biological survival (Luchkina and Bolshakov, 2019). Moreover, empirical studies on vaccines have demonstrated that hesitant behavior arises because users have some degree of apprehension about vaccination (Bedford et al., 2018). In this study, while university students perceived the usefulness and ease of use of GAI technology, they nevertheless exhibited considerable apprehension regarding the threat posed by GAI technology, which in turn led to their hesitancy in utilizing the technology rather than clear acceptance or rejection. On the basis of the preceding discussion, this study posits Hypothesis 1:

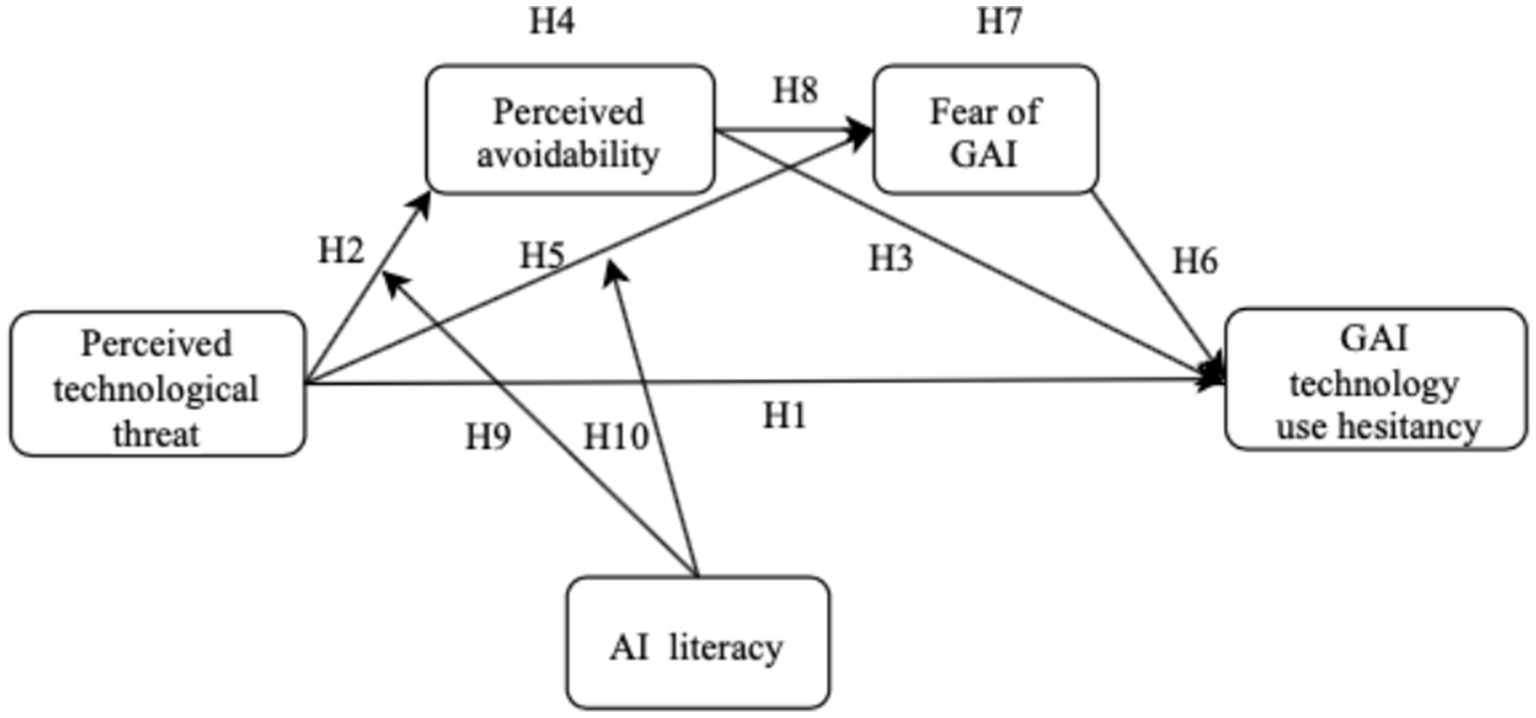

H1: Perceived technological threat positively influences GAI technology use hesitancy.

2.4 Mediating role of perceived avoidability

Perceived avoidability is defined as the likelihood that an individual can avoid a threat by implementing specific safeguards (Liang and Xue, 2009). Traditional technology threat avoidance theory (TTAT) posits that perceived avoidability is a protective assessment strategy after a user perceives a threat (Cao et al., 2021). In addition, protection motivation theory (PMT) posits that following stimulation by the external environment, individuals will assess both the perceived threat and their personal response to it. These individuals will subsequently engage in specific actions aimed at self-protection (Floyd et al., 2000). Perceived technological threat represents the assessment of the threat itself, whereas perceived avoidability signifies the evaluation of individuals’ personal capacity to confront technological threats. It specifically denotes the probability that an individual currently possesses the ability to avoid a perceived technological threat (Alam et al., 2024). Higher perceived technological threats necessitate the development of more extensive coping skills to facilitate resolution. Therefore, when a technological threat to an individual is perceived to be high, the relative perception of avoidability is likely to decline. In instances where technology is perceived as a threat that is challenging to avoid, individuals may engage in self-protective behaviors that delay or refuse the use of technology for reasons related to personal development and well-being. Therefore, this study proposes Hypotheses 2, 3, and 4.

H2: Perceived technological threat negatively influences perceived avoidability.

H3: Perceived avoidability negatively influences GAI technology use hesitancy.

H4: Perceived avoidability mediates the relationship between perceived technological threat and GAI technology use hesitancy.

2.5 Mediating role of fear of GAI

Fear of GAI is a negative emotion evoked after an individual perceives threats from the development of GAI technology (Gorman, 2008). Public apprehensions surrounding GAI technology predominantly stem not from technological artifacts per se but rather from the sociotechnical externalities of algorithmic advancement. These societal repercussions manifest across temporal dimensions, encompassing both contemporary workforce displacement anxieties and prospective implications of labor market paradigm shifts. The core concern lies in the algorithmic displacement phenomenon, wherein intelligent systems progressively automate cognitive tasks traditionally within human domains, fundamentally reconfiguring employment ecosystems. The fear-as-acquired-drive model (Hovland et al., 1953) posits that fear or emotional tension motivates individuals to react defensively when they perceive a threat. When individuals perceive a threat to GAI technology, fear and apprehension about the development of GAI technology will drive them to reduce the use of GAI technology and avoid the damage that the development of the technology may cause to the individual. Sutton’s (1982) meta-analysis established a causal linkage between threat cognition and affective arousal, demonstrating that amplified risk appraisals evoke psychophysiological fear responses that proportionally intensify behavioral intentions to adopt safeguarding measures. Extending this theoretical framework to GAI technological contexts, an increase in the threat perception of GAI technology may result in an elevated level of fear among students. Consequently, an increased level of fear may lead to a greater reluctance to utilize and indirectly safeguard oneself from the technology. Therefore, this study proposes Hypotheses 5, 6, and 7.

H5: Perceived technological threat positively influences fear of GAI.

H6: Fear of GAI positively influences GAI technology use hesitancy.

H7: Fear of GAI mediates the relationship between perceived technological threat and GAI technology use hesitancy.

Within the theoretical architecture of protection motivation theory, fear emerges as a dynamic psychosocial phenomenon operationalized through threat appraisal-coping appraisal interactions (Floyd et al., 2000; Alam et al., 2024). Those who perceive GAI threats will undertake a personal assessment of the threat, whereby the presence of threats that cannot be avoided on the basis of the individual’s abilities and social circumstances will elicit anxiety or fear. This fear will prompt individuals to engage in avoidance behaviors that are beneficial to them. It can be reasonably deduced that a reluctance to utilize GAI may be prompted by the emotion of fear, which may arise when an individual perceives that threats posed by the technology cannot be avoided. H8 was subsequently formulated.

H8: Perceived avoidability and fear of GAI play chain-mediating roles in the relationship between perceived technological threat and AI technology use hesitancy.

2.6 Moderating role of AI literacy

Building upon foundational work in artificial intelligence education (Long and Magerko, 2020), AI literacy has been conceptualized as the ability to critically assess and operationally deploy AI technologies, thereby enhancing collaborative workflows and productive outcomes. This investigation synthesizes contemporary scholarly perspectives, integrating Ng et al.’s (2021) framework to propose a structured definition aligned with technological advancements. Specifically, AI literacy encompasses four interconnected dimensions: recognizing and understanding AI, using and applying AI, evaluating and creating AI, and understanding the ethical implications of AI.

From the perspective of AI-for-social-good, Hermann (2022) posited that AI literacy can assist individuals in more effectively addressing and assessing the challenges posed by the mass production changes brought about by AI. Higher levels of AI literacy serve to mitigate the adverse effects of the technology while simultaneously enabling individuals to capitalize on the opportunities presented by AI developments. Furthermore, higher AI literacy can motivate more people or institutions to take the initiative to establish measures that balance people and technology to increase the service potential of AI and minimize its negative impacts (Carolus et al., 2023). Thus, AI literacy has a moderating effect on students’ GAI technology use, and higher AI literacy can attenuate the overall relationship between technology threat and hesitation to use. This is evidenced by the observation that as AI literacy increases, individuals perceive a reduction in the threat posed by AI, an increase in the perceived avoidability of negative consequences, a decrease in fear, and a corresponding reduction in hesitation to use. Thus, this research proposes Hypotheses 9 and 10.

H9: AI literacy enhances the relationship between perceived technological threat and perceived avoidability; that is, AI literacy has a promotional effect on the influence of perceived technological threat on perceived avoidability.

H10: AI literacy weakens the relationship between perceived technological threat and fear of GAI; that is, AI literacy has an inhibitory effect on the influence of perceived technological threat on fear of GAI.

Figure 1 illustrates the idea underlying this study and the specific model.

3 Method

This study utilized a survey method, with data collection conducted in March 2024 via WenJuanXing’s sample pool, a recognized professional survey platform in China. First, participants were required to have knowledge of or experience with GAI technology. Then, a 50% male-to-female ratio was employed using stratified random sampling. Initially, 450 samples were collected from each stratum, resulting in a total of 900 samples. After excluding responses with completion times shorter than 120 s and those with consistent patterns, 805 valid questionnaires remained. Demographically, the sample comprised 52.3% male and 47.7% female participants. Approximately 87.3% of the respondents were between the ages of 18 and 25. A total of 47.7% of the respondents reported an intermediate level of English proficiency. With respect to the educational level, 72.5% were studying for a bachelor’s degree or associate degree, 23.2% were studying for a master’s degree, and 4.3% were obtaining a doctoral degree. The marital status of the respondents revealed that 6.6% were married, 33.7% were single, and the remainder were in committed relationships.

3.1 Measurements

This study employed measurement items drawn from well-established previous studies to enhance the validity of this research. For this study, two doctoral students majoring in Communication Studies and proficient in English translated the survey questionnaire into Chinese. Their work was compared to create the preliminary version of the questionnaire, which was pre-tested on 50 college students. After the pretest, the questionnaire was revised to correct any inaccuracies, resulting in the final version. The key variables included in the analysis were operationalized as follows:

3.1.1 Perceived technological threat

The investigation employed a twenty-item psychometric scale to assess student apprehensions regarding artificial intelligence risks, structured according to four theoretical dimensions outlined in Clauson’s analytical framework (2022). Five items focused on replacement, including the risk that AI technology might replace a task, job, lifestyle, or core experience of humans. Six items focused on the difficulties associated with AI technology, including the processes of understanding, learning, and using technology. The burden of technology was also the subject of five questions that emphasized the ways in which AI technology can distract users, its time-consuming nature, and its ability to invade their personal time. Four questions focused on control, including invasions of personal privacy and monitoring (Clauson, 2022). The mean score was calculated to construct the variable (Cronbach’s α = 0.90, M = 2.67, SD = 0.74).

3.1.2 GAI technology use hesitancy

This survey asked participants four questions regarding use hesitancy; all these items were adapted from Demirgüneş (2018). An example question is as follows: I hesitate to use GAI products to create. The participants evaluated each statement using an ordinal response framework (1–5) anchored between strongly disagree and strongly agree (Cronbach’s α = 0.89, M = 2.69, SD = 1.09).

3.1.3 Perceived avoidability

Three questions that focused on the negative effects of AI technology in terms of avoidability were adapted from Liang and Xue (2010) and answered on a 5-point scale. The first item solicited respondents’ evaluations regarding their perceived feasibility of mitigating detrimental impacts from GAI technology, contingent upon currently accessible organizational infrastructures. The participants were required to quantify their level of endorsement through a psychometrically validated scale measuring technological risk containment potential. The second question required participants to quantify their degree of taking all relevant facts into consideration; they could protect themselves from the negative effects of AI generation technology. The third question required participants to quantify their degree of taking all relevant facts into consideration, and the negative effects of GAI technology were avoidable. The responses were averaged to form the variable (Cronbach’s α = 0.85, M = 3.32, SD = 1.10).

3.1.4 Fear of GAI

The survey required participants to self-report their affective states through four questions. Specifically, respondents quantified their agreement levels with statements assessing apprehension intensity toward GAI-driven solution implementation and psychological discomfort associated with GAI technology integration. These measurement instruments were methodologically adapted from established pandemic-related psychological assessment tools (Ahorsu et al., 2022), maintaining conceptual equivalence while recontextualizing fear dimensions for technological risk evaluation. The responses were averaged to form the variable (Cronbach’s α = 0.89, M = 2.70, SD = 1.01).

3.1.5 AI literacy

A set of five items pertaining to AI literacy was adapted from Carolus et al. (2023). An example question was related to whether the participant can use AI to complete tasks in his or her daily life and work. The participants answered all these questions on a five-point Likert scale. The responses were averaged to form the variable (Cronbach’s α = 0.82, M = 3.21, SD = 0.95).

The control variables included in this research were general demographic variables, English proficiency [ranging from 1 (elementary stage) to 3 (advanced stage) according to the standards stipulated by the Chinese English Proficiency Scale (Ministry of Education of the People’s Republic of China and National Language Commission of the People’s Republic of China, 2018)], and a specific evaluation form was attached to the questionnaire that participants could use for the purpose of comparison.

4 Data analysis and results

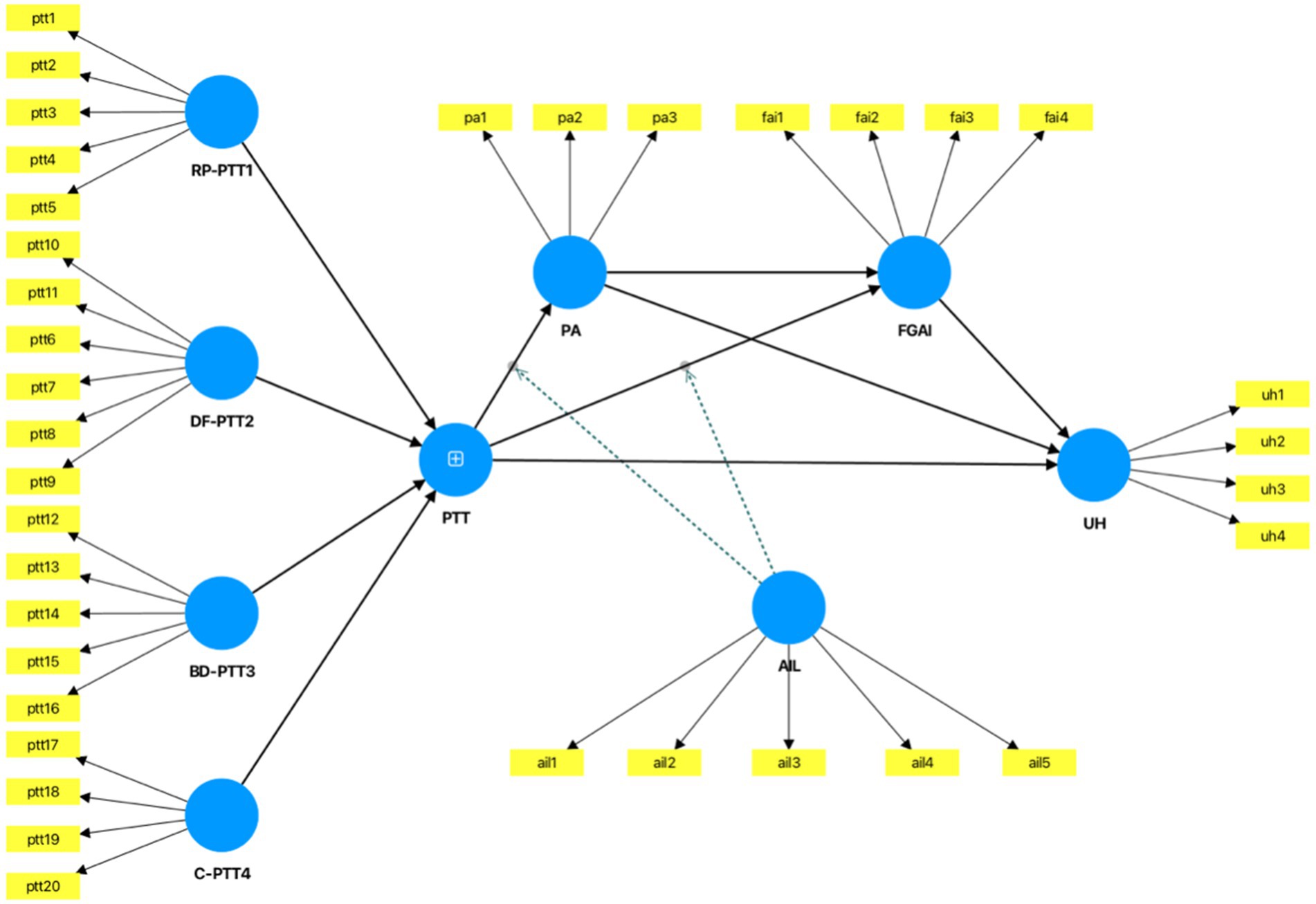

This study employs partial least squares structural equation modeling (PLS-SEM) to analyze and validate the proposed theoretical framework. The research model features perceived technological threat as a key independent variable, conceptualized as a higher-order construct (HOC) comprising four distinct subdimensions with low intercorrelations: substitutive effects, usage difficulties, boundary issues, and privacy concerns. Each subdimension operates as a lower-order construct (LOC) measured through 4–6 reflective indicators, aligning with the reflective-formative (Type II) specification in PLS-SEM applications. The disjoint two-stage approach proves most appropriate for this reflective-formative model configuration (Becker et al., 2023), as illustrated in Figure 2.

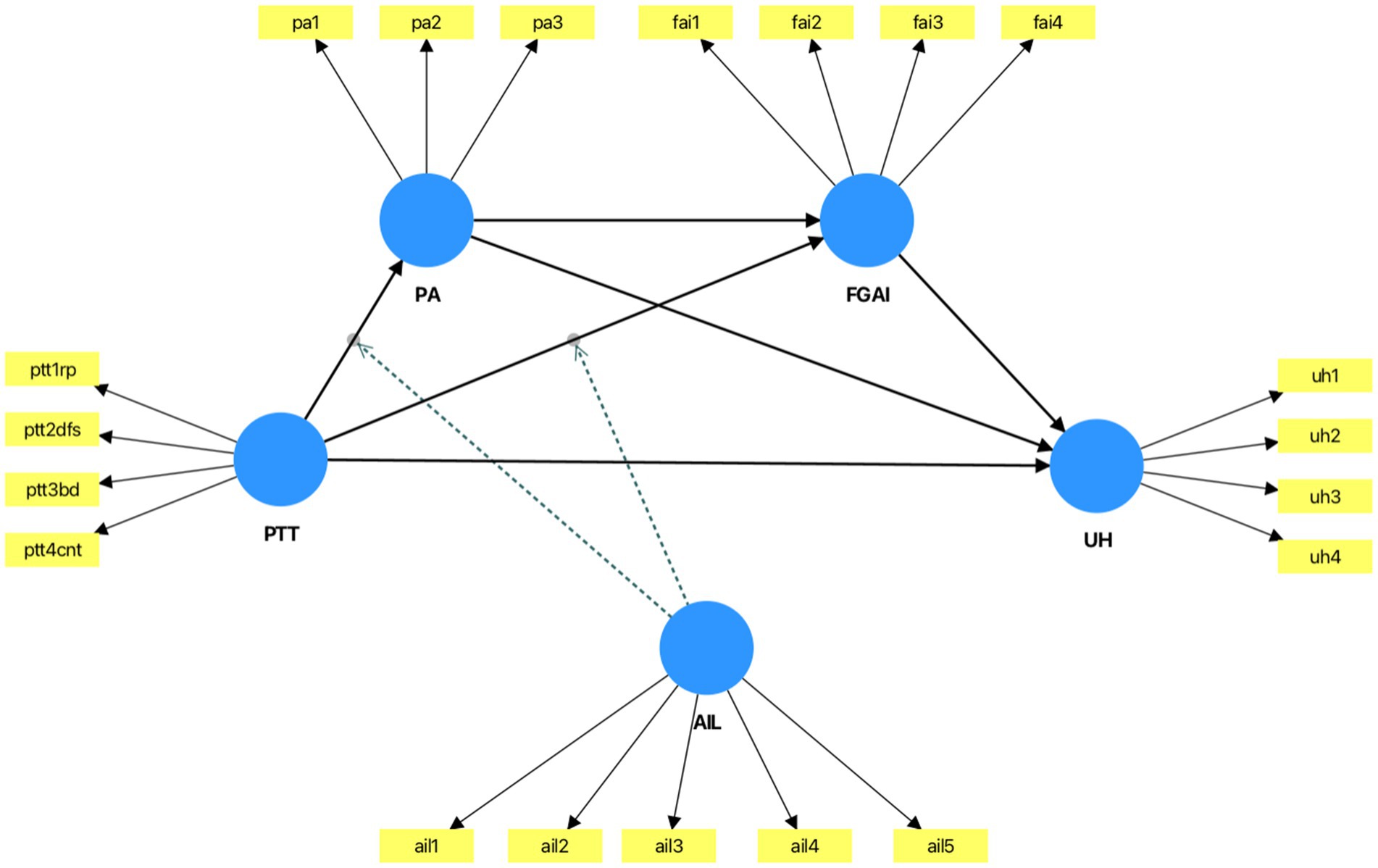

Following Becker et al.’s (2023) PLS-SEM guidelines, we first established measurement model adequacy through a rigorous assessment of reliability and validity criteria using Smart PLS-4 software. In the initial analytical stage, we subsequently estimated first-order constructs directly linked to the final endogenous variable (attractiveness), preserving latent variable scores for each LOC. These scores were then utilized as formative indicators for the second-order HOC in the subsequent analytical phase, as shown in Figure 3. This methodological sequencing ensures proper construct operationalization while maintaining statistical robustness throughout the analytical process.

4.1 Measurement model of reflective constructs

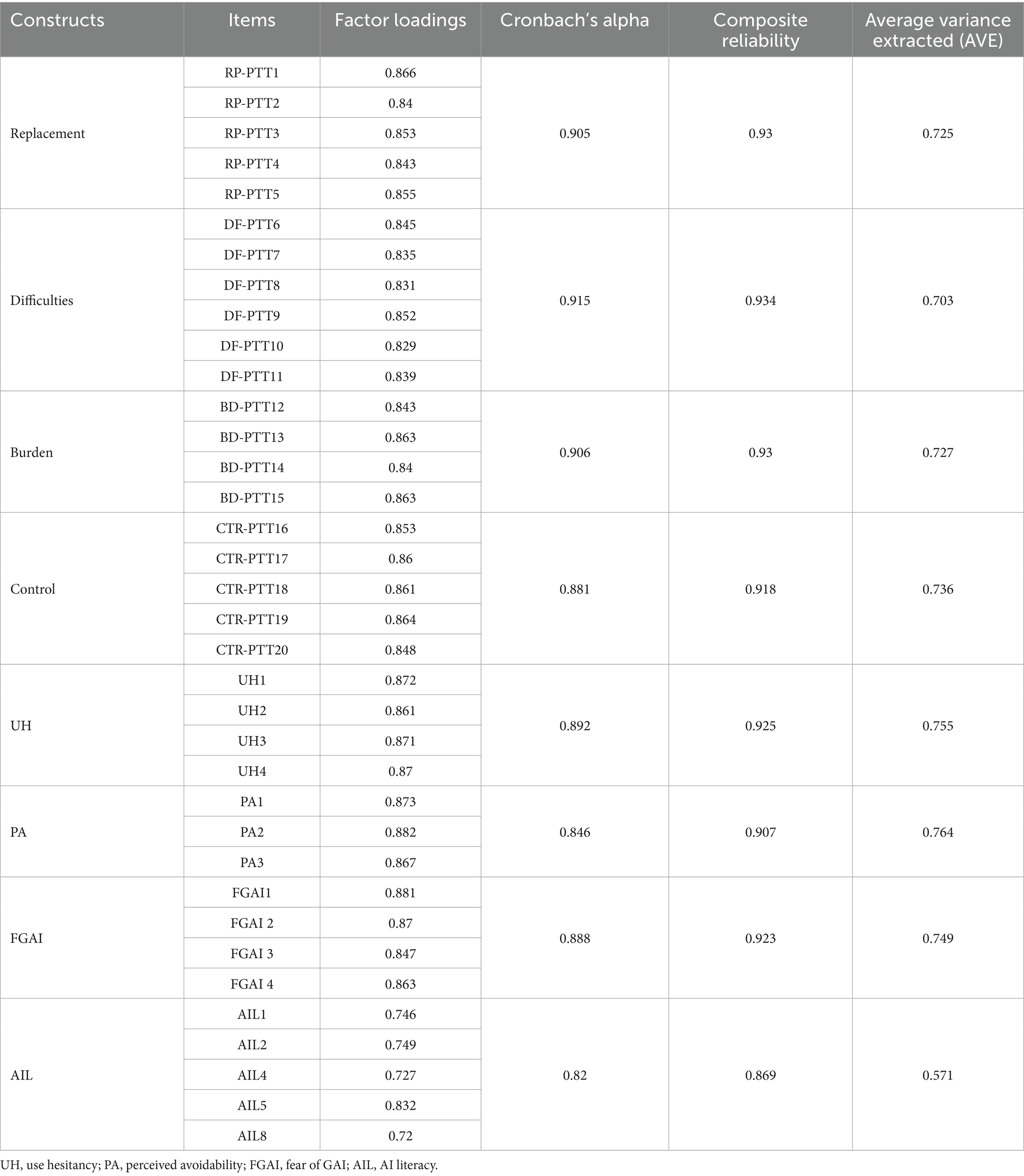

As presented in Table 1, the reliability analysis established measurement consistency across all eight primary latent variables, with Cronbach’s alpha coefficients (α) ranging from 0.82 to 0.915, exceeding the recommended benchmark of 0.7 for social science research. Furthermore, composite reliability estimates ranged between 0.869 and 0.934, surpassing the 0.70 benchmark for construct reliability (Hair et al., 2020). These results collectively confirm adequate internal consistency across all the measurement scales.

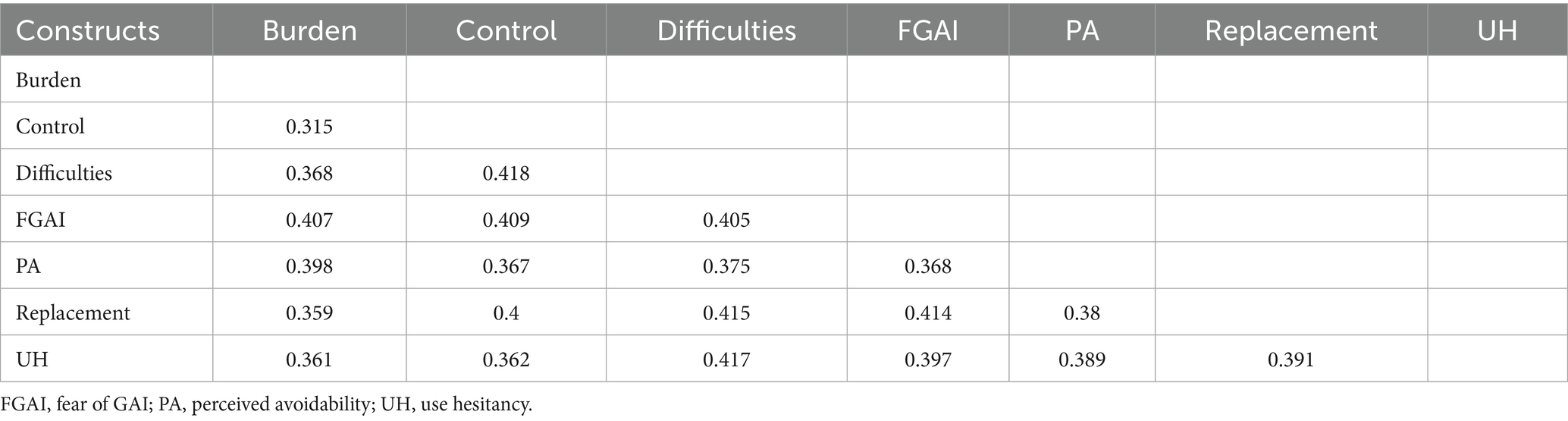

The measurement model’s convergent validity was rigorously evaluated through contemporary psychometric protocols (Hair et al., 2020), employing two complementary diagnostic metrics: standardized measurement loadings and average variance extracted (AVE) indices. All standardized factor loadings exceeded 0.7, indicating strong indicator reliability, as shown in Table 1. Convergent validity was substantiated by AVE indices surpassing the psychometric benchmark of 0.50 (range: 0.571–0.764), fulfilling essential requirements for latent construct measurement precision (Hair et al., 2020). Discriminant validity evaluation employed the heterotrait–monotrait (HTMT) ratio methodology, with all cross-construct correlation estimates remaining under the stringent cutoff value of 0.85 in Table 2 (Hair et al., 2020). These combined consequences substantiate the measurement model’s psychometric adequacy, confirming distinct construct operationalization without evidence of cross-dimensional contamination.

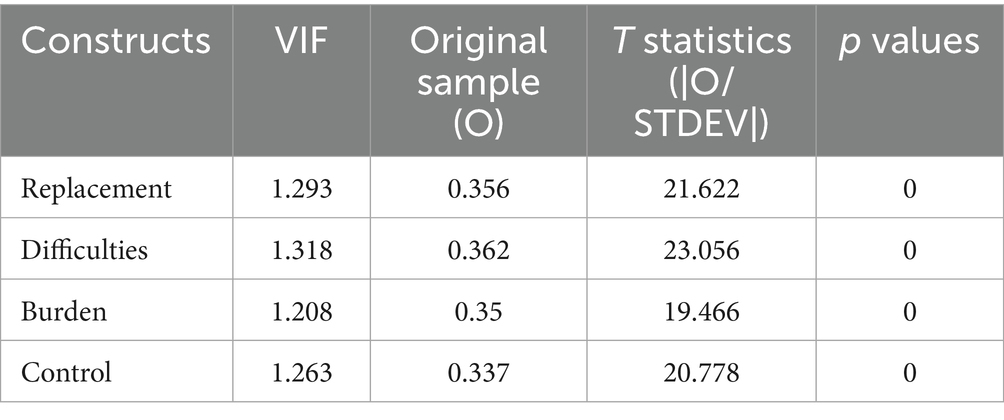

4.2 Measurement model of the higher-order formative construct

For the formative measurement evaluation, multicollinearity was first assessed through variance inflation factor (VIF) analysis. As summarized in Table 3, all the VIF values for indicators of the formative construct (perceived technological threat) remained substantially below the critical threshold of 5 (Hair et al., 2020), effectively ruling out multicollinearity concerns. Subsequent bootstrapping analysis with 5,000 subsamples was used to evaluate the statistical significance of the outer weights, which represent standardized regression coefficients indicating each indicator’s relative contribution to the formative construct (Becker et al., 2023). The results in Table 3 demonstrate significant outer weights for all four formative indicators (p < 0.01), confirming their substantive relevance to the higher-order construct.

Table 3. VIF values and significance of outer weights of higher-order formative construct (perceived technological threat).

This sequential validation process, encompassing collinearity diagnostics, outer weight significance testing, and threshold compliance verification, satisfies the recommended criteria for formative measurement model evaluation (Becker et al., 2023; Hair et al., 2020). The collective evidence substantiates both the psychometric adequacy of the measurement framework and the appropriateness of proceeding to structural model interpretation.

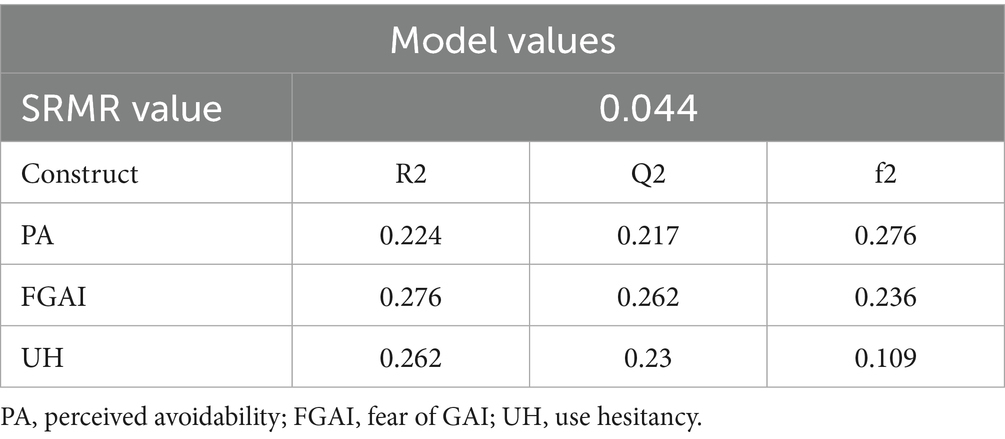

4.3 Structural model assessment

As shown in Table 4, the model demonstrated a strong global fit with an SRMR value of 0.044, substantially below the recommended threshold of 0.08 (Hair et al., 2020). The explanatory power analysis revealed moderate predictive efficacy across the following endogenous constructs: perceived avoidability (R2 = 0.244), fear of general artificial intelligence (GAI) (R2 = 0.276), and use hesitancy (R2 = 0.262). Effect size evaluation through Cohen’s f2 indicated substantial relationships, with perceived technological threat demonstrating large effects on perceived avoidability (f2 = 0.276) and fear of GAI (f2 = 0.236), alongside a medium effect on use hesitancy.

The model’s predictive ability was further substantiated through blindfolding procedures, yielding positive Q2 values for all endogenous constructs (Table 4), thereby confirming its predictive relevance (Hair et al., 2020). Bootstrap analysis (5,000 subsamples) of path coefficients revealed statistically significant structural relationships (p < 0.01) across all hypothesized paths. Collectively, these metrics confirm the structural model’s robustness in explaining theoretical relationships while maintaining appropriate parsimony.

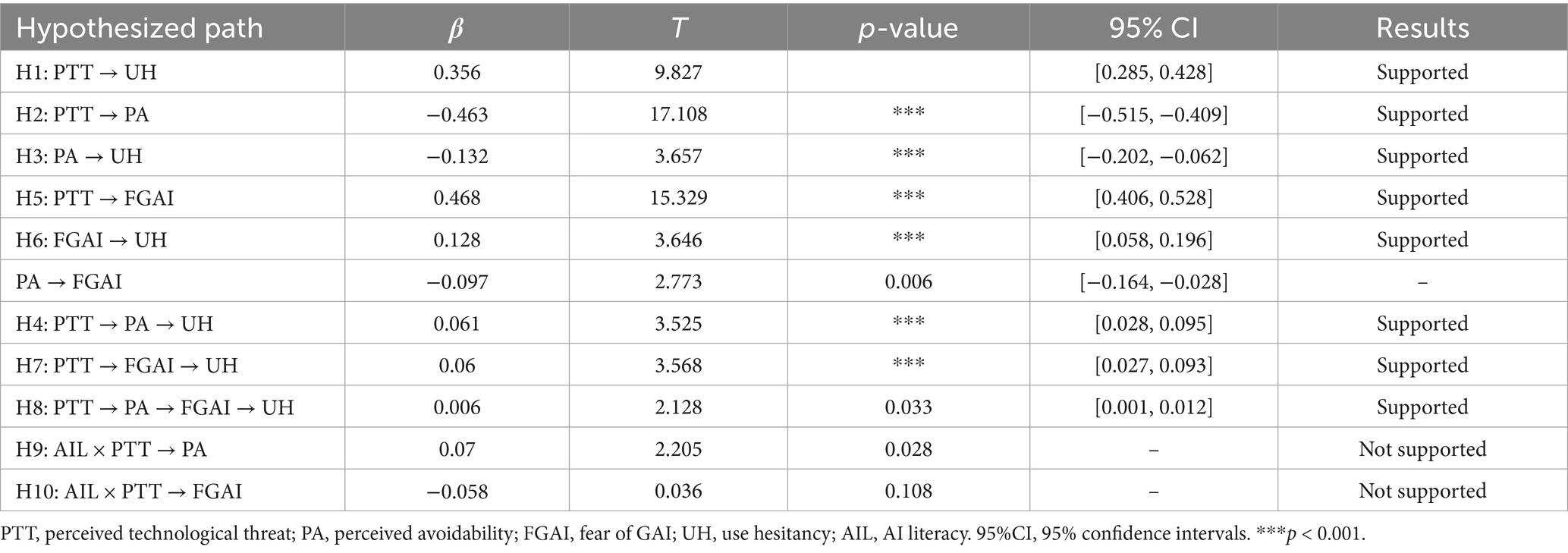

The concluding phase of structural model assessment involves hypothesis verification through examination of critical statistical parameters. As documented in Table 5 and visually represented in Figure 3, the analytical outcomes demonstrate path coefficient magnitudes accompanied by their respective significance indicators (t statistics and p values), precision estimates (95% confidence intervals), and ultimate hypothesis validation conclusions.

As expected, H1 to H6 are supported. Specifically, perceived technology threat is positively associated with GAI technology use hesitancy (β = 0.356, p < 0.001) and fear of GAI (β = 0.468, p < 0.001) but negatively related to perceived avoidability (β = −0.463, p < 0.001), thus supporting H1, H2, and H5. Perceived avoidability is found to be negatively associated with GAI technology use hesitancy (β = −0.132, p < 0.001), supporting H3, and fear of GAI is positively related to GAI technology use hesitancy (β = 0.128, p < 0.001), thus supporting H6.

In addition, the mediating effects of perceived avoidability and fear of GAI are also important for this study. Table 5 also shows the effects of mediation. The mediating effect of perceived avoidability between perceived technology threat and use hesitancy is significant [β = 0.061, CI = (0.028, 0.095)], thus supporting H4. The mediating effect of fear of GAI between perceived technology threat and use hesitancy is also significant [β = 0.060, CI = (0.027, 0.093)], supporting H7. Perceived avoidability and fear of GAI play a chain mediating role between perceived technology threat and use hesitancy, which is significant [β = 0.006, CI = (0.001, 0.012)], thus supporting H8. Moreover, the analysis results related to the control variables reveal no significant relationships with use hesitancy, perceived avoidability or fear of GAI.

4.4 Testing the moderating effects

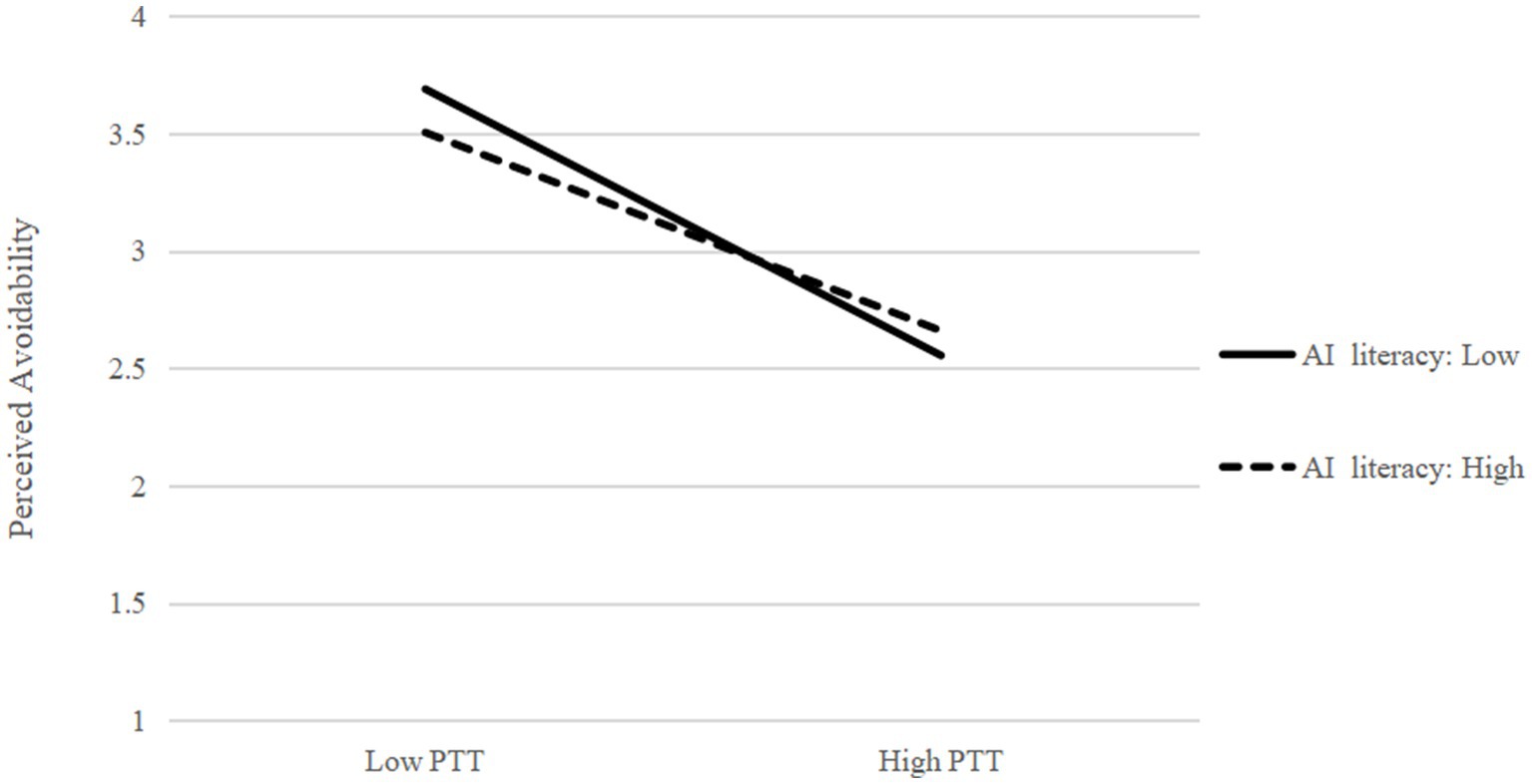

In H9 and H10, this study assumed that AI literacy would moderate the path between perceived technological threat and perceived avoidability and the path between perceived technological threat and fear of GAI. First, we added the interaction term for perceived technological threat and AI literacy to the structural model. According to the results in Table 5, the path coefficient from the interaction term of perceived technological threat and AI literacy is significant (β = 0.07, p < 0.05), and the path between perceived technological threat and perceived avoidability is moderated by AI literacy. However, perceived technological threat is negatively related to perceived avoidability; thus, AI literacy does not enhance the relationship between perceived technological threat and perceived avoidability but rather weakens it. As a result, H9 is not supported. In addition, the moderating effects of AI literacy on perceived technological threat and fear of GAI are not significant (β = −0.058, p = 0.108). Therefore, H10 is not supported. The results are presented in Figure 4.

This study plots predicted perceived technological threat against perceived avoidability separately at both low and high levels of AI literacy (Figure 5). The results reveal that the relationship between perceived technological threat and perceived avoidability is weaker among participants who present high levels of AI literacy (bsimple = −0.83, p < 0.001) than among participants who present low levels of AI literacy (bsimple = −0.90, p < 0.001). Specifically, the impact of perceived technological threat on perceived avoidability is attenuated by an individual’s AI literacy; the impact of perceived technological threat on perceived avoidability diminishes as an individual’s AI literacy increases.

Figure 5. AI literacy as a moderator in the relationship between perceived T = technological threat and perceived avoidability (two-way interaction graph). PTT, perceived technological threat.

5 Discussion

Consistent with earlier findings in the field of vaccination behavior, the present study confirms that greater perceived technological threat in the diffusion of new technologies leads to greater hesitancy in use (Bedford et al., 2018; Hinks, 2020). The main reason for this situation is that GAI technologies constantly impact the learning outcome outputs and social and employment environments of students in higher education, and their anxieties and concerns about their future development make it impossible for them to ignore the threat of GAI and may amplify the threat that exists in the use of the technology (Audry, 2021). However, along with this threat, the convenience of GAI technology and the societal need for technological innovation justify students’ technology use. This phenomenon can also be explained using the PMT, where higher threats can prevent people from adopting GAI technologies decisively by adopting defense mechanisms (Marikyan and Papagiannidis, 2023).

Consistent with the findings of TTAT and PMT (Liang and Xue, 2009; Floyd et al., 2000), this study found that perceived avoidability, as a measure of threat assessment, had a buffering effect on the relationship between perceived technological threat and use hesitancy. The results indicate that after assessing the perceived technological threat to an individual, GAI technology will present high perceived avoidability if it is found to pose a threat that can be countered on the basis of the individual’s ability. The perception of high avoidability reduces distress caused by the threat, which in turn reduces the hesitancy that is defensive in nature and ultimately enables the effective use of GAI technology. Taken together, these findings also support the application of the risk-buffering hypothesis (Luthar et al., 2015) in the context of the current rapid development of AI, where individuals plan for the assessment of threats and take appropriate defensive measures to reduce the many uncertainties brought about by the rapid development of GAI technology.

When faced with the threat of GAI technology, people develop emotions while making threat assessments. Individuals with high perceived threat are more likely to feel fear and develop more hesitation. In addition, from the theoretical perspective of emotions and decision-making, the risk-as-feeling model proposed by Loewenstein et al. (2001) suggests that emotions have an important influence on the way individuals deal with threats and make final decisions. Negative emotions such as fear drive individuals to make relatively negative decisions, such as taking a hesitant stance toward GAI technology. Thus, this finding also supports the validity of the risk-as-feeling model in the diffusion of new technologies.

The present study indicated that perceived avoidability and fear of GAI both mediate the associations between perceived technological threat and AI technology use hesitancy. According to the TMS, people may exhibit negative emotional responses to technological stresses and threats. However, if people find that they can avoid these negative effects through their personal abilities or implement relevant measures, they may change their negative emotions and ultimately accept the new technology (Lazarus and Folkman, 1987). In this study, when college students believed that they could avoid the threats caused by GAI technology or transform the corresponding pressure into an advantage, they adopted more positive coping styles. The results of this study are consistent with the preceding discussion, which indicates that the relationship between perceived avoidability and fear continues to hold regarding the chain-mediated effects of perceived technological threat and use hesitancy. Higher levels of perceived avoidability reduce people’s perceptions of technological threats, thus mitigating their fear of using GAI and ultimately reducing their hesitation to use such technology.

In contrast with the hypotheses of the present study, AI literacy did not enhance the relationship between perceived technological threat and perceived avoidability; instead, it had a comparatively limited attenuating effect. Furthermore, the moderating effect of AI literacy on the relationship between perceived technological threat and fear of GAI was not statistically significant. The complexity inherent in AI technology presents significant challenges, yet current AI literacy education predominantly focuses on superficial applications and daily entertainment rather than addressing the deep understanding of core algorithms and logic, thereby failing to resolve critical barriers to advanced AI implementation (Hermann, 2022). Furthermore, a discernible gap exists between users’ basic AI literacy and the rapid evolution of market-driven technologies. Contemporary literacy frameworks not only inadequately equip users to effectively utilize GAI technologies but also may paradoxically heighten perceptions of technological inevitability by exposing them to accelerating technical iterations (Kong et al., 2025). Additionally, AI literacy extends beyond fundamental knowledge and functional awareness to encompass ethical discernment. Despite widespread technological adoption, persistent issues such as algorithmic biases and opaque decision-making processes remain fundamentally unresolved (Taulli, 2023).

From the perspective of the technology acceptance paradox, while basic AI literacy, comprising cognitive understanding and operational proficiency, may enhance user acceptance and foster perceptions of avoidability regarding certain technological risks, this relationship proves counterintuitive at advanced levels. As individuals develop deeper technical familiarity, operational expertise, and critical awareness of embedded ethical dilemmas, they increasingly recognize the inherent and systemic nature of AI-related threats. Even superficial technological risks are perceived as fundamentally entrenched rather than transient (Büchi, 2024).

6 Conclusion

In summary, this study indicates that perceived technological threat is positively related to AI use hesitancy. Furthermore, the mediation analysis revealed that both perceived avoidability and fear of AI mediate the association between perceived technological threat and AI technology use hesitancy. Moreover, perceived avoidability and fear of AI have a chain-mediated effect on the relationship between perceived technological threat and use hesitancy. It is important to recognize that GAI development cannot be circumvented or disregarded. Consequently, those engaged in higher education could develop enhanced GAI application capabilities and augment their overall personal resilience to diminish the potential risks associated with GAI development.

6.1 Implications and limitations

This study enriches the traditional TAM and DOI theory, thus enabling them to be applied to new technologies. In response to the new technological revolution, the audience not only pays attention to the advantages of the technology itself but also worries about the threats caused by such technology. Although the audience may benefit greatly from the new technology, people remain concerned about it. Furthermore, this study extends the notion of behavioral intention in the traditional TAM by exploring the notion of technology use hesitancy. Owing to the rapid development of AI technology, the audience’s values or social environment may change at any time, and their attitudes toward AI technology may similarly shift (Demirgüneş, 2018). It is difficult to describe the ambivalence of the current audience in terms of the simple acceptance or rejection of a given technology. Our focus on hesitation reflects the audience’s possible psychological experiences over a relatively long period.

Furthermore, this study posits that targeted enhancement of professional AI literacy, in conjunction with the cultivation of general knowledge competencies among students in higher education, can assist students in mitigating their perception of threats in technological threat assessment and enhancing their capacity to respond to such threats in a strategic and evasive manner. Furthermore, it can mitigate the effects of fear and emotional avoidance decisions resulting from a lack of competence. In the context of university education, the establishment of relatively sound rules concerning the use of AI technology can reduce students’ abuse of and bias toward technology (Pradana et al., 2023). Guiding students to view the development and use of AI technology positively can mitigate the negative emotions they experience with respect to the use of technology. Moreover, industry norms regarding the use of AI should be clarified, and ways of addressing the threat caused by using AI technology proactively should be proposed, thereby effectively mitigating the negative emotions experienced by the masses about new technologies and helping promote the use of AI technology.

There are several limitations of this study. First, we cannot make long-term predictions because AI technology is changing at a rapid pace. Moreover, we employed a cross-sectional design in this research, and a longitudinal design or experimental design is needed to confirm the causal relationships among the variables reported here. Finally, this study primarily focuses on the potential threats that GAI technology poses to individuals, mainly discussing the negative impacts that accompany its development. It does not extensively discuss the positive impacts of GAI development.

Future research should focus on the rapid development of GAI technology. More attention has been given to the technical pressures that people face in the process of using GAI. A discussion of how to avoid or address these negative effects when applying the technical advantages offered by GAI to all walks of life is thus of great practical value.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval were not required for the study of human participants in accordance with local legal and institutional requirements. The participants themselves completed an informed consent form before the questionnaire was started.

Author contributions

YY: Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Validation.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

I would like to express my sincere gratitude to Assistant Prof. Peng Kun for her insightful comments on an earlier draft of the manuscript. In addition, we thank the editors and reviewers who provided extensive and strong feedback on this manuscript.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1618850/full#supplementary-material

References

Ahorsu, D. K., Lin, C., Imani, V., Saffari, M., Griffiths, M. D., and Pakpour, A. H. (2022). The fear of COVID-19 scale: development and initial validation. Int. J. Ment. Health Addict. 20, 1537–1545. doi: 10.1007/s11469-020-00270-8

Alam, S. S., Ahsan, N., Kokash, H. A., Alam, S., and Ahmed, S. (2024). A students’ behaviors in information security: extension of protection motivation theory (PMT). Inf. Secur. J. Glob. Perspect. 34, 191–213. doi: 10.1080/19393555.2024.2408264

Avcı, Ü. (2024). Students’ GAI acceptance: role of demographics, creative mindsets, anxiety, attitudes. J. Comput. Inf. Syst. 1–15. doi: 10.1080/08874417.2024.2386545

Bandarupalli, M.. (2025). Chegg global student survey 2025: 80% of undergraduates worldwide have used GenAI to support their studies—but accuracy a top concern. Available online at: https://investor.chegg.com/Press-Releases/press-release-details/2025/Chegg-Global-Student-Survey-2025-80-of-Undergraduates-Worldwide-Have-Used-GenAI-to-Support-their-Studies--But-Accuracy-a-Top-Concern/default.aspx (accessed July 15, 2025)

Basha, J. Y. (2024). The negative impacts of AI tools on students in academic and real-life performance. Int. J. Soc. Sci. Commer. 1, 1–16.

Becker, J.-M., Cheah, J.-H., Gholamzade, R., Ringle, C. M., and Sarstedt, M. (2023). PLS-SEM’S most wanted guidance. Int. J. Contemp. Hosp. Manag. 35, 321–346. doi: 10.1108/IJCHM-04-2022-0474

Bedford, H., Attwell, K., Danchin, M., Marshall, H., Corben, P., and Leask, J. (2018). Vaccine hesitancy, refusal and access barriers: the need for clarity in terminology. Vaccine 36, 6556–6558. doi: 10.1016/j.vaccine.2017.08.004

Büchi, M. (2024). Digital well-being theory and research. New Media Soc. 26, 172–189. doi: 10.1177/14614448211056851

Bussink-Voorend, D., Hautvast, J. L., Vandeberg, L., Visser, O., and Hulscher, M. E. (2022). A systematic literature review to clarify the concept of vaccine hesitancy. Nat. Hum. Behav. 6, 1634–1648. doi: 10.1038/s41562-022-01431-6

Cao, G., Duan, Y., Edwards, J. S., and Dwivedi, Y. K. (2021). Understanding managers’ attitudes and behavioral intentions towards using artificial intelligence for organizational decision-making. Technovation 106:102312. doi: 10.1016/j.technovation.2021.102312

Caramiaux, B., and Fdili Alaoui, S. (2022). Explorers of unknown planets. Practices and politics of artificial intelligence in visual arts. Proc. ACM Hum. Comput. Interact. 6, 1–24. doi: 10.1145/3555578

Carolus, A., Koch, M. J., Straka, S., Latoschik, M. E., and Wienrich, C. (2023). MAILS—Meta AI literacy scale: development and testing of an AI literacy questionnaire based on well-founded competency models and psychological change- and meta-competencies. Comput. Human Behav. 1:100014. doi: 10.1016/j.chbah.2023.100014

Clauson, M. (2022). The Technological Threat Measure: Subjective Threat Perception in the Technology-Work Interface, Scale Development and Validation (Doctoral dissertation, University of Georgia).

Davis, F. D., Bagozzi, R. P., and Warshaw, P. R. (1989). User acceptance of computer technology: a comparison of two theoretical models. Manag. Sci. 35, 982–1003. doi: 10.1287/mnsc.35.8.982

Demirgüneş, B. K. (2018). Determination of factors that cause shopping hesitation. METU Stud. Dev. 45, 25–57.

Floyd, D. L., Prentice-Dunn, S., and Rogers, R. W. (2000). A meta-analysis of research on protection motivation theory. J. Appl. Soc. Psychol. 30, 407–429. doi: 10.1111/j.1559-1816.2000.tb02323.x

Freeman, J. (2025) HEPI/Kortext AI survey shows explosive increase in the use of generative AI tools by students. Available online at: https://www.hepi.ac.uk/2025/02/26/hepi-kortext-ai-survey-shows-explosive-increase-in-the-use-of-generative-ai-tools-by-students/ (accessed July15, 2025)

Gorman, J. M. (2008). Fear and anxiety: the benefits of translational research. Arlington, VA: American Psychiatric Publishing, Inc.

Hair, J. F., Howard, M. C., and Nitzl, C. (2020). Assessing measurement model quality in PLS-SEM using confirmatory composite analysis. J. Bus. Res. 109, 101–110. doi: 10.1016/j.jbusres.2019.11.069

Hermann, E. (2022). Artificial intelligence and mass personalization of communication content—an ethical and literacy perspective. New Media Soc. 24, 1258–1277. doi: 10.1177/14614448211022702

Hinks, T. (2020). Fear of robots and life satisfaction. Int. J. Soc. Robot. 12:98:792. doi: 10.1007/s12369-020-00640-18

Holmes, W., and Tuomi, I. (2022). State of the art and practice in AI in education. Eur. J. Educ. 57, 542–570. doi: 10.1111/ejed.12533

Hovland, C. I., Janis, I. L., and Kelley, H. H. (1953). Communication and persuasion. New Haven, CT: Yale University Press.

Jin, Y., Yan, L., Echeverria, V., Gašević, D., and Martinez-Maldonado, R. (2025). Generative AI in higher education: a global perspective of institutional adoption policies and guidelines. Comput. Educ. 8:100348. doi: 10.1016/j.caeai.2024.100348

Kandoth, S., and Kushe Shekhar, S. (2022). Social influence and intention to use AI: the role of personal innovativeness and perceived trust using the parallel mediation model. Forum Sci. Oecon. 10, 131–150. doi: 10.23762/FSO_VOL10_NO3_7

Kitsara, I. (2022). “Artificial intelligence and the digital divide: from an innovation perspective” in Platforms and artificial intelligence. Progress in IS 245–265. ed. A. Bounfour (Cham: Springer).

Kong, S.-C., Korte, S.-M., Burton, S., Keskitalo, P., Turunen, T., Smith, D., et al. (2025). Artificial intelligence (AI) literacy—an argument for AI literacy in education. Innov. Educ. Teach. Int. 62, 477–483. doi: 10.1080/14703297.2024.2332744

Lazarus, R. S., and Folkman, S. (1987). Transactional theory and research on emotions and coping. Eur. J. Personal. 1, 141–169. doi: 10.1002/per.2410010304

Liang, H., and Xue, Y. (2009). Avoidance of information technology threats: a theoretical perspective. MIS Q. 33, 71–90. doi: 10.2307/20650279

Liang, H., and Xue, Y.East Carolina University (2010). Understanding security behaviors in personal computer usage: a threat avoidance perspective. J. Assoc. Inf. Syst. 11, 394–413. doi: 10.17705/1jais.00232

Lim, W. M., Gunasekara, A., Pallant, J. L., Pallant, J. I., and Pechenkina, E. (2023). Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 21:100790. doi: 10.1016/j.ijme.2023.100790

Loewenstein, G., Weber, E., Hsee, C., and Welch, N. (2001). Risk as feelings. Psychol. Bull. 127, 267–287. doi: 10.1037/0033-2909.127.2.267

Long, D., and Magerko, B.. (2020). What is AI literacy? Competencies and design considerations. In: Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1–16).

Luchkina, N. V., and Bolshakov, V. Y. (2019). Mechanisms of fear learning and extinction: synaptic plasticity–fear memory connection. Psychopharmacology 236, 163–182. doi: 10.1007/s00213-018-5104-4

Luthar, S. S., Crossman, E. J., and Small, P. J. (2015). “Resilience and adversity” in Socioemotional processes: 3. Handbook of child psychology and developmental science. eds. R. M. Lerner and M. E. Lamb. 7th ed (New York: Wiley), 247–286.

Marikyan, D., and Papagiannidis, S. (2023). “Protection motivation theory: A review,” in TheoryHub Book. ed. S. Papagiannidis. Available at: http://open.ncl.ac.uk/ ISBN:9781739604400

Ministry of Education of the People’s Republic of China and National Language Commission of the People’s Republic of China. China’s standards of English language ability (2018). Available online at: https://cse.neea.edu.cn/res/ceedu/1811/6bdc26c323d188948fca8048833f151a.pdf (accessed January 10, 2024)

Mittal, U., Sai, S., Chamola, V., and Sangwan, D. (2024). A comprehensive review on generative AI for education. IEEE Access 12, 142733–142759. doi: 10.1109/ACCESS.2024.3468368

Ng, D. T. K., Leung, J. K. L., Chu, K. W. S., and Qiao, M. S. (2021). Ai literacy: definition, teaching, evaluation, and ethical issues. Proc. Assoc. Inf. Sci. Technol. 58, 504–509. doi: 10.1002/pra2.487

Pradana, M., Elisa, H. P., and Syarifuddin, S. (2023). Discussing ChatGPT in education: a literature review and bibliometric analysis. Cogent Educ. 10:2243134. doi: 10.1080/2331186X.2023.2243134

Sutton, S. (1982). “Fear-arousing communications: a critical examination of theory and research” in Social psychology and behavioral medicine. ed. J. Eiser (London, UK: John Wiley & Sons), 303–337.

Taulli, T. (2023). “Introduction to generative AI: the potential for this technology is enormous” in Generative AI: how ChatGPT and other AI tools will revolutionize business (Berkeley, CA: Apress), 1–20.

Wach, K., Duong, C. D., Ejdys, J., Kazlauskaitė, R., Korzynski, P., Mazurek, G., et al. (2023). The dark side of generative artificial intelligence: a critical analysis of controversies and risks of ChatGPT. Entrep. Bus. Econ. Rev. 11, 7–30. doi: 10.15678/EBER.2023.110201

Keywords: generative AI, technology threats, use hesitancy, AI literacy, higher education

Citation: Yin Y (2025) From threat perception to use hesitancy: examining college students’ psychological barriers to generative AI adoption. Front. Educ. 10:1618850. doi: 10.3389/feduc.2025.1618850

Edited by:

Yudhi Arifani, Universitas Muhammadiyah Gresik, IndonesiaReviewed by:

Xianglong Wang, University of California, Davis, United StatesJanika Leoste, Tallinn University, Estonia

Copyright © 2025 Yin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yukun Yin, MzIyMDAwNTI5MUBzdHVkZW50Lm11c3QuZWR1Lm1v

†ORCID: Yukun Yin, orcid.org/0009-0006-5955-5475

Yukun Yin

Yukun Yin