- 1Office of International Cooperation and Exchange, Yunnan Agricultural University, Kunming, China

- 2Department of Laboratory Animal Science of Fudan University, Shanghai, China

This paper examines the profound impact of artificial intelligence (AI) and geopolitics on cross-border higher education cooperation. AI has the potential to enhance educational accessibility and collaboration efficiency by enabling personalized learning, virtual classrooms, open resource platforms, and open-source research collaborations, ultimately helping bridge global educational gaps. However, significant challenges arise, such as techno-nationalism (e.g., semiconductor export controls), data sovereignty conflicts (e.g., GDPR restrictions), divergent algorithmic values, and the expanding digital divide. To address these challenges, this study proposes several solutions: the creation of an inclusive technological ecosystem (including open-source platforms, shared computing power, and cross-cultural models); the development of mutual recognition mechanisms (such as data stratification and standard harmonization); the strengthening of South-South cooperation through digital public goods; and the reconstruction of ethical consensus, emphasizing cultural diversity and human-in-the-loop principles. Notably, China has actively contributed to these efforts through technological empowerment (e.g., National Smart Education Platform, Luban Workshops), regulatory input (e.g., Global Governance Initiative), and infrastructure support. Looking ahead, the paper argues for the establishment of an “Intelligent Education Community,” guided by the principles of “extensive consultation, joint contribution, shared benefits, and wise governance,” to ensure that AI advances global educational equity and promotes human progress.

1 Introduction

The world today is experiencing a dual wave of technological transformation and geopolitical restructuring. The rapid development of artificial intelligence (AI) is fundamentally changing the way knowledge is produced, disseminated, and acquired, while profound shifts in the global geopolitical landscape are reshaping the basic logic of international cooperation (Chaudhry and Kazim, 2022; Fernández et al., 2023). According to data from the 2025 Artificial Intelligence Action Summit, over 90% of the world’s top universities have adopted AI-powered teaching tools. Educational digitalization has evolved from a supplementary tool into a core component of higher education infrastructure. At the same time, the rise of techno-nationalism, intensifying digital sovereignty rivalries, and the fragmentation of international rules have created an unprecedentedly complex environment for cross-border educational cooperation. Traditional models of higher education internationalization–based on physical mobility and fixed curriculum systems–are undergoing disruptive reconstruction (Luo, 2022; Luo and Van Assche, 2023).

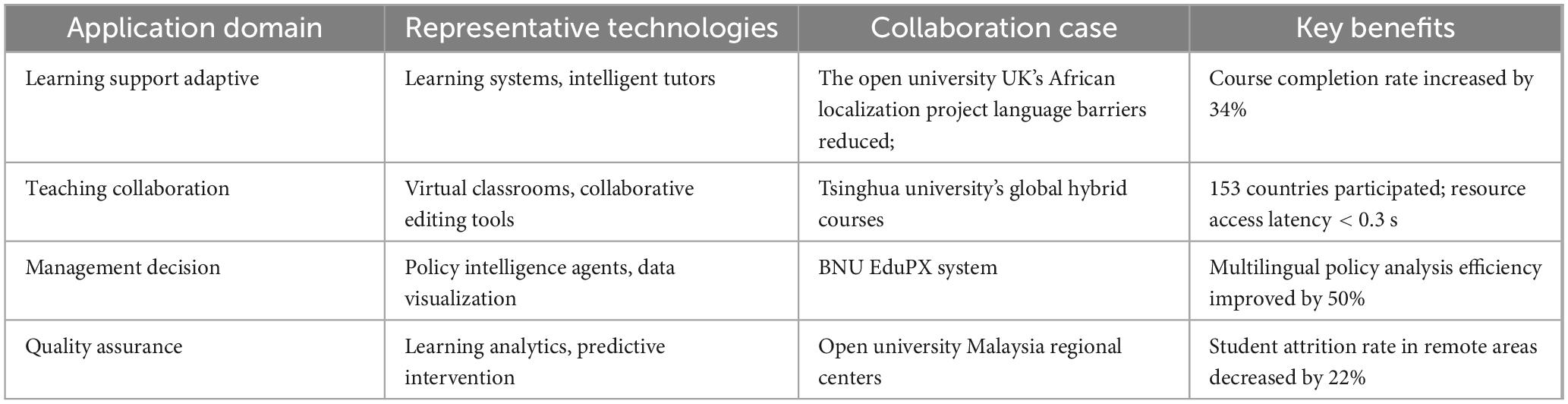

The integration of artificial intelligence and cross-border higher education is unfolding across multiple dimensions. At the technological application level, innovations such as adaptive learning systems, virtual laboratories, and multilingual AI tutors are making it possible to deliver education across geographic boundaries. The UK’s Open University, for example, has used AI translation tools to localize its courses into 37 languages including Swahili, significantly enhancing access for learners in underrepresented regions. AI is also enabling more personalized and flexible learning pathways, thus making education more inclusive and learner-centered (Dang et al., 2021; Wang et al., 2022; Sallam, 2023).

In terms of cooperation models, the landscape is shifting from traditional modes like offshore campuses and credit transfer agreements toward new forms of collaboration based on data sharing, platform interoperability, and joint research. China’s international edition of the National Smart Education Public Service Platform now connects over 1,300 universities worldwide, forming a decentralized global resource network. In 2024 alone, the platform recorded over 210% growth in its user base, reflecting strong international demand for open and intelligent education infrastructure (Groot et al., 2020).

Geopolitically, the landscape of cooperation is being reshaped by major global forces, including the U.S.–China tech rivalry, the European Union’s Artificial Intelligence Act, and multilateral initiatives such as the BRICS Digital Education Alliance. These developments have produced a contradictory scenario in which “technological sovereignty” and “open science” coexist, often uneasily. Regulatory divergence, infrastructure gaps, and differing values embedded in AI algorithms have all contributed to growing tension between national interests and global collaboration (Vijayakumar, 2022; Wang et al., 2024).

To contextualize this transformation, recent studies from adjacent domains provide insights into how policy-driven digital interventions reshape institutional landscapes:

First, Xu et al. (2025) examined the systemic effects of China’s National Civilized Cities initiative and found that coordinated top-down policies significantly improved regional sustainability outcomes, both economically and environmentally (Yang et al., 2025). This parallels how coordinated AI-enabled education strategies–especially when embedded in national development agendas–can drive fundamental shifts in higher education models and infrastructure. It underscores the idea that digitalization, when policy-aligned, can serve as a structural catalyst for institutional transformation across borders.

Second, Wang and He (2024) investigated how environmental regulatory frameworks influenced the technological complexity of high-tech industry exports in China (Yang et al., 2024). Their findings suggest that policy environments play a decisive role in shaping national innovation pathways. This insight is particularly relevant to the context of cross-border higher education, where geopolitical forces and techno-nationalist policies increasingly shape the direction and ethics of AI deployment in global knowledge systems.

Third, Zhai et al. (2023) analyzed the impact of cross-border e-commerce pilot zones and revealed how digital infrastructure, supported by policy integration, fosters inclusive regional development and transnational collaboration (Yang et al., 2023). This finding echoes the current shift toward decentralized educational platforms and data-sharing frameworks in higher education. Just as e-commerce platforms enhanced trade interoperability, AI-powered education ecosystems can lower barriers to academic collaboration and promote shared digital knowledge across geopolitical boundaries.

Understanding this transformation requires returning to the fundamental purpose of education and responding to the imperatives of our time. As emphasized by UNESCO, the digitalization of education is not simply a technological application but a new pathway to realizing the right to education. Against the backdrop of intertwined AI and geopolitical dynamics, cross-border higher education cooperation now faces three central challenges: how to leverage AI to bridge rather than widen educational inequalities; how to safeguard academic freedom and universal values amidst the competition over technological sovereignty; and how to build a global education governance system that balances innovation with inclusiveness, and ethics with security (AlShebli et al., 2024; Ducret et al., 2024).

This paper aims to provide a systematic response to these questions by analyzing the emerging opportunities, identifying the key challenges, and proposing viable pathways forward. Through this framework, it seeks to contribute to the reimagining of cross-border higher education in an era defined by intelligent technology and geopolitical complexity.

This research aims to critically explore how the integration of artificial intelligence (AI) into cross-border higher education can reshape global educational cooperation while addressing the challenges posed by technological and geopolitical dynamics. The central purpose of this study is to identify the key opportunities that AI offers in enhancing educational accessibility, equity, and flexibility, and to investigate the potential risks that arise from geopolitical rivalries, data sovereignty conflicts, and regulatory fragmentation. By examining these interrelated factors, this research seeks to propose viable solutions for ensuring that AI can serve as a tool for inclusive and innovative education while maintaining academic freedom, ethical standards, and global cooperation. Ultimately, the goal is to provide a comprehensive framework that informs the future development of international educational policies and governance systems, ensuring that AI advances not only technological progress but also universal educational values.

2 Methods

This article adopts a qualitative and interpretive approach to review recent developments at the intersection of artificial intelligence, geopolitics, and cross-border higher education. The materials discussed in this manuscript were selected through a comprehensive reading of relevant academic and policy-oriented sources published in recent years. These include journal articles, policy documents, international initiatives, and case-based reports that explore the technological, regulatory, and strategic dimensions of global education cooperation.

The inclusion criteria were: (1) relevance to AI applications in international higher education or educational governance, (2) focus on geopolitical or regulatory dimensions, and (3) empirical or conceptual contributions to educational transformation, digital infrastructure, or policy formation. Exclusion criteria included sources lacking substantive discussion of cross-border cooperation or those focused solely on domestic applications of AI in education.

A thematic coding process was applied to identify and cluster core dimensions emerging from the literature, including AI empowerment in education, techno-nationalism, data sovereignty, regulatory divergence, South–South cooperation, and ethical governance. These themes formed the basis of the analytical structure of the manuscript. Special attention was given to China’s role in global digital education initiatives, which was treated as a separate sub-theme given its strategic relevance.

To ensure the analytical rigor and reliability of the thematic analysis, the coding process was independently conducted by two authors. Each author reviewed the selected materials and performed open coding using an inductive approach, allowing key themes to emerge naturally from the data. The initial coding results were then compared, and differences in theme boundaries, terminology, or interpretation were identified.

To resolve these discrepancies, a third author acted as an adjudicator and facilitated a structured consensus-building process. Through a series of joint discussions, the team refined the thematic framework, clarified the definitions of each category, and agreed on a unified codebook. This revised coding scheme was subsequently applied across all materials to ensure consistency in theme identification and application.

Throughout the process, analytical memos were maintained to document coding decisions and justifications, providing an audit trail to enhance transparency. Regular team meetings were held to reflect on emerging insights and ensure a balanced integration of multiple perspectives. This collaborative, multi-author approach helped reduce individual bias, improve coding coherence, and strengthen the validity of the review.

To ensure a global perspective, the review incorporated literature from both Western and non-Western academic traditions, and drew upon case studies from Africa, Southeast Asia, and Latin America where available. This approach allowed for a balanced, inclusive, and policy-relevant examination of how AI and geopolitical dynamics jointly shape the future of international higher education (Ejaz et al., 2022; Gilbert, 2024).

3 AI empowerment: a transformative force in cross-border educational cooperation

3.1 The reconstruction and innovation of teaching models

Artificial intelligence is fundamentally reshaping the pedagogical landscape of cross-border higher education. The application of generative AI has enabled unprecedented levels of precision in personalized learning support. For instance, practices at institutions such as Athabasca University in Canada demonstrate how AI systems, by analyzing student interaction data, can dynamically adjust content difficulty, recommend culturally relevant case studies, and provide 24/7 academic tutoring. These features have significantly enhanced learner engagement and course completion rates among international students from diverse backgrounds (Arslan et al., 2024; As’ad, 2024).

More profound, however, is the transformation of the educator’s role–from that of a knowledge transmitter to a designer of pedagogical frameworks. Within international collaborative programs, faculty are increasingly tasked with creating cross-cultural learning environments, facilitating critical inquiry, and guiding ethical reflection. The cognitive and affective dimensions of teaching–previously constrained by time and geography–are being amplified through intelligent systems that assume partial responsibility for content delivery and assessment (Almansour et al., 2025; Symeou et al., 2025).

The rise of virtual academic exchange has also dismantled traditional geographical boundaries in education. In 2024, leveraging platforms like the Global MOOC and Online Education Alliance, institutions such as Tsinghua University offered hundreds of globally integrated courses, drawing tens of thousands of learners from over 150 countries. With the aid of immersive technologies such as virtual reality, Brazilian students can now “walk through” the Forbidden City while studying Chinese architectural history, while Malaysian students can “operate” German Industry 4.0 virtual production lines (As’ad, 2024; Wu et al., 2025). These multi-spatial, hybrid learning experiences foster a new mode of internationalization–marked by physical absence but cognitive presence–enabling a deeper level of global engagement.

Equally significant is the role of AI in lowering the institutional barriers to international cooperation. The “EduPX” system, developed by Beijing Normal University, is an AI-driven education policy and planning agent capable of operating in twelve languages including Chinese, English, French, and Spanish. It provides cross-border education stakeholders with decision-support tools such as curriculum alignment standards, quality assurance frameworks, and policy analysis modules. By automating and optimizing administrative processes, such tools help reduce regulatory friction, improve interoperability, and streamline governance in joint education programs (Létourneau et al., 2025; Zhou and Peng, 2025).

In sum, AI is not merely a supplementary tool in the educational process–it is a transformative force that is redefining how teaching is structured, delivered, and experienced across borders. Its integration into cross-border higher education signals a shift toward more adaptive, inclusive, and globally interconnected learning ecosystems (Table 1).

3.2 Global mechanisms for equitable access to educational resources

Artificial intelligence has opened new possibilities for the global redistribution of educational resources. Traditional models of cross-border education have long been constrained by physical infrastructure and institutional capacity, often resulting in a “core–periphery” structure that reinforces global educational inequality. In contrast, the rise of AI-driven Open Educational Resources (OER) is reshaping this landscape, promoting more decentralized, inclusive, and equitable access (Gamage et al., 2022).

For example, the international version of China’s National Smart Education Public Service Platform integrates over 27,000 MOOCs, 18,000 virtual simulation experiments, and 1,200 discipline-specific digital teaching repositories. These high-quality resources are made freely accessible to users in developing countries, offering them opportunities previously limited to elite institutions. Notably, in 2024, 68% of newly registered users on the platform came from Asia, Africa, and Latin America, reflecting a significant southward flow of educational content and the potential for AI to rebalance global knowledge exchange (Zhu et al., 2023).

At the heart of equitable access lies the ability to localize content effectively. The experience of Open University Malaysia offers valuable insights: by developing AI-based translation tools that convert international courses into Malay, Tamil, and other regional languages, and by adapting course cases to Southeast Asian cultural contexts, the university significantly improved learners’ comprehension–raising the completion rate for an agricultural technology course from 52% to 89%. This underscores the importance of both linguistic and cultural adaptation in achieving meaningful access (Li et al., 2024).

However, the promise of AI translation is not without risk. As Professor Rob Farrow has noted, AI tools designed for literature and discourse analysis may misinterpret or devalue local narrative structures, inadvertently marginalizing indigenous knowledge systems. To address this, the China–ASEAN Vocational Education Community has adopted a dual-track content development model: international experts provide the foundational knowledge architecture, while local educators embed cultural relevance and context. This approach helps create educational resources that are both globally shareable and locally rooted.

Universal access also depends on robust supporting infrastructure. In remote islands of Southeast Asia, Open University Malaysia has established regional learning hubs equipped with offline AI-enabled terminals. In rural parts of Africa, Chinese-funded “Smart Mobile Classrooms” use satellite connections to access international education platforms. These innovations help bridge the “last mile” of digital learning, extending AI-powered education to populations previously unreachable by conventional systems.

Nevertheless, systemic challenges persist. As of recent global assessments, only about 45% of the least developed countries have reliable access to electricity, severely limiting the deployment of AI-based educational tools. Realizing the full potential of AI-driven global education equity will therefore require not only technological innovation, but also sustained investment in foundational infrastructure, stronger multilateral cooperation, and inclusive financing mechanisms to support underserved regions (Al Mazrooei et al., 2022; Zhu et al., 2023).

In sum, AI offers a transformative opportunity to create a global educational commons–but realizing this vision demands a balance between digital innovation, cultural respect, and infrastructural justice.

3.3 A paradigm shift in scientific collaboration

Artificial intelligence has significantly enhanced both the depth and efficiency of cross-border scientific collaboration. Under traditional research models, international cooperation was often constrained by time zones, limited access to shared infrastructure, and high coordination costs. AI-driven collaborative platforms are now transforming this landscape by enabling real-time data integration, distributed computing, and seamless communication across borders.

For instance, the European Union’s Science Cloud initiative links high-performance computing centers across 17 countries to support large-scale climate change simulations with distributed computing power. In parallel, Chinese astronomers have used the AI-based “Sky Survey” platform to coordinate observatories in Chile and South Africa, enabling real-time monitoring of southern hemisphere galaxies. These cases illustrate how AI can orchestrate globally dispersed resources to achieve integrated scientific outputs (Trägårdh et al., 2020; Zhu et al., 2023).

Large language models (LLMs) are also emerging as catalysts for research innovation. Open-source models such as DeepSeek and LLaMA are empowering researchers in resource-constrained regions to participate in cutting-edge scientific exploration. A notable example is the agricultural AI project in Ethiopia, where local scientists built a crop disease diagnosis system using open-source models. By combining satellite imagery provided by Chinese institutions with plant genome data from French repositories, the system achieved a 92% accuracy rate in predicting coffee leaf rust outbreaks. This model of “open technology+data sharing” exemplifies the role of AI in democratizing global knowledge production and narrowing the research capability gap between developed and developing countries (Roberts et al., 2021). These regulatory divergences not only shape technical and institutional architectures but also reflect deeper philosophical tensions around data control, accountability, and the values embedded in AI governance.

However, the rapid expansion of AI in scientific research also raises complex ethical challenges. There are considerable divergences among China, the United States, and the European Union regarding algorithmic transparency, data sovereignty, and ethical oversight. The EU mandates strict governance for “high-risk” AI research under its Artificial Intelligence Act, emphasizing human rights and accountability. China advocates a balanced approach that promotes development while safeguarding national security. Meanwhile, many developing countries express concern over becoming “ethical colonies”–forced to adopt foreign regulatory standards that may not align with their socio-political contexts or research priorities (Abiri et al., 2023). This contrast in governance models–between normative assertiveness and pragmatic sovereignty–raises critical questions about the future of global AI coordination and the legitimacy of emerging digital education frameworks.

To address this tension, the BRICS framework for AI ethics has proposed the principle of “mutual recognition with differences.” This approach encourages countries to develop domestic ethical and regulatory standards tailored to their national conditions, while establishing interoperability through equivalence assessments. Such a model offers a flexible and pluralistic path to governing cross-border scientific research, allowing for ethical diversity without sacrificing international collaboration (Bontempi et al., 2024).

In sum, AI is not merely optimizing existing research methods–it is redefining the very paradigm of global scientific cooperation. As collaborative platforms, foundational models, and shared data infrastructures continue to evolve, the future of research will depend on our ability to build inclusive, transparent, and ethically sound systems that transcend national boundaries while respecting local contexts. These structural tensions around infrastructure and institutional alignment inevitably raise pressing questions about who controls data, how it flows across borders, and which values underpin its governance–concerns that are at the heart of the emerging debate on data sovereignty.

4 Geopolitical challenges: structural barriers to international cooperation

4.1 Techno-nationalism and academic fragmentation

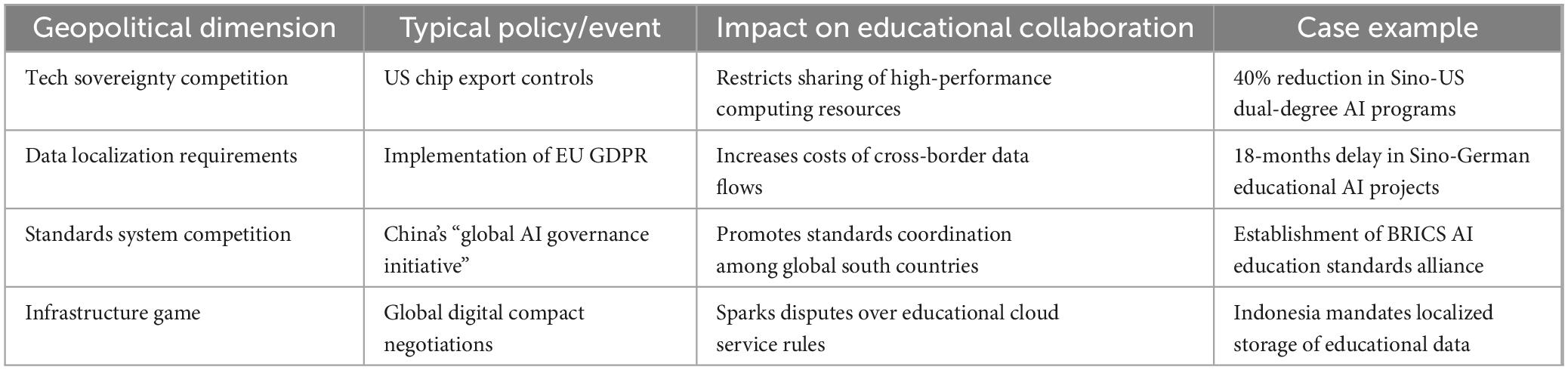

Rising technological competition–now elevated to the realm of national strategic security–is profoundly reshaping the landscape of cross-border educational collaboration. The United States’ CHIPS and Science Act, which restricts the export of high-performance GPUs to China, has directly impacted numerous joint AI research projects between Chinese and American institutions. According to recent studies, nearly 30% of China’s dual-degree programs have had to adjust curricula due to limited computing capacity, severely hindering teaching in cutting-edge fields such as machine learning (Tang et al., 2025).

More concerning is the trend toward secondary sanctions in technology governance. In 2024, Delft University of Technology in the Netherlands was forced to terminate its joint autonomous driving research with Chinese partners after being flagged for using American chip design tools–despite not being a U.S. institution. This kind of “long-arm jurisdiction” has entangled academic collaboration in the vortex of geopolitical rivalry, blurring the boundaries between education, research, and strategic competition.

Artificial intelligence technological development is also becoming increasingly polarized along geopolitical lines. The United States, through closed-source ecosystems like OpenAI and Anthropic, is reinforcing proprietary technological barriers(Hahn and Oleynik, 2020). In contrast, China is charting a more open-source-driven path with models like DeepSeek and Zhipu. Meanwhile, the European Union positions itself as a “third pole,” using regulatory tools–such as the Artificial Intelligence Act–to assert ethical leadership. This divergence in technical trajectories is weakening the foundation for educational cooperation. Joint publications between Chinese and U.S. universities in natural language processing, for instance, declined by 43% since their 2021 peak, while China–EU collaborations in AI ethics rose by 35%, reflecting a geopolitically influenced realignment of academic networks (Fung and Etienne, 2023).

Academic freedom is increasingly subject to institutional pressure. The U.S. National Science Foundation (NSF) now requires AI research projects to sign “technology protection agreements.” China’s “indigenous innovation” policy mandates the localization of educational data storage. The EU’s GDPR restricts the cross-border flow of biometric and other sensitive data. While these policies may be grounded in legitimate security concerns, they collectively contribute to the emergence of a “digital iron curtain.” As one Harvard professor warned, “When scientists must undergo political screening just to share code, the engine of scientific progress stalls.”

In sum, techno-nationalism is no longer a peripheral concern–it has become a structural barrier to international academic cooperation. The erosion of shared infrastructure, misaligned regulatory regimes, and rising distrust among global powers threaten to fragment the once-open landscape of global knowledge exchange. Addressing this challenge requires not only technical interoperability but also renewed commitments to academic openness, mutual trust, and the depoliticization of science (Sun et al., 2024; Wang et al., 2024).

4.2 Digital sovereignty and conflicts in data governance

Data has emerged as a new form of strategic asset, and divergent national regulatory frameworks are increasingly impeding the free flow of educational data across borders. The European Union’s General Data Protection Regulation (GDPR) enshrines the principle of “data subject primacy,” requiring explicit consent from students before AI systems can process their personal information. In contrast, China’s Interim Measures for the Management of Generative AI Services emphasizes “data security assessments,” imposing strict limitations on the export of sensitive data. Southeast Asian nations have adopted a more flexible stance, permitting the cross-border transfer of certain types of educational data under defined safeguards.

These differences have led to both friction and breakthroughs in practice. A Sino-German digital education project was delayed for 18 months due to disputes over the transmission of student facial expression data, while a China–Singapore smart campus initiative advanced smoothly thanks to a mutually recognized data classification and protection framework. These contrasting cases underscore the critical importance of institutional compatibility and governance interoperability in cross-border collaboration (Pesapane et al., 2018).

Beneath the legal and procedural tensions lie deeper cultural and ideological divisions–particularly in the values encoded within AI algorithms. Western education AI systems tend to prioritize individualism, tailoring content to personal learning styles and encouraging critical thinking. In contrast, many East Asian models emphasize collective efficacy, focusing on improving overall classroom performance and reinforcing foundational knowledge. This divergence reflects not only pedagogical differences but also cultural worldviews about education, learning, and social development.

The conflict intensifies when algorithmic design becomes a vehicle for ideological projection. For example, one U.S.-based MOOC platform embedded a “democracy index” into its course recommendation algorithm, sparking backlash from multiple countries who viewed it as political interference. In response, Kazakhstan’s Digital Education Act now mandates that “educational algorithms must reflect national cultural values.” These developments highlight a core tension in cross-border educational AI cooperation: AI is not merely a neutral tool–it is also a carrier of culture and a medium of value transmission.

At a structural level, dependency on global infrastructure further exacerbates power asymmetries. Today, approximately 92% of education-focused AI platforms operate on just three cloud service providers: AWS, Azure, and Alibaba Cloud. Most leading learning analytics tools originate from companies in the United States or Europe. This concentration creates technological dependencies that limit autonomy. For instance, when Indonesian universities host online courses on U.S. platforms, their student data is automatically funneled into third-party monitoring networks. Similarly, when African institutions rely on AI laboratories donated by external actors, they are often constrained by the donor’s terms regarding maintenance, updates, and system integration (Nemitz, 2018; Zhou and Peng, 2025).

Recent empirical work by Chan et al. (2024) provides valuable insight into how generative AI can enhance university-level English writing proficiency. In a large-scale randomized controlled trial involving 918 undergraduate students in Hong Kong, the authors examined the effects of automated feedback generated by GPT-3.5-turbo on students’ academic writing performance. The study found that students who received AI-generated feedback demonstrated statistically significant improvements in their writing quality compared to those in the control group. Moreover, survey and interview data revealed increased motivation and engagement among students, with many reporting that AI feedback allowed them to learn more efficiently and independently. However, some students also reported mixed emotional responses, such as feelings of anxiety, overreliance on the technology, and concerns about the accuracy of AI-generated corrections. These emotional reactions highlight the complex relationship between students and AI in educational contexts.

This work not only highlights the pedagogical benefits of large language models but also underscores the need to address the affective and ethical dimensions of AI-assisted learning in multilingual, cross-cultural higher education settings. While generative AI shows promise in reducing the cognitive load of manual writing corrections, it raises concerns about the potential for diminishing critical thinking and creativity in students. AI’s reliance on predefined algorithms may inadvertently reinforce biases, making it essential to develop systems that ensure fairness, transparency, and adaptability to diverse linguistic and cultural needs. Furthermore, future research should explore the long-term impacts of AI feedback on student development, including its role in fostering self-regulation and academic autonomy. Ethical considerations, such as the protection of students’ data privacy and the potential for AI to replace human interaction in feedback processes, must also be critically examined (Chan et al., 2024).

China’s National Smart Education Platform (International Version) offers a vivid case of how state-led digital infrastructure can serve as a conduit for educational globalization. Launched in 2022 under the Ministry of Education, the platform provides free access to over 25,000 courses, including general education (K–12), higher education, vocational training, and teacher professional development. The content spans disciplines such as mathematics, artificial intelligence, engineering, Chinese language, and Marxist theory, available in both Mandarin and English. As of March 2024, official data report that the platform has attracted 8.46 million international visits, with more than 412,000 active registered learners from 36 developing countries, particularly across Southeast Asia, the Middle East, and Sub-Saharan Africa.

Several bilateral cooperation programs have been established through the platform, including the “Digital Silk Road Education Initiative,” which supports digital curriculum exchange and remote teacher training. In Laos, for example, more than 4,000 secondary school students enrolled in platform-hosted STEM courses, and 360 teachers received AI-enhanced pedagogical training by late 2023. In Ethiopia, digital literacy scores among participating schools improved by 22%, as reported in a joint monitoring report by Addis Ababa University and East China Normal University (Shen and Sun, 2022). Moreover, the platform’s multilingual AI-powered translation engine processed over 12 million words of educational content into Arabic, French, and Swahili, directly supporting curriculum localization and inclusion for partner nations (Ma et al., 2025).

Importantly, qualitative feedback from participating institutions underscores institutional transformation beyond content delivery. A 2023 UNESCO-affiliated policy forum highlighted how the platform enabled decentralized teacher development in countries with limited access to in-person upskilling programs. In Uzbekistan, where over 1,100 university instructors engaged with the platform’s blended learning modules, the Ministry of Higher Education formally integrated co-developed courses on AI literacy and digital ethics into its national curriculum blueprint. These outcomes demonstrate that China’s platform is not merely a technical export but a strategic vehicle for shaping educational governance, pedagogical values, and digital dependencies in the Global South.

From a human rights perspective, this study not only explores the opportunities and challenges posed by artificial intelligence (AI) and geopolitics in cross-border higher education, but also examines the far-reaching societal implications of these developments. As AI continues to reshape educational systems globally, it is essential to ensure that its application aligns with fundamental values such as human dignity, autonomy, and equality. Particular attention must be paid to the risks it poses to privacy, academic freedom, and equitable access to information, especially in regions where legal and regulatory frameworks remain underdeveloped. A careful examination of AI’s roles in surveillance, data collection, and automated decision-making is necessary to protect vulnerable groups from discrimination and exploitation.

Furthermore, viewing AI development through a human rights lens highlights the need to promote inclusivity, fairness, and social justice in AI-driven education. This means ensuring transparency in AI systems, accountability in their use, and safeguarding the rights of students from algorithmic bias and structural inequality. By grounding this research in human rights principles, it contributes to current academic discourse and helps ensure that international educational cooperation remains both technologically innovative and ethically responsible. Ultimately, this approach promotes a future in which AI supports a fair, inclusive, and just global educational landscape.

In response to these concerns, UNESCO has advocated for the development of Digital Public Goods (DPGs)–open, interoperable, and inclusive technological infrastructures that empower developing countries with greater freedom of choice. By investing in DPGs, the international community can help build a more balanced digital ecosystem–one that reduces dependency, respects cultural sovereignty, and fosters equitable participation in global educational innovation (Table 2).

4.3 The stratification of the digital divide

Artificial intelligence has the potential to exacerbate global educational inequality, particularly through the deepening of existing digital divides. Developed countries enjoy a triple advantage in computing infrastructure, data availability, and talent concentration. For instance, the average AI infrastructure investment per university in the United States is 17 times higher than that in India. Europe hosts approximately 43% of the world’s top AI researchers. In China, the budget for intelligent education across “Double First-Class” universities exceeds the combined funding available to the top universities in Africa. These disparities reinforce a “core-dominated” structure in cross-border educational cooperation: nearly 80% of AI-powered education collaboration projects are initiated by institutions in North America, Europe, or East Asia, while African institutions are frequently positioned as data providers rather than co-creators in the design and governance of such initiatives (Al Mazrooei et al., 2022). Building on these findings, the following section explores the broader normative and strategic implications–particularly how AI and geopolitical dynamics shape governance values, institutional legitimacy, and the prospects for inclusive international cooperation.

Hardware inequality translates directly into educational exclusion. In rural Kenya, students access AI-based courses over 2G networks, experiencing an average latency of 8 s per interaction. In Bangladesh, chronic electricity shortages have rendered approximately 40% of smart classrooms non-functional. As Professor Tan Qing observed, “AI learning platforms depend on stable connectivity, and in infrastructure-deficient regions, operational feasibility becomes a critical challenge.”

A more subtle–but equally significant–dimension of inequality lies in the epistemic layer. Many AI education platforms, particularly those developed in the West, are designed with an implicit assumption of technological universalism. This often results in the marginalization of local knowledge systems. For example, Ethiopian educators have raised concerns that AI models label pastoralist cultures as “primitive modes of production,” prompting the question: is AI empowering communities, or merely reproducing digital forms of cultural imperialism? (Chawla et al., 2023; Xiao et al., 2024).

The absorption of AI technologies is further constrained by disparities in teacher preparedness. Countries such as France and Singapore have made AI literacy part of mandatory teacher training, while in Indonesia, only 12% of teachers have received any form of training in intelligent education tools. This capability gap becomes especially pronounced in international projects. In one notable case, a China-funded smart classroom initiative in Laos saw a 60% equipment idle rate just 3 months after launch due to local teachers’ inability to operate the systems.

To address this issue, the UNESCO International Institute for Educational Planning (IIEP) launched the “Digital Resilience for Teachers” initiative. The program focuses on contextualized professional development, aiming to close the capacity gap through culturally sensitive, needs-based training modules. This reflects a broader shift from simply deploying digital tools to building inclusive, adaptive ecosystems that empower educators and learners alike.

In sum, the digital divide is no longer just about access–it is stratifying across multiple levels: infrastructure, cognition, participation, and capacity. If left unaddressed, these layered inequalities risk transforming AI from a tool of educational equity into a mechanism of global stratification.

5 Pathways forward: innovative approaches to multi-level collaborative governance

5.1 Building an inclusive technological ecosystem

Overcoming technological barriers in cross-border higher education must go beyond expanding access–it requires an explicit engagement with the human rights implications of advanced artificial intelligence. While open and inclusive frameworks like the EU-led OpenAIRE project and Asian licensing initiatives have made AI resources more accessible to the Global South, their design and deployment often overlook critical rights-based considerations. The right to privacy, freedom from algorithmic discrimination, and cultural self-determination are frequently compromised when technological inclusion is pursued without ethical guardrails.

For example, modular AI teaching devices adopted by Nigerian universities enable educational delivery in low-connectivity settings, but without robust oversight, these devices may capture and process student data in ways that bypass meaningful consent. The push for offline AI functionality–though crucial for equity–must therefore be accompanied by strong human rights protections such as data minimization, local data stewardship, and transparency about algorithmic functions.

Similarly, emerging transnational computing networks that redistribute infrastructure and data responsibilities–like those inspired by the European Science Cloud–raise concerns about data sovereignty and the equitable treatment of educational partners. If developing countries contribute sensitive data (e.g., student behavior, learning analytics, cultural materials), but lack agency over how such data is processed or commercialized, the risk of digital exploitation increases. Here, directly addressing human rights means embedding principles like “fair benefit sharing,” “algorithmic explainability,” and “participatory governance” into all resource-sharing agreements (Greene et al., 2019; Wenzel, 2023).

The development of culturally inclusive AI models also presents both opportunity and risk. Multilingual and non-Western logic–enabled AI systems have improved engagement and performance in Kenya, Indonesia, and beyond. However, if these systems are later standardized through global platforms without sustained community input, they risk reproducing a new form of epistemic domination. Actively protecting the right to cultural identity, educational pluralism, and linguistic equity must be part of any initiative claiming inclusivity (Mohammed and Prasad, 2023).

In short, building an inclusive AI ecosystem for education is inseparable from protecting fundamental human rights. Technological justice cannot be achieved by access alone; it requires active and continuous safeguards against surveillance, discrimination, and marginalization. Engaging directly with these human rights impacts–through policy design, platform governance, and field-level accountability–is essential to ensure that AI serves as a tool of empowerment rather than control.

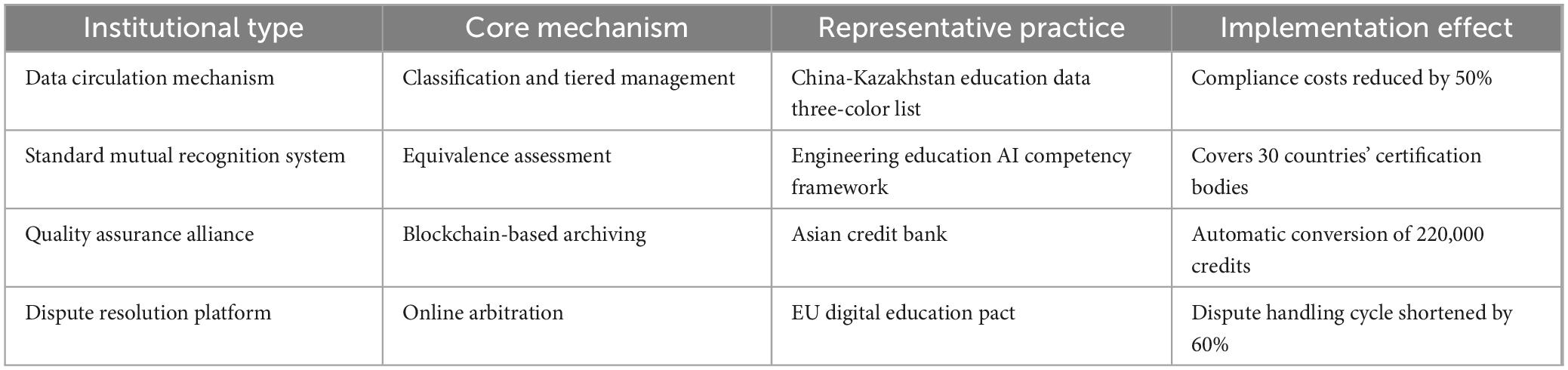

5.2 Innovating systems for mutual recognition

A key step toward enabling responsible and scalable cross-border educational cooperation is the establishment of differentiated mechanisms for data circulation–not only to improve efficiency and interoperability, but also to safeguard fundamental human rights. As AI increasingly governs the flow and analysis of educational data, rights such as privacy, autonomy, and non-discrimination must be actively protected through robust design and policy.

One promising model involves implementing tiered data governance frameworks based on data type and sensitivity. For example, teaching behavior data may require strict localization to prevent behavioral profiling; academic research data may be shared internationally under strong ethical oversight; and cultural heritage data should only cross borders with prior consent and fair attribution, protecting the cultural rights of source communities. In a pioneering case, the China–Kazakhstan digital education partnership introduced a “three-tier data list” model: whitelisted metadata flows freely, gray-listed anonymized learning data is shared conditionally with audit mechanisms, and blacklisted archives are protected from export. This model has become a rights-aware template, balancing openness with ethical safeguards (Dort et al., 2022).

Mutual recognition and interoperability of academic standards are essential, but they must also protect learner dignity and identity. For instance, while the International Engineering Alliance (IEA) bridges China’s “New Engineering” and Western accreditation systems, such frameworks should ensure that student data are not subject to discriminatory treatment or opaque algorithmic profiling. Similarly, the blockchain-based “credit bank” launched by the Asian Universities Alliance should guarantee fairness in academic mobility by embedding algorithmic explainability, transparency, and dispute resolution mechanisms.

Crucially, governance models must incorporate human rights protections at every level. National agreements should go beyond IP rights to include digital rights protocols (e.g., against coercive data localization or AI surveillance); industry bodies must align technical standards with international human rights norms; and universities must ensure participatory governance through student consent, faculty oversight, and transparent accountability.

The China–Africa AI Education Community provides a case in point. While it shows promise in inclusive collaboration and infrastructure sharing, its long-term success depends on incorporating ethical review boards, student data protection charters, and redress mechanisms for affected individuals. Without such rights-based frameworks, even well-meaning South–South cooperation risks reproducing asymmetries and digital dependencies.

In sum, institutional interoperability in the AI era must not merely connect systems–it must uphold the dignity, autonomy, and cultural integrity of every learner. Embedding human rights principles into the design and governance of cross-border AI education systems is not optional; it is essential for legitimacy, sustainability, and justice (Liu et al., 2023).

Together, these strategies form a blueprint for institutional interoperability in the digital age–grounded in differentiated data governance, cross-system standard alignment, and adaptive, multi-stakeholder collaboration.

5.3 Deepening south–south technological cooperation

Innovation among developing countries holds unique strategic advantages. Compared to traditional North–South partnerships, South–South cooperation is often less constrained by ideological divergence and more focused on practical, context-specific solutions. A prime example is the China–ASEAN Smart Agriculture Education Network. In this initiative, Yunnan Agricultural University provided AI-based pest recognition technology; Thai universities contributed tropical crop data; and Philippine institutions designed miniaturized hardware. The result was a cost-effective field teaching kit priced at just 15% of comparable Western products–demonstrating the efficiency and contextual relevance of South–South collaboration. However, such collaborations must also embed protections for data ownership, fair attribution, and the right of local communities to benefit from technologies derived from their knowledge and resources.

Establishing demand-driven technology transfer centers is a critical next step. With support from UNESCO, Ethiopia has launched an East African AI education hub focused on digital transformation in agriculture, healthcare, and primary education. The hub operates under a “needs-driven” model: Rwanda identified a demand for frontline medical training; Kenya contributed medical imaging datasets; and China provided AI algorithms. Together, they co-developed an offline-capable AI-assisted diagnostic training system. To ensure equitable participation, these projects must integrate rights-based design principles–such as local control over data governance, informed community consent, and algorithmic transparency–so that technology transfer does not become a form of extractive digital dependency (Sardinha et al., 2018; Dang et al., 2021).

Promoting digital public goods (DPGs) is another essential dimension of South–South cooperation. The Indian government has open-sourced its national AI education platform “Bhashini,” offering multilingual education modules in 22 regional languages. China, in turn, has opened its National Smart Education Platform interface, enabling free access to course resources for partner countries. Globally, education accounts for 38% of all registered DPG projects. Yet, to qualify as true public goods in a human rights sense, DPGs must protect user privacy, uphold cultural sovereignty, and enable users–especially marginalized learners–to influence how platforms evolve. Long-term sustainability also remains a challenge: only 15% of these projects currently receive sustained funding. To address this, G20 countries should consider establishing a “Global EdTech Fund” to provide reliable financial channels for high-quality digital public goods, particularly those developed and used by countries in the Global South. This fund should include accountability benchmarks for rights protection, equity impact, and participatory evaluation by end users (AlShebli et al., 2024; As’ad, 2024).

In sum, deepening South–South technological cooperation means moving beyond traditional hierarchies and toward a future of shared design, contextual relevance, and mutual empowerment. With the right mechanisms, South-led innovation can become a powerful driver of global educational equity.

5.4 Reconstructing a shared humanistic value consensus

Embedding the principle of cultural diversity into educational AI is essential for ensuring inclusive and meaningful global learning experiences. A compelling example comes from Malaysia’s Ministry of Education, which recontextualized imported AI-based curricula by assembling local scholars to revise case libraries. They integrated elements such as Malay folktales and traditional crafts, raising the perceived cultural relevance of the courses from 42% to 79%. This initiative demonstrates that safeguarding cultural rights in AI education requires more than translation–it demands structural representation of non-dominant knowledge systems within algorithmic content. On a deeper level, efforts are underway to construct multicultural training datasets. A trilateral initiative among Saudi Arabia, Egypt, and China has produced an Islamic civilization knowledge graph to supply non-Western content for large-scale educational models, helping to correct cultural bias in algorithmic training corpora (Masters, 2019). Such efforts should be accompanied by governance mechanisms that prevent cultural appropriation and ensure local communities retain control over how their knowledge is digitized, contextualized, and used.

Reframing the ethical relationship between teachers, students, and AI systems is equally critical. The European Union promotes the “human-in-the-loop” principle, which stipulates that AI should only assist–not replace–educators in decision-making processes. For instance, in France, AI tools are prohibited from automatically generating student evaluations, and in Finland, any algorithmic recommendation must include a clear pedagogical rationale. In China, the “Human-led, Technology-empowered” framework emphasizes the primacy of education values over technical efficiency. These models reflect a growing recognition that teacher autonomy is a foundational right in education, and that algorithmic authority must never override professional judgment. As part of this model, universities such as Beijing Normal University have introduced mandatory “AI Ethics Workshops” for educators, where teachers learn to identify algorithmic bias and uphold fairness in technologically mediated learning environments (Dave and Patel, 2023; Boscardin et al., 2024). Embedding such training in professional development standards helps operationalize the right to fair and non-discriminatory education in AI-enhanced settings.

Toward a global consensus, the United Nations’ Guidance for Human-Centered AI Governance proposes five foundational principles for education-related AI: transparency, fairness, accountability, privacy protection, and human autonomy. However, these principles must be localized and interpreted within specific cultural contexts. African educators have emphasized communalism, calling for AI to serve collective well-being rather than purely individual achievement. In Latin America, educators have drawn upon traditions of critical pedagogy, opposing AI implementations that risk entrenching social hierarchies. These regional insights highlight the need for participatory governance in AI deployment–where teachers, students, and civil society actors shape not just the use of AI, but the values and norms encoded in it. These perspectives underline the importance of a flexible global framework–one that maintains universal ethical principles while allowing for diverse implementation models (Table 3).

Ultimately, reconstructing a shared humanistic value consensus in AI-enabled education requires more than high-level declarations. It demands culturally grounded datasets, educator empowerment, and ethical pluralism. Only by acknowledging the moral agency of both humans and communities can educational AI systems foster genuine inclusion and global solidarity.

6 China’s role: practical explorations as a responsible global actor

6.1 Technological empowerment: supplying digital public goods in education

China has actively participated in global education governance through technological assistance and platform sharing. Within 1 year of its launch, the international version of the National Smart Education Public Service Platform provided access to over 143,000 free resources worldwide. Among them, the vocational education module known as the “Luban Workshop” has been adopted by institutions in more than 60 countries. The platform operates on a “core platform + localized mirroring” architecture: in Egypt, it integrates with the national student registration system; in Pakistan, courses are equipped with Urdu subtitles; in Laos, offline servers enable access in remote regions. This model of “unified standards with flexible localization” has led to over 8.7 million monthly active users globally (Fang et al., 2020). While impressive in scale, such platforms must incorporate safeguards to ensure cultural appropriateness, user consent, and rights-respecting use of learner data. Open access alone is not sufficient–platforms must also be subject to public accountability and transparent data governance protocols.

China also exports context-specific technological solutions tailored to the infrastructure conditions of developing countries. Innovations include low-power education terminals with up to 72 h of standby time, lightweight AI models requiring less than 100MB of memory, and fully offline learning systems. For example, Huawei has designed solar-powered AI classrooms for rural schools in Cambodia. These combine photovoltaic energy with edge computing, enabling stable digital instruction even in areas with unreliable electricity supply. Such innovations illustrate the importance of designing AI systems that are not only technically efficient but also inclusive and rights-aligned–respecting the autonomy of local educators and preventing reliance on opaque, externally controlled technologies.

Beyond regulatory divergence and issues of data governance, it is also imperative to consider the broader human rights implications of deploying AI technologies in cross-border educational ecosystems. Chan and Lo (2025) offer a comprehensive analysis of how AI-driven surveillance systems–such as facial recognition, predictive policing, AI-powered drones, and smart sensors–pose significant threats to fundamental rights, particularly the right to privacy. Their study illustrates how these technologies, often operating with limited transparency and accountability, may lead to structural discrimination and the erosion of civil liberties, especially when used in educational or institutional settings. The concept of a “digital Panopticon” introduced in their work underscores the normalization of constant monitoring, which could severely compromise academic freedom, student autonomy, and democratic participation in global higher education partnerships. This raises urgent questions for AI education governance: Who owns the data? Who audits the algorithms? How are power and oversight distributed across borders? The authors call for legal and ethical reforms including the recognition of a “right to reasonable inferences,” privacy-by-design principles, algorithmic transparency, and stronger human oversight. Integrating such perspectives is crucial to ensure that the expansion of AI in international education not only enhances learning and connectivity but also safeguards human dignity and fundamental rights across diverse geopolitical contexts (Chan and Lo, 2025). Integrating such perspectives into cross-border AI education frameworks is not optional–it is a normative imperative to ensure that digital expansion enhances learning without sacrificing liberty, dignity, or justice.

In the field of vocational education, AI-based “Chinese + Vocational Skills” programs have trained high-speed rail technicians in Thailand and textile machinery operators in Ethiopia. These initiatives facilitate both technology transfer and talent development, contributing to long-term capacity building in partner countries (Li and Qin, 2023).

Through such efforts, China demonstrates how scalable digital public goods–rooted in practical adaptability and inclusive access–can support equitable and sustainable development in global education.

6.2 Rule-shaping contributions: China’s wisdom in global governance

China has taken an active role in shaping the global governance architecture for artificial intelligence. In 2023, it introduced the Global Initiative for AI Governance, promoting the principles of “human-centered” development and “AI for good,” with a strong emphasis on fairness and inclusiveness in the field of education. To move beyond aspirational language, the implementation of these principles must include mechanisms to monitor AI’s real-world effects on academic freedom, data protection, and educational equity. Under the United Nations framework, China also sponsored the resolution on “Enhancing International Cooperation on AI Capacity Building,” which garnered support from over 140 countries and created new channels for developing countries to access critical AI technologies (Ng et al., 2023). These efforts must now be matched with safeguards that ensure such access does not come at the cost of user autonomy, local control, or ethical integrity.

China has also been a proactive contributor in innovating cross-border cooperation frameworks. In the field of data governance, it has piloted a “tiered and categorized” data flow mechanism. For example, within the Shanghai Free Trade Zone, educational and research data may be selectively transmitted across borders under regulated conditions. Such frameworks should be anchored in enforceable privacy standards, consent protocols, and user oversight mechanisms to prevent misuse or coercive data practices. In the area of technical standards, China’s engineering education accreditation system has been formally included in the Washington Accord, laying a foundation for the global mobility of AI engineering professionals. This mobility must be accompanied by mutual recognition of rights, ethical training standards, and protections for students and professionals working in transnational contexts. Regarding intellectual property rights, the “Luban Workshop” vocational education model employs a tripartite approach: original content developed by Chinese institutions, localized adaptation by partner countries, and revenue-sharing mechanisms that ensure equitable benefits for all stakeholders (Zhang et al., 2024). To ensure fairness, such models must also incorporate transparent licensing terms, culturally respectful adaptation protocols, and inclusive benefit-sharing agreements–especially where indigenous or community-generated knowledge is involved.

Through these contributions, China is not merely participating in global governance–it is actively helping shape a more balanced, inclusive, and development-oriented international order for emerging technologies like AI. The next frontier lies in embedding accountability, participation, and human rights protections as foundational elements–not just aspirations–of this evolving global AI architecture.

6.3 Ethical governance and human rights safeguards in AI-enabled education

Building on recent scholarship such as The Impact of Advances in Artificial Intelligence on Human Rights, this study reinforces the argument that ethical governance of AI in cross-border education cannot be decoupled from broader human rights considerations. The referenced work provides a critical and comprehensive examination of how AI-driven surveillance technologies–including facial recognition, predictive algorithms, and intelligent sensors–pose unprecedented challenges to fundamental rights, particularly the right to privacy. It identifies alarming gaps in current legal and ethical frameworks, where opacity, algorithmic bias, and lack of human oversight risk normalizing systemic infringements on civil liberties (Sharma and Jindal, 2023).

These findings are particularly relevant in the context of international education cooperation, where AI platforms often operate across jurisdictions with uneven regulatory protections. When data generated by students, teachers, or institutions traverses national borders–whether through learning analytics, cloud-based platforms, or automated assessment tools–it is essential to establish globally agreed-upon safeguards that prioritize individual autonomy, consent, and non-discrimination.

In light of this, our analysis highlights the need to embed the principles of transparency, fairness, and human oversight–emphasized in the aforementioned study–into all levels of cross-border AI deployment. This includes designing interoperable platforms with privacy-by-design architecture, enforcing data localization protocols sensitive to sovereignty concerns, and ensuring that educational AI systems do not disproportionately disadvantage vulnerable populations such as linguistic minorities, rural learners, or politically marginalized communities. By aligning with the normative insights of this reference, this paper contributes to advancing a global governance agenda that treats educational data not merely as a commodity, but as a site of rights, agency, and collective dignity.

The concerns raised by Chan and Lo (2025) regarding the emergence of a “digital Panopticon” offer a critical lens through which to examine the broader implications of AI-driven educational infrastructure. While large-scale digital platforms and data-intensive learning systems can enhance pedagogical efficiency and transnational access, they also risk normalizing constant surveillance within educational environments. This is particularly salient in cross-border partnerships, where asymmetries in technological power and regulatory maturity may result in opaque data practices and limited avenues for redress (Sharma and Jindal, 2023).

By drawing upon the metaphor of the “digital Panopticon,” Chan and Lo emphasize how the pervasive use of facial recognition, predictive monitoring, and smart sensors in educational settings can erode students’ sense of autonomy, inhibit academic freedom, and reinforce behavioral conformity. These dynamics are not merely technical or legal concerns–they are deeply cultural and psychological. The routinization of surveillance in the name of performance optimization may cultivate a climate of internalized monitoring, where learners adapt their behavior not based on intrinsic motivation or critical engagement, but under the implicit gaze of algorithmic systems.

This analysis resonates with our own findings, which highlight that the expansion of digital education infrastructure, particularly when deployed without adequate safeguards, can amplify social inequalities and institutionalize surveillance logics. Therefore, a truly ethical and human-centered approach to AI in cross-border education must go beyond infrastructural provisioning and algorithmic efficiency. It must actively address the social and cultural consequences of long-term monitoring–by ensuring transparency, student agency, and robust accountability mechanisms that transcend national boundaries.

6.4 Infrastructure support: a new cornerstone for education digitalization

China is actively bridging the global digital divide through international cooperation that links “new infrastructure” with education. In Southeast Asia, China has supported the construction of regional education cloud nodes, delivering computing services to countries such as Laos and Myanmar. In Africa, Huawei has played a central role in developing a “smart education backbone network,” bringing internet access to over 3,000 schools. These initiatives place a strong emphasis on capacity transfer: for example, the Djibouti Teacher Training Center is equipped with AI-powered teaching systems developed in China, and is expected to reach a 90% local operations and maintenance rate within 5 years (Talib et al., 2019). To align with a rights-based development agenda, such infrastructure must not only promote access but also empower local actors with control over platforms, respect for educational sovereignty, and transparent accountability for digital outcomes.

Innovative financing mechanisms further underpin these infrastructure efforts. The Asian Infrastructure Investment Bank (AIIB) has launched a dedicated “New Infrastructure for Education” loan program, offering interest rates approximately 40% below commercial benchmarks. Additionally, the China–ASEAN Digital Education Fund employs a blended financing model: 30% from government sources, 50% from private enterprises, and 20% from international organizations. This structure effectively addresses the financing bottlenecks faced by developing countries, making large-scale educational infrastructure projects more feasible and sustainable (Platas, 2023; Adhikari et al., 2024). However, such financing strategies must also include enforceable social safeguards–such as protections against digital debt dependence, conditionalities that support equitable education outcomes, and public audit mechanisms for transparency and stakeholder participation.

Through the integration of digital infrastructure, financial innovation, and local capacity building, China is helping lay the foundational architecture for inclusive, future-ready education systems in the Global South. Yet for this architecture to be rights-respecting, human agency, local consent, and cultural diversity must be integral to its design and deployment–not secondary considerations.

Recent empirical studies have measured the tangible benefits of AI-assisted learning, especially in writing proficiency and cognitive load reduction. Chan et al. (2024) large-scale randomized controlled trial involving 918 Hong Kong undergraduates reported that students receiving GPT-3.5-generated feedback improved their writing scores by an average of 12% compared to controls (p < 0.01). Survey results further indicated a 20%–25% increase in learning motivation and engagement among participants. Complementing these findings, a German study that examined biometric indicators–eye-tracking and functional near-infrared spectroscopy (fNIRS)–during analytical writing tasks found that AI-assisted students maintained equivalent quality to controls but exhibited a 15% reduction in cognitive strain and a 10% faster task completion rate. These converging results support our argument that AI-driven feedback mechanisms can boost learning efficiency, reduce student effort, and support sustainable, learner-centered education, which is particularly relevant for multilingual, digitally mediated, international contexts (Yu et al., 2025). While promising, such studies must also assess whether algorithmic feedback respects student privacy, reinforces or mitigates cognitive inequities, and preserves educator agency in evaluating student performance.

However, evidence from other disciplines underscores the contextual variability of AI’s effectiveness. A randomized trial in pharmacy education assessed the impact of AI-generated guidance during OSCE-style clinical exams and found no statistically significant differences in performance outcomes or anxiety levels between AI-assisted and control groups. This suggests that while generative AI may excel in structured writing tasks, its benefits are less pronounced in experiential learning or high-stakes professional assessments (Huespe et al., 2023). The divergence across these domains highlights the need for discipline-sensitive AI integration strategies. It reinforces our recommendation that AI interventions in cross-border higher education should be carefully tailored–considering factors like task complexity, cultural-linguistic context, and the balance between technological assistance and human oversight. These findings emphasize that “one-size-fits-all” AI deployment can exacerbate educational inequalities if not carefully contextualized–and that human oversight and cultural alignment are essential in preserving fairness and pedagogical quality.

Taken together, the findings of this manuscript align with and extend existing empirical research by situating AI-enabled education within a broader geopolitical and policy-driven framework. While prior studies such as those by Chan et al. (2024) and Kramer et al. (2024) have provided micro-level evidence of generative AI’s pedagogical benefits–ranging from improved writing outcomes to reduced cognitive load–this study offers a macro-level perspective that foregrounds the strategic, regulatory, and ethical dimensions of AI deployment in cross-border higher education. By integrating policy analysis, global case studies, and thematic synthesis, this manuscript contributes a multidimensional framework that not only accounts for individual learning enhancements but also interrogates the institutional and geopolitical constraints that shape their implementation. This broader lens reveals that the transformative potential of AI in education is neither automatic nor uniform, but highly contingent upon governance systems, infrastructural disparities, and value-driven algorithmic design. As such, the present study complements existing literature by arguing that the future of international educational collaboration requires not only technological innovation but also inclusive governance, cultural sensitivity, and sustained global cooperation. This broader lens reveals that technological benefits must not obscure questions of digital equity, student agency, and systemic accountability–without which, transformative potential may translate into structural harm.

This study contributes a novel perspective by synthesizing the intersection of artificial intelligence, geopolitics, and cross-border higher education through a multidimensional and policy-oriented lens. One of its unique strengths lies in the integration of both Western and non-Western literature, combined with illustrative case studies from diverse geopolitical regions such as China, Southeast Asia, and Africa. Additionally, the manuscript offers forward-looking policy recommendations grounded in emerging trends, including platform interoperability, data sovereignty, and ethical AI governance.

Nevertheless, the review is not without limitations. As a qualitative synthesis, it lacks original empirical data, and the rapid evolution of both AI technology and geopolitical contexts means that some findings may soon require updating. Furthermore, while efforts were made to ensure balanced geographic representation, there remains an overrepresentation of Chinese and Anglophone sources, and certain regions such as South America and Central Asia are underexplored. This unevenness reflects broader structural imbalances in global knowledge production, reinforcing the need for inclusive research collaboration and capacity building that empowers scholars from the Global South to co-shape digital education policy and AI design agendas. Future research could benefit from a more systematic meta-analysis of empirical studies and greater engagement with regional stakeholders to capture diverse educational realities in the Global South.

In South America, the São Paulo Virtual University (Univesp) in Brazil has significantly expanded digital access to higher education by integrating AI-powered learning management systems. As of 2023, the platform had enrolled over 200,000 students across all states of Brazil, offering STEM and vocational modules via open-source platforms. Although not globally syndicated like China’s Smart Education Platform, Univesp has collaborated with UNESCO to localize digital infrastructure standards, making it a potential regional model for scalable, inclusive online education (Barilli et al., 2011). The case of Univesp shows that AI integration need not follow extractive models dominated by global platforms–it can be guided by public values, regional ownership, and open-source principles that center equity and transparency.

Meanwhile, in Central Asia, Kazakhstan has made strides in implementing digital infrastructure through the Kundelik.kz platform, which connects over 300,000 teachers and 2.5 million students nationwide. A 2022 World Bank assessment reported improvements in parental engagement and student attendance linked to the platform’s AI-enabled communication tools. These regional cases–though often underreported–demonstrate the diverse modalities through which digital education platforms are evolving beyond the Global North–South binary (Abdigapbarova et al., 2025). Highlighting these decentralized, plural models helps resist technological standardization and affirms the right of regions to develop education ecosystems that reflect their own social, linguistic, and epistemological priorities.

7 Conclusion

Artificial intelligence and geopolitics are jointly reshaping the architecture of cross-border higher education. While AI offers transformative opportunities–including virtual classrooms, personalized learning, and real-time international collaboration–geopolitical frictions over data sovereignty, technological standards, and normative values are increasingly fragmenting educational ecosystems.

To guide the future of AI-enabled international education, we propose three foundational principles: AI must augment–not displace–human educators; global openness must be reconciled with national digital sovereignty; and technological innovation must serve global equity and accessibility. Crucially, these goals cannot be achieved through technical design alone–they require a governance architecture that embeds privacy-by-design, algorithmic transparency, and inclusive oversight mechanisms.

Such governance must be collaborative and rights-based. Engaging policymakers, technologists, educators, and civil society is essential to ensure that AI deployment respects human dignity, autonomy, and academic freedom–particularly across diverse geopolitical contexts. These protections are not peripheral; they are central to maintaining trust, justice, and ethical legitimacy in AI-powered education.

China’s evolving role–through digital public goods, global rule-making, and infrastructure partnerships–demonstrates the potential of inclusive and development-oriented cooperation. By integrating both Western and non-Western perspectives and adopting a forward-looking, policy-based framework, this study underscores the need for holistic, responsible, and globally relevant analysis. As affirmed at the 2025 World Artificial Intelligence Conference, “Only through shared dialogue and responsible governance can AI truly empower the future of education.”

Author contributions

YZ (1st author): Project administration, Visualization, Resources, Formal Analysis, Validation, Data curation, Conceptualization, Supervision, Investigation, Methodology, Writing – original draft, Writing – review & editing, Software. ZZ: Methodology, Conceptualization, Data curation, Investigation, Writing – original draft, Visualization, Project administration. WX: Validation, Project administration, Supervision, Data curation, Writing – original draft, Conceptualization, Investigation. YZ (4th author): Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

Our heartfelt appreciation goes out to every author who played a role in contributing to this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

AI, Artificial intelligence; OER, Open Educational Resources; LLMs, Large language models; NSF, National Science Foundation; GDPR, General Data Protection Regulation; DPGs, Digital Public Goods; GPAI, Global Partnership on Artificial Intelligence; IEA, International Engineering Alliance; AIIB, Asian Infrastructure Investment Bank.

References