Abstract

Introduction:

The learning process is characterized by its variability rather than linearity, as individuals differ in how they receive, process, and store information. In traditional learning, taking into consideration the individual differences between students can be difficult. As a result, many talented students may fail because their learning speed does not align with the assessment requirements.

Objectives:

In this study, we propose efficiency algorithm as a new assessment method for adaptive learning (AL), based on artificial intelligence, to evaluate differences in students’ learning speed and help ensure the graduation of competent professionals in their discipline.

Methods:

Our assessment method was based on how effectively students apply the knowledge they have acquired to complete tasks. Using four important parameters that always answer the question of how the student completes rather than its completion. These parameters were information search, information evaluation, information processing, and information communication, which together constitute the basic components of our efficiency algorithm.

Key findings:

Our results showed that, by using the Naïve Bayes algorithm, we can determine with high accuracy (93%) in which part of the learning process (information search, information evaluation, information processing, or information communication) the student encounters difficulties.

Contribution:

Our proposed approach helps in designing personalized learning plans that directly target individual weaknesses.

1 Introduction

In the digital age, with the introduction of digital technologies into every aspect of our lives, the way we work has changed. Data and information are becoming available anywhere and anytime, leading to changes in all areas of our lives. Education, teaching, and learning are also affected by this change. Similar to many other fields, the introduction of artificial intelligence (AI) in education has revolutionized the way of teaching and learning. The concept of teaching has changed from being teacher-centered to student-centered, and traditional learning methods no longer align with the labor market requirements. Artificial intelligence and digital technologies have allowed the introduction of adaptive learning (AL), where each student can have the learning process and curriculum in line with his abilities and tendencies. In adaptive learning, it is important to understand students’ needs to adjust lectures, assessment tools, and course objectives to effectively meet those needs. In the shift from teacher-centered to student-centered education, teachers may be burdened with extra work, but artificial intelligence tools simplify the new tasks that arise with adaptive learning.

Personalized learning refers to an educational strategy in which all learning activities—from objectives to the sequence of content and instructions—can vary according to students’ needs. The objective of adaptive learning is to generate learning experiences that take into consideration the individual differences of students.

In the majority of European, industrialized, and advanced countries, universities and academic institutions are the primary counterparts to the industrial sector and the labor market. Therefore, the industrial sector and the labor market are the primary funders of universities, as companies that need employees are looking to build partnerships with universities and educational institutions.

The most important question to be asked is: what is the role of artificial intelligence in this scenario? In the adaptive learning model discussed in this article, student assessment is continuous, meaning that a vast amount of data on the student’s learning process is produced during the class. These data are difficult to analyze manually without artificial intelligence tools. AI tools help teachers analyze a vast amount of data collected about students to predict adaptive learning paths and courses for each student within the same curriculum and discipline. This approach aligns the learning process with each student’s capabilities, enabling them to achieve proficient in their specialization. Adaptive learning helps in preparing students to be capable of analytical thinking and problem-solving. It provides quality education and good learning opportunities for all. This is a fact: we need competent graduate students to reduce the gap between university outcomes and labor market requirements. The employment ratio of graduated students is an essential performance indicator. If the unemployment ratio among graduated students is high, it indicates that university outcomes do not align with labor market requirements. The unique independent variable in this equation that we can control is the student. We live in an era defined by a conflict of competencies; therefore, we need graduates with broader competencies, greater skills, and a strong knowledge base.

In this article, we explore how artificial intelligence can help change assessment methods in higher education.

In a previous study (eLearning Industry, 2023), adaptive learning is defined as a learner-centric approach that provides learners with a customized, flexible learning journey that align with their passion. The study explains how adaptive learning changes the learning process and goals to meet learners’ needs. It adjusts the pace at which students learn, the material they use, the technologies employed, and the way they are taught. AI technology helps predict how people will learn. Therefore, AI can be used in adaptive learning to analyze students’ data, such as their performance, strengths, and weaknesses, and provide a corresponding pathway for each student with appropriate content. An individual learning path in adaptive learning ensures that students receive appropriate challenges and support to maximize their learning outcomes. To solve the unemployment problem among graduate students, we must reduce the gap between university outcomes and labor market requirements, and adaptive learning is an important step in this direction. In another study (Randieri, 2024), how AI has revolutionized education is examined. A survey was conducted to study the perception of AI in education. 88% of respondents strongly agreed on the significance of AI in education, however, additional 9% expressing only agreement. However, only 14% disagreed and 5% strongly disagreed. The study findings affirm our approach that the use of AI in education does not replace human teachers, and emphasizes on the essential role of human emotional and empathetic engagement in teaching. AI can support educational materials, assessments, and the analysis of student performance. Another previous study (ElevenLabs, 2024) presented how AI is used in education. The study explains how AI can solve educational challenges that traditional learning cannot. It improves concentration to enhance comprehension, encourages learning, and develops students’ writing skills. It can also reduce teachers’ workload by providing tools for assessments and exam generation and improve comprehension by simplifying complex context, providing multimedia tools for presenting the content.

In our study, we aim to transform the learning method for graduate students to reduce the gap between university outcomes and labor market requirements. We focus on changing assessment methods, based on the idea that in the learning process, the unique factor that we can truly control is the student, who needs motivation, encouragement and new tools adapted to the new data era. The literature reveals a lack of statistical and quantitative studies that focus on identifying the weak points of individual undergraduate students. In our study, we aim to use an online platform that helps teachers in collecting data and measuring students’ improvement.

Today, information is available everywhere, but understanding it and using it to produce useful knowledge is the role of the educator. The educator’s task is to guide students in accessing information and processing it in a useful manner. In a previous study (Matthews, 2023), how AI tools improve student assessment outcomes is presented. Using AI tools in assessments enables educators to process more information and data about learners. Educators can develop new materials quickly, and these materials can be used to provide individualized student assessments. Adaptive learning helps educators evaluate students’ progress as they work through materials at their own pace. It provides the educator the possibility to take into consideration differences in students’ capabilities and can identify the weaknesses and strengths of students in some topics. In addition, it allows the educator to perform continuous assessments and to receive real-time feedback on student performance—something that is difficult to achieve in traditional learning. By analyzing the weaknesses and strengths of students, educators can create an adaptive learning pathway. However, AI tools such as ChatGPT are seen as a threat to academic integrity in traditional higher education assessments (Rudolpj et al., 2023). In a previous study (Perkins, 2023), it is discussed that there are concerns regarding AI detection tools for closed-book exams as an alternative assessment strategy (Rudolph et al., 2023). Furthermore, generative AI continues to evolve, and there is more skepticism about the effectiveness of the assessment process, student achievement, and student motivation to learn (Balducci, 2024). In another study (Balducci, 2024), a student-centered approach to education is proposed, based on three main concepts: authenticity, collaboration, and process complexity, which are considered critical to the security of assessments. The concept of student assessment has changed from evaluating whether a student has completed the work to understanding how the student has completed it.

In a previous study (Ideas Hub, 2023), the impact of AI on student assessment in higher education was discussed. The study highlights several key ways in which AI can make education more efficient, including minimizing biases, providing adaptive learning feedback to students, fostering a growth mindset among students, and improving accuracy and reliability, among other features. Most research articles in the literature focus on the concept of adaptive learning, which promotes a shift from the traditional close-ended exam, designed to measures how the students’ and teachers’ responses align with the standard measurement defined by the institution, toward new assessment methods that is based on formative evaluation. The new method assess what the student has learned in the past and how they will apply this learning in the future. In adaptive learning, the students compare their performance against themselves, rather than their peers. This strategy necessitates changes in learning behavior for both students and teachers (Black et al., 2004). In a previous study (Dai and Ke, 2022), the authors suggest the use of multimodal computing for assessment and feedback to improve existing assessments, which have various limitations, such as their inability to accurately evaluate the application of knowledge and skills that students have learned in real-life contexts. The authors emphasize moving toward assessments that measure how well students can master the knowledge and skills they have acquired.

In another study (Contrino et al., 2024), the impact of integrating adaptive learning (AL) with flexible, interactive technology (FIT) on learning outcomes and student achievement was observed in online and face-to-face courses. For this purpose, the Cog Book platform was used to test student performance in both teaching scenarios, providing a quantitative evaluation of the impact of incorporating adaptive learning in higher education. This is because there is a lack of quantitative studies that examine the impact of AL on student performance in higher education. The results presented in the article show that applying FIT+AL or FIT to students in the same semester (August–December) for two consecutive years indicated that the grade point average was higher for FIT+AL than for FIT. Furthermore, in the second year, a comparison between FIT+AL in online courses and FIT+AL in face-to-face courses showed that the percentage of passing students was higher in face-to-face courses with AL than in online courses with FIT+AL. The percentage of grades over 90 in face-to-face +AL courses was higher compared to online courses. The students’ performance improved in the second year due to increased familiarity with the new methodology and its advantages. The integration of adaptive learning in undergraduate courses improves the grades and percentage of passing students, which, in turn, increases learner satisfaction, a key indicator of success. According to the authors in a previous study (Florence Martin et al., 2020), AL helps generate a personalized learning experience by taking into account the individual differences of students. This approach is designed to increase the understanding of the material while measuring the results obtained through evaluation. Personalized learning experiences that are based on students’ previous knowledge help in collecting information about their progress. In a previous study (du Eileen Plooy et al., 2024), the impact of personalized adaptive learning on academic performance and engagement in higher education was examined. The study was based on different concepts, such as learning preferences, behaviors, knowledge, academic achievements, and learning analytics. The results indicated that 59% of the observed cases demonstrated an increase in students’ academic performance after implementing personalized adaptive learning. However, the positive impact of AL was not limited to overall academic metrics; it also enhanced critical thinking skills and improved self-regulation strategies for learning. In addition, 36% of the observed cases demonstrated that adaptive learning increased student engagement. The study concludes that personalized adaptive learning has a positive impact on teaching and learning outcomes. In another study (Ipinnaiye and Risquez, 2024), the pedagogical effect of using adaptive learning on student performance was examined, considering two separate indicators: the amount of time spent studying and the number of completed assignments. The results showed that student performance was enhanced by the number of completed adaptive assignments. However, the amount of time spent studying was negatively associated with performance, as evidenced by a strong non-linear relationship between the time spent and students’ performance. The study concludes that the amount of time spent studying alone is not sufficient to improve student performance, but the quality of study time is what promotes deeper engagement with learning.

AI makes the learning process more effective and scalable, as it can assist in content generation, provide instant feedback and assessment, offer time flexibility, reduce costs, and support professional development. In addition, AI can help identify individual learners’ needs, create adaptive learning paths, and deliver instant and individual feedback. Using AI in education can assist in keeping track of learners’ progress, monitor their performance, and predict the next topic or the next step in the student’s academic life. The first step toward applying adaptive learning for undergraduate students is to clearly define the goal and objectives of adaptive learning, understand learners’ needs and abilities, create adaptable content, and use adaptive assessments and dynamic evaluations. Then, feedback should be gathered to improve or adjust AI content and technologies in a way that increases student motivation and encourages the student to develop skills aligned with local, regional, and international labor market requirements. Similar to any new technology, adaptive learning offers advantages for students but may pose challenges for institutions where all learning processes need to be restructured.

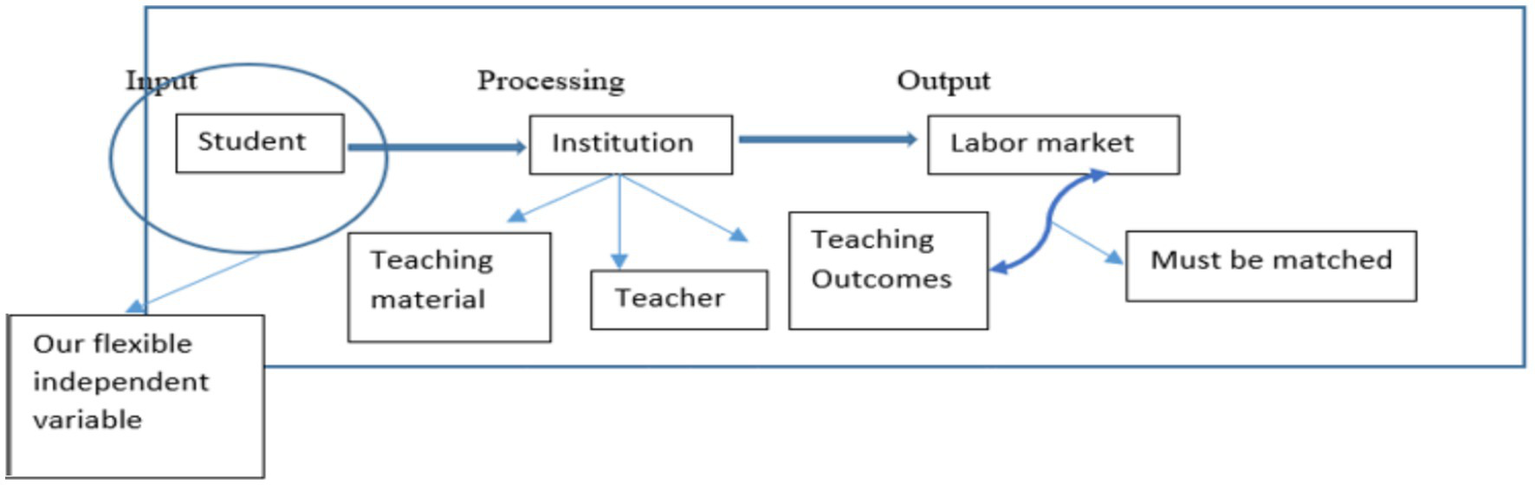

In this study, we propose an adaptive learning system based on continuous assessments of students to predict the best pathway that aligns with students’ abilities and learning speed. The learning process depends on several elements: students (who serves as the input), institutions (which offer teaching materials, teachers, and teaching outcomes), representing the processing phase, and the output, which is the prepared student entering the labor market.

2 Materials and methods

In the learning process, the teaching material is stable and clear. The teacher, the most important element in the learning process, now has a shifted role: from helping students acquire knowledge and skills aligned with labor market requirements to guiding them on how to apply the knowledge and skills they have acquired during their academic life. The output of the learning process is a result of how the input (i.e., the student) is processed to give the required output (graduate competences). In this scenario, the unique independent variable that we can control is the student. Figure 1 represents our learning process paradigm.

Figure 1

The learning process paradigm.

The problem with traditional learning is that teaching outcomes do not match labor market requirements because the unemployment rate has increased and that graduates do not have the skills and competencies needed for the labor market. The question, then, is: What will change for students in our new proposed methodology? Continuous assessment motivates them to pay more attention to their capabilities. We need to restructure the educational system in higher education to shift the role of students from passive recipients to active participants in their learning.

Our proposed system aims to motivate students to increase their cognitive level to meet labor market requirements. This is achieved by identifying the weaknesses and strengths in their knowledge, skills, and competencies. Today, information is available to students from various sources and in multiple formats. The role of teachers is to teach students how to use this information to reach their goals. In the labor market, a student’s goal is to find a good job, which, from employers’ point of view, depends on the graduate’s efficiency.

2.1 Efficiency algorithm

Before explaining how our algorithm guides students toward mastering what they have learned, it is appropriate to first define what an algorithm is. The algorithm is a logical step followed to find a problem solution. The steps in designing an algorithm are depicted in Figure 1, that defines the input, processing phase and expected output.

Graduate efficiency is developed during academic years by mastering the following skills: information search, information evaluation, information processing, and information communication. When the graduate knows how to search the information they need, distinguish between relevant information and irrelevant information, and identify the relationship between information and topics and context, they can then use the appropriate information to find the needed solution and how to express the results obtained.

The parameters described above are expressed in our efficiency algorithm using a linear equation as follows:

2.1.1 Efficiency or degree of mastery = information search + information evaluation+ information processing + information communication

The linear equation above forms the core of our proposed system, representing the calculation of the degree of mastery—a new assessment method. In this method, information search measures how students search for the needed information. The value of this parameter ranges from 0 to 10, where 0 indicates that the student is unable to search for information, and 10 indicates that the student has correctly searched for the information. Information evaluation, which is part of the input in our algorithm, represents the constraints that separate one problem from another and measures how students can find a relationship between the information found and the topic studied. The value of this parameter ranges from 0 to 10. Information processing measures the ability of students to use appropriate information in the correct context. Information communication measures the ability of students to present and describe the results obtained from the solution (output).

In our proposed algorithm, assessments are based on these four parameters, taking into account that information today is available for all and emphasizing the importance of knowing how to use the available information.

2.1.1.1 Results and analysis

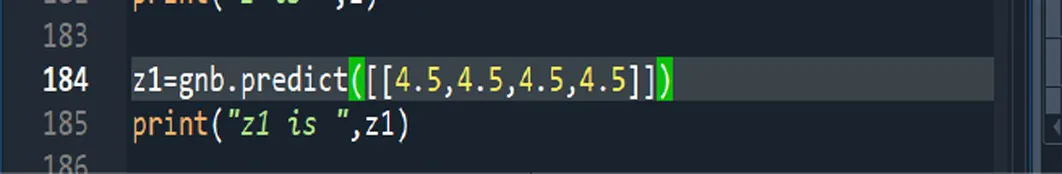

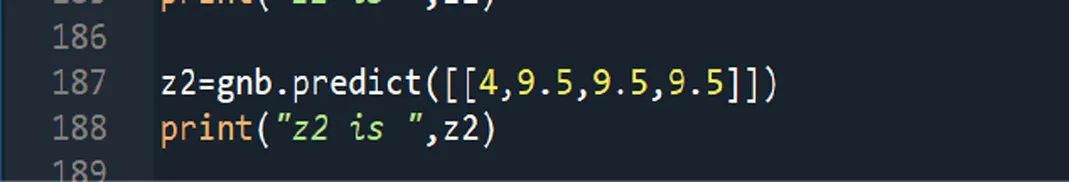

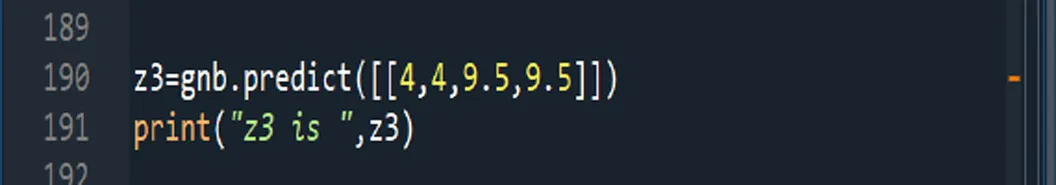

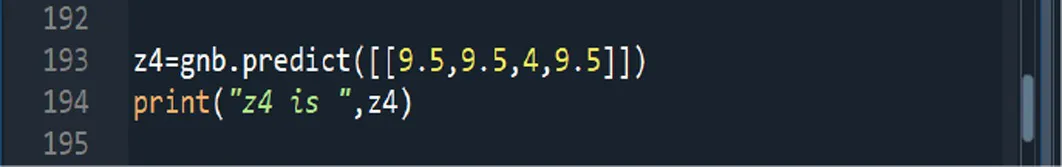

To evaluate the performance of our algorithm, it was implemented from scratch in Python. We used a linear regression algorithm to determine whether the relationship between our independent variables (information search, information evaluation, information processing, and information communication) and the dependent variable (efficiency) was linear and to identify the main parameter that had the greatest impact on this relationship (Figure 2). The dataset used was downloaded from the Mendeley website (Mendeley Data Repository, n.d.; Köhler and González-Ibáñez, 2023), with data license (CC-BY 40). The data were collected by the Department of Information Engineering at Santiago University, Chile.

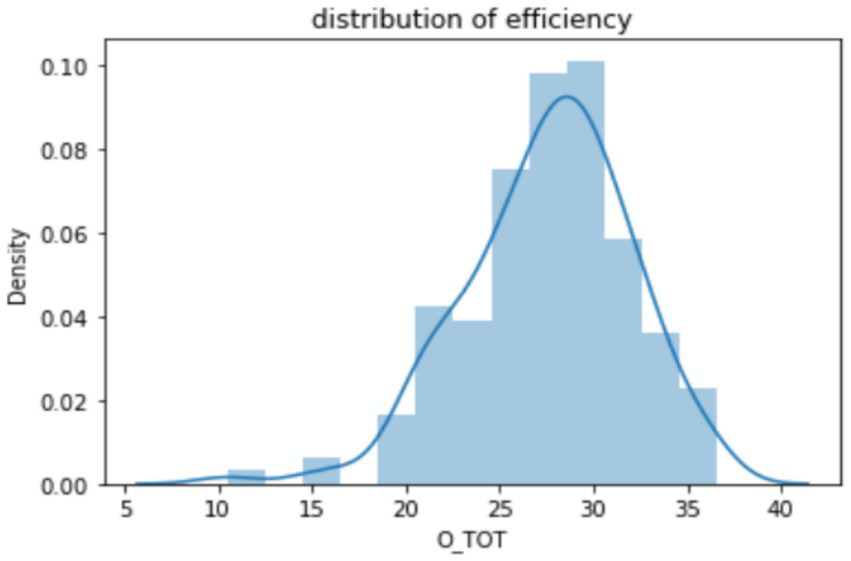

Figure 2

Distribution of efficiency.

The dataset consisted of 153 observations across 23 engineering programs at a Chilean university and included 25 features. From these data, we extracted the fields relevant to our study: information search and information evaluation for self-learning and information search, information evaluation, information processing, and information communication for observed learning. Each of these parameters was evaluated on a scale from 0 to 10. Then, we compared the student’s total performance in both cases: self-learning and observed learning.

The results show that, the percentage of students whose its performance in information search and information evaluation in self-learning is better than in observed learning. Then, we compare the percentage of improvement on using information search and information evaluation for self-learning in comparison with information processing and information communication for observed learning, with all other parameters (processing, communication), the results show that the percentage of students that its total performance is better in the first case (self-learning) is 75.8%. Considering that the total performance value was 40, the maximum performance value in the dataset was 36.58 and the average was 27.59.

The results obtained by applying linear regression on the dataset indicated that the relationship between efficiency and the four parameters considered was linear and can be expressed as follows:

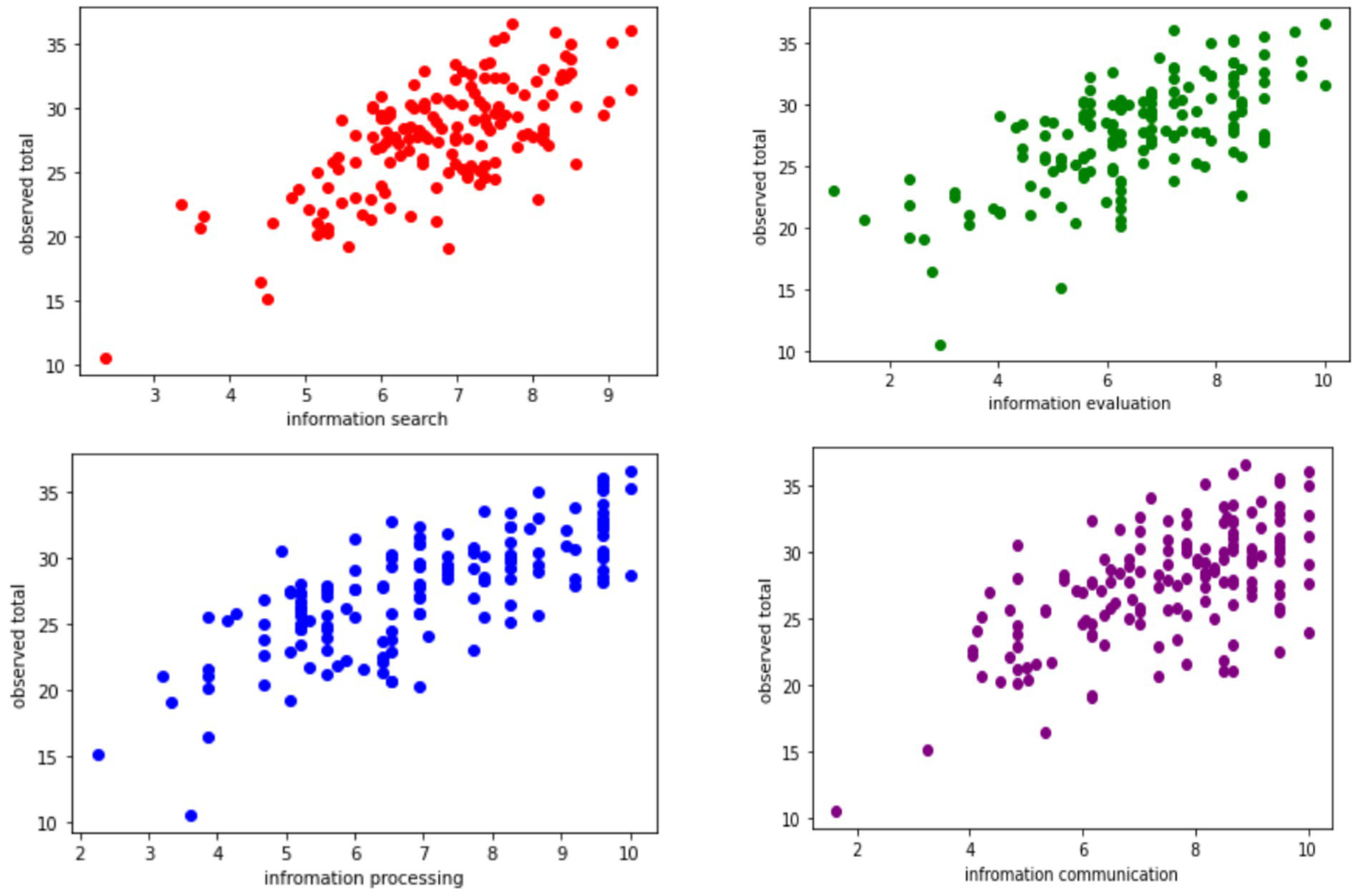

From the previous relationship, it was noted that the least important factor was information search; however, the other three parameters (information evaluation, information processing, and information communication) had similar importance and a greater impact on the efficiency of the value. The negative value in this equation indicated that, without the four considered parameters, the efficiency would be negative. When the data were divided into 80% for training and 20% for testing, the accuracy ratio was 65.34% and the mean absolute error was 2.26. The average cross-validation score for 5 k fold was 57.15%. Figure 3 shows the relationship between our parameters and the total efficiency value; it shows a linear relationship. Figure 2 shows the distribution of efficiency in the observed learning data, which resembled a near-normal distribution. The linear regression algorithm was used to test the relationship between the four parameters and student competencies. Our results showed that there was a linear relationship between the four parameters and student efficiency.

Figure 3

Relationship between the efficient algorithm parameters and competency.

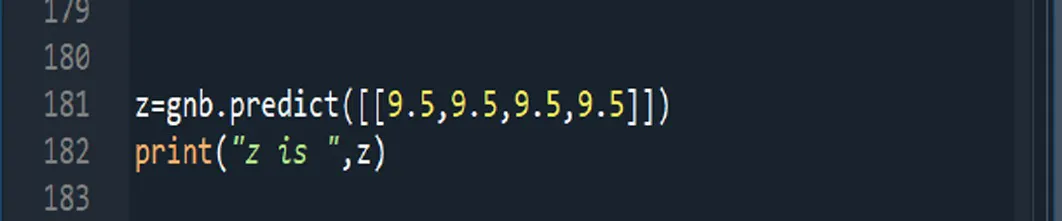

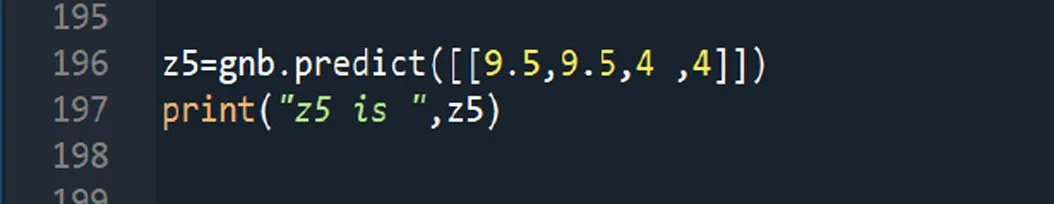

To determine the best level of efficiency and to classify students as proficient and non-proficient, we used the Naïve Bayes algorithm as a classification method. All students with a total performance evaluation above the average were considered proficient, while those below the average were considered non-proficient. The results showed that our estimation was accurate at a rate of 95% for proficient students and 91% for non-proficient students. The average precision was 93%. In Table 1, it is shown how our four variables affected the evaluation of the students (proficient and non-proficient).

Table 1

|

|

|

|---|---|---|

| Proficient | All four evaluations were high |

| Non-proficient | All four evaluations were low |

| Proficient | Only information search was low, and the other three evaluations were high |

| Non-proficient | Information search and information evaluation were low |

| Proficient | Only information processing was low |

| Non-proficient | Information processing and information communication were low |

Predictions of student evaluations using the Naïve Bayes algorithm.

In addition, we used several other machine learning algorithms to evaluate the performance of our algorithm, such as the decision tree algorithm, KNN algorithm, and AdaBoost algorithm. The results presented in Table 2 show that the best performance was obtained using the Naïve Bayes algorithm, followed by the KNN algorithm, which achieved an accuracy of 90.3% the results presented in the table represents the average of each metric, because it is measured the accuracy for proficient and non-proficient then, is considered the average.

Table 2

| Metrics | Naïve Bayes | Decision tree | KNN | AdaBoost |

|---|---|---|---|---|

| Average accuracy | 93% | 81% | 90.3% | 81% |

| Average precision | 93% | 79% | 79% | |

| Average recall | 94% | 80% | 81% |

Performance comparison.

The main challenge in our work lies in the degree of acceptance from students, as it requires collaboration among all the people involved in the learning process—students, teachers, and institutions. Our presented work was applied to undergraduate students in higher education but can also be applied to school students, provided that all the necessary information and evaluations are collected through an online platform that represents an interactive environment involving students, teachers, and institutions.

3 Conclusion and future work

In this article, we propose a new assessment system that helps teachers in analyzing data collected from continuous student assessments to identify students’ weaknesses and strengths and develop an adaptive learning curriculum. Using artificial intelligence tools, the system can analyze large amounts of student data and predict the best pathway. We propose an efficiency algorithm that represents a linear relationship between four independent variables that constitute graduate efficiency. Student assessments must be based on these four parameters: information search, information evaluation, information processing, and information communication. Our results showed that, by measuring these four independent variables, we can predict students’ strengths and weaknesses with high accuracy: 91% for proficient students and 93% for non-proficient students. Our variables were evaluated on a scale from 0 to 10. Students with an evaluation above 5 were considered proficient, while students with an evaluation below 5 were considered non-proficient. For our future work, we will focus on proposing a comprehensive question structure based on Bloom’s taxonomy standards to evaluate each variable.

Statements

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found at: https://data.mendeley.com/research-data.

Author contributions

AZ: Conceptualization, Data curation, Formal analysis, Methodology, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The author declares that this work is funded by the Deanship of Research of Jadara University. The author is grateful to the Deanship of Research of Jadara University for providing financial support for this publication.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

BalducciB. (2024). AI and student assessment in human-centered education. Front. Educ.9, 1–3.

2

BlackP. J.HarrisonC.LeeC.et al. (2004). Working inside the black box: assessment for learning in the classroom. PDK International86, 8–21.

3

ContrinoM. F.Reyes-MillánM.Vazquez-VillegasP.eyes-Millán (2024). Using an adaptive learning tool to improve student performance and satisfaction in online and face-to-face education for a more personalized approach. Smart Learn. Environ.11, 1–24.

4

DaiC.-P.KeF. (2022). Educational applications of artificial intelligence in simulation-based learning: a systematic mapping review. Comp. Educ.3, 100087–100017. doi: 10.1016/j.caeai.2022.100087

5

du Eileen PlooyDaleen CasteleijnDenise Franzsen (2024). Personalized adaptive learning in higher education: a scoping review of key characteristics and impact on academic performance and engagement. Heliyon10, 1–24.

6

eLearning Industry. How AI is personalizing education for every student. (2023). Available online at: https://elearningindustry.com/how-ai-is-personalizing-education-for-every-student (Accessed August 23, 2024).

7

ElevenLabs. Top 5 AI-enabled educational tools shaping learning in 2024, II ElevenLabs. (2024). Available online at: https://elevenlabs.io/blog/top-5-ai-enabled-educational-tools (Accessed August 23, 2024).

8

Ideas Hub. AI in higher education: impact of AI on student assessment, Tenso. (2023). Available online at: https://www.tensorway.com/post/ai-for-student-assessment-in-higher-education (Accessed May 4, 2025).

9

IpinnaiyeO.RisquezA. (2024). Exploring adaptive learning, learner-content interaction and student performance in undergraduate economics classes. Comput. Educ.215, 1–11.

10

KöhlerJ.González-IbáñezR. (2023). Information competences and academic achievement: a dataset. Data8:164. doi: 10.3390/data8110164

11

MartinF.ChenY.MooreR. L.WestineC. (2020). Systematic review of adaptive learning research designs, context, strategies, and technologies from 2009 to 2018. Educ. Technol. Res. Dev.68, 1903–1929.

12

MatthewsE., How can AI tools improve student assessment outcomes?Tao. (2023). Available online at: https://www.taotesting.com/blog/how-can-ai-tools-improve-student-assessment-outcomes/ (Accessed August 23, 2024).

13

Mendeley Data Repository. (n.d.). Dataset of information competences and first-semester grades. doi: 10.17632/7r5fnrhyky.1

14

PerkinsM. (2023). Academic integrity considerations of AI large language models in the post-pandemic era: ChatGPT and beyond. J. Univ. Teach. Learn. Pract.20, 7–24. doi: 10.53761/1.20.02.07

15

RandieriC. Personalized learning and AI:revolutionizing education. Forbes Technology Council. (2024). Available online at: https://www.forbes.com/councils/forbestechcouncil/2024/07/22/personalized-learning-and-ai-revolutionizing-education/ (Accessed August 23, 2024).

16

RudolphJ.TanS.TanS. (2023). ChatGPT: bullshit spewer or the end of traditional assessments in higher education?J. Applied Learn. Teach.6, 342–363.

17

RudolpjJ.TanS.TanS. (2023). War of the chatbots: bard, Bing chat, ChatGPT, Ernie and beyond. The new AI gold rush and its impact on higher education. J. Applied Learn. Teach.6, 364–389.

Summary

Keywords

adaptive learning, higher education outcomes, student pathway, graduate efficiency, structured curriculum

Citation

Zabian AH (2025) Efficiency algorithm: new AI-based tools for an adaptive learning environment. Front. Educ. 10:1702662. doi: 10.3389/feduc.2025.1702662

Received

10 September 2025

Accepted

31 October 2025

Published

26 November 2025

Volume

10 - 2025

Edited by

Sergio Ruiz-Viruel, University of Malaga, Spain

Reviewed by

John Mark R. Asio, Gordon College, Philippines

Ying Chen, Taizhou University, China

Updates

Copyright

© 2025 Zabian.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Arwa Hasan Zabian, arwa@jadara.edu.jo

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.