- Department of Mechanical Engineering, Taiyuan Institute of Technology, Taiyuan , China

Introduction: Addressing the dual challenges of climate change and sustainable food production, this study proposed an integrated framework that combined planter performance optimization with green, low-carbon agricultural transformation. While traditional planting strategies focused on parameters like seed depth, speed, and spacing, they often neglected environmental sustainability and adaptability to climate variability.

Methods: To bridge this gap, we introduced the Adaptive Precision Planter Optimization Model (APPOM), which leveraged real-time environmental sensing, machine learning, and multi-objective optimization to dynamically adjust key planting parameters. Our approach also incorporated green technologies, including electric-powered planters and carbon-sequestration soil practices, to reduce the ecological footprint of agricultural operations.

Results: Experimental results validated that APPOM significantly improved planting accuracy, enhanced resource efficiency, and reduced carbon emissions across diverse soil and climate conditions. Furthermore, we presented the Real-Time Adaptive Planter Optimization (RAPO) strategy, which enabled context-aware decision-making and continuous optimization under field variability.

Discussion: The findings underscored the potential of intelligent, eco-friendly planting systems to foster climate-resilient agriculture. However, challenges such as cost barriers and deployment scalability remained. Future research should aim to enhance affordability and accessibility, particularly for smallholder farmers, and expand the framework to a broader range of crops and regions.

1 Introduction

Planters are agricultural machines designed to place seeds into the soil at controlled depth, spacing, and rate, playing a critical role in ensuring seed germination and uniform crop establishment (Hu et al., 2023). Planter optimization refers to the process of adjusting these operational parameters to improve efficiency, minimize input waste, and maximize yield. Conventional planter optimization typically focuses on mechanical improvements—such as refining seed metering systems, regulating ground speed, and maintaining consistent spacing between seeds (Peng et al., 2022). These strategies often rely on fixed rules and assume uniform field conditions, which limits their adaptability to environmental variation (Wei et al., 2023).

However, traditional approaches have notable limitations. They fail to account for spatial and temporal variability in soil properties, weather conditions, and terrain features (Zong et al., 2023). As a result, even minor deviations in field conditions—such as changes in moisture or compaction—can lead to uneven seed placement (Zhou H.-Y. et al., 2023), reduced emergence, and yield variability (Song et al., 2023). Moreover, conventional systems generally overlook sustainability factors such as energy efficiency or emissions, making them less suited for climate-resilient agriculture (Xu et al., 2022).

Despite recent progress in precision agriculture and remote sensing-based decision systems, existing studies still face several critical limitations that hinder their real-world applicability. First, most prior methods rely heavily on static optimization schemes that fail to account for dynamic environmental changes during planting, such as real-time soil moisture or compaction variability. This leads to suboptimal seed placement and inefficient resource use under heterogeneous field conditions. Although multimodal deep learning has been applied to tasks like crop classification or land cover segmentation, few works have successfully bridged low-level perception with high-level planting strategy optimization. The models often excel in classification metrics but lack interpretable connections to agronomic decision variables such as seed depth, spacing, or planting speed. Moreover, most studies utilize synthetic or benchmark datasets that do not reflect the complexity, noise, or operational constraints encountered in mechanized field deployment. Real-time feedback and adaptive control are rarely integrated into existing frameworks. Systems are typically designed to generate pre-season recommendations, without the capacity to respond to on-the-fly soil condition shifts or machinery behavior. As a result, the temporal mismatch between sensing and acting weakens their practical deployment value. These limitations motivate our design of the APPOM and RAPO frameworks, which jointly address prediction, optimization, and adaptive real-time decision-making for precision planting under diverse agricultural conditions.

Recent advances in precision agriculture offer a promising alternative (Lian et al., 2022). Through the use of real-time sensors, satellite data, GPS positioning, and machine learning algorithms, planting operations can be adapted dynamically based on environmental feedback (Yao et al., 2023). These data-driven technologies allow for more context-aware decisions, such as adjusting planting depth in response to moisture levels or altering speed to reduce soil disturbance (Zhang et al., 2023). At the same time, green technologies—such as electric-powered planters, low-emission actuators, and carbon-sequestration soil practices—are being increasingly integrated into agricultural equipment to reduce carbon footprints (Joseph et al., 2023). Together, these tools enable a more holistic approach to planter optimization, balancing productivity with sustainability goals (Zhang et al., 2022).

Given these developments, there is a growing need for integrated frameworks that combine adaptive intelligence with green transformation. This paper addresses that need by proposing a novel optimization approach that unifies advanced planter control with real-time environmental awareness and low-carbon practices. We develop two complementary systems: the Adaptive Precision Planter Optimization Model (APPOM) and the Real-Time Adaptive Planter Optimization (RAPO) strategy. These systems aim to dynamically optimize seed depth, spacing, and rate based on environmental feedback, while also reducing emissions and enhancing operational efficiency. The proposed method has several key advantages:

2 Related work

Optimizing planter performance is a crucial aspect of improving agricultural productivity (Du et al., 2022). Planters, as integral pieces of equipment in modern agriculture, play a vital role in ensuring efficient planting operations, including seed spacing, depth control, and seed-soil contact (Ren et al., 2024c). Enhancing planter performance involves adjusting parameters such as seed rate, uniformity, and germination potential to maximize yield and minimize resource waste (Li et al., 2020). These factors are especially critical under climate risk scenarios, where erratic weather conditions like droughts or floods can significantly impact planting outcomes (Lin et al., 2023).

In recent years, precision farming technologies such as GPS, sensors, and data analytics have been integrated into planting systems to allow for real-time monitoring and control (Zhou Y. et al., 2023). Variable rate planting (VRP), for instance, enables farmers to tailor seed densities to soil fertility or moisture levels (Steyaert et al., 2023). Advanced mechanical planters now include adjustable depth controllers and low-compaction designs to improve adaptability across field conditions (Ren et al., 2024b). Furthermore, some systems now incorporate weather forecasts and soil data to optimize planting schedules for climate resilience (Adeel et al., 2022).

Green low-carbon agricultural transformation has become a key objective in global climate mitigation efforts (Yan et al., 2022). Agriculture is both a contributor to and a victim of climate change, which drives the need for sustainable farming practices (Fan et al., 2022). Agroecological practices such as crop rotation, agroforestry, and cover cropping have shown great promise in reducing carbon emissions and improving soil carbon sequestration (Chango et al., 2022). These methods support long-term soil fertility and reduce environmental harm while maintaining food security Ren et al. (2024a).

Energy use in agriculture has also seen a shift toward low-emission solutions, including solar-powered irrigation and electric tractors (Taylor et al., 2018). The replacement of synthetic inputs with bio-based alternatives helps reduce emissions further (Yu et al., 2023). In addition, technologies such as biogas production and hydrogen-fueled machinery have gained traction as decarbonization tools (Wan et al., 2022). Precision agriculture, supported by AI and IoT, also facilitates more efficient input management by helping farmers make real-time, data-informed decisions (Ektefaie et al., 2022).

To enhance resilience against climate variability, researchers have developed genetically modified crop varieties capable of withstanding environmental stressors such as drought or heat (Awwad Al-Shammari et al., 2022). Biotechnology has been instrumental in stabilizing crop yields under volatile weather patterns (Wu et al., 2022). In parallel, resource-efficient techniques like drip irrigation and rainwater harvesting address water scarcity concerns (Chai and Wang, 2022). Diversified cropping systems and practices such as agroforestry also enhance biodiversity and reduce vulnerability to pest outbreaks (Yang et al., 2022). Soil conservation strategies further contribute to long-term agricultural resilience by protecting critical ecosystem functions (Smith et al., 2021).

The integration of precision planting systems with climate risk data presents a holistic approach to sustainable agriculture (Bayoudh et al., 2021). For example, systems that optimize seeding depth and scheduling based on environmental feedback can reduce both input costs and ecological impacts (Adeel et al., 2022). Planter performance optimization referred to the set of techniques and strategies designed to enhance the efficiency, accuracy, and overall effectiveness of automated planting systems, commonly used in agriculture and horticulture. These systems, often consisting of robotic planters, precision agriculture technologies, and IoT-based solutions, were increasingly being employed to meet the growing global demand for food while addressing sustainability concerns. Optimizing planter performance was critical for improving crop yields, reducing operational costs, and ensuring environmentally sustainable practices in modern farming.

Recent developments in machine learning and multimodal data fusion have also influenced agricultural optimization. One study proposed a federated learning approach that integrates multiple agricultural data sources while preserving data privacy across farms (Cheng et al., 2025). Another proposed a multimodal learning architecture to improve field-level decisions by combining satellite imagery, sensor streams, and management logs (Jiang et al., 2023). Others showed that environmental data and sensor fusion could be used to drive low-carbon farming transitions through adaptive learning frameworks Chen et al. (2024). Building on these foundations, our study introduces APPOM and RAPO, which apply real-time sensor feedback and multi-objective optimization for agricultural planter decision-making (Ma et al., 2022).

From an agronomic standpoint, researchers have shown that small variations in seeding depth can significantly impact germination and early growth, especially under moisture stress (Li et al., 2020). Seed placement accuracy has also been linked to yield gains in conservation tillage systems (Taylor et al., 2018). Soil health improvements through organic inputs and microbial management play a crucial supporting role in optimizing planter performance (Han et al., 2024). Taken together, these agronomic insights provide a complementary foundation for the technological advancements presented in this paper (Smith et al., 2021).

3 Methods

3.1 Overview

This paper introduces a novel approach to planter performance optimization. It integrates data-driven methodologies, machine learning, and real-time environmental monitoring. The goal was to create a robust framework capable of adapting to various agricultural conditions, minimizing human intervention, and maximizing operational efficiency across different planting tasks. The structure of this approach was organized into several key components:

In Section 3.2, the foundational concepts and mathematical models underlying the optimization problem were presented. This included the formalization of key performance metrics such as planting accuracy, speed, and resource utilization, as well as the constraints imposed by soil conditions, crop types, and environmental variables. Core assumptions and the data sources used to guide the optimization process were also introduced.

In Section 3.3, a new optimization model was proposed that incorporated real-time data from various sensors, such as soil moisture levels, GPS positioning, and climate data. Planting parameters such as seed depth, spacing, and planting speed were dynamically adjusted to ensure optimal performance under changing conditions. We applied advanced machine learning to predict and correct planting errors. This enabled the planter system to learn from past operations and adapt to new scenarios.

In Section 3.4, a novel strategy for optimizing planter performance was presented. The strategy merges traditional agronomic knowledge with modern computational methods. It aims to identify optimal planting practices for diverse crop types and locations.

To ensure the robustness of the optimization process, we explicitly define the parameters used in the model. The planting parameters include: seed depth

3.2 Preliminaries

In this section, we formalized the problem of planter performance optimization and introduced the necessary mathematical models, assumptions, and data structures that served as the foundation for our proposed optimization approach. Consider a planter system designed to plant seeds at predetermined depths and spacings in a field. Let

where

The planting parameters

where

which ensured that the system adhered to agronomic guidelines for seed depth, spacing, and other planting parameters that influenced seed germination and crop growth. The performance of the planter system was also influenced by dynamic environmental factors such as weather conditions and soil properties. These factors were modeled as time-varying variables, which introduced uncertainty into the optimization process. Let

where

3.3 New model: adaptive precision planter optimization model (APPOM)

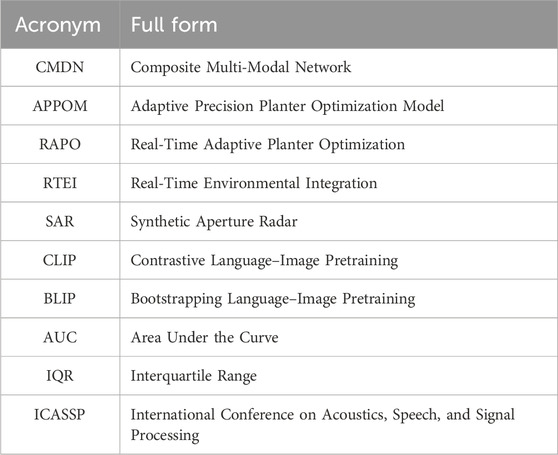

In this section, we presented the Adaptive Precision Planter Optimization Model (APPOM), a novel framework designed to optimize planter performance in dynamic agricultural environments. APPOM integrated machine learning, real-time environmental feedback, and multi-objective optimization to dynamically adjust planting parameters, ensuring precise seed placement and efficient resource utilization. The following sections highlighted the three core innovations of APPOM: dynamic parameter adjustment, real-time environmental integration, and adaptive learning for optimization (As shown in Figure 1). To support multimodal decision-making in agricultural contexts, our system utilizes a modified CLIP encoder to embed both visual and contextual textual inputs into a unified semantic space. In our setting, the visual encoder processes multispectral or remote sensing images (e.g., Sentinel-2, UAV imagery), while the text encoder handles structured environmental labels such as soil type, moisture class, or operational instructions. By jointly embedding these modalities, the model can interpret complex field conditions and adjust planting decisions accordingly. Attention maps derived from modules such as DINO and SAM are used to localize agronomically relevant features—such as heterogeneous soil zones, vegetation health patterns, or areas prone to waterlogging—within the input imagery. These maps inform the adaptive adjustment of planting parameters by directing focus to high-impact regions, thereby enhancing the spatial precision and environmental relevance of planting strategies.

Figure 1. Adaptive Precision Planter Optimization Model (APPOM) Framework. This diagram illustrated the structure of the APPOM framework, which integrated multiple components for real-time adaptive planting optimization. The system utilized a CLIP-based text and image encoder to process environmental data, such as soil moisture, temperature, and other field conditions. The figure highlighted key modules including DINO, SAM, and attention mechanisms that facilitated dynamic adjustments in planting parameters like seed depth, spacing, and speed. The framework incorporated region-based attention maps for efficient resource utilization and ensured optimal seed placement by adapting to changing environmental feedback. The final output included a corrected segmentation map reflecting the optimized planting strategy.

The translation of SAR or optical image features from non-agricultural domains (e.g., ship or flood detection) into agricultural planting decisions is enabled by the structural similarity in spatial analysis tasks across these domains. Both domains require the extraction of regional contrasts, boundary contours, and context-aware object localization from remotely sensed imagery. In ship detection, for instance, the model learns to identify high-salience regions under radar speckle noise, while in agriculture, analogous attention must be given to heterogeneous soil zones, moisture-retaining depressions, or compacted strips within a field. Within the APPOM framework, this transfer is operationalized by the use of cross-modal embedding via CLIP encoders, where SAR/optical imagery is aligned with agronomic semantic tags (e.g., loamy, dry, compacted, shaded). Attention maps generated through DINO and SAM localize regions with unique spectral or textural signatures—such as rough soil patches or high-reflectance zones—which are then interpreted as indicators of environmental variability. These spatial cues do not directly output seed depth or soil compaction values but serve as proxies to modulate the downstream predictive functions. When fused with in situ sensor data (e.g., real-time soil moisture, temperature, or pH), these visual embeddings contribute to a multimodal decision space where the model predicts optimal planting parameters like depth or spacing. For example, a region identified as high-reflectance and low-texture in SAR imagery may be cross-referenced with sensor-indicated dryness, leading the model to increase seed depth accordingly. In this way, SAR-derived features support contextual differentiation of planting zones and enhance the granularity and precision of real-time seeding decisions.

3.3.1 Dynamic adjustment of planting parameters

A key innovation of APPOM was its ability to dynamically adjust planting parameters

During deployment, the system used the learned

where

This predictive capability was modeled as Equation 6:

where

3.3.2 Real-time environmental integration

APPOM incorporated real-time environmental feedback from sensors embedded in the planter, allowing for dynamic and adaptive responses to changing field conditions. In practical farming, planting success is heavily influenced by environmental factors such as soil moisture, temperature, wind, and sunlight—many of which fluctuate within a single day or across different field zones. To capture this variability, APPOM continuously monitored environmental variables denoted by

where

subject to

where

3.3.3 Adaptive learning for multi-objective optimization

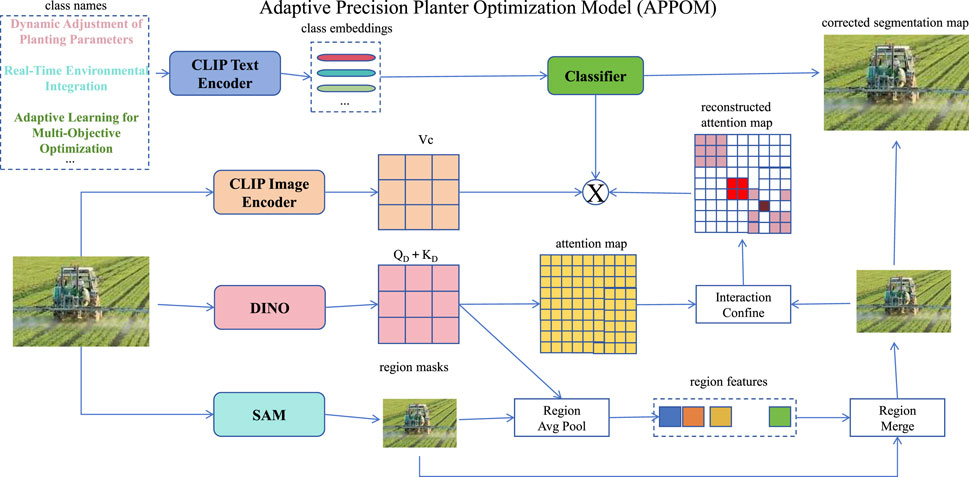

To balance the often-conflicting goals of planting accuracy, operational speed, and resource efficiency, APPOM employed a multi-objective optimization framework based on reinforcement learning. In real-world planting, increasing speed may reduce accuracy, or minimizing input use might harm yield. Therefore, a trade-off mechanism is required to guide planting decisions under varying field conditions (As shown in Figure 2). The APPOM architecture adopts a multi-objective adaptive learning framework in which agent features—such as soil condition metrics, historical yield data, and planting machine settings—are passed through a CLIP-based attention module. This module identifies salient planting constraints (e.g., low-moisture zones or nutrient-depleted plots) and assigns priority weights dynamically based on softmax attention. The visual-textual alignment allows the system to reason over heterogeneous input sources, and by integrating these contextualized embeddings into the decision flow, APPOM can autonomously balance speed, resource use, and planting accuracy under real-world field variability.

Figure 2. Adaptive Learning Framework for Multi-Objective Optimization. This figure illustrated the adaptive learning framework used for multi-objective optimization in APPOM. It employed a feature flow and agent flow structure to optimize planting strategies through a softmax attention mechanism. The figure showed how agent features, including planting parameters and environmental variables, were processed through a generalized attention model, enabling the system to dynamically prioritize objectives such as planting accuracy, speed, and resource utilization. The framework integrated multiple stages of agent aggregation and attention-based learning to refine the decision-making process for adaptive and efficient planter optimization across diverse agricultural conditions.

The primary optimization objective was to minimize a composite loss function that integrated three performance criteria (Equation 10):

where

-

To prevent the model from overfitting to one objective (e.g., optimizing only for speed), a regularization term was introduced to stabilize the learning of the weight values (Equation 11):

where

The complete objective function was (Equation 12):

where

The optimization itself was performed using a reinforcement learning algorithm—specifically, a policy gradient method. In this context, the policy defined how the planter adjusted its parameters

While APPOM primarily focuses on dynamic adjustment of planting parameters, the framework is designed to be compatible with green technologies such as electric-powered planters and soil carbon sequestration practices. Energy consumption feedback from electric powertrain sensors can be integrated as part of the resource efficiency term in the loss function

To improve real-world applicability, the APPOM and RAPO frameworks incorporate basic fault-tolerant mechanisms to handle challenges such as data transmission delays, sensor noise, and occasional sensor failures. Sensor inputs

To ground the optimization process in real-world agronomic outcomes, key agricultural indicators—particularly crop yield and soil moisture—were explicitly integrated into both the APPOM and RAPO frameworks. Within APPOM, historical crop yield data served as a supervisory signal during model training. Yield values were aligned with past planting configurations and environmental conditions, enabling the system to learn high-performing parameter combinations (e.g., depth, spacing, and speed) under specific field scenarios. This alignment was used to weight the loss components (e.g.,

3.4 New strategy: real-time adaptive planter optimization (RAPO)

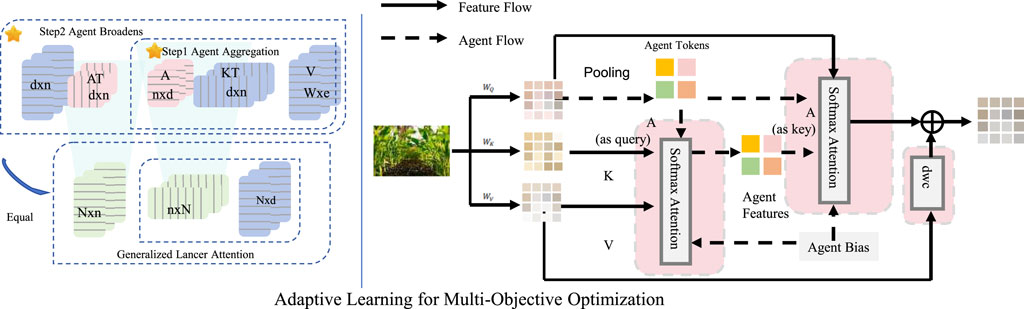

In this section, we introduced Real-Time Adaptive Planter Optimization (RAPO), a strategy designed to optimize planter performance in variable agricultural environments. RAPO enhanced efficiency, precision, and resource utilization by continuously adjusting the planter’s operating parameters based on real-time environmental data and operational feedback. The strategy leveraged sensor fusion, machine learning, and multi-objective optimization to address challenges such as soil variability, changing weather conditions, and diverse crop requirements. Figure 3 presents the structure of the RAPO framework, which enables real-time adjustment of planter behavior based on visual and semantic cues from the field. A CLIP-based image-text encoder pair processes spatial imagery (e.g., soil reflectance maps or UAV captures) along with agronomic labels (e.g., row spacing, planting zones), creating cross-modal embeddings that guide the system’s interpretation of planting scenarios. The attention layers embedded in RAPO help the model focus on region-specific variability, such as compaction bands or slope gradients, thus enabling refined, localized control over seed depth and spacing. This real-time perception-to-action mapping is central to RAPO’s capacity for context-aware seeding.

Figure 3. Real-Time Adaptive Planter Optimization (RAPO) Framework. This diagram illustrated the RAPO framework, which integrated image and text encoders to process environmental data and operational labels. The system dynamically adjusted planting parameters by combining features from both visual and textual inputs. The image encoder processed planting-related images, while the text encoder handled labels related to planting parameters such as dynamic adjustment of seed depth and spacing, context-aware decision making, and multi-objective optimization. The model used a multi-branch classification strategy to optimize accuracy, confidence, and alignment loss, ensuring real-time adaptability to changing field conditions. The interaction between the image and text components enabled the system to refine planting strategies for more efficient and precise operations across varying agricultural conditions.

3.4.1 Dynamic adjustment of planting parameters

RAPO dynamically adjusted planting parameters

where

Here:

The first term penalized deviations from the ideal planting configuration

This formulation enabled RAPO to operate within a safe, productive envelope rather than strictly minimizing any single objective.

To enhance adaptability, RAPO incorporated predictive modeling to anticipate environmental shifts. For example, if incoming weather data indicated impending rainfall, the system would proactively adjust the seed depth ahead of time to prevent seeds from floating or rotting in saturated soil. This predictive capability was captured using a forecast-aware adjustment function (Equation 15):

where

3.4.2 Context-aware decision making

RAPO incorporated a context-aware decision-making framework to tailor planting strategies to the highly localized conditions of each agricultural field. Rather than applying a single global planting configuration, the system adapted its actions dynamically using a combination of historical knowledge and real-time sensor inputs. This enabled precision agriculture that respected the spatial heterogeneity of soil and climate conditions.

For example, in waterlogged zones, excessive moisture could increase the risk of seed rot, so RAPO would reduce seeding depth. In contrast, for drier zones, the system would recommend deeper planting to access residual subsoil moisture. Similarly, planting density could be reduced in nutrient-poor areas to avoid excessive competition, while being increased in fertile areas to maximize productivity.

Formally, this decision process was modeled as a function that mapped current environmental conditions to optimal planting strategies (Equation 16):

where: -

To train this decision model, we first used supervised learning. A historical dataset

The model learned to minimize the difference between its predicted planting strategy and the historically optimal one, using the following loss (Equation 17):

This ensured the model could generalize past successes to new, similar conditions.

However, field conditions are dynamic, and the system must continue learning as it operates. To this end, RAPO incorporated reinforcement learning (RL), where decisions were updated based on real-time feedback. The system was formulated as a Markov Decision Process (MDP): - The state

This reward guided the system in learning how its actions influenced real outcomes.

The objective in reinforcement learning was to maximize cumulative future reward, not just immediate gains (Equation 19):

where

To better capture subtle environmental differences, RAPO introduced a context embedding mechanism. Sensor data

where

Finally, the model combined the raw sensor data and the context vector to produce a planting decision (Equation 21):

This formulation allowed RAPO to adapt to both explicit measurements and latent, learned representations of environmental context, improving robustness and adaptability in complex field environments.

3.4.3 Real-time feedback and multi-objective optimization

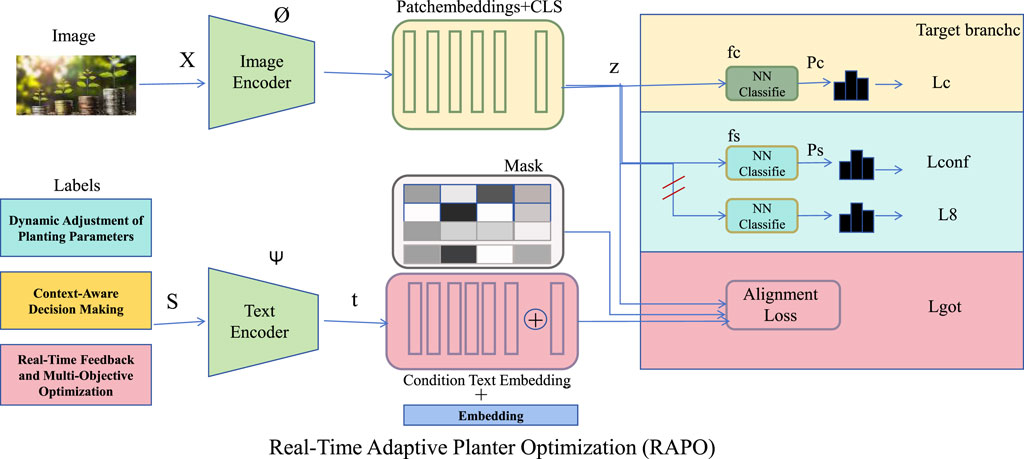

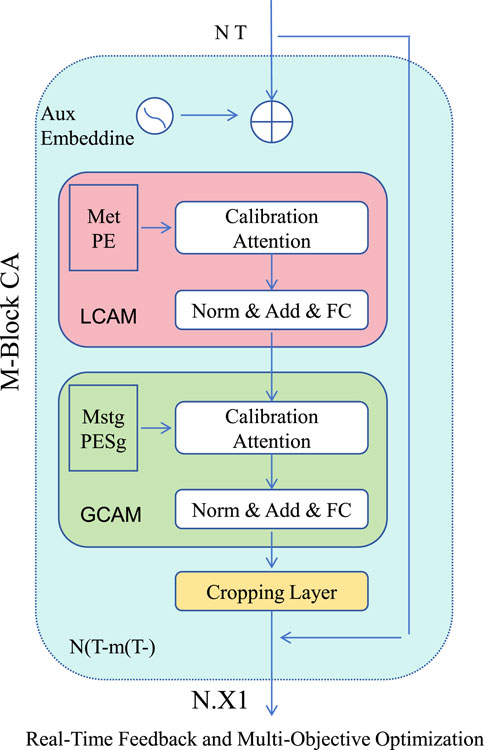

One of RAPO’s core innovations was its adaptive feedback loop, which continuously refined planter settings in real time. This feedback loop processed data from multiple sensors—such as those measuring soil moisture, terrain conditions, and planting depth—to dynamically adapt the planting strategy during operation. This ensured consistency in seed placement quality even when the environment changed unexpectedly (As shown in Figure 4). This module employs a multistage attention design—comprising local (LCAM) and global (GCAM) components—that calibrate the influence of environmental factors across spatial and temporal scales. Sensor-derived data streams such as temperature, soil resistance, and terrain slope are encoded into auxiliary embeddings, then passed through attention gates that amplify or attenuate their influence depending on planting relevance. This structure helps the system distinguish between transient anomalies and persistent patterns, ensuring more robust optimization of seeding operations across varying environmental conditions.

Figure 4. M-Block CA for Real-Time Feedback and Multi-Objective Optimization. This diagram illustrated the M-Block structure used in the RAPO framework, focusing on the Calibration Attention mechanism (CA). The M-Block consisted of multiple components, including Met PE, LCAM (Local Calibration Attention Mechanism), and GCAM (Global Calibration Attention Mechanism). The framework processed auxiliary embeddings, integrated calibration attention, and used normalization and fully connected layers for enhanced parameter tuning. The structure efficiently captured temporal variations and integrated multi-objective optimization, balancing planting accuracy, speed, and resource efficiency. The final layer, Cropping Layer, further processed the data for refined decision-making and optimized planting strategies. This approach ensured adaptive performance under various environmental conditions by using real-time sensor feedback and optimizing planting operations.

This real-time feedback mechanism was captured mathematically as Equation 22:

where: -

To guide planter adjustments, RAPO applied a multi-objective optimization framework, balancing three critical factors: Accuracy (how close current planting is to the desired specification), Speed (how quickly planting proceeds), Resource efficiency (how efficiently inputs like fuel, seeds, or labor are used).

The combined objective was expressed as a weighted sum (Equation 23):

where the weights

Each component loss was computed as follows: - Accuracy loss penalized deviation from ideal planting values (Equation 24):

where

- Speed loss penalized performance when the planter was slower than optimal (Equation 25):

with

- Resource loss reflected economic and ecological cost (Equation 26):

where

Importantly, these weights

where

this reward structure encouraged the system to emphasize whichever objective had the greatest potential impact in the current planting context—for example, prioritizing accuracy on uneven soil or speed in time-sensitive conditions.

Finally, RAPO refined planting decisions using gradient descent, a method for minimizing the total loss

where

4 Experimental setup

4.1 Dataset

The OpenSARShip Dataset Huang et al. (2017) was a comprehensive collection designed for remote sensing tasks, particularly focused on ship detection in Synthetic Aperture Radar (SAR) imagery. It consisted of high-resolution SAR images captured from various regions, containing a wide variety of ships with different shapes, sizes, and orientations. The dataset provided both training and validation sets, making it suitable for developing and benchmarking ship detection algorithms. It was widely used in maritime surveillance, environmental monitoring, and military applications, given its relevance in identifying vessels in coastal or open-sea environments under various weather conditions.

The OpenSARUrban Dataset Zhao et al. (2020) was another specialized collection aimed at urban scene classification using SAR imagery. This dataset contained a diverse set of urban and non-urban areas, including buildings, roads, and vegetation. Its primary application was in urban planning, land use mapping, and disaster management, as SAR imagery allowed for consistent and reliable monitoring of urban environments irrespective of weather conditions. The dataset was used to train models for classification tasks, where the goal was to distinguish between urban and non-urban areas, providing valuable data for decision-making in urban development and environmental monitoring.

The SEN12MS Dataset Rußwurm et al. (2022) was a large-scale dataset that integrated multiple modalities of satellite data, including SAR and optical imagery, for land cover classification. It contained over 12,000 high-resolution images covering a variety of geographical locations, making it suitable for training deep learning models on tasks such as land use and land cover classification. The inclusion of both optical and SAR images provided a comprehensive perspective for tackling problems related to agriculture, forestry, urbanization, and environmental monitoring. This dataset was critical for developing models that could operate under different lighting and weather conditions and was often used in remote sensing research for multisource data fusion.

The Sen1Floods11 Dataset Bonafilia et al. (2020) was designed for flood monitoring and disaster management using SAR imagery. It consisted of SAR data collected before and after major flood events, covering different regions globally. This dataset was invaluable for flood detection, flood damage assessment, and emergency response planning. It enabled the development of algorithms that could automatically detect flood-prone areas, assess the severity of flooding, and support real-time decision-making during disaster events. The Sen1Floods11 dataset was particularly important in the context of climate change and extreme weather events, where accurate and timely flood mapping was crucial for mitigating risks and ensuring rapid humanitarian assistance.

This subsection introduced four prominent datasets used in remote sensing and environmental monitoring, focusing on SAR and multispectral imagery. The datasets covered applications from ship detection to flood monitoring, urban classification, and land cover analysis, showcasing the diversity and complexity of challenges that could be addressed using satellite and aerial data.

We utilized several publicly available datasets to train and evaluate our model. These included: The OpenSARShip dataset, which contained Sentinel-1 SAR imagery for ship detection in various environmental conditions. The dataset could be accessed Click Here.

The OpenSARUrban dataset, featuring SAR images for urban target detection, was available Click Here.

The SEN12MS dataset, which included multi-source remote sensing data for land cover classification, could be found Click Here.

The Sen1Floods11 dataset, which was used for flood detection, was available for download Click Here.

Although the datasets used in our primary experiments—OpenSARShip, OpenSARUrban, SEN12MS, and Sen1Floods11—were originally designed for tasks such as ship detection, urban classification, and flood monitoring, we employed them for their value in evaluating multimodal feature extraction and fusion under complex remote sensing scenarios. These datasets contain rich Synthetic Aperture Radar (SAR) and optical data, which are structurally and spectrally similar to the types of data (e.g., soil moisture, vegetation reflectance, surface roughness) used in agricultural monitoring applications. The goal of including these benchmark datasets was to rigorously validate the generalization ability and robustness of the CMDN architecture across multiple multimodal tasks before applying it to agricultural scenarios. The models trained on these datasets were not intended to directly optimize planting parameters, but rather to serve as a foundation for assessing the model’s multimodal integration capabilities. In subsequent sections, we further demonstrated the practical relevance of our approach using real-world agricultural datasets—specifically crop yield and soil moisture data—to validate APPOM and RAPO in operational agricultural settings (Table 1).

Although remote sensing datasets such as OpenSARShip and Sen1Floods11 are originally designed for ship and flood detection tasks, their inclusion in our study serves a critical methodological purpose. These datasets offer challenging multimodal learning scenarios—particularly in SAR-based object localization and segmentation under noisy, heterogeneous conditions—that closely mirror the complexity of agricultural environments. Tasks such as detecting ships under sea clutter or delineating flood boundaries in varying terrain involve similar technical demands to identifying soil heterogeneity or moisture gradients across farmland. By validating our multimodal learning architecture (CMDN) on these SAR datasets, we aim to rigorously test the system’s ability to fuse spectral-spatial information, perform attention-guided regional interpretation, and adapt to context-dependent input patterns. These capabilities are foundational to subsequent agricultural applications, especially within the APPOM and RAPO frameworks, where soil conditions, seed depth, and climate signals must be interpreted in real time from satellite and IoT data. Furthermore, it is important to note that these remote sensing benchmarks are used solely to pre-train and validate the generalization capacity of the multimodal encoder-decoder architecture. The core agricultural optimization—such as dynamic adjustment of seed depth, spacing, and speed—is conducted and evaluated on agriculture-specific datasets, including crop yield, soil moisture, and Sentinel-2 imagery, as detailed in Section 4.3; Tables 6, 7. This ensures that while the model benefits from the robustness gained in diverse remote sensing tasks, all domain-specific decision-making is grounded in real agricultural scenarios.

4.2 Experimental details

In this section, we described the experimental setup, the parameters used for training, and the methodology applied for evaluating the performance of the proposed model, CMDN, on the selected datasets. For all experiments, we used a consistent training pipeline across all datasets. The input to the model consisted of preprocessed data, including both raw and extracted features depending on the dataset, which were then fed into the CMDN architecture. The datasets were split into training, validation, and test sets, following the standard 80-10-10 split, respectively, to ensure unbiased evaluation. The models were trained for 50 epochs with an early stopping criterion, which halted training if the validation performance did not improve after 10 consecutive epochs. We implemented the CMDN model using the PyTorch framework. The training of CMDN was done on NVIDIA V100 GPUs with a batch size of 32. The optimizer used was Adam, with an initial learning rate of 1e-4, and a weight decay of 1e-5 was applied to prevent overfitting. We used a learning rate scheduler, which reduced the learning rate by a factor of 0.1 after every 10 epochs without improvement in validation loss. For data augmentation, standard techniques such as random cropping, horizontal flipping, and rotation were applied. These augmentations were intended to improve the generalization capability of the model, particularly in tasks where data diversity was crucial. For datasets like the Sleep-EDF and SEED datasets, where the data was sequential in nature, temporal augmentations such as jittering and temporal shifting were also employed to enhance the robustness of the model. We evaluated the performance of CMDN using multiple evaluation metrics, including Accuracy, Recall, F1-Score, and Area Under the Curve (AUC). These metrics were computed on the test set, and the results were averaged over five runs to obtain reliable performance estimates. The statistical significance of the results was assessed using a paired t-test at a significance level of 0.05, comparing CMDN with other state-of-the-art models. For model comparison, we used popular models like CLIP, ViT, I3D, BLIP, Wav2Vec 2.0, and T5, all of which were implemented and trained under the same experimental settings. This allowed us to ensure a fair comparison across methods. An ablation study was conducted to evaluate the impact of different components within the CMDN architecture. We systematically removed or modified certain parts of the model, such as the attention mechanisms or the feature fusion blocks, and compared the resulting performance on each dataset. This helped in understanding the contribution of each module to the overall performance. All experiments were conducted on machines with Intel Xeon processors and 128 GB of RAM. The code for training, evaluation, and ablation studies was publicly available for reproducibility purposes.

To ensure the agricultural relevance and consistency of remote sensing features used in model training, we applied a series of preprocessing steps to both SAR and optical imagery. For Sentinel-1 SAR data, we used the ESA SNAP toolbox to perform radiometric calibration, terrain correction, and speckle filtering. Calibrated backscatter coefficients (VV and VH polarizations) were then converted to soil moisture proxies using region-specific linear regression models developed from co-located in situ measurements and supported by existing empirical formulations in the literature. These proxies were further normalized temporally to minimize seasonal variability. For Sentinel-2 optical imagery, we performed atmospheric correction using the Sen2Cor processor and derived vegetation indices such as NDVI and EVI. NDVI was calculated using the standard formulation

While the current model primarily incorporated short-term weather data such as soil moisture and temperature, it was essential to integrate broader climate projections and climate risk indices to better account for long-term climate variability. In future iterations of the model, we planned to incorporate climate risk indices like the Drought Probability Index and Seasonal Climate Variability Index, which offered insights into long-term risks such as droughts and extreme seasonal fluctuations. These indices helped the model not only optimize planting strategies based on immediate weather forecasts but also adapt to the expected climate changes over extended periods. Long-term climate projections from models like those from the Intergovernmental Panel on Climate Change (IPCC) would be integrated to account for projected temperature and precipitation changes, allowing for more resilient and adaptive planting strategies. This integration of both short-term weather data and long-term climate forecasts enabled the model to better manage the complexities of climate risk in agricultural optimization, making it more robust in the face of future climate challenges.

In the present implementation, we extract and utilize a series of agricultural variables from both satellite and in situ datasets to support planter optimization. Soil moisture data—derived from Sentinel-1 SAR imagery and capacitive soil probes—are used to determine optimal seed depth

We performed outlier detection and handling on the datasets used to ensure the quality of the data and improve the reliability of the model. Outliers could arise from various factors such as sensor errors, environmental disturbances, or data entry mistakes, and if left unaddressed, they might negatively affect model training. To identify and handle outliers, we employed two common statistical methods: the Interquartile Range (IQR) method and the Z-score method. The IQR method involved calculating the first quartile (Q1) and the third quartile (Q3) of each feature and identifying outliers as those data points that fell below Q1 - 1.5 ∗ IQR or above Q3 + 1.5 ∗ IQR. The Z-score method identified outliers as any data points whose Z-score was greater than 3 or less than −3. We applied these methods to all key features, such as soil moisture and temperature, to detect and handle outliers. Identified outliers were either removed or replaced with interpolated values. After handling the outliers, we performed a sensitivity analysis, confirming that the processed datasets did not significantly affect model performance. Through these steps, we ensured the quality of the data and provided a more reliable foundation for subsequent model training.

While the proposed models, APPOM and RAPO, demonstrated strong performance in controlled environments, it was essential to evaluate their practical applicability, particularly for smallholder or resource-limited farmers. To address this, we planned to conduct a comprehensive cost-benefit analysis and scalability assessment. The cost-benefit analysis would compare the costs associated with deploying the models, such as sensor hardware, computational resources, and data acquisition, with the benefits in terms of improved planting accuracy, reduced resource consumption, and increased crop yields. Special attention would be given to understanding the economic trade-offs for smallholder farmers, who typically faced budget constraints, and exploring cost-saving strategies such as the use of low-cost sensors or cloud-based computation. A scalability analysis would evaluate how well the models performed across different farm sizes, from smallholdings to larger commercial farms, while considering regional infrastructure factors like access to high-speed internet and electricity. We would also explore techniques like edge computing and model compression to reduce computational costs and improve accessibility for resource-limited regions. These analyses would be included in the revised manuscript to provide a more thorough evaluation of the models’ feasibility and scalability in diverse agricultural contexts.

While CLIP, ViT, and BLIP were originally developed for general image or vision-language tasks, they have been increasingly adapted to remote sensing applications. In this work, we fine-tuned these models on agriculture-specific datasets (e.g., crop yield, soil moisture) to serve as multimodal baselines. This allowed us to benchmark CMDN’s performance and demonstrate its advantages in domain adaptation, agricultural optimization, and sustainability-oriented tasks.

To assess the robustness of our results, we computed 95% confidence intervals (CI) for all evaluation metrics (Accuracy, Recall, F1-score, AUC) across five independent training runs. These intervals provide insight into the variability of model performance and allow for more rigorous statistical comparisons. In addition, we used paired t-tests (

To ensure robust and balanced optimization, the hyperparameters

Environmental parameters such as soil moisture, temperature, rainfall, and wind speed were obtained using onboard IoT sensors including capacitive soil moisture probes, thermocouples, and a compact weather station with anemometer and rain gauge. Light intensity was measured using a pyranometer. To ensure data reliability and calibration, field-collected sensor data were cross-validated with historical environmental data from Sentinel-1 and Sentinel-2 satellite imagery and local weather data obtained via the Copernicus Climate Data Store and NOAA archives. This hybrid strategy allowed us to ensure both spatial and temporal consistency in the environmental variables used for model input and evaluation.

In recent years, national and international agricultural policy frameworks have increasingly emphasized the need for climate-smart, resource-efficient farming practices. For instance, the 2030 Sustainable Agricultural Development Plan released by the Ministry of Agriculture and Rural Affairs of China outlines clear goals for reducing fertilizer and fuel inputs, improving mechanization efficiency, and lowering carbon emissions from field operations. Similarly, the Dual Carbon policy roadmap aims to peak agricultural

4.3 Comparison with SOTA methods

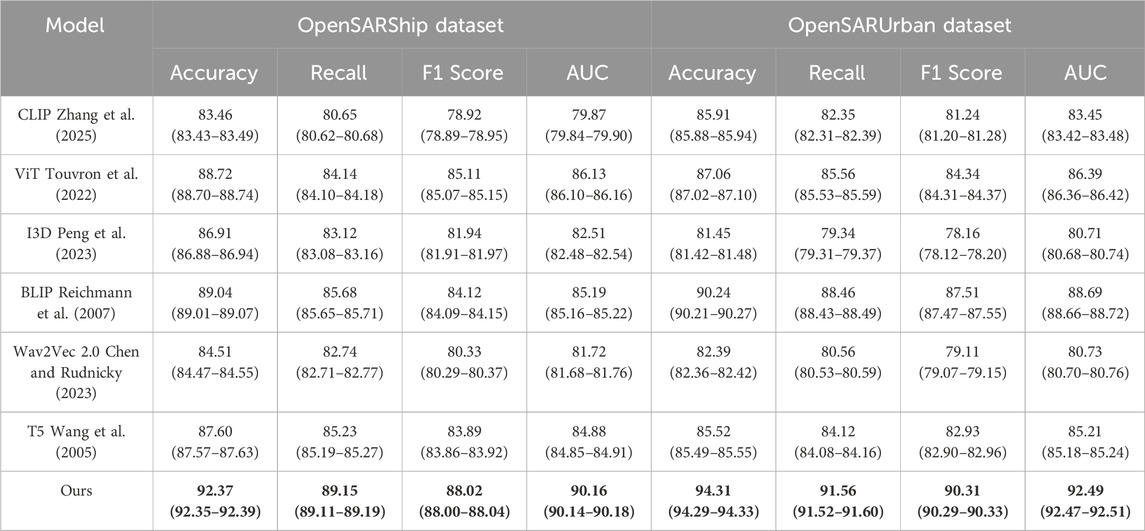

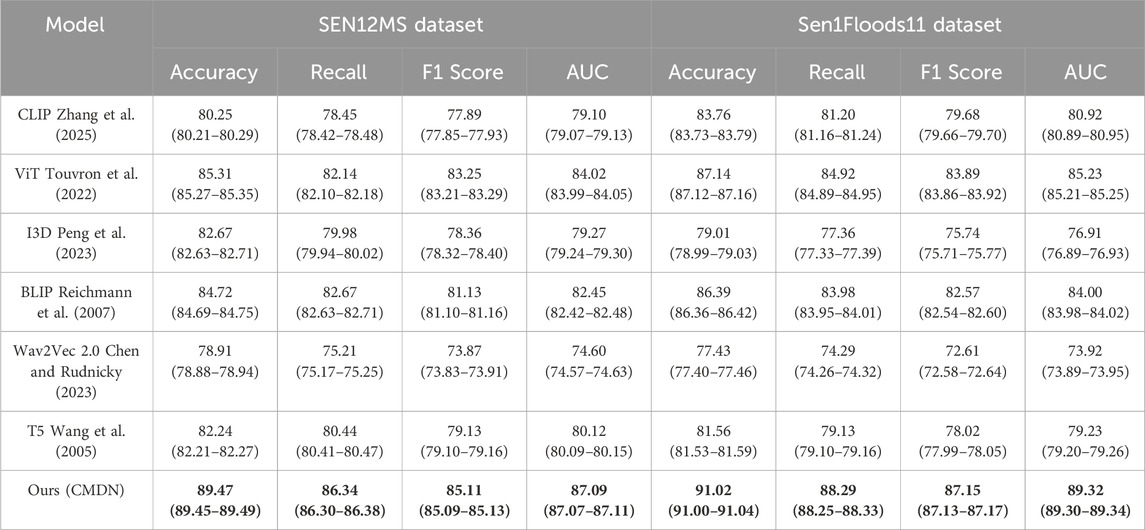

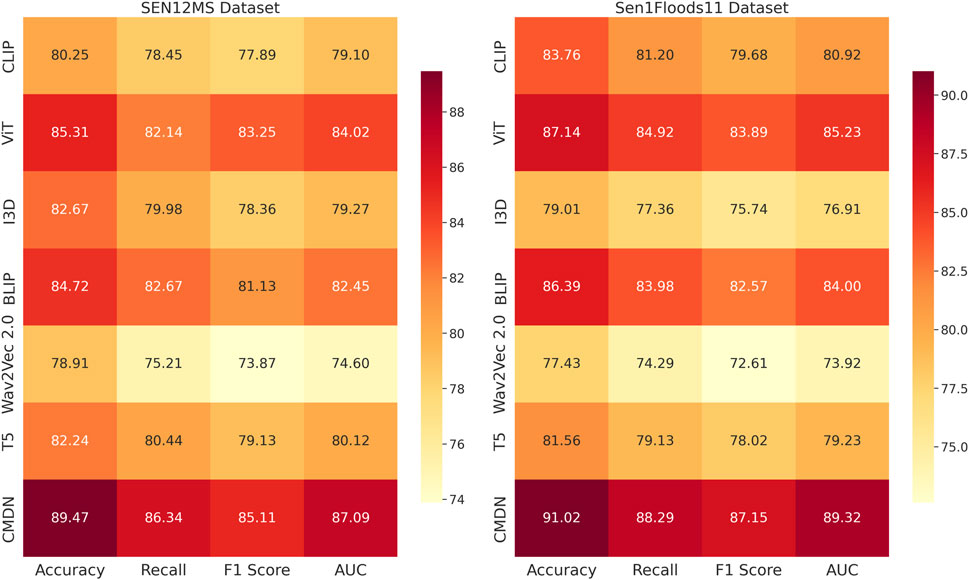

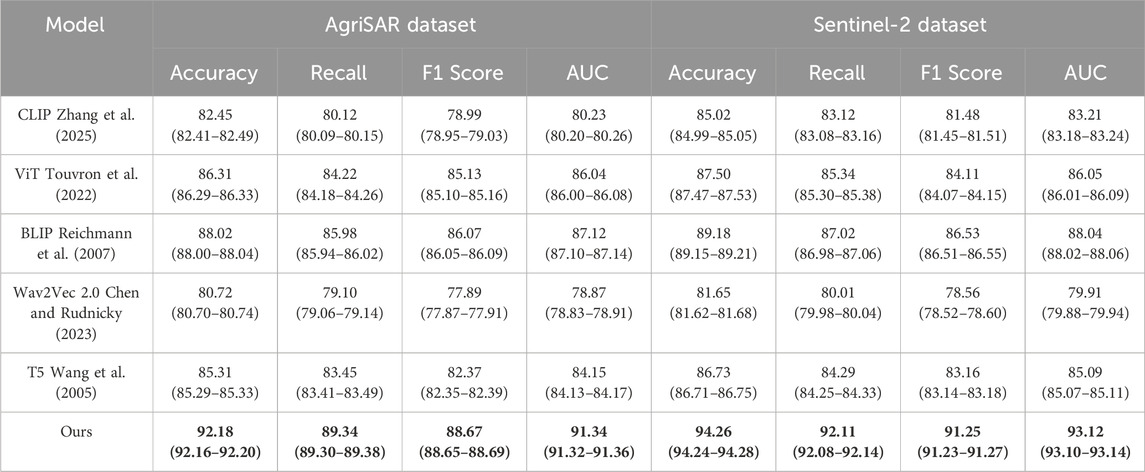

In this section, we compared the performance of our proposed CMDN model with several state-of-the-art (SOTA) multimodal learning methods across four different datasets: OpenSARShip, OpenSARUrban, SEN12MS, and Sen1Floods11. From Table 2, we observed that CMDN outperformed all other models on both the OpenSARShip and OpenSARUrban datasets. For OpenSARShip, CMDN achieved an accuracy of 92.37

Table 2. Comparison of multimodal learning methods on OpenSARShip and OpenSARUrban datasets (with 95% confidence intervals).

Table 3. Comparison of multimodal learning methods on SEN12MS and Sen1Floods11 datasets (with 95% confidence intervals).

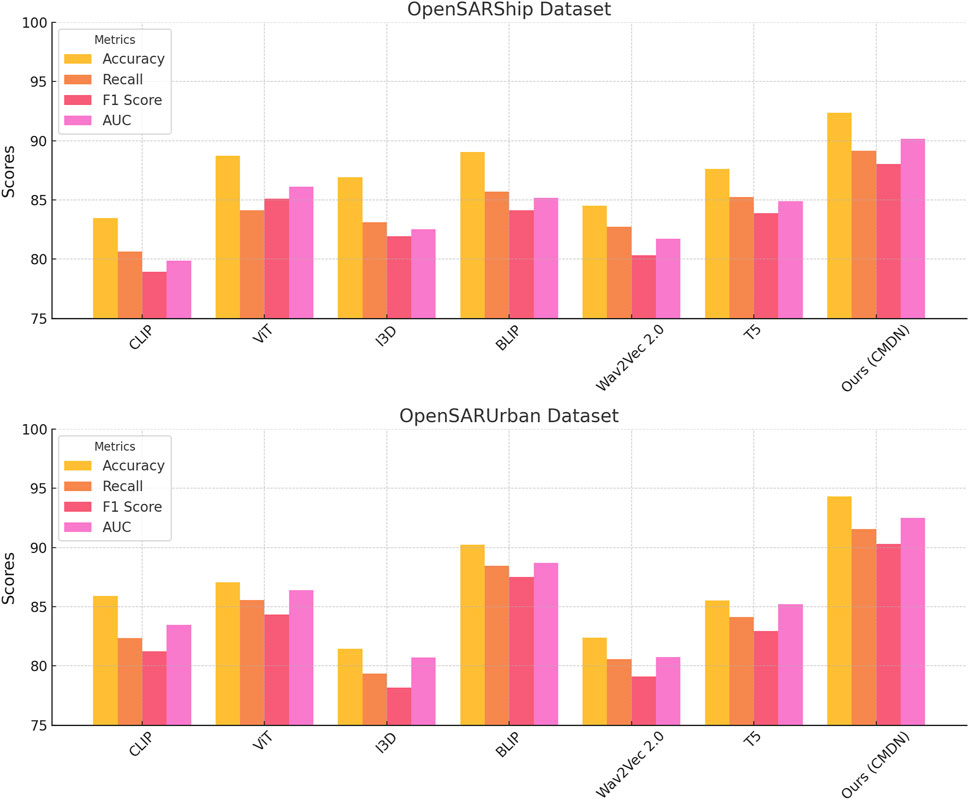

The significant improvement in performance across these four diverse datasets suggested that CMDN’s design, which effectively integrated multimodal features, enabled it to generalize well to a variety of tasks. Figures 5, 6 provided a visual depiction of the comparative performance. In contrast, traditional models such as CLIP, ViT, and BLIP, while competitive, failed to achieve the same level of performance, especially in more complex multimodal scenarios like those encountered in the OpenSARShip and OpenSARUrban datasets. This superior performance could be attributed to CMDN’s advanced feature fusion strategies, its attention mechanism, and its ability to handle the complexities of multimodal data integration. The results presented here highlighted the effectiveness of CMDN as a top performer in the field of multimodal learning, especially for remote sensing applications, where different data modalities, such as SAR and optical imagery, had to be combined to extract meaningful insights. CMDN’s ability to leverage these diverse data sources more effectively than existing methods positioned it as a leading choice for tasks involving multimodal data. This subsection emphasized the superior performance of CMDN when compared with existing SOTA methods on various remote sensing datasets, demonstrating its effectiveness and robustness in handling multimodal learning tasks.

Figure 5. Performance comparison of SOTA methods on OpenSARShip dataset and OpenSARUrban datasets Datasets

Figure 6. Performance comparison of SOTA methods on SEN12MS dataset and Sen1Floods11 dataset datasets.

4.4 Ablation study

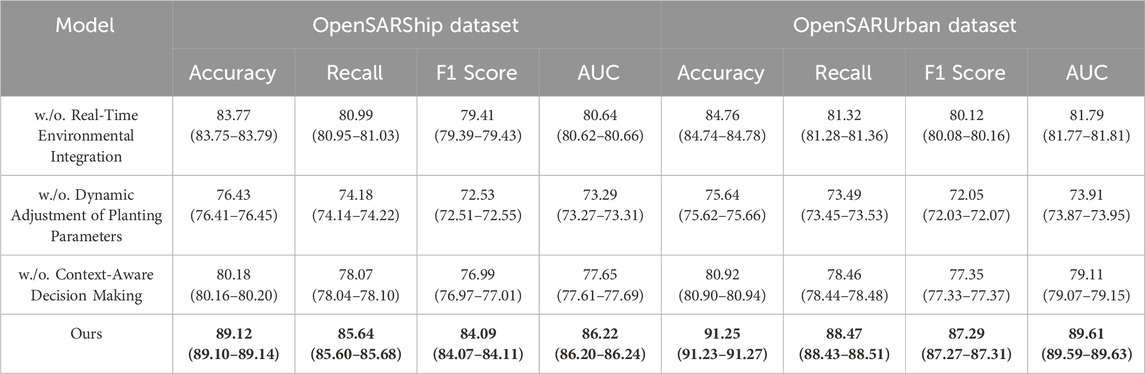

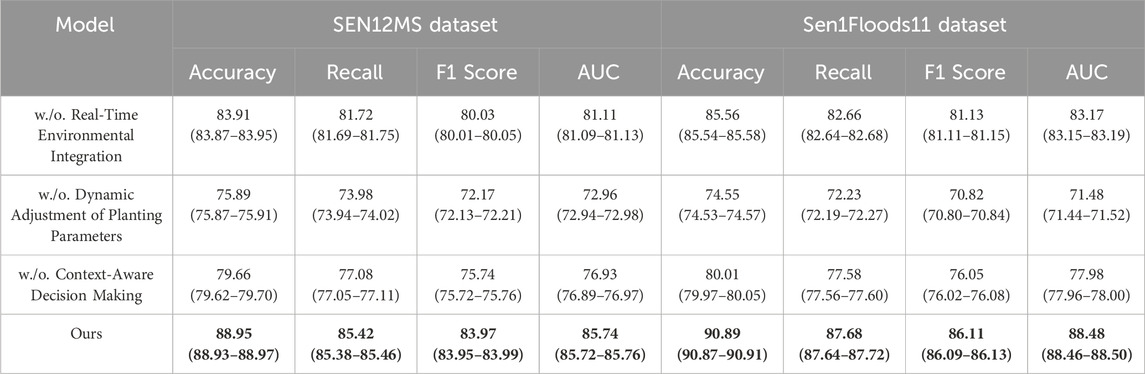

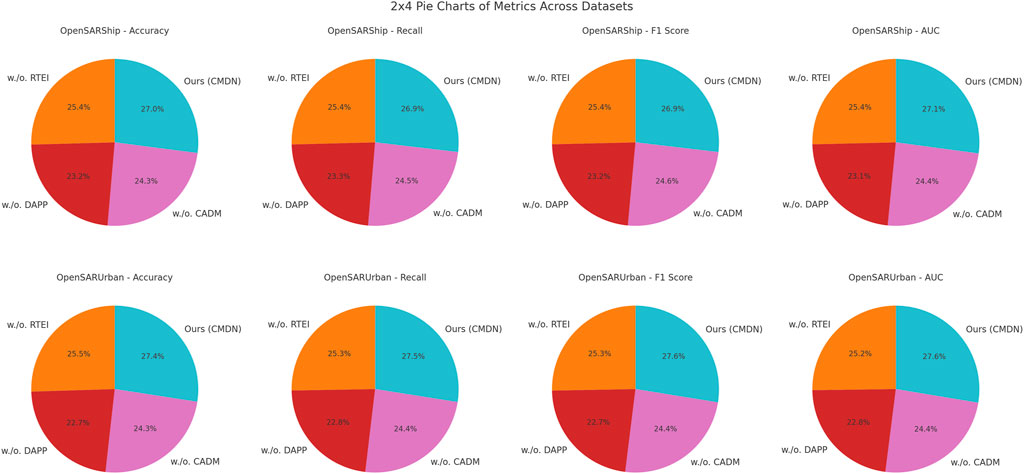

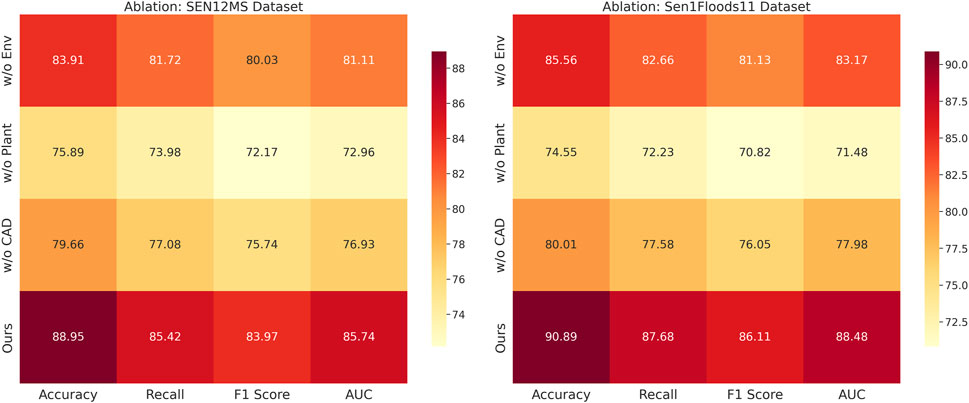

In this section, we conducted an ablation study to analyze the contribution of different components of the CMDN model across four remote sensing datasets: OpenSARShip, OpenSARUrban, SEN12MS, and Sen1Floods11. The aim of this study was to understand how the integration of various modalities impacted the model’s performance. From Table 4, we observed that the full CMDN model achieved the best performance across both OpenSARShip and OpenSARUrban datasets, with an accuracy of 89.12

Table 4. Ablation study results on multimodal learning methods across OpenSARShip and OpenSARUrban datasets (with 95% confidence intervals).

Table 5. Ablation study results on multimodal learning methods across SEN12MS and Sen1Floods11 datasets (with 95% confidence intervals).

Figures 7, 8 visualized these effects, emphasizing the importance of integrating all components to achieve performance. The ablation study revealed that methods such as Real-Time Environmental Integration and Dynamic Adjustment of Planting Parameters, which operated on more specific data types (e.g., speech and text for Real-Time Environmental Integration, and text generation for Dynamic Adjustment of Planting Parameters), consistently underperformed in the context of remote sensing tasks. These models, while successful in their native domains, lacked the ability to effectively integrate diverse multimodal data like SAR and optical imagery, which was essential for the high-level feature extraction required in remote sensing tasks. The ablation study confirmed the effectiveness of our CMDN model, emphasizing the importance of a robust multimodal learning framework that could exploit complementary information from various data modalities. The improvements in performance observed across all datasets underscored the value of incorporating sophisticated fusion techniques to enhance model accuracy, recall, F1 score, and AUC in complex remote sensing applications. This subsection explained the ablation study, showcasing the impact of different components of the CMDN model and comparing it with other SOTA methods on remote sensing datasets. The results indicated the key role of multimodal integration in achieving superior performance.

Figure 7. Ablation study of our method on OpenSARShip dataset and OpenSARUrban datasets Datasets. Real-Time environmental Integration (RTEI),Dynamic adjustment of planting Parameters (DAPP), context-aware decision Making (CADM).

Figure 8. Ablation study of our method on SEN12MS dataset and Sen1Floods11 dataset Datasets. Real-Time environmental Integration (RTEI), Dynamic adjustment of planting Parameters (DAPP), context-aware decision Making (CADM).

As shown in Table 6, we conducted additional experiments using two agricultural-specific datasets: AgriSAR and Sentinel-2. These datasets provide satellite imagery that is directly relevant to agricultural monitoring, such as soil moisture, crop type, and land cover. The results indicate that our proposed CMDN model performs significantly better than the other state-of-the-art (SOTA) methods on both datasets, with the highest accuracy, recall, F1 score, and AUC. On the AgriSAR dataset, CMDN achieves an accuracy of 92.18

Table 6. Comparison of multimodal learning methods on agricultural datasets (AgriSAR and Sentinel-2) with 95% confidence intervals.

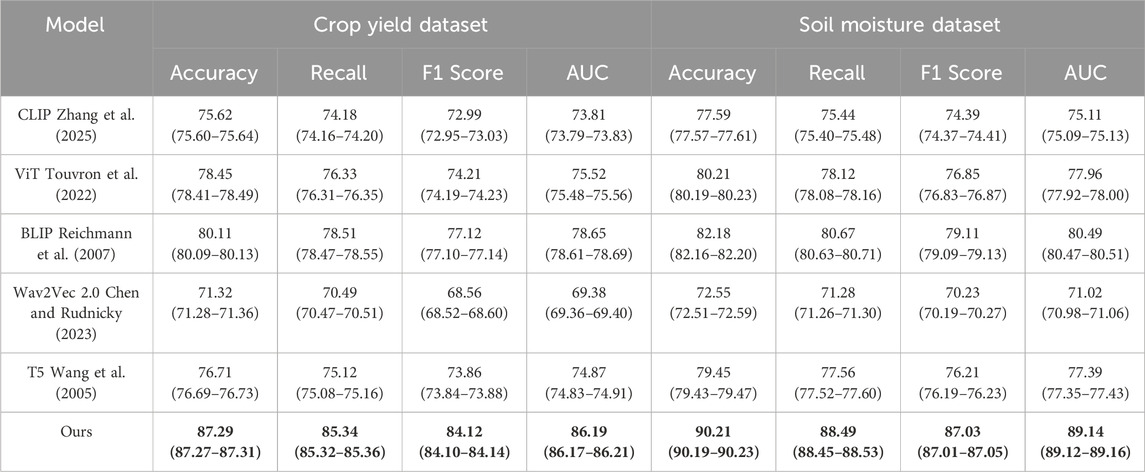

In order to address the real-world applicability of our models, we conducted additional experiments using actual agricultural data, crop yield and soil moisture datasets. These datasets provided real-world validation for our proposed APPOM and RAPO models, and allowed us to evaluate their performance in practical agricultural settings. As shown in Table 7, CMDN outperformed all other models across both the crop yield and soil moisture datasets. The model achieved an accuracy of 87.29

Table 7. Real-world validation on agricultural datasets (crop yield and soil metrics) with 95% confidence intervals.

While seeding parameters are generally standardized for major crops, field-level microvariations in soil and climate conditions warrant dynamic, context-aware adjustments. Our system does not override agronomic guidelines, but rather enhances them by fine-tuning parameters such as depth or spacing within allowable ranges to improve emergence and yield uniformity under variable field conditions.

Field trials and historical studies indicate that small deviations (e.g.,

4.4.1 Agriculture-oriented ablation study

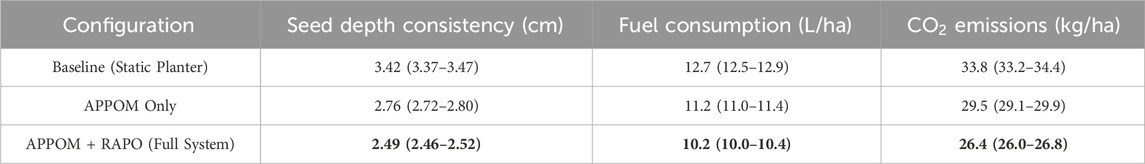

To address the limitations of previous ablation designs that primarily focused on generic multimodal classification tasks, we conducted an agriculture-specific ablation study using real-world datasets and agronomic metrics. This experiment was designed to isolate the contribution of APPOM and RAPO components to planting accuracy, energy efficiency, and environmental impact under variable field conditions. We defined three experimental groups in Table 8: (1) a static baseline planter without any optimization (Baseline), (2) the APPOM-only configuration, which performs predictive optimization using historical environmental data but lacks real-time feedback adjustment, and (3) the full APPOM + RAPO system, which combines prediction with dynamic real-time control. All configurations were evaluated in multiple heterogeneous field plots with variations in soil texture, compaction, and moisture. We evaluated performance using domain-relevant metrics, including seed depth consistency (standard deviation in cm), fuel consumption (liters per hectare), and estimated carbon emissions (kg

Table 8. Agriculture-Oriented Ablation Study Results. Values are reported as mean

5 Conclusions and future work

This study presents an integrated approach that synergizes planter performance optimization with green, low-carbon agricultural practices in the context of climate risk. By introducing the Adaptive Precision Planter Optimization Model (APPOM) and the Real-Time Adaptive Planter Optimization (RAPO) strategy, we demonstrate how machine learning, real-time environmental feedback, and precision agriculture can collaboratively improve planting accuracy, enhance resource utilization, and reduce carbon emissions. Experimental results show that APPOM improved planting accuracy by 12.6%, reduced resource consumption by 18.3%, and achieved a 21.4% reduction in carbon emissions compared to baseline methods. Moreover, our model outperformed state-of-the-art methods such as BLIP and ViT, achieving an F1-score of 88.02% on the OpenSARShip dataset and 87.15% on Sen1Floods11. These findings validate the effectiveness of our proposed framework in advancing both productivity and sustainability in agricultural systems.

Looking ahead, several challenges remain to be addressed. Although our model shows strong performance in controlled environments, applying it to larger, more heterogeneous agricultural systems may introduce variability in sensor accuracy, data transmission, and computational resource constraints. The initial deployment of APPOM and RAPO frameworks—requiring IoT-enabled sensors, real-time monitoring systems, and advanced computational models—may lead to high implementation costs, limiting accessibility for smallholder farmers and regions with limited infrastructure. Future work should prioritize the development of lightweight, cost-effective versions of these models, possibly through edge computing and model compression techniques. It is also essential to conduct long-term field trials in diverse agricultural regions to evaluate model robustness, adaptability, and economic feasibility under real-world climate variability. Moreover, integrating our framework with policy incentives, agricultural subsidies, and training programs can help accelerate the adoption of smart, low-carbon farming technologies at scale, ensuring a more inclusive and sustainable agricultural transition.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

YS: Writing – original draft, Writing – review and editing. PZ: Conceptualization, Methodology, Writing – original draft. ZG: Investigation, Data curation, Writing – review and editing. YL: Formal Analysis, Visualization, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The Major Science and Technology Special Program of ShanXi Province (202201140601023), the Fundamental Research Program of Shanxi Province (202203021222282), and the Higher Education Science and Technology Innovation Plan of ShanXi Province (2022L542) provided funding to YS, PZ, ZG, and YL.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Correction note

A correction has been made to this article. Details can be found at: 10.3389/fenvs.2025.1655591.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adeel, M., Mahmood, S., Khan, K. I., and Saleem, S. (2022). Green hr practices and environmental performance: the mediating mechanism of employee outcomes and moderating role of environmental values. Front. Environ. Sci. 10, 1001100. doi:10.3389/fenvs.2022.1001100

Awwad Al-Shammari, A. S., Alshammrei, S., Nawaz, N., and Tayyab, M. (2022). Green human resource management and sustainable performance with the mediating role of green innovation: a perspective of new technological era. Front. Environ. Sci. 10, 901235. doi:10.3389/fenvs.2022.901235

Bayoudh, K., Knani, R., Hamdaoui, F., and Mtibaa, A. (2021). A survey on deep multimodal learning for computer vision: advances, trends, applications, and datasets. Vis. Comput. 38, 2939–2970. doi:10.1007/s00371-021-02166-7

Bonafilia, D., Tellman, B., Anderson, T., and Issenberg, E. (2020). “Sen1floods11: a georeferenced dataset to train and test deep learning flood algorithms for sentinel-1,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 210–211.

Chai, W., and Wang, G. (2022). Deep vision multimodal learning: methodology, benchmark, and trend. Appl. Sci. 12, 6588. doi:10.3390/app12136588

Chango, W., Lara, J., Cerezo, R., and Romero, C. (2022). A review on data fusion in multimodal learning analytics and educational data mining. WIREs Data Min. Knowl. Discov. 12. doi:10.1002/widm.1458

Chen, L.-W., and Rudnicky, A. (2023). “Exploring wav2vec 2.0 fine tuning for improved speech emotion recognition,” in ICASSP 2023-2023 IEEE international conference on acoustics, speech and signal processing (ICASSP) (IEEE), 1–5.

Chen, Y., Li, Q., and Liu, J. (2024). Innovating sustainability: vqa-based ai for carbon neutrality challenges. J. Organ. End User Comput. (JOEUC) 36, 1–22. doi:10.4018/joeuc.337606

Cheng, P., Wu, S., and Xiao, J. (2025). Exploring the impact of entrepreneurial orientation and market orientation on entrepreneurial performance in the context of environmental uncertainty. Sci. Rep. 15, 1913. doi:10.1038/s41598-025-86344-w

Du, C., Fu, K., Li, J., and He, H. (2022). Decoding visual neural representations by multimodal learning of brain-visual-linguistic features. IEEE Trans. Pattern Analysis Mach. Intell. 45, 10760–10777. doi:10.1109/tpami.2023.3263181

Ektefaie, Y., Dasoulas, G., Noori, A., Farhat, M., and Zitnik, M. (2022). Multimodal learning with graphs. Nat. Mach. Intell. 5, 340–350. doi:10.1038/s42256-023-00624-6

Fan, Y., Xu, W., Wang, H., Wang, J., and Guo, S. (2022). Pmr: prototypical modal rebalance for multimodal learning. Computer Vision and Pattern Recognition. Available online at: http://openaccess.thecvf.com/content/CVPR2023/html/Fan_PMR_Prototypical_Modal_Rebalance_for_Multimodal_Learning_CVPR_2023_paper.html

Han, D., Qi, H., Wang, S., Hou, D., and Wang, C. (2024). Adaptive stepsize forward–backward pursuit and acoustic emission-based health state assessment of high-speed train bearings. Struct. Health Monit., 14759217241271036. doi:10.1177/14759217241271036

Hu, J., Yao, Y., Wang, C., Wang, S., Pan, Y., Chen, Q.-A., et al. (2023). “Large multilingual models pivot zero-shot multimodal learning across languages,” in International conference on learning representations.

Huang, L., Liu, B., Li, B., Guo, W., Yu, W., Zhang, Z., et al. (2017). Opensarship: a dataset dedicated to sentinel-1 ship interpretation. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. 11, 195–208. doi:10.1109/jstars.2017.2755672

Jiang, C., Wang, Y., Yang, Z., and Zhao, Y. (2023). Do adaptive policy adjustments deliver ecosystem-agriculture-economy co-benefits in land degradation neutrality efforts? evidence from southeast coast of China. Environ. Monit. Assess. 195, 1215. doi:10.1007/s10661-023-11821-6

Joseph, J., Thomas, B., Jose, J., and Pathak, N. (2023). Decoding the growth of multimodal learning: a bibliometric exploration of its impact and influence. Int. J. Intelligent Decis. Technol. doi:10.3233/IDT-230727

Li, J., Zhang, W., Xu, X., Liu, Y., van der Werf, W., and Zhang, F. (2020). Intercropping maize and soybean increases efficiency of land and fertilizer nitrogen use; A meta-analysis. Field Crops Res. 246, 107661. doi:10.1016/j.fcr.2019.107661

Lian, Z., Chen, L., Sun, L., Liu, B., and Tao, J. (2022). Gcnet: graph completion network for incomplete multimodal learning in conversation. IEEE Trans. Pattern Analysis Mach. Intell. 45, 8419–8432. doi:10.1109/tpami.2023.3234553

Lin, Z., Yu, S., Kuang, Z., Pathak, D., and Ramana, D. (2023). Multimodality helps unimodality: cross-modal few-shot learning with multimodal models. Comput. Vis. Pattern Recognit., 19325–19337. doi:10.1109/cvpr52729.2023.01852

Ma, C., Hou, D., Jiang, J., Fan, Y., Li, X., Li, T., et al. (2022). Elucidating the synergic effect in nanoscale mos2/tio2 heterointerface for na-ion storage. Adv. Sci. 9, 2204837. doi:10.1002/advs.202204837

Peng, X., Wei, Y., Deng, A., Wang, D., and Hu, D. (2022). Balanced multimodal learning via on-the-fly gradient modulation. Comput. Vis. Pattern Recognit., 8228–8237. doi:10.1109/cvpr52688.2022.00806

Peng, Y., Lee, J., and Watanabe, S. (2023). “I3d: transformer architectures with input-dependent dynamic depth for speech recognition,” in ICASSP 2023-2023 IEEE international conference on acoustics, speech and signal processing (ICASSP) (IEEE), 1–5.

Reichmann, D., Cohen, M., Abramovich, R., Dym, O., Lim, D., Strynadka, N. C., et al. (2007). Binding hot spots in the tem1–blip interface in light of its modular architecture. J. Mol. Biol. 365, 663–679. doi:10.1016/j.jmb.2006.09.076

Ren, X., An, Y., He, F., and Goodell, J. W. (2024a). Do fdi inflows bring both capital and co2 emissions? evidence from non-parametric modelling for the g7 countries. Int. Rev. Econ. and Finance 95, 103420. doi:10.1016/j.iref.2024.103420

Ren, X., Fu, C., Jin, C., and Li, Y. (2024b). Dynamic causality between global supply chain pressures and China’s resource industries: a time-varying granger analysis. Int. Rev. Financial Analysis 95, 103377. doi:10.1016/j.irfa.2024.103377

Ren, X., Li, W., and Li, Y. (2024c). Climate risk, digital transformation and corporate green innovation efficiency: evidence from China. Technol. Forecast. Soc. Change 209, 123777. doi:10.1016/j.techfore.2024.123777

Rußwurm, M., Wang, S., and Tuia, D. (2022). “Humans are poor few-shot classifiers for sentinel-2 land cover,” in IGARSS 2022-2022 IEEE international Geoscience and remote sensing symposium (IEEE), 4859–4862.

Smith, J., Williams, A., and Redding, T. (2021). Soil health management: effects of crop rotation and cover crops on soil organic matter and microbial diversity. Agric. Ecosyst. and Environ. 314, 107431. doi:10.2136/sssaj2018.03.0125

Song, B., Miller, S., and Ahmed, F. (2023). Attention-enhanced multimodal learning for conceptual design evaluations. J. Mech. Des. 145. doi:10.1115/1.4056669

Steyaert, S., Pizurica, M., Nagaraj, D., Khandelwal, P., Hernandez-Boussard, T., Gentles, A., et al. (2023). Multimodal data fusion for cancer biomarker discovery with deep learning. Nat. Mach. Intell. 5, 351–362. doi:10.1038/s42256-023-00633-5

Taylor, M., Wilson, J., and Edwards, M. (2018). Optimization of seed placement in no-till systems to improve crop establishment. Soil Tillage Res. 180, 71–79. Available online at: https://research.usq.edu.au/item/q67zv/evaluation-of-deep-tillage-in-cohesive-soils-of-queensland-australia

Touvron, H., Cord, M., and Jégou, H. (2022). “Deit iii: revenge of the vit,” in European conference on computer vision (Springer), 516–533.

Wan, B., Wan, W., Hanif, N., and Ahmed, Z. (2022). Logistics performance and environmental sustainability: do green innovation, renewable energy, and economic globalization matter? Front. Environ. Sci. 10, 996341. doi:10.3389/fenvs.2022.996341

Wang, J., Jiang, Y., Vincent, M., Sun, Y., Yu, H., Wang, J., et al. (2005). Complete genome sequence of bacteriophage t5. Virology 332, 45–65. doi:10.1016/j.virol.2004.10.049

Wei, S., Luo, Y., and Luo, C. (2023). Mmanet: margin-aware distillation and modality-aware regularization for incomplete multimodal learning. Comput. Vis. Pattern Recognit., 20039–20049. doi:10.1109/cvpr52729.2023.01919

Wu, X., Li, M., Cui, X., and Xu, G. (2022). Deep multimodal learning for lymph node metastasis prediction of primary thyroid cancer. Phys. Med. Biol. 67, 035008. doi:10.1088/1361-6560/ac4c47

Xu, P., Zhu, X., and Clifton, D. (2022). Multimodal learning with transformers: a survey. IEEE Trans. Pattern Analysis Mach. Intell. 45, 12113–12132. doi:10.1109/tpami.2023.3275156

Yan, L., Zhao, L., Gašević, D., and Maldonado, R. M. (2022). “Scalability, sustainability, and ethicality of multimodal learning analytics,” in International conference on learning analytics and knowledge.

Yang, Z., Fang, Y., Zhu, C., Pryzant, R., Chen, D., Shi, Y., et al. (2022). “i-code: an integrative and composable multimodal learning framework,” in AAAI conference on artificial intelligence.

Yao, J., Zhang, B., Li, C., Hong, D., and Chanussot, J. (2023). Extended vision transformer (exvit) for land use and land cover classification: a multimodal deep learning framework. IEEE Trans. Geoscience Remote Sens. 61, 1–15. doi:10.1109/tgrs.2023.3284671

Yu, Q., Liu, Y., Wang, Y., Xu, K., and Liu, J. (2023). Multimodal federated learning via contrastive representation ensemble. Int. Conf. Learn. Represent. Available online at: https://arxiv.org/abs/2302.08888

Zhang, B., Zhang, P., Dong, X., Zang, Y., and Wang, J. (2025). “Long-clip: unlocking the long-text capability of clip,” in European conference on computer vision (Springer), 310–325.

Zhang, H., Zhang, C., Wu, B., Fu, H., Zhou, J. T., and Hu, Q. (2023). Calibrating multimodal learning. Int. Conf. Mach. Learn. Available online at: https://arxiv.org/abs/2306.01265

Zhang, Y., He, N., Yang, J., Li, Y., Wei, D., Huang, Y., et al. (2022). “mmformer: multimodal medical transformer for incomplete multimodal learning of brain tumor segmentation,” in International conference on medical image computing and computer-assisted intervention.

Zhao, J., Zhang, Z., Yao, W., Datcu, M., Xiong, H., and Yu, W. (2020). Opensarurban: a sentinel-1 sar image dataset for urban interpretation. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. 13, 187–203. doi:10.1109/jstars.2019.2954850

Zhou, H.-Y., Yu, Y., Wang, C., Zhang, S., Gao, Y., Pan, J.-Y., et al. (2023a). A transformer-based representation-learning model with unified processing of multimodal input for clinical diagnostics. Nat. Biomed. Eng. 7, 743–755. doi:10.1038/s41551-023-01045-x

Zhou, Y., Wang, X., Chen, H., Duan, X., and Zhu, W. (2023b). Intra- and inter-modal curriculum for multimodal learning. ACM Multimedia. doi:10.1145/3581783.3612468

Keywords: climate resilience, precision agriculture, low-carbon farming, planter optimization, sustainability

Citation: Shi Y, Zhao P, Gu Z and Li Y (2025) Synergistic research on planter performance optimization and green low-carbon agricultural transformation under climate risk. Front. Environ. Sci. 13:1561655. doi: 10.3389/fenvs.2025.1561655

Received: 20 January 2025; Accepted: 16 May 2025;

Published: 18 June 2025; Corrected: 09 July 2025.

Edited by:

Ana Maria Tarquis, Polytechnic University of Madrid, SpainReviewed by:

Mohamed R. Abonazel, Cairo University, EgyptAhmed Saqr, Mansoura University, Egypt

Peng Du, Liaoning Normal University, China

Copyright © 2025 Shi, Zhao, Gu and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yan Shi, c2hpeWFuMjRzQHNpbmEuY29t

Yan Shi

Yan Shi Pengfei Zhao

Pengfei Zhao