- 1Department of Otolaryngology–Head and Neck Surgery/Surgical Oncology, Princess Margaret Cancer Centre/University Health Network, Toronto, ON, Canada

- 2Guided Therapeutics (GTx) Program, Techna Institute, University Health Network, Toronto, ON, Canada

- 3Unit of Otorhinolaryngology–Head and Neck Surgery, University of Brescia–ASST “Spedali Civili di Brescia, Brescia, Italy

- 4Section of Otorhinolaryngology–Head and Neck Surgery, University of Padua–Azienda Ospedaliera di Padova, Padua, Italy

Objective: To report the first use of a novel projected augmented reality (AR) system in open sinonasal tumor resections in preclinical models and to compare the AR approach with an advanced intraoperative navigation (IN) system.

Methods: Four tumor models were created. Five head and neck surgeons participated in the study performing virtual osteotomies. Unguided, AR, IN, and AR + IN simulations were performed. Statistical comparisons between approaches were obtained. Intratumoral cut rate was the main outcome. The groups were also compared in terms of percentage of intratumoral, close, adequate, and excessive distances from the tumor. Information on a wearable gaze tracker headset and NASA Task Load Index questionnaire results were analyzed as well.

Results: A total of 335 cuts were simulated. Intratumoral cuts were observed in 20.7%, 9.4%, 1.2,% and 0% of the unguided, AR, IN, and AR + IN simulations, respectively (p < 0.0001). The AR was superior than the unguided approach in univariate and multivariate models. The percentage of time looking at the screen during the procedures was 55.5% for the unguided approaches and 0%, 78.5%, and 61.8% in AR, IN, and AR + IN, respectively (p < 0.001). The combined approach significantly reduced the screen time compared with the IN procedure alone.

Conclusion: We reported the use of a novel AR system for oncological resections in open sinonasal approaches, with improved margin delineation compared with unguided techniques. AR improved the gaze-toggling drawback of IN. Further refinements of the AR system are needed before translating our experience to clinical practice.

Introduction

The complex anatomy and close proximity of critical structures in the sinonasal region represent a major challenge for surgeons when treating advanced tumors in this location, and incomplete resections are not uncommon, both in open and endoscopic approaches (1, 2). Intraoperative navigation (IN) has been proposed as a potential strategy to improve surgical margins (3). IN enables co-registration of computed tomography (CT) and/or magnetic resonance imaging (MRI) studies with surgical instruments. As a result, real-time feedback of instrument location is provided in order to help the surgeon during the operation.

Our group has recently published an advanced IN system for open sinonasal approaches during the resection of locally aggressive cancers (4). This technology not only allows the surgeon to locate a registered instrument or pointer tool in two dimensions but also introduces planar cutting tool capabilities along with three-dimensional (3D) volume rendering. Therefore, the surgeon can anticipate the direction of the cutting instrument in 3D planes with respect to the tumor and improve accuracy of margin delineation. Still, one key drawback of all IN systems is that the information is displayed outside the surgical field, and therefore surgeons are forced to switch their gaze between the actual procedure and the navigation monitor, which can impact safety and efficiency.

Augmented reality (AR) uses visual inputs to enhance the user’s natural vision and therefore can integrate navigation information onto the surgical field (5). This feature can potentially address the gaze-toggling drawback of IN and at the same time provide valuable information to the surgeon, for example, facilitating tumor localization and delineation. Reports of AR in otolaryngology–head and neck surgery are scarce, and most of them come from endoscopic sinus surgery (6), transoral robotic surgery (7), and otology (8, 9). Nevertheless, open sinonasal procedures also represent an adequate indication for AR. The rigid structure of the sinonasal region facilitates the co-registration processes required for AR, and the high rates of incomplete resections in advanced sinonasal tumors could be improved with the use of this technology.

The objective of this study was to report the first use of a novel AR system in open sinonasal tumor resections in preclinical models and to compare an AR approach with an advanced IN navigation system.

Materials and Methods

Tumor Models

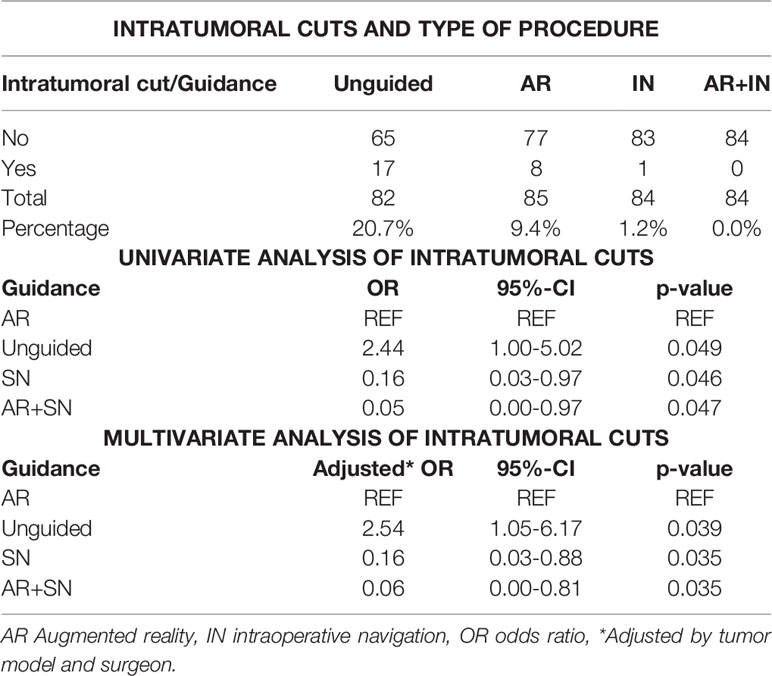

Two artificial skulls (Sawbones®) and a moldable material (Play-Doh®) mixed with acrylic glue were employed to build four locally advanced sinonasal tumor models (Figure 1A). Tumor surfaces were disguised with tape. Five different areas to be osteotomized were delineated: palatal osteotomy (Pa), fronto-maxillary junction (FMJ), latero-inferior orbital rim (LIOR), zygomatic arch (Zy), and pterygomaxillary junction (PMJ) (Figure 1B).

Figure 1 Tumor models, image acquisition, and tumor contouring. (A) Artificial skulls with moldable material simulating advanced sinonasal tumors. (B) Final tumor model, with the tumors covered with white tape. The areas to be osteotomized are delineated with visible tape and marked with numbers. A four-sphere reference tool drilled to the skull to co-register the intraoperative navigation. (C) Higher attenuation of the artificial tumor models in the cone-beam computed tomography (CBCT) images. (D) Three-dimensional (3D) contouring of the left tumor.

Image Acquisition and Tumor Contouring

Cone-beam computed tomography (CBCT) scans acquired 3D images of the skull models (9). Tumors showed higher x-ray attenuation than the artificial bone (Figure 1C). Tumor contouring was performed semiautomatically (10). First, a global threshold was applied to provide a quick, coarse segmentation, and then manual refinement was used to smooth the segmentation (Figure 1D).

Advanced Intraoperative Navigation System

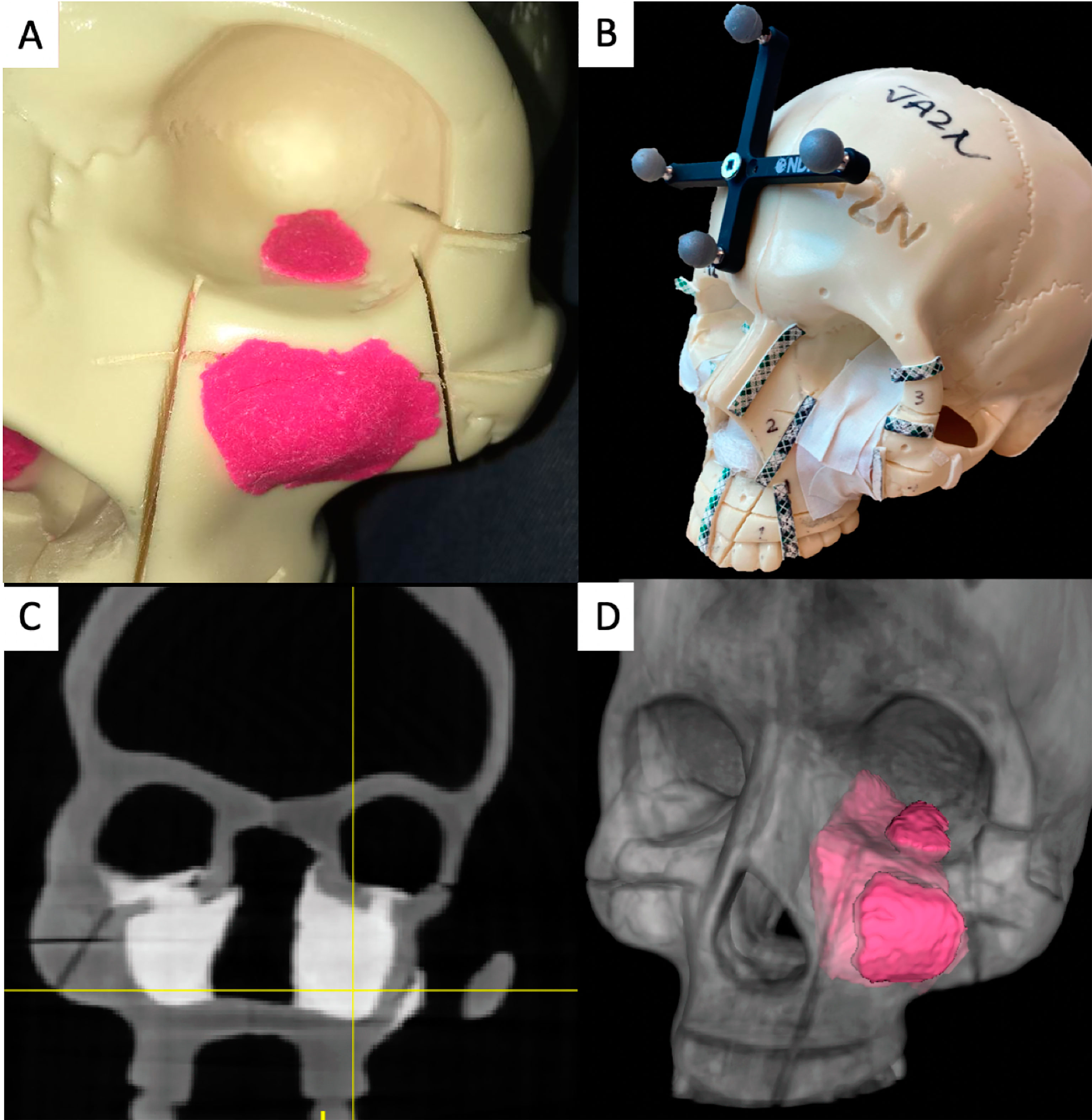

An in-house navigation software package, GTx-Eyes, processed and displayed the CBCT images (11). This software has been proven useful in a breadth of surgical oncology subspecialties (12–15), and the technical aspects are described elsewhere (16). Tumor and margin segmentations were superimposed on triplanar views and also shown as 3D surface renderings (Figure 2A). Tool tracking was achieved by a stereoscopic infrared camera. Image-to-tracker registration was obtained by paired-point matching of predrilled divots in the skull by means of a tracked pointer. A four-sphere reference tool was drilled to the skull. A fiducial registration error of ≤1 mm was deemed acceptable. A three-sphere reference was attached to an osteotome and calibrated. This advanced IN system allows visualization of the entire trajectory of the cutting instrument with respect to the tumor in 3D views (Figures 2B–D, Supplementary Video).

Figure 2 Advanced intraoperative navigation system. (A) Setup of the system, depicting the triplanar cutting views and the three-dimensional (3D) rendering of the tumor on the screen. The skull and the cutting instrument (in this case an osteotome) are referenced to be tracked and co-registered. (B–D) The system allows users to visualize cutting trajectories of the instrument with respect to the tumor. (B) Cutting of the latero-inferior orbital rim, (C) pterygomaxillary junction, and (D) palate.

Augmented Reality System

The AR system was composed of a portable high-definition projector (PicoPro, Celluon Inc., Federal Way, WA, USA), a stereoscopic infrared camera (Polaris Spectra, NDI, Waterloo, ON, Canada), a USB 2.0M pixel generic camera (ICAN Webcam 2MP, China), and a laptop computer (M4500, Precision laptop, Dell, Round Rock, TX, USA). A custom-made 3D printed case was fabricated to anchor a four-sphere reference tool and contain the other elements of the AR system (Figure 3). The technical details of this system including the kinematic transformation, reference system, and conventional image-to-tracker registration method have also been described elsewhere (17).

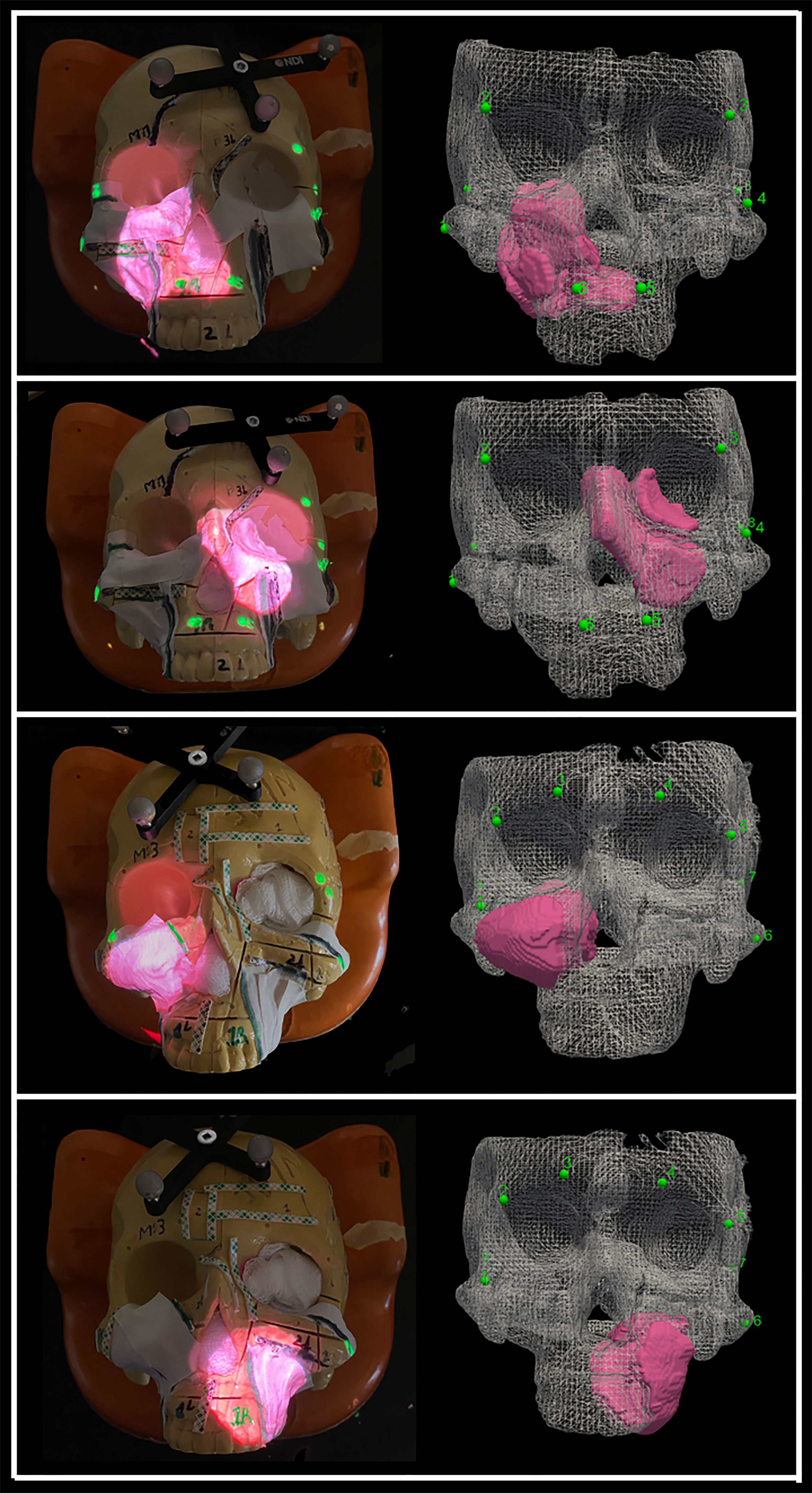

The AR system was registered into a single coordinate system by pairing correspondent landmarks using fiducial markers identifiable in both the images and projection surface (Figure 4). GTx-Eyes provided the 3D surface rendering of the tumors, which were projected by the AR system onto the skulls. The virtual tumor was delineated with CBCT imaging through ITK-SNAP software (ref below). The optical sensor mounted to the projector case facilitated real-time tracking of the AR device to allow the projector and/or skull to be repositioned during tasks without compromising projection accuracy (Supplementary Video), with a registration error <1 mm. This approach facilitated identification of the tumors and in consequence guided the virtual cuts of the participants (Supplementary Figure S1).

Figure 4 Projection of the four sinonasal tumors using augmented reality, which enables tumor localization. The alignment points are depicted as well as the three-dimensional (3D) reconstruction of the tumors. Pictures were taken without light for demonstration purposes, but good visualization is obtained with light as well.

Gaze-Tracking System

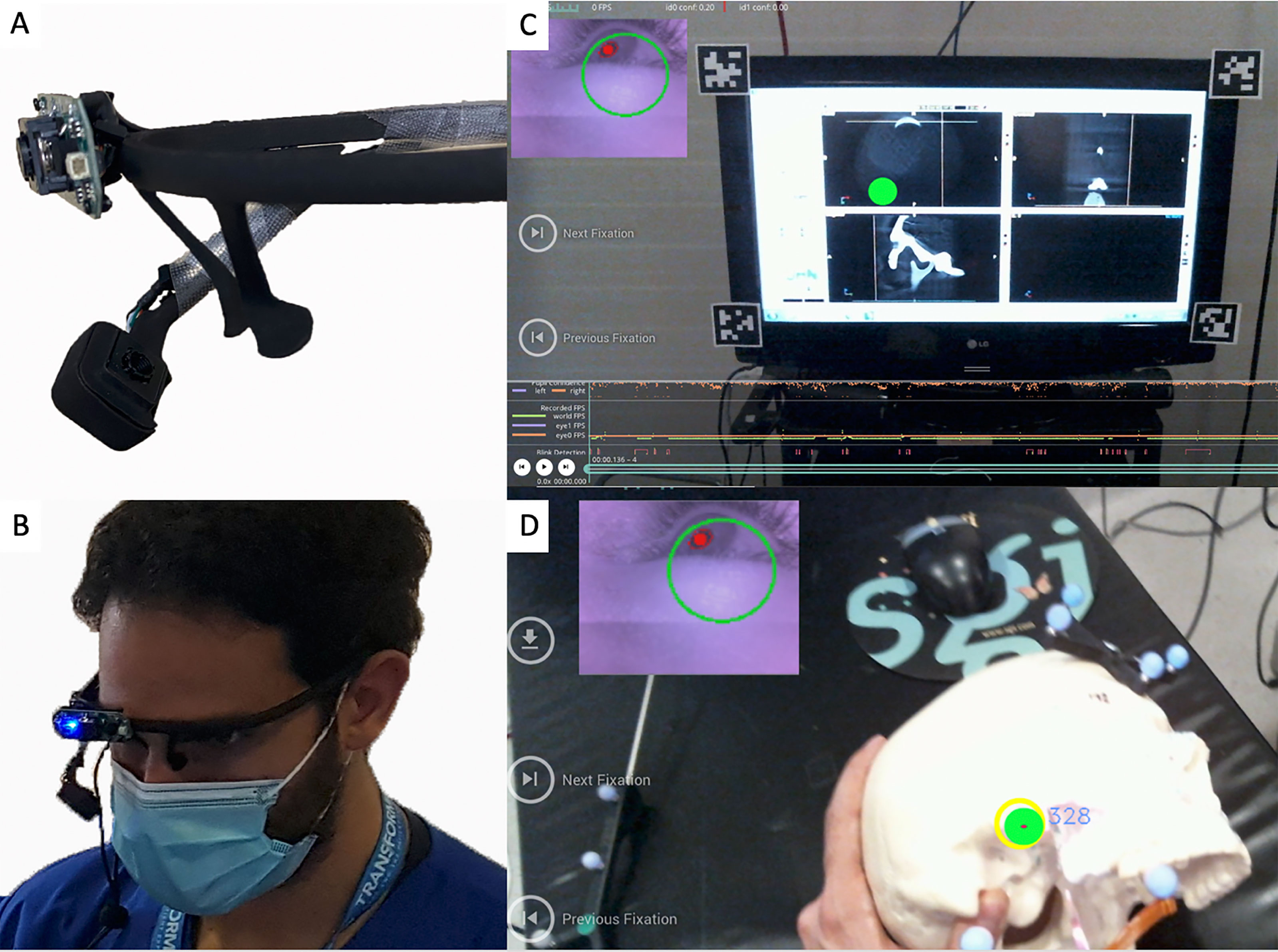

A wearable gaze tracker headset was developed to continuously monitor and locate the user’s gaze (e.g., surgical field vs. navigation monitor) during surgical tasks. This headset was used solely for gaze-tracking and did not project any information. The eye tracker (Pupil Labs, Berlin, Germany) consists of two cameras: one trained on the eye and the other, a “world camera”, recording the individual’s field of view. A series of computer vision algorithms are applied to the input from the eye camera to reliably detect the pupil throughout the eye’s range of motion. A calibration step provides a triangulating mapping function between the pupil and world cameras, which enables the user’s gaze to be precisely tracked (Figures 5A–D).

Figure 5 (A) Gaze-tracking system with the two cameras that allow it to visualize the pupils and also the participant’s view. (B) The device placed on one of the participants. (C, D) The “world camera” showing the participant’s view, which could be either on the screen (C) or on the surgical field (D). The green dot indicates the exact position of the gaze. A small picture-in-picture screen (upper left) shows the position of the pupil.

Simulations

Five head and neck fellowship-trained surgeons with 3–5 years of experience in oncologic ablations from the Department of Otolaryngology–Head and Neck Surgery of the University Health Network participated in the simulations.

Surgeons were instructed to position the osteotome between the delineated areas of the different osteotomy sites in a sequential order (Pa-FMJ-LIOR-Zy-PMJ) and to provide a 1-cm margin from the tumor along the plane trajectory. Instead of cutting the skulls, virtual cuts were performed in order to allow the reutilization of the models. This involved recording the osteotome position and orientation in the navigation software after the surgeon placed the osteotome in a certain direction and provided confirmation of obtaining the proposed cut. The analysis was performed on the virtual cutting trajectory after all the simulations were completed.

Four procedures were performed: 1) Unguided using axial, sagittal, coronal images; 2) Guided -AR-; 3) Guided -IN-; and 4) Guided -AR and IN-. This last group was possible, as both systems are contained in the same platform software and can be used simultaneously. Analysis of cutting planes was performed using MATLAB software. An area of 4 cm × 2 cm (1 cm on both sides with respect to the longitudinal axis) along the longitudinal axis of the cut was isolated from each plane. The minimal distance with respect to the tumor surface was calculated for each point making up the isolated area and reproduced as a distribution of distances shown as a 4 cm × 2 cm color scaled image. Distance from the tumor surface was classified as “intratumoral” when ≤0 mm, “close” when >0 mm and ≤5 mm, “adequate” when >5 mm and ≤15 mm, and “excessive” when >15 mm. The percentages of points at intratumoral, close, adequate, and excessive distances were calculated for each simulation plane.

The gaze-tracking system was calibrated to each participant, and it was used in all the simulations. The eye-tracking data were analyzed to identify each time point the participants switched their gaze between the navigation monitor and the surgical field. The metric reported is the percentage of total study time spent looking at the navigation monitor.

Finally, a NASA Task Load Index (NASA-TLX) questionnaire that measures the workload of a task was completed by the participants to evaluate the different approaches (18). This questionnaire is widely used and validated and rates the perceived workload to assess a task, system, or other aspects of performance (19–21). It has been employed to assess the workload of new technologies during surgical procedures (22). The total workload is divided into six subjective subscales, which assess mental, physical, and temporal demand, performance, effort, and frustration. Scores range between very low and very high.

Statistical Analysis

Statistical analysis was run through XLSTAT® (Addinsoft®, New York). Simulations were grouped into four categories: unguided, AR, IN, and AR + IN. Rate of intratumoral virtual cuts was the main outcome and was assessed with the Fisher’s exact test. Multivariable analysis adjusting for surgeon and tumor was performed through logistic regression analysis. The groups were also compared in terms of percentage of intratumoral, close, adequate, and excessive distances from the tumor and duration of the simulations through the bilateral Kruskal–Wallis test and Steel–Dwass–Critchlow–Fligner post-hoc test. The Kruskal–Wallis test was also employed to analyze the gaze-tracking outcomes and the NASA-TLX scores. Level of significance was set at 0.05 for all statistical tests.

Results

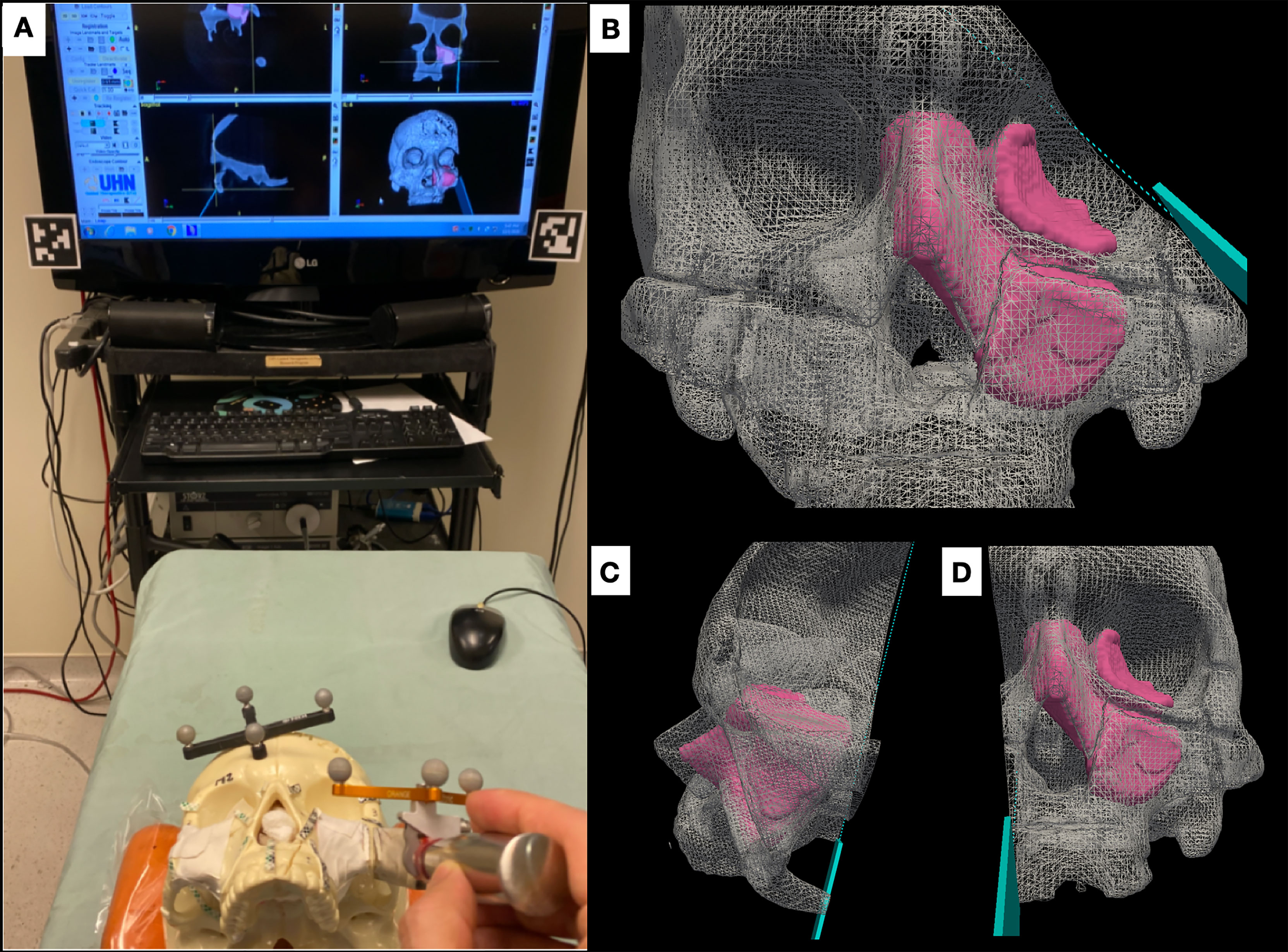

Intratumoral Cuts

A total of 335 cuts were simulated. Intratumoral cuts were observed in 20.7%, 9.4%, 1.2%, and 0% of the unguided, AR, IN, and AR + IN simulations, respectively (p < 0.0001). Univariate analysis comparing different procedures with AR showed that this technology improved margins with respect to unguided simulations. The advanced IN approach reduced the intratumoral cut rates compared with AR, and the combination of AR and IN did not significantly decrease the intratumoral cut rate compared with IN alone (p = 0.51). These differences were also seen in a multivariate model adjusted for tumor and surgeon (Table 1).

Distribution of Points Forming Simulation Planes

The percentage of points forming the simulation planes was also registered. We observed that only the advanced IN system and the combined approach significantly decreased the percentage of intratumoral (p < 0.0001) and close margin points (p = 0.008) compared with the unguided resections (Supplementary Table S1).

Duration of Simulations and Gaze-Tracking Results

Mean total duration of the simulation was 215 s for unguided procedures and 117, 134, and 120 s in the AR, IN, and AR + IN, respectively. Participants required significantly more time to perform the unguided simulations compared to the AR- and IN-guided ones (p = 0.004). There were no differences between the AR-, IN-, and AR + IN-guided procedures. The percentage of time looking at the screen during the procedures was 55.5% for the unguided approaches and 0%, 78.5%, and 61.8% in AR, IN, and AR + IN, respectively (p < 0.001). Adding the AR technology to the combined approach significantly reduced the screen time compared with the advanced IN procedures alone (Supplementary Table S2).

NASA-TLX Scores

We found no differences in scores between the unguided and the AR procedures, and both of them exhibited a high degree of mental demand, effort, and frustration. Combining AR to IN showed a significant improvement on the previous scores (Supplementary Graph 1 and Supplementary Table S3).

Discussion

In this study, we observed that both advanced IN and AR technologies improved margin delineation compared with unguided procedures. Advanced IN was better for margin delineation than AR but required gaze-toggling between the surgical field and the navigation monitor, whereas AR allowed the surgeon to focus only on the surgical field. The combination of both technologies partially improved the flaws on margins and staring outside the surgical field of the AR and IN techniques, respectively. The integration of AR and IN also improved Mental Demand, Performance, Effort, and Frustration domains in the NASA-TLX questionnaires.

Margin control is among the most important prognostic factors and the only surgeon-controlled variable in head and neck cancer, and efforts have been centered around obtaining clear margins after tumor resections. Nevertheless, positive surgical margins represent a major issue, even in the hand of experienced surgeons. In a report from the largest tertiary referral head and neck cancer center in the Netherlands, 39% out of 69 resections of advanced maxillary tumors (>T3) were incomplete, being posterior and superior margins the most commonly involved (23). In a bi-institutional study from the Cleveland Clinic and the UC San Francisco (24), 24% out of 75 post-maxillectomy patients had positive margins in definitive pathology. Positive margins were associated with a 2-fold increase of risk of death, and in multivariate analysis after controlling for age, nodal stage, and surgical treatment, margins were independently associated with survival (25). Moreover, it has been reported that intraoperative frozen sections (which are probably the only intraoperative resource to evaluate adequacy of the resection) have only 40% sensitivity in open sinonasal approaches (26).

Currently, IN is employed in many centers in endoscopic sinonasal procedures (27–29). By point-tracking an instrument and locating it on two dimensions in triplanar views, IN has shown an increase in accuracy and a reduction in operative time, impacting favorably surgical outcomes and complications. Utilization of IN in open procedures to resect malignant tumors has been less reported, but promising results were obtained in margin status in small cohorts (3, 30, 31). Our group has recently published a preclinical experience utilizing the same advanced IN system used in the present study to assist in open sinonasal approaches (4). The main novelty is that our advanced IN system allows surgeons not only to track the desired instrument but also to visualize the entire cutting trajectory of a tracked cutting tool in 3D. In our previous experience using this technology, eight head and neck surgeons performed 381 simulated osteotomies for the resection of seven tumor models. The use of 3D navigation for margin delineation significantly improved control of margins: unguided cuts had 18.1% intratumoral cuts compared to 0% intratumoral cuts with 3D navigation (p < 0.0001). Furthermore, a clinical study using this advanced IN system for mandibulectomies demonstrated a <1.5-mm accuracy between the planned cuts and the actual bone resection in the post-resection imaging (15). One of the main criticisms to the system by the surgeons in this report was the multitasking challenge between the surgical field and the IN monitor, which can ultimately impact not only efficiency but also patient safety, as the surgeon has to look away from the surgical field.

AR enhances the surgeon’s vision rather than replacing it: CT, PET-CT, or MRI scans can be visualized in 3D and in real time, granting “X-ray vision” to the physician (32, 33). It has recently gained interest by computer-assisted surgery researchers, as it integrates the imaging information onto the surgical field. This has the potential to overcome the main drawback of the IN technology, which stems from the frequent switching of focus from the navigation screens to the surgical field and the translation of 2D imaging data to a 3D anatomical structure (33). Despite having reports on AR application in the field of otolaryngology–head and neck surgery, the majority describe the use of AR using wearable computers (Microsoft HoloLens®, Microsoft Corporation, Redmond) and other head-mounted displays (HMDs), which might be cumbersome especially in long procedures, and preclude the use of loupes/headlights. There are literature reports about HMD limitations including heaviness of the devices, breaches in patient privacy/information, battery life, potential lag time secondary to preoperative image processing, and the potential of signal interferences of wireless Internet or Bluetooth connections that may cause intermittent data transmission of image (34). Moreover, most reports of HMD rely solely on the operator visual alignment between the projected images and the anatomical area of interest (35) without any co-registration steps between the projecting surface and the AR system, which can lead to errors. Lastly, there are descriptions of the use of AR in the operating room, but they are merely descriptive and not aimed to improve a surgical task (36) or for educational purposes only (37).

Our study reports several innovations. Tracking the AR projector as well as the projection surface with reflecting markers allowed us to be able to reposition the skull models and the projector without losing accuracy (17, 38), and this is something that was not described previously in head and neck surgery. This is paramount in computer-assisted surgery, as it allows precise projection even when movement occurs, as in real-time situations in the operating room. Another key aspect of our approach is the use of an external projector, which avoids the need for heavy wearable headsets. As a clarification, the headsets used in our study were for gaze-tracking only. The sinonasal/skull base region rigidity represents an excellent indication for AR, as the deformation of tissue is minimal and co-registration is facilitated. Deformation has to be taken into consideration during soft-tissue resections, as it is not possible to adjust the projections during AR (7, 39). By tracking the gaze of the participants, we were able to quantitatively measure the percentage of time that the surgeons had to look away from the surgical field. As our results suggest, there is significant improvement when AR is employed both alone or in combination with IN, addressing the main disadvantages of IN utility. Our AR system shares the same software platform as the advanced IN system, and both approaches can be used concurrently, allowing to evaluate the combination of both. Finally, there is a lack of user evaluation analysis with AR, so we utilized a validated questionnaire to investigate the differences between approaches.

Despite being significantly superior than unguided simulations in terms of intratumoral cut rates, there is room for improvement in our AR system. The advanced IN technology performed better than the AR in terms of intratumoral cut rates, as well as intratumoral and close distribution of points forming the simulation planes. This might be explained by the challenge in finding the correct angle between the projector and the projecting surface. We observed that if the angle differed greatly from 90 degrees, the image can be distorted and therefore lead to inaccuracies in surgical guidance to the operator. For example, when performing the PMJ cuts, by turning the skull 180 degrees, there were cases that the alignment was lost that might have impacted the positive margin cuts. Another important limitation is that the sense of depth can be lost in the projections, and the image can be interpreted in 2D on the surface rather than in 3D, especially with changes in ambient light. One last limitation of image projection is the parallax issue (40). This phenomenon occurs when there is a 3D space non-alignment between the viewer and the projection perspectives. Our system minimizes this issue by adjusting the perspective of the pico-projector close to the surgeon’s sight. In addition, the AR system is fully integrated into our intraoperative navigation system with real-time tracking technology; therefore, the relocation/movement/displacement of the projector will not affect projection accuracy, with no need for further recalibration and registration procedure. These limitations were also reflected on the NASA-TLX scores, where mental demand, effort, and frustration rates for AR were higher than those for IN and similar to those of unguided approaches. Participants commented on the fact that when the adequate angle of projection was lost, they had difficulties interpreting the information from the AR system, which negatively impacted the aforementioned domains of the NASA-TLX questionnaire. A plausible way of improving these flaws, and in consequence improving the margin delineation, is to project the cutting trajectory using AR. Similar to the advanced IN capabilities, the AR could further incorporate the intended cut trajectories on the surgical field, in addition to its projection of the tumor for localization. We also acknowledge the limitations of using preclinical models that may not perfectly replicate the conditions of the operating room. Lastly, another limitation is the non-randomization of the simulated cuts. This was done in order to prevent the participant’s retained memory of the guided views if seen prior to the unguided cuts. Still, the fact that the sequence unguided-AR-IN-AR + IN was followed by all surgeons could have resulted in some degree of learning effect by the participants toward the end of the tasks.

Conclusion

We reported the use of AR for open sinonasal approaches and improved margin delineation compared with unguided techniques. The advanced IN performed better in terms of margin delineation, but the AR improved the gaze-toggling drawback of IN. Further research within our group is currently underway before translating our experience to clinical practice.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This is a self-funded study. Funding was provided by the Princess Margaret Cancer Foundation (Toronto, Canada), including the Kevin and Sandra Sullivan Chair in Surgical Oncology, the Myron and Berna Garron Fund, the Strobele Family Fund, and the RACH Fund.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The gaze-tracking system was developed by Dr. Kazuhiro Yasufuku’s research laboratory.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2021.723509/full#supplementary-material

Supplementary Video | Advanced Intraoperative Navigation system showing the entire cutting trajectory with respect to the tumor, and the Augmented Reality system providing the exact location of the tumor. The co-registration of the skull and the projector in the Augmented Reality system allows the image to be accurately projected and automatically adjusted despite movements.

References

1. Deganello A, Ferrari M, Paderno A, Turri-Zanoni M, Schreiber A, Mattavelli D, et al. Endoscopic-Assisted Maxillectomy: Operative Technique and Control of Surgical Margins. Oral Oncol (2019) 93:29–38. doi: 10.1016/j.oraloncology.2019.04.002

2. Nishio N, Fujimoto Y, Fujii M, Saito K, Hiramatsu M, Maruo T, et al. Craniofacial Resection for T4 Maxillary Sinus Carcinoma. Otolaryngol Neck Surg (2015) 153(2):231–8. doi: 10.1177/0194599815586770

3. Catanzaro S, Copelli C, Manfuso A, Tewfik K, Pederneschi N, Cassano L, et al. Intraoperative Navigation in Complex Head and Neck Resections: Indications and Limits. Int J Comput Assist Radiol Surg (2017) 12(5):881–7. doi: 10.1007/s11548-016-1486-0

4. Ferrari M, Daly MJ, Douglas CM, Chan HHL, Qiu J, Deganello A, et al. Navigation-Guided Osteotomies Improve Margin Delineation in Tumors Involving the Sinonasal Area: A Preclinical Study. Oral Oncol (2019) 99:104463. doi: 10.1016/j.oraloncology.2019.104463

5. Moawad GN, Elkhalil J, Klebanoff JS, Rahman S, Habib N, Alkatout I. Augmented Realities, Artificial Intelligence, and Machine Learning: Clinical Implications and How Technology Is Shaping the Future of Medicine. J Clin Med (2020) 9(12):3811. doi: 10.3390/jcm9123811

6. Khanwalkar AR, Welch KC. Updates in Techniques for Improved Visualization in Sinus Surgery. Curr Opin Otolaryngol Head Neck Surg (2021) 29(1):9–20. doi: 10.1097/MOO.0000000000000693

7. Liu WP, Richmon JD, Sorger JM, Azizian M, Taylor RH. Augmented Reality and Cone Beam CT Guidance for Transoral Robotic Surgery. J Robot Surg (2015) 9(3):223–33. doi: 10.1007/s11701-015-0520-5

8. Creighton FX, Unberath M, Song T, Zhao Z, Armand M, Carey J. Early Feasibility Studies of Augmented Reality Navigation for Lateral Skull Base Surgery. Otol Neurotol (2020) 41(7):883–8. doi: 10.1097/MAO.0000000000002724

9. King E, Daly MJ, Chan H, Bachar G, Dixon BJ, Siewerdsen JH, et al. Intraoperative Cone-Beam CT for Head and Neck Surgery: Feasibility of Clinical Implementation Using a Prototype Mobile C-Arm. Head Neck (2013) 35(7):959–67. doi: 10.1002/hed.23060

10. Jermyn M, Ghadyani H, Mastanduno MA, Turner W, Davis SC, Dehghani H, et al. Fast Segmentation and High-Quality Three-Dimensional Volume Mesh Creation From Medical Images for Diffuse Optical Tomography. J BioMed Opt (2013) 18(8):86007. doi: 10.1117/1.JBO.18.8.086007

11. Daly MJ, Chan H, Nithiananthan S, Qiu J, Barker E, Bachar G, et al. Clinical Implementation of Intraoperative Cone-Beam CT in Head and Neck Surgery, Vol. 796426. Wong KH, Holmes DR III, editors. Lake Buena Vista, FL: SPIE (2011). doi: 10.1117/12.878976

12. Dixon BJ, Chan H, Daly MJ, Qiu d, Vescan A, Witterick IJ, et al. Three-Dimensional Virtual Navigation Versus Conventional Image Guidance: A Randomized Controlled Trial. Laryngoscope (2016) 126(7):1510–5. doi: 10.1002/lary.25882

13. Sternheim A, Daly M, Qiu J, Weersink R, Chan H, Jaffray D, et al. Navigated Pelvic Osteotomy and Tumor Resection: A Study Assessing the Accuracy and Reproducibility of Resection Planes in Sawbones and Cadavers. J Bone Joint Surg Am (2015) 97(1):40–6. doi: 10.2106/JBJS.N.00276

14. Lee CY, Chan H, Ujiie H, Fujino K, Kinoshita T, Irish JC, et al. Novel Thoracoscopic Navigation System With Augmented Real-Time Image Guidance for Chest Wall Tumors. Ann Thorac Surg (2018) 106(5):1468–75. doi: 10.1016/j.athoracsur.2018.06.062

15. Hasan W, Daly MJ, Chan HHL, Qiu J, Irish JC. Intraoperative Cone-Beam CT-Guided Osteotomy Navigation in Mandible and Maxilla Surgery. Laryngoscope (2020) 130(5):1166–72. doi: 10.1002/lary.28082

16. Daly MJ, Siewerdsen JH, Moseley DJ, Jaffray DA, Irish JC. Intraoperative Cone-Beam CT for Guidance of Head and Neck Surgery: Assessment of Dose and Image Quality Using a C-Arm Prototype. Med Phys (2006) 33(10):3767–80. doi: 10.1118/1.2349687

17. Chan HHL, Haerle SK, Daly MJ, Zheng J, Philp L, Ferrari M, et al. An Integrated Augmented Reality Surgical Navigation Platform Using Multi-Modality Imaging for Guidance. Máthé D, Ed. PloS One (2021) 16(4):e0250558. doi: 10.1371/journal.pone.0250558

18. Hancock PA, Meshkati N. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv Psychol (1988) 52:139–83. doi: 10.1016/S0166-4115(08)62386-9

19. Rainieri G, Fraboni F, Russo G, Tul M, Pingitore A, Tessari A, et al. Visual Scanning Techniques and Mental Workload of Helicopter Pilots During Simulated Flight. Aerosp Med Hum Perform (2021) 92(1):11–9. doi: 10.3357/AMHP.5681.2021

20. Lebet RM, Hasbani NR, Sisko MT, Agus MSD, Nadkarni VM, Wypij D, et al. Nurses’ Perceptions of Workload Burden in Pediatric Critical Care. Am J Crit Care (2021) 30(1):27–35. doi: 10.4037/ajcc2021725

21. Devos H, Gustafson K, Ahmadnezhad P, Liao K, Mahnken JD, Brooks WM, et al. Psychometric Properties of NASA-TLX and Index of Cognitive Activity as Measures of Cognitive Workload in Older Adults. Brain Sci (2020) 10(12):994. doi: 10.3390/brainsci10120994

22. Lowndes BR, Forsyth KL, Blocker RC, Dean PG, Truty MJ, Heller SF, et al. NASA-TLX Assessment of Surgeon Workload Variation Across Specialties. Ann Surg (2020) 271(4):686–92. doi: 10.1097/SLA.0000000000003058

23. Kreeft AM, Smeele LE, Rasch CRN, Hauptmann M, Rietveld DHF, Leemans CR, et al. Preoperative Imaging and Surgical Margins in Maxillectomy Patients. Head Neck (2012) 34(11):1652–6. doi: 10.1002/hed.21987

24. Likhterov I, Fritz MA, El-Sayed IH, Rahul S, Rayess HM, Knott PD. Locoregional Recurrence Following Maxillectomy: Implications for Microvascular Reconstruction. Laryngoscope (2017) 127(11):2534–8. doi: 10.1002/lary.26620

25. Mücke T, Loeffelbein DJ, Hohlweg-Majert B, Kesting MR, Wolff KD, Hölzle F. Reconstruction of the Maxilla and Midface - Surgical Management, Outcome, and Prognostic Factors. Oral Oncol (2009) 45(12):1073–8. doi: 10.1016/j.oraloncology.2009.10.003

26. Murphy J, Isaiah A, Wolf JS, Lubek JE. The Influence of Intraoperative Frozen Section Analysis in Patients With Total or Extended Maxillectomy. Oral Surg Oral Med Oral Pathol Oral Radiol (2016) 121(1):17–21. doi: 10.1016/j.oooo.2015.07.014

27. Leong J-L, Batra PS, Citardi MJ. CT-MR Image Fusion for the Management of Skull Base Lesions. Otolaryngol Neck Surg (2006) 134(5):868–76. doi: 10.1016/j.otohns.2005.11.015

28. Kacker A, Tabaee A, Anand V. Computer-Assisted Surgical Navigation in Revision Endoscopic Sinus Surgery. Otolaryngol Clin North Am (2005) 38(3):473–82. doi: 10.1016/j.otc.2004.10.021

29. Vicaut E, Bertrand B, Betton J-L, Bizon A, Briche D, Castillo L, et al. Use of a Navigation System in Endonasal Surgery: Impact on Surgical Strategy and Surgeon Satisfaction. A Prospective Multicenter Study. Eur Ann Otorhinolaryngol Head Neck Dis (2019) 136(6):461–4. doi: 10.1016/j.anorl.2019.08.002

30. Tarsitano A, Ricotta F, Baldino G, Badiali G, Pizzigallo A, Ramieri V, et al. Navigation-Guided Resection of Maxillary Tumours: The Accuracy of Computer-Assisted Surgery in Terms of Control of Resection Margins – A Feasibility Study. J Cranio Maxillofacial Surg (2017) 45(12):2109–14. doi: 10.1016/j.jcms.2017.09.023

31. Feichtinger M, Pau M, Zemann W, Aigner RM, Kärcher H. Intraoperative Control of Resection Margins in Advanced Head and Neck Cancer Using a 3D-Navigation System Based on PET/CT Image Fusion. J Cranio Maxillofacial Surg (2010) 38(8):589–94. doi: 10.1016/j.jcms.2010.02.004

32. Chen X, Xu L, Wang Y, Wang H, Wang F, Zeng X, et al. Development of a Surgical Navigation System Based on Augmented Reality Using an Optical See-Through Head-Mounted Display. J BioMed Inform (2015) 55:124–31. doi: 10.1016/j.jbi.2015.04.003

33. Gsaxner C, Pepe A, Li J, Ibrahimpasic U, Wallner J, Schmalstieg D, et al. Augmented Reality for Head and Neck Carcinoma Imaging: Description and Feasibility of an Instant Calibration, Markerless Approach. Comput Methods Programs BioMed (2020) 200:105854. doi: 10.1016/j.cmpb.2020.105854

34. Rahman R, Wood ME, Qian L, Price CL, Johnson AA, Osgood GM. Head-Mounted Display Use in Surgery: A Systematic Review. Surg Innov (2020) 27(1):88–100. doi: 10.1177/1553350619871787

35. Battaglia S, Badiali G, Cercenelli L, Bortolani B, Marcelli E, Cipriani R, et al. Combination of CAD/CAM and Augmented Reality in Free Fibula Bone Harvest. Plast Reconstr Surg - Glob Open (2019) 7(11):e2510. doi: 10.1097/GOX.0000000000002510

36. Tepper OM, Rudy HL, Lefkowitz A, Weimer KA, Marks SM, Stern CS, et al. Mixed Reality With Hololens: Where Virtual Reality Meets Augmented Reality in the Operating Room. Plast Reconstr Surg (2017) 140(5):1066–70. doi: 10.1097/PRS.0000000000003802

37. Rose AS, Kim H, Fuchs H, Frahm JM. Development of Augmented-Reality Applications in Otolaryngology–Head and Neck Surgery. Laryngoscope (2019) 129(S3):S1–11. doi: 10.1002/lary.28098

38. Meulstee JW, Nijsink J, Schreurs R, Verhamme LM, Xi T, Delye HHK, et al. Toward Holographic-Guided Surgery. Surg Innov (2019) 26(1):86–94. doi: 10.1177/1553350618799552

39. Chan JYK, Holsinger FC, Liu S, Sorger JM, Azizian M, Tsang RKY. Augmented Reality for Image Guidance in Transoral Robotic Surgery. J Robot Surg (2020) 14(4):579–83. doi: 10.1007/s11701-019-01030-0

Keywords: augmented reality, intraoperative navigation, surgical margins, sinonasal tumors, surgical margin delineation

Citation: Sahovaler A, Chan HHL, Gualtieri T, Daly M, Ferrari M, Vannelli C, Eu D, Manojlovic-Kolarski M, Orzell S, Taboni S, de Almeida JR, Goldstein DP, Deganello A, Nicolai P, Gilbert RW and Irish JC (2021) Augmented Reality and Intraoperative Navigation in Sinonasal Malignancies: A Preclinical Study. Front. Oncol. 11:723509. doi: 10.3389/fonc.2021.723509

Received: 10 June 2021; Accepted: 12 October 2021;

Published: 01 November 2021.

Edited by:

Florian M. Thieringer, University Hospital Basel, SwitzerlandReviewed by:

Catriona M. Douglas, NHS Greater Glasgow and Clyde, United KingdomThomas Gander, University Hospital Zürich, Switzerland

Copyright © 2021 Sahovaler, Chan, Gualtieri, Daly, Ferrari, Vannelli, Eu, Manojlovic-Kolarski, Orzell, Taboni, de Almeida, Goldstein, Deganello, Nicolai, Gilbert and Irish. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jonathan C. Irish, am9uYXRoYW4uaXJpc2hAdWhuLmNh

†These authors have contributed equally to this work

Axel Sahovaler

Axel Sahovaler Harley H. L. Chan

Harley H. L. Chan Tommaso Gualtieri

Tommaso Gualtieri Michael Daly

Michael Daly Marco Ferrari

Marco Ferrari Claire Vannelli2

Claire Vannelli2 Mirko Manojlovic-Kolarski

Mirko Manojlovic-Kolarski Stefano Taboni

Stefano Taboni John R. de Almeida

John R. de Almeida Alberto Deganello

Alberto Deganello Piero Nicolai

Piero Nicolai