- College of Education, China West Normal University, Nanchong, China

Immersive 360° videos are of interest to educators because of their ability to provide immersive sensory experience and other features. This study examined the effects of four cue conditions on 360° video learning performance, attention, cognitive load, and mood using eye-tracking devices, brainwave meters, and subjective questionnaires. The randomly assigned participants (n = 62) did go to the experimental group (visual cues only, auditory cues only, and audiovisual cues) or the control group (no cues). The results showed that visual and audiovisual cues effectively guide learners’ attention to the related learning content, reduce cognitive load during learning, and improve retention performance but have no significant effect on knowledge transfer or long-term memory. Auditory cues increase the number of times learners look at the related learning content but do not affect gaze duration and distract their attention, hindering the acquisition of relevant learning content. The study also found that visual cues effectively increase the number of times learners looked at the content. However, they do not affect gaze duration. The study also revealed that visual cues effectively increase learners’ relaxation when viewing 360° videos. The study’s findings can provide a reference for the instructional processing of information related to 360° video design and its practical application in teaching.

1 Introduction

With its immersive presence and interactive features (Albus et al., 2021), the deep integration of virtual reality technology with education brings more possibilities for learning. For example, virtual reality-based learning can increase the sense of presence in the learning process (Makransky et al., 2019). Studies have shown that virtual reality can effectively improve learning outcomes (Merchant et al., 2014). Virtual reality is split into virtual desktops, cave virtual environments, and immersive virtual reality with head-mounted displays (HMDs), which can be accessed differently (Buttussi and Chittaro, 2018).

360º videos have good applicability; they not only can be viewed on computers, mobile phone tablets, VR boxes, and HMDs but also have the advantages of ease of use and low cost (Shadiev and Sintawati, 2020). 360° videos have received attention due to their high potential for application in education. The immersive 360° video discussed in this study is a panoramic video viewed through a dedicated virtual reality display (HMD) device (e.g., HTC Vive, Oculus Rift), which enhances immersion in the learning process (Harrington et al., 2018), allowing viewers to turn their heads to view the video as if they were exploring the real world. As 360° videos involve 360 visual angle visible scene pictures and linearly changing audio commentary, learners may experience distraction or difficulty concentrating on the learning process, thus causing learners to ignore important learning content, affecting the necessary cognitive processing, and ultimately reducing their academic performance. Therefore, the question of how to guide learners’ attention to the necessary content in 360° video immersive learning environments is worth further study.

The use of cues in traditional media can direct learners’ attention to relevant material and thus increase necessary processing and reduce the external processing of irrelevant material (Mayer and Fiore, 2014), reducing cognitive load (Moreno and Abercrombie, 2010). Studies have provided reference cases for solving the above problems by guiding learners in 360° videos. Therefore, this study investigated whether the inclusion of cueing in immersive 360° videos affects learners’ attention, cognitive load, and learning performance. This research can help guide the design and development of 360° educational videos (e.g., educators who want learners to focus on a necessary piece of information can add cues to direct their attention and promote understanding of the relevant information).

1.1 360° video in education

In contrast to the flat screen of traditional video, 360° video is immersive and spherical (Moreno and Abercrombie, 2010), creating an almost realistic learning environment (Parmaxi, 2020). Importantly, unlike VR, 360° videos do not allow users to interact with elements in the virtual environment. However, learners can stand at a point of view and view the video content left, right, up, and down (Ward, 2017), creating the illusion of place and an immersive experience that increases learner engagement (Harrington et al., 2018) and improves learning outcomes (Rupp et al., 2019). 360° videos have been successfully used in various educational fields, including medicine (Yoganathan et al., 2018), sports (Liu et al., 2020), science (Wu et al., 2019), and lecture training (Stupar-Rutenfrans et al., 2017). For example, Yoganathan et al. (2018) demonstrated that 360° videos help medical students acquire knot-tying skills; scholars such as Stupar-Rutenfrans et al. (2017) confirmed that learners can effectively relieve nervousness about speaking when 360° videos represent a natural environment; and Araiza-Alba et al. (2020) used 360° videos, traditional videos, and posters to teach water safety skills and found that learners considered 360° videos to be more inspiring and attractive than traditional learning methods. However, the impact of 360° videos on learners is not always positive. Rupp et al. (2016) found that 360° videos made learners focus more on the immersive experience than on the learning content, resulting in distractions and even increased cognitive load, hindering the processing of relevant knowledge (Licorish et al., 2018). They drew on previous research findings in 2D media: the more significantly the total cognitive load was reduced by the cue, the better the retention and transfer of multimedia learning was (Xie et al., 2017). The research conclusions enlighten us about the need to understand the effects of cues in 360° videos and whether they can address the issues of guiding learners to select specific information for processing, reducing cognitive load, and providing learning effects in 360° videos.

1.2 The cognitive process in 360° videos

The learning content in 360° videos is presented to learners through narration and visual images. Based on Baddeley’s working memory model, cognitive resources can be best used when visual information is simultaneously presented in an auditory manner (Baddeley, 1992). The cognitive theory of multimedia learning (CTML) (Mayer, 2005a,b) considers selection, organization, and integration to be meaningful learning processes. Selection is achieved through an attentional process. The all-encompassing visibility of 360° video makes the selection process more complex because learners must filter out more elements that are irrelevant to learning (Albus et al., 2021). Moreover, the capacity of each channel of the cognitive system is limited, and the learner’s ability to organize and process information is limited (Sweller et al., 1998). Therefore, the information presented in 360° videos may lead to information overload, increasing cognitive load during learning and affecting the learner’s processing and integration of learning content.

1.3 Principle of multimedia learning cues

Cueing is an instructional design approach in which noncontent information is used in multimedia learning to draw learners’ attention to critical information and promote learning achievement (De Koning et al., 2007, 2009). Mayer, (2005a,b) divided cues into visual and verbal cues, where visual cues include colors, spotlights, arrows, etc., and verbal cues include titles, outlines, etc. According to the multimedia learning principles proposed by Mayer (2014), cues can provide good support for learners’ complex selection, processing, and integration processes in learning through 360° video content. Previous studies in 2D media have shown that cues can direct learners’ attention to specific learning content (Mayer, 2005a,b), reducing the time spent visually searching for less relevant areas (Jamet, 2014) and improving learning performance (Grant and Spivey, 2003). However, scholars disagree over whether cues are still relevant in immersive virtual reality environments. Cooper et al. (2018) suggested that multisensory feedback cues effectively provide additional information to improve overall task performance and user-perceived presence. Pizzoli et al. (2021) reported that olfactory cues can trigger distant memories, reduce the cognitive load required to re-evoke memories and experiences, and help learners relax. However, other studies have shown no significant effect of cues on cognitive load (Albus et al., 2021) or posttest performance (Hwang and Shin, 2018) for learning in virtual reality environments. In addition, scholars in other fields have explored the weighting of different sensory cues on individual influences. Soares et al. (2021) analyzed the role of visual and auditory cues in pedestrian crossing decisions and found that pedestrian crossing decisions were based mainly on pedestrians’ visual perceptions of the motion characteristics of approaching vehicles. Keshavarz et al. (2017) explored the effect of different cues on the estimated time-to-contact (TTC) in a virtual reality environment and found that visual cues were generally dominant. However, there are no relevant findings in the educational domain.

Based on the above analysis, given that learners must perform the complex processes of selecting, organizing, and integrating information in VR with limited working memory, thought should be given to how cues can be better designed to be embedded in 360° videos to support learners. This study refers to Paivio’s (1991) work, where cues act on visual, auditory, and audiovisual channels. Therefore, this study’s dual encoding theory uses eye-tracking devices, brainwave meters, and questionnaires to assess how cues acting on different channels affect 360° video learning.

2 Hypothesis

This study aimed to investigate the effects of different types of cues (visual, auditory, and audiovisual channels) embedded in 360° videos on learning performance, attention, emotion, and cognitive load in 360° video learning.

Hypothesis 1: Cues effectively guide learners to learn the visual attention distribution of 360° videos. The frequency of visual attention allocation to relevant learning content is higher for audio-visual cues than for visual cues and lowest for auditory cues.

Hypothesis 2: Cues effectively regulate learners’ implicit attention allocation when learning content with 360° videos. The frequency of visual attention allocation to relevant learning content is higher for audio-visual cues than for visual cues and lowest for auditory cues.

Hypothesis 3: Cues reduce the cognitive load of learners learning using 360° videos, and the cognitive load of audiovisual cues is lower than that of visual and auditory cues.

Hypothesis 4: Cues enhance learners’ learning performance in 360° videos, and the visual learning performance of the audiovisual cue group is better than that of the visual and auditory cue groups.

3 Methodology

3.1 Participants

This study randomly recruited more than 90 undergraduate and graduate students from majors such as Chinese Language and Literature, Education, and Foreign Language and Literature from a certain normal university as candidates. The selection criteria were high school students in liberal arts or not taking biology in the New College Entrance Examination and who passed the cell structure knowledge test (excluding participants with scores exceeding 6 points). Finally, this study selected sixty-two participants as valid; these included seven males and 55 females aged between 19 and 24 years (M = 20.42, SD = 1.139). With the consent of the participants, researchers randomly divided them into four groups and paid a 15 yuan labor fee after the experiment.

3.2 Experimental devices

This study used the HTC VIVE Pro Eye eye-tracking device to record the participants’ eye movement data with a monocular resolution of 1,440 × 1,600 and a binocular resolution of 3 K (2,880 × 1,600). The refresh rate was 90 Hz, the field of view was 110 degrees maximum, the participants maintained a standing position during the experiment, and 5 points were used for eye movement calibration in the experiment.

The BrainLink Pro brainwave instrument, a Macro Intelligence Technology Co., Ltd. product, was used as a portable brainwave instrument. The present study used brain data derived from brainwave instrument concentration, relaxation, and brain use data. Attention measured by the brainwave instrument during learning process reflects the concentration level of the brain in real time, with 0 being the lowest and 100 being the highest. Importantly, attention measured by brainwaves is different from attention measured by eye tracking in terms of the number of gaze sessions and average gaze duration. Wright and Ward (2008) classify attention into two types: externally explicit and implicit. Extrinsic attention is an individual’s response to external stimuli, such as visual attention. Implicit attention, which is the process of internally enhancing neural processing of signals received by the sensory apparatus, is usually not directly externally observable. Therefore, the attention measured by brainwaves here is implicit. Brain use reflects the brain’s real-time workload; the higher the brain workload is, the higher the value, with-100 being the lowest and 100 being the highest. Relaxation indicates the degree of relaxation of the brain. The more relaxed the brain is, the lower the value is; 0 is the lowest, and 100 is the highest.

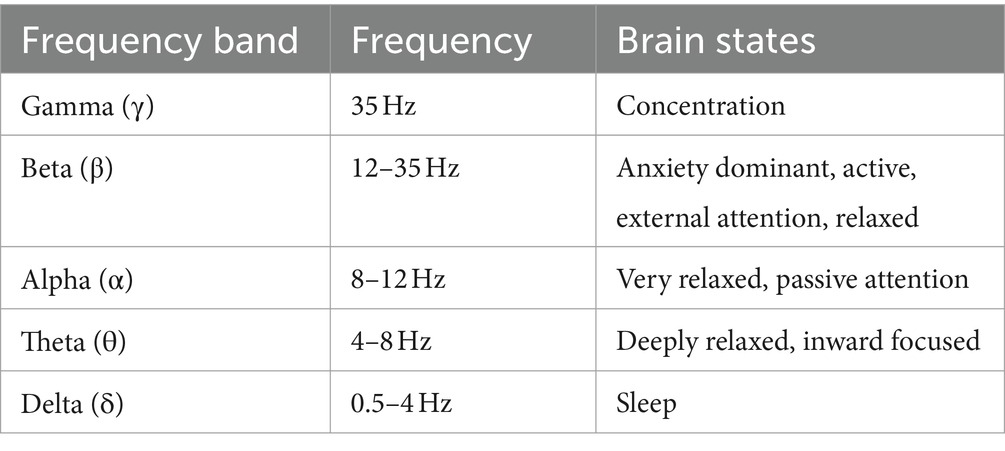

Brainwaves are electrical changes in nerve cells and neuronal pulses in the brain, and the current principles of brain electricity generation involve mainly action and postsynaptic potentials. Modern scientific research has shown that the brain produces electrical signals when it works, dividing them into gamma waves, delta waves, theta waves, alpha waves, and beta waves according to the frequency range (Abhang et al., 2016). The characteristics of the five basic EEG waves are shown in Table 1.

Table 1. Characteristics of the five basic brainwaves (Abhang et al., 2016).

In this study, brainwave data were collected while the participants were viewing 360° videos with the help of a brainwave instrument. The brainwave device used in this study collected data while ensuring that the participants wore and used the HTC VIVE HMD. The α-wave β-wave δ-wave, γ-wave and θ-wave EEG signals recorded the brainwave data from the learning process and calculated the learner’s concentration, relaxation, and brain use through built-in algorithms. Its basic EEG parameters include a dry electrode contact sensor, with a collection frequency of 3–100 Hz, a sampling rate of 512 Hz, a bandwidth of 100 Hz, an ADC of 24 bits, a maximum input impedance of 20 Mohm, and a signal transmission method for the serial port (UART). This brainwave instrument has been successfully used in previous studies (Lin and Li, 2018; Phanichraksaphong and Tsai, 2019).

3.3 Experimental materials

All the participants were randomly divided into four groups, with 16 participants watching the A (no cues) video, 16 watching the B (visual cues only) video, 15 watching the C (auditory cues only) video, and 15 watching the D (audiovisual cues) video. To control for the influence of other external variables on the experiment, four groups of participants participated in the experiment strictly according to the experimental procedure.

3.3.1 Video

The 360° video resource was taken from the YouTube platform The Body VR: Journey Inside a Cell, which was successfully used in a study by Meyer et al. (2019). The 360° video is broadly based on a virtual environment in which the participant travels in a cellular space within the bloodstream, crosses blood vessels to reach an individual cell in the tissue fluid and enters it, and then observes the internal structure and how the cell works. First, according to the experimental needs, we translated and dubbed the 360° video resources and invited two first-line high school biology teachers to correct the content and make appropriate corrections to the 360° video resources. The final video length was 7 min and 21 s.

3.3.2 Audio

All audio in the 360° video was recorded in a professional recording studio using a panoramic camera and dubbed by a student majoring in broadcasting and hosting. The audio produced was rendered as stationary flat stereo rendered audio. Noise reduction, volume level and timbre were processed through Adobe Audition software, and the audio output format was stereo. The edited video and audio were synthesized through the VR function module in Adobe Premiere Pro software. The subjects watched and listened to the experimental material through the HMD device HTC VIVE (which supports stereo playback).

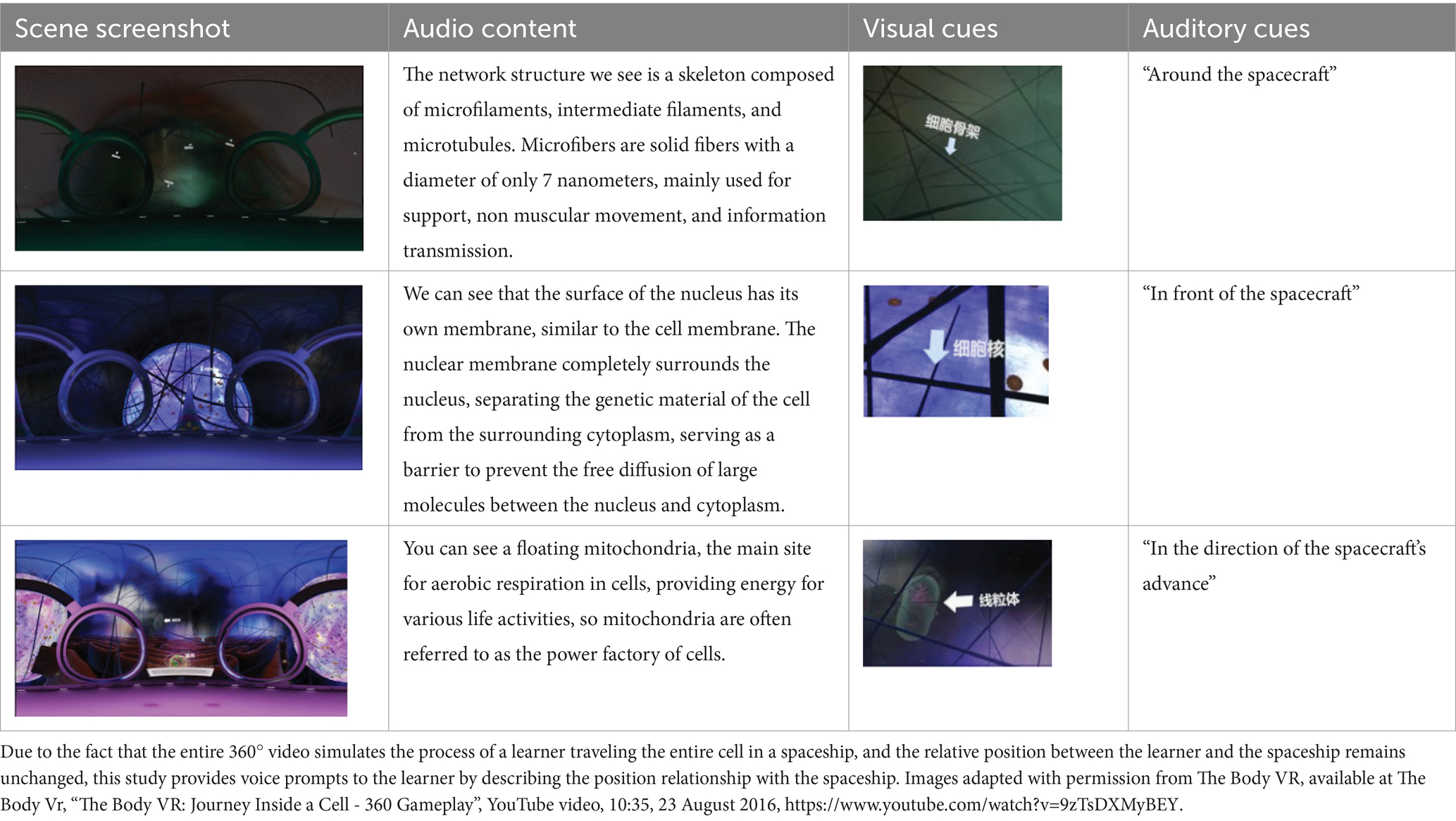

Finally, the 360° video was processed in to versions without any cues, with visual cues, with auditory cues, and with audiovisual cues. The visual cues were presented with arrows and text only at appropriate locations, and the auditory cues were presented only by voice narration. Audiovisual cues involve the simultaneous presentation of both the visual and auditory information mentioned above. Examples of the auditory and visual cues are shown in Table 2.

3.3.3 Prior knowledge test

The questions were based on a pretest-test questionnaire used in Mayer’s Media and Methods experiment and modified by two first-line high school biology teachers to measure the participants’ knowledge of cells.

3.3.4 Emotion measurement

Subjective emotion was measured using Watson et al.’s (1988) Positive Affect Questionnaire to measure changes in participants’ emotions before and after the experiment and was scored on a 5-point Likert scale. The reliability coefficients of the pre-and post-scale measures were 0.881 and 0.858, respectively.

3.3.5 Cognitive load measurement

Sweller et al. (2019) classified cognitive load into intrinsic, external, and associative loads. Frederiksen et al. (2020) discussed the relationship between being in a virtual reality environment and the three types of cognitive load. Therefore, this study adopted Paas’s (1994) Subjective Evaluation Scale and Leppink et al., (2013) Cognitive Load Scale to develop a cognitive load questionnaire. The questionnaire includes an evaluation of the 360° video materials’ learning task difficulty, subjective psychological effort, internal cognitive load, external cognitive load, and associated cognitive load. The items are scored on a 9-point Likert scale. The reliability coefficients of the subjective mental effort scale and cognitive load scale were 0.692 and 0.700, respectively.

3.3.6 Attention measurement

Attention is a human mental activity that is part of the cognitive process. In this study, episodic measures of visual attention were made by eye-tracking technology, and studies have shown that visual attention is correlated with gaze (Pi et al., 2019). In this study, the number of gazes and the average gaze duration when cues appeared in seven scenes of the 360° video were selected to examine the visual attention allocation of subjects. The time spent on the first gaze in each scene’s area of interest was also selected as the effect of the cue on the visibility of the learning content.

3.3.7 Learning achievement test

In this study, the learning achievement test included a retention test, migration test, and knowledge forgetting test. In the retention test, 16 single-choice questions related to the content of the 360° video were presented, for a score of 16 points. In the migration test, the ability of the participants to apply the cellular knowledge learned from the 360° video to new situations was investigated, and three subjective questions were set, each with 3 points and a total possible score of 9 points. In the knowledge forgetting test, the order of the test questions was changed, and the participants answered them again.

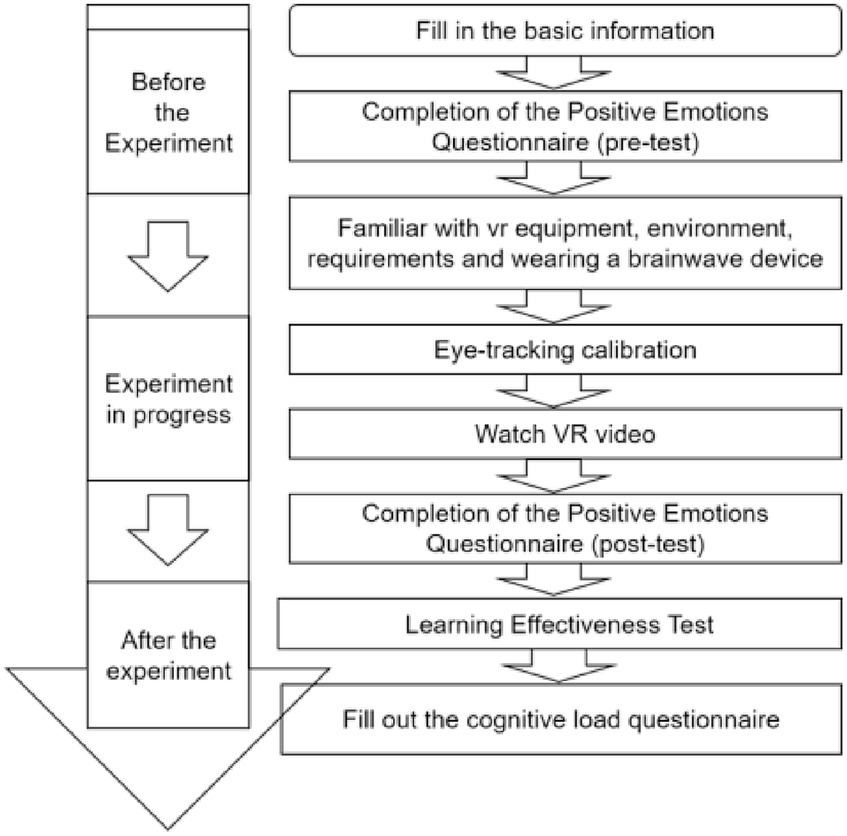

3.4 Experimental process

The entire experiment was conducted in an eye-movement laboratory and lasted 25 min. First, the participants provided basic information, completed the pretest questions, and completed the positive emotion scale (pretest) in the reception laboratory. Then, they entered the eye-movement lab and wore the brainwave meter and VR headset. After the participants became accustomed to the equipment, the researcher introduced the experimental procedure. After confirming the participant’s understanding, the participants entered the 360° video learning stage, and their eye-movement trajectories were recorded. After learning, the participants completed the Positive Emotion Scale (posttest), the Cognitive Load Questionnaire, the Retention Test and the Transfer Test. At the end of the experiment, the participants were asked to join a QQ (a social media chat application from Tencent) group to change the order of the learning retention test options; the test was then sent to the participants to reanswer through an online questionnaire a week later as the knowledge forgetting test. The experimental process is shown in Figure 1.

4 Results

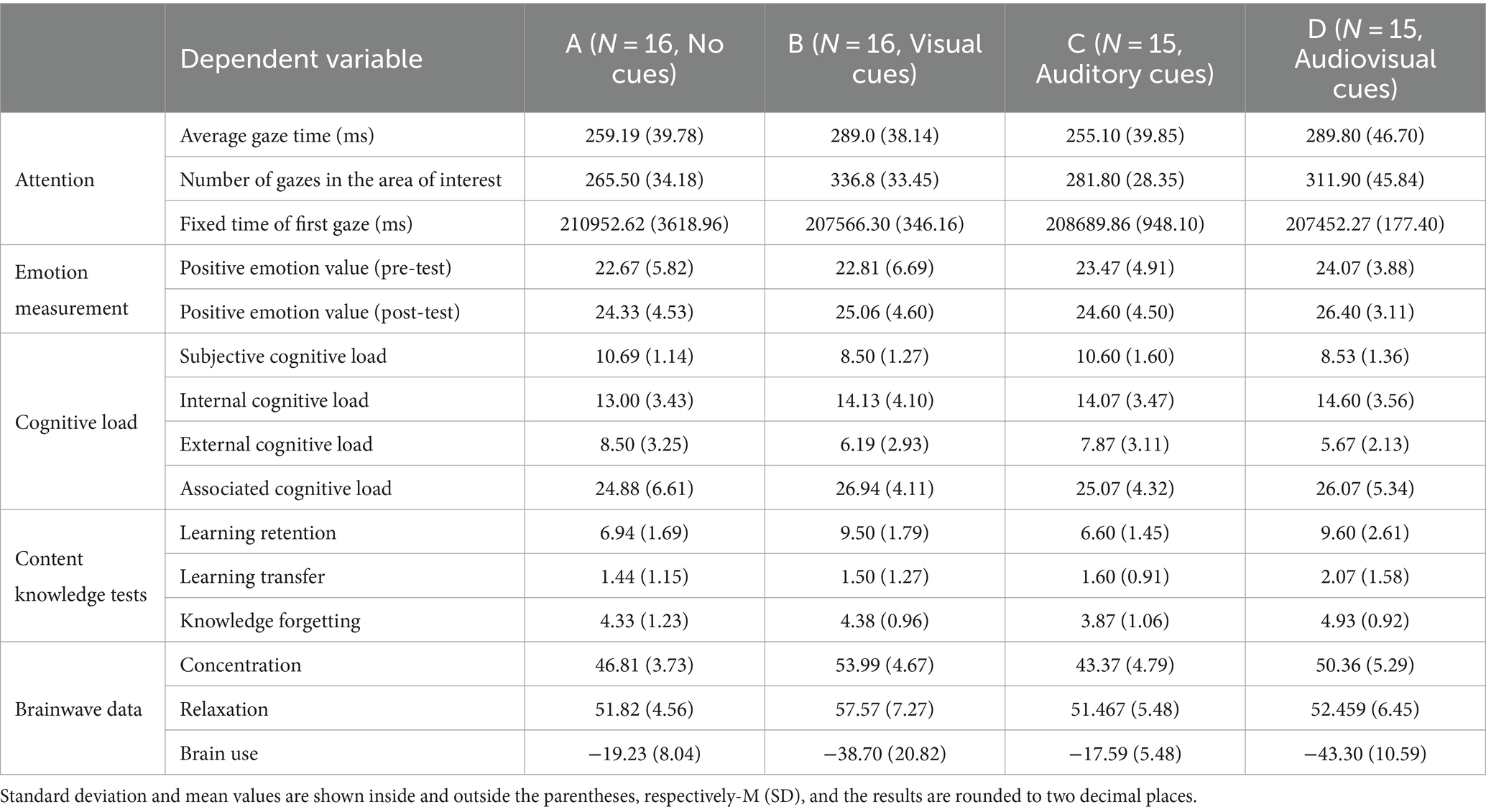

In this study, there was no significant difference between the pretest-test scores of the four groups of participants (F = 0.40, p = 0.76). The dependent variables of this study were participants’ positive emotional values (pre-and post-tests); scores on the cognitive load questionnaire; gaze times in the area of interest; average gaze time; learning retention; transfer test; forgetting score; and brainwaves associated with concentration, relaxation, and brain use. The independent variables were cues that acted on different channels. The statistical method is One-way ANOVA. During the experiment, one participant did not have eye movement data recorded, two participants did not complete the online knowledge forgetting questionnaire, and one participant’s brainwave data recording failed. The descriptive statistics of the dependent variables are shown in Table 3.

4.1 Attention allocation

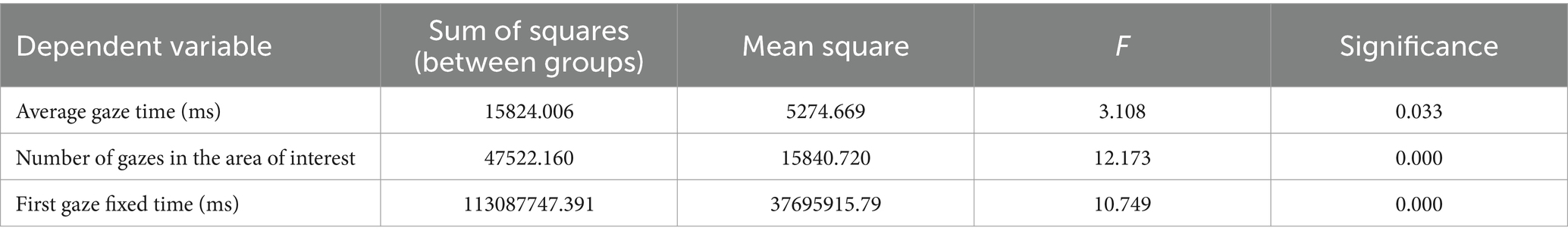

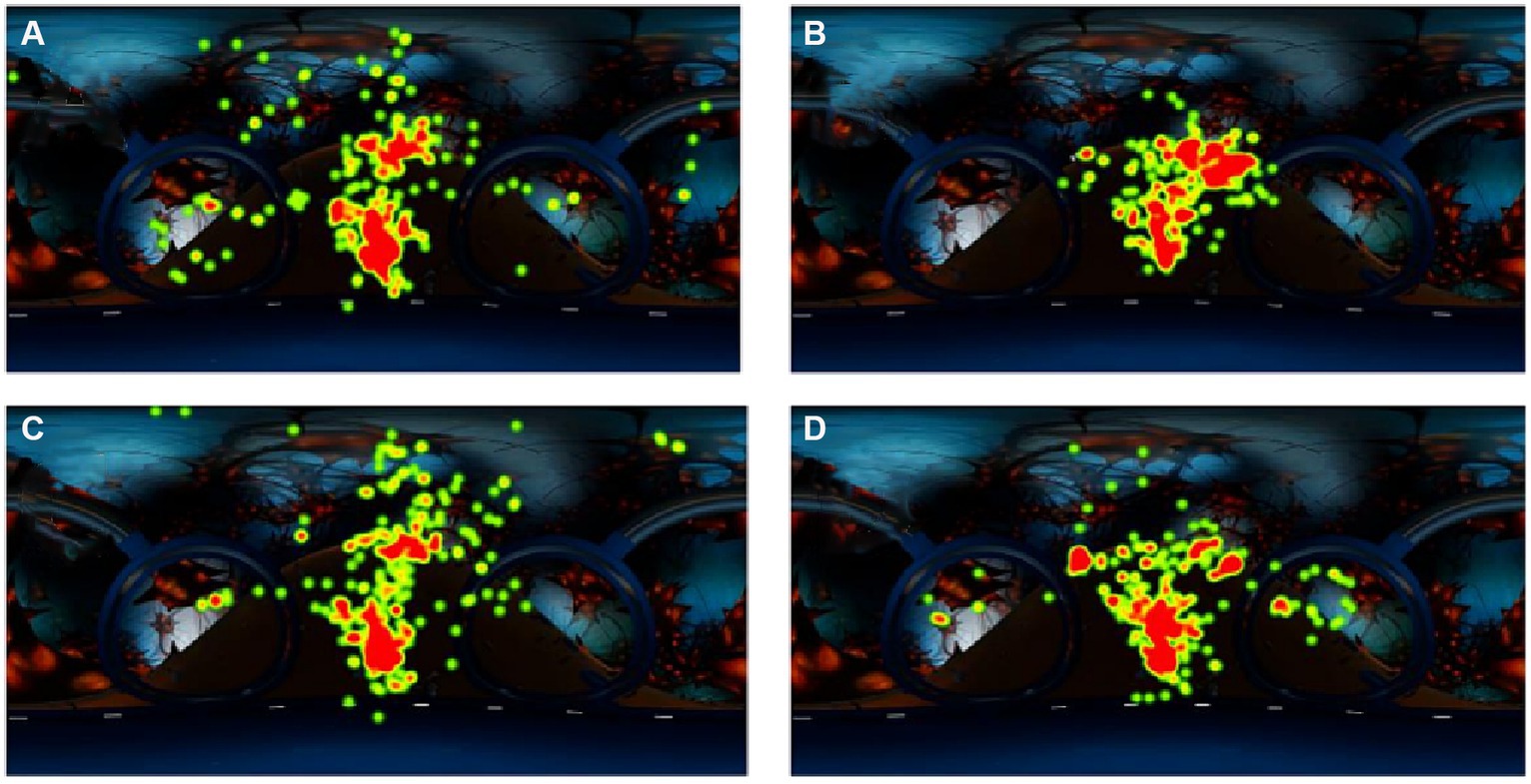

In this study, the mean gaze point duration and the number of interest area gazes were selected as dependent variables for the eye movement instrument in terms of visual attention. One-way ANOVA revealed that the cues acting on different channels significantly affected visual attention allocation. The visual and audiovisual cues increased the mean gaze duration (F = 3.11, p = 0.03). The visual and audio-visual cues increased the number of gaze sessions in the area of interest (F = 12.17, p < 0.001), but the effect of audio-visual cues was weaker than that of visual cues. The effect of cues was significant for the mean time to the first fixation in the area of interest. The visual and audio-visual cues effectively reduced the time learners spent in visual searching, allowing them to focus on specific learning contents. In contrast, the effect of auditory cues was not significant. The one-way ANOVA for each attention-dependent variable is shown in Table 4 and Figure 2.

Figure 2. Map of the hot zone of each group’s gaze [Group (A): no cue, Group (B): visual cues, Group (C): auditory cues, Group (D): audiovisual cues].

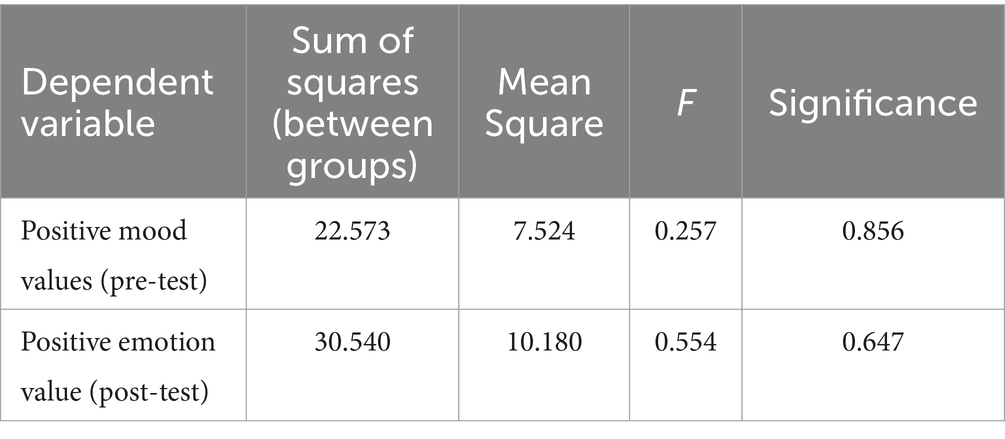

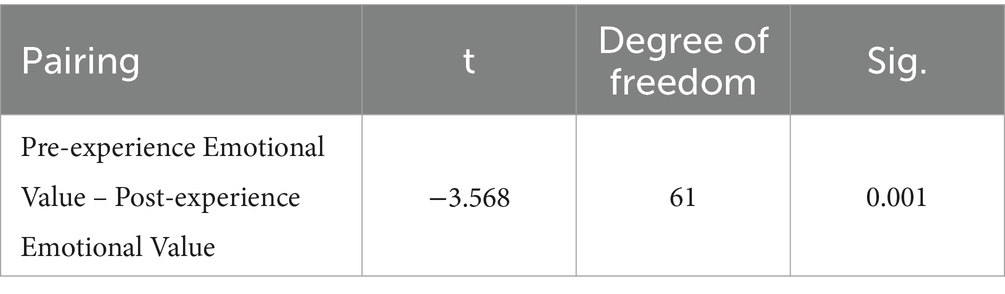

4.2 Emotion measurements

For the subjective positive mood questionnaire, the data were analyzed by one-way ANOVA and an independent samples t-test before and after the test, and the results are shown in Table 3 (Pretest results F = 0.26, p = 0.86; posttest results F = 0.55, p = 0.65; paired values of pretest and posttest t = −3.57, Sig = 0.001). The data indicated no significant difference between the groups before and after the experiment. There was no significant difference in the participants’ emotions, and the groups’ emotion values changed significantly before and after the experiment. Independent sample paired t tests and one-way ANOVA for the dependent variable of mood in each group in terms of relaxation measured by the objective brainwave meter are shown in Tables 5, 6.

Table 5. Results of independent sample paired t tests of positive emotions before and after the experience.

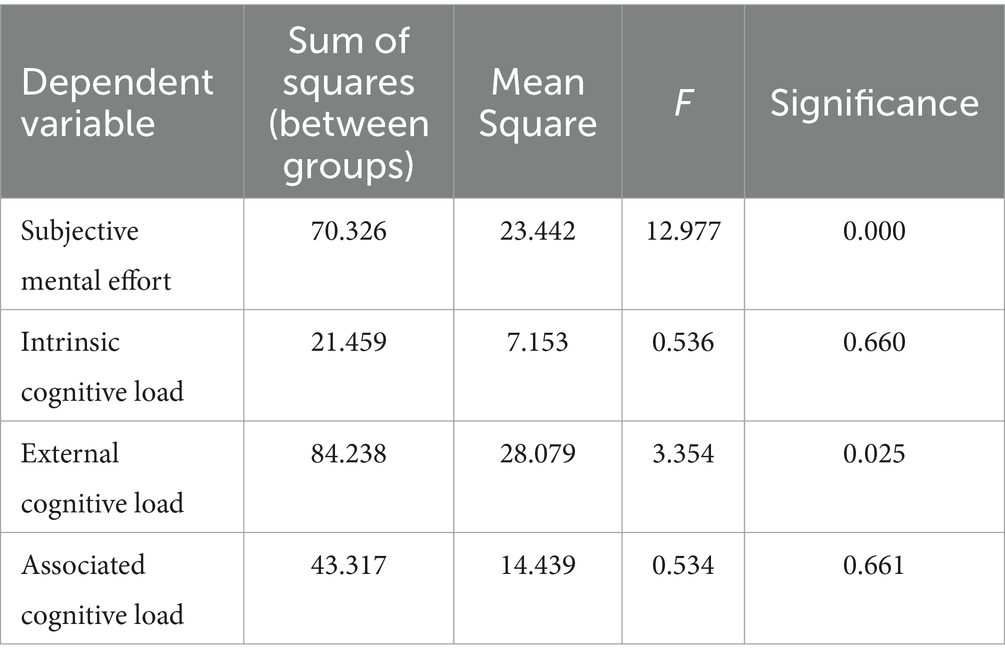

4.3 Cognitive load

The subjective questionnaire revealed that cues acting on different channels had a significant effect on learners’ subjective mental effort (F = 12.98, p < 0.001). Multiple comparison analyses revealed that the visual and audiovisual cues reduced learners’ subjective mental effort in the 360° video environment, and the effect of audiovisual cues on subjective mental effort was comparable to that of visual cues. In contrast, the auditory cues had no significant effect on subjective mental effort. Moreover, the subjective questionnaire revealed no significant effect of cues acting on different channels on intrinsic cognitive load (F = 0.54, p = 0. 66) or associative cognitive load (F = 0.53, p = 0.66) and a significant effect on external cognitive load (F = 3.35, p = 0.03). Least significant difference (LSD) multiple comparison analysis revealed that the visual and audio-visual cues effectively reduced the external cognitive load. The results of the one-way ANOVA for the dependent variable of cognitive load in each group are shown in Table 7.

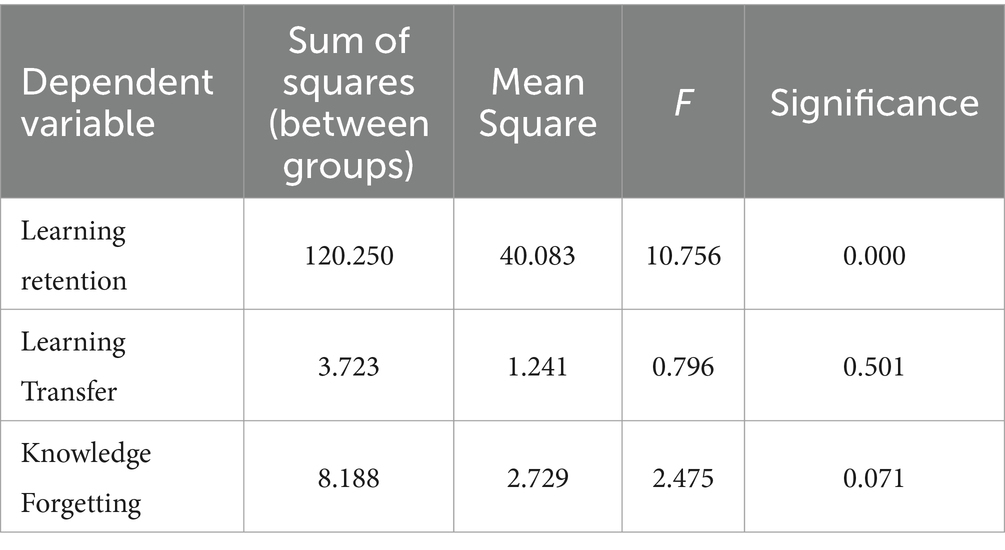

4.4 Academic performance

The effect of cues was significant for learning retention performance (F = 10.76, p < 0.001). The visual and audio-visual cues contributed significantly to knowledge retention, while the effect of auditory cues was not significant. In terms of learning transfer performance, the cue effect was nonsignificant (F = 0.80, p = 0.50), indicating that cues acting on different channels did not affect knowledge transfer. On the online knowledge forgetting test after one week, there was no significant difference in the scores of the four groups (F = 2.48, p = 0.07). The results indicated that the visual and audio-visual cues contributed to knowledge transient retention but had no significant effect on knowledge transfer or long-term memory. The one-way ANOVA results for each group’s learning performance are shown in Table 8.

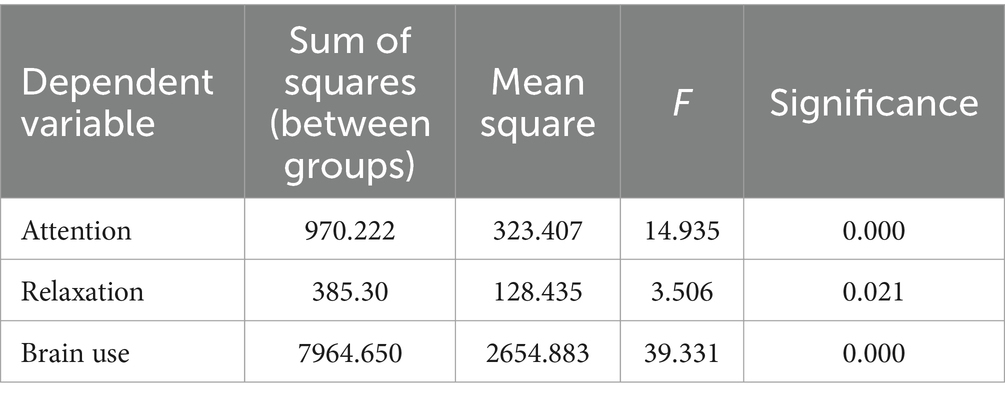

4.5 Brainwave data

Regarding attention, the cues with different roles significantly affected attention (F = 14.94, p < 0.001). The data were analyzed by multiple comparisons, which revealed that the visual and audiovisual cues increased implicit attention, the visual cues were the most effective, and the auditory cues decreased implicit attention.

Regarding relaxation, the effect of cues was significant according to one-way ANOVA (F = 3.51, p = 0.02). The visual cues significantly increased relaxation after multiple comparisons, but auditory and audiovisual cues had no significant effect on relaxation. Perhaps the reason for this result is that in 360° videos, learners receive mismatched information from the auditory and visual channels, while the 360° video narration and scenes are constantly changing linearly, making it more challenging to process the information and causing learners to be nervous or upset. Visual cues can more easily draw learners’ attention to the relevant areas, reduce learners’ tension, and enhance learners’ relaxation. Audiovisual cues may not significantly affect relaxation due to the directional effect of auditory cues, which mitigates the effect of visual cues on relaxation.

Regarding brain use, objective brainwave meter measurements also showed that cues acting on different channels significantly affected the learners’ cognitive load (F = 11.86, p < 0.001). Multiple comparisons showed that the visual and audiovisual cues effectively reduced the learners’ cognitive load, while the auditory cues had no significant effect. The results of one-way ANOVA for each group of brainwave data are shown in Table 9.

5 Conclusion analysis and discussion

The current study investigated the effects of cues acting on different channels in 360° videos on learning declarative knowledge and the underlying mechanisms involved. The results showed that cues acting on different channels affected learners differently. Visual and audiovisual cues effectively guide learners’ attention to the related learning content, reduce the external cognitive load during learning, and improve learning retention performance. However, they have no significant effect on knowledge transfer or long-term memory. Auditory cues increase the number of times learners attend to related learning content, but they do not affect the duration of attention. Auditory cues distract implicit attention during learning and have no significant impact on the acquisition of relevant learning content.

5.1 Effects of the embedding cues of different channels in 360° videos on attention

Visual and audiovisual cues effectively direct learners’ visual attention, but auditory cues have no significant effect on visual attention. Visual and audiovisual cues increase implicit attention, with visual cues being the most effective, while auditory cues distract implicit attention.

For the effect of visual cues, in 360° video immersive virtual reality environments, cues (arrows and annotations) can make specific learning content more salient than other content. Visual cues can effectively direct visual attention to learning content (Liu et al., 2022); prevent spatial selection errors, i.e., align visual information with speech narration; and increase learners’ attention. This explanation is supported by the fact that visual cues effectively reduce learners’ visual search time for specific learning content, as shown by the first fixation time in the area of interest in this experiment.

Auditory cues guide learners on where to look in the virtual reality environment. However, auditory cues themselves do not provide content-related information. The presentation time is short, so when auditory cues direct learners’ visual attention to the relevant learning content area, they still need to rely on a visual search to complete the selection of relevant learning information. The lack of support provided by visual cues may result in redundancy between auditory channel cues and narration. Without the support of visual cues, information in the auditory channel and narration may be redundant. Inconsistency between visual information and video narration causes time selection errors and distracts learners’ implicit attention. Thus, in a previous study, auditory cues alone in 360° videos were shown to increase the difficulty of accessing information in a virtual reality environment and to distract learners’ implicit attention, as confirmed by Feintuch et al. (2006).

For the effect of audiovisual cues, in 360° video immersive learning environments, visual information is dominant, and when auditory and visual information cues are available, visual cues are more dominant; therefore, audiovisual cues significantly affect learners’ attention allocation. Based on Baddeley’s working memory model, the multimedia learning process has a modal effect (Baddeley, 1992), which means that cognitive resources are best used when visual information is simultaneously presented in an auditory manner. However, the results of this study showed that cues in 360° videos had an “inverse modal effect,” and the effect of auditory cues on visual cues was not enhanced. The reason is that there is a cross-channel attentional transfer process from auditory to visual attention or from visual to auditory attention in response to audiovisual cues. In the above process, auditory cues have a distracting effect on implicit attention, so auditory cues do not strengthen the effect of visual cues.

At the same time, some studies have shown a channel effect for cues; cues and attentional content appearing in the same channel accelerate cognitive responses (Turatto et al., 2002). In 360° video immersive virtual reality environments, where the most salient information is presented visually, embedding visual cues (arrows and text) that match the audio narration in the area of the scene that requires attention will make the relationship between visual information and audio narration more salient, which supports organization and integration of information; additionally, the consistency principle of the CTML holds (Mayer, 2005a,b). Eye-movement data suggest that auditory audiovisual cues diminish the effect of guiding visual attention; thus, auditory cues do not enhance the effect of visual cues.

5.2 Effects of embedding cues of different channels in 360° videos on emotions

This study revealed no significant moderating effect of cues on positive emotions in 360° videos. 360° videos can stimulate positive emotions in learners before and after the experience, and 360° videos provide an immersive presence to enhance emotional arousal (Shen Xialin et al., 2019) and stimulate positive emotions in learners when learning with 360° video content (Stavroulia et al., 2019).

5.3 Effects of embedding cues of different channels in 360° videos on cognitive load

Visual and audiovisual cues reduce learners’ cognitive load (subjective mental effort) when learning with 360° videos, precisely the external cognitive load. Moreover, there is no significant effect on the intrinsic cognitive load or associated cognitive load. Visual cues can guide learners’ attention to relevant information, improve the efficiency and effectiveness of finding necessary information (Ozcelik et al., 2010), and effectively reduce learners’ difficulty accessing learning content in 360° videos.

5.4 Effect of the embedding cues of different channels in 360° videos on learning performance

Visual and audiovisual cues improve learning retention performance. Based on Meyer’s finding that virtual reality learning environments do not affect learning performance related to declarative knowledge (Meyer et al., 2019), this study embedded cues in 360° videos and confirmed that the cues were effective at enhancing learners’ acquisition of declarative knowledge learning and retention but had no significant effect on knowledge transfer or long-term memory. This finding indicates that cues modulate learners’ attention in 360° video learning environments and that cues positively influence the cognitive process of selection but not the process of organization and integration. The reason for this may be that the novelty of VR technology brings excitement and fun. Learners pay more attention to the learning environment (Rupp et al., 2016), leading them to distract themselves from the novelty of the video content experience and focus more on surface features of the learning content than on deep processing; therefore, cues do not promote a deeper understanding of the learning content. Clark (1994) argued that differences in teaching methods lead to differences in learning rather than differences in the media itself. Therefore, it is possible to think about different instructional approaches to using 360° video resources to enhance learning performance. In a previous study on the effect of pretraining on learning performance prior to VR experiences, Meyer et al. (2019) found that pretraining improved VR performance on declarative knowledge transfer.

6 Shortcomings of the study and outlook

Notably, objective conditions, including the sex ratio of the participants (more females than males), the age of the participants, and the differences between the experimental setting and the actual teaching environment, limited this study; moreover, the experimental material in this study used static stereo audio, and the conclusions obtained from auditory cues are applicable only to the audio of static stereo audio. Therefore, the use of these findings to guide actual teaching and resource development needs to be further explored.

This study has produced new findings on the learning retention effects of 360° videos on declarative knowledge acquisition. Future research should be conducted in the following areas.

1. Exploring the effects of using 360° videos as a teaching modality on learning performance.

2. Exploring boundary conditions such as the method, dynamics, and number of cues embedded in 360° videos.

3. Exploring the effects of cues on other knowledge types or subject knowledge during 360° videos learning.

4. Exploring the effects of cues acting on tactile, olfactory, and other channels on learning performance in virtual reality environments.

5. Exploring the effects of immersive spatial audio 360° videos on learning performance.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Local Ethics Committee of China West Normal University. The participants provided their written informed consent to participate in this study.

Author contributions

GH: Writing – review & editing. CC: Writing – original draft. YT: Writing – review & editing. RL: Writing – review & editing. HZ: Writing – review & editing. LZ: Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This study was supported by the Projects of Industry-University Collaborative Education of the Ministry of Education (202102464026), China West Normal University on the 2022 University-level Student Innovation and Entrepreneurship Training Program, and Nanchong Social Science Research “14th Five-Year Plan” 2022 Annual Project (NC22C472).

Acknowledgments

The publication and writing of the thesis cannot be separated from the help of Deng Shuangjie, Zhang Lei, and Wang Yihan, and I would like to express my gratitude here.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abhang, P., Gawali, B. W., and Mehrotra, S. C. (2016). “Chapter 2- technological basics of EEG recording and operation of apparatus” in Introduction to EEG-and Speech-Based Emotion Recognition (Cambridge, MA: Academic Press), 19–50.

Albus, P., Vogt, A., and Seufert, T. (2021). Signaling in virtual reality influences learning outcome and cognitive load. Comput. Educ. 166:104154. doi: 10.1016/j.compedu.2021.104154

Araiza-Alba, P., Keane, T., Matthews, B. L., Simpson, K., Strugnell, G., Chen, W. S., et al. (2020). The potential of 360-degree virtual reality videos to teach water-safety skills to children. Comput. Educ. 163:104096. doi: 10.1016/j.compedu.2020.104096

Buttussi, F., and Chittaro, L. (2018). Effects of different types of virtual reality display on presence and learning in a safety training scenario. IEEE Trans. Vis. Comput. Graph. 24, 1063–1076. doi: 10.1109/TVCG.2017.2653117

Clark, R. E. (1994). Media will never influence learning. Educ. Technol. Res. Dev. 42, 21–29. doi: 10.1007/BF02299088

Cooper, N., Milella, F., Pinto, C., Cant, I., White, M., and Meyer, G. (2018). The effects of substitute multisensory feedback on task performance and the sense of presence in a virtual reality environment. PLoS One 13:e0191846. doi: 10.1371/journal.pone.0191846

de Koning, B. B., Tabbers, H. K., Rikers, R. M. J. P., and Paas, F. (2007). Attention cueing as a means to enhance learning from an animation. Appl. Cogn. Psychol. 21, 731–746. doi: 10.1002/acp.1346

de Koning, B. B., Tabbers, H. K., Rikers, R. M., and Paas, F. (2009). Towards a framework for attention cueing in instructional animations: guidelines for research and design. Educ. Psychol. Rev. 21, 113–140. doi: 10.1007/s10648-009-9098-7

Feintuch, U., Liat, R., Hwang, J., Josman, N., Katz, N., Kizony, R., et al. (2006). Integrating haptic-tactile feedback into a video capture based VE for rehabilitation. Cyberpsychol. Behav. 9, 129–132. doi: 10.1089/cpb.2006.9.129

Frederiksen, J. G., Sørensen, S. M. D., Konge, L., Svendsen, M. B. S., Nobel-Jørgensen, M., Bjerrum, F., et al. (2020). Cognitive load and performance in immersive virtual reality versus conventional virtual reality simulation training of laparoscopic surgery: a randomized trial. Surg. Endosc. 34, 1244–1252. doi: 10.1007/s00464-019-06887-8

Grant, E. R., and Spivey, M. J. (2003). Eye movements and problem solving: guiding attention guides thought. Psychol. Sci. 14, 462–466. doi: 10.1111/1467-9280.02454

Harrington, C. M., Kavanagh, D. O., Wright Ballester, G., Wright Ballester, A., Dicker, P., Traynor, O., et al. (2018). 360° operative videos: a randomised cross-over study evaluating attentiveness and information retention. J. Surg. Educ. 75, 993–1000. doi: 10.1016/j.jsurg.2017.10.010

Hwang, Y., and Shin, D. D. (2018). Visual cues enhance user performance in virtual environments. Soc. Behav. Pers. 46, 11–24. doi: 10.2224/sbp.6500

Jamet, E. (2014). An eye-tracking study of cueing effects in multimedia learning. Comput. Hum. Behav. 32, 47–53. doi: 10.1016/j.chb.2013.11.013

Keshavarz, B., Campos, J. L., DeLucia, P. R., and Oberfeld, D. (2017). Estimating the relative weights of visual and auditory tau versus heuristic-based cues for time-to-contact judgments in realistic, familiar scenes by older and younger adults. Atten. Percept. Psychophys. 79, 929–944. doi: 10.3758/s13414-016-1270-9

Leppink, J., Paas, F., Van der Vleuten, C. P., Van Gog, T., and Van Merriënboer, J. J. (2013). Development of an instrument for measuring different types of cognitive load. Behav. Res. Methods 45, 1058–1072. doi: 10.3758/s13428-013-0334-1

Licorish, S. A., Owen, H. E., Daniel, B., and George, J. L. (2018). Students’ perception of Kahoot!‘s influence on teaching and learning. Res. Pract. Technol. Enhanc. Learn. 13, 1–23. doi: 10.1186/s41039-018-0078-8

Lin, L., and Li, M. (2018). Optimizing learning from animation: examining the impact of biofeedback. Learn. Instr. 55, 32–40. doi: 10.1016/j.learninstruc.2018.02.005

Liu, Q., Homma, R., and Iki, K. (2020). Evaluating cyclists’ perception of satisfaction using 360° videos. Transport. Res. Part A 132, 205–213. doi: 10.1016/j.tra.2019.11.008

Liu, R., Xu, X., Yang, H., Li, Z., and Huang, G. (2022). Impacts of cues on learning and attention in immersive 360-degree video: An eye-tracking study. Front. Psychol. 12:792069. doi: 10.3389/fpsyg.2021.792069

Makransky, G., Terkildsen, T. S., and Mayer, R. E. (2019). Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. 60, 225–236. doi: 10.1016/j.learninstruc.2017.12.007

Mayer, R. E. (2005a). “Cognitive theory of multimedia learning” in The Cambridge handbook of multimedia learning, vol. 41 (Cambridge: Cambridge University Press), 31–48.

Mayer, R. E. (2005b). The Cambridge handbook of multimedia learning. Cambridge: Cambridge University Press.

Mayer, R. E. (2014). The Cambridge handbook of multimedia learning 2nd Edn Cambridge, MA: Cambridge University Press.

Mayer, R. E., and Fiore, L. (2014). “Principles for reducing extraneous processing in multimedia learning: coherence, signaling, redundancy, spatial contiguity, and temporal contiguity principles” in The Cambridge handbook of multimedia learning. ed. R. E. Mayer. 2nd ed (Cambridge: Cambridge University Press), 279–315.

Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., and Davis, T. J. (2014). Effectiveness of virtual reality-based instruction on students' learning outcomes in K-12 and higher education: a meta-analysis. Comput. Educ. 70, 29–40. doi: 10.1016/j.compedu.2013.07.033

Meyer, O. A., Omdahl, M. K., and Makransky, G. (2019). Investigating the effect of pre-training when learning through immersive virtual reality and video: a media and methods experiment. Comput. Educ. 140:103603. doi: 10.1016/j.compedu.2019.103603

Moreno, R., and Abercrombie, S. (2010). Promoting awareness of learner diversity in prospective teachers: signaling individual and group differences within virtual classroom cases. J. Technol. Teach. Educ. 18, 111–130.

Ozcelik, E., Arslan-Ari, I., and Cagiltay, K. (2010). Why does signaling enhance multimedia learning? Evidence from eye movements. Comput. Hum. Behav. 26, 110–117. doi: 10.1016/j.chb.2009.09.001

Paas, F. G. W. C., and Van Merriënboer, J. J. G. (1994). Variability of worked examples and transfer of geometrical problem-solving skills: a cognitive-load approach. J. Educ. Psychol. 86, 122–133. doi: 10.1037/0022-0663.86.1.122

Paivio, A. (1991). Dual coding theory: Retrospect and current status. Canadian Journal of Psychology / Revue canadienne de psychologie, 45, 255–287. doi: 10.1037/h0084295

Parmaxi, A. (2020). Virtual reality in language learning: a systematic review and implications for research and practice. Interact. Learn. Environ. 31, 172–184. doi: 10.1080/10494820.2020.1765392

Phanichraksaphong, V., and Tsai, W. (2019). A study of the suitability of marine transportation personnel using brainwave analysis and virtual reality technology. Maritime Technol. Res. 2, 69–81. doi: 10.33175/mtr.2020.218954

Pi, Z., Zhang, Y., Zhu, F., Xu, K., Yang, J., and Hu, W. (2019). Instructors' pointing gestures improve learning regardless of their use of directed gaze in video lectures. Comput. Educ. 128, 345–352. doi: 10.1016/j.compedu.2018.10.006

Pizzoli, S. M., Monzani, D., Mazzocco, K., Maggioni, E., and Pravettoni, G. (2021). The power of Odor persuasion: the incorporation of olfactory cues in virtual environments for personalized relaxation. Perspect. Psychol. Sci. 17, 652–661. doi: 10.1177/17456916211014196

Rupp, M. A., Kozachuk, J., Michaelis, J. R., Odette, K. L., Smither, J. A., and McConnell, D. S. (2016). The effects of immersiveness and future VR expectations on subjec-tive-experiences during an educational 360° video. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 60, 2108–2112. doi: 10.1177/1541931213601477

Rupp, M. A., Odette, K. L., Kozachuk, J., Michaelis, J. R., Smither, J. A., and McConnell, D. S. (2019). Investigating learning outcomes and subjective experiences in 360-degree videos. Comput. Educ. 128, 256–268. doi: 10.1016/j.compedu.2018.09.015

Shadiev, R., and Sintawati, W. (2020). A review of research on intercultural learning supported by technology. Educ. Res. Rev. 31:100338. doi: 10.1016/j.edurev.2020.100338

Soares, F., Silva, E., Pereira, F. C., Silva, C., Sousa, E., and Freitas, E. (2021). To cross or not to cross: impact of visual and auditory cues on pedestrians’ crossing decision-making. Transport. Res. F: Traffic Psychol. Behav. 82, 202–220. doi: 10.1016/j.trf.2021.08.014

Stavroulia, K., Christofi, M., Baka, E., Michael-Grigoriou, D., Magnenat-Thalmann, N., and Lanitis, A. (2019). Assessing the emotional impact of virtual reality-based teacher training. Int. J. Inform. Learn. Technol. 36, 192–217. doi: 10.1108/IJILT-11-2018-0127

Stupar-Rutenfrans, S., Ketelaars, L. E. H., and van Gisbergen, M. S. (2017). Beat the fear of public speaking: Mobile 360° video virtual reality exposure training in home environment reduces public speaking anxiety. Cyberpsychol. Behav. Soc. Netw. 20, 624–633. doi: 10.1089/cyber.2017.0174

Sweller, J., van Merrienboer, J. J. G., and Paas, F. G. W. C. (1998). Cognitive architecture and instructional design. Educ. Psychol. Rev. 10, 251–296. doi: 10.1023/A:1022193728205

Sweller, J., van Merrienboer, J. J. G., and Paas, F. G. W. C. (2019). Cognitive architecture and instructional design: 20 years later. Educ. Psychol. Rev. 31, 261–292. doi: 10.1007/s10648-019-09465-5

Turatto, M., Benso, F., Galfano, G., and Umiltà, C. (2002). Nonspatial attentional shifts between audition and vision. J. Exp. Psychol. Hum. Percept. Perform. 28, 628–639. doi: 10.1037//0096-1523.28.3.628

Ward, C. (2017). Demystifying 360 vs. VR [blog post]. [blog post]. Available at: https://vimeo.com/blog/post/virtual-reality-vs-360-degree-video/

Watson, D., Clark, L. A., and Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: the PANAS scales. J. Pers. Soc. Psychol. 54, 1063–1070. doi: 10.1037/0022-3514.54.6.1063

Wu, J., Guo, R., Wang, Z., and Zeng, R. (2019). Integrating spherical video-based virtual reality into elementary school students’ scientific inquiry instruction: effects on their problem-solving performance. Interact. Learn. Environ. 29, 496–509. doi: 10.1080/10494820.2019.1587469

Xialin, S., Jiping, Z., and Xun, W. (2019). The emotional mechanism of virtual reality: body schema enhances emotional arousal. China Electron. Educ. 12, 8–15.

Xie, H., Wang, F., Hao, Y., Chen, J., An, J., Wang, Y., et al. (2017). The more total cognitive load is reduced by cues, the better retention and transfer of multimedia learning: a meta-analysis and two meta-regression analyses. PLoS One 12:e0183884. doi: 10.1371/journal.pone.0183884

Keywords: 360°video, cues, eye movement test, brainwave test, learning result

Citation: Huang G, Chen C, Tang Y, Zhang H, Liu R and Zhou L (2024) A study on the effect of different channel cues on learning in immersive 360° videos. Front. Psychol. 15:1335022. doi: 10.3389/fpsyg.2024.1335022

Edited by:

Meryem Yilmaz Soylu, Georgia Institute of Technology, United StatesReviewed by:

Diana Sanchez, San Francisco State University, United StatesDavid Murphy, University College Cork, Ireland

Copyright © 2024 Huang, Chen, Tang, Zhang, Liu and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guan Huang, helen1983226@aliyun.com

Guan Huang

Guan Huang Chao Chen

Chao Chen Rui Liu

Rui Liu