- 1Huntsman Mental Health Institute, Salt Lake City, UT, United States

- 2Department of Psychiatry, University of Utah, Salt Lake City, UT, United States

- 3Center for High Performance Computing, University of Utah, Salt Lake City, UT, United States

Introduction: Internalizing disorders (depression, anxiety, somatic symptom disorder) are among the most common mental health conditions that can substantially reduce daily life function. Early adolescence is an important developmental stage for the increase in prevalence of internalizing disorders and understanding specific factors that predict their onset may be germane to intervention and prevention strategies.

Methods: We analyzed ~6,000 candidate predictors from multiple knowledge domains (cognitive, psychosocial, neural, biological) contributed by children of late elementary school age (9–10 yrs) and their parents in the ABCD cohort to construct individual-level models predicting the later (11–12 yrs) onset of depression, anxiety and somatic symptom disorder using deep learning with artificial neural networks. Deep learning was guided by an evolutionary algorithm that jointly performed optimization across hyperparameters and automated feature selection, allowing more candidate predictors and a wider variety of predictor types to be analyzed than the largest previous comparable machine learning studies.

Results: We found that the future onset of internalizing disorders could be robustly predicted in early adolescence with AUROCs ≥~0.90 and ≥~80% accuracy.

Discussion: Each disorder had a specific set of predictors, though parent problem behavioral traits and sleep disturbances represented cross-cutting themes. Additional computational experiments revealed that psychosocial predictors were more important to predicting early adolescent internalizing disorders than cognitive, neural or biological factors and generated models with better performance. Future work, including replication in additional datasets, will help test the generalizability of our findings and explore their application to other stages in human development and mental health conditions.

Introduction

Depression, anxiety and problematic somatic symptoms (physical symptoms such as headaches and stomachaches) are common mental health issues in adolescence. Often collectively referred to as internalizing disorders, they have been associated with reduced levels of well-being and daily life function, increased risk of self-harm and suicide and are substantial predictors of adult psychopathology (1). Depression and anxiety are among the most common mental illnesses in the population with lifetime prevalence of ~30% and ~20% respectively (2). The incidence of internalizing disorders increases exponentially during the peri-adolescent period, with anxiety having an earlier developmental arc (3). Anxiety disorders emerge during elementary school, with the median age of onset being 11 years of age (yrs) and 75% of lifetime illness occurring by 21 yrs. Major depression cases begin to onset at 11–12 yrs with median onset at 31–32 yrs and 75% of lifetime illness having onset by 44 yrs (4). Problematic somatic symptoms affect up to 40% of youth and increase over peri-adolescence: one third to a half continue to report symptoms as adults with 5-7% in the general population and ~17% in the primary care population meeting criteria as adults for Somatic Symptom Disorder (SSD). (5, 6).

Given the considerable personal, societal and economic burdens associated with internalizing disorders (7–10), there is great interest in identifying specific factors that predict their onset, since evidence suggests that early intervention improves outcomes (11, 12) and reduces resource use (13). Isolating key predictors of internalizing disorders is challenging since they have been associated with a host of different factors from varied domains ranging from biological (neural; genetic; hormonal) and psychological models (fear/threat response) to interpersonal relationship function, parent characteristics, the community environment and wider social determinants of health such as relative poverty. Historically, an important barrier to disambiguating the relative importance of such factors to predicting case onset has been the paucity of appropriate multimodal data in large participant samples. Outside the US, national registries or school system data have been available offering large sample sizes (n>10,000) but these typically lack physiologic information such as neuroimaging data (14–17). An alternative strategy is to combine data from multiple studies offering neuroimaging or genomic data to boost sample size such as the datasets offered by IMAGEN or ENIGMA, though pooling across heterogenous studies may inherently limit features (variables) available for analysis to those that are shared across all studies (18–20). Consequently, to promote comparative discovery at scale, federal and other organizations have recently sponsored the formation of large, longitudinal cohorts collecting a wide variety of multimodal data types with standardized protocols. In peri-adolescence, the flagship initiative of this type is the ongoing population-level ABCD study (n = 11,800) used in the present study (21–23).

Concomitantly, interest has recently grown in applying machine learning (ML) methods to these newly-emerging large-scale population cohorts as ML techniques offer advantages in approaching such high-dimension data. Firstly, they can generate individual-level case predictions from multidimensional data to bridge extant work focused on group-level statistical effects with individual-level discoveries of potential clinical relevance by “providing multivariate signatures that are valid at the single-subject level” (24, 25). Secondly, ML techniques can simultaneously analyze hundreds of candidate predictors and incorporate non-linear relationships among a set of predictors. These properties are relevant to the construction of individual-level models since significant group-level effects may not be useful at the individual level while a feature with low effect size at the group level may prove germane. While a number of ML predictive studies have been performed in youth internalizing disorders, these have to date considered <200 candidate predictors and focused largely on prevailing cases of depression, rather than new onset cases in adolescence, especially early adolescence. The latter are of considerable translational interest since understanding individual-level drivers of illness onset and obtaining better visibility into whether future onset can be reliably predicted using ML would potentially inform risk stratification strategies. Extant work is also highly heterogenous with respect to which candidate predictors (input features) are considered. In particular, some studies use only psychosocial features and some only neuroimaging features, while a few have incorporated both types. Concomitantly, performance has been variable, with accuracy ranging over ~50-90% but the achievement of robust precision (positive predictive value) - an important metric for translational relevance - typically proving more elusive. Moreover, since obtaining physiologic measures such as neuroimaging metrics is complex and uncommon in clinical practice, it is relevant to understand whether they improve individual-level case prediction. Finally, few studies have constructed predictive models of anxiety or somatic problems in youth using ML classifiers or applied a consistent analytic architecture across the three major categories of internalizing disorders simultaneously in the same population and data to enable direct comparisons and determine the specificity of predictive models to different internalizing disorders.

Beyond empirical findings, developmental and ecological frameworks also suggest that a variety of personal and environmental factors such as family context and self-regulatory processes are influential in the emergence of internalizing disorders. Bronfenbrenner’s bioecological model emphasizes the broader influence of family and environment on child development, while Cicchetti and Rogosch’s developmental psychopathology framework highlights how multiple interacting risks can lead to similar internalizing outcomes (26, 27). Transactional perspectives likewise point to the reciprocal shaping of child and parent behavior across developmental stages (28). Also, sleep has been conceptualized as a particularly important self-regulatory domain, with sleep disturbances proposed as a transdiagnostic risk factor for later internalizing symptoms (29–31).

In the present study, we aim to build on prior work by predicting cases of depression, anxiety and SSD in early adolescence (9–12 yrs) using deep learning guided by a large-scale AI optimization process. Specifically, we aimed to a) identify and rank the most important predictors after analyzing thousands of multidomain candidate predictors; b) provide individual-level predictions of future, new onset cases at 11–12 yrs in comparison to all prevailing cases at the same age and 9–10 yrs; c) determine the incremental value of using multidomain predictors vs neural-only modeling; and d) examine the relationship between predictor importance and accuracy. Applying a common analytic architecture to data from the ABCD cohort, we first constructed multimodal predictive models by analyzing 5,810 candidate predictors spanning demographics; developmental and medical history; white and gray matter brain structure, neural function (cortical and subcortical connectivity, 3 tasks); brain volumetrics; physiologic function (e.g. sleep, hormone levels, pubertal stage, physical function); cognitive and academic performance; social and cultural environment (e.g. parents, friends, bullying); activities of everyday life (e.g. screen use, hobbies); living environment (e.g. crime, pollution, educational and food availability) and substance use. Subsequently, we recapitulated all analytic procedures using multiple types of neural candidate predictors.

To make these case classifications, we used deep learning with artificial neural networks, which incorporates non-linear relationships among predictors and is resistant to multicollinearity. While artificial neural networks offer powerful predictive capability, their application to translational aims can be limited by the relative difficulty of tuning these models (setting hyperparameters that control learning) and their tendency to act as ‘black box’ estimators where the features used to make predictions are not interpretable and their relative importance is difficult to determine. We enhanced deep learning performance with Integrated Evolutionary Learning (IEL), an AI-based form of computational intelligence, to jointly optimize across the hyperparameters and learn the most important final predictors and render explainable predictions. IEL is a genetic algorithm which instantiates the principles of natural selection in computer code, typically performing ~40,000 model fits during training before testing final, optimized models in a holdout, unseen data partition. All results presented are from testing for generalization in this holdout, unseen data.

Materials and methods

Terminology and definitions

Terms used in quantitative analysis may be shared among different fields with variant meanings. Here, we use ML conventions throughout (32–34). ‘Prediction’ means predicting the quantitative value of a target variable by analyzing patterns in input data. We refer to the set of all input data as containing ‘features’ or ‘candidate predictors’ and those identified in final, optimized models (presented in RESULTS) as ‘final predictors’. The set of observations used to train and validate models is referred to as the ‘training set’ and the unseen holdout set of observations is termed the ‘test set’. We use ‘generalizability’ to refer to the ability of a trained model to adapt to new, previously unseen data drawn from the same distribution i.e. model fit in the test set. ‘Precision’ refers to the fraction of positive predictions that were correct; ‘Recall’ to the proportion of true positives that were correctly predicted; and ‘Accuracy’ to the number of correct predictions as a fraction of total predictions. Receiver Operating Characteristic curves (ROC Curves) are provided that quantify classification performance at different classification thresholds plotting true positive versus false positive rates, where the Area Under the Curve (AUROC) is defined as the two-dimensional area under the ROC curve from (0,0) to (1,1).

Data and data collection in the ABCD study

Data used in the present study comes from the ABCD study, an epidemiologically informed prospective cohort study that is the largest study of brain development and child health conducted in the United States to date. ABCD recruited 11,880 children (52% male; 48% female) at ages 9–10 years (108–120 months) via 21 sites across the United States and will follow this cohort until age 19-20. The cohort is oversampled for twin pairs (n = 800) and non-twin siblings from the same family may also be enrolled. A wide variety of information is collected about participants. This data has been made available to qualified researchers at no cost by the NIH since 2018 and is released periodically. Currently, it may be obtained from the NBDC Data Hub (https://www.nbdc-datahub.org/). This study uses data from release 4.0, which includes data up to the 42-month follow-up date. A full explanation of recruitment procedures, the participant sample and overall design of the ABCD study may be found in Jernigan et al; Garavan et al; and Volkow et al. (35–37) This study has been reviewed and deemed not human subjects research by the University of Utah Institutional Review Board.

The phenotypic and substance abuse assessment protocol is covered in detail in Barch et al. and Lisdahl et al, respectively (38, 39). In brief, phenotypic assessments of physical and mental health, substance use, neurocognition and culture and environment are performed for youth and their parents and biospecimen collection for DNA, pubertal hormone levels, substance use metabolites (hair) and substance and environmental toxin exposure (baby teeth) are collected from youth at 9–10 yrs. A summary description of assessments performed and environmental and school-related variables derived from geocoding at age 9–10 yrs surveyed in the present study may be inspected in Supplementary Table S1.

Brain imaging is collected at 9–10 yrs and every two years thereafter and incorporates optimized 3D T1; 3D T2; Diffusion Tensor Imaging; Resting state functional MRI (rsfMRI); and 3 task MRI (tfMRI) protocols that are harmonized to be compatible across acquisition sites. The tfMRI protocol comprises the Monetary Incentive Delay (MID) and Stop Signal (SST) tasks and an emotional version of the n-back task which collectively measure reward processing, motivation, impulsivity, impulse control, working memory and emotion regulation. The ABCD study provides fully-processed metrics from each of these imaging types. Full details of the neuroimaging protocol may be inspected in Casey et al. and the pre-processing and analytic pipeline used to generate neural metrics in Hagler et al. (40, 41) The present study uses all available processed metrics that have passed quality control from the diffusion fullshell; cortical and subcortical Gordon correlations (derived from rsfMRI); structural; volumetric; and all three tasks as well as corresponding head motion statistics for each modality. For certain modalities such as rsfMRI, multiple scans were attempted or completed. In such cases we use variables from the first scan.

Study inclusion criteria and sample partitioning for machine learning

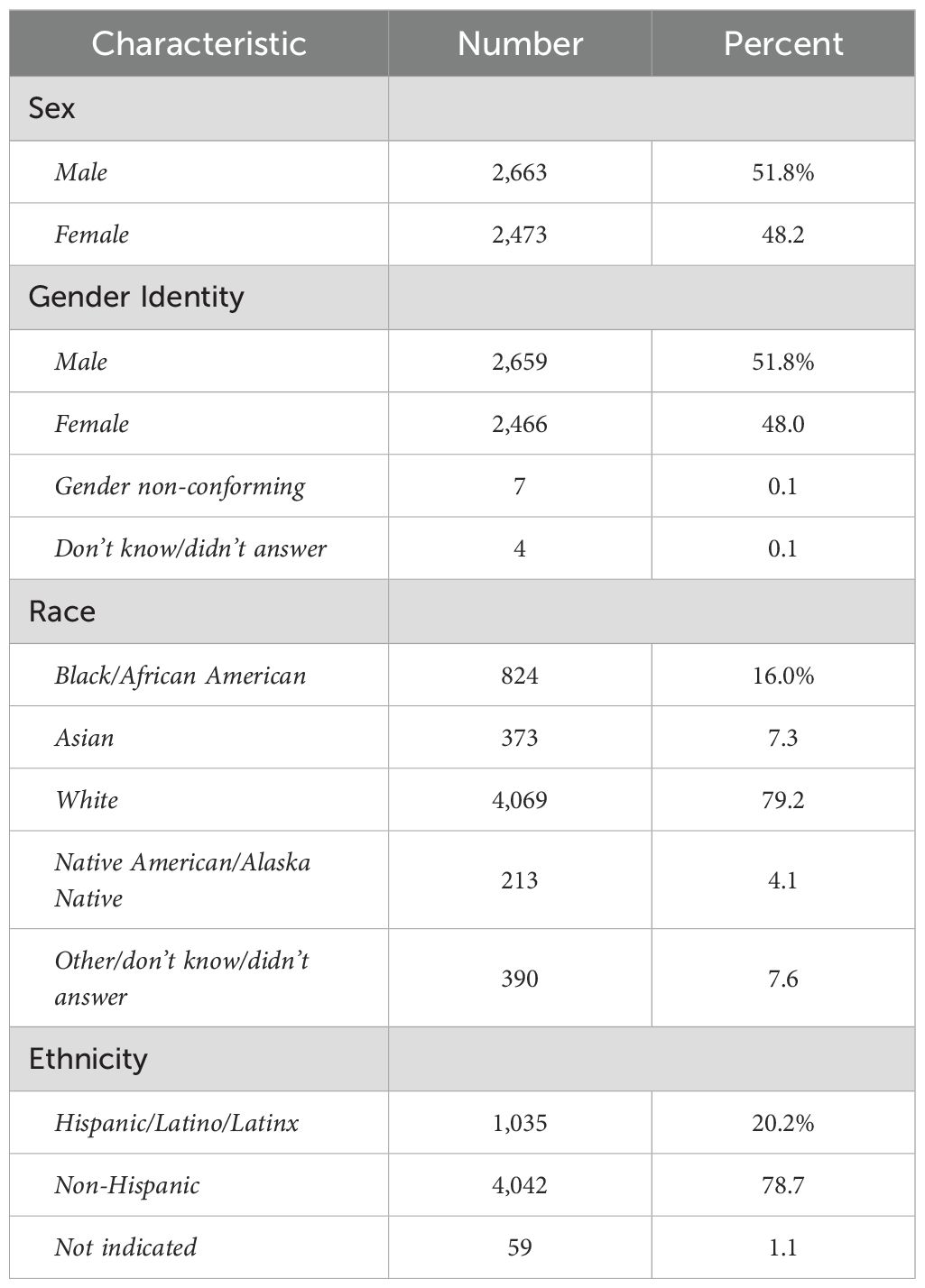

Inclusion criteria for the present study were a) participants enrolled in the study at baseline who were still enrolled at 2-year follow-up (n = 8,084) who had b) complete data passing quality control available for all neural metric types (n = 6,178) and were c) youth participants unrelated to any other youth participant in the study (n = 5,136). If a youth had a twin or other sibling(s) present in the cohort, we selected the older or oldest sibling for inclusion in our study. We present characteristics of the study sample at 9–10 yrs since these participants correspond to the input data used to make predictions. Demographic characteristics of this sample at age 9–10 yrs are presented in Table 1.

Sex refers to sex assigned at birth on the original birth certificate. Gender refers to the youth’s gender identification. Race and ethnicity refer to the parents’ view of youth’s race or ethnicity. More than one race identification may be selected and therefore percentages sum to >100%.

Physiologic and cognitive characteristics of the participant sample at 9–10 yrs may be viewed in Table 2.

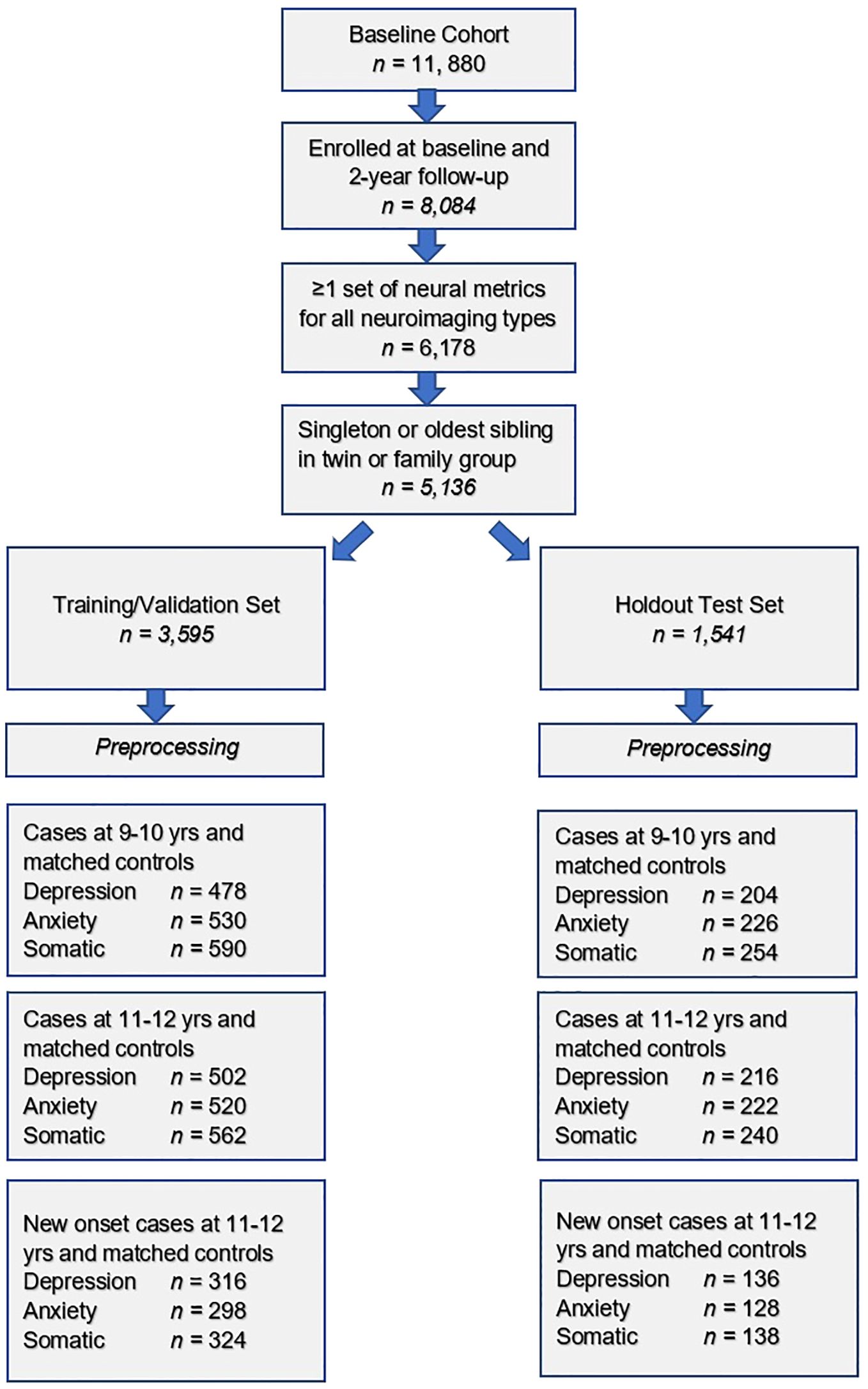

Steps in the formation of the study sample used to construct predictive models of depression, anxiety and somatic symptom disorder are shown. After exclusion criteria are applied, the sample was randomly partitioned into training and test sets followed by separate pre-processing of targets and features. Subsequently, samples for each experiment were formed as described in Preparation of predictive targets and Construction of participant case samples for internalizing disorders and controls.

Preparation of predictive targets

The present study uses predictive targets of depression, anxiety and somatic problems derived from the Child Behavior Checklist for youth ages 4–18 years (CBCL) called the ‘ABCD Parent Child Behavior Checklist Scores Aseba (CBCL) in the ABCD study. The CBCL is a standardized instrument in widespread clinical and research use for the assessment of mental and emotional well-being in youth. It forms part of the Achenbach System of Empirically Based Assessment (ASEBA) “designed to facilitate assessment, intervention planning and outcome evaluation among school, mental health, medical and social service practitioners who deal with maladaptive behavior in children, adolescents and young adults.” (42) During assessment with the CBCL, parents rate their child on a 0-1–2 scale on 118 specific problem items such as “Unhappy, sad or depressed” or “Acts too young for age” for the prior 6 months. The answers to these questions are aggregated into raw, T and percentile scores for 8 syndrome subscales (Anxiety, Somatic Problems, Depression, Social Problems, Thought Problems, Attention Problems, Rule Breaking and Aggressive Behavior) derived from principal components analysis of data from 4455 children referred for mental health services. The CBCL is normed in a sex/gender-specific manner on a U.S. nationally representative sample of 2368 youth ages 4–18 that takes into account differences in problem scores for “males versus females”. It exhibits excellent test-retest reliability of 0.82-0.96 for the syndrome scales with an average r of 0.89 across all scales. Content and criterion validity is strong with referred versus non-referred children scoring higher on 113/188 problem items and significantly higher on all problem scales, respectively.

To form binary classification targets for prediction, we thresholded CBCL subscale T scores for Depression, Anxiety and Somatic problems using cutpoints established by ASEBA for clinical practice. Specifically, a T score of 65-69 (95th to 98th percentile) is considered in the ‘borderline clinical’ range, and scores of ≥70 are considered in the ‘clinical range.’ Accordingly, we discretized T scores for each of the 3 subscales under consideration by deeming every individual with a T score ≥ 65 as a ‘case’ [1] and every individual with a score <65 as a ‘not case’ [0]. This process was performed separately for CBCL scores at baseline and 2-year follow-up in the training and test sets.

Construction of participant case samples for internalizing disorders and controls

To test our hypotheses, we formed 3 different participant samples for each of the internalizing disorders in the training and test sets, respectively (Figure 1). The first sample contained cases of depression, anxiety and SSD as defined in Preparation of predictive targets at baseline assessment, when youth were 9–10 years of age. The second sample contained cases of depression, anxiety and SSD at 2-year follow-up, when youth were 11–12 years of age. Finally, the third sample contained only new onset cases of depression, anxiety and SSD at 2-year follow-up. A new onset case was defined as a youth who met criteria for depression, anxiety or SSD following the ASEBA threshold in the CBCL who did not meet criteria for the disorder in question at baseline assessment. In all samples, we constructed a balanced sample of controls matched for age and sex/gender selected from the eligible study population (see: Study inclusion criteria and sample partitioning for machine learning above) from youth with the lowest possible scores on the relevant syndrome scale. No sample in the training sets was <200 participants, a recommended threshold for robust ML analyses.

Preparation of candidate predictors (input features)

The feature set in the present study comprises the majority of available phenotypic and environmental variables derived from baseline assessment at 9–10 years of age (including data collection site) and all available neural metrics (including head motion statistics) with the exception of temporal variance measures. For continuous phenotypic features where subscale or total scores for assessments were available, these were used. For example, subscale scores for different types of sleep-related disorders from the larger Munich Chronotype Questionnaire. Any metrics or instruments that directly quantified mental health symptoms were excluded since we aimed to predict cases of mental illness without using symptoms. For example, the Youth 7UP Mania scale. The feature set was then partitioned into training and test sets that conformed with the partitions detailed above in Formation of the study participant sample for internalizing disorders in Figure 1. Pre-processing of phenotypic and environmental features was subsequently performed separately in the training and test sets. First, features with >35% missing values were discarded. This threshold was used since prior research shows that good results may be obtained with ML methods with imputation up to 50% missing data (43). Also, it was selected pragmatically to balance the retention of potentially informative features against the risk of excessive imputation. Recent works have also suggested a slightly more lenient threshold (e.g., 40%) to exclude variables containing many missing values from analysis (44, 45). Nominal variables were one-hot encoded to transform them into binary variables. Continuous variables were then winsorized to [mean +/- 3] standard deviations to remove outliers, whereas ordinal variables were winsorized according to the bounds defined in the provided data dictionary. Then, all features were scaled in the interval [0,1] with the minimum-maximum normalization. Missing values were imputed using non-negative matrix factorization (NNMF). NNMF is a mathematically-proven imputation method that minimizes the cost function of missing data rather than assuming zero values. It can effectively reconstruct missing values in high-dimensional datasets by leveraging latent structure. It is effective at capturing both global and local structures in the data and has been demonstrated to perform well regardless of the underlying pattern of missingness (46–48). Supplementary Table S2 shows the number and percentage of observations which were trimmed and filled with NNMF for the training and test sets, respectively.

Further, a sensitivity analysis was performed to compare the predictive performance of NNMF with the Multiple Imputation by Chained Equations (MICE), which is a common alternative imputation method modeling each variable conditionally on the others and imputes missing values through iterative regression. Results of the sensitivity analysis (included in the Supplementary Table S4) demonstrate that test accuracy (when using our proposed algorithm described in Integrated Evolutionary Learning for deep learning optimization below) is generally consistent across the two imputation methods, with NNMF tending to slightly outperform MICE for most targets and case definitions (i.e., an average of 2.4% improvement in accuracy). In light of these findings, NNMF is selected to be the default data imputation method adopted in this work, as it provided equal or better performance in most scenarios while maintaining computational efficiency for high-dimensional datasets.

After imputation with NNMF, any variables originating from phenotypic assessments lacking summary scores were reduced to a summary metric using feature agglomeration to produce a final set of (n=804) phenotypic and environmental features. Neural metrics (n = 5,006) were processed and underwent quality control by the ABCD study team and were therefore not pre-processed with the exception of scaling, again performed separately in the training and test partitions. There were no missing neural features. The final combined feature set including neural, phenotypic, environmental, head motion and site features comprised 5,810 features.

Overview of predictive analytic pipeline

We used deep learning with artificial neural networks (AdamW optimizer) to predict cases of depression, anxiety and somatic problems in early adolescence in three scenarios: at 9–10 years of age, at 11–12 years of age and in new onset cases at 11–12 years of age. Deep learning models were implemented with k-fold cross-validation and trained by an AI meta-learning algorithm that jointly performed feature selection and optimized across the hyperparameters in an automated manner, pursuing ~40,000 model fits for each experiment. Model training was terminated based on the AUROC. Subsequently, final optimized models were tested for their ability to generalize in the holdout, unseen test set and performance statistics of AUROC, accuracy, precision and recall, and ROC curves are reported for the best-performing models. We also report the relative importance of final predictors to making case predictions quantified with two techniques: Shapley Additive Explanations (SHAP) and permutation using the eli5 algorithm. Detailed explanations of these methods are provided below. Code for the predictive analytics may be accessed at the de Lacy Laboratory GitHub: https://github.com/delacylab/integrated_evolutionary_learning.

Coarse feature selection

Prior to beginning model training, we performed coarse feature selection for each of the nine experiments i.e. 3 targets of depression, anxiety and SSD each in 3 participant samples of 9–10 yrs; 11–12 yrs and new onset cases at 11–12 yrs. The purpose of this process was to quantify, for each sample, which of the 5,810 features exhibited a non-zero relationship with the target in order to reduce the number of features entering the deep learning pipeline in a principled manner. First, a simple filtering process was performed in which χ2 (categorical features) and ANOVA (continuous features) statistics and mutual information metric (all features) were computed to quantify the relationship between all features and the target, where the target (depression, anxiety, SSD) was represented by a categorical vector in [0,1]. Any feature with a non-zero relationship (either positive or negative) with the target was retained. While we acknowledge the potential risk that this univariate filtering cannot fully capture complex interactions across the features, it is practically advantageous to reduce the dimensionality of the feature space before the execution of additional filtering procedures that require high computational complexity in face of large feature sets.

Subsequently, feature selection was performed on these filtered feature subsets using the Least Absolute Shrinkage and Selection Operator (LASSO) algorithm. LASSO is a popular regularization technique based in linear regression that efficiently selects a reduced set of features by forcing certain regression coefficients to zero. The LASSO algorithm has a hyperparameter (commonly called the α) that instantiates the amount of penalization (shrinkage) that will be imposed on the features. We implemented the LASSO with our AI meta-learning algorithm Integrated Evolutionary Learning to tune the α hyperparameter in the same manner as described below in Integrated Evolutionary Learning for deep learning optimization.

Notice that the LASSO algorithm may lead to biased feature selection results when multicollinearity exists in the pre-selected feature set or features were related to the target in a non-linear manner. To alleviate these potential problems, Boruta (49) was selected as a complementary feature selection method. Boruta is an ensemble-based method designed to capture all relevant features using random forest modeling and has been shown to be less sensitive to multicollinearity (50). A sensitivity analysis comparing the LASSO only, the Boruta only, and the LASSO combined with Boruta methods was performed. Since the feature set selected by Boruta tends to be small but non-overlapping with that selected by LASSO, we focused on the comparison between LASSO and the LASSO combined with Boruta methods. Results from the sensitivity analysis, included in Supplementary Table S5, demonstrated that the combined method yields 1-10% improvement in predictive accuracy (when using our proposed Integrated Evolutionary Learning models explained in the next subsection) compared to the LASSO only method in all targets and ages of case determination. In light of this improvement, this combined method, which selects the union of features retained by both LASSO and Boruta, is taken as the default feature selection configuration in this study unless specified otherwise.

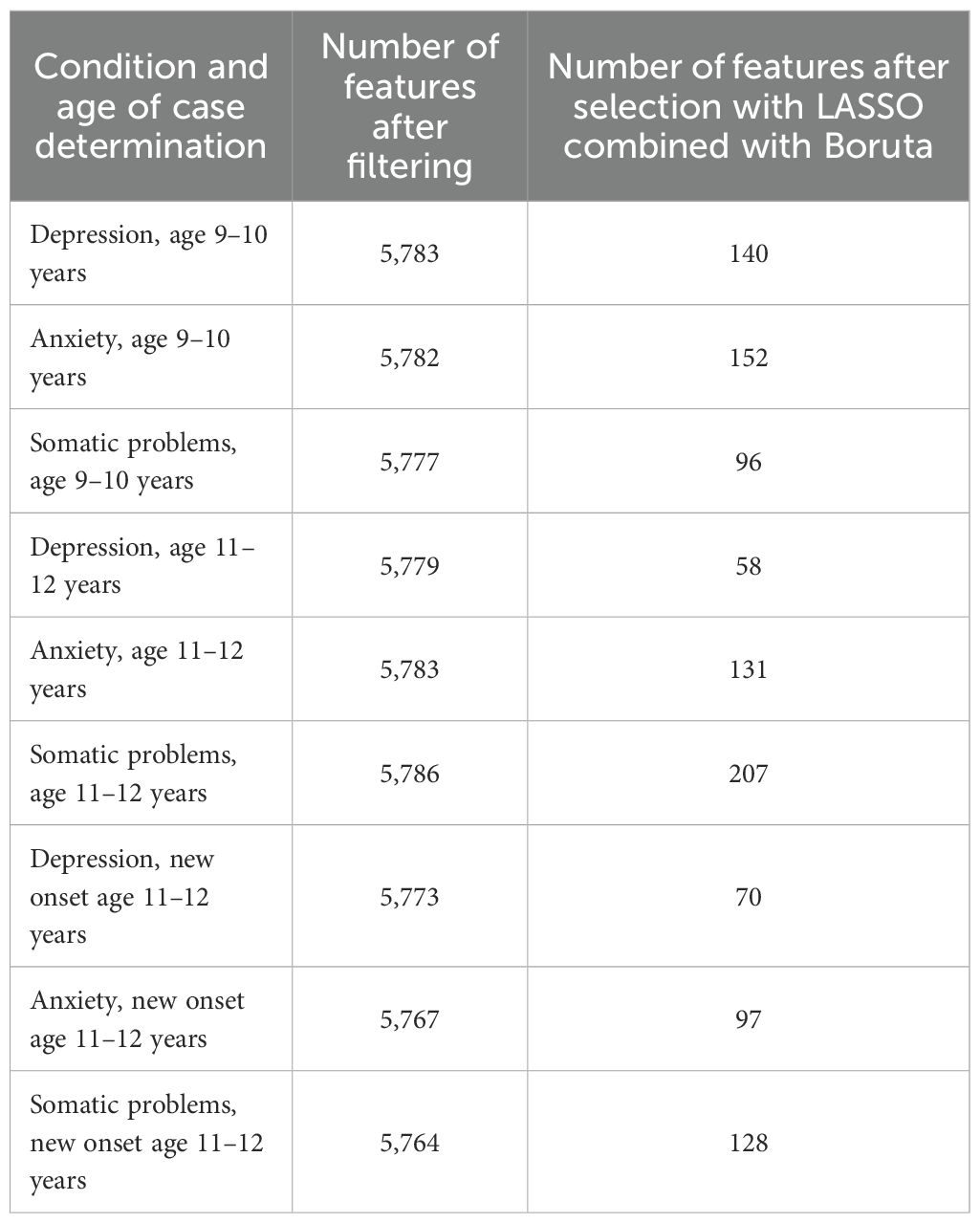

The number of features retained for each of the 9 experiments after each step in the coarse feature selection process may be examined in Table 3. Specific features selected by the LASSO combined with Boruta and the resulting feature importance scores (univariate coefficients in LASSO and Boruta importance scores) between each of these features and the target vectors (depression, anxiety, somatic problems) for each participant sample (9–10 yrs; 11–12 yrs and new onset cases at 11–12 yrs) may be viewed in Supplementary Table S3a-i. Each feature set selected by the LASSO combined with Boruta then entered the deep learning pipeline.

The total baseline set of 5,810 features was reduced via coarse feature selection in a two-step process of filtering followed by regularization with the LASSO algorithm combined with the Boruta algorithm. This table displays the number of remaining features after each step for each target (depression, anxiety and somatic problems) and participant sample (at age 9–10 years, at age 11–12 years and for new onset cases at age 11–12 years). Detailed tables showing the univariate coefficients between each feature selected by the LASSO and the target vectors for each case sample and controls may be viewed in Supplementary Table S3a-i.

Deep learning with artificial neural networks

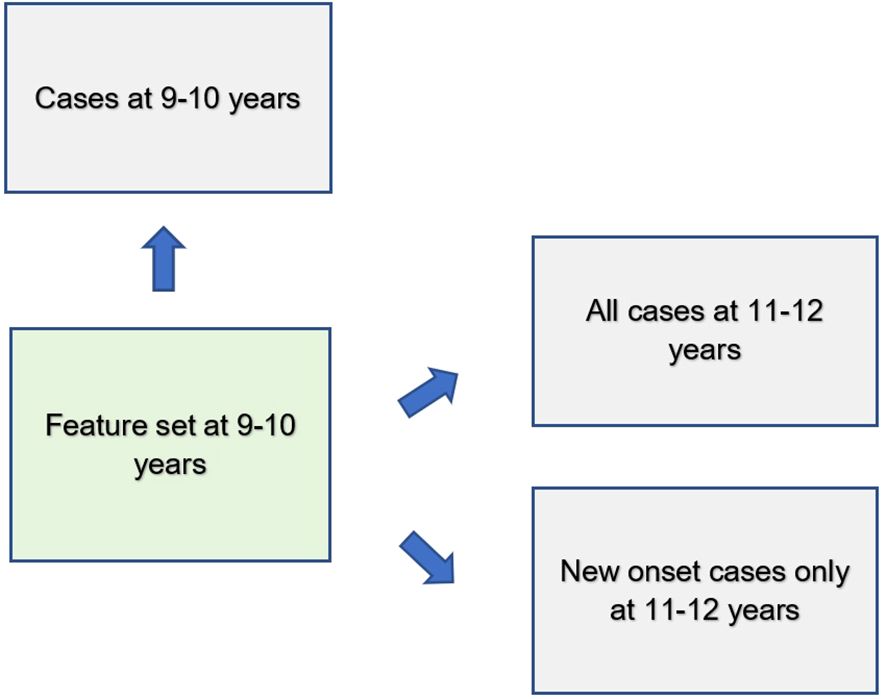

We used deep learning to predict cases of depression, anxiety and somatic problems in each participant sample (at ages 9-10, ages 11–12 and for new onset cases only at ages 11–12 years). In order to determine the relative ability of features to predict future cases of internalizing disorders, features collected at baseline assessment (ages 9–10 years) were used to predict cases present at ages 11–12 years. We also constructed similar models that restricted the cases at 11–12 years of age to only new onset cases, where the participant was not exhibiting clinical levels of symptoms at ages 9–10 years. Finally, to quantify any dropoff in predictive power over the two-year followup period, comparative models predicting cases at 9–10 years of age were also computed. Therefore, the feature set comprised only variables collected at 9–10 years of age in all analytic scenarios (Figure 2).

Features assessed at baseline (ages 9–10 years) were used to predict cases of depression, anxiety and somatic problems present contemporaneously as well as all cases 2 years in the future (ages 11–12 years) and only new onset cases at ages 11–12 years.

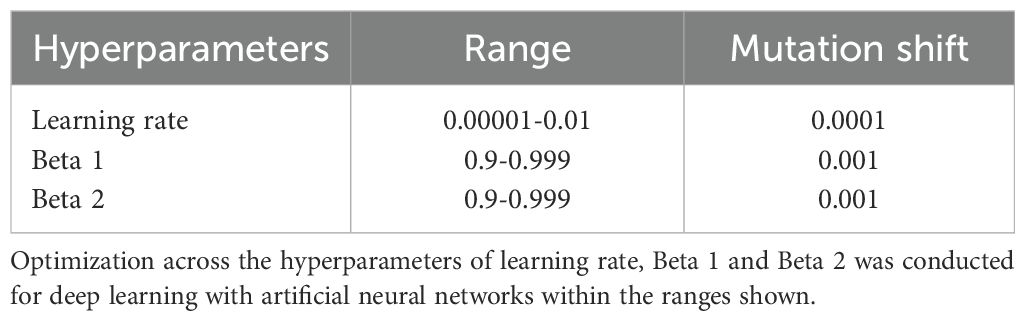

We trained artificial neural networks using the AdamW algorithm with 3 layers, 300 neurons per layer, early stopping (patience = 3, metric = validation loss) and the ReLU activation function. The last output layer contained a conventional softmax function. Learning parameters (Table 3) were tuned with IEL as detailed below. Deep learning models were encoded with PyTorch embedded in custom Python code (51).

Integrated Evolutionary Learning for optimization across hyperparameters and fine feature selection

Many ML algorithms have hyperparameters that control learning. Their settings require ‘tuning’ that can have a dramatic effect on performance. Typically, tuning is performed via ‘rules of thumb’ and ≤50 model fits are explored, introducing the possibility of bias and potentially limiting the solution space (52–54). To address this issue, we previously developed and here applied an AI technique called Integrated Evolutionary Learning (IEL) which can improve the performance of ML predictive algorithms in comparable tabular data by up to 20-25% versus the use of default model hyperparameters and conventional designs (55). IEL is a form of computational intelligence or metaheuristic based on an evolutionary algorithm that instantiates the concepts of biological evolutionary selection in computer code. It optimizes across the hyperparameters of the deep learning algorithm by adaptively breeding models over hundreds of learning generations by selecting for improvements in a fitness function (here, AUROC).

For each experiment, the deep learning algorithm was nested inside IEL, which initialized the first generation of 100 models with randomized hyperparameter values or ‘chromosomes’. These hyperparameter settings (Table 4) were subsequently recombined, mutated or eliminated over successive generations. In recombination, ‘parent’ hyperparameters were arithmetically averaged to form ‘children’. In mutation, hyperparameter settings were shifted with the range of possible values shown in Table 4. When these first 100 models were trained, the BIC was computed for each solution. Of the 80 best models, 40 were recombined by averaging the hyperparameter setting after a pivot point at the midpoint to produce 20 ‘child’ models. 20 were mutated to produce the same number of child models by shifting the requisite hyperparameter by the mutation shift value (Table 4). The remaining 20 were discarded. The next generation of models was then formed by adding 60 new models with randomized settings and adding these to the 40 child models retained from the initial generation. Thereafter, IEL continued to recombine, mutate and discard 100 models per generation in a similar fashion to minimize the BIC until the latter fitness function plateaued. With 100 models fitted per generation, IEL typically fits ~40,000 models per experiment over ~400 generations.

IEL jointly performs optimization across hyperparameter settings with automated feature selection and mitigate the risk of overfitting. For each experiment, IEL has available to it the set of features selected in the two-step feature selection process performed with filtering and the LASSO (Coarse feature selection, Supplementary Table S3). From each of these sets, a random number of features in the range [2-50] was set for each model in the initial generation of 100 models and specific features were randomly sampled from the set of available features. This iterative subset exploration reduces the risk that predictive performance hinges on a single subset or on correlated features retained by the initial selection. After computing the AUROC for each model, feature sets from the best-performing 60 models were individually allocated to the recombined and mutated child models. Other feature sets were discarded. As with hyperparameter tuning, this process was repeated for succeeding generations until the AUROC plateaued.

IEL implements recursive learning to facilitate computationally efficiency. After training until the AUROC plateaued, we determine the elbow of the fitness function plotted versus number of features and re-start learning with a warm start. The feature set available after this warm start is constrained to that subset of features, thresholded by their importance, corresponding to the fitness function elbow. Learning then proceeds by thresholding features available for learning at the original warm start feature importance + 2 standard deviations. In addition, the number of models per generation is reduced to 50 and 20 models are recombined and 10 models are mutated. Otherwise, training after the warm start uses the same principles as detailed above.

Cross validation

Deep learning models were fit within IEL using stratified k-fold cross validation i.e. every one of the 100 models in each learning generation within IEL was individually trained and validated using cross-validation in the training partition. IEL allows the number of features used to fit each model to differ within each model in every generation. Accordingly, k (the number of splits) was set as the nearest integer above [sample size/number of features]. Cross validation was implemented with the scikit-learn StratifiedKFold function.

Testing for generalization in holdout, unseen test data and performance measurement

After training was completed, optimized models generated by IEL were tested on the holdout, unseen test set for each sample and mental health condition by applying the requisite hyperparameter settings and selected features obtained from the 100 best-performing models in the training phase to the test set. The area under the receiver operating curve (AUROC), accuracy, precision, and recall were computed for test set models using standard Sci-Kit learn libraries and models with the best performance in each statistic selected for presentation as the final, optimized models. The threshold for prediction probability was 0.5 and receiver operating characteristic (ROC) curves are also provided for each experiment (Supplementary Figures 1, 2).

Feature importance determination

Shapley Additive Explanations (SHAP) values were computed to determine the relative importance of each feature to predicting cases of mental illness. SHAP is a game theoretic approach commonly used in ML to explain the output of any ML model including ‘black box’ estimators such as artificial neural networks and is considered resistant to multicollinearity (56). In this work, GradientSHAP, encoded in PyTorch (51) and Captum (57) packages in Python as a combined technique of Integrated Gradients (58) and SmoothGrad (59), is adopted as a fast approximation of SHAP values for gradient-based models.

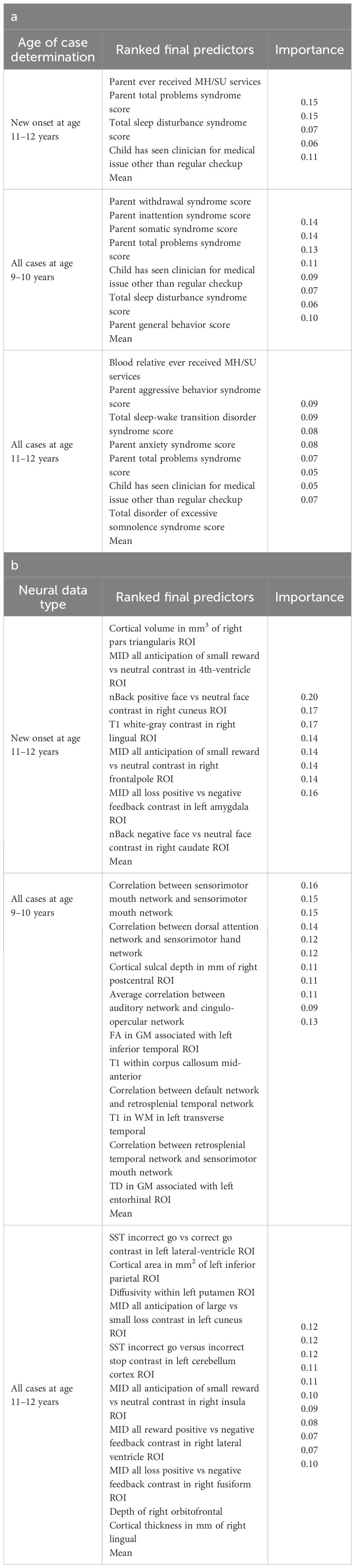

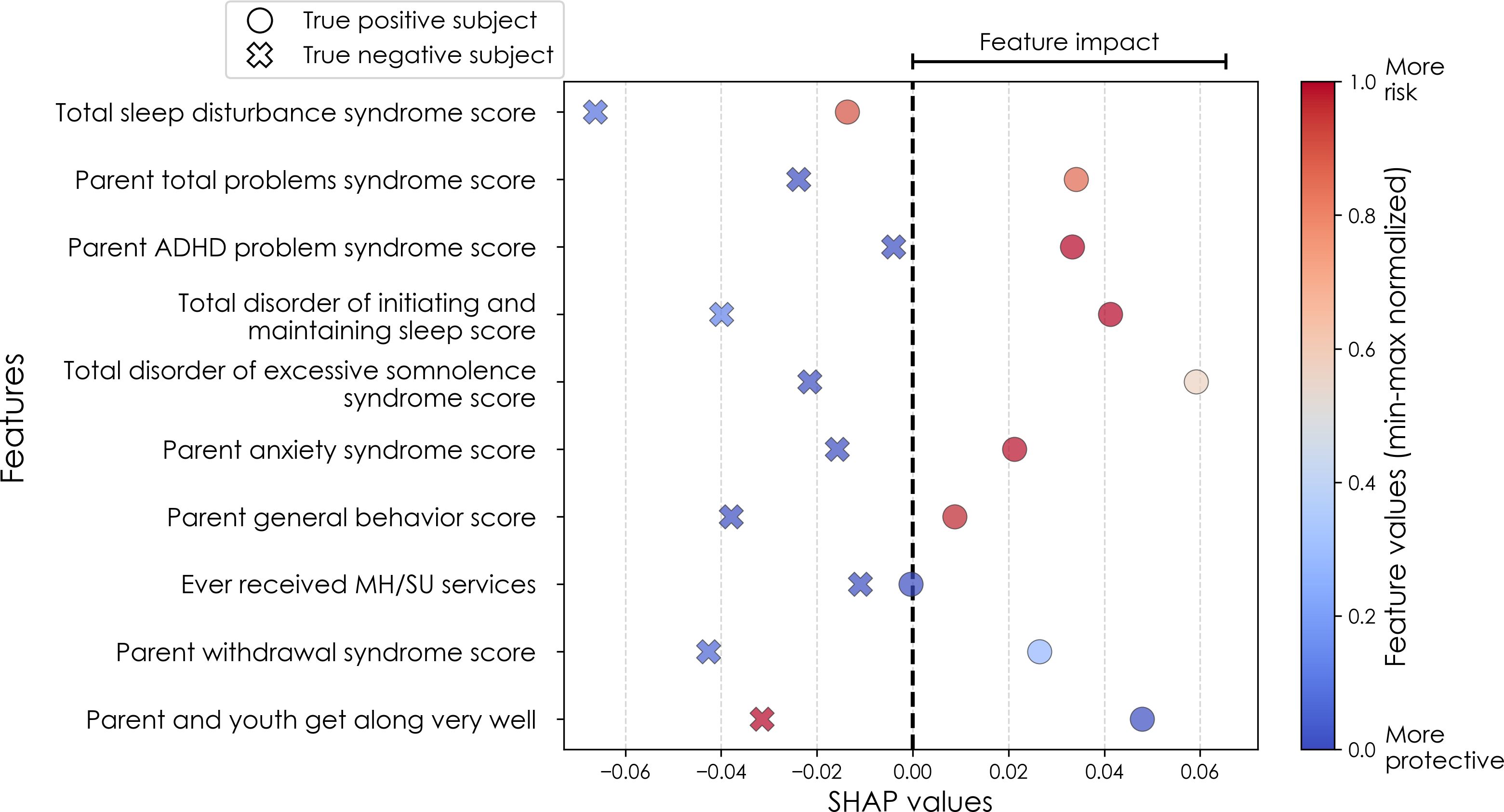

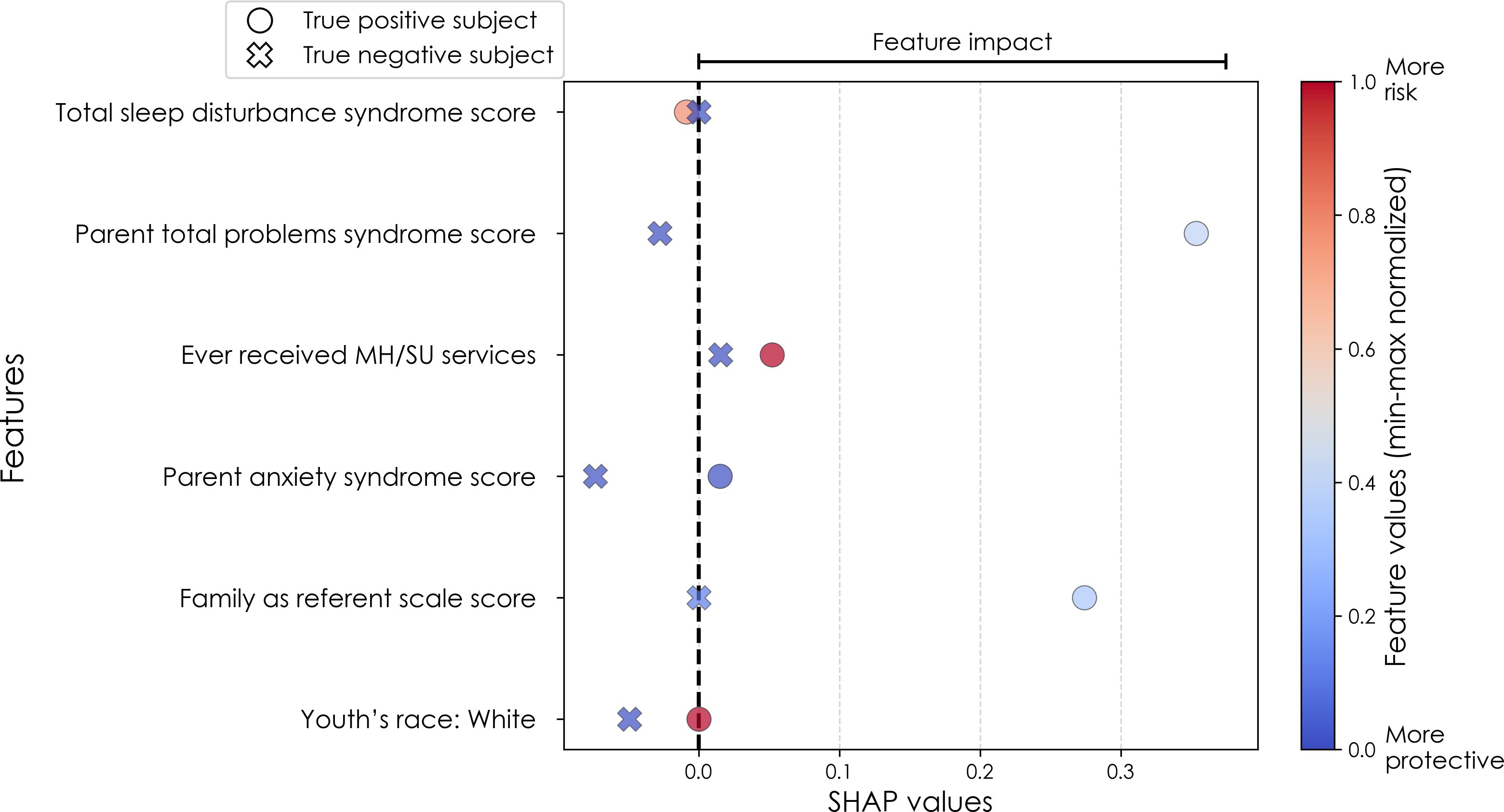

To illustrate the model’s decision-making process in clinically interpretable terms, a case study is provided for each target’s onset cases at 11–12 years. In each case, the contribution from the key features to the predicted labels of two selected contrasting participants were studied in terms of GradientSHAP values. The visualized GradientSHAP values in Figures 3–5 serve as indicators of risk versus protective factors of the relevant mental illness.

Figure 3. Case study of depression predictors in the multimodal predictive model of new onset at 11–12 years. The summary plot presents the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting depression with new onset at 11–12 yrs. The color gradient represents the scaled value of each feature where red = high and blue = low. Circle and cross marks correspond to the feature values of a pre-selected pair of (true) positive subject and (true) negative subject respectively. MH, mental health; SU, substance use.

Figure 4. Case study of anxiety predictors in the multimodal predictive model of new onset at 11–12 years. The summary plot presents the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting depression with new onset at 11–12 yrs. The color gradient represents the scaled value of each feature where red = high and blue = low. Circle and cross marks correspond to the feature values of a pre-selected pair of (true) positive subject and (true) negative subject respectively. MH, mental health; SU, substance use; SST, Standard Stop Signal task; MID, Monetary Incentive Delay task; ROI, region of interest; FA, fractional anisotropy; LD, longitudinal diffusivity; WM, white matter; GM, gray matter.

Figure 5. Case study of predictors of somatic disorder in the multimodal predictive model of new onset at 11–12 years. The summary plot presents the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting depression with new onset at 11–12 yrs. The color gradient represents the scaled value of each feature where red = high and blue = low. Circle and cross marks correspond to the feature values of a pre-selected pair of (true) positive subject and (true) negative subject respectively. MH, mental health; SU, substance use.

Fairness subgroup performance analysis

While the ABCD dataset is one of the most demographically diverse pediatric neuro-developmental cohorts currently available, it may not fully represent all racial and socioeconomic groups within the U.S. population. Thus, even if a trained model has a decent predictive performance in general, it may not perform as well in each demographic subgroup. To robustly evaluate the model performance from a fairness perspective, we conducted a subgroup performance analysis on the held-out test set, stratified by race and annual family income level, in order to assess whether models perform consistently across subpopulations.

The stratification of race is conducted in terms of 3 racial subgroups: White, Black, and other/multiple races (Others). Annual family income level was stratified into 4 subgroups: below $15,999, $16,000-$34,999, $35,000-$74,999, and above $75,000). The fairness subgroup analysis considers only the onset cases of 11–12 years of each studied internalizing disorders, which are the main focus groups in this work. For each subgroup analysis, we evaluated the predictive performance in terms of accuracy, precision, recall, and AUROC to assess whether the predictive model systematically performs better or worse across subpopulations.

Baseline modeling comparison

To assess the predictive performance of the IEL modeling technique, we conducted a baseline modeling comparison using several traditional ML modeling methods, including logistic regression, random forest, and support vector machines (SVM). Thees models were trained on the same multimodal feature sets obtained after the LASSO combined with Boruta feature selection step (i.e., containing 58–207 features). This aims to compare whether the IEL modeling pipeline, which embeds an iterative feature inclusion step in its evolutionary process, can result in consistent predictive performance compared to the baseline benchmarking models while involving strictly fewer features. All baseline models were evaluated using the same train/test splits as our deep learning approach and encoded using the Python package sklearn (60) with their default runtime parameters. Student t-tests were performed to verify whether the predictive accuracy score of IEL was statistically significantly different from the scores of the three baseline modeling methods.

Summary of the preprocessing and modeling pipelines

To improve the transparency and reproducibility of our study, the data preprocessing and modeling pipelines are documented on our code-sharing space (https://github.com/delacylab/integrated_evolutionary_learning). Below we recap the overall pipelines for clarity.

● Variables with less than 35% of missing values were retained.

● The full samples were partitioned into a training set for model training and a held-out test set for model evaluation.

● For each of the training set and test set, variables were winsorized, scaled to the unit interval, and imputed.

● Coarse feature selection was performed to retain features that exhibited a non-zero relationship with the target in the training set.

● Feature selection combining LASSO and Boruta was performed to retain relevant features.

● Cross-validated deep learning models were trained within the IEL algorithm to perform hyperparameter optimization and fine feature inclusion.

● Identify the elbow of the fitness curve (obtained across the generations run in the IEL algorithm) to shrink the feature subset further.

● Re-train the IEL algorithm with a warm-started feature subset until the fitness score plateau.

● Evaluate the test data set using the model retrained with the hyperparameter settings and selected features of the best-fitting model identified in the last execution of IEL.

Results

Overview

All results are from testing the final model obtained after optimization with IEL for generalization in the holdout, unseen test dataset for each participant sample and experiment. For each condition (depression, anxiety, SSD) a parallel set of results is presented for each participant sample of new onset cases at 11–12 yrs; all prevailing cases at 9–10 yrs and all prevailing cases at 11–12 yrs. In all experiments only data collected at 9–10 yrs is input to deep learning to make predictions. Thus, results obtained for new onset and prevailing cases at 11–12 yrs represent predictions of future case status.

For each disorder and age group, results are presented for the metrics below for a) multimodal models constructed using all types of input features; and b) neural-only models.

● Performance statistics: accuracy, precision, recall and AUROC. ROC curves may be viewed in Supplementary Figures 1, 2.

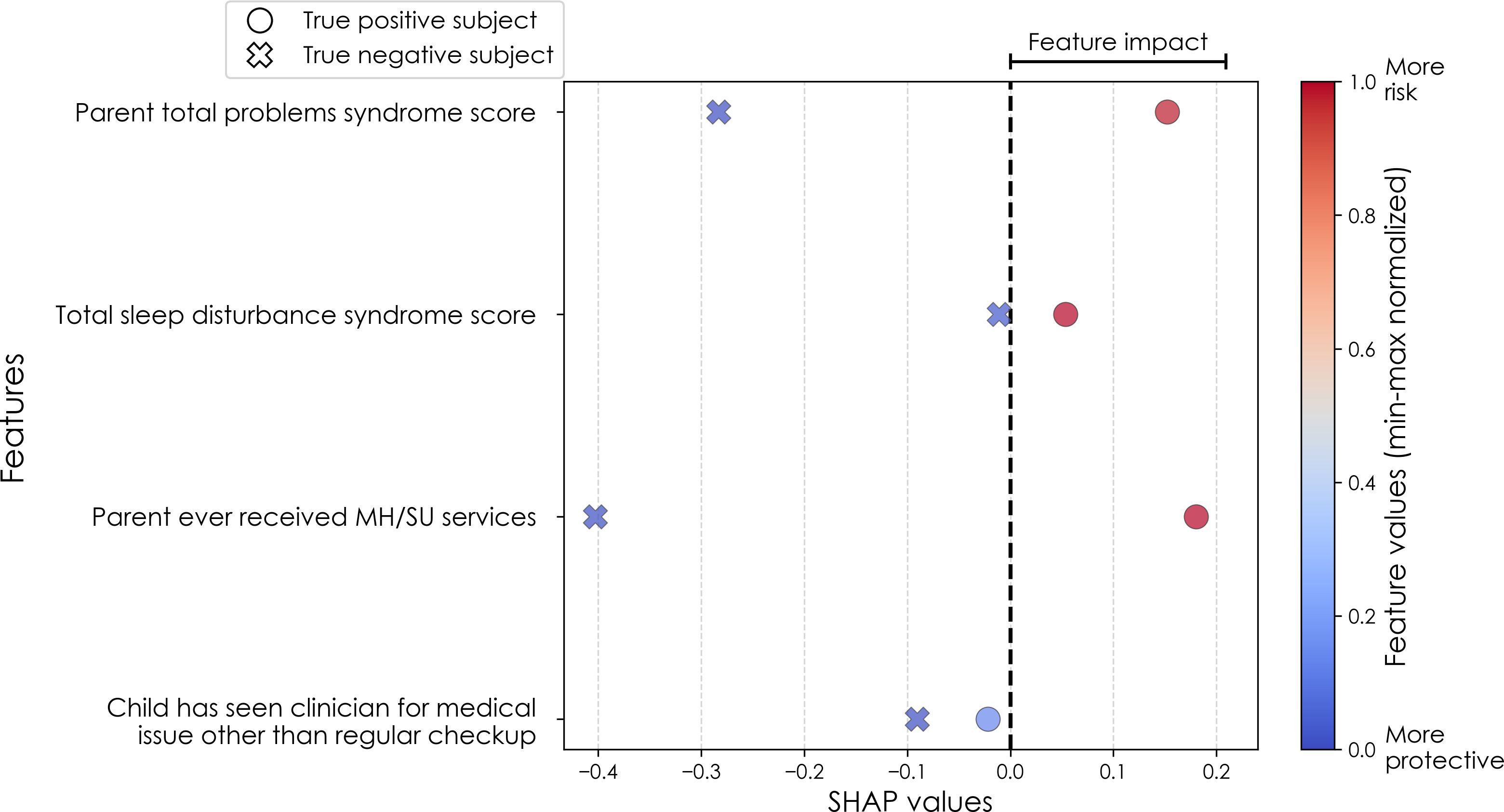

● Final predictors ranked in order of importance by their group-level SHAP score (average absolute value across the participant sample) and the mean predictor importance for the requisite experiment.

● Individual-level final predictor importance (SHAP scores) across the participant sample. This summary plot is also used to determine the directionality of the relationship between the predictor and case status.

Results summary

Across all prediction scenarios, our models consistently achieved strong discriminative ability, particularly in multimodal settings where phenotypic, environmental, and neural predictors were included. In all cases, multimodal models outperformed neural-only models (see Tables 5–7), underscoring the central importance of psychosocial domains in early prediction of internalizing disorders. Predictive models are essential precursors of risk stratification tools, and we note that our models here achieved very strong positive class discrimination, with precision (positive predictive value) of 77-84%. Parental psychopathology and child sleep disturbances emerged as important cross-cutting predictors across outcomes (see Figures 6–8). Our proposed IEL modeling strategy achieved highly comparable performance with the traditional ML modeling methods with the primary benefit of improved model parsimony, another important feature when creating risk stratification precursor models. We note that results from our fairness subgroup analyses demonstrate visible disparities in predictive performance across different racial groups and family income levels.

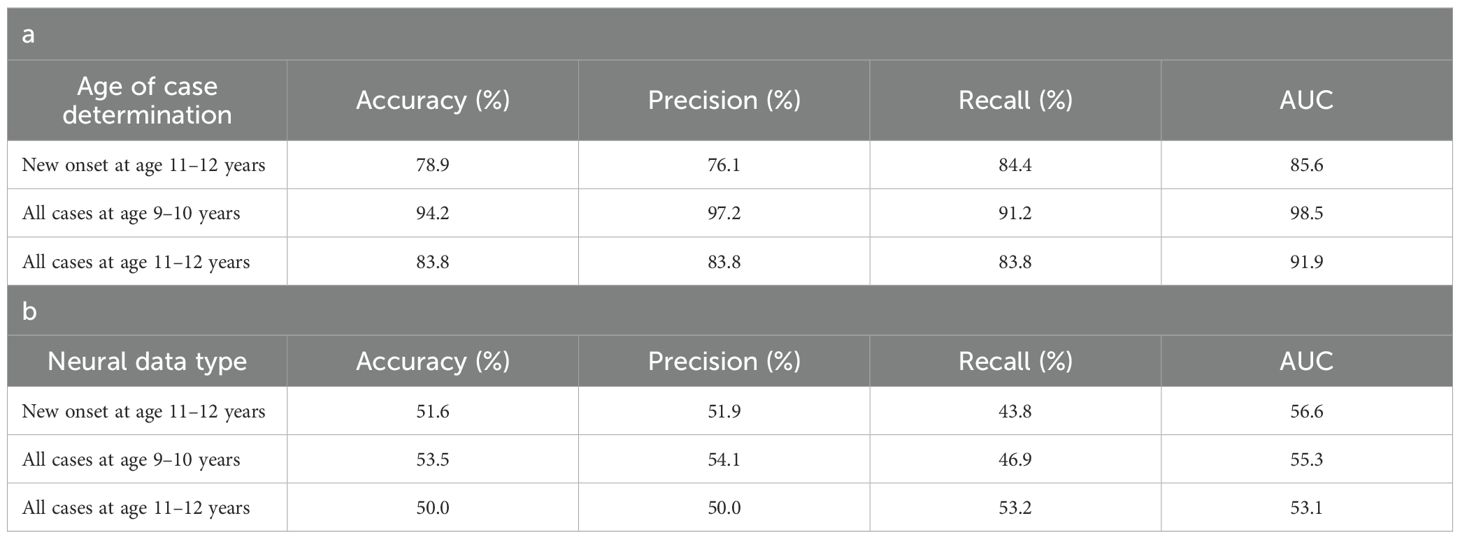

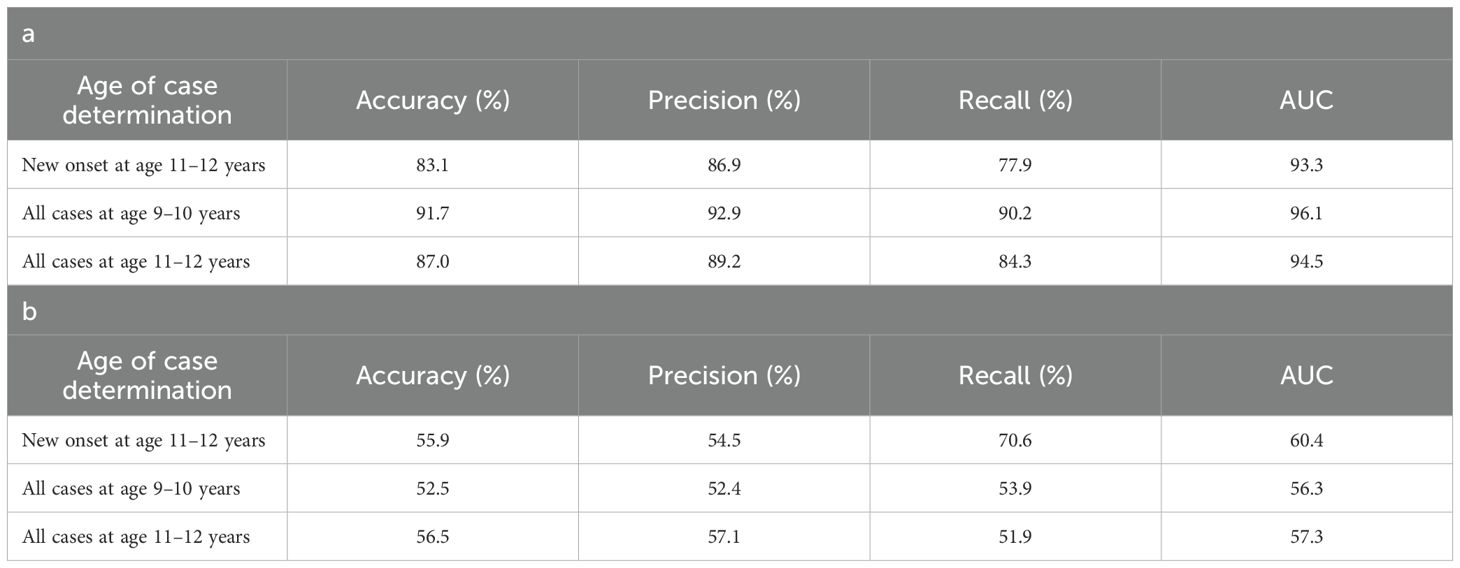

Table 5. Performance of deep learning optimized with integrated evolutionary learning in predicting cases of depression using multimodal and neural-only feature types.

Table 7. Performance of deep learning optimized with integrated evolutionary learning in predicting cases of somatic symptom disorder.

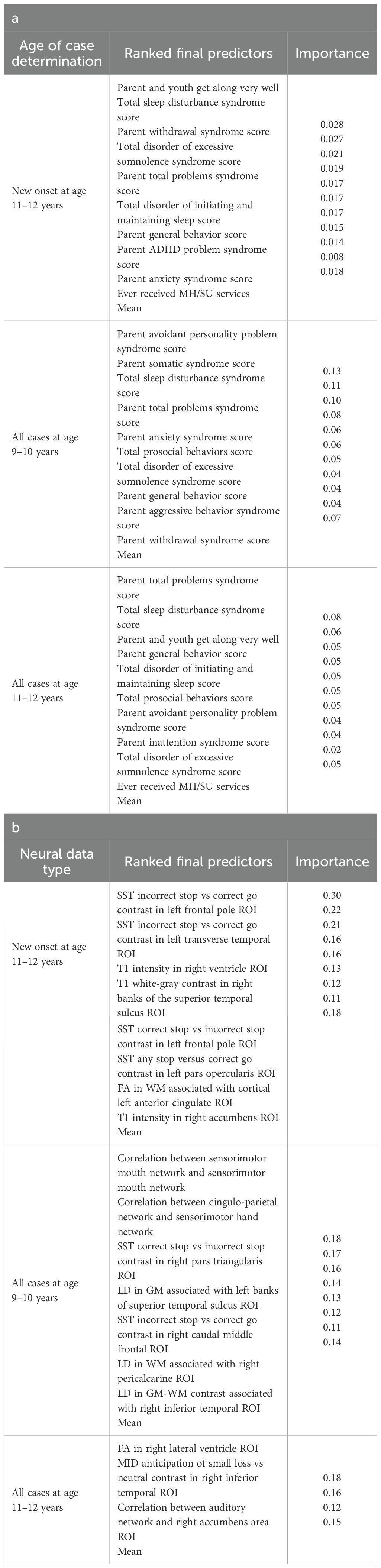

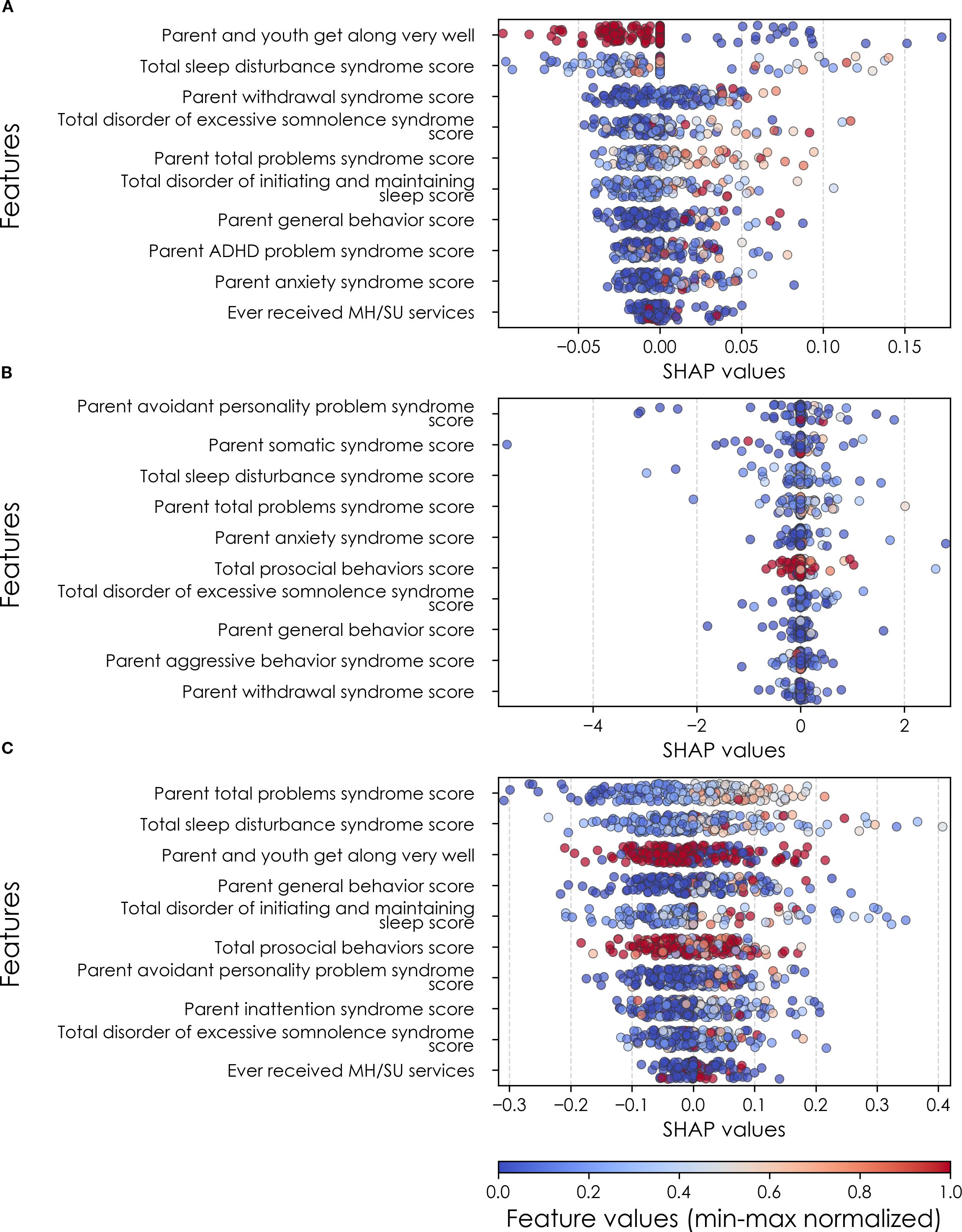

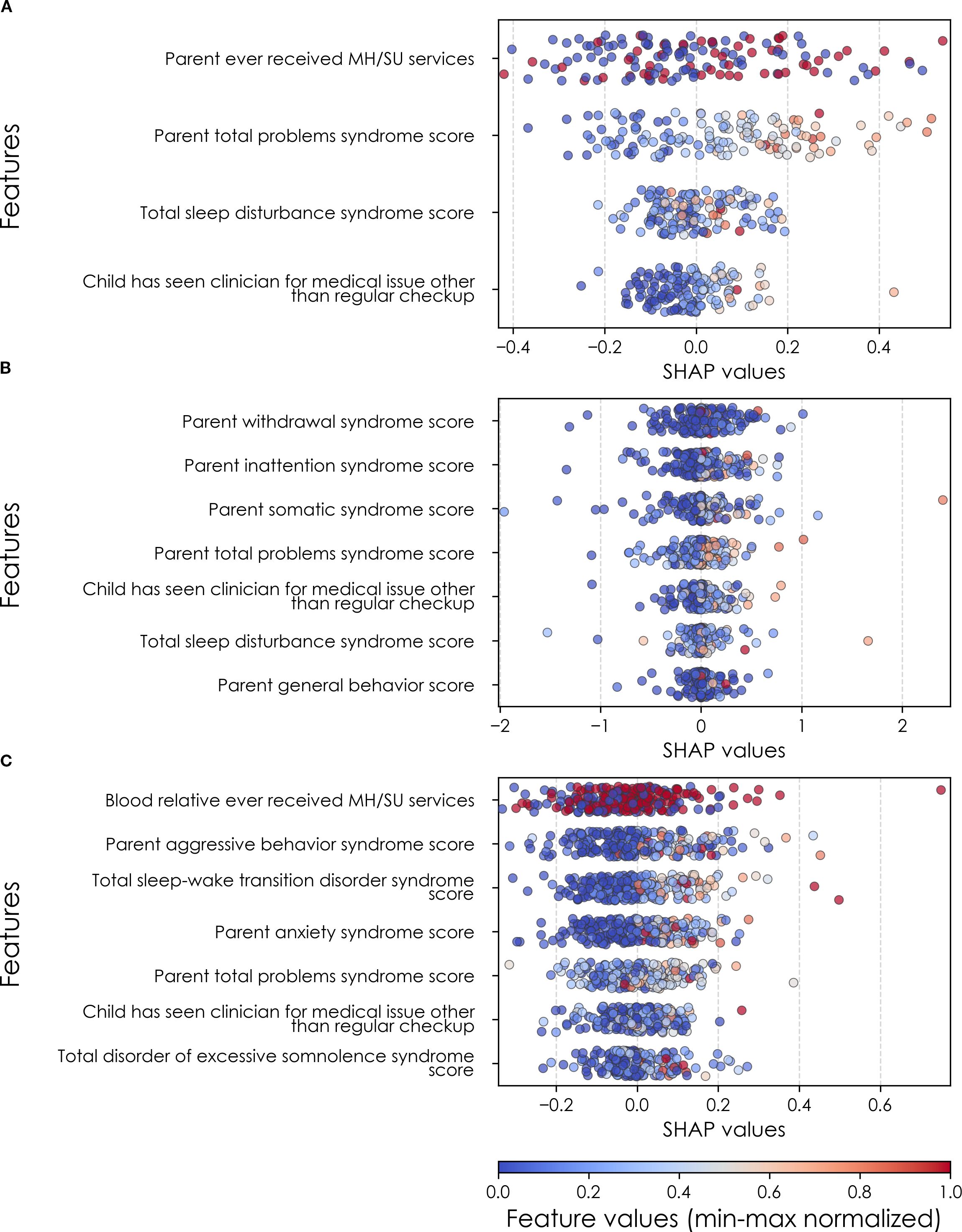

Figure 6. Individual-level importances of depression predictors in multimodal predictive models. Summary plots are presented of the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting depression (A) with new onset at 11–12 yrs; (B) in all cases at 9–10 yrs; and (C) in all cases at 11–12 yrs. The color gradient represents the original value of each feature (metric) where red = high and blue = low. Discrete (binary) features appear as red or blue, while continuous features appear as a color gradient from low to high. MH, mental health; SU, substance use.

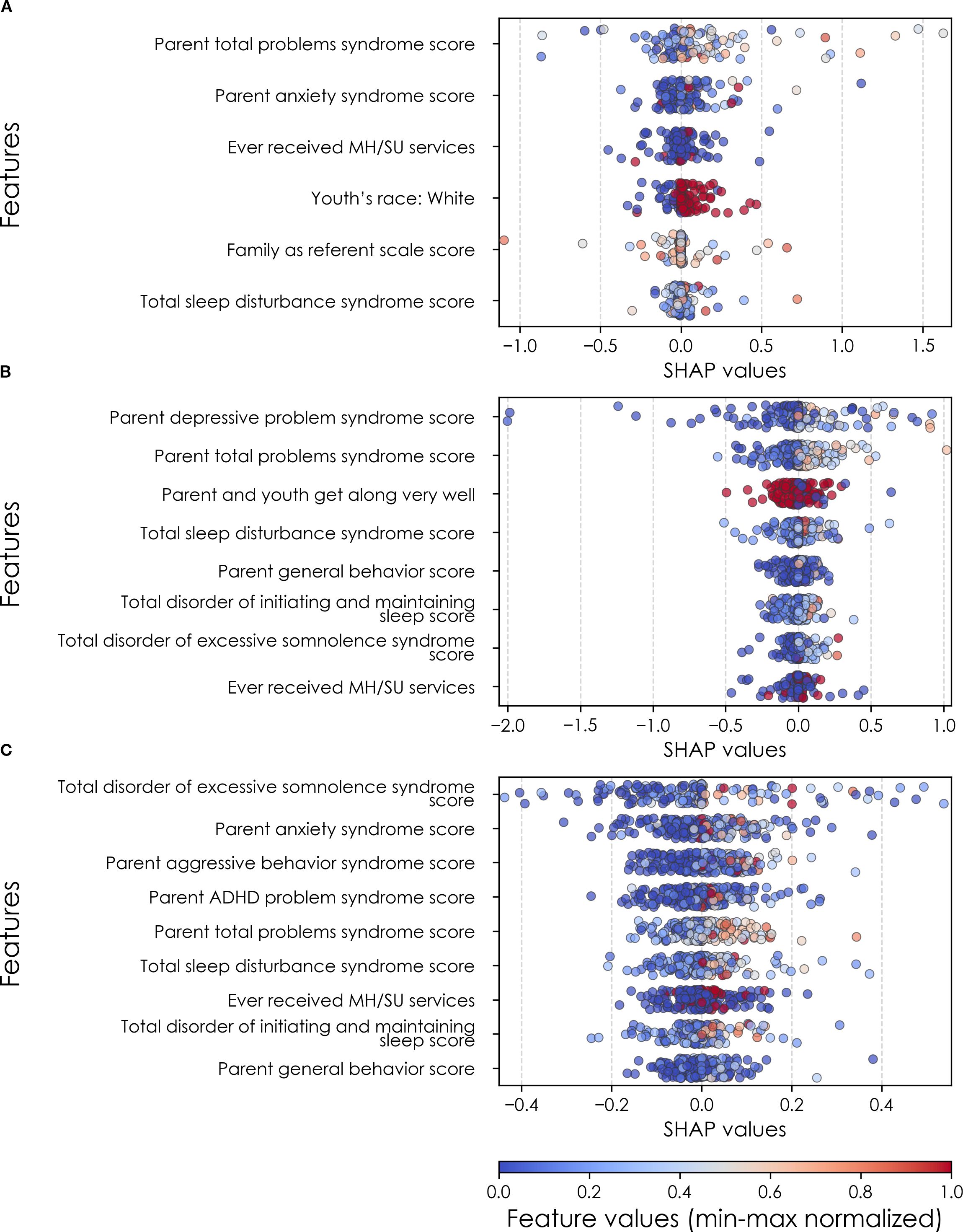

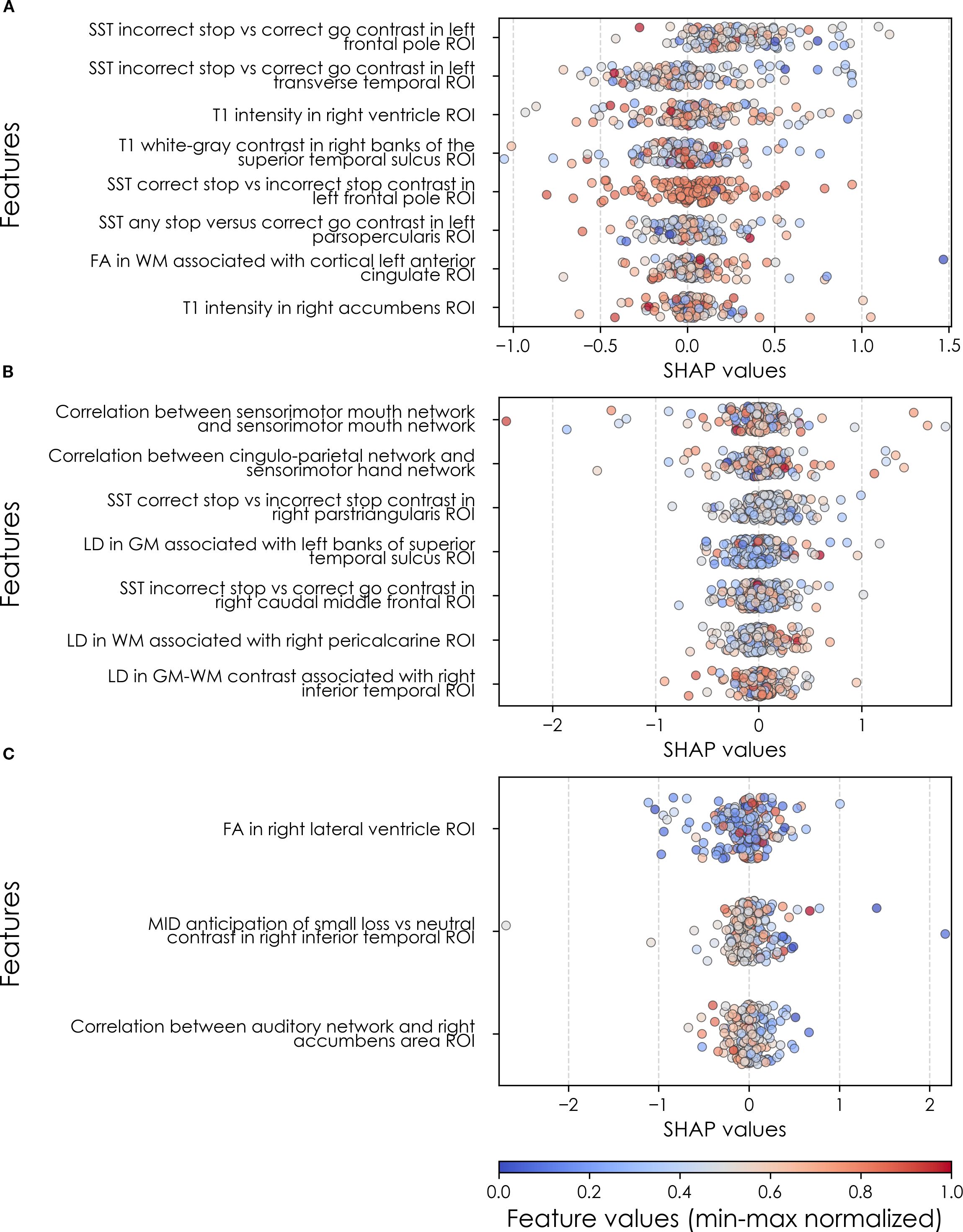

Figure 7. Individual-level importances of final predictors of anxiety in early adolescence. Summary plots are presented of the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting anxiety (A) with new onset at 11–12 yrs; (B) in all cases at 9–10 yrs; and (C) in all cases at 11–12 yrs. The color gradient represents the original value of each feature (metric) where red = high and blue = low. Discrete (binary) features appear as red or blue, while continuous features appear as a color gradient. MH, mental health; SU, substance use; SST, Standard Stop Signal task; MID, Monetary Incentive Delay task; ROI, region of interest; FA, fractional anisotropy; LD, longitudinal diffusivity; WM, white matter; GM, gray matter.

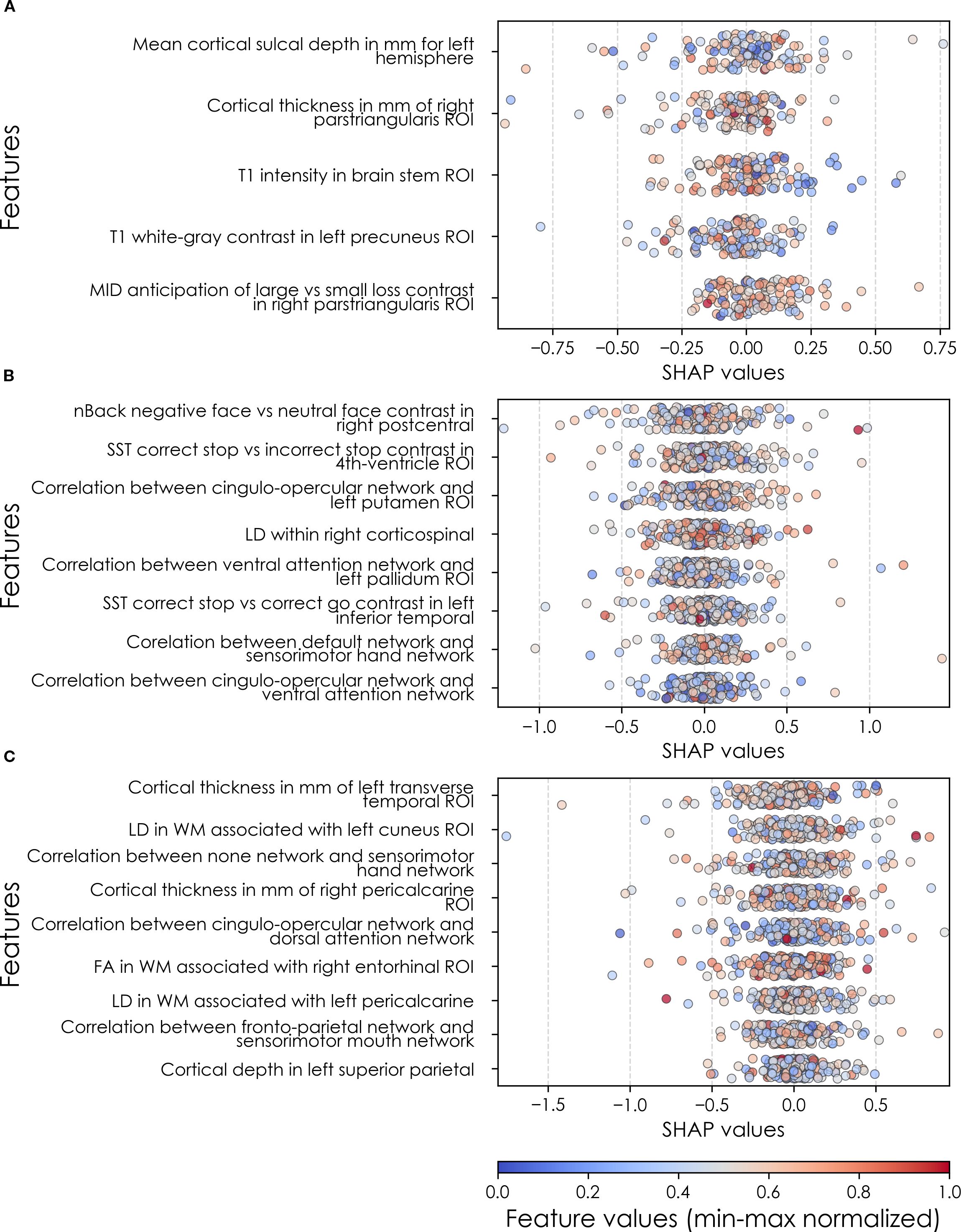

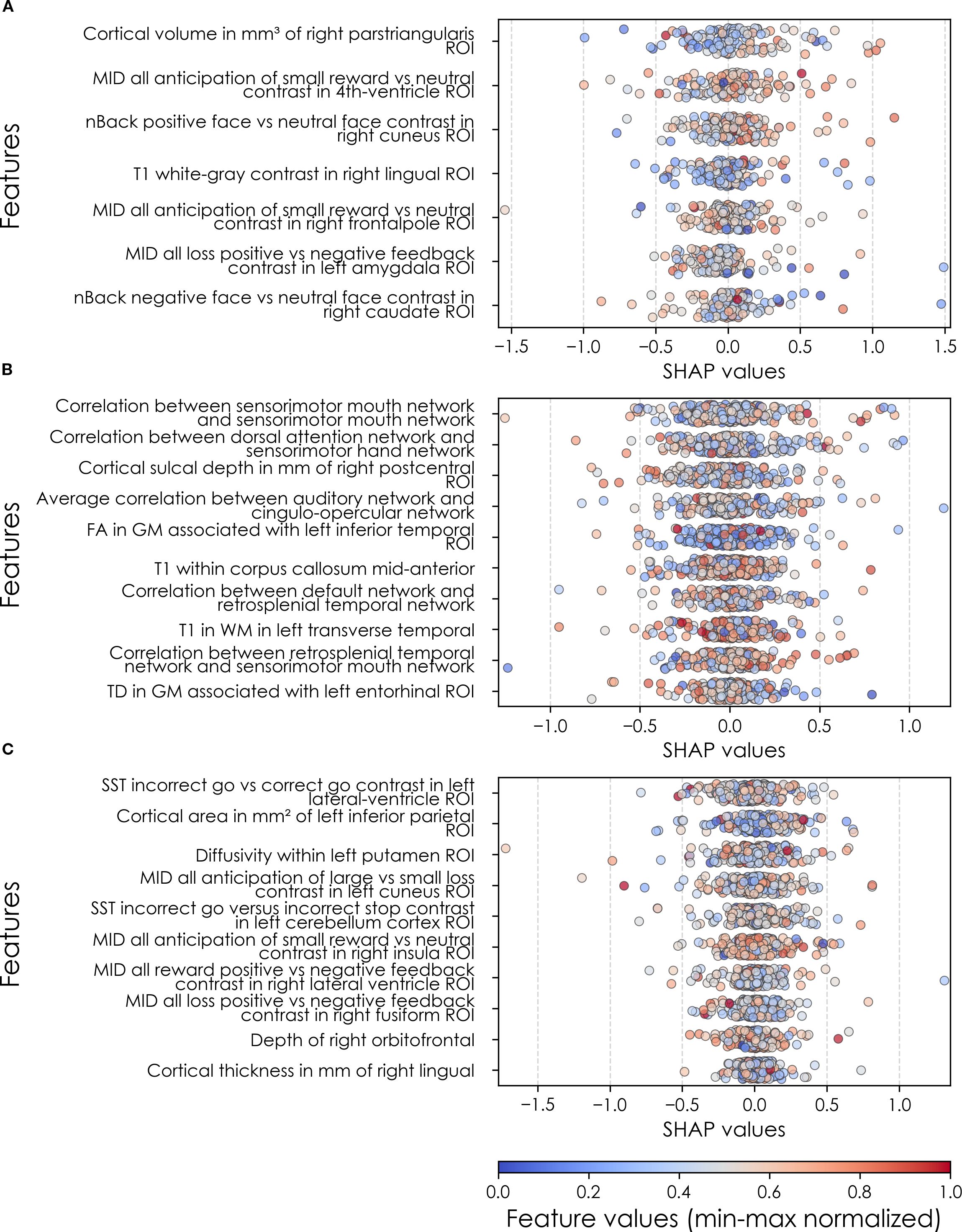

Figure 8. Individual-level importances of final predictors of somatic disorder in early adolescence. Summary plots are presented of the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting SSD (A) with new onset at 11–12 yrs; (B) in all cases at 9–10 yrs; and (C) in all cases at 11–12 yrs. The color gradient represents the original value of each feature (metric) where red = high and blue = low. Discrete (binary) features appear as red or blue, while continuous features appear as a color gradient. MH, mental health; SU, substance use.

Depression

Deep learning optimized with IEL predicted depression in early adolescence with >80% accuracy and recall and ≥90% AUROC across all experiments (Table 5a), with precision of ~77-84%. Performance was slightly worse by a few percentage points in predicting new onset cases in the future (at 11–12 yrs) than either contemporaneous or all prevailing cases at 11–12 yrs. When each experiment was recapitulated using only neural candidate predictors, we found that final optimized predictive models displayed substantially lower performance (Table 5b) than those obtained with multimodal predictors with accuracy of ~52-56% and AUROC of ~56-60%, or some 27-39 percentage points lower than with multimodal predictors. Similar differentials were seen in precision and recall. In depression, multimodal models achieved somewhat better performance when predicting prevailing cases at 9-10yrs and11–12 vs new onset cases at 11–12 yrs. In neural-only models this was reversed, with a substantially stronger model obtained for new onset cases.

Performance statistics of accuracy, precision, recall and the AUROC are shown for the most accurate model obtained with deep learning optimized with Integrated Evolutionary Learning using a) multimodal features and b) only neural features. We used features obtained at 9–10 years of age to predict new onset cases of depression at 11–12 years of age as well as all prevailing contemporaneous cases (9–10 yrs) and all prevailing cases at 11–12 years of age. Corresponding ROC curves may be viewed in Supplementary Figures 1 and 2.

In interpreting multimodal models (Table 8), we found that parent problem behaviors were the most important predictors of early adolescent depression in each participant sample. Specific parental behavioral drivers of youth cases differed by age and case type. In new onset cases at 11–12 yrs, positive parent-youth relationships were the top predictor, followed by parent sleep disturbance, withdrawal traits, and excessive somnolence, while indicators of overall parental behavioral burden and prior mental health/substance use (MH/SU) services were also present. In all cases at 9–10 yrs, parent avoidant and somatic traits were most important, along with sleep disturbances and prosocial behaviors. In all cases at 11–12 yrs, parent behavioral problems and sleep disturances were most predictive, with positive parent-youth relationships and prosocial behaviors appearing among the top-ranked features. Group-level importances for multimodal model predictors (averaged across the participant sample) were in the range [0.05, 0.07] and the mean importance for each experiment in the range [0.018, 0.05].

Final predictors of cases of all prevailing cases of depression at ages 9–10 and 11–12 years as well as new onset cases only at 11–12 years of age are shown for the most accurate models obtained using deep learning optimized with IEL obtained with a) multimodal features and b) only neural features. Final predictors are ranked in order of importance where the relative importance of each predictor is computed with the Shapley Additive Explanations technique and presented here averaged across all participants in the sample. Features in blue indicate an inverse relationship with depression verified with the Shapley method. MH = mental health; SU = substance use; SST = Standard Stop Signal task; MID = Monetary Incentive Delay task; ROI = region of interest; FA = fractional anisotropy; LD = longitudinal diffusivity; WM = white matter; GM = gray matter.

Final predictors of new onset cases at 11–12 yrs obtained in neural-only models (Table 8b) were dominated by features derived from the Standard Stop Signal fMRI task, which measures response inhibition. Here, SST ROIs emphasized the left hemisphere. Specifically, SST responses in pars opercularis (Broca’s area), left frontal pole, anterior cingulate and transverse temporal area. Certain structural metrics also appeared as final predictors of new onset cases. Specifically, white-gray contrast in the right superior temporal sulcus, right accumbens T1 intensity and white matter structural integrity in the left anterior cingulate.

Connectivity metrics in sensorimotor and cingulo-parietal brain networks were prominent in predicting contemporaneous prevailing cases of depression at 9-10yrs as were, again, metrics associated with the Stop Signal task – once again in Broca’s area (pars triangularis) and frontal regions. The final predictive model for all prevailing future cases of depression at 11–12 yrs was parsimonious and included contrast differences in the right inferior temporal ROI in the Monetary Incentive Delay task, which measures approach and avoidance during reward processing, and correlation between the auditory functional connectivity neural network and the right accumbens. Group-level importances for neural-only model predictors were in the range [0.11,0.30] and the mean importance for each experiment in the range [0.14, 0.18]. As indicated by the color-coding of the feature values in Figures 6 and 9, feature values of the neural predictors generally have a smaller variance than the psychosocial predictors in the multimodal models.

Figure 9. Individual-level importances of depression predictors in neural-only predictive models. Summary plots are presented of the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting depression (A) with new onset at 11–12 yrs; (B) in all cases at 9–10 yrs; and (C) in all cases at 11–12 yrs. The color gradient represents the original value of each feature (metric) where red = high and blue = low. Discrete (binary) features appear as red or blue, while continuous features appear as a color gradient. MH, mental health; SU, substance use.

Where Table 8 presents the importance of final predictors as summarized (mean absolute value) across the requisite experimental participant sample, we were also interested in predictor importance at the individual participant level. We computed and plotted individual-level SHAP values to understand both the dispersion of predictor importances across individuals and the directionality of the relationship between final predictors and clinical case status (Figure 6). In SHAP summary plots, each data point represents an individual participant and the colorization reflects the original value of the predictor as an input feature. Thus, discrete-valued features appear as red or blue, whereas a continuous feature appears as a color gradient from low to high. The directionality of the relationship between predictors and depression case status obtained in these plots was further compared with coefficients obtained during LASSO regression for Coarse Feature Selection (Supplementary Table S3) and found to be in agreement.

Figure 6 reveals that individual-level importance of final predictors in early adolescent depression are typically widely dispersed. For example, when predicting new onset cases of depression at 11–12 yrs, the leading predictor of parent externalizing traits has a large range of ~[-0.4,0.6] across individual participants. Further, dispersion is typically greater for the more important predictors. Overall, these plots also indicate that all final predictors obtained have a positive relationship with depression case status, with the exception of secondary caregiver acceptance and prosocial behaviors in predicting new onset cases (see also Table 8). We also computed individual-level importances of final predictors for neural-only experiments (Figure 9). Here, the dispersion of individual-level predictor importances across participants were consistently smaller in neural-only versus multimodal prediction of early adolescent depression. In addition, Figure 3 visualized the individual-level predictor importances of two selected contrasting participants studied at the new onset cases at 11–12 yrs, which further differentiate clearly the protective factors (e.g., parents and youth getting along very well) from the risk factors (e.g., parents’ syndrome scores of multiple mental illnesses).

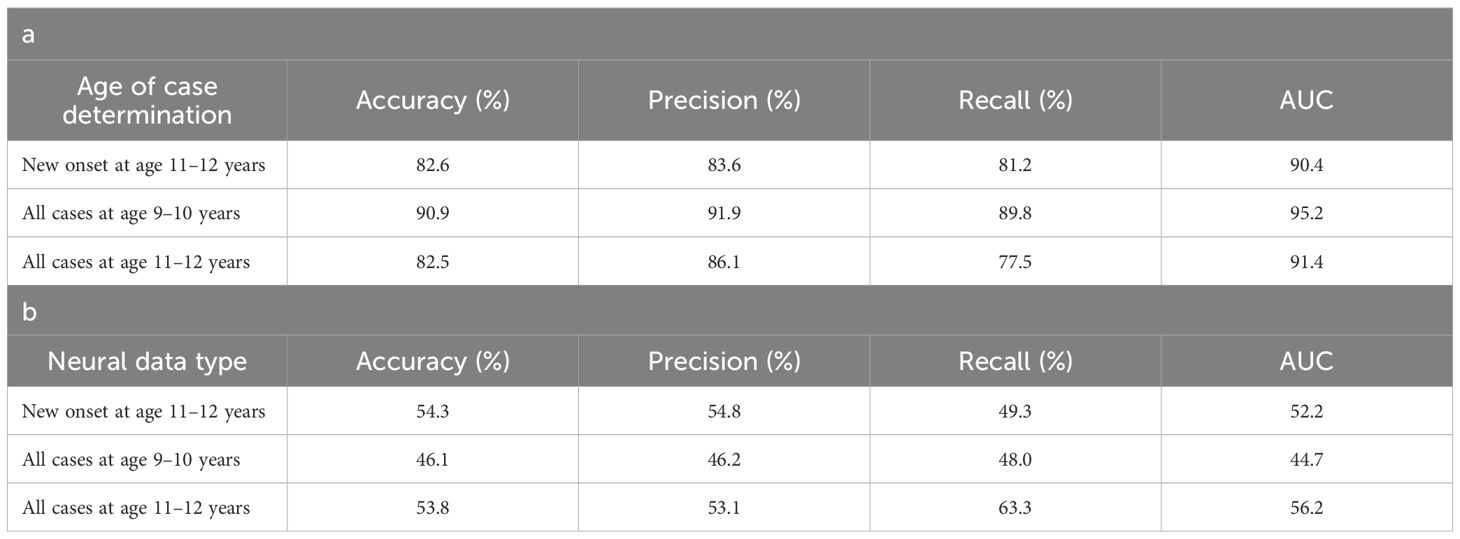

Anxiety

Deep learning optimized with IEL performed very well in predicting both new onset and prevailing cases of anxiety in early adolescence. In anxiety, ~79% accuracy and ~86% AUROC was achieved in predicting new onset cases versus ~84% accuracy and ~92% AUROC in predicting prevailing cases using data obtained at 9–10 yrs to predict cases at the future time point of 11–12 yrs. The best overall performance was observed using data at 9–10 yrs to predict contemporaneous prevailing cases, with ~94% accuracy and nearly 100% AUROC achieved (Table 6a). Similar to depression, neural-only models did not perform as well as multimodal models in predicting anxiety cases, being ~24-40% less accurate. Best performance was obtained when predicting all cases of anxiety at 11–12 yrs, where the final, optimized neural-only model achieved 54% accuracy and ~55% AUROC. Neural-only predictive models of all prevailing cases at 9–10 yrs and 11–12 yrs also showed inferior performance with accuracy of ~52 and ~50% and AUROC of 57 and 53% respectively (Table 6b).

Performance statistics of accuracy, precision, recall and the AUROC are shown for the most accurate model obtained with deep learning optimized with Integrated Evolutionary Learning using a) multimodal features and b) only neural features. We used features obtained at 9–10 years of age to predict new onset cases of anxiety at 11–12 years of age as well as all prevailing contemporaneous cases (9–10 yrs) and all prevailing cases at 11–12 years of age. Corresponding ROC curves may be viewed in Supplementary Figures 1 and 2.

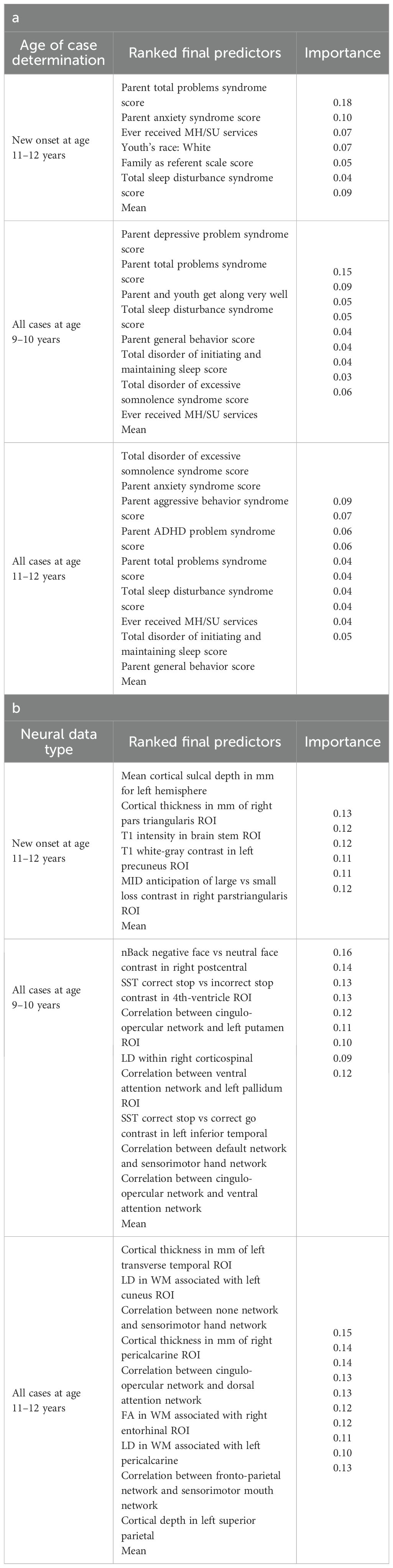

In anxiety, new onset cases were predicted with a relatively complex final model comprising 5 predictors (Table 9a). Here, the most important predictor was the parent’s total burden of behavioral problems, followed by parent anxiety traits, whether the youth had ever received mental health or substance use (MH/SU) services, the youth’s race (White), and the family’s referent scale score. Total sleep disturbance also appeared but with lower importance.

We detected overlap between the final predictors of new onset cases of anxiety at 11–12 yrs and those which predicted prevailing cases at 9–10 and 11–12 yrs. At 9–10 yrs, parent depressive and total behavioral problem scores were the top predictors, with sleep disturbances and parent–youth relationship quality also appearing. For all prevailing cases at 11–12 yrs, sleep disturbances, parent anxiety and aggressive traits, and the mother’s history of clinical treatment were prominent, along with overall parent behavioral burden. Group-level importances for multimodal model predictors were in the range [0.03, 0.18] and the mean importance for each experiment in the range [0.05, 0.09].

Final predictors of cases of all prevailing cases of anxiety at ages 9–10 and 11–12 years as well as new onset cases only at 11–12 years of age are shown for the most accurate models obtained using deep learning optimized with IEL obtained with a) multimodal features and b) only neural features. Final predictors are ranked in order of importance where the relative importance of each predictor is computed with the Shapley Additive Explanations technique and presented here averaged across all participants in the sample. Features in blue indicate an inverse relationship with depression verified with the Shapley method. MH = mental health; SU = substance use; SST = Standard Stop Signal task; MID = Monetary Incentive Delay task; ROI = region of interest; FA = fractional anisotropy; LD = longitudinal diffusivity; WM = white matter; GM = gray matter.

In neural-only models predicting new onset anxiety cases, features from the MID fMRI task and structural metrics predominated (Table 9b). Important final predictors were cortical depth in the left hemisphere and right pars triangularis (Broca’s area) as well as MID anticipation in the latter. Final, optimized models predicting prevailing cases at 9–10 years emphasized measures of brain function including SST and nBack task metrics in the right postcentral and left temporal, as well as multiple connectivity measures including the ventral attention, default mode, sensorimotor and cingulo-opercular networks. At 11–12 yrs, results again emphasized connectivity metrics among control and sensorimotor networks as well as structural measures. Group-level importances for neural-only model predictors were in the range [0.09, 0.16] and the mean importance for each experiment in the range [0.12, 0.13]. Similar to the case of depression, the feature values (as visualized in Figures 7, 10) of the neural predictors had a relatively smaller variance than the psychosocial predictors in the multimodal models.

Figure 10. Individual-level importances of neural final predictors of anxiety in early adolescence. Summary plots are presented of the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting anxiety (A) with new onset at 11–12 yrs; (B) in all cases at 9–10 yrs; and (C) in all cases at 11–12 yrs. The color gradient represents the original value of each feature (metric) where red = high and blue = low. Discrete (binary) features appear as red or blue, while continuous features appear as a color gradient. MH, mental health; SU, substance use; SST, Standard Stop Signal task; MID, Monetary Incentive Delay task; ROI, region of interest; FA, fractional anisotropy; LD, longitudinal diffusivity; WM, white matter; GM, gray matter.

To probe the dispersion of predictor importances at the individual level, we again developed summary plots of individual-level importances (Figures 7, 10). Similarly to depression, we observed relatively more widely dispersed individual-level importances over the participant sample in multimodal vs neural-only models, and the trend for wider dispersion of predictor importance in the more important final predictors. The directionality of the relationship between predictors and depression case status obtained in these plots was further compared with coefficients obtained during LASSO regression for Coarse Feature Selection (Supplementary Table S3) and found to be in agreement.

Individual-level predictor importances for the best-performing mixed-type neural models of anxiety again showed reduced dispersion across the participant group (Figure 10) when compared with multimodal models (Figure 7). The widest dispersion was observed when predicting new onset cases of anxiety. Figure 4 presents the individual-level predictor importances of two selected contrasting participants studied at the new onset cases at 11–12 yrs. Unlike the case study for depression, the current case study provides a less obvious disparity, which can potentially be explained by the relatively weaker predictive performance of the model predicting anxiety onset at 11–12 yrs.

Somatic symptom disorder

Deep learning optimized with IEL performed well using multimodal data in predicting both new onset and prevailing cases of SSD in early adolescence. Here, ~83% accuracy and ~90% AUROC was achieved in predicting future, new onset cases at 11–12 yrs with data obtained at 9–10 yrs. The best overall performance was observed using data at 9–10 yrs to predict contemporaneous prevailing cases, with ~91% accuracy and ~95% AUROC. Predictive performance of all prevailing cases at 11–12 yrs using data from 9-10yrs was comparable to new onset predictions, with accuracy of ~83% and AUROC of ~91% (Table 7a). As with depression and anxiety, neural-only models did not perform as well as multimodal models (Table 7b), being ~29-45% less accurate. The best performance was seen in predicting new onset cases at 11–12 yrs with accuracy of ~54% and AUROC of ~52% and all prevailing cases at 11-12 yrs with accuracy of ~54% and AUROC of ~56%. Accuracy in the model predicting prevailing cases at 9-10 yrs dropped to ~46% with a low AUROC value of ~45%.

Performance statistics of accuracy, precision, recall and the AUC are shown for the most accurate model obtained with deep learning optimized with Integrated Evolutionary Learning using a) multimodal features and b) only neural features. We used features obtained at 9–10 years of age to predict new onset cases of somatic symptom disorder at 11–12 years of age as well as all prevailing contemporaneous cases (9–10 yrs) and all prevailing cases at 11–12 years of age. Corresponding ROC curves may be viewed in Supplementary Figures 1 and 2.

In interpreting optimized multimodal predictive models for early adolescent SSD, we observed that new onset cases were predicted by whether the youth had ever received mental health or substance use (MH/SU) services, the parent’s total burden of behavioral problems, total sleep disturbances, and whether the child had seen a clinician for a medical issue other than a regular checkup (Table 10a). While sets of specific predictors were not the same, overlap was observed among age groups. At 9–10 yrs, prevailing cases were predicted by parent withdrawal, inattention, somatic, and total behavioral problem scores, along with sleep disturbance, visits to a clinician for non-routine medical issues, and parent general behavior. For prevailing cases at 11–12 yrs, predictors included a relative’s history of MH/SU services, parent aggressive and anxiety traits, sleep-wake transition disturbances, and total behavioral problems, as well as non-routine clinical visits and excessive somnolence disorders. Group-level importances for multimodal model predictors were in the range [0.05, 0.15] and the mean importance for each experiment in the range [0.07, 0.11].

Final predictors of cases of all prevailing cases of SSD at ages 9–10 and 11–12 years as well as new onset cases only at 11–12 years of age are shown for the most accurate models obtained using deep learning optimized with IEL obtained with a) multimodal features and b) only neural features. Final predictors are ranked in order of importance where the relative importance of each predictor is computed with the Shapley Additive Explanations technique and presented here averaged across all participants in the sample. Features in blue indicate an inverse relationship with depression verified with the Shapley method. MH = mental health; SU = substance use; SST = Standard Stop Signal task; MID = Monetary Incentive Delay task; ROI = region of interest; FA = fractional anisotropy; LD = longitudinal diffusivity; WM = white matter; GM = gray matter.

In neural-only models, we found that MID and nBack fMRI task features were emphasized in predicting new onset cases, here emphasizing the frontal pole, left amygdala, cuneus and caudate. Important structural predictors of new onset cases were cortical volume in the right pars triangularis and contrast in the right lingual. As with new onset cases, final predictors of prevailing cases of SSD at 11–12 yrs centered on task fMRI metrics, again the MID with the addition of SST measures. Specific neural predictors of all prevailing cases at 11–12 yrs centered on the cuneus, insula and fusiform along with structural measures in parietal, putamen and orbitfrontal regions. In contrast, the final, optimized model predicting all prevailing cases at 9–10 yrs was dominated by connectivity metrics derived from rsfMRI, again emphasizing sensorimotor-control network connections (Figure 8).

When examined at the individual level, final predictors of SSD in each participant sample showed the same patterns as we observed in depression and anxiety. Individual-level predictor importances were widely dispersed, where typically the more important predictors exhibited wider dispersions (Figures 8, 11). Further, the dispersion of individual-level importances was greater in the more accurate multimodal models.

Figure 11. Individual-level importances of neural final predictors of somatic symptom disorder in early adolescence. Summary plots are presented of the importance of each final predictor (computed with the Shapley Additive Explanations technique) on an individual subject level to predicting SSD (A) with new onset at 11–12 yrs; (B) in all cases at 9–10 yrs; and (C) in all cases at 11–12 yrs. The color gradient represents the original value of each feature (metric) where red = high and blue = low. Discrete (binary) features appear as red or blue, while continuous features appear as a color gradient. MH, mental health; SU, substance use.

Similarly to depression and anxiety, individual-level importances of final neural predictors of somatic symptom disorder had a generally smaller variance in terms of feature values compared to the psychosocial predictors in the multimodal models, as indicated in Figures 8, 11. Their group-level importances were in the range [0.07, 0.20] where the disparity across important predictors were similar (Figure 8), suggesting no essential differences of explanatory power between them. The directionality of the relationship between predictors and SSD case status obtained in these plots was further compared with coefficients obtained during LASSO regression for Coarse Feature Selection (Supplementary Table S3) and found to be in agreement. Further, Figure 5 visualizes the case study with two contrasting participants at the onset cases of 11–12 yrs, where parents’ total problems syndrome score and their history of receiving mental health or substance use services are a strong risk factors for the children’s SSD.

Fairness subgroup performance analysis

To robustly evaluate the model performance from a fairness perspective, we conducted a subgroup performance analysis on the held-out test set, stratified by race and annual family income level. Performance statistics are reported in Supplementary Table S6.

For the stratification of race, 58.8-68.0% of the individuals in the held-out test set identified as White, 12.5-19.9% as Black, and 19.5-21.0% as other races. Accuracy scores for anxiety (79.3-84.0%) and somatic problems (83.3-86.2%) were largely consistent across different racial groups. However, in the case of depression, a larger disparity was observed: predictive accuracy was 92.6% for Black participants but 79.3% for participants categorized as other races, despite their similar sample sizes.

For the annual family income level stratification, 8.1-11.9% of the individuals in the held-out test set reported an annual income below $15,999, 9.0-17.1% earned $16,000-$34,999, 17.1-22.5% earned $35,000-$74,999, and 57.7-58.6% earned $75,000 or above. This stratification revealed larger performance disparities across income groups. The most extreme case was observed when predicting somatic problems, where the accuracy was 60.0% for the lowest income group compared to 91.3% for the $35,000-$74,999 group — a difference exceeding 30 percentage points.

Baseline comparison

We compared our IEL approach with three traditional ML modeling methods: logistic regression, random forest, and SVM. The performance statistics, defined in terms of accuracy, precision, recall, and AUROC, of these models, trained with the multimodal feature sets, are reported in Supplementary Table S7. The results demonstrate that the baseline models achieved highly comparable performance with IEL consistently. The average accuracy (across targets and cases of determination) of IEL is 86.1% whereas the baseline models, which include roughly 10 times more features, have an average accuracy of 87.5-88.7%. Student t-tests verified that IEL does not have a statistically significantly different accuracy performance compared to logistic regression (p-value = 0.36), random forest (p-value = 0.54), or SVM (p-value = 0.23).

Discussion

Common and specific themes across internalizing disorders

We analyzed ~6,000 candidate predictors from multiple knowledge domains (cognitive, psychosocial, neural, biological) contributed by children of late elementary school age (9–10 yrs) and their parents and constructed robust, individual-level models predicting the later (11–12 yrs) onset of depression, anxiety and SSD. Leveraging an optimization pipeline that included AI-guided automated feature selection allowed us to extend prior work by analyzing a wider variety of predictor types and ~40x more candidate predictors than previous comparable ML studies. A common pre-processing and analytic design across all three internalizing disorders in the same youth cohort allows the direct comparison of results to elicit their diagnostic specificity and identify common themes. In addition, we wanted to quantify the relative predictive performance of multimodal vs neural features and examine the relationship between predictor importance and model accuracy. To our knowledge, this is the first ML study in adolescent internalizing disorders to include multiple types of neural predictors (rsfMRI connectivity; task fMRI effects; diffusion and structural metrics), analyze >200 multimodal features and quantify the relationship between predictor importance and accuracy. The iterative feature sampling approach adopted in our genetic algorithm offers additional robustness to this quantification by reducing the risk that predictive accuracy hinges on a single subset or on correlated predictors retained in the pre-processing phase.

Comparing across results, we found that the relative predictive performance of our models varied according to the specific disorder and type of predictor (psychosocial vs neural). Deep learning optimized with IEL rendered robust individual-level predictions of all three internalizing disorders with AUROCs of 86-99% and 79-94% accuracy. Precision and recall were also consistently ≥~80% with scattered exceptions in precision (new onset anxiety: 76%) and recall (new onset depression: 78%; prevailing SSD at 11–12 yrs: 78%). Our primary focus was in predicting future, new onset cases of each internalizing disorder in early adolescence. We found that new onset cases of depression could be most reliably predicted (AUROC ~93%), followed by SSD (AUROC ~90%) and anxiety (AUROC 86%).

An important result is that we found that predicting early adolescent internalizing disorders with multimodal features resulted in substantially better performance than exclusively neural-based models, and that psychosocial predictors were preferentially selected in multimodal modeling. Our pipeline includes automated feature selection with a genetic algorithm (IEL) that progressively selects among features as it learns how to optimize predictive models over a principled training process (typically ~40,000 models). Cognitive, neural and biological features failed to outcompete psychosocial features in training with multimodal. Further targeted experiments specifically assessed the standalone predictive ability of multiple neural feature types derived from MRI. These experiments demonstrated that neural-only models achieved a close to 50% performance, sacrificing 24-45% performance compared to the multimodal models, across statistics (accuracy, AUROC, precision, recall). While little extant research has directly compared psychosocial to neural features in youth internalizing disorders, our results are congruent with studies that have used multimodal feature types including MRI metrics (18, 19). Our design extended prior work by allowing us to examine more and wider feature types and disorders and the prediction of new onset vs prevailing cases. Neural-only models of new onset cases achieved superior performance to other participant samples and selectively comprised task fMRI and structural metrics, though more neural feature types (rsfMRI connectivity, diffusion-based) were available for selection, suggesting structural and task fMRI neural features may have particular promise in predicting adolescent onset of internalizing disorders.